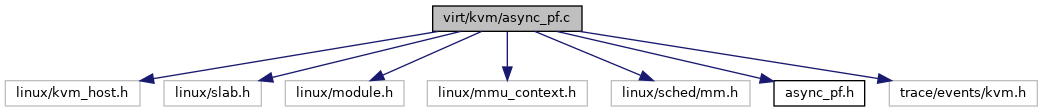

#include <linux/kvm_host.h>

#include <linux/slab.h>

#include <linux/module.h>

#include <linux/mmu_context.h>

#include <linux/sched/mm.h>

#include "async_pf.h"

#include <trace/events/kvm.h>

Go to the source code of this file.

◆ async_pf_execute()

| static void async_pf_execute |

( |

struct work_struct * |

work | ) |

|

|

static |

Definition at line 45 of file async_pf.c.

47 struct kvm_async_pf *apf =

48 container_of(work,

struct kvm_async_pf, work);

49 struct mm_struct *mm = apf->mm;

50 struct kvm_vcpu *vcpu = apf->vcpu;

51 unsigned long addr = apf->addr;

52 gpa_t cr2_or_gpa = apf->cr2_or_gpa;

64 get_user_pages_remote(mm, addr, 1, FOLL_WRITE, NULL, &locked);

68 if (IS_ENABLED(CONFIG_KVM_ASYNC_PF_SYNC))

69 kvm_arch_async_page_present(vcpu, apf);

71 spin_lock(&vcpu->async_pf.lock);

72 first = list_empty(&vcpu->async_pf.done);

73 list_add_tail(&apf->link, &vcpu->async_pf.done);

75 spin_unlock(&vcpu->async_pf.lock);

77 if (!IS_ENABLED(CONFIG_KVM_ASYNC_PF_SYNC) && first)

78 kvm_arch_async_page_present_queued(vcpu);

85 trace_kvm_async_pf_completed(addr, cr2_or_gpa);

87 __kvm_vcpu_wake_up(vcpu);

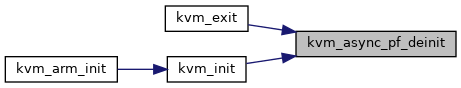

◆ kvm_async_pf_deinit()

| void kvm_async_pf_deinit |

( |

void |

| ) |

|

Definition at line 32 of file async_pf.c.

static struct kmem_cache * async_pf_cache

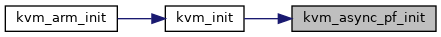

◆ kvm_async_pf_init()

| int kvm_async_pf_init |

( |

void |

| ) |

|

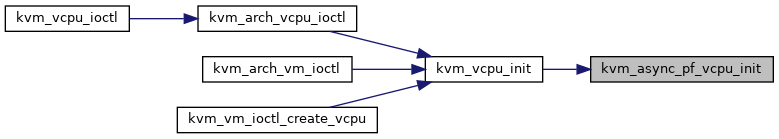

◆ kvm_async_pf_vcpu_init()

| void kvm_async_pf_vcpu_init |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 38 of file async_pf.c.

40 INIT_LIST_HEAD(&vcpu->async_pf.done);

41 INIT_LIST_HEAD(&vcpu->async_pf.queue);

42 spin_lock_init(&vcpu->async_pf.lock);

◆ kvm_async_pf_wakeup_all()

| int kvm_async_pf_wakeup_all |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 223 of file async_pf.c.

225 struct kvm_async_pf *work;

228 if (!list_empty_careful(&vcpu->async_pf.done))

235 work->wakeup_all =

true;

236 INIT_LIST_HEAD(&work->queue);

238 spin_lock(&vcpu->async_pf.lock);

239 first = list_empty(&vcpu->async_pf.done);

240 list_add_tail(&work->link, &vcpu->async_pf.done);

241 spin_unlock(&vcpu->async_pf.lock);

243 if (!IS_ENABLED(CONFIG_KVM_ASYNC_PF_SYNC) && first)

244 kvm_arch_async_page_present_queued(vcpu);

246 vcpu->async_pf.queued++;

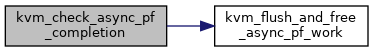

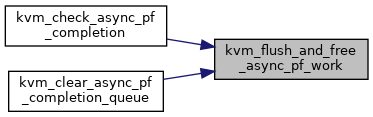

◆ kvm_check_async_pf_completion()

| void kvm_check_async_pf_completion |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 158 of file async_pf.c.

160 struct kvm_async_pf *work;

162 while (!list_empty_careful(&vcpu->async_pf.done) &&

163 kvm_arch_can_dequeue_async_page_present(vcpu)) {

164 spin_lock(&vcpu->async_pf.lock);

165 work = list_first_entry(&vcpu->async_pf.done, typeof(*work),

167 list_del(&work->link);

168 spin_unlock(&vcpu->async_pf.lock);

170 kvm_arch_async_page_ready(vcpu, work);

171 if (!IS_ENABLED(CONFIG_KVM_ASYNC_PF_SYNC))

172 kvm_arch_async_page_present(vcpu, work);

174 list_del(&work->queue);

175 vcpu->async_pf.queued--;

static void kvm_flush_and_free_async_pf_work(struct kvm_async_pf *work)

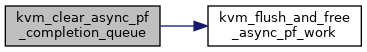

◆ kvm_clear_async_pf_completion_queue()

| void kvm_clear_async_pf_completion_queue |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 113 of file async_pf.c.

115 spin_lock(&vcpu->async_pf.lock);

118 while (!list_empty(&vcpu->async_pf.queue)) {

119 struct kvm_async_pf *work =

120 list_first_entry(&vcpu->async_pf.queue,

121 typeof(*work), queue);

122 list_del(&work->queue);

131 spin_unlock(&vcpu->async_pf.lock);

132 #ifdef CONFIG_KVM_ASYNC_PF_SYNC

133 flush_work(&work->work);

135 if (cancel_work_sync(&work->work)) {

140 spin_lock(&vcpu->async_pf.lock);

143 while (!list_empty(&vcpu->async_pf.done)) {

144 struct kvm_async_pf *work =

145 list_first_entry(&vcpu->async_pf.done,

146 typeof(*work), link);

147 list_del(&work->link);

149 spin_unlock(&vcpu->async_pf.lock);

151 spin_lock(&vcpu->async_pf.lock);

153 spin_unlock(&vcpu->async_pf.lock);

155 vcpu->async_pf.queued = 0;

◆ kvm_flush_and_free_async_pf_work()

| static void kvm_flush_and_free_async_pf_work |

( |

struct kvm_async_pf * |

work | ) |

|

|

static |

Definition at line 92 of file async_pf.c.

106 if (work->wakeup_all)

107 WARN_ON_ONCE(work->work.func);

109 flush_work(&work->work);

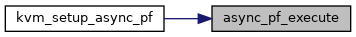

◆ kvm_setup_async_pf()

| bool kvm_setup_async_pf |

( |

struct kvm_vcpu * |

vcpu, |

|

|

gpa_t |

cr2_or_gpa, |

|

|

unsigned long |

hva, |

|

|

struct kvm_arch_async_pf * |

arch |

|

) |

| |

Definition at line 184 of file async_pf.c.

187 struct kvm_async_pf *work;

189 if (vcpu->async_pf.queued >= ASYNC_PF_PER_VCPU)

193 if (unlikely(kvm_is_error_hva(hva)))

200 work = kmem_cache_zalloc(

async_pf_cache, GFP_NOWAIT | __GFP_NOWARN);

204 work->wakeup_all =

false;

206 work->cr2_or_gpa = cr2_or_gpa;

209 work->mm = current->mm;

214 list_add_tail(&work->queue, &vcpu->async_pf.queue);

215 vcpu->async_pf.queued++;

216 work->notpresent_injected = kvm_arch_async_page_not_present(vcpu, work);

218 schedule_work(&work->work);

static void async_pf_execute(struct work_struct *work)

◆ async_pf_cache

| struct kmem_cache* async_pf_cache |

|

static |