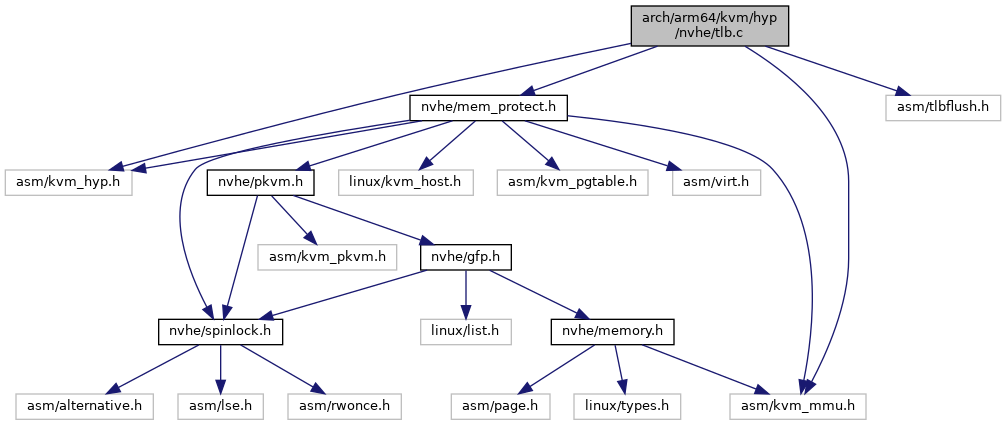

#include <asm/kvm_hyp.h>

#include <asm/kvm_mmu.h>

#include <asm/tlbflush.h>

#include <nvhe/mem_protect.h>

Go to the source code of this file.

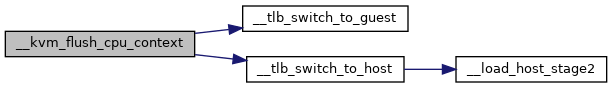

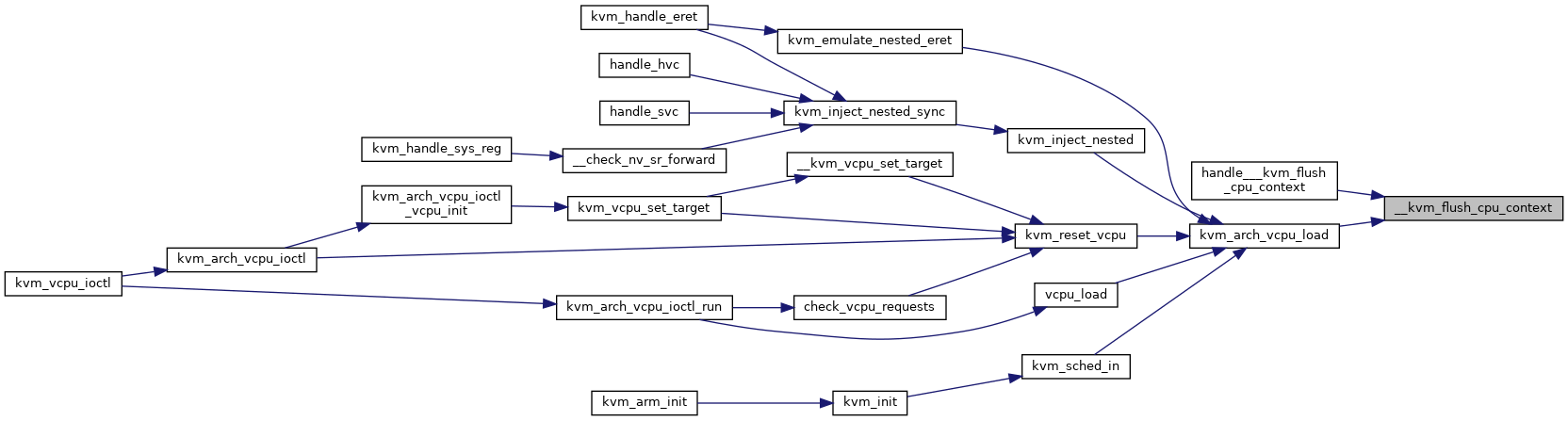

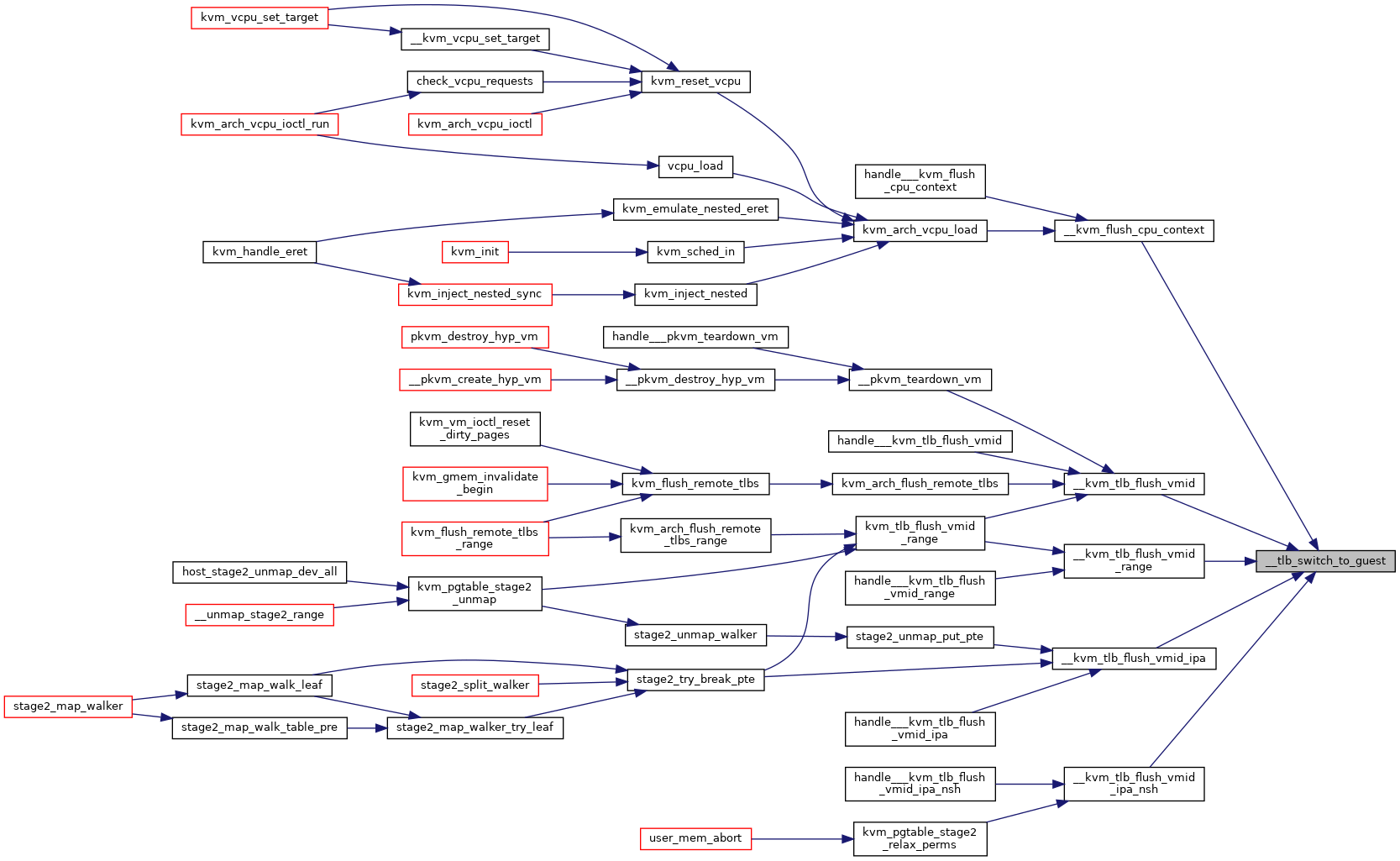

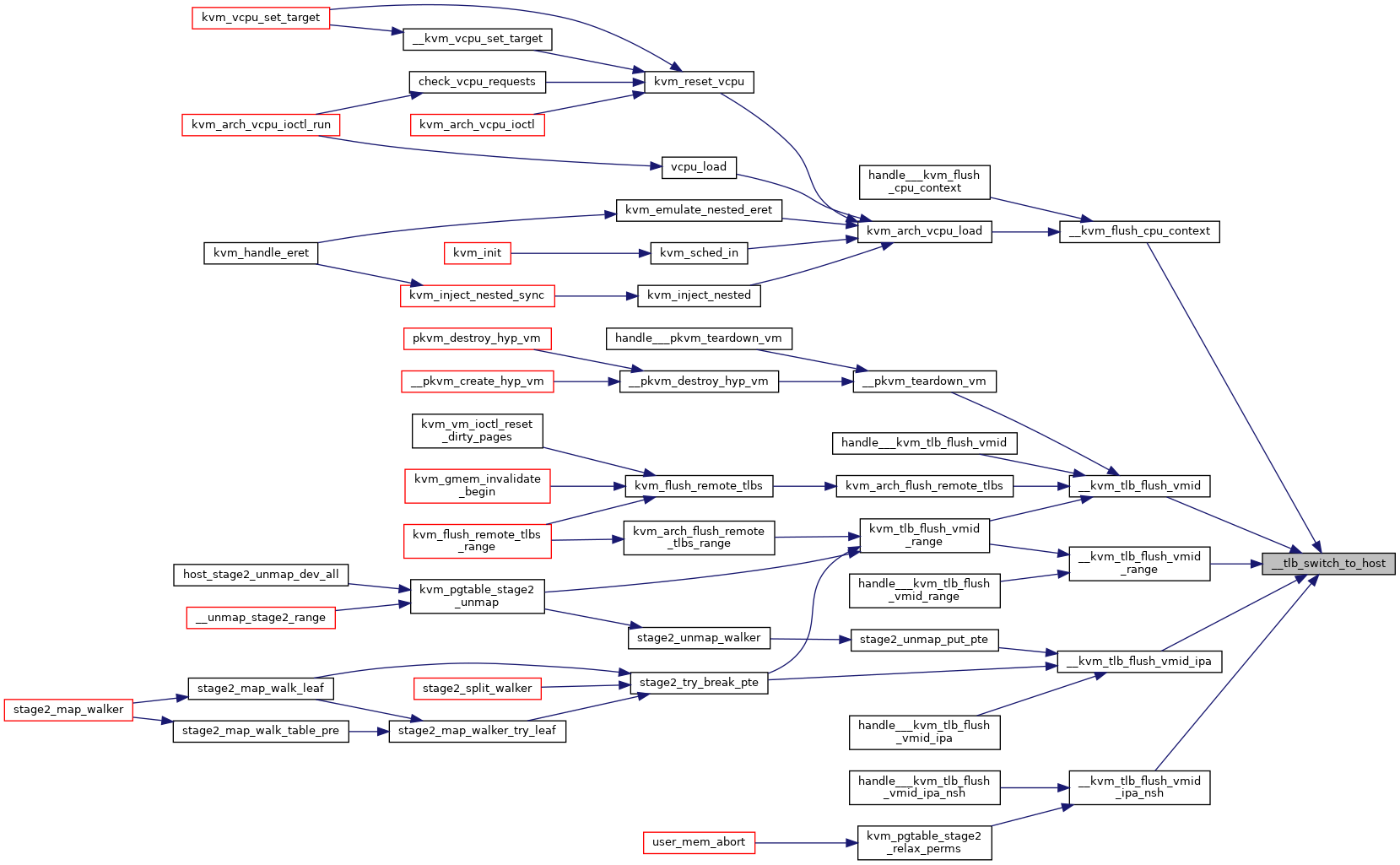

◆ __kvm_flush_cpu_context()

| void __kvm_flush_cpu_context |

( |

struct kvm_s2_mmu * |

mmu | ) |

|

Definition at line 182 of file tlb.c.

190 asm volatile(

"ic iallu");

static void __tlb_switch_to_guest(struct kvm_s2_mmu *mmu, struct tlb_inv_context *cxt, bool nsh)

static void __tlb_switch_to_host(struct tlb_inv_context *cxt)

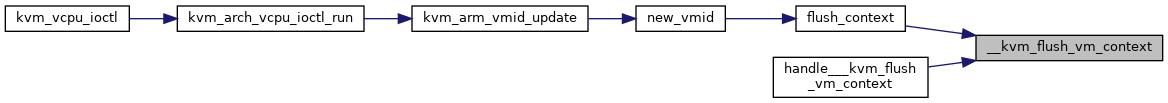

◆ __kvm_flush_vm_context()

| void __kvm_flush_vm_context |

( |

void |

| ) |

|

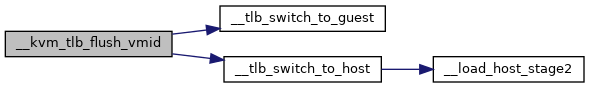

◆ __kvm_tlb_flush_vmid()

| void __kvm_tlb_flush_vmid |

( |

struct kvm_s2_mmu * |

mmu | ) |

|

Definition at line 168 of file tlb.c.

175 __tlbi(vmalls12e1is);

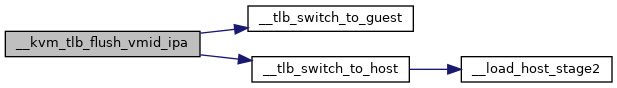

◆ __kvm_tlb_flush_vmid_ipa()

| void __kvm_tlb_flush_vmid_ipa |

( |

struct kvm_s2_mmu * |

mmu, |

|

|

phys_addr_t |

ipa, |

|

|

int |

level |

|

) |

| |

Definition at line 81 of file tlb.c.

95 __tlbi_level(ipas2e1is, ipa, level);

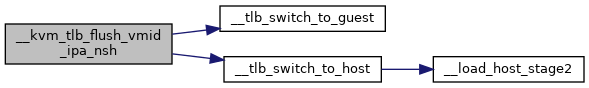

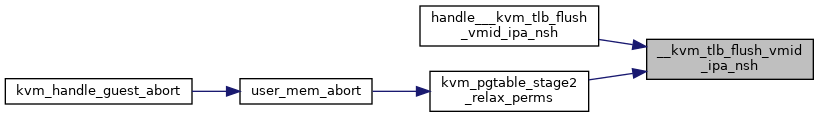

◆ __kvm_tlb_flush_vmid_ipa_nsh()

| void __kvm_tlb_flush_vmid_ipa_nsh |

( |

struct kvm_s2_mmu * |

mmu, |

|

|

phys_addr_t |

ipa, |

|

|

int |

level |

|

) |

| |

Definition at line 111 of file tlb.c.

125 __tlbi_level(ipas2e1, ipa, level);

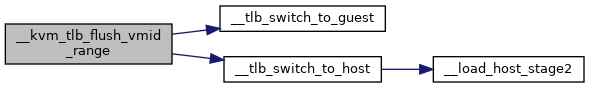

◆ __kvm_tlb_flush_vmid_range()

| void __kvm_tlb_flush_vmid_range |

( |

struct kvm_s2_mmu * |

mmu, |

|

|

phys_addr_t |

start, |

|

|

unsigned long |

pages |

|

) |

| |

Definition at line 141 of file tlb.c.

145 unsigned long stride;

152 start = round_down(start, stride);

157 __flush_s2_tlb_range_op(ipas2e1is, start, pages, stride,

◆ __tlb_switch_to_guest()

| static void __tlb_switch_to_guest |

( |

struct kvm_s2_mmu * |

mmu, |

|

|

struct tlb_inv_context * |

cxt, |

|

|

bool |

nsh |

|

) |

| |

|

static |

Definition at line 17 of file tlb.c.

43 if (cpus_have_final_cap(ARM64_WORKAROUND_SPECULATIVE_AT)) {

53 val = cxt->

tcr = read_sysreg_el1(SYS_TCR);

54 val |= TCR_EPD1_MASK | TCR_EPD0_MASK;

55 write_sysreg_el1(val, SYS_TCR);

65 __load_stage2(mmu, kern_hyp_va(mmu->arch));

66 asm(ALTERNATIVE(

"isb",

"nop", ARM64_WORKAROUND_SPECULATIVE_AT));

◆ __tlb_switch_to_host()

Definition at line 69 of file tlb.c.

73 if (cpus_have_final_cap(ARM64_WORKAROUND_SPECULATIVE_AT)) {

77 write_sysreg_el1(cxt->

tcr, SYS_TCR);

static __always_inline void __load_host_stage2(void)