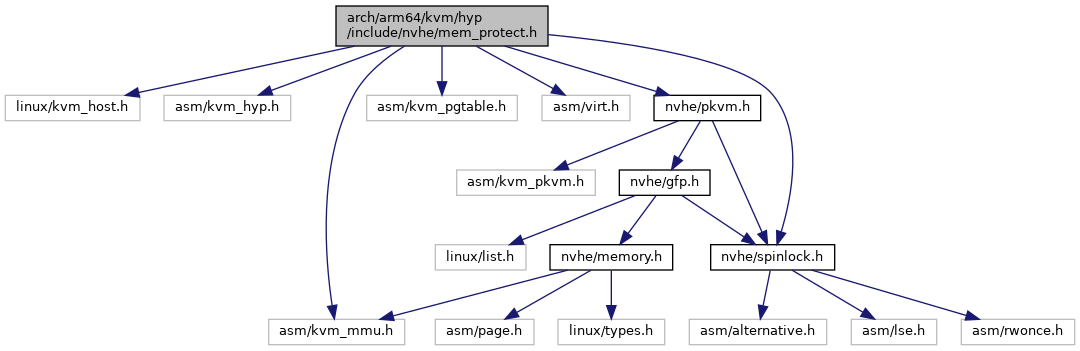

#include <linux/kvm_host.h>#include <asm/kvm_hyp.h>#include <asm/kvm_mmu.h>#include <asm/kvm_pgtable.h>#include <asm/virt.h>#include <nvhe/pkvm.h>#include <nvhe/spinlock.h>

Go to the source code of this file.

Classes | |

| struct | host_mmu |

Macros | |

| #define | PKVM_PAGE_STATE_PROT_MASK (KVM_PGTABLE_PROT_SW0 | KVM_PGTABLE_PROT_SW1) |

Enumerations | |

| enum | pkvm_page_state { PKVM_PAGE_OWNED = 0ULL , PKVM_PAGE_SHARED_OWNED = KVM_PGTABLE_PROT_SW0 , PKVM_PAGE_SHARED_BORROWED = KVM_PGTABLE_PROT_SW1 , __PKVM_PAGE_RESERVED , PKVM_NOPAGE } |

| enum | pkvm_component_id { PKVM_ID_HOST , PKVM_ID_HYP , PKVM_ID_FFA } |

Functions | |

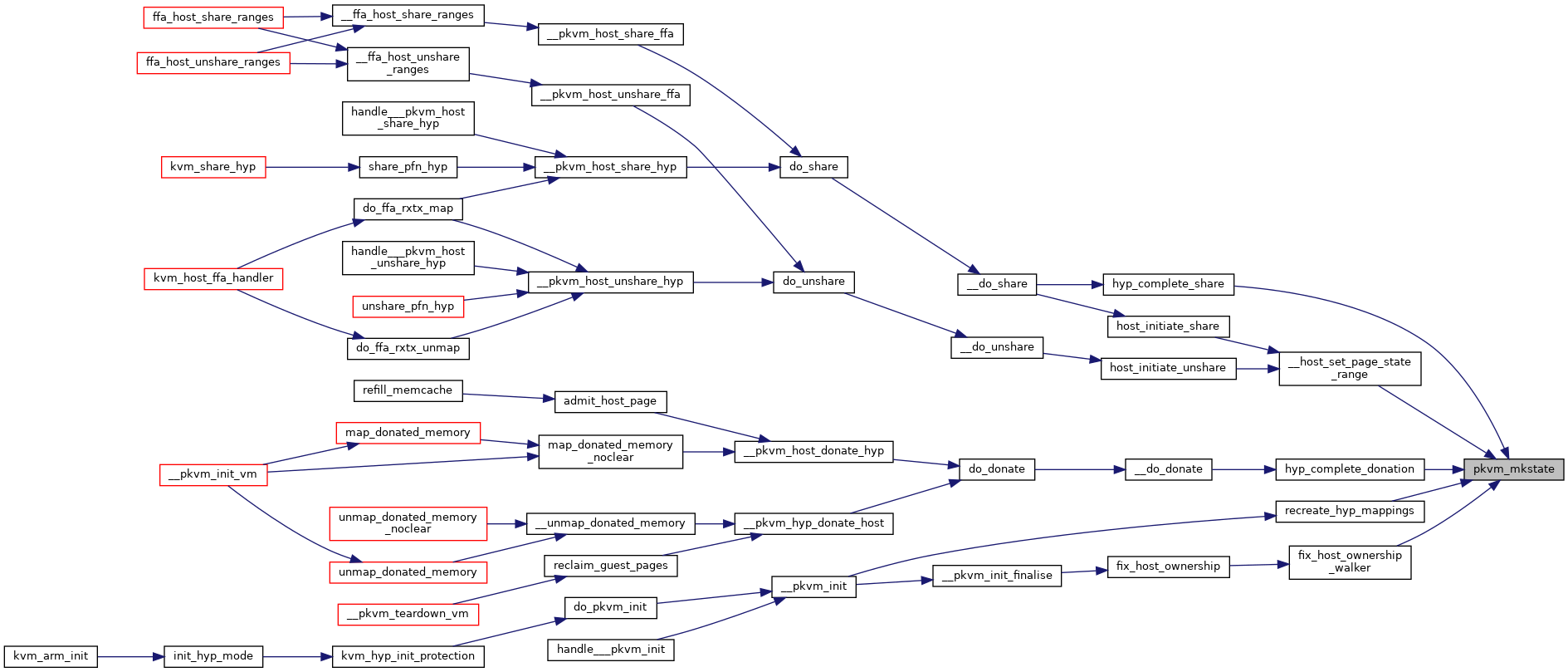

| static enum kvm_pgtable_prot | pkvm_mkstate (enum kvm_pgtable_prot prot, enum pkvm_page_state state) |

| static enum pkvm_page_state | pkvm_getstate (enum kvm_pgtable_prot prot) |

| int | __pkvm_prot_finalize (void) |

| int | __pkvm_host_share_hyp (u64 pfn) |

| int | __pkvm_host_unshare_hyp (u64 pfn) |

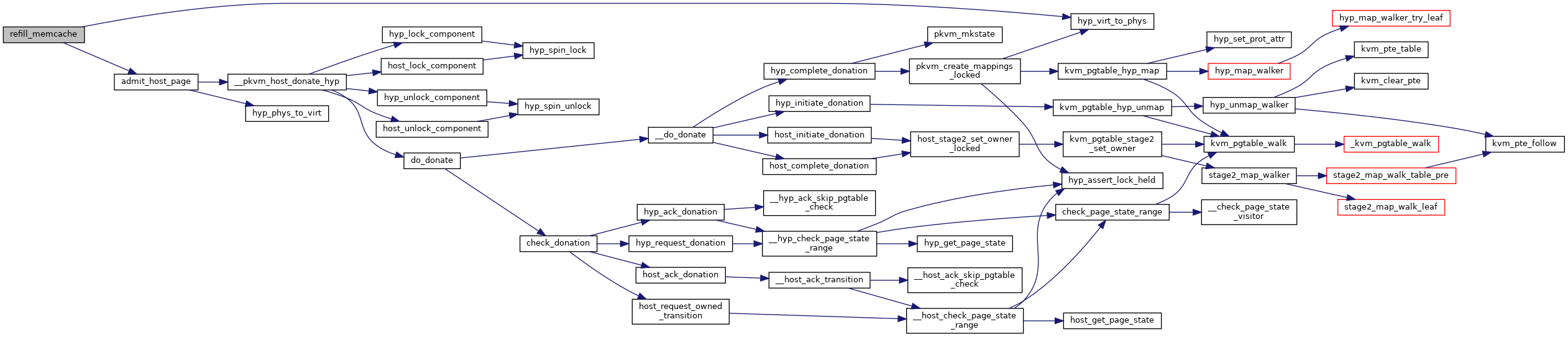

| int | __pkvm_host_donate_hyp (u64 pfn, u64 nr_pages) |

| int | __pkvm_hyp_donate_host (u64 pfn, u64 nr_pages) |

| int | __pkvm_host_share_ffa (u64 pfn, u64 nr_pages) |

| int | __pkvm_host_unshare_ffa (u64 pfn, u64 nr_pages) |

| bool | addr_is_memory (phys_addr_t phys) |

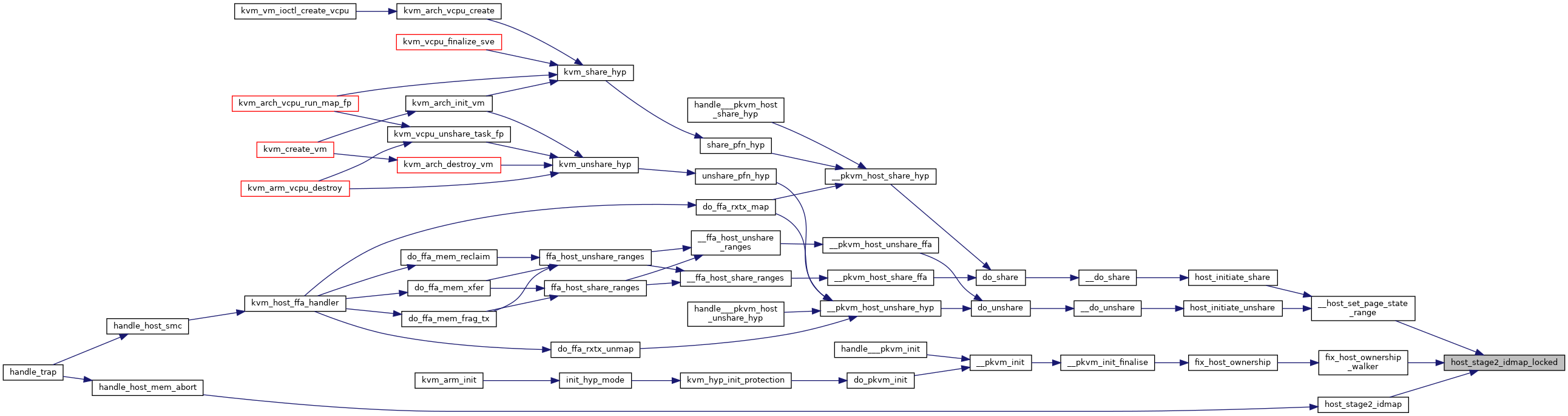

| int | host_stage2_idmap_locked (phys_addr_t addr, u64 size, enum kvm_pgtable_prot prot) |

| int | host_stage2_set_owner_locked (phys_addr_t addr, u64 size, u8 owner_id) |

| int | kvm_host_prepare_stage2 (void *pgt_pool_base) |

| int | kvm_guest_prepare_stage2 (struct pkvm_hyp_vm *vm, void *pgd) |

| void | handle_host_mem_abort (struct kvm_cpu_context *host_ctxt) |

| int | hyp_pin_shared_mem (void *from, void *to) |

| void | hyp_unpin_shared_mem (void *from, void *to) |

| void | reclaim_guest_pages (struct pkvm_hyp_vm *vm, struct kvm_hyp_memcache *mc) |

| int | refill_memcache (struct kvm_hyp_memcache *mc, unsigned long min_pages, struct kvm_hyp_memcache *host_mc) |

| static __always_inline void | __load_host_stage2 (void) |

Variables | |

| struct host_mmu | host_mmu |

| unsigned long | hyp_nr_cpus |

Macro Definition Documentation

◆ PKVM_PAGE_STATE_PROT_MASK

| #define PKVM_PAGE_STATE_PROT_MASK (KVM_PGTABLE_PROT_SW0 | KVM_PGTABLE_PROT_SW1) |

Definition at line 36 of file mem_protect.h.

Enumeration Type Documentation

◆ pkvm_component_id

| enum pkvm_component_id |

◆ pkvm_page_state

| enum pkvm_page_state |

| Enumerator | |

|---|---|

| PKVM_PAGE_OWNED | |

| PKVM_PAGE_SHARED_OWNED | |

| PKVM_PAGE_SHARED_BORROWED | |

| __PKVM_PAGE_RESERVED | |

| PKVM_NOPAGE | |

Definition at line 25 of file mem_protect.h.

Function Documentation

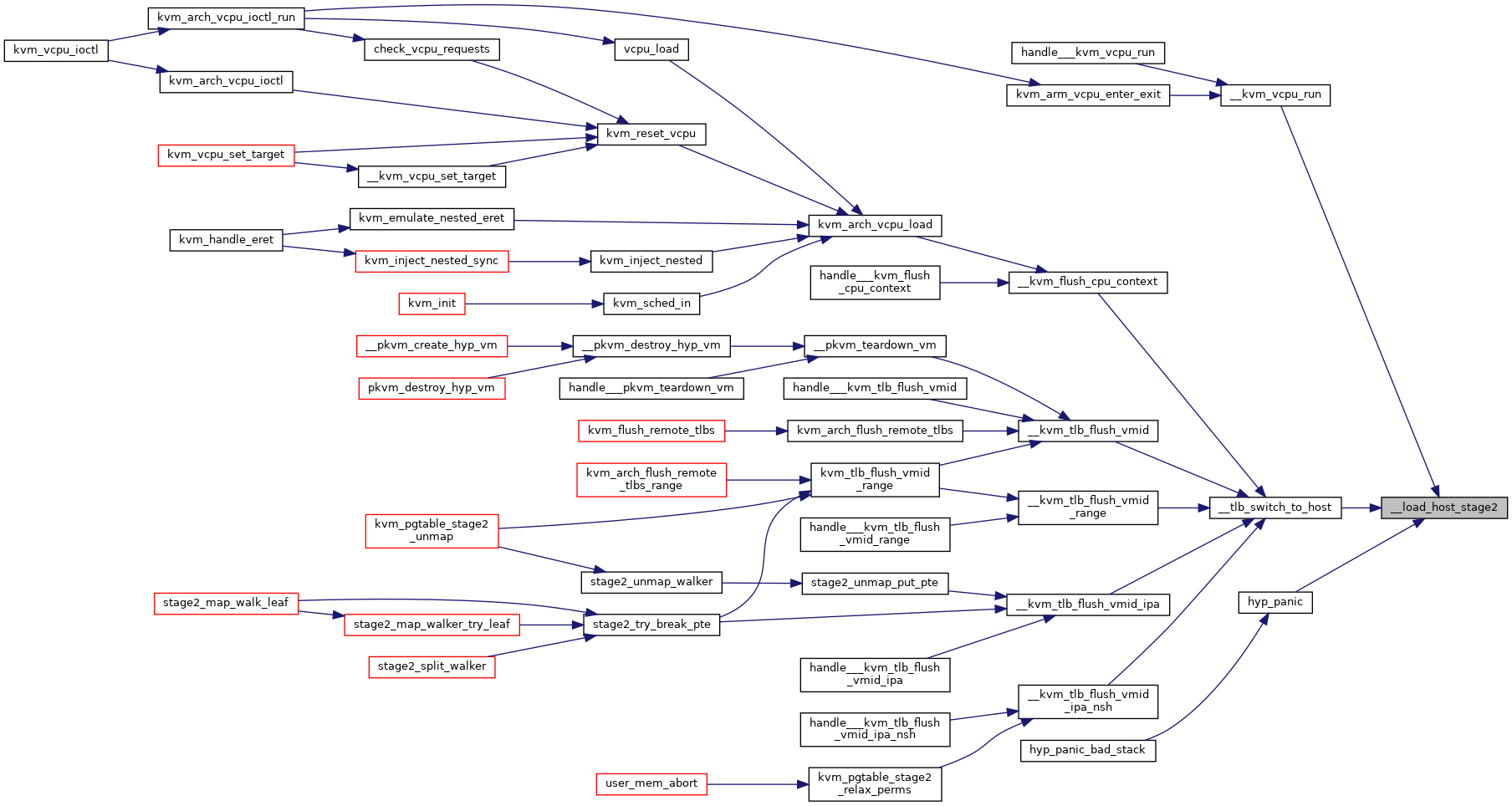

◆ __load_host_stage2()

|

static |

Definition at line 86 of file mem_protect.h.

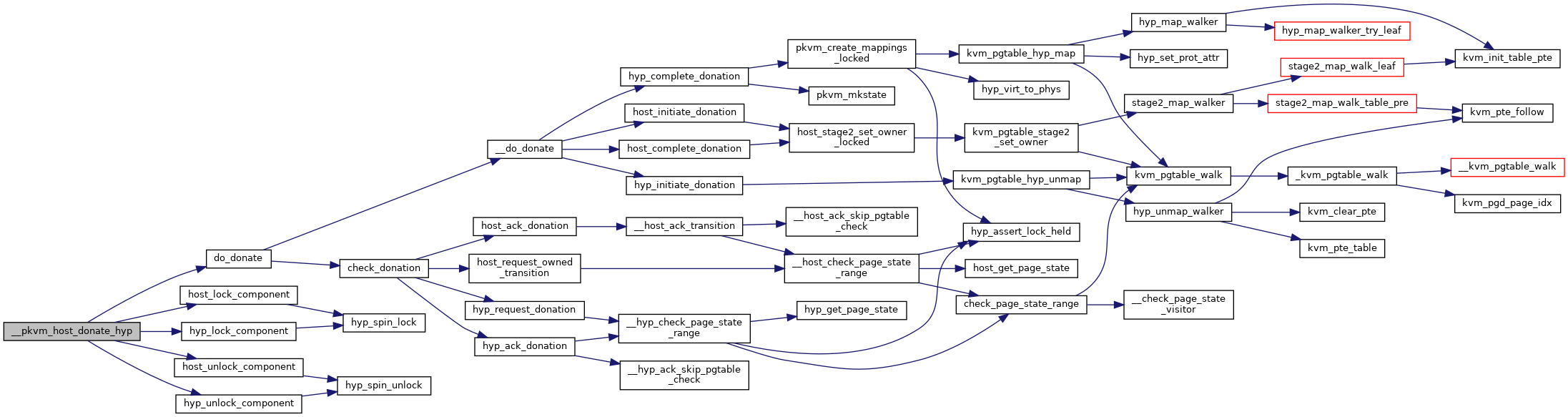

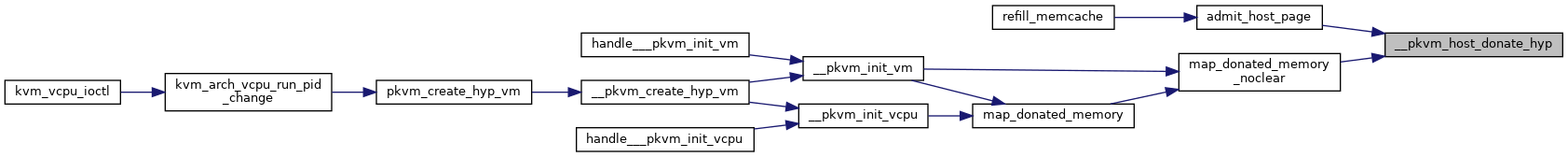

◆ __pkvm_host_donate_hyp()

| int __pkvm_host_donate_hyp | ( | u64 | pfn, |

| u64 | nr_pages | ||

| ) |

Definition at line 1152 of file mem_protect.c.

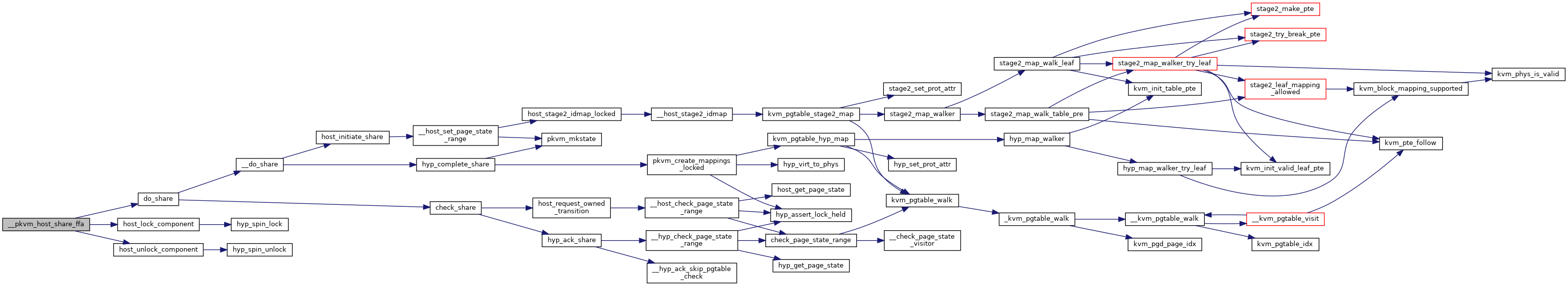

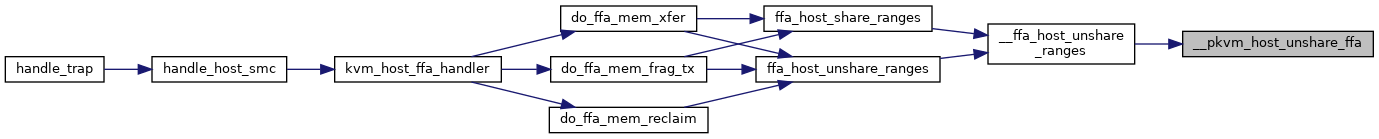

◆ __pkvm_host_share_ffa()

| int __pkvm_host_share_ffa | ( | u64 | pfn, |

| u64 | nr_pages | ||

| ) |

Definition at line 1261 of file mem_protect.c.

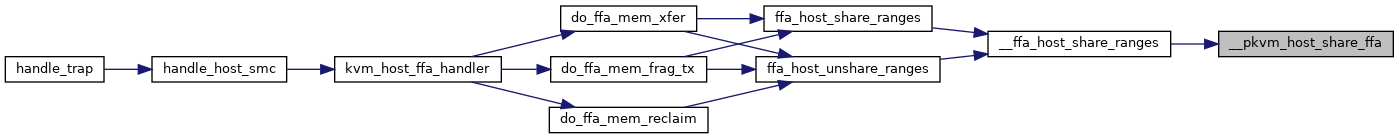

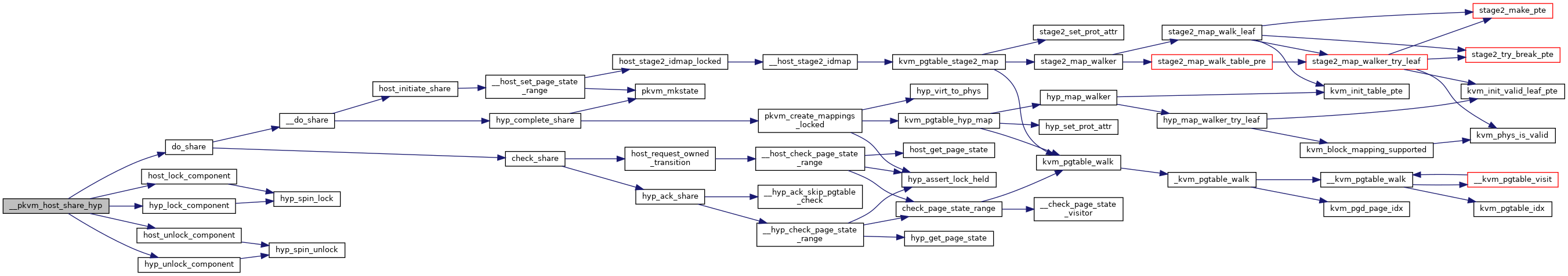

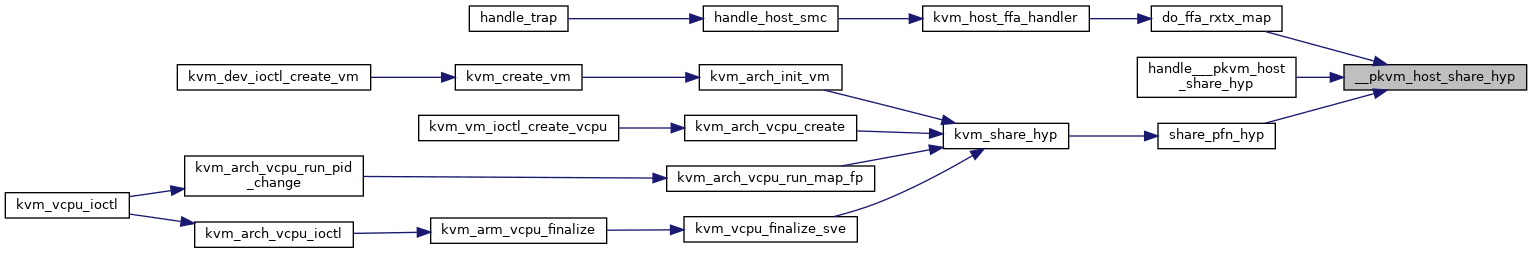

◆ __pkvm_host_share_hyp()

| int __pkvm_host_share_hyp | ( | u64 | pfn | ) |

Definition at line 1086 of file mem_protect.c.

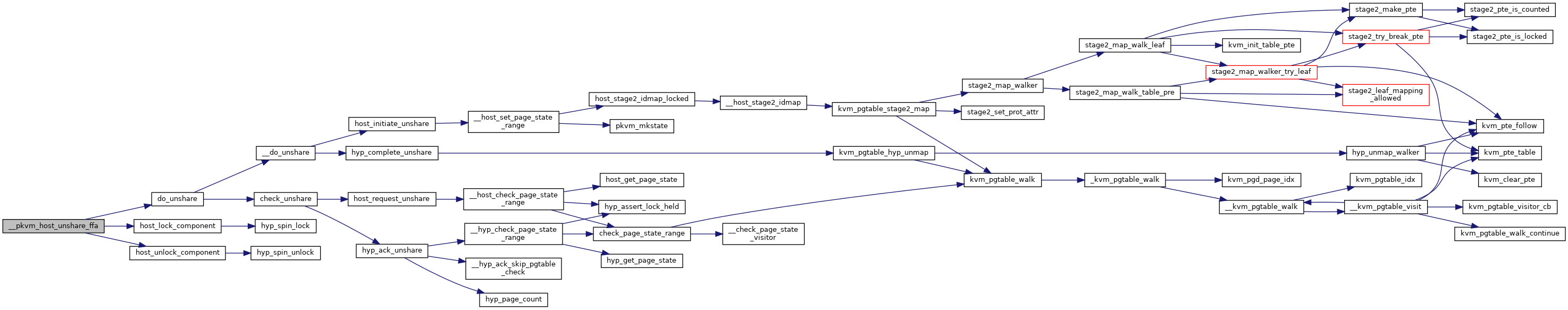

◆ __pkvm_host_unshare_ffa()

| int __pkvm_host_unshare_ffa | ( | u64 | pfn, |

| u64 | nr_pages | ||

| ) |

Definition at line 1284 of file mem_protect.c.

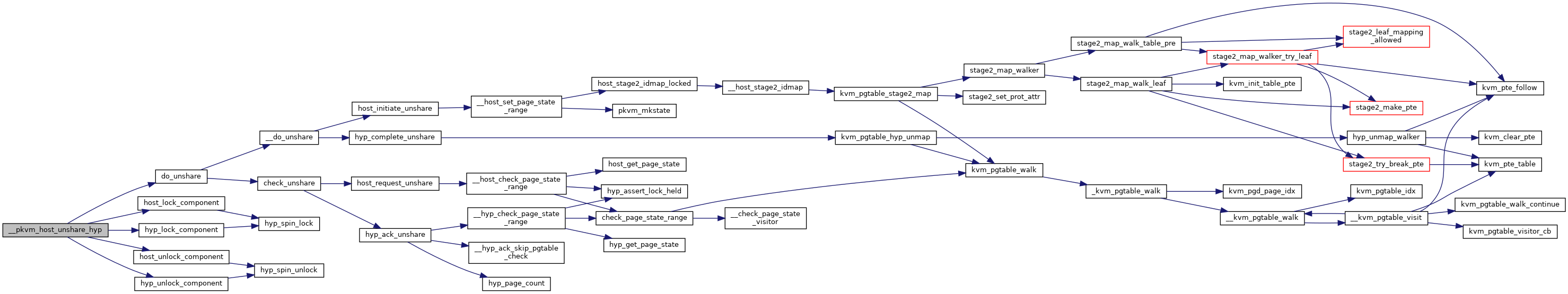

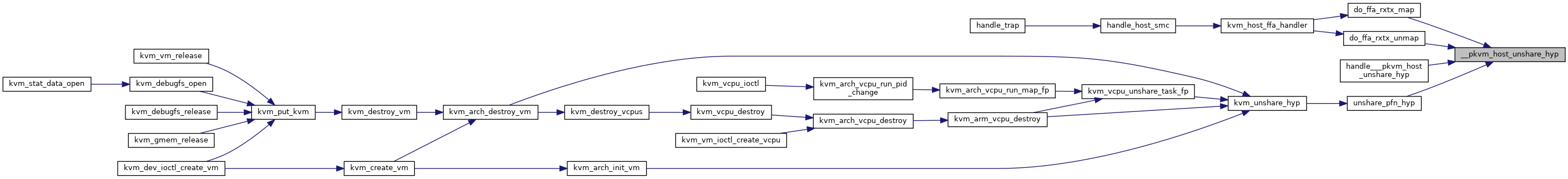

◆ __pkvm_host_unshare_hyp()

| int __pkvm_host_unshare_hyp | ( | u64 | pfn | ) |

Definition at line 1119 of file mem_protect.c.

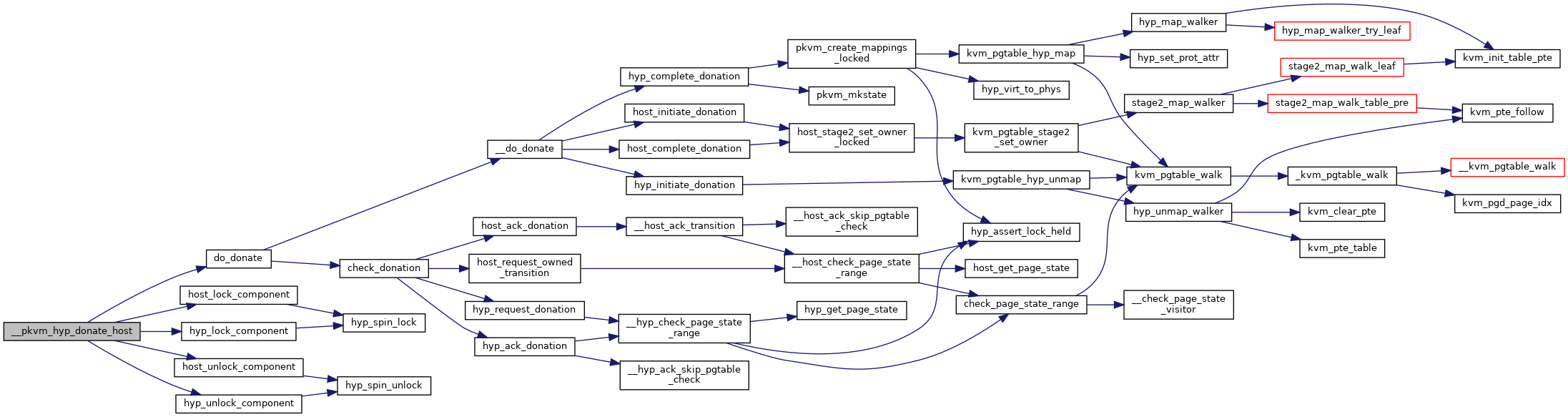

◆ __pkvm_hyp_donate_host()

| int __pkvm_hyp_donate_host | ( | u64 | pfn, |

| u64 | nr_pages | ||

| ) |

Definition at line 1184 of file mem_protect.c.

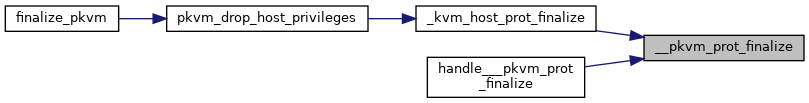

◆ __pkvm_prot_finalize()

| int __pkvm_prot_finalize | ( | void | ) |

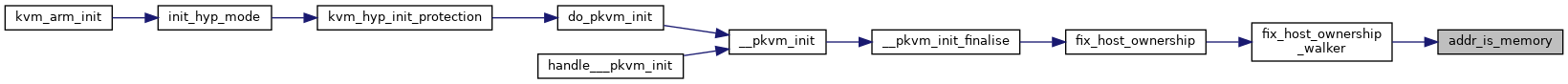

◆ addr_is_memory()

| bool addr_is_memory | ( | phys_addr_t | phys | ) |

Definition at line 378 of file mem_protect.c.

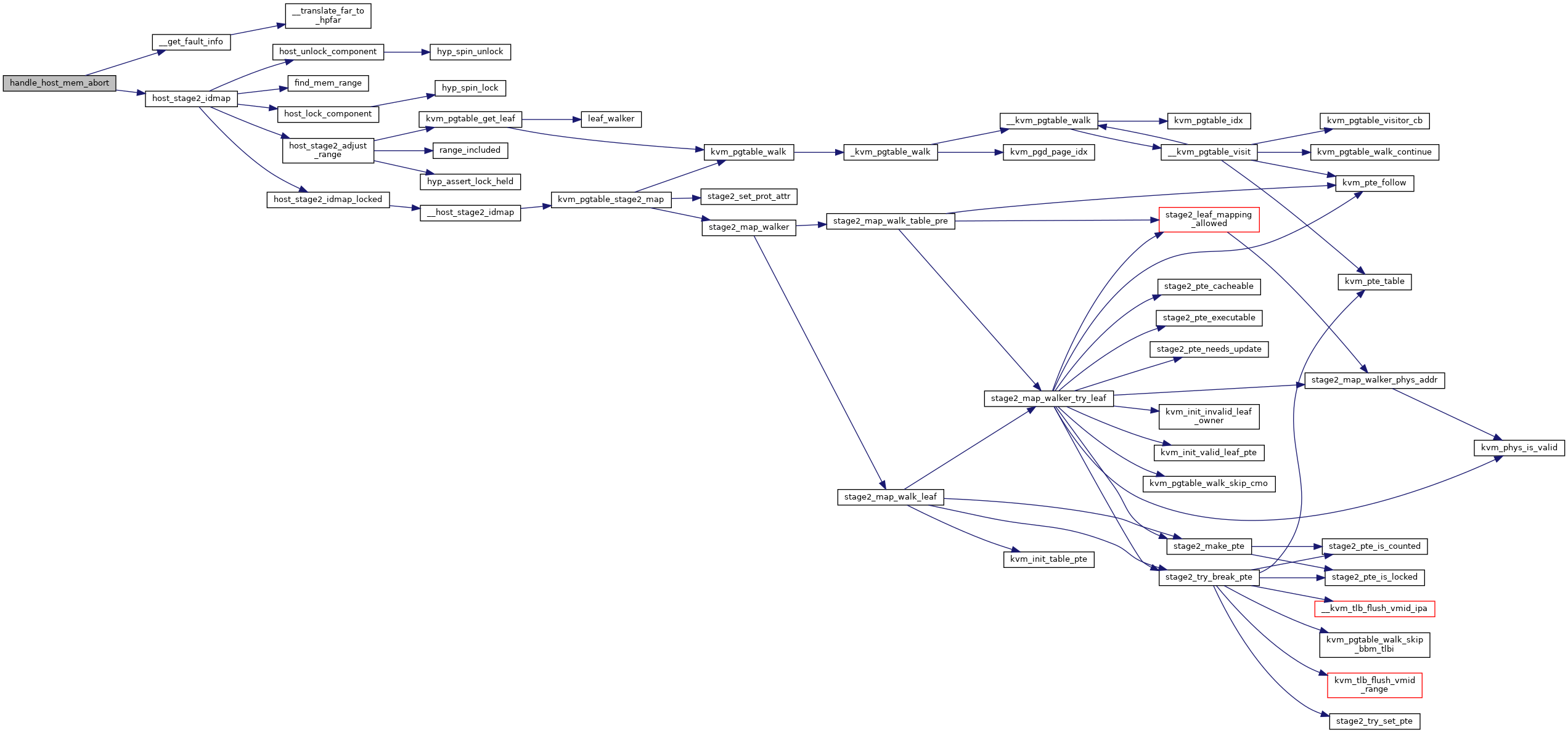

◆ handle_host_mem_abort()

| void handle_host_mem_abort | ( | struct kvm_cpu_context * | host_ctxt | ) |

Definition at line 529 of file mem_protect.c.

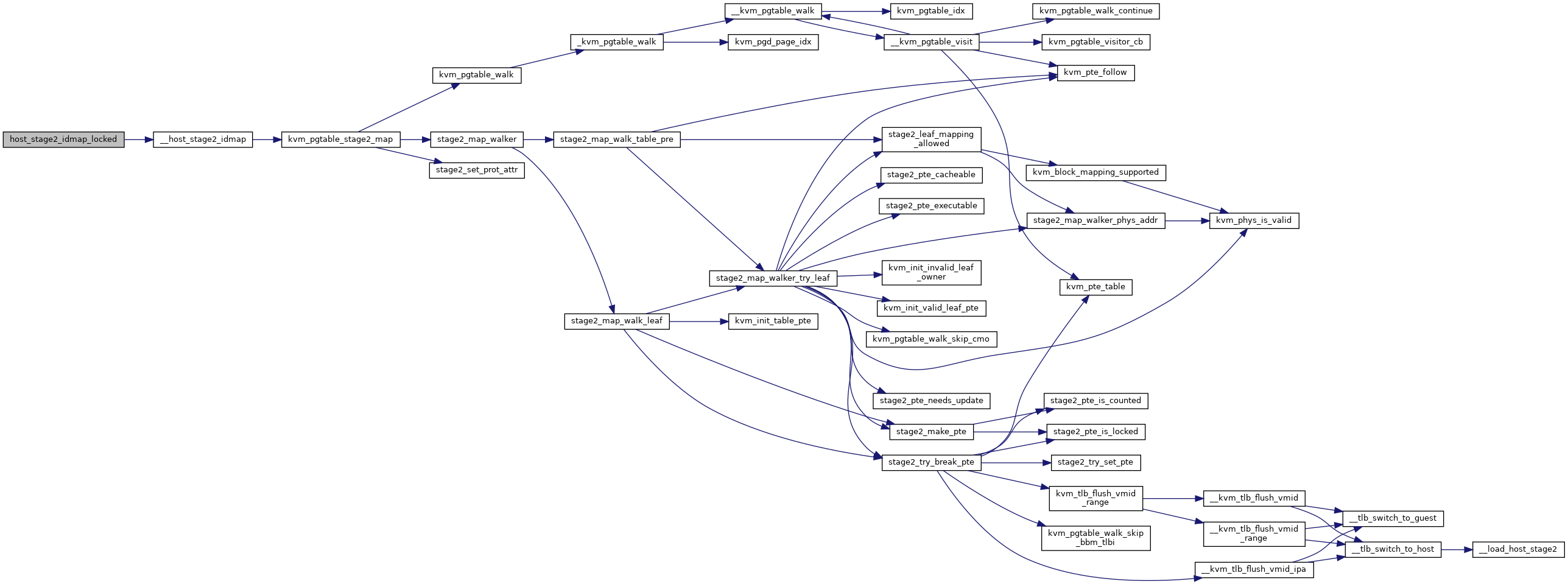

◆ host_stage2_idmap_locked()

| int host_stage2_idmap_locked | ( | phys_addr_t | addr, |

| u64 | size, | ||

| enum kvm_pgtable_prot | prot | ||

| ) |

Definition at line 474 of file mem_protect.c.

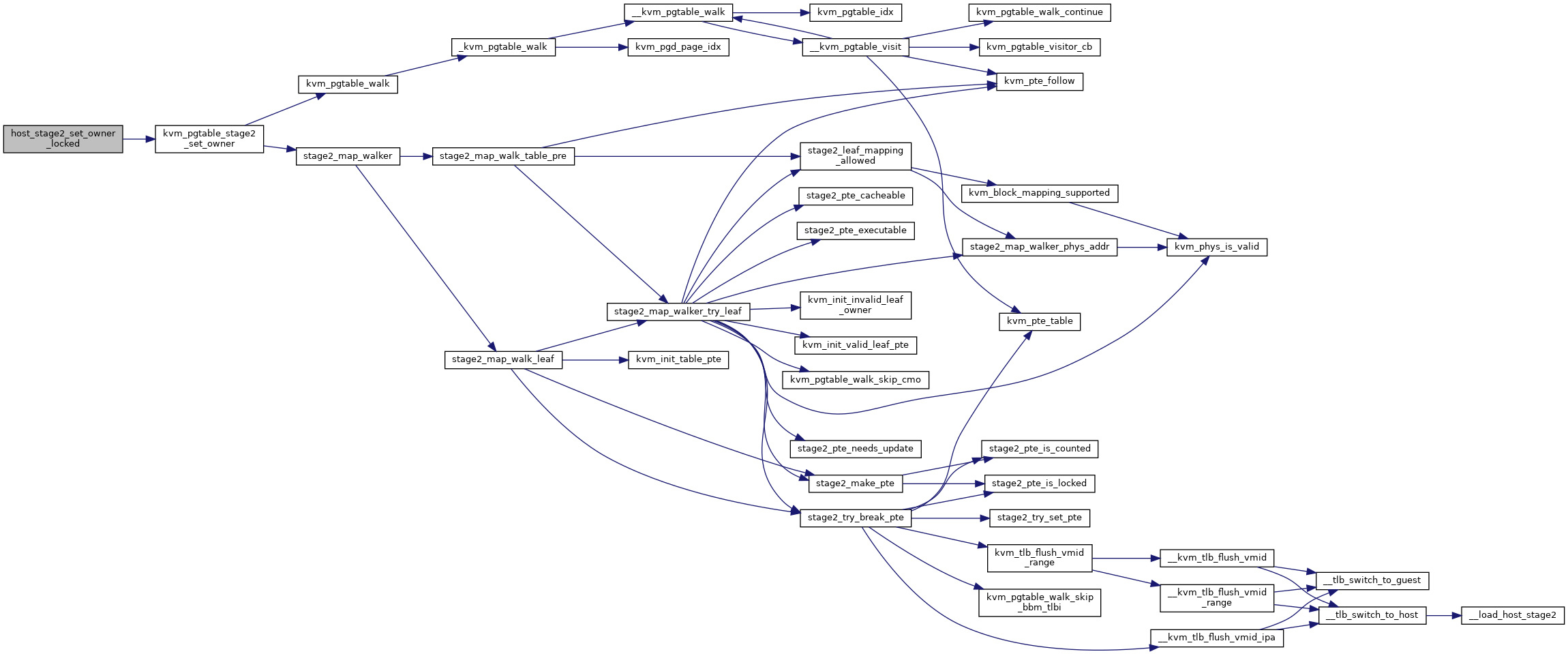

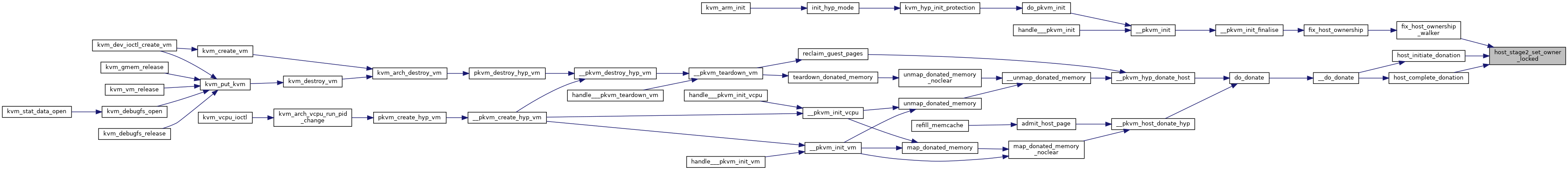

◆ host_stage2_set_owner_locked()

| int host_stage2_set_owner_locked | ( | phys_addr_t | addr, |

| u64 | size, | ||

| u8 | owner_id | ||

| ) |

Definition at line 480 of file mem_protect.c.

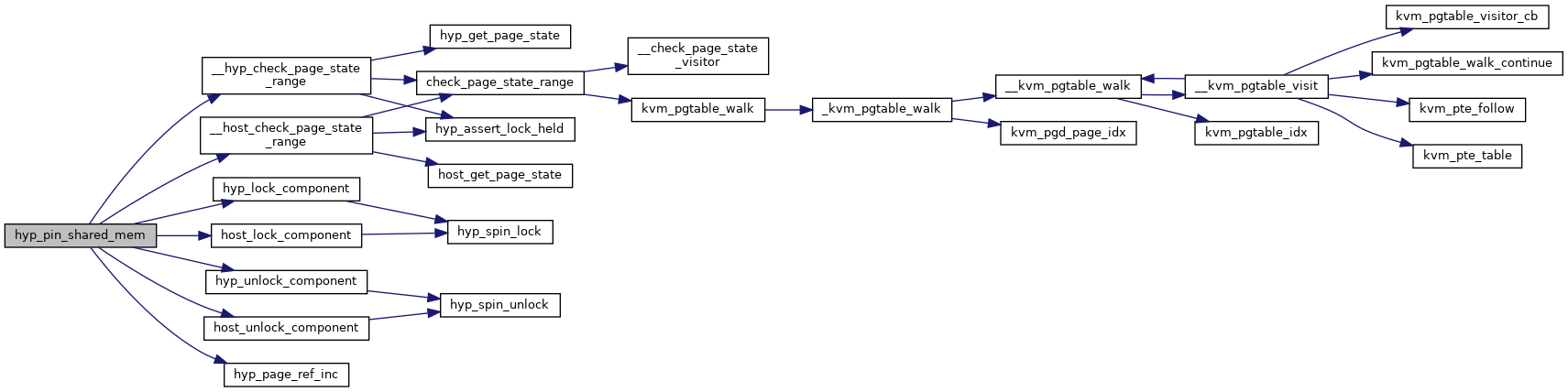

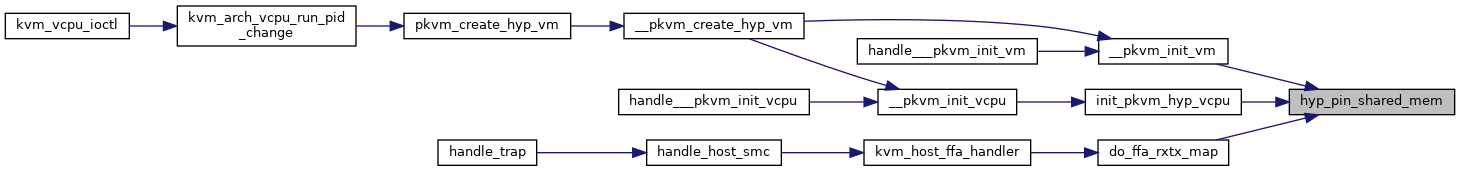

◆ hyp_pin_shared_mem()

| int hyp_pin_shared_mem | ( | void * | from, |

| void * | to | ||

| ) |

Definition at line 1216 of file mem_protect.c.

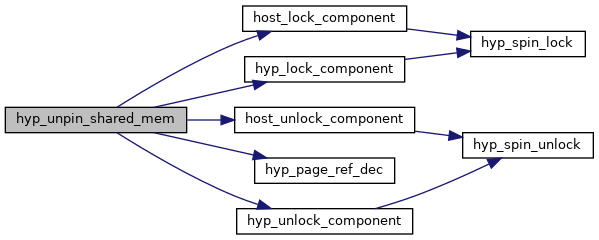

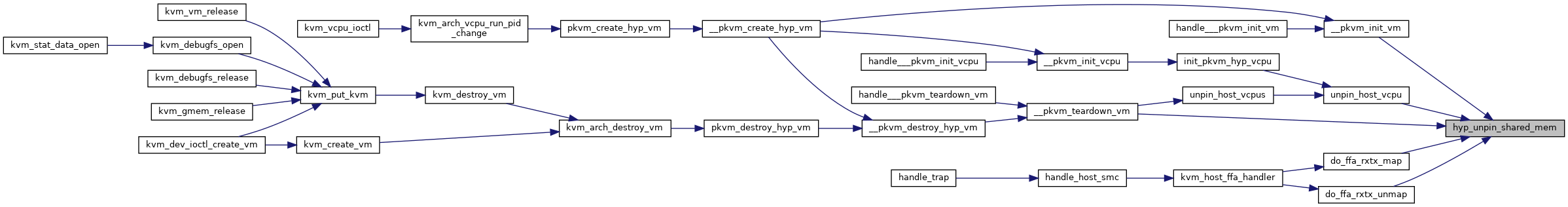

◆ hyp_unpin_shared_mem()

| void hyp_unpin_shared_mem | ( | void * | from, |

| void * | to | ||

| ) |

Definition at line 1246 of file mem_protect.c.

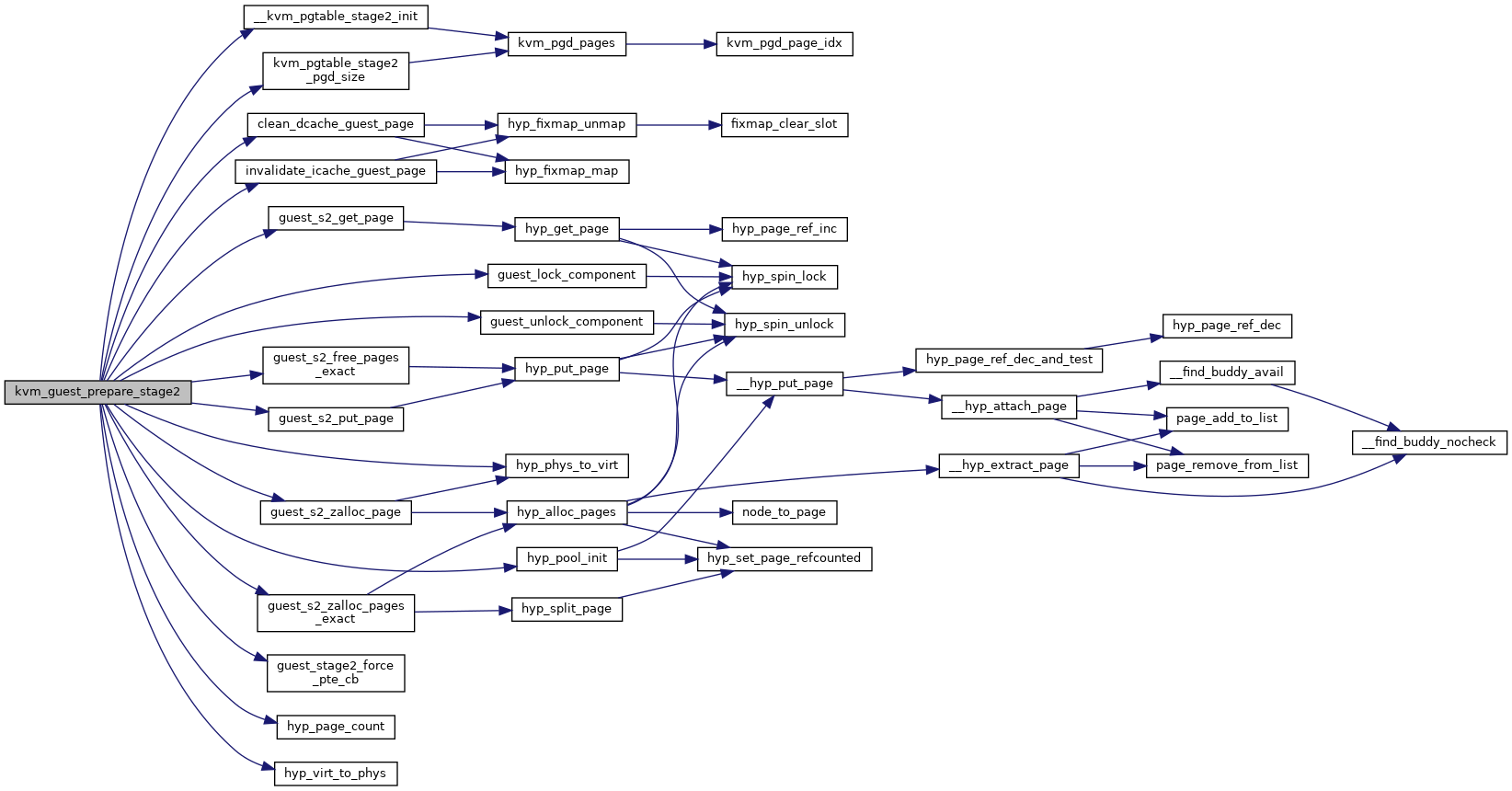

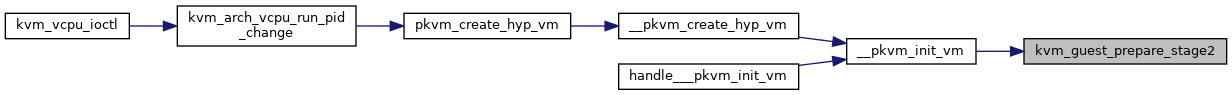

◆ kvm_guest_prepare_stage2()

| int kvm_guest_prepare_stage2 | ( | struct pkvm_hyp_vm * | vm, |

| void * | pgd | ||

| ) |

Definition at line 232 of file mem_protect.c.

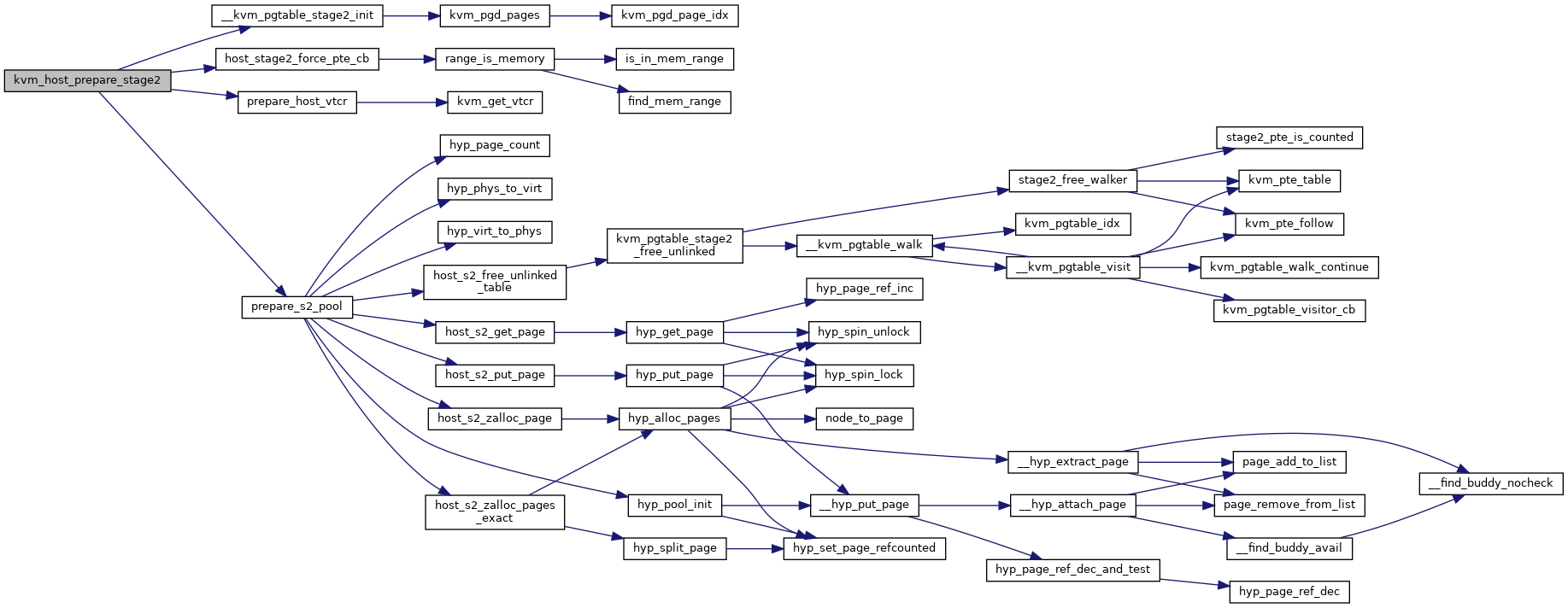

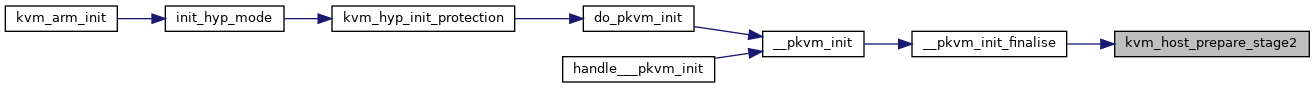

◆ kvm_host_prepare_stage2()

| int kvm_host_prepare_stage2 | ( | void * | pgt_pool_base | ) |

Definition at line 138 of file mem_protect.c.

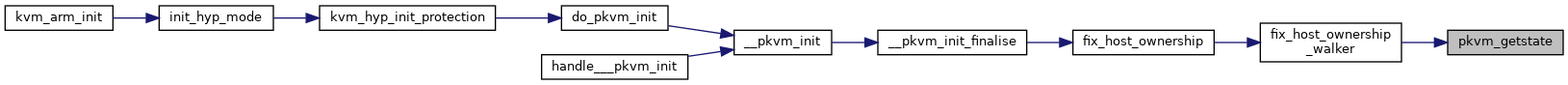

◆ pkvm_getstate()

|

inlinestatic |

◆ pkvm_mkstate()

|

inlinestatic |

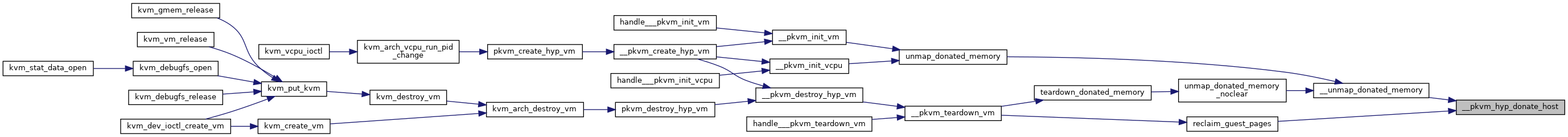

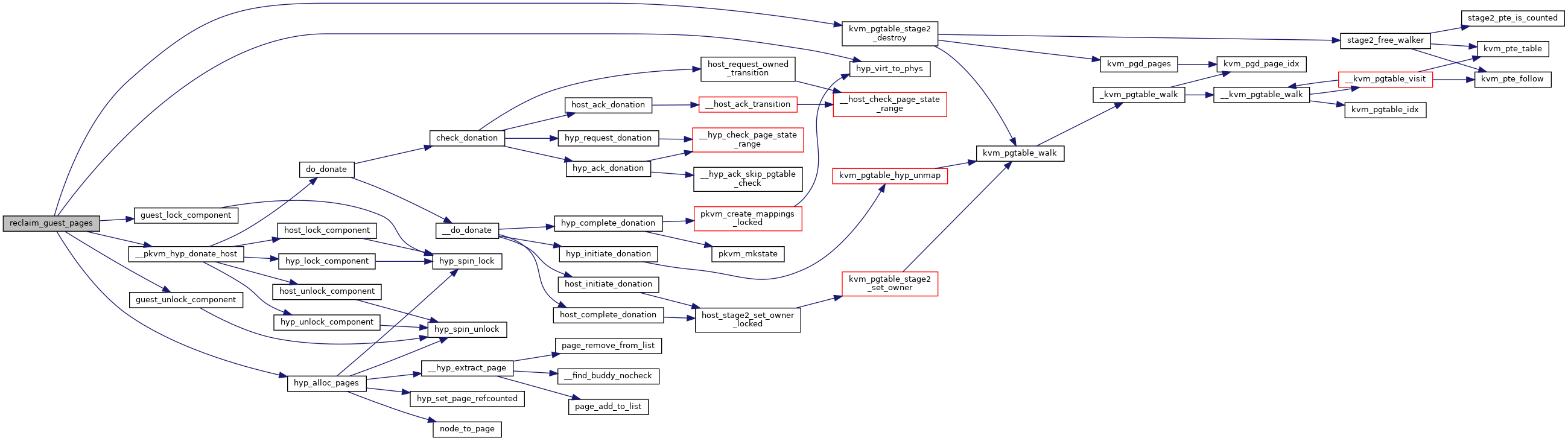

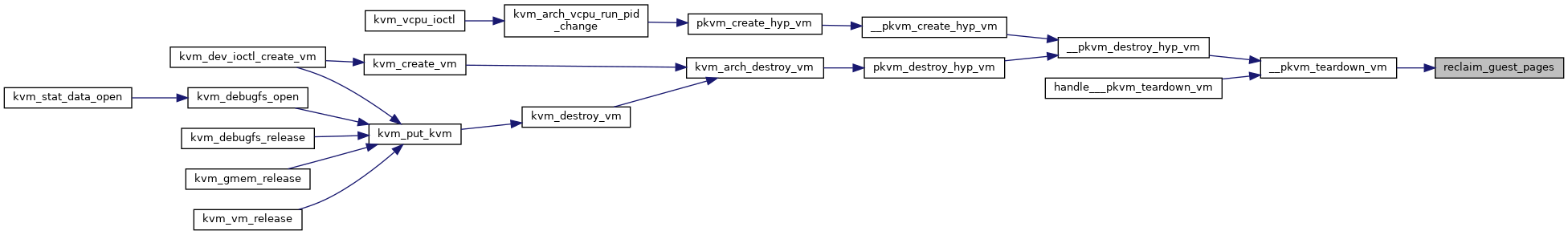

◆ reclaim_guest_pages()

| void reclaim_guest_pages | ( | struct pkvm_hyp_vm * | vm, |

| struct kvm_hyp_memcache * | mc | ||

| ) |

Definition at line 269 of file mem_protect.c.

◆ refill_memcache()

| int refill_memcache | ( | struct kvm_hyp_memcache * | mc, |

| unsigned long | min_pages, | ||

| struct kvm_hyp_memcache * | host_mc | ||

| ) |

Variable Documentation

◆ host_mmu

Definition at line 1 of file mem_protect.c.