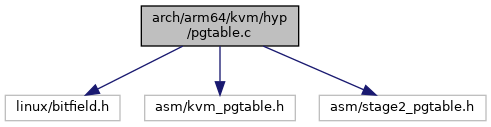

#include <linux/bitfield.h>#include <asm/kvm_pgtable.h>#include <asm/stage2_pgtable.h>

Go to the source code of this file.

Classes | |

| struct | kvm_pgtable_walk_data |

| struct | leaf_walk_data |

| struct | hyp_map_data |

| struct | stage2_map_data |

| struct | stage2_attr_data |

| struct | stage2_age_data |

Functions | |

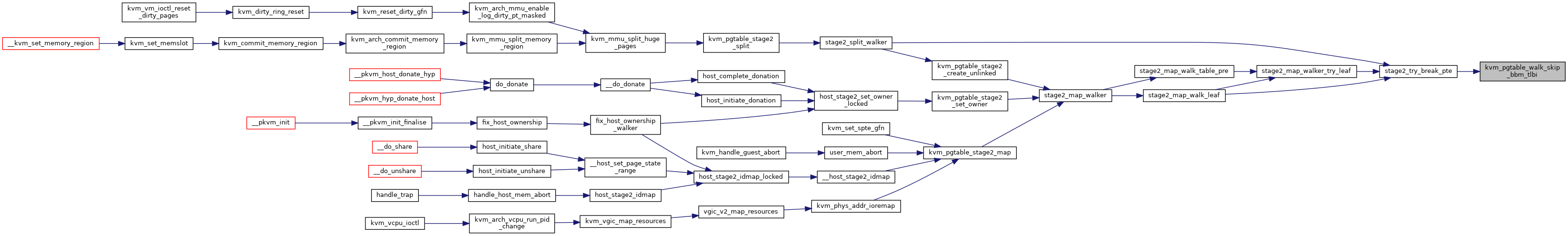

| static bool | kvm_pgtable_walk_skip_bbm_tlbi (const struct kvm_pgtable_visit_ctx *ctx) |

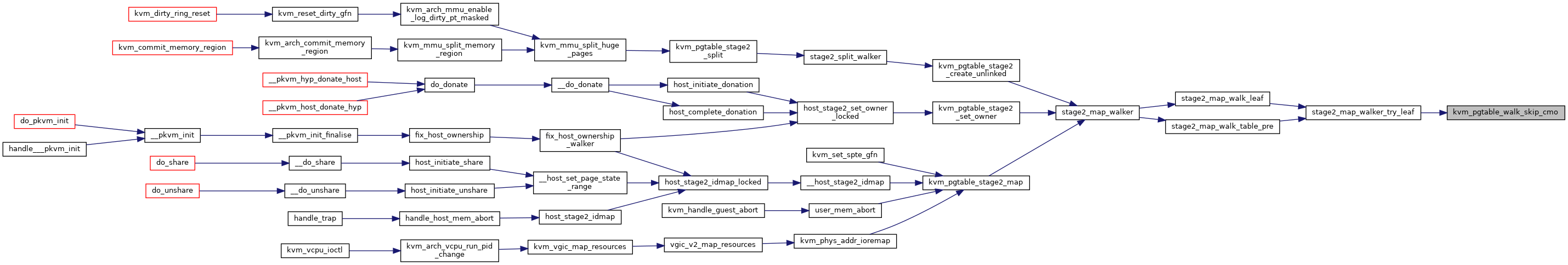

| static bool | kvm_pgtable_walk_skip_cmo (const struct kvm_pgtable_visit_ctx *ctx) |

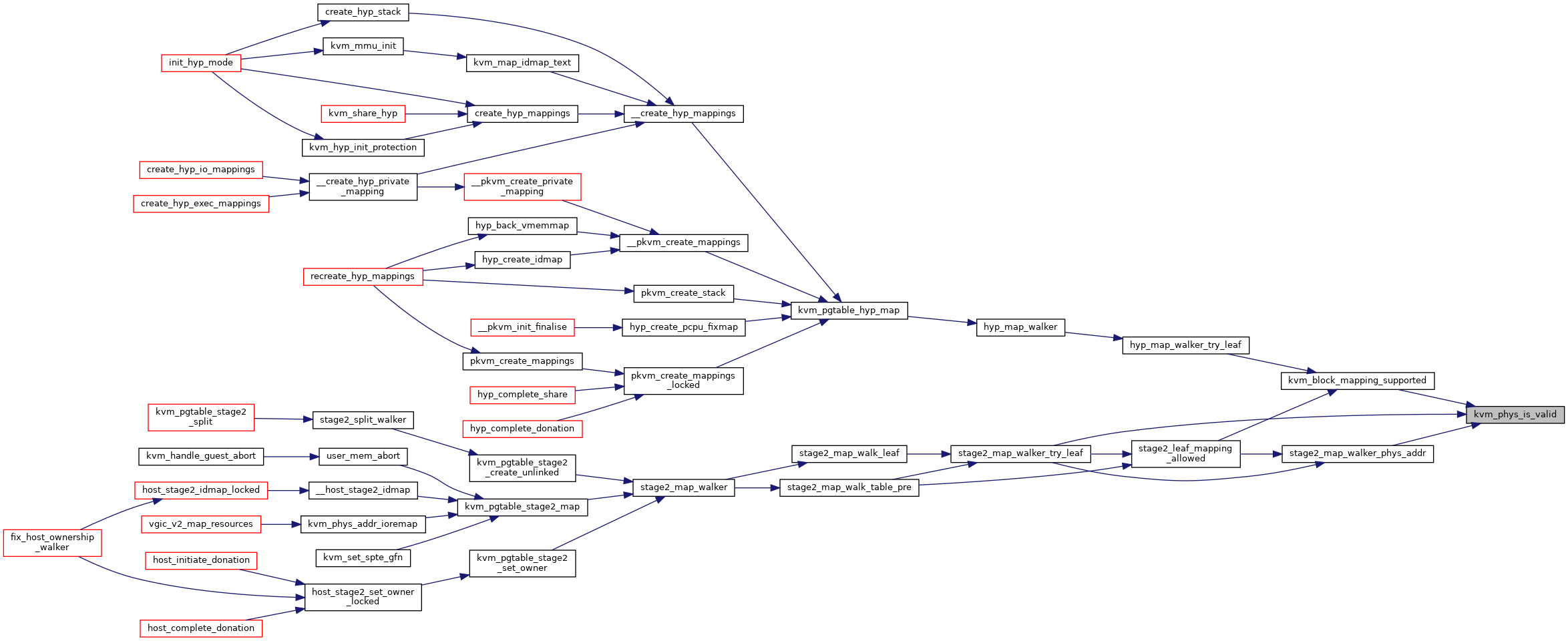

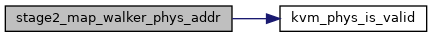

| static bool | kvm_phys_is_valid (u64 phys) |

| static bool | kvm_block_mapping_supported (const struct kvm_pgtable_visit_ctx *ctx, u64 phys) |

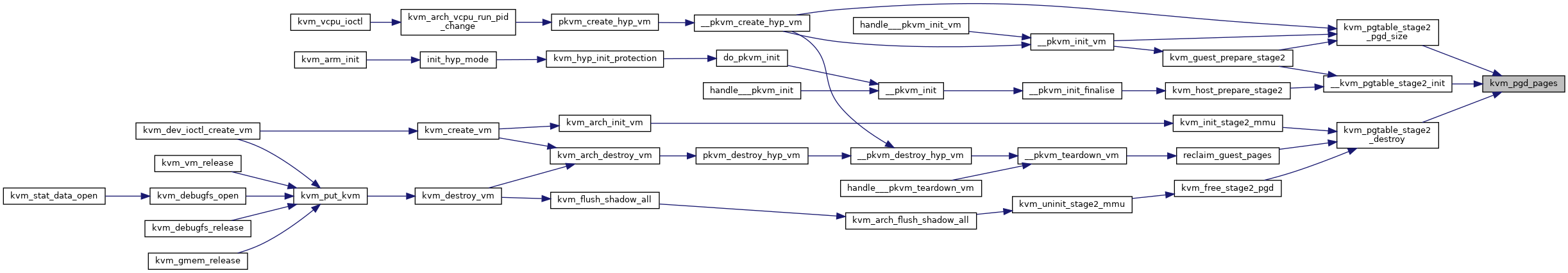

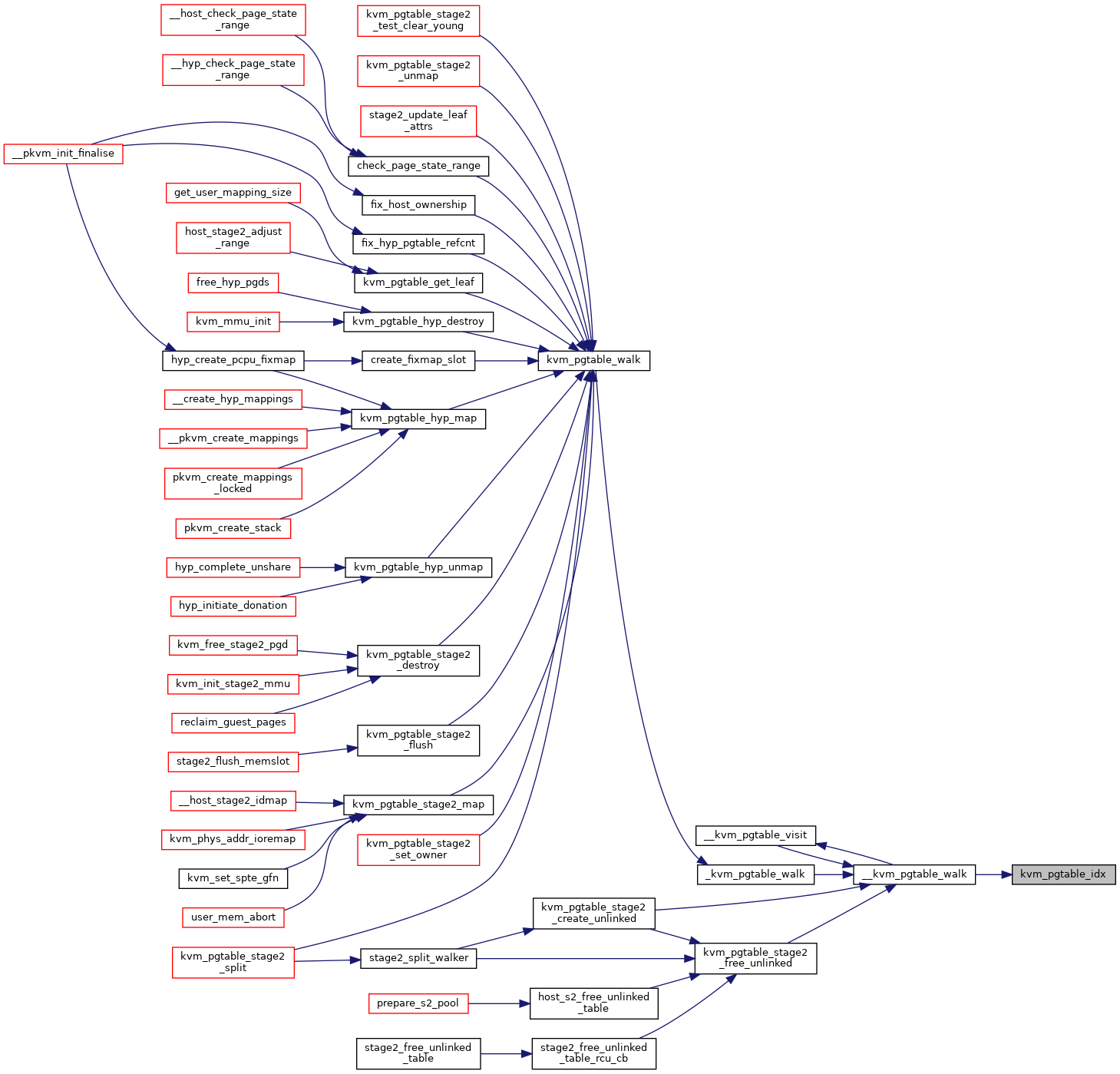

| static u32 | kvm_pgtable_idx (struct kvm_pgtable_walk_data *data, s8 level) |

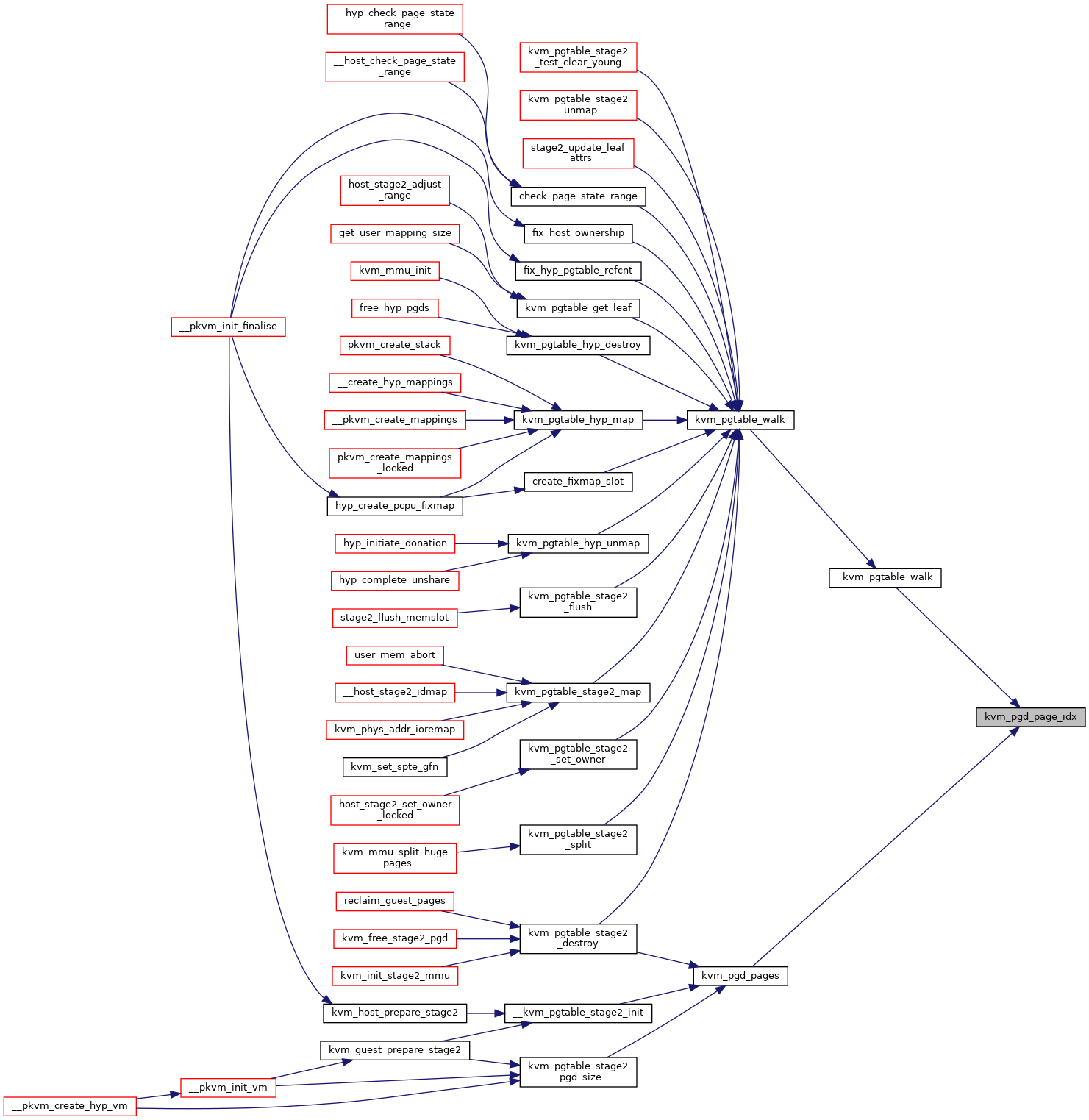

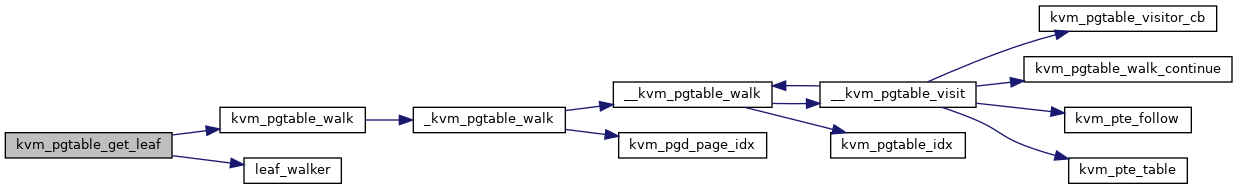

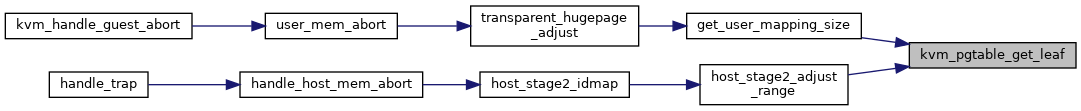

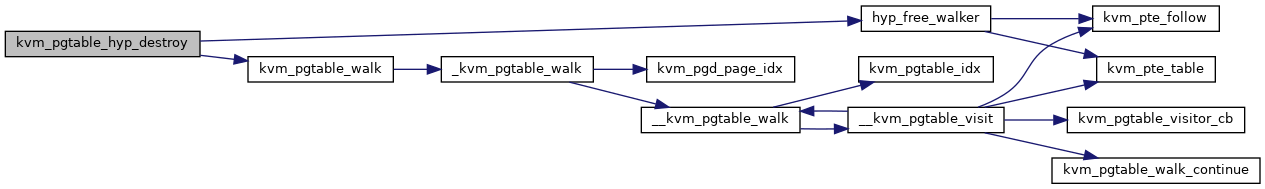

| static u32 | kvm_pgd_page_idx (struct kvm_pgtable *pgt, u64 addr) |

| static u32 | kvm_pgd_pages (u32 ia_bits, s8 start_level) |

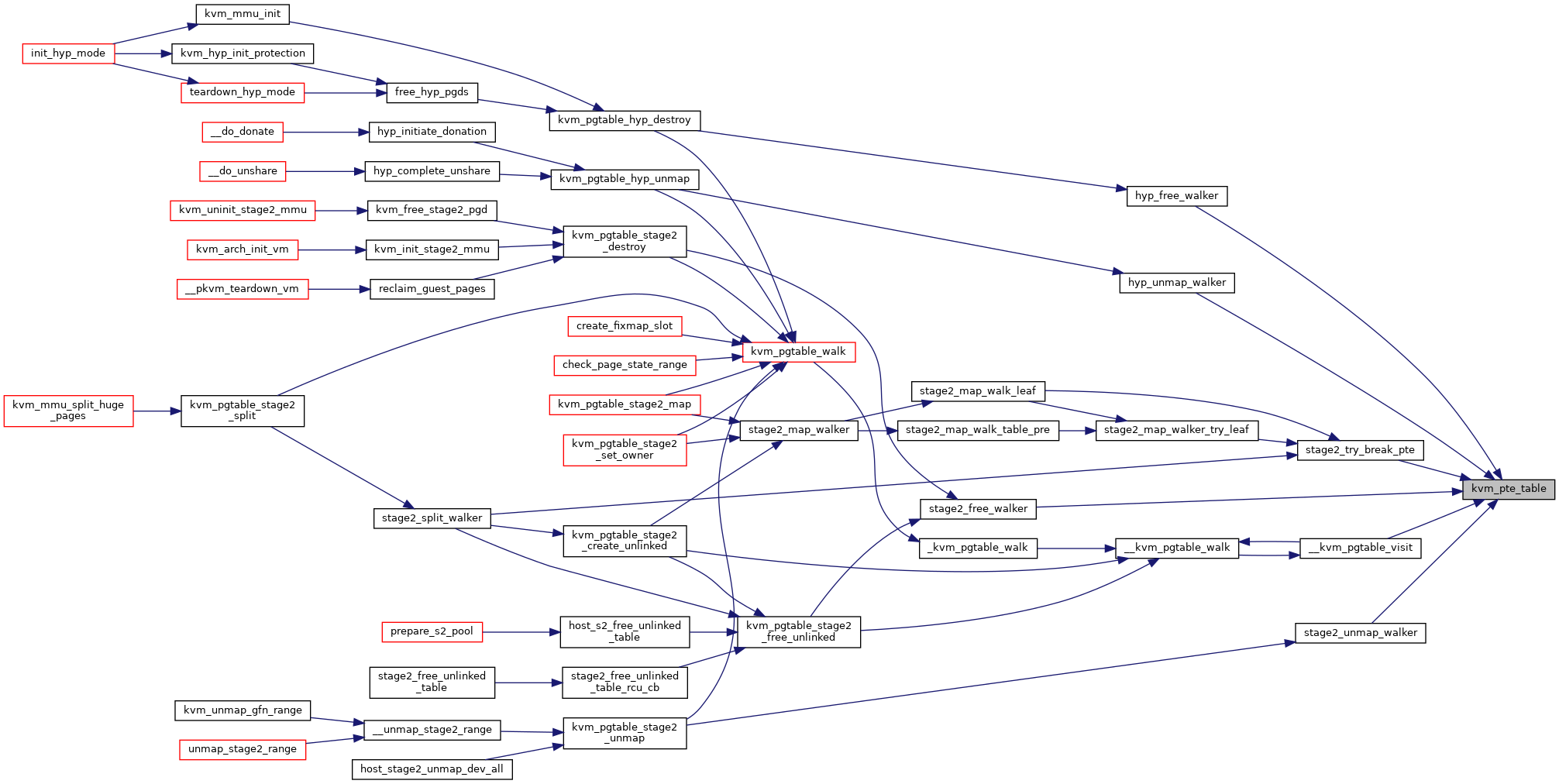

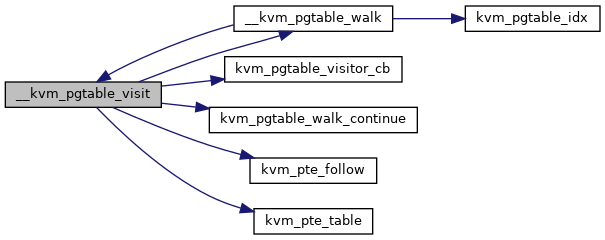

| static bool | kvm_pte_table (kvm_pte_t pte, s8 level) |

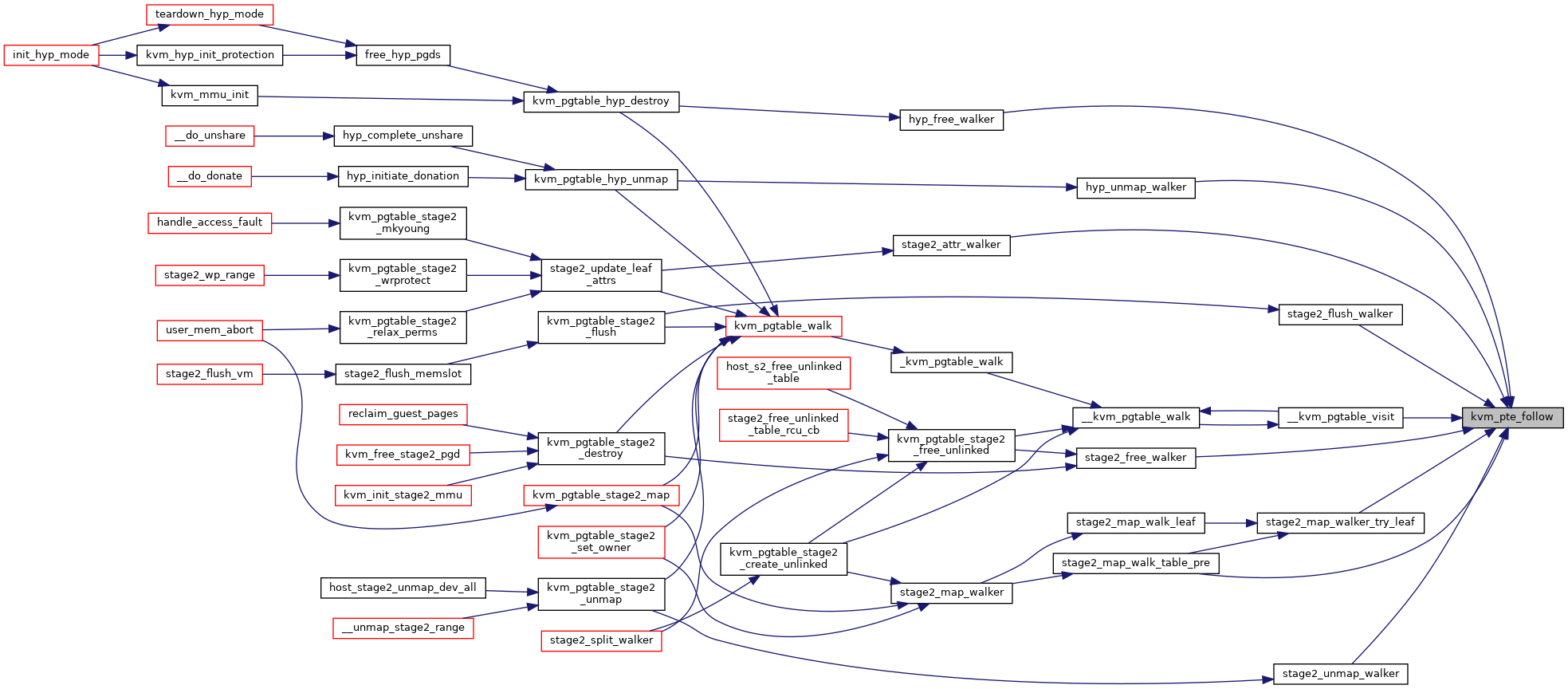

| static kvm_pte_t * | kvm_pte_follow (kvm_pte_t pte, struct kvm_pgtable_mm_ops *mm_ops) |

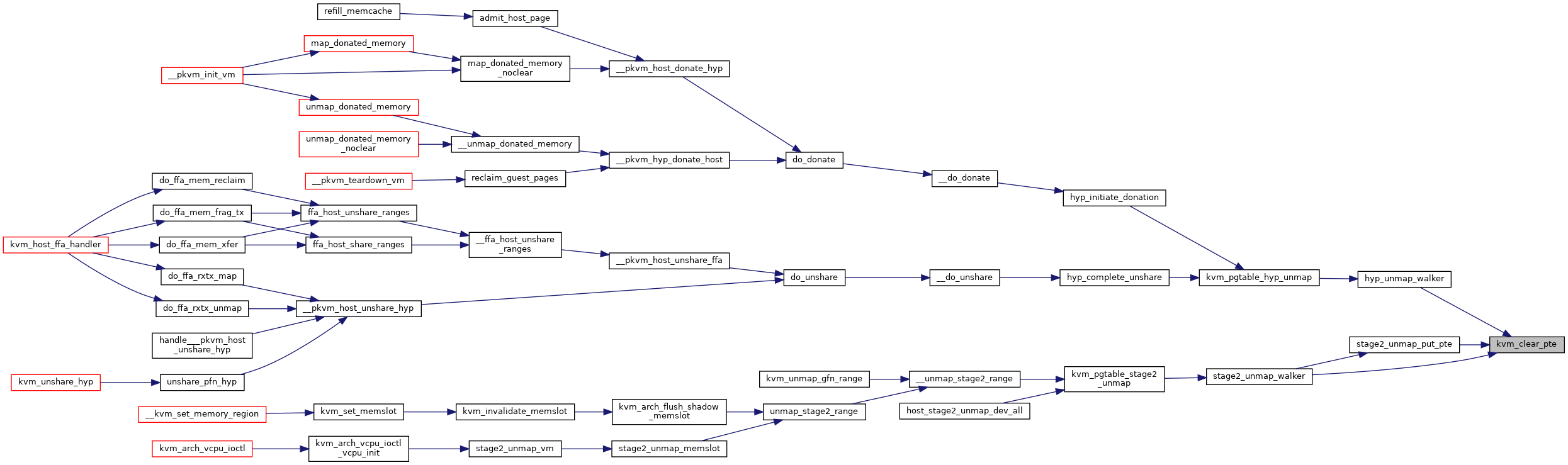

| static void | kvm_clear_pte (kvm_pte_t *ptep) |

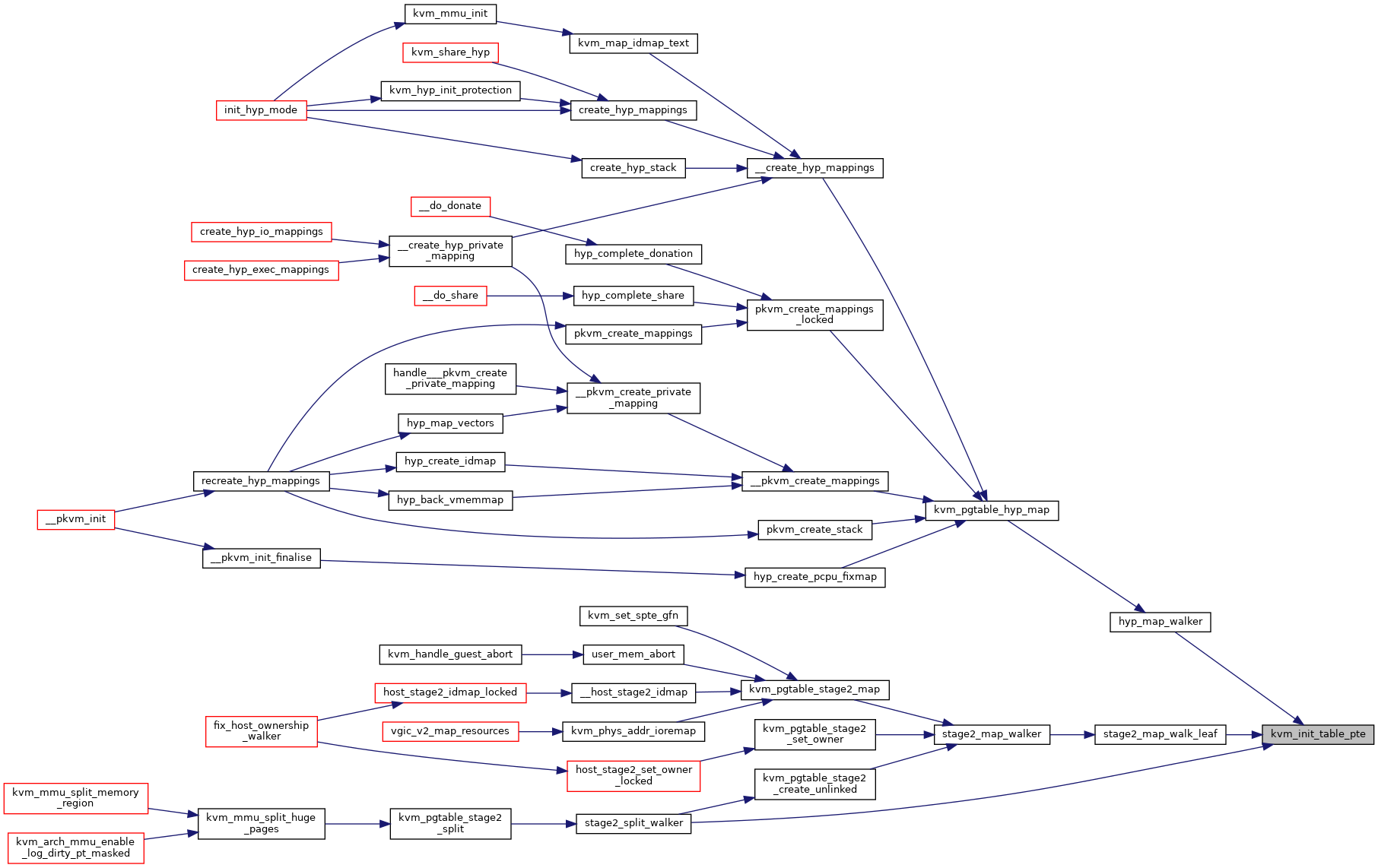

| static kvm_pte_t | kvm_init_table_pte (kvm_pte_t *childp, struct kvm_pgtable_mm_ops *mm_ops) |

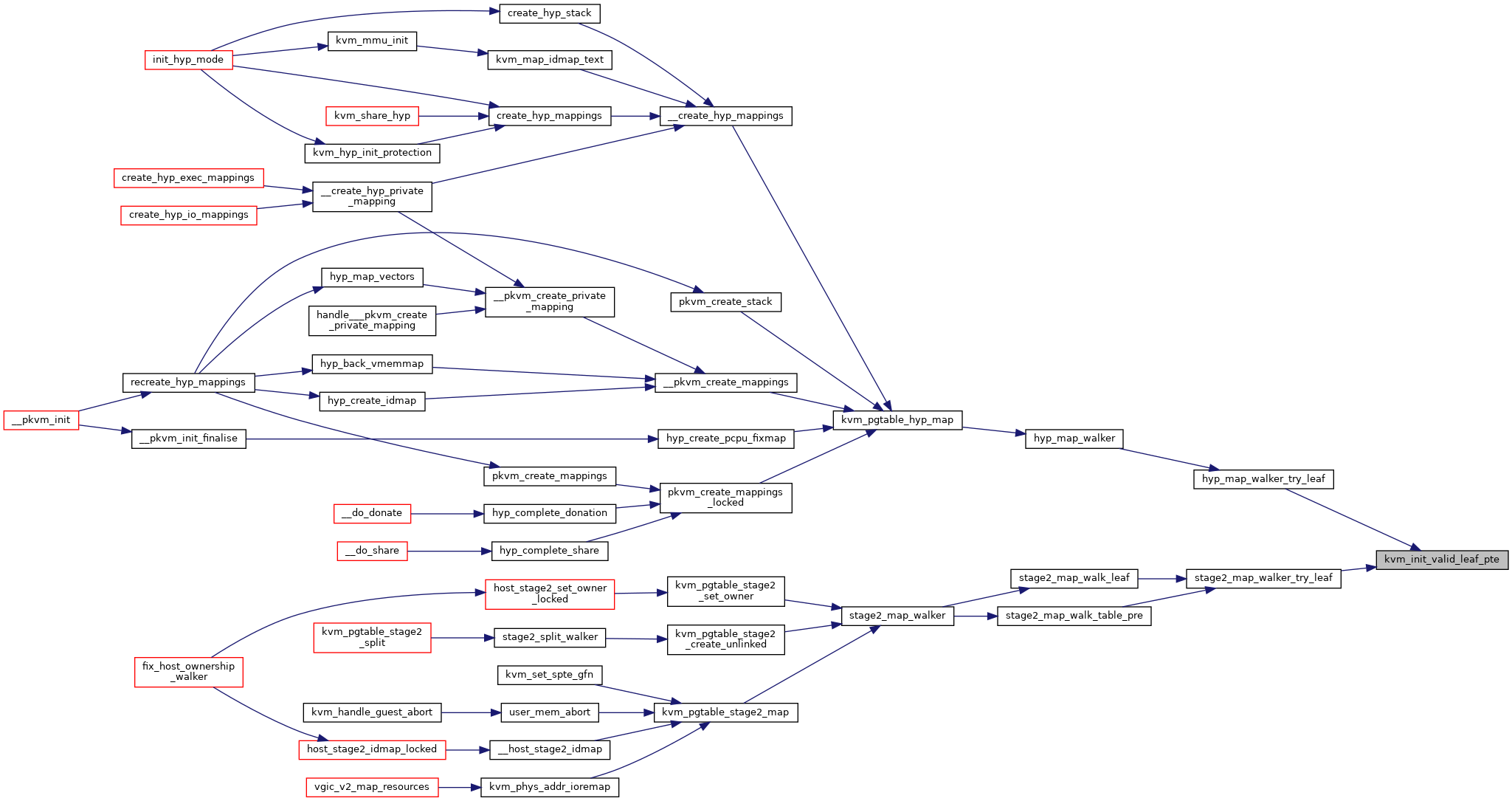

| static kvm_pte_t | kvm_init_valid_leaf_pte (u64 pa, kvm_pte_t attr, s8 level) |

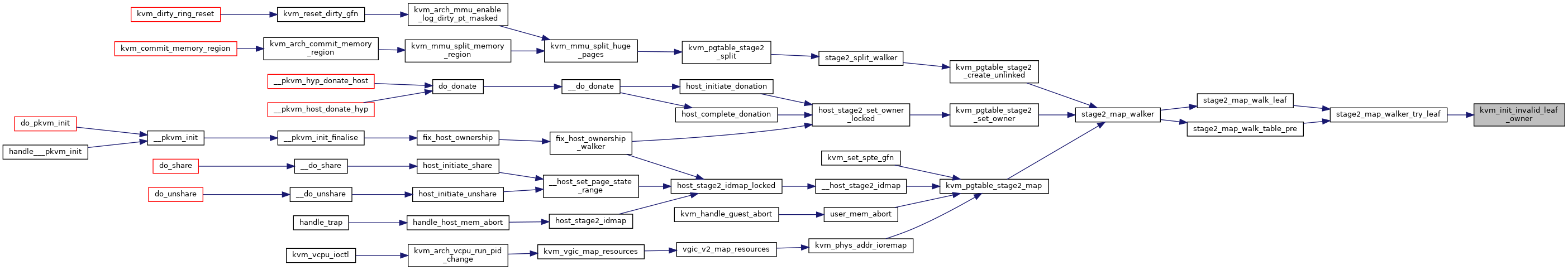

| static kvm_pte_t | kvm_init_invalid_leaf_owner (u8 owner_id) |

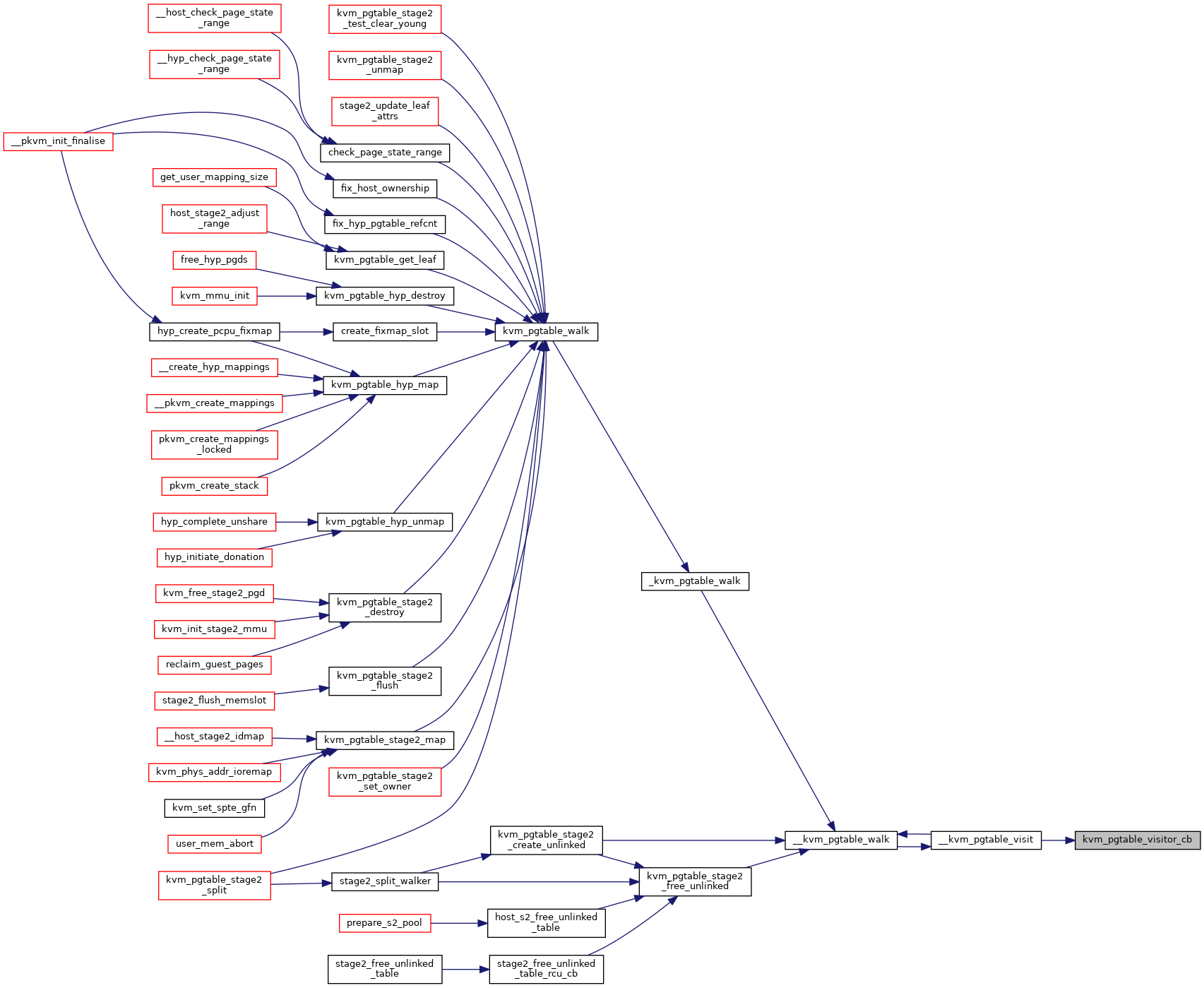

| static int | kvm_pgtable_visitor_cb (struct kvm_pgtable_walk_data *data, const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

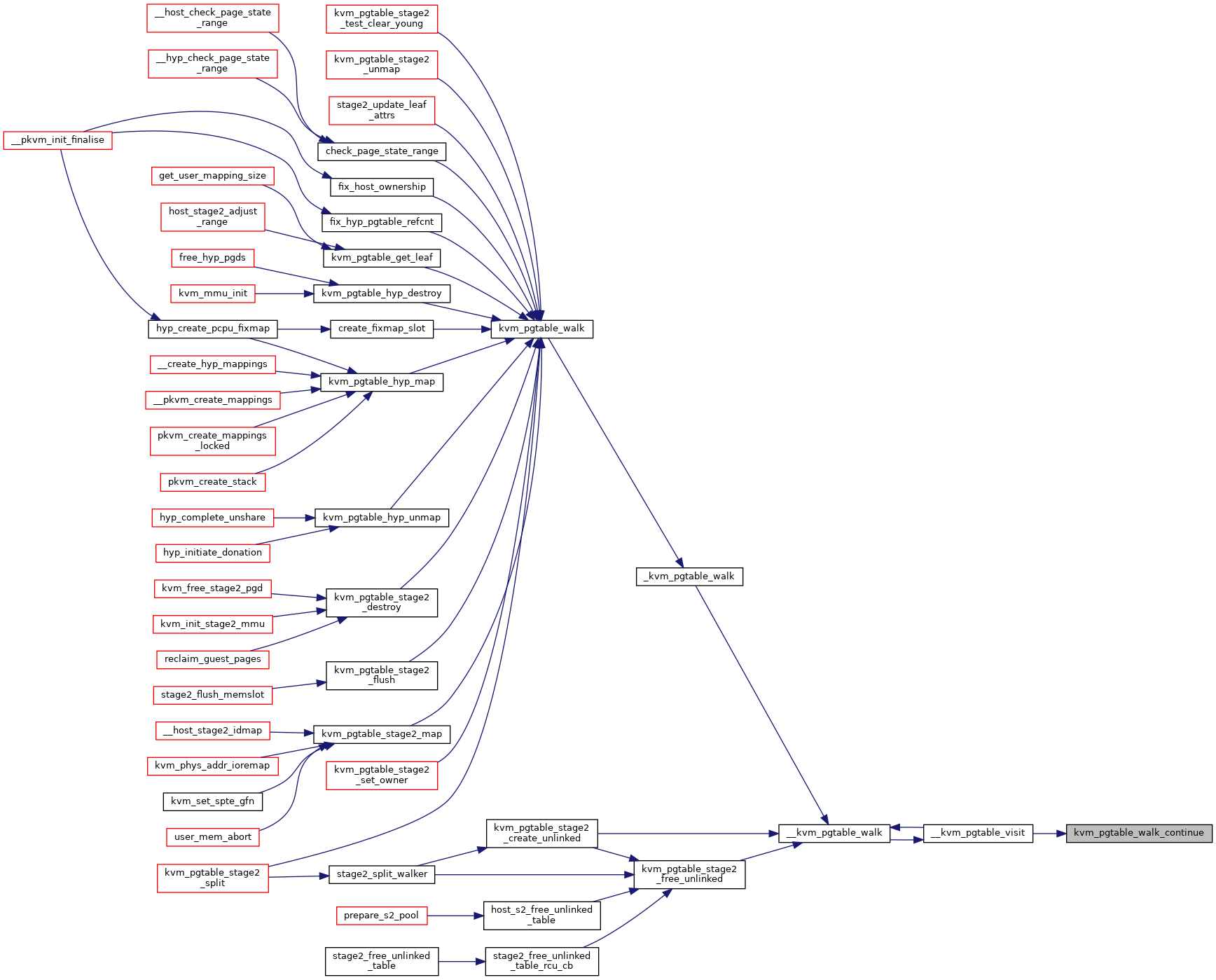

| static bool | kvm_pgtable_walk_continue (const struct kvm_pgtable_walker *walker, int r) |

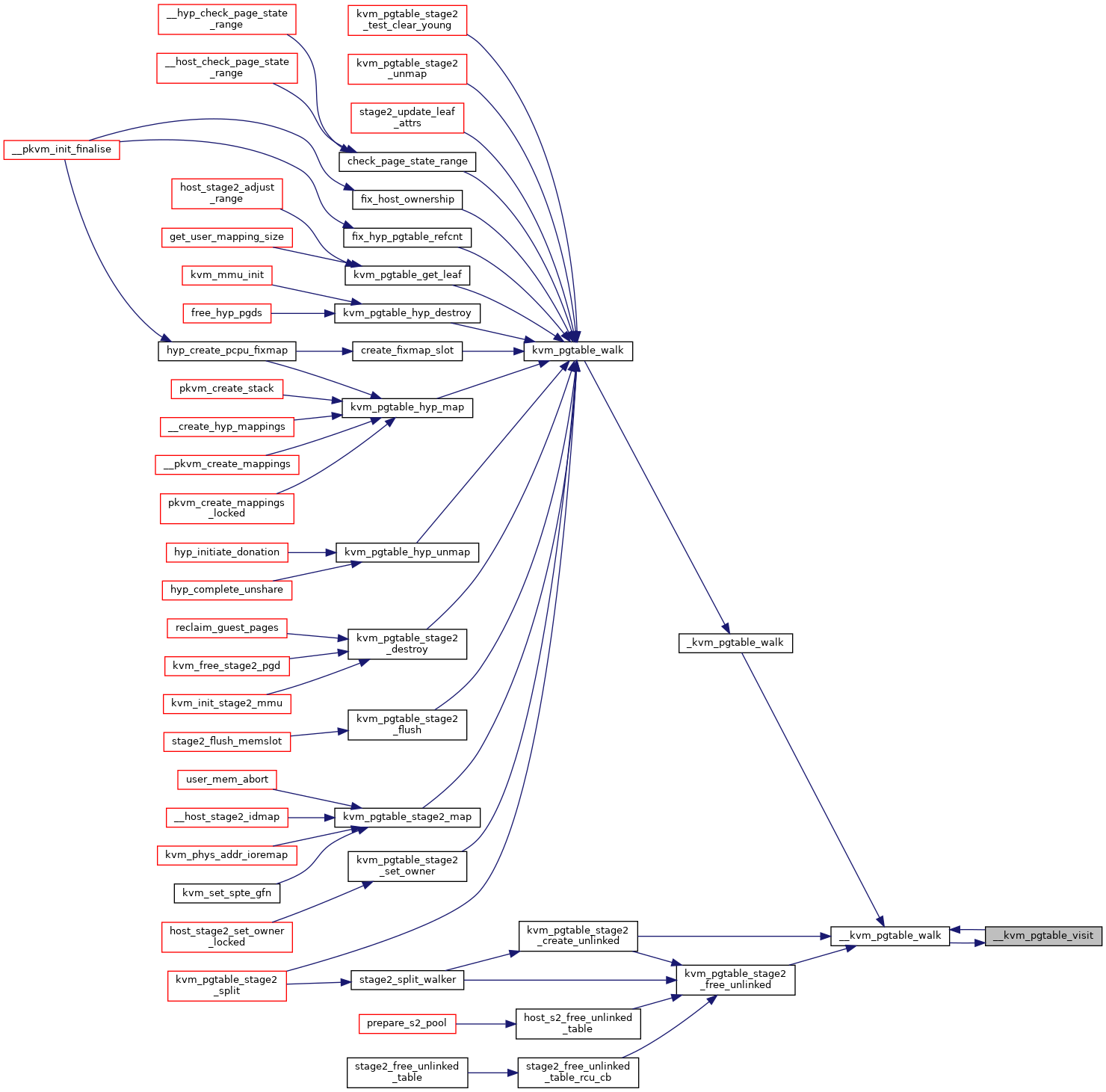

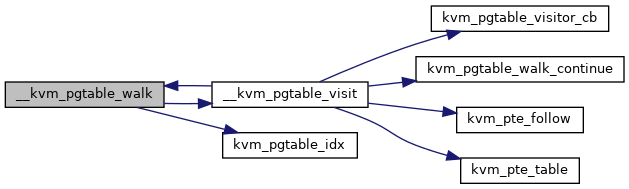

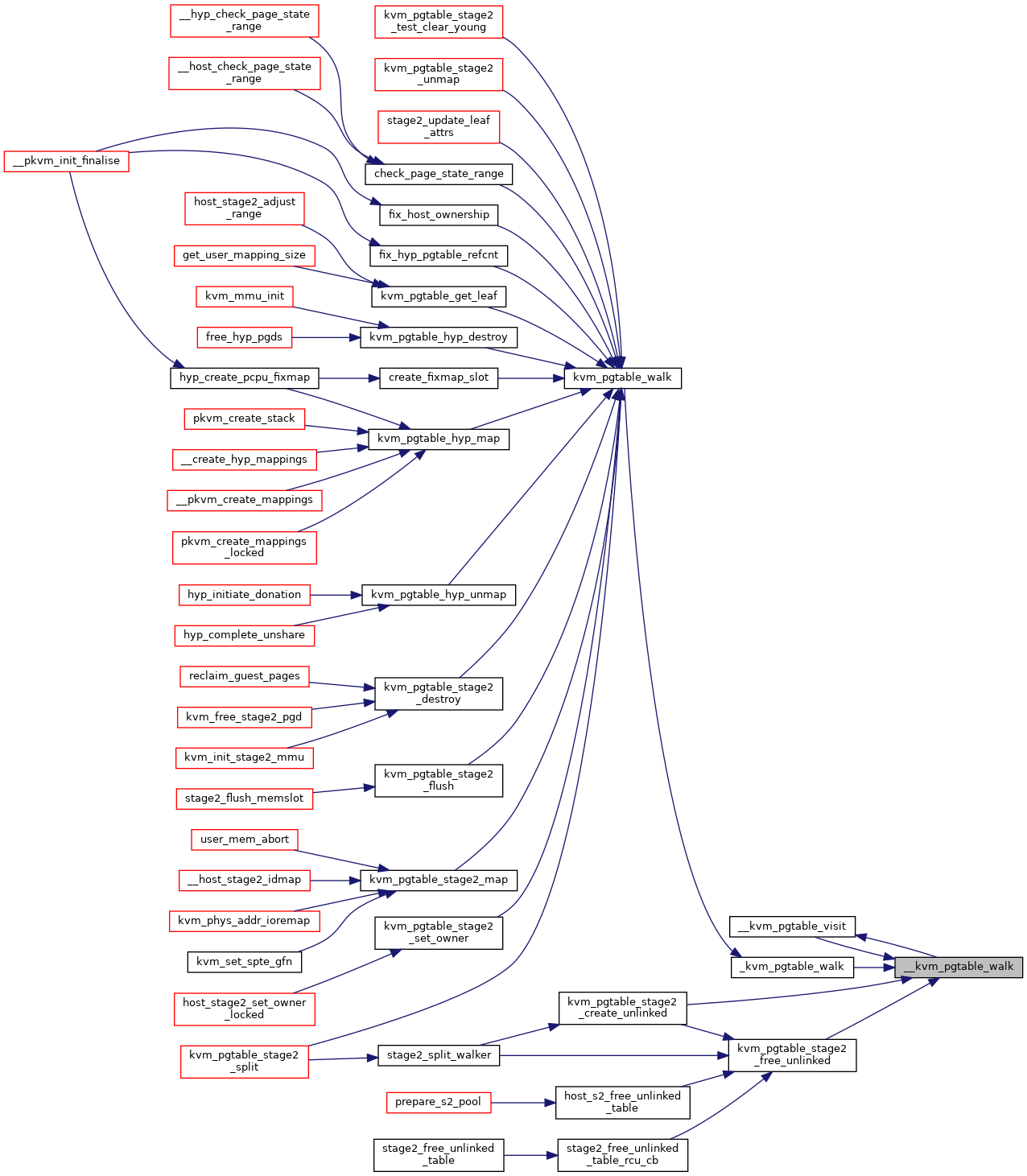

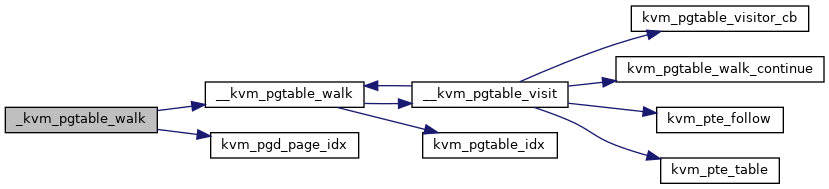

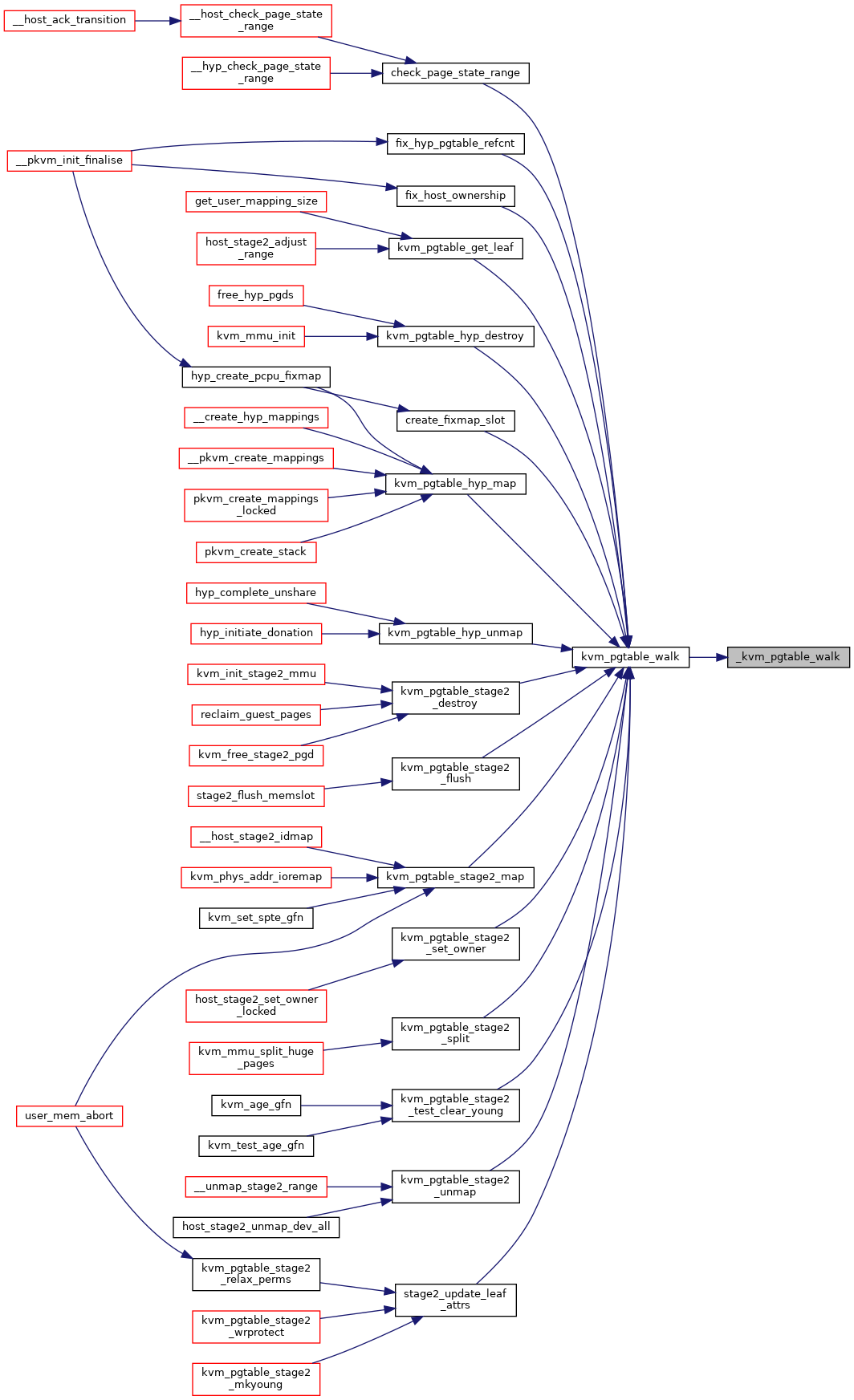

| static int | __kvm_pgtable_walk (struct kvm_pgtable_walk_data *data, struct kvm_pgtable_mm_ops *mm_ops, kvm_pteref_t pgtable, s8 level) |

| static int | __kvm_pgtable_visit (struct kvm_pgtable_walk_data *data, struct kvm_pgtable_mm_ops *mm_ops, kvm_pteref_t pteref, s8 level) |

| static int | _kvm_pgtable_walk (struct kvm_pgtable *pgt, struct kvm_pgtable_walk_data *data) |

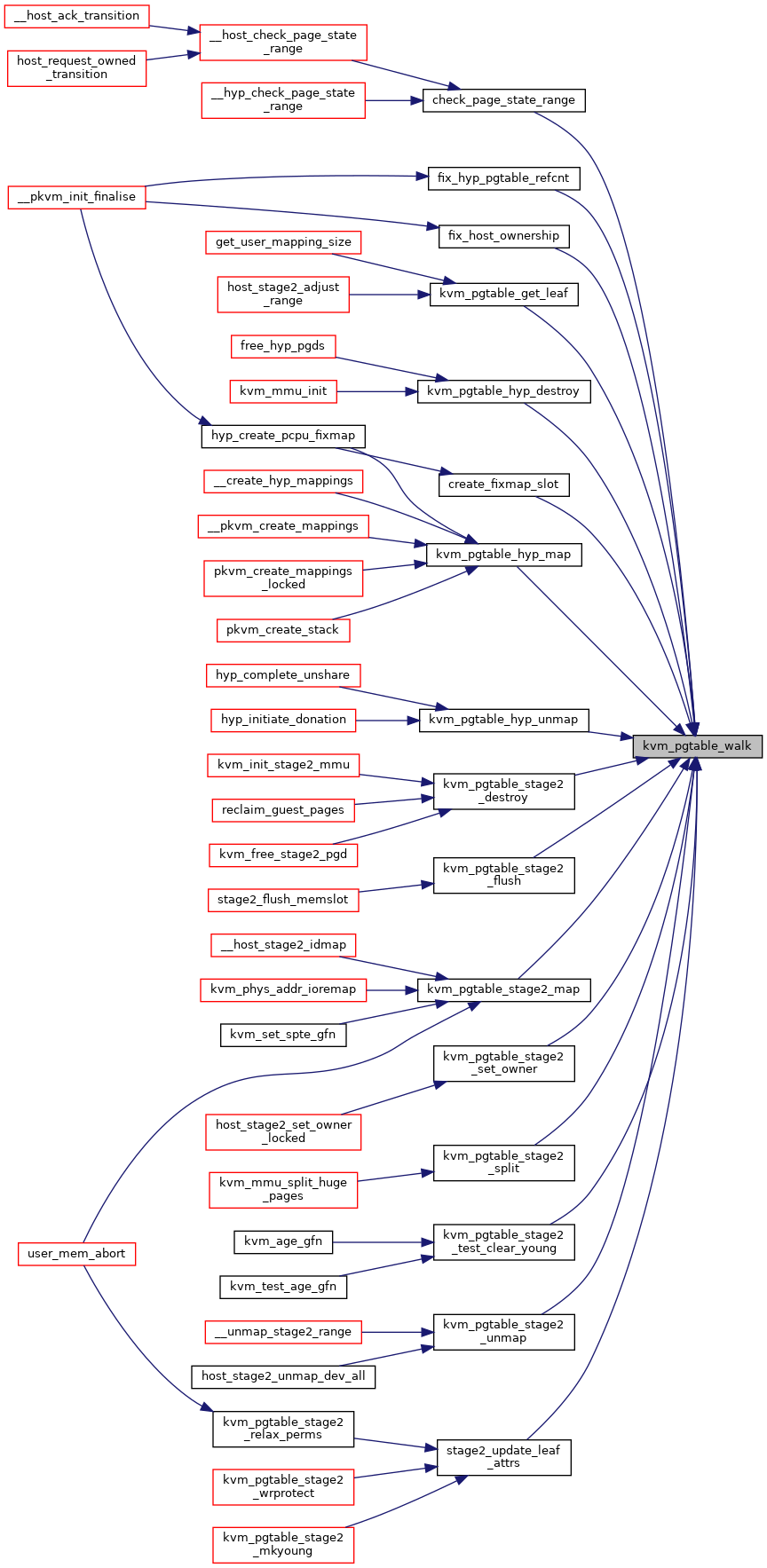

| int | kvm_pgtable_walk (struct kvm_pgtable *pgt, u64 addr, u64 size, struct kvm_pgtable_walker *walker) |

| static int | leaf_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

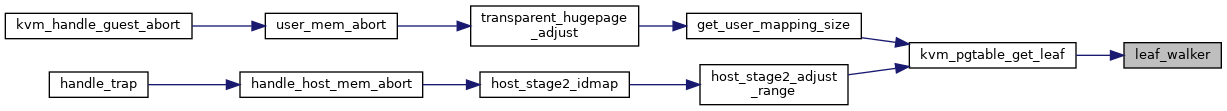

| int | kvm_pgtable_get_leaf (struct kvm_pgtable *pgt, u64 addr, kvm_pte_t *ptep, s8 *level) |

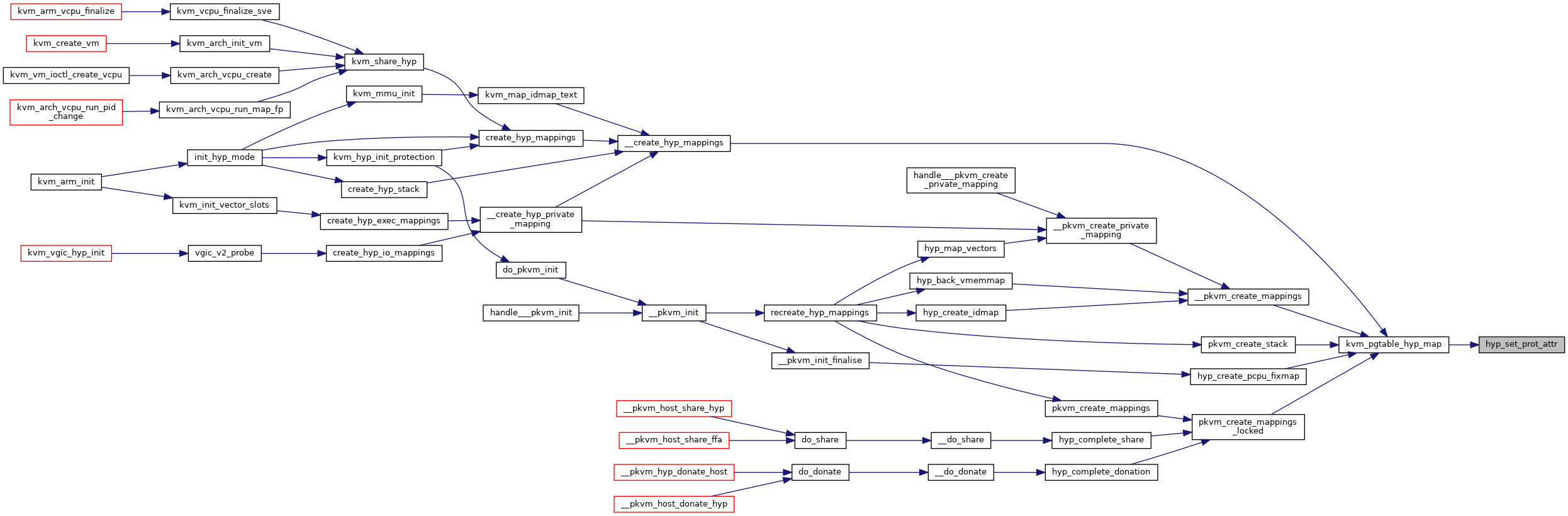

| static int | hyp_set_prot_attr (enum kvm_pgtable_prot prot, kvm_pte_t *ptep) |

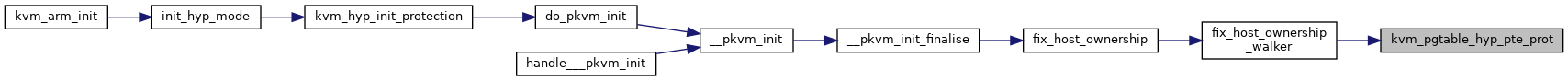

| enum kvm_pgtable_prot | kvm_pgtable_hyp_pte_prot (kvm_pte_t pte) |

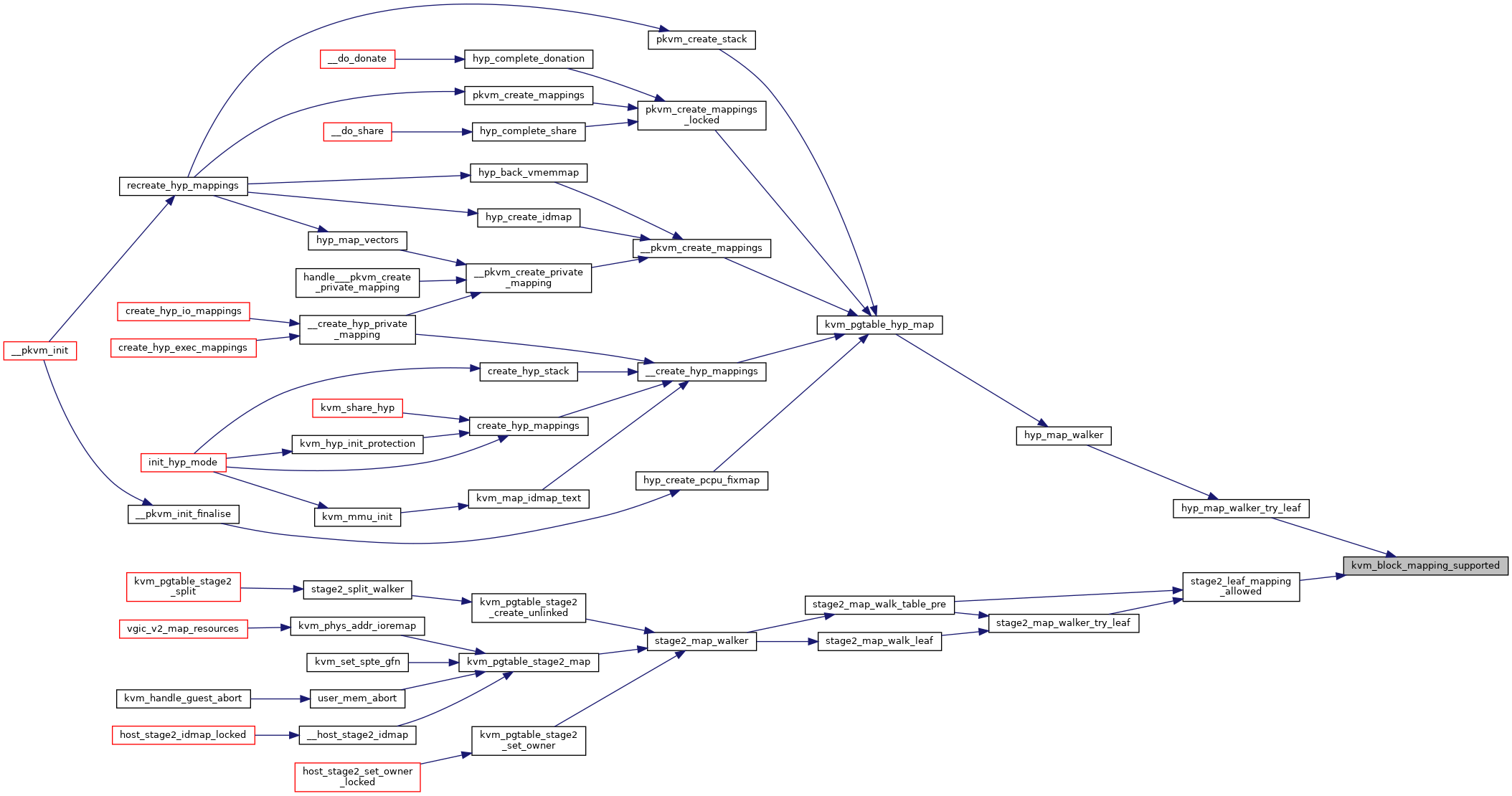

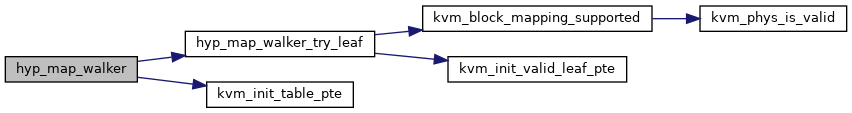

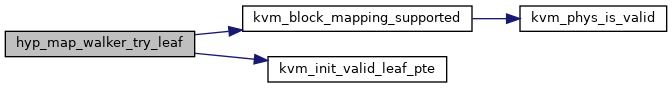

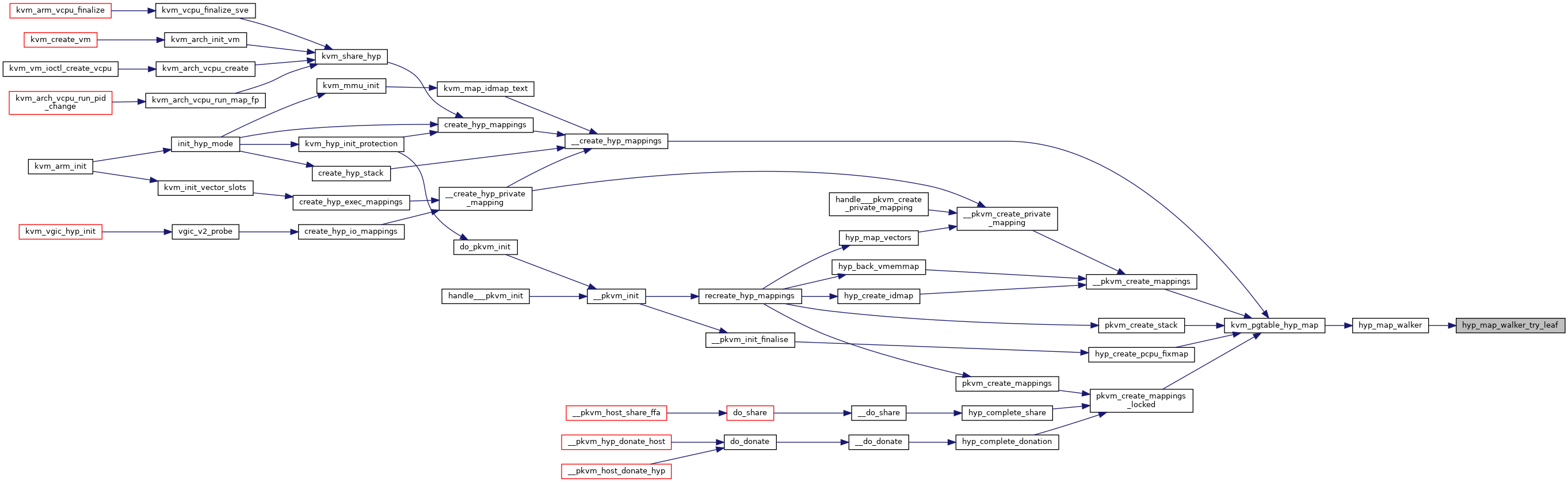

| static bool | hyp_map_walker_try_leaf (const struct kvm_pgtable_visit_ctx *ctx, struct hyp_map_data *data) |

| static int | hyp_map_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

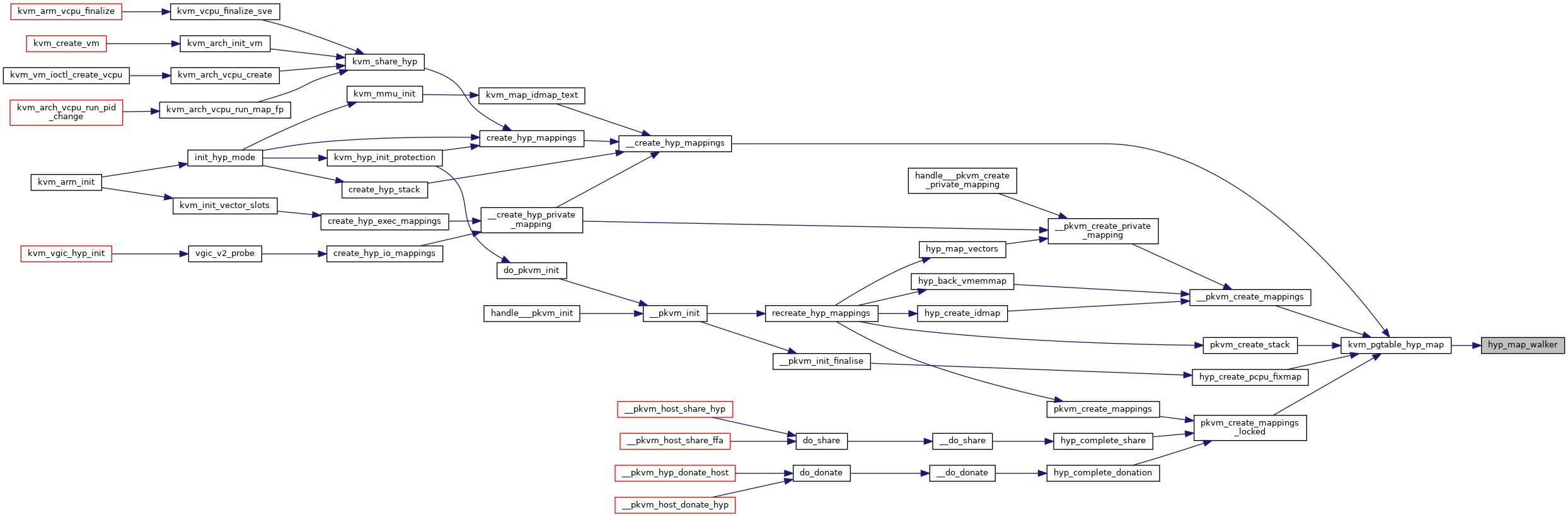

| int | kvm_pgtable_hyp_map (struct kvm_pgtable *pgt, u64 addr, u64 size, u64 phys, enum kvm_pgtable_prot prot) |

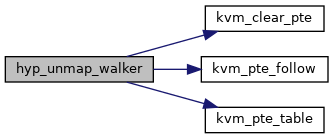

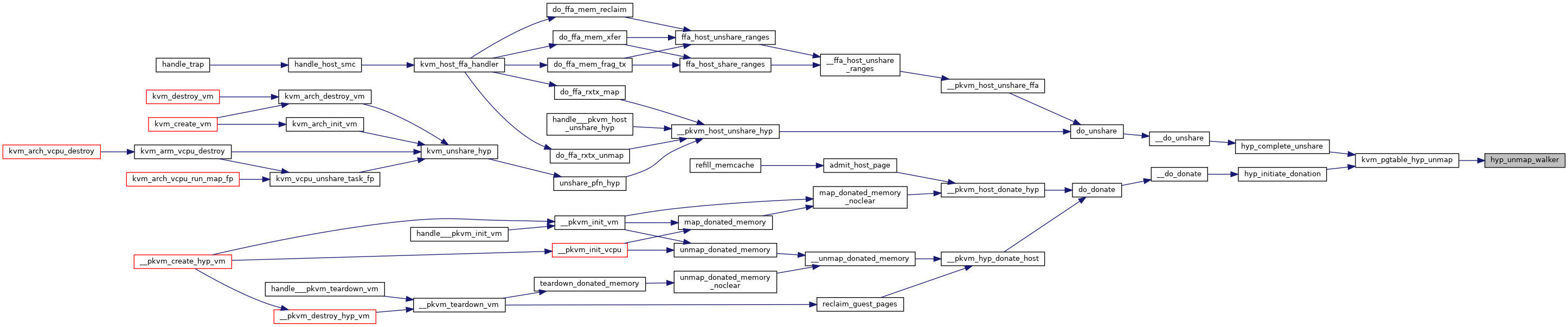

| static int | hyp_unmap_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

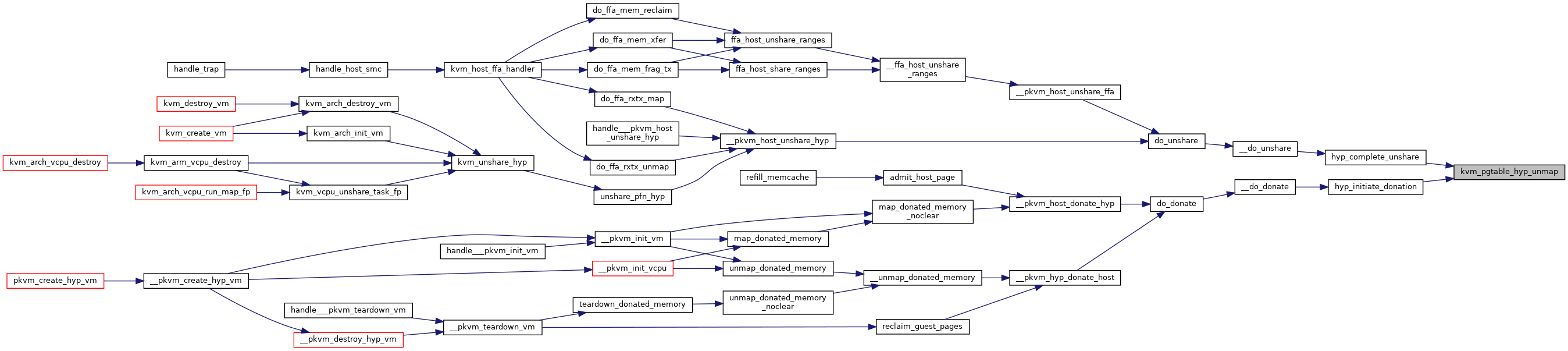

| u64 | kvm_pgtable_hyp_unmap (struct kvm_pgtable *pgt, u64 addr, u64 size) |

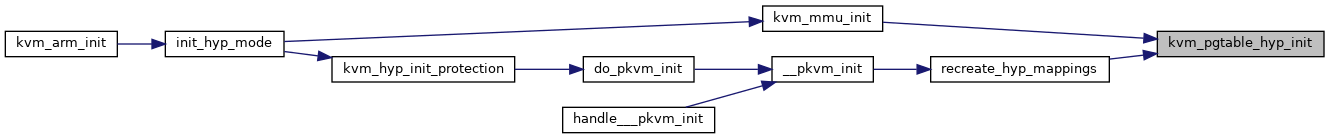

| int | kvm_pgtable_hyp_init (struct kvm_pgtable *pgt, u32 va_bits, struct kvm_pgtable_mm_ops *mm_ops) |

| static int | hyp_free_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

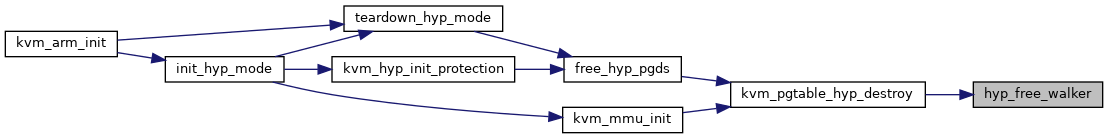

| void | kvm_pgtable_hyp_destroy (struct kvm_pgtable *pgt) |

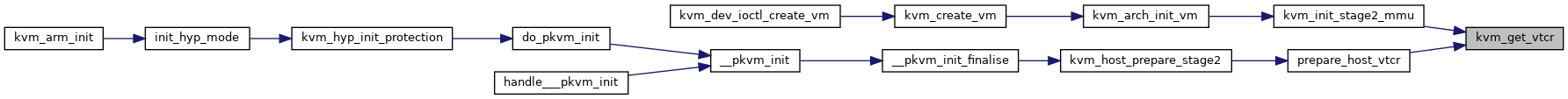

| u64 | kvm_get_vtcr (u64 mmfr0, u64 mmfr1, u32 phys_shift) |

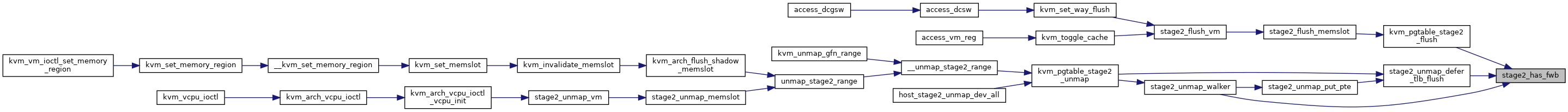

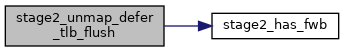

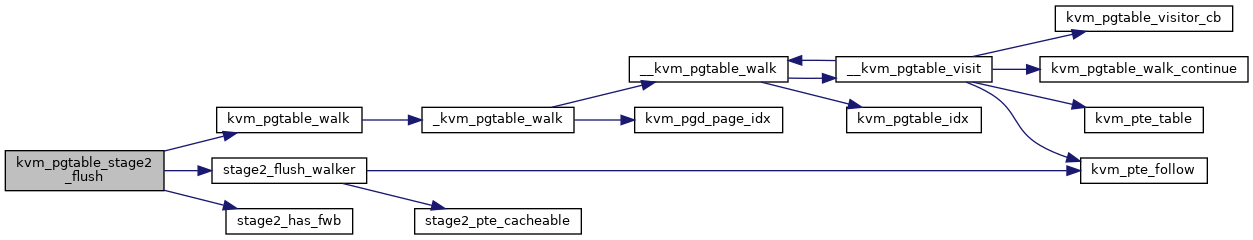

| static bool | stage2_has_fwb (struct kvm_pgtable *pgt) |

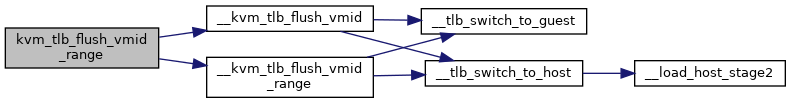

| void | kvm_tlb_flush_vmid_range (struct kvm_s2_mmu *mmu, phys_addr_t addr, size_t size) |

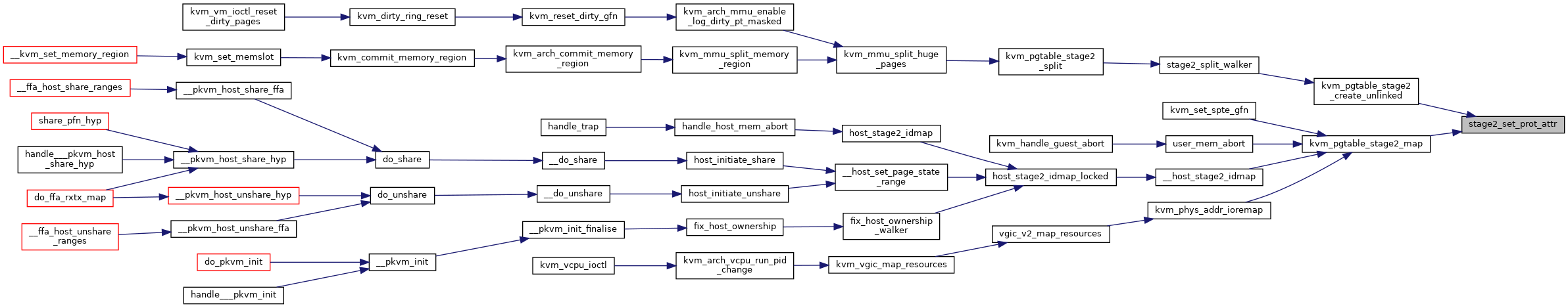

| static int | stage2_set_prot_attr (struct kvm_pgtable *pgt, enum kvm_pgtable_prot prot, kvm_pte_t *ptep) |

| enum kvm_pgtable_prot | kvm_pgtable_stage2_pte_prot (kvm_pte_t pte) |

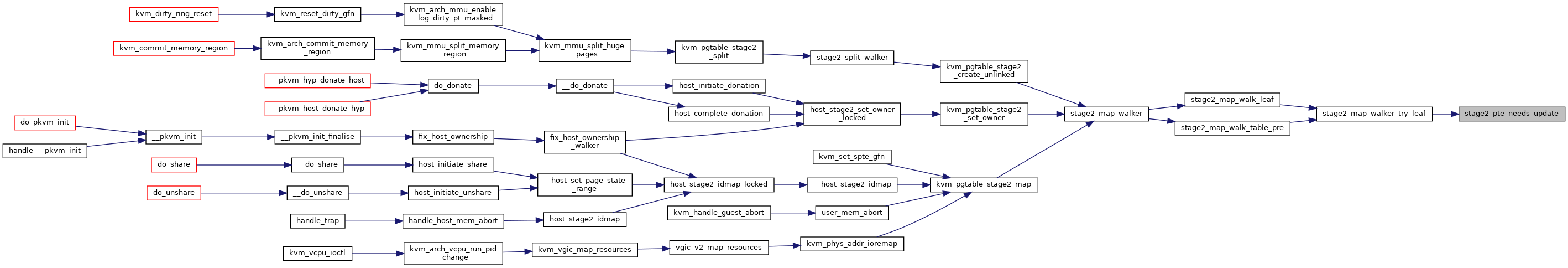

| static bool | stage2_pte_needs_update (kvm_pte_t old, kvm_pte_t new) |

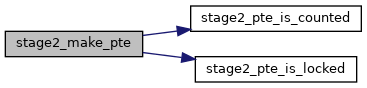

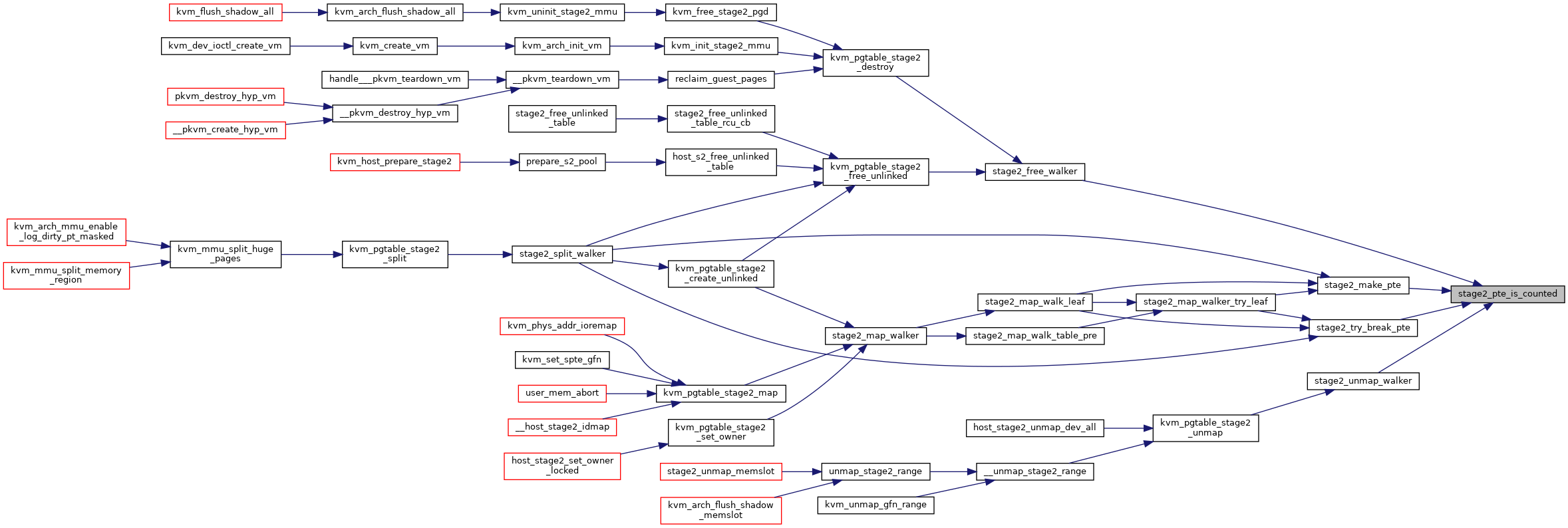

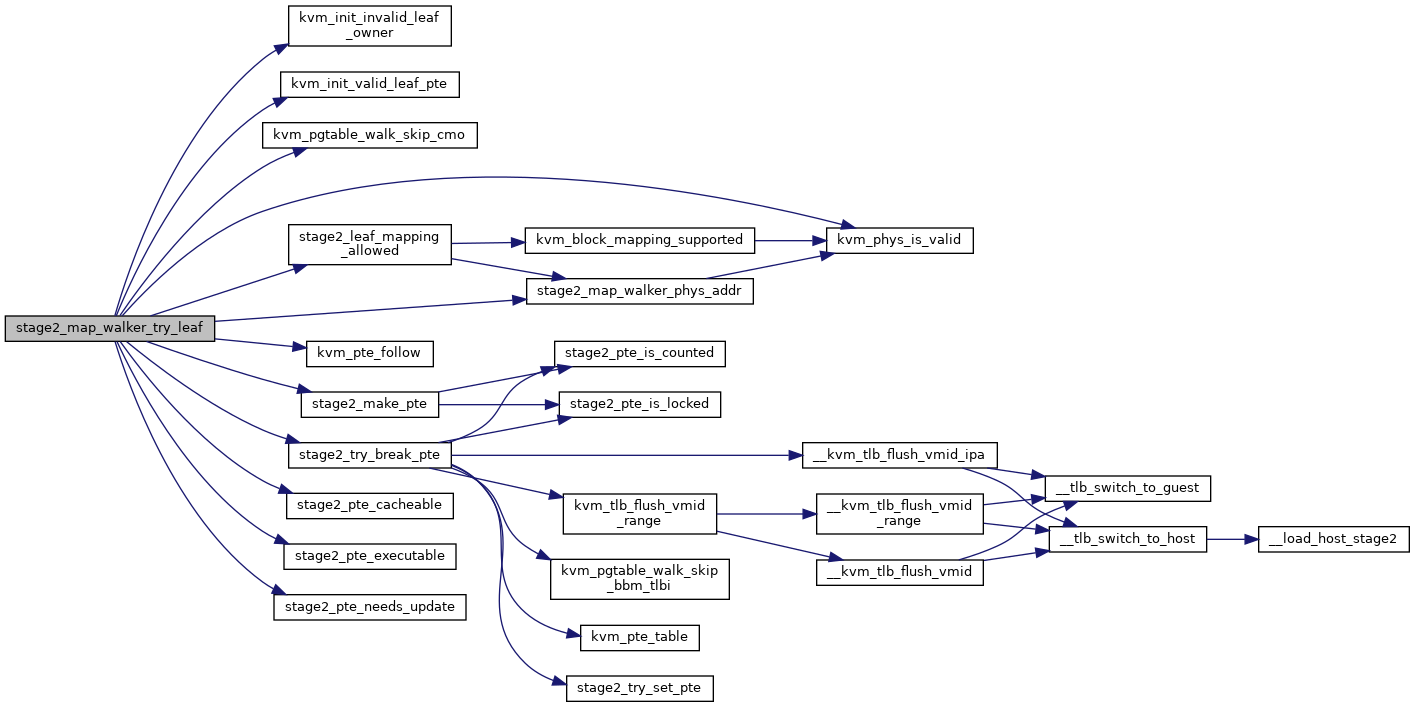

| static bool | stage2_pte_is_counted (kvm_pte_t pte) |

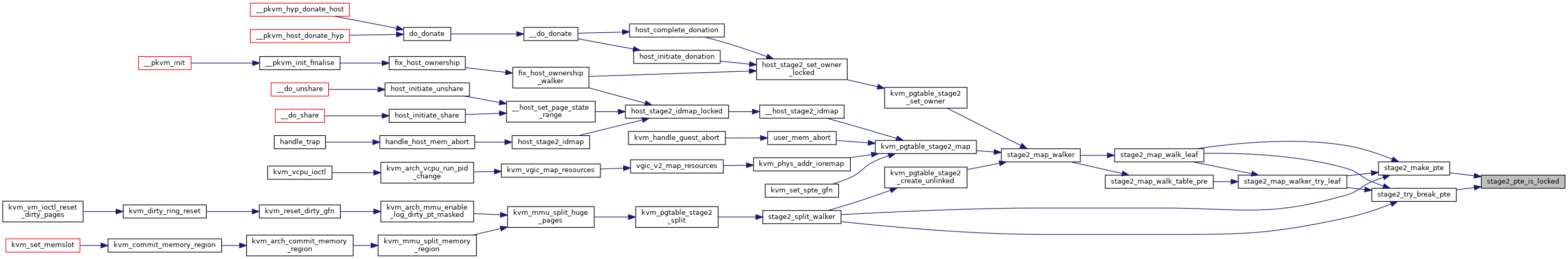

| static bool | stage2_pte_is_locked (kvm_pte_t pte) |

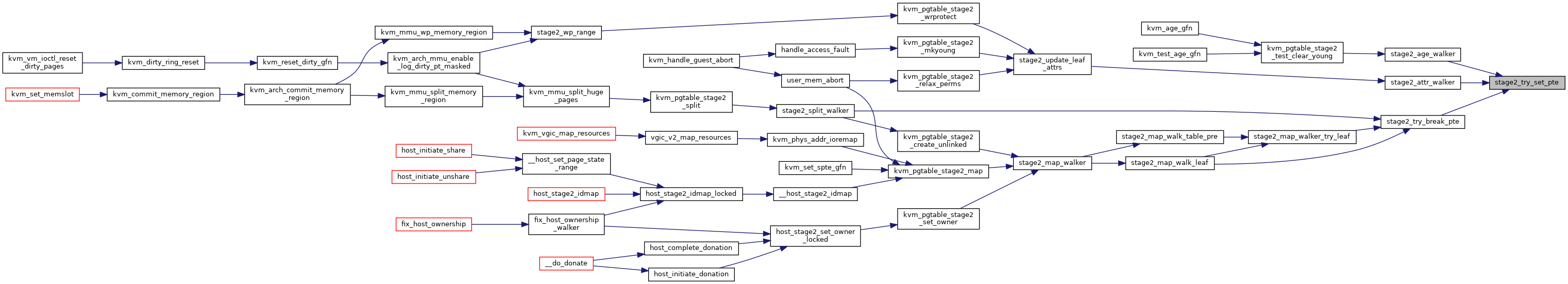

| static bool | stage2_try_set_pte (const struct kvm_pgtable_visit_ctx *ctx, kvm_pte_t new) |

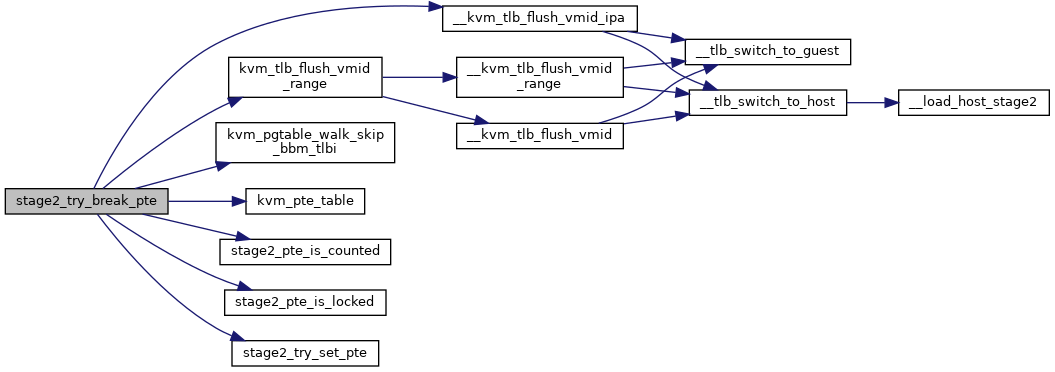

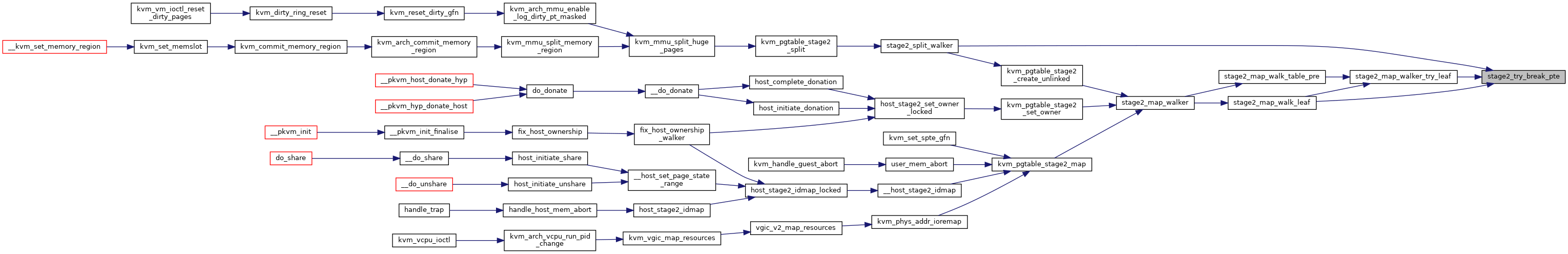

| static bool | stage2_try_break_pte (const struct kvm_pgtable_visit_ctx *ctx, struct kvm_s2_mmu *mmu) |

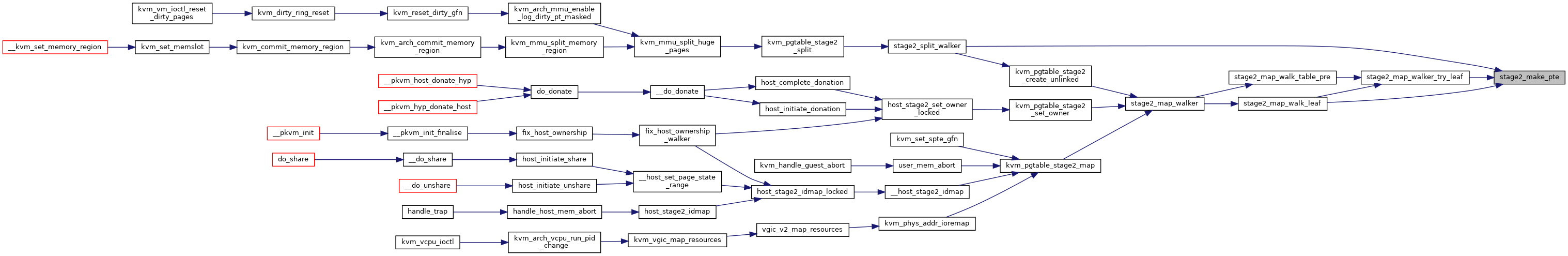

| static void | stage2_make_pte (const struct kvm_pgtable_visit_ctx *ctx, kvm_pte_t new) |

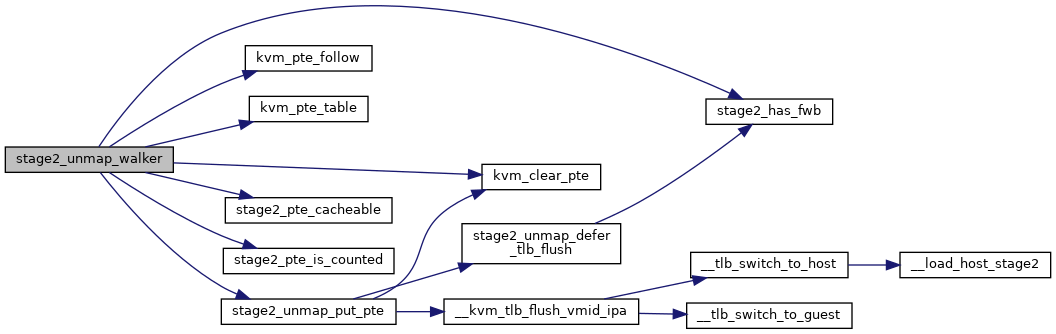

| static bool | stage2_unmap_defer_tlb_flush (struct kvm_pgtable *pgt) |

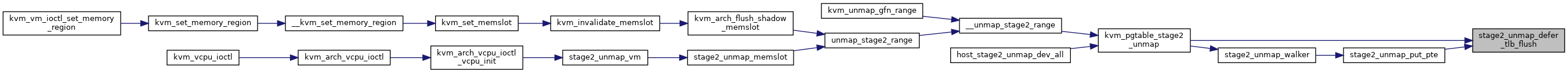

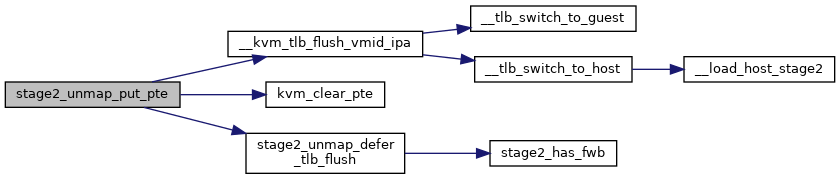

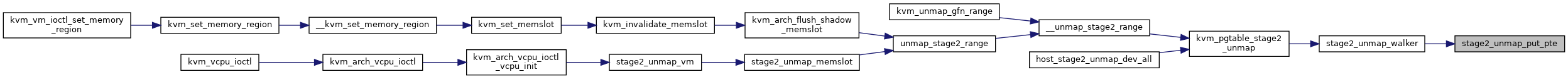

| static void | stage2_unmap_put_pte (const struct kvm_pgtable_visit_ctx *ctx, struct kvm_s2_mmu *mmu, struct kvm_pgtable_mm_ops *mm_ops) |

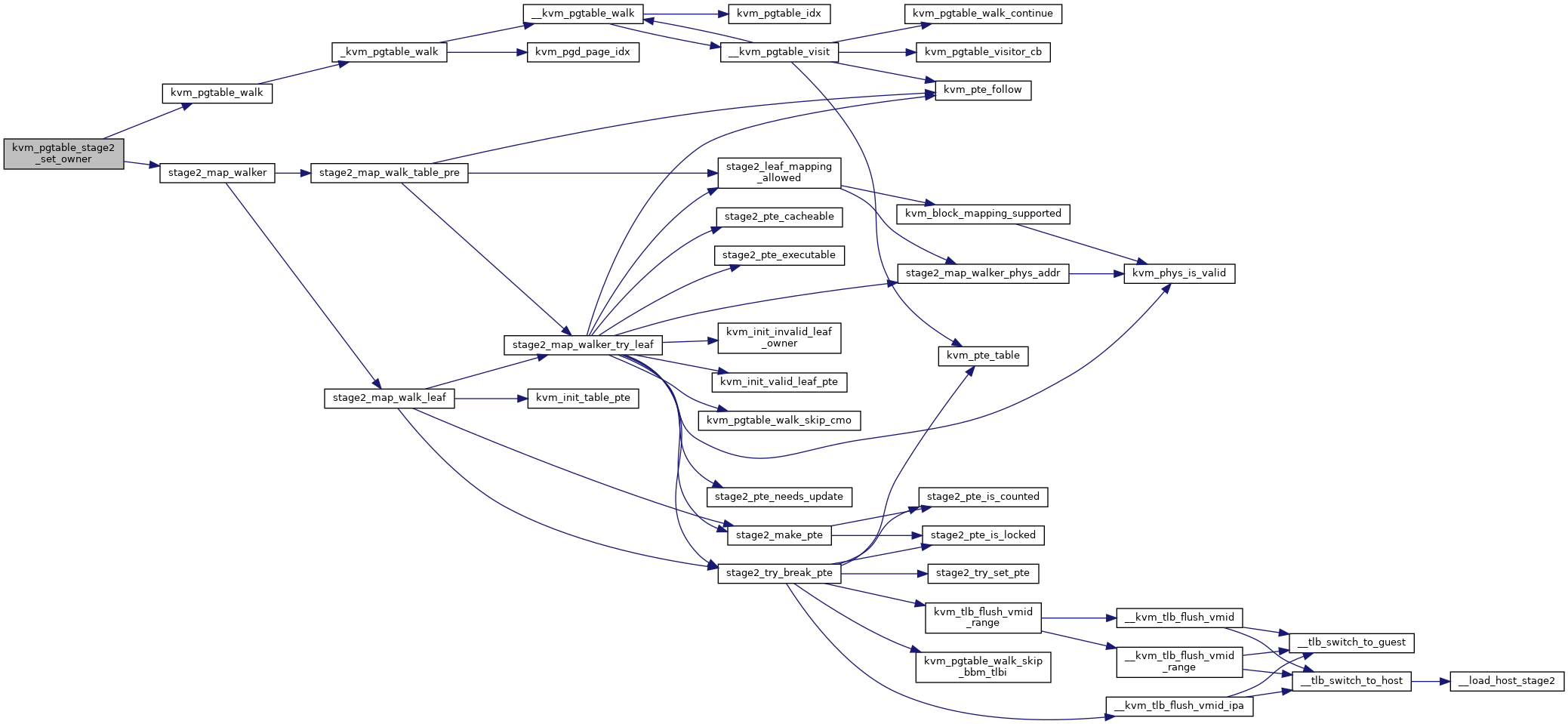

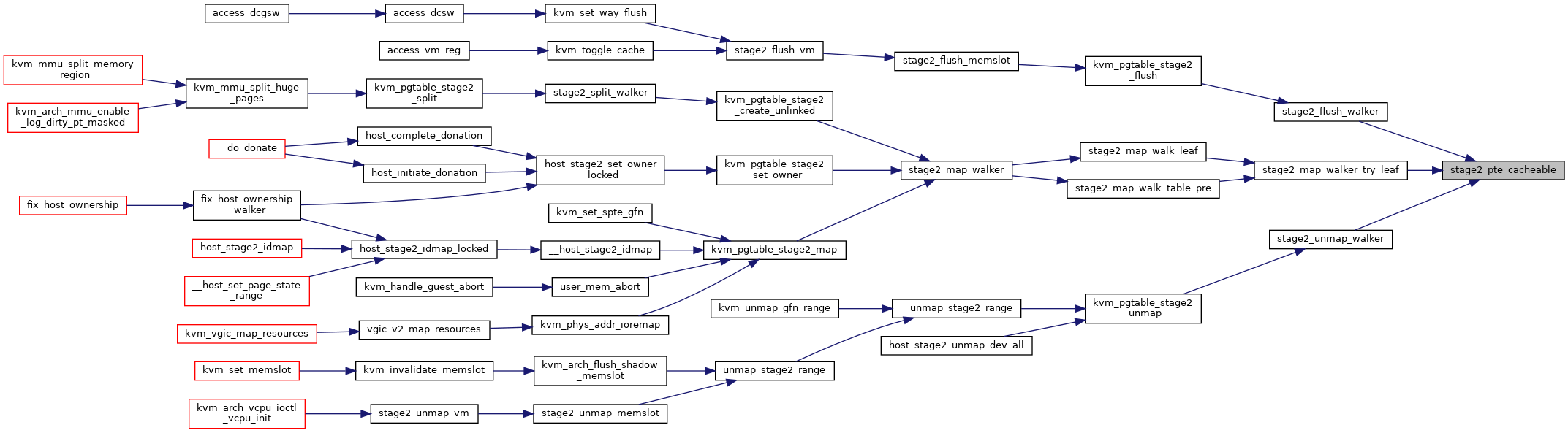

| static bool | stage2_pte_cacheable (struct kvm_pgtable *pgt, kvm_pte_t pte) |

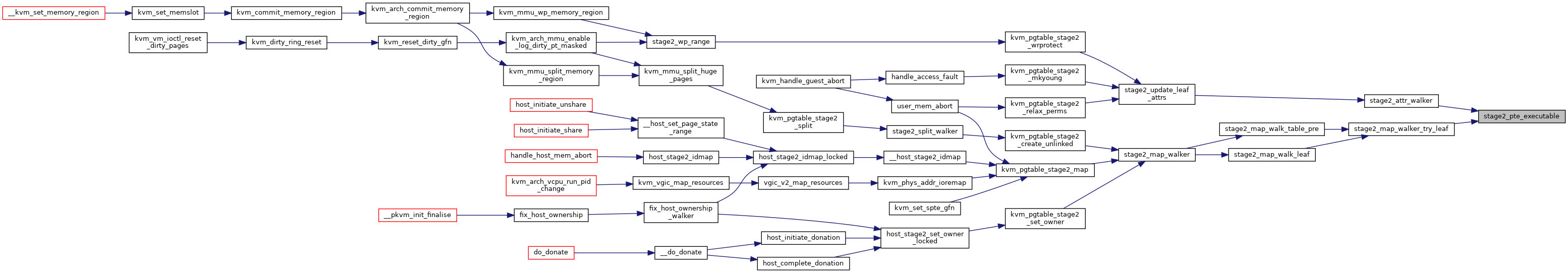

| static bool | stage2_pte_executable (kvm_pte_t pte) |

| static u64 | stage2_map_walker_phys_addr (const struct kvm_pgtable_visit_ctx *ctx, const struct stage2_map_data *data) |

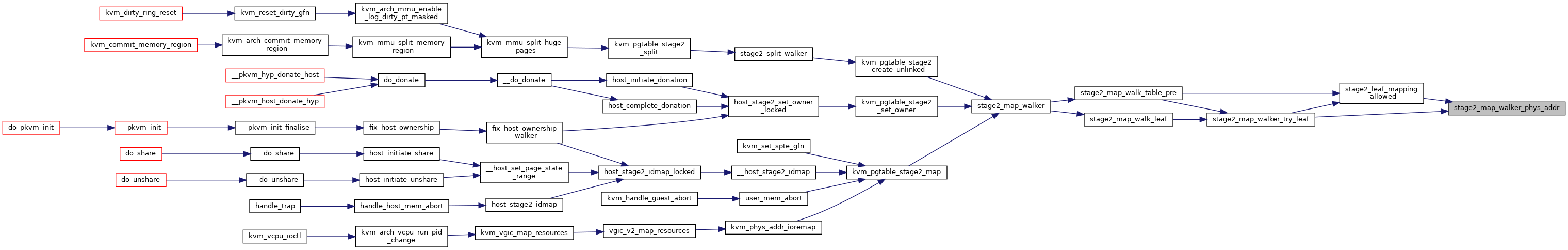

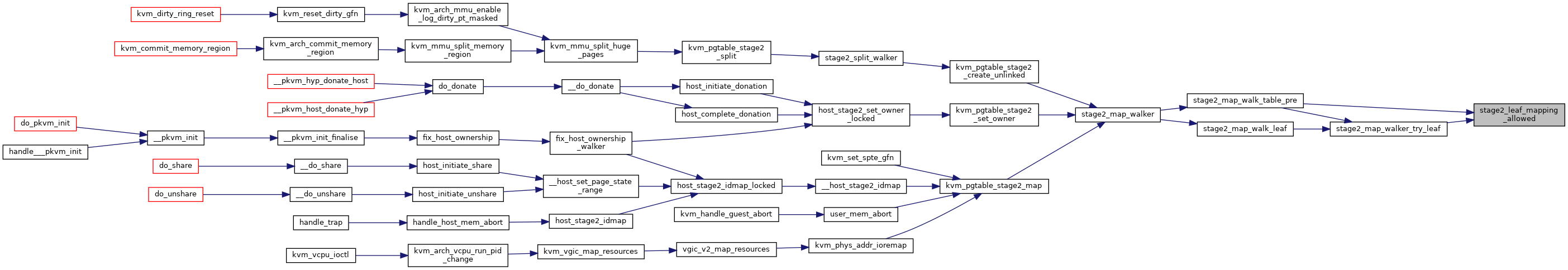

| static bool | stage2_leaf_mapping_allowed (const struct kvm_pgtable_visit_ctx *ctx, struct stage2_map_data *data) |

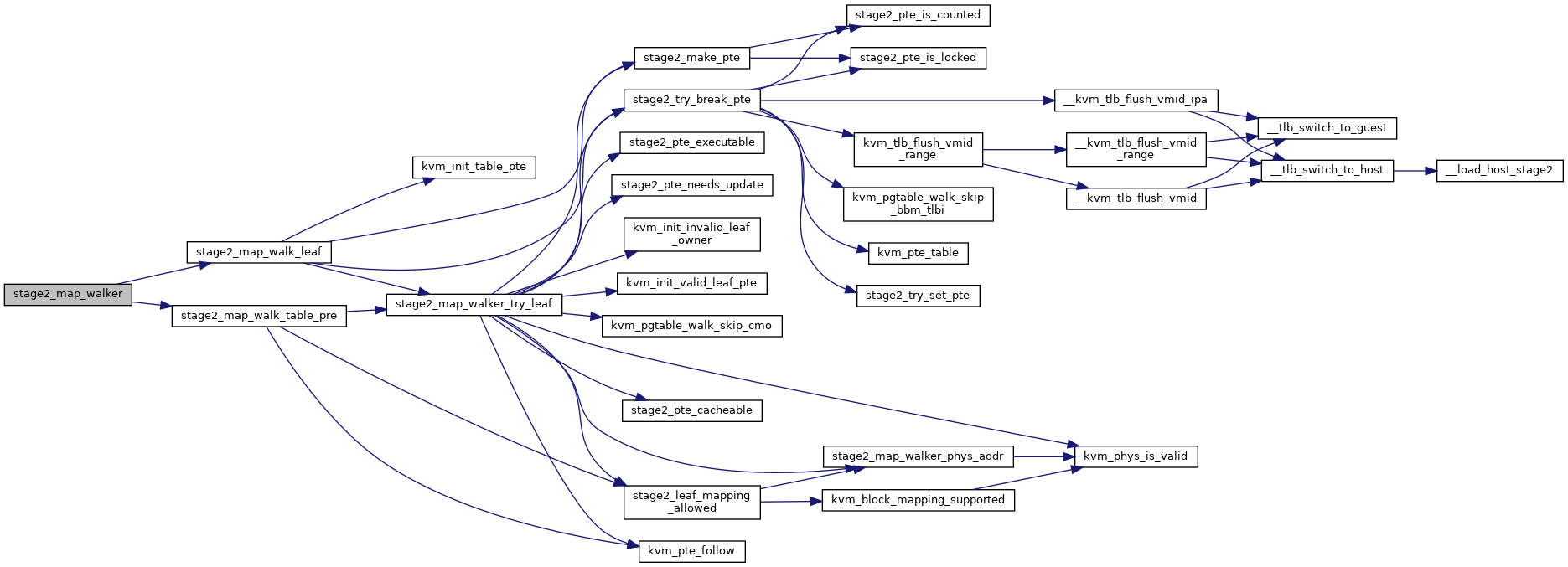

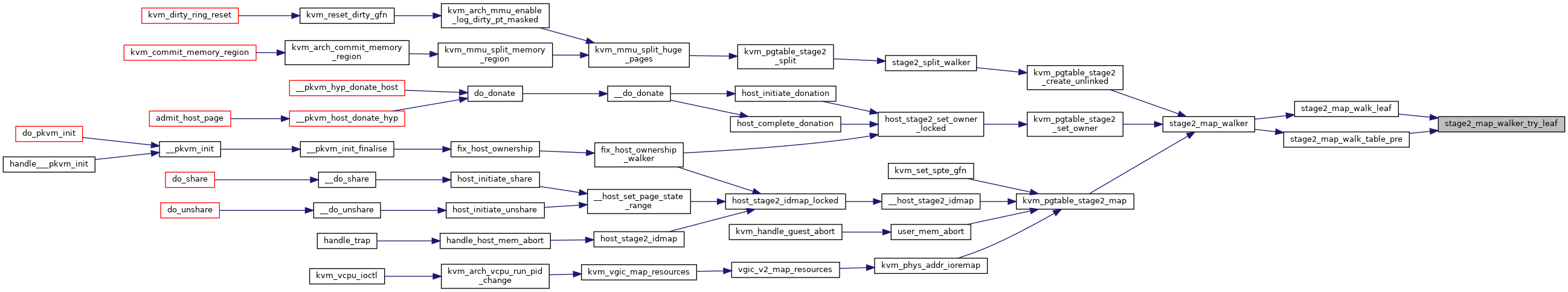

| static int | stage2_map_walker_try_leaf (const struct kvm_pgtable_visit_ctx *ctx, struct stage2_map_data *data) |

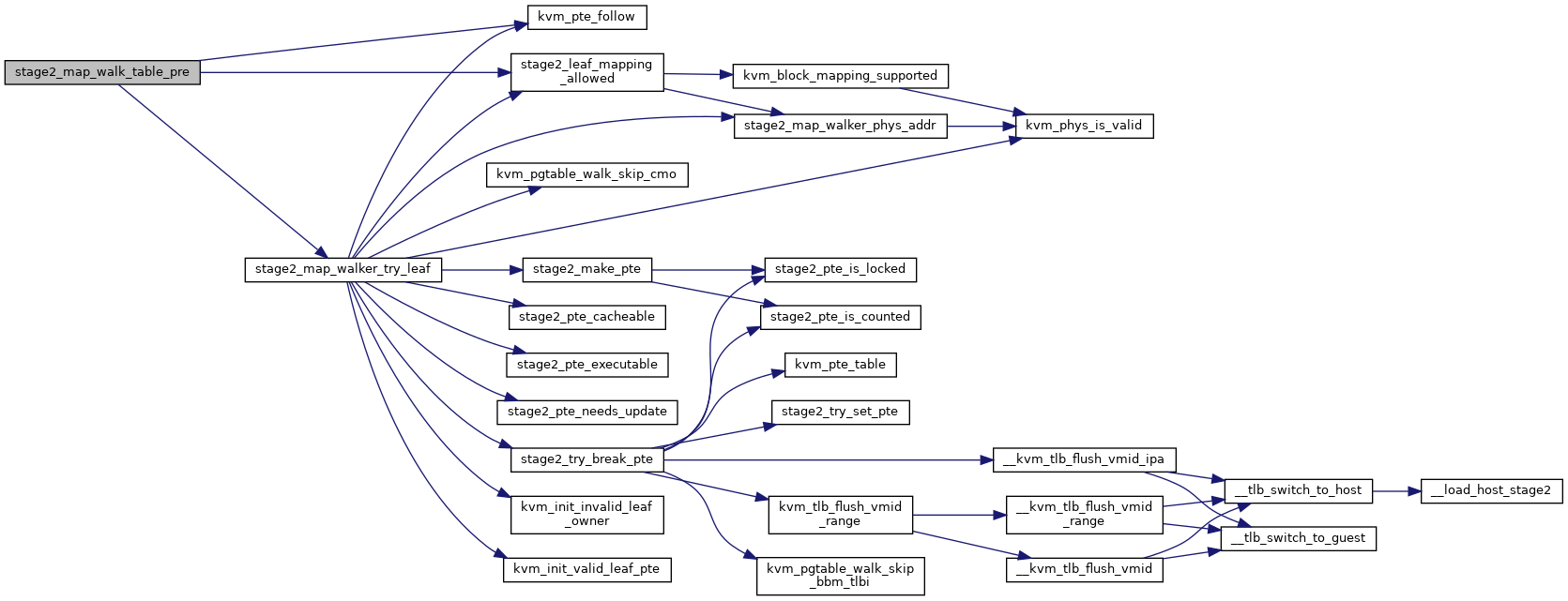

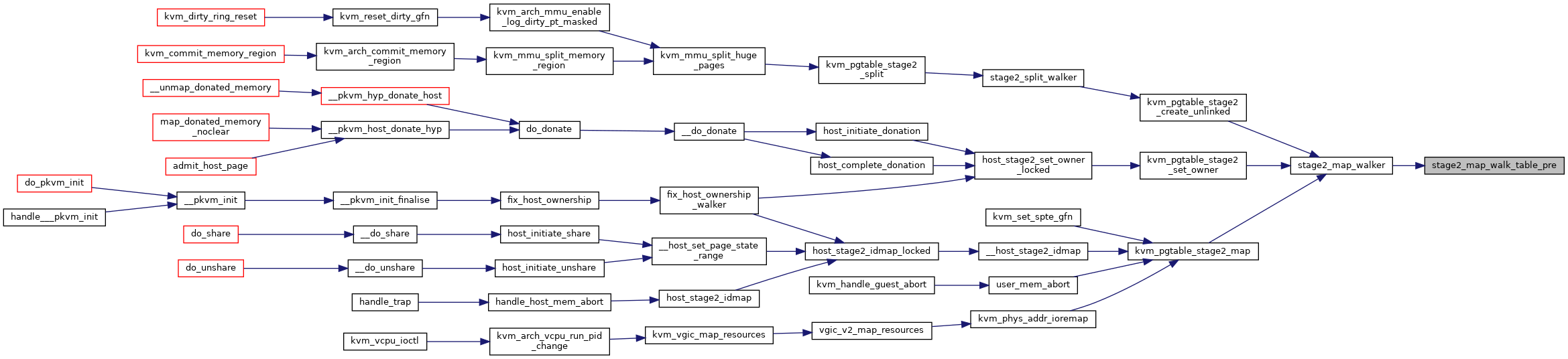

| static int | stage2_map_walk_table_pre (const struct kvm_pgtable_visit_ctx *ctx, struct stage2_map_data *data) |

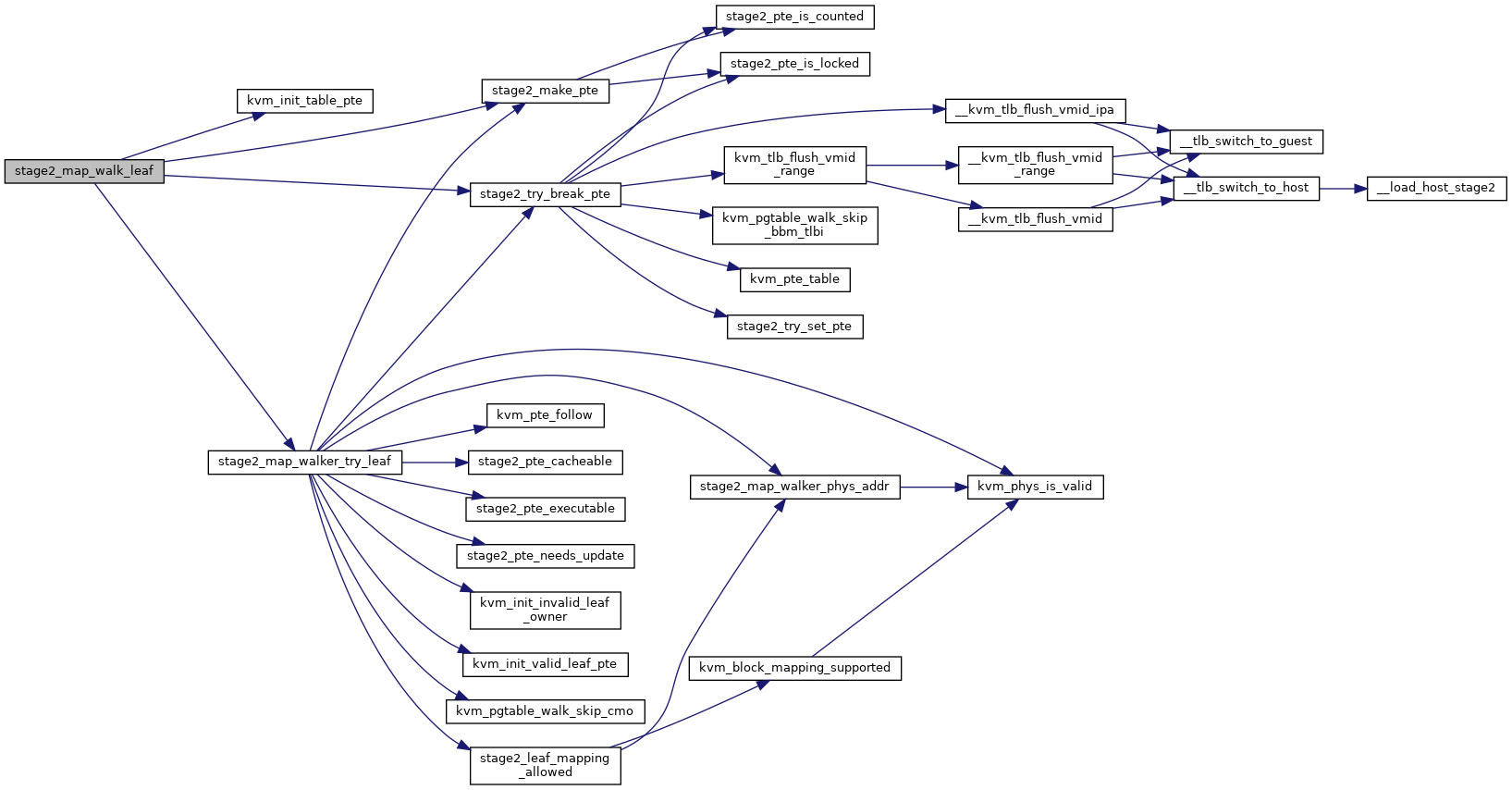

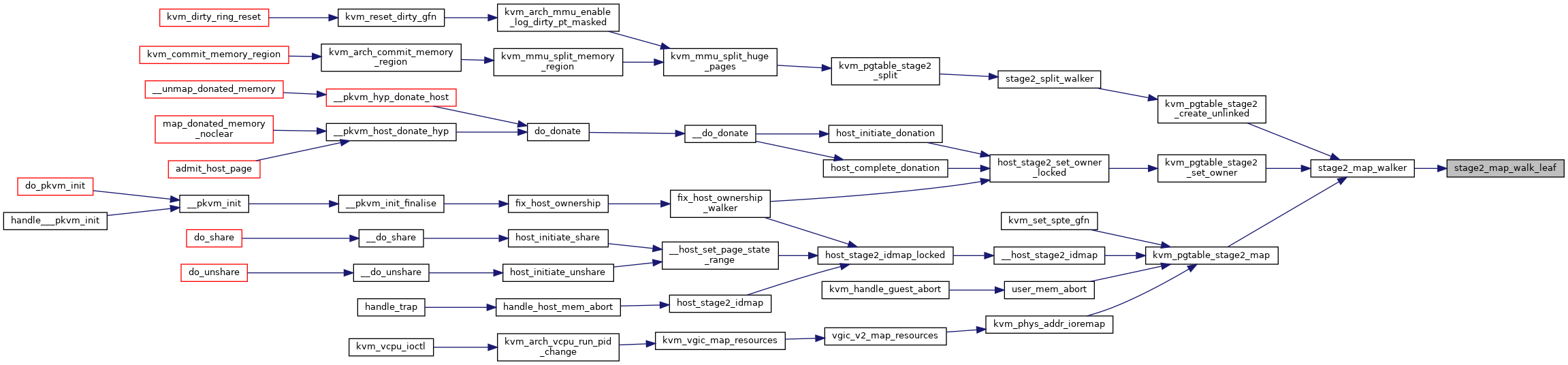

| static int | stage2_map_walk_leaf (const struct kvm_pgtable_visit_ctx *ctx, struct stage2_map_data *data) |

| static int | stage2_map_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

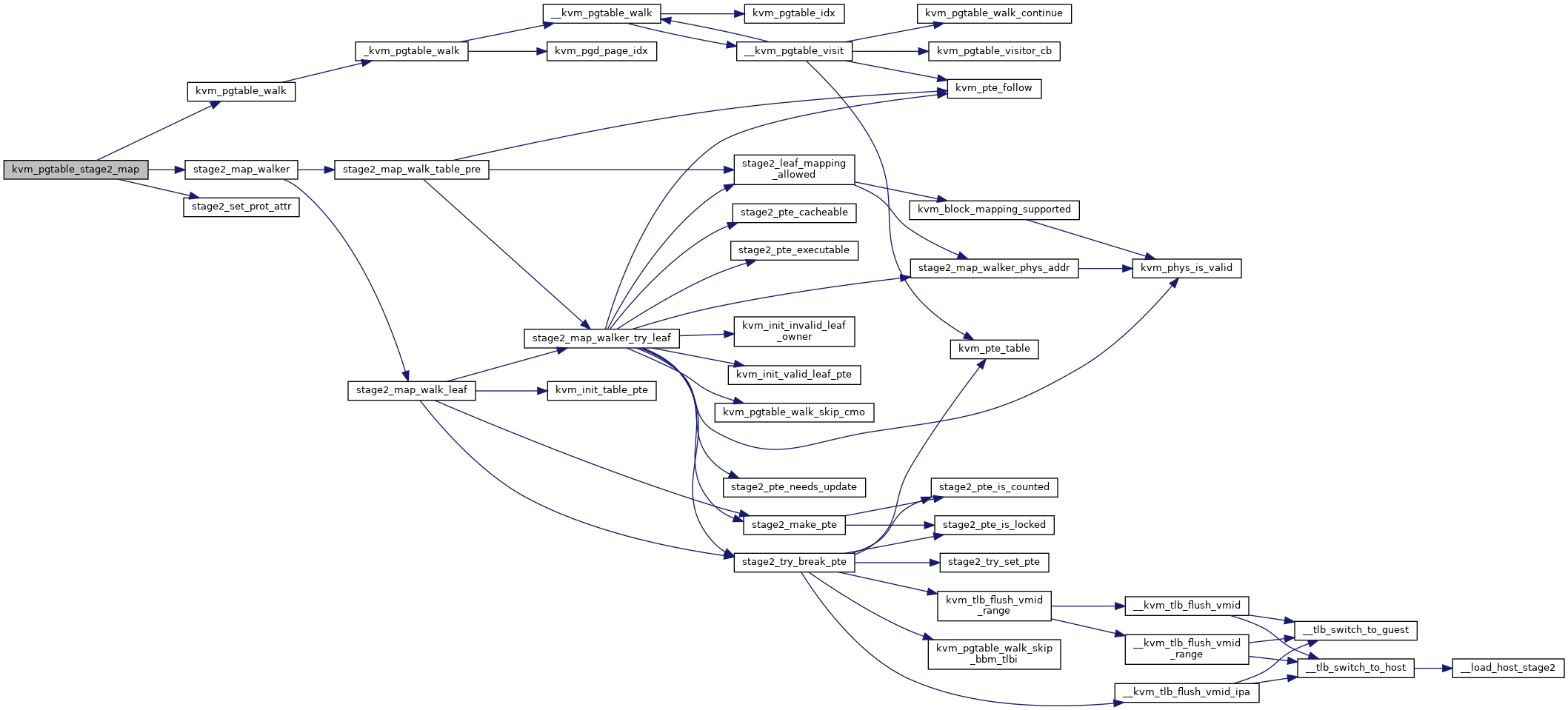

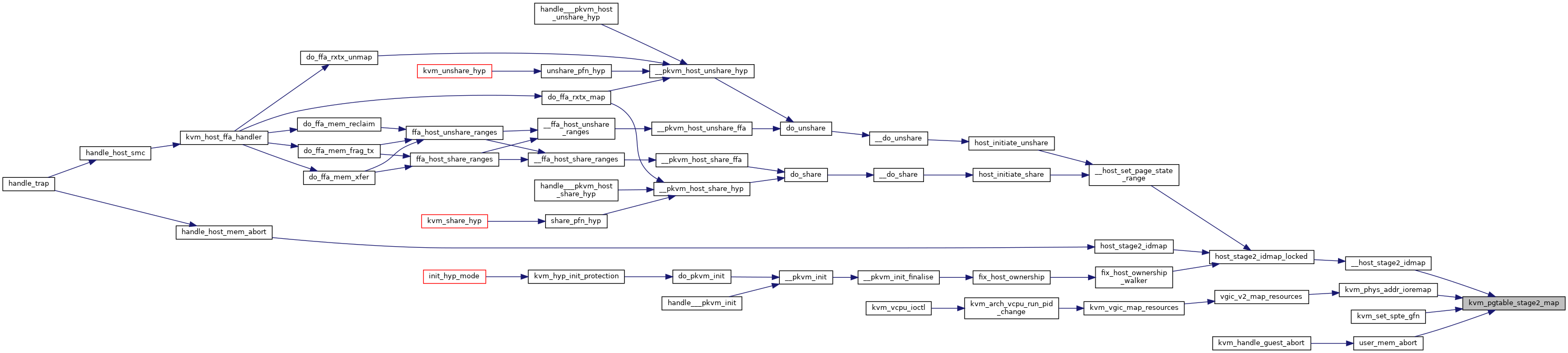

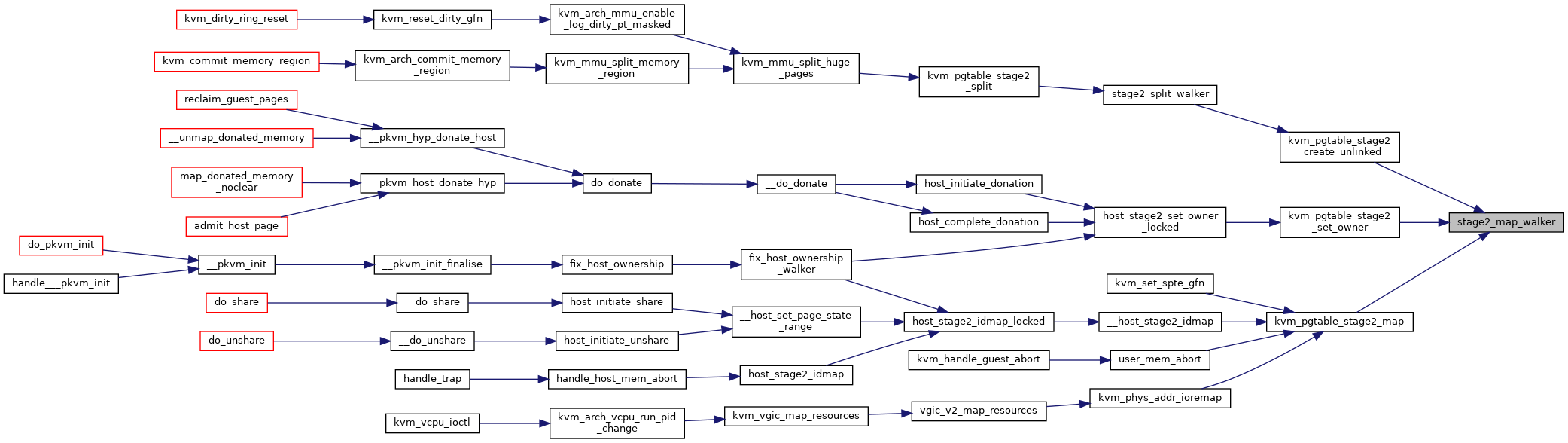

| int | kvm_pgtable_stage2_map (struct kvm_pgtable *pgt, u64 addr, u64 size, u64 phys, enum kvm_pgtable_prot prot, void *mc, enum kvm_pgtable_walk_flags flags) |

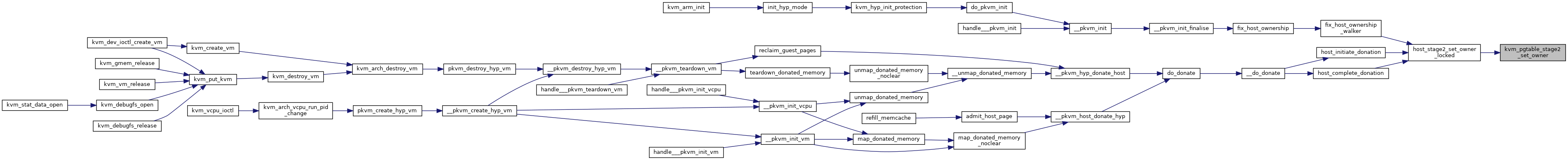

| int | kvm_pgtable_stage2_set_owner (struct kvm_pgtable *pgt, u64 addr, u64 size, void *mc, u8 owner_id) |

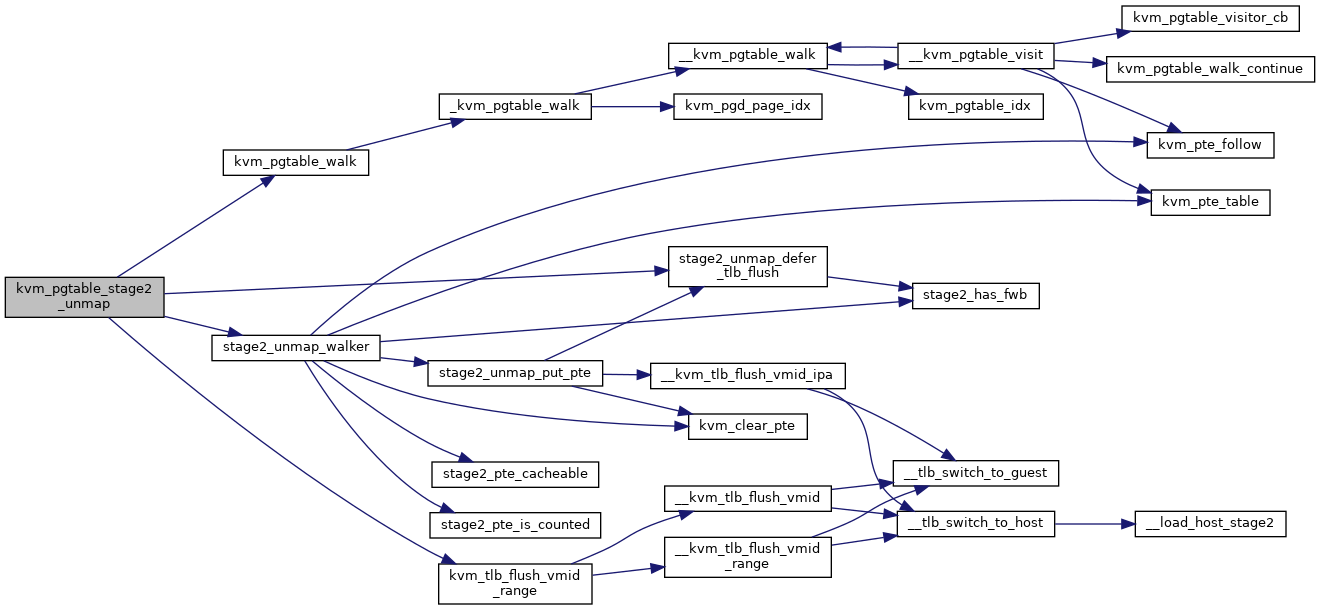

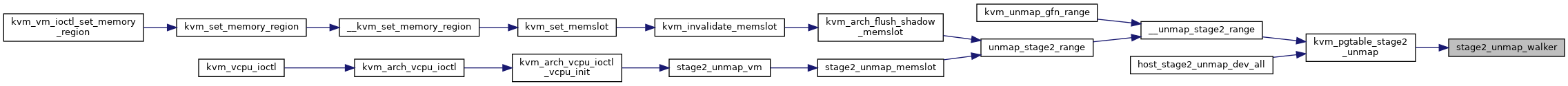

| static int | stage2_unmap_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

| int | kvm_pgtable_stage2_unmap (struct kvm_pgtable *pgt, u64 addr, u64 size) |

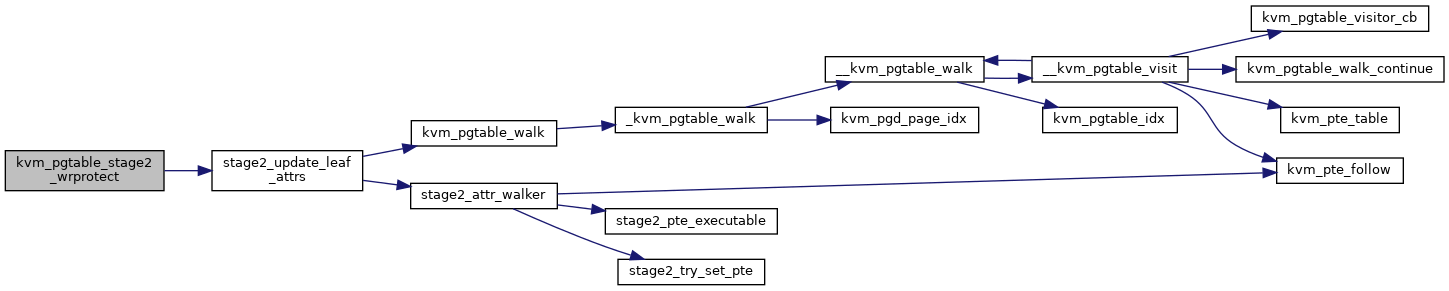

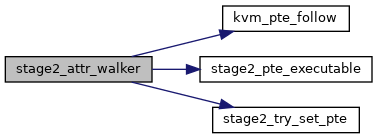

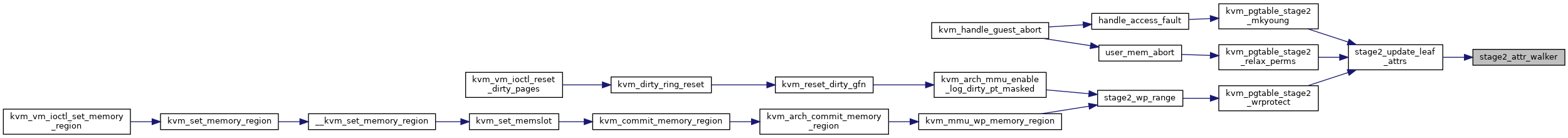

| static int | stage2_attr_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

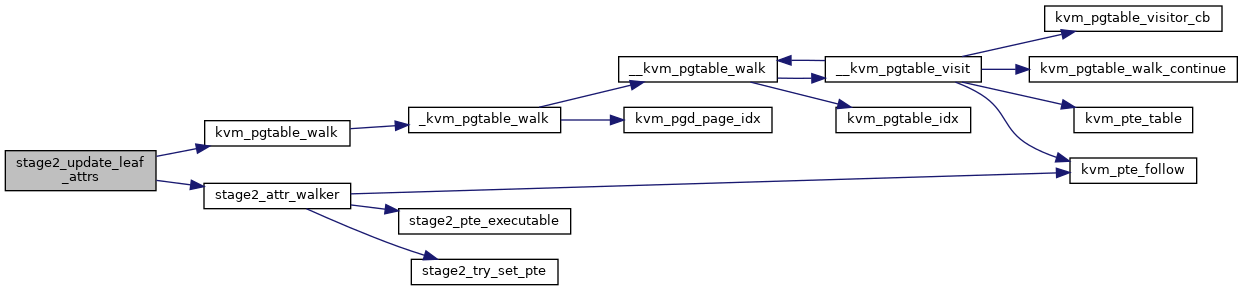

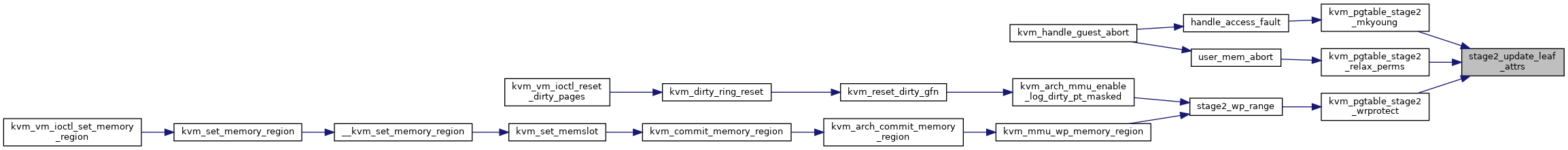

| static int | stage2_update_leaf_attrs (struct kvm_pgtable *pgt, u64 addr, u64 size, kvm_pte_t attr_set, kvm_pte_t attr_clr, kvm_pte_t *orig_pte, s8 *level, enum kvm_pgtable_walk_flags flags) |

| int | kvm_pgtable_stage2_wrprotect (struct kvm_pgtable *pgt, u64 addr, u64 size) |

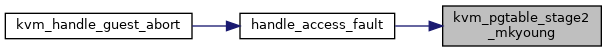

| kvm_pte_t | kvm_pgtable_stage2_mkyoung (struct kvm_pgtable *pgt, u64 addr) |

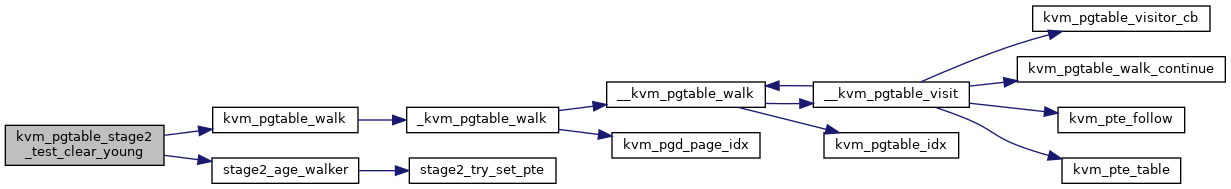

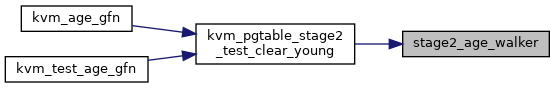

| static int | stage2_age_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

| bool | kvm_pgtable_stage2_test_clear_young (struct kvm_pgtable *pgt, u64 addr, u64 size, bool mkold) |

| int | kvm_pgtable_stage2_relax_perms (struct kvm_pgtable *pgt, u64 addr, enum kvm_pgtable_prot prot) |

| static int | stage2_flush_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

| int | kvm_pgtable_stage2_flush (struct kvm_pgtable *pgt, u64 addr, u64 size) |

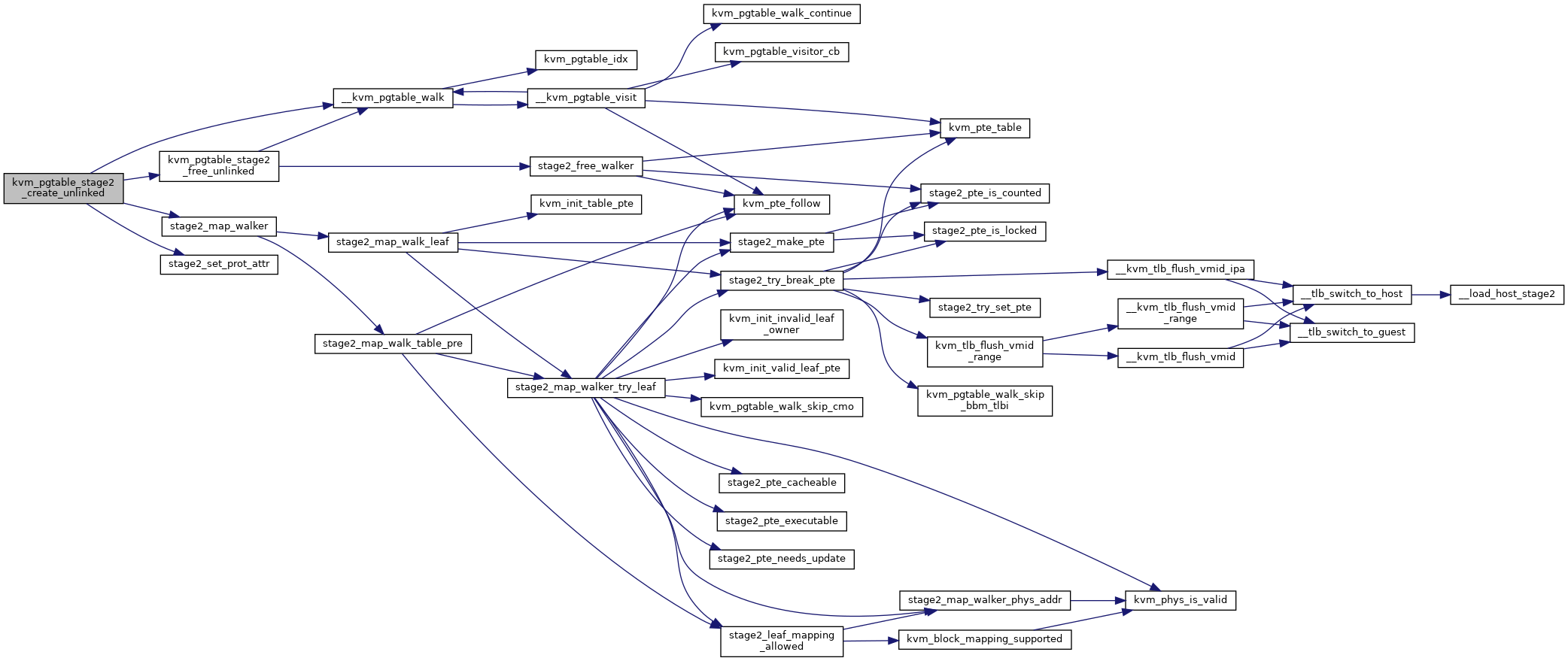

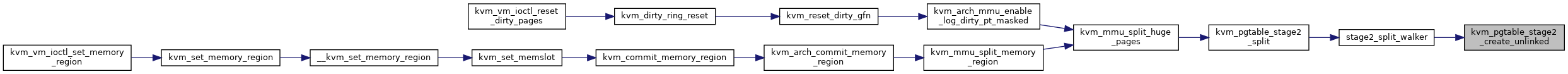

| kvm_pte_t * | kvm_pgtable_stage2_create_unlinked (struct kvm_pgtable *pgt, u64 phys, s8 level, enum kvm_pgtable_prot prot, void *mc, bool force_pte) |

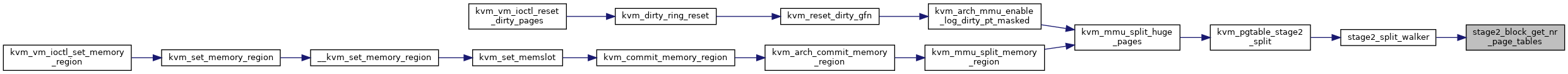

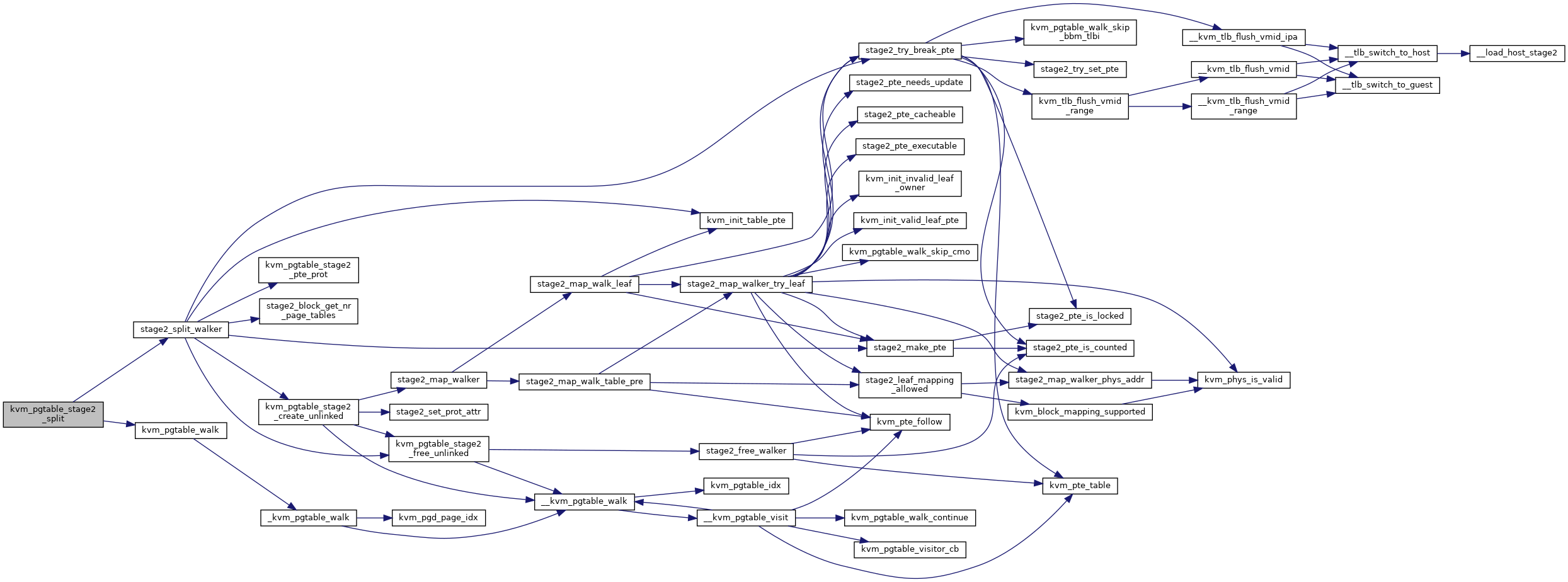

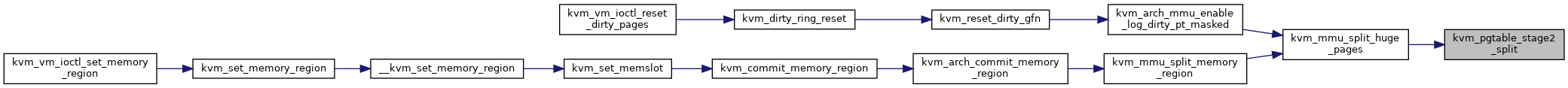

| static int | stage2_block_get_nr_page_tables (s8 level) |

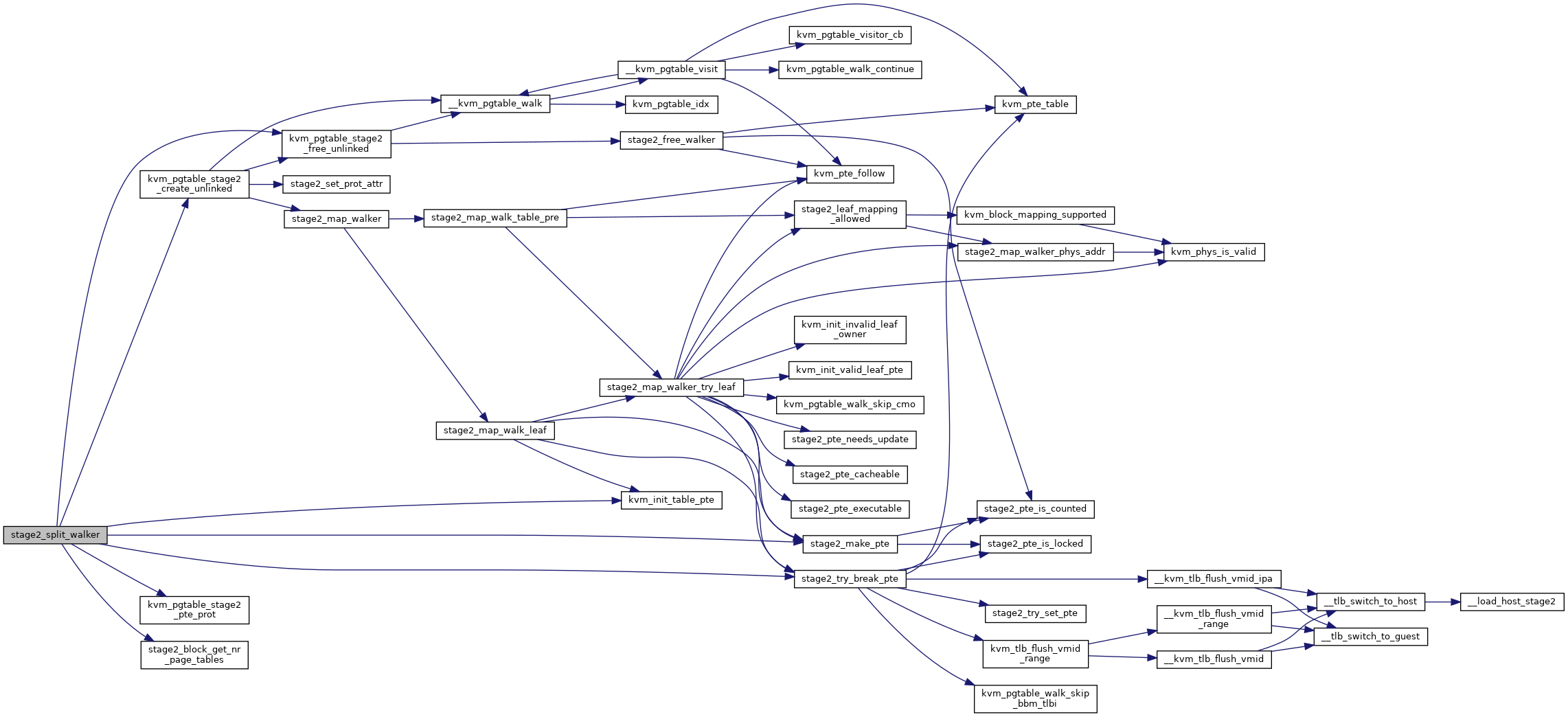

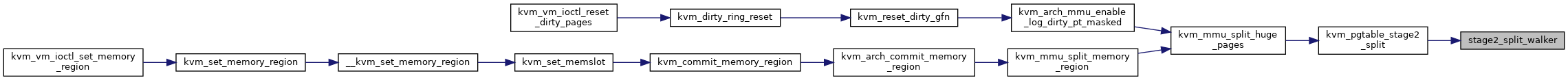

| static int | stage2_split_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

| int | kvm_pgtable_stage2_split (struct kvm_pgtable *pgt, u64 addr, u64 size, struct kvm_mmu_memory_cache *mc) |

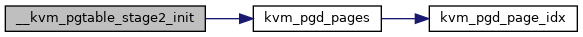

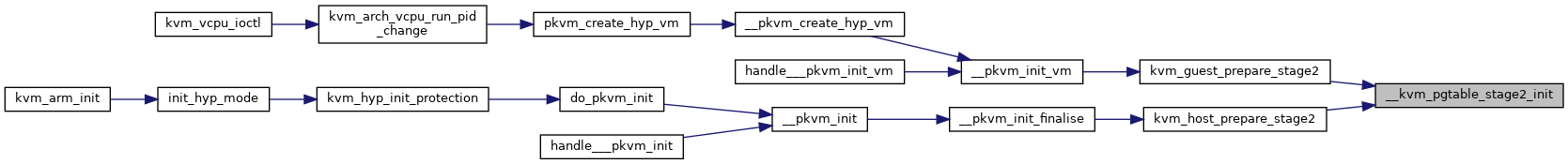

| int | __kvm_pgtable_stage2_init (struct kvm_pgtable *pgt, struct kvm_s2_mmu *mmu, struct kvm_pgtable_mm_ops *mm_ops, enum kvm_pgtable_stage2_flags flags, kvm_pgtable_force_pte_cb_t force_pte_cb) |

| size_t | kvm_pgtable_stage2_pgd_size (u64 vtcr) |

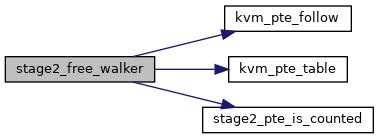

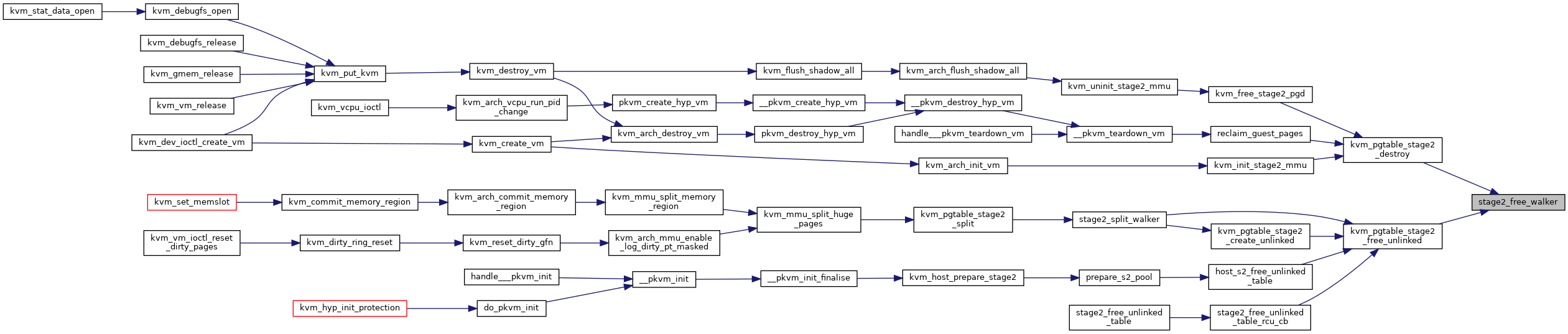

| static int | stage2_free_walker (const struct kvm_pgtable_visit_ctx *ctx, enum kvm_pgtable_walk_flags visit) |

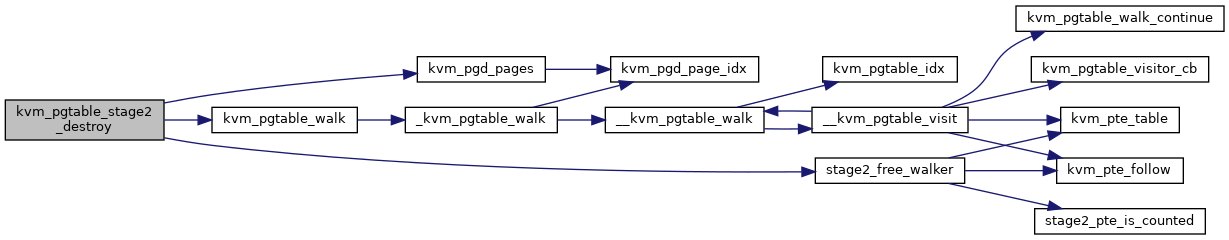

| void | kvm_pgtable_stage2_destroy (struct kvm_pgtable *pgt) |

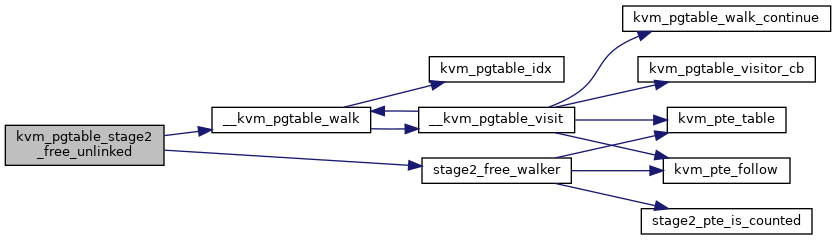

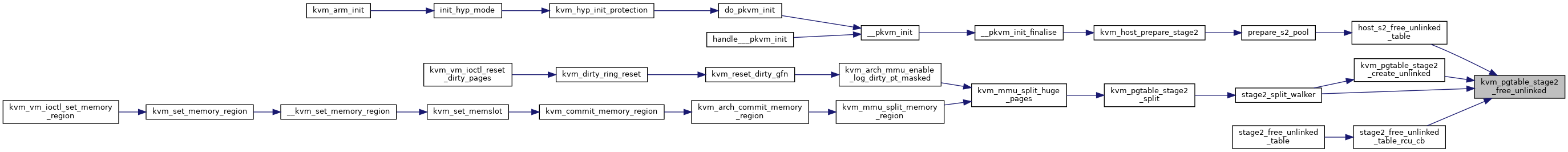

| void | kvm_pgtable_stage2_free_unlinked (struct kvm_pgtable_mm_ops *mm_ops, void *pgtable, s8 level) |

Macro Definition Documentation

◆ KVM_INVALID_PTE_LOCKED

◆ KVM_INVALID_PTE_OWNER_MASK

◆ KVM_MAX_OWNER_ID

◆ KVM_PTE_LEAF_ATTR_HI

◆ KVM_PTE_LEAF_ATTR_HI_S1_GP

◆ KVM_PTE_LEAF_ATTR_HI_S1_XN

◆ KVM_PTE_LEAF_ATTR_HI_S2_XN

◆ KVM_PTE_LEAF_ATTR_HI_SW

◆ KVM_PTE_LEAF_ATTR_LO

◆ KVM_PTE_LEAF_ATTR_LO_S1_AF

◆ KVM_PTE_LEAF_ATTR_LO_S1_AP

◆ KVM_PTE_LEAF_ATTR_LO_S1_AP_RO

| #define KVM_PTE_LEAF_ATTR_LO_S1_AP_RO ({ cpus_have_final_cap(ARM64_KVM_HVHE) ? 2 : 3; }) |

◆ KVM_PTE_LEAF_ATTR_LO_S1_AP_RW

| #define KVM_PTE_LEAF_ATTR_LO_S1_AP_RW ({ cpus_have_final_cap(ARM64_KVM_HVHE) ? 0 : 1; }) |

◆ KVM_PTE_LEAF_ATTR_LO_S1_ATTRIDX

◆ KVM_PTE_LEAF_ATTR_LO_S1_SH

◆ KVM_PTE_LEAF_ATTR_LO_S1_SH_IS

◆ KVM_PTE_LEAF_ATTR_LO_S2_AF

◆ KVM_PTE_LEAF_ATTR_LO_S2_MEMATTR

◆ KVM_PTE_LEAF_ATTR_LO_S2_S2AP_R

◆ KVM_PTE_LEAF_ATTR_LO_S2_S2AP_W

◆ KVM_PTE_LEAF_ATTR_LO_S2_SH

◆ KVM_PTE_LEAF_ATTR_LO_S2_SH_IS

◆ KVM_PTE_LEAF_ATTR_S2_PERMS

| #define KVM_PTE_LEAF_ATTR_S2_PERMS |

◆ KVM_PTE_TYPE

◆ KVM_PTE_TYPE_BLOCK

◆ KVM_PTE_TYPE_PAGE

◆ KVM_PTE_TYPE_TABLE

◆ KVM_S2_MEMATTR

| #define KVM_S2_MEMATTR | ( | pgt, | |

| attr | |||

| ) | PAGE_S2_MEMATTR(attr, stage2_has_fwb(pgt)) |

Function Documentation

◆ __kvm_pgtable_stage2_init()

| int __kvm_pgtable_stage2_init | ( | struct kvm_pgtable * | pgt, |

| struct kvm_s2_mmu * | mmu, | ||

| struct kvm_pgtable_mm_ops * | mm_ops, | ||

| enum kvm_pgtable_stage2_flags | flags, | ||

| kvm_pgtable_force_pte_cb_t | force_pte_cb | ||

| ) |

◆ __kvm_pgtable_visit()

|

inlinestatic |

Definition at line 211 of file pgtable.c.

◆ __kvm_pgtable_walk()

|

static |

Definition at line 277 of file pgtable.c.

◆ _kvm_pgtable_walk()

|

static |

Definition at line 301 of file pgtable.c.

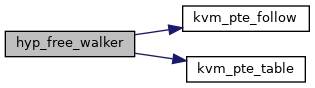

◆ hyp_free_walker()

|

static |

◆ hyp_map_walker()

|

static |

Definition at line 465 of file pgtable.c.

◆ hyp_map_walker_try_leaf()

|

static |

Definition at line 444 of file pgtable.c.

◆ hyp_set_prot_attr()

|

static |

◆ hyp_unmap_walker()

|

static |

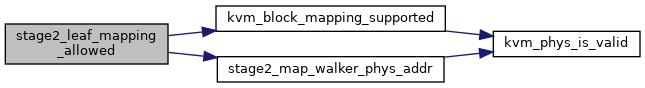

◆ kvm_block_mapping_supported()

|

static |

◆ kvm_clear_pte()

|

static |

◆ kvm_get_vtcr()

| u64 kvm_get_vtcr | ( | u64 | mmfr0, |

| u64 | mmfr1, | ||

| u32 | phys_shift | ||

| ) |

◆ kvm_init_invalid_leaf_owner()

|

static |

◆ kvm_init_table_pte()

|

static |

◆ kvm_init_valid_leaf_pte()

|

static |

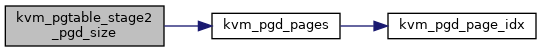

◆ kvm_pgd_page_idx()

|

static |

◆ kvm_pgd_pages()

|

static |

◆ kvm_pgtable_get_leaf()

| int kvm_pgtable_get_leaf | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| kvm_pte_t * | ptep, | ||

| s8 * | level | ||

| ) |

Definition at line 361 of file pgtable.c.

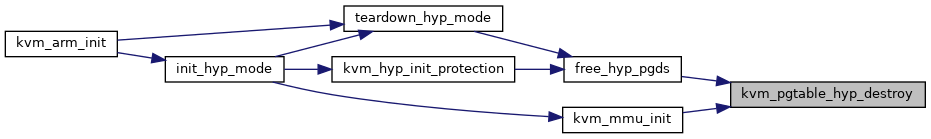

◆ kvm_pgtable_hyp_destroy()

| void kvm_pgtable_hyp_destroy | ( | struct kvm_pgtable * | pgt | ) |

Definition at line 607 of file pgtable.c.

◆ kvm_pgtable_hyp_init()

| int kvm_pgtable_hyp_init | ( | struct kvm_pgtable * | pgt, |

| u32 | va_bits, | ||

| struct kvm_pgtable_mm_ops * | mm_ops | ||

| ) |

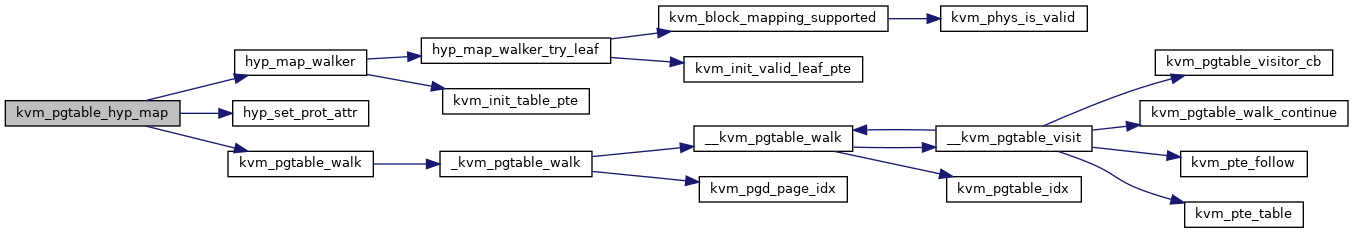

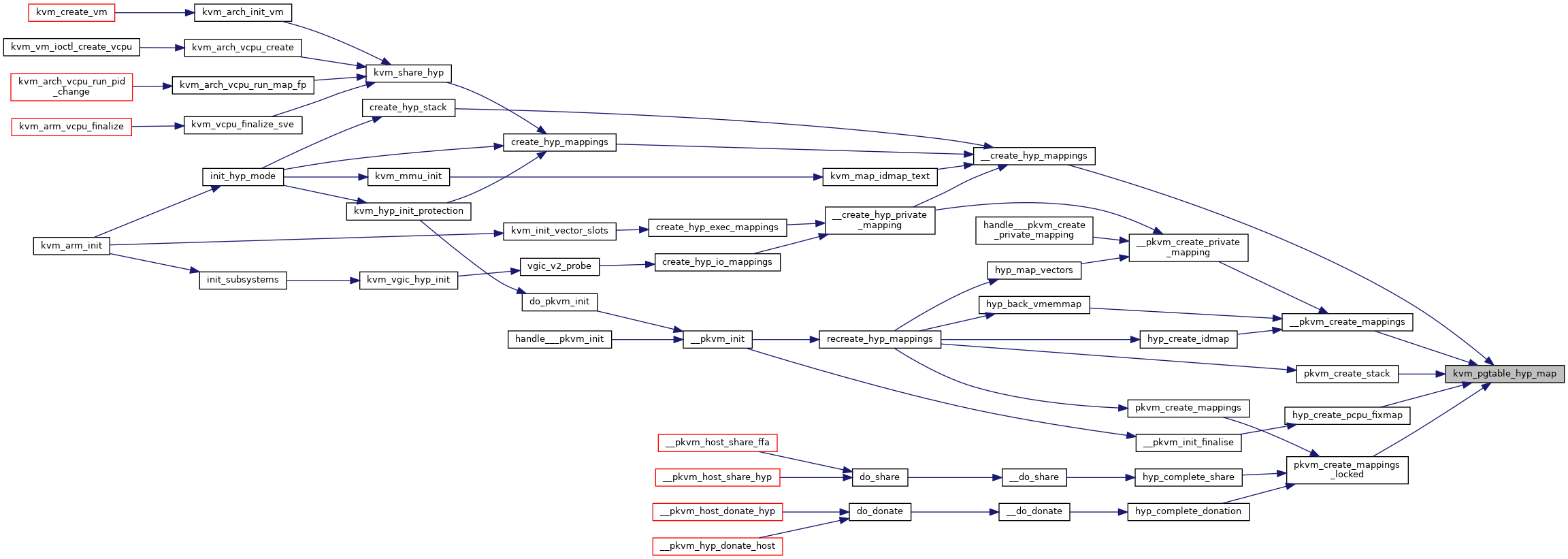

◆ kvm_pgtable_hyp_map()

| int kvm_pgtable_hyp_map | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| u64 | size, | ||

| u64 | phys, | ||

| enum kvm_pgtable_prot | prot | ||

| ) |

Definition at line 489 of file pgtable.c.

◆ kvm_pgtable_hyp_pte_prot()

| enum kvm_pgtable_prot kvm_pgtable_hyp_pte_prot | ( | kvm_pte_t | pte | ) |

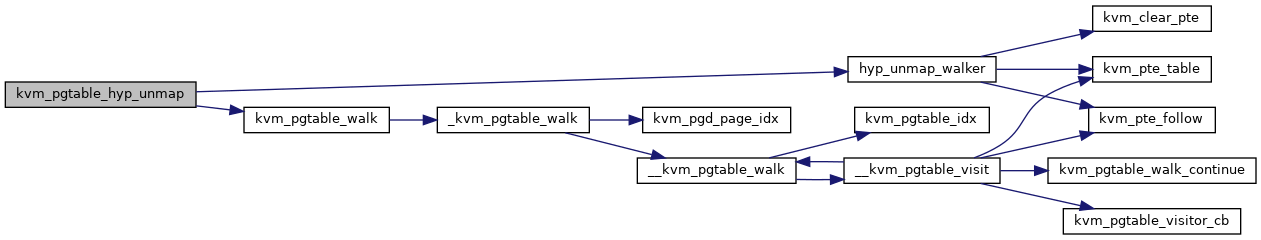

◆ kvm_pgtable_hyp_unmap()

| u64 kvm_pgtable_hyp_unmap | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| u64 | size | ||

| ) |

Definition at line 552 of file pgtable.c.

◆ kvm_pgtable_idx()

|

static |

◆ kvm_pgtable_stage2_create_unlinked()

| kvm_pte_t* kvm_pgtable_stage2_create_unlinked | ( | struct kvm_pgtable * | pgt, |

| u64 | phys, | ||

| s8 | level, | ||

| enum kvm_pgtable_prot | prot, | ||

| void * | mc, | ||

| bool | force_pte | ||

| ) |

Definition at line 1378 of file pgtable.c.

◆ kvm_pgtable_stage2_destroy()

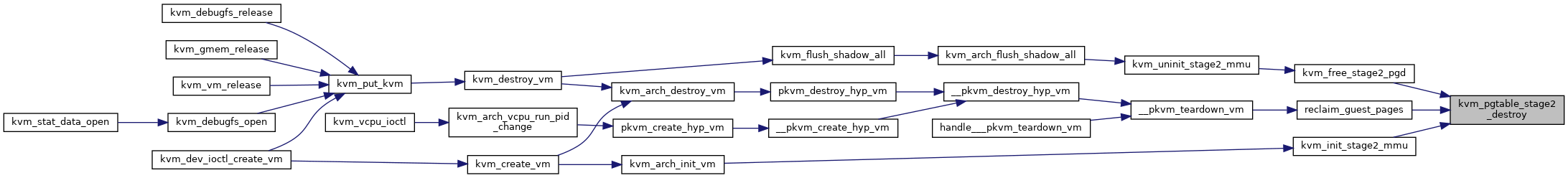

| void kvm_pgtable_stage2_destroy | ( | struct kvm_pgtable * | pgt | ) |

Definition at line 1586 of file pgtable.c.

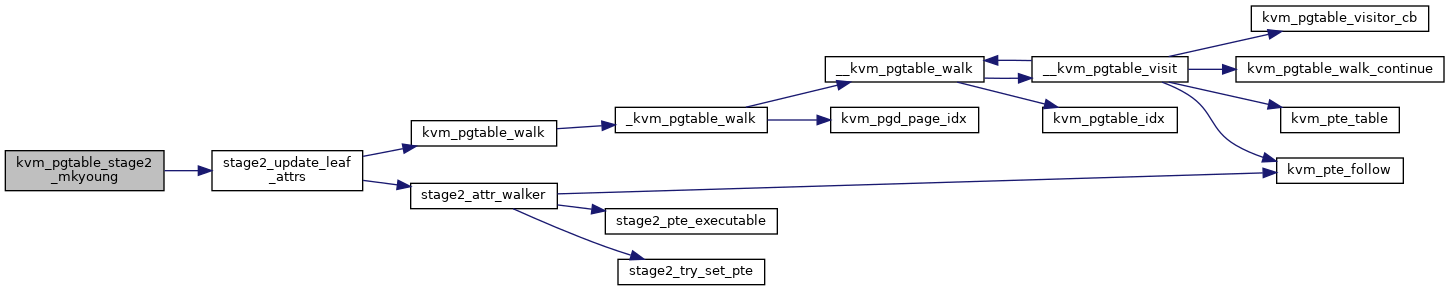

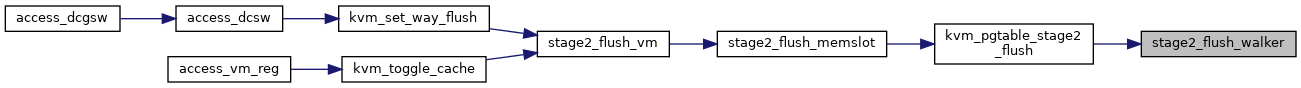

◆ kvm_pgtable_stage2_flush()

| int kvm_pgtable_stage2_flush | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| u64 | size | ||

| ) |

Definition at line 1364 of file pgtable.c.

◆ kvm_pgtable_stage2_free_unlinked()

| void kvm_pgtable_stage2_free_unlinked | ( | struct kvm_pgtable_mm_ops * | mm_ops, |

| void * | pgtable, | ||

| s8 | level | ||

| ) |

◆ kvm_pgtable_stage2_map()

| int kvm_pgtable_stage2_map | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| u64 | size, | ||

| u64 | phys, | ||

| enum kvm_pgtable_prot | prot, | ||

| void * | mc, | ||

| enum kvm_pgtable_walk_flags | flags | ||

| ) |

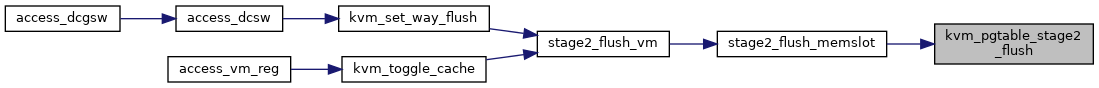

◆ kvm_pgtable_stage2_mkyoung()

| kvm_pte_t kvm_pgtable_stage2_mkyoung | ( | struct kvm_pgtable * | pgt, |

| u64 | addr | ||

| ) |

Definition at line 1257 of file pgtable.c.

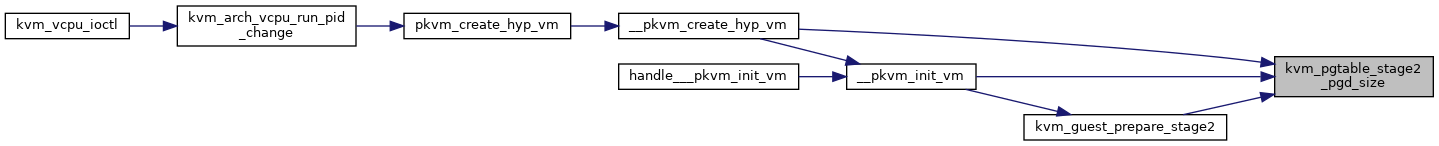

◆ kvm_pgtable_stage2_pgd_size()

| size_t kvm_pgtable_stage2_pgd_size | ( | u64 | vtcr | ) |

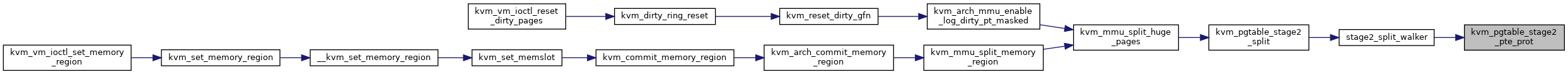

◆ kvm_pgtable_stage2_pte_prot()

| enum kvm_pgtable_prot kvm_pgtable_stage2_pte_prot | ( | kvm_pte_t | pte | ) |

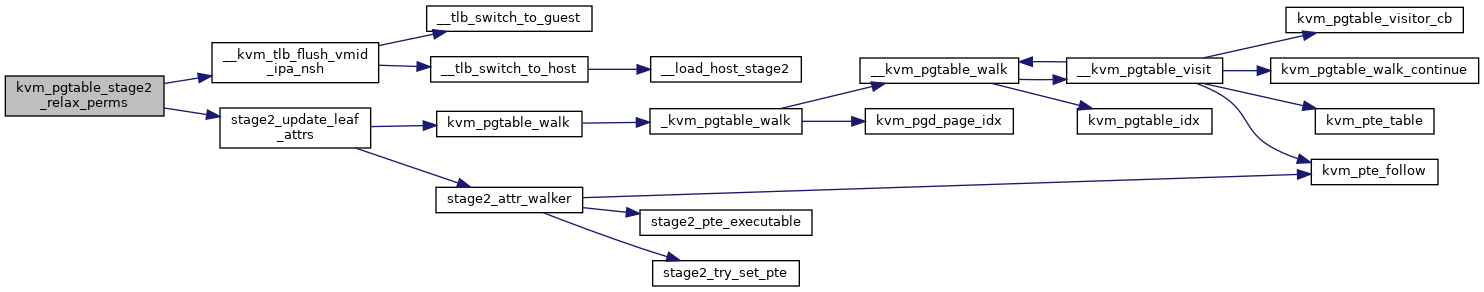

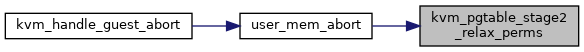

◆ kvm_pgtable_stage2_relax_perms()

| int kvm_pgtable_stage2_relax_perms | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| enum kvm_pgtable_prot | prot | ||

| ) |

◆ kvm_pgtable_stage2_set_owner()

| int kvm_pgtable_stage2_set_owner | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| u64 | size, | ||

| void * | mc, | ||

| u8 | owner_id | ||

| ) |

◆ kvm_pgtable_stage2_split()

| int kvm_pgtable_stage2_split | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| u64 | size, | ||

| struct kvm_mmu_memory_cache * | mc | ||

| ) |

Definition at line 1521 of file pgtable.c.

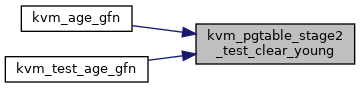

◆ kvm_pgtable_stage2_test_clear_young()

| bool kvm_pgtable_stage2_test_clear_young | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| u64 | size, | ||

| bool | mkold | ||

| ) |

Definition at line 1306 of file pgtable.c.

◆ kvm_pgtable_stage2_unmap()

| int kvm_pgtable_stage2_unmap | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| u64 | size | ||

| ) |

Definition at line 1160 of file pgtable.c.

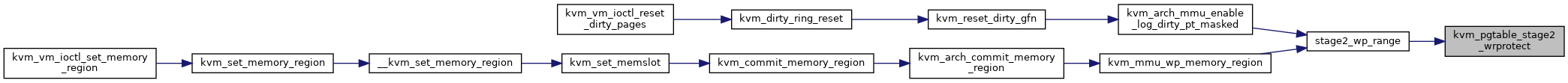

◆ kvm_pgtable_stage2_wrprotect()

| int kvm_pgtable_stage2_wrprotect | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| u64 | size | ||

| ) |

◆ kvm_pgtable_visitor_cb()

|

static |

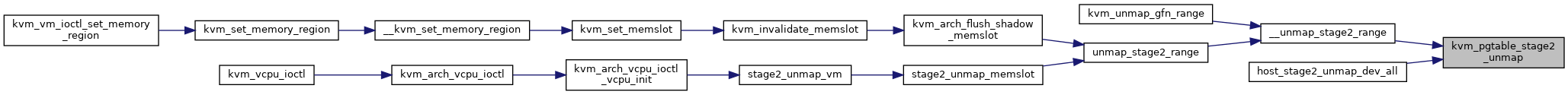

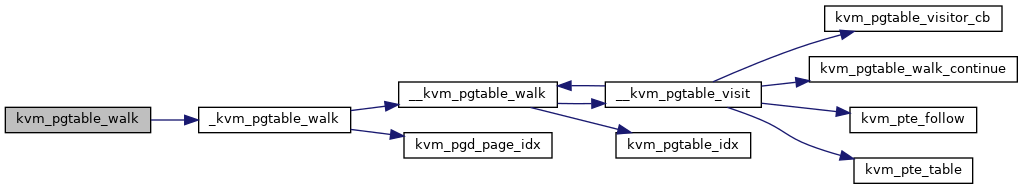

◆ kvm_pgtable_walk()

| int kvm_pgtable_walk | ( | struct kvm_pgtable * | pgt, |

| u64 | addr, | ||

| u64 | size, | ||

| struct kvm_pgtable_walker * | walker | ||

| ) |

Definition at line 324 of file pgtable.c.

◆ kvm_pgtable_walk_continue()

|

static |

◆ kvm_pgtable_walk_skip_bbm_tlbi()

|

static |

◆ kvm_pgtable_walk_skip_cmo()

|

static |

◆ kvm_phys_is_valid()

|

static |

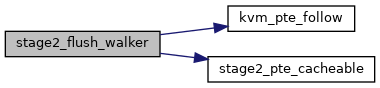

◆ kvm_pte_follow()

|

static |

◆ kvm_pte_table()

|

static |

◆ kvm_tlb_flush_vmid_range()

| void kvm_tlb_flush_vmid_range | ( | struct kvm_s2_mmu * | mmu, |

| phys_addr_t | addr, | ||

| size_t | size | ||

| ) |

◆ leaf_walker()

|

static |

◆ stage2_age_walker()

|

static |

Definition at line 1277 of file pgtable.c.

◆ stage2_attr_walker()

|

static |

Definition at line 1184 of file pgtable.c.

◆ stage2_block_get_nr_page_tables()

|

static |

◆ stage2_flush_walker()

|

static |

Definition at line 1349 of file pgtable.c.

◆ stage2_free_walker()

|

static |

◆ stage2_has_fwb()

|

static |

◆ stage2_leaf_mapping_allowed()

|

static |

Definition at line 927 of file pgtable.c.

◆ stage2_make_pte()

|

static |

◆ stage2_map_walk_leaf()

|

static |

Definition at line 1000 of file pgtable.c.

◆ stage2_map_walk_table_pre()

|

static |

Definition at line 982 of file pgtable.c.

◆ stage2_map_walker()

|

static |

Definition at line 1046 of file pgtable.c.

◆ stage2_map_walker_phys_addr()

|

static |

◆ stage2_map_walker_try_leaf()

|

static |

Definition at line 938 of file pgtable.c.

◆ stage2_pte_cacheable()

|

static |

◆ stage2_pte_executable()

|

static |

◆ stage2_pte_is_counted()

|

static |

◆ stage2_pte_is_locked()

|

static |

◆ stage2_pte_needs_update()

|

static |

◆ stage2_set_prot_attr()

|

static |

◆ stage2_split_walker()

|

static |

Definition at line 1452 of file pgtable.c.

◆ stage2_try_break_pte()

|

static |

stage2_try_break_pte() - Invalidates a pte according to the 'break-before-make' requirements of the architecture.

@ctx: context of the visited pte. @mmu: stage-2 mmu

Returns: true if the pte was successfully broken.

If the removed pte was valid, performs the necessary serialization and TLB invalidation for the old value. For counted ptes, drops the reference count on the containing table page.

Definition at line 810 of file pgtable.c.

◆ stage2_try_set_pte()

|

static |

◆ stage2_unmap_defer_tlb_flush()

|

static |

◆ stage2_unmap_put_pte()

|

static |

◆ stage2_unmap_walker()

|

static |

Definition at line 1117 of file pgtable.c.

◆ stage2_update_leaf_attrs()

|

static |

Definition at line 1221 of file pgtable.c.