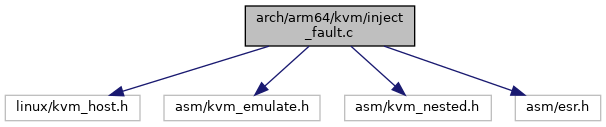

#include <linux/kvm_host.h>

#include <asm/kvm_emulate.h>

#include <asm/kvm_nested.h>

#include <asm/esr.h>

Go to the source code of this file.

◆ DFSR_FSC_EXTABT_LPAE

| #define DFSR_FSC_EXTABT_LPAE 0x10 |

◆ DFSR_FSC_EXTABT_nLPAE

| #define DFSR_FSC_EXTABT_nLPAE 0x08 |

◆ DFSR_LPAE

◆ TTBCR_EAE

| #define TTBCR_EAE BIT(31) |

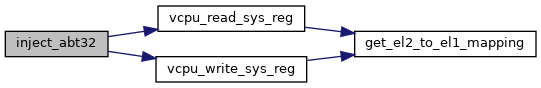

◆ inject_abt32()

| static void inject_abt32 |

( |

struct kvm_vcpu * |

vcpu, |

|

|

bool |

is_pabt, |

|

|

u32 |

addr |

|

) |

| |

|

static |

Definition at line 128 of file inject_fault.c.

144 kvm_pend_exception(vcpu, EXCEPT_AA32_IABT);

145 far &= GENMASK(31, 0);

146 far |= (u64)addr << 32;

149 kvm_pend_exception(vcpu, EXCEPT_AA32_DABT);

150 far &= GENMASK(63, 32);

#define DFSR_FSC_EXTABT_LPAE

#define DFSR_FSC_EXTABT_nLPAE

u64 vcpu_read_sys_reg(const struct kvm_vcpu *vcpu, int reg)

void vcpu_write_sys_reg(struct kvm_vcpu *vcpu, u64 val, int reg)

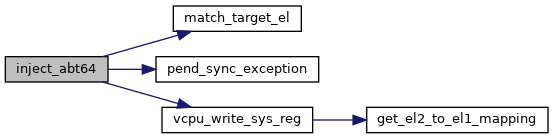

◆ inject_abt64()

| static void inject_abt64 |

( |

struct kvm_vcpu * |

vcpu, |

|

|

bool |

is_iabt, |

|

|

unsigned long |

addr |

|

) |

| |

|

static |

Definition at line 57 of file inject_fault.c.

59 unsigned long cpsr = *vcpu_cpsr(vcpu);

60 bool is_aarch32 = vcpu_mode_is_32bit(vcpu);

69 if (kvm_vcpu_trap_il_is32bit(vcpu))

76 if (is_aarch32 || (cpsr & PSR_MODE_MASK) == PSR_MODE_EL0t)

77 esr |= (ESR_ELx_EC_IABT_LOW << ESR_ELx_EC_SHIFT);

79 esr |= (ESR_ELx_EC_IABT_CUR << ESR_ELx_EC_SHIFT);

82 esr |= ESR_ELx_EC_DABT_LOW << ESR_ELx_EC_SHIFT;

84 esr |= ESR_ELx_FSC_EXTABT;

static bool match_target_el(struct kvm_vcpu *vcpu, unsigned long target)

static void pend_sync_exception(struct kvm_vcpu *vcpu)

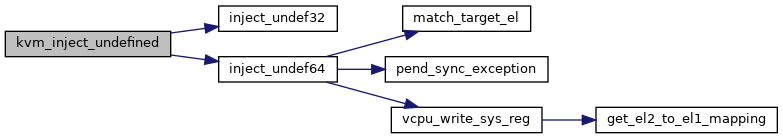

◆ inject_undef32()

| static void inject_undef32 |

( |

struct kvm_vcpu * |

vcpu | ) |

|

|

static |

Definition at line 119 of file inject_fault.c.

121 kvm_pend_exception(vcpu, EXCEPT_AA32_UND);

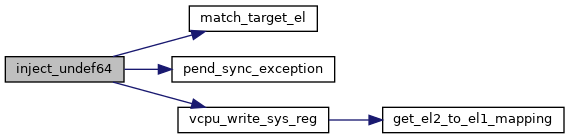

◆ inject_undef64()

| static void inject_undef64 |

( |

struct kvm_vcpu * |

vcpu | ) |

|

|

static |

Definition at line 95 of file inject_fault.c.

97 u64 esr = (ESR_ELx_EC_UNKNOWN << ESR_ELx_EC_SHIFT);

105 if (kvm_vcpu_trap_il_is32bit(vcpu))

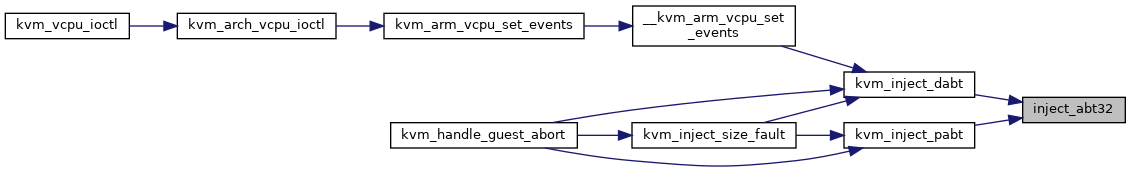

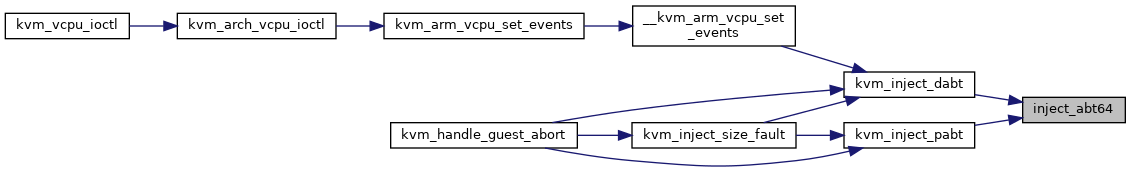

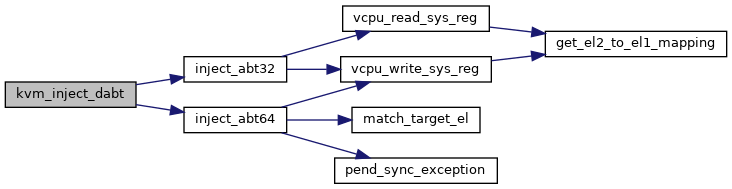

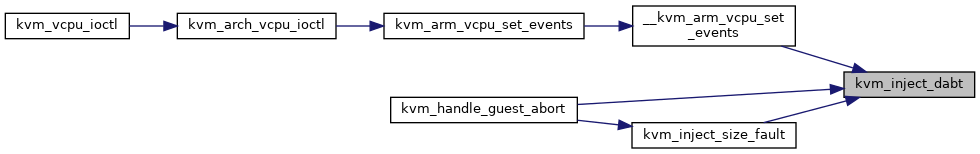

◆ kvm_inject_dabt()

| void kvm_inject_dabt |

( |

struct kvm_vcpu * |

vcpu, |

|

|

unsigned long |

addr |

|

) |

| |

kvm_inject_dabt - inject a data abort into the guest @vcpu: The VCPU to receive the data abort @addr: The address to report in the DFAR

It is assumed that this code is called from the VCPU thread and that the VCPU therefore is not currently executing guest code.

Definition at line 166 of file inject_fault.c.

168 if (vcpu_el1_is_32bit(vcpu))

static void inject_abt32(struct kvm_vcpu *vcpu, bool is_pabt, u32 addr)

static void inject_abt64(struct kvm_vcpu *vcpu, bool is_iabt, unsigned long addr)

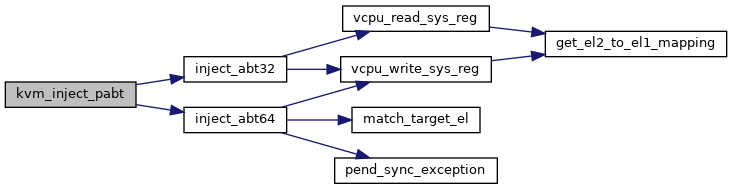

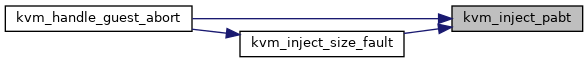

◆ kvm_inject_pabt()

| void kvm_inject_pabt |

( |

struct kvm_vcpu * |

vcpu, |

|

|

unsigned long |

addr |

|

) |

| |

kvm_inject_pabt - inject a prefetch abort into the guest @vcpu: The VCPU to receive the prefetch abort @addr: The address to report in the DFAR

It is assumed that this code is called from the VCPU thread and that the VCPU therefore is not currently executing guest code.

Definition at line 182 of file inject_fault.c.

184 if (vcpu_el1_is_32bit(vcpu))

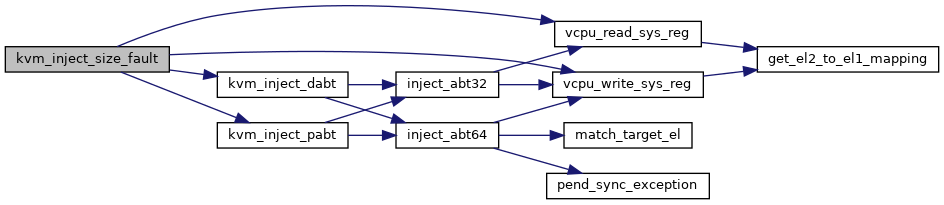

◆ kvm_inject_size_fault()

| void kvm_inject_size_fault |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 190 of file inject_fault.c.

192 unsigned long addr, esr;

194 addr = kvm_vcpu_get_fault_ipa(vcpu);

195 addr |= kvm_vcpu_get_hfar(vcpu) & GENMASK(11, 0);

197 if (kvm_vcpu_trap_is_iabt(vcpu))

209 if (vcpu_el1_is_32bit(vcpu) &&

214 esr &= ~GENMASK_ULL(5, 0);

void kvm_inject_dabt(struct kvm_vcpu *vcpu, unsigned long addr)

void kvm_inject_pabt(struct kvm_vcpu *vcpu, unsigned long addr)

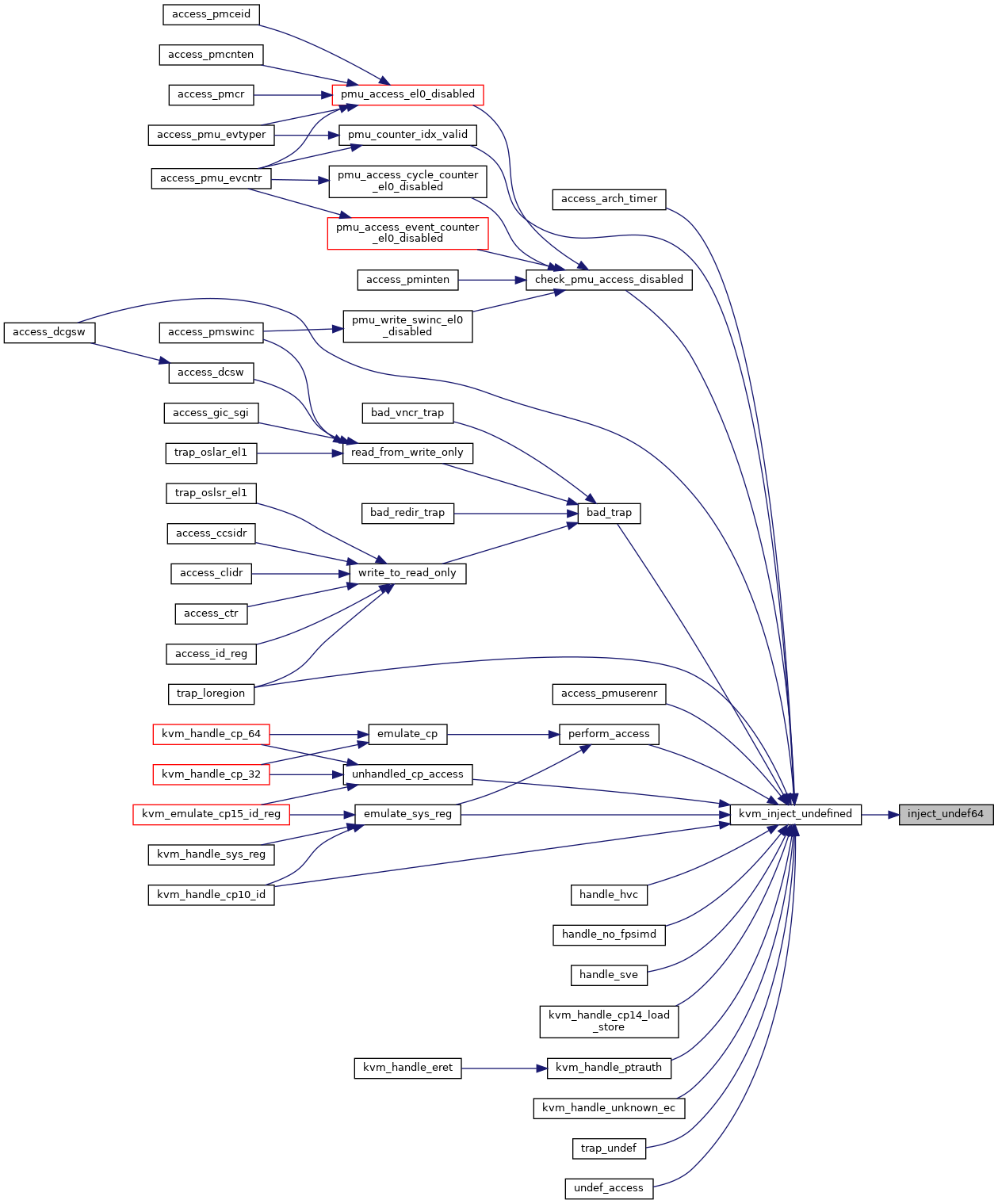

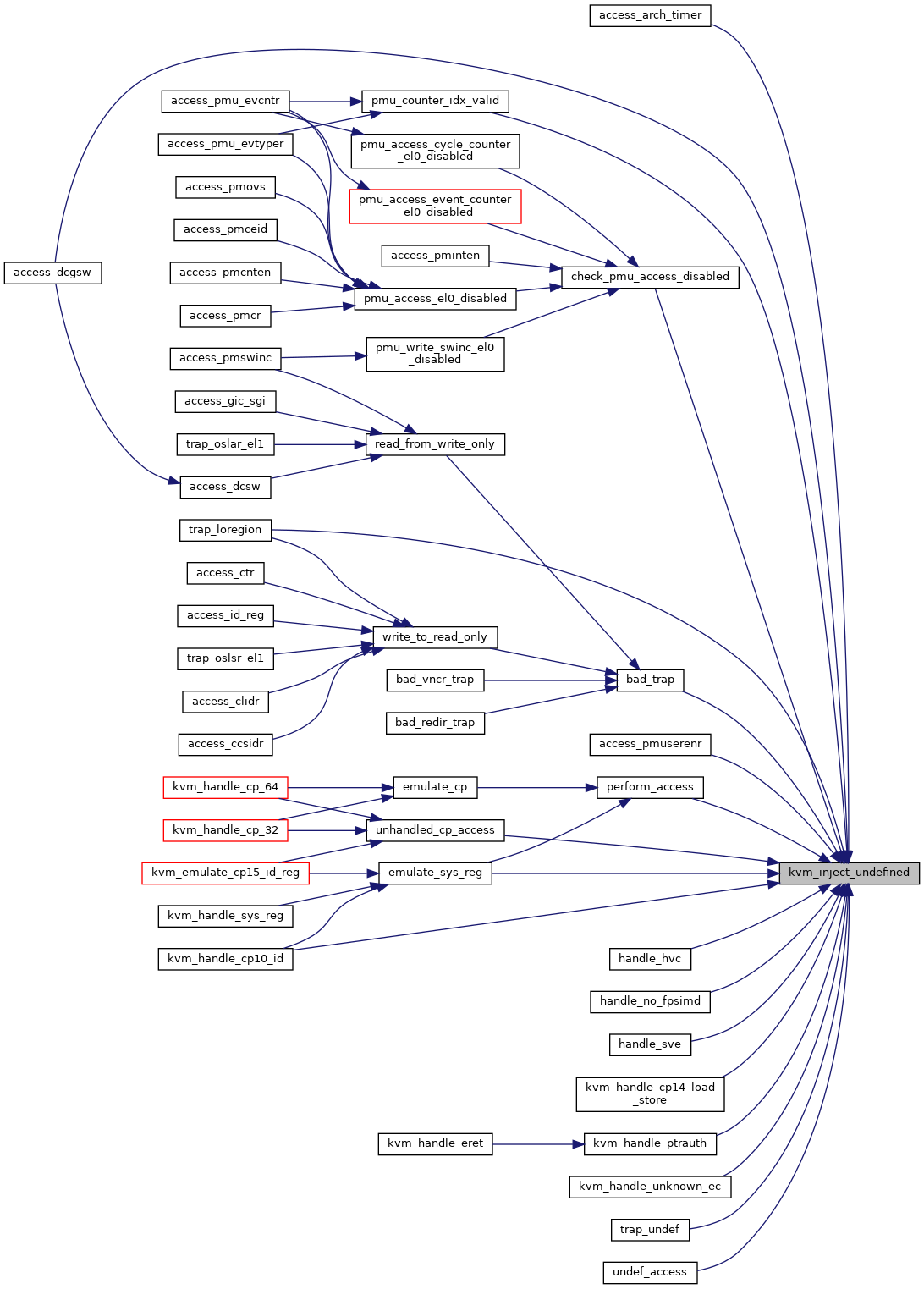

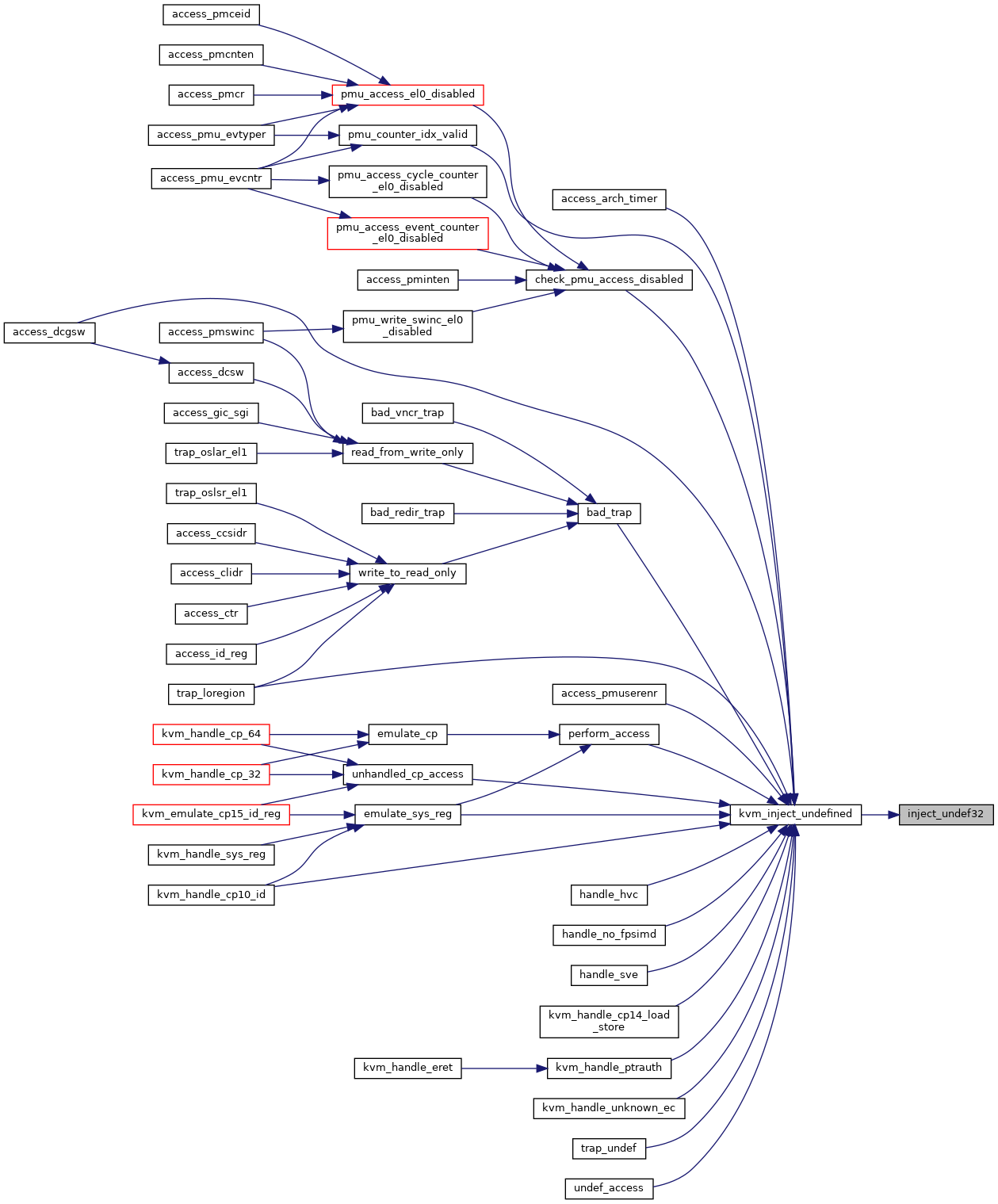

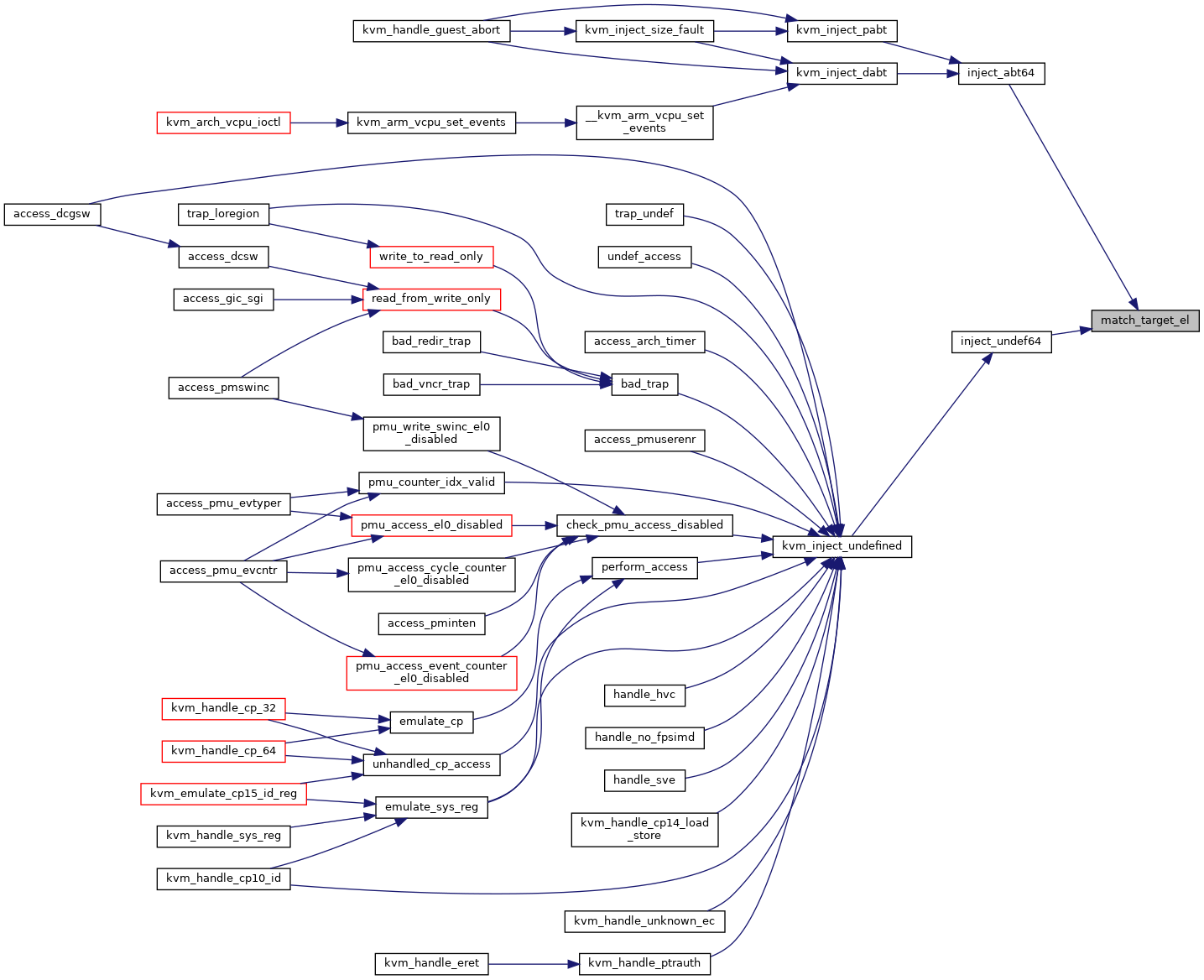

◆ kvm_inject_undefined()

| void kvm_inject_undefined |

( |

struct kvm_vcpu * |

vcpu | ) |

|

kvm_inject_undefined - inject an undefined instruction into the guest @vcpu: The vCPU in which to inject the exception

It is assumed that this code is called from the VCPU thread and that the VCPU therefore is not currently executing guest code.

Definition at line 225 of file inject_fault.c.

227 if (vcpu_el1_is_32bit(vcpu))

static void inject_undef64(struct kvm_vcpu *vcpu)

static void inject_undef32(struct kvm_vcpu *vcpu)

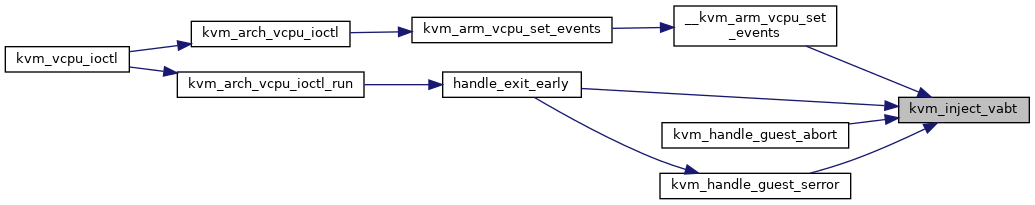

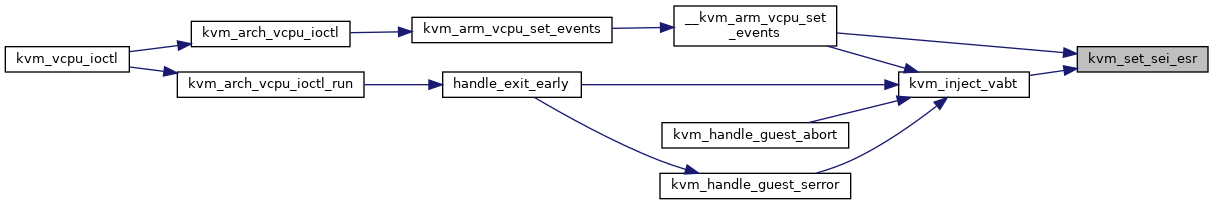

◆ kvm_inject_vabt()

| void kvm_inject_vabt |

( |

struct kvm_vcpu * |

vcpu | ) |

|

kvm_inject_vabt - inject an async abort / SError into the guest @vcpu: The VCPU to receive the exception

It is assumed that this code is called from the VCPU thread and that the VCPU therefore is not currently executing guest code.

Systems with the RAS Extensions specify an imp-def ESR (ISV/IDS = 1) with the remaining ISS all-zeros so that this error is not interpreted as an uncategorized RAS error. Without the RAS Extensions we can't specify an ESR value, so the CPU generates an imp-def value.

Definition at line 251 of file inject_fault.c.

void kvm_set_sei_esr(struct kvm_vcpu *vcpu, u64 esr)

◆ kvm_set_sei_esr()

| void kvm_set_sei_esr |

( |

struct kvm_vcpu * |

vcpu, |

|

|

u64 |

esr |

|

) |

| |

Definition at line 233 of file inject_fault.c.

235 vcpu_set_vsesr(vcpu, esr & ESR_ELx_ISS_MASK);

236 *vcpu_hcr(vcpu) |= HCR_VSE;

◆ match_target_el()

| static bool match_target_el |

( |

struct kvm_vcpu * |

vcpu, |

|

|

unsigned long |

target |

|

) |

| |

|

static |

Definition at line 52 of file inject_fault.c.

54 return (vcpu_get_flag(vcpu, EXCEPT_MASK) == target);

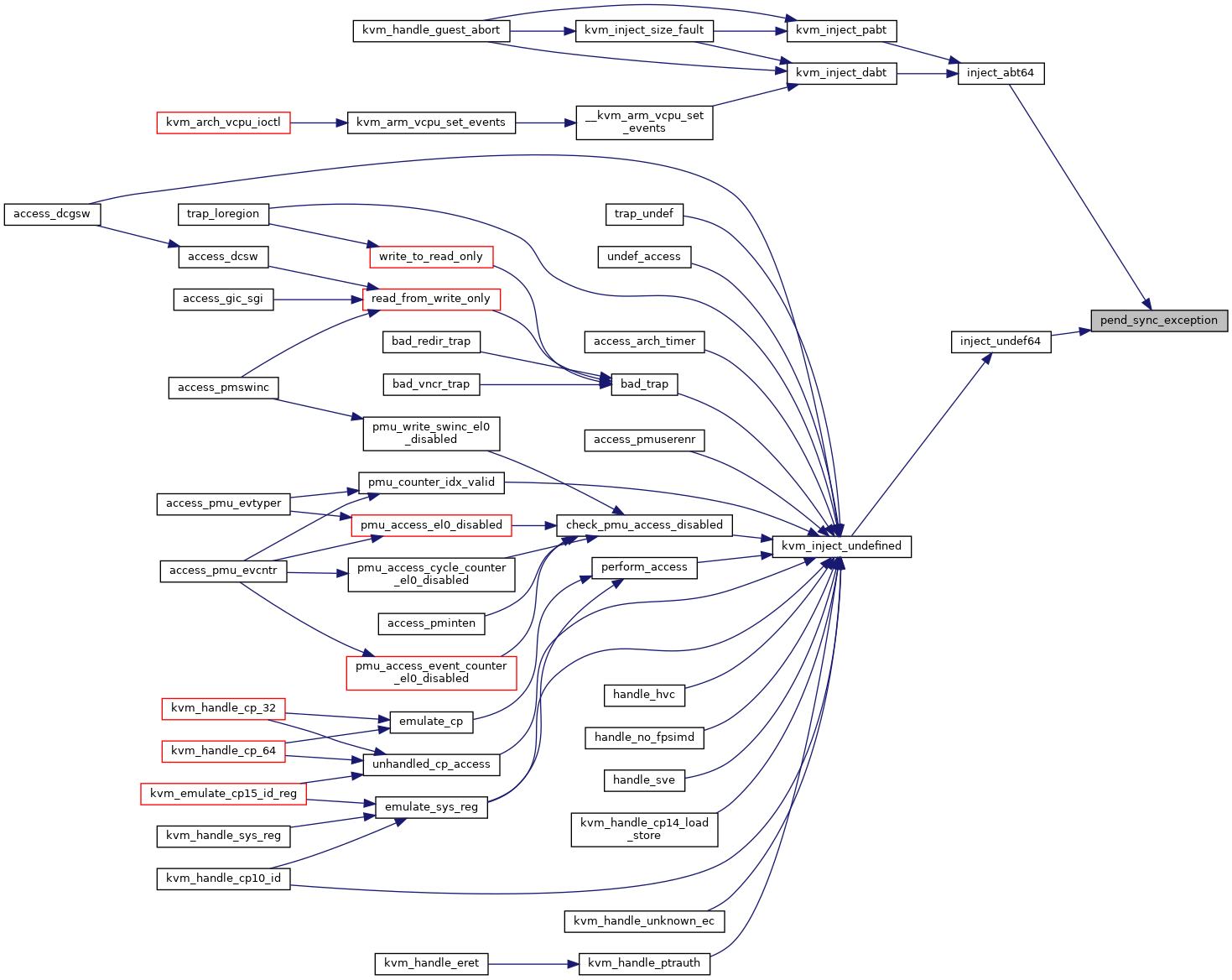

◆ pend_sync_exception()

| static void pend_sync_exception |

( |

struct kvm_vcpu * |

vcpu | ) |

|

|

static |

Definition at line 18 of file inject_fault.c.

21 if (likely(!vcpu_has_nv(vcpu))) {

22 kvm_pend_exception(vcpu, EXCEPT_AA64_EL1_SYNC);

32 switch(*vcpu_cpsr(vcpu) & PSR_MODE_MASK) {

35 kvm_pend_exception(vcpu, EXCEPT_AA64_EL2_SYNC);

39 kvm_pend_exception(vcpu, EXCEPT_AA64_EL1_SYNC);

42 if (vcpu_el2_tge_is_set(vcpu))

43 kvm_pend_exception(vcpu, EXCEPT_AA64_EL2_SYNC);

45 kvm_pend_exception(vcpu, EXCEPT_AA64_EL1_SYNC);