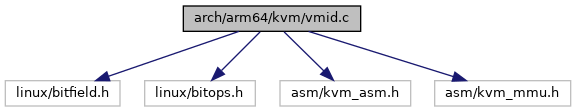

#include <linux/bitfield.h>

#include <linux/bitops.h>

#include <asm/kvm_asm.h>

#include <asm/kvm_mmu.h>

Go to the source code of this file.

◆ idx2vmid

◆ NUM_USER_VMIDS

◆ vmid2idx

| #define vmid2idx |

( |

|

vmid | ) |

((vmid) & ~VMID_MASK) |

◆ VMID_ACTIVE_INVALID

◆ VMID_FIRST_VERSION

◆ vmid_gen_match

◆ VMID_MASK

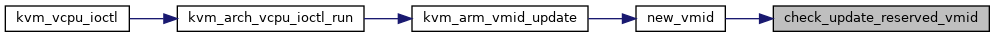

◆ check_update_reserved_vmid()

| static bool check_update_reserved_vmid |

( |

u64 |

vmid, |

|

|

u64 |

newvmid |

|

) |

| |

|

static |

Definition at line 72 of file vmid.c.

82 for_each_possible_cpu(cpu) {

83 if (per_cpu(reserved_vmids, cpu) == vmid) {

85 per_cpu(reserved_vmids, cpu) = newvmid;

◆ DEFINE_PER_CPU() [1/2]

| static DEFINE_PER_CPU |

( |

atomic64_t |

, |

|

|

active_vmids |

|

|

) |

| |

|

static |

◆ DEFINE_PER_CPU() [2/2]

| static DEFINE_PER_CPU |

( |

u64 |

, |

|

|

reserved_vmids |

|

|

) |

| |

|

static |

◆ DEFINE_RAW_SPINLOCK()

| static DEFINE_RAW_SPINLOCK |

( |

cpu_vmid_lock |

| ) |

|

|

static |

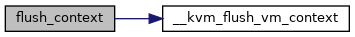

◆ flush_context()

| static void flush_context |

( |

void |

| ) |

|

|

static |

Definition at line 45 of file vmid.c.

52 for_each_possible_cpu(cpu) {

53 vmid = atomic64_xchg_relaxed(&per_cpu(active_vmids, cpu), 0);

57 vmid = per_cpu(reserved_vmids, cpu);

59 per_cpu(reserved_vmids, cpu) = vmid;

void __kvm_flush_vm_context(void)

static unsigned long * vmid_map

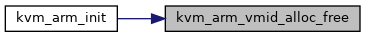

◆ kvm_arm_vmid_alloc_free()

| void __init kvm_arm_vmid_alloc_free |

( |

void |

| ) |

|

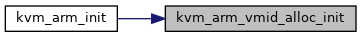

◆ kvm_arm_vmid_alloc_init()

| int __init kvm_arm_vmid_alloc_init |

( |

void |

| ) |

|

Definition at line 180 of file vmid.c.

unsigned int __ro_after_init kvm_arm_vmid_bits

static atomic64_t vmid_generation

#define VMID_FIRST_VERSION

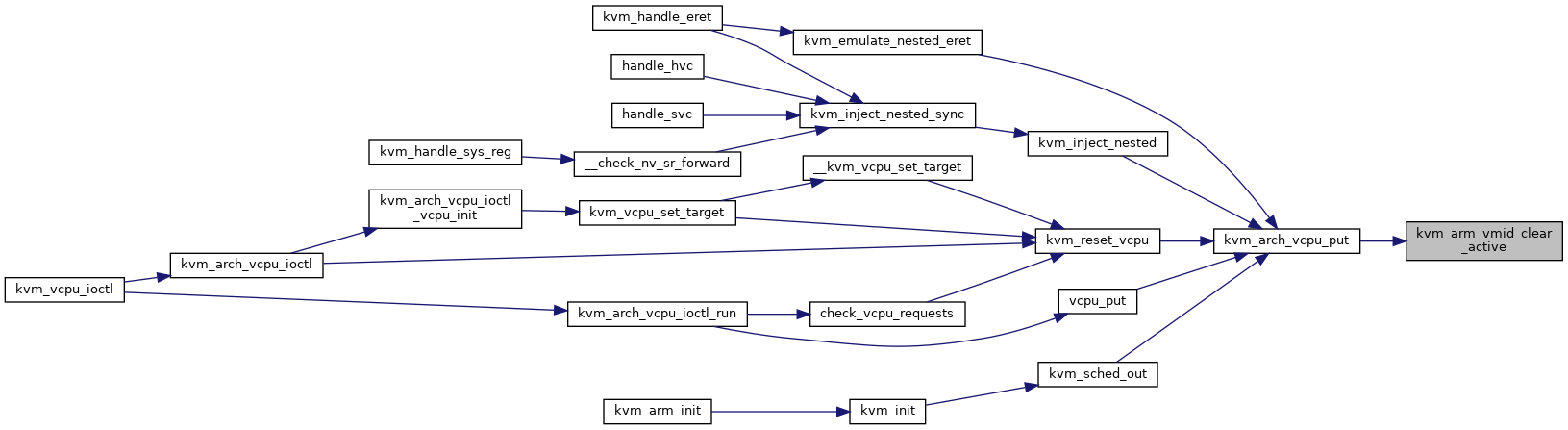

◆ kvm_arm_vmid_clear_active()

| void kvm_arm_vmid_clear_active |

( |

void |

| ) |

|

Definition at line 133 of file vmid.c.

#define VMID_ACTIVE_INVALID

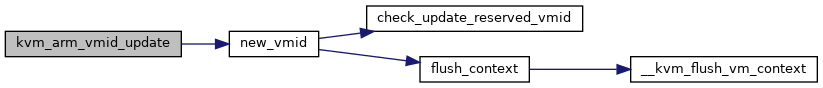

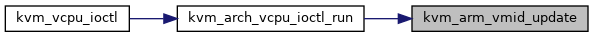

◆ kvm_arm_vmid_update()

| bool kvm_arm_vmid_update |

( |

struct kvm_vmid * |

kvm_vmid | ) |

|

Definition at line 138 of file vmid.c.

141 u64 vmid, old_active_vmid;

142 bool updated =

false;

144 vmid = atomic64_read(&kvm_vmid->id);

156 old_active_vmid = atomic64_read(this_cpu_ptr(&active_vmids));

158 0 != atomic64_cmpxchg_relaxed(this_cpu_ptr(&active_vmids),

159 old_active_vmid, vmid))

162 raw_spin_lock_irqsave(&cpu_vmid_lock, flags);

165 vmid = atomic64_read(&kvm_vmid->id);

171 atomic64_set(this_cpu_ptr(&active_vmids), vmid);

172 raw_spin_unlock_irqrestore(&cpu_vmid_lock, flags);

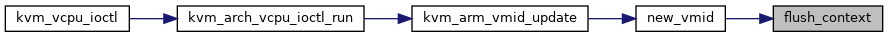

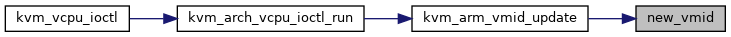

static u64 new_vmid(struct kvm_vmid *kvm_vmid)

#define vmid_gen_match(vmid)

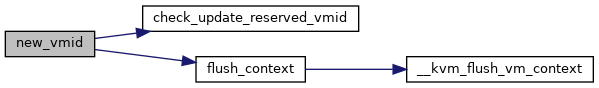

◆ new_vmid()

| static u64 new_vmid |

( |

struct kvm_vmid * |

kvm_vmid | ) |

|

|

static |

Definition at line 92 of file vmid.c.

94 static u32 cur_idx = 1;

95 u64 vmid = atomic64_read(&kvm_vmid->id);

99 u64 newvmid = generation | (vmid & ~

VMID_MASK);

102 atomic64_set(&kvm_vmid->id, newvmid);

107 atomic64_set(&kvm_vmid->id, newvmid);

128 atomic64_set(&kvm_vmid->id, vmid);

static bool check_update_reserved_vmid(u64 vmid, u64 newvmid)

static void flush_context(void)

◆ kvm_arm_vmid_bits

◆ vmid_generation

| atomic64_t vmid_generation |

|

static |

◆ vmid_map