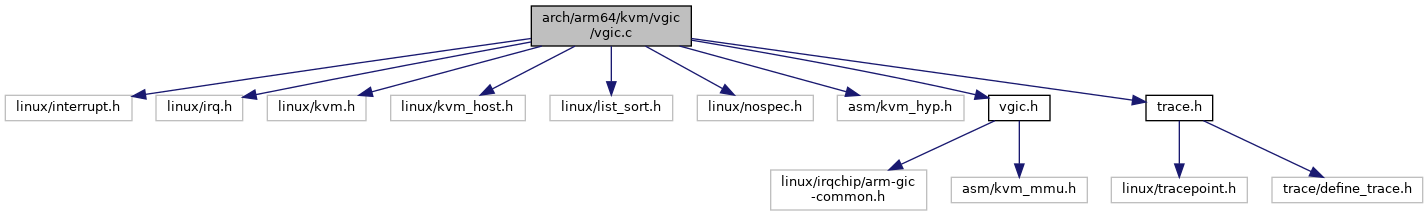

#include <linux/interrupt.h>#include <linux/irq.h>#include <linux/kvm.h>#include <linux/kvm_host.h>#include <linux/list_sort.h>#include <linux/nospec.h>#include <asm/kvm_hyp.h>#include "vgic.h"#include "trace.h"

Go to the source code of this file.

Macros | |

| #define | CREATE_TRACE_POINTS |

Functions | |

| static struct vgic_irq * | vgic_get_lpi (struct kvm *kvm, u32 intid) |

| struct vgic_irq * | vgic_get_irq (struct kvm *kvm, struct kvm_vcpu *vcpu, u32 intid) |

| static void | vgic_irq_release (struct kref *ref) |

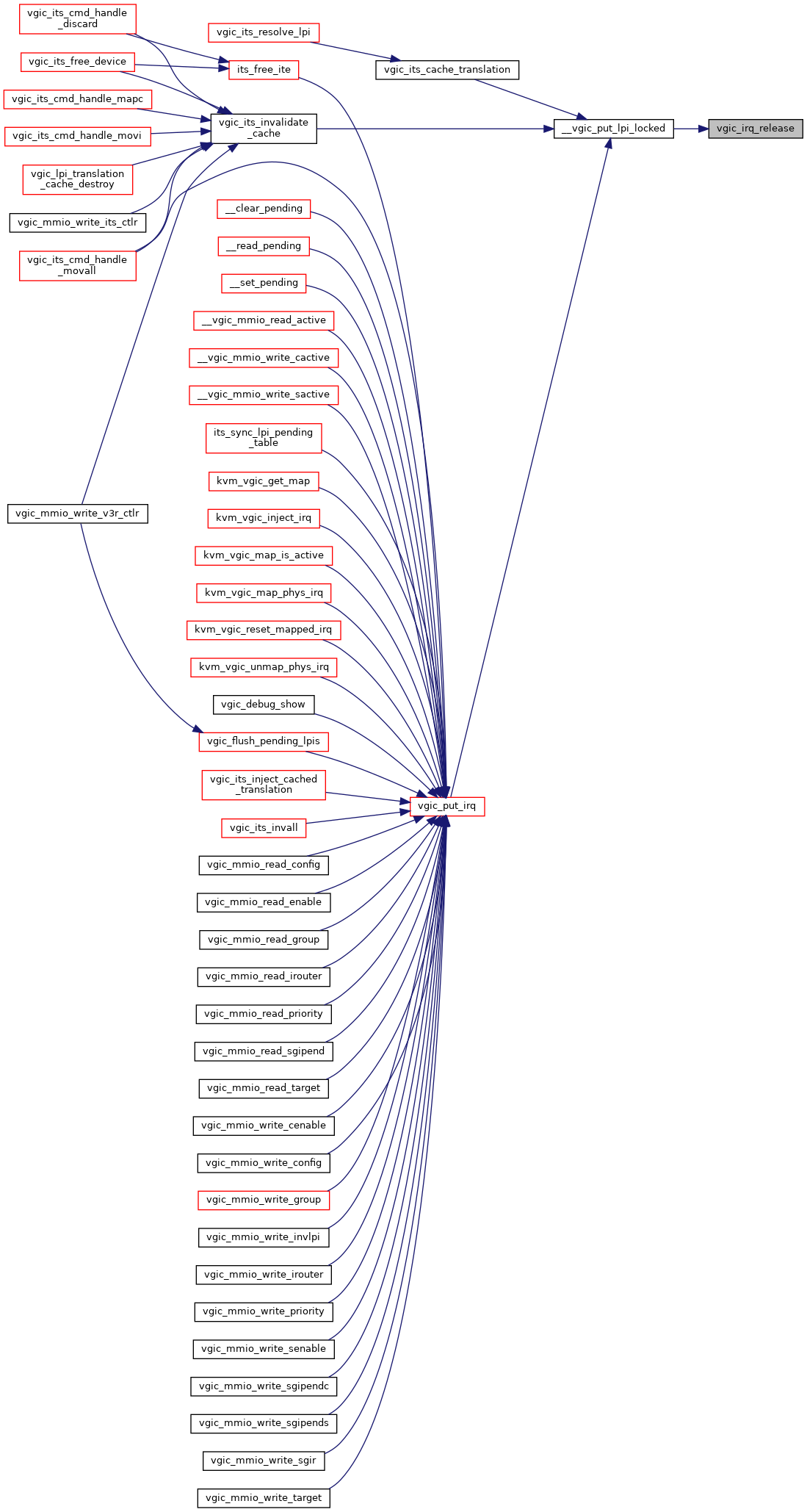

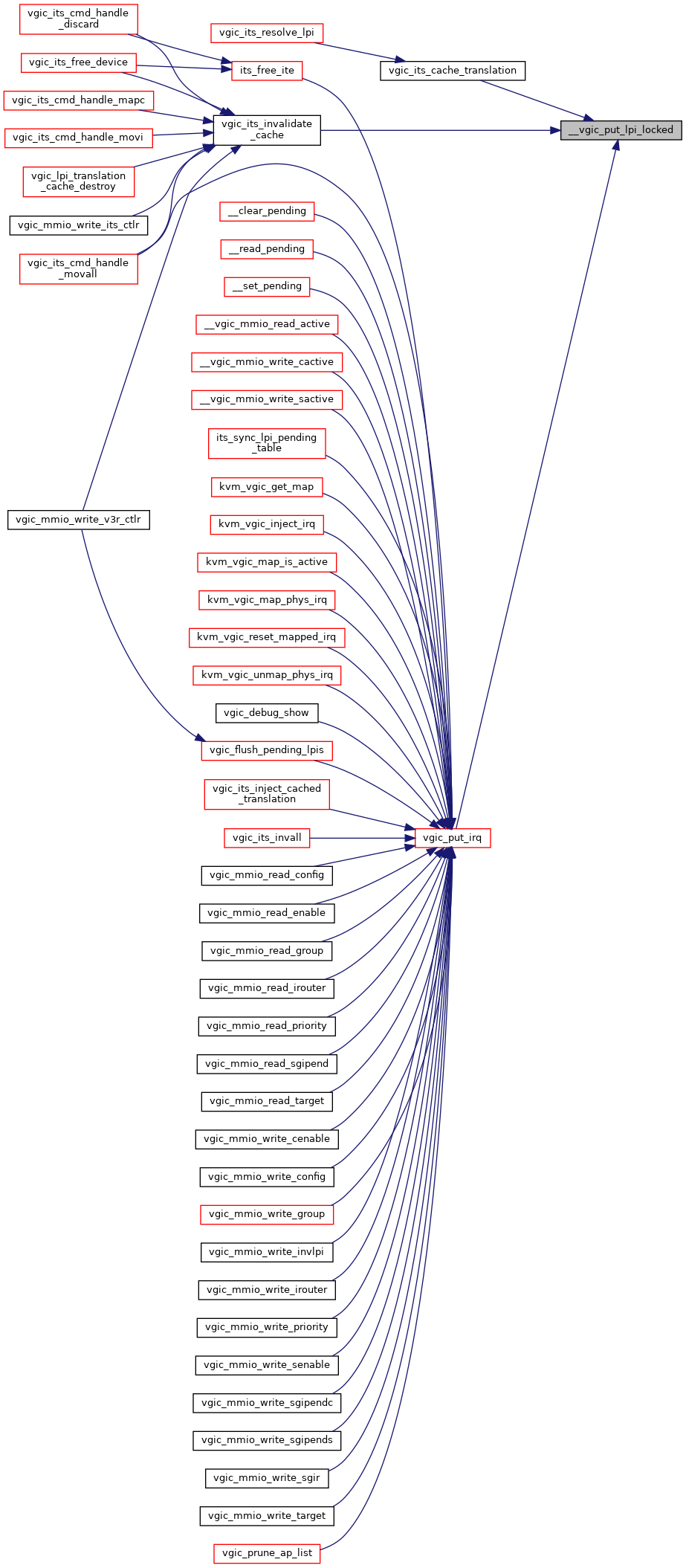

| void | __vgic_put_lpi_locked (struct kvm *kvm, struct vgic_irq *irq) |

| void | vgic_put_irq (struct kvm *kvm, struct vgic_irq *irq) |

| void | vgic_flush_pending_lpis (struct kvm_vcpu *vcpu) |

| void | vgic_irq_set_phys_pending (struct vgic_irq *irq, bool pending) |

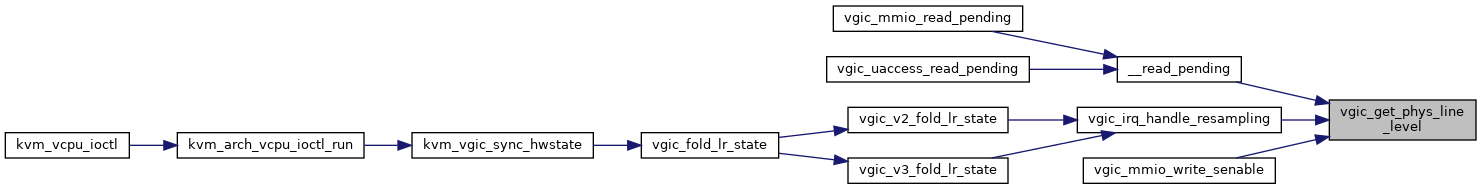

| bool | vgic_get_phys_line_level (struct vgic_irq *irq) |

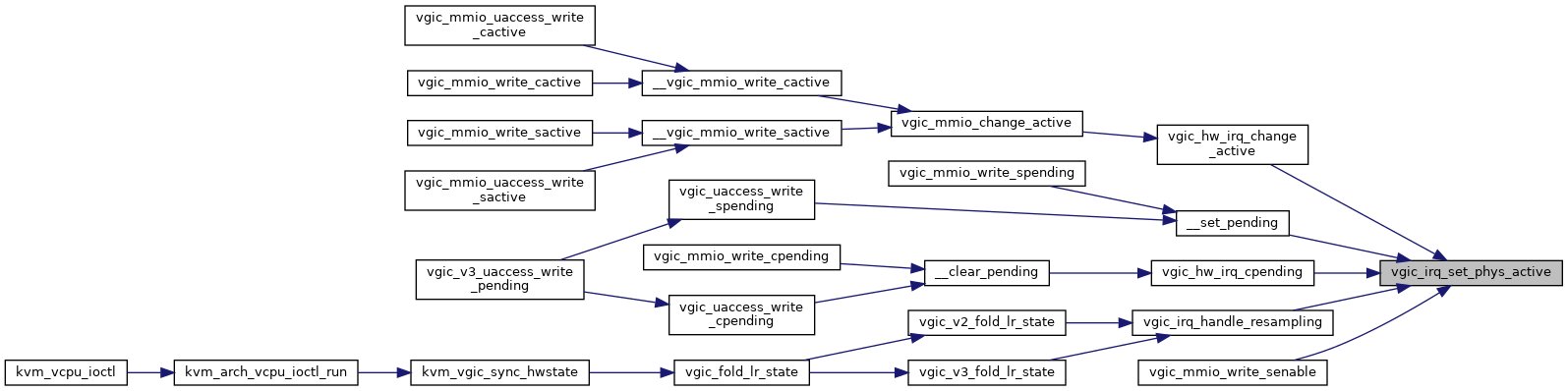

| void | vgic_irq_set_phys_active (struct vgic_irq *irq, bool active) |

| static struct kvm_vcpu * | vgic_target_oracle (struct vgic_irq *irq) |

| static int | vgic_irq_cmp (void *priv, const struct list_head *a, const struct list_head *b) |

| static void | vgic_sort_ap_list (struct kvm_vcpu *vcpu) |

| static bool | vgic_validate_injection (struct vgic_irq *irq, bool level, void *owner) |

| bool | vgic_queue_irq_unlock (struct kvm *kvm, struct vgic_irq *irq, unsigned long flags) |

| int | kvm_vgic_inject_irq (struct kvm *kvm, struct kvm_vcpu *vcpu, unsigned int intid, bool level, void *owner) |

| static int | kvm_vgic_map_irq (struct kvm_vcpu *vcpu, struct vgic_irq *irq, unsigned int host_irq, struct irq_ops *ops) |

| static void | kvm_vgic_unmap_irq (struct vgic_irq *irq) |

| int | kvm_vgic_map_phys_irq (struct kvm_vcpu *vcpu, unsigned int host_irq, u32 vintid, struct irq_ops *ops) |

| void | kvm_vgic_reset_mapped_irq (struct kvm_vcpu *vcpu, u32 vintid) |

| int | kvm_vgic_unmap_phys_irq (struct kvm_vcpu *vcpu, unsigned int vintid) |

| int | kvm_vgic_get_map (struct kvm_vcpu *vcpu, unsigned int vintid) |

| int | kvm_vgic_set_owner (struct kvm_vcpu *vcpu, unsigned int intid, void *owner) |

| static void | vgic_prune_ap_list (struct kvm_vcpu *vcpu) |

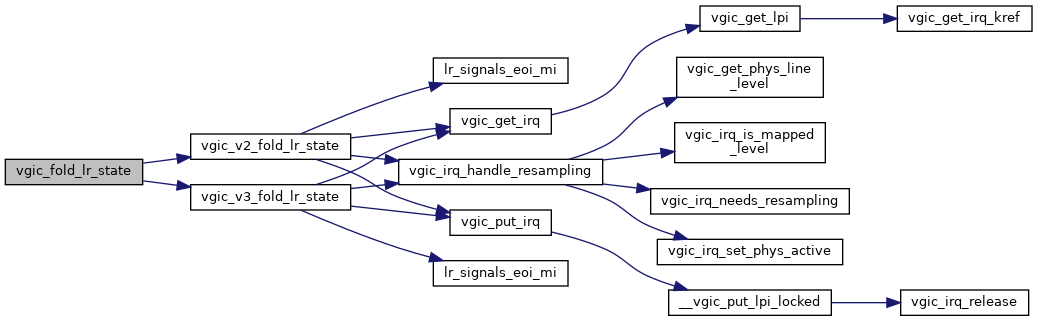

| static void | vgic_fold_lr_state (struct kvm_vcpu *vcpu) |

| static void | vgic_populate_lr (struct kvm_vcpu *vcpu, struct vgic_irq *irq, int lr) |

| static void | vgic_clear_lr (struct kvm_vcpu *vcpu, int lr) |

| static void | vgic_set_underflow (struct kvm_vcpu *vcpu) |

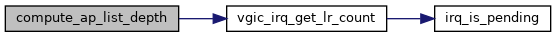

| static int | compute_ap_list_depth (struct kvm_vcpu *vcpu, bool *multi_sgi) |

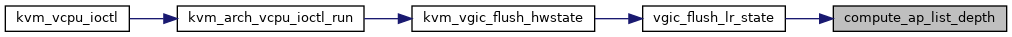

| static void | vgic_flush_lr_state (struct kvm_vcpu *vcpu) |

| static bool | can_access_vgic_from_kernel (void) |

| static void | vgic_save_state (struct kvm_vcpu *vcpu) |

| void | kvm_vgic_sync_hwstate (struct kvm_vcpu *vcpu) |

| static void | vgic_restore_state (struct kvm_vcpu *vcpu) |

| void | kvm_vgic_flush_hwstate (struct kvm_vcpu *vcpu) |

| void | kvm_vgic_load (struct kvm_vcpu *vcpu) |

| void | kvm_vgic_put (struct kvm_vcpu *vcpu) |

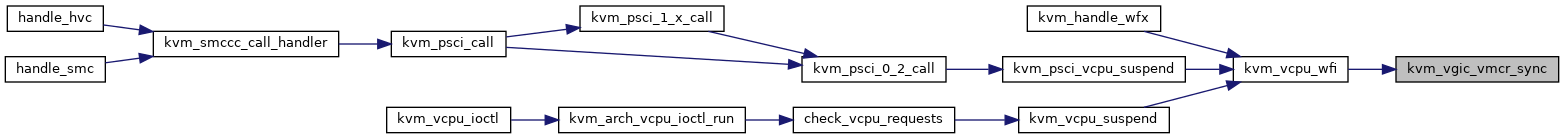

| void | kvm_vgic_vmcr_sync (struct kvm_vcpu *vcpu) |

| int | kvm_vgic_vcpu_pending_irq (struct kvm_vcpu *vcpu) |

| void | vgic_kick_vcpus (struct kvm *kvm) |

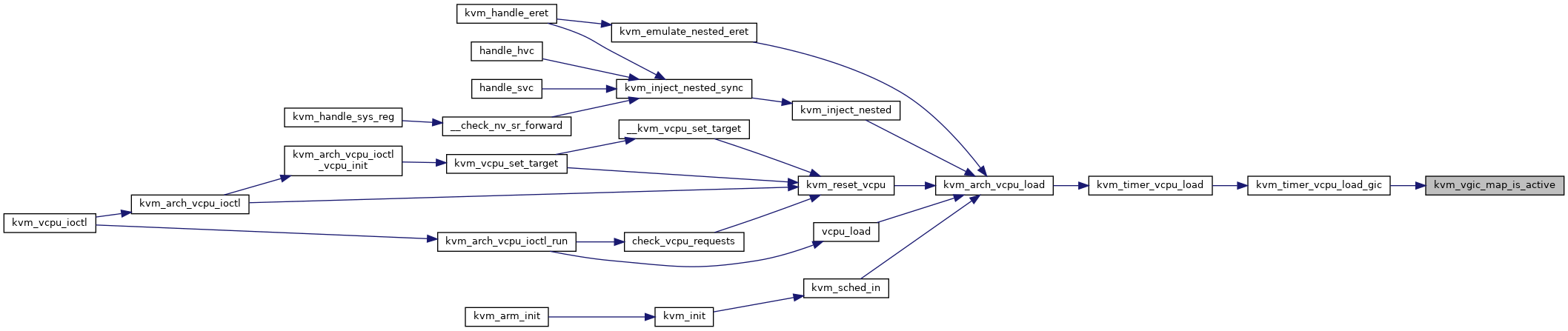

| bool | kvm_vgic_map_is_active (struct kvm_vcpu *vcpu, unsigned int vintid) |

| void | vgic_irq_handle_resampling (struct vgic_irq *irq, bool lr_deactivated, bool lr_pending) |

Variables | |

| struct vgic_global kvm_vgic_global_state | __ro_after_init |

Macro Definition Documentation

◆ CREATE_TRACE_POINTS

Function Documentation

◆ __vgic_put_lpi_locked()

| void __vgic_put_lpi_locked | ( | struct kvm * | kvm, |

| struct vgic_irq * | irq | ||

| ) |

Definition at line 126 of file vgic.c.

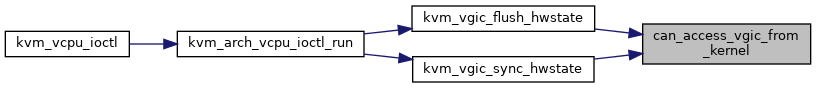

◆ can_access_vgic_from_kernel()

|

inlinestatic |

◆ compute_ap_list_depth()

|

static |

Definition at line 771 of file vgic.c.

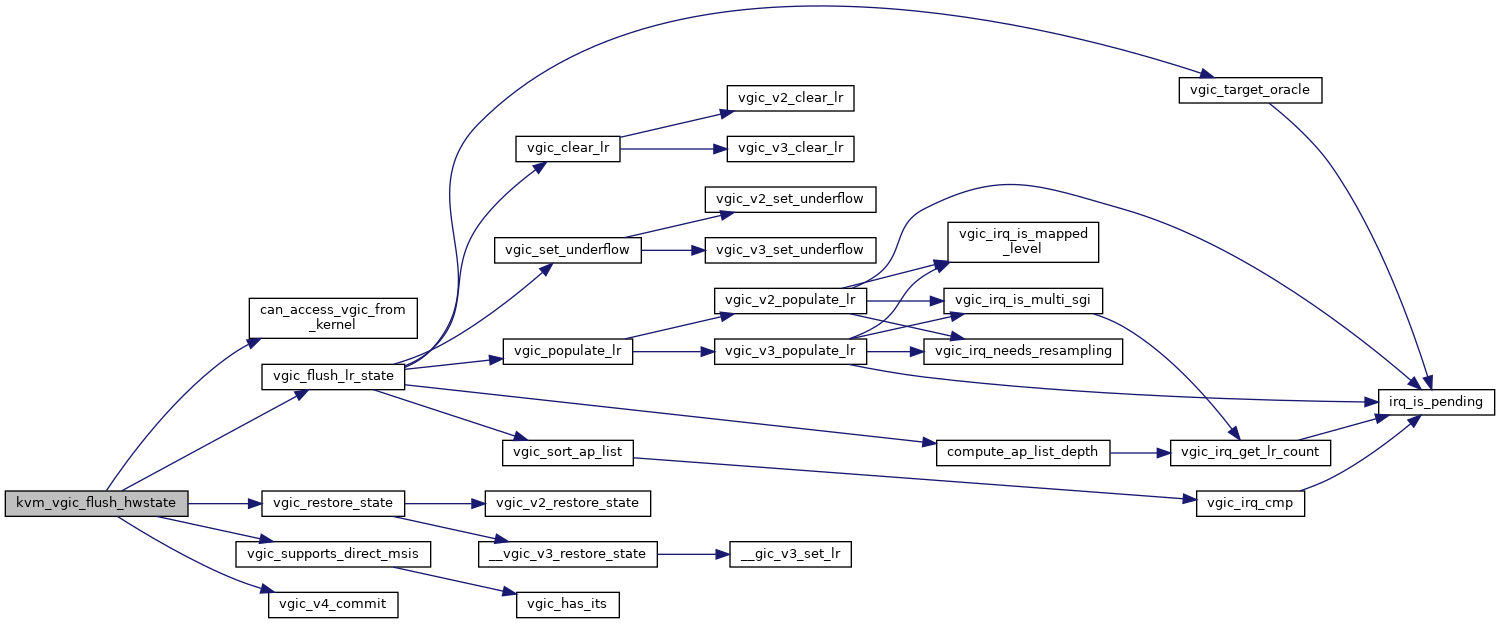

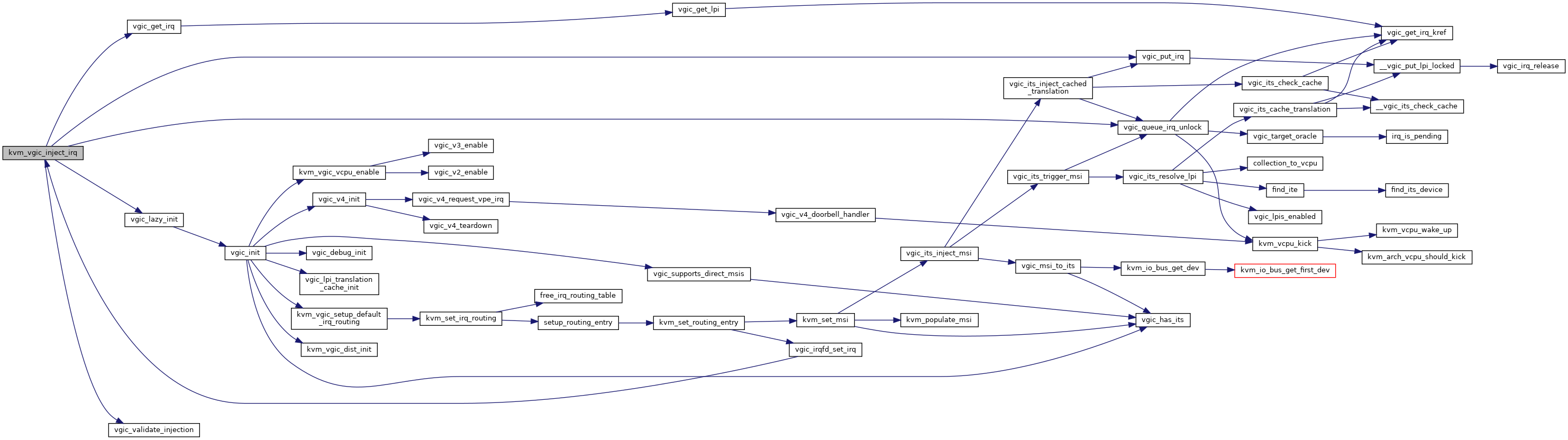

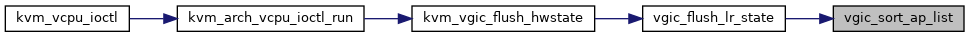

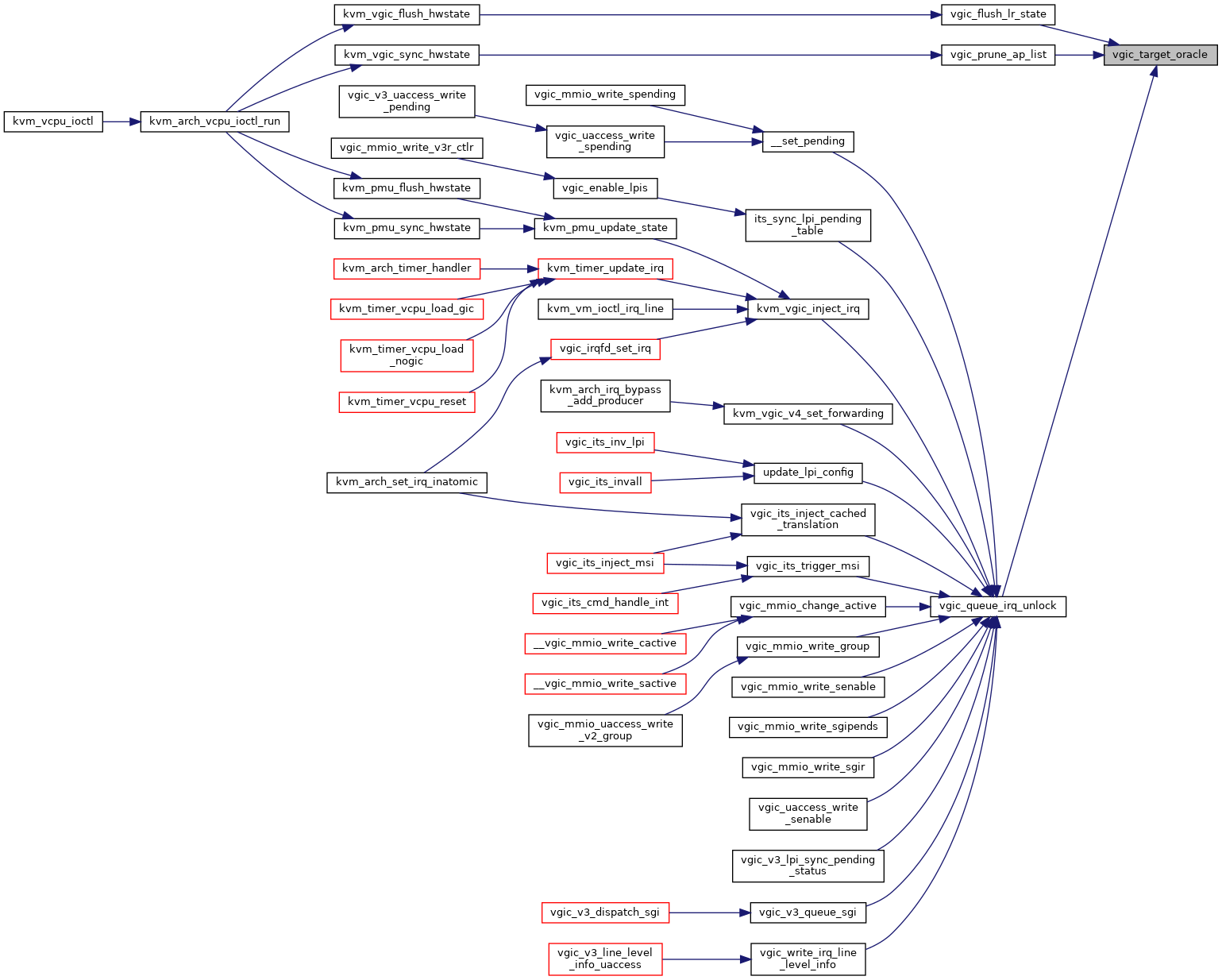

◆ kvm_vgic_flush_hwstate()

| void kvm_vgic_flush_hwstate | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 905 of file vgic.c.

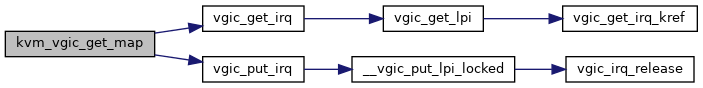

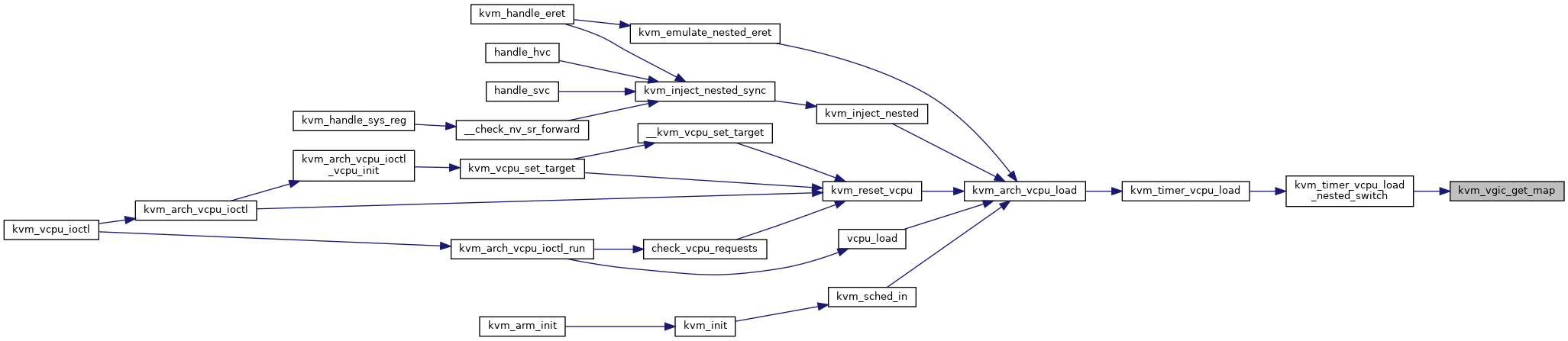

◆ kvm_vgic_get_map()

| int kvm_vgic_get_map | ( | struct kvm_vcpu * | vcpu, |

| unsigned int | vintid | ||

| ) |

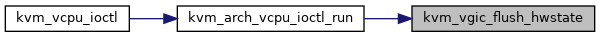

◆ kvm_vgic_inject_irq()

| int kvm_vgic_inject_irq | ( | struct kvm * | kvm, |

| struct kvm_vcpu * | vcpu, | ||

| unsigned int | intid, | ||

| bool | level, | ||

| void * | owner | ||

| ) |

kvm_vgic_inject_irq - Inject an IRQ from a device to the vgic @kvm: The VM structure pointer @vcpu: The CPU for PPIs or NULL for global interrupts @intid: The INTID to inject a new state to. @level: Edge-triggered: true: to trigger the interrupt false: to ignore the call Level-sensitive true: raise the input signal false: lower the input signal @owner: The opaque pointer to the owner of the IRQ being raised to verify that the caller is allowed to inject this IRQ. Userspace injections will have owner == NULL.

The VGIC is not concerned with devices being active-LOW or active-HIGH for level-sensitive interrupts. You can think of the level parameter as 1 being HIGH and 0 being LOW and all devices being active-HIGH.

Definition at line 439 of file vgic.c.

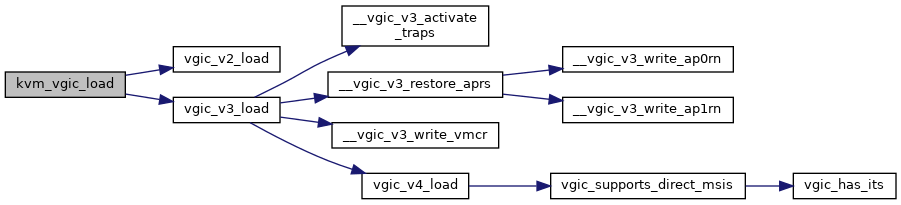

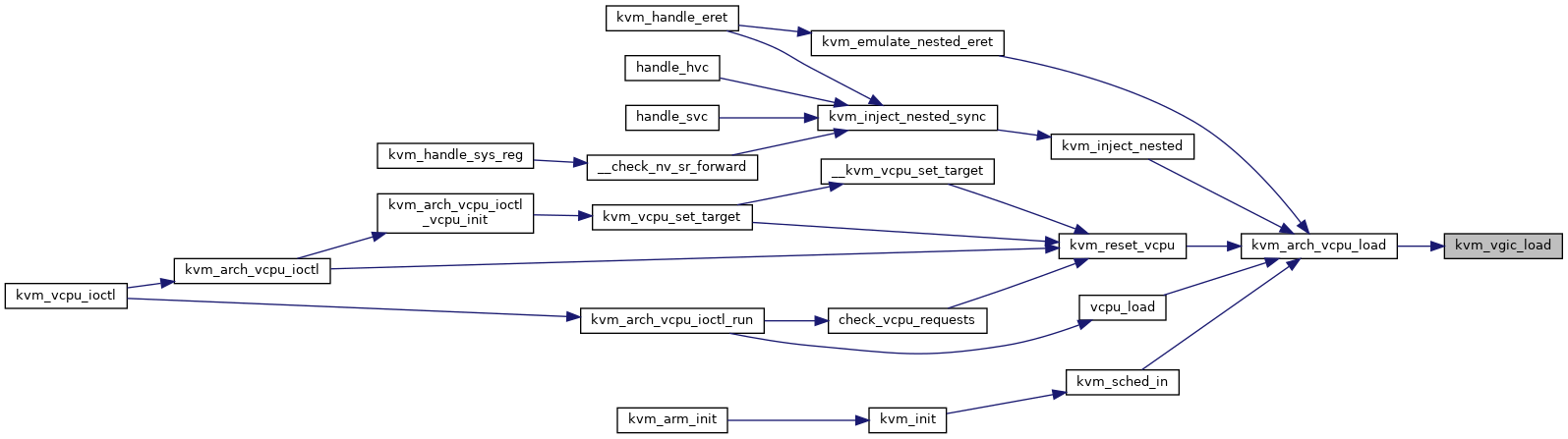

◆ kvm_vgic_load()

| void kvm_vgic_load | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_vgic_map_irq()

|

static |

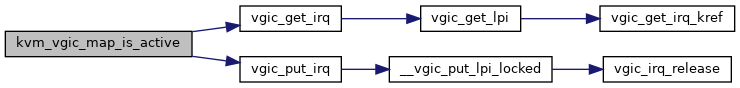

◆ kvm_vgic_map_is_active()

| bool kvm_vgic_map_is_active | ( | struct kvm_vcpu * | vcpu, |

| unsigned int | vintid | ||

| ) |

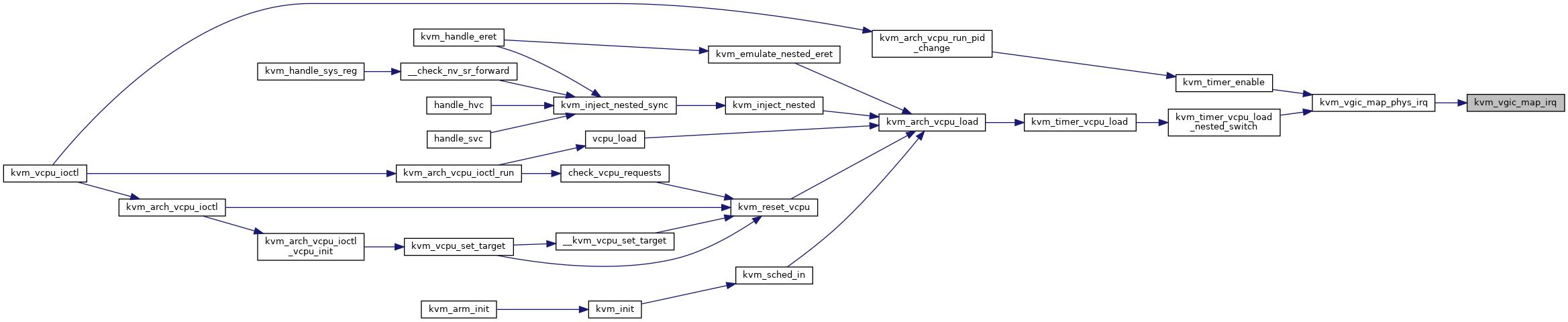

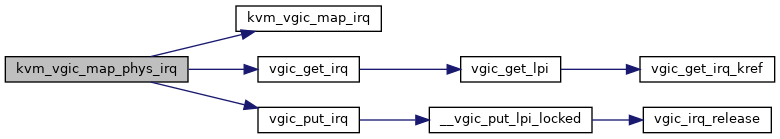

◆ kvm_vgic_map_phys_irq()

| int kvm_vgic_map_phys_irq | ( | struct kvm_vcpu * | vcpu, |

| unsigned int | host_irq, | ||

| u32 | vintid, | ||

| struct irq_ops * | ops | ||

| ) |

Definition at line 514 of file vgic.c.

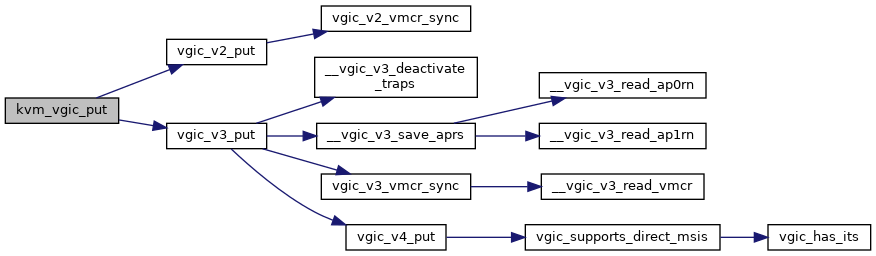

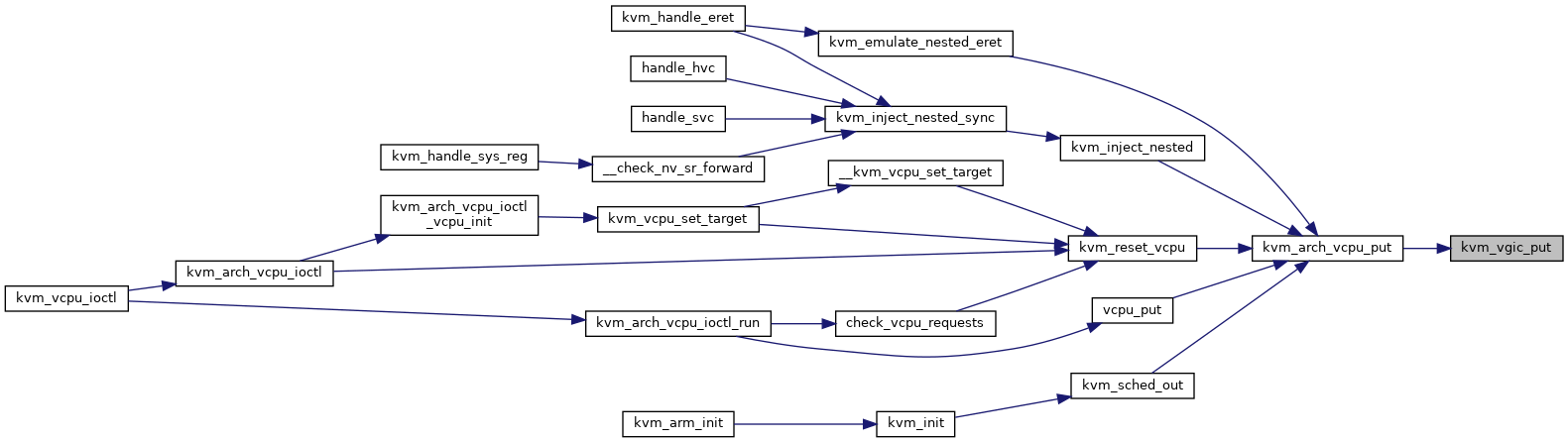

◆ kvm_vgic_put()

| void kvm_vgic_put | ( | struct kvm_vcpu * | vcpu | ) |

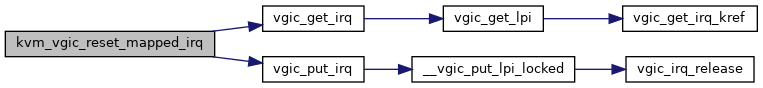

◆ kvm_vgic_reset_mapped_irq()

| void kvm_vgic_reset_mapped_irq | ( | struct kvm_vcpu * | vcpu, |

| u32 | vintid | ||

| ) |

kvm_vgic_reset_mapped_irq - Reset a mapped IRQ @vcpu: The VCPU pointer @vintid: The INTID of the interrupt

Reset the active and pending states of a mapped interrupt. Kernel subsystems injecting mapped interrupts should reset their interrupt lines when we are doing a reset of the VM.

Definition at line 540 of file vgic.c.

◆ kvm_vgic_set_owner()

| int kvm_vgic_set_owner | ( | struct kvm_vcpu * | vcpu, |

| unsigned int | intid, | ||

| void * | owner | ||

| ) |

kvm_vgic_set_owner - Set the owner of an interrupt for a VM

@vcpu: Pointer to the VCPU (used for PPIs) @intid: The virtual INTID identifying the interrupt (PPI or SPI) @owner: Opaque pointer to the owner

Returns 0 if intid is not already used by another in-kernel device and the owner is set, otherwise returns an error code.

Definition at line 601 of file vgic.c.

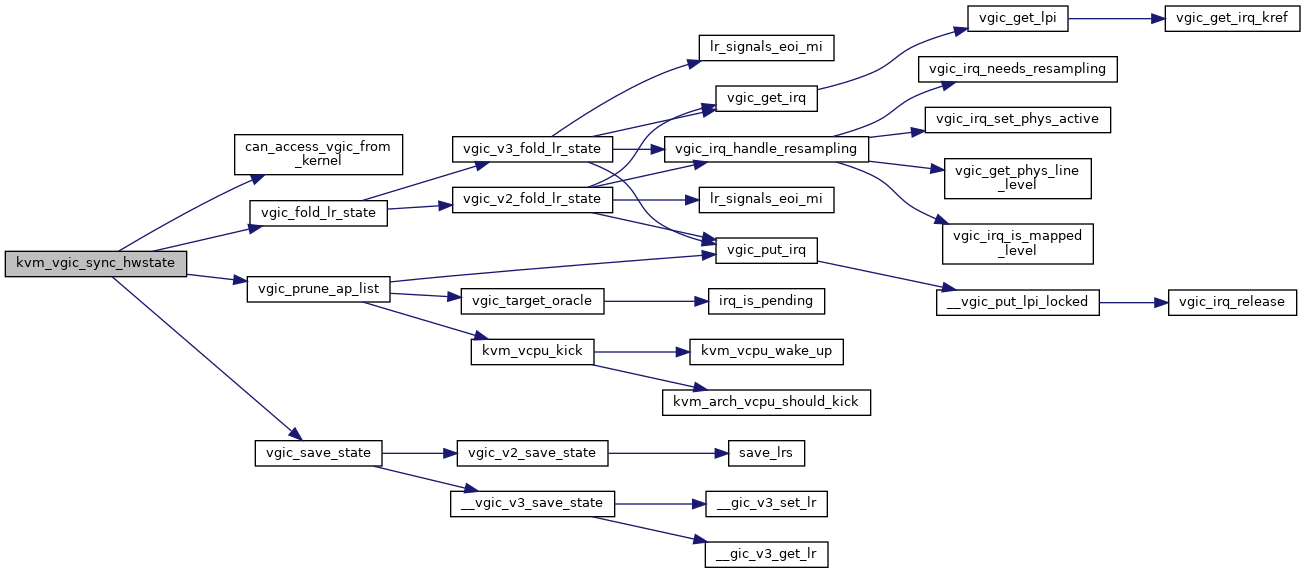

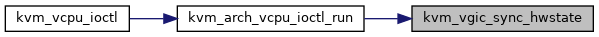

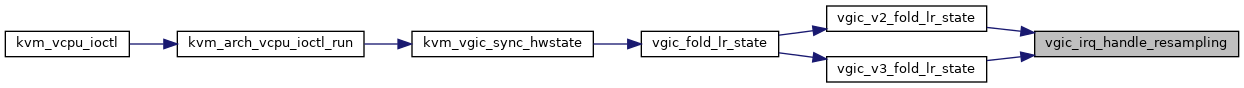

◆ kvm_vgic_sync_hwstate()

| void kvm_vgic_sync_hwstate | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_vgic_unmap_irq()

|

inlinestatic |

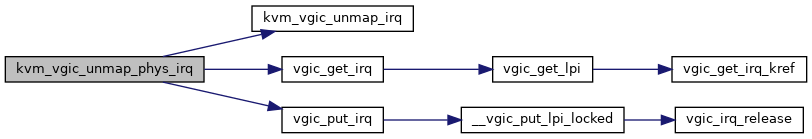

◆ kvm_vgic_unmap_phys_irq()

| int kvm_vgic_unmap_phys_irq | ( | struct kvm_vcpu * | vcpu, |

| unsigned int | vintid | ||

| ) |

◆ kvm_vgic_vcpu_pending_irq()

| int kvm_vgic_vcpu_pending_irq | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 971 of file vgic.c.

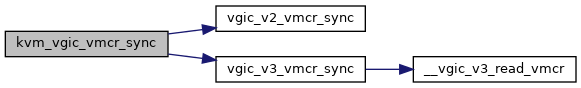

◆ kvm_vgic_vmcr_sync()

| void kvm_vgic_vmcr_sync | ( | struct kvm_vcpu * | vcpu | ) |

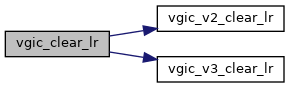

◆ vgic_clear_lr()

|

inlinestatic |

◆ vgic_flush_lr_state()

|

static |

Definition at line 797 of file vgic.c.

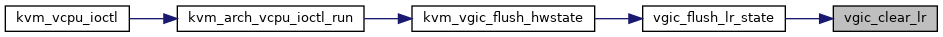

◆ vgic_flush_pending_lpis()

| void vgic_flush_pending_lpis | ( | struct kvm_vcpu * | vcpu | ) |

◆ vgic_fold_lr_state()

|

inlinestatic |

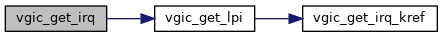

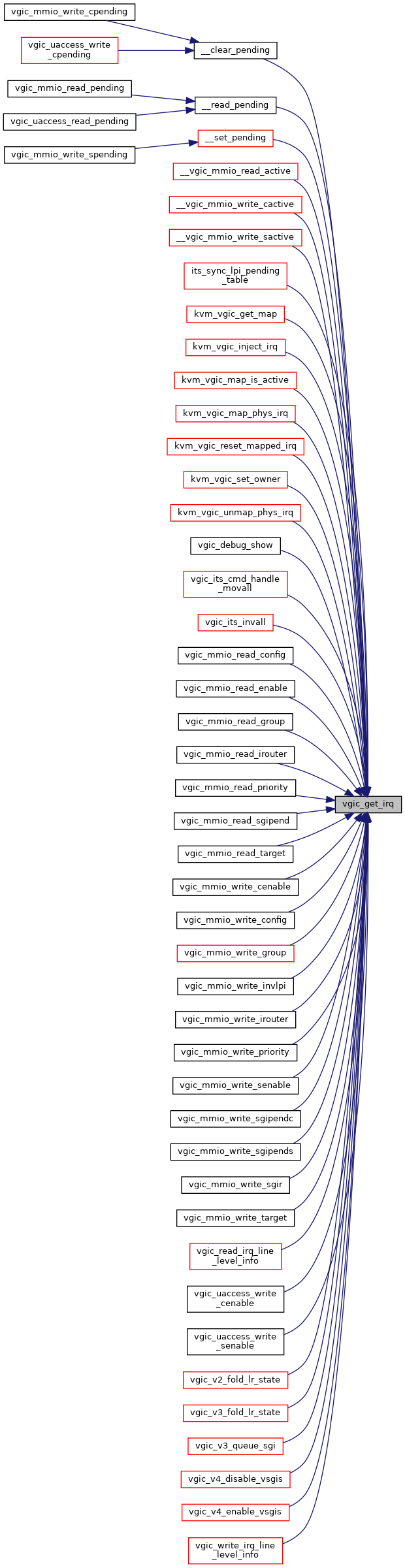

◆ vgic_get_irq()

| struct vgic_irq* vgic_get_irq | ( | struct kvm * | kvm, |

| struct kvm_vcpu * | vcpu, | ||

| u32 | intid | ||

| ) |

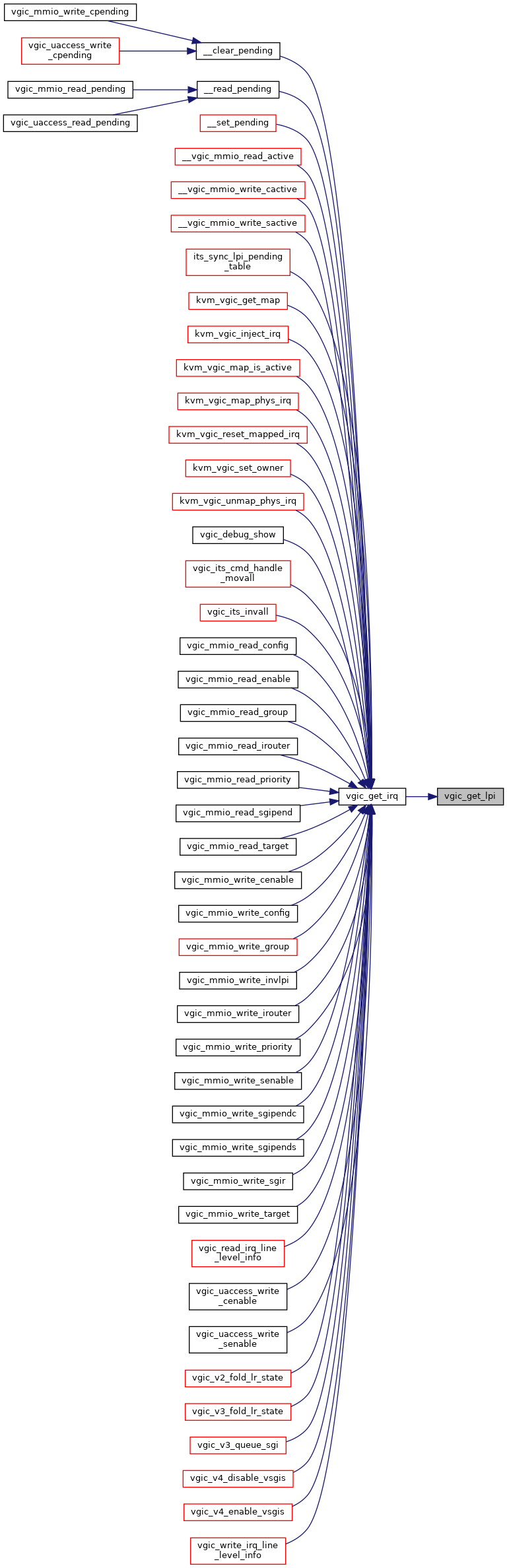

◆ vgic_get_lpi()

|

static |

◆ vgic_get_phys_line_level()

| bool vgic_get_phys_line_level | ( | struct vgic_irq * | irq | ) |

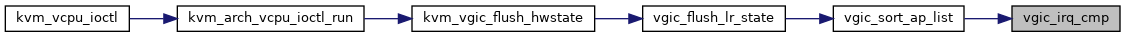

◆ vgic_irq_cmp()

|

static |

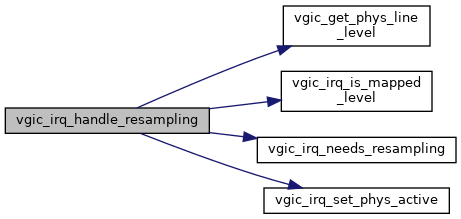

◆ vgic_irq_handle_resampling()

| void vgic_irq_handle_resampling | ( | struct vgic_irq * | irq, |

| bool | lr_deactivated, | ||

| bool | lr_pending | ||

| ) |

Definition at line 1060 of file vgic.c.

◆ vgic_irq_release()

|

static |

◆ vgic_irq_set_phys_active()

| void vgic_irq_set_phys_active | ( | struct vgic_irq * | irq, |

| bool | active | ||

| ) |

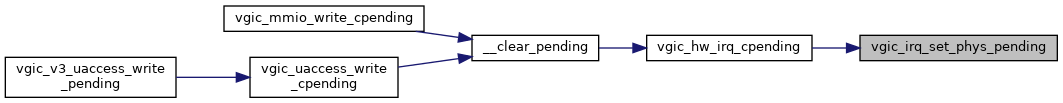

◆ vgic_irq_set_phys_pending()

| void vgic_irq_set_phys_pending | ( | struct vgic_irq * | irq, |

| bool | pending | ||

| ) |

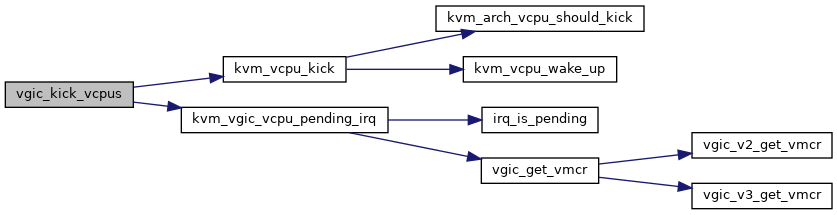

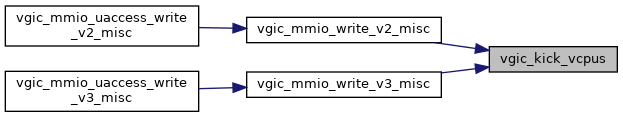

◆ vgic_kick_vcpus()

| void vgic_kick_vcpus | ( | struct kvm * | kvm | ) |

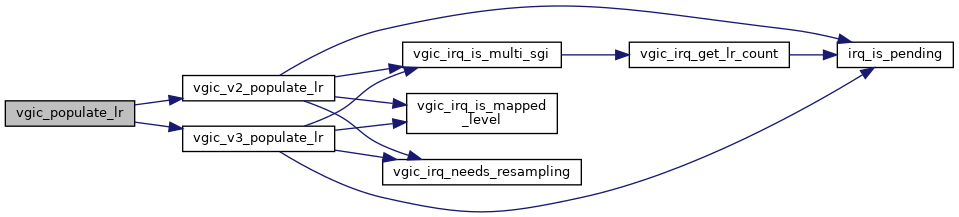

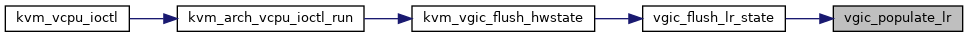

◆ vgic_populate_lr()

|

inlinestatic |

Definition at line 743 of file vgic.c.

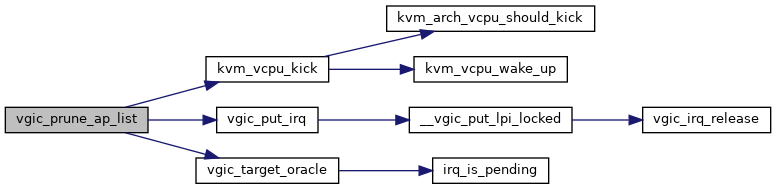

◆ vgic_prune_ap_list()

|

static |

vgic_prune_ap_list - Remove non-relevant interrupts from the list

@vcpu: The VCPU pointer

Go over the list of "interesting" interrupts, and prune those that we won't have to consider in the near future.

Definition at line 633 of file vgic.c.

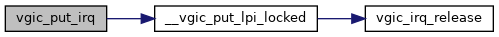

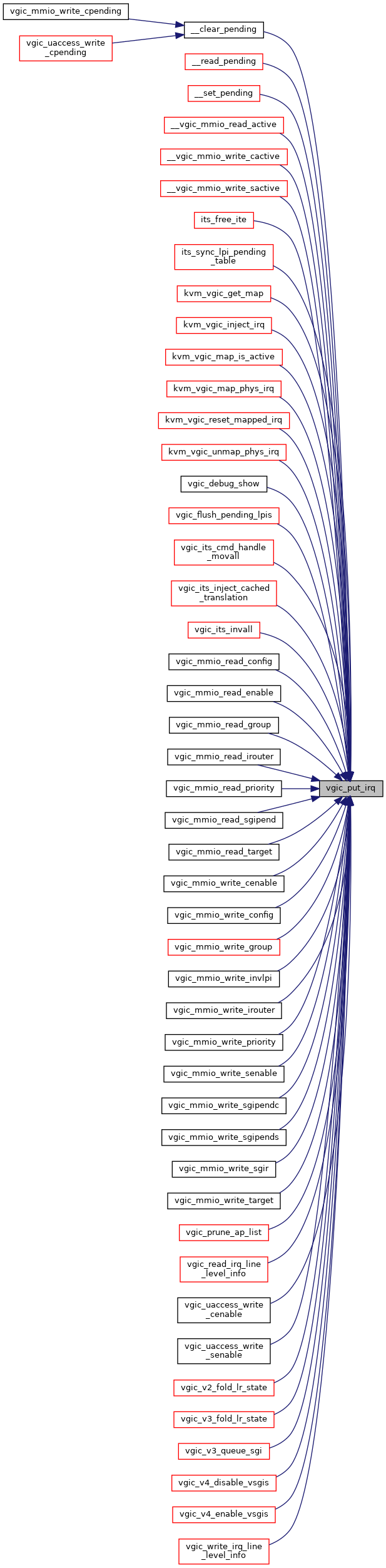

◆ vgic_put_irq()

| void vgic_put_irq | ( | struct kvm * | kvm, |

| struct vgic_irq * | irq | ||

| ) |

Definition at line 139 of file vgic.c.

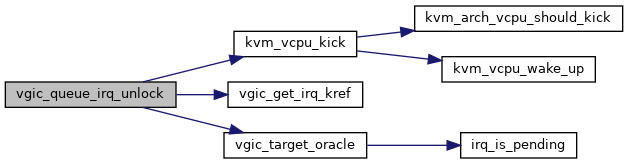

◆ vgic_queue_irq_unlock()

| bool vgic_queue_irq_unlock | ( | struct kvm * | kvm, |

| struct vgic_irq * | irq, | ||

| unsigned long | flags | ||

| ) |

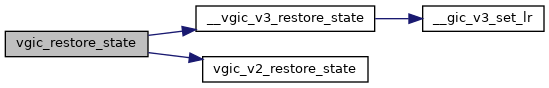

◆ vgic_restore_state()

|

inlinestatic |

Definition at line 896 of file vgic.c.

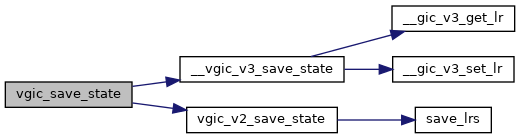

◆ vgic_save_state()

|

inlinestatic |

Definition at line 866 of file vgic.c.

◆ vgic_set_underflow()

|

inlinestatic |

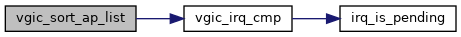

◆ vgic_sort_ap_list()

|

static |

Definition at line 299 of file vgic.c.

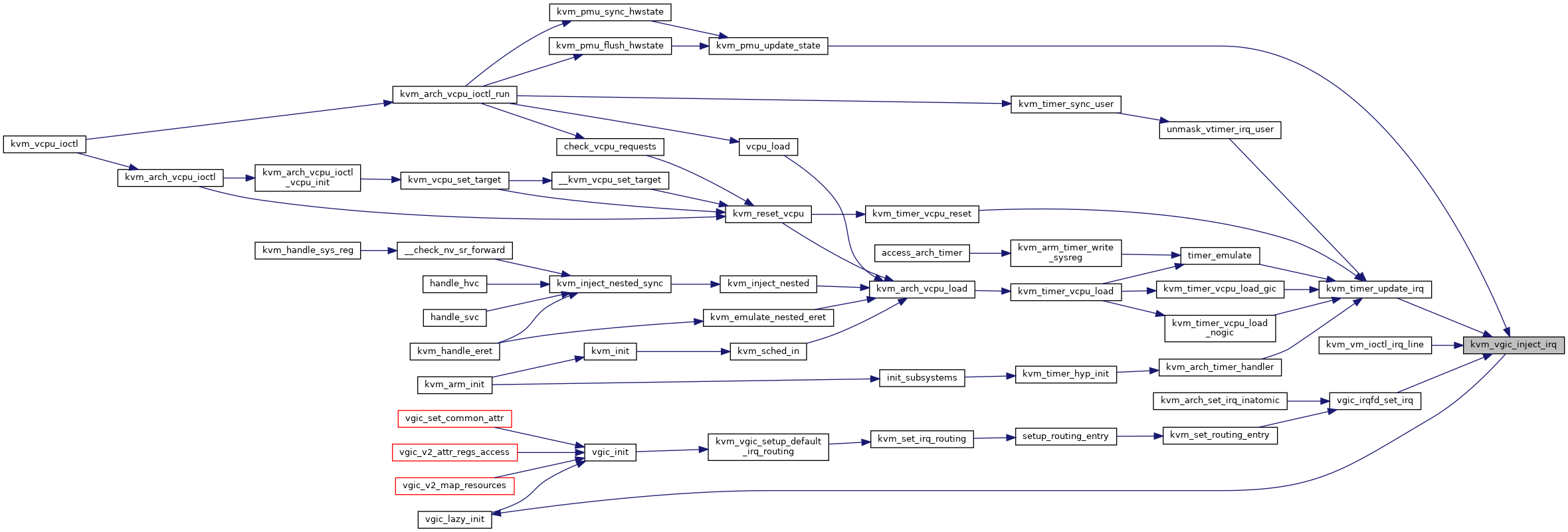

◆ vgic_target_oracle()

|

static |

kvm_vgic_target_oracle - compute the target vcpu for an irq

@irq: The irq to route. Must be already locked.

Based on the current state of the interrupt (enabled, pending, active, vcpu and target_vcpu), compute the next vcpu this should be given to. Return NULL if this shouldn't be injected at all.

Requires the IRQ lock to be held.

Definition at line 216 of file vgic.c.

◆ vgic_validate_injection()

|

static |

Variable Documentation

◆ __ro_after_init

| struct vgic_global kvm_vgic_global_state __ro_after_init |