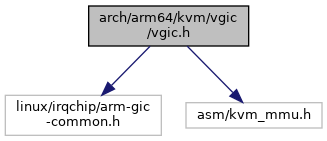

#include <linux/irqchip/arm-gic-common.h>#include <asm/kvm_mmu.h>

Go to the source code of this file.

Classes | |

| struct | vgic_vmcr |

| struct | vgic_reg_attr |

Functions | |

| static u32 | vgic_get_implementation_rev (struct kvm_vcpu *vcpu) |

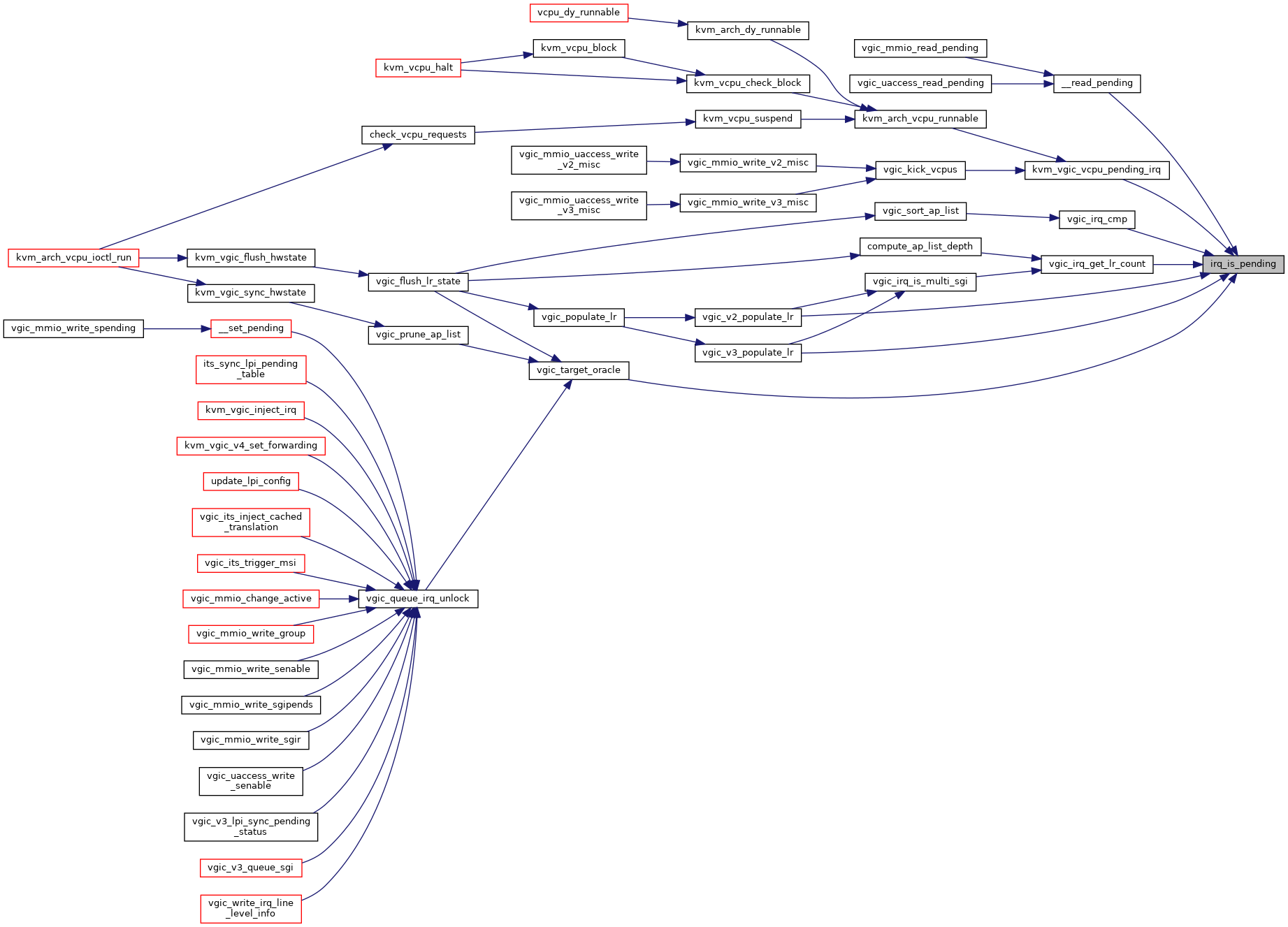

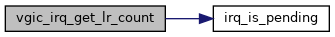

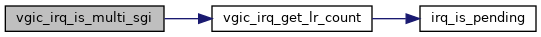

| static bool | irq_is_pending (struct vgic_irq *irq) |

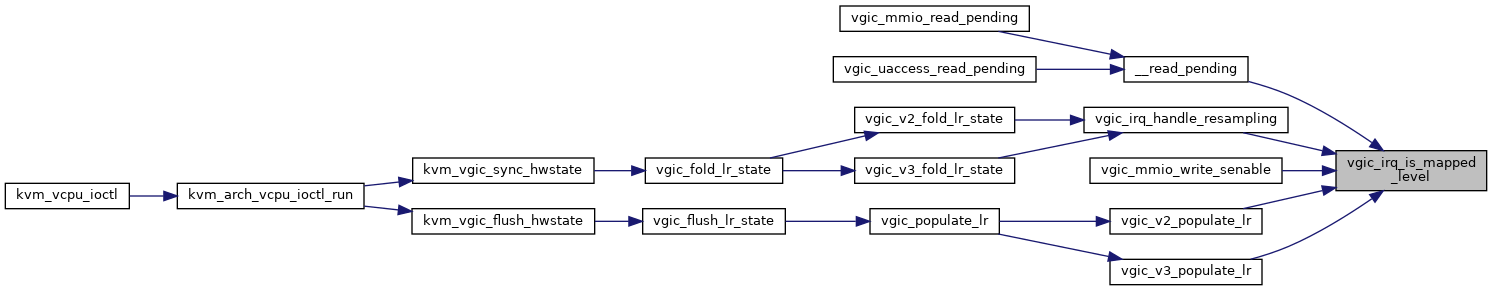

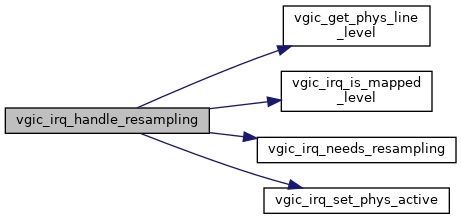

| static bool | vgic_irq_is_mapped_level (struct vgic_irq *irq) |

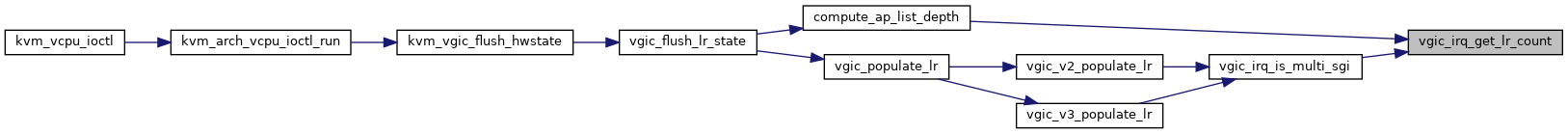

| static int | vgic_irq_get_lr_count (struct vgic_irq *irq) |

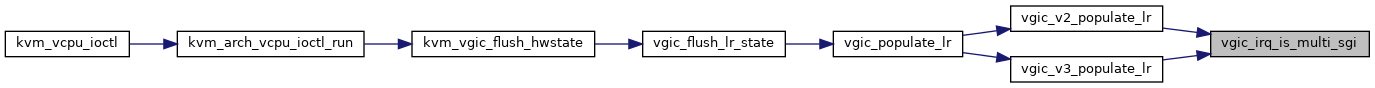

| static bool | vgic_irq_is_multi_sgi (struct vgic_irq *irq) |

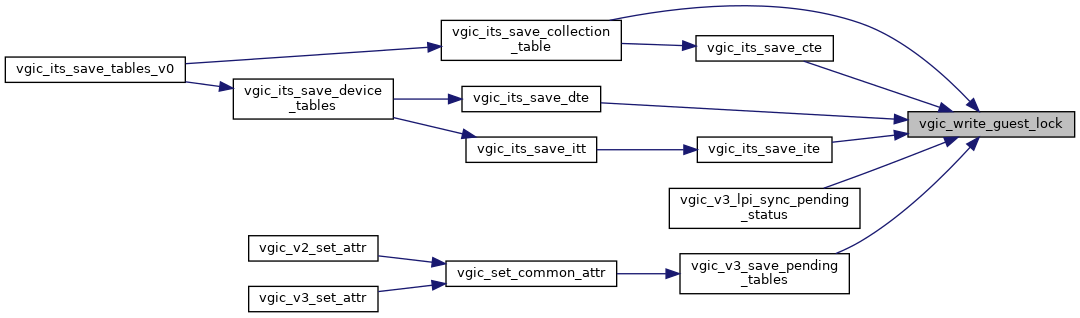

| static int | vgic_write_guest_lock (struct kvm *kvm, gpa_t gpa, const void *data, unsigned long len) |

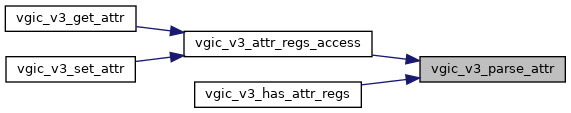

| int | vgic_v3_parse_attr (struct kvm_device *dev, struct kvm_device_attr *attr, struct vgic_reg_attr *reg_attr) |

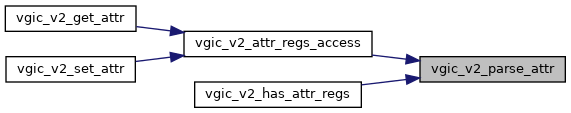

| int | vgic_v2_parse_attr (struct kvm_device *dev, struct kvm_device_attr *attr, struct vgic_reg_attr *reg_attr) |

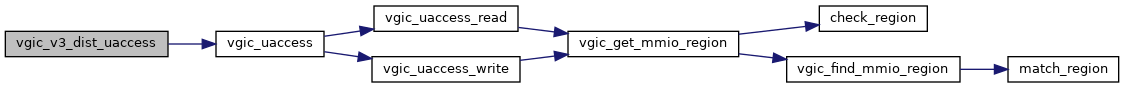

| const struct vgic_register_region * | vgic_get_mmio_region (struct kvm_vcpu *vcpu, struct vgic_io_device *iodev, gpa_t addr, int len) |

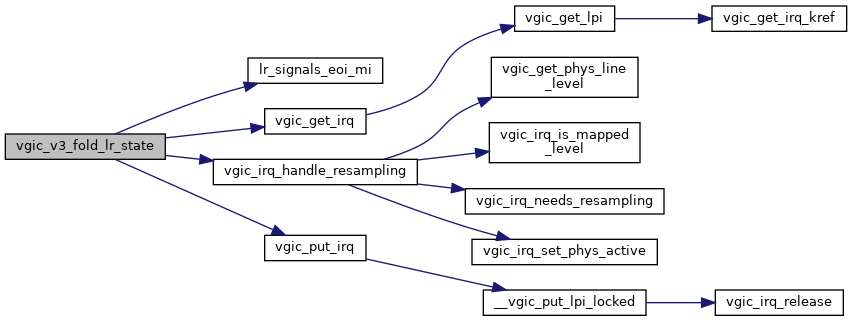

| struct vgic_irq * | vgic_get_irq (struct kvm *kvm, struct kvm_vcpu *vcpu, u32 intid) |

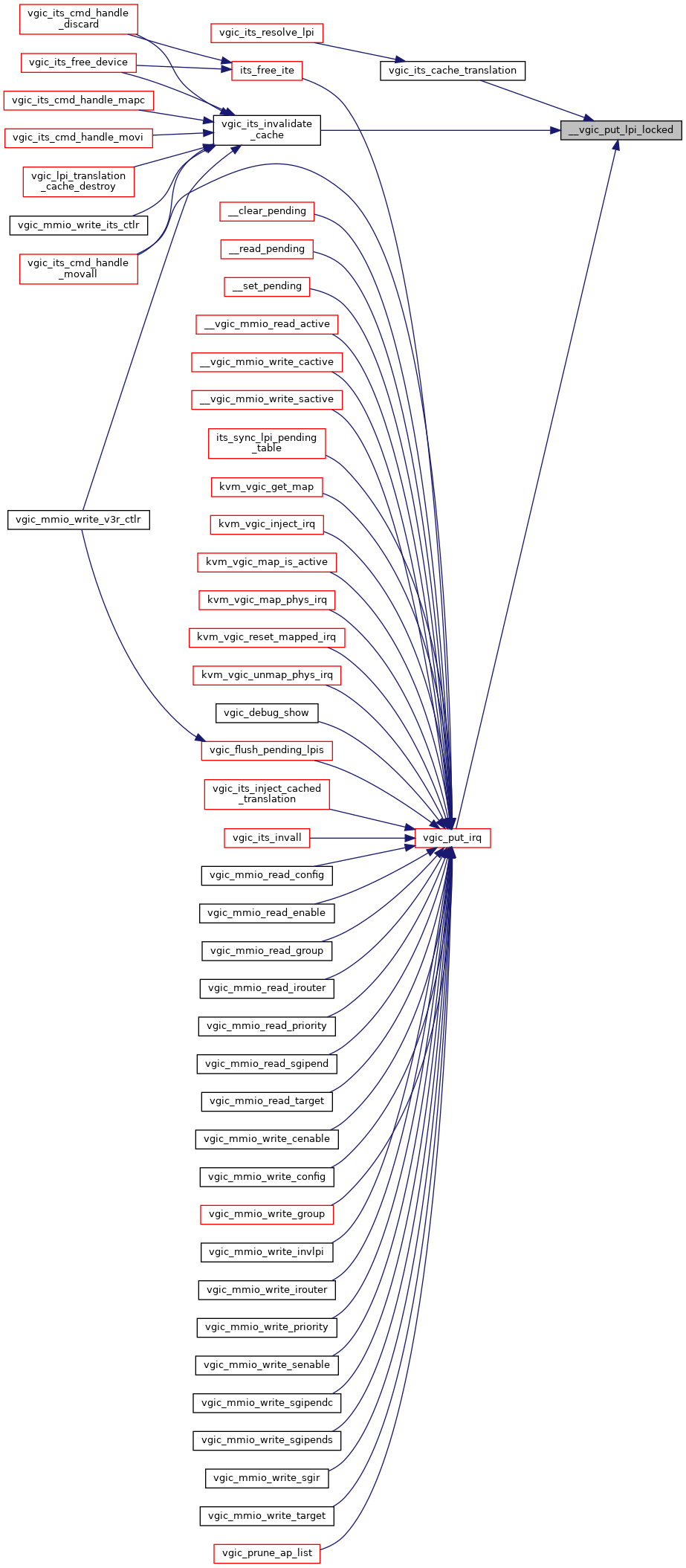

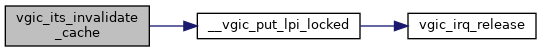

| void | __vgic_put_lpi_locked (struct kvm *kvm, struct vgic_irq *irq) |

| void | vgic_put_irq (struct kvm *kvm, struct vgic_irq *irq) |

| bool | vgic_get_phys_line_level (struct vgic_irq *irq) |

| void | vgic_irq_set_phys_pending (struct vgic_irq *irq, bool pending) |

| void | vgic_irq_set_phys_active (struct vgic_irq *irq, bool active) |

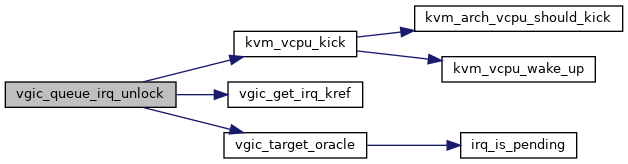

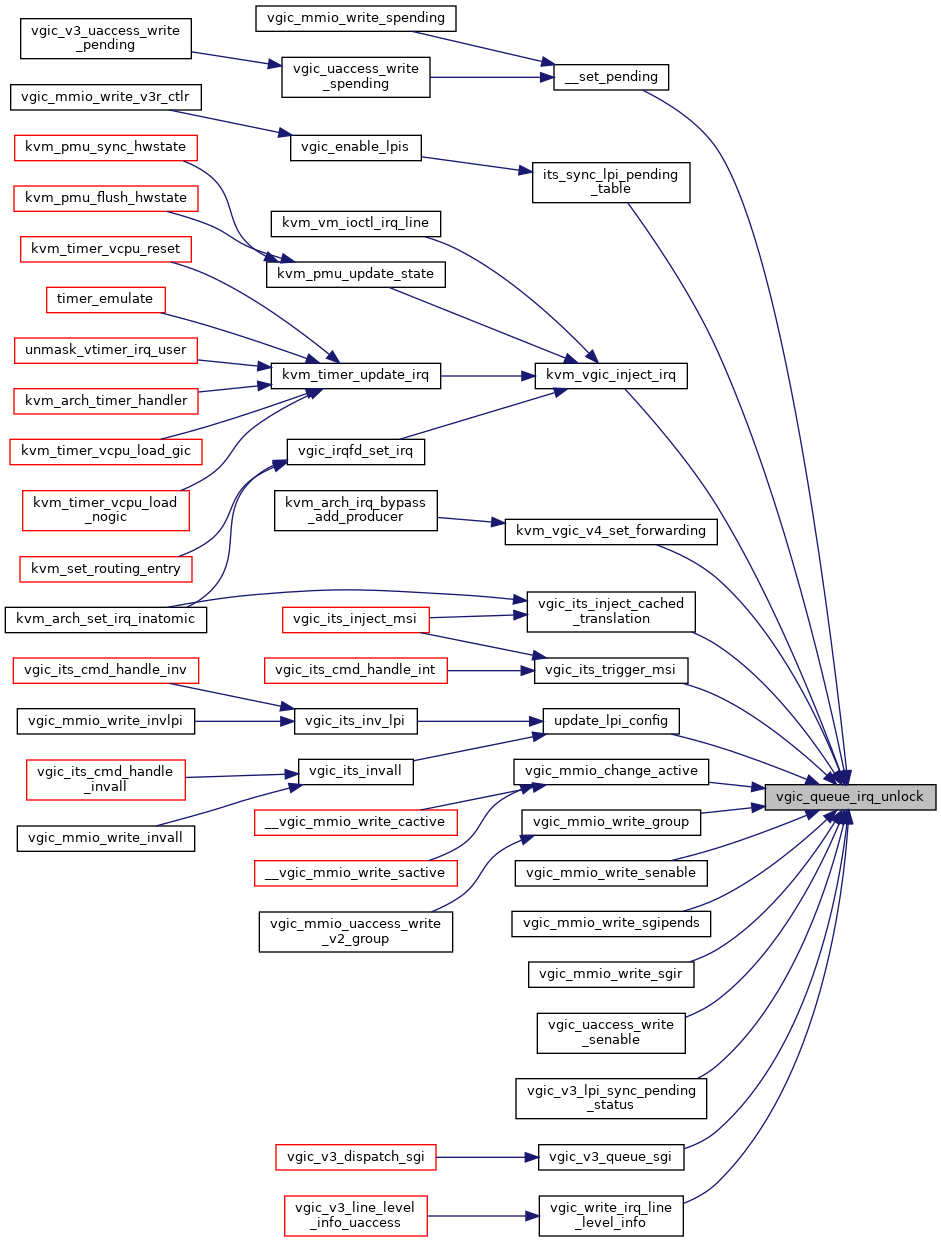

| bool | vgic_queue_irq_unlock (struct kvm *kvm, struct vgic_irq *irq, unsigned long flags) |

| void | vgic_kick_vcpus (struct kvm *kvm) |

| void | vgic_irq_handle_resampling (struct vgic_irq *irq, bool lr_deactivated, bool lr_pending) |

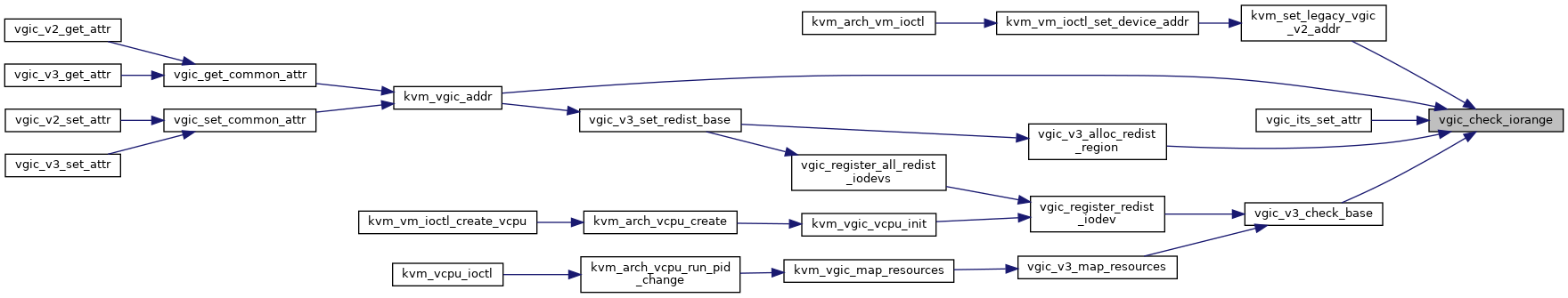

| int | vgic_check_iorange (struct kvm *kvm, phys_addr_t ioaddr, phys_addr_t addr, phys_addr_t alignment, phys_addr_t size) |

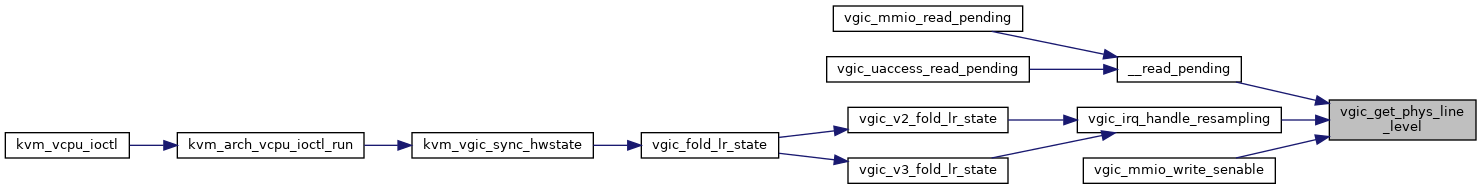

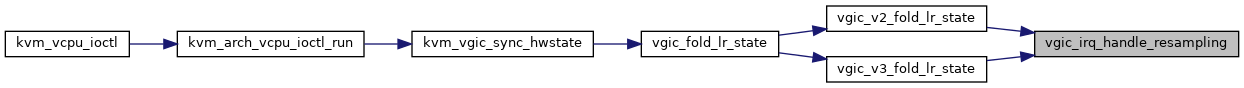

| void | vgic_v2_fold_lr_state (struct kvm_vcpu *vcpu) |

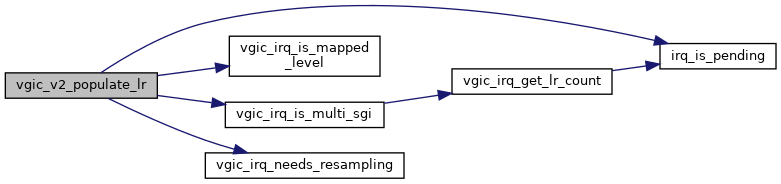

| void | vgic_v2_populate_lr (struct kvm_vcpu *vcpu, struct vgic_irq *irq, int lr) |

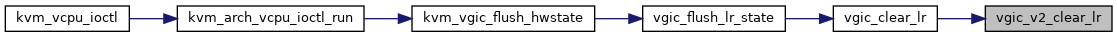

| void | vgic_v2_clear_lr (struct kvm_vcpu *vcpu, int lr) |

| void | vgic_v2_set_underflow (struct kvm_vcpu *vcpu) |

| int | vgic_v2_has_attr_regs (struct kvm_device *dev, struct kvm_device_attr *attr) |

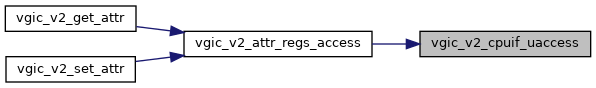

| int | vgic_v2_dist_uaccess (struct kvm_vcpu *vcpu, bool is_write, int offset, u32 *val) |

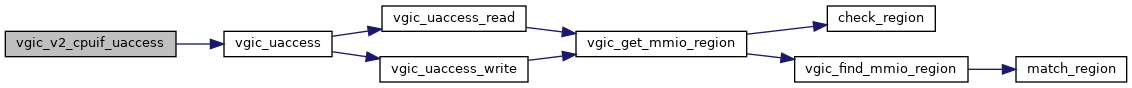

| int | vgic_v2_cpuif_uaccess (struct kvm_vcpu *vcpu, bool is_write, int offset, u32 *val) |

| void | vgic_v2_set_vmcr (struct kvm_vcpu *vcpu, struct vgic_vmcr *vmcr) |

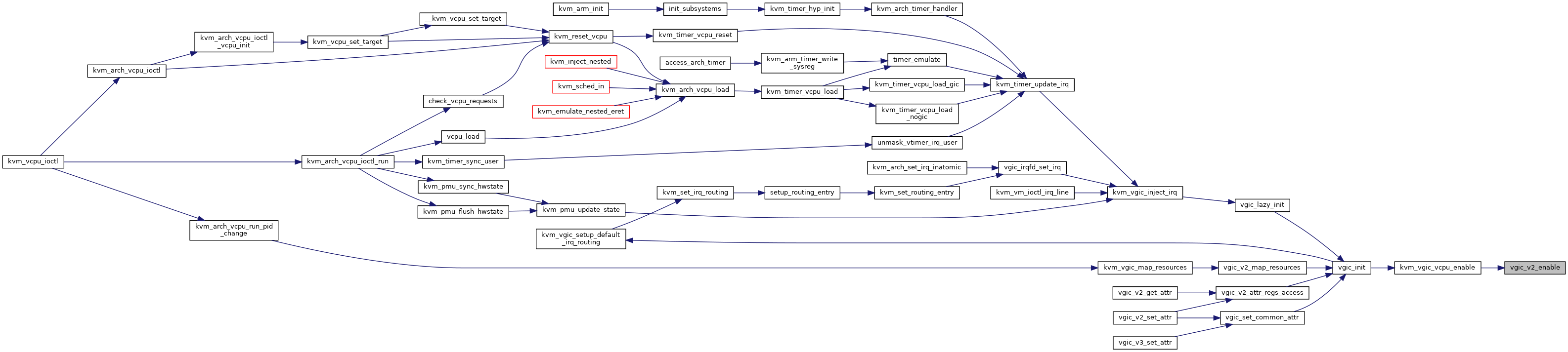

| void | vgic_v2_get_vmcr (struct kvm_vcpu *vcpu, struct vgic_vmcr *vmcr) |

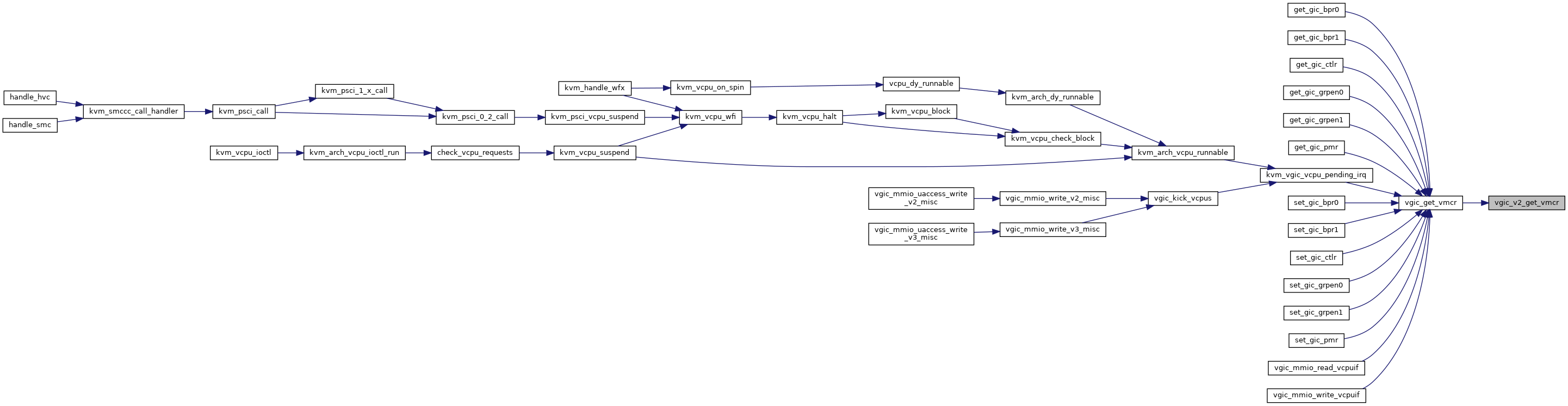

| void | vgic_v2_enable (struct kvm_vcpu *vcpu) |

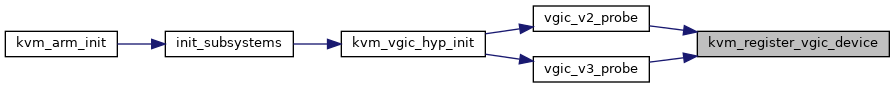

| int | vgic_v2_probe (const struct gic_kvm_info *info) |

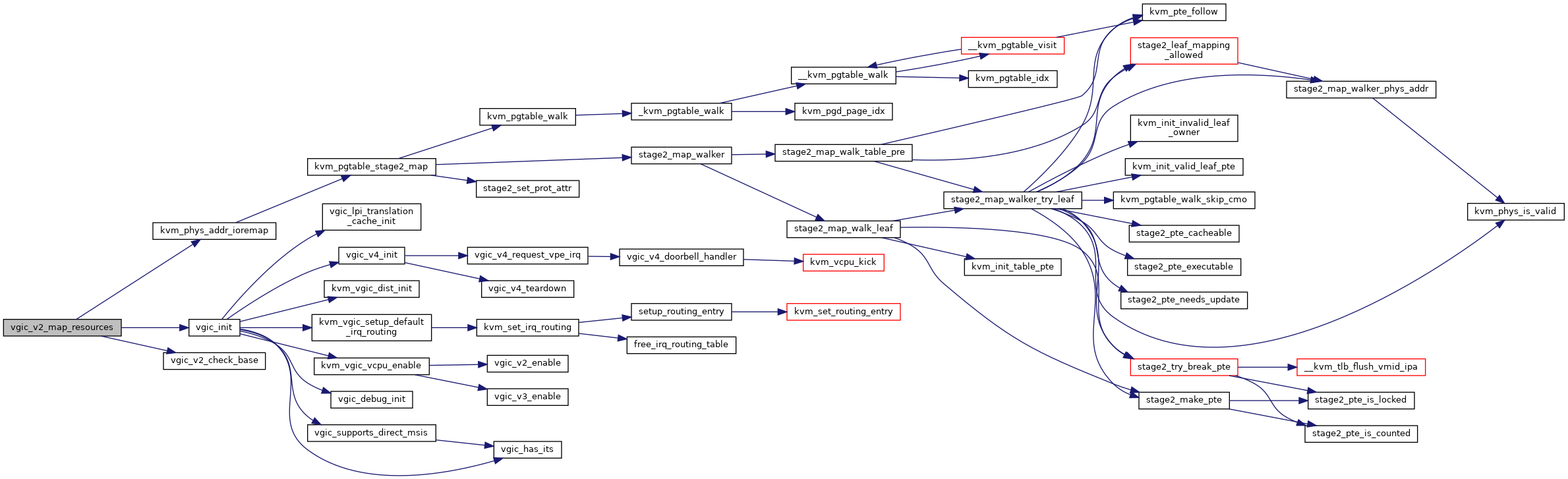

| int | vgic_v2_map_resources (struct kvm *kvm) |

| int | vgic_register_dist_iodev (struct kvm *kvm, gpa_t dist_base_address, enum vgic_type) |

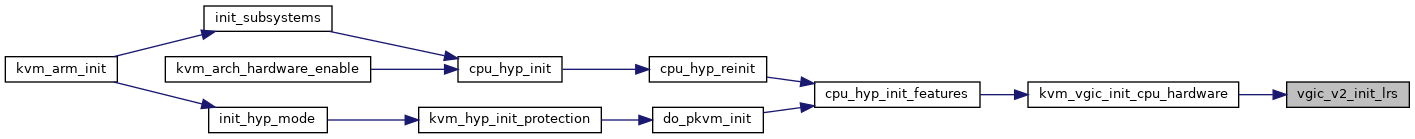

| void | vgic_v2_init_lrs (void) |

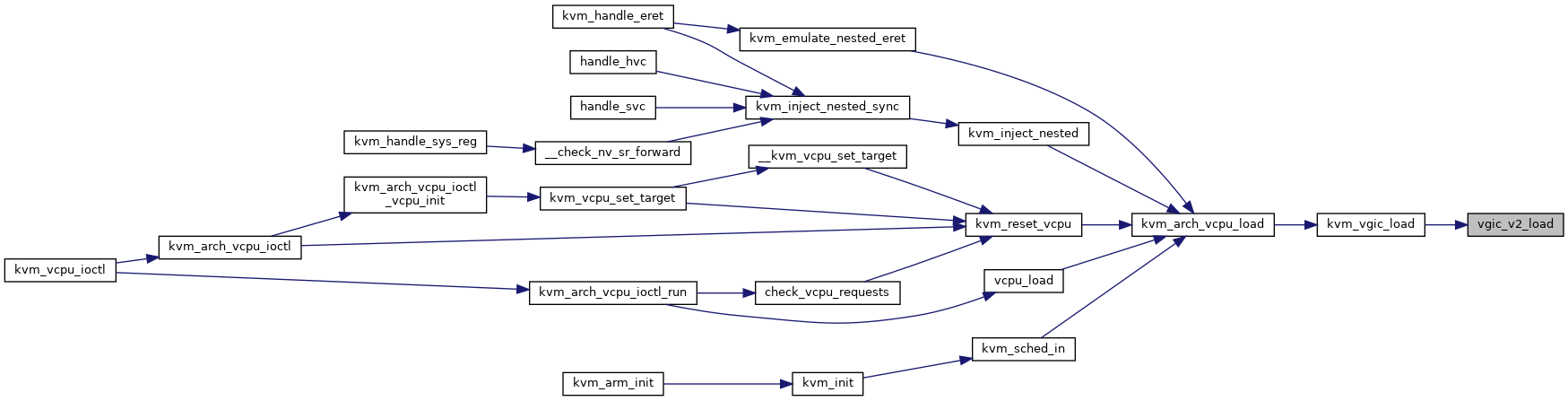

| void | vgic_v2_load (struct kvm_vcpu *vcpu) |

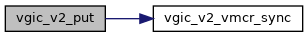

| void | vgic_v2_put (struct kvm_vcpu *vcpu) |

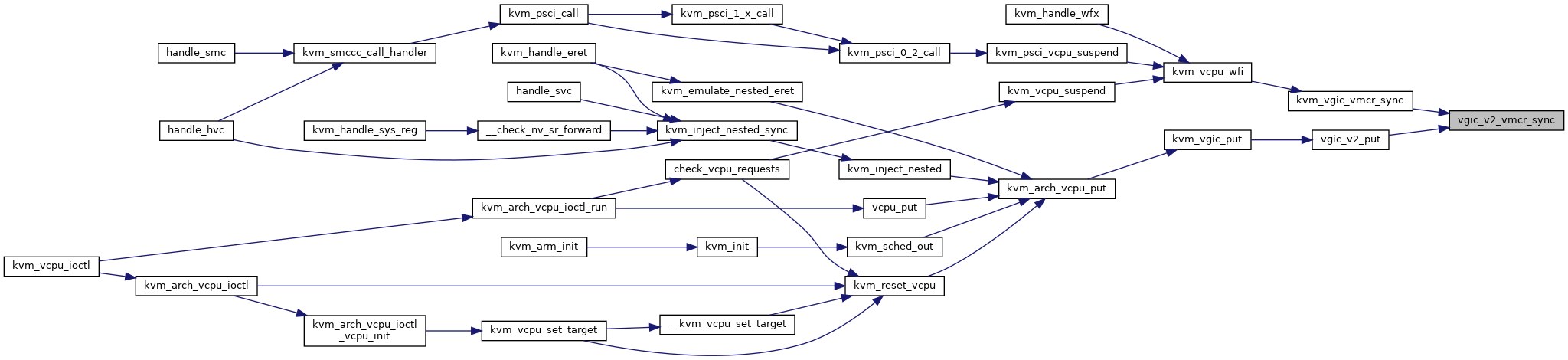

| void | vgic_v2_vmcr_sync (struct kvm_vcpu *vcpu) |

| void | vgic_v2_save_state (struct kvm_vcpu *vcpu) |

| void | vgic_v2_restore_state (struct kvm_vcpu *vcpu) |

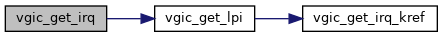

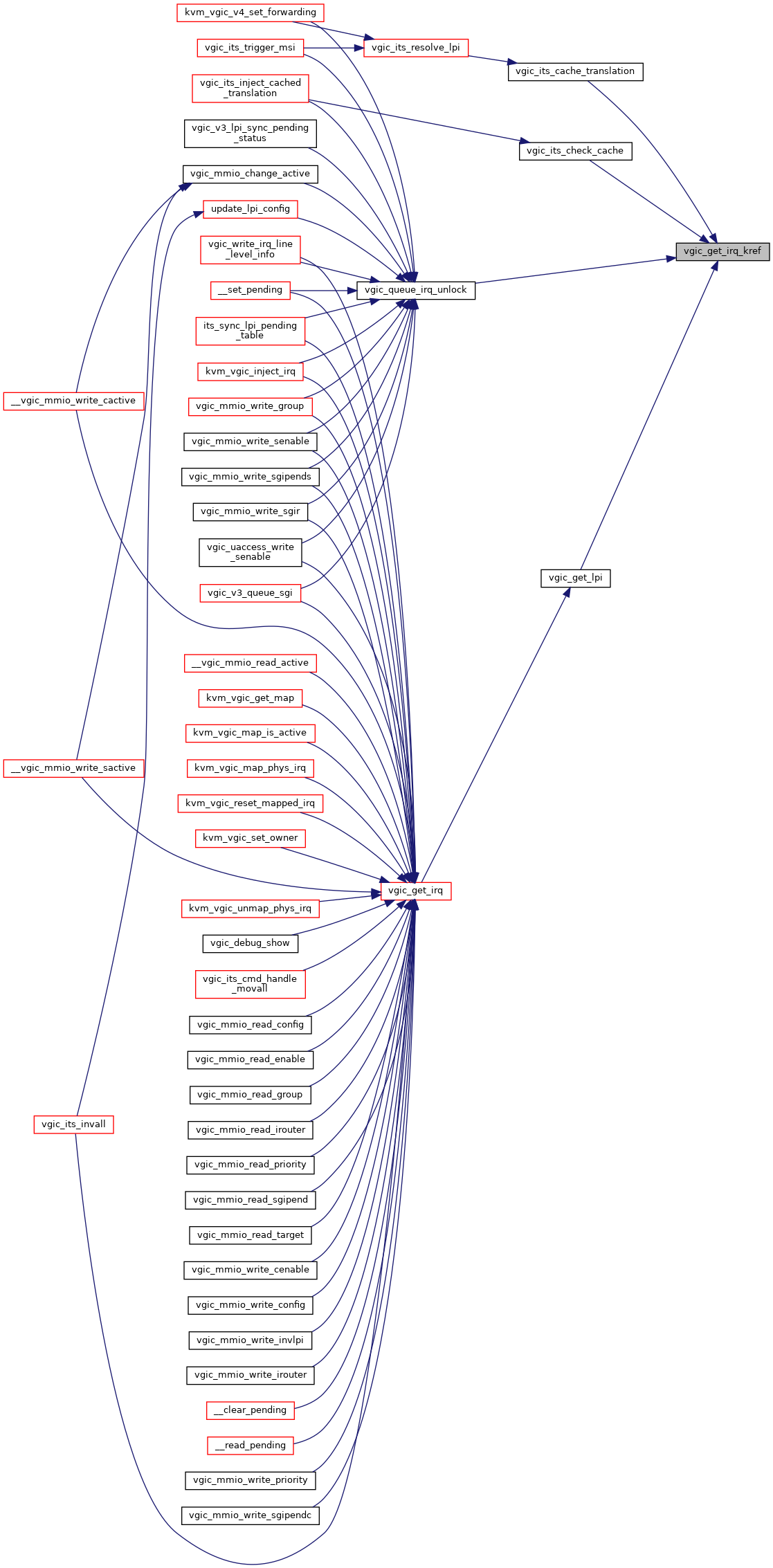

| static void | vgic_get_irq_kref (struct vgic_irq *irq) |

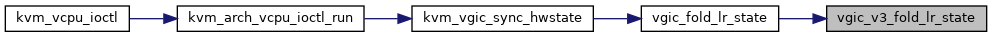

| void | vgic_v3_fold_lr_state (struct kvm_vcpu *vcpu) |

| void | vgic_v3_populate_lr (struct kvm_vcpu *vcpu, struct vgic_irq *irq, int lr) |

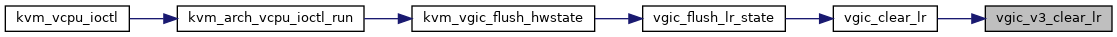

| void | vgic_v3_clear_lr (struct kvm_vcpu *vcpu, int lr) |

| void | vgic_v3_set_underflow (struct kvm_vcpu *vcpu) |

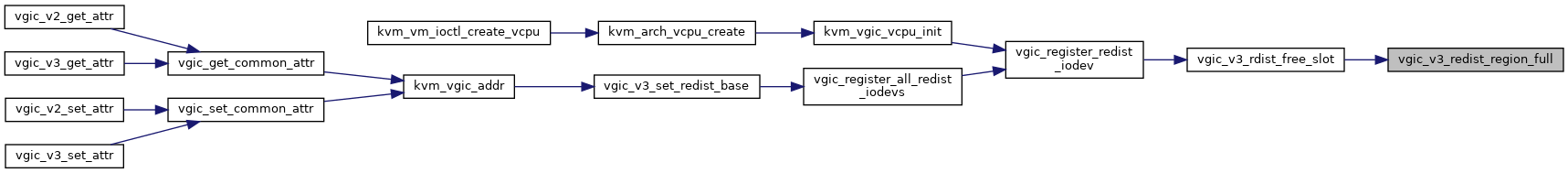

| void | vgic_v3_set_vmcr (struct kvm_vcpu *vcpu, struct vgic_vmcr *vmcr) |

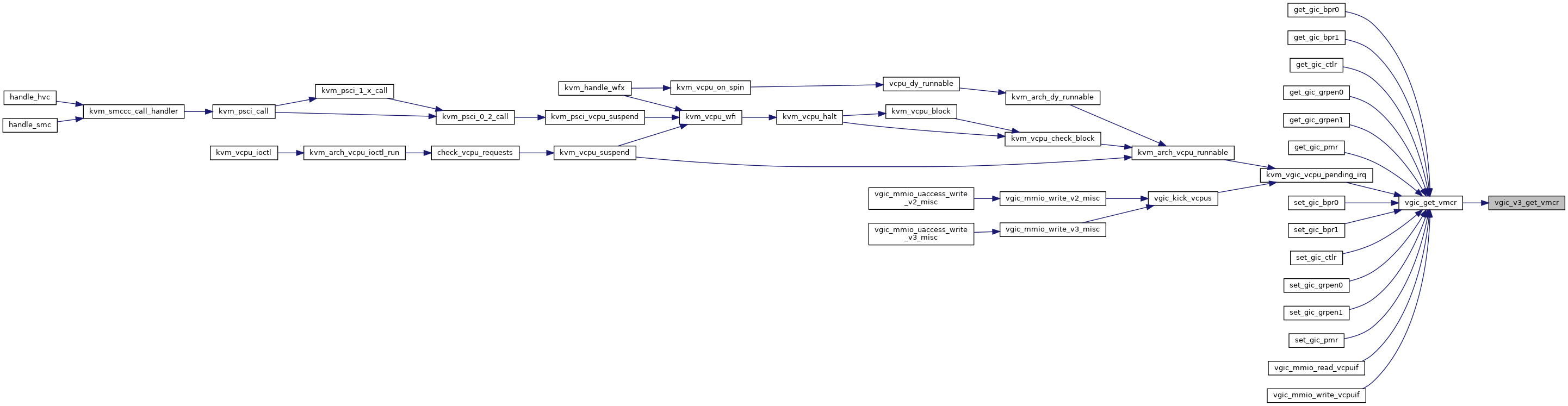

| void | vgic_v3_get_vmcr (struct kvm_vcpu *vcpu, struct vgic_vmcr *vmcr) |

| void | vgic_v3_enable (struct kvm_vcpu *vcpu) |

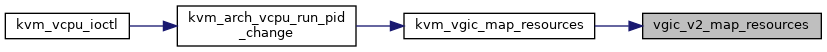

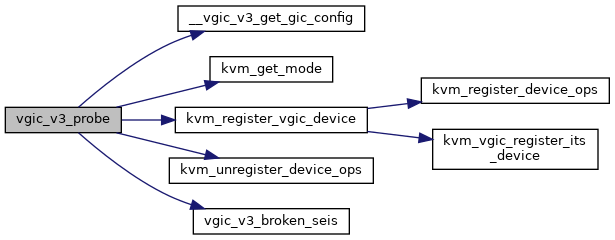

| int | vgic_v3_probe (const struct gic_kvm_info *info) |

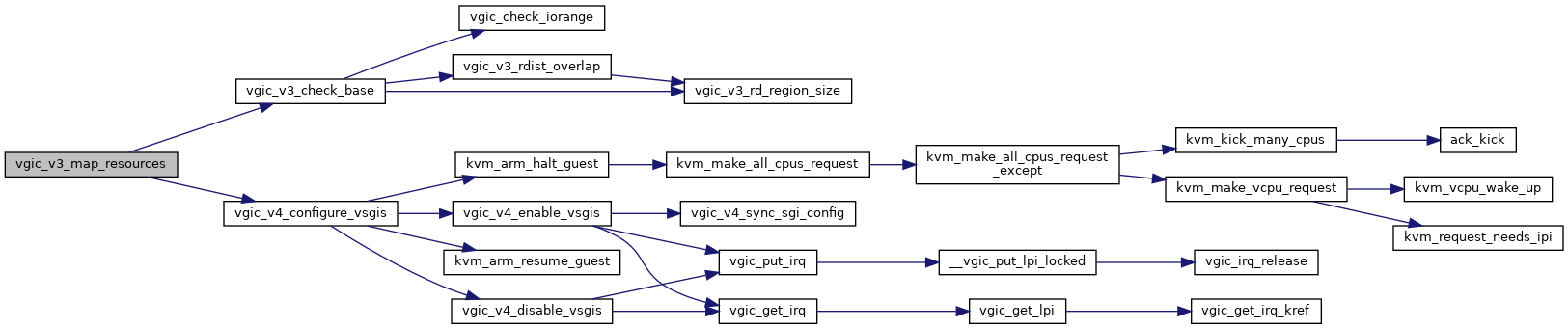

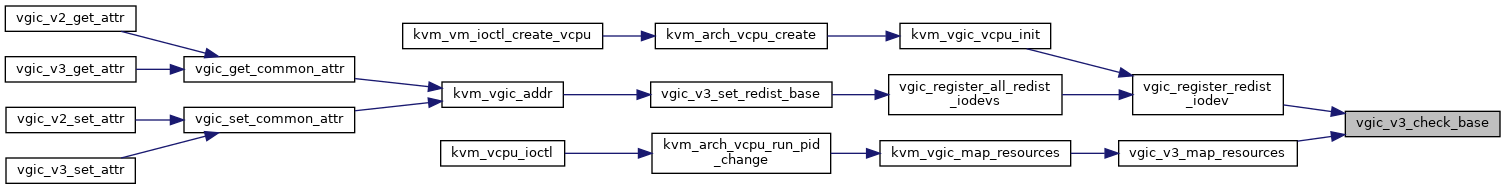

| int | vgic_v3_map_resources (struct kvm *kvm) |

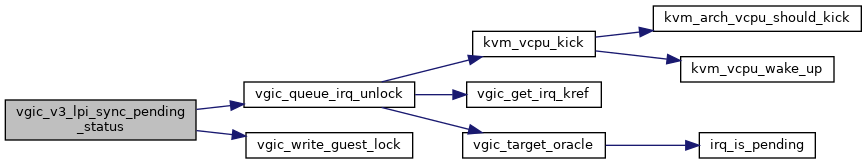

| int | vgic_v3_lpi_sync_pending_status (struct kvm *kvm, struct vgic_irq *irq) |

| int | vgic_v3_save_pending_tables (struct kvm *kvm) |

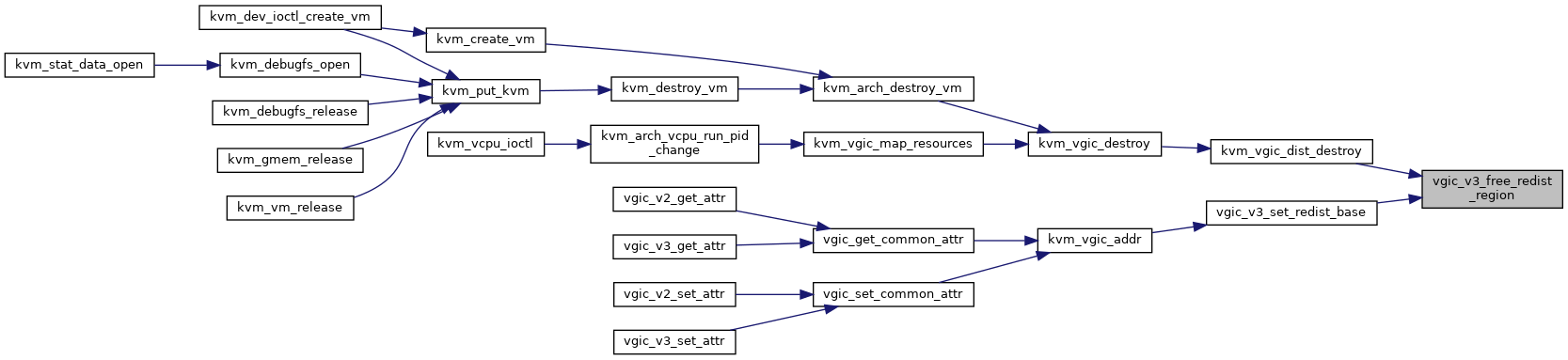

| int | vgic_v3_set_redist_base (struct kvm *kvm, u32 index, u64 addr, u32 count) |

| int | vgic_register_redist_iodev (struct kvm_vcpu *vcpu) |

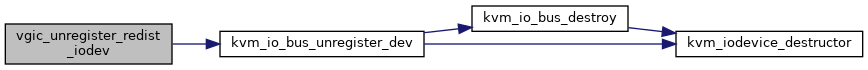

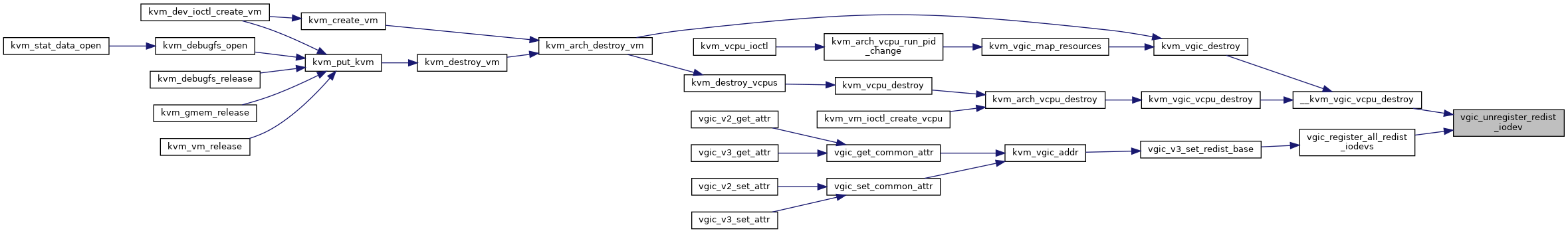

| void | vgic_unregister_redist_iodev (struct kvm_vcpu *vcpu) |

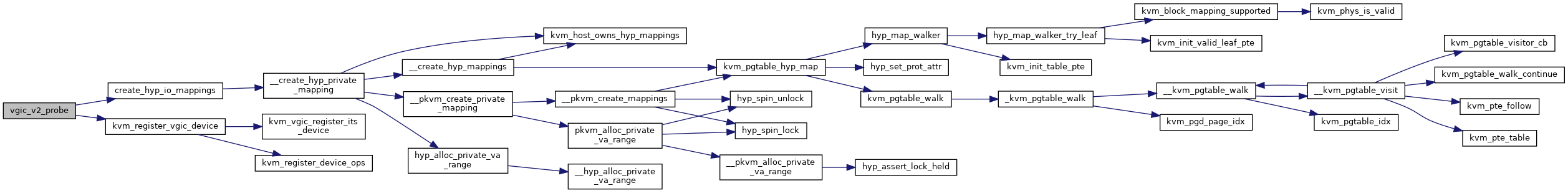

| bool | vgic_v3_check_base (struct kvm *kvm) |

| void | vgic_v3_load (struct kvm_vcpu *vcpu) |

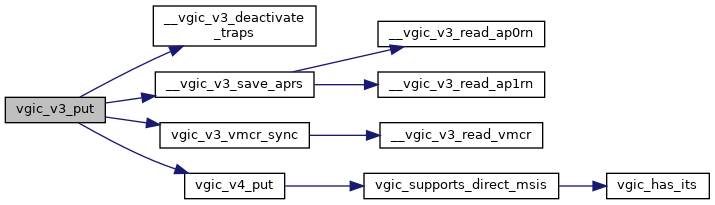

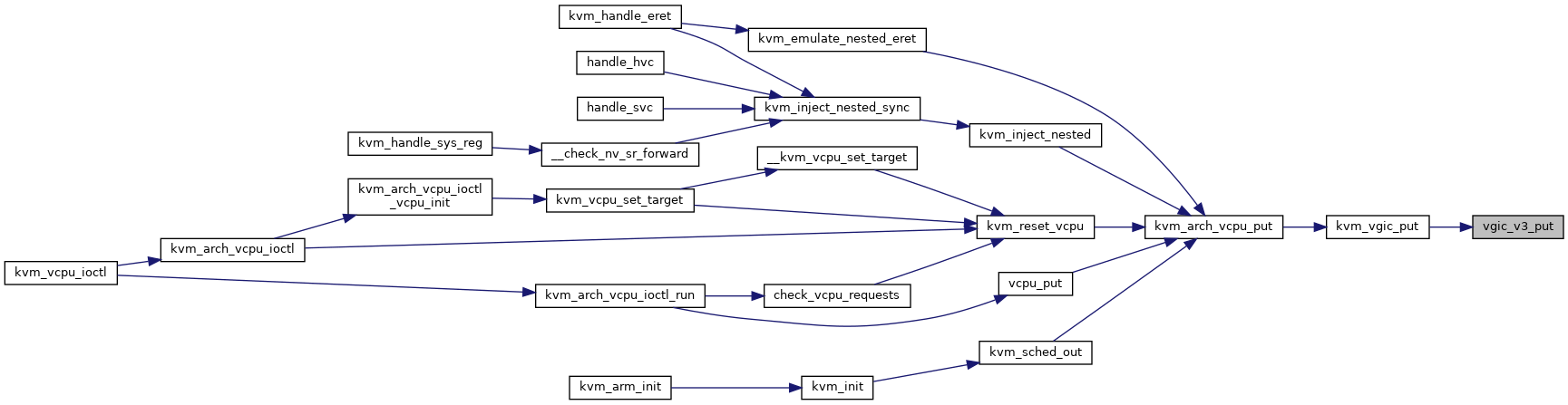

| void | vgic_v3_put (struct kvm_vcpu *vcpu) |

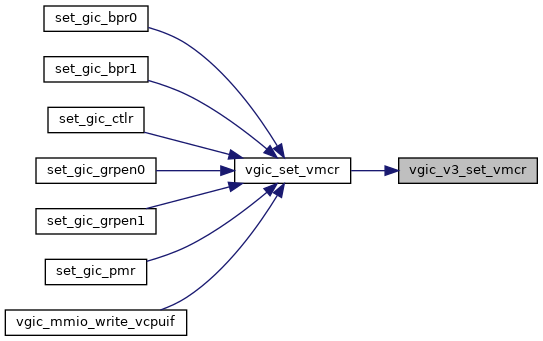

| void | vgic_v3_vmcr_sync (struct kvm_vcpu *vcpu) |

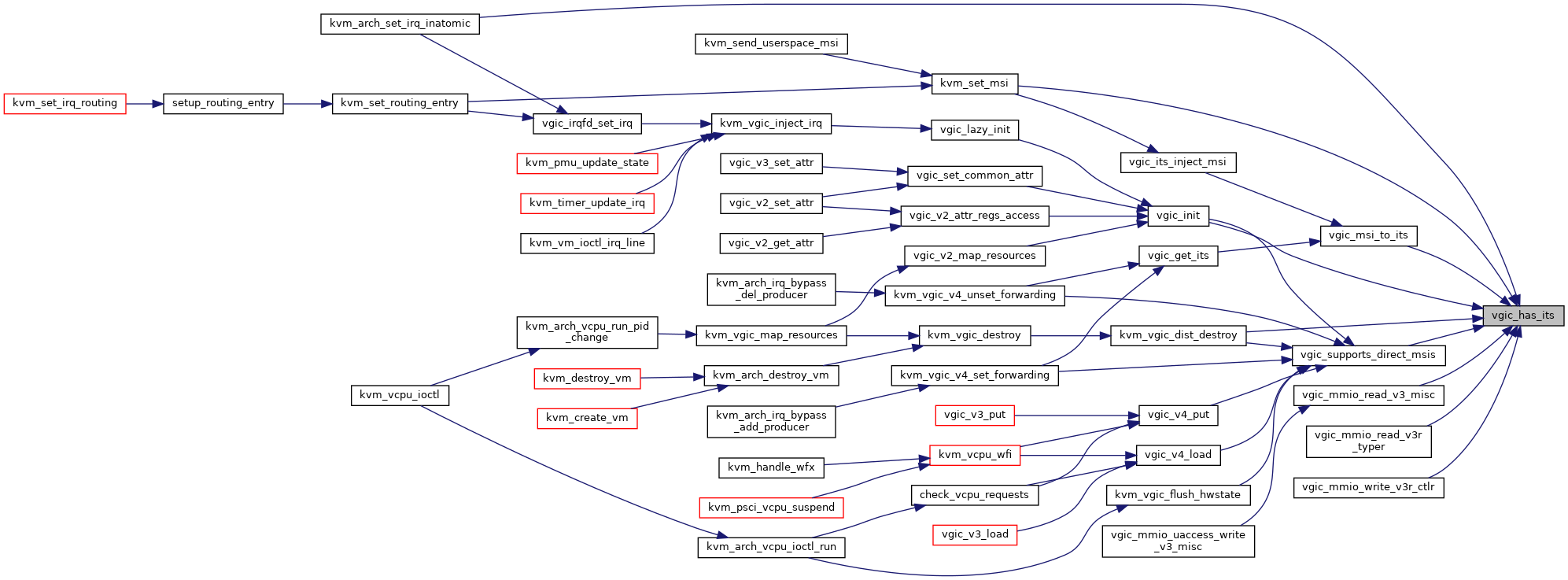

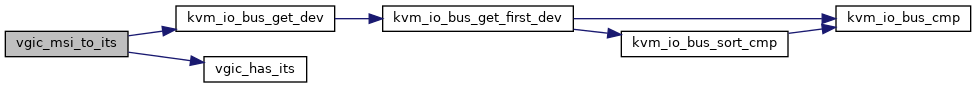

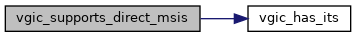

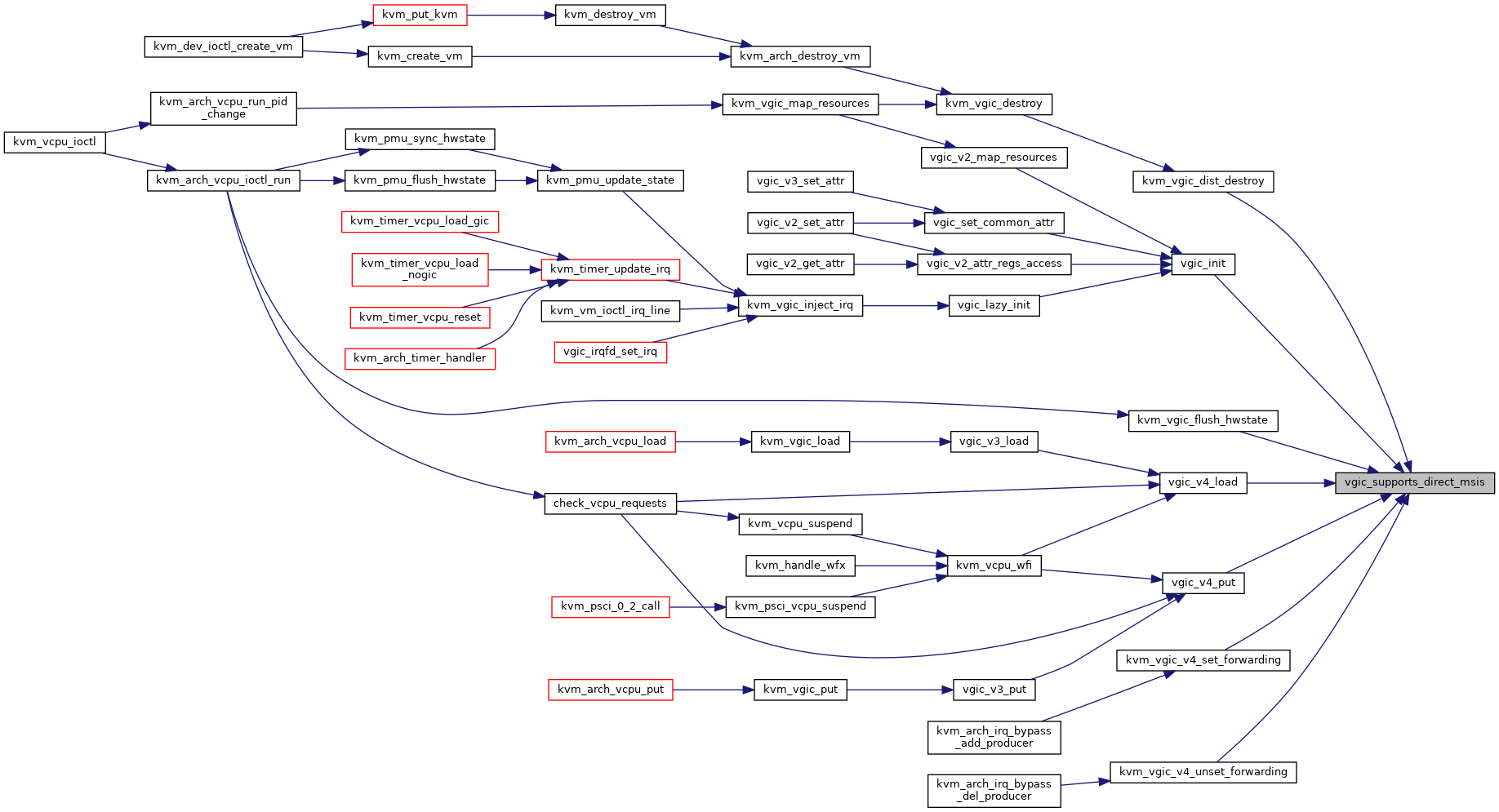

| bool | vgic_has_its (struct kvm *kvm) |

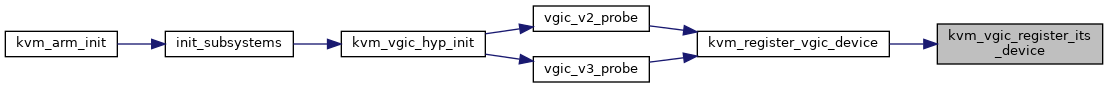

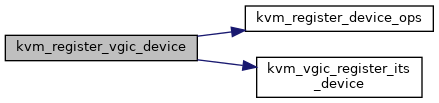

| int | kvm_vgic_register_its_device (void) |

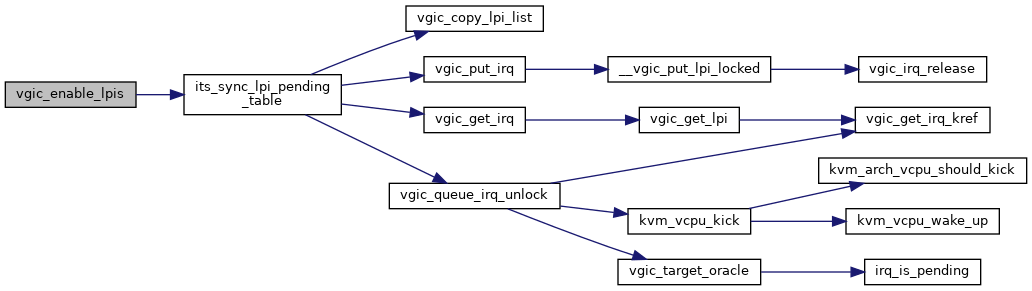

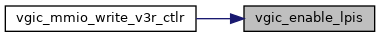

| void | vgic_enable_lpis (struct kvm_vcpu *vcpu) |

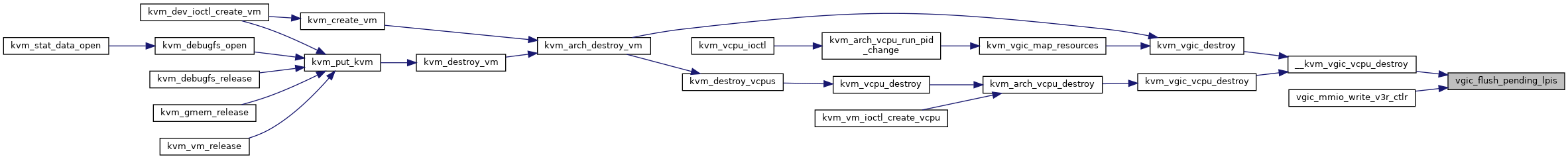

| void | vgic_flush_pending_lpis (struct kvm_vcpu *vcpu) |

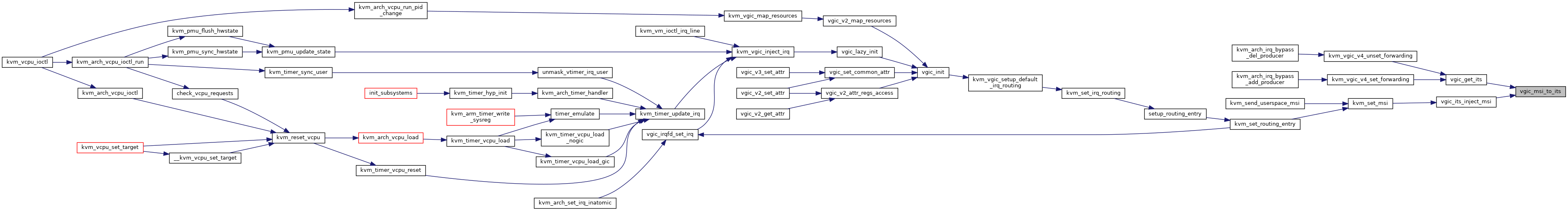

| int | vgic_its_inject_msi (struct kvm *kvm, struct kvm_msi *msi) |

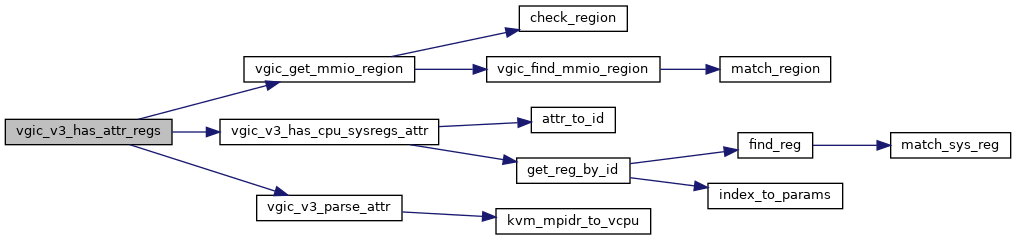

| int | vgic_v3_has_attr_regs (struct kvm_device *dev, struct kvm_device_attr *attr) |

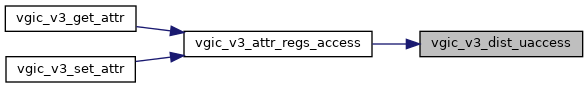

| int | vgic_v3_dist_uaccess (struct kvm_vcpu *vcpu, bool is_write, int offset, u32 *val) |

| int | vgic_v3_redist_uaccess (struct kvm_vcpu *vcpu, bool is_write, int offset, u32 *val) |

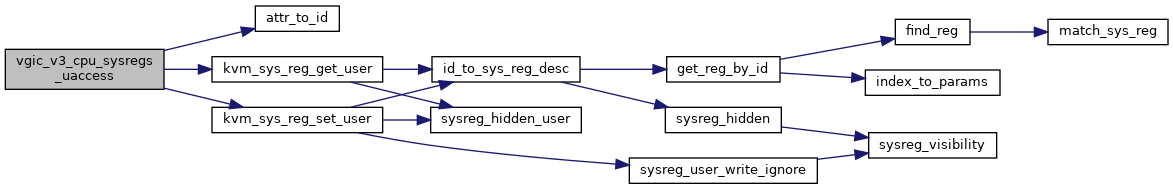

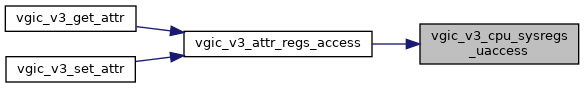

| int | vgic_v3_cpu_sysregs_uaccess (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr, bool is_write) |

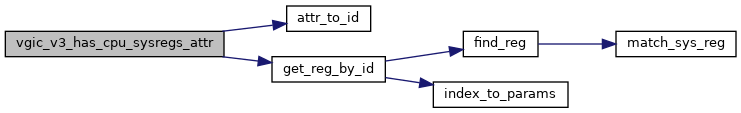

| int | vgic_v3_has_cpu_sysregs_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

| int | vgic_v3_line_level_info_uaccess (struct kvm_vcpu *vcpu, bool is_write, u32 intid, u32 *val) |

| int | kvm_register_vgic_device (unsigned long type) |

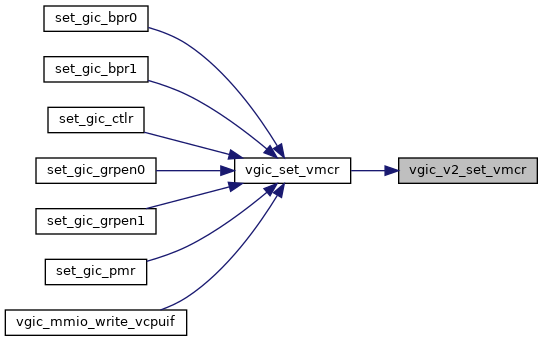

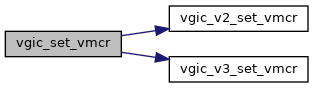

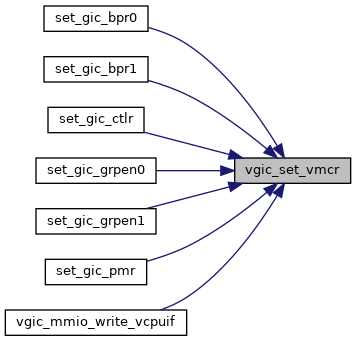

| void | vgic_set_vmcr (struct kvm_vcpu *vcpu, struct vgic_vmcr *vmcr) |

| void | vgic_get_vmcr (struct kvm_vcpu *vcpu, struct vgic_vmcr *vmcr) |

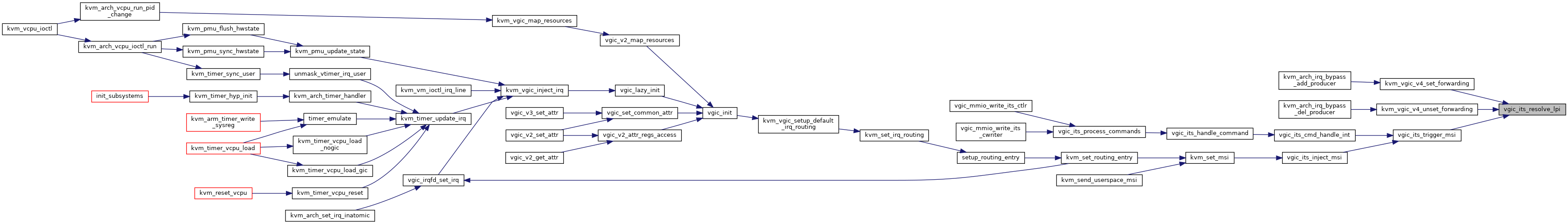

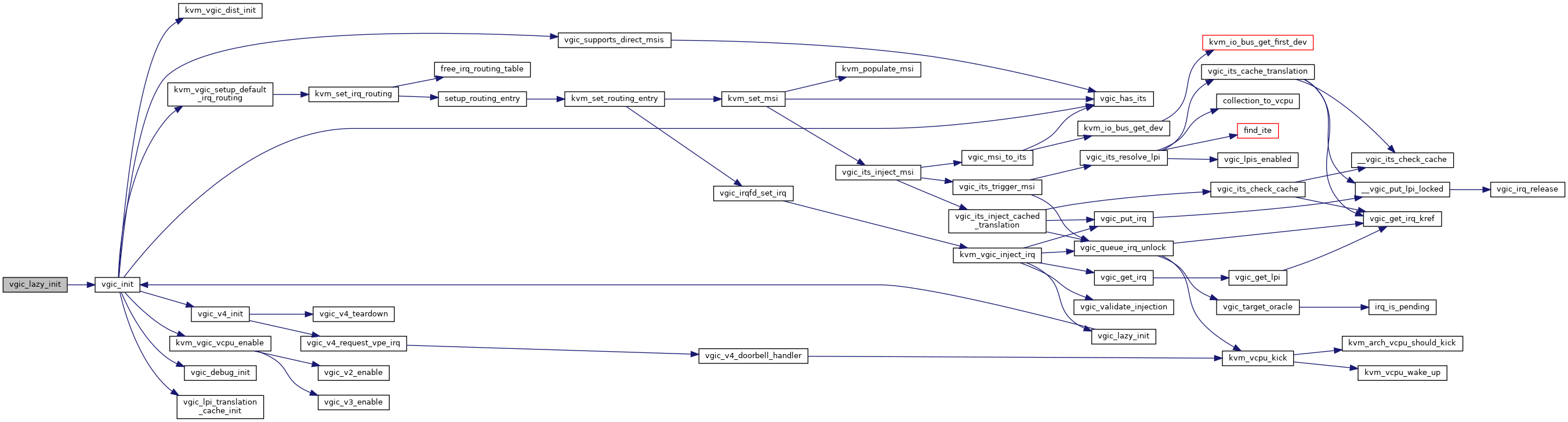

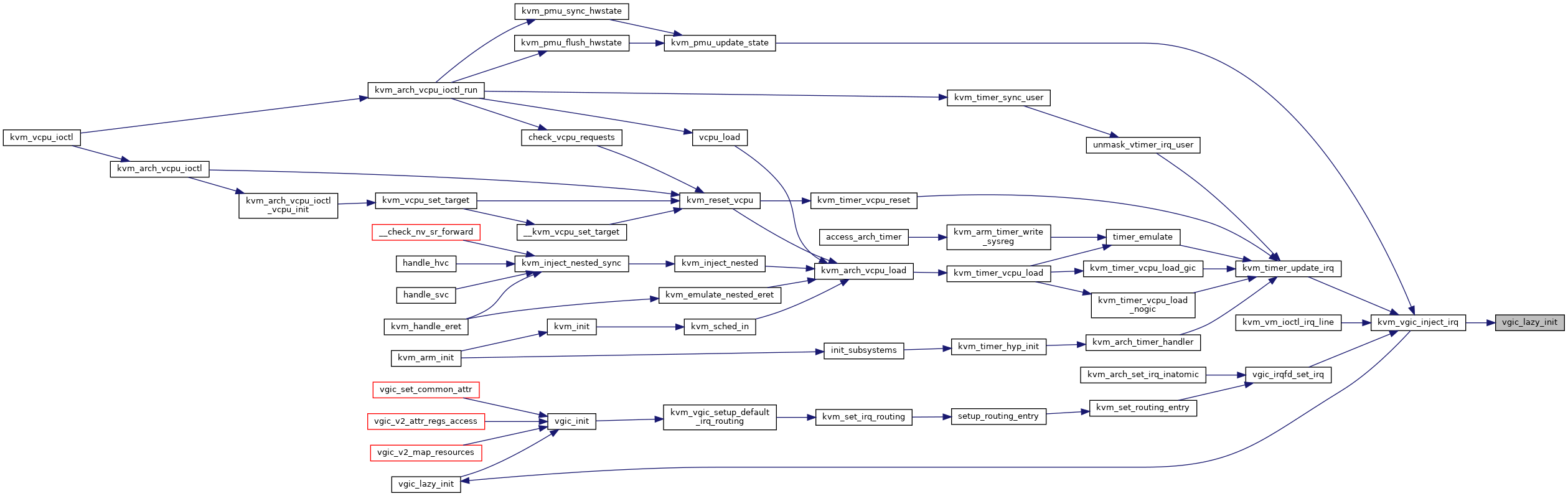

| int | vgic_lazy_init (struct kvm *kvm) |

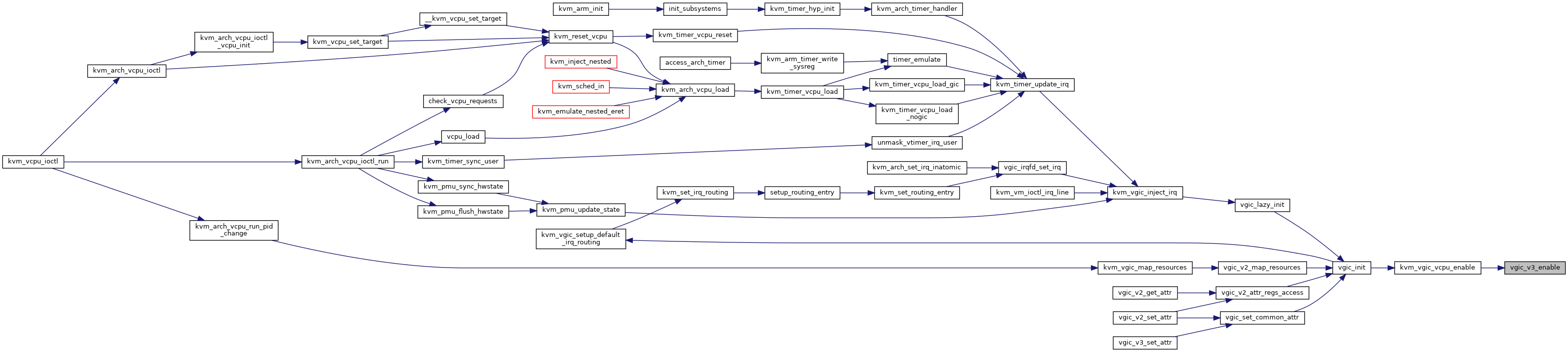

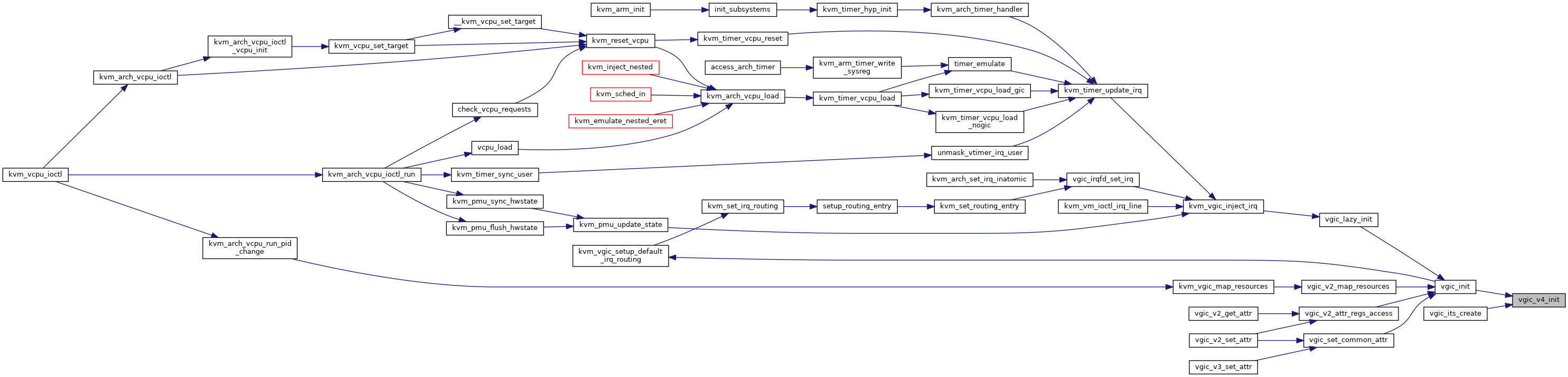

| int | vgic_init (struct kvm *kvm) |

| void | vgic_debug_init (struct kvm *kvm) |

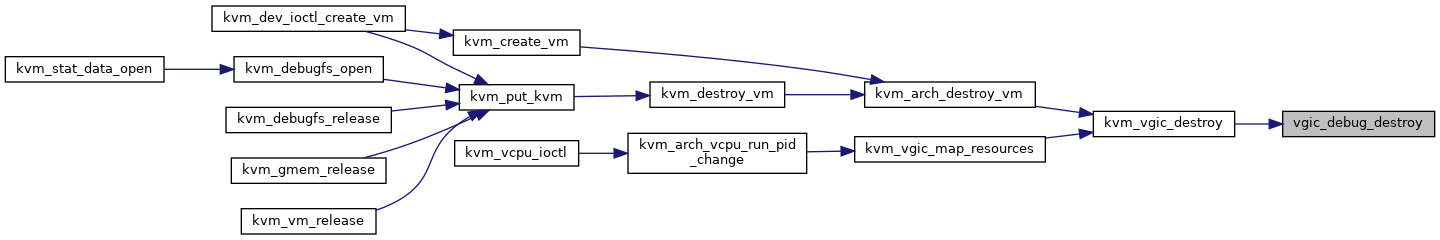

| void | vgic_debug_destroy (struct kvm *kvm) |

| static int | vgic_v3_max_apr_idx (struct kvm_vcpu *vcpu) |

| static bool | vgic_v3_redist_region_full (struct vgic_redist_region *region) |

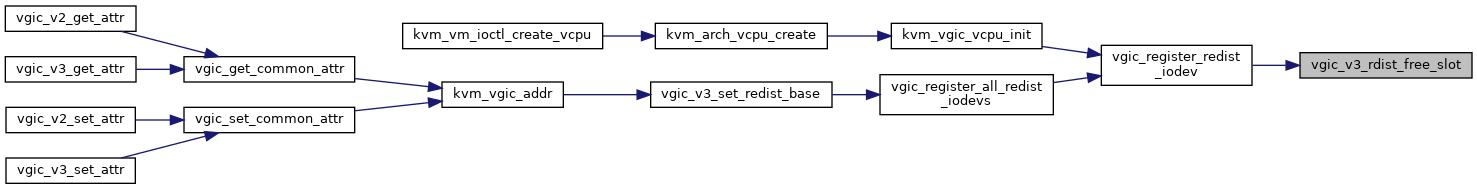

| struct vgic_redist_region * | vgic_v3_rdist_free_slot (struct list_head *rdregs) |

| static size_t | vgic_v3_rd_region_size (struct kvm *kvm, struct vgic_redist_region *rdreg) |

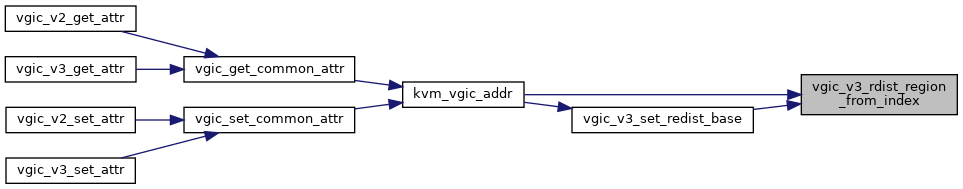

| struct vgic_redist_region * | vgic_v3_rdist_region_from_index (struct kvm *kvm, u32 index) |

| void | vgic_v3_free_redist_region (struct vgic_redist_region *rdreg) |

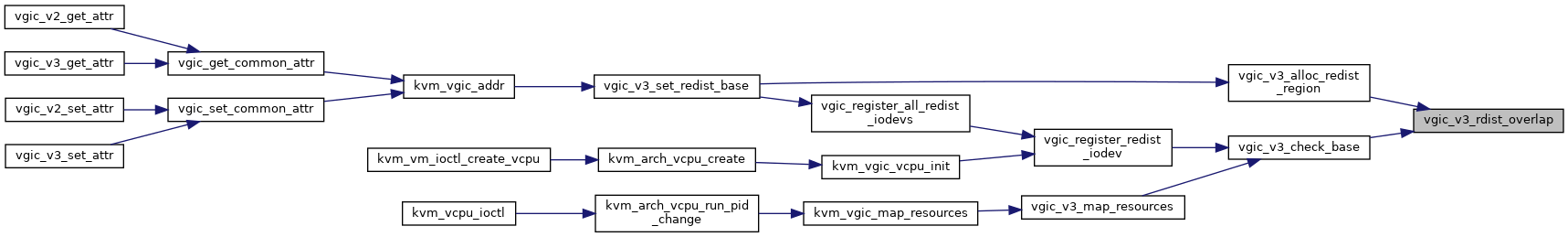

| bool | vgic_v3_rdist_overlap (struct kvm *kvm, gpa_t base, size_t size) |

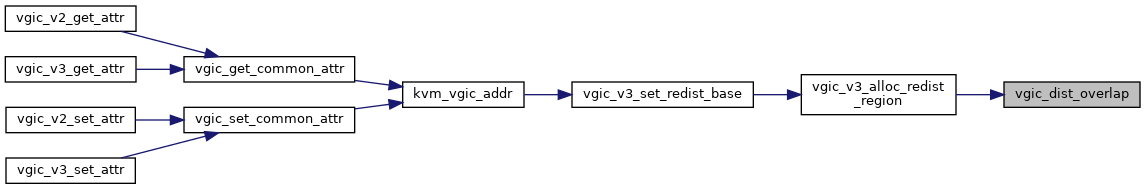

| static bool | vgic_dist_overlap (struct kvm *kvm, gpa_t base, size_t size) |

| bool | vgic_lpis_enabled (struct kvm_vcpu *vcpu) |

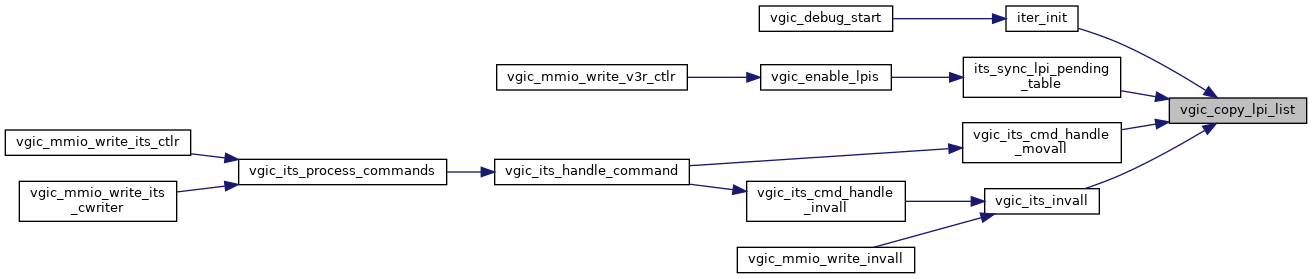

| int | vgic_copy_lpi_list (struct kvm *kvm, struct kvm_vcpu *vcpu, u32 **intid_ptr) |

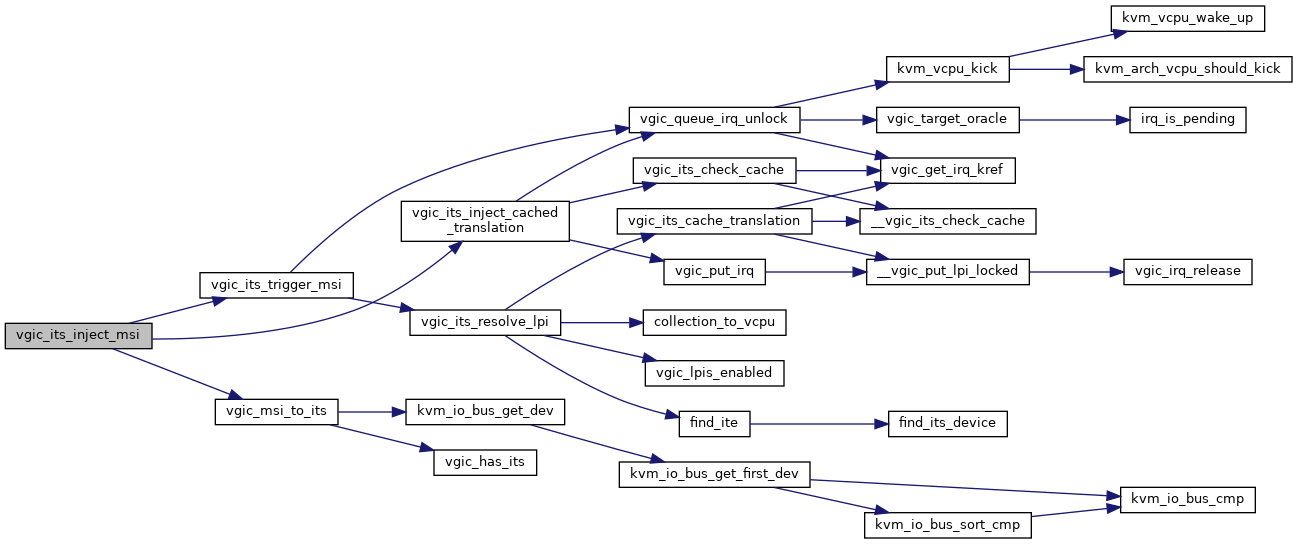

| int | vgic_its_resolve_lpi (struct kvm *kvm, struct vgic_its *its, u32 devid, u32 eventid, struct vgic_irq **irq) |

| struct vgic_its * | vgic_msi_to_its (struct kvm *kvm, struct kvm_msi *msi) |

| int | vgic_its_inject_cached_translation (struct kvm *kvm, struct kvm_msi *msi) |

| void | vgic_lpi_translation_cache_init (struct kvm *kvm) |

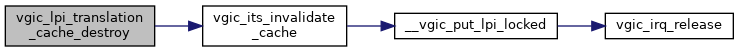

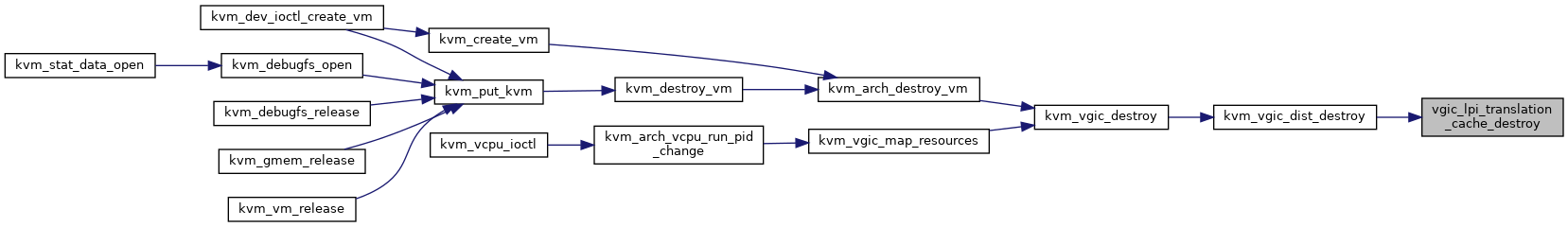

| void | vgic_lpi_translation_cache_destroy (struct kvm *kvm) |

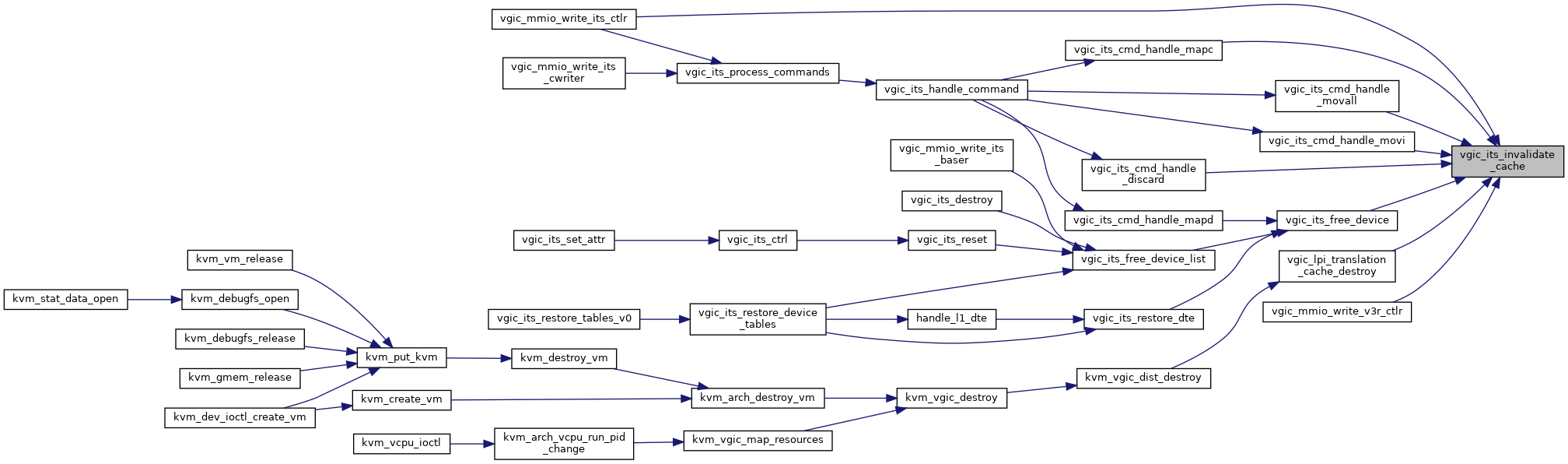

| void | vgic_its_invalidate_cache (struct kvm *kvm) |

| int | vgic_its_inv_lpi (struct kvm *kvm, struct vgic_irq *irq) |

| int | vgic_its_invall (struct kvm_vcpu *vcpu) |

| bool | vgic_supports_direct_msis (struct kvm *kvm) |

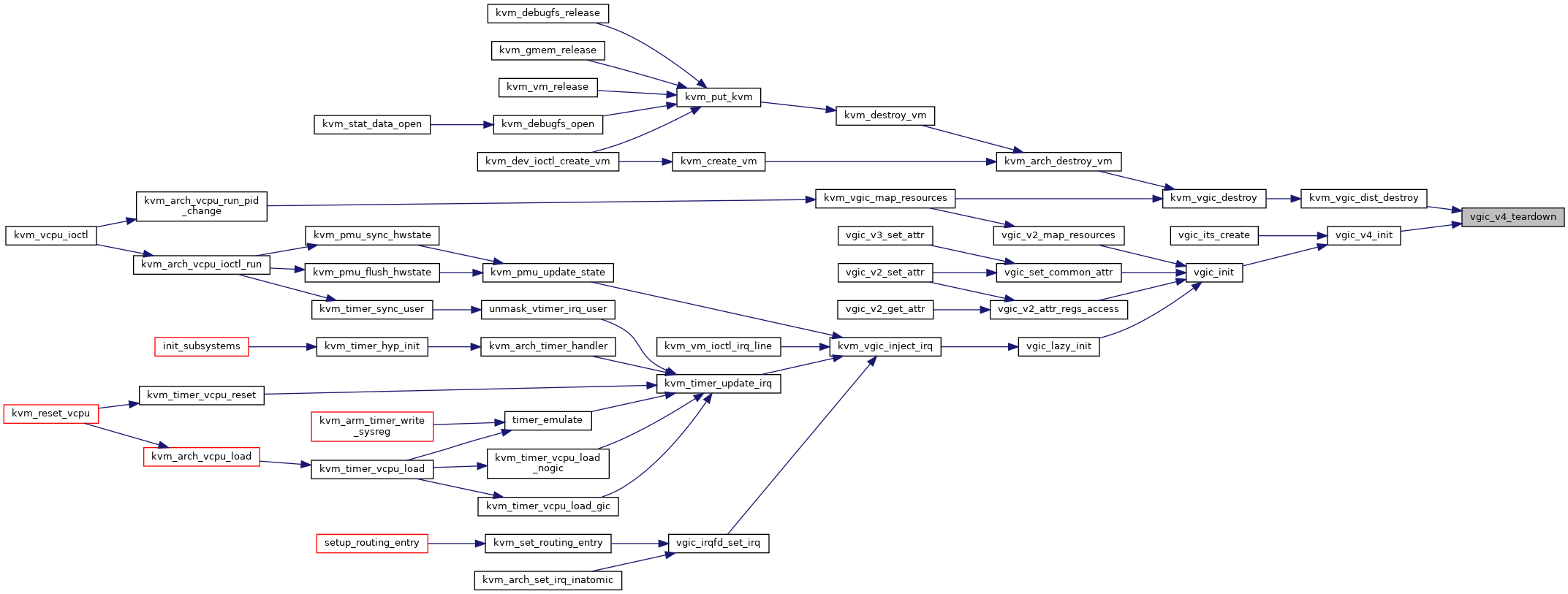

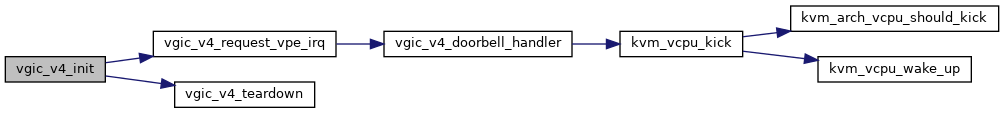

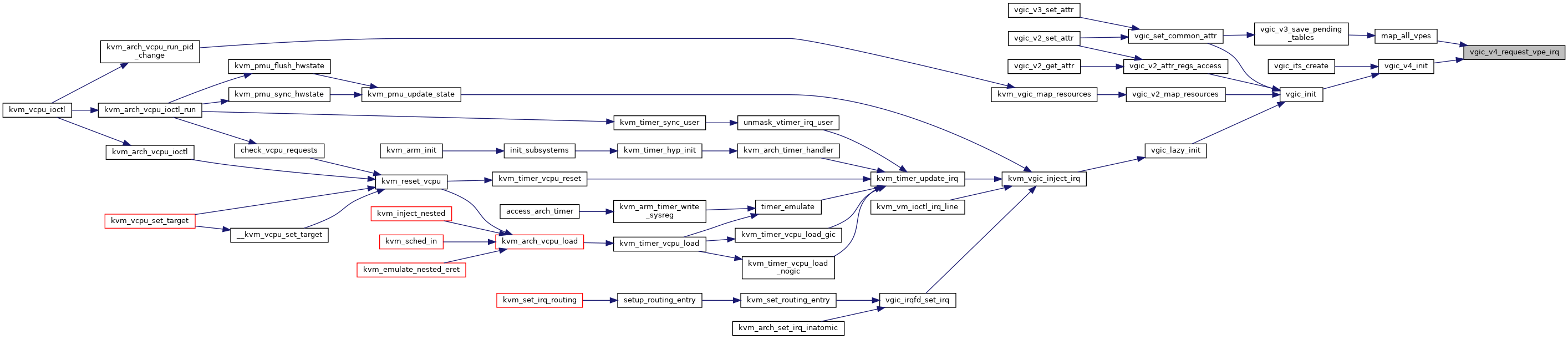

| int | vgic_v4_init (struct kvm *kvm) |

| void | vgic_v4_teardown (struct kvm *kvm) |

| void | vgic_v4_configure_vsgis (struct kvm *kvm) |

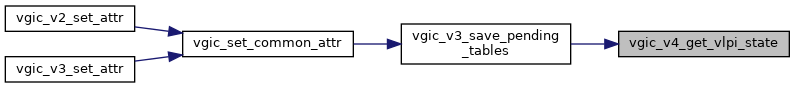

| void | vgic_v4_get_vlpi_state (struct vgic_irq *irq, bool *val) |

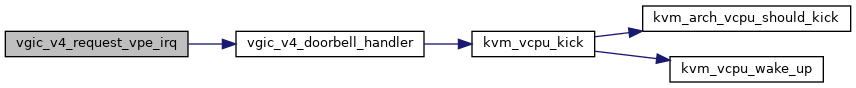

| int | vgic_v4_request_vpe_irq (struct kvm_vcpu *vcpu, int irq) |

Macro Definition Documentation

◆ DEBUG_SPINLOCK_BUG_ON

◆ IMPLEMENTER_ARM

◆ INTERRUPT_ID_BITS_ITS

◆ INTERRUPT_ID_BITS_SPIS

◆ IS_VGIC_ADDR_UNDEF

| #define IS_VGIC_ADDR_UNDEF | ( | _x | ) | ((_x) == VGIC_ADDR_UNDEF) |

◆ KVM_DEV_ARM_VGIC_SYSREG_MASK

| #define KVM_DEV_ARM_VGIC_SYSREG_MASK |

◆ KVM_ITS_CTE_ICID_MASK

◆ KVM_ITS_CTE_RDBASE_SHIFT

◆ KVM_ITS_CTE_VALID_MASK

◆ KVM_ITS_CTE_VALID_SHIFT

◆ KVM_ITS_DTE_ITTADDR_MASK

◆ KVM_ITS_DTE_ITTADDR_SHIFT

◆ KVM_ITS_DTE_NEXT_MASK

◆ KVM_ITS_DTE_NEXT_SHIFT

◆ KVM_ITS_DTE_SIZE_MASK

◆ KVM_ITS_DTE_VALID_MASK

◆ KVM_ITS_DTE_VALID_SHIFT

◆ KVM_ITS_ITE_ICID_MASK

◆ KVM_ITS_ITE_NEXT_SHIFT

◆ KVM_ITS_ITE_PINTID_MASK

◆ KVM_ITS_ITE_PINTID_SHIFT

◆ KVM_ITS_L1E_ADDR_MASK

◆ KVM_ITS_L1E_VALID_MASK

◆ KVM_REG_ARM_VGIC_SYSREG_CRM_MASK

◆ KVM_REG_ARM_VGIC_SYSREG_CRM_SHIFT

◆ KVM_REG_ARM_VGIC_SYSREG_CRN_MASK

◆ KVM_REG_ARM_VGIC_SYSREG_CRN_SHIFT

◆ KVM_REG_ARM_VGIC_SYSREG_OP0_MASK

◆ KVM_REG_ARM_VGIC_SYSREG_OP0_SHIFT

◆ KVM_REG_ARM_VGIC_SYSREG_OP1_MASK

◆ KVM_REG_ARM_VGIC_SYSREG_OP1_SHIFT

◆ KVM_REG_ARM_VGIC_SYSREG_OP2_MASK

◆ KVM_REG_ARM_VGIC_SYSREG_OP2_SHIFT

◆ KVM_VGIC_V3_RDIST_BASE_MASK

◆ KVM_VGIC_V3_RDIST_COUNT_MASK

◆ KVM_VGIC_V3_RDIST_COUNT_SHIFT

◆ KVM_VGIC_V3_RDIST_FLAGS_MASK

◆ KVM_VGIC_V3_RDIST_FLAGS_SHIFT

◆ KVM_VGIC_V3_RDIST_INDEX_MASK

◆ PRODUCT_ID_KVM

◆ VGIC_ADDR_UNDEF

◆ VGIC_AFFINITY_0_MASK

| #define VGIC_AFFINITY_0_MASK (0xffUL << VGIC_AFFINITY_0_SHIFT) |

◆ VGIC_AFFINITY_0_SHIFT

◆ VGIC_AFFINITY_1_MASK

| #define VGIC_AFFINITY_1_MASK (0xffUL << VGIC_AFFINITY_1_SHIFT) |

◆ VGIC_AFFINITY_1_SHIFT

◆ VGIC_AFFINITY_2_MASK

| #define VGIC_AFFINITY_2_MASK (0xffUL << VGIC_AFFINITY_2_SHIFT) |

◆ VGIC_AFFINITY_2_SHIFT

◆ VGIC_AFFINITY_3_MASK

| #define VGIC_AFFINITY_3_MASK (0xffUL << VGIC_AFFINITY_3_SHIFT) |

◆ VGIC_AFFINITY_3_SHIFT

◆ VGIC_AFFINITY_LEVEL

| #define VGIC_AFFINITY_LEVEL | ( | reg, | |

| level | |||

| ) |

◆ vgic_irq_is_sgi

| #define vgic_irq_is_sgi | ( | intid | ) | ((intid) < VGIC_NR_SGIS) |

◆ VGIC_PRI_BITS

◆ VGIC_TO_MPIDR

| #define VGIC_TO_MPIDR | ( | val | ) |

Function Documentation

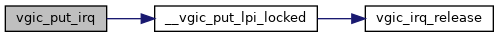

◆ __vgic_put_lpi_locked()

| void __vgic_put_lpi_locked | ( | struct kvm * | kvm, |

| struct vgic_irq * | irq | ||

| ) |

Definition at line 126 of file vgic.c.

◆ irq_is_pending()

|

inlinestatic |

◆ kvm_register_vgic_device()

| int kvm_register_vgic_device | ( | unsigned long | type | ) |

Definition at line 316 of file vgic-kvm-device.c.

◆ kvm_vgic_register_its_device()

| int kvm_vgic_register_its_device | ( | void | ) |

◆ vgic_check_iorange()

| int vgic_check_iorange | ( | struct kvm * | kvm, |

| phys_addr_t | ioaddr, | ||

| phys_addr_t | addr, | ||

| phys_addr_t | alignment, | ||

| phys_addr_t | size | ||

| ) |

◆ vgic_copy_lpi_list()

| int vgic_copy_lpi_list | ( | struct kvm * | kvm, |

| struct kvm_vcpu * | vcpu, | ||

| u32 ** | intid_ptr | ||

| ) |

Definition at line 319 of file vgic-its.c.

◆ vgic_debug_destroy()

| void vgic_debug_destroy | ( | struct kvm * | kvm | ) |

◆ vgic_debug_init()

| void vgic_debug_init | ( | struct kvm * | kvm | ) |

◆ vgic_dist_overlap()

|

inlinestatic |

◆ vgic_enable_lpis()

| void vgic_enable_lpis | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1866 of file vgic-its.c.

◆ vgic_flush_pending_lpis()

| void vgic_flush_pending_lpis | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 152 of file vgic.c.

◆ vgic_get_implementation_rev()

|

inlinestatic |

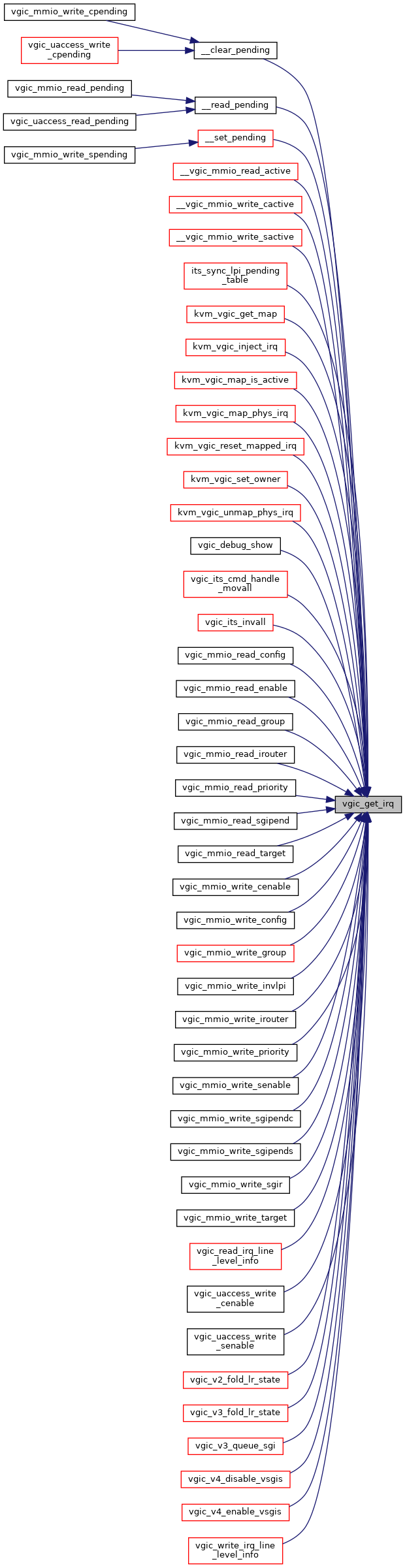

◆ vgic_get_irq()

| struct vgic_irq* vgic_get_irq | ( | struct kvm * | kvm, |

| struct kvm_vcpu * | vcpu, | ||

| u32 | intid | ||

| ) |

◆ vgic_get_irq_kref()

|

inlinestatic |

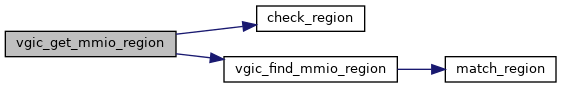

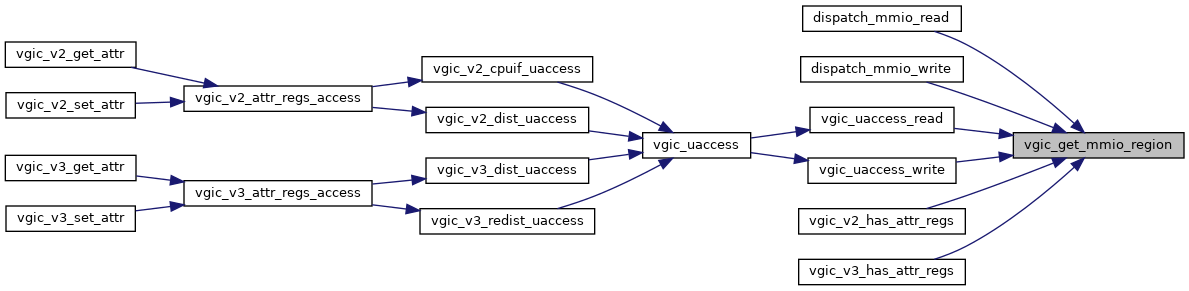

◆ vgic_get_mmio_region()

| const struct vgic_register_region* vgic_get_mmio_region | ( | struct kvm_vcpu * | vcpu, |

| struct vgic_io_device * | iodev, | ||

| gpa_t | addr, | ||

| int | len | ||

| ) |

Definition at line 950 of file vgic-mmio.c.

◆ vgic_get_phys_line_level()

| bool vgic_get_phys_line_level | ( | struct vgic_irq * | irq | ) |

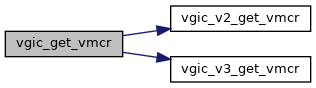

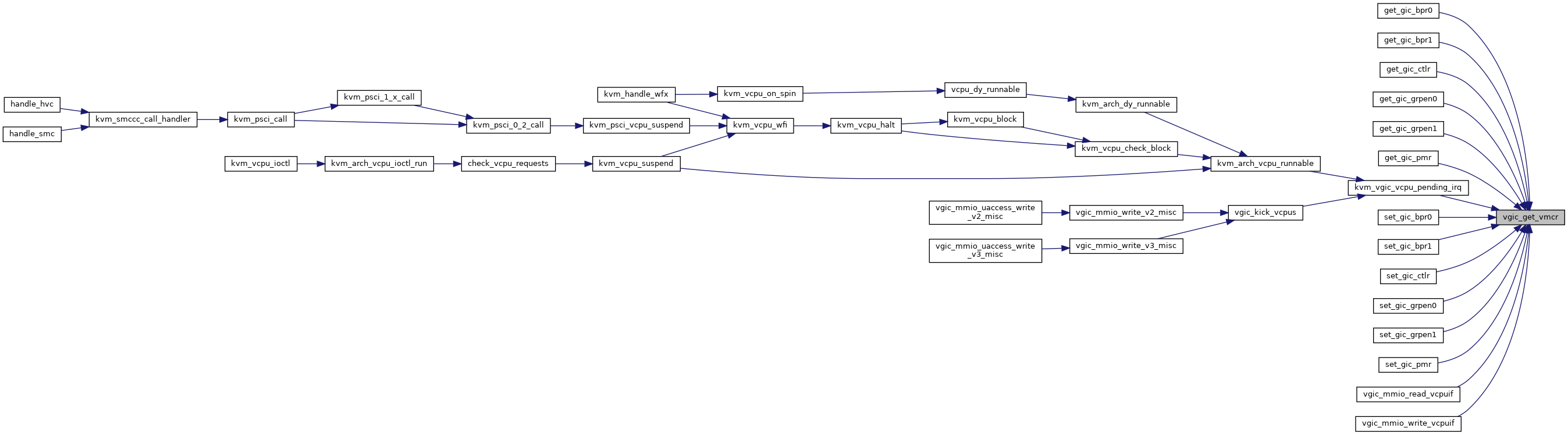

◆ vgic_get_vmcr()

| void vgic_get_vmcr | ( | struct kvm_vcpu * | vcpu, |

| struct vgic_vmcr * | vmcr | ||

| ) |

Definition at line 851 of file vgic-mmio.c.

◆ vgic_has_its()

| bool vgic_has_its | ( | struct kvm * | kvm | ) |

◆ vgic_init()

| int vgic_init | ( | struct kvm * | kvm | ) |

Definition at line 262 of file vgic-init.c.

◆ vgic_irq_get_lr_count()

|

inlinestatic |

◆ vgic_irq_handle_resampling()

| void vgic_irq_handle_resampling | ( | struct vgic_irq * | irq, |

| bool | lr_deactivated, | ||

| bool | lr_pending | ||

| ) |

Definition at line 1060 of file vgic.c.

◆ vgic_irq_is_mapped_level()

|

inlinestatic |

◆ vgic_irq_is_multi_sgi()

|

inlinestatic |

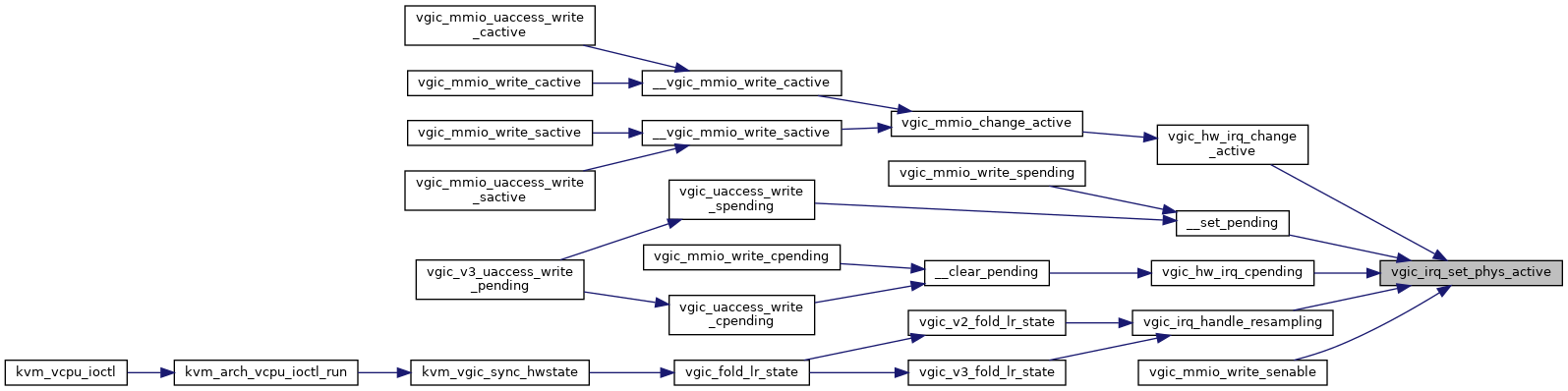

◆ vgic_irq_set_phys_active()

| void vgic_irq_set_phys_active | ( | struct vgic_irq * | irq, |

| bool | active | ||

| ) |

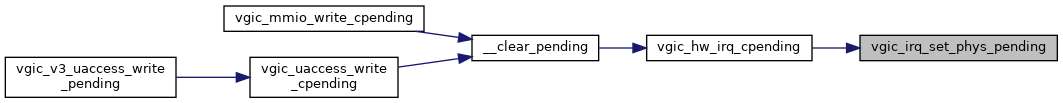

◆ vgic_irq_set_phys_pending()

| void vgic_irq_set_phys_pending | ( | struct vgic_irq * | irq, |

| bool | pending | ||

| ) |

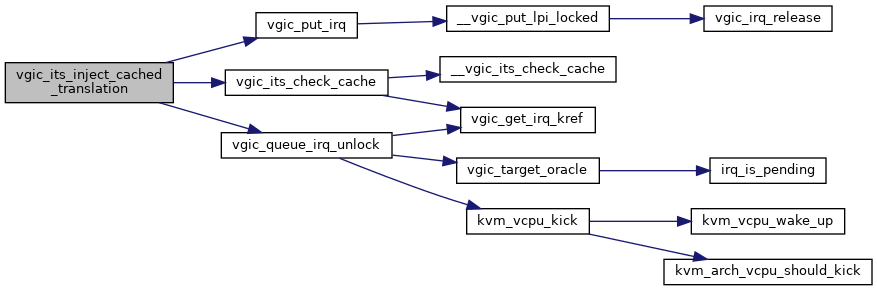

◆ vgic_its_inject_cached_translation()

| int vgic_its_inject_cached_translation | ( | struct kvm * | kvm, |

| struct kvm_msi * | msi | ||

| ) |

Definition at line 765 of file vgic-its.c.

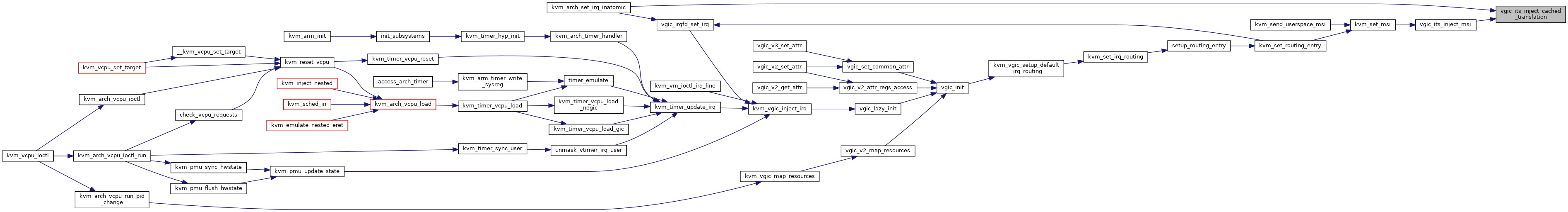

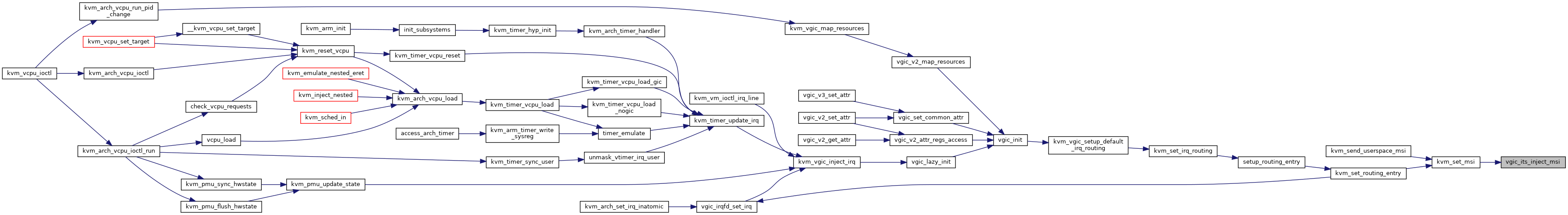

◆ vgic_its_inject_msi()

| int vgic_its_inject_msi | ( | struct kvm * | kvm, |

| struct kvm_msi * | msi | ||

| ) |

Definition at line 790 of file vgic-its.c.

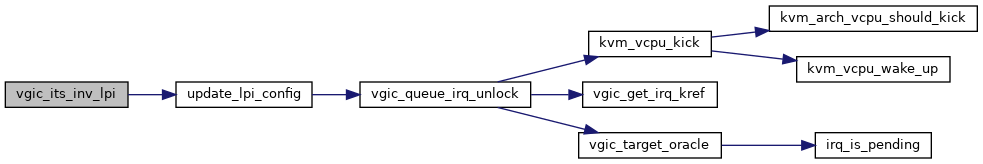

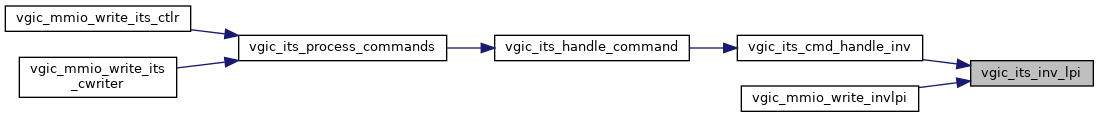

◆ vgic_its_inv_lpi()

| int vgic_its_inv_lpi | ( | struct kvm * | kvm, |

| struct vgic_irq * | irq | ||

| ) |

Definition at line 1323 of file vgic-its.c.

◆ vgic_its_invalidate_cache()

| void vgic_its_invalidate_cache | ( | struct kvm * | kvm | ) |

Definition at line 659 of file vgic-its.c.

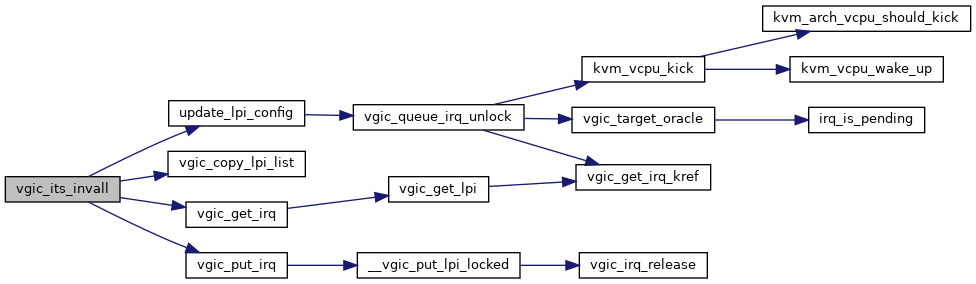

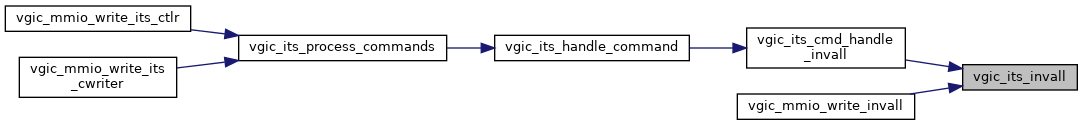

◆ vgic_its_invall()

| int vgic_its_invall | ( | struct kvm_vcpu * | vcpu | ) |

vgic_its_invall - invalidate all LPIs targetting a given vcpu @vcpu: the vcpu for which the RD is targetted by an invalidation

Contrary to the INVALL command, this targets a RD instead of a collection, and we don't need to hold the its_lock, since no ITS is involved here.

Definition at line 1355 of file vgic-its.c.

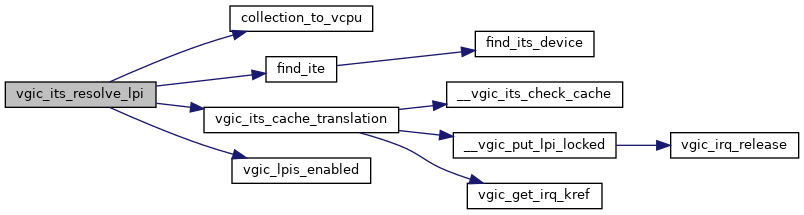

◆ vgic_its_resolve_lpi()

| int vgic_its_resolve_lpi | ( | struct kvm * | kvm, |

| struct vgic_its * | its, | ||

| u32 | devid, | ||

| u32 | eventid, | ||

| struct vgic_irq ** | irq | ||

| ) |

Definition at line 682 of file vgic-its.c.

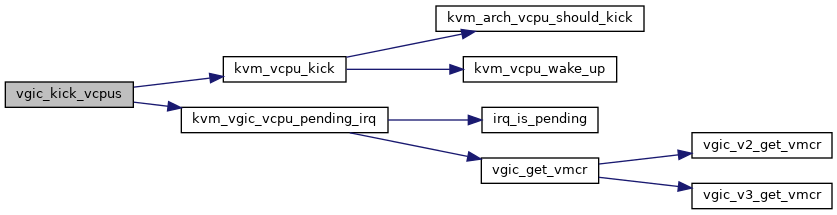

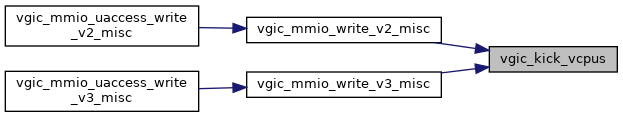

◆ vgic_kick_vcpus()

| void vgic_kick_vcpus | ( | struct kvm * | kvm | ) |

◆ vgic_lazy_init()

| int vgic_lazy_init | ( | struct kvm * | kvm | ) |

vgic_lazy_init: Lazy init is only allowed if the GIC exposed to the guest is a GICv2. A GICv3 must be explicitly initialized by userspace using the KVM_DEV_ARM_VGIC_GRP_CTRL KVM_DEVICE group. @kvm: kvm struct pointer

Definition at line 423 of file vgic-init.c.

◆ vgic_lpi_translation_cache_destroy()

| void vgic_lpi_translation_cache_destroy | ( | struct kvm * | kvm | ) |

Definition at line 1927 of file vgic-its.c.

◆ vgic_lpi_translation_cache_init()

| void vgic_lpi_translation_cache_init | ( | struct kvm * | kvm | ) |

◆ vgic_lpis_enabled()

| bool vgic_lpis_enabled | ( | struct kvm_vcpu * | vcpu | ) |

◆ vgic_msi_to_its()

| struct vgic_its* vgic_msi_to_its | ( | struct kvm * | kvm, |

| struct kvm_msi * | msi | ||

| ) |

Definition at line 708 of file vgic-its.c.

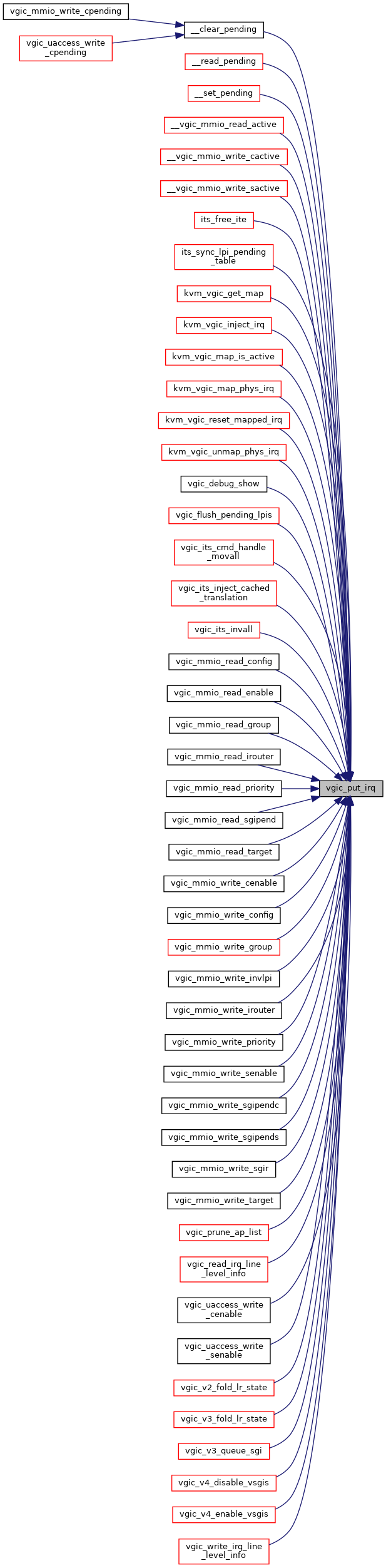

◆ vgic_put_irq()

| void vgic_put_irq | ( | struct kvm * | kvm, |

| struct vgic_irq * | irq | ||

| ) |

◆ vgic_queue_irq_unlock()

| bool vgic_queue_irq_unlock | ( | struct kvm * | kvm, |

| struct vgic_irq * | irq, | ||

| unsigned long | flags | ||

| ) |

Definition at line 336 of file vgic.c.

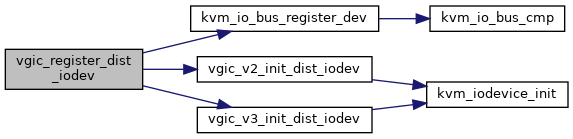

◆ vgic_register_dist_iodev()

| int vgic_register_dist_iodev | ( | struct kvm * | kvm, |

| gpa_t | dist_base_address, | ||

| enum | vgic_type | ||

| ) |

Definition at line 1080 of file vgic-mmio.c.

◆ vgic_register_redist_iodev()

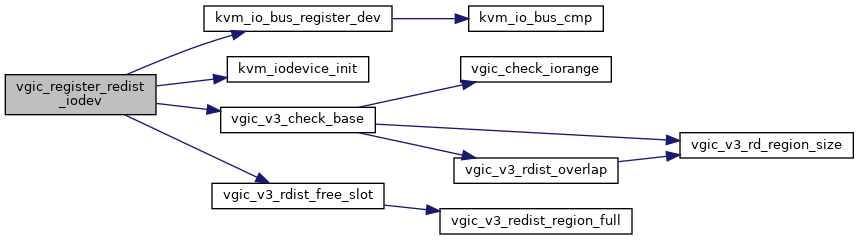

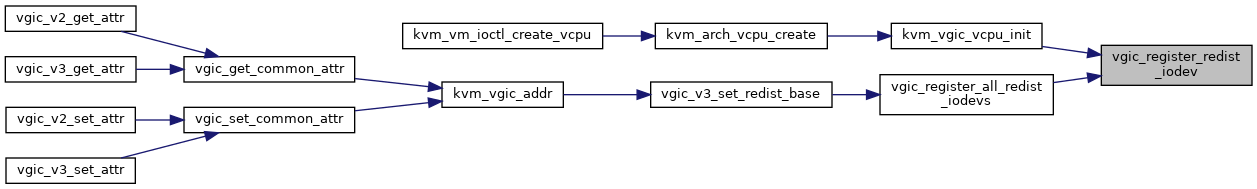

| int vgic_register_redist_iodev | ( | struct kvm_vcpu * | vcpu | ) |

vgic_register_redist_iodev - register a single redist iodev @vcpu: The VCPU to which the redistributor belongs

Register a KVM iodev for this VCPU's redistributor using the address provided.

Return 0 on success, -ERRNO otherwise.

Definition at line 746 of file vgic-mmio-v3.c.

◆ vgic_set_vmcr()

| void vgic_set_vmcr | ( | struct kvm_vcpu * | vcpu, |

| struct vgic_vmcr * | vmcr | ||

| ) |

Definition at line 843 of file vgic-mmio.c.

◆ vgic_supports_direct_msis()

| bool vgic_supports_direct_msis | ( | struct kvm * | kvm | ) |

Definition at line 51 of file vgic-mmio-v3.c.

◆ vgic_unregister_redist_iodev()

| void vgic_unregister_redist_iodev | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 805 of file vgic-mmio-v3.c.

◆ vgic_v2_clear_lr()

| void vgic_v2_clear_lr | ( | struct kvm_vcpu * | vcpu, |

| int | lr | ||

| ) |

◆ vgic_v2_cpuif_uaccess()

| int vgic_v2_cpuif_uaccess | ( | struct kvm_vcpu * | vcpu, |

| bool | is_write, | ||

| int | offset, | ||

| u32 * | val | ||

| ) |

Definition at line 539 of file vgic-mmio-v2.c.

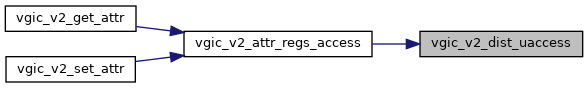

◆ vgic_v2_dist_uaccess()

| int vgic_v2_dist_uaccess | ( | struct kvm_vcpu * | vcpu, |

| bool | is_write, | ||

| int | offset, | ||

| u32 * | val | ||

| ) |

Definition at line 551 of file vgic-mmio-v2.c.

◆ vgic_v2_enable()

| void vgic_v2_enable | ( | struct kvm_vcpu * | vcpu | ) |

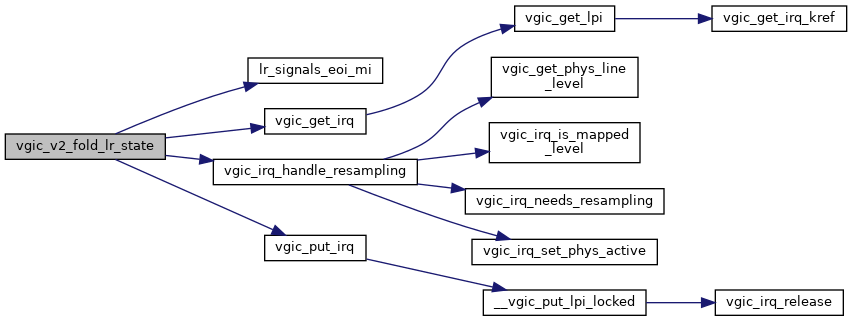

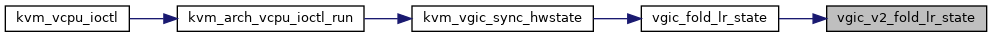

◆ vgic_v2_fold_lr_state()

| void vgic_v2_fold_lr_state | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 49 of file vgic-v2.c.

◆ vgic_v2_get_vmcr()

| void vgic_v2_get_vmcr | ( | struct kvm_vcpu * | vcpu, |

| struct vgic_vmcr * | vmcr | ||

| ) |

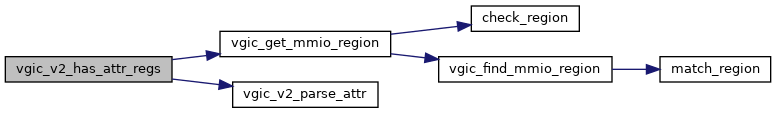

◆ vgic_v2_has_attr_regs()

| int vgic_v2_has_attr_regs | ( | struct kvm_device * | dev, |

| struct kvm_device_attr * | attr | ||

| ) |

Definition at line 497 of file vgic-mmio-v2.c.

◆ vgic_v2_init_lrs()

| void vgic_v2_init_lrs | ( | void | ) |

◆ vgic_v2_load()

| void vgic_v2_load | ( | struct kvm_vcpu * | vcpu | ) |

◆ vgic_v2_map_resources()

| int vgic_v2_map_resources | ( | struct kvm * | kvm | ) |

Definition at line 289 of file vgic-v2.c.

◆ vgic_v2_parse_attr()

| int vgic_v2_parse_attr | ( | struct kvm_device * | dev, |

| struct kvm_device_attr * | attr, | ||

| struct vgic_reg_attr * | reg_attr | ||

| ) |

◆ vgic_v2_populate_lr()

| void vgic_v2_populate_lr | ( | struct kvm_vcpu * | vcpu, |

| struct vgic_irq * | irq, | ||

| int | lr | ||

| ) |

◆ vgic_v2_probe()

| int vgic_v2_probe | ( | const struct gic_kvm_info * | info | ) |

vgic_v2_probe - probe for a VGICv2 compatible interrupt controller @info: pointer to the GIC description

Returns 0 if the VGICv2 has been probed successfully, returns an error code otherwise

Definition at line 337 of file vgic-v2.c.

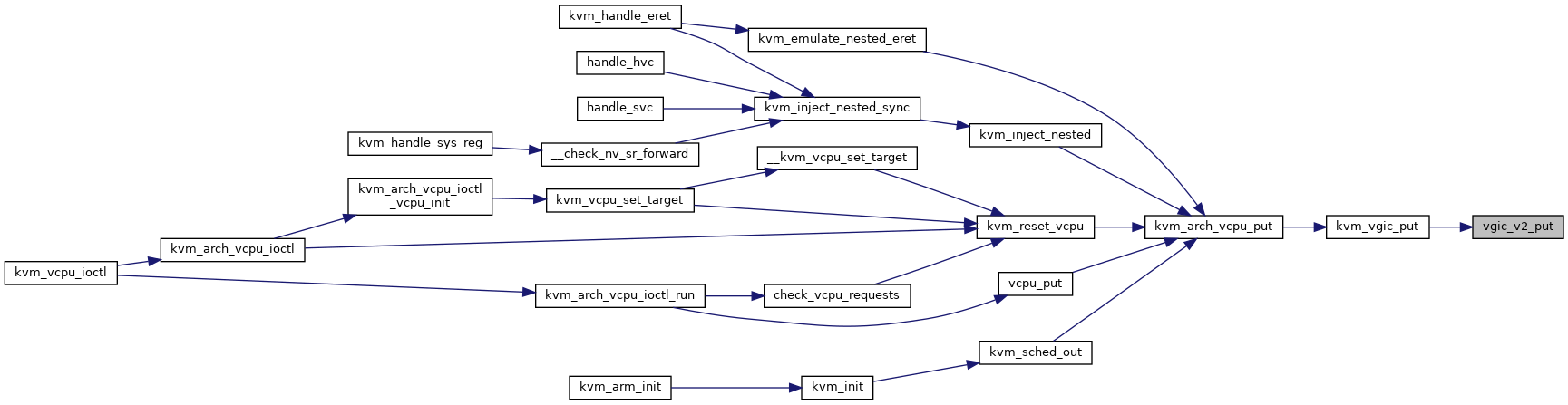

◆ vgic_v2_put()

| void vgic_v2_put | ( | struct kvm_vcpu * | vcpu | ) |

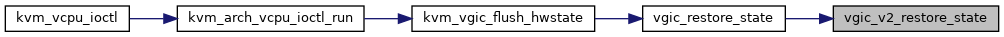

◆ vgic_v2_restore_state()

| void vgic_v2_restore_state | ( | struct kvm_vcpu * | vcpu | ) |

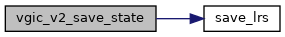

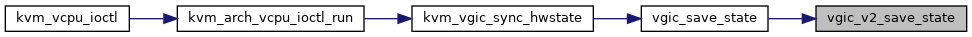

◆ vgic_v2_save_state()

| void vgic_v2_save_state | ( | struct kvm_vcpu * | vcpu | ) |

◆ vgic_v2_set_underflow()

| void vgic_v2_set_underflow | ( | struct kvm_vcpu * | vcpu | ) |

◆ vgic_v2_set_vmcr()

| void vgic_v2_set_vmcr | ( | struct kvm_vcpu * | vcpu, |

| struct vgic_vmcr * | vmcr | ||

| ) |

◆ vgic_v2_vmcr_sync()

| void vgic_v2_vmcr_sync | ( | struct kvm_vcpu * | vcpu | ) |

◆ vgic_v3_check_base()

| bool vgic_v3_check_base | ( | struct kvm * | kvm | ) |

Definition at line 477 of file vgic-v3.c.

◆ vgic_v3_clear_lr()

| void vgic_v3_clear_lr | ( | struct kvm_vcpu * | vcpu, |

| int | lr | ||

| ) |

◆ vgic_v3_cpu_sysregs_uaccess()

| int vgic_v3_cpu_sysregs_uaccess | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_device_attr * | attr, | ||

| bool | is_write | ||

| ) |

Definition at line 351 of file vgic-sys-reg-v3.c.

◆ vgic_v3_dist_uaccess()

| int vgic_v3_dist_uaccess | ( | struct kvm_vcpu * | vcpu, |

| bool | is_write, | ||

| int | offset, | ||

| u32 * | val | ||

| ) |

Definition at line 1095 of file vgic-mmio-v3.c.

◆ vgic_v3_enable()

| void vgic_v3_enable | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 260 of file vgic-v3.c.

◆ vgic_v3_fold_lr_state()

| void vgic_v3_fold_lr_state | ( | struct kvm_vcpu * | vcpu | ) |

◆ vgic_v3_free_redist_region()

| void vgic_v3_free_redist_region | ( | struct vgic_redist_region * | rdreg | ) |

◆ vgic_v3_get_vmcr()

| void vgic_v3_get_vmcr | ( | struct kvm_vcpu * | vcpu, |

| struct vgic_vmcr * | vmcr | ||

| ) |

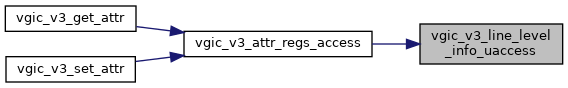

◆ vgic_v3_has_attr_regs()

| int vgic_v3_has_attr_regs | ( | struct kvm_device * | dev, |

| struct kvm_device_attr * | attr | ||

| ) |

Definition at line 956 of file vgic-mmio-v3.c.

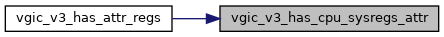

◆ vgic_v3_has_cpu_sysregs_attr()

| int vgic_v3_has_cpu_sysregs_attr | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_device_attr * | attr | ||

| ) |

Definition at line 342 of file vgic-sys-reg-v3.c.

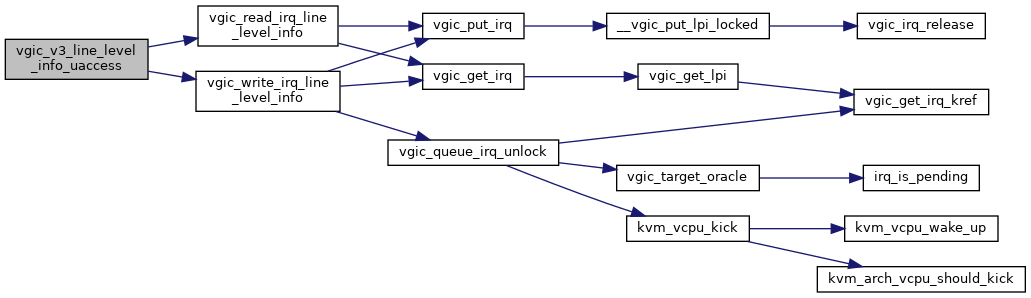

◆ vgic_v3_line_level_info_uaccess()

| int vgic_v3_line_level_info_uaccess | ( | struct kvm_vcpu * | vcpu, |

| bool | is_write, | ||

| u32 | intid, | ||

| u32 * | val | ||

| ) |

Definition at line 1117 of file vgic-mmio-v3.c.

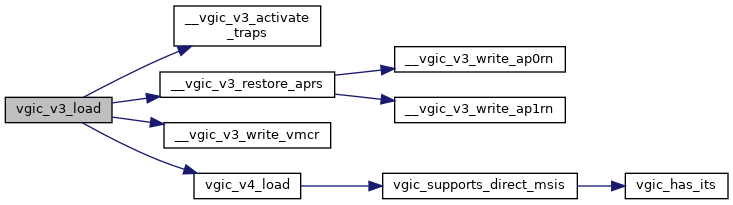

◆ vgic_v3_load()

| void vgic_v3_load | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 720 of file vgic-v3.c.

◆ vgic_v3_lpi_sync_pending_status()

| int vgic_v3_lpi_sync_pending_status | ( | struct kvm * | kvm, |

| struct vgic_irq * | irq | ||

| ) |

Definition at line 305 of file vgic-v3.c.

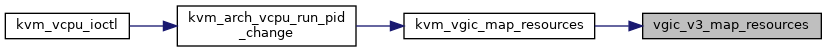

◆ vgic_v3_map_resources()

| int vgic_v3_map_resources | ( | struct kvm * | kvm | ) |

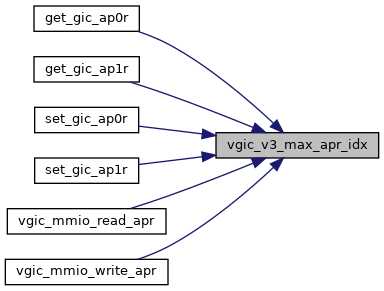

◆ vgic_v3_max_apr_idx()

|

inlinestatic |

◆ vgic_v3_parse_attr()

| int vgic_v3_parse_attr | ( | struct kvm_device * | dev, |

| struct kvm_device_attr * | attr, | ||

| struct vgic_reg_attr * | reg_attr | ||

| ) |

Definition at line 474 of file vgic-kvm-device.c.

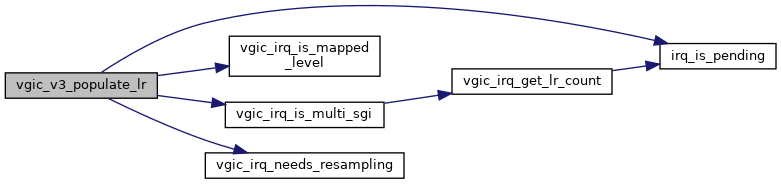

◆ vgic_v3_populate_lr()

| void vgic_v3_populate_lr | ( | struct kvm_vcpu * | vcpu, |

| struct vgic_irq * | irq, | ||

| int | lr | ||

| ) |

◆ vgic_v3_probe()

| int vgic_v3_probe | ( | const struct gic_kvm_info * | info | ) |

vgic_v3_probe - probe for a VGICv3 compatible interrupt controller @info: pointer to the GIC description

Returns 0 if the VGICv3 has been probed successfully, returns an error code otherwise

Definition at line 632 of file vgic-v3.c.

◆ vgic_v3_put()

| void vgic_v3_put | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 748 of file vgic-v3.c.

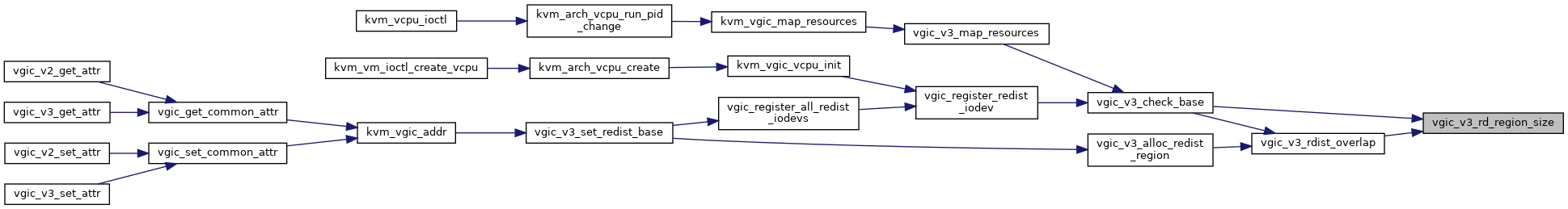

◆ vgic_v3_rd_region_size()

|

inlinestatic |

◆ vgic_v3_rdist_free_slot()

| struct vgic_redist_region* vgic_v3_rdist_free_slot | ( | struct list_head * | rd_regions | ) |

vgic_v3_rdist_free_slot - Look up registered rdist regions and identify one which has free space to put a new rdist region.

@rd_regions: redistributor region list head

A redistributor regions maps n redistributors, n = region size / (2 x 64kB). Stride between redistributors is 0 and regions are filled in the index order.

Return: the redist region handle, if any, that has space to map a new rdist region.

Definition at line 513 of file vgic-v3.c.

◆ vgic_v3_rdist_overlap()

| bool vgic_v3_rdist_overlap | ( | struct kvm * | kvm, |

| gpa_t | base, | ||

| size_t | size | ||

| ) |

vgic_v3_rdist_overlap - check if a region overlaps with any existing redistributor region

@kvm: kvm handle @base: base of the region @size: size of region

Return: true if there is an overlap

Definition at line 460 of file vgic-v3.c.

◆ vgic_v3_rdist_region_from_index()

| struct vgic_redist_region* vgic_v3_rdist_region_from_index | ( | struct kvm * | kvm, |

| u32 | index | ||

| ) |

◆ vgic_v3_redist_region_full()

|

inlinestatic |

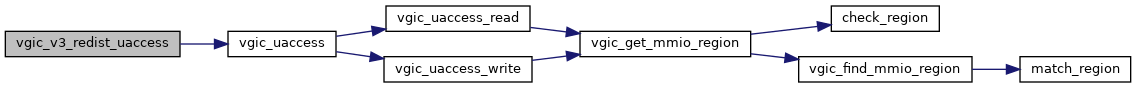

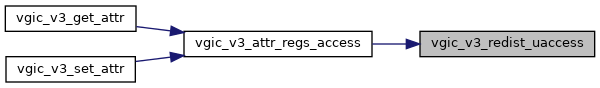

◆ vgic_v3_redist_uaccess()

| int vgic_v3_redist_uaccess | ( | struct kvm_vcpu * | vcpu, |

| bool | is_write, | ||

| int | offset, | ||

| u32 * | val | ||

| ) |

Definition at line 1106 of file vgic-mmio-v3.c.

◆ vgic_v3_save_pending_tables()

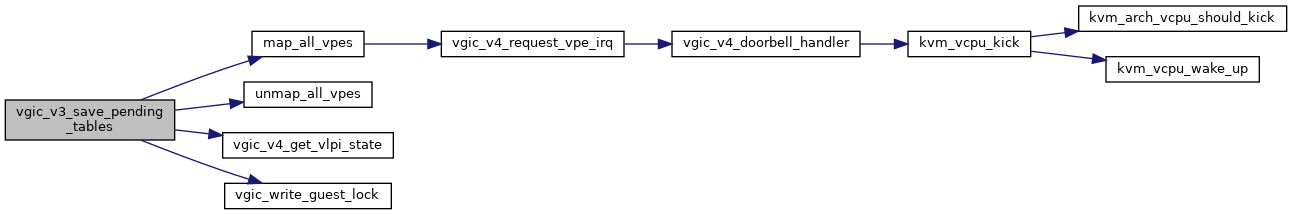

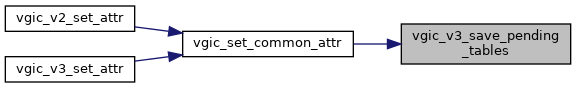

| int vgic_v3_save_pending_tables | ( | struct kvm * | kvm | ) |

vgic_v3_save_pending_tables - Save the pending tables into guest RAM kvm lock and all vcpu lock must be held

Definition at line 377 of file vgic-v3.c.

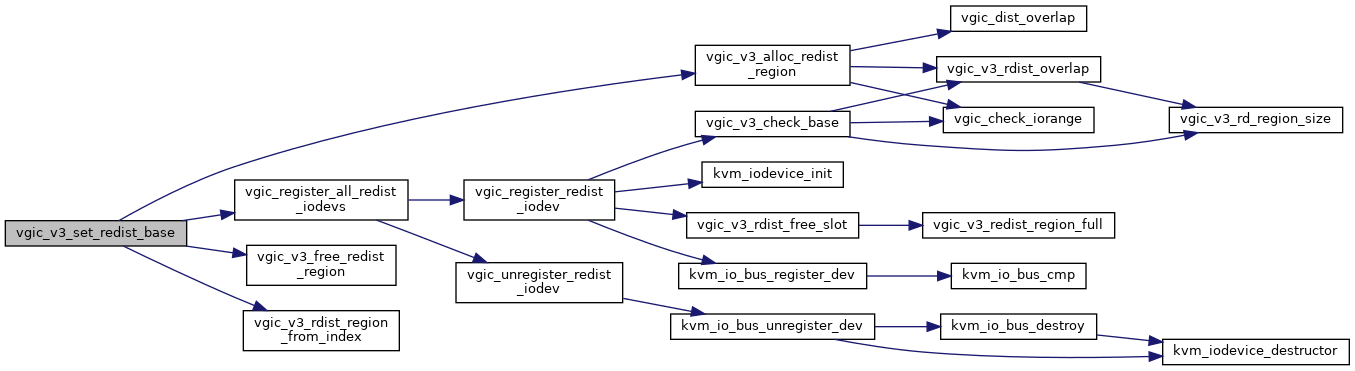

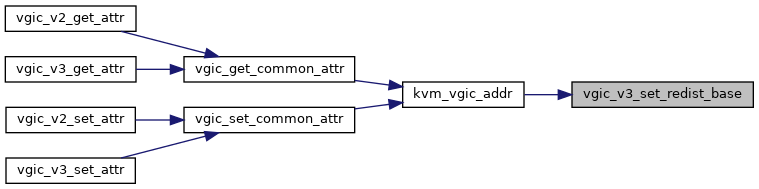

◆ vgic_v3_set_redist_base()

| int vgic_v3_set_redist_base | ( | struct kvm * | kvm, |

| u32 | index, | ||

| u64 | addr, | ||

| u32 | count | ||

| ) |

Definition at line 928 of file vgic-mmio-v3.c.

◆ vgic_v3_set_underflow()

| void vgic_v3_set_underflow | ( | struct kvm_vcpu * | vcpu | ) |

◆ vgic_v3_set_vmcr()

| void vgic_v3_set_vmcr | ( | struct kvm_vcpu * | vcpu, |

| struct vgic_vmcr * | vmcr | ||

| ) |

◆ vgic_v3_vmcr_sync()

| void vgic_v3_vmcr_sync | ( | struct kvm_vcpu * | vcpu | ) |

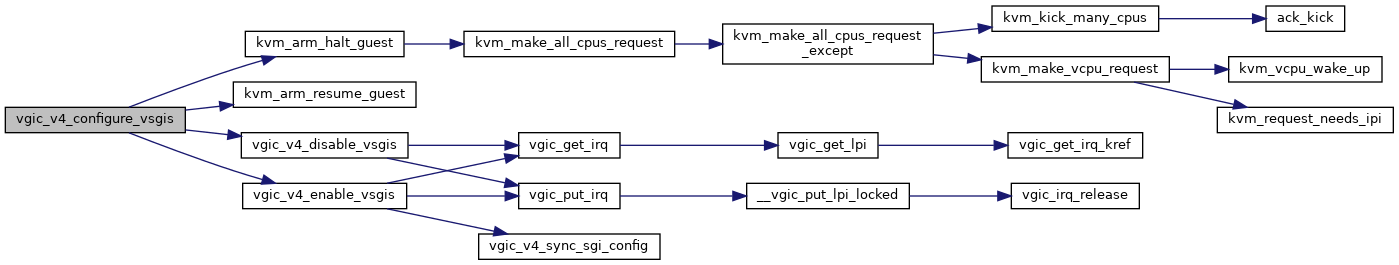

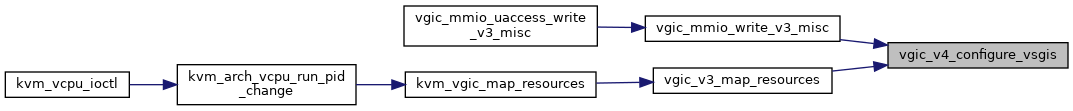

◆ vgic_v4_configure_vsgis()

| void vgic_v4_configure_vsgis | ( | struct kvm * | kvm | ) |

Definition at line 187 of file vgic-v4.c.

◆ vgic_v4_get_vlpi_state()

| void vgic_v4_get_vlpi_state | ( | struct vgic_irq * | irq, |

| bool * | val | ||

| ) |

◆ vgic_v4_init()

| int vgic_v4_init | ( | struct kvm * | kvm | ) |

vgic_v4_init - Initialize the GICv4 data structures @kvm: Pointer to the VM being initialized

We may be called each time a vITS is created, or when the vgic is initialized. In both cases, the number of vcpus should now be fixed.

Definition at line 239 of file vgic-v4.c.

◆ vgic_v4_request_vpe_irq()

| int vgic_v4_request_vpe_irq | ( | struct kvm_vcpu * | vcpu, |

| int | irq | ||

| ) |

Definition at line 226 of file vgic-v4.c.

◆ vgic_v4_teardown()

| void vgic_v4_teardown | ( | struct kvm * | kvm | ) |