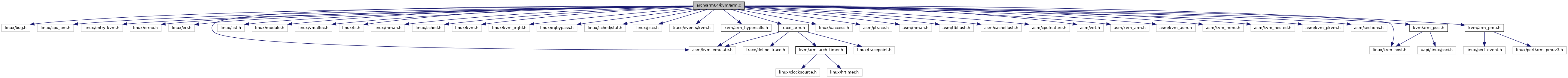

#include <linux/bug.h>#include <linux/cpu_pm.h>#include <linux/entry-kvm.h>#include <linux/errno.h>#include <linux/err.h>#include <linux/kvm_host.h>#include <linux/list.h>#include <linux/module.h>#include <linux/vmalloc.h>#include <linux/fs.h>#include <linux/mman.h>#include <linux/sched.h>#include <linux/kvm.h>#include <linux/kvm_irqfd.h>#include <linux/irqbypass.h>#include <linux/sched/stat.h>#include <linux/psci.h>#include <trace/events/kvm.h>#include "trace_arm.h"#include <linux/uaccess.h>#include <asm/ptrace.h>#include <asm/mman.h>#include <asm/tlbflush.h>#include <asm/cacheflush.h>#include <asm/cpufeature.h>#include <asm/virt.h>#include <asm/kvm_arm.h>#include <asm/kvm_asm.h>#include <asm/kvm_mmu.h>#include <asm/kvm_nested.h>#include <asm/kvm_pkvm.h>#include <asm/kvm_emulate.h>#include <asm/sections.h>#include <kvm/arm_hypercalls.h>#include <kvm/arm_pmu.h>#include <kvm/arm_psci.h>

Go to the source code of this file.

Macros | |

| #define | CREATE_TRACE_POINTS |

| #define | init_psci_0_1_impl_state(config, what) config.psci_0_1_ ## what ## _implemented = psci_ops.what |

Functions | |

| DECLARE_KVM_HYP_PER_CPU (unsigned long, kvm_hyp_vector) | |

| DEFINE_PER_CPU (unsigned long, kvm_arm_hyp_stack_page) | |

| DECLARE_KVM_NVHE_PER_CPU (struct kvm_nvhe_init_params, kvm_init_params) | |

| DECLARE_KVM_NVHE_PER_CPU (struct kvm_cpu_context, kvm_hyp_ctxt) | |

| static | DEFINE_PER_CPU (unsigned char, kvm_hyp_initialized) |

| DEFINE_STATIC_KEY_FALSE (userspace_irqchip_in_use) | |

| bool | is_kvm_arm_initialised (void) |

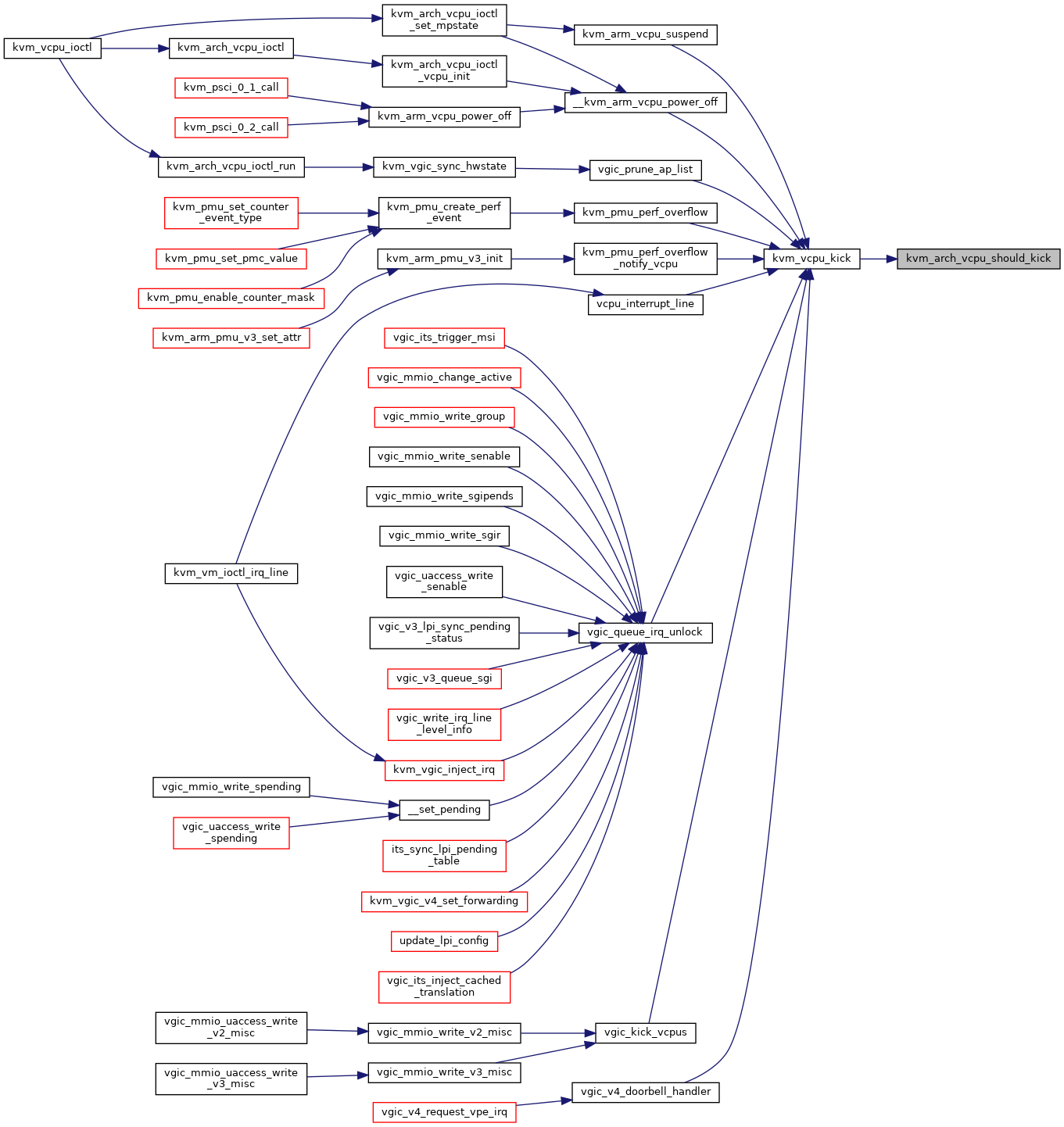

| int | kvm_arch_vcpu_should_kick (struct kvm_vcpu *vcpu) |

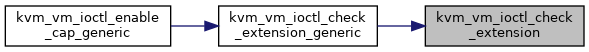

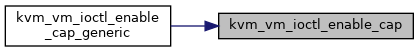

| int | kvm_vm_ioctl_enable_cap (struct kvm *kvm, struct kvm_enable_cap *cap) |

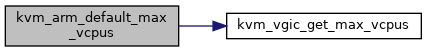

| static int | kvm_arm_default_max_vcpus (void) |

| int | kvm_arch_init_vm (struct kvm *kvm, unsigned long type) |

| vm_fault_t | kvm_arch_vcpu_fault (struct kvm_vcpu *vcpu, struct vm_fault *vmf) |

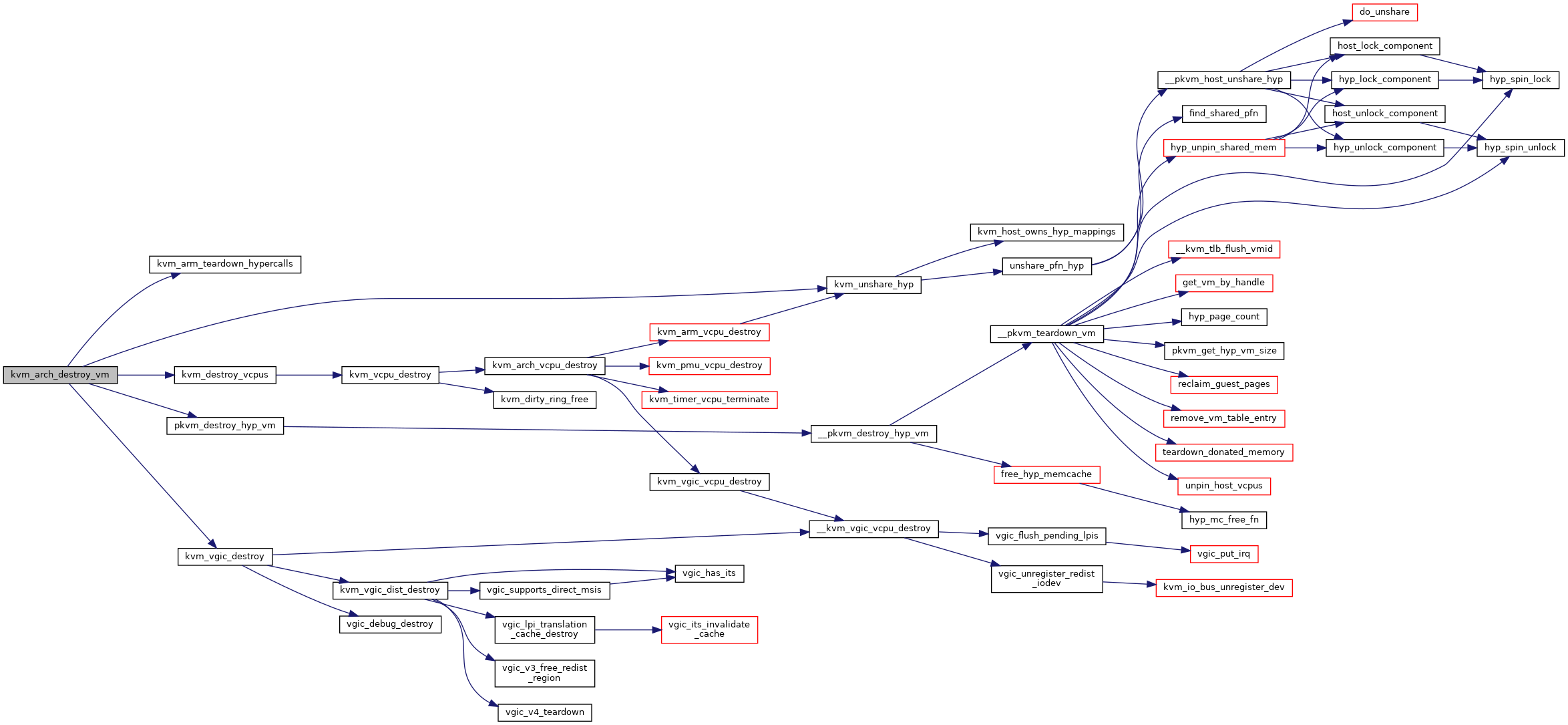

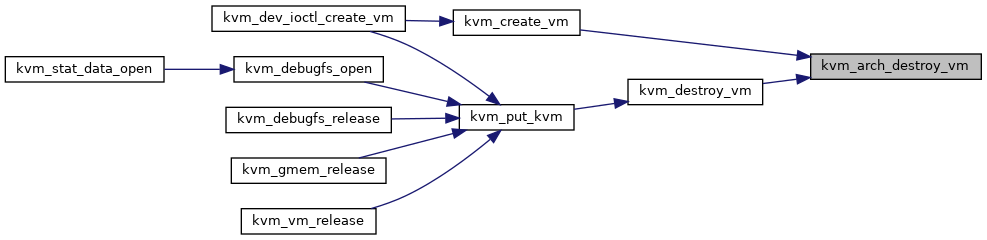

| void | kvm_arch_destroy_vm (struct kvm *kvm) |

| int | kvm_vm_ioctl_check_extension (struct kvm *kvm, long ext) |

| long | kvm_arch_dev_ioctl (struct file *filp, unsigned int ioctl, unsigned long arg) |

| struct kvm * | kvm_arch_alloc_vm (void) |

| int | kvm_arch_vcpu_precreate (struct kvm *kvm, unsigned int id) |

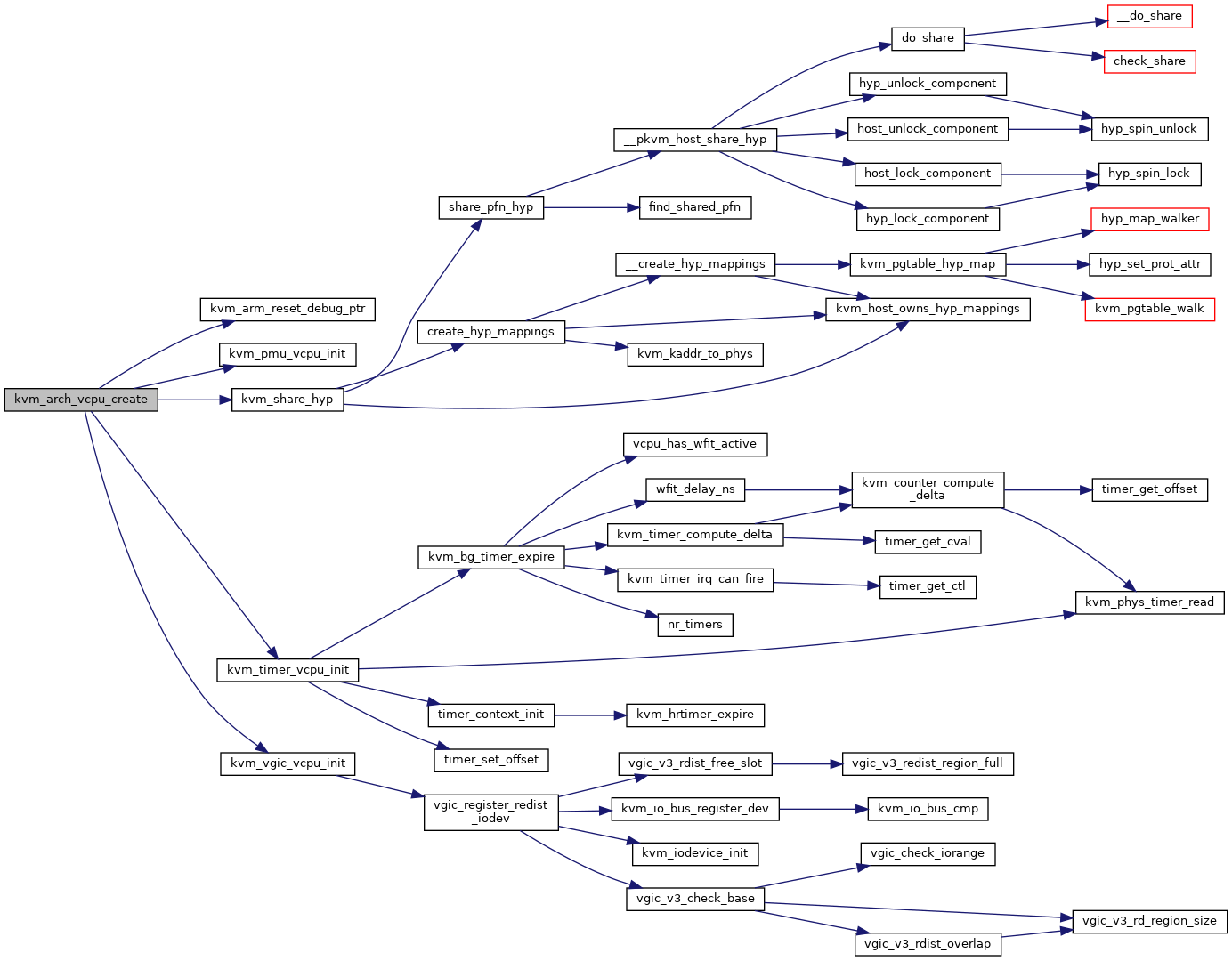

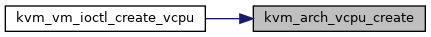

| int | kvm_arch_vcpu_create (struct kvm_vcpu *vcpu) |

| void | kvm_arch_vcpu_postcreate (struct kvm_vcpu *vcpu) |

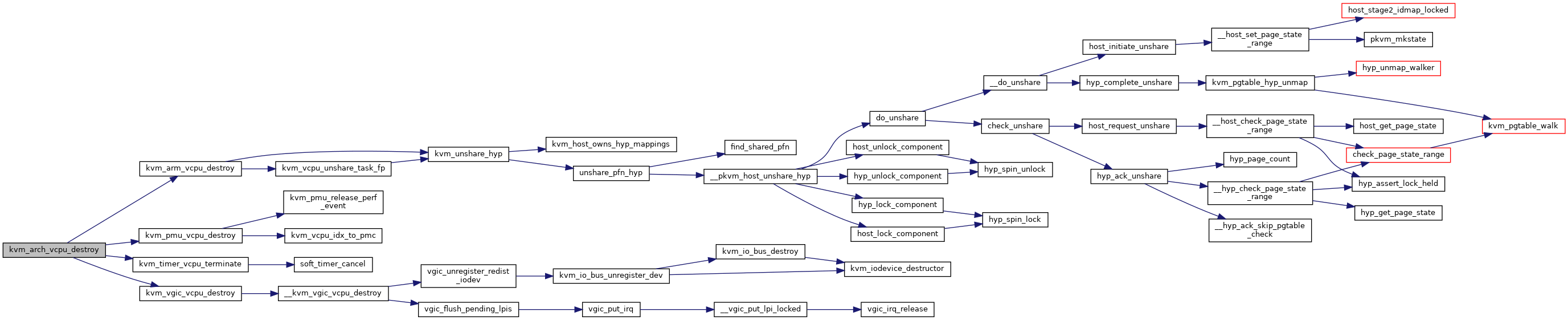

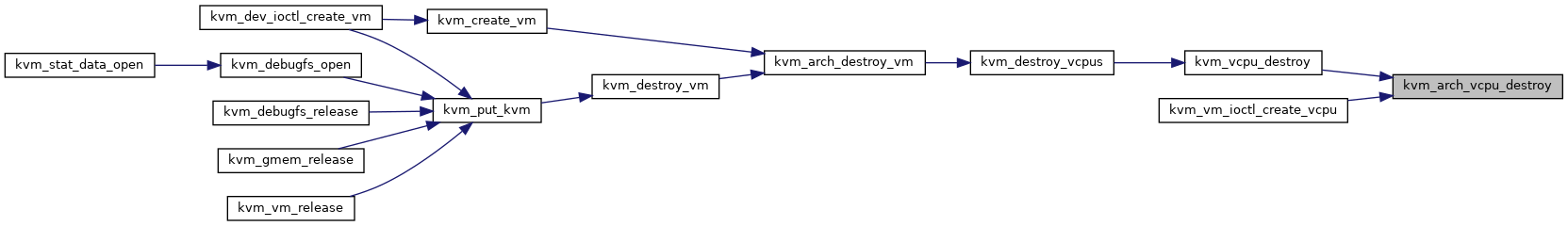

| void | kvm_arch_vcpu_destroy (struct kvm_vcpu *vcpu) |

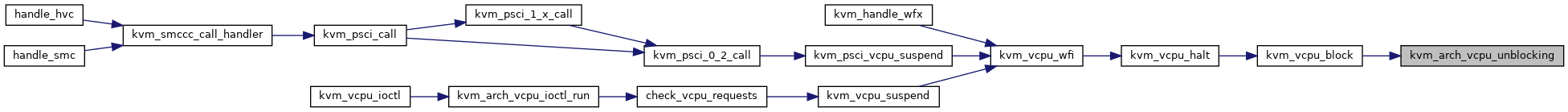

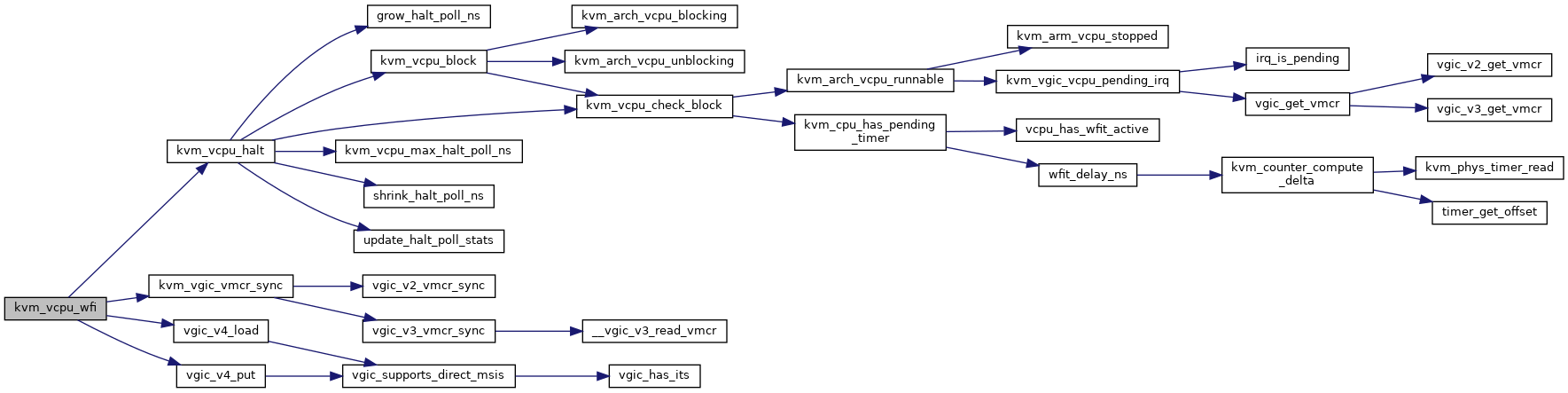

| void | kvm_arch_vcpu_blocking (struct kvm_vcpu *vcpu) |

| void | kvm_arch_vcpu_unblocking (struct kvm_vcpu *vcpu) |

| void | kvm_arch_vcpu_load (struct kvm_vcpu *vcpu, int cpu) |

| void | kvm_arch_vcpu_put (struct kvm_vcpu *vcpu) |

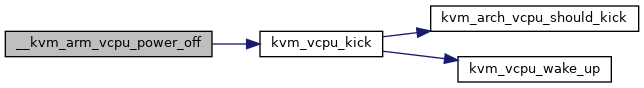

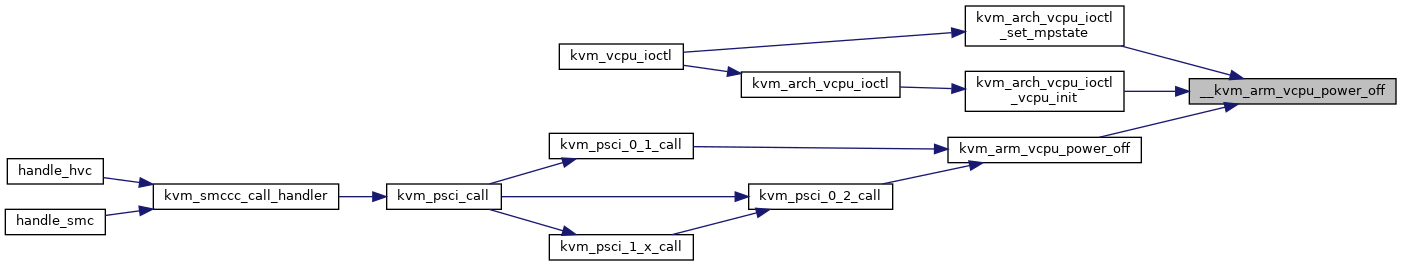

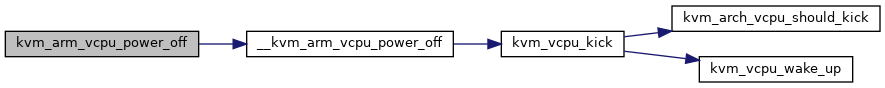

| static void | __kvm_arm_vcpu_power_off (struct kvm_vcpu *vcpu) |

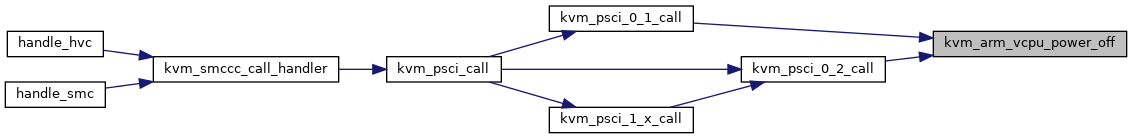

| void | kvm_arm_vcpu_power_off (struct kvm_vcpu *vcpu) |

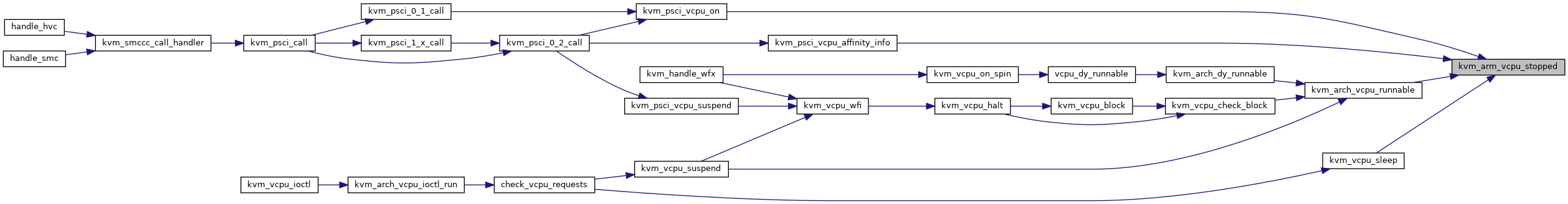

| bool | kvm_arm_vcpu_stopped (struct kvm_vcpu *vcpu) |

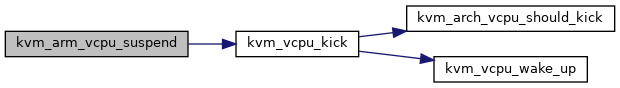

| static void | kvm_arm_vcpu_suspend (struct kvm_vcpu *vcpu) |

| static bool | kvm_arm_vcpu_suspended (struct kvm_vcpu *vcpu) |

| int | kvm_arch_vcpu_ioctl_get_mpstate (struct kvm_vcpu *vcpu, struct kvm_mp_state *mp_state) |

| int | kvm_arch_vcpu_ioctl_set_mpstate (struct kvm_vcpu *vcpu, struct kvm_mp_state *mp_state) |

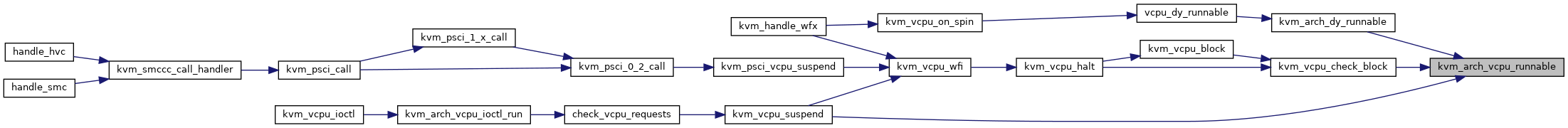

| int | kvm_arch_vcpu_runnable (struct kvm_vcpu *v) |

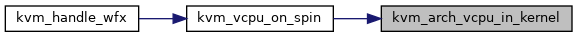

| bool | kvm_arch_vcpu_in_kernel (struct kvm_vcpu *vcpu) |

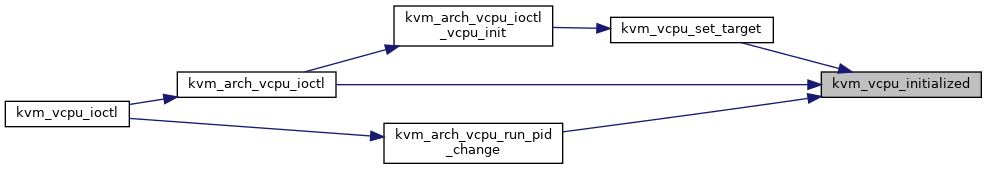

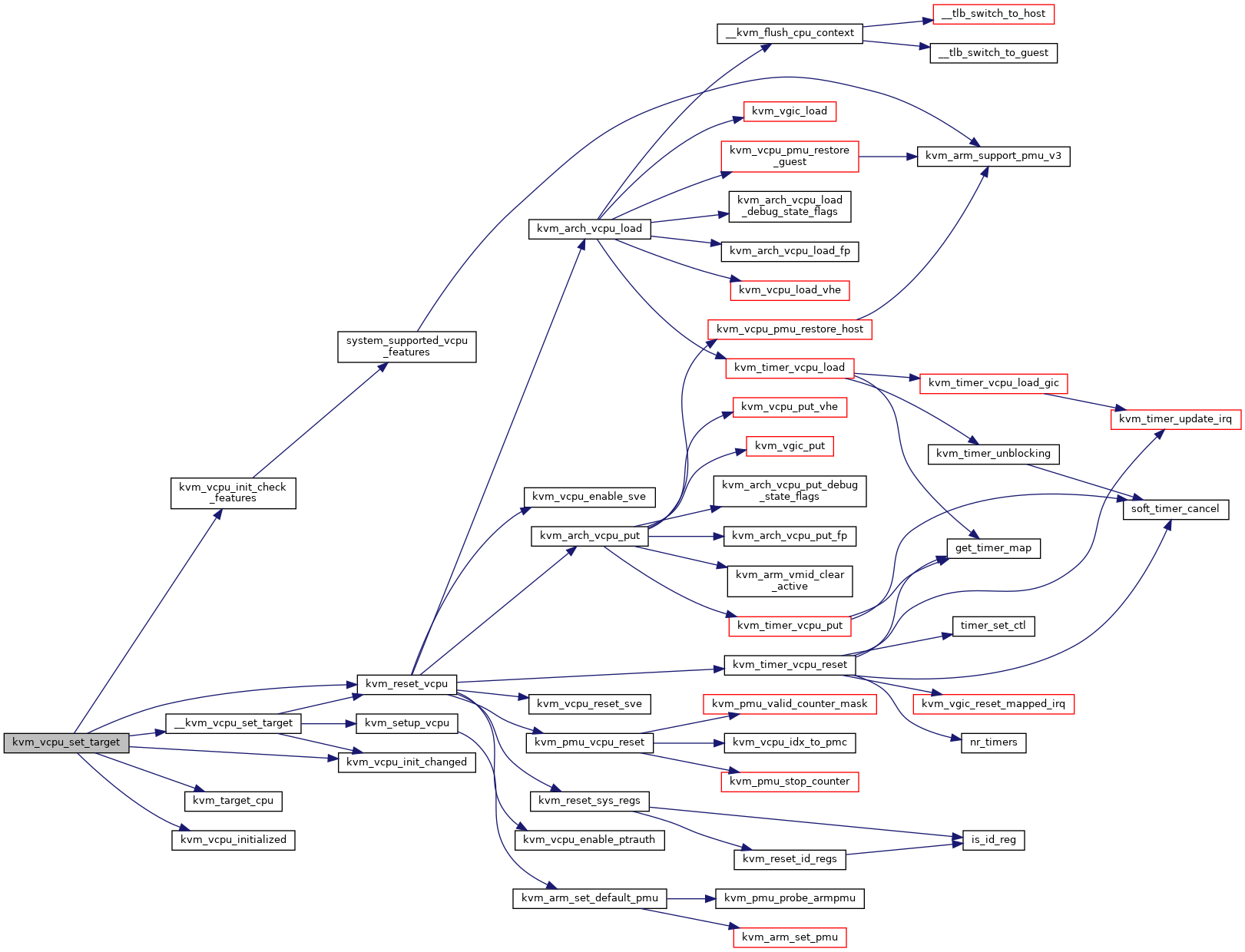

| static int | kvm_vcpu_initialized (struct kvm_vcpu *vcpu) |

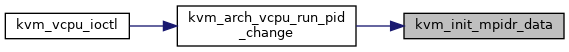

| static void | kvm_init_mpidr_data (struct kvm *kvm) |

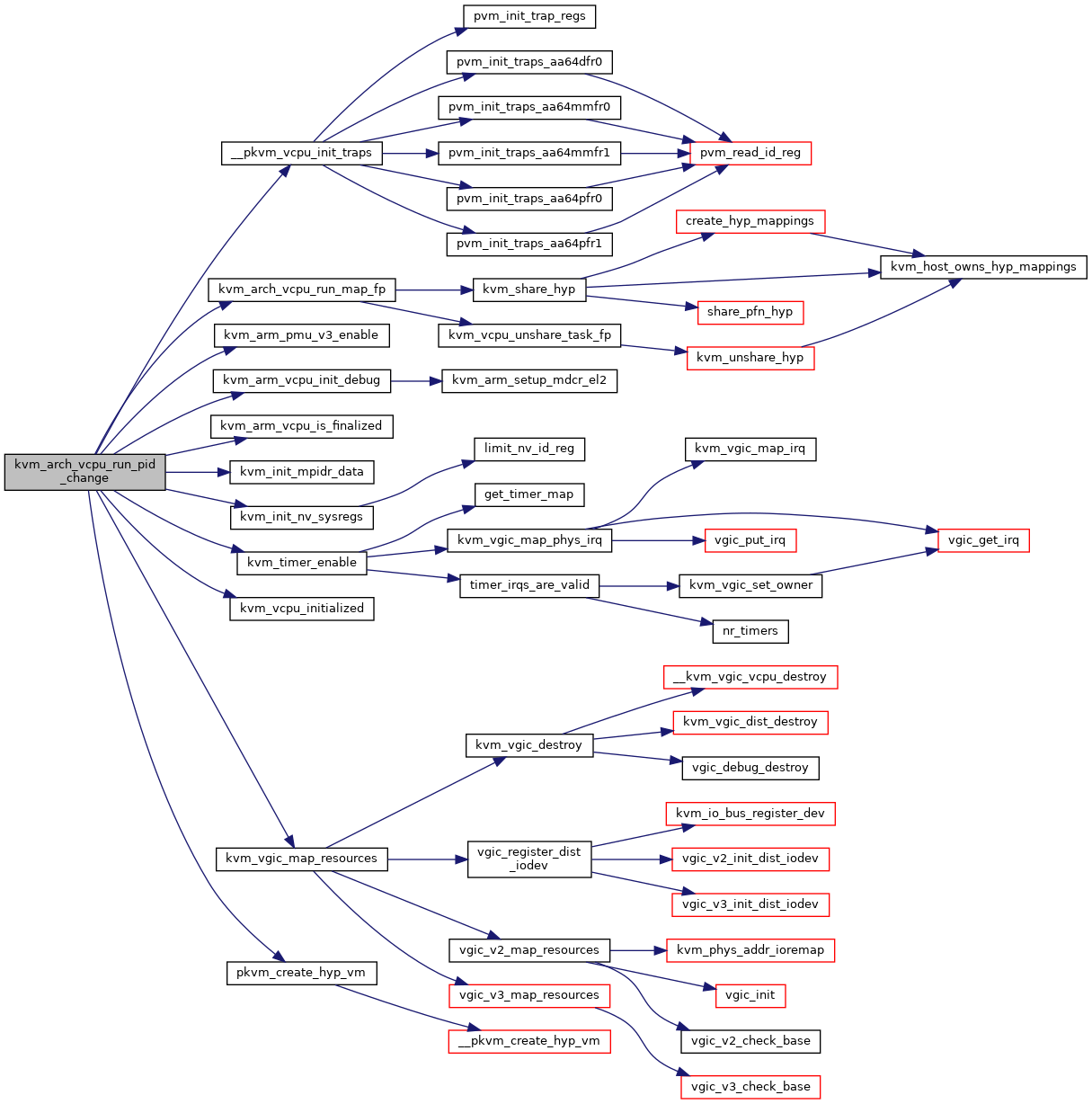

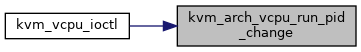

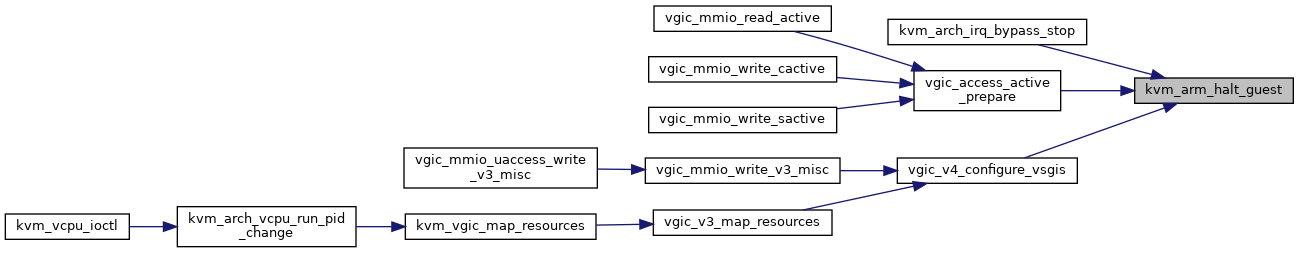

| int | kvm_arch_vcpu_run_pid_change (struct kvm_vcpu *vcpu) |

| bool | kvm_arch_intc_initialized (struct kvm *kvm) |

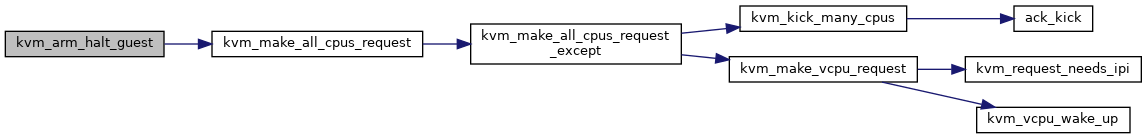

| void | kvm_arm_halt_guest (struct kvm *kvm) |

| void | kvm_arm_resume_guest (struct kvm *kvm) |

| static void | kvm_vcpu_sleep (struct kvm_vcpu *vcpu) |

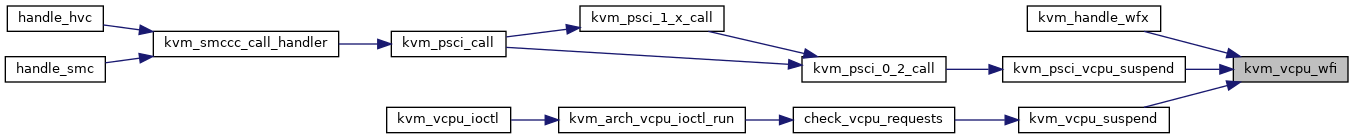

| void | kvm_vcpu_wfi (struct kvm_vcpu *vcpu) |

| static int | kvm_vcpu_suspend (struct kvm_vcpu *vcpu) |

| static int | check_vcpu_requests (struct kvm_vcpu *vcpu) |

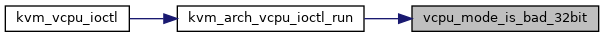

| static bool | vcpu_mode_is_bad_32bit (struct kvm_vcpu *vcpu) |

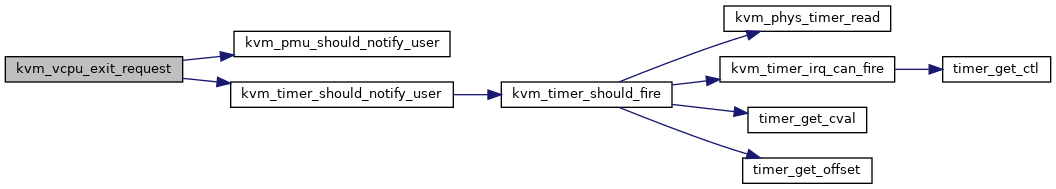

| static bool | kvm_vcpu_exit_request (struct kvm_vcpu *vcpu, int *ret) |

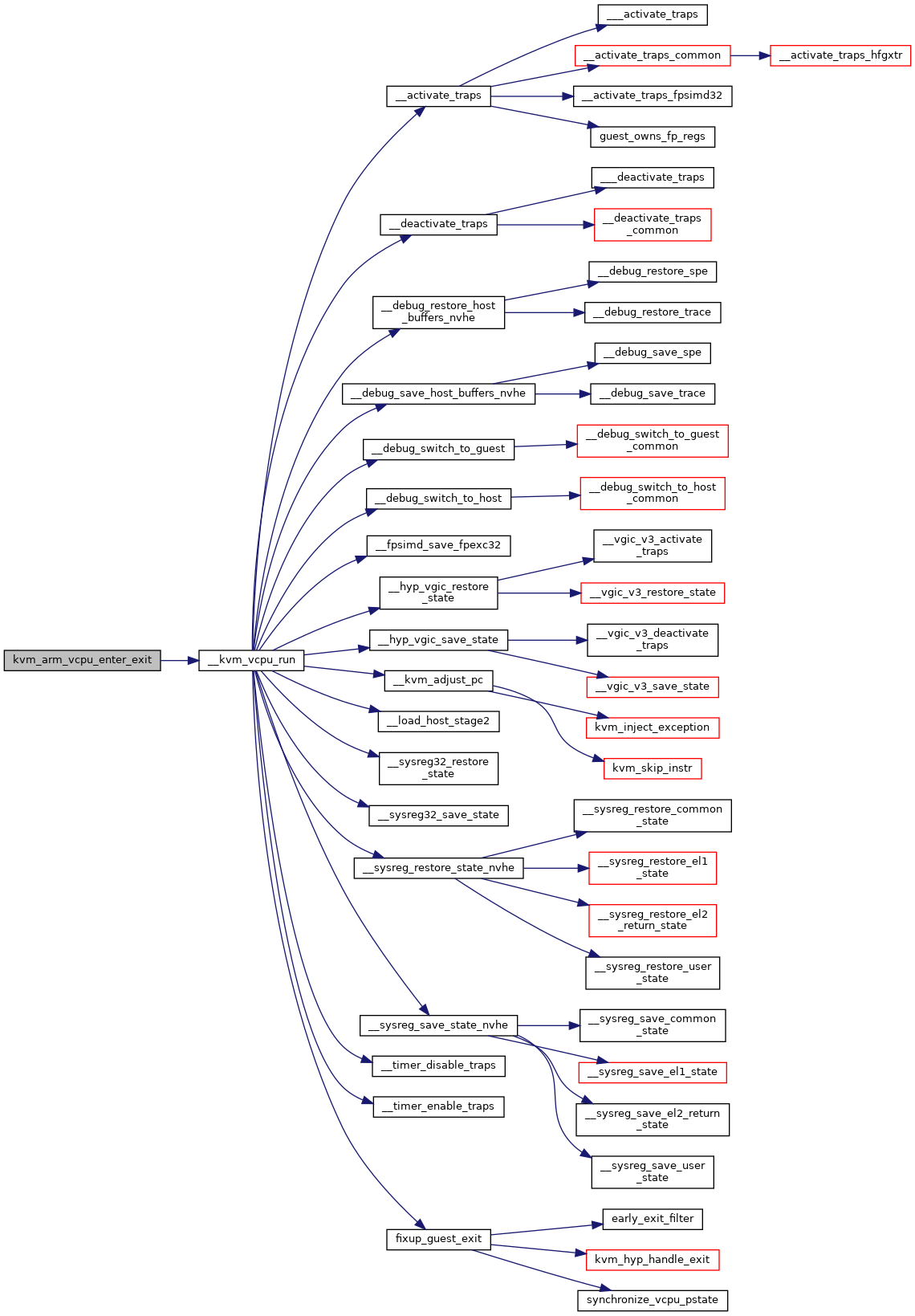

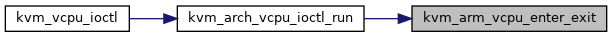

| static int noinstr | kvm_arm_vcpu_enter_exit (struct kvm_vcpu *vcpu) |

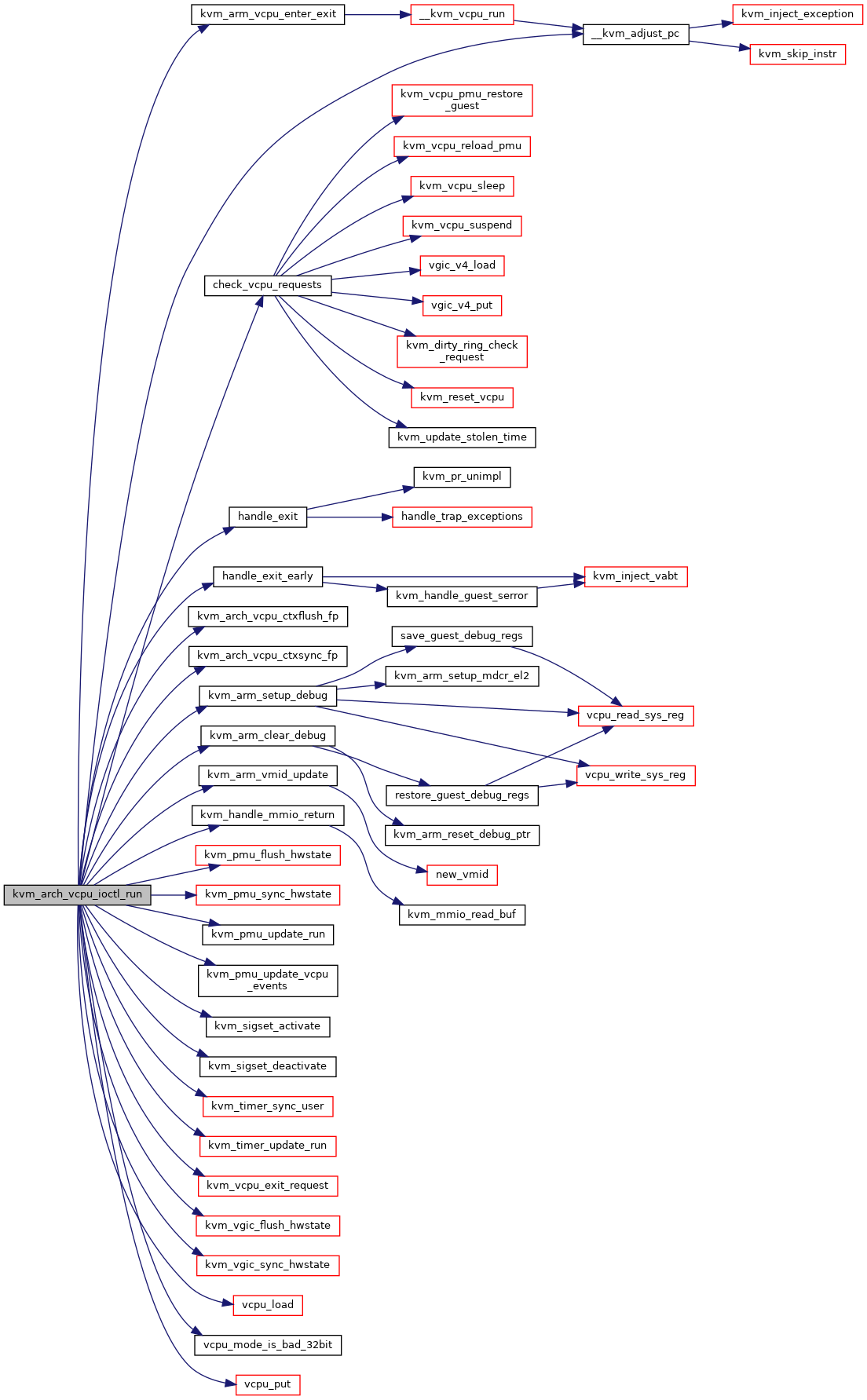

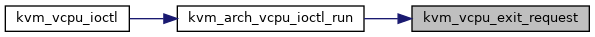

| int | kvm_arch_vcpu_ioctl_run (struct kvm_vcpu *vcpu) |

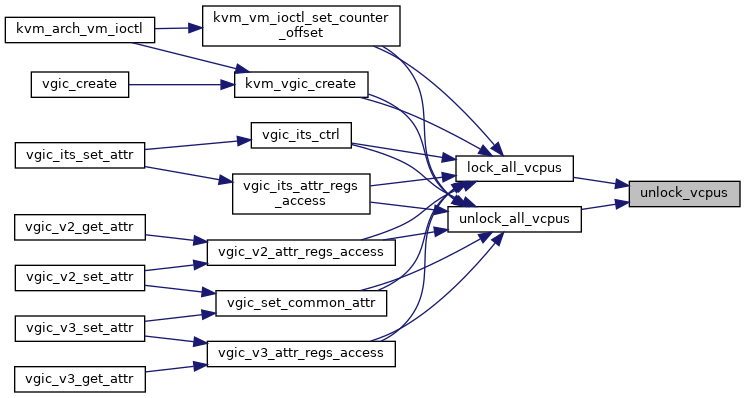

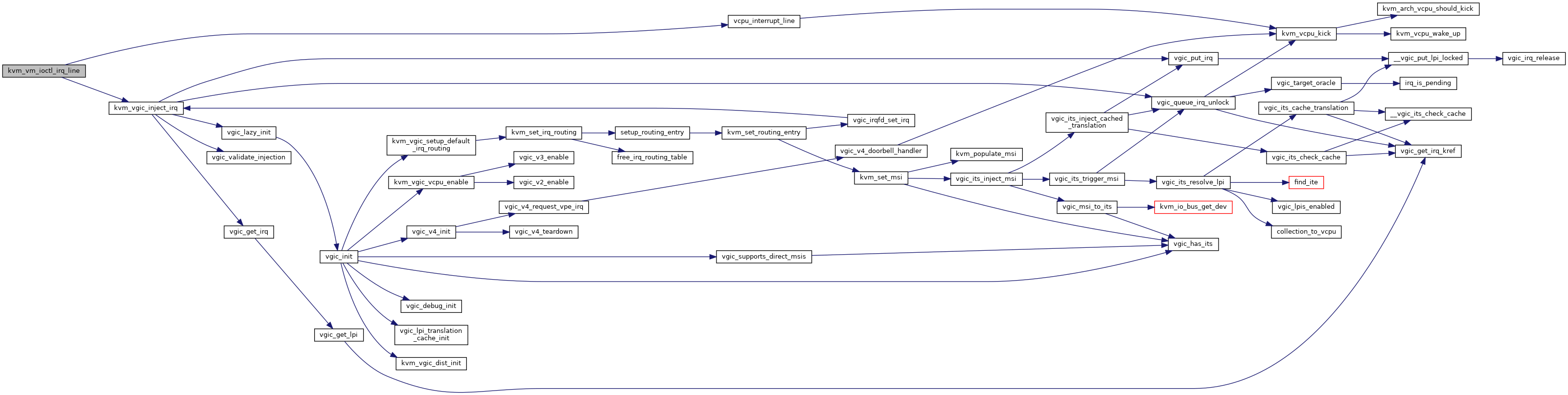

| static int | vcpu_interrupt_line (struct kvm_vcpu *vcpu, int number, bool level) |

| int | kvm_vm_ioctl_irq_line (struct kvm *kvm, struct kvm_irq_level *irq_level, bool line_status) |

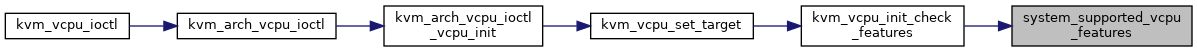

| static unsigned long | system_supported_vcpu_features (void) |

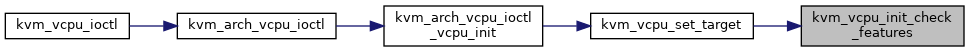

| static int | kvm_vcpu_init_check_features (struct kvm_vcpu *vcpu, const struct kvm_vcpu_init *init) |

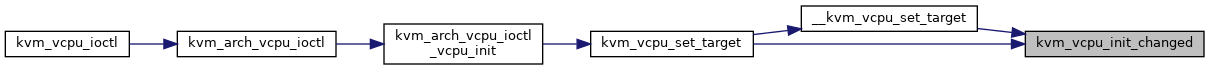

| static bool | kvm_vcpu_init_changed (struct kvm_vcpu *vcpu, const struct kvm_vcpu_init *init) |

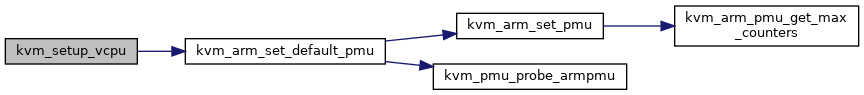

| static int | kvm_setup_vcpu (struct kvm_vcpu *vcpu) |

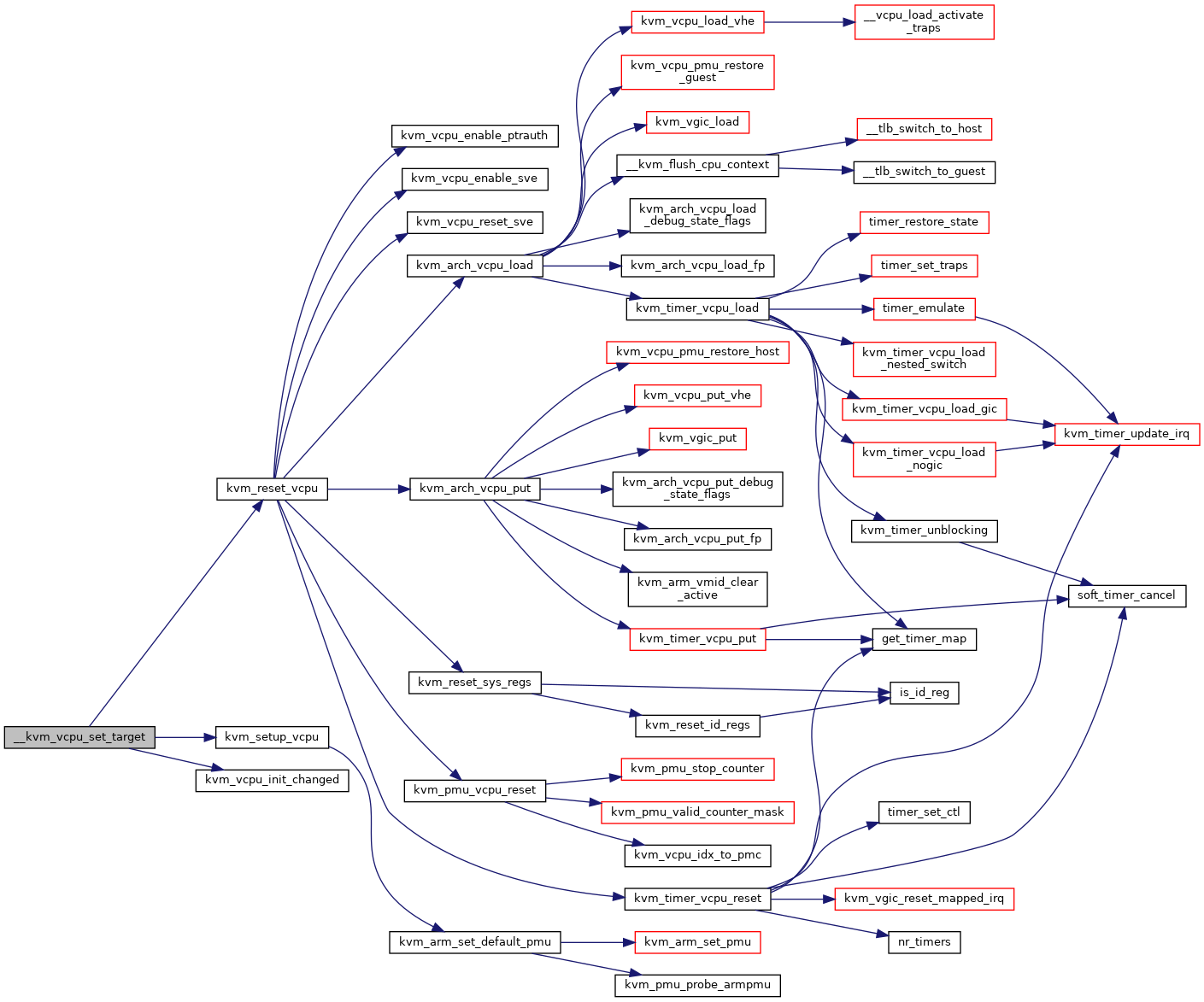

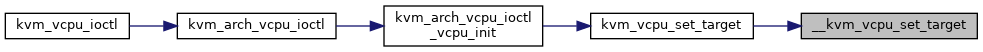

| static int | __kvm_vcpu_set_target (struct kvm_vcpu *vcpu, const struct kvm_vcpu_init *init) |

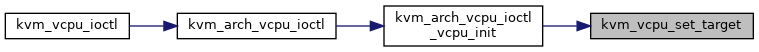

| static int | kvm_vcpu_set_target (struct kvm_vcpu *vcpu, const struct kvm_vcpu_init *init) |

| static int | kvm_arch_vcpu_ioctl_vcpu_init (struct kvm_vcpu *vcpu, struct kvm_vcpu_init *init) |

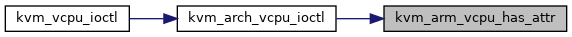

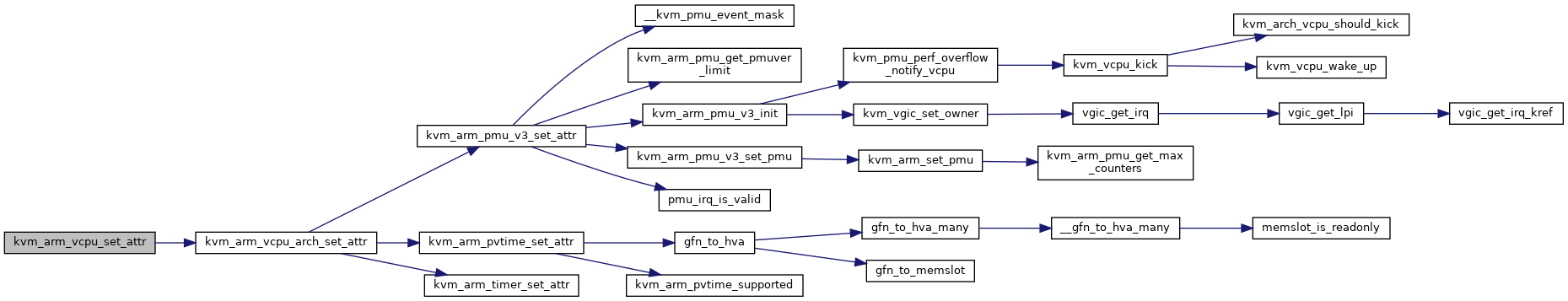

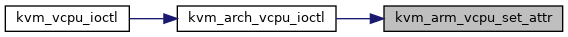

| static int | kvm_arm_vcpu_set_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

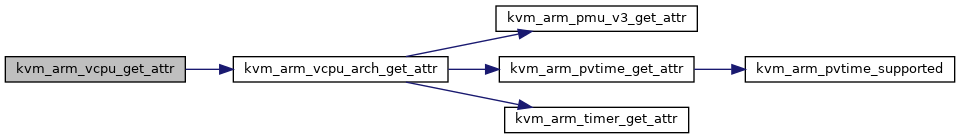

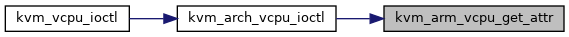

| static int | kvm_arm_vcpu_get_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

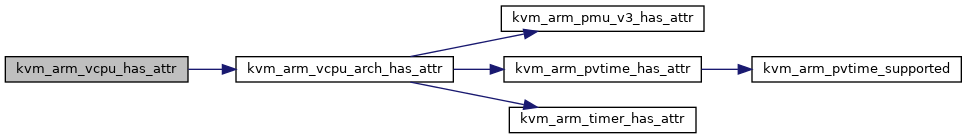

| static int | kvm_arm_vcpu_has_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

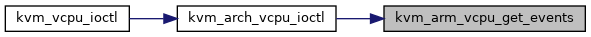

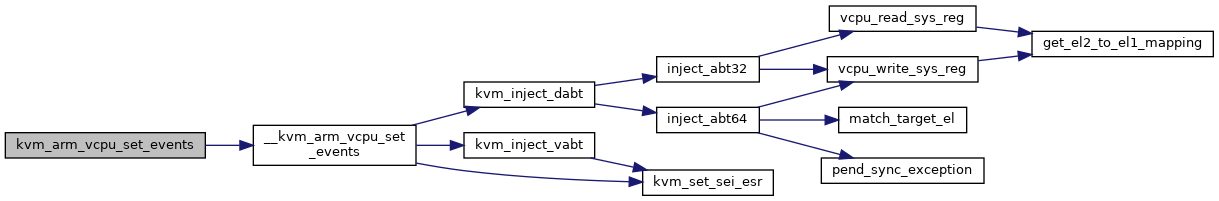

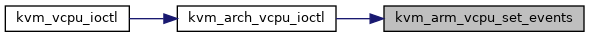

| static int | kvm_arm_vcpu_get_events (struct kvm_vcpu *vcpu, struct kvm_vcpu_events *events) |

| static int | kvm_arm_vcpu_set_events (struct kvm_vcpu *vcpu, struct kvm_vcpu_events *events) |

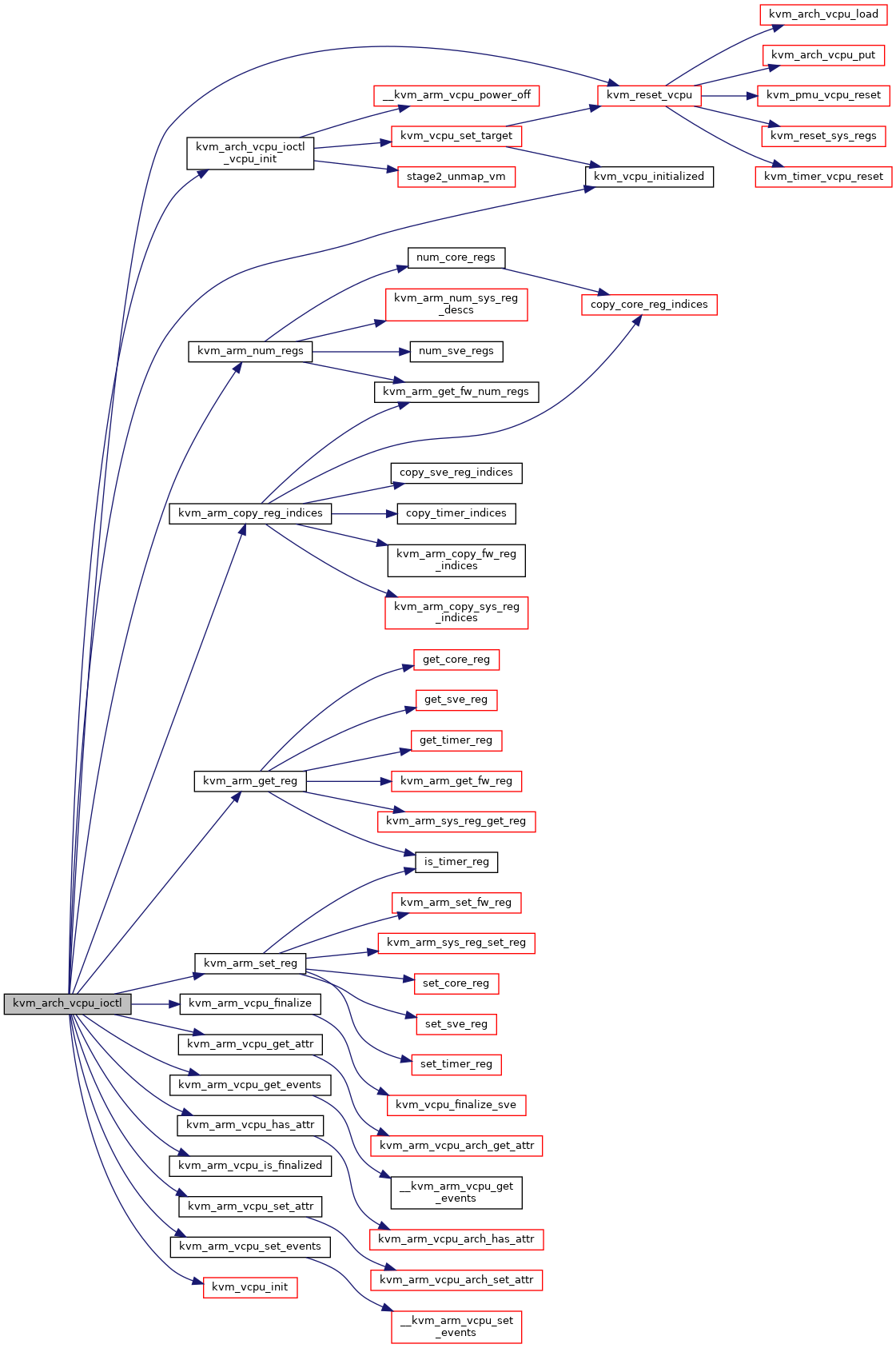

| long | kvm_arch_vcpu_ioctl (struct file *filp, unsigned int ioctl, unsigned long arg) |

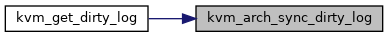

| void | kvm_arch_sync_dirty_log (struct kvm *kvm, struct kvm_memory_slot *memslot) |

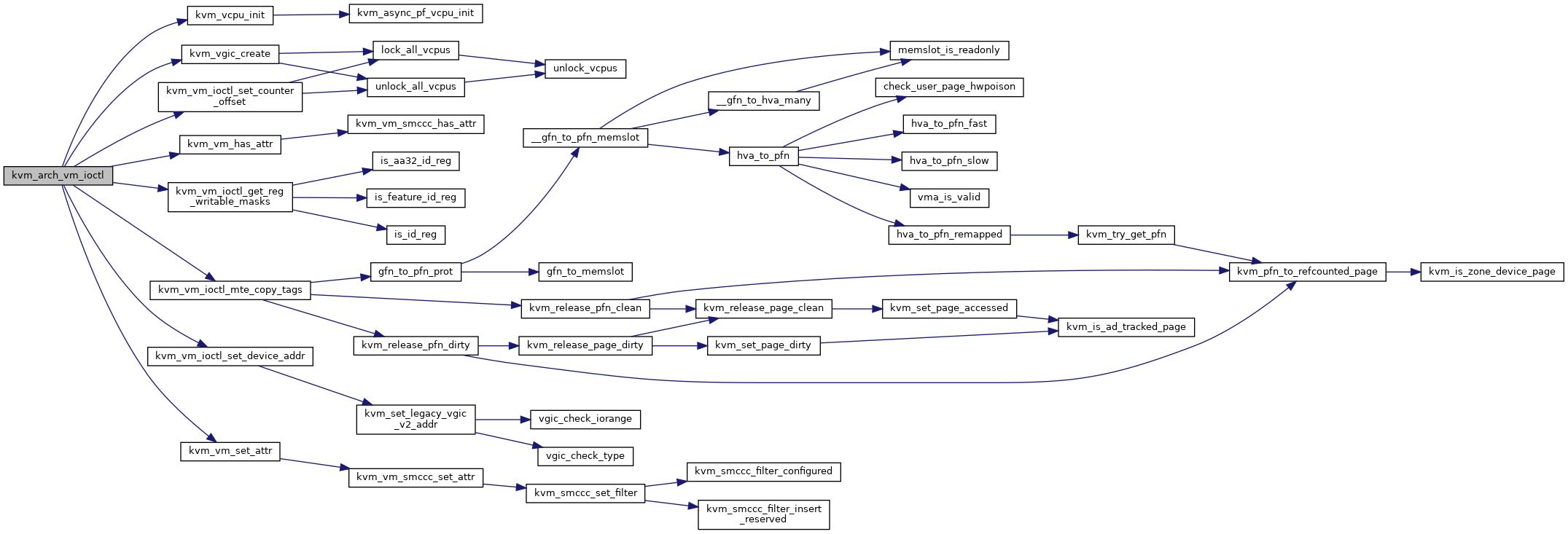

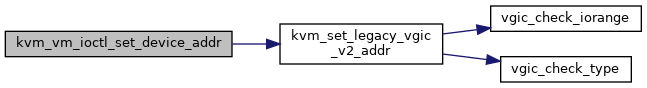

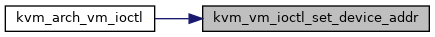

| static int | kvm_vm_ioctl_set_device_addr (struct kvm *kvm, struct kvm_arm_device_addr *dev_addr) |

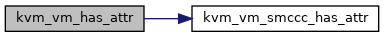

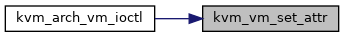

| static int | kvm_vm_has_attr (struct kvm *kvm, struct kvm_device_attr *attr) |

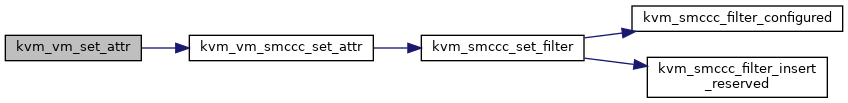

| static int | kvm_vm_set_attr (struct kvm *kvm, struct kvm_device_attr *attr) |

| int | kvm_arch_vm_ioctl (struct file *filp, unsigned int ioctl, unsigned long arg) |

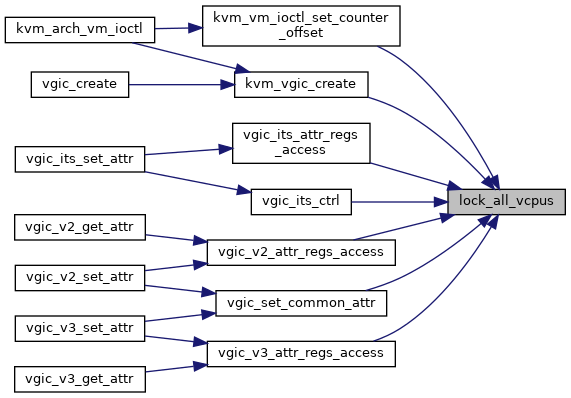

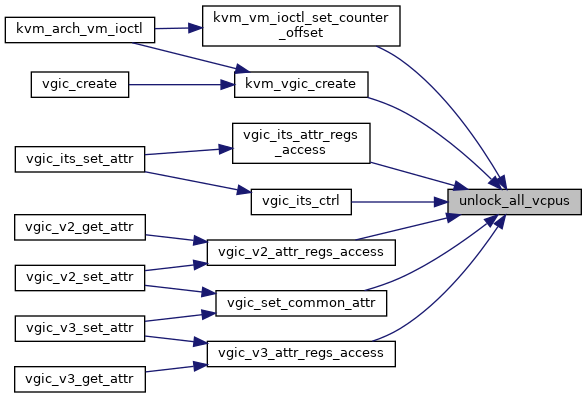

| static void | unlock_vcpus (struct kvm *kvm, int vcpu_lock_idx) |

| void | unlock_all_vcpus (struct kvm *kvm) |

| bool | lock_all_vcpus (struct kvm *kvm) |

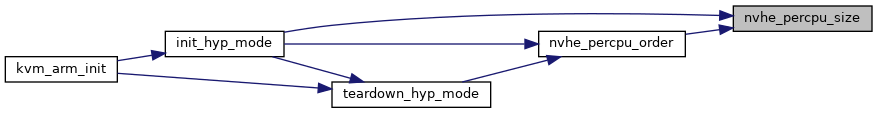

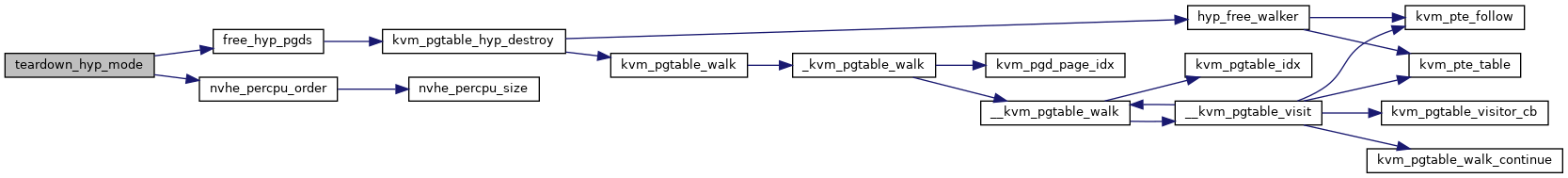

| static unsigned long | nvhe_percpu_size (void) |

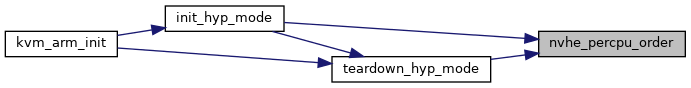

| static unsigned long | nvhe_percpu_order (void) |

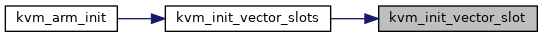

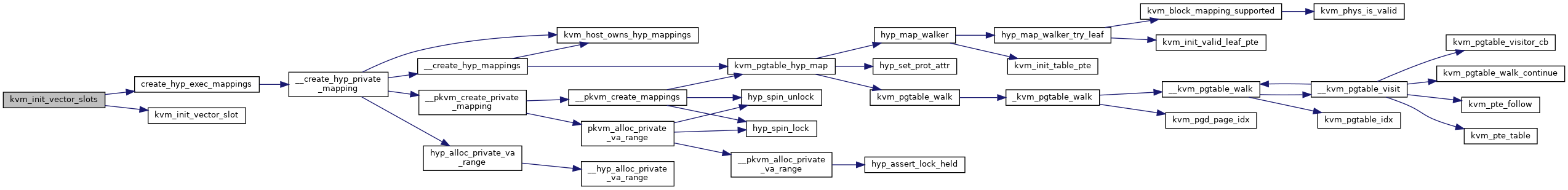

| static void | kvm_init_vector_slot (void *base, enum arm64_hyp_spectre_vector slot) |

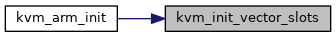

| static int | kvm_init_vector_slots (void) |

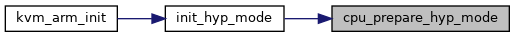

| static void __init | cpu_prepare_hyp_mode (int cpu, u32 hyp_va_bits) |

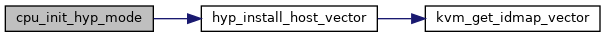

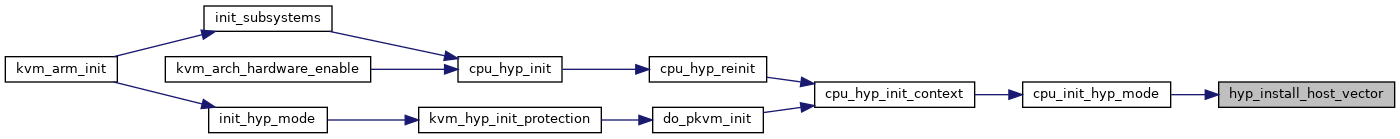

| static void | hyp_install_host_vector (void) |

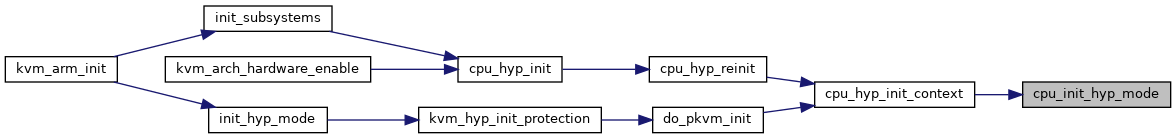

| static void | cpu_init_hyp_mode (void) |

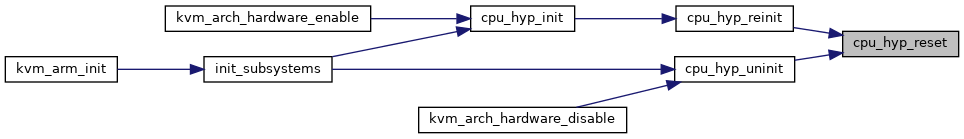

| static void | cpu_hyp_reset (void) |

| static void | cpu_set_hyp_vector (void) |

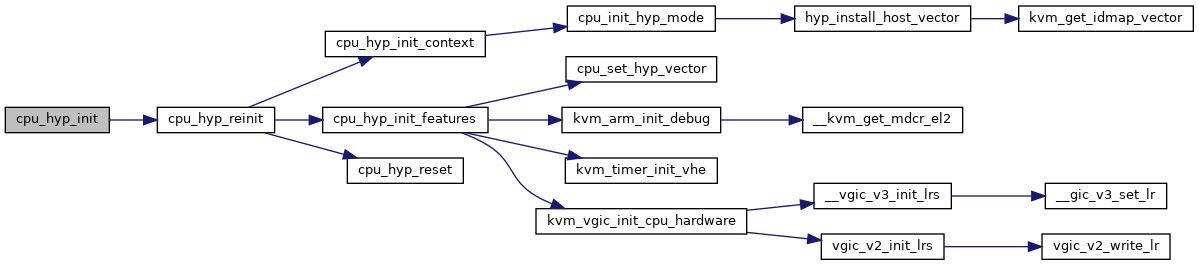

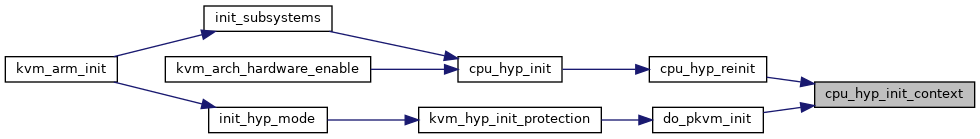

| static void | cpu_hyp_init_context (void) |

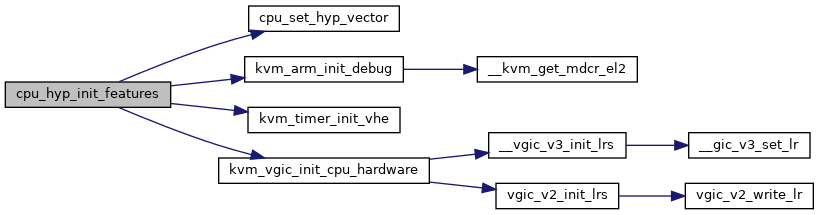

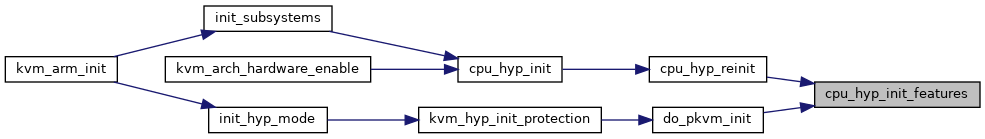

| static void | cpu_hyp_init_features (void) |

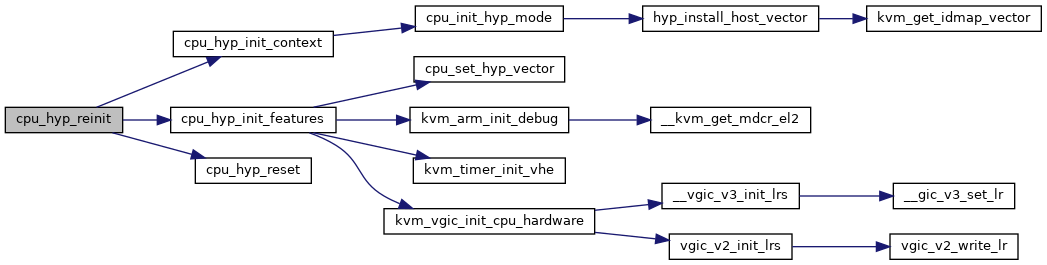

| static void | cpu_hyp_reinit (void) |

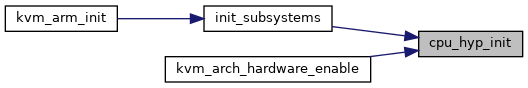

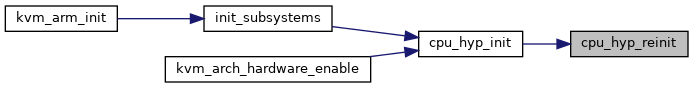

| static void | cpu_hyp_init (void *discard) |

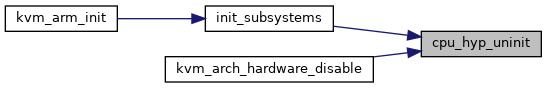

| static void | cpu_hyp_uninit (void *discard) |

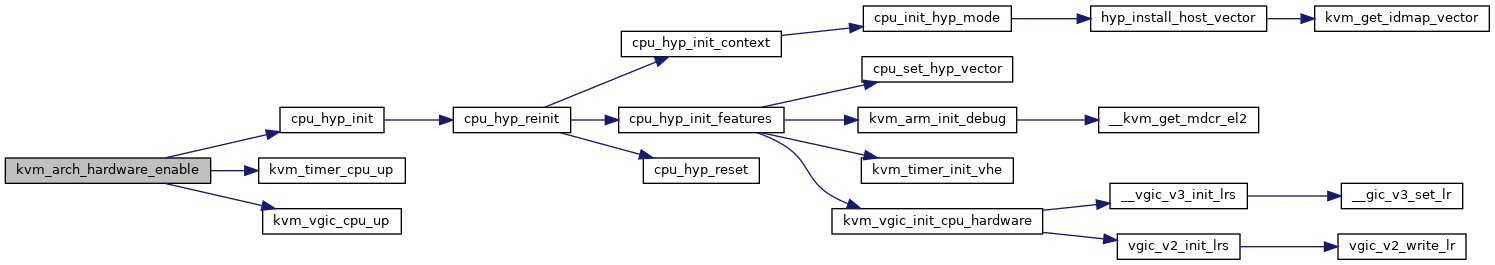

| int | kvm_arch_hardware_enable (void) |

| void | kvm_arch_hardware_disable (void) |

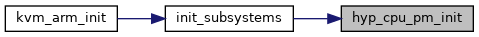

| static void __init | hyp_cpu_pm_init (void) |

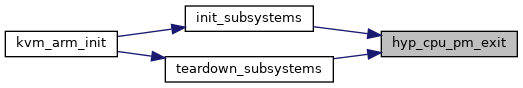

| static void __init | hyp_cpu_pm_exit (void) |

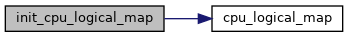

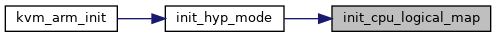

| static void __init | init_cpu_logical_map (void) |

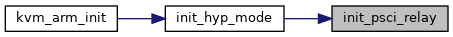

| static bool __init | init_psci_relay (void) |

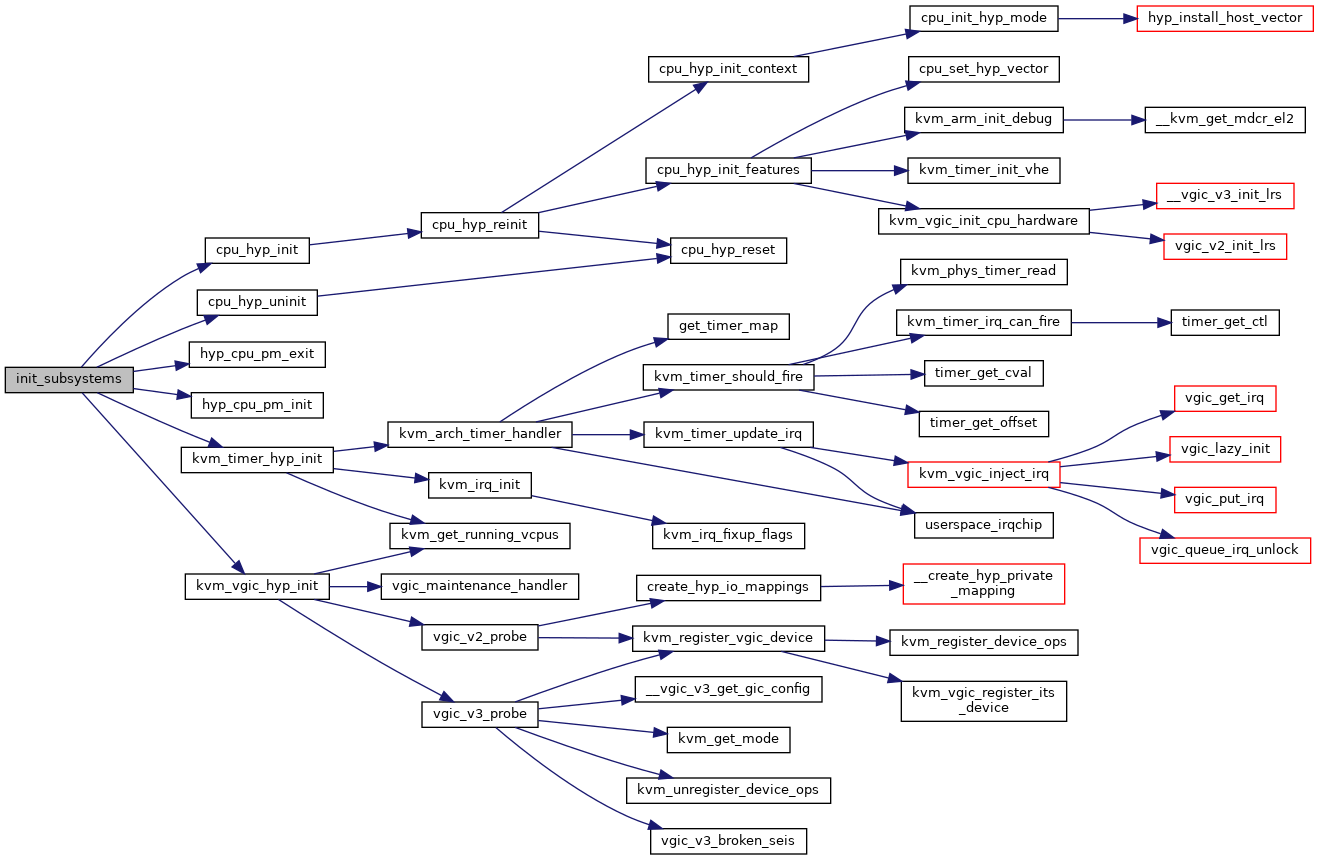

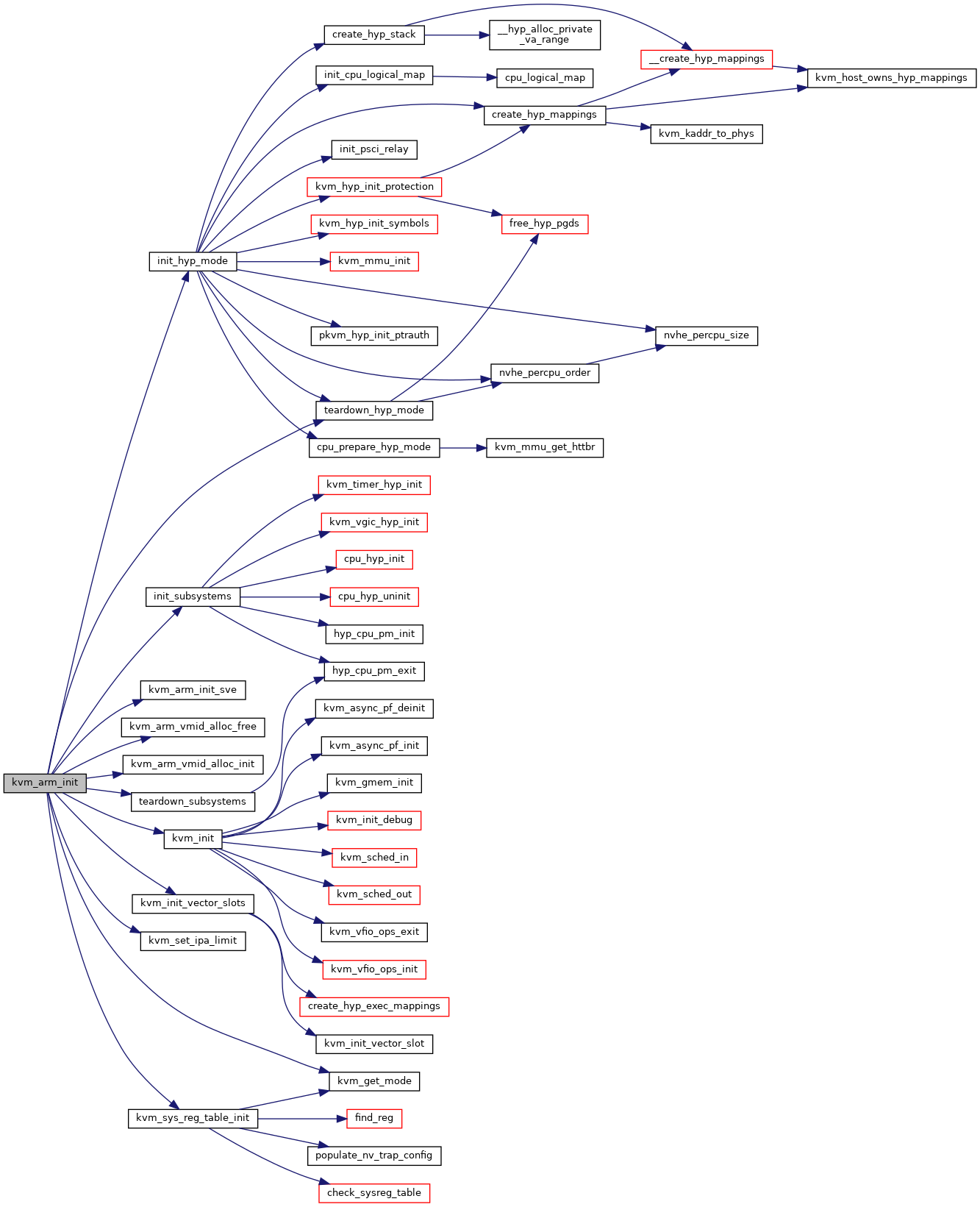

| static int __init | init_subsystems (void) |

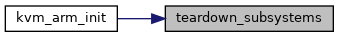

| static void __init | teardown_subsystems (void) |

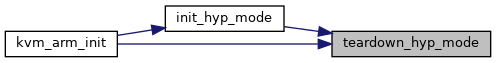

| static void __init | teardown_hyp_mode (void) |

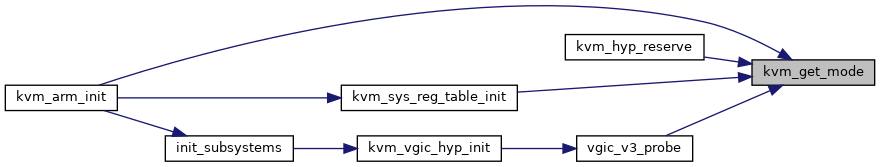

| static int __init | do_pkvm_init (u32 hyp_va_bits) |

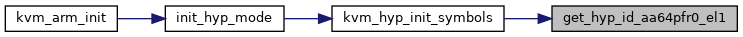

| static u64 | get_hyp_id_aa64pfr0_el1 (void) |

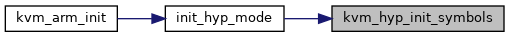

| static void | kvm_hyp_init_symbols (void) |

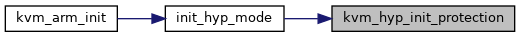

| static int __init | kvm_hyp_init_protection (u32 hyp_va_bits) |

| static void | pkvm_hyp_init_ptrauth (void) |

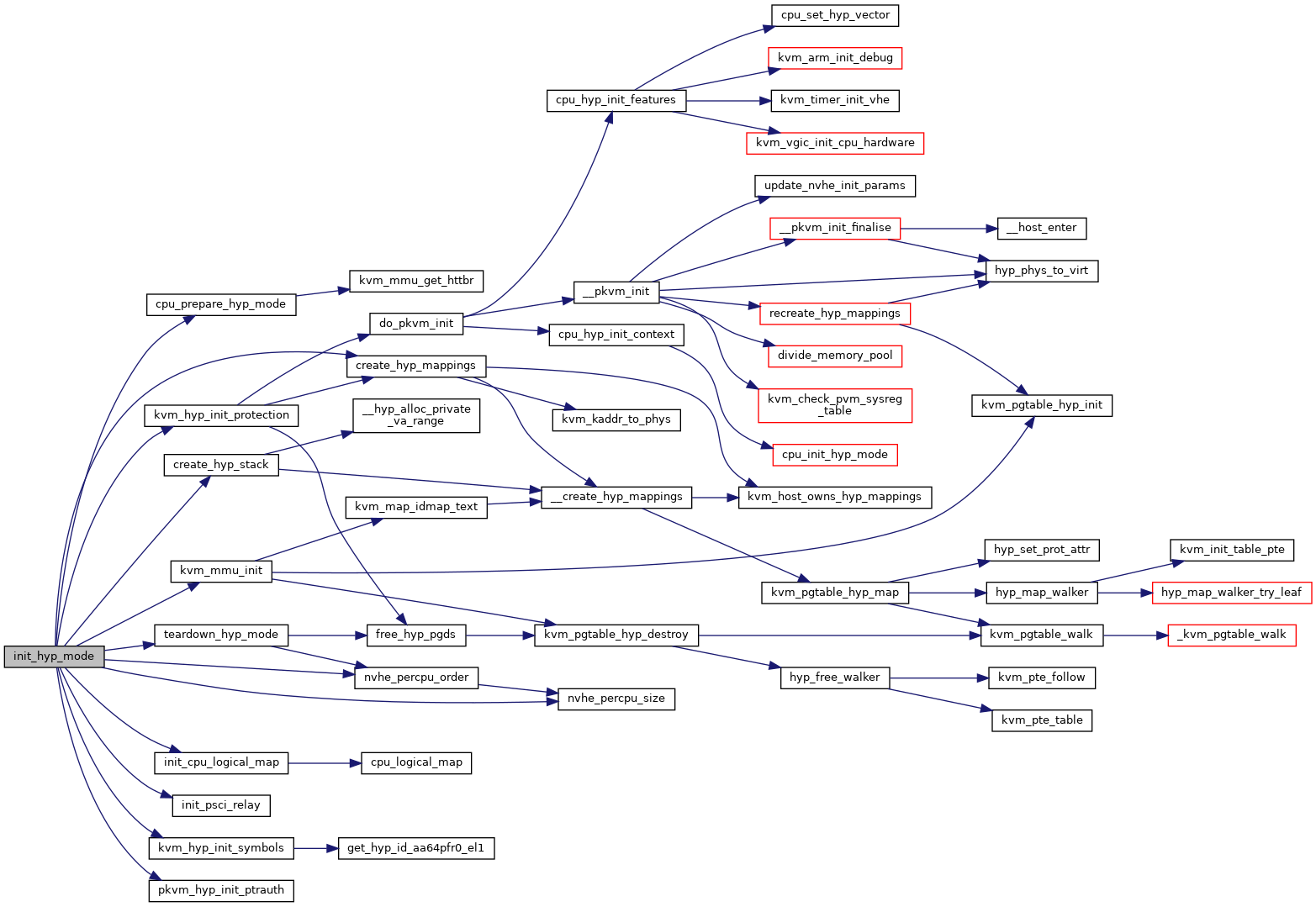

| static int __init | init_hyp_mode (void) |

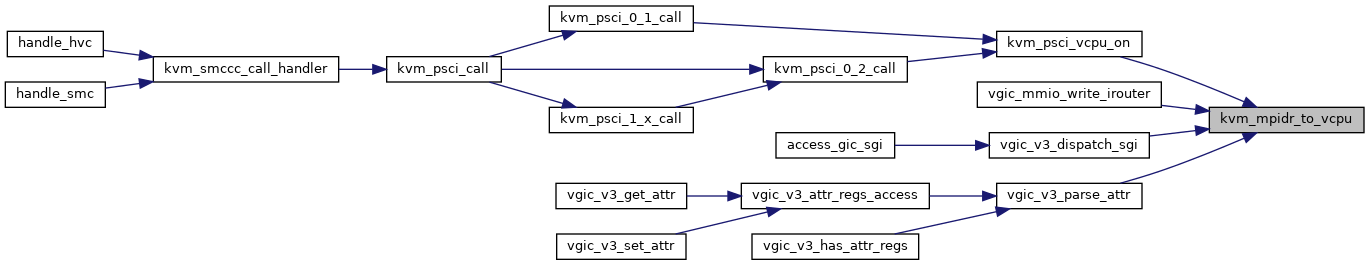

| struct kvm_vcpu * | kvm_mpidr_to_vcpu (struct kvm *kvm, unsigned long mpidr) |

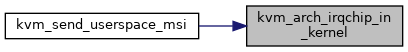

| bool | kvm_arch_irqchip_in_kernel (struct kvm *kvm) |

| bool | kvm_arch_has_irq_bypass (void) |

| int | kvm_arch_irq_bypass_add_producer (struct irq_bypass_consumer *cons, struct irq_bypass_producer *prod) |

| void | kvm_arch_irq_bypass_del_producer (struct irq_bypass_consumer *cons, struct irq_bypass_producer *prod) |

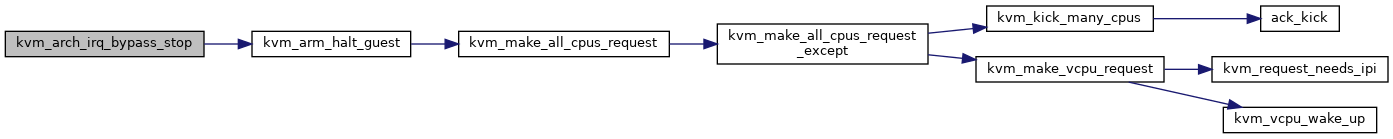

| void | kvm_arch_irq_bypass_stop (struct irq_bypass_consumer *cons) |

| void | kvm_arch_irq_bypass_start (struct irq_bypass_consumer *cons) |

| static __init int | kvm_arm_init (void) |

| static int __init | early_kvm_mode_cfg (char *arg) |

| early_param ("kvm-arm.mode", early_kvm_mode_cfg) | |

| enum kvm_mode | kvm_get_mode (void) |

| module_init (kvm_arm_init) | |

Variables | |

| static enum kvm_mode | kvm_mode = KVM_MODE_DEFAULT |

| static bool | vgic_present |

| static bool | kvm_arm_initialised |

| static void * | hyp_spectre_vector_selector [BP_HARDEN_EL2_SLOTS] |

Macro Definition Documentation

◆ CREATE_TRACE_POINTS

◆ init_psci_0_1_impl_state

| #define init_psci_0_1_impl_state | ( | config, | |

| what | |||

| ) | config.psci_0_1_ ## what ## _implemented = psci_ops.what |

Function Documentation

◆ __kvm_arm_vcpu_power_off()

|

static |

◆ __kvm_vcpu_set_target()

|

static |

Definition at line 1341 of file arm.c.

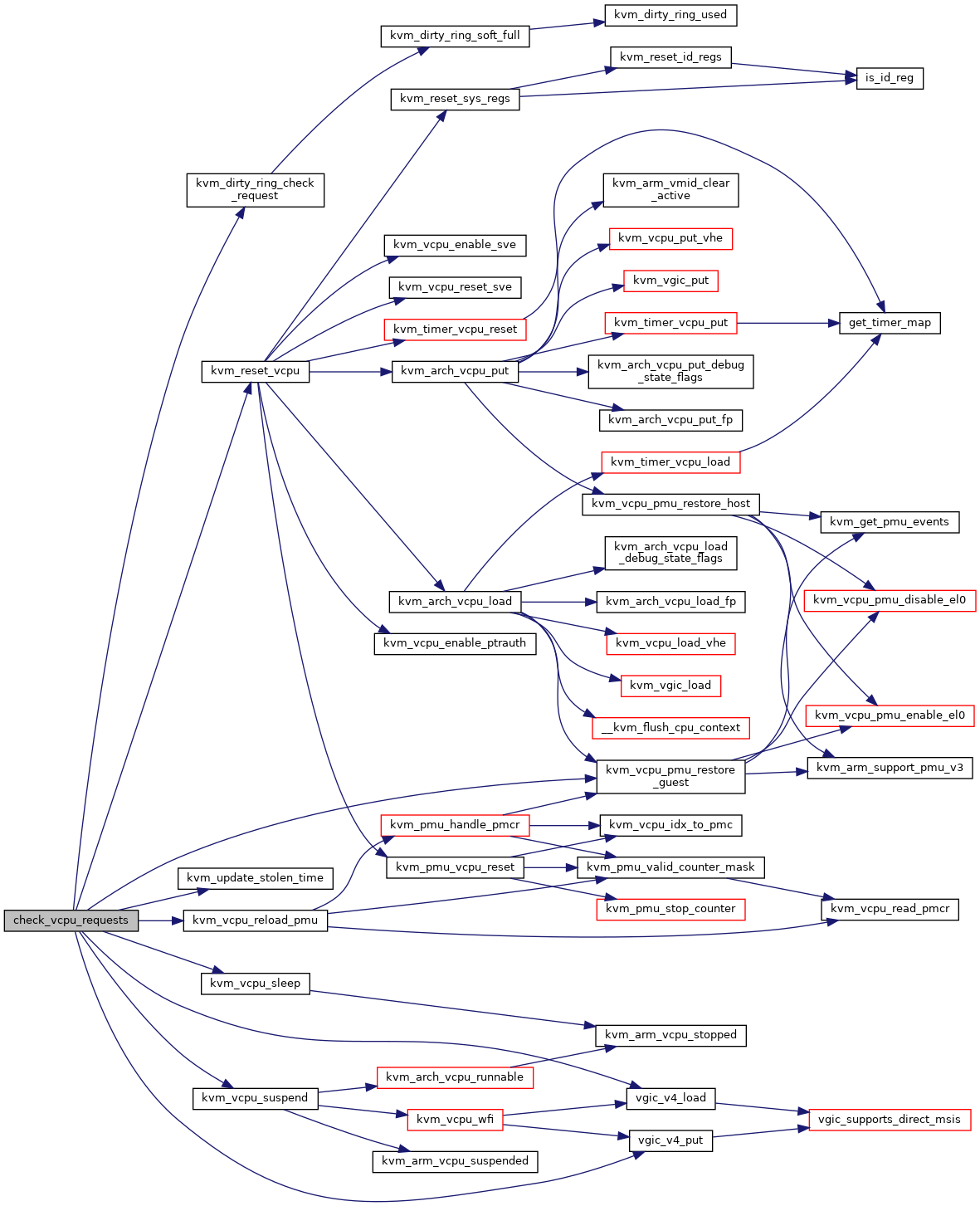

◆ check_vcpu_requests()

|

static |

check_vcpu_requests - check and handle pending vCPU requests @vcpu: the VCPU pointer

Return: 1 if we should enter the guest 0 if we should exit to userspace < 0 if we should exit to userspace, where the return value indicates an error

Definition at line 838 of file arm.c.

◆ cpu_hyp_init()

|

static |

◆ cpu_hyp_init_context()

|

static |

◆ cpu_hyp_init_features()

|

static |

◆ cpu_hyp_reinit()

|

static |

◆ cpu_hyp_reset()

|

static |

◆ cpu_hyp_uninit()

|

static |

◆ cpu_init_hyp_mode()

|

static |

◆ cpu_prepare_hyp_mode()

|

static |

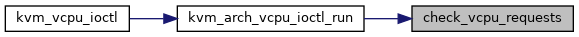

◆ cpu_set_hyp_vector()

|

static |

Definition at line 1950 of file arm.c.

◆ DECLARE_KVM_HYP_PER_CPU()

| DECLARE_KVM_HYP_PER_CPU | ( | unsigned long | , |

| kvm_hyp_vector | |||

| ) |

◆ DECLARE_KVM_NVHE_PER_CPU() [1/2]

| DECLARE_KVM_NVHE_PER_CPU | ( | struct kvm_cpu_context | , |

| kvm_hyp_ctxt | |||

| ) |

◆ DECLARE_KVM_NVHE_PER_CPU() [2/2]

| DECLARE_KVM_NVHE_PER_CPU | ( | struct kvm_nvhe_init_params | , |

| kvm_init_params | |||

| ) |

◆ DEFINE_PER_CPU() [1/2]

|

static |

◆ DEFINE_PER_CPU() [2/2]

| DEFINE_PER_CPU | ( | unsigned long | , |

| kvm_arm_hyp_stack_page | |||

| ) |

◆ DEFINE_STATIC_KEY_FALSE()

| DEFINE_STATIC_KEY_FALSE | ( | userspace_irqchip_in_use | ) |

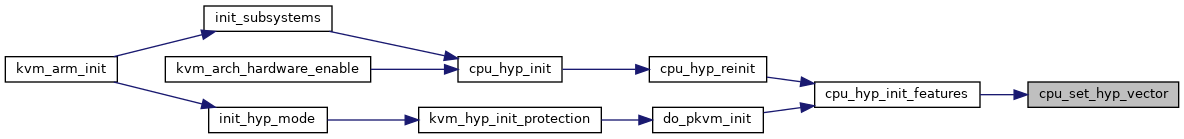

◆ do_pkvm_init()

|

static |

Definition at line 2198 of file arm.c.

◆ early_kvm_mode_cfg()

◆ early_param()

| early_param | ( | "kvm-arm.mode" | , |

| early_kvm_mode_cfg | |||

| ) |

◆ get_hyp_id_aa64pfr0_el1()

|

static |

◆ hyp_cpu_pm_exit()

|

inlinestatic |

◆ hyp_cpu_pm_init()

|

inlinestatic |

◆ hyp_install_host_vector()

|

static |

◆ init_cpu_logical_map()

|

static |

◆ init_hyp_mode()

|

static |

Definition at line 2298 of file arm.c.

◆ init_psci_relay()

|

static |

Definition at line 2107 of file arm.c.

◆ init_subsystems()

|

static |

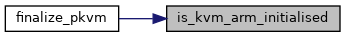

◆ is_kvm_arm_initialised()

| bool is_kvm_arm_initialised | ( | void | ) |

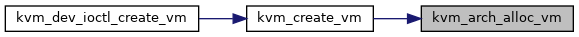

◆ kvm_arch_alloc_vm()

| struct kvm* kvm_arch_alloc_vm | ( | void | ) |

◆ kvm_arch_destroy_vm()

| void kvm_arch_destroy_vm | ( | struct kvm * | kvm | ) |

kvm_arch_destroy_vm - destroy the VM data structure @kvm: pointer to the KVM struct

Definition at line 198 of file arm.c.

◆ kvm_arch_dev_ioctl()

| long kvm_arch_dev_ioctl | ( | struct file * | filp, |

| unsigned int | ioctl, | ||

| unsigned long | arg | ||

| ) |

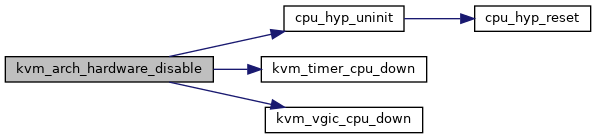

◆ kvm_arch_hardware_disable()

| void kvm_arch_hardware_disable | ( | void | ) |

◆ kvm_arch_hardware_enable()

| int kvm_arch_hardware_enable | ( | void | ) |

◆ kvm_arch_has_irq_bypass()

| bool kvm_arch_has_irq_bypass | ( | void | ) |

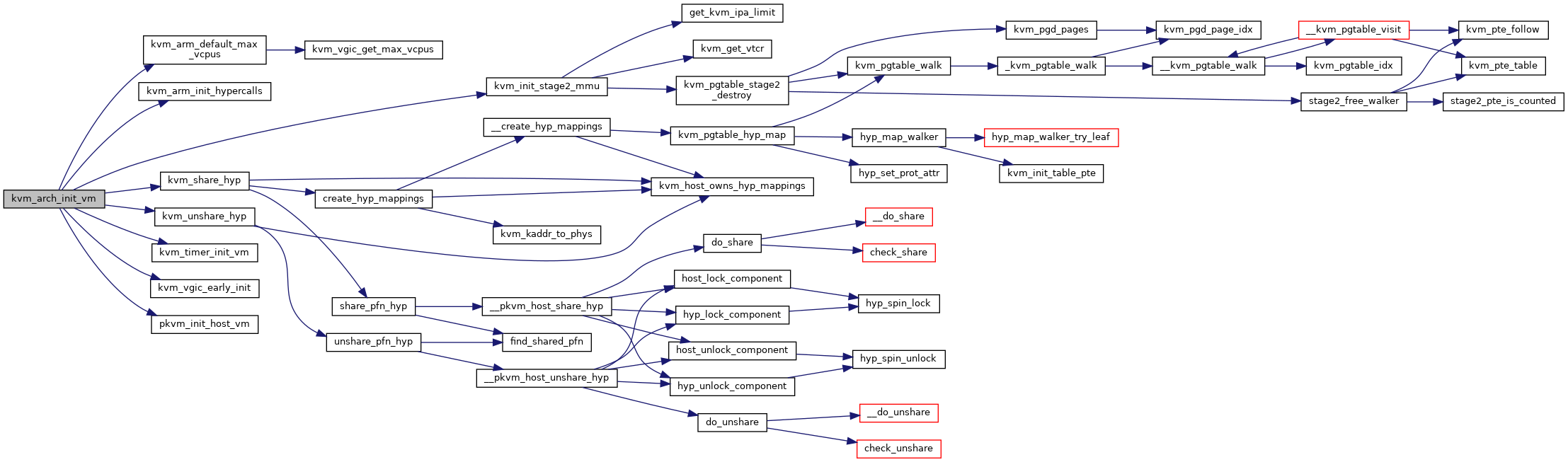

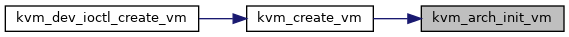

◆ kvm_arch_init_vm()

| int kvm_arch_init_vm | ( | struct kvm * | kvm, |

| unsigned long | type | ||

| ) |

kvm_arch_init_vm - initializes a VM data structure @kvm: pointer to the KVM struct

Definition at line 136 of file arm.c.

◆ kvm_arch_intc_initialized()

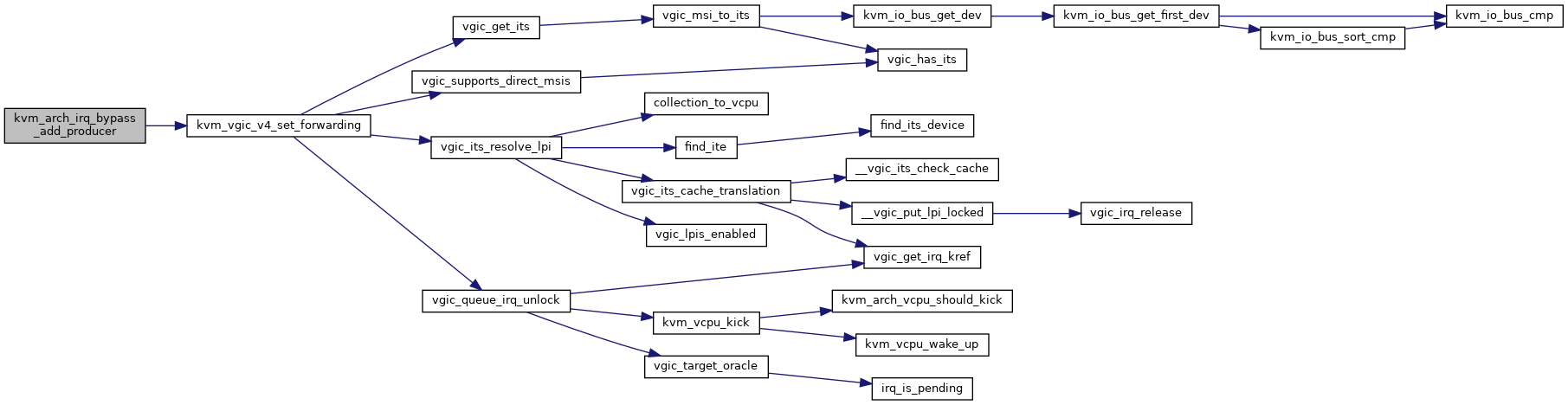

◆ kvm_arch_irq_bypass_add_producer()

| int kvm_arch_irq_bypass_add_producer | ( | struct irq_bypass_consumer * | cons, |

| struct irq_bypass_producer * | prod | ||

| ) |

Definition at line 2495 of file arm.c.

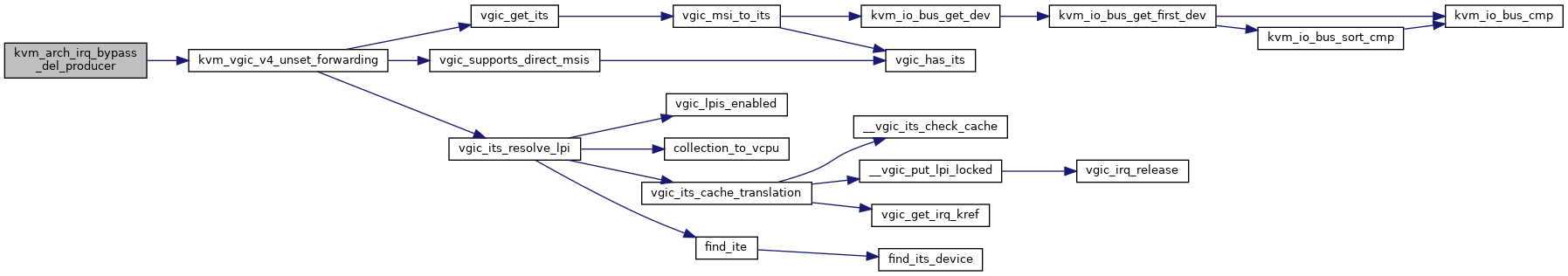

◆ kvm_arch_irq_bypass_del_producer()

| void kvm_arch_irq_bypass_del_producer | ( | struct irq_bypass_consumer * | cons, |

| struct irq_bypass_producer * | prod | ||

| ) |

Definition at line 2504 of file arm.c.

◆ kvm_arch_irq_bypass_start()

| void kvm_arch_irq_bypass_start | ( | struct irq_bypass_consumer * | cons | ) |

◆ kvm_arch_irq_bypass_stop()

| void kvm_arch_irq_bypass_stop | ( | struct irq_bypass_consumer * | cons | ) |

◆ kvm_arch_irqchip_in_kernel()

| bool kvm_arch_irqchip_in_kernel | ( | struct kvm * | kvm | ) |

◆ kvm_arch_sync_dirty_log()

| void kvm_arch_sync_dirty_log | ( | struct kvm * | kvm, |

| struct kvm_memory_slot * | memslot | ||

| ) |

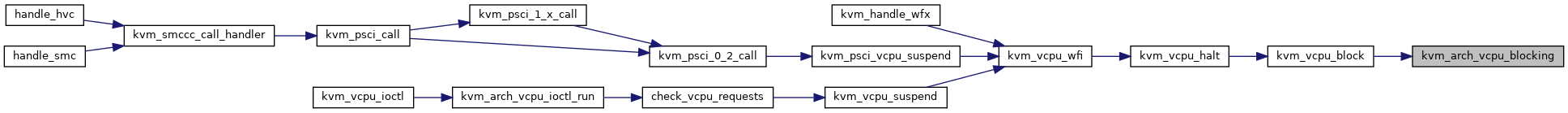

◆ kvm_arch_vcpu_blocking()

| void kvm_arch_vcpu_blocking | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_arch_vcpu_create()

| int kvm_arch_vcpu_create | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_arch_vcpu_destroy()

| void kvm_arch_vcpu_destroy | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 404 of file arm.c.

◆ kvm_arch_vcpu_fault()

| vm_fault_t kvm_arch_vcpu_fault | ( | struct kvm_vcpu * | vcpu, |

| struct vm_fault * | vmf | ||

| ) |

◆ kvm_arch_vcpu_in_kernel()

| bool kvm_arch_vcpu_in_kernel | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_arch_vcpu_ioctl()

| long kvm_arch_vcpu_ioctl | ( | struct file * | filp, |

| unsigned int | ioctl, | ||

| unsigned long | arg | ||

| ) |

Definition at line 1516 of file arm.c.

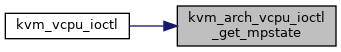

◆ kvm_arch_vcpu_ioctl_get_mpstate()

| int kvm_arch_vcpu_ioctl_get_mpstate | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_mp_state * | mp_state | ||

| ) |

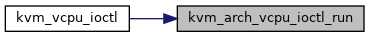

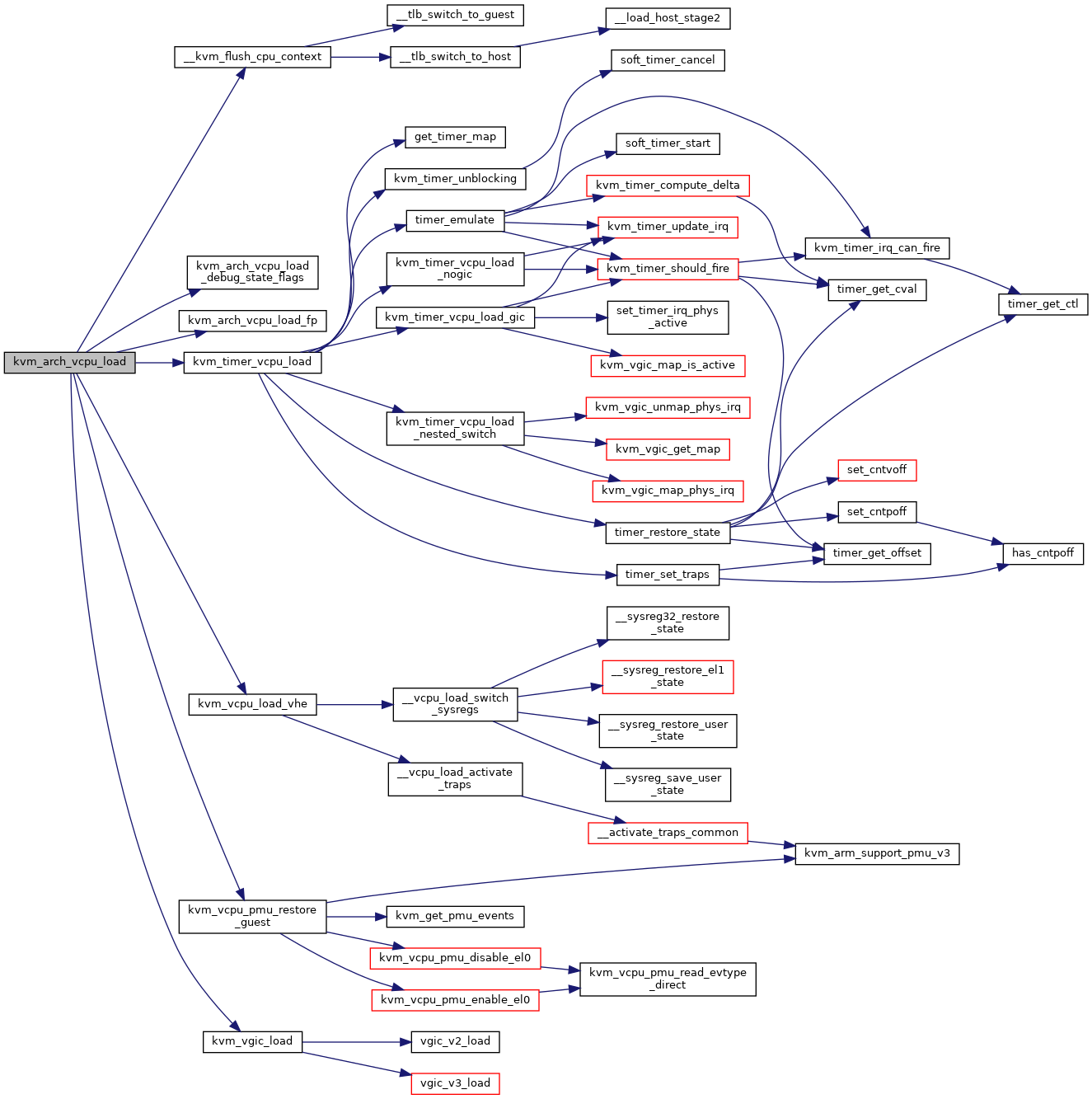

◆ kvm_arch_vcpu_ioctl_run()

| int kvm_arch_vcpu_ioctl_run | ( | struct kvm_vcpu * | vcpu | ) |

kvm_arch_vcpu_ioctl_run - the main VCPU run function to execute guest code @vcpu: The VCPU pointer

This function is called through the VCPU_RUN ioctl called from user space. It will execute VM code in a loop until the time slice for the process is used or some emulation is needed from user space in which case the function will return with return value 0 and with the kvm_run structure filled in with the required data for the requested emulation.

Definition at line 965 of file arm.c.

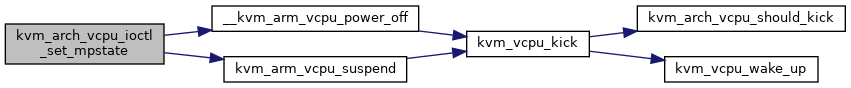

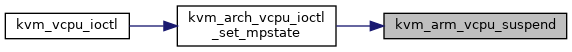

◆ kvm_arch_vcpu_ioctl_set_mpstate()

| int kvm_arch_vcpu_ioctl_set_mpstate | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_mp_state * | mp_state | ||

| ) |

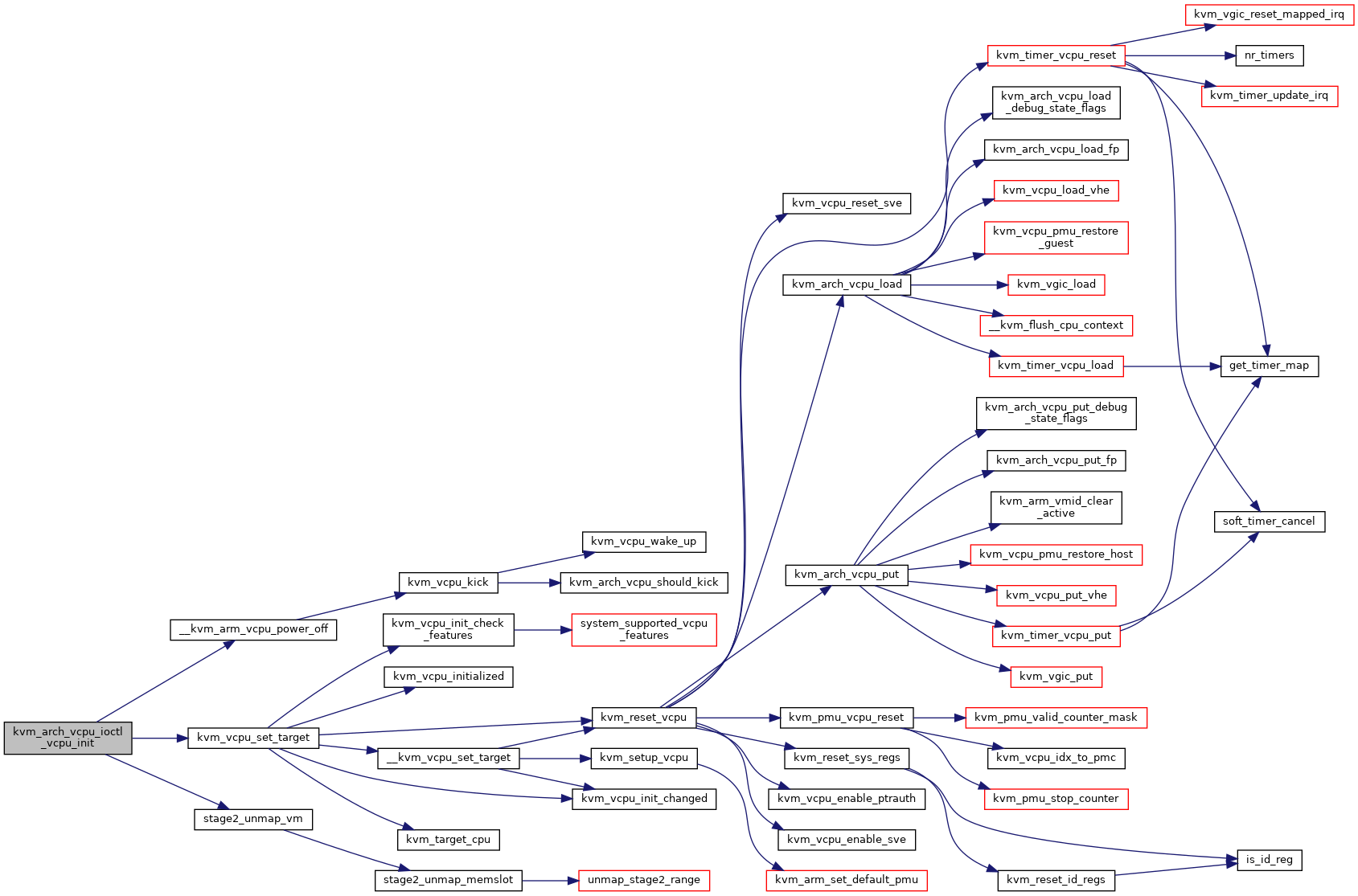

◆ kvm_arch_vcpu_ioctl_vcpu_init()

|

static |

Definition at line 1394 of file arm.c.

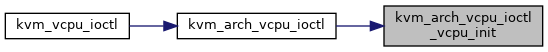

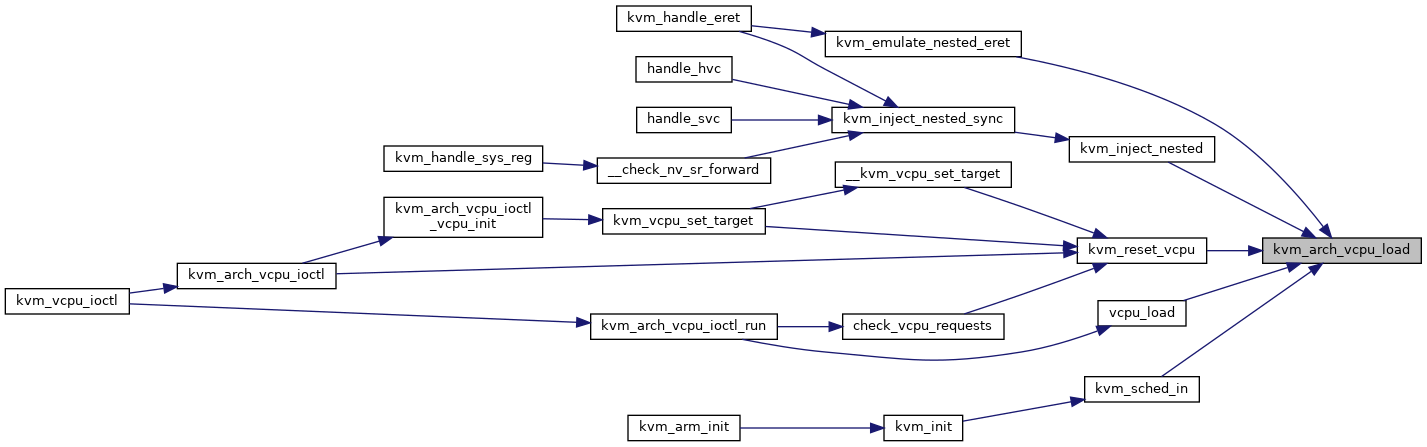

◆ kvm_arch_vcpu_load()

| void kvm_arch_vcpu_load | ( | struct kvm_vcpu * | vcpu, |

| int | cpu | ||

| ) |

Definition at line 426 of file arm.c.

◆ kvm_arch_vcpu_postcreate()

| void kvm_arch_vcpu_postcreate | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_arch_vcpu_precreate()

| int kvm_arch_vcpu_precreate | ( | struct kvm * | kvm, |

| unsigned int | id | ||

| ) |

◆ kvm_arch_vcpu_put()

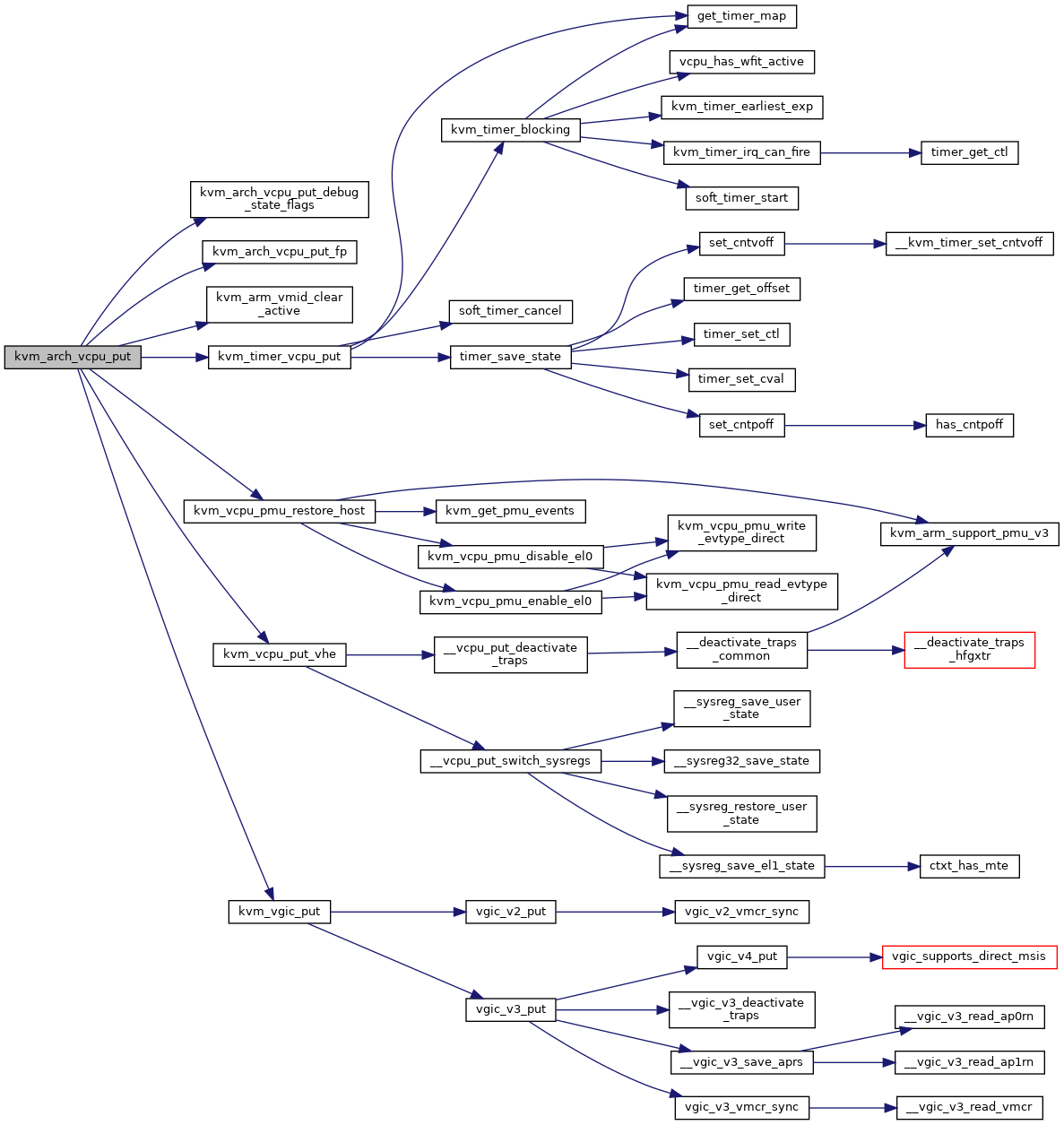

| void kvm_arch_vcpu_put | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 472 of file arm.c.

◆ kvm_arch_vcpu_run_pid_change()

| int kvm_arch_vcpu_run_pid_change | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_arch_vcpu_runnable()

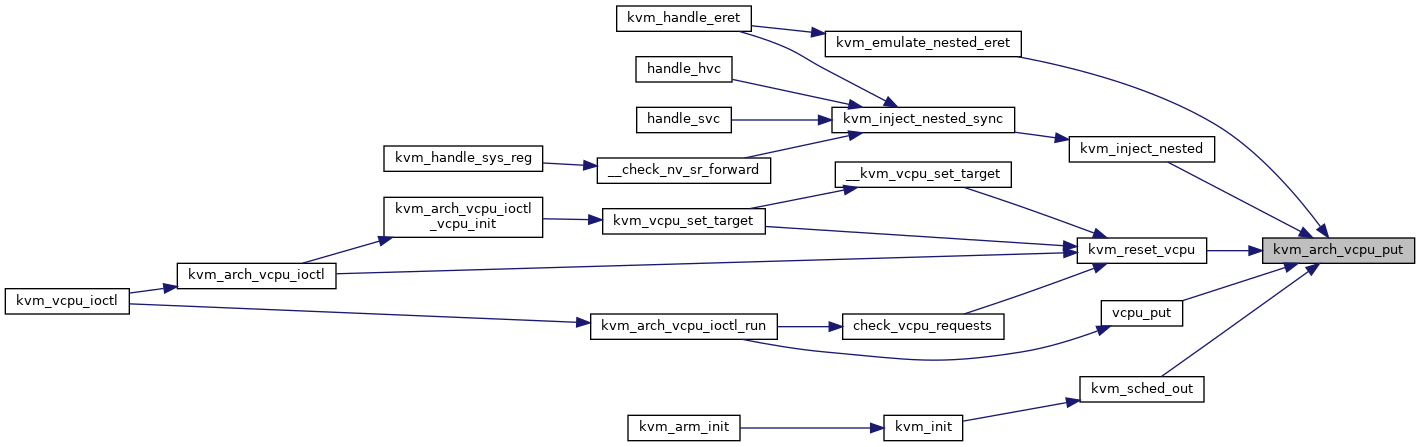

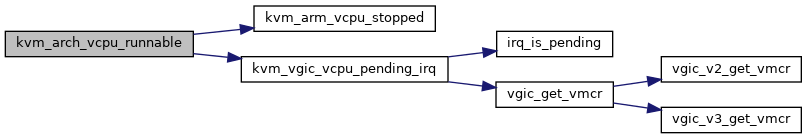

| int kvm_arch_vcpu_runnable | ( | struct kvm_vcpu * | v | ) |

kvm_arch_vcpu_runnable - determine if the vcpu can be scheduled @v: The VCPU pointer

If the guest CPU is not waiting for interrupts or an interrupt line is asserted, the CPU is by definition runnable.

Definition at line 559 of file arm.c.

◆ kvm_arch_vcpu_should_kick()

| int kvm_arch_vcpu_should_kick | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_arch_vcpu_unblocking()

| void kvm_arch_vcpu_unblocking | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_arch_vm_ioctl()

| int kvm_arch_vm_ioctl | ( | struct file * | filp, |

| unsigned int | ioctl, | ||

| unsigned long | arg | ||

| ) |

Definition at line 1683 of file arm.c.

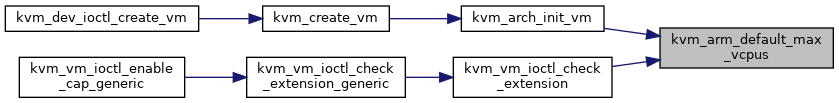

◆ kvm_arm_default_max_vcpus()

|

static |

◆ kvm_arm_halt_guest()

| void kvm_arm_halt_guest | ( | struct kvm * | kvm | ) |

Definition at line 719 of file arm.c.

◆ kvm_arm_init()

|

static |

Definition at line 2531 of file arm.c.

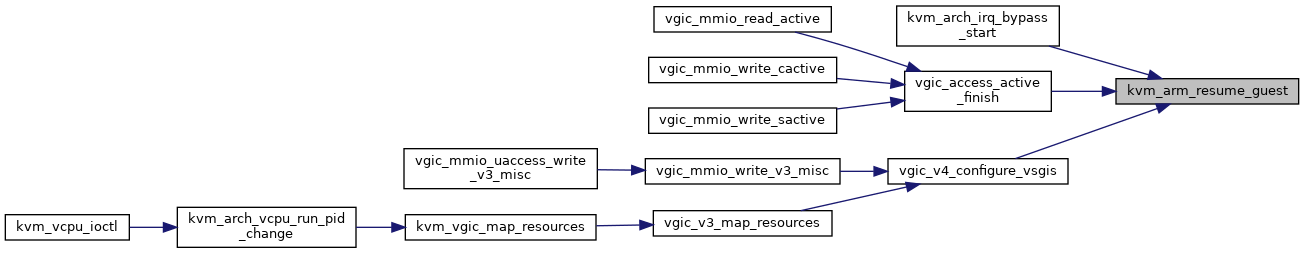

◆ kvm_arm_resume_guest()

| void kvm_arm_resume_guest | ( | struct kvm * | kvm | ) |

◆ kvm_arm_vcpu_enter_exit()

|

static |

◆ kvm_arm_vcpu_get_attr()

|

static |

Definition at line 1462 of file arm.c.

◆ kvm_arm_vcpu_get_events()

|

static |

Definition at line 1490 of file arm.c.

◆ kvm_arm_vcpu_has_attr()

|

static |

Definition at line 1476 of file arm.c.

◆ kvm_arm_vcpu_power_off()

| void kvm_arm_vcpu_power_off | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_arm_vcpu_set_attr()

|

static |

Definition at line 1448 of file arm.c.

◆ kvm_arm_vcpu_set_events()

|

static |

Definition at line 1498 of file arm.c.

◆ kvm_arm_vcpu_stopped()

| bool kvm_arm_vcpu_stopped | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_arm_vcpu_suspend()

|

static |

◆ kvm_arm_vcpu_suspended()

|

static |

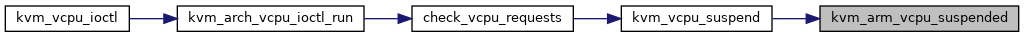

◆ kvm_get_mode()

| enum kvm_mode kvm_get_mode | ( | void | ) |

◆ kvm_hyp_init_protection()

|

static |

◆ kvm_hyp_init_symbols()

|

static |

◆ kvm_init_mpidr_data()

|

static |

◆ kvm_init_vector_slot()

|

static |

◆ kvm_init_vector_slots()

|

static |

Definition at line 1818 of file arm.c.

◆ kvm_mpidr_to_vcpu()

| struct kvm_vcpu* kvm_mpidr_to_vcpu | ( | struct kvm * | kvm, |

| unsigned long | mpidr | ||

| ) |

◆ kvm_setup_vcpu()

|

static |

◆ kvm_vcpu_exit_request()

|

static |

kvm_vcpu_exit_request - returns true if the VCPU should not enter the guest @vcpu: The VCPU pointer @ret: Pointer to write optional return code

Returns: true if the VCPU needs to return to a preemptible + interruptible and skip guest entry.

This function disambiguates between two different types of exits: exits to a preemptible + interruptible kernel context and exits to userspace. For an exit to userspace, this function will write the return code to ret and return true. For an exit to preemptible + interruptible kernel context (i.e. check for pending work and re-enter), return true without writing to ret.

Definition at line 905 of file arm.c.

◆ kvm_vcpu_init_changed()

|

static |

◆ kvm_vcpu_init_check_features()

|

static |

Definition at line 1273 of file arm.c.

◆ kvm_vcpu_initialized()

|

static |

◆ kvm_vcpu_set_target()

|

static |

Definition at line 1371 of file arm.c.

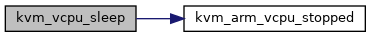

◆ kvm_vcpu_sleep()

|

static |

◆ kvm_vcpu_suspend()

|

static |

◆ kvm_vcpu_wfi()

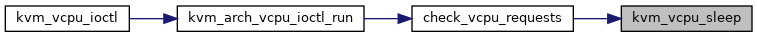

| void kvm_vcpu_wfi | ( | struct kvm_vcpu * | vcpu | ) |

kvm_vcpu_wfi - emulate Wait-For-Interrupt behavior @vcpu: The VCPU pointer

Suspend execution of a vCPU until a valid wake event is detected, i.e. until the vCPU is runnable. The vCPU may or may not be scheduled out, depending on when a wake event arrives, e.g. there may already be a pending wake event.

Definition at line 769 of file arm.c.

◆ kvm_vm_has_attr()

|

static |

Definition at line 1663 of file arm.c.

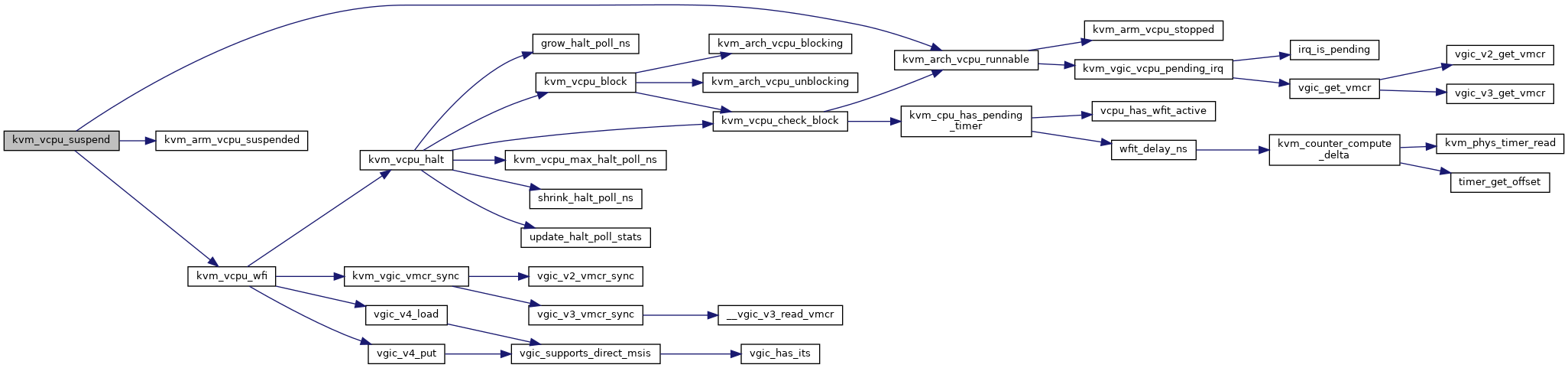

◆ kvm_vm_ioctl_check_extension()

| int kvm_vm_ioctl_check_extension | ( | struct kvm * | kvm, |

| long | ext | ||

| ) |

◆ kvm_vm_ioctl_enable_cap()

| int kvm_vm_ioctl_enable_cap | ( | struct kvm * | kvm, |

| struct kvm_enable_cap * | cap | ||

| ) |

Definition at line 72 of file arm.c.

◆ kvm_vm_ioctl_irq_line()

| int kvm_vm_ioctl_irq_line | ( | struct kvm * | kvm, |

| struct kvm_irq_level * | irq_level, | ||

| bool | line_status | ||

| ) |

Definition at line 1196 of file arm.c.

◆ kvm_vm_ioctl_set_device_addr()

|

static |

Definition at line 1650 of file arm.c.

◆ kvm_vm_set_attr()

|

static |

Definition at line 1673 of file arm.c.

◆ lock_all_vcpus()

| bool lock_all_vcpus | ( | struct kvm * | kvm | ) |

◆ module_init()

| module_init | ( | kvm_arm_init | ) |

◆ nvhe_percpu_order()

|

static |

◆ nvhe_percpu_size()

|

static |

◆ pkvm_hyp_init_ptrauth()

|

static |

◆ system_supported_vcpu_features()

|

static |

◆ teardown_hyp_mode()

|

static |

◆ teardown_subsystems()

|

static |

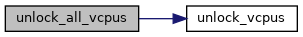

◆ unlock_all_vcpus()

| void unlock_all_vcpus | ( | struct kvm * | kvm | ) |

◆ unlock_vcpus()

|

static |

◆ vcpu_interrupt_line()

|

static |

◆ vcpu_mode_is_bad_32bit()

|

static |

Variable Documentation

◆ hyp_spectre_vector_selector

|

static |