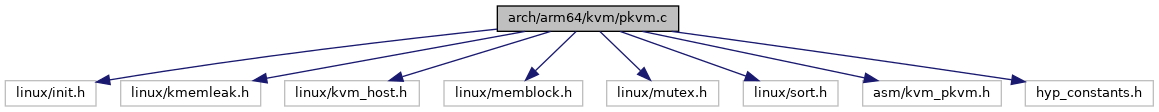

#include <linux/init.h>#include <linux/kmemleak.h>#include <linux/kvm_host.h>#include <linux/memblock.h>#include <linux/mutex.h>#include <linux/sort.h>#include <asm/kvm_pkvm.h>#include "hyp_constants.h"

Include dependency graph for pkvm.c:

Go to the source code of this file.

Functions | |

| DEFINE_STATIC_KEY_FALSE (kvm_protected_mode_initialized) | |

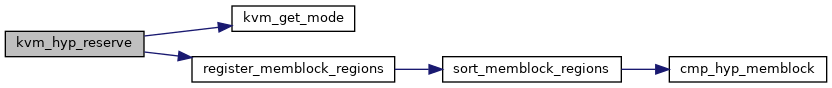

| static int | cmp_hyp_memblock (const void *p1, const void *p2) |

| static void __init | sort_memblock_regions (void) |

| static int __init | register_memblock_regions (void) |

| void __init | kvm_hyp_reserve (void) |

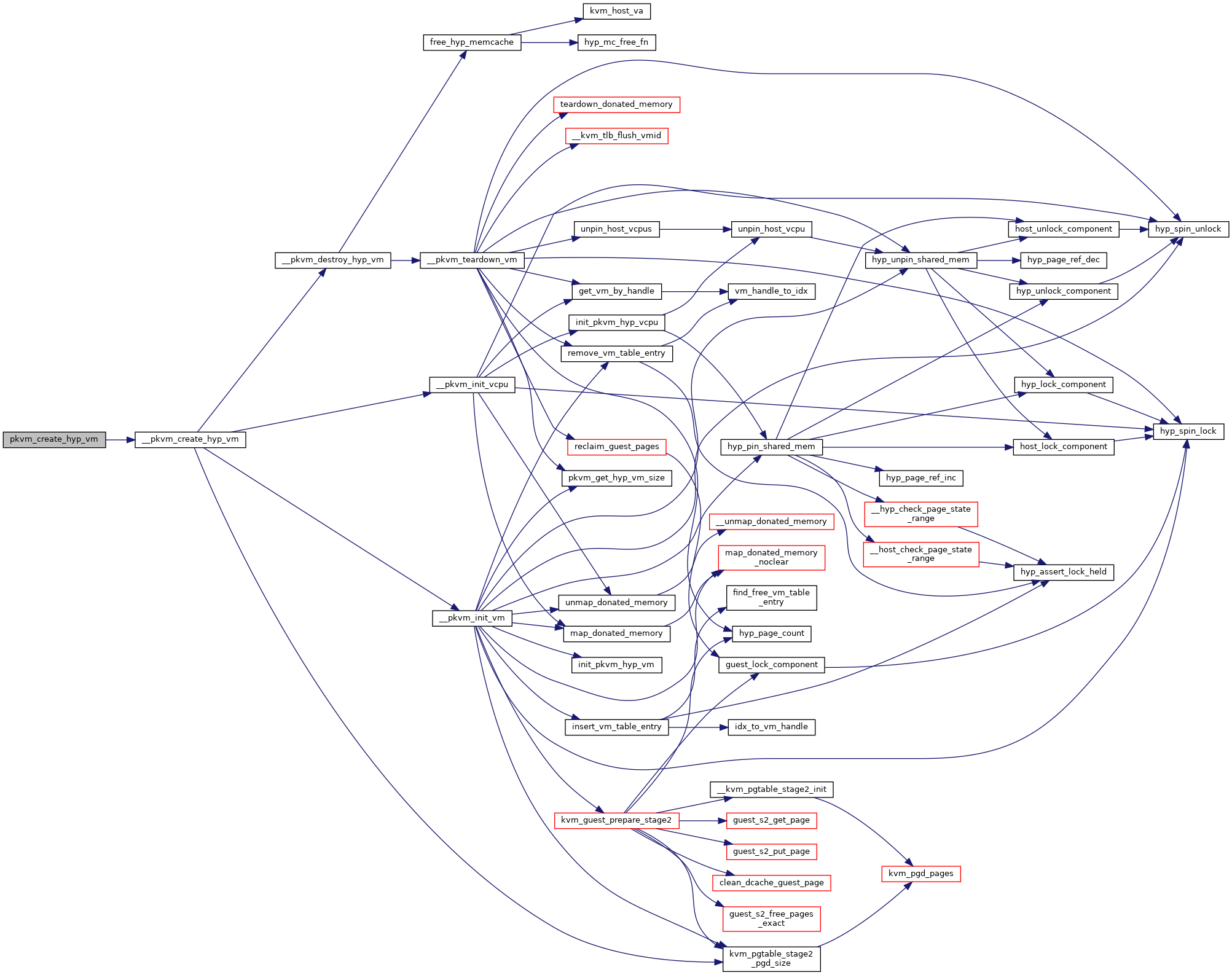

| static void | __pkvm_destroy_hyp_vm (struct kvm *host_kvm) |

| static int | __pkvm_create_hyp_vm (struct kvm *host_kvm) |

| int | pkvm_create_hyp_vm (struct kvm *host_kvm) |

| void | pkvm_destroy_hyp_vm (struct kvm *host_kvm) |

| int | pkvm_init_host_vm (struct kvm *host_kvm) |

| static void __init | _kvm_host_prot_finalize (void *arg) |

| static int __init | pkvm_drop_host_privileges (void) |

| static int __init | finalize_pkvm (void) |

| device_initcall_sync (finalize_pkvm) | |

Variables | |

| static struct memblock_region * | hyp_memory = kvm_nvhe_sym(hyp_memory) |

| static unsigned int * | hyp_memblock_nr_ptr = &kvm_nvhe_sym(hyp_memblock_nr) |

| phys_addr_t | hyp_mem_base |

| phys_addr_t | hyp_mem_size |

Function Documentation

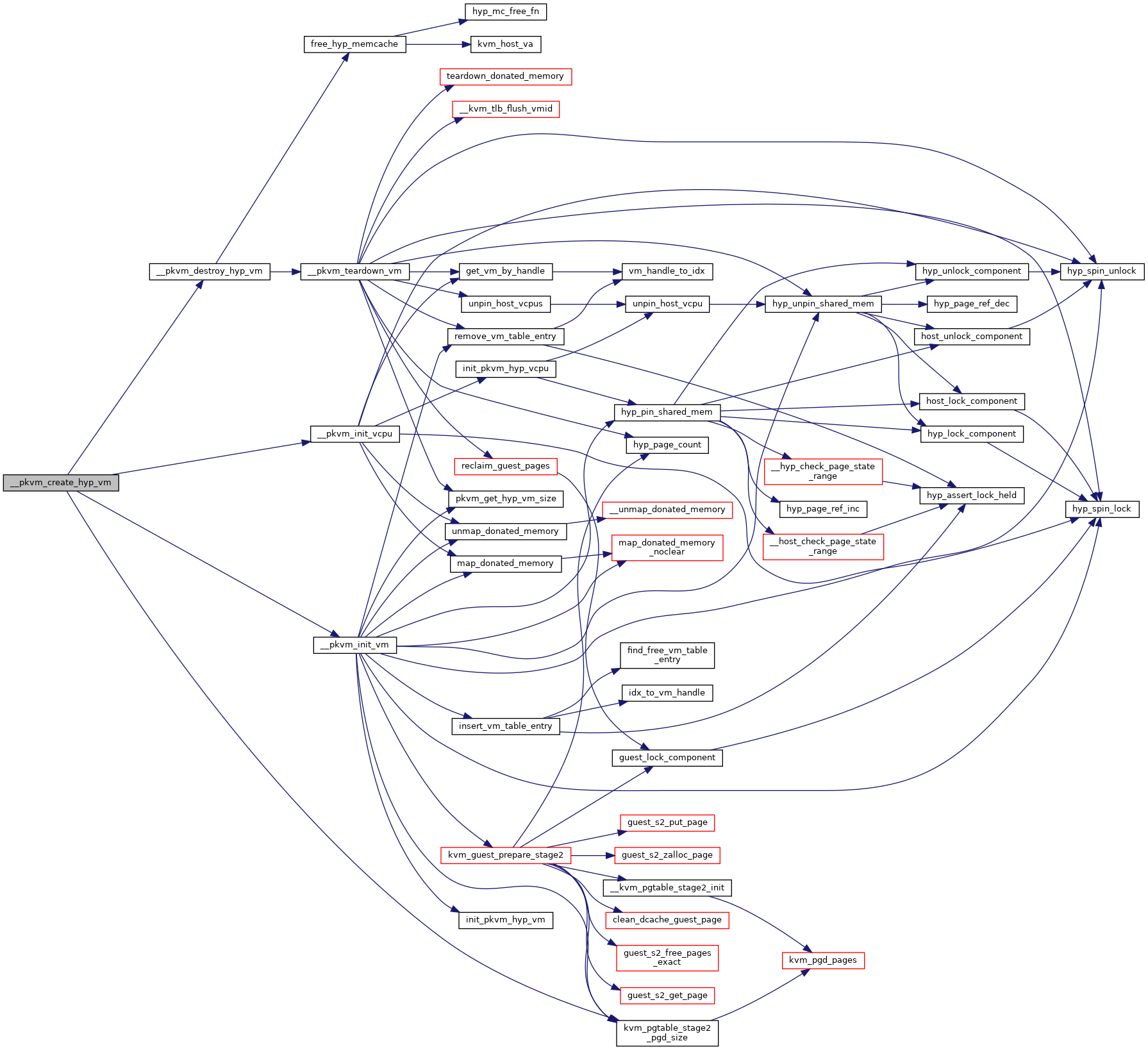

◆ __pkvm_create_hyp_vm()

|

static |

Definition at line 125 of file pkvm.c.

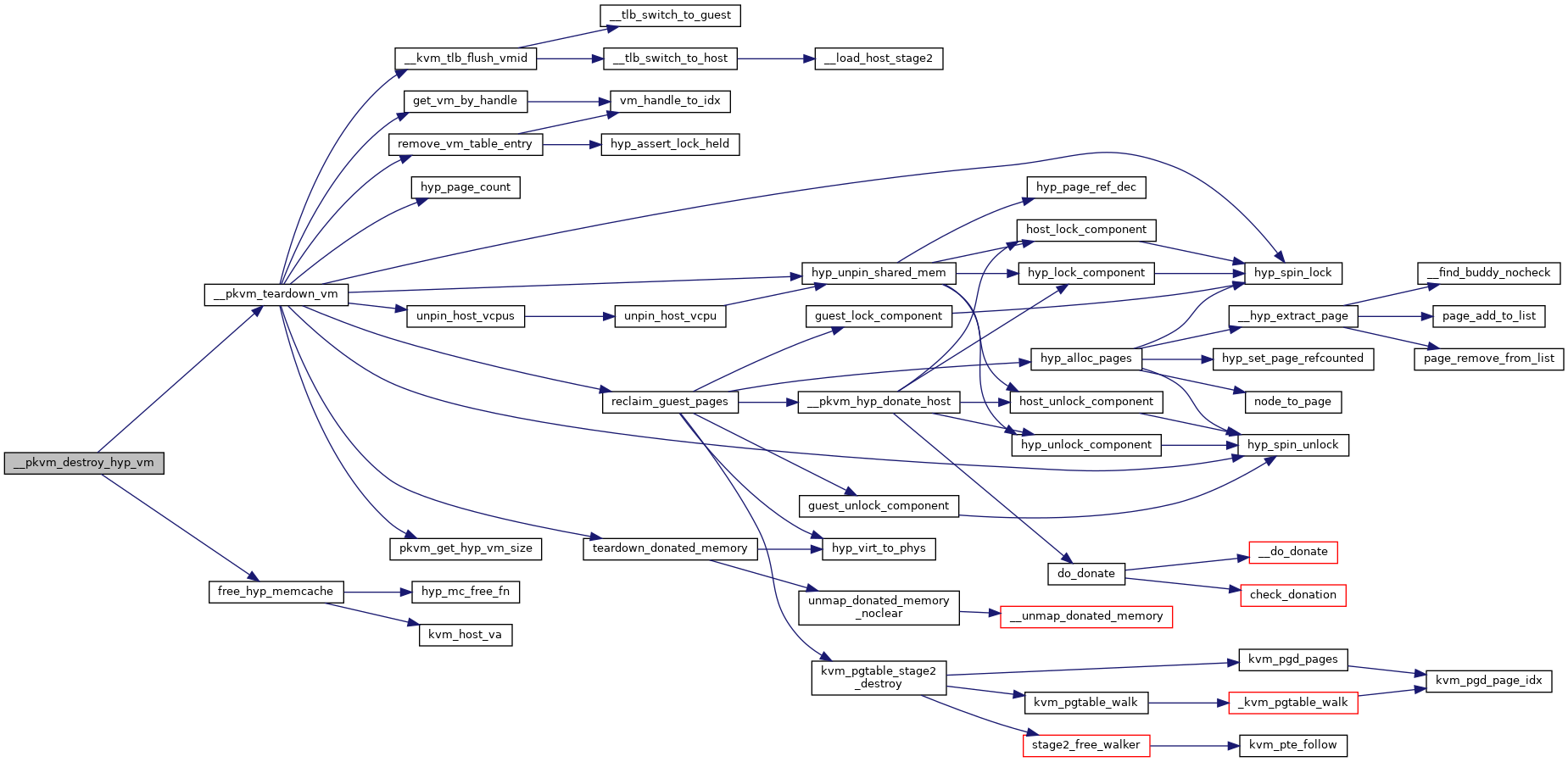

int __pkvm_init_vm(struct kvm *host_kvm, unsigned long vm_hva, unsigned long pgd_hva)

Definition: pkvm.c:470

int __pkvm_init_vcpu(pkvm_handle_t handle, struct kvm_vcpu *host_vcpu, unsigned long vcpu_hva)

Definition: pkvm.c:539

Here is the call graph for this function:

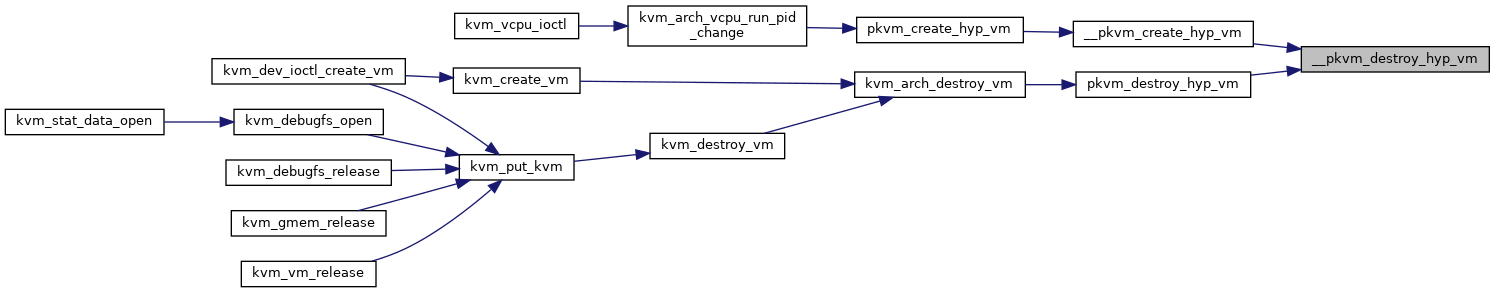

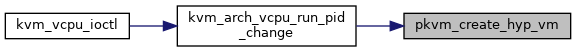

Here is the caller graph for this function:

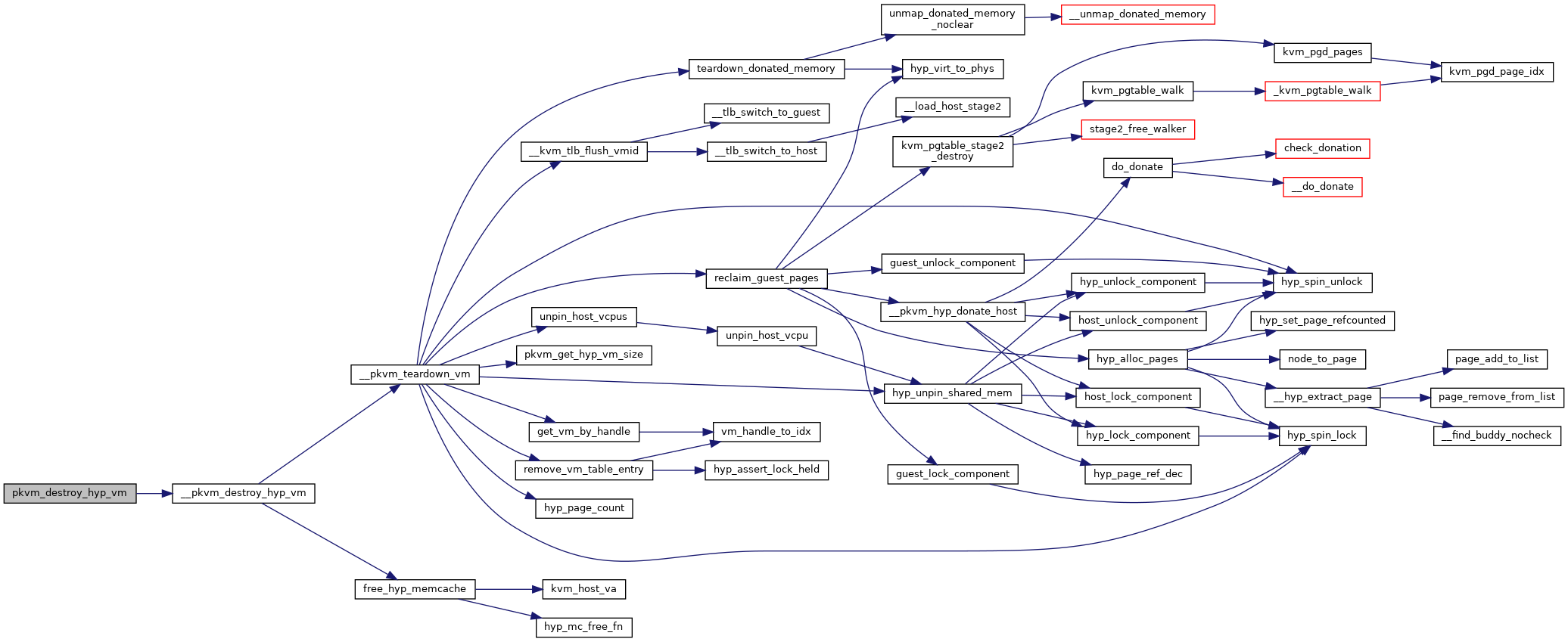

◆ __pkvm_destroy_hyp_vm()

|

static |

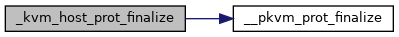

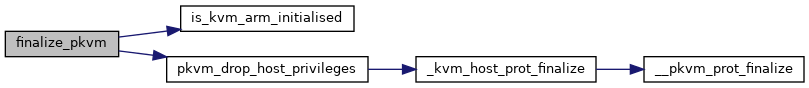

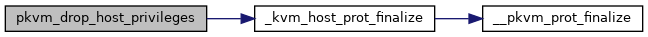

◆ _kvm_host_prot_finalize()

|

static |

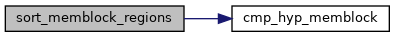

◆ cmp_hyp_memblock()

|

static |

◆ DEFINE_STATIC_KEY_FALSE()

| DEFINE_STATIC_KEY_FALSE | ( | kvm_protected_mode_initialized | ) |

◆ device_initcall_sync()

| device_initcall_sync | ( | finalize_pkvm | ) |

◆ finalize_pkvm()

|

static |

◆ kvm_hyp_reserve()

| void __init kvm_hyp_reserve | ( | void | ) |

◆ pkvm_create_hyp_vm()

| int pkvm_create_hyp_vm | ( | struct kvm * | host_kvm | ) |

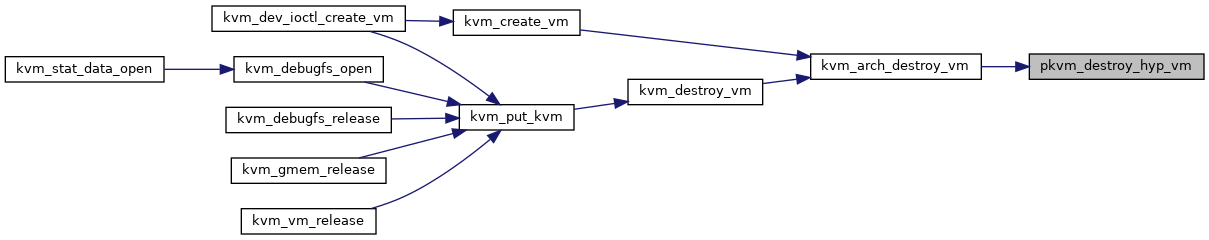

◆ pkvm_destroy_hyp_vm()

| void pkvm_destroy_hyp_vm | ( | struct kvm * | host_kvm | ) |

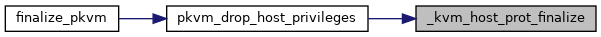

◆ pkvm_drop_host_privileges()

|

static |

◆ pkvm_init_host_vm()

| int pkvm_init_host_vm | ( | struct kvm * | host_kvm | ) |

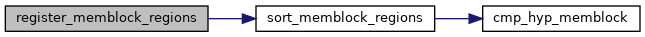

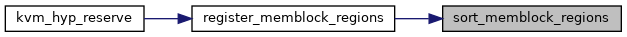

◆ register_memblock_regions()

|

static |

◆ sort_memblock_regions()

|

static |

Variable Documentation

◆ hyp_mem_base

◆ hyp_mem_size

◆ hyp_memblock_nr_ptr

|

static |