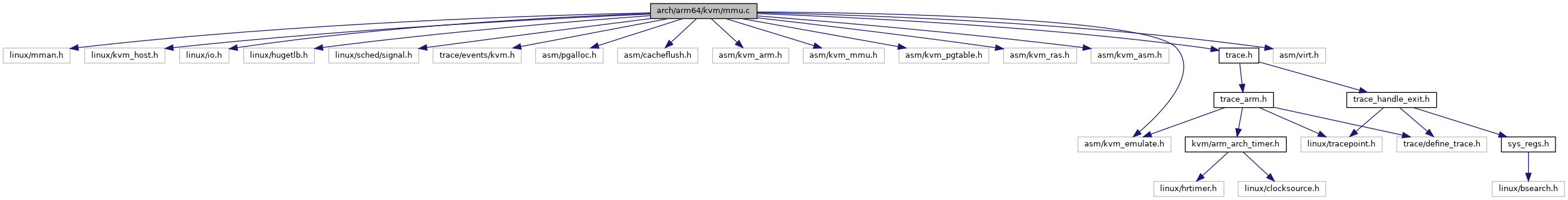

#include <linux/mman.h>#include <linux/kvm_host.h>#include <linux/io.h>#include <linux/hugetlb.h>#include <linux/sched/signal.h>#include <trace/events/kvm.h>#include <asm/pgalloc.h>#include <asm/cacheflush.h>#include <asm/kvm_arm.h>#include <asm/kvm_mmu.h>#include <asm/kvm_pgtable.h>#include <asm/kvm_ras.h>#include <asm/kvm_asm.h>#include <asm/kvm_emulate.h>#include <asm/virt.h>#include "trace.h"

Go to the source code of this file.

Classes | |

| struct | hyp_shared_pfn |

Macros | |

| #define | stage2_apply_range_resched(mmu, addr, end, fn) stage2_apply_range(mmu, addr, end, fn, true) |

Functions | |

| static | DEFINE_MUTEX (kvm_hyp_pgd_mutex) |

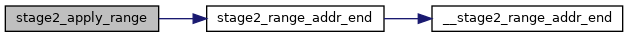

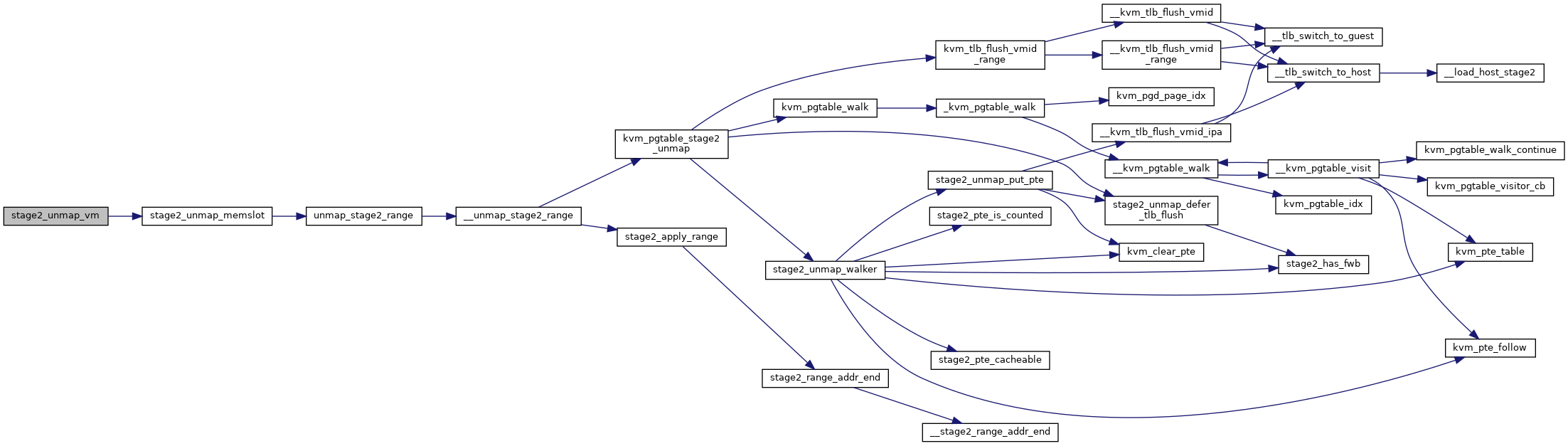

| static phys_addr_t | __stage2_range_addr_end (phys_addr_t addr, phys_addr_t end, phys_addr_t size) |

| static phys_addr_t | stage2_range_addr_end (phys_addr_t addr, phys_addr_t end) |

| static int | stage2_apply_range (struct kvm_s2_mmu *mmu, phys_addr_t addr, phys_addr_t end, int(*fn)(struct kvm_pgtable *, u64, u64), bool resched) |

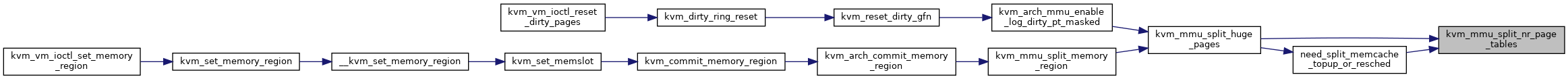

| static int | kvm_mmu_split_nr_page_tables (u64 range) |

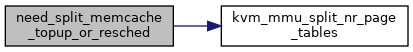

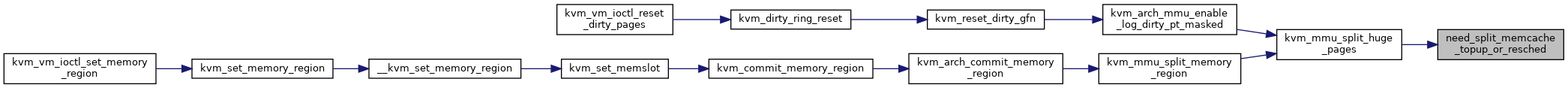

| static bool | need_split_memcache_topup_or_resched (struct kvm *kvm) |

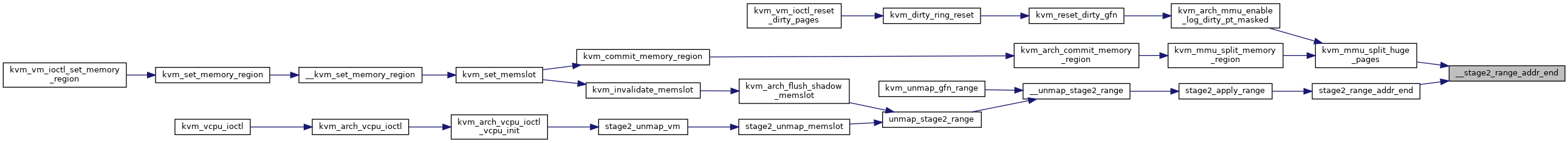

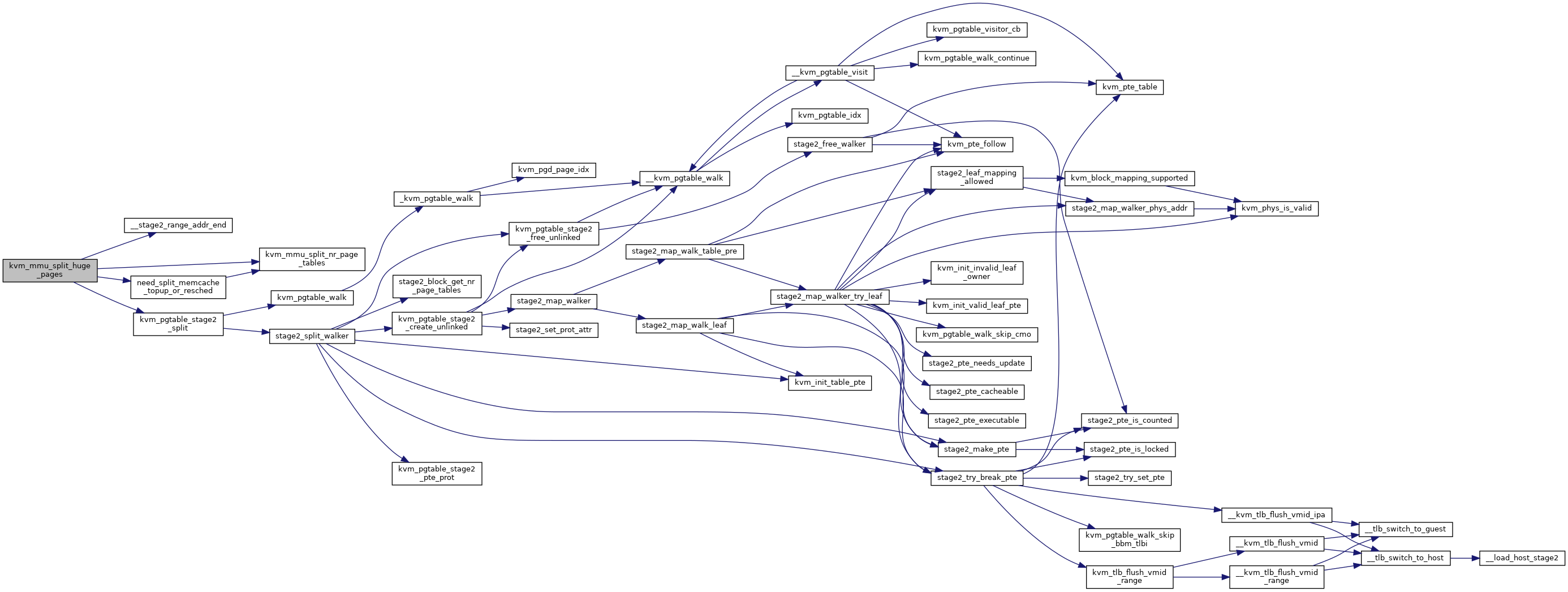

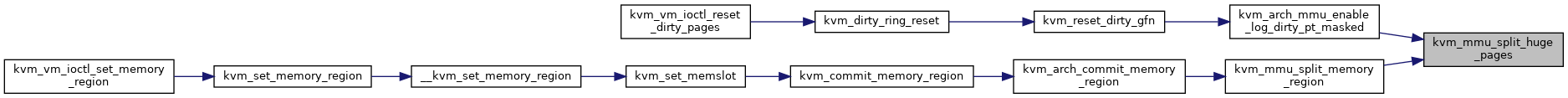

| static int | kvm_mmu_split_huge_pages (struct kvm *kvm, phys_addr_t addr, phys_addr_t end) |

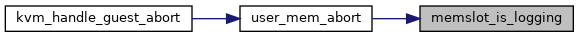

| static bool | memslot_is_logging (struct kvm_memory_slot *memslot) |

| int | kvm_arch_flush_remote_tlbs (struct kvm *kvm) |

| int | kvm_arch_flush_remote_tlbs_range (struct kvm *kvm, gfn_t gfn, u64 nr_pages) |

| static bool | kvm_is_device_pfn (unsigned long pfn) |

| static void * | stage2_memcache_zalloc_page (void *arg) |

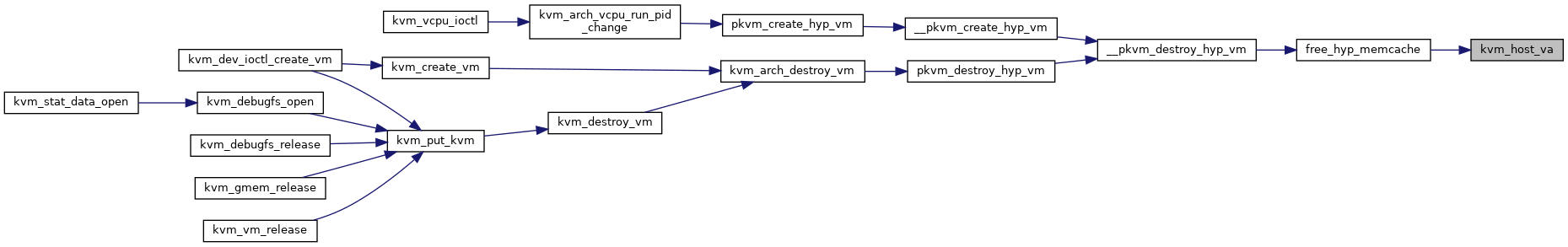

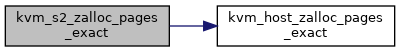

| static void * | kvm_host_zalloc_pages_exact (size_t size) |

| static void * | kvm_s2_zalloc_pages_exact (size_t size) |

| static void | kvm_s2_free_pages_exact (void *virt, size_t size) |

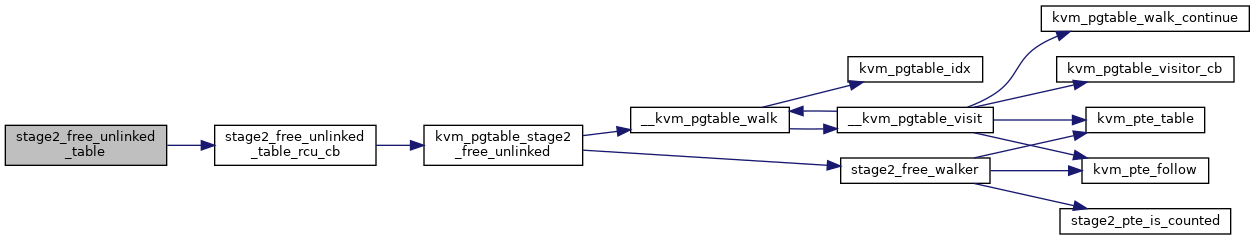

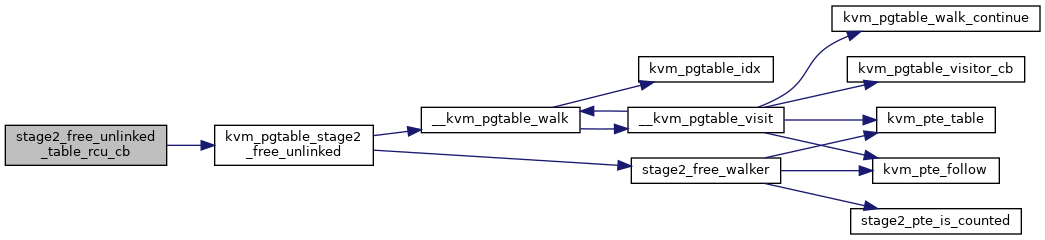

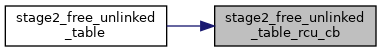

| static void | stage2_free_unlinked_table_rcu_cb (struct rcu_head *head) |

| static void | stage2_free_unlinked_table (void *addr, s8 level) |

| static void | kvm_host_get_page (void *addr) |

| static void | kvm_host_put_page (void *addr) |

| static void | kvm_s2_put_page (void *addr) |

| static int | kvm_host_page_count (void *addr) |

| static phys_addr_t | kvm_host_pa (void *addr) |

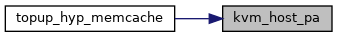

| static void * | kvm_host_va (phys_addr_t phys) |

| static void | clean_dcache_guest_page (void *va, size_t size) |

| static void | invalidate_icache_guest_page (void *va, size_t size) |

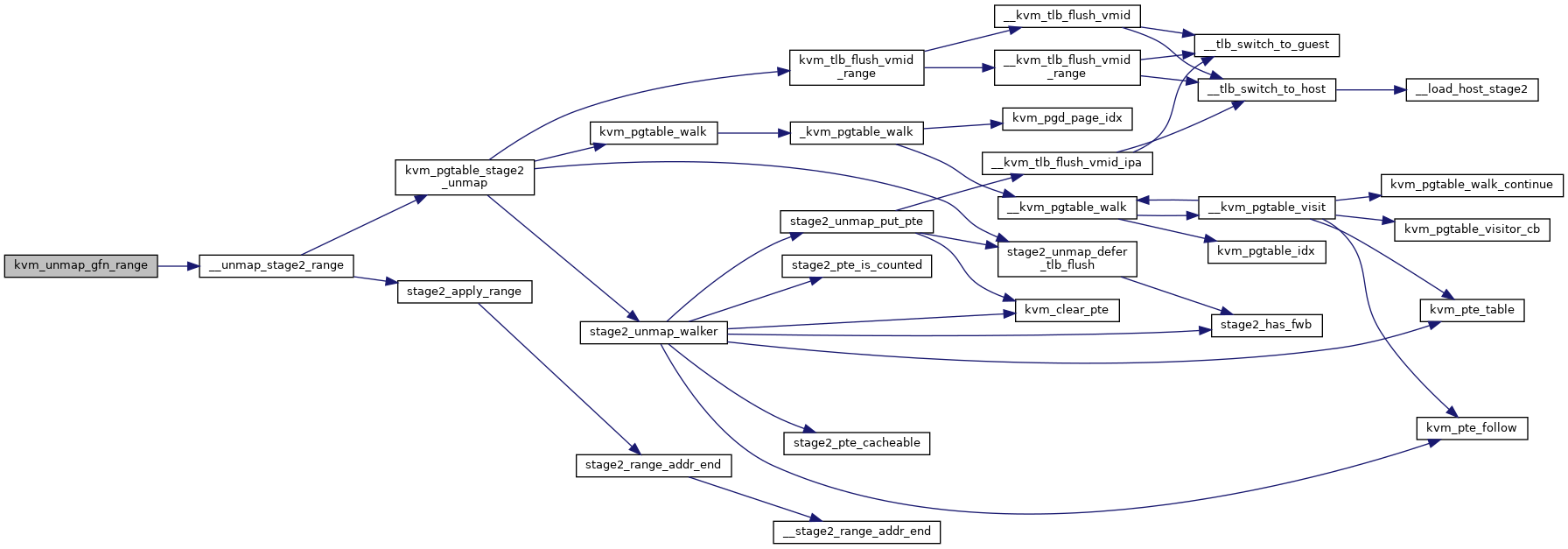

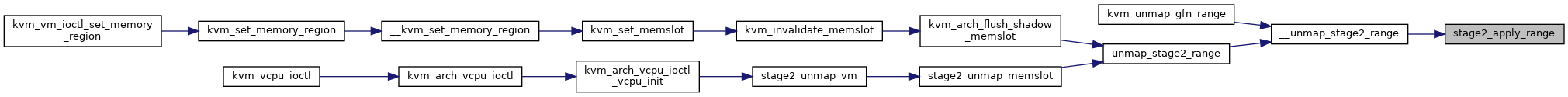

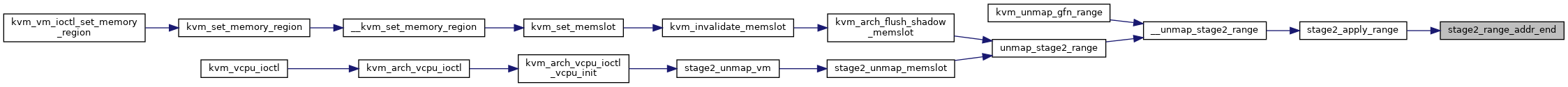

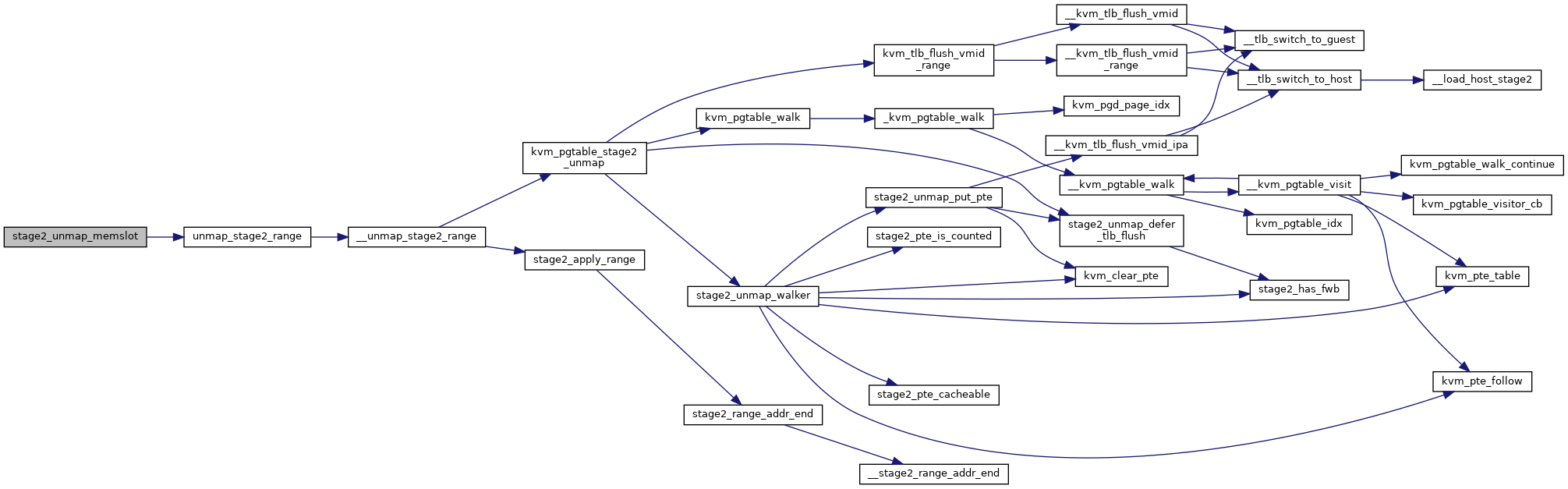

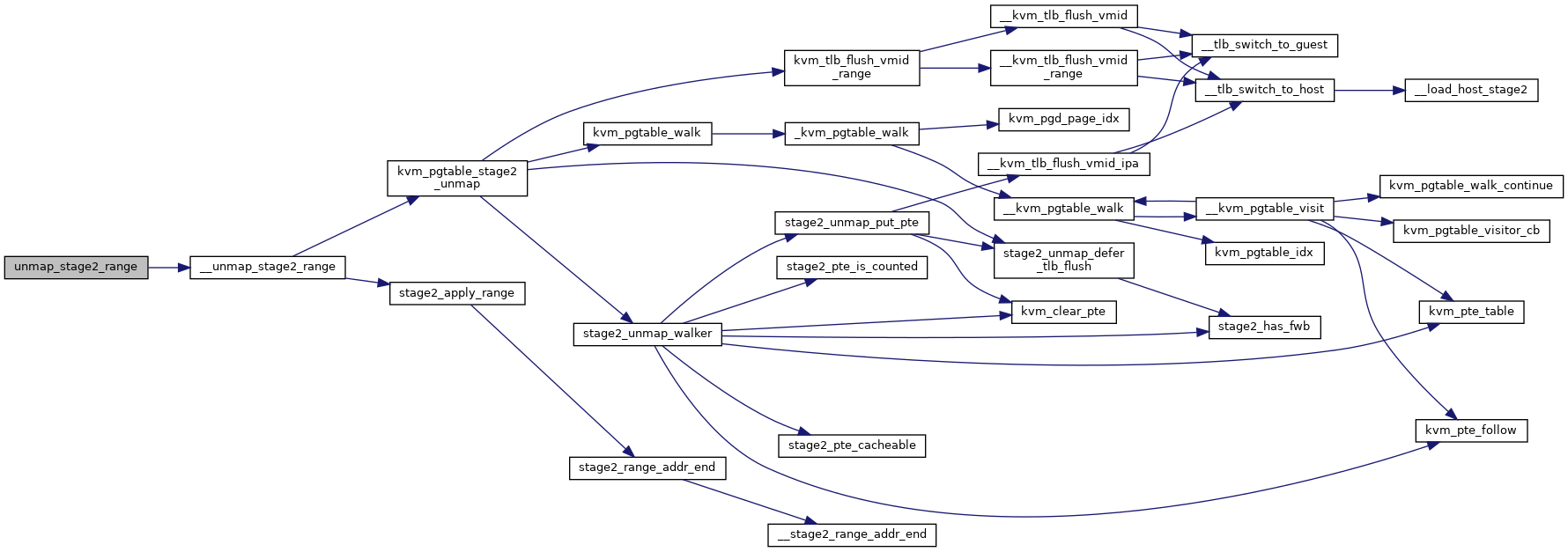

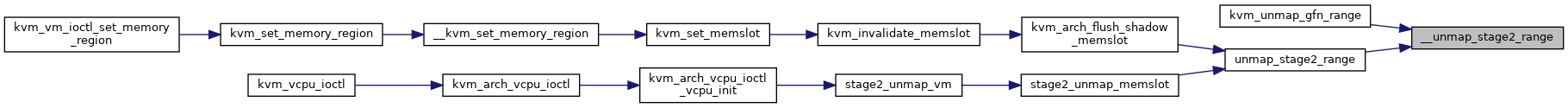

| static void | __unmap_stage2_range (struct kvm_s2_mmu *mmu, phys_addr_t start, u64 size, bool may_block) |

| static void | unmap_stage2_range (struct kvm_s2_mmu *mmu, phys_addr_t start, u64 size) |

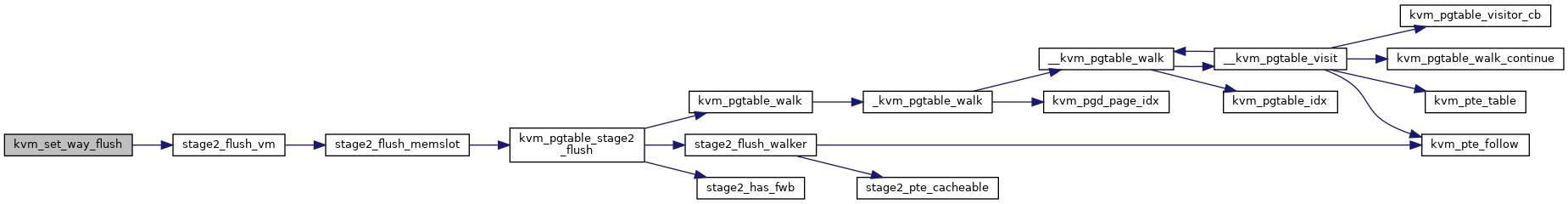

| static void | stage2_flush_memslot (struct kvm *kvm, struct kvm_memory_slot *memslot) |

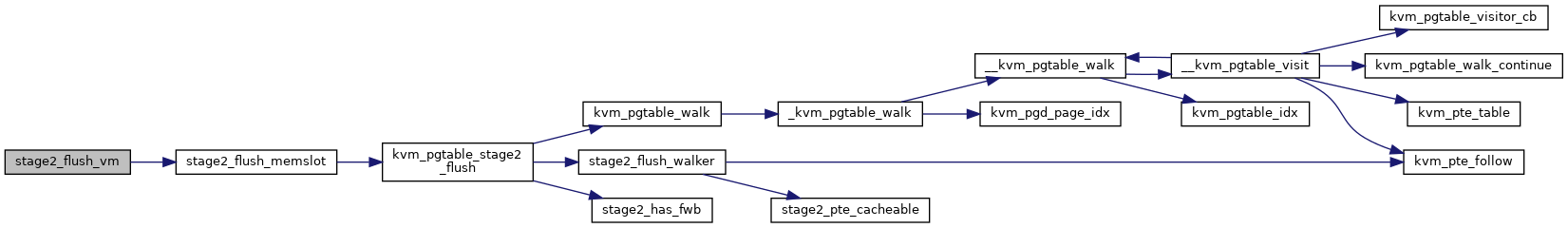

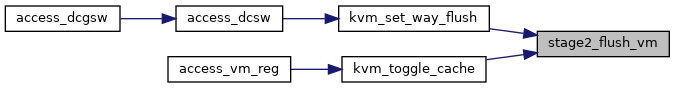

| static void | stage2_flush_vm (struct kvm *kvm) |

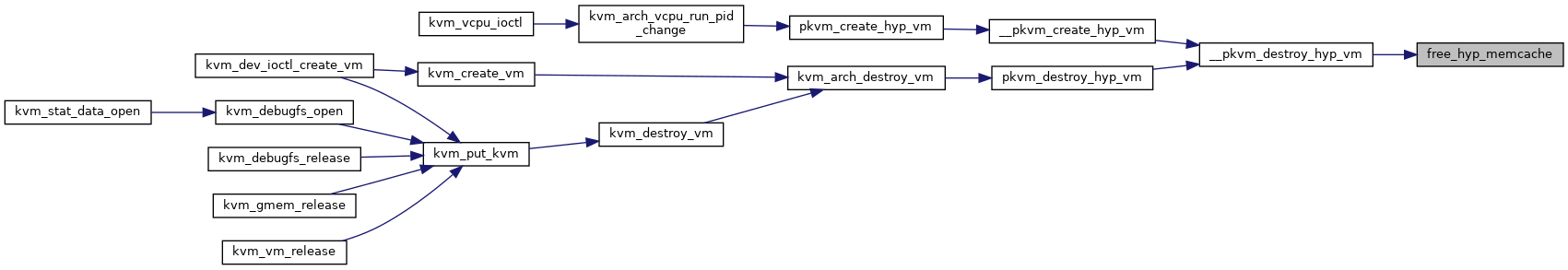

| void __init | free_hyp_pgds (void) |

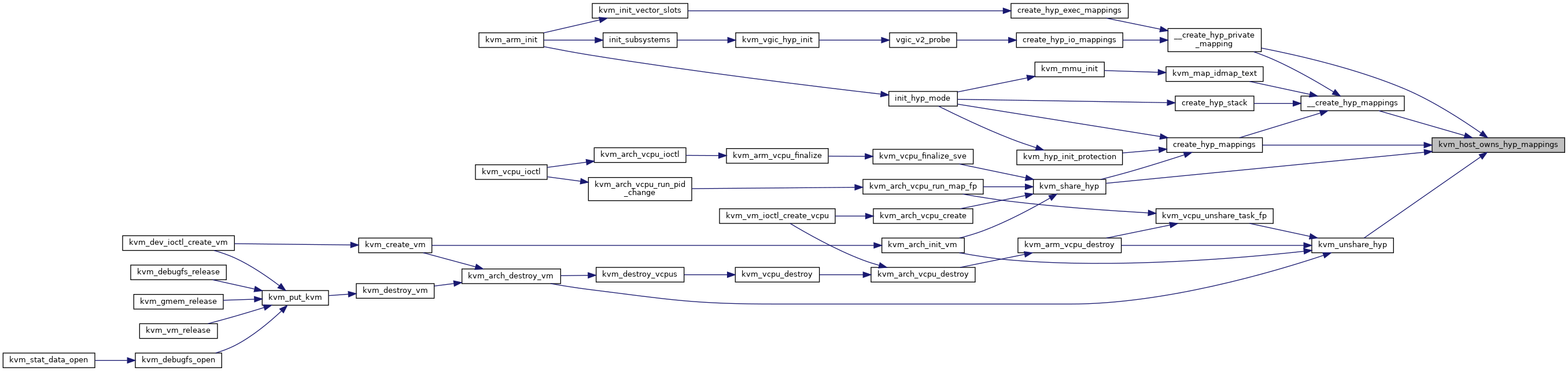

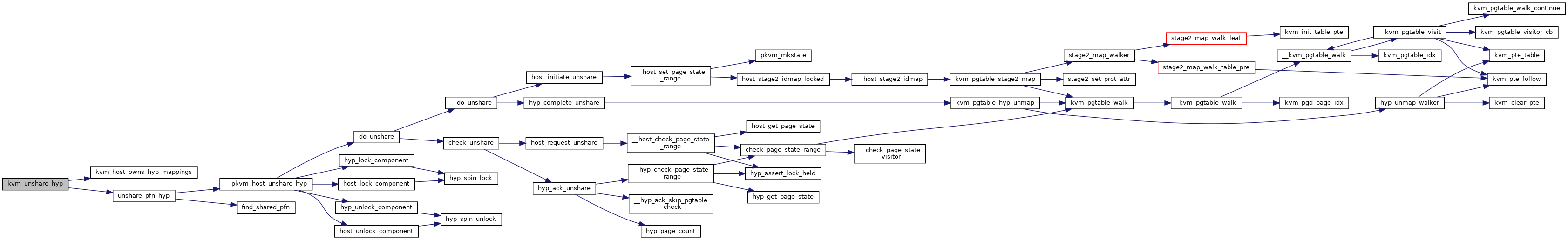

| static bool | kvm_host_owns_hyp_mappings (void) |

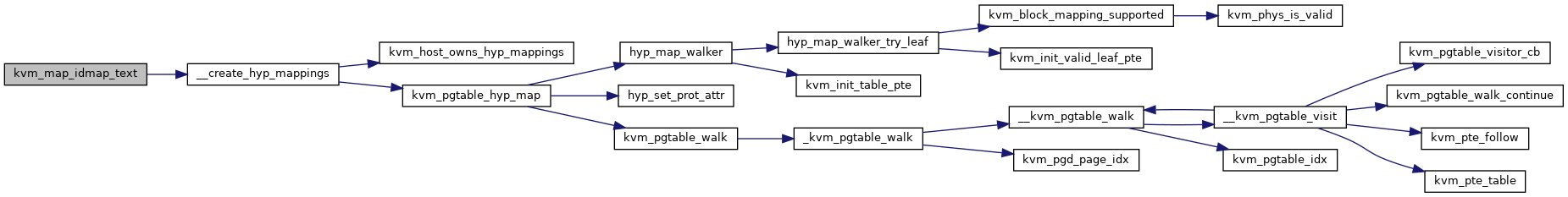

| int | __create_hyp_mappings (unsigned long start, unsigned long size, unsigned long phys, enum kvm_pgtable_prot prot) |

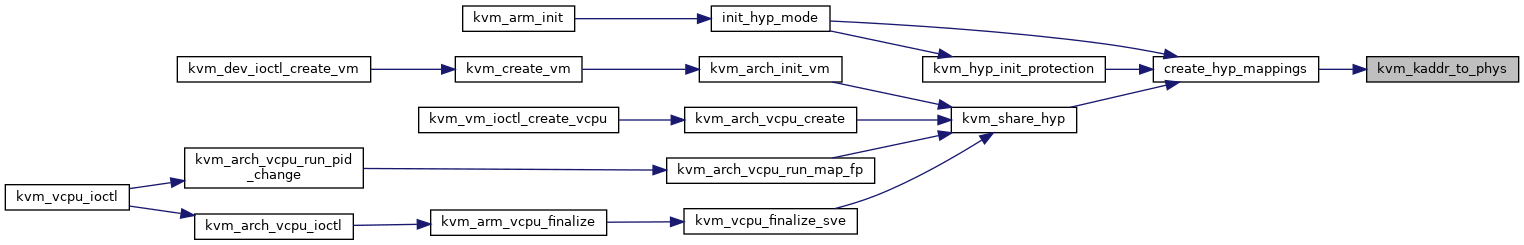

| static phys_addr_t | kvm_kaddr_to_phys (void *kaddr) |

| static | DEFINE_MUTEX (hyp_shared_pfns_lock) |

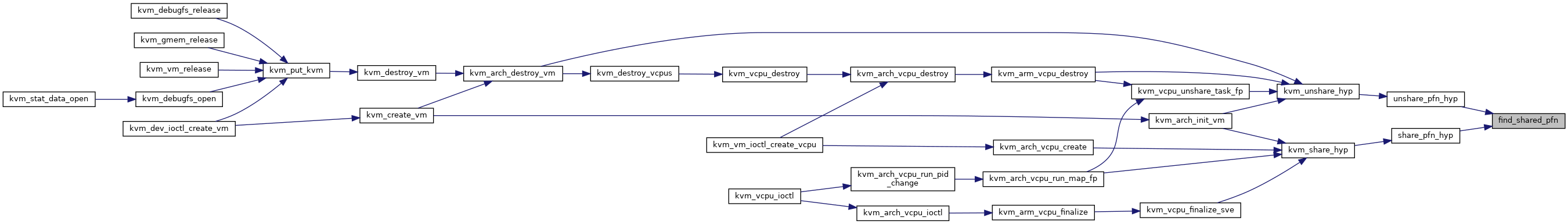

| static struct hyp_shared_pfn * | find_shared_pfn (u64 pfn, struct rb_node ***node, struct rb_node **parent) |

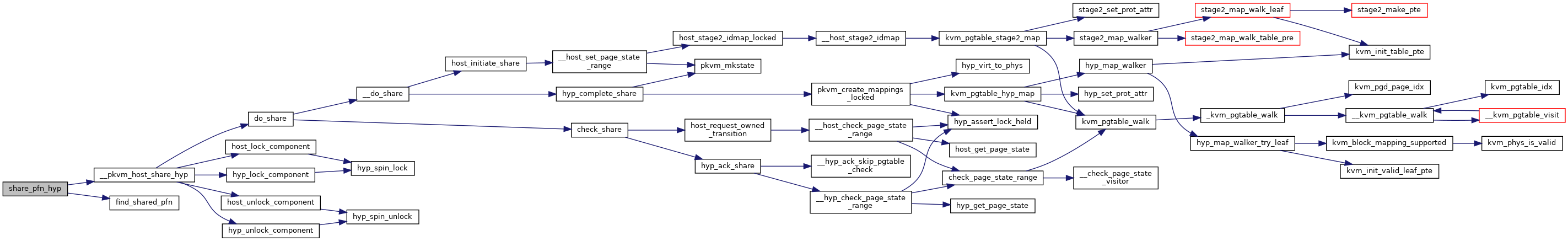

| static int | share_pfn_hyp (u64 pfn) |

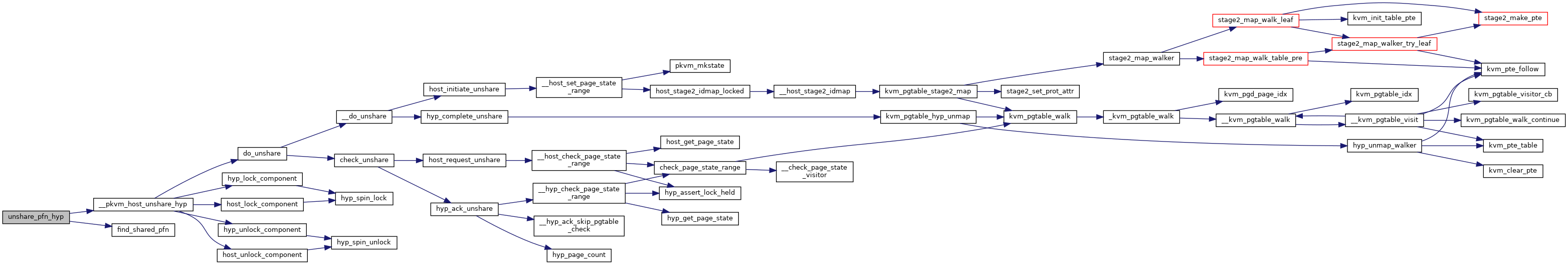

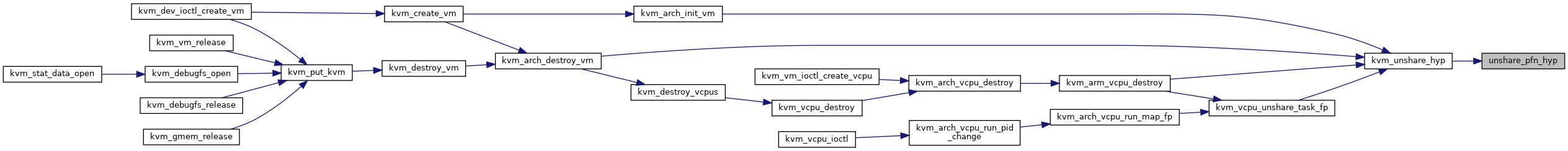

| static int | unshare_pfn_hyp (u64 pfn) |

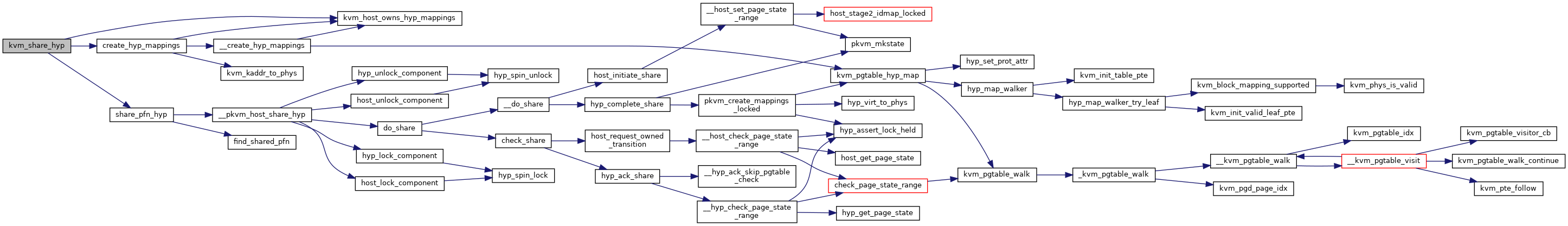

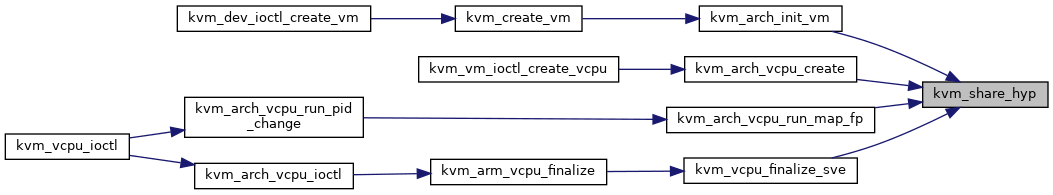

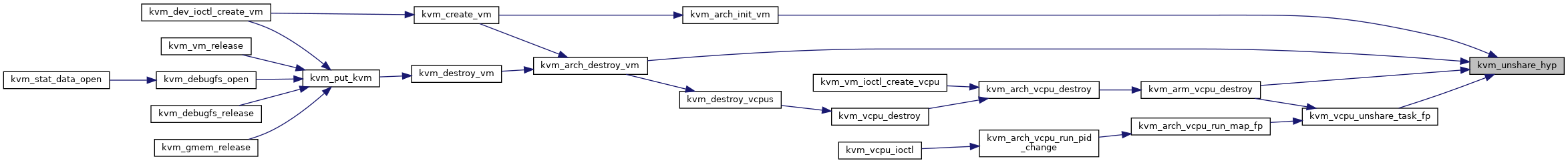

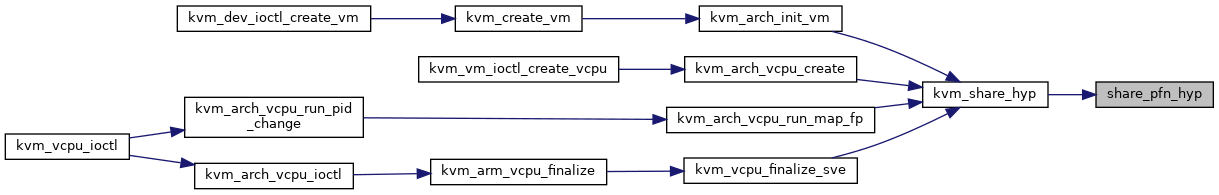

| int | kvm_share_hyp (void *from, void *to) |

| void | kvm_unshare_hyp (void *from, void *to) |

| int | create_hyp_mappings (void *from, void *to, enum kvm_pgtable_prot prot) |

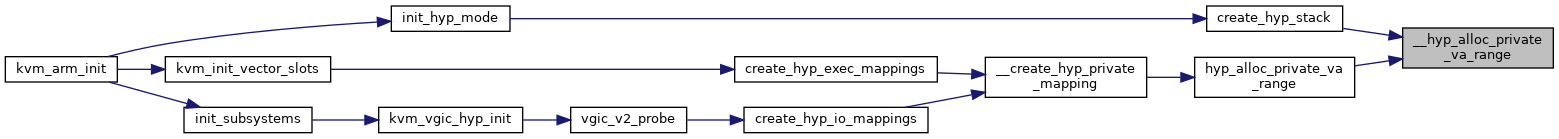

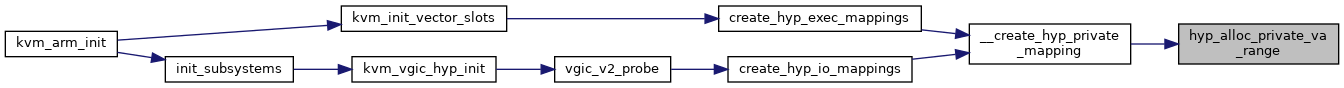

| static int | __hyp_alloc_private_va_range (unsigned long base) |

| int | hyp_alloc_private_va_range (size_t size, unsigned long *haddr) |

| static int | __create_hyp_private_mapping (phys_addr_t phys_addr, size_t size, unsigned long *haddr, enum kvm_pgtable_prot prot) |

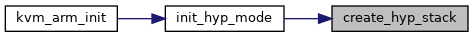

| int | create_hyp_stack (phys_addr_t phys_addr, unsigned long *haddr) |

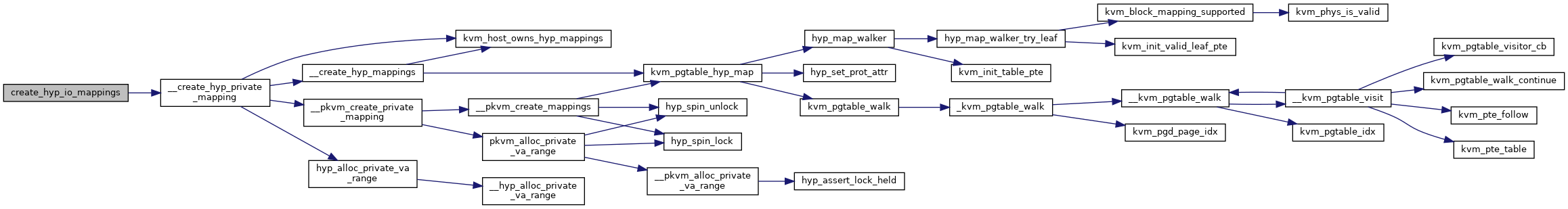

| int | create_hyp_io_mappings (phys_addr_t phys_addr, size_t size, void __iomem **kaddr, void __iomem **haddr) |

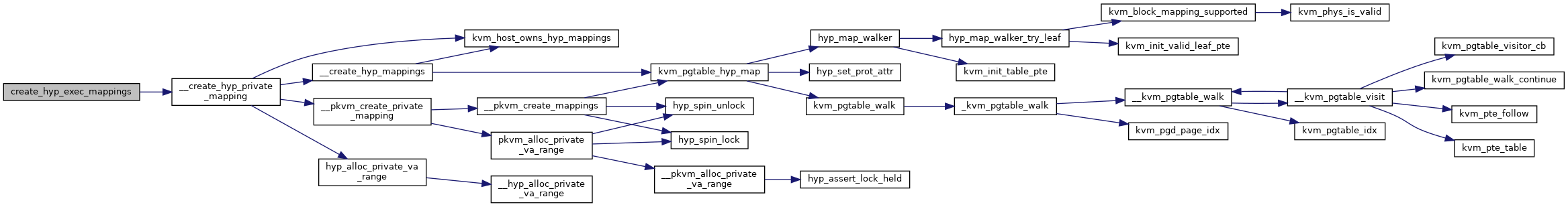

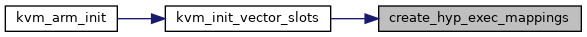

| int | create_hyp_exec_mappings (phys_addr_t phys_addr, size_t size, void **haddr) |

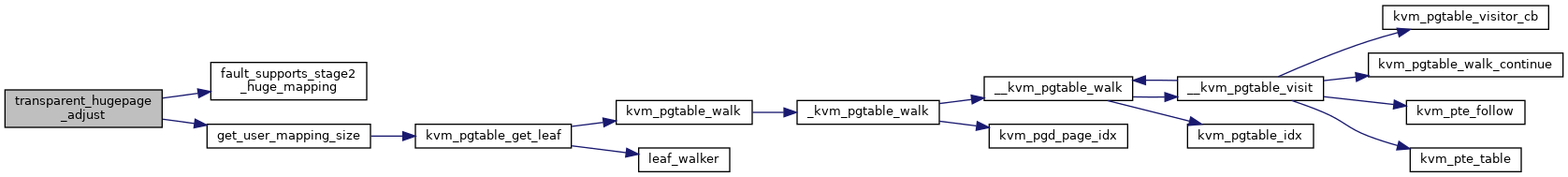

| static int | get_user_mapping_size (struct kvm *kvm, u64 addr) |

| int | kvm_init_stage2_mmu (struct kvm *kvm, struct kvm_s2_mmu *mmu, unsigned long type) |

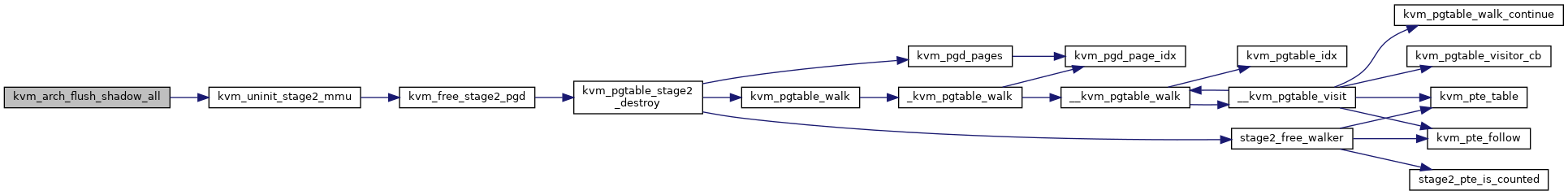

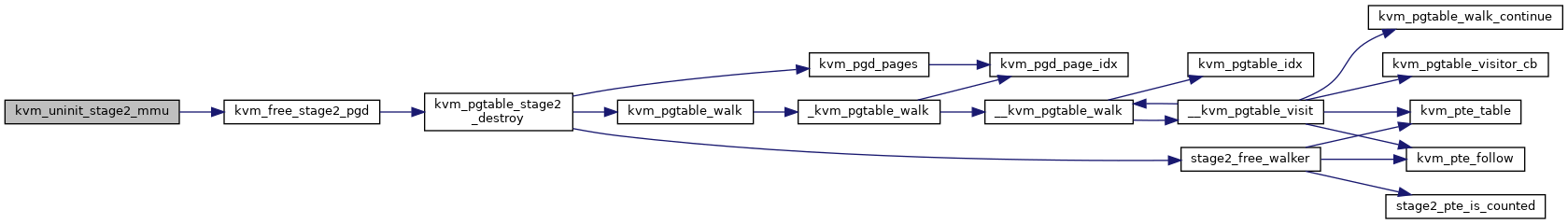

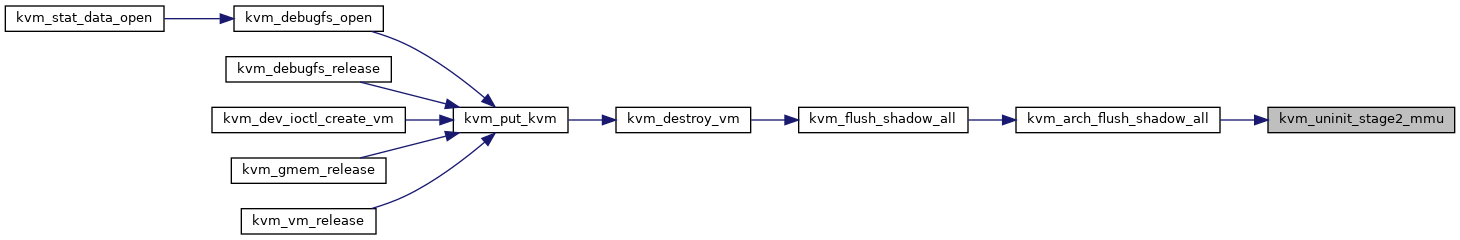

| void | kvm_uninit_stage2_mmu (struct kvm *kvm) |

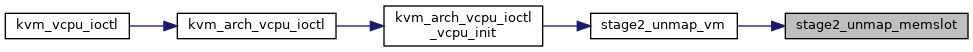

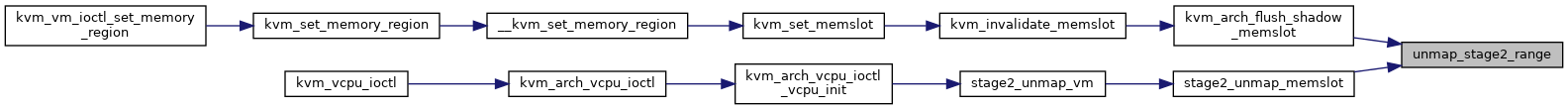

| static void | stage2_unmap_memslot (struct kvm *kvm, struct kvm_memory_slot *memslot) |

| void | stage2_unmap_vm (struct kvm *kvm) |

| void | kvm_free_stage2_pgd (struct kvm_s2_mmu *mmu) |

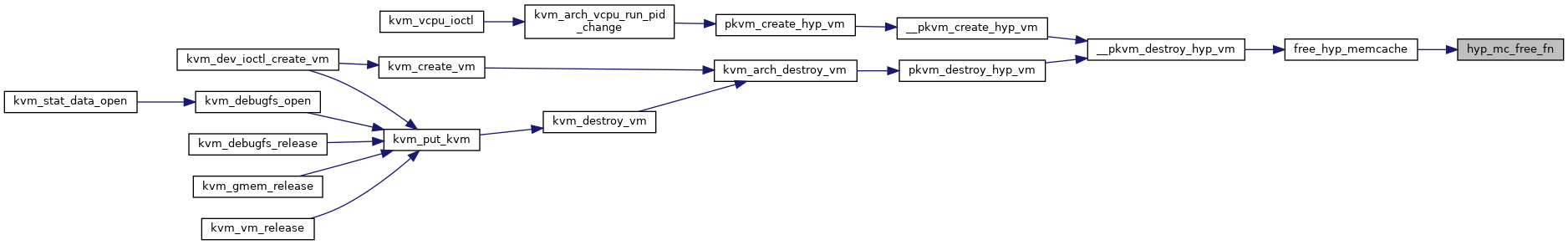

| static void | hyp_mc_free_fn (void *addr, void *unused) |

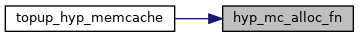

| static void * | hyp_mc_alloc_fn (void *unused) |

| void | free_hyp_memcache (struct kvm_hyp_memcache *mc) |

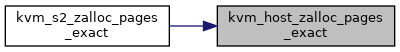

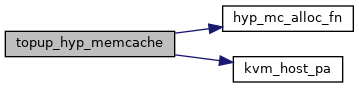

| int | topup_hyp_memcache (struct kvm_hyp_memcache *mc, unsigned long min_pages) |

| int | kvm_phys_addr_ioremap (struct kvm *kvm, phys_addr_t guest_ipa, phys_addr_t pa, unsigned long size, bool writable) |

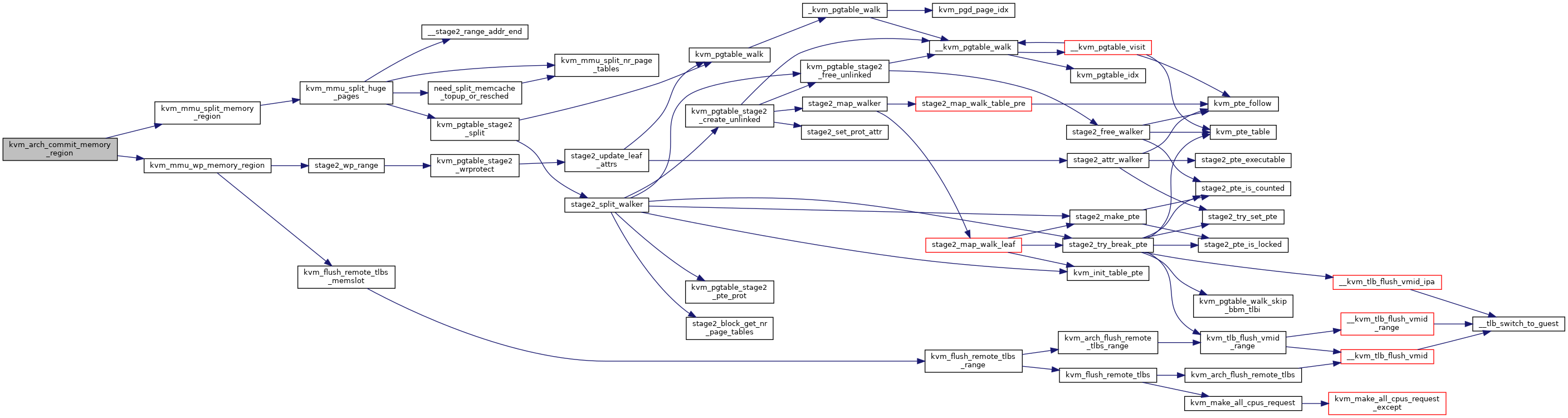

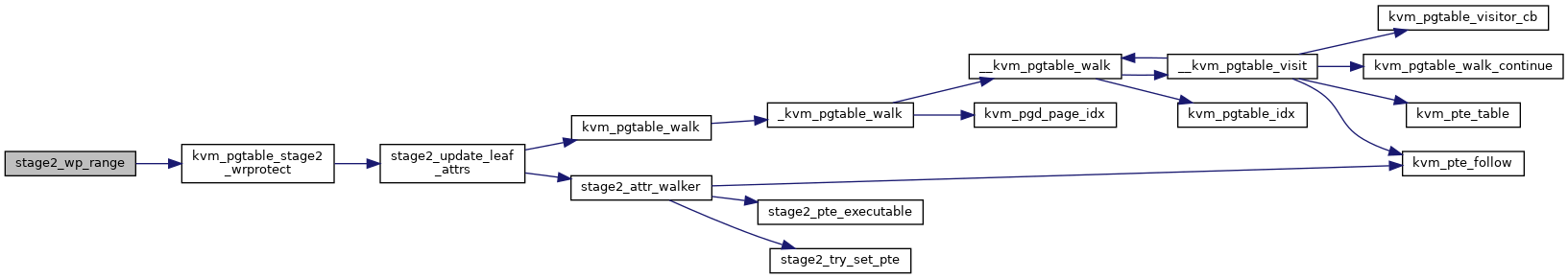

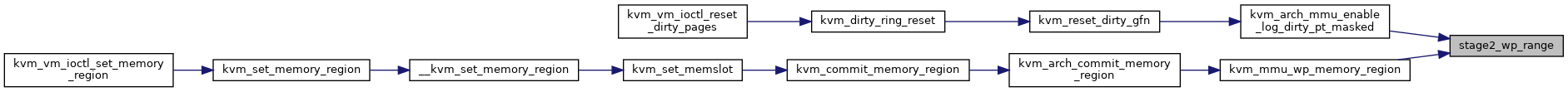

| static void | stage2_wp_range (struct kvm_s2_mmu *mmu, phys_addr_t addr, phys_addr_t end) |

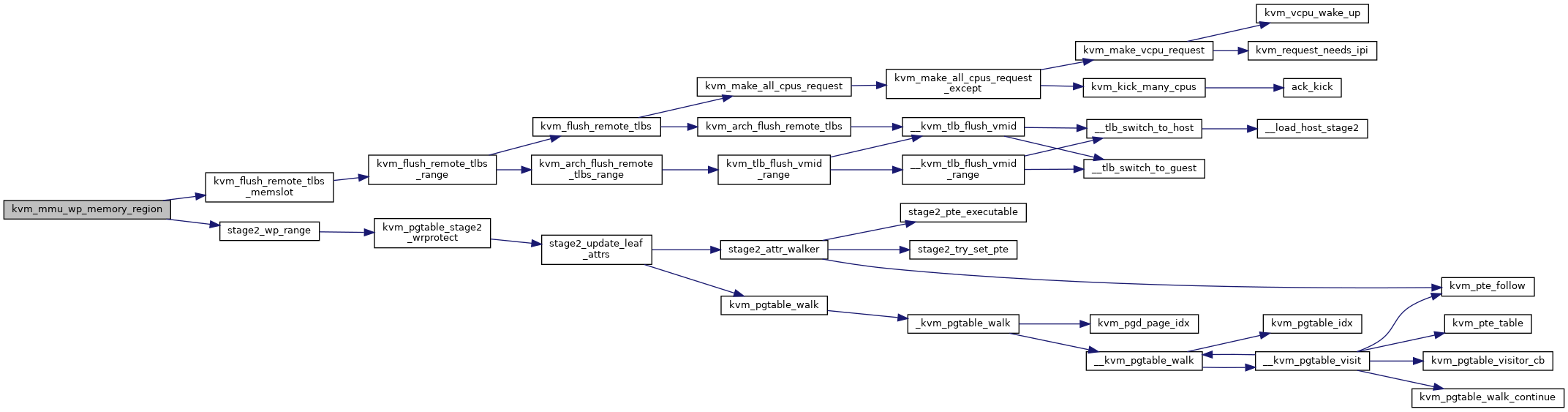

| static void | kvm_mmu_wp_memory_region (struct kvm *kvm, int slot) |

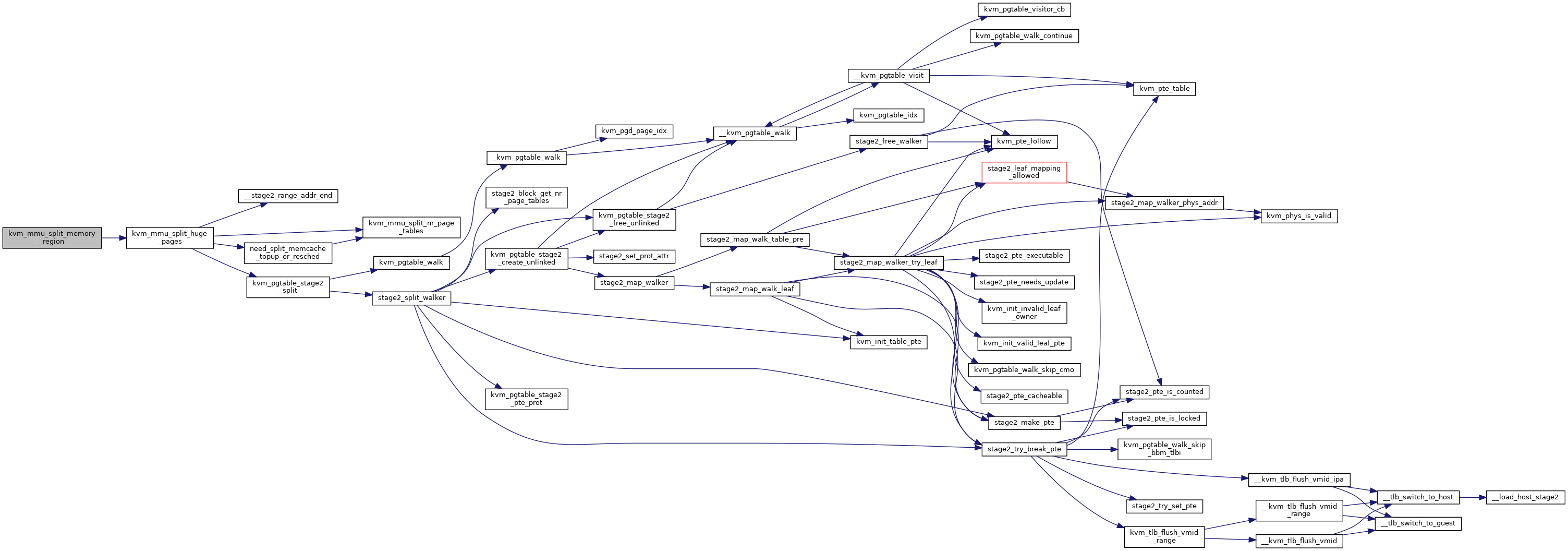

| static void | kvm_mmu_split_memory_region (struct kvm *kvm, int slot) |

| void | kvm_arch_mmu_enable_log_dirty_pt_masked (struct kvm *kvm, struct kvm_memory_slot *slot, gfn_t gfn_offset, unsigned long mask) |

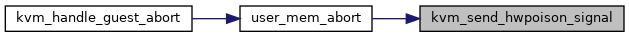

| static void | kvm_send_hwpoison_signal (unsigned long address, short lsb) |

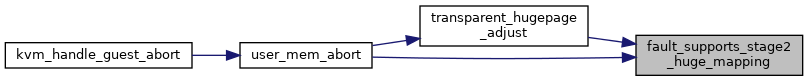

| static bool | fault_supports_stage2_huge_mapping (struct kvm_memory_slot *memslot, unsigned long hva, unsigned long map_size) |

| static long | transparent_hugepage_adjust (struct kvm *kvm, struct kvm_memory_slot *memslot, unsigned long hva, kvm_pfn_t *pfnp, phys_addr_t *ipap) |

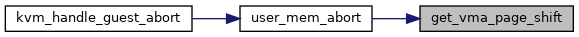

| static int | get_vma_page_shift (struct vm_area_struct *vma, unsigned long hva) |

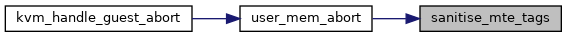

| static void | sanitise_mte_tags (struct kvm *kvm, kvm_pfn_t pfn, unsigned long size) |

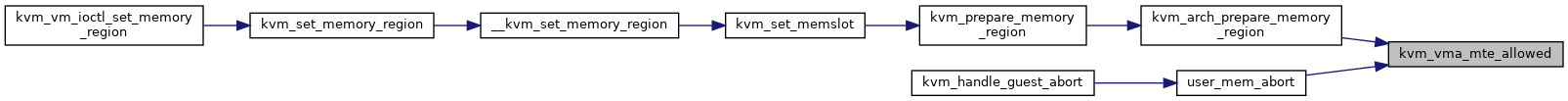

| static bool | kvm_vma_mte_allowed (struct vm_area_struct *vma) |

| static int | user_mem_abort (struct kvm_vcpu *vcpu, phys_addr_t fault_ipa, struct kvm_memory_slot *memslot, unsigned long hva, bool fault_is_perm) |

| static void | handle_access_fault (struct kvm_vcpu *vcpu, phys_addr_t fault_ipa) |

| int | kvm_handle_guest_abort (struct kvm_vcpu *vcpu) |

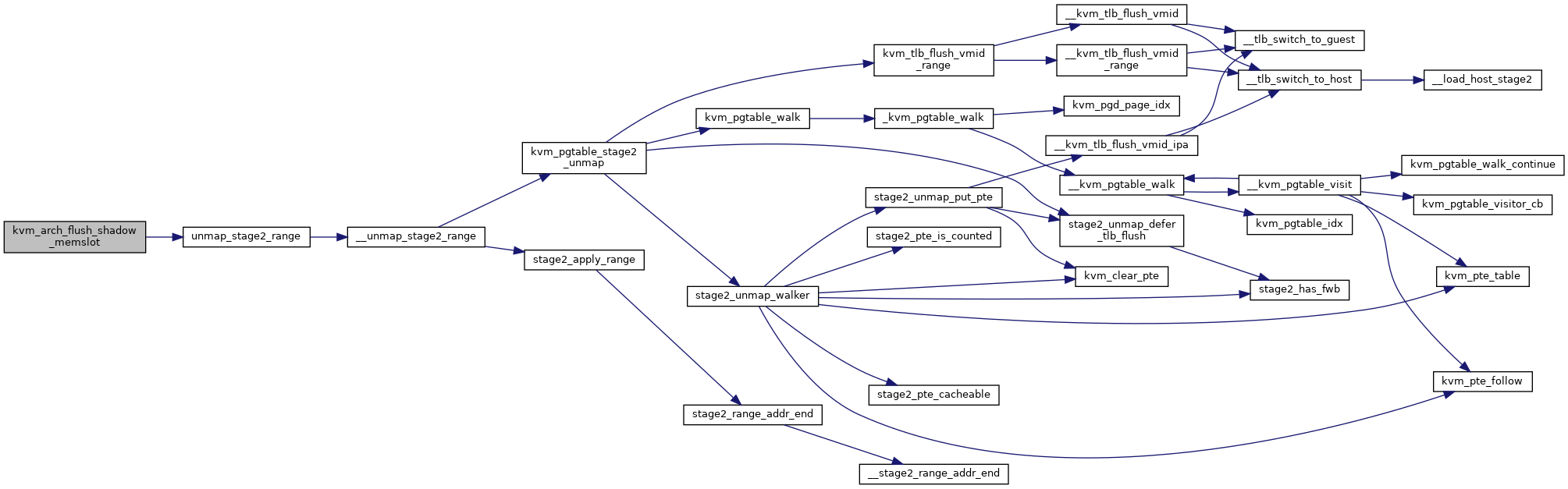

| bool | kvm_unmap_gfn_range (struct kvm *kvm, struct kvm_gfn_range *range) |

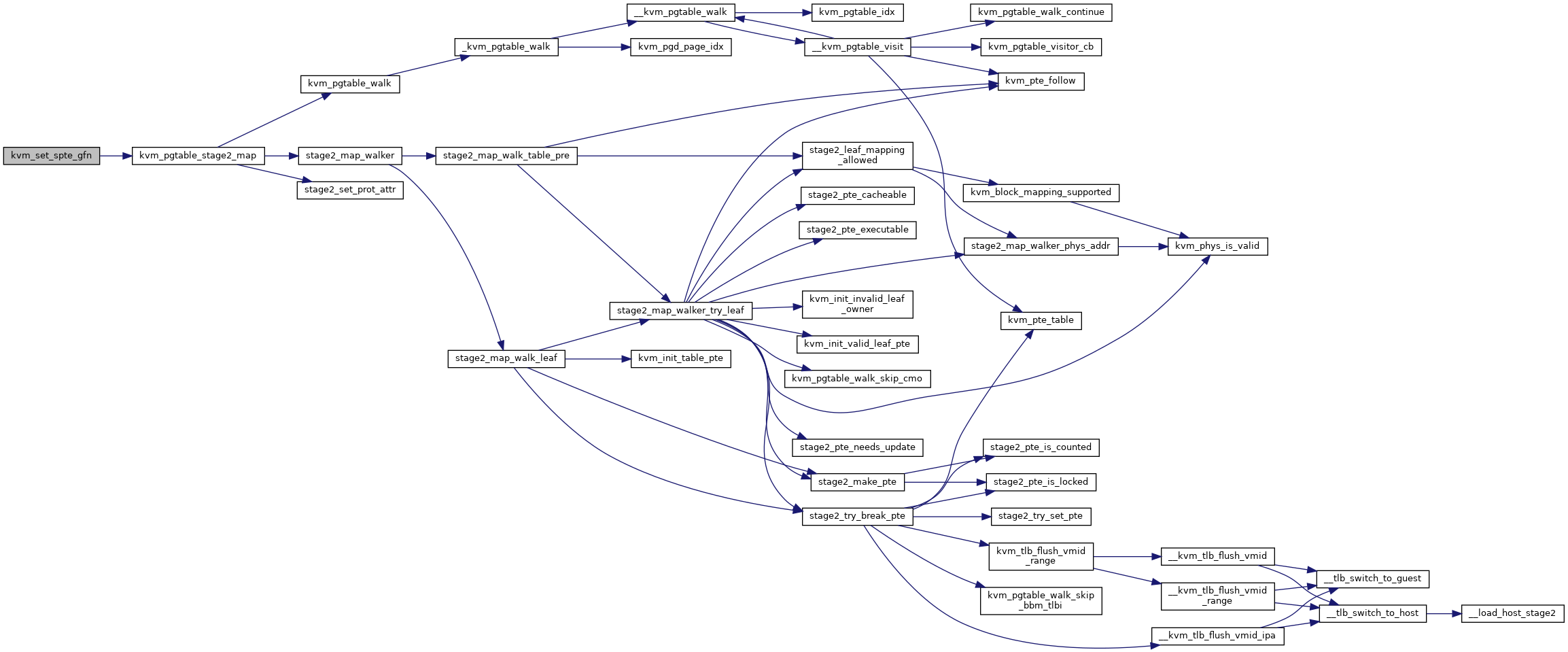

| bool | kvm_set_spte_gfn (struct kvm *kvm, struct kvm_gfn_range *range) |

| bool | kvm_age_gfn (struct kvm *kvm, struct kvm_gfn_range *range) |

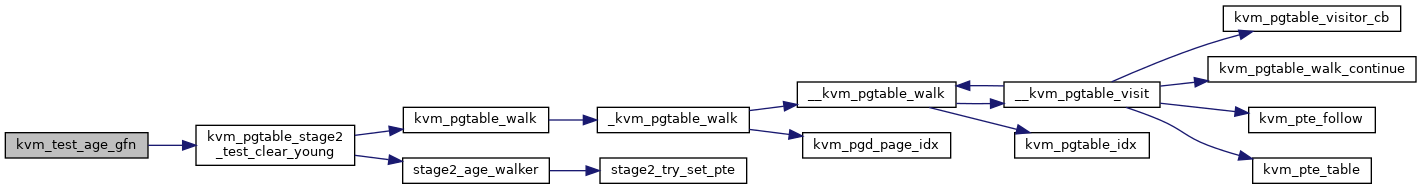

| bool | kvm_test_age_gfn (struct kvm *kvm, struct kvm_gfn_range *range) |

| phys_addr_t | kvm_mmu_get_httbr (void) |

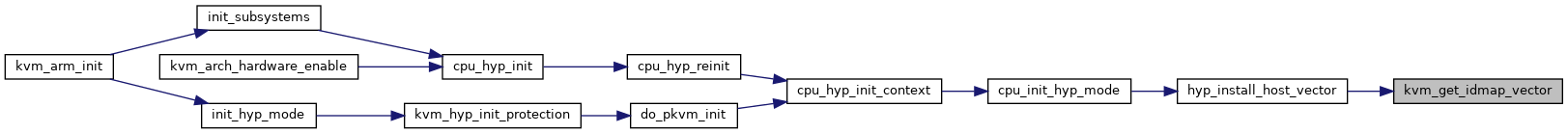

| phys_addr_t | kvm_get_idmap_vector (void) |

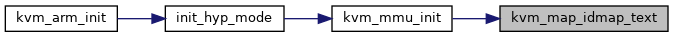

| static int | kvm_map_idmap_text (void) |

| static void * | kvm_hyp_zalloc_page (void *arg) |

| int __init | kvm_mmu_init (u32 *hyp_va_bits) |

| void | kvm_arch_commit_memory_region (struct kvm *kvm, struct kvm_memory_slot *old, const struct kvm_memory_slot *new, enum kvm_mr_change change) |

| int | kvm_arch_prepare_memory_region (struct kvm *kvm, const struct kvm_memory_slot *old, struct kvm_memory_slot *new, enum kvm_mr_change change) |

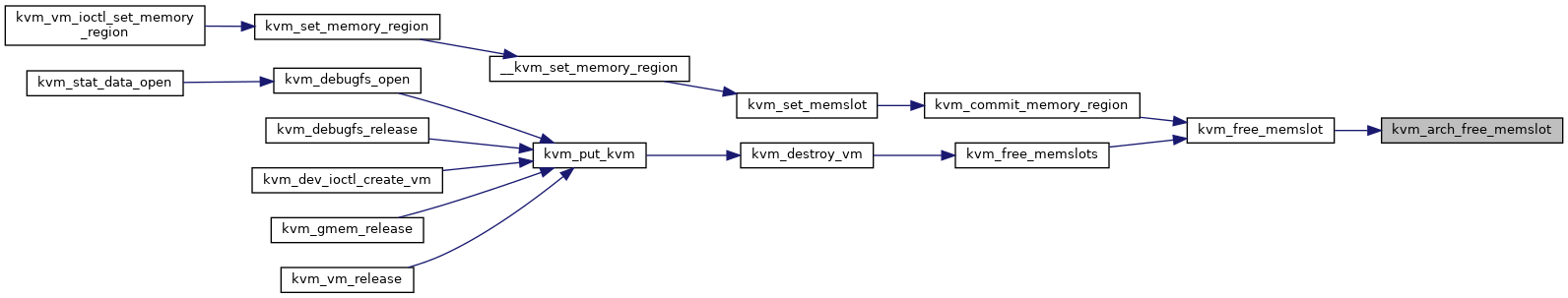

| void | kvm_arch_free_memslot (struct kvm *kvm, struct kvm_memory_slot *slot) |

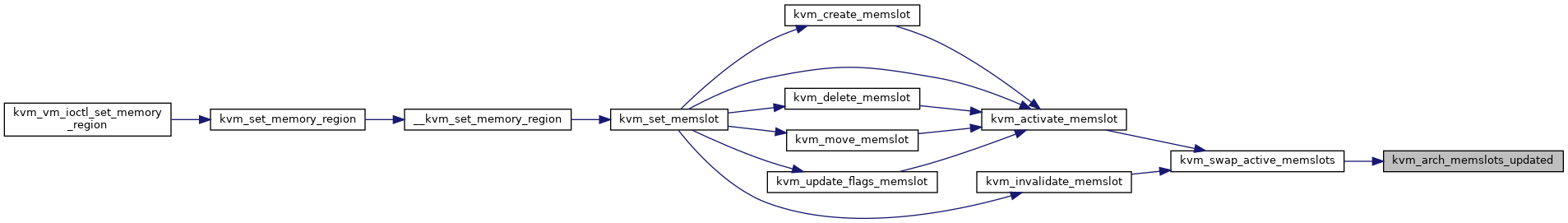

| void | kvm_arch_memslots_updated (struct kvm *kvm, u64 gen) |

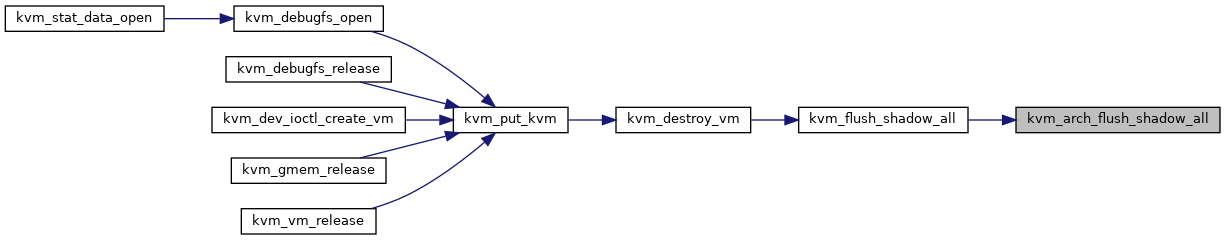

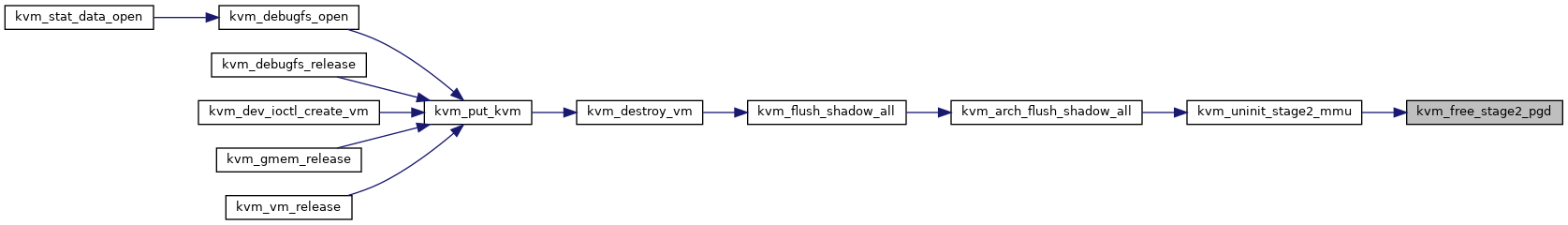

| void | kvm_arch_flush_shadow_all (struct kvm *kvm) |

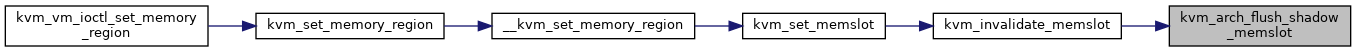

| void | kvm_arch_flush_shadow_memslot (struct kvm *kvm, struct kvm_memory_slot *slot) |

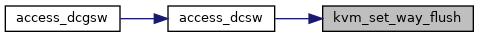

| void | kvm_set_way_flush (struct kvm_vcpu *vcpu) |

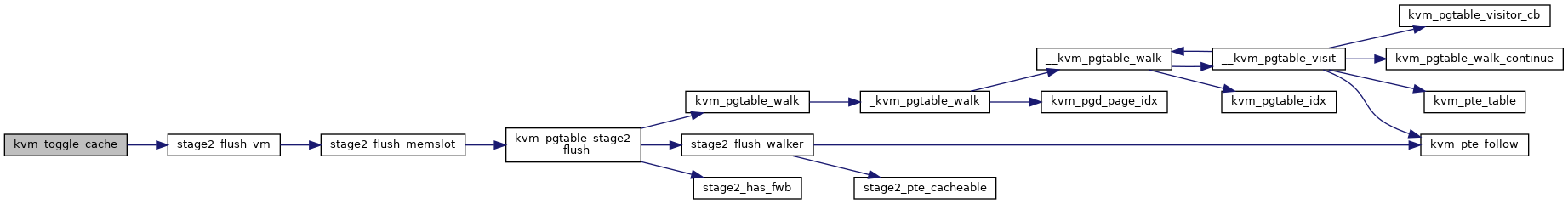

| void | kvm_toggle_cache (struct kvm_vcpu *vcpu, bool was_enabled) |

Variables | |

| static struct kvm_pgtable * | hyp_pgtable |

| static unsigned long __ro_after_init | hyp_idmap_start |

| static unsigned long __ro_after_init | hyp_idmap_end |

| static phys_addr_t __ro_after_init | hyp_idmap_vector |

| static unsigned long __ro_after_init | io_map_base |

| static struct kvm_pgtable_mm_ops | kvm_s2_mm_ops |

| static struct rb_root | hyp_shared_pfns = RB_ROOT |

| static struct kvm_pgtable_mm_ops | kvm_user_mm_ops |

| static struct kvm_pgtable_mm_ops | kvm_hyp_mm_ops |

Macro Definition Documentation

◆ stage2_apply_range_resched

| #define stage2_apply_range_resched | ( | mmu, | |

| addr, | |||

| end, | |||

| fn | |||

| ) | stage2_apply_range(mmu, addr, end, fn, true) |

Function Documentation

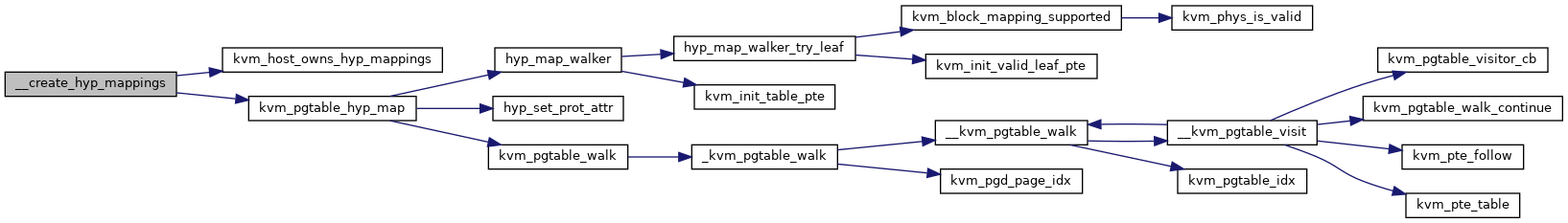

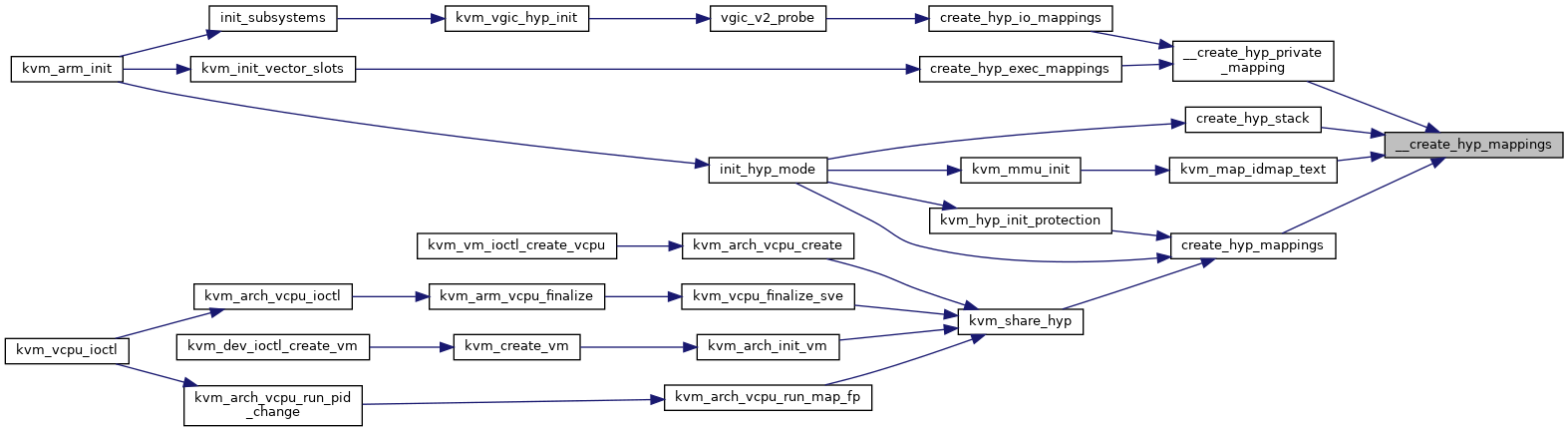

◆ __create_hyp_mappings()

| int __create_hyp_mappings | ( | unsigned long | start, |

| unsigned long | size, | ||

| unsigned long | phys, | ||

| enum kvm_pgtable_prot | prot | ||

| ) |

Definition at line 404 of file mmu.c.

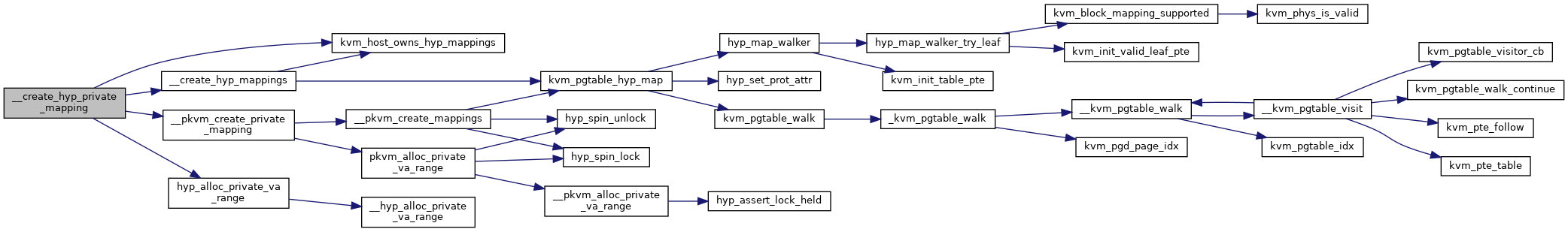

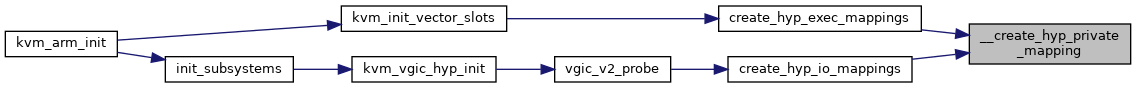

◆ __create_hyp_private_mapping()

|

static |

Definition at line 661 of file mmu.c.

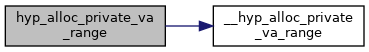

◆ __hyp_alloc_private_va_range()

|

static |

◆ __stage2_range_addr_end()

|

static |

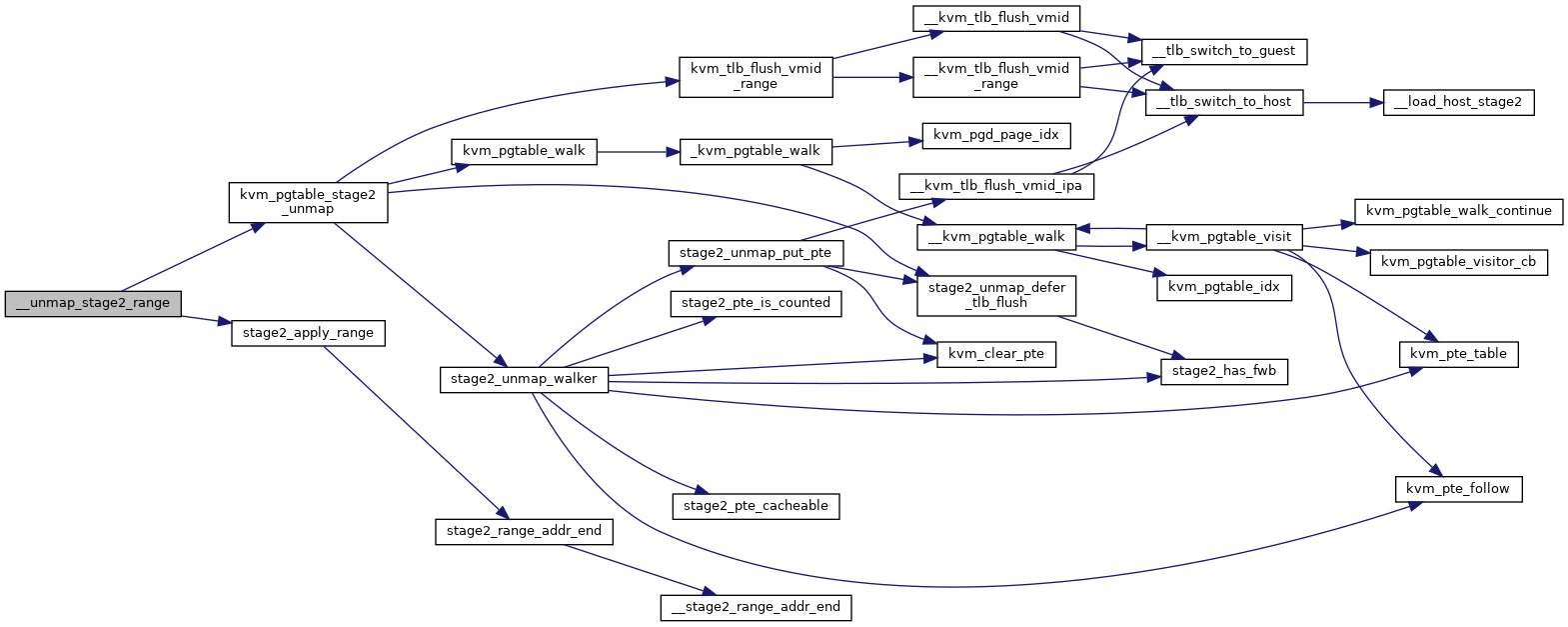

◆ __unmap_stage2_range()

|

static |

unmap_stage2_range – Clear stage2 page table entries to unmap a range @mmu: The KVM stage-2 MMU pointer @start: The intermediate physical base address of the range to unmap @size: The size of the area to unmap @may_block: Whether or not we are permitted to block

Clear a range of stage-2 mappings, lowering the various ref-counts. Must be called while holding mmu_lock (unless for freeing the stage2 pgd before destroying the VM), otherwise another faulting VCPU may come in and mess with things behind our backs.

Definition at line 319 of file mmu.c.

◆ clean_dcache_guest_page()

|

static |

◆ create_hyp_exec_mappings()

| int create_hyp_exec_mappings | ( | phys_addr_t | phys_addr, |

| size_t | size, | ||

| void ** | haddr | ||

| ) |

create_hyp_exec_mappings - Map an executable range into HYP @phys_addr: The physical start address which gets mapped @size: Size of the region being mapped @haddr: HYP VA for this mapping

Definition at line 778 of file mmu.c.

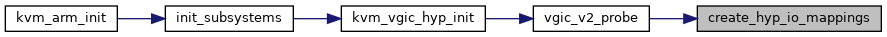

◆ create_hyp_io_mappings()

| int create_hyp_io_mappings | ( | phys_addr_t | phys_addr, |

| size_t | size, | ||

| void __iomem ** | kaddr, | ||

| void __iomem ** | haddr | ||

| ) |

create_hyp_io_mappings - Map IO into both kernel and HYP @phys_addr: The physical start address which gets mapped @size: Size of the region being mapped @kaddr: Kernel VA for this mapping @haddr: HYP VA for this mapping

Definition at line 740 of file mmu.c.

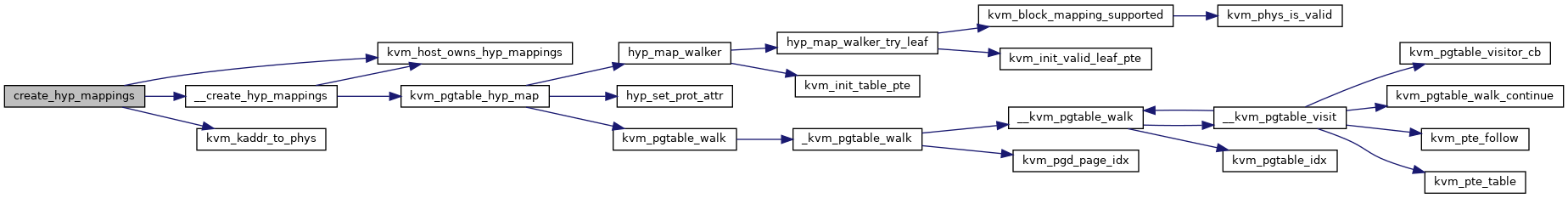

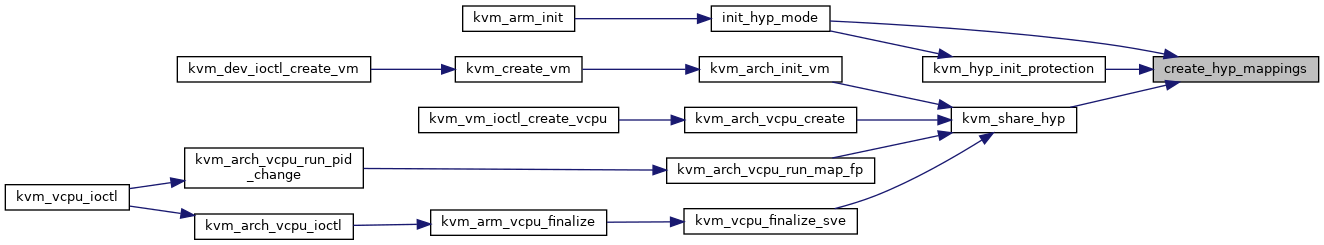

◆ create_hyp_mappings()

| int create_hyp_mappings | ( | void * | from, |

| void * | to, | ||

| enum kvm_pgtable_prot | prot | ||

| ) |

create_hyp_mappings - duplicate a kernel virtual address range in Hyp mode @from: The virtual kernel start address of the range @to: The virtual kernel end address of the range (exclusive) @prot: The protection to be applied to this range

The same virtual address as the kernel virtual address is also used in Hyp-mode mapping (modulo HYP_PAGE_OFFSET) to the same underlying physical pages.

Definition at line 574 of file mmu.c.

◆ create_hyp_stack()

| int create_hyp_stack | ( | phys_addr_t | phys_addr, |

| unsigned long * | haddr | ||

| ) |

◆ DEFINE_MUTEX() [1/2]

|

static |

◆ DEFINE_MUTEX() [2/2]

|

static |

◆ fault_supports_stage2_huge_mapping()

|

static |

◆ find_shared_pfn()

|

static |

◆ free_hyp_memcache()

| void free_hyp_memcache | ( | struct kvm_hyp_memcache * | mc | ) |

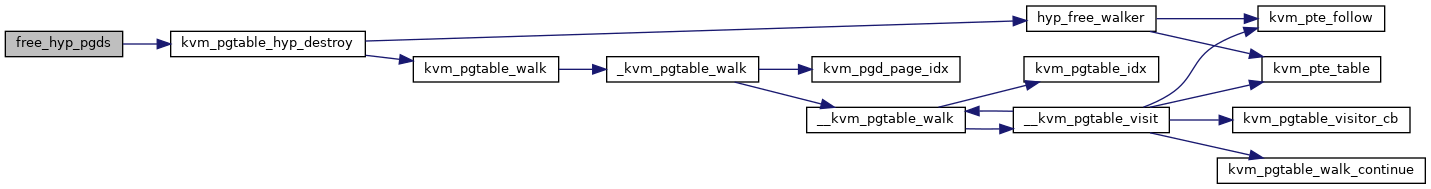

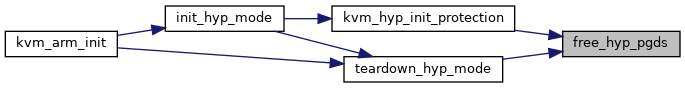

◆ free_hyp_pgds()

| void __init free_hyp_pgds | ( | void | ) |

free_hyp_pgds - free Hyp-mode page tables

Definition at line 372 of file mmu.c.

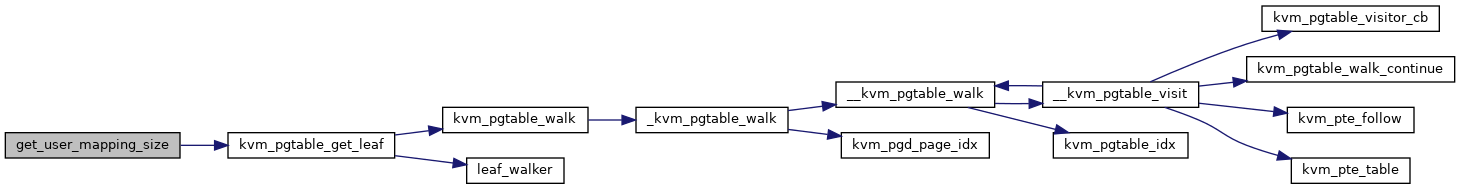

◆ get_user_mapping_size()

|

static |

Definition at line 802 of file mmu.c.

◆ get_vma_page_shift()

|

static |

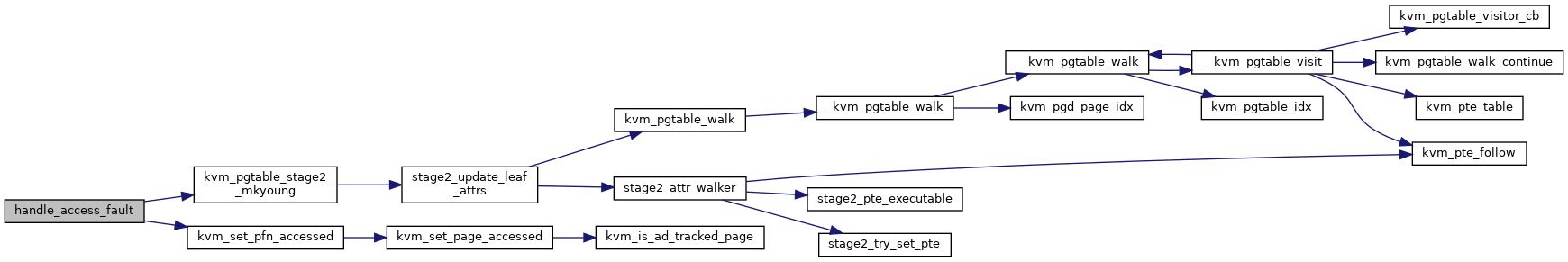

◆ handle_access_fault()

|

static |

Definition at line 1592 of file mmu.c.

◆ hyp_alloc_private_va_range()

| int hyp_alloc_private_va_range | ( | size_t | size, |

| unsigned long * | haddr | ||

| ) |

hyp_alloc_private_va_range - Allocates a private VA range. @size: The size of the VA range to reserve. @haddr: The hypervisor virtual start address of the allocation.

The private virtual address (VA) range is allocated below io_map_base and aligned based on the order of @size.

Return: 0 on success or negative error code on failure.

Definition at line 633 of file mmu.c.

◆ hyp_mc_alloc_fn()

|

static |

◆ hyp_mc_free_fn()

|

static |

◆ invalidate_icache_guest_page()

|

static |

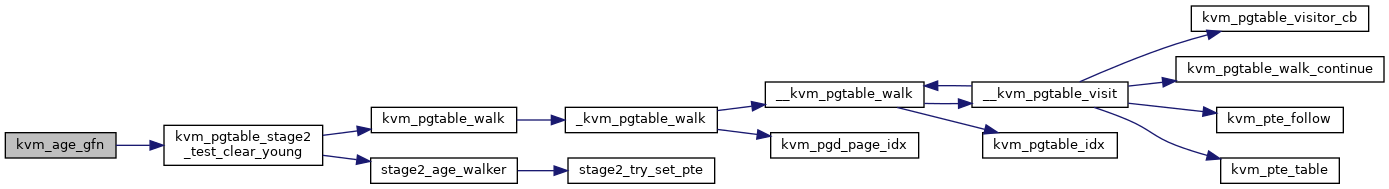

◆ kvm_age_gfn()

| bool kvm_age_gfn | ( | struct kvm * | kvm, |

| struct kvm_gfn_range * | range | ||

| ) |

Definition at line 1799 of file mmu.c.

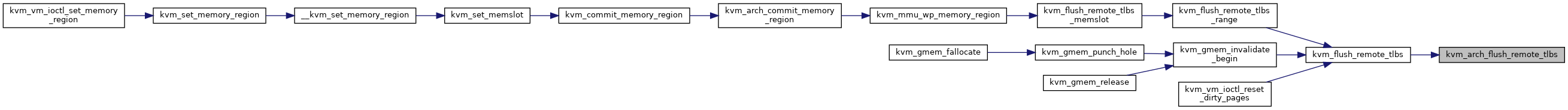

◆ kvm_arch_commit_memory_region()

| void kvm_arch_commit_memory_region | ( | struct kvm * | kvm, |

| struct kvm_memory_slot * | old, | ||

| const struct kvm_memory_slot * | new, | ||

| enum kvm_mr_change | change | ||

| ) |

Definition at line 1946 of file mmu.c.

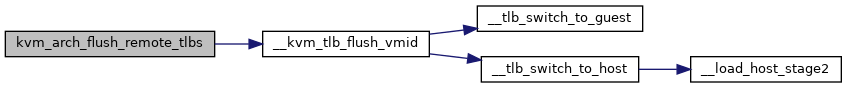

◆ kvm_arch_flush_remote_tlbs()

| int kvm_arch_flush_remote_tlbs | ( | struct kvm * | kvm | ) |

kvm_arch_flush_remote_tlbs() - flush all VM TLB entries for v7/8 @kvm: pointer to kvm structure.

Interface to HYP function to flush all VM TLB entries

Definition at line 169 of file mmu.c.

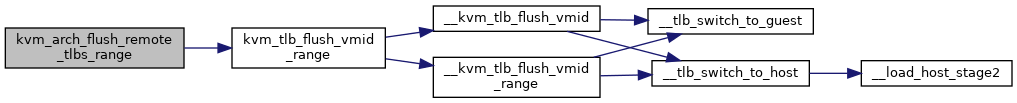

◆ kvm_arch_flush_remote_tlbs_range()

| int kvm_arch_flush_remote_tlbs_range | ( | struct kvm * | kvm, |

| gfn_t | gfn, | ||

| u64 | nr_pages | ||

| ) |

Definition at line 175 of file mmu.c.

◆ kvm_arch_flush_shadow_all()

| void kvm_arch_flush_shadow_all | ( | struct kvm * | kvm | ) |

◆ kvm_arch_flush_shadow_memslot()

| void kvm_arch_flush_shadow_memslot | ( | struct kvm * | kvm, |

| struct kvm_memory_slot * | slot | ||

| ) |

◆ kvm_arch_free_memslot()

| void kvm_arch_free_memslot | ( | struct kvm * | kvm, |

| struct kvm_memory_slot * | slot | ||

| ) |

◆ kvm_arch_memslots_updated()

| void kvm_arch_memslots_updated | ( | struct kvm * | kvm, |

| u64 | gen | ||

| ) |

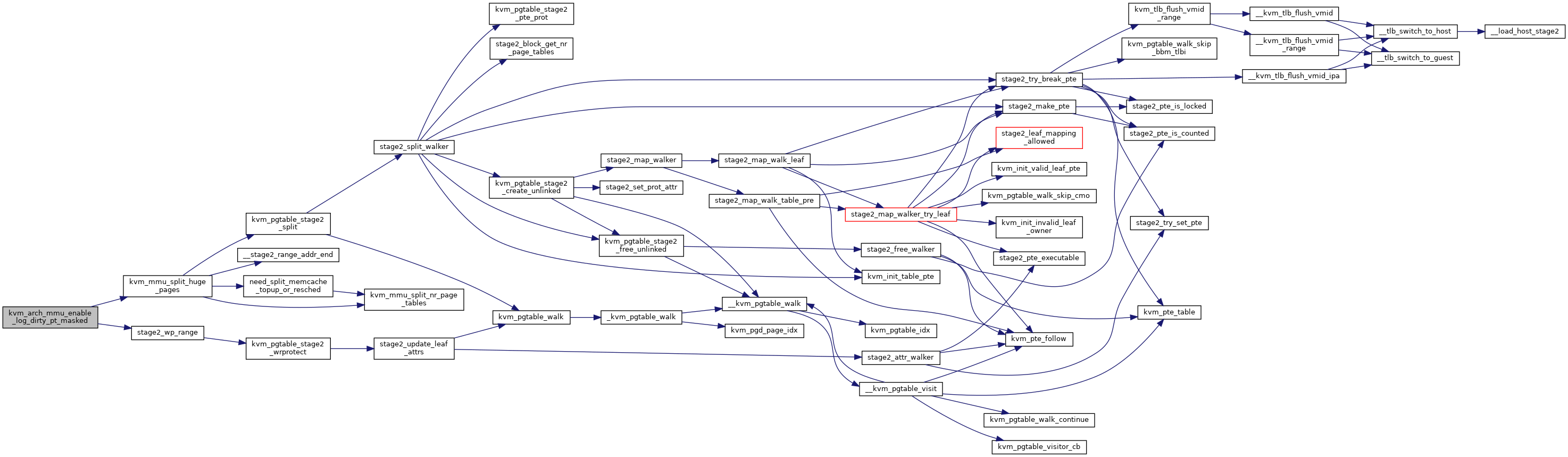

◆ kvm_arch_mmu_enable_log_dirty_pt_masked()

| void kvm_arch_mmu_enable_log_dirty_pt_masked | ( | struct kvm * | kvm, |

| struct kvm_memory_slot * | slot, | ||

| gfn_t | gfn_offset, | ||

| unsigned long | mask | ||

| ) |

Definition at line 1185 of file mmu.c.

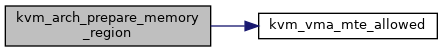

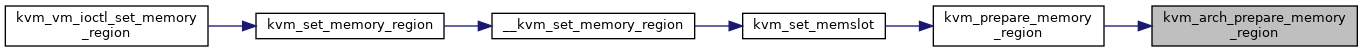

◆ kvm_arch_prepare_memory_region()

| int kvm_arch_prepare_memory_region | ( | struct kvm * | kvm, |

| const struct kvm_memory_slot * | old, | ||

| struct kvm_memory_slot * | new, | ||

| enum kvm_mr_change | change | ||

| ) |

Definition at line 1990 of file mmu.c.

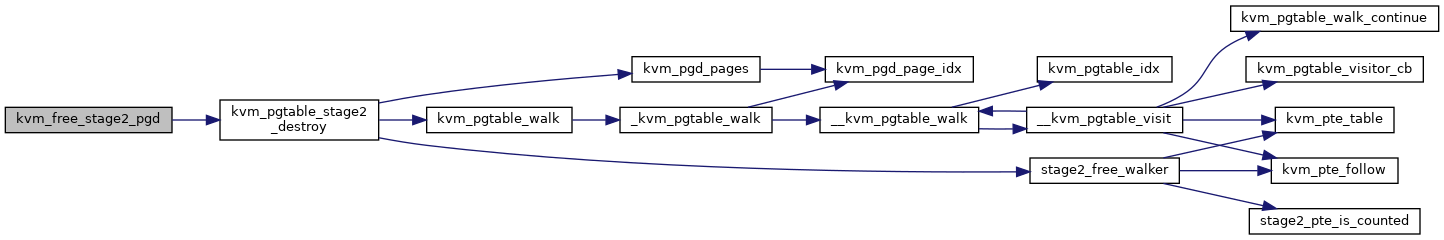

◆ kvm_free_stage2_pgd()

| void kvm_free_stage2_pgd | ( | struct kvm_s2_mmu * | mmu | ) |

Definition at line 1011 of file mmu.c.

◆ kvm_get_idmap_vector()

| phys_addr_t kvm_get_idmap_vector | ( | void | ) |

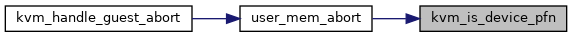

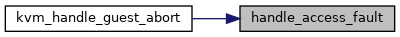

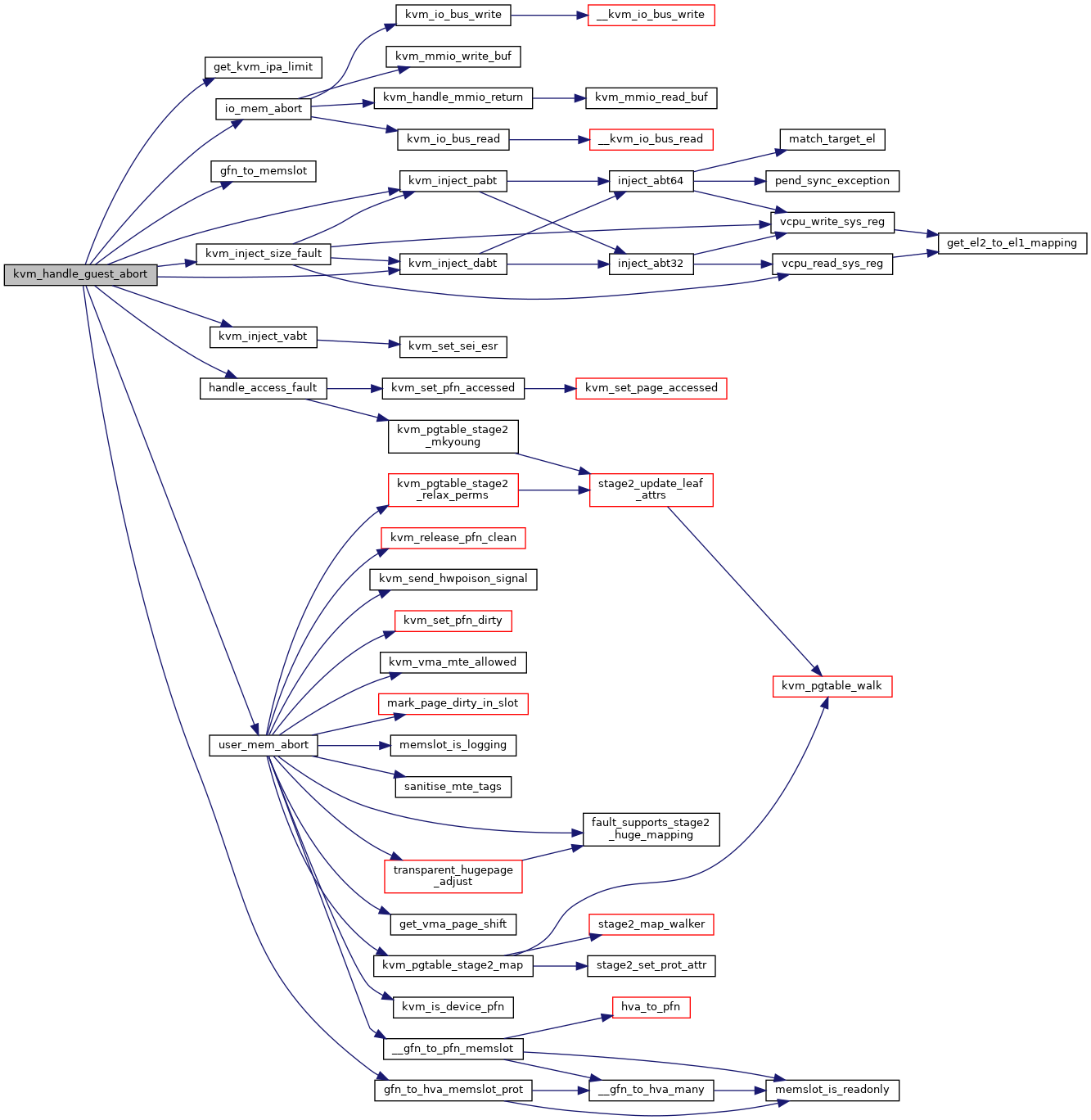

◆ kvm_handle_guest_abort()

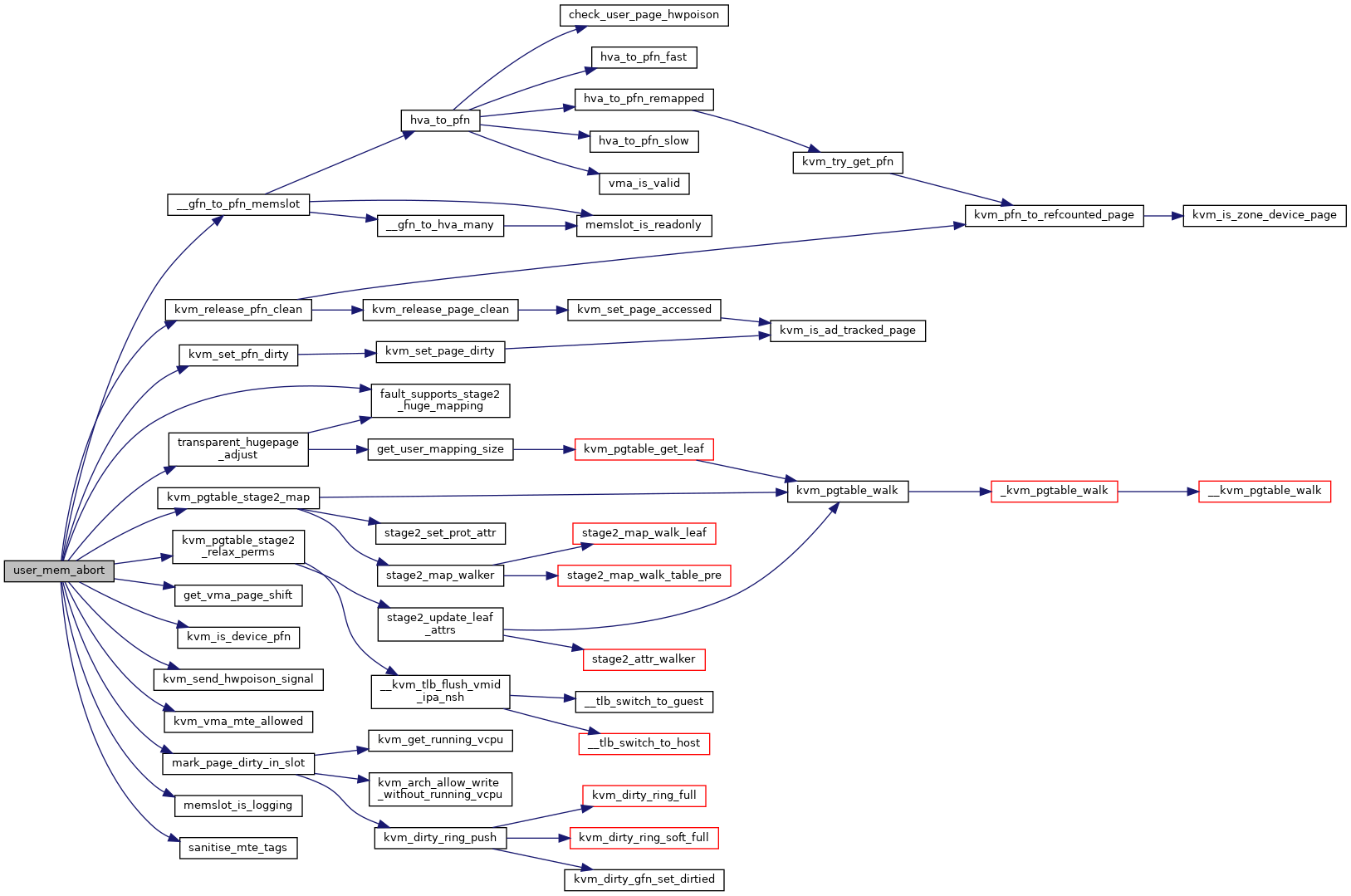

| int kvm_handle_guest_abort | ( | struct kvm_vcpu * | vcpu | ) |

kvm_handle_guest_abort - handles all 2nd stage aborts @vcpu: the VCPU pointer

Any abort that gets to the host is almost guaranteed to be caused by a missing second stage translation table entry, which can mean that either the guest simply needs more memory and we must allocate an appropriate page or it can mean that the guest tried to access I/O memory, which is emulated by user space. The distinction is based on the IPA causing the fault and whether this memory region has been registered as standard RAM by user space.

Definition at line 1619 of file mmu.c.

◆ kvm_host_get_page()

|

static |

◆ kvm_host_owns_hyp_mappings()

|

static |

◆ kvm_host_pa()

|

static |

◆ kvm_host_page_count()

|

static |

◆ kvm_host_put_page()

|

static |

◆ kvm_host_va()

|

static |

◆ kvm_host_zalloc_pages_exact()

|

static |

◆ kvm_hyp_zalloc_page()

|

static |

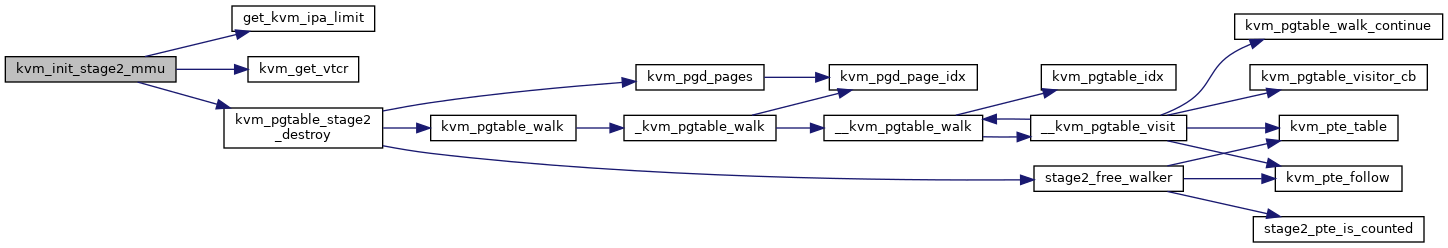

◆ kvm_init_stage2_mmu()

| int kvm_init_stage2_mmu | ( | struct kvm * | kvm, |

| struct kvm_s2_mmu * | mmu, | ||

| unsigned long | type | ||

| ) |

kvm_init_stage2_mmu - Initialise a S2 MMU structure @kvm: The pointer to the KVM structure @mmu: The pointer to the s2 MMU structure @type: The machine type of the virtual machine

Allocates only the stage-2 HW PGD level table(s). Note we don't need locking here as this is only called when the VM is created, which can only be done once.

Definition at line 868 of file mmu.c.

◆ kvm_is_device_pfn()

|

static |

◆ kvm_kaddr_to_phys()

|

static |

◆ kvm_map_idmap_text()

|

static |

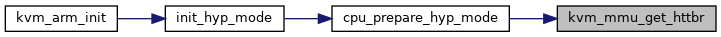

◆ kvm_mmu_get_httbr()

| phys_addr_t kvm_mmu_get_httbr | ( | void | ) |

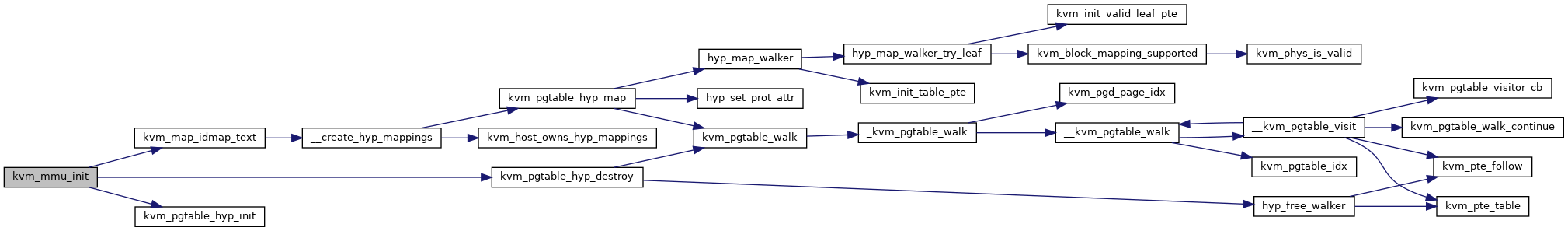

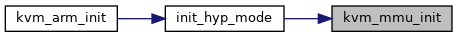

◆ kvm_mmu_init()

| int __init kvm_mmu_init | ( | u32 * | hyp_va_bits | ) |

Definition at line 1858 of file mmu.c.

◆ kvm_mmu_split_huge_pages()

|

static |

Definition at line 114 of file mmu.c.

◆ kvm_mmu_split_memory_region()

|

static |

kvm_mmu_split_memory_region() - split the stage 2 blocks into PAGE_SIZE pages for memory slot @kvm: The KVM pointer @slot: The memory slot to split

Acquires kvm->mmu_lock. Called with kvm->slots_lock mutex acquired, serializing operations for VM memory regions.

Definition at line 1155 of file mmu.c.

◆ kvm_mmu_split_nr_page_tables()

|

static |

◆ kvm_mmu_wp_memory_region()

|

static |

kvm_mmu_wp_memory_region() - write protect stage 2 entries for memory slot @kvm: The KVM pointer @slot: The memory slot to write protect

Called to start logging dirty pages after memory region KVM_MEM_LOG_DIRTY_PAGES operation is called. After this function returns all present PUD, PMD and PTEs are write protected in the memory region. Afterwards read of dirty page log can be called.

Acquires kvm_mmu_lock. Called with kvm->slots_lock mutex acquired, serializing operations for VM memory regions.

Definition at line 1128 of file mmu.c.

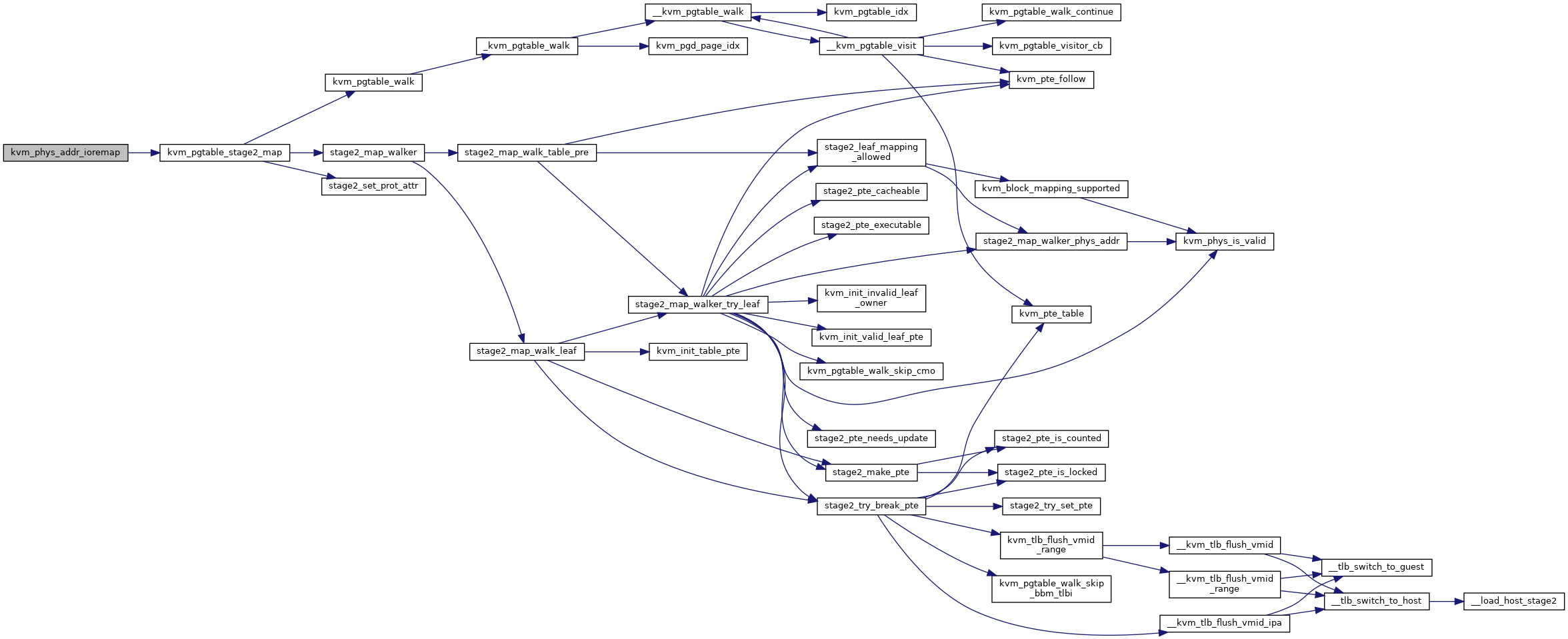

◆ kvm_phys_addr_ioremap()

| int kvm_phys_addr_ioremap | ( | struct kvm * | kvm, |

| phys_addr_t | guest_ipa, | ||

| phys_addr_t | pa, | ||

| unsigned long | size, | ||

| bool | writable | ||

| ) |

kvm_phys_addr_ioremap - map a device range to guest IPA

@kvm: The KVM pointer @guest_ipa: The IPA at which to insert the mapping @pa: The physical address of the device @size: The size of the mapping @writable: Whether or not to create a writable mapping

Definition at line 1066 of file mmu.c.

◆ kvm_s2_free_pages_exact()

|

static |

◆ kvm_s2_put_page()

|

static |

◆ kvm_s2_zalloc_pages_exact()

|

static |

◆ kvm_send_hwpoison_signal()

|

static |

◆ kvm_set_spte_gfn()

| bool kvm_set_spte_gfn | ( | struct kvm * | kvm, |

| struct kvm_gfn_range * | range | ||

| ) |

◆ kvm_set_way_flush()

| void kvm_set_way_flush | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_share_hyp()

| int kvm_share_hyp | ( | void * | from, |

| void * | to | ||

| ) |

◆ kvm_test_age_gfn()

| bool kvm_test_age_gfn | ( | struct kvm * | kvm, |

| struct kvm_gfn_range * | range | ||

| ) |

◆ kvm_toggle_cache()

| void kvm_toggle_cache | ( | struct kvm_vcpu * | vcpu, |

| bool | was_enabled | ||

| ) |

◆ kvm_uninit_stage2_mmu()

| void kvm_uninit_stage2_mmu | ( | struct kvm * | kvm | ) |

◆ kvm_unmap_gfn_range()

| bool kvm_unmap_gfn_range | ( | struct kvm * | kvm, |

| struct kvm_gfn_range * | range | ||

| ) |

◆ kvm_unshare_hyp()

| void kvm_unshare_hyp | ( | void * | from, |

| void * | to | ||

| ) |

◆ kvm_vma_mte_allowed()

|

static |

◆ memslot_is_logging()

|

static |

◆ need_split_memcache_topup_or_resched()

|

static |

◆ sanitise_mte_tags()

|

static |

◆ share_pfn_hyp()

|

static |

◆ stage2_apply_range()

|

static |

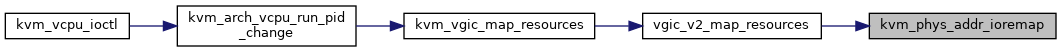

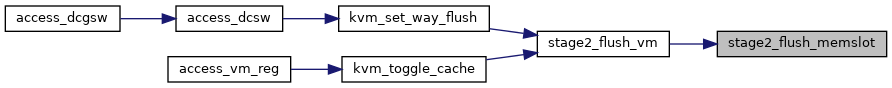

◆ stage2_flush_memslot()

|

static |

Definition at line 336 of file mmu.c.

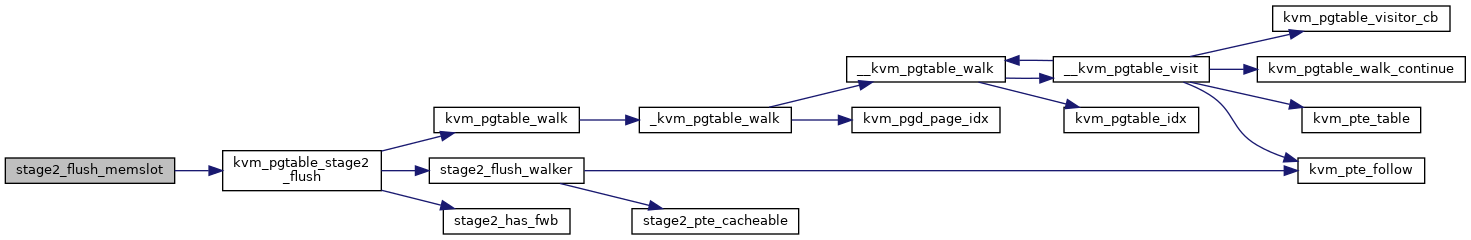

◆ stage2_flush_vm()

|

static |

stage2_flush_vm - Invalidate cache for pages mapped in stage 2 @kvm: The struct kvm pointer

Go through the stage 2 page tables and invalidate any cache lines backing memory already mapped to the VM.

Definition at line 352 of file mmu.c.

◆ stage2_free_unlinked_table()

|

static |

◆ stage2_free_unlinked_table_rcu_cb()

|

static |

Definition at line 222 of file mmu.c.

◆ stage2_memcache_zalloc_page()

|

static |

◆ stage2_range_addr_end()

|

static |

◆ stage2_unmap_memslot()

|

static |

◆ stage2_unmap_vm()

| void stage2_unmap_vm | ( | struct kvm * | kvm | ) |

stage2_unmap_vm - Unmap Stage-2 RAM mappings @kvm: The struct kvm pointer

Go through the memregions and unmap any regular RAM backing memory already mapped to the VM.

Definition at line 992 of file mmu.c.

◆ stage2_wp_range()

|

static |

stage2_wp_range() - write protect stage2 memory region range @mmu: The KVM stage-2 MMU pointer @addr: Start address of range @end: End address of range

Definition at line 1110 of file mmu.c.

◆ topup_hyp_memcache()

| int topup_hyp_memcache | ( | struct kvm_hyp_memcache * | mc, |

| unsigned long | min_pages | ||

| ) |

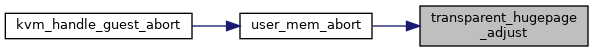

◆ transparent_hugepage_adjust()

|

static |

Definition at line 1284 of file mmu.c.

◆ unmap_stage2_range()

|

static |

◆ unshare_pfn_hyp()

|

static |

◆ user_mem_abort()

|

static |

Definition at line 1377 of file mmu.c.

Variable Documentation

◆ hyp_idmap_end

|

static |

◆ hyp_idmap_start

|

static |

◆ hyp_idmap_vector

|

static |

◆ hyp_pgtable

◆ hyp_shared_pfns

◆ io_map_base

|

static |

◆ kvm_hyp_mm_ops

|

static |

◆ kvm_s2_mm_ops

|

static |

◆ kvm_user_mm_ops

|

static |