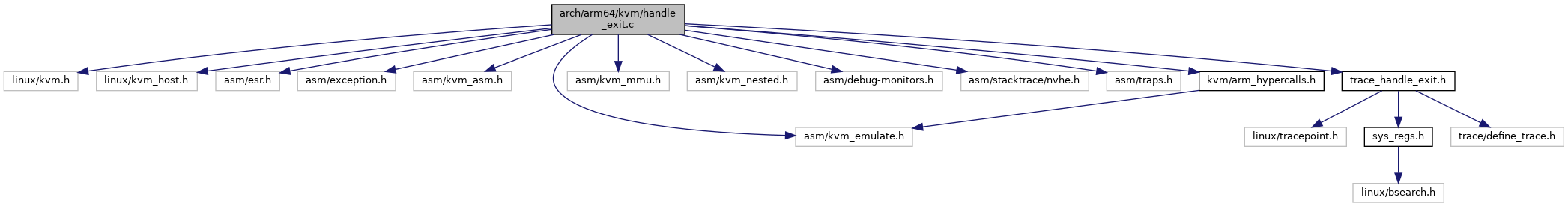

#include <linux/kvm.h>#include <linux/kvm_host.h>#include <asm/esr.h>#include <asm/exception.h>#include <asm/kvm_asm.h>#include <asm/kvm_emulate.h>#include <asm/kvm_mmu.h>#include <asm/kvm_nested.h>#include <asm/debug-monitors.h>#include <asm/stacktrace/nvhe.h>#include <asm/traps.h>#include <kvm/arm_hypercalls.h>#include "trace_handle_exit.h"

Go to the source code of this file.

Macros | |

| #define | CREATE_TRACE_POINTS |

Typedefs | |

| typedef int(* | exit_handle_fn) (struct kvm_vcpu *) |

Functions | |

| static void | kvm_handle_guest_serror (struct kvm_vcpu *vcpu, u64 esr) |

| static int | handle_hvc (struct kvm_vcpu *vcpu) |

| static int | handle_smc (struct kvm_vcpu *vcpu) |

| static int | handle_no_fpsimd (struct kvm_vcpu *vcpu) |

| static int | kvm_handle_wfx (struct kvm_vcpu *vcpu) |

| static int | kvm_handle_guest_debug (struct kvm_vcpu *vcpu) |

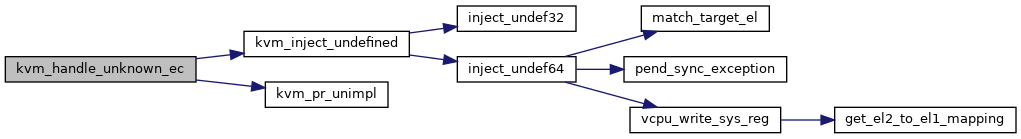

| static int | kvm_handle_unknown_ec (struct kvm_vcpu *vcpu) |

| static int | handle_sve (struct kvm_vcpu *vcpu) |

| static int | kvm_handle_ptrauth (struct kvm_vcpu *vcpu) |

| static int | kvm_handle_eret (struct kvm_vcpu *vcpu) |

| static int | handle_svc (struct kvm_vcpu *vcpu) |

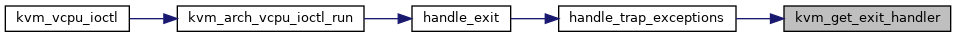

| static exit_handle_fn | kvm_get_exit_handler (struct kvm_vcpu *vcpu) |

| static int | handle_trap_exceptions (struct kvm_vcpu *vcpu) |

| int | handle_exit (struct kvm_vcpu *vcpu, int exception_index) |

| void | handle_exit_early (struct kvm_vcpu *vcpu, int exception_index) |

| void __noreturn __cold | nvhe_hyp_panic_handler (u64 esr, u64 spsr, u64 elr_virt, u64 elr_phys, u64 par, uintptr_t vcpu, u64 far, u64 hpfar) |

Variables | |

| static exit_handle_fn | arm_exit_handlers [] |

Macro Definition Documentation

◆ CREATE_TRACE_POINTS

| #define CREATE_TRACE_POINTS |

Definition at line 26 of file handle_exit.c.

Typedef Documentation

◆ exit_handle_fn

| typedef int(* exit_handle_fn) (struct kvm_vcpu *) |

Definition at line 29 of file handle_exit.c.

Function Documentation

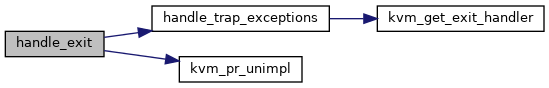

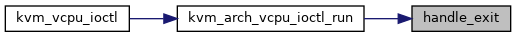

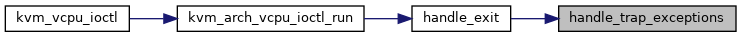

◆ handle_exit()

| int handle_exit | ( | struct kvm_vcpu * | vcpu, |

| int | exception_index | ||

| ) |

Definition at line 322 of file handle_exit.c.

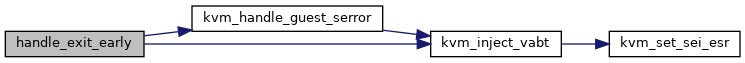

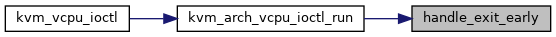

◆ handle_exit_early()

| void handle_exit_early | ( | struct kvm_vcpu * | vcpu, |

| int | exception_index | ||

| ) |

Definition at line 366 of file handle_exit.c.

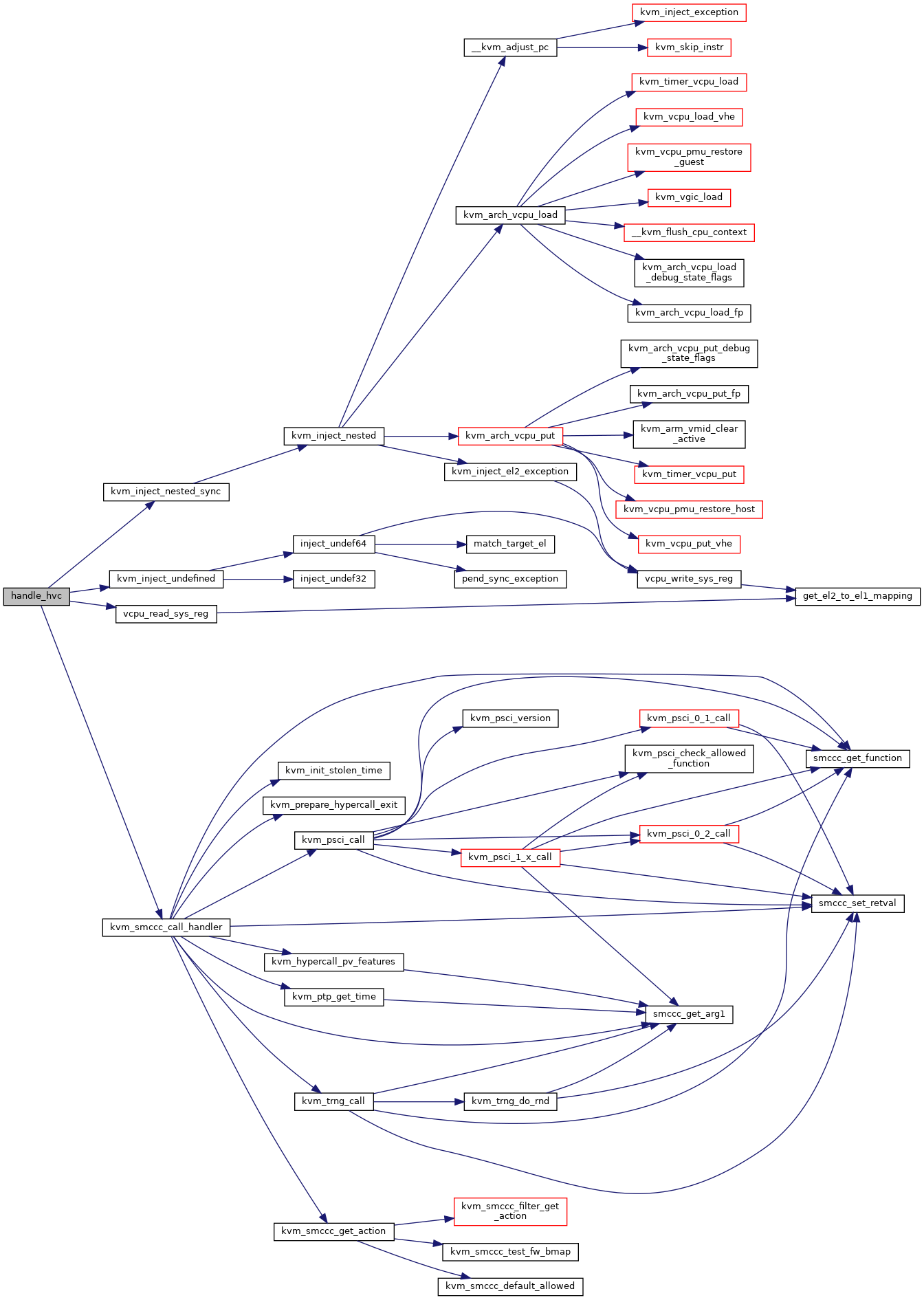

◆ handle_hvc()

|

static |

Definition at line 37 of file handle_exit.c.

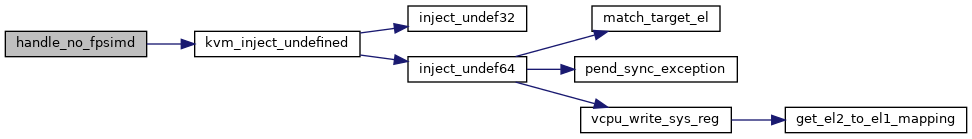

◆ handle_no_fpsimd()

|

static |

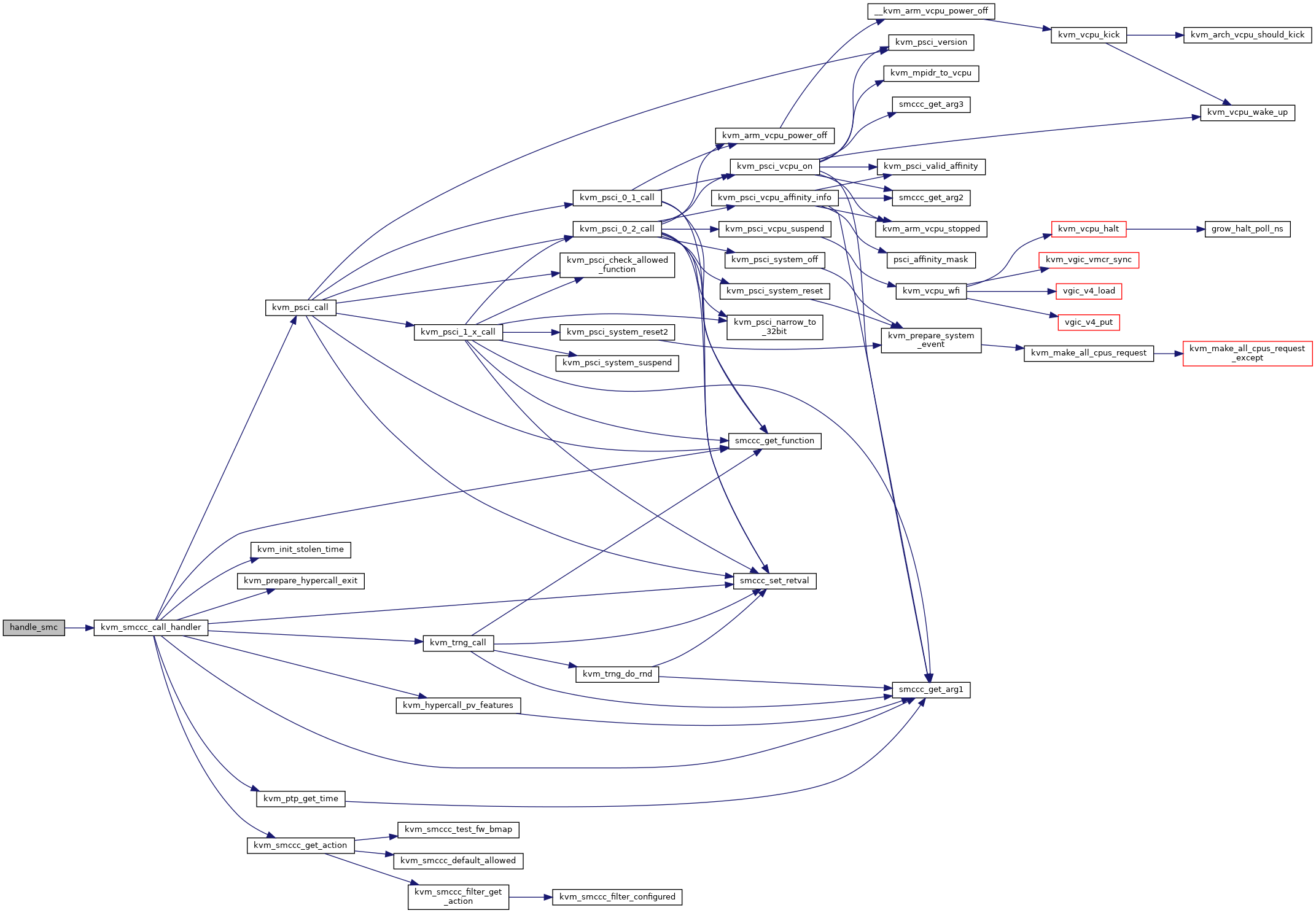

◆ handle_smc()

|

static |

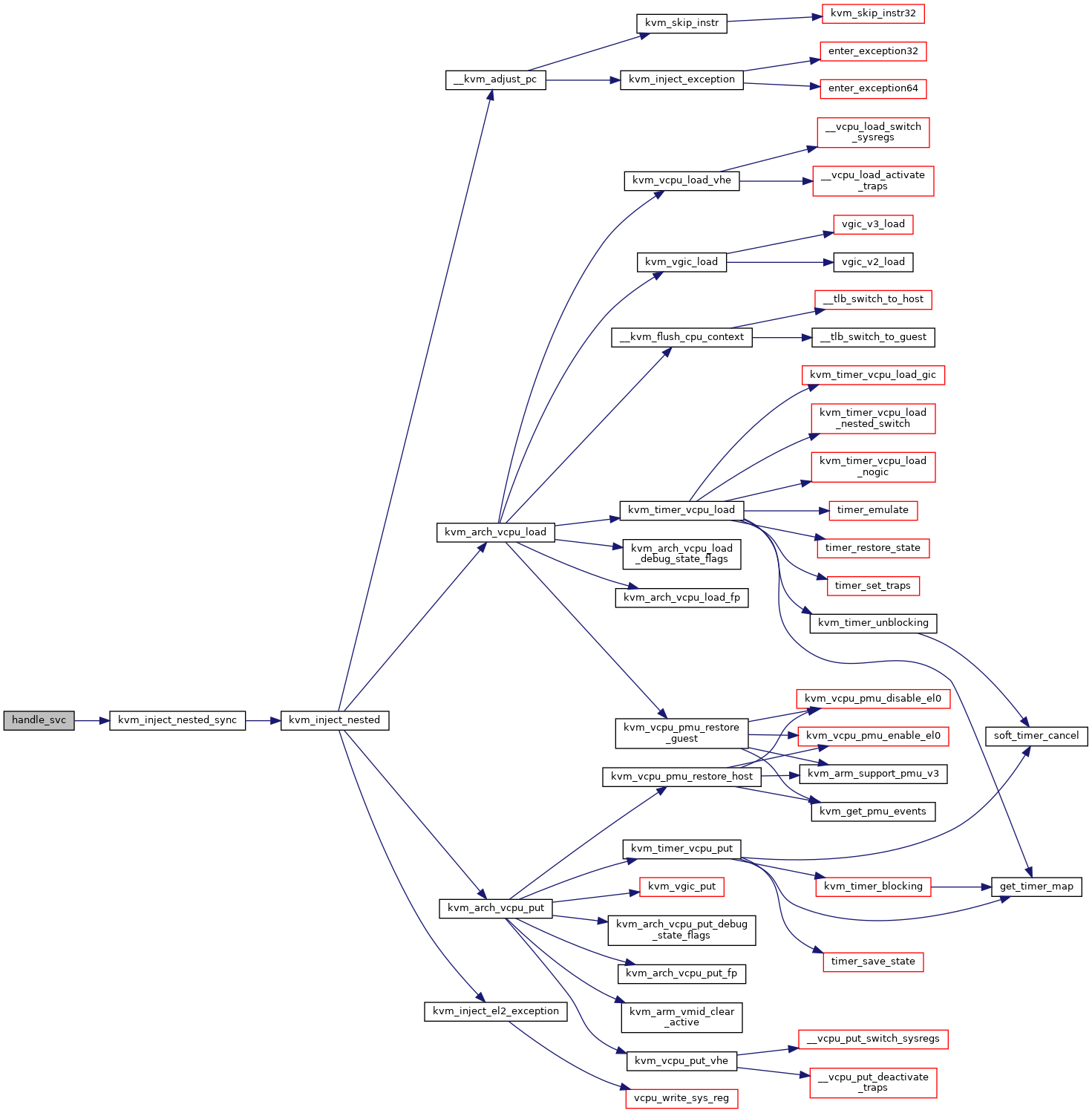

◆ handle_svc()

|

static |

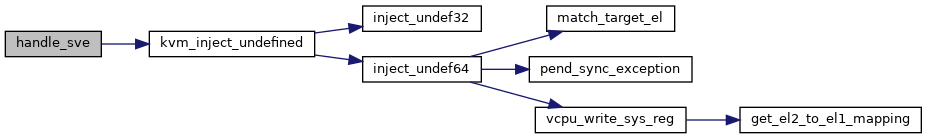

◆ handle_sve()

|

static |

◆ handle_trap_exceptions()

|

static |

Definition at line 297 of file handle_exit.c.

◆ kvm_get_exit_handler()

|

static |

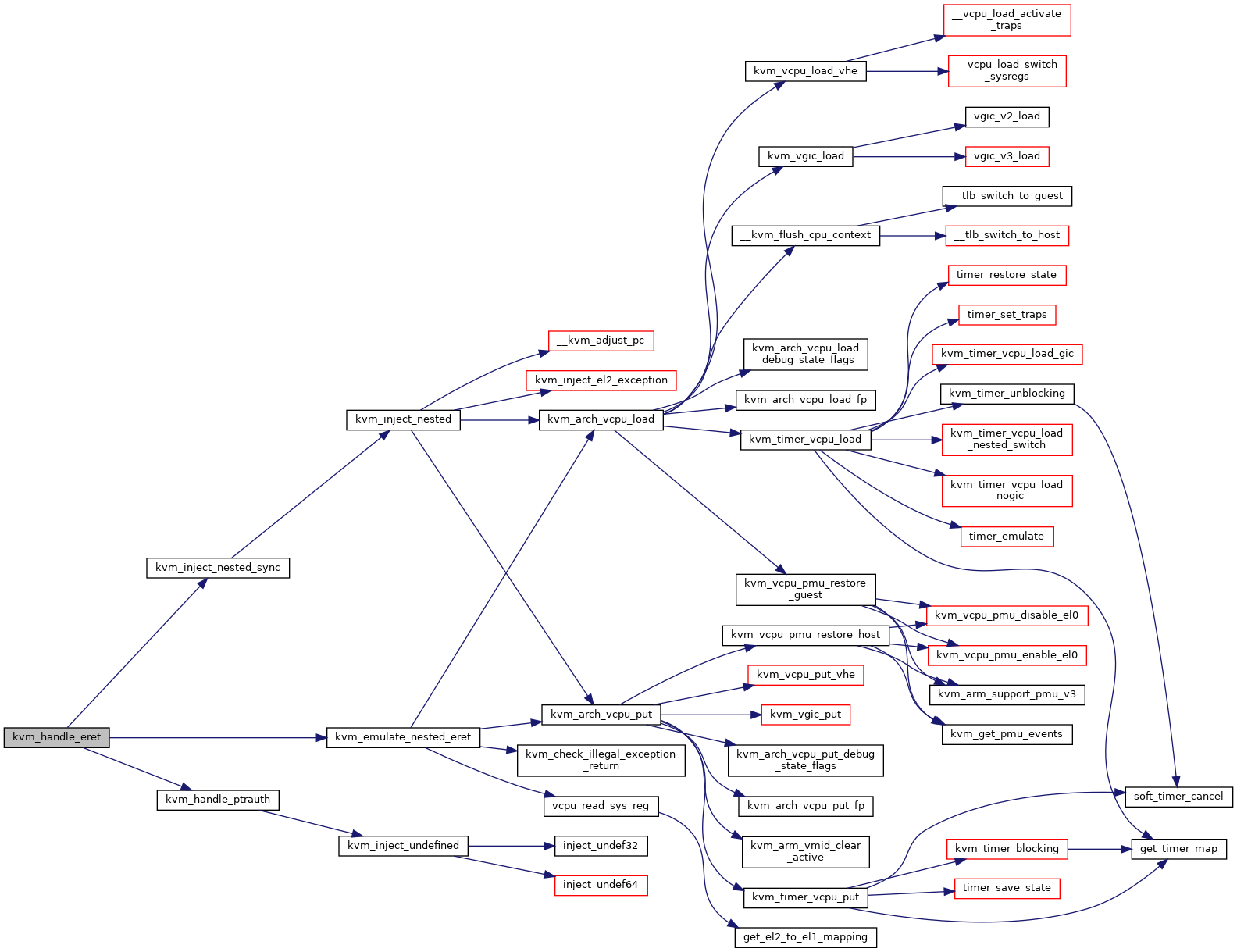

◆ kvm_handle_eret()

|

static |

Definition at line 220 of file handle_exit.c.

◆ kvm_handle_guest_debug()

|

static |

kvm_handle_guest_debug - handle a debug exception instruction

@vcpu: the vcpu pointer

We route all debug exceptions through the same handler. If both the guest and host are using the same debug facilities it will be up to userspace to re-inject the correct exception for guest delivery.

- Returns

- : 0 (while setting vcpu->run->exit_reason)

Definition at line 166 of file handle_exit.c.

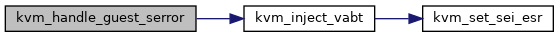

◆ kvm_handle_guest_serror()

|

static |

Definition at line 31 of file handle_exit.c.

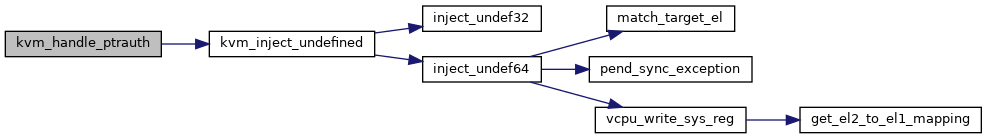

◆ kvm_handle_ptrauth()

|

static |

Definition at line 214 of file handle_exit.c.

◆ kvm_handle_unknown_ec()

|

static |

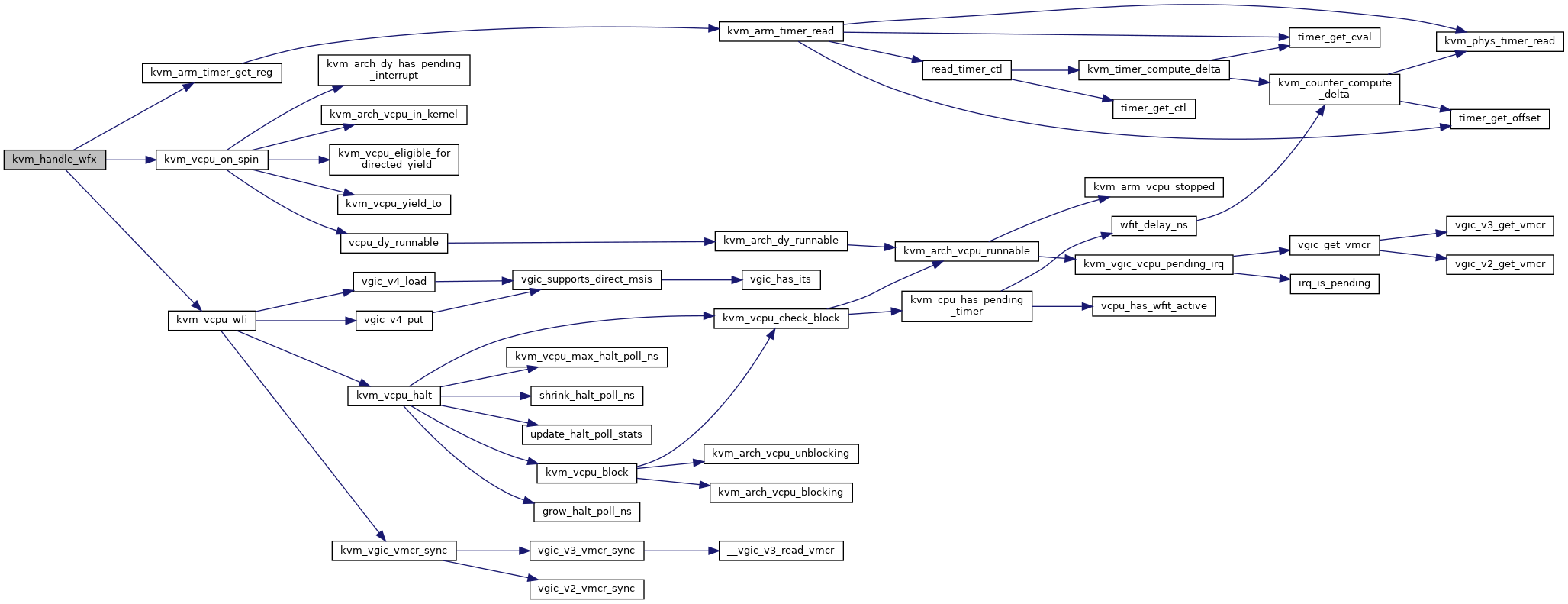

◆ kvm_handle_wfx()

|

static |

kvm_handle_wfx - handle a wait-for-interrupts or wait-for-event instruction executed by a guest

@vcpu: the vcpu pointer

WFE[T]: Yield the CPU and come back to this vcpu when the scheduler decides to. WFI: Simply call kvm_vcpu_halt(), which will halt execution of world-switches and schedule other host processes until there is an incoming IRQ or FIQ to the VM. WFIT: Same as WFI, with a timed wakeup implemented as a background timer

WF{I,E}T can immediately return if the deadline has already expired.

Definition at line 114 of file handle_exit.c.

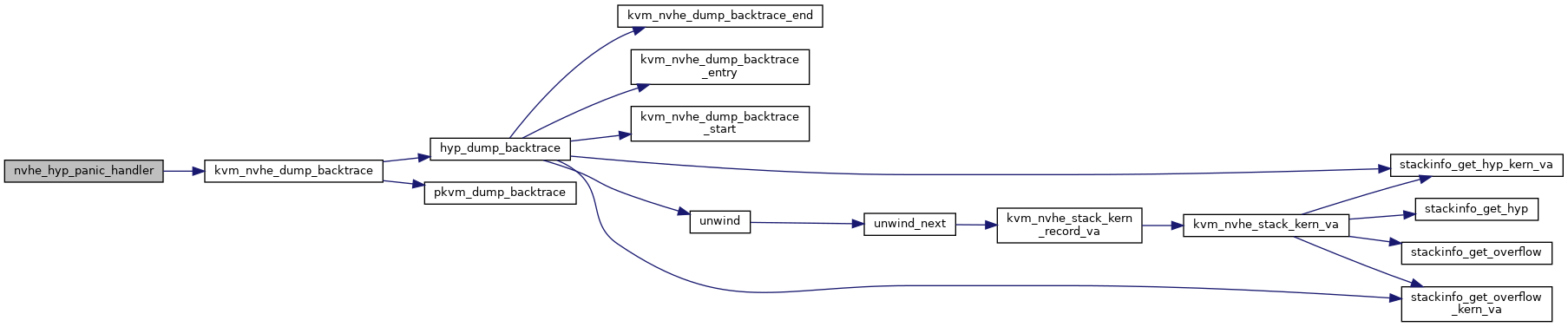

◆ nvhe_hyp_panic_handler()

| void __noreturn __cold nvhe_hyp_panic_handler | ( | u64 | esr, |

| u64 | spsr, | ||

| u64 | elr_virt, | ||

| u64 | elr_phys, | ||

| u64 | par, | ||

| uintptr_t | vcpu, | ||

| u64 | far, | ||

| u64 | hpfar | ||

| ) |

Definition at line 386 of file handle_exit.c.

Variable Documentation

◆ arm_exit_handlers

|

static |

Definition at line 255 of file handle_exit.c.