#include <hyp/switch.h>#include <hyp/sysreg-sr.h>#include <linux/arm-smccc.h>#include <linux/kvm_host.h>#include <linux/types.h>#include <linux/jump_label.h>#include <uapi/linux/psci.h>#include <kvm/arm_psci.h>#include <asm/barrier.h>#include <asm/cpufeature.h>#include <asm/kprobes.h>#include <asm/kvm_asm.h>#include <asm/kvm_emulate.h>#include <asm/kvm_hyp.h>#include <asm/kvm_mmu.h>#include <asm/fpsimd.h>#include <asm/debug-monitors.h>#include <asm/processor.h>#include <nvhe/fixed_config.h>#include <nvhe/mem_protect.h>

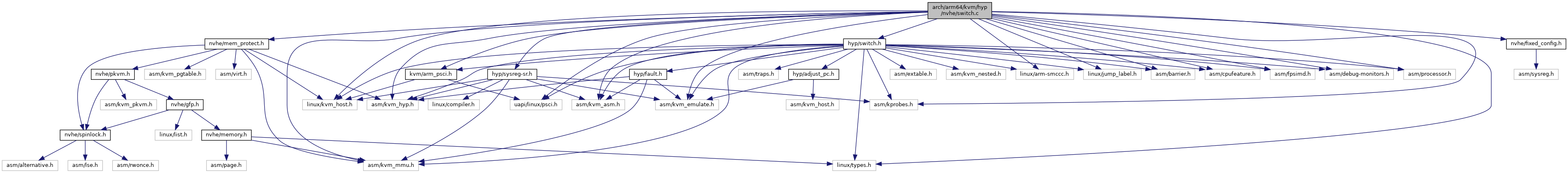

Include dependency graph for switch.c:

Go to the source code of this file.

Macros | |

| #define | __pmu_switch_to_guest(v) ({ false; }) |

| #define | __pmu_switch_to_host(v) do {} while (0) |

Functions | |

| DEFINE_PER_CPU (struct kvm_host_data, kvm_host_data) | |

| DEFINE_PER_CPU (struct kvm_cpu_context, kvm_hyp_ctxt) | |

| DEFINE_PER_CPU (unsigned long, kvm_hyp_vector) | |

| void | kvm_nvhe_prepare_backtrace (unsigned long fp, unsigned long pc) |

| static void | __activate_traps (struct kvm_vcpu *vcpu) |

| static void | __deactivate_traps (struct kvm_vcpu *vcpu) |

| static void | __hyp_vgic_save_state (struct kvm_vcpu *vcpu) |

| static void | __hyp_vgic_restore_state (struct kvm_vcpu *vcpu) |

| static bool | kvm_handle_pvm_sys64 (struct kvm_vcpu *vcpu, u64 *exit_code) |

| static const exit_handler_fn * | kvm_get_exit_handler_array (struct kvm_vcpu *vcpu) |

| static void | early_exit_filter (struct kvm_vcpu *vcpu, u64 *exit_code) |

| int | __kvm_vcpu_run (struct kvm_vcpu *vcpu) |

| asmlinkage void __noreturn | hyp_panic (void) |

| asmlinkage void __noreturn | hyp_panic_bad_stack (void) |

| asmlinkage void | kvm_unexpected_el2_exception (void) |

Variables | |

| static const exit_handler_fn | hyp_exit_handlers [] |

| static const exit_handler_fn | pvm_exit_handlers [] |

Macro Definition Documentation

◆ __pmu_switch_to_guest

◆ __pmu_switch_to_host

Function Documentation

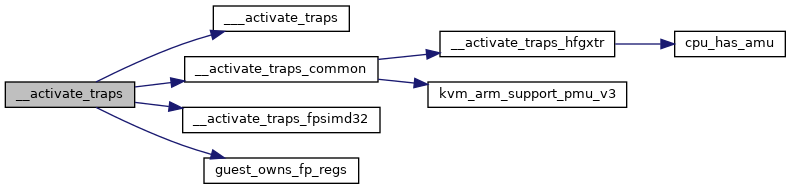

◆ __activate_traps()

|

static |

Definition at line 39 of file switch.c.

static void __activate_traps_common(struct kvm_vcpu *vcpu)

Definition: switch.h:207

static void __activate_traps_fpsimd32(struct kvm_vcpu *vcpu)

Definition: switch.h:57

Here is the call graph for this function:

Here is the caller graph for this function:

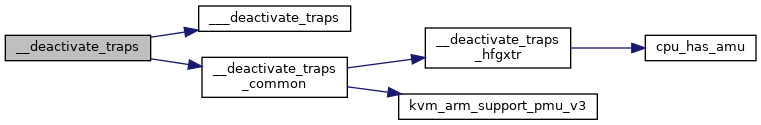

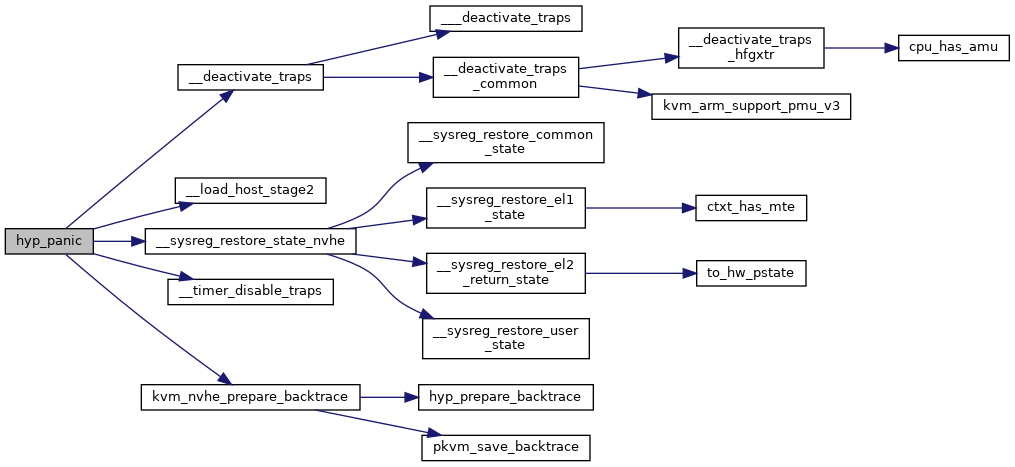

◆ __deactivate_traps()

|

static |

Definition at line 84 of file switch.c.

static void __deactivate_traps_common(struct kvm_vcpu *vcpu)

Definition: switch.h:249

Here is the call graph for this function:

Here is the caller graph for this function:

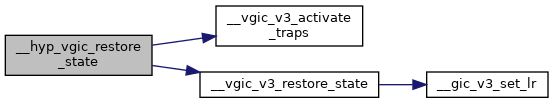

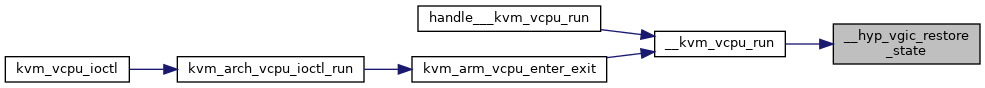

◆ __hyp_vgic_restore_state()

|

static |

Definition at line 125 of file switch.c.

struct vgic_global kvm_vgic_global_state

void __vgic_v3_restore_state(struct vgic_v3_cpu_if *cpu_if)

Definition: vgic-v3-sr.c:234

void __vgic_v3_activate_traps(struct vgic_v3_cpu_if *cpu_if)

Definition: vgic-v3-sr.c:260

Here is the call graph for this function:

Here is the caller graph for this function:

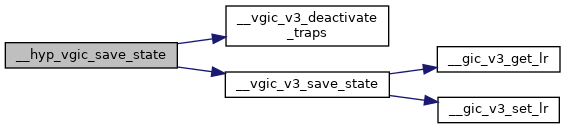

◆ __hyp_vgic_save_state()

|

static |

Definition at line 116 of file switch.c.

void __vgic_v3_save_state(struct vgic_v3_cpu_if *cpu_if)

Definition: vgic-v3-sr.c:199

void __vgic_v3_deactivate_traps(struct vgic_v3_cpu_if *cpu_if)

Definition: vgic-v3-sr.c:307

Here is the call graph for this function:

Here is the caller graph for this function:

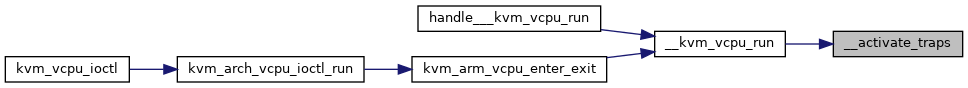

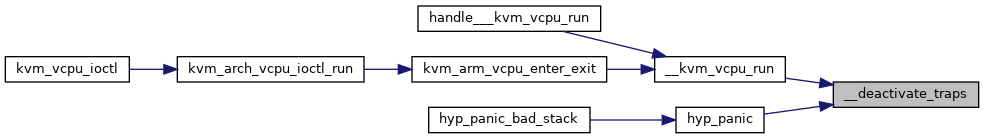

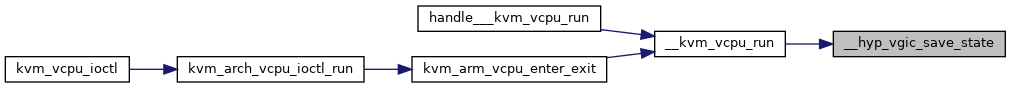

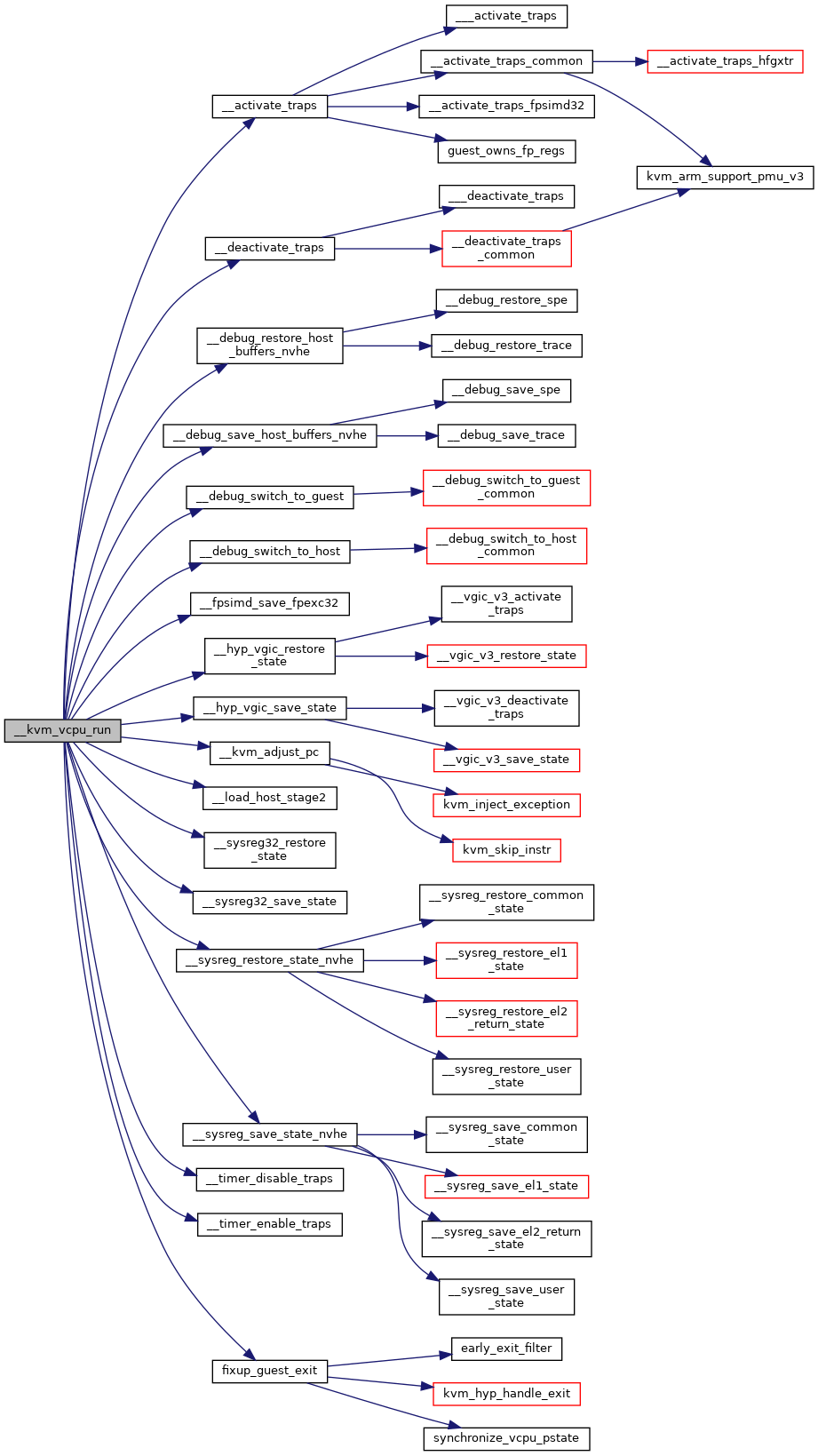

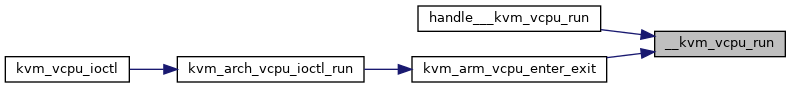

◆ __kvm_vcpu_run()

| int __kvm_vcpu_run | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 248 of file switch.c.

void __debug_restore_host_buffers_nvhe(struct kvm_vcpu *vcpu)

Definition: debug-sr.c:97

void __debug_save_host_buffers_nvhe(struct kvm_vcpu *vcpu)

Definition: debug-sr.c:82

static void __hyp_vgic_restore_state(struct kvm_vcpu *vcpu)

Definition: switch.c:125

static void __hyp_vgic_save_state(struct kvm_vcpu *vcpu)

Definition: switch.c:116

void __sysreg_restore_state_nvhe(struct kvm_cpu_context *ctxt)

Definition: sysreg-sr.c:29

void __sysreg_save_state_nvhe(struct kvm_cpu_context *ctxt)

Definition: sysreg-sr.c:21

static bool fixup_guest_exit(struct kvm_vcpu *vcpu, u64 *exit_code)

Definition: switch.h:693

static void __fpsimd_save_fpexc32(struct kvm_vcpu *vcpu)

Definition: switch.h:49

static void __sysreg32_restore_state(struct kvm_vcpu *vcpu)

Definition: sysreg-sr.h:229

static void __sysreg32_save_state(struct kvm_vcpu *vcpu)

Definition: sysreg-sr.h:212

Here is the call graph for this function:

Here is the caller graph for this function:

◆ DEFINE_PER_CPU() [1/3]

| DEFINE_PER_CPU | ( | struct kvm_cpu_context | , |

| kvm_hyp_ctxt | |||

| ) |

◆ DEFINE_PER_CPU() [2/3]

| DEFINE_PER_CPU | ( | struct kvm_host_data | , |

| kvm_host_data | |||

| ) |

◆ DEFINE_PER_CPU() [3/3]

| DEFINE_PER_CPU | ( | unsigned long | , |

| kvm_hyp_vector | |||

| ) |

◆ early_exit_filter()

|

static |

◆ hyp_panic()

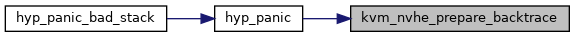

| asmlinkage void __noreturn hyp_panic | ( | void | ) |

Definition at line 362 of file switch.c.

void kvm_nvhe_prepare_backtrace(unsigned long fp, unsigned long pc)

Definition: stacktrace.c:152

Here is the call graph for this function:

Here is the caller graph for this function:

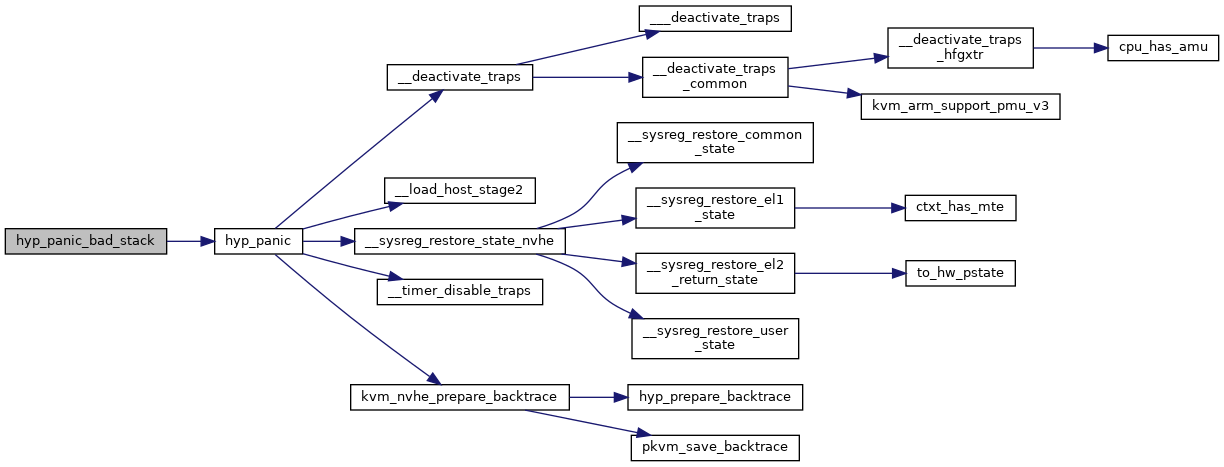

◆ hyp_panic_bad_stack()

| asmlinkage void __noreturn hyp_panic_bad_stack | ( | void | ) |

◆ kvm_get_exit_handler_array()

|

static |

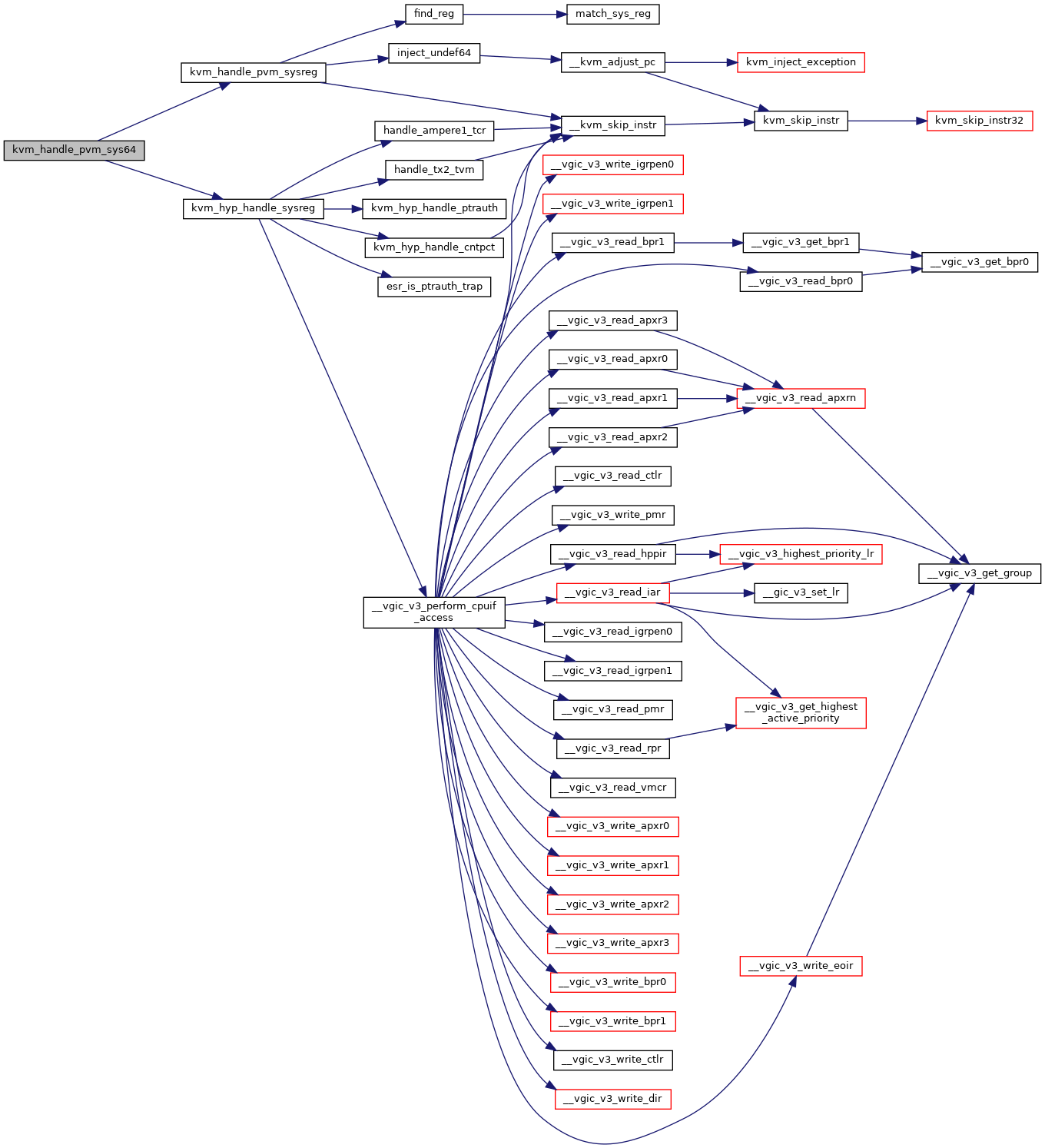

◆ kvm_handle_pvm_sys64()

|

static |

Definition at line 174 of file switch.c.

bool kvm_handle_pvm_sysreg(struct kvm_vcpu *vcpu, u64 *exit_code)

Definition: sys_regs.c:474

static bool kvm_hyp_handle_sysreg(struct kvm_vcpu *vcpu, u64 *exit_code)

Definition: switch.h:573

Here is the call graph for this function:

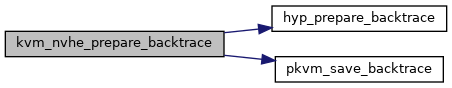

◆ kvm_nvhe_prepare_backtrace()

| void kvm_nvhe_prepare_backtrace | ( | unsigned long | fp, |

| unsigned long | pc | ||

| ) |

Definition at line 152 of file stacktrace.c.

static void pkvm_save_backtrace(unsigned long fp, unsigned long pc)

Definition: stacktrace.c:138

static void hyp_prepare_backtrace(unsigned long fp, unsigned long pc)

Definition: stacktrace.c:26

Here is the call graph for this function:

Here is the caller graph for this function:

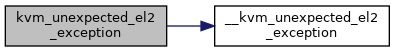

◆ kvm_unexpected_el2_exception()

| asmlinkage void kvm_unexpected_el2_exception | ( | void | ) |

Definition at line 393 of file switch.c.

static void __kvm_unexpected_el2_exception(void)

Definition: switch.h:748

Here is the call graph for this function:

Variable Documentation

◆ hyp_exit_handlers

|

static |

Initial value:

= {

[0 ... ESR_ELx_EC_MAX] = NULL,

[ESR_ELx_EC_CP15_32] = kvm_hyp_handle_cp15_32,

[ESR_ELx_EC_SYS64] = kvm_hyp_handle_sysreg,

[ESR_ELx_EC_SVE] = kvm_hyp_handle_fpsimd,

[ESR_ELx_EC_FP_ASIMD] = kvm_hyp_handle_fpsimd,

[ESR_ELx_EC_IABT_LOW] = kvm_hyp_handle_iabt_low,

[ESR_ELx_EC_DABT_LOW] = kvm_hyp_handle_dabt_low,

[ESR_ELx_EC_WATCHPT_LOW] = kvm_hyp_handle_watchpt_low,

[ESR_ELx_EC_PAC] = kvm_hyp_handle_ptrauth,

[ESR_ELx_EC_MOPS] = kvm_hyp_handle_mops,

}

static bool kvm_hyp_handle_iabt_low(struct kvm_vcpu *vcpu, u64 *exit_code) __alias(kvm_hyp_handle_memory_fault)

static bool kvm_hyp_handle_fpsimd(struct kvm_vcpu *vcpu, u64 *exit_code)

Definition: switch.h:330

static bool kvm_hyp_handle_mops(struct kvm_vcpu *vcpu, u64 *exit_code)

Definition: switch.h:300

static bool kvm_hyp_handle_ptrauth(struct kvm_vcpu *vcpu, u64 *exit_code)

Definition: switch.h:476

static bool kvm_hyp_handle_dabt_low(struct kvm_vcpu *vcpu, u64 *exit_code)

Definition: switch.h:617

static bool kvm_hyp_handle_cp15_32(struct kvm_vcpu *vcpu, u64 *exit_code)

Definition: switch.h:596

static bool kvm_hyp_handle_watchpt_low(struct kvm_vcpu *vcpu, u64 *exit_code) __alias(kvm_hyp_handle_memory_fault)

◆ pvm_exit_handlers

|

static |

Initial value:

= {

[0 ... ESR_ELx_EC_MAX] = NULL,

[ESR_ELx_EC_SYS64] = kvm_handle_pvm_sys64,

[ESR_ELx_EC_SVE] = kvm_handle_pvm_restricted,

[ESR_ELx_EC_FP_ASIMD] = kvm_hyp_handle_fpsimd,

[ESR_ELx_EC_IABT_LOW] = kvm_hyp_handle_iabt_low,

[ESR_ELx_EC_DABT_LOW] = kvm_hyp_handle_dabt_low,

[ESR_ELx_EC_WATCHPT_LOW] = kvm_hyp_handle_watchpt_low,

[ESR_ELx_EC_PAC] = kvm_hyp_handle_ptrauth,

[ESR_ELx_EC_MOPS] = kvm_hyp_handle_mops,

}

bool kvm_handle_pvm_restricted(struct kvm_vcpu *vcpu, u64 *exit_code)

Definition: sys_regs.c:512

static bool kvm_handle_pvm_sys64(struct kvm_vcpu *vcpu, u64 *exit_code)

Definition: switch.c:174