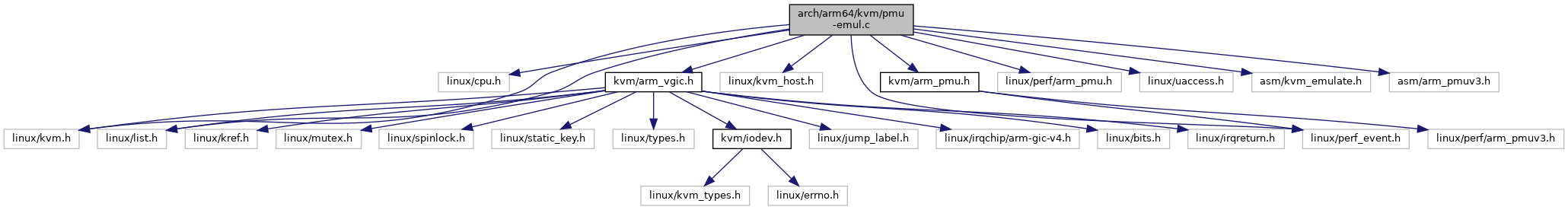

#include <linux/cpu.h>#include <linux/kvm.h>#include <linux/kvm_host.h>#include <linux/list.h>#include <linux/perf_event.h>#include <linux/perf/arm_pmu.h>#include <linux/uaccess.h>#include <asm/kvm_emulate.h>#include <kvm/arm_pmu.h>#include <kvm/arm_vgic.h>#include <asm/arm_pmuv3.h>

Go to the source code of this file.

Macros | |

| #define | PERF_ATTR_CFG1_COUNTER_64BIT BIT(0) |

Functions | |

| DEFINE_STATIC_KEY_FALSE (kvm_arm_pmu_available) | |

| static | LIST_HEAD (arm_pmus) |

| static | DEFINE_MUTEX (arm_pmus_lock) |

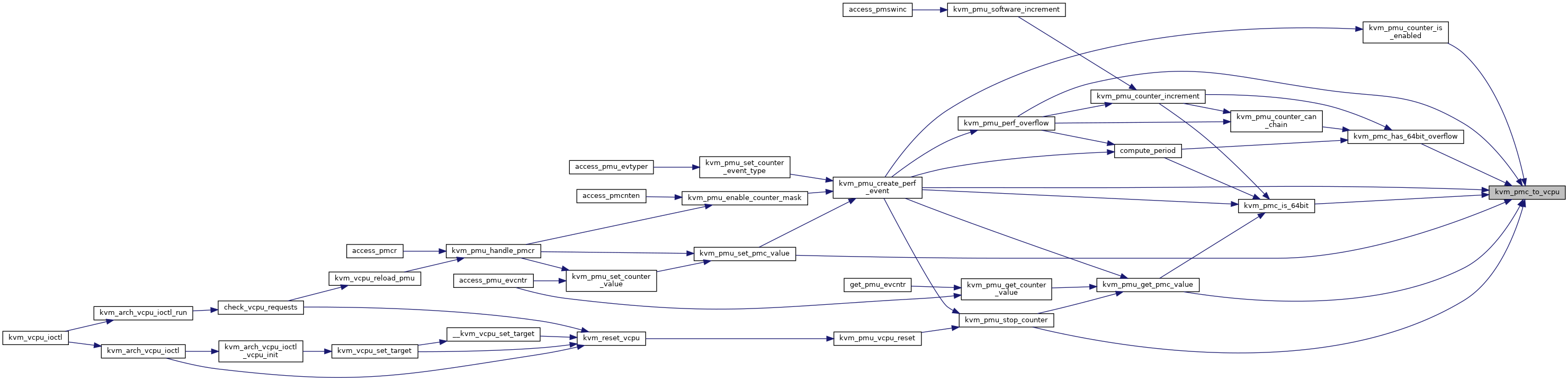

| static void | kvm_pmu_create_perf_event (struct kvm_pmc *pmc) |

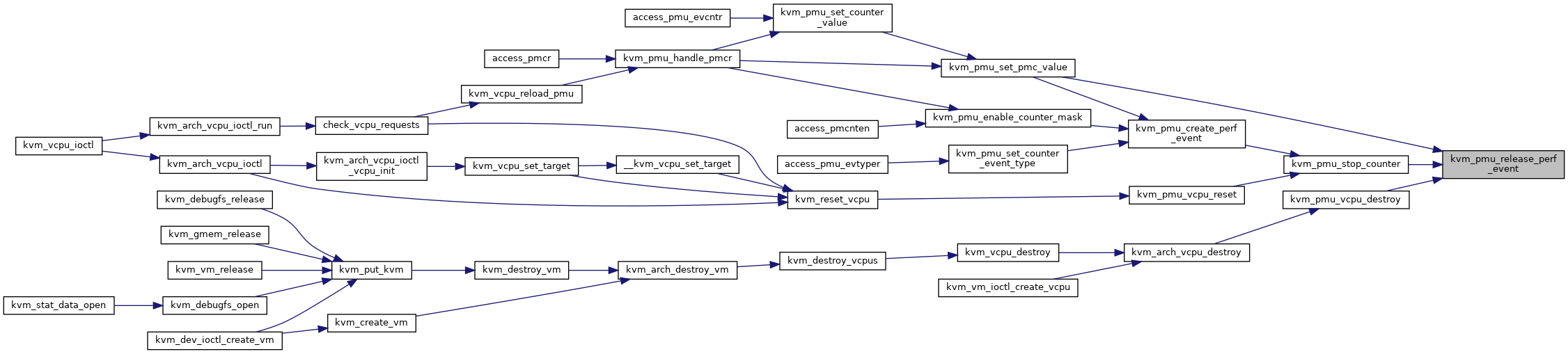

| static void | kvm_pmu_release_perf_event (struct kvm_pmc *pmc) |

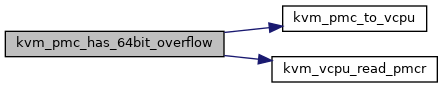

| static struct kvm_vcpu * | kvm_pmc_to_vcpu (const struct kvm_pmc *pmc) |

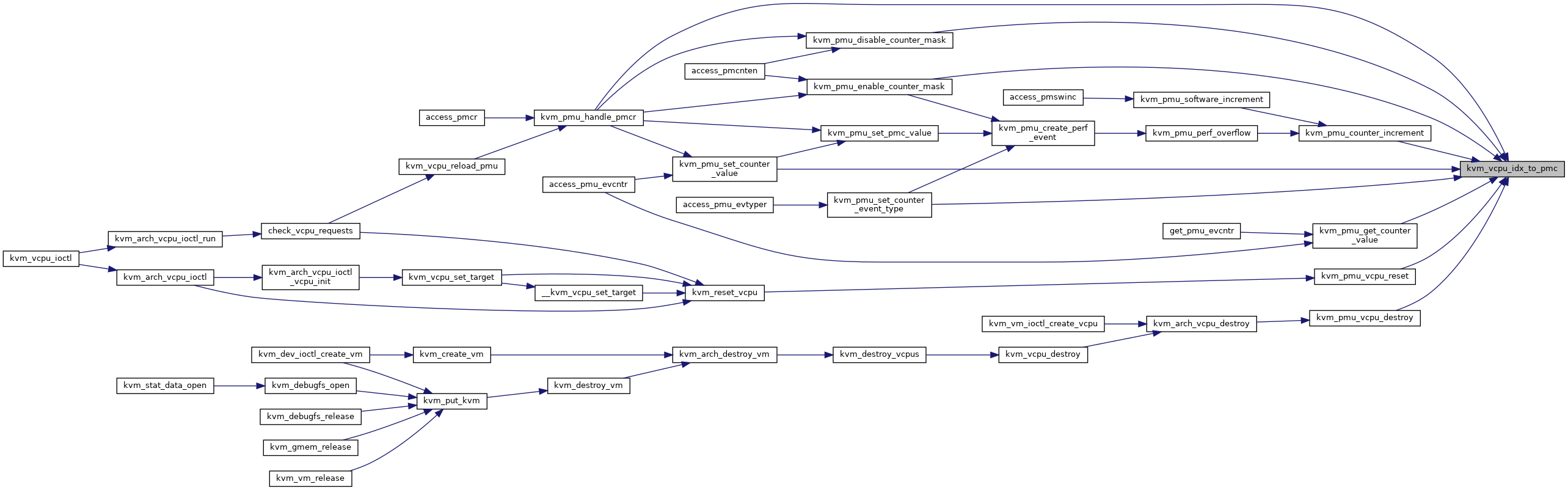

| static struct kvm_pmc * | kvm_vcpu_idx_to_pmc (struct kvm_vcpu *vcpu, int cnt_idx) |

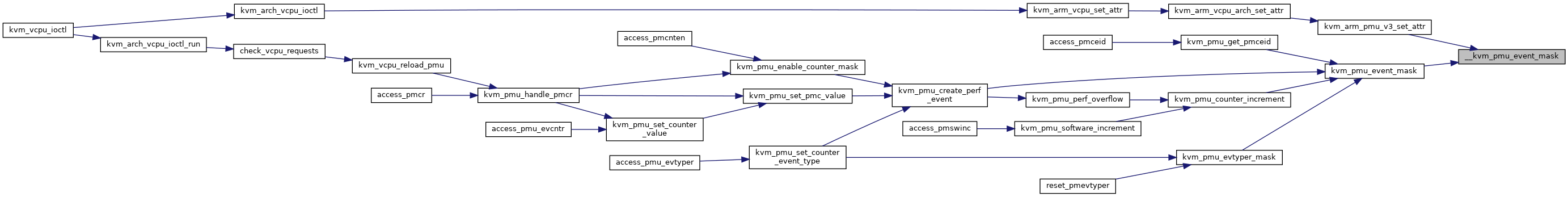

| static u32 | __kvm_pmu_event_mask (unsigned int pmuver) |

| static u32 | kvm_pmu_event_mask (struct kvm *kvm) |

| u64 | kvm_pmu_evtyper_mask (struct kvm *kvm) |

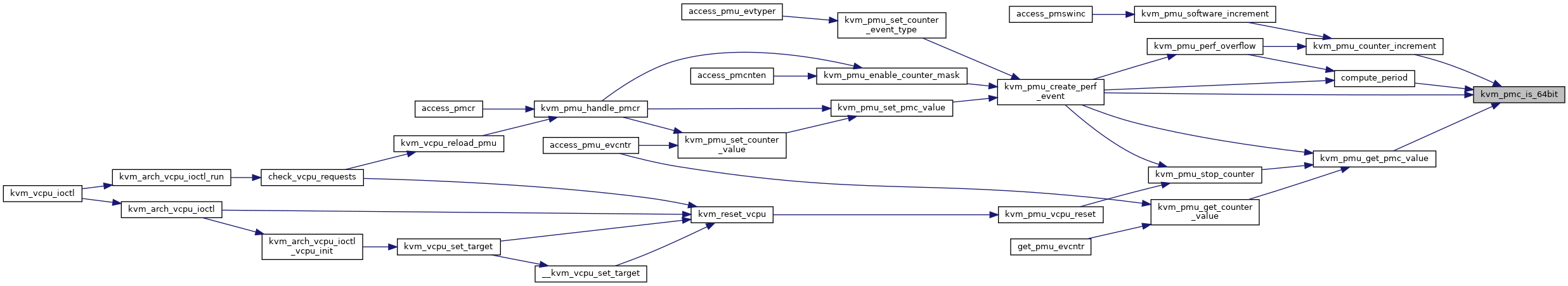

| static bool | kvm_pmc_is_64bit (struct kvm_pmc *pmc) |

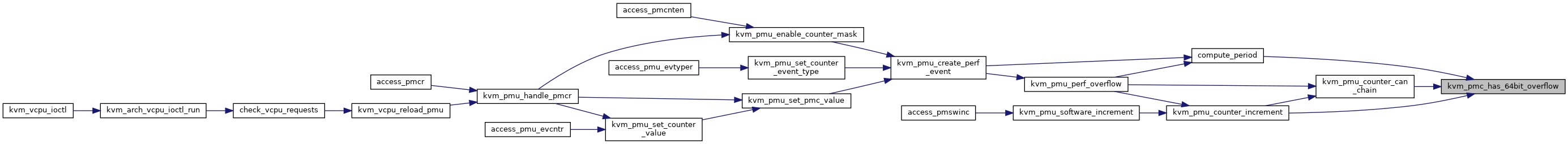

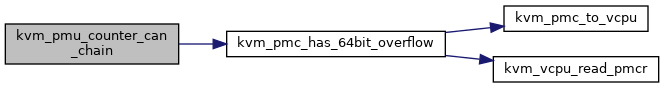

| static bool | kvm_pmc_has_64bit_overflow (struct kvm_pmc *pmc) |

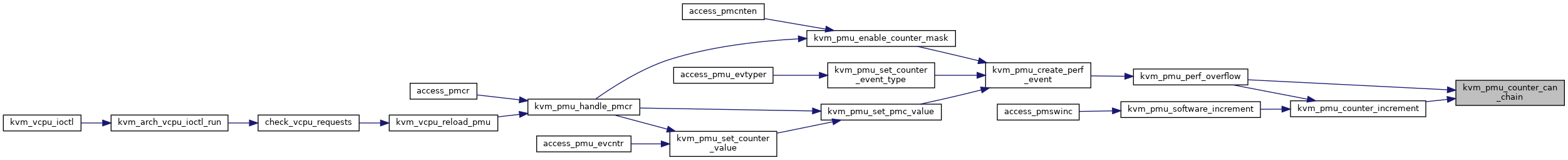

| static bool | kvm_pmu_counter_can_chain (struct kvm_pmc *pmc) |

| static u32 | counter_index_to_reg (u64 idx) |

| static u32 | counter_index_to_evtreg (u64 idx) |

| static u64 | kvm_pmu_get_pmc_value (struct kvm_pmc *pmc) |

| u64 | kvm_pmu_get_counter_value (struct kvm_vcpu *vcpu, u64 select_idx) |

| static void | kvm_pmu_set_pmc_value (struct kvm_pmc *pmc, u64 val, bool force) |

| void | kvm_pmu_set_counter_value (struct kvm_vcpu *vcpu, u64 select_idx, u64 val) |

| static void | kvm_pmu_stop_counter (struct kvm_pmc *pmc) |

| void | kvm_pmu_vcpu_init (struct kvm_vcpu *vcpu) |

| void | kvm_pmu_vcpu_reset (struct kvm_vcpu *vcpu) |

| void | kvm_pmu_vcpu_destroy (struct kvm_vcpu *vcpu) |

| u64 | kvm_pmu_valid_counter_mask (struct kvm_vcpu *vcpu) |

| void | kvm_pmu_enable_counter_mask (struct kvm_vcpu *vcpu, u64 val) |

| void | kvm_pmu_disable_counter_mask (struct kvm_vcpu *vcpu, u64 val) |

| static u64 | kvm_pmu_overflow_status (struct kvm_vcpu *vcpu) |

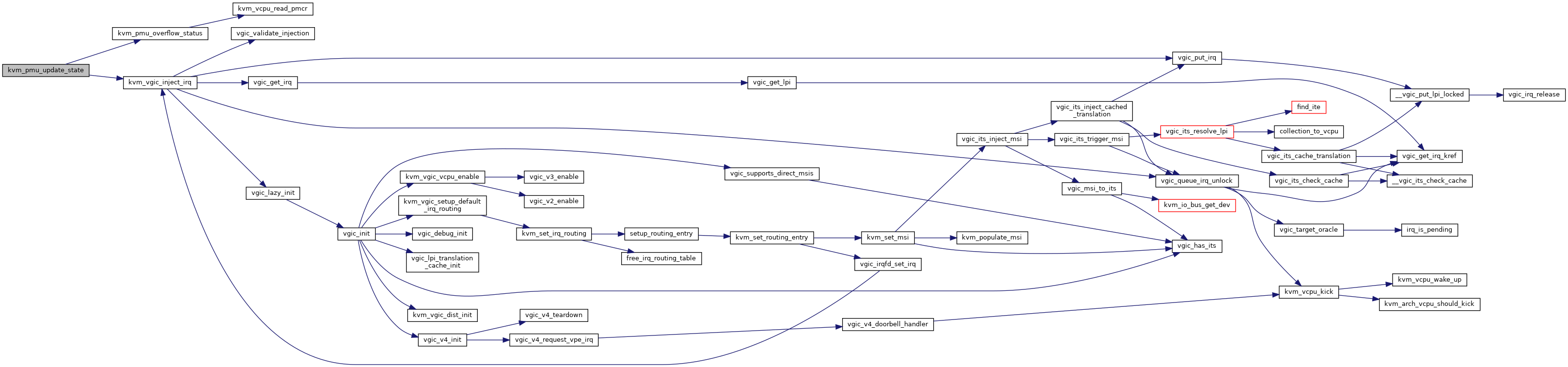

| static void | kvm_pmu_update_state (struct kvm_vcpu *vcpu) |

| bool | kvm_pmu_should_notify_user (struct kvm_vcpu *vcpu) |

| void | kvm_pmu_update_run (struct kvm_vcpu *vcpu) |

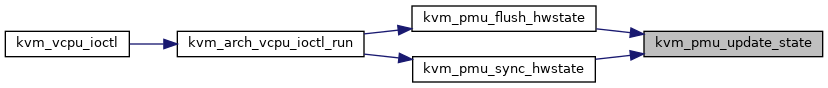

| void | kvm_pmu_flush_hwstate (struct kvm_vcpu *vcpu) |

| void | kvm_pmu_sync_hwstate (struct kvm_vcpu *vcpu) |

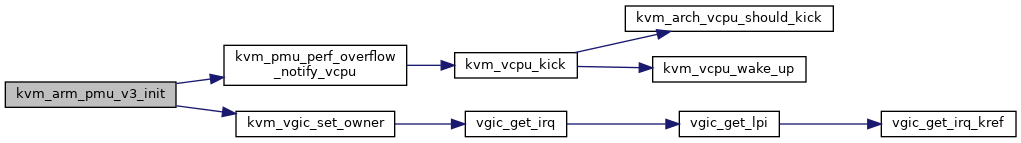

| static void | kvm_pmu_perf_overflow_notify_vcpu (struct irq_work *work) |

| static void | kvm_pmu_counter_increment (struct kvm_vcpu *vcpu, unsigned long mask, u32 event) |

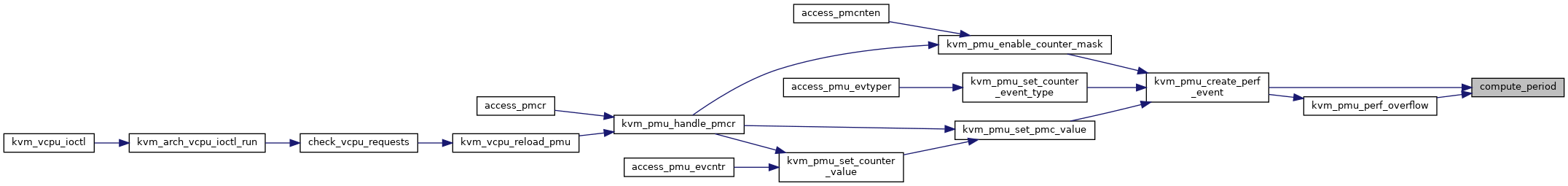

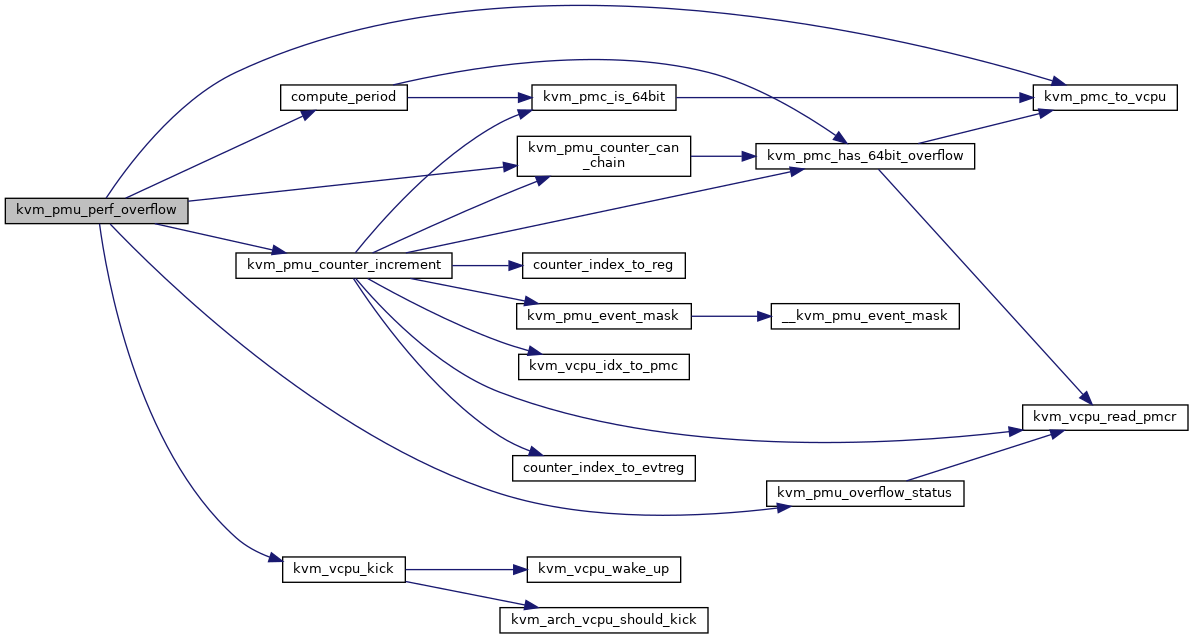

| static u64 | compute_period (struct kvm_pmc *pmc, u64 counter) |

| static void | kvm_pmu_perf_overflow (struct perf_event *perf_event, struct perf_sample_data *data, struct pt_regs *regs) |

| void | kvm_pmu_software_increment (struct kvm_vcpu *vcpu, u64 val) |

| void | kvm_pmu_handle_pmcr (struct kvm_vcpu *vcpu, u64 val) |

| static bool | kvm_pmu_counter_is_enabled (struct kvm_pmc *pmc) |

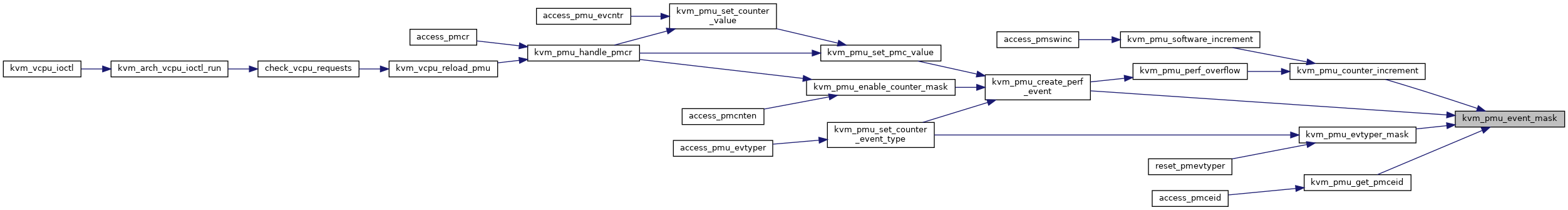

| void | kvm_pmu_set_counter_event_type (struct kvm_vcpu *vcpu, u64 data, u64 select_idx) |

| void | kvm_host_pmu_init (struct arm_pmu *pmu) |

| static struct arm_pmu * | kvm_pmu_probe_armpmu (void) |

| u64 | kvm_pmu_get_pmceid (struct kvm_vcpu *vcpu, bool pmceid1) |

| void | kvm_vcpu_reload_pmu (struct kvm_vcpu *vcpu) |

| int | kvm_arm_pmu_v3_enable (struct kvm_vcpu *vcpu) |

| static int | kvm_arm_pmu_v3_init (struct kvm_vcpu *vcpu) |

| static bool | pmu_irq_is_valid (struct kvm *kvm, int irq) |

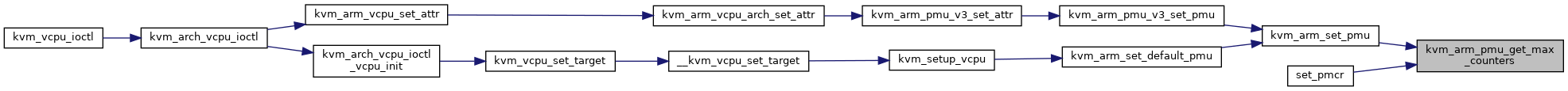

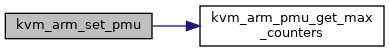

| u8 | kvm_arm_pmu_get_max_counters (struct kvm *kvm) |

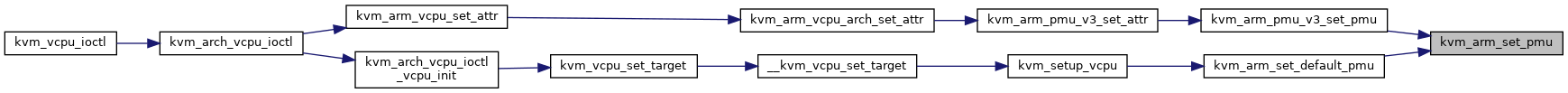

| static void | kvm_arm_set_pmu (struct kvm *kvm, struct arm_pmu *arm_pmu) |

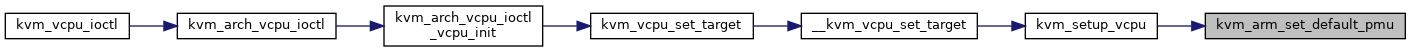

| int | kvm_arm_set_default_pmu (struct kvm *kvm) |

| static int | kvm_arm_pmu_v3_set_pmu (struct kvm_vcpu *vcpu, int pmu_id) |

| int | kvm_arm_pmu_v3_set_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

| int | kvm_arm_pmu_v3_get_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

| int | kvm_arm_pmu_v3_has_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

| u8 | kvm_arm_pmu_get_pmuver_limit (void) |

| u64 | kvm_vcpu_read_pmcr (struct kvm_vcpu *vcpu) |

Macro Definition Documentation

◆ PERF_ATTR_CFG1_COUNTER_64BIT

| #define PERF_ATTR_CFG1_COUNTER_64BIT BIT(0) |

Definition at line 19 of file pmu-emul.c.

Function Documentation

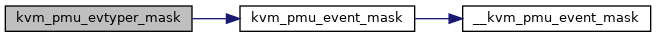

◆ __kvm_pmu_event_mask()

|

static |

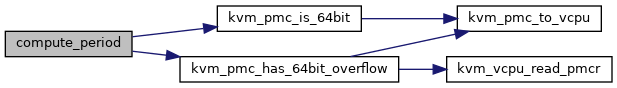

◆ compute_period()

|

static |

Definition at line 481 of file pmu-emul.c.

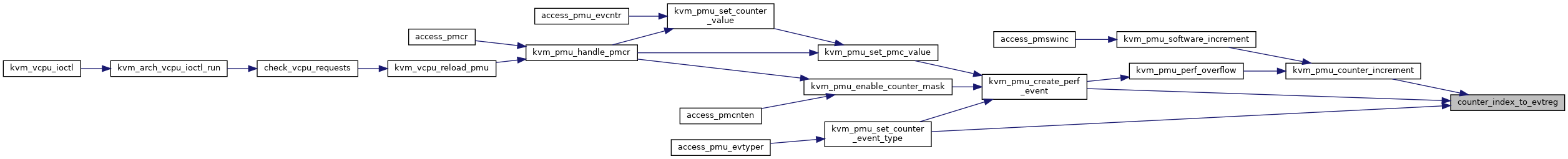

◆ counter_index_to_evtreg()

|

static |

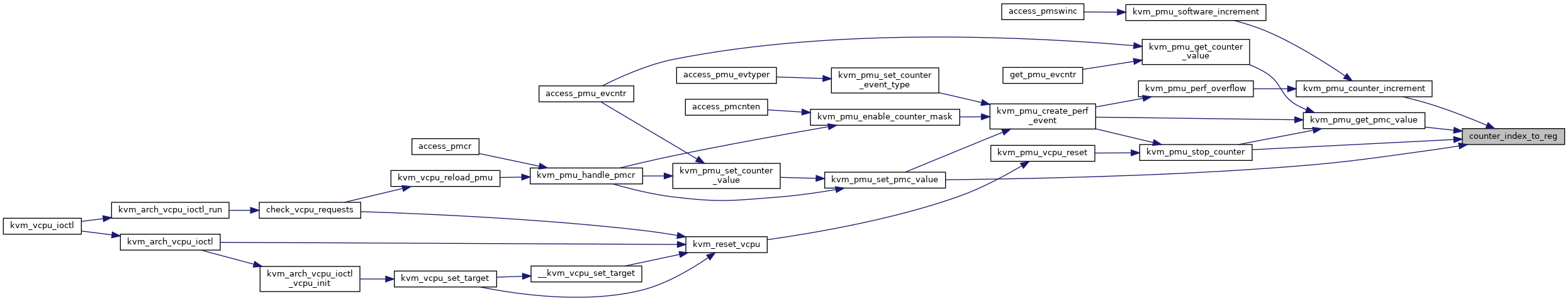

◆ counter_index_to_reg()

|

static |

◆ DEFINE_MUTEX()

|

static |

◆ DEFINE_STATIC_KEY_FALSE()

| DEFINE_STATIC_KEY_FALSE | ( | kvm_arm_pmu_available | ) |

◆ kvm_arm_pmu_get_max_counters()

| u8 kvm_arm_pmu_get_max_counters | ( | struct kvm * | kvm | ) |

kvm_arm_pmu_get_max_counters - Return the max number of PMU counters. @kvm: The kvm pointer

Definition at line 908 of file pmu-emul.c.

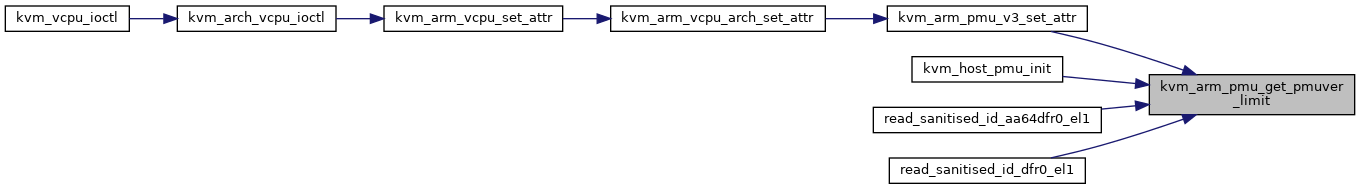

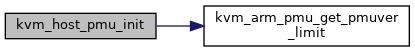

◆ kvm_arm_pmu_get_pmuver_limit()

| u8 kvm_arm_pmu_get_pmuver_limit | ( | void | ) |

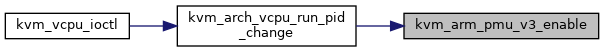

◆ kvm_arm_pmu_v3_enable()

| int kvm_arm_pmu_v3_enable | ( | struct kvm_vcpu * | vcpu | ) |

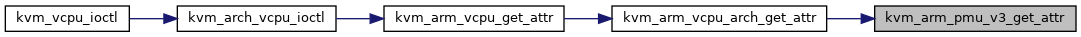

◆ kvm_arm_pmu_v3_get_attr()

| int kvm_arm_pmu_v3_get_attr | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_device_attr * | attr | ||

| ) |

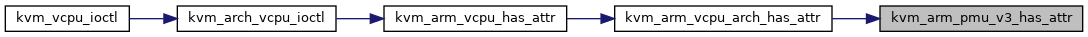

◆ kvm_arm_pmu_v3_has_attr()

| int kvm_arm_pmu_v3_has_attr | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_device_attr * | attr | ||

| ) |

◆ kvm_arm_pmu_v3_init()

|

static |

Definition at line 849 of file pmu-emul.c.

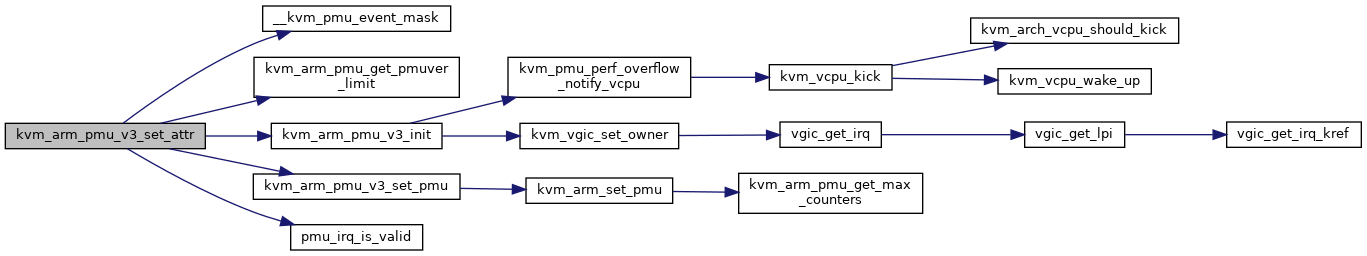

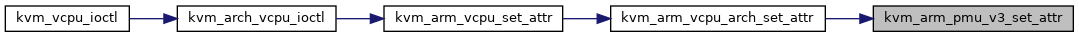

◆ kvm_arm_pmu_v3_set_attr()

| int kvm_arm_pmu_v3_set_attr | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_device_attr * | attr | ||

| ) |

Definition at line 980 of file pmu-emul.c.

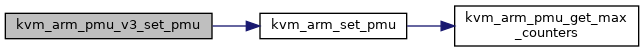

◆ kvm_arm_pmu_v3_set_pmu()

|

static |

Definition at line 950 of file pmu-emul.c.

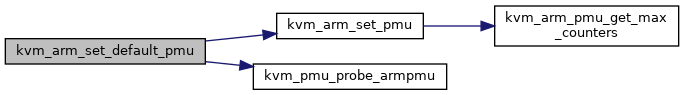

◆ kvm_arm_set_default_pmu()

| int kvm_arm_set_default_pmu | ( | struct kvm * | kvm | ) |

kvm_arm_set_default_pmu - No PMU set, get the default one. @kvm: The kvm pointer

The observant among you will notice that the supported_cpus mask does not get updated for the default PMU even though it is quite possible the selected instance supports only a subset of cores in the system. This is intentional, and upholds the preexisting behavior on heterogeneous systems where vCPUs can be scheduled on any core but the guest counters could stop working.

Definition at line 939 of file pmu-emul.c.

◆ kvm_arm_set_pmu()

|

static |

Definition at line 919 of file pmu-emul.c.

◆ kvm_host_pmu_init()

| void kvm_host_pmu_init | ( | struct arm_pmu * | pmu | ) |

◆ kvm_pmc_has_64bit_overflow()

|

static |

Definition at line 90 of file pmu-emul.c.

◆ kvm_pmc_is_64bit()

|

static |

kvm_pmc_is_64bit - determine if counter is 64bit @pmc: counter context

Definition at line 84 of file pmu-emul.c.

◆ kvm_pmc_to_vcpu()

|

static |

◆ kvm_pmu_counter_can_chain()

|

static |

Definition at line 98 of file pmu-emul.c.

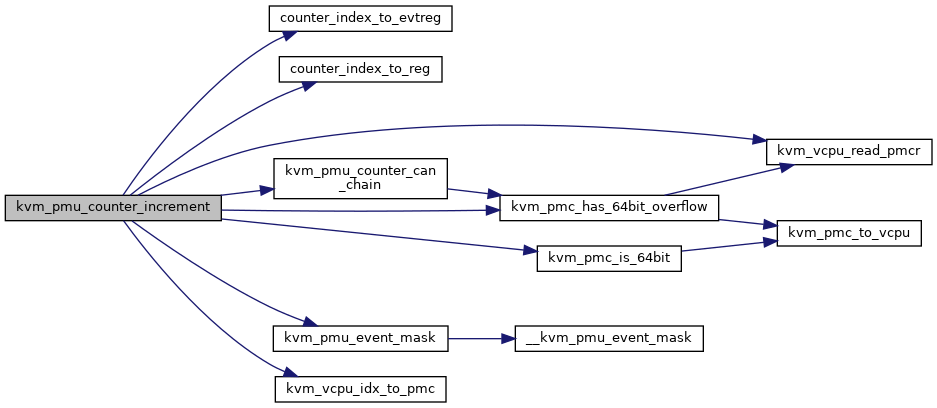

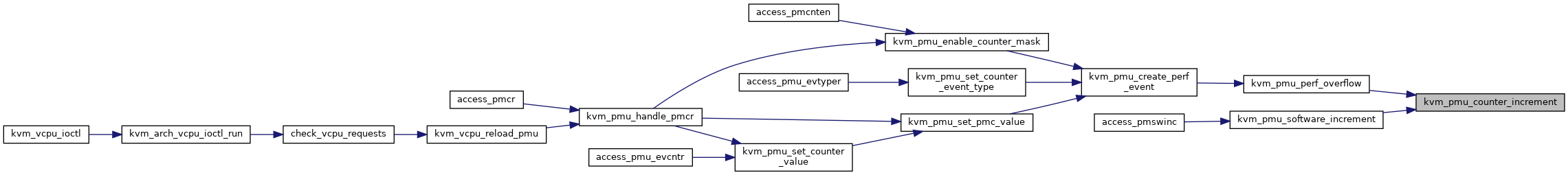

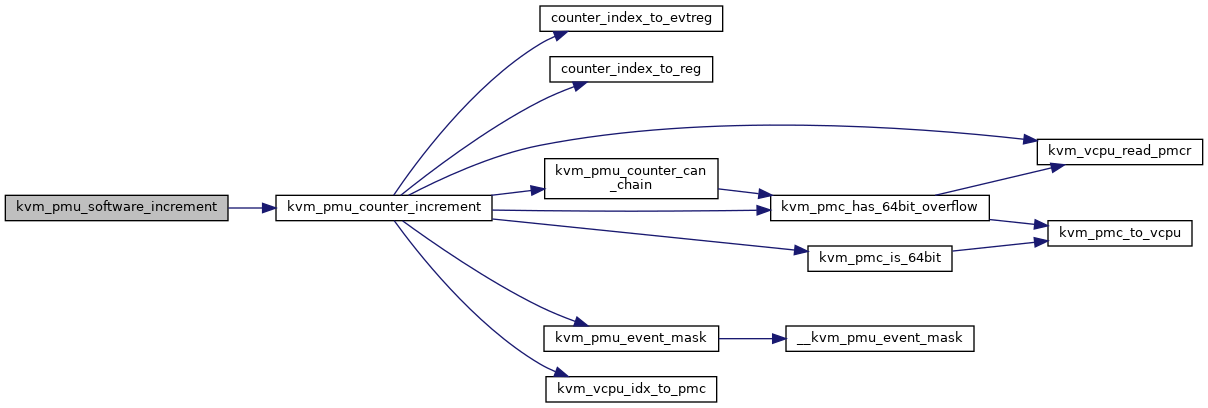

◆ kvm_pmu_counter_increment()

|

static |

Definition at line 440 of file pmu-emul.c.

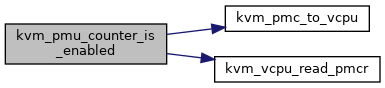

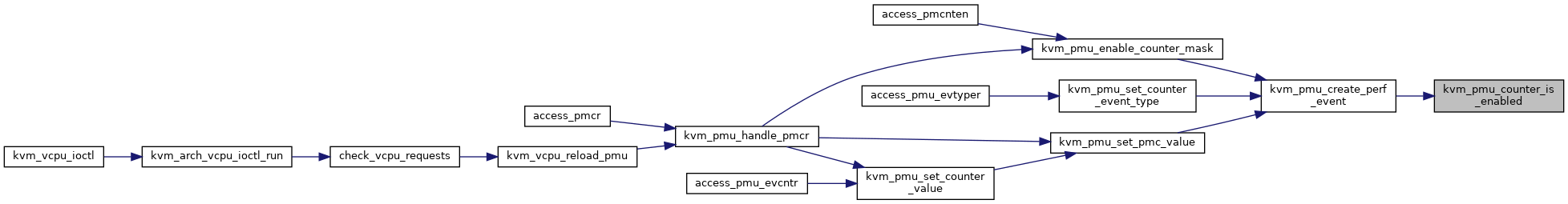

◆ kvm_pmu_counter_is_enabled()

|

static |

Definition at line 585 of file pmu-emul.c.

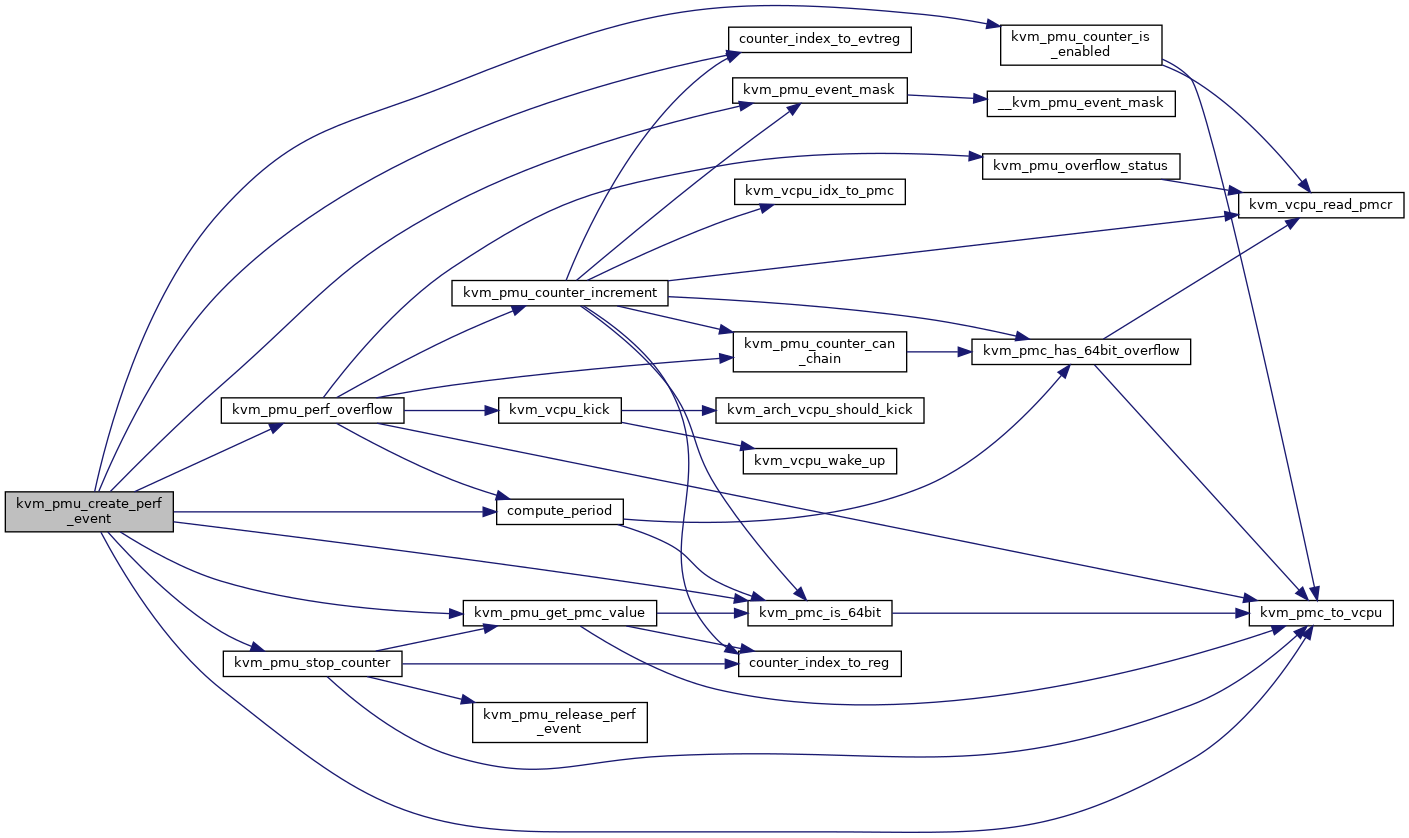

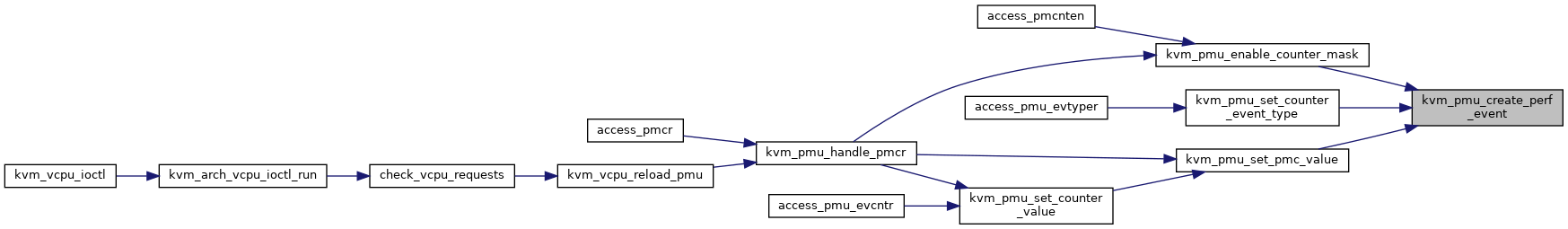

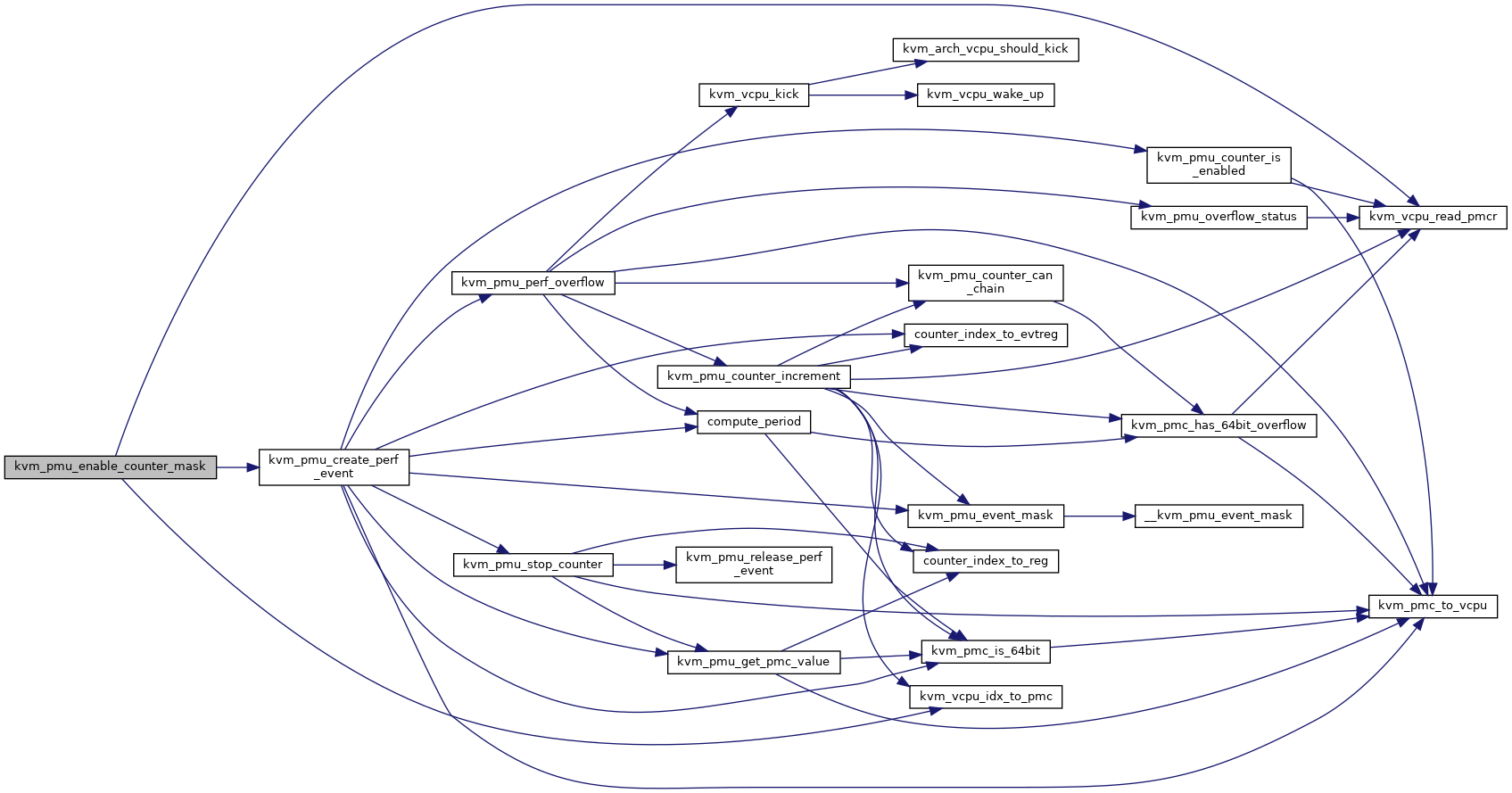

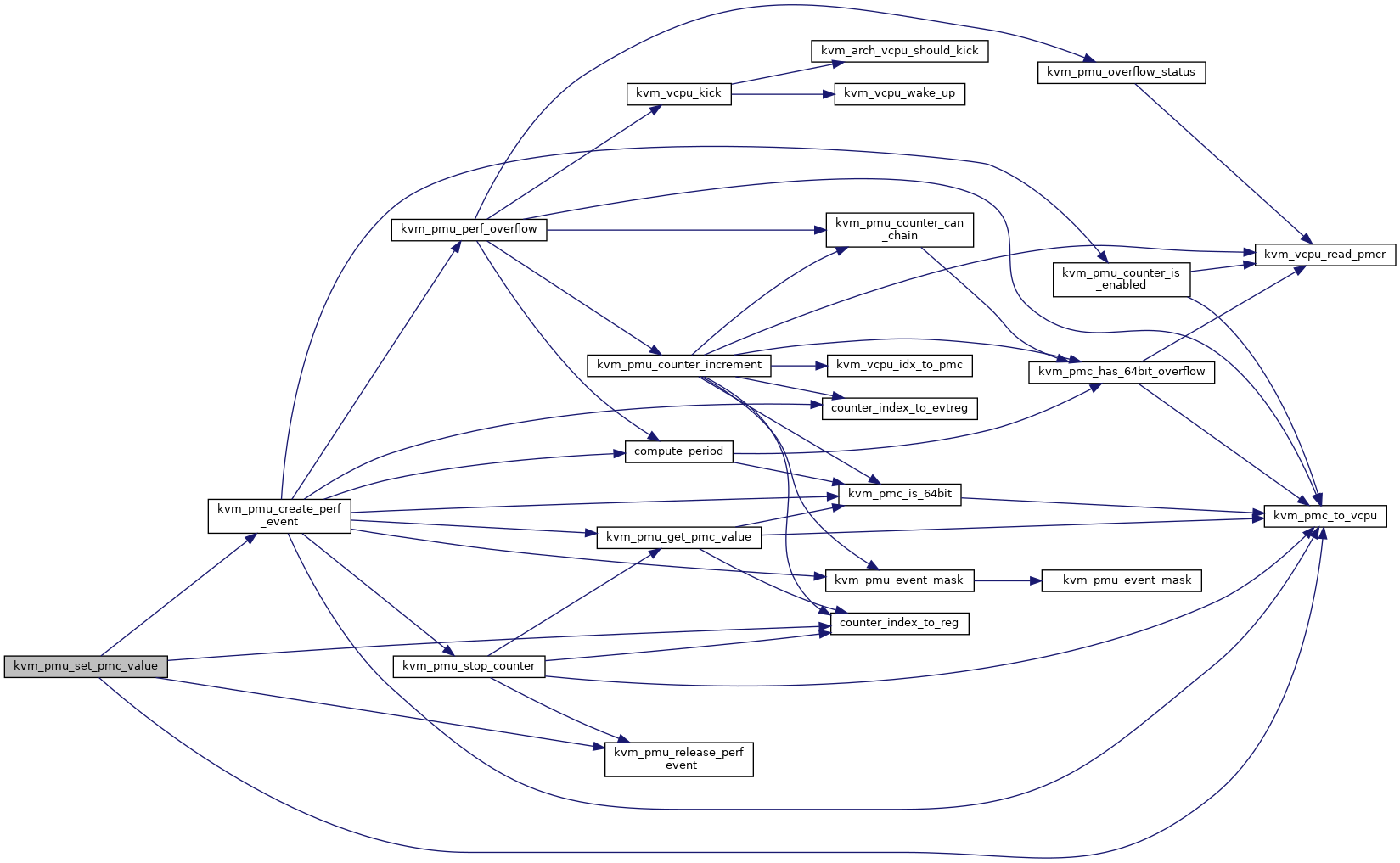

◆ kvm_pmu_create_perf_event()

|

static |

kvm_pmu_create_perf_event - create a perf event for a counter @pmc: Counter context

Definition at line 596 of file pmu-emul.c.

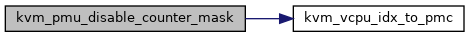

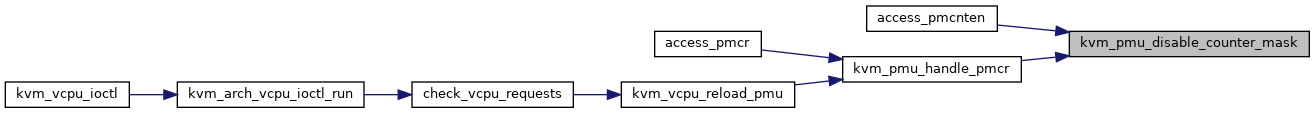

◆ kvm_pmu_disable_counter_mask()

| void kvm_pmu_disable_counter_mask | ( | struct kvm_vcpu * | vcpu, |

| u64 | val | ||

| ) |

kvm_pmu_disable_counter_mask - disable selected PMU counters @vcpu: The vcpu pointer @val: the value guest writes to PMCNTENCLR register

Call perf_event_disable to stop counting the perf event

Definition at line 319 of file pmu-emul.c.

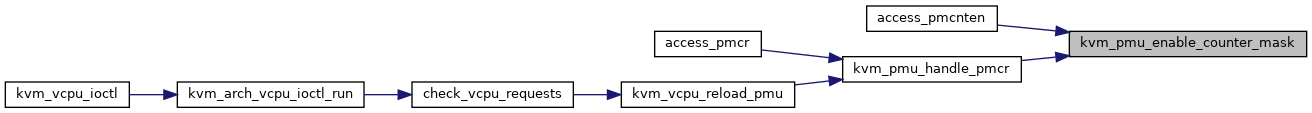

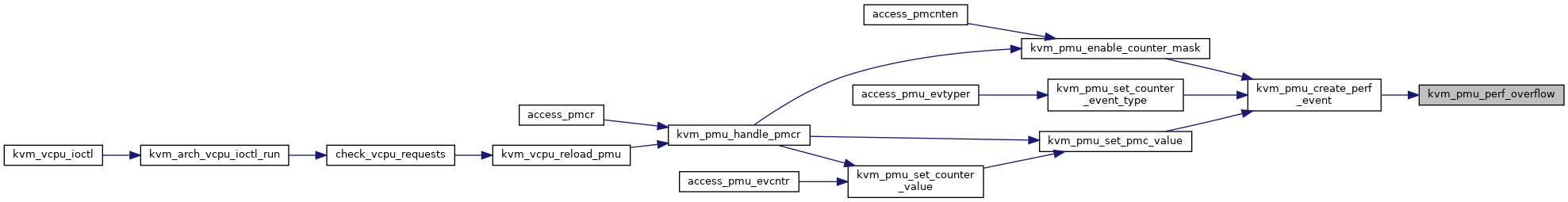

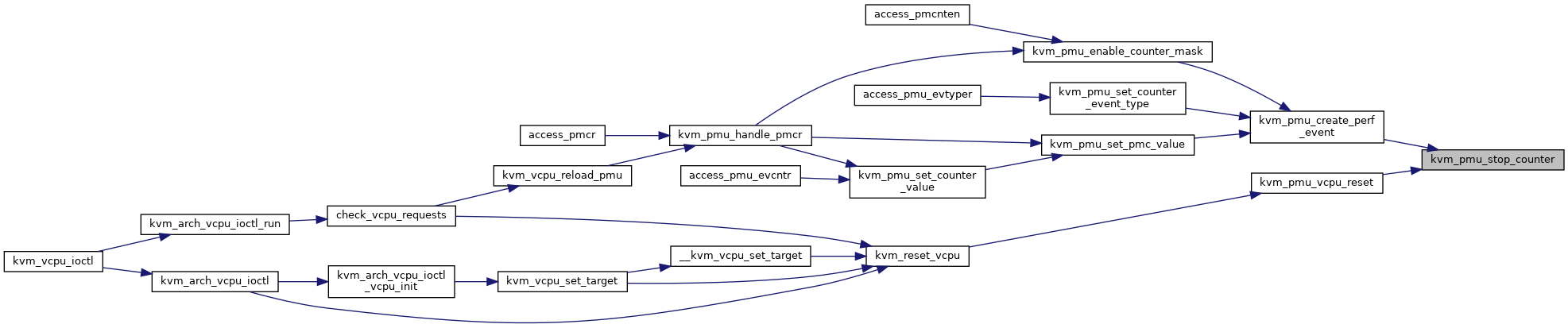

◆ kvm_pmu_enable_counter_mask()

| void kvm_pmu_enable_counter_mask | ( | struct kvm_vcpu * | vcpu, |

| u64 | val | ||

| ) |

kvm_pmu_enable_counter_mask - enable selected PMU counters @vcpu: The vcpu pointer @val: the value guest writes to PMCNTENSET register

Call perf_event_enable to start counting the perf event

Definition at line 285 of file pmu-emul.c.

◆ kvm_pmu_event_mask()

|

static |

Definition at line 55 of file pmu-emul.c.

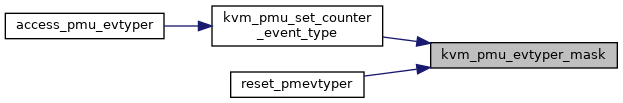

◆ kvm_pmu_evtyper_mask()

| u64 kvm_pmu_evtyper_mask | ( | struct kvm * | kvm | ) |

Definition at line 63 of file pmu-emul.c.

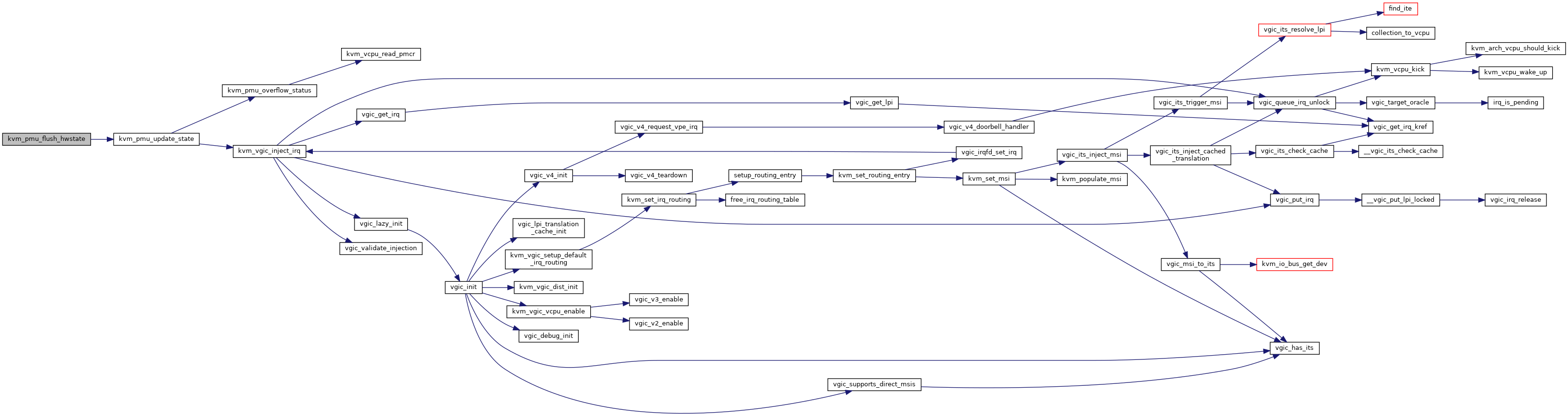

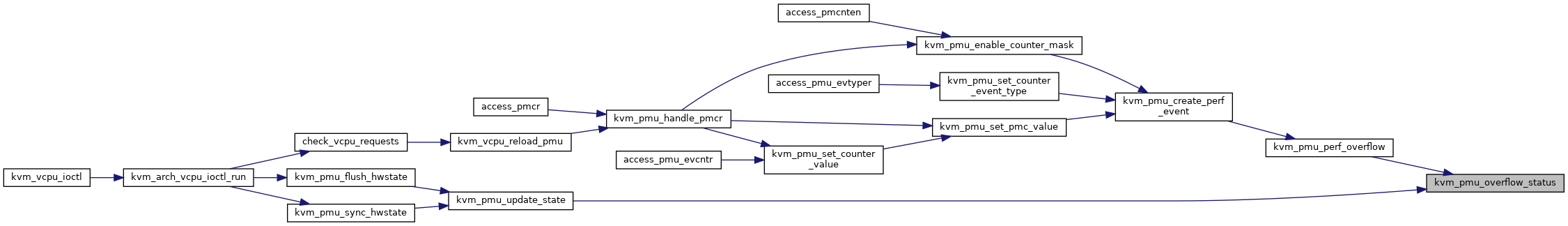

◆ kvm_pmu_flush_hwstate()

| void kvm_pmu_flush_hwstate | ( | struct kvm_vcpu * | vcpu | ) |

kvm_pmu_flush_hwstate - flush pmu state to cpu @vcpu: The vcpu pointer

Check if the PMU has overflowed while we were running in the host, and inject an interrupt if that was the case.

Definition at line 405 of file pmu-emul.c.

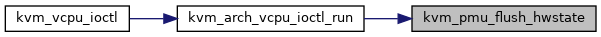

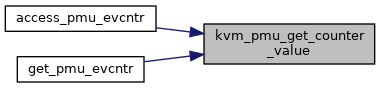

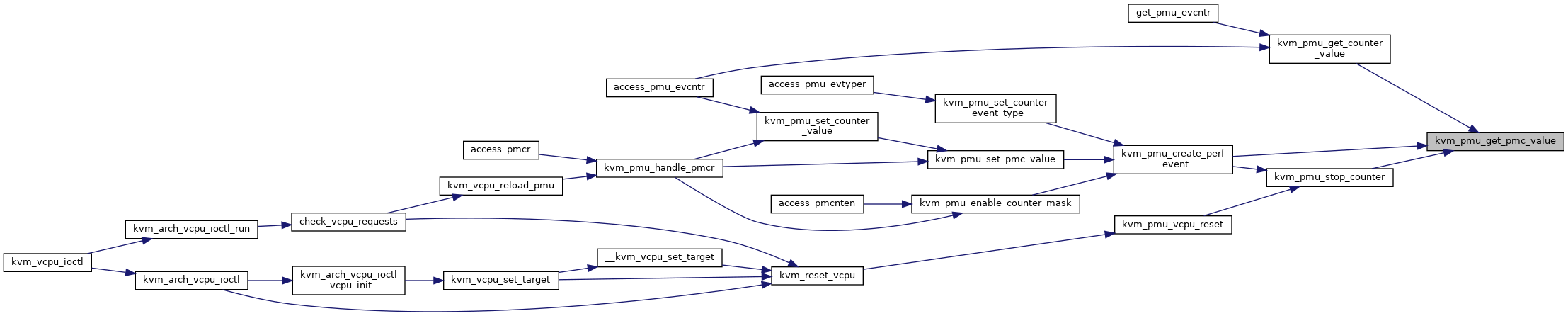

◆ kvm_pmu_get_counter_value()

| u64 kvm_pmu_get_counter_value | ( | struct kvm_vcpu * | vcpu, |

| u64 | select_idx | ||

| ) |

kvm_pmu_get_counter_value - get PMU counter value @vcpu: The vcpu pointer @select_idx: The counter index

Definition at line 141 of file pmu-emul.c.

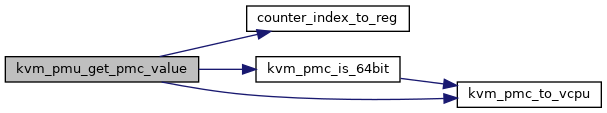

◆ kvm_pmu_get_pmc_value()

|

static |

Definition at line 114 of file pmu-emul.c.

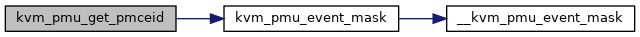

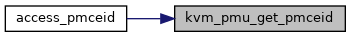

◆ kvm_pmu_get_pmceid()

| u64 kvm_pmu_get_pmceid | ( | struct kvm_vcpu * | vcpu, |

| bool | pmceid1 | ||

| ) |

Definition at line 760 of file pmu-emul.c.

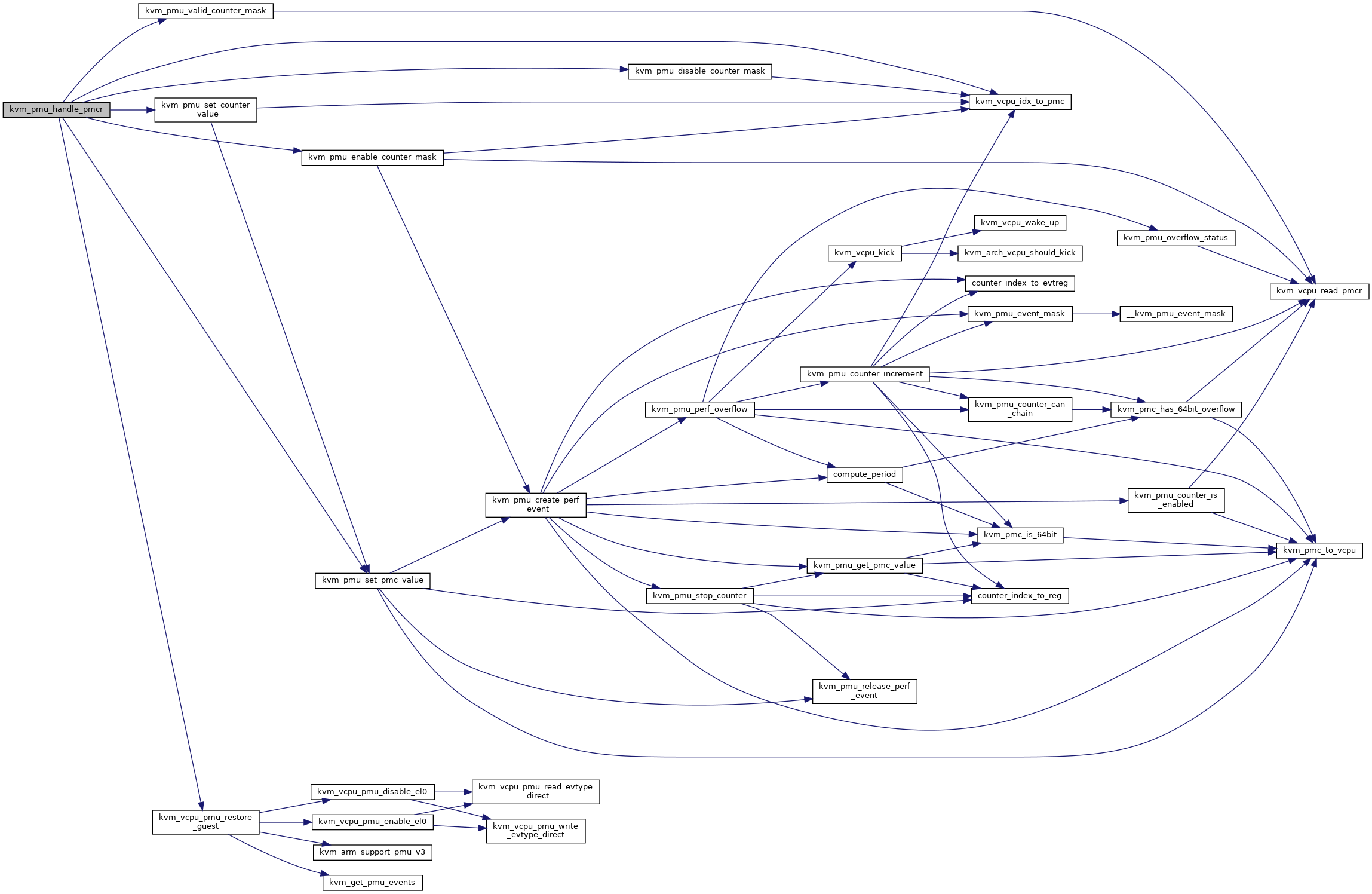

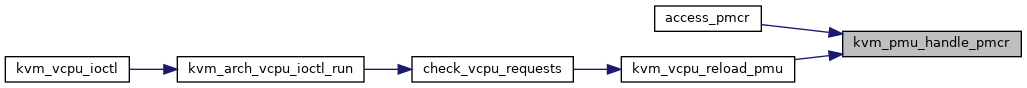

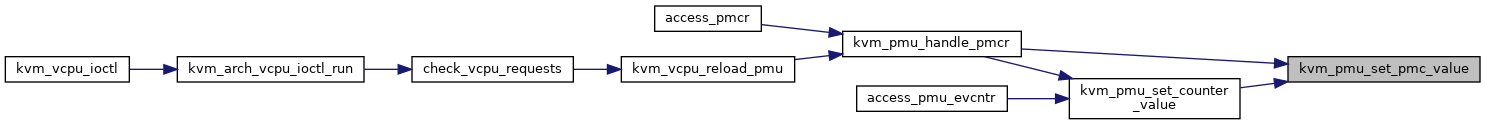

◆ kvm_pmu_handle_pmcr()

| void kvm_pmu_handle_pmcr | ( | struct kvm_vcpu * | vcpu, |

| u64 | val | ||

| ) |

kvm_pmu_handle_pmcr - handle PMCR register @vcpu: The vcpu pointer @val: the value guest writes to PMCR register

Definition at line 551 of file pmu-emul.c.

◆ kvm_pmu_overflow_status()

|

static |

Definition at line 339 of file pmu-emul.c.

◆ kvm_pmu_perf_overflow()

|

static |

When the perf event overflows, set the overflow status and inform the vcpu.

Definition at line 496 of file pmu-emul.c.

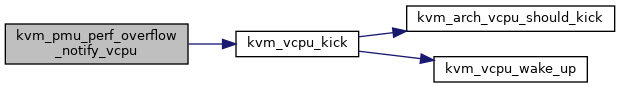

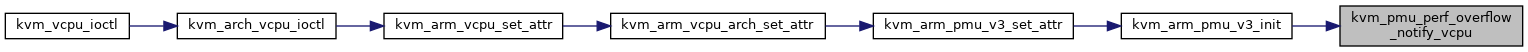

◆ kvm_pmu_perf_overflow_notify_vcpu()

|

static |

When perf interrupt is an NMI, we cannot safely notify the vcpu corresponding to the event. This is why we need a callback to do it once outside of the NMI context.

Definition at line 427 of file pmu-emul.c.

◆ kvm_pmu_probe_armpmu()

|

static |

◆ kvm_pmu_release_perf_event()

|

static |

kvm_pmu_release_perf_event - remove the perf event @pmc: The PMU counter pointer

Definition at line 194 of file pmu-emul.c.

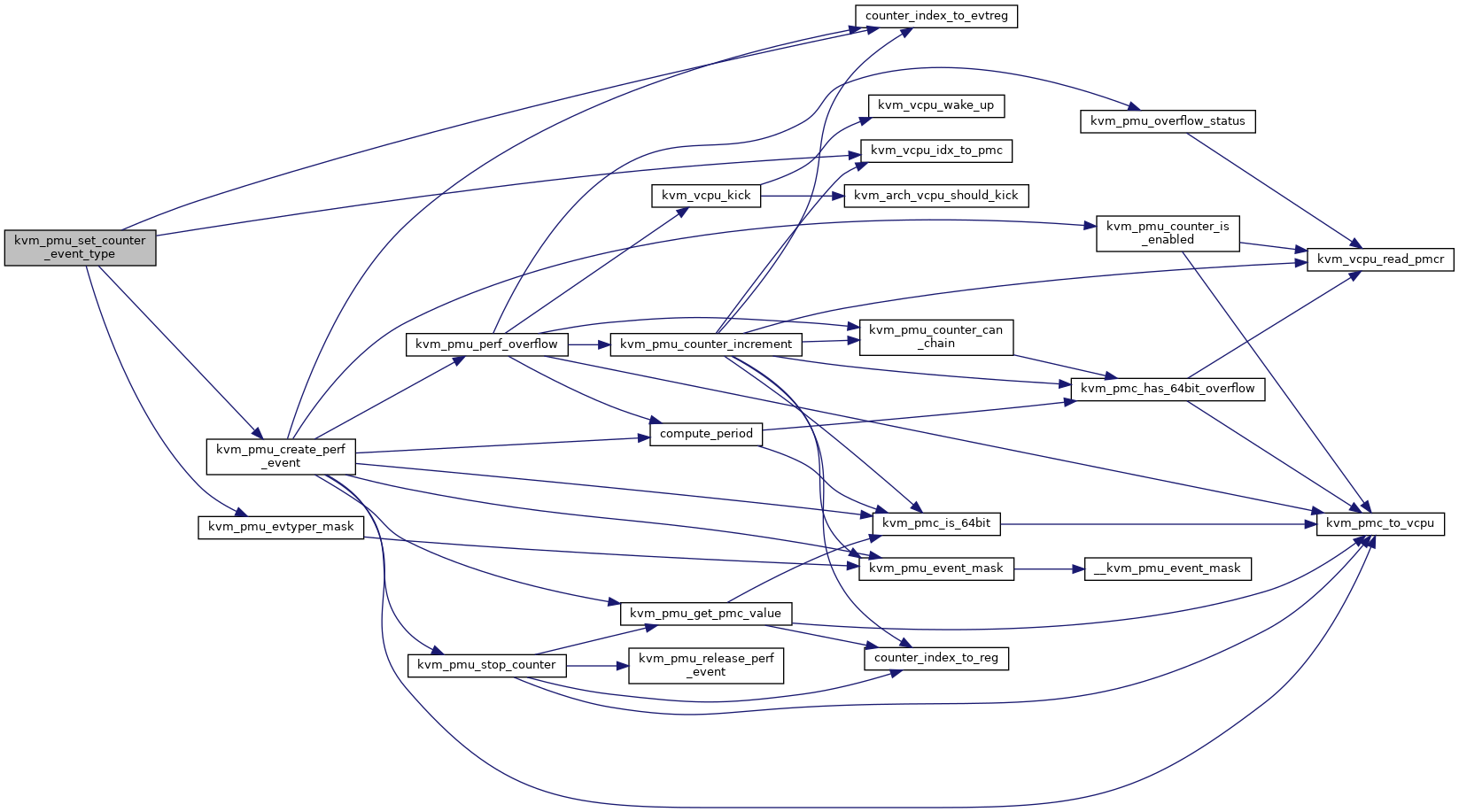

◆ kvm_pmu_set_counter_event_type()

| void kvm_pmu_set_counter_event_type | ( | struct kvm_vcpu * | vcpu, |

| u64 | data, | ||

| u64 | select_idx | ||

| ) |

kvm_pmu_set_counter_event_type - set selected counter to monitor some event @vcpu: The vcpu pointer @data: The data guest writes to PMXEVTYPER_EL0 @select_idx: The number of selected counter

When OS accesses PMXEVTYPER_EL0, that means it wants to set a PMC to count an event with given hardware event number. Here we call perf_event API to emulate this action and create a kernel perf event for it.

Definition at line 678 of file pmu-emul.c.

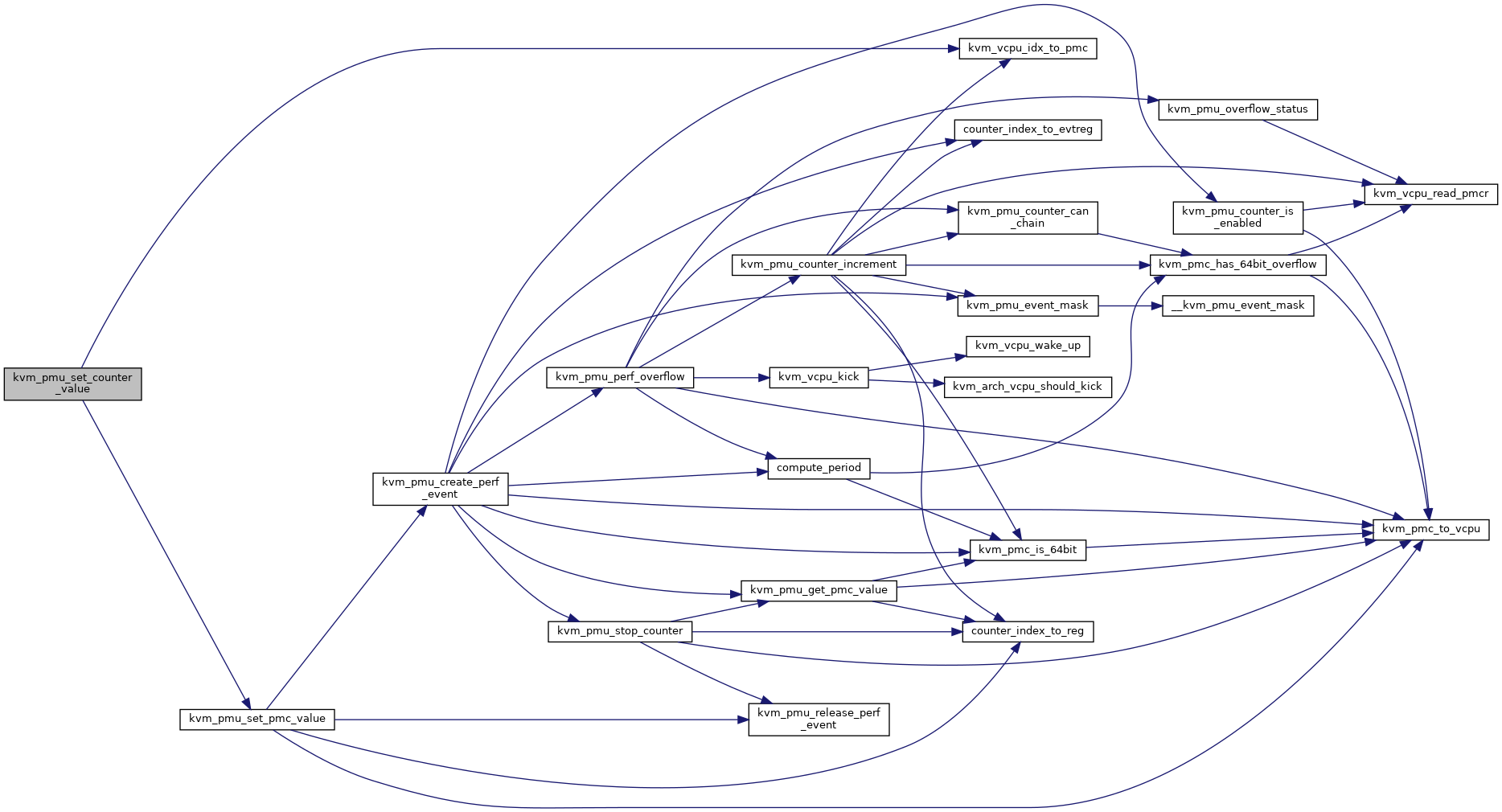

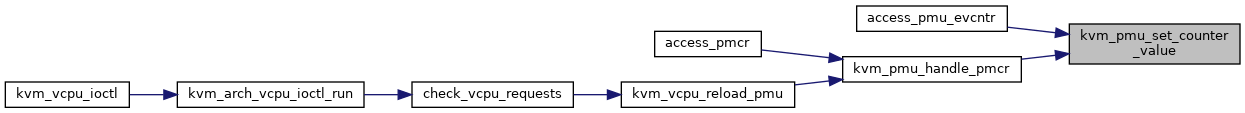

◆ kvm_pmu_set_counter_value()

| void kvm_pmu_set_counter_value | ( | struct kvm_vcpu * | vcpu, |

| u64 | select_idx, | ||

| u64 | val | ||

| ) |

kvm_pmu_set_counter_value - set PMU counter value @vcpu: The vcpu pointer @select_idx: The counter index @val: The counter value

Definition at line 182 of file pmu-emul.c.

◆ kvm_pmu_set_pmc_value()

|

static |

Definition at line 149 of file pmu-emul.c.

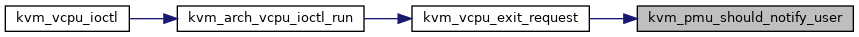

◆ kvm_pmu_should_notify_user()

| bool kvm_pmu_should_notify_user | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 373 of file pmu-emul.c.

◆ kvm_pmu_software_increment()

| void kvm_pmu_software_increment | ( | struct kvm_vcpu * | vcpu, |

| u64 | val | ||

| ) |

kvm_pmu_software_increment - do software increment @vcpu: The vcpu pointer @val: the value guest writes to PMSWINC register

Definition at line 541 of file pmu-emul.c.

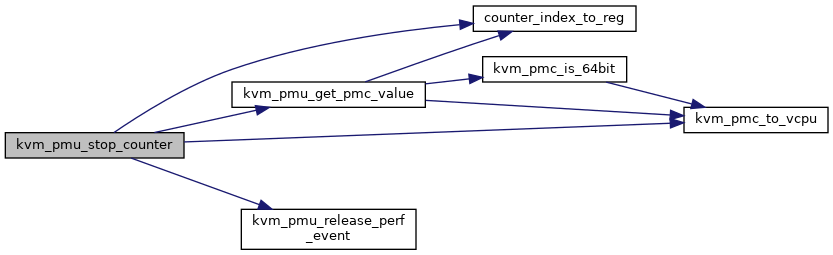

◆ kvm_pmu_stop_counter()

|

static |

kvm_pmu_stop_counter - stop PMU counter @pmc: The PMU counter pointer

If this counter has been configured to monitor some event, release it here.

Definition at line 209 of file pmu-emul.c.

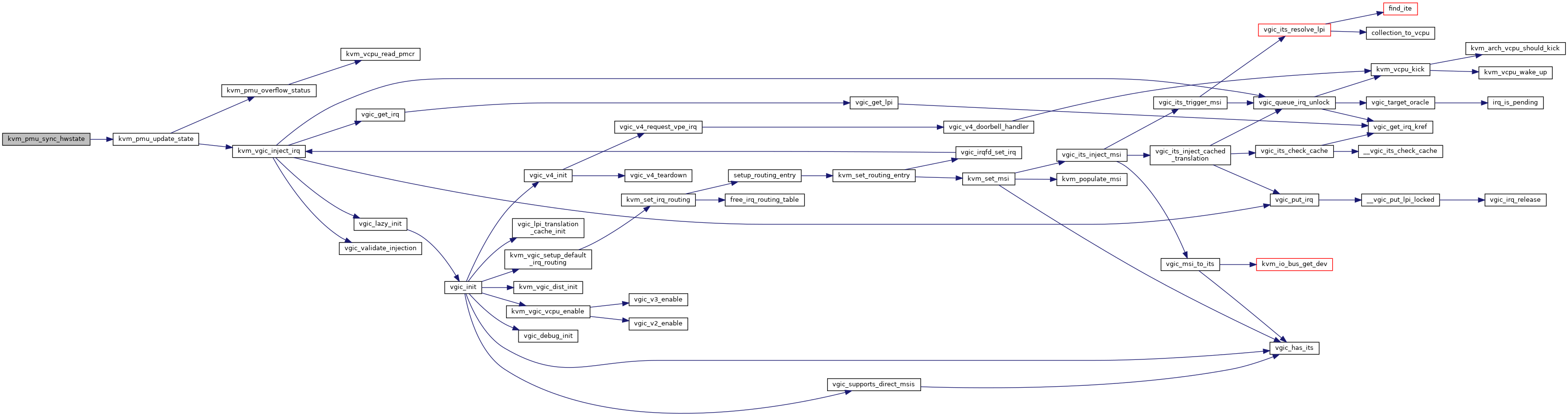

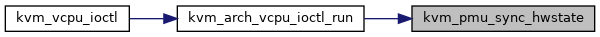

◆ kvm_pmu_sync_hwstate()

| void kvm_pmu_sync_hwstate | ( | struct kvm_vcpu * | vcpu | ) |

kvm_pmu_sync_hwstate - sync pmu state from cpu @vcpu: The vcpu pointer

Check if the PMU has overflowed while we were running in the guest, and inject an interrupt if that was the case.

Definition at line 417 of file pmu-emul.c.

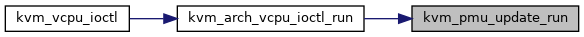

◆ kvm_pmu_update_run()

| void kvm_pmu_update_run | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_pmu_update_state()

|

static |

Definition at line 352 of file pmu-emul.c.

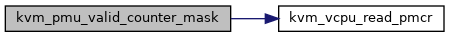

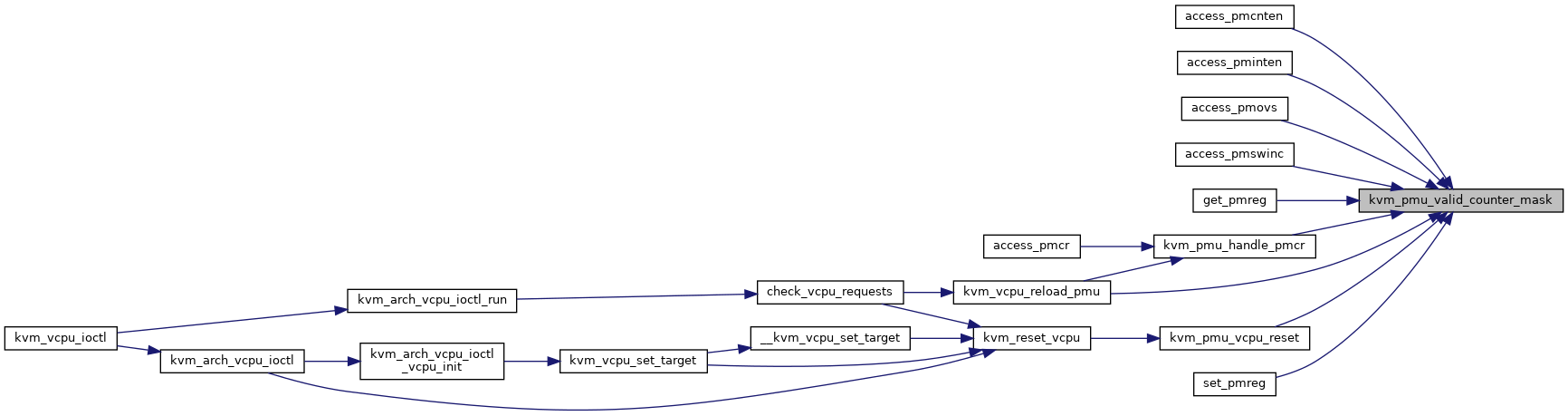

◆ kvm_pmu_valid_counter_mask()

| u64 kvm_pmu_valid_counter_mask | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 268 of file pmu-emul.c.

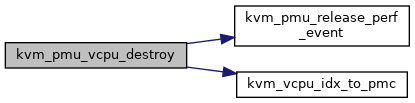

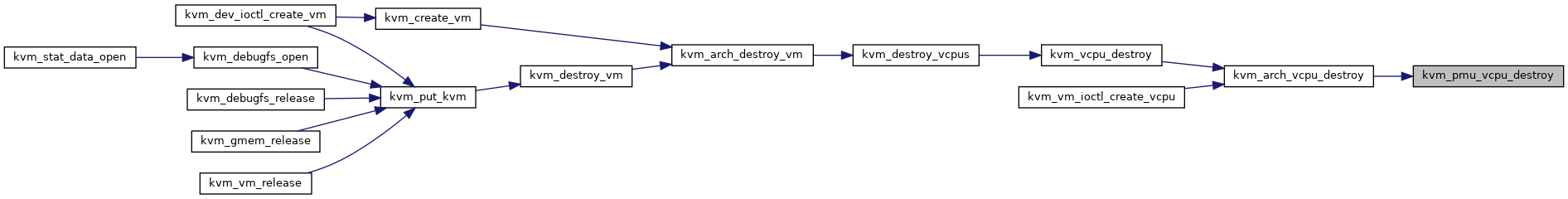

◆ kvm_pmu_vcpu_destroy()

| void kvm_pmu_vcpu_destroy | ( | struct kvm_vcpu * | vcpu | ) |

kvm_pmu_vcpu_destroy - free perf event of PMU for cpu @vcpu: The vcpu pointer

Definition at line 259 of file pmu-emul.c.

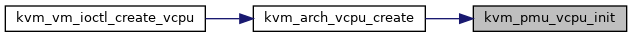

◆ kvm_pmu_vcpu_init()

| void kvm_pmu_vcpu_init | ( | struct kvm_vcpu * | vcpu | ) |

kvm_pmu_vcpu_init - assign pmu counter idx for cpu @vcpu: The vcpu pointer

Definition at line 231 of file pmu-emul.c.

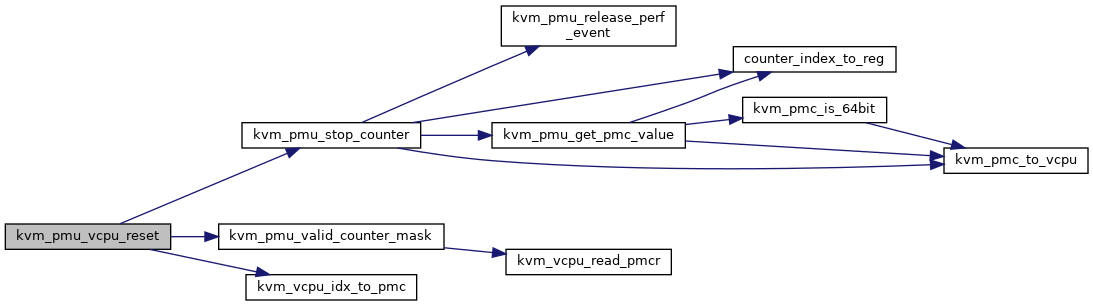

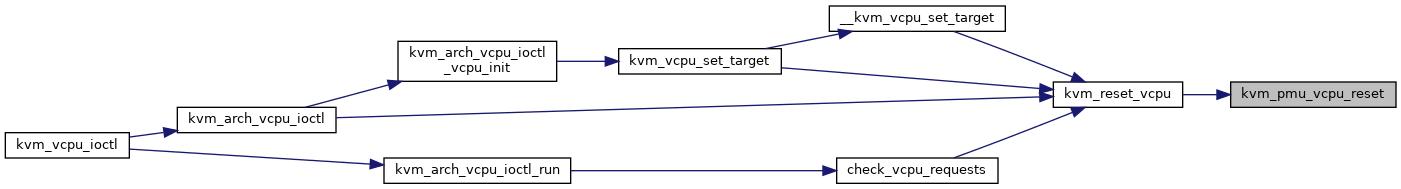

◆ kvm_pmu_vcpu_reset()

| void kvm_pmu_vcpu_reset | ( | struct kvm_vcpu * | vcpu | ) |

kvm_pmu_vcpu_reset - reset pmu state for cpu @vcpu: The vcpu pointer

Definition at line 245 of file pmu-emul.c.

◆ kvm_vcpu_idx_to_pmc()

|

static |

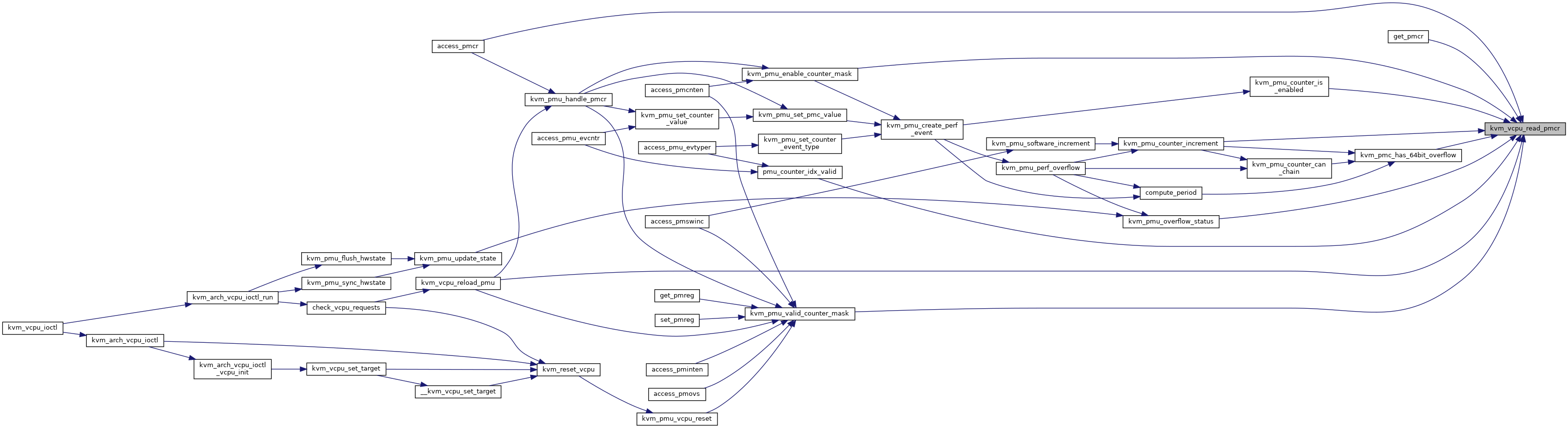

◆ kvm_vcpu_read_pmcr()

| u64 kvm_vcpu_read_pmcr | ( | struct kvm_vcpu * | vcpu | ) |

kvm_vcpu_read_pmcr - Read PMCR_EL0 register for the vCPU @vcpu: The vcpu pointer

Definition at line 1136 of file pmu-emul.c.

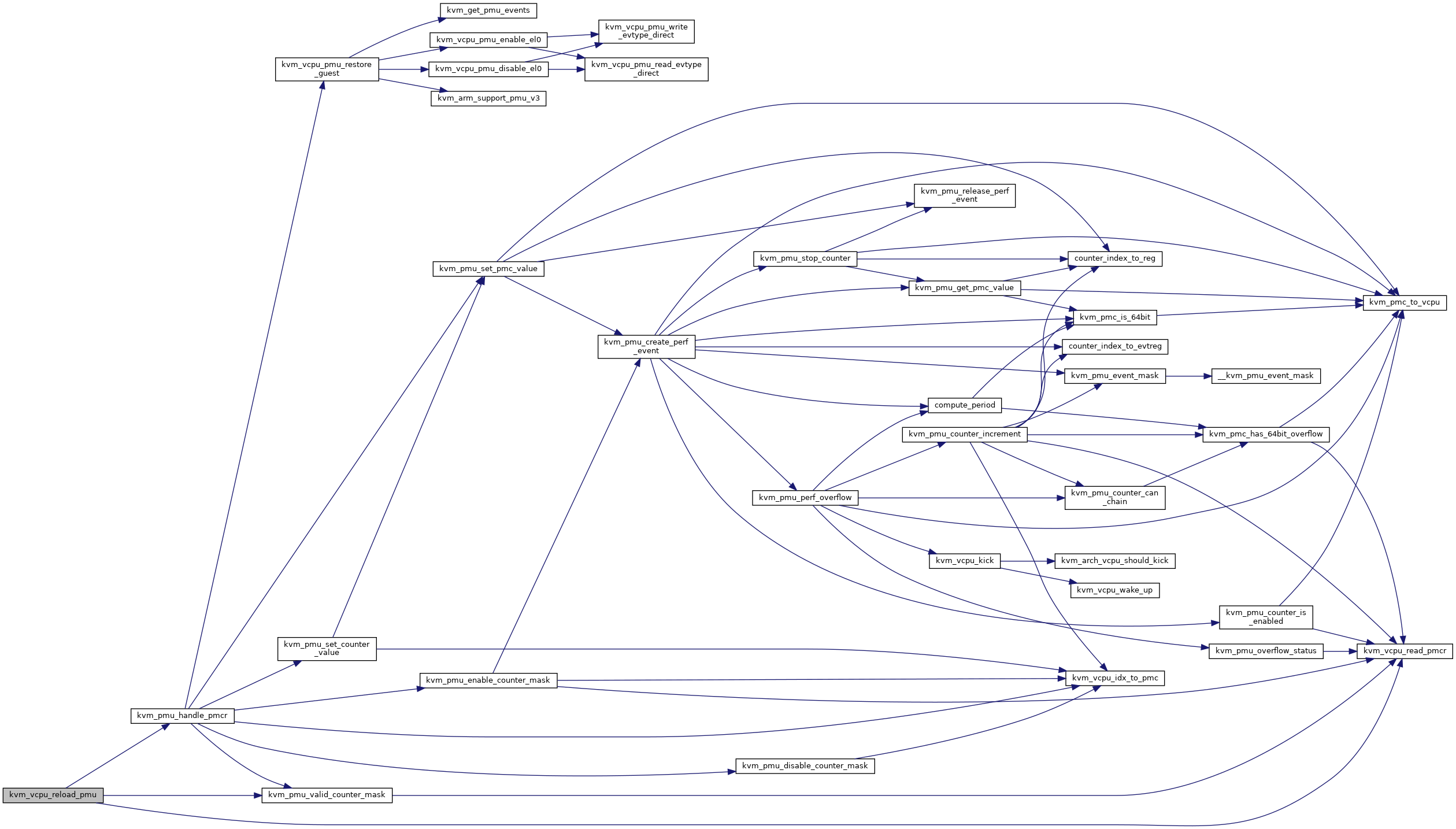

◆ kvm_vcpu_reload_pmu()

| void kvm_vcpu_reload_pmu | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 805 of file pmu-emul.c.

◆ LIST_HEAD()

|

static |

◆ pmu_irq_is_valid()

|

static |