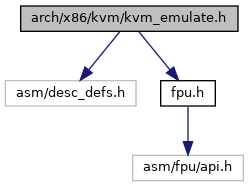

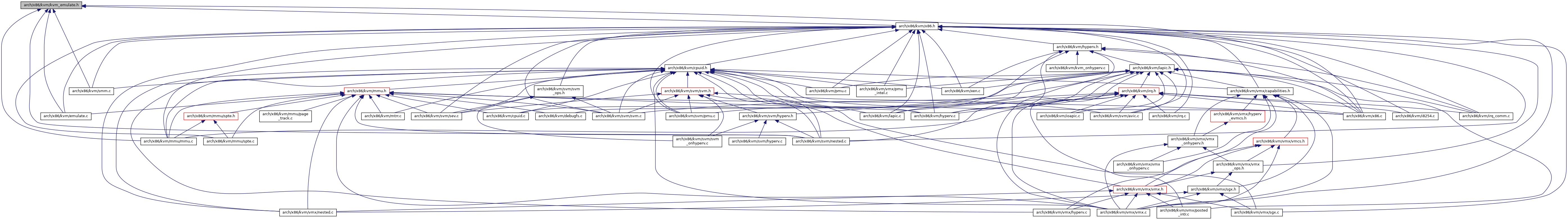

Go to the source code of this file.

Classes | |

| struct | x86_exception |

| struct | x86_instruction_info |

| struct | x86_emulate_ops |

| struct | operand |

| struct | fetch_cache |

| struct | read_cache |

| struct | x86_emulate_ctxt |

Typedefs | |

| typedef void(* | fastop_t) (struct fastop *) |

Functions | |

| static bool | is_guest_vendor_intel (u32 ebx, u32 ecx, u32 edx) |

| static bool | is_guest_vendor_amd (u32 ebx, u32 ecx, u32 edx) |

| static bool | is_guest_vendor_hygon (u32 ebx, u32 ecx, u32 edx) |

| int | x86_decode_insn (struct x86_emulate_ctxt *ctxt, void *insn, int insn_len, int emulation_type) |

| bool | x86_page_table_writing_insn (struct x86_emulate_ctxt *ctxt) |

| void | init_decode_cache (struct x86_emulate_ctxt *ctxt) |

| int | x86_emulate_insn (struct x86_emulate_ctxt *ctxt) |

| int | emulator_task_switch (struct x86_emulate_ctxt *ctxt, u16 tss_selector, int idt_index, int reason, bool has_error_code, u32 error_code) |

| int | emulate_int_real (struct x86_emulate_ctxt *ctxt, int irq) |

| void | emulator_invalidate_register_cache (struct x86_emulate_ctxt *ctxt) |

| void | emulator_writeback_register_cache (struct x86_emulate_ctxt *ctxt) |

| bool | emulator_can_use_gpa (struct x86_emulate_ctxt *ctxt) |

| static ulong | reg_read (struct x86_emulate_ctxt *ctxt, unsigned nr) |

| static ulong * | reg_write (struct x86_emulate_ctxt *ctxt, unsigned nr) |

| static ulong * | reg_rmw (struct x86_emulate_ctxt *ctxt, unsigned nr) |

Macro Definition Documentation

◆ EMULATION_FAILED

| #define EMULATION_FAILED -1 |

Definition at line 505 of file kvm_emulate.h.

◆ EMULATION_INTERCEPTED

| #define EMULATION_INTERCEPTED 2 |

Definition at line 508 of file kvm_emulate.h.

◆ EMULATION_OK

| #define EMULATION_OK 0 |

Definition at line 506 of file kvm_emulate.h.

◆ EMULATION_RESTART

| #define EMULATION_RESTART 1 |

Definition at line 507 of file kvm_emulate.h.

◆ KVM_EMULATOR_BUG_ON

| #define KVM_EMULATOR_BUG_ON | ( | cond, | |

| ctxt | |||

| ) |

Definition at line 377 of file kvm_emulate.h.

◆ NR_EMULATOR_GPRS

| #define NR_EMULATOR_GPRS 8 |

Definition at line 304 of file kvm_emulate.h.

◆ REPE_PREFIX

| #define REPE_PREFIX 0xf3 |

Definition at line 387 of file kvm_emulate.h.

◆ REPNE_PREFIX

| #define REPNE_PREFIX 0xf2 |

Definition at line 388 of file kvm_emulate.h.

◆ X86EMUL_CMPXCHG_FAILED

| #define X86EMUL_CMPXCHG_FAILED 4 /* cmpxchg did not see expected value */ |

Definition at line 87 of file kvm_emulate.h.

◆ X86EMUL_CONTINUE

| #define X86EMUL_CONTINUE 0 |

Definition at line 81 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_AMDisbetterI_ebx

| #define X86EMUL_CPUID_VENDOR_AMDisbetterI_ebx 0x69444d41 |

Definition at line 395 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_AMDisbetterI_ecx

| #define X86EMUL_CPUID_VENDOR_AMDisbetterI_ecx 0x21726574 |

Definition at line 396 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_AMDisbetterI_edx

| #define X86EMUL_CPUID_VENDOR_AMDisbetterI_edx 0x74656273 |

Definition at line 397 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_AuthenticAMD_ebx

| #define X86EMUL_CPUID_VENDOR_AuthenticAMD_ebx 0x68747541 |

Definition at line 391 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_AuthenticAMD_ecx

| #define X86EMUL_CPUID_VENDOR_AuthenticAMD_ecx 0x444d4163 |

Definition at line 392 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_AuthenticAMD_edx

| #define X86EMUL_CPUID_VENDOR_AuthenticAMD_edx 0x69746e65 |

Definition at line 393 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_CentaurHauls_ebx

| #define X86EMUL_CPUID_VENDOR_CentaurHauls_ebx 0x746e6543 |

Definition at line 407 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_CentaurHauls_ecx

| #define X86EMUL_CPUID_VENDOR_CentaurHauls_ecx 0x736c7561 |

Definition at line 408 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_CentaurHauls_edx

| #define X86EMUL_CPUID_VENDOR_CentaurHauls_edx 0x48727561 |

Definition at line 409 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_GenuineIntel_ebx

| #define X86EMUL_CPUID_VENDOR_GenuineIntel_ebx 0x756e6547 |

Definition at line 403 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_GenuineIntel_ecx

| #define X86EMUL_CPUID_VENDOR_GenuineIntel_ecx 0x6c65746e |

Definition at line 404 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_GenuineIntel_edx

| #define X86EMUL_CPUID_VENDOR_GenuineIntel_edx 0x49656e69 |

Definition at line 405 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_HygonGenuine_ebx

| #define X86EMUL_CPUID_VENDOR_HygonGenuine_ebx 0x6f677948 |

Definition at line 399 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_HygonGenuine_ecx

| #define X86EMUL_CPUID_VENDOR_HygonGenuine_ecx 0x656e6975 |

Definition at line 400 of file kvm_emulate.h.

◆ X86EMUL_CPUID_VENDOR_HygonGenuine_edx

| #define X86EMUL_CPUID_VENDOR_HygonGenuine_edx 0x6e65476e |

Definition at line 401 of file kvm_emulate.h.

◆ X86EMUL_F_FETCH

| #define X86EMUL_F_FETCH BIT(1) |

Definition at line 93 of file kvm_emulate.h.

◆ X86EMUL_F_IMPLICIT

| #define X86EMUL_F_IMPLICIT BIT(2) |

Definition at line 94 of file kvm_emulate.h.

◆ X86EMUL_F_INVLPG

| #define X86EMUL_F_INVLPG BIT(3) |

Definition at line 95 of file kvm_emulate.h.

◆ X86EMUL_F_WRITE

| #define X86EMUL_F_WRITE BIT(0) |

Definition at line 92 of file kvm_emulate.h.

◆ X86EMUL_INTERCEPTED

| #define X86EMUL_INTERCEPTED 6 /* Intercepted by nested VMCB/VMCS */ |

Definition at line 89 of file kvm_emulate.h.

◆ X86EMUL_IO_NEEDED

| #define X86EMUL_IO_NEEDED 5 /* IO is needed to complete emulation */ |

Definition at line 88 of file kvm_emulate.h.

◆ X86EMUL_PROPAGATE_FAULT

| #define X86EMUL_PROPAGATE_FAULT 2 /* propagate a generated fault to guest */ |

Definition at line 85 of file kvm_emulate.h.

◆ X86EMUL_RETRY_INSTR

| #define X86EMUL_RETRY_INSTR 3 /* retry the instruction for some reason */ |

Definition at line 86 of file kvm_emulate.h.

◆ X86EMUL_UNHANDLEABLE

| #define X86EMUL_UNHANDLEABLE 1 |

Definition at line 83 of file kvm_emulate.h.

Typedef Documentation

◆ fastop_t

| typedef void(* fastop_t) (struct fastop *) |

Definition at line 293 of file kvm_emulate.h.

Enumeration Type Documentation

◆ x86_intercept

| enum x86_intercept |

Definition at line 442 of file kvm_emulate.h.

◆ x86_intercept_stage

| enum x86_intercept_stage |

| Enumerator | |

|---|---|

| X86_ICTP_NONE | |

| X86_ICPT_PRE_EXCEPT | |

| X86_ICPT_POST_EXCEPT | |

| X86_ICPT_POST_MEMACCESS | |

Definition at line 435 of file kvm_emulate.h.

◆ x86emul_mode

| enum x86emul_mode |

| Enumerator | |

|---|---|

| X86EMUL_MODE_REAL | |

| X86EMUL_MODE_VM86 | |

| X86EMUL_MODE_PROT16 | |

| X86EMUL_MODE_PROT32 | |

| X86EMUL_MODE_PROT64 | |

Definition at line 279 of file kvm_emulate.h.

Function Documentation

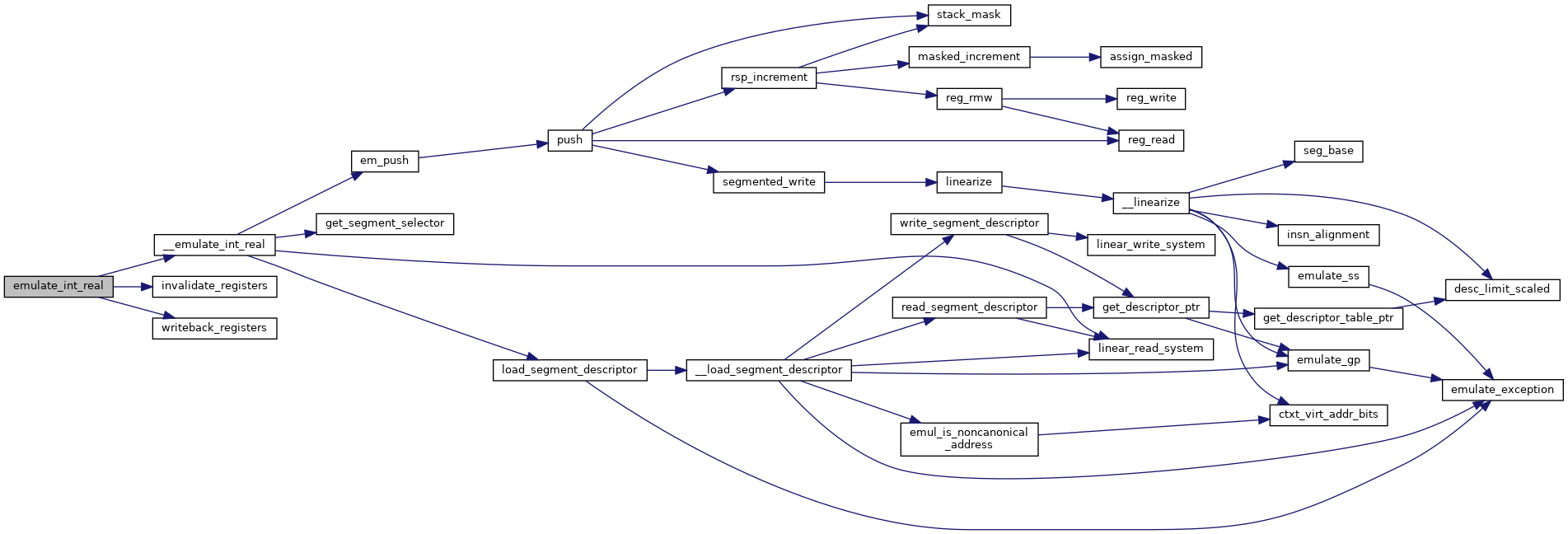

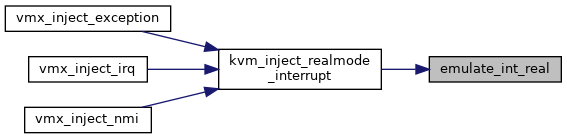

◆ emulate_int_real()

| int emulate_int_real | ( | struct x86_emulate_ctxt * | ctxt, |

| int | irq | ||

| ) |

Definition at line 2069 of file emulate.c.

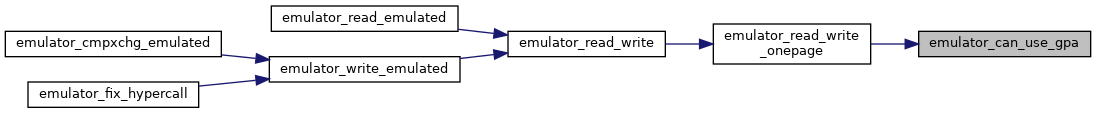

◆ emulator_can_use_gpa()

| bool emulator_can_use_gpa | ( | struct x86_emulate_ctxt * | ctxt | ) |

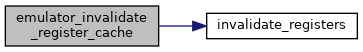

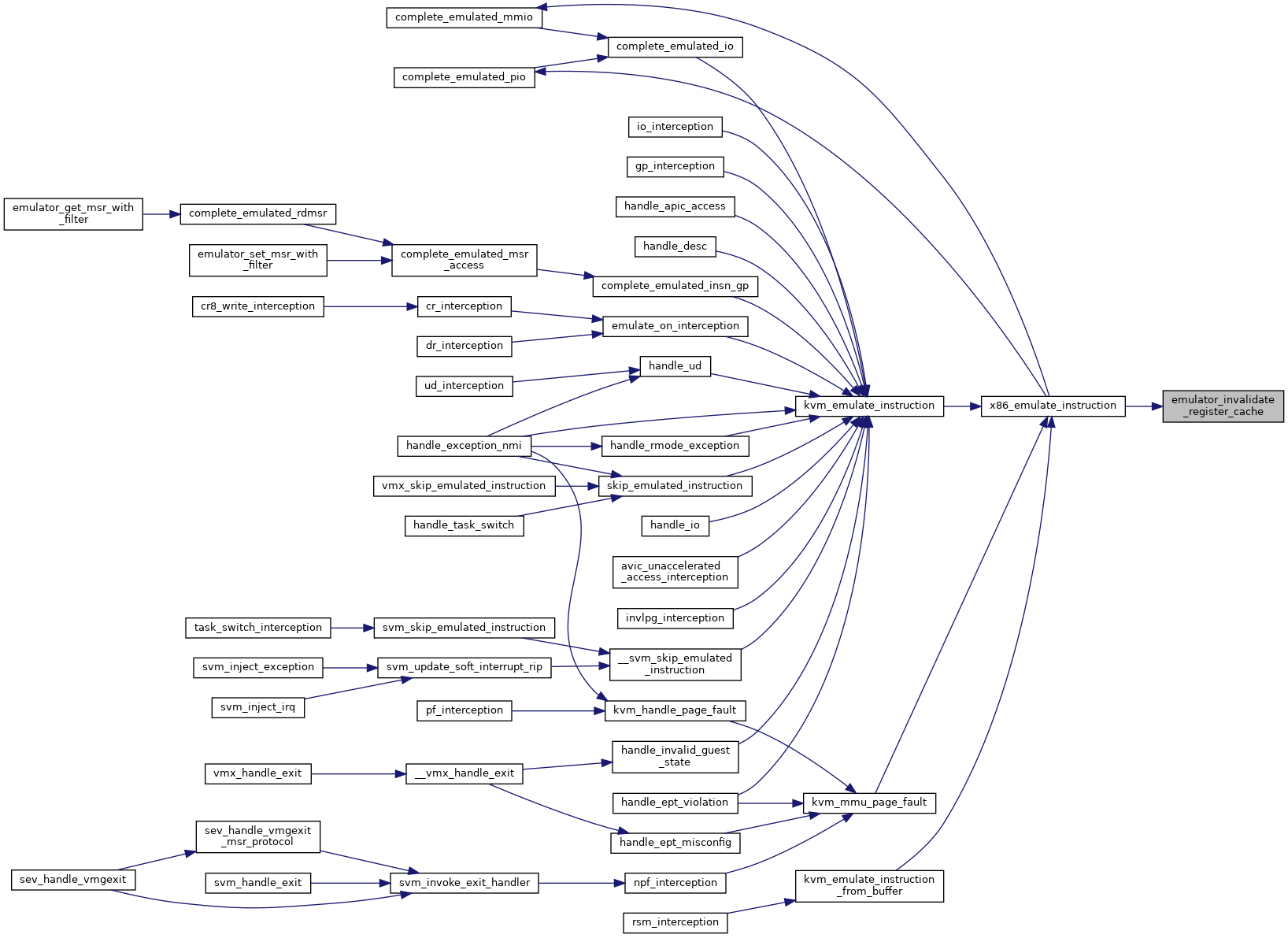

◆ emulator_invalidate_register_cache()

| void emulator_invalidate_register_cache | ( | struct x86_emulate_ctxt * | ctxt | ) |

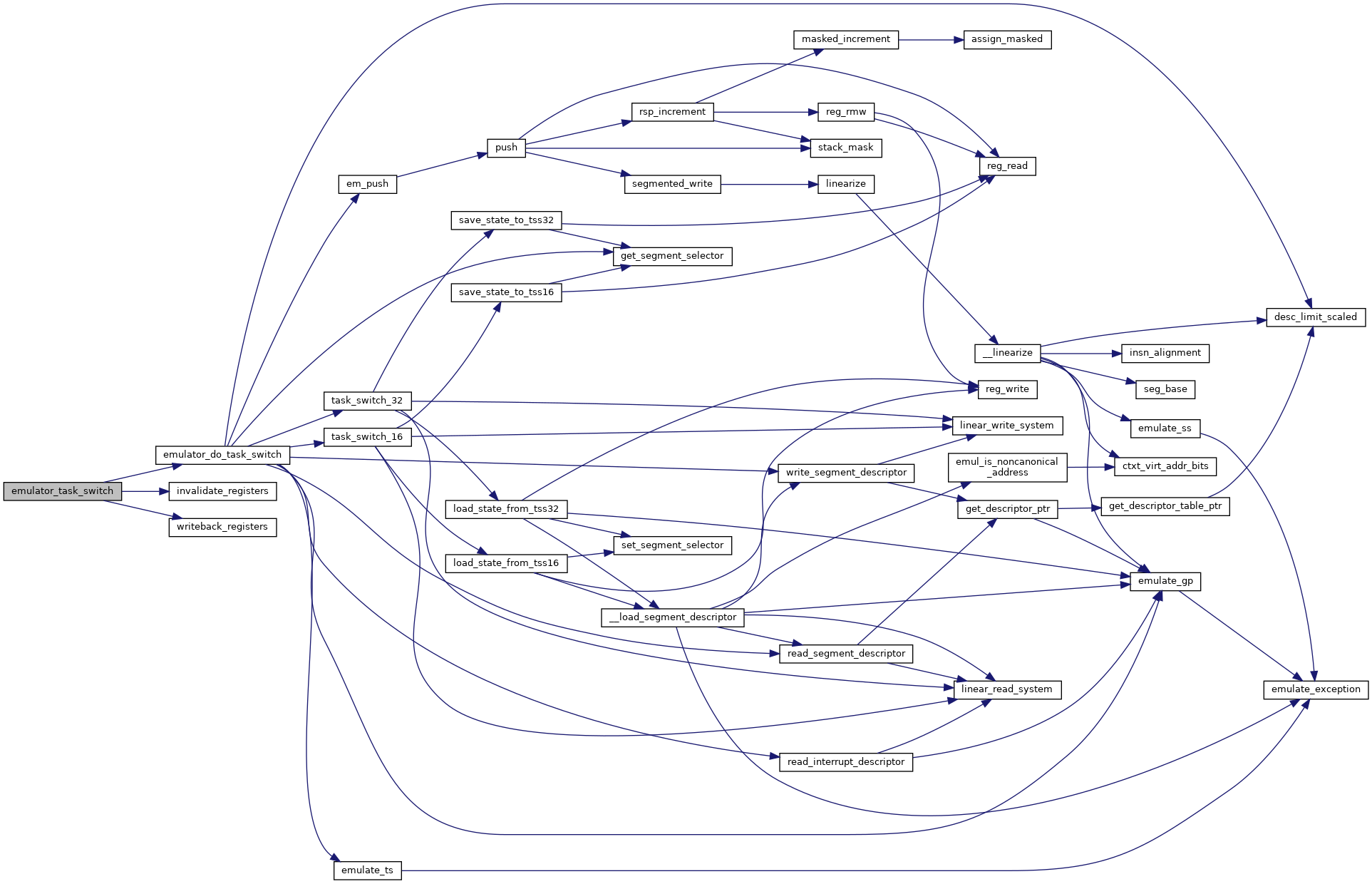

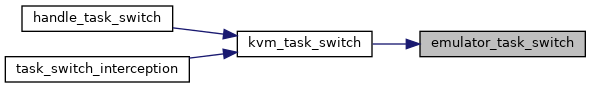

◆ emulator_task_switch()

| int emulator_task_switch | ( | struct x86_emulate_ctxt * | ctxt, |

| u16 | tss_selector, | ||

| int | idt_index, | ||

| int | reason, | ||

| bool | has_error_code, | ||

| u32 | error_code | ||

| ) |

Definition at line 3020 of file emulate.c.

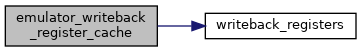

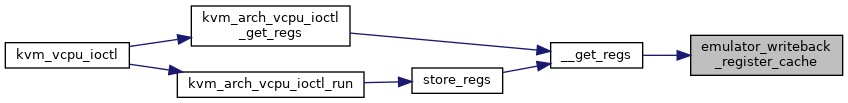

◆ emulator_writeback_register_cache()

| void emulator_writeback_register_cache | ( | struct x86_emulate_ctxt * | ctxt | ) |

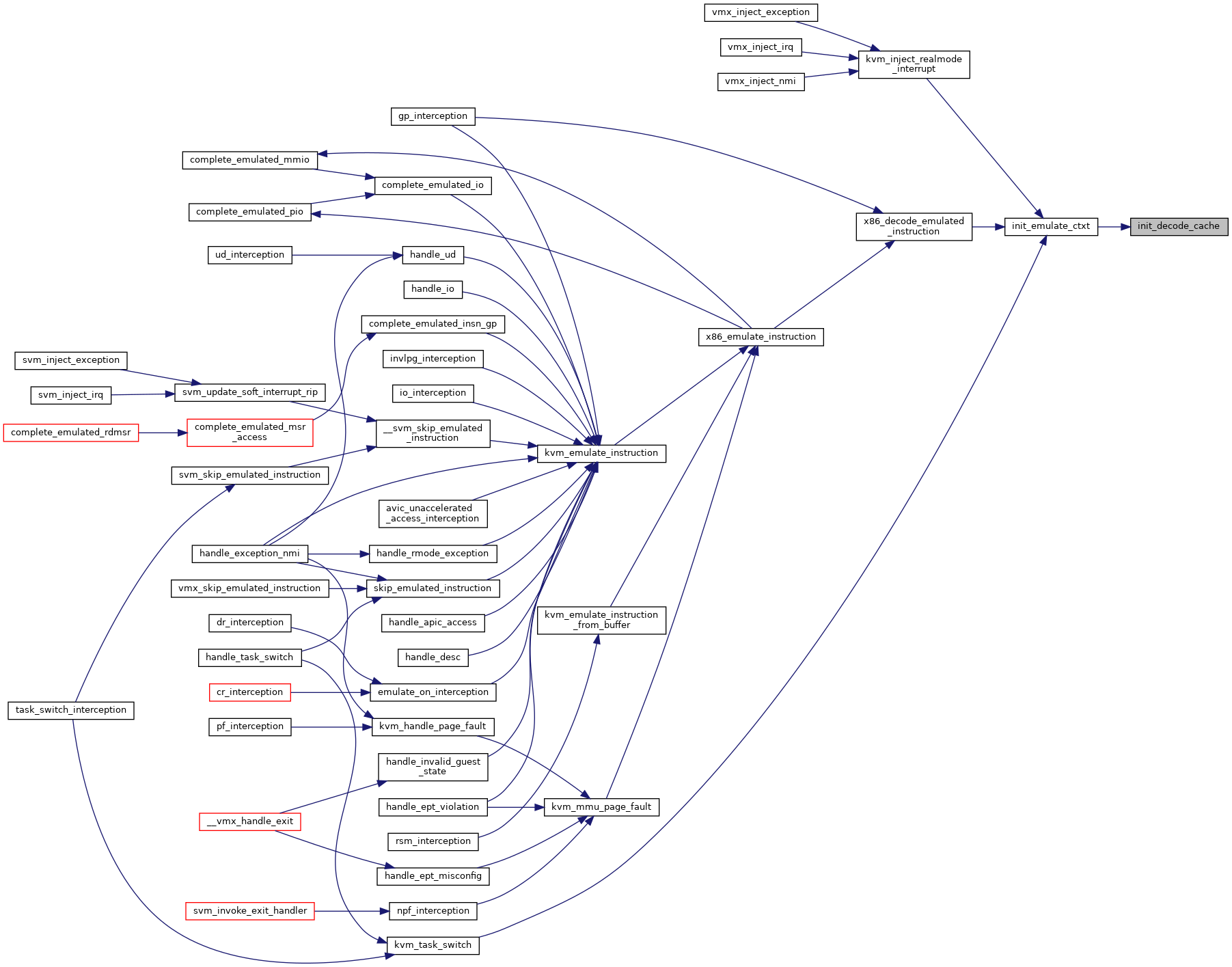

◆ init_decode_cache()

| void init_decode_cache | ( | struct x86_emulate_ctxt * | ctxt | ) |

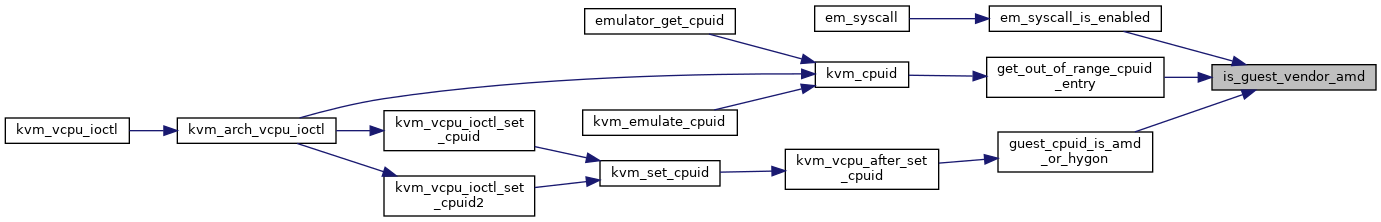

◆ is_guest_vendor_amd()

|

inlinestatic |

Definition at line 418 of file kvm_emulate.h.

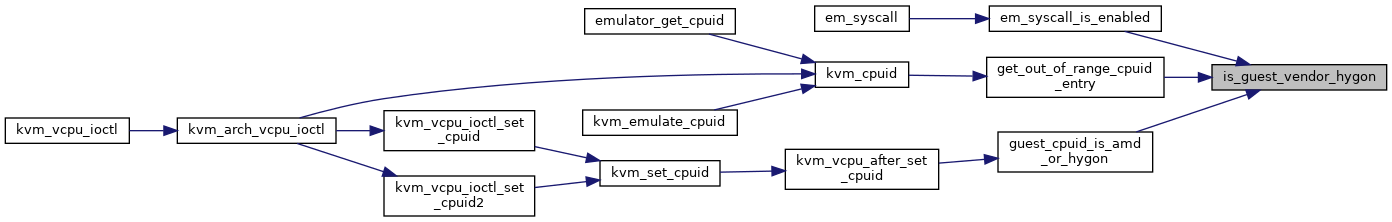

◆ is_guest_vendor_hygon()

|

inlinestatic |

Definition at line 428 of file kvm_emulate.h.

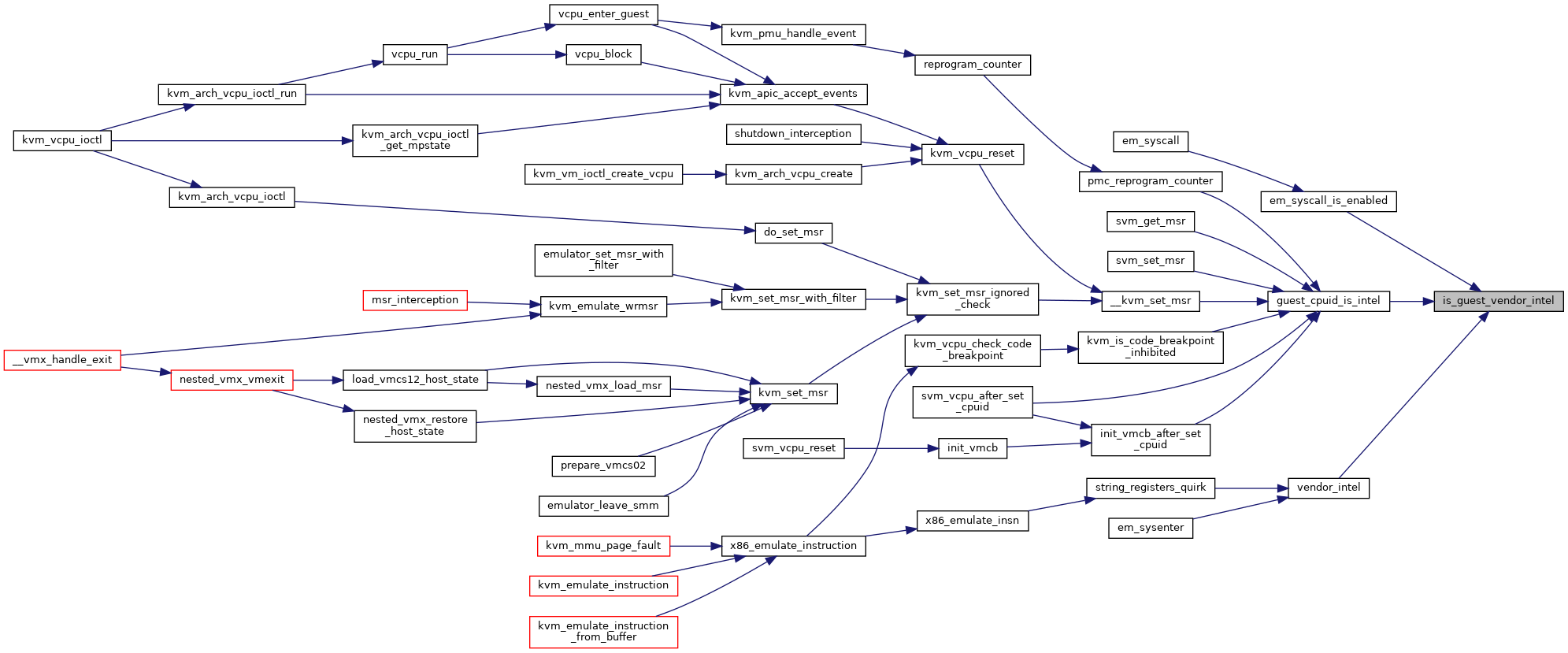

◆ is_guest_vendor_intel()

|

inlinestatic |

Definition at line 411 of file kvm_emulate.h.

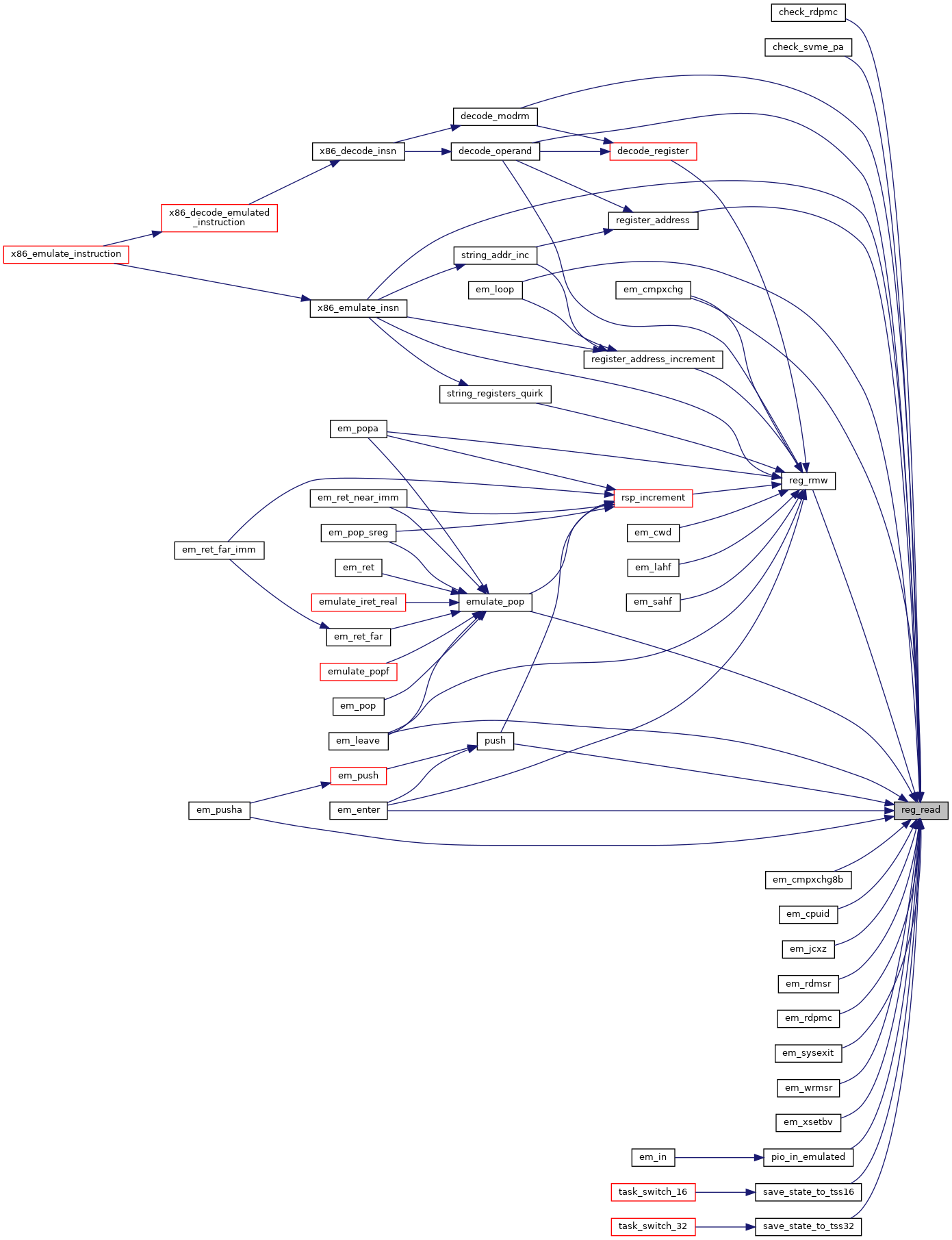

◆ reg_read()

|

inlinestatic |

Definition at line 519 of file kvm_emulate.h.

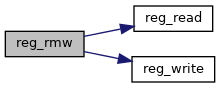

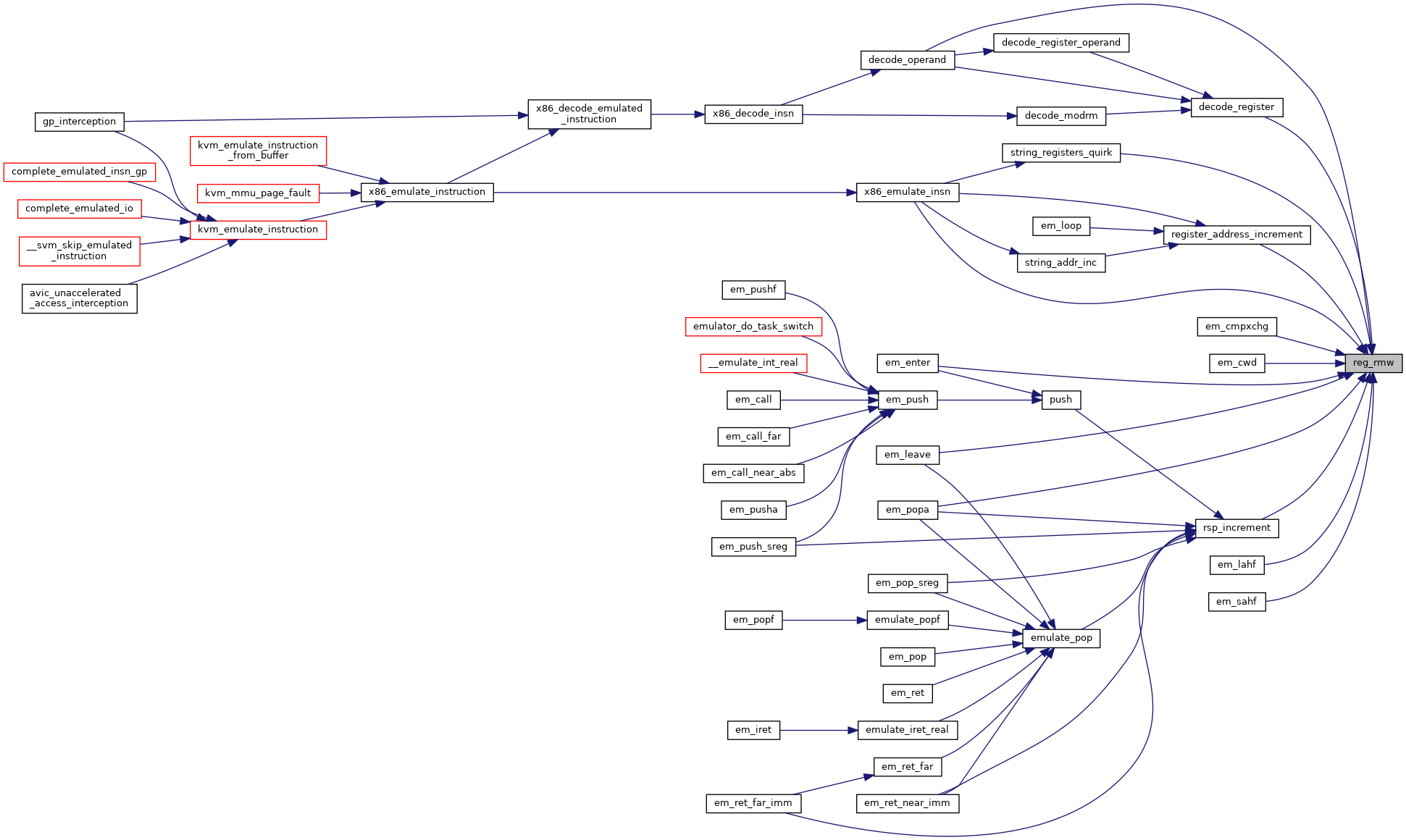

◆ reg_rmw()

|

inlinestatic |

Definition at line 544 of file kvm_emulate.h.

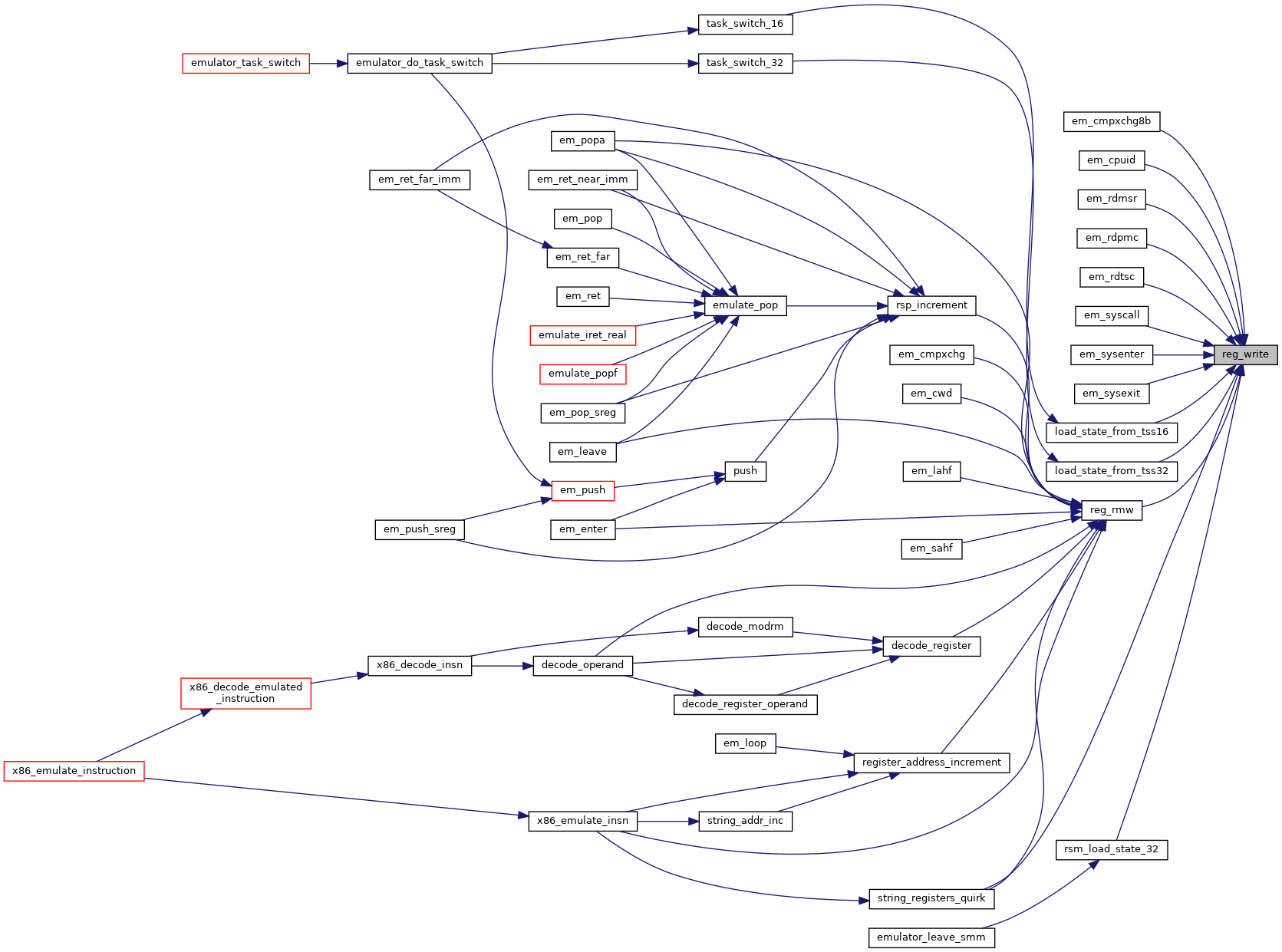

◆ reg_write()

|

inlinestatic |

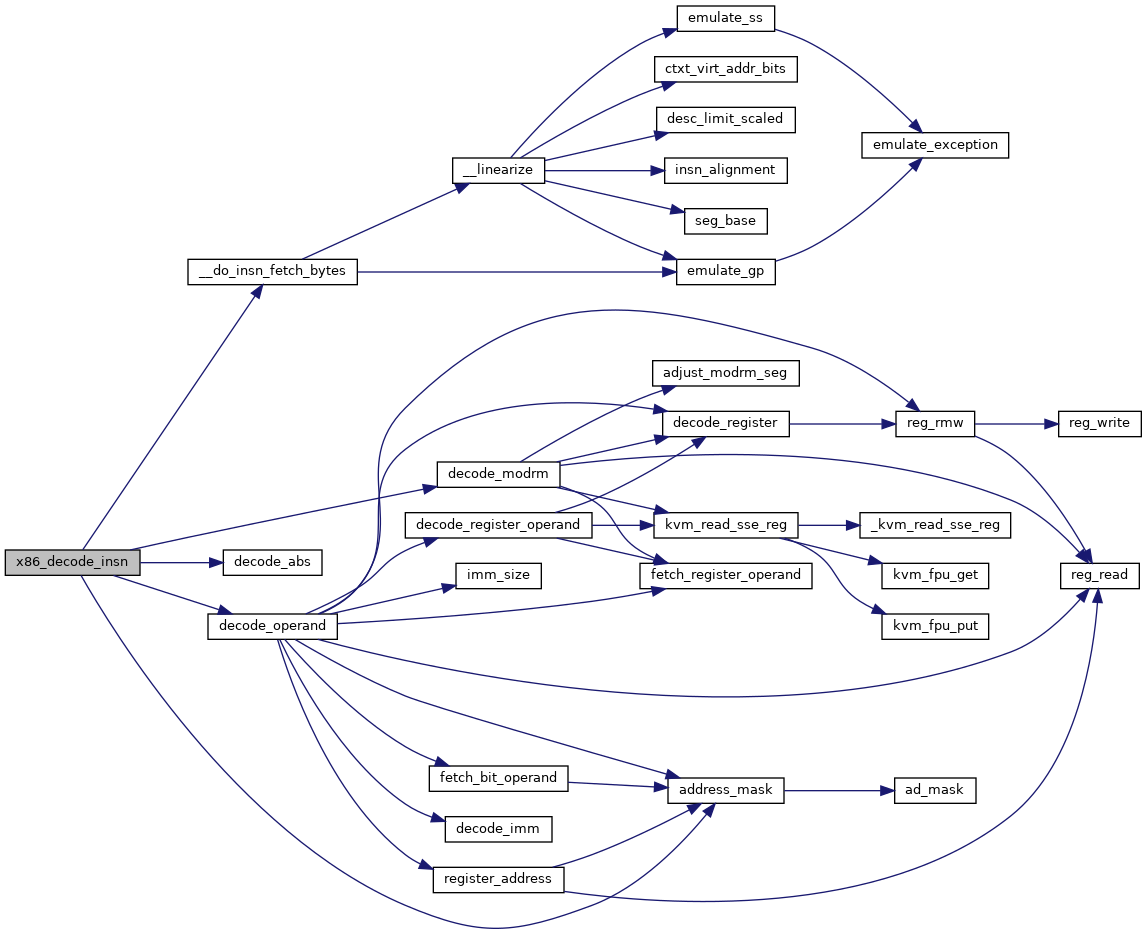

◆ x86_decode_insn()

| int x86_decode_insn | ( | struct x86_emulate_ctxt * | ctxt, |

| void * | insn, | ||

| int | insn_len, | ||

| int | emulation_type | ||

| ) |

Definition at line 4763 of file emulate.c.

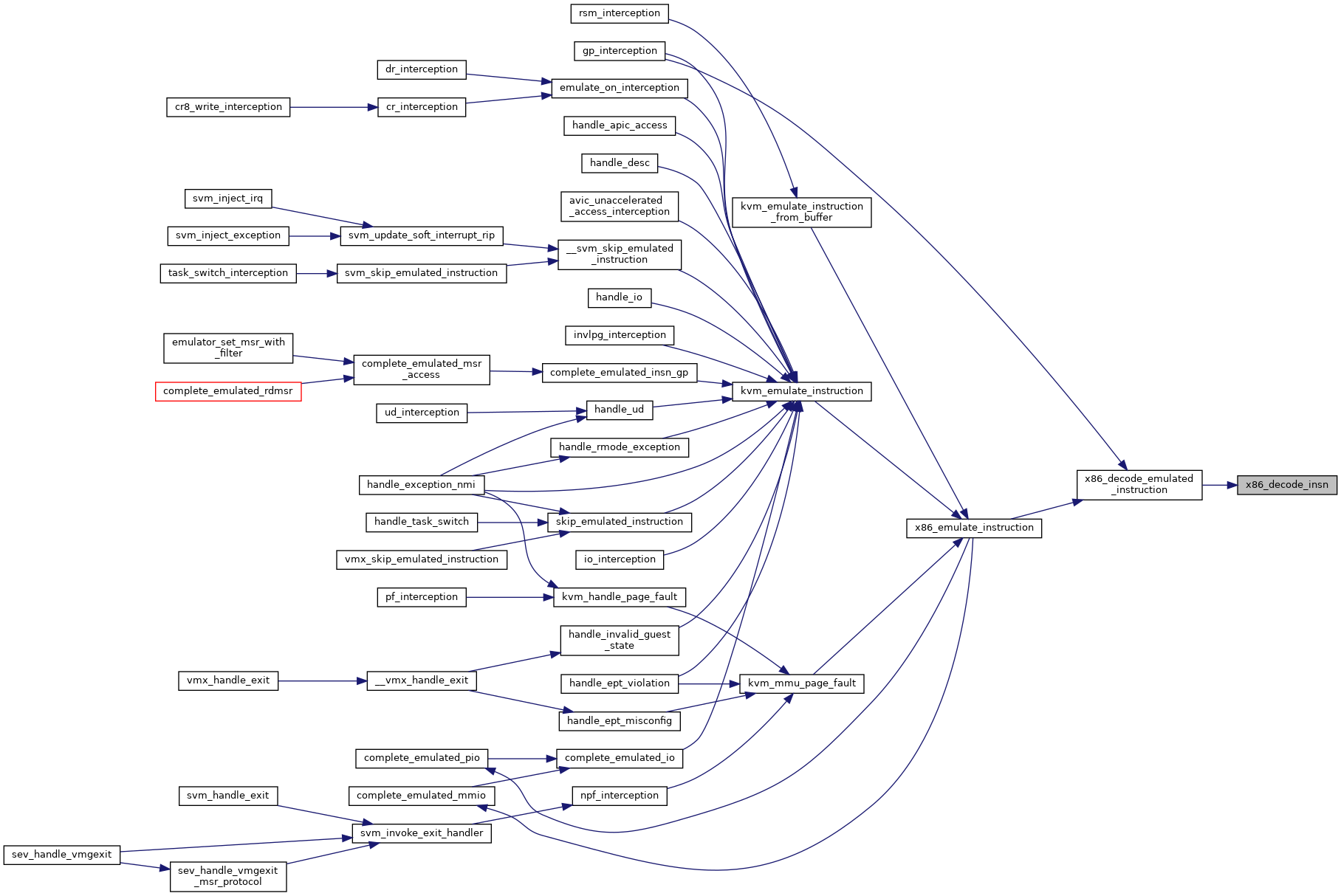

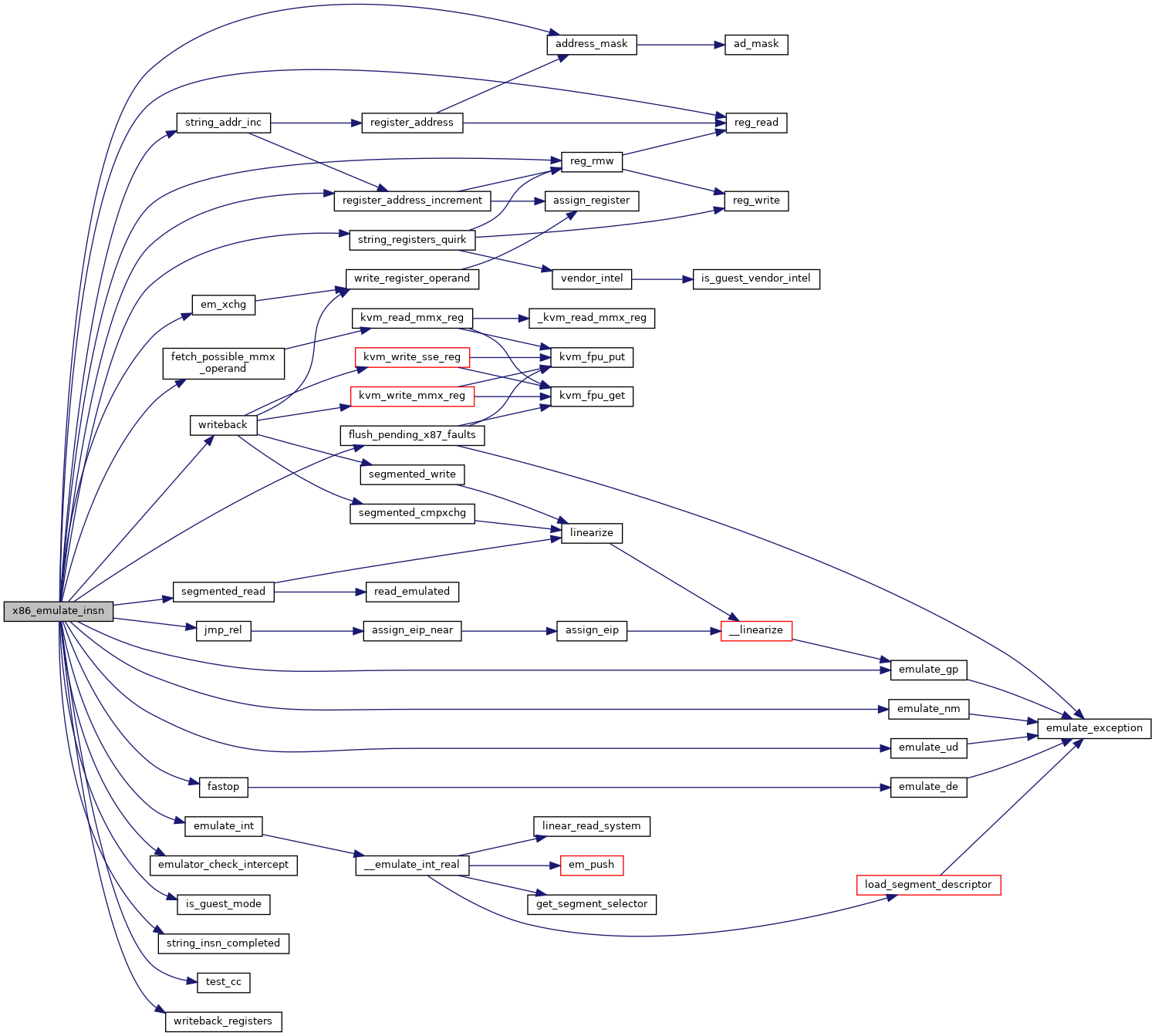

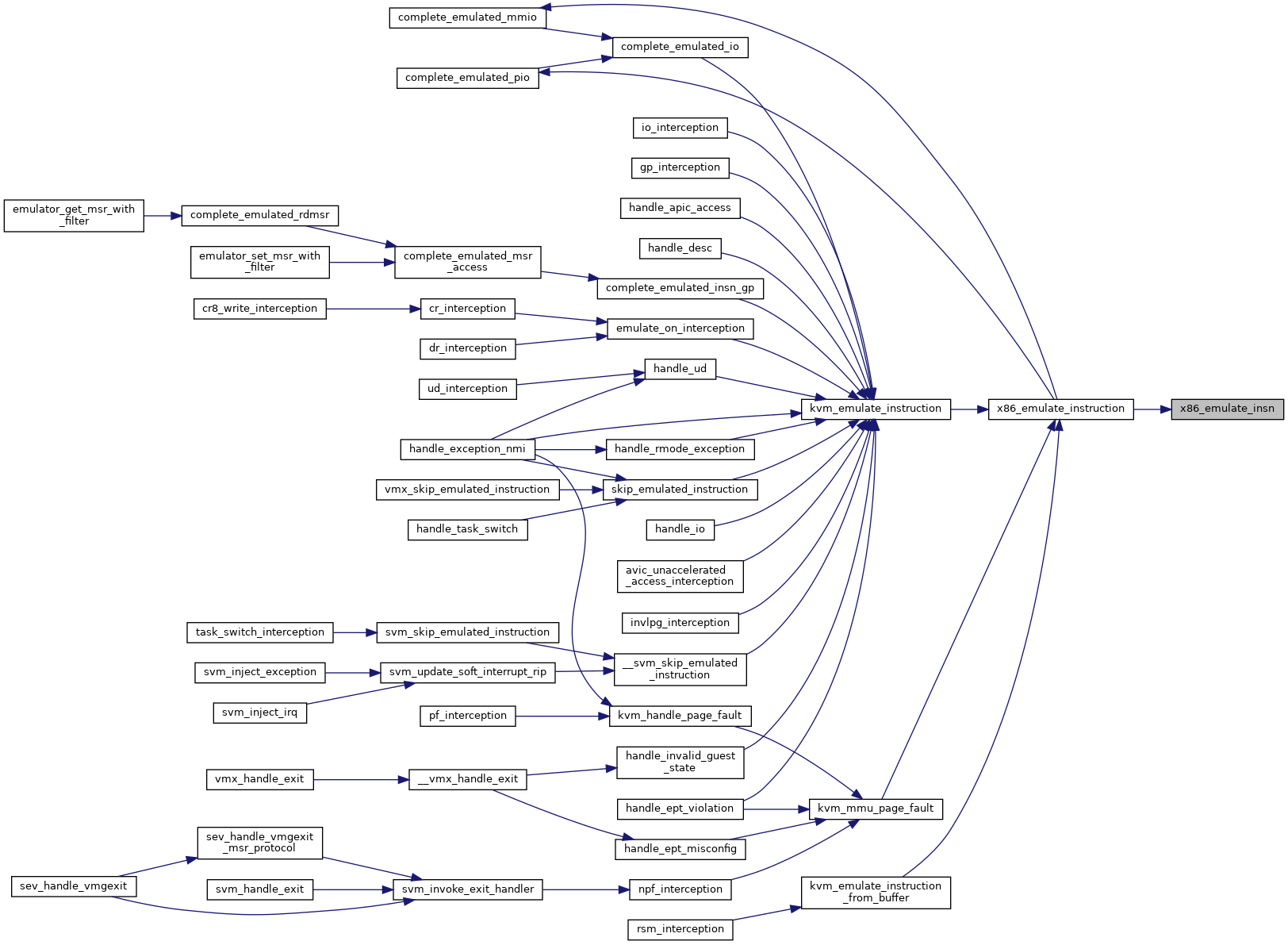

◆ x86_emulate_insn()

| int x86_emulate_insn | ( | struct x86_emulate_ctxt * | ctxt | ) |

Definition at line 5140 of file emulate.c.

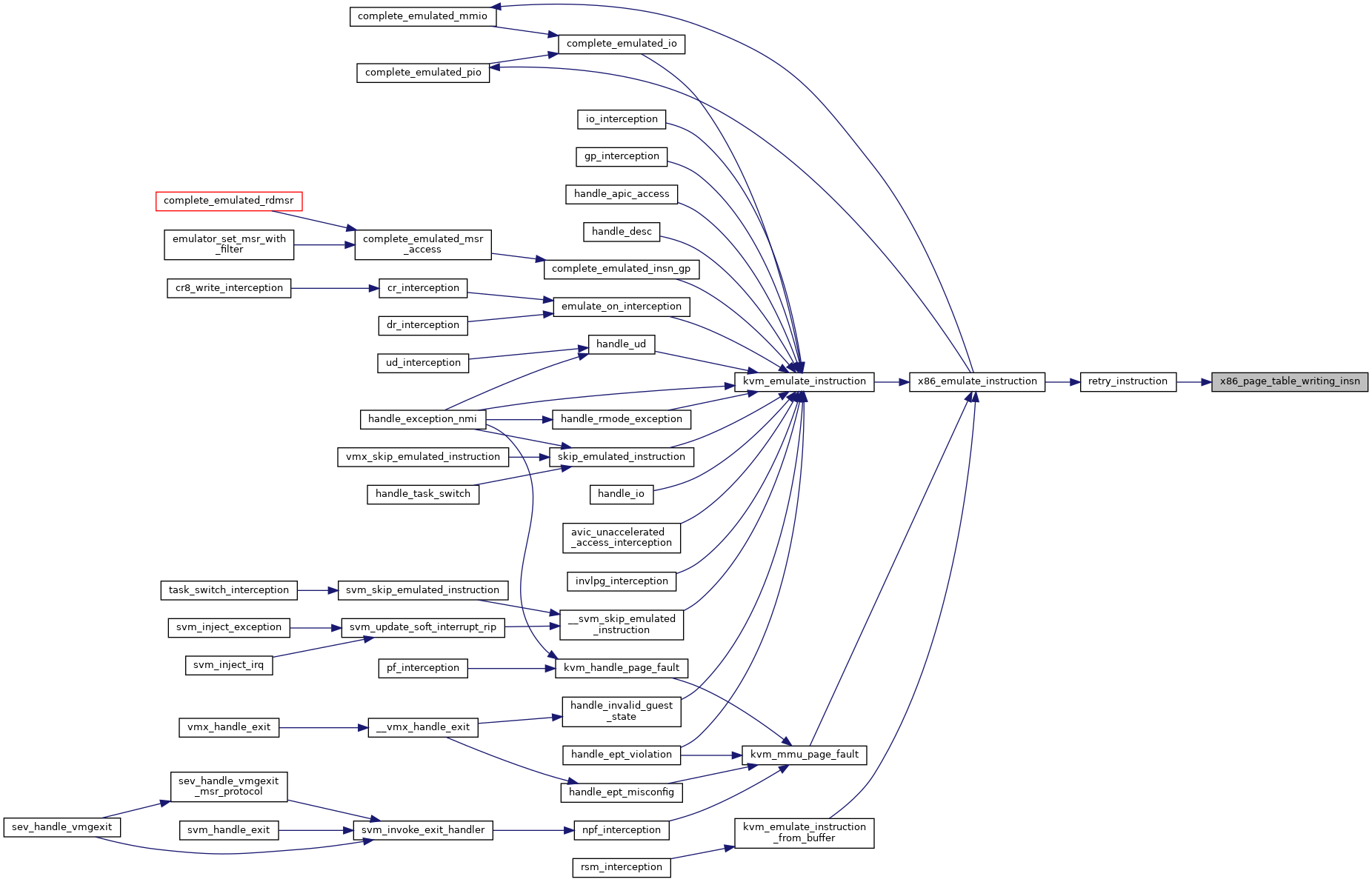

◆ x86_page_table_writing_insn()

| bool x86_page_table_writing_insn | ( | struct x86_emulate_ctxt * | ctxt | ) |