|

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

| |

| #define | OpNone 0ull |

| |

| #define | OpImplicit 1ull /* No generic decode */ |

| |

| #define | OpReg 2ull /* Register */ |

| |

| #define | OpMem 3ull /* Memory */ |

| |

| #define | OpAcc 4ull /* Accumulator: AL/AX/EAX/RAX */ |

| |

| #define | OpDI 5ull /* ES:DI/EDI/RDI */ |

| |

| #define | OpMem64 6ull /* Memory, 64-bit */ |

| |

| #define | OpImmUByte 7ull /* Zero-extended 8-bit immediate */ |

| |

| #define | OpDX 8ull /* DX register */ |

| |

| #define | OpCL 9ull /* CL register (for shifts) */ |

| |

| #define | OpImmByte 10ull /* 8-bit sign extended immediate */ |

| |

| #define | OpOne 11ull /* Implied 1 */ |

| |

| #define | OpImm 12ull /* Sign extended up to 32-bit immediate */ |

| |

| #define | OpMem16 13ull /* Memory operand (16-bit). */ |

| |

| #define | OpMem32 14ull /* Memory operand (32-bit). */ |

| |

| #define | OpImmU 15ull /* Immediate operand, zero extended */ |

| |

| #define | OpSI 16ull /* SI/ESI/RSI */ |

| |

| #define | OpImmFAddr 17ull /* Immediate far address */ |

| |

| #define | OpMemFAddr 18ull /* Far address in memory */ |

| |

| #define | OpImmU16 19ull /* Immediate operand, 16 bits, zero extended */ |

| |

| #define | OpES 20ull /* ES */ |

| |

| #define | OpCS 21ull /* CS */ |

| |

| #define | OpSS 22ull /* SS */ |

| |

| #define | OpDS 23ull /* DS */ |

| |

| #define | OpFS 24ull /* FS */ |

| |

| #define | OpGS 25ull /* GS */ |

| |

| #define | OpMem8 26ull /* 8-bit zero extended memory operand */ |

| |

| #define | OpImm64 27ull /* Sign extended 16/32/64-bit immediate */ |

| |

| #define | OpXLat 28ull /* memory at BX/EBX/RBX + zero-extended AL */ |

| |

| #define | OpAccLo 29ull /* Low part of extended acc (AX/AX/EAX/RAX) */ |

| |

| #define | OpAccHi 30ull /* High part of extended acc (-/DX/EDX/RDX) */ |

| |

| #define | OpBits 5 /* Width of operand field */ |

| |

| #define | OpMask ((1ull << OpBits) - 1) |

| |

| #define | ByteOp (1<<0) /* 8-bit operands. */ |

| |

| #define | DstShift 1 |

| |

| #define | ImplicitOps (OpImplicit << DstShift) |

| |

| #define | DstReg (OpReg << DstShift) |

| |

| #define | DstMem (OpMem << DstShift) |

| |

| #define | DstAcc (OpAcc << DstShift) |

| |

| #define | DstDI (OpDI << DstShift) |

| |

| #define | DstMem64 (OpMem64 << DstShift) |

| |

| #define | DstMem16 (OpMem16 << DstShift) |

| |

| #define | DstImmUByte (OpImmUByte << DstShift) |

| |

| #define | DstDX (OpDX << DstShift) |

| |

| #define | DstAccLo (OpAccLo << DstShift) |

| |

| #define | DstMask (OpMask << DstShift) |

| |

| #define | SrcShift 6 |

| |

| #define | SrcNone (OpNone << SrcShift) |

| |

| #define | SrcReg (OpReg << SrcShift) |

| |

| #define | SrcMem (OpMem << SrcShift) |

| |

| #define | SrcMem16 (OpMem16 << SrcShift) |

| |

| #define | SrcMem32 (OpMem32 << SrcShift) |

| |

| #define | SrcImm (OpImm << SrcShift) |

| |

| #define | SrcImmByte (OpImmByte << SrcShift) |

| |

| #define | SrcOne (OpOne << SrcShift) |

| |

| #define | SrcImmUByte (OpImmUByte << SrcShift) |

| |

| #define | SrcImmU (OpImmU << SrcShift) |

| |

| #define | SrcSI (OpSI << SrcShift) |

| |

| #define | SrcXLat (OpXLat << SrcShift) |

| |

| #define | SrcImmFAddr (OpImmFAddr << SrcShift) |

| |

| #define | SrcMemFAddr (OpMemFAddr << SrcShift) |

| |

| #define | SrcAcc (OpAcc << SrcShift) |

| |

| #define | SrcImmU16 (OpImmU16 << SrcShift) |

| |

| #define | SrcImm64 (OpImm64 << SrcShift) |

| |

| #define | SrcDX (OpDX << SrcShift) |

| |

| #define | SrcMem8 (OpMem8 << SrcShift) |

| |

| #define | SrcAccHi (OpAccHi << SrcShift) |

| |

| #define | SrcMask (OpMask << SrcShift) |

| |

| #define | BitOp (1<<11) |

| |

| #define | MemAbs (1<<12) /* Memory operand is absolute displacement */ |

| |

| #define | String (1<<13) /* String instruction (rep capable) */ |

| |

| #define | Stack (1<<14) /* Stack instruction (push/pop) */ |

| |

| #define | GroupMask (7<<15) /* Opcode uses one of the group mechanisms */ |

| |

| #define | Group (1<<15) /* Bits 3:5 of modrm byte extend opcode */ |

| |

| #define | GroupDual (2<<15) /* Alternate decoding of mod == 3 */ |

| |

| #define | Prefix (3<<15) /* Instruction varies with 66/f2/f3 prefix */ |

| |

| #define | RMExt (4<<15) /* Opcode extension in ModRM r/m if mod == 3 */ |

| |

| #define | Escape (5<<15) /* Escape to coprocessor instruction */ |

| |

| #define | InstrDual (6<<15) /* Alternate instruction decoding of mod == 3 */ |

| |

| #define | ModeDual (7<<15) /* Different instruction for 32/64 bit */ |

| |

| #define | Sse (1<<18) /* SSE Vector instruction */ |

| |

| #define | ModRM (1<<19) |

| |

| #define | Mov (1<<20) |

| |

| #define | Prot (1<<21) /* instruction generates #UD if not in prot-mode */ |

| |

| #define | EmulateOnUD (1<<22) /* Emulate if unsupported by the host */ |

| |

| #define | NoAccess (1<<23) /* Don't access memory (lea/invlpg/verr etc) */ |

| |

| #define | Op3264 (1<<24) /* Operand is 64b in long mode, 32b otherwise */ |

| |

| #define | Undefined (1<<25) /* No Such Instruction */ |

| |

| #define | Lock (1<<26) /* lock prefix is allowed for the instruction */ |

| |

| #define | Priv (1<<27) /* instruction generates #GP if current CPL != 0 */ |

| |

| #define | No64 (1<<28) |

| |

| #define | PageTable (1 << 29) /* instruction used to write page table */ |

| |

| #define | NotImpl (1 << 30) /* instruction is not implemented */ |

| |

| #define | Src2Shift (31) |

| |

| #define | Src2None (OpNone << Src2Shift) |

| |

| #define | Src2Mem (OpMem << Src2Shift) |

| |

| #define | Src2CL (OpCL << Src2Shift) |

| |

| #define | Src2ImmByte (OpImmByte << Src2Shift) |

| |

| #define | Src2One (OpOne << Src2Shift) |

| |

| #define | Src2Imm (OpImm << Src2Shift) |

| |

| #define | Src2ES (OpES << Src2Shift) |

| |

| #define | Src2CS (OpCS << Src2Shift) |

| |

| #define | Src2SS (OpSS << Src2Shift) |

| |

| #define | Src2DS (OpDS << Src2Shift) |

| |

| #define | Src2FS (OpFS << Src2Shift) |

| |

| #define | Src2GS (OpGS << Src2Shift) |

| |

| #define | Src2Mask (OpMask << Src2Shift) |

| |

| #define | Mmx ((u64)1 << 40) /* MMX Vector instruction */ |

| |

| #define | AlignMask ((u64)7 << 41) |

| |

| #define | Aligned ((u64)1 << 41) /* Explicitly aligned (e.g. MOVDQA) */ |

| |

| #define | Unaligned ((u64)2 << 41) /* Explicitly unaligned (e.g. MOVDQU) */ |

| |

| #define | Avx ((u64)3 << 41) /* Advanced Vector Extensions */ |

| |

| #define | Aligned16 ((u64)4 << 41) /* Aligned to 16 byte boundary (e.g. FXSAVE) */ |

| |

| #define | Fastop ((u64)1 << 44) /* Use opcode::u.fastop */ |

| |

| #define | NoWrite ((u64)1 << 45) /* No writeback */ |

| |

| #define | SrcWrite ((u64)1 << 46) /* Write back src operand */ |

| |

| #define | NoMod ((u64)1 << 47) /* Mod field is ignored */ |

| |

| #define | Intercept ((u64)1 << 48) /* Has valid intercept field */ |

| |

| #define | CheckPerm ((u64)1 << 49) /* Has valid check_perm field */ |

| |

| #define | PrivUD ((u64)1 << 51) /* #UD instead of #GP on CPL > 0 */ |

| |

| #define | NearBranch ((u64)1 << 52) /* Near branches */ |

| |

| #define | No16 ((u64)1 << 53) /* No 16 bit operand */ |

| |

| #define | IncSP ((u64)1 << 54) /* SP is incremented before ModRM calc */ |

| |

| #define | TwoMemOp ((u64)1 << 55) /* Instruction has two memory operand */ |

| |

| #define | IsBranch ((u64)1 << 56) /* Instruction is considered a branch. */ |

| |

| #define | DstXacc (DstAccLo | SrcAccHi | SrcWrite) |

| |

| #define | X2(x...) x, x |

| |

| #define | X3(x...) X2(x), x |

| |

| #define | X4(x...) X2(x), X2(x) |

| |

| #define | X5(x...) X4(x), x |

| |

| #define | X6(x...) X4(x), X2(x) |

| |

| #define | X7(x...) X4(x), X3(x) |

| |

| #define | X8(x...) X4(x), X4(x) |

| |

| #define | X16(x...) X8(x), X8(x) |

| |

| #define | EFLG_RESERVED_ZEROS_MASK 0xffc0802a |

| |

| #define | EFLAGS_MASK |

| |

| #define | ON64(x) |

| |

| #define | FASTOP_SIZE 16 |

| |

| #define | __FOP_FUNC(name) |

| |

| #define | FOP_FUNC(name) __FOP_FUNC(#name) |

| |

| #define | __FOP_RET(name) |

| |

| #define | FOP_RET(name) __FOP_RET(#name) |

| |

| #define | __FOP_START(op, align) |

| |

| #define | FOP_START(op) __FOP_START(op, FASTOP_SIZE) |

| |

| #define | FOP_END ".popsection") |

| |

| #define | __FOPNOP(name) |

| |

| #define | FOPNOP() __FOPNOP(__stringify(__UNIQUE_ID(nop))) |

| |

| #define | FOP1E(op, dst) |

| |

| #define | FOP1EEX(op, dst) FOP1E(op, dst) _ASM_EXTABLE_TYPE_REG(10b, 11b, EX_TYPE_ZERO_REG, %%esi) |

| |

| #define | FASTOP1(op) |

| |

| #define | FASTOP1SRC2(op, name) |

| |

| #define | FASTOP1SRC2EX(op, name) |

| |

| #define | FOP2E(op, dst, src) |

| |

| #define | FASTOP2(op) |

| |

| #define | FASTOP2W(op) |

| |

| #define | FASTOP2CL(op) |

| |

| #define | FASTOP2R(op, name) |

| |

| #define | FOP3E(op, dst, src, src2) |

| |

| #define | FASTOP3WCL(op) |

| |

| #define | FOP_SETCC(op) |

| |

| #define | asm_safe(insn, inoutclob...) |

| |

| #define | insn_fetch(_type, _ctxt) |

| |

| #define | insn_fetch_arr(_arr, _size, _ctxt) |

| |

| #define | VMWARE_PORT_VMPORT (0x5658) |

| |

| #define | VMWARE_PORT_VMRPC (0x5659) |

| |

| #define | D(_y) { .flags = (_y) } |

| |

| #define | DI(_y, _i) { .flags = (_y)|Intercept, .intercept = x86_intercept_##_i } |

| |

| #define | DIP(_y, _i, _p) |

| |

| #define | N D(NotImpl) |

| |

| #define | EXT(_f, _e) { .flags = ((_f) | RMExt), .u.group = (_e) } |

| |

| #define | G(_f, _g) { .flags = ((_f) | Group | ModRM), .u.group = (_g) } |

| |

| #define | GD(_f, _g) { .flags = ((_f) | GroupDual | ModRM), .u.gdual = (_g) } |

| |

| #define | ID(_f, _i) { .flags = ((_f) | InstrDual | ModRM), .u.idual = (_i) } |

| |

| #define | MD(_f, _m) { .flags = ((_f) | ModeDual), .u.mdual = (_m) } |

| |

| #define | E(_f, _e) { .flags = ((_f) | Escape | ModRM), .u.esc = (_e) } |

| |

| #define | I(_f, _e) { .flags = (_f), .u.execute = (_e) } |

| |

| #define | F(_f, _e) { .flags = (_f) | Fastop, .u.fastop = (_e) } |

| |

| #define | II(_f, _e, _i) { .flags = (_f)|Intercept, .u.execute = (_e), .intercept = x86_intercept_##_i } |

| |

| #define | IIP(_f, _e, _i, _p) |

| |

| #define | GP(_f, _g) { .flags = ((_f) | Prefix), .u.gprefix = (_g) } |

| |

| #define | D2bv(_f) D((_f) | ByteOp), D(_f) |

| |

| #define | D2bvIP(_f, _i, _p) DIP((_f) | ByteOp, _i, _p), DIP(_f, _i, _p) |

| |

| #define | I2bv(_f, _e) I((_f) | ByteOp, _e), I(_f, _e) |

| |

| #define | F2bv(_f, _e) F((_f) | ByteOp, _e), F(_f, _e) |

| |

| #define | I2bvIP(_f, _e, _i, _p) IIP((_f) | ByteOp, _e, _i, _p), IIP(_f, _e, _i, _p) |

| |

| #define | F6ALU(_f, _e) |

| |

|

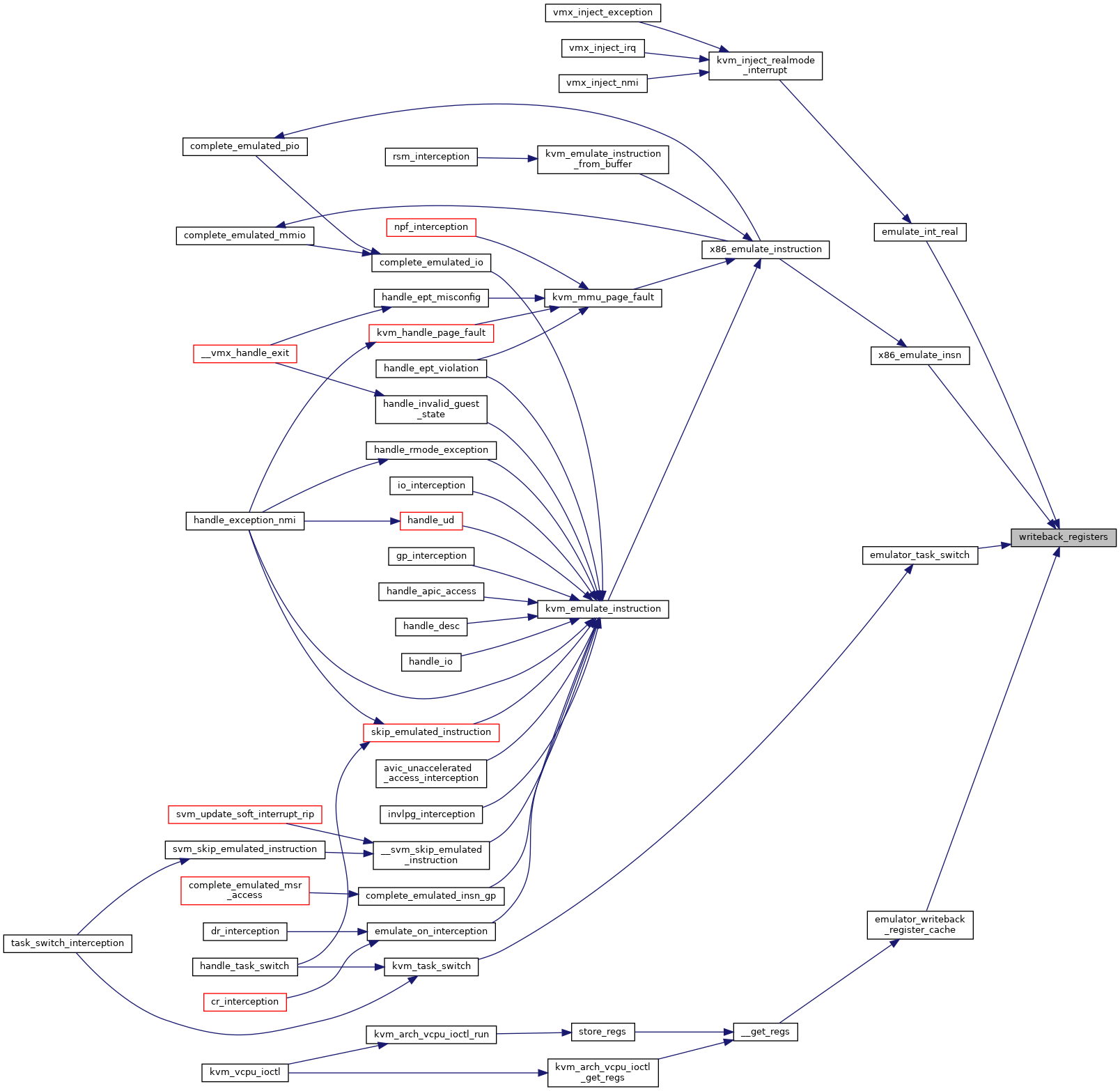

| static void | writeback_registers (struct x86_emulate_ctxt *ctxt) |

| |

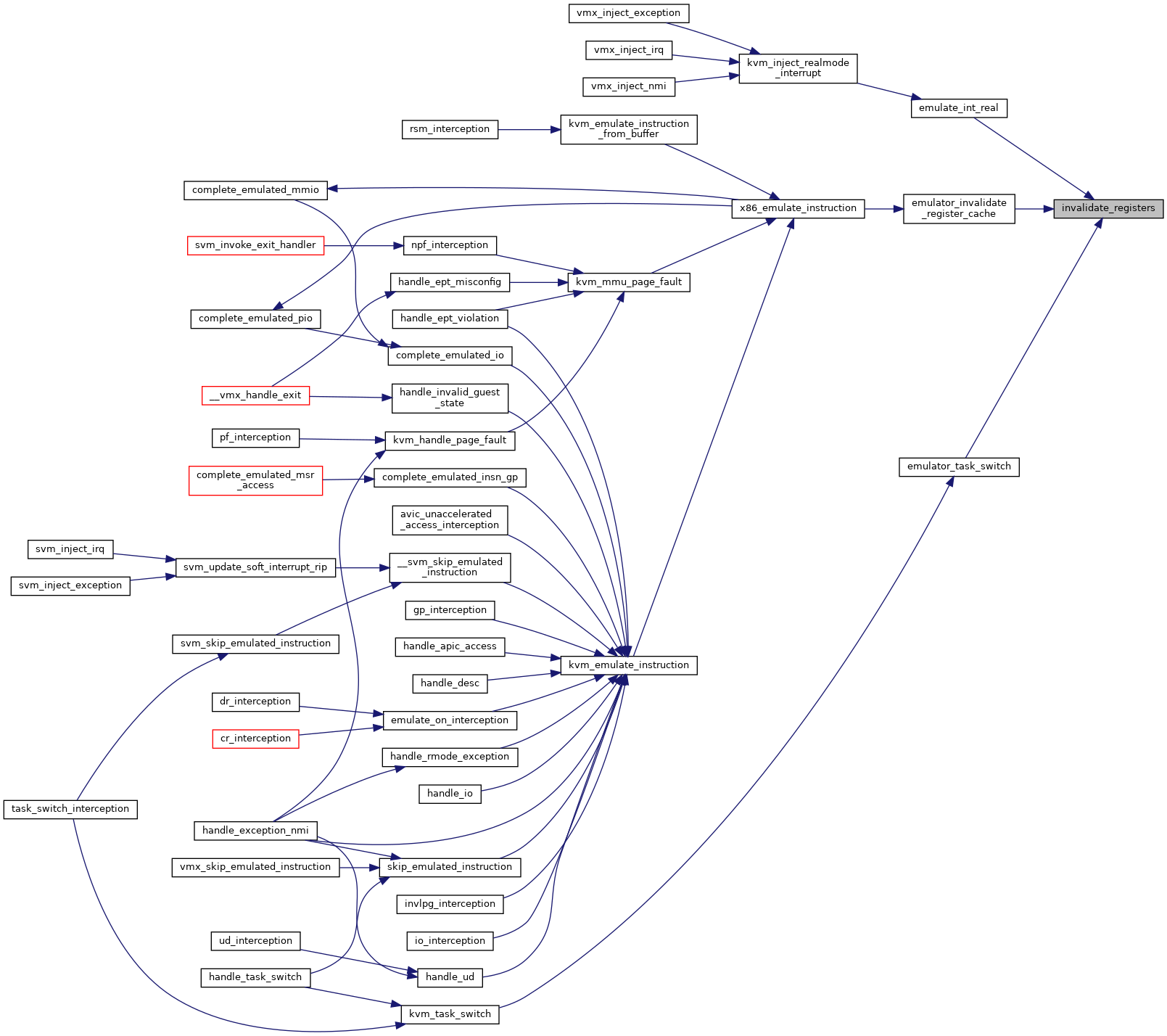

| static void | invalidate_registers (struct x86_emulate_ctxt *ctxt) |

| |

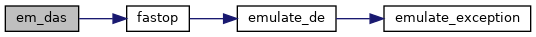

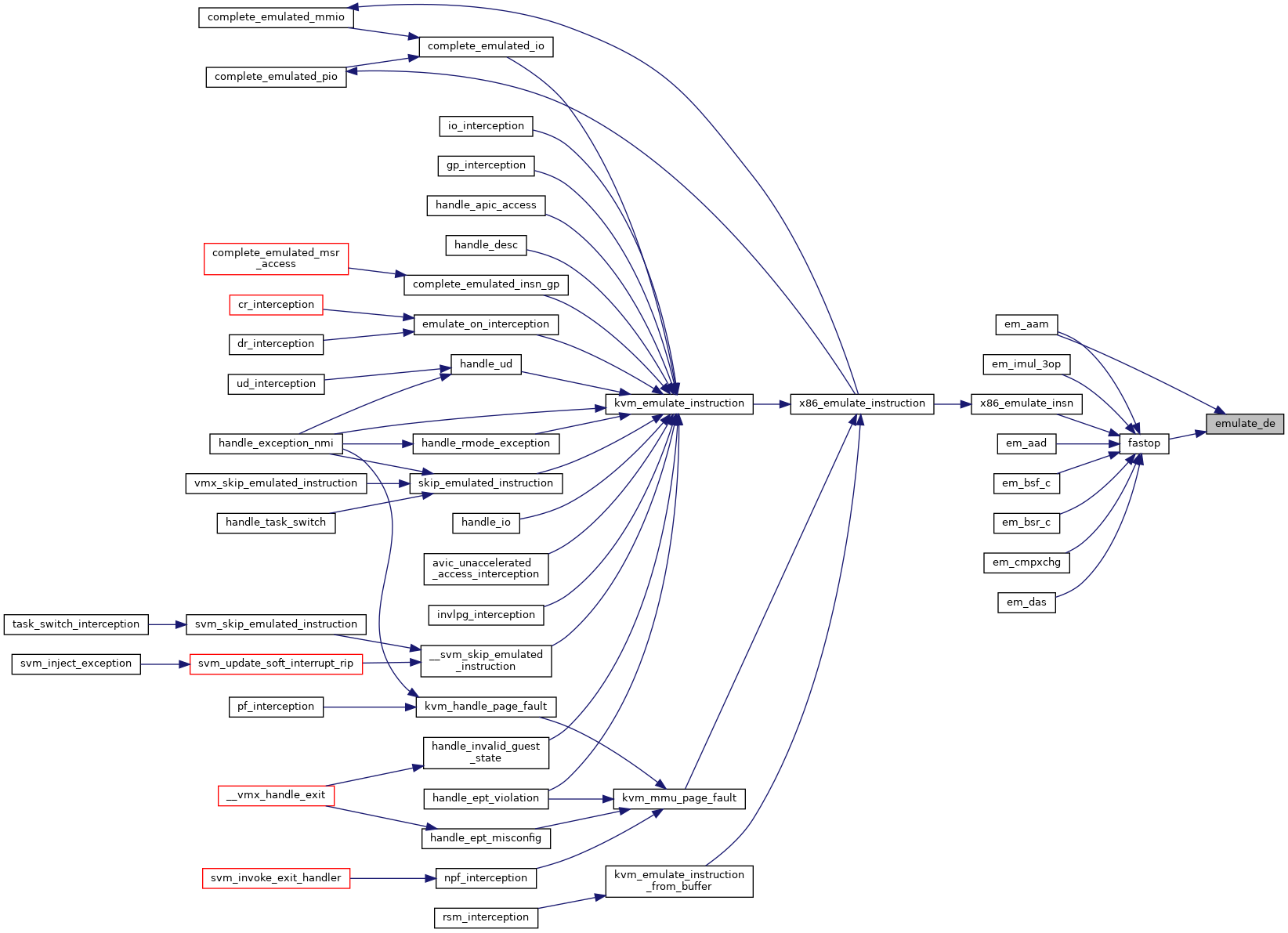

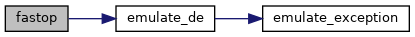

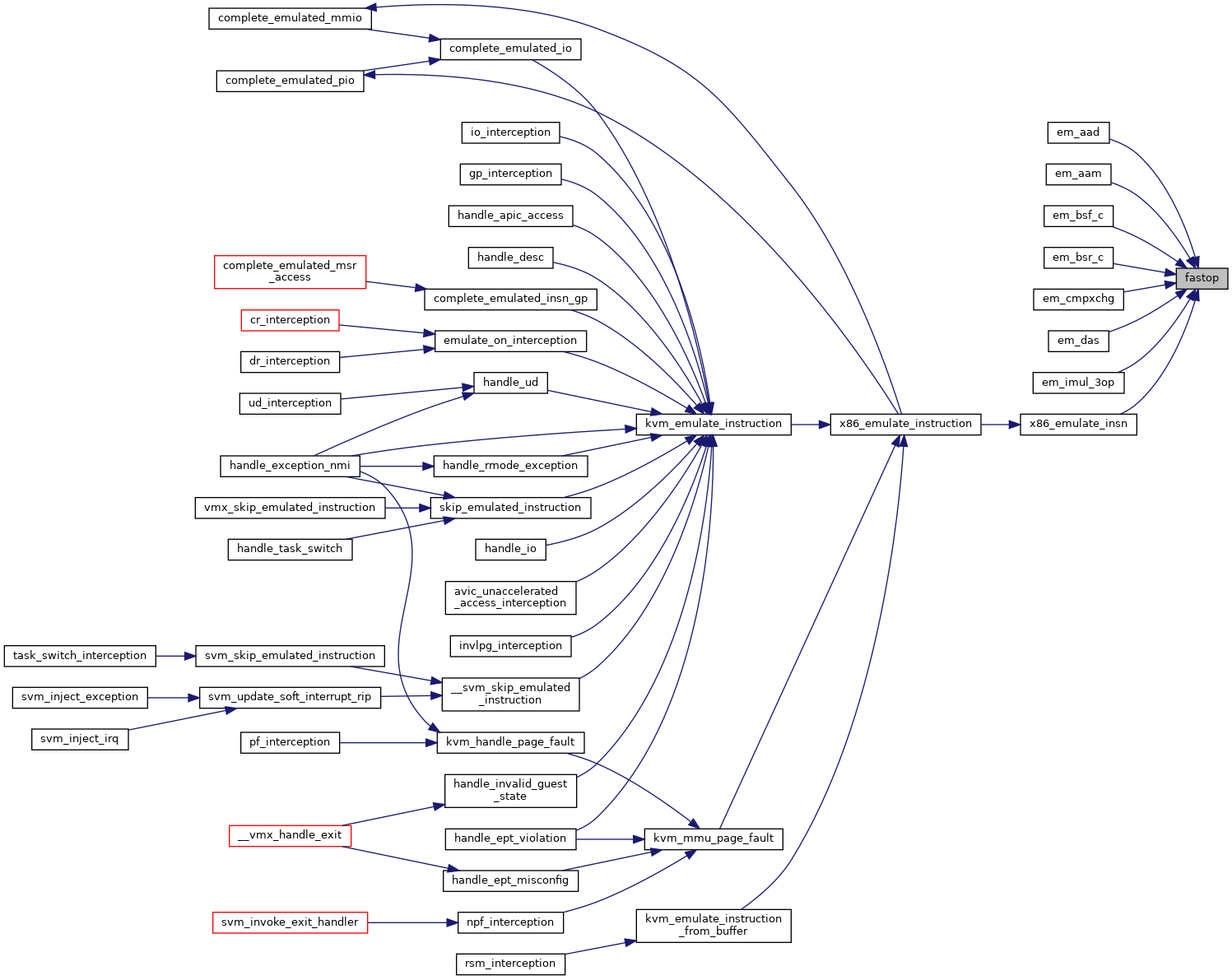

| static int | fastop (struct x86_emulate_ctxt *ctxt, fastop_t fop) |

| |

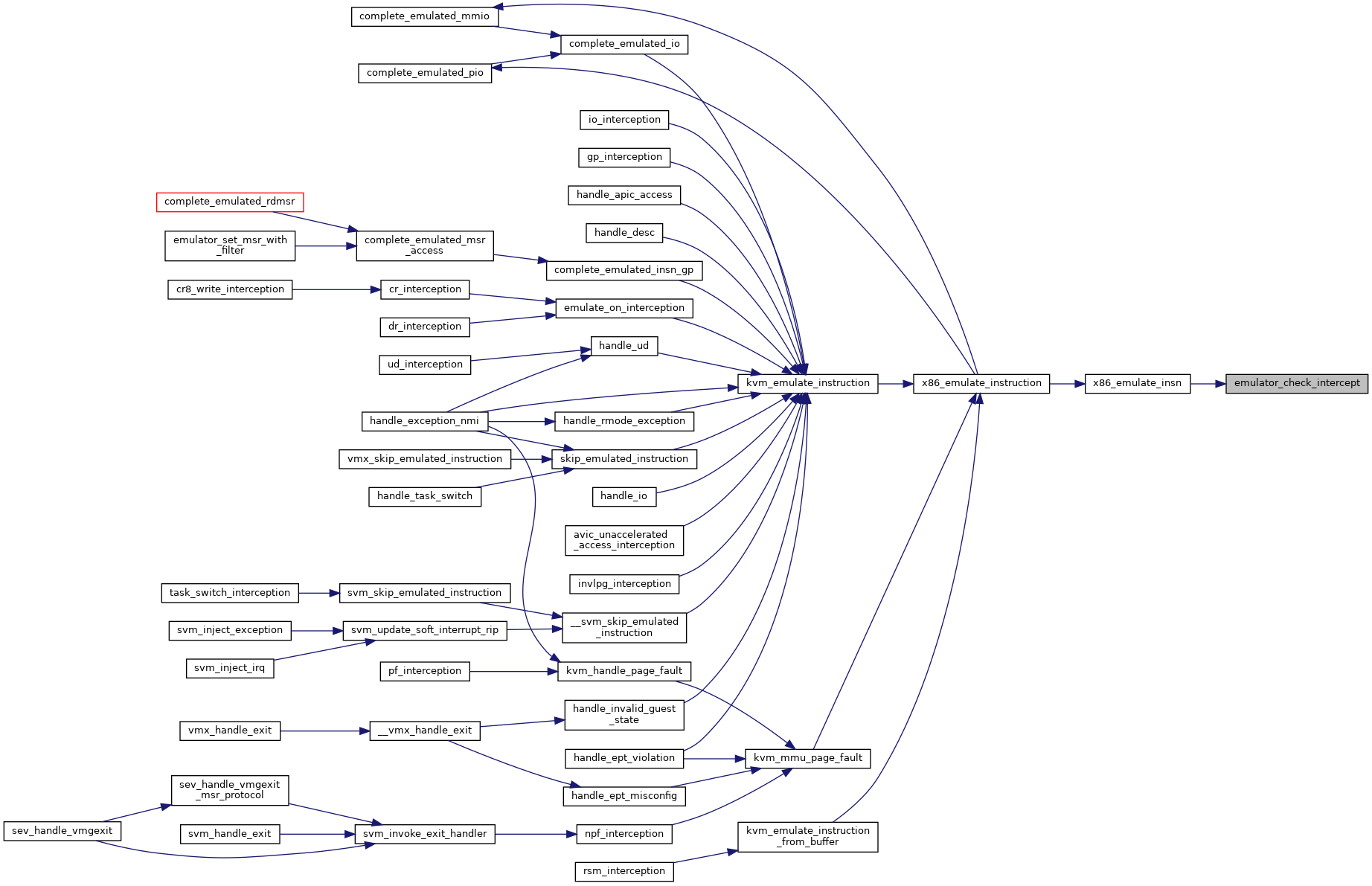

| static int | emulator_check_intercept (struct x86_emulate_ctxt *ctxt, enum x86_intercept intercept, enum x86_intercept_stage stage) |

| |

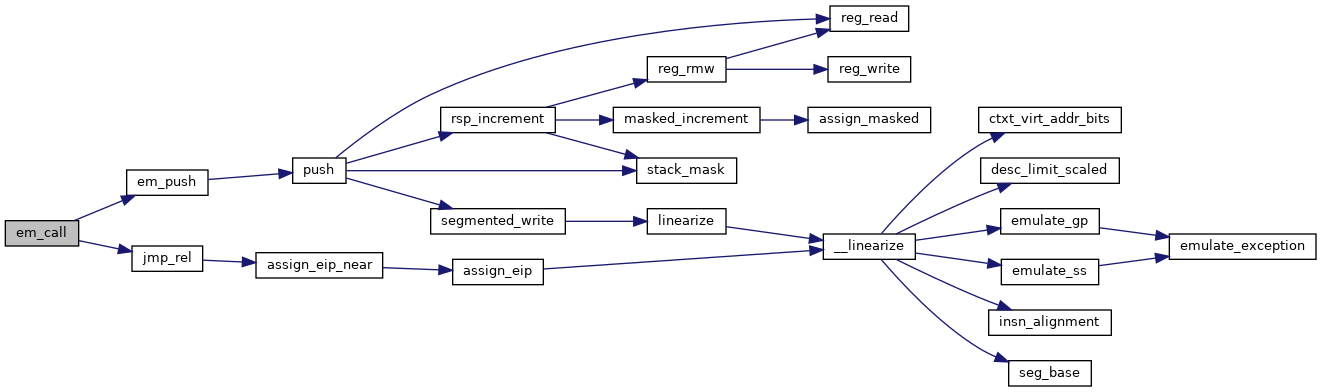

| static void | assign_masked (ulong *dest, ulong src, ulong mask) |

| |

| static void | assign_register (unsigned long *reg, u64 val, int bytes) |

| |

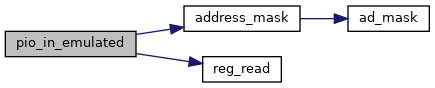

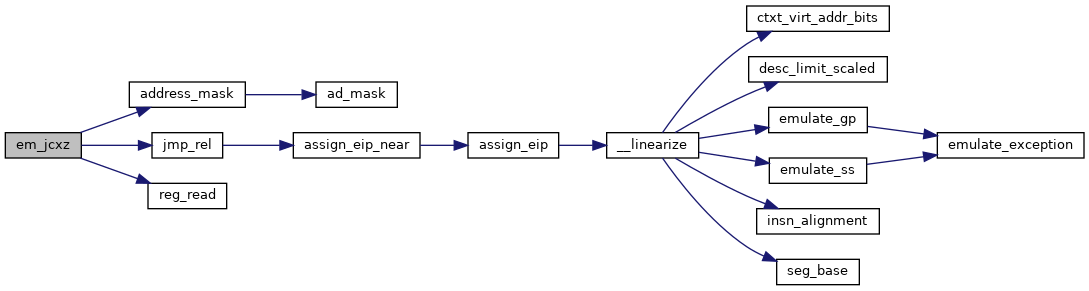

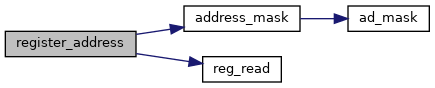

| static unsigned long | ad_mask (struct x86_emulate_ctxt *ctxt) |

| |

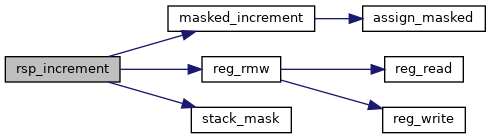

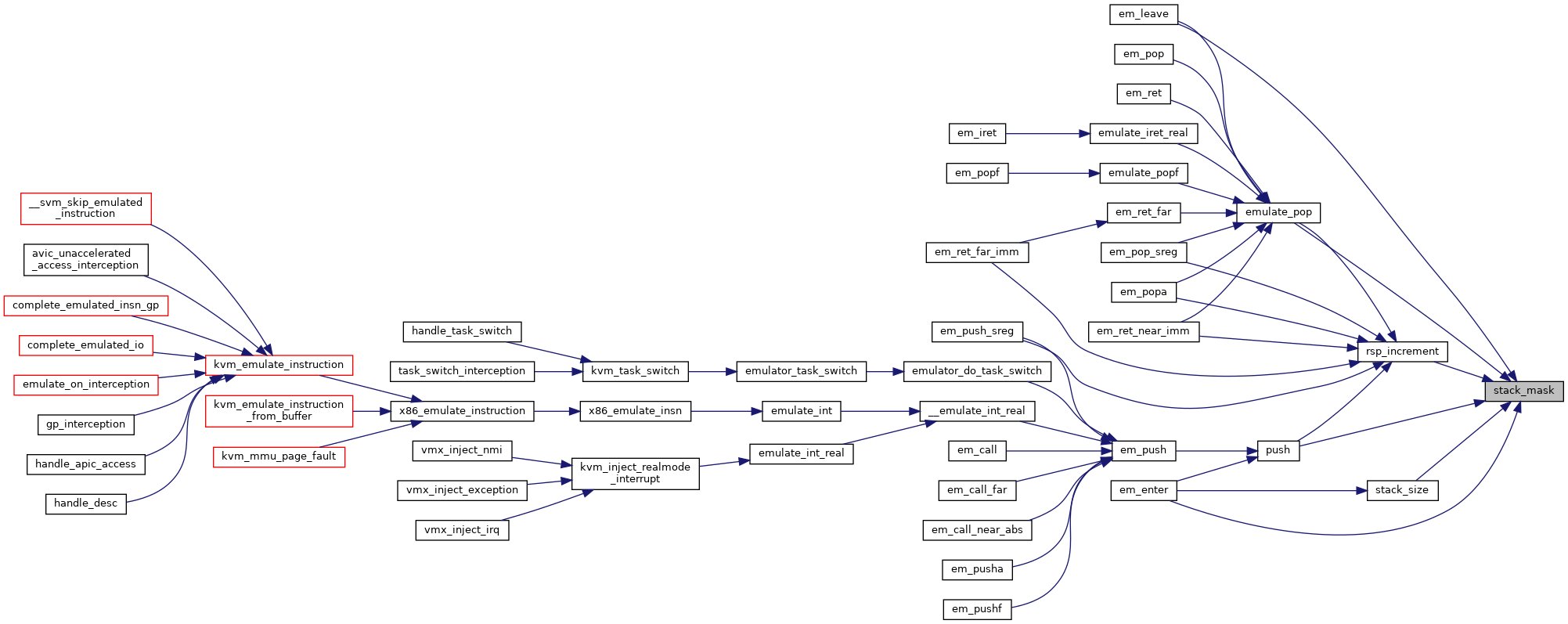

| static ulong | stack_mask (struct x86_emulate_ctxt *ctxt) |

| |

| static int | stack_size (struct x86_emulate_ctxt *ctxt) |

| |

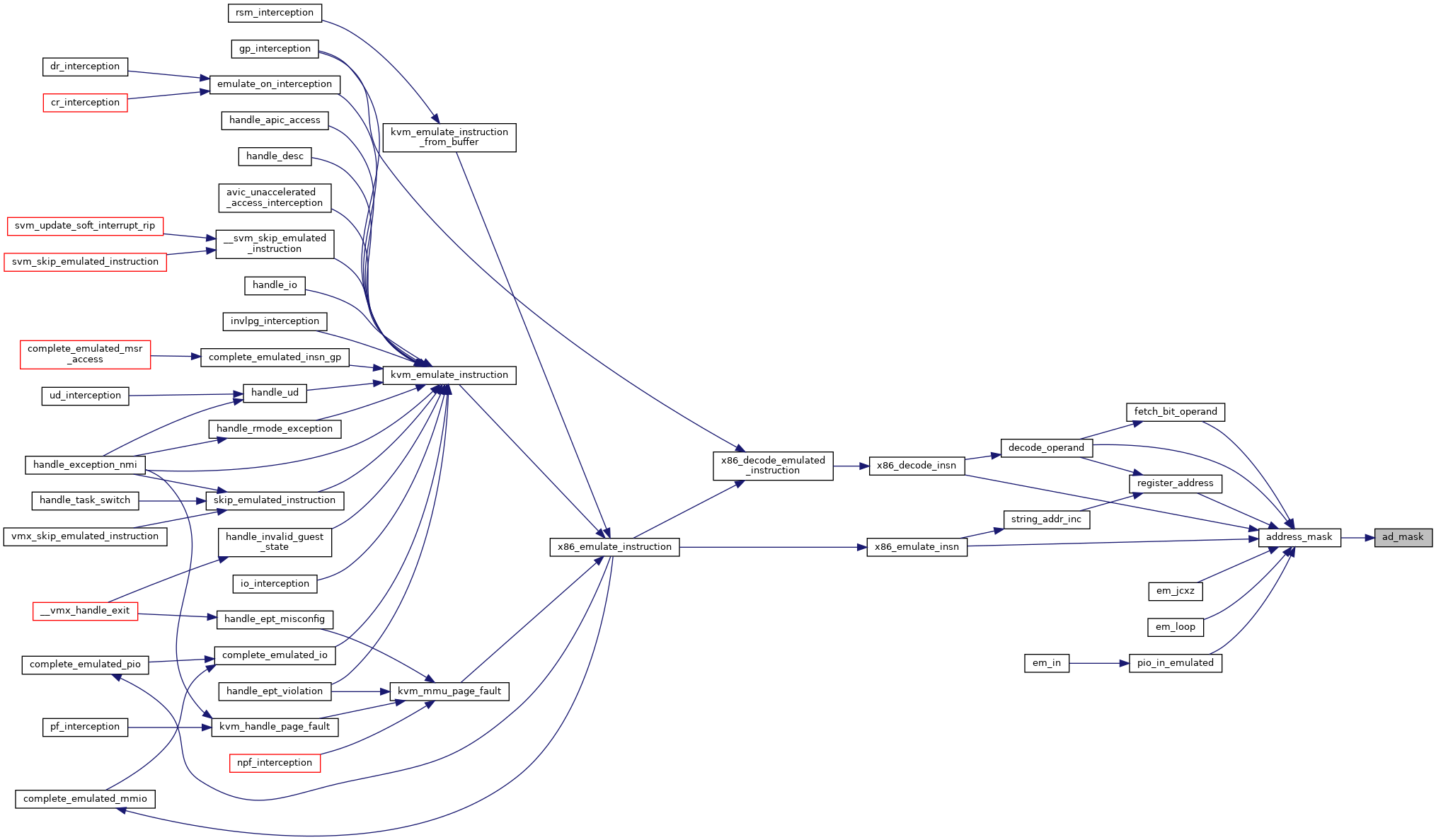

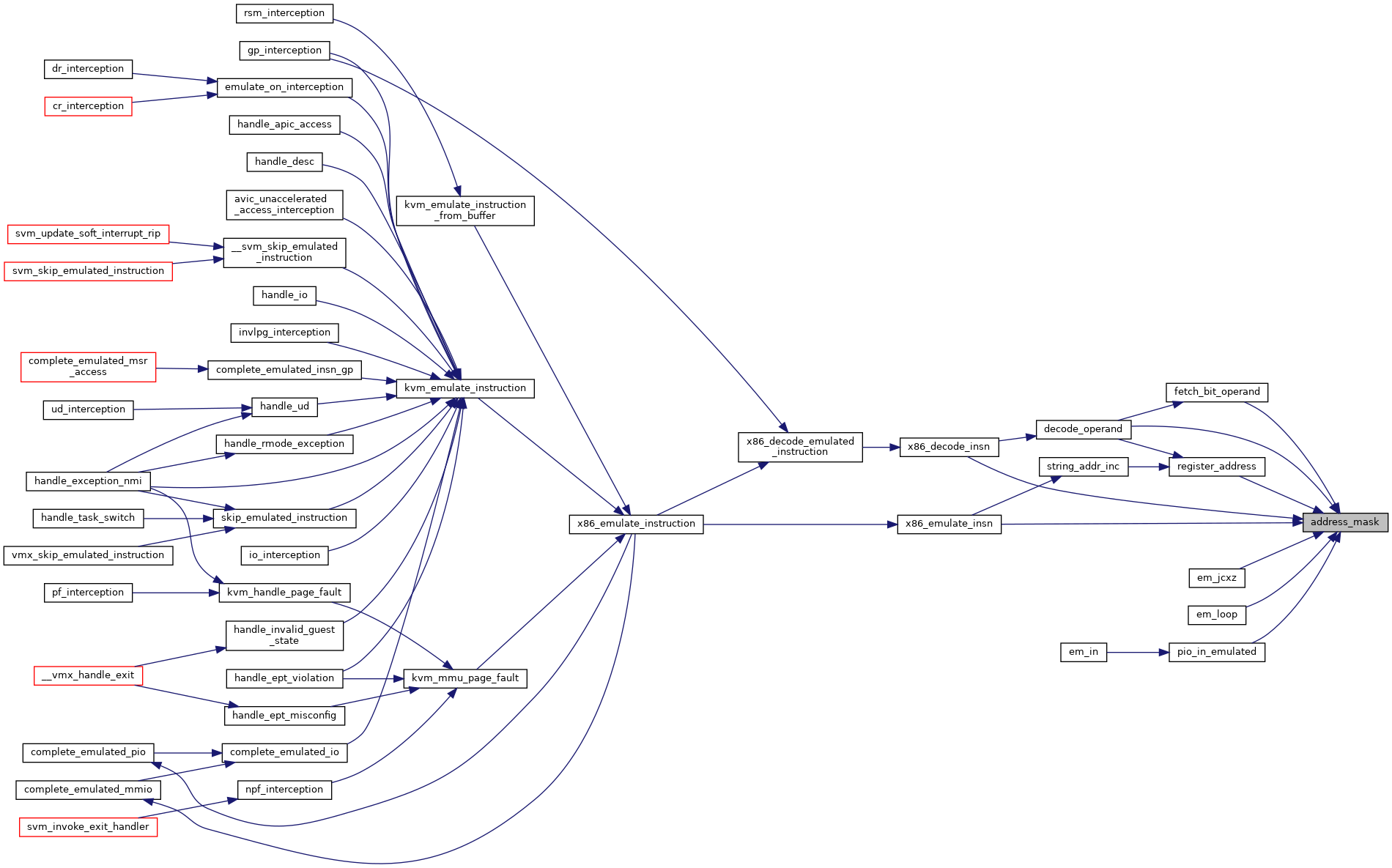

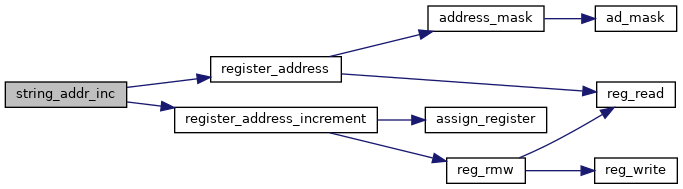

| static unsigned long | address_mask (struct x86_emulate_ctxt *ctxt, unsigned long reg) |

| |

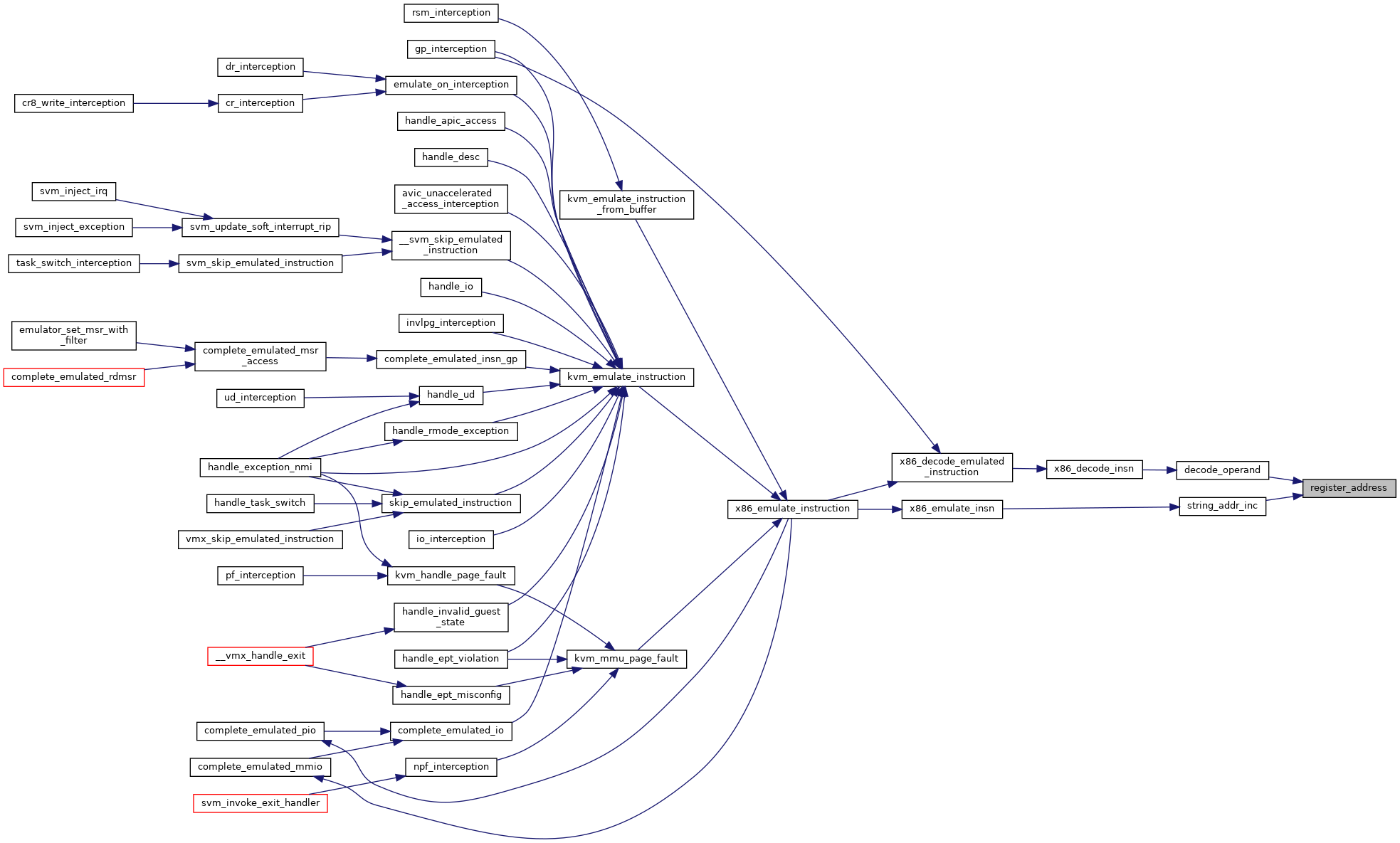

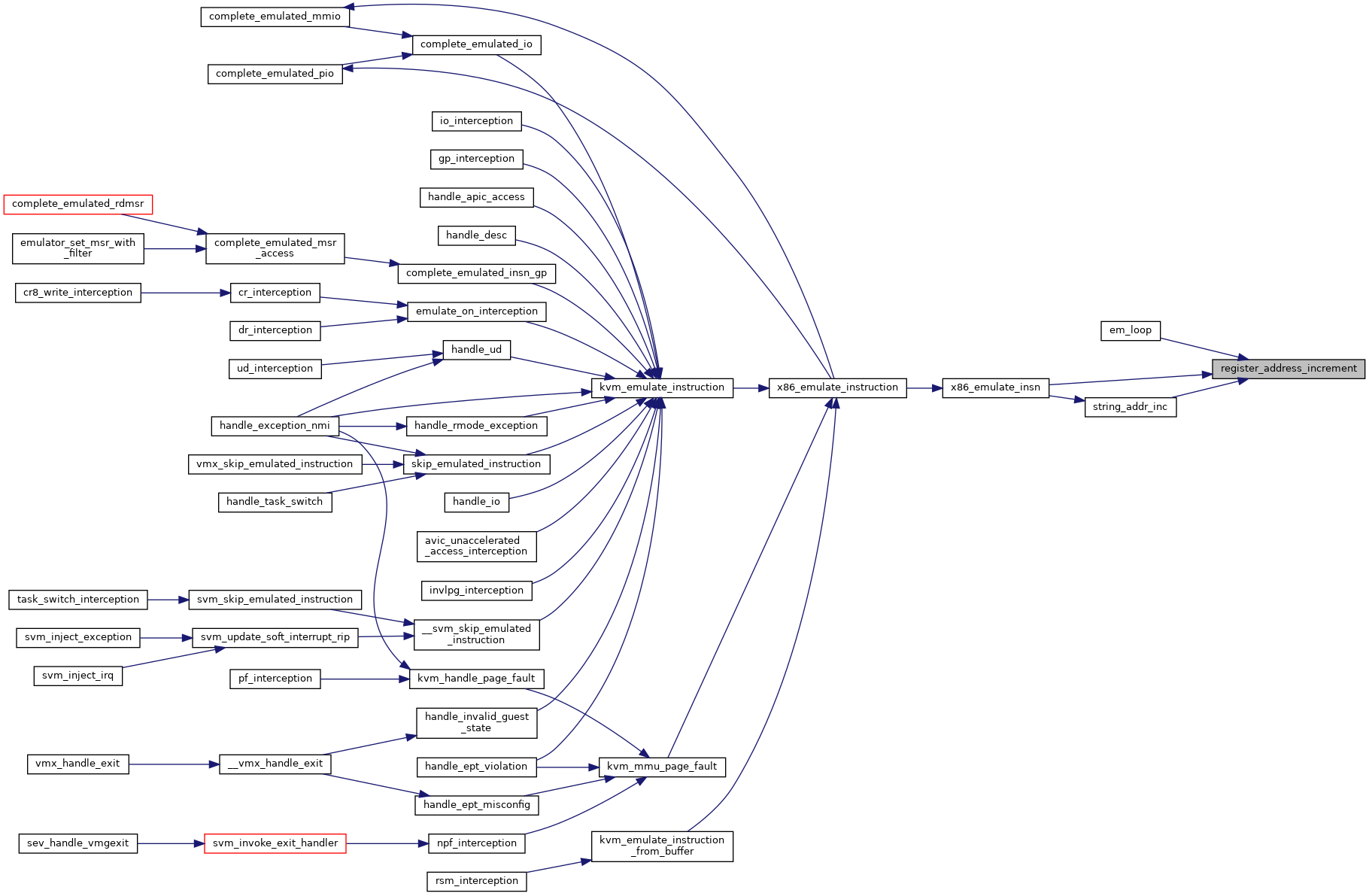

| static unsigned long | register_address (struct x86_emulate_ctxt *ctxt, int reg) |

| |

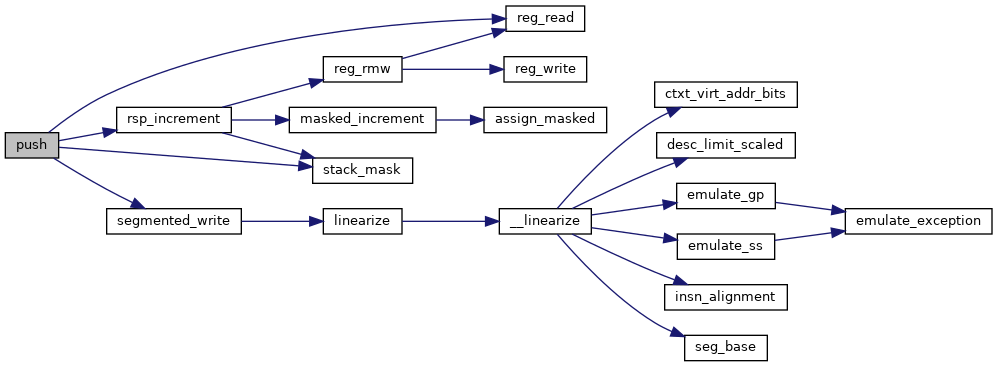

| static void | masked_increment (ulong *reg, ulong mask, int inc) |

| |

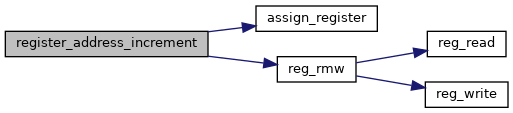

| static void | register_address_increment (struct x86_emulate_ctxt *ctxt, int reg, int inc) |

| |

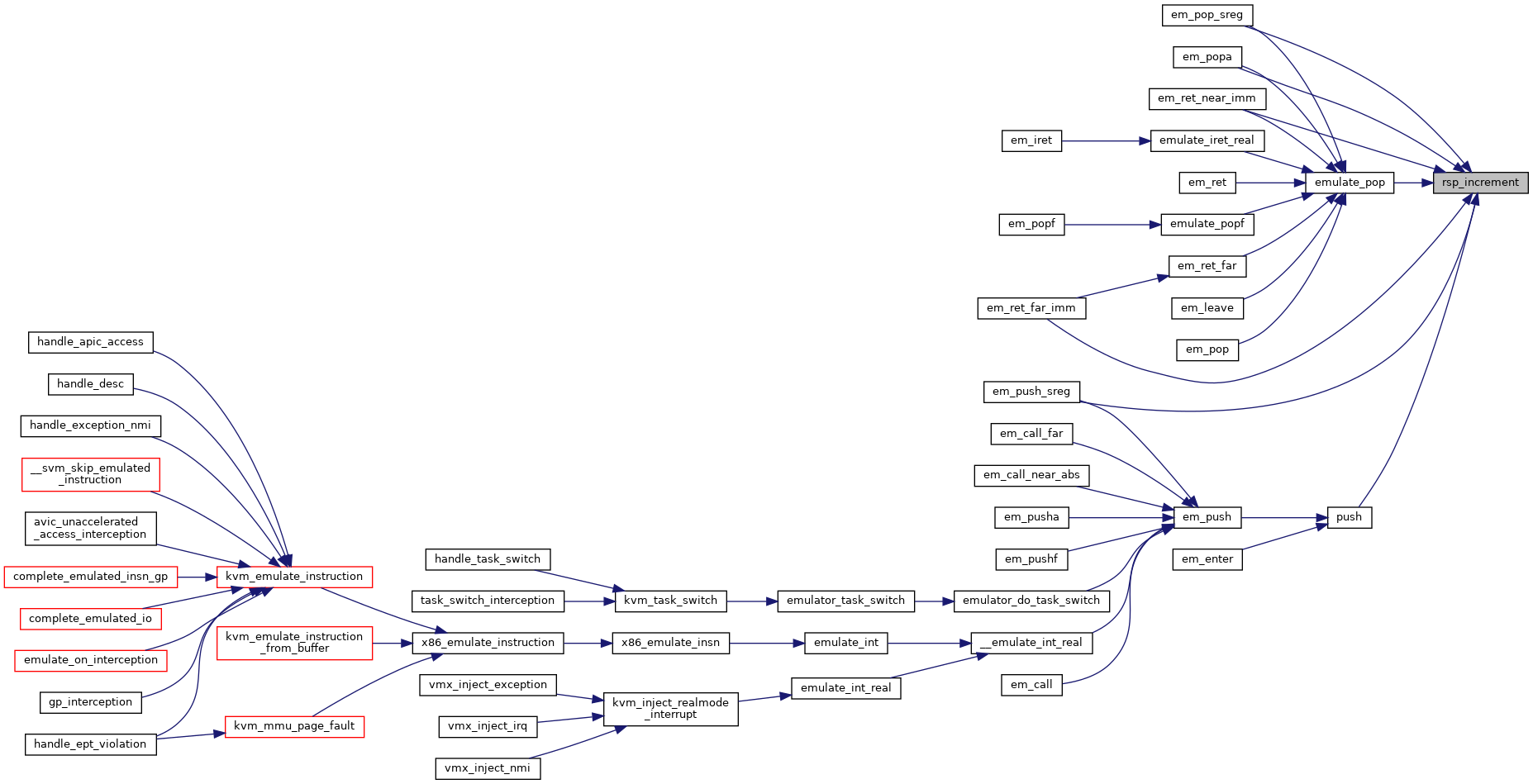

| static void | rsp_increment (struct x86_emulate_ctxt *ctxt, int inc) |

| |

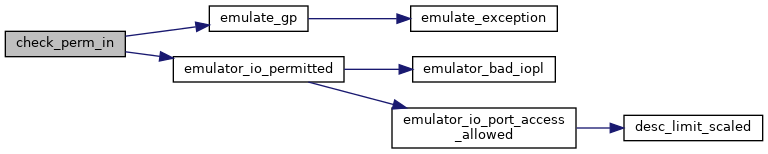

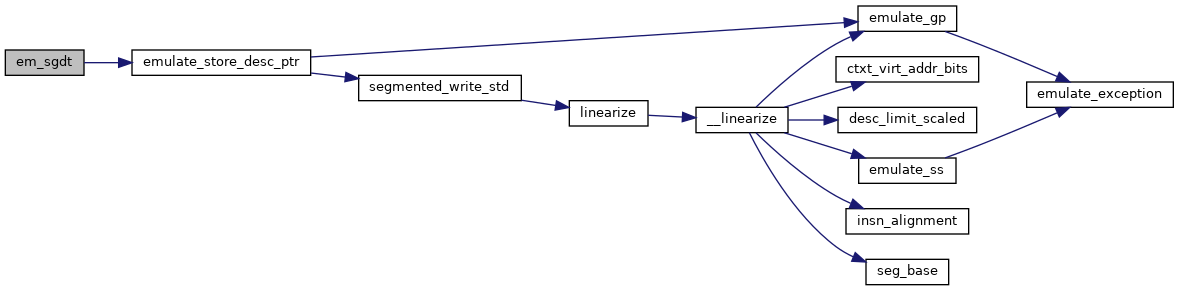

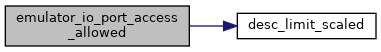

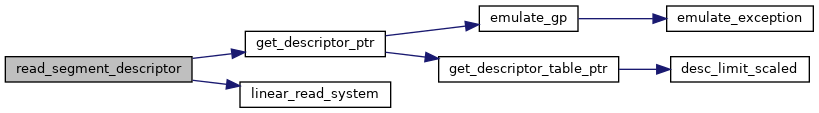

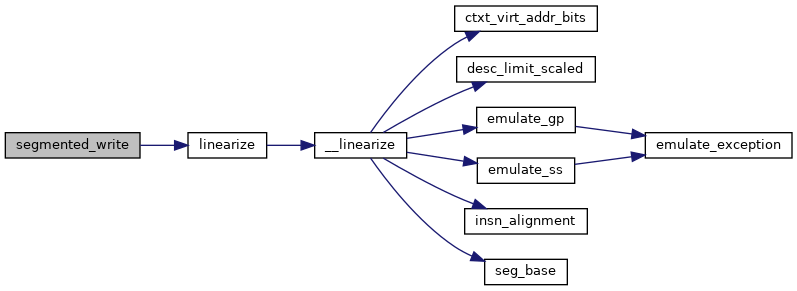

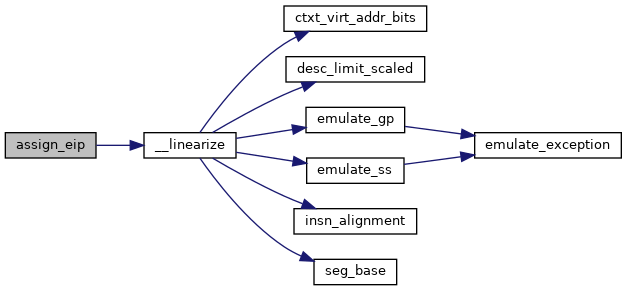

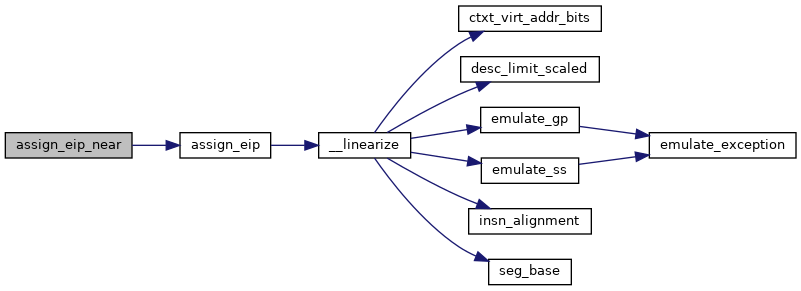

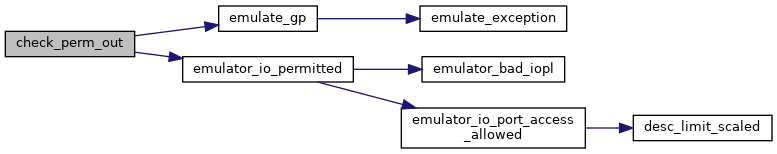

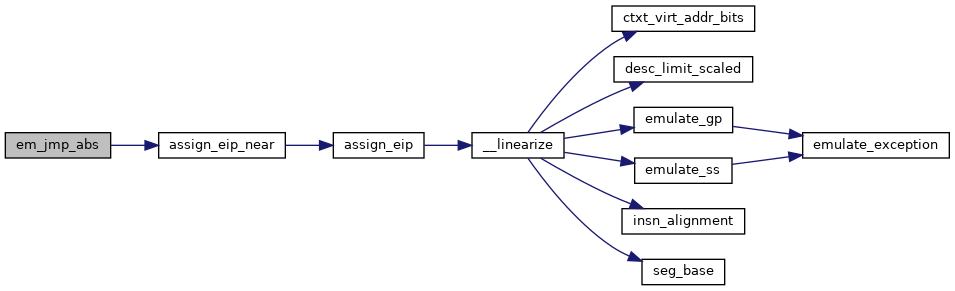

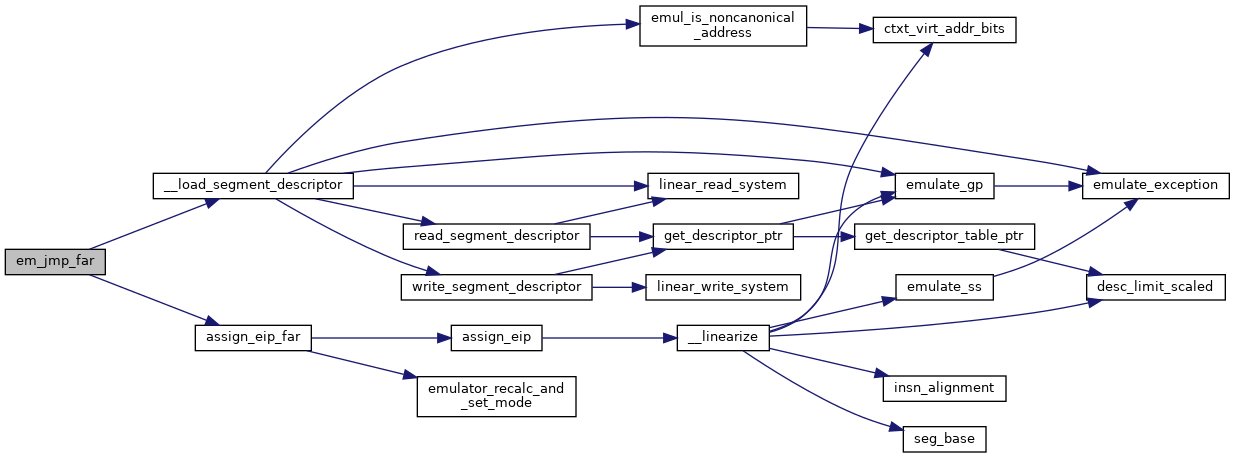

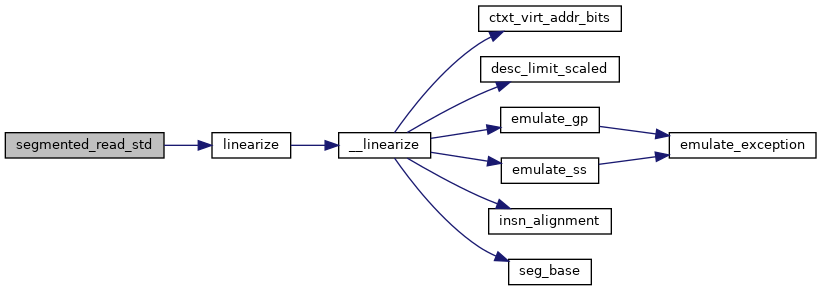

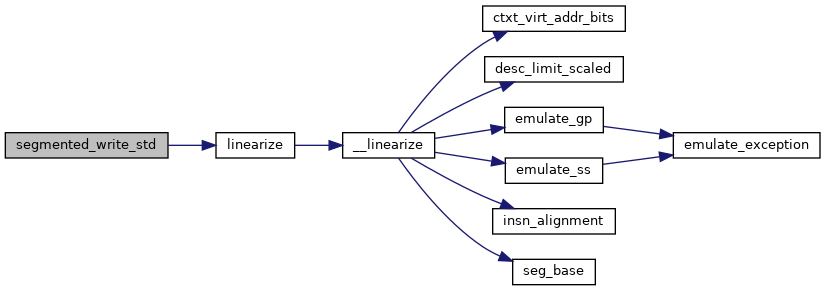

| static u32 | desc_limit_scaled (struct desc_struct *desc) |

| |

| static unsigned long | seg_base (struct x86_emulate_ctxt *ctxt, int seg) |

| |

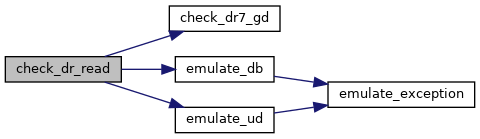

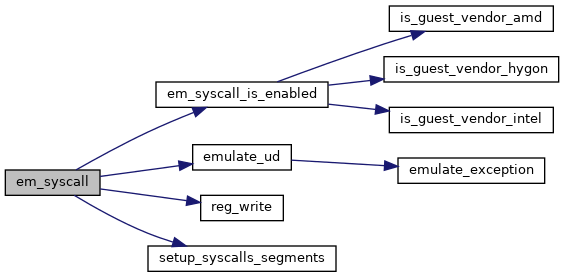

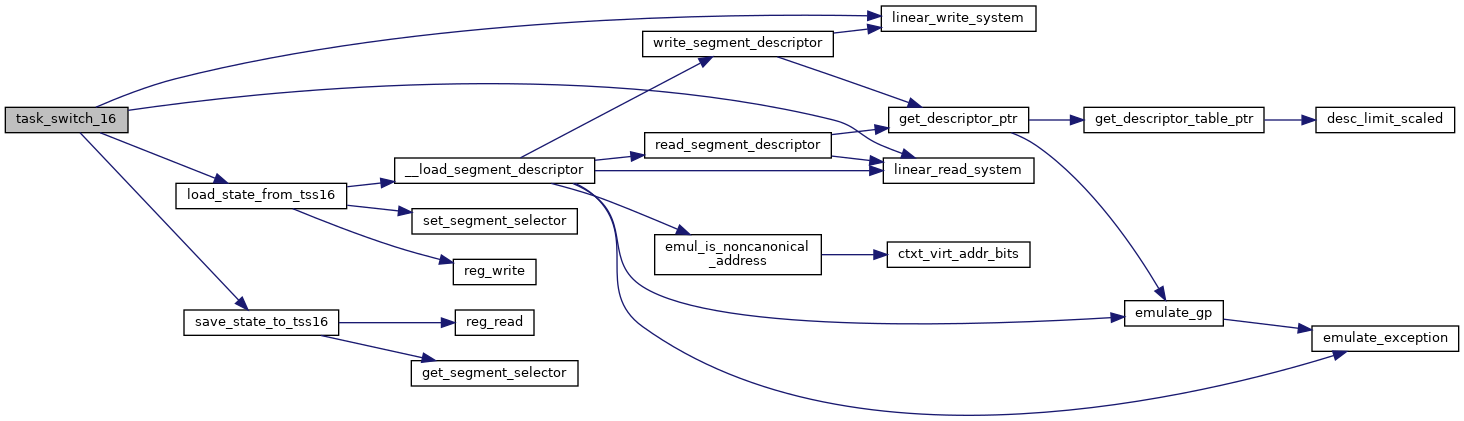

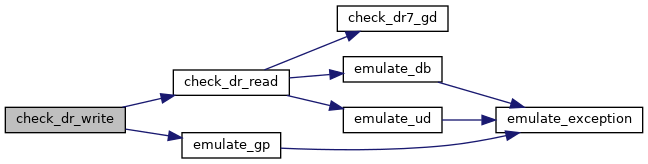

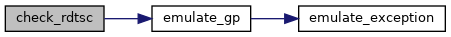

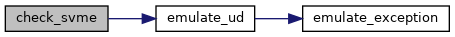

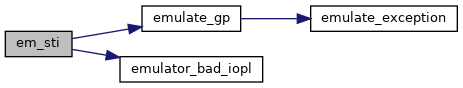

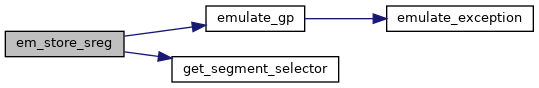

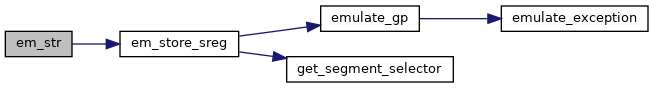

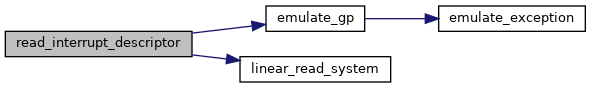

| static int | emulate_exception (struct x86_emulate_ctxt *ctxt, int vec, u32 error, bool valid) |

| |

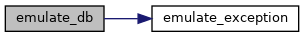

| static int | emulate_db (struct x86_emulate_ctxt *ctxt) |

| |

| static int | emulate_gp (struct x86_emulate_ctxt *ctxt, int err) |

| |

| static int | emulate_ss (struct x86_emulate_ctxt *ctxt, int err) |

| |

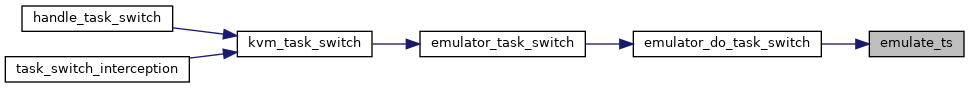

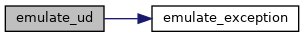

| static int | emulate_ud (struct x86_emulate_ctxt *ctxt) |

| |

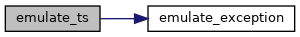

| static int | emulate_ts (struct x86_emulate_ctxt *ctxt, int err) |

| |

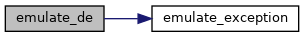

| static int | emulate_de (struct x86_emulate_ctxt *ctxt) |

| |

| static int | emulate_nm (struct x86_emulate_ctxt *ctxt) |

| |

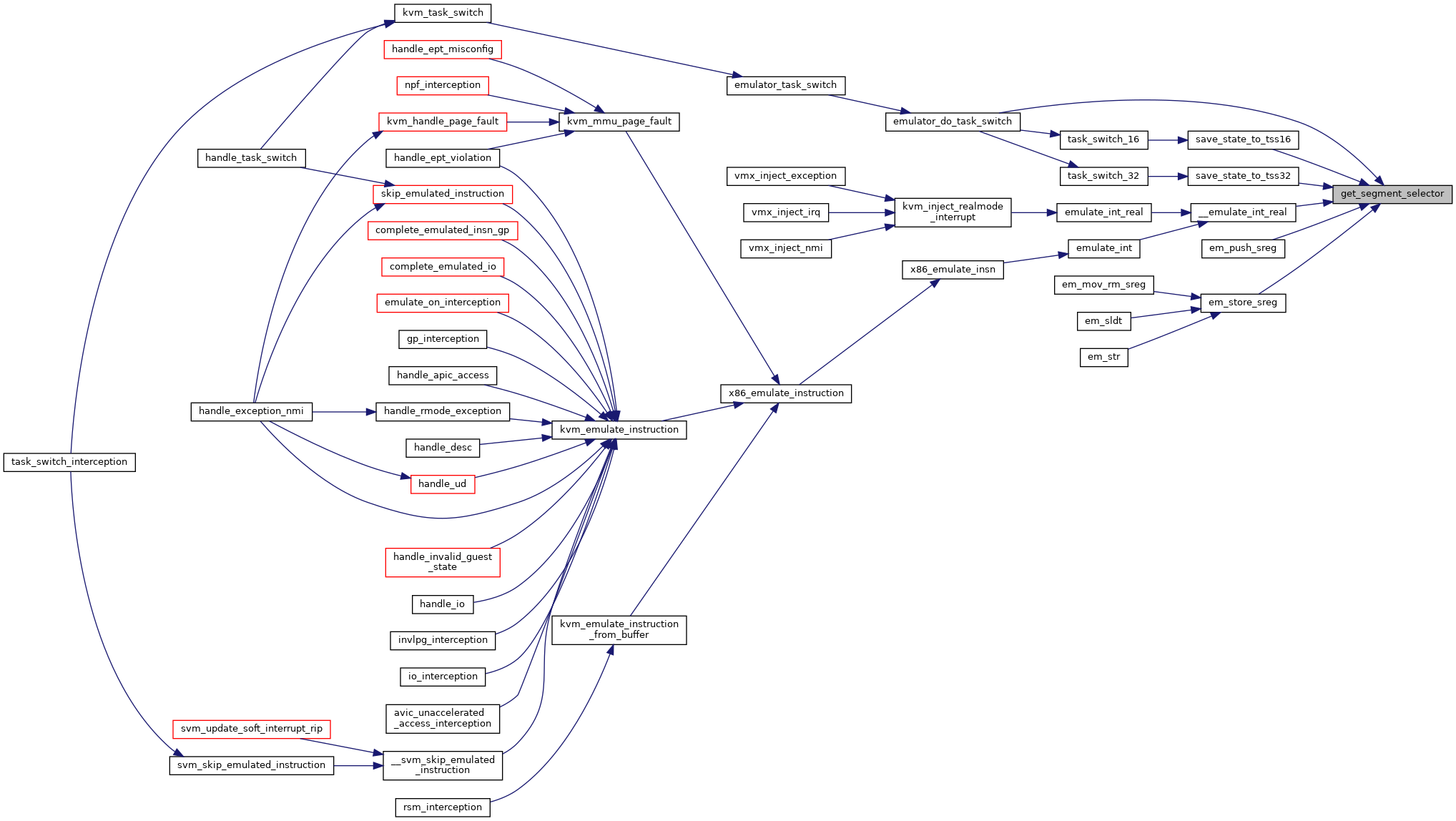

| static u16 | get_segment_selector (struct x86_emulate_ctxt *ctxt, unsigned seg) |

| |

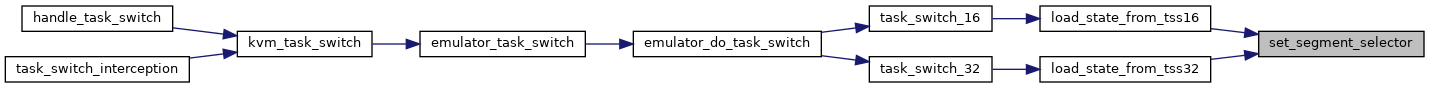

| static void | set_segment_selector (struct x86_emulate_ctxt *ctxt, u16 selector, unsigned seg) |

| |

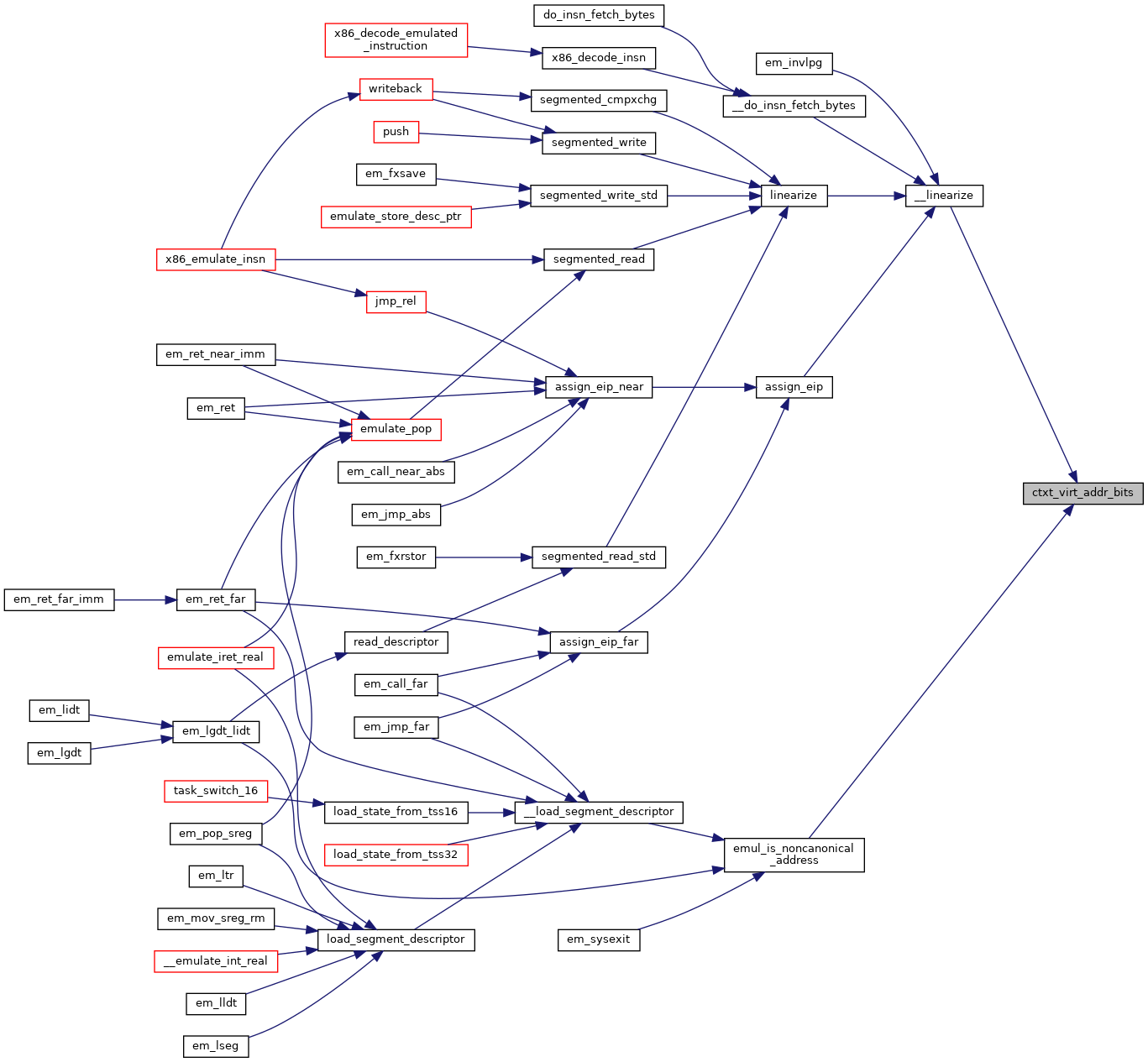

| static u8 | ctxt_virt_addr_bits (struct x86_emulate_ctxt *ctxt) |

| |

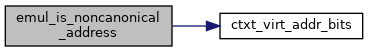

| static bool | emul_is_noncanonical_address (u64 la, struct x86_emulate_ctxt *ctxt) |

| |

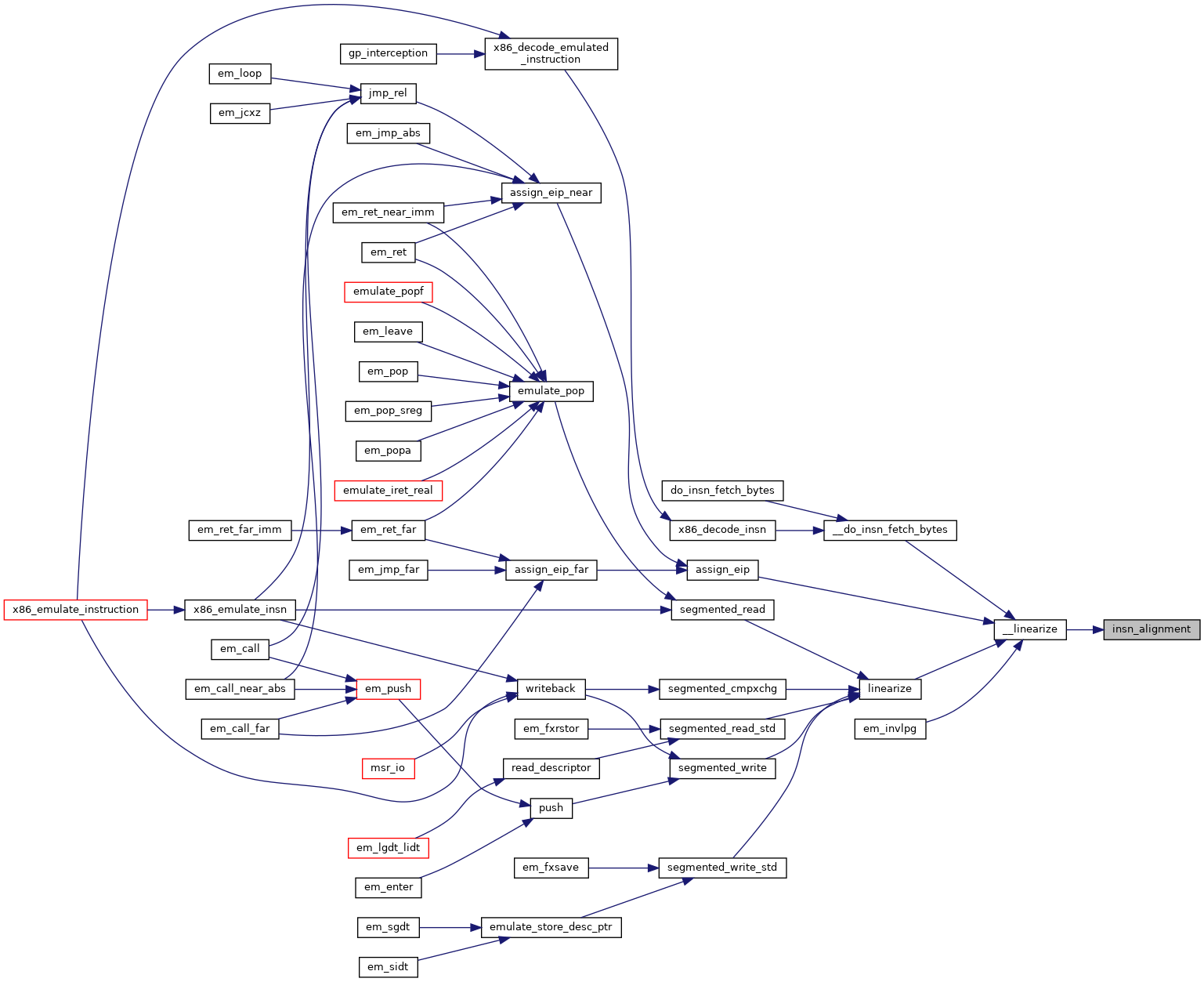

| static unsigned | insn_alignment (struct x86_emulate_ctxt *ctxt, unsigned size) |

| |

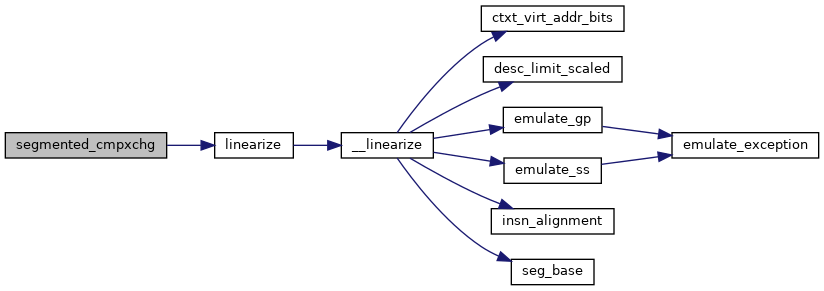

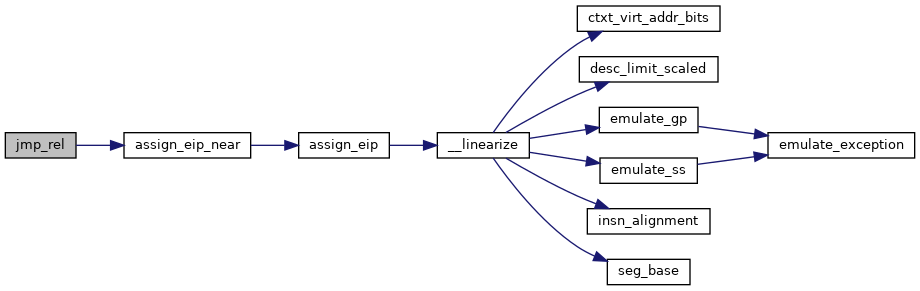

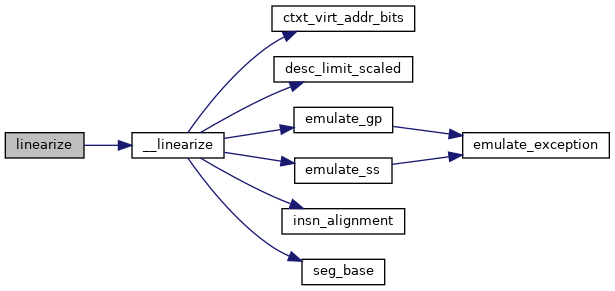

| static __always_inline int | __linearize (struct x86_emulate_ctxt *ctxt, struct segmented_address addr, unsigned *max_size, unsigned size, enum x86emul_mode mode, ulong *linear, unsigned int flags) |

| |

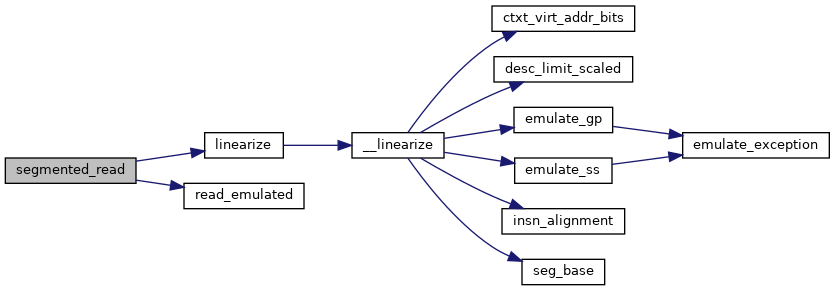

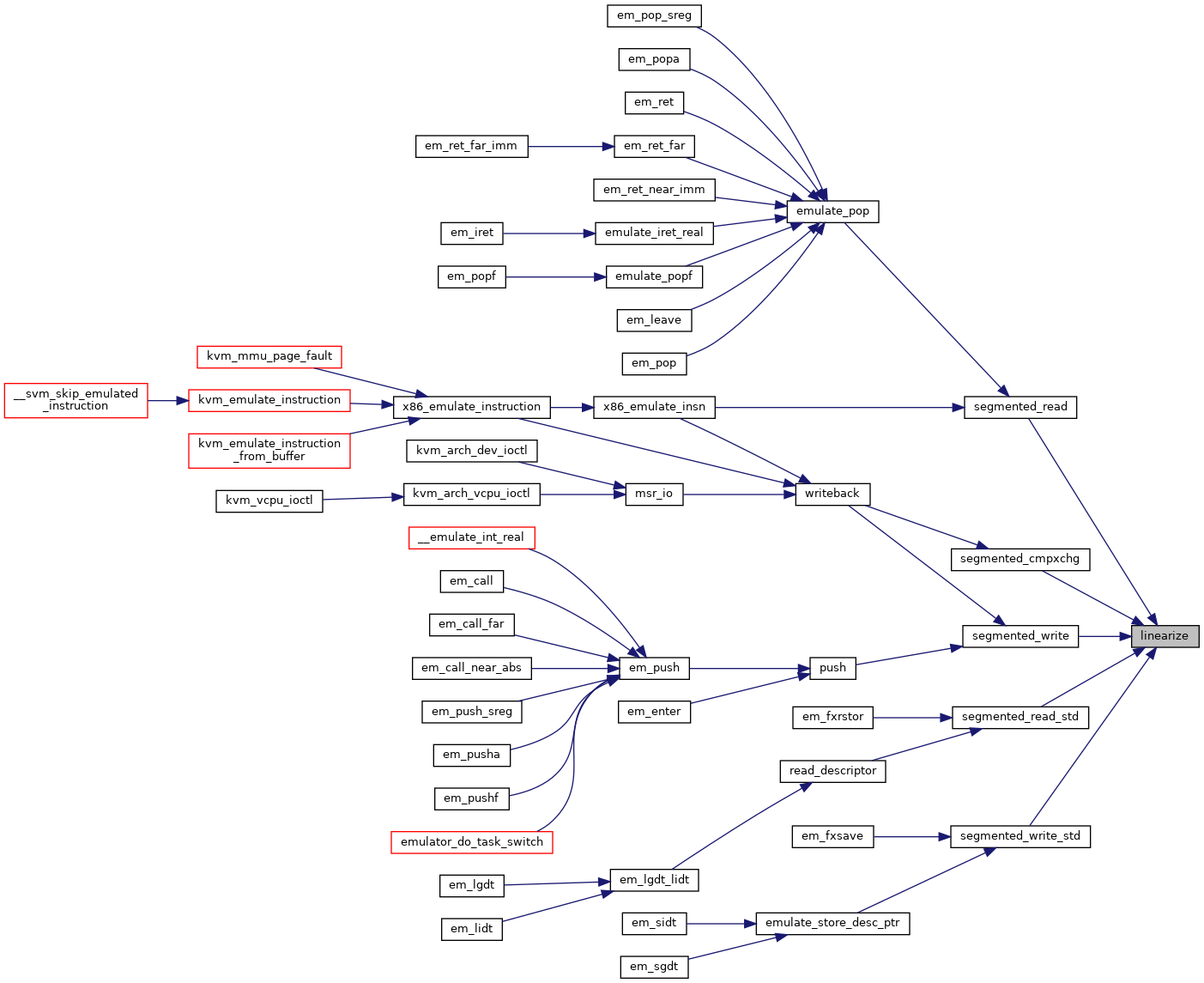

| static int | linearize (struct x86_emulate_ctxt *ctxt, struct segmented_address addr, unsigned size, bool write, ulong *linear) |

| |

| static int | assign_eip (struct x86_emulate_ctxt *ctxt, ulong dst) |

| |

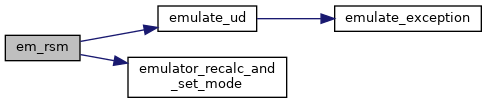

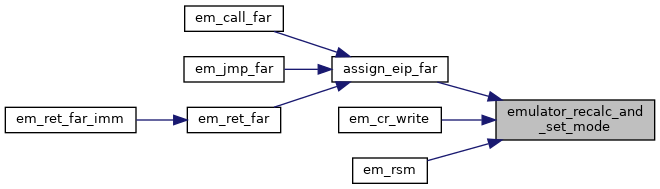

| static int | emulator_recalc_and_set_mode (struct x86_emulate_ctxt *ctxt) |

| |

| static int | assign_eip_near (struct x86_emulate_ctxt *ctxt, ulong dst) |

| |

| static int | assign_eip_far (struct x86_emulate_ctxt *ctxt, ulong dst) |

| |

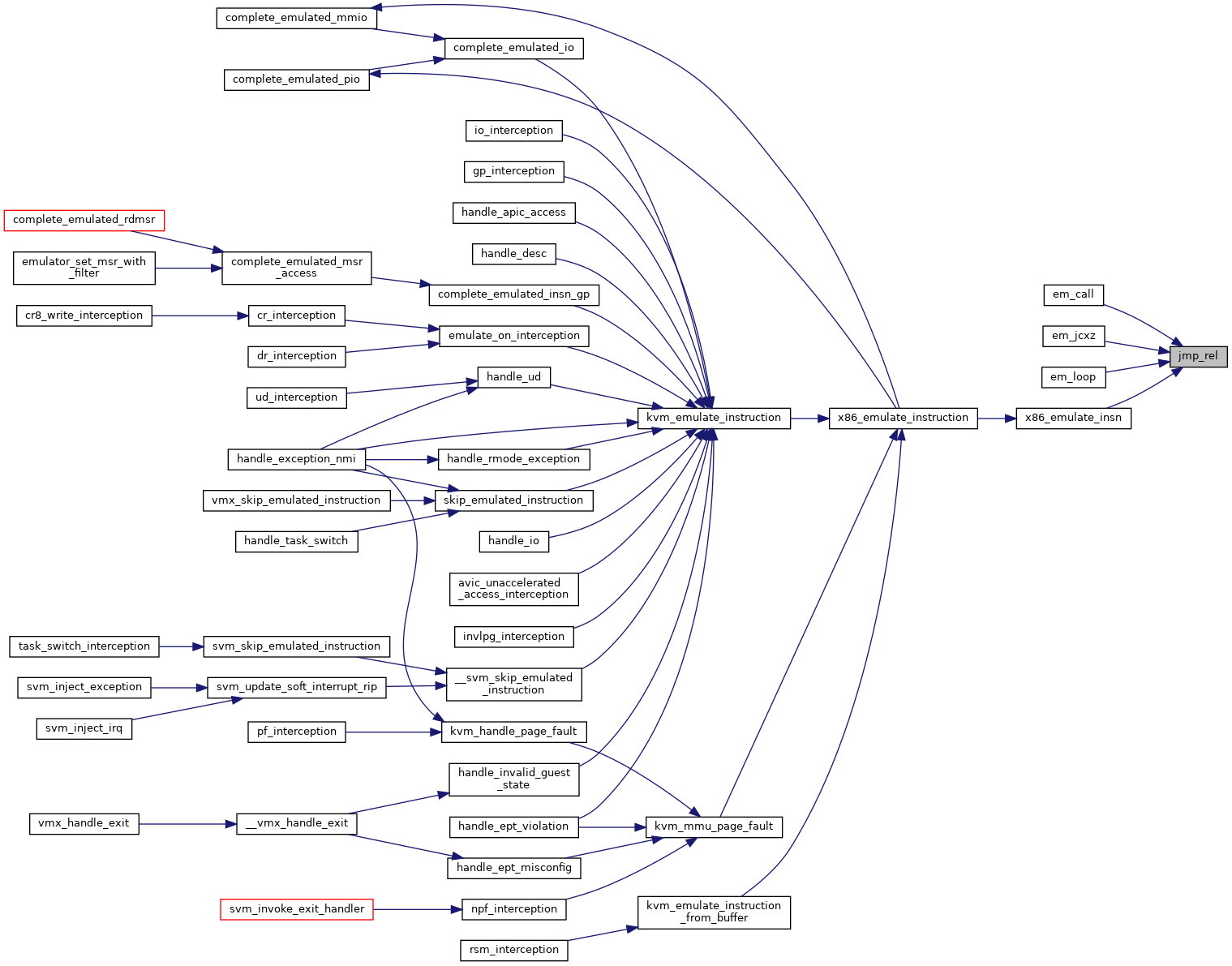

| static int | jmp_rel (struct x86_emulate_ctxt *ctxt, int rel) |

| |

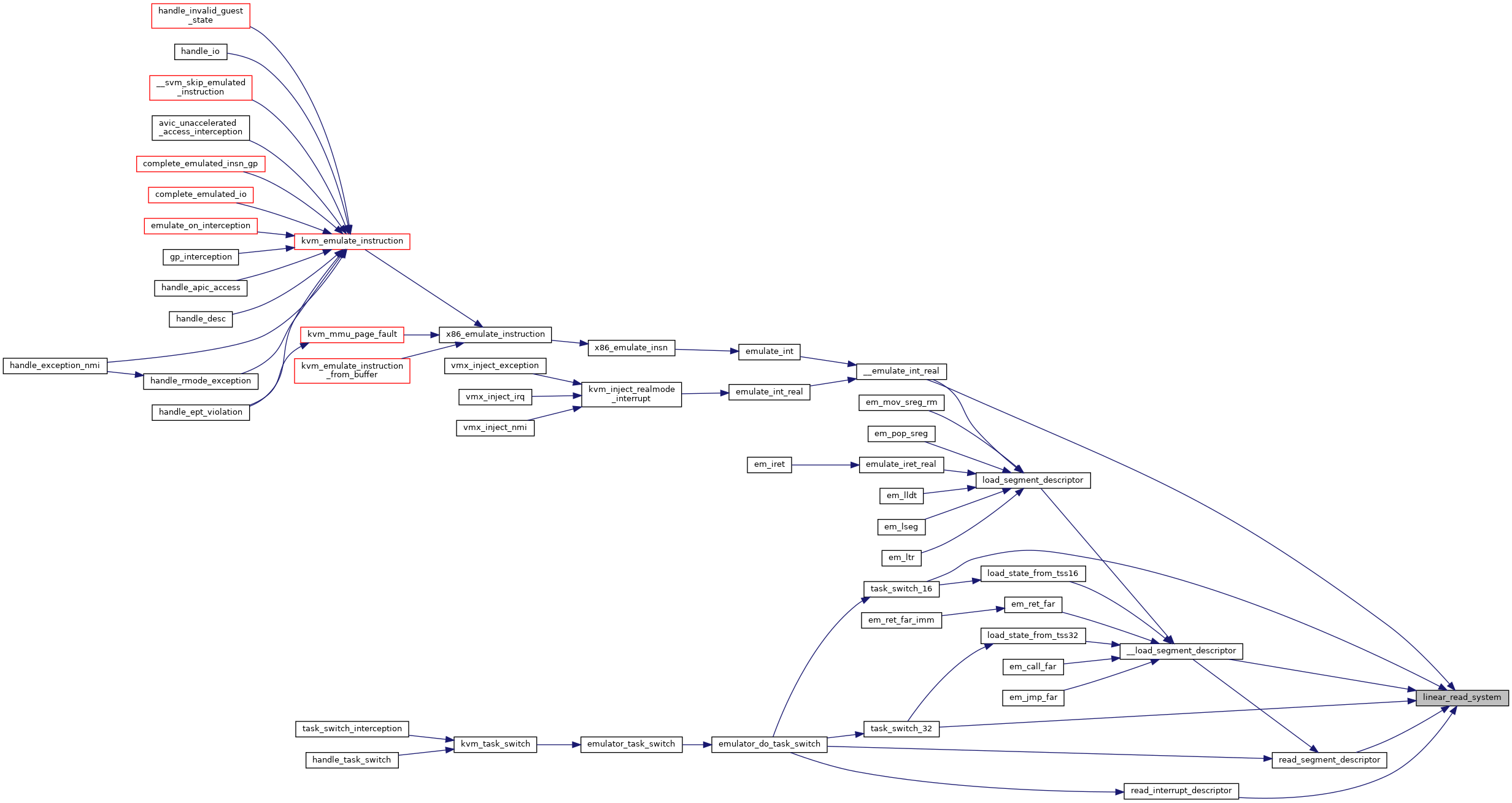

| static int | linear_read_system (struct x86_emulate_ctxt *ctxt, ulong linear, void *data, unsigned size) |

| |

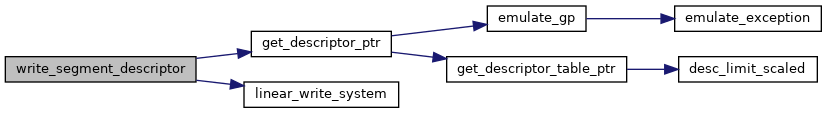

| static int | linear_write_system (struct x86_emulate_ctxt *ctxt, ulong linear, void *data, unsigned int size) |

| |

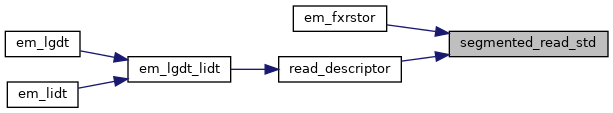

| static int | segmented_read_std (struct x86_emulate_ctxt *ctxt, struct segmented_address addr, void *data, unsigned size) |

| |

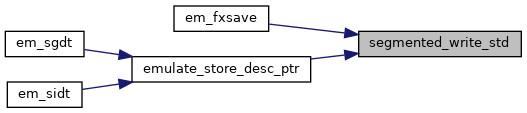

| static int | segmented_write_std (struct x86_emulate_ctxt *ctxt, struct segmented_address addr, void *data, unsigned int size) |

| |

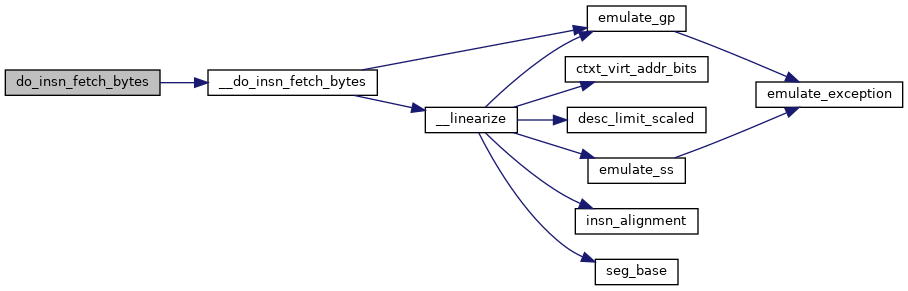

| static int | __do_insn_fetch_bytes (struct x86_emulate_ctxt *ctxt, int op_size) |

| |

| static __always_inline int | do_insn_fetch_bytes (struct x86_emulate_ctxt *ctxt, unsigned size) |

| |

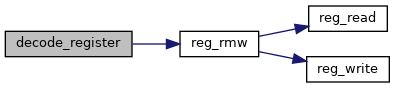

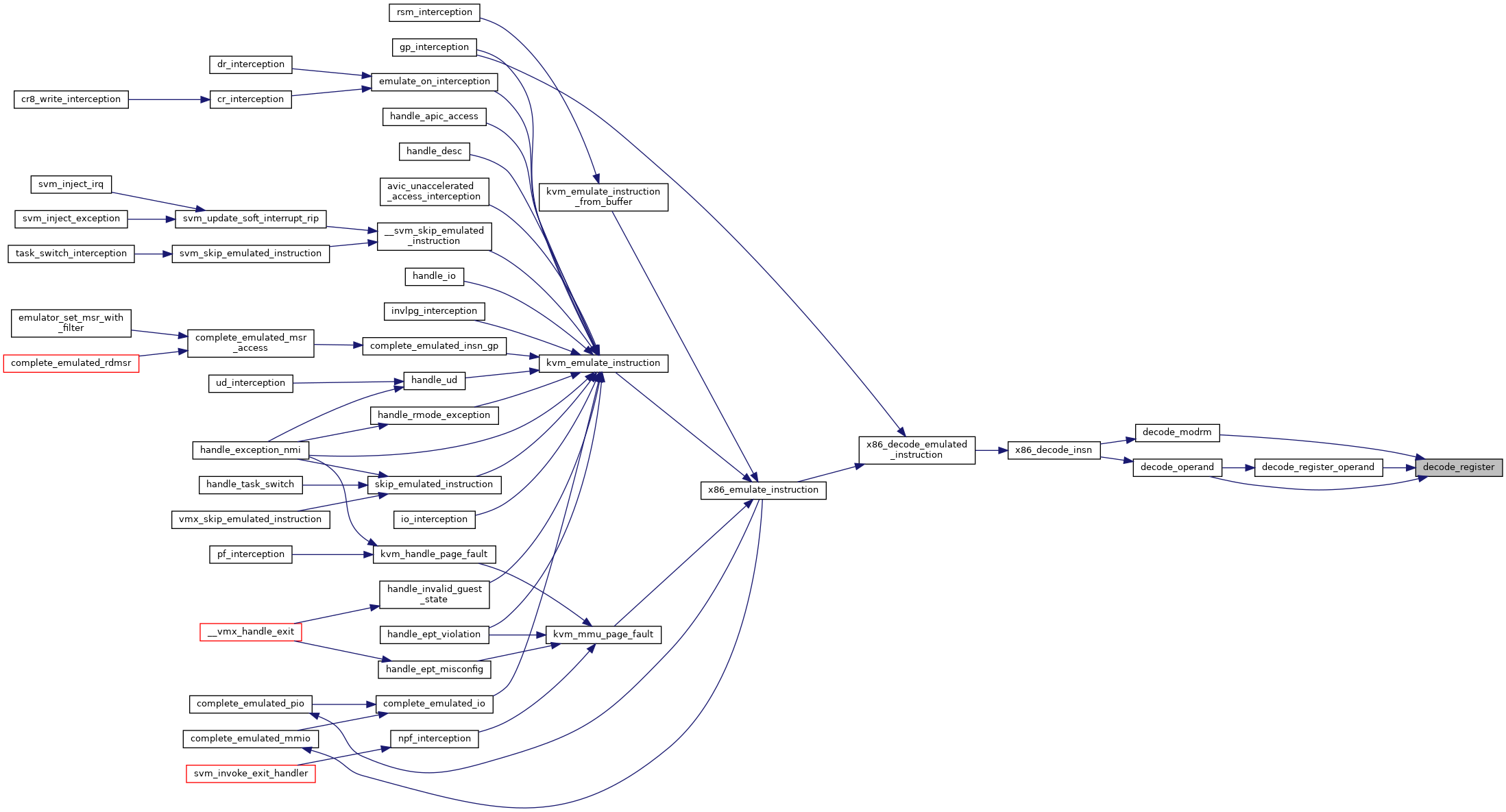

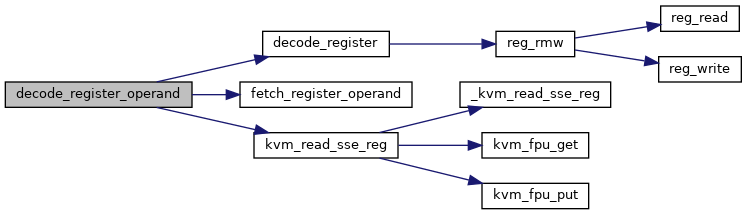

| static void * | decode_register (struct x86_emulate_ctxt *ctxt, u8 modrm_reg, int byteop) |

| |

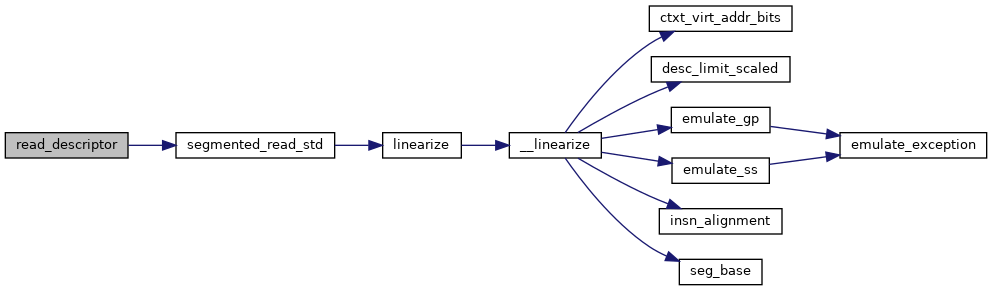

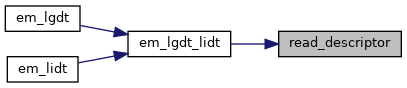

| static int | read_descriptor (struct x86_emulate_ctxt *ctxt, struct segmented_address addr, u16 *size, unsigned long *address, int op_bytes) |

| |

| | FASTOP2 (add) |

| |

| | FASTOP2 (or) |

| |

| | FASTOP2 (adc) |

| |

| | FASTOP2 (sbb) |

| |

| | FASTOP2 (and) |

| |

| | FASTOP2 (sub) |

| |

| | FASTOP2 (xor) |

| |

| | FASTOP2 (cmp) |

| |

| | FASTOP2 (test) |

| |

| | FASTOP1SRC2 (mul, mul_ex) |

| |

| | FASTOP1SRC2 (imul, imul_ex) |

| |

| | FASTOP1SRC2EX (div, div_ex) |

| |

| | FASTOP1SRC2EX (idiv, idiv_ex) |

| |

| | FASTOP3WCL (shld) |

| |

| | FASTOP3WCL (shrd) |

| |

| | FASTOP2W (imul) |

| |

| | FASTOP1 (not) |

| |

| | FASTOP1 (neg) |

| |

| | FASTOP1 (inc) |

| |

| | FASTOP1 (dec) |

| |

| | FASTOP2CL (rol) |

| |

| | FASTOP2CL (ror) |

| |

| | FASTOP2CL (rcl) |

| |

| | FASTOP2CL (rcr) |

| |

| | FASTOP2CL (shl) |

| |

| | FASTOP2CL (shr) |

| |

| | FASTOP2CL (sar) |

| |

| | FASTOP2W (bsf) |

| |

| | FASTOP2W (bsr) |

| |

| | FASTOP2W (bt) |

| |

| | FASTOP2W (bts) |

| |

| | FASTOP2W (btr) |

| |

| | FASTOP2W (btc) |

| |

| | FASTOP2 (xadd) |

| |

| | FASTOP2R (cmp, cmp_r) |

| |

| static int | em_bsf_c (struct x86_emulate_ctxt *ctxt) |

| |

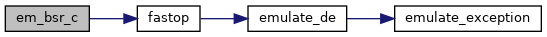

| static int | em_bsr_c (struct x86_emulate_ctxt *ctxt) |

| |

| static __always_inline u8 | test_cc (unsigned int condition, unsigned long flags) |

| |

| static void | fetch_register_operand (struct operand *op) |

| |

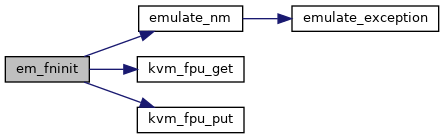

| static int | em_fninit (struct x86_emulate_ctxt *ctxt) |

| |

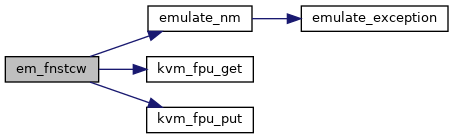

| static int | em_fnstcw (struct x86_emulate_ctxt *ctxt) |

| |

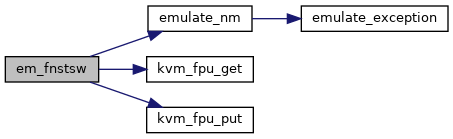

| static int | em_fnstsw (struct x86_emulate_ctxt *ctxt) |

| |

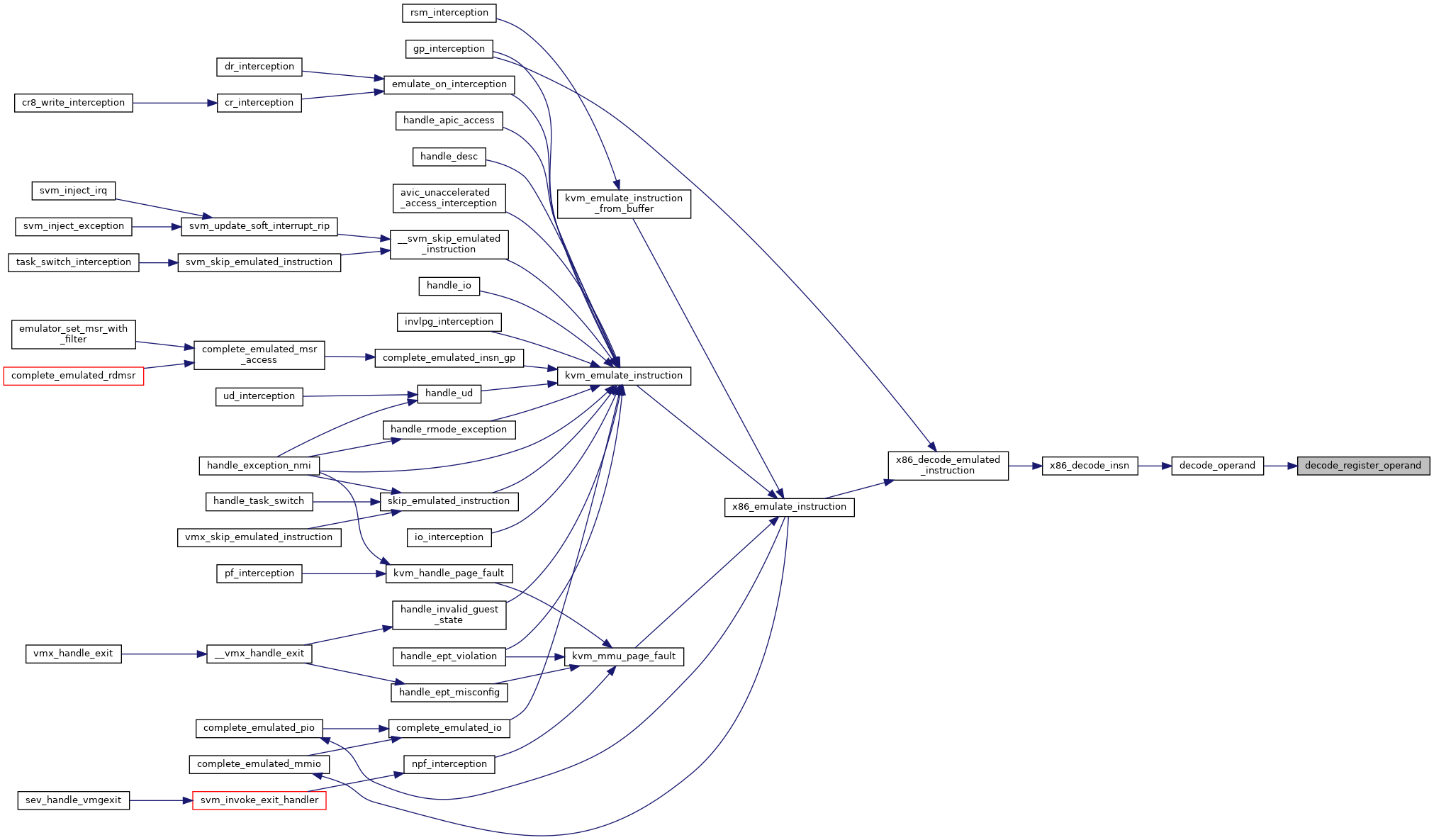

| static void | decode_register_operand (struct x86_emulate_ctxt *ctxt, struct operand *op) |

| |

| static void | adjust_modrm_seg (struct x86_emulate_ctxt *ctxt, int base_reg) |

| |

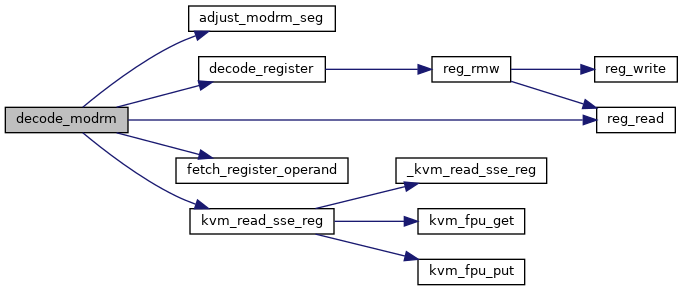

| static int | decode_modrm (struct x86_emulate_ctxt *ctxt, struct operand *op) |

| |

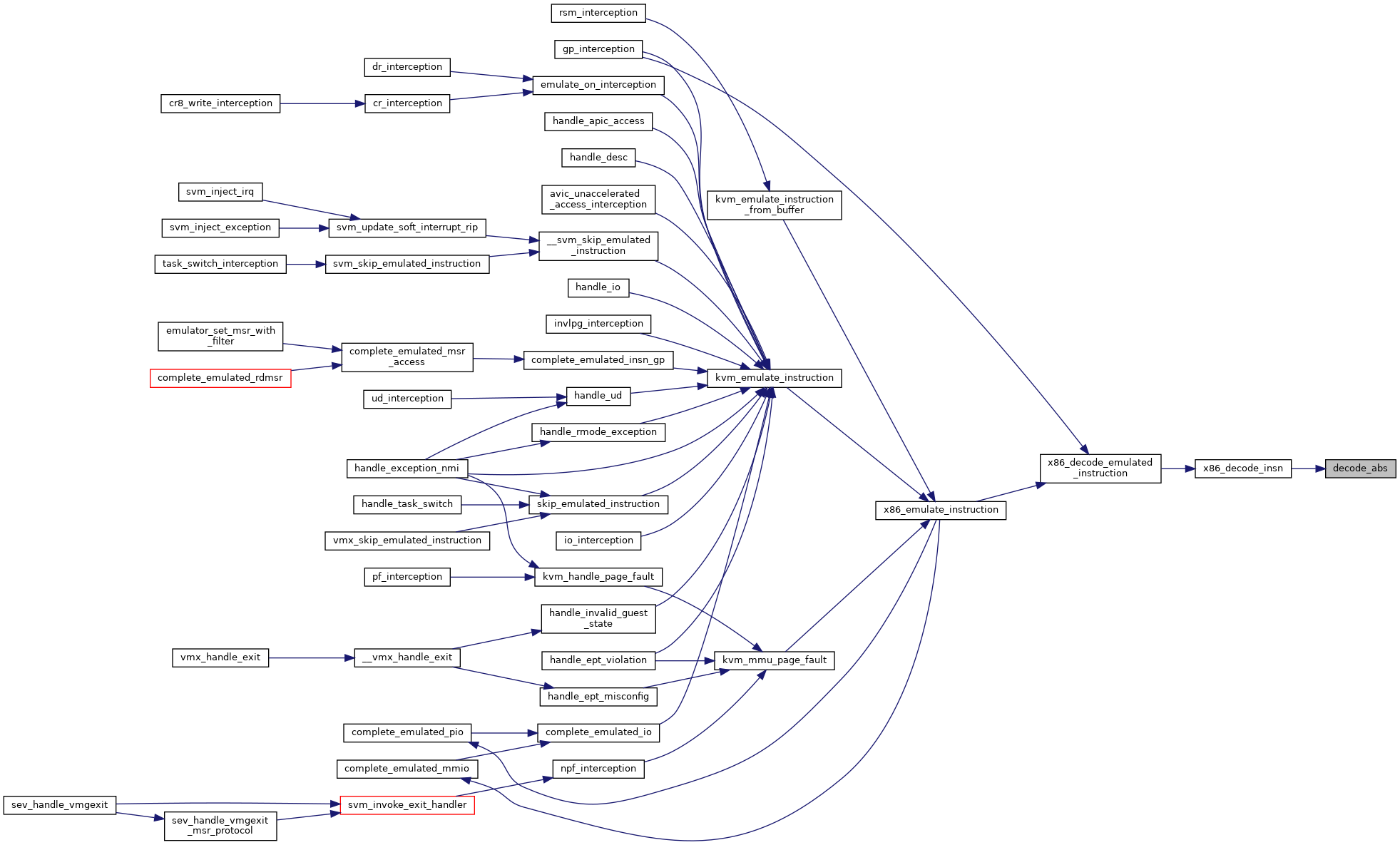

| static int | decode_abs (struct x86_emulate_ctxt *ctxt, struct operand *op) |

| |

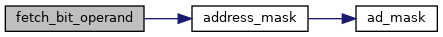

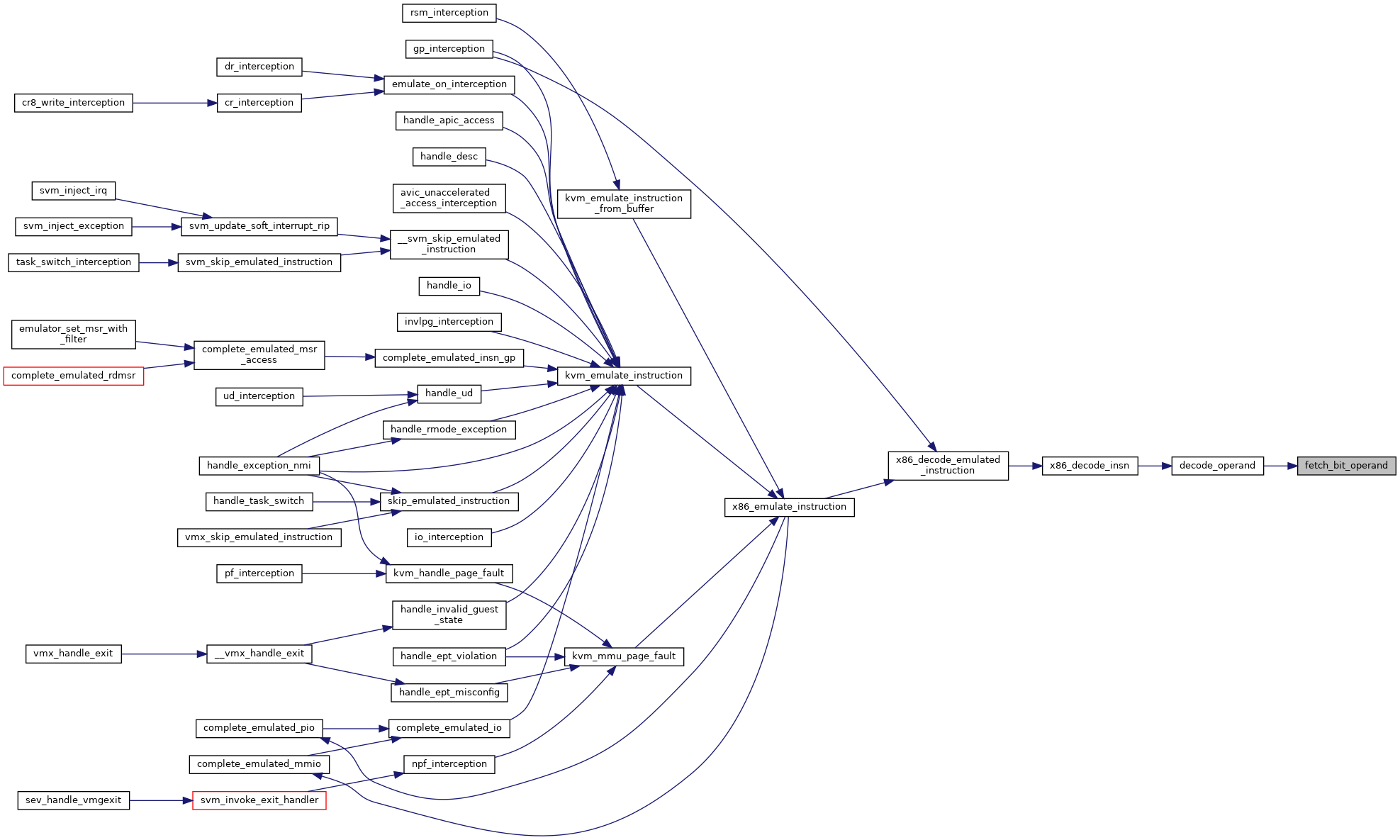

| static void | fetch_bit_operand (struct x86_emulate_ctxt *ctxt) |

| |

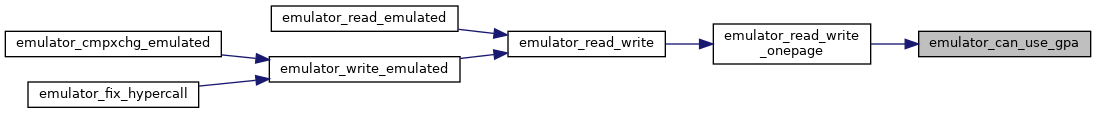

| static int | read_emulated (struct x86_emulate_ctxt *ctxt, unsigned long addr, void *dest, unsigned size) |

| |

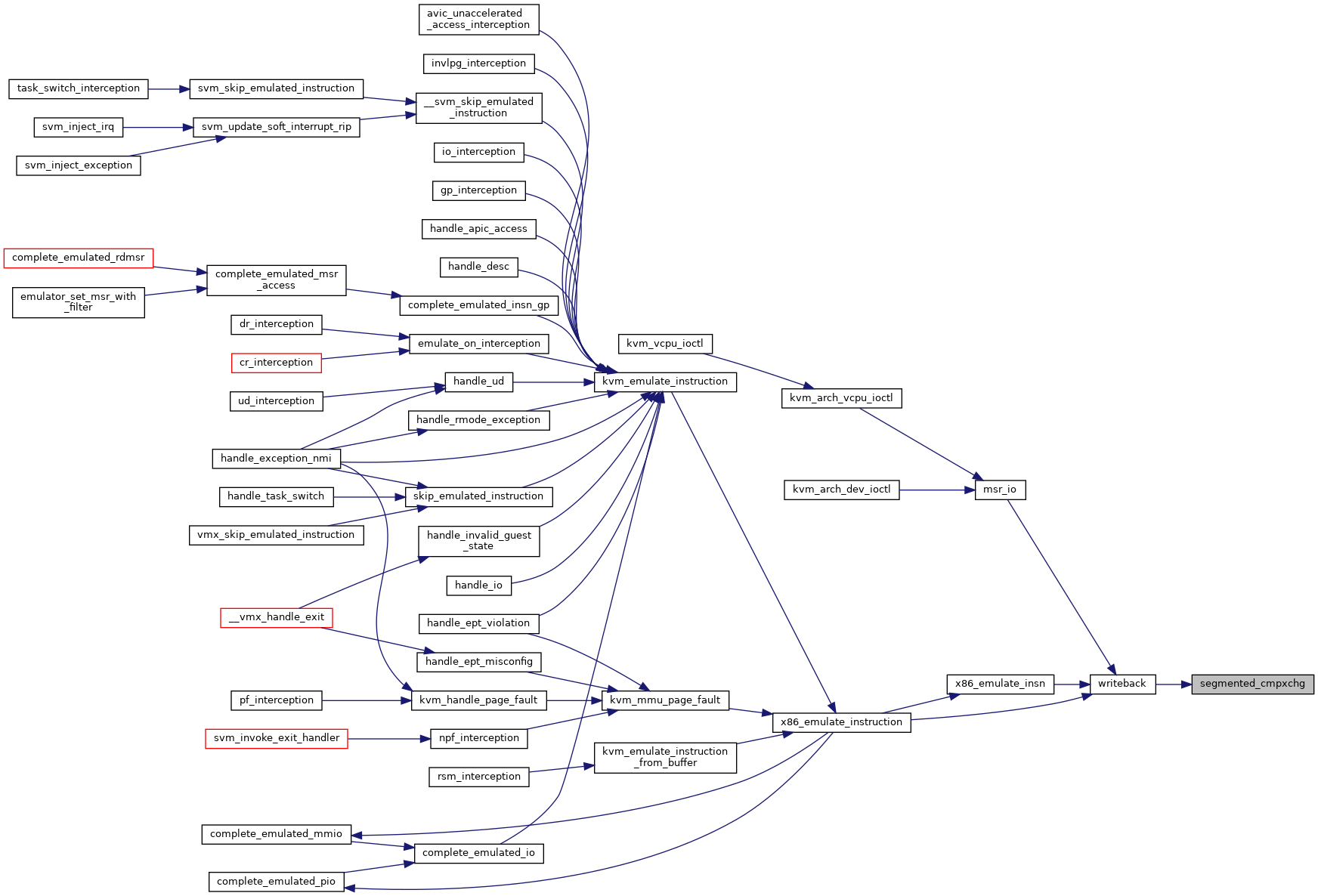

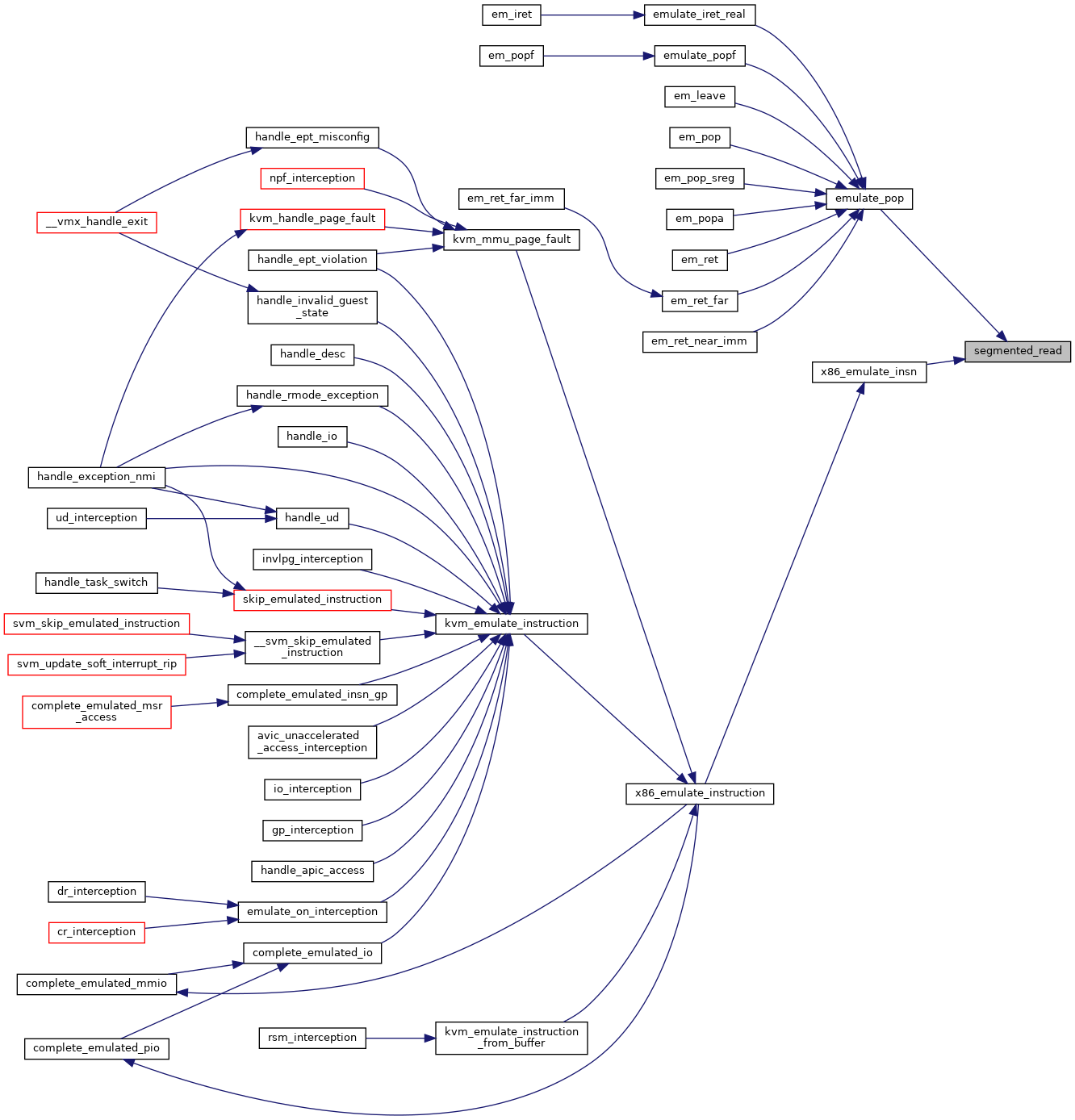

| static int | segmented_read (struct x86_emulate_ctxt *ctxt, struct segmented_address addr, void *data, unsigned size) |

| |

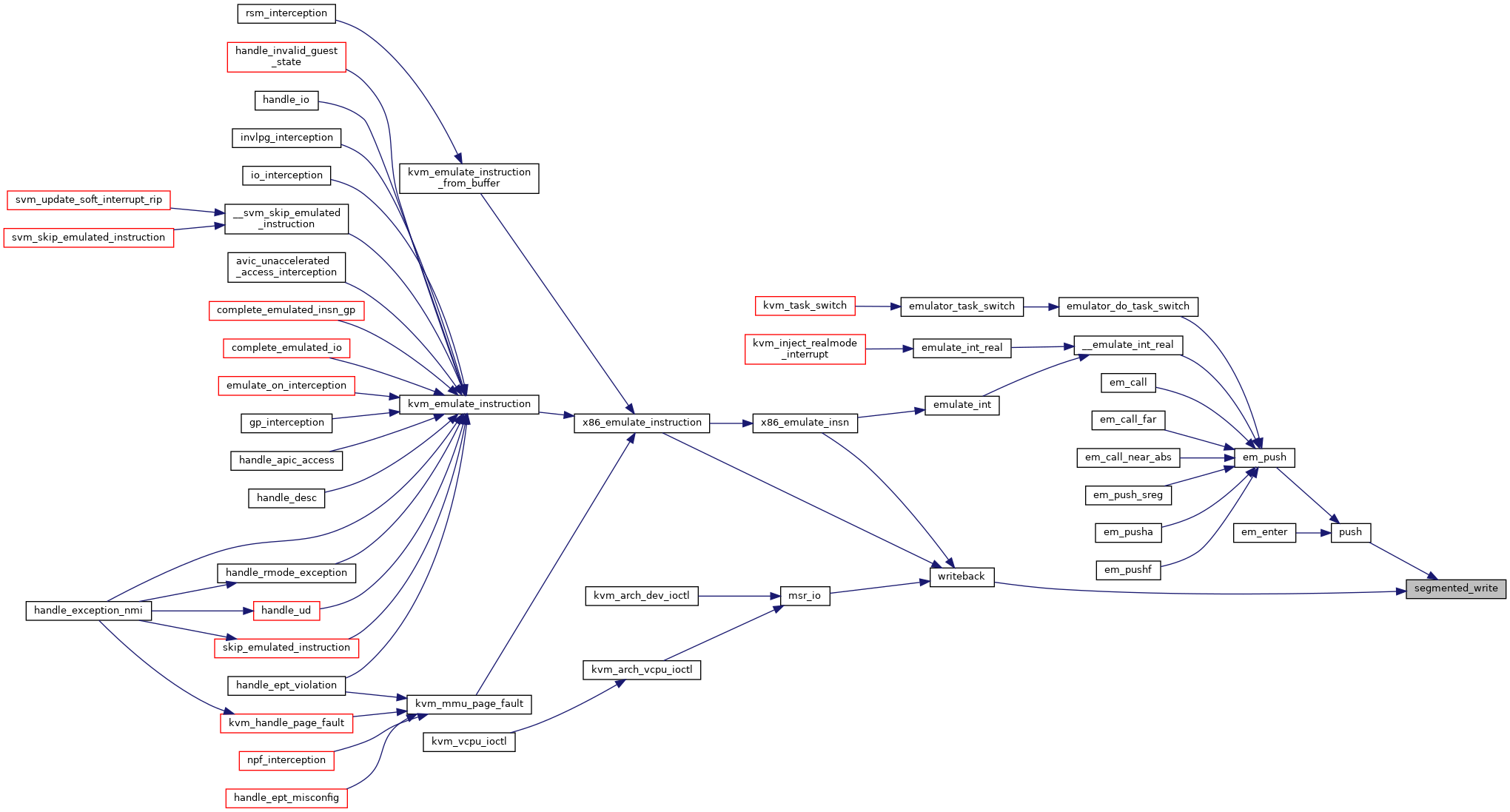

| static int | segmented_write (struct x86_emulate_ctxt *ctxt, struct segmented_address addr, const void *data, unsigned size) |

| |

| static int | segmented_cmpxchg (struct x86_emulate_ctxt *ctxt, struct segmented_address addr, const void *orig_data, const void *data, unsigned size) |

| |

| static int | pio_in_emulated (struct x86_emulate_ctxt *ctxt, unsigned int size, unsigned short port, void *dest) |

| |

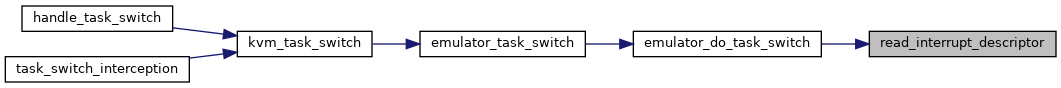

| static int | read_interrupt_descriptor (struct x86_emulate_ctxt *ctxt, u16 index, struct desc_struct *desc) |

| |

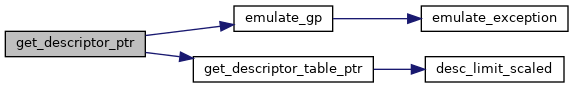

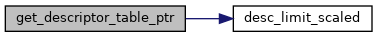

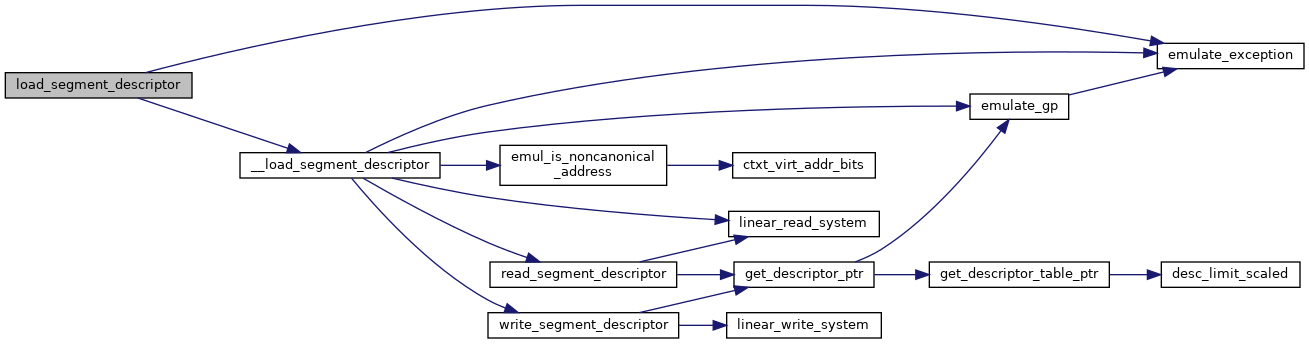

| static void | get_descriptor_table_ptr (struct x86_emulate_ctxt *ctxt, u16 selector, struct desc_ptr *dt) |

| |

| static int | get_descriptor_ptr (struct x86_emulate_ctxt *ctxt, u16 selector, ulong *desc_addr_p) |

| |

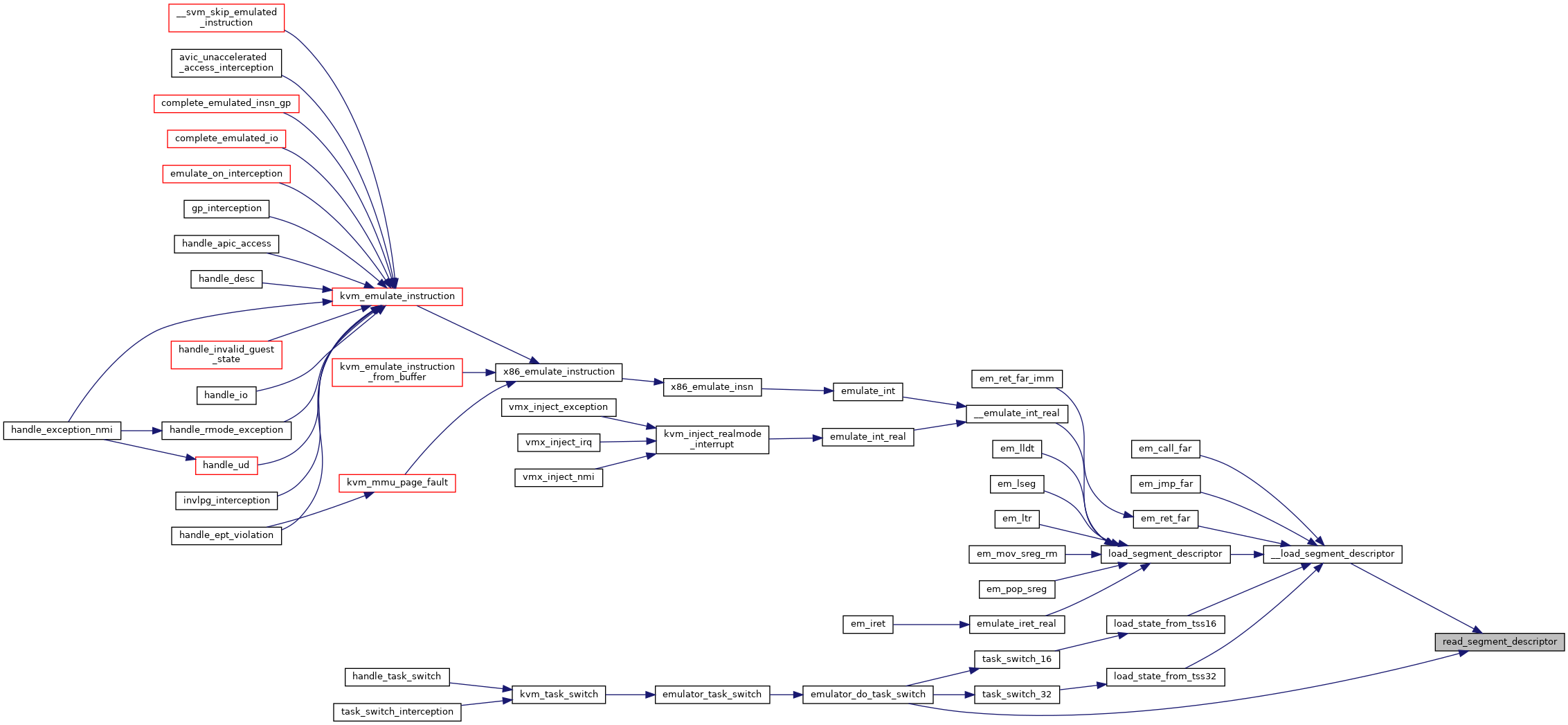

| static int | read_segment_descriptor (struct x86_emulate_ctxt *ctxt, u16 selector, struct desc_struct *desc, ulong *desc_addr_p) |

| |

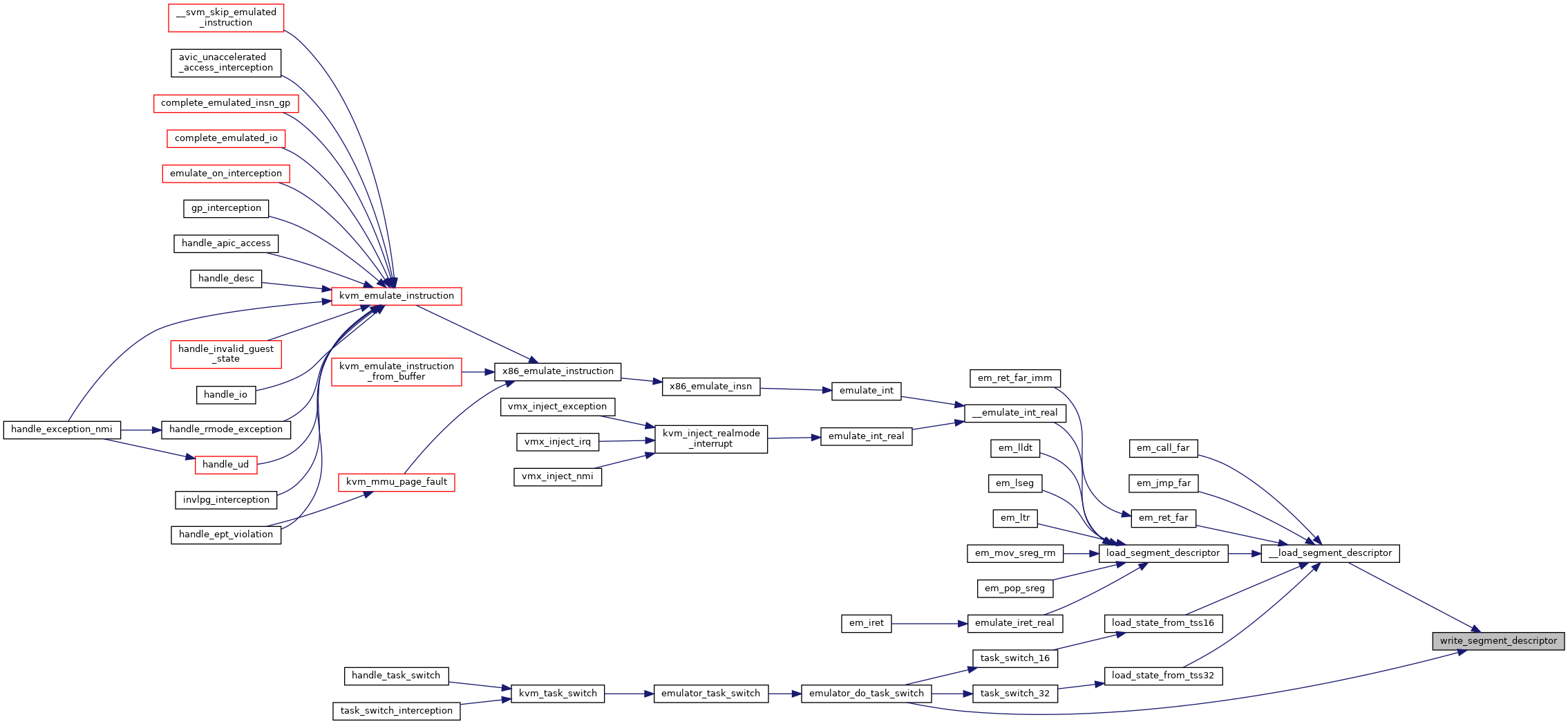

| static int | write_segment_descriptor (struct x86_emulate_ctxt *ctxt, u16 selector, struct desc_struct *desc) |

| |

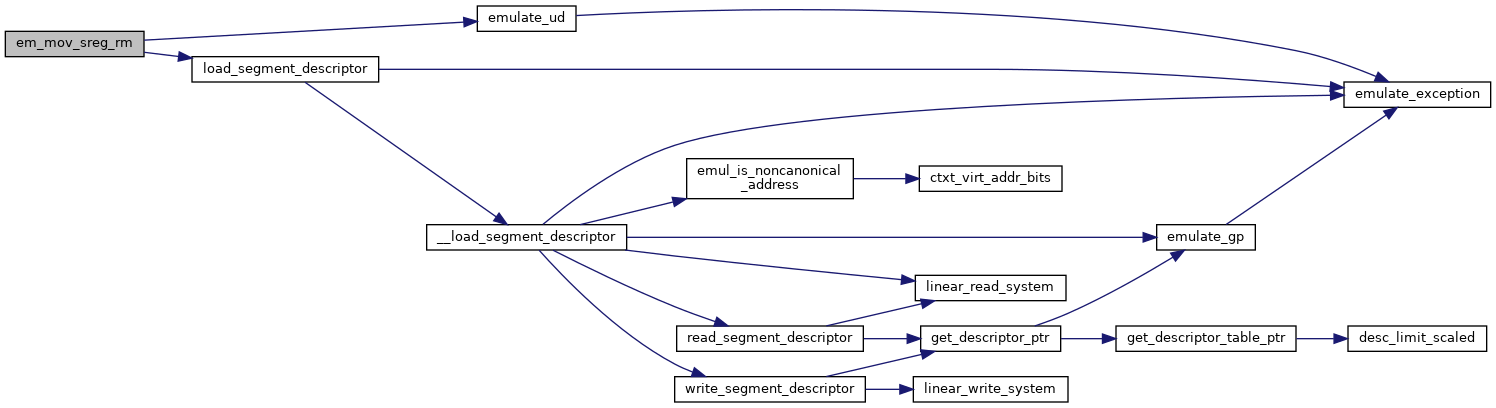

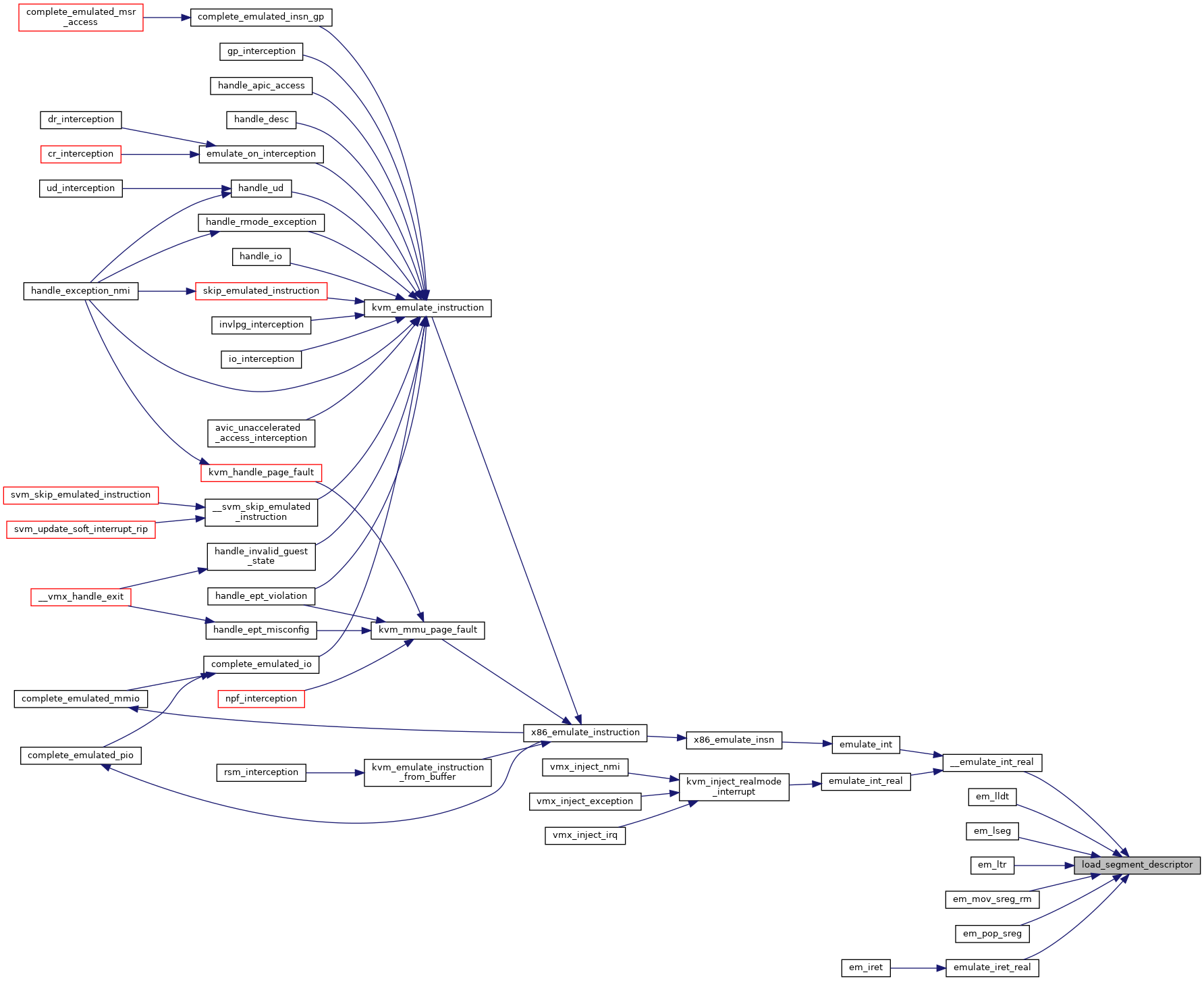

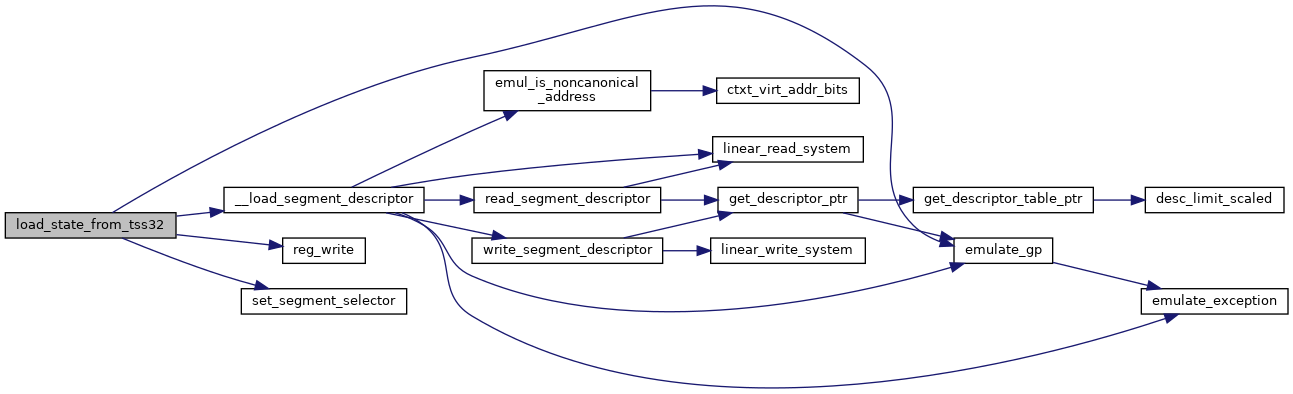

| static int | __load_segment_descriptor (struct x86_emulate_ctxt *ctxt, u16 selector, int seg, u8 cpl, enum x86_transfer_type transfer, struct desc_struct *desc) |

| |

| static int | load_segment_descriptor (struct x86_emulate_ctxt *ctxt, u16 selector, int seg) |

| |

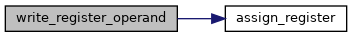

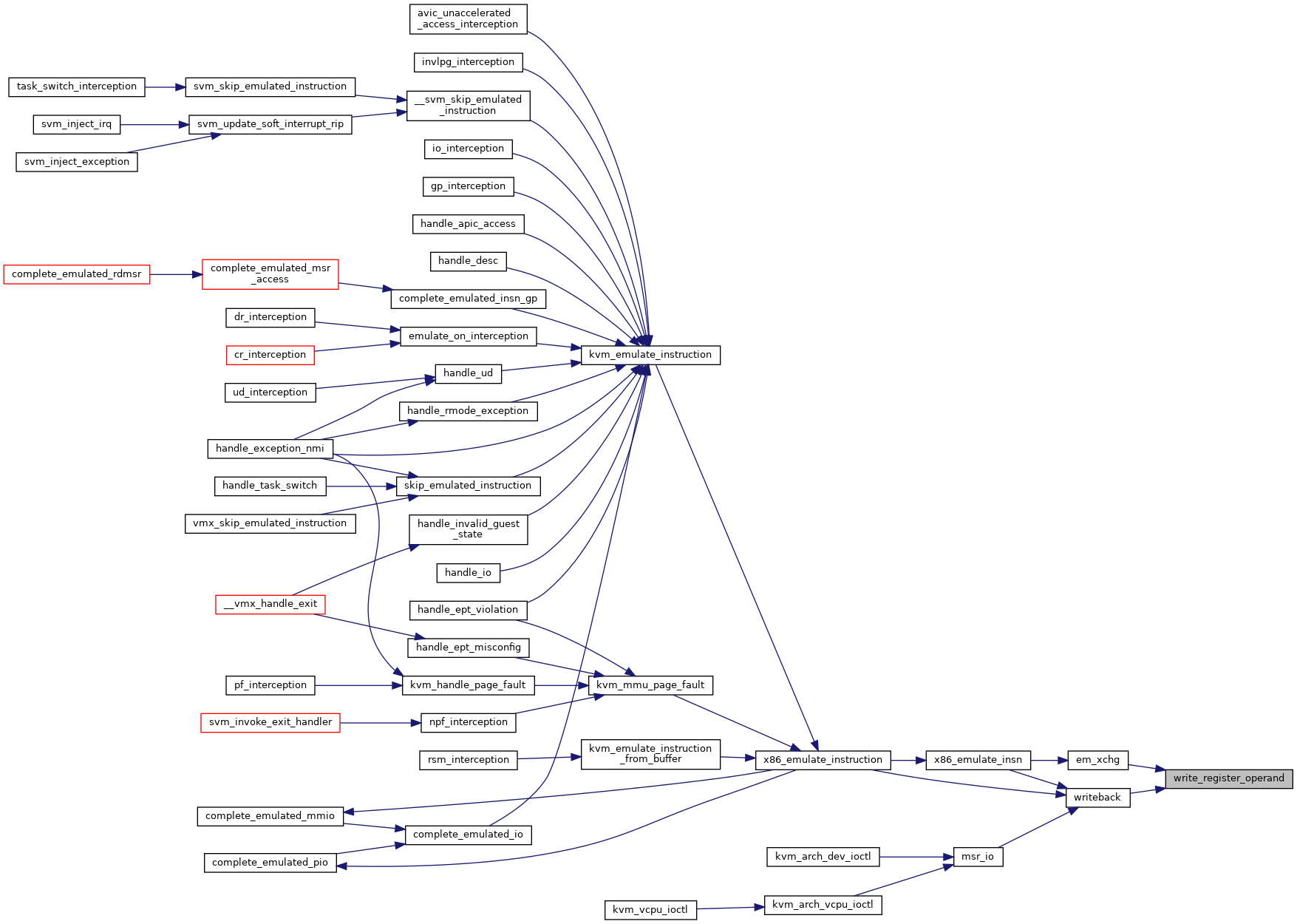

| static void | write_register_operand (struct operand *op) |

| |

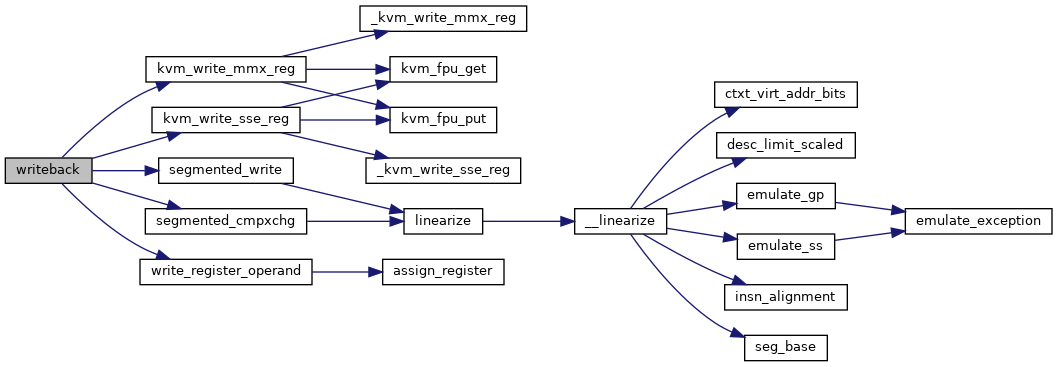

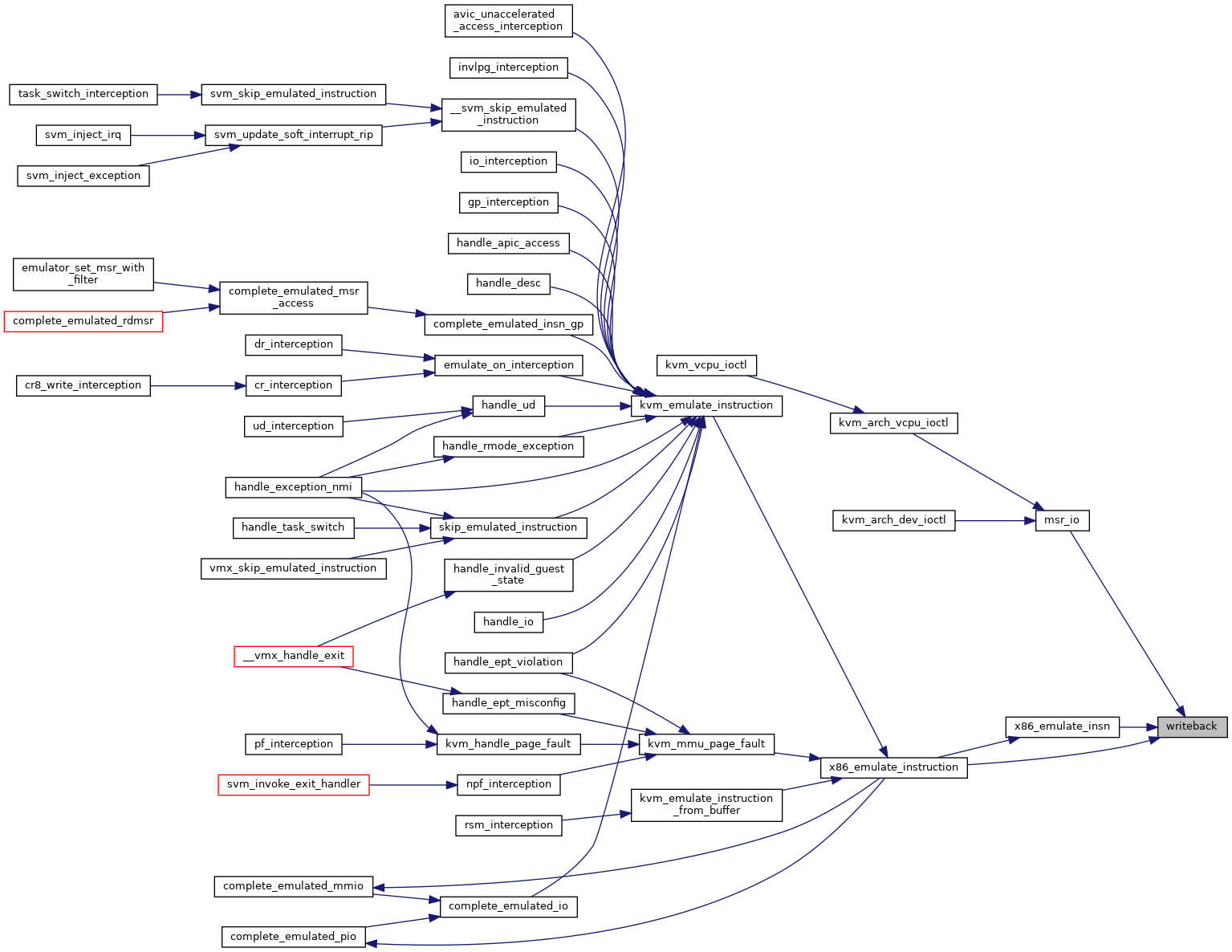

| static int | writeback (struct x86_emulate_ctxt *ctxt, struct operand *op) |

| |

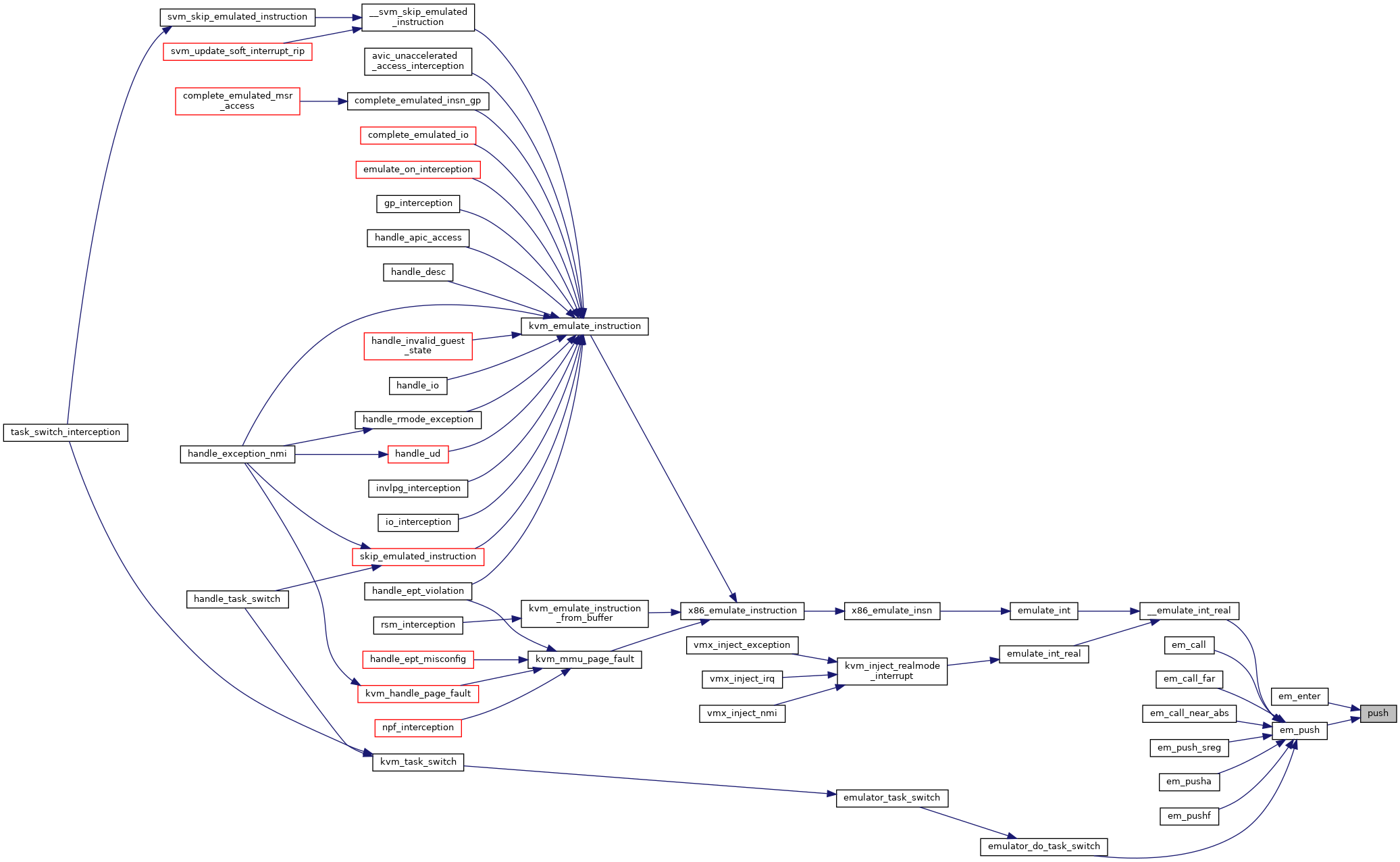

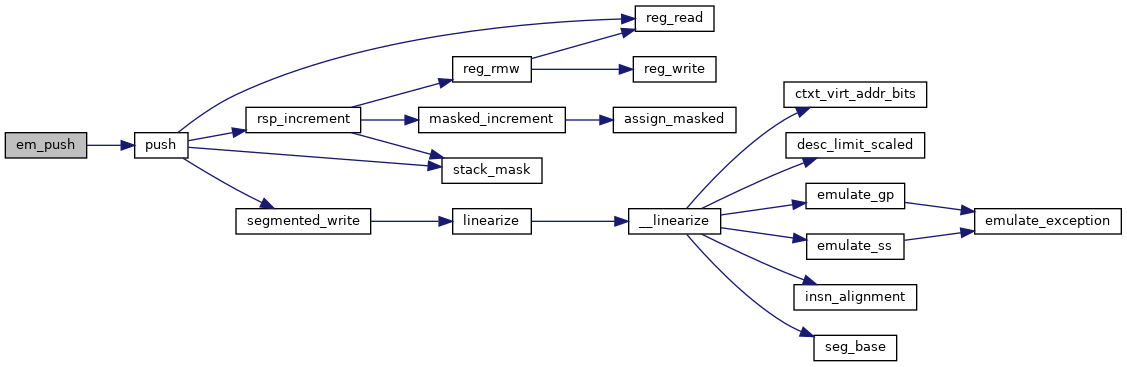

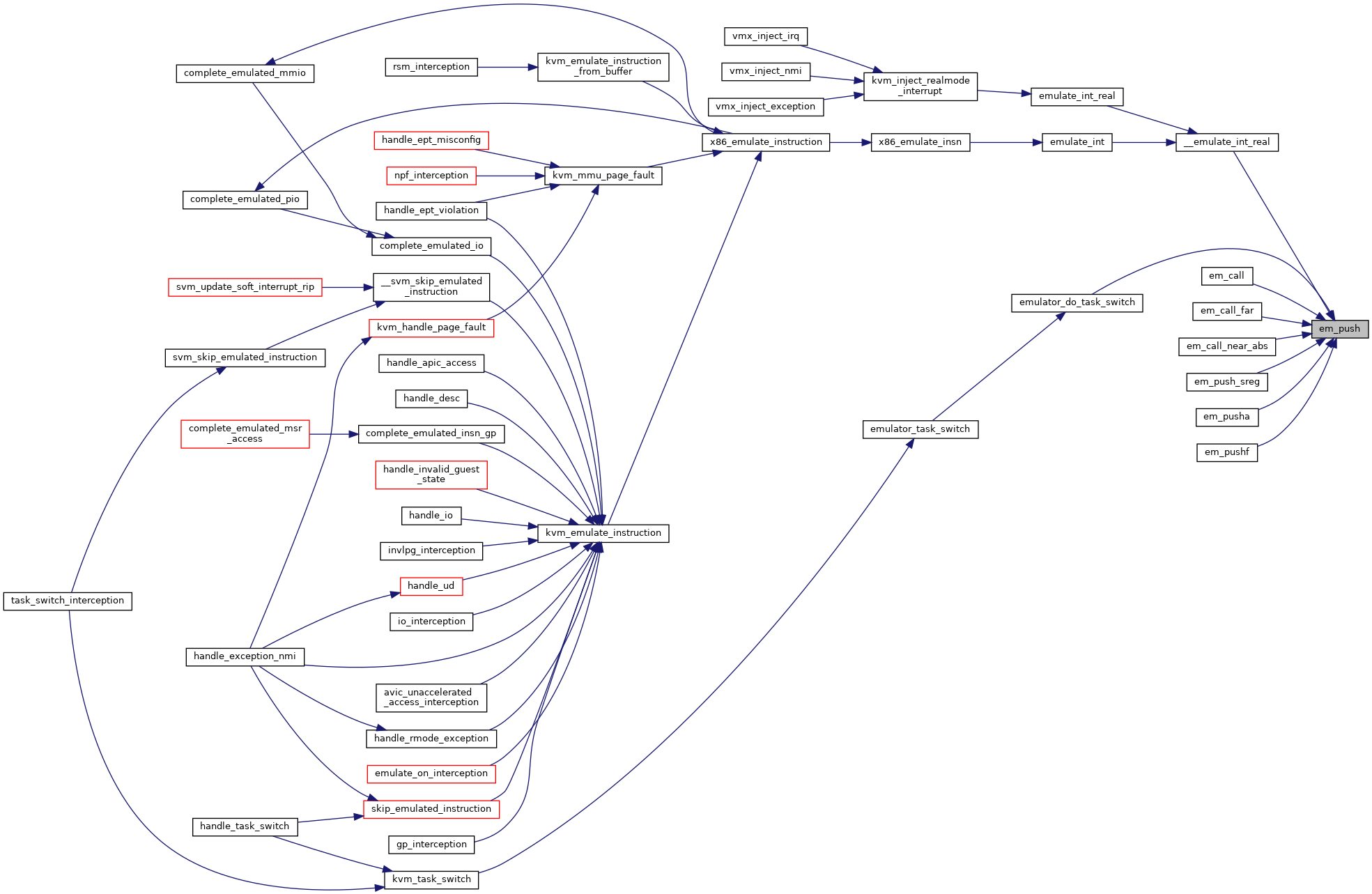

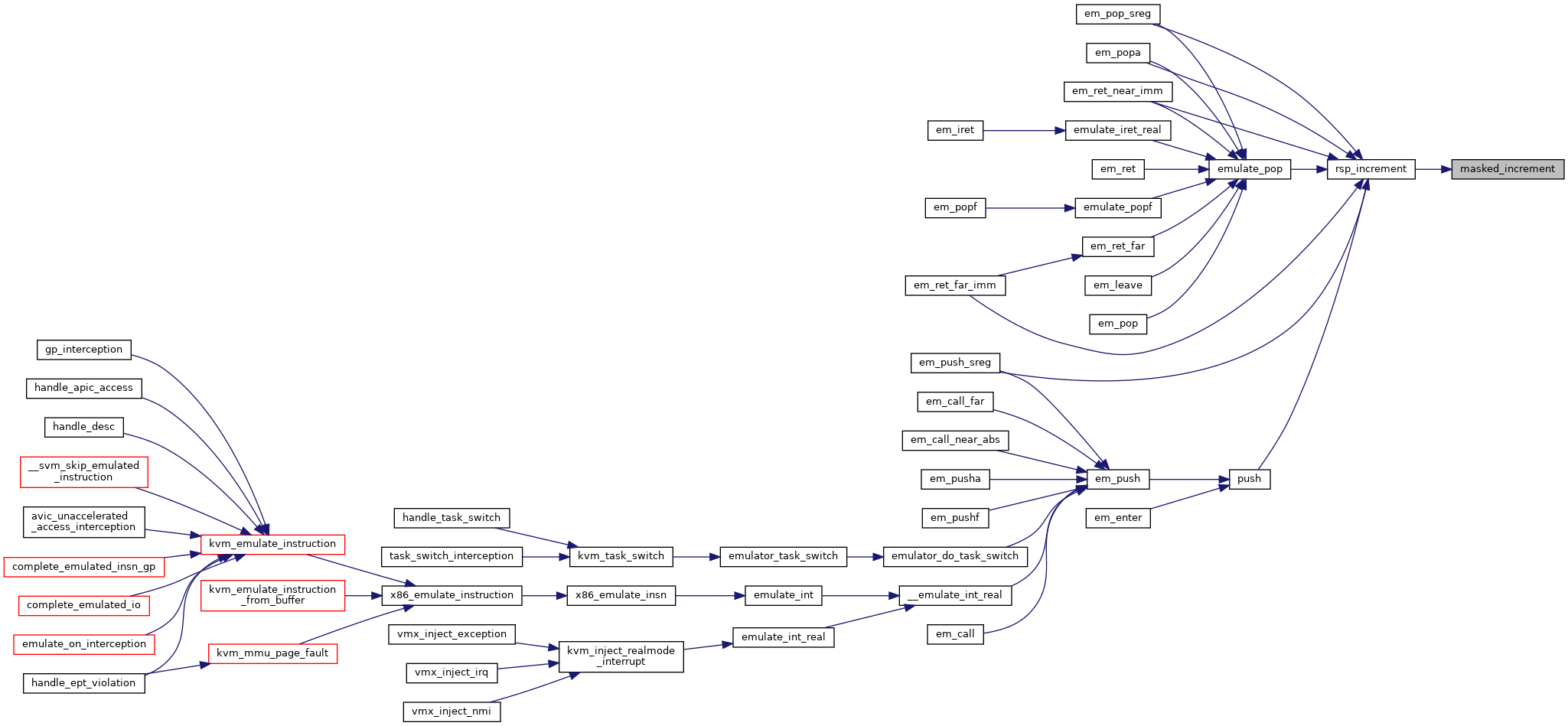

| static int | push (struct x86_emulate_ctxt *ctxt, void *data, int bytes) |

| |

| static int | em_push (struct x86_emulate_ctxt *ctxt) |

| |

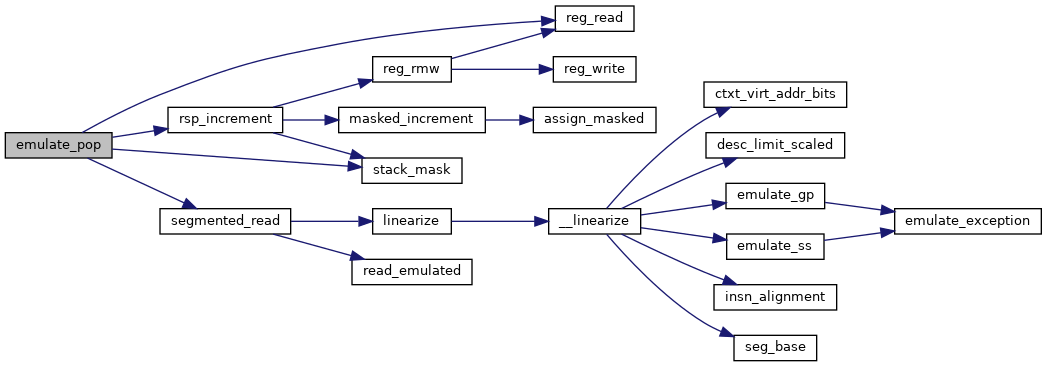

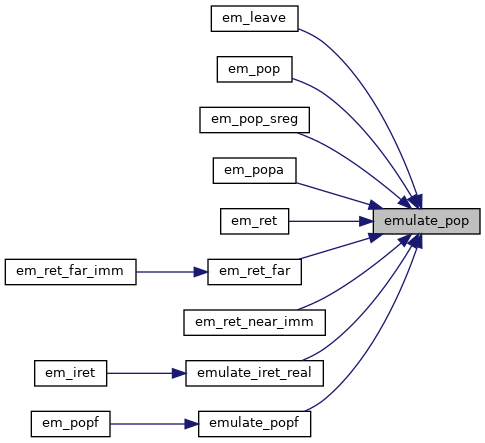

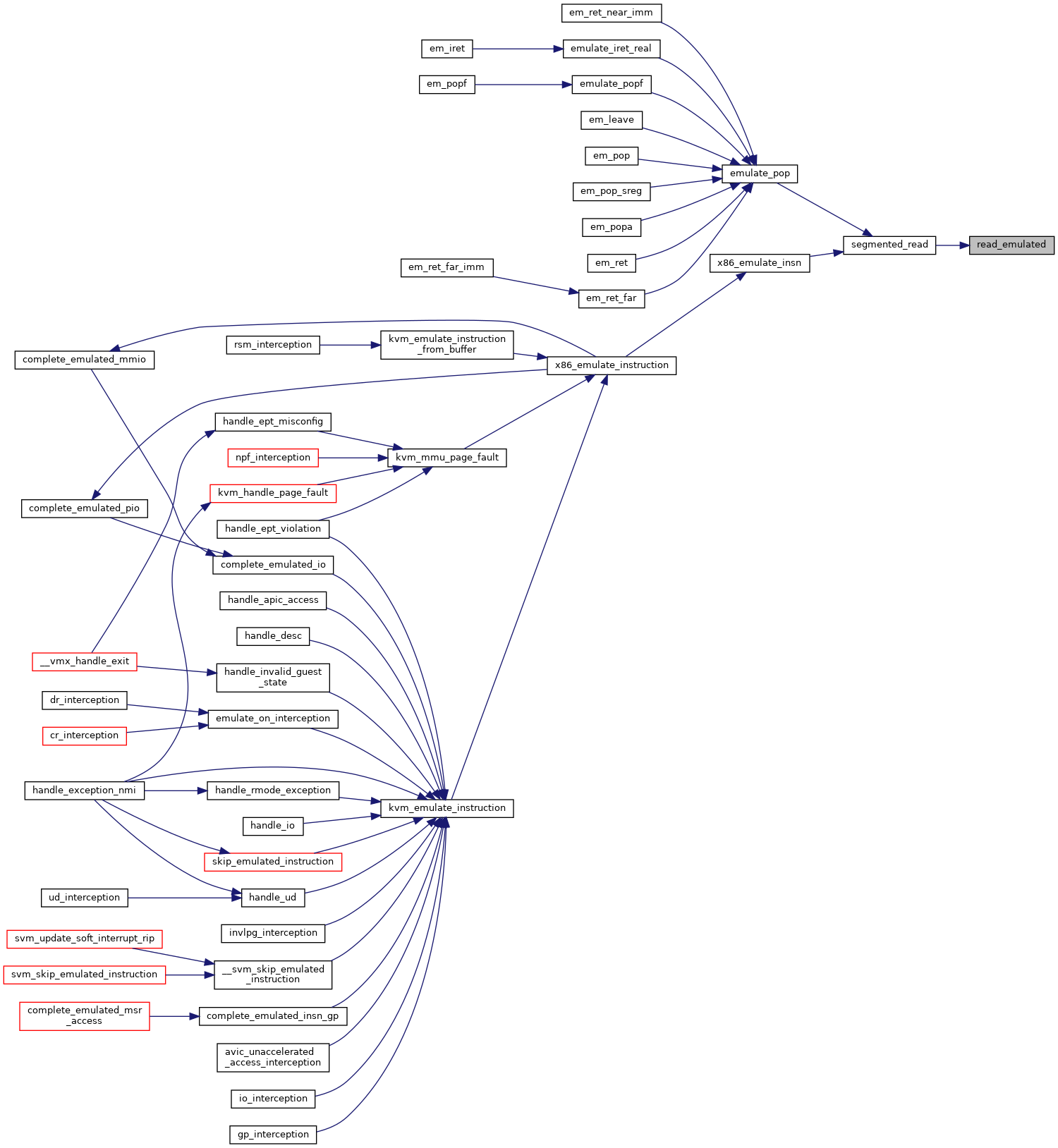

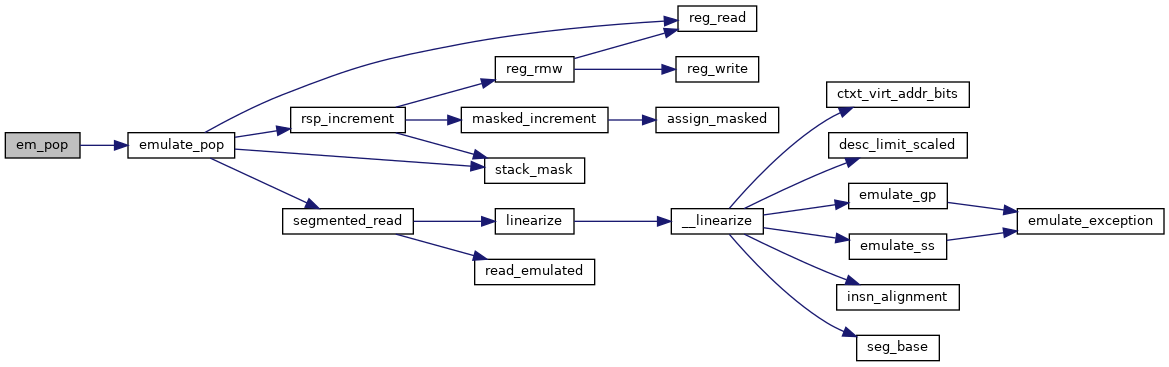

| static int | emulate_pop (struct x86_emulate_ctxt *ctxt, void *dest, int len) |

| |

| static int | em_pop (struct x86_emulate_ctxt *ctxt) |

| |

| static int | emulate_popf (struct x86_emulate_ctxt *ctxt, void *dest, int len) |

| |

| static int | em_popf (struct x86_emulate_ctxt *ctxt) |

| |

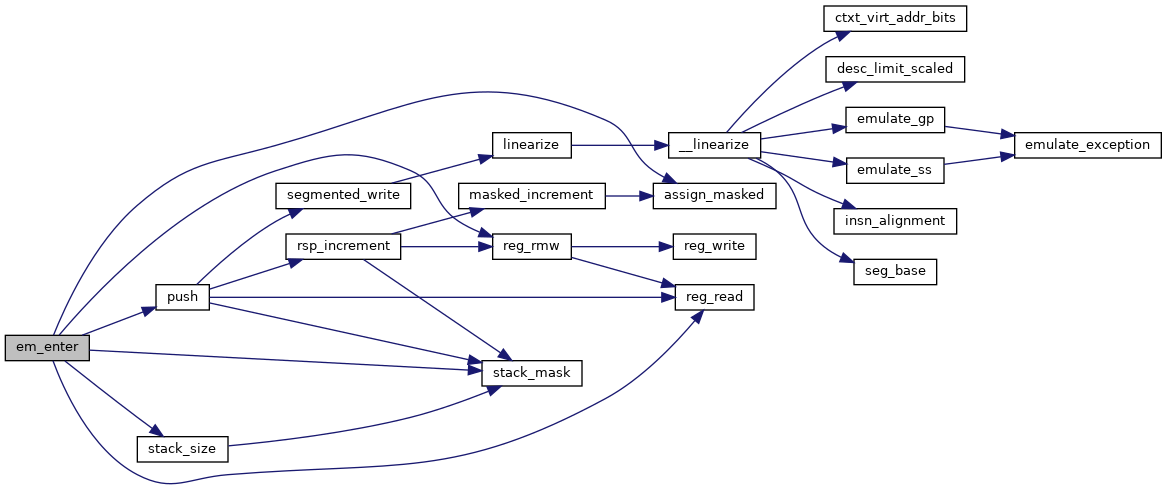

| static int | em_enter (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_leave (struct x86_emulate_ctxt *ctxt) |

| |

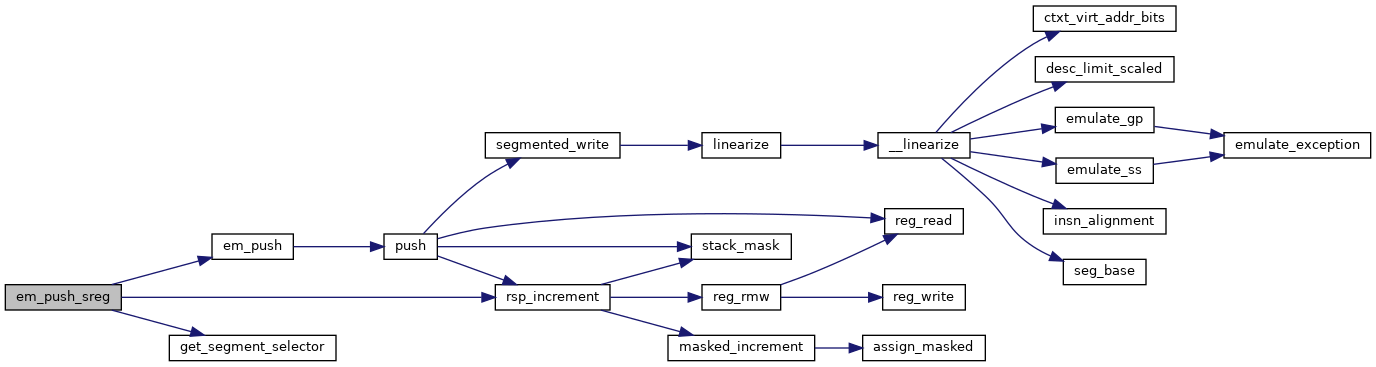

| static int | em_push_sreg (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_pop_sreg (struct x86_emulate_ctxt *ctxt) |

| |

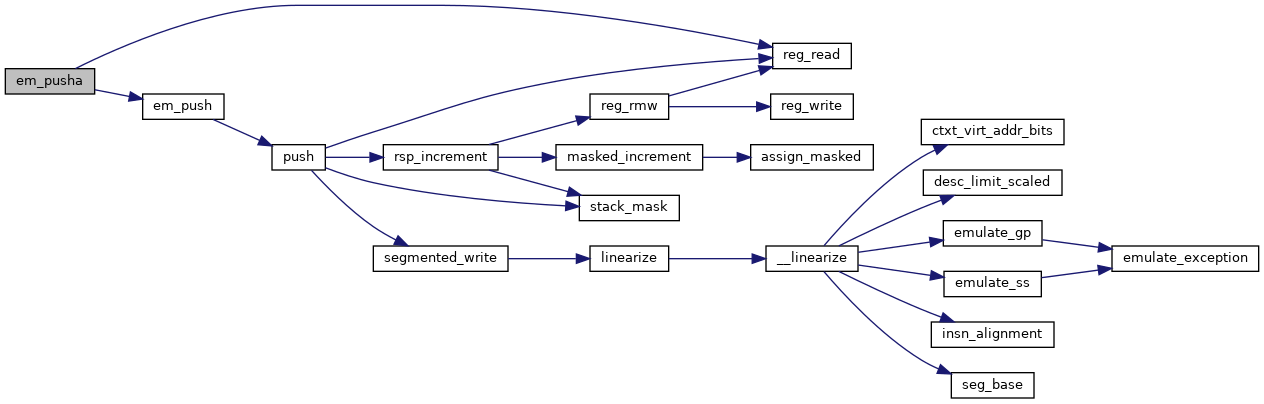

| static int | em_pusha (struct x86_emulate_ctxt *ctxt) |

| |

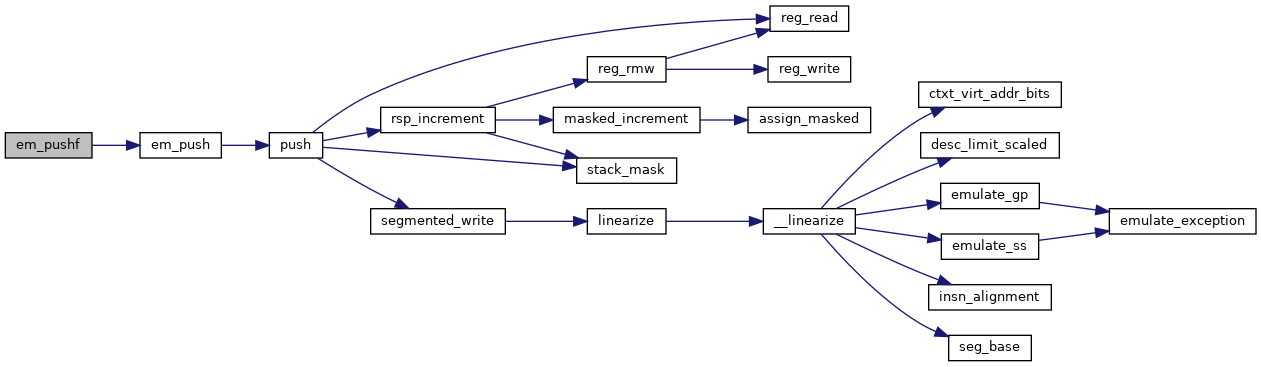

| static int | em_pushf (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_popa (struct x86_emulate_ctxt *ctxt) |

| |

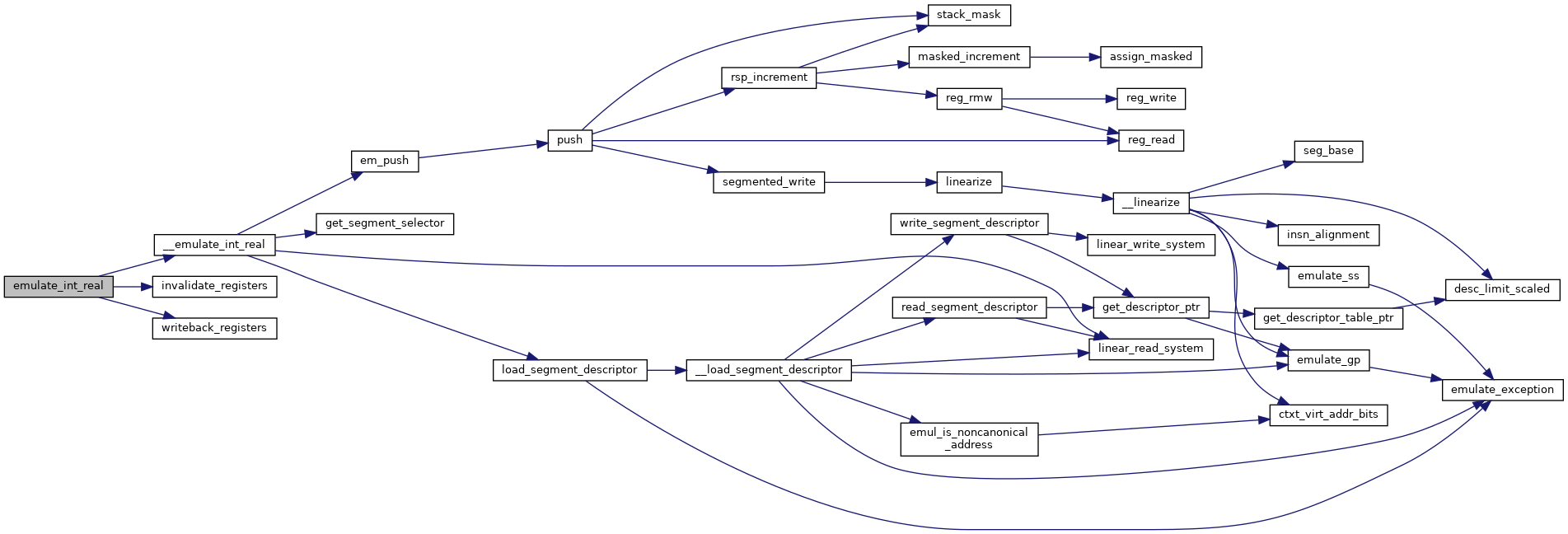

| static int | __emulate_int_real (struct x86_emulate_ctxt *ctxt, int irq) |

| |

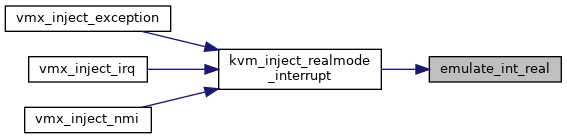

| int | emulate_int_real (struct x86_emulate_ctxt *ctxt, int irq) |

| |

| static int | emulate_int (struct x86_emulate_ctxt *ctxt, int irq) |

| |

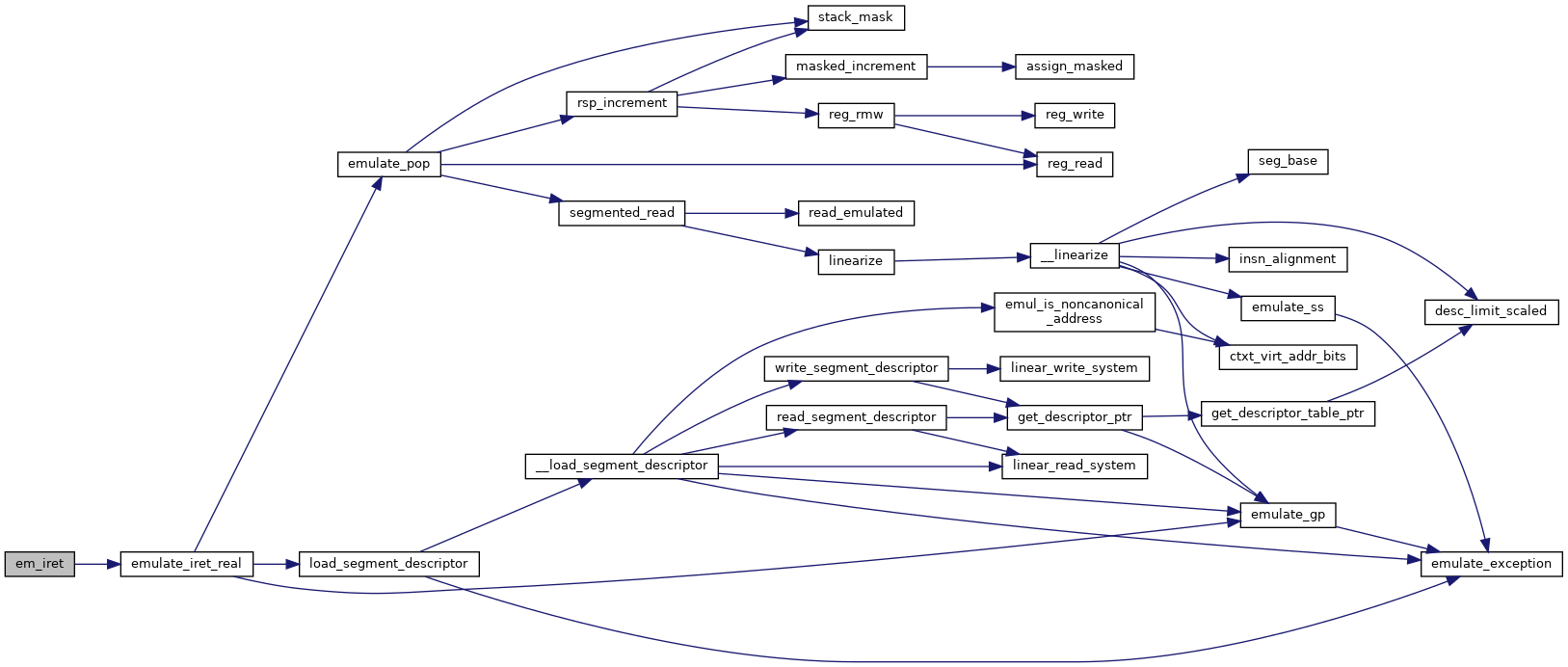

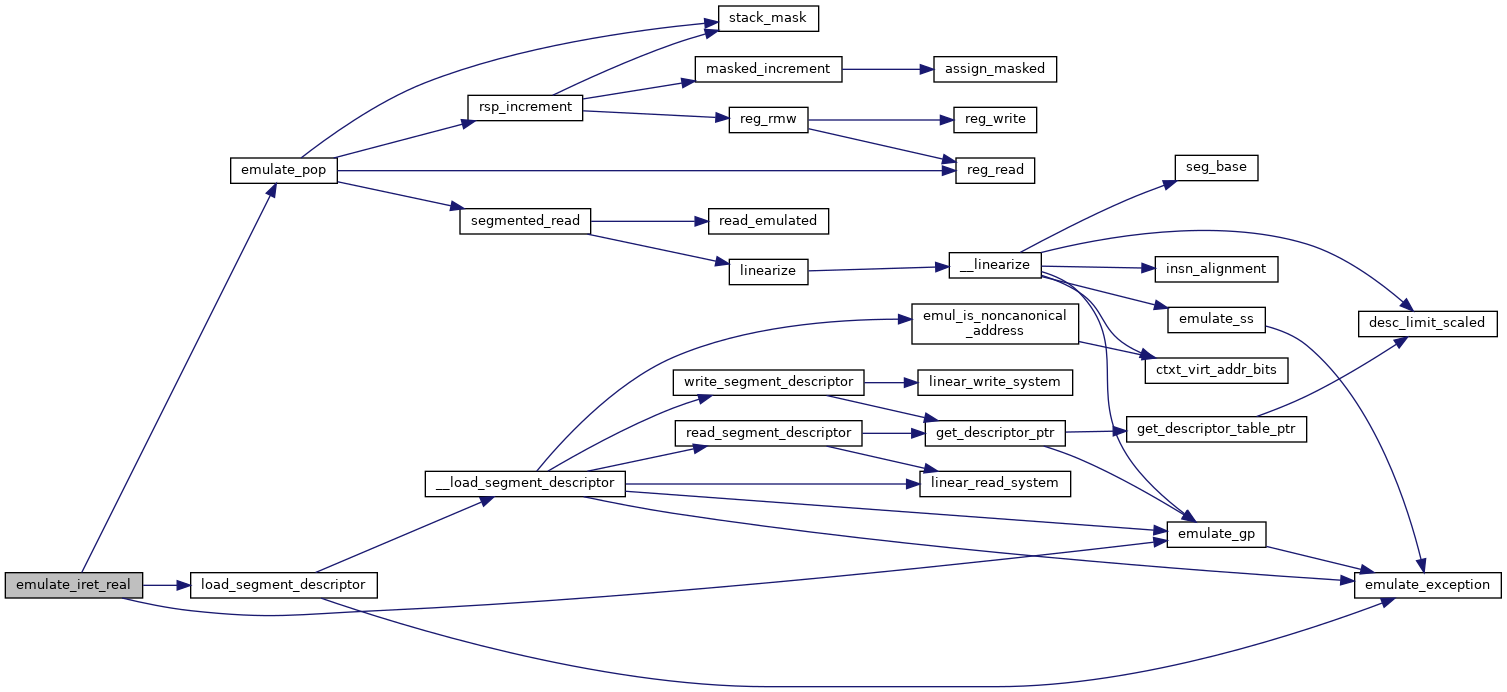

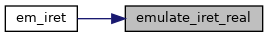

| static int | emulate_iret_real (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_iret (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_jmp_far (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_jmp_abs (struct x86_emulate_ctxt *ctxt) |

| |

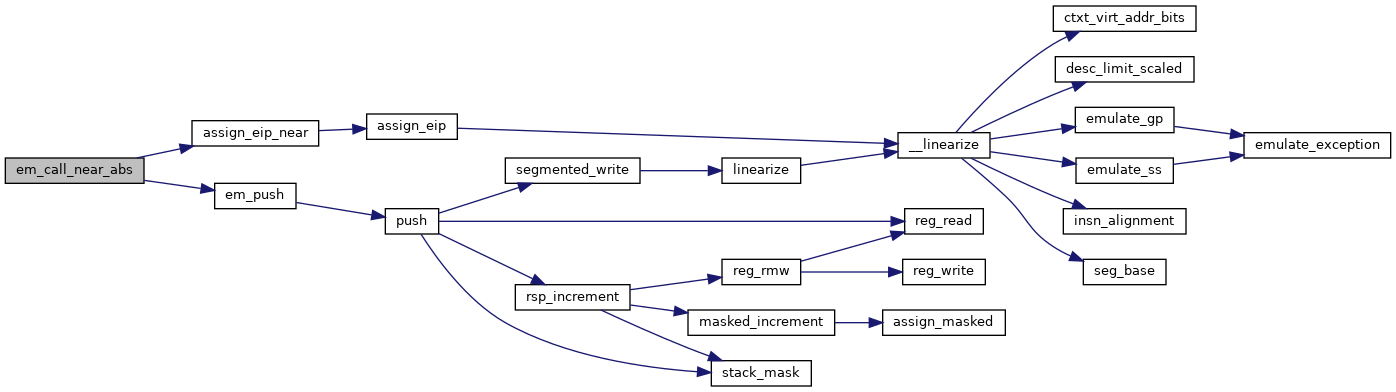

| static int | em_call_near_abs (struct x86_emulate_ctxt *ctxt) |

| |

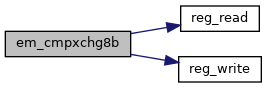

| static int | em_cmpxchg8b (struct x86_emulate_ctxt *ctxt) |

| |

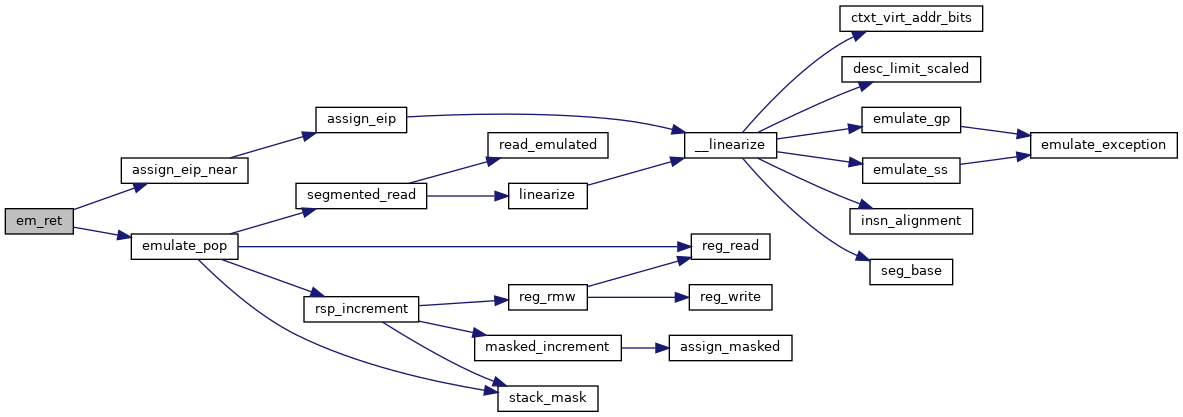

| static int | em_ret (struct x86_emulate_ctxt *ctxt) |

| |

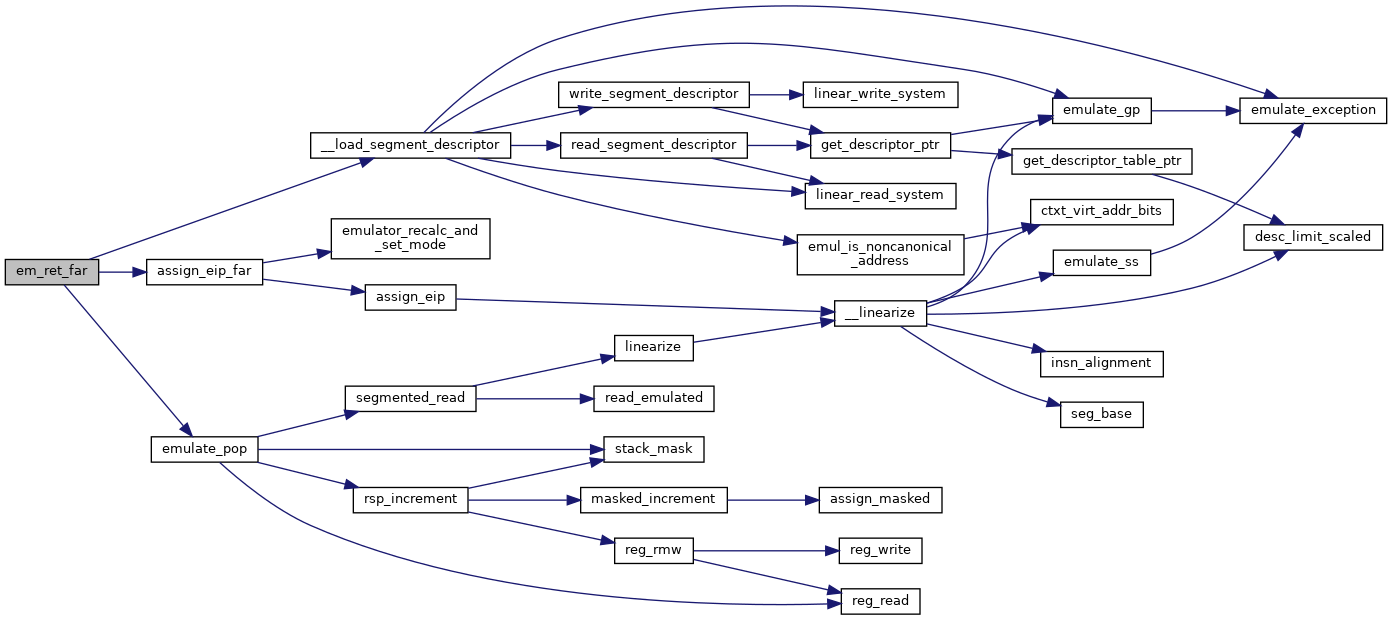

| static int | em_ret_far (struct x86_emulate_ctxt *ctxt) |

| |

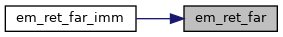

| static int | em_ret_far_imm (struct x86_emulate_ctxt *ctxt) |

| |

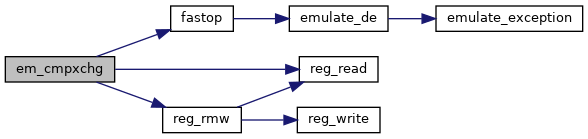

| static int | em_cmpxchg (struct x86_emulate_ctxt *ctxt) |

| |

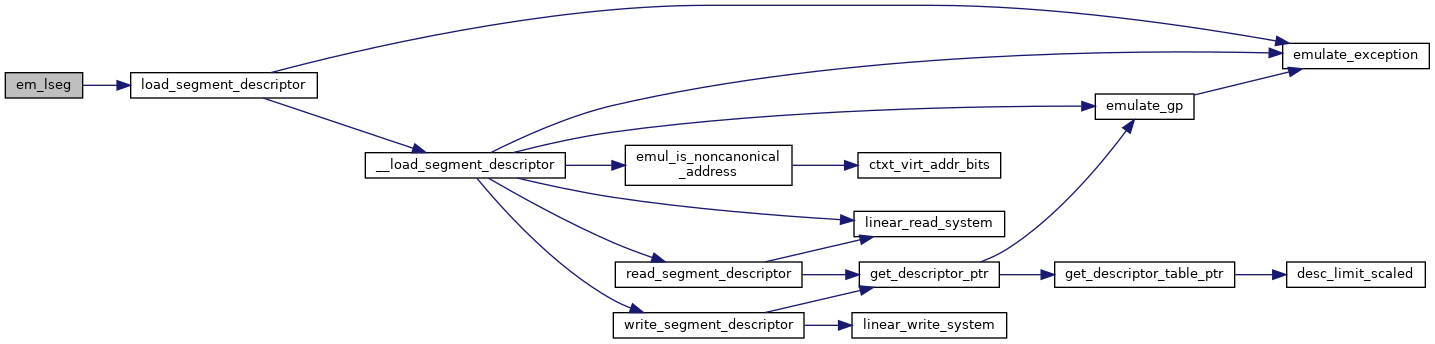

| static int | em_lseg (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_rsm (struct x86_emulate_ctxt *ctxt) |

| |

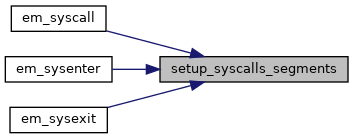

| static void | setup_syscalls_segments (struct desc_struct *cs, struct desc_struct *ss) |

| |

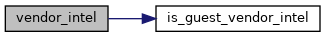

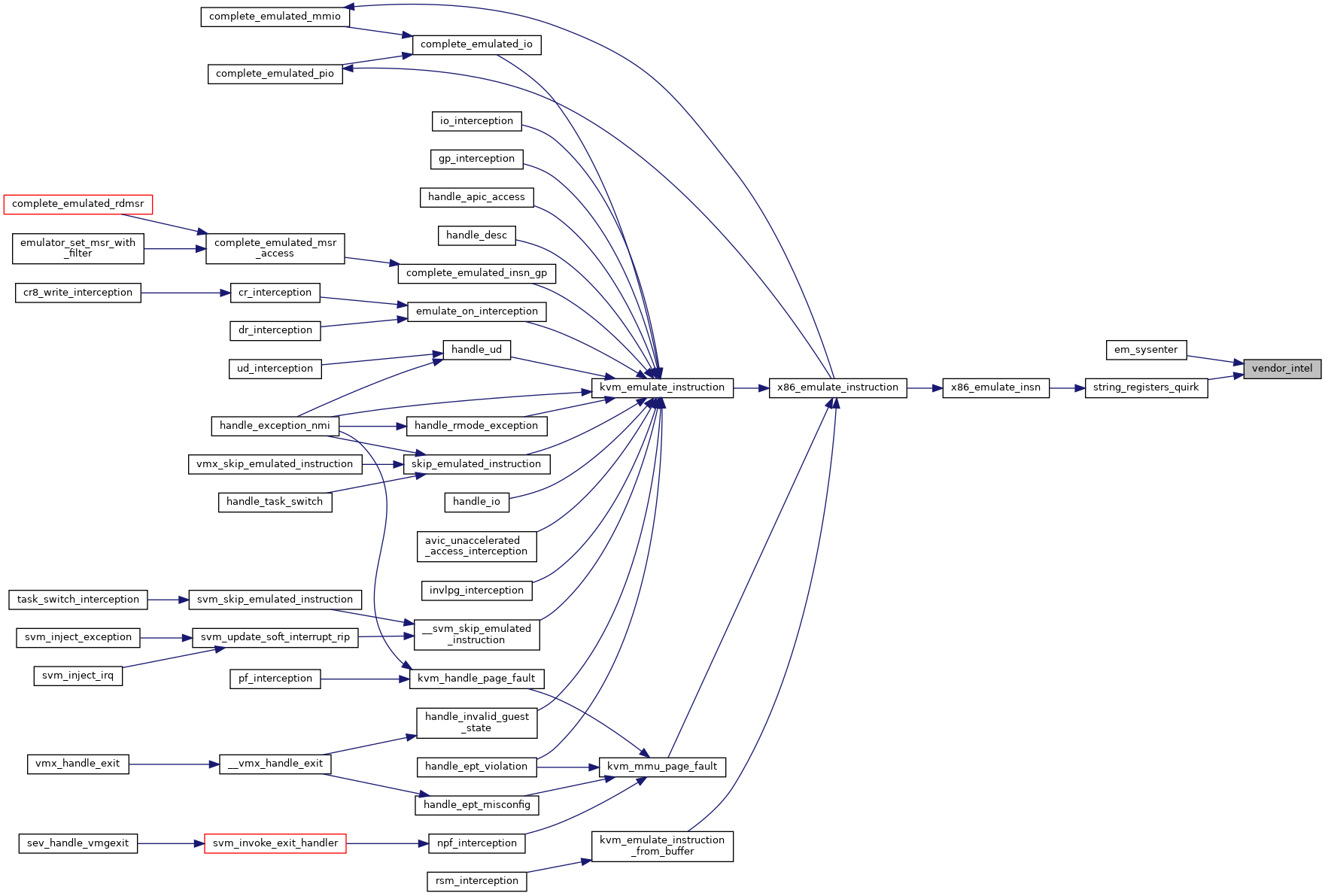

| static bool | vendor_intel (struct x86_emulate_ctxt *ctxt) |

| |

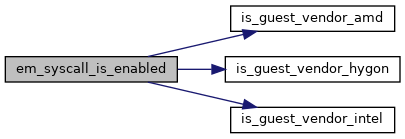

| static bool | em_syscall_is_enabled (struct x86_emulate_ctxt *ctxt) |

| |

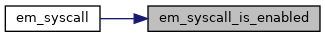

| static int | em_syscall (struct x86_emulate_ctxt *ctxt) |

| |

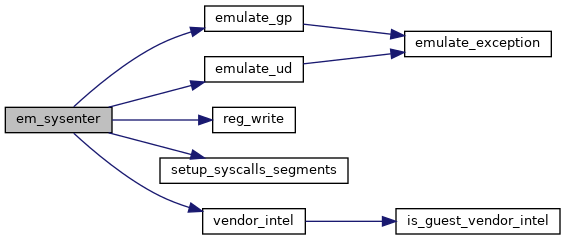

| static int | em_sysenter (struct x86_emulate_ctxt *ctxt) |

| |

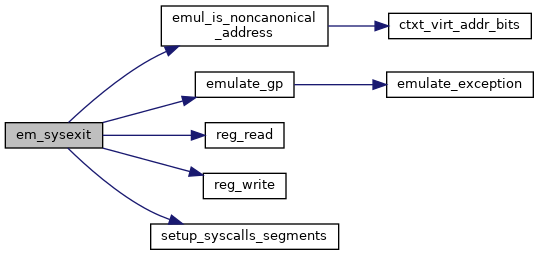

| static int | em_sysexit (struct x86_emulate_ctxt *ctxt) |

| |

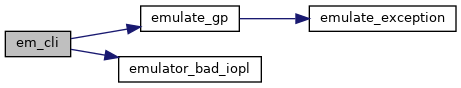

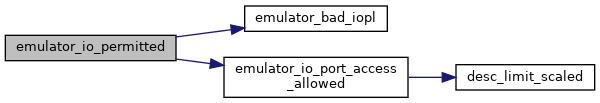

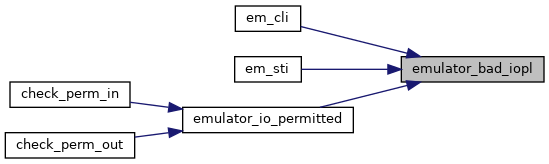

| static bool | emulator_bad_iopl (struct x86_emulate_ctxt *ctxt) |

| |

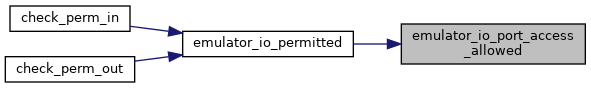

| static bool | emulator_io_port_access_allowed (struct x86_emulate_ctxt *ctxt, u16 port, u16 len) |

| |

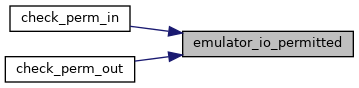

| static bool | emulator_io_permitted (struct x86_emulate_ctxt *ctxt, u16 port, u16 len) |

| |

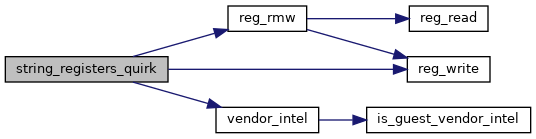

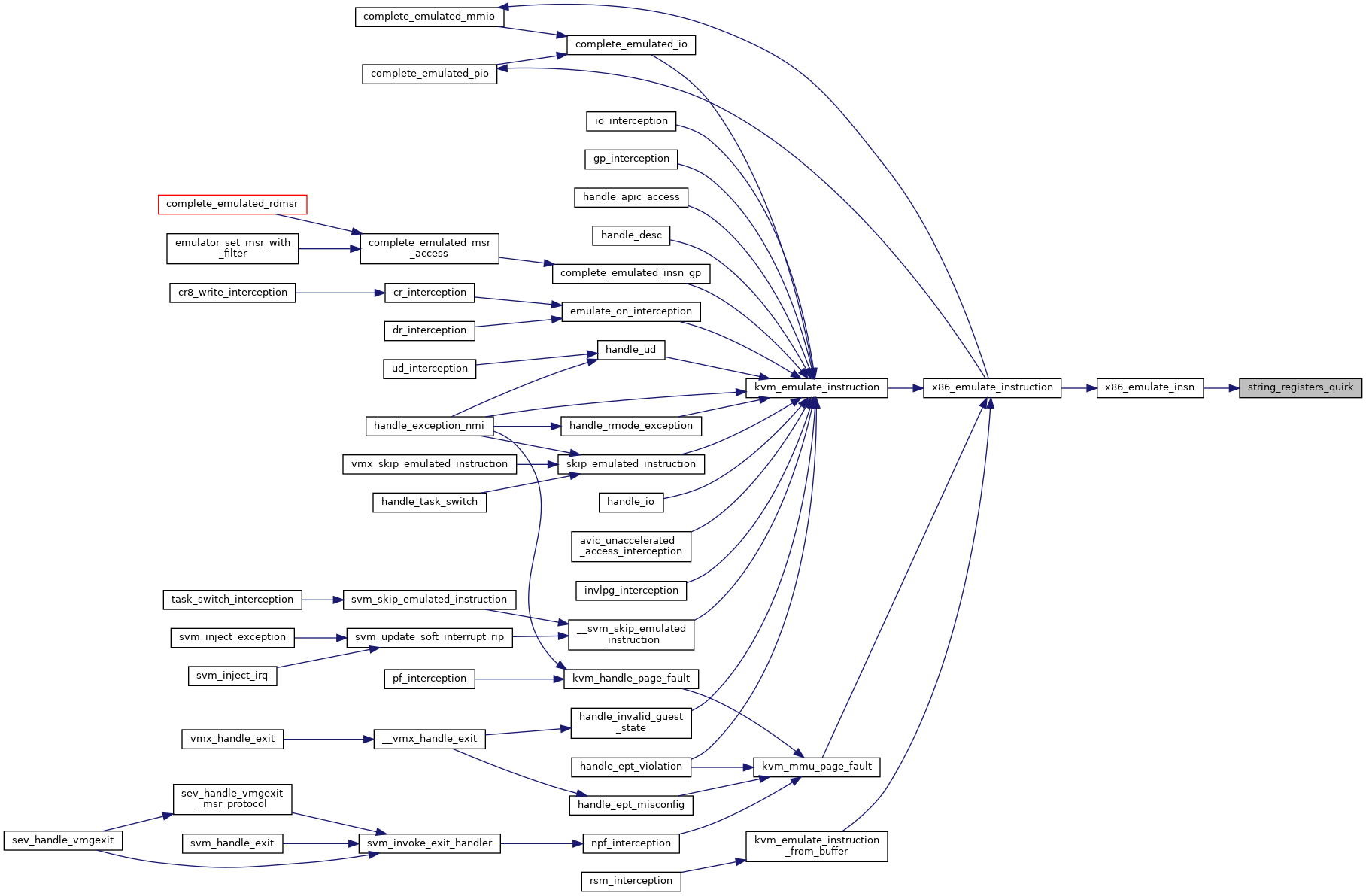

| static void | string_registers_quirk (struct x86_emulate_ctxt *ctxt) |

| |

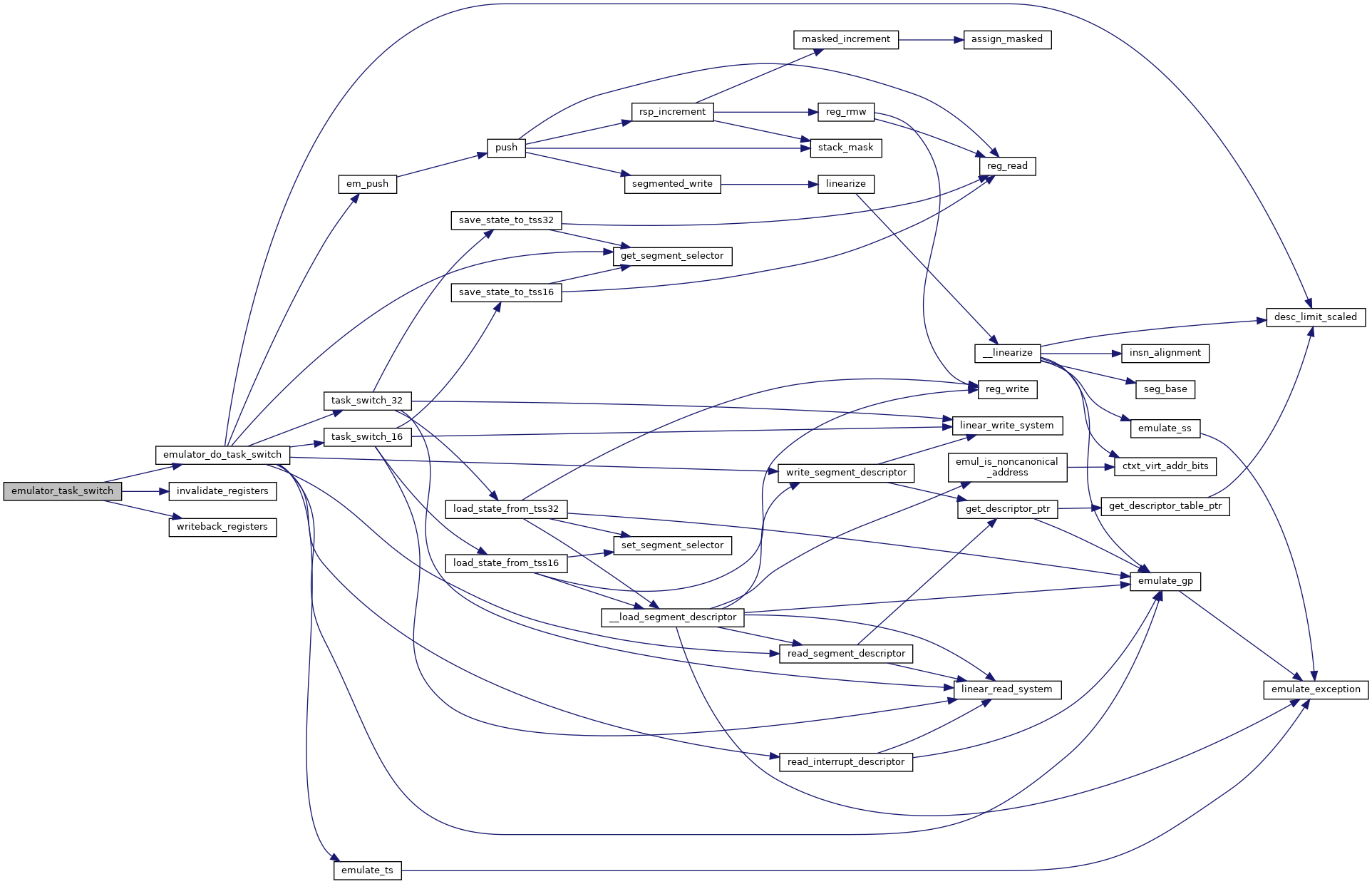

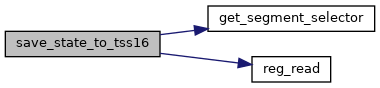

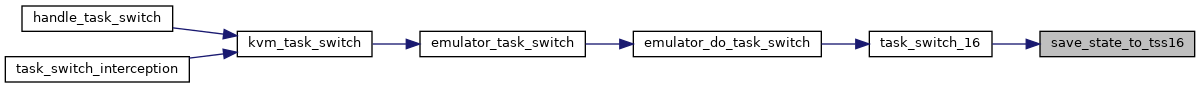

| static void | save_state_to_tss16 (struct x86_emulate_ctxt *ctxt, struct tss_segment_16 *tss) |

| |

| static int | load_state_from_tss16 (struct x86_emulate_ctxt *ctxt, struct tss_segment_16 *tss) |

| |

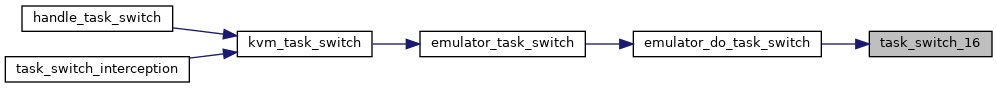

| static int | task_switch_16 (struct x86_emulate_ctxt *ctxt, u16 old_tss_sel, ulong old_tss_base, struct desc_struct *new_desc) |

| |

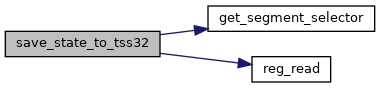

| static void | save_state_to_tss32 (struct x86_emulate_ctxt *ctxt, struct tss_segment_32 *tss) |

| |

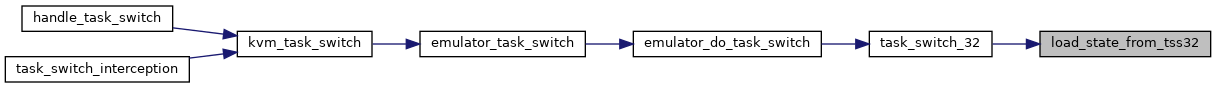

| static int | load_state_from_tss32 (struct x86_emulate_ctxt *ctxt, struct tss_segment_32 *tss) |

| |

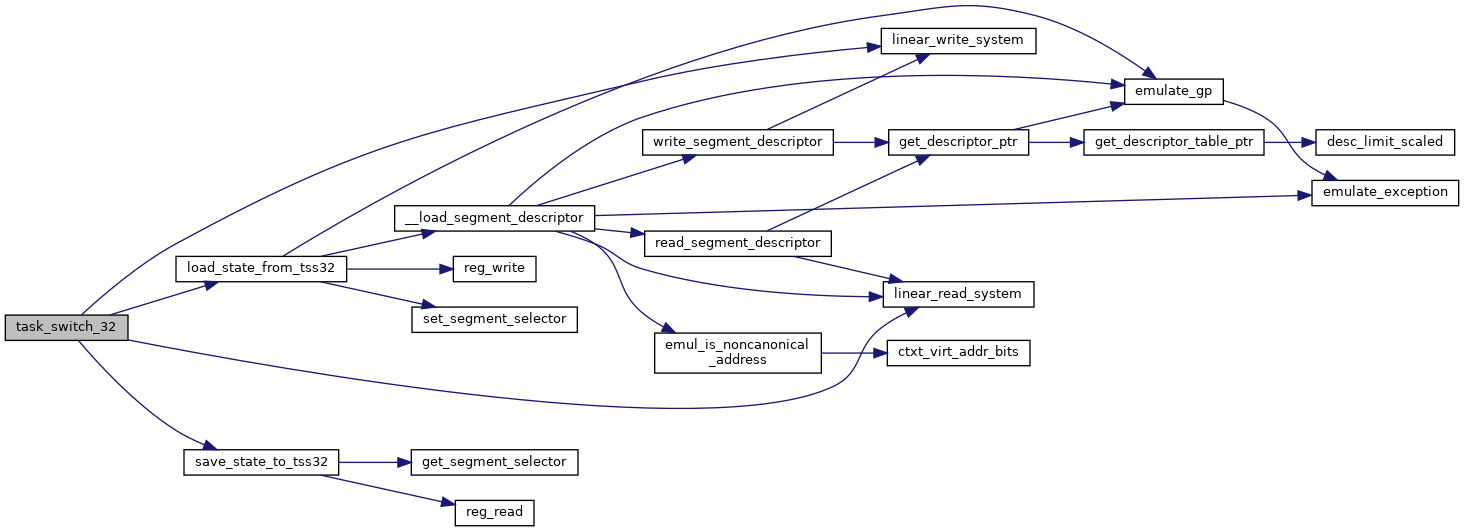

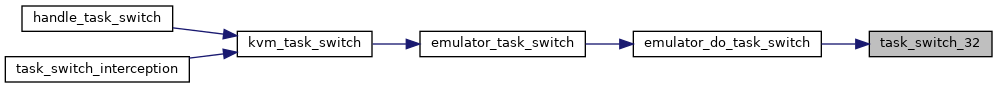

| static int | task_switch_32 (struct x86_emulate_ctxt *ctxt, u16 old_tss_sel, ulong old_tss_base, struct desc_struct *new_desc) |

| |

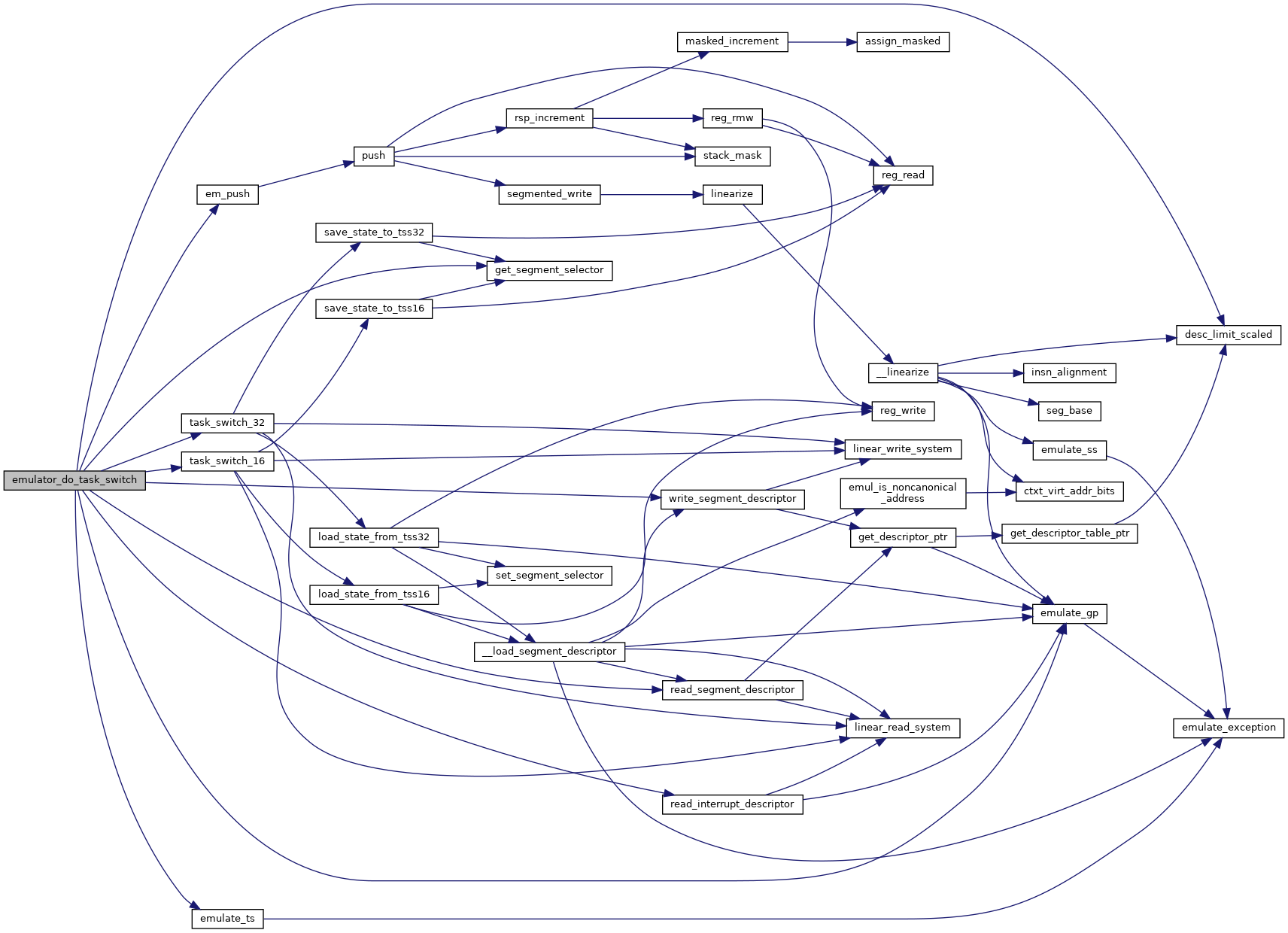

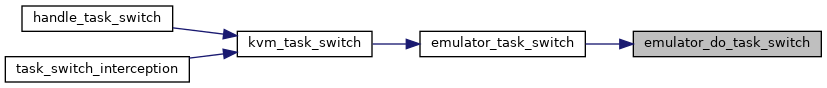

| static int | emulator_do_task_switch (struct x86_emulate_ctxt *ctxt, u16 tss_selector, int idt_index, int reason, bool has_error_code, u32 error_code) |

| |

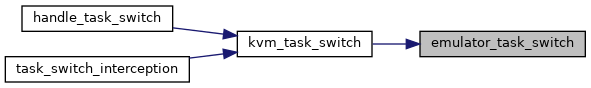

| int | emulator_task_switch (struct x86_emulate_ctxt *ctxt, u16 tss_selector, int idt_index, int reason, bool has_error_code, u32 error_code) |

| |

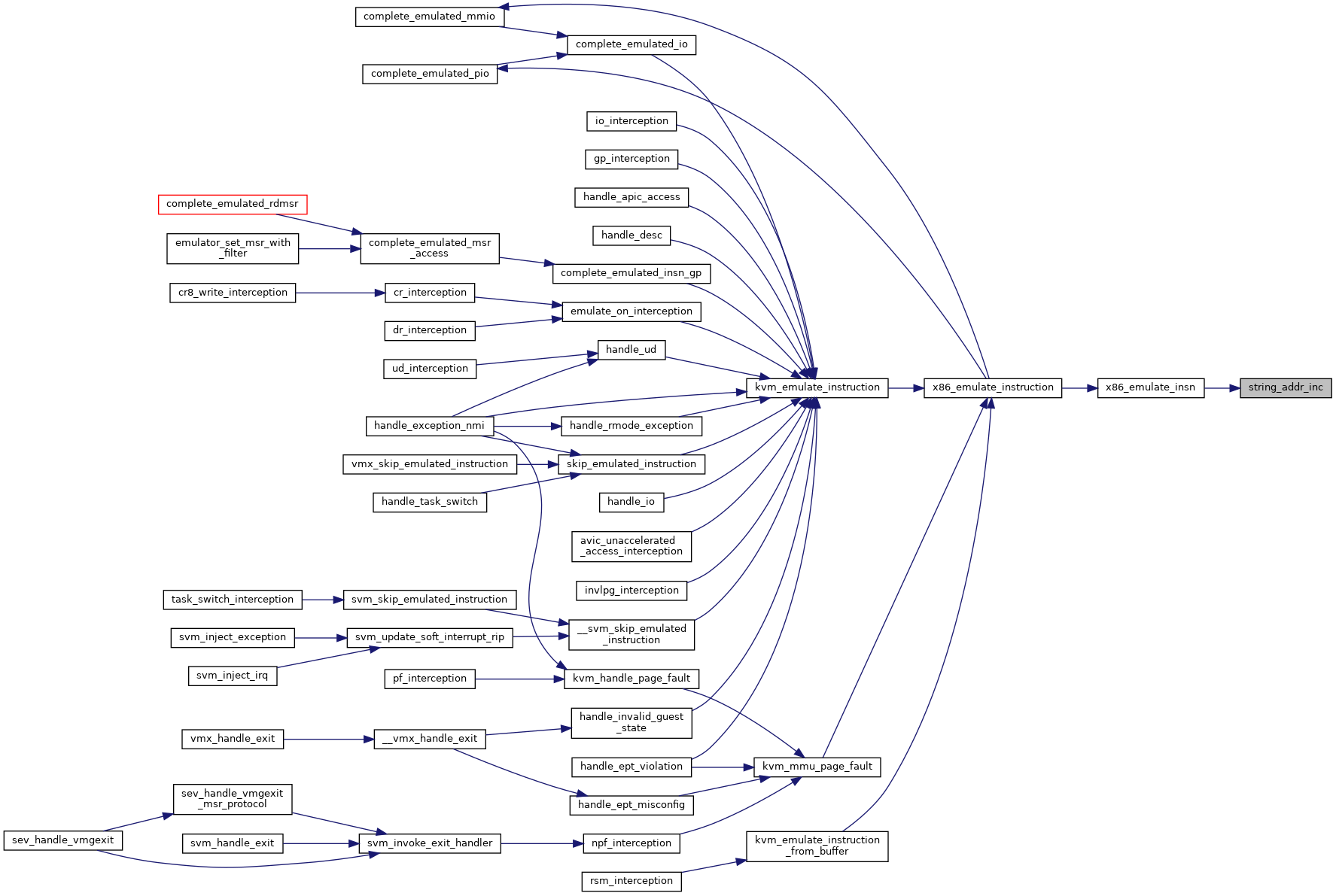

| static void | string_addr_inc (struct x86_emulate_ctxt *ctxt, int reg, struct operand *op) |

| |

| static int | em_das (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_aam (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_aad (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_call (struct x86_emulate_ctxt *ctxt) |

| |

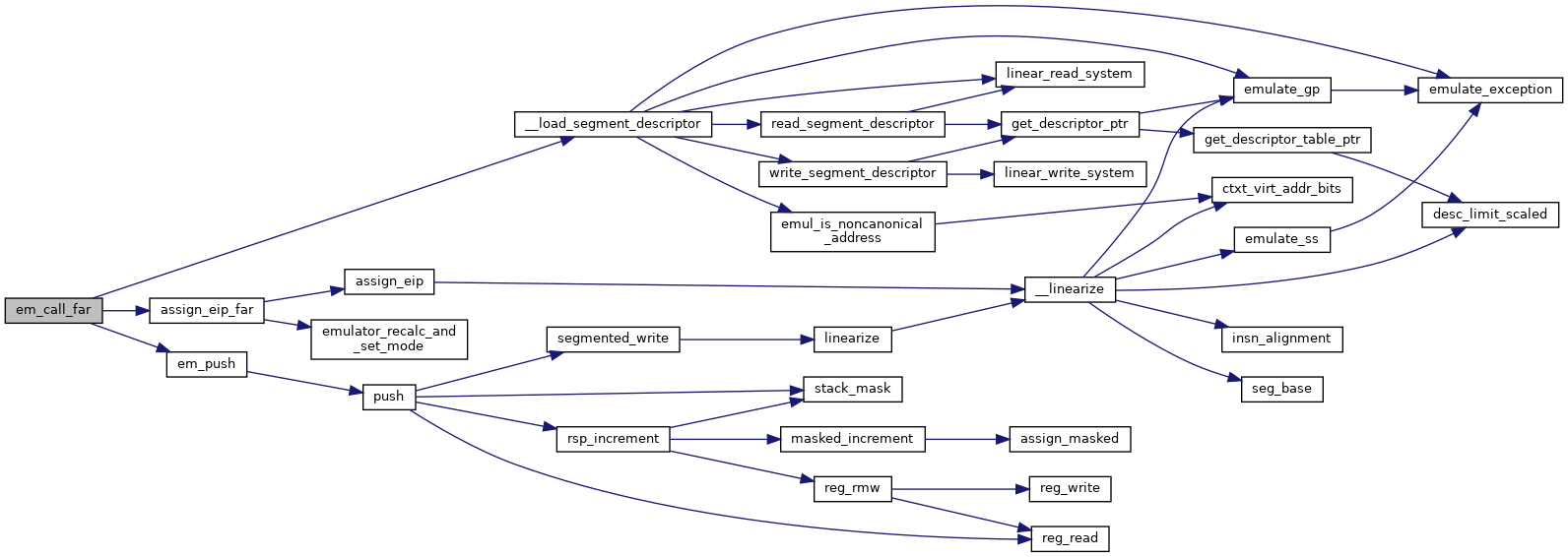

| static int | em_call_far (struct x86_emulate_ctxt *ctxt) |

| |

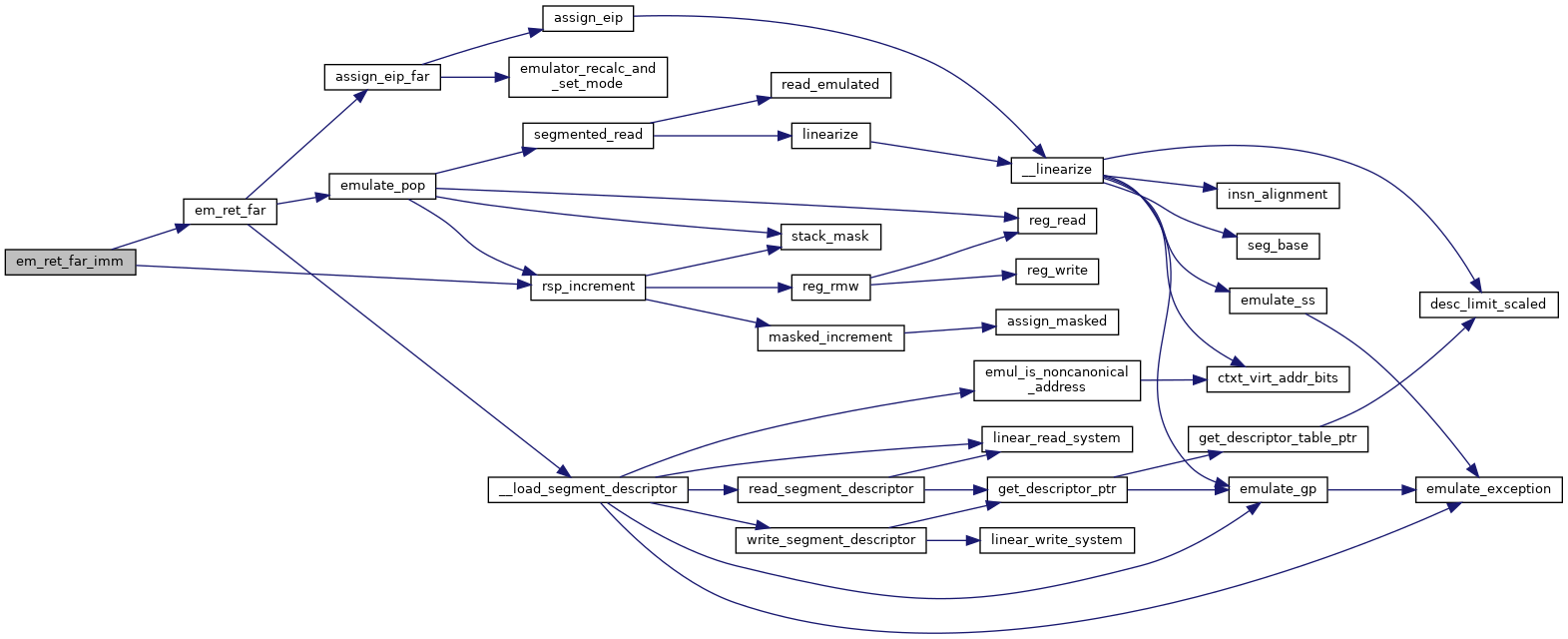

| static int | em_ret_near_imm (struct x86_emulate_ctxt *ctxt) |

| |

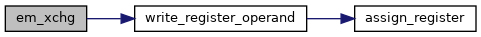

| static int | em_xchg (struct x86_emulate_ctxt *ctxt) |

| |

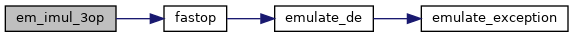

| static int | em_imul_3op (struct x86_emulate_ctxt *ctxt) |

| |

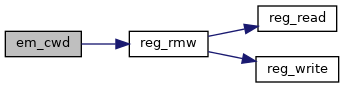

| static int | em_cwd (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_rdpid (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_rdtsc (struct x86_emulate_ctxt *ctxt) |

| |

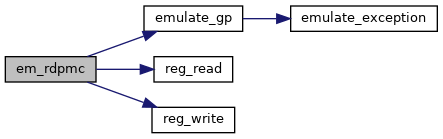

| static int | em_rdpmc (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_mov (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_movbe (struct x86_emulate_ctxt *ctxt) |

| |

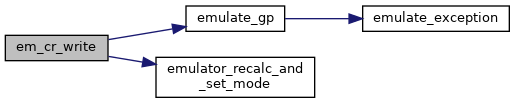

| static int | em_cr_write (struct x86_emulate_ctxt *ctxt) |

| |

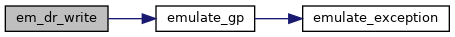

| static int | em_dr_write (struct x86_emulate_ctxt *ctxt) |

| |

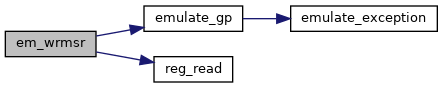

| static int | em_wrmsr (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_rdmsr (struct x86_emulate_ctxt *ctxt) |

| |

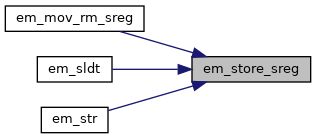

| static int | em_store_sreg (struct x86_emulate_ctxt *ctxt, int segment) |

| |

| static int | em_mov_rm_sreg (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_mov_sreg_rm (struct x86_emulate_ctxt *ctxt) |

| |

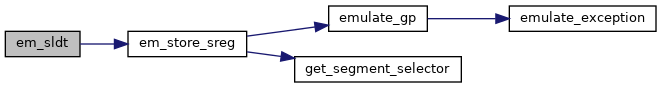

| static int | em_sldt (struct x86_emulate_ctxt *ctxt) |

| |

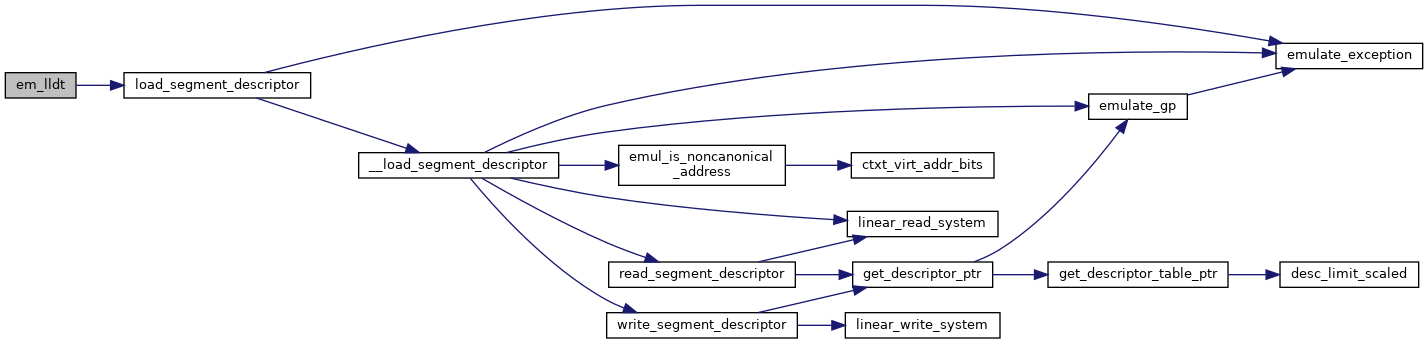

| static int | em_lldt (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_str (struct x86_emulate_ctxt *ctxt) |

| |

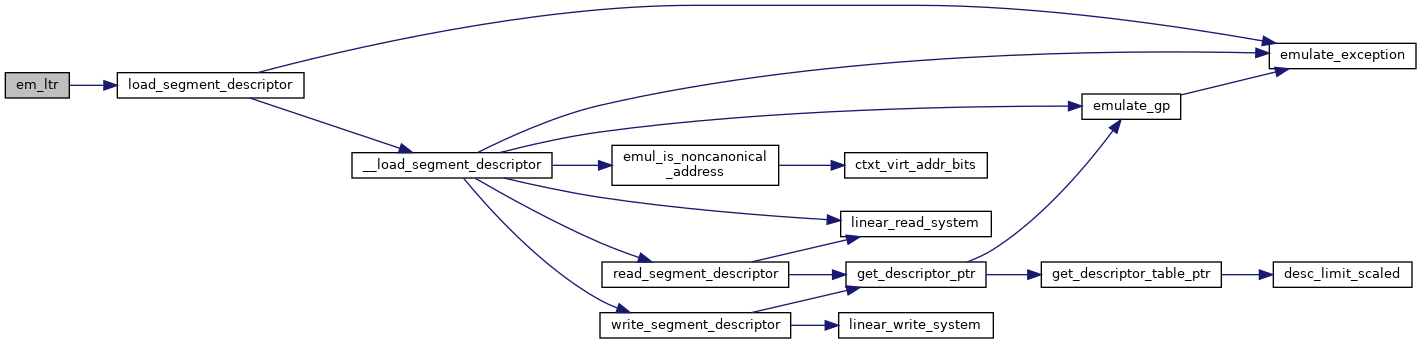

| static int | em_ltr (struct x86_emulate_ctxt *ctxt) |

| |

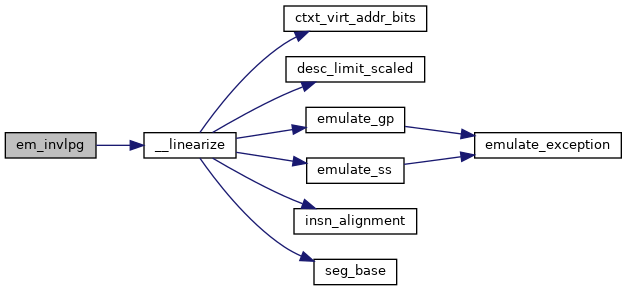

| static int | em_invlpg (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_clts (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_hypercall (struct x86_emulate_ctxt *ctxt) |

| |

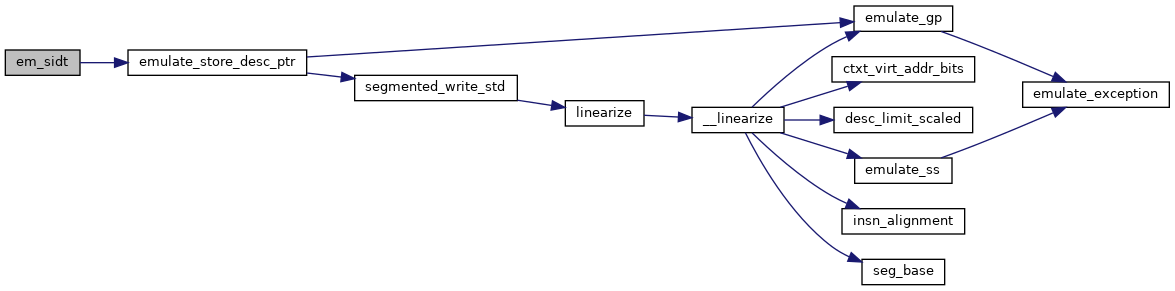

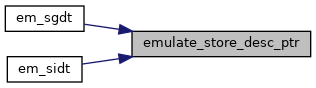

| static int | emulate_store_desc_ptr (struct x86_emulate_ctxt *ctxt, void(*get)(struct x86_emulate_ctxt *ctxt, struct desc_ptr *ptr)) |

| |

| static int | em_sgdt (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_sidt (struct x86_emulate_ctxt *ctxt) |

| |

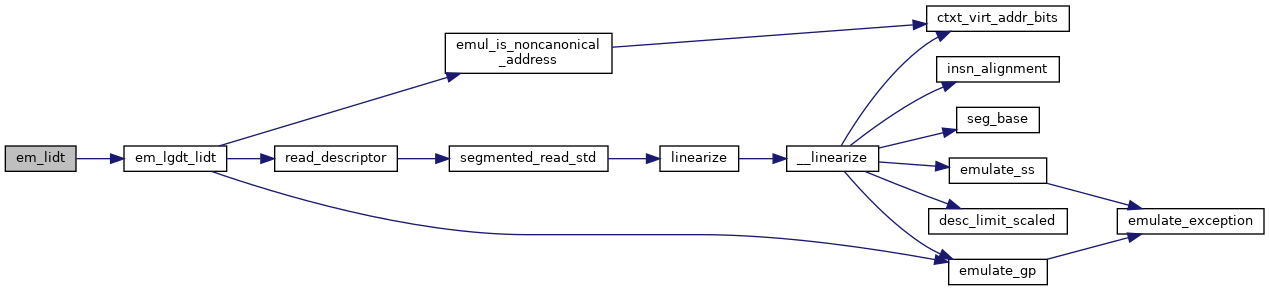

| static int | em_lgdt_lidt (struct x86_emulate_ctxt *ctxt, bool lgdt) |

| |

| static int | em_lgdt (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_lidt (struct x86_emulate_ctxt *ctxt) |

| |

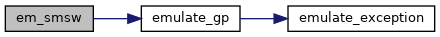

| static int | em_smsw (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_lmsw (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_loop (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_jcxz (struct x86_emulate_ctxt *ctxt) |

| |

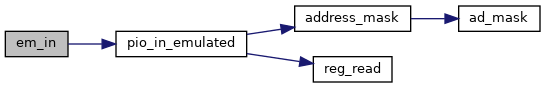

| static int | em_in (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_out (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_cli (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_sti (struct x86_emulate_ctxt *ctxt) |

| |

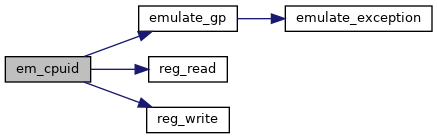

| static int | em_cpuid (struct x86_emulate_ctxt *ctxt) |

| |

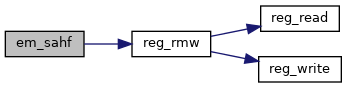

| static int | em_sahf (struct x86_emulate_ctxt *ctxt) |

| |

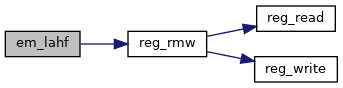

| static int | em_lahf (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_bswap (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_clflush (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_clflushopt (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_movsxd (struct x86_emulate_ctxt *ctxt) |

| |

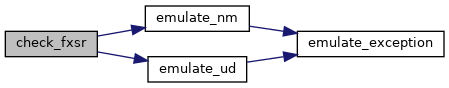

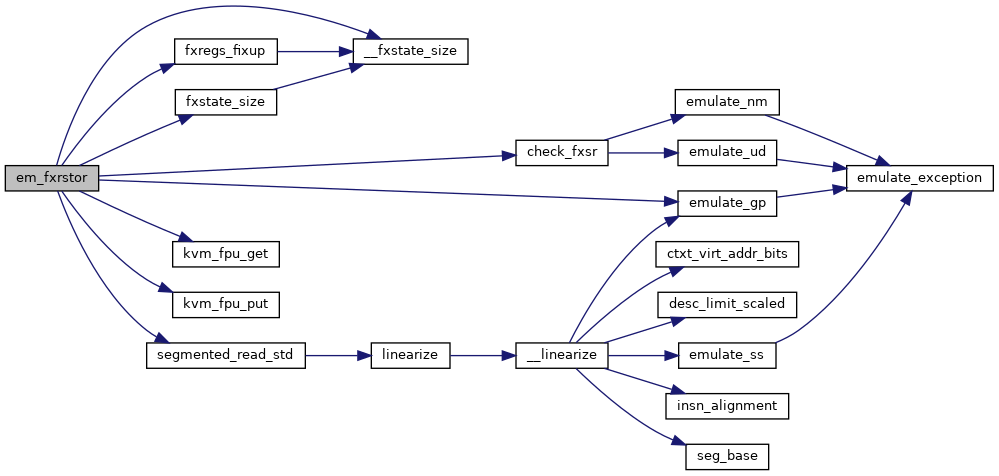

| static int | check_fxsr (struct x86_emulate_ctxt *ctxt) |

| |

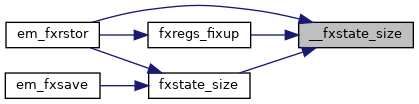

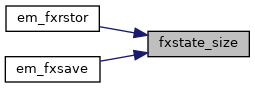

| static size_t | __fxstate_size (int nregs) |

| |

| static size_t | fxstate_size (struct x86_emulate_ctxt *ctxt) |

| |

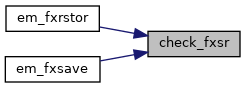

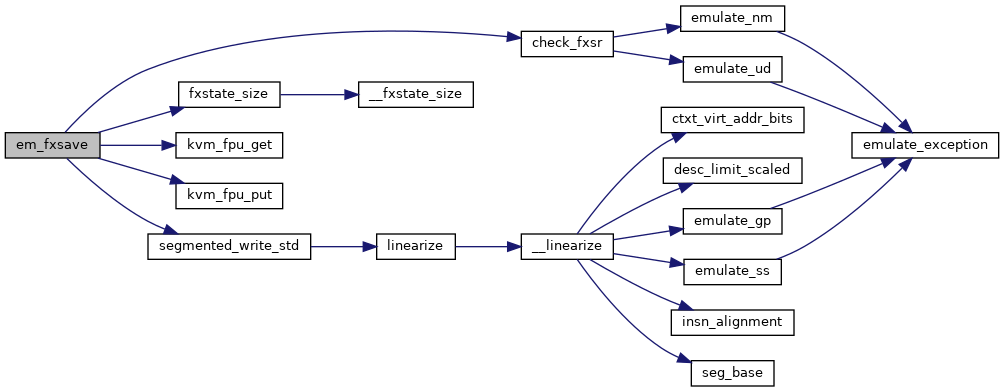

| static int | em_fxsave (struct x86_emulate_ctxt *ctxt) |

| |

| static noinline int | fxregs_fixup (struct fxregs_state *fx_state, const size_t used_size) |

| |

| static int | em_fxrstor (struct x86_emulate_ctxt *ctxt) |

| |

| static int | em_xsetbv (struct x86_emulate_ctxt *ctxt) |

| |

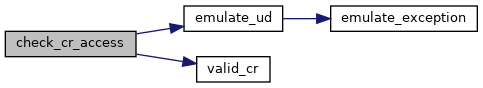

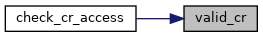

| static bool | valid_cr (int nr) |

| |

| static int | check_cr_access (struct x86_emulate_ctxt *ctxt) |

| |

| static int | check_dr7_gd (struct x86_emulate_ctxt *ctxt) |

| |

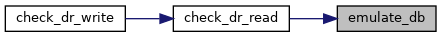

| static int | check_dr_read (struct x86_emulate_ctxt *ctxt) |

| |

| static int | check_dr_write (struct x86_emulate_ctxt *ctxt) |

| |

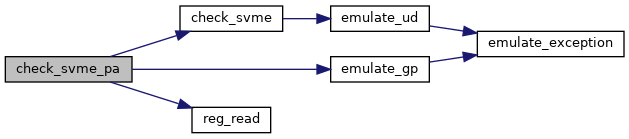

| static int | check_svme (struct x86_emulate_ctxt *ctxt) |

| |

| static int | check_svme_pa (struct x86_emulate_ctxt *ctxt) |

| |

| static int | check_rdtsc (struct x86_emulate_ctxt *ctxt) |

| |

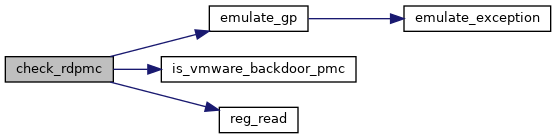

| static int | check_rdpmc (struct x86_emulate_ctxt *ctxt) |

| |

| static int | check_perm_in (struct x86_emulate_ctxt *ctxt) |

| |

| static int | check_perm_out (struct x86_emulate_ctxt *ctxt) |

| |

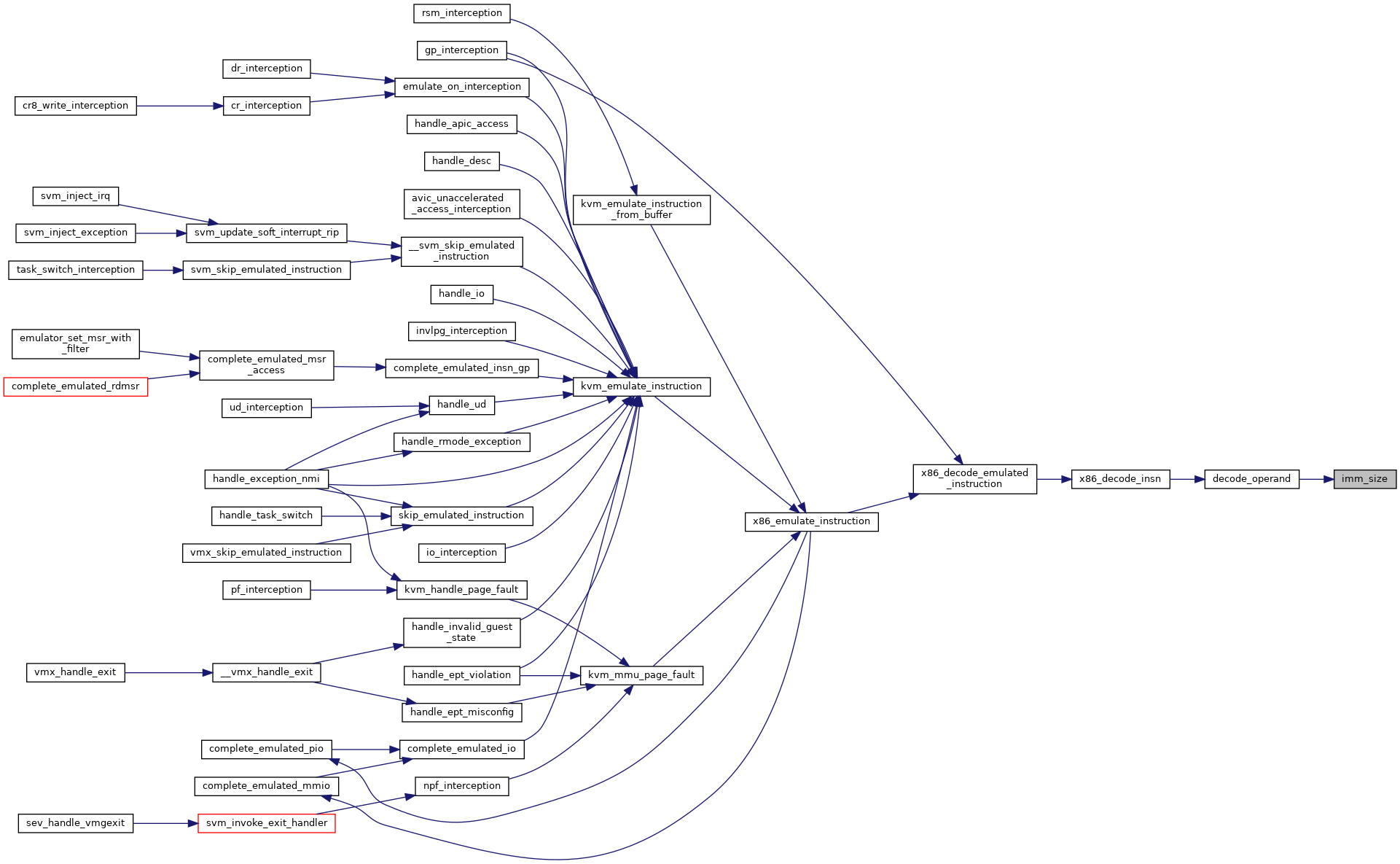

| static unsigned | imm_size (struct x86_emulate_ctxt *ctxt) |

| |

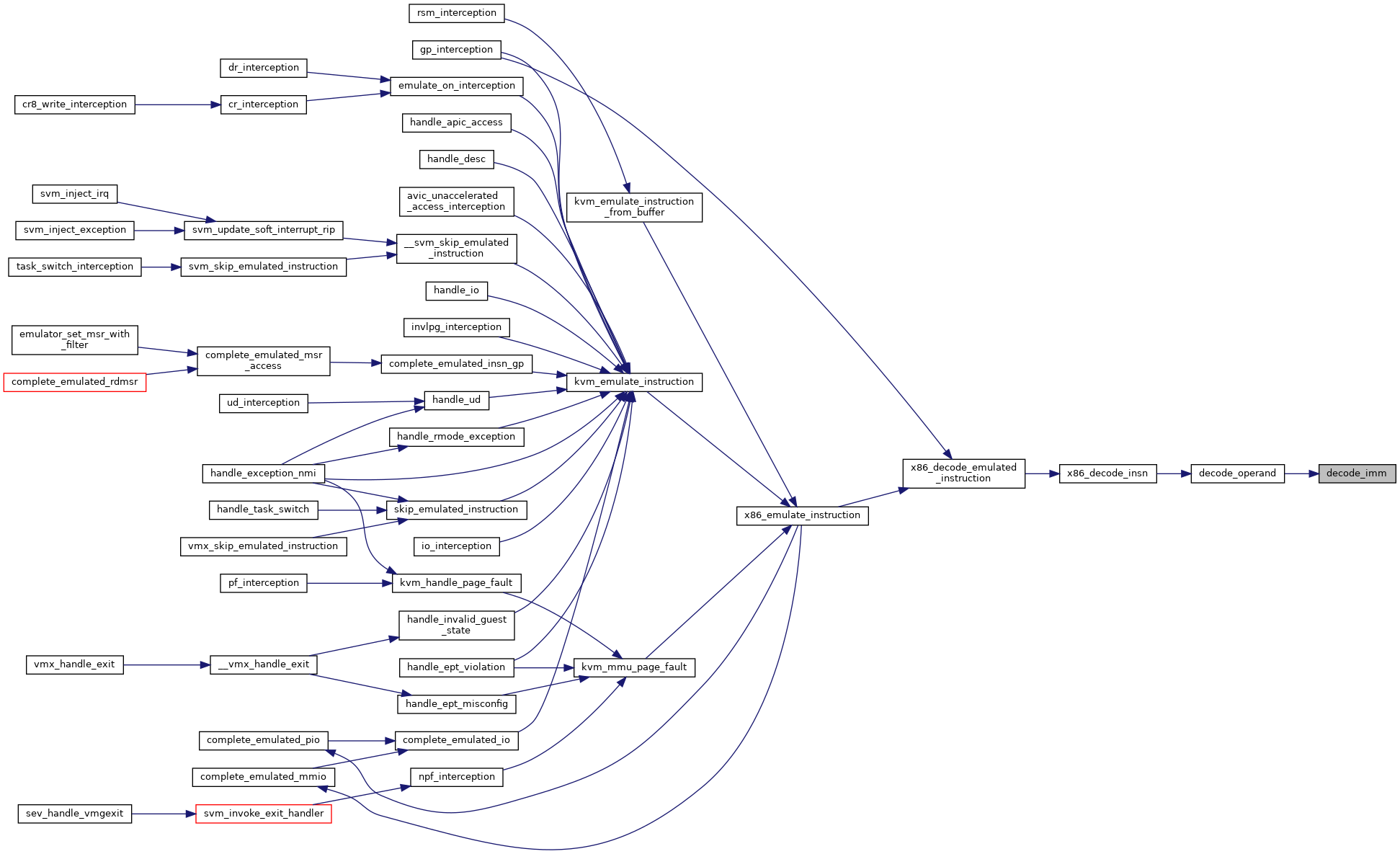

| static int | decode_imm (struct x86_emulate_ctxt *ctxt, struct operand *op, unsigned size, bool sign_extension) |

| |

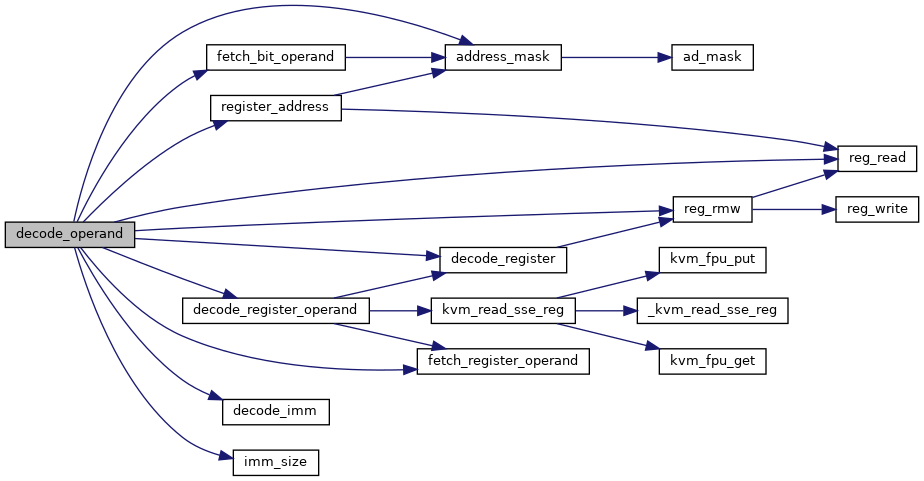

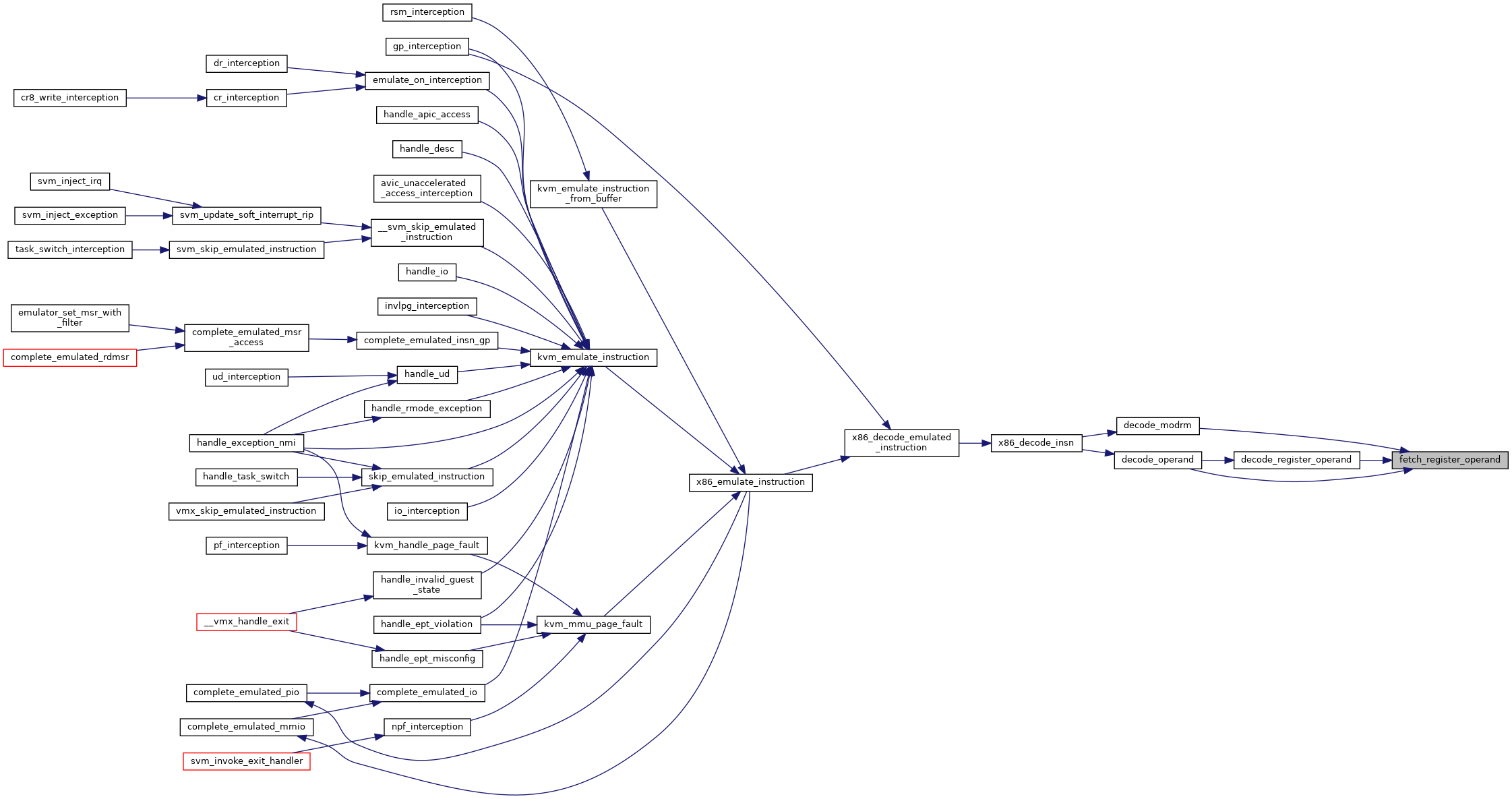

| static int | decode_operand (struct x86_emulate_ctxt *ctxt, struct operand *op, unsigned d) |

| |

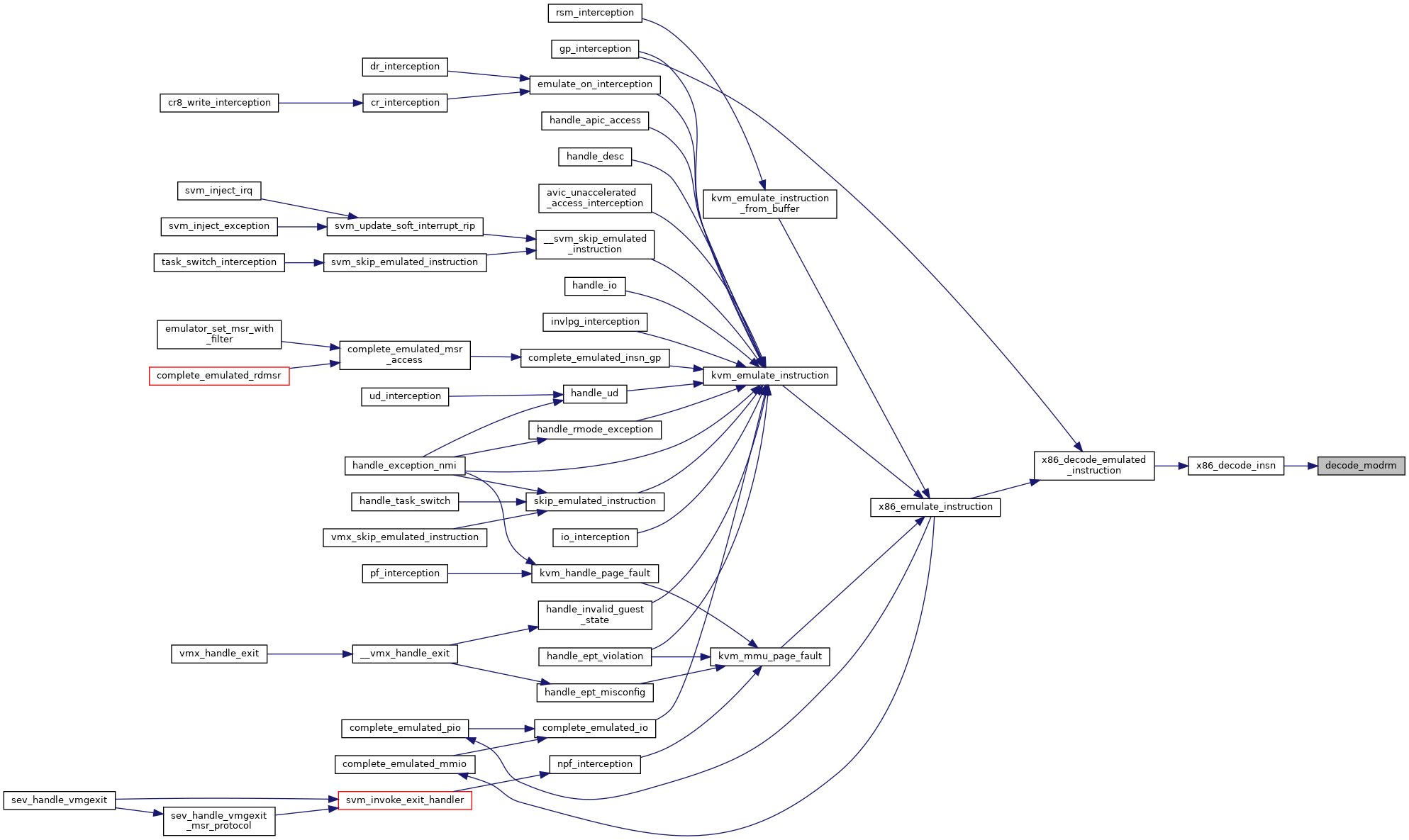

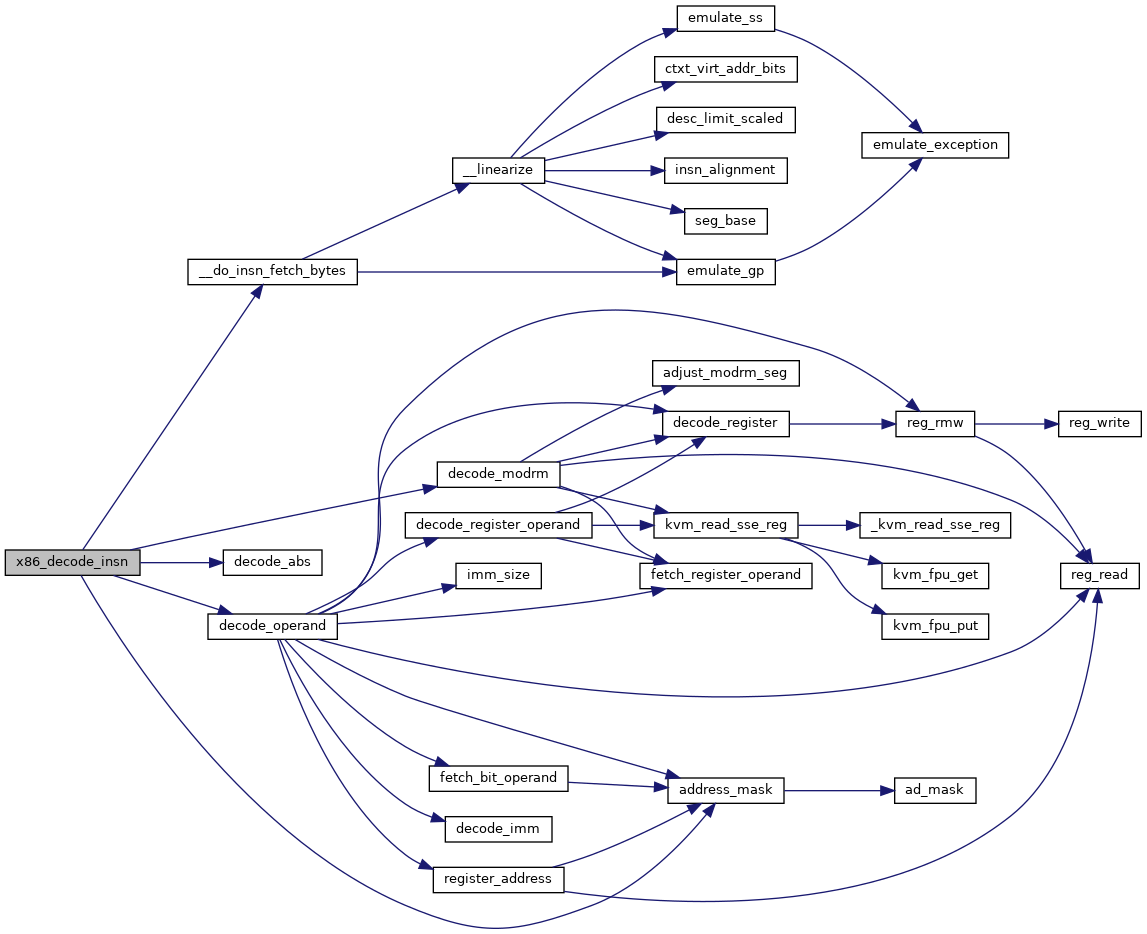

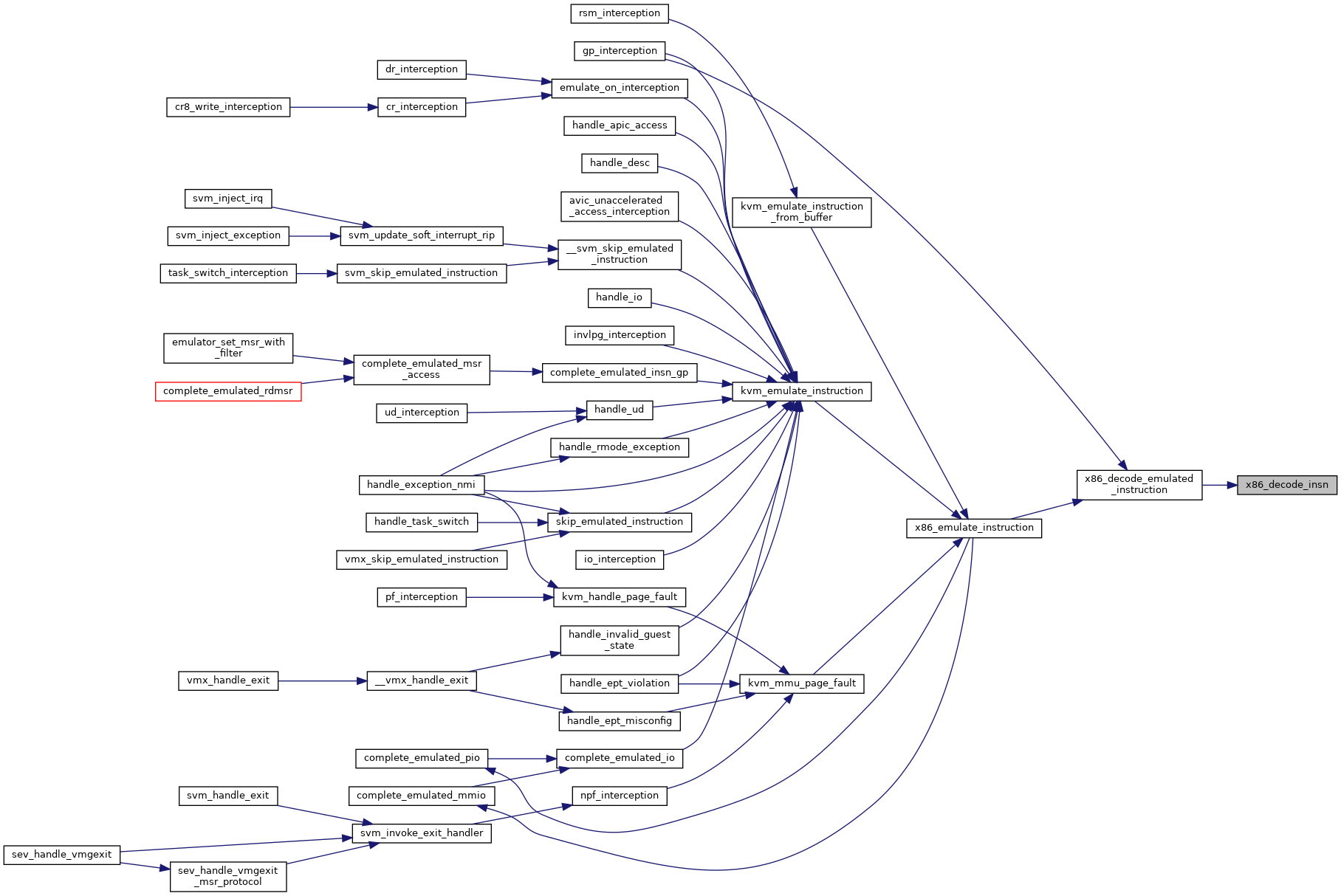

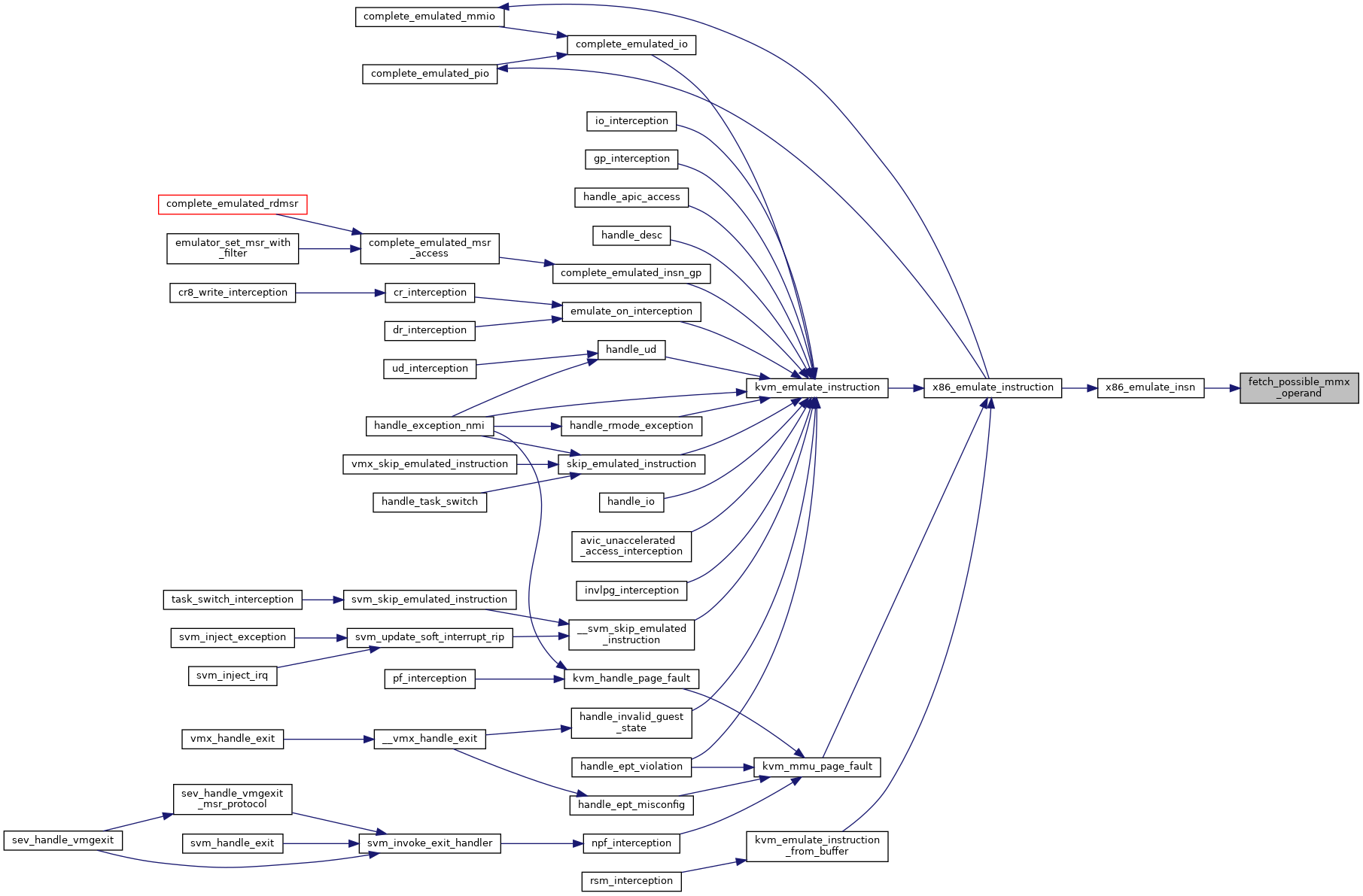

| int | x86_decode_insn (struct x86_emulate_ctxt *ctxt, void *insn, int insn_len, int emulation_type) |

| |

| bool | x86_page_table_writing_insn (struct x86_emulate_ctxt *ctxt) |

| |

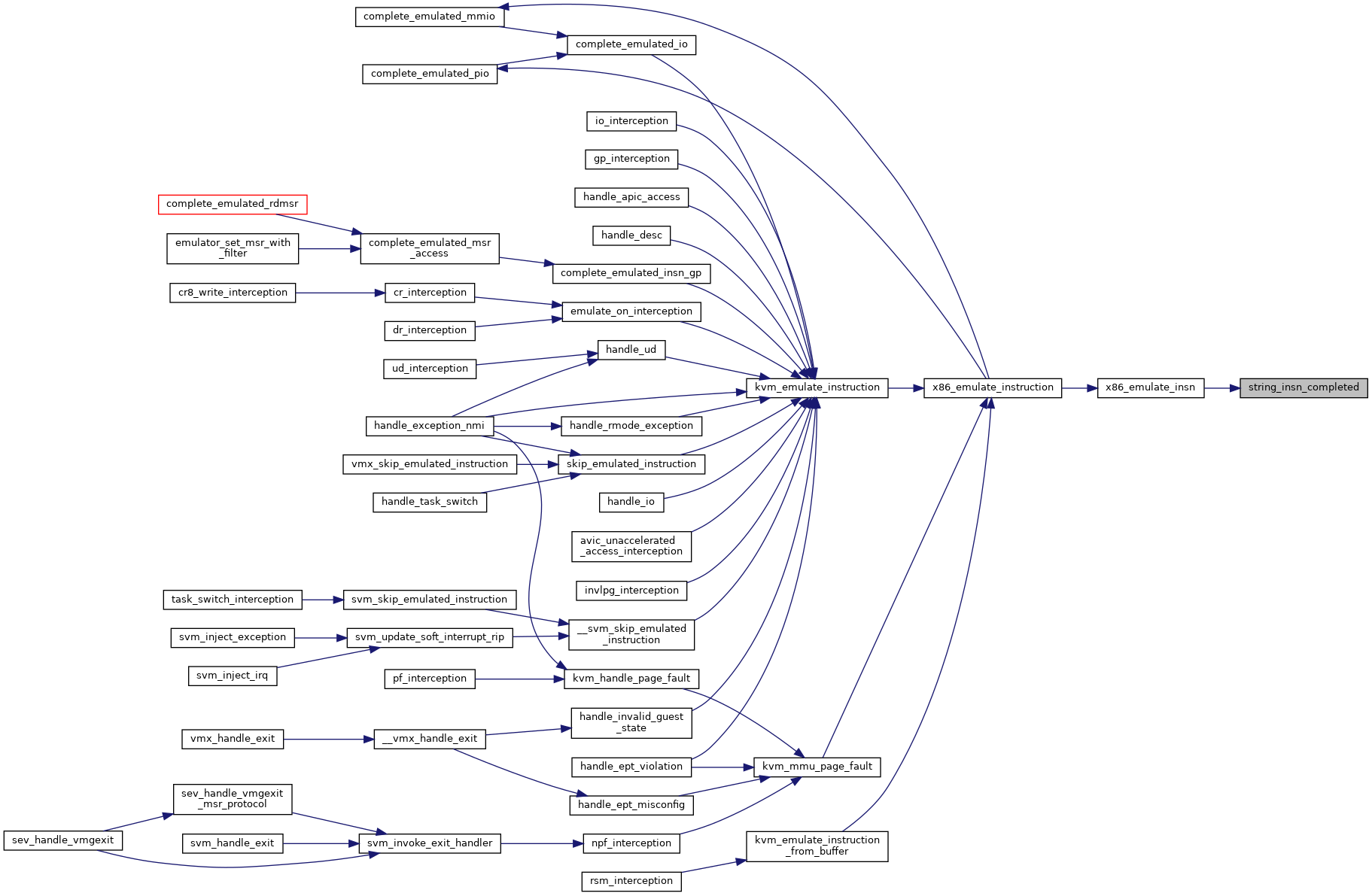

| static bool | string_insn_completed (struct x86_emulate_ctxt *ctxt) |

| |

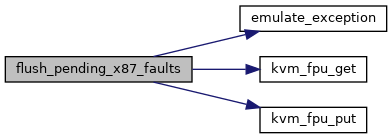

| static int | flush_pending_x87_faults (struct x86_emulate_ctxt *ctxt) |

| |

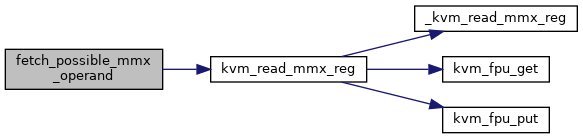

| static void | fetch_possible_mmx_operand (struct operand *op) |

| |

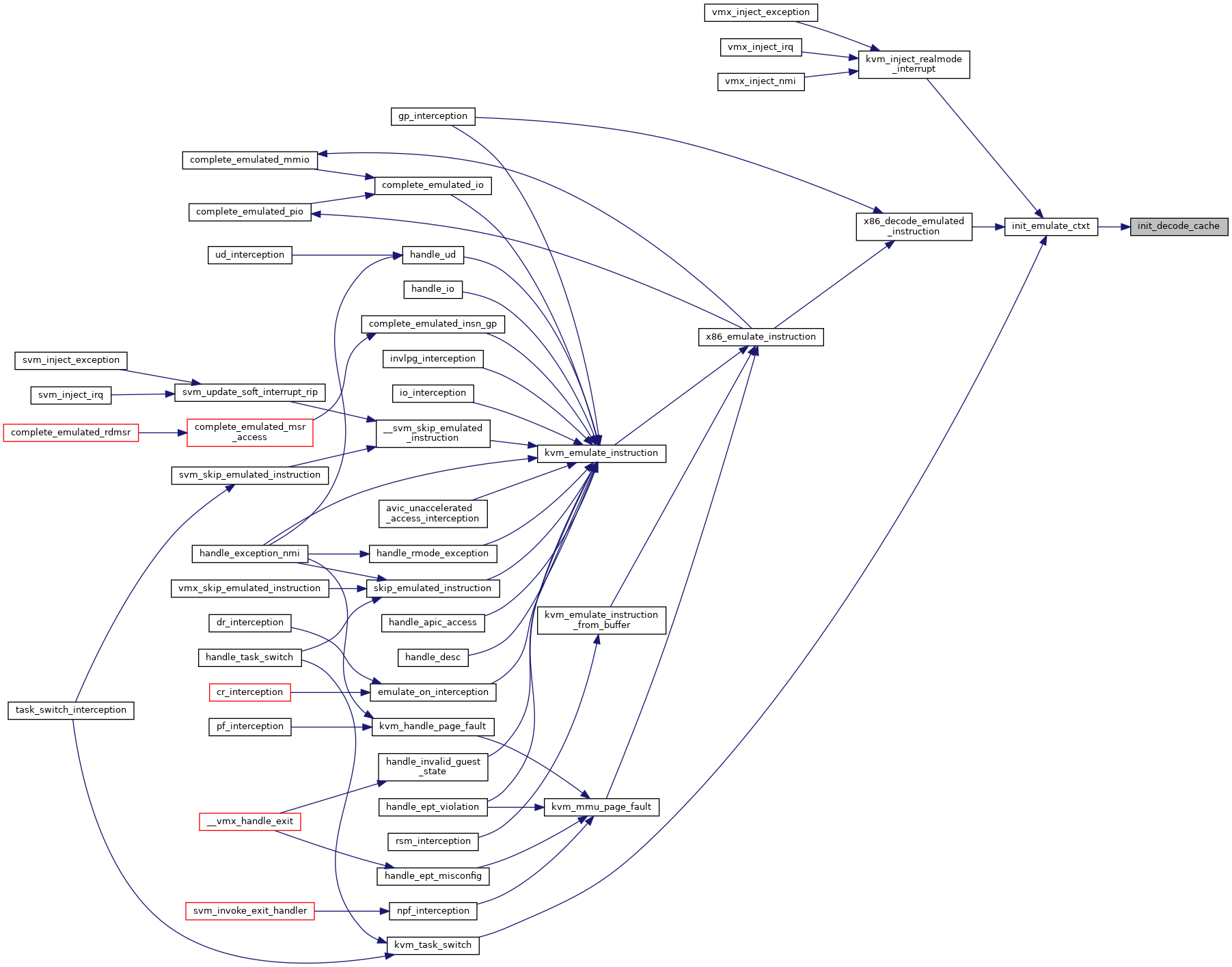

| void | init_decode_cache (struct x86_emulate_ctxt *ctxt) |

| |

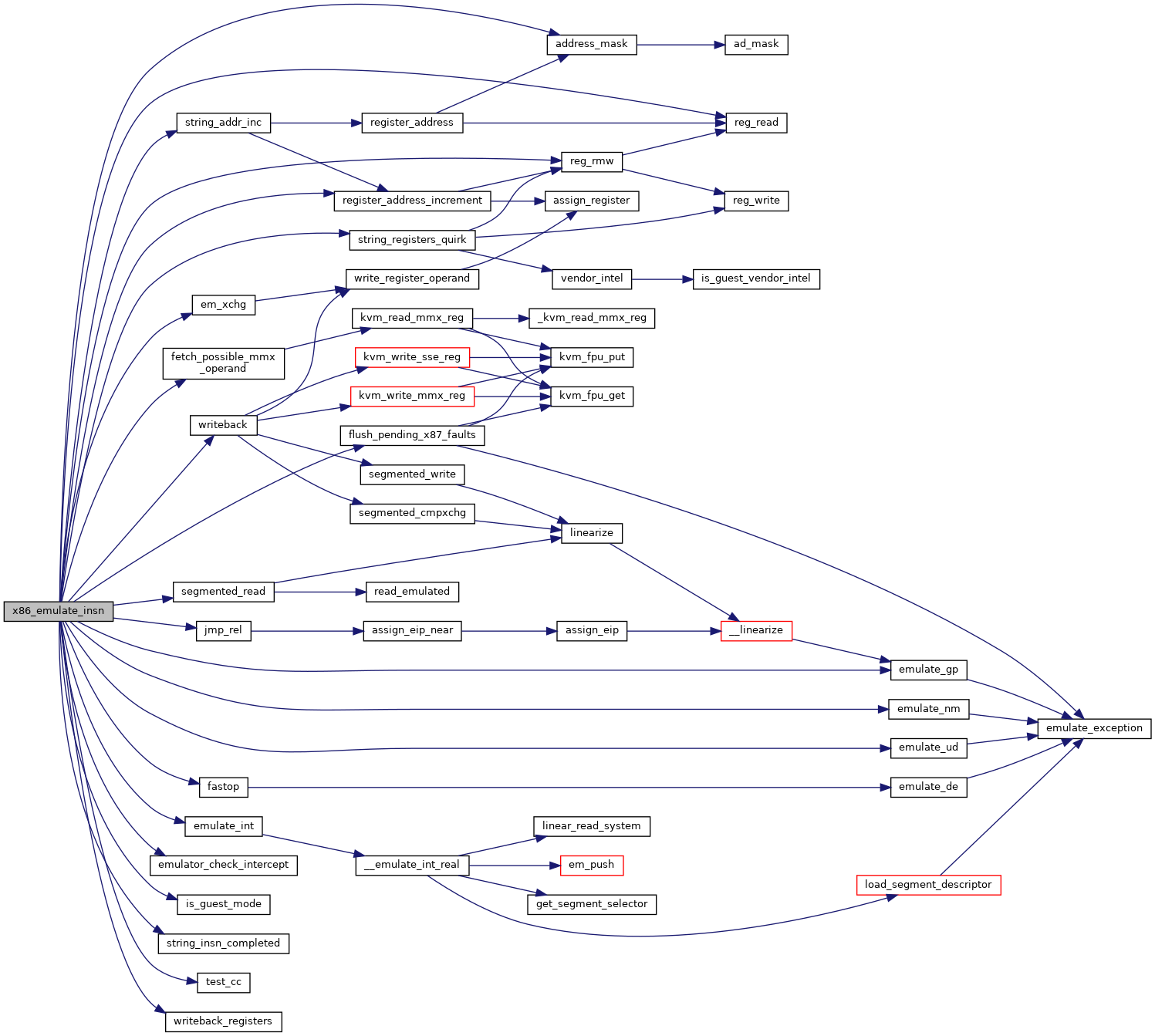

| int | x86_emulate_insn (struct x86_emulate_ctxt *ctxt) |

| |

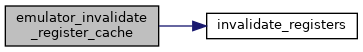

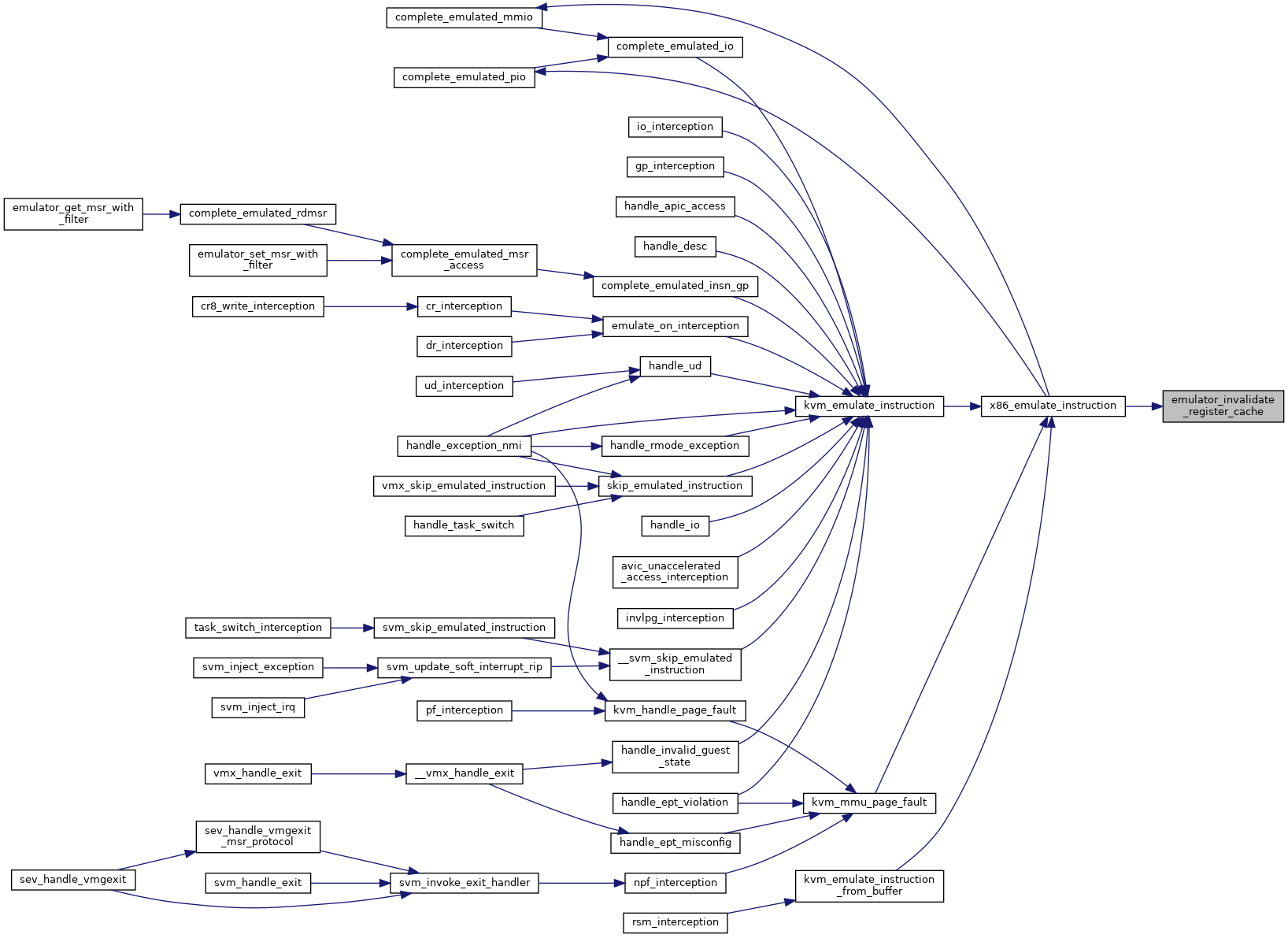

| void | emulator_invalidate_register_cache (struct x86_emulate_ctxt *ctxt) |

| |

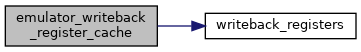

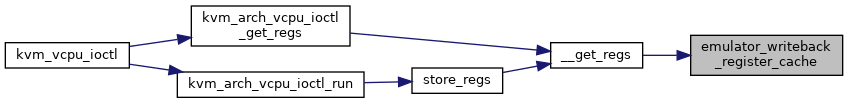

| void | emulator_writeback_register_cache (struct x86_emulate_ctxt *ctxt) |

| |

| bool | emulator_can_use_gpa (struct x86_emulate_ctxt *ctxt) |

| |