#include <linux/kvm_host.h>#include "irq.h"#include "ioapic.h"#include "mmu.h"#include "i8254.h"#include "tss.h"#include "kvm_cache_regs.h"#include "kvm_emulate.h"#include "mmu/page_track.h"#include "x86.h"#include "cpuid.h"#include "pmu.h"#include "hyperv.h"#include "lapic.h"#include "xen.h"#include "smm.h"#include <linux/clocksource.h>#include <linux/interrupt.h>#include <linux/kvm.h>#include <linux/fs.h>#include <linux/vmalloc.h>#include <linux/export.h>#include <linux/moduleparam.h>#include <linux/mman.h>#include <linux/highmem.h>#include <linux/iommu.h>#include <linux/cpufreq.h>#include <linux/user-return-notifier.h>#include <linux/srcu.h>#include <linux/slab.h>#include <linux/perf_event.h>#include <linux/uaccess.h>#include <linux/hash.h>#include <linux/pci.h>#include <linux/timekeeper_internal.h>#include <linux/pvclock_gtod.h>#include <linux/kvm_irqfd.h>#include <linux/irqbypass.h>#include <linux/sched/stat.h>#include <linux/sched/isolation.h>#include <linux/mem_encrypt.h>#include <linux/entry-kvm.h>#include <linux/suspend.h>#include <linux/smp.h>#include <trace/events/ipi.h>#include <trace/events/kvm.h>#include <asm/debugreg.h>#include <asm/msr.h>#include <asm/desc.h>#include <asm/mce.h>#include <asm/pkru.h>#include <linux/kernel_stat.h>#include <asm/fpu/api.h>#include <asm/fpu/xcr.h>#include <asm/fpu/xstate.h>#include <asm/pvclock.h>#include <asm/div64.h>#include <asm/irq_remapping.h>#include <asm/mshyperv.h>#include <asm/hypervisor.h>#include <asm/tlbflush.h>#include <asm/intel_pt.h>#include <asm/emulate_prefix.h>#include <asm/sgx.h>#include <clocksource/hyperv_timer.h>#include "trace.h"#include <asm/kvm-x86-ops.h>Go to the source code of this file.

Classes | |

| struct | kvm_user_return_msrs |

| struct | kvm_user_return_msrs::kvm_user_return_msr_values |

| struct | read_write_emulator_ops |

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

| #define | CREATE_TRACE_POINTS |

| #define | MAX_IO_MSRS 256 |

| #define | KVM_MAX_MCE_BANKS 32 |

| #define | ERR_PTR_USR(e) ((void __user *)ERR_PTR(e)) |

| #define | emul_to_vcpu(ctxt) ((struct kvm_vcpu *)(ctxt)->vcpu) |

| #define | KVM_EXIT_HYPERCALL_VALID_MASK (1 << KVM_HC_MAP_GPA_RANGE) |

| #define | KVM_CAP_PMU_VALID_MASK KVM_PMU_CAP_DISABLE |

| #define | KVM_X2APIC_API_VALID_FLAGS |

| #define | KVM_X86_OP(func) |

| #define | KVM_X86_OP_OPTIONAL KVM_X86_OP |

| #define | KVM_X86_OP_OPTIONAL_RET0 KVM_X86_OP |

| #define | KVM_FEP_CLEAR_RFLAGS_RF BIT(1) |

| #define | KVM_MAX_NR_USER_RETURN_MSRS 16 |

| #define | KVM_SUPPORTED_XCR0 |

| #define | EXCPT_BENIGN 0 |

| #define | EXCPT_CONTRIBUTORY 1 |

| #define | EXCPT_PF 2 |

| #define | EXCPT_FAULT 0 |

| #define | EXCPT_TRAP 1 |

| #define | EXCPT_ABORT 2 |

| #define | EXCPT_INTERRUPT 3 |

| #define | EXCPT_DB 4 |

| #define | KVM_SUPPORTED_ARCH_CAP |

| #define | KVMCLOCK_UPDATE_DELAY msecs_to_jiffies(100) |

| #define | KVMCLOCK_SYNC_PERIOD (300 * HZ) |

| #define | SMT_RSB_MSG |

| #define | emulator_try_cmpxchg_user(t, ptr, old, new) (__try_cmpxchg_user((t __user *)(ptr), (t *)(old), *(t *)(new), efault ## t)) |

| #define | __KVM_X86_OP(func) static_call_update(kvm_x86_##func, kvm_x86_ops.func); |

| #define | KVM_X86_OP(func) WARN_ON(!kvm_x86_ops.func); __KVM_X86_OP(func) |

| #define | KVM_X86_OP_OPTIONAL __KVM_X86_OP |

| #define | KVM_X86_OP_OPTIONAL_RET0(func) |

| #define | __kvm_cpu_cap_has(UNUSED_, f) kvm_cpu_cap_has(f) |

Functions | |

| EXPORT_SYMBOL_GPL (kvm_caps) | |

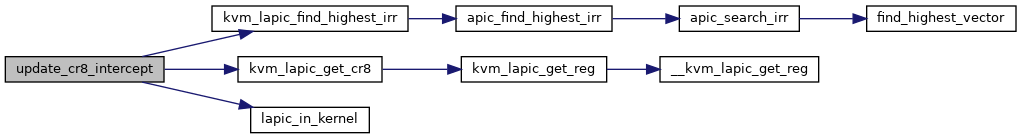

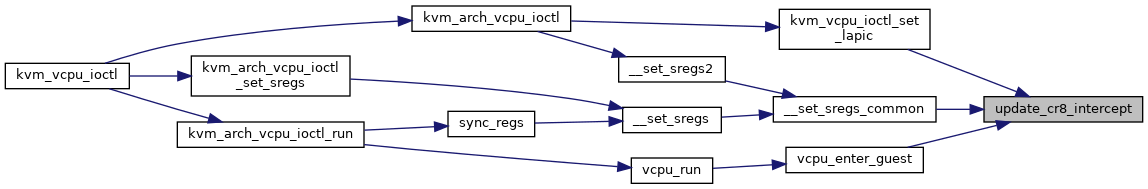

| static void | update_cr8_intercept (struct kvm_vcpu *vcpu) |

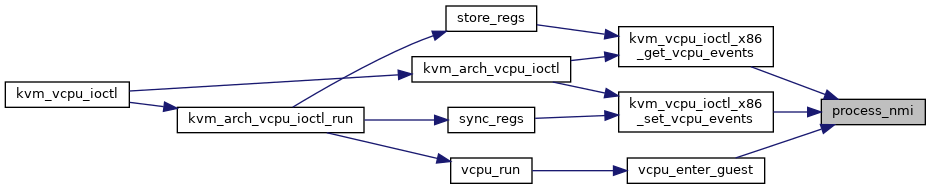

| static void | process_nmi (struct kvm_vcpu *vcpu) |

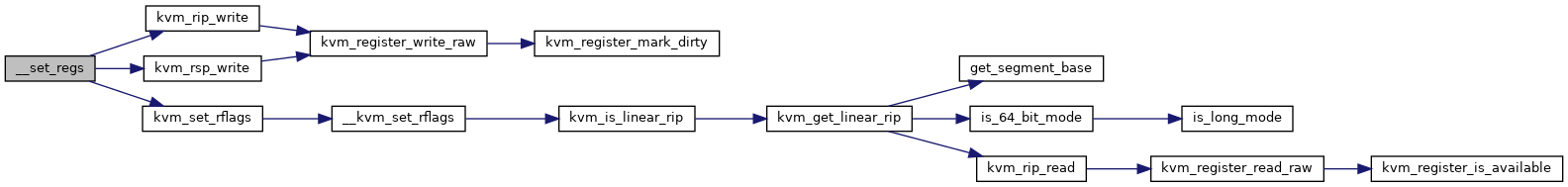

| static void | __kvm_set_rflags (struct kvm_vcpu *vcpu, unsigned long rflags) |

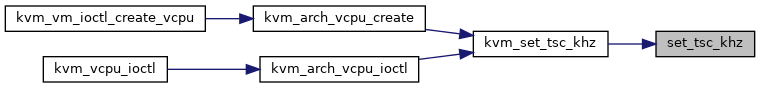

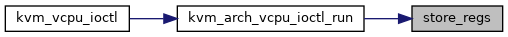

| static void | store_regs (struct kvm_vcpu *vcpu) |

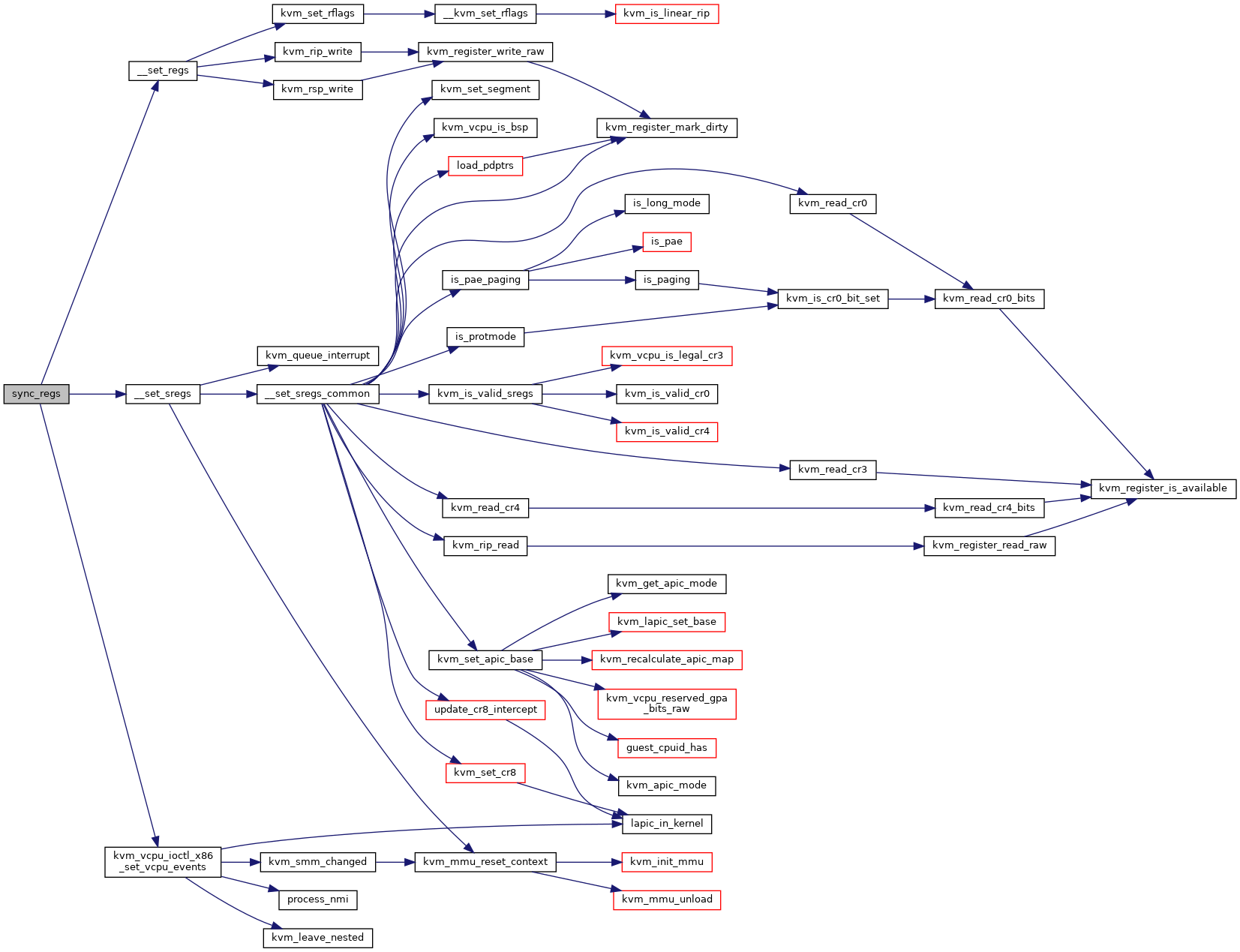

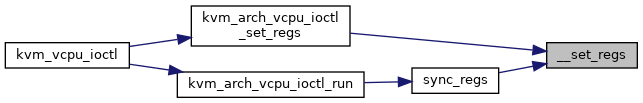

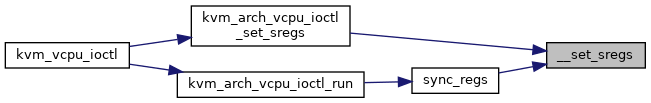

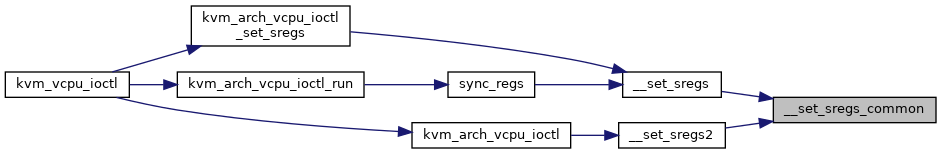

| static int | sync_regs (struct kvm_vcpu *vcpu) |

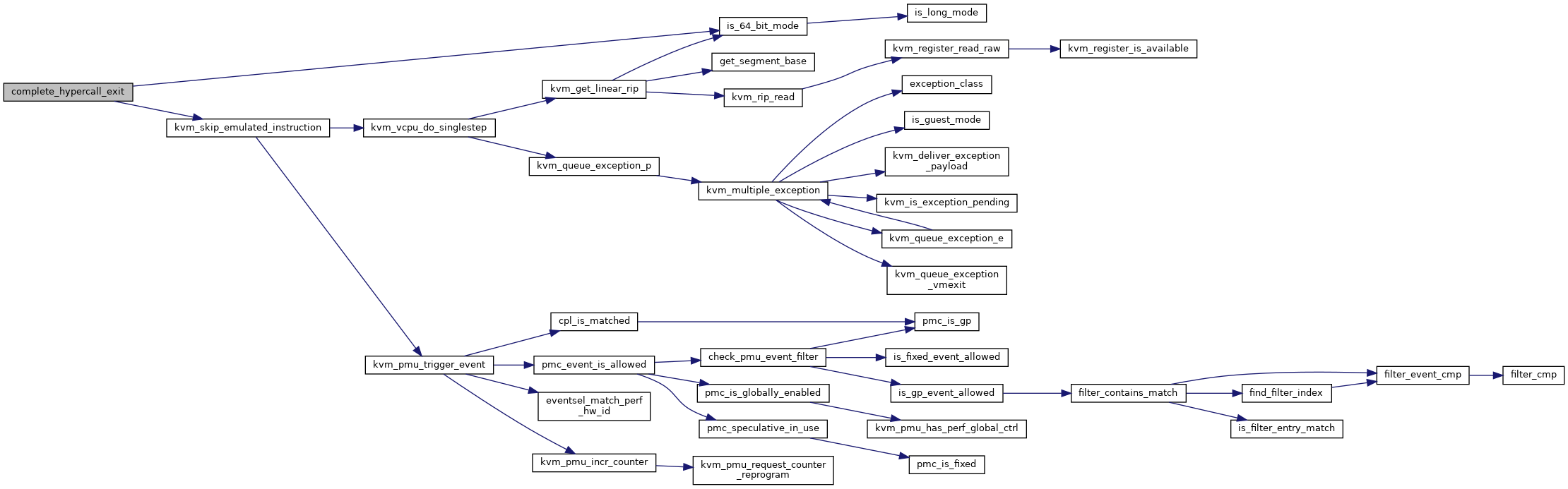

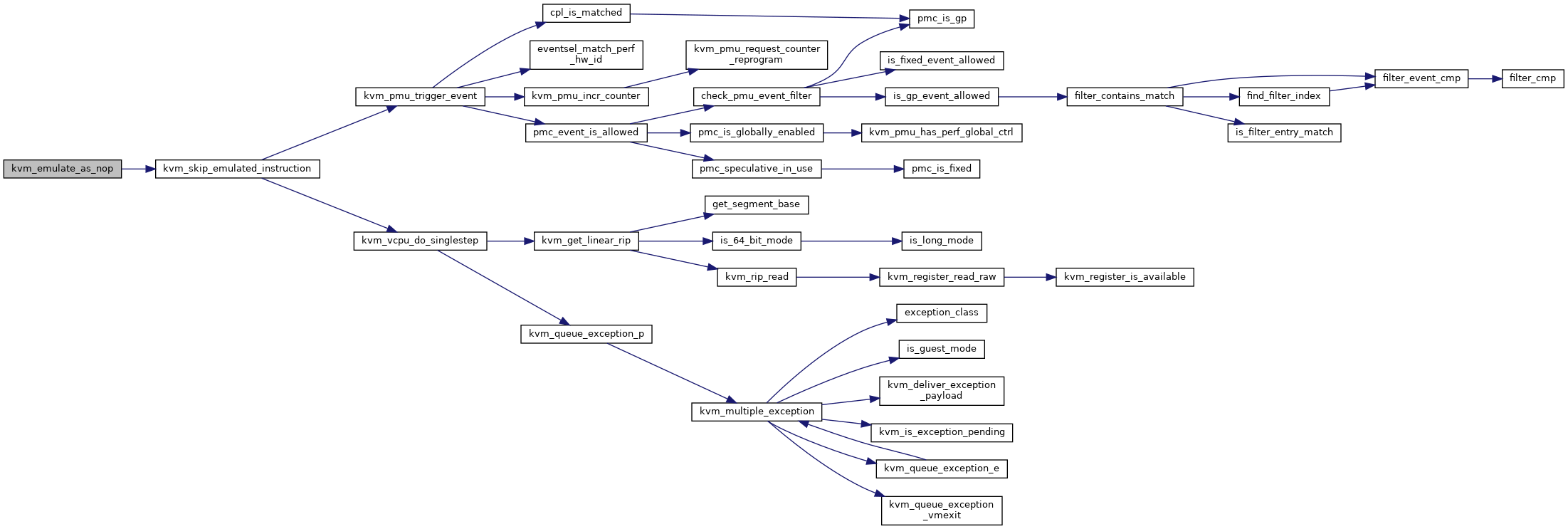

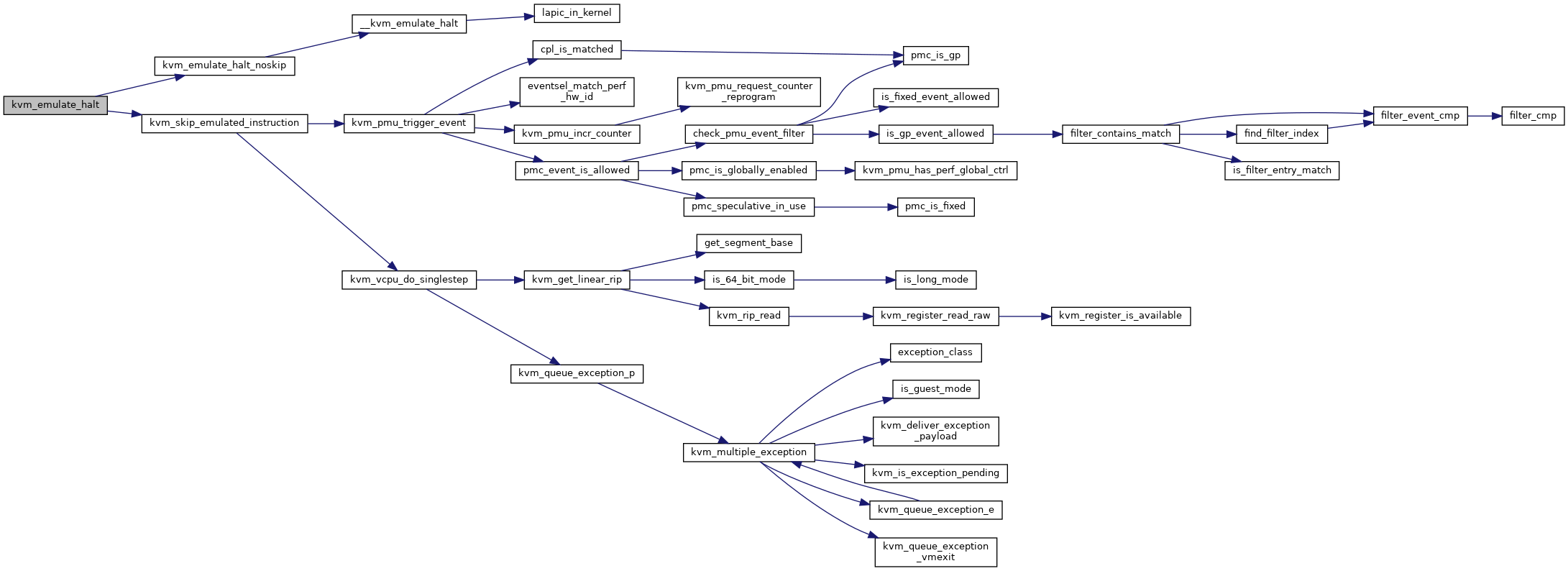

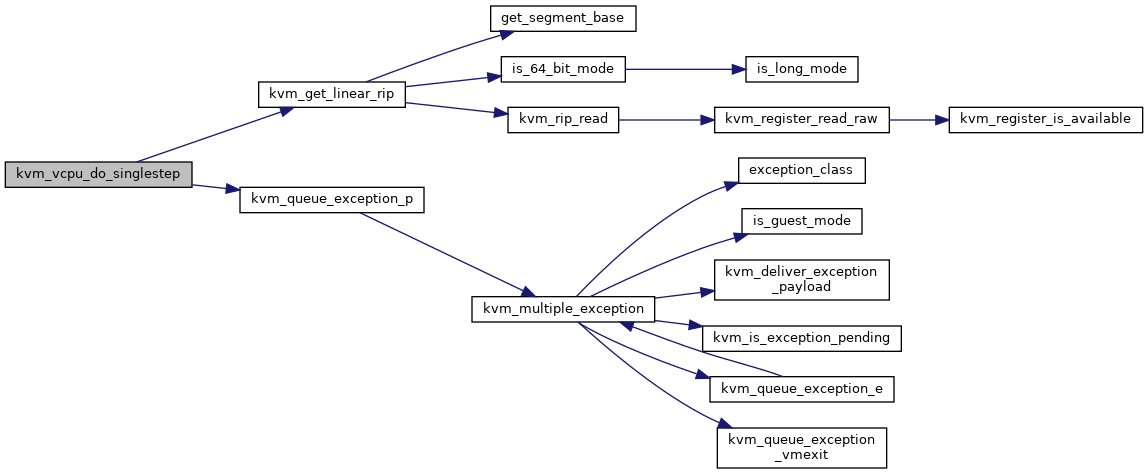

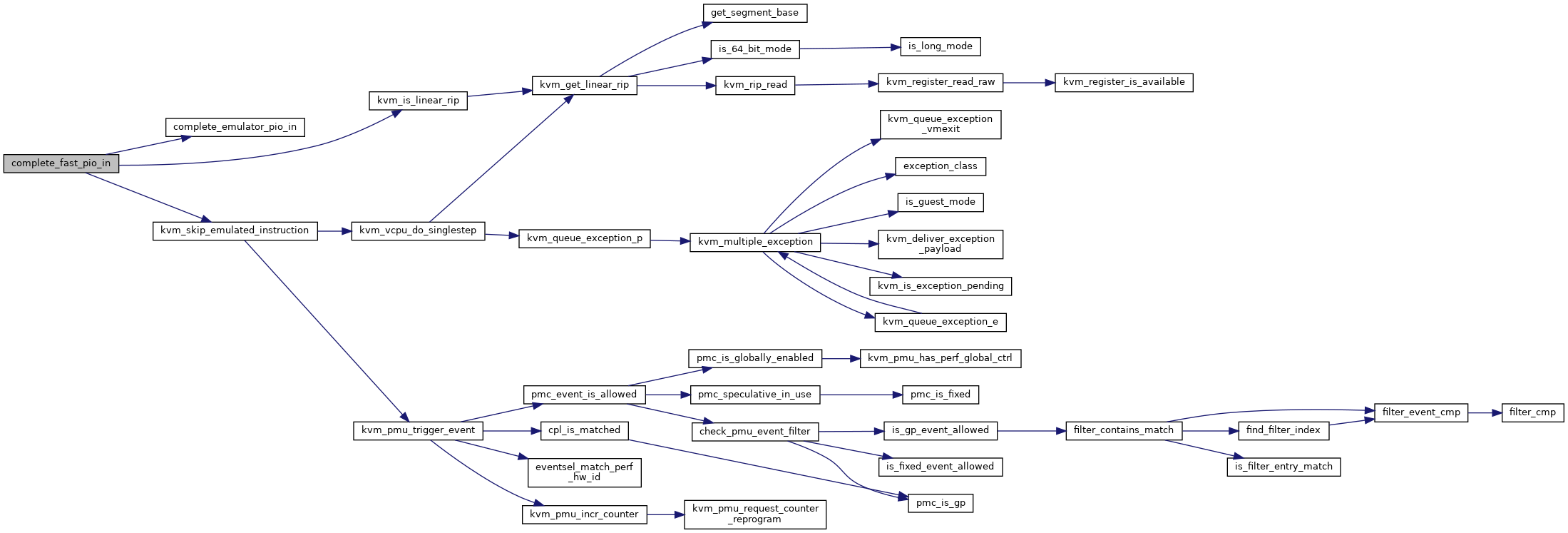

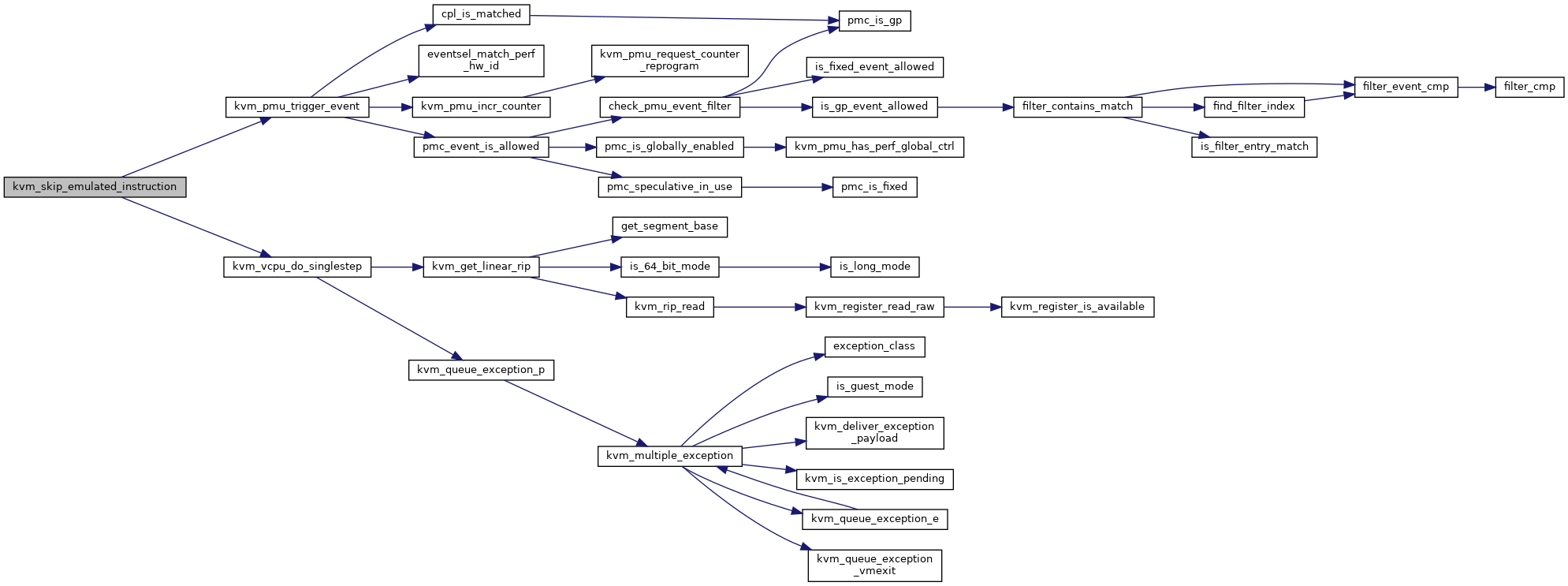

| static int | kvm_vcpu_do_singlestep (struct kvm_vcpu *vcpu) |

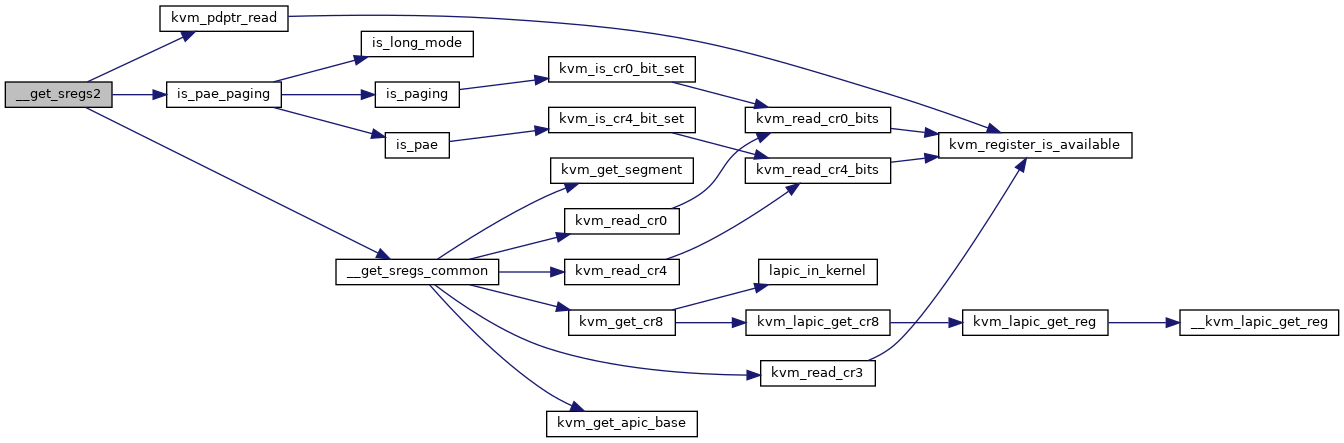

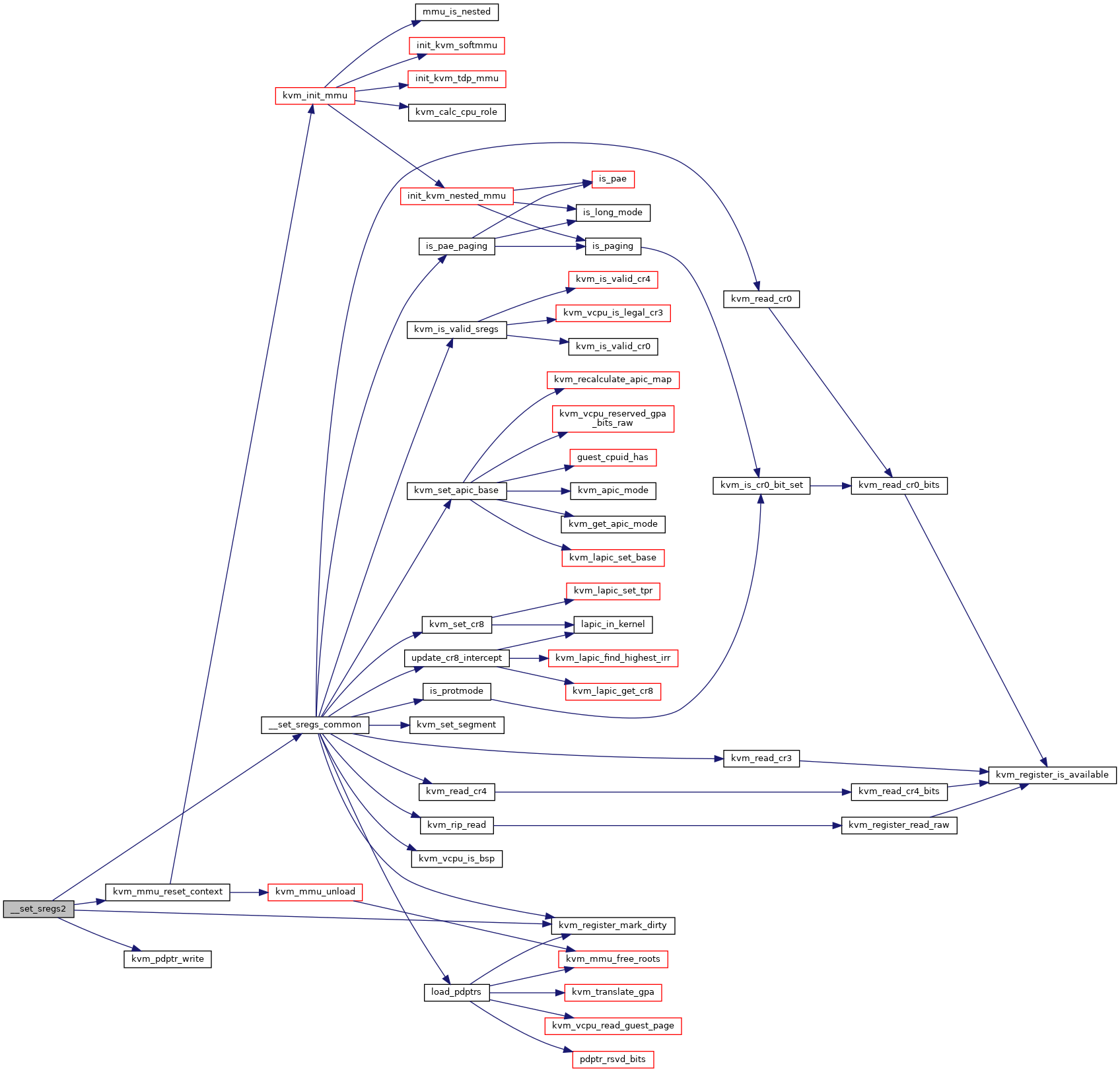

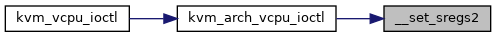

| static int | __set_sregs2 (struct kvm_vcpu *vcpu, struct kvm_sregs2 *sregs2) |

| static void | __get_sregs2 (struct kvm_vcpu *vcpu, struct kvm_sregs2 *sregs2) |

| static | DEFINE_MUTEX (vendor_module_lock) |

| EXPORT_STATIC_CALL_GPL (kvm_x86_get_cs_db_l_bits) | |

| EXPORT_STATIC_CALL_GPL (kvm_x86_cache_reg) | |

| module_param (ignore_msrs, bool, 0644) | |

| module_param (report_ignored_msrs, bool, 0644) | |

| EXPORT_SYMBOL_GPL (report_ignored_msrs) | |

| module_param (min_timer_period_us, uint, 0644) | |

| module_param (kvmclock_periodic_sync, bool, 0444) | |

| module_param (tsc_tolerance_ppm, uint, 0644) | |

| module_param (lapic_timer_advance_ns, int, 0644) | |

| module_param (vector_hashing, bool, 0444) | |

| module_param (enable_vmware_backdoor, bool, 0444) | |

| EXPORT_SYMBOL_GPL (enable_vmware_backdoor) | |

| module_param (force_emulation_prefix, int, 0644) | |

| module_param (pi_inject_timer, bint, 0644) | |

| EXPORT_SYMBOL_GPL (enable_pmu) | |

| module_param (enable_pmu, bool, 0444) | |

| module_param (eager_page_split, bool, 0644) | |

| module_param (mitigate_smt_rsb, bool, 0444) | |

| EXPORT_SYMBOL_GPL (kvm_nr_uret_msrs) | |

| EXPORT_SYMBOL_GPL (host_efer) | |

| EXPORT_SYMBOL_GPL (allow_smaller_maxphyaddr) | |

| EXPORT_SYMBOL_GPL (enable_apicv) | |

| EXPORT_SYMBOL_GPL (host_xss) | |

| EXPORT_SYMBOL_GPL (host_arch_capabilities) | |

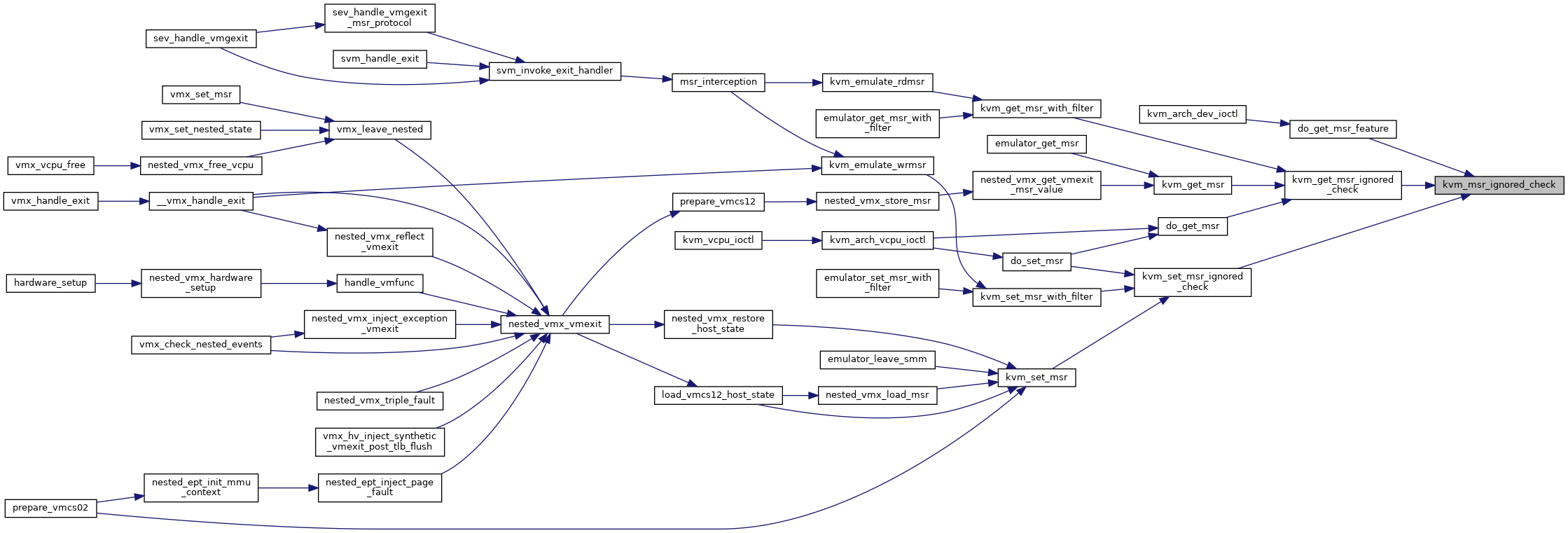

| static bool | kvm_msr_ignored_check (u32 msr, u64 data, bool write) |

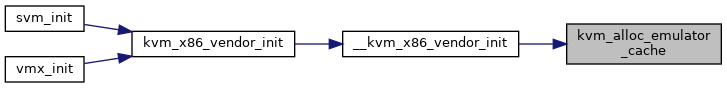

| static struct kmem_cache * | kvm_alloc_emulator_cache (void) |

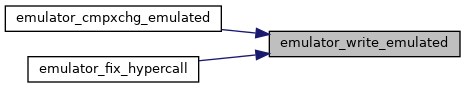

| static int | emulator_fix_hypercall (struct x86_emulate_ctxt *ctxt) |

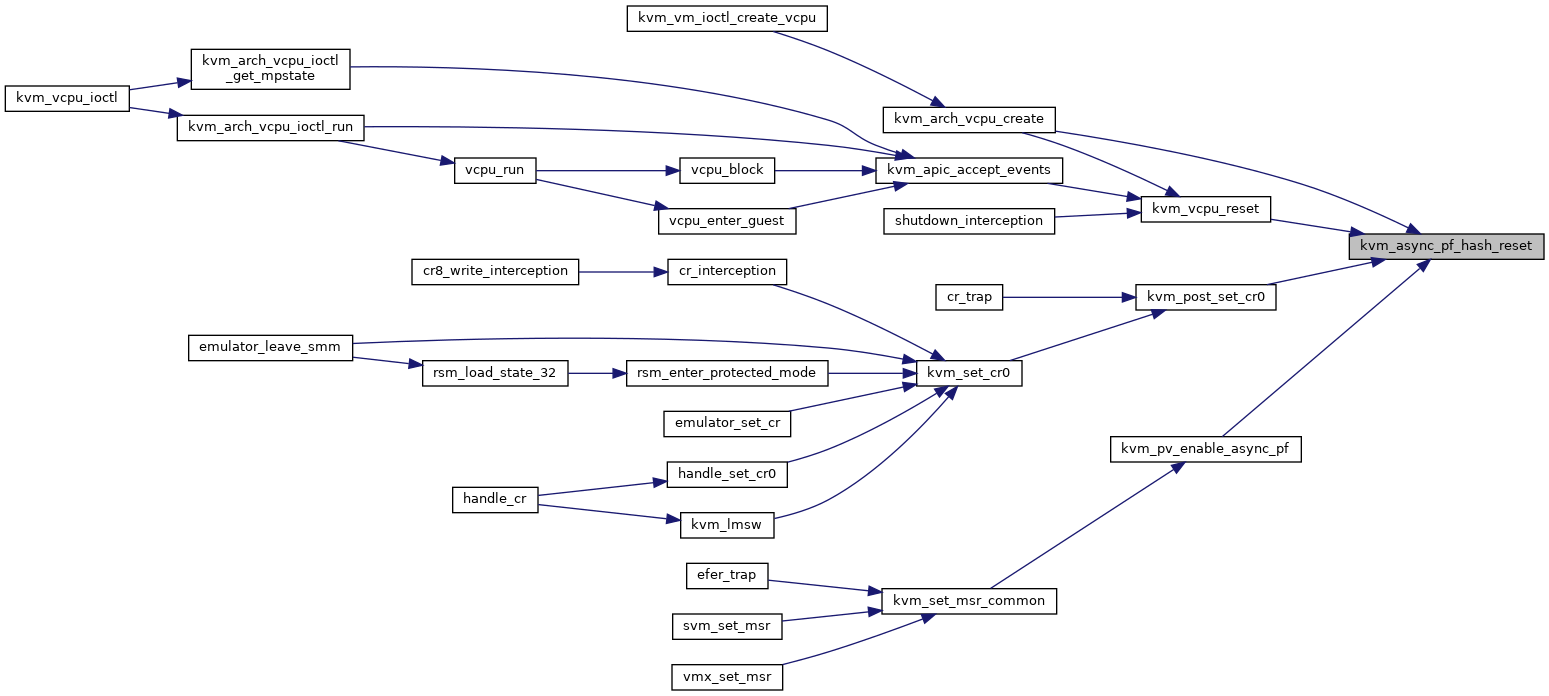

| static void | kvm_async_pf_hash_reset (struct kvm_vcpu *vcpu) |

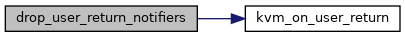

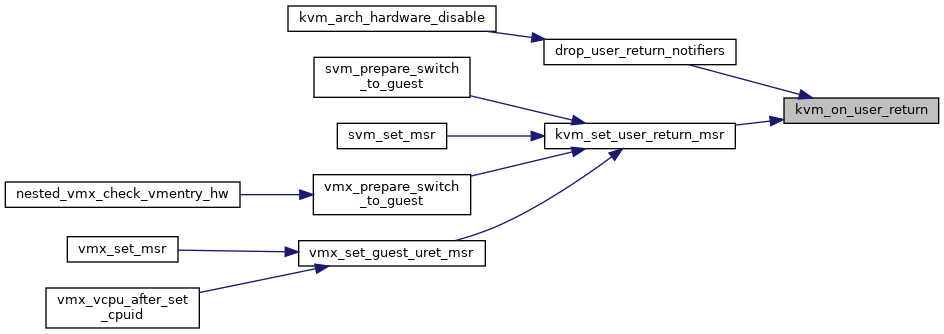

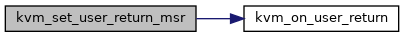

| static void | kvm_on_user_return (struct user_return_notifier *urn) |

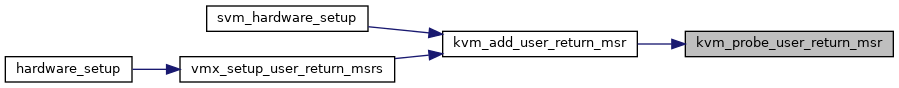

| static int | kvm_probe_user_return_msr (u32 msr) |

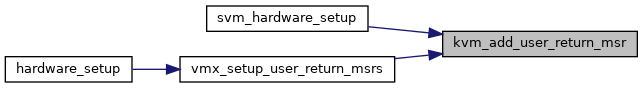

| int | kvm_add_user_return_msr (u32 msr) |

| EXPORT_SYMBOL_GPL (kvm_add_user_return_msr) | |

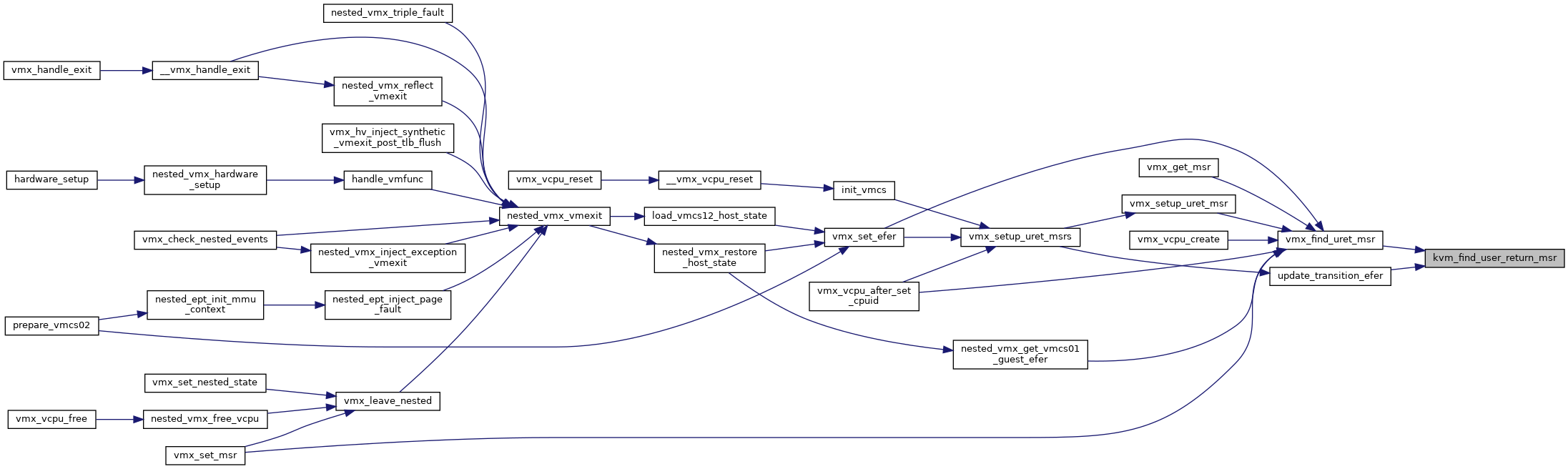

| int | kvm_find_user_return_msr (u32 msr) |

| EXPORT_SYMBOL_GPL (kvm_find_user_return_msr) | |

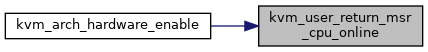

| static void | kvm_user_return_msr_cpu_online (void) |

| int | kvm_set_user_return_msr (unsigned slot, u64 value, u64 mask) |

| EXPORT_SYMBOL_GPL (kvm_set_user_return_msr) | |

| static void | drop_user_return_notifiers (void) |

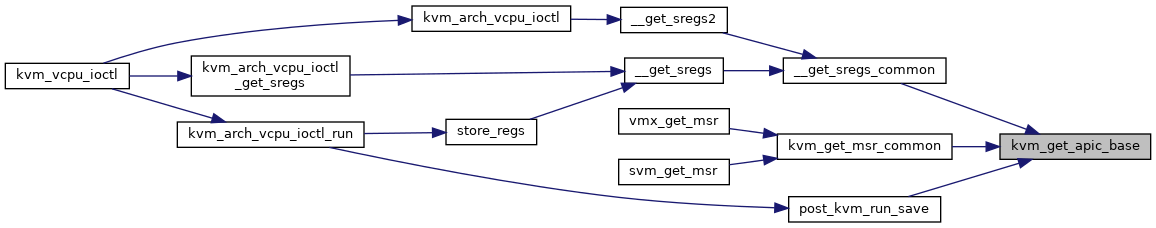

| u64 | kvm_get_apic_base (struct kvm_vcpu *vcpu) |

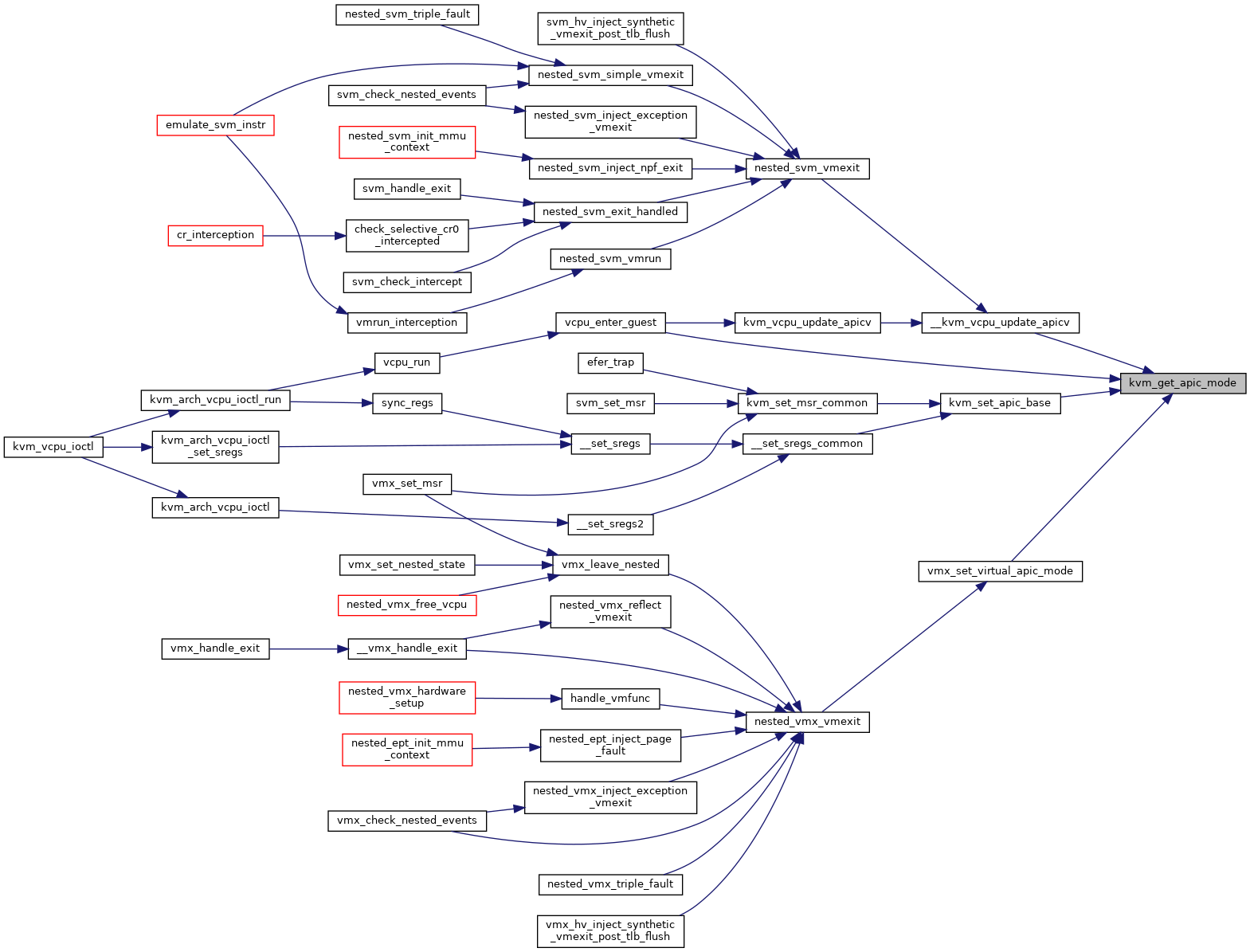

| enum lapic_mode | kvm_get_apic_mode (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_get_apic_mode) | |

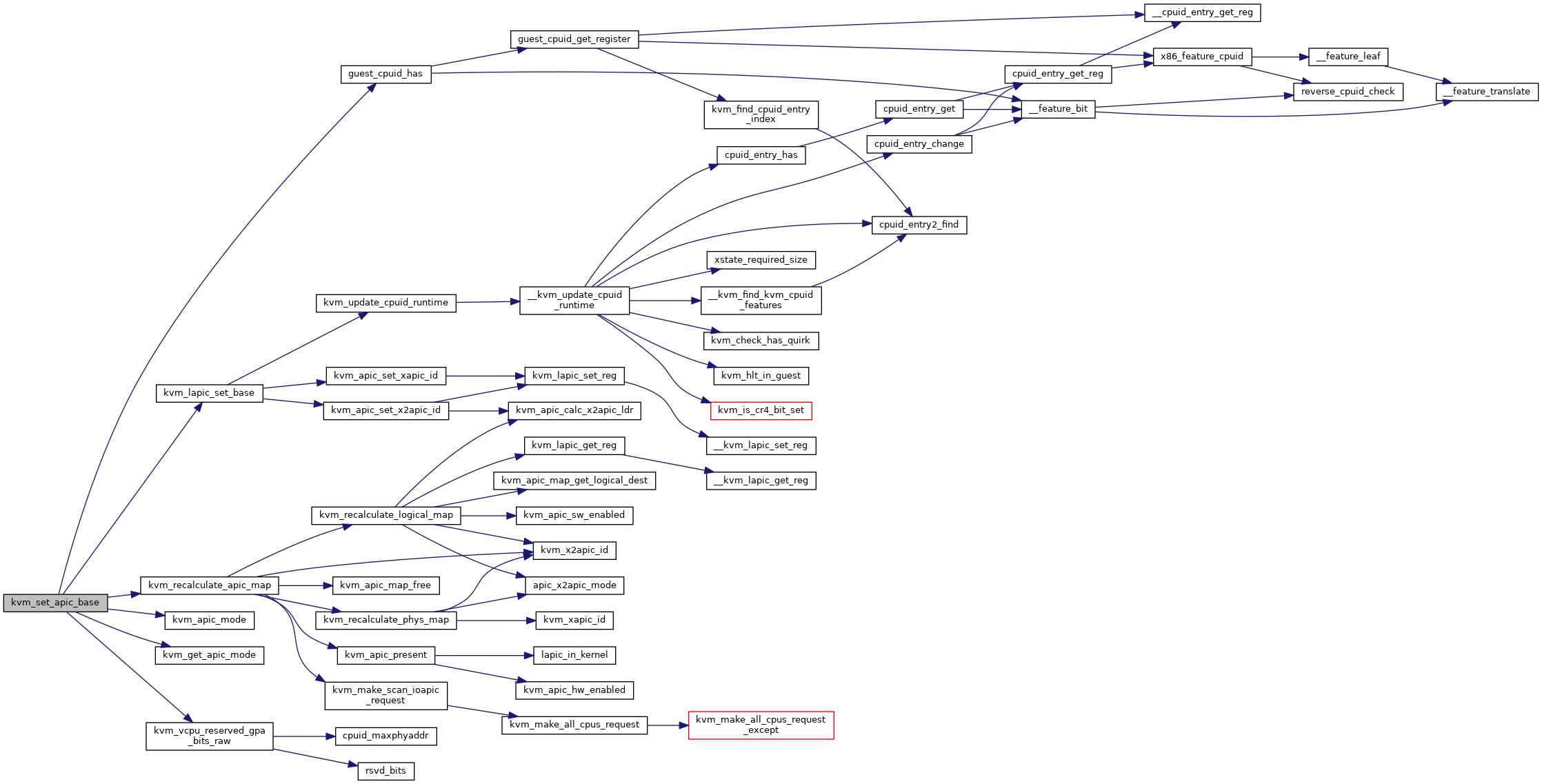

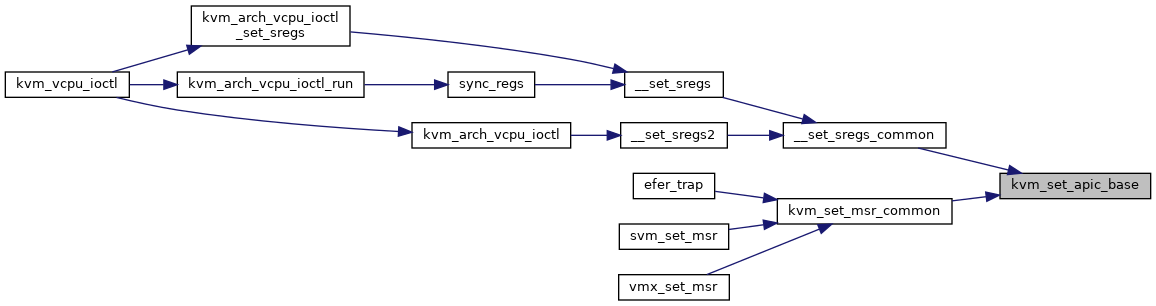

| int | kvm_set_apic_base (struct kvm_vcpu *vcpu, struct msr_data *msr_info) |

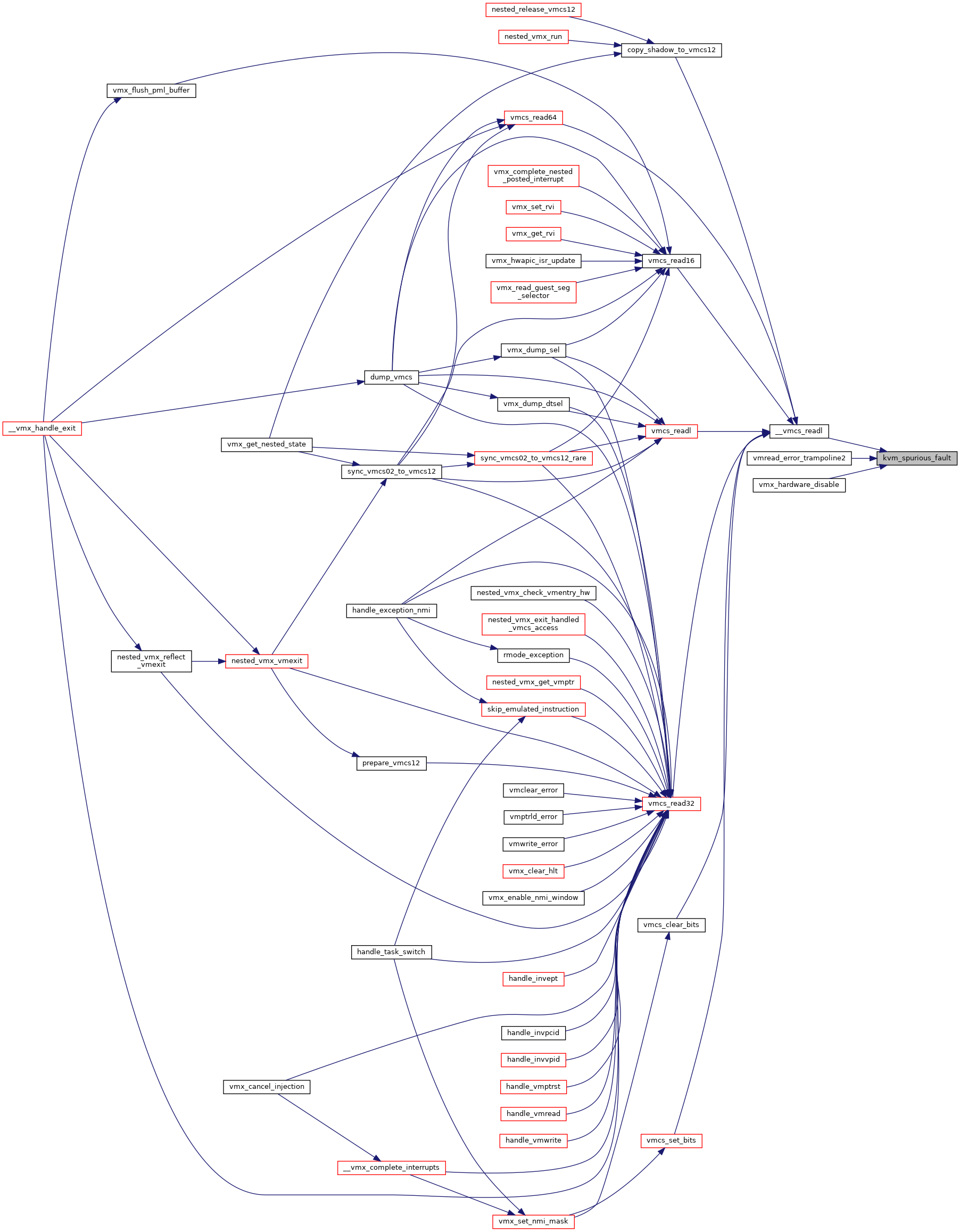

| noinstr void | kvm_spurious_fault (void) |

| EXPORT_SYMBOL_GPL (kvm_spurious_fault) | |

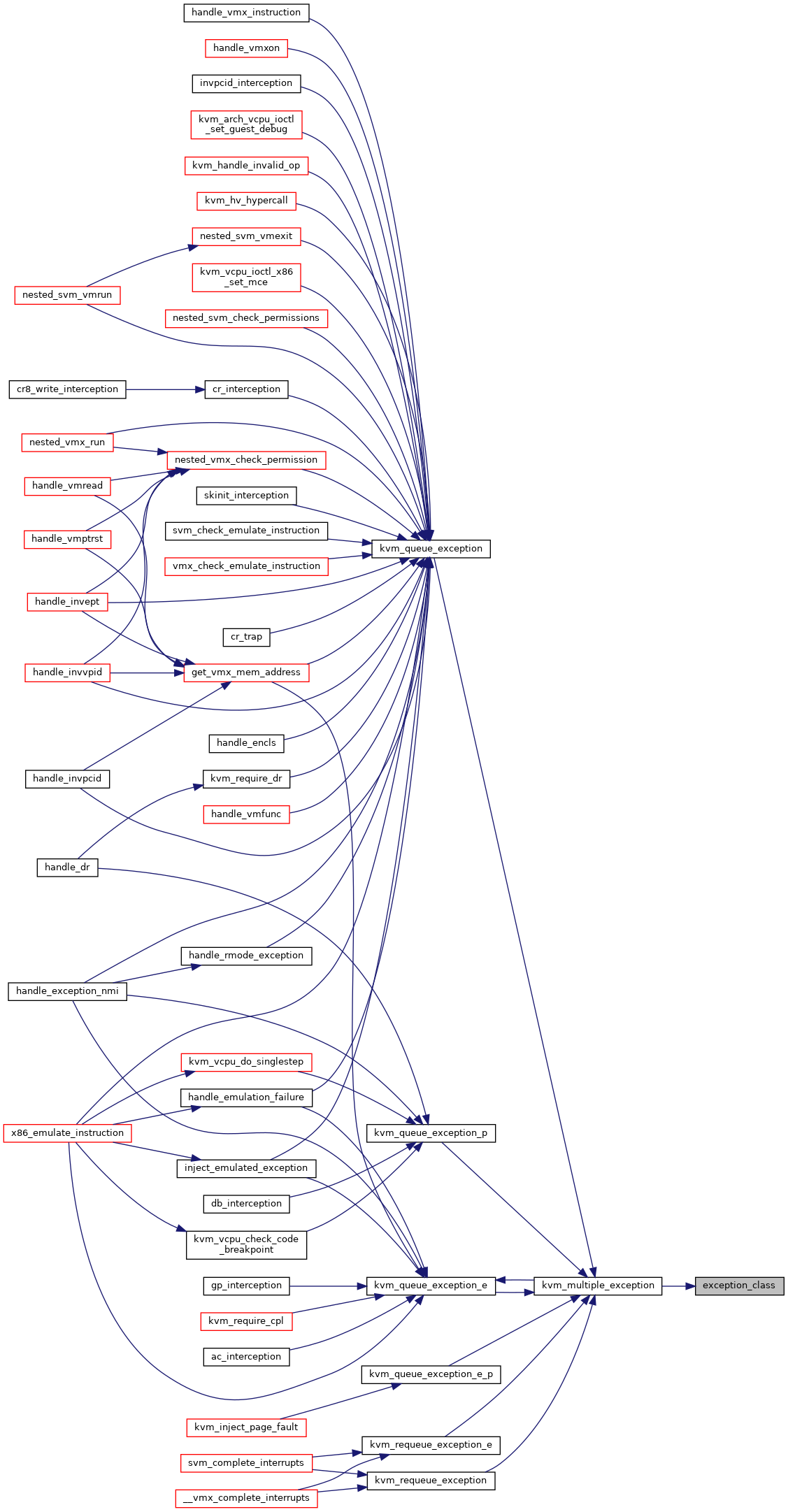

| static int | exception_class (int vector) |

| static int | exception_type (int vector) |

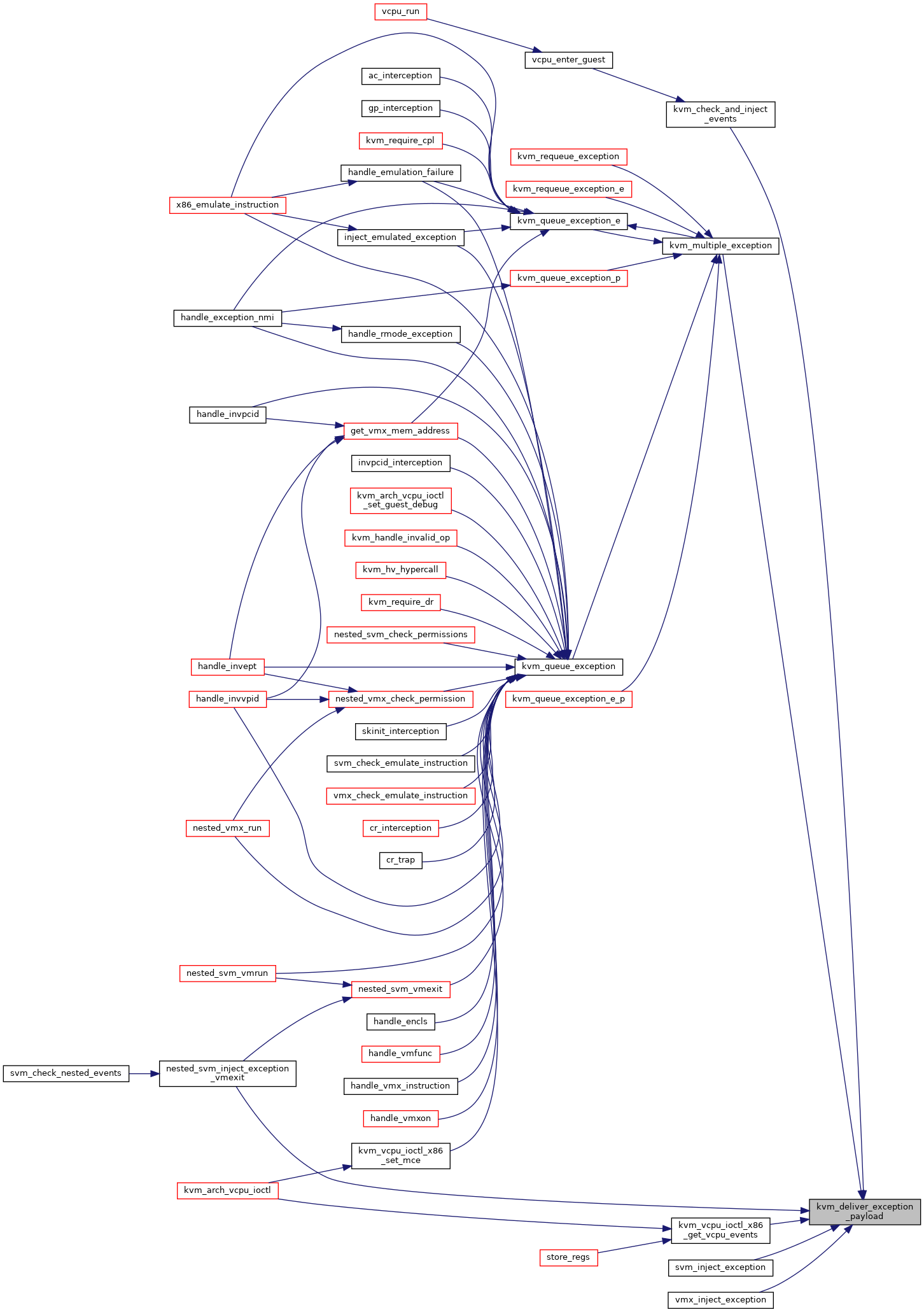

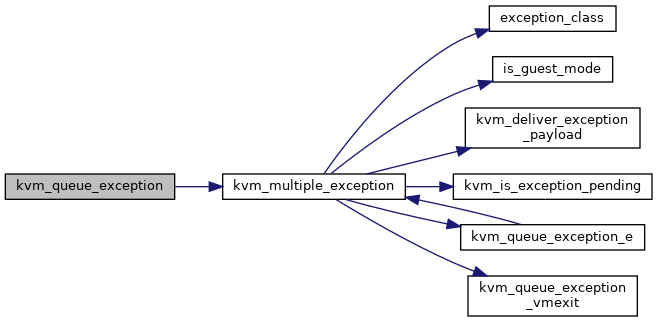

| void | kvm_deliver_exception_payload (struct kvm_vcpu *vcpu, struct kvm_queued_exception *ex) |

| EXPORT_SYMBOL_GPL (kvm_deliver_exception_payload) | |

| static void | kvm_queue_exception_vmexit (struct kvm_vcpu *vcpu, unsigned int vector, bool has_error_code, u32 error_code, bool has_payload, unsigned long payload) |

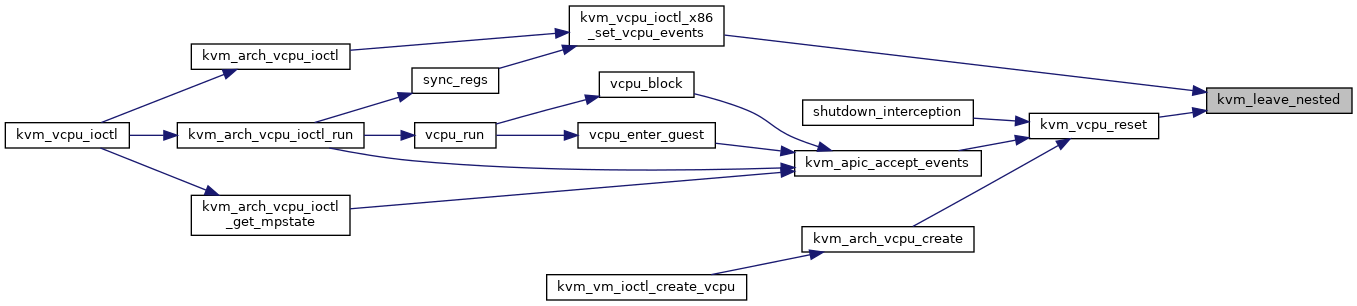

| static void | kvm_leave_nested (struct kvm_vcpu *vcpu) |

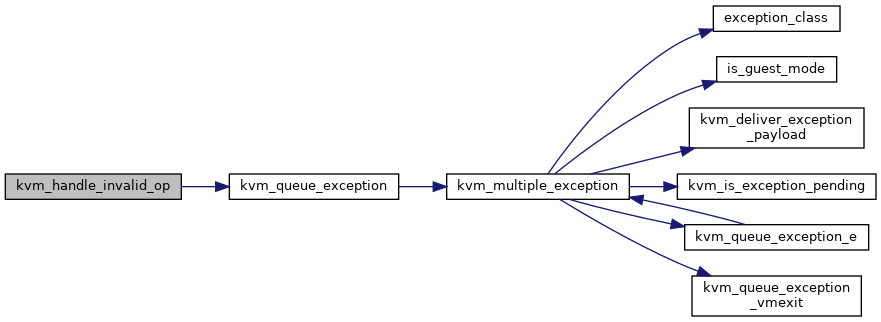

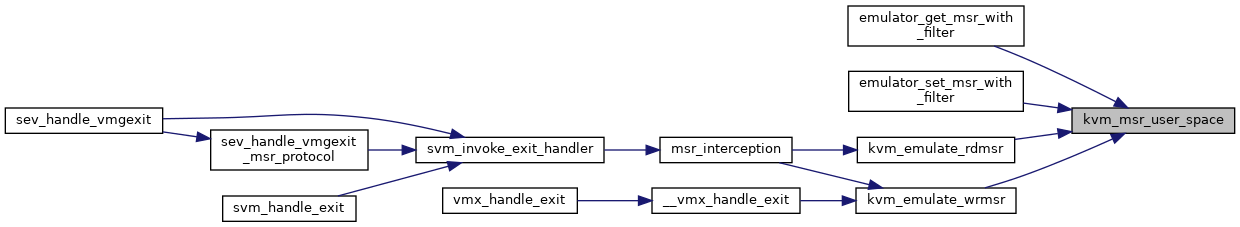

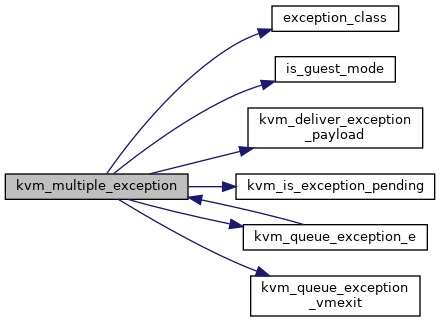

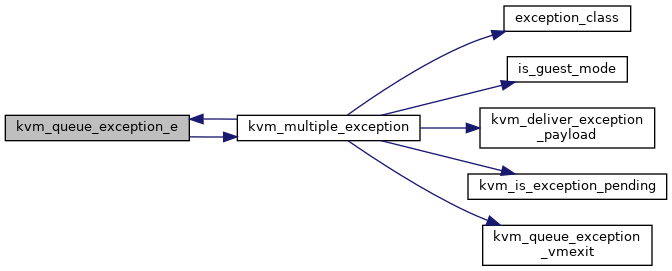

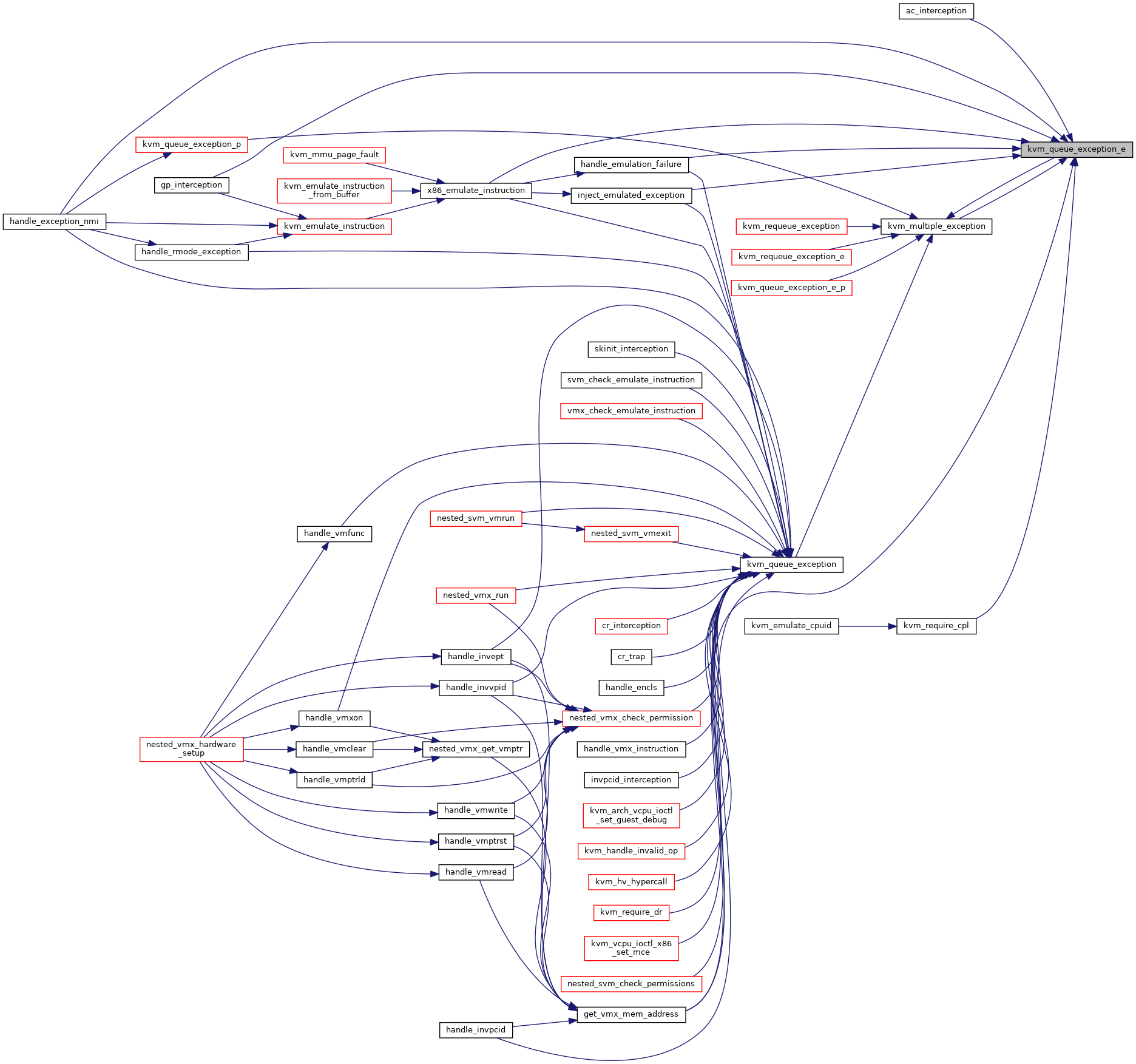

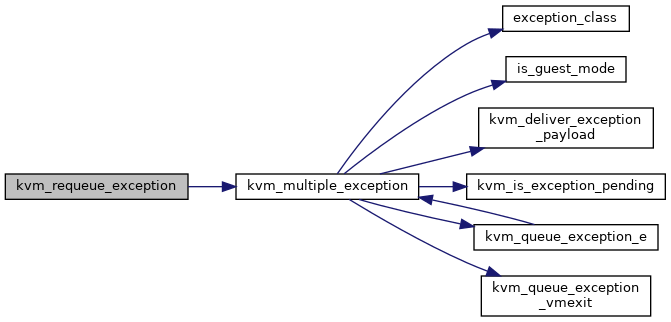

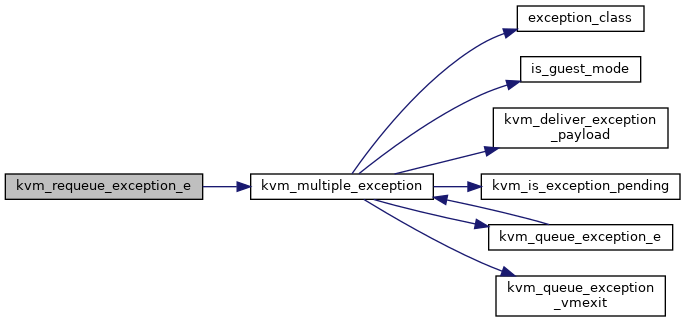

| static void | kvm_multiple_exception (struct kvm_vcpu *vcpu, unsigned nr, bool has_error, u32 error_code, bool has_payload, unsigned long payload, bool reinject) |

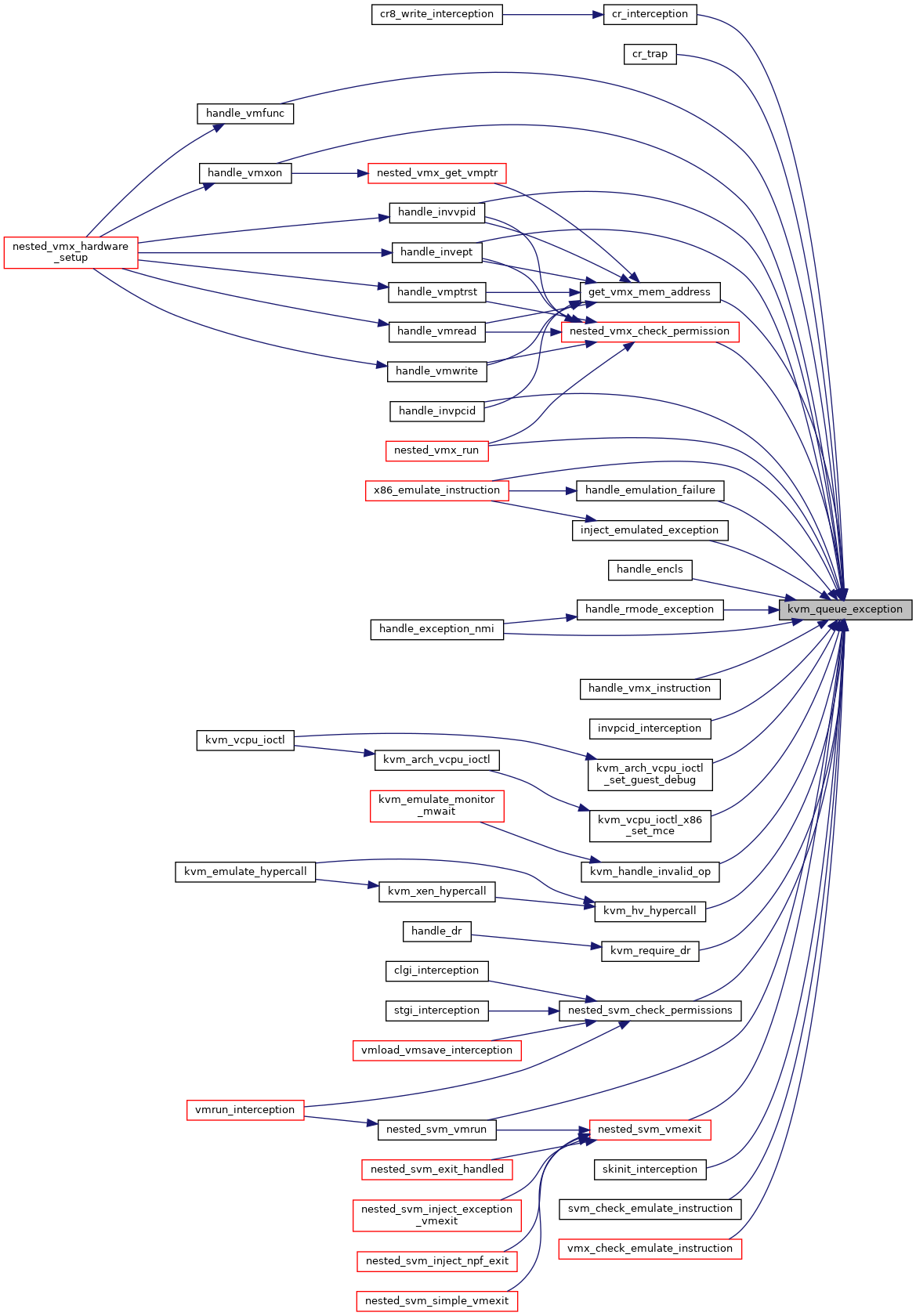

| void | kvm_queue_exception (struct kvm_vcpu *vcpu, unsigned nr) |

| EXPORT_SYMBOL_GPL (kvm_queue_exception) | |

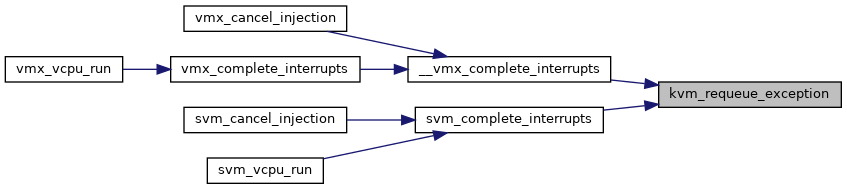

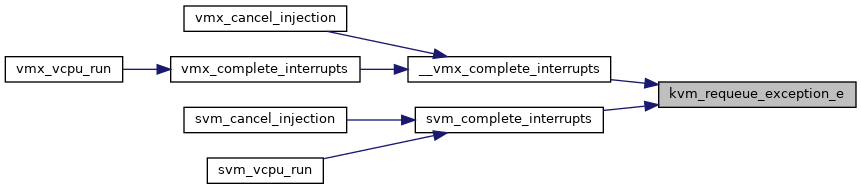

| void | kvm_requeue_exception (struct kvm_vcpu *vcpu, unsigned nr) |

| EXPORT_SYMBOL_GPL (kvm_requeue_exception) | |

| void | kvm_queue_exception_p (struct kvm_vcpu *vcpu, unsigned nr, unsigned long payload) |

| EXPORT_SYMBOL_GPL (kvm_queue_exception_p) | |

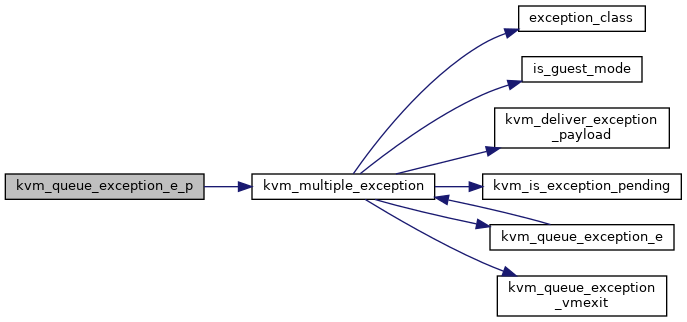

| static void | kvm_queue_exception_e_p (struct kvm_vcpu *vcpu, unsigned nr, u32 error_code, unsigned long payload) |

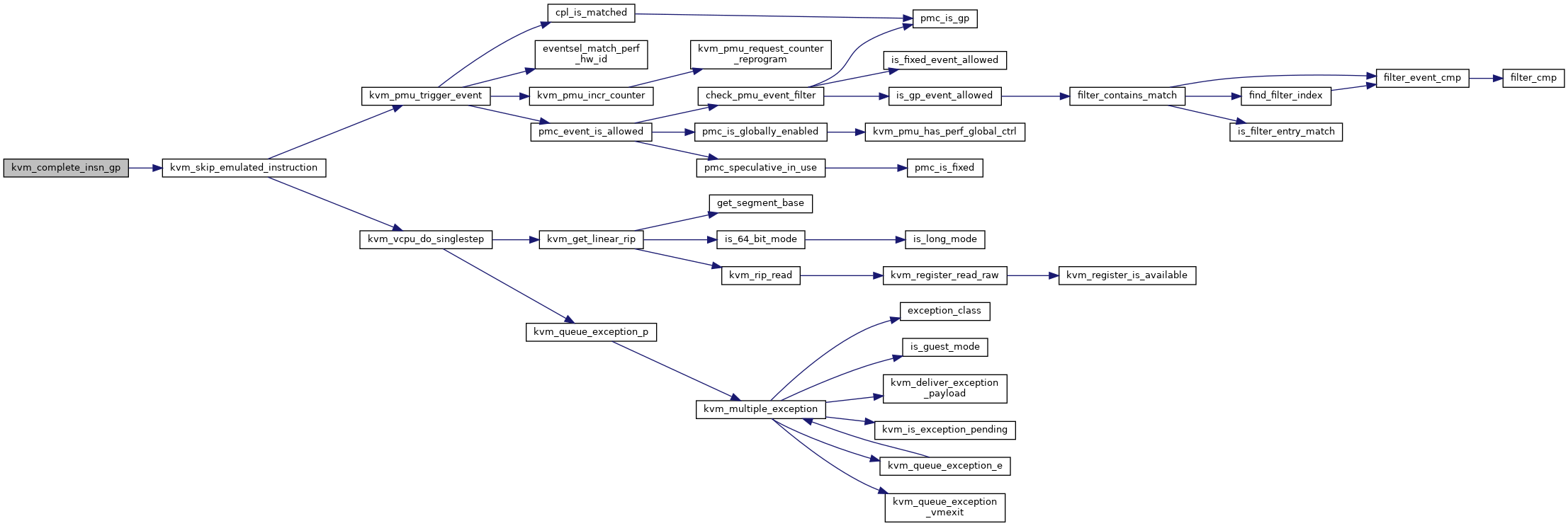

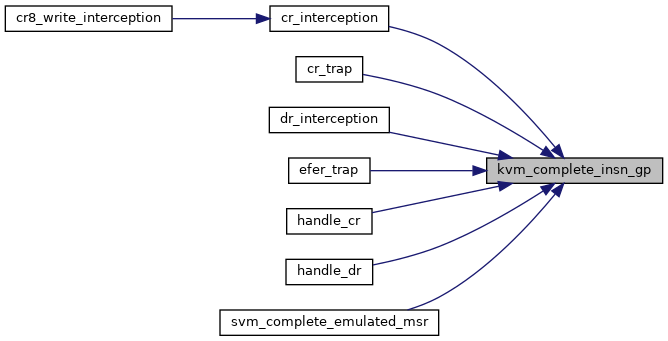

| int | kvm_complete_insn_gp (struct kvm_vcpu *vcpu, int err) |

| EXPORT_SYMBOL_GPL (kvm_complete_insn_gp) | |

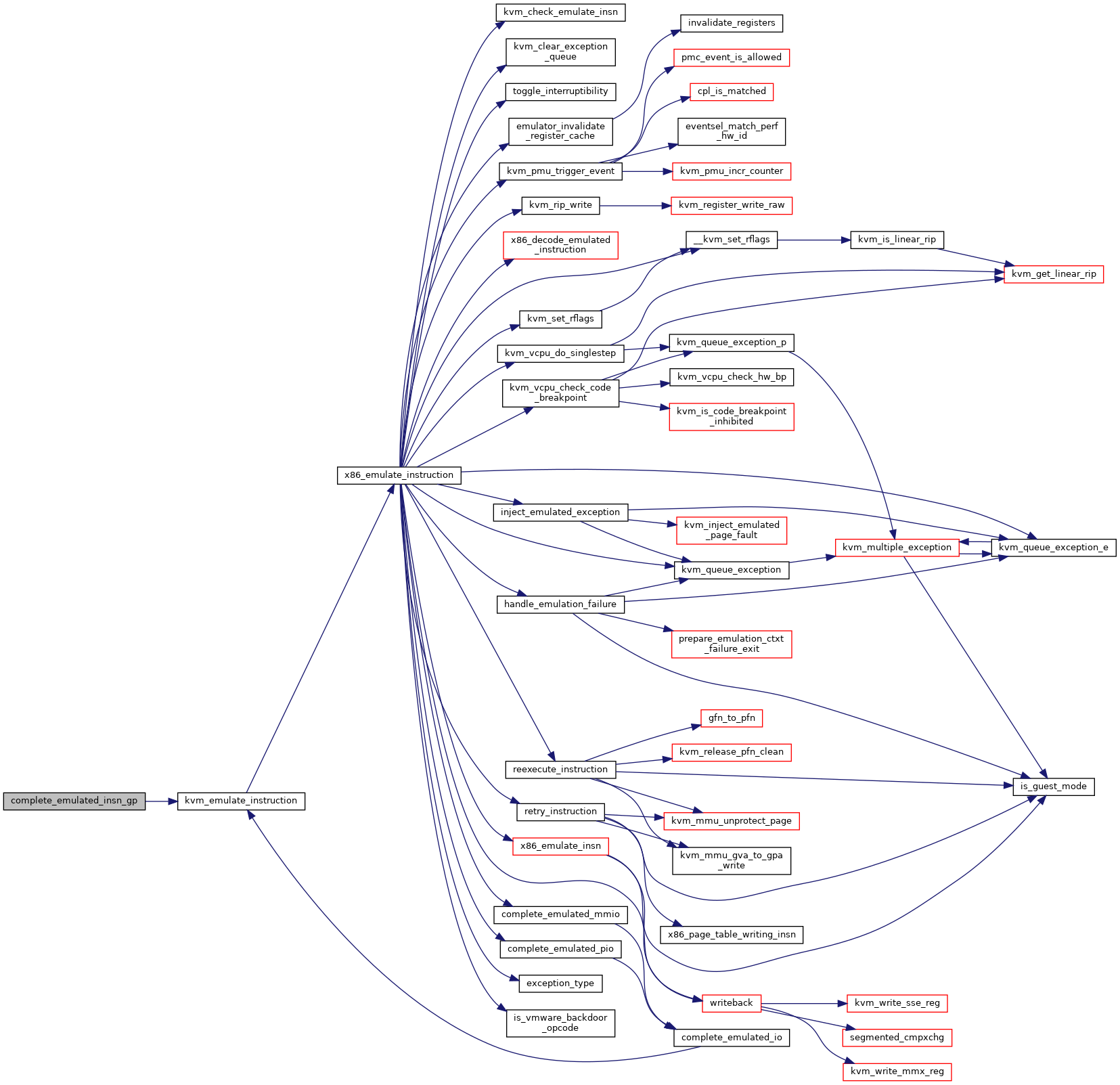

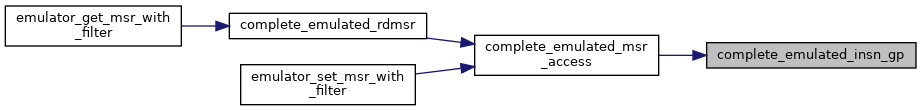

| static int | complete_emulated_insn_gp (struct kvm_vcpu *vcpu, int err) |

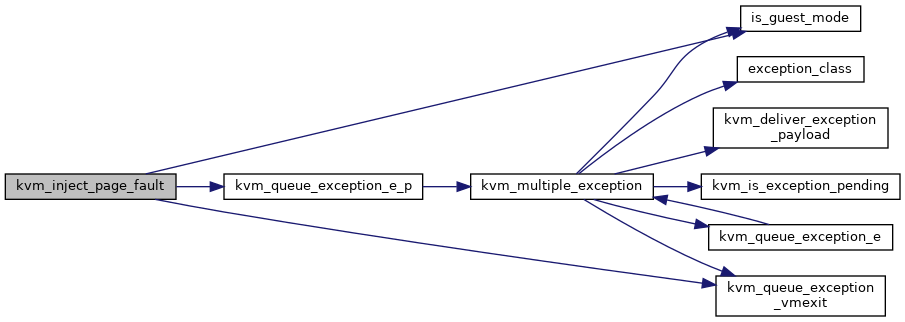

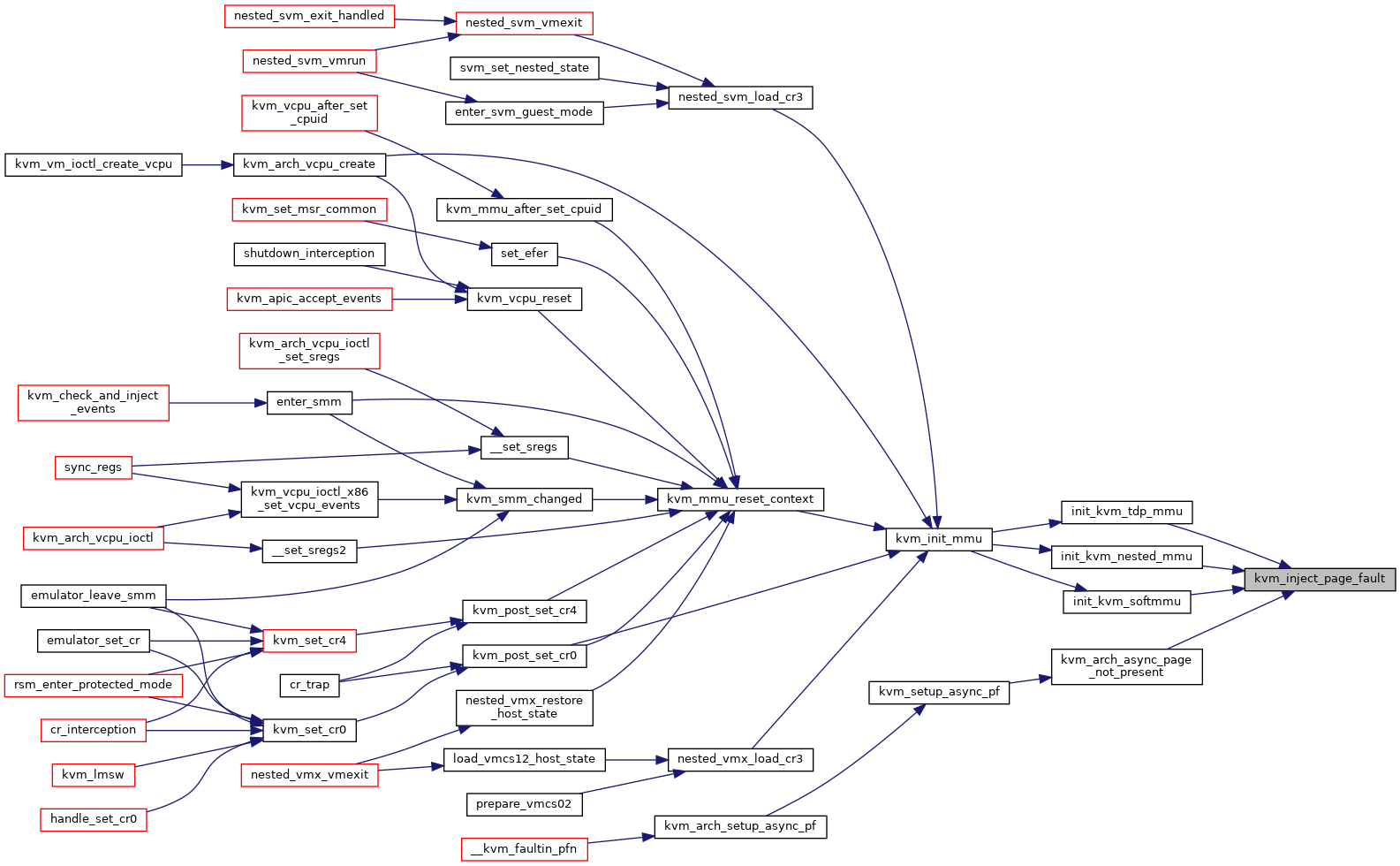

| void | kvm_inject_page_fault (struct kvm_vcpu *vcpu, struct x86_exception *fault) |

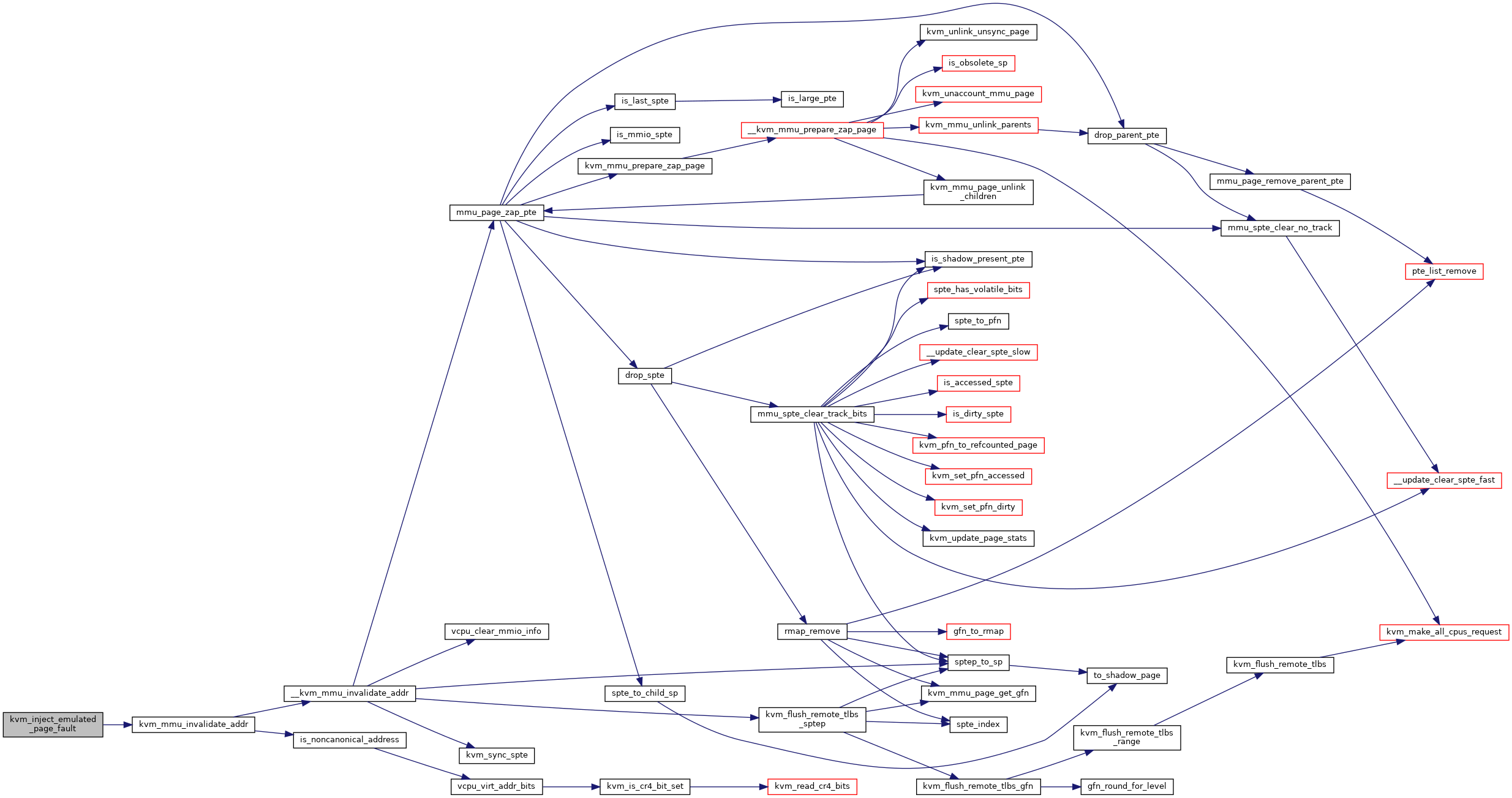

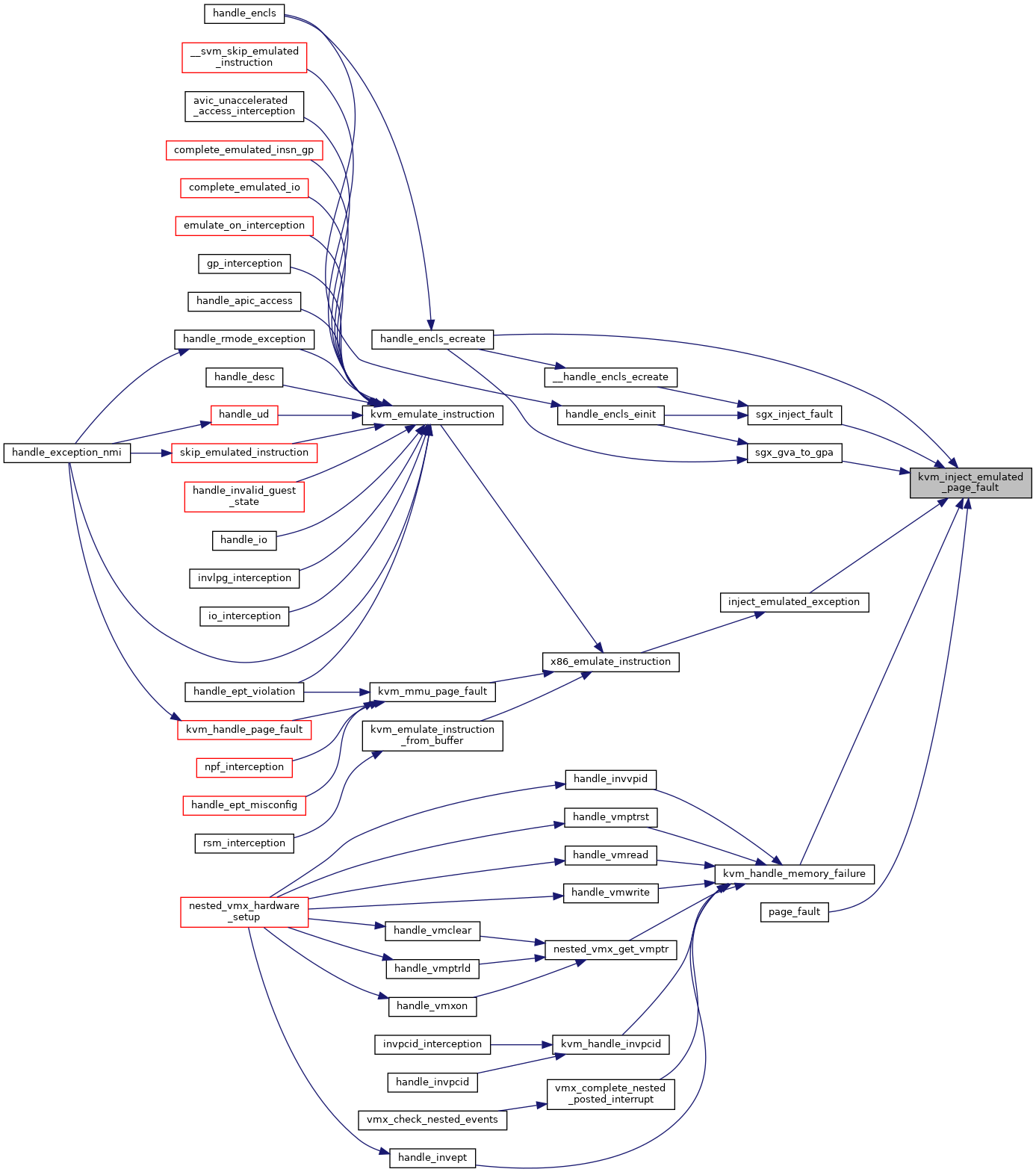

| void | kvm_inject_emulated_page_fault (struct kvm_vcpu *vcpu, struct x86_exception *fault) |

| EXPORT_SYMBOL_GPL (kvm_inject_emulated_page_fault) | |

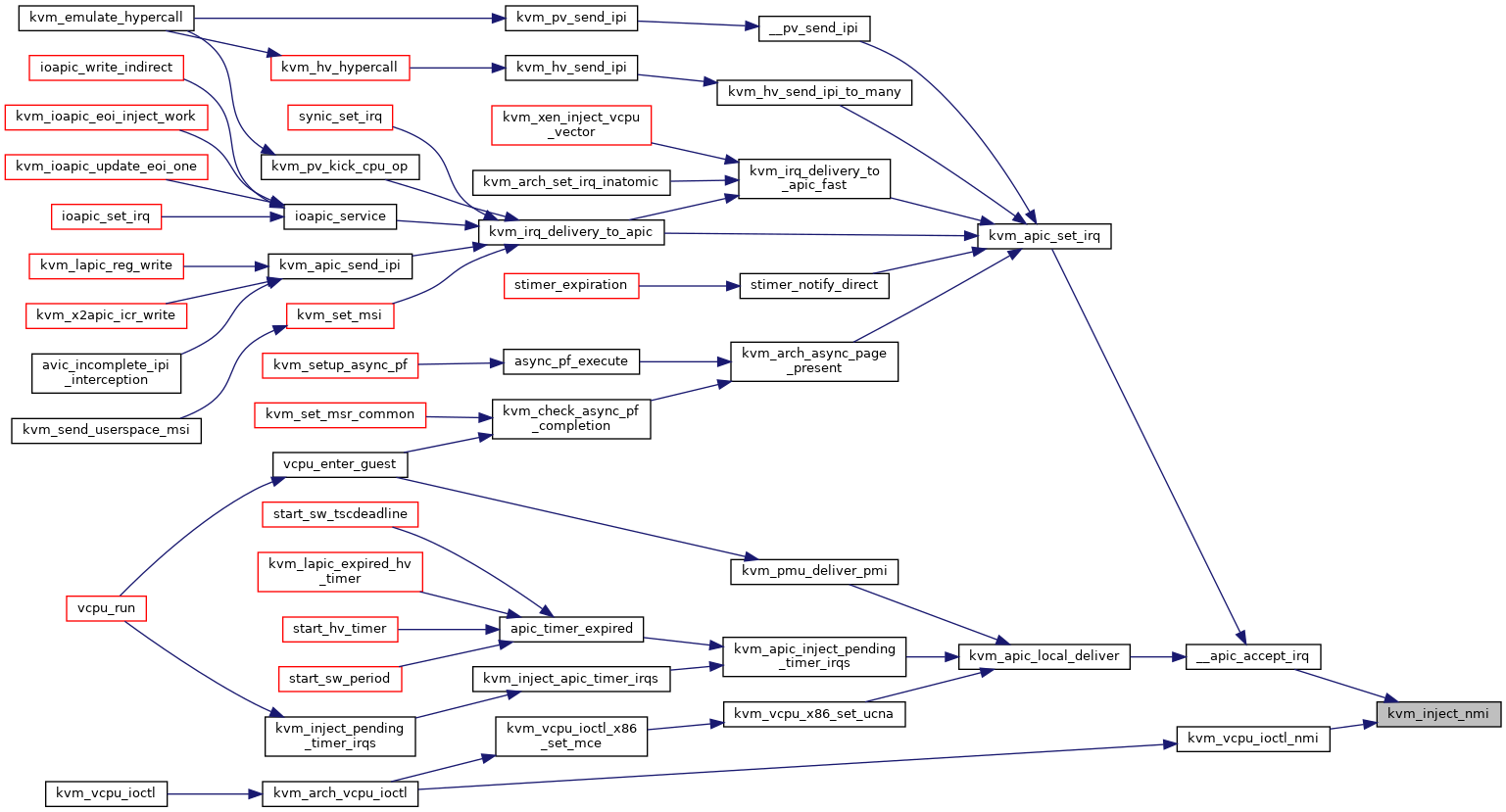

| void | kvm_inject_nmi (struct kvm_vcpu *vcpu) |

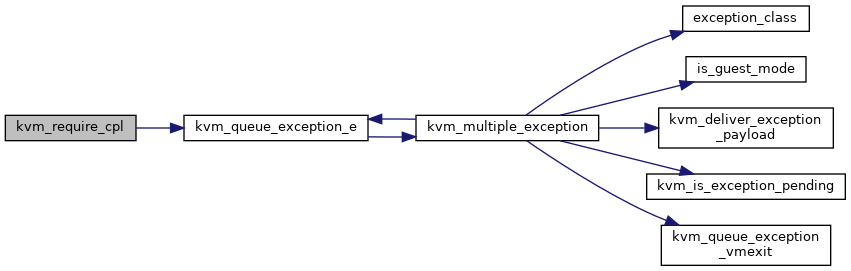

| void | kvm_queue_exception_e (struct kvm_vcpu *vcpu, unsigned nr, u32 error_code) |

| EXPORT_SYMBOL_GPL (kvm_queue_exception_e) | |

| void | kvm_requeue_exception_e (struct kvm_vcpu *vcpu, unsigned nr, u32 error_code) |

| EXPORT_SYMBOL_GPL (kvm_requeue_exception_e) | |

| bool | kvm_require_cpl (struct kvm_vcpu *vcpu, int required_cpl) |

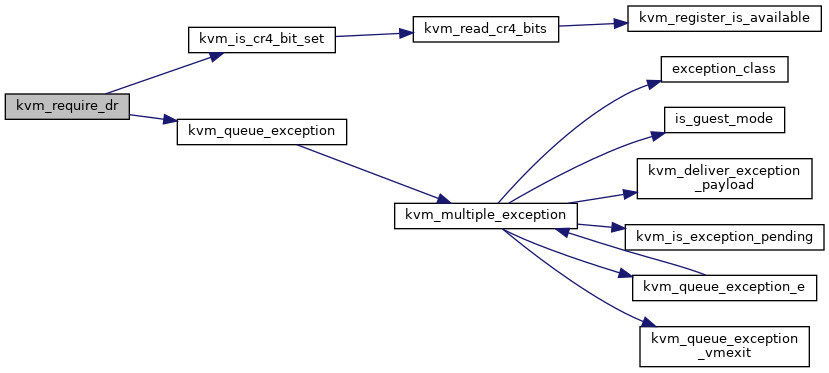

| bool | kvm_require_dr (struct kvm_vcpu *vcpu, int dr) |

| EXPORT_SYMBOL_GPL (kvm_require_dr) | |

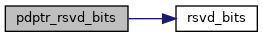

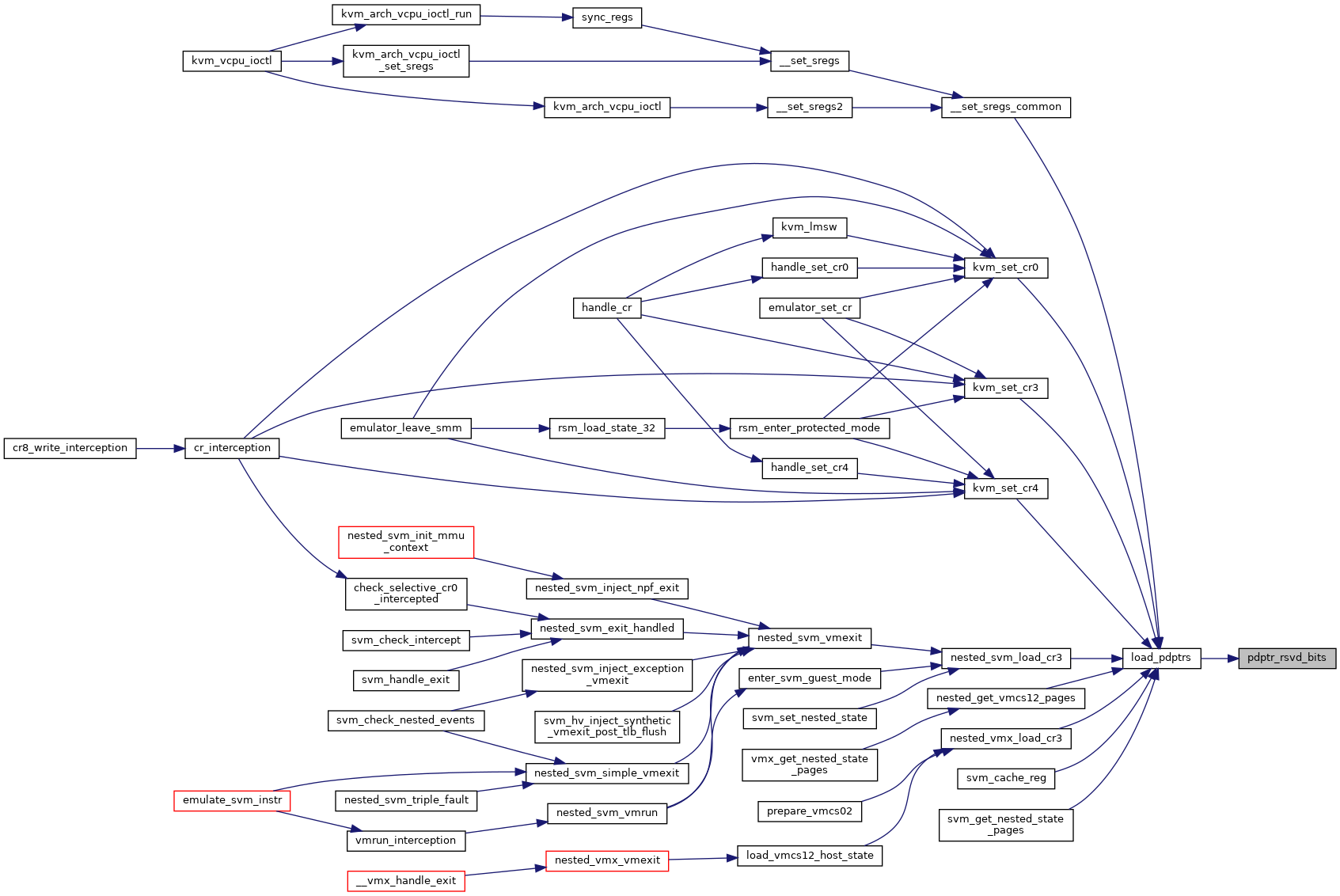

| static u64 | pdptr_rsvd_bits (struct kvm_vcpu *vcpu) |

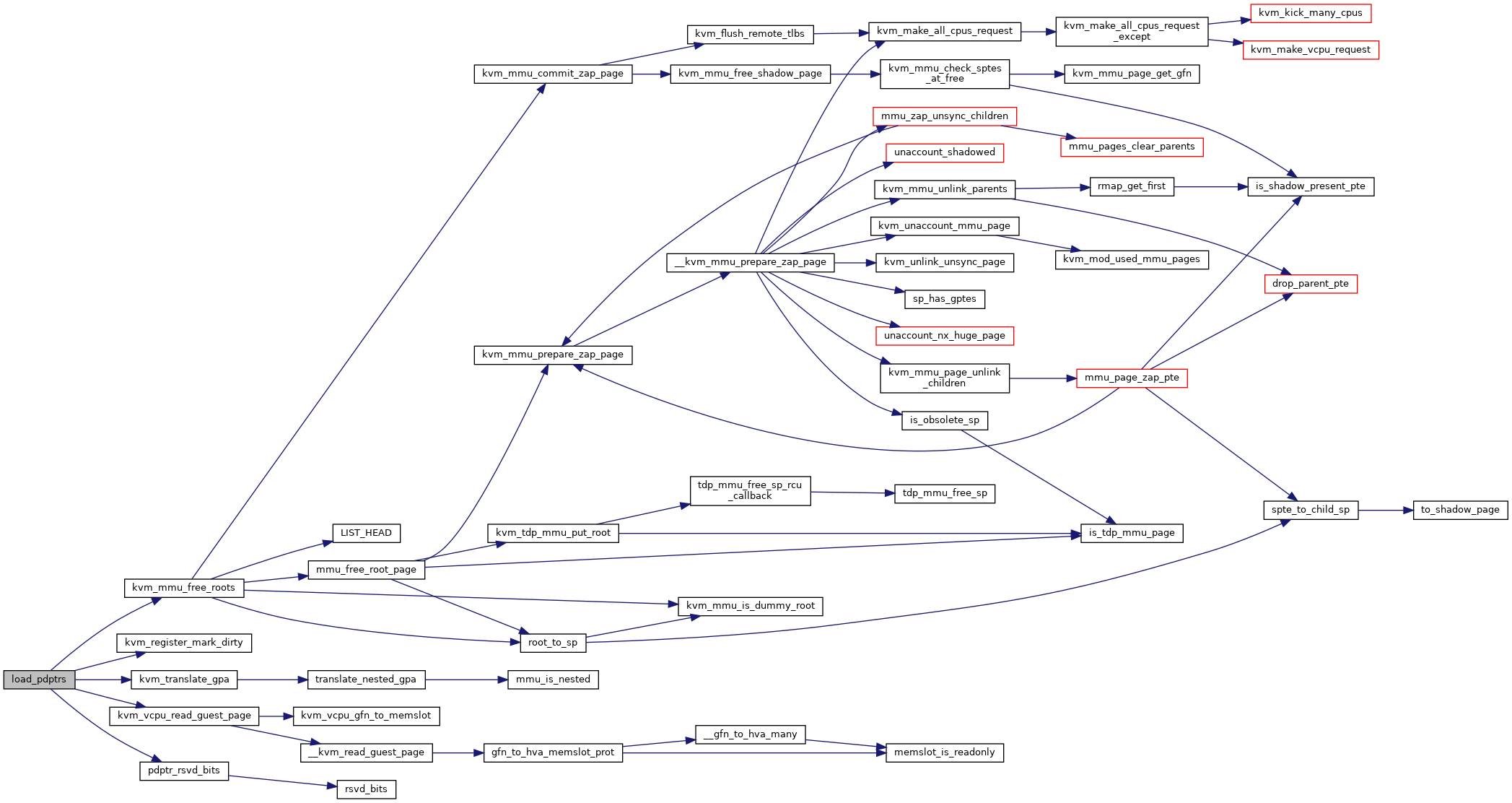

| int | load_pdptrs (struct kvm_vcpu *vcpu, unsigned long cr3) |

| EXPORT_SYMBOL_GPL (load_pdptrs) | |

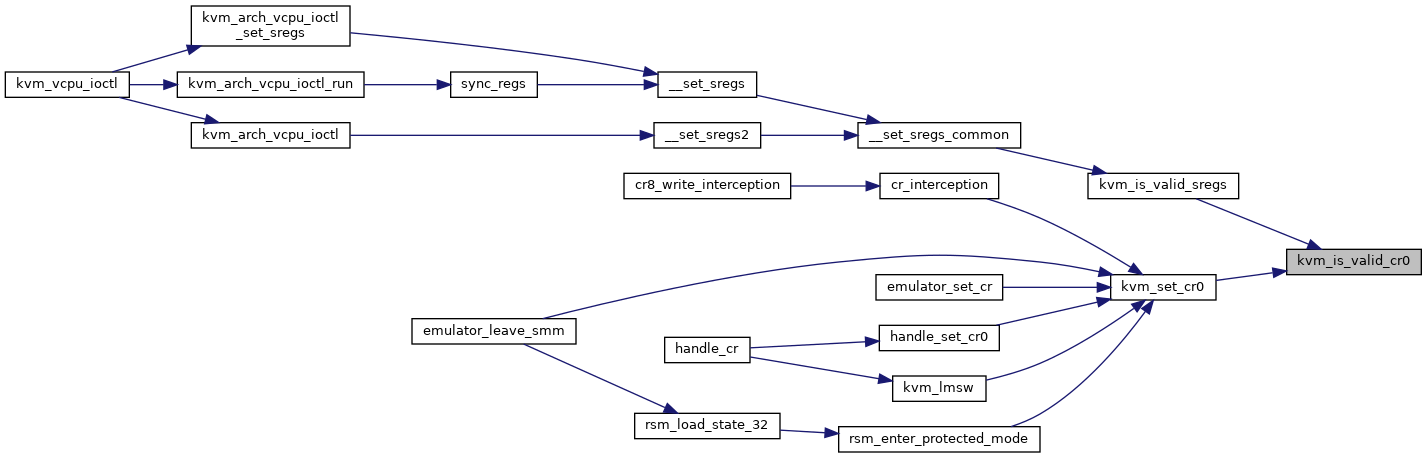

| static bool | kvm_is_valid_cr0 (struct kvm_vcpu *vcpu, unsigned long cr0) |

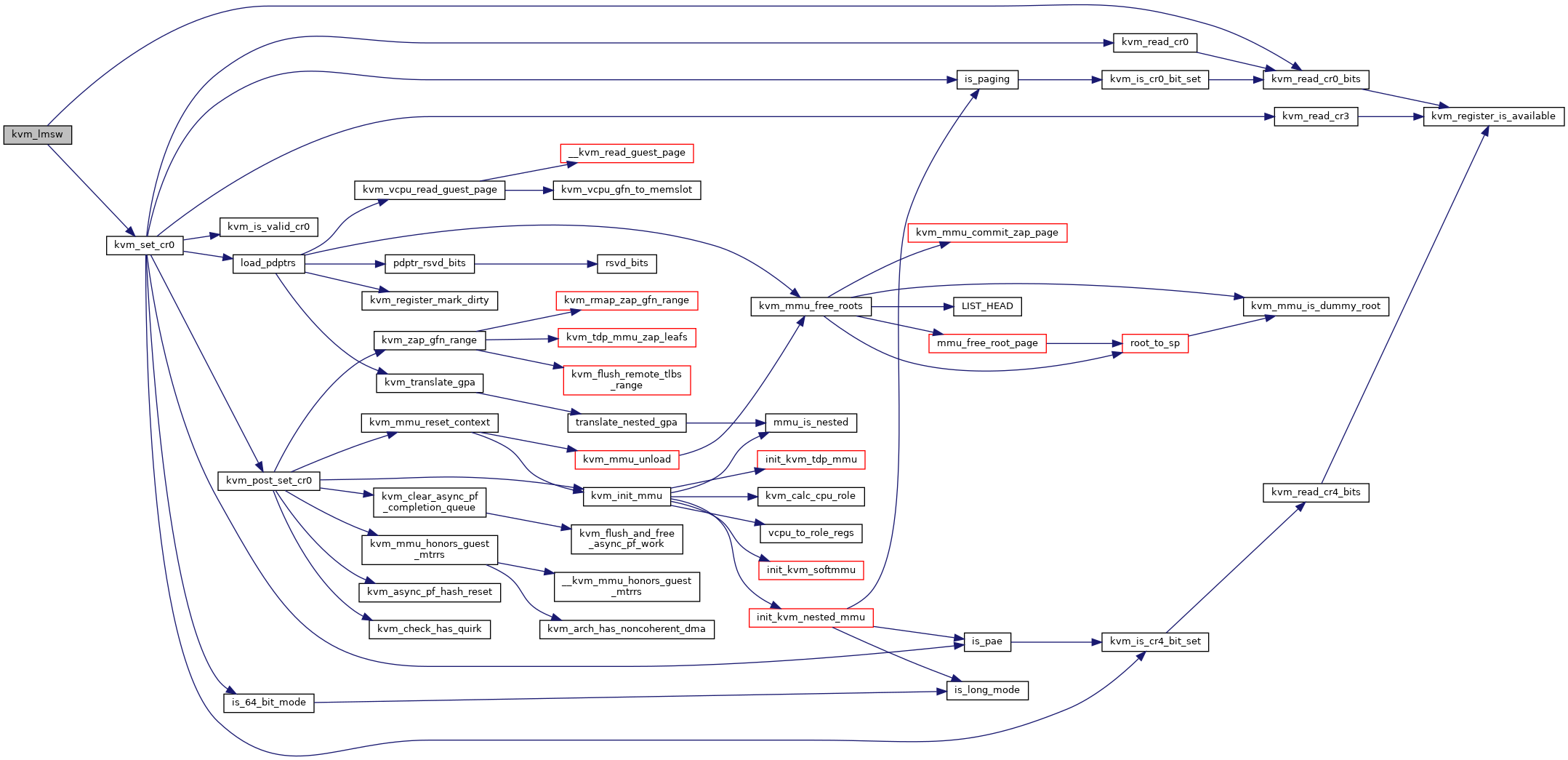

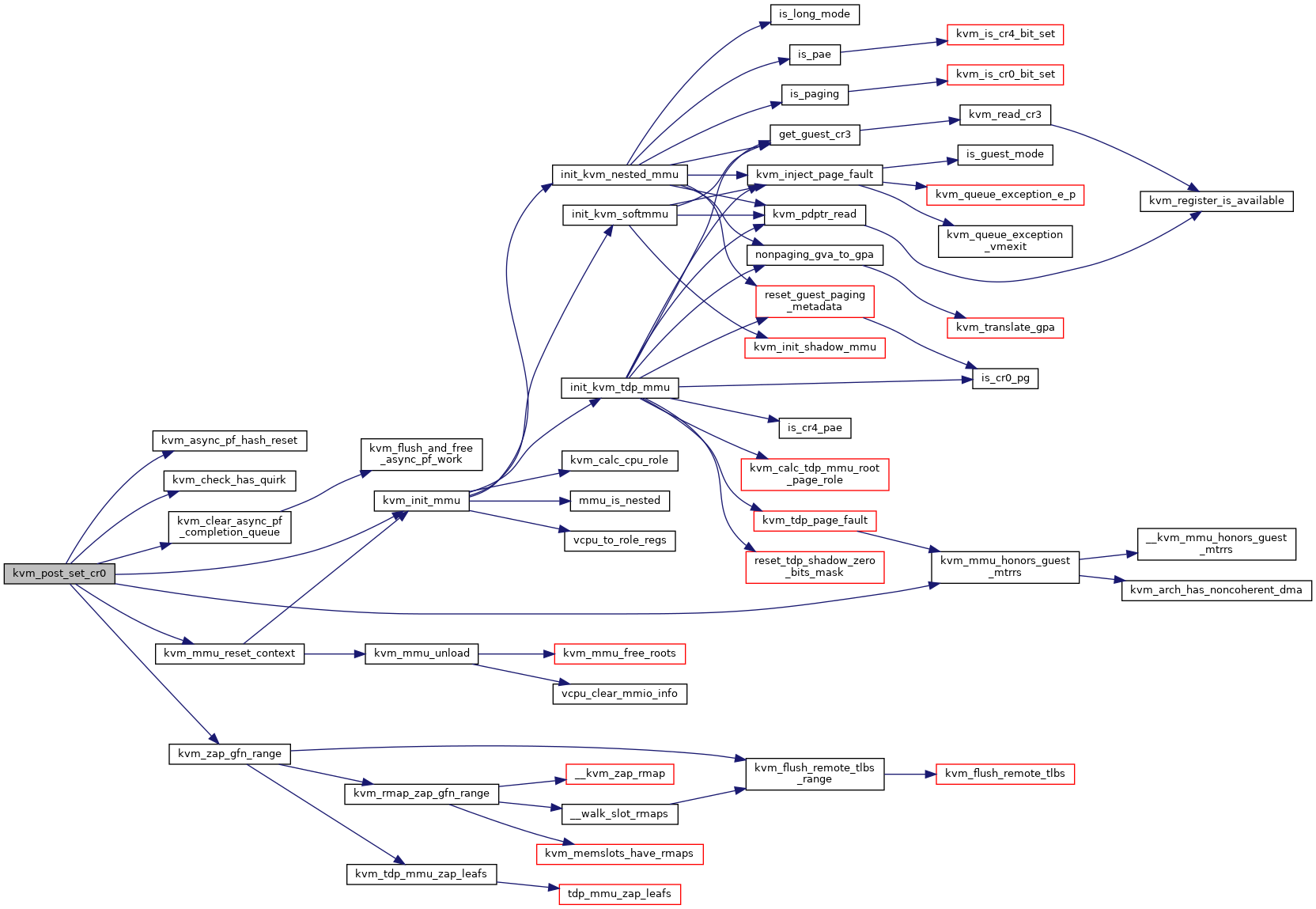

| void | kvm_post_set_cr0 (struct kvm_vcpu *vcpu, unsigned long old_cr0, unsigned long cr0) |

| EXPORT_SYMBOL_GPL (kvm_post_set_cr0) | |

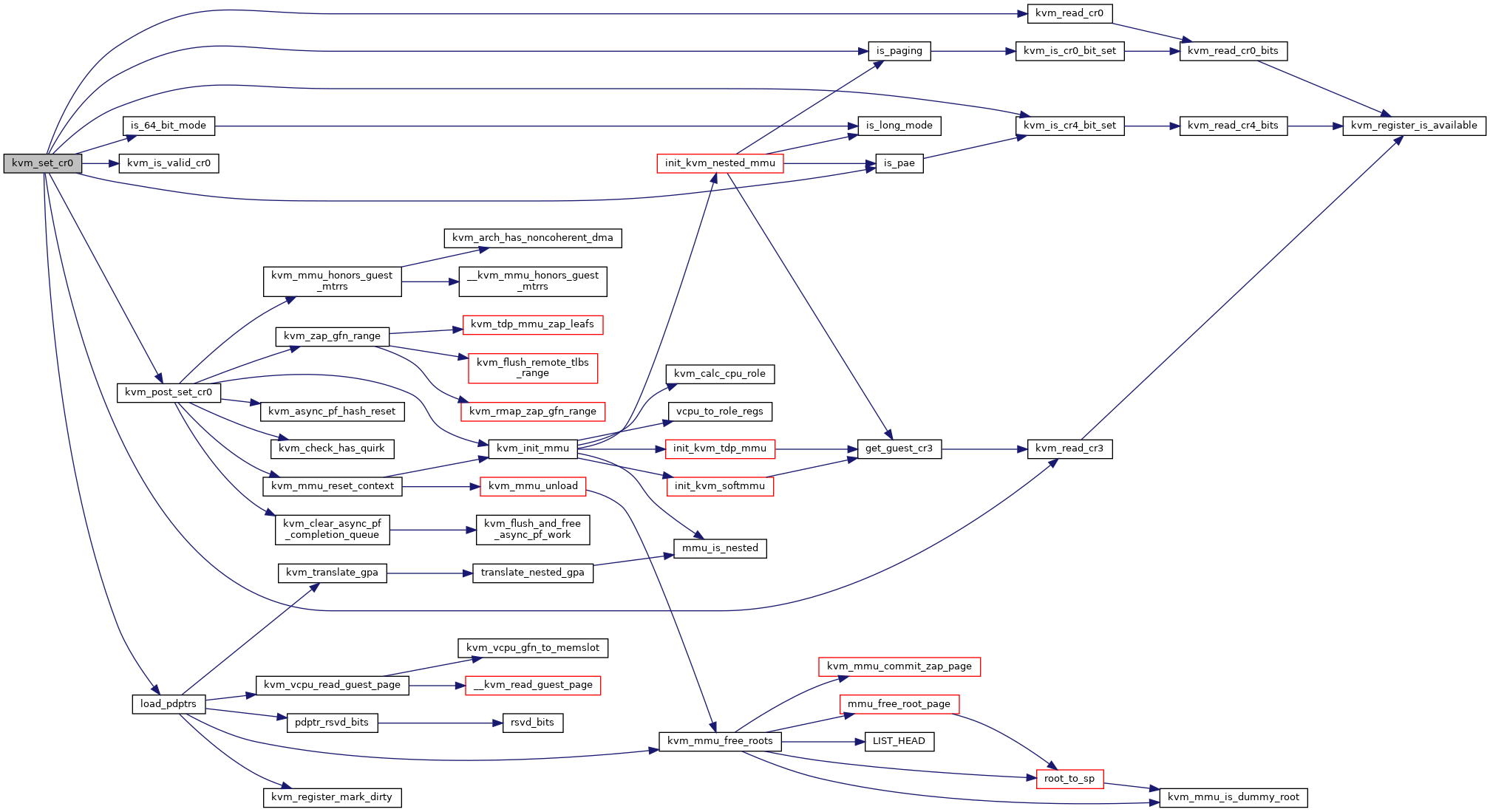

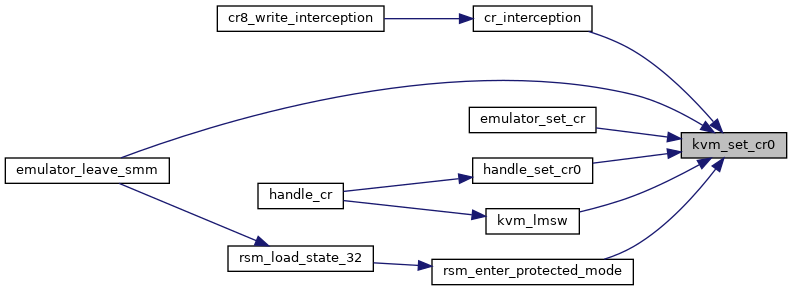

| int | kvm_set_cr0 (struct kvm_vcpu *vcpu, unsigned long cr0) |

| EXPORT_SYMBOL_GPL (kvm_set_cr0) | |

| void | kvm_lmsw (struct kvm_vcpu *vcpu, unsigned long msw) |

| EXPORT_SYMBOL_GPL (kvm_lmsw) | |

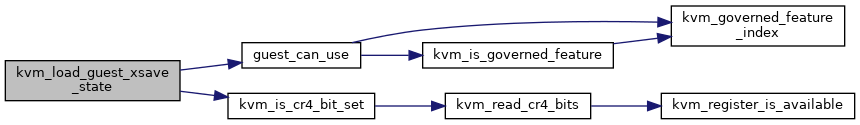

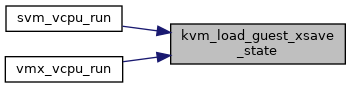

| void | kvm_load_guest_xsave_state (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_load_guest_xsave_state) | |

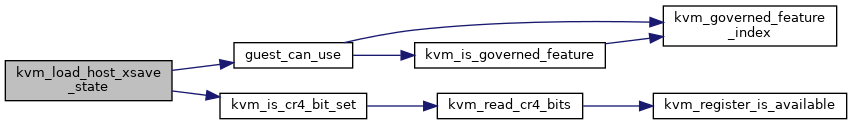

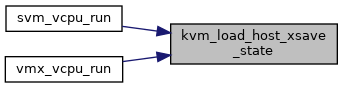

| void | kvm_load_host_xsave_state (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_load_host_xsave_state) | |

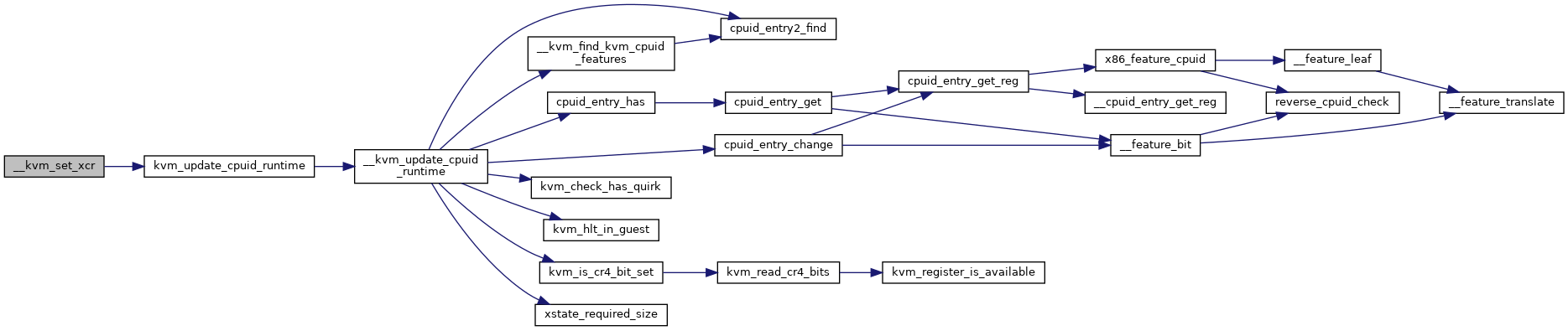

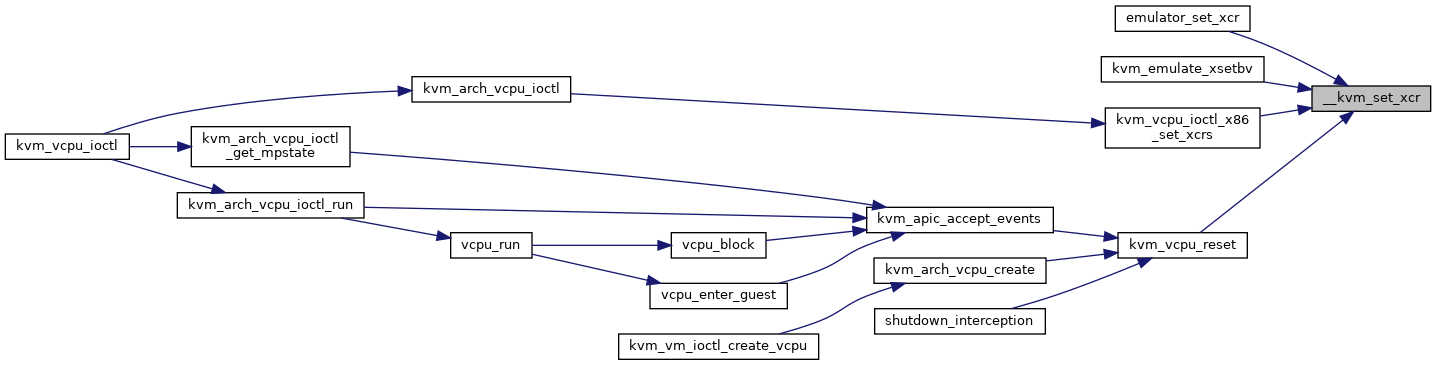

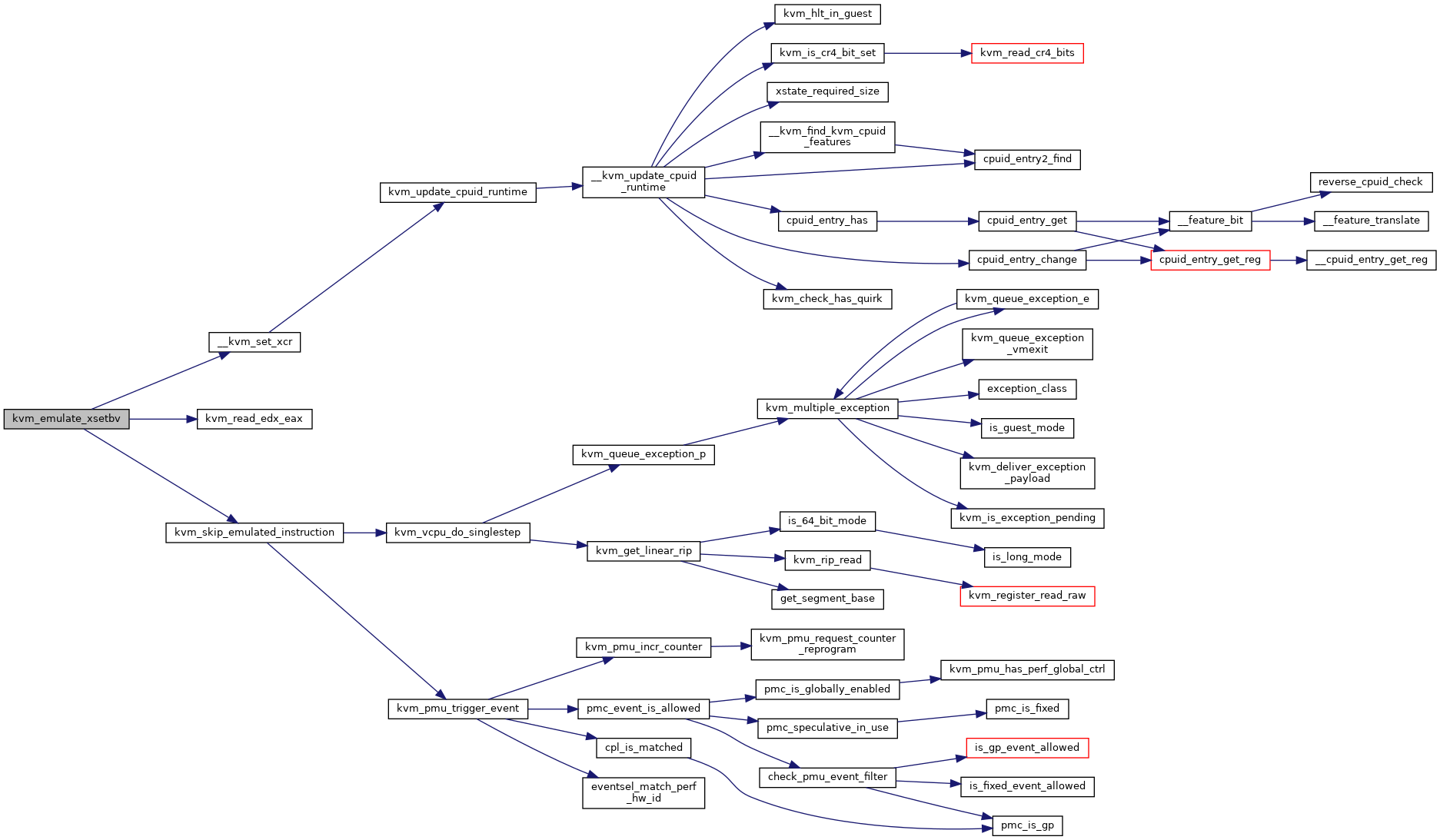

| static int | __kvm_set_xcr (struct kvm_vcpu *vcpu, u32 index, u64 xcr) |

| int | kvm_emulate_xsetbv (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_xsetbv) | |

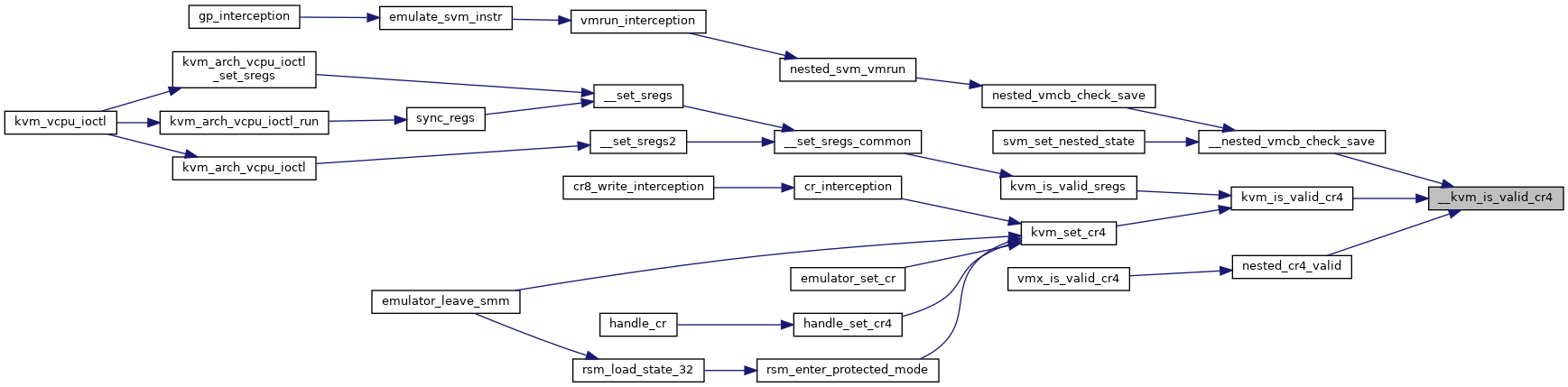

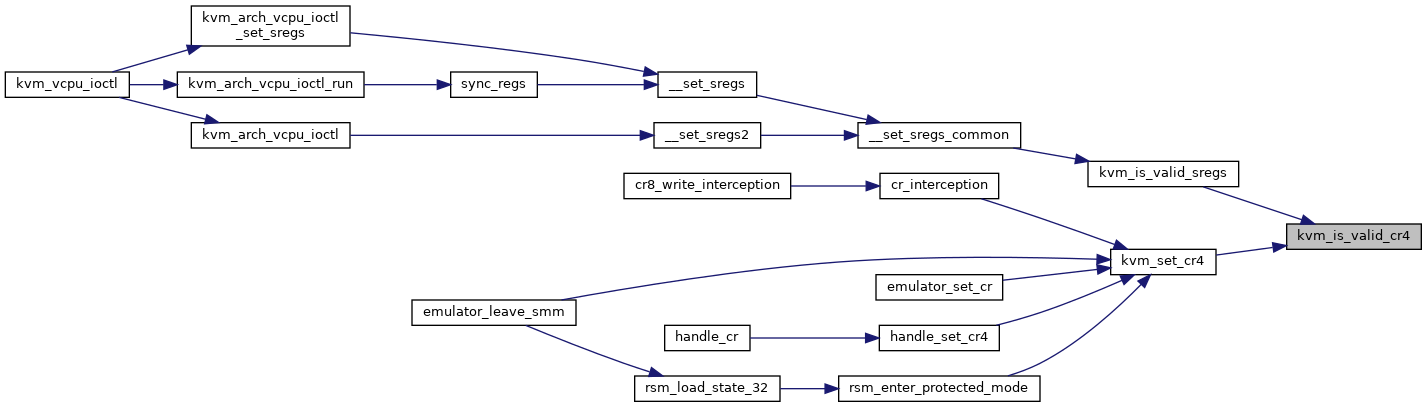

| bool | __kvm_is_valid_cr4 (struct kvm_vcpu *vcpu, unsigned long cr4) |

| EXPORT_SYMBOL_GPL (__kvm_is_valid_cr4) | |

| static bool | kvm_is_valid_cr4 (struct kvm_vcpu *vcpu, unsigned long cr4) |

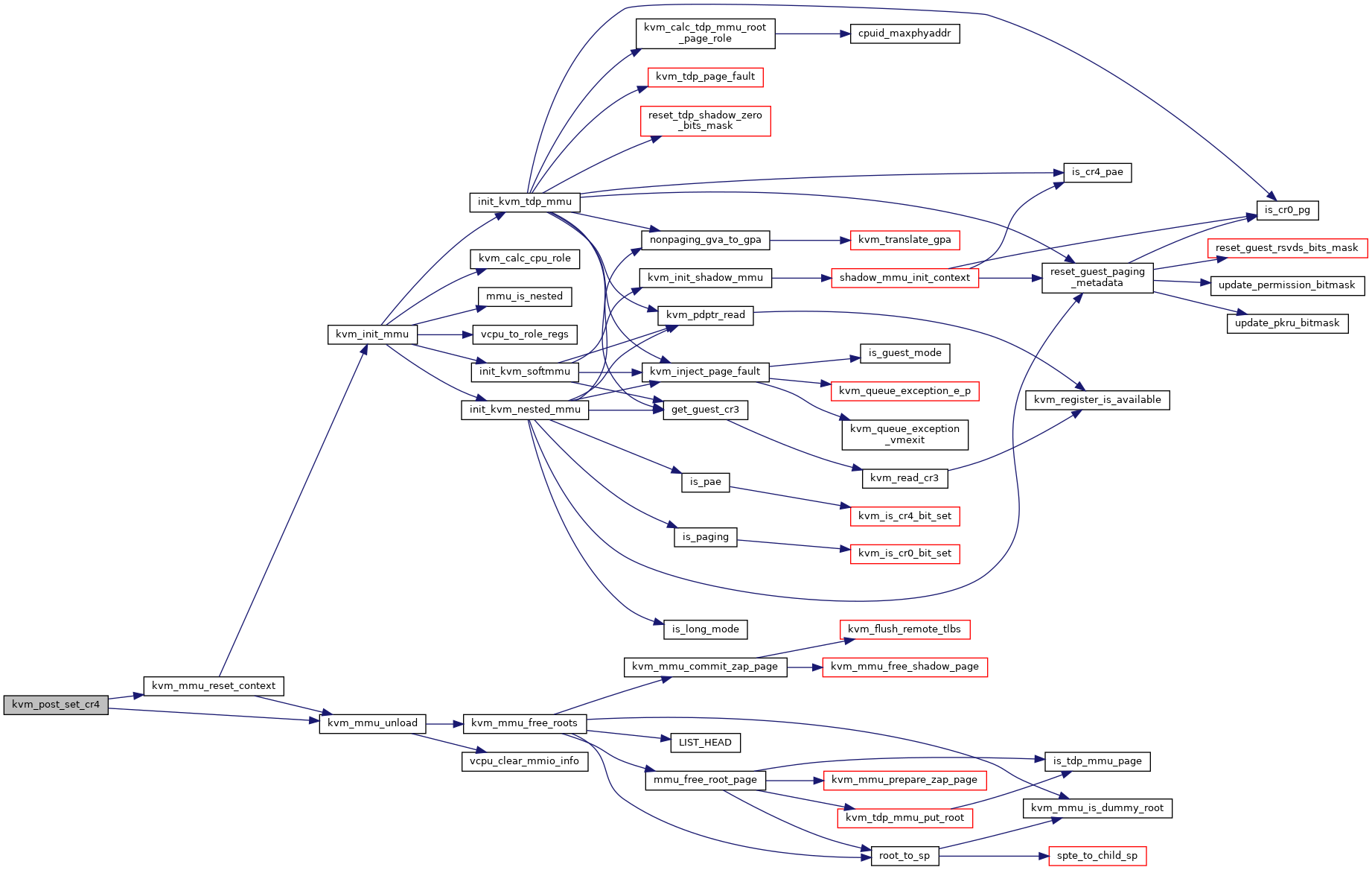

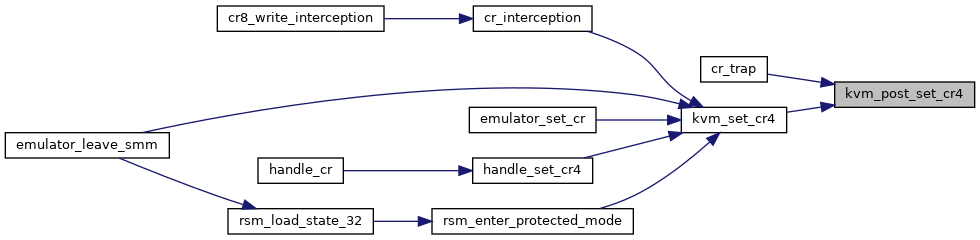

| void | kvm_post_set_cr4 (struct kvm_vcpu *vcpu, unsigned long old_cr4, unsigned long cr4) |

| EXPORT_SYMBOL_GPL (kvm_post_set_cr4) | |

| int | kvm_set_cr4 (struct kvm_vcpu *vcpu, unsigned long cr4) |

| EXPORT_SYMBOL_GPL (kvm_set_cr4) | |

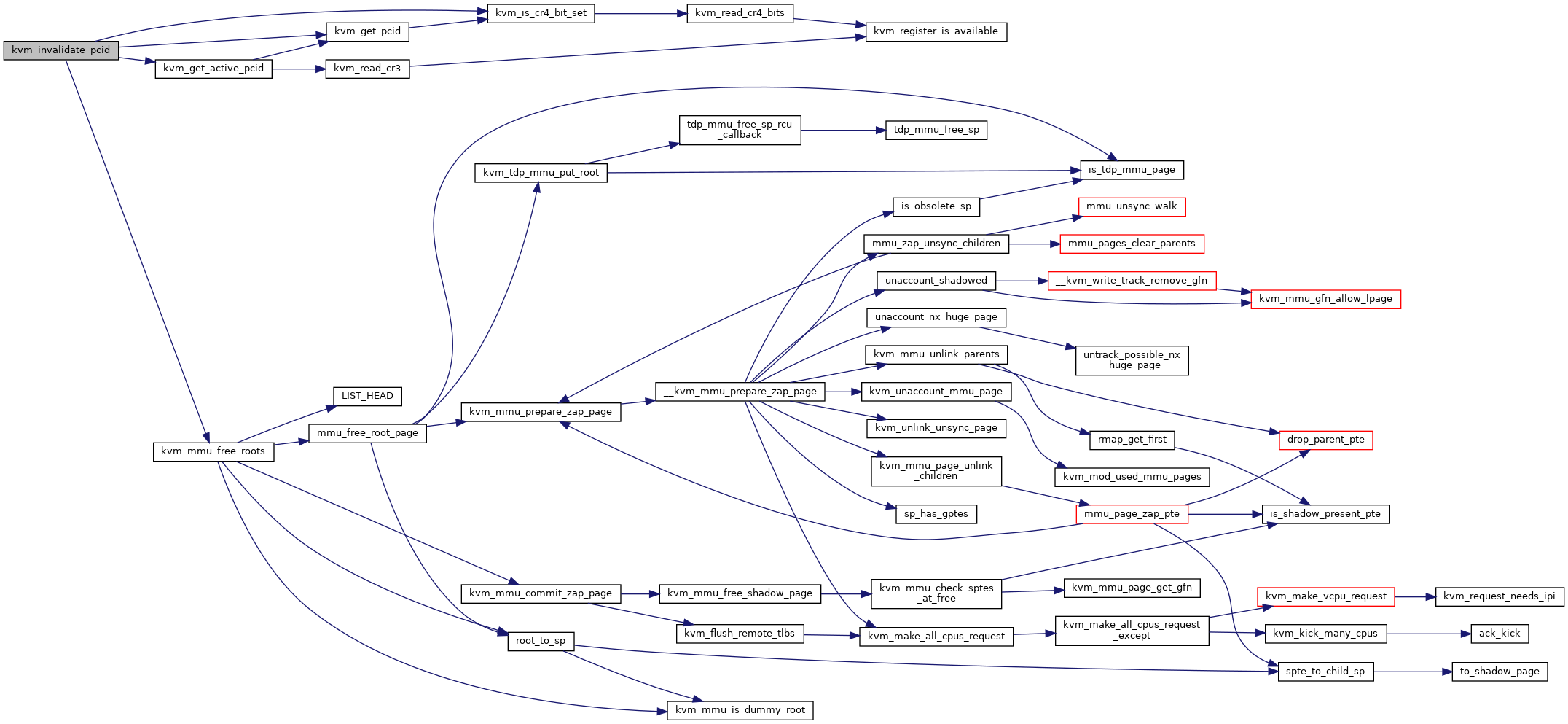

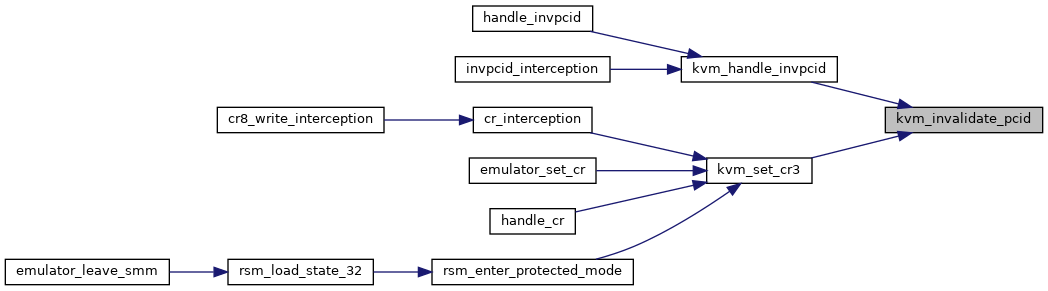

| static void | kvm_invalidate_pcid (struct kvm_vcpu *vcpu, unsigned long pcid) |

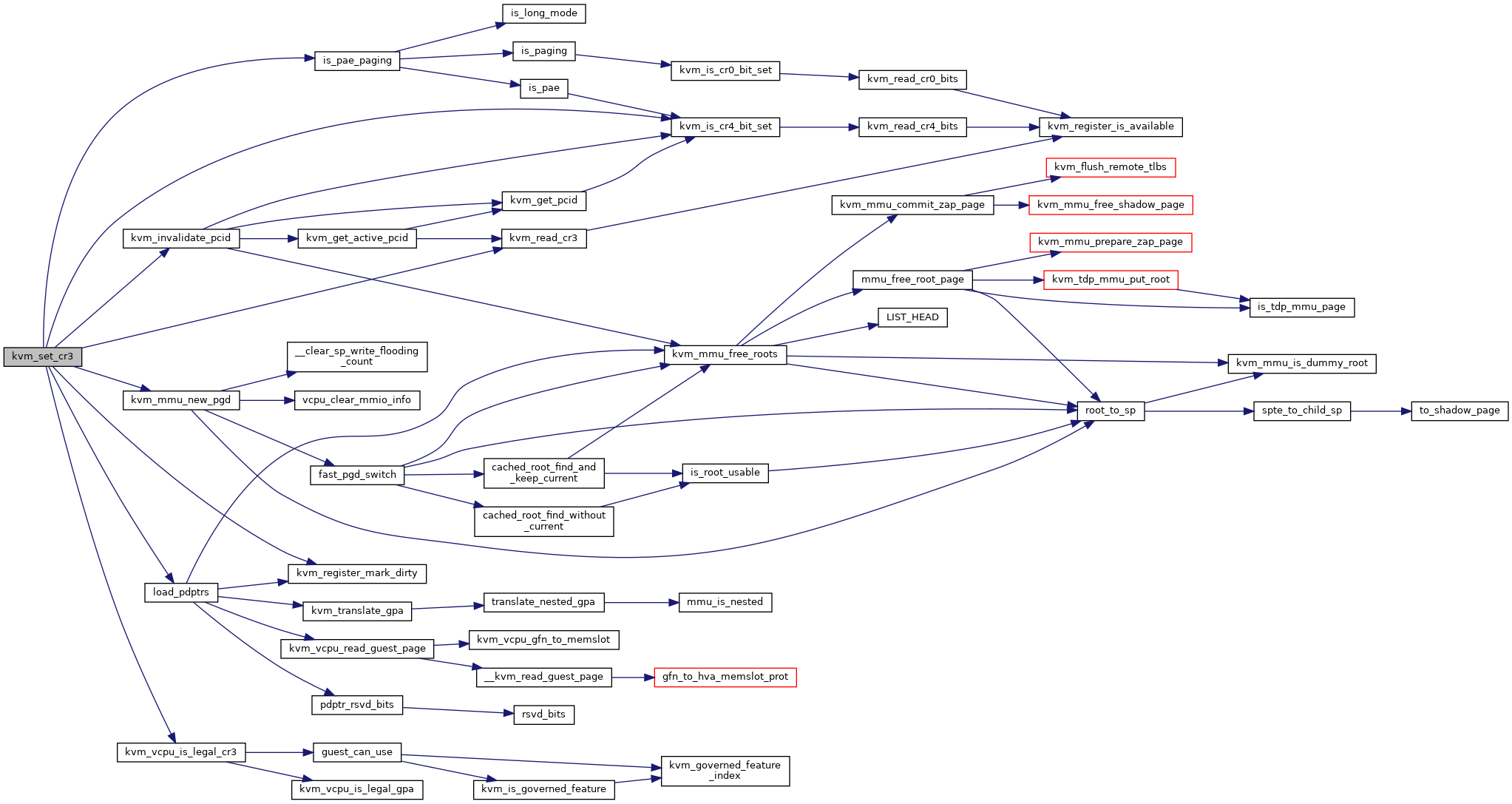

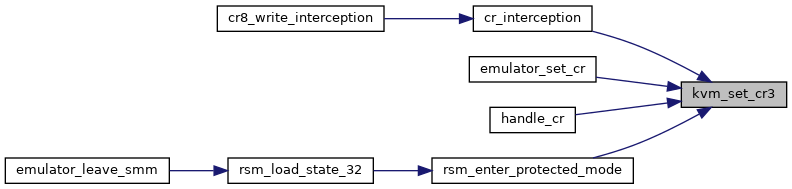

| int | kvm_set_cr3 (struct kvm_vcpu *vcpu, unsigned long cr3) |

| EXPORT_SYMBOL_GPL (kvm_set_cr3) | |

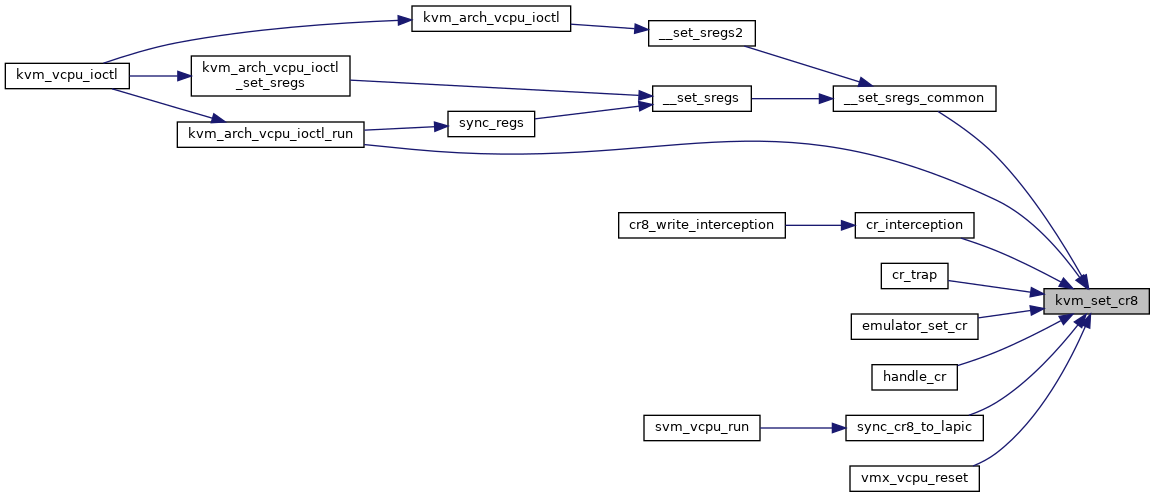

| int | kvm_set_cr8 (struct kvm_vcpu *vcpu, unsigned long cr8) |

| EXPORT_SYMBOL_GPL (kvm_set_cr8) | |

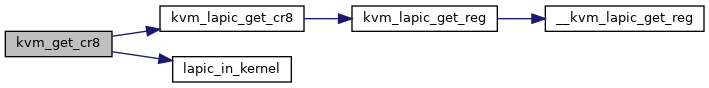

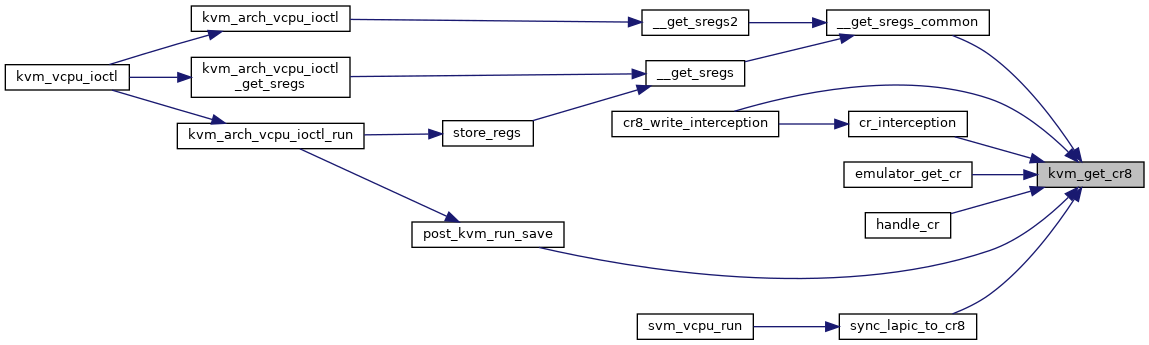

| unsigned long | kvm_get_cr8 (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_get_cr8) | |

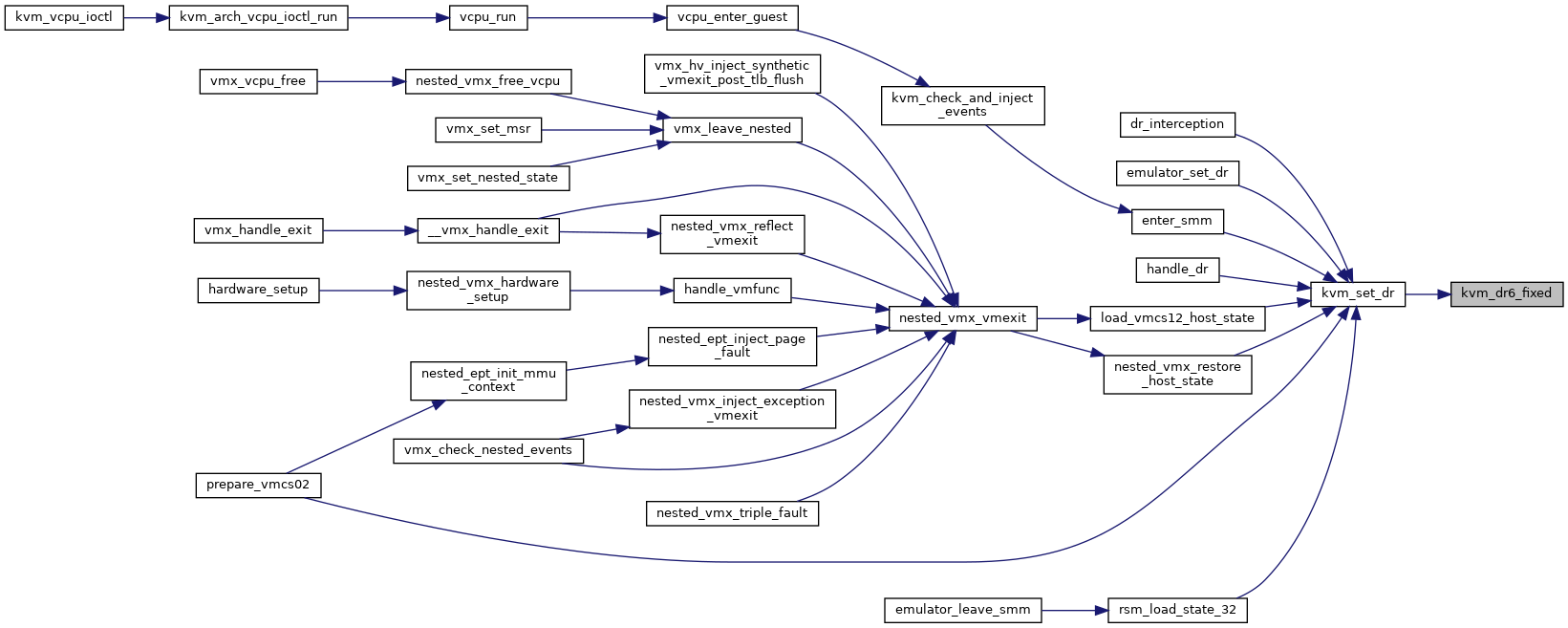

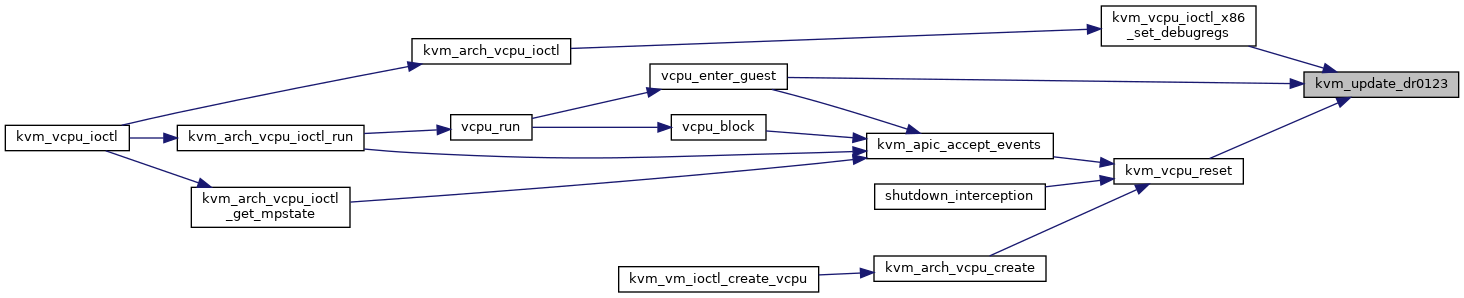

| static void | kvm_update_dr0123 (struct kvm_vcpu *vcpu) |

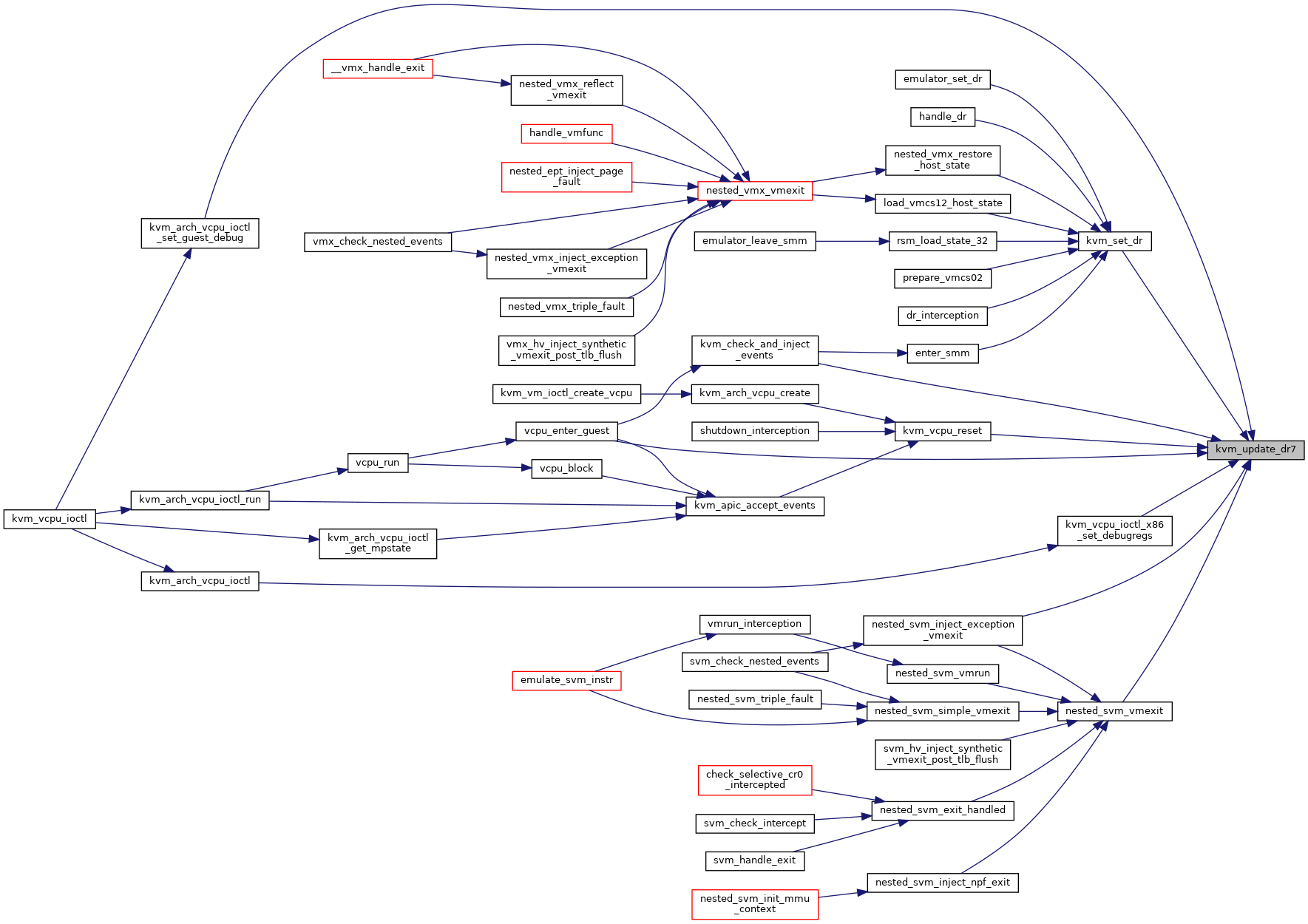

| void | kvm_update_dr7 (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_update_dr7) | |

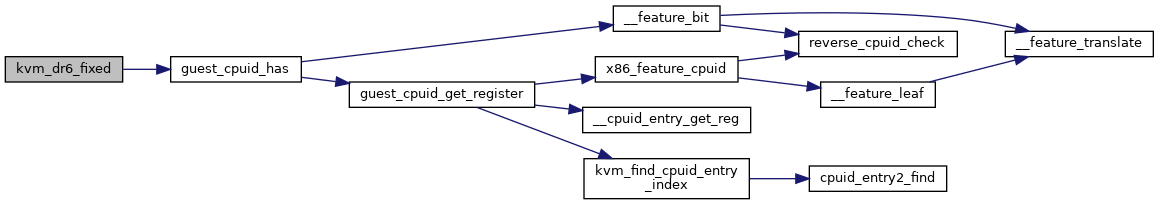

| static u64 | kvm_dr6_fixed (struct kvm_vcpu *vcpu) |

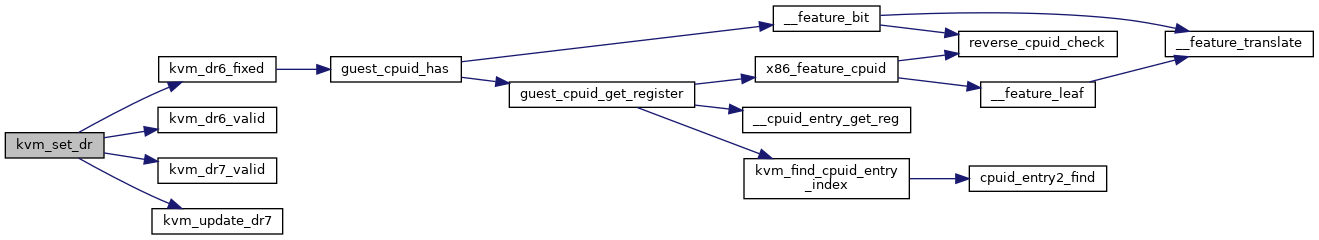

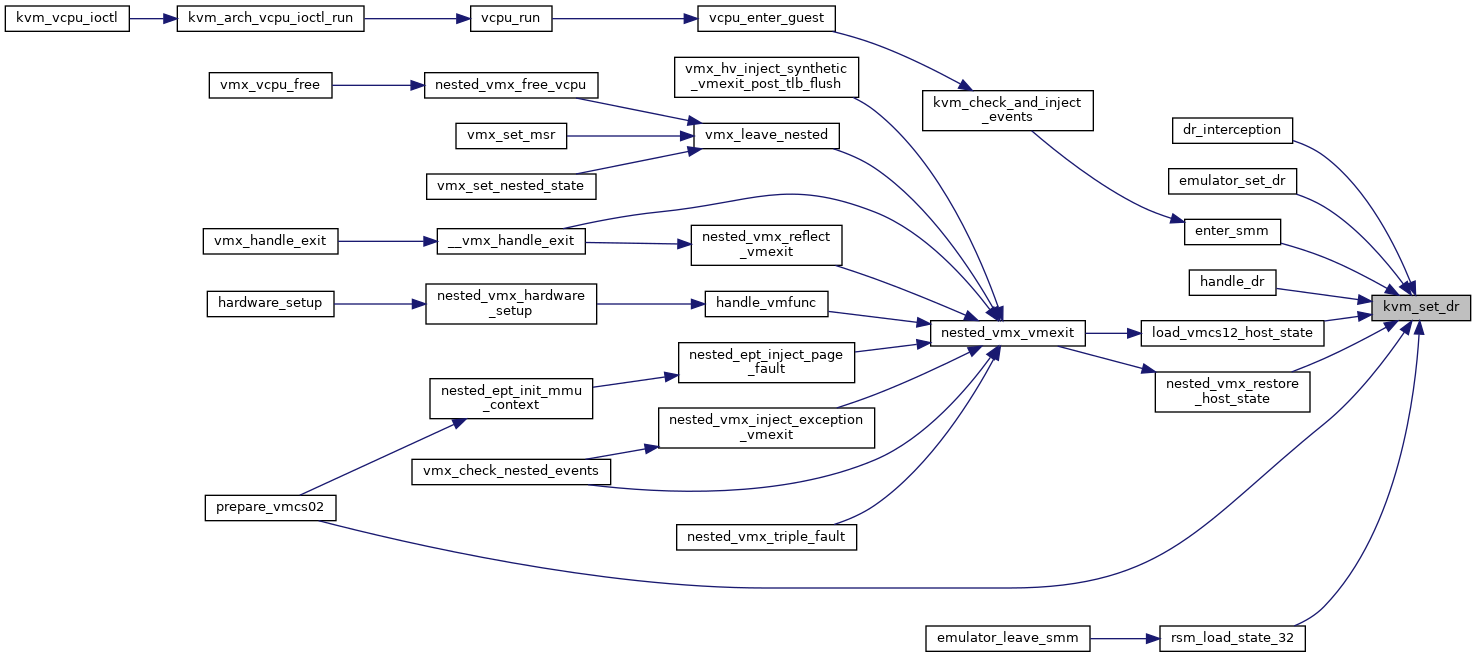

| int | kvm_set_dr (struct kvm_vcpu *vcpu, int dr, unsigned long val) |

| EXPORT_SYMBOL_GPL (kvm_set_dr) | |

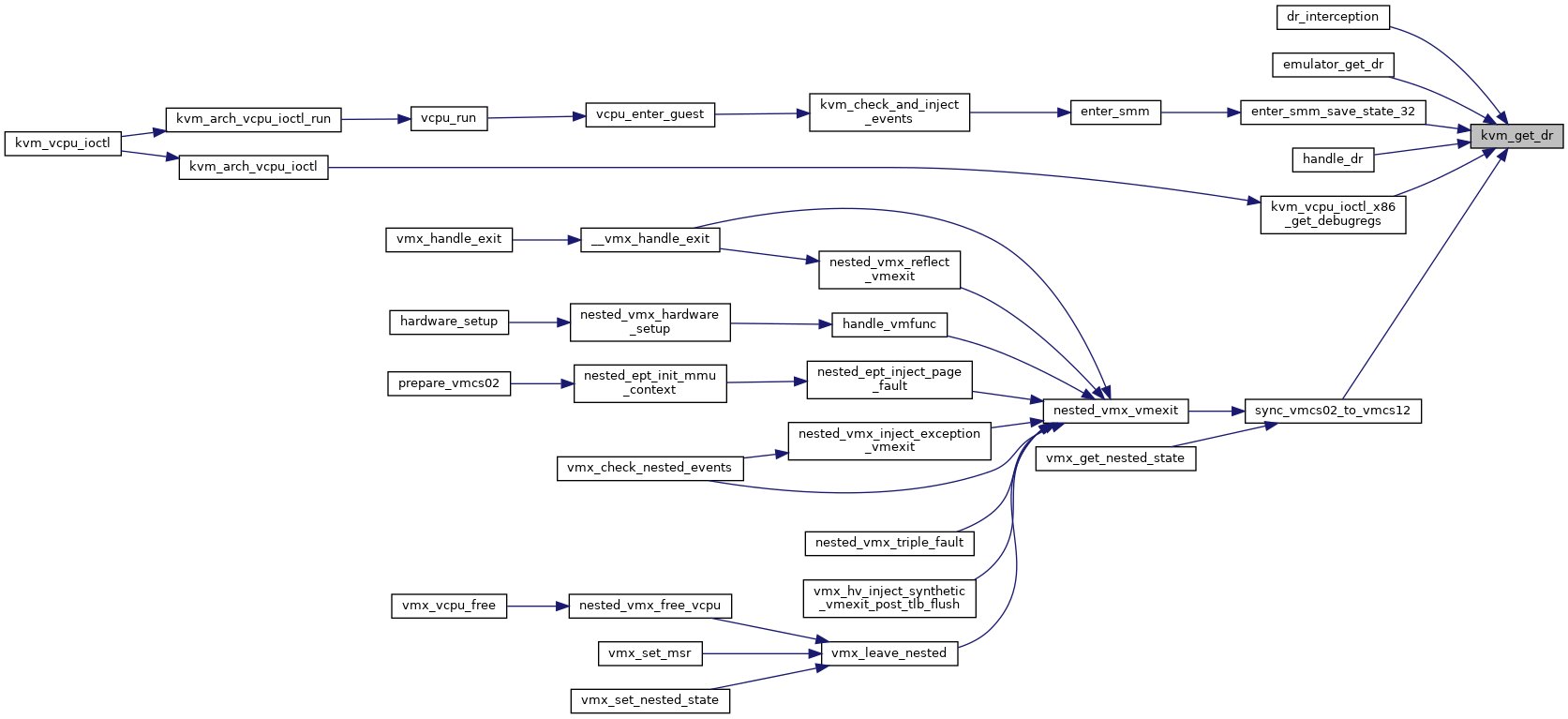

| void | kvm_get_dr (struct kvm_vcpu *vcpu, int dr, unsigned long *val) |

| EXPORT_SYMBOL_GPL (kvm_get_dr) | |

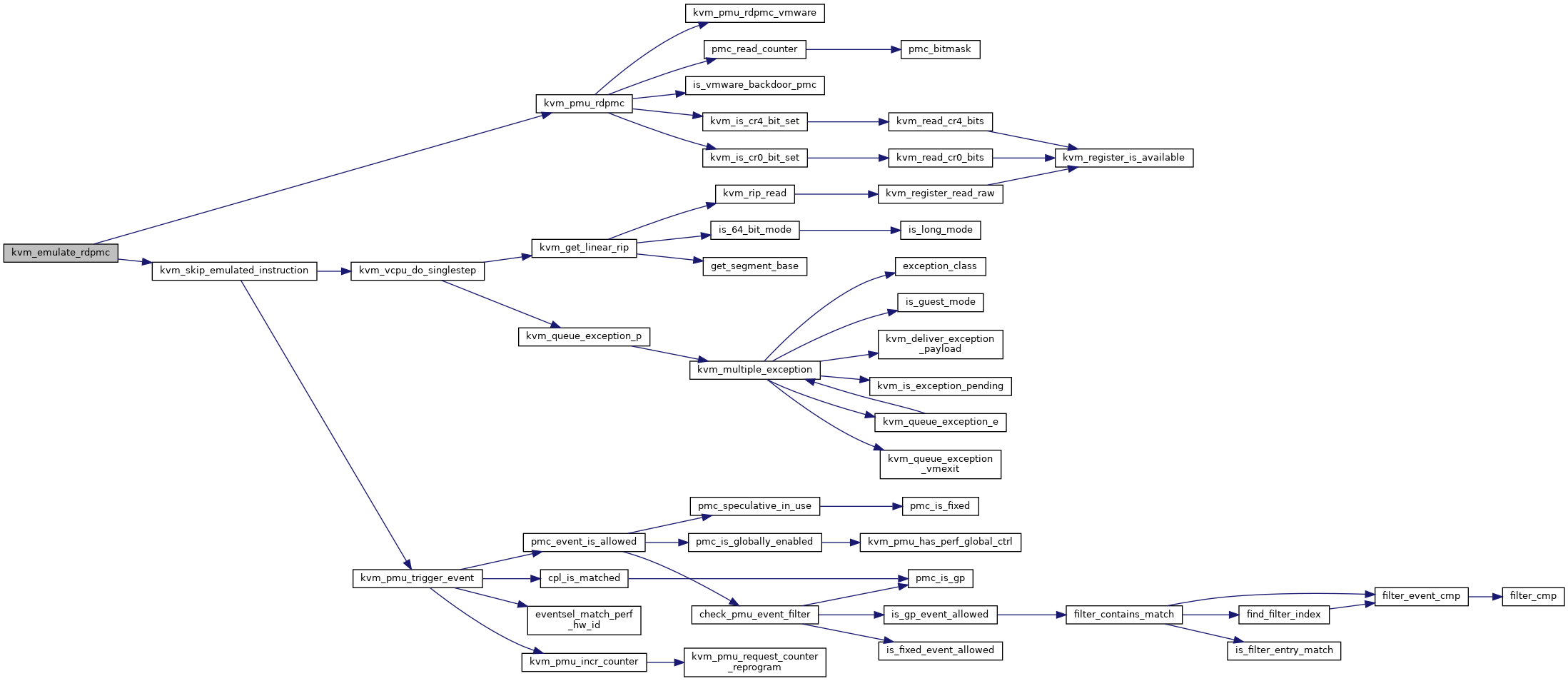

| int | kvm_emulate_rdpmc (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_rdpmc) | |

| static bool | kvm_is_immutable_feature_msr (u32 msr) |

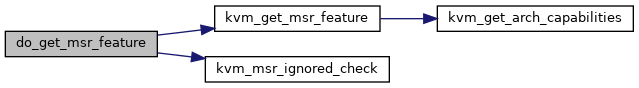

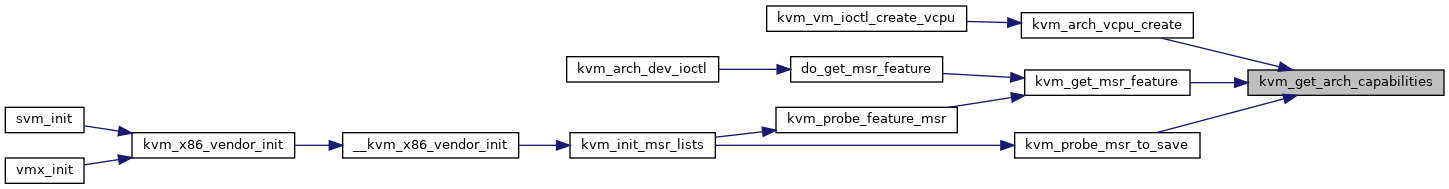

| static u64 | kvm_get_arch_capabilities (void) |

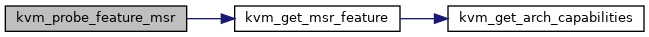

| static int | kvm_get_msr_feature (struct kvm_msr_entry *msr) |

| static int | do_get_msr_feature (struct kvm_vcpu *vcpu, unsigned index, u64 *data) |

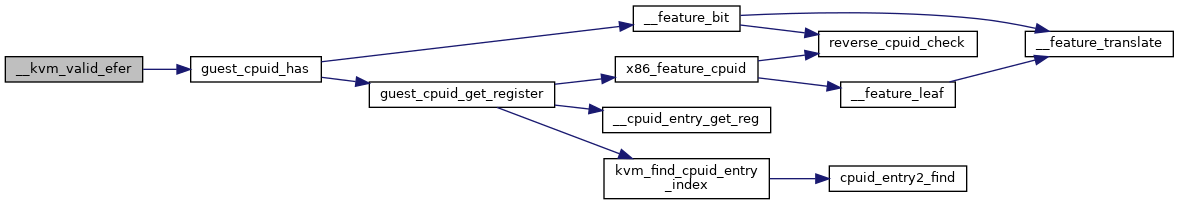

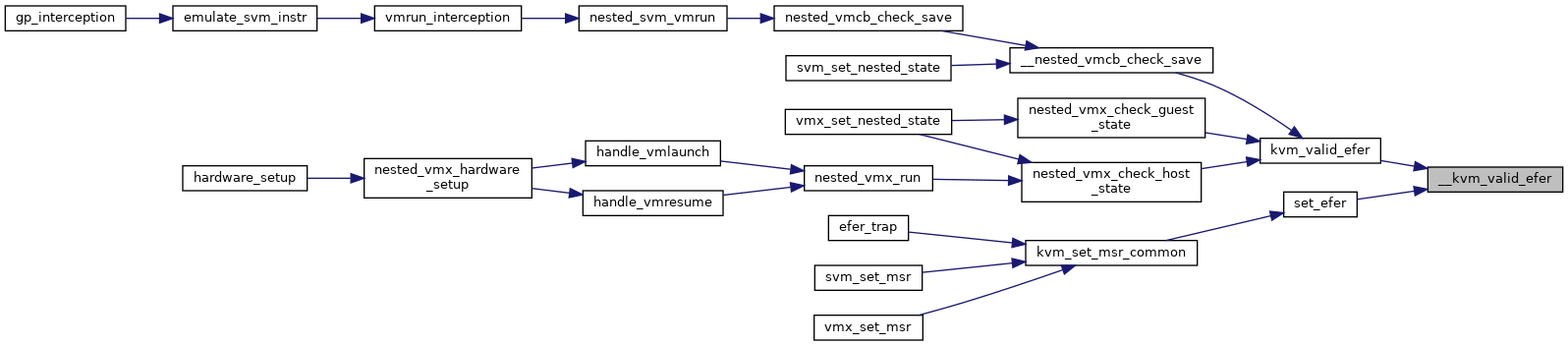

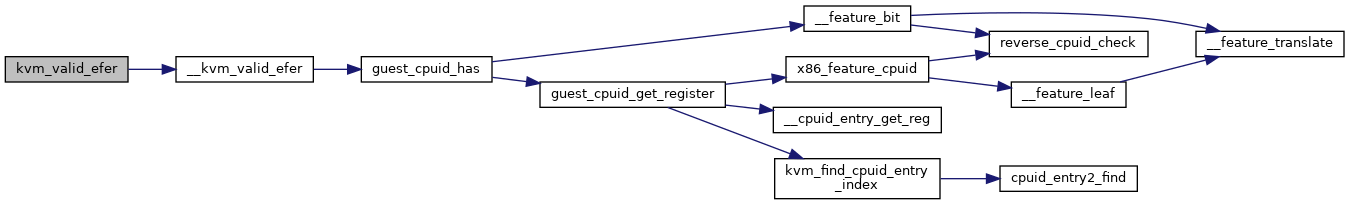

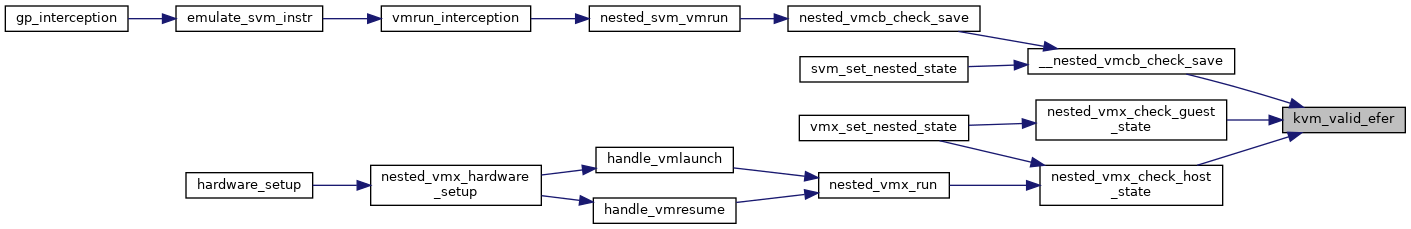

| static bool | __kvm_valid_efer (struct kvm_vcpu *vcpu, u64 efer) |

| bool | kvm_valid_efer (struct kvm_vcpu *vcpu, u64 efer) |

| EXPORT_SYMBOL_GPL (kvm_valid_efer) | |

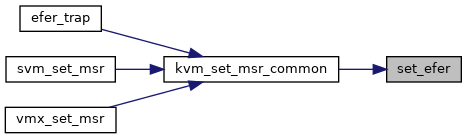

| static int | set_efer (struct kvm_vcpu *vcpu, struct msr_data *msr_info) |

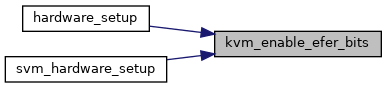

| void | kvm_enable_efer_bits (u64 mask) |

| EXPORT_SYMBOL_GPL (kvm_enable_efer_bits) | |

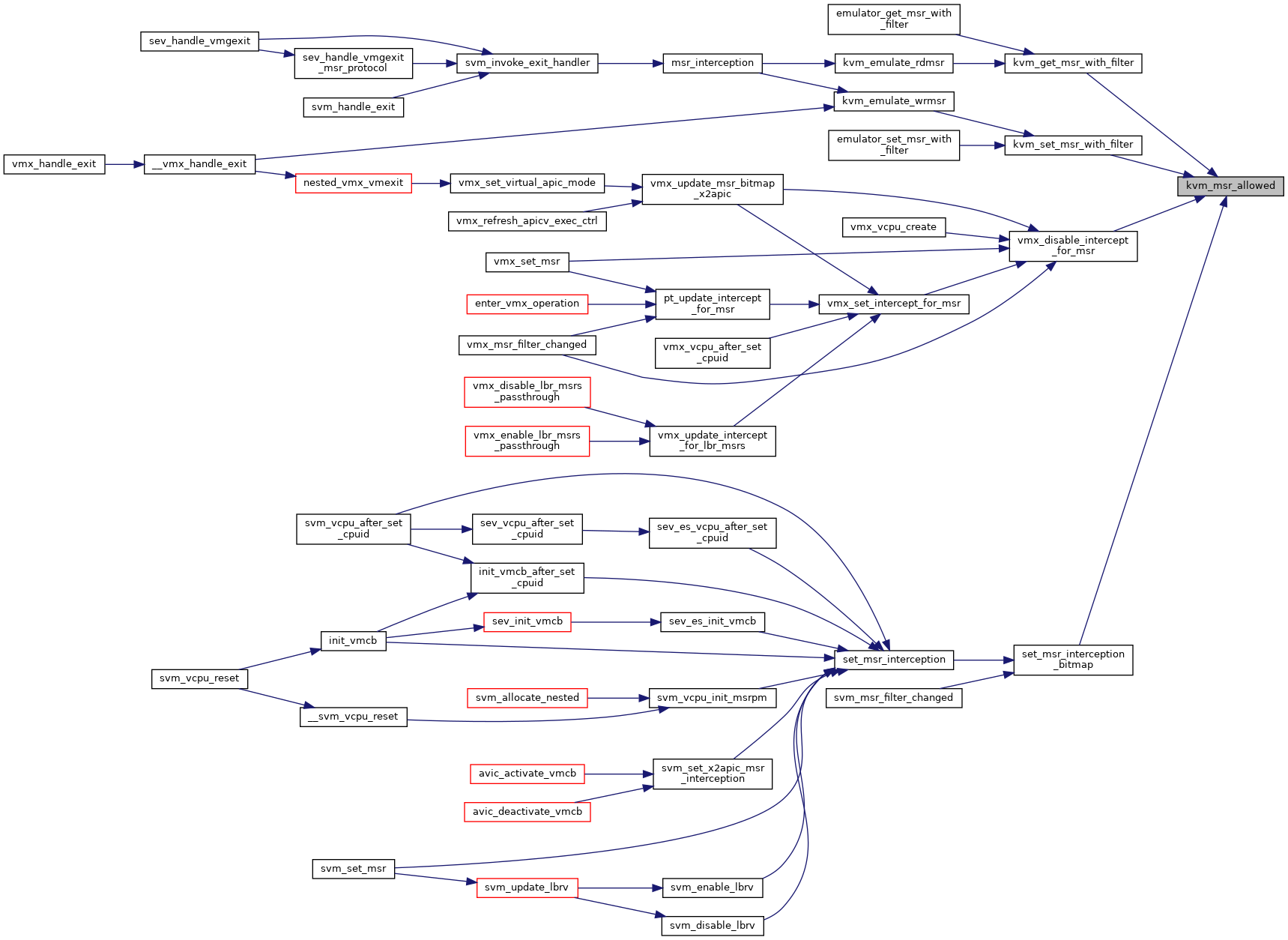

| bool | kvm_msr_allowed (struct kvm_vcpu *vcpu, u32 index, u32 type) |

| EXPORT_SYMBOL_GPL (kvm_msr_allowed) | |

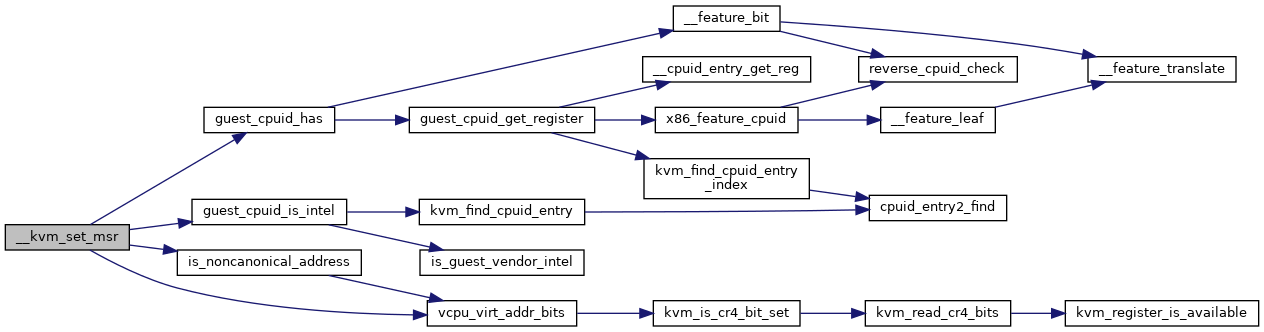

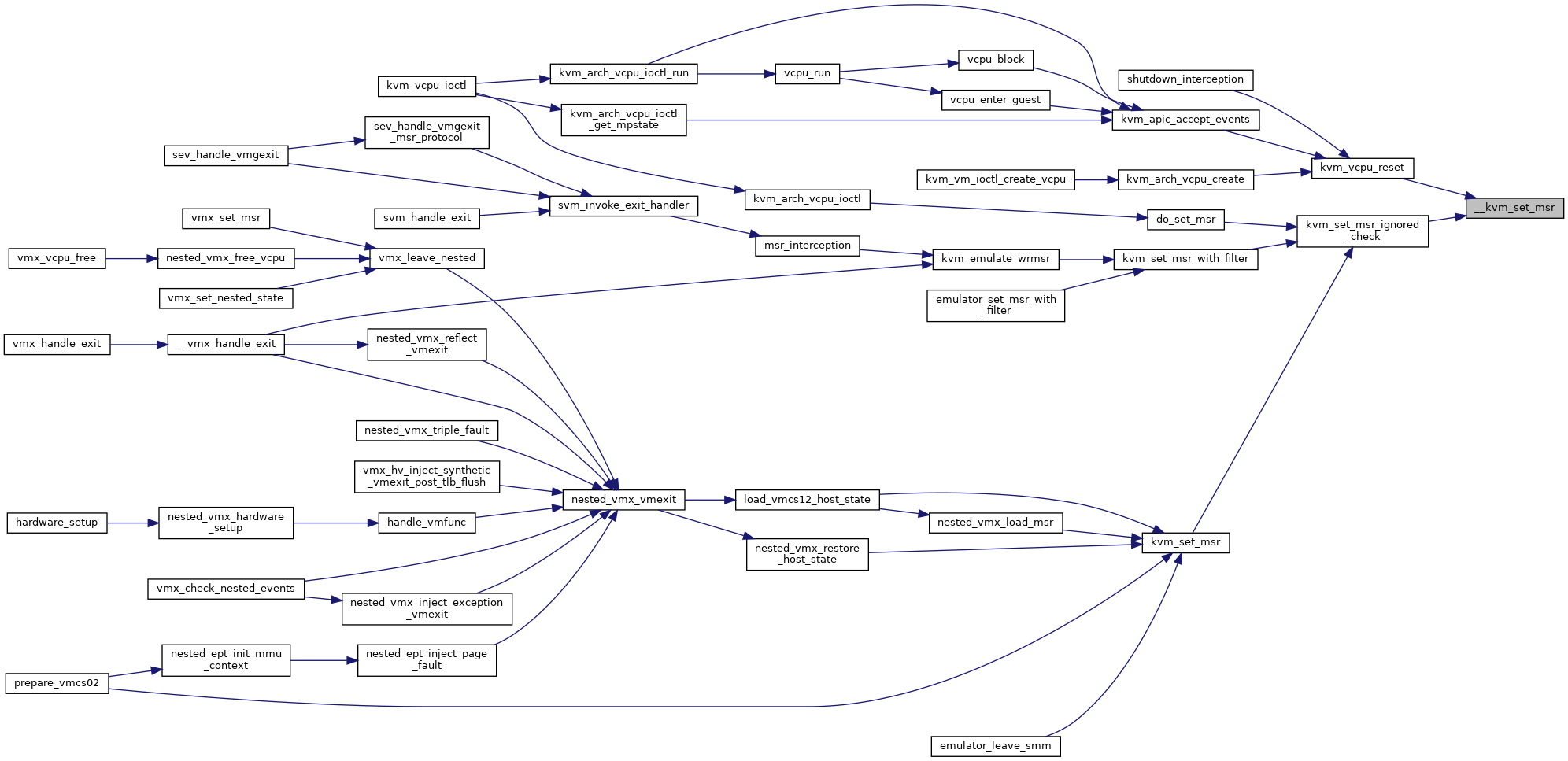

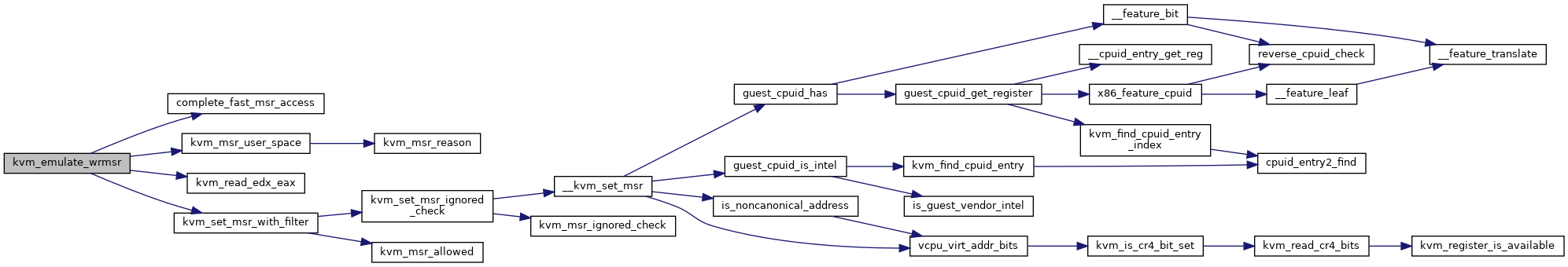

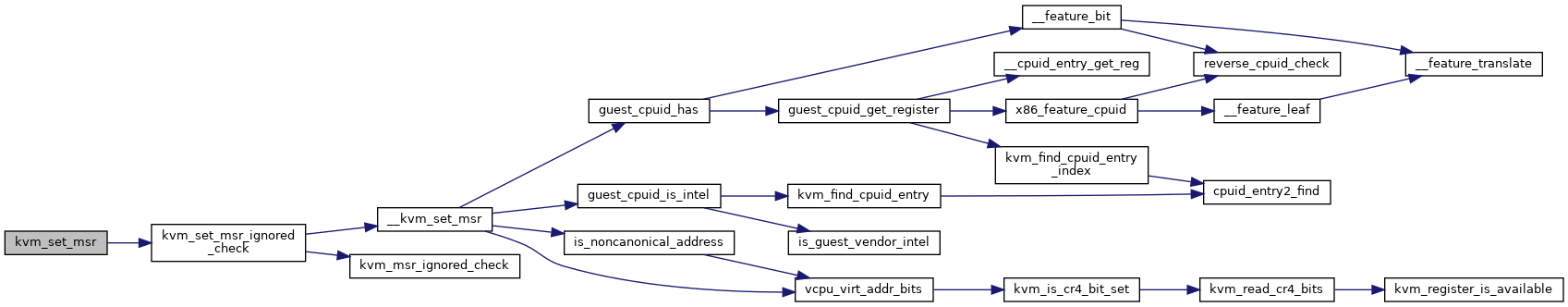

| static int | __kvm_set_msr (struct kvm_vcpu *vcpu, u32 index, u64 data, bool host_initiated) |

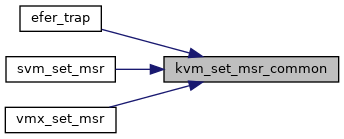

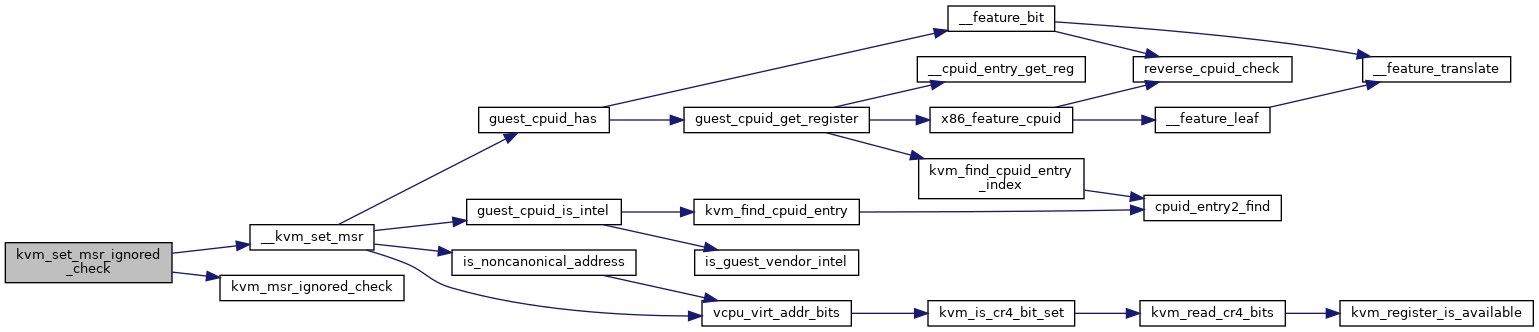

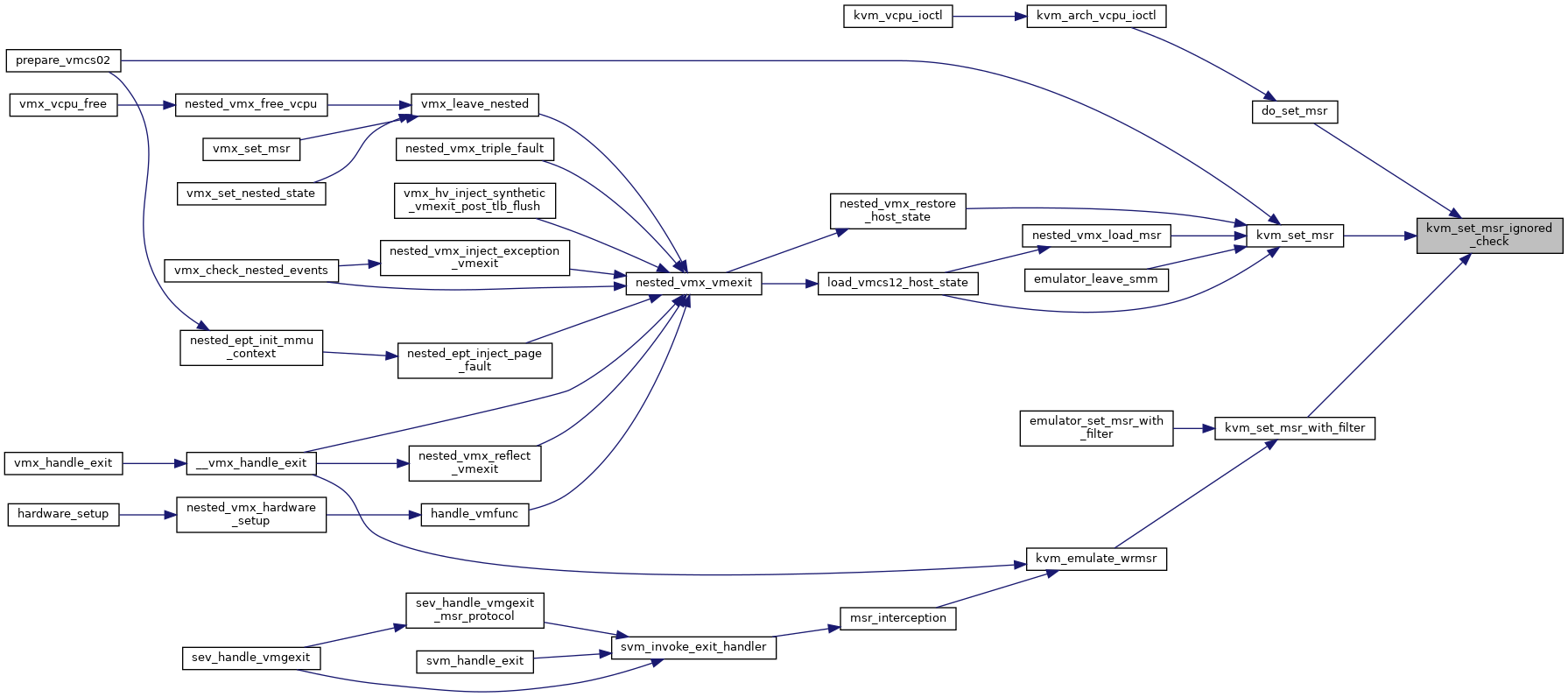

| static int | kvm_set_msr_ignored_check (struct kvm_vcpu *vcpu, u32 index, u64 data, bool host_initiated) |

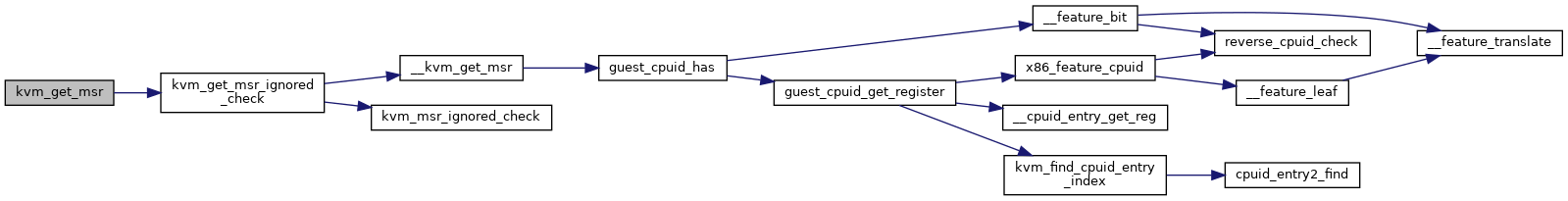

| int | __kvm_get_msr (struct kvm_vcpu *vcpu, u32 index, u64 *data, bool host_initiated) |

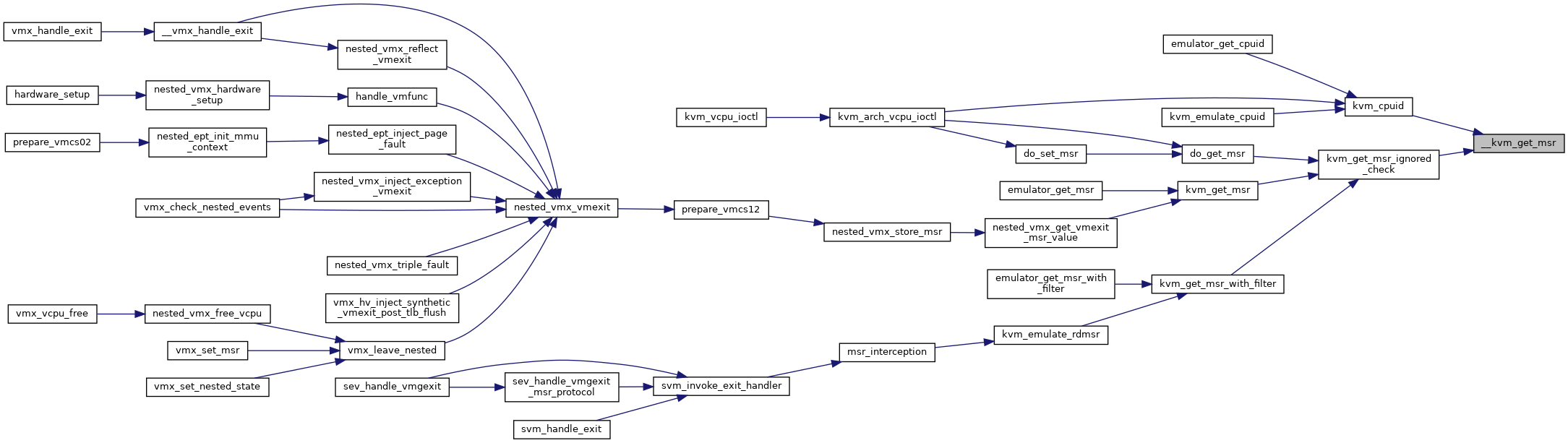

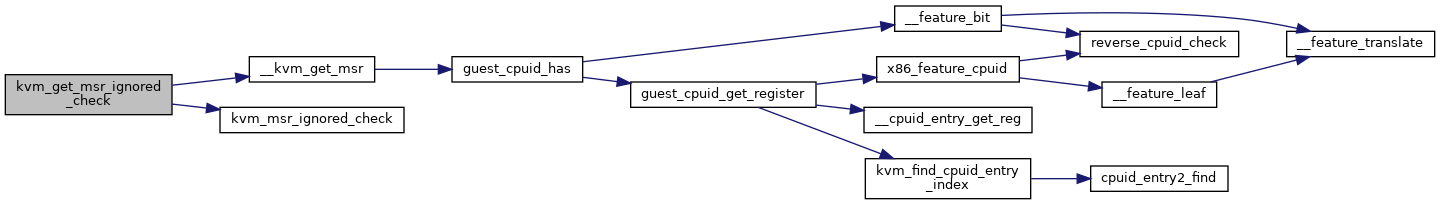

| static int | kvm_get_msr_ignored_check (struct kvm_vcpu *vcpu, u32 index, u64 *data, bool host_initiated) |

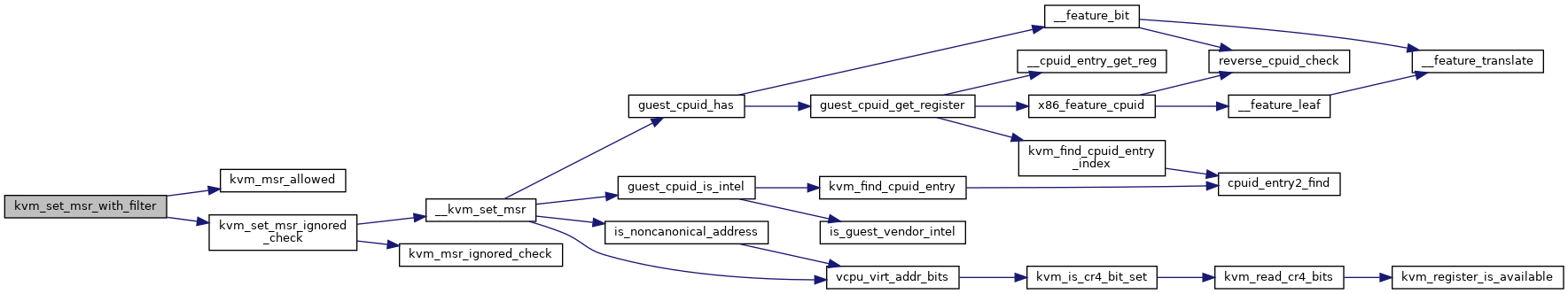

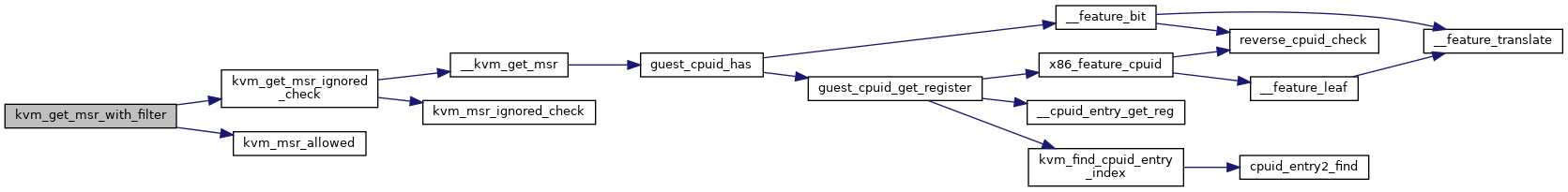

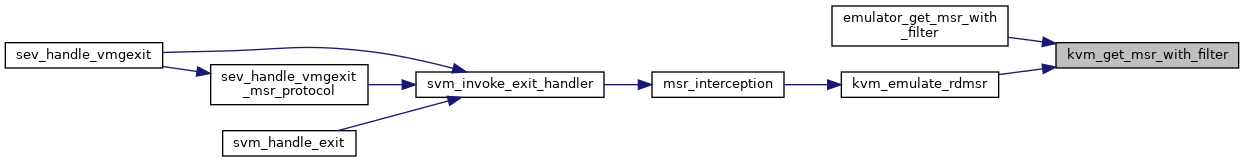

| static int | kvm_get_msr_with_filter (struct kvm_vcpu *vcpu, u32 index, u64 *data) |

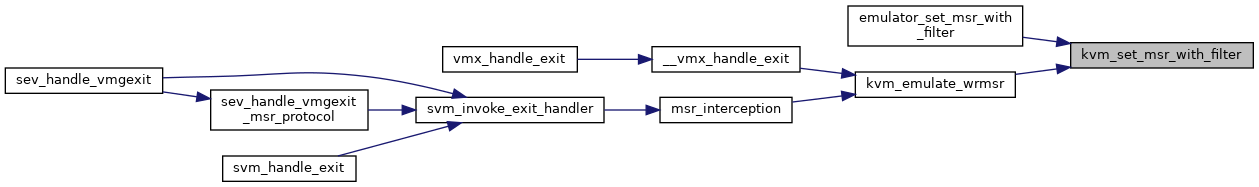

| static int | kvm_set_msr_with_filter (struct kvm_vcpu *vcpu, u32 index, u64 data) |

| int | kvm_get_msr (struct kvm_vcpu *vcpu, u32 index, u64 *data) |

| EXPORT_SYMBOL_GPL (kvm_get_msr) | |

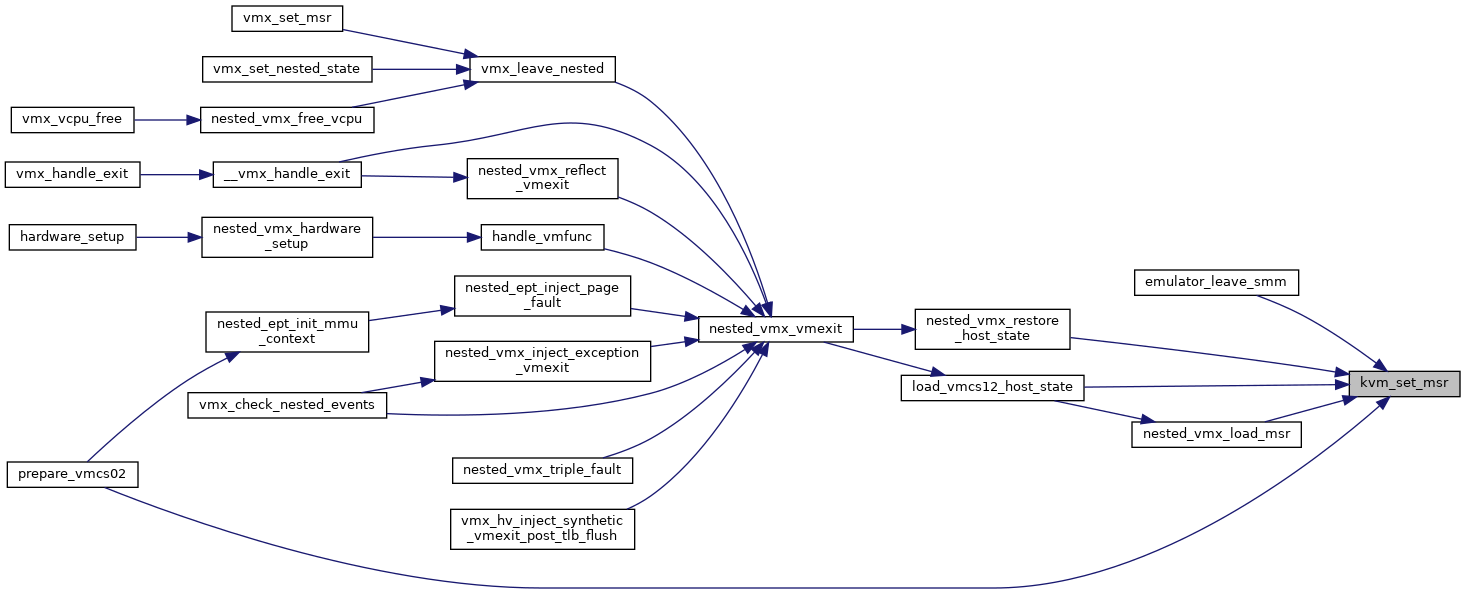

| int | kvm_set_msr (struct kvm_vcpu *vcpu, u32 index, u64 data) |

| EXPORT_SYMBOL_GPL (kvm_set_msr) | |

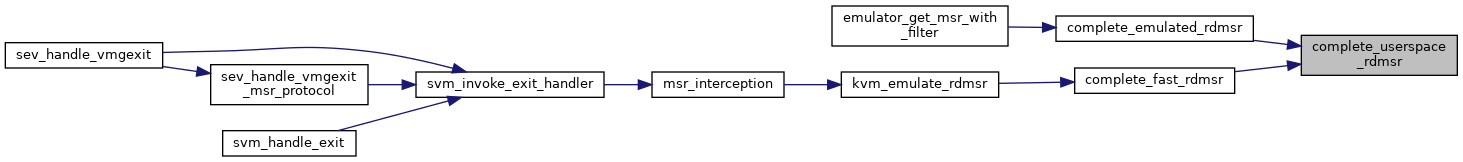

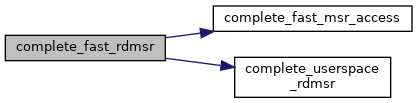

| static void | complete_userspace_rdmsr (struct kvm_vcpu *vcpu) |

| static int | complete_emulated_msr_access (struct kvm_vcpu *vcpu) |

| static int | complete_emulated_rdmsr (struct kvm_vcpu *vcpu) |

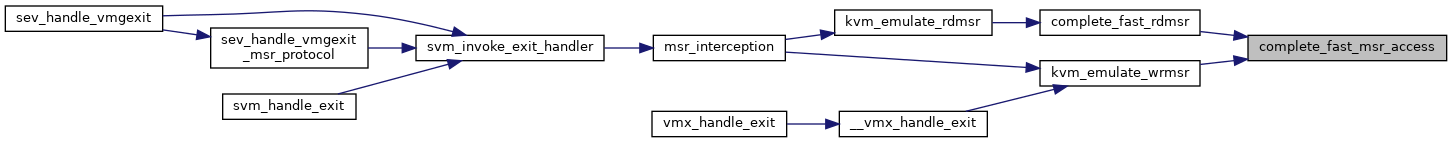

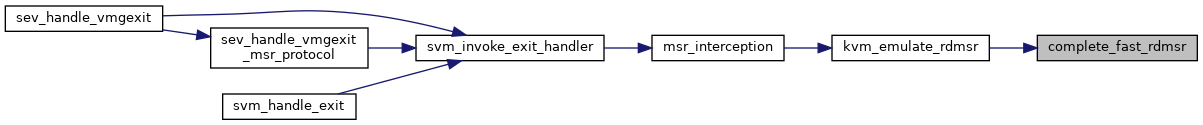

| static int | complete_fast_msr_access (struct kvm_vcpu *vcpu) |

| static int | complete_fast_rdmsr (struct kvm_vcpu *vcpu) |

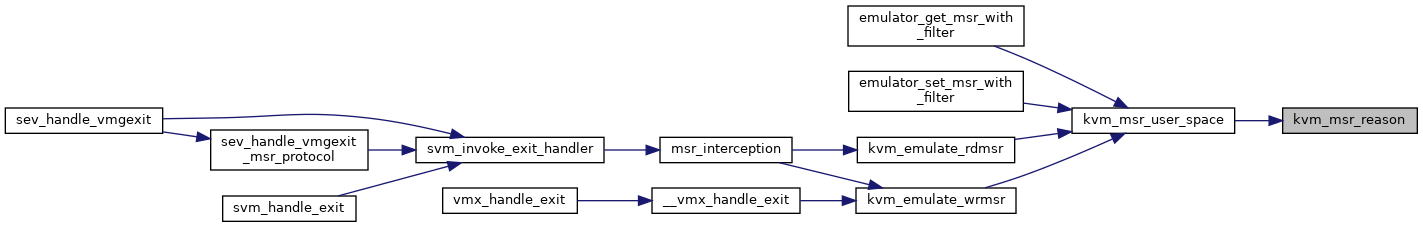

| static u64 | kvm_msr_reason (int r) |

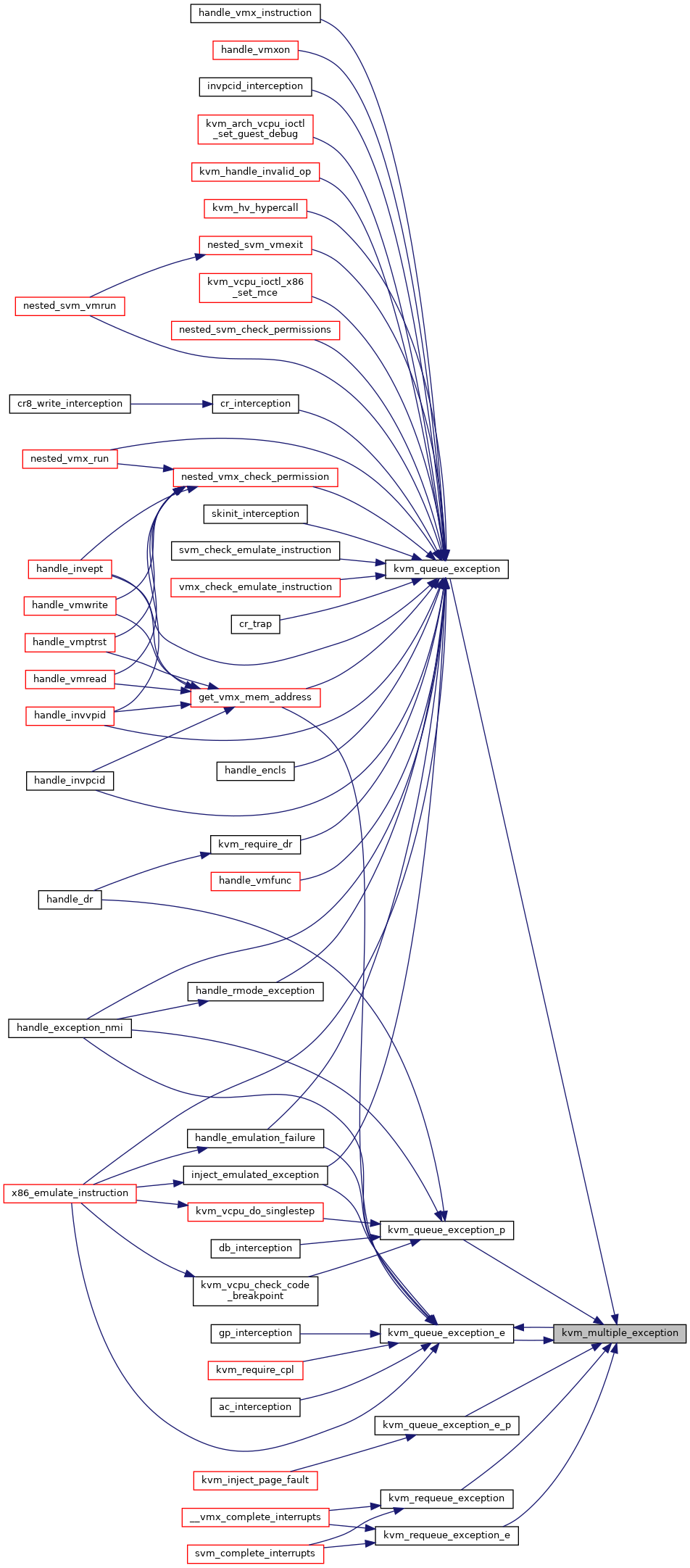

| static int | kvm_msr_user_space (struct kvm_vcpu *vcpu, u32 index, u32 exit_reason, u64 data, int(*completion)(struct kvm_vcpu *vcpu), int r) |

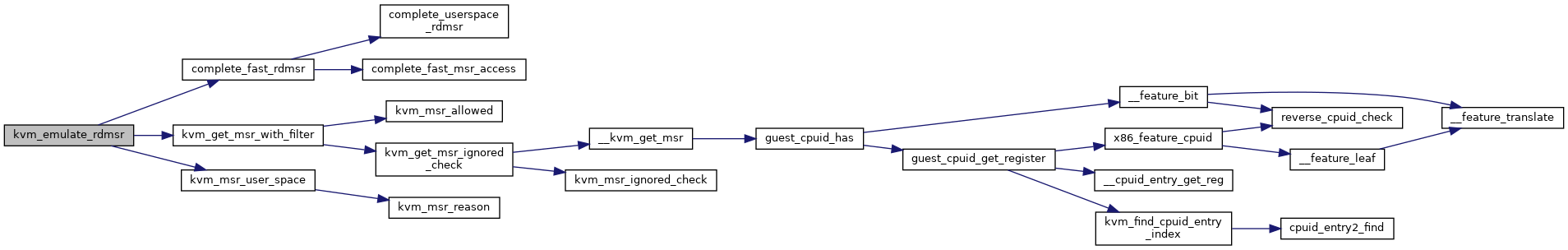

| int | kvm_emulate_rdmsr (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_rdmsr) | |

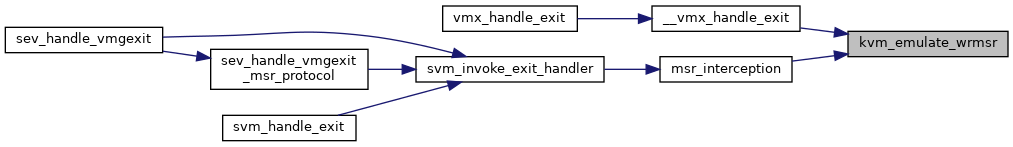

| int | kvm_emulate_wrmsr (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_wrmsr) | |

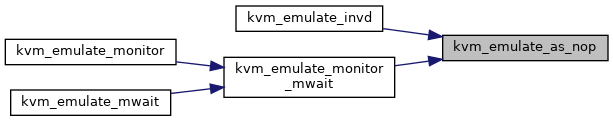

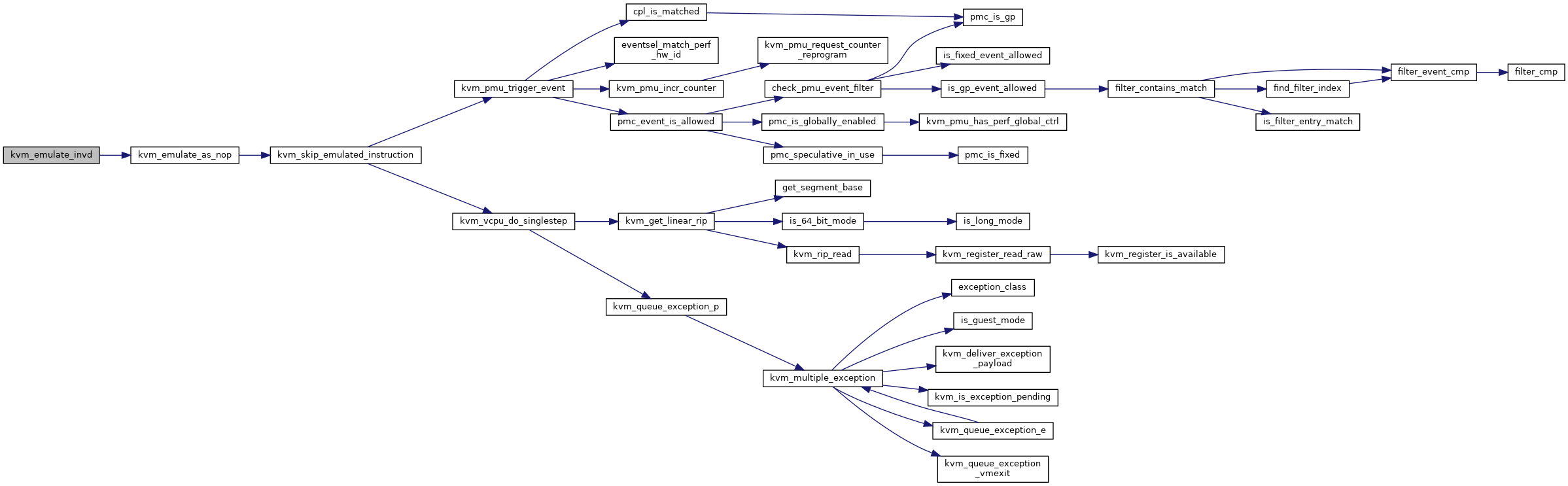

| int | kvm_emulate_as_nop (struct kvm_vcpu *vcpu) |

| int | kvm_emulate_invd (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_invd) | |

| int | kvm_handle_invalid_op (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_handle_invalid_op) | |

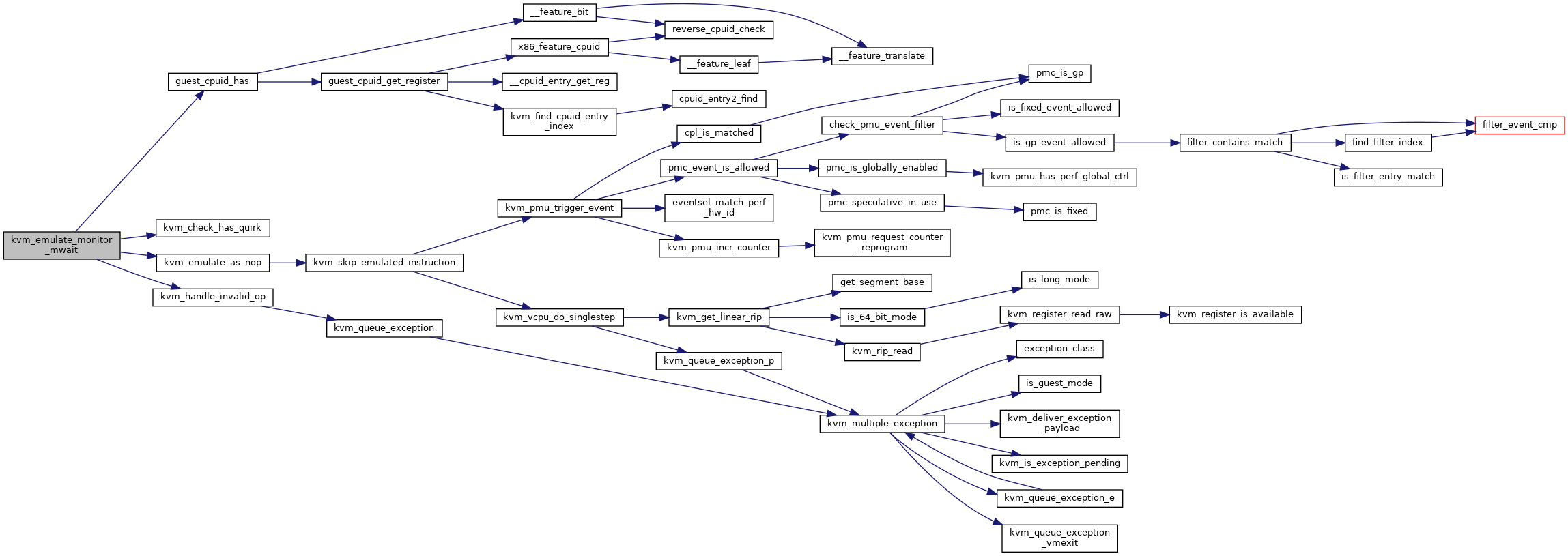

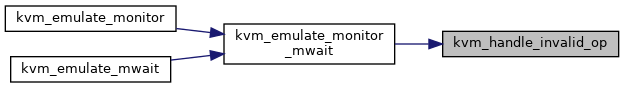

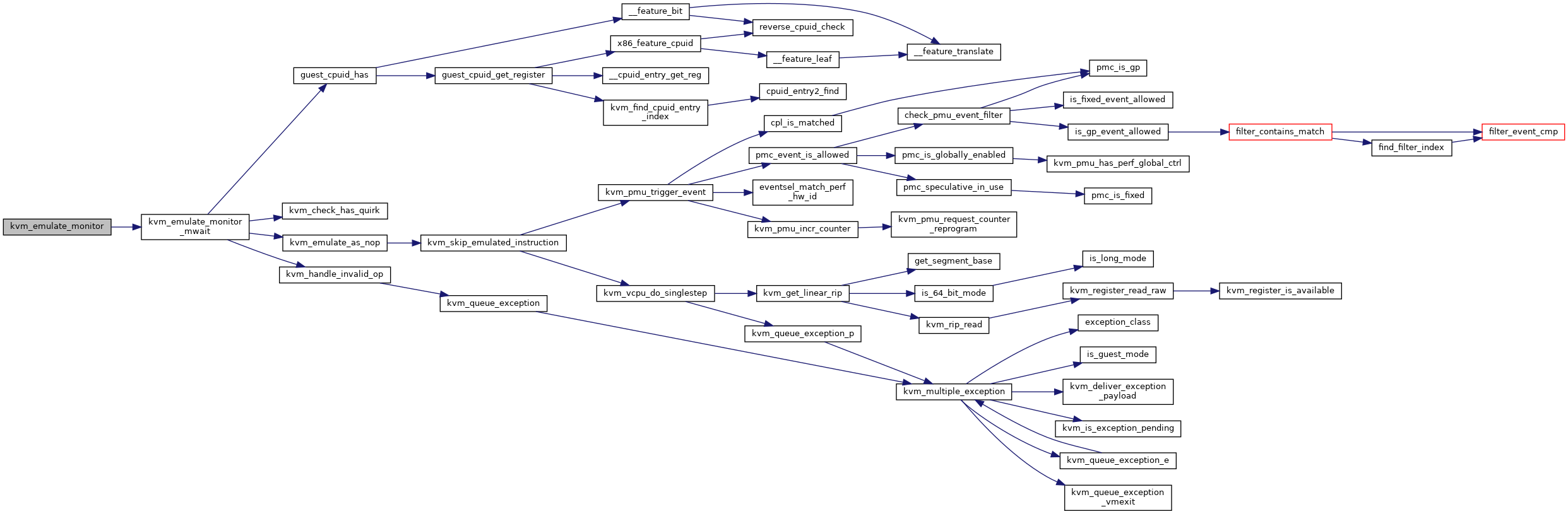

| static int | kvm_emulate_monitor_mwait (struct kvm_vcpu *vcpu, const char *insn) |

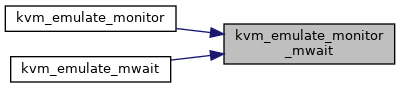

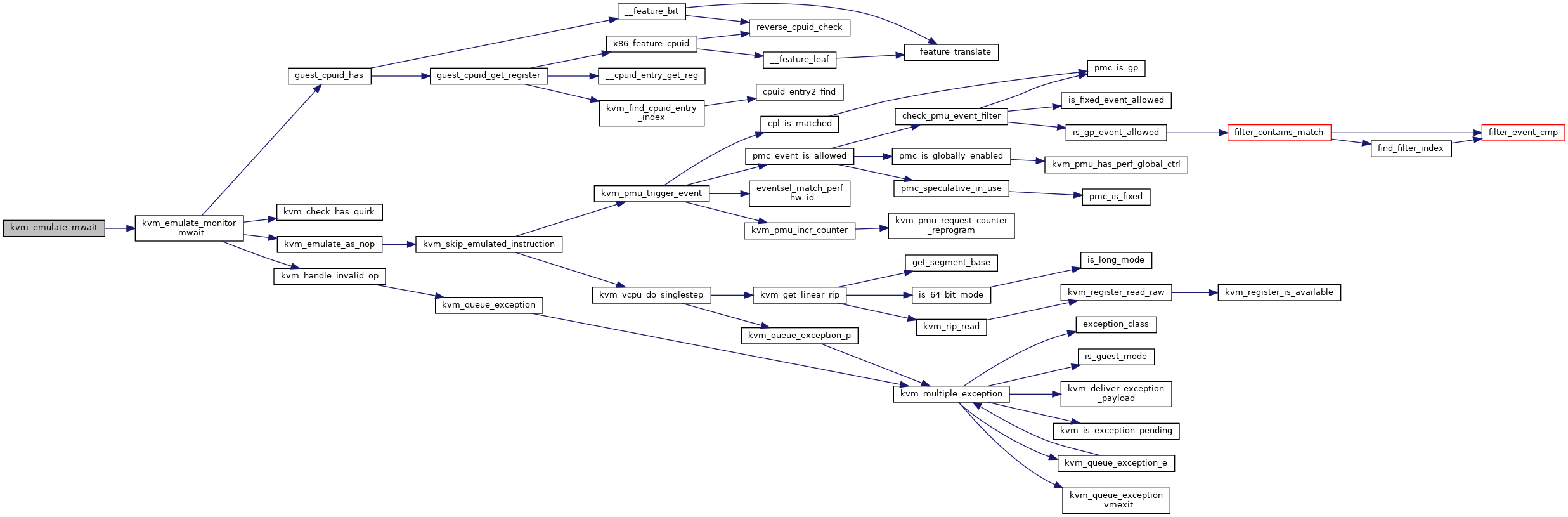

| int | kvm_emulate_mwait (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_mwait) | |

| int | kvm_emulate_monitor (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_monitor) | |

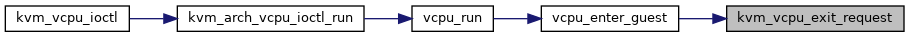

| static bool | kvm_vcpu_exit_request (struct kvm_vcpu *vcpu) |

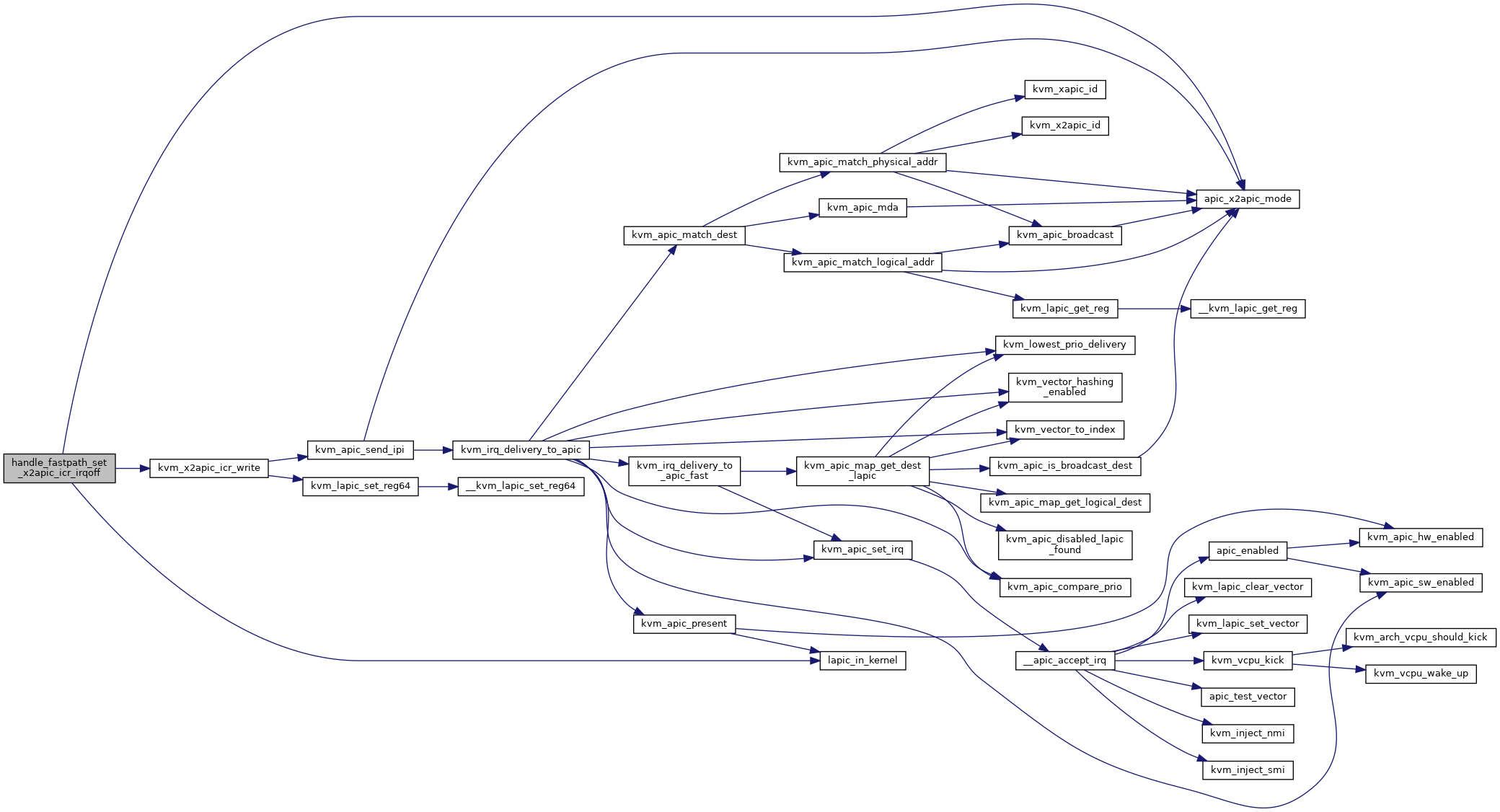

| static int | handle_fastpath_set_x2apic_icr_irqoff (struct kvm_vcpu *vcpu, u64 data) |

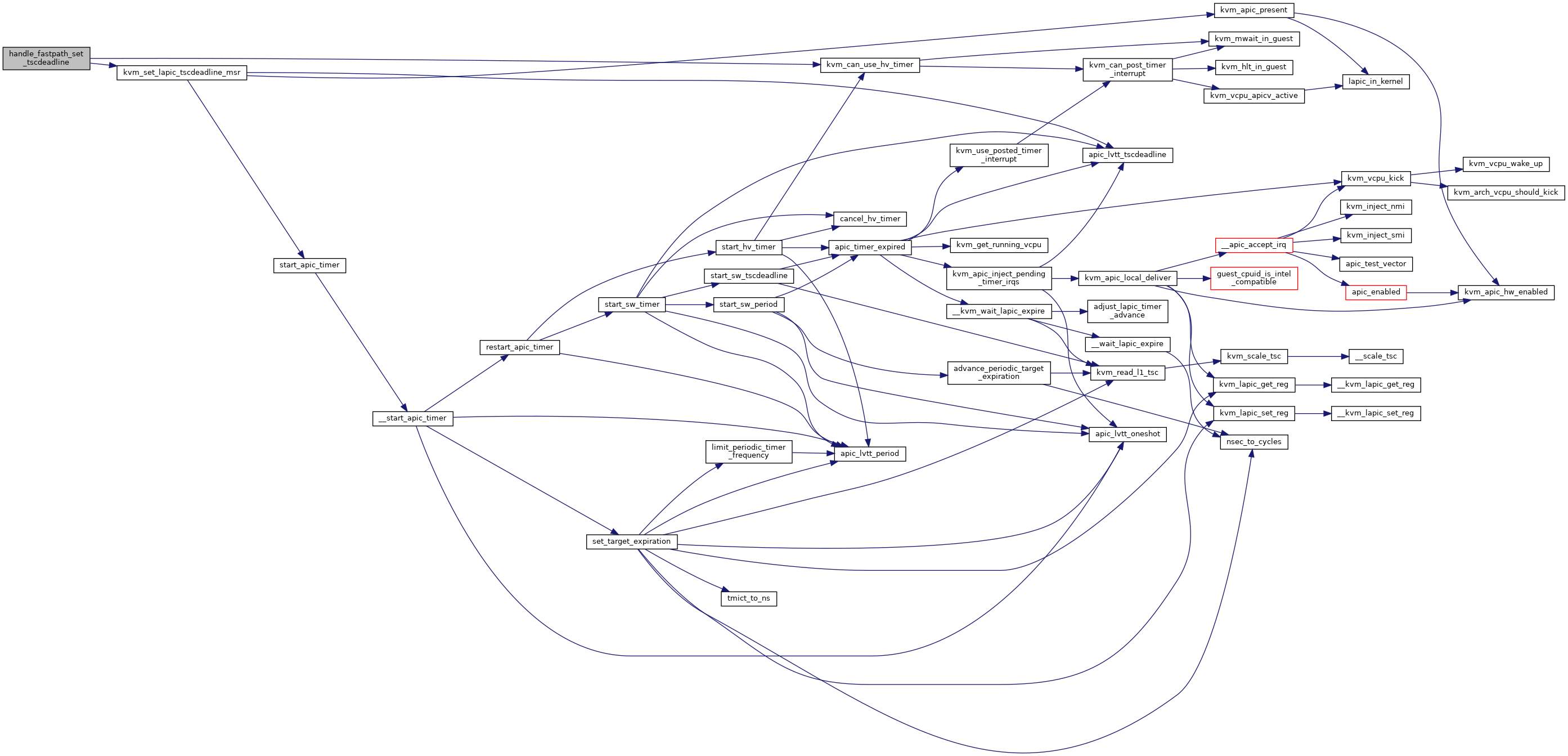

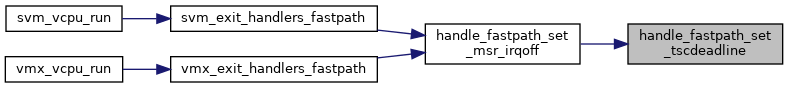

| static int | handle_fastpath_set_tscdeadline (struct kvm_vcpu *vcpu, u64 data) |

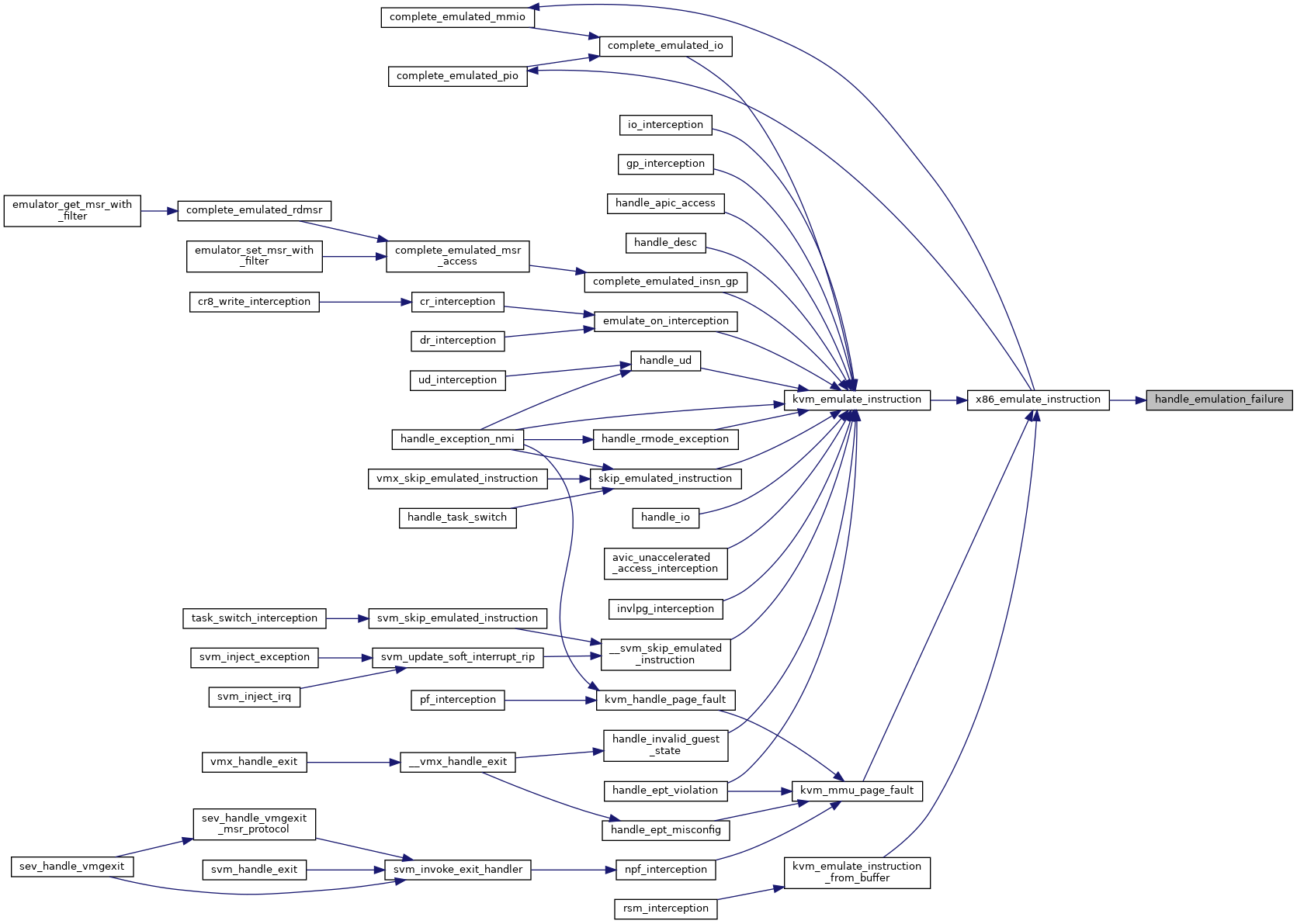

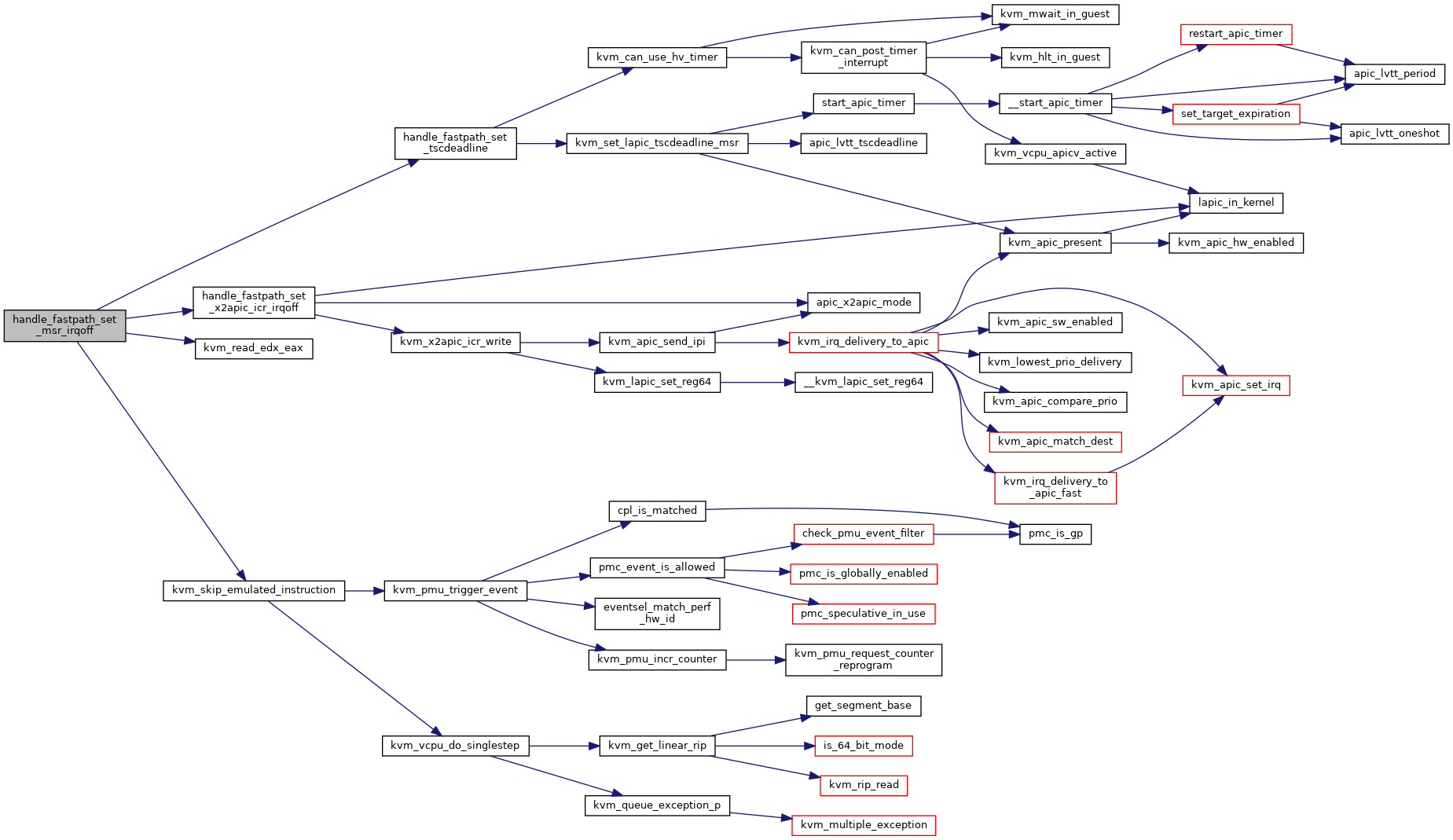

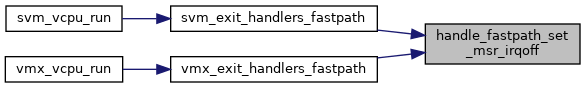

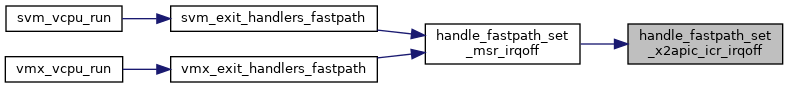

| fastpath_t | handle_fastpath_set_msr_irqoff (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (handle_fastpath_set_msr_irqoff) | |

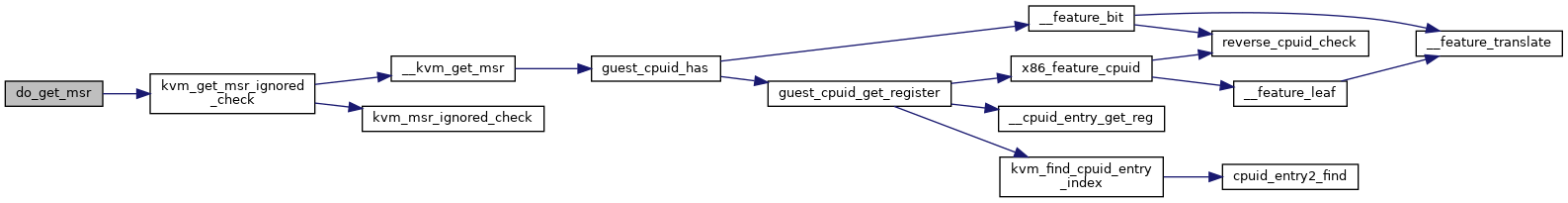

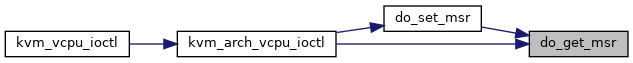

| static int | do_get_msr (struct kvm_vcpu *vcpu, unsigned index, u64 *data) |

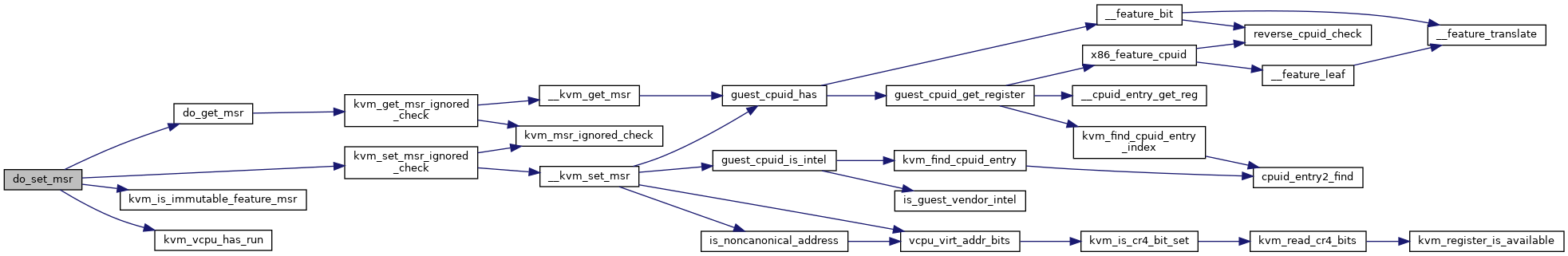

| static int | do_set_msr (struct kvm_vcpu *vcpu, unsigned index, u64 *data) |

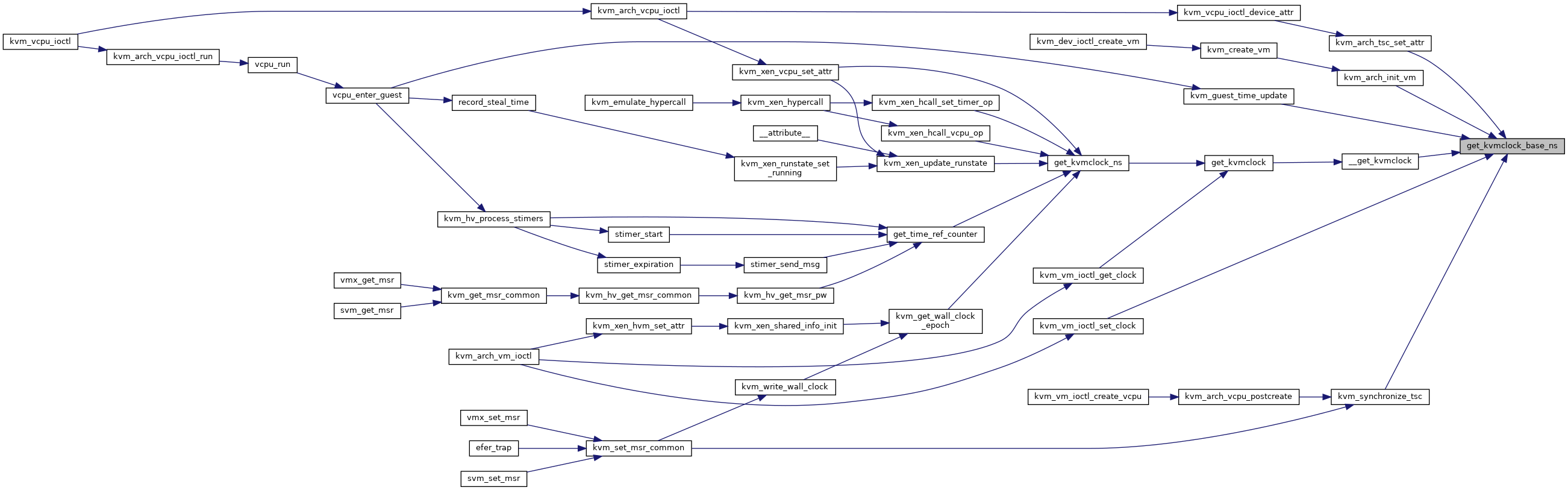

| static s64 | get_kvmclock_base_ns (void) |

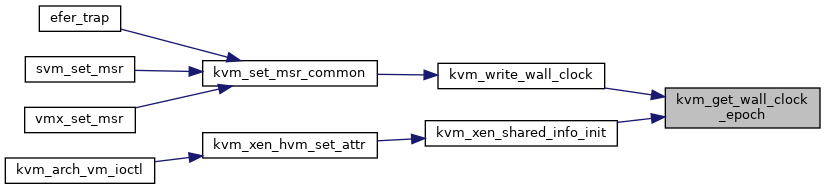

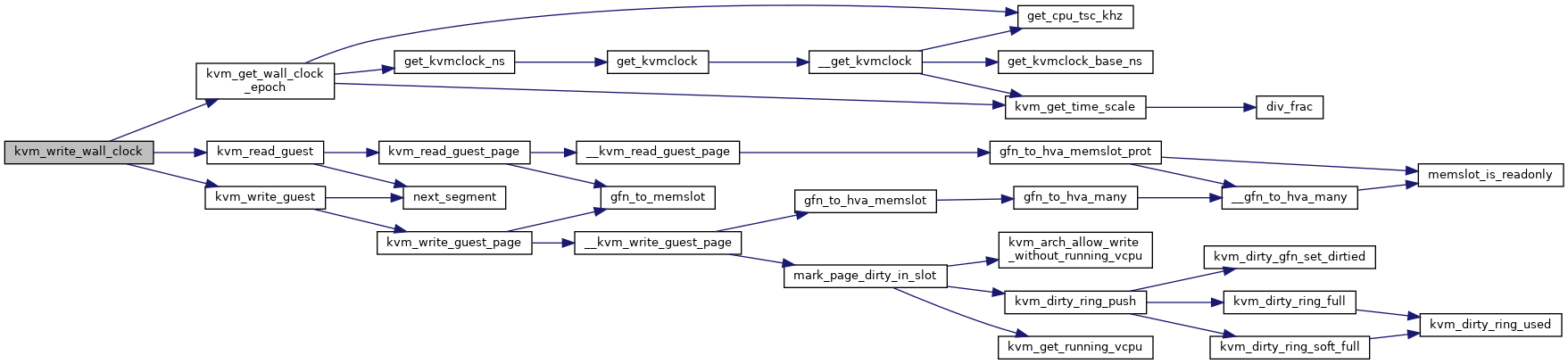

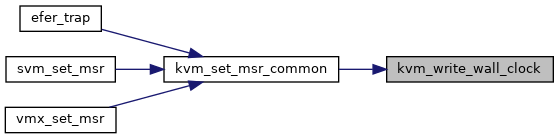

| static void | kvm_write_wall_clock (struct kvm *kvm, gpa_t wall_clock, int sec_hi_ofs) |

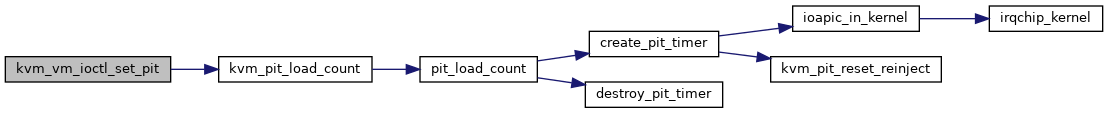

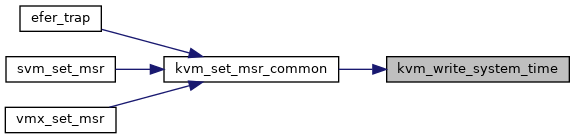

| static void | kvm_write_system_time (struct kvm_vcpu *vcpu, gpa_t system_time, bool old_msr, bool host_initiated) |

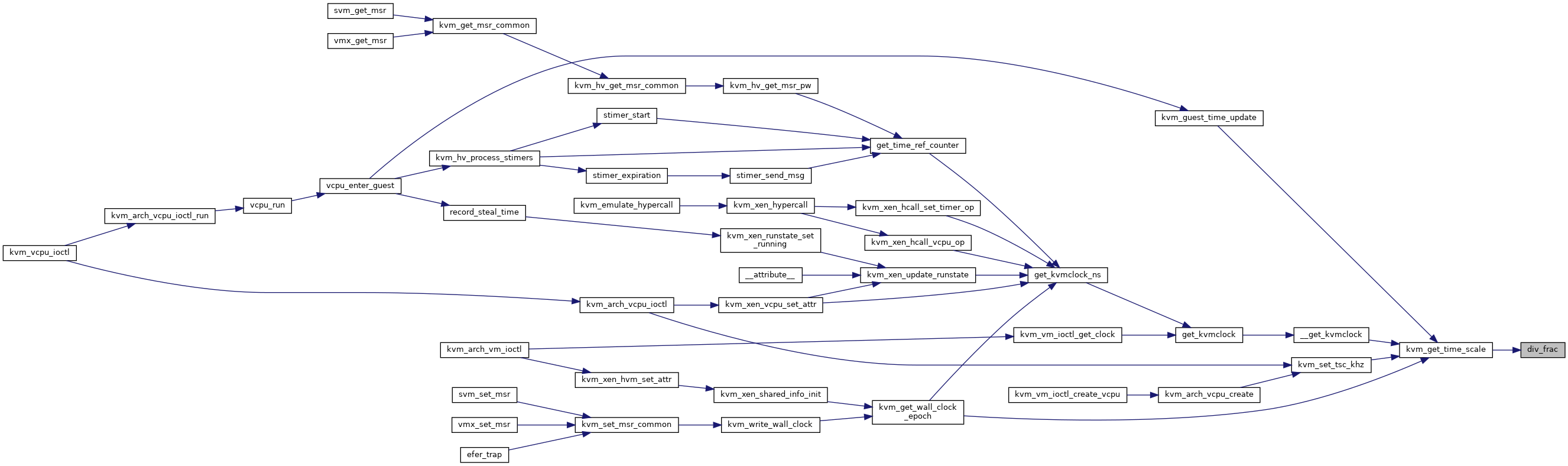

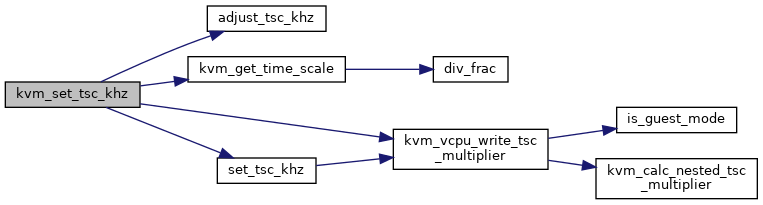

| static uint32_t | div_frac (uint32_t dividend, uint32_t divisor) |

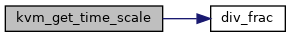

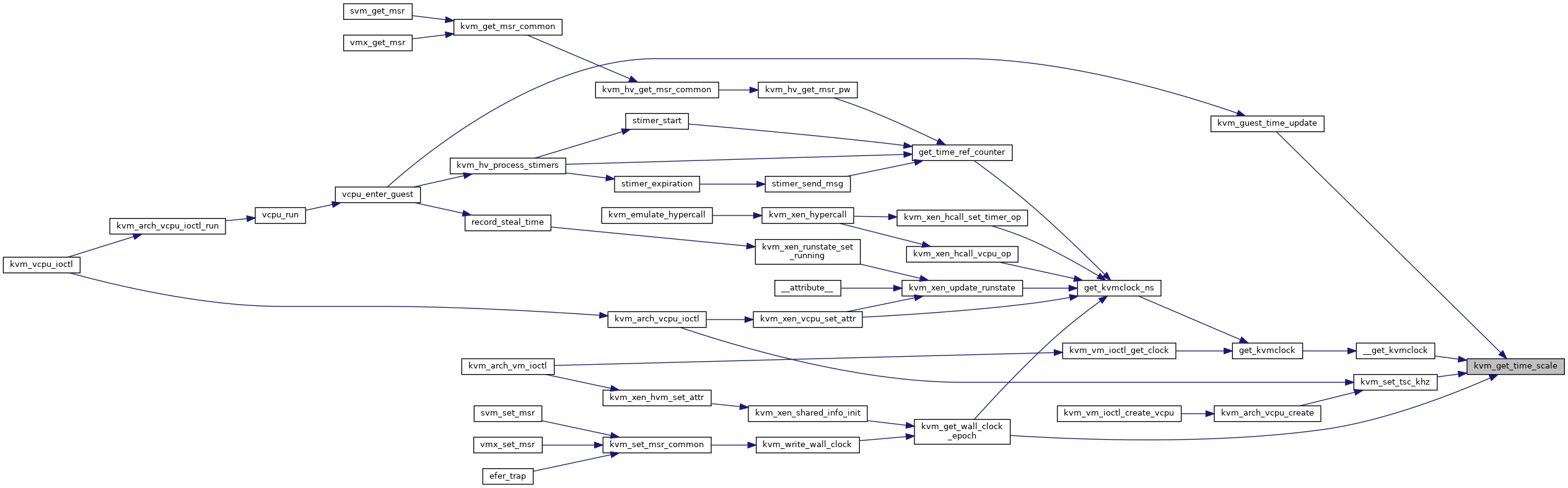

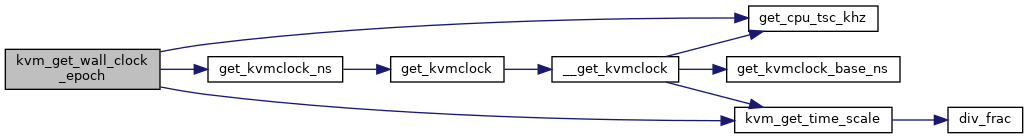

| static void | kvm_get_time_scale (uint64_t scaled_hz, uint64_t base_hz, s8 *pshift, u32 *pmultiplier) |

| static | DEFINE_PER_CPU (unsigned long, cpu_tsc_khz) |

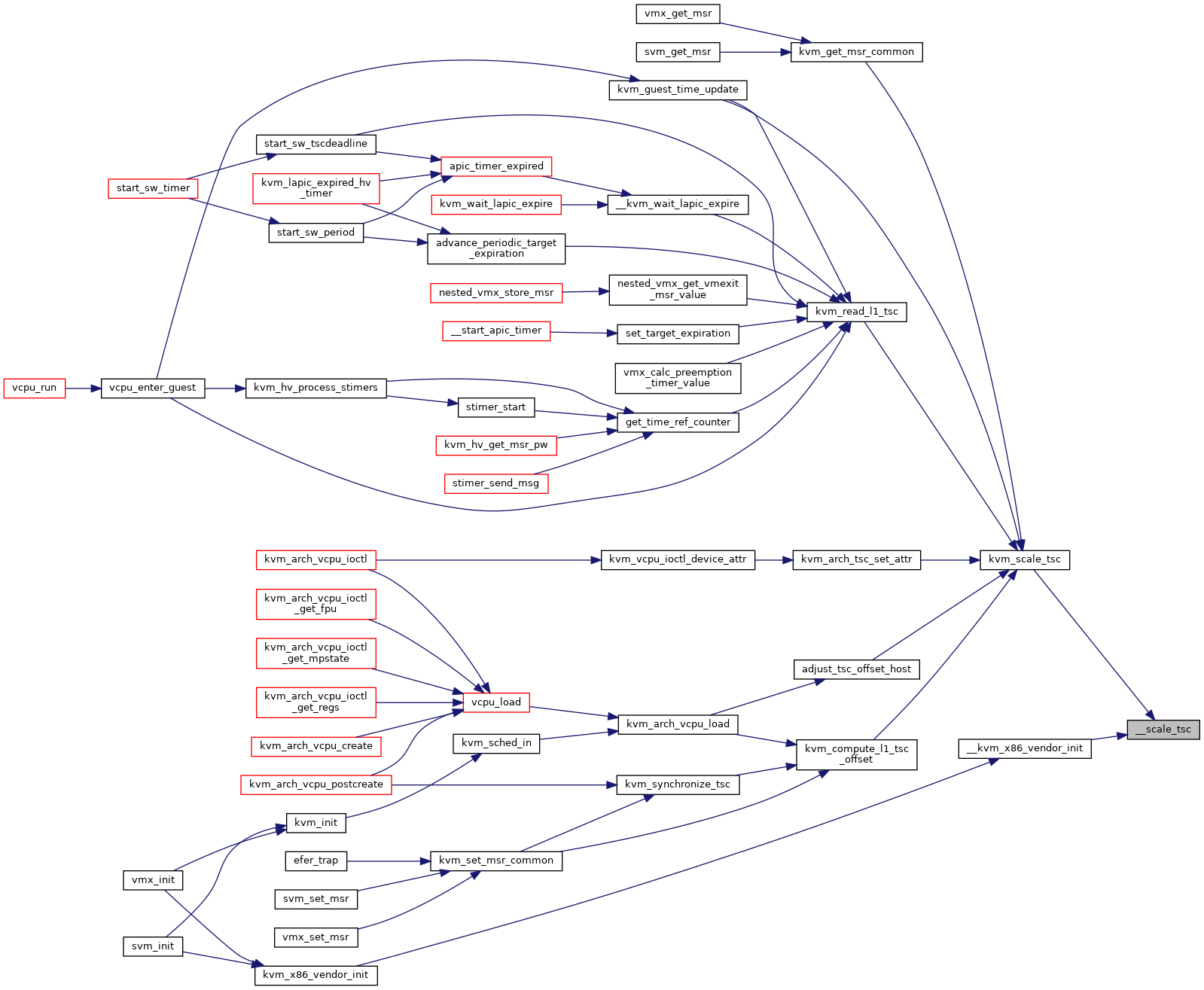

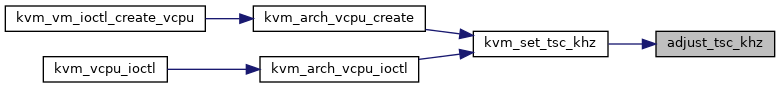

| static u32 | adjust_tsc_khz (u32 khz, s32 ppm) |

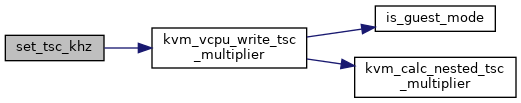

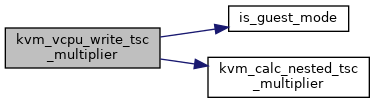

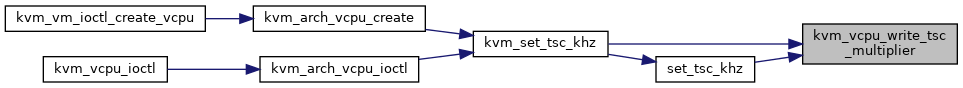

| static void | kvm_vcpu_write_tsc_multiplier (struct kvm_vcpu *vcpu, u64 l1_multiplier) |

| static int | set_tsc_khz (struct kvm_vcpu *vcpu, u32 user_tsc_khz, bool scale) |

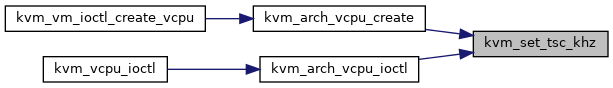

| static int | kvm_set_tsc_khz (struct kvm_vcpu *vcpu, u32 user_tsc_khz) |

| static u64 | compute_guest_tsc (struct kvm_vcpu *vcpu, s64 kernel_ns) |

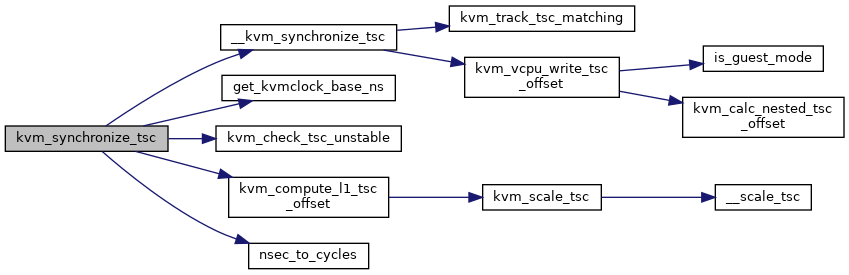

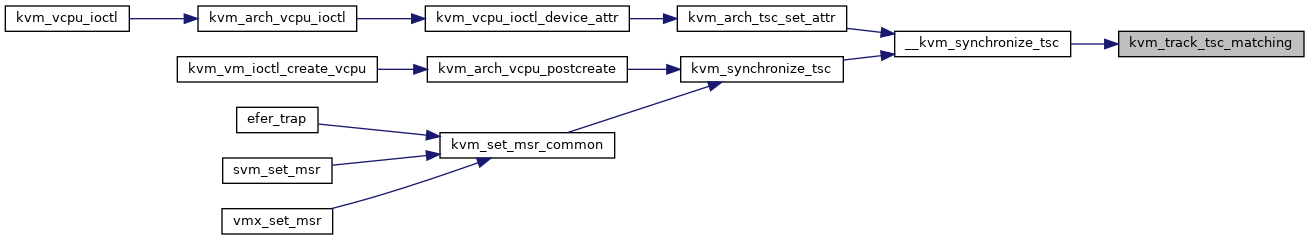

| static void | kvm_track_tsc_matching (struct kvm_vcpu *vcpu, bool new_generation) |

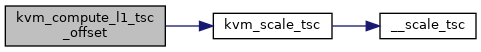

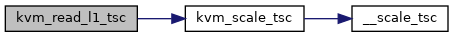

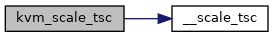

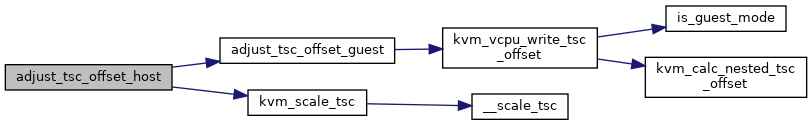

| static u64 | __scale_tsc (u64 ratio, u64 tsc) |

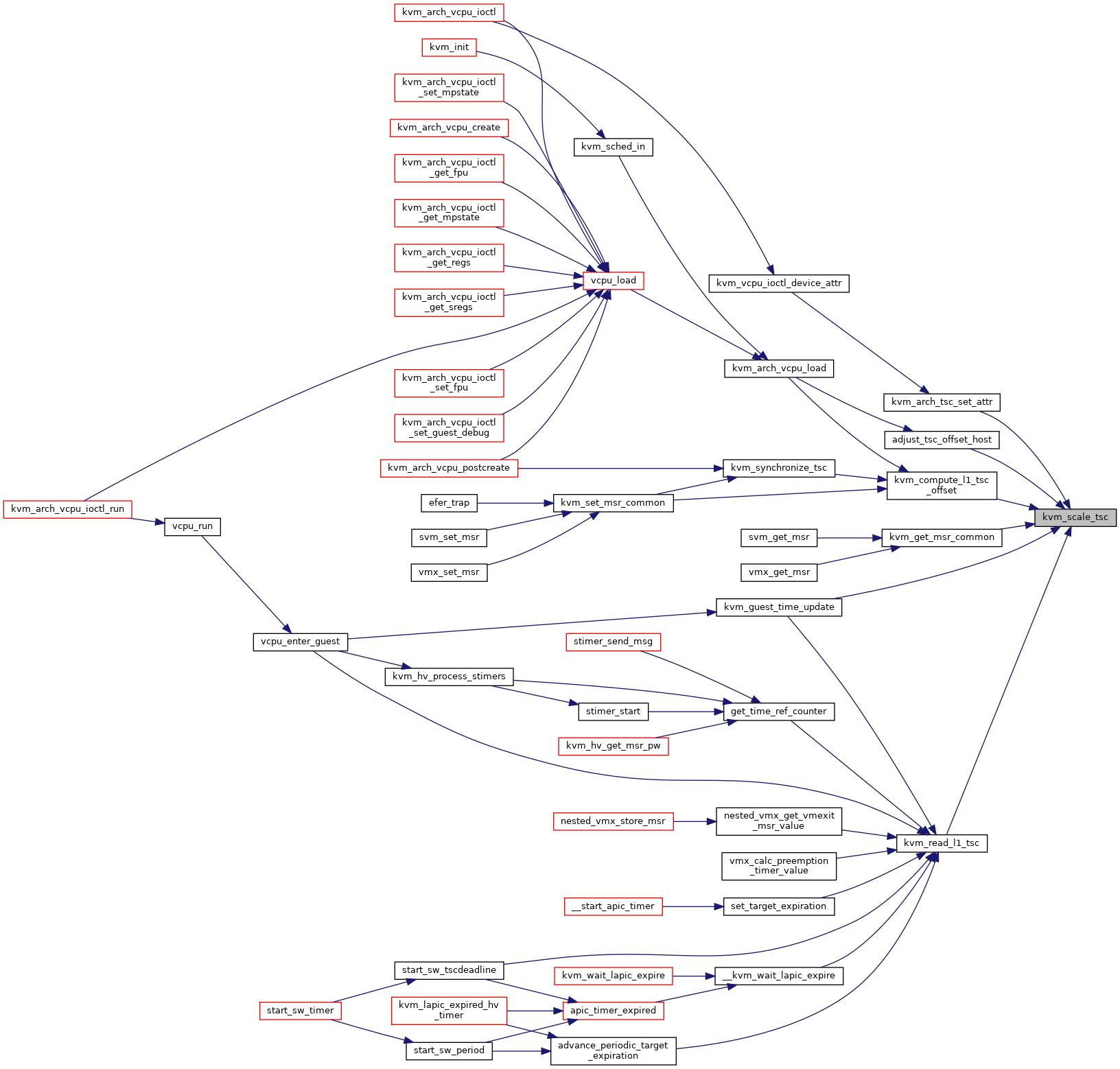

| u64 | kvm_scale_tsc (u64 tsc, u64 ratio) |

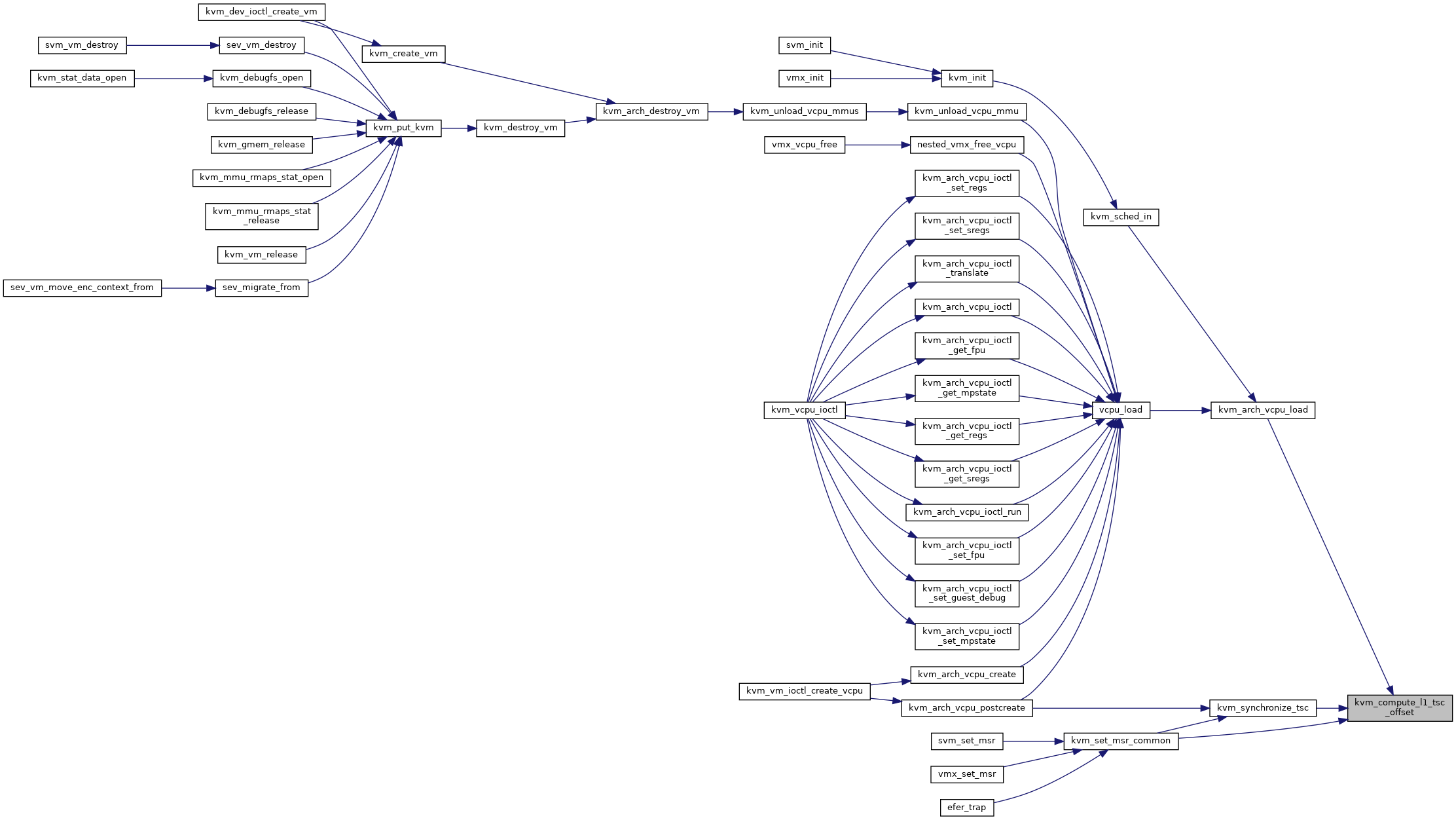

| static u64 | kvm_compute_l1_tsc_offset (struct kvm_vcpu *vcpu, u64 target_tsc) |

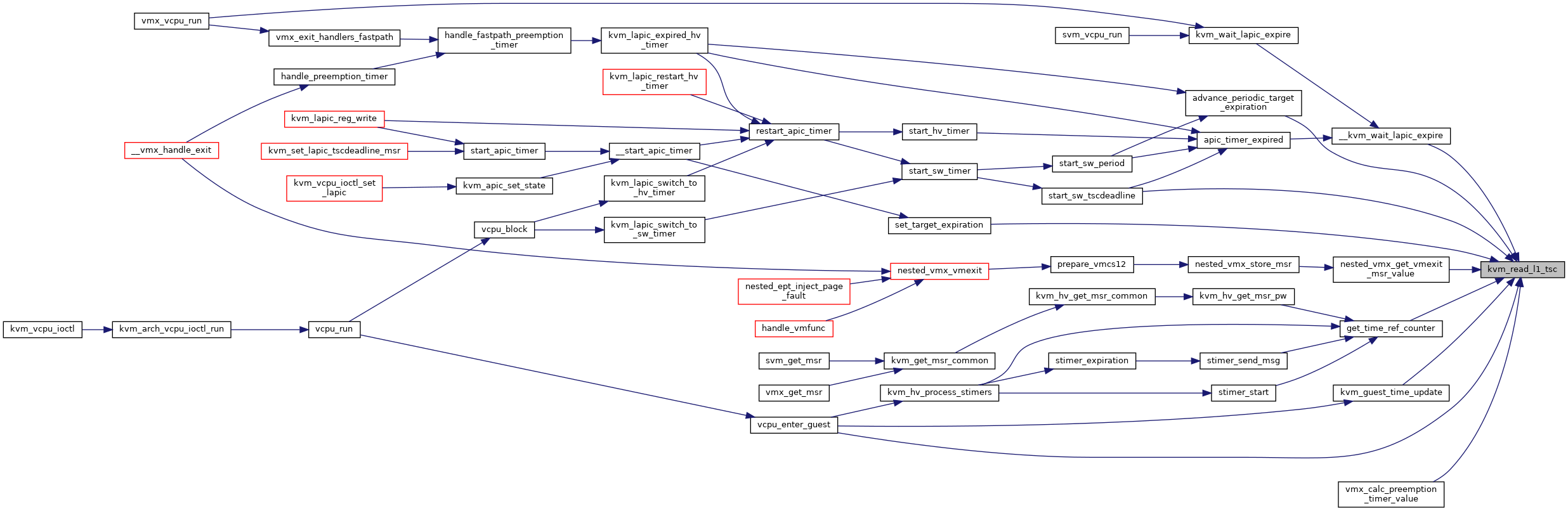

| u64 | kvm_read_l1_tsc (struct kvm_vcpu *vcpu, u64 host_tsc) |

| EXPORT_SYMBOL_GPL (kvm_read_l1_tsc) | |

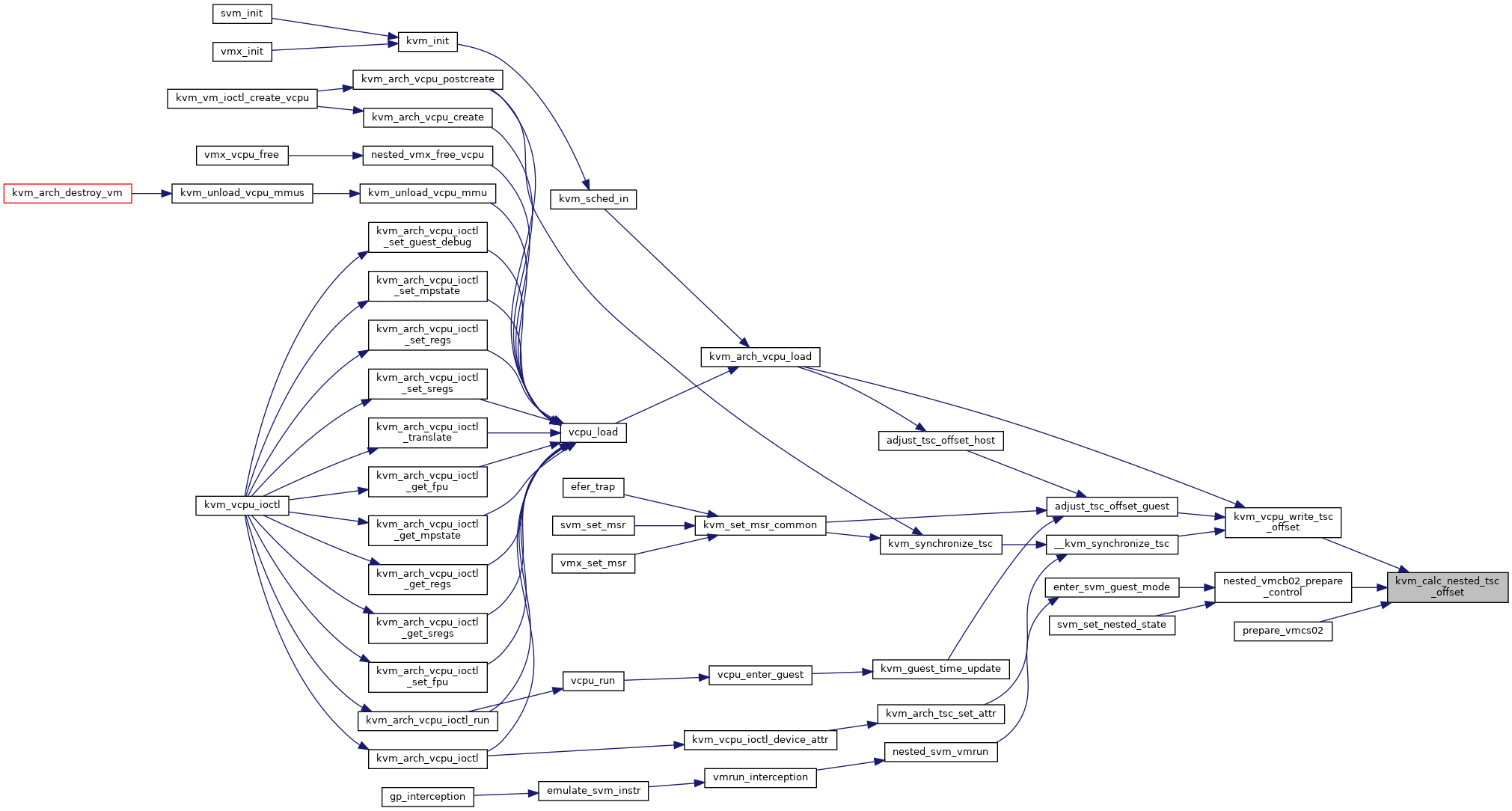

| u64 | kvm_calc_nested_tsc_offset (u64 l1_offset, u64 l2_offset, u64 l2_multiplier) |

| EXPORT_SYMBOL_GPL (kvm_calc_nested_tsc_offset) | |

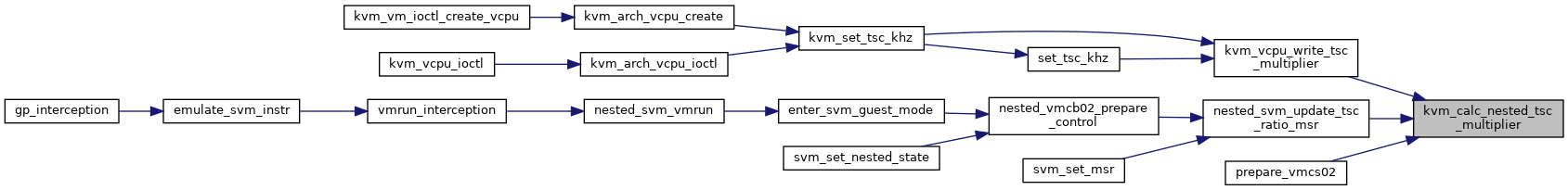

| u64 | kvm_calc_nested_tsc_multiplier (u64 l1_multiplier, u64 l2_multiplier) |

| EXPORT_SYMBOL_GPL (kvm_calc_nested_tsc_multiplier) | |

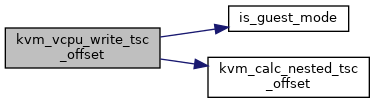

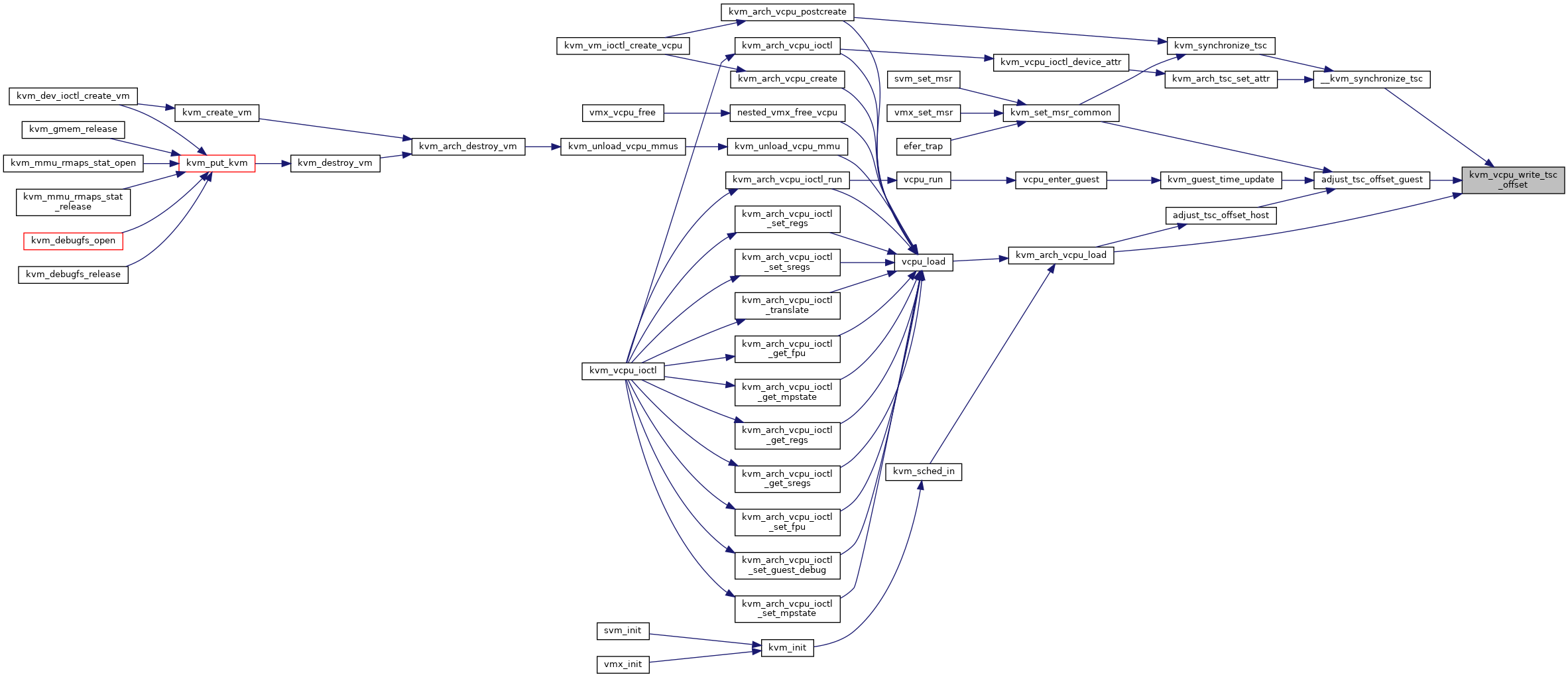

| static void | kvm_vcpu_write_tsc_offset (struct kvm_vcpu *vcpu, u64 l1_offset) |

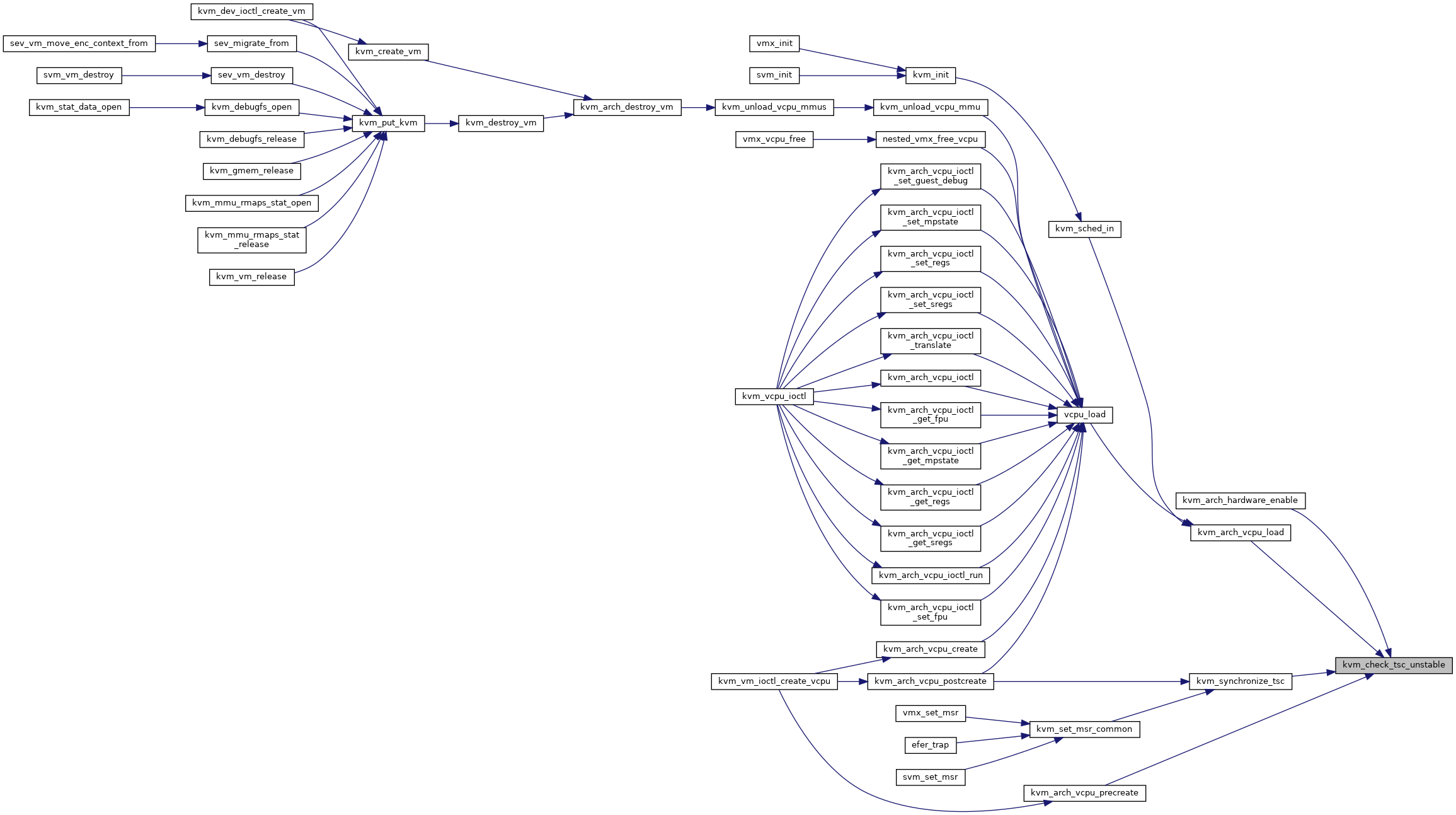

| static bool | kvm_check_tsc_unstable (void) |

| static void | __kvm_synchronize_tsc (struct kvm_vcpu *vcpu, u64 offset, u64 tsc, u64 ns, bool matched) |

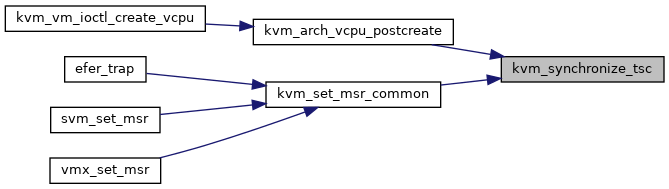

| static void | kvm_synchronize_tsc (struct kvm_vcpu *vcpu, u64 *user_value) |

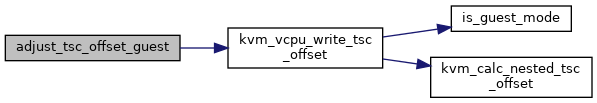

| static void | adjust_tsc_offset_guest (struct kvm_vcpu *vcpu, s64 adjustment) |

| static void | adjust_tsc_offset_host (struct kvm_vcpu *vcpu, s64 adjustment) |

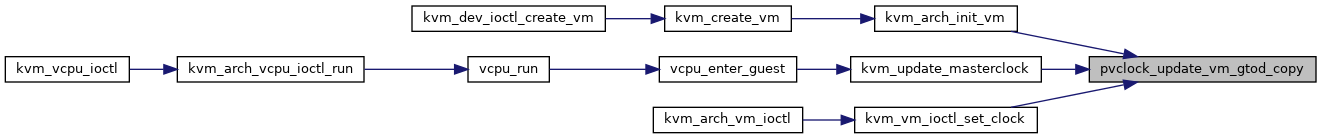

| static void | pvclock_update_vm_gtod_copy (struct kvm *kvm) |

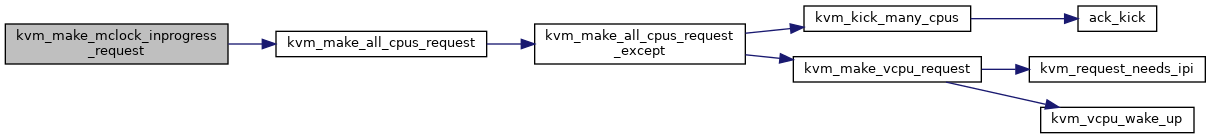

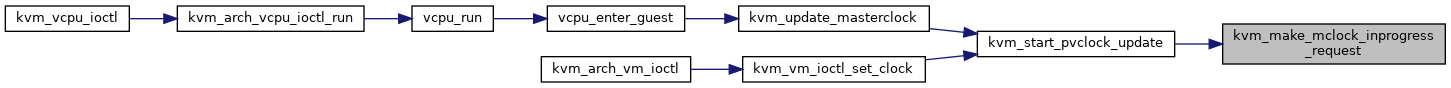

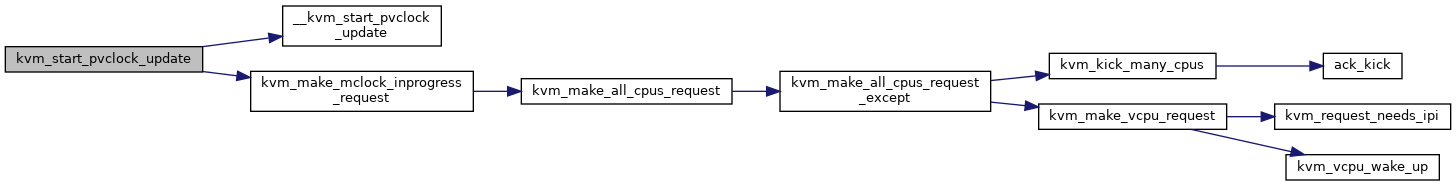

| static void | kvm_make_mclock_inprogress_request (struct kvm *kvm) |

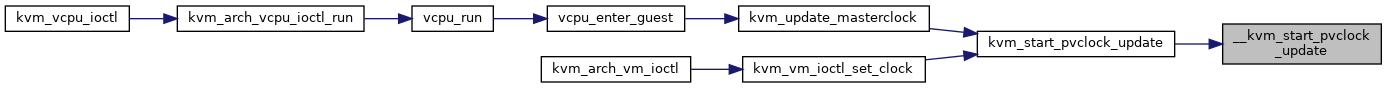

| static void | __kvm_start_pvclock_update (struct kvm *kvm) |

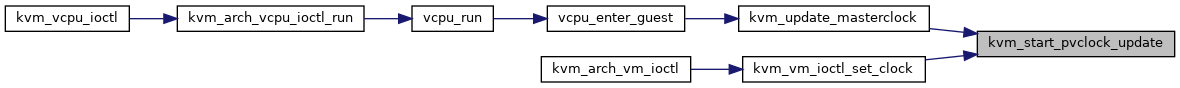

| static void | kvm_start_pvclock_update (struct kvm *kvm) |

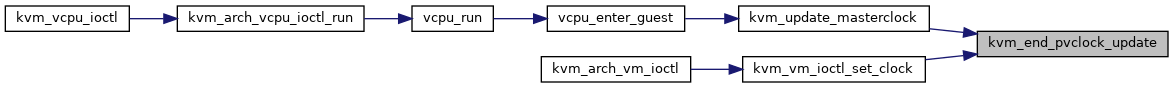

| static void | kvm_end_pvclock_update (struct kvm *kvm) |

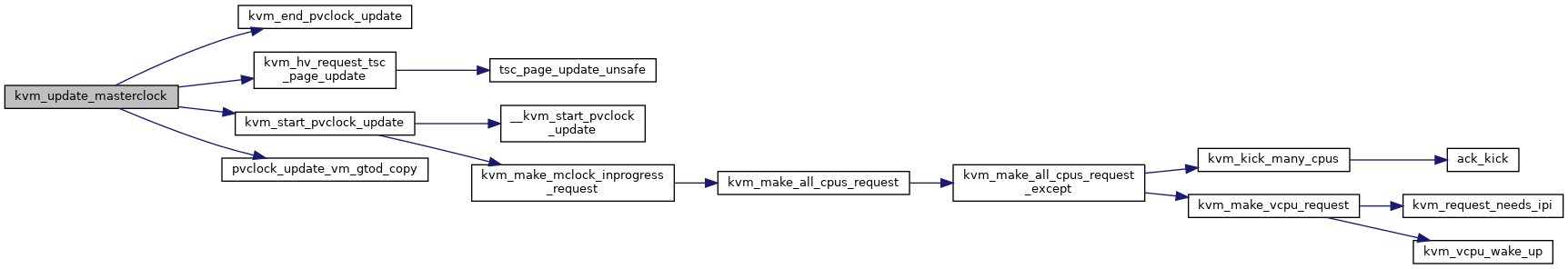

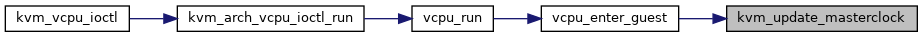

| static void | kvm_update_masterclock (struct kvm *kvm) |

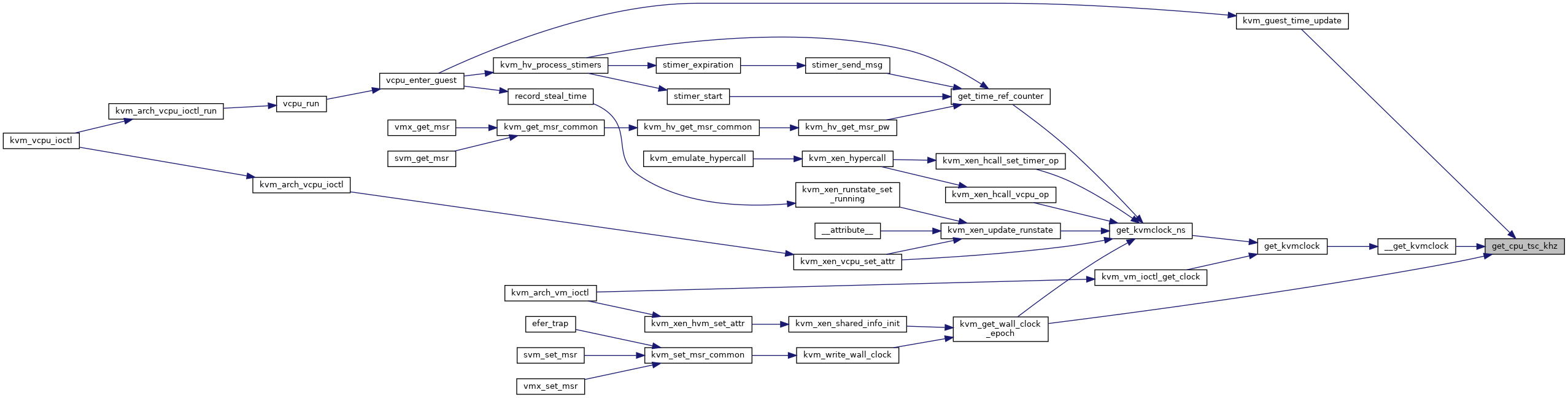

| static unsigned long | get_cpu_tsc_khz (void) |

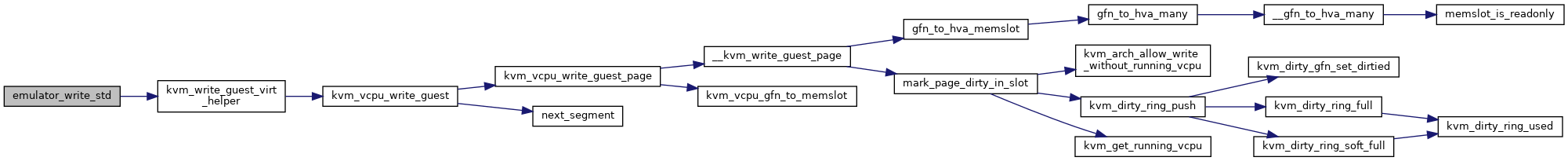

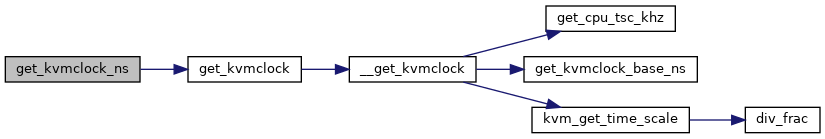

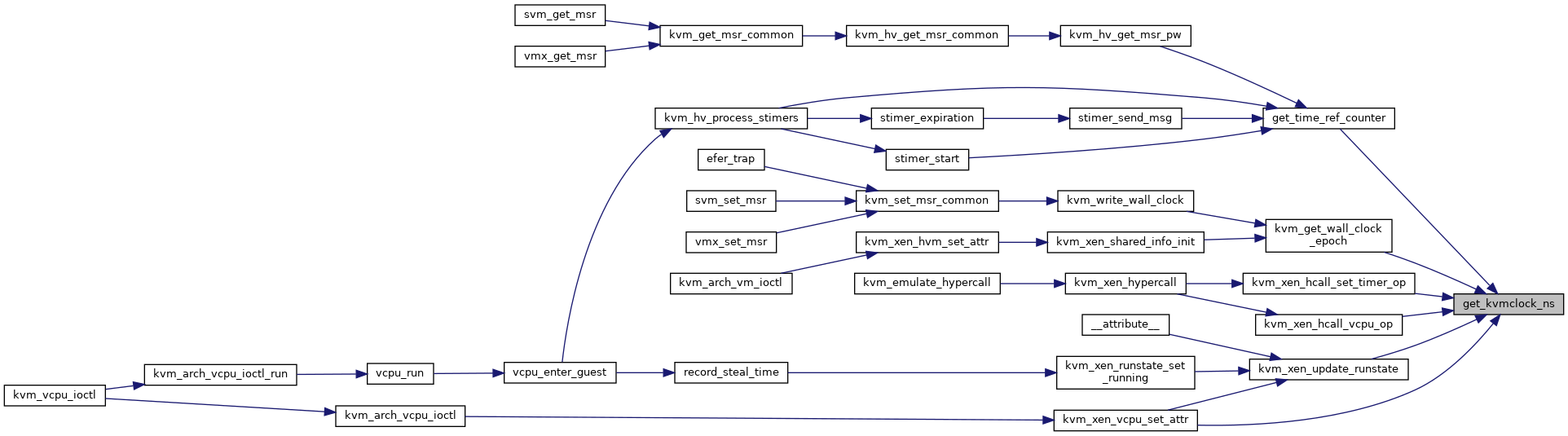

| static void | __get_kvmclock (struct kvm *kvm, struct kvm_clock_data *data) |

| static void | get_kvmclock (struct kvm *kvm, struct kvm_clock_data *data) |

| u64 | get_kvmclock_ns (struct kvm *kvm) |

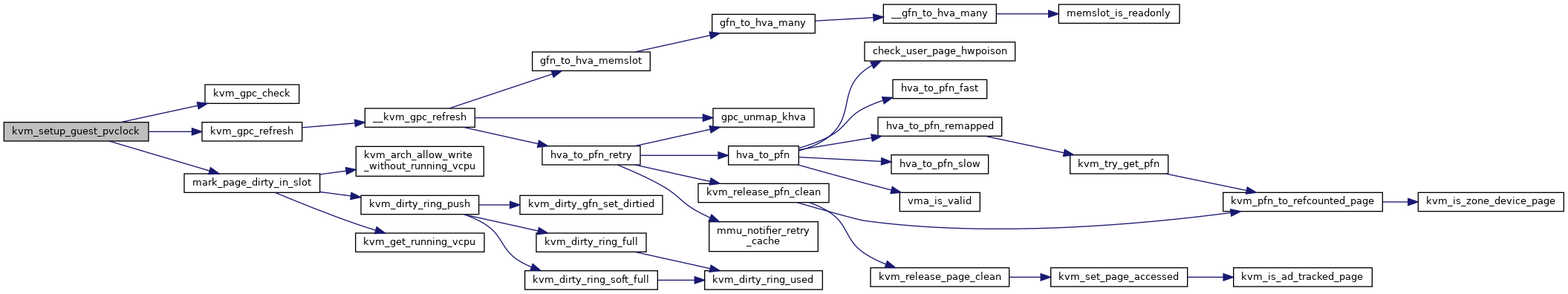

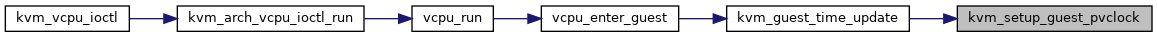

| static void | kvm_setup_guest_pvclock (struct kvm_vcpu *v, struct gfn_to_pfn_cache *gpc, unsigned int offset, bool force_tsc_unstable) |

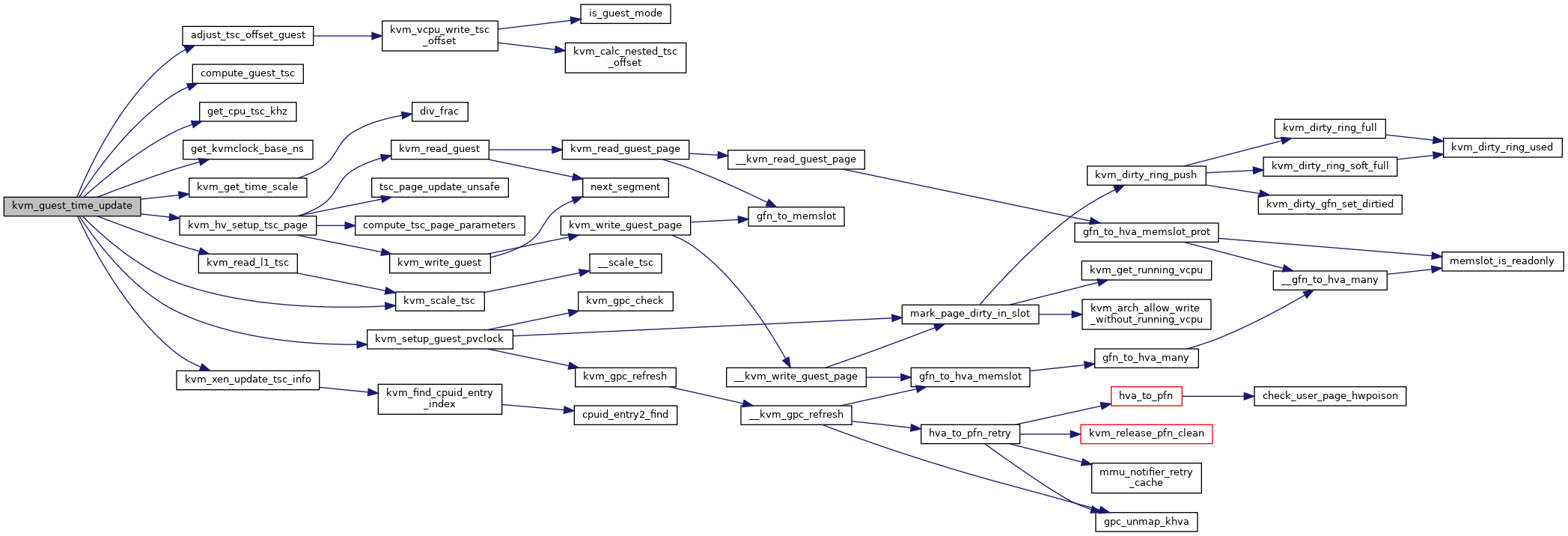

| static int | kvm_guest_time_update (struct kvm_vcpu *v) |

| uint64_t | kvm_get_wall_clock_epoch (struct kvm *kvm) |

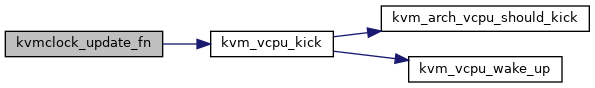

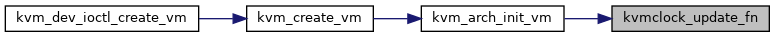

| static void | kvmclock_update_fn (struct work_struct *work) |

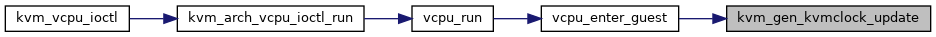

| static void | kvm_gen_kvmclock_update (struct kvm_vcpu *v) |

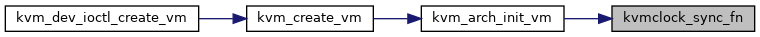

| static void | kvmclock_sync_fn (struct work_struct *work) |

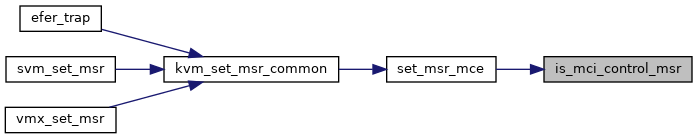

| static bool | is_mci_control_msr (u32 msr) |

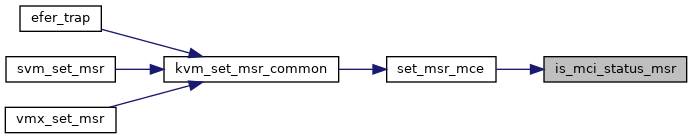

| static bool | is_mci_status_msr (u32 msr) |

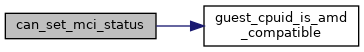

| static bool | can_set_mci_status (struct kvm_vcpu *vcpu) |

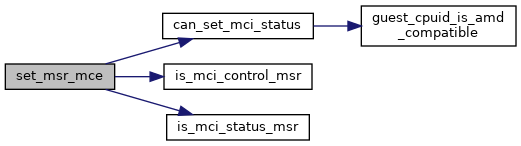

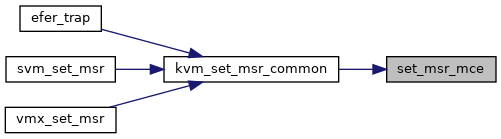

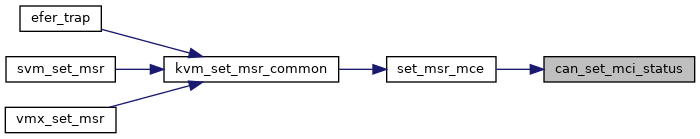

| static int | set_msr_mce (struct kvm_vcpu *vcpu, struct msr_data *msr_info) |

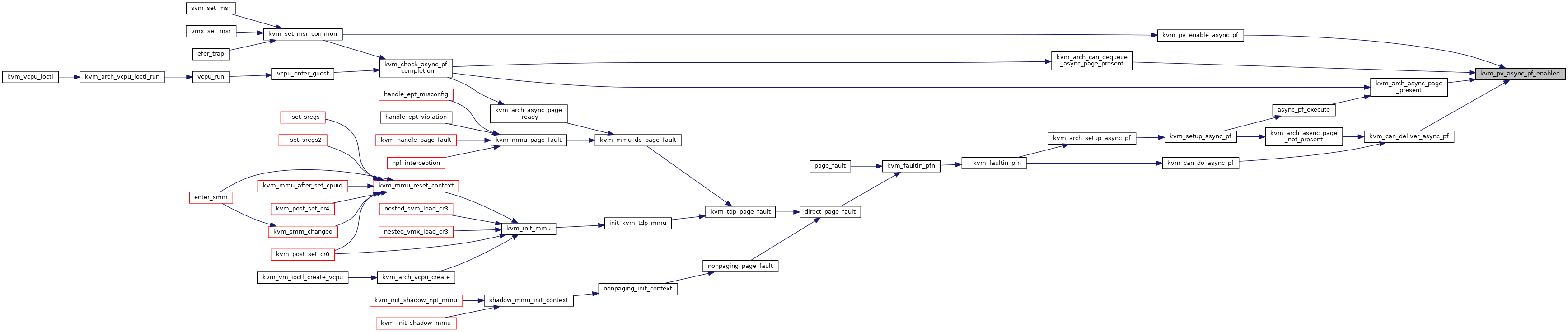

| static bool | kvm_pv_async_pf_enabled (struct kvm_vcpu *vcpu) |

| static int | kvm_pv_enable_async_pf (struct kvm_vcpu *vcpu, u64 data) |

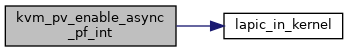

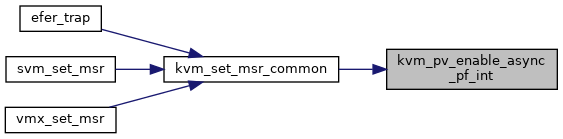

| static int | kvm_pv_enable_async_pf_int (struct kvm_vcpu *vcpu, u64 data) |

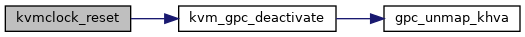

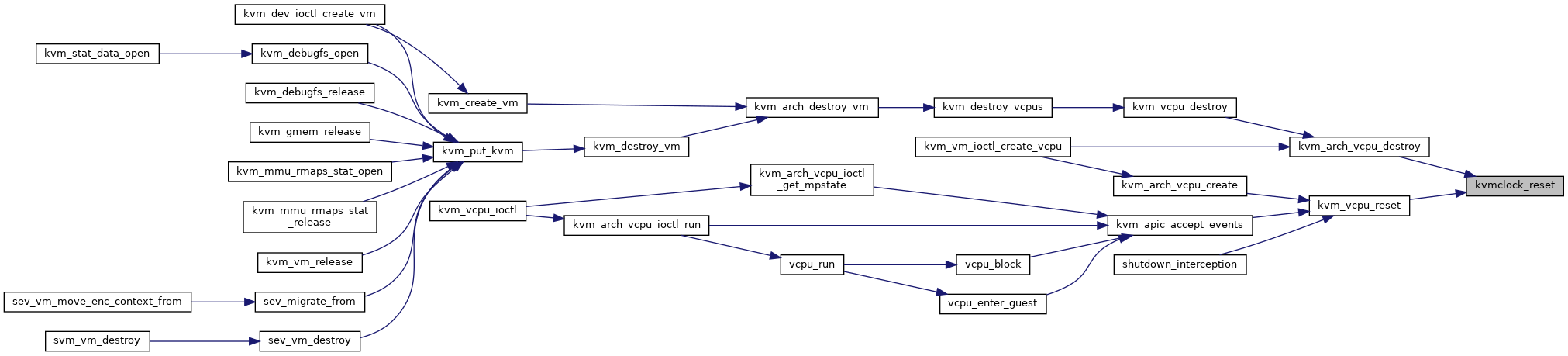

| static void | kvmclock_reset (struct kvm_vcpu *vcpu) |

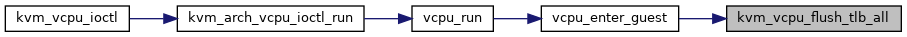

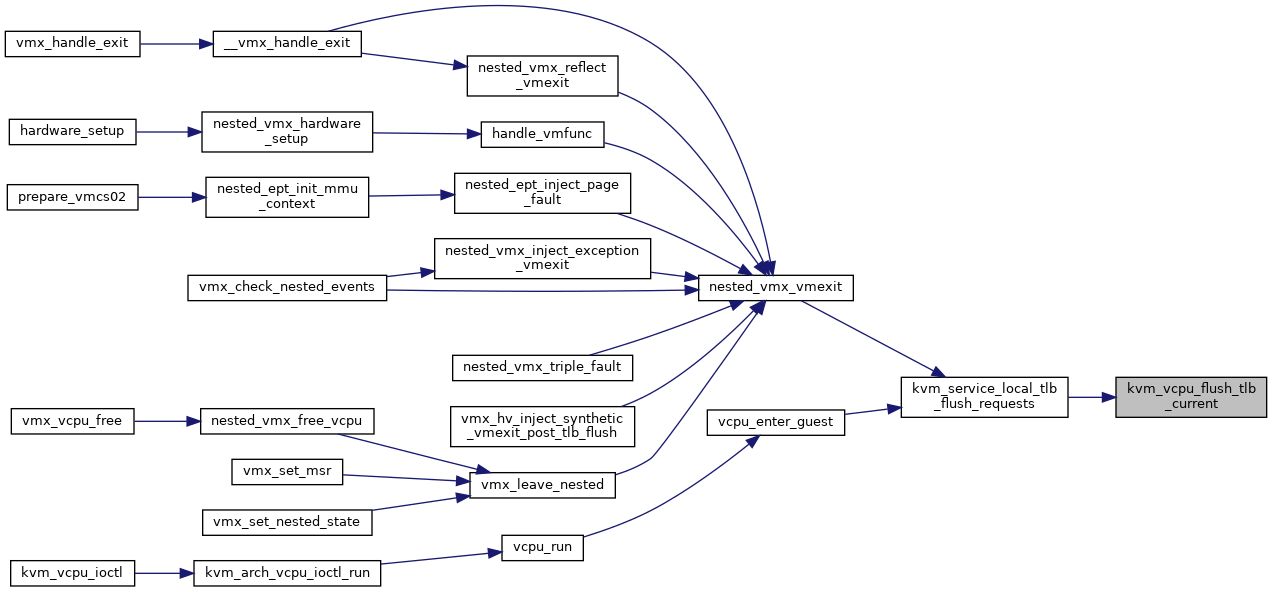

| static void | kvm_vcpu_flush_tlb_all (struct kvm_vcpu *vcpu) |

| static void | kvm_vcpu_flush_tlb_guest (struct kvm_vcpu *vcpu) |

| static void | kvm_vcpu_flush_tlb_current (struct kvm_vcpu *vcpu) |

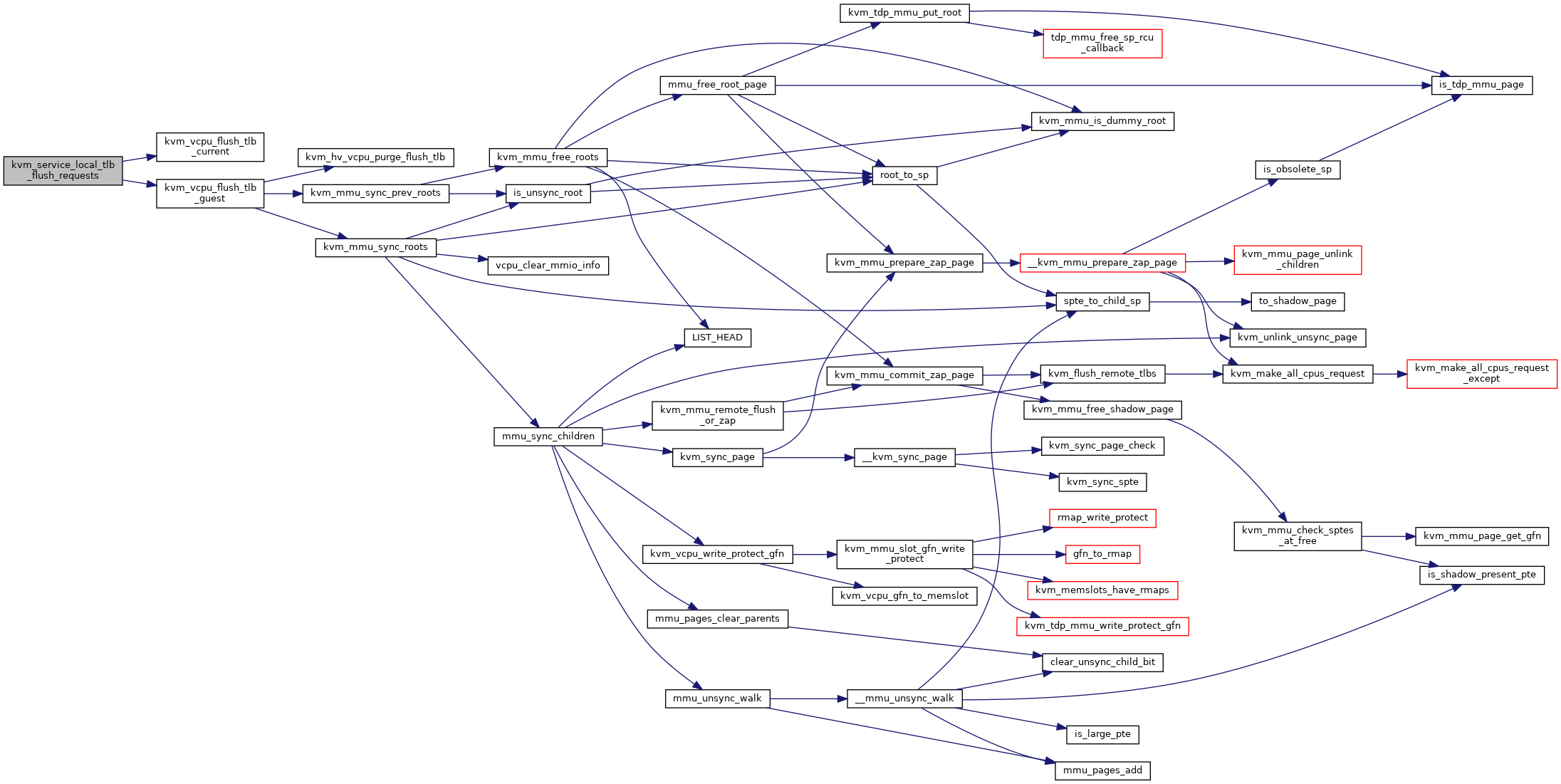

| void | kvm_service_local_tlb_flush_requests (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_service_local_tlb_flush_requests) | |

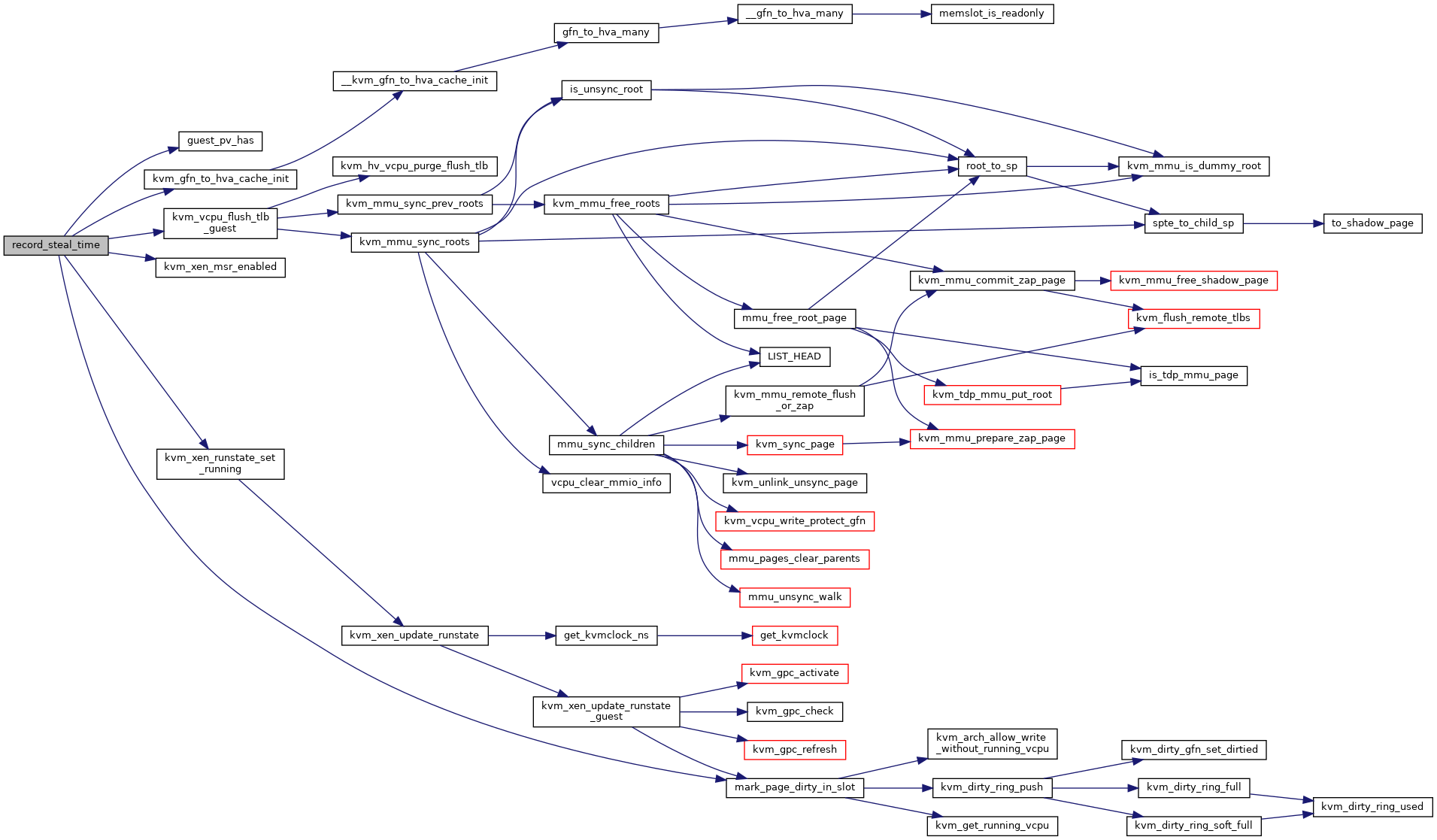

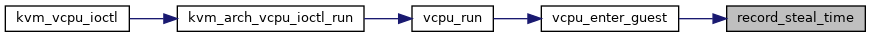

| static void | record_steal_time (struct kvm_vcpu *vcpu) |

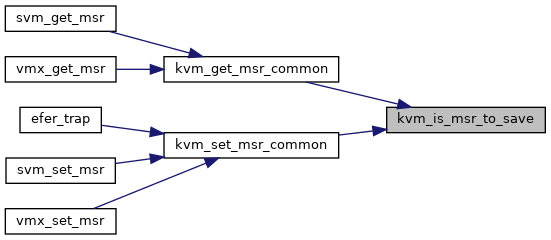

| static bool | kvm_is_msr_to_save (u32 msr_index) |

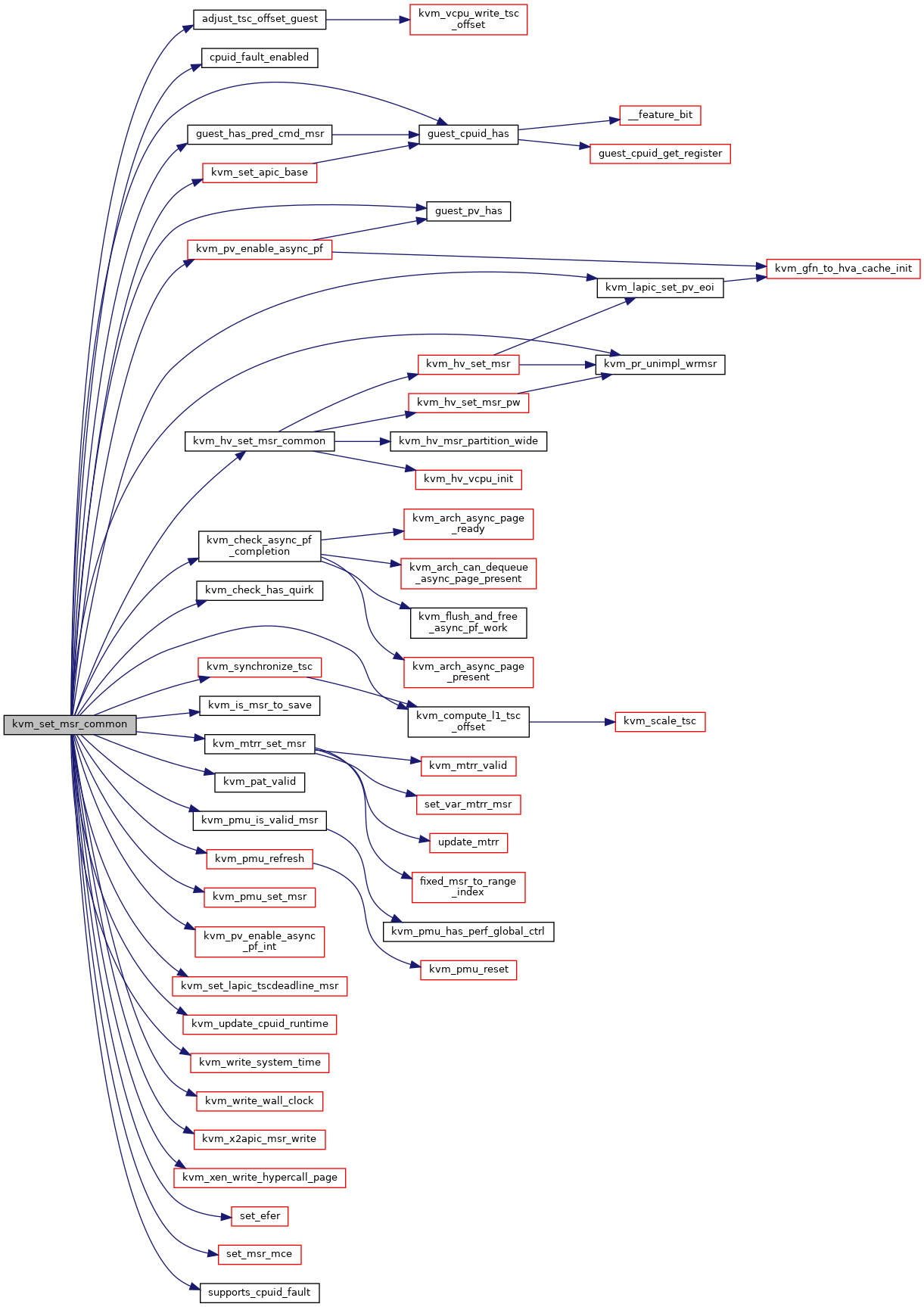

| int | kvm_set_msr_common (struct kvm_vcpu *vcpu, struct msr_data *msr_info) |

| EXPORT_SYMBOL_GPL (kvm_set_msr_common) | |

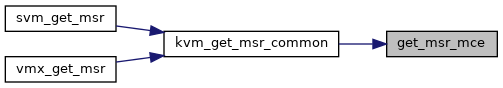

| static int | get_msr_mce (struct kvm_vcpu *vcpu, u32 msr, u64 *pdata, bool host) |

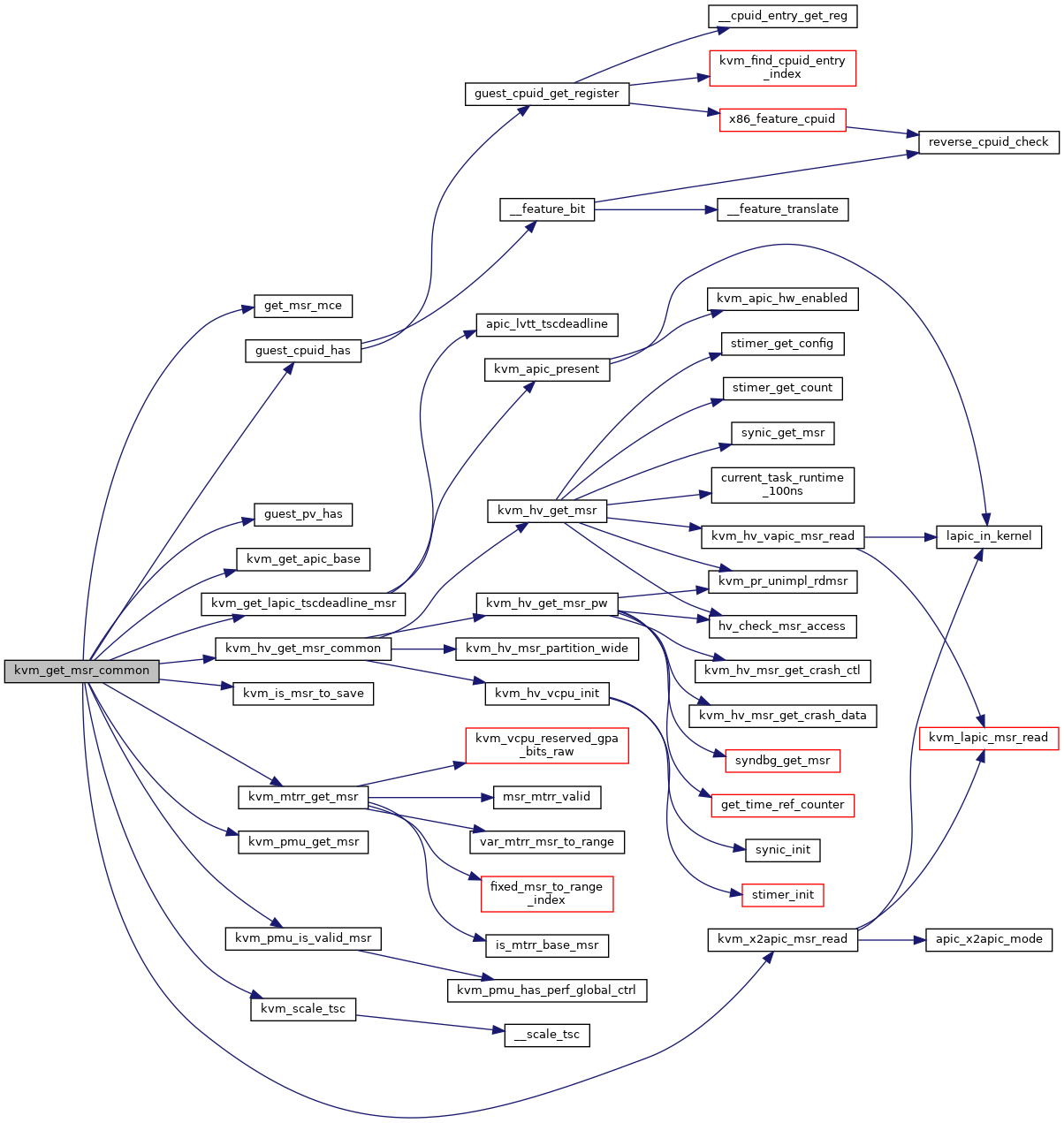

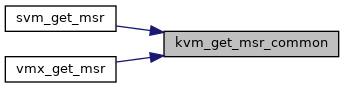

| int | kvm_get_msr_common (struct kvm_vcpu *vcpu, struct msr_data *msr_info) |

| EXPORT_SYMBOL_GPL (kvm_get_msr_common) | |

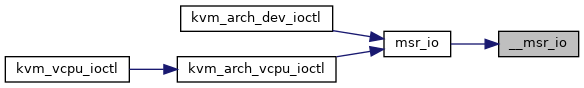

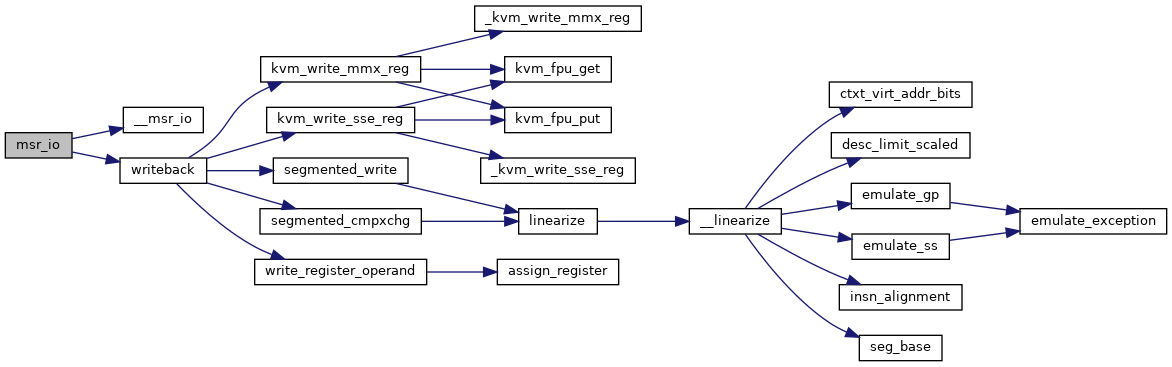

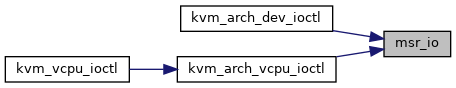

| static int | __msr_io (struct kvm_vcpu *vcpu, struct kvm_msrs *msrs, struct kvm_msr_entry *entries, int(*do_msr)(struct kvm_vcpu *vcpu, unsigned index, u64 *data)) |

| static int | msr_io (struct kvm_vcpu *vcpu, struct kvm_msrs __user *user_msrs, int(*do_msr)(struct kvm_vcpu *vcpu, unsigned index, u64 *data), int writeback) |

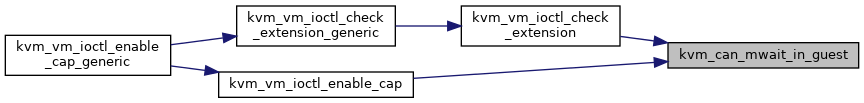

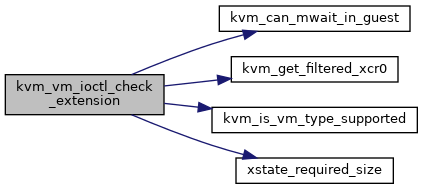

| static bool | kvm_can_mwait_in_guest (void) |

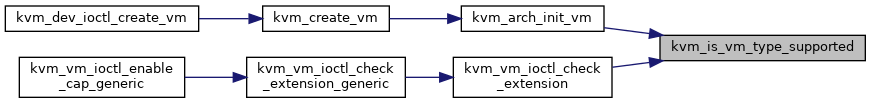

| static bool | kvm_is_vm_type_supported (unsigned long type) |

| int | kvm_vm_ioctl_check_extension (struct kvm *kvm, long ext) |

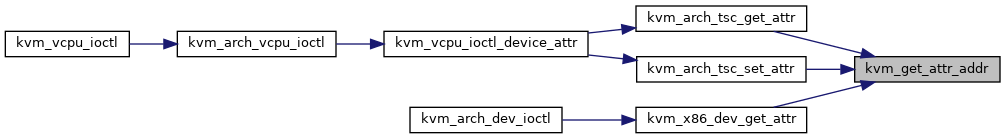

| static void __user * | kvm_get_attr_addr (struct kvm_device_attr *attr) |

| static int | kvm_x86_dev_get_attr (struct kvm_device_attr *attr) |

| static int | kvm_x86_dev_has_attr (struct kvm_device_attr *attr) |

| long | kvm_arch_dev_ioctl (struct file *filp, unsigned int ioctl, unsigned long arg) |

| static void | wbinvd_ipi (void *garbage) |

| static bool | need_emulate_wbinvd (struct kvm_vcpu *vcpu) |

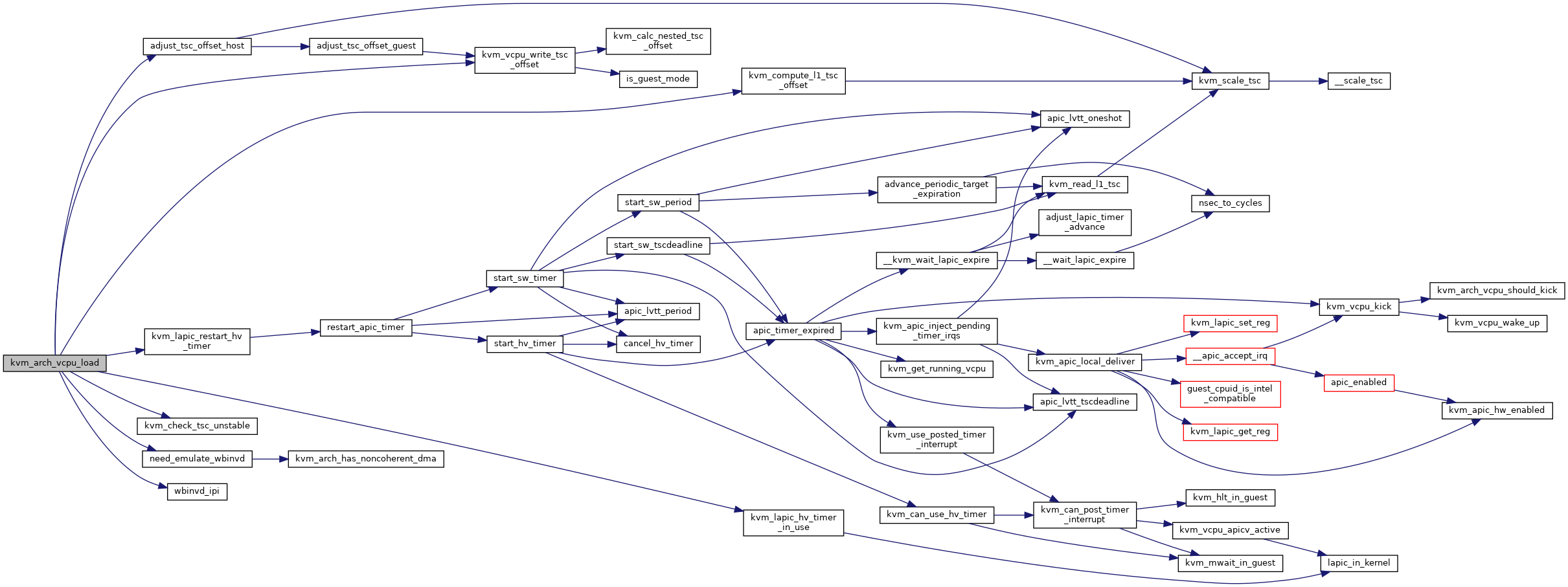

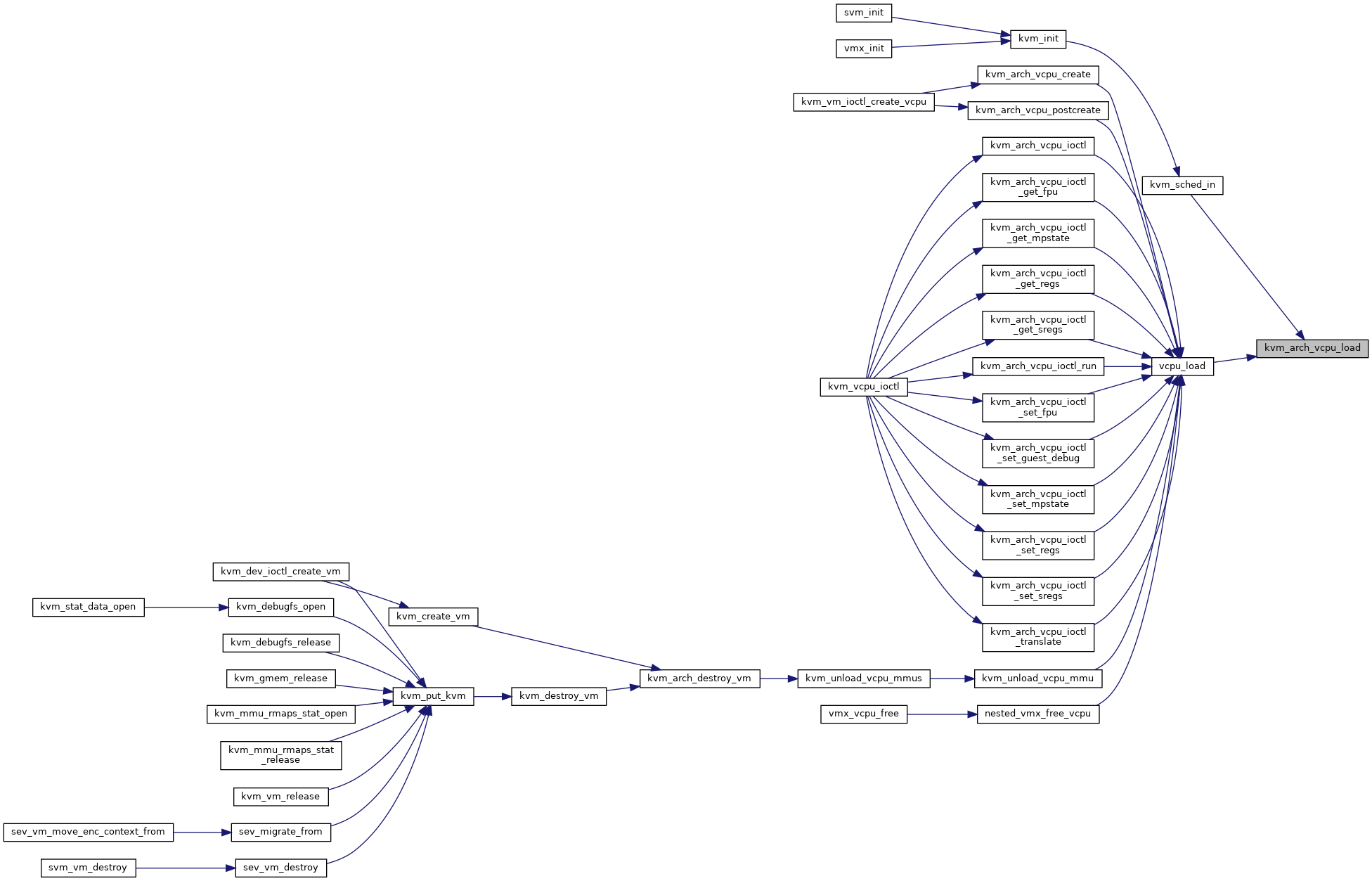

| void | kvm_arch_vcpu_load (struct kvm_vcpu *vcpu, int cpu) |

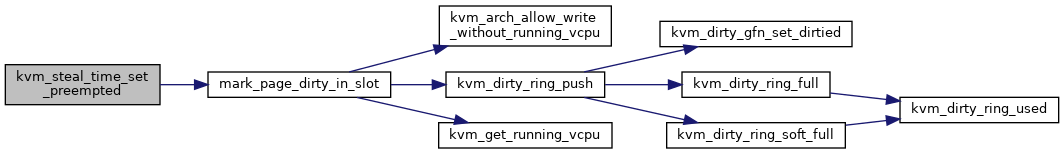

| static void | kvm_steal_time_set_preempted (struct kvm_vcpu *vcpu) |

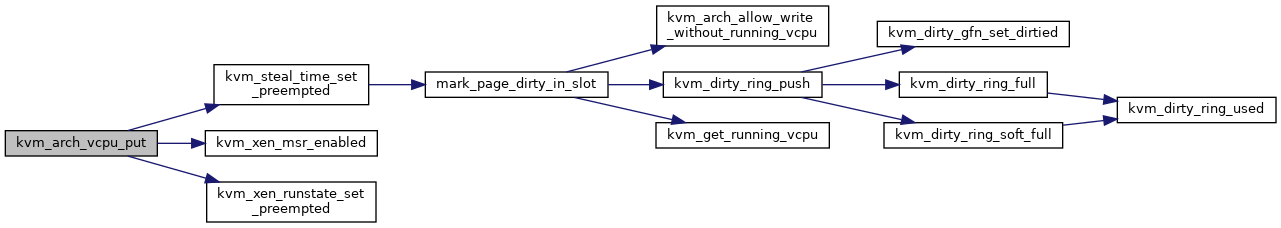

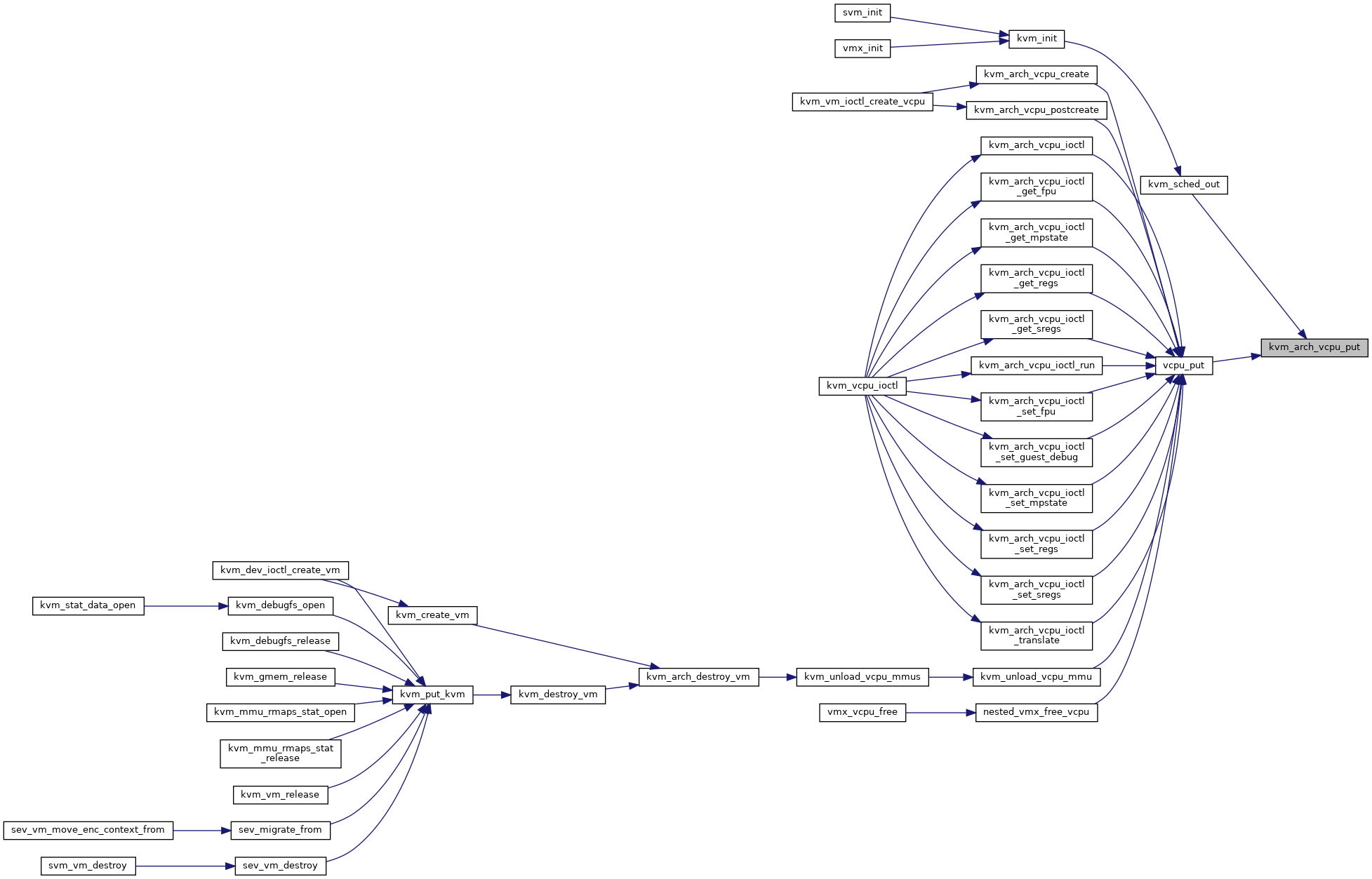

| void | kvm_arch_vcpu_put (struct kvm_vcpu *vcpu) |

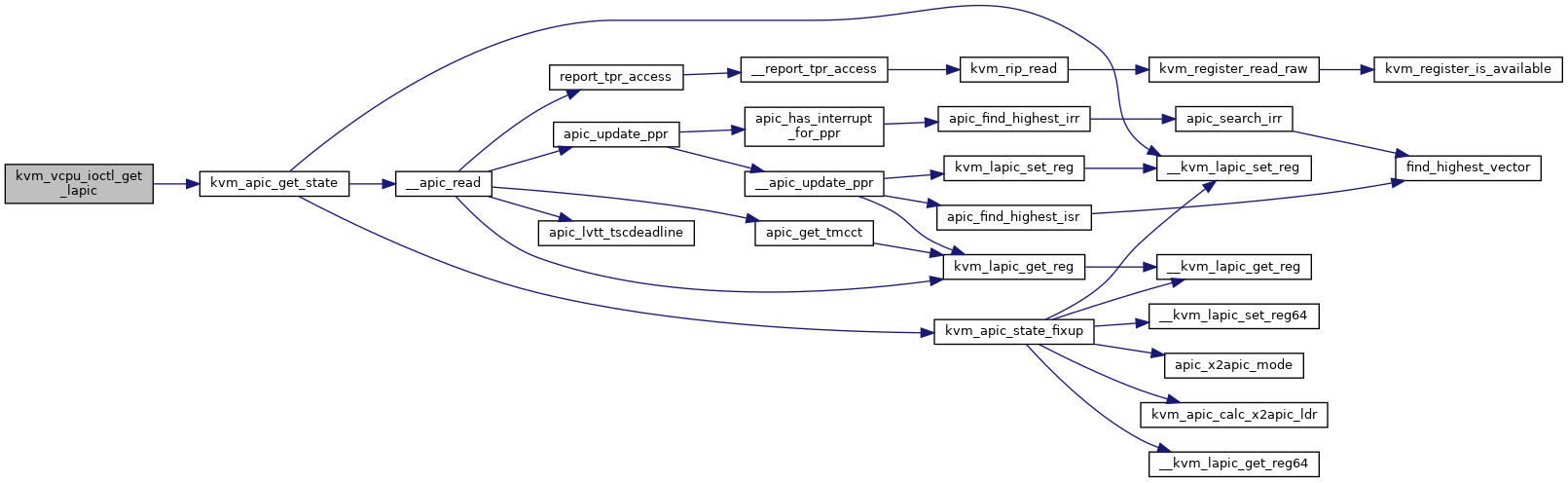

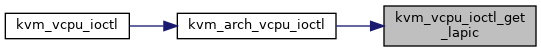

| static int | kvm_vcpu_ioctl_get_lapic (struct kvm_vcpu *vcpu, struct kvm_lapic_state *s) |

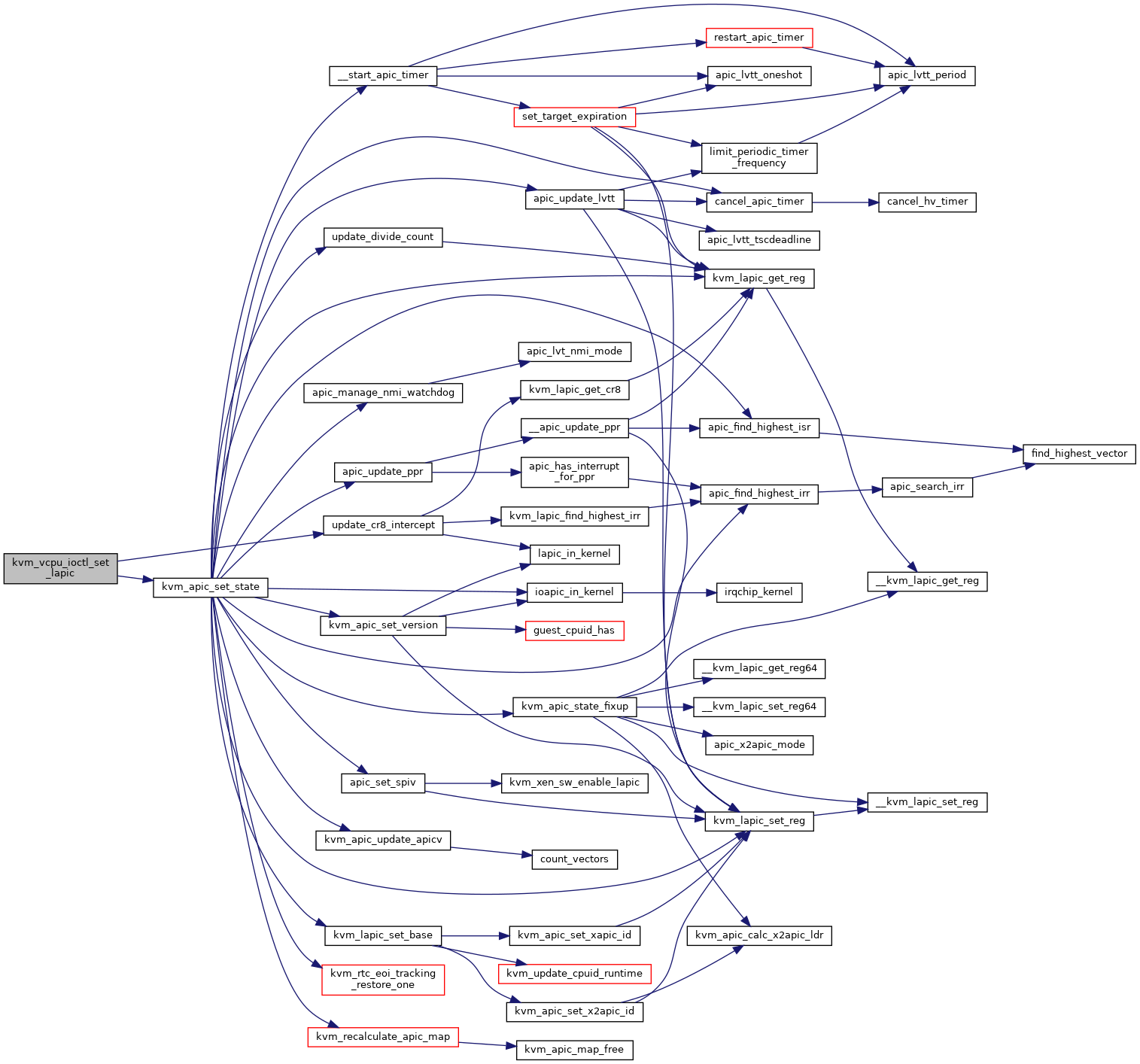

| static int | kvm_vcpu_ioctl_set_lapic (struct kvm_vcpu *vcpu, struct kvm_lapic_state *s) |

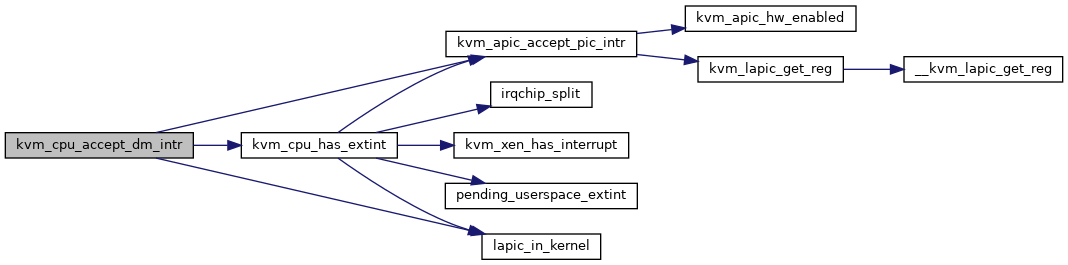

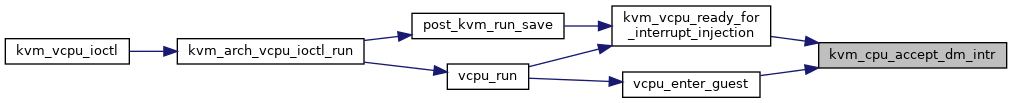

| static int | kvm_cpu_accept_dm_intr (struct kvm_vcpu *vcpu) |

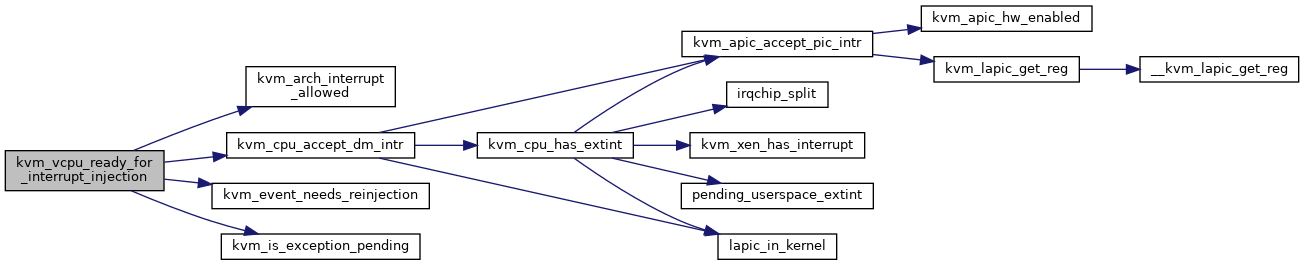

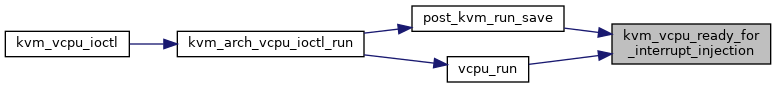

| static int | kvm_vcpu_ready_for_interrupt_injection (struct kvm_vcpu *vcpu) |

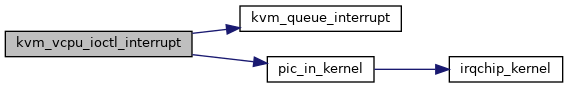

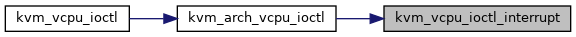

| static int | kvm_vcpu_ioctl_interrupt (struct kvm_vcpu *vcpu, struct kvm_interrupt *irq) |

| static int | kvm_vcpu_ioctl_nmi (struct kvm_vcpu *vcpu) |

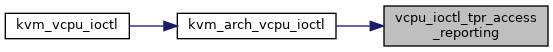

| static int | vcpu_ioctl_tpr_access_reporting (struct kvm_vcpu *vcpu, struct kvm_tpr_access_ctl *tac) |

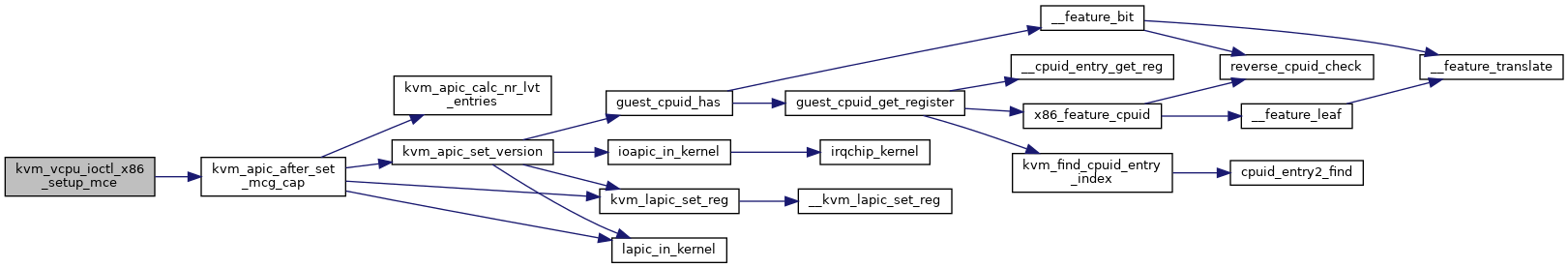

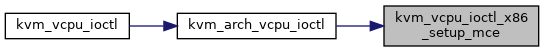

| static int | kvm_vcpu_ioctl_x86_setup_mce (struct kvm_vcpu *vcpu, u64 mcg_cap) |

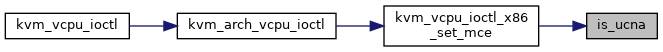

| static bool | is_ucna (struct kvm_x86_mce *mce) |

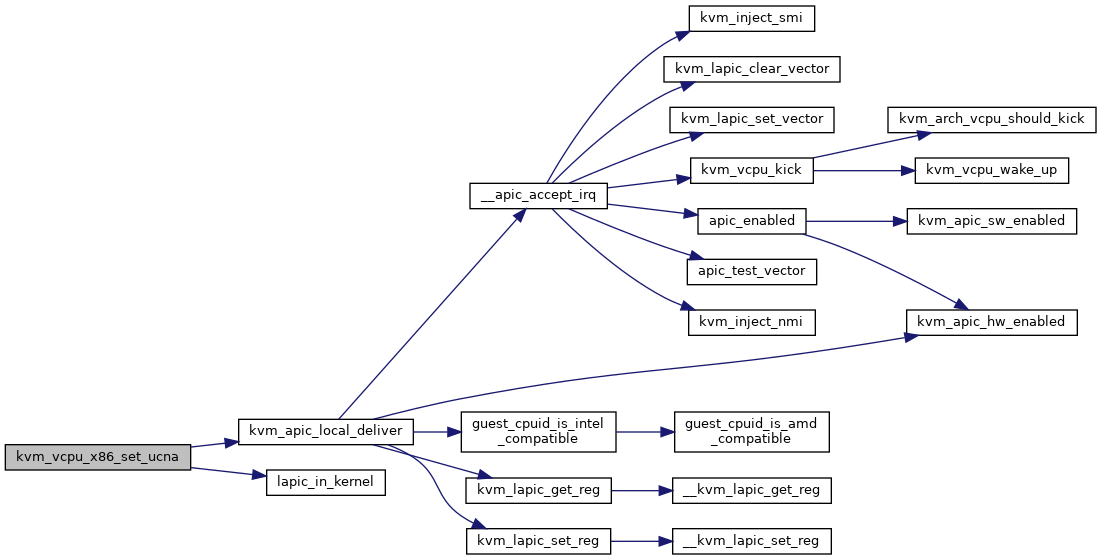

| static int | kvm_vcpu_x86_set_ucna (struct kvm_vcpu *vcpu, struct kvm_x86_mce *mce, u64 *banks) |

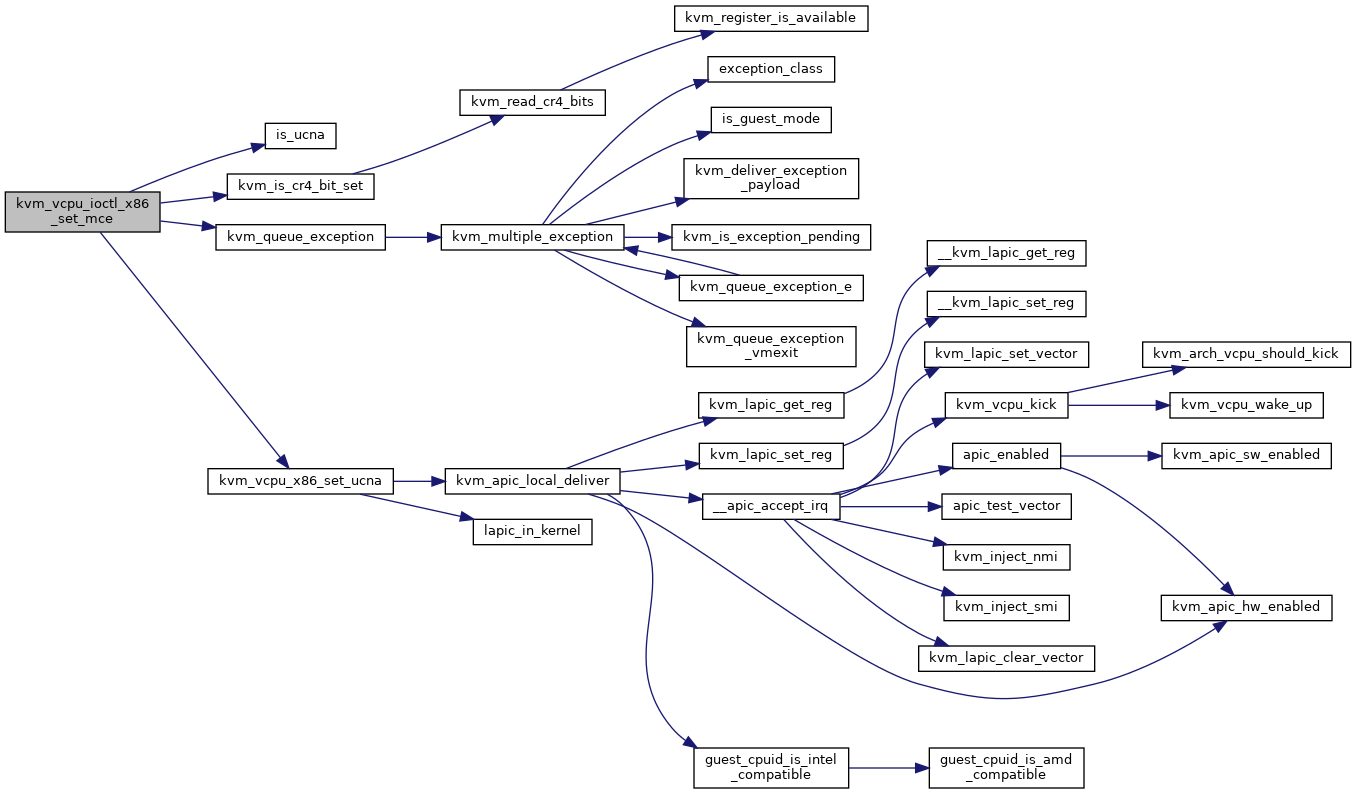

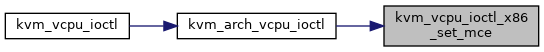

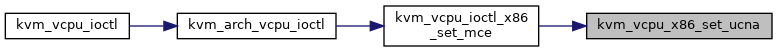

| static int | kvm_vcpu_ioctl_x86_set_mce (struct kvm_vcpu *vcpu, struct kvm_x86_mce *mce) |

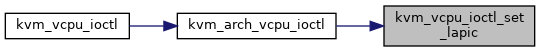

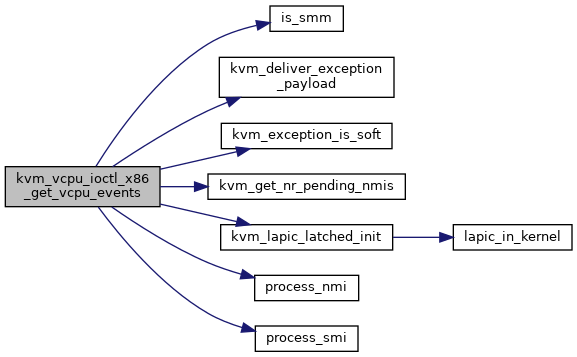

| static void | kvm_vcpu_ioctl_x86_get_vcpu_events (struct kvm_vcpu *vcpu, struct kvm_vcpu_events *events) |

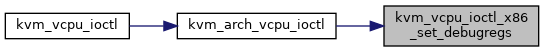

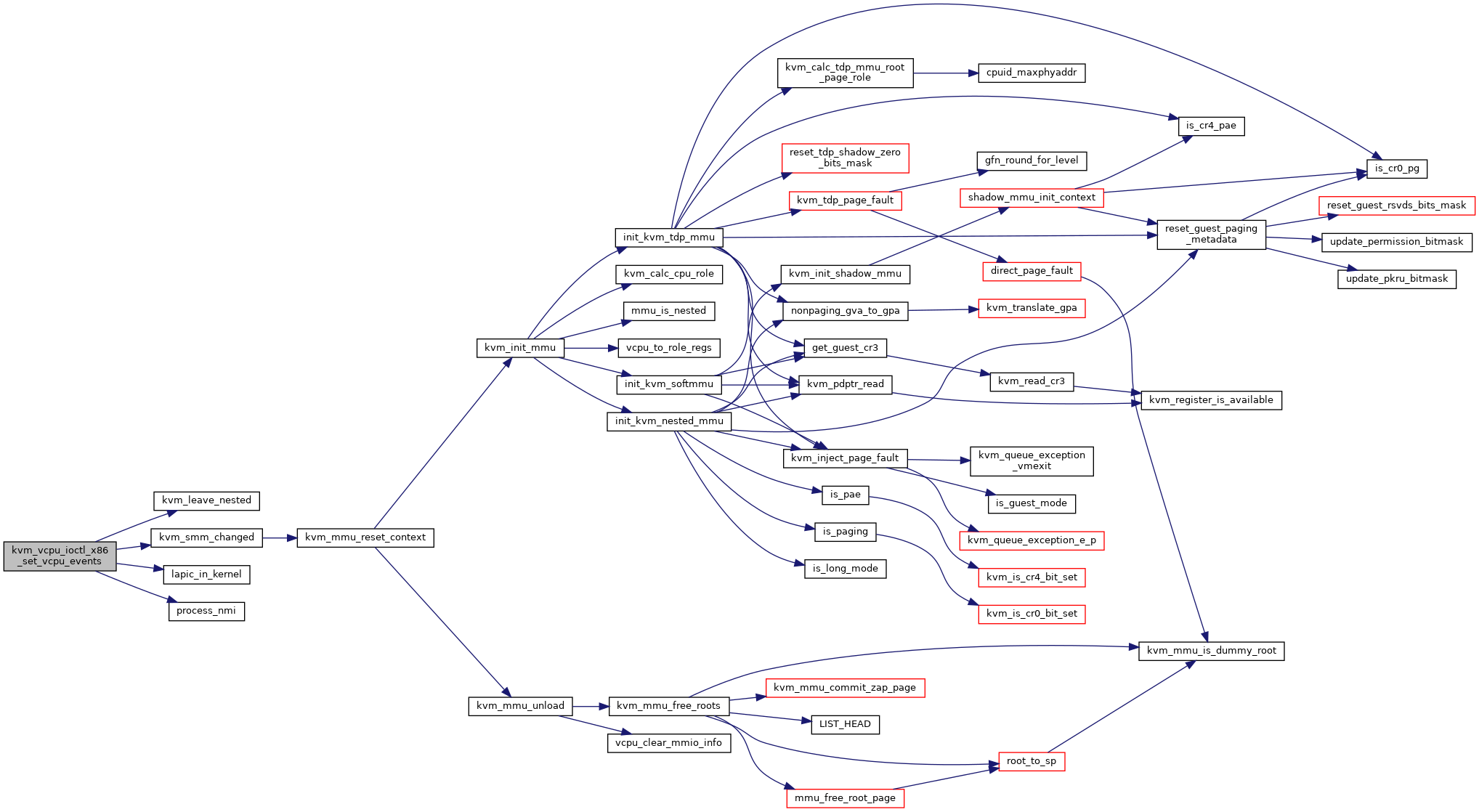

| static int | kvm_vcpu_ioctl_x86_set_vcpu_events (struct kvm_vcpu *vcpu, struct kvm_vcpu_events *events) |

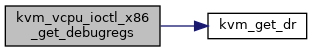

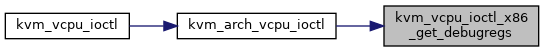

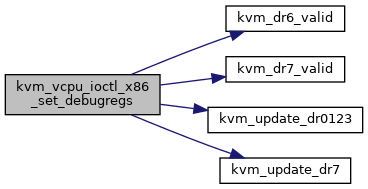

| static void | kvm_vcpu_ioctl_x86_get_debugregs (struct kvm_vcpu *vcpu, struct kvm_debugregs *dbgregs) |

| static int | kvm_vcpu_ioctl_x86_set_debugregs (struct kvm_vcpu *vcpu, struct kvm_debugregs *dbgregs) |

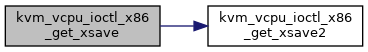

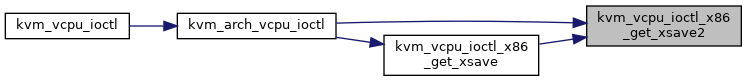

| static void | kvm_vcpu_ioctl_x86_get_xsave2 (struct kvm_vcpu *vcpu, u8 *state, unsigned int size) |

| static void | kvm_vcpu_ioctl_x86_get_xsave (struct kvm_vcpu *vcpu, struct kvm_xsave *guest_xsave) |

| static int | kvm_vcpu_ioctl_x86_set_xsave (struct kvm_vcpu *vcpu, struct kvm_xsave *guest_xsave) |

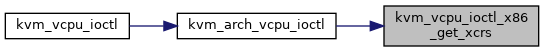

| static void | kvm_vcpu_ioctl_x86_get_xcrs (struct kvm_vcpu *vcpu, struct kvm_xcrs *guest_xcrs) |

| static int | kvm_vcpu_ioctl_x86_set_xcrs (struct kvm_vcpu *vcpu, struct kvm_xcrs *guest_xcrs) |

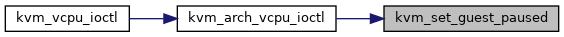

| static int | kvm_set_guest_paused (struct kvm_vcpu *vcpu) |

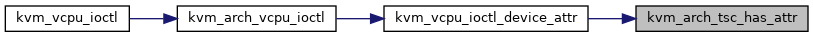

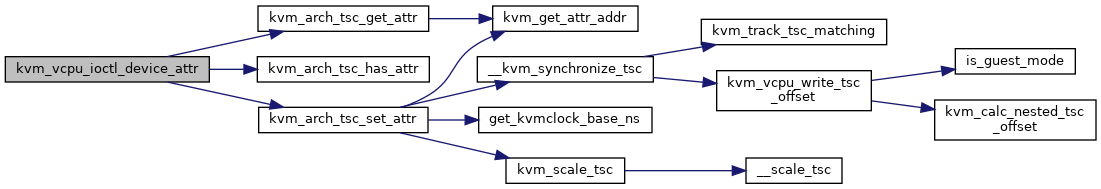

| static int | kvm_arch_tsc_has_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

| static int | kvm_arch_tsc_get_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

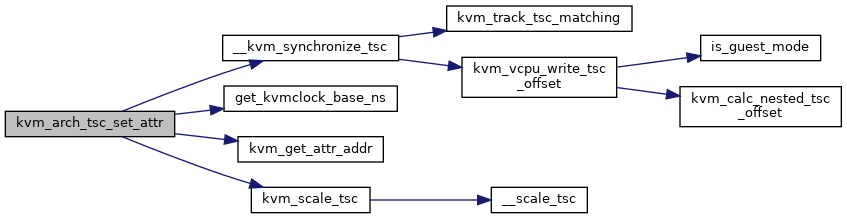

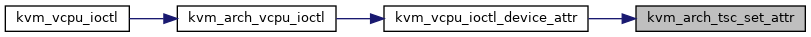

| static int | kvm_arch_tsc_set_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

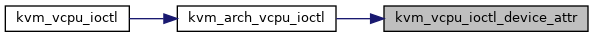

| static int | kvm_vcpu_ioctl_device_attr (struct kvm_vcpu *vcpu, unsigned int ioctl, void __user *argp) |

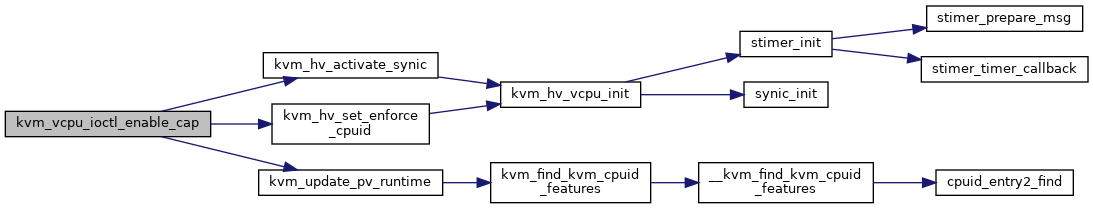

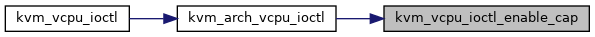

| static int | kvm_vcpu_ioctl_enable_cap (struct kvm_vcpu *vcpu, struct kvm_enable_cap *cap) |

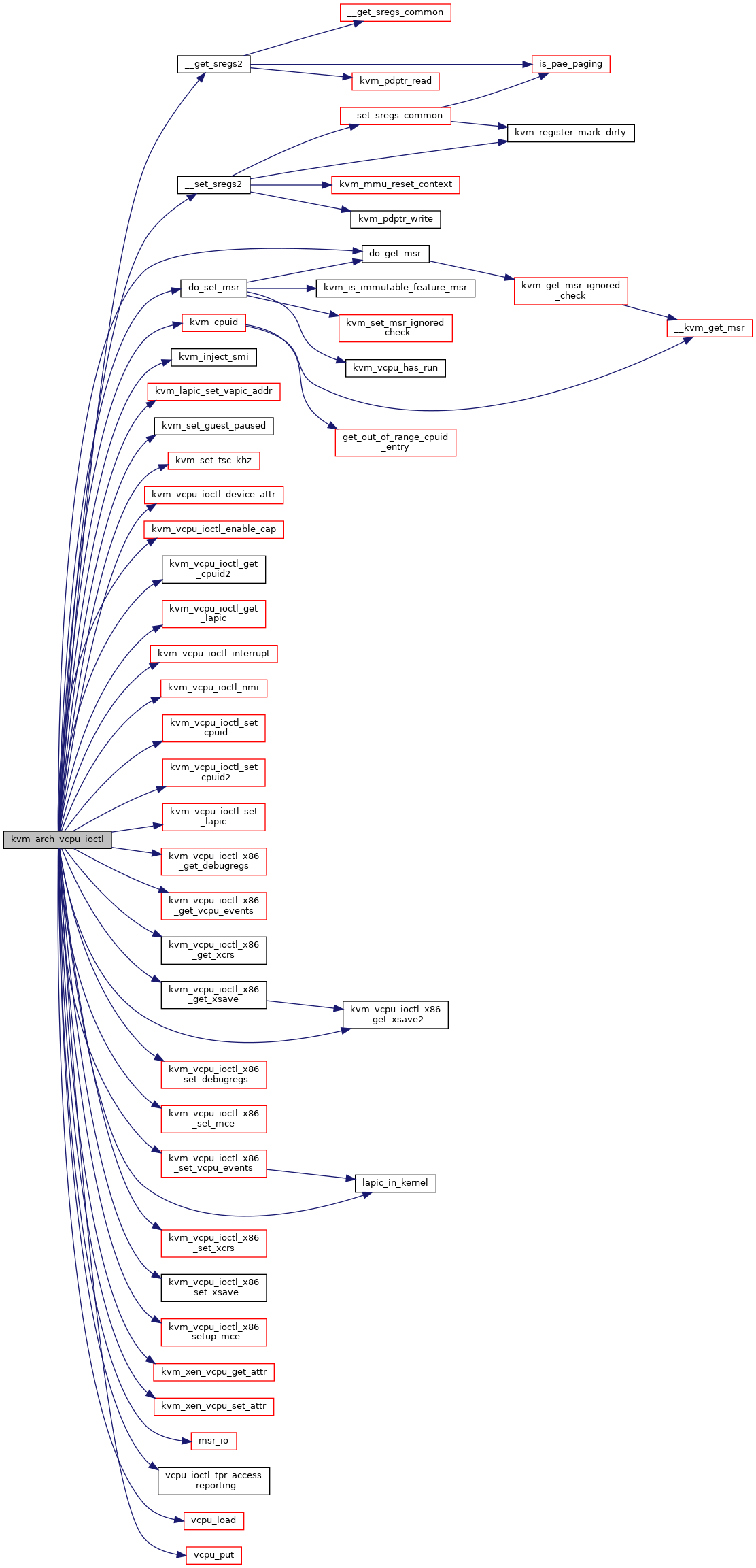

| long | kvm_arch_vcpu_ioctl (struct file *filp, unsigned int ioctl, unsigned long arg) |

| vm_fault_t | kvm_arch_vcpu_fault (struct kvm_vcpu *vcpu, struct vm_fault *vmf) |

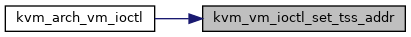

| static int | kvm_vm_ioctl_set_tss_addr (struct kvm *kvm, unsigned long addr) |

| static int | kvm_vm_ioctl_set_identity_map_addr (struct kvm *kvm, u64 ident_addr) |

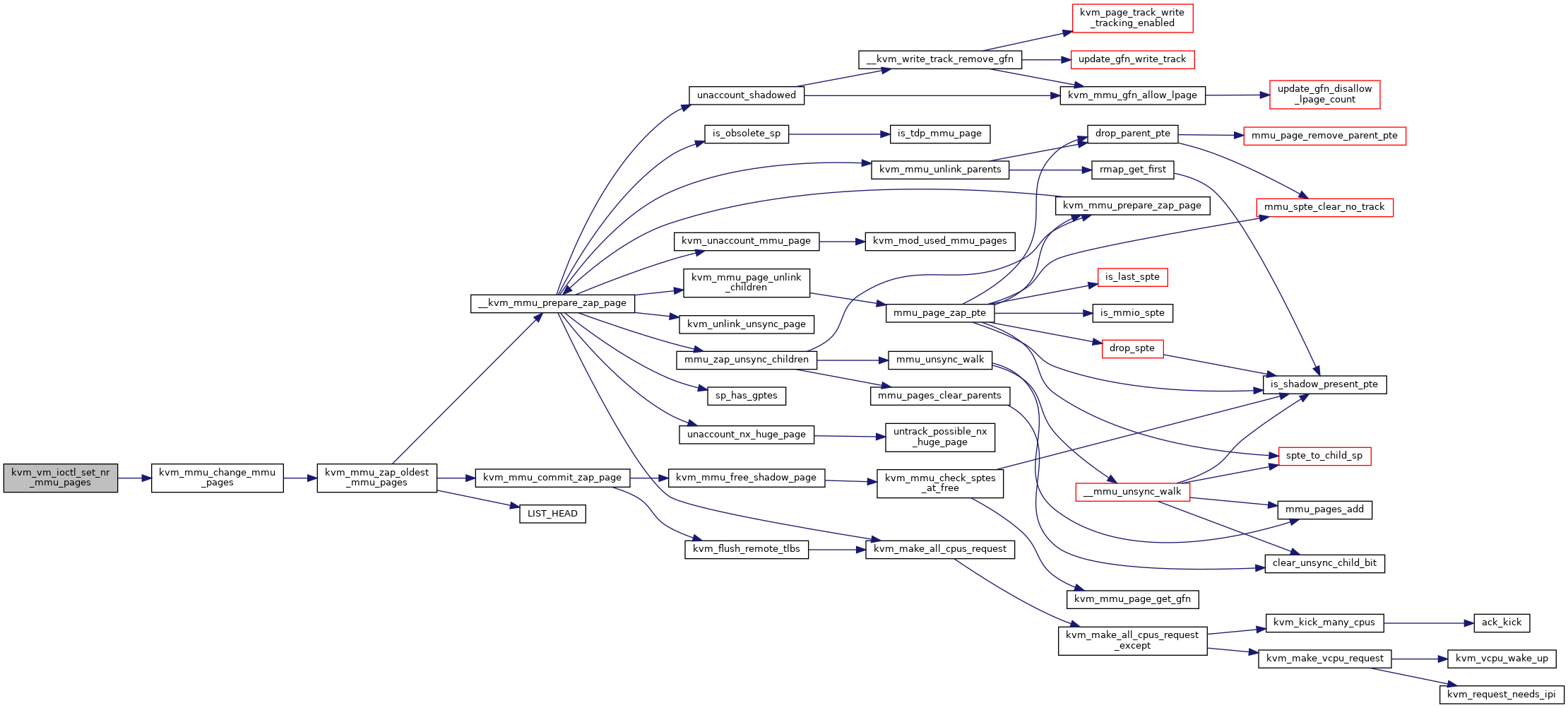

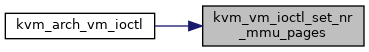

| static int | kvm_vm_ioctl_set_nr_mmu_pages (struct kvm *kvm, unsigned long kvm_nr_mmu_pages) |

| static int | kvm_vm_ioctl_get_irqchip (struct kvm *kvm, struct kvm_irqchip *chip) |

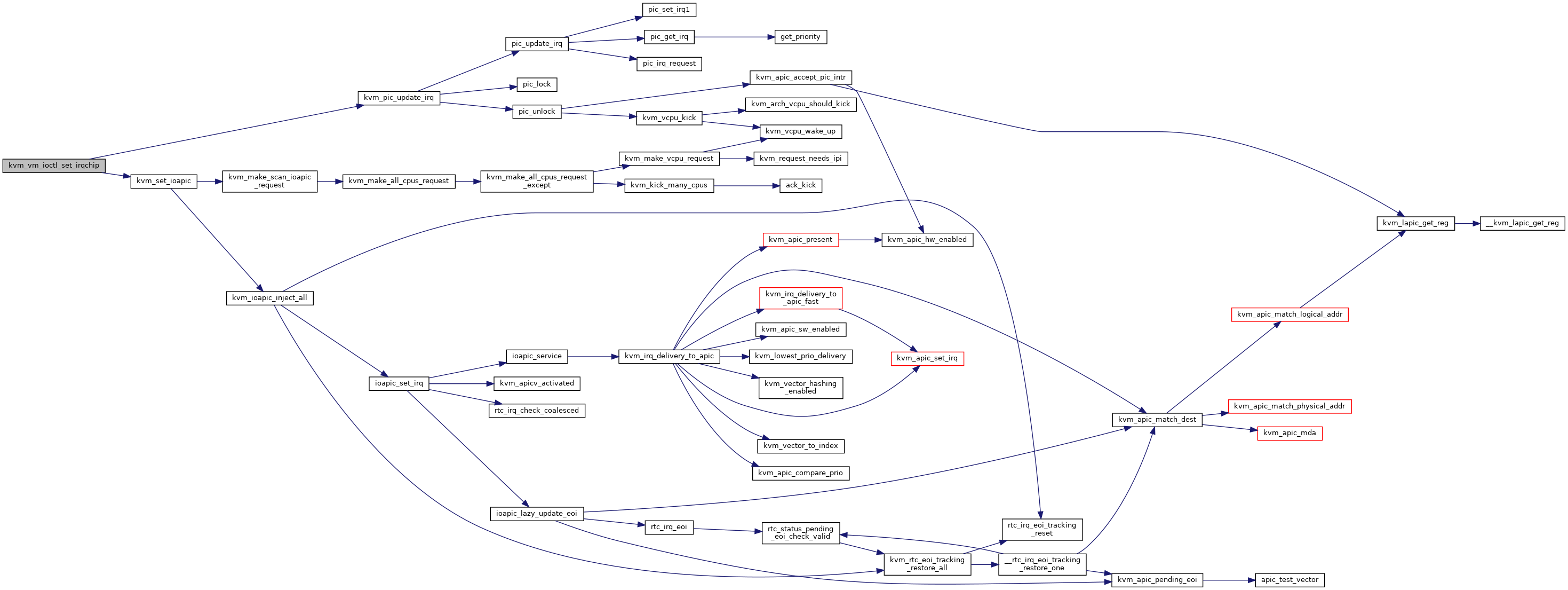

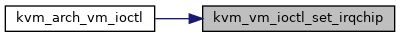

| static int | kvm_vm_ioctl_set_irqchip (struct kvm *kvm, struct kvm_irqchip *chip) |

| static int | kvm_vm_ioctl_get_pit (struct kvm *kvm, struct kvm_pit_state *ps) |

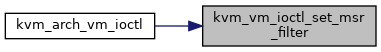

| static int | kvm_vm_ioctl_set_pit (struct kvm *kvm, struct kvm_pit_state *ps) |

| static int | kvm_vm_ioctl_get_pit2 (struct kvm *kvm, struct kvm_pit_state2 *ps) |

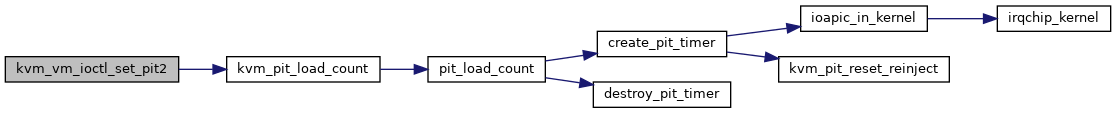

| static int | kvm_vm_ioctl_set_pit2 (struct kvm *kvm, struct kvm_pit_state2 *ps) |

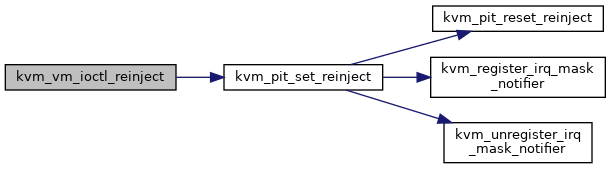

| static int | kvm_vm_ioctl_reinject (struct kvm *kvm, struct kvm_reinject_control *control) |

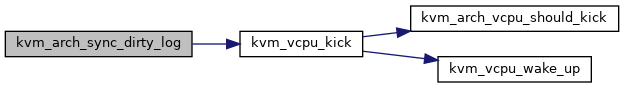

| void | kvm_arch_sync_dirty_log (struct kvm *kvm, struct kvm_memory_slot *memslot) |

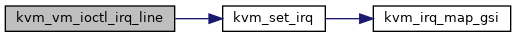

| int | kvm_vm_ioctl_irq_line (struct kvm *kvm, struct kvm_irq_level *irq_event, bool line_status) |

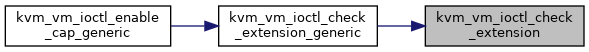

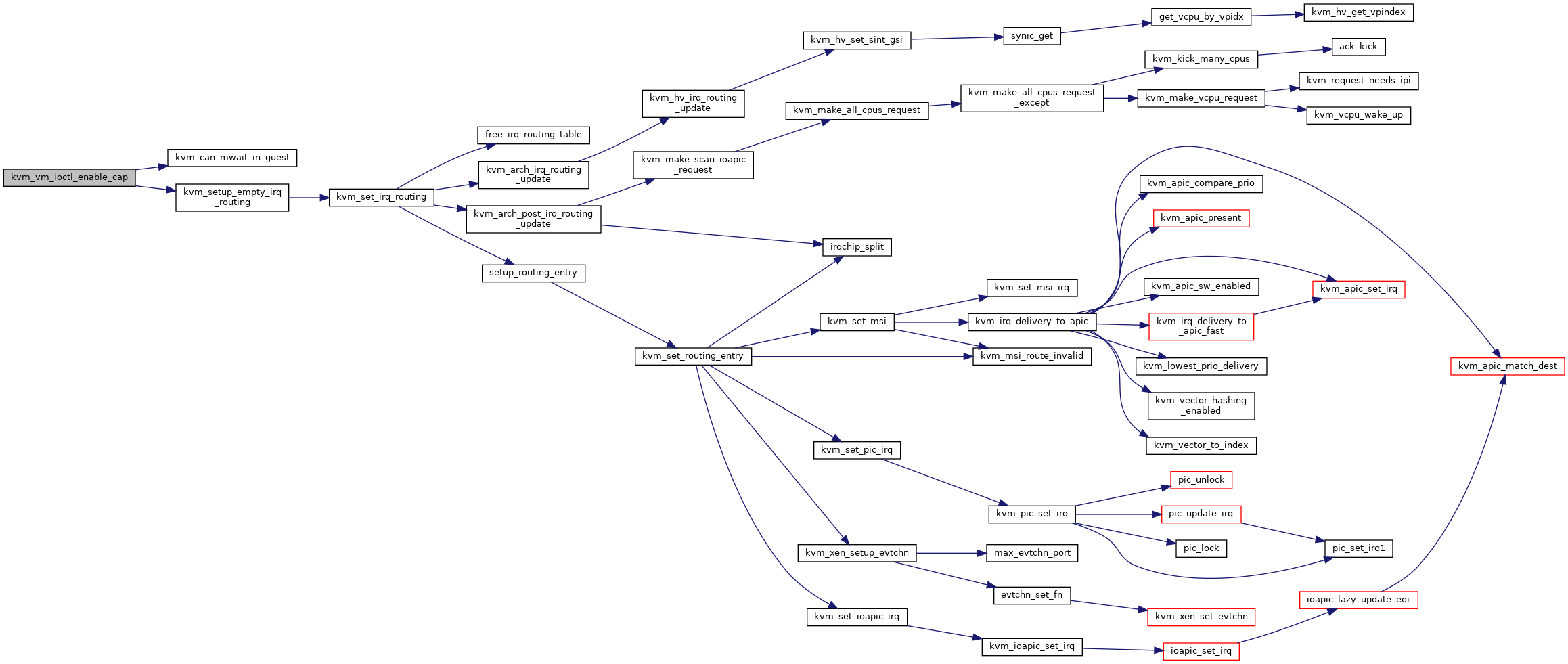

| int | kvm_vm_ioctl_enable_cap (struct kvm *kvm, struct kvm_enable_cap *cap) |

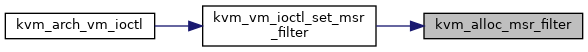

| static struct kvm_x86_msr_filter * | kvm_alloc_msr_filter (bool default_allow) |

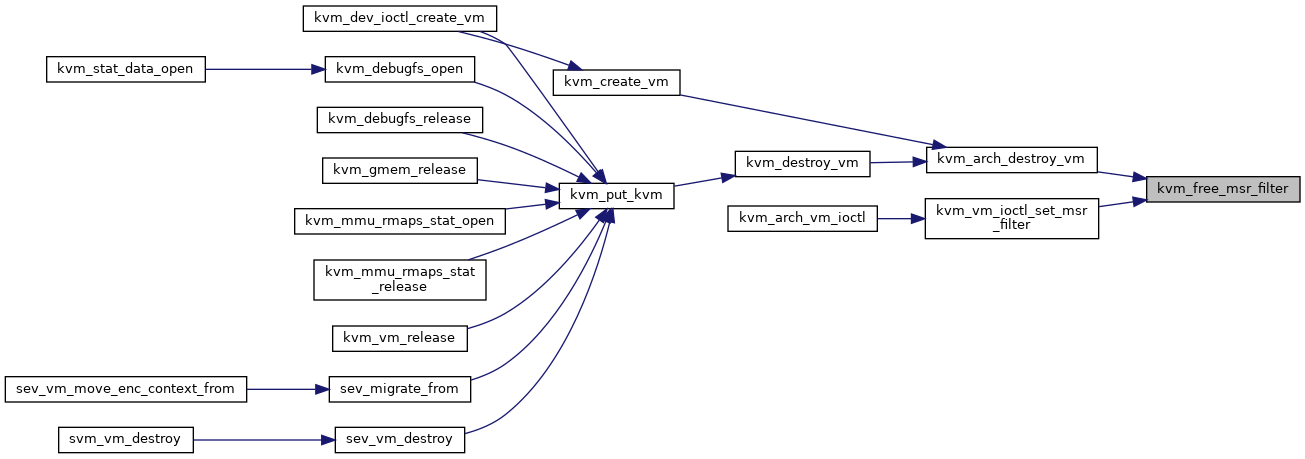

| static void | kvm_free_msr_filter (struct kvm_x86_msr_filter *msr_filter) |

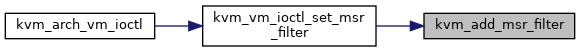

| static int | kvm_add_msr_filter (struct kvm_x86_msr_filter *msr_filter, struct kvm_msr_filter_range *user_range) |

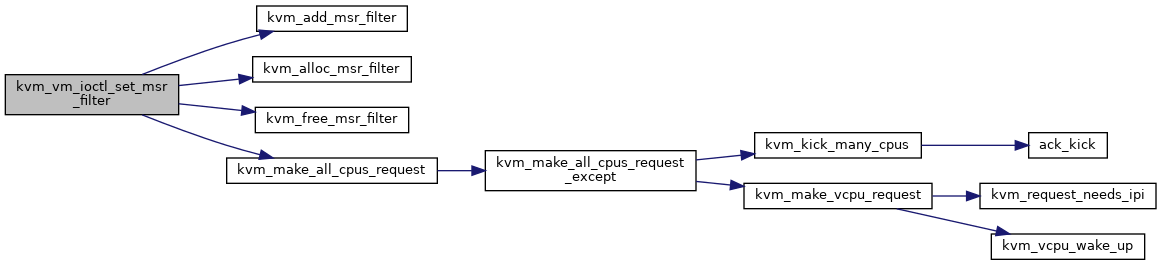

| static int | kvm_vm_ioctl_set_msr_filter (struct kvm *kvm, struct kvm_msr_filter *filter) |

| static int | kvm_vm_ioctl_get_clock (struct kvm *kvm, void __user *argp) |

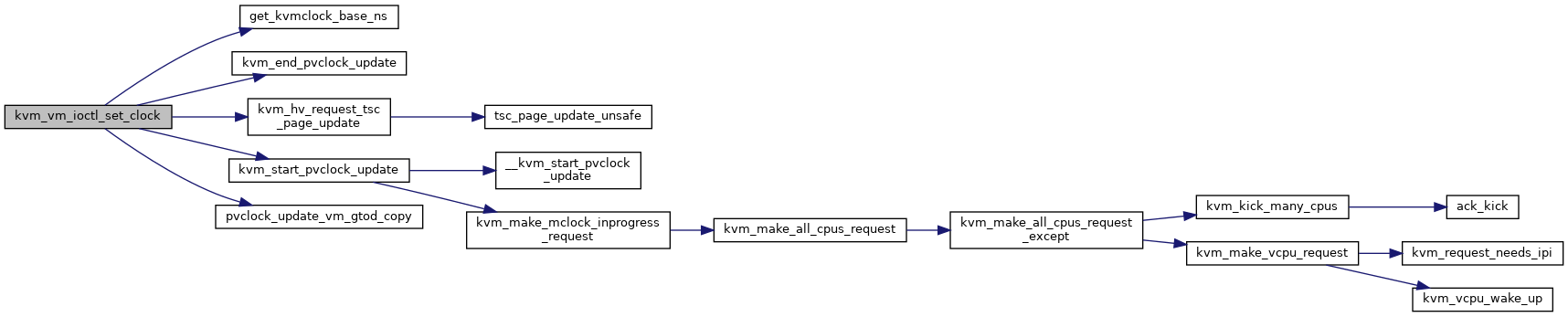

| static int | kvm_vm_ioctl_set_clock (struct kvm *kvm, void __user *argp) |

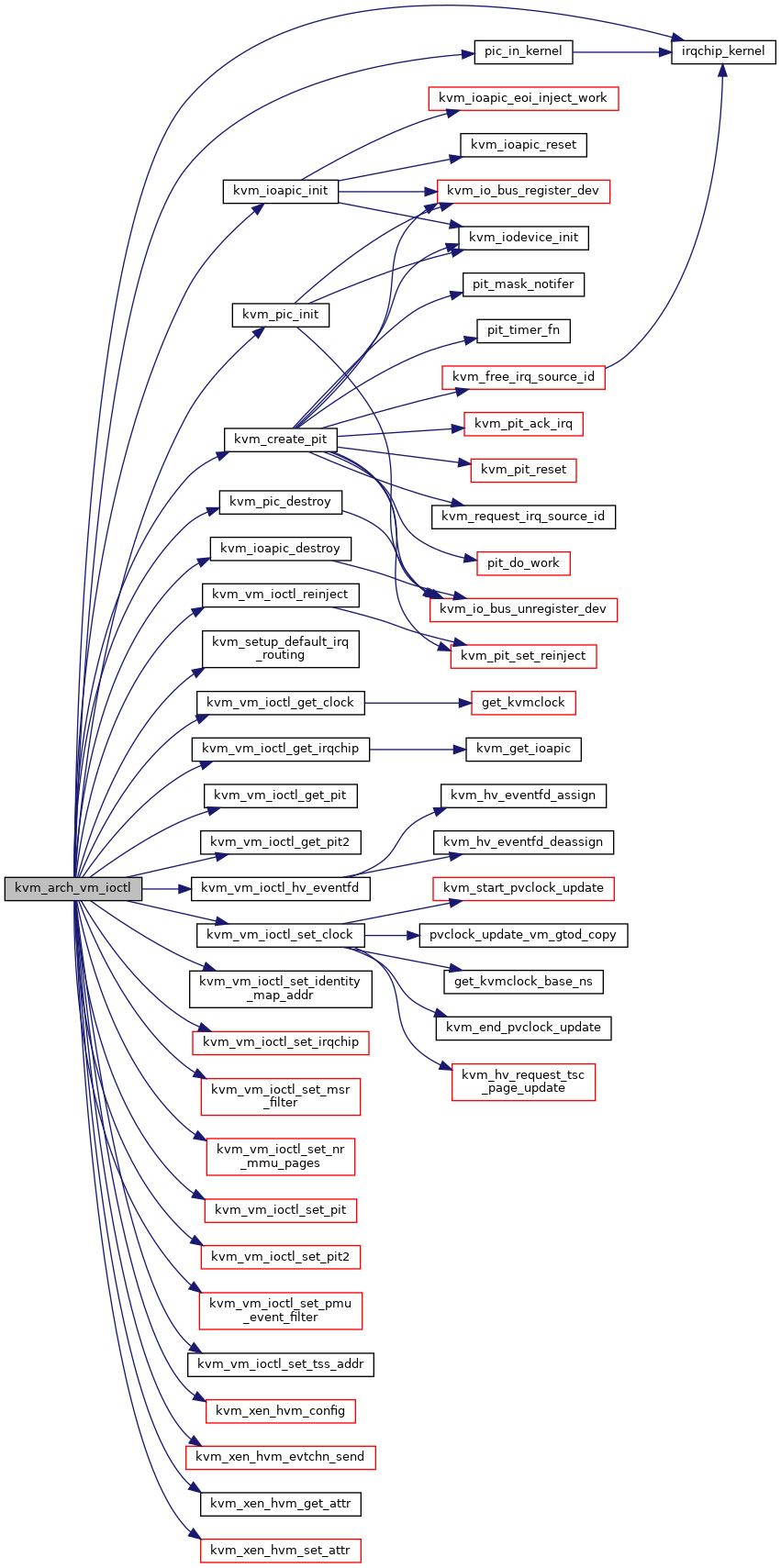

| int | kvm_arch_vm_ioctl (struct file *filp, unsigned int ioctl, unsigned long arg) |

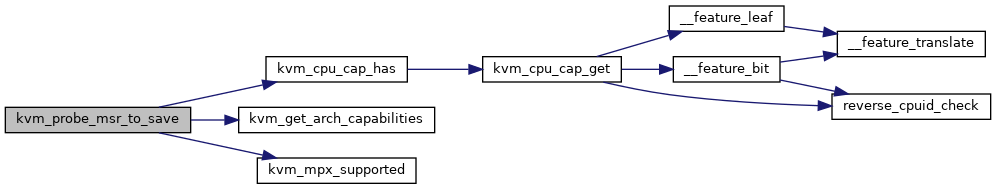

| static void | kvm_probe_feature_msr (u32 msr_index) |

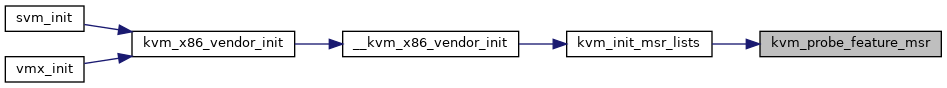

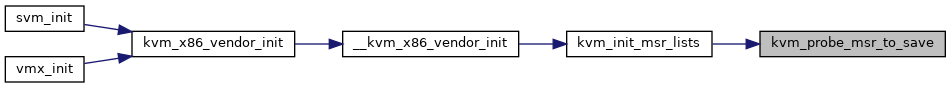

| static void | kvm_probe_msr_to_save (u32 msr_index) |

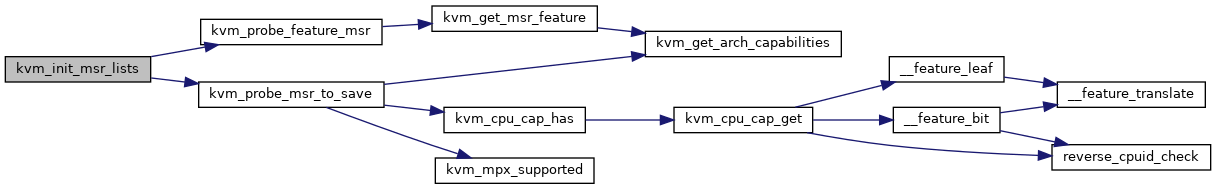

| static void | kvm_init_msr_lists (void) |

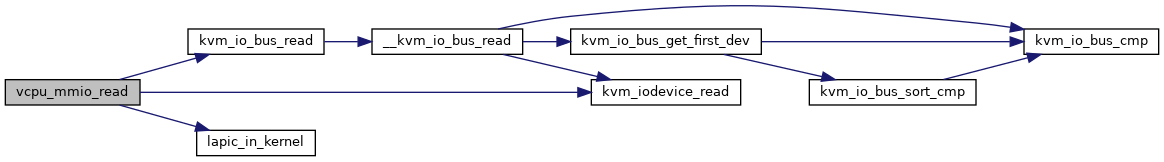

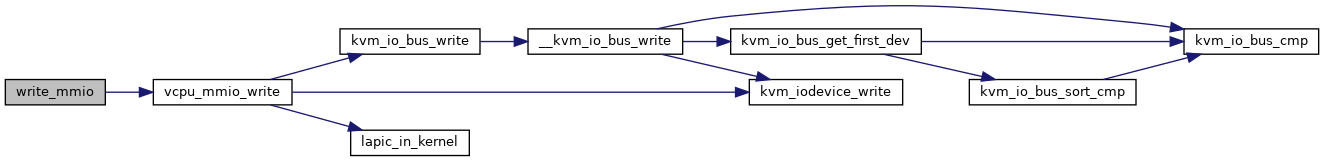

| static int | vcpu_mmio_write (struct kvm_vcpu *vcpu, gpa_t addr, int len, const void *v) |

| static int | vcpu_mmio_read (struct kvm_vcpu *vcpu, gpa_t addr, int len, void *v) |

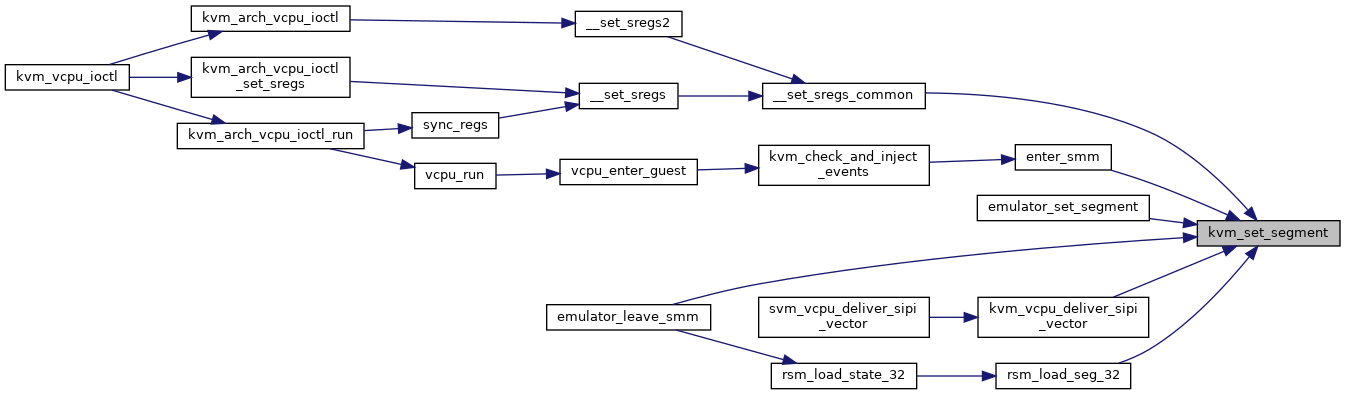

| void | kvm_set_segment (struct kvm_vcpu *vcpu, struct kvm_segment *var, int seg) |

| void | kvm_get_segment (struct kvm_vcpu *vcpu, struct kvm_segment *var, int seg) |

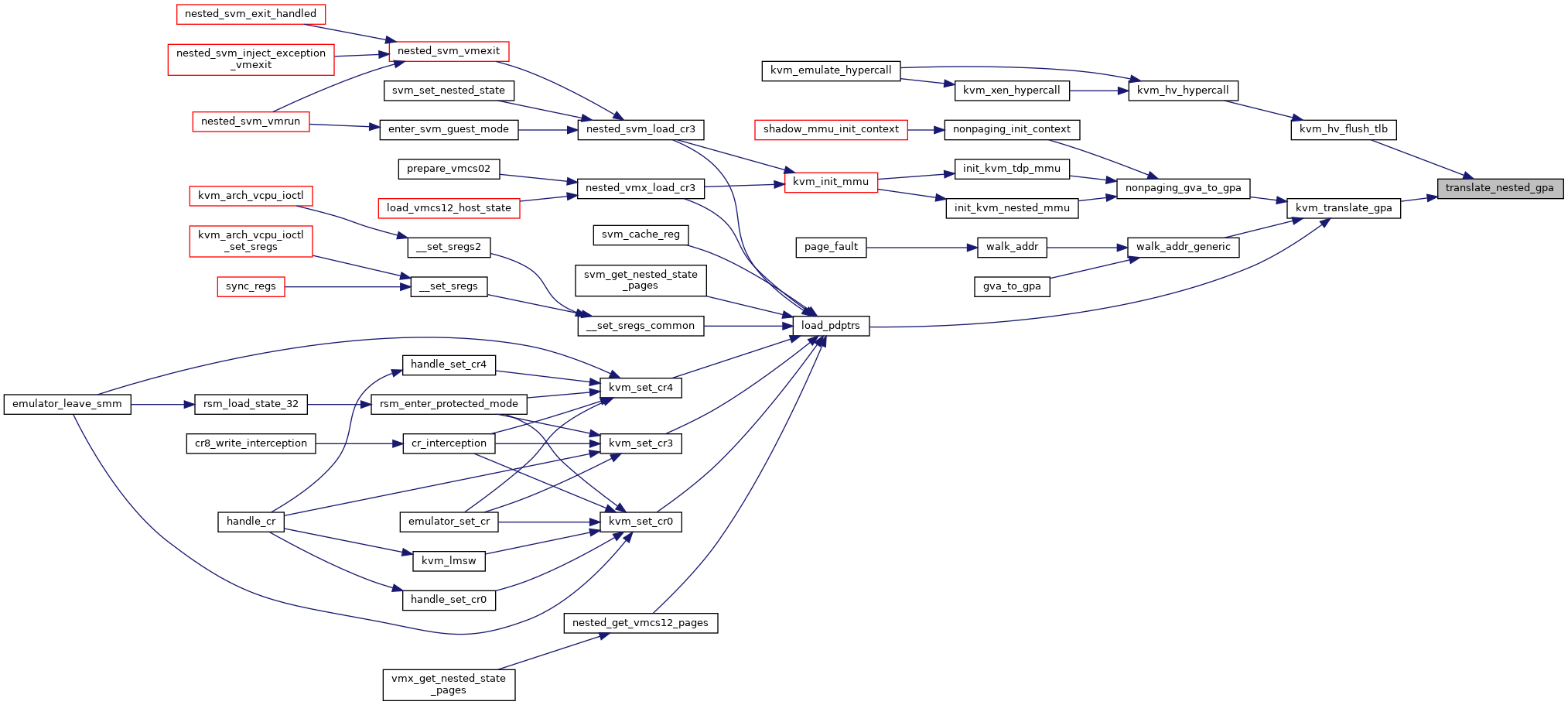

| gpa_t | translate_nested_gpa (struct kvm_vcpu *vcpu, gpa_t gpa, u64 access, struct x86_exception *exception) |

| gpa_t | kvm_mmu_gva_to_gpa_read (struct kvm_vcpu *vcpu, gva_t gva, struct x86_exception *exception) |

| EXPORT_SYMBOL_GPL (kvm_mmu_gva_to_gpa_read) | |

| gpa_t | kvm_mmu_gva_to_gpa_write (struct kvm_vcpu *vcpu, gva_t gva, struct x86_exception *exception) |

| EXPORT_SYMBOL_GPL (kvm_mmu_gva_to_gpa_write) | |

| gpa_t | kvm_mmu_gva_to_gpa_system (struct kvm_vcpu *vcpu, gva_t gva, struct x86_exception *exception) |

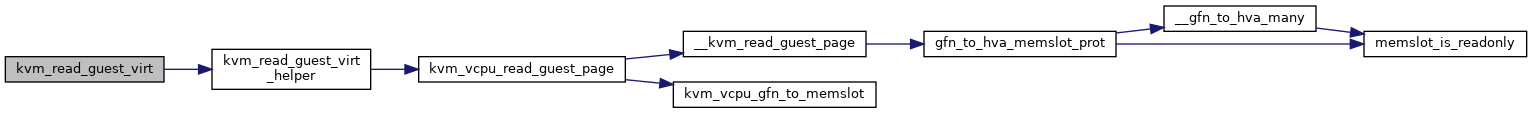

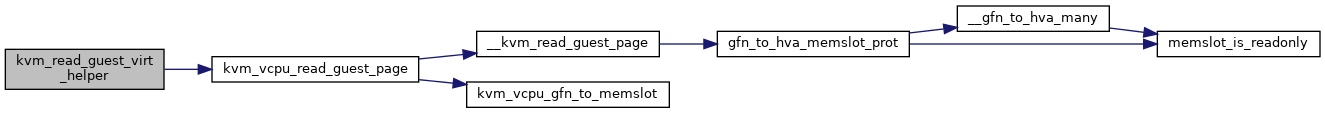

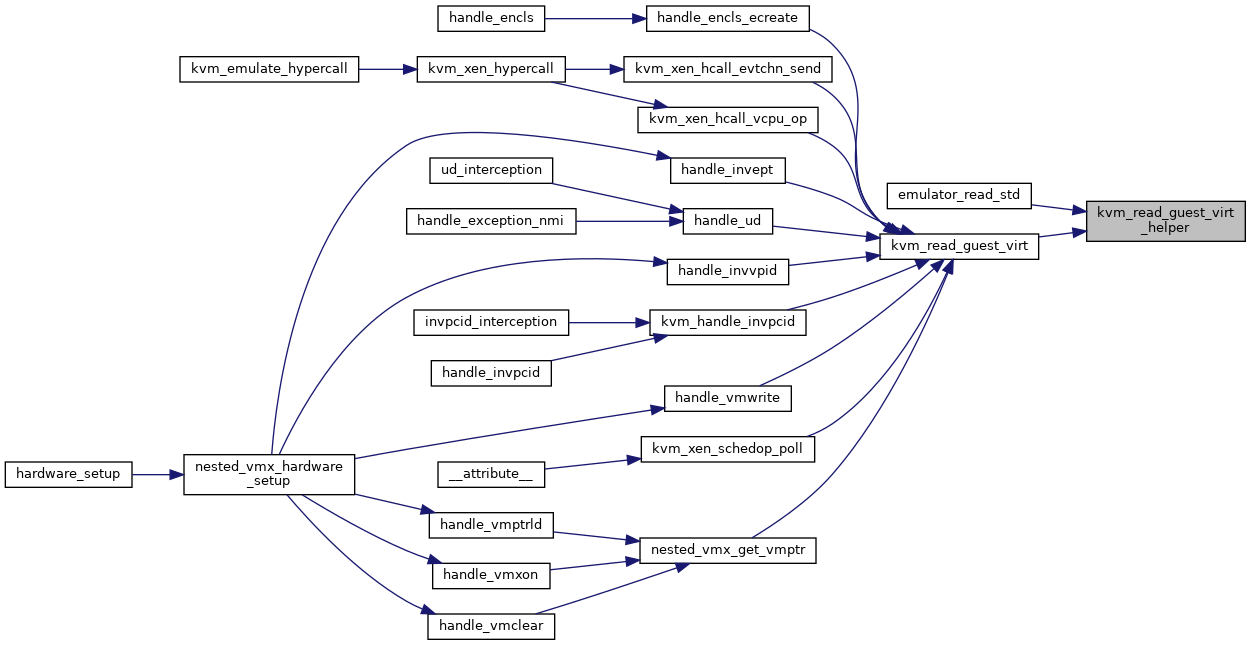

| static int | kvm_read_guest_virt_helper (gva_t addr, void *val, unsigned int bytes, struct kvm_vcpu *vcpu, u64 access, struct x86_exception *exception) |

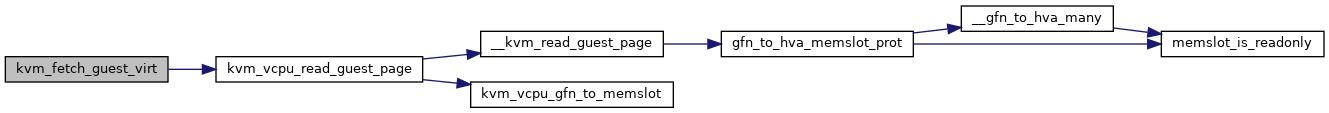

| static int | kvm_fetch_guest_virt (struct x86_emulate_ctxt *ctxt, gva_t addr, void *val, unsigned int bytes, struct x86_exception *exception) |

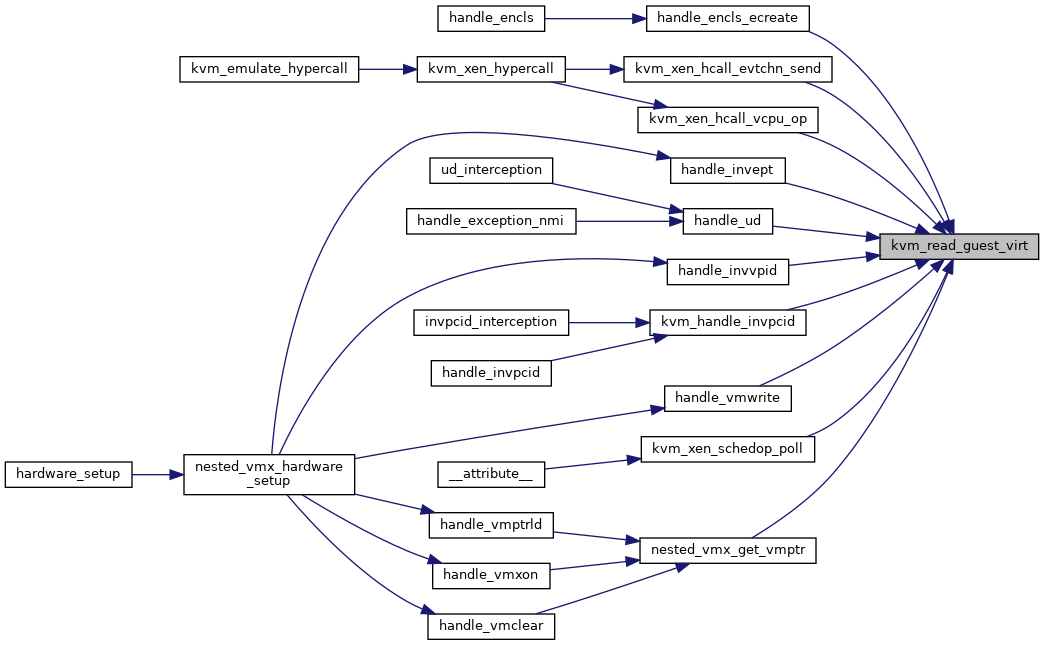

| int | kvm_read_guest_virt (struct kvm_vcpu *vcpu, gva_t addr, void *val, unsigned int bytes, struct x86_exception *exception) |

| EXPORT_SYMBOL_GPL (kvm_read_guest_virt) | |

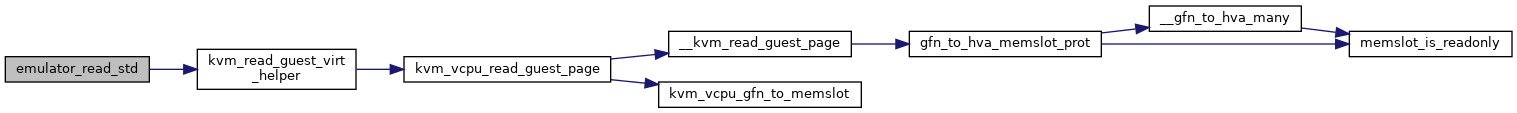

| static int | emulator_read_std (struct x86_emulate_ctxt *ctxt, gva_t addr, void *val, unsigned int bytes, struct x86_exception *exception, bool system) |

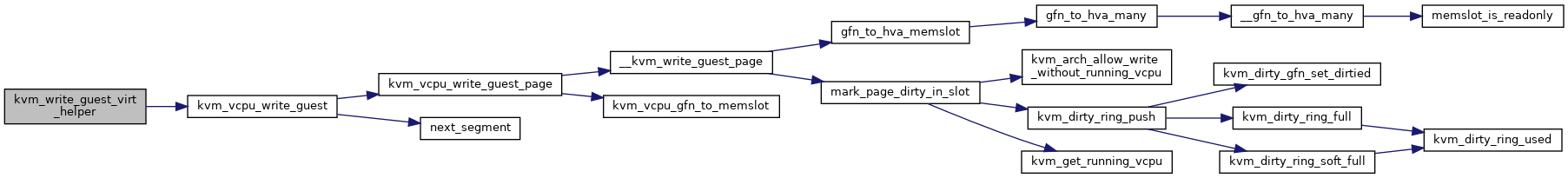

| static int | kvm_write_guest_virt_helper (gva_t addr, void *val, unsigned int bytes, struct kvm_vcpu *vcpu, u64 access, struct x86_exception *exception) |

| static int | emulator_write_std (struct x86_emulate_ctxt *ctxt, gva_t addr, void *val, unsigned int bytes, struct x86_exception *exception, bool system) |

| int | kvm_write_guest_virt_system (struct kvm_vcpu *vcpu, gva_t addr, void *val, unsigned int bytes, struct x86_exception *exception) |

| EXPORT_SYMBOL_GPL (kvm_write_guest_virt_system) | |

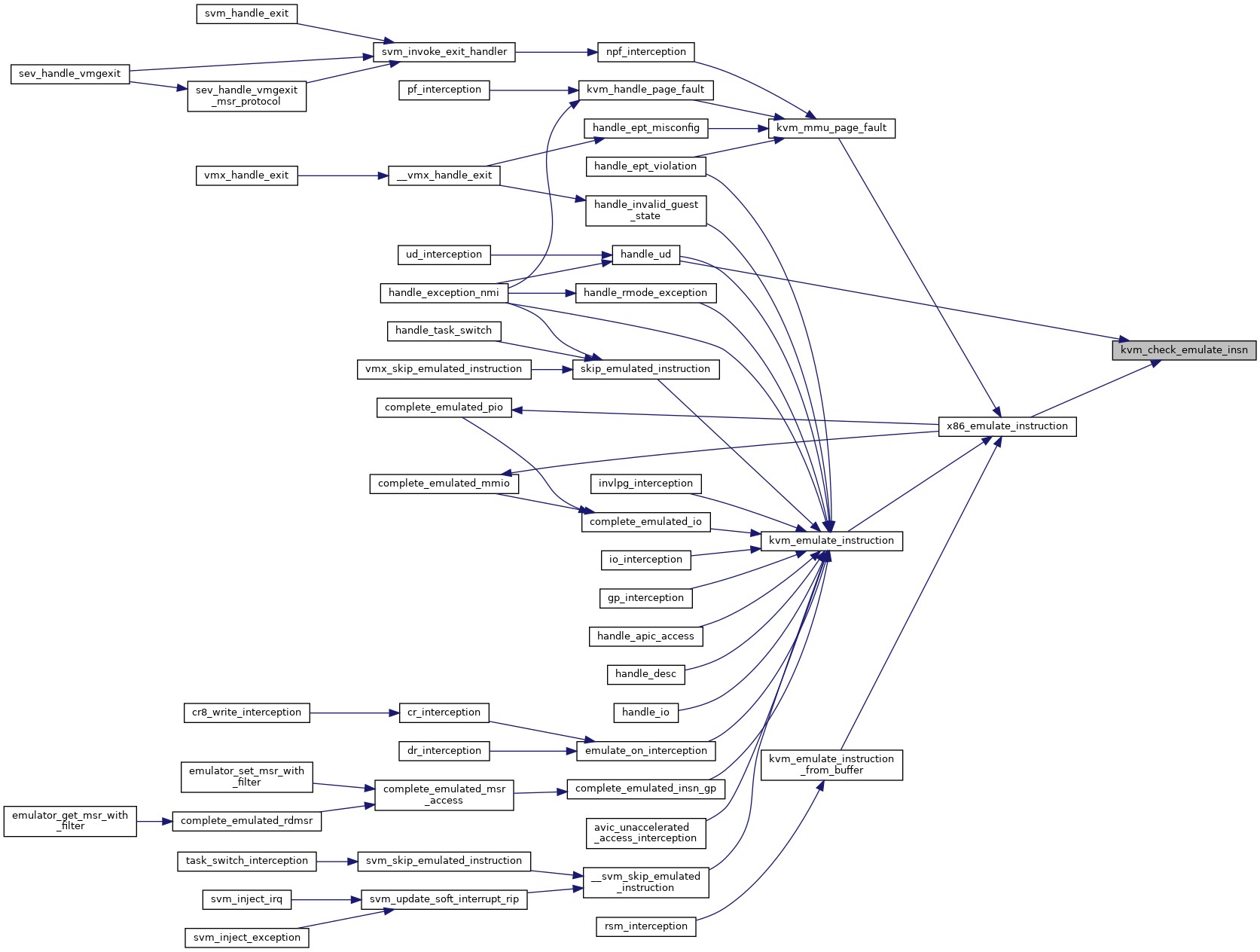

| static int | kvm_check_emulate_insn (struct kvm_vcpu *vcpu, int emul_type, void *insn, int insn_len) |

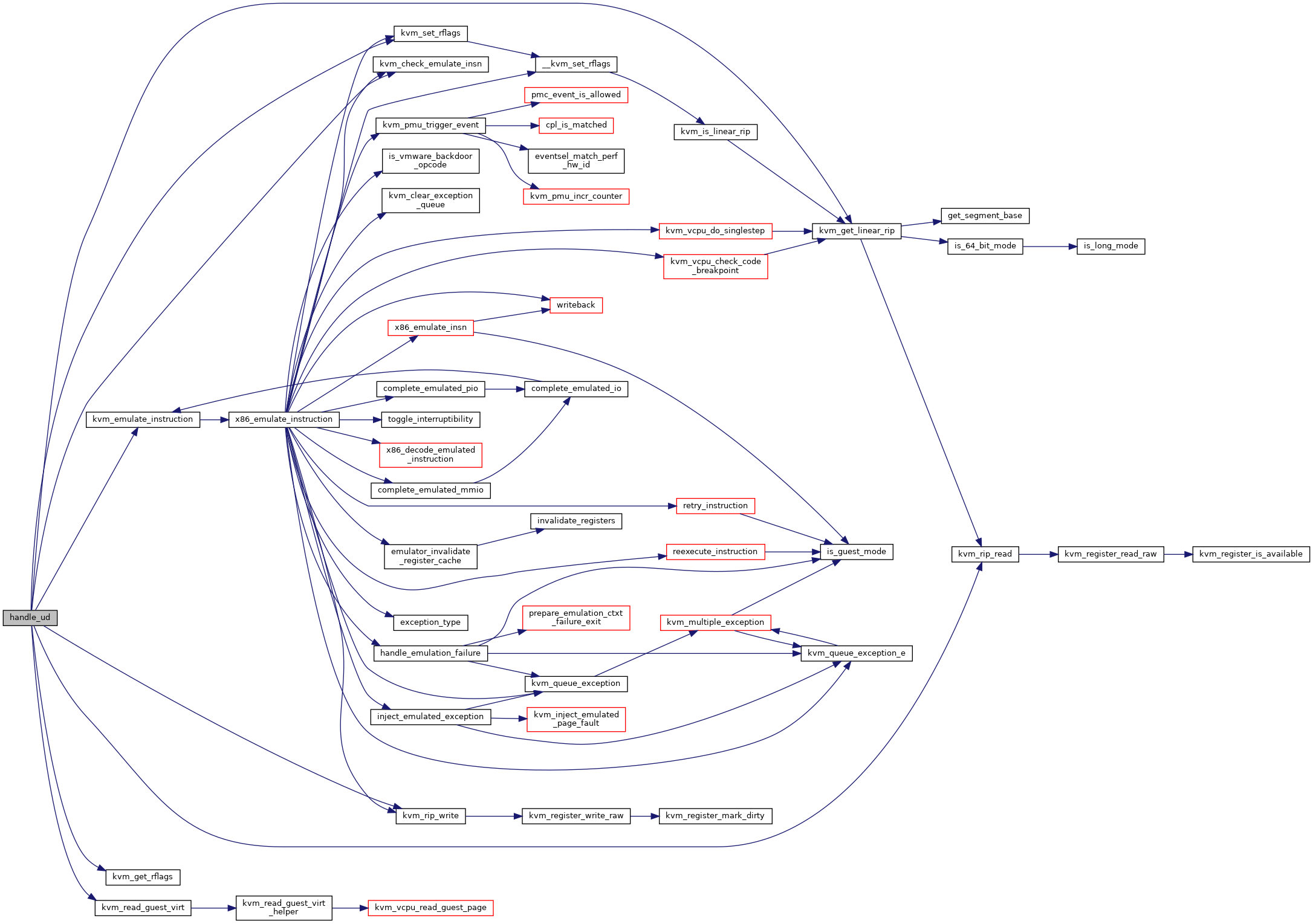

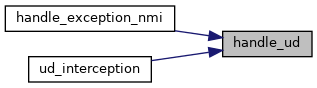

| int | handle_ud (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (handle_ud) | |

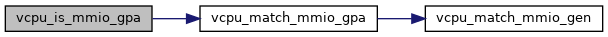

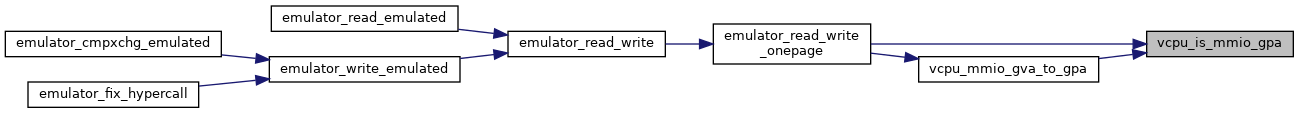

| static int | vcpu_is_mmio_gpa (struct kvm_vcpu *vcpu, unsigned long gva, gpa_t gpa, bool write) |

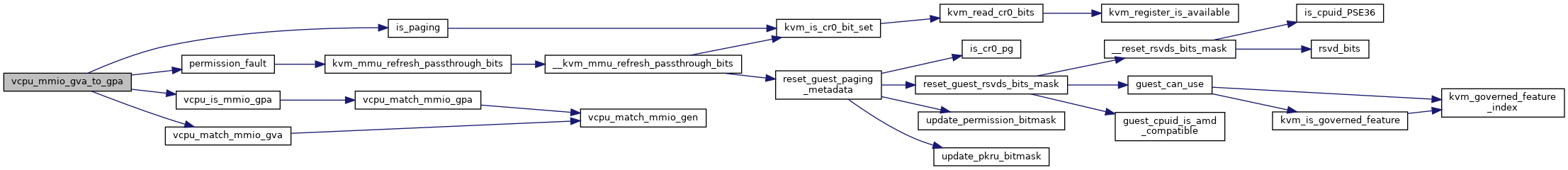

| static int | vcpu_mmio_gva_to_gpa (struct kvm_vcpu *vcpu, unsigned long gva, gpa_t *gpa, struct x86_exception *exception, bool write) |

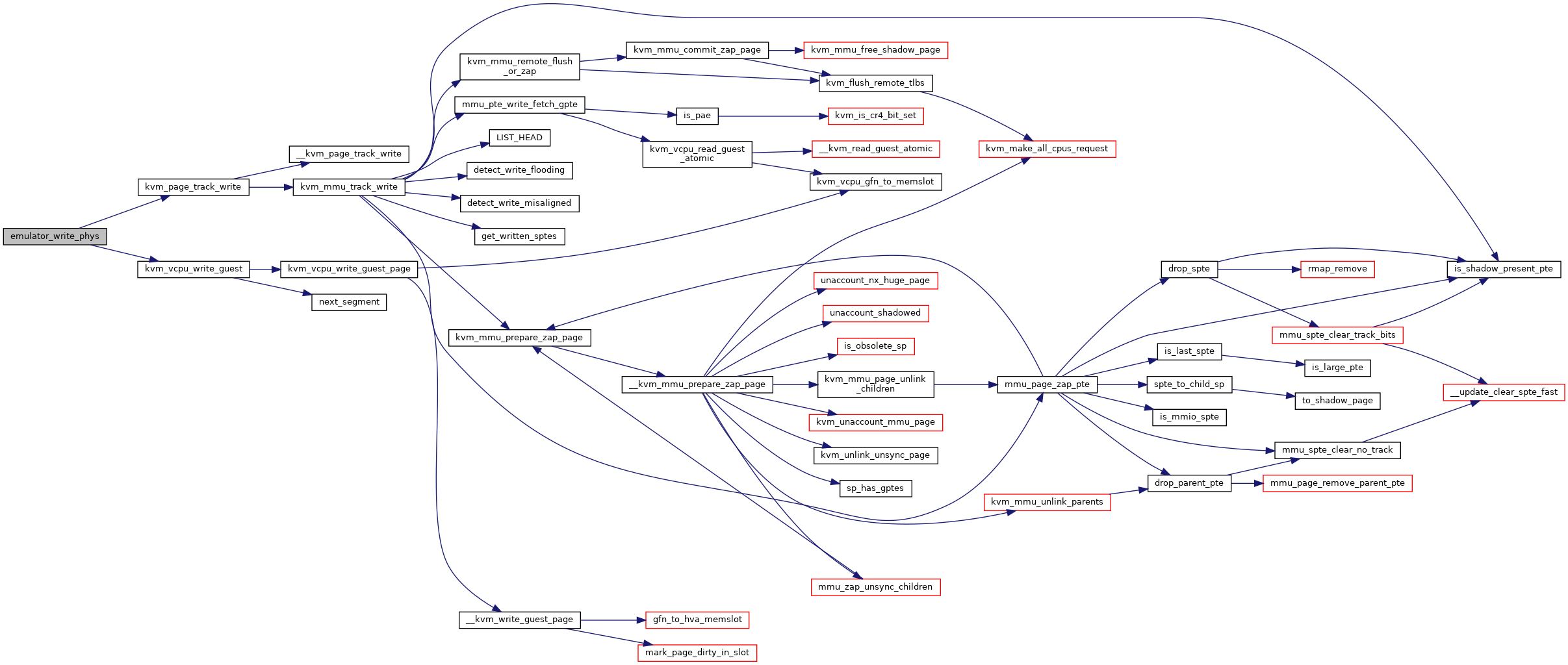

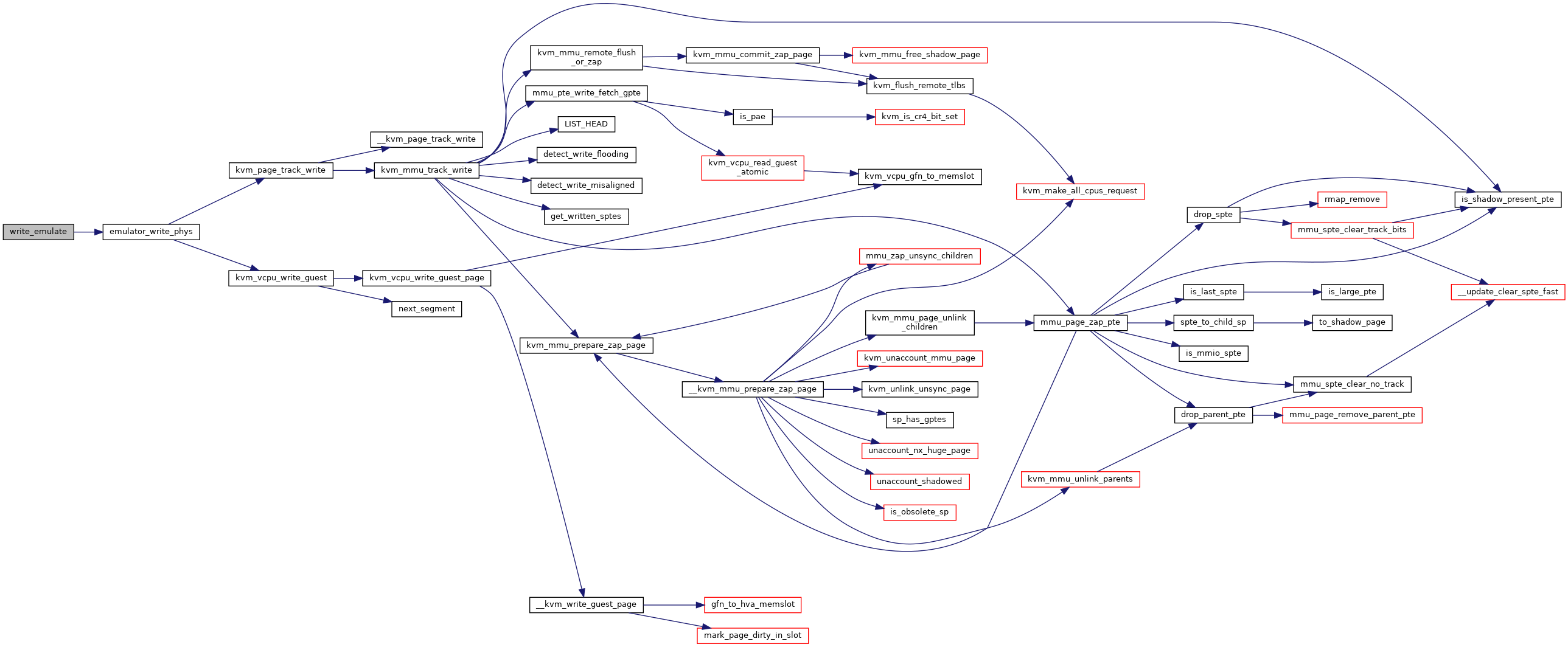

| int | emulator_write_phys (struct kvm_vcpu *vcpu, gpa_t gpa, const void *val, int bytes) |

| static int | read_prepare (struct kvm_vcpu *vcpu, void *val, int bytes) |

| static int | read_emulate (struct kvm_vcpu *vcpu, gpa_t gpa, void *val, int bytes) |

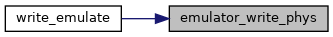

| static int | write_emulate (struct kvm_vcpu *vcpu, gpa_t gpa, void *val, int bytes) |

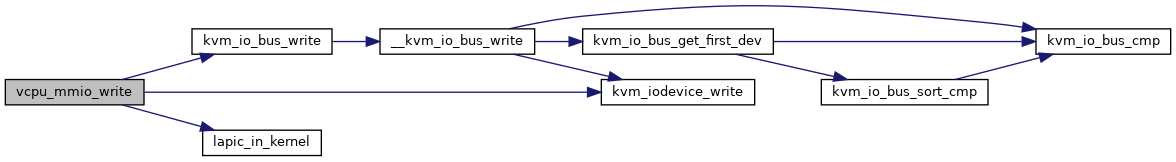

| static int | write_mmio (struct kvm_vcpu *vcpu, gpa_t gpa, int bytes, void *val) |

| static int | read_exit_mmio (struct kvm_vcpu *vcpu, gpa_t gpa, void *val, int bytes) |

| static int | write_exit_mmio (struct kvm_vcpu *vcpu, gpa_t gpa, void *val, int bytes) |

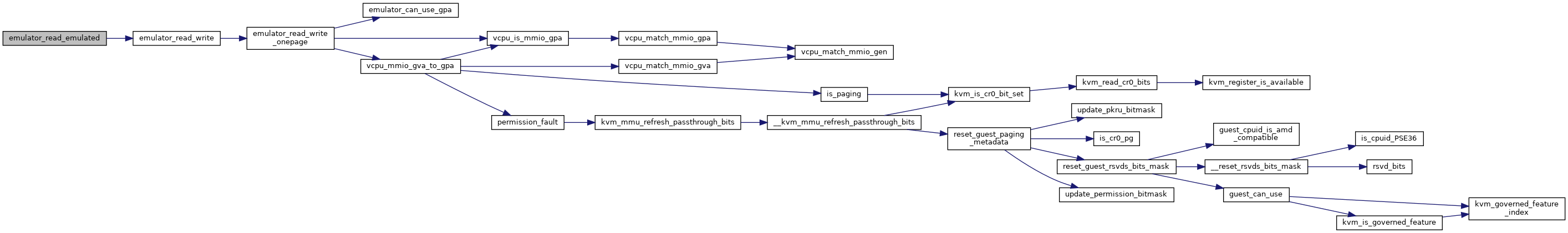

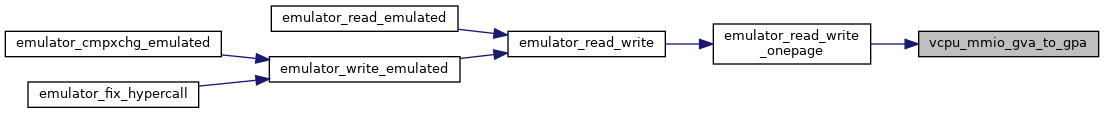

| static int | emulator_read_write_onepage (unsigned long addr, void *val, unsigned int bytes, struct x86_exception *exception, struct kvm_vcpu *vcpu, const struct read_write_emulator_ops *ops) |

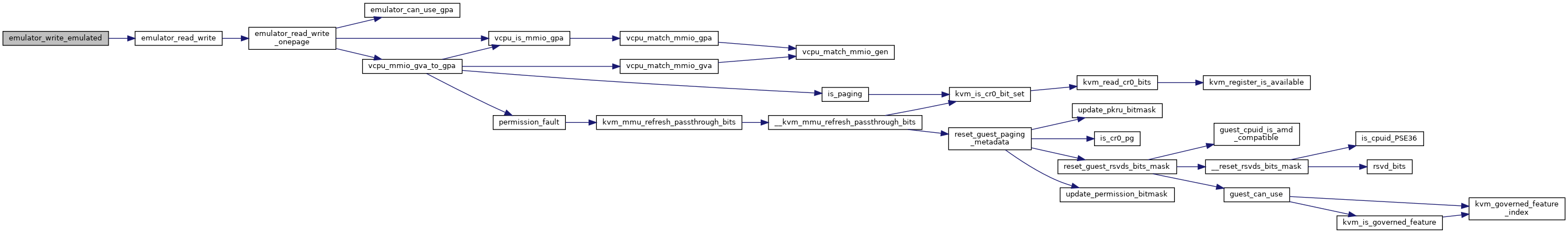

| static int | emulator_read_write (struct x86_emulate_ctxt *ctxt, unsigned long addr, void *val, unsigned int bytes, struct x86_exception *exception, const struct read_write_emulator_ops *ops) |

| static int | emulator_read_emulated (struct x86_emulate_ctxt *ctxt, unsigned long addr, void *val, unsigned int bytes, struct x86_exception *exception) |

| static int | emulator_write_emulated (struct x86_emulate_ctxt *ctxt, unsigned long addr, const void *val, unsigned int bytes, struct x86_exception *exception) |

| static int | emulator_cmpxchg_emulated (struct x86_emulate_ctxt *ctxt, unsigned long addr, const void *old, const void *new, unsigned int bytes, struct x86_exception *exception) |

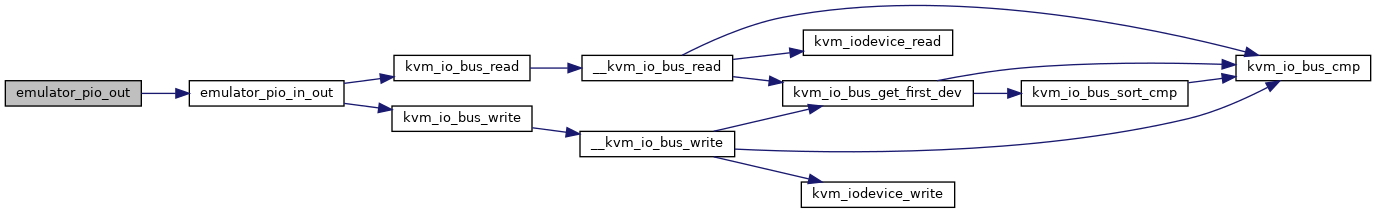

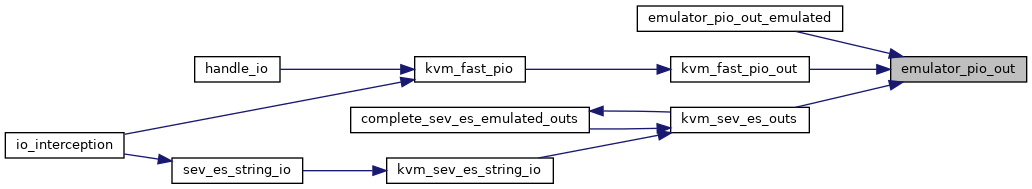

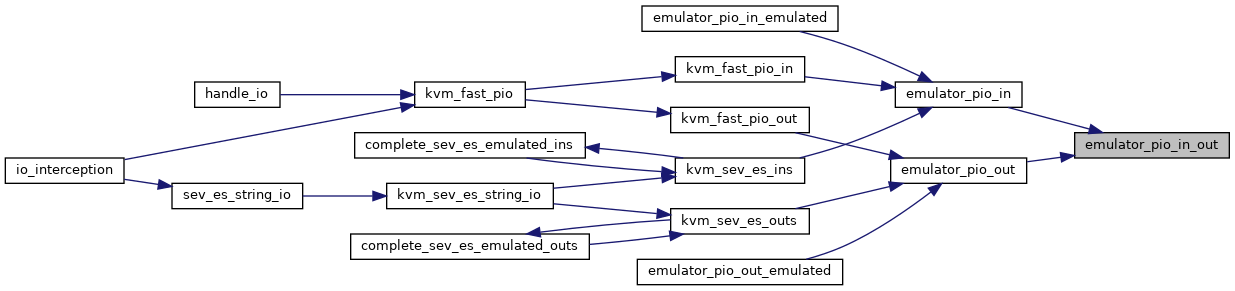

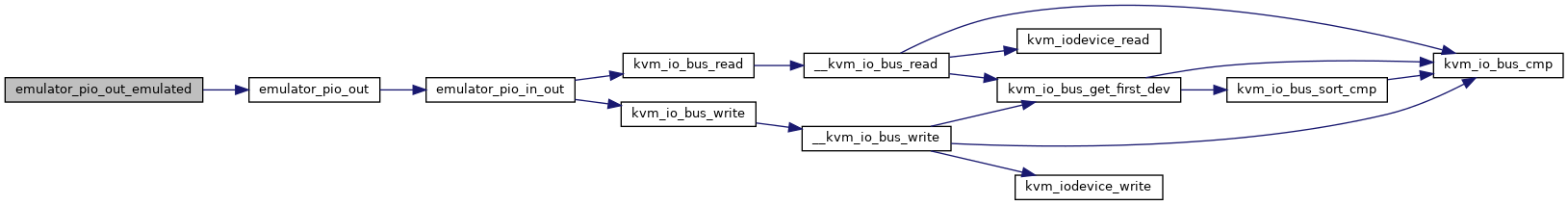

| static int | emulator_pio_in_out (struct kvm_vcpu *vcpu, int size, unsigned short port, void *data, unsigned int count, bool in) |

| static int | emulator_pio_in (struct kvm_vcpu *vcpu, int size, unsigned short port, void *val, unsigned int count) |

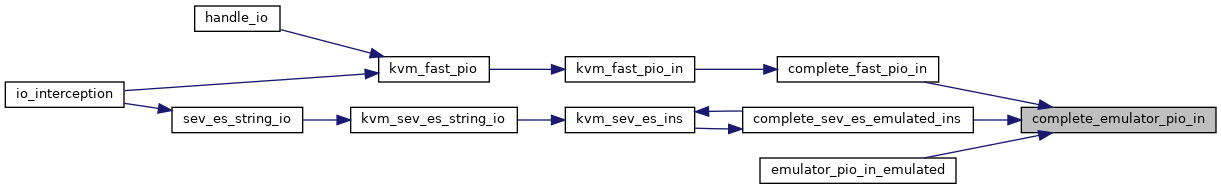

| static void | complete_emulator_pio_in (struct kvm_vcpu *vcpu, void *val) |

| static int | emulator_pio_in_emulated (struct x86_emulate_ctxt *ctxt, int size, unsigned short port, void *val, unsigned int count) |

| static int | emulator_pio_out (struct kvm_vcpu *vcpu, int size, unsigned short port, const void *val, unsigned int count) |

| static int | emulator_pio_out_emulated (struct x86_emulate_ctxt *ctxt, int size, unsigned short port, const void *val, unsigned int count) |

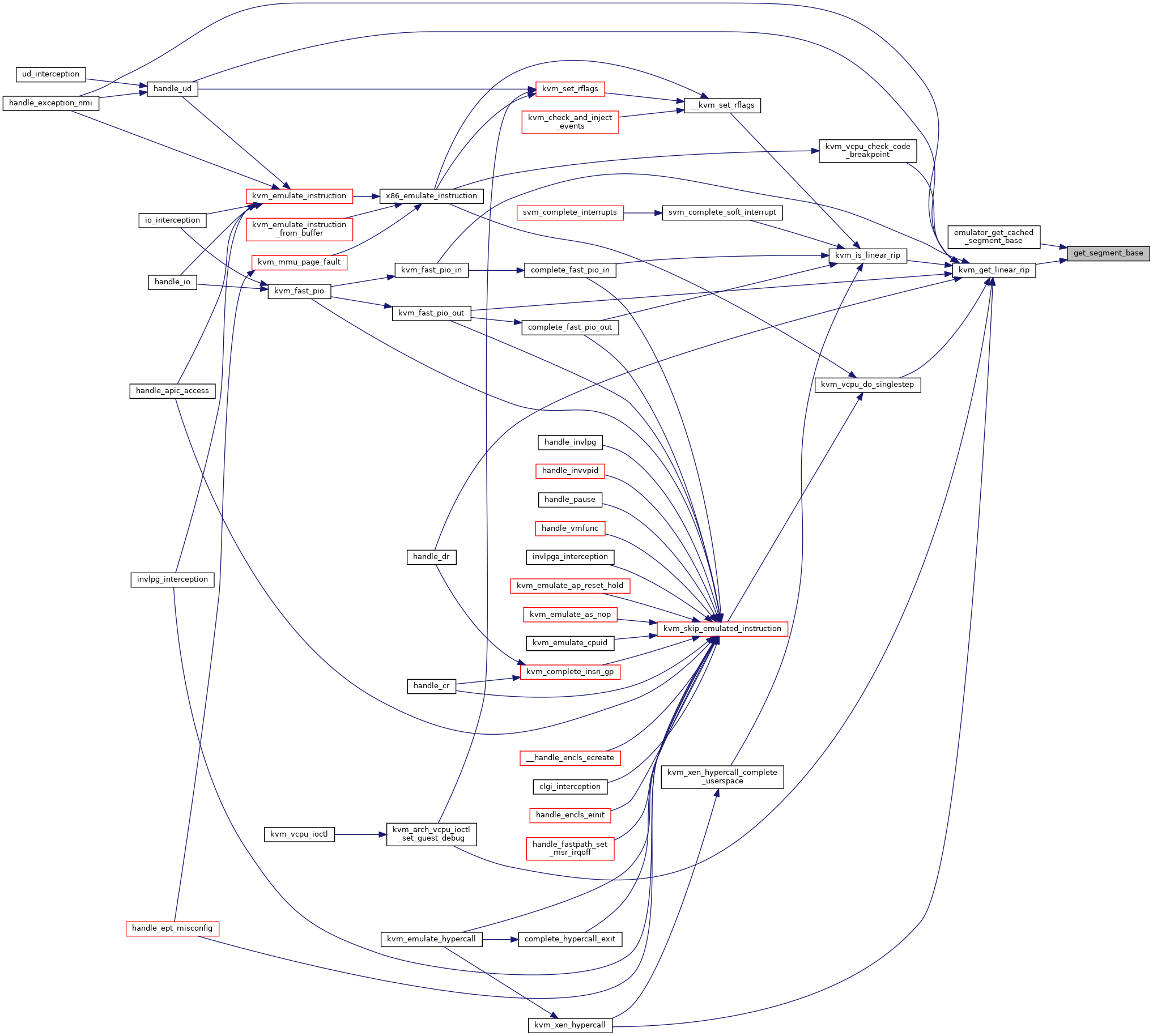

| static unsigned long | get_segment_base (struct kvm_vcpu *vcpu, int seg) |

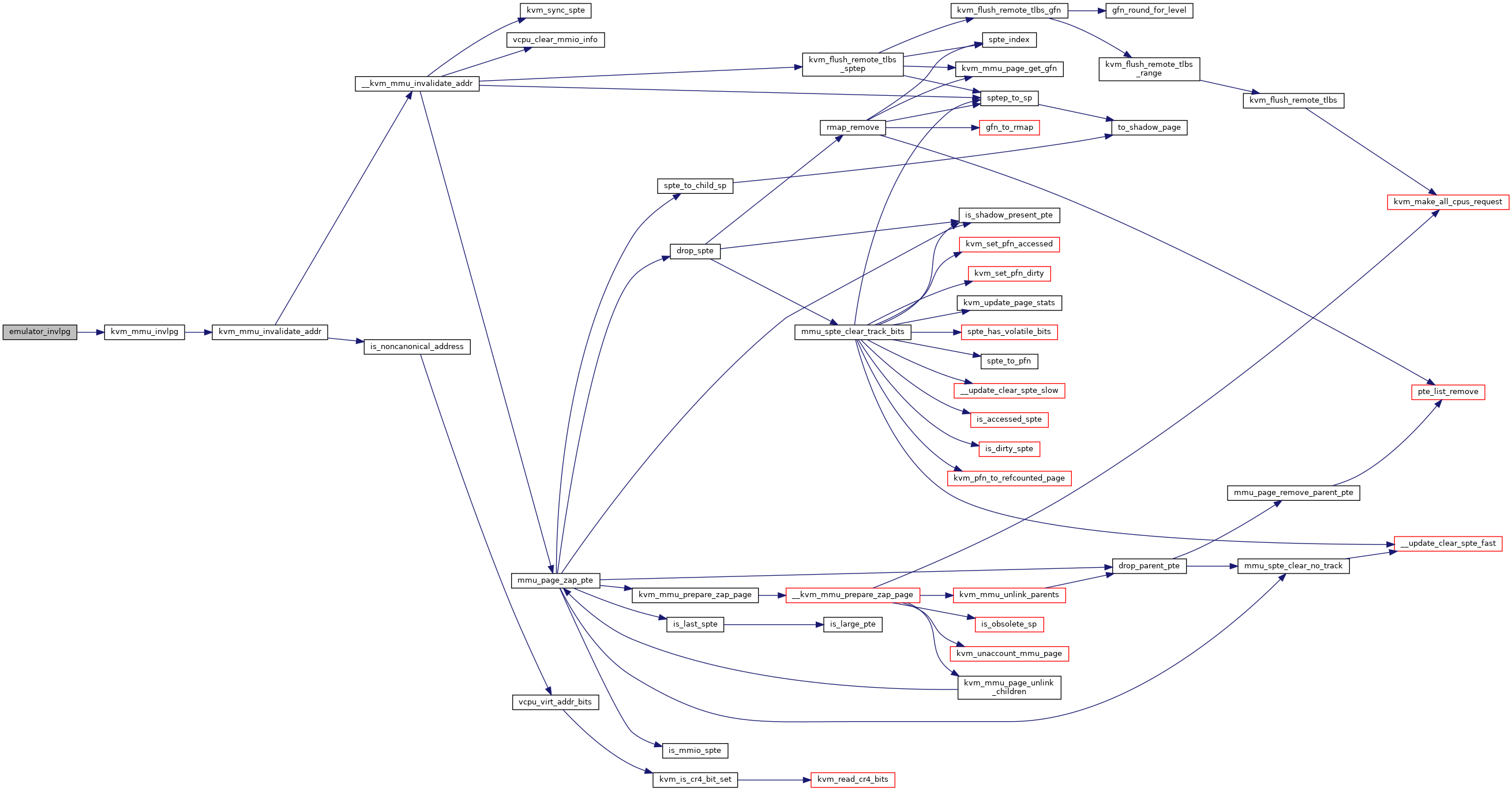

| static void | emulator_invlpg (struct x86_emulate_ctxt *ctxt, ulong address) |

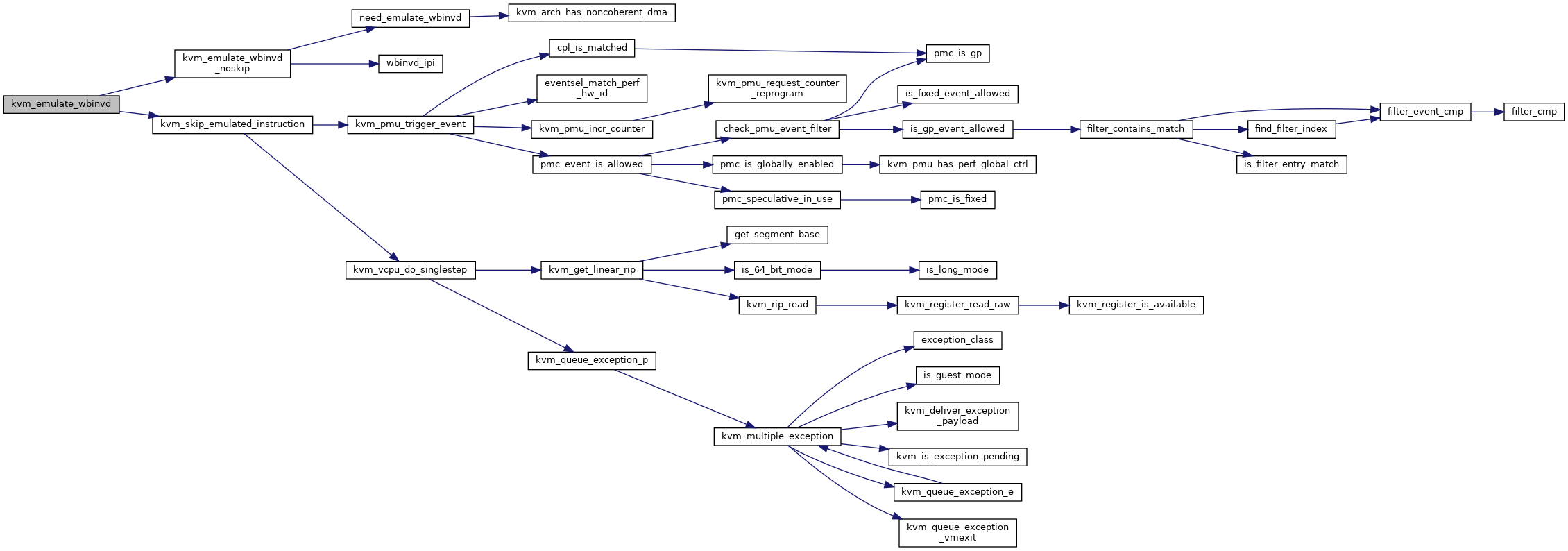

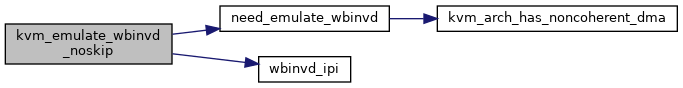

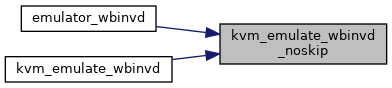

| static int | kvm_emulate_wbinvd_noskip (struct kvm_vcpu *vcpu) |

| int | kvm_emulate_wbinvd (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_wbinvd) | |

| static void | emulator_wbinvd (struct x86_emulate_ctxt *ctxt) |

| static void | emulator_get_dr (struct x86_emulate_ctxt *ctxt, int dr, unsigned long *dest) |

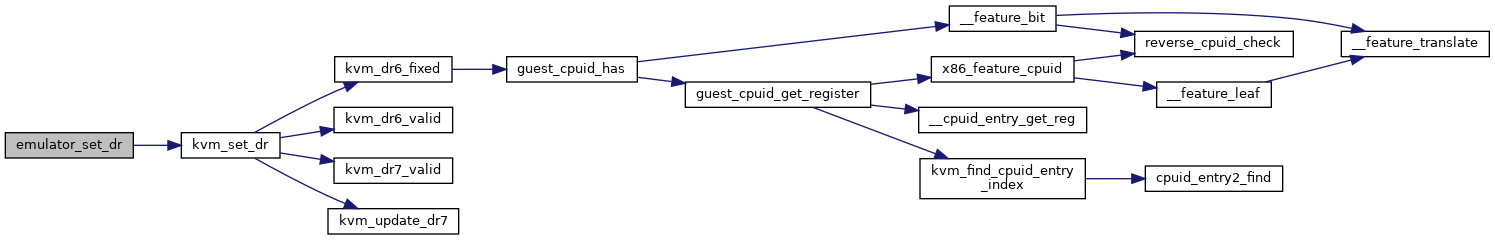

| static int | emulator_set_dr (struct x86_emulate_ctxt *ctxt, int dr, unsigned long value) |

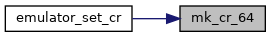

| static u64 | mk_cr_64 (u64 curr_cr, u32 new_val) |

| static unsigned long | emulator_get_cr (struct x86_emulate_ctxt *ctxt, int cr) |

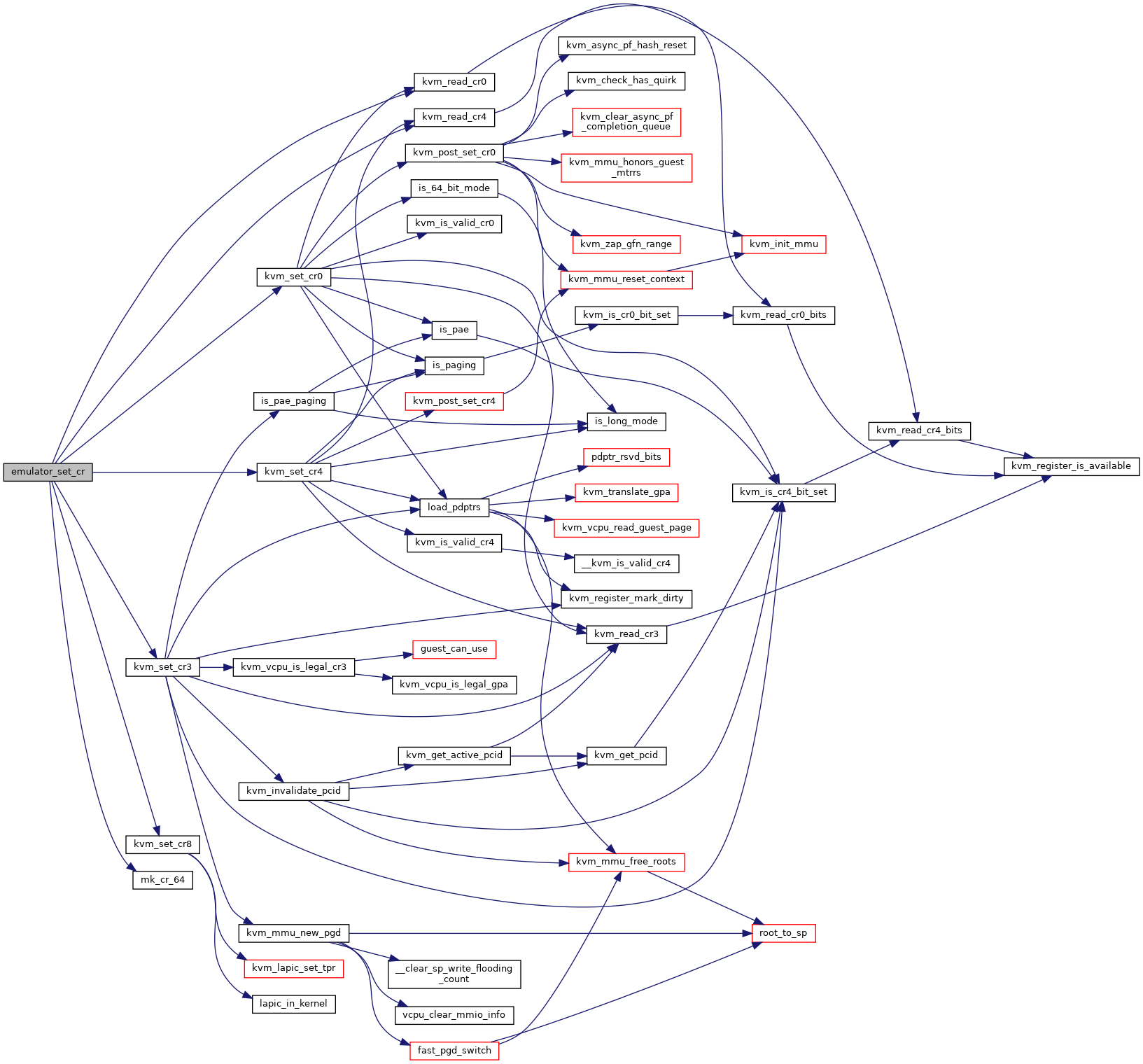

| static int | emulator_set_cr (struct x86_emulate_ctxt *ctxt, int cr, ulong val) |

| static int | emulator_get_cpl (struct x86_emulate_ctxt *ctxt) |

| static void | emulator_get_gdt (struct x86_emulate_ctxt *ctxt, struct desc_ptr *dt) |

| static void | emulator_get_idt (struct x86_emulate_ctxt *ctxt, struct desc_ptr *dt) |

| static void | emulator_set_gdt (struct x86_emulate_ctxt *ctxt, struct desc_ptr *dt) |

| static void | emulator_set_idt (struct x86_emulate_ctxt *ctxt, struct desc_ptr *dt) |

| static unsigned long | emulator_get_cached_segment_base (struct x86_emulate_ctxt *ctxt, int seg) |

| static bool | emulator_get_segment (struct x86_emulate_ctxt *ctxt, u16 *selector, struct desc_struct *desc, u32 *base3, int seg) |

| static void | emulator_set_segment (struct x86_emulate_ctxt *ctxt, u16 selector, struct desc_struct *desc, u32 base3, int seg) |

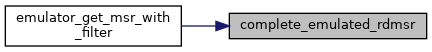

| static int | emulator_get_msr_with_filter (struct x86_emulate_ctxt *ctxt, u32 msr_index, u64 *pdata) |

| static int | emulator_set_msr_with_filter (struct x86_emulate_ctxt *ctxt, u32 msr_index, u64 data) |

| static int | emulator_get_msr (struct x86_emulate_ctxt *ctxt, u32 msr_index, u64 *pdata) |

| static int | emulator_check_pmc (struct x86_emulate_ctxt *ctxt, u32 pmc) |

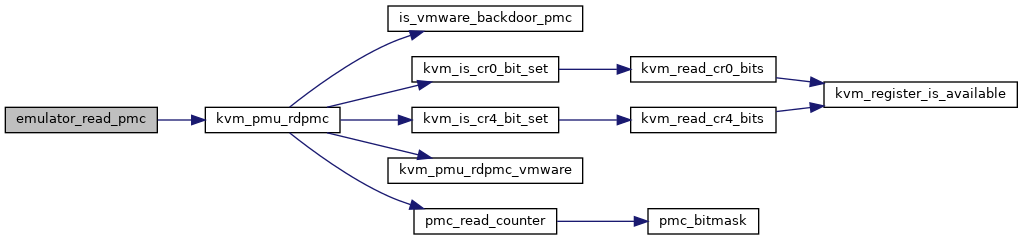

| static int | emulator_read_pmc (struct x86_emulate_ctxt *ctxt, u32 pmc, u64 *pdata) |

| static void | emulator_halt (struct x86_emulate_ctxt *ctxt) |

| static int | emulator_intercept (struct x86_emulate_ctxt *ctxt, struct x86_instruction_info *info, enum x86_intercept_stage stage) |

| static bool | emulator_get_cpuid (struct x86_emulate_ctxt *ctxt, u32 *eax, u32 *ebx, u32 *ecx, u32 *edx, bool exact_only) |

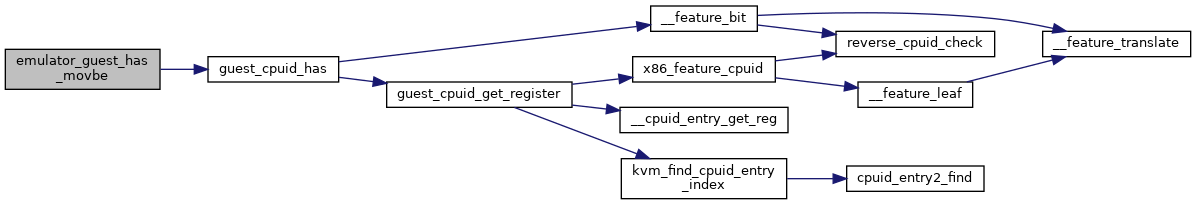

| static bool | emulator_guest_has_movbe (struct x86_emulate_ctxt *ctxt) |

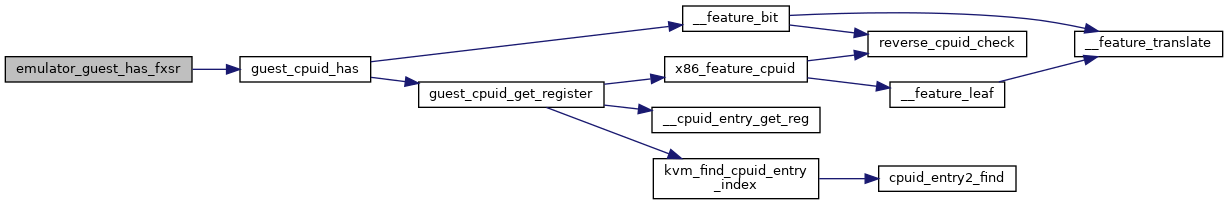

| static bool | emulator_guest_has_fxsr (struct x86_emulate_ctxt *ctxt) |

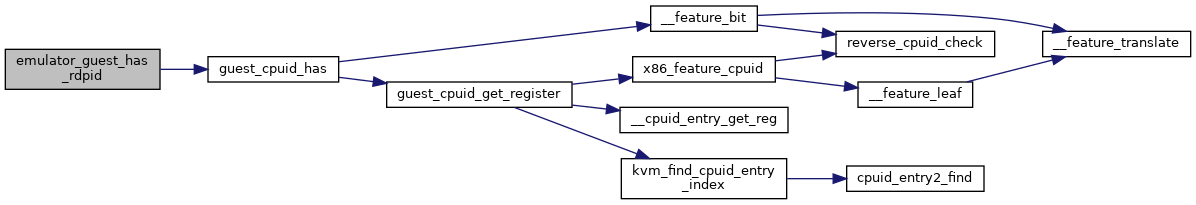

| static bool | emulator_guest_has_rdpid (struct x86_emulate_ctxt *ctxt) |

| static ulong | emulator_read_gpr (struct x86_emulate_ctxt *ctxt, unsigned reg) |

| static void | emulator_write_gpr (struct x86_emulate_ctxt *ctxt, unsigned reg, ulong val) |

| static void | emulator_set_nmi_mask (struct x86_emulate_ctxt *ctxt, bool masked) |

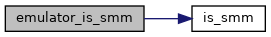

| static bool | emulator_is_smm (struct x86_emulate_ctxt *ctxt) |

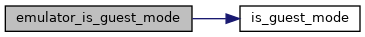

| static bool | emulator_is_guest_mode (struct x86_emulate_ctxt *ctxt) |

| static int | emulator_leave_smm (struct x86_emulate_ctxt *ctxt) |

| static void | emulator_triple_fault (struct x86_emulate_ctxt *ctxt) |

| static int | emulator_set_xcr (struct x86_emulate_ctxt *ctxt, u32 index, u64 xcr) |

| static void | emulator_vm_bugged (struct x86_emulate_ctxt *ctxt) |

| static gva_t | emulator_get_untagged_addr (struct x86_emulate_ctxt *ctxt, gva_t addr, unsigned int flags) |

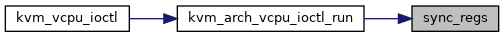

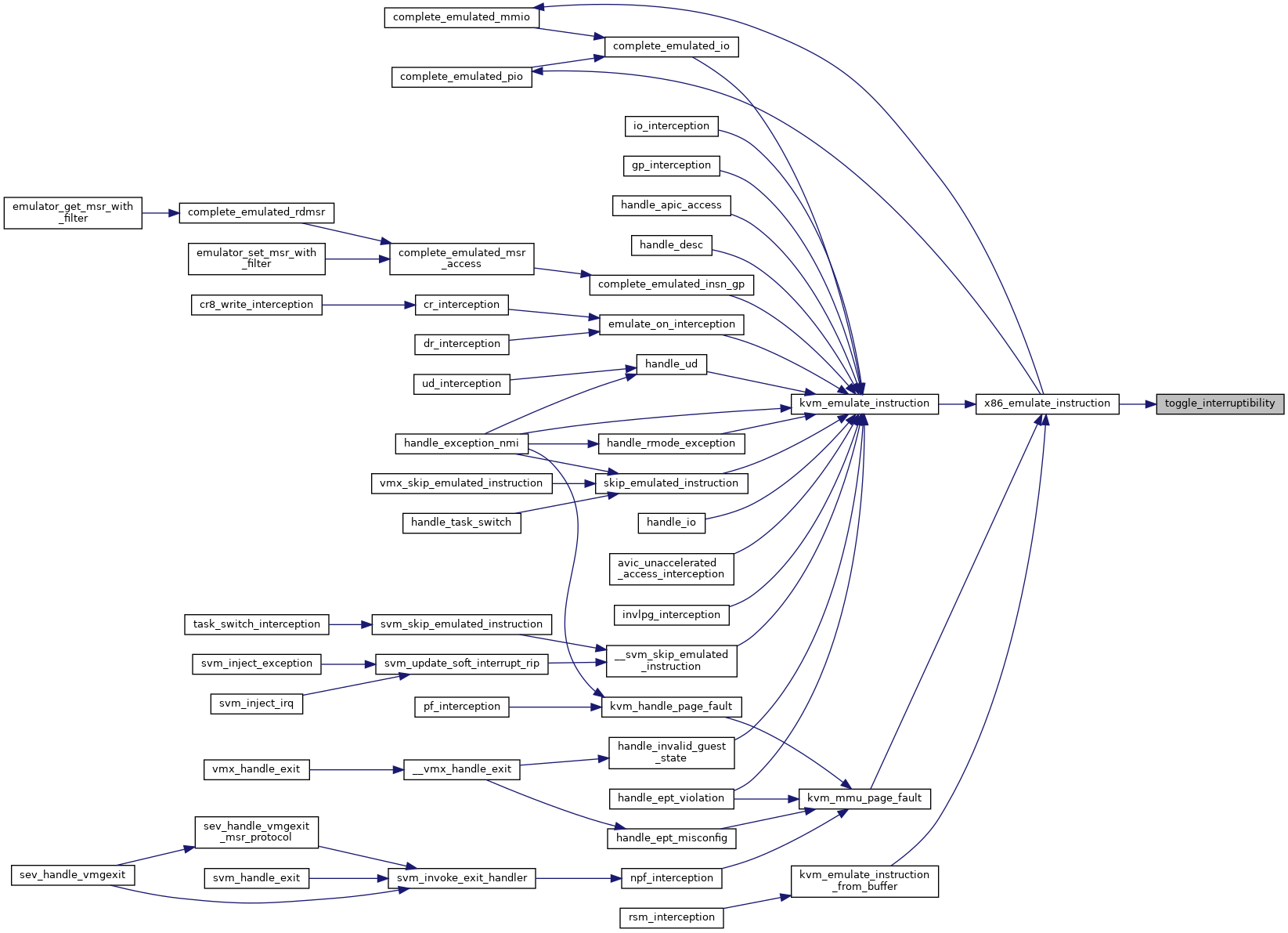

| static void | toggle_interruptibility (struct kvm_vcpu *vcpu, u32 mask) |

| static void | inject_emulated_exception (struct kvm_vcpu *vcpu) |

| static struct x86_emulate_ctxt * | alloc_emulate_ctxt (struct kvm_vcpu *vcpu) |

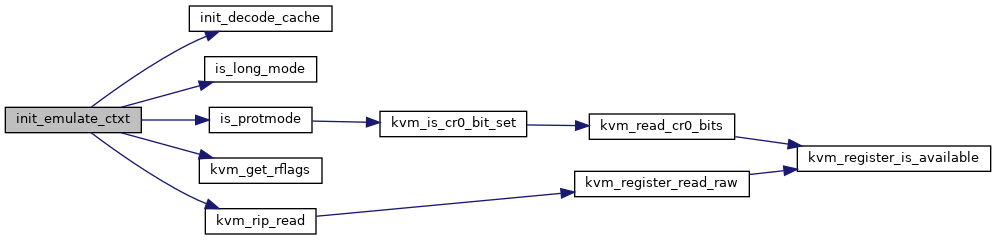

| static void | init_emulate_ctxt (struct kvm_vcpu *vcpu) |

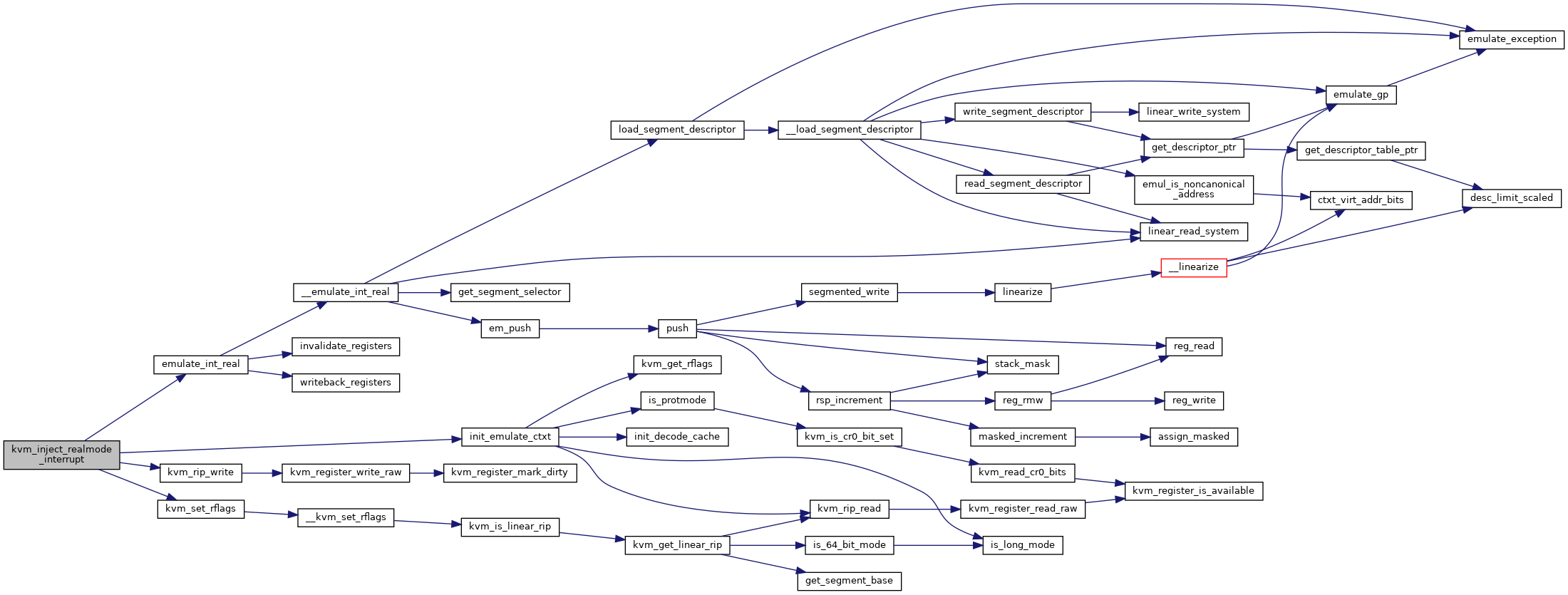

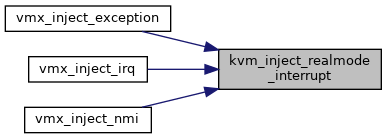

| void | kvm_inject_realmode_interrupt (struct kvm_vcpu *vcpu, int irq, int inc_eip) |

| EXPORT_SYMBOL_GPL (kvm_inject_realmode_interrupt) | |

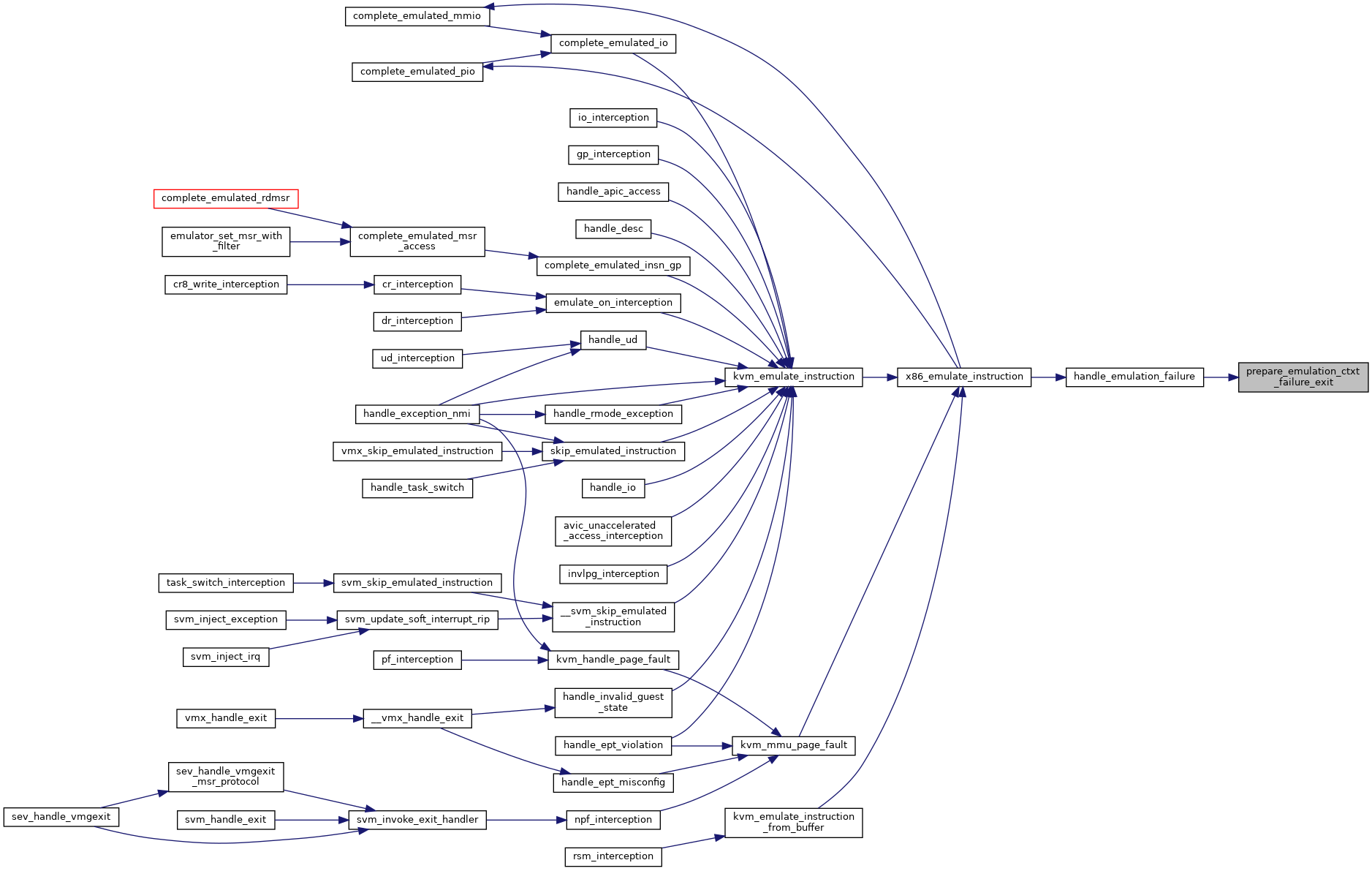

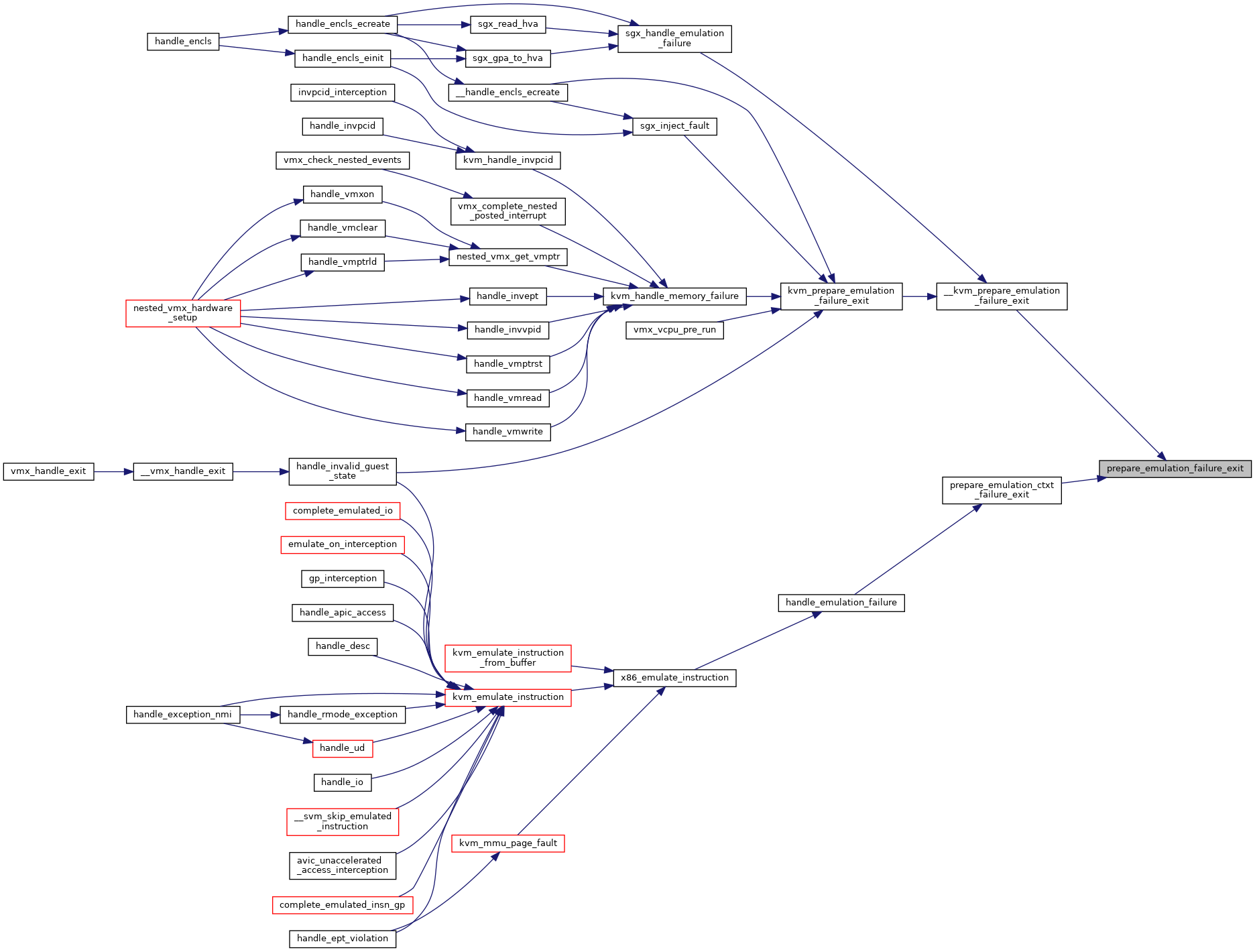

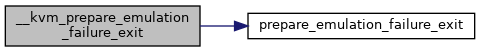

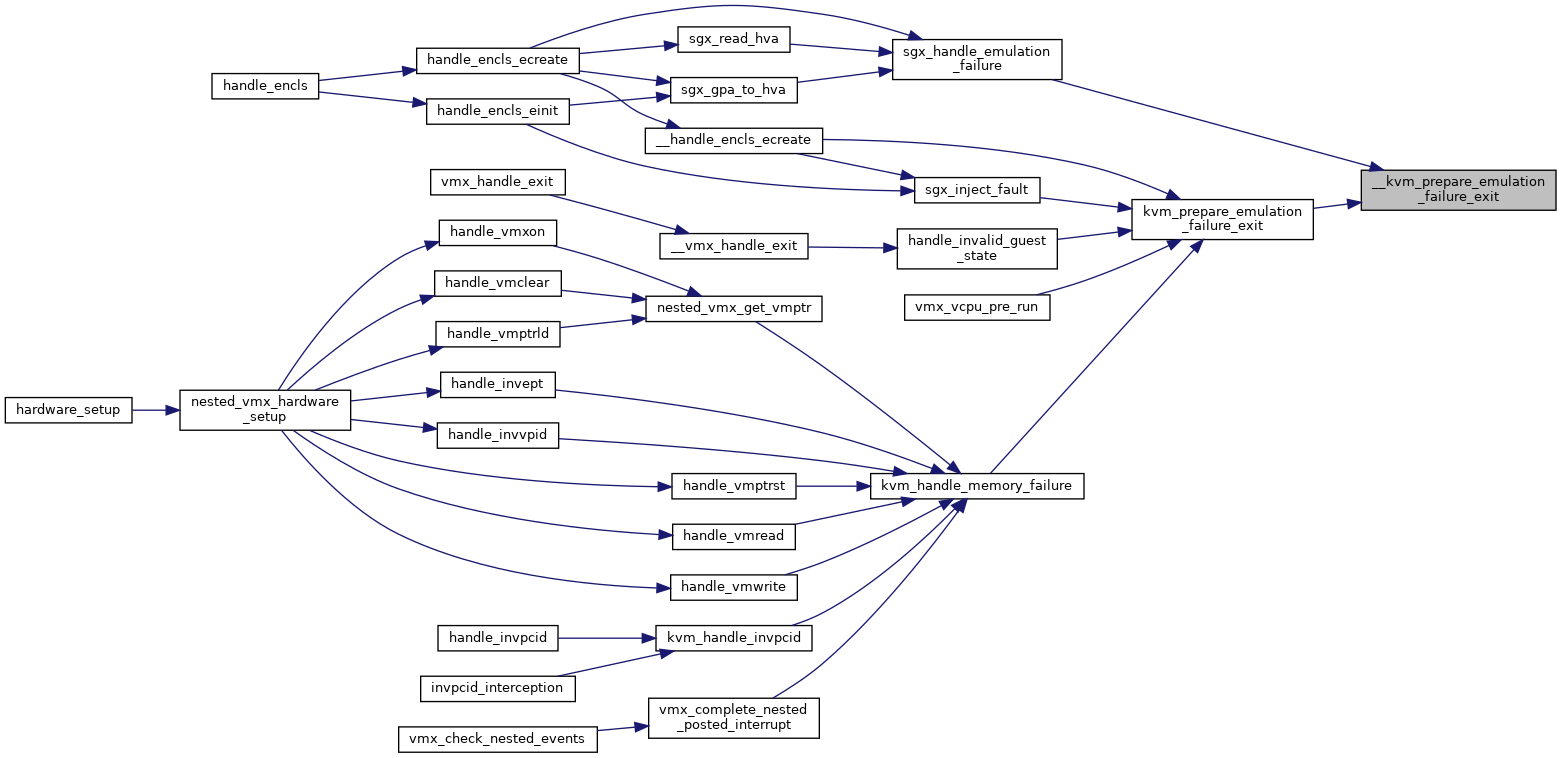

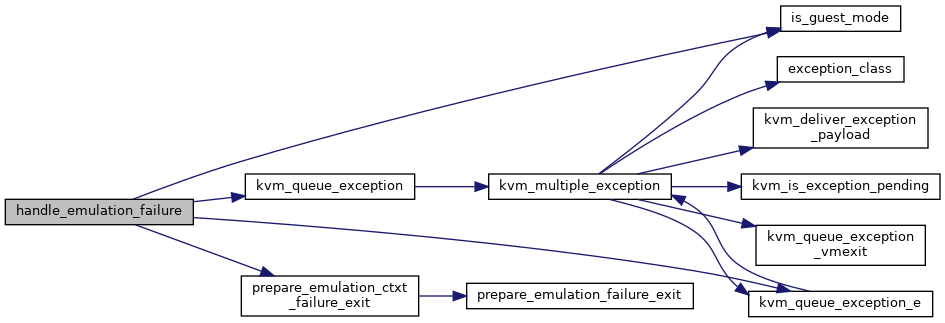

| static void | prepare_emulation_failure_exit (struct kvm_vcpu *vcpu, u64 *data, u8 ndata, u8 *insn_bytes, u8 insn_size) |

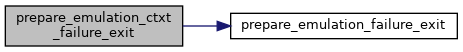

| static void | prepare_emulation_ctxt_failure_exit (struct kvm_vcpu *vcpu) |

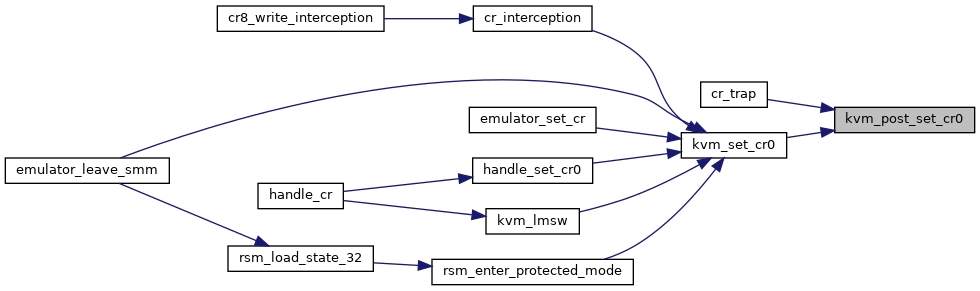

| void | __kvm_prepare_emulation_failure_exit (struct kvm_vcpu *vcpu, u64 *data, u8 ndata) |

| EXPORT_SYMBOL_GPL (__kvm_prepare_emulation_failure_exit) | |

| void | kvm_prepare_emulation_failure_exit (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_prepare_emulation_failure_exit) | |

| static int | handle_emulation_failure (struct kvm_vcpu *vcpu, int emulation_type) |

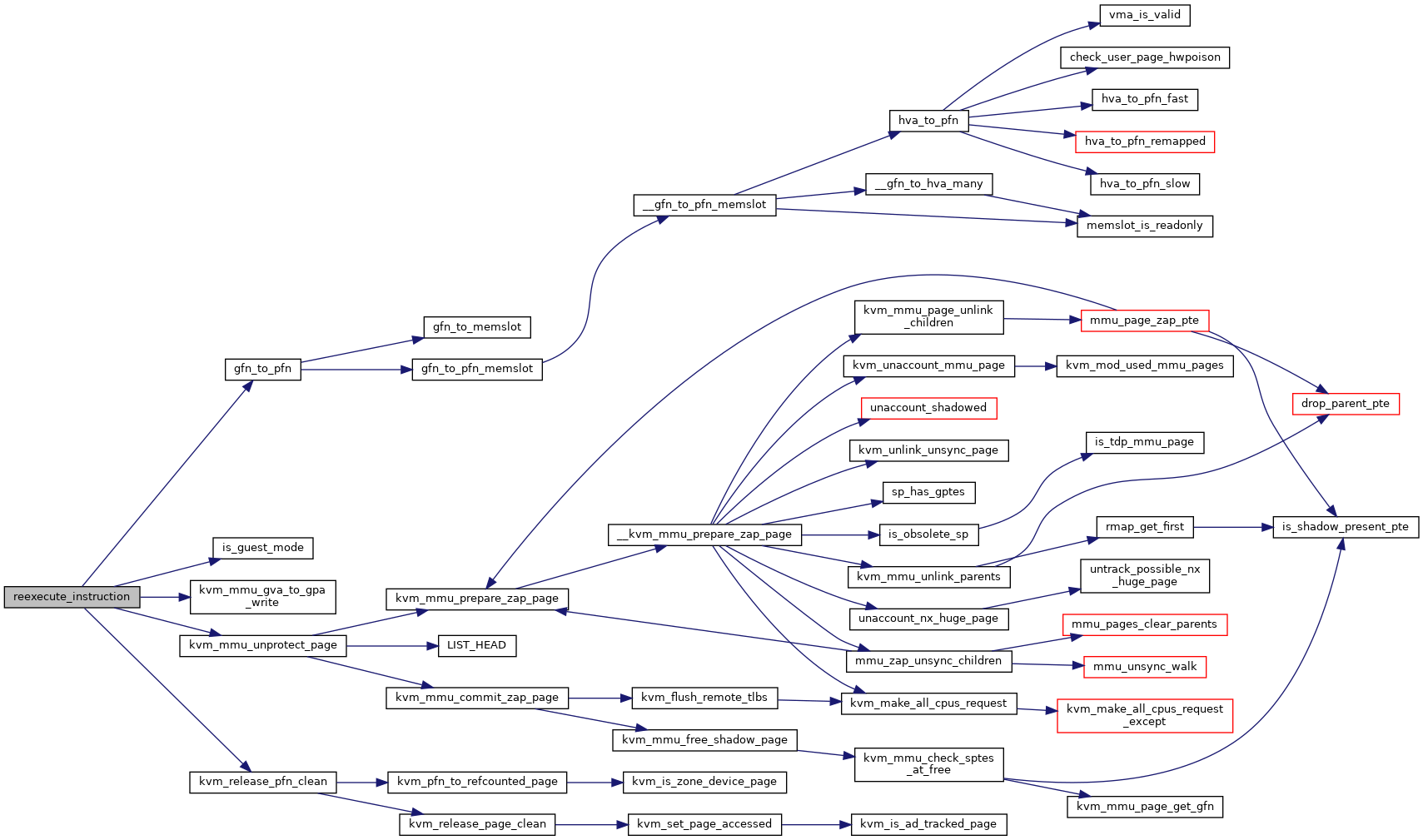

| static bool | reexecute_instruction (struct kvm_vcpu *vcpu, gpa_t cr2_or_gpa, int emulation_type) |

| static bool | retry_instruction (struct x86_emulate_ctxt *ctxt, gpa_t cr2_or_gpa, int emulation_type) |

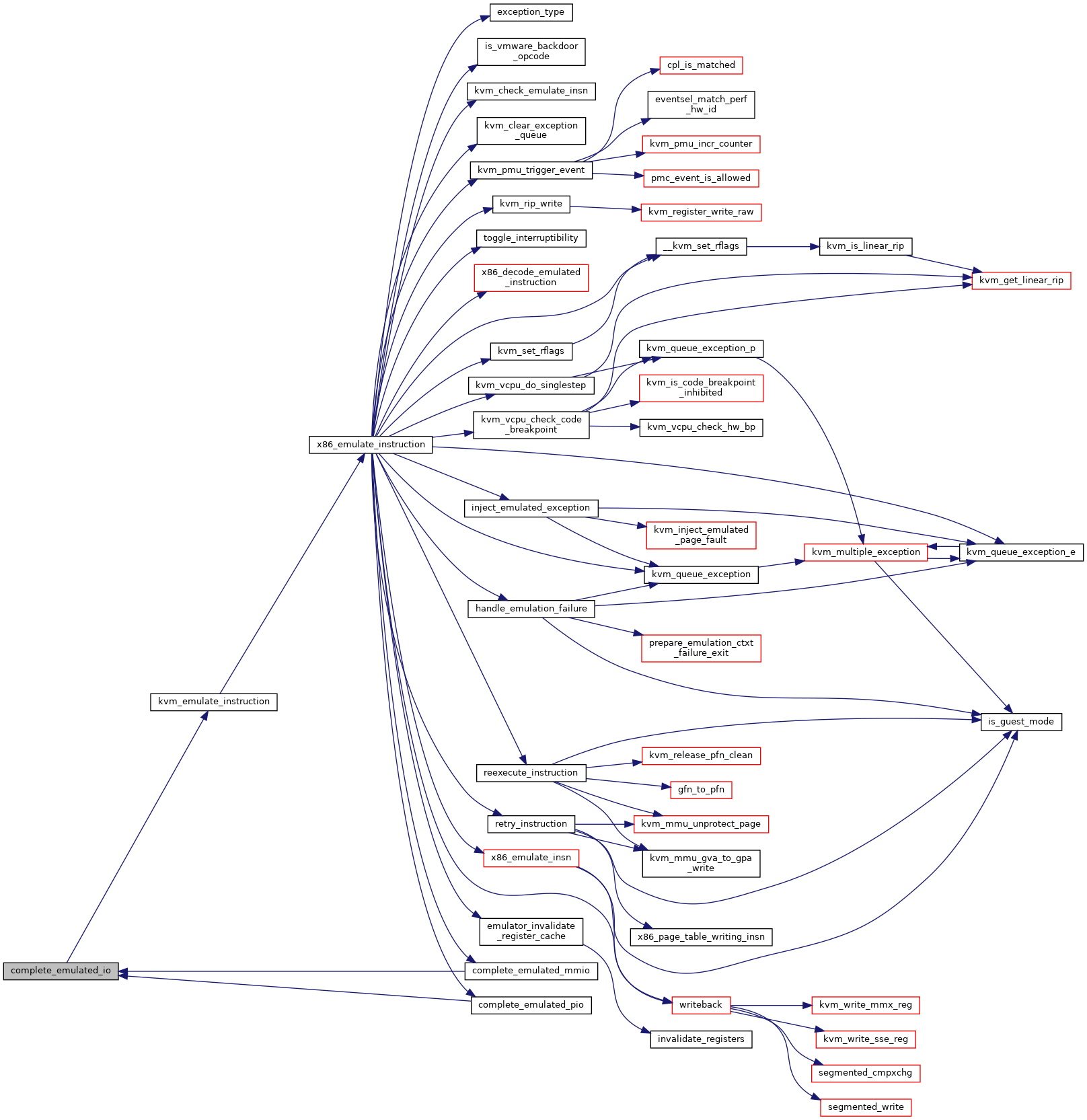

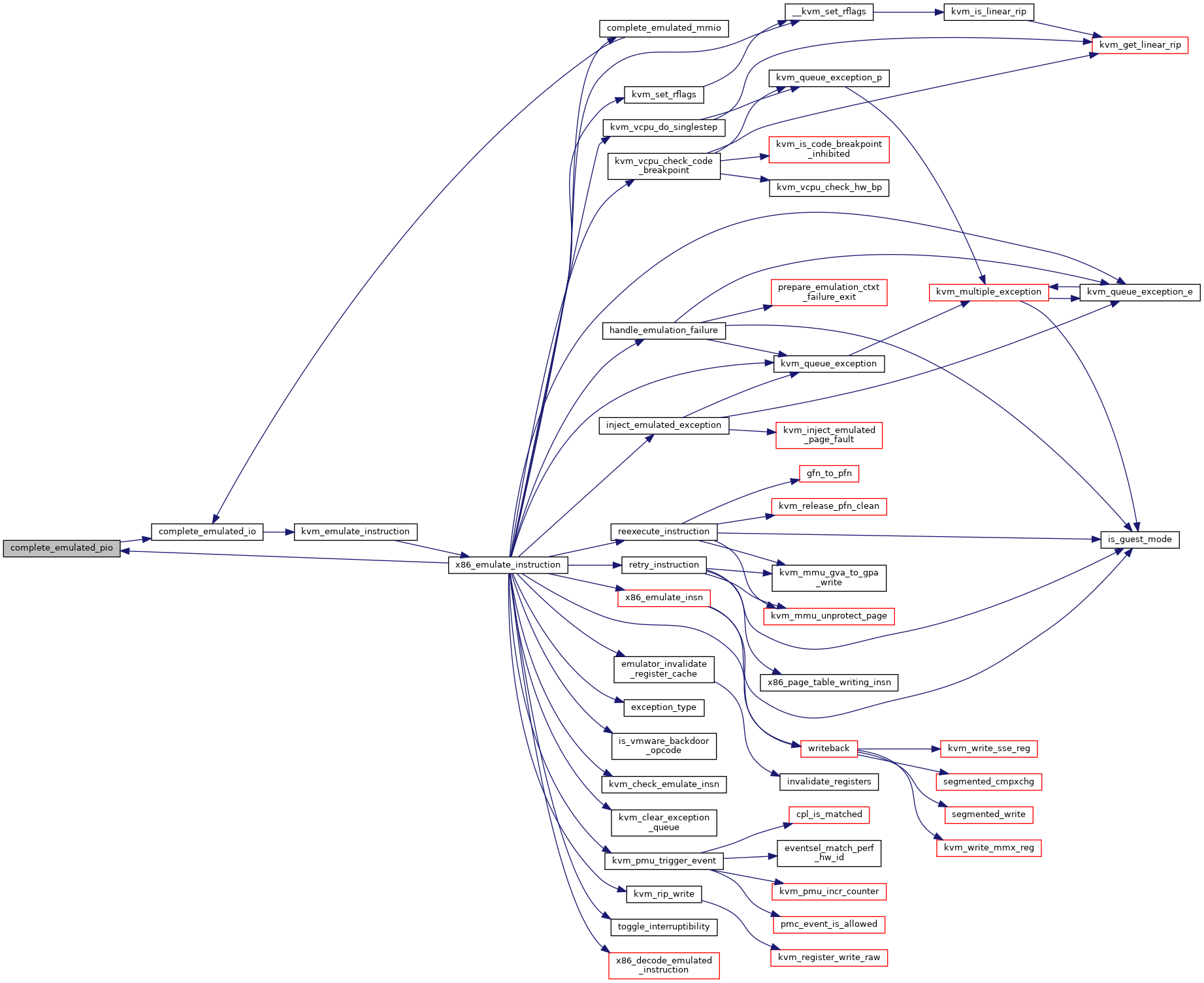

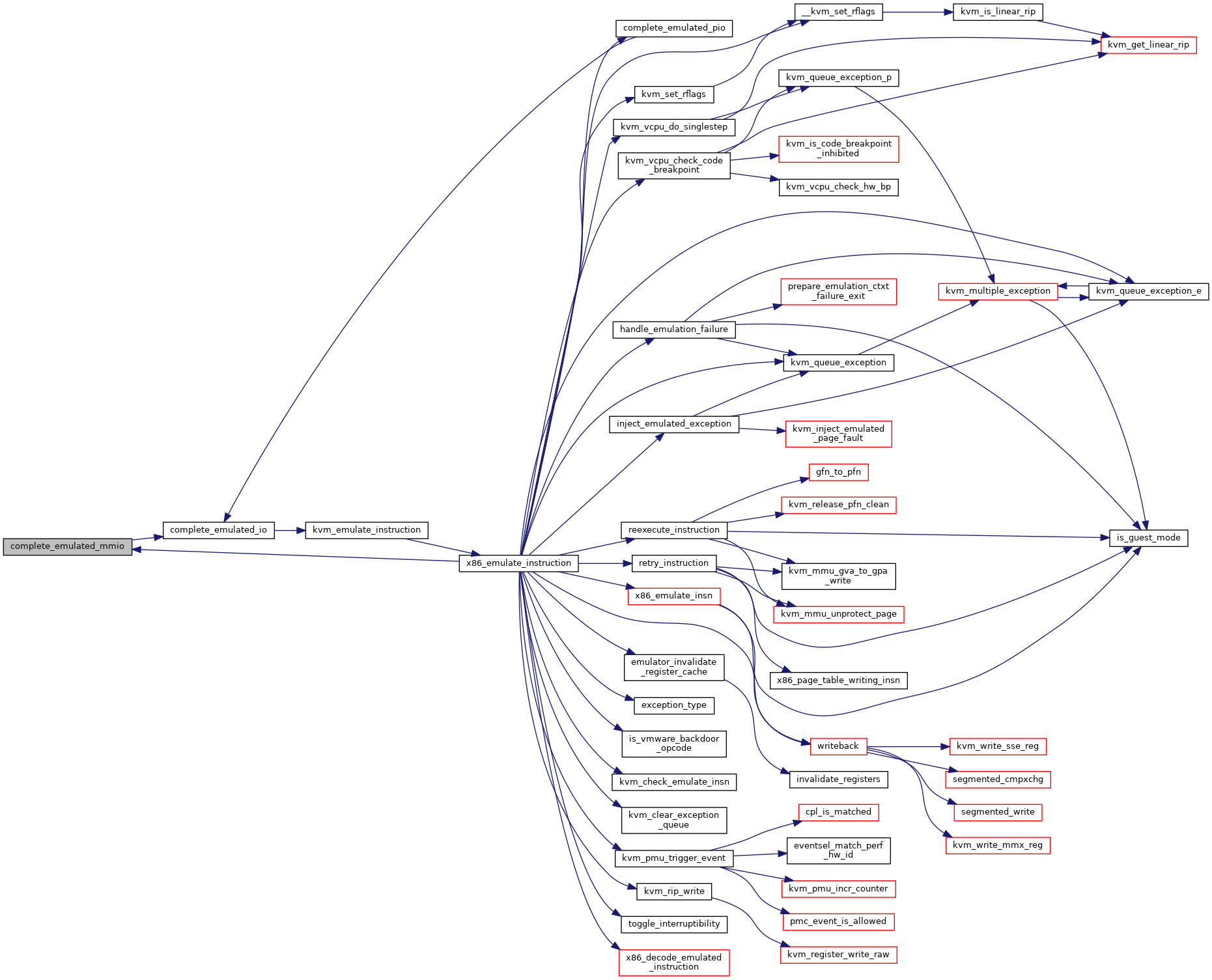

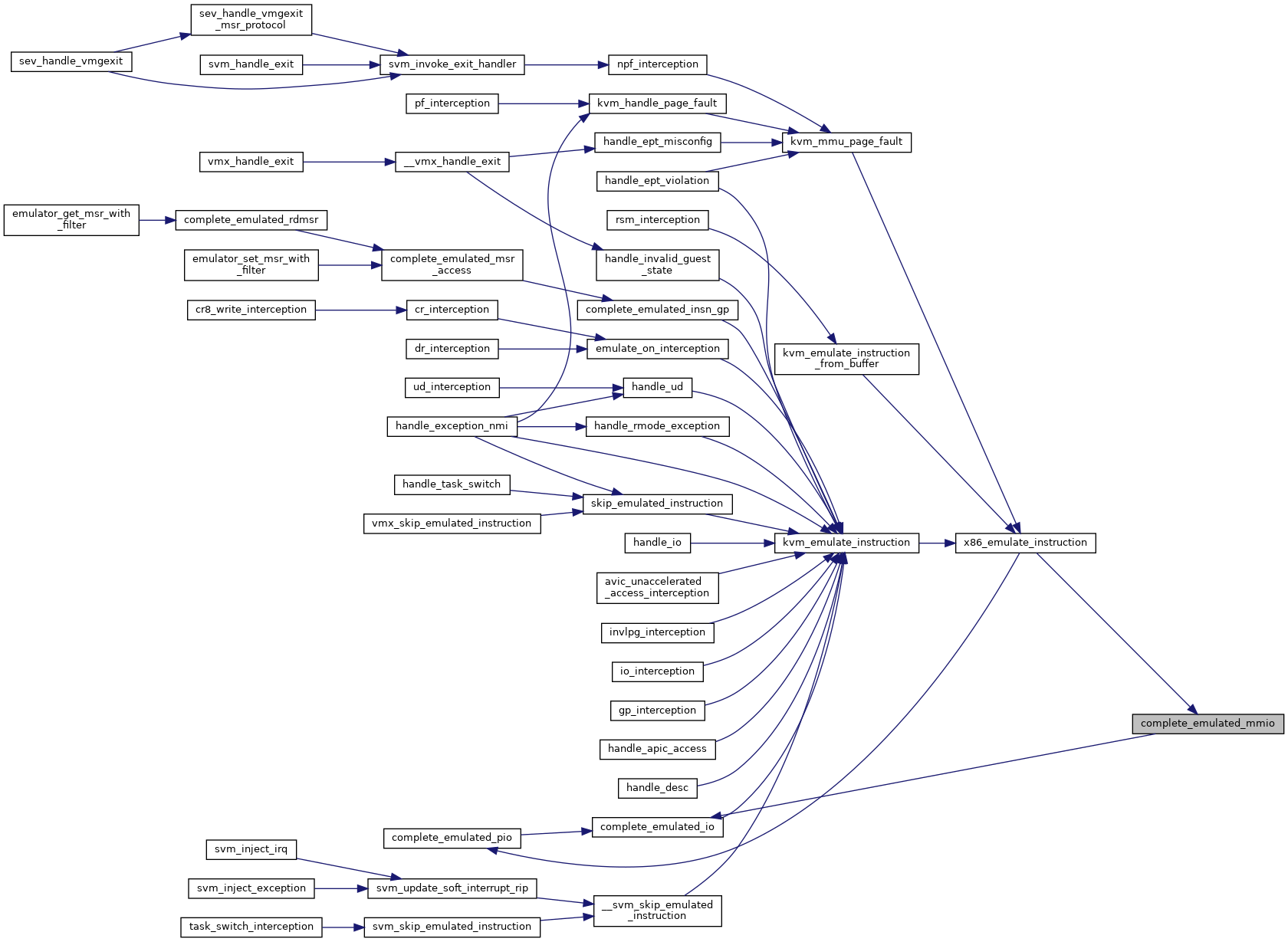

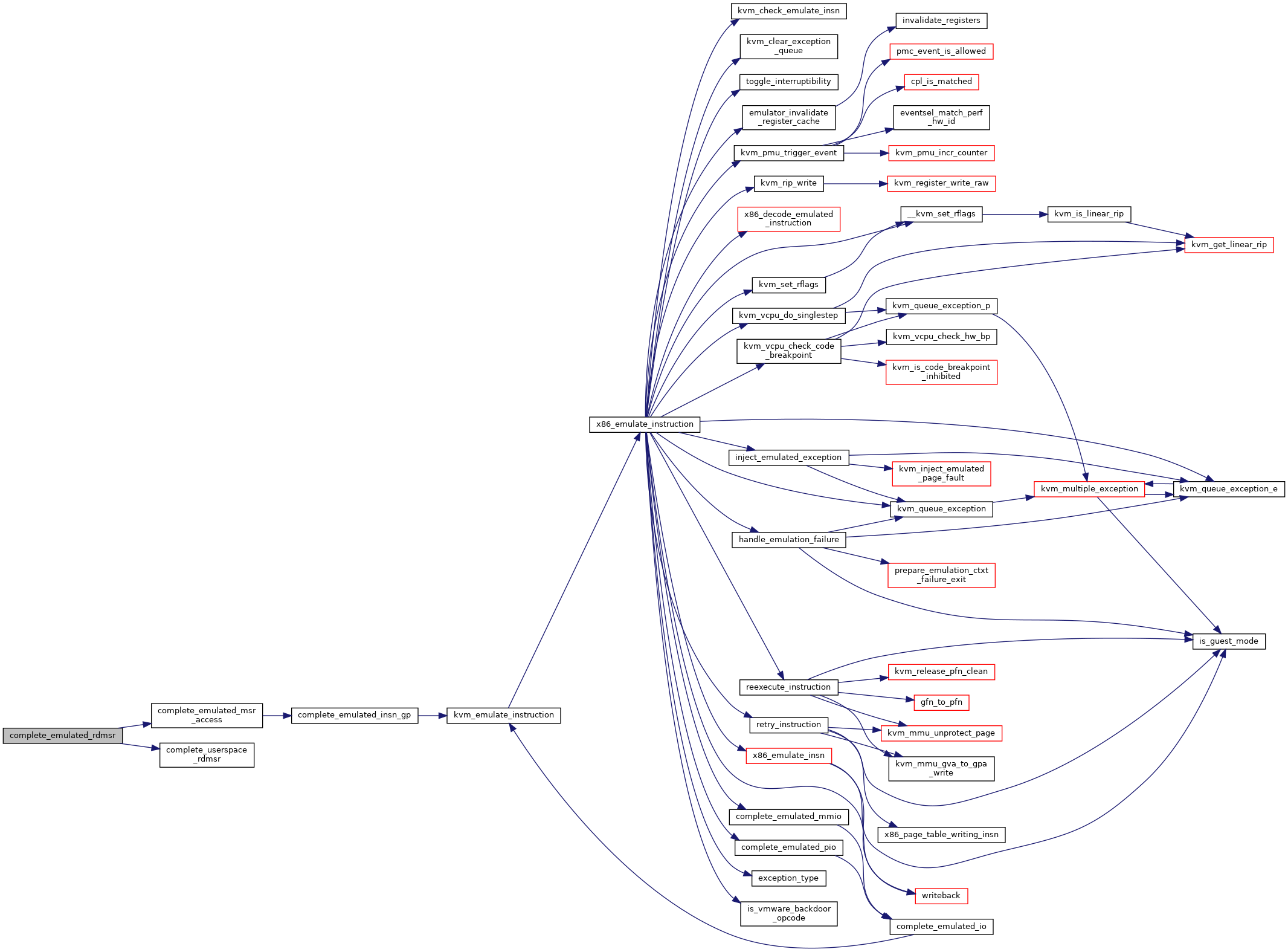

| static int | complete_emulated_mmio (struct kvm_vcpu *vcpu) |

| static int | complete_emulated_pio (struct kvm_vcpu *vcpu) |

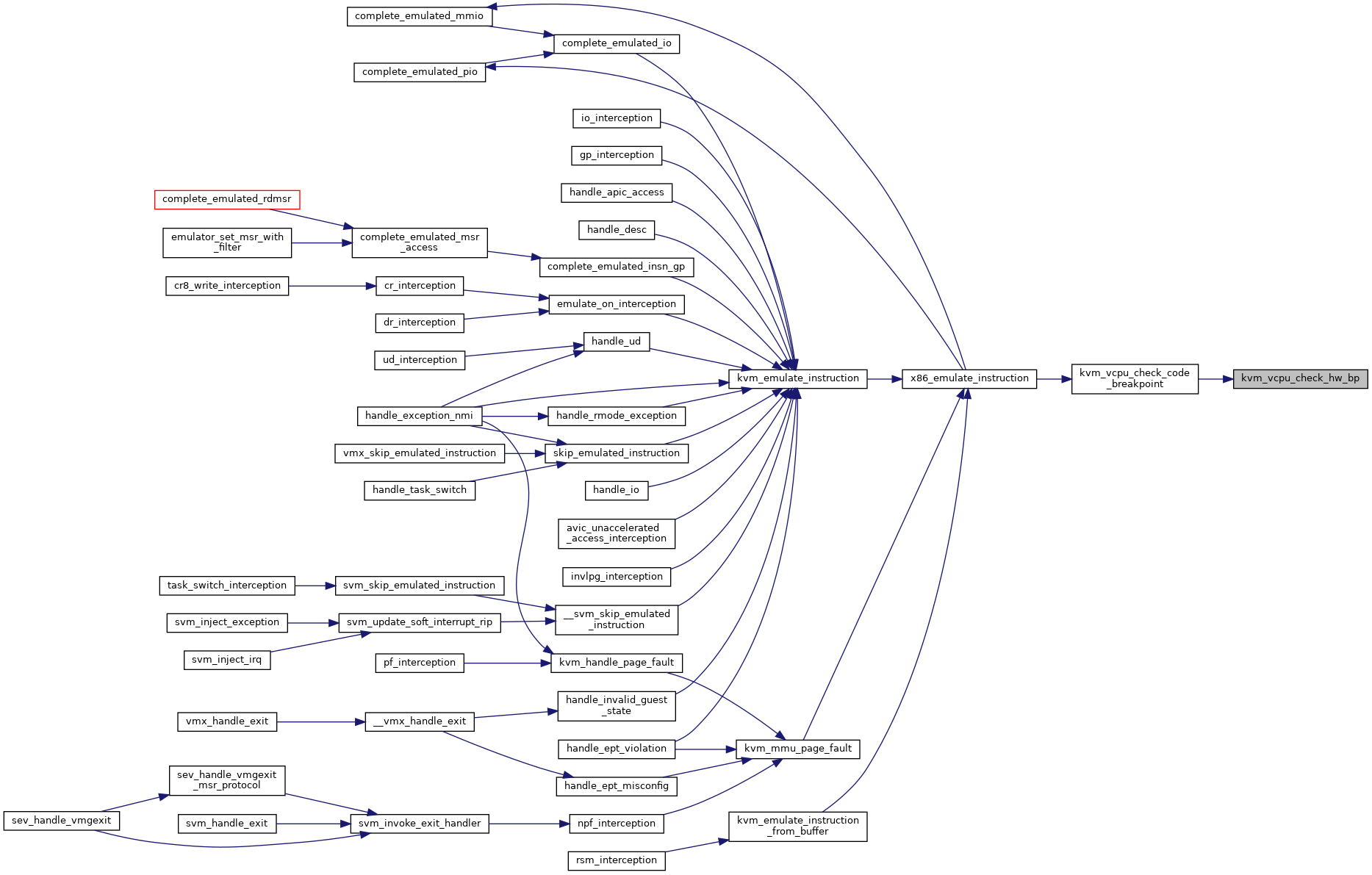

| static int | kvm_vcpu_check_hw_bp (unsigned long addr, u32 type, u32 dr7, unsigned long *db) |

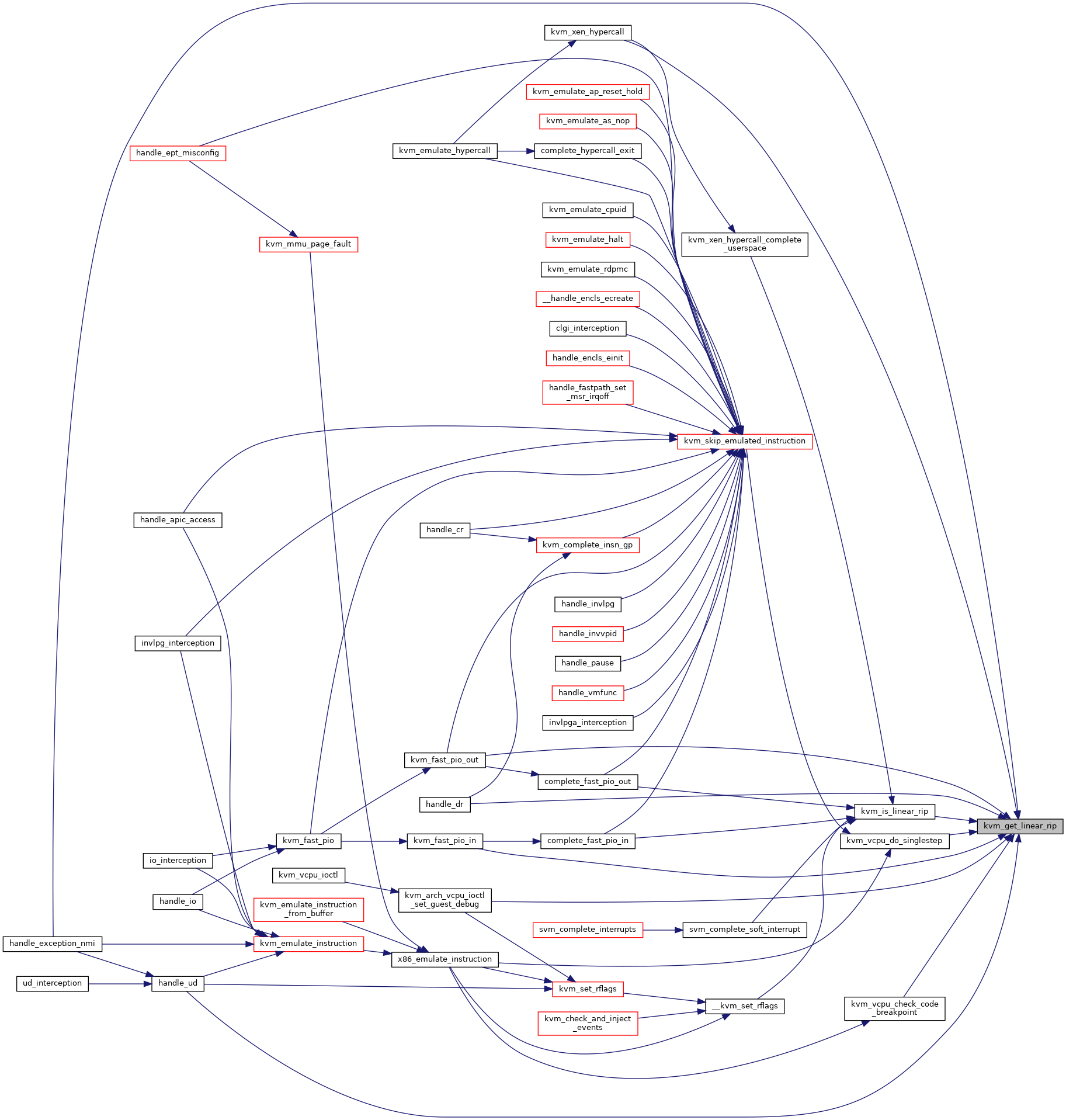

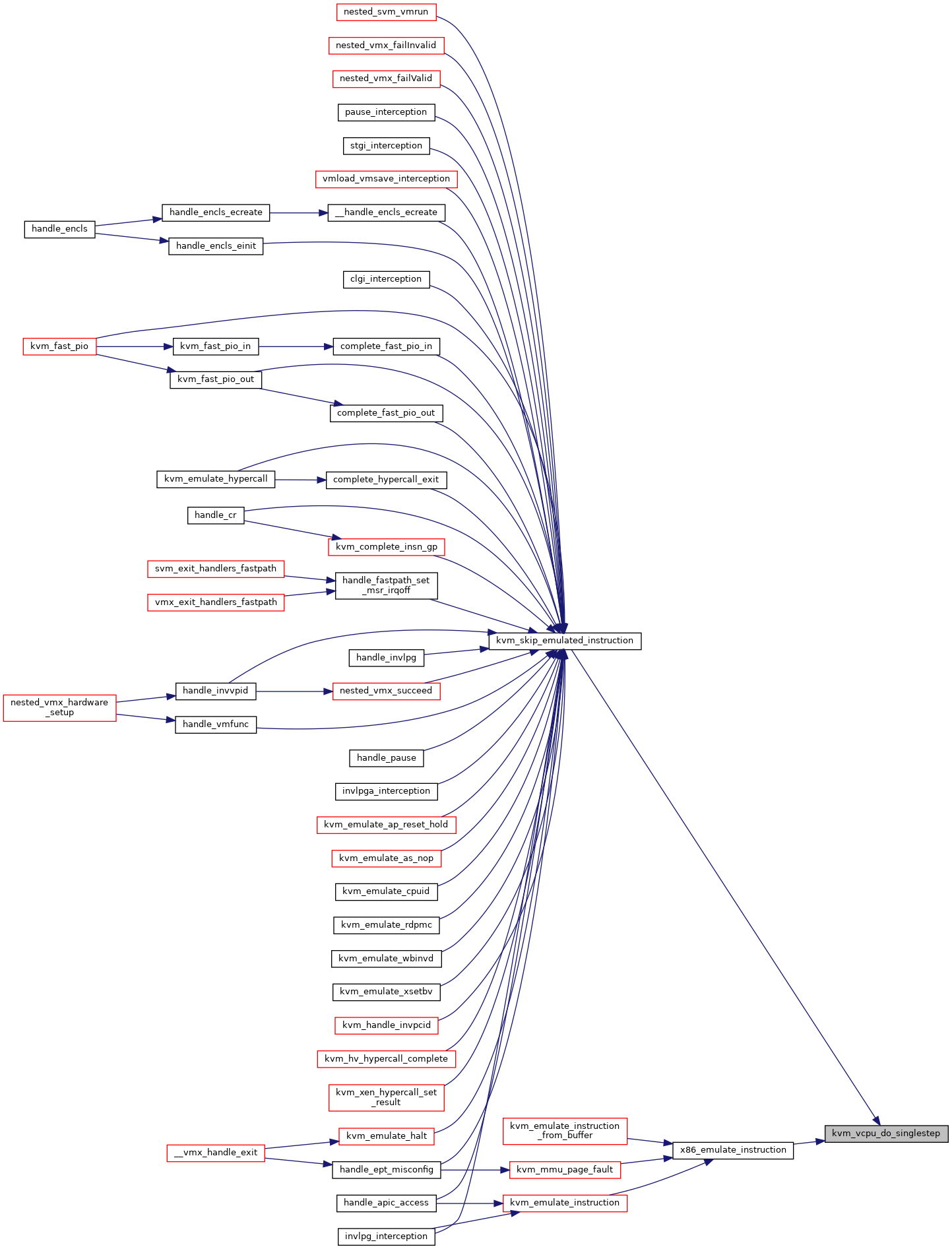

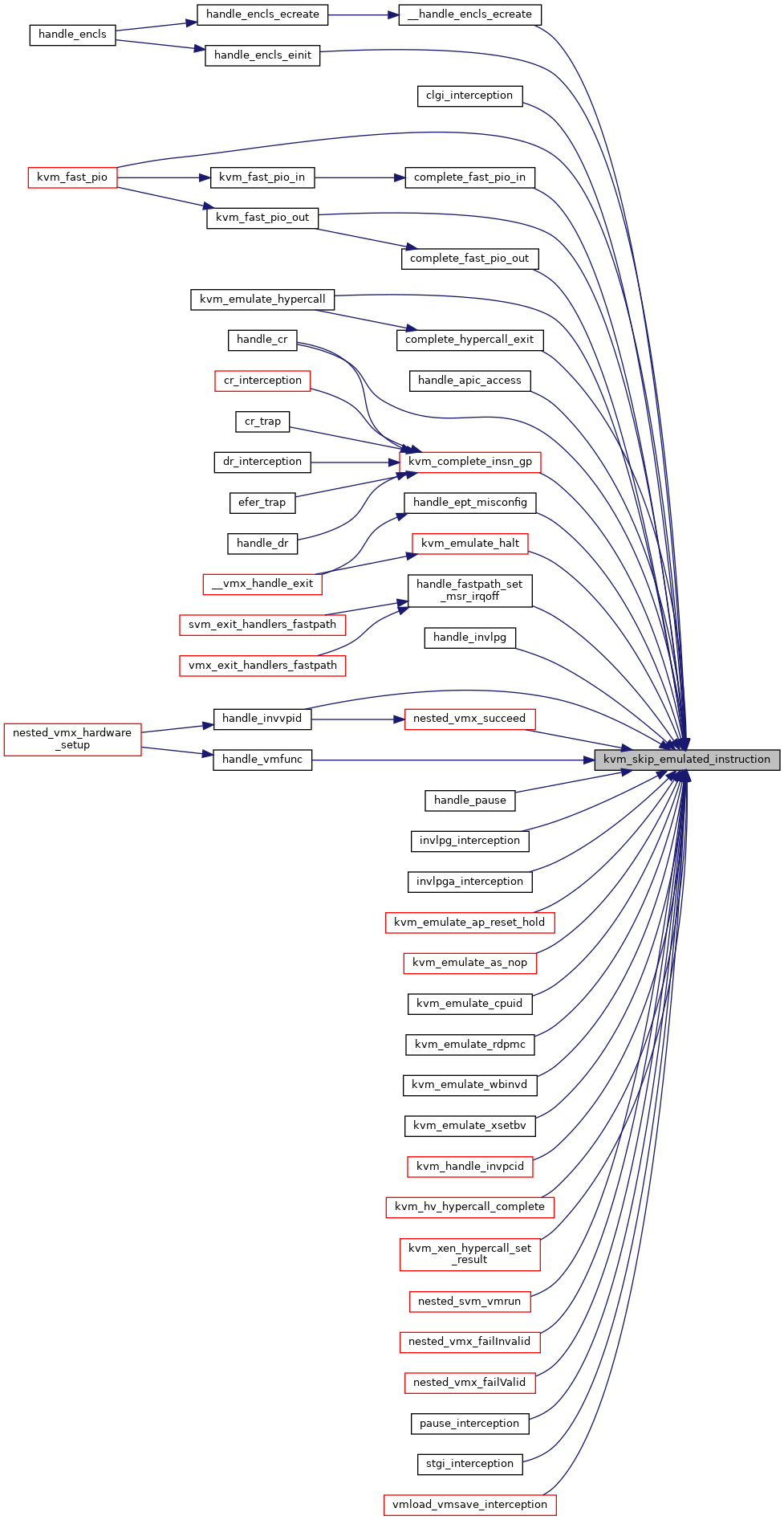

| int | kvm_skip_emulated_instruction (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_skip_emulated_instruction) | |

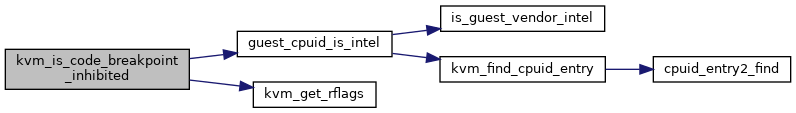

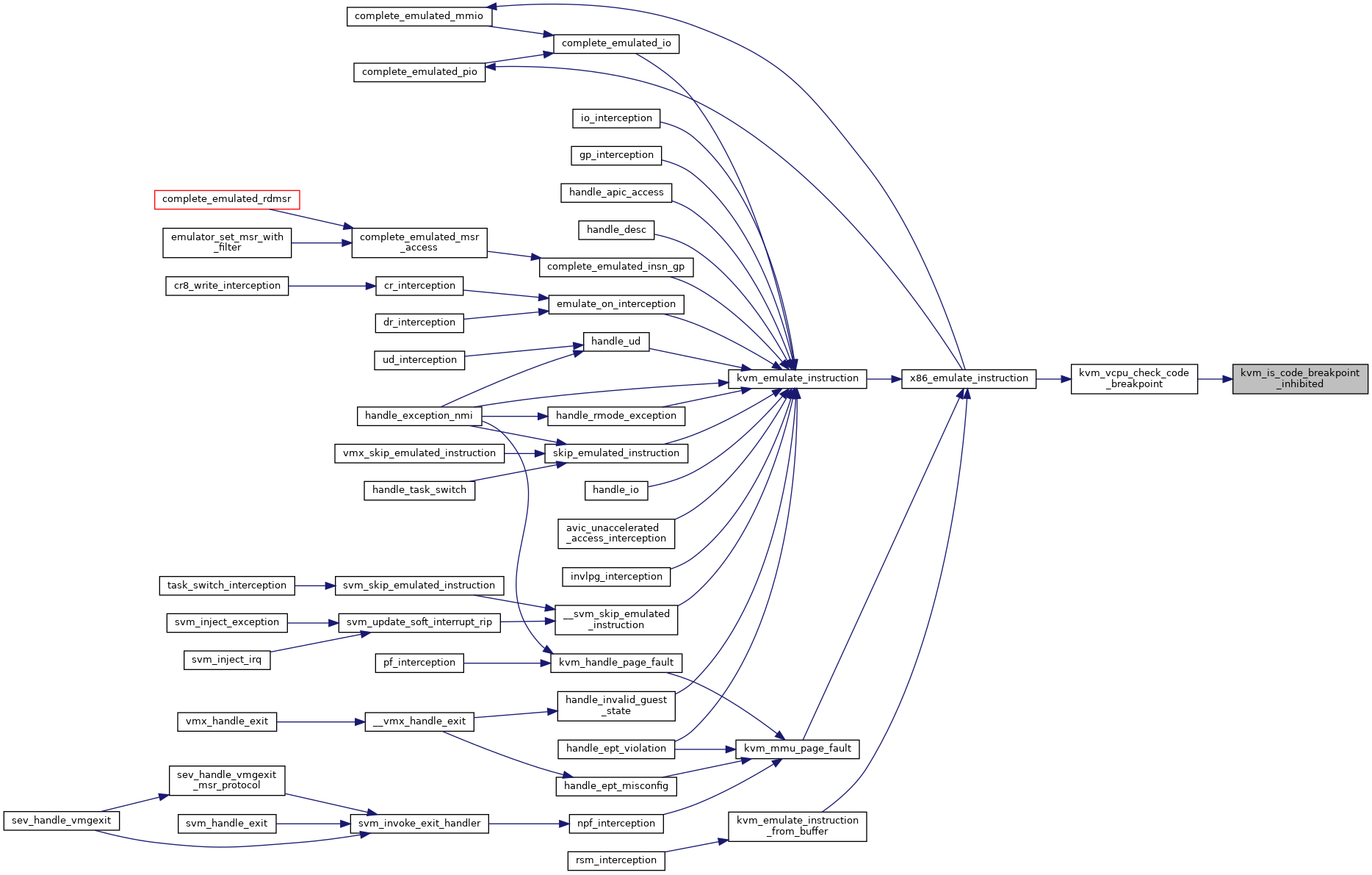

| static bool | kvm_is_code_breakpoint_inhibited (struct kvm_vcpu *vcpu) |

| static bool | kvm_vcpu_check_code_breakpoint (struct kvm_vcpu *vcpu, int emulation_type, int *r) |

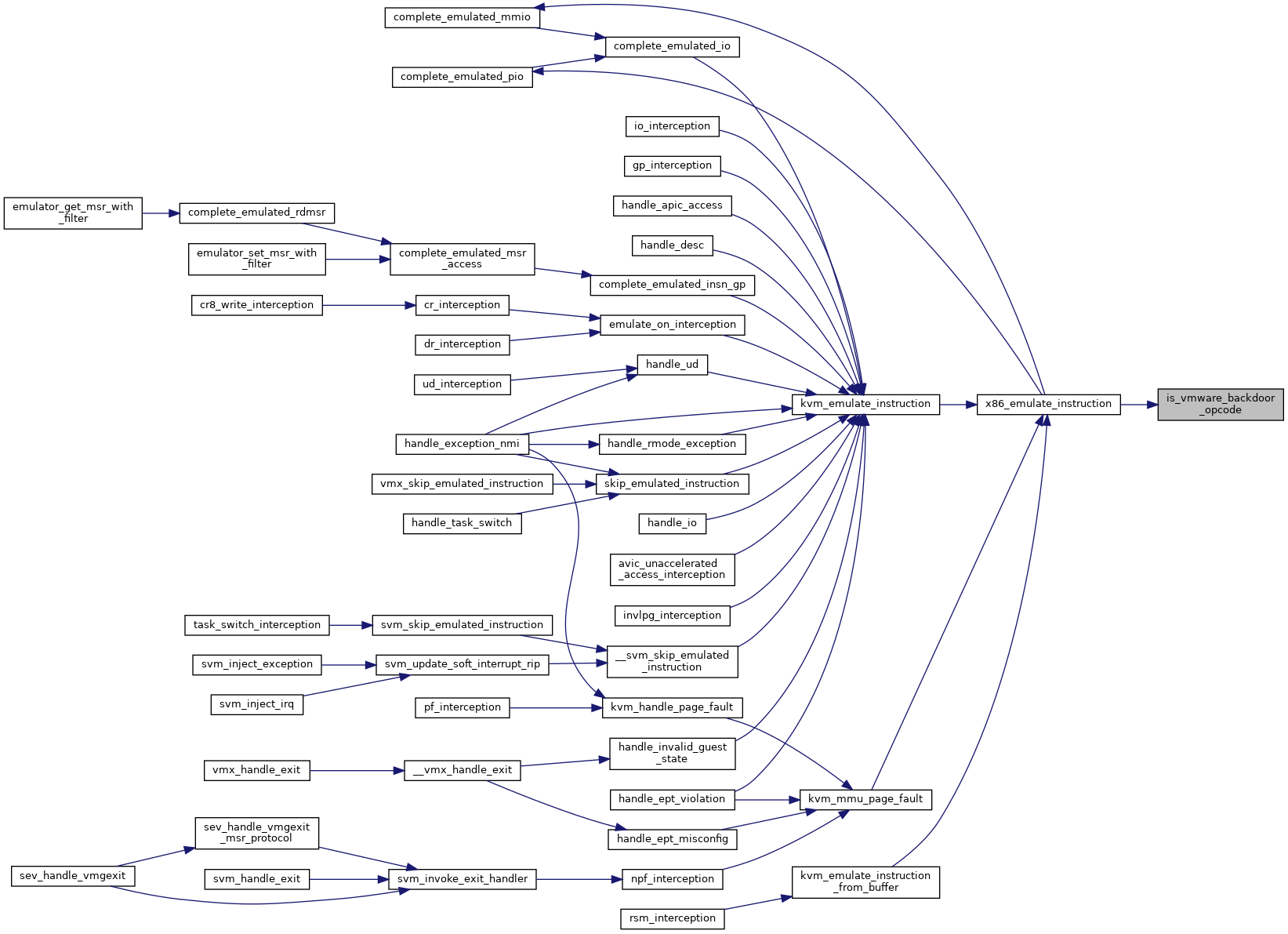

| static bool | is_vmware_backdoor_opcode (struct x86_emulate_ctxt *ctxt) |

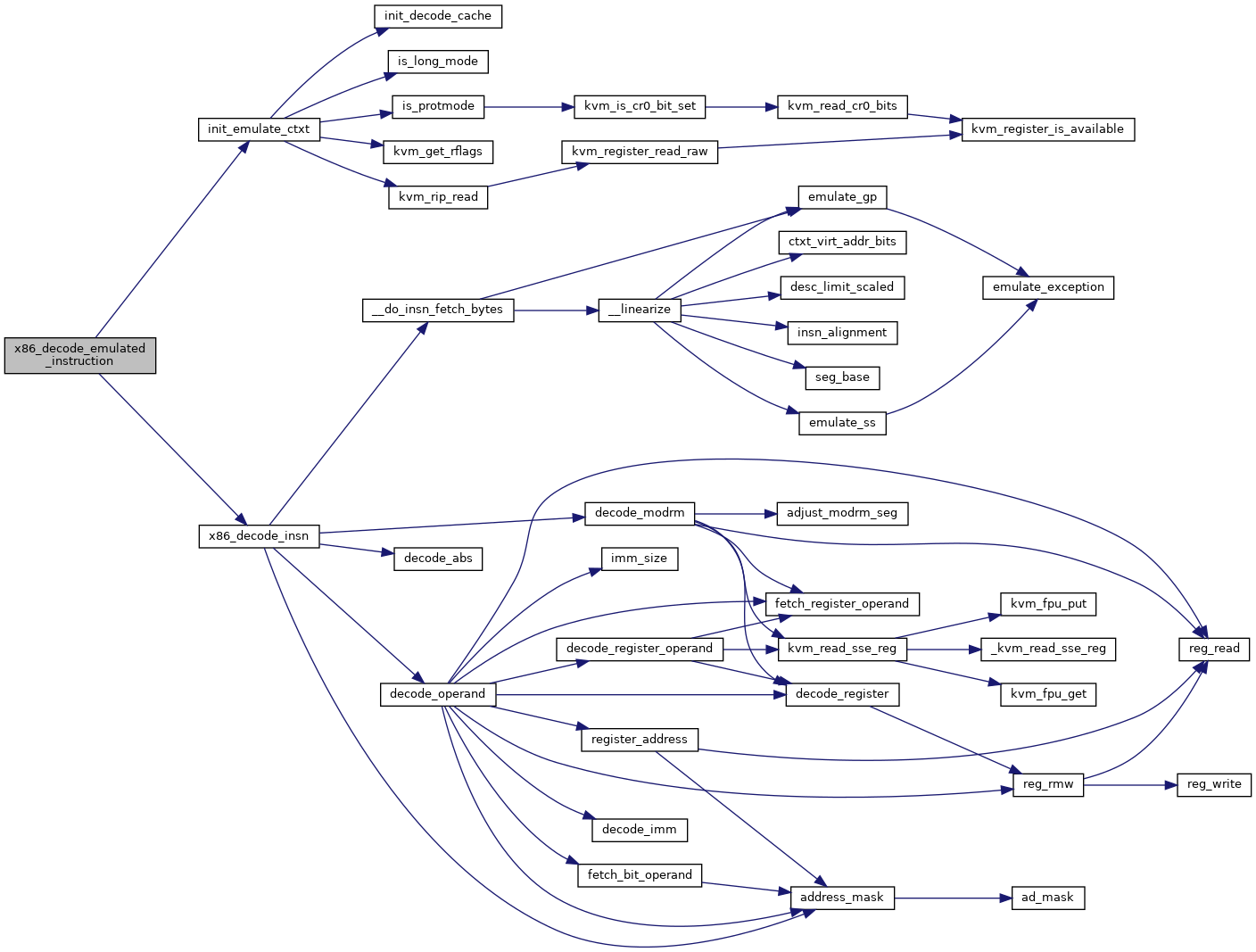

| int | x86_decode_emulated_instruction (struct kvm_vcpu *vcpu, int emulation_type, void *insn, int insn_len) |

| EXPORT_SYMBOL_GPL (x86_decode_emulated_instruction) | |

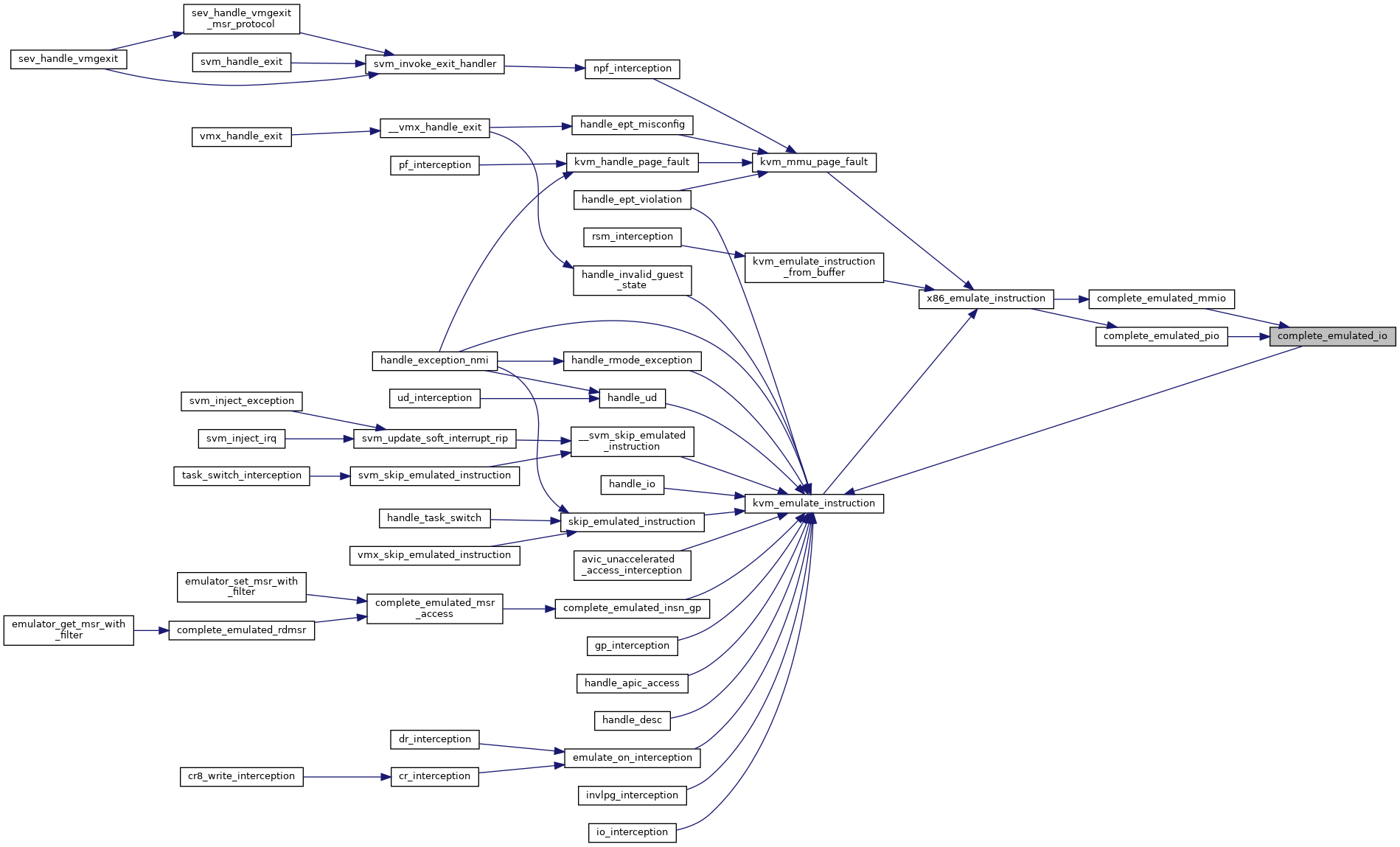

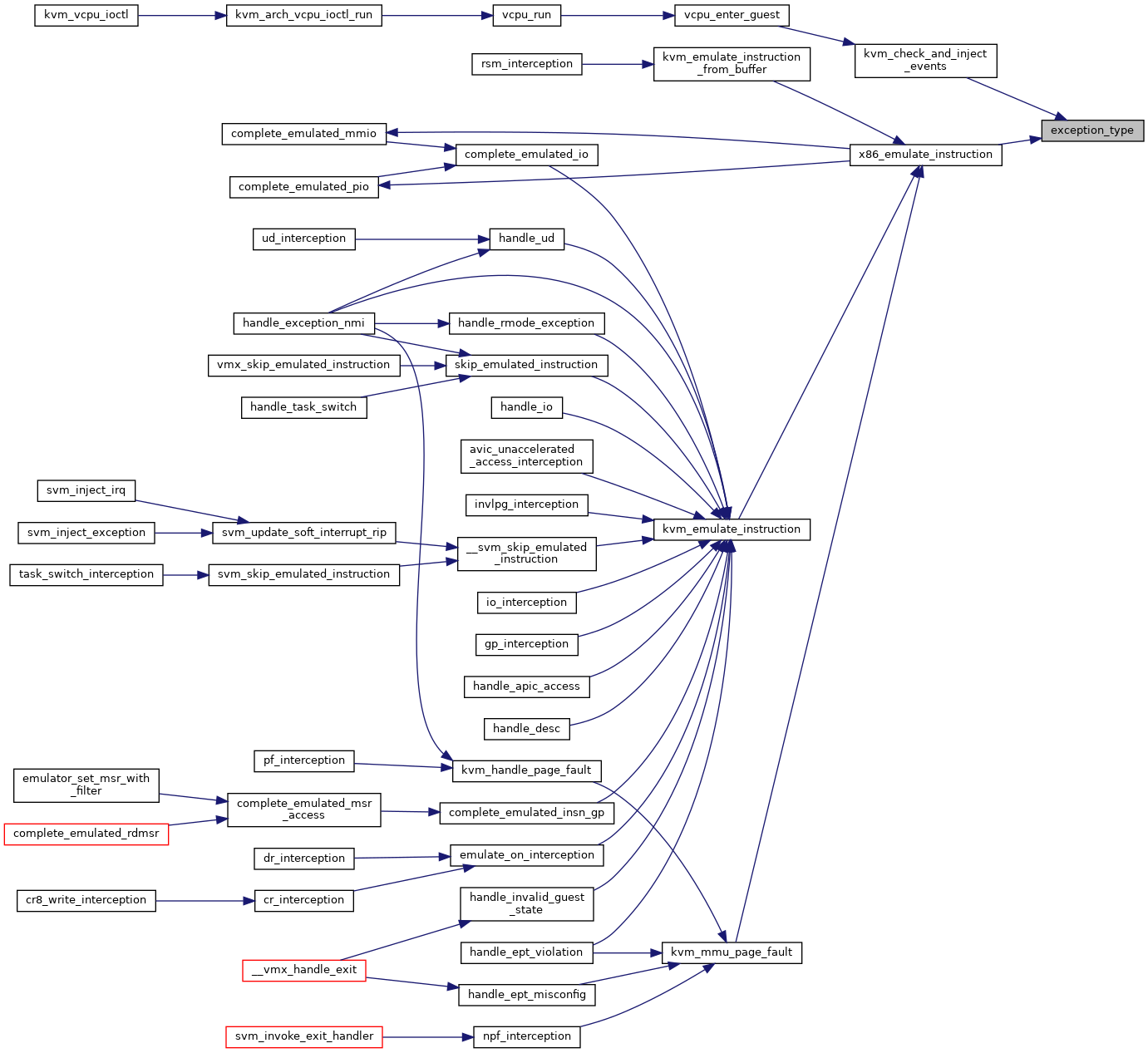

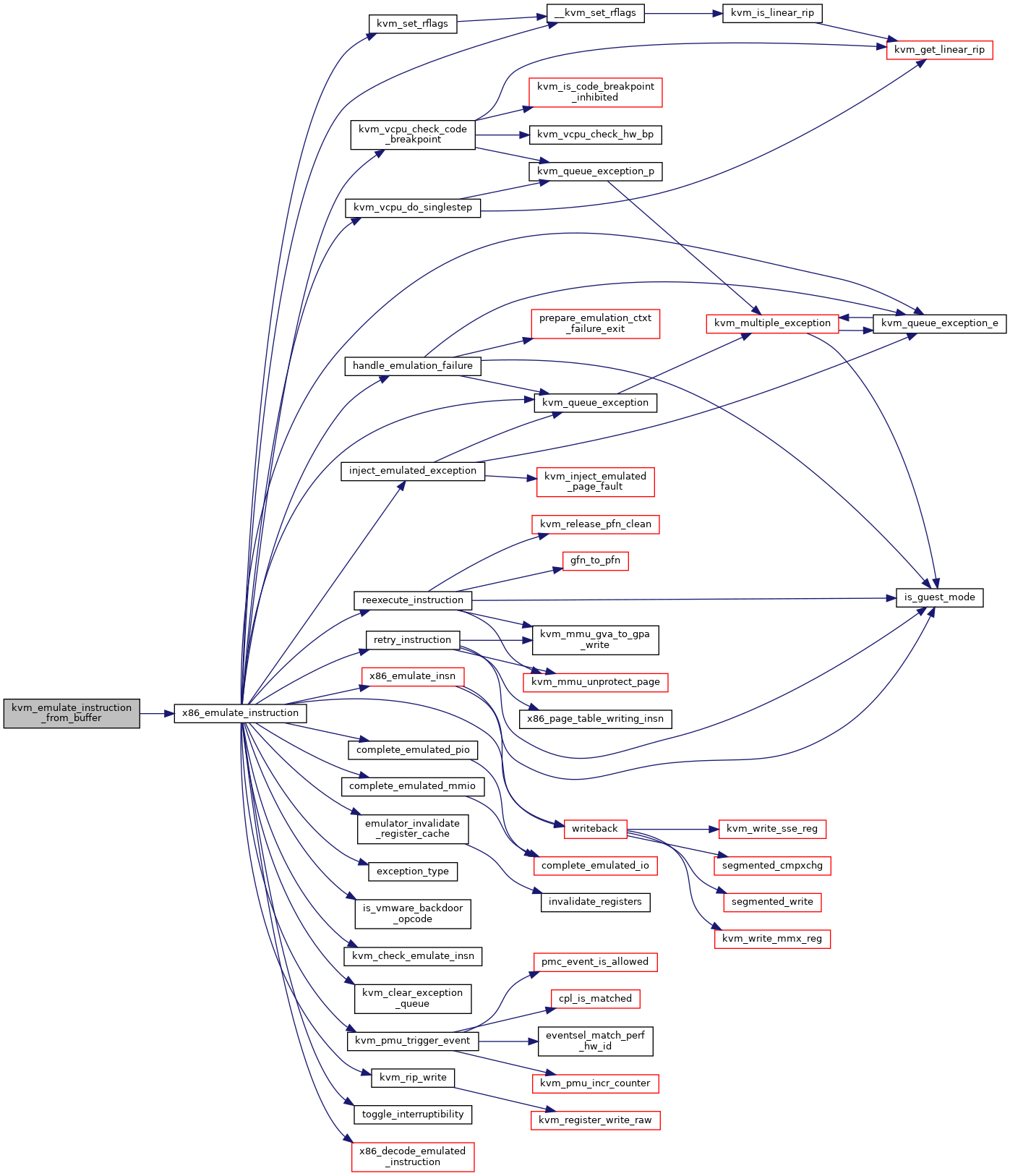

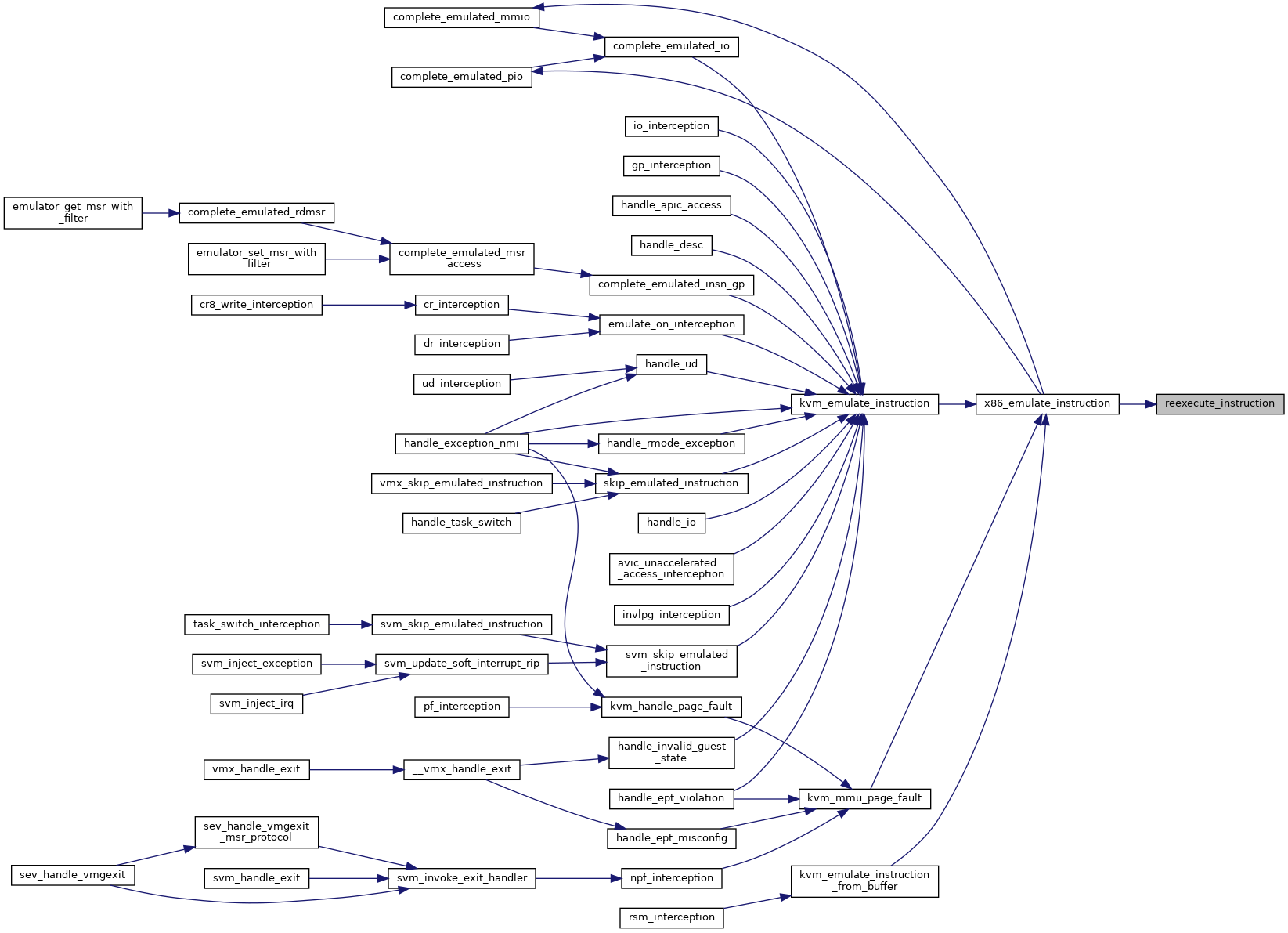

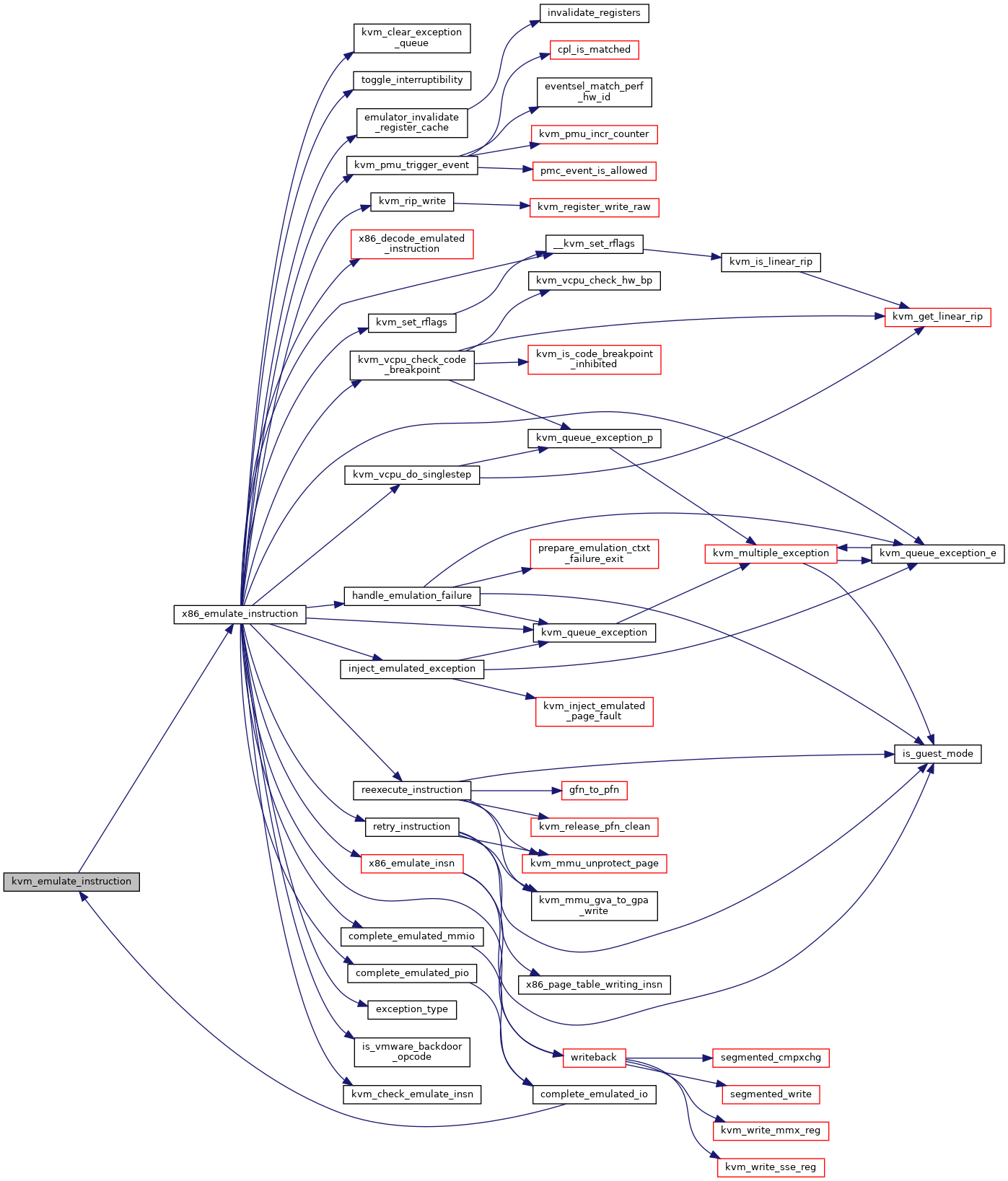

| int | x86_emulate_instruction (struct kvm_vcpu *vcpu, gpa_t cr2_or_gpa, int emulation_type, void *insn, int insn_len) |

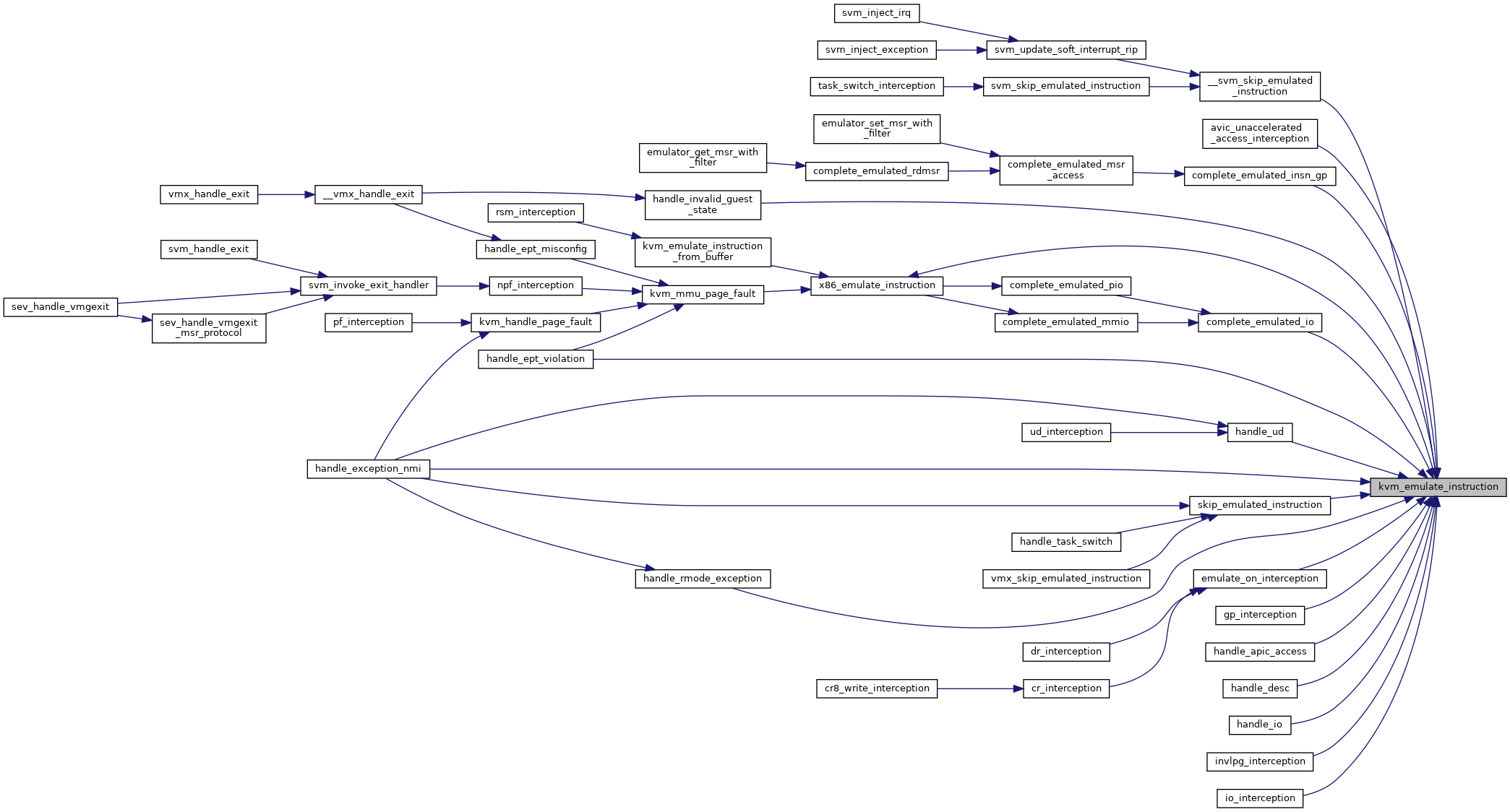

| int | kvm_emulate_instruction (struct kvm_vcpu *vcpu, int emulation_type) |

| EXPORT_SYMBOL_GPL (kvm_emulate_instruction) | |

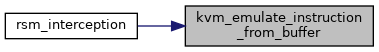

| int | kvm_emulate_instruction_from_buffer (struct kvm_vcpu *vcpu, void *insn, int insn_len) |

| EXPORT_SYMBOL_GPL (kvm_emulate_instruction_from_buffer) | |

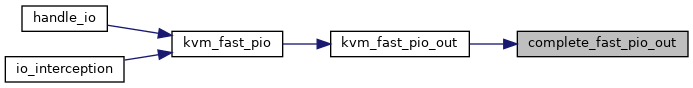

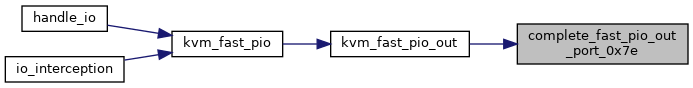

| static int | complete_fast_pio_out_port_0x7e (struct kvm_vcpu *vcpu) |

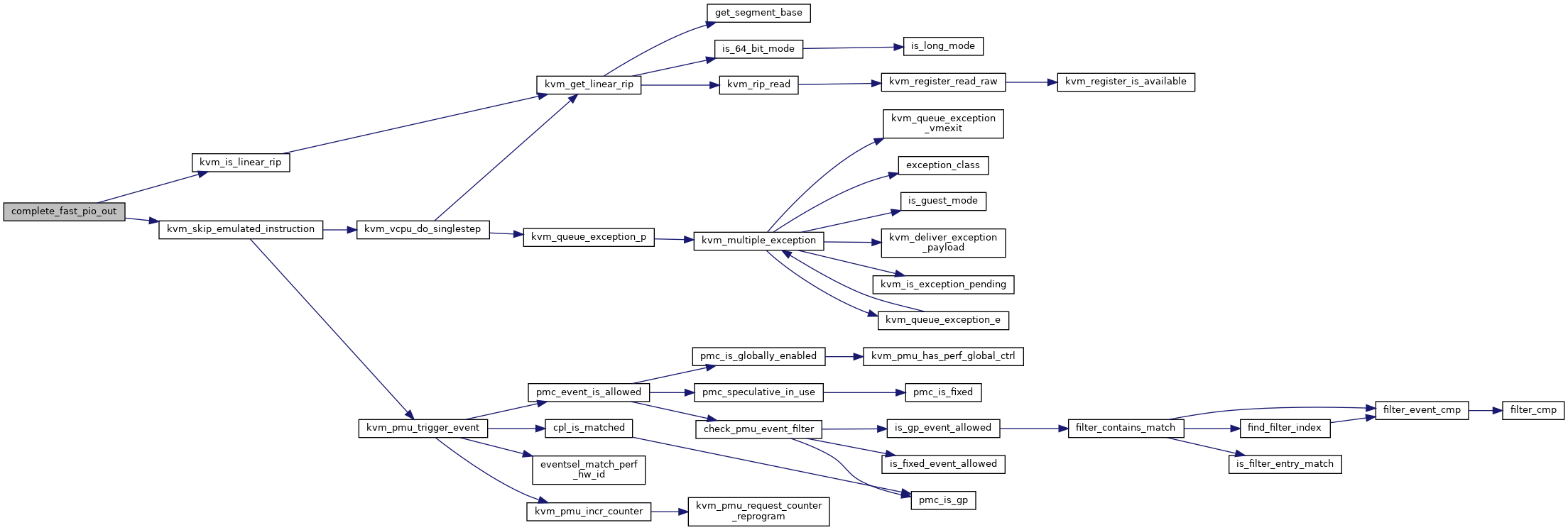

| static int | complete_fast_pio_out (struct kvm_vcpu *vcpu) |

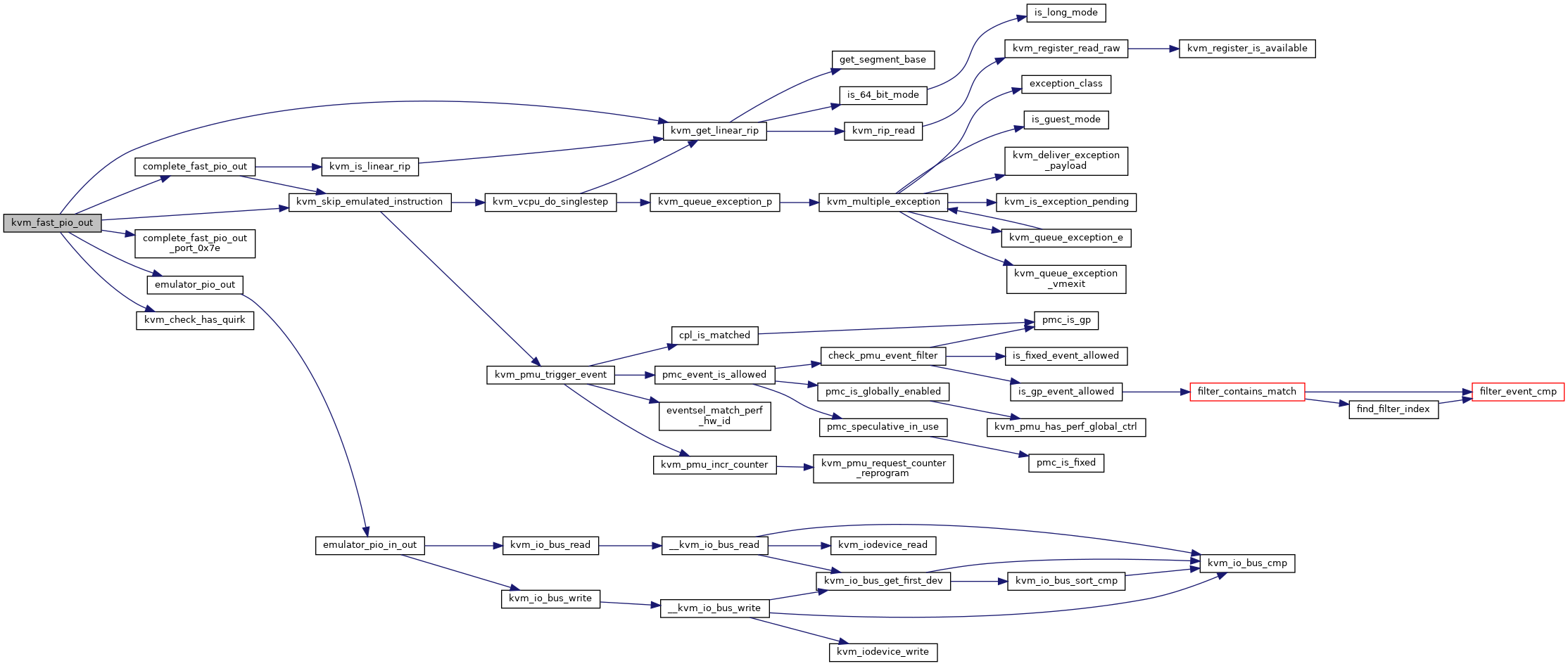

| static int | kvm_fast_pio_out (struct kvm_vcpu *vcpu, int size, unsigned short port) |

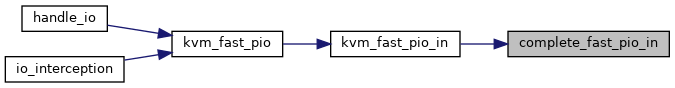

| static int | complete_fast_pio_in (struct kvm_vcpu *vcpu) |

| static int | kvm_fast_pio_in (struct kvm_vcpu *vcpu, int size, unsigned short port) |

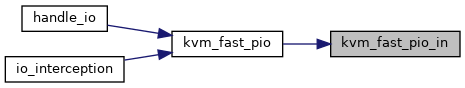

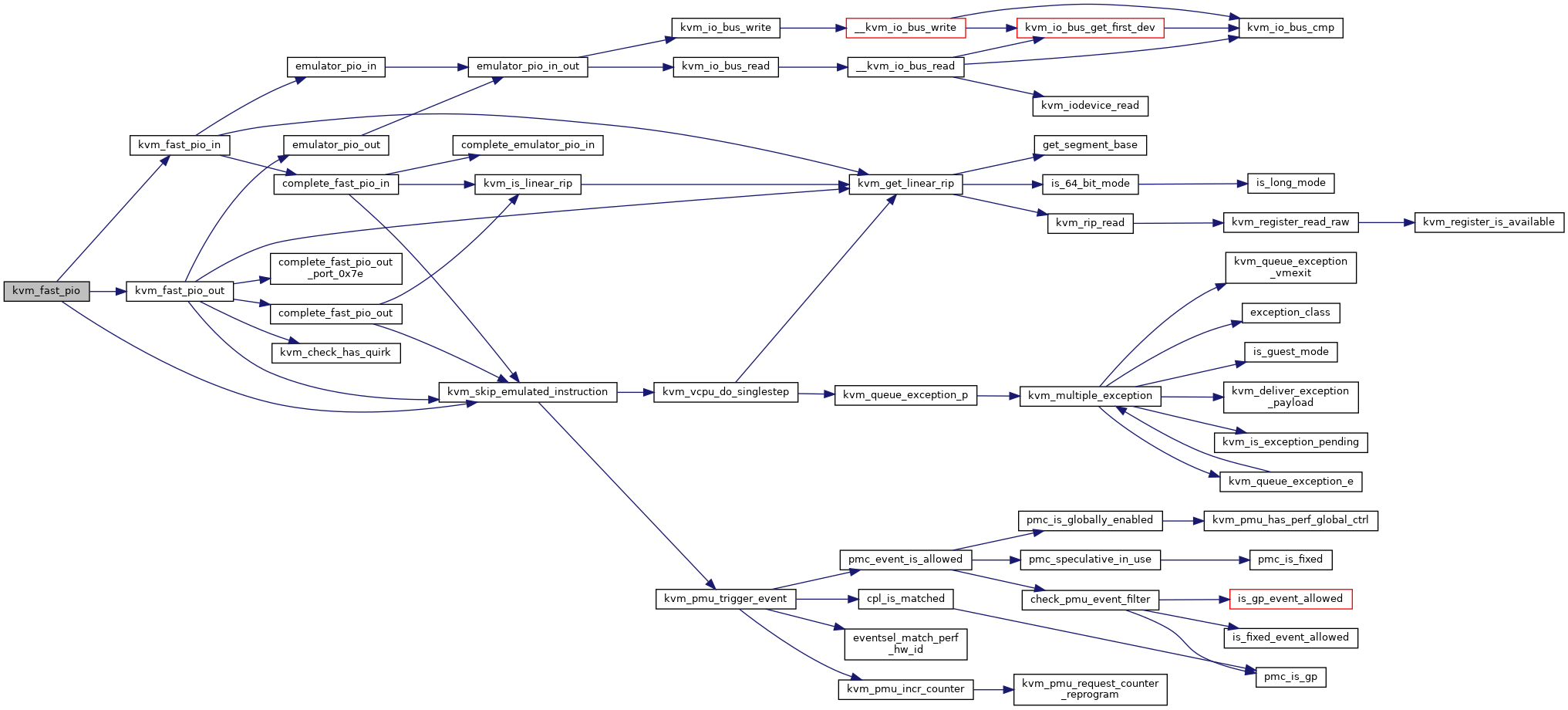

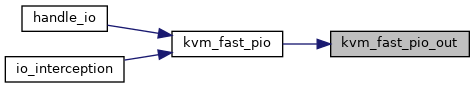

| int | kvm_fast_pio (struct kvm_vcpu *vcpu, int size, unsigned short port, int in) |

| EXPORT_SYMBOL_GPL (kvm_fast_pio) | |

| static int | kvmclock_cpu_down_prep (unsigned int cpu) |

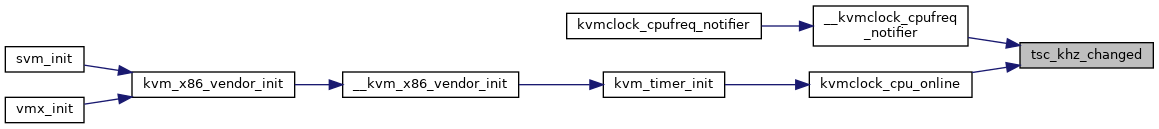

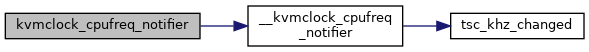

| static void | tsc_khz_changed (void *data) |

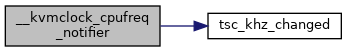

| static void | __kvmclock_cpufreq_notifier (struct cpufreq_freqs *freq, int cpu) |

| static int | kvmclock_cpufreq_notifier (struct notifier_block *nb, unsigned long val, void *data) |

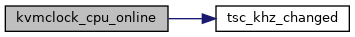

| static int | kvmclock_cpu_online (unsigned int cpu) |

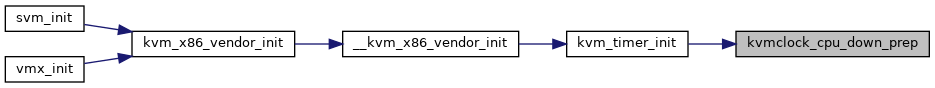

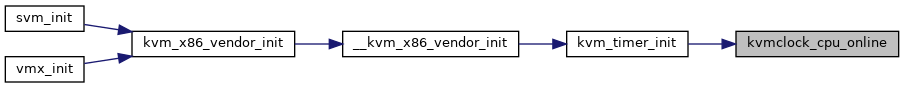

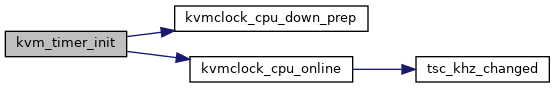

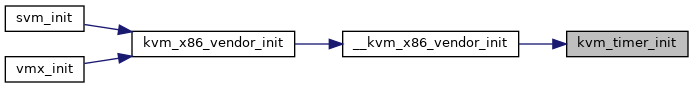

| static void | kvm_timer_init (void) |

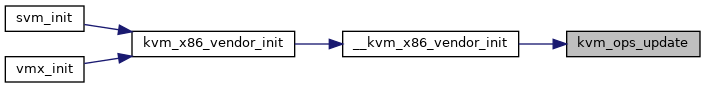

| static void | kvm_ops_update (struct kvm_x86_init_ops *ops) |

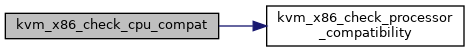

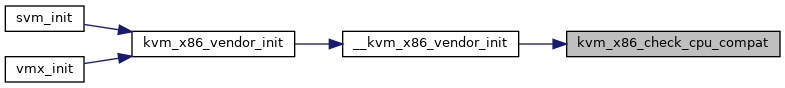

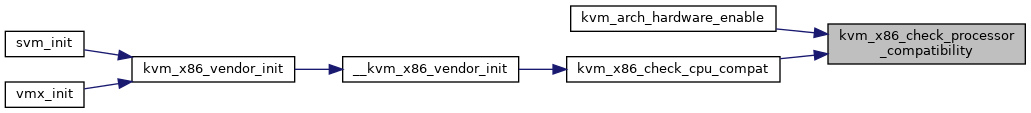

| static int | kvm_x86_check_processor_compatibility (void) |

| static void | kvm_x86_check_cpu_compat (void *ret) |

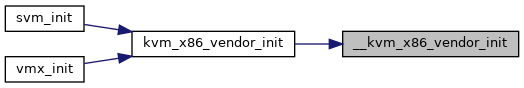

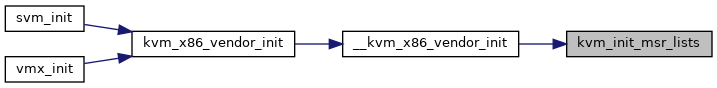

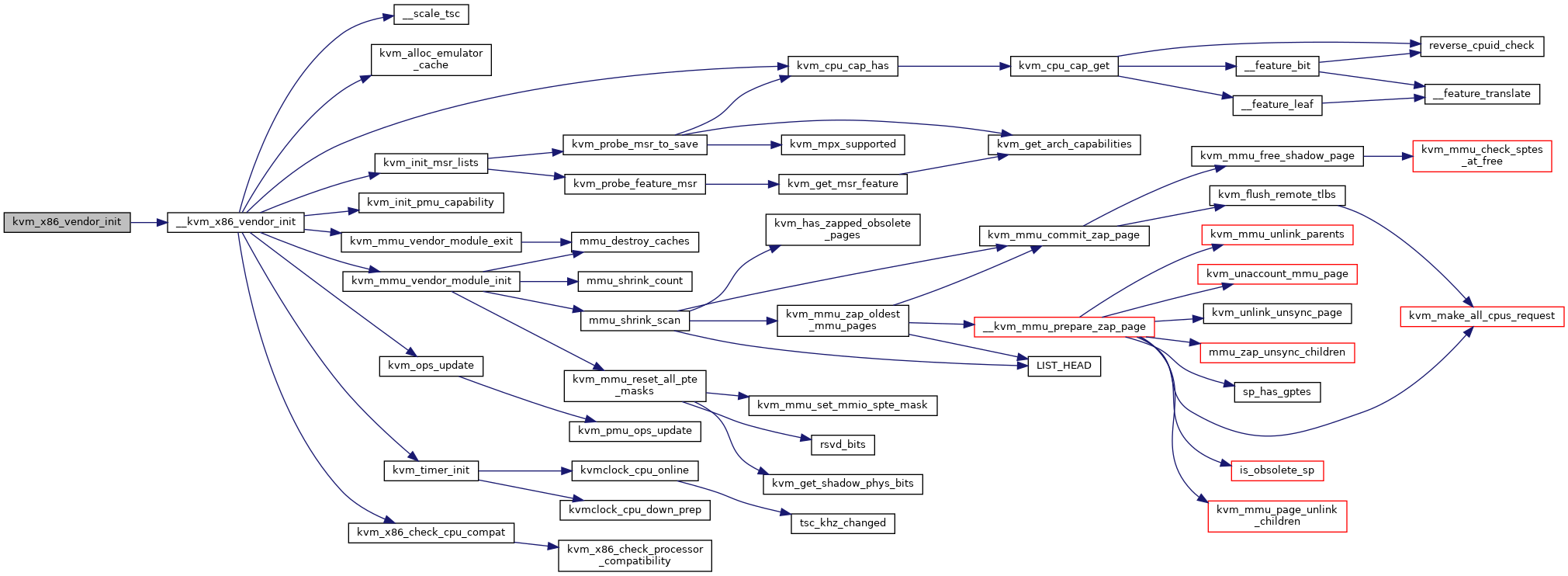

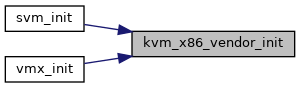

| static int | __kvm_x86_vendor_init (struct kvm_x86_init_ops *ops) |

| int | kvm_x86_vendor_init (struct kvm_x86_init_ops *ops) |

| EXPORT_SYMBOL_GPL (kvm_x86_vendor_init) | |

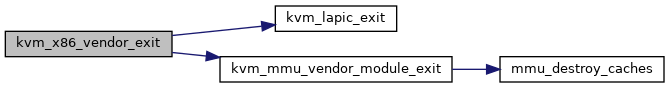

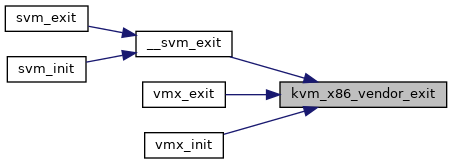

| void | kvm_x86_vendor_exit (void) |

| EXPORT_SYMBOL_GPL (kvm_x86_vendor_exit) | |

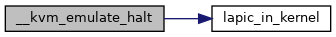

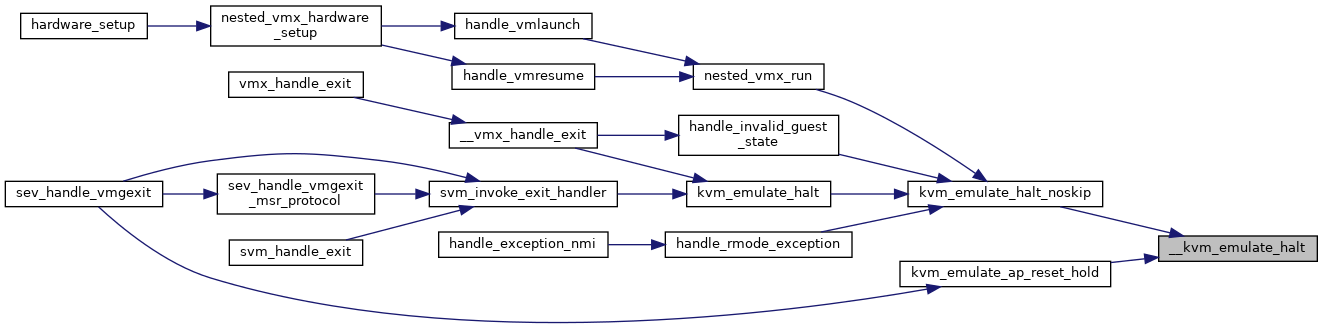

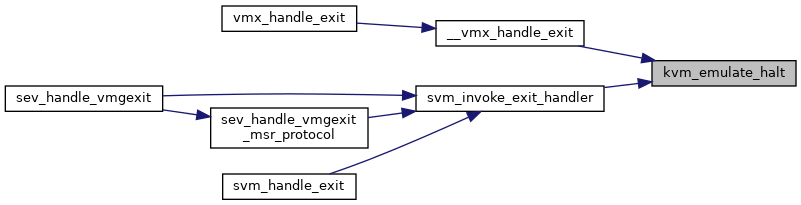

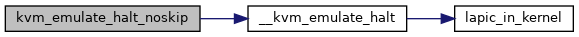

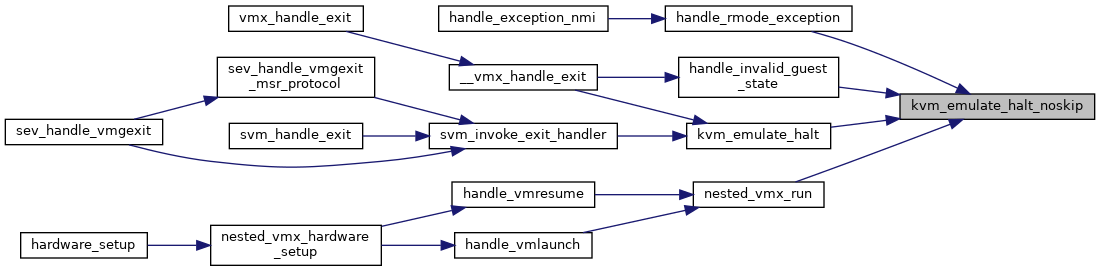

| static int | __kvm_emulate_halt (struct kvm_vcpu *vcpu, int state, int reason) |

| int | kvm_emulate_halt_noskip (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_halt_noskip) | |

| int | kvm_emulate_halt (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_halt) | |

| int | kvm_emulate_ap_reset_hold (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_ap_reset_hold) | |

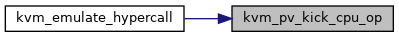

| static void | kvm_pv_kick_cpu_op (struct kvm *kvm, int apicid) |

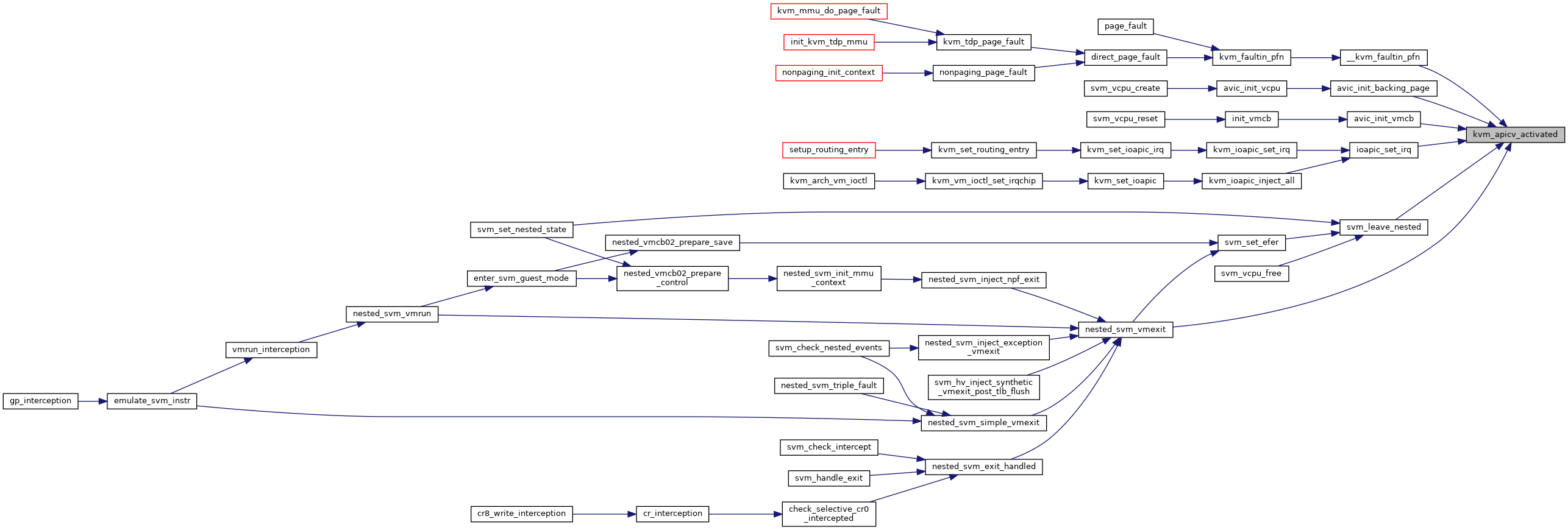

| bool | kvm_apicv_activated (struct kvm *kvm) |

| EXPORT_SYMBOL_GPL (kvm_apicv_activated) | |

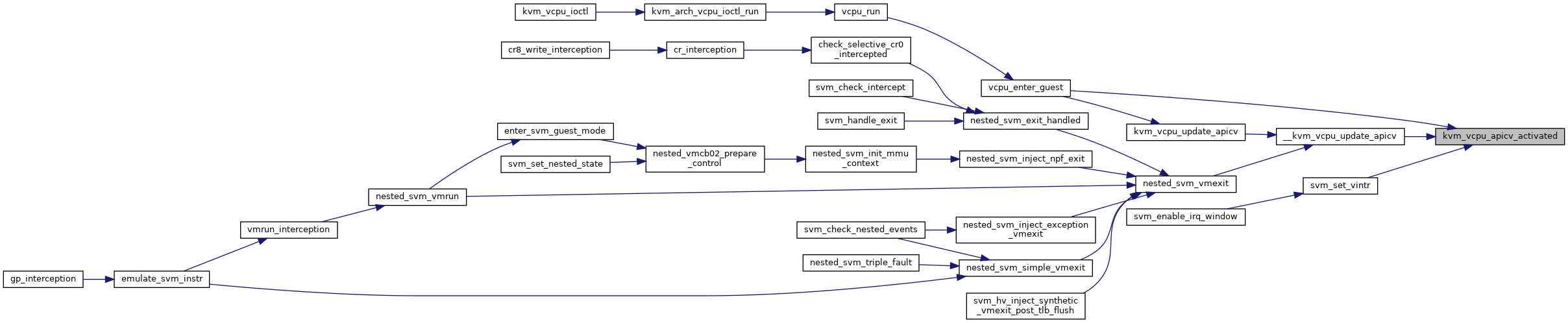

| bool | kvm_vcpu_apicv_activated (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_vcpu_apicv_activated) | |

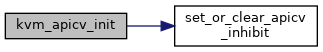

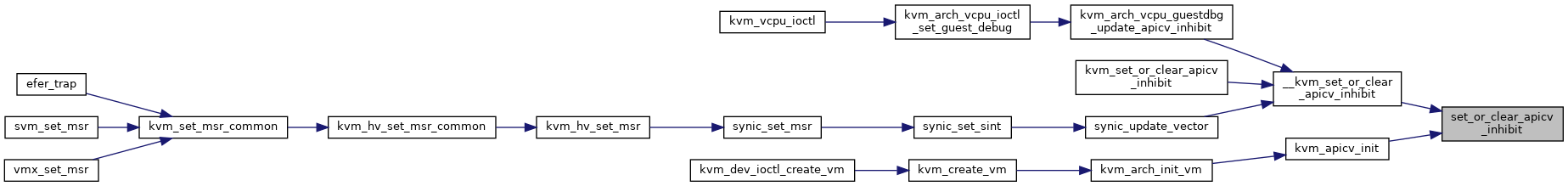

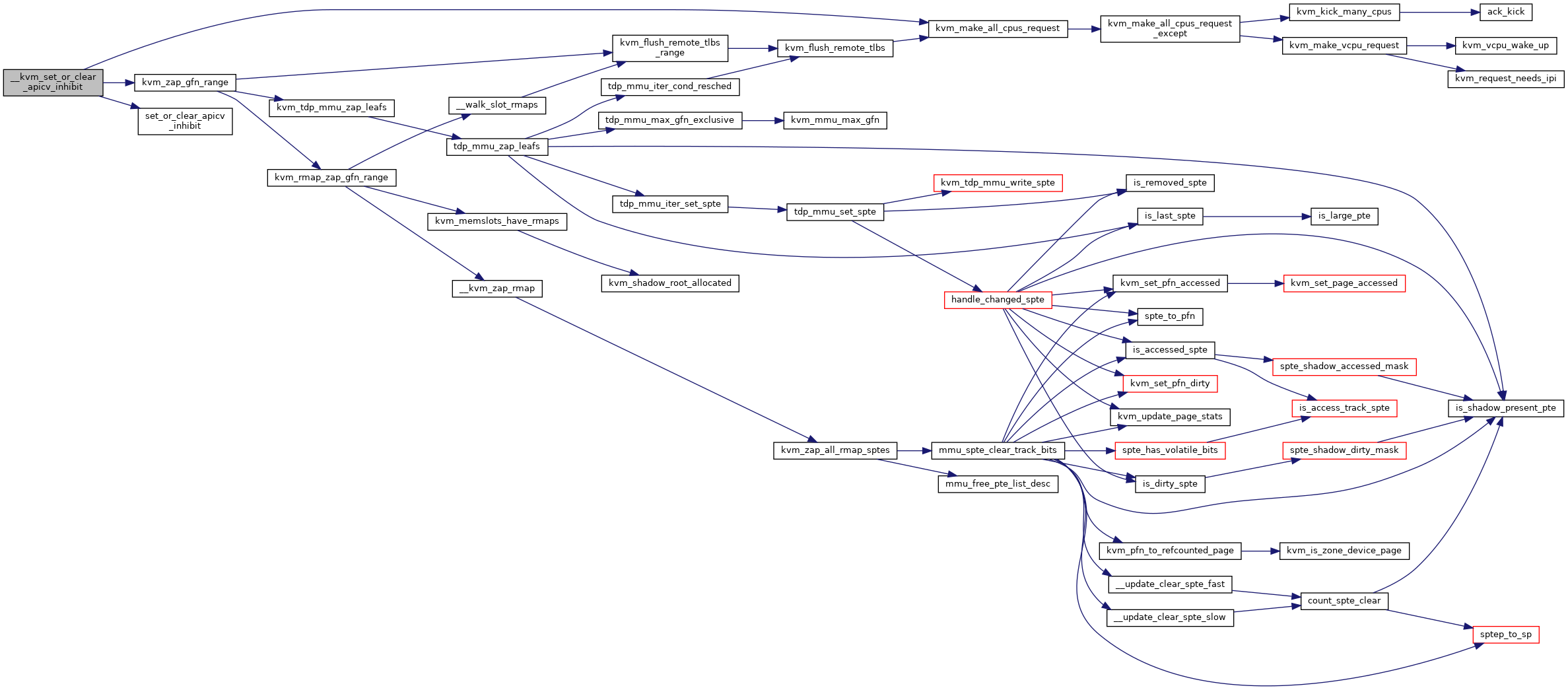

| static void | set_or_clear_apicv_inhibit (unsigned long *inhibits, enum kvm_apicv_inhibit reason, bool set) |

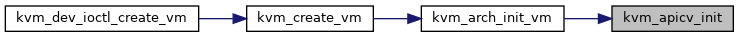

| static void | kvm_apicv_init (struct kvm *kvm) |

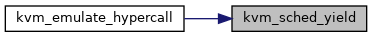

| static void | kvm_sched_yield (struct kvm_vcpu *vcpu, unsigned long dest_id) |

| static int | complete_hypercall_exit (struct kvm_vcpu *vcpu) |

| int | kvm_emulate_hypercall (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_hypercall) | |

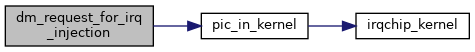

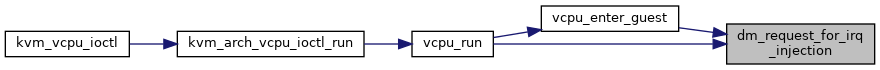

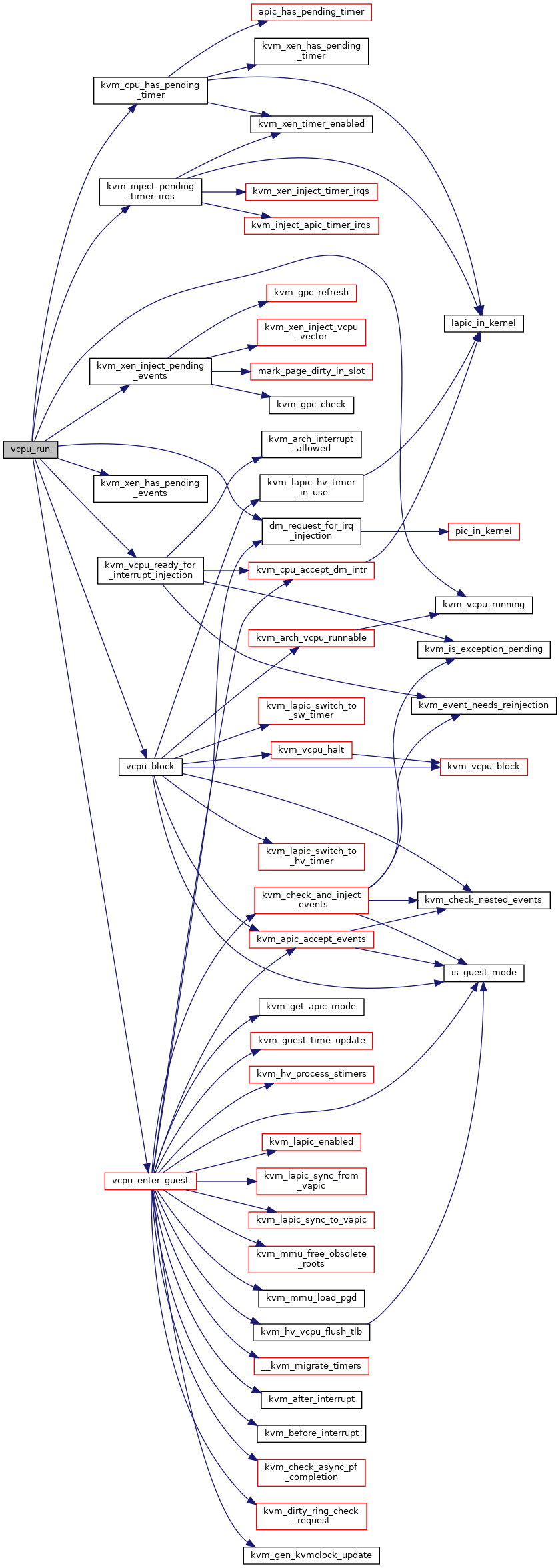

| static int | dm_request_for_irq_injection (struct kvm_vcpu *vcpu) |

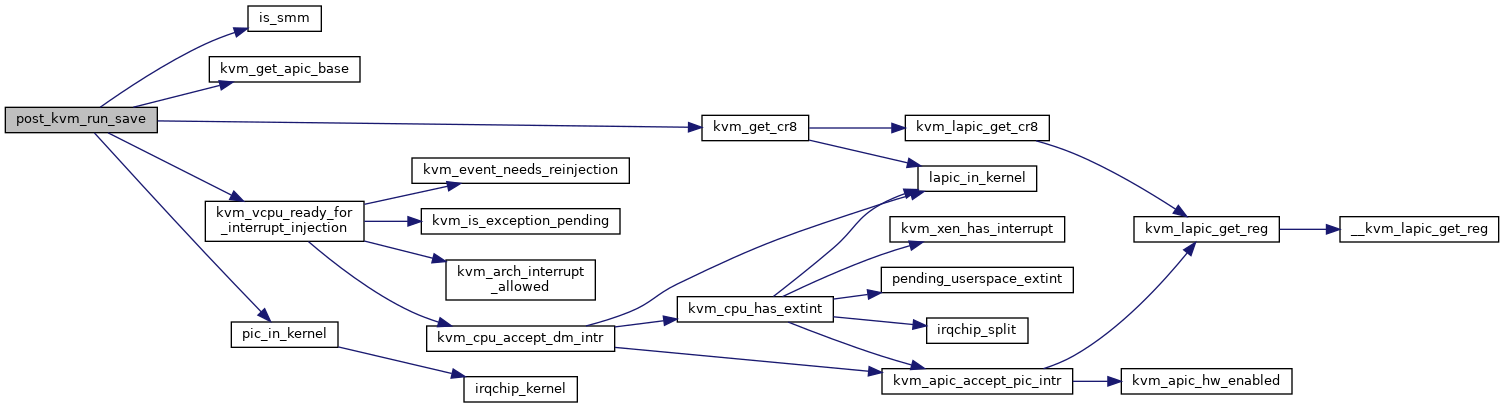

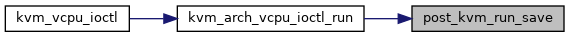

| static void | post_kvm_run_save (struct kvm_vcpu *vcpu) |

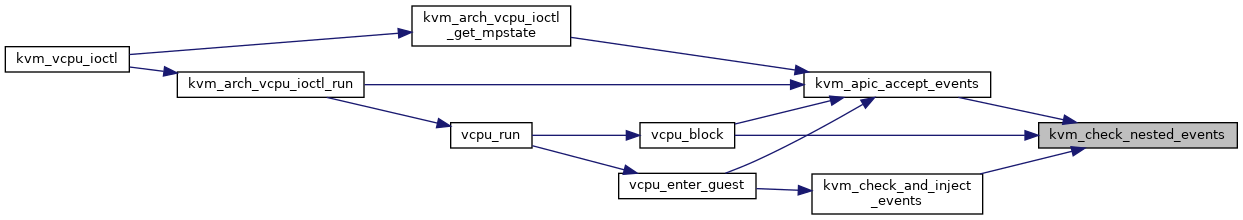

| int | kvm_check_nested_events (struct kvm_vcpu *vcpu) |

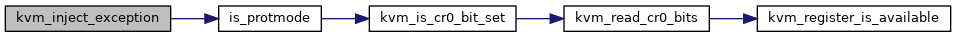

| static void | kvm_inject_exception (struct kvm_vcpu *vcpu) |

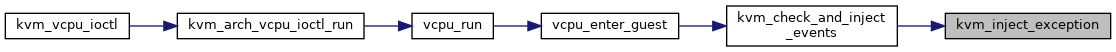

| static int | kvm_check_and_inject_events (struct kvm_vcpu *vcpu, bool *req_immediate_exit) |

| int | kvm_get_nr_pending_nmis (struct kvm_vcpu *vcpu) |

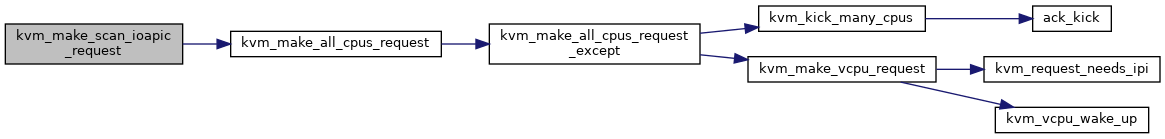

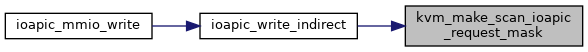

| void | kvm_make_scan_ioapic_request_mask (struct kvm *kvm, unsigned long *vcpu_bitmap) |

| void | kvm_make_scan_ioapic_request (struct kvm *kvm) |

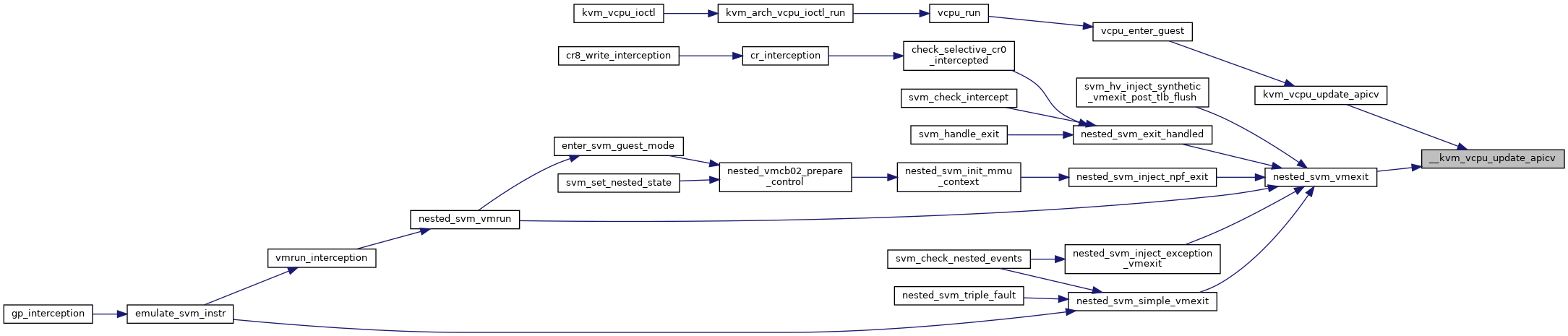

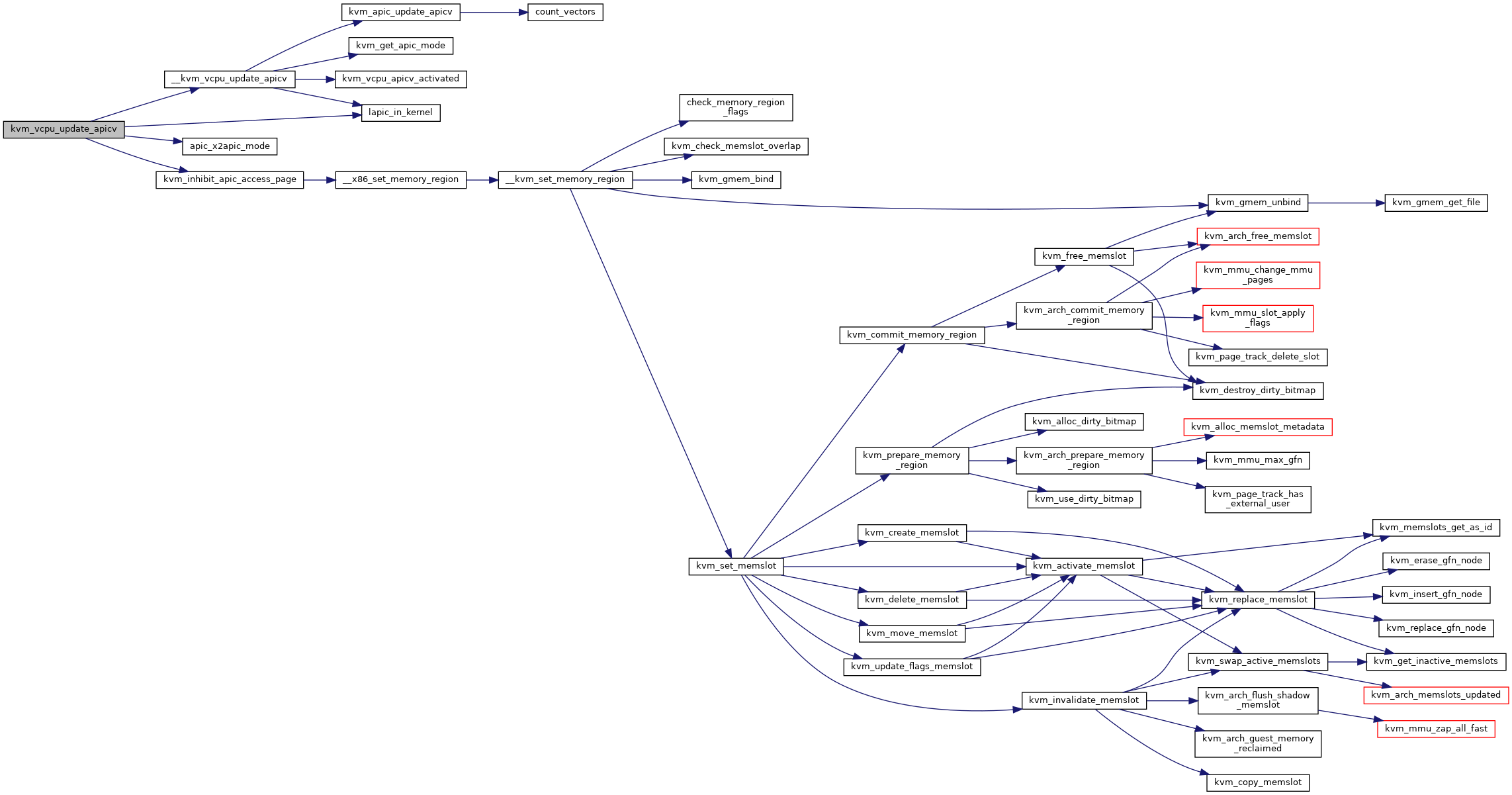

| void | __kvm_vcpu_update_apicv (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (__kvm_vcpu_update_apicv) | |

| static void | kvm_vcpu_update_apicv (struct kvm_vcpu *vcpu) |

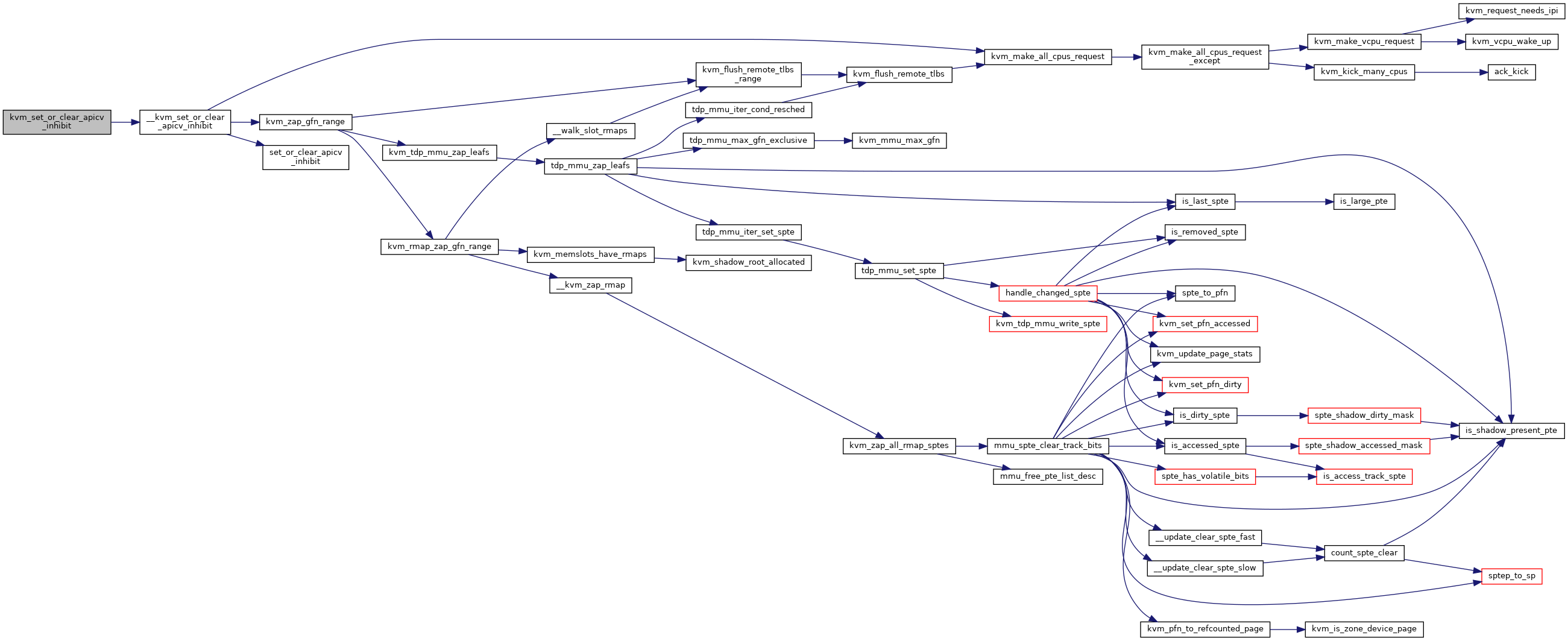

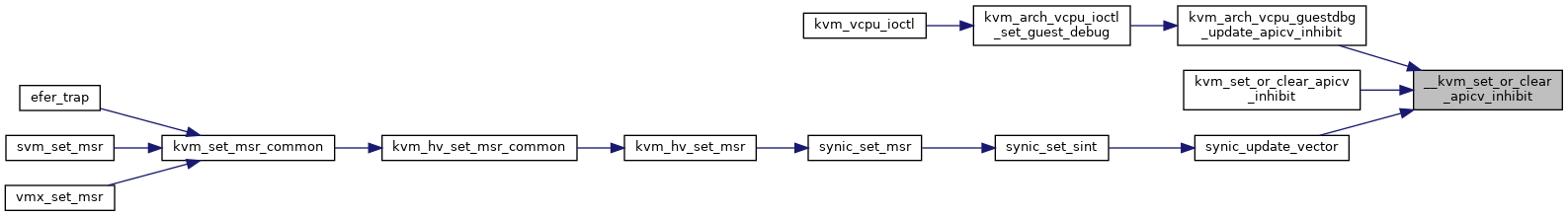

| void | __kvm_set_or_clear_apicv_inhibit (struct kvm *kvm, enum kvm_apicv_inhibit reason, bool set) |

| void | kvm_set_or_clear_apicv_inhibit (struct kvm *kvm, enum kvm_apicv_inhibit reason, bool set) |

| EXPORT_SYMBOL_GPL (kvm_set_or_clear_apicv_inhibit) | |

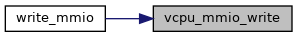

| static void | vcpu_scan_ioapic (struct kvm_vcpu *vcpu) |

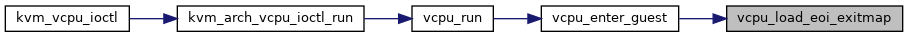

| static void | vcpu_load_eoi_exitmap (struct kvm_vcpu *vcpu) |

| void | kvm_arch_guest_memory_reclaimed (struct kvm *kvm) |

| static void | kvm_vcpu_reload_apic_access_page (struct kvm_vcpu *vcpu) |

| void | __kvm_request_immediate_exit (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (__kvm_request_immediate_exit) | |

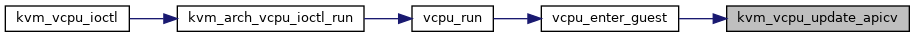

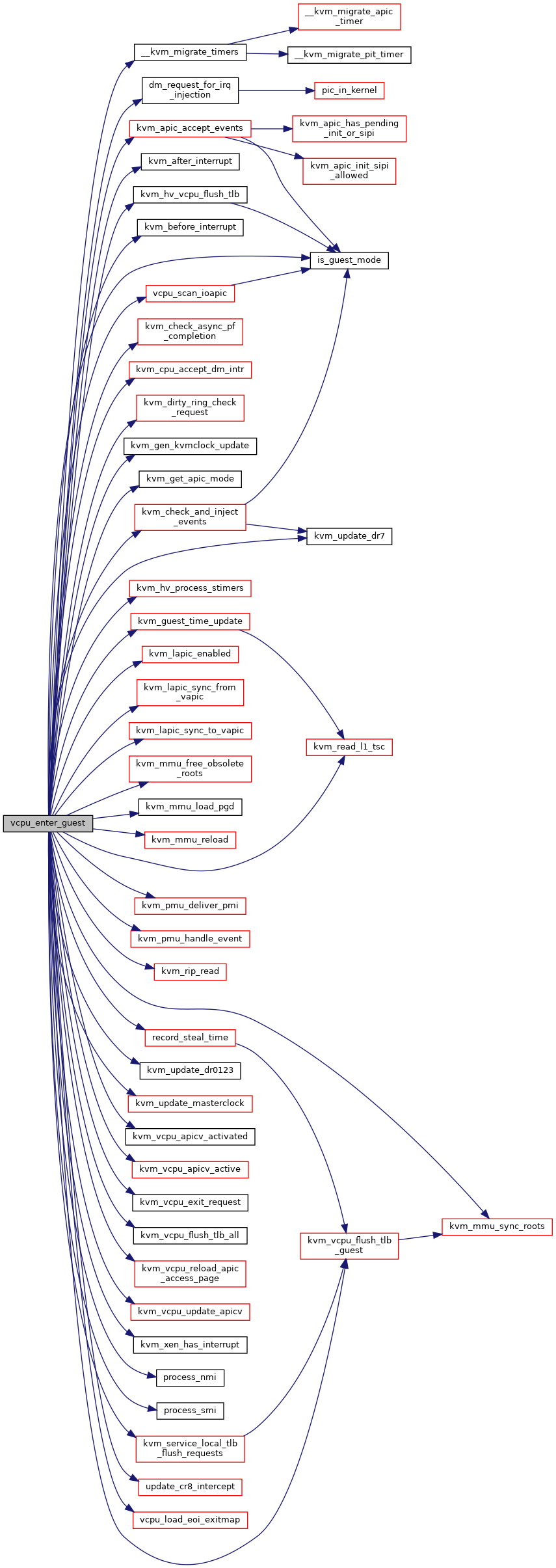

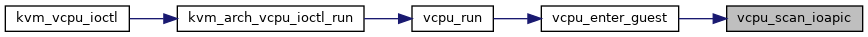

| static int | vcpu_enter_guest (struct kvm_vcpu *vcpu) |

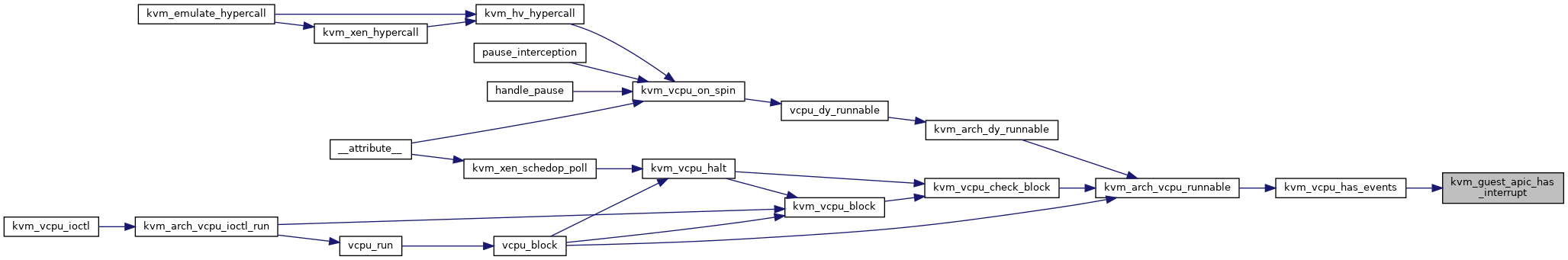

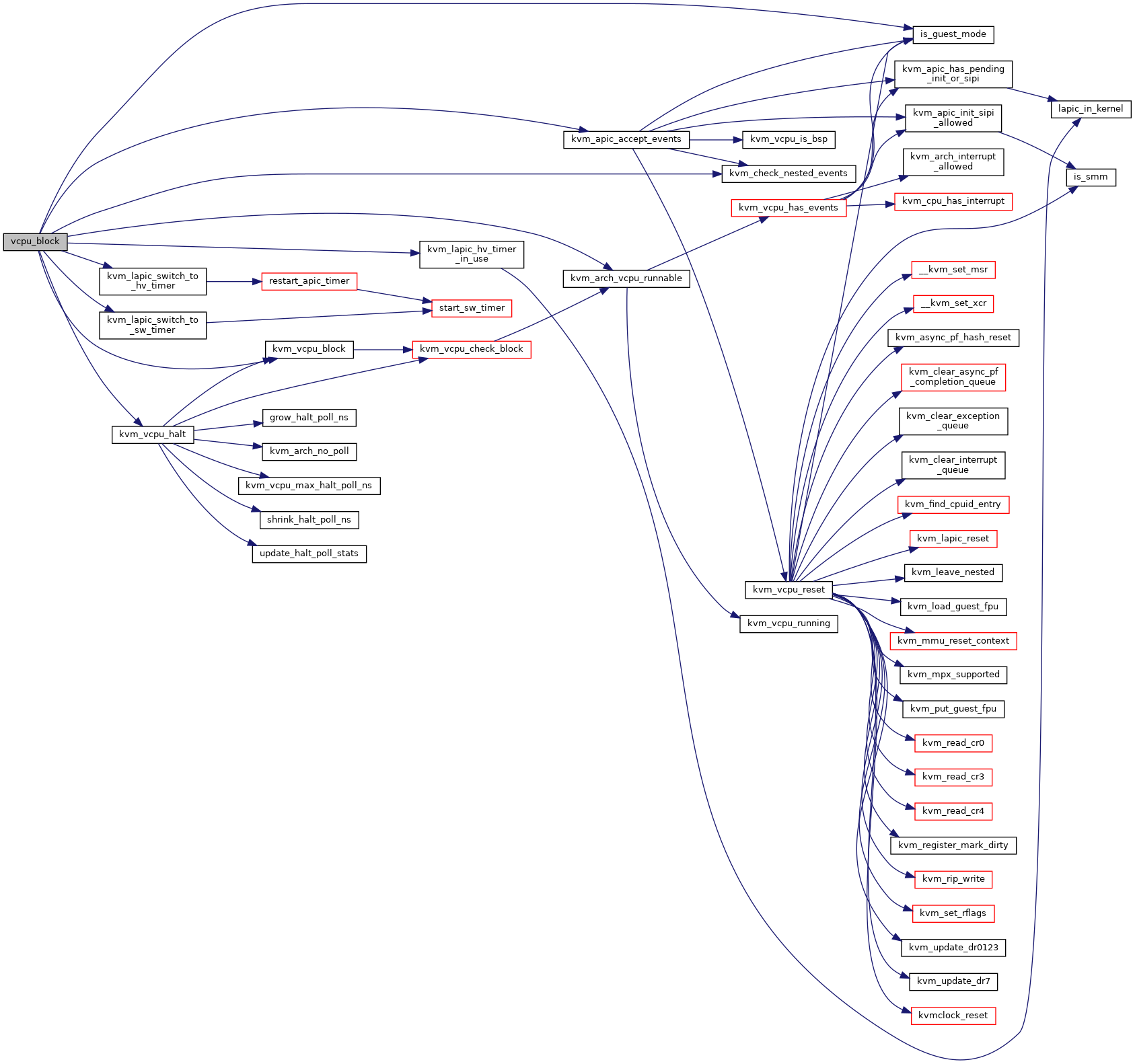

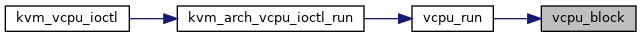

| static int | vcpu_block (struct kvm_vcpu *vcpu) |

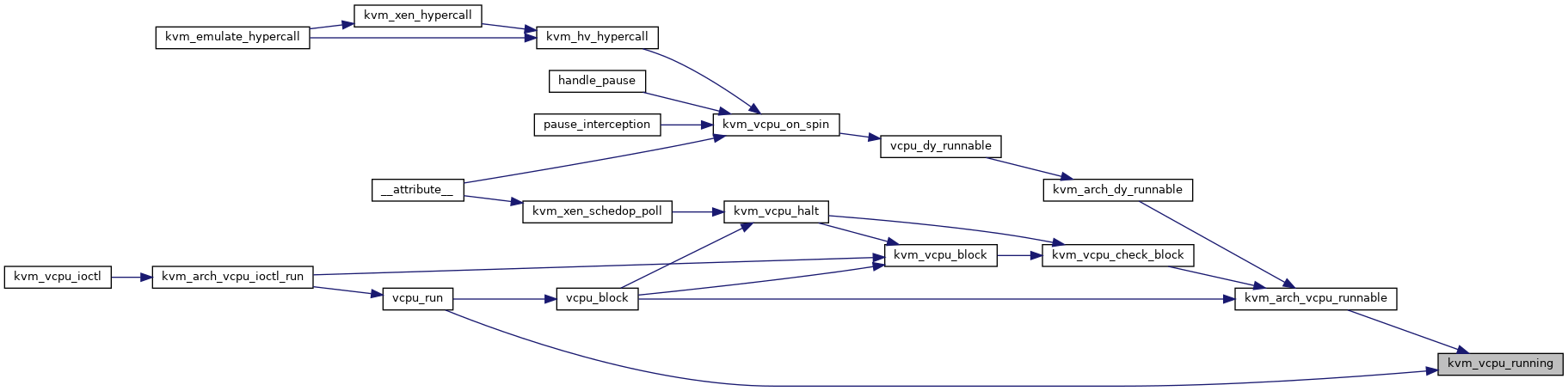

| static bool | kvm_vcpu_running (struct kvm_vcpu *vcpu) |

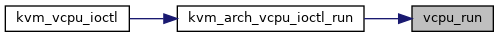

| static int | vcpu_run (struct kvm_vcpu *vcpu) |

| static int | complete_emulated_io (struct kvm_vcpu *vcpu) |

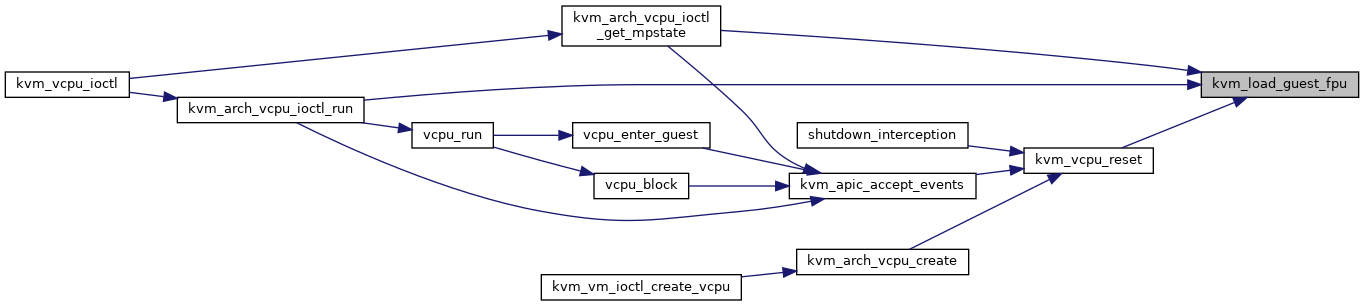

| static void | kvm_load_guest_fpu (struct kvm_vcpu *vcpu) |

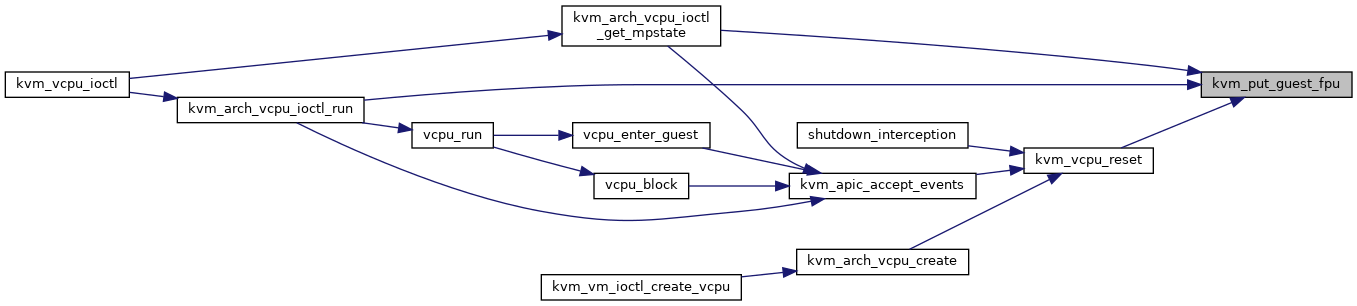

| static void | kvm_put_guest_fpu (struct kvm_vcpu *vcpu) |

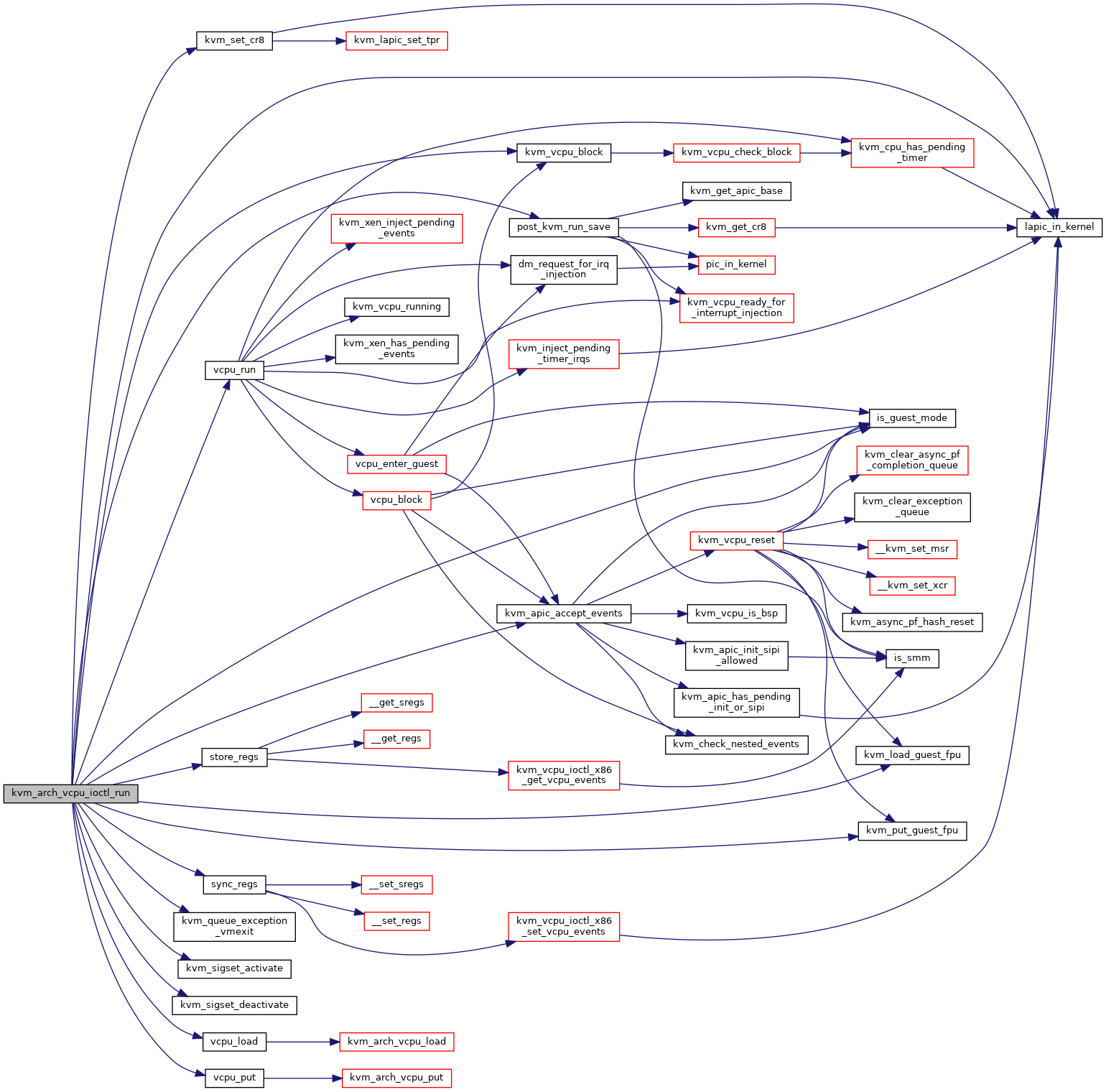

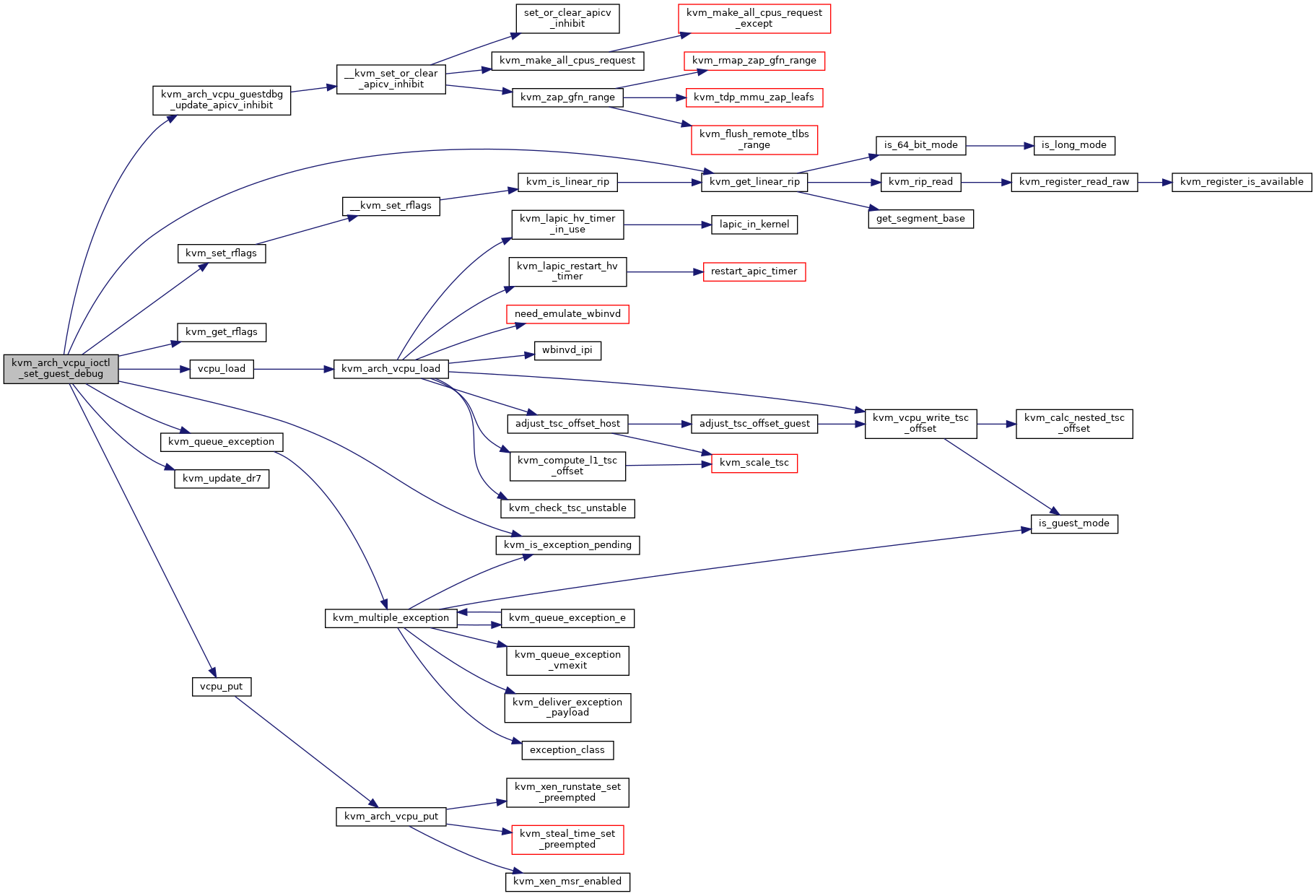

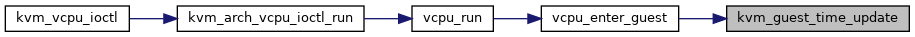

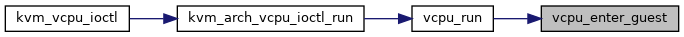

| int | kvm_arch_vcpu_ioctl_run (struct kvm_vcpu *vcpu) |

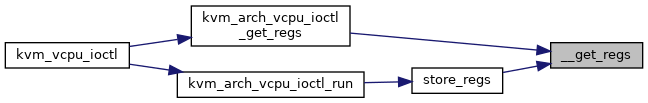

| static void | __get_regs (struct kvm_vcpu *vcpu, struct kvm_regs *regs) |

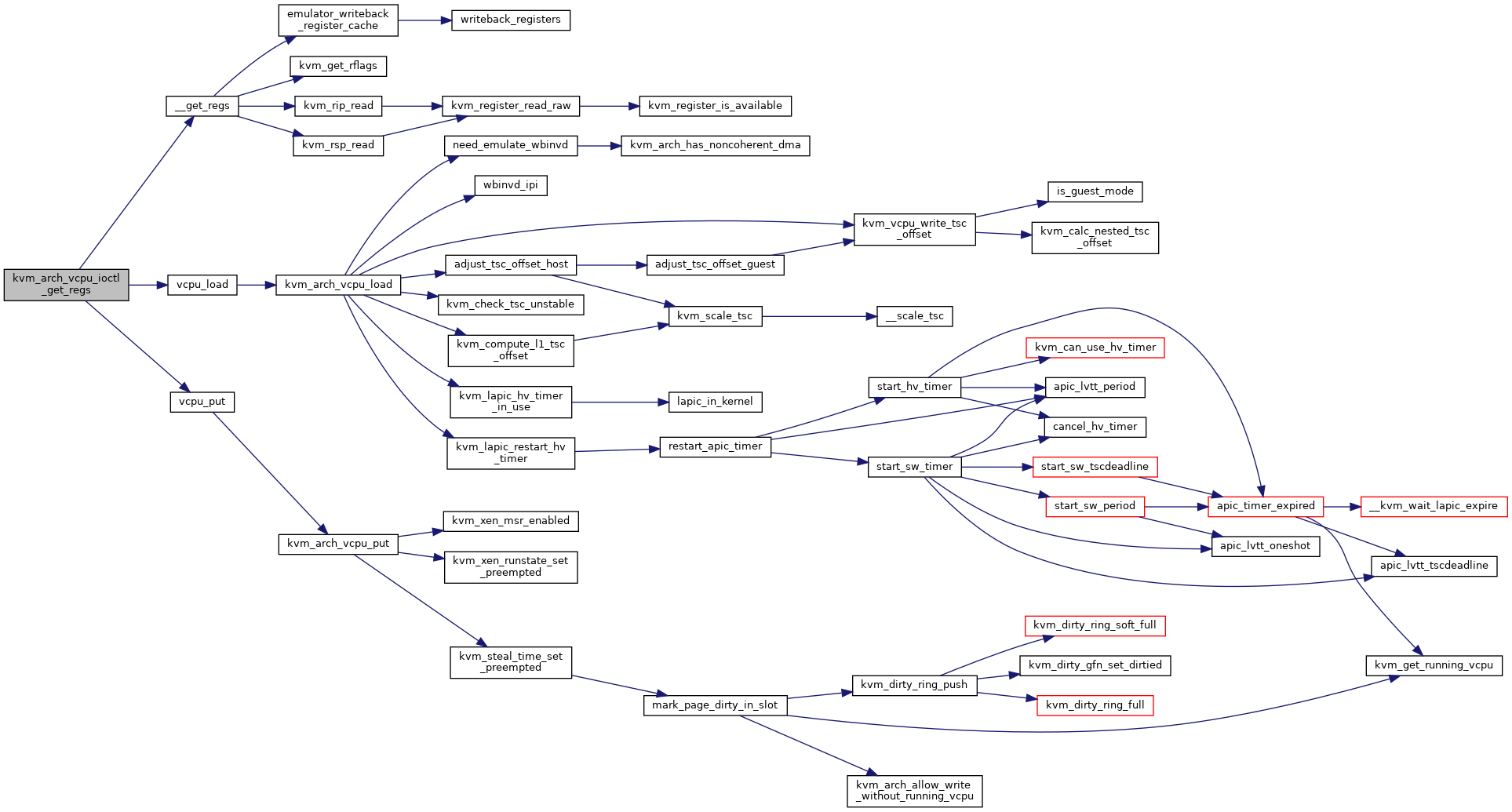

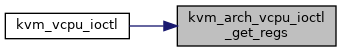

| int | kvm_arch_vcpu_ioctl_get_regs (struct kvm_vcpu *vcpu, struct kvm_regs *regs) |

| static void | __set_regs (struct kvm_vcpu *vcpu, struct kvm_regs *regs) |

| int | kvm_arch_vcpu_ioctl_set_regs (struct kvm_vcpu *vcpu, struct kvm_regs *regs) |

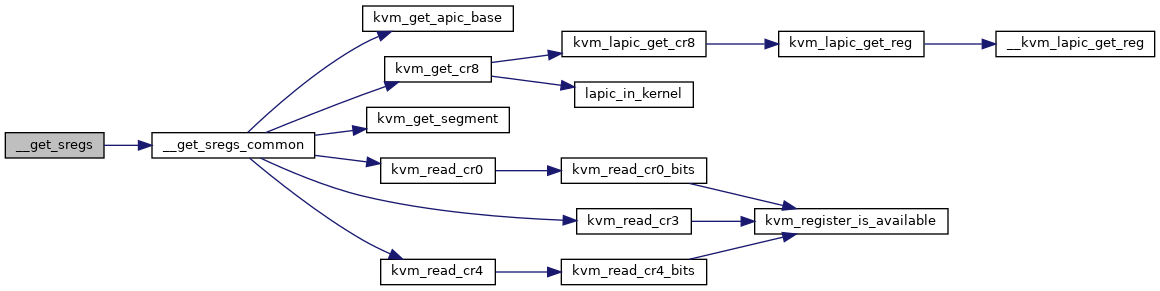

| static void | __get_sregs_common (struct kvm_vcpu *vcpu, struct kvm_sregs *sregs) |

| static void | __get_sregs (struct kvm_vcpu *vcpu, struct kvm_sregs *sregs) |

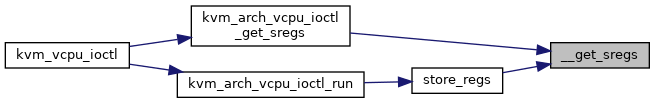

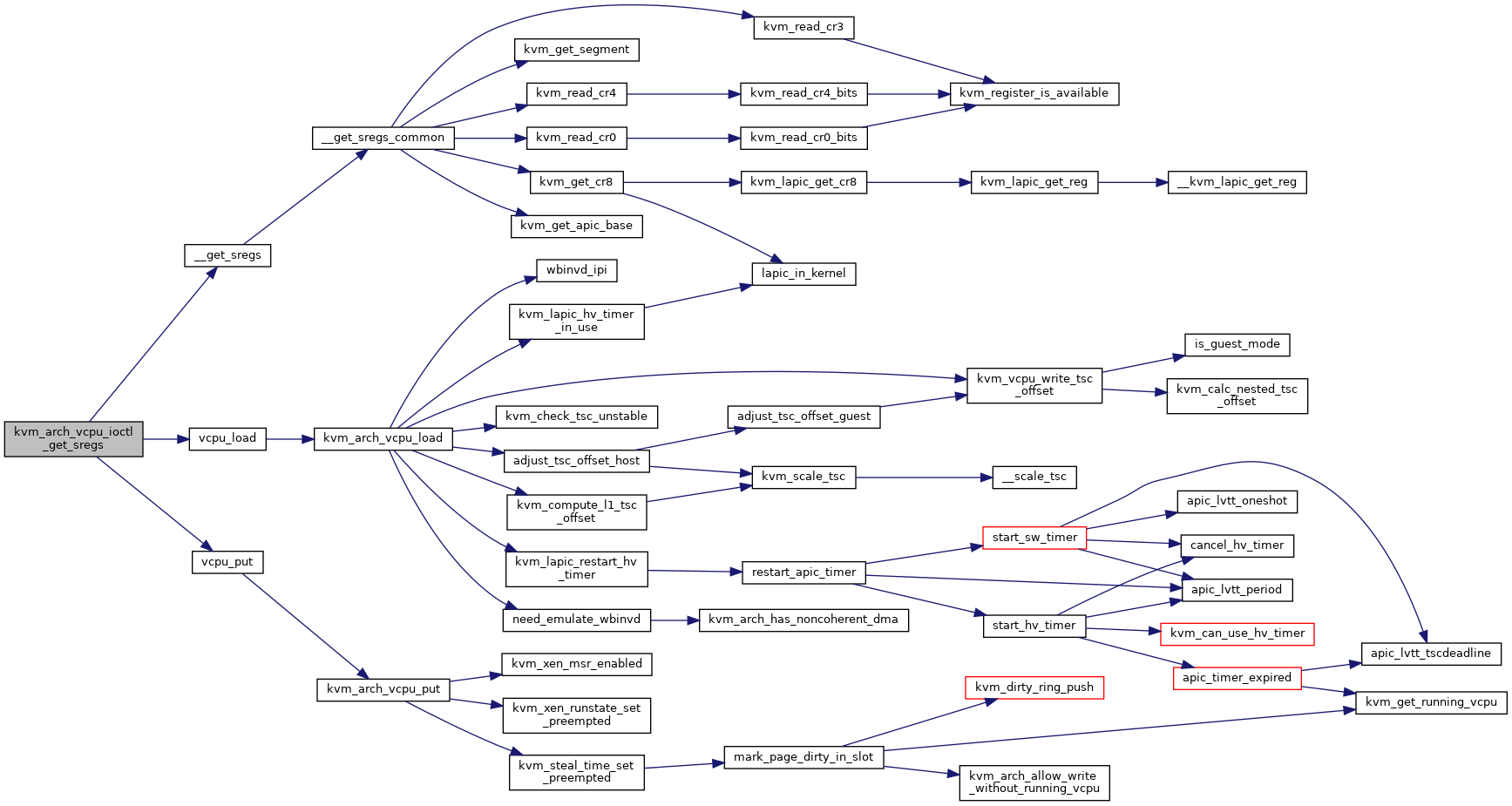

| int | kvm_arch_vcpu_ioctl_get_sregs (struct kvm_vcpu *vcpu, struct kvm_sregs *sregs) |

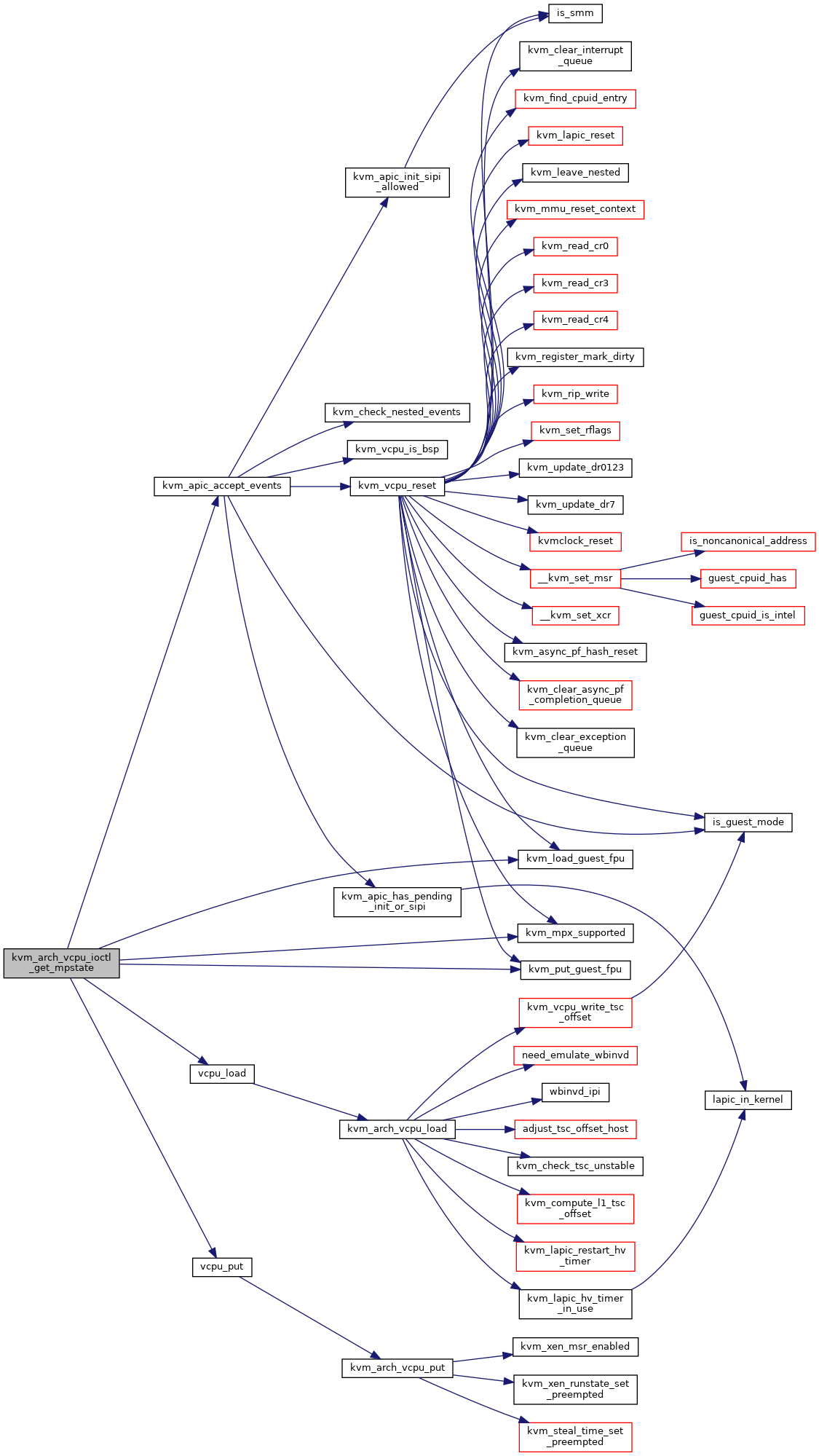

| int | kvm_arch_vcpu_ioctl_get_mpstate (struct kvm_vcpu *vcpu, struct kvm_mp_state *mp_state) |

| int | kvm_arch_vcpu_ioctl_set_mpstate (struct kvm_vcpu *vcpu, struct kvm_mp_state *mp_state) |

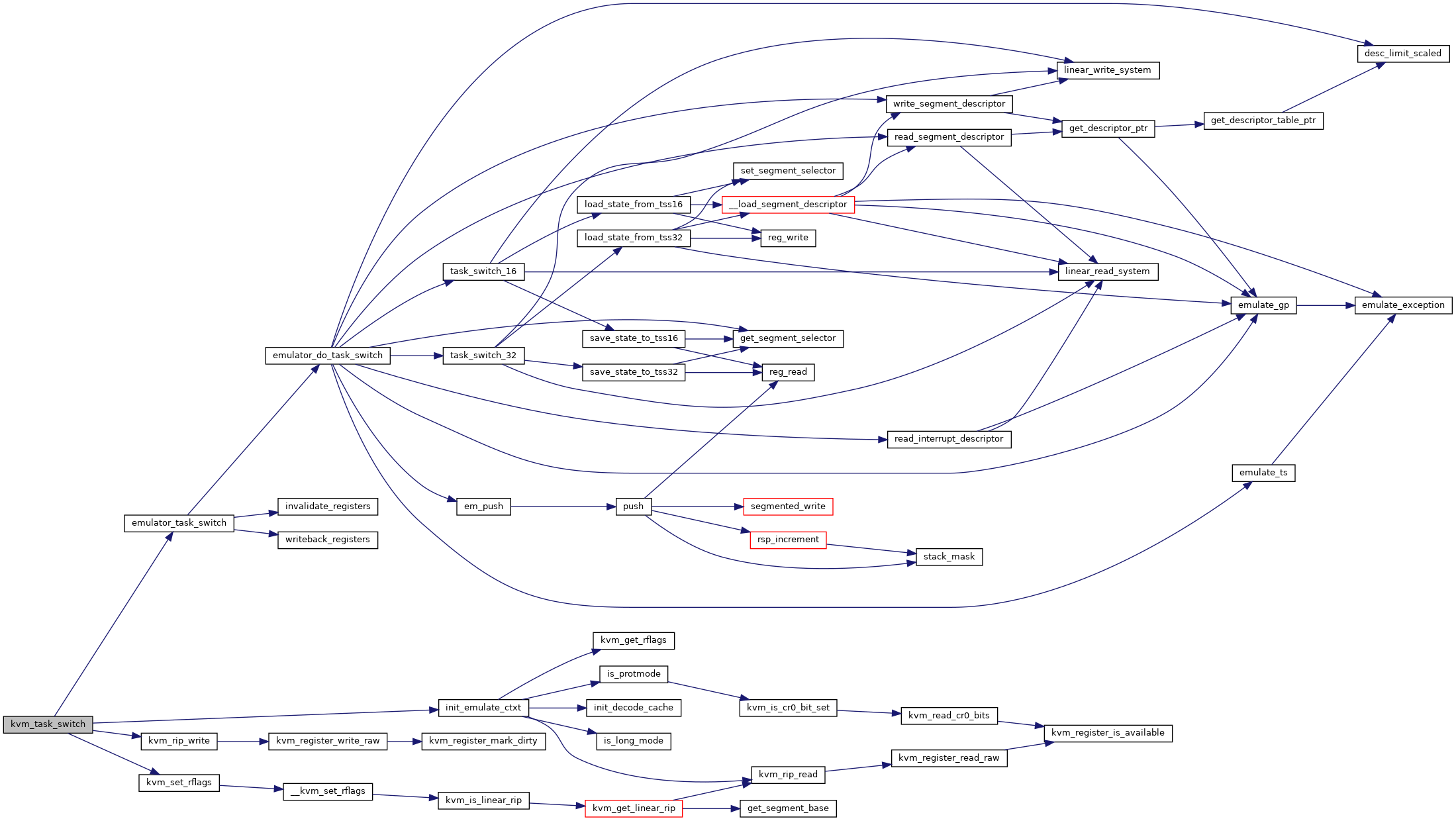

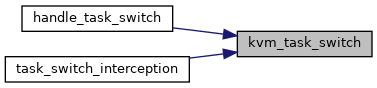

| int | kvm_task_switch (struct kvm_vcpu *vcpu, u16 tss_selector, int idt_index, int reason, bool has_error_code, u32 error_code) |

| EXPORT_SYMBOL_GPL (kvm_task_switch) | |

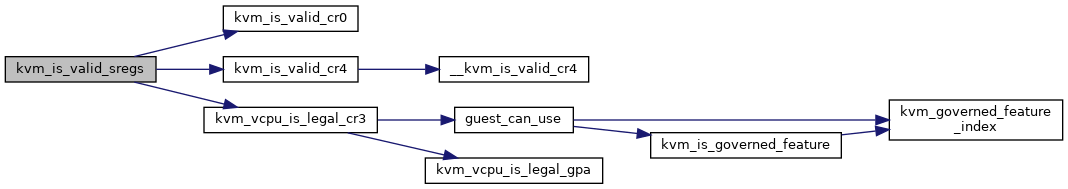

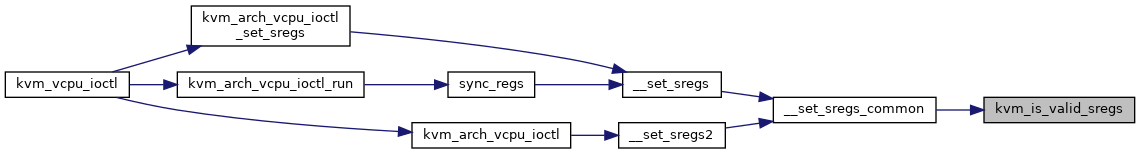

| static bool | kvm_is_valid_sregs (struct kvm_vcpu *vcpu, struct kvm_sregs *sregs) |

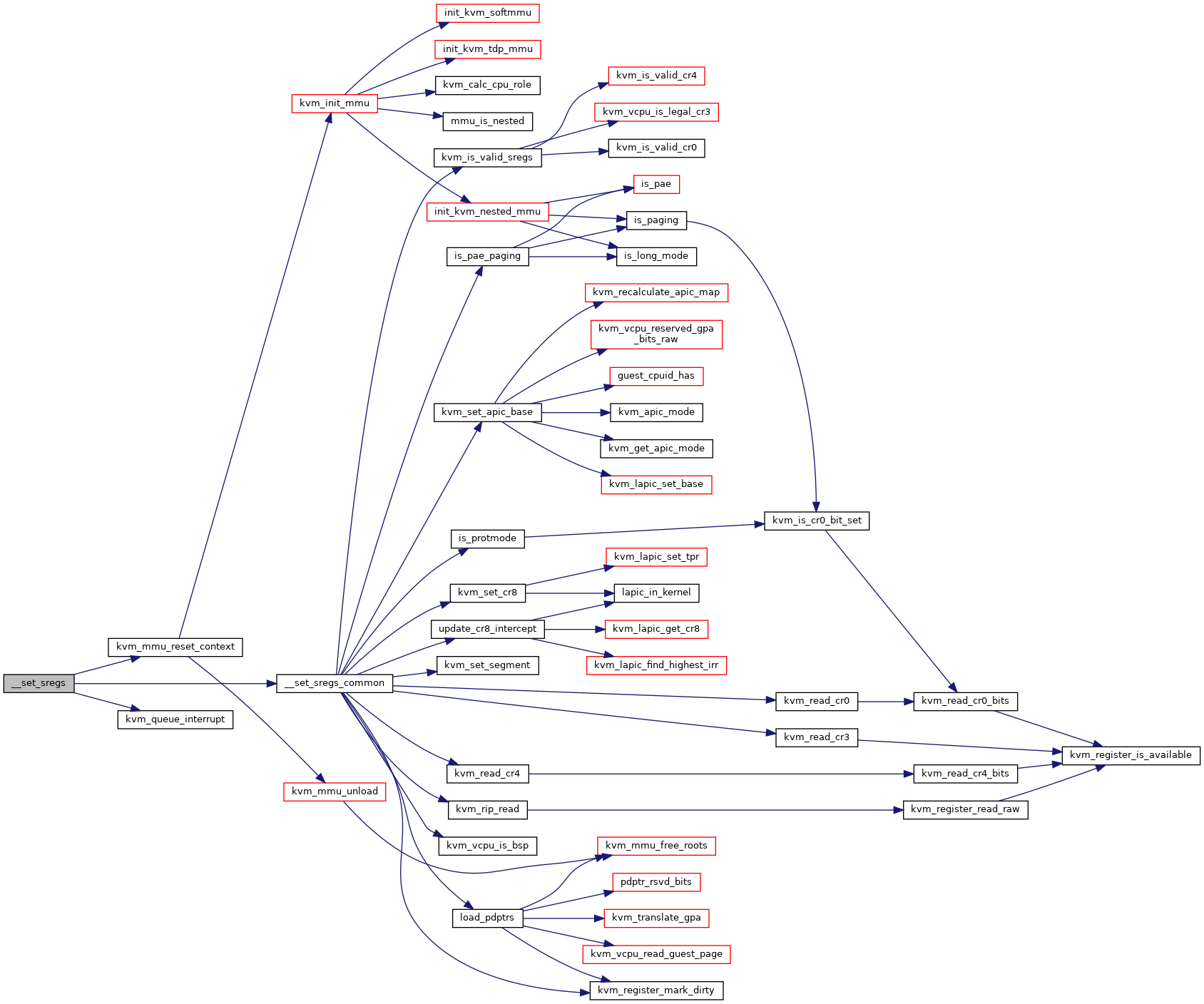

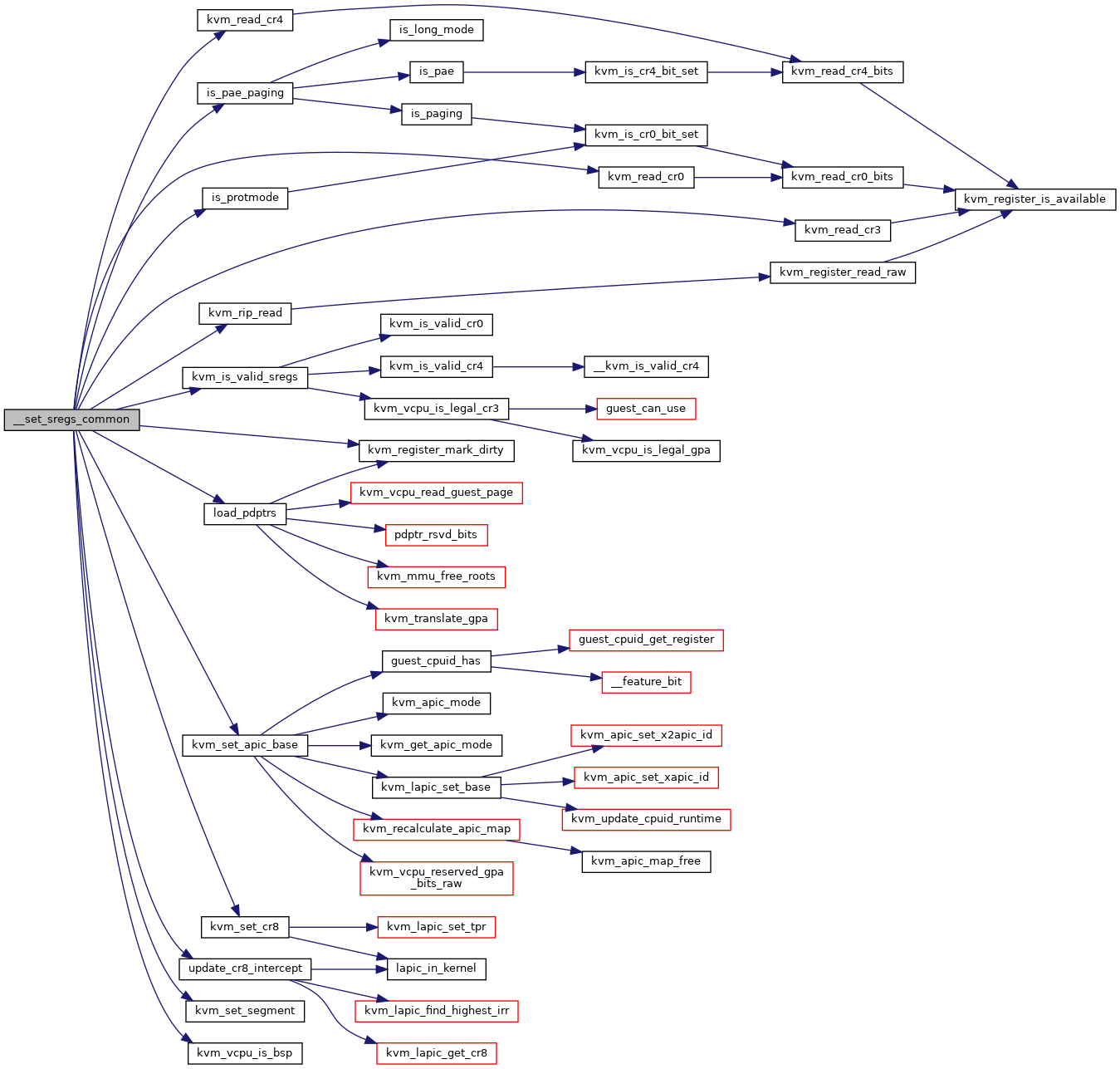

| static int | __set_sregs_common (struct kvm_vcpu *vcpu, struct kvm_sregs *sregs, int *mmu_reset_needed, bool update_pdptrs) |

| static int | __set_sregs (struct kvm_vcpu *vcpu, struct kvm_sregs *sregs) |

| int | kvm_arch_vcpu_ioctl_set_sregs (struct kvm_vcpu *vcpu, struct kvm_sregs *sregs) |

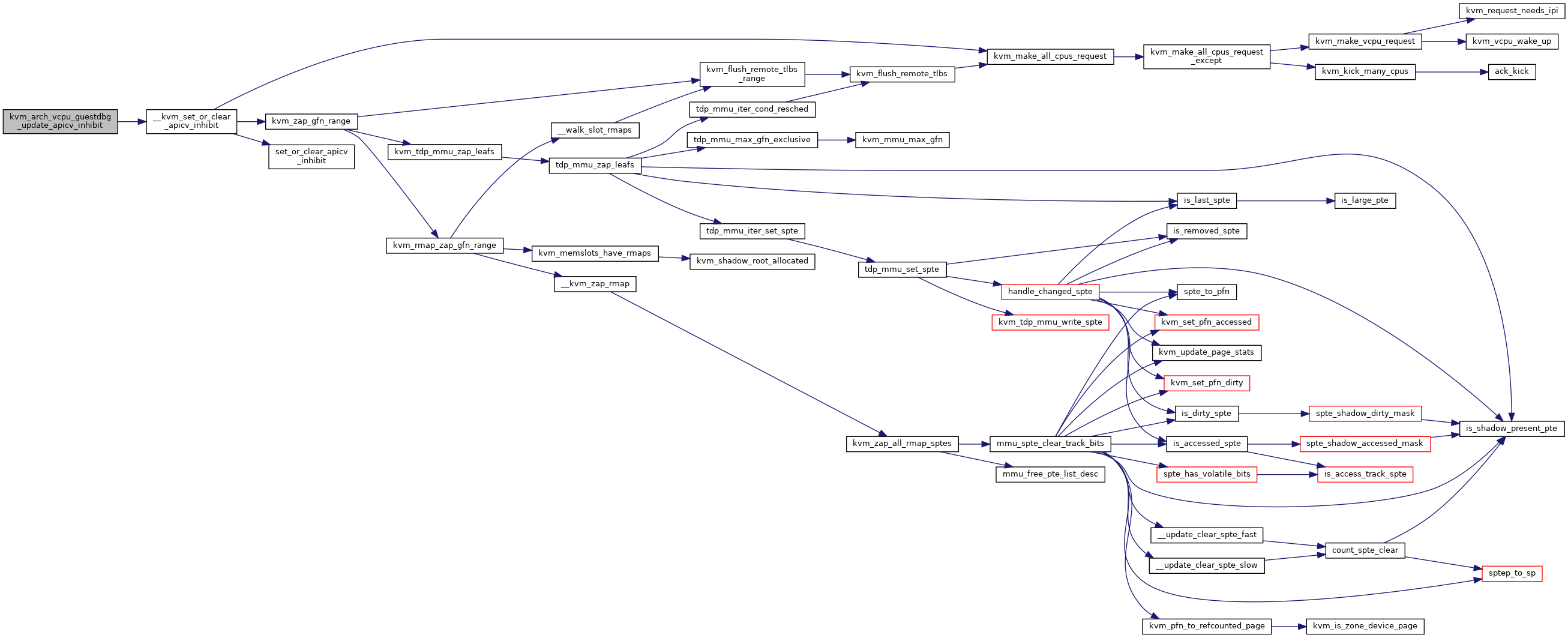

| static void | kvm_arch_vcpu_guestdbg_update_apicv_inhibit (struct kvm *kvm) |

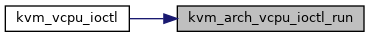

| int | kvm_arch_vcpu_ioctl_set_guest_debug (struct kvm_vcpu *vcpu, struct kvm_guest_debug *dbg) |

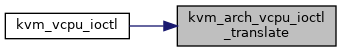

| int | kvm_arch_vcpu_ioctl_translate (struct kvm_vcpu *vcpu, struct kvm_translation *tr) |

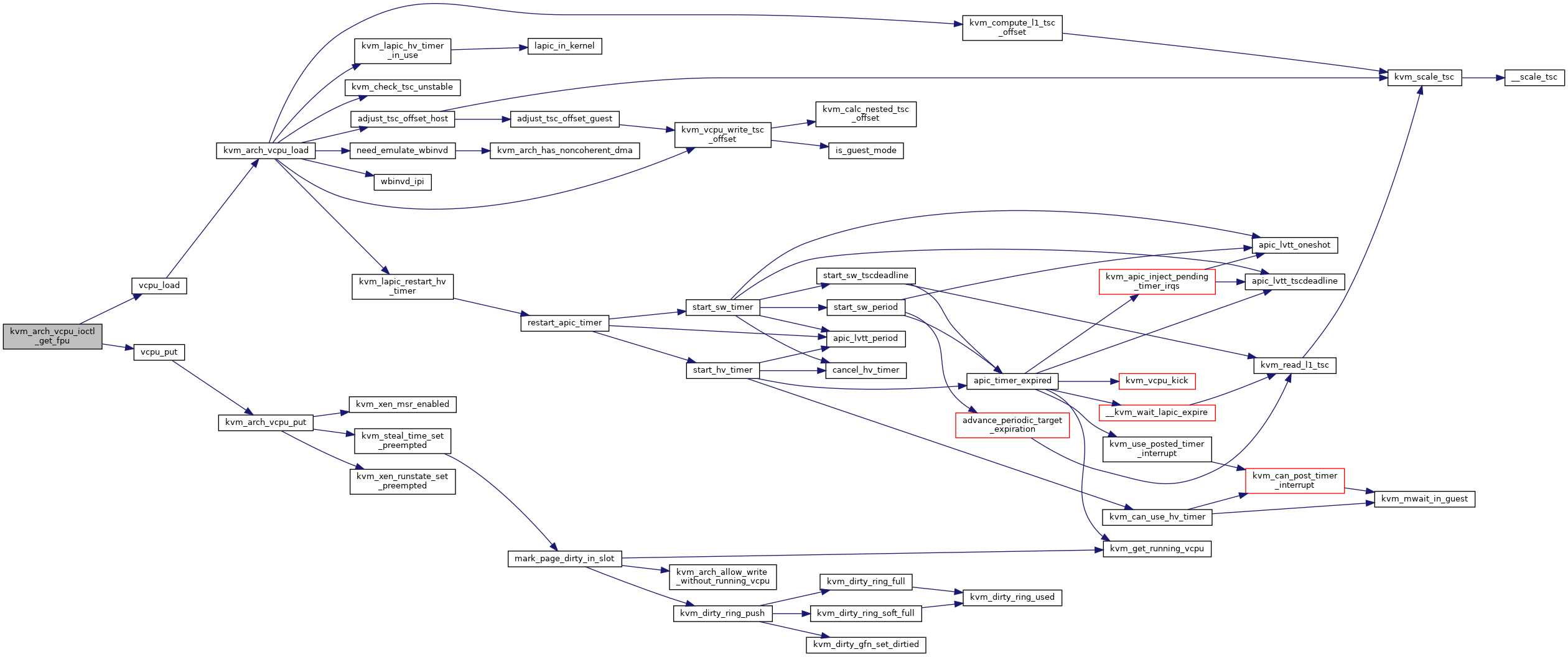

| int | kvm_arch_vcpu_ioctl_get_fpu (struct kvm_vcpu *vcpu, struct kvm_fpu *fpu) |

| int | kvm_arch_vcpu_ioctl_set_fpu (struct kvm_vcpu *vcpu, struct kvm_fpu *fpu) |

| int | kvm_arch_vcpu_precreate (struct kvm *kvm, unsigned int id) |

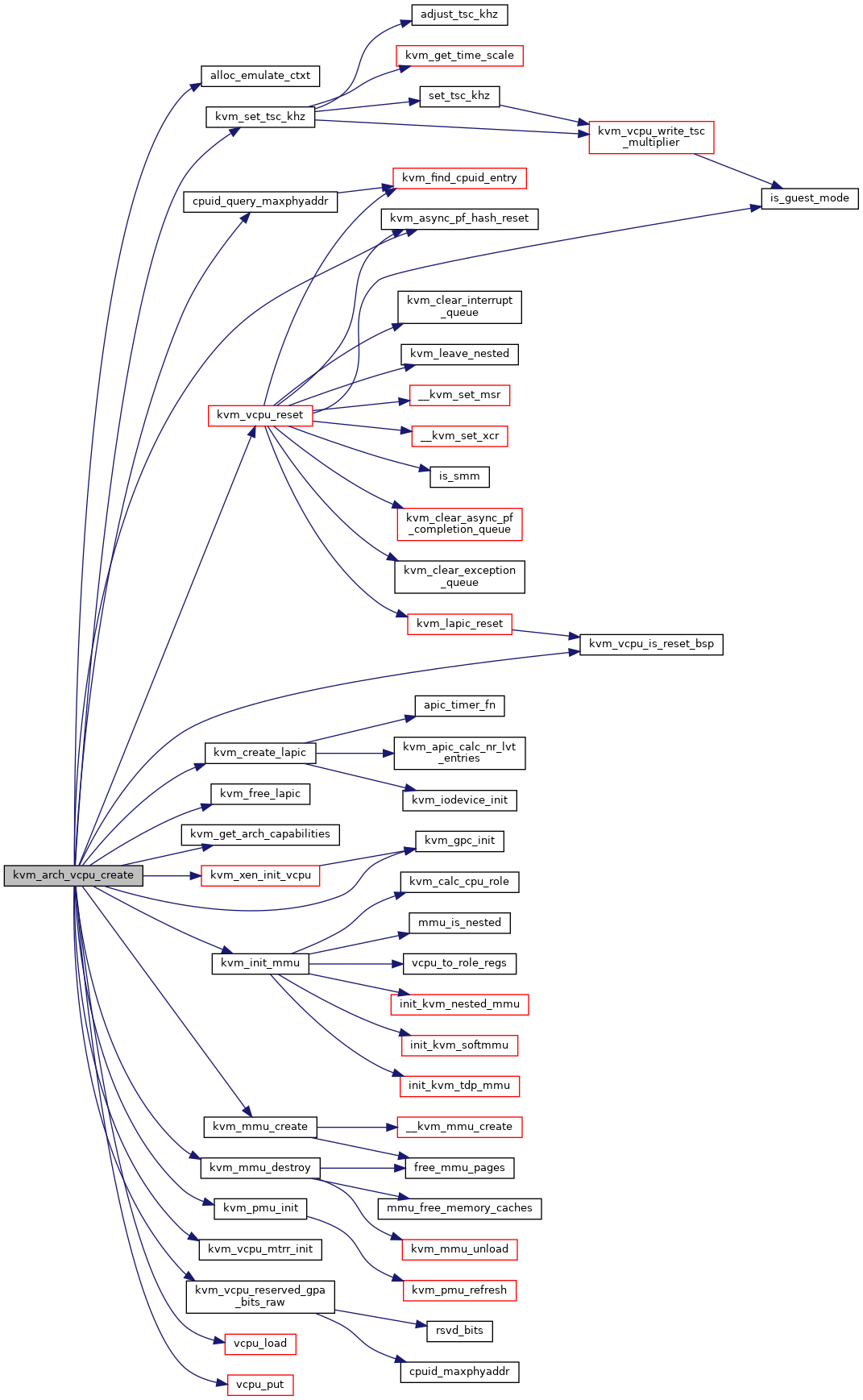

| int | kvm_arch_vcpu_create (struct kvm_vcpu *vcpu) |

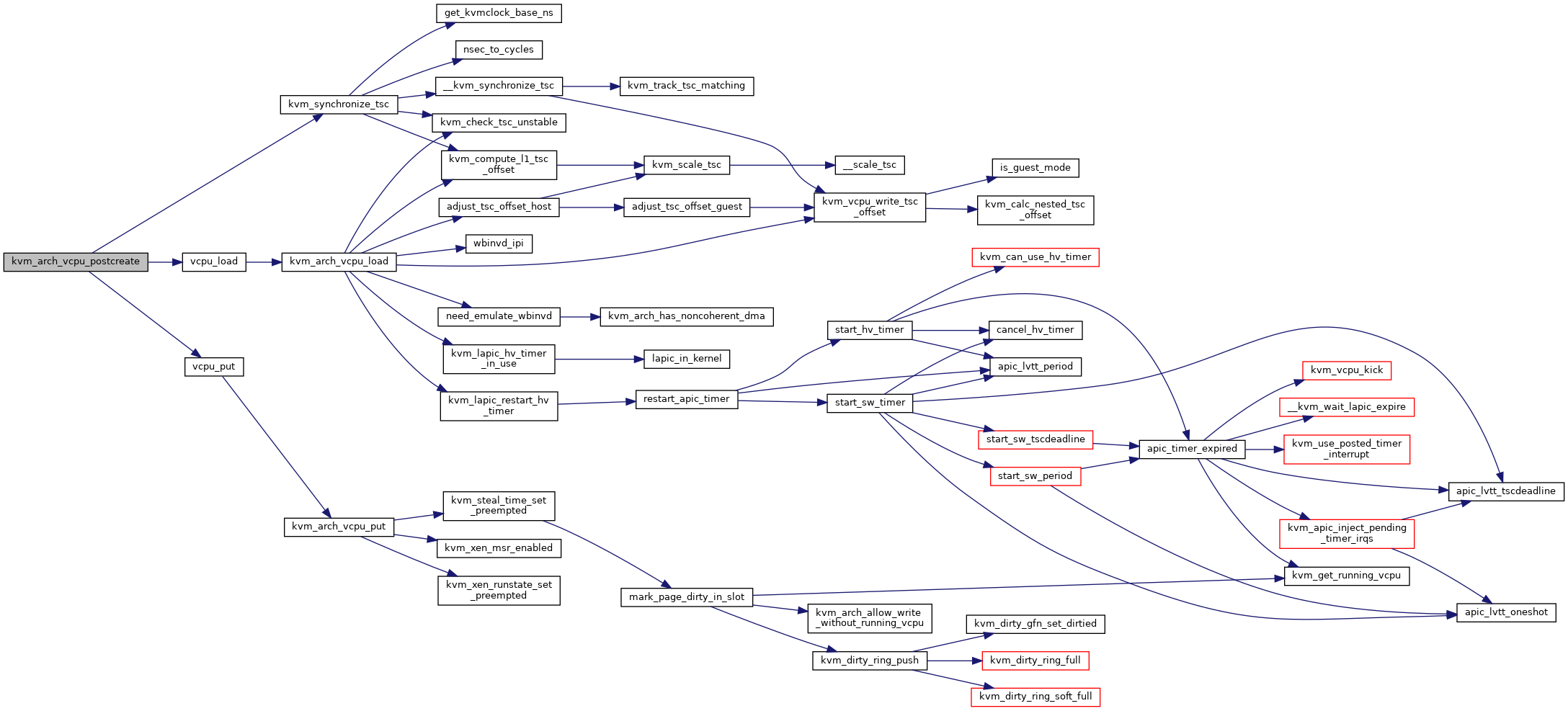

| void | kvm_arch_vcpu_postcreate (struct kvm_vcpu *vcpu) |

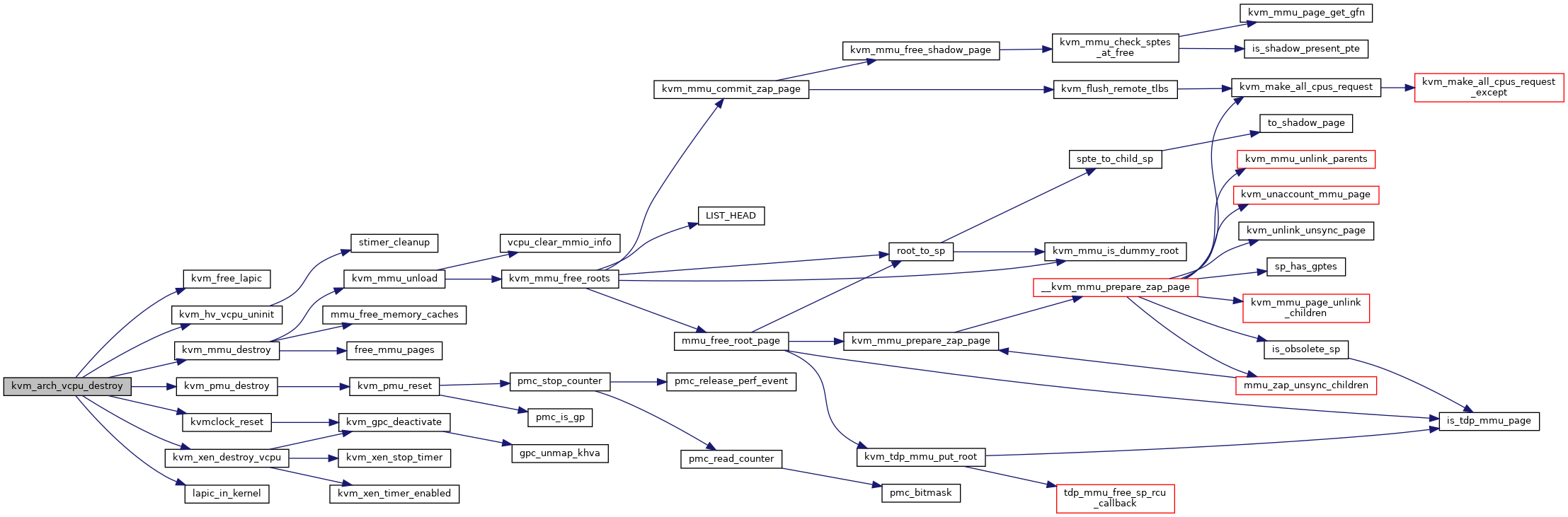

| void | kvm_arch_vcpu_destroy (struct kvm_vcpu *vcpu) |

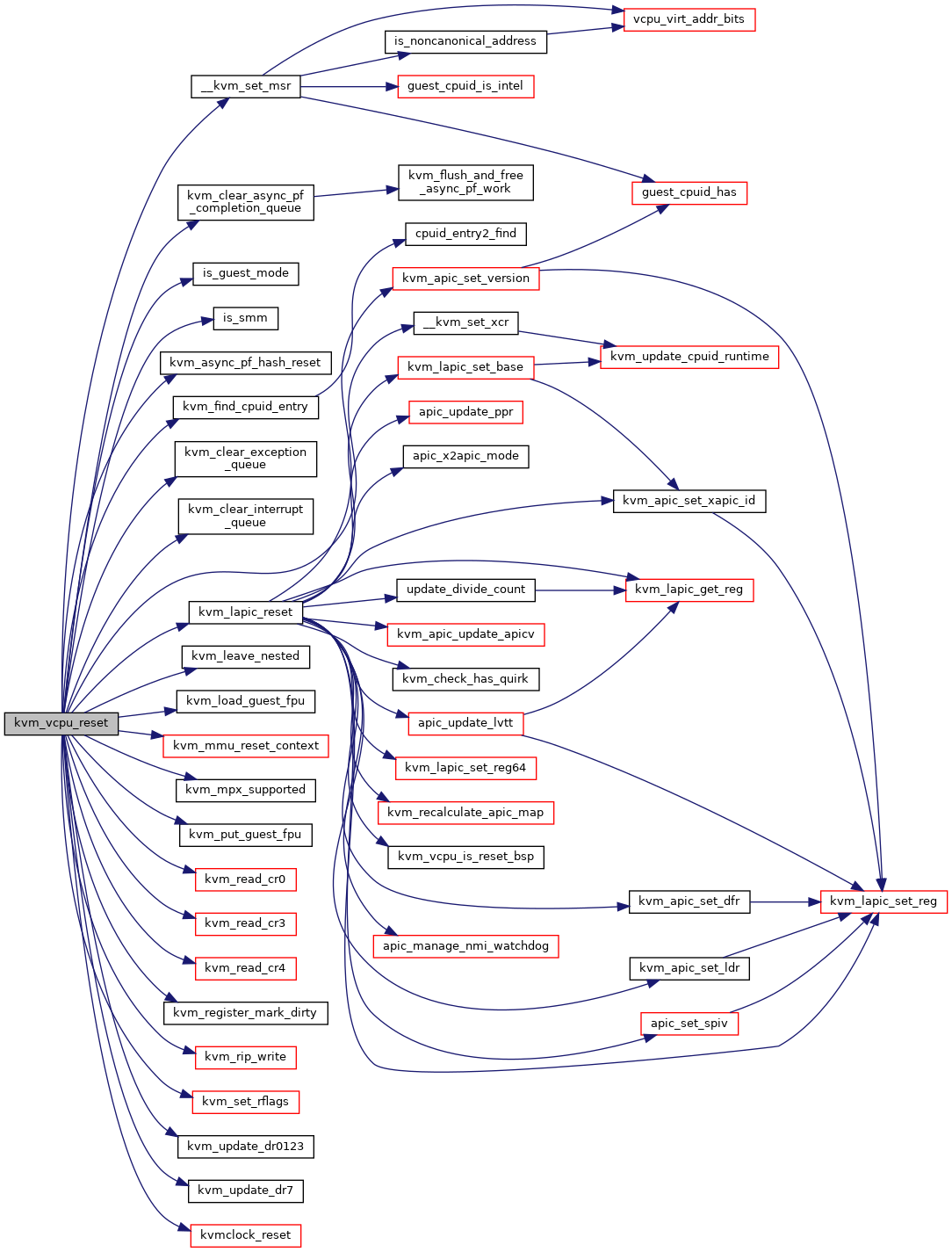

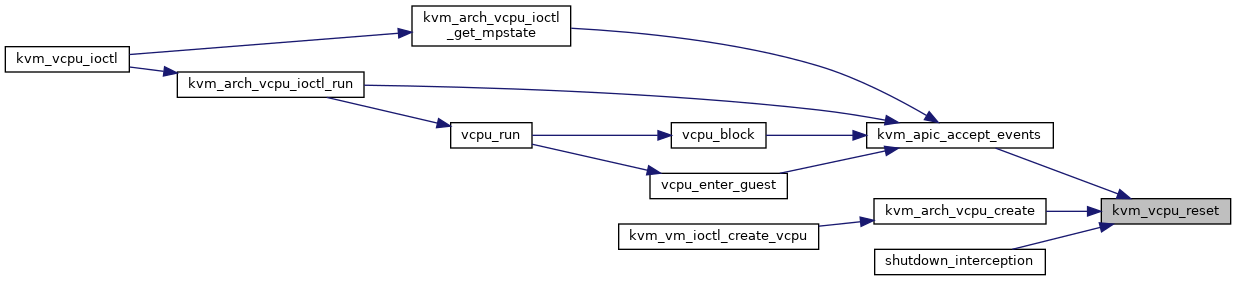

| void | kvm_vcpu_reset (struct kvm_vcpu *vcpu, bool init_event) |

| EXPORT_SYMBOL_GPL (kvm_vcpu_reset) | |

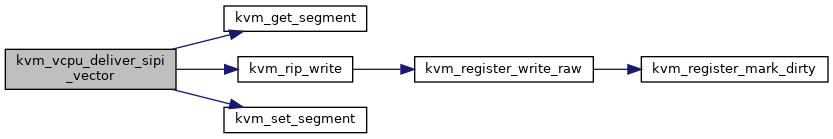

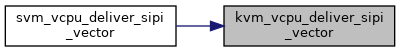

| void | kvm_vcpu_deliver_sipi_vector (struct kvm_vcpu *vcpu, u8 vector) |

| EXPORT_SYMBOL_GPL (kvm_vcpu_deliver_sipi_vector) | |

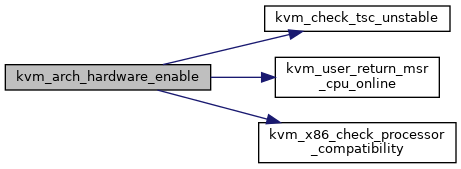

| int | kvm_arch_hardware_enable (void) |

| void | kvm_arch_hardware_disable (void) |

| bool | kvm_vcpu_is_reset_bsp (struct kvm_vcpu *vcpu) |

| bool | kvm_vcpu_is_bsp (struct kvm_vcpu *vcpu) |

| __read_mostly | DEFINE_STATIC_KEY_FALSE (kvm_has_noapic_vcpu) |

| EXPORT_SYMBOL_GPL (kvm_has_noapic_vcpu) | |

| void | kvm_arch_sched_in (struct kvm_vcpu *vcpu, int cpu) |

| void | kvm_arch_free_vm (struct kvm *kvm) |

| int | kvm_arch_init_vm (struct kvm *kvm, unsigned long type) |

| int | kvm_arch_post_init_vm (struct kvm *kvm) |

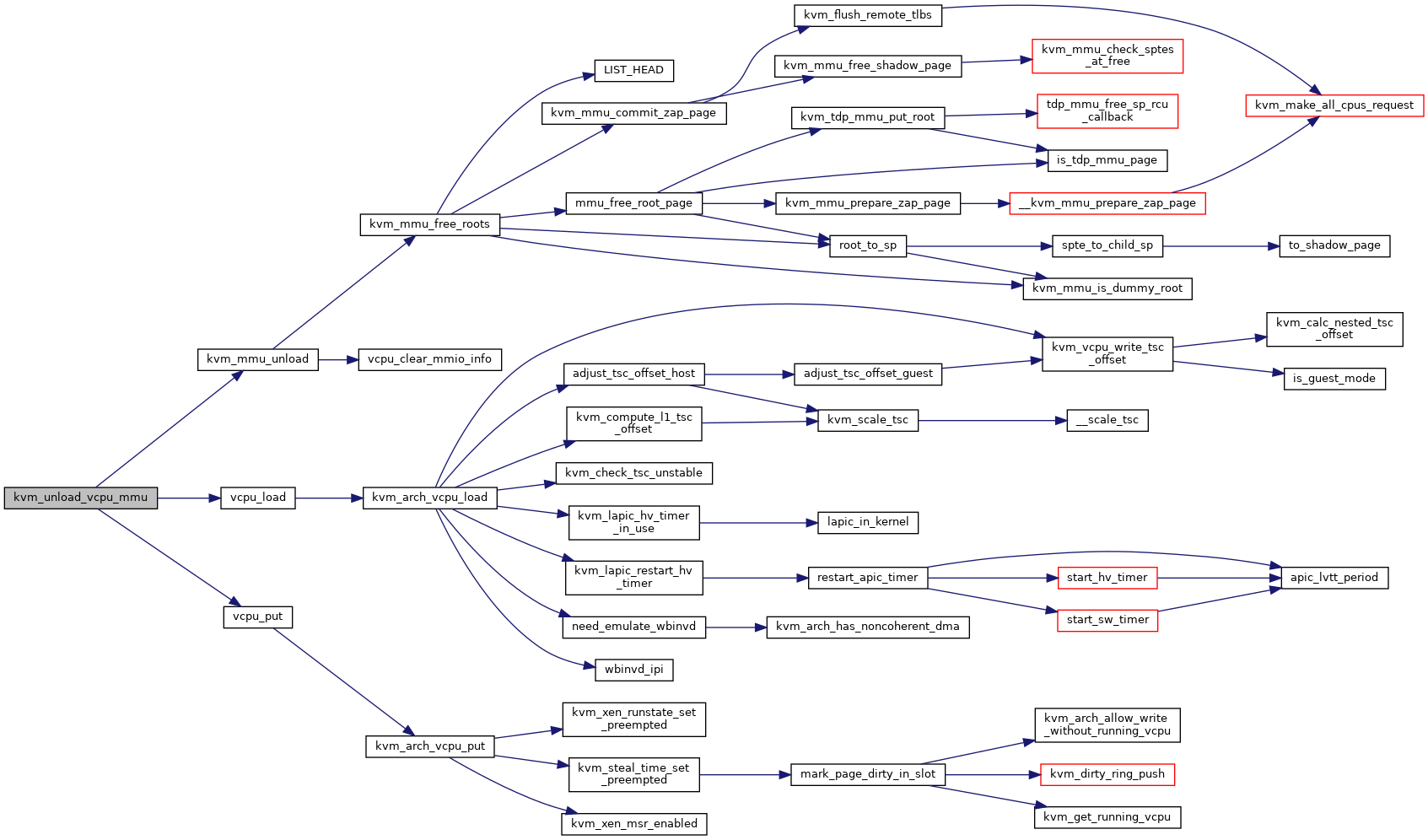

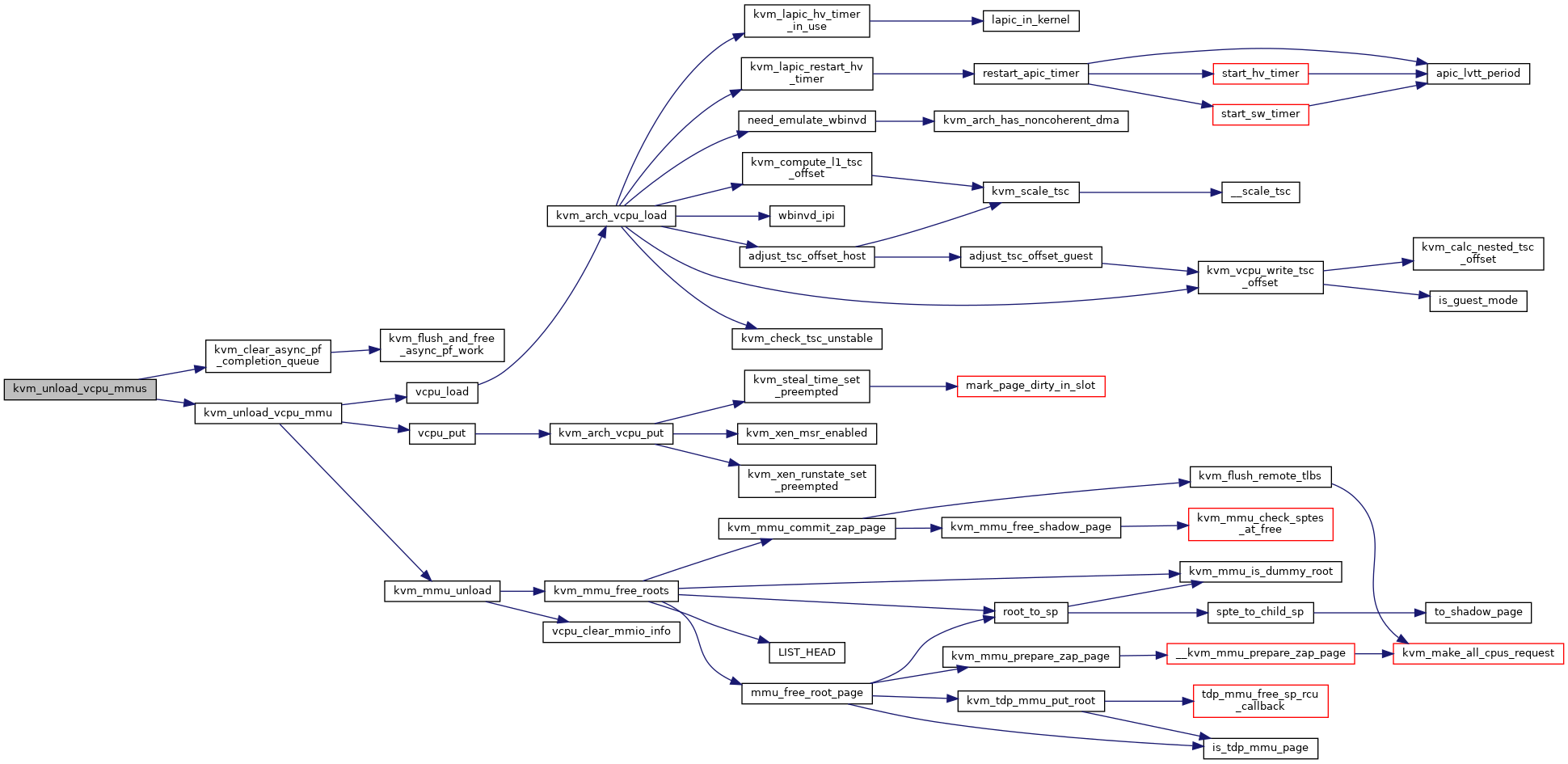

| static void | kvm_unload_vcpu_mmu (struct kvm_vcpu *vcpu) |

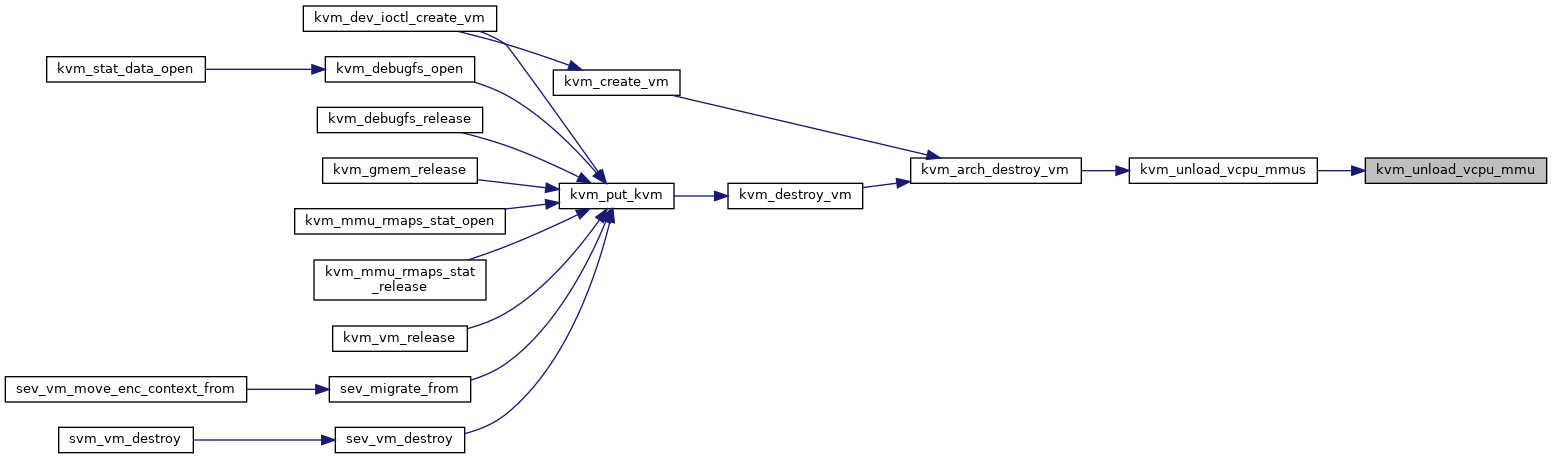

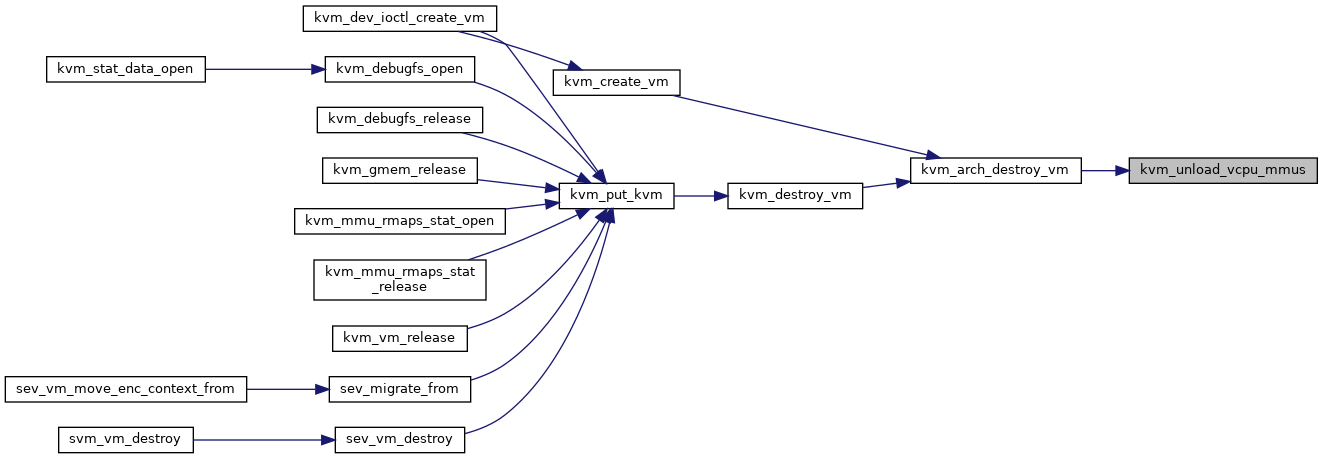

| static void | kvm_unload_vcpu_mmus (struct kvm *kvm) |

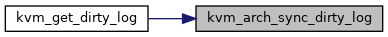

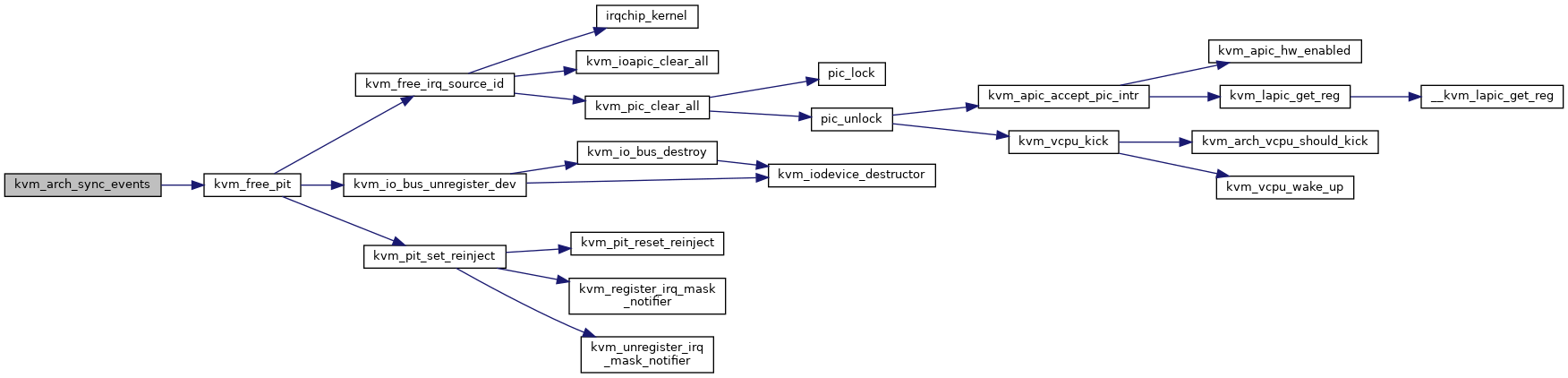

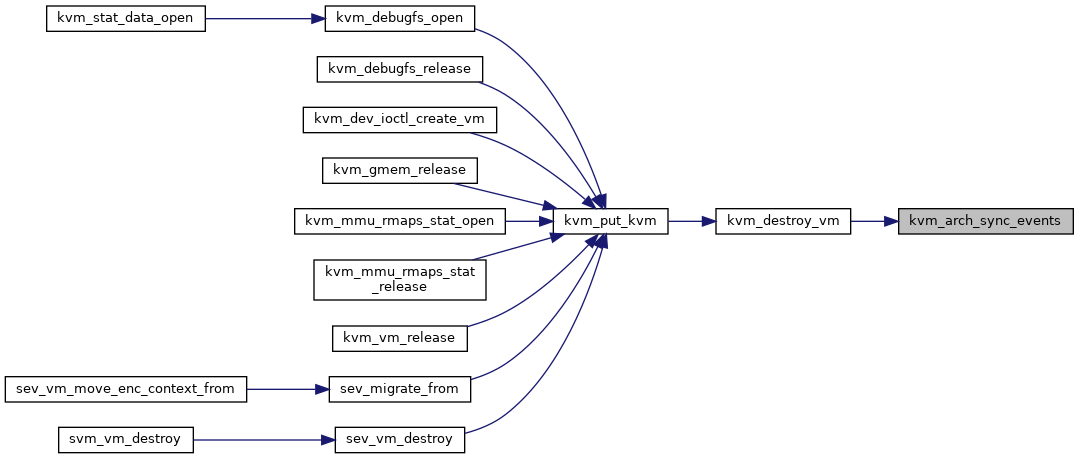

| void | kvm_arch_sync_events (struct kvm *kvm) |

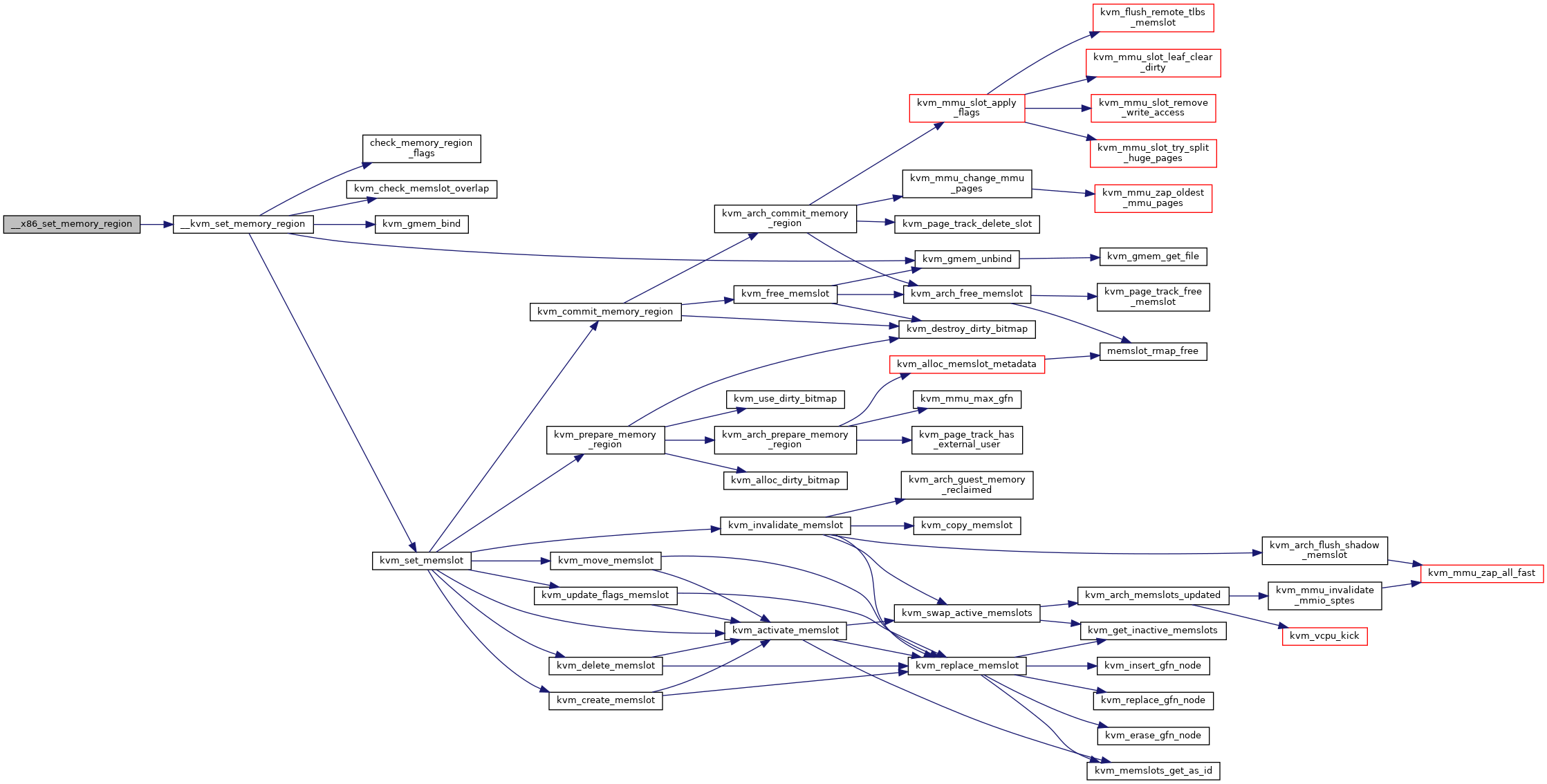

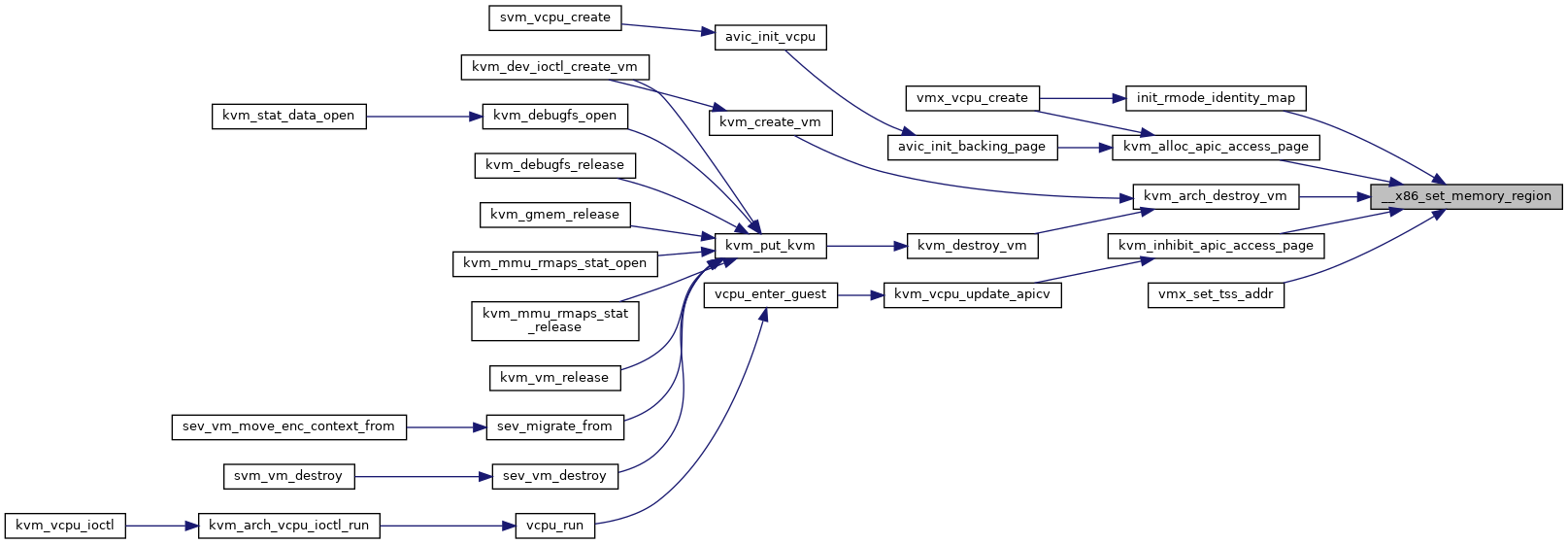

| void __user * | __x86_set_memory_region (struct kvm *kvm, int id, gpa_t gpa, u32 size) |

| EXPORT_SYMBOL_GPL (__x86_set_memory_region) | |

| void | kvm_arch_pre_destroy_vm (struct kvm *kvm) |

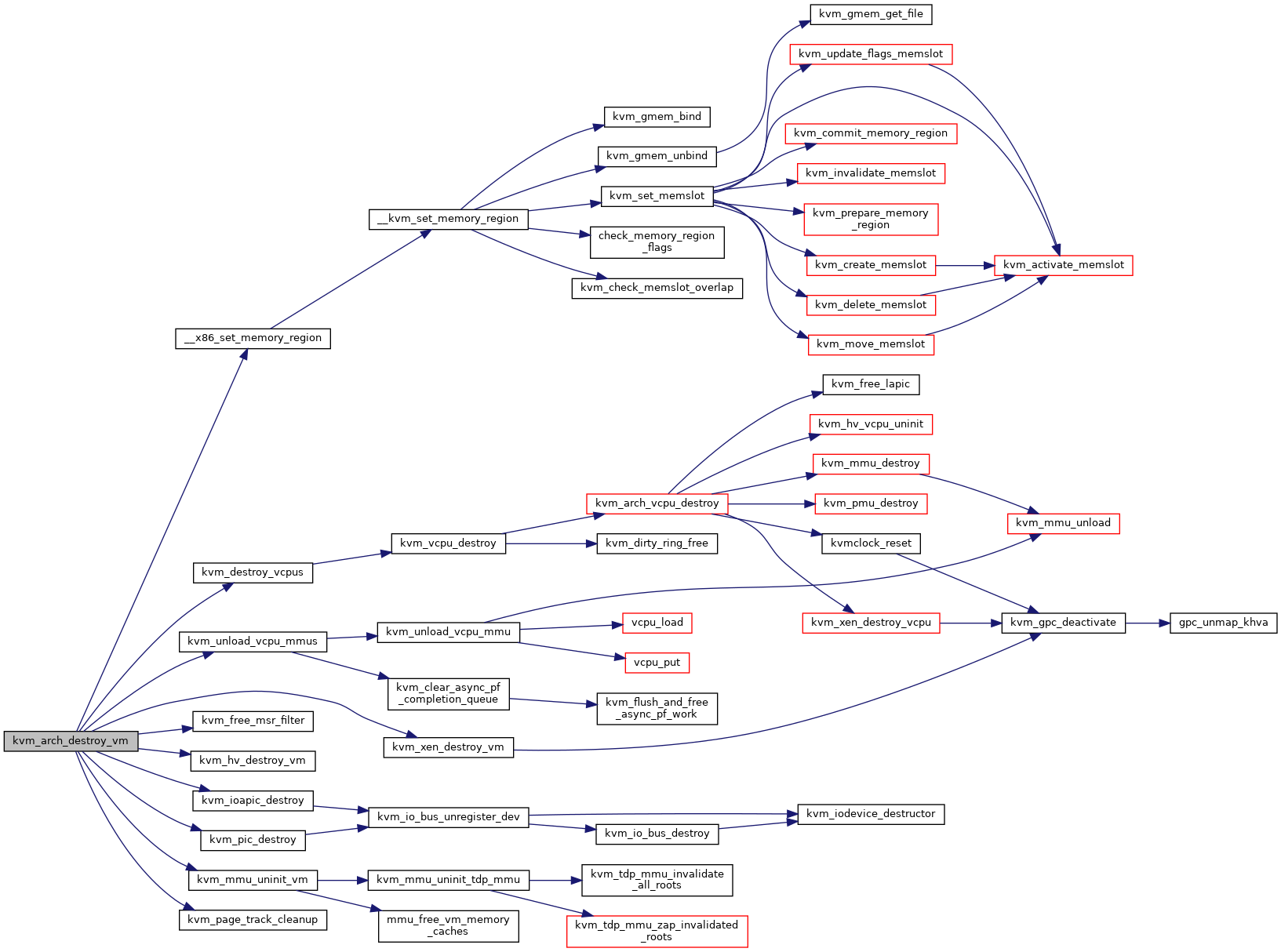

| void | kvm_arch_destroy_vm (struct kvm *kvm) |

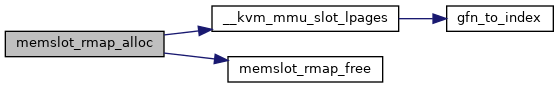

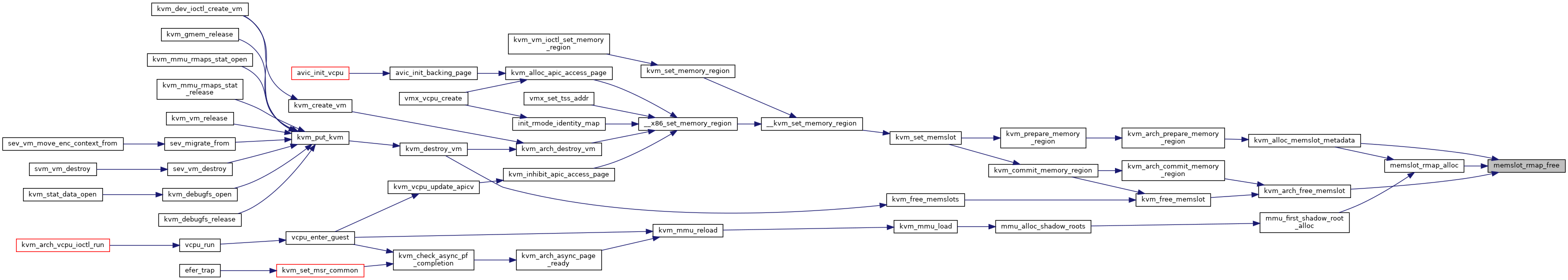

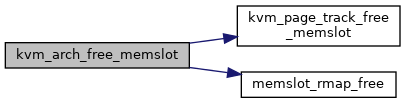

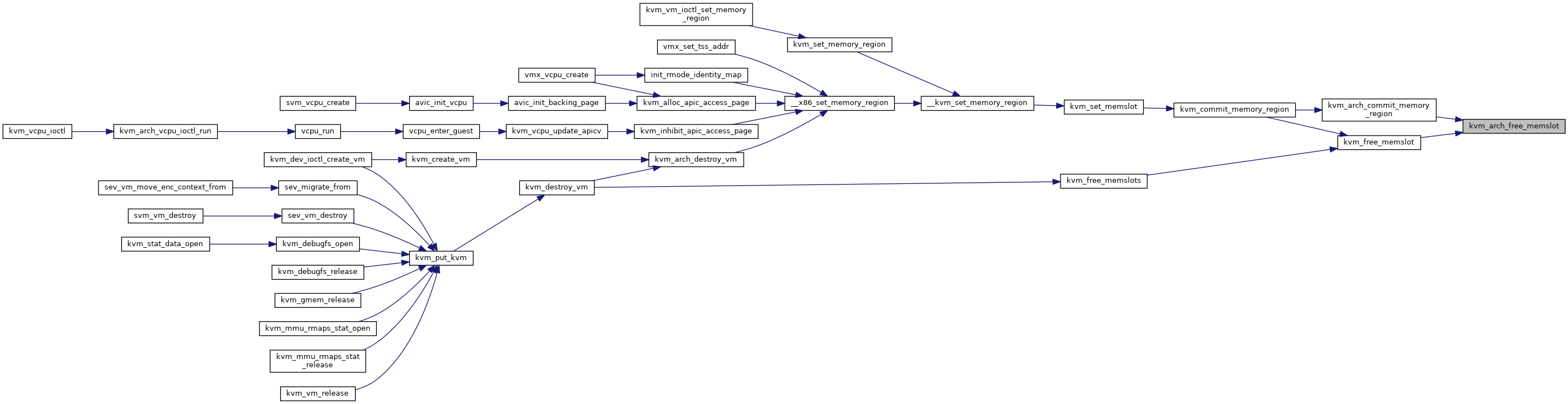

| static void | memslot_rmap_free (struct kvm_memory_slot *slot) |

| void | kvm_arch_free_memslot (struct kvm *kvm, struct kvm_memory_slot *slot) |

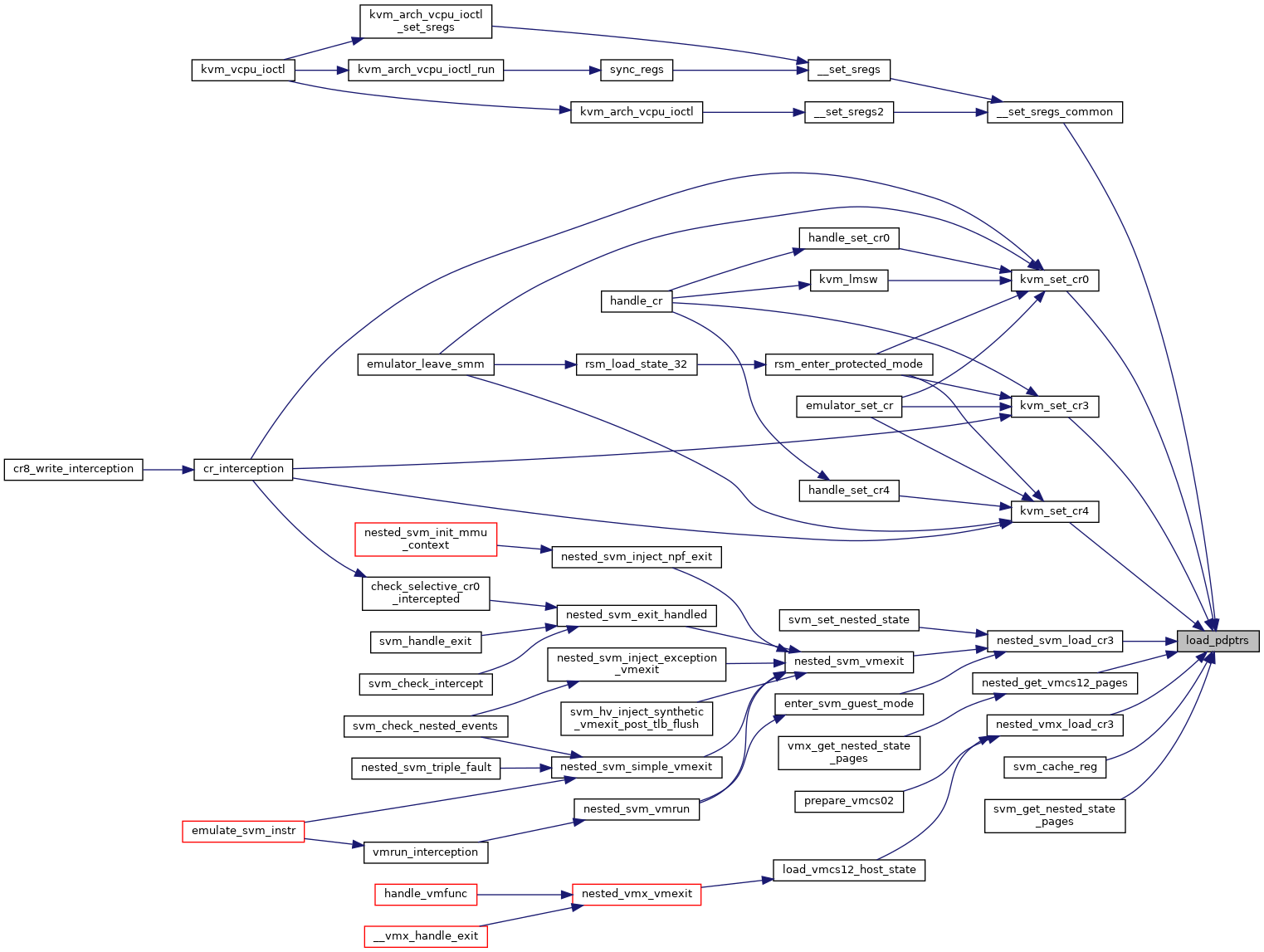

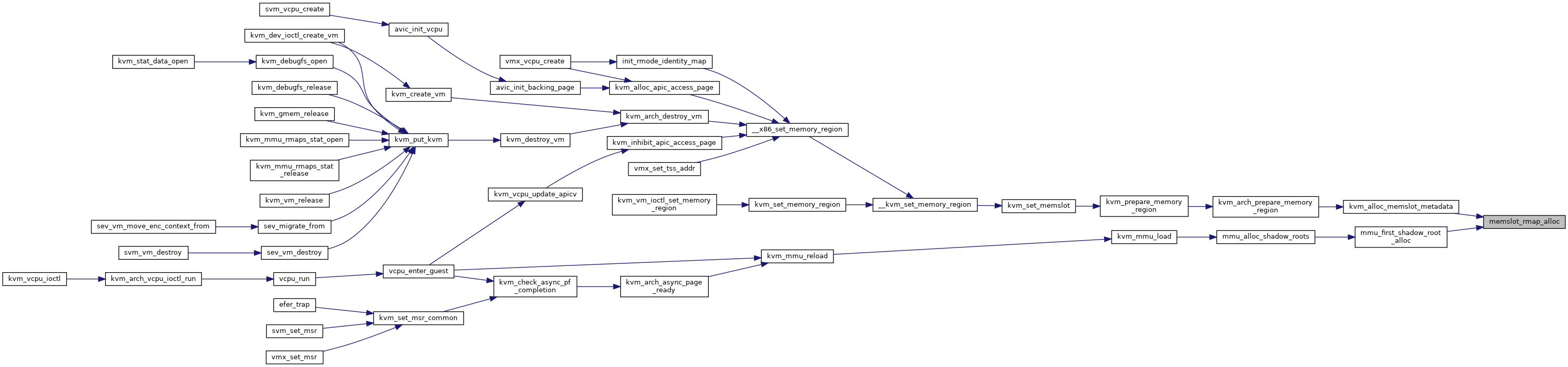

| int | memslot_rmap_alloc (struct kvm_memory_slot *slot, unsigned long npages) |

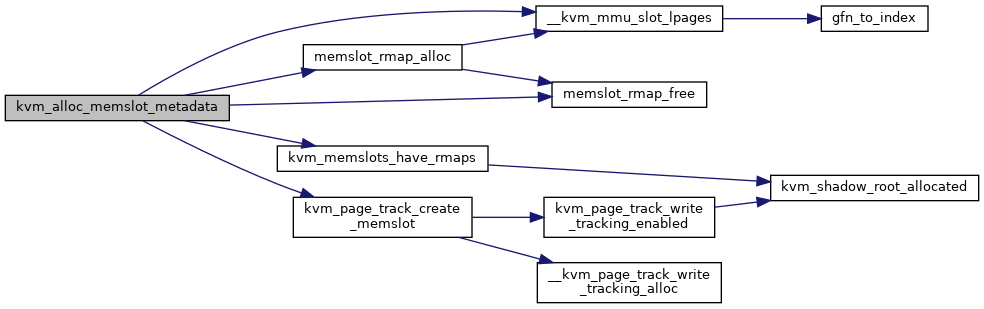

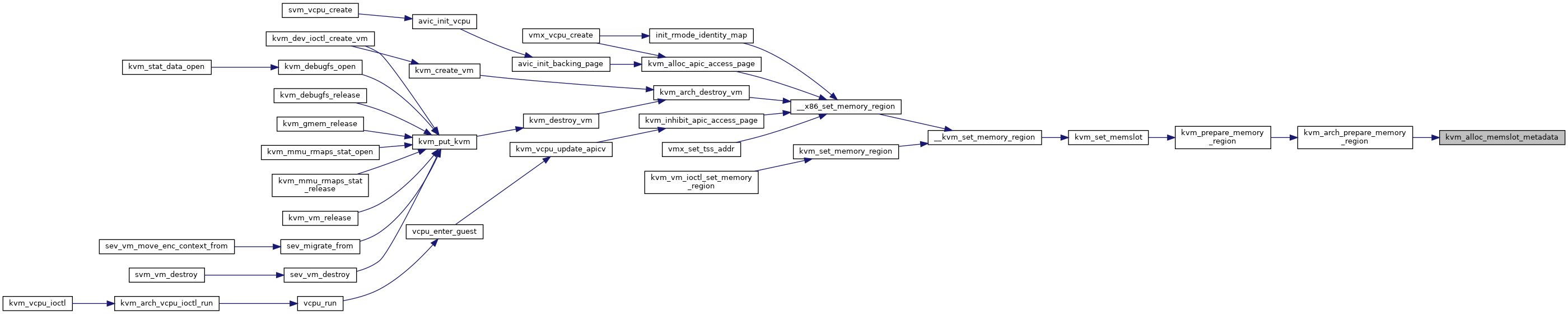

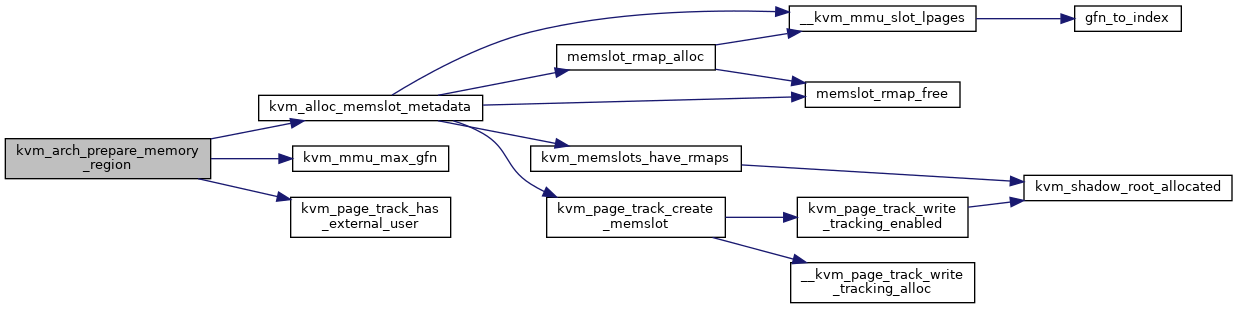

| static int | kvm_alloc_memslot_metadata (struct kvm *kvm, struct kvm_memory_slot *slot) |

| void | kvm_arch_memslots_updated (struct kvm *kvm, u64 gen) |

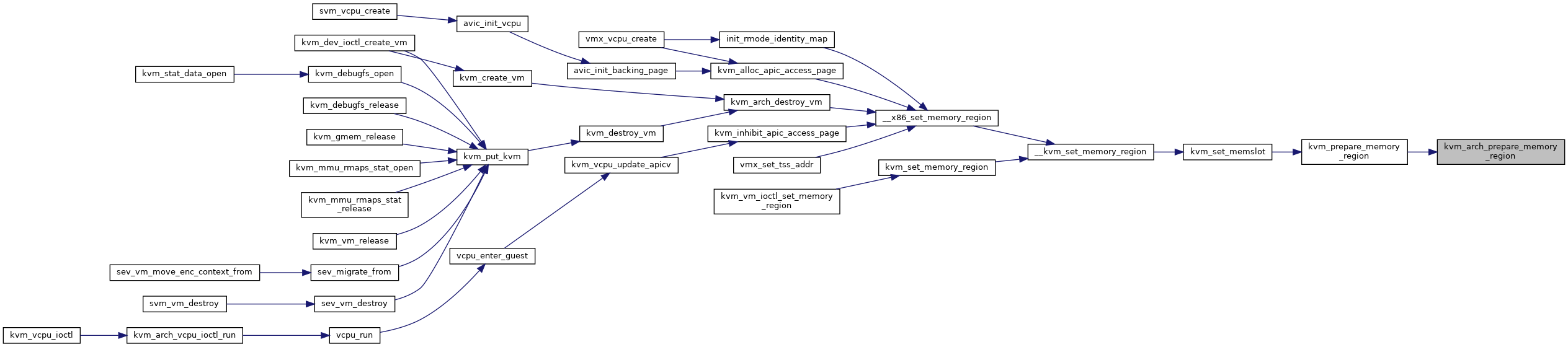

| int | kvm_arch_prepare_memory_region (struct kvm *kvm, const struct kvm_memory_slot *old, struct kvm_memory_slot *new, enum kvm_mr_change change) |

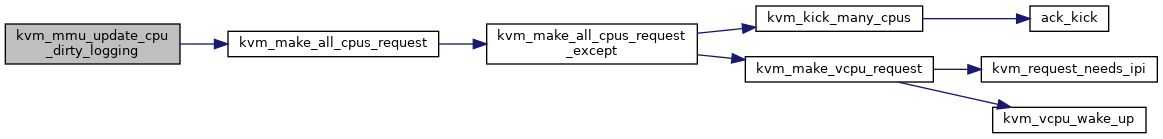

| static void | kvm_mmu_update_cpu_dirty_logging (struct kvm *kvm, bool enable) |

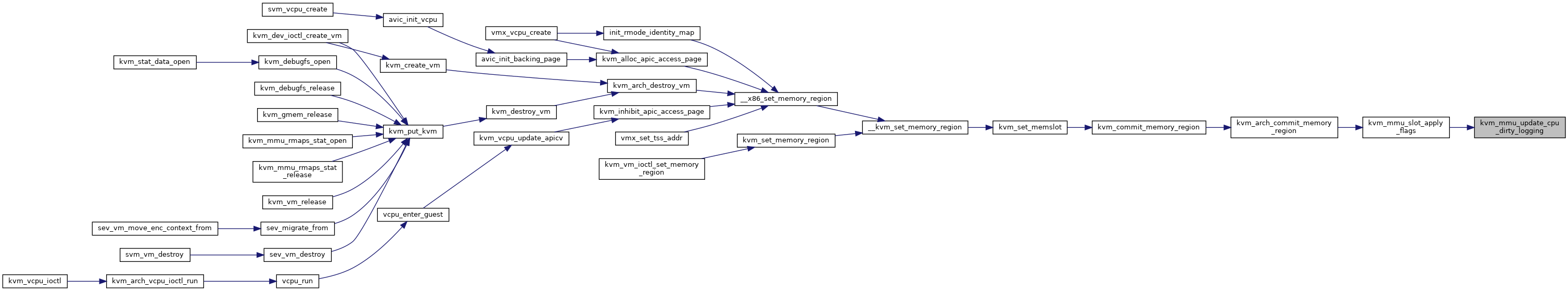

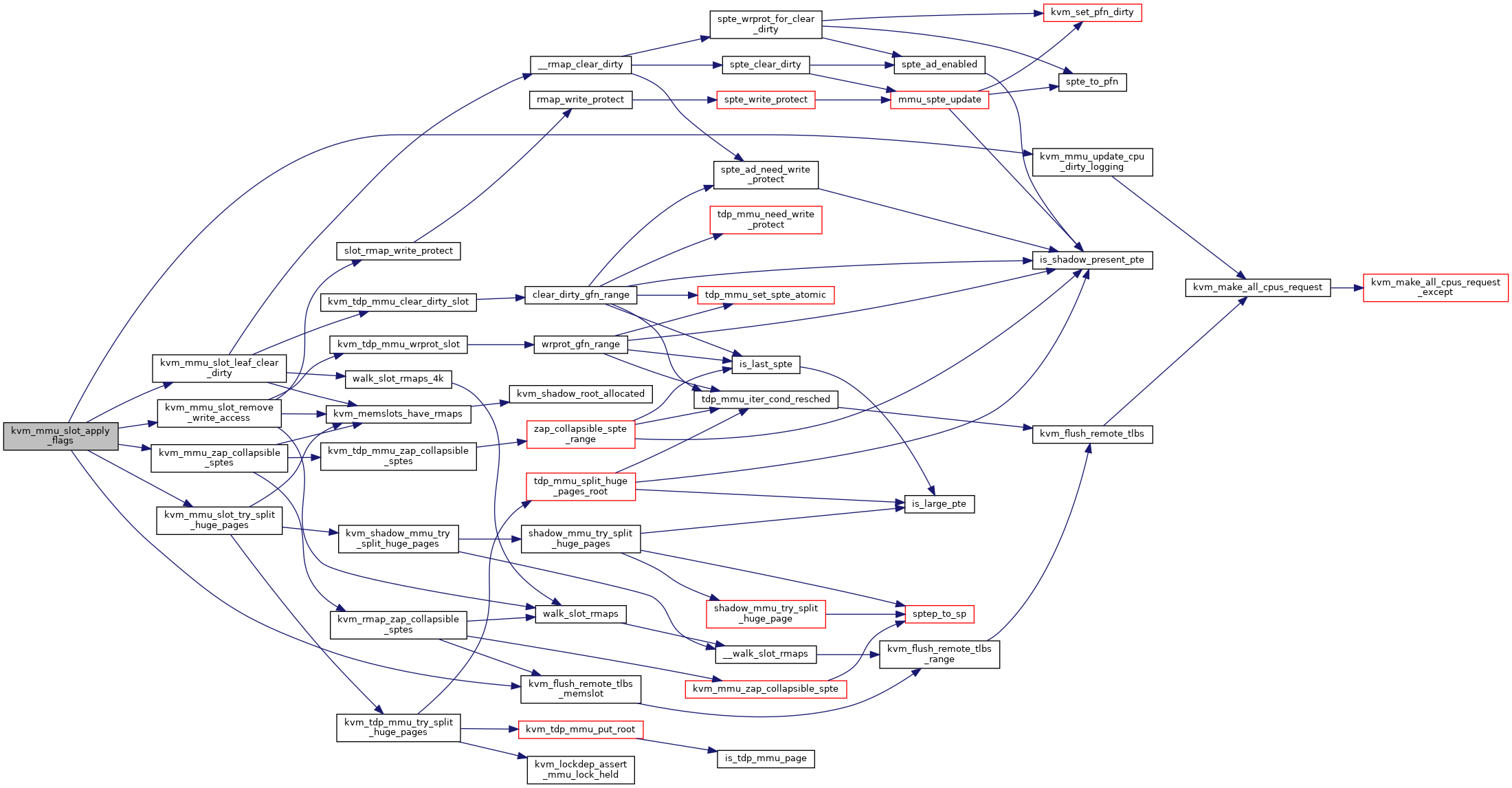

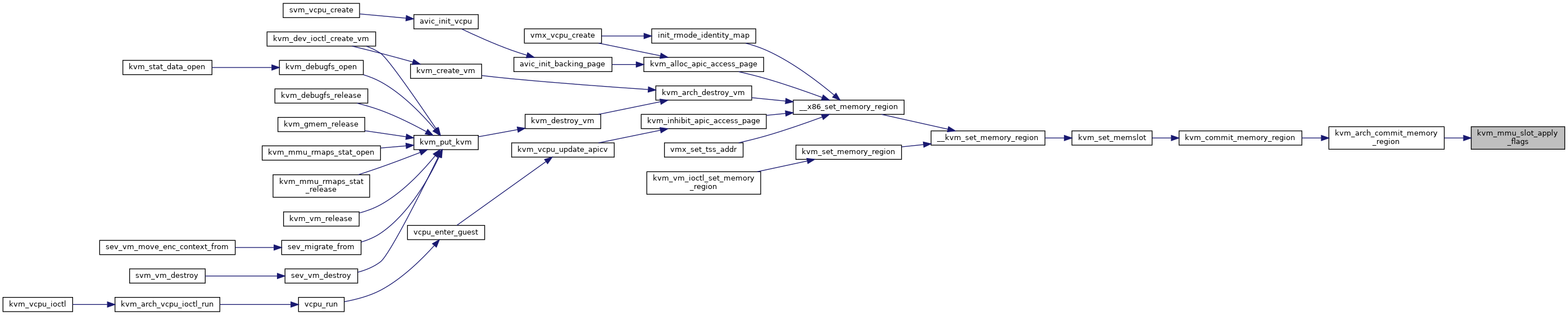

| static void | kvm_mmu_slot_apply_flags (struct kvm *kvm, struct kvm_memory_slot *old, const struct kvm_memory_slot *new, enum kvm_mr_change change) |

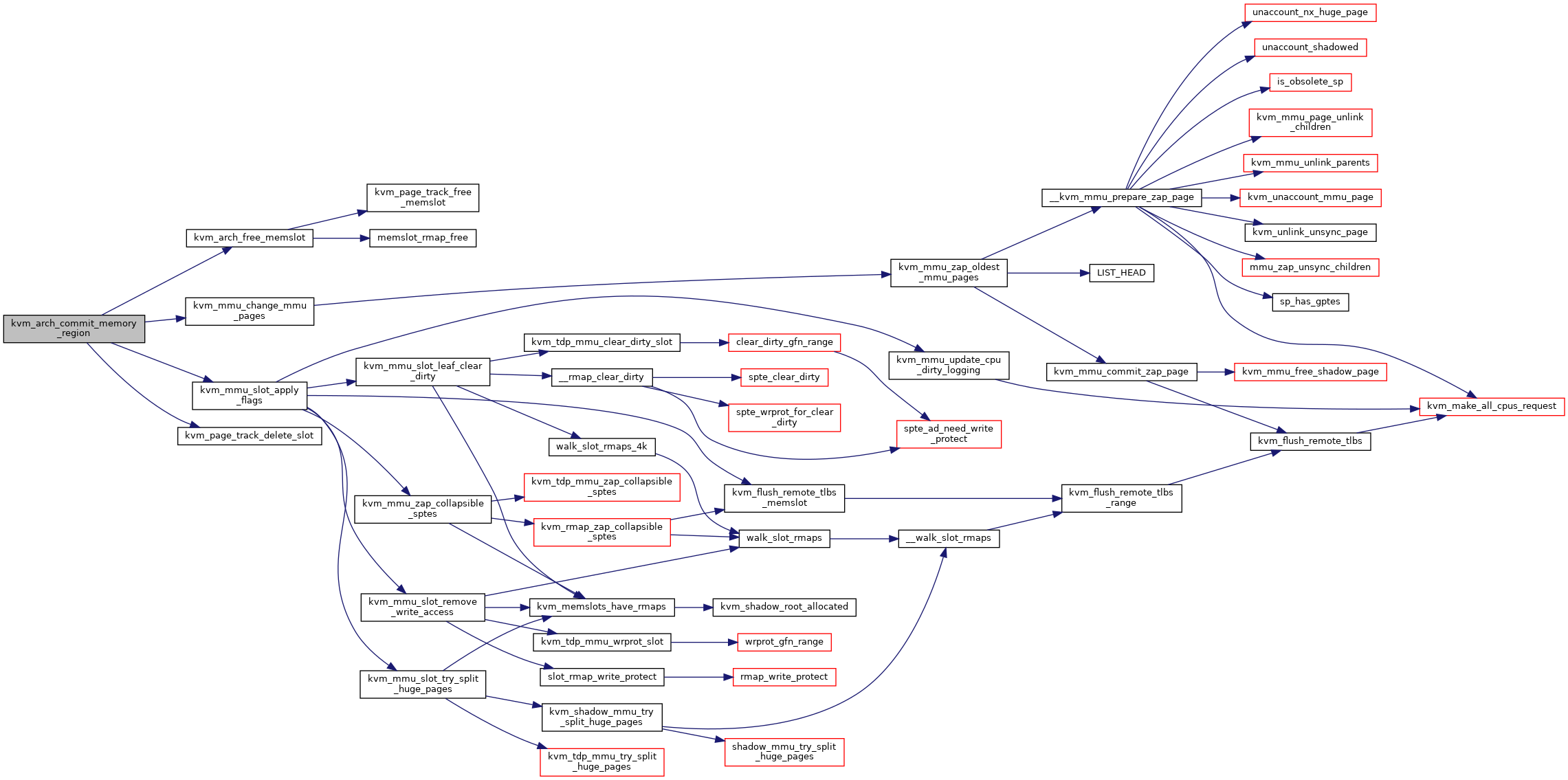

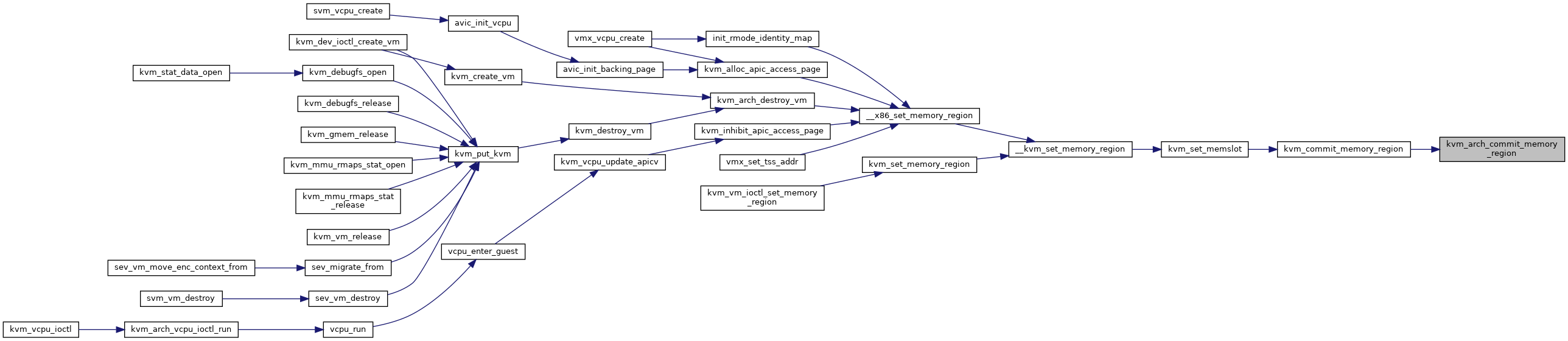

| void | kvm_arch_commit_memory_region (struct kvm *kvm, struct kvm_memory_slot *old, const struct kvm_memory_slot *new, enum kvm_mr_change change) |

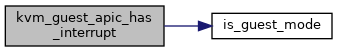

| static bool | kvm_guest_apic_has_interrupt (struct kvm_vcpu *vcpu) |

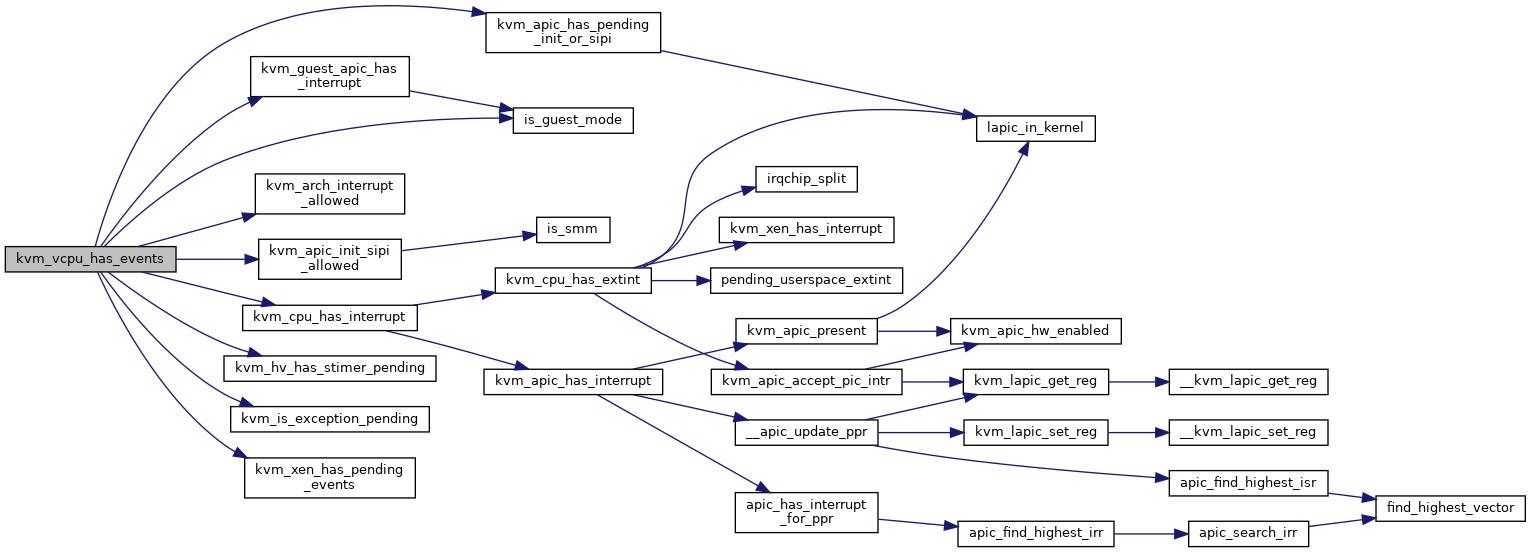

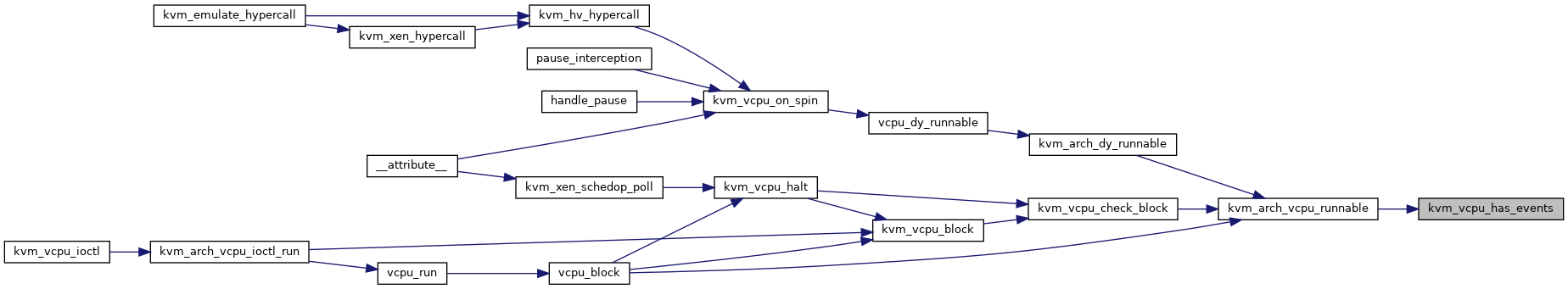

| static bool | kvm_vcpu_has_events (struct kvm_vcpu *vcpu) |

| int | kvm_arch_vcpu_runnable (struct kvm_vcpu *vcpu) |

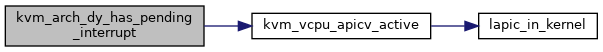

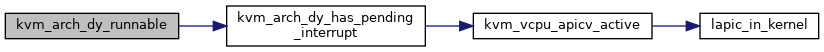

| bool | kvm_arch_dy_has_pending_interrupt (struct kvm_vcpu *vcpu) |

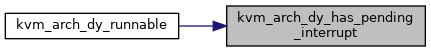

| bool | kvm_arch_dy_runnable (struct kvm_vcpu *vcpu) |

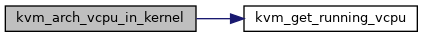

| bool | kvm_arch_vcpu_in_kernel (struct kvm_vcpu *vcpu) |

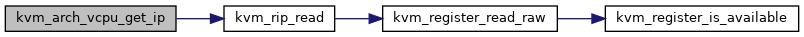

| unsigned long | kvm_arch_vcpu_get_ip (struct kvm_vcpu *vcpu) |

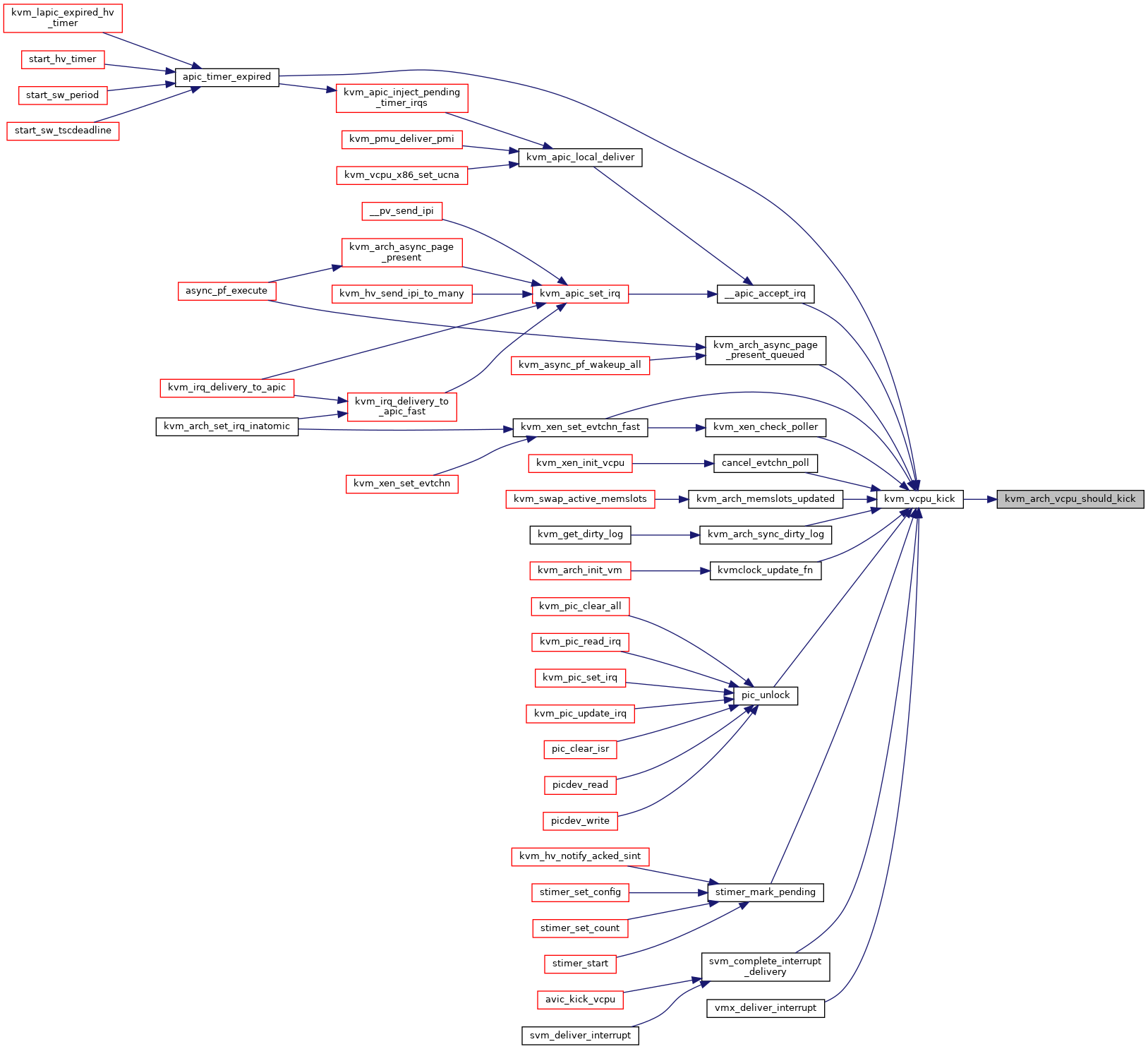

| int | kvm_arch_vcpu_should_kick (struct kvm_vcpu *vcpu) |

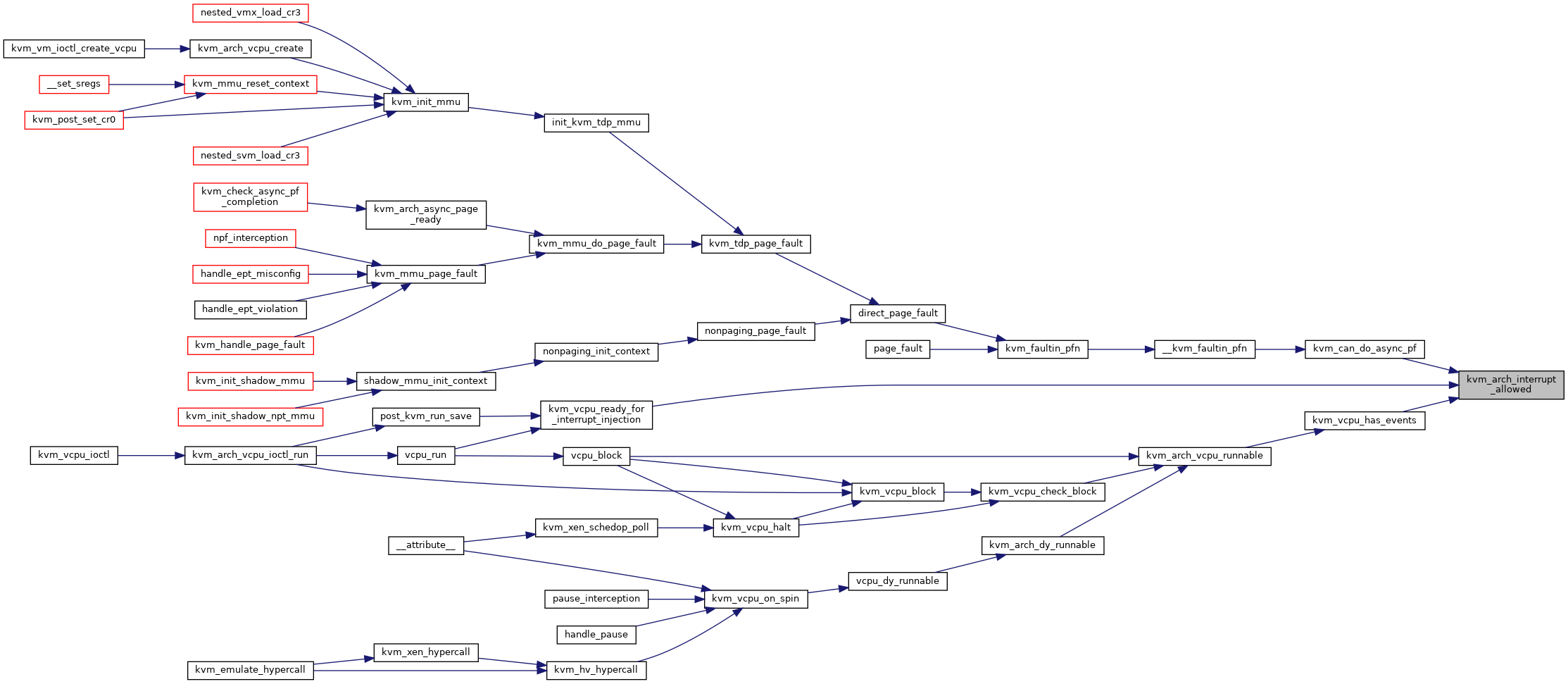

| int | kvm_arch_interrupt_allowed (struct kvm_vcpu *vcpu) |

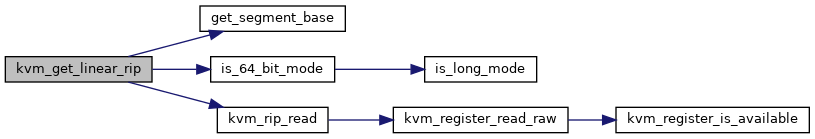

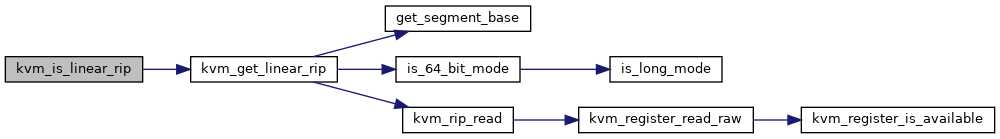

| unsigned long | kvm_get_linear_rip (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_get_linear_rip) | |

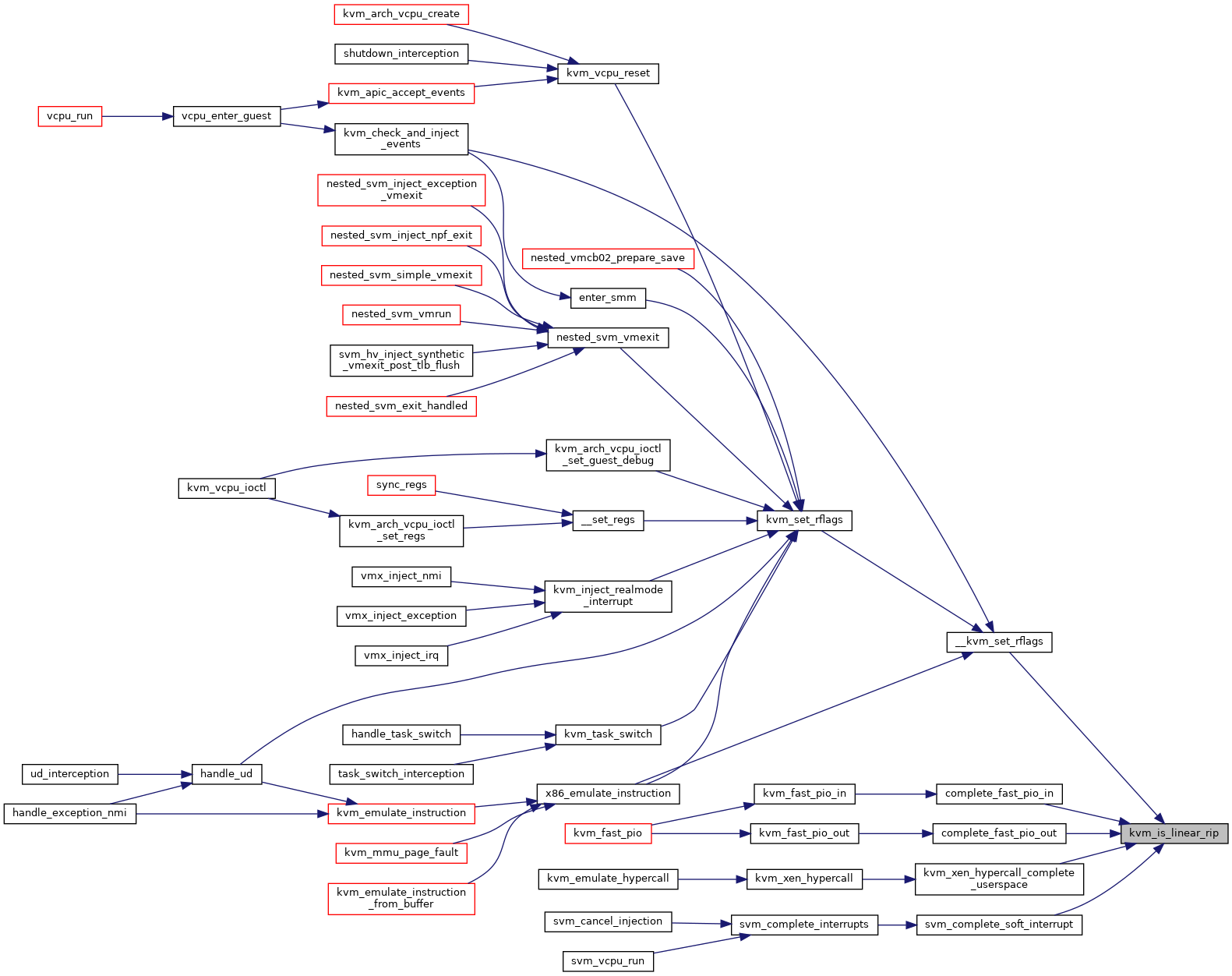

| bool | kvm_is_linear_rip (struct kvm_vcpu *vcpu, unsigned long linear_rip) |

| EXPORT_SYMBOL_GPL (kvm_is_linear_rip) | |

| unsigned long | kvm_get_rflags (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_get_rflags) | |

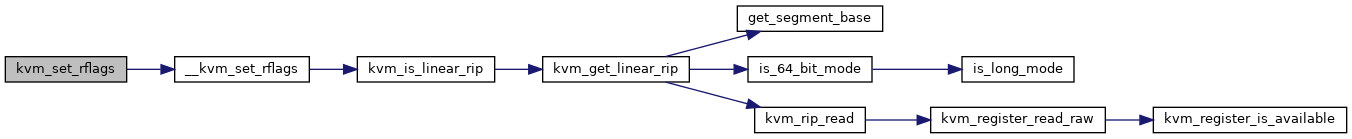

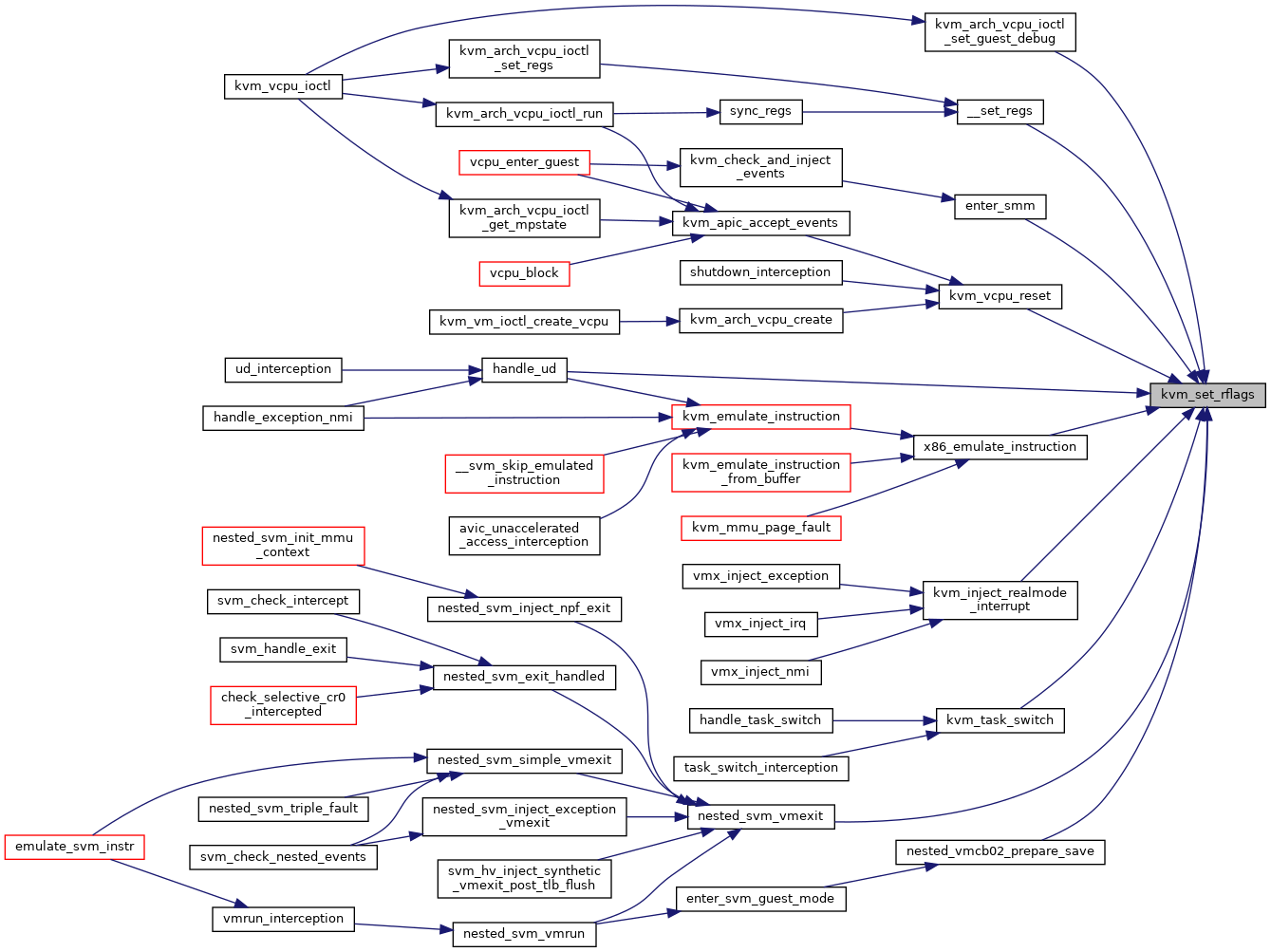

| void | kvm_set_rflags (struct kvm_vcpu *vcpu, unsigned long rflags) |

| EXPORT_SYMBOL_GPL (kvm_set_rflags) | |

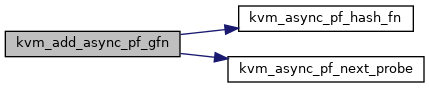

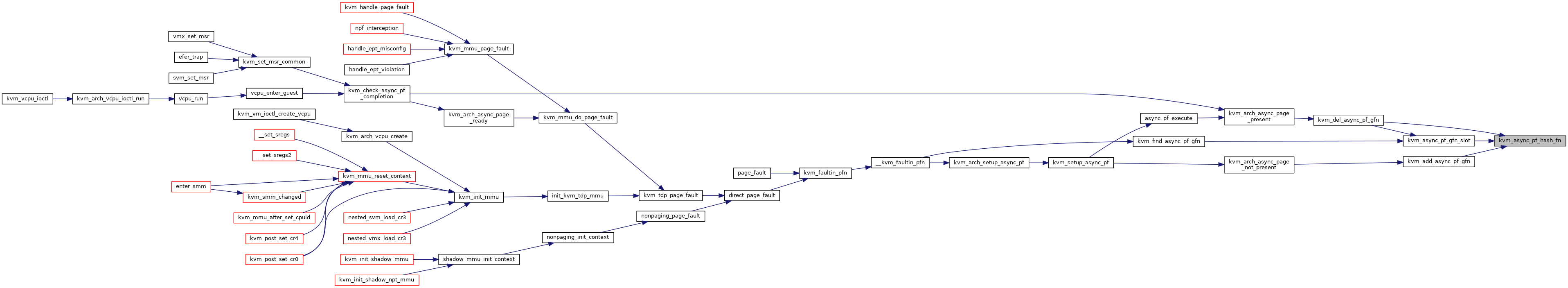

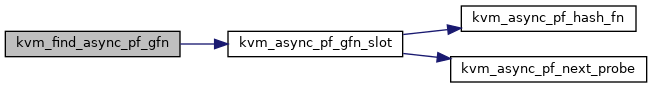

| static u32 | kvm_async_pf_hash_fn (gfn_t gfn) |

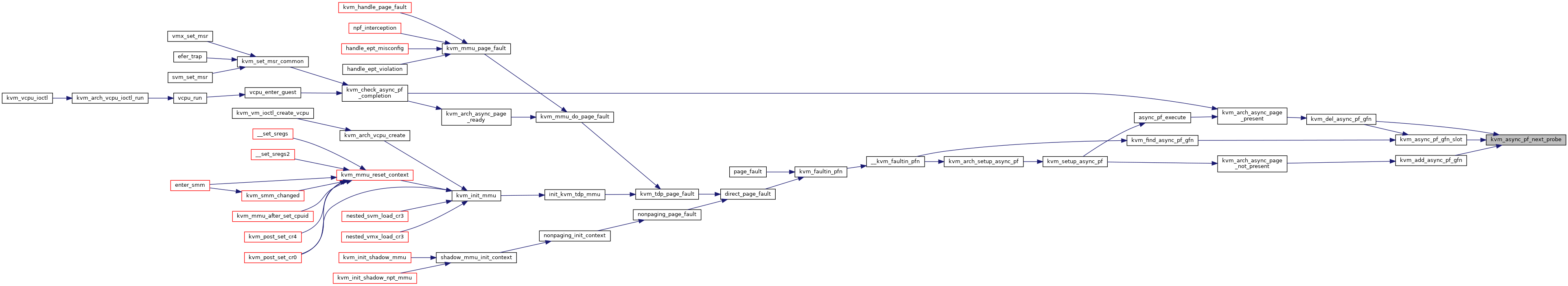

| static u32 | kvm_async_pf_next_probe (u32 key) |

| static void | kvm_add_async_pf_gfn (struct kvm_vcpu *vcpu, gfn_t gfn) |

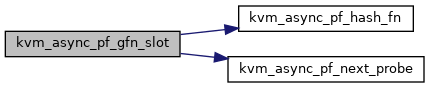

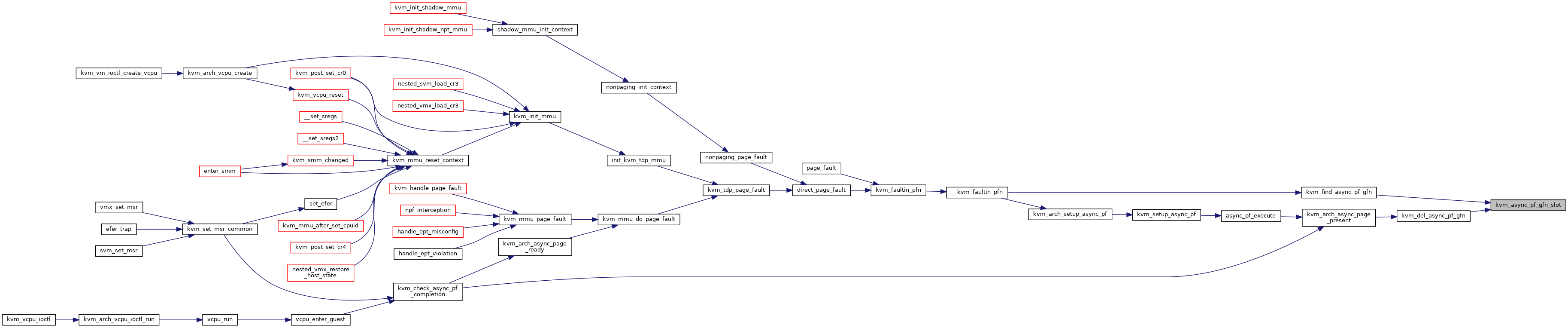

| static u32 | kvm_async_pf_gfn_slot (struct kvm_vcpu *vcpu, gfn_t gfn) |

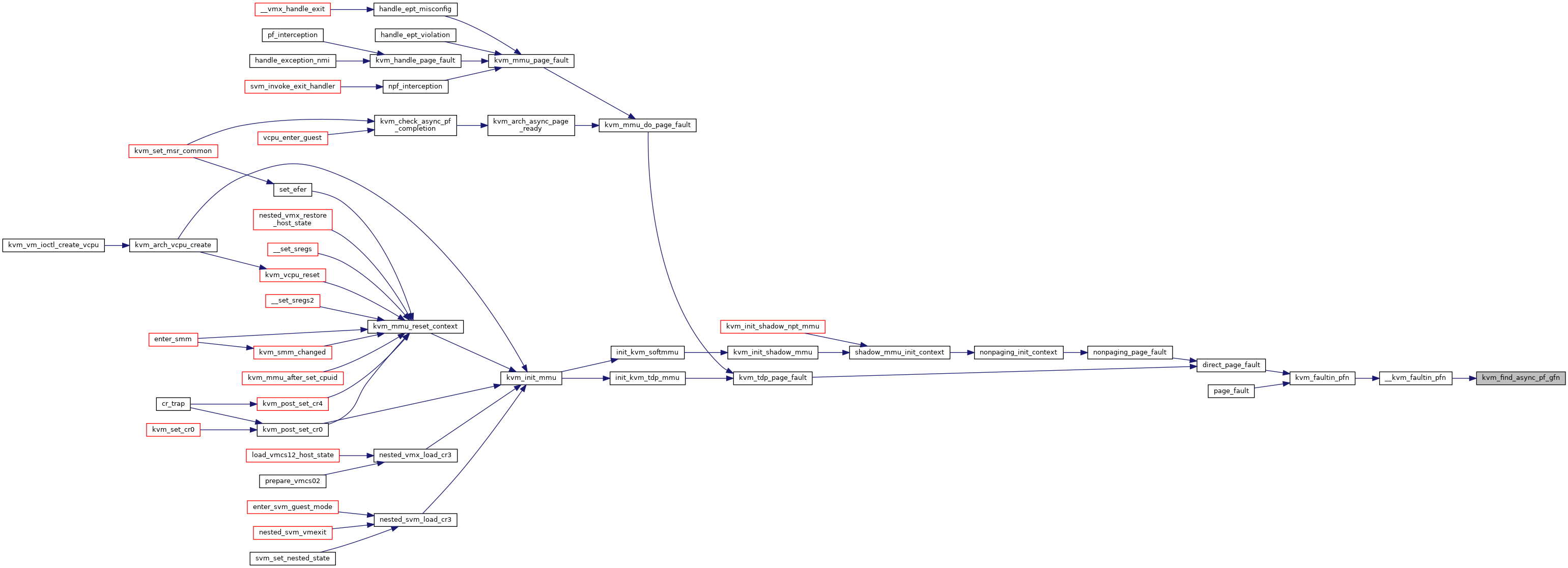

| bool | kvm_find_async_pf_gfn (struct kvm_vcpu *vcpu, gfn_t gfn) |

| static void | kvm_del_async_pf_gfn (struct kvm_vcpu *vcpu, gfn_t gfn) |

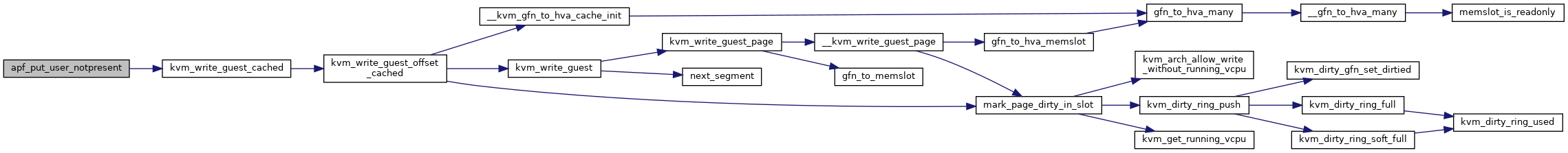

| static int | apf_put_user_notpresent (struct kvm_vcpu *vcpu) |

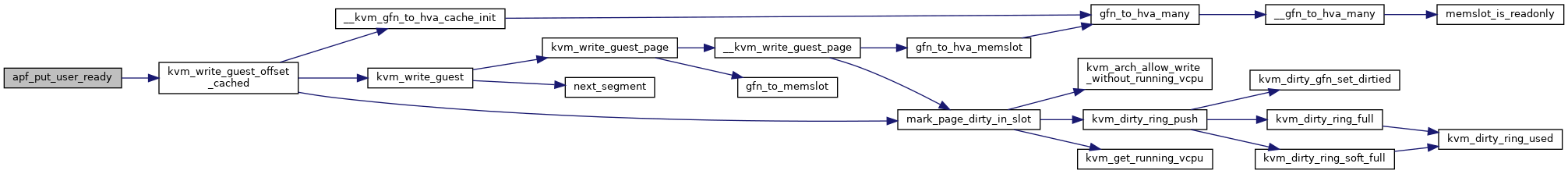

| static int | apf_put_user_ready (struct kvm_vcpu *vcpu, u32 token) |

| static bool | apf_pageready_slot_free (struct kvm_vcpu *vcpu) |

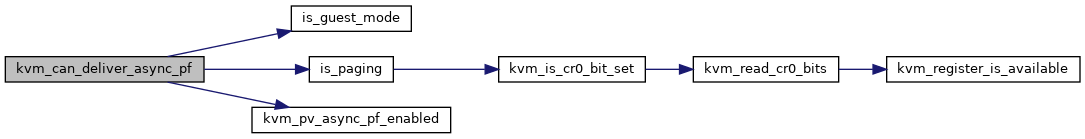

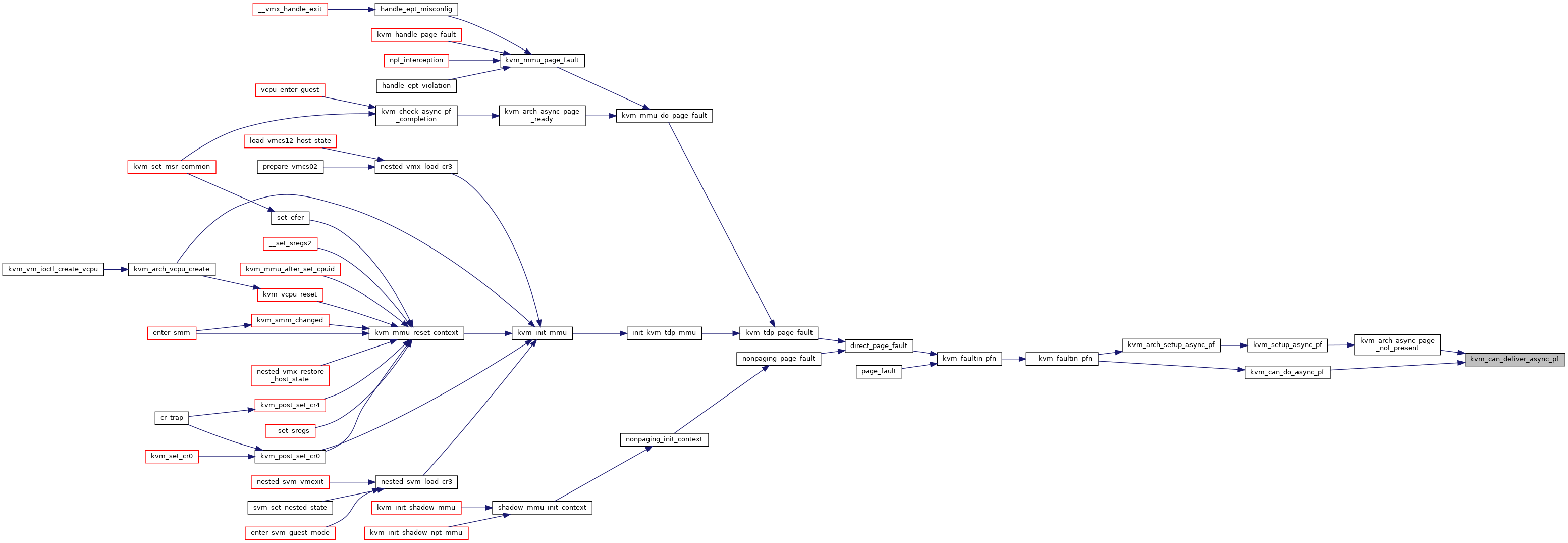

| static bool | kvm_can_deliver_async_pf (struct kvm_vcpu *vcpu) |

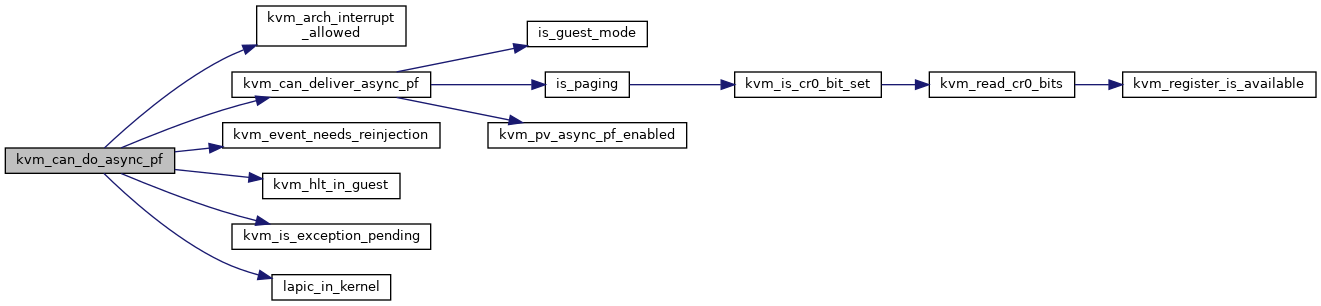

| bool | kvm_can_do_async_pf (struct kvm_vcpu *vcpu) |

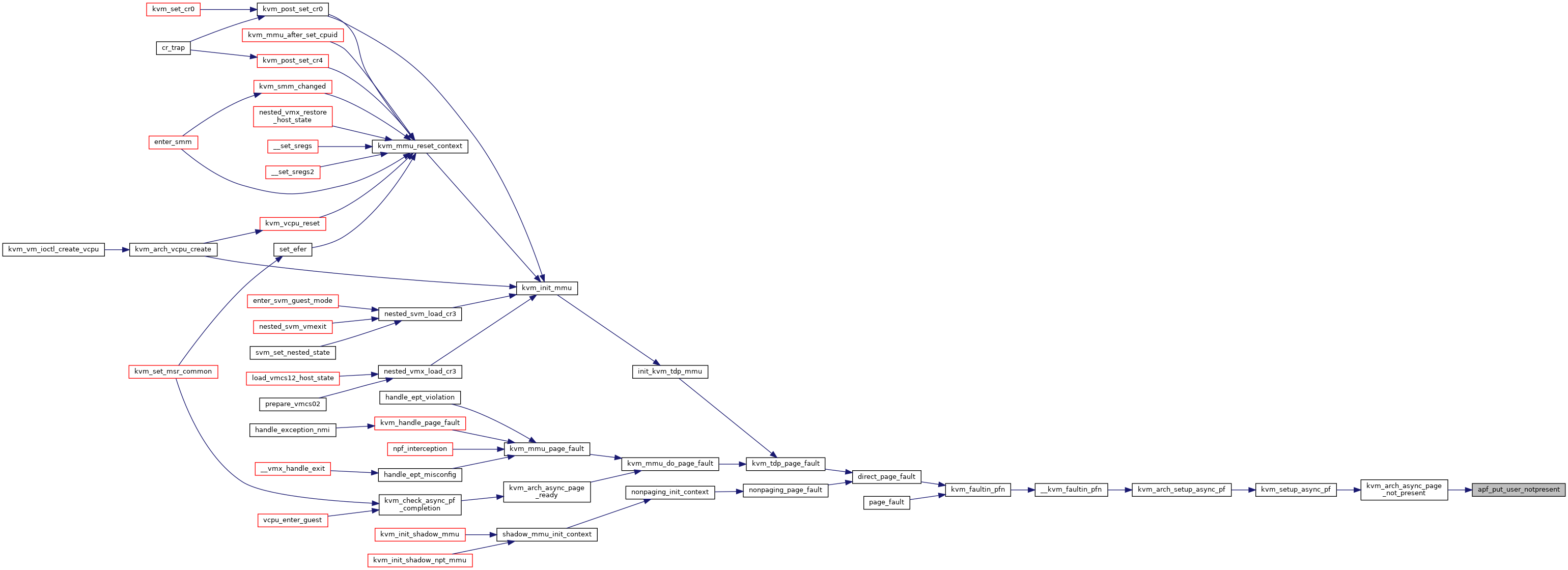

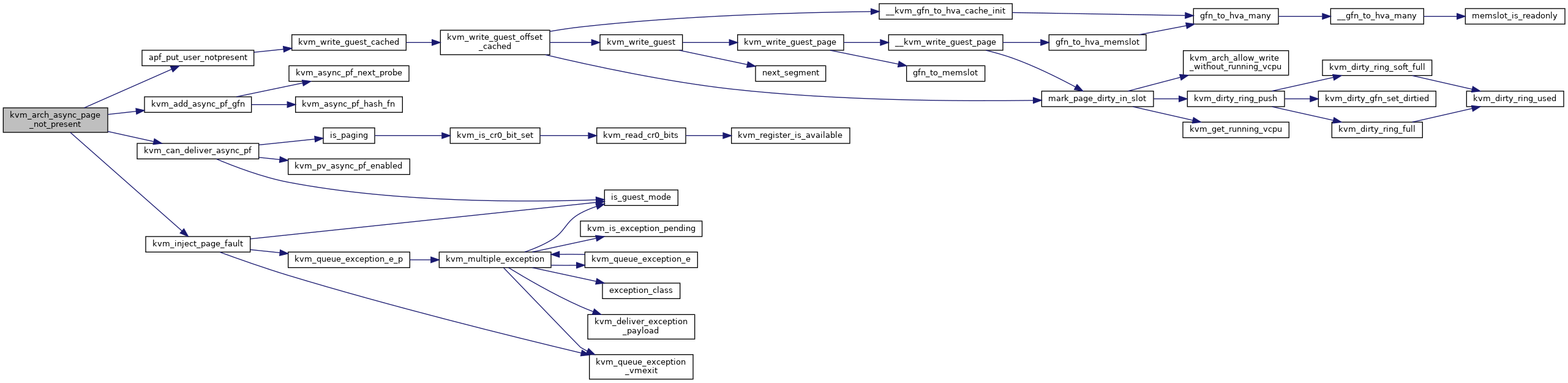

| bool | kvm_arch_async_page_not_present (struct kvm_vcpu *vcpu, struct kvm_async_pf *work) |

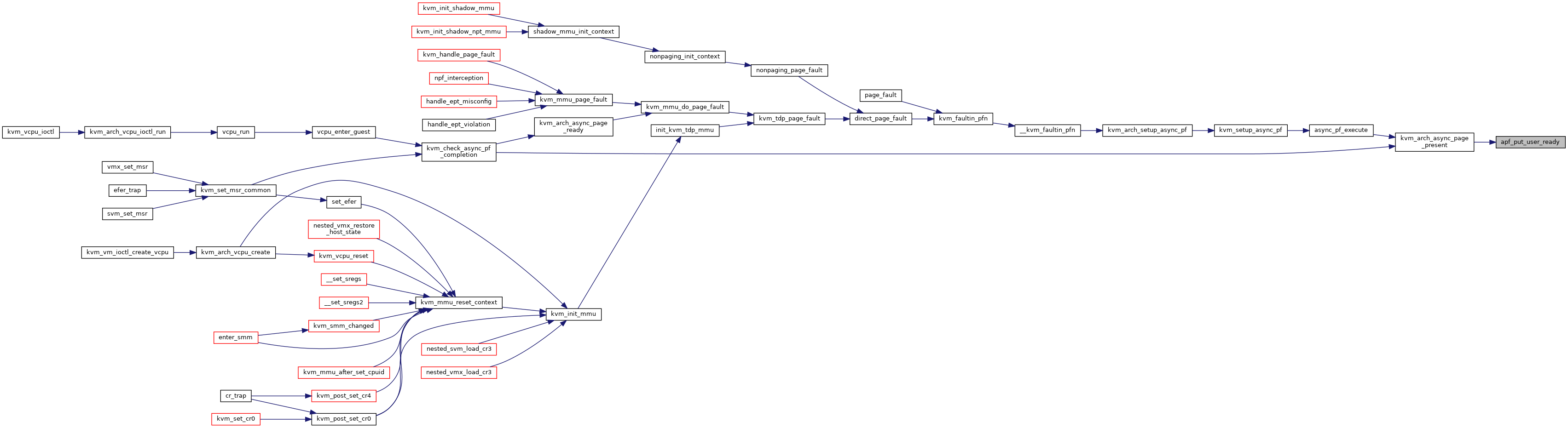

| void | kvm_arch_async_page_present (struct kvm_vcpu *vcpu, struct kvm_async_pf *work) |

| void | kvm_arch_async_page_present_queued (struct kvm_vcpu *vcpu) |

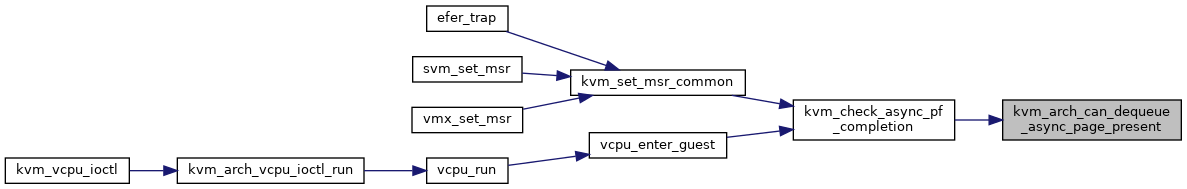

| bool | kvm_arch_can_dequeue_async_page_present (struct kvm_vcpu *vcpu) |

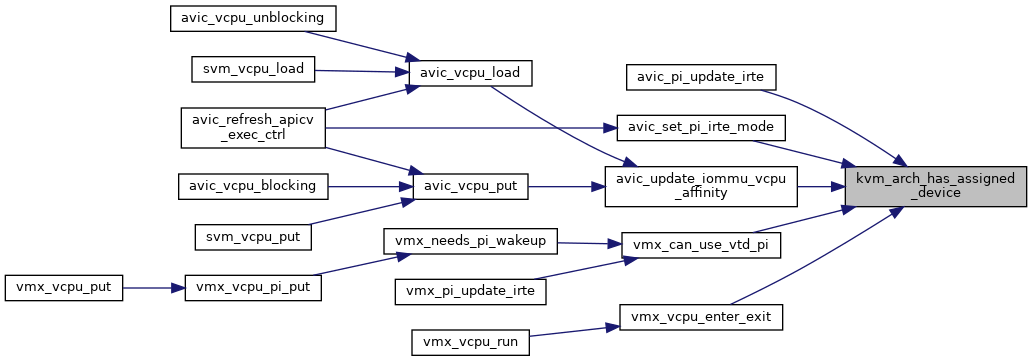

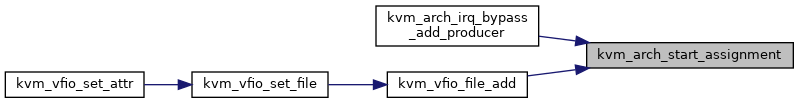

| void | kvm_arch_start_assignment (struct kvm *kvm) |

| EXPORT_SYMBOL_GPL (kvm_arch_start_assignment) | |

| void | kvm_arch_end_assignment (struct kvm *kvm) |

| EXPORT_SYMBOL_GPL (kvm_arch_end_assignment) | |

| bool noinstr | kvm_arch_has_assigned_device (struct kvm *kvm) |

| EXPORT_SYMBOL_GPL (kvm_arch_has_assigned_device) | |

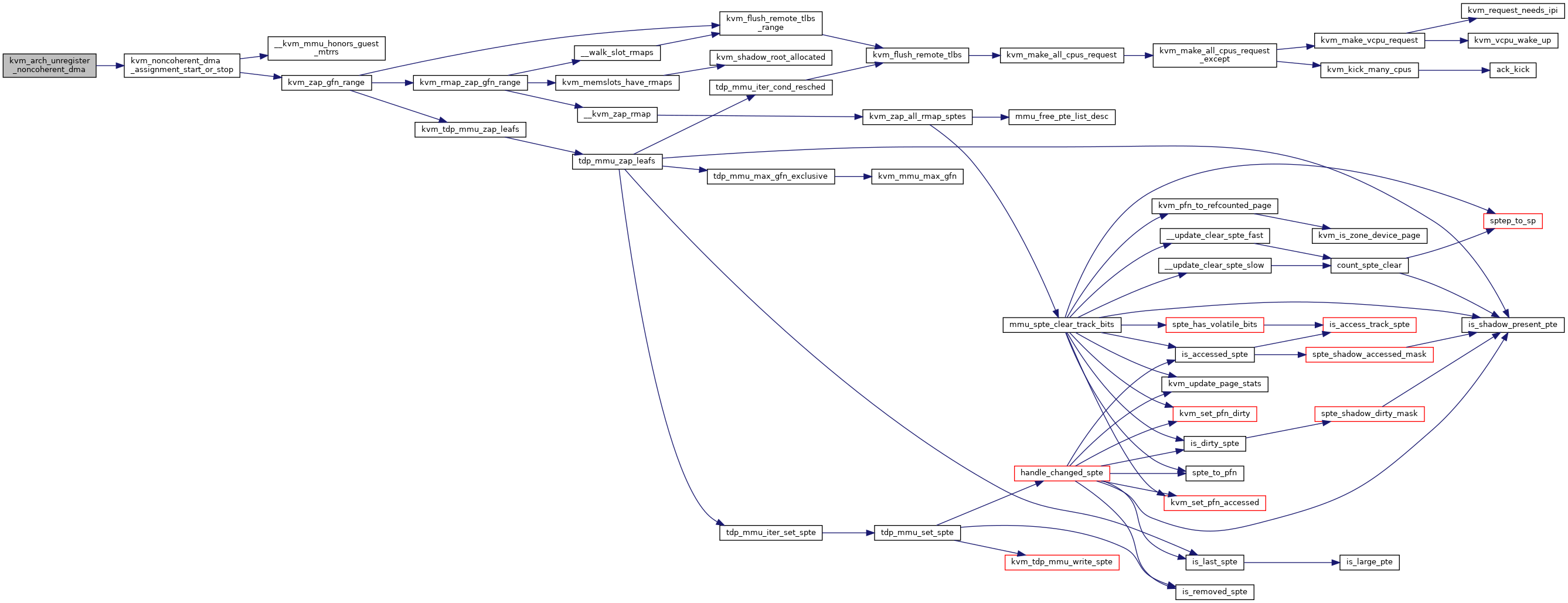

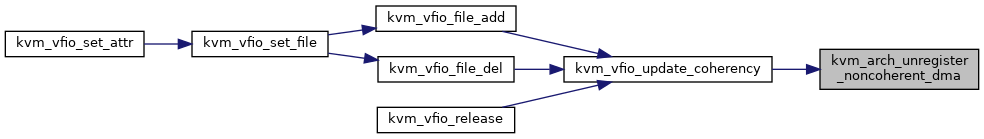

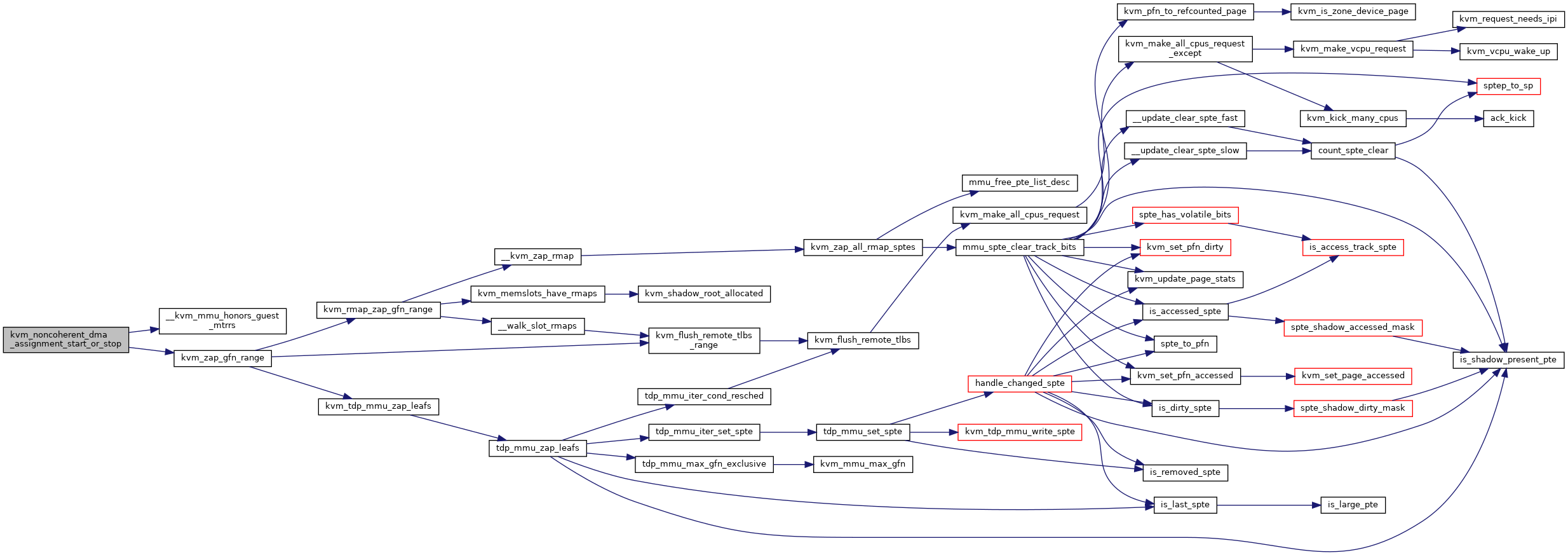

| static void | kvm_noncoherent_dma_assignment_start_or_stop (struct kvm *kvm) |

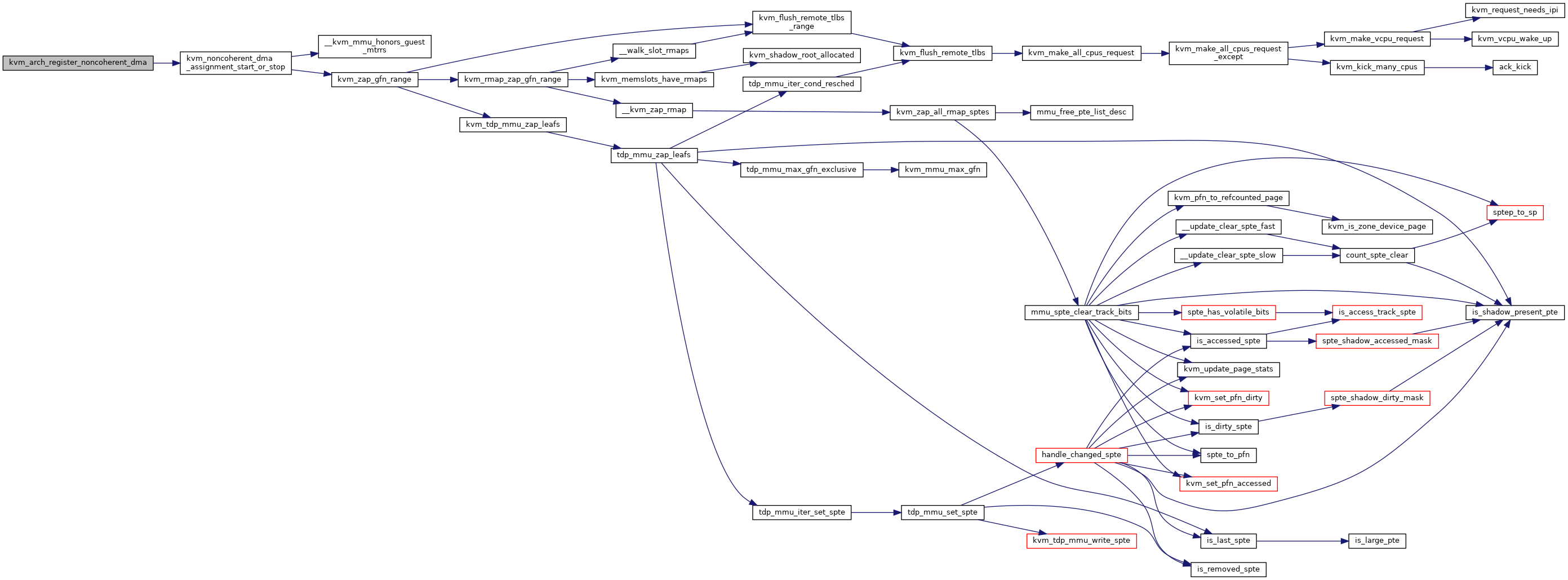

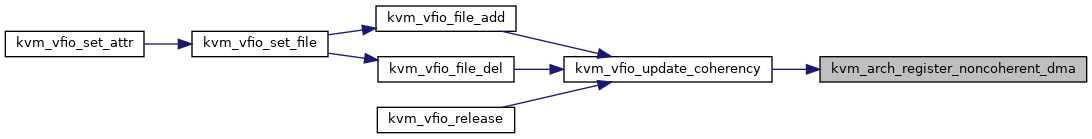

| void | kvm_arch_register_noncoherent_dma (struct kvm *kvm) |

| EXPORT_SYMBOL_GPL (kvm_arch_register_noncoherent_dma) | |

| void | kvm_arch_unregister_noncoherent_dma (struct kvm *kvm) |

| EXPORT_SYMBOL_GPL (kvm_arch_unregister_noncoherent_dma) | |

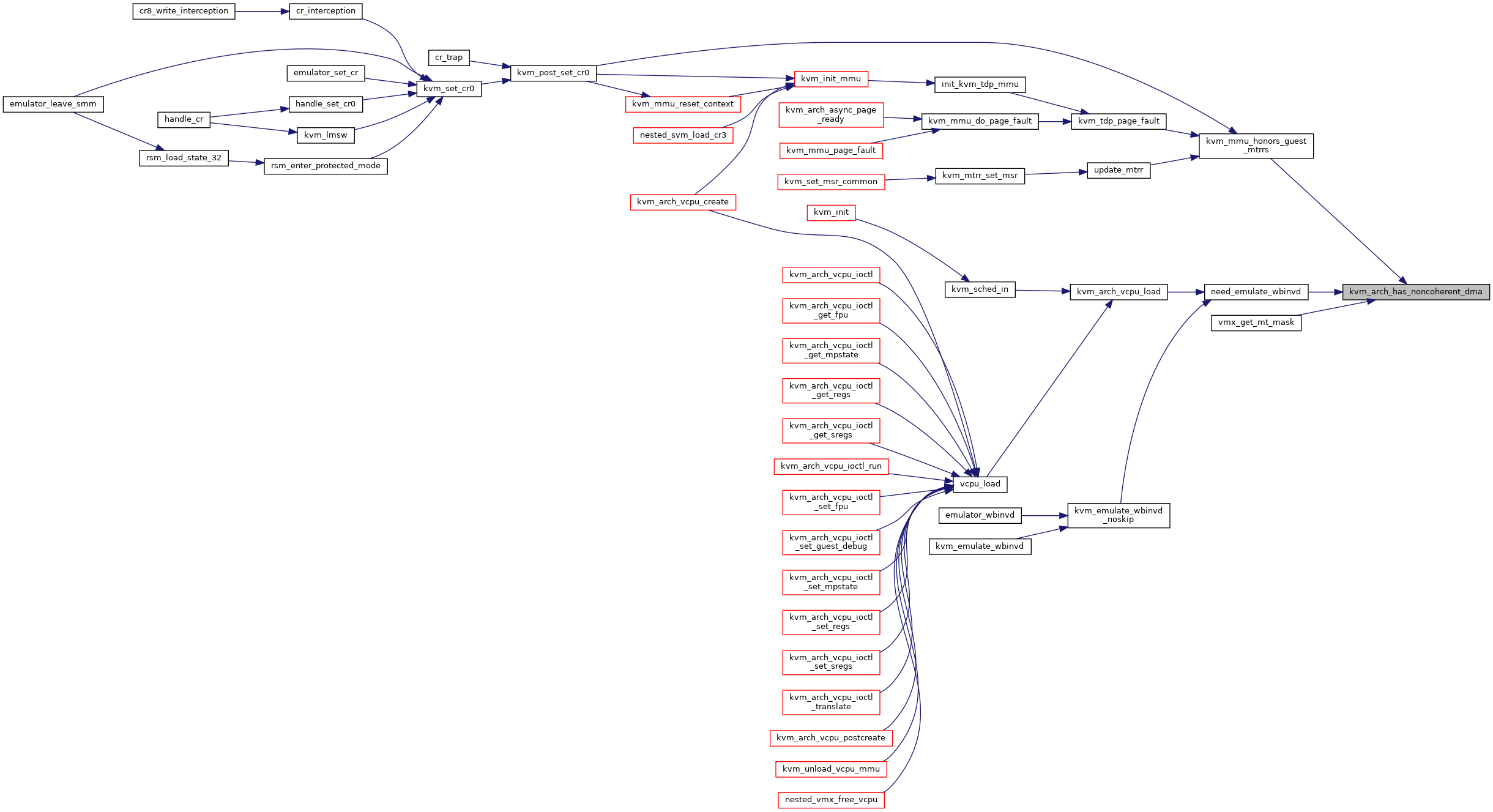

| bool | kvm_arch_has_noncoherent_dma (struct kvm *kvm) |

| EXPORT_SYMBOL_GPL (kvm_arch_has_noncoherent_dma) | |

| bool | kvm_arch_has_irq_bypass (void) |

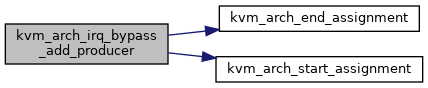

| int | kvm_arch_irq_bypass_add_producer (struct irq_bypass_consumer *cons, struct irq_bypass_producer *prod) |

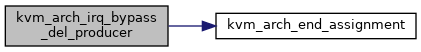

| void | kvm_arch_irq_bypass_del_producer (struct irq_bypass_consumer *cons, struct irq_bypass_producer *prod) |

| int | kvm_arch_update_irqfd_routing (struct kvm *kvm, unsigned int host_irq, uint32_t guest_irq, bool set) |

| bool | kvm_arch_irqfd_route_changed (struct kvm_kernel_irq_routing_entry *old, struct kvm_kernel_irq_routing_entry *new) |

| bool | kvm_vector_hashing_enabled (void) |

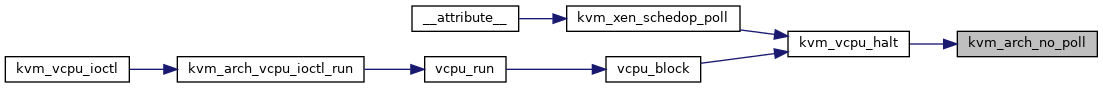

| bool | kvm_arch_no_poll (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_arch_no_poll) | |

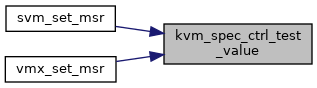

| int | kvm_spec_ctrl_test_value (u64 value) |

| EXPORT_SYMBOL_GPL (kvm_spec_ctrl_test_value) | |

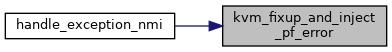

| void | kvm_fixup_and_inject_pf_error (struct kvm_vcpu *vcpu, gva_t gva, u16 error_code) |

| EXPORT_SYMBOL_GPL (kvm_fixup_and_inject_pf_error) | |

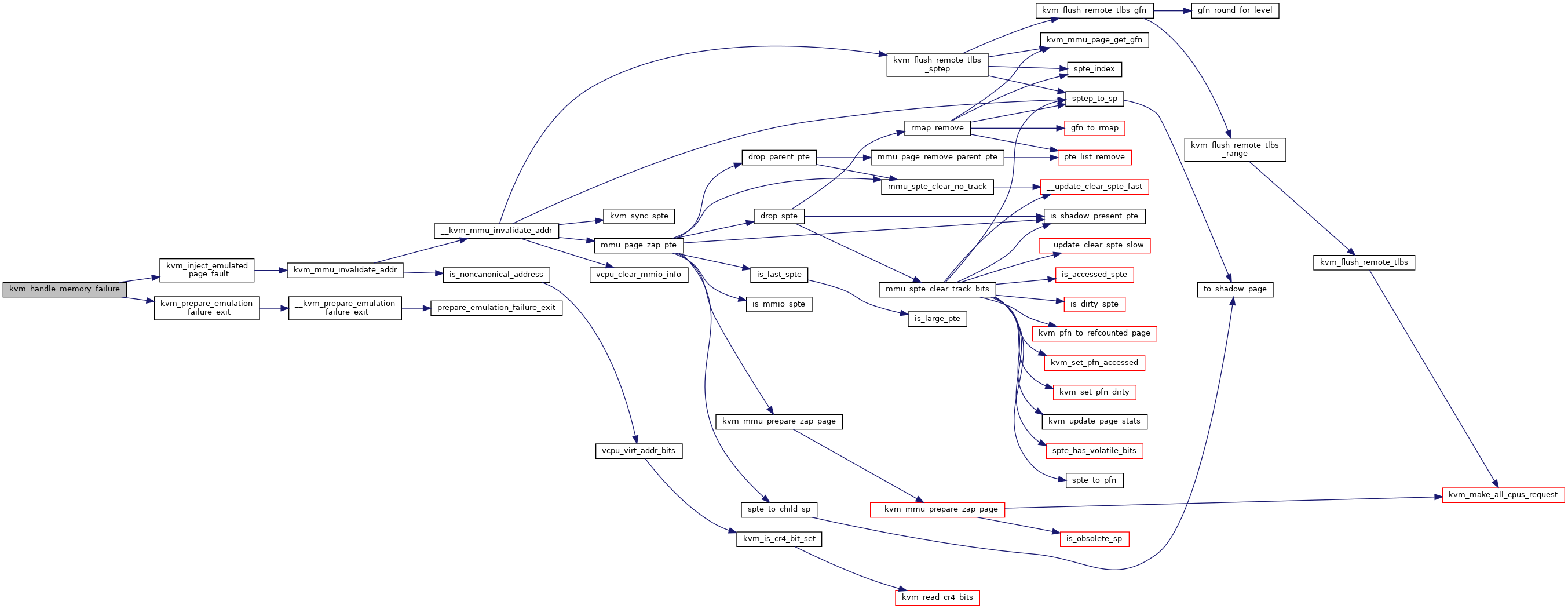

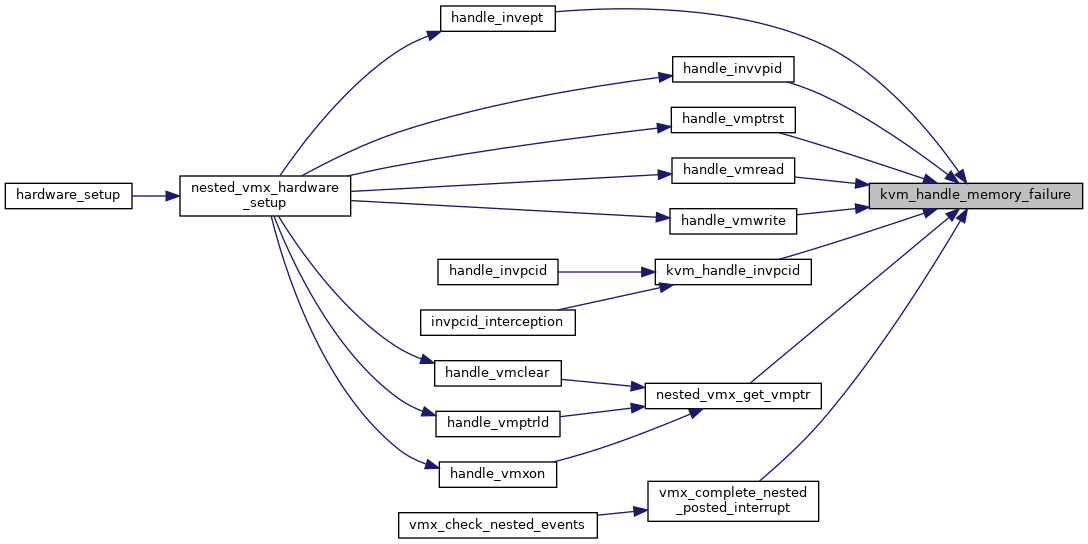

| int | kvm_handle_memory_failure (struct kvm_vcpu *vcpu, int r, struct x86_exception *e) |

| EXPORT_SYMBOL_GPL (kvm_handle_memory_failure) | |

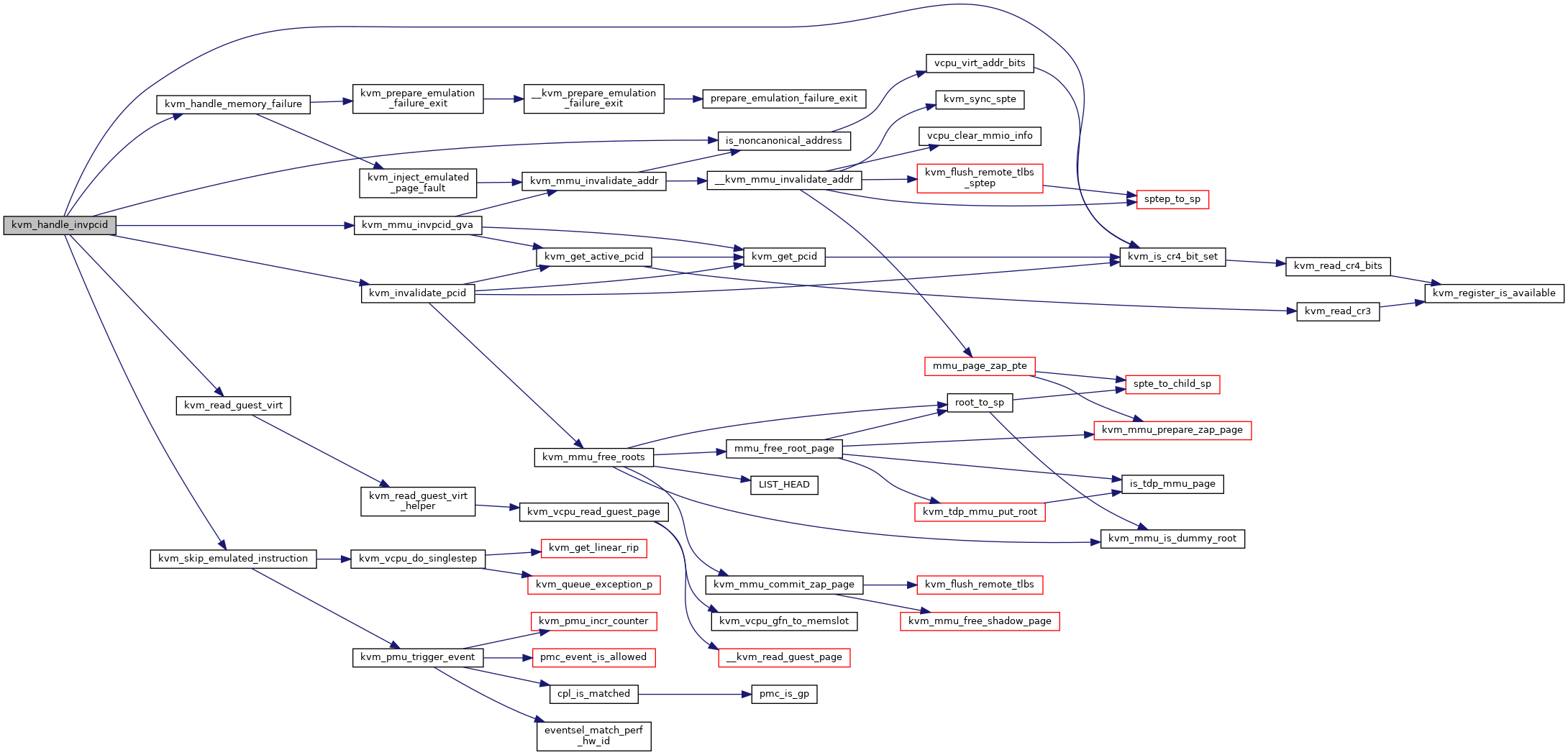

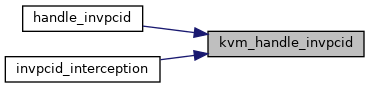

| int | kvm_handle_invpcid (struct kvm_vcpu *vcpu, unsigned long type, gva_t gva) |

| EXPORT_SYMBOL_GPL (kvm_handle_invpcid) | |

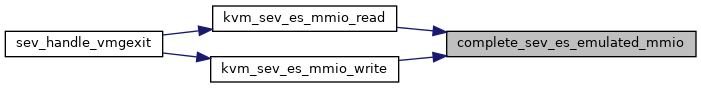

| static int | complete_sev_es_emulated_mmio (struct kvm_vcpu *vcpu) |

| int | kvm_sev_es_mmio_write (struct kvm_vcpu *vcpu, gpa_t gpa, unsigned int bytes, void *data) |

| EXPORT_SYMBOL_GPL (kvm_sev_es_mmio_write) | |

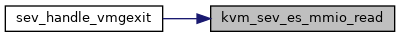

| int | kvm_sev_es_mmio_read (struct kvm_vcpu *vcpu, gpa_t gpa, unsigned int bytes, void *data) |

| EXPORT_SYMBOL_GPL (kvm_sev_es_mmio_read) | |

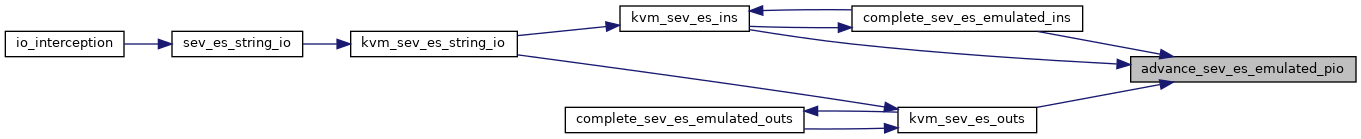

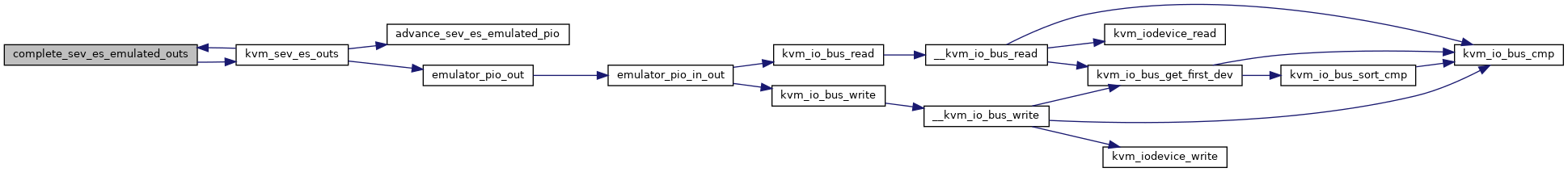

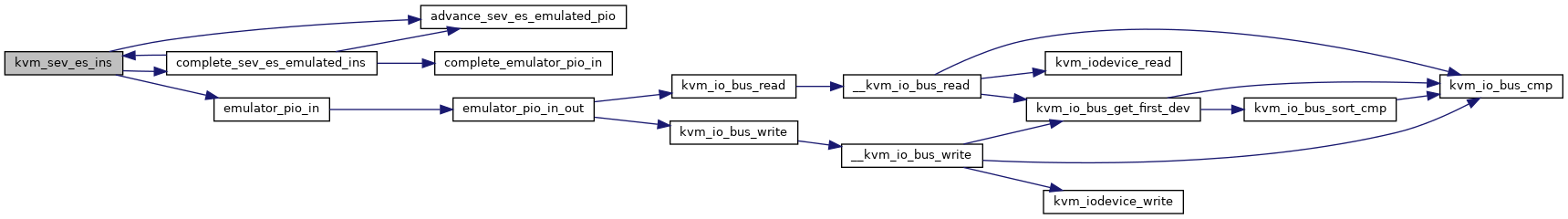

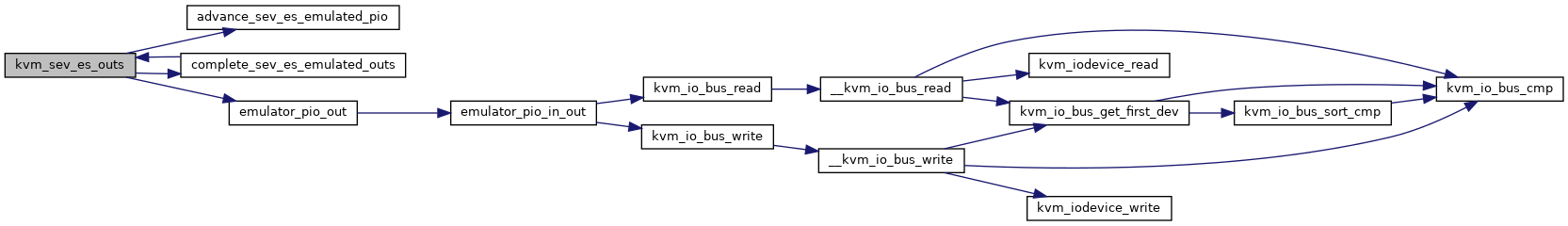

| static void | advance_sev_es_emulated_pio (struct kvm_vcpu *vcpu, unsigned count, int size) |

| static int | kvm_sev_es_outs (struct kvm_vcpu *vcpu, unsigned int size, unsigned int port) |

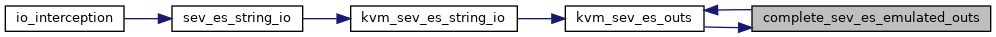

| static int | complete_sev_es_emulated_outs (struct kvm_vcpu *vcpu) |

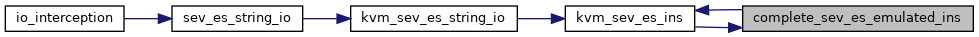

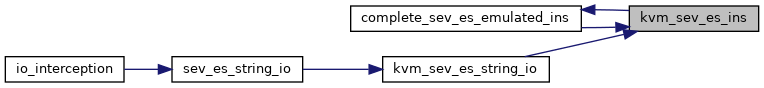

| static int | kvm_sev_es_ins (struct kvm_vcpu *vcpu, unsigned int size, unsigned int port) |

| static int | complete_sev_es_emulated_ins (struct kvm_vcpu *vcpu) |

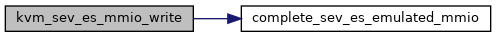

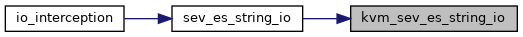

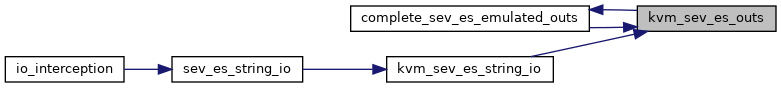

| int | kvm_sev_es_string_io (struct kvm_vcpu *vcpu, unsigned int size, unsigned int port, void *data, unsigned int count, int in) |

| EXPORT_SYMBOL_GPL (kvm_sev_es_string_io) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_entry) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_exit) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_fast_mmio) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_inj_virq) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_page_fault) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_msr) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_cr) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_nested_vmenter) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_nested_vmexit) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_nested_vmexit_inject) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_nested_intr_vmexit) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_nested_vmenter_failed) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_invlpga) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_skinit) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_nested_intercepts) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_write_tsc_offset) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_ple_window_update) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_pml_full) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_pi_irte_update) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_avic_unaccelerated_access) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_avic_incomplete_ipi) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_avic_ga_log) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_avic_kick_vcpu_slowpath) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_avic_doorbell) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_apicv_accept_irq) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_vmgexit_enter) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_vmgexit_exit) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_vmgexit_msr_protocol_enter) | |

| EXPORT_TRACEPOINT_SYMBOL_GPL (kvm_vmgexit_msr_protocol_exit) | |

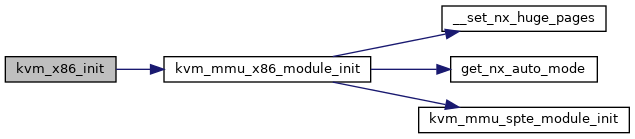

| static int __init | kvm_x86_init (void) |

| module_init (kvm_x86_init) | |

| static void __exit | kvm_x86_exit (void) |

| module_exit (kvm_x86_exit) | |

Macro Definition Documentation

◆ __kvm_cpu_cap_has

| #define __kvm_cpu_cap_has | ( | UNUSED_, | |

| f | |||

| ) | kvm_cpu_cap_has(f) |

◆ __KVM_X86_OP

| #define __KVM_X86_OP | ( | func | ) | static_call_update(kvm_x86_##func, kvm_x86_ops.func); |

◆ CREATE_TRACE_POINTS

◆ emul_to_vcpu

| #define emul_to_vcpu | ( | ctxt | ) | ((struct kvm_vcpu *)(ctxt)->vcpu) |

◆ emulator_try_cmpxchg_user

| #define emulator_try_cmpxchg_user | ( | t, | |

| ptr, | |||

| old, | |||

| new | |||

| ) | (__try_cmpxchg_user((t __user *)(ptr), (t *)(old), *(t *)(new), efault ## t)) |

◆ ERR_PTR_USR

◆ EXCPT_ABORT

◆ EXCPT_BENIGN

◆ EXCPT_CONTRIBUTORY

◆ EXCPT_DB

◆ EXCPT_FAULT

◆ EXCPT_INTERRUPT

◆ EXCPT_PF

◆ EXCPT_TRAP

◆ KVM_CAP_PMU_VALID_MASK

◆ KVM_EXIT_HYPERCALL_VALID_MASK

| #define KVM_EXIT_HYPERCALL_VALID_MASK (1 << KVM_HC_MAP_GPA_RANGE) |

◆ KVM_FEP_CLEAR_RFLAGS_RF

◆ KVM_MAX_MCE_BANKS

◆ KVM_MAX_NR_USER_RETURN_MSRS

◆ KVM_SUPPORTED_ARCH_CAP

| #define KVM_SUPPORTED_ARCH_CAP |

◆ KVM_SUPPORTED_XCR0

| #define KVM_SUPPORTED_XCR0 |

◆ KVM_X2APIC_API_VALID_FLAGS

| #define KVM_X2APIC_API_VALID_FLAGS |

◆ KVM_X86_OP [1/2]

| #define KVM_X86_OP | ( | func | ) |

◆ KVM_X86_OP [2/2]

| #define KVM_X86_OP | ( | func | ) | WARN_ON(!kvm_x86_ops.func); __KVM_X86_OP(func) |

◆ KVM_X86_OP_OPTIONAL [1/2]

| #define KVM_X86_OP_OPTIONAL KVM_X86_OP |

◆ KVM_X86_OP_OPTIONAL [2/2]

| #define KVM_X86_OP_OPTIONAL __KVM_X86_OP |

◆ KVM_X86_OP_OPTIONAL_RET0 [1/2]

| #define KVM_X86_OP_OPTIONAL_RET0 KVM_X86_OP |

◆ KVM_X86_OP_OPTIONAL_RET0 [2/2]

| #define KVM_X86_OP_OPTIONAL_RET0 | ( | func | ) |

◆ KVMCLOCK_SYNC_PERIOD

◆ KVMCLOCK_UPDATE_DELAY

◆ MAX_IO_MSRS

◆ pr_fmt

◆ SMT_RSB_MSG

| #define SMT_RSB_MSG |

Function Documentation

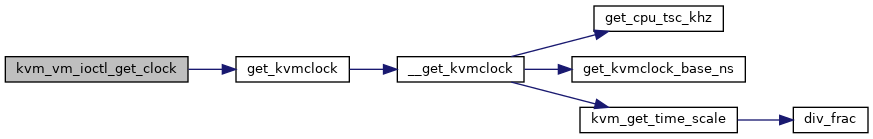

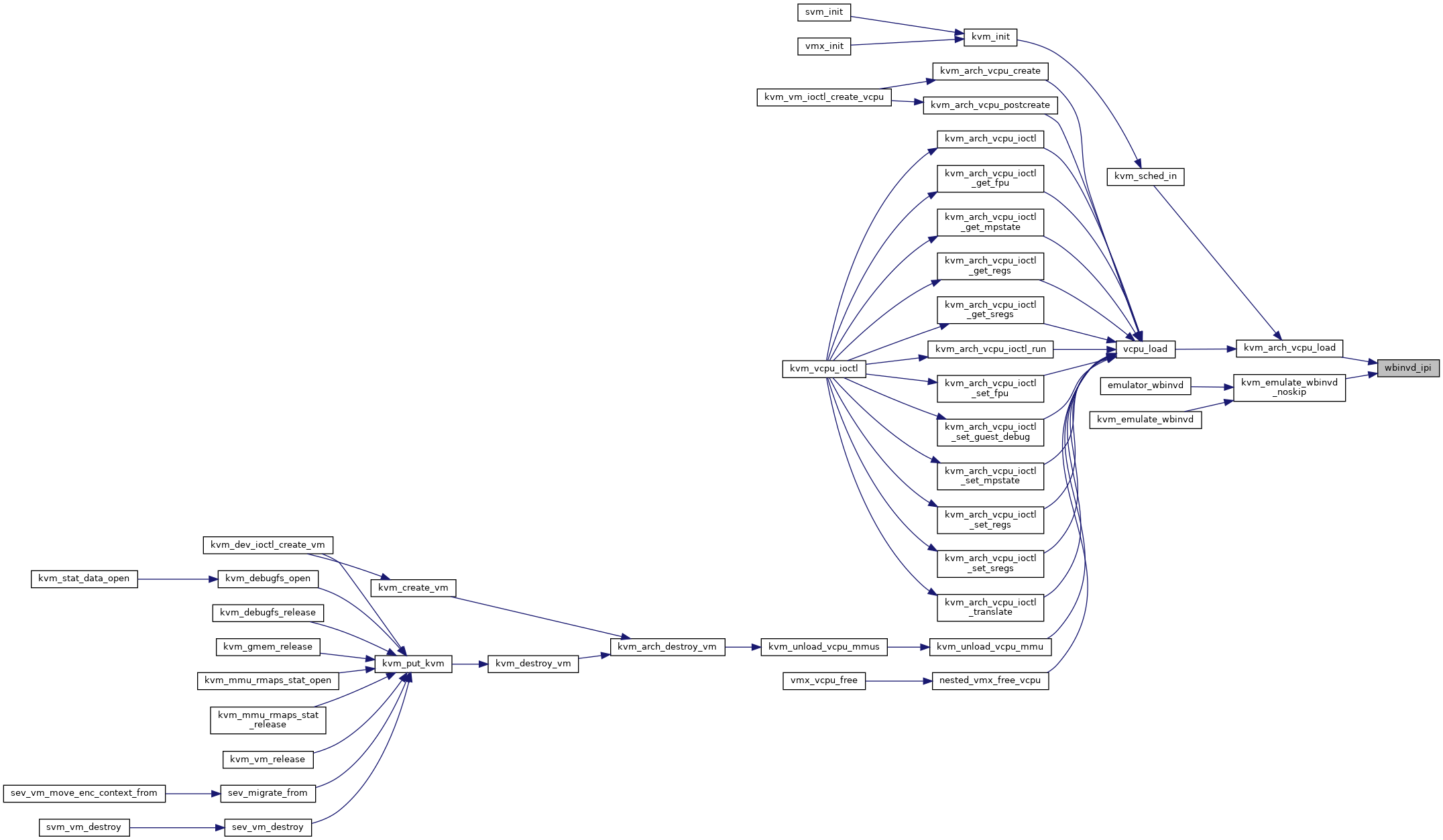

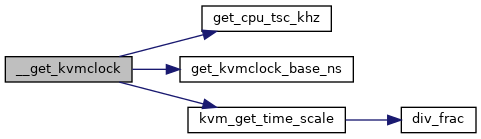

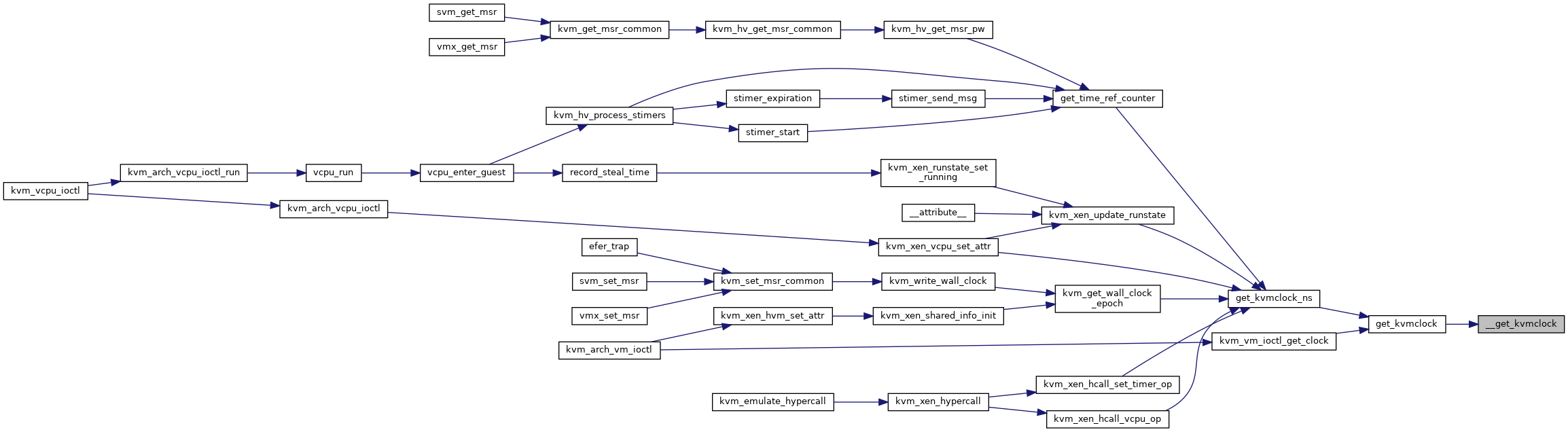

◆ __get_kvmclock()

|

static |

Definition at line 3059 of file x86.c.

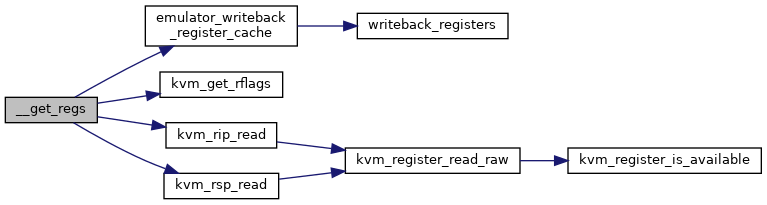

◆ __get_regs()

|

static |

Definition at line 11424 of file x86.c.

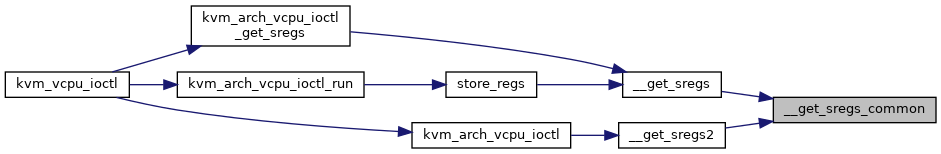

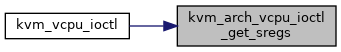

◆ __get_sregs()

|

static |

Definition at line 11544 of file x86.c.

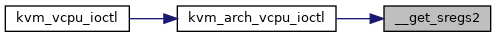

◆ __get_sregs2()

|

static |

Definition at line 11556 of file x86.c.

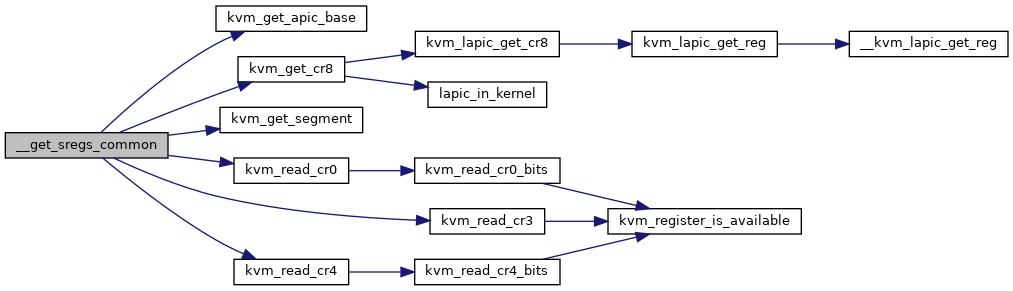

◆ __get_sregs_common()

|

static |

Definition at line 11509 of file x86.c.

◆ __kvm_emulate_halt()

|

static |

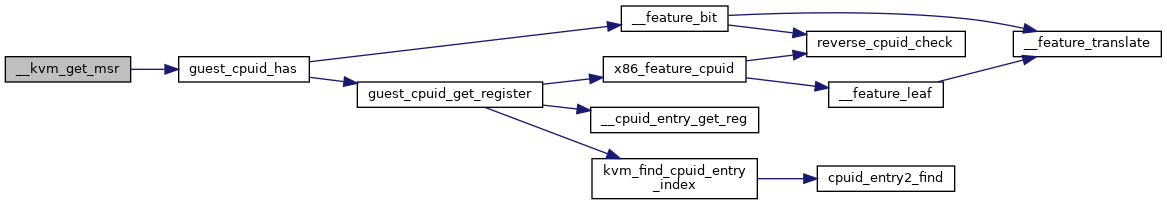

◆ __kvm_get_msr()

| int __kvm_get_msr | ( | struct kvm_vcpu * | vcpu, |

| u32 | index, | ||

| u64 * | data, | ||

| bool | host_initiated | ||

| ) |

Definition at line 1925 of file x86.c.

◆ __kvm_is_valid_cr4()

| bool __kvm_is_valid_cr4 | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | cr4 | ||

| ) |

◆ __kvm_prepare_emulation_failure_exit()

| void __kvm_prepare_emulation_failure_exit | ( | struct kvm_vcpu * | vcpu, |

| u64 * | data, | ||

| u8 | ndata | ||

| ) |

Definition at line 8720 of file x86.c.

◆ __kvm_request_immediate_exit()

| void __kvm_request_immediate_exit | ( | struct kvm_vcpu * | vcpu | ) |

◆ __kvm_set_msr()

|

static |

◆ __kvm_set_or_clear_apicv_inhibit()

| void __kvm_set_or_clear_apicv_inhibit | ( | struct kvm * | kvm, |

| enum kvm_apicv_inhibit | reason, | ||

| bool | set | ||

| ) |

Definition at line 10585 of file x86.c.

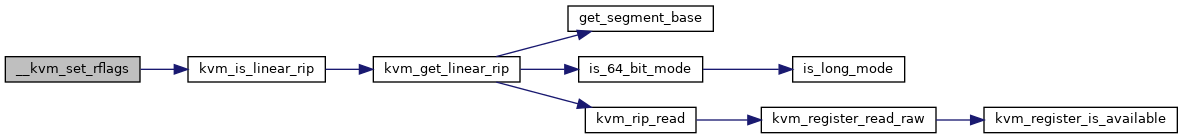

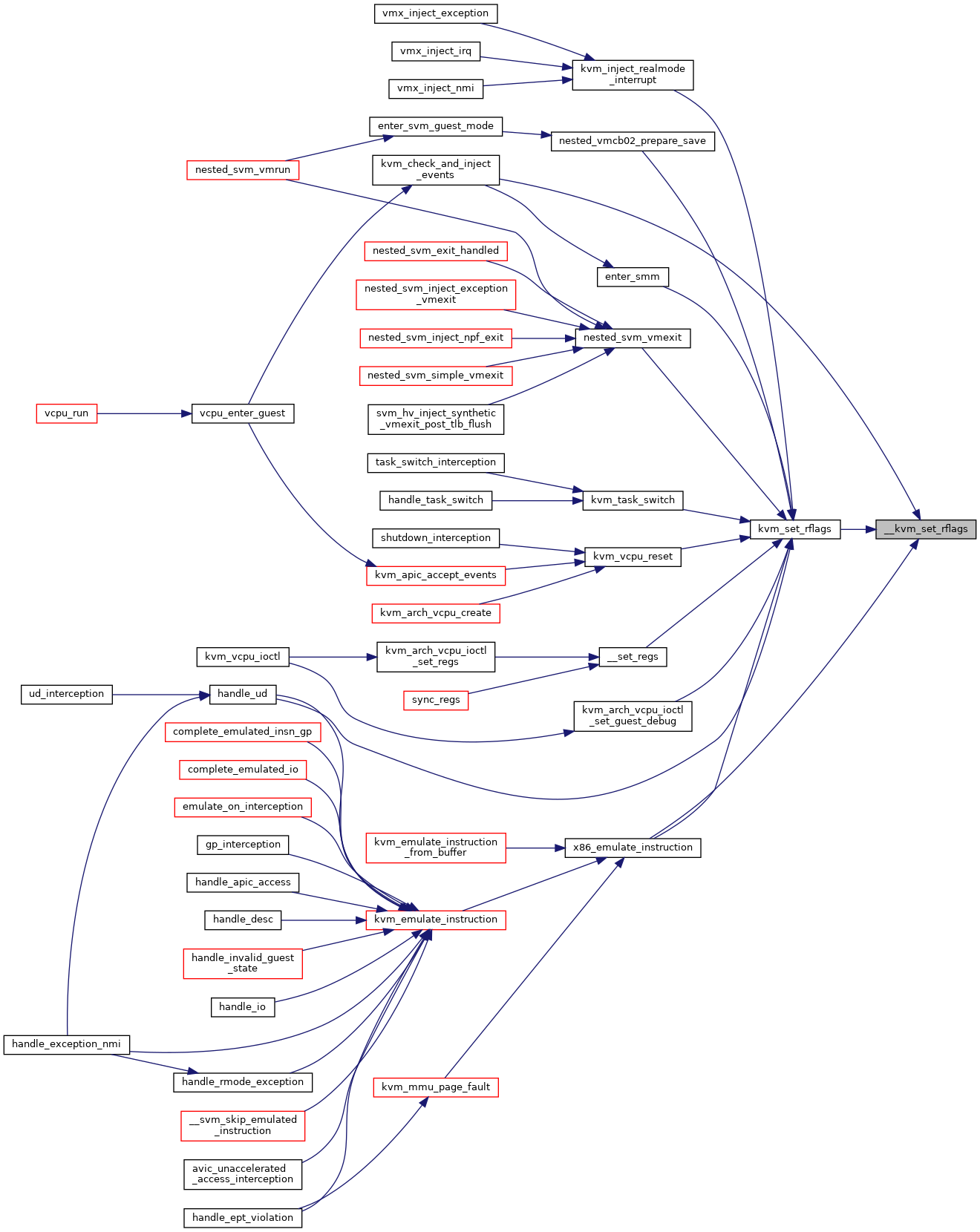

◆ __kvm_set_rflags()

|

static |

Definition at line 13181 of file x86.c.

◆ __kvm_set_xcr()

|

static |

◆ __kvm_start_pvclock_update()

|

static |

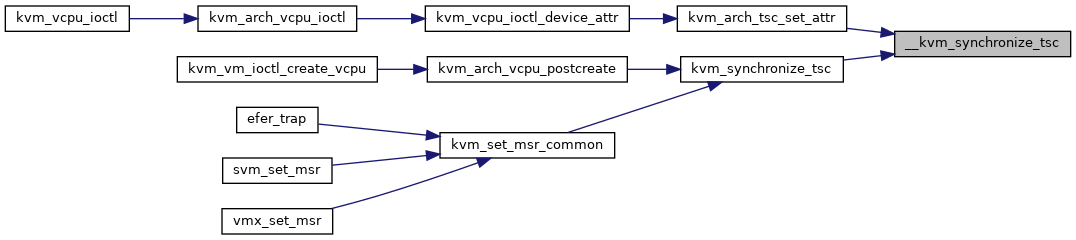

◆ __kvm_synchronize_tsc()

|

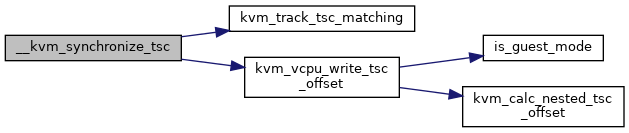

static |

Definition at line 2673 of file x86.c.

◆ __kvm_valid_efer()

|

static |

◆ __kvm_vcpu_update_apicv()

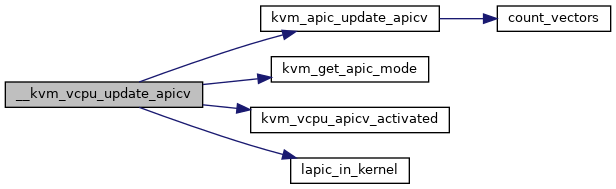

| void __kvm_vcpu_update_apicv | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 10525 of file x86.c.

◆ __kvm_x86_vendor_init()

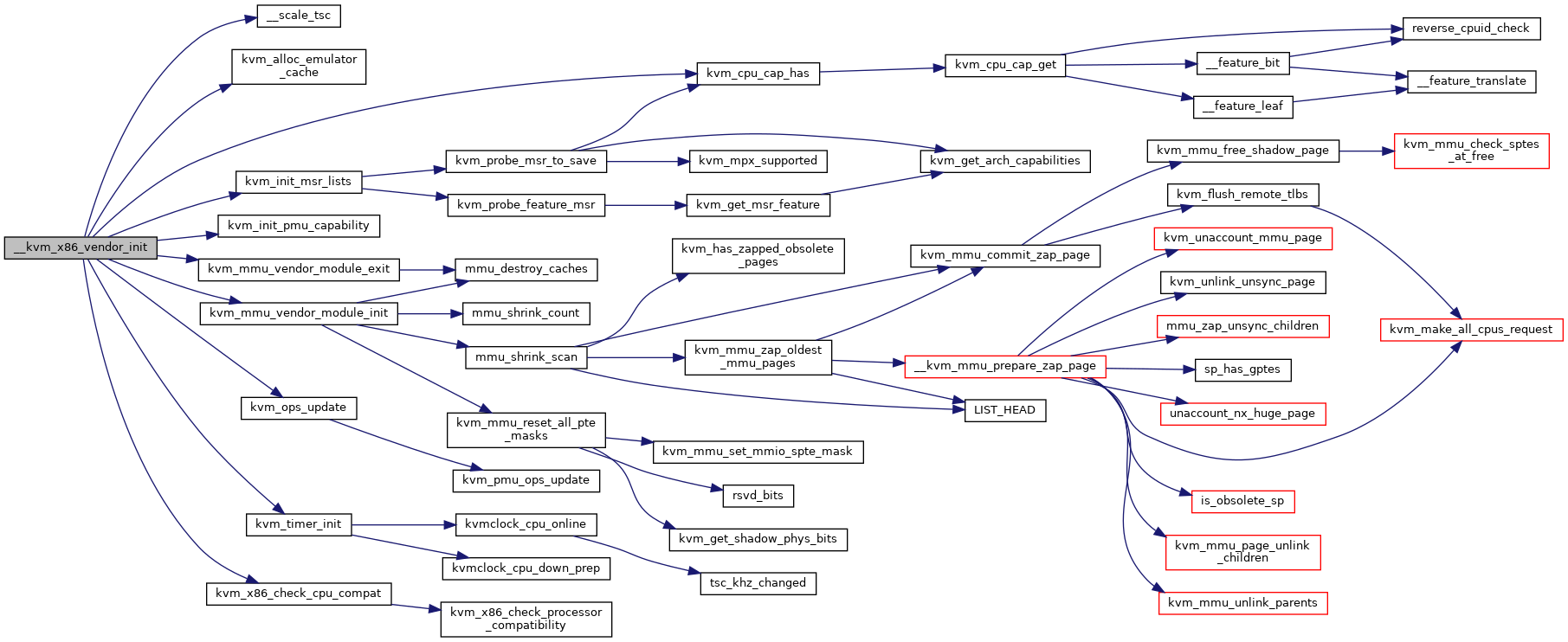

|

static |

Definition at line 9651 of file x86.c.

◆ __kvmclock_cpufreq_notifier()

|

static |

◆ __msr_io()

|

static |

◆ __scale_tsc()

|

inlinestatic |

◆ __set_regs()

|

static |

Definition at line 11468 of file x86.c.

◆ __set_sregs()

|

static |

Definition at line 11777 of file x86.c.

◆ __set_sregs2()

|

static |

Definition at line 11803 of file x86.c.

◆ __set_sregs_common()

|

static |

Definition at line 11705 of file x86.c.

◆ __x86_set_memory_region()

| void __user* __x86_set_memory_region | ( | struct kvm * | kvm, |

| int | id, | ||

| gpa_t | gpa, | ||

| u32 | size | ||

| ) |

__x86_set_memory_region: Setup KVM internal memory slot

@kvm: the kvm pointer to the VM. @id: the slot ID to setup. @gpa: the GPA to install the slot (unused when @size == 0). @size: the size of the slot. Set to zero to uninstall a slot.

This function helps to setup a KVM internal memory slot. Specify @size > 0 to install a new slot, while @size == 0 to uninstall a slot. The return code can be one of the following:

HVA: on success (uninstall will return a bogus HVA) -errno: on error

The caller should always use IS_ERR() to check the return value before use. Note, the KVM internal memory slots are guaranteed to remain valid and unchanged until the VM is destroyed, i.e., the GPA->HVA translation will not change. However, the HVA is a user address, i.e. its accessibility is not guaranteed, and must be accessed via __copy_{to,from}_user().

Definition at line 12637 of file x86.c.

◆ adjust_tsc_khz()

|

static |

◆ adjust_tsc_offset_guest()

|

inlinestatic |

◆ adjust_tsc_offset_host()

|

inlinestatic |

Definition at line 2797 of file x86.c.

◆ advance_sev_es_emulated_pio()

|

static |

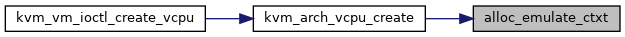

◆ alloc_emulate_ctxt()

|

static |

Definition at line 8596 of file x86.c.

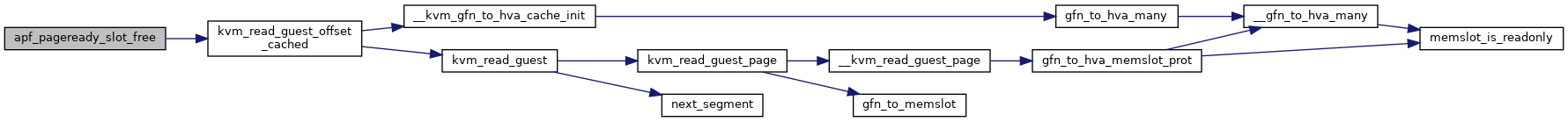

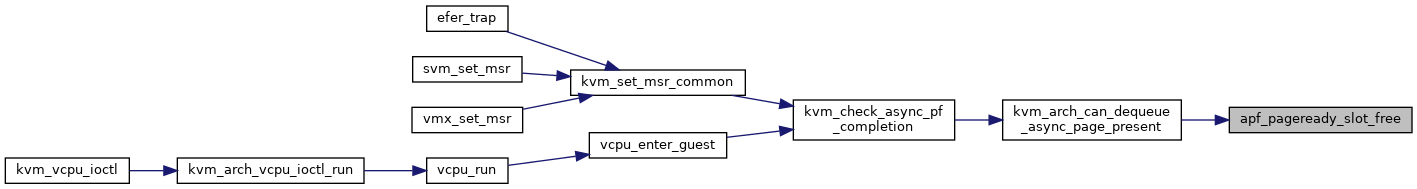

◆ apf_pageready_slot_free()

|

inlinestatic |

Definition at line 13279 of file x86.c.

◆ apf_put_user_notpresent()

|

inlinestatic |

Definition at line 13263 of file x86.c.

◆ apf_put_user_ready()

|

inlinestatic |

Definition at line 13271 of file x86.c.

◆ can_set_mci_status()

|

static |

Definition at line 3422 of file x86.c.

◆ complete_emulated_insn_gp()

|

static |

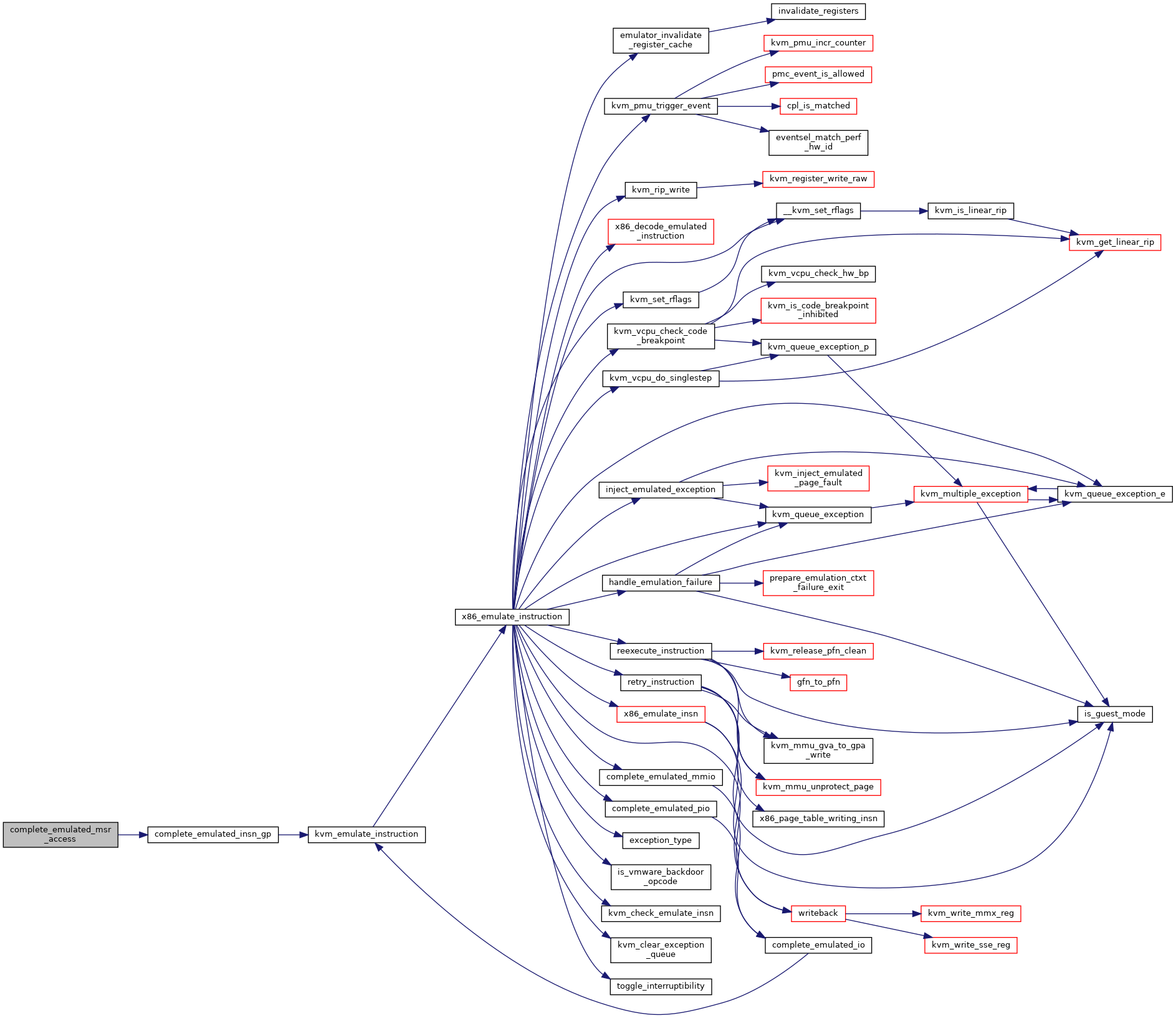

Definition at line 768 of file x86.c.

◆ complete_emulated_io()

|

inlinestatic |

◆ complete_emulated_mmio()

|

static |

Definition at line 11249 of file x86.c.

◆ complete_emulated_msr_access()

|

static |

◆ complete_emulated_pio()

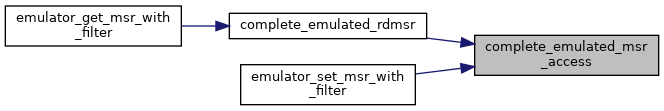

|

static |

◆ complete_emulated_rdmsr()

|

static |

Definition at line 2006 of file x86.c.

◆ complete_emulator_pio_in()

|

static |

◆ complete_fast_msr_access()

|

static |

◆ complete_fast_pio_in()

|

static |

Definition at line 9316 of file x86.c.

◆ complete_fast_pio_out()

|

static |

◆ complete_fast_pio_out_port_0x7e()

|

static |

◆ complete_fast_rdmsr()

|

static |

Definition at line 2017 of file x86.c.

◆ complete_hypercall_exit()

|

static |

◆ complete_sev_es_emulated_ins()

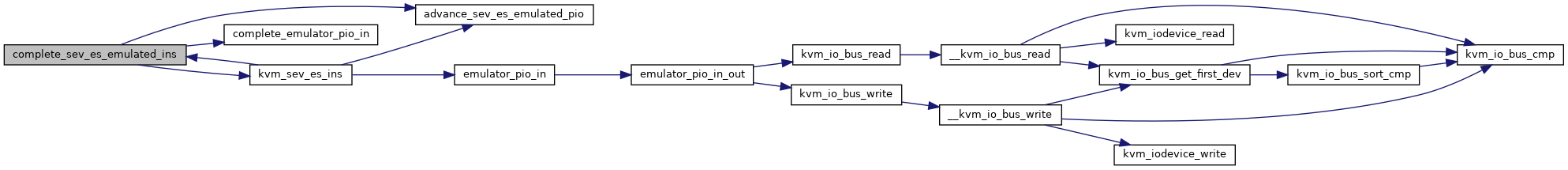

|

static |

Definition at line 13844 of file x86.c.

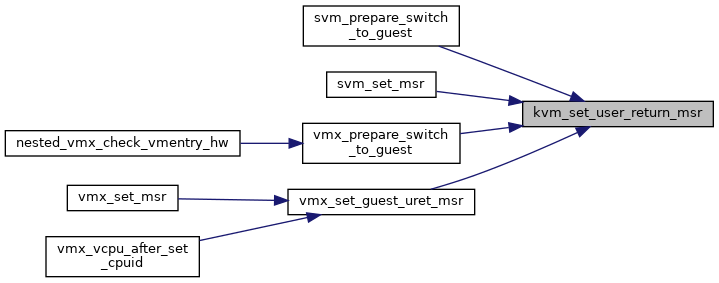

◆ complete_sev_es_emulated_mmio()

|

static |

Definition at line 13676 of file x86.c.

◆ complete_sev_es_emulated_outs()

|

static |

Definition at line 13808 of file x86.c.

◆ complete_userspace_rdmsr()

|

static |

◆ compute_guest_tsc()

|

static |

◆ DEFINE_MUTEX()

|

static |

◆ DEFINE_PER_CPU()

|

static |

◆ DEFINE_STATIC_KEY_FALSE()

| __read_mostly DEFINE_STATIC_KEY_FALSE | ( | kvm_has_noapic_vcpu | ) |

◆ div_frac()

|

static |

◆ dm_request_for_irq_injection()

|

static |

◆ do_get_msr()

|

static |

Definition at line 2224 of file x86.c.

◆ do_get_msr_feature()

|

static |

◆ do_set_msr()

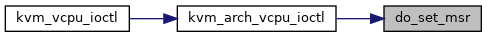

|

static |

Definition at line 2229 of file x86.c.

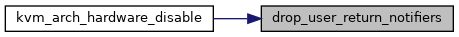

◆ drop_user_return_notifiers()

|

static |

◆ emulator_check_pmc()

|

static |

◆ emulator_cmpxchg_emulated()

|

static |

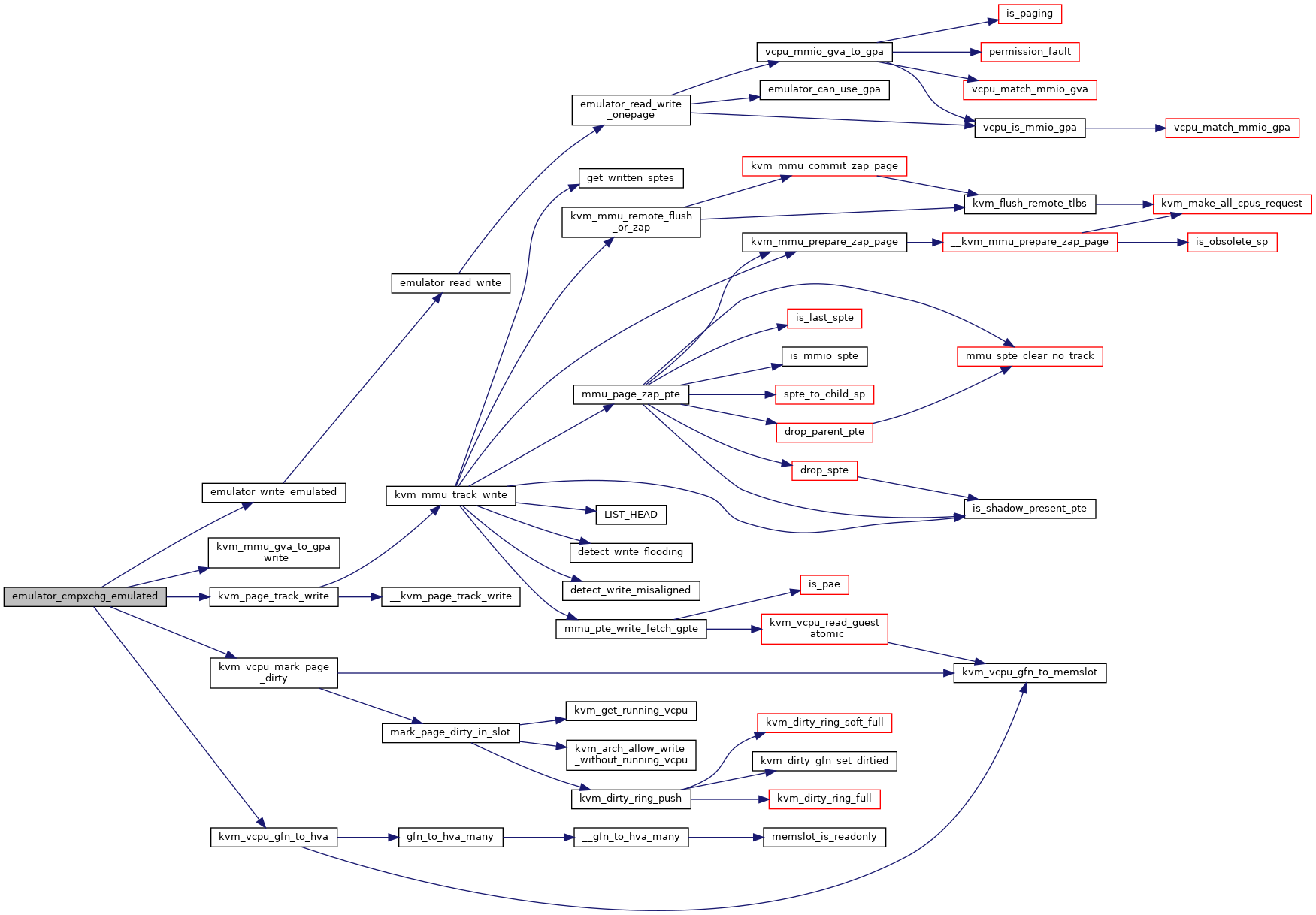

Definition at line 7953 of file x86.c.

◆ emulator_fix_hypercall()

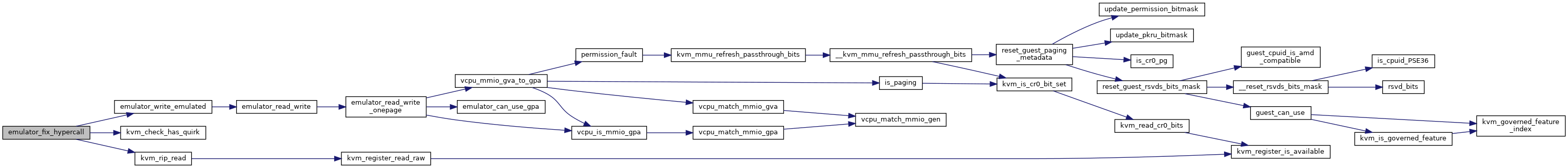

|

static |

◆ emulator_get_cached_segment_base()

|

static |

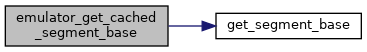

Definition at line 8284 of file x86.c.

◆ emulator_get_cpl()

|

static |

◆ emulator_get_cpuid()

|

static |

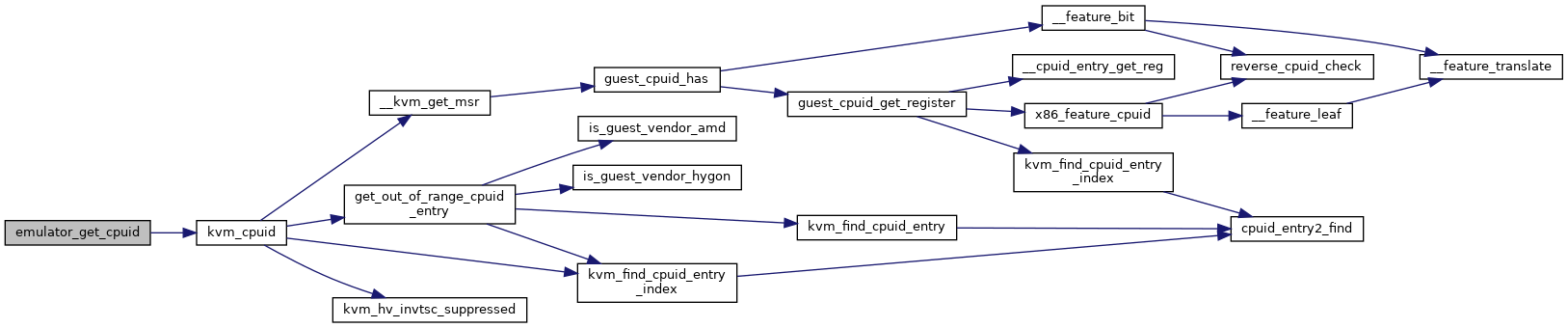

Definition at line 8435 of file x86.c.

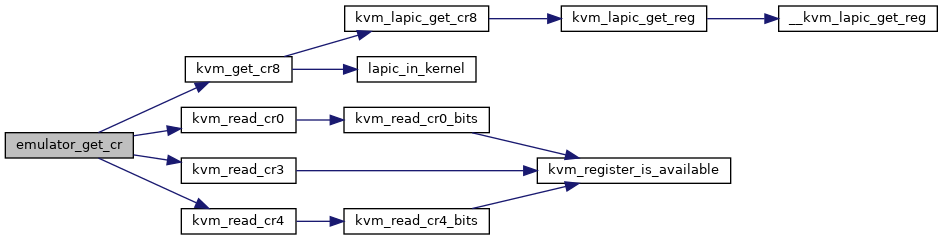

◆ emulator_get_cr()

|

static |

◆ emulator_get_dr()

|

static |

◆ emulator_get_gdt()

|

static |

◆ emulator_get_idt()

|

static |

◆ emulator_get_msr()

|

static |

◆ emulator_get_msr_with_filter()

|

static |

Definition at line 8356 of file x86.c.

◆ emulator_get_segment()

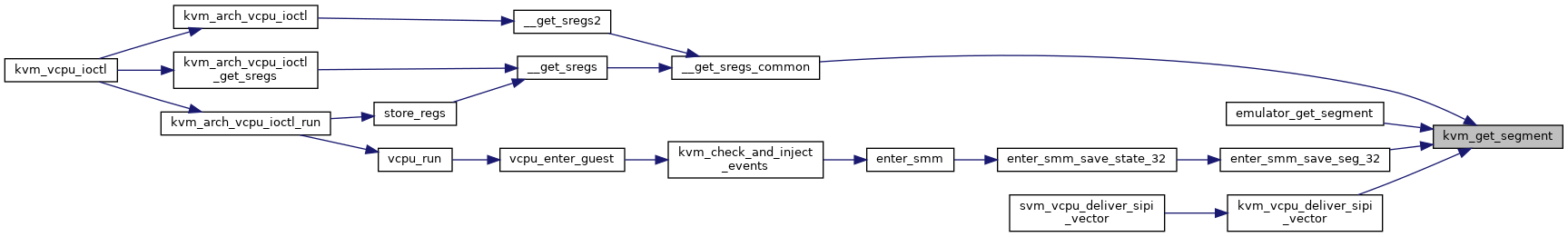

|

static |

◆ emulator_get_untagged_addr()

|

static |

◆ emulator_guest_has_fxsr()

|

static |

◆ emulator_guest_has_movbe()

|

static |

◆ emulator_guest_has_rdpid()

|

static |

◆ emulator_halt()

|

static |

◆ emulator_intercept()

|

static |

◆ emulator_invlpg()

|

static |

◆ emulator_is_guest_mode()

|

static |

◆ emulator_is_smm()

|

static |

◆ emulator_leave_smm()

|

static |

◆ emulator_pio_in()

|

static |

Definition at line 8087 of file x86.c.

◆ emulator_pio_in_emulated()

|

static |

Definition at line 8106 of file x86.c.

◆ emulator_pio_in_out()

|

static |

Definition at line 8036 of file x86.c.

◆ emulator_pio_out()

|

static |

◆ emulator_pio_out_emulated()

|

static |

Definition at line 8134 of file x86.c.

◆ emulator_read_emulated()

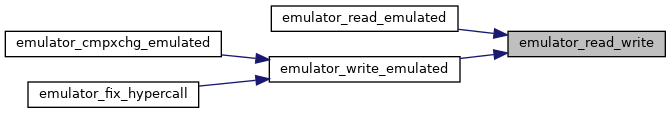

|

static |

Definition at line 7930 of file x86.c.

◆ emulator_read_gpr()

|

static |

Definition at line 8457 of file x86.c.

◆ emulator_read_pmc()

|

static |

◆ emulator_read_std()

|

static |

Definition at line 7590 of file x86.c.

◆ emulator_read_write()

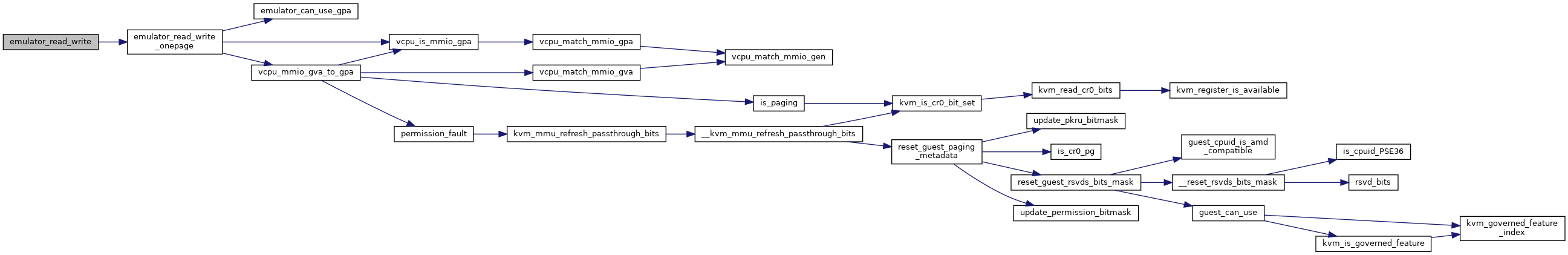

|

static |

Definition at line 7876 of file x86.c.

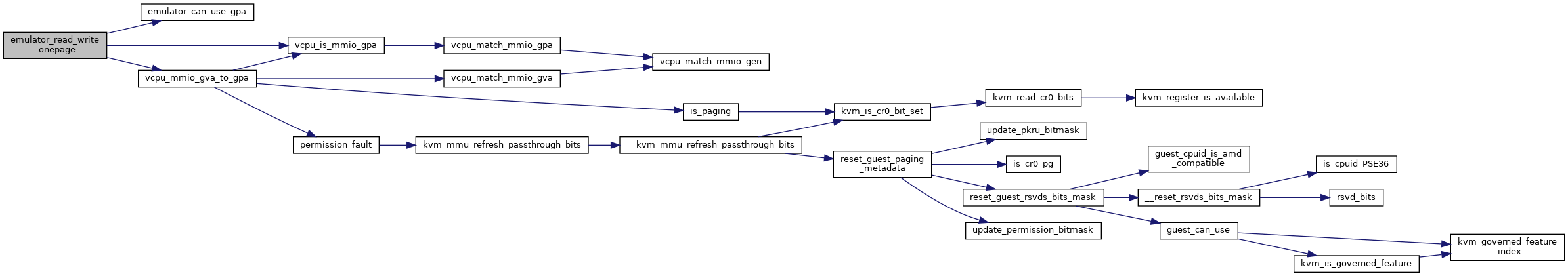

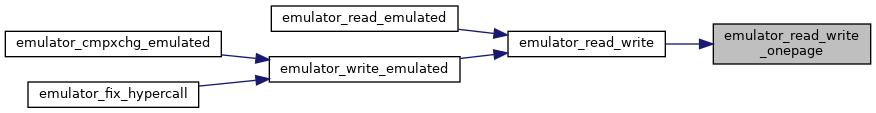

◆ emulator_read_write_onepage()

|

static |

Definition at line 7825 of file x86.c.

◆ emulator_set_cr()

|

static |

◆ emulator_set_dr()

|

static |

◆ emulator_set_gdt()

|

static |

◆ emulator_set_idt()

|

static |

◆ emulator_set_msr_with_filter()

|

static |

Definition at line 8379 of file x86.c.

◆ emulator_set_nmi_mask()

|

static |

◆ emulator_set_segment()

|

static |

◆ emulator_set_xcr()

|

static |

Definition at line 8495 of file x86.c.

◆ emulator_triple_fault()

|

static |

◆ emulator_vm_bugged()

|

static |

◆ emulator_wbinvd()

|

static |

Definition at line 8178 of file x86.c.

◆ emulator_write_emulated()

|

static |

◆ emulator_write_gpr()

|

static |

Definition at line 8462 of file x86.c.

◆ emulator_write_phys()

| int emulator_write_phys | ( | struct kvm_vcpu * | vcpu, |

| gpa_t | gpa, | ||

| const void * | val, | ||

| int | bytes | ||

| ) |

Definition at line 7741 of file x86.c.

◆ emulator_write_std()

|

static |

Definition at line 7635 of file x86.c.

◆ exception_class()

|

static |

◆ exception_type()

|

static |

◆ EXPORT_STATIC_CALL_GPL() [1/2]

| EXPORT_STATIC_CALL_GPL | ( | kvm_x86_cache_reg | ) |

◆ EXPORT_STATIC_CALL_GPL() [2/2]

| EXPORT_STATIC_CALL_GPL | ( | kvm_x86_get_cs_db_l_bits | ) |

◆ EXPORT_SYMBOL_GPL() [1/107]

| EXPORT_SYMBOL_GPL | ( | __kvm_is_valid_cr4 | ) |

◆ EXPORT_SYMBOL_GPL() [2/107]

| EXPORT_SYMBOL_GPL | ( | __kvm_prepare_emulation_failure_exit | ) |

◆ EXPORT_SYMBOL_GPL() [3/107]

| EXPORT_SYMBOL_GPL | ( | __kvm_request_immediate_exit | ) |

◆ EXPORT_SYMBOL_GPL() [4/107]

| EXPORT_SYMBOL_GPL | ( | __kvm_vcpu_update_apicv | ) |

◆ EXPORT_SYMBOL_GPL() [5/107]

| EXPORT_SYMBOL_GPL | ( | __x86_set_memory_region | ) |

◆ EXPORT_SYMBOL_GPL() [6/107]

| EXPORT_SYMBOL_GPL | ( | allow_smaller_maxphyaddr | ) |

◆ EXPORT_SYMBOL_GPL() [7/107]

| EXPORT_SYMBOL_GPL | ( | enable_apicv | ) |

◆ EXPORT_SYMBOL_GPL() [8/107]

| EXPORT_SYMBOL_GPL | ( | enable_pmu | ) |

◆ EXPORT_SYMBOL_GPL() [9/107]

| EXPORT_SYMBOL_GPL | ( | enable_vmware_backdoor | ) |

◆ EXPORT_SYMBOL_GPL() [10/107]

| EXPORT_SYMBOL_GPL | ( | handle_fastpath_set_msr_irqoff | ) |

◆ EXPORT_SYMBOL_GPL() [11/107]

| EXPORT_SYMBOL_GPL | ( | handle_ud | ) |

◆ EXPORT_SYMBOL_GPL() [12/107]

| EXPORT_SYMBOL_GPL | ( | host_arch_capabilities | ) |

◆ EXPORT_SYMBOL_GPL() [13/107]

| EXPORT_SYMBOL_GPL | ( | host_efer | ) |

◆ EXPORT_SYMBOL_GPL() [14/107]

| EXPORT_SYMBOL_GPL | ( | host_xss | ) |

◆ EXPORT_SYMBOL_GPL() [15/107]

| EXPORT_SYMBOL_GPL | ( | kvm_add_user_return_msr | ) |

◆ EXPORT_SYMBOL_GPL() [16/107]

| EXPORT_SYMBOL_GPL | ( | kvm_apicv_activated | ) |

◆ EXPORT_SYMBOL_GPL() [17/107]

| EXPORT_SYMBOL_GPL | ( | kvm_arch_end_assignment | ) |

◆ EXPORT_SYMBOL_GPL() [18/107]

| EXPORT_SYMBOL_GPL | ( | kvm_arch_has_assigned_device | ) |

◆ EXPORT_SYMBOL_GPL() [19/107]

| EXPORT_SYMBOL_GPL | ( | kvm_arch_has_noncoherent_dma | ) |

◆ EXPORT_SYMBOL_GPL() [20/107]

| EXPORT_SYMBOL_GPL | ( | kvm_arch_no_poll | ) |

◆ EXPORT_SYMBOL_GPL() [21/107]

| EXPORT_SYMBOL_GPL | ( | kvm_arch_register_noncoherent_dma | ) |

◆ EXPORT_SYMBOL_GPL() [22/107]

| EXPORT_SYMBOL_GPL | ( | kvm_arch_start_assignment | ) |

◆ EXPORT_SYMBOL_GPL() [23/107]

| EXPORT_SYMBOL_GPL | ( | kvm_arch_unregister_noncoherent_dma | ) |

◆ EXPORT_SYMBOL_GPL() [24/107]

| EXPORT_SYMBOL_GPL | ( | kvm_calc_nested_tsc_multiplier | ) |

◆ EXPORT_SYMBOL_GPL() [25/107]

| EXPORT_SYMBOL_GPL | ( | kvm_calc_nested_tsc_offset | ) |

◆ EXPORT_SYMBOL_GPL() [26/107]

| EXPORT_SYMBOL_GPL | ( | kvm_caps | ) |

◆ EXPORT_SYMBOL_GPL() [27/107]

| EXPORT_SYMBOL_GPL | ( | kvm_complete_insn_gp | ) |

◆ EXPORT_SYMBOL_GPL() [28/107]

| EXPORT_SYMBOL_GPL | ( | kvm_deliver_exception_payload | ) |

◆ EXPORT_SYMBOL_GPL() [29/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_ap_reset_hold | ) |

◆ EXPORT_SYMBOL_GPL() [30/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_halt | ) |

◆ EXPORT_SYMBOL_GPL() [31/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_halt_noskip | ) |

◆ EXPORT_SYMBOL_GPL() [32/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_hypercall | ) |

◆ EXPORT_SYMBOL_GPL() [33/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_instruction | ) |

◆ EXPORT_SYMBOL_GPL() [34/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_instruction_from_buffer | ) |

◆ EXPORT_SYMBOL_GPL() [35/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_invd | ) |

◆ EXPORT_SYMBOL_GPL() [36/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_monitor | ) |

◆ EXPORT_SYMBOL_GPL() [37/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_mwait | ) |

◆ EXPORT_SYMBOL_GPL() [38/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_rdmsr | ) |

◆ EXPORT_SYMBOL_GPL() [39/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_rdpmc | ) |

◆ EXPORT_SYMBOL_GPL() [40/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_wbinvd | ) |

◆ EXPORT_SYMBOL_GPL() [41/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_wrmsr | ) |

◆ EXPORT_SYMBOL_GPL() [42/107]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_xsetbv | ) |

◆ EXPORT_SYMBOL_GPL() [43/107]

| EXPORT_SYMBOL_GPL | ( | kvm_enable_efer_bits | ) |

◆ EXPORT_SYMBOL_GPL() [44/107]

| EXPORT_SYMBOL_GPL | ( | kvm_fast_pio | ) |

◆ EXPORT_SYMBOL_GPL() [45/107]

| EXPORT_SYMBOL_GPL | ( | kvm_find_user_return_msr | ) |

◆ EXPORT_SYMBOL_GPL() [46/107]

| EXPORT_SYMBOL_GPL | ( | kvm_fixup_and_inject_pf_error | ) |

◆ EXPORT_SYMBOL_GPL() [47/107]

| EXPORT_SYMBOL_GPL | ( | kvm_get_apic_mode | ) |

◆ EXPORT_SYMBOL_GPL() [48/107]

| EXPORT_SYMBOL_GPL | ( | kvm_get_cr8 | ) |

◆ EXPORT_SYMBOL_GPL() [49/107]

| EXPORT_SYMBOL_GPL | ( | kvm_get_dr | ) |

◆ EXPORT_SYMBOL_GPL() [50/107]

| EXPORT_SYMBOL_GPL | ( | kvm_get_linear_rip | ) |

◆ EXPORT_SYMBOL_GPL() [51/107]

| EXPORT_SYMBOL_GPL | ( | kvm_get_msr | ) |

◆ EXPORT_SYMBOL_GPL() [52/107]

| EXPORT_SYMBOL_GPL | ( | kvm_get_msr_common | ) |

◆ EXPORT_SYMBOL_GPL() [53/107]

| EXPORT_SYMBOL_GPL | ( | kvm_get_rflags | ) |

◆ EXPORT_SYMBOL_GPL() [54/107]

| EXPORT_SYMBOL_GPL | ( | kvm_handle_invalid_op | ) |

◆ EXPORT_SYMBOL_GPL() [55/107]

| EXPORT_SYMBOL_GPL | ( | kvm_handle_invpcid | ) |

◆ EXPORT_SYMBOL_GPL() [56/107]

| EXPORT_SYMBOL_GPL | ( | kvm_handle_memory_failure | ) |

◆ EXPORT_SYMBOL_GPL() [57/107]

| EXPORT_SYMBOL_GPL | ( | kvm_has_noapic_vcpu | ) |

◆ EXPORT_SYMBOL_GPL() [58/107]

| EXPORT_SYMBOL_GPL | ( | kvm_inject_emulated_page_fault | ) |

◆ EXPORT_SYMBOL_GPL() [59/107]

| EXPORT_SYMBOL_GPL | ( | kvm_inject_realmode_interrupt | ) |

◆ EXPORT_SYMBOL_GPL() [60/107]

| EXPORT_SYMBOL_GPL | ( | kvm_is_linear_rip | ) |

◆ EXPORT_SYMBOL_GPL() [61/107]

| EXPORT_SYMBOL_GPL | ( | kvm_lmsw | ) |

◆ EXPORT_SYMBOL_GPL() [62/107]

| EXPORT_SYMBOL_GPL | ( | kvm_load_guest_xsave_state | ) |

◆ EXPORT_SYMBOL_GPL() [63/107]

| EXPORT_SYMBOL_GPL | ( | kvm_load_host_xsave_state | ) |

◆ EXPORT_SYMBOL_GPL() [64/107]

| EXPORT_SYMBOL_GPL | ( | kvm_mmu_gva_to_gpa_read | ) |

◆ EXPORT_SYMBOL_GPL() [65/107]

| EXPORT_SYMBOL_GPL | ( | kvm_mmu_gva_to_gpa_write | ) |

◆ EXPORT_SYMBOL_GPL() [66/107]

| EXPORT_SYMBOL_GPL | ( | kvm_msr_allowed | ) |

◆ EXPORT_SYMBOL_GPL() [67/107]

| EXPORT_SYMBOL_GPL | ( | kvm_nr_uret_msrs | ) |

◆ EXPORT_SYMBOL_GPL() [68/107]

| EXPORT_SYMBOL_GPL | ( | kvm_post_set_cr0 | ) |

◆ EXPORT_SYMBOL_GPL() [69/107]

| EXPORT_SYMBOL_GPL | ( | kvm_post_set_cr4 | ) |

◆ EXPORT_SYMBOL_GPL() [70/107]

| EXPORT_SYMBOL_GPL | ( | kvm_prepare_emulation_failure_exit | ) |

◆ EXPORT_SYMBOL_GPL() [71/107]

| EXPORT_SYMBOL_GPL | ( | kvm_queue_exception | ) |

◆ EXPORT_SYMBOL_GPL() [72/107]

| EXPORT_SYMBOL_GPL | ( | kvm_queue_exception_e | ) |

◆ EXPORT_SYMBOL_GPL() [73/107]

| EXPORT_SYMBOL_GPL | ( | kvm_queue_exception_p | ) |

◆ EXPORT_SYMBOL_GPL() [74/107]

| EXPORT_SYMBOL_GPL | ( | kvm_read_guest_virt | ) |

◆ EXPORT_SYMBOL_GPL() [75/107]

| EXPORT_SYMBOL_GPL | ( | kvm_read_l1_tsc | ) |

◆ EXPORT_SYMBOL_GPL() [76/107]

| EXPORT_SYMBOL_GPL | ( | kvm_requeue_exception | ) |

◆ EXPORT_SYMBOL_GPL() [77/107]

| EXPORT_SYMBOL_GPL | ( | kvm_requeue_exception_e | ) |

◆ EXPORT_SYMBOL_GPL() [78/107]

| EXPORT_SYMBOL_GPL | ( | kvm_require_dr | ) |

◆ EXPORT_SYMBOL_GPL() [79/107]

| EXPORT_SYMBOL_GPL | ( | kvm_service_local_tlb_flush_requests | ) |

◆ EXPORT_SYMBOL_GPL() [80/107]

| EXPORT_SYMBOL_GPL | ( | kvm_set_cr0 | ) |

◆ EXPORT_SYMBOL_GPL() [81/107]

| EXPORT_SYMBOL_GPL | ( | kvm_set_cr3 | ) |

◆ EXPORT_SYMBOL_GPL() [82/107]

| EXPORT_SYMBOL_GPL | ( | kvm_set_cr4 | ) |

◆ EXPORT_SYMBOL_GPL() [83/107]

| EXPORT_SYMBOL_GPL | ( | kvm_set_cr8 | ) |

◆ EXPORT_SYMBOL_GPL() [84/107]

| EXPORT_SYMBOL_GPL | ( | kvm_set_dr | ) |

◆ EXPORT_SYMBOL_GPL() [85/107]

| EXPORT_SYMBOL_GPL | ( | kvm_set_msr | ) |

◆ EXPORT_SYMBOL_GPL() [86/107]

| EXPORT_SYMBOL_GPL | ( | kvm_set_msr_common | ) |

◆ EXPORT_SYMBOL_GPL() [87/107]

| EXPORT_SYMBOL_GPL | ( | kvm_set_or_clear_apicv_inhibit | ) |

◆ EXPORT_SYMBOL_GPL() [88/107]

| EXPORT_SYMBOL_GPL | ( | kvm_set_rflags | ) |

◆ EXPORT_SYMBOL_GPL() [89/107]

| EXPORT_SYMBOL_GPL | ( | kvm_set_user_return_msr | ) |

◆ EXPORT_SYMBOL_GPL() [90/107]

| EXPORT_SYMBOL_GPL | ( | kvm_sev_es_mmio_read | ) |

◆ EXPORT_SYMBOL_GPL() [91/107]

| EXPORT_SYMBOL_GPL | ( | kvm_sev_es_mmio_write | ) |

◆ EXPORT_SYMBOL_GPL() [92/107]

| EXPORT_SYMBOL_GPL | ( | kvm_sev_es_string_io | ) |

◆ EXPORT_SYMBOL_GPL() [93/107]

| EXPORT_SYMBOL_GPL | ( | kvm_skip_emulated_instruction | ) |

◆ EXPORT_SYMBOL_GPL() [94/107]

| EXPORT_SYMBOL_GPL | ( | kvm_spec_ctrl_test_value | ) |

◆ EXPORT_SYMBOL_GPL() [95/107]

| EXPORT_SYMBOL_GPL | ( | kvm_spurious_fault | ) |

◆ EXPORT_SYMBOL_GPL() [96/107]

| EXPORT_SYMBOL_GPL | ( | kvm_task_switch | ) |

◆ EXPORT_SYMBOL_GPL() [97/107]

| EXPORT_SYMBOL_GPL | ( | kvm_update_dr7 | ) |

◆ EXPORT_SYMBOL_GPL() [98/107]

| EXPORT_SYMBOL_GPL | ( | kvm_valid_efer | ) |

◆ EXPORT_SYMBOL_GPL() [99/107]

| EXPORT_SYMBOL_GPL | ( | kvm_vcpu_apicv_activated | ) |

◆ EXPORT_SYMBOL_GPL() [100/107]

| EXPORT_SYMBOL_GPL | ( | kvm_vcpu_deliver_sipi_vector | ) |

◆ EXPORT_SYMBOL_GPL() [101/107]

| EXPORT_SYMBOL_GPL | ( | kvm_vcpu_reset | ) |

◆ EXPORT_SYMBOL_GPL() [102/107]

| EXPORT_SYMBOL_GPL | ( | kvm_write_guest_virt_system | ) |

◆ EXPORT_SYMBOL_GPL() [103/107]

| EXPORT_SYMBOL_GPL | ( | kvm_x86_vendor_exit | ) |

◆ EXPORT_SYMBOL_GPL() [104/107]

| EXPORT_SYMBOL_GPL | ( | kvm_x86_vendor_init | ) |

◆ EXPORT_SYMBOL_GPL() [105/107]

| EXPORT_SYMBOL_GPL | ( | load_pdptrs | ) |

◆ EXPORT_SYMBOL_GPL() [106/107]

| EXPORT_SYMBOL_GPL | ( | report_ignored_msrs | ) |

◆ EXPORT_SYMBOL_GPL() [107/107]

| EXPORT_SYMBOL_GPL | ( | x86_decode_emulated_instruction | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [1/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_apicv_accept_irq | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [2/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_avic_doorbell | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [3/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_avic_ga_log | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [4/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_avic_incomplete_ipi | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [5/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_avic_kick_vcpu_slowpath | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [6/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_avic_unaccelerated_access | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [7/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_cr | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [8/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_entry | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [9/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_exit | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [10/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_fast_mmio | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [11/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_inj_virq | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [12/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_invlpga | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [13/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_msr | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [14/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_nested_intercepts | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [15/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_nested_intr_vmexit | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [16/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_nested_vmenter | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [17/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_nested_vmenter_failed | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [18/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_nested_vmexit | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [19/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_nested_vmexit_inject | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [20/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_page_fault | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [21/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_pi_irte_update | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [22/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_ple_window_update | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [23/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_pml_full | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [24/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_skinit | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [25/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_vmgexit_enter | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [26/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_vmgexit_exit | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [27/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_vmgexit_msr_protocol_enter | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [28/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_vmgexit_msr_protocol_exit | ) |

◆ EXPORT_TRACEPOINT_SYMBOL_GPL() [29/29]

| EXPORT_TRACEPOINT_SYMBOL_GPL | ( | kvm_write_tsc_offset | ) |

◆ get_cpu_tsc_khz()

|

static |

◆ get_kvmclock()

|

static |