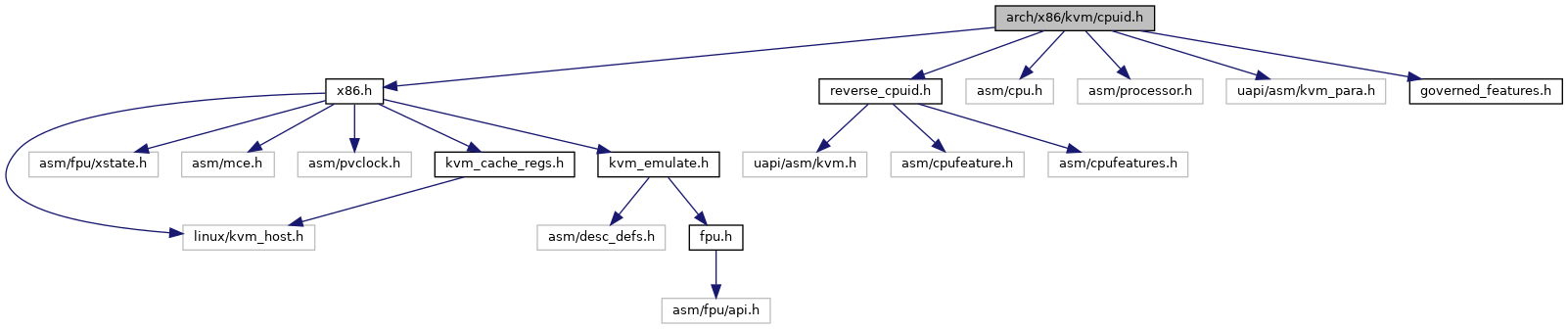

#include "x86.h"#include "reverse_cpuid.h"#include <asm/cpu.h>#include <asm/processor.h>#include <uapi/asm/kvm_para.h>#include "governed_features.h"

Go to the source code of this file.

Macros | |

| #define | KVM_GOVERNED_FEATURE(x) KVM_GOVERNED_##x, |

| #define | KVM_GOVERNED_FEATURE(x) case x: return KVM_GOVERNED_##x; |

Enumerations | |

| enum | kvm_governed_features { KVM_NR_GOVERNED_FEATURES } |

Functions | |

| void | kvm_set_cpu_caps (void) |

| void | kvm_update_cpuid_runtime (struct kvm_vcpu *vcpu) |

| void | kvm_update_pv_runtime (struct kvm_vcpu *vcpu) |

| struct kvm_cpuid_entry2 * | kvm_find_cpuid_entry_index (struct kvm_vcpu *vcpu, u32 function, u32 index) |

| struct kvm_cpuid_entry2 * | kvm_find_cpuid_entry (struct kvm_vcpu *vcpu, u32 function) |

| int | kvm_dev_ioctl_get_cpuid (struct kvm_cpuid2 *cpuid, struct kvm_cpuid_entry2 __user *entries, unsigned int type) |

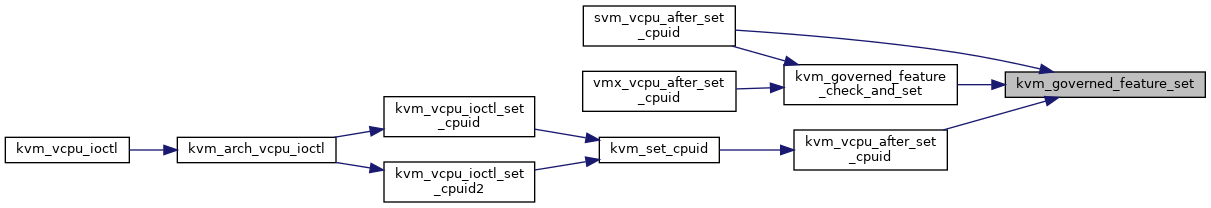

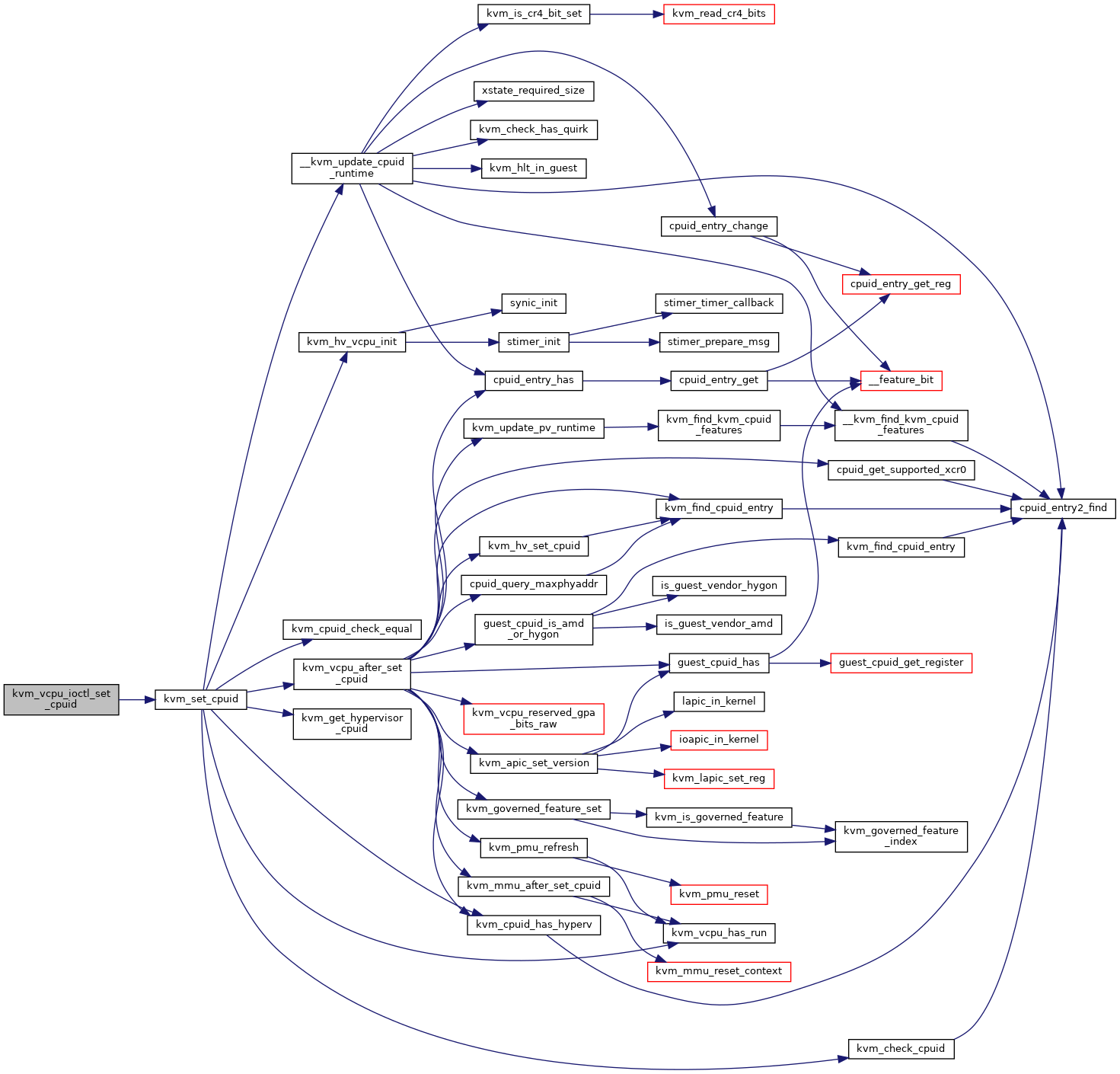

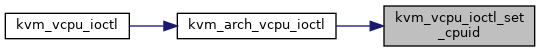

| int | kvm_vcpu_ioctl_set_cpuid (struct kvm_vcpu *vcpu, struct kvm_cpuid *cpuid, struct kvm_cpuid_entry __user *entries) |

| int | kvm_vcpu_ioctl_set_cpuid2 (struct kvm_vcpu *vcpu, struct kvm_cpuid2 *cpuid, struct kvm_cpuid_entry2 __user *entries) |

| int | kvm_vcpu_ioctl_get_cpuid2 (struct kvm_vcpu *vcpu, struct kvm_cpuid2 *cpuid, struct kvm_cpuid_entry2 __user *entries) |

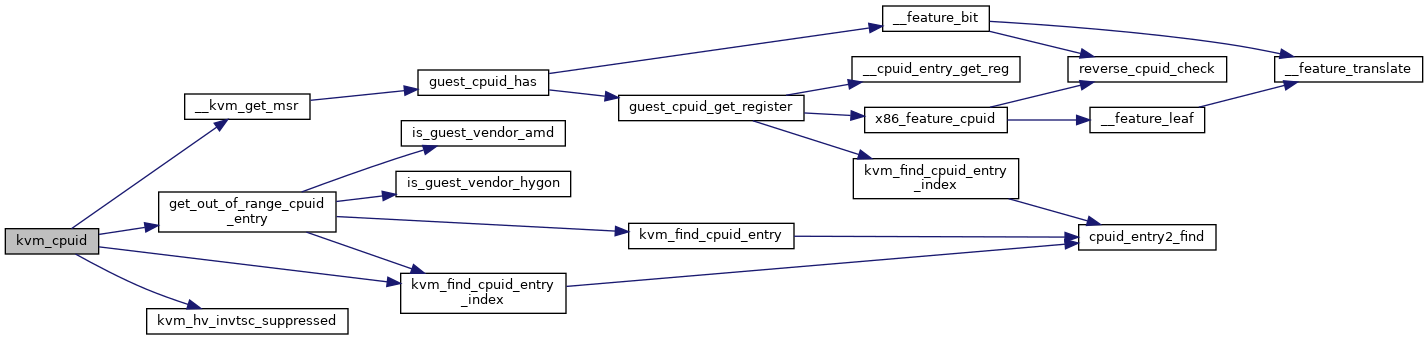

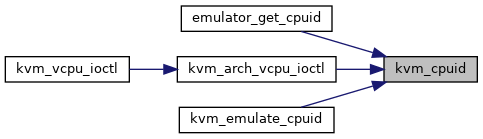

| bool | kvm_cpuid (struct kvm_vcpu *vcpu, u32 *eax, u32 *ebx, u32 *ecx, u32 *edx, bool exact_only) |

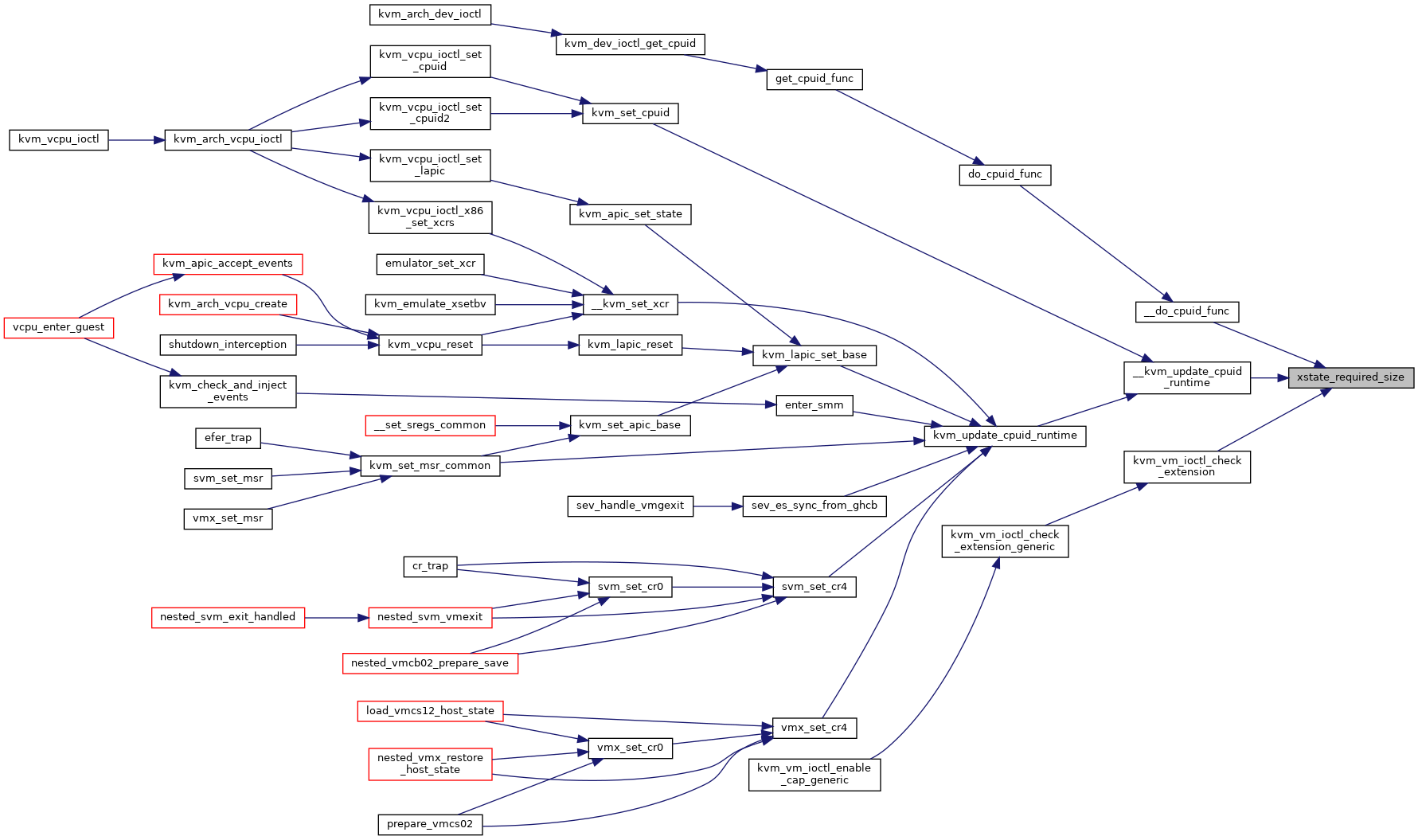

| u32 | xstate_required_size (u64 xstate_bv, bool compacted) |

| int | cpuid_query_maxphyaddr (struct kvm_vcpu *vcpu) |

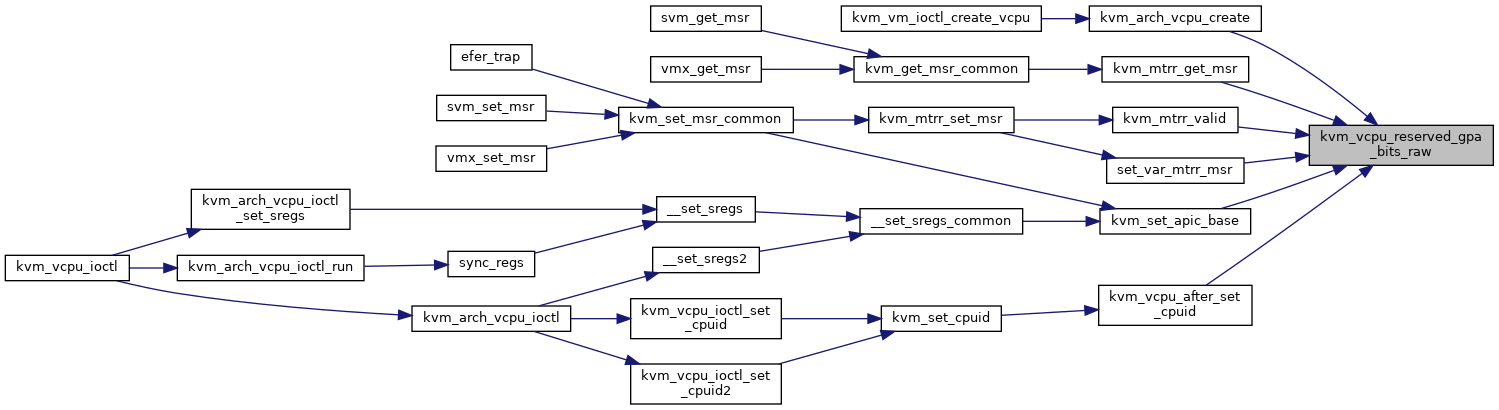

| u64 | kvm_vcpu_reserved_gpa_bits_raw (struct kvm_vcpu *vcpu) |

| static int | cpuid_maxphyaddr (struct kvm_vcpu *vcpu) |

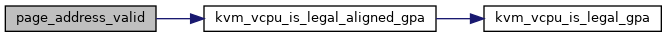

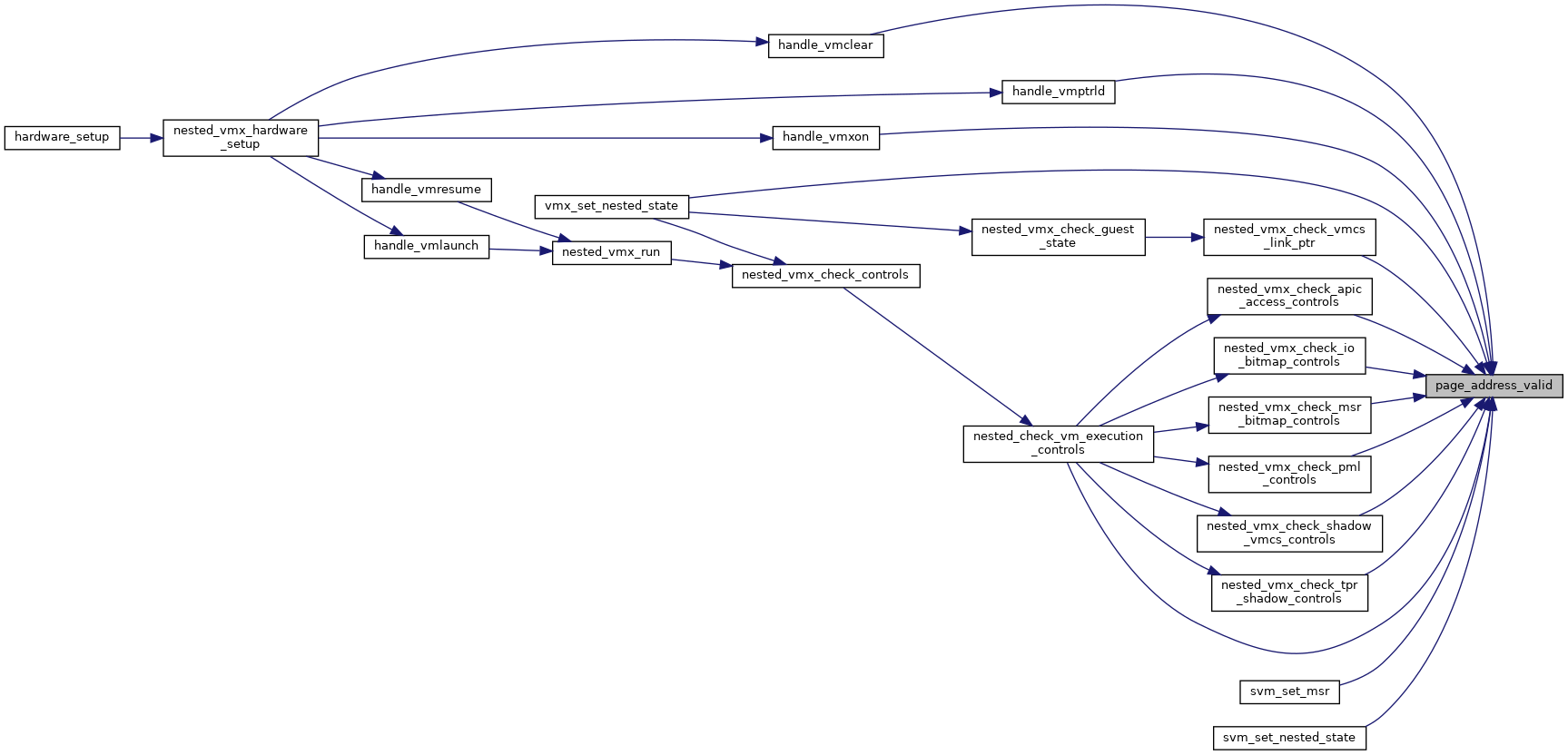

| static bool | kvm_vcpu_is_legal_gpa (struct kvm_vcpu *vcpu, gpa_t gpa) |

| static bool | kvm_vcpu_is_legal_aligned_gpa (struct kvm_vcpu *vcpu, gpa_t gpa, gpa_t alignment) |

| static bool | page_address_valid (struct kvm_vcpu *vcpu, gpa_t gpa) |

| static __always_inline void | cpuid_entry_override (struct kvm_cpuid_entry2 *entry, unsigned int leaf) |

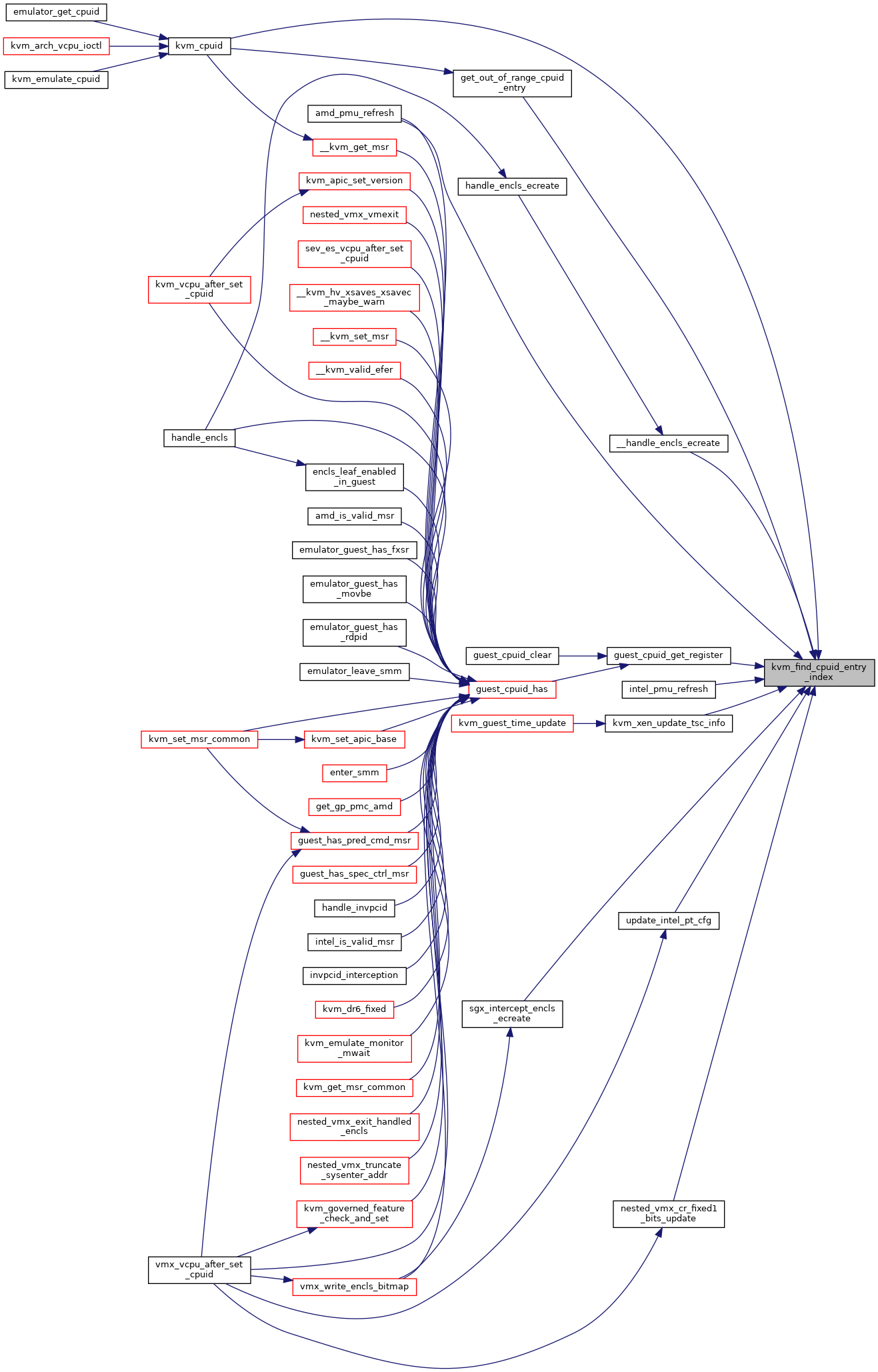

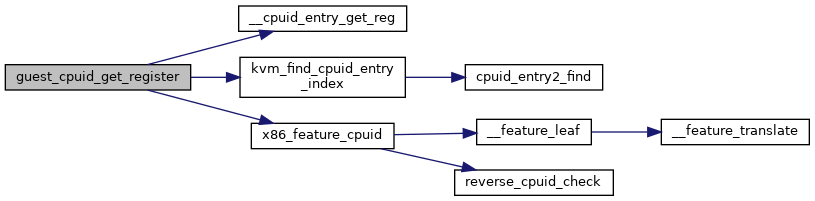

| static __always_inline u32 * | guest_cpuid_get_register (struct kvm_vcpu *vcpu, unsigned int x86_feature) |

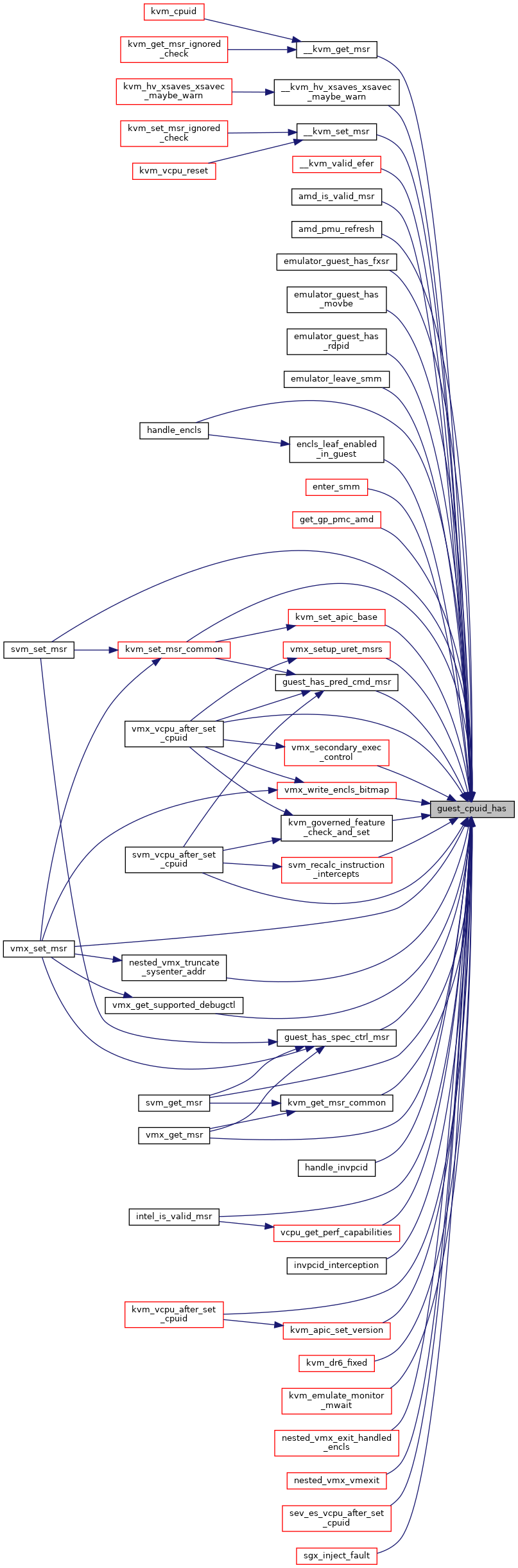

| static __always_inline bool | guest_cpuid_has (struct kvm_vcpu *vcpu, unsigned int x86_feature) |

| static __always_inline void | guest_cpuid_clear (struct kvm_vcpu *vcpu, unsigned int x86_feature) |

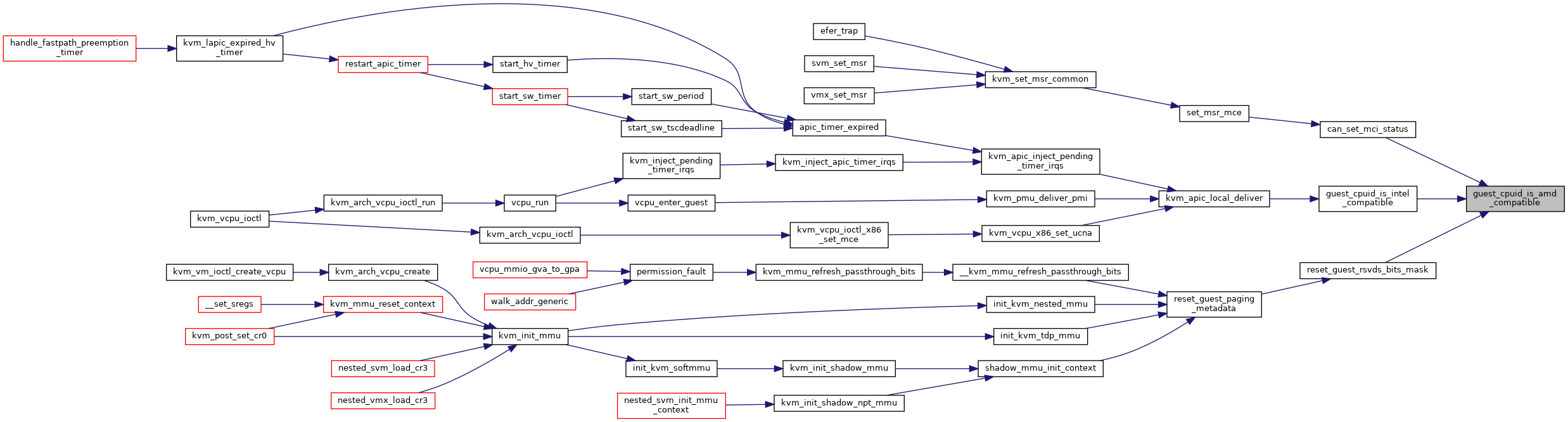

| static bool | guest_cpuid_is_amd_or_hygon (struct kvm_vcpu *vcpu) |

| static bool | guest_cpuid_is_intel (struct kvm_vcpu *vcpu) |

| static bool | guest_cpuid_is_amd_compatible (struct kvm_vcpu *vcpu) |

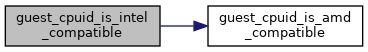

| static bool | guest_cpuid_is_intel_compatible (struct kvm_vcpu *vcpu) |

| static int | guest_cpuid_family (struct kvm_vcpu *vcpu) |

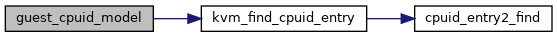

| static int | guest_cpuid_model (struct kvm_vcpu *vcpu) |

| static bool | cpuid_model_is_consistent (struct kvm_vcpu *vcpu) |

| static int | guest_cpuid_stepping (struct kvm_vcpu *vcpu) |

| static bool | guest_has_spec_ctrl_msr (struct kvm_vcpu *vcpu) |

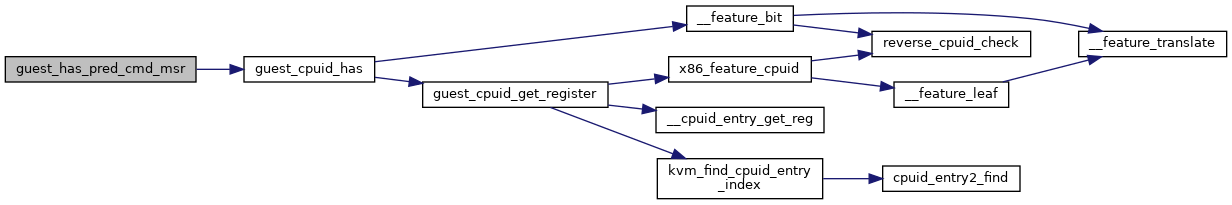

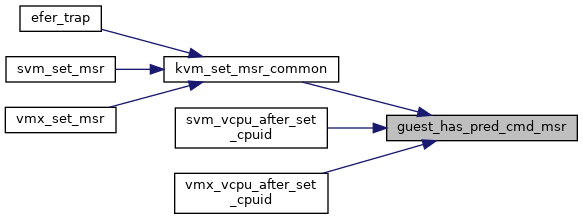

| static bool | guest_has_pred_cmd_msr (struct kvm_vcpu *vcpu) |

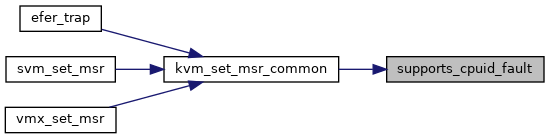

| static bool | supports_cpuid_fault (struct kvm_vcpu *vcpu) |

| static bool | cpuid_fault_enabled (struct kvm_vcpu *vcpu) |

| static __always_inline void | kvm_cpu_cap_clear (unsigned int x86_feature) |

| static __always_inline void | kvm_cpu_cap_set (unsigned int x86_feature) |

| static __always_inline u32 | kvm_cpu_cap_get (unsigned int x86_feature) |

| static __always_inline bool | kvm_cpu_cap_has (unsigned int x86_feature) |

| static __always_inline void | kvm_cpu_cap_check_and_set (unsigned int x86_feature) |

| static __always_inline bool | guest_pv_has (struct kvm_vcpu *vcpu, unsigned int kvm_feature) |

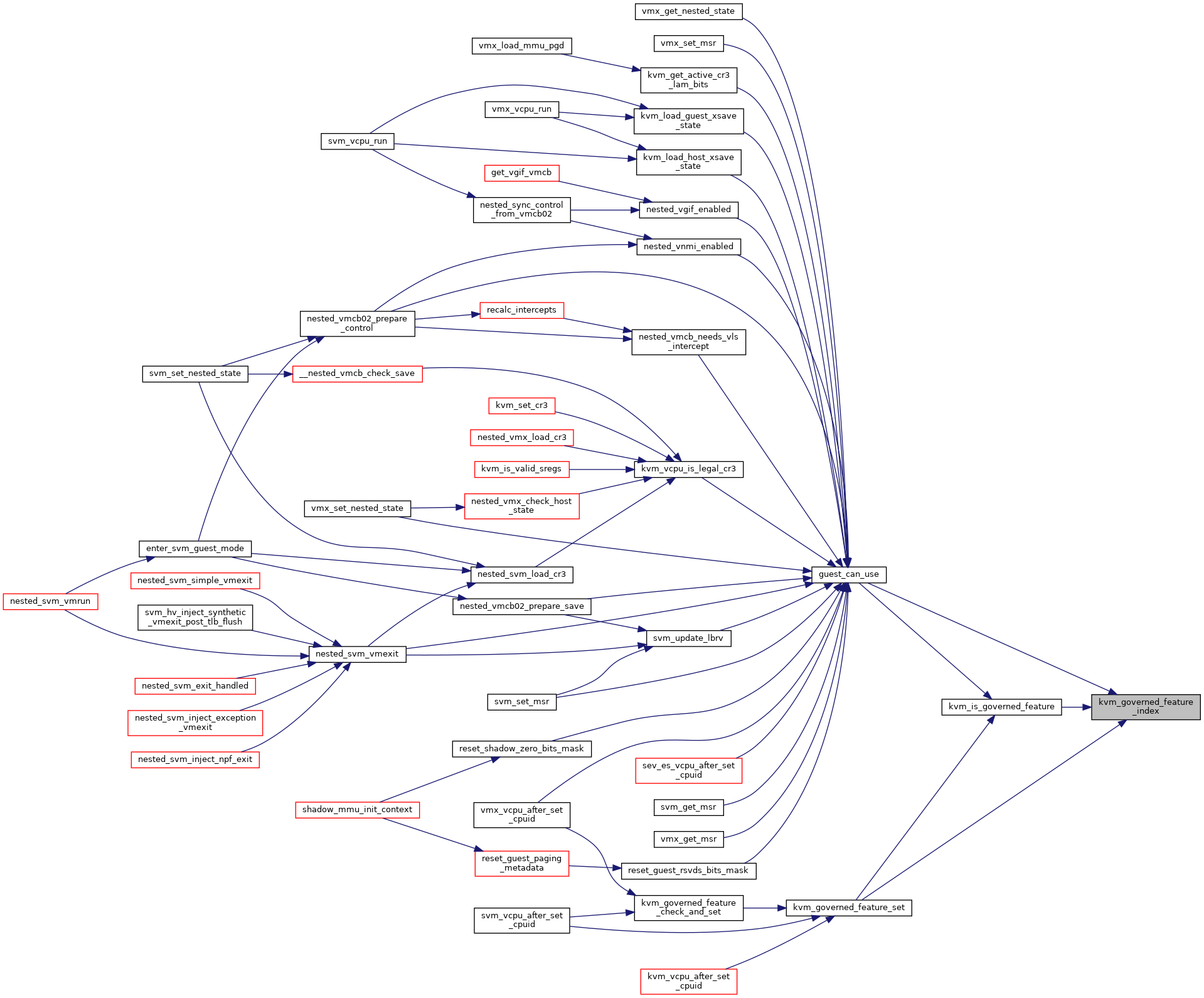

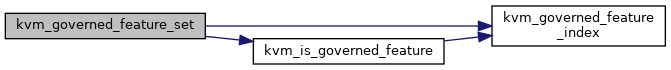

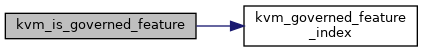

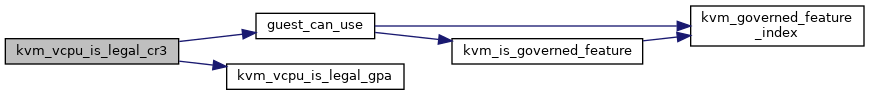

| static __always_inline int | kvm_governed_feature_index (unsigned int x86_feature) |

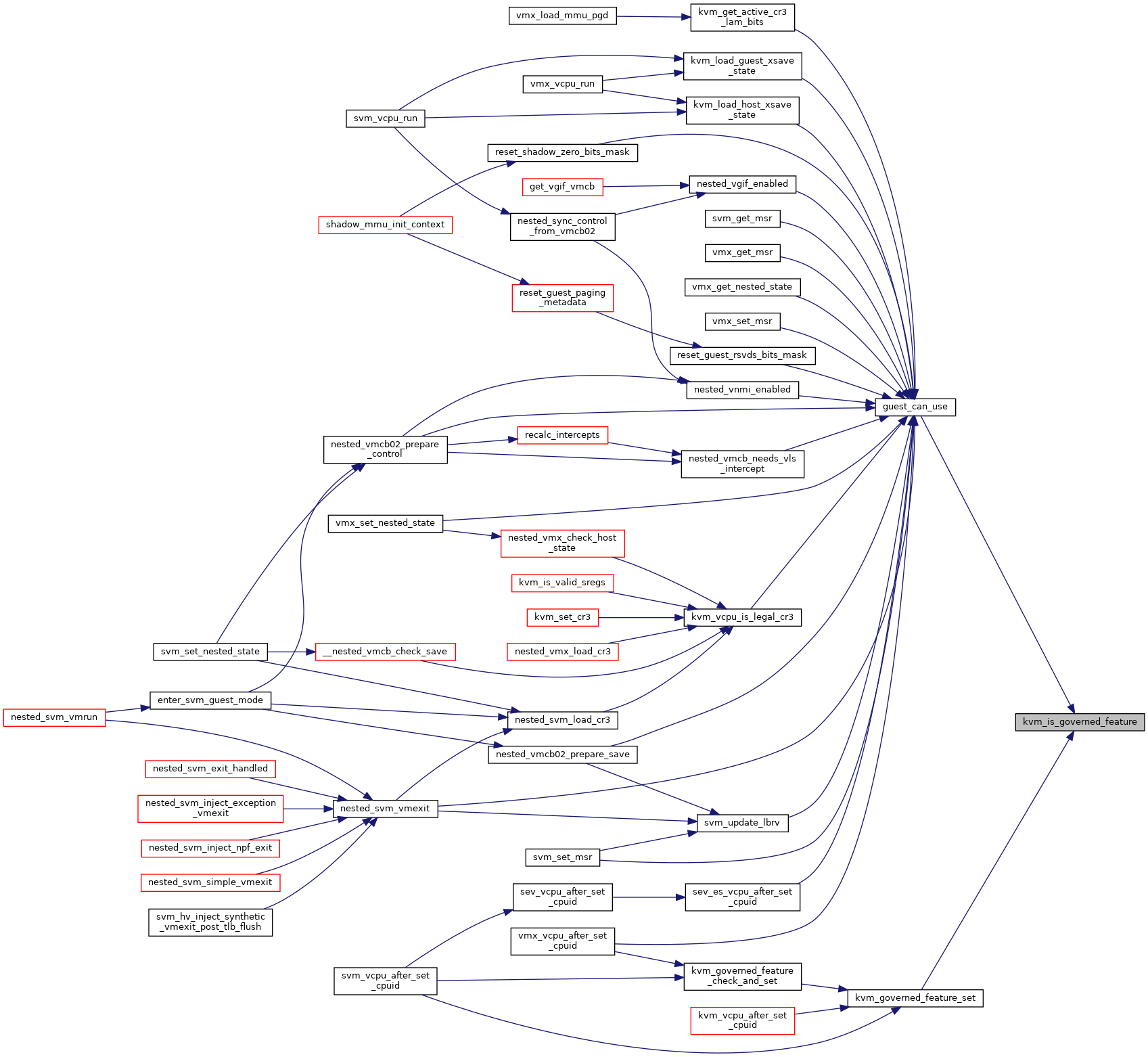

| static __always_inline bool | kvm_is_governed_feature (unsigned int x86_feature) |

| static __always_inline void | kvm_governed_feature_set (struct kvm_vcpu *vcpu, unsigned int x86_feature) |

| static __always_inline void | kvm_governed_feature_check_and_set (struct kvm_vcpu *vcpu, unsigned int x86_feature) |

| static __always_inline bool | guest_can_use (struct kvm_vcpu *vcpu, unsigned int x86_feature) |

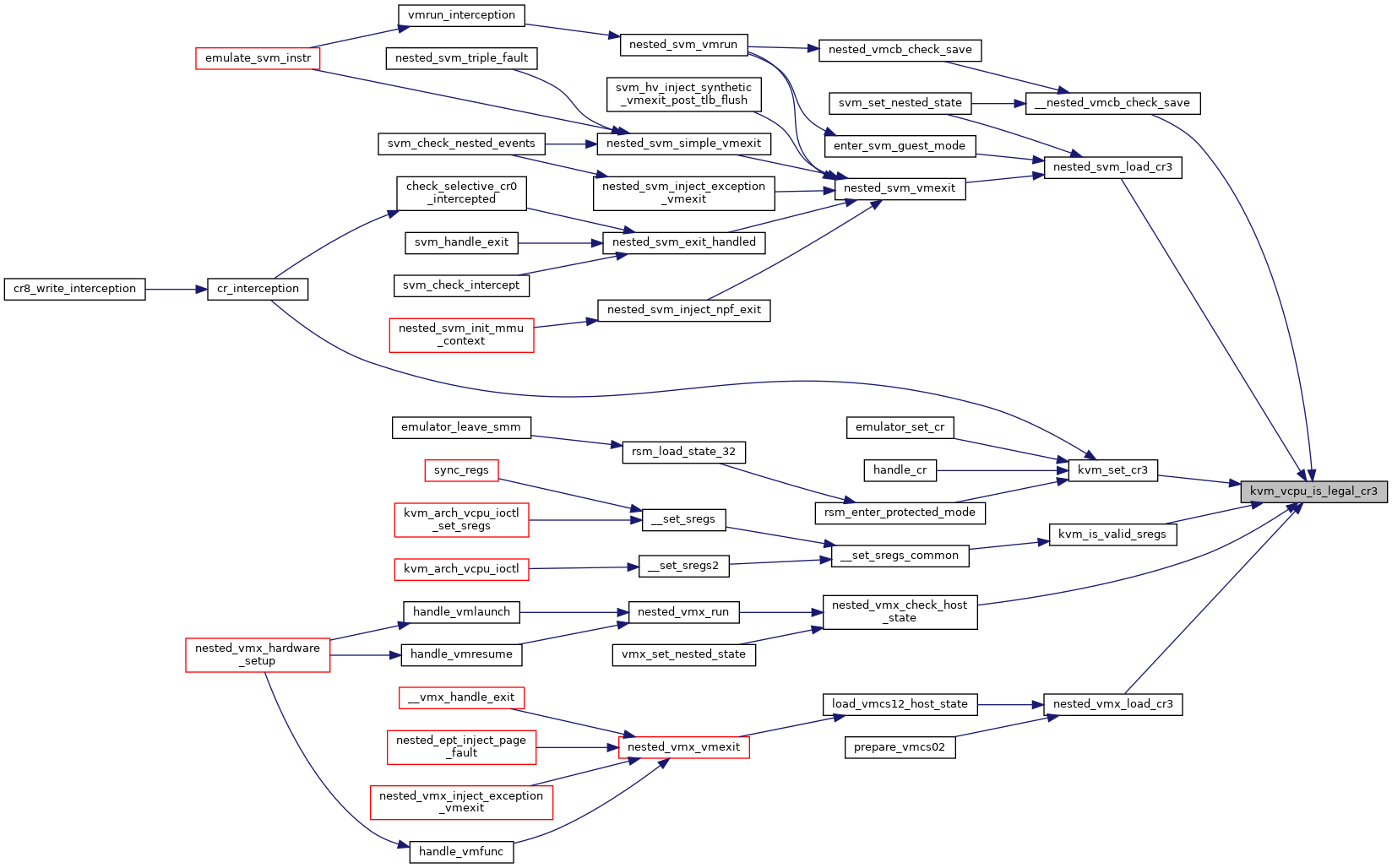

| static bool | kvm_vcpu_is_legal_cr3 (struct kvm_vcpu *vcpu, unsigned long cr3) |

Variables | |

| u32 kvm_cpu_caps[NR_KVM_CPU_CAPS] | __read_mostly |

Macro Definition Documentation

◆ KVM_GOVERNED_FEATURE [1/2]

◆ KVM_GOVERNED_FEATURE [2/2]

| #define KVM_GOVERNED_FEATURE | ( | x | ) | case x: return KVM_GOVERNED_##x; |

Enumeration Type Documentation

◆ kvm_governed_features

Function Documentation

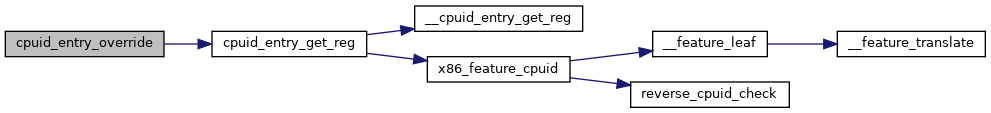

◆ cpuid_entry_override()

|

static |

Definition at line 61 of file cpuid.h.

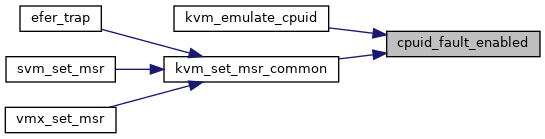

◆ cpuid_fault_enabled()

|

inlinestatic |

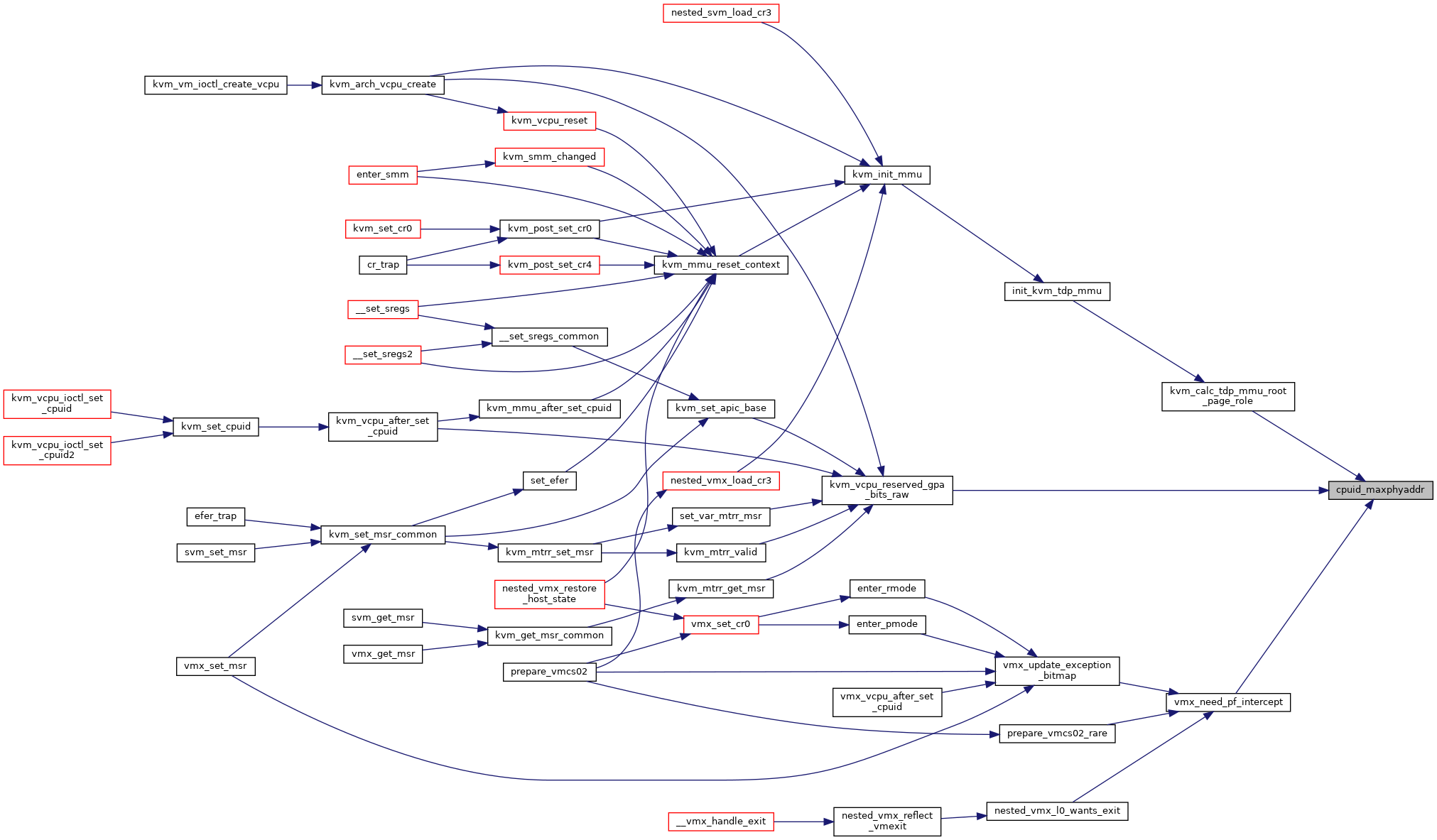

◆ cpuid_maxphyaddr()

|

inlinestatic |

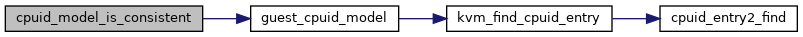

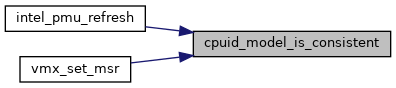

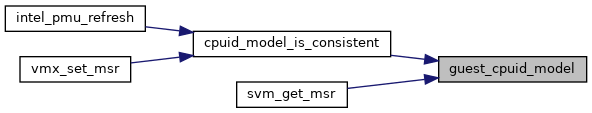

◆ cpuid_model_is_consistent()

|

inlinestatic |

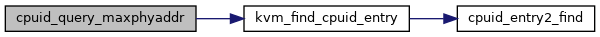

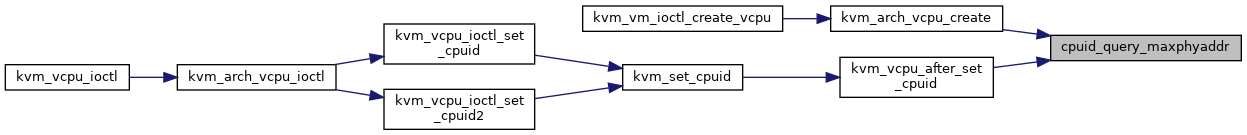

◆ cpuid_query_maxphyaddr()

| int cpuid_query_maxphyaddr | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 390 of file cpuid.c.

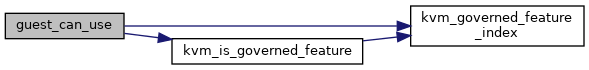

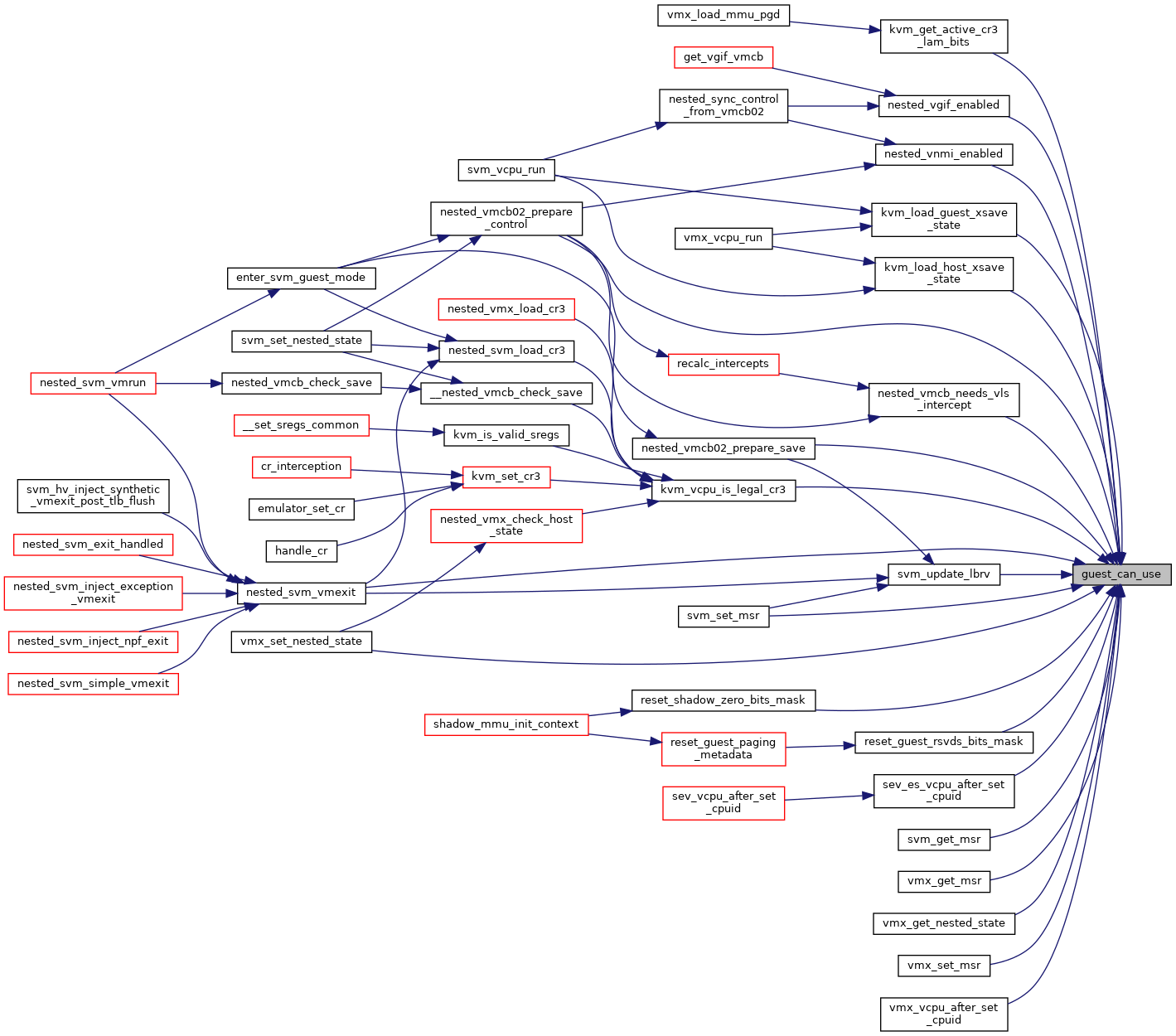

◆ guest_can_use()

|

static |

Definition at line 278 of file cpuid.h.

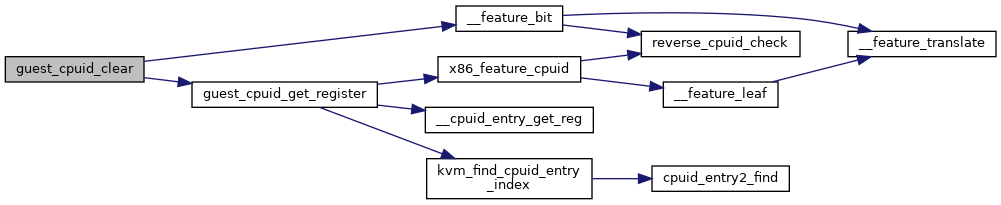

◆ guest_cpuid_clear()

|

static |

Definition at line 95 of file cpuid.h.

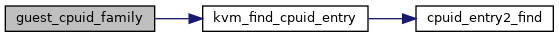

◆ guest_cpuid_family()

|

inlinestatic |

Definition at line 133 of file cpuid.h.

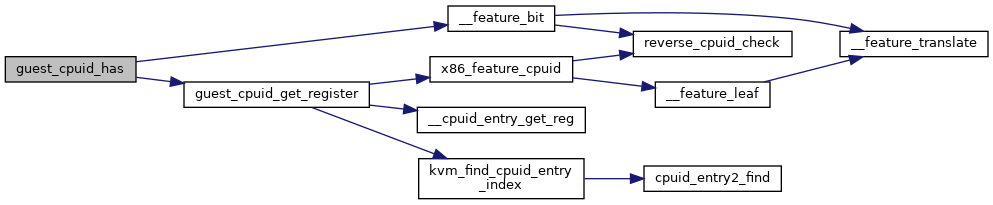

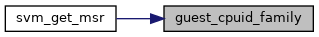

◆ guest_cpuid_get_register()

|

static |

Definition at line 70 of file cpuid.h.

◆ guest_cpuid_has()

|

static |

◆ guest_cpuid_is_amd_compatible()

|

inlinestatic |

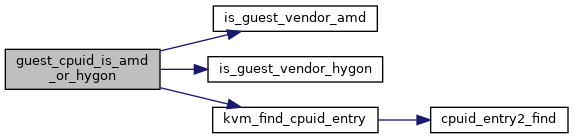

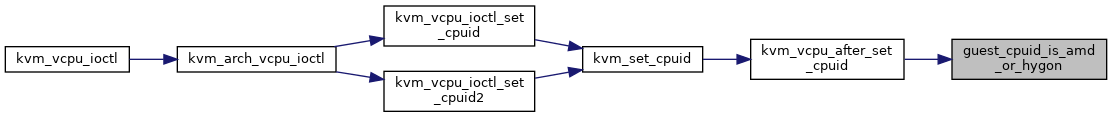

◆ guest_cpuid_is_amd_or_hygon()

|

inlinestatic |

Definition at line 105 of file cpuid.h.

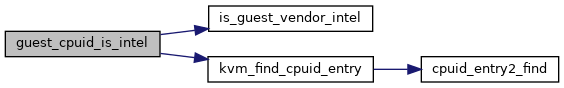

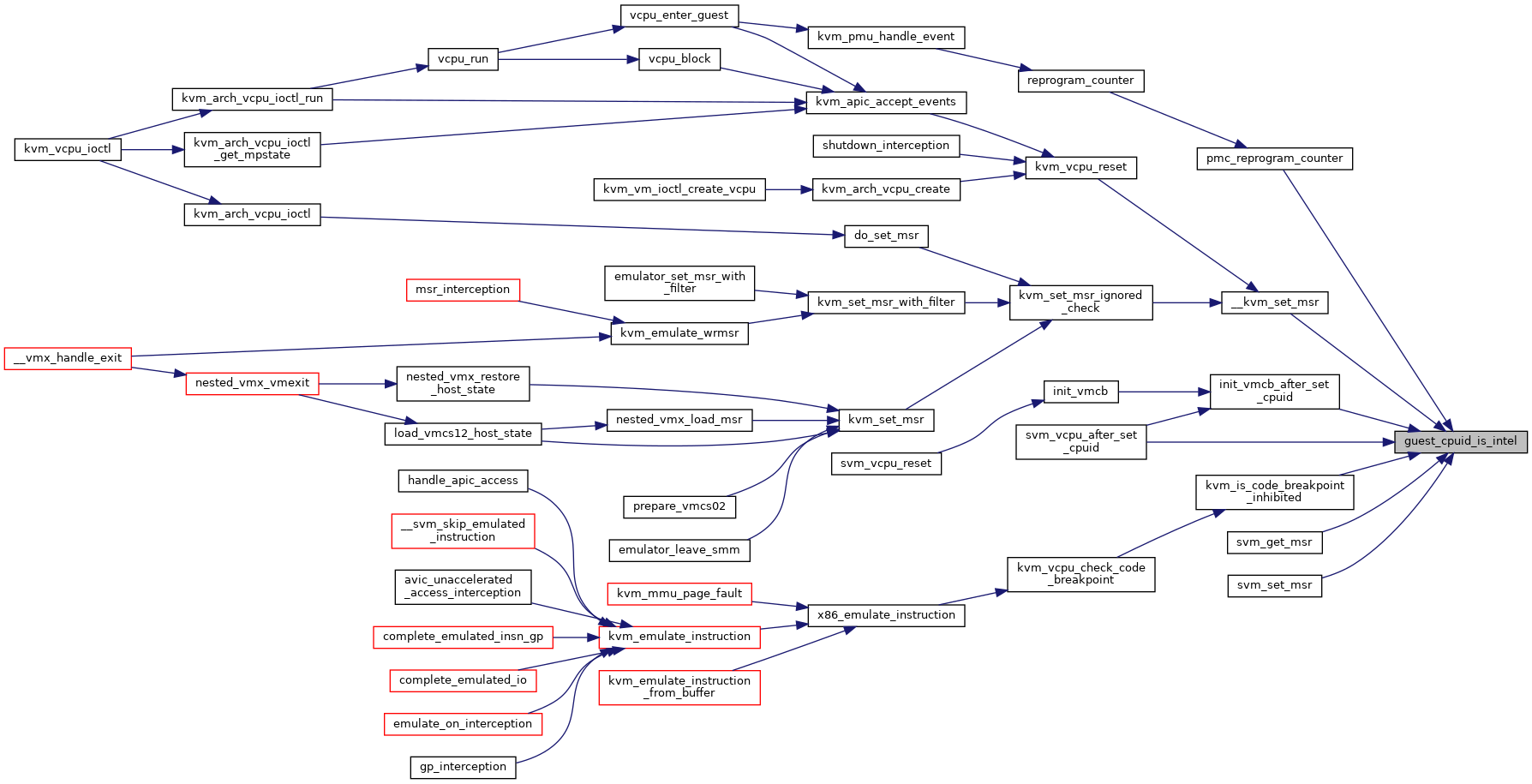

◆ guest_cpuid_is_intel()

|

inlinestatic |

Definition at line 115 of file cpuid.h.

◆ guest_cpuid_is_intel_compatible()

|

inlinestatic |

Definition at line 128 of file cpuid.h.

◆ guest_cpuid_model()

|

inlinestatic |

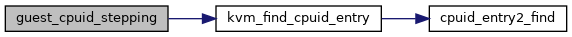

◆ guest_cpuid_stepping()

|

inlinestatic |

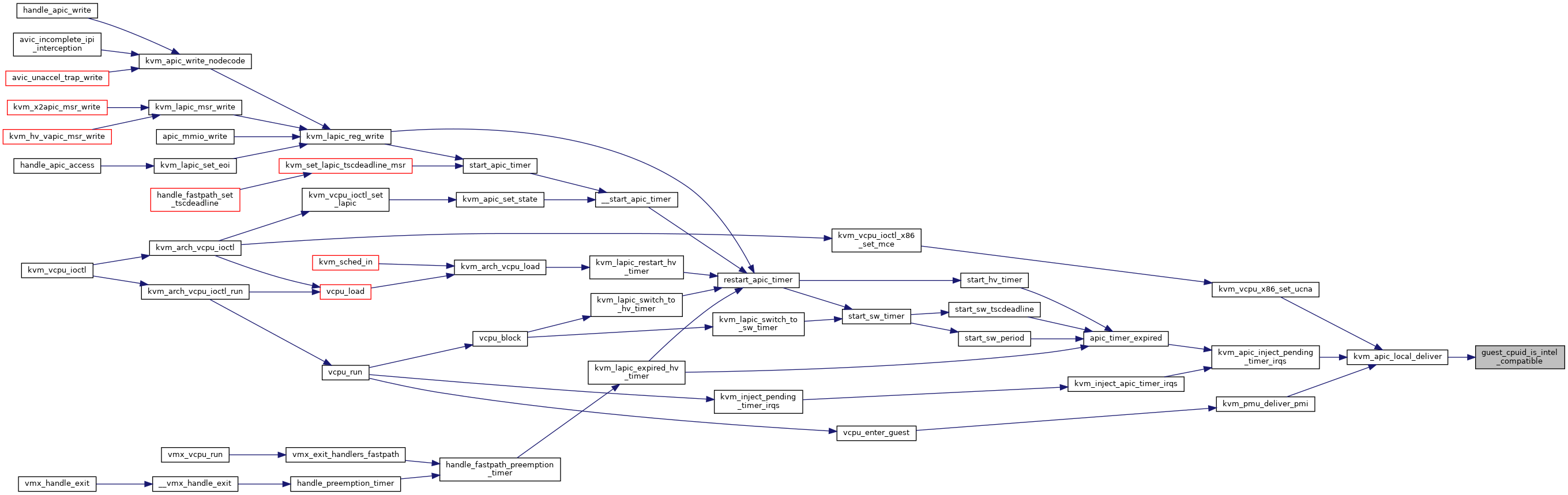

◆ guest_has_pred_cmd_msr()

|

inlinestatic |

Definition at line 179 of file cpuid.h.

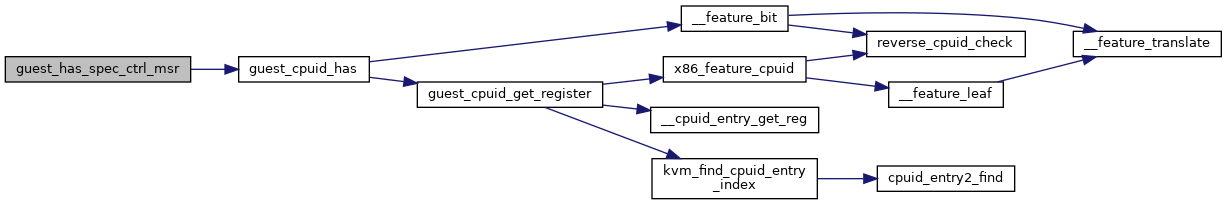

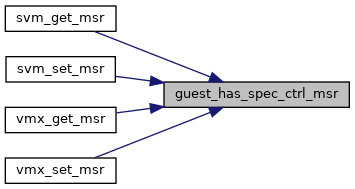

◆ guest_has_spec_ctrl_msr()

|

inlinestatic |

◆ guest_pv_has()

|

static |

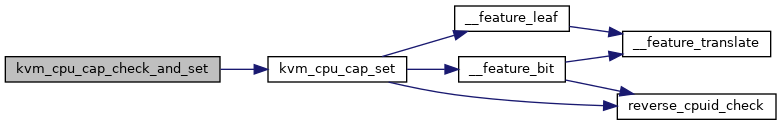

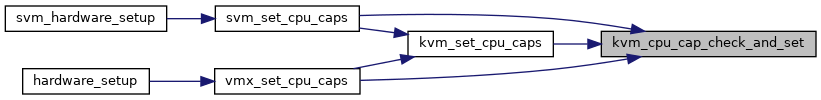

◆ kvm_cpu_cap_check_and_set()

|

static |

Definition at line 226 of file cpuid.h.

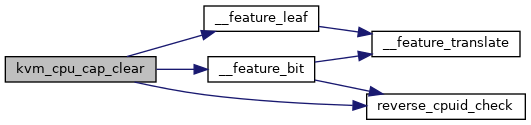

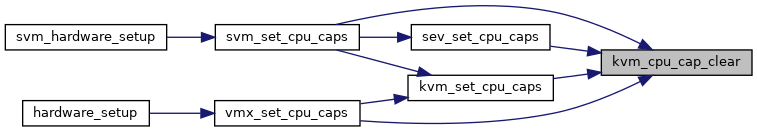

◆ kvm_cpu_cap_clear()

|

static |

Definition at line 197 of file cpuid.h.

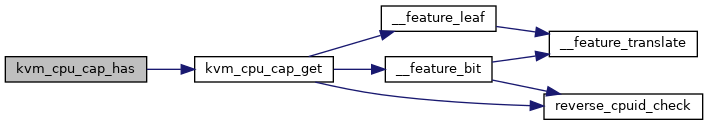

◆ kvm_cpu_cap_get()

|

static |

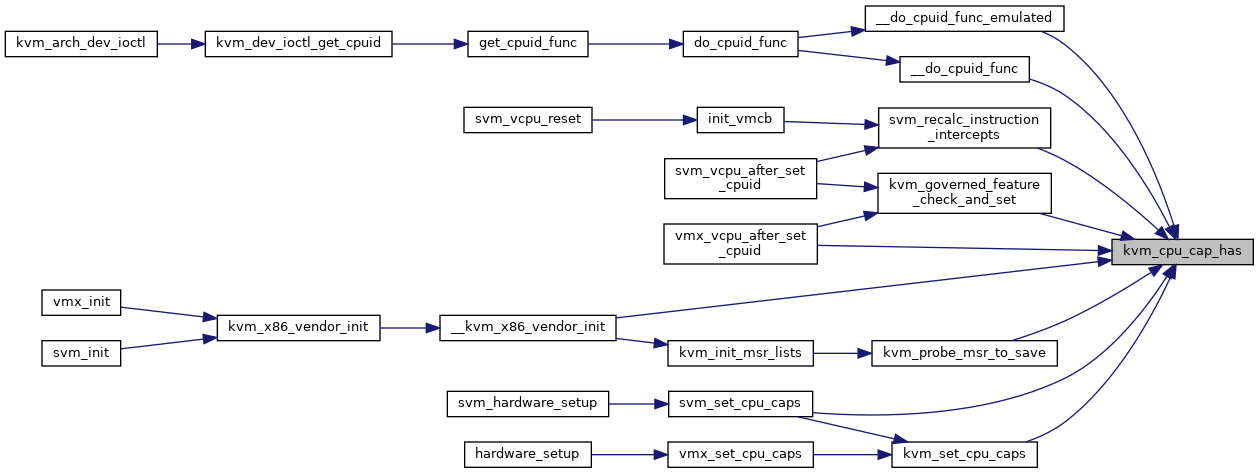

◆ kvm_cpu_cap_has()

|

static |

Definition at line 221 of file cpuid.h.

◆ kvm_cpu_cap_set()

|

static |

◆ kvm_cpuid()

| bool kvm_cpuid | ( | struct kvm_vcpu * | vcpu, |

| u32 * | eax, | ||

| u32 * | ebx, | ||

| u32 * | ecx, | ||

| u32 * | edx, | ||

| bool | exact_only | ||

| ) |

Definition at line 1531 of file cpuid.c.

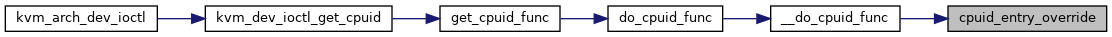

◆ kvm_dev_ioctl_get_cpuid()

| int kvm_dev_ioctl_get_cpuid | ( | struct kvm_cpuid2 * | cpuid, |

| struct kvm_cpuid_entry2 __user * | entries, | ||

| unsigned int | type | ||

| ) |

Definition at line 1404 of file cpuid.c.

◆ kvm_find_cpuid_entry()

| struct kvm_cpuid_entry2* kvm_find_cpuid_entry | ( | struct kvm_vcpu * | vcpu, |

| u32 | function | ||

| ) |

Definition at line 1455 of file cpuid.c.

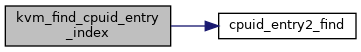

◆ kvm_find_cpuid_entry_index()

| struct kvm_cpuid_entry2* kvm_find_cpuid_entry_index | ( | struct kvm_vcpu * | vcpu, |

| u32 | function, | ||

| u32 | index | ||

| ) |

◆ kvm_governed_feature_check_and_set()

|

static |

Definition at line 271 of file cpuid.h.

◆ kvm_governed_feature_index()

|

static |

◆ kvm_governed_feature_set()

|

static |

◆ kvm_is_governed_feature()

|

static |

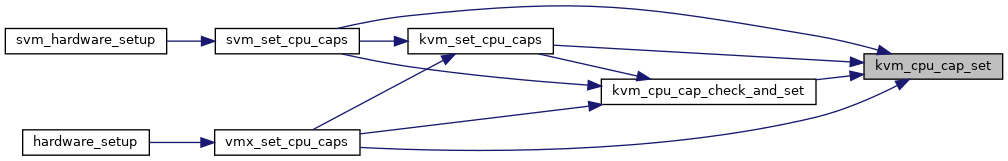

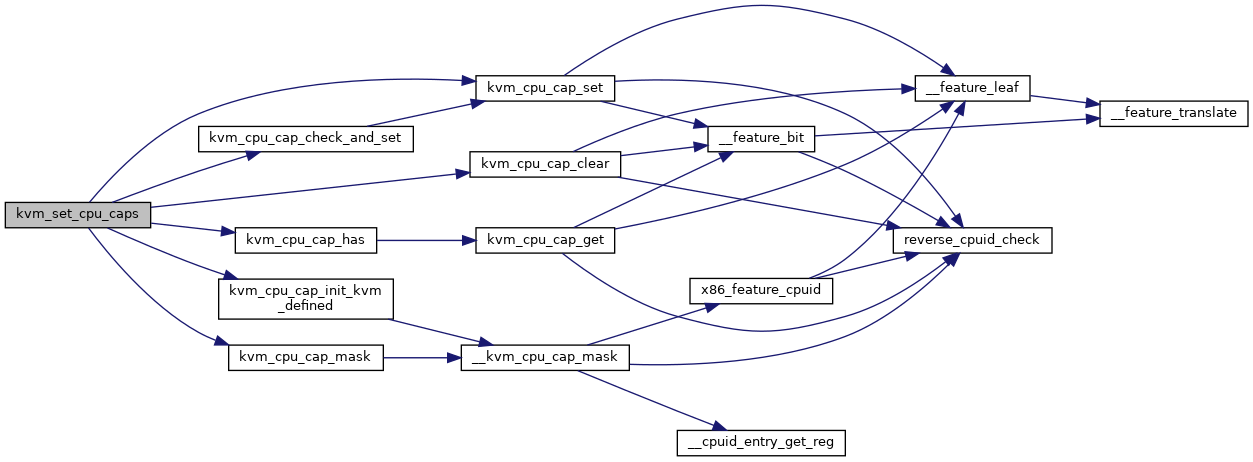

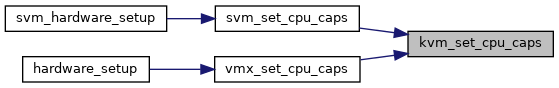

◆ kvm_set_cpu_caps()

| void kvm_set_cpu_caps | ( | void | ) |

Definition at line 585 of file cpuid.c.

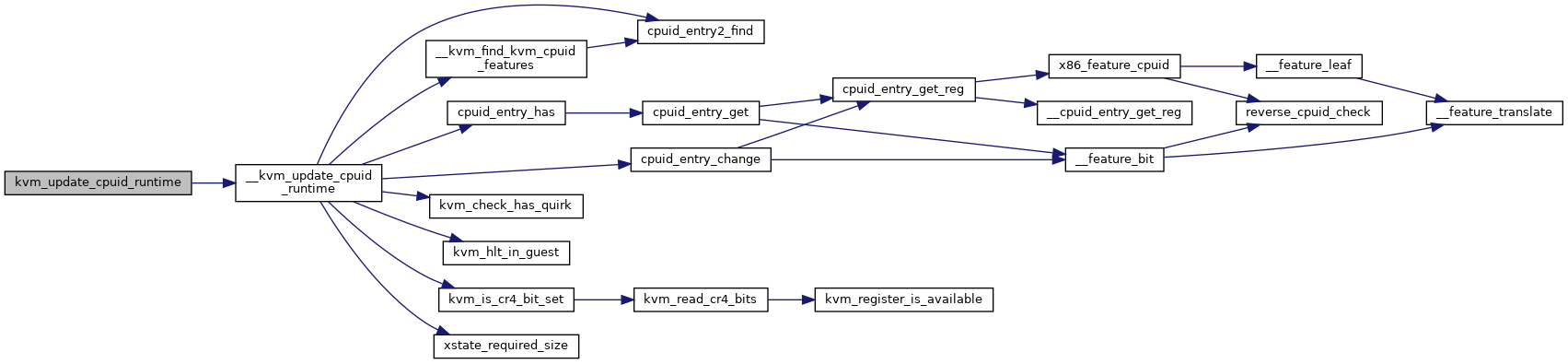

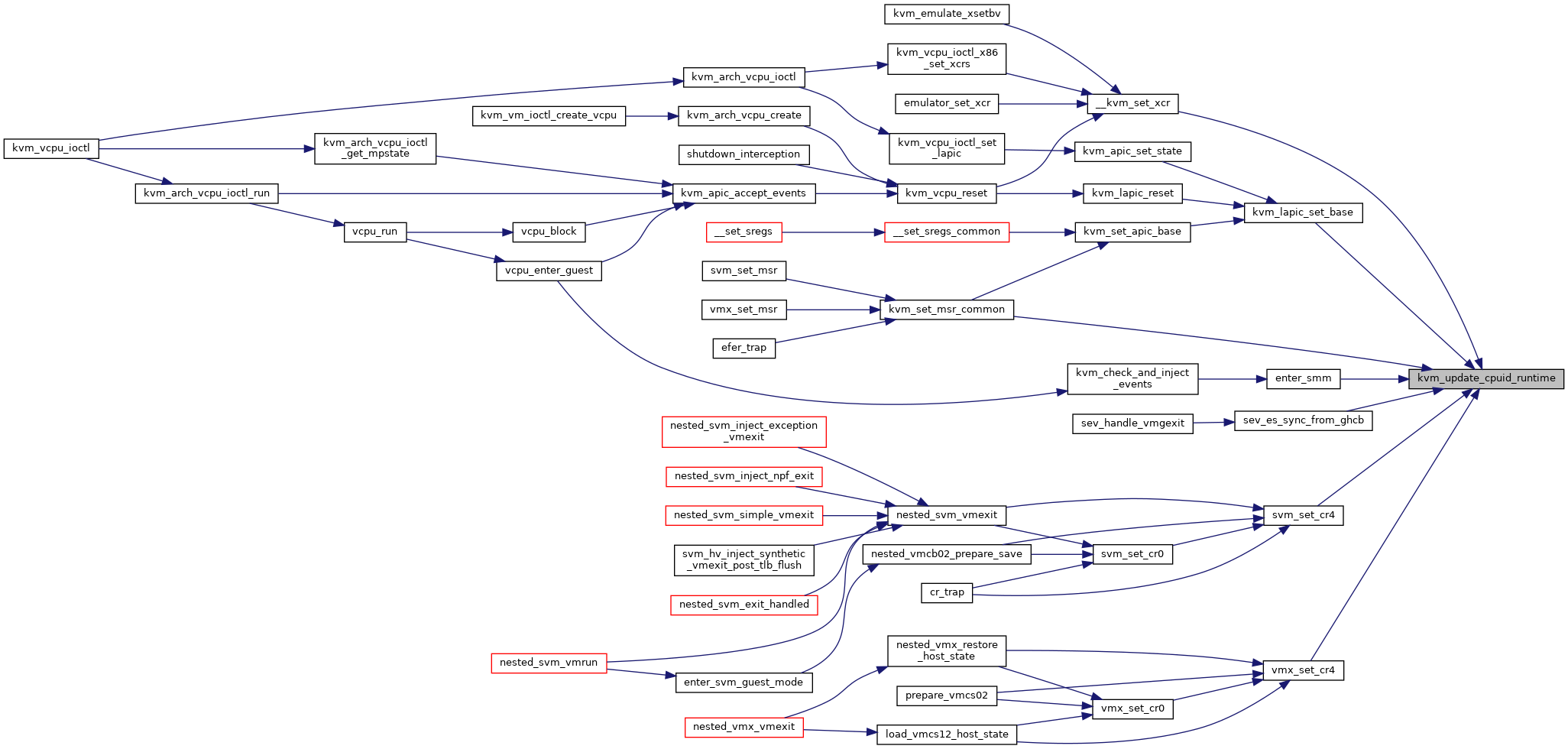

◆ kvm_update_cpuid_runtime()

| void kvm_update_cpuid_runtime | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 309 of file cpuid.c.

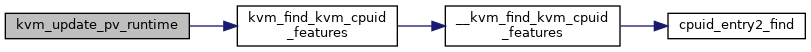

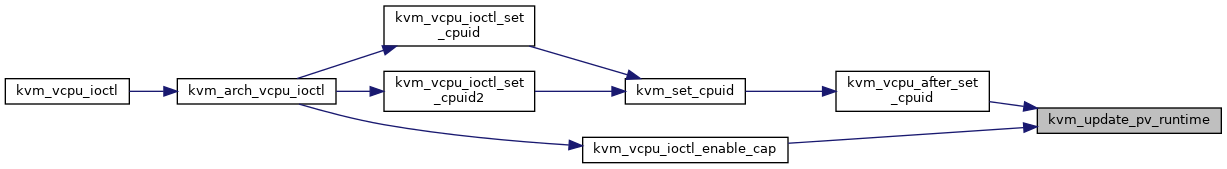

◆ kvm_update_pv_runtime()

| void kvm_update_pv_runtime | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 238 of file cpuid.c.

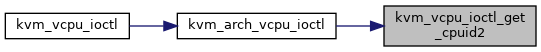

◆ kvm_vcpu_ioctl_get_cpuid2()

| int kvm_vcpu_ioctl_get_cpuid2 | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_cpuid2 * | cpuid, | ||

| struct kvm_cpuid_entry2 __user * | entries | ||

| ) |

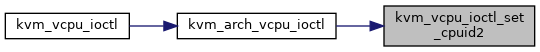

◆ kvm_vcpu_ioctl_set_cpuid()

| int kvm_vcpu_ioctl_set_cpuid | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_cpuid * | cpuid, | ||

| struct kvm_cpuid_entry __user * | entries | ||

| ) |

Definition at line 467 of file cpuid.c.

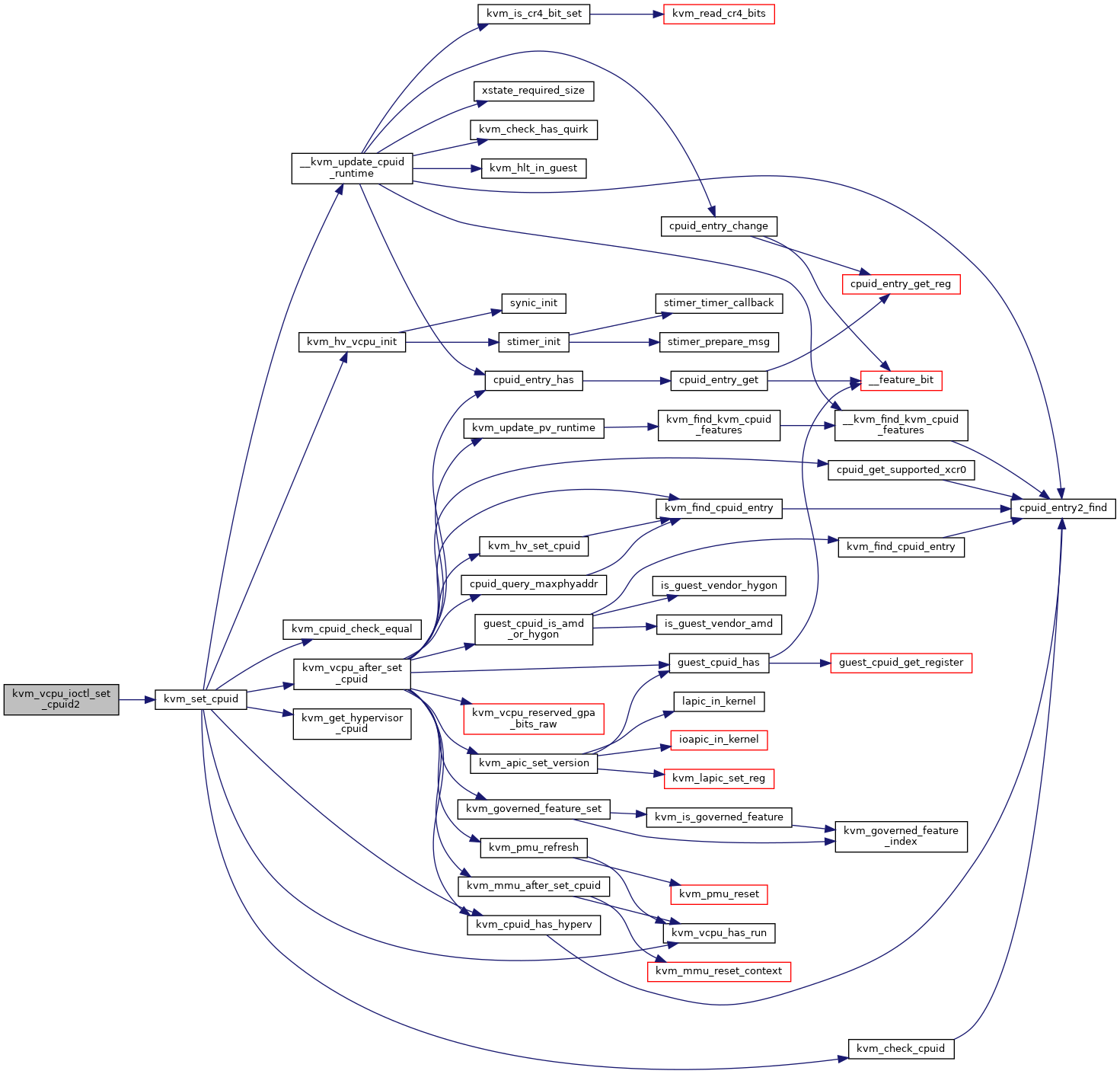

◆ kvm_vcpu_ioctl_set_cpuid2()

| int kvm_vcpu_ioctl_set_cpuid2 | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_cpuid2 * | cpuid, | ||

| struct kvm_cpuid_entry2 __user * | entries | ||

| ) |

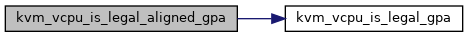

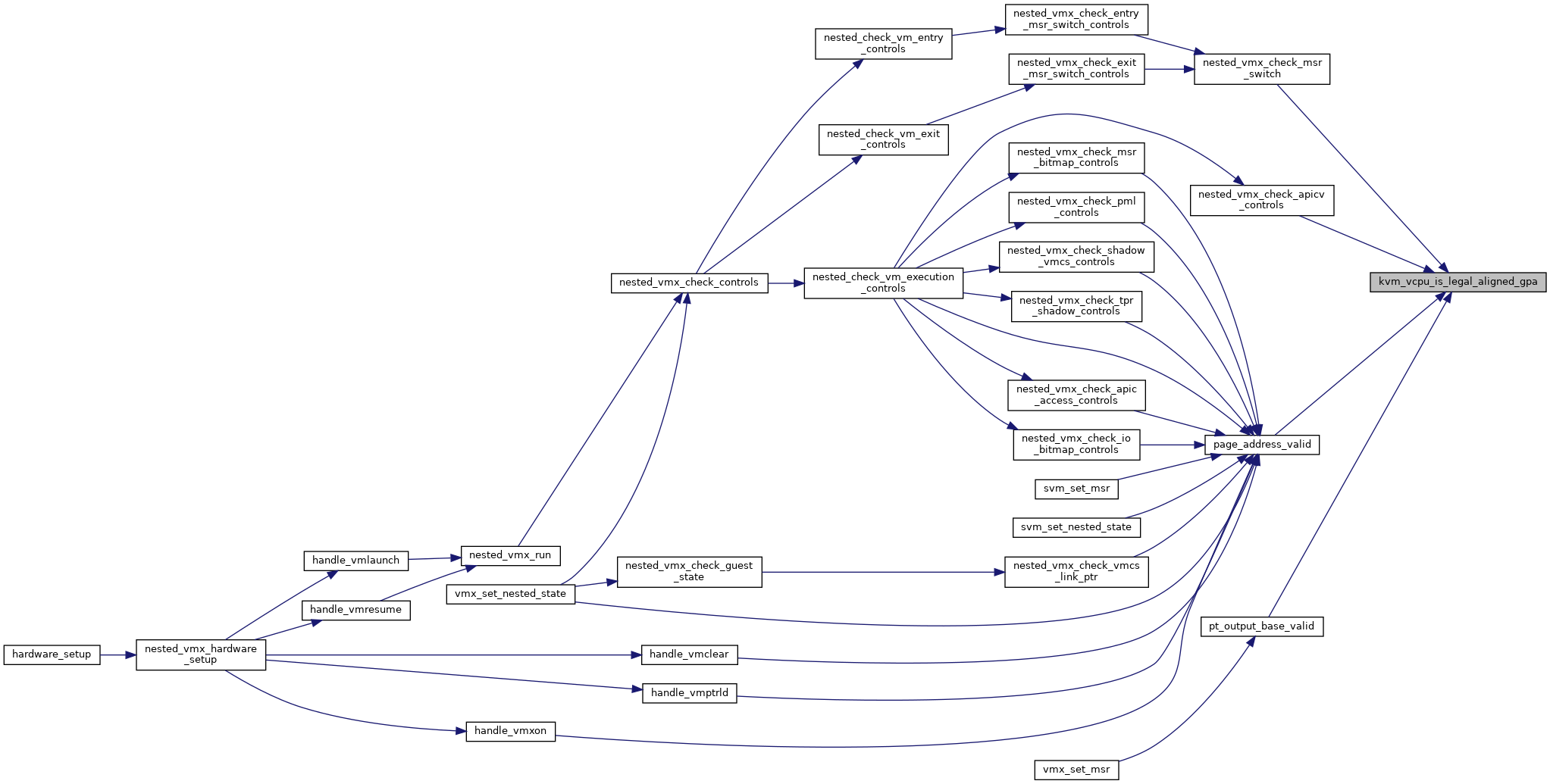

◆ kvm_vcpu_is_legal_aligned_gpa()

|

inlinestatic |

Definition at line 50 of file cpuid.h.

◆ kvm_vcpu_is_legal_cr3()

|

inlinestatic |

Definition at line 287 of file cpuid.h.

◆ kvm_vcpu_is_legal_gpa()

|

inlinestatic |

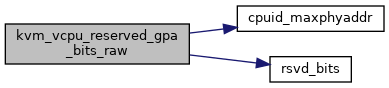

◆ kvm_vcpu_reserved_gpa_bits_raw()

| u64 kvm_vcpu_reserved_gpa_bits_raw | ( | struct kvm_vcpu * | vcpu | ) |

◆ page_address_valid()

|

inlinestatic |

Definition at line 56 of file cpuid.h.

◆ supports_cpuid_fault()

|

inlinestatic |

◆ xstate_required_size()

| u32 xstate_required_size | ( | u64 | xstate_bv, |

| bool | compacted | ||

| ) |

Variable Documentation

◆ __read_mostly

|

extern |