Go to the source code of this file.

Functions | |

| static __always_inline u64 | rsvd_bits (int s, int e) |

| static gfn_t | kvm_mmu_max_gfn (void) |

| static u8 | kvm_get_shadow_phys_bits (void) |

| void | kvm_mmu_set_mmio_spte_mask (u64 mmio_value, u64 mmio_mask, u64 access_mask) |

| void | kvm_mmu_set_me_spte_mask (u64 me_value, u64 me_mask) |

| void | kvm_mmu_set_ept_masks (bool has_ad_bits, bool has_exec_only) |

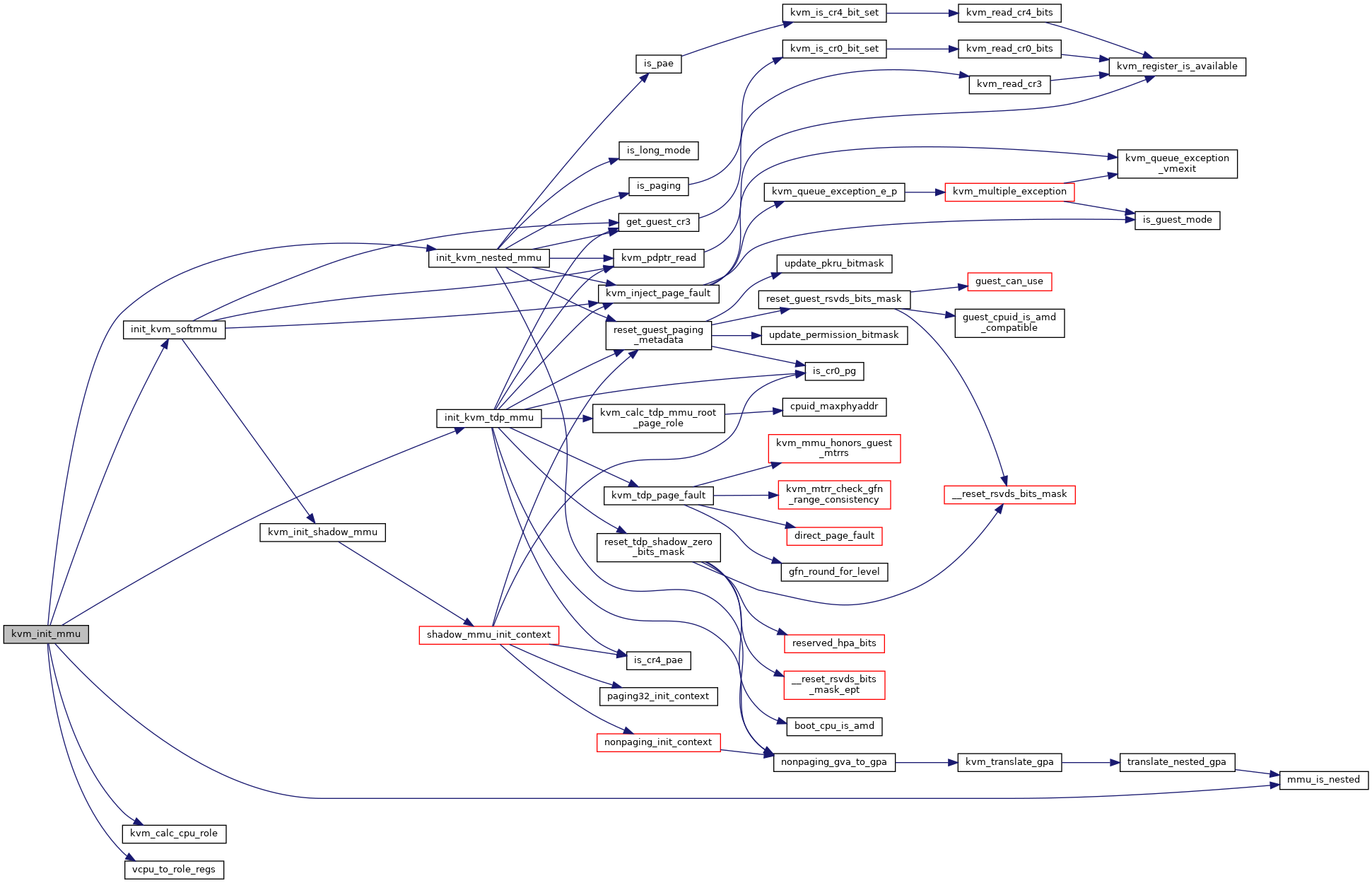

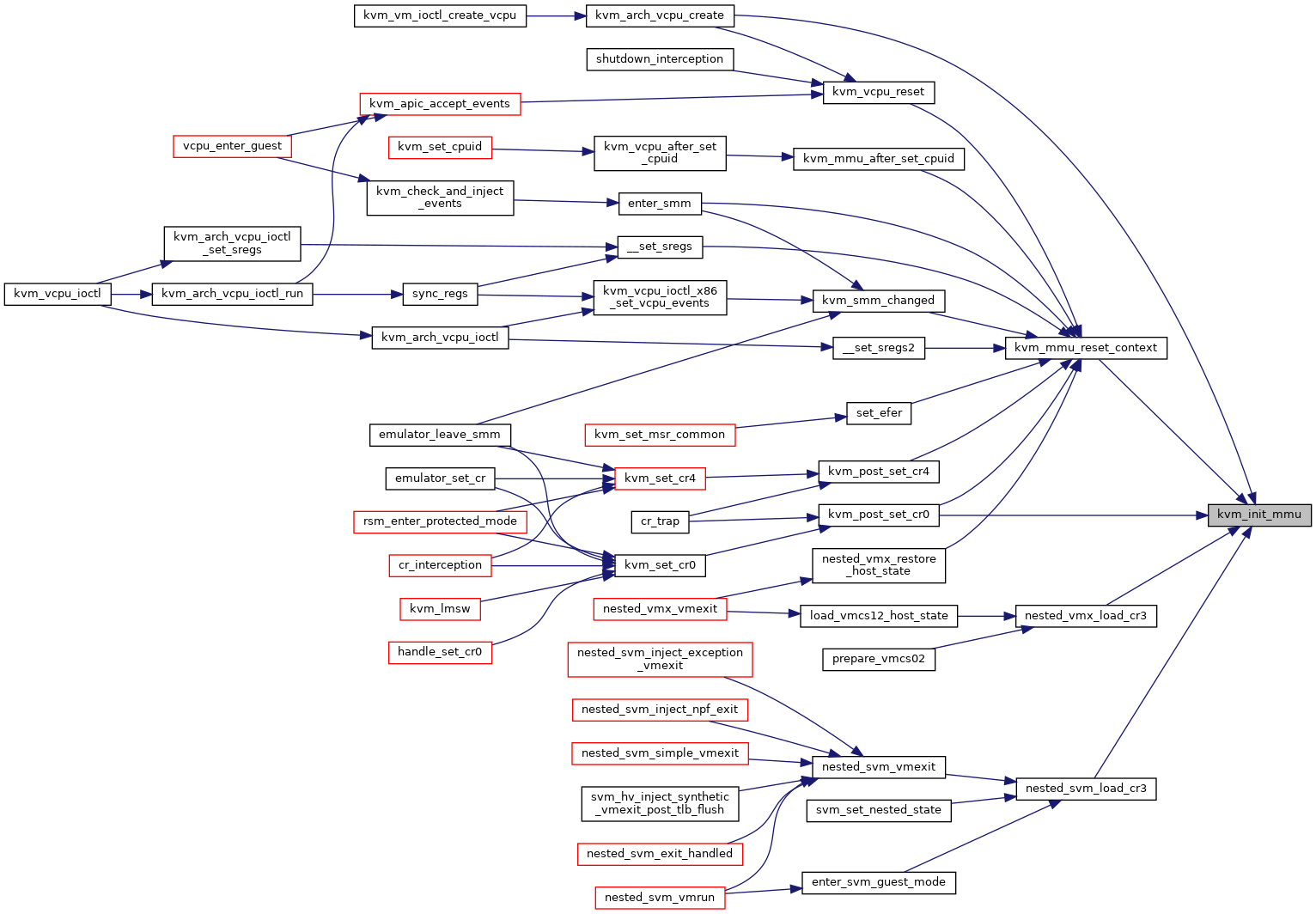

| void | kvm_init_mmu (struct kvm_vcpu *vcpu) |

| void | kvm_init_shadow_npt_mmu (struct kvm_vcpu *vcpu, unsigned long cr0, unsigned long cr4, u64 efer, gpa_t nested_cr3) |

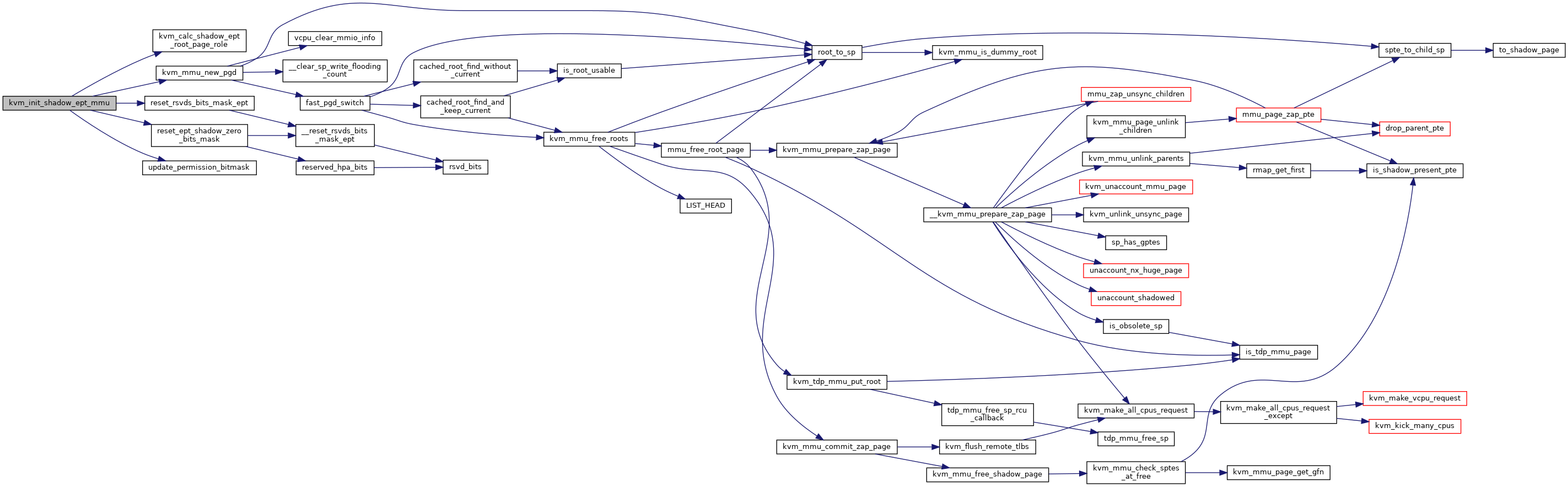

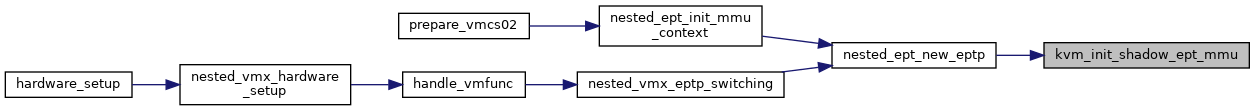

| void | kvm_init_shadow_ept_mmu (struct kvm_vcpu *vcpu, bool execonly, int huge_page_level, bool accessed_dirty, gpa_t new_eptp) |

| bool | kvm_can_do_async_pf (struct kvm_vcpu *vcpu) |

| int | kvm_handle_page_fault (struct kvm_vcpu *vcpu, u64 error_code, u64 fault_address, char *insn, int insn_len) |

| void | __kvm_mmu_refresh_passthrough_bits (struct kvm_vcpu *vcpu, struct kvm_mmu *mmu) |

| int | kvm_mmu_load (struct kvm_vcpu *vcpu) |

| void | kvm_mmu_unload (struct kvm_vcpu *vcpu) |

| void | kvm_mmu_free_obsolete_roots (struct kvm_vcpu *vcpu) |

| void | kvm_mmu_sync_roots (struct kvm_vcpu *vcpu) |

| void | kvm_mmu_sync_prev_roots (struct kvm_vcpu *vcpu) |

| void | kvm_mmu_track_write (struct kvm_vcpu *vcpu, gpa_t gpa, const u8 *new, int bytes) |

| static int | kvm_mmu_reload (struct kvm_vcpu *vcpu) |

| static unsigned long | kvm_get_pcid (struct kvm_vcpu *vcpu, gpa_t cr3) |

| static unsigned long | kvm_get_active_pcid (struct kvm_vcpu *vcpu) |

| static unsigned long | kvm_get_active_cr3_lam_bits (struct kvm_vcpu *vcpu) |

| static void | kvm_mmu_load_pgd (struct kvm_vcpu *vcpu) |

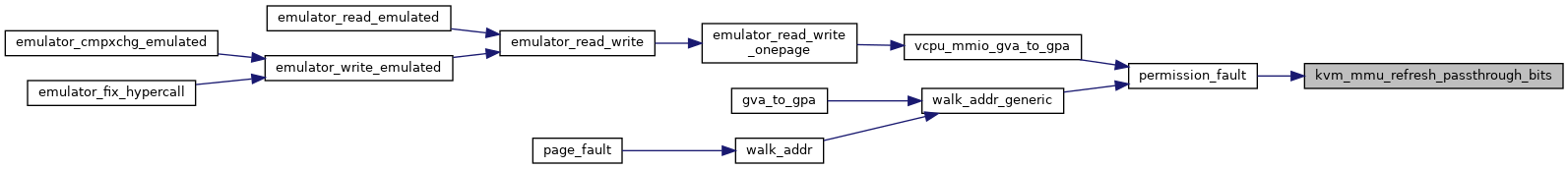

| static void | kvm_mmu_refresh_passthrough_bits (struct kvm_vcpu *vcpu, struct kvm_mmu *mmu) |

| static u8 | permission_fault (struct kvm_vcpu *vcpu, struct kvm_mmu *mmu, unsigned pte_access, unsigned pte_pkey, u64 access) |

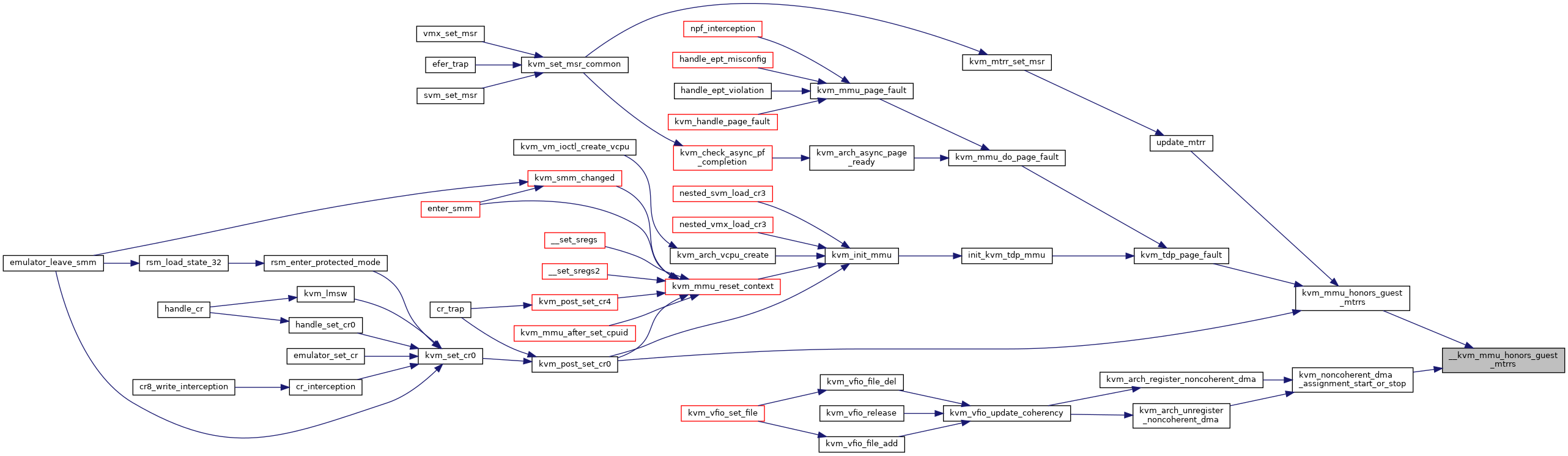

| bool | __kvm_mmu_honors_guest_mtrrs (bool vm_has_noncoherent_dma) |

| static bool | kvm_mmu_honors_guest_mtrrs (struct kvm *kvm) |

| void | kvm_zap_gfn_range (struct kvm *kvm, gfn_t gfn_start, gfn_t gfn_end) |

| int | kvm_arch_write_log_dirty (struct kvm_vcpu *vcpu) |

| int | kvm_mmu_post_init_vm (struct kvm *kvm) |

| void | kvm_mmu_pre_destroy_vm (struct kvm *kvm) |

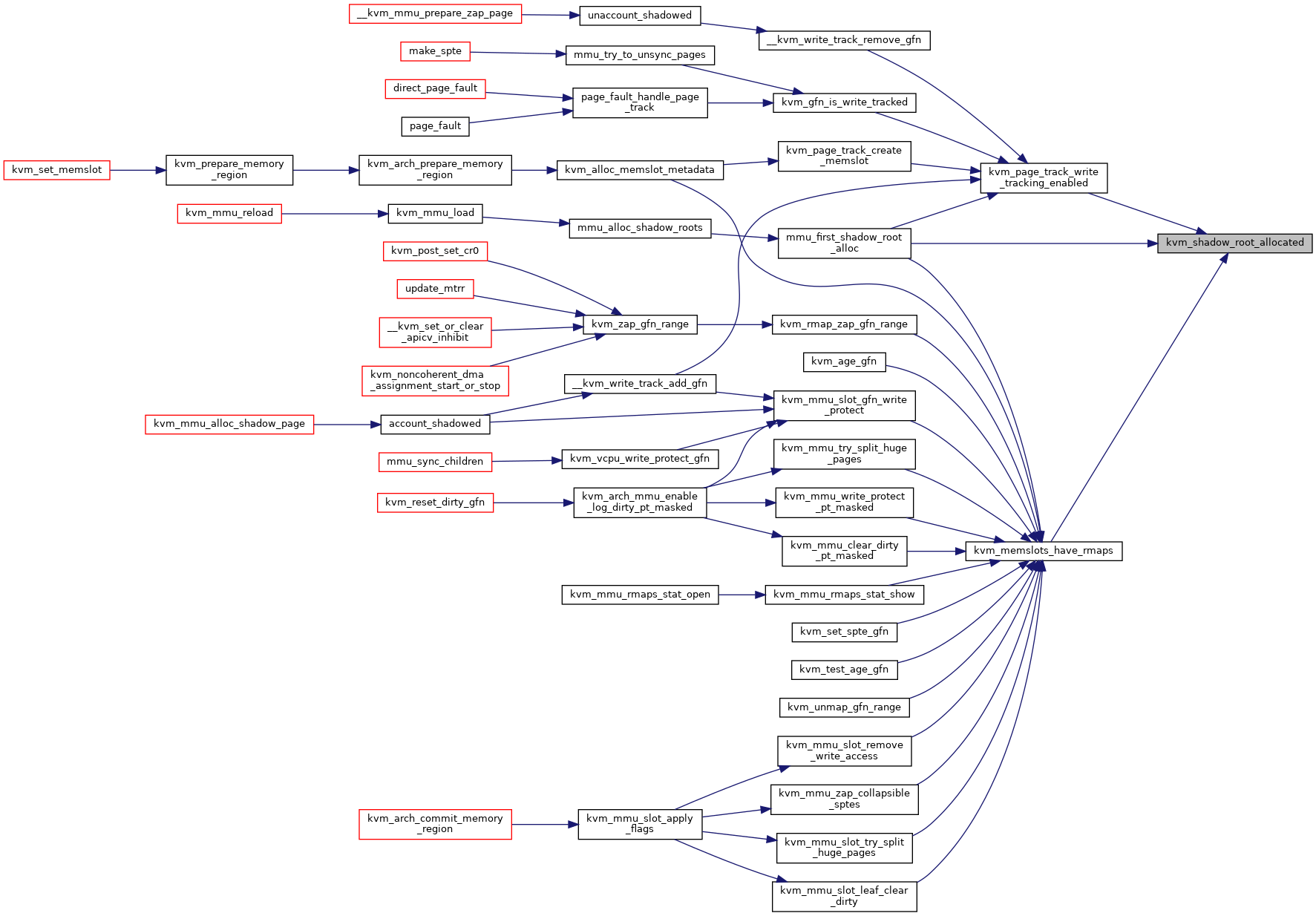

| static bool | kvm_shadow_root_allocated (struct kvm *kvm) |

| static bool | kvm_memslots_have_rmaps (struct kvm *kvm) |

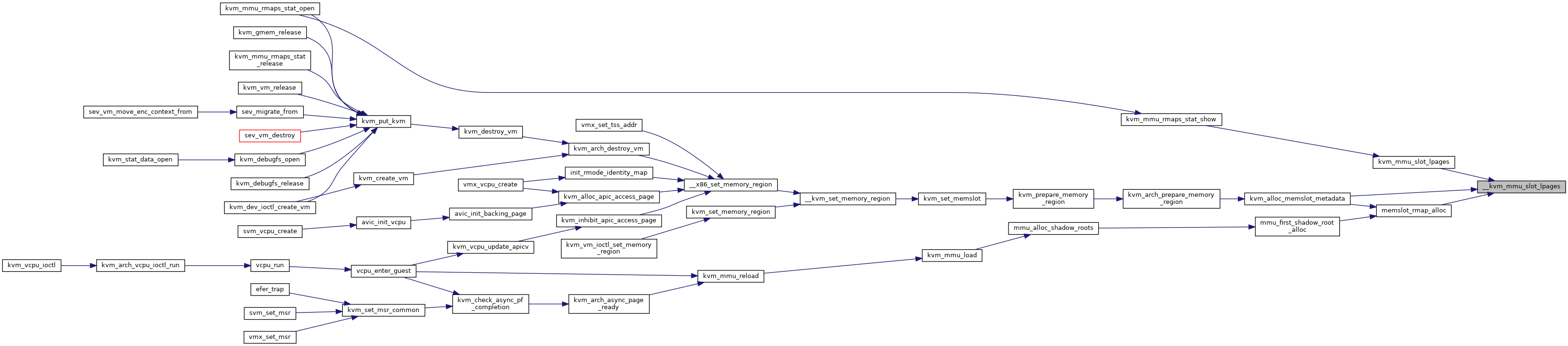

| static gfn_t | gfn_to_index (gfn_t gfn, gfn_t base_gfn, int level) |

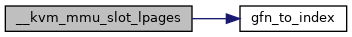

| static unsigned long | __kvm_mmu_slot_lpages (struct kvm_memory_slot *slot, unsigned long npages, int level) |

| static unsigned long | kvm_mmu_slot_lpages (struct kvm_memory_slot *slot, int level) |

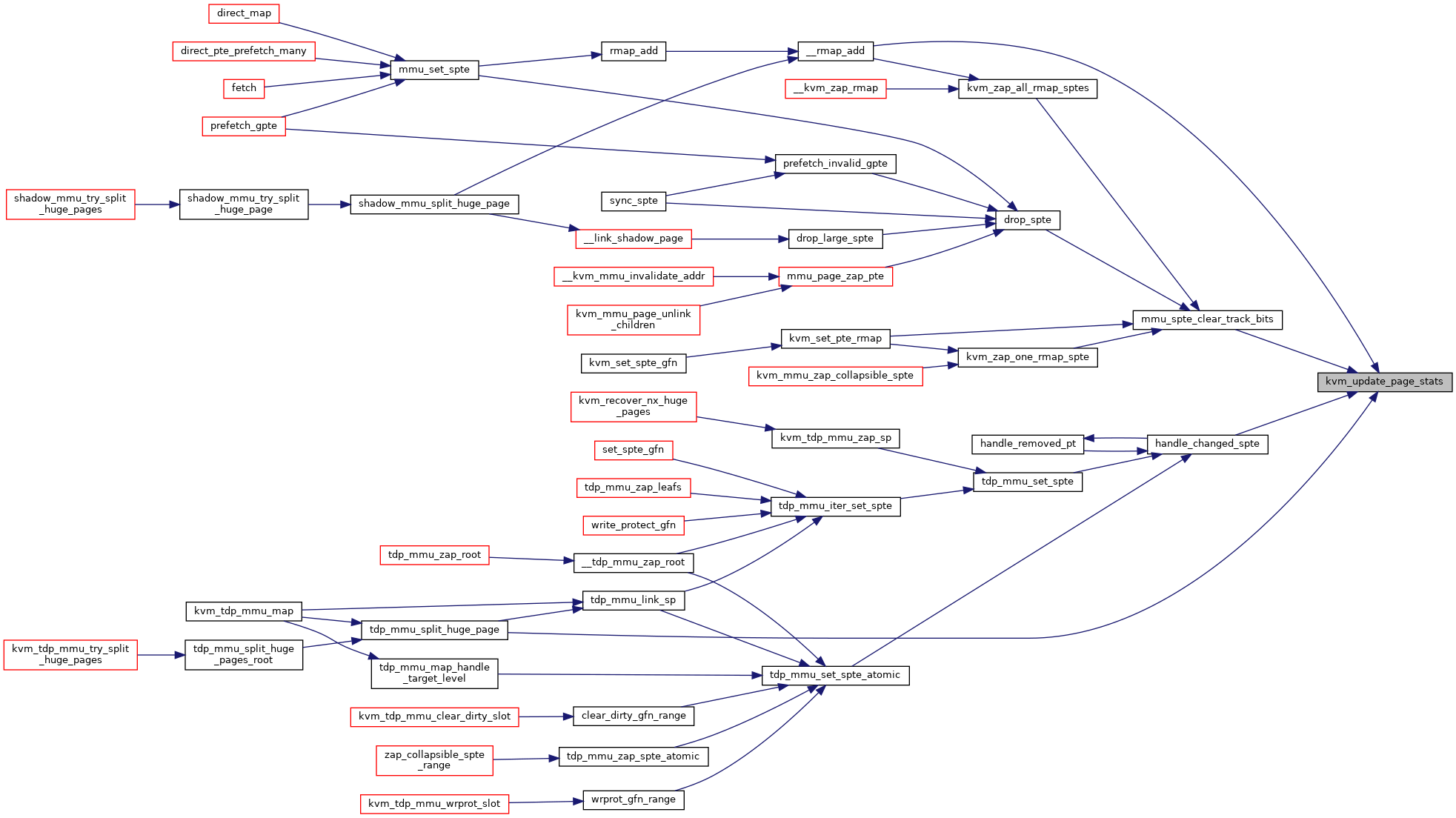

| static void | kvm_update_page_stats (struct kvm *kvm, int level, int count) |

| gpa_t | translate_nested_gpa (struct kvm_vcpu *vcpu, gpa_t gpa, u64 access, struct x86_exception *exception) |

| static gpa_t | kvm_translate_gpa (struct kvm_vcpu *vcpu, struct kvm_mmu *mmu, gpa_t gpa, u64 access, struct x86_exception *exception) |

Variables | |

| bool __read_mostly | enable_mmio_caching |

| u8 __read_mostly | shadow_phys_bits |

Macro Definition Documentation

◆ KVM_MMU_CR0_ROLE_BITS

◆ KVM_MMU_CR4_ROLE_BITS

| #define KVM_MMU_CR4_ROLE_BITS |

◆ KVM_MMU_EFER_ROLE_BITS

◆ PT32_ROOT_LEVEL

◆ PT32E_ROOT_LEVEL

◆ PT64_NX_MASK

| #define PT64_NX_MASK (1ULL << PT64_NX_SHIFT) |

◆ PT64_NX_SHIFT

◆ PT64_ROOT_4LEVEL

◆ PT64_ROOT_5LEVEL

◆ PT_ACCESSED_MASK

| #define PT_ACCESSED_MASK (1ULL << PT_ACCESSED_SHIFT) |

◆ PT_ACCESSED_SHIFT

◆ PT_DIR_PAT_MASK

| #define PT_DIR_PAT_MASK (1ULL << PT_DIR_PAT_SHIFT) |

◆ PT_DIR_PAT_SHIFT

◆ PT_DIRTY_MASK

| #define PT_DIRTY_MASK (1ULL << PT_DIRTY_SHIFT) |

◆ PT_DIRTY_SHIFT

◆ PT_GLOBAL_MASK

◆ PT_PAGE_SIZE_MASK

| #define PT_PAGE_SIZE_MASK (1ULL << PT_PAGE_SIZE_SHIFT) |

◆ PT_PAGE_SIZE_SHIFT

◆ PT_PAT_MASK

◆ PT_PAT_SHIFT

◆ PT_PCD_MASK

◆ PT_PRESENT_MASK

◆ PT_PWT_MASK

◆ PT_USER_MASK

| #define PT_USER_MASK (1ULL << PT_USER_SHIFT) |

◆ PT_USER_SHIFT

◆ PT_WRITABLE_MASK

| #define PT_WRITABLE_MASK (1ULL << PT_WRITABLE_SHIFT) |

◆ PT_WRITABLE_SHIFT

◆ tdp_mmu_enabled

Function Documentation

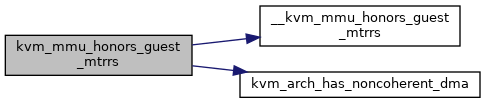

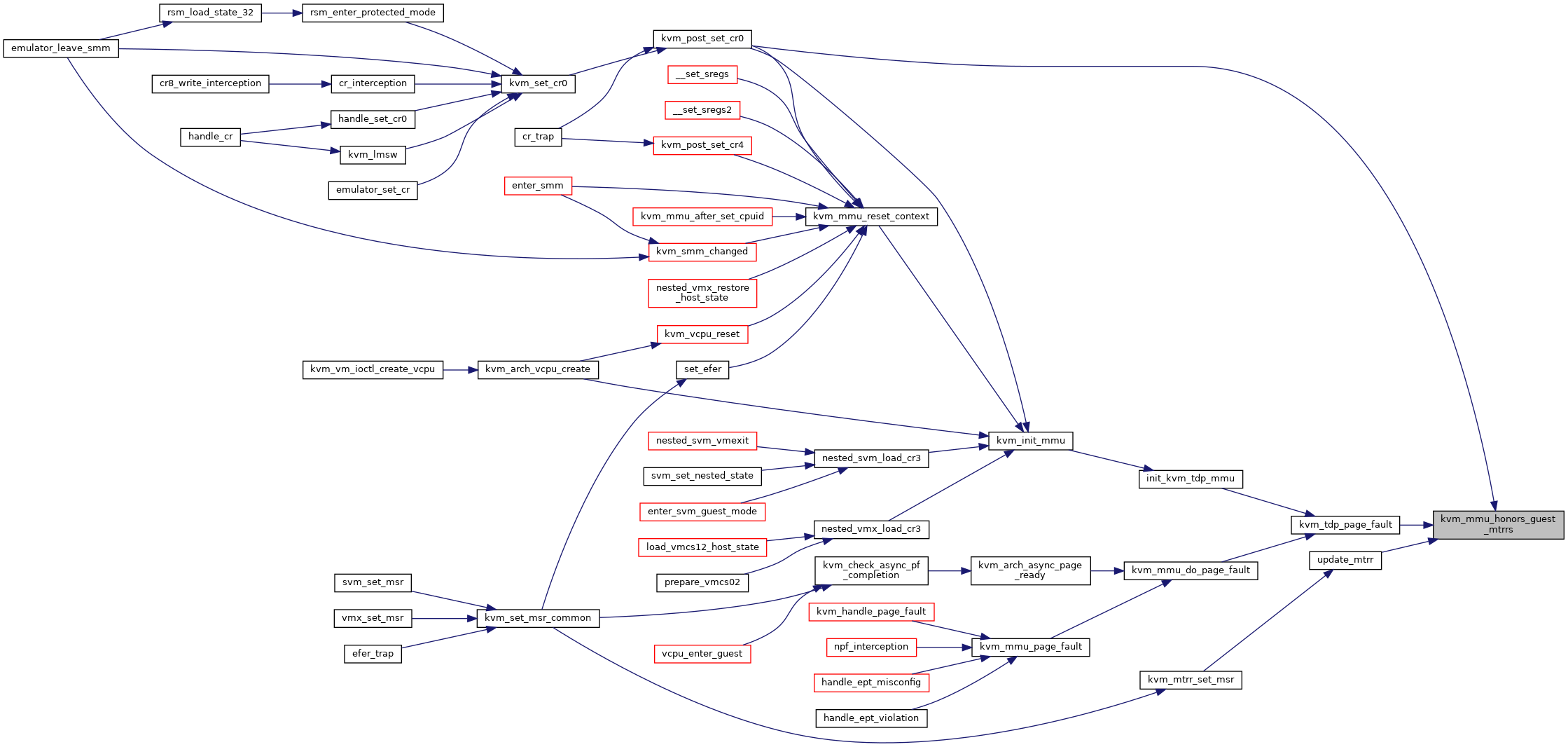

◆ __kvm_mmu_honors_guest_mtrrs()

| bool __kvm_mmu_honors_guest_mtrrs | ( | bool | vm_has_noncoherent_dma | ) |

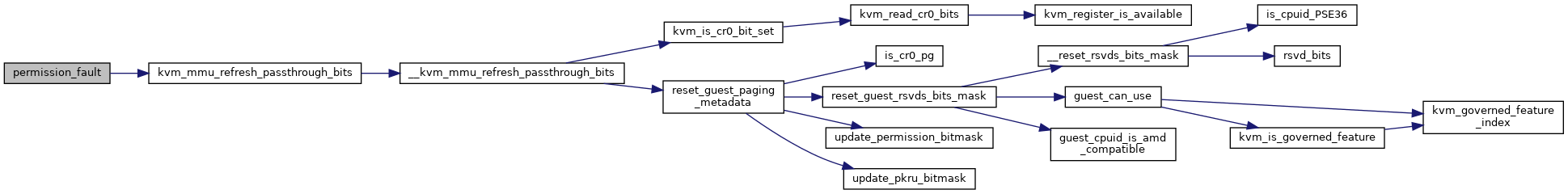

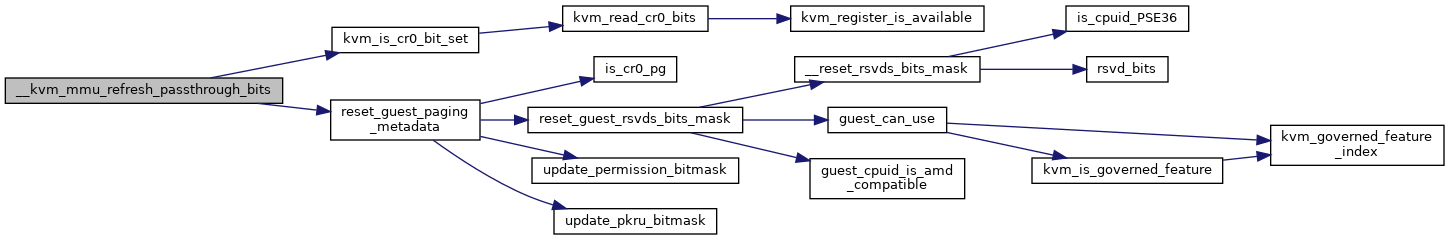

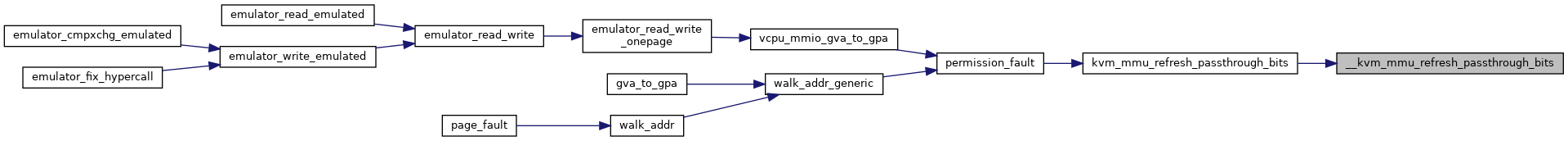

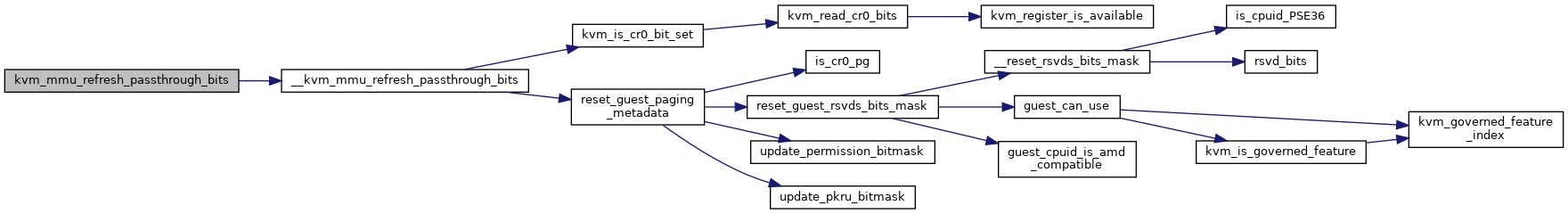

◆ __kvm_mmu_refresh_passthrough_bits()

| void __kvm_mmu_refresh_passthrough_bits | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_mmu * | mmu | ||

| ) |

Definition at line 5284 of file mmu.c.

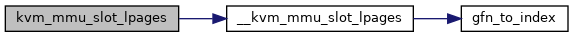

◆ __kvm_mmu_slot_lpages()

|

inlinestatic |

◆ gfn_to_index()

|

inlinestatic |

◆ kvm_arch_write_log_dirty()

| int kvm_arch_write_log_dirty | ( | struct kvm_vcpu * | vcpu | ) |

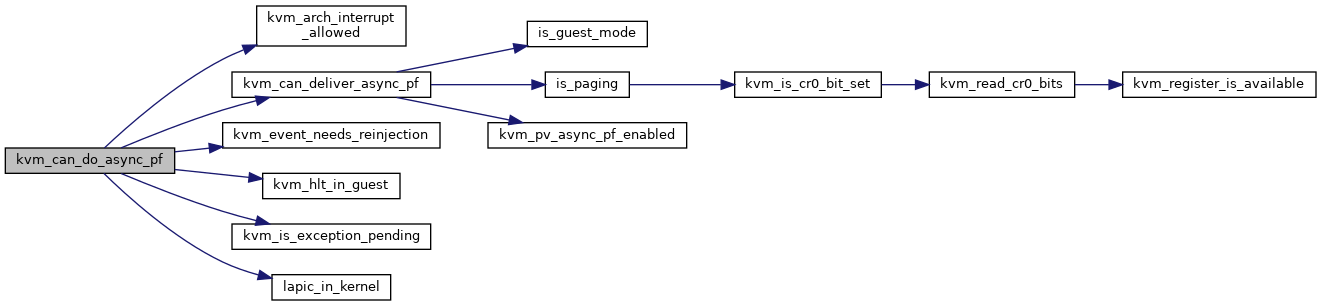

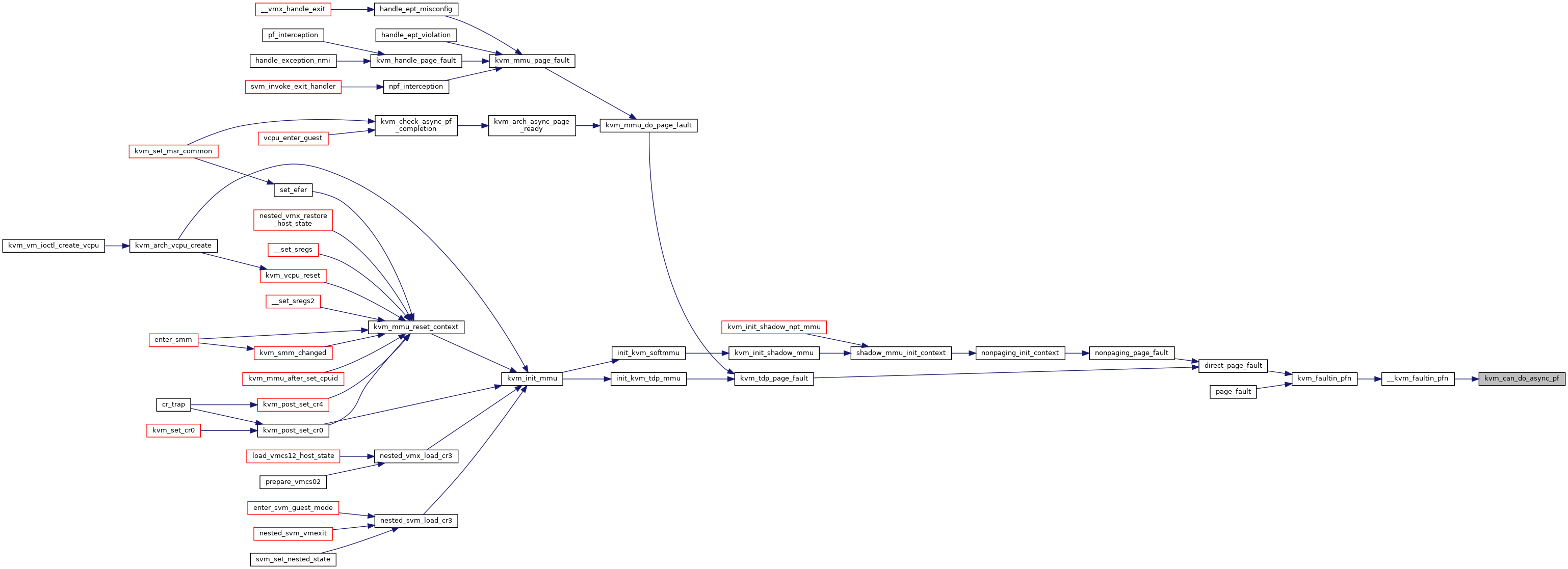

◆ kvm_can_do_async_pf()

| bool kvm_can_do_async_pf | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 13317 of file x86.c.

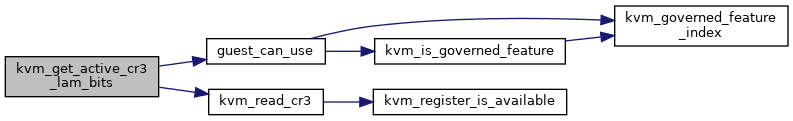

◆ kvm_get_active_cr3_lam_bits()

|

inlinestatic |

Definition at line 149 of file mmu.h.

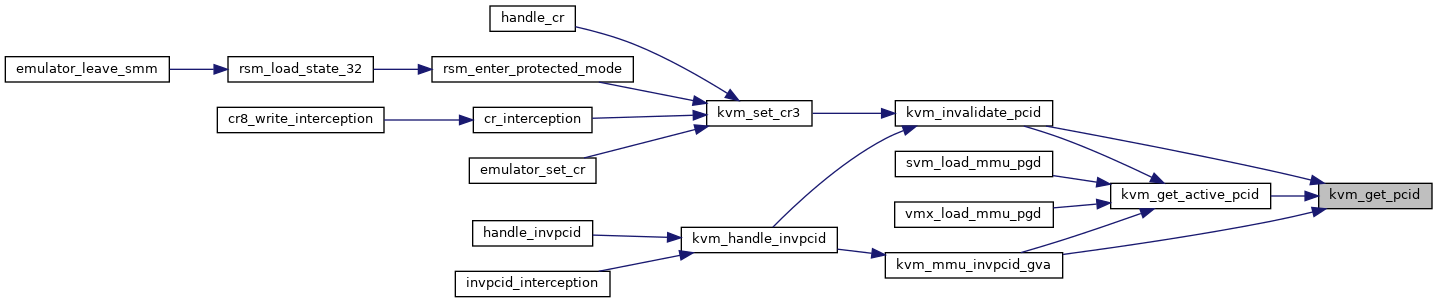

◆ kvm_get_active_pcid()

|

inlinestatic |

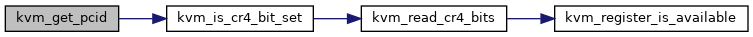

◆ kvm_get_pcid()

|

inlinestatic |

Definition at line 135 of file mmu.h.

◆ kvm_get_shadow_phys_bits()

|

inlinestatic |

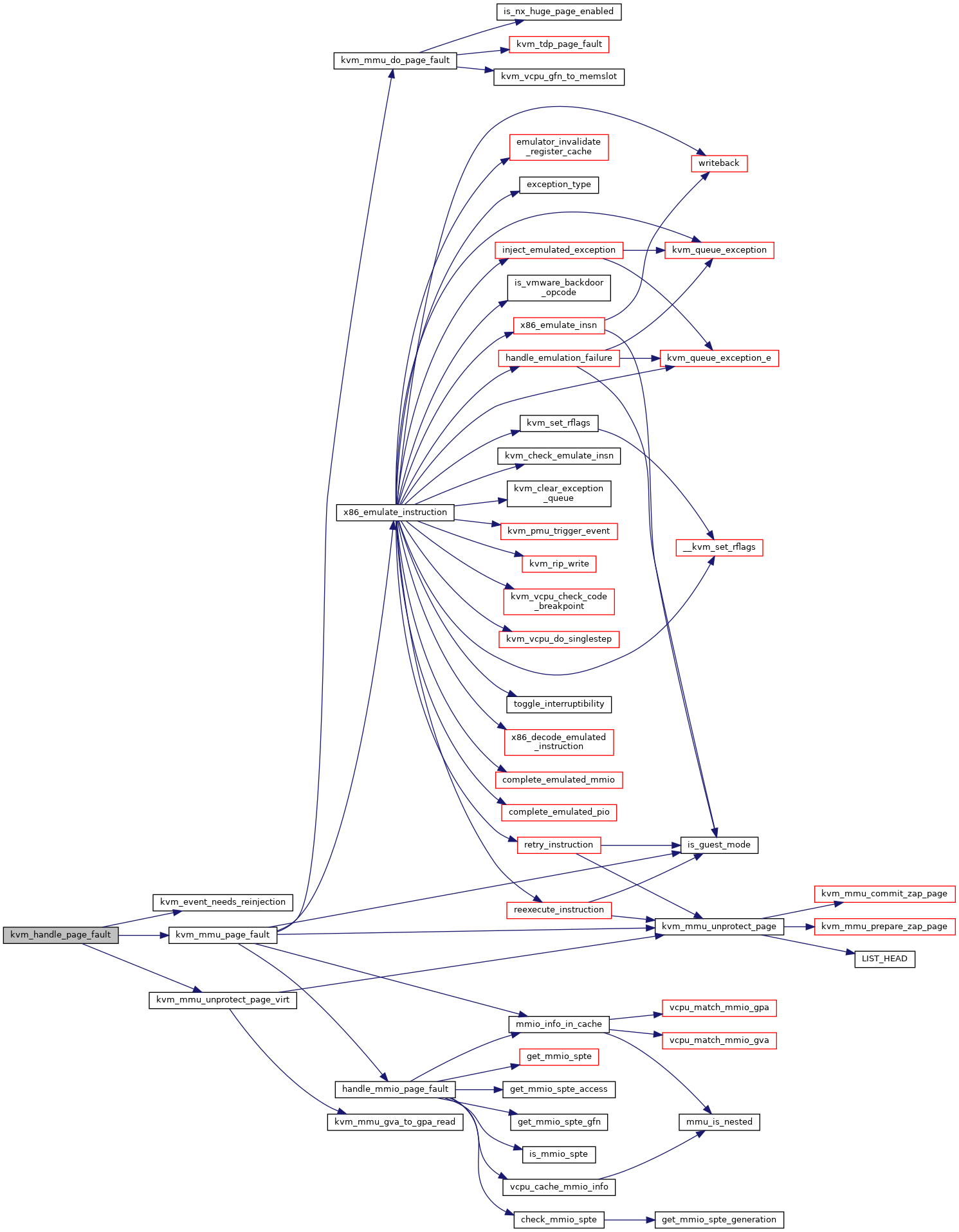

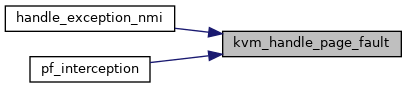

◆ kvm_handle_page_fault()

| int kvm_handle_page_fault | ( | struct kvm_vcpu * | vcpu, |

| u64 | error_code, | ||

| u64 | fault_address, | ||

| char * | insn, | ||

| int | insn_len | ||

| ) |

Definition at line 4540 of file mmu.c.

◆ kvm_init_mmu()

| void kvm_init_mmu | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 5538 of file mmu.c.

◆ kvm_init_shadow_ept_mmu()

| void kvm_init_shadow_ept_mmu | ( | struct kvm_vcpu * | vcpu, |

| bool | execonly, | ||

| int | huge_page_level, | ||

| bool | accessed_dirty, | ||

| gpa_t | new_eptp | ||

| ) |

Definition at line 5458 of file mmu.c.

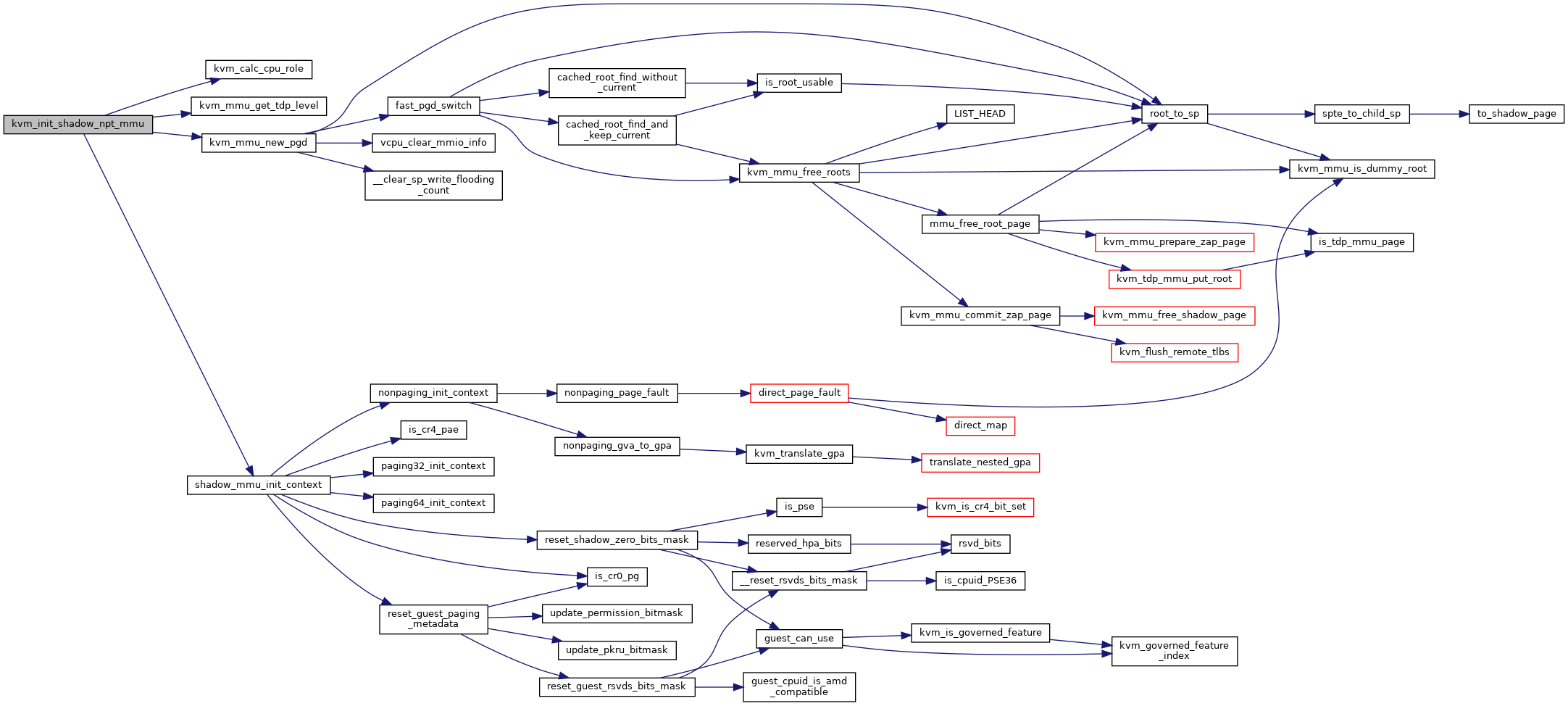

◆ kvm_init_shadow_npt_mmu()

| void kvm_init_shadow_npt_mmu | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | cr0, | ||

| unsigned long | cr4, | ||

| u64 | efer, | ||

| gpa_t | nested_cr3 | ||

| ) |

Definition at line 5407 of file mmu.c.

◆ kvm_memslots_have_rmaps()

|

inlinestatic |

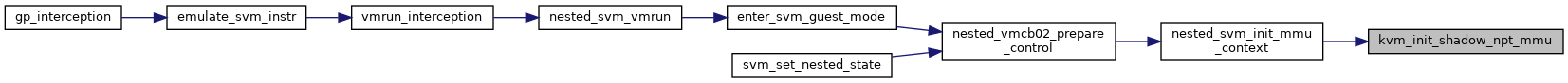

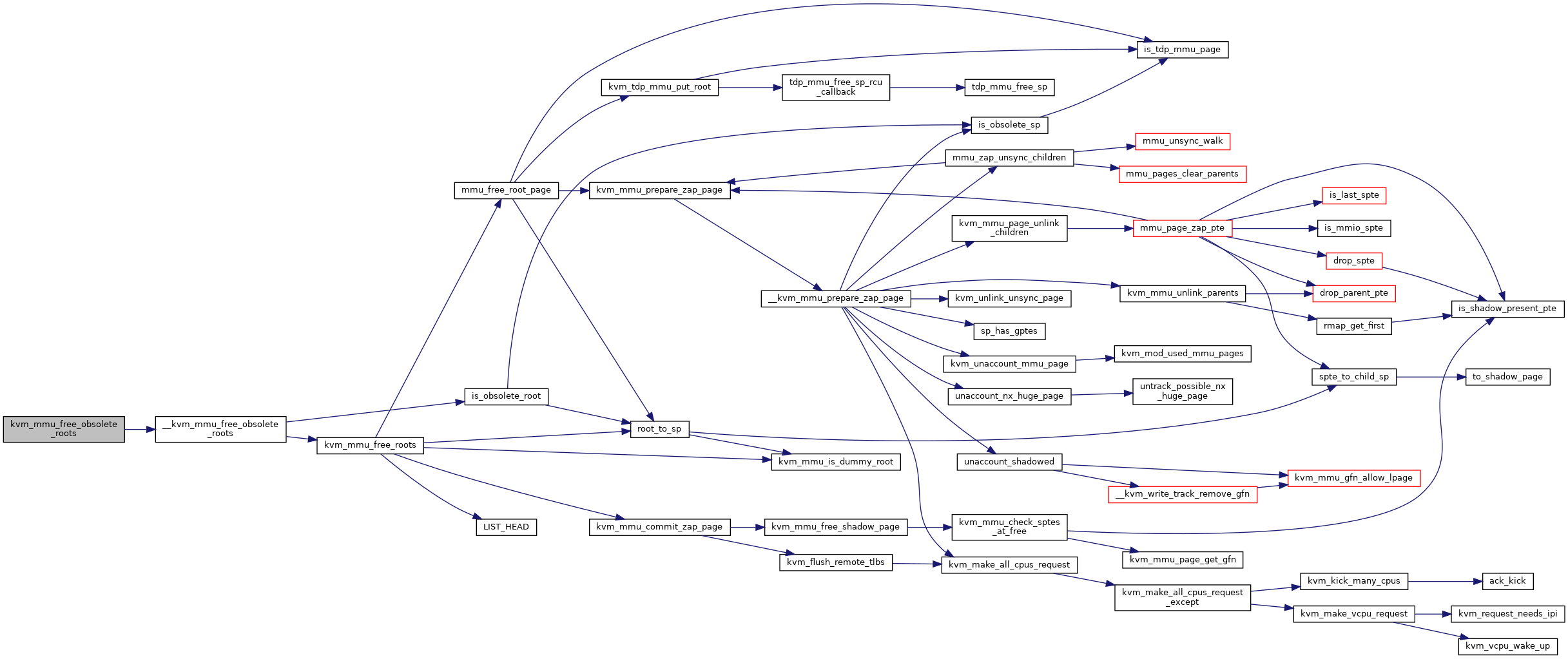

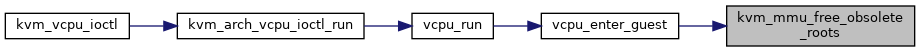

◆ kvm_mmu_free_obsolete_roots()

| void kvm_mmu_free_obsolete_roots | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 5676 of file mmu.c.

◆ kvm_mmu_honors_guest_mtrrs()

|

inlinestatic |

Definition at line 250 of file mmu.h.

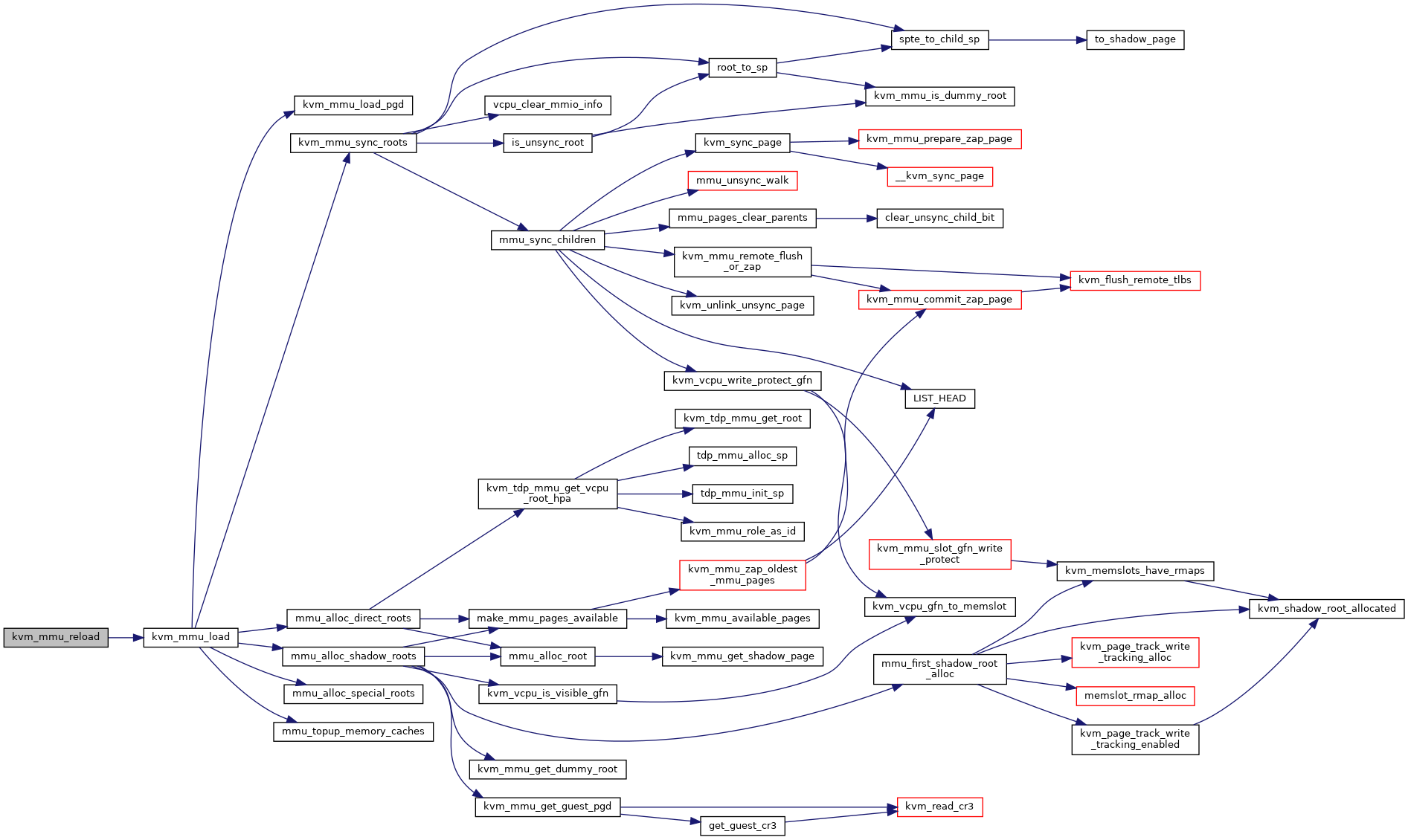

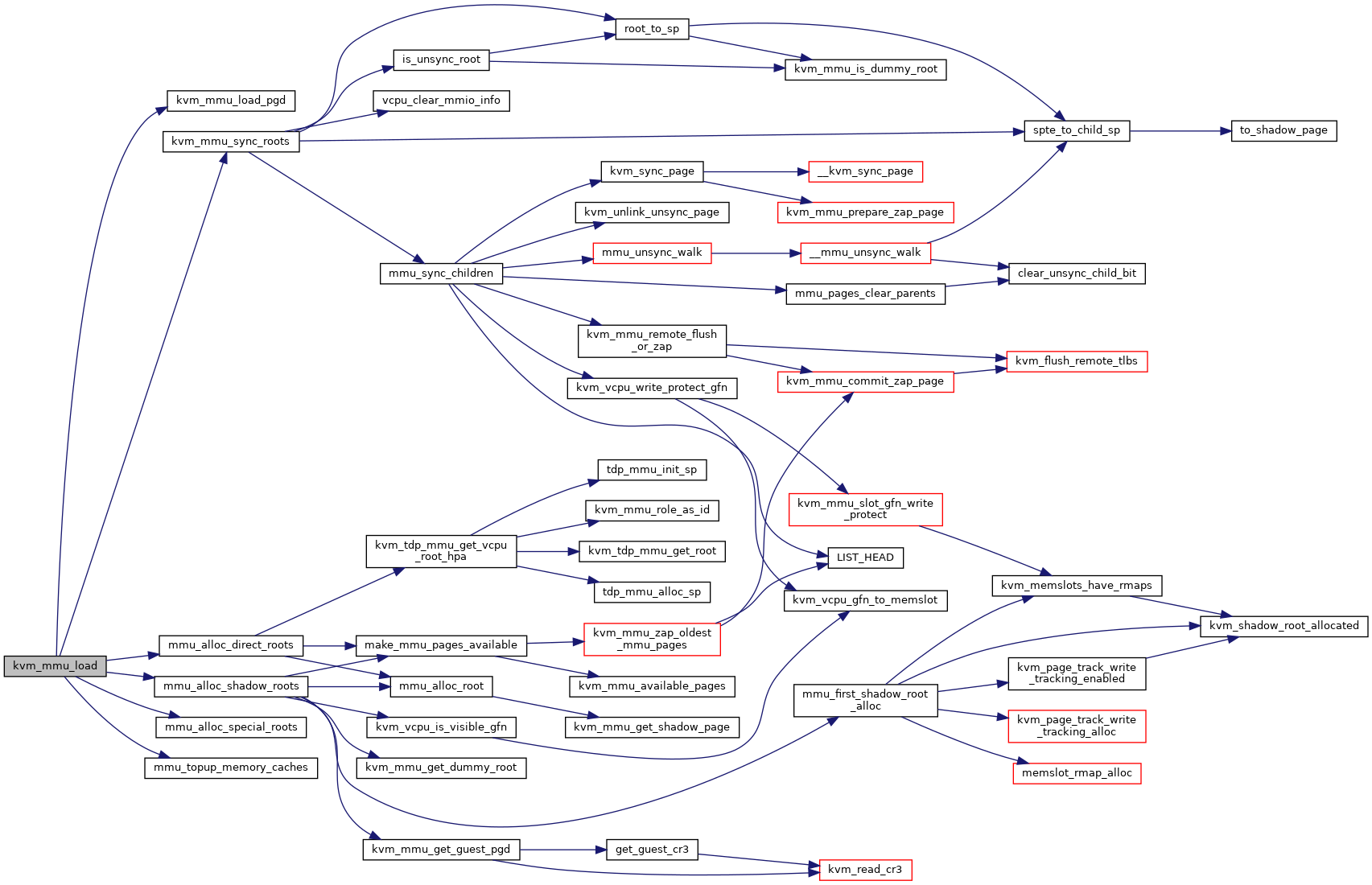

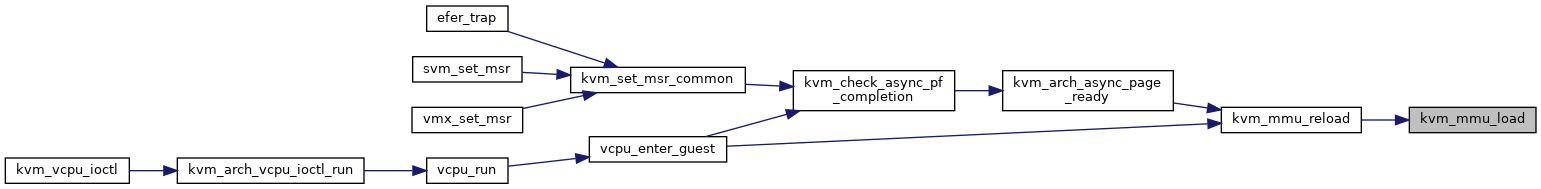

◆ kvm_mmu_load()

| int kvm_mmu_load | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 5588 of file mmu.c.

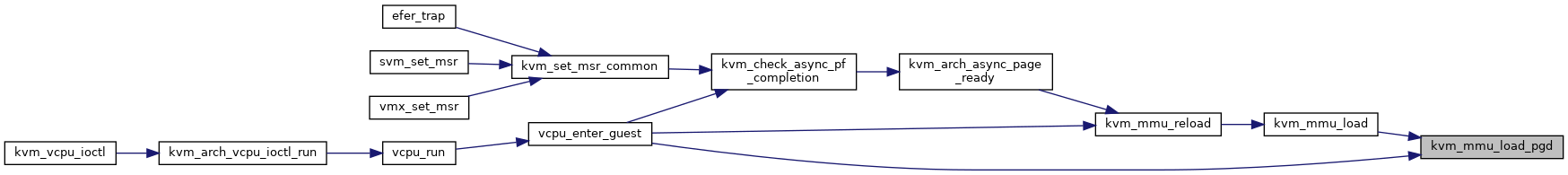

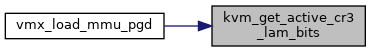

◆ kvm_mmu_load_pgd()

|

inlinestatic |

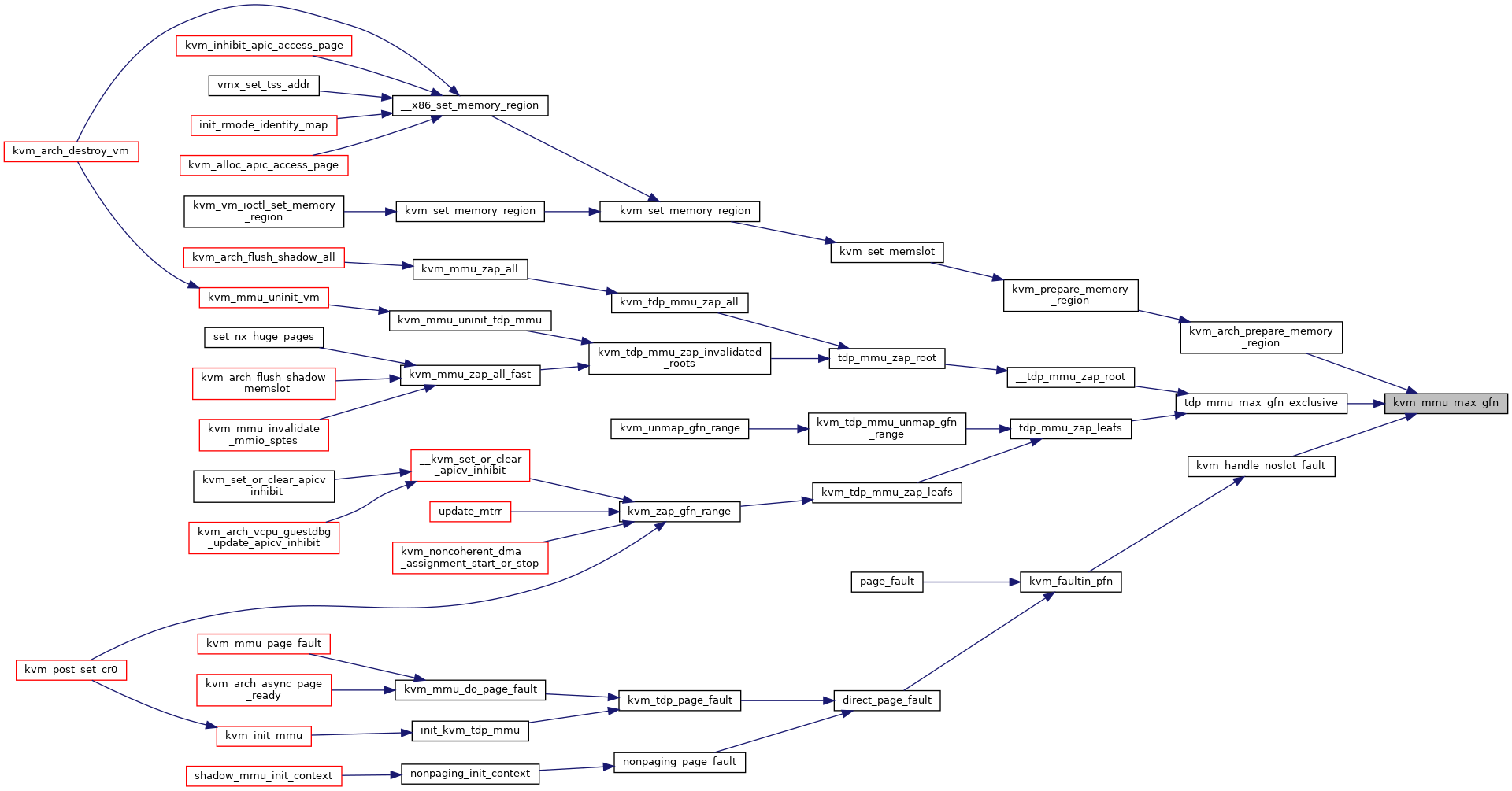

◆ kvm_mmu_max_gfn()

|

inlinestatic |

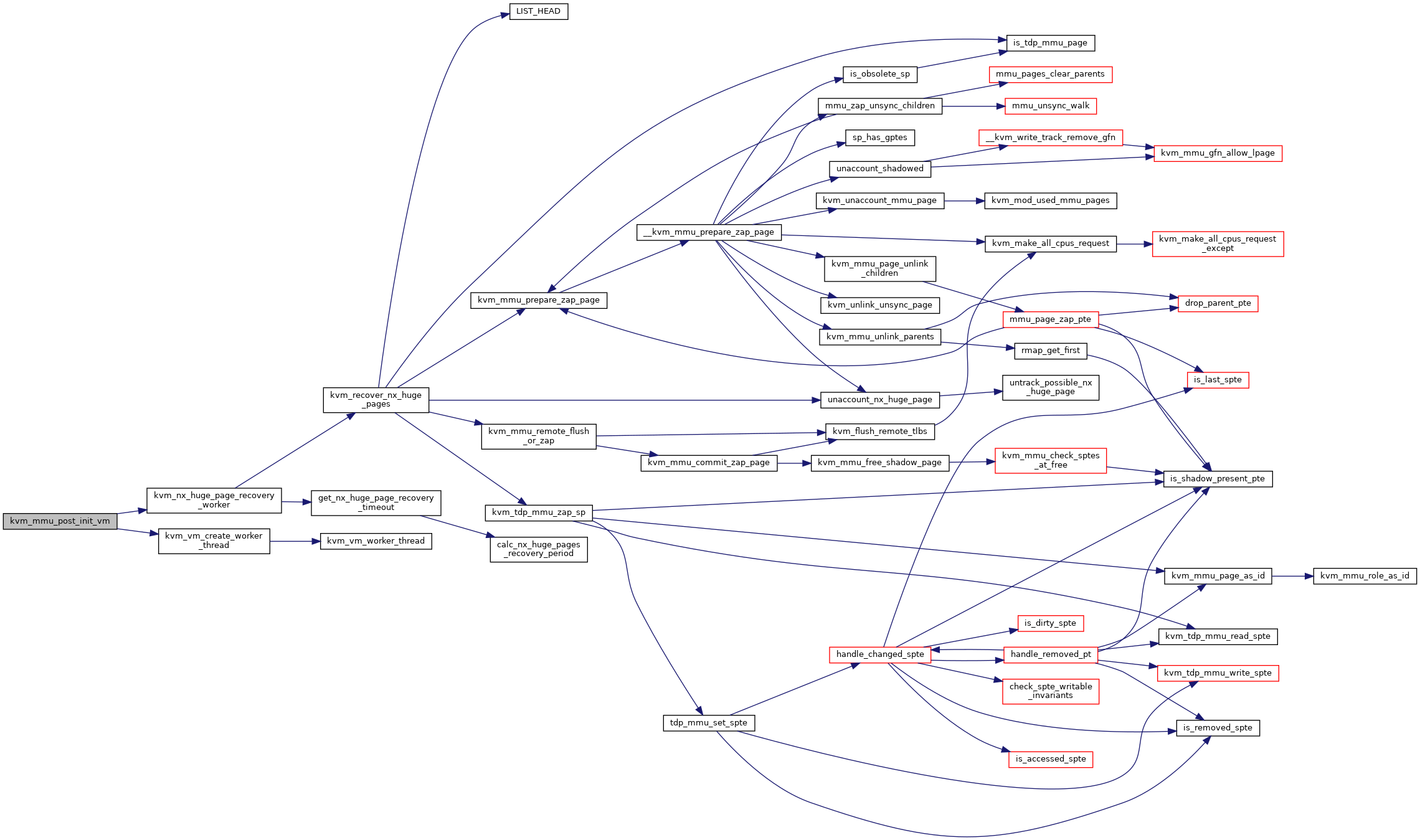

◆ kvm_mmu_post_init_vm()

| int kvm_mmu_post_init_vm | ( | struct kvm * | kvm | ) |

Definition at line 7279 of file mmu.c.

◆ kvm_mmu_pre_destroy_vm()

| void kvm_mmu_pre_destroy_vm | ( | struct kvm * | kvm | ) |

◆ kvm_mmu_refresh_passthrough_bits()

|

inlinestatic |

Definition at line 168 of file mmu.h.

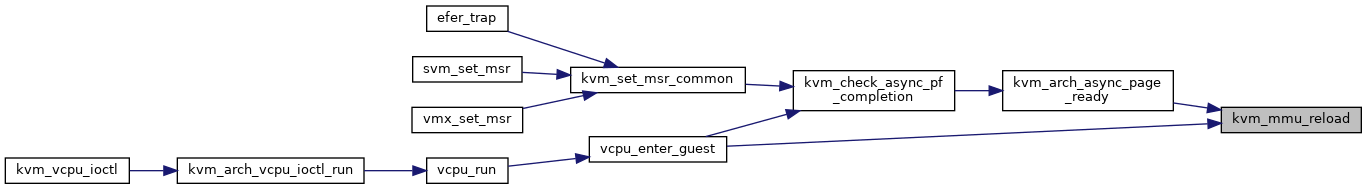

◆ kvm_mmu_reload()

|

inlinestatic |

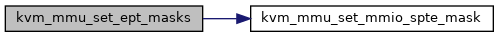

◆ kvm_mmu_set_ept_masks()

| void kvm_mmu_set_ept_masks | ( | bool | has_ad_bits, |

| bool | has_exec_only | ||

| ) |

Definition at line 425 of file spte.c.

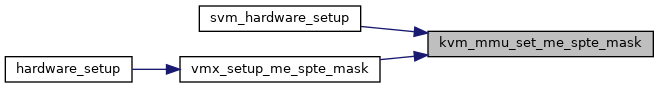

◆ kvm_mmu_set_me_spte_mask()

| void kvm_mmu_set_me_spte_mask | ( | u64 | me_value, |

| u64 | me_mask | ||

| ) |

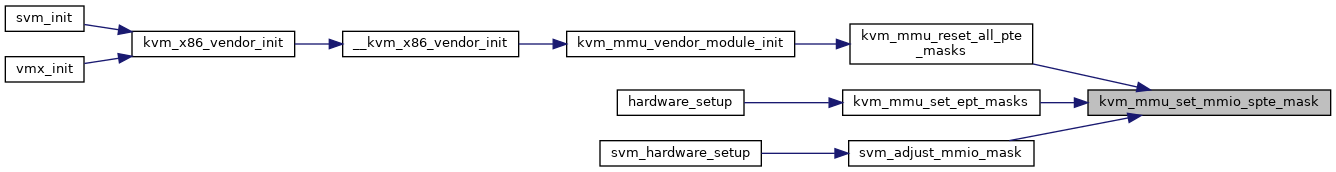

◆ kvm_mmu_set_mmio_spte_mask()

| void kvm_mmu_set_mmio_spte_mask | ( | u64 | mmio_value, |

| u64 | mmio_mask, | ||

| u64 | access_mask | ||

| ) |

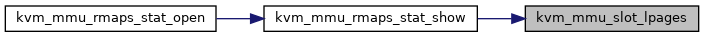

◆ kvm_mmu_slot_lpages()

|

inlinestatic |

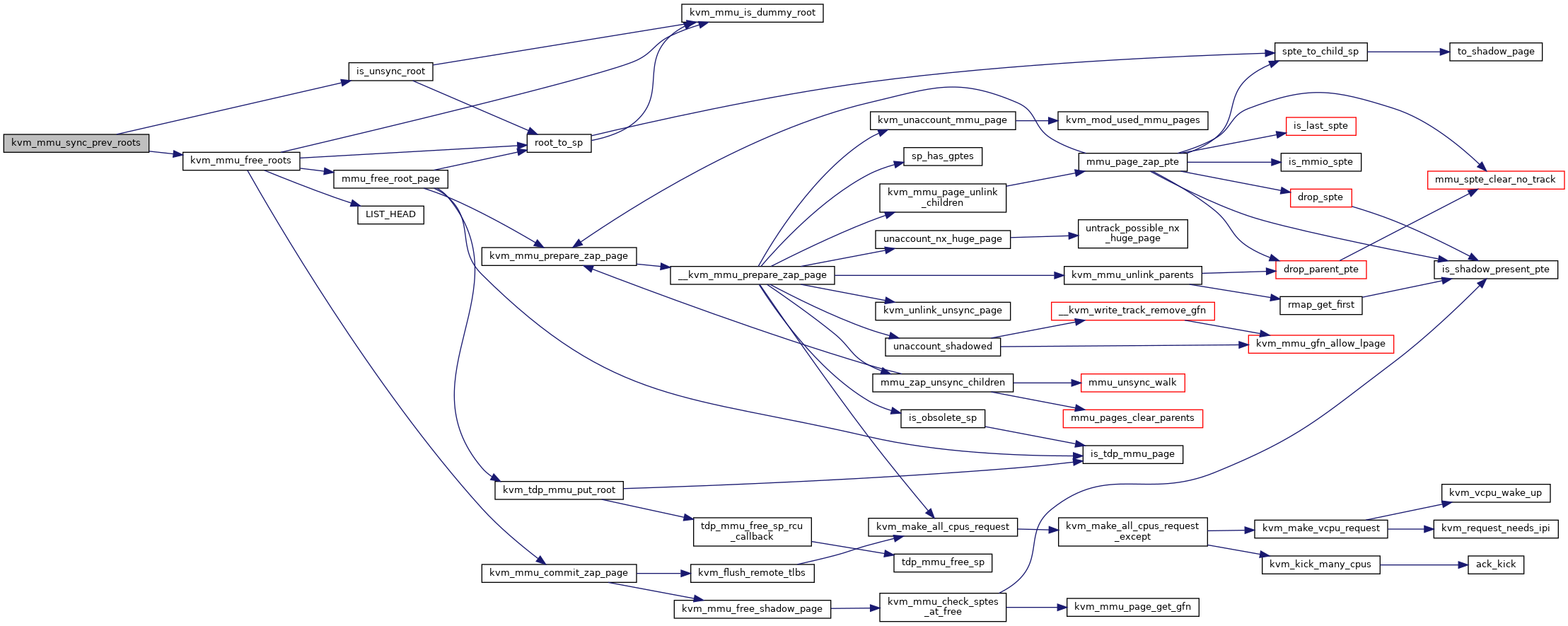

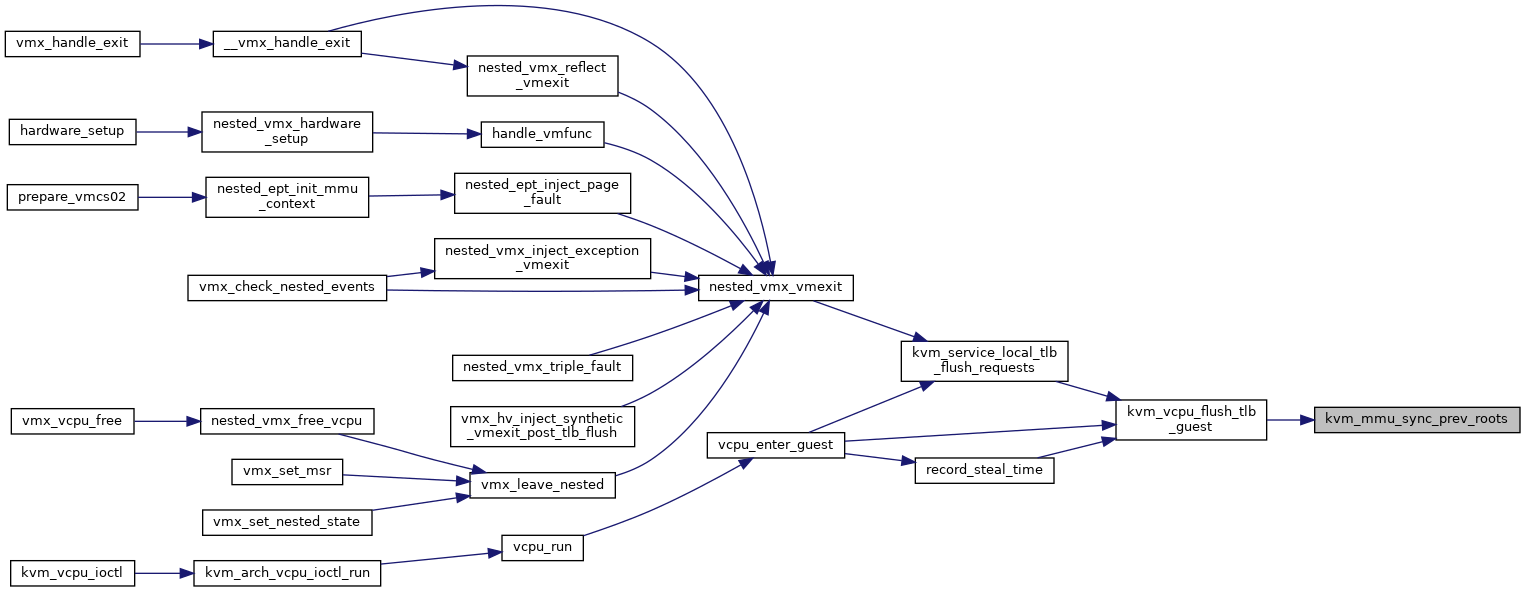

◆ kvm_mmu_sync_prev_roots()

| void kvm_mmu_sync_prev_roots | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 4062 of file mmu.c.

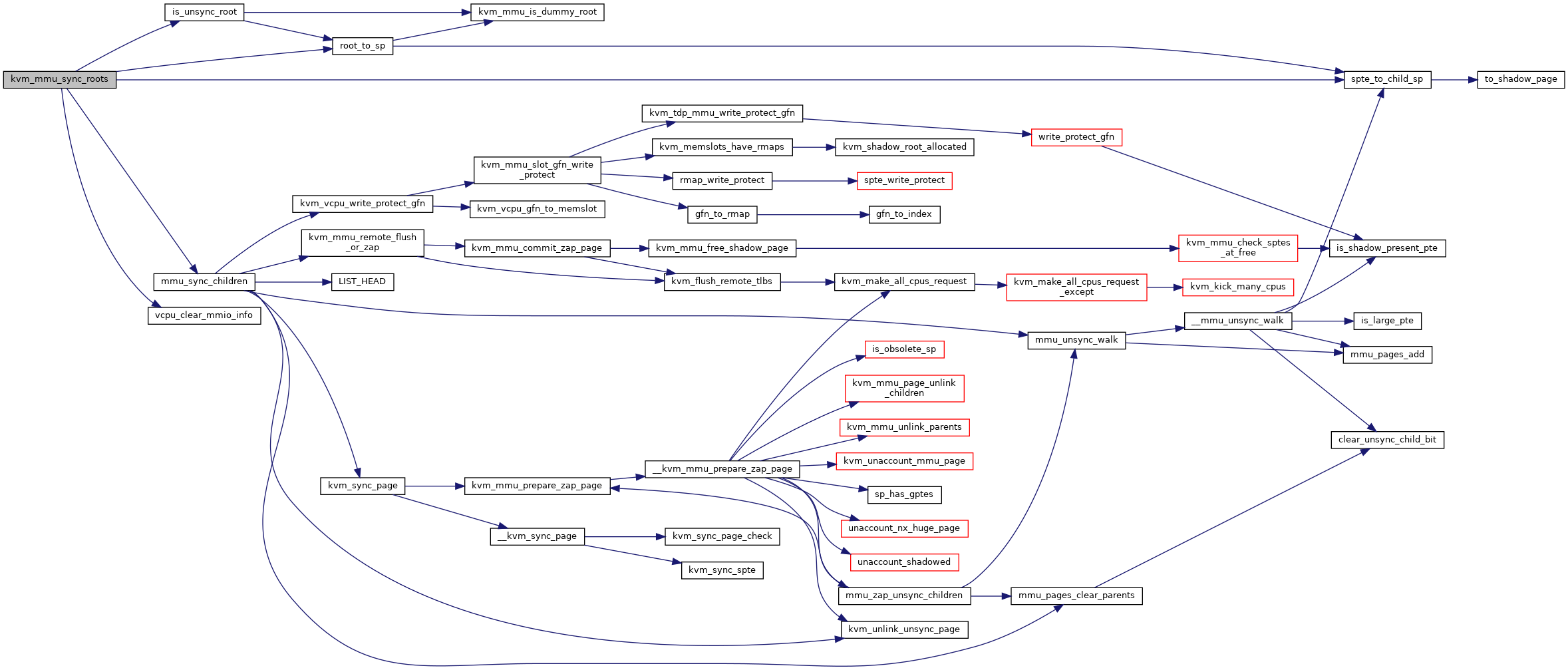

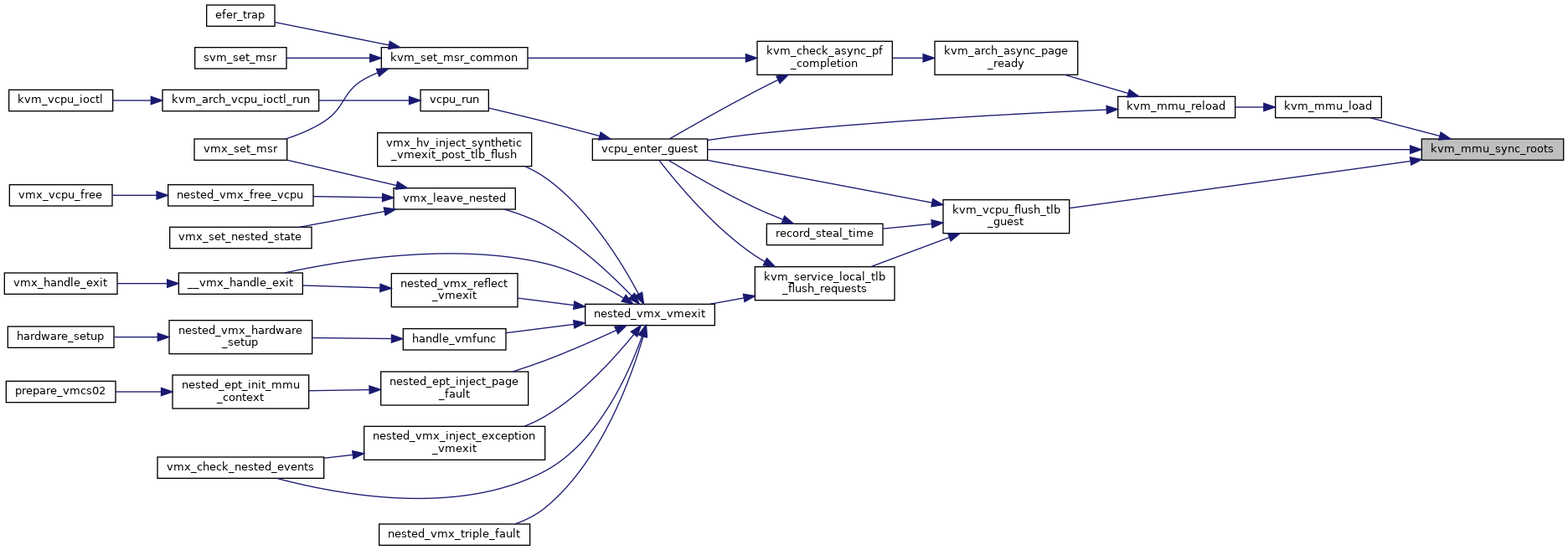

◆ kvm_mmu_sync_roots()

| void kvm_mmu_sync_roots | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 4021 of file mmu.c.

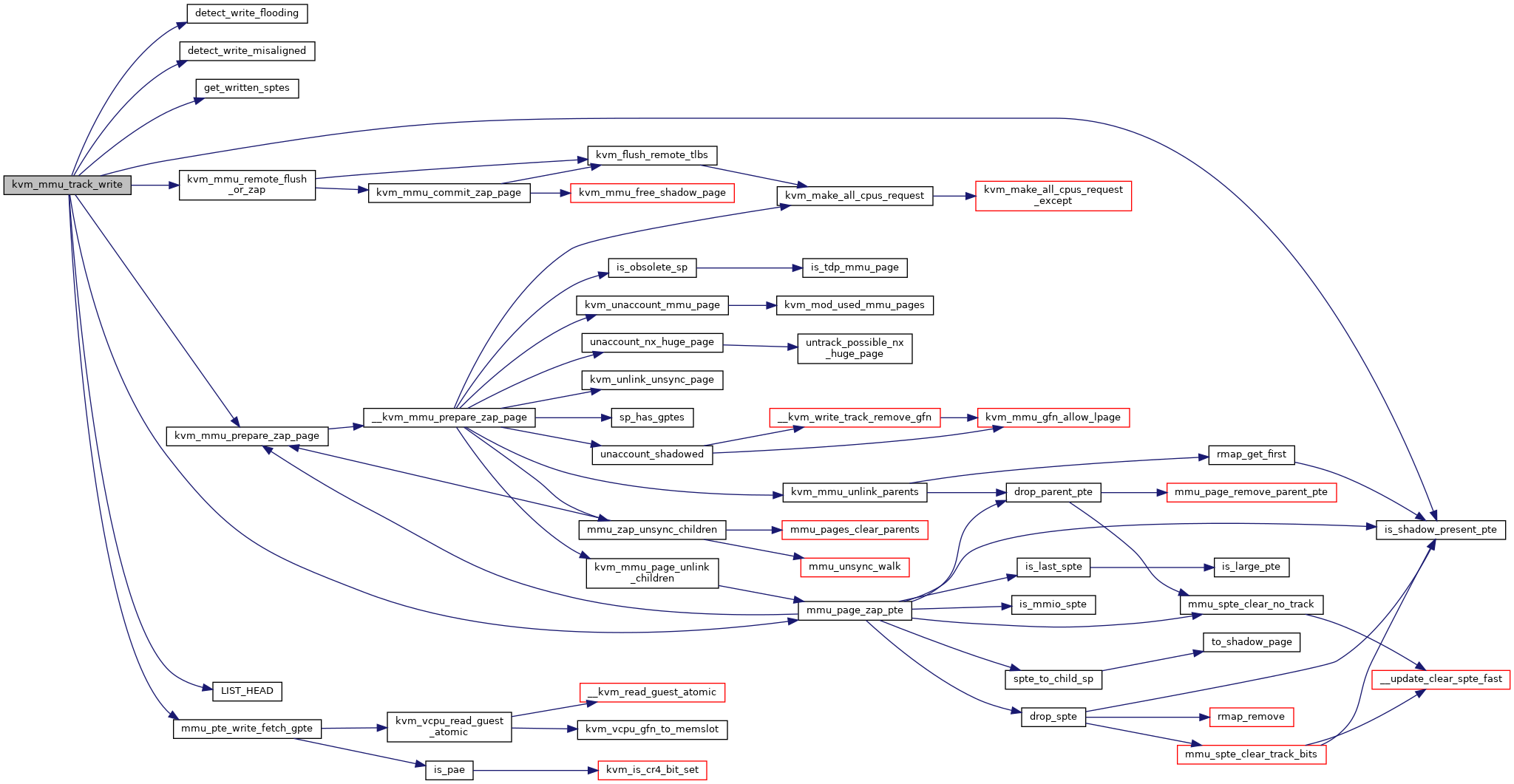

◆ kvm_mmu_track_write()

| void kvm_mmu_track_write | ( | struct kvm_vcpu * | vcpu, |

| gpa_t | gpa, | ||

| const u8 * | new, | ||

| int | bytes | ||

| ) |

Definition at line 5781 of file mmu.c.

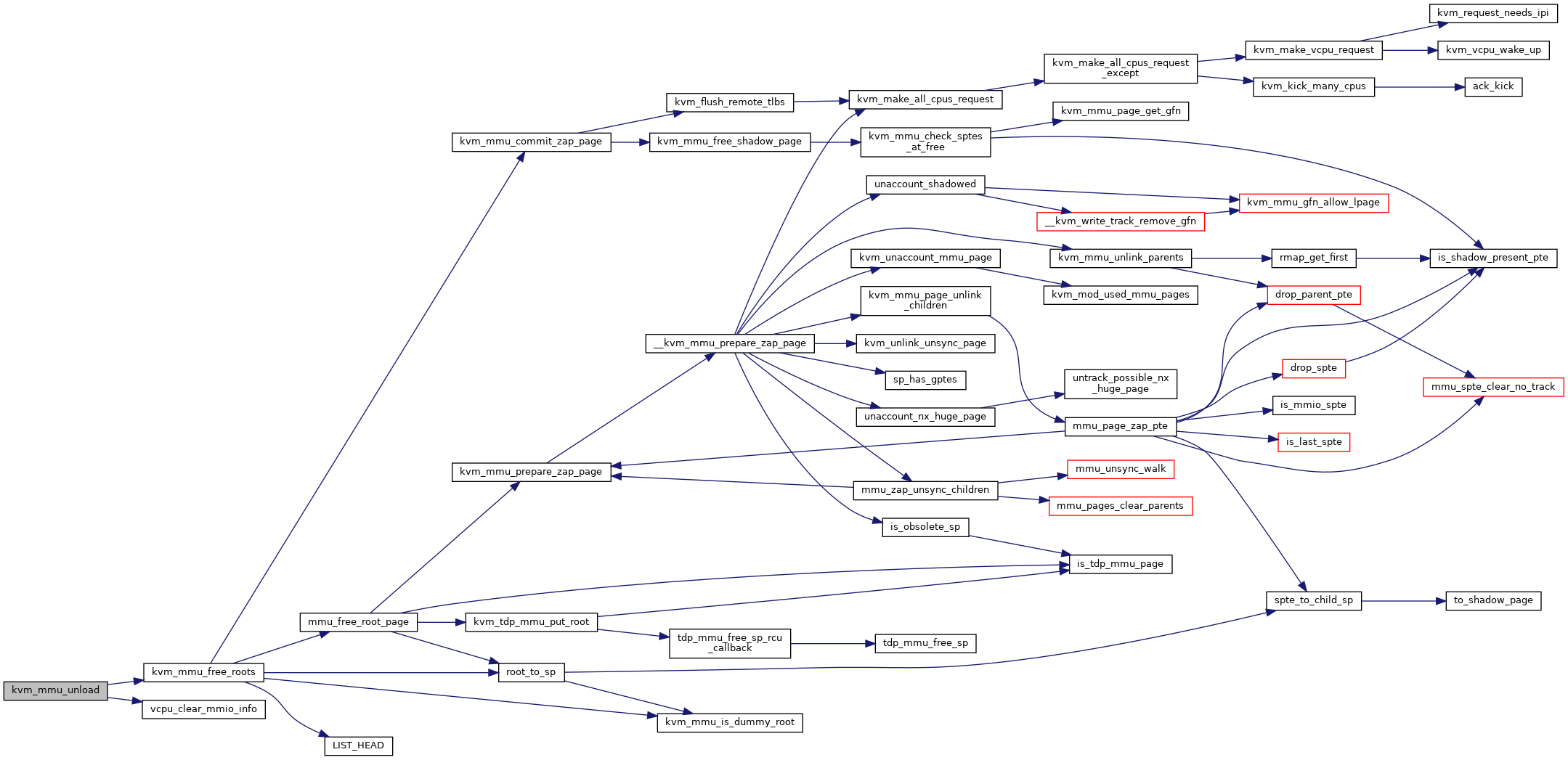

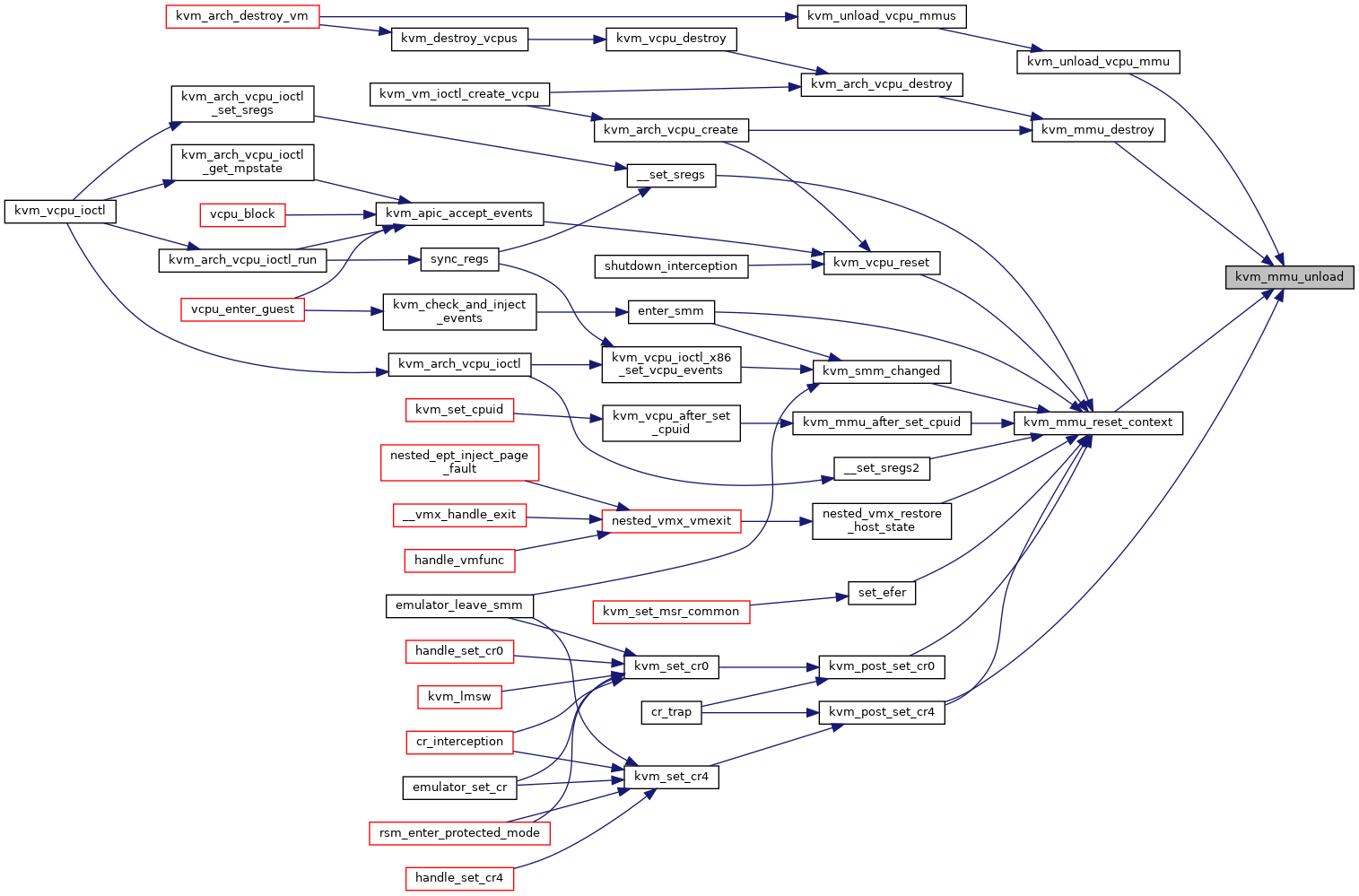

◆ kvm_mmu_unload()

| void kvm_mmu_unload | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_shadow_root_allocated()

|

inlinestatic |

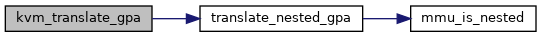

◆ kvm_translate_gpa()

|

inlinestatic |

Definition at line 313 of file mmu.h.

◆ kvm_update_page_stats()

|

inlinestatic |

◆ kvm_zap_gfn_range()

| void kvm_zap_gfn_range | ( | struct kvm * | kvm, |

| gfn_t | gfn_start, | ||

| gfn_t | gfn_end | ||

| ) |

Definition at line 6373 of file mmu.c.

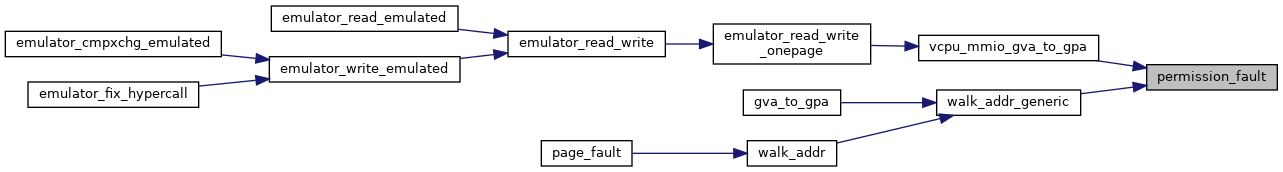

◆ permission_fault()

|

inlinestatic |

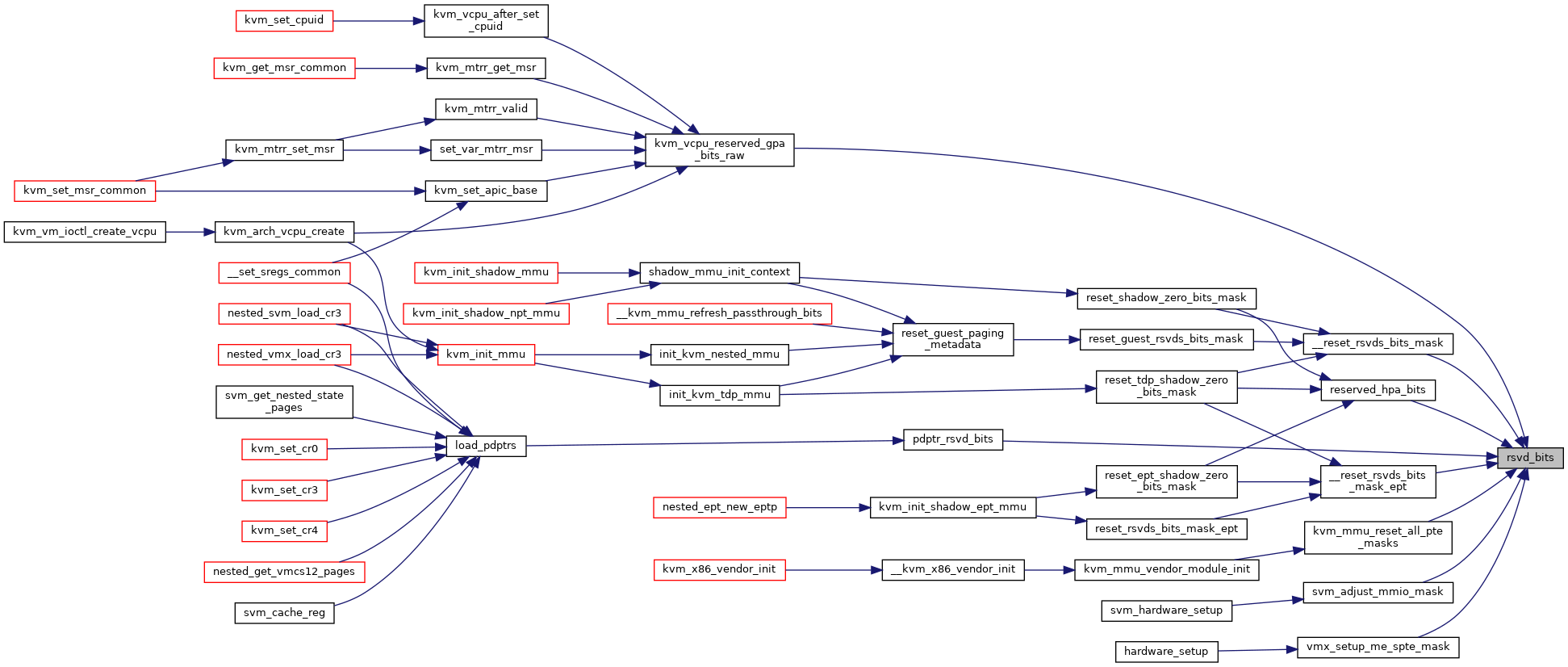

◆ rsvd_bits()

|

static |

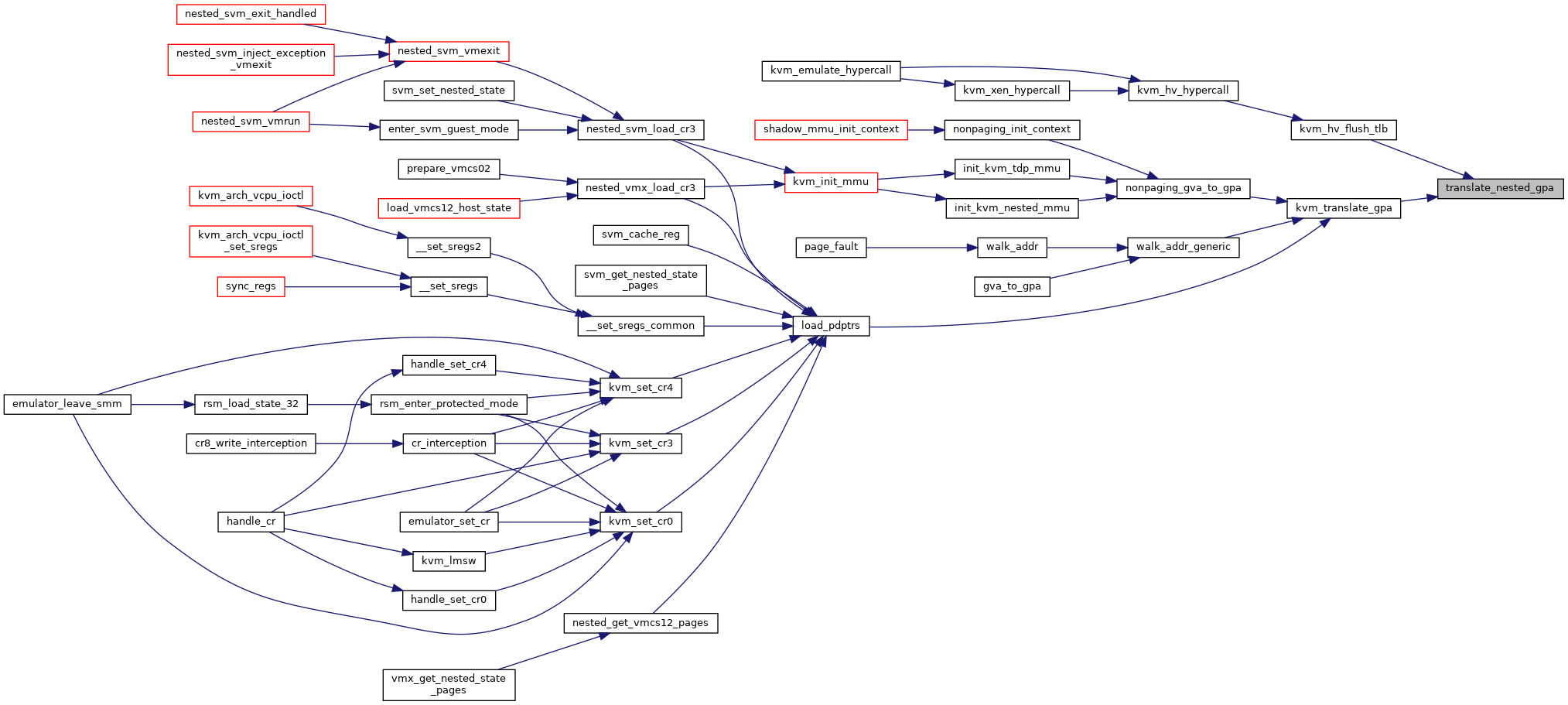

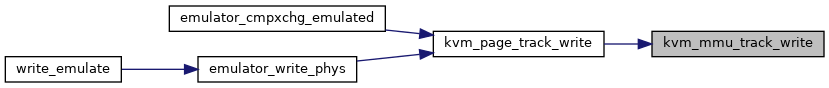

◆ translate_nested_gpa()

| gpa_t translate_nested_gpa | ( | struct kvm_vcpu * | vcpu, |

| gpa_t | gpa, | ||

| u64 | access, | ||

| struct x86_exception * | exception | ||

| ) |

Variable Documentation

◆ enable_mmio_caching

|

extern |

◆ shadow_phys_bits

|

extern |