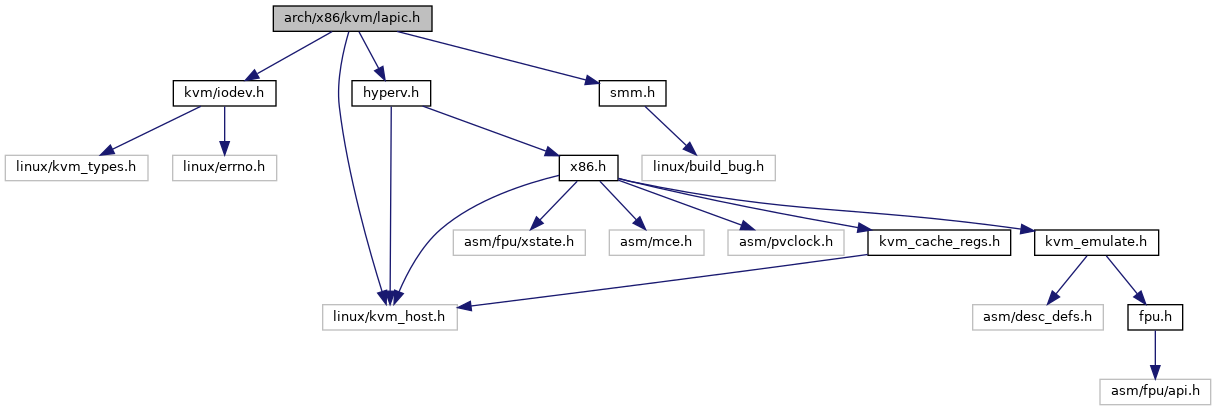

Go to the source code of this file.

Classes | |

| struct | kvm_timer |

| struct | kvm_lapic |

Macros | |

| #define | KVM_APIC_INIT 0 |

| #define | KVM_APIC_SIPI 1 |

| #define | APIC_SHORT_MASK 0xc0000 |

| #define | APIC_DEST_NOSHORT 0x0 |

| #define | APIC_DEST_MASK 0x800 |

| #define | APIC_BUS_CYCLE_NS 1 |

| #define | APIC_BUS_FREQUENCY (1000000000ULL / APIC_BUS_CYCLE_NS) |

| #define | APIC_BROADCAST 0xFF |

| #define | X2APIC_BROADCAST 0xFFFFFFFFul |

| #define | APIC_LVTx(x) ((x) == LVT_CMCI ? APIC_LVTCMCI : APIC_LVTT + 0x10 * (x)) |

| #define | VEC_POS(v) ((v) & (32 - 1)) |

| #define | REG_POS(v) (((v) >> 5) << 4) |

Enumerations | |

| enum | lapic_mode { LAPIC_MODE_DISABLED = 0 , LAPIC_MODE_INVALID = X2APIC_ENABLE , LAPIC_MODE_XAPIC = MSR_IA32_APICBASE_ENABLE , LAPIC_MODE_X2APIC = MSR_IA32_APICBASE_ENABLE | X2APIC_ENABLE } |

| enum | lapic_lvt_entry { LVT_TIMER , LVT_THERMAL_MONITOR , LVT_PERFORMANCE_COUNTER , LVT_LINT0 , LVT_LINT1 , LVT_ERROR , LVT_CMCI , KVM_APIC_MAX_NR_LVT_ENTRIES } |

Functions | |

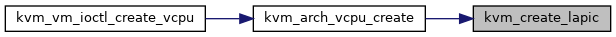

| int | kvm_create_lapic (struct kvm_vcpu *vcpu, int timer_advance_ns) |

| void | kvm_free_lapic (struct kvm_vcpu *vcpu) |

| int | kvm_apic_has_interrupt (struct kvm_vcpu *vcpu) |

| int | kvm_apic_accept_pic_intr (struct kvm_vcpu *vcpu) |

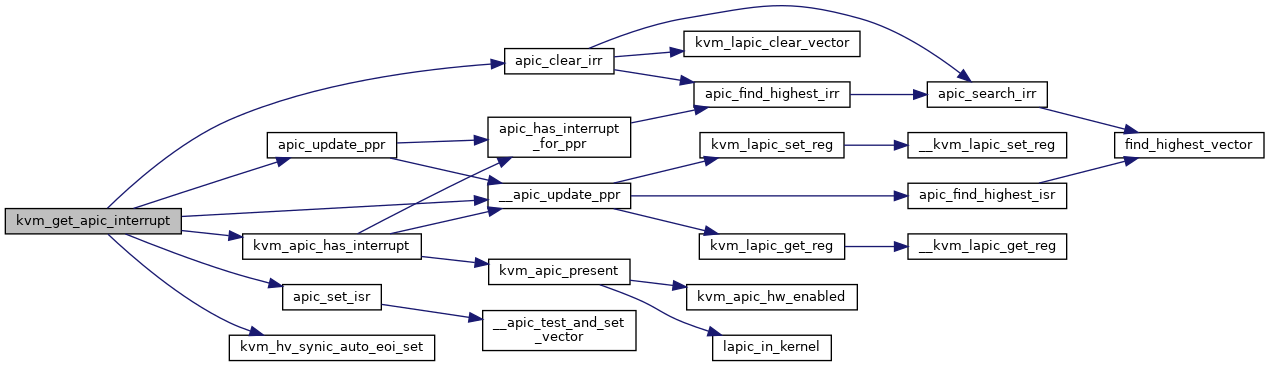

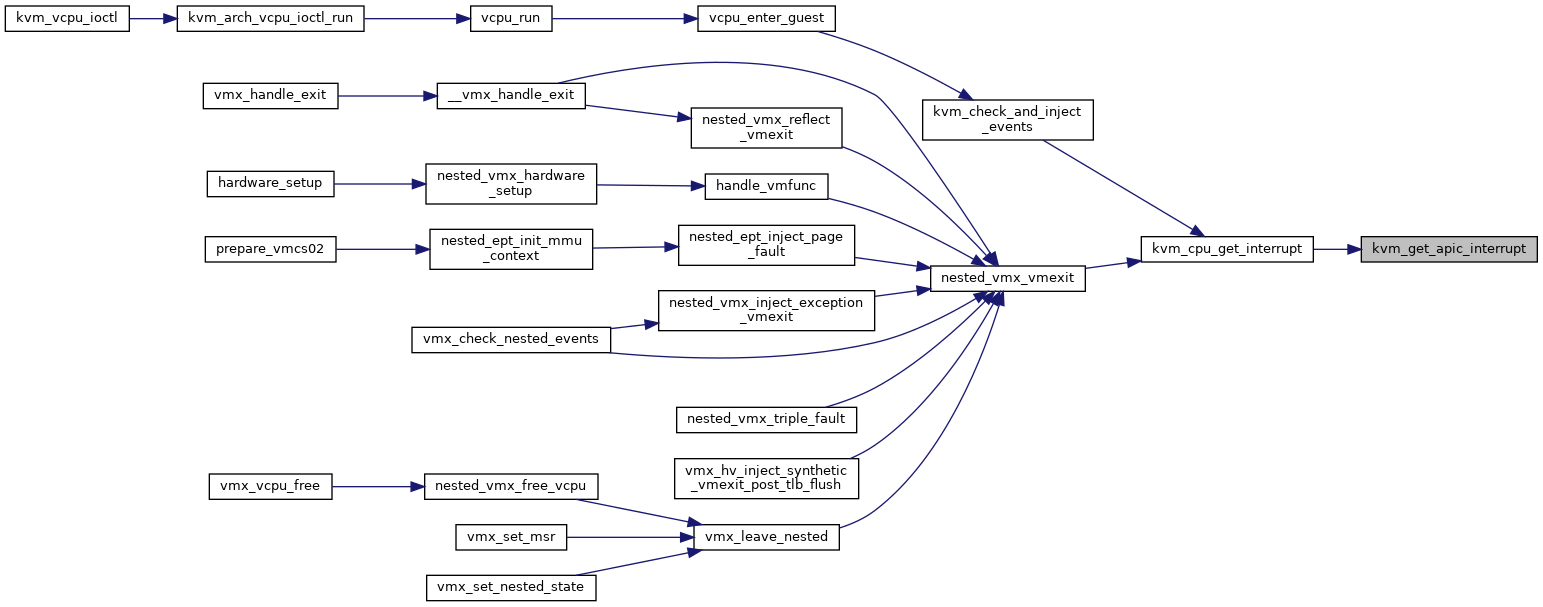

| int | kvm_get_apic_interrupt (struct kvm_vcpu *vcpu) |

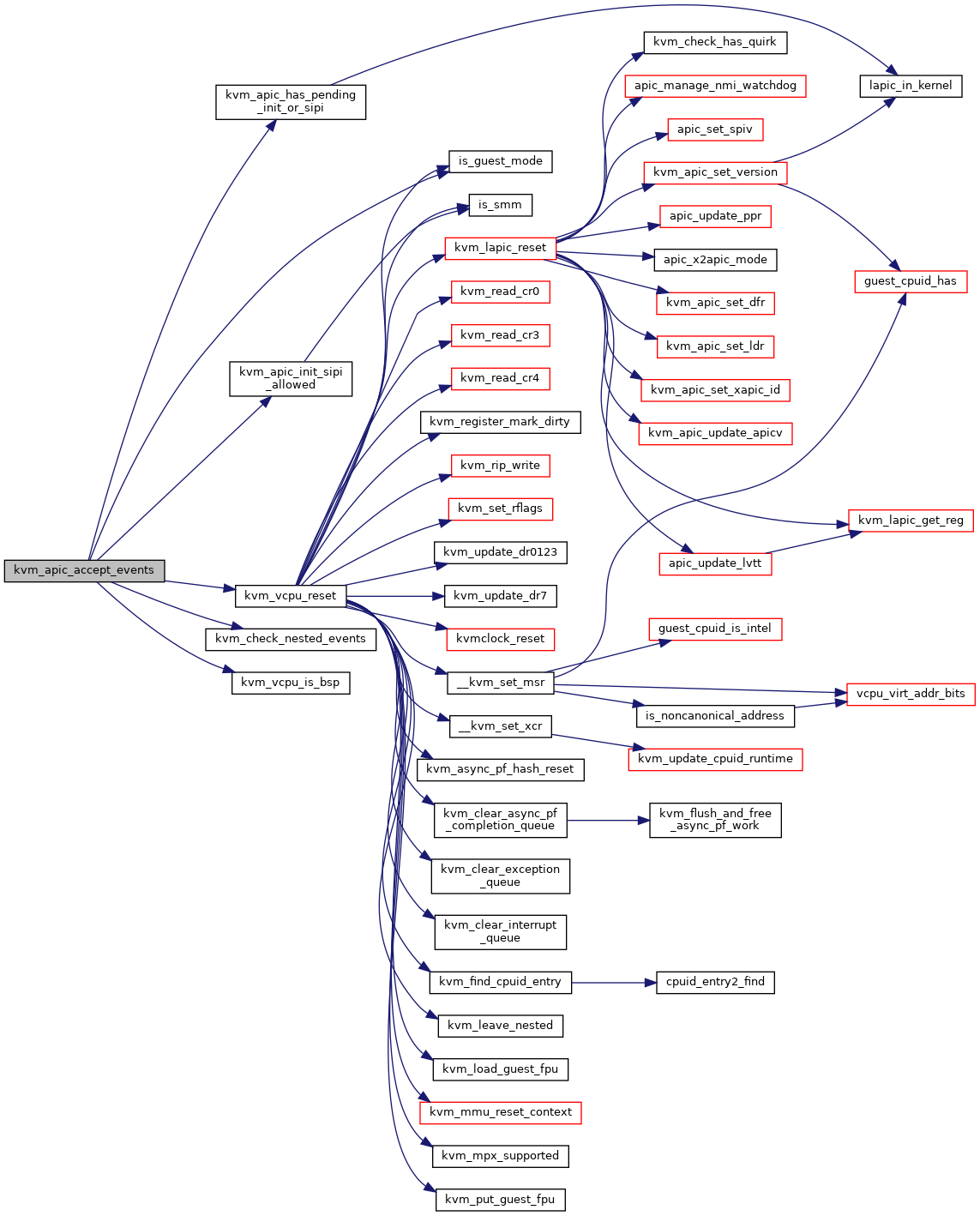

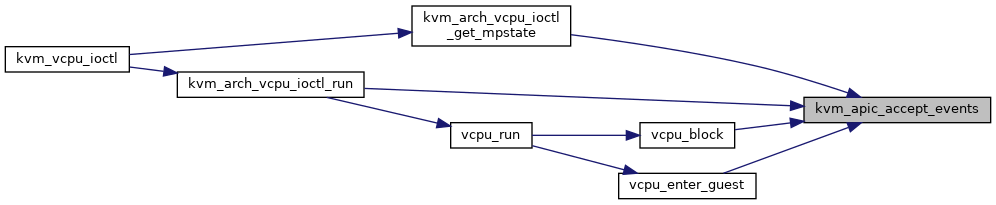

| int | kvm_apic_accept_events (struct kvm_vcpu *vcpu) |

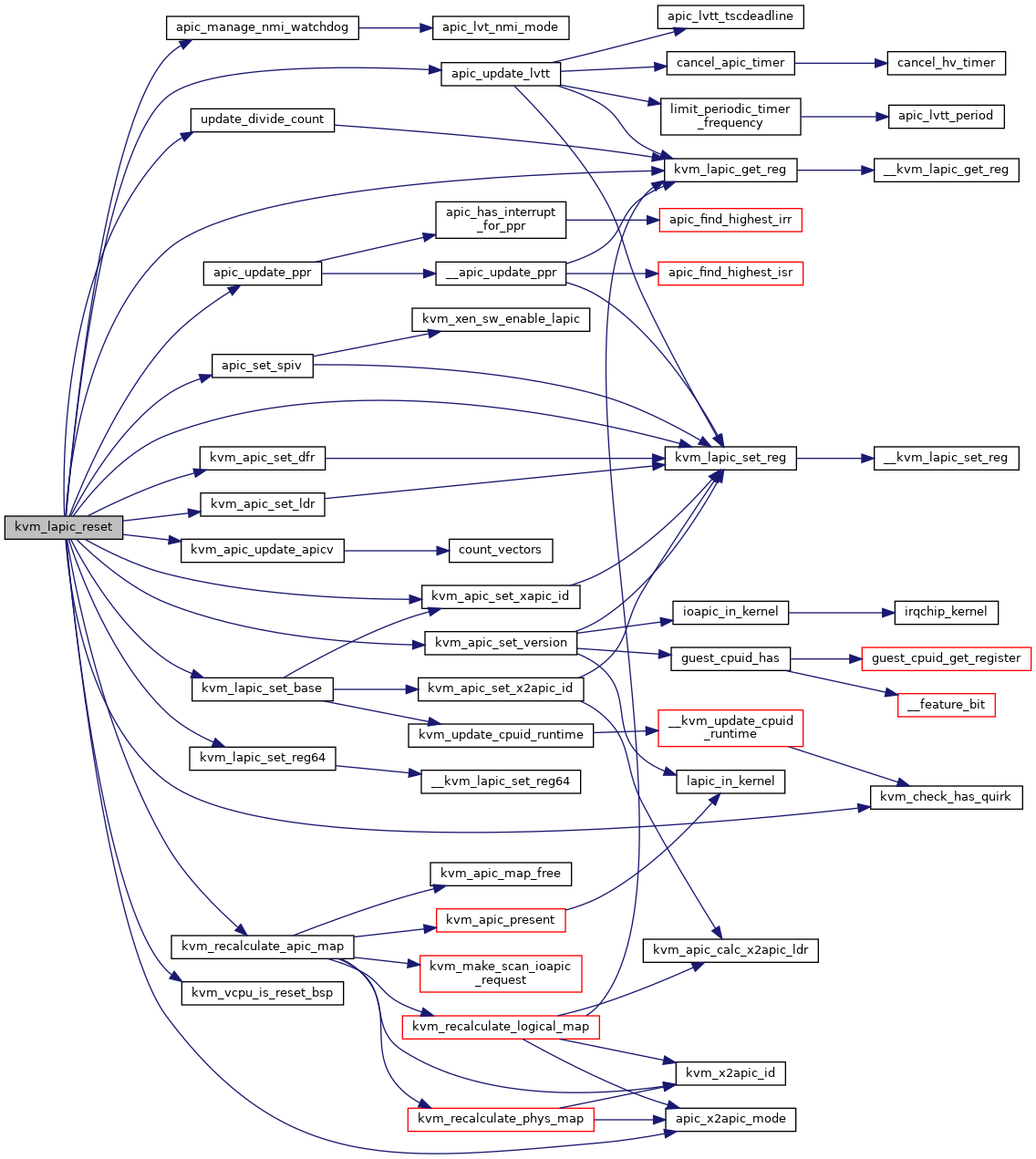

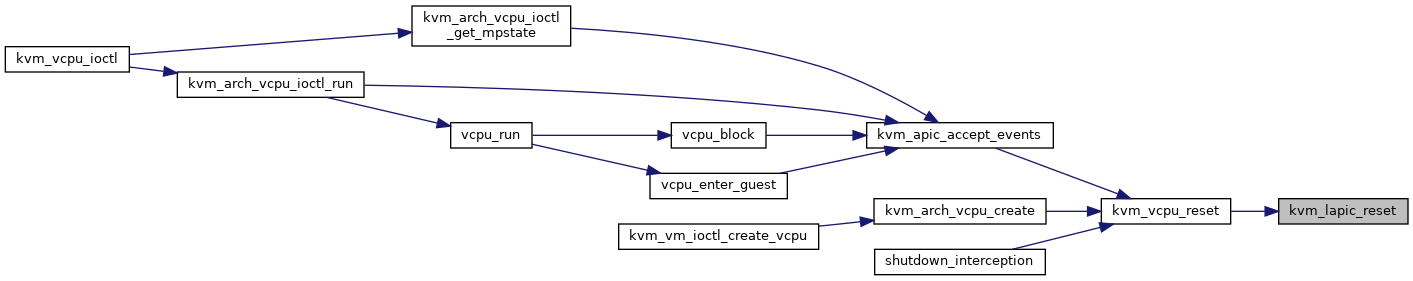

| void | kvm_lapic_reset (struct kvm_vcpu *vcpu, bool init_event) |

| u64 | kvm_lapic_get_cr8 (struct kvm_vcpu *vcpu) |

| void | kvm_lapic_set_tpr (struct kvm_vcpu *vcpu, unsigned long cr8) |

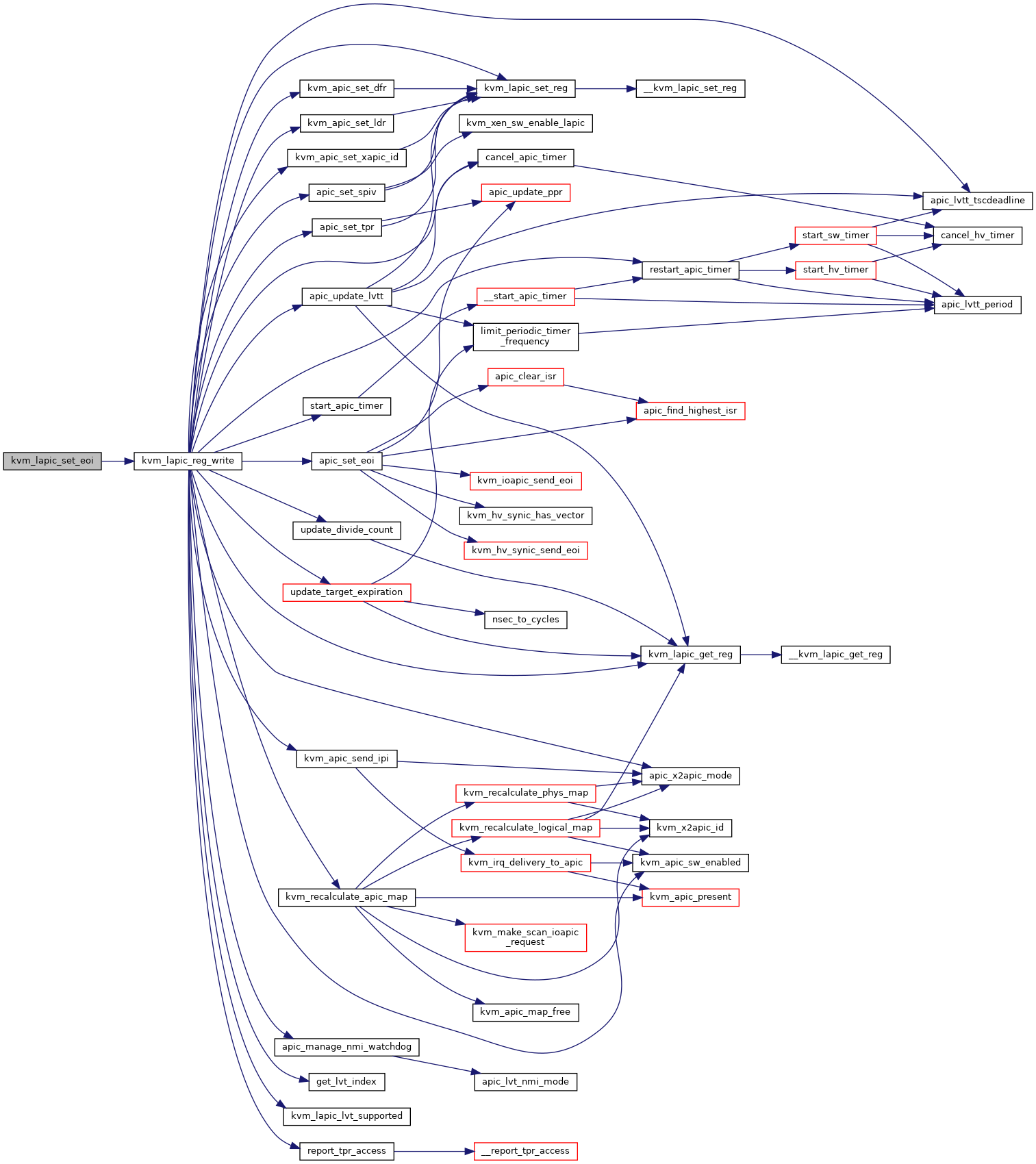

| void | kvm_lapic_set_eoi (struct kvm_vcpu *vcpu) |

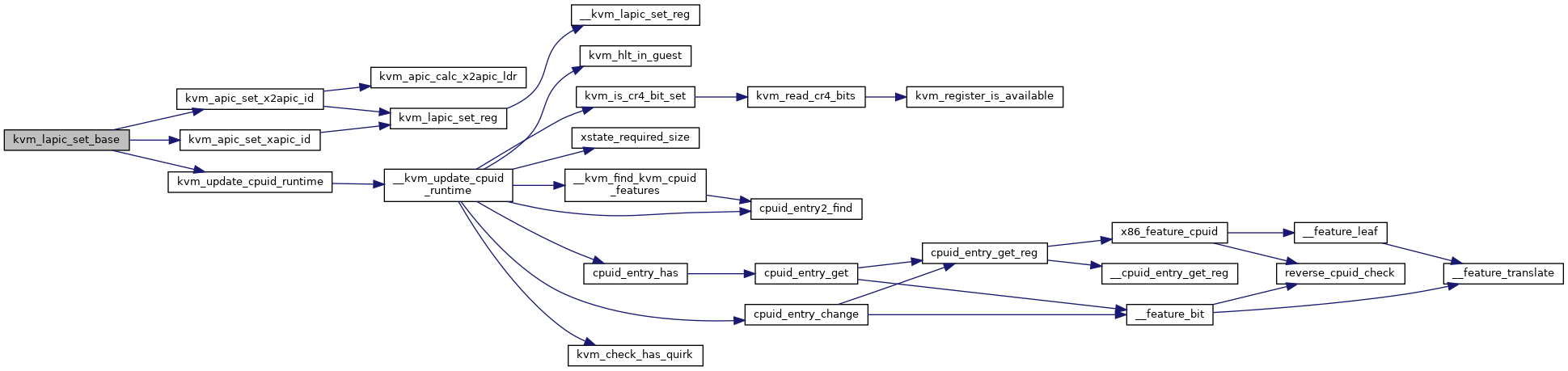

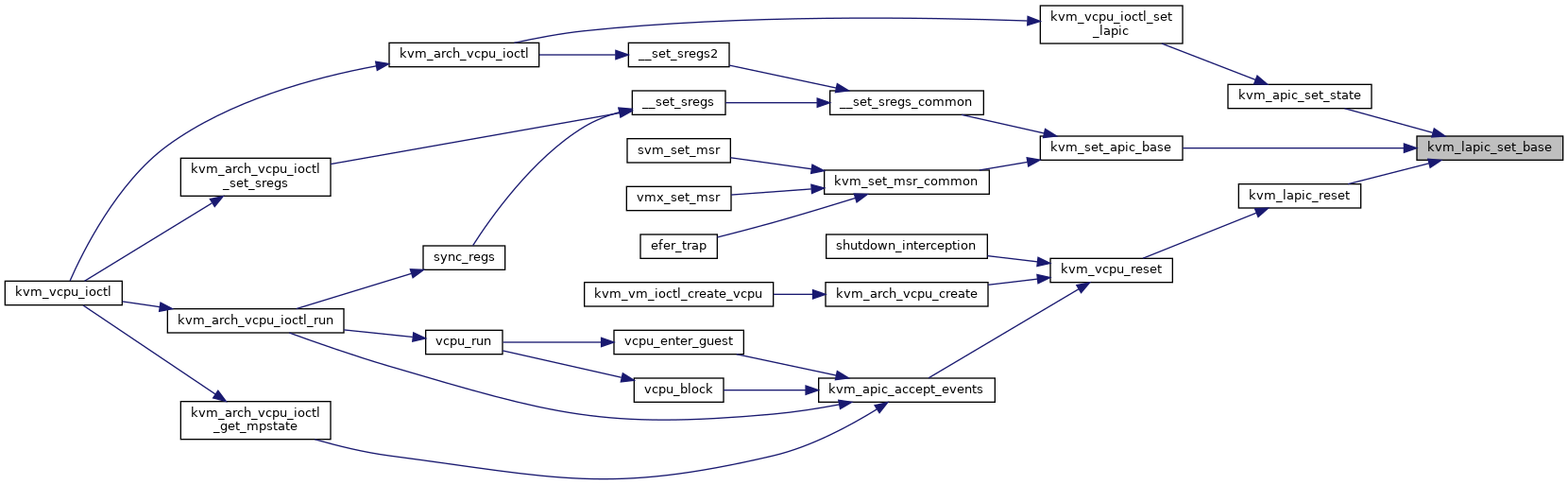

| void | kvm_lapic_set_base (struct kvm_vcpu *vcpu, u64 value) |

| u64 | kvm_lapic_get_base (struct kvm_vcpu *vcpu) |

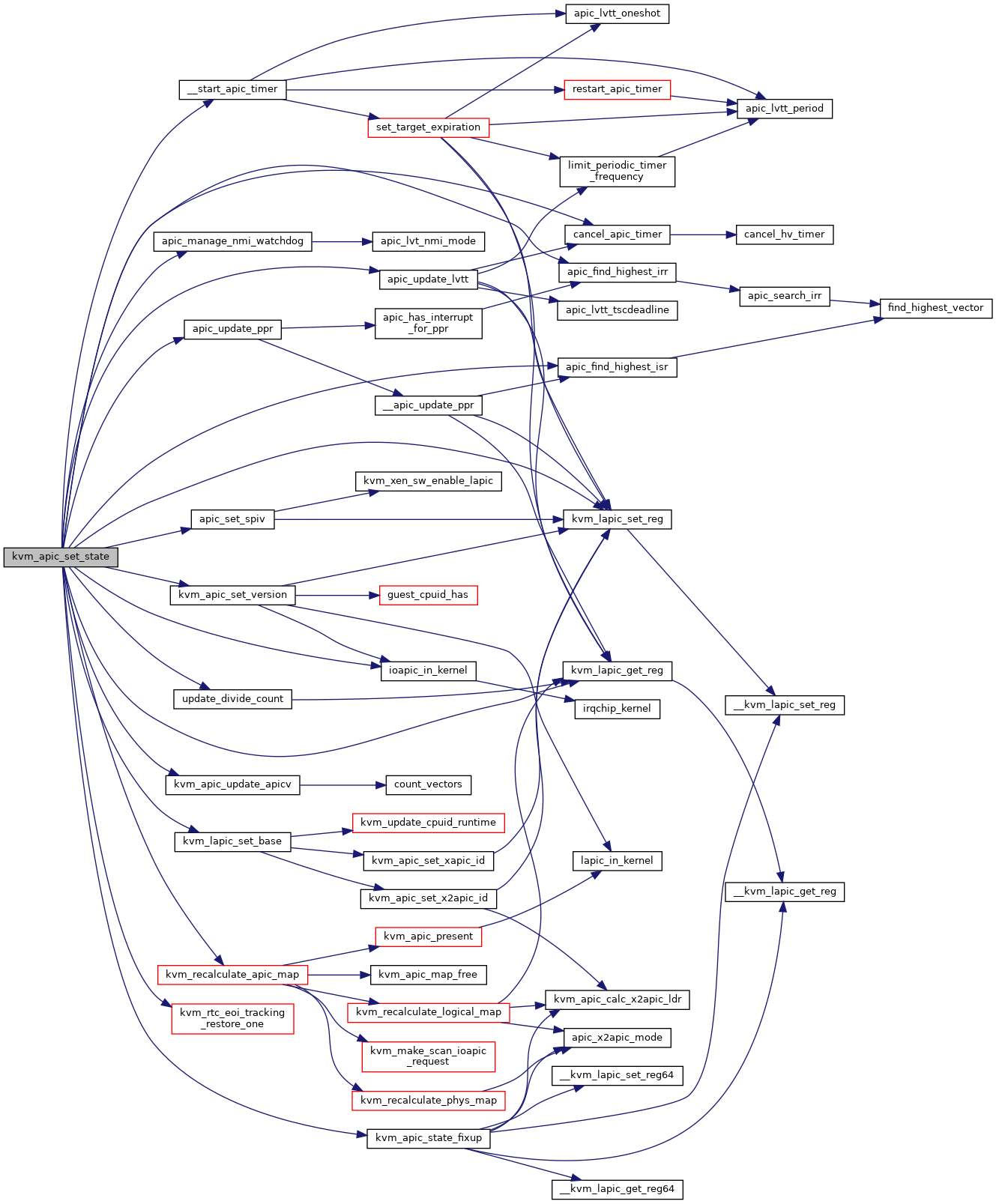

| void | kvm_recalculate_apic_map (struct kvm *kvm) |

| void | kvm_apic_set_version (struct kvm_vcpu *vcpu) |

| void | kvm_apic_after_set_mcg_cap (struct kvm_vcpu *vcpu) |

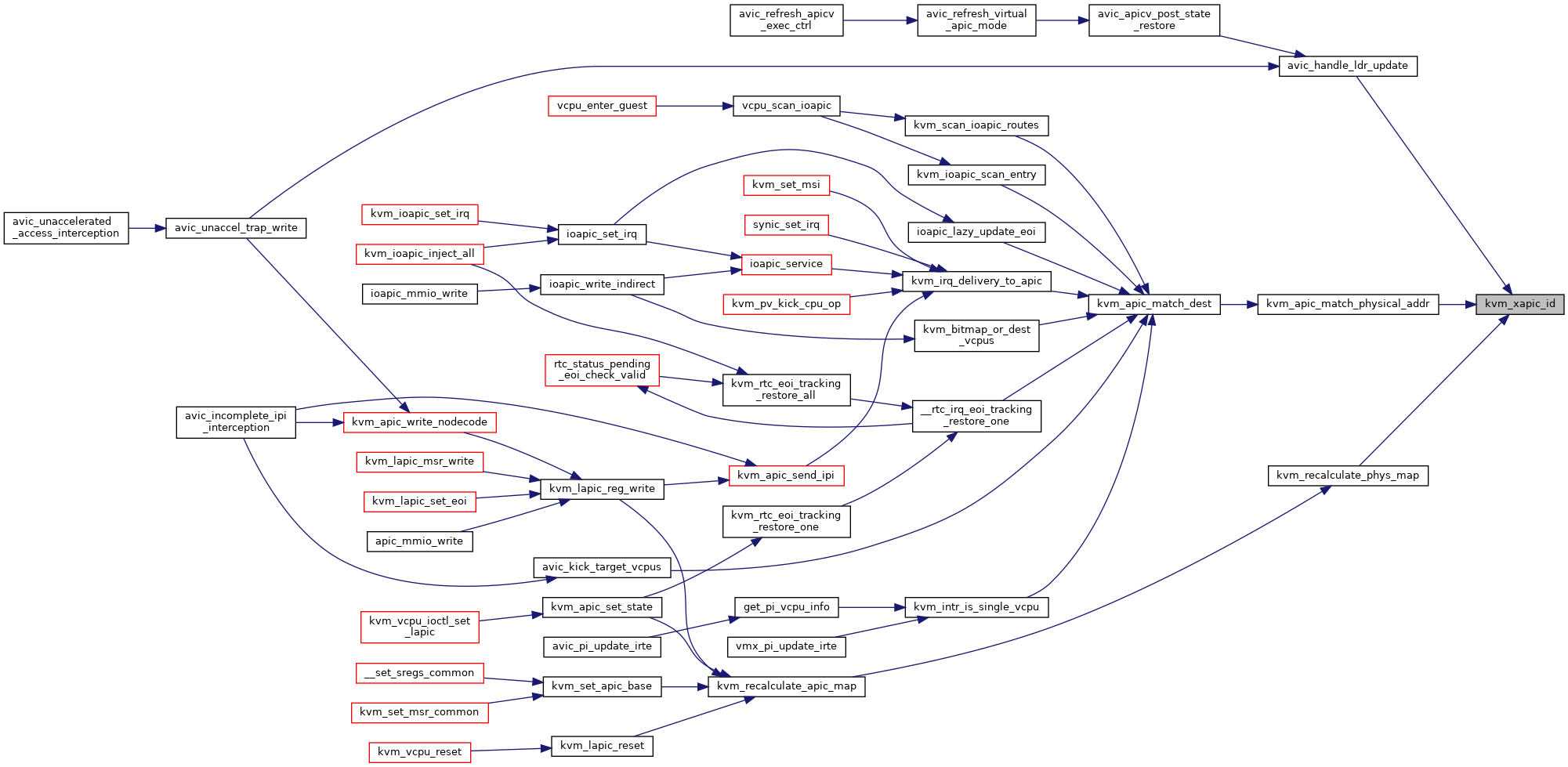

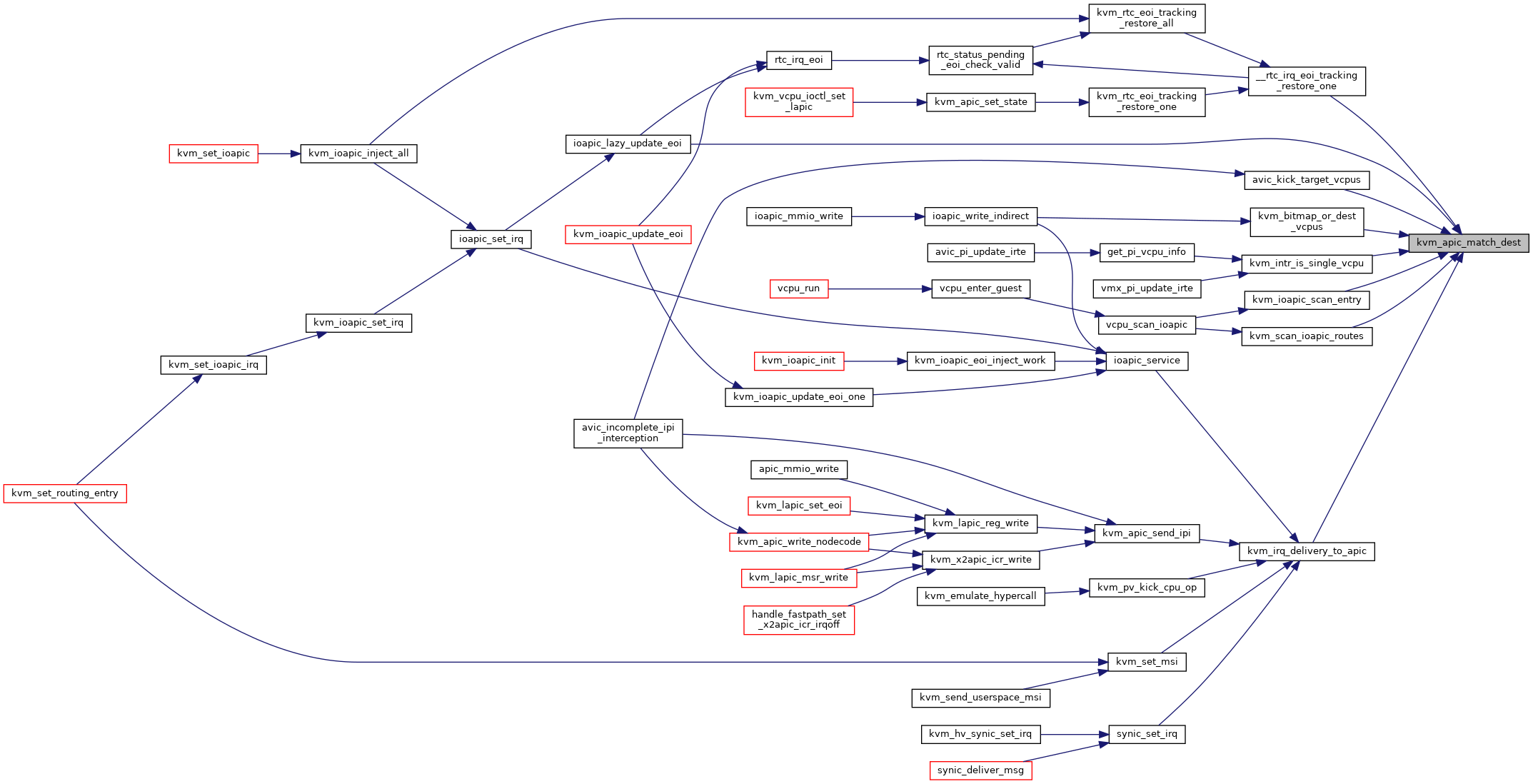

| bool | kvm_apic_match_dest (struct kvm_vcpu *vcpu, struct kvm_lapic *source, int shorthand, unsigned int dest, int dest_mode) |

| int | kvm_apic_compare_prio (struct kvm_vcpu *vcpu1, struct kvm_vcpu *vcpu2) |

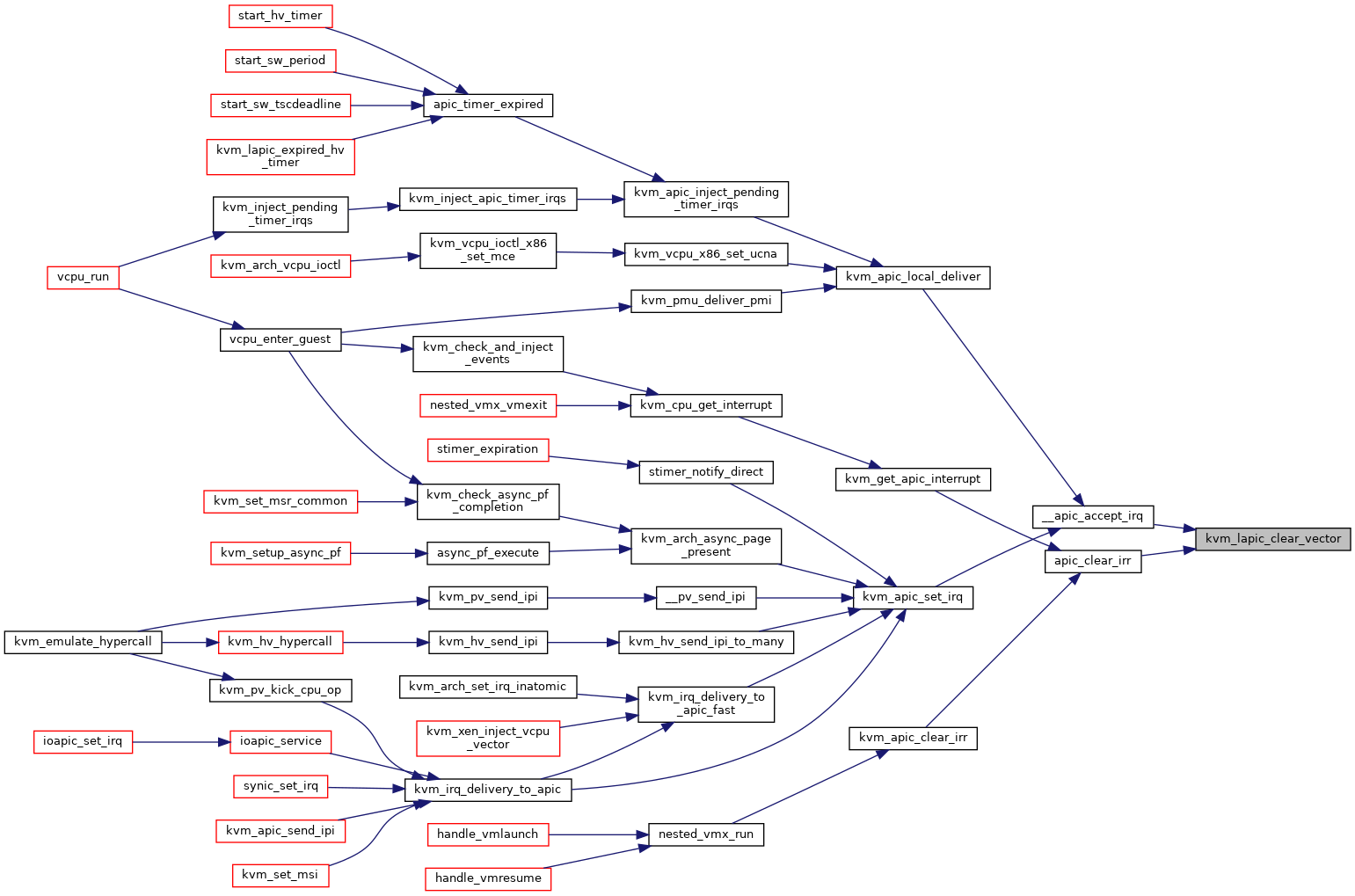

| void | kvm_apic_clear_irr (struct kvm_vcpu *vcpu, int vec) |

| bool | __kvm_apic_update_irr (u32 *pir, void *regs, int *max_irr) |

| bool | kvm_apic_update_irr (struct kvm_vcpu *vcpu, u32 *pir, int *max_irr) |

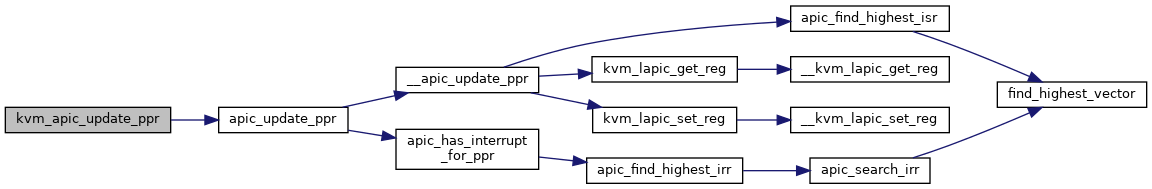

| void | kvm_apic_update_ppr (struct kvm_vcpu *vcpu) |

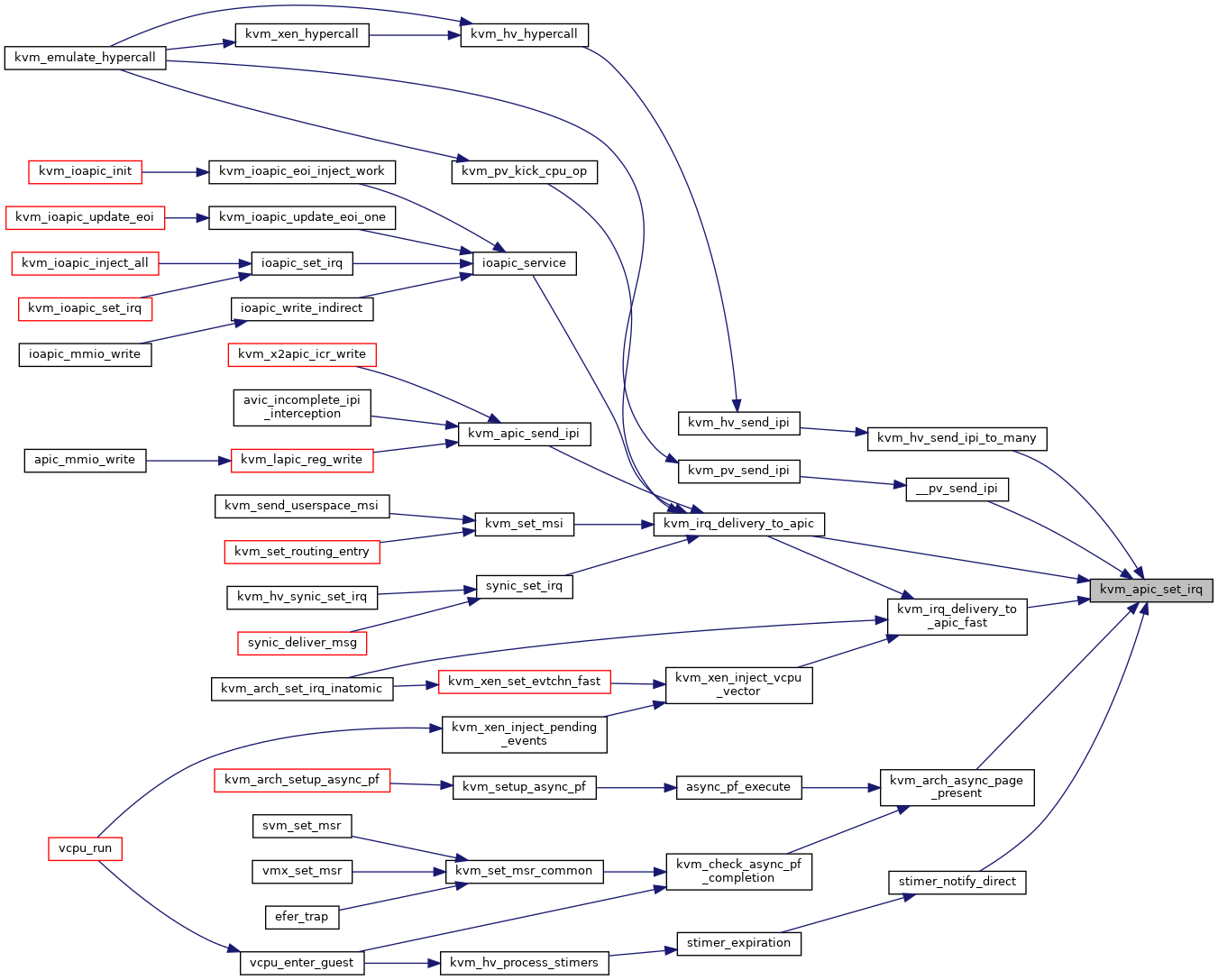

| int | kvm_apic_set_irq (struct kvm_vcpu *vcpu, struct kvm_lapic_irq *irq, struct dest_map *dest_map) |

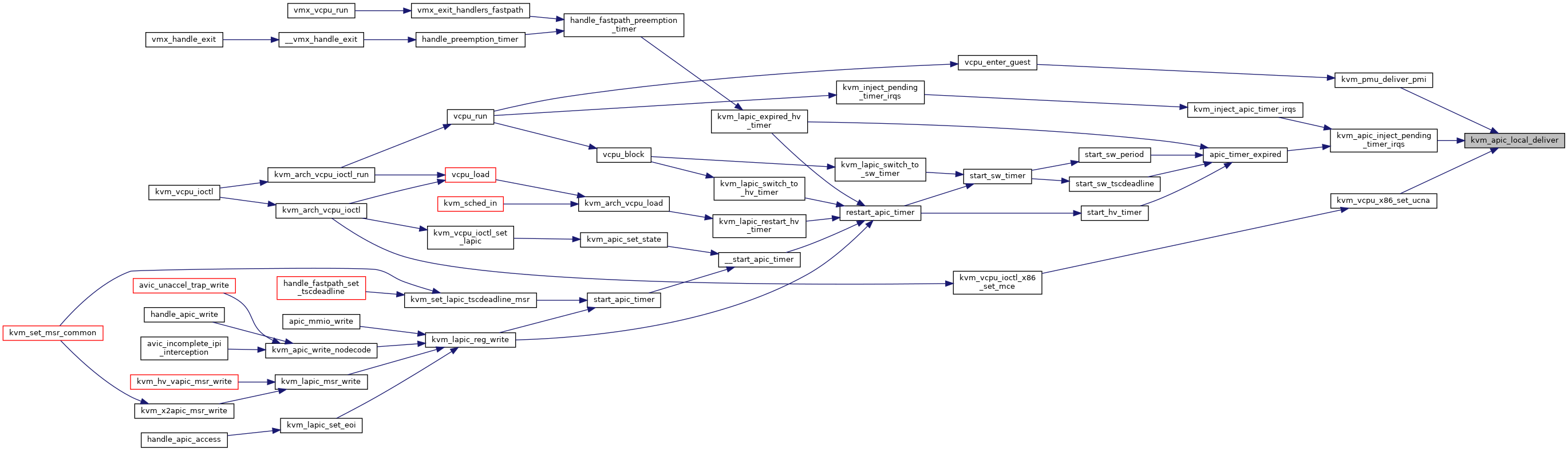

| int | kvm_apic_local_deliver (struct kvm_lapic *apic, int lvt_type) |

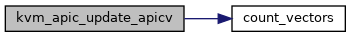

| void | kvm_apic_update_apicv (struct kvm_vcpu *vcpu) |

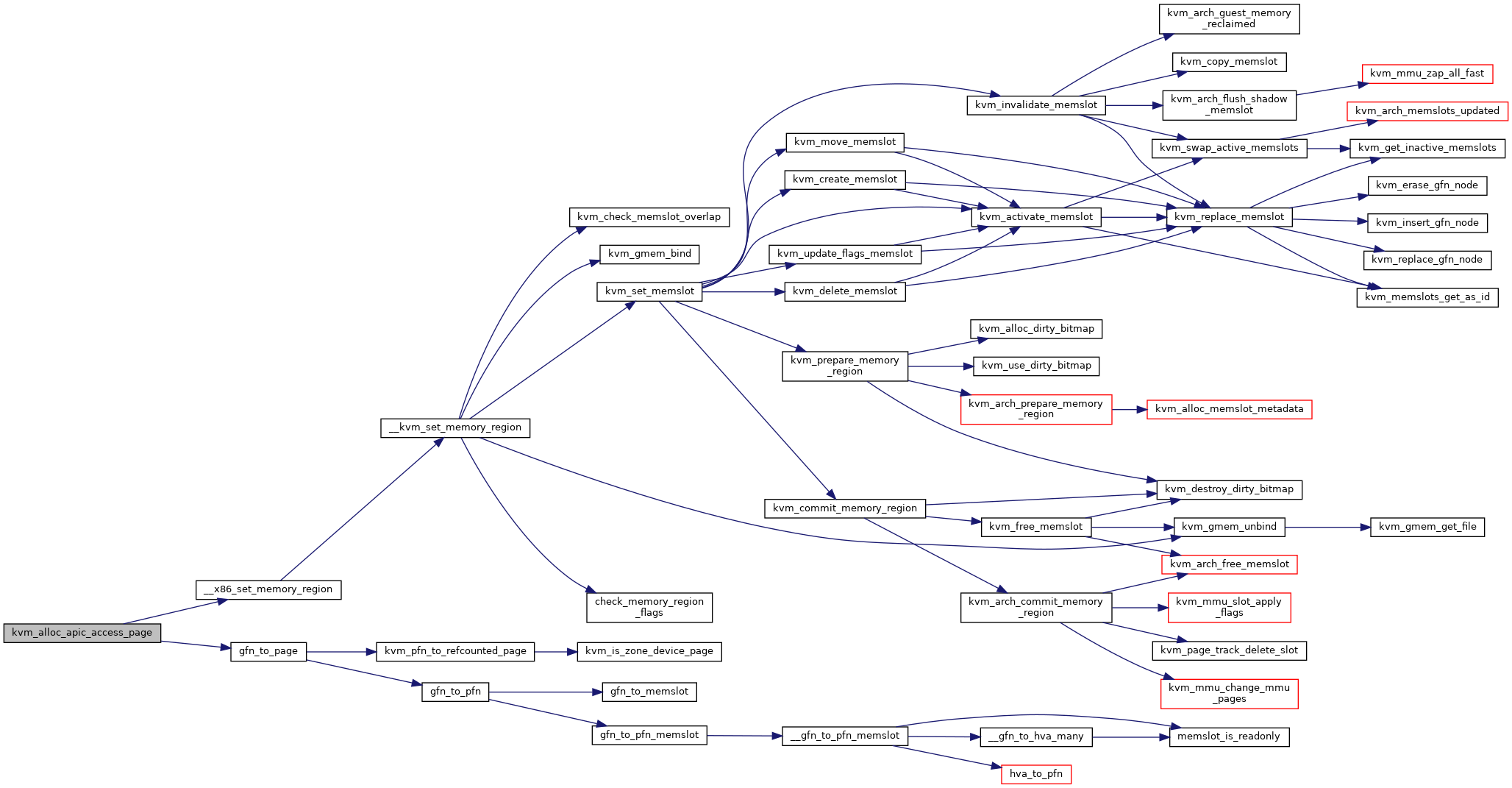

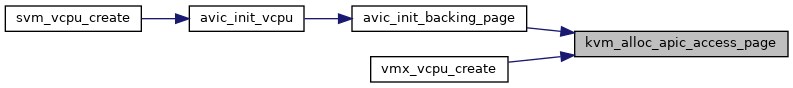

| int | kvm_alloc_apic_access_page (struct kvm *kvm) |

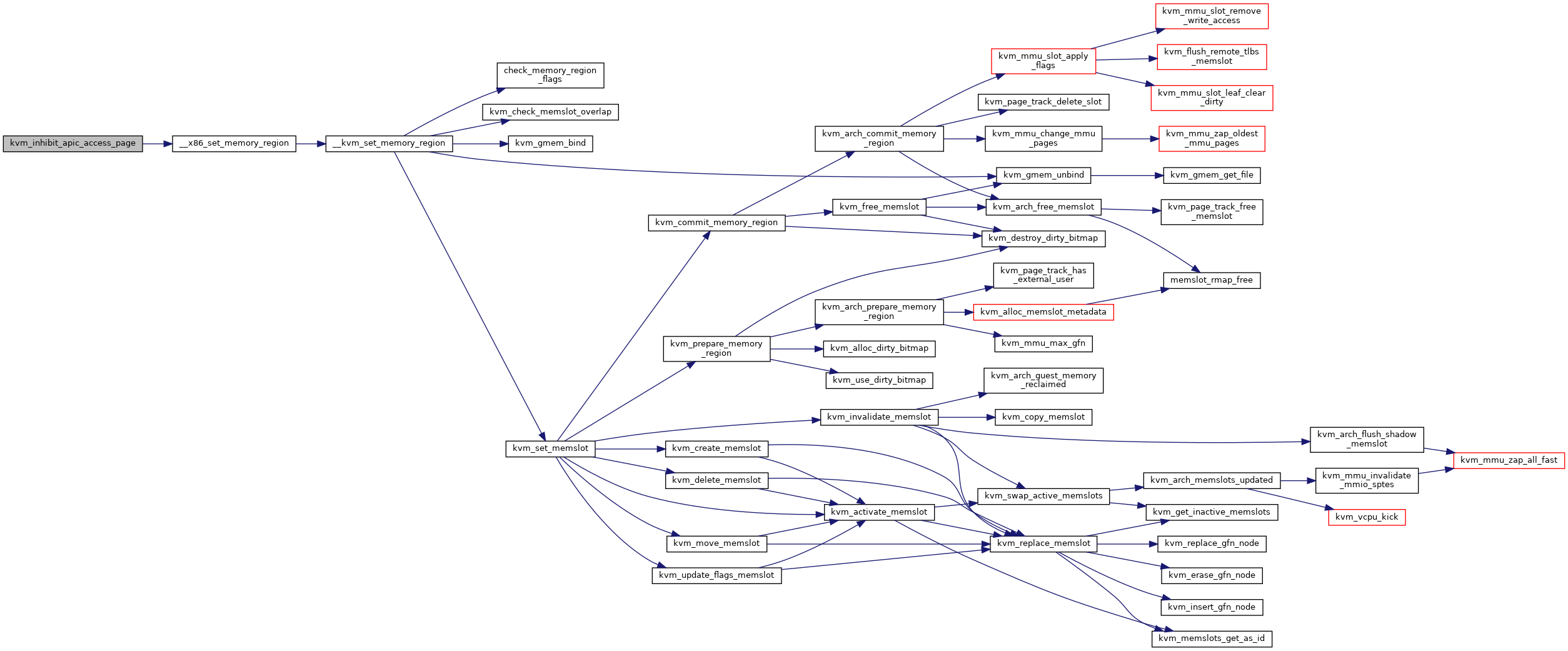

| void | kvm_inhibit_apic_access_page (struct kvm_vcpu *vcpu) |

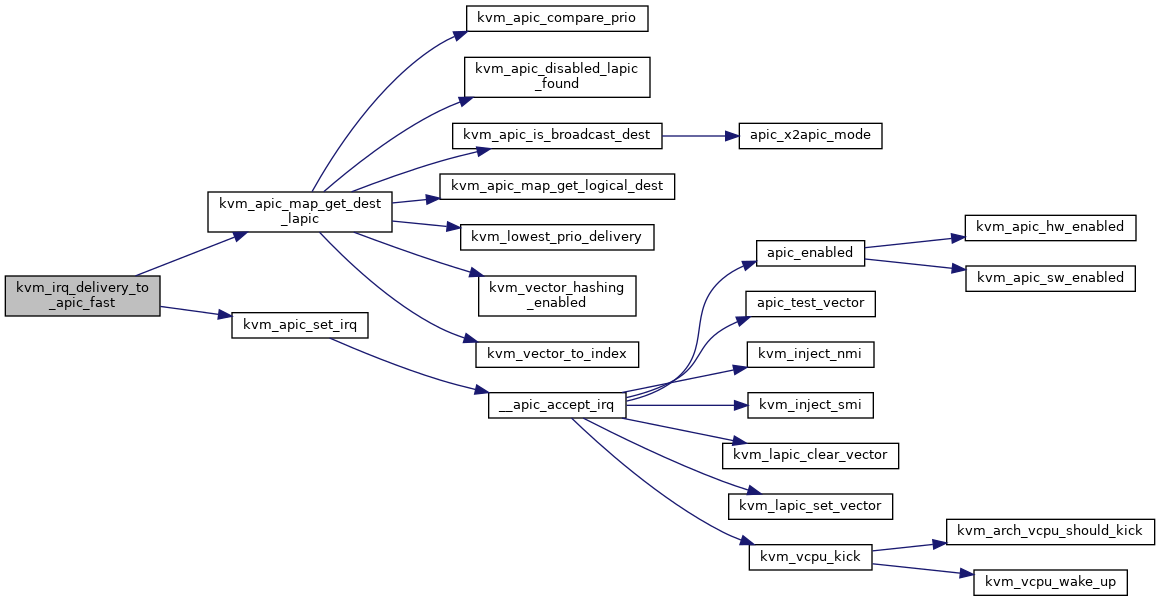

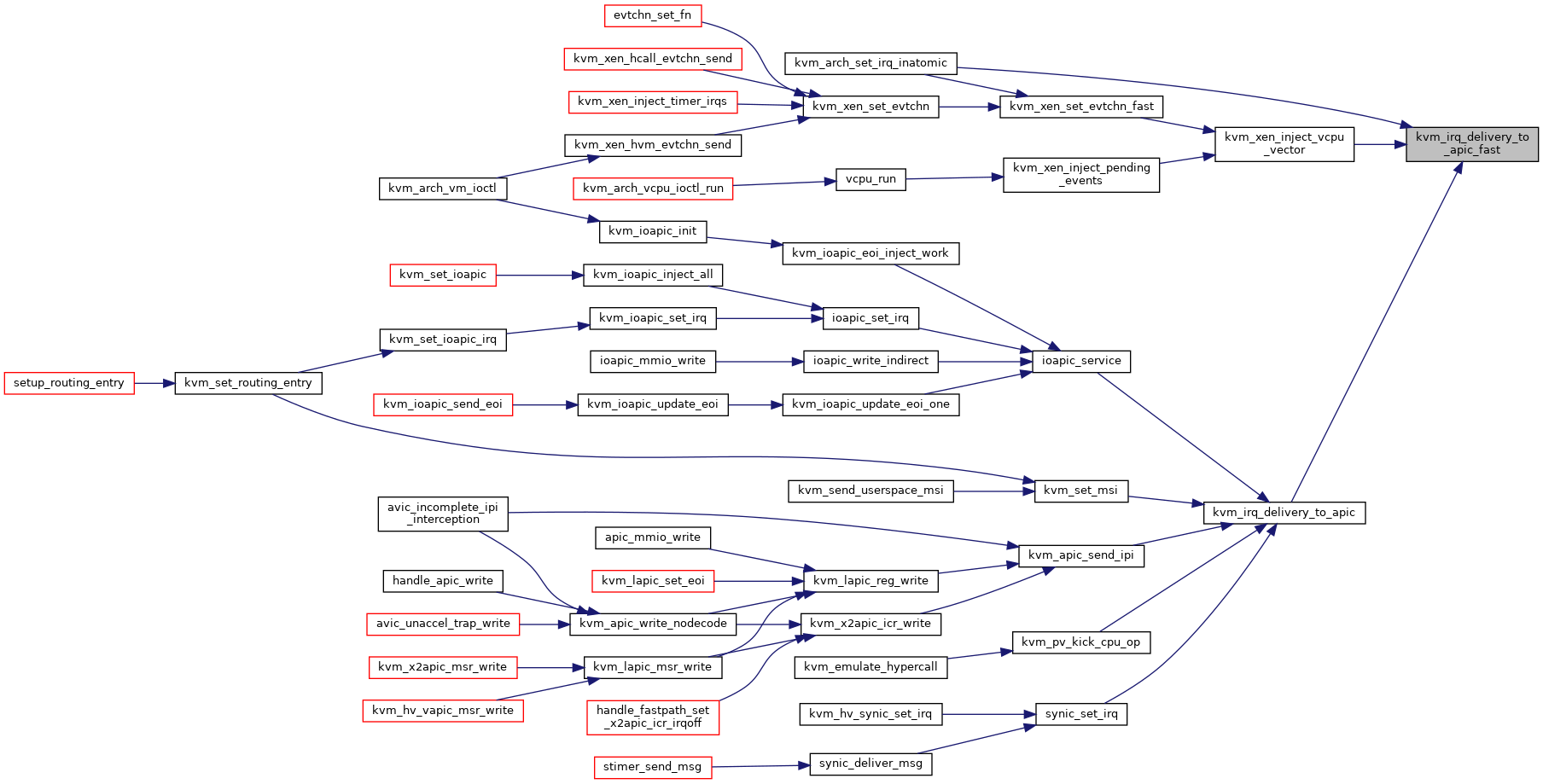

| bool | kvm_irq_delivery_to_apic_fast (struct kvm *kvm, struct kvm_lapic *src, struct kvm_lapic_irq *irq, int *r, struct dest_map *dest_map) |

| void | kvm_apic_send_ipi (struct kvm_lapic *apic, u32 icr_low, u32 icr_high) |

| u64 | kvm_get_apic_base (struct kvm_vcpu *vcpu) |

| int | kvm_set_apic_base (struct kvm_vcpu *vcpu, struct msr_data *msr_info) |

| int | kvm_apic_get_state (struct kvm_vcpu *vcpu, struct kvm_lapic_state *s) |

| int | kvm_apic_set_state (struct kvm_vcpu *vcpu, struct kvm_lapic_state *s) |

| enum lapic_mode | kvm_get_apic_mode (struct kvm_vcpu *vcpu) |

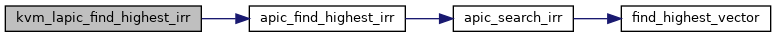

| int | kvm_lapic_find_highest_irr (struct kvm_vcpu *vcpu) |

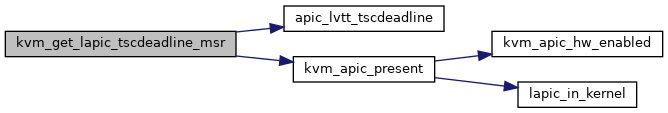

| u64 | kvm_get_lapic_tscdeadline_msr (struct kvm_vcpu *vcpu) |

| void | kvm_set_lapic_tscdeadline_msr (struct kvm_vcpu *vcpu, u64 data) |

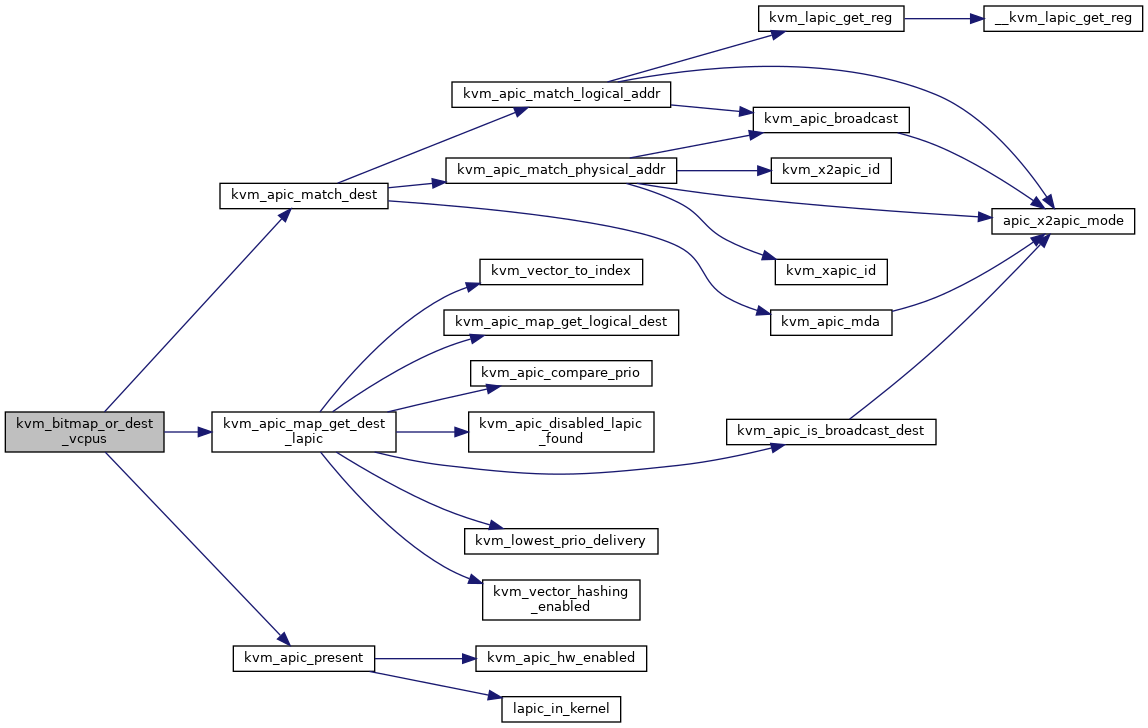

| void | kvm_apic_write_nodecode (struct kvm_vcpu *vcpu, u32 offset) |

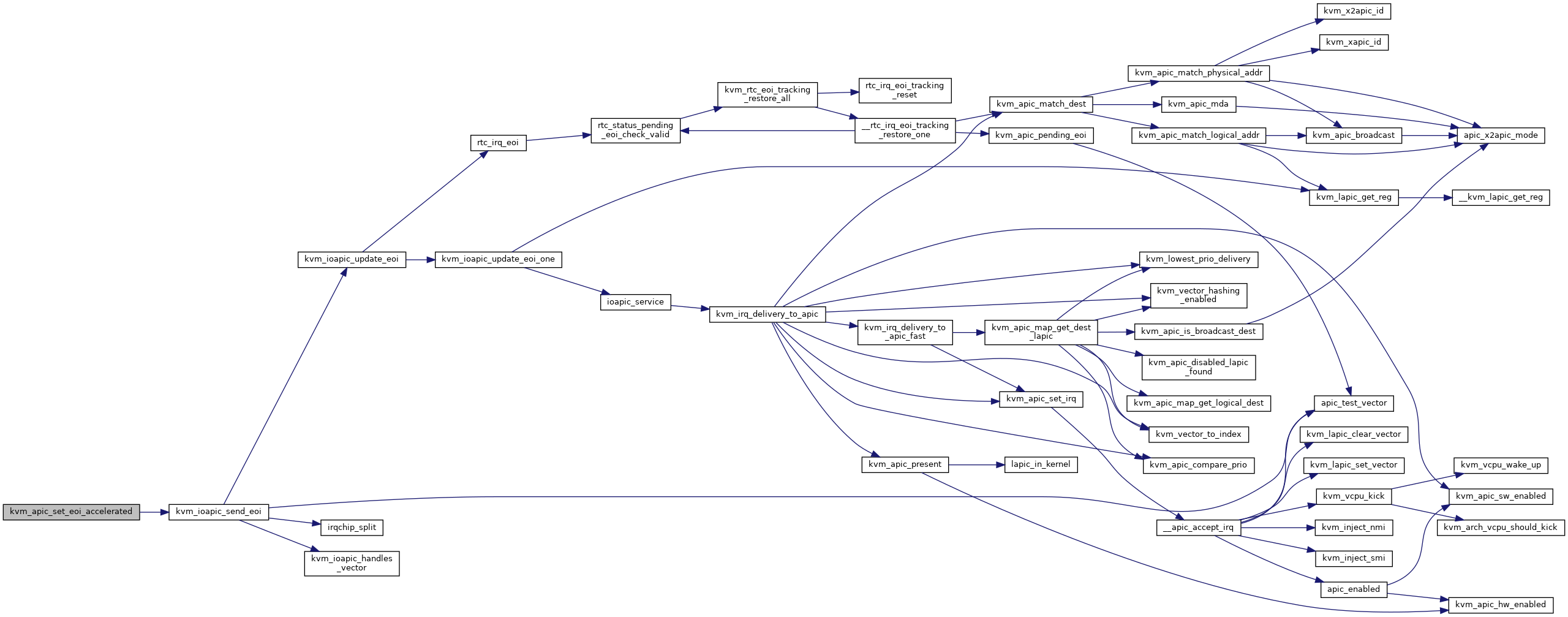

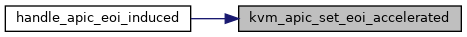

| void | kvm_apic_set_eoi_accelerated (struct kvm_vcpu *vcpu, int vector) |

| int | kvm_lapic_set_vapic_addr (struct kvm_vcpu *vcpu, gpa_t vapic_addr) |

| void | kvm_lapic_sync_from_vapic (struct kvm_vcpu *vcpu) |

| void | kvm_lapic_sync_to_vapic (struct kvm_vcpu *vcpu) |

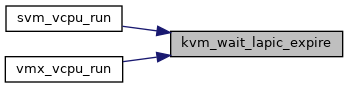

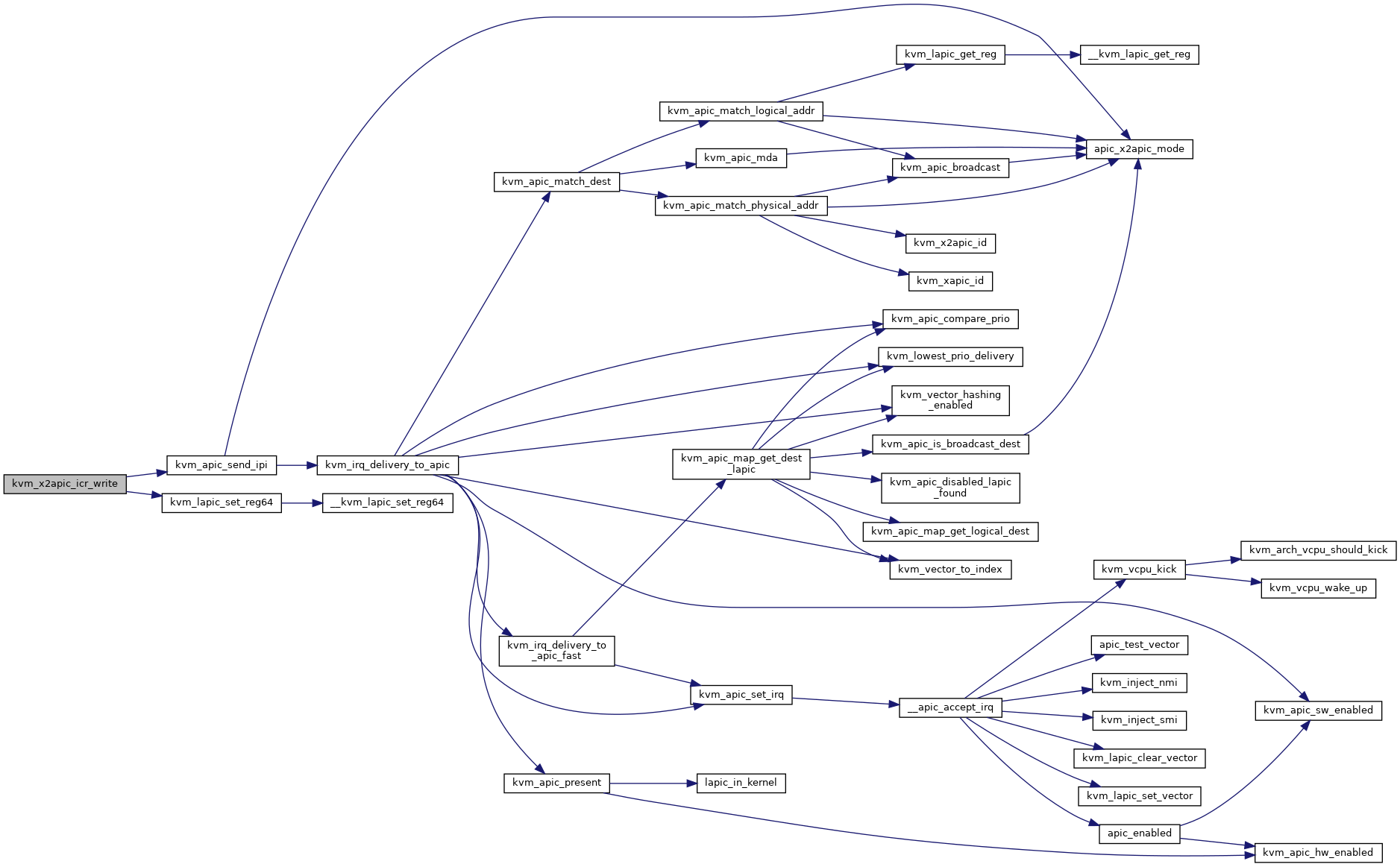

| int | kvm_x2apic_icr_write (struct kvm_lapic *apic, u64 data) |

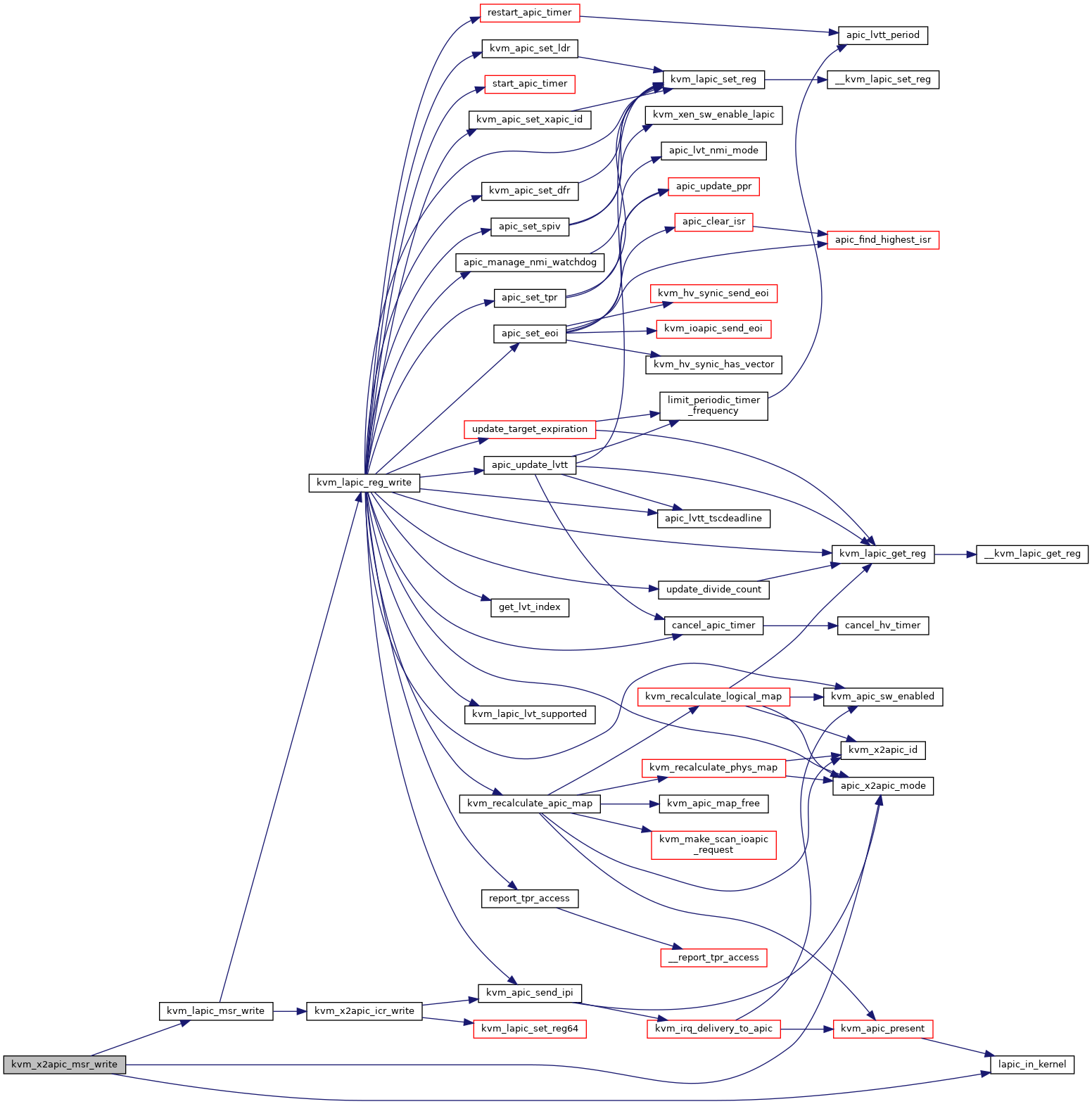

| int | kvm_x2apic_msr_write (struct kvm_vcpu *vcpu, u32 msr, u64 data) |

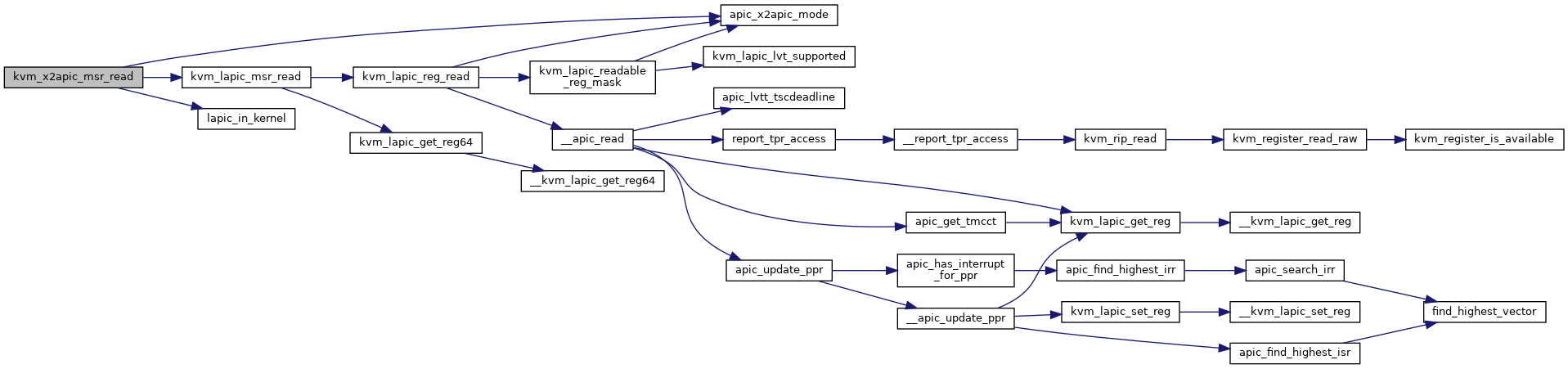

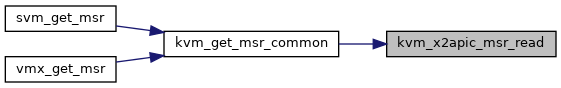

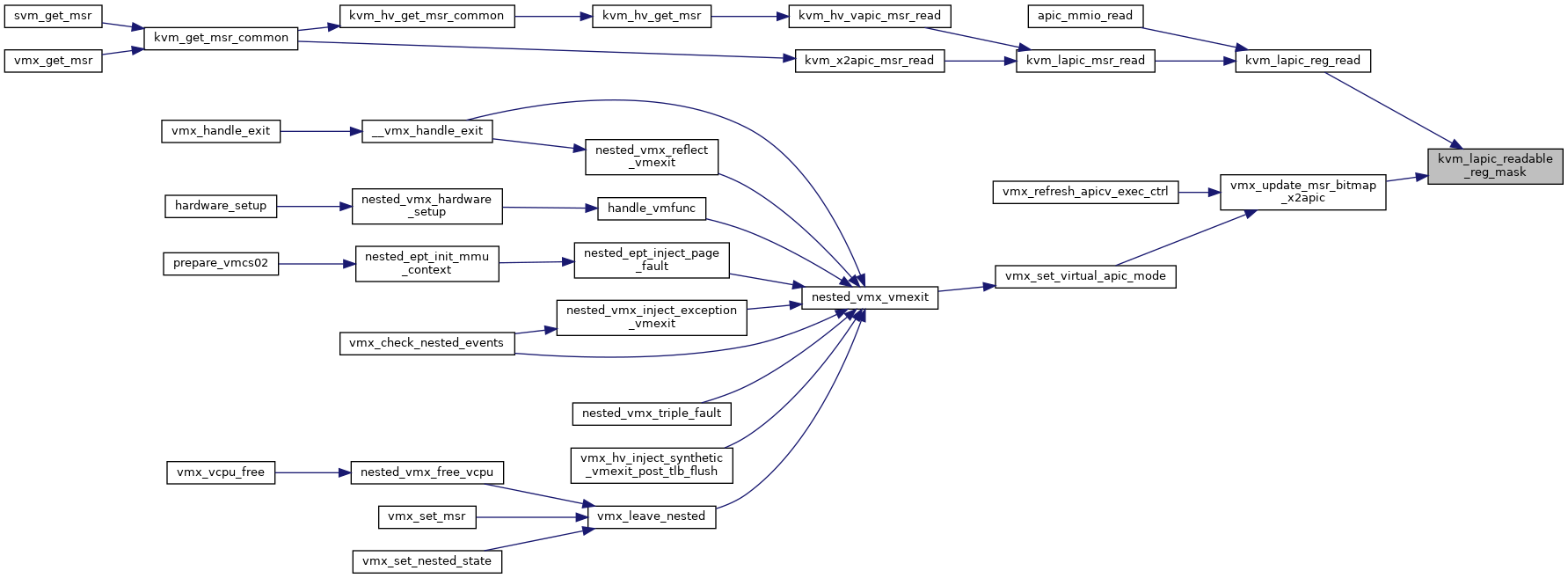

| int | kvm_x2apic_msr_read (struct kvm_vcpu *vcpu, u32 msr, u64 *data) |

| int | kvm_hv_vapic_msr_write (struct kvm_vcpu *vcpu, u32 msr, u64 data) |

| int | kvm_hv_vapic_msr_read (struct kvm_vcpu *vcpu, u32 msr, u64 *data) |

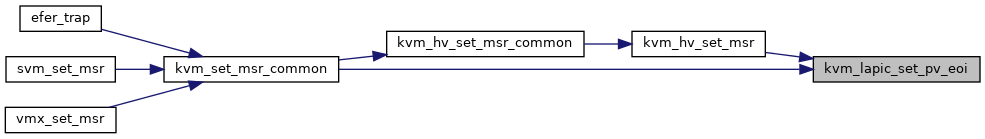

| int | kvm_lapic_set_pv_eoi (struct kvm_vcpu *vcpu, u64 data, unsigned long len) |

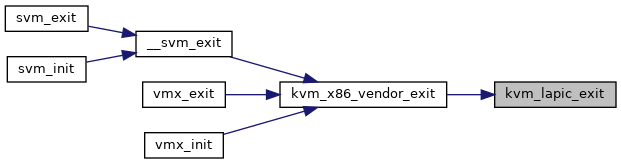

| void | kvm_lapic_exit (void) |

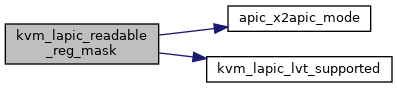

| u64 | kvm_lapic_readable_reg_mask (struct kvm_lapic *apic) |

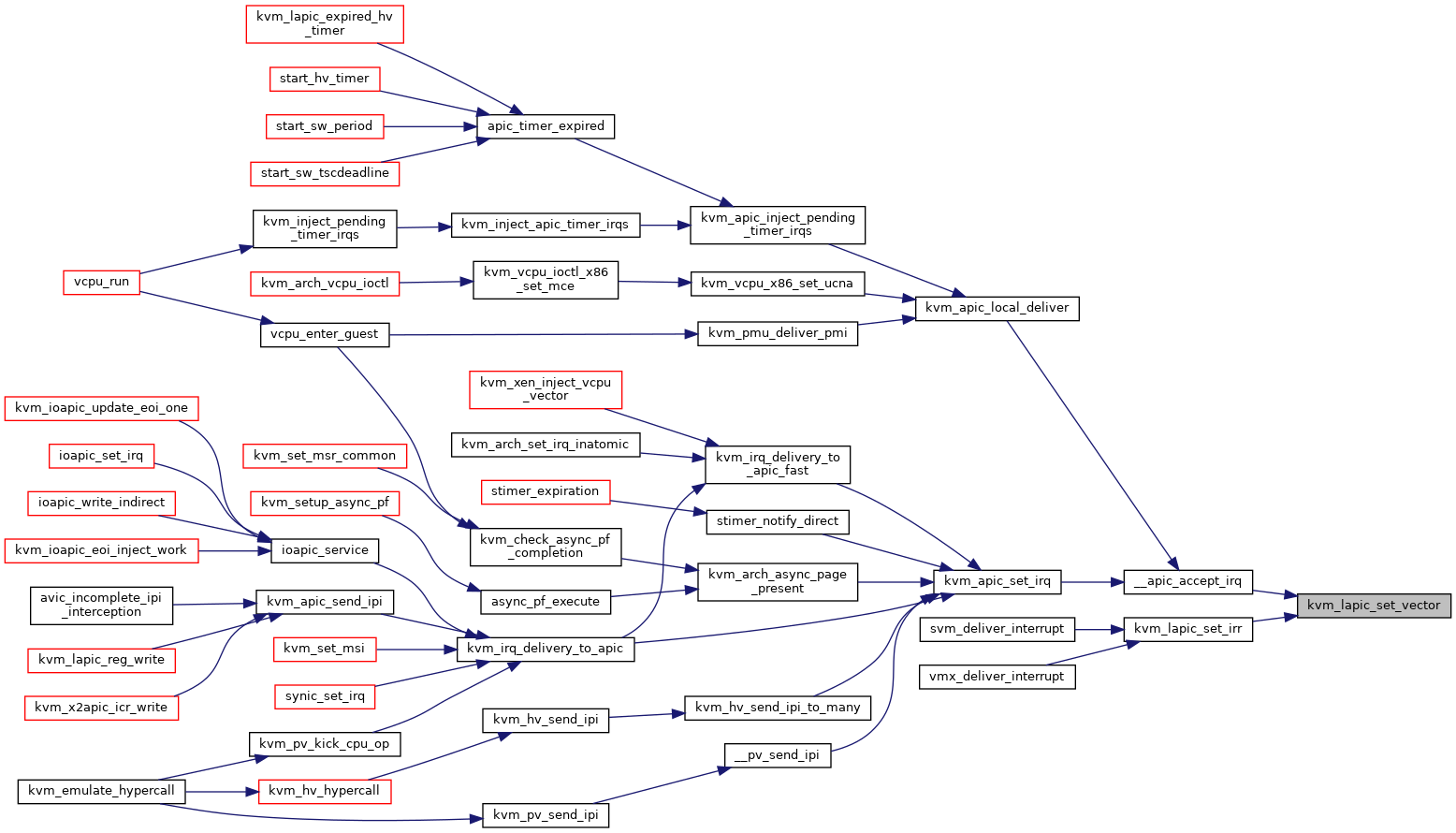

| static void | kvm_lapic_clear_vector (int vec, void *bitmap) |

| static void | kvm_lapic_set_vector (int vec, void *bitmap) |

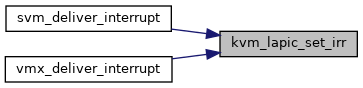

| static void | kvm_lapic_set_irr (int vec, struct kvm_lapic *apic) |

| static u32 | __kvm_lapic_get_reg (char *regs, int reg_off) |

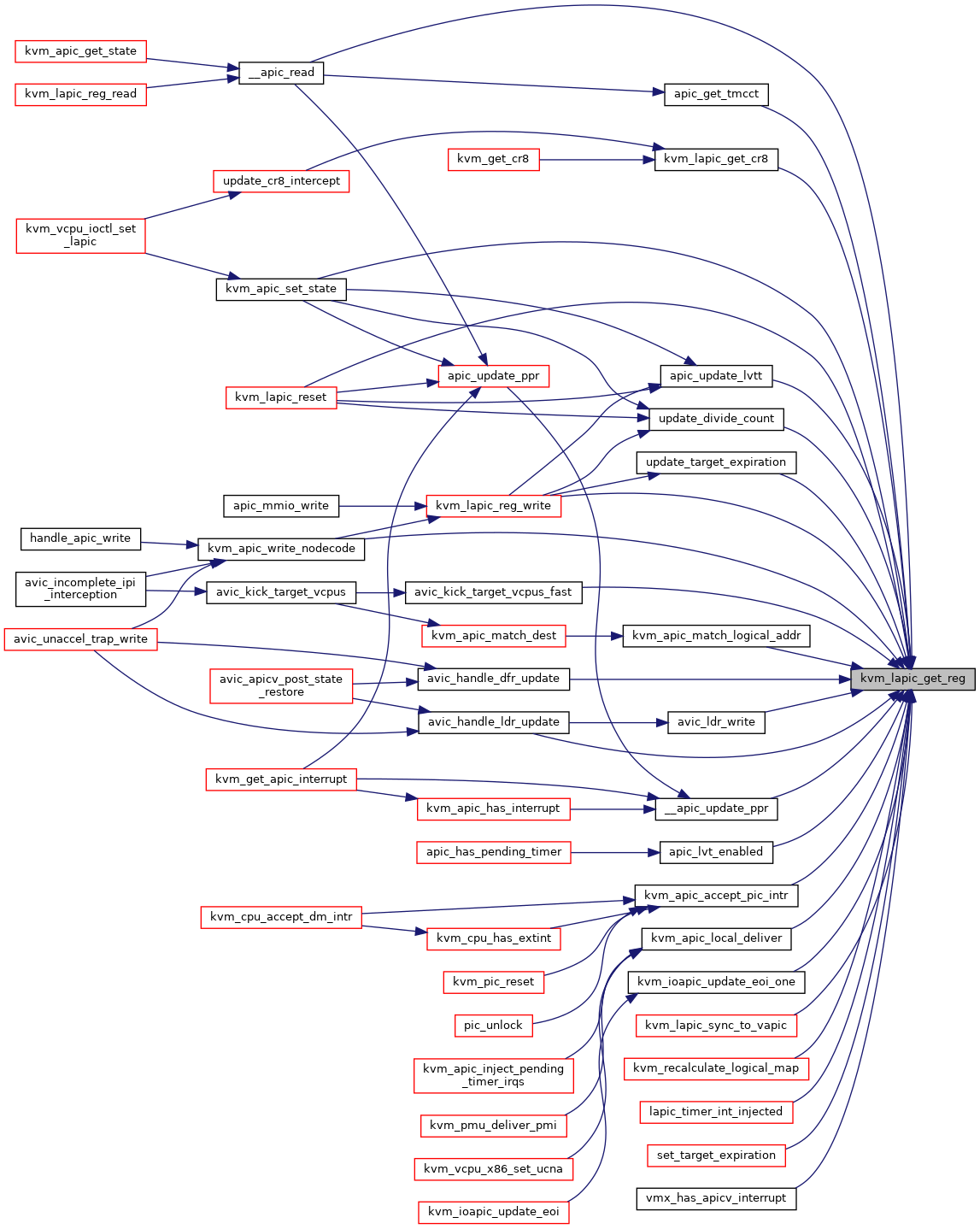

| static u32 | kvm_lapic_get_reg (struct kvm_lapic *apic, int reg_off) |

| DECLARE_STATIC_KEY_FALSE (kvm_has_noapic_vcpu) | |

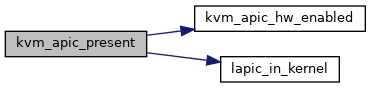

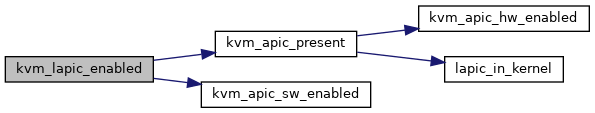

| static bool | lapic_in_kernel (struct kvm_vcpu *vcpu) |

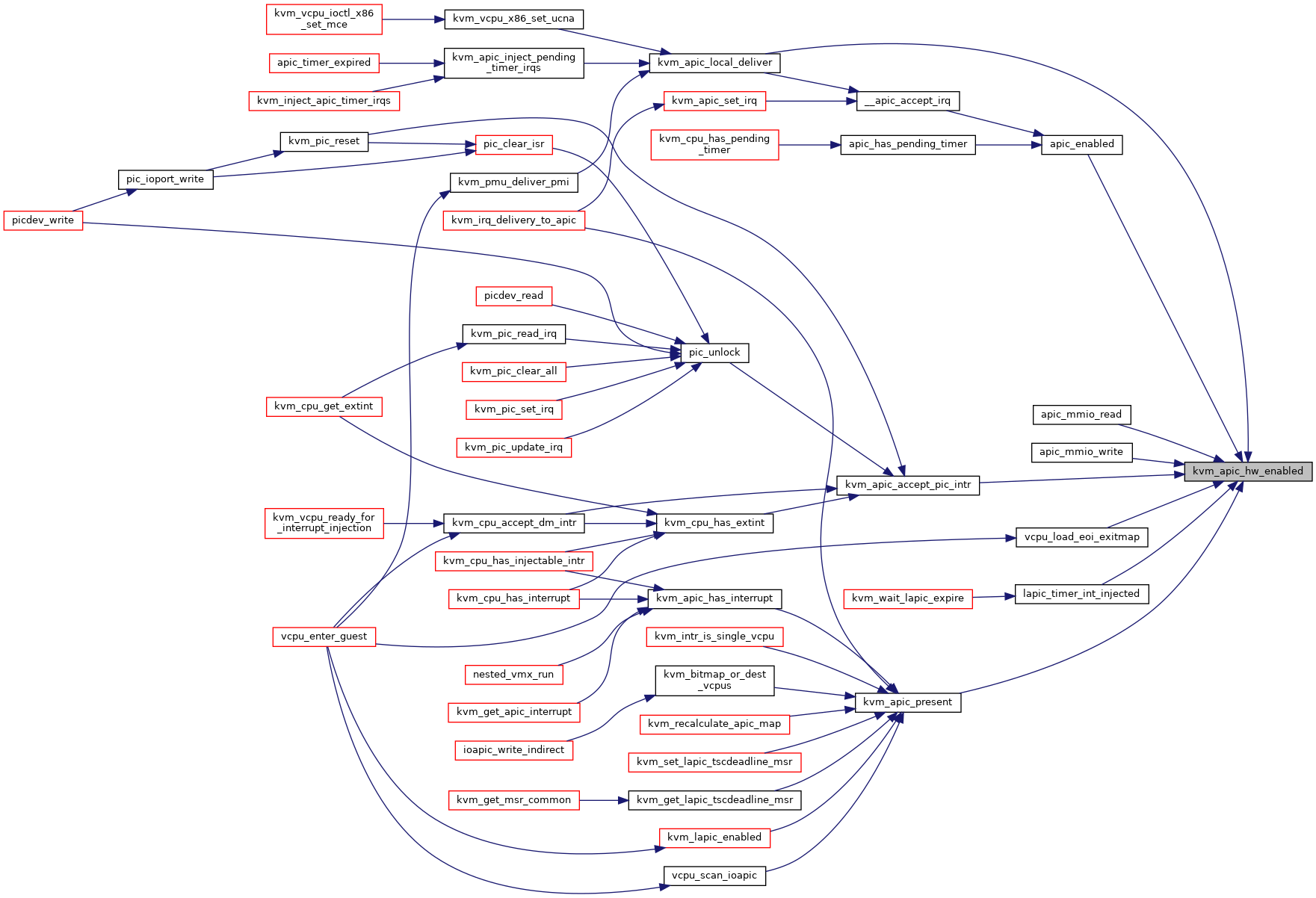

| static bool | kvm_apic_hw_enabled (struct kvm_lapic *apic) |

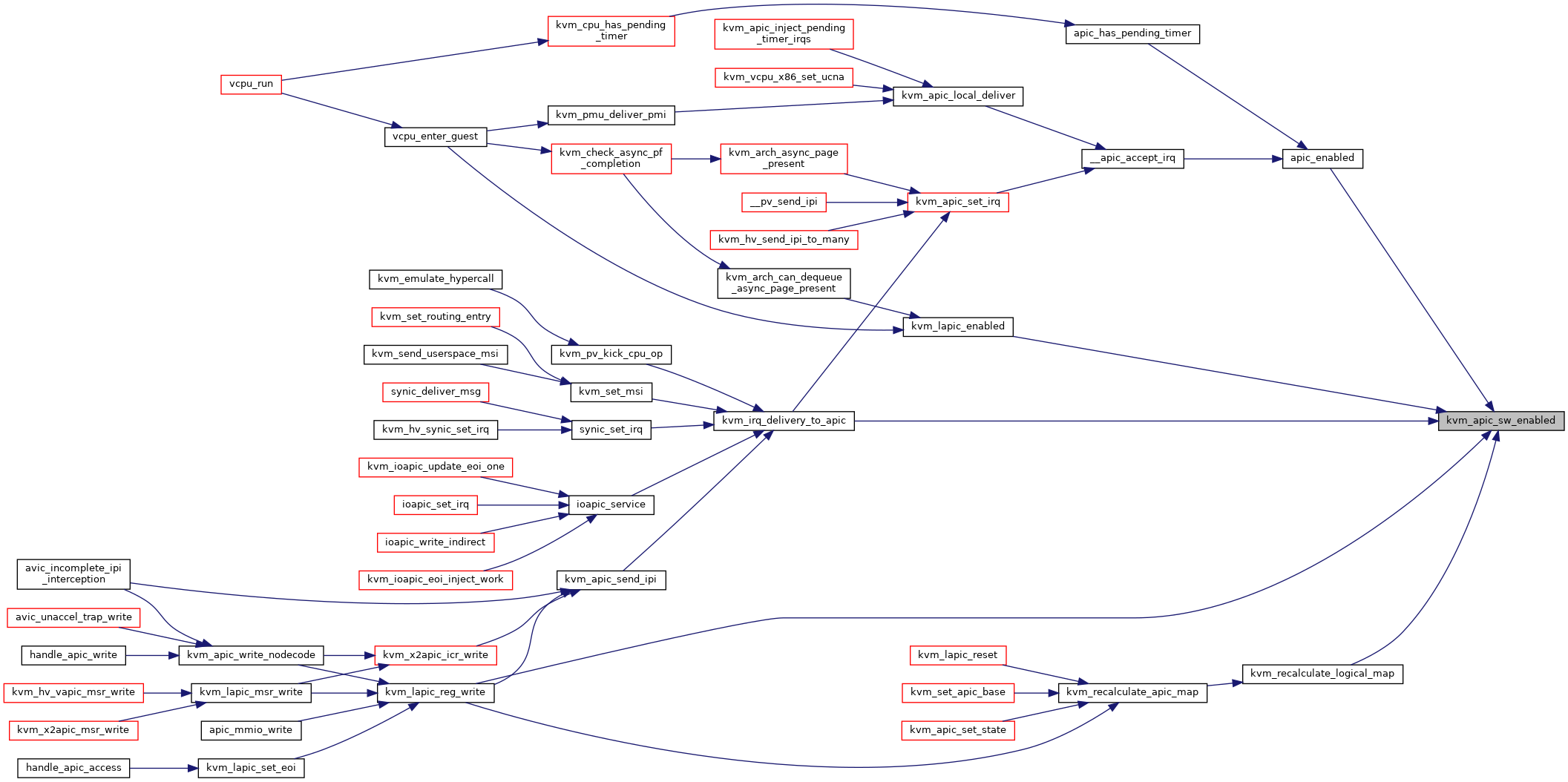

| static bool | kvm_apic_sw_enabled (struct kvm_lapic *apic) |

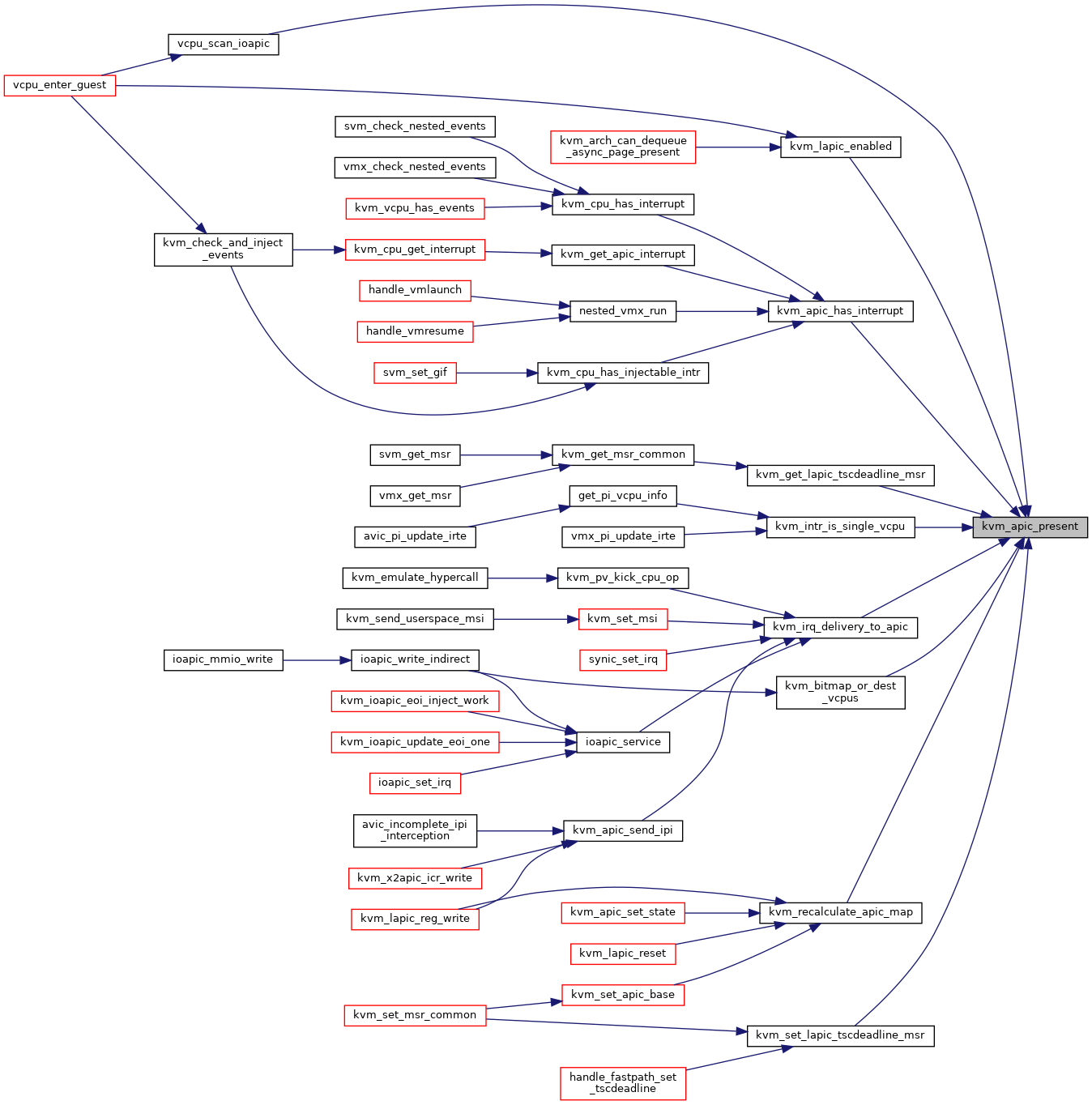

| static bool | kvm_apic_present (struct kvm_vcpu *vcpu) |

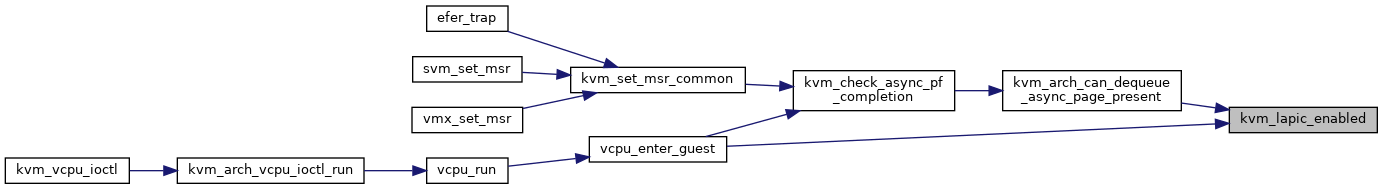

| static int | kvm_lapic_enabled (struct kvm_vcpu *vcpu) |

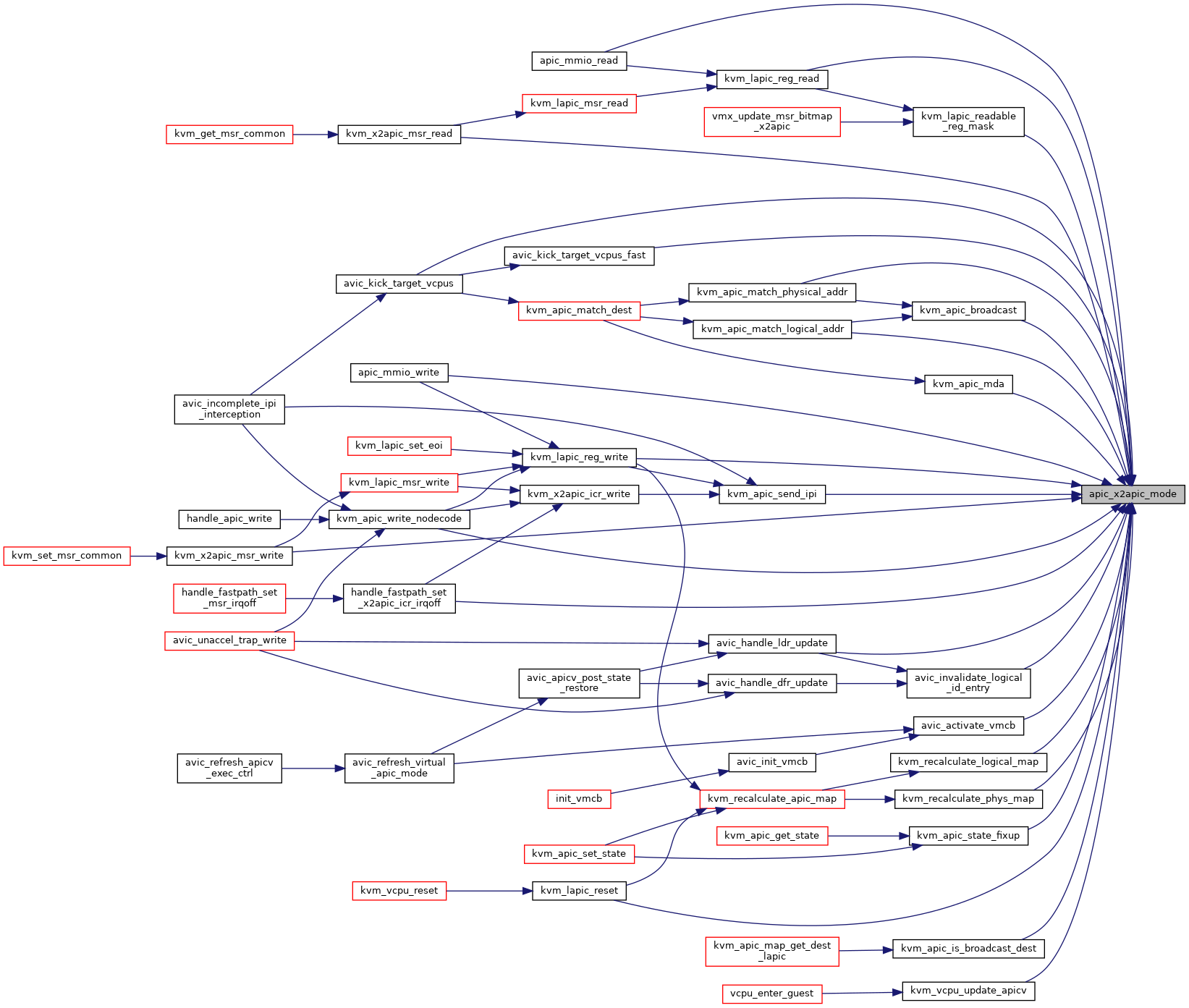

| static int | apic_x2apic_mode (struct kvm_lapic *apic) |

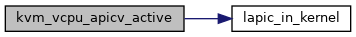

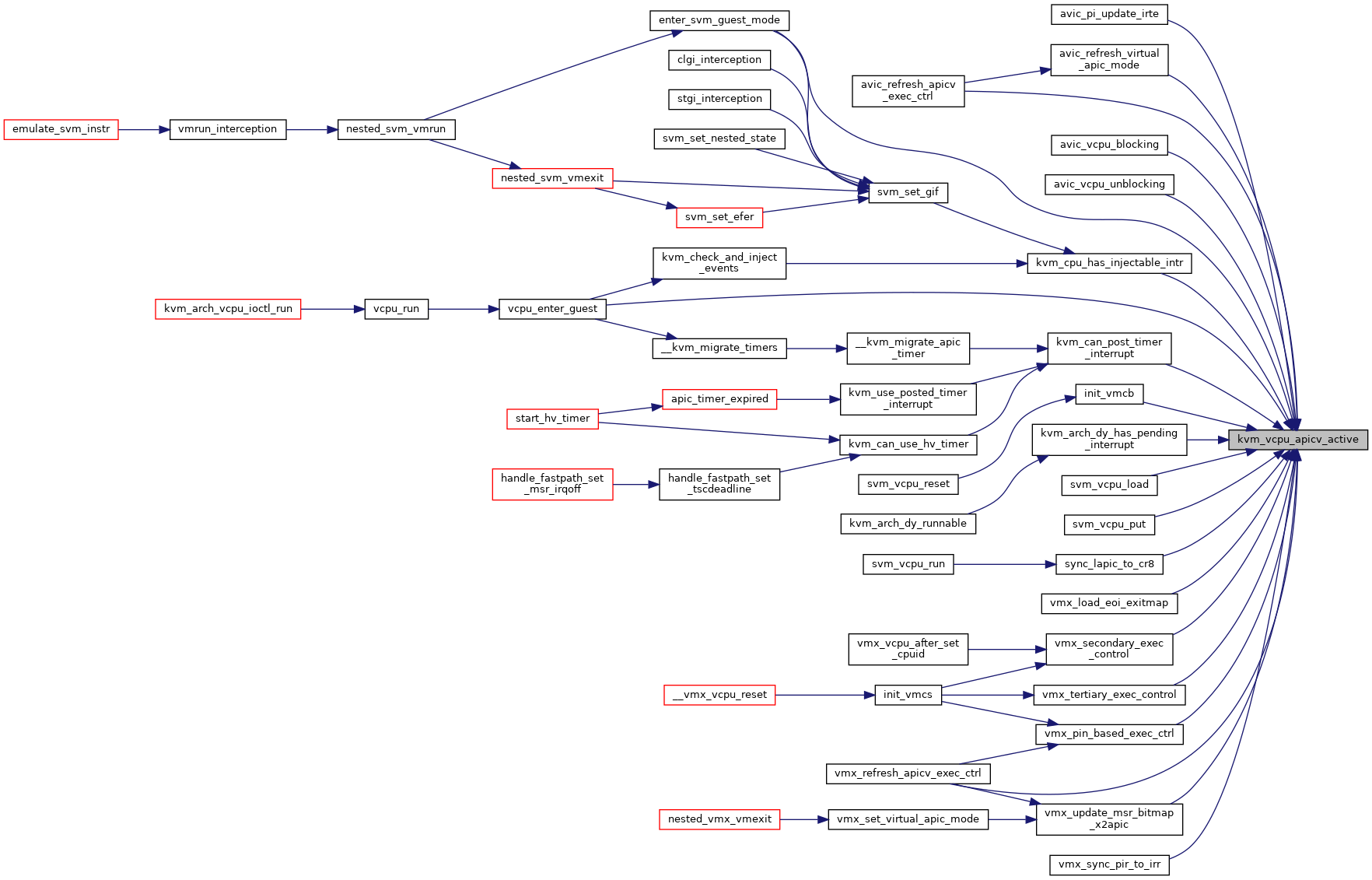

| static bool | kvm_vcpu_apicv_active (struct kvm_vcpu *vcpu) |

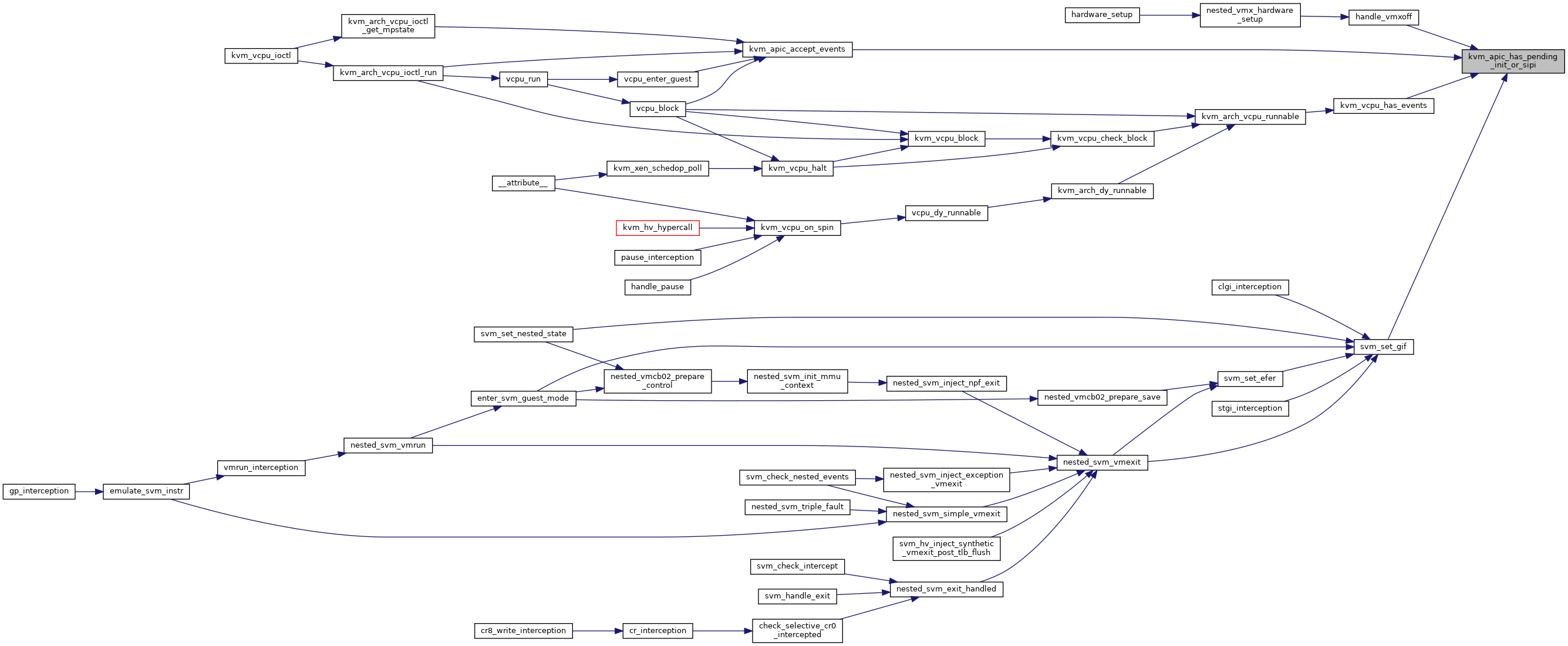

| static bool | kvm_apic_has_pending_init_or_sipi (struct kvm_vcpu *vcpu) |

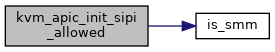

| static bool | kvm_apic_init_sipi_allowed (struct kvm_vcpu *vcpu) |

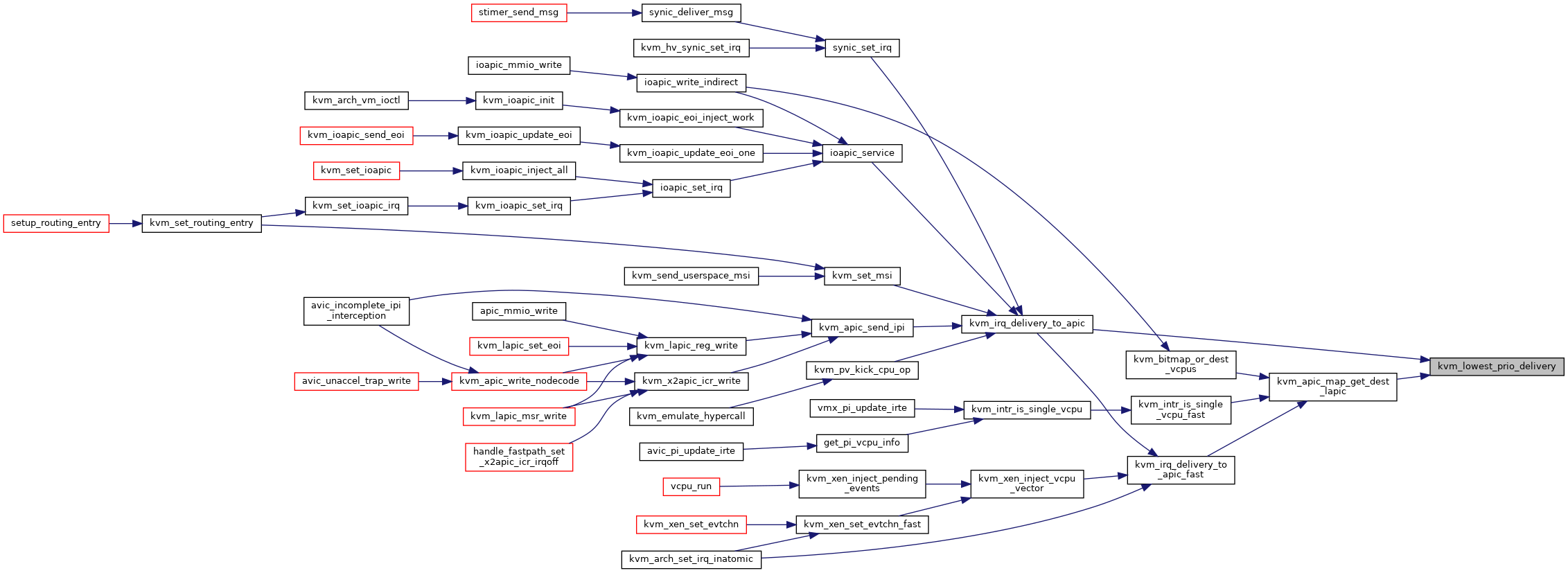

| static bool | kvm_lowest_prio_delivery (struct kvm_lapic_irq *irq) |

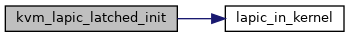

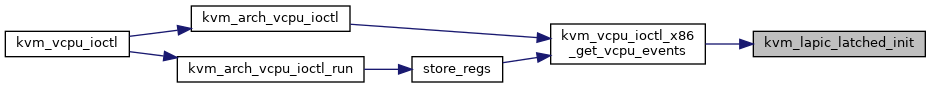

| static int | kvm_lapic_latched_init (struct kvm_vcpu *vcpu) |

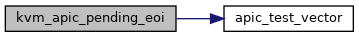

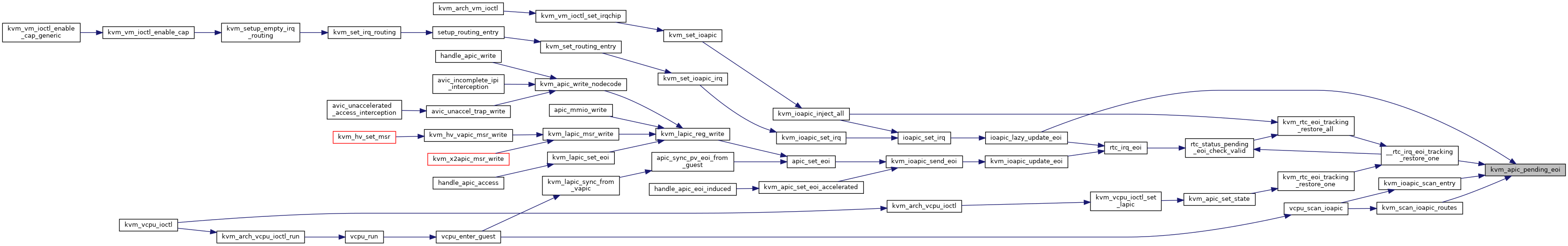

| bool | kvm_apic_pending_eoi (struct kvm_vcpu *vcpu, int vector) |

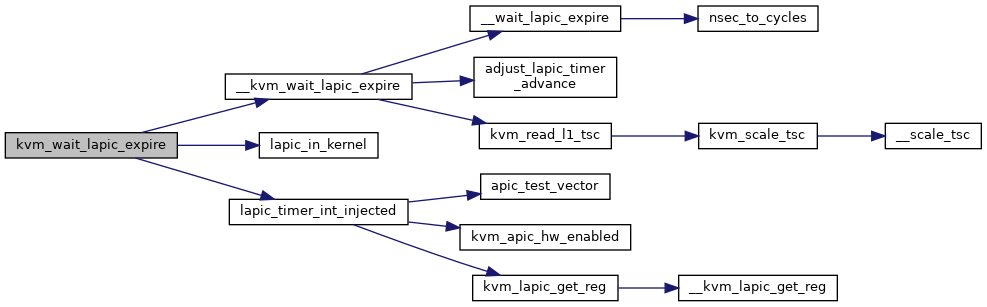

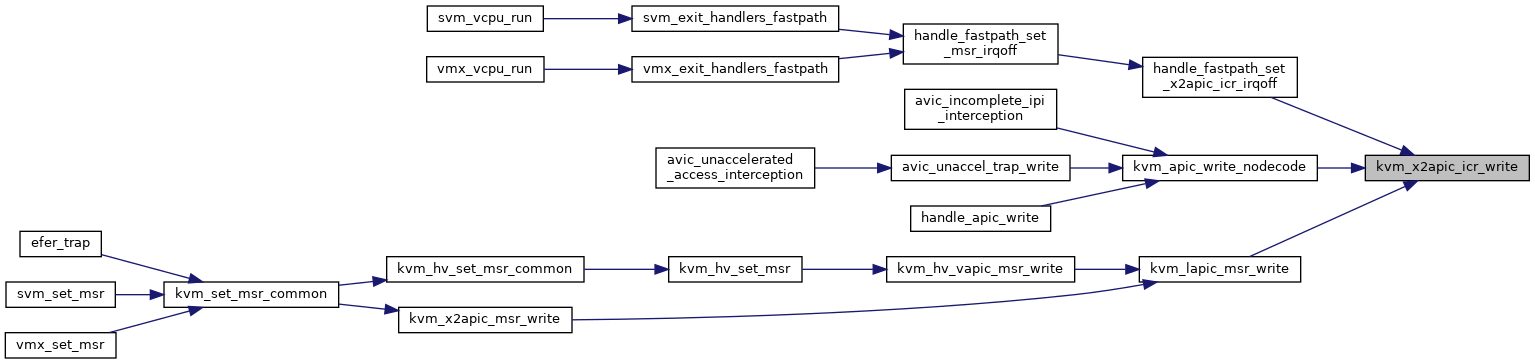

| void | kvm_wait_lapic_expire (struct kvm_vcpu *vcpu) |

| void | kvm_bitmap_or_dest_vcpus (struct kvm *kvm, struct kvm_lapic_irq *irq, unsigned long *vcpu_bitmap) |

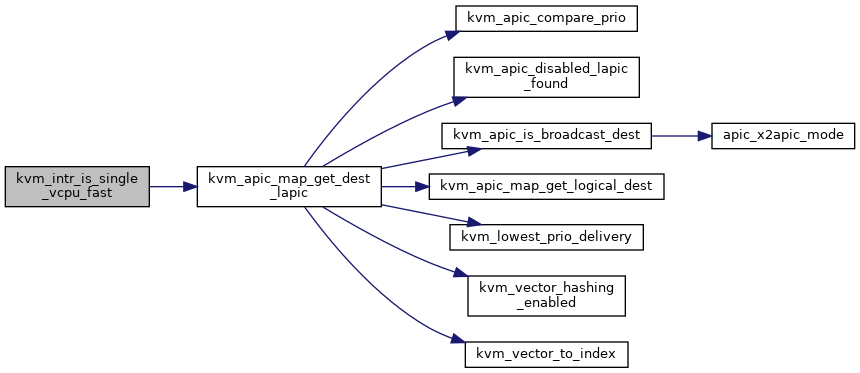

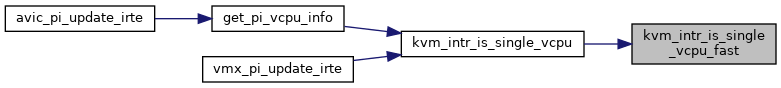

| bool | kvm_intr_is_single_vcpu_fast (struct kvm *kvm, struct kvm_lapic_irq *irq, struct kvm_vcpu **dest_vcpu) |

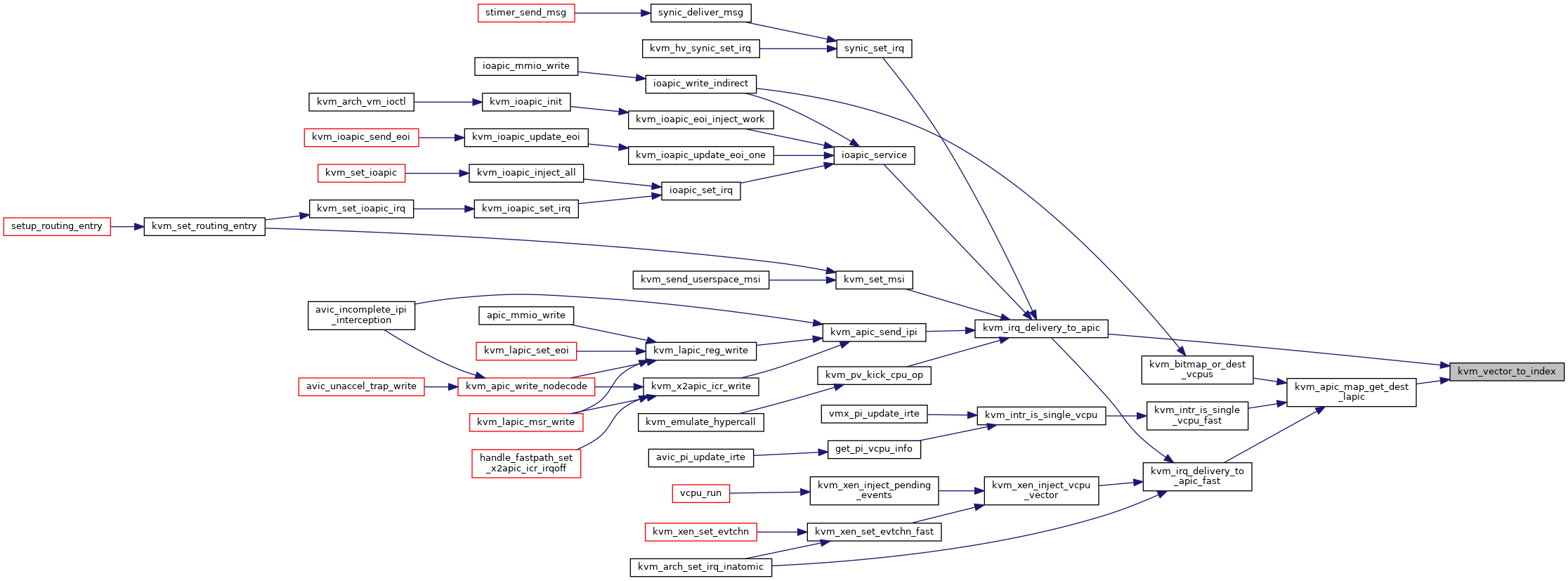

| int | kvm_vector_to_index (u32 vector, u32 dest_vcpus, const unsigned long *bitmap, u32 bitmap_size) |

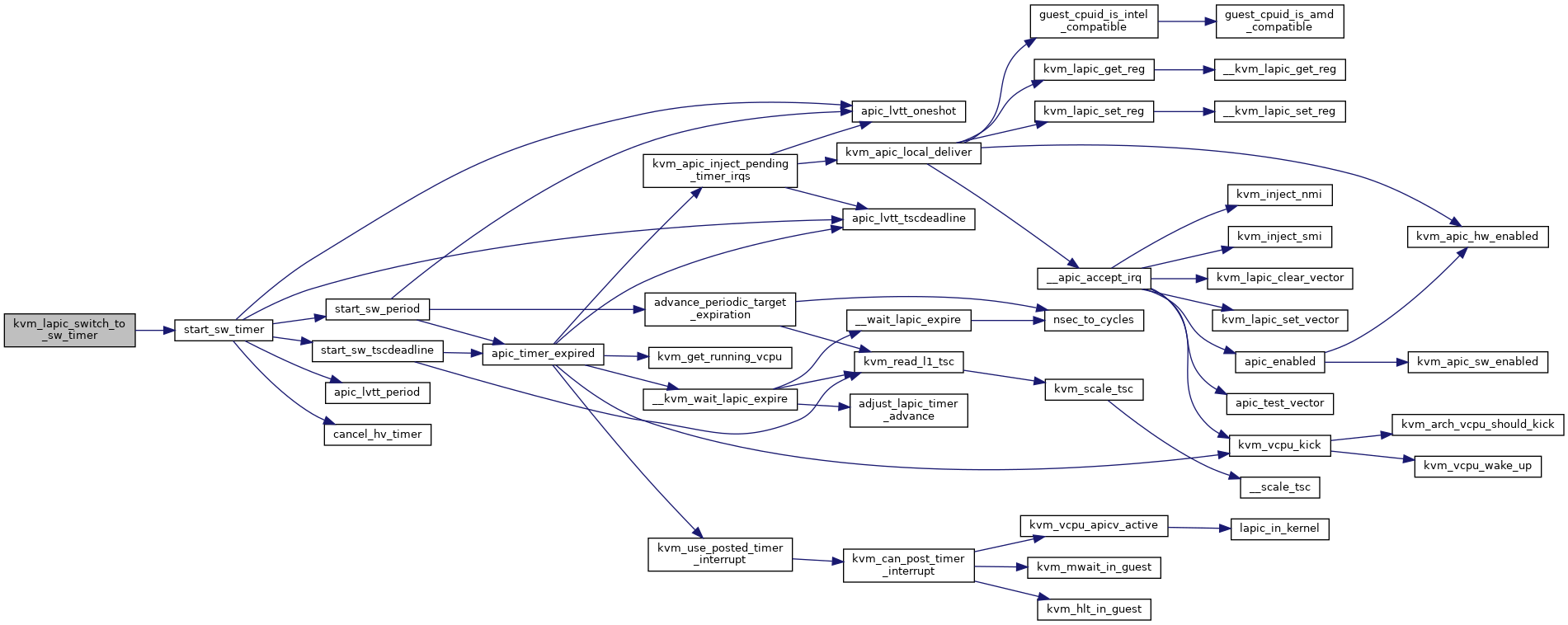

| void | kvm_lapic_switch_to_sw_timer (struct kvm_vcpu *vcpu) |

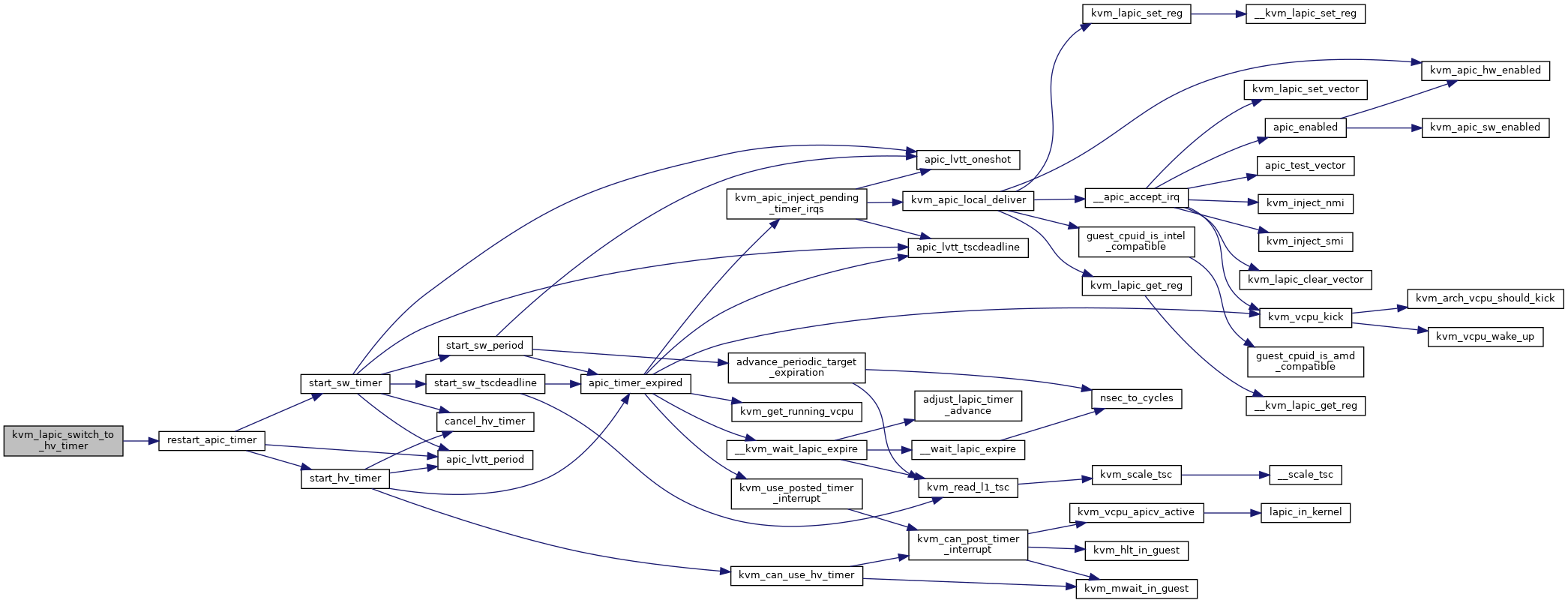

| void | kvm_lapic_switch_to_hv_timer (struct kvm_vcpu *vcpu) |

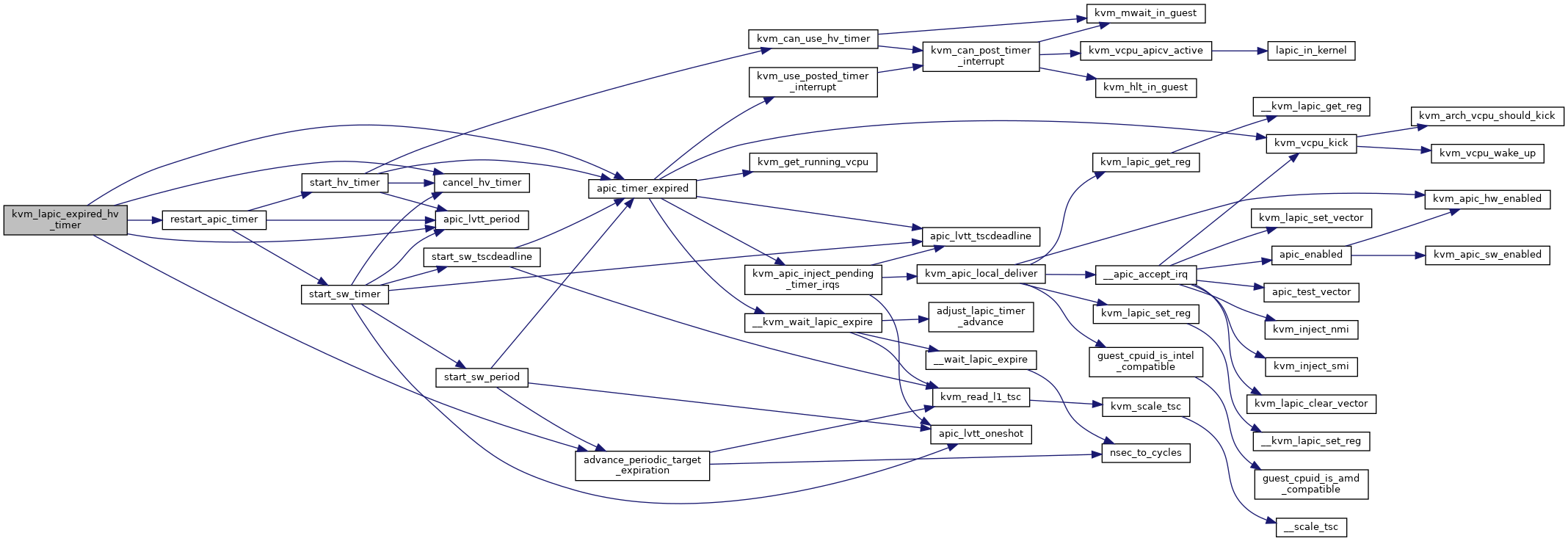

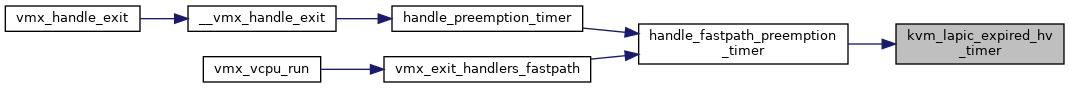

| void | kvm_lapic_expired_hv_timer (struct kvm_vcpu *vcpu) |

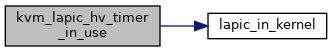

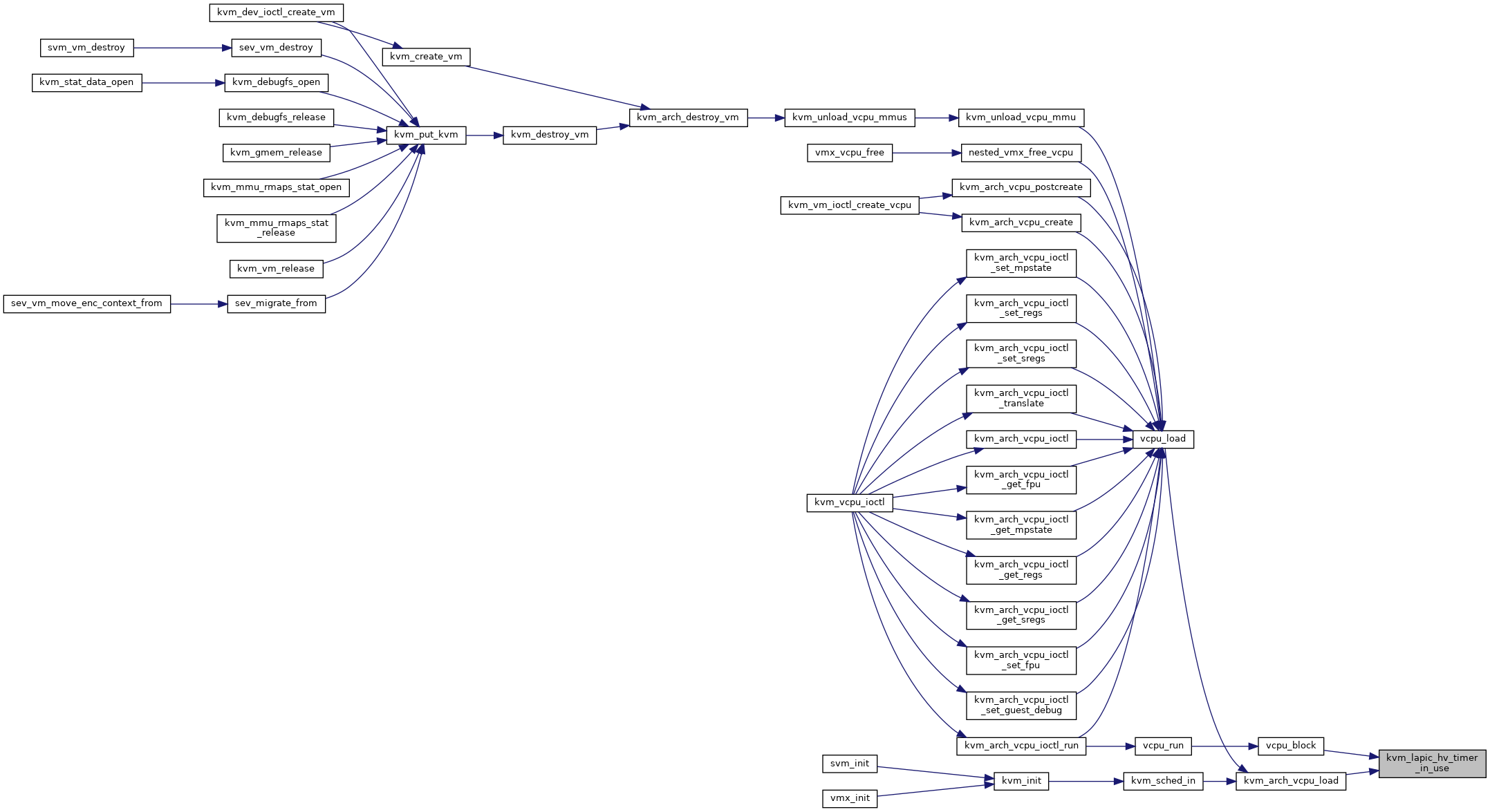

| bool | kvm_lapic_hv_timer_in_use (struct kvm_vcpu *vcpu) |

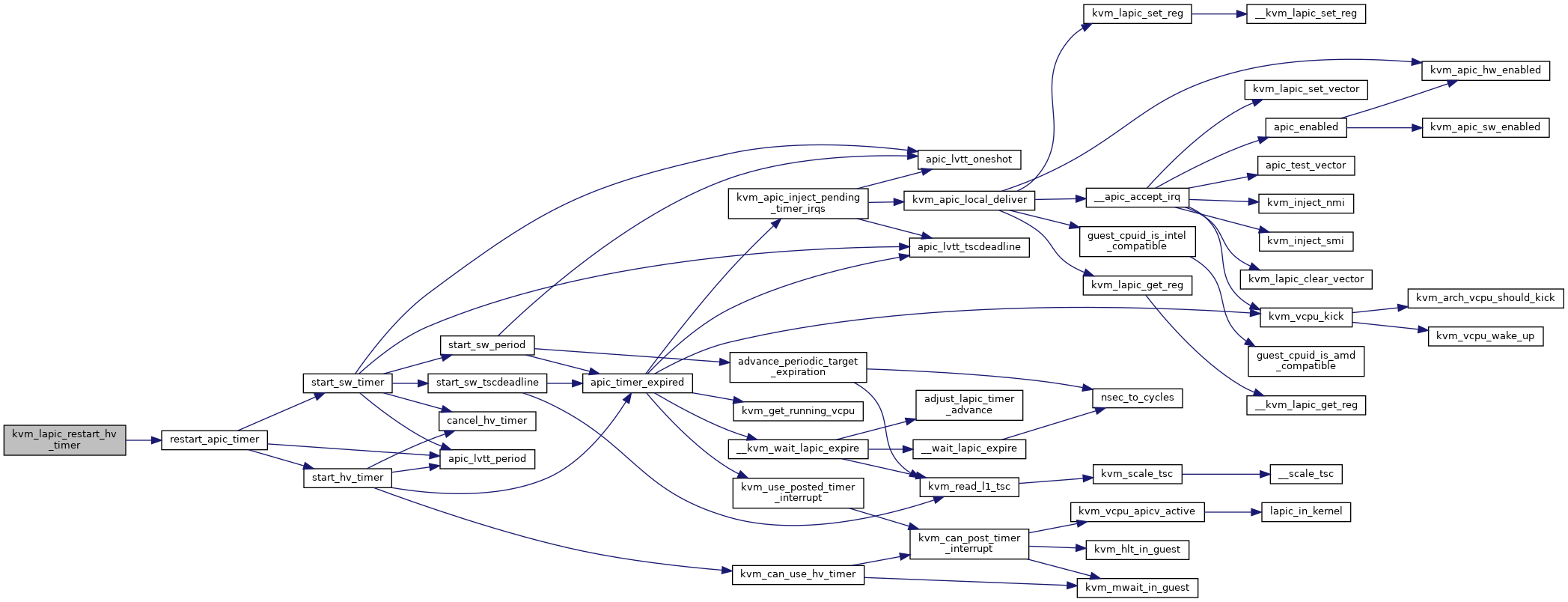

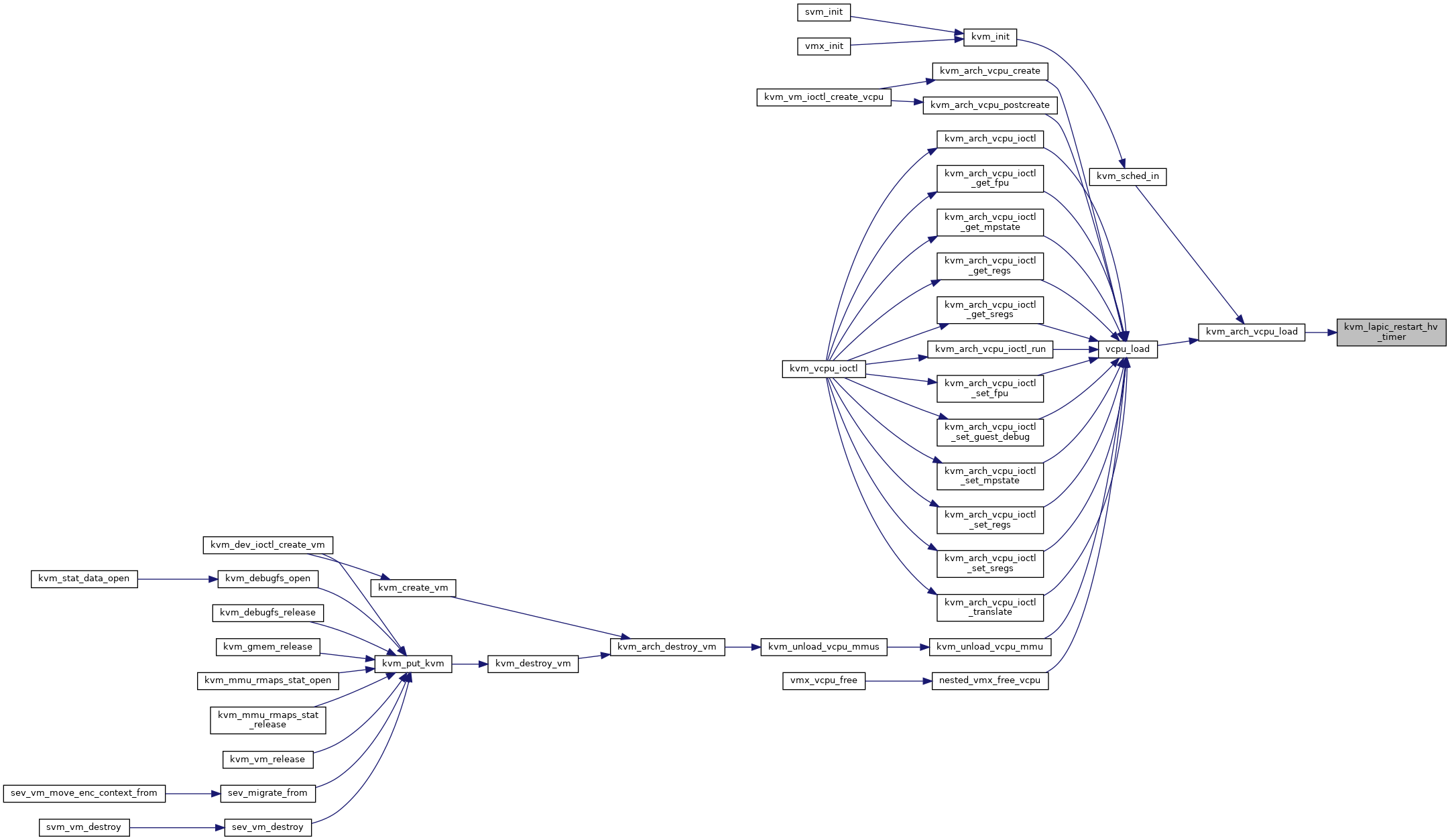

| void | kvm_lapic_restart_hv_timer (struct kvm_vcpu *vcpu) |

| bool | kvm_can_use_hv_timer (struct kvm_vcpu *vcpu) |

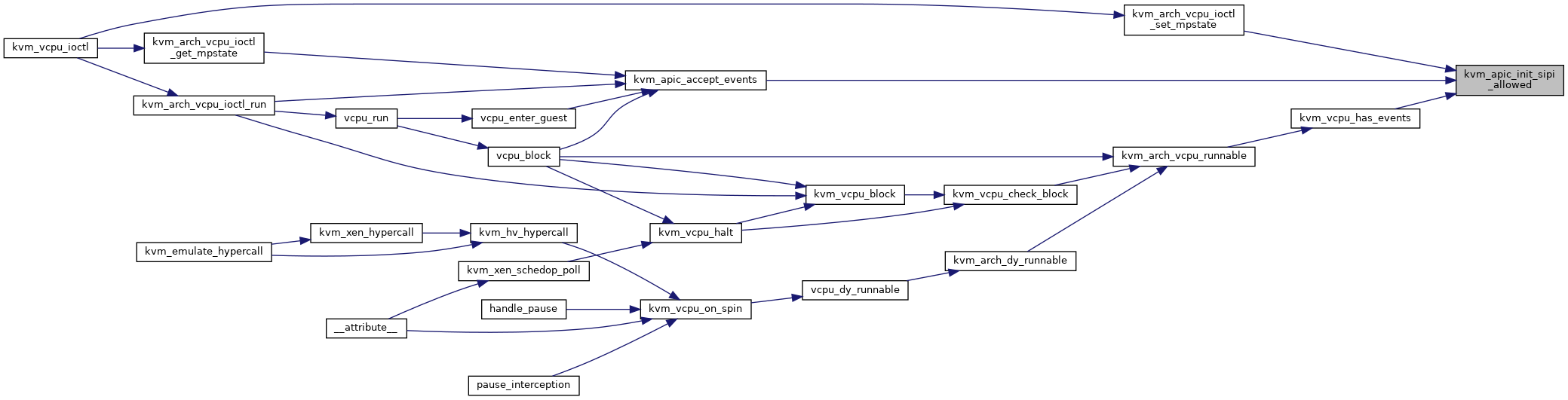

| static enum lapic_mode | kvm_apic_mode (u64 apic_base) |

| static u8 | kvm_xapic_id (struct kvm_lapic *apic) |

Variables | |

| struct static_key_false_deferred | apic_hw_disabled |

| struct static_key_false_deferred | apic_sw_disabled |

Macro Definition Documentation

◆ APIC_BROADCAST

◆ APIC_BUS_CYCLE_NS

◆ APIC_BUS_FREQUENCY

| #define APIC_BUS_FREQUENCY (1000000000ULL / APIC_BUS_CYCLE_NS) |

◆ APIC_DEST_MASK

◆ APIC_DEST_NOSHORT

◆ APIC_LVTx

| #define APIC_LVTx | ( | x | ) | ((x) == LVT_CMCI ? APIC_LVTCMCI : APIC_LVTT + 0x10 * (x)) |

◆ APIC_SHORT_MASK

◆ KVM_APIC_INIT

◆ KVM_APIC_SIPI

◆ REG_POS

◆ VEC_POS

◆ X2APIC_BROADCAST

Enumeration Type Documentation

◆ lapic_lvt_entry

| enum lapic_lvt_entry |

◆ lapic_mode

| enum lapic_mode |

Function Documentation

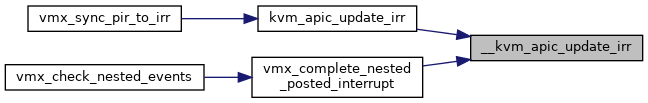

◆ __kvm_apic_update_irr()

| bool __kvm_apic_update_irr | ( | u32 * | pir, |

| void * | regs, | ||

| int * | max_irr | ||

| ) |

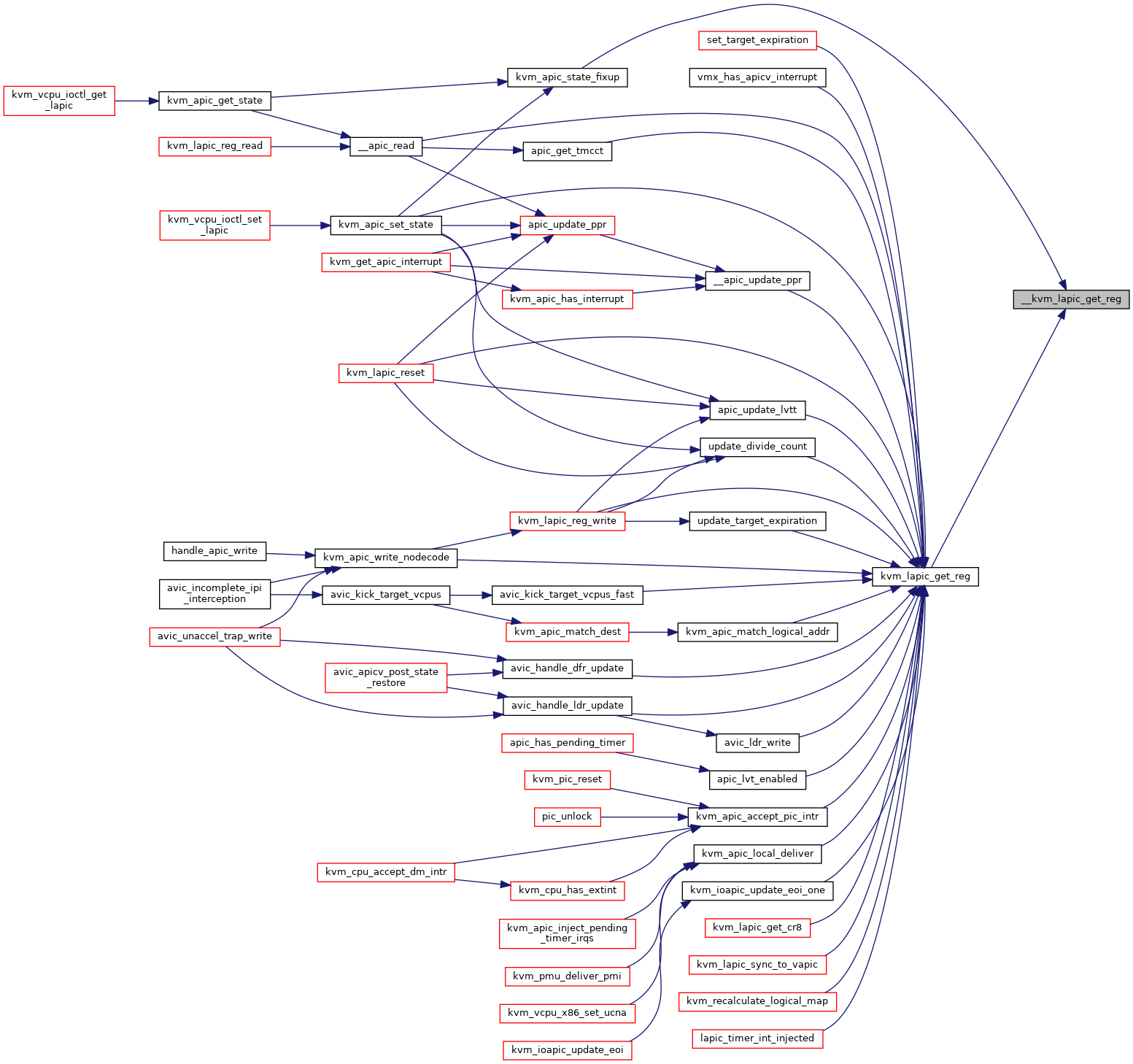

◆ __kvm_lapic_get_reg()

|

inlinestatic |

◆ apic_x2apic_mode()

|

inlinestatic |

◆ DECLARE_STATIC_KEY_FALSE()

| DECLARE_STATIC_KEY_FALSE | ( | kvm_has_noapic_vcpu | ) |

◆ kvm_alloc_apic_access_page()

| int kvm_alloc_apic_access_page | ( | struct kvm * | kvm | ) |

Definition at line 2598 of file lapic.c.

◆ kvm_apic_accept_events()

| int kvm_apic_accept_events | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3263 of file lapic.c.

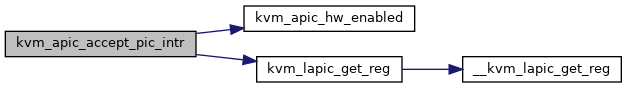

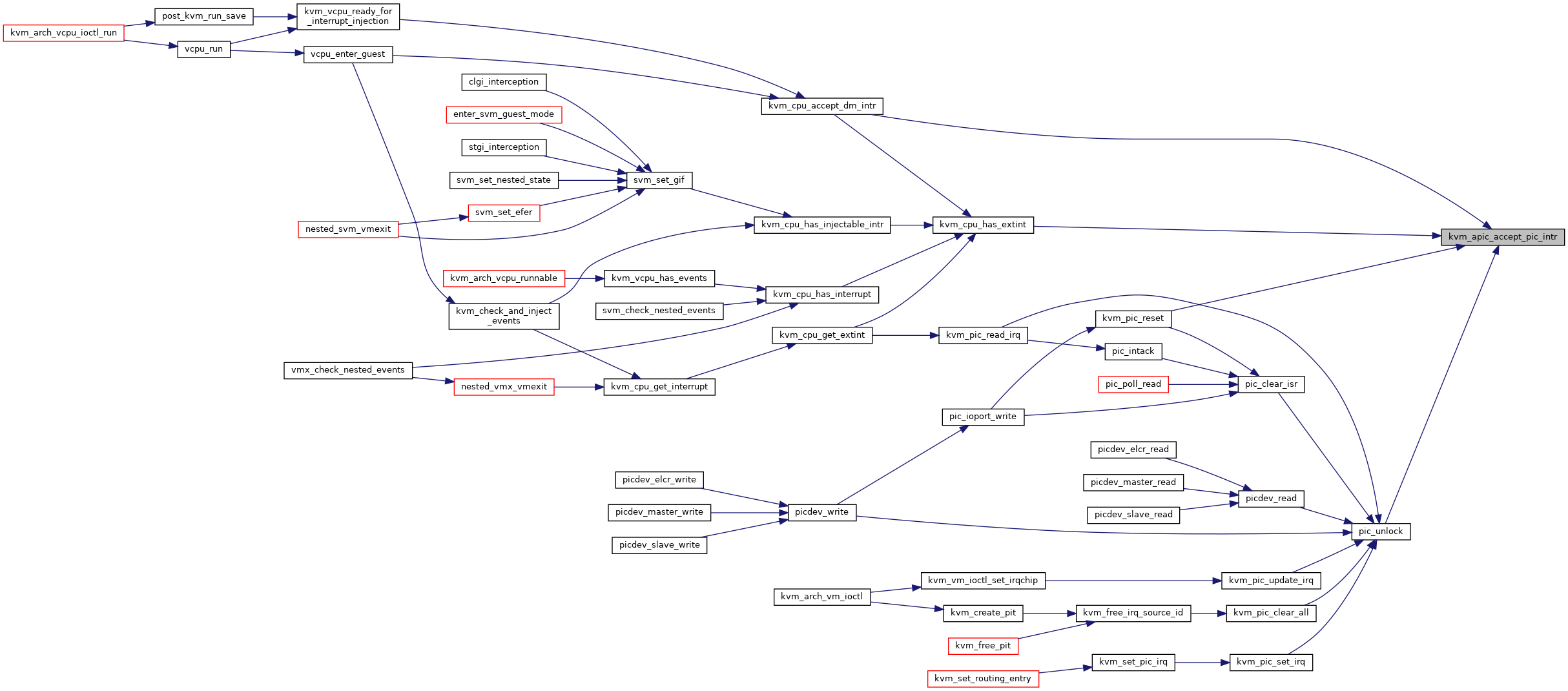

◆ kvm_apic_accept_pic_intr()

| int kvm_apic_accept_pic_intr | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2872 of file lapic.c.

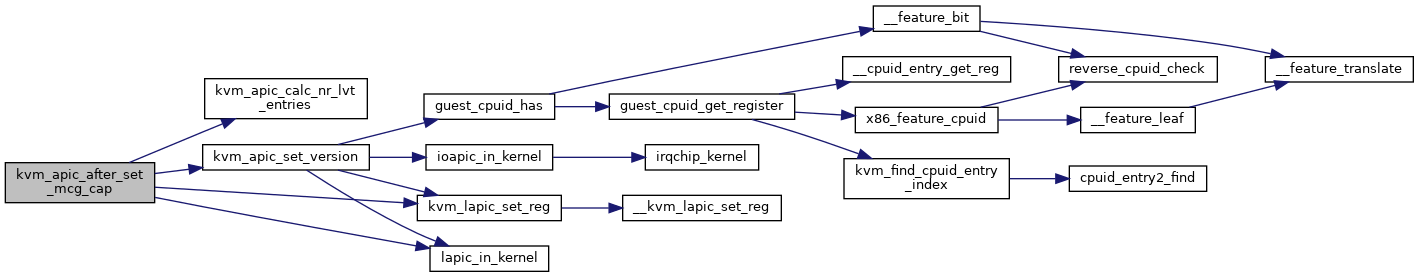

◆ kvm_apic_after_set_mcg_cap()

| void kvm_apic_after_set_mcg_cap | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 596 of file lapic.c.

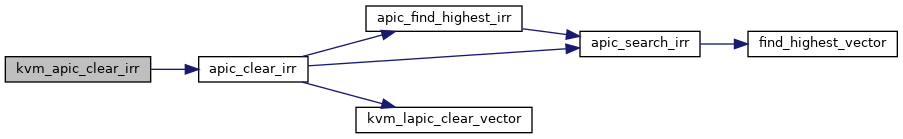

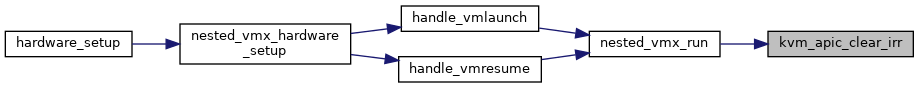

◆ kvm_apic_clear_irr()

| void kvm_apic_clear_irr | ( | struct kvm_vcpu * | vcpu, |

| int | vec | ||

| ) |

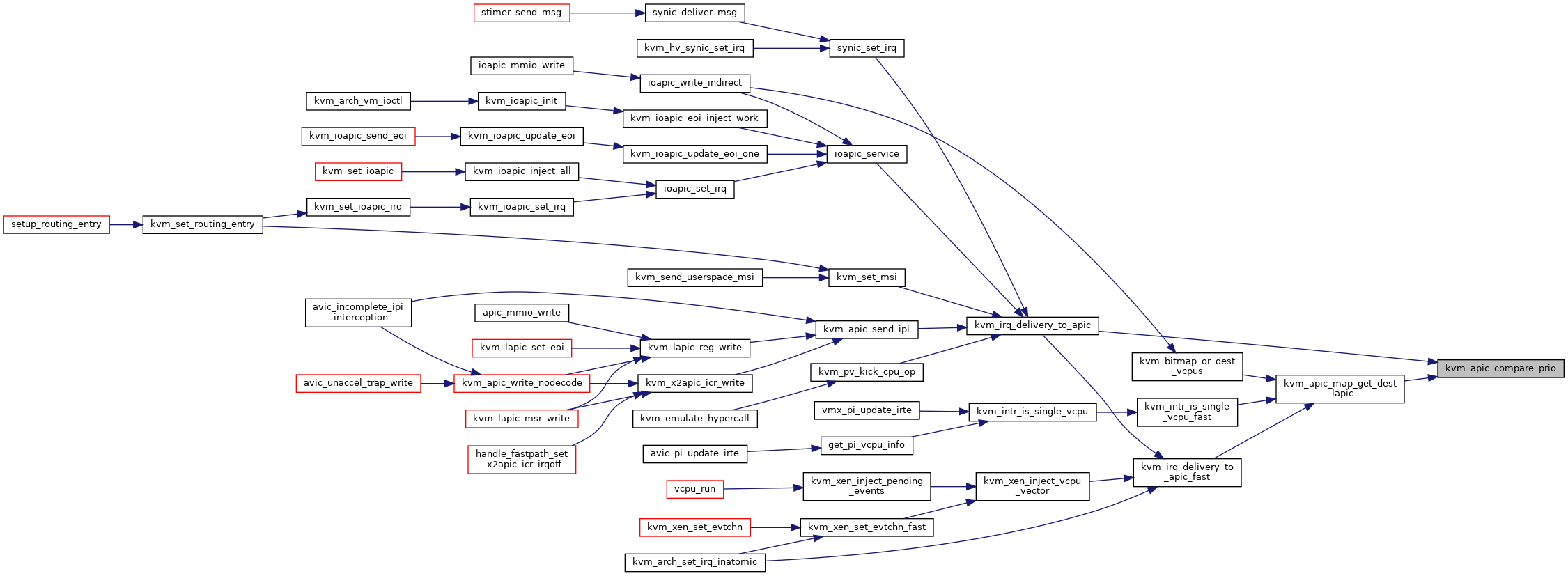

◆ kvm_apic_compare_prio()

| int kvm_apic_compare_prio | ( | struct kvm_vcpu * | vcpu1, |

| struct kvm_vcpu * | vcpu2 | ||

| ) |

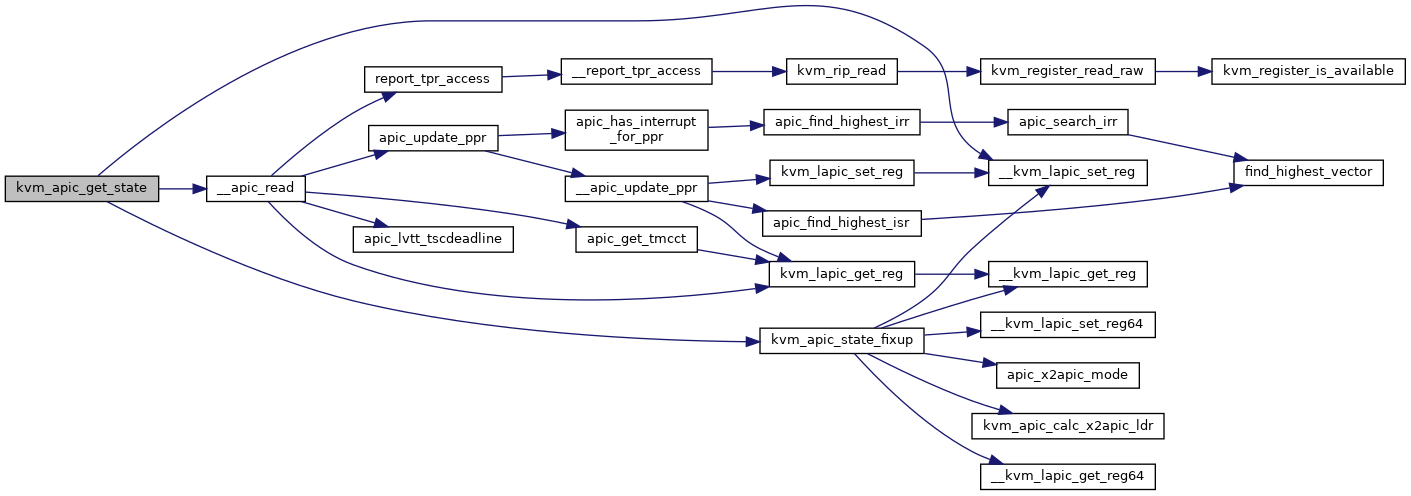

◆ kvm_apic_get_state()

| int kvm_apic_get_state | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_lapic_state * | s | ||

| ) |

Definition at line 2970 of file lapic.c.

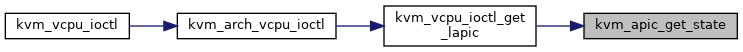

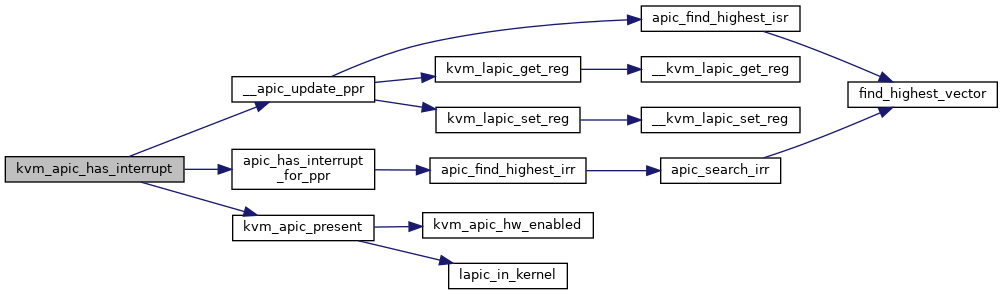

◆ kvm_apic_has_interrupt()

| int kvm_apic_has_interrupt | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2859 of file lapic.c.

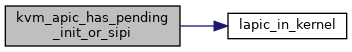

◆ kvm_apic_has_pending_init_or_sipi()

|

inlinestatic |

◆ kvm_apic_hw_enabled()

|

inlinestatic |

◆ kvm_apic_init_sipi_allowed()

|

inlinestatic |

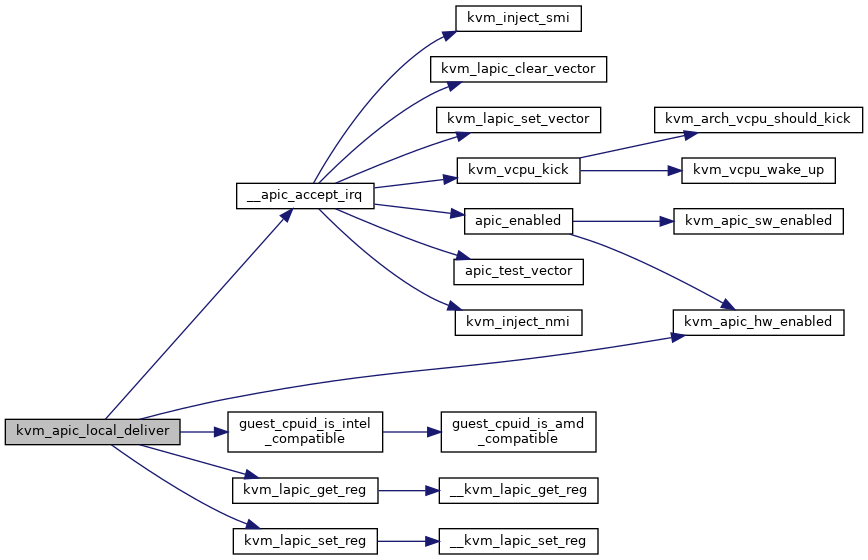

◆ kvm_apic_local_deliver()

| int kvm_apic_local_deliver | ( | struct kvm_lapic * | apic, |

| int | lvt_type | ||

| ) |

Definition at line 2762 of file lapic.c.

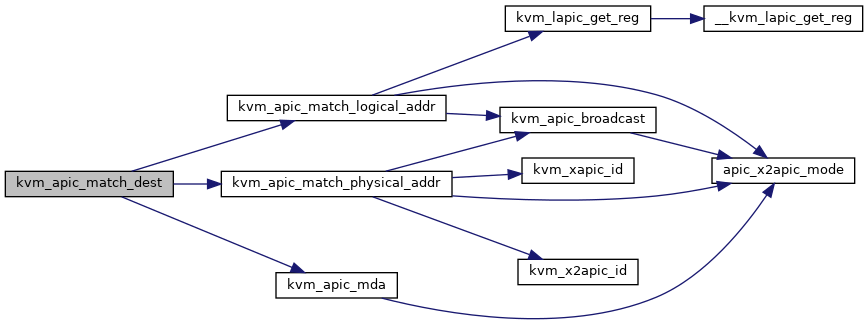

◆ kvm_apic_match_dest()

| bool kvm_apic_match_dest | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_lapic * | source, | ||

| int | shorthand, | ||

| unsigned int | dest, | ||

| int | dest_mode | ||

| ) |

Definition at line 1067 of file lapic.c.

◆ kvm_apic_mode()

|

inlinestatic |

◆ kvm_apic_pending_eoi()

| bool kvm_apic_pending_eoi | ( | struct kvm_vcpu * | vcpu, |

| int | vector | ||

| ) |

◆ kvm_apic_present()

|

inlinestatic |

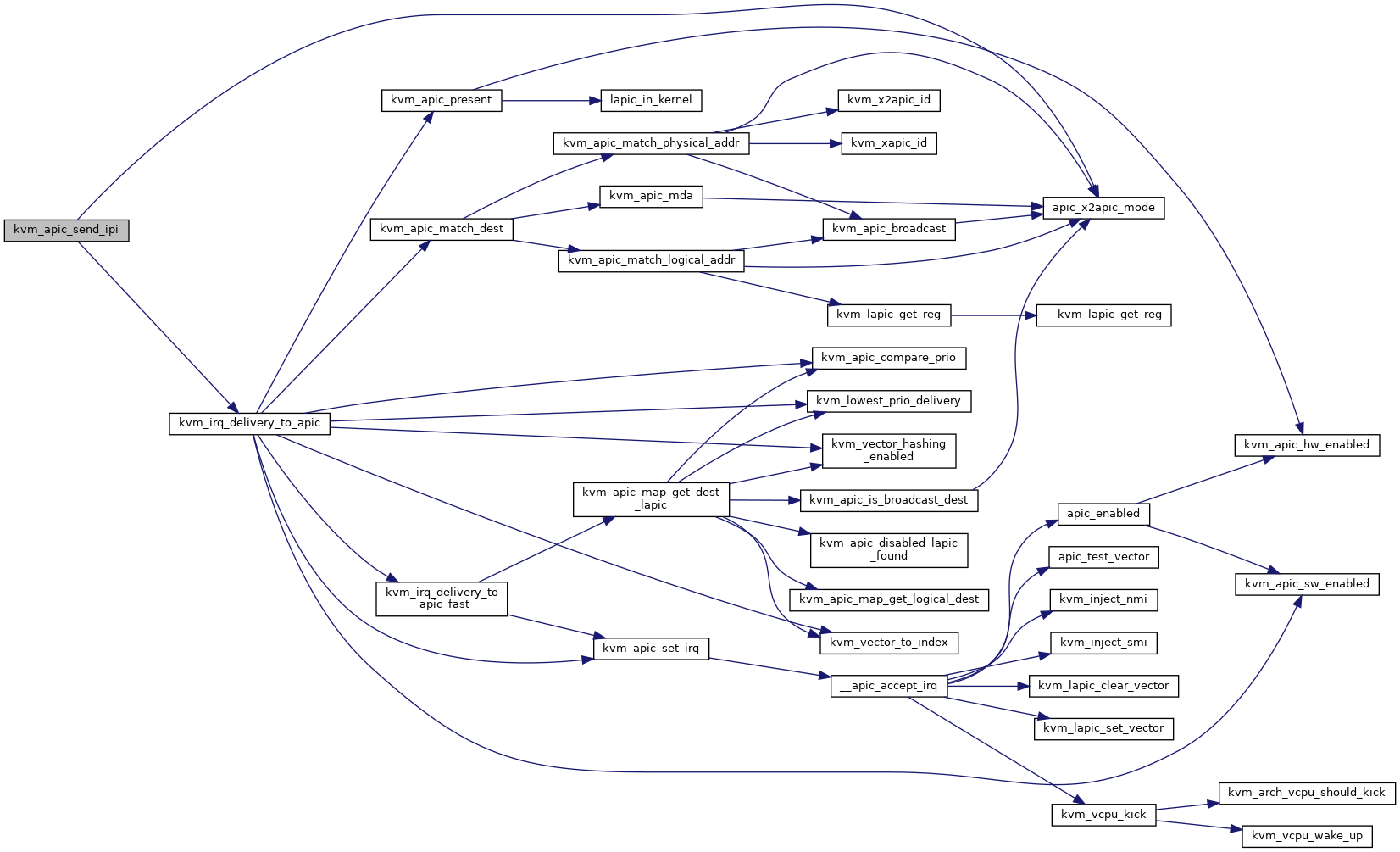

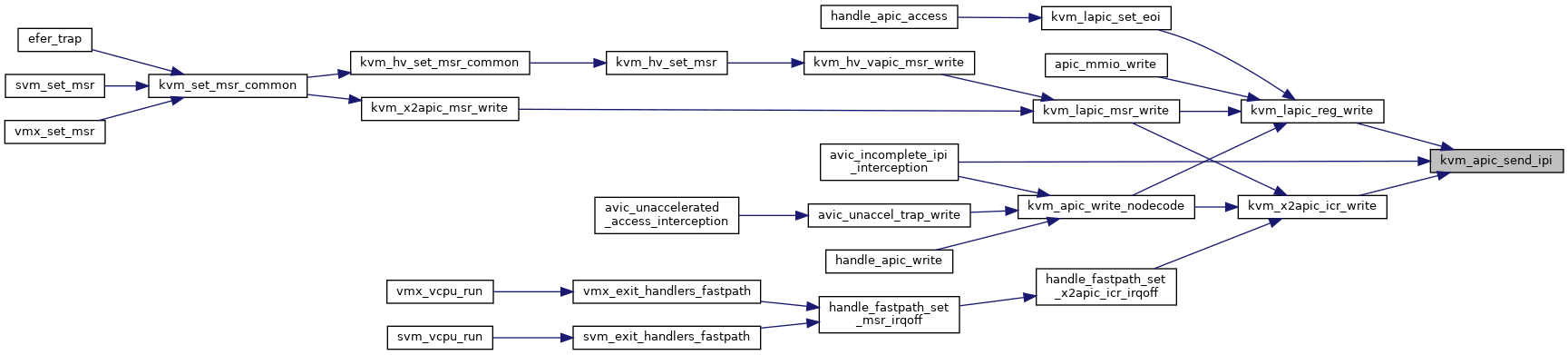

◆ kvm_apic_send_ipi()

| void kvm_apic_send_ipi | ( | struct kvm_lapic * | apic, |

| u32 | icr_low, | ||

| u32 | icr_high | ||

| ) |

Definition at line 1504 of file lapic.c.

◆ kvm_apic_set_eoi_accelerated()

| void kvm_apic_set_eoi_accelerated | ( | struct kvm_vcpu * | vcpu, |

| int | vector | ||

| ) |

Definition at line 1493 of file lapic.c.

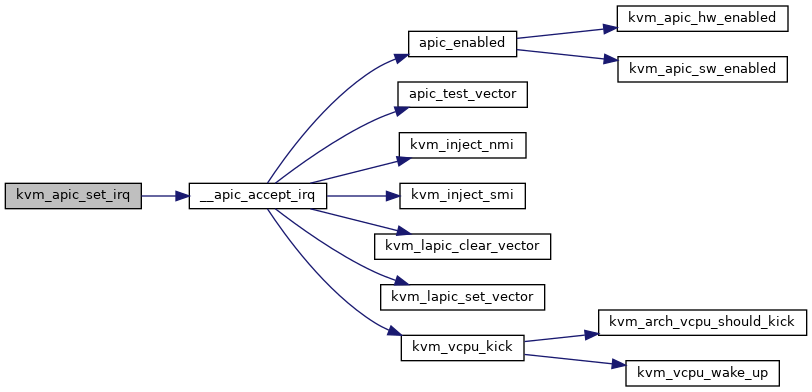

◆ kvm_apic_set_irq()

| int kvm_apic_set_irq | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_lapic_irq * | irq, | ||

| struct dest_map * | dest_map | ||

| ) |

Definition at line 823 of file lapic.c.

◆ kvm_apic_set_state()

| int kvm_apic_set_state | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_lapic_state * | s | ||

| ) |

Definition at line 2984 of file lapic.c.

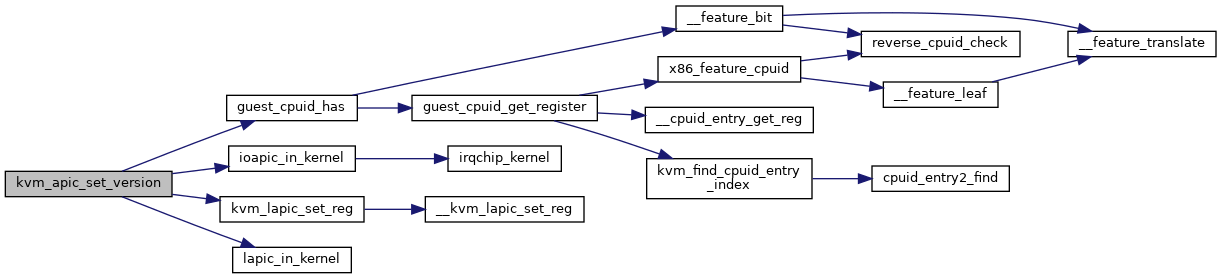

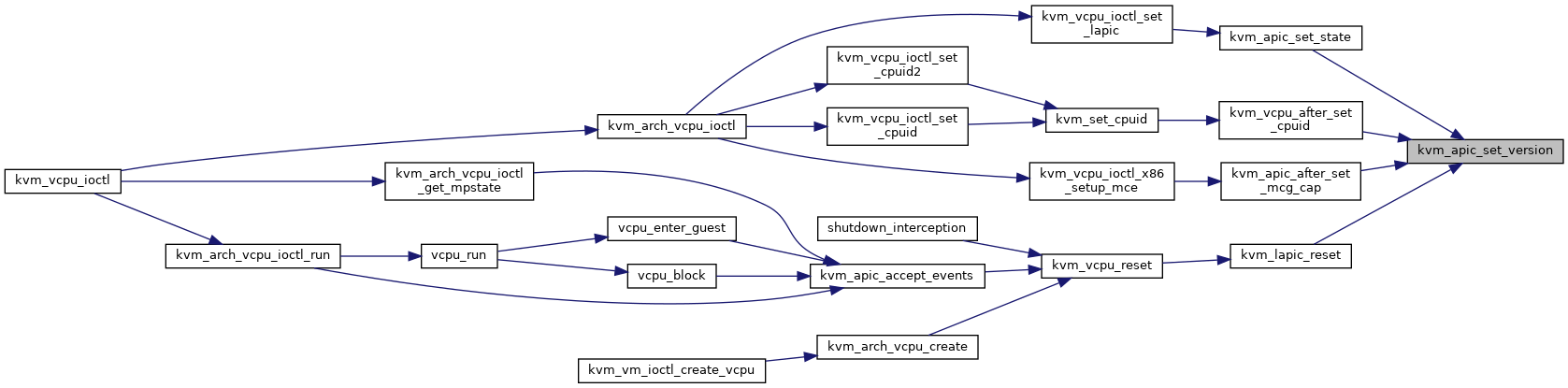

◆ kvm_apic_set_version()

| void kvm_apic_set_version | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 573 of file lapic.c.

◆ kvm_apic_sw_enabled()

|

inlinestatic |

◆ kvm_apic_update_apicv()

| void kvm_apic_update_apicv | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_apic_update_irr()

| bool kvm_apic_update_irr | ( | struct kvm_vcpu * | vcpu, |

| u32 * | pir, | ||

| int * | max_irr | ||

| ) |

Definition at line 690 of file lapic.c.

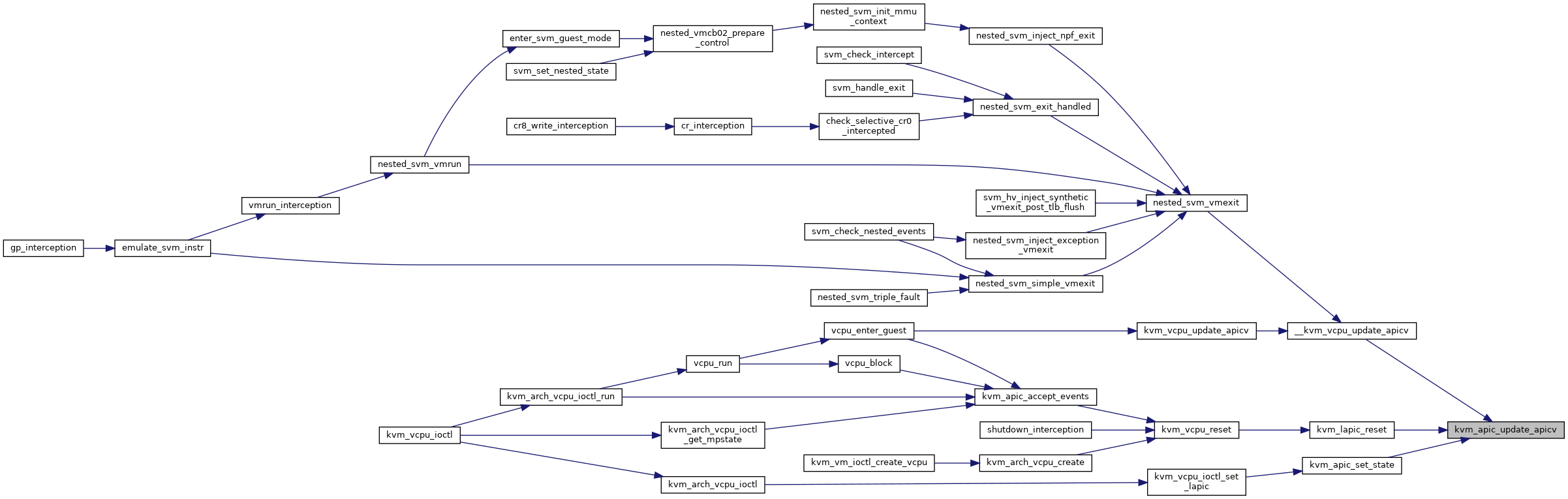

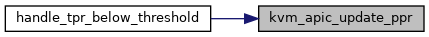

◆ kvm_apic_update_ppr()

| void kvm_apic_update_ppr | ( | struct kvm_vcpu * | vcpu | ) |

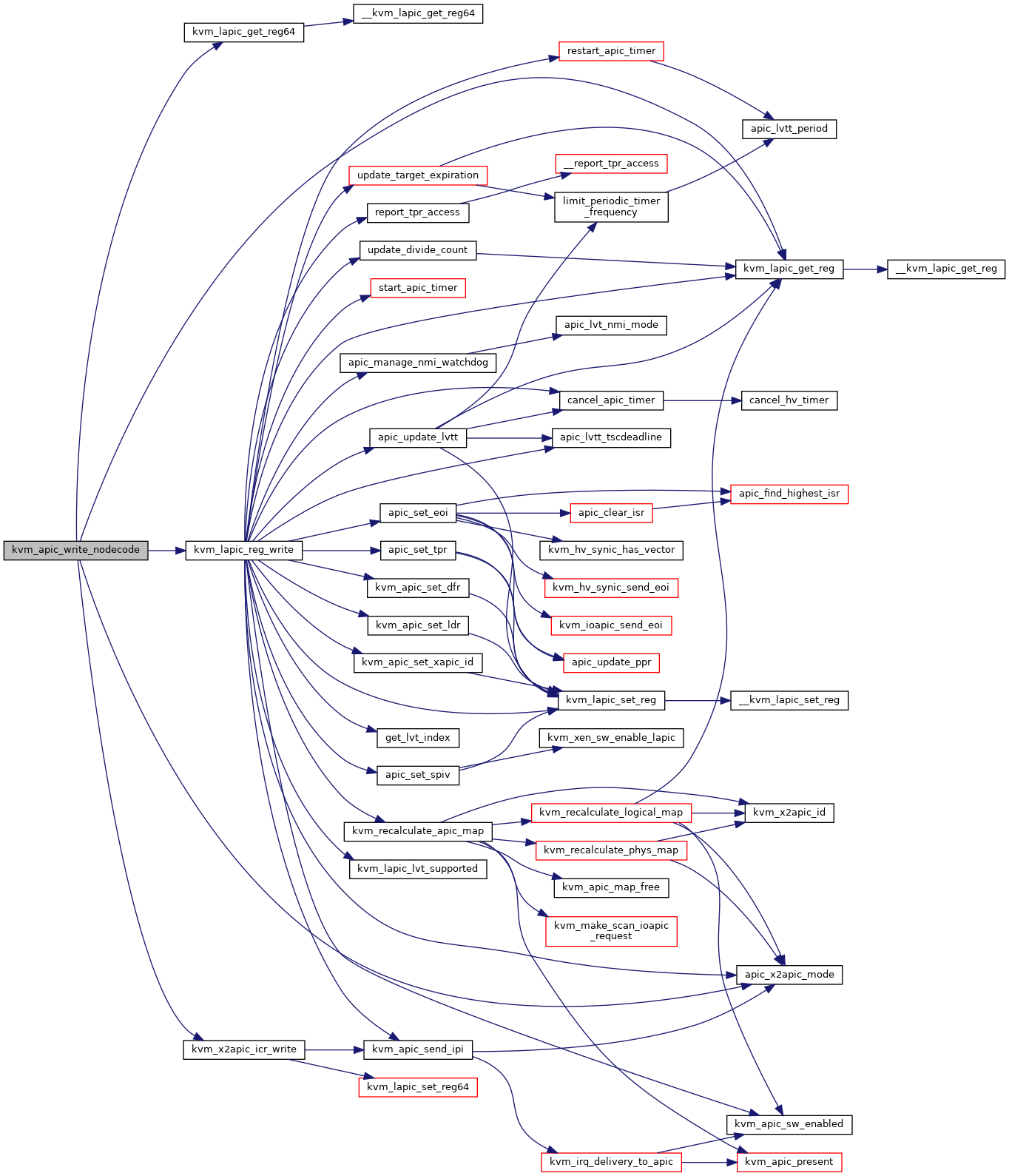

◆ kvm_apic_write_nodecode()

| void kvm_apic_write_nodecode | ( | struct kvm_vcpu * | vcpu, |

| u32 | offset | ||

| ) |

Definition at line 2446 of file lapic.c.

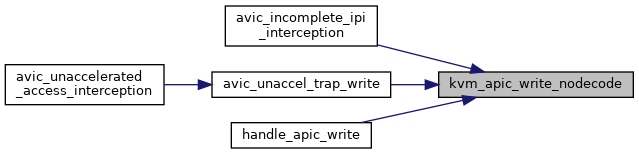

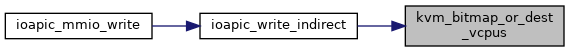

◆ kvm_bitmap_or_dest_vcpus()

| void kvm_bitmap_or_dest_vcpus | ( | struct kvm * | kvm, |

| struct kvm_lapic_irq * | irq, | ||

| unsigned long * | vcpu_bitmap | ||

| ) |

Definition at line 1394 of file lapic.c.

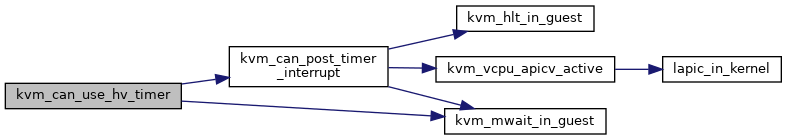

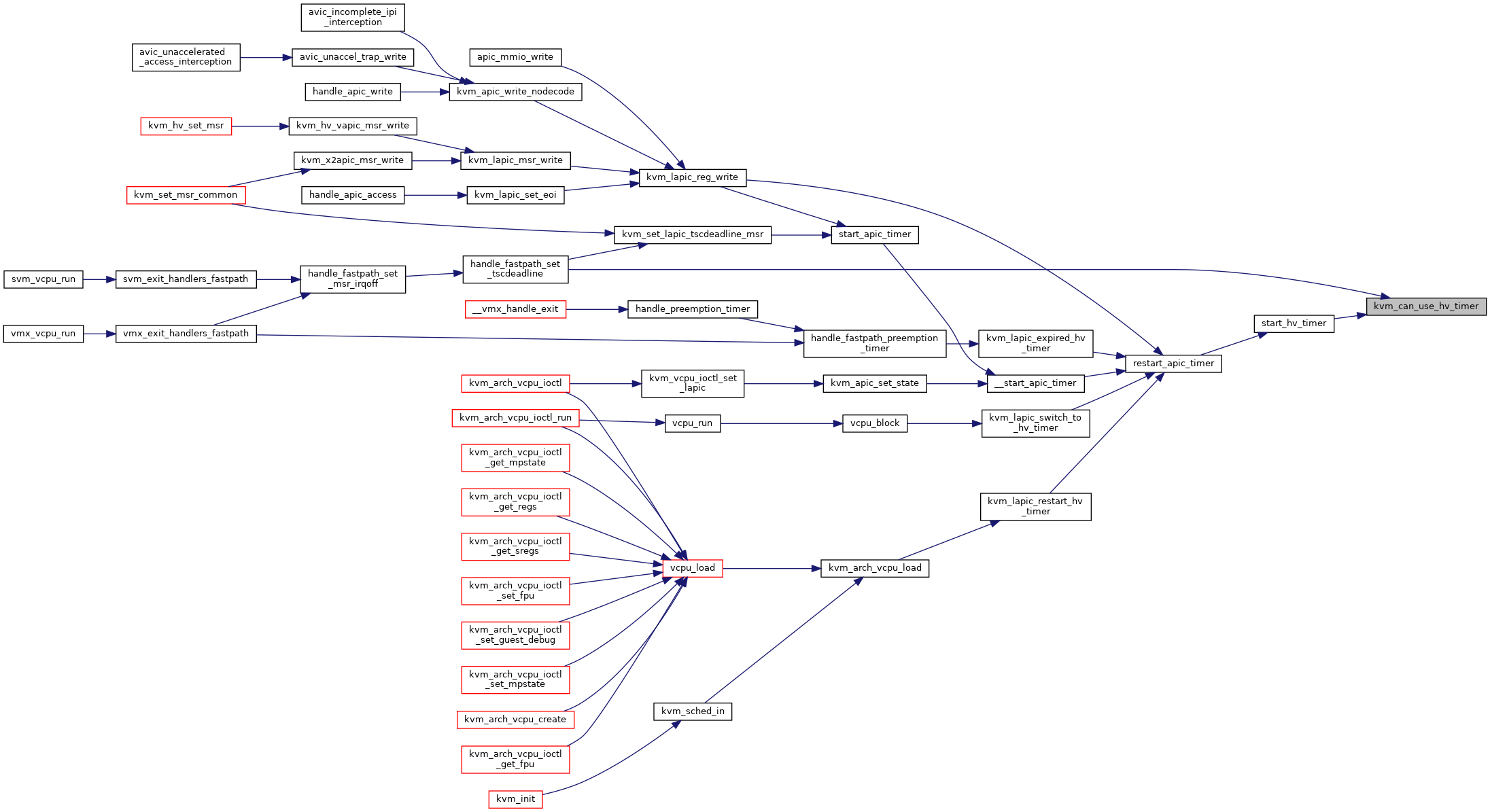

◆ kvm_can_use_hv_timer()

| bool kvm_can_use_hv_timer | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 154 of file lapic.c.

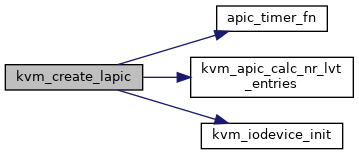

◆ kvm_create_lapic()

| int kvm_create_lapic | ( | struct kvm_vcpu * | vcpu, |

| int | timer_advance_ns | ||

| ) |

Definition at line 2810 of file lapic.c.

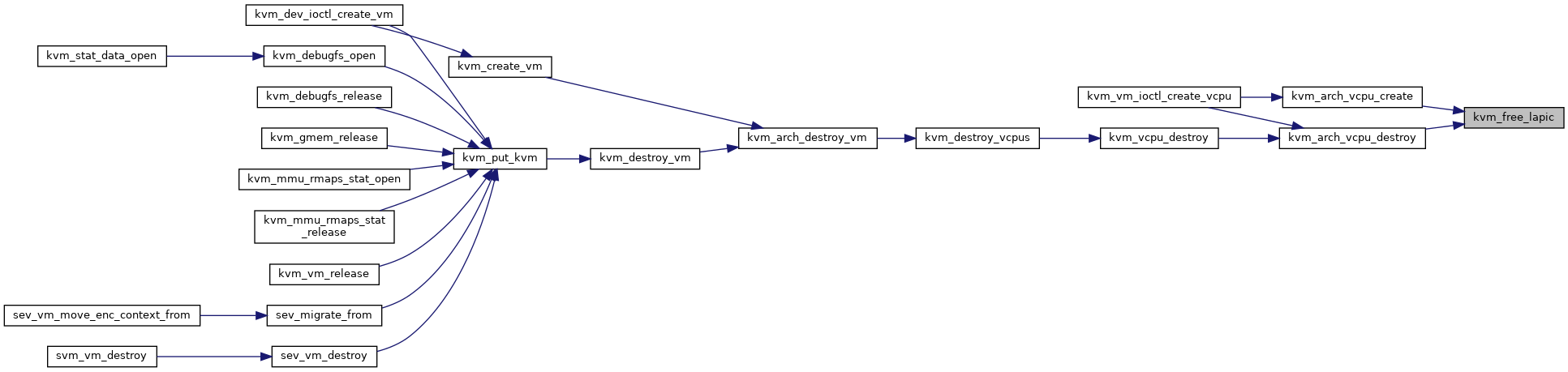

◆ kvm_free_lapic()

| void kvm_free_lapic | ( | struct kvm_vcpu * | vcpu | ) |

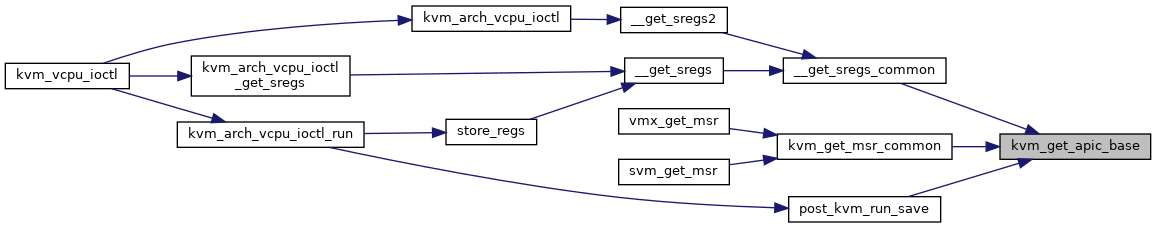

◆ kvm_get_apic_base()

| u64 kvm_get_apic_base | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_get_apic_interrupt()

| int kvm_get_apic_interrupt | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2894 of file lapic.c.

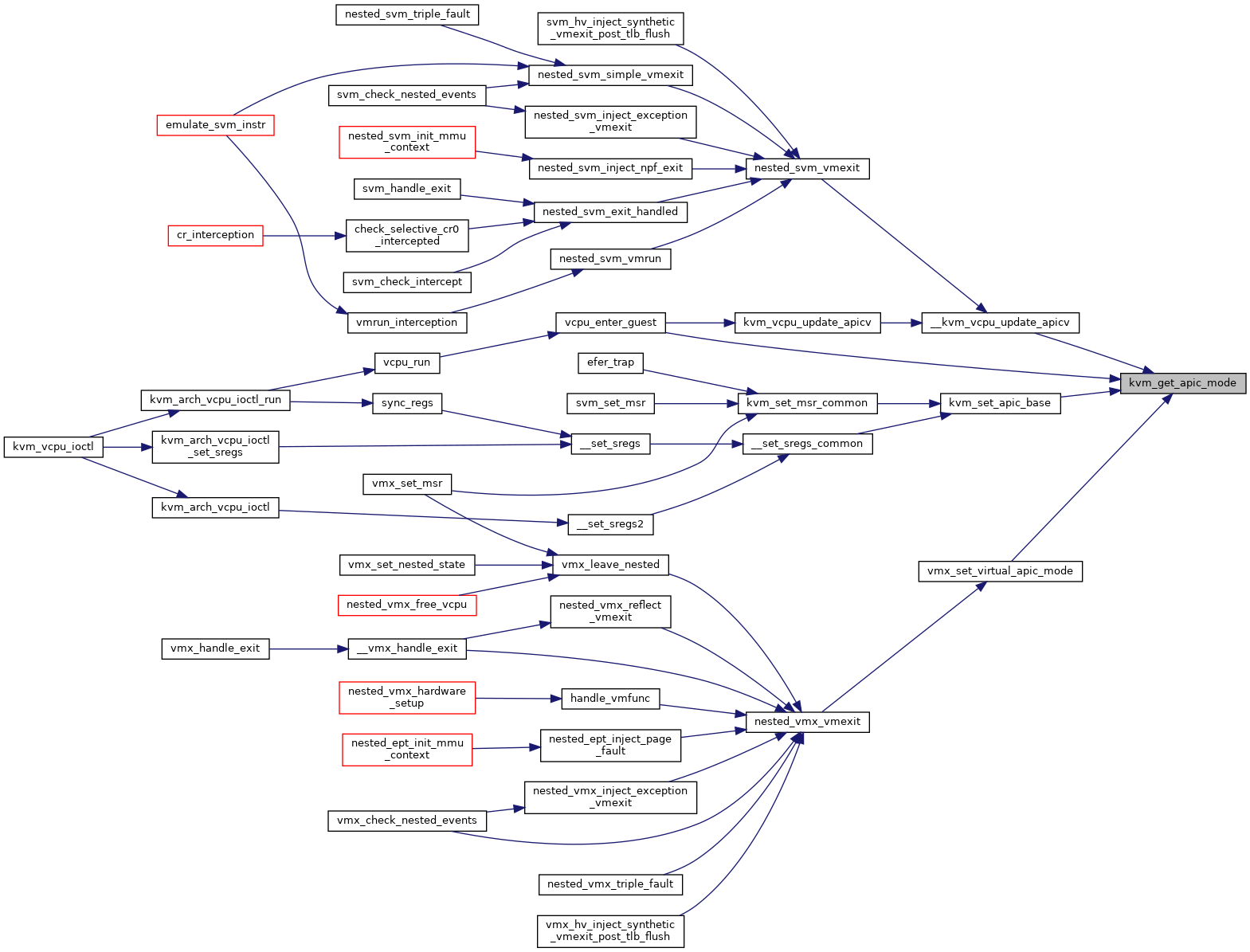

◆ kvm_get_apic_mode()

| enum lapic_mode kvm_get_apic_mode | ( | struct kvm_vcpu * | vcpu | ) |

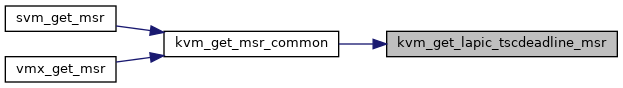

◆ kvm_get_lapic_tscdeadline_msr()

| u64 kvm_get_lapic_tscdeadline_msr | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2494 of file lapic.c.

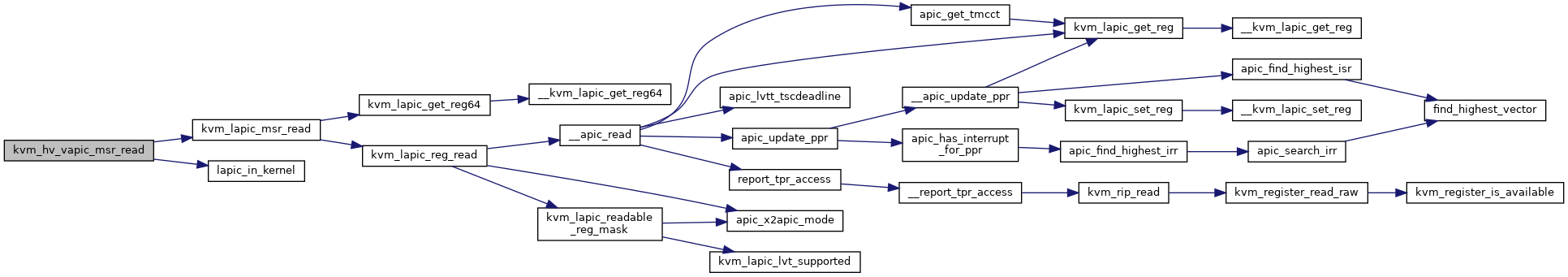

◆ kvm_hv_vapic_msr_read()

| int kvm_hv_vapic_msr_read | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| u64 * | data | ||

| ) |

Definition at line 3229 of file lapic.c.

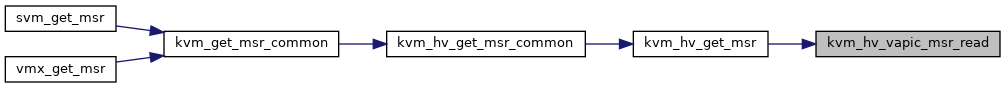

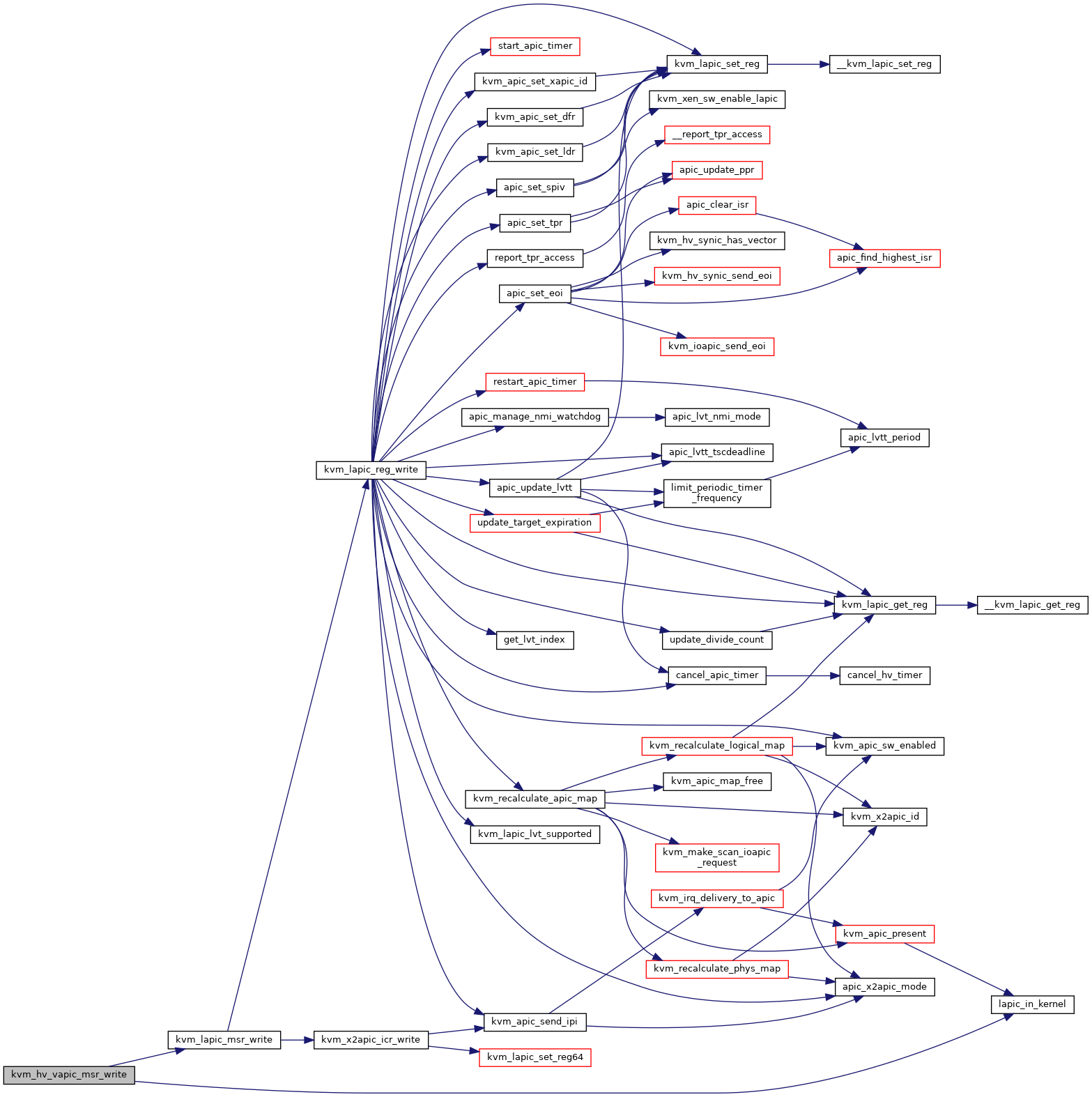

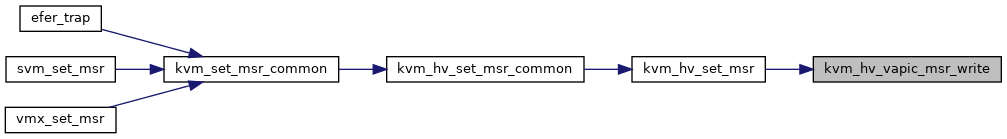

◆ kvm_hv_vapic_msr_write()

| int kvm_hv_vapic_msr_write | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| u64 | data | ||

| ) |

Definition at line 3221 of file lapic.c.

◆ kvm_inhibit_apic_access_page()

| void kvm_inhibit_apic_access_page | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_intr_is_single_vcpu_fast()

| bool kvm_intr_is_single_vcpu_fast | ( | struct kvm * | kvm, |

| struct kvm_lapic_irq * | irq, | ||

| struct kvm_vcpu ** | dest_vcpu | ||

| ) |

◆ kvm_irq_delivery_to_apic_fast()

| bool kvm_irq_delivery_to_apic_fast | ( | struct kvm * | kvm, |

| struct kvm_lapic * | src, | ||

| struct kvm_lapic_irq * | irq, | ||

| int * | r, | ||

| struct dest_map * | dest_map | ||

| ) |

Definition at line 1208 of file lapic.c.

◆ kvm_lapic_clear_vector()

|

inlinestatic |

◆ kvm_lapic_enabled()

|

inlinestatic |

◆ kvm_lapic_exit()

| void kvm_lapic_exit | ( | void | ) |

◆ kvm_lapic_expired_hv_timer()

| void kvm_lapic_expired_hv_timer | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2171 of file lapic.c.

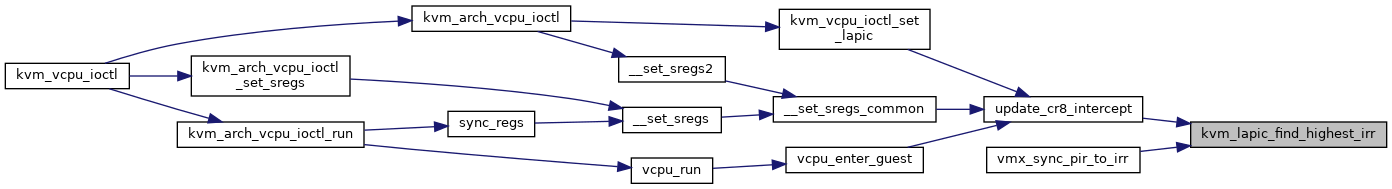

◆ kvm_lapic_find_highest_irr()

| int kvm_lapic_find_highest_irr | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_get_base()

| u64 kvm_lapic_get_base | ( | struct kvm_vcpu * | vcpu | ) |

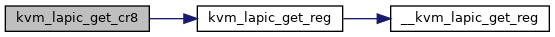

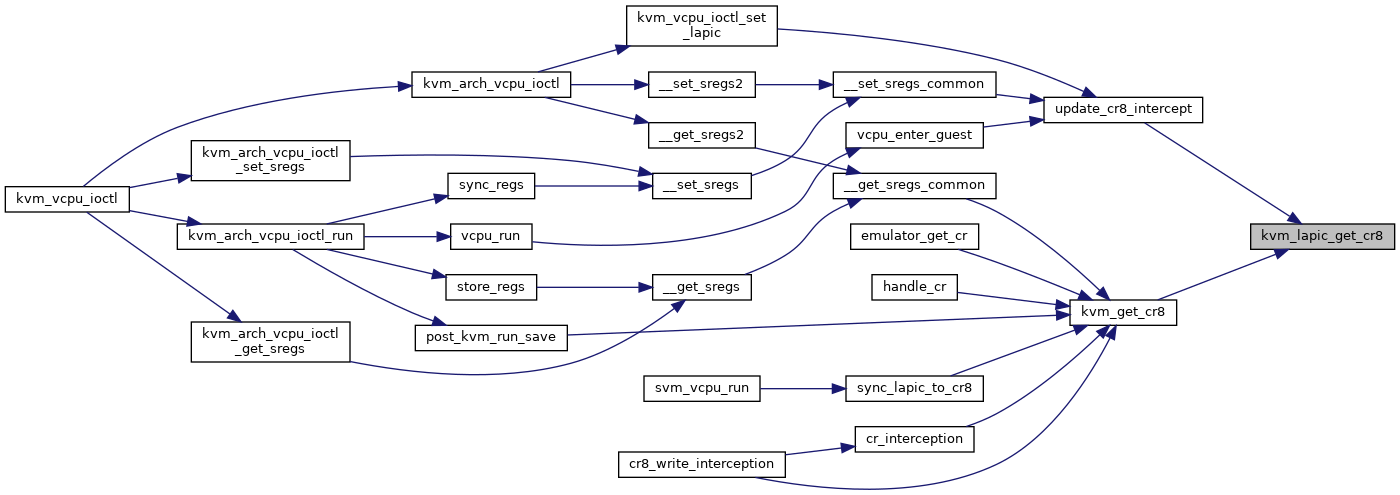

◆ kvm_lapic_get_cr8()

| u64 kvm_lapic_get_cr8 | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_get_reg()

|

inlinestatic |

◆ kvm_lapic_hv_timer_in_use()

| bool kvm_lapic_hv_timer_in_use | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_latched_init()

|

inlinestatic |

◆ kvm_lapic_readable_reg_mask()

| u64 kvm_lapic_readable_reg_mask | ( | struct kvm_lapic * | apic | ) |

Definition at line 1607 of file lapic.c.

◆ kvm_lapic_reset()

| void kvm_lapic_reset | ( | struct kvm_vcpu * | vcpu, |

| bool | init_event | ||

| ) |

Definition at line 2669 of file lapic.c.

◆ kvm_lapic_restart_hv_timer()

| void kvm_lapic_restart_hv_timer | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_set_base()

| void kvm_lapic_set_base | ( | struct kvm_vcpu * | vcpu, |

| u64 | value | ||

| ) |

Definition at line 2530 of file lapic.c.

◆ kvm_lapic_set_eoi()

| void kvm_lapic_set_eoi | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_set_irr()

|

inlinestatic |

◆ kvm_lapic_set_pv_eoi()

| int kvm_lapic_set_pv_eoi | ( | struct kvm_vcpu * | vcpu, |

| u64 | data, | ||

| unsigned long | len | ||

| ) |

Definition at line 3237 of file lapic.c.

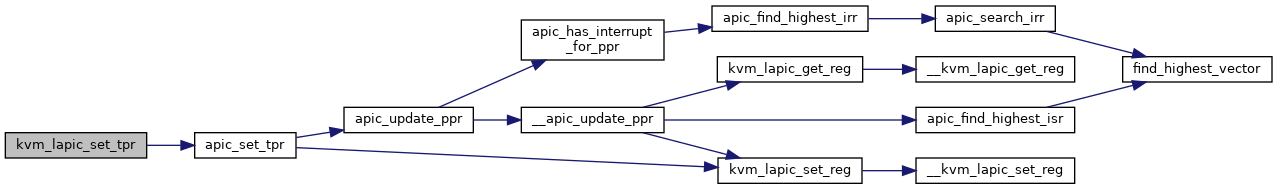

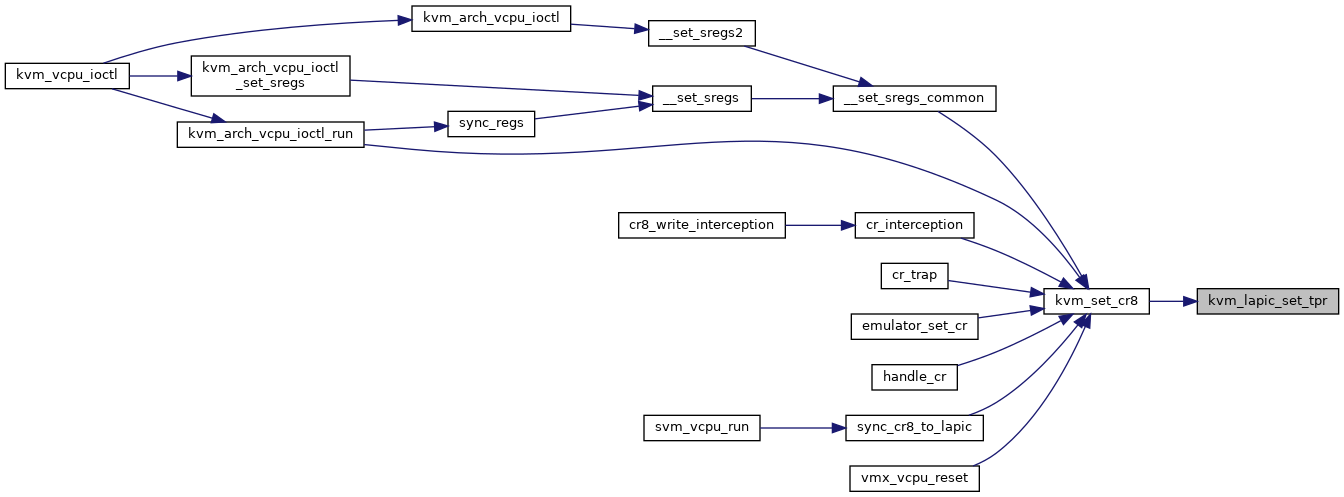

◆ kvm_lapic_set_tpr()

| void kvm_lapic_set_tpr | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | cr8 | ||

| ) |

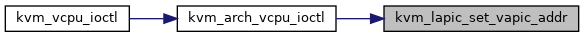

◆ kvm_lapic_set_vapic_addr()

| int kvm_lapic_set_vapic_addr | ( | struct kvm_vcpu * | vcpu, |

| gpa_t | vapic_addr | ||

| ) |

◆ kvm_lapic_set_vector()

|

inlinestatic |

◆ kvm_lapic_switch_to_hv_timer()

| void kvm_lapic_switch_to_hv_timer | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_switch_to_sw_timer()

| void kvm_lapic_switch_to_sw_timer | ( | struct kvm_vcpu * | vcpu | ) |

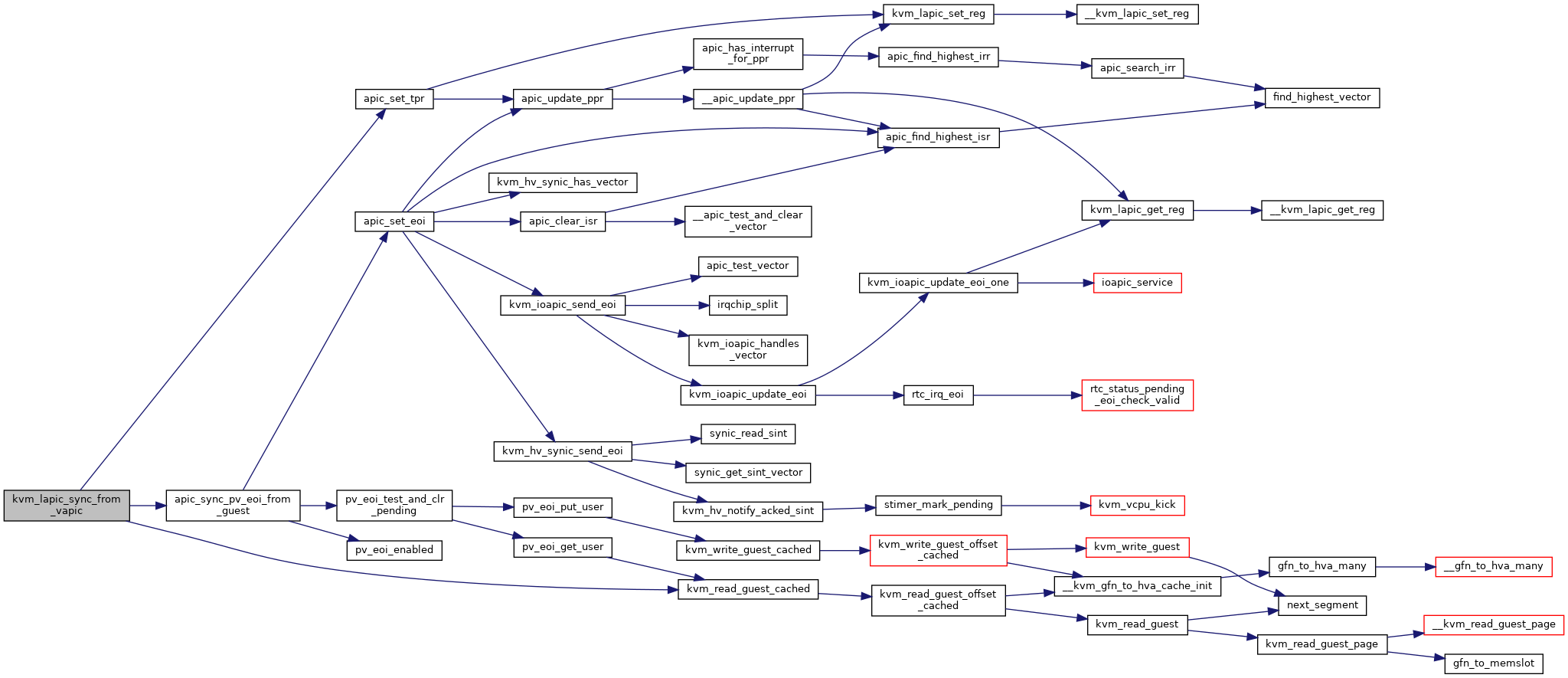

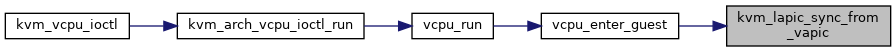

◆ kvm_lapic_sync_from_vapic()

| void kvm_lapic_sync_from_vapic | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3072 of file lapic.c.

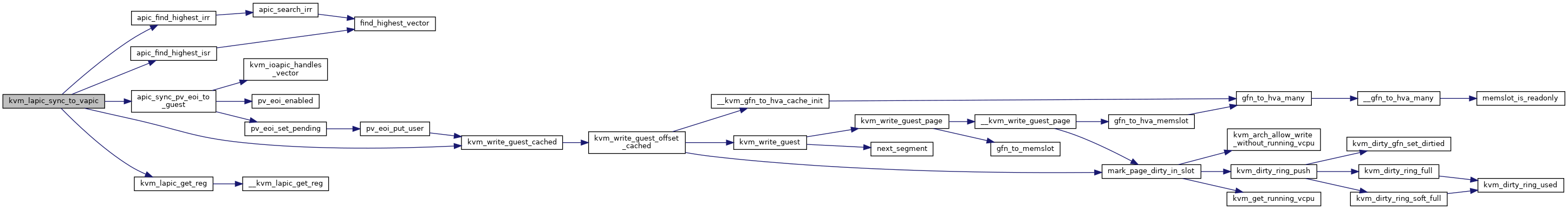

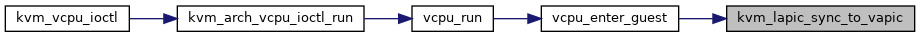

◆ kvm_lapic_sync_to_vapic()

| void kvm_lapic_sync_to_vapic | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3115 of file lapic.c.

◆ kvm_lowest_prio_delivery()

|

inlinestatic |

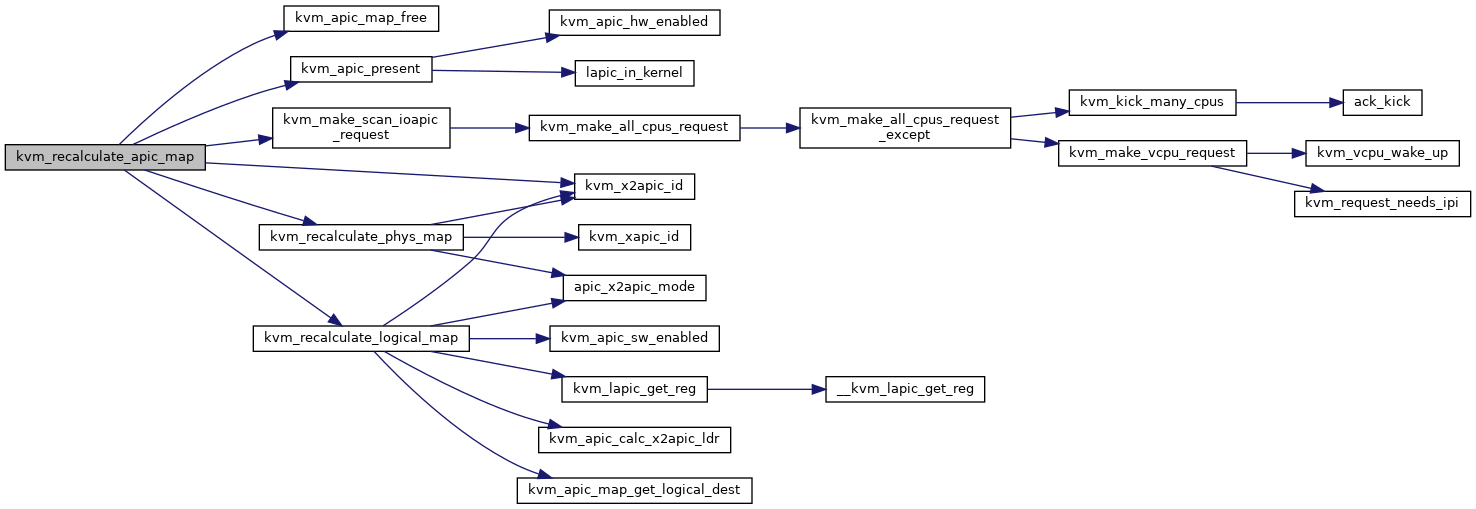

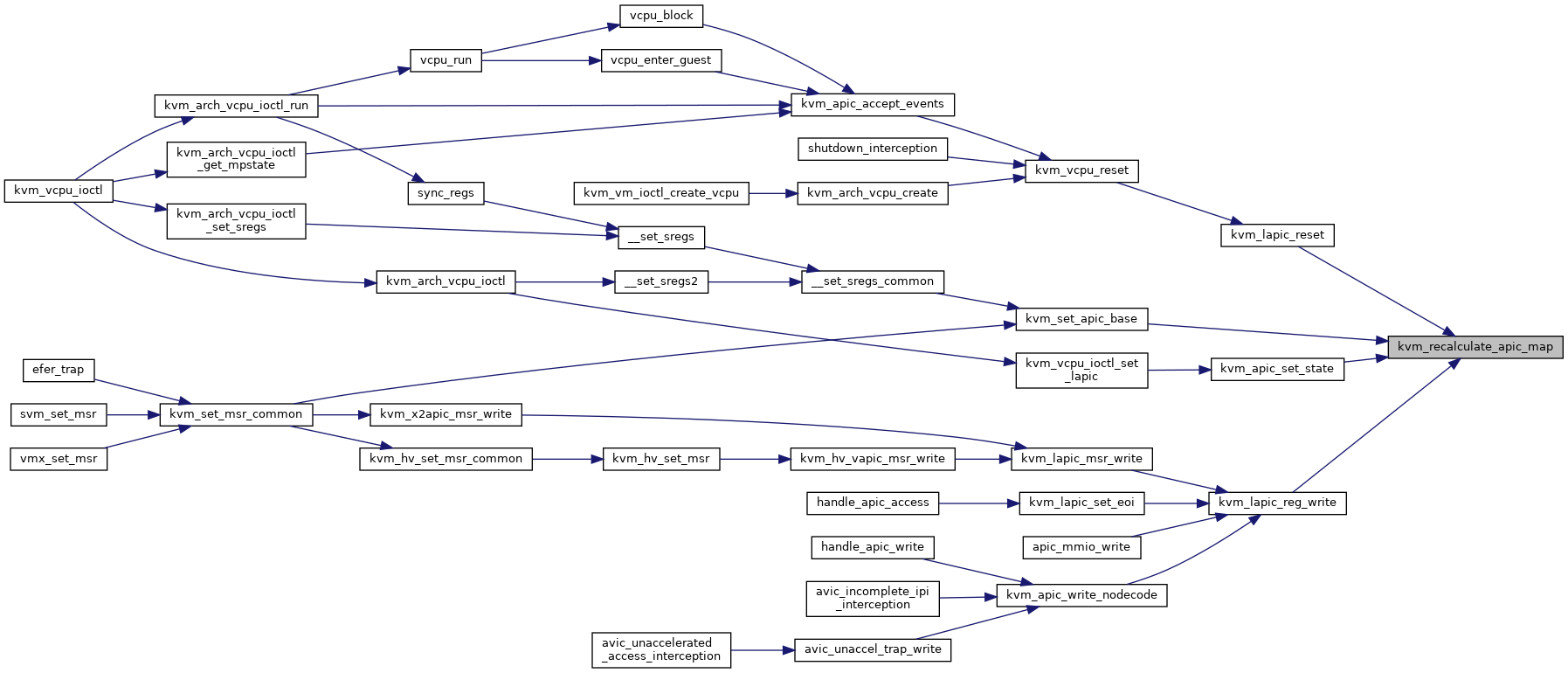

◆ kvm_recalculate_apic_map()

| void kvm_recalculate_apic_map | ( | struct kvm * | kvm | ) |

Definition at line 374 of file lapic.c.

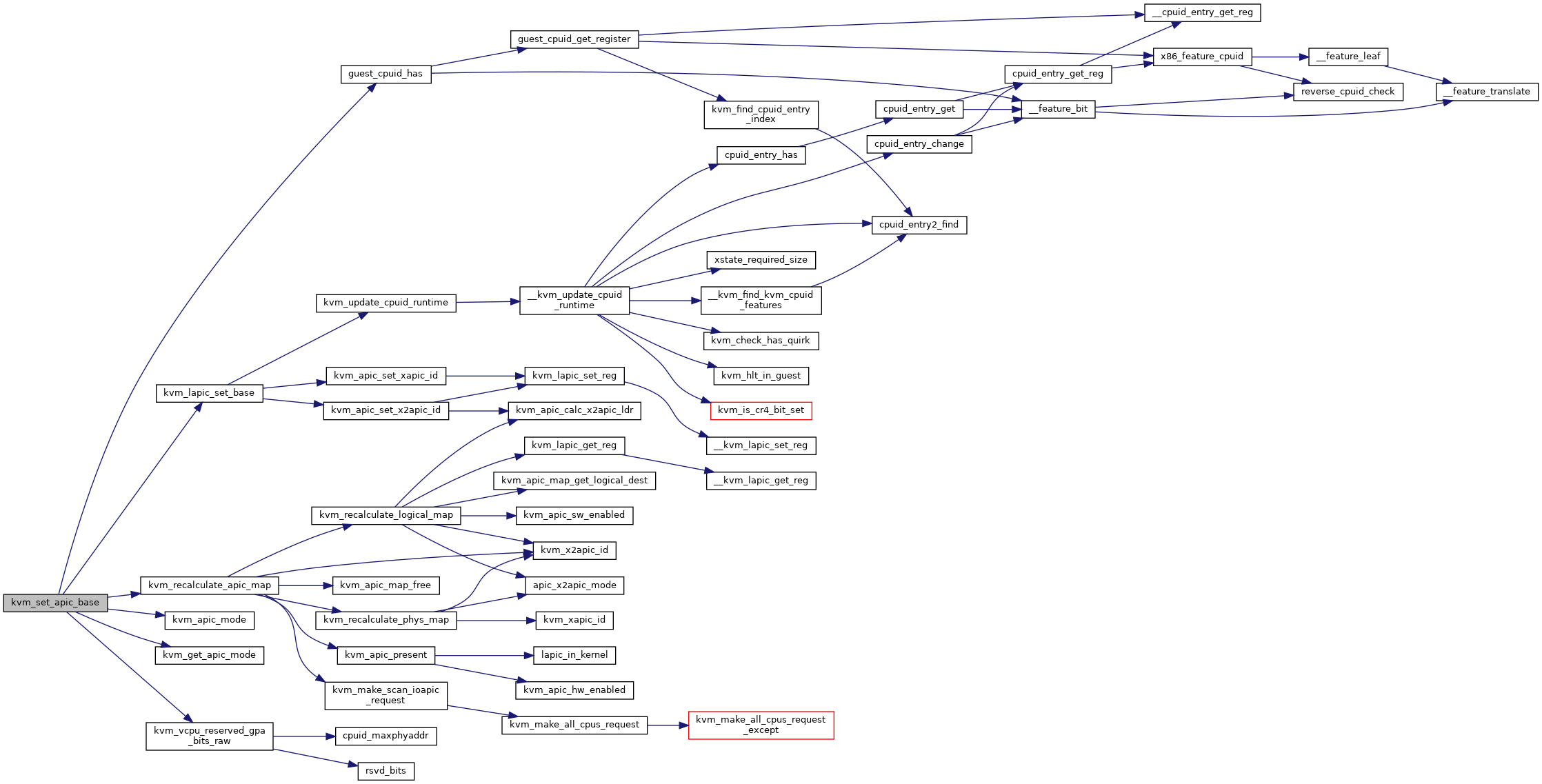

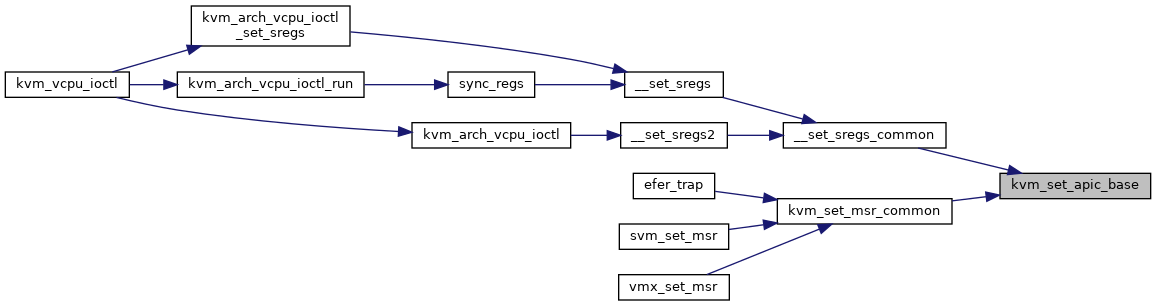

◆ kvm_set_apic_base()

| int kvm_set_apic_base | ( | struct kvm_vcpu * | vcpu, |

| struct msr_data * | msr_info | ||

| ) |

Definition at line 485 of file x86.c.

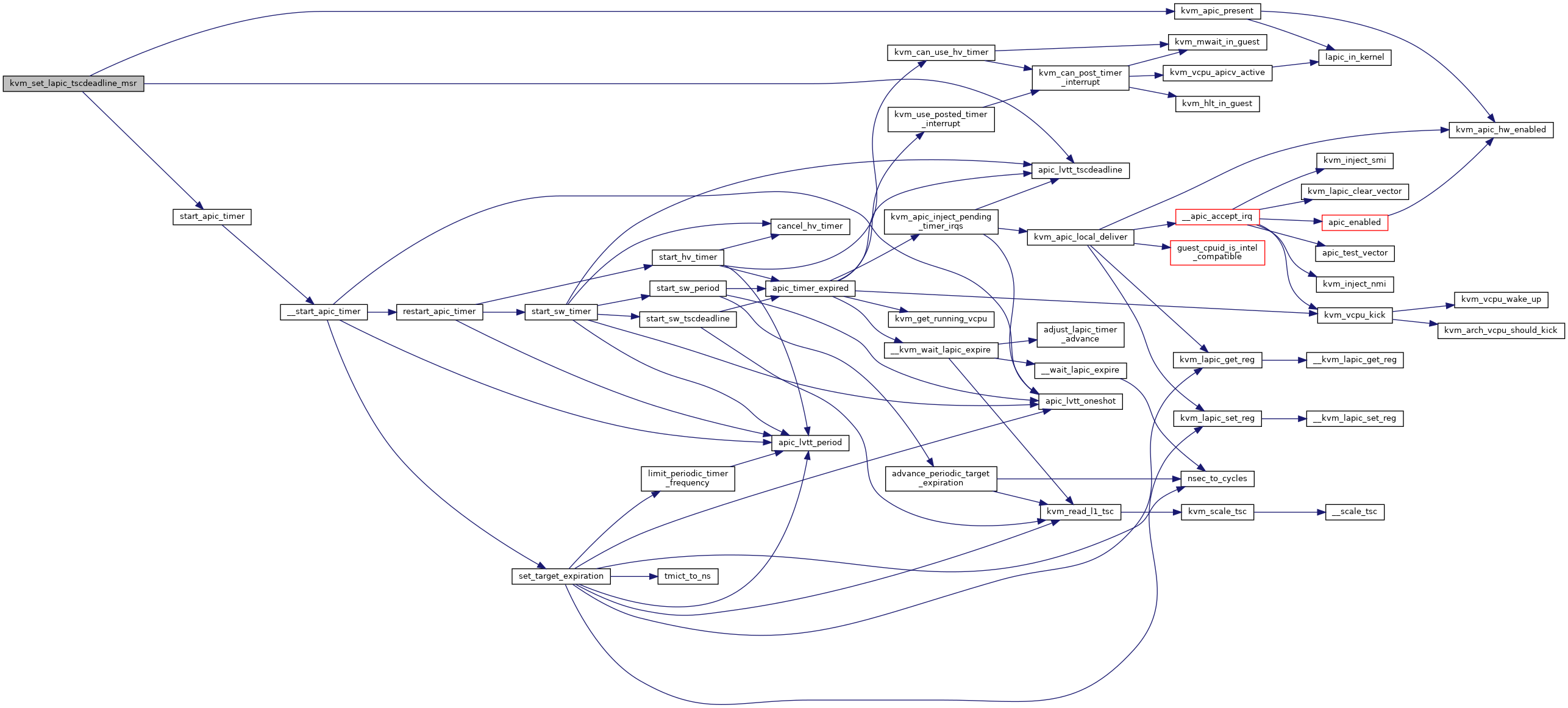

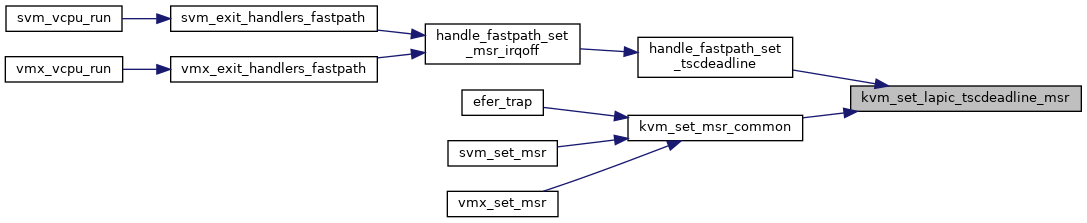

◆ kvm_set_lapic_tscdeadline_msr()

| void kvm_set_lapic_tscdeadline_msr | ( | struct kvm_vcpu * | vcpu, |

| u64 | data | ||

| ) |

◆ kvm_vcpu_apicv_active()

|

inlinestatic |

◆ kvm_vector_to_index()

| int kvm_vector_to_index | ( | u32 | vector, |

| u32 | dest_vcpus, | ||

| const unsigned long * | bitmap, | ||

| u32 | bitmap_size | ||

| ) |

◆ kvm_wait_lapic_expire()

| void kvm_wait_lapic_expire | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1869 of file lapic.c.

◆ kvm_x2apic_icr_write()

| int kvm_x2apic_icr_write | ( | struct kvm_lapic * | apic, |

| u64 | data | ||

| ) |

Definition at line 3155 of file lapic.c.

◆ kvm_x2apic_msr_read()

| int kvm_x2apic_msr_read | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| u64 * | data | ||

| ) |

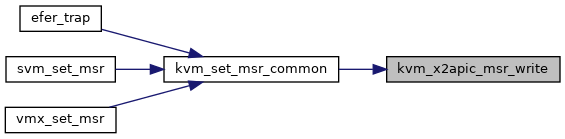

◆ kvm_x2apic_msr_write()

| int kvm_x2apic_msr_write | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| u64 | data | ||

| ) |

◆ kvm_xapic_id()

|

inlinestatic |

◆ lapic_in_kernel()

|

inlinestatic |

Variable Documentation

◆ apic_hw_disabled

|

extern |

◆ apic_sw_disabled

|

extern |