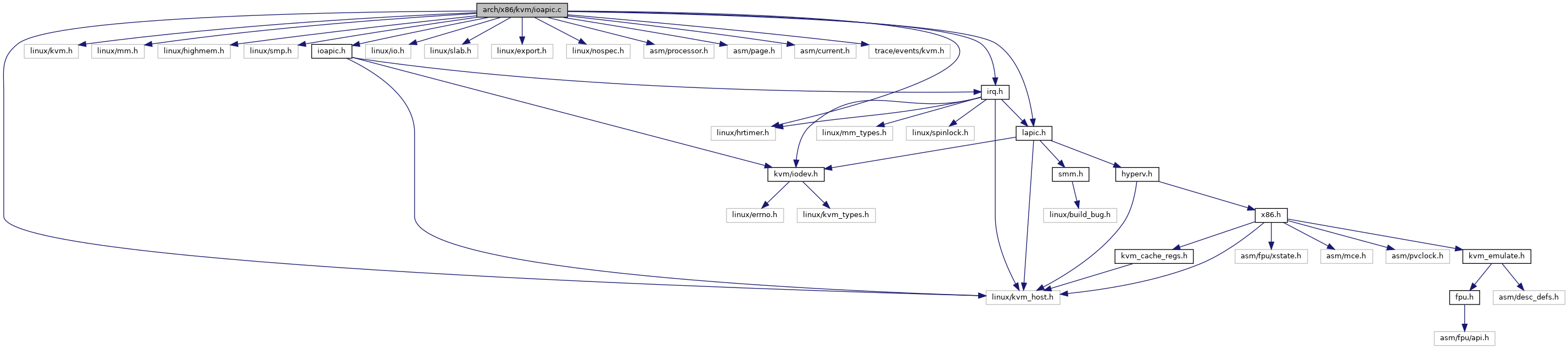

#include <linux/kvm_host.h>#include <linux/kvm.h>#include <linux/mm.h>#include <linux/highmem.h>#include <linux/smp.h>#include <linux/hrtimer.h>#include <linux/io.h>#include <linux/slab.h>#include <linux/export.h>#include <linux/nospec.h>#include <asm/processor.h>#include <asm/page.h>#include <asm/current.h>#include <trace/events/kvm.h>#include "ioapic.h"#include "lapic.h"#include "irq.h"

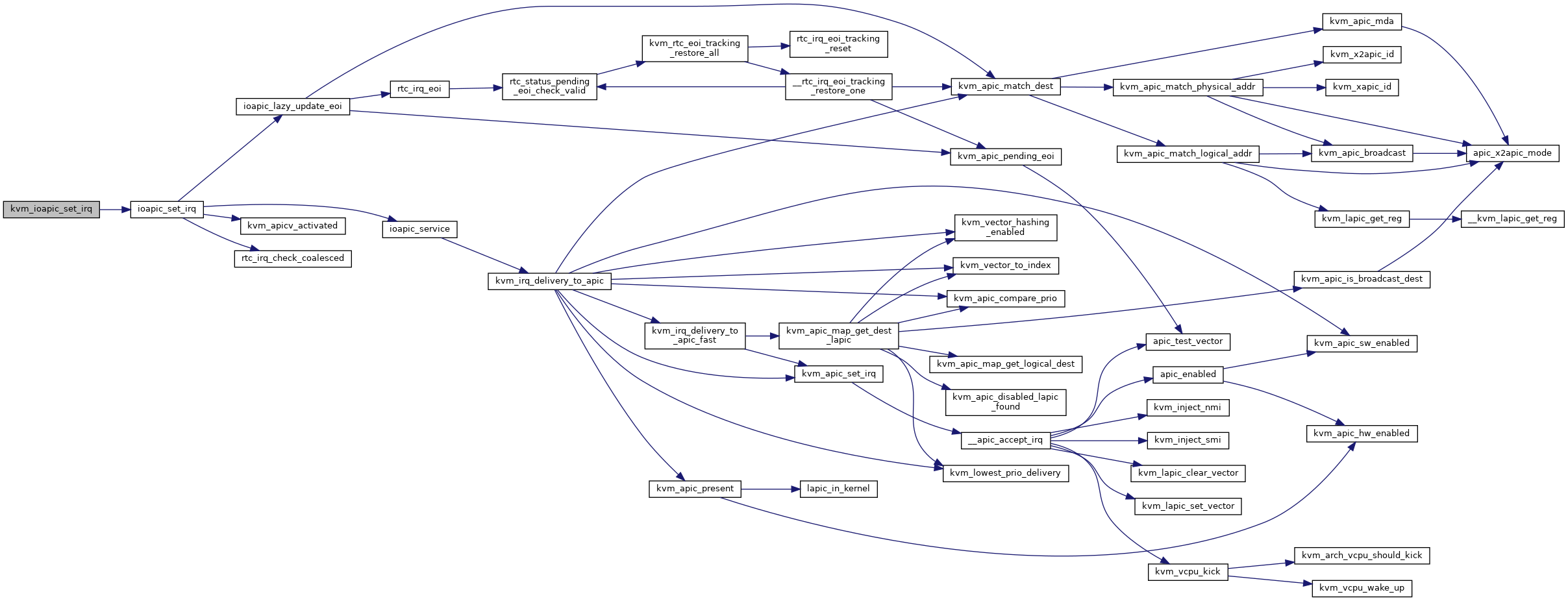

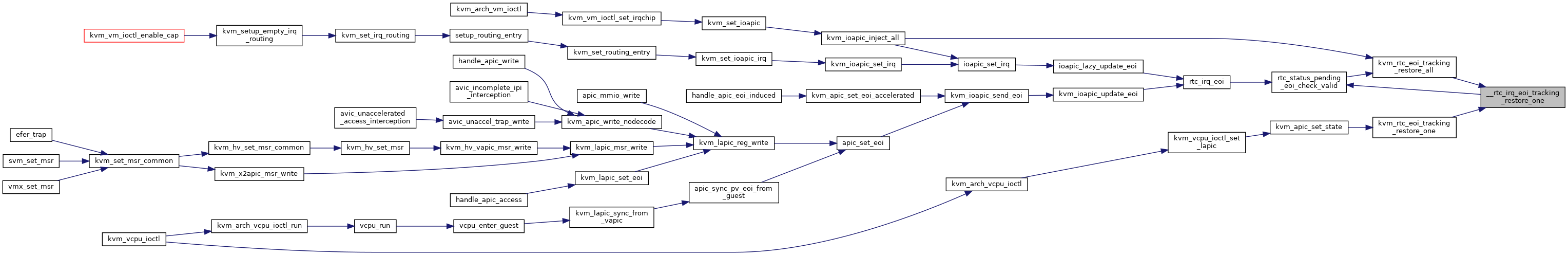

Include dependency graph for ioapic.c:

Go to the source code of this file.

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

| #define | IOAPIC_SUCCESSIVE_IRQ_MAX_COUNT 10000 |

Functions | |

| static int | ioapic_service (struct kvm_ioapic *vioapic, int irq, bool line_status) |

| static void | kvm_ioapic_update_eoi_one (struct kvm_vcpu *vcpu, struct kvm_ioapic *ioapic, int trigger_mode, int pin) |

| static unsigned long | ioapic_read_indirect (struct kvm_ioapic *ioapic) |

| static void | rtc_irq_eoi_tracking_reset (struct kvm_ioapic *ioapic) |

| static void | kvm_rtc_eoi_tracking_restore_all (struct kvm_ioapic *ioapic) |

| static void | rtc_status_pending_eoi_check_valid (struct kvm_ioapic *ioapic) |

| static void | __rtc_irq_eoi_tracking_restore_one (struct kvm_vcpu *vcpu) |

| void | kvm_rtc_eoi_tracking_restore_one (struct kvm_vcpu *vcpu) |

| static void | rtc_irq_eoi (struct kvm_ioapic *ioapic, struct kvm_vcpu *vcpu, int vector) |

| static bool | rtc_irq_check_coalesced (struct kvm_ioapic *ioapic) |

| static void | ioapic_lazy_update_eoi (struct kvm_ioapic *ioapic, int irq) |

| static int | ioapic_set_irq (struct kvm_ioapic *ioapic, unsigned int irq, int irq_level, bool line_status) |

| static void | kvm_ioapic_inject_all (struct kvm_ioapic *ioapic, unsigned long irr) |

| void | kvm_ioapic_scan_entry (struct kvm_vcpu *vcpu, ulong *ioapic_handled_vectors) |

| void | kvm_arch_post_irq_ack_notifier_list_update (struct kvm *kvm) |

| static void | ioapic_write_indirect (struct kvm_ioapic *ioapic, u32 val) |

| int | kvm_ioapic_set_irq (struct kvm_ioapic *ioapic, int irq, int irq_source_id, int level, bool line_status) |

| void | kvm_ioapic_clear_all (struct kvm_ioapic *ioapic, int irq_source_id) |

| static void | kvm_ioapic_eoi_inject_work (struct work_struct *work) |

| void | kvm_ioapic_update_eoi (struct kvm_vcpu *vcpu, int vector, int trigger_mode) |

| static struct kvm_ioapic * | to_ioapic (struct kvm_io_device *dev) |

| static int | ioapic_in_range (struct kvm_ioapic *ioapic, gpa_t addr) |

| static int | ioapic_mmio_read (struct kvm_vcpu *vcpu, struct kvm_io_device *this, gpa_t addr, int len, void *val) |

| static int | ioapic_mmio_write (struct kvm_vcpu *vcpu, struct kvm_io_device *this, gpa_t addr, int len, const void *val) |

| static void | kvm_ioapic_reset (struct kvm_ioapic *ioapic) |

| int | kvm_ioapic_init (struct kvm *kvm) |

| void | kvm_ioapic_destroy (struct kvm *kvm) |

| void | kvm_get_ioapic (struct kvm *kvm, struct kvm_ioapic_state *state) |

| void | kvm_set_ioapic (struct kvm *kvm, struct kvm_ioapic_state *state) |

Variables | |

| static const struct kvm_io_device_ops | ioapic_mmio_ops |

Macro Definition Documentation

◆ IOAPIC_SUCCESSIVE_IRQ_MAX_COUNT

◆ pr_fmt

Function Documentation

◆ __rtc_irq_eoi_tracking_restore_one()

|

static |

Definition at line 109 of file ioapic.c.

static void rtc_status_pending_eoi_check_valid(struct kvm_ioapic *ioapic)

Definition: ioapic.c:103

bool kvm_apic_pending_eoi(struct kvm_vcpu *vcpu, int vector)

Definition: lapic.c:110

bool kvm_apic_match_dest(struct kvm_vcpu *vcpu, struct kvm_lapic *source, int shorthand, unsigned int dest, int dest_mode)

Definition: lapic.c:1067

Definition: ioapic.h:40

Definition: ioapic.h:74

union kvm_ioapic_redirect_entry redirtbl[IOAPIC_NUM_PINS]

Definition: ioapic.h:80

Definition: ioapic.h:57

struct kvm_ioapic_redirect_entry::@1 fields

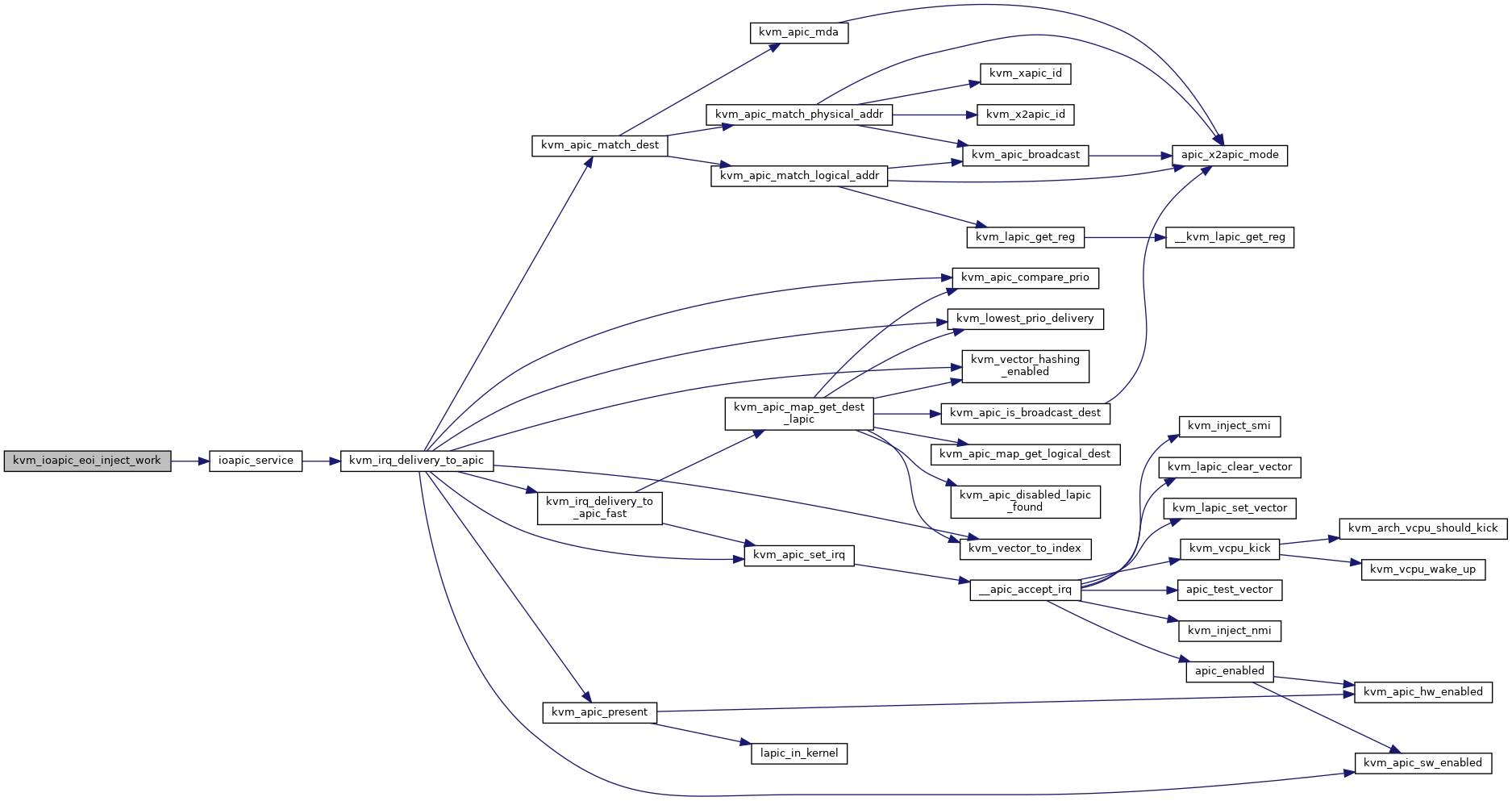

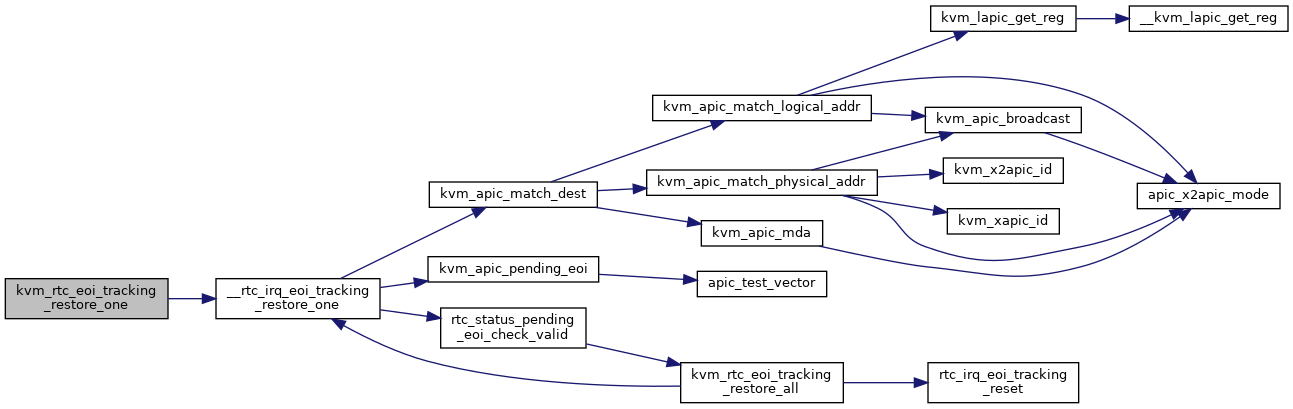

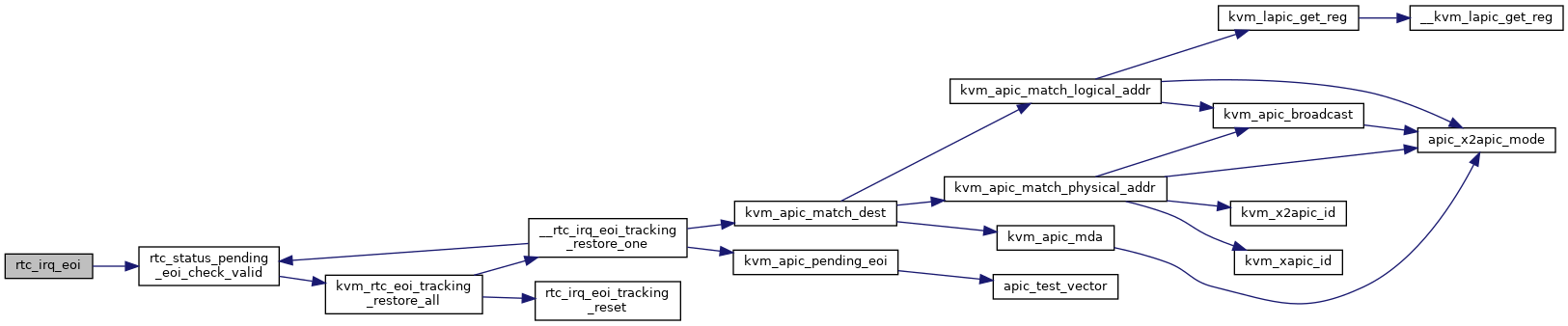

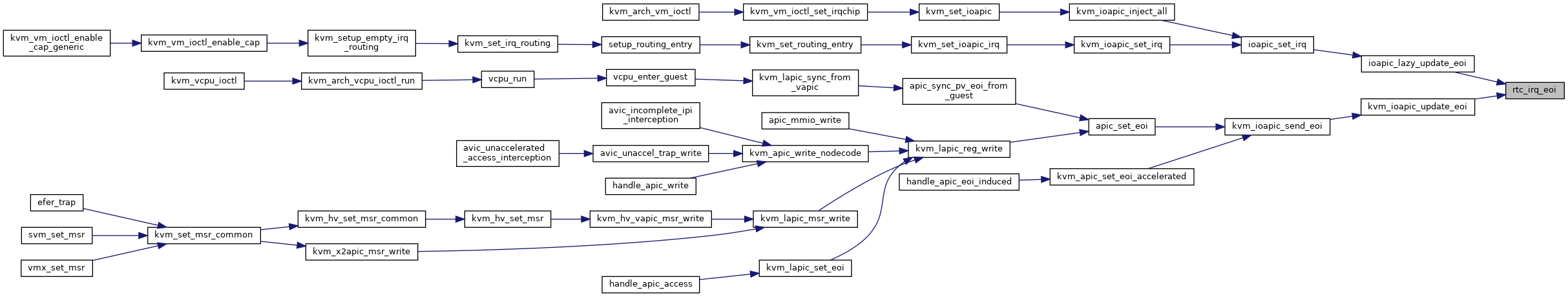

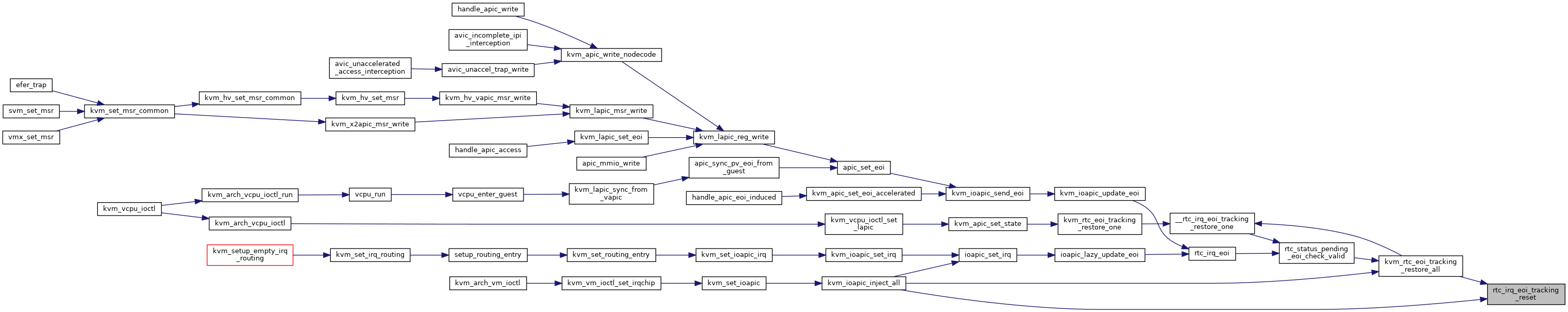

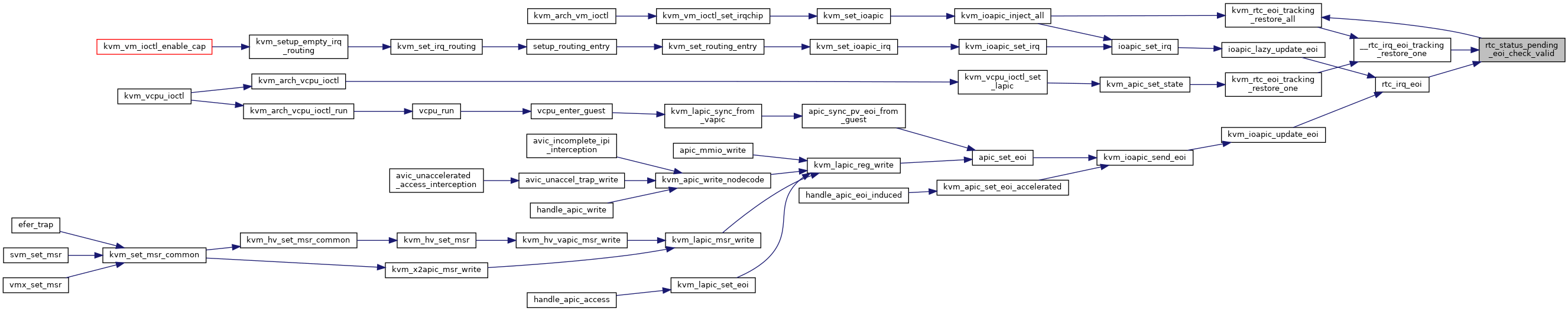

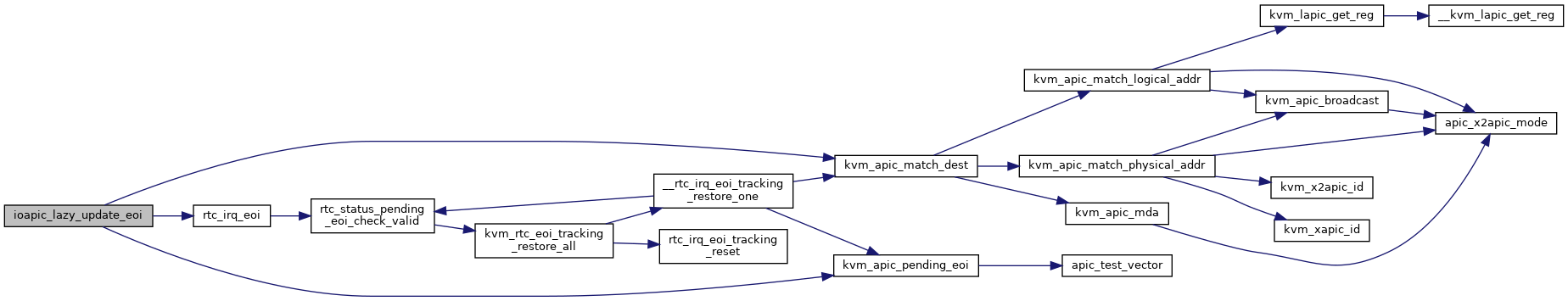

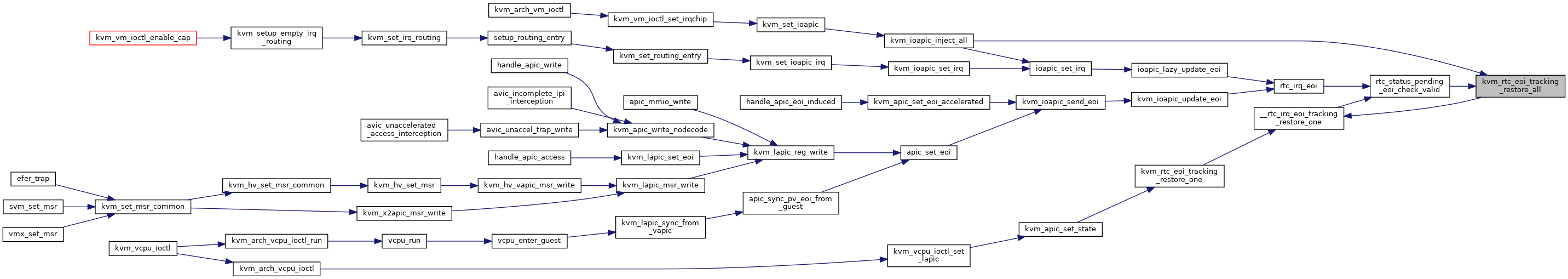

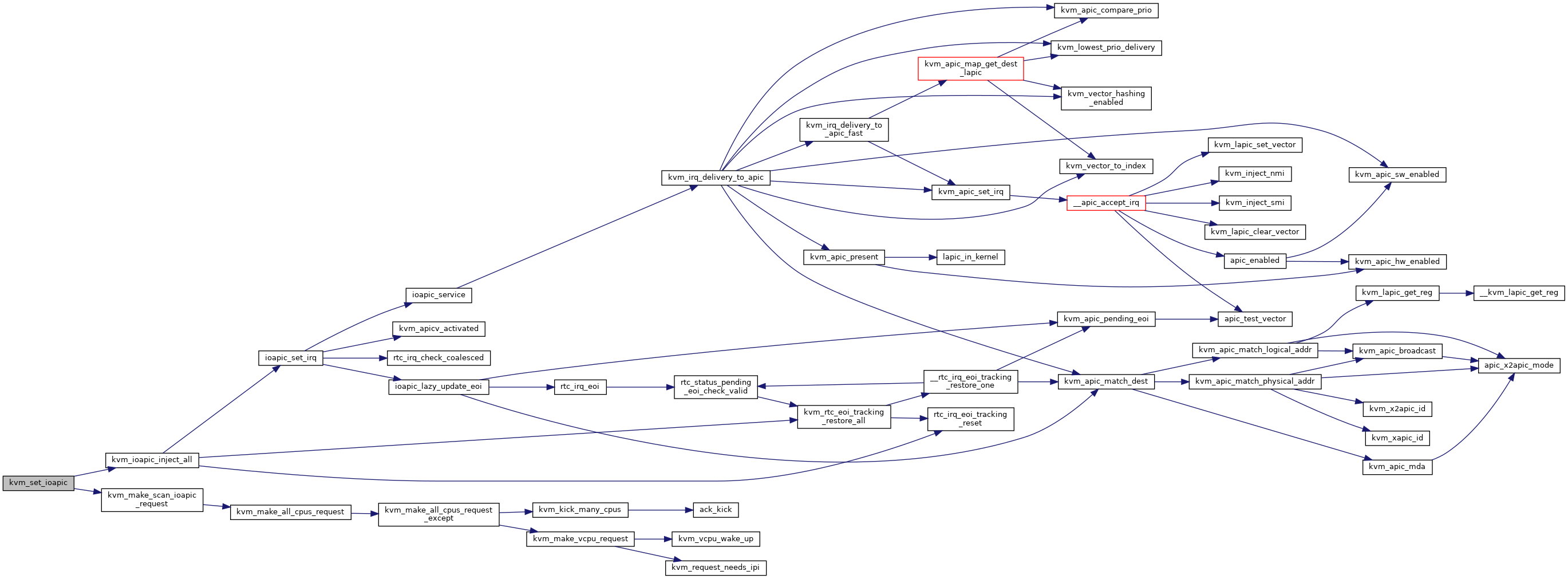

Here is the call graph for this function:

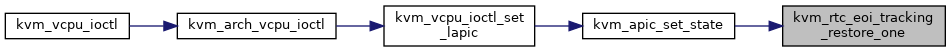

Here is the caller graph for this function:

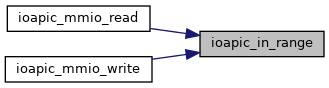

◆ ioapic_in_range()

|

inlinestatic |

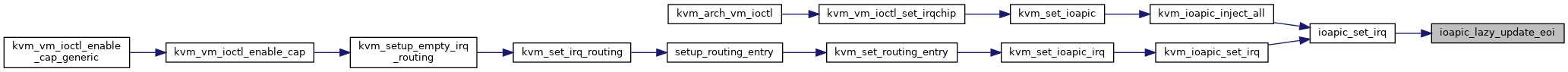

◆ ioapic_lazy_update_eoi()

|

static |

Definition at line 184 of file ioapic.c.

static void rtc_irq_eoi(struct kvm_ioapic *ioapic, struct kvm_vcpu *vcpu, int vector)

Definition: ioapic.c:161

Here is the call graph for this function:

Here is the caller graph for this function:

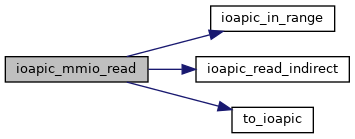

◆ ioapic_mmio_read()

|

static |

Definition at line 607 of file ioapic.c.

static unsigned long ioapic_read_indirect(struct kvm_ioapic *ioapic)

Definition: ioapic.c:58

static int ioapic_in_range(struct kvm_ioapic *ioapic, gpa_t addr)

Definition: ioapic.c:601

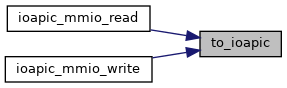

Here is the call graph for this function:

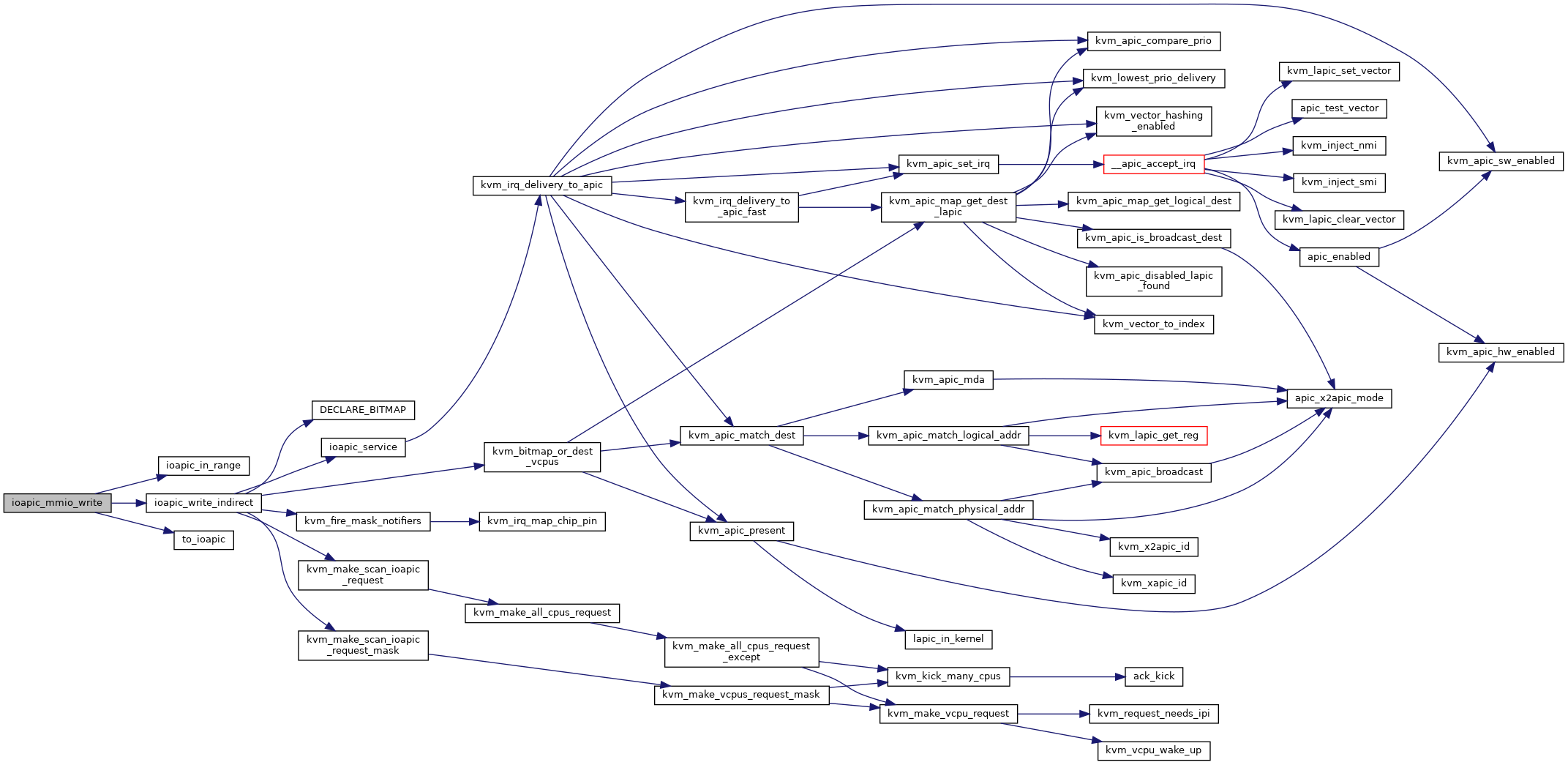

◆ ioapic_mmio_write()

|

static |

Definition at line 649 of file ioapic.c.

static void ioapic_write_indirect(struct kvm_ioapic *ioapic, u32 val)

Definition: ioapic.c:316

Here is the call graph for this function:

◆ ioapic_read_indirect()

|

static |

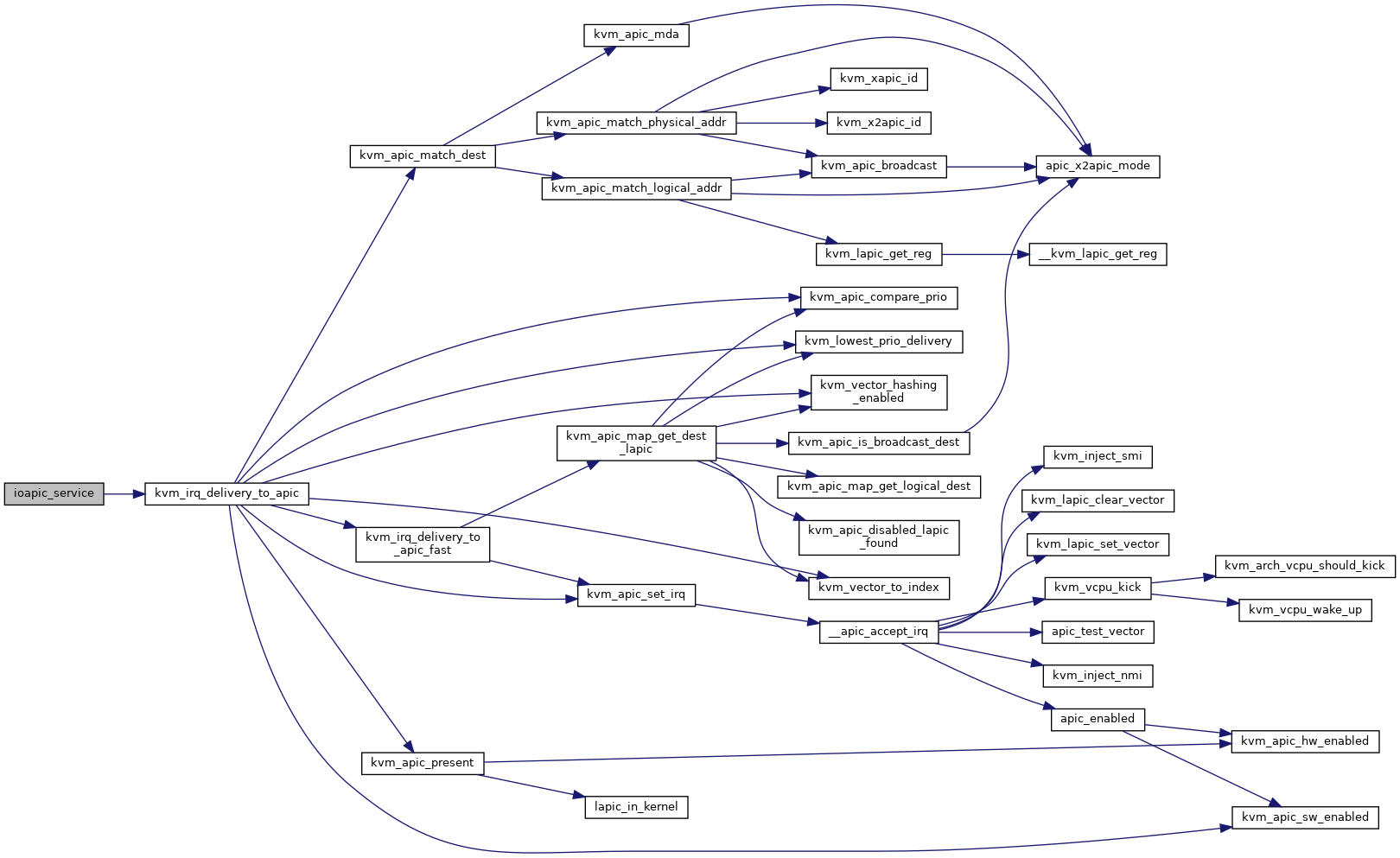

◆ ioapic_service()

|

static |

Definition at line 442 of file ioapic.c.

int kvm_irq_delivery_to_apic(struct kvm *kvm, struct kvm_lapic *src, struct kvm_lapic_irq *irq, struct dest_map *dest_map)

Definition: irq_comm.c:47

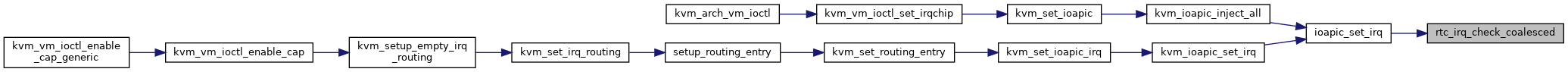

Here is the call graph for this function:

Here is the caller graph for this function:

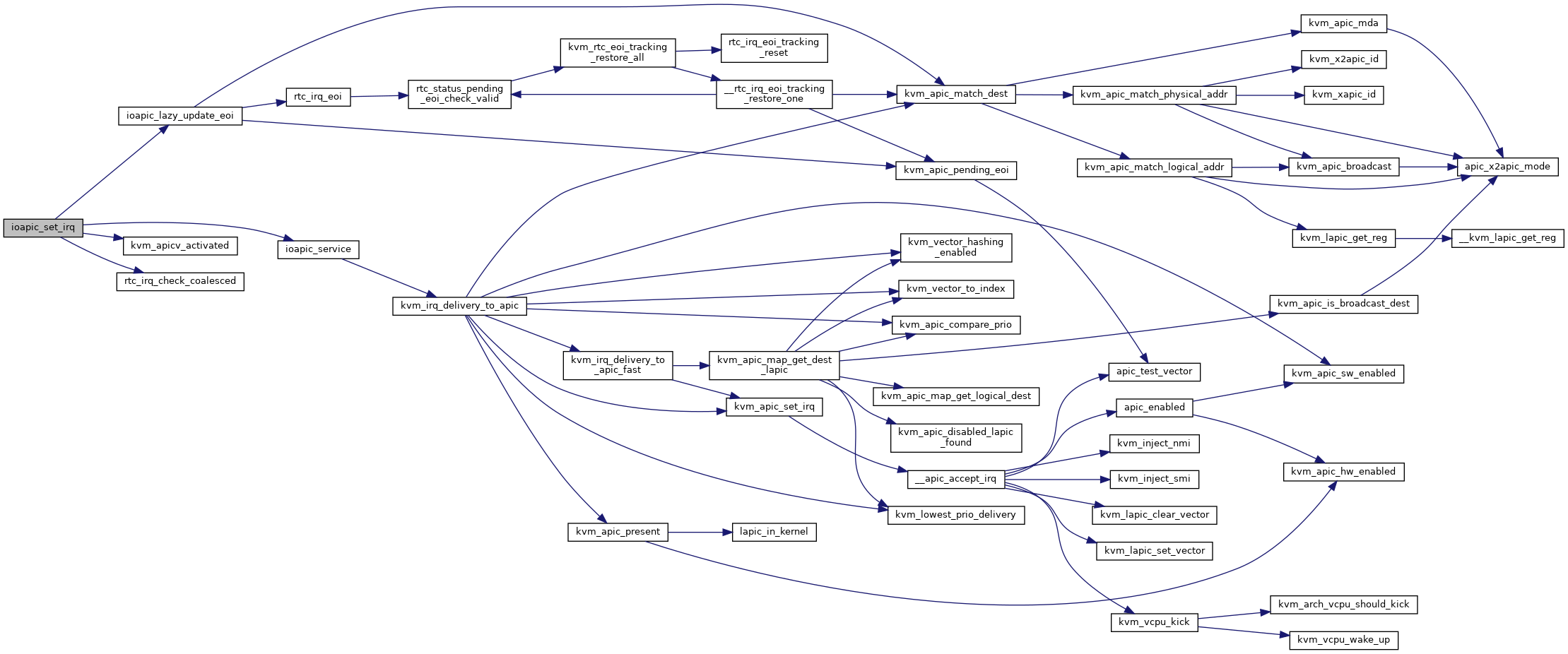

◆ ioapic_set_irq()

|

static |

Definition at line 206 of file ioapic.c.

static bool rtc_irq_check_coalesced(struct kvm_ioapic *ioapic)

Definition: ioapic.c:176

static void ioapic_lazy_update_eoi(struct kvm_ioapic *ioapic, int irq)

Definition: ioapic.c:184

static int ioapic_service(struct kvm_ioapic *vioapic, int irq, bool line_status)

Definition: ioapic.c:442

Here is the call graph for this function:

Here is the caller graph for this function:

◆ ioapic_write_indirect()

|

static |

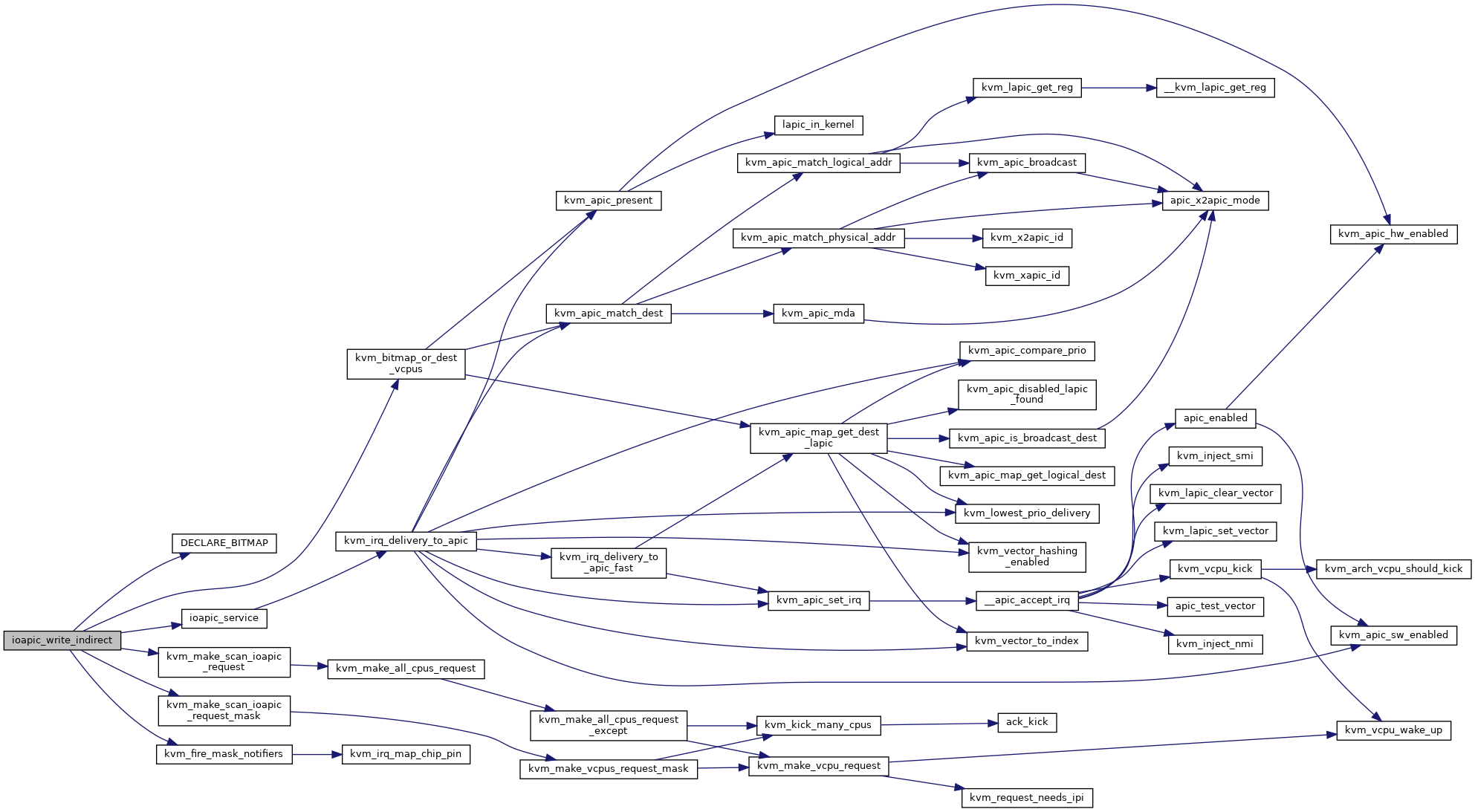

Definition at line 316 of file ioapic.c.

void kvm_fire_mask_notifiers(struct kvm *kvm, unsigned irqchip, unsigned pin, bool mask)

Definition: irq_comm.c:259

void kvm_bitmap_or_dest_vcpus(struct kvm *kvm, struct kvm_lapic_irq *irq, unsigned long *vcpu_bitmap)

Definition: lapic.c:1394

static DECLARE_BITMAP(vmx_vpid_bitmap, VMX_NR_VPIDS)

void kvm_make_scan_ioapic_request_mask(struct kvm *kvm, unsigned long *vcpu_bitmap)

Definition: x86.c:10514

void kvm_make_scan_ioapic_request(struct kvm *kvm)

Definition: x86.c:10520

Here is the call graph for this function:

Here is the caller graph for this function:

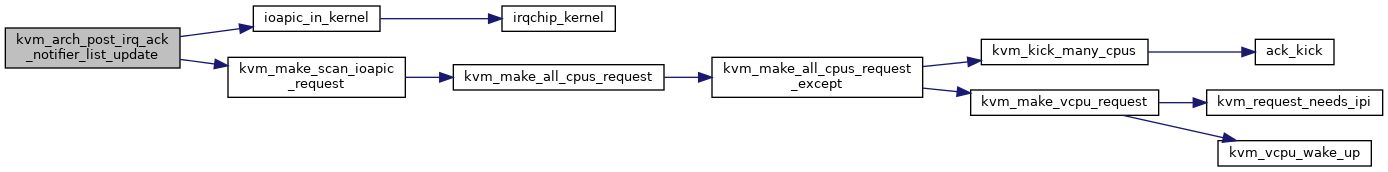

◆ kvm_arch_post_irq_ack_notifier_list_update()

| void kvm_arch_post_irq_ack_notifier_list_update | ( | struct kvm * | kvm | ) |

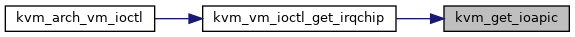

◆ kvm_get_ioapic()

| void kvm_get_ioapic | ( | struct kvm * | kvm, |

| struct kvm_ioapic_state * | state | ||

| ) |

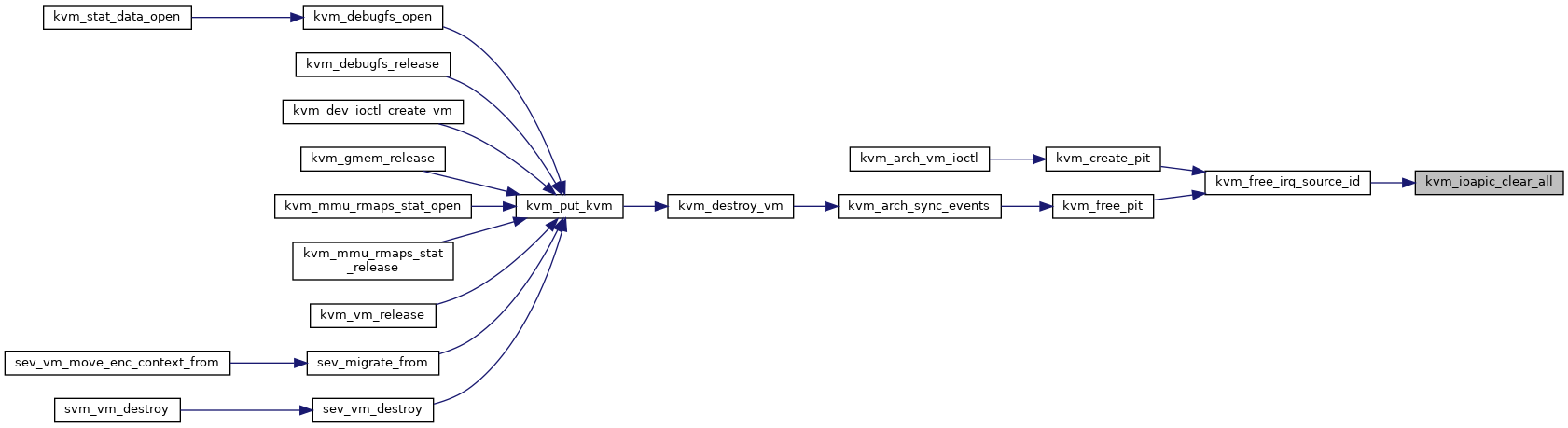

◆ kvm_ioapic_clear_all()

| void kvm_ioapic_clear_all | ( | struct kvm_ioapic * | ioapic, |

| int | irq_source_id | ||

| ) |

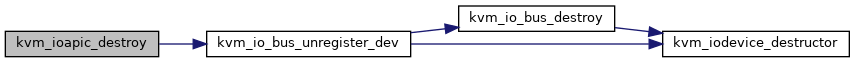

◆ kvm_ioapic_destroy()

| void kvm_ioapic_destroy | ( | struct kvm * | kvm | ) |

Definition at line 740 of file ioapic.c.

int kvm_io_bus_unregister_dev(struct kvm *kvm, enum kvm_bus bus_idx, struct kvm_io_device *dev)

Definition: kvm_main.c:5941

Here is the call graph for this function:

Here is the caller graph for this function:

◆ kvm_ioapic_eoi_inject_work()

|

static |

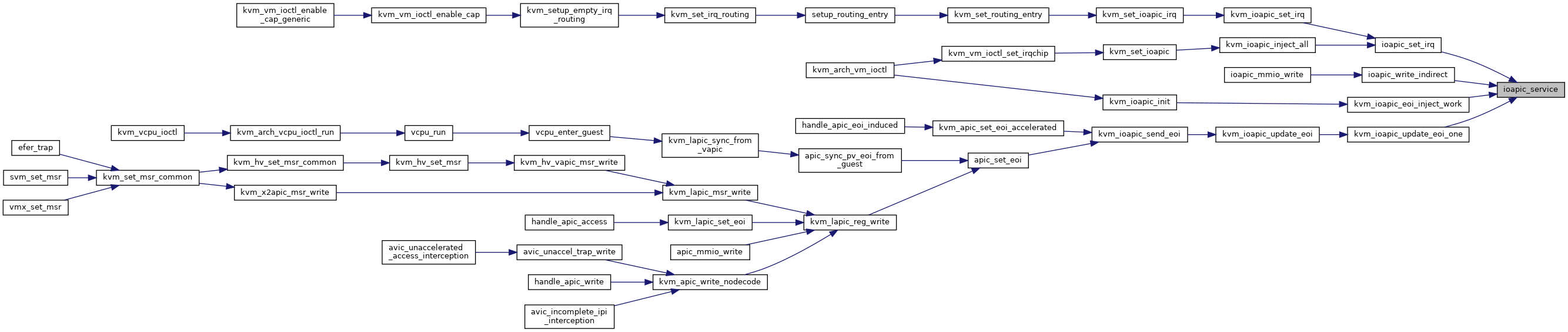

◆ kvm_ioapic_init()

| int kvm_ioapic_init | ( | struct kvm * | kvm | ) |

Definition at line 714 of file ioapic.c.

static void kvm_ioapic_eoi_inject_work(struct work_struct *work)

Definition: ioapic.c:512

static void kvm_iodevice_init(struct kvm_io_device *dev, const struct kvm_io_device_ops *ops)

Definition: iodev.h:36

int kvm_io_bus_register_dev(struct kvm *kvm, enum kvm_bus bus_idx, gpa_t addr, int len, struct kvm_io_device *dev)

Definition: kvm_main.c:5897

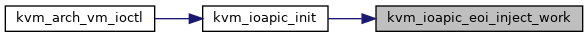

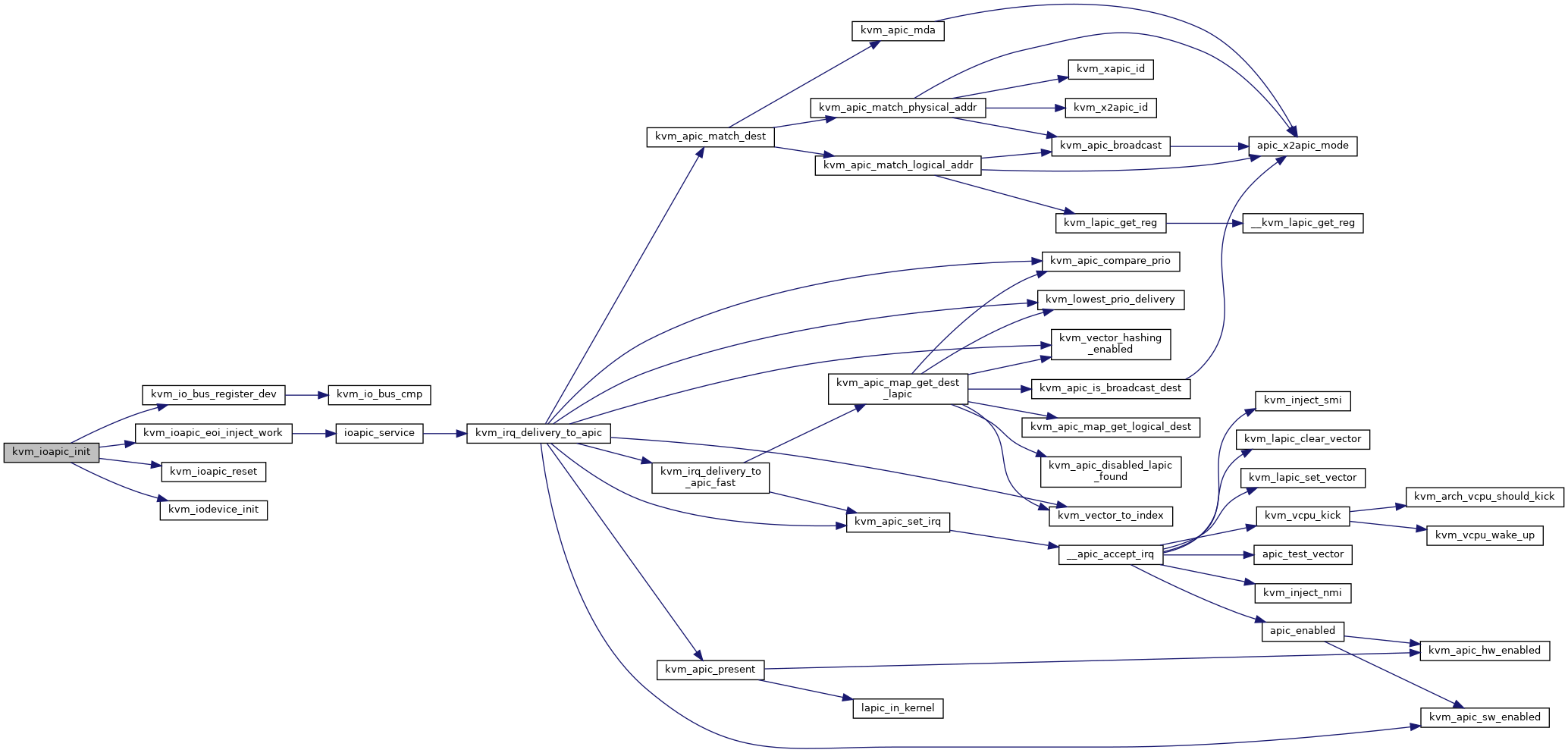

Here is the call graph for this function:

Here is the caller graph for this function:

◆ kvm_ioapic_inject_all()

|

static |

Definition at line 266 of file ioapic.c.

static void rtc_irq_eoi_tracking_reset(struct kvm_ioapic *ioapic)

Definition: ioapic.c:95

static void kvm_rtc_eoi_tracking_restore_all(struct kvm_ioapic *ioapic)

Definition: ioapic.c:148

static int ioapic_set_irq(struct kvm_ioapic *ioapic, unsigned int irq, int irq_level, bool line_status)

Definition: ioapic.c:206

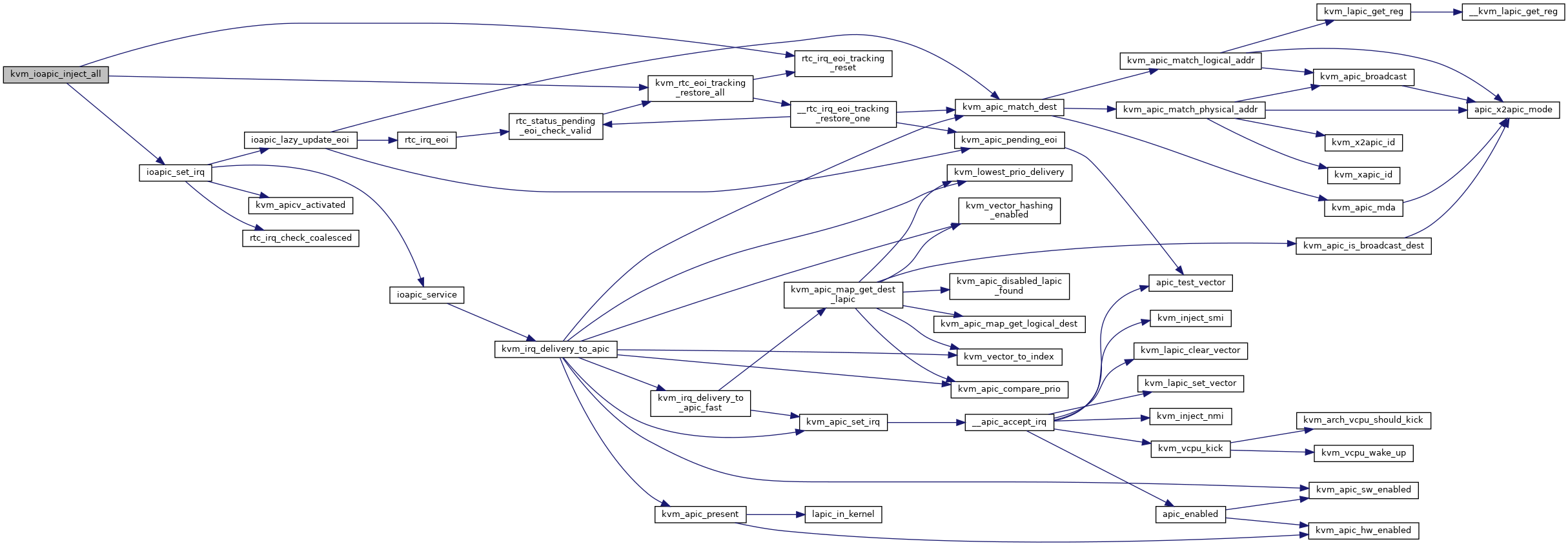

Here is the call graph for this function:

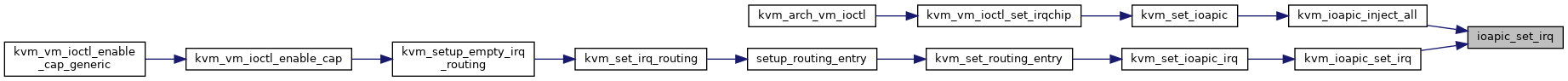

Here is the caller graph for this function:

◆ kvm_ioapic_reset()

|

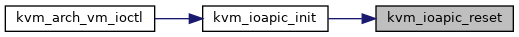

static |

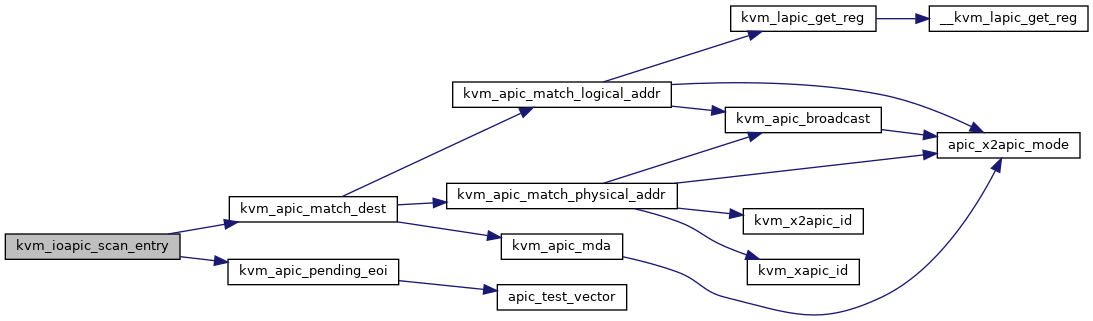

◆ kvm_ioapic_scan_entry()

| void kvm_ioapic_scan_entry | ( | struct kvm_vcpu * | vcpu, |

| ulong * | ioapic_handled_vectors | ||

| ) |

◆ kvm_ioapic_set_irq()

| int kvm_ioapic_set_irq | ( | struct kvm_ioapic * | ioapic, |

| int | irq, | ||

| int | irq_source_id, | ||

| int | level, | ||

| bool | line_status | ||

| ) |

◆ kvm_ioapic_update_eoi()

| void kvm_ioapic_update_eoi | ( | struct kvm_vcpu * | vcpu, |

| int | vector, | ||

| int | trigger_mode | ||

| ) |

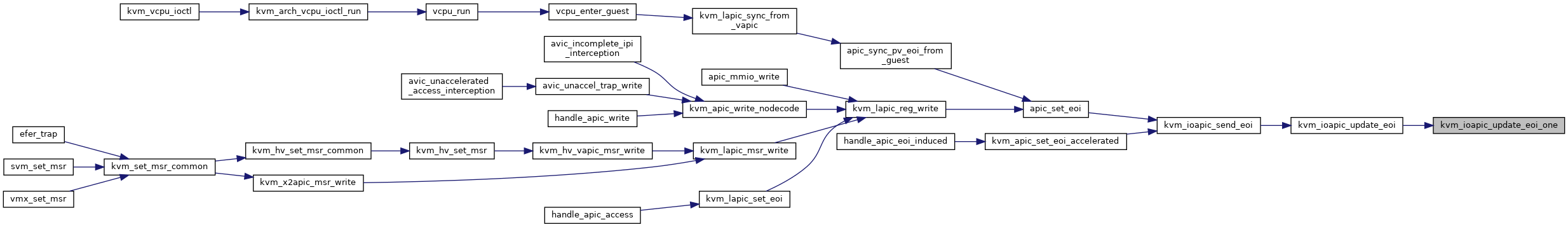

Definition at line 579 of file ioapic.c.

static void kvm_ioapic_update_eoi_one(struct kvm_vcpu *vcpu, struct kvm_ioapic *ioapic, int trigger_mode, int pin)

Definition: ioapic.c:531

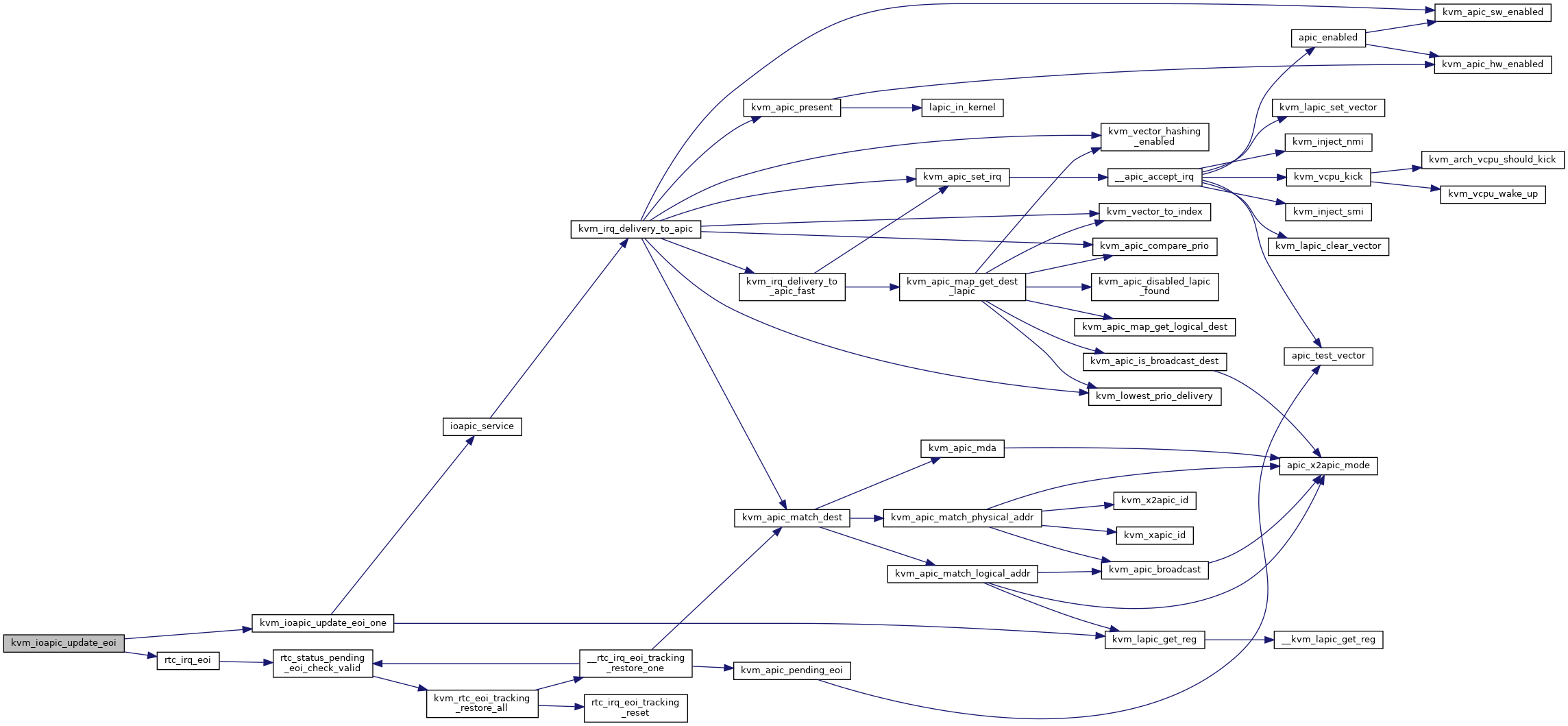

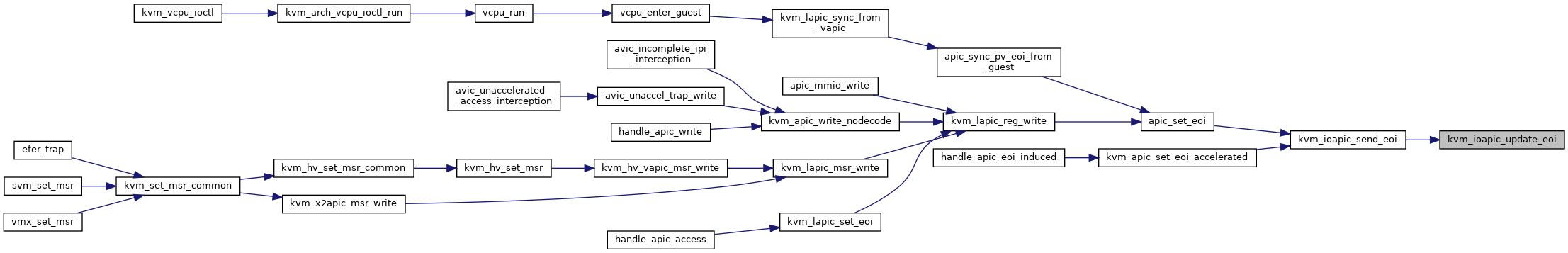

Here is the call graph for this function:

Here is the caller graph for this function:

◆ kvm_ioapic_update_eoi_one()

|

static |

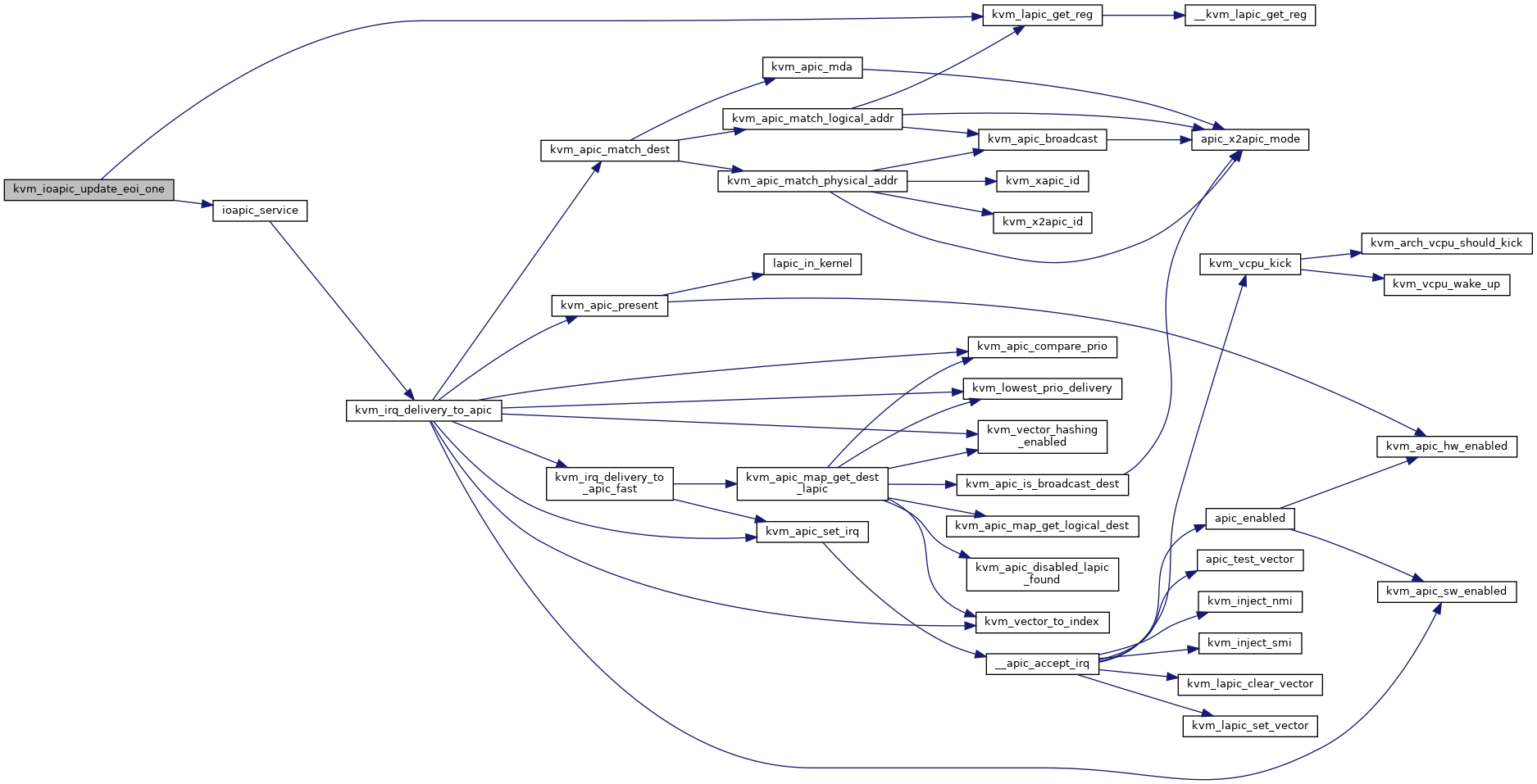

Definition at line 531 of file ioapic.c.

static u32 kvm_lapic_get_reg(struct kvm_lapic *apic, int reg_off)

Definition: lapic.h:179

Definition: lapic.h:59

Here is the call graph for this function:

Here is the caller graph for this function:

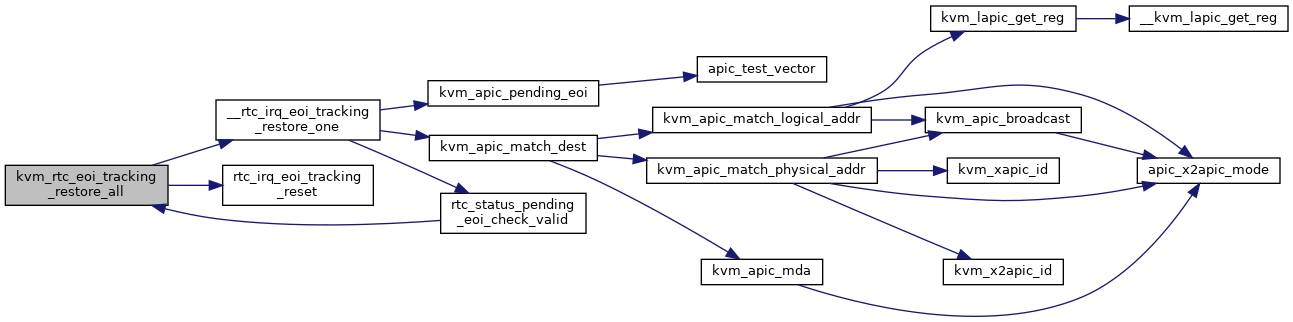

◆ kvm_rtc_eoi_tracking_restore_all()

|

static |

Definition at line 148 of file ioapic.c.

static void __rtc_irq_eoi_tracking_restore_one(struct kvm_vcpu *vcpu)

Definition: ioapic.c:109

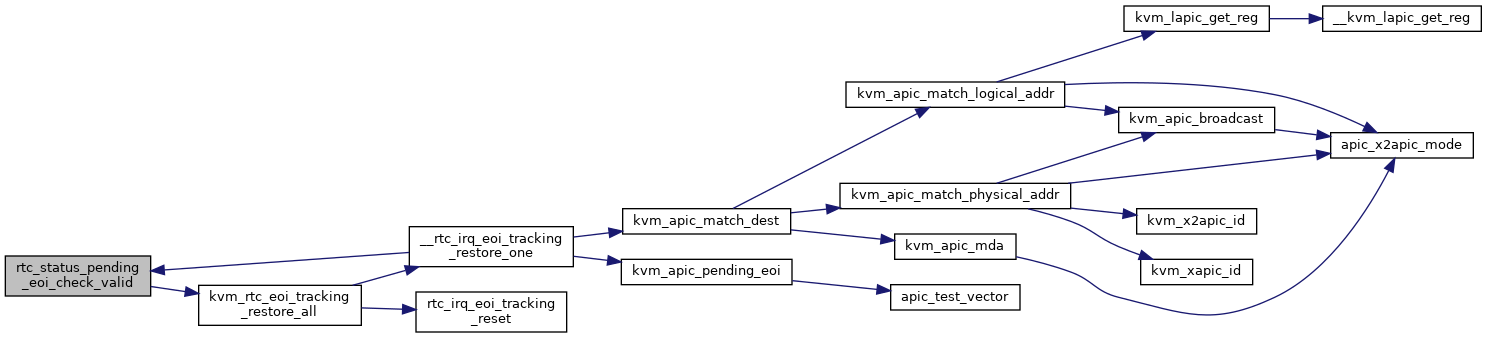

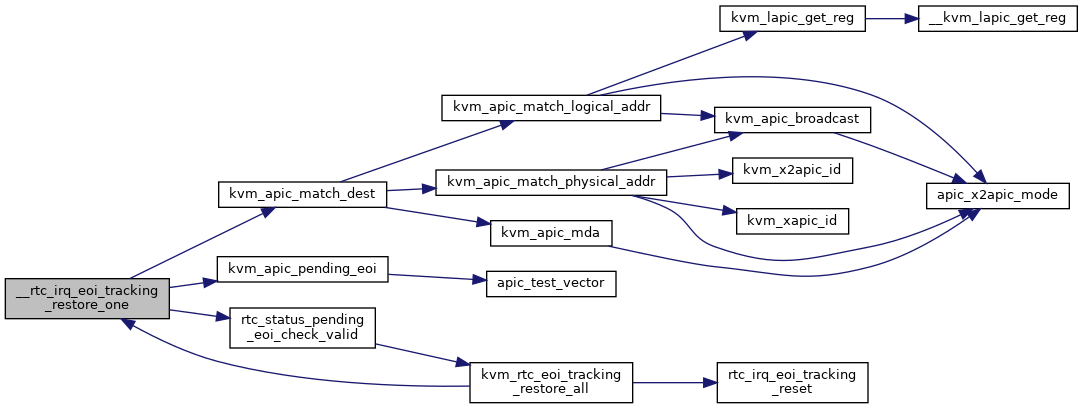

Here is the call graph for this function:

Here is the caller graph for this function:

◆ kvm_rtc_eoi_tracking_restore_one()

| void kvm_rtc_eoi_tracking_restore_one | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_set_ioapic()

| void kvm_set_ioapic | ( | struct kvm * | kvm, |

| struct kvm_ioapic_state * | state | ||

| ) |

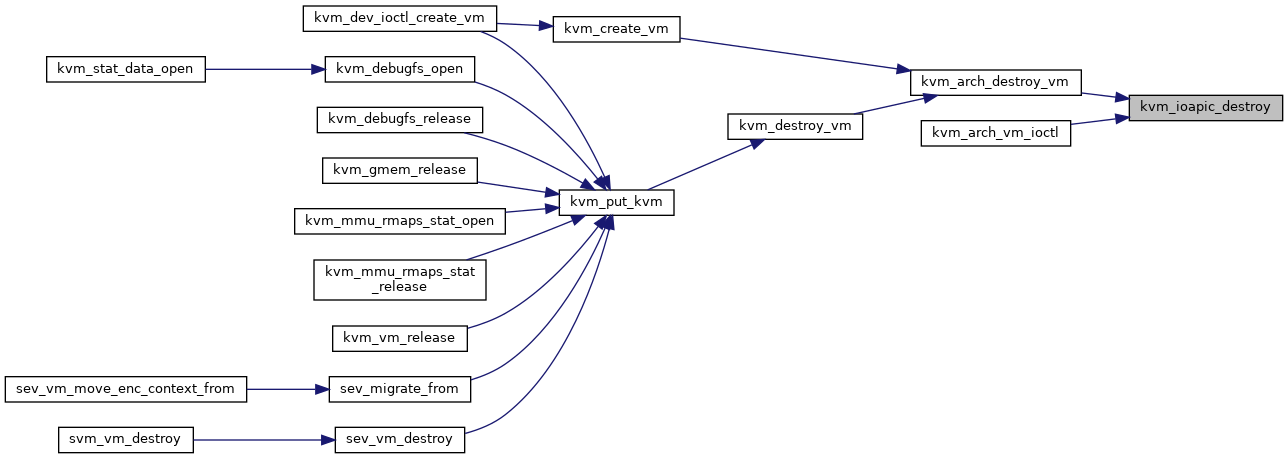

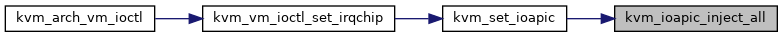

Definition at line 765 of file ioapic.c.

static void kvm_ioapic_inject_all(struct kvm_ioapic *ioapic, unsigned long irr)

Definition: ioapic.c:266

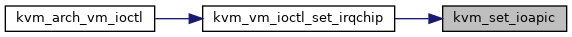

Here is the call graph for this function:

Here is the caller graph for this function:

◆ rtc_irq_check_coalesced()

|

static |

◆ rtc_irq_eoi()

|

static |

◆ rtc_irq_eoi_tracking_reset()

|

static |

◆ rtc_status_pending_eoi_check_valid()

|

static |

◆ to_ioapic()

|

inlinestatic |

Variable Documentation

◆ ioapic_mmio_ops

|

static |

Initial value:

= {

.read = ioapic_mmio_read,

.write = ioapic_mmio_write,

}

static int ioapic_mmio_read(struct kvm_vcpu *vcpu, struct kvm_io_device *this, gpa_t addr, int len, void *val)

Definition: ioapic.c:607

static int ioapic_mmio_write(struct kvm_vcpu *vcpu, struct kvm_io_device *this, gpa_t addr, int len, const void *val)

Definition: ioapic.c:649