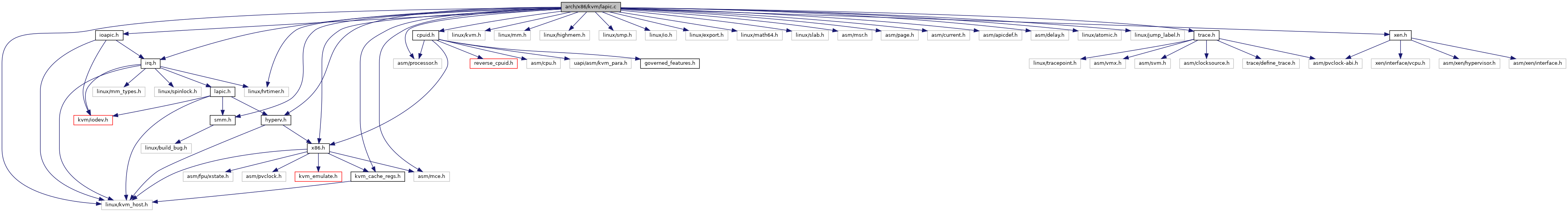

#include <linux/kvm_host.h>#include <linux/kvm.h>#include <linux/mm.h>#include <linux/highmem.h>#include <linux/smp.h>#include <linux/hrtimer.h>#include <linux/io.h>#include <linux/export.h>#include <linux/math64.h>#include <linux/slab.h>#include <asm/processor.h>#include <asm/mce.h>#include <asm/msr.h>#include <asm/page.h>#include <asm/current.h>#include <asm/apicdef.h>#include <asm/delay.h>#include <linux/atomic.h>#include <linux/jump_label.h>#include "kvm_cache_regs.h"#include "irq.h"#include "ioapic.h"#include "trace.h"#include "x86.h"#include "xen.h"#include "cpuid.h"#include "hyperv.h"#include "smm.h"

Go to the source code of this file.

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

| #define | mod_64(x, y) ((x) - (y) * div64_u64(x, y)) |

| #define | APIC_VERSION 0x14UL |

| #define | LAPIC_MMIO_LENGTH (1 << 12) |

| #define | MAX_APIC_VECTOR 256 |

| #define | APIC_VECTORS_PER_REG 32 |

| #define | LAPIC_TIMER_ADVANCE_ADJUST_MIN 100 /* clock cycles */ |

| #define | LAPIC_TIMER_ADVANCE_ADJUST_MAX 10000 /* clock cycles */ |

| #define | LAPIC_TIMER_ADVANCE_NS_INIT 1000 |

| #define | LAPIC_TIMER_ADVANCE_NS_MAX 5000 |

| #define | LAPIC_TIMER_ADVANCE_ADJUST_STEP 8 |

| #define | LVT_MASK (APIC_LVT_MASKED | APIC_SEND_PENDING | APIC_VECTOR_MASK) |

| #define | LINT_MASK |

| #define | APIC_REG_MASK(reg) (1ull << ((reg) >> 4)) |

| #define | APIC_REGS_MASK(first, count) (APIC_REG_MASK(first) * ((1ull << (count)) - 1)) |

Enumerations | |

| enum | { CLEAN , UPDATE_IN_PROGRESS , DIRTY } |

Functions | |

| static int | kvm_lapic_msr_read (struct kvm_lapic *apic, u32 reg, u64 *data) |

| static int | kvm_lapic_msr_write (struct kvm_lapic *apic, u32 reg, u64 data) |

| static void | __kvm_lapic_set_reg (char *regs, int reg_off, u32 val) |

| static void | kvm_lapic_set_reg (struct kvm_lapic *apic, int reg_off, u32 val) |

| static __always_inline u64 | __kvm_lapic_get_reg64 (char *regs, int reg) |

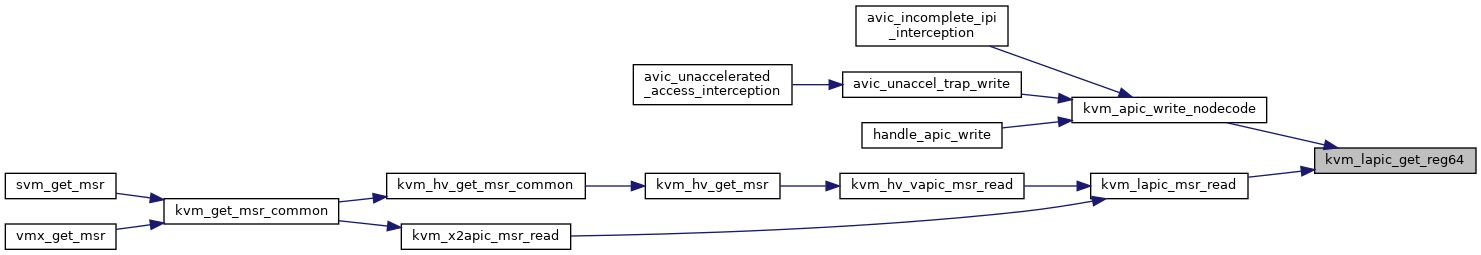

| static __always_inline u64 | kvm_lapic_get_reg64 (struct kvm_lapic *apic, int reg) |

| static __always_inline void | __kvm_lapic_set_reg64 (char *regs, int reg, u64 val) |

| static __always_inline void | kvm_lapic_set_reg64 (struct kvm_lapic *apic, int reg, u64 val) |

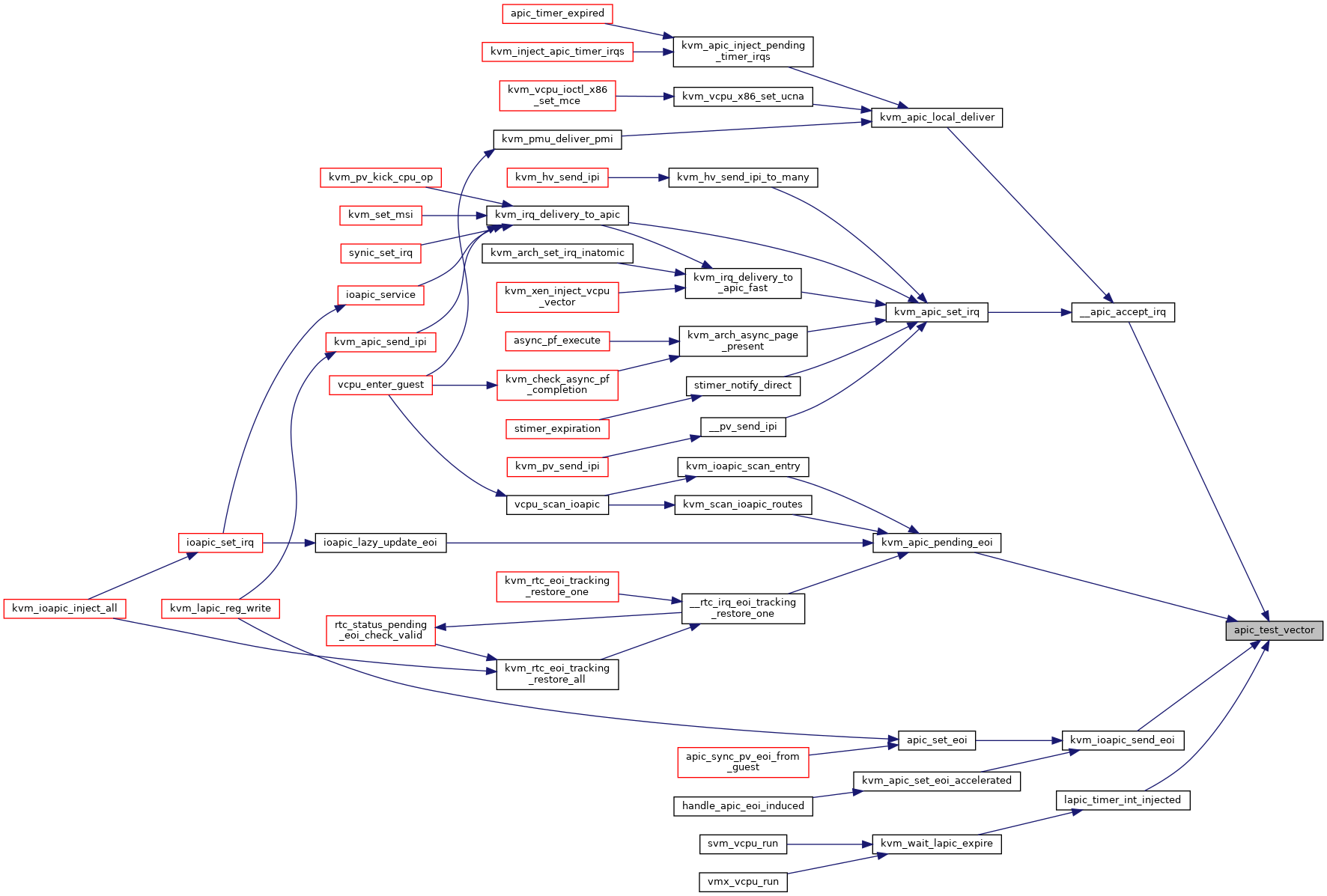

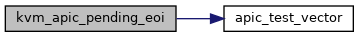

| static int | apic_test_vector (int vec, void *bitmap) |

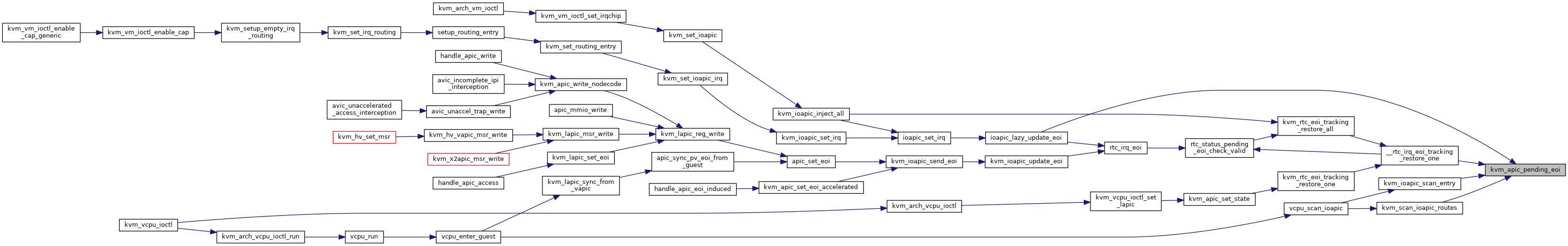

| bool | kvm_apic_pending_eoi (struct kvm_vcpu *vcpu, int vector) |

| static int | __apic_test_and_set_vector (int vec, void *bitmap) |

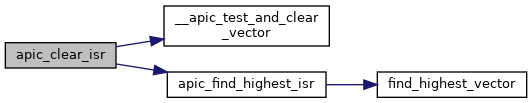

| static int | __apic_test_and_clear_vector (int vec, void *bitmap) |

| __read_mostly | DEFINE_STATIC_KEY_DEFERRED_FALSE (apic_hw_disabled, HZ) |

| __read_mostly | DEFINE_STATIC_KEY_DEFERRED_FALSE (apic_sw_disabled, HZ) |

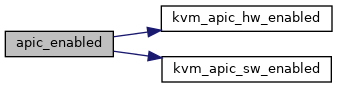

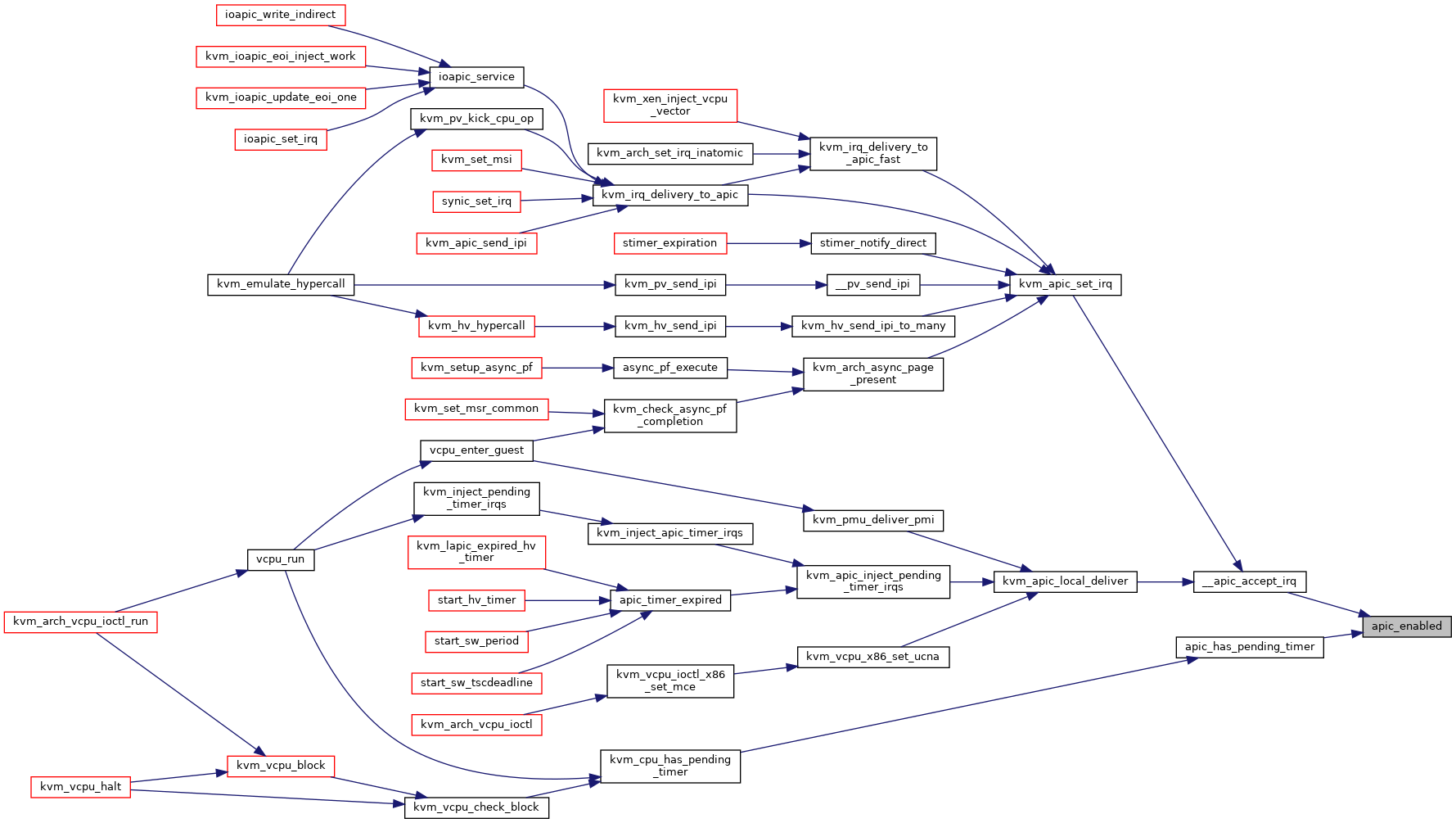

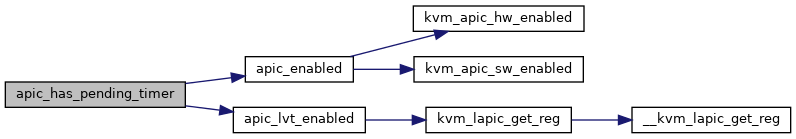

| static int | apic_enabled (struct kvm_lapic *apic) |

| static u32 | kvm_x2apic_id (struct kvm_lapic *apic) |

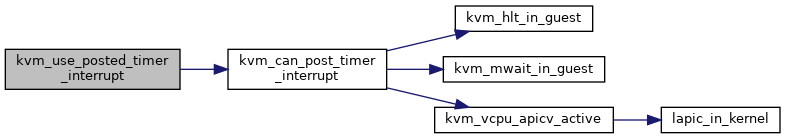

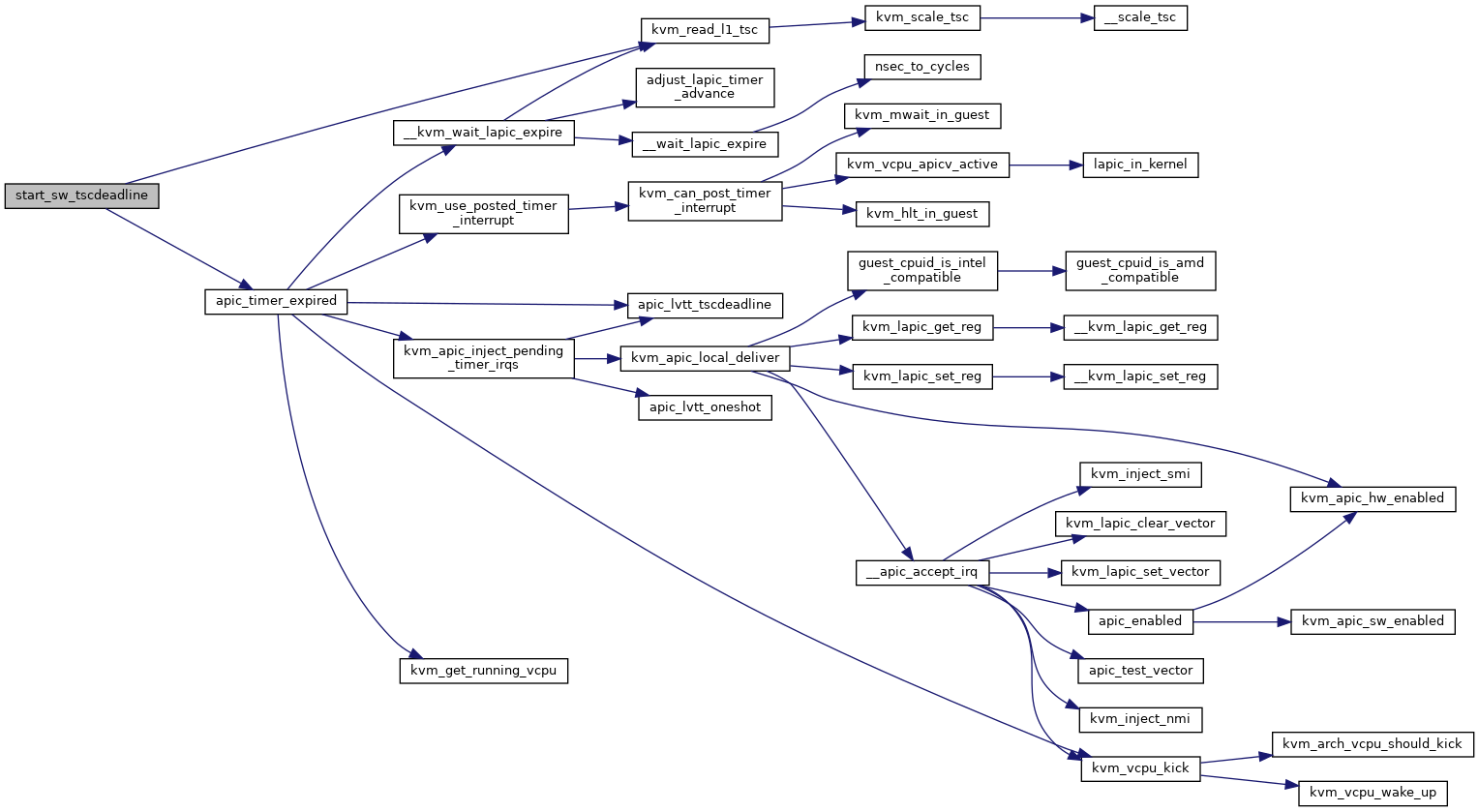

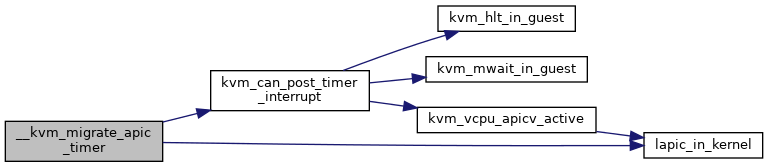

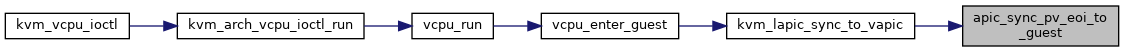

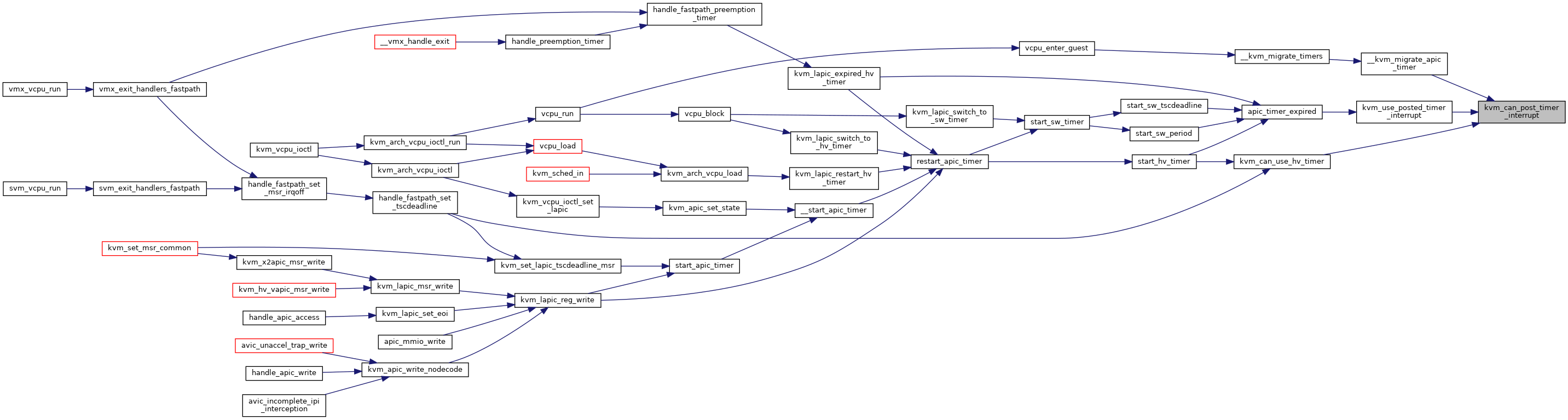

| static bool | kvm_can_post_timer_interrupt (struct kvm_vcpu *vcpu) |

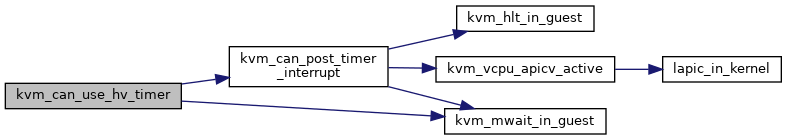

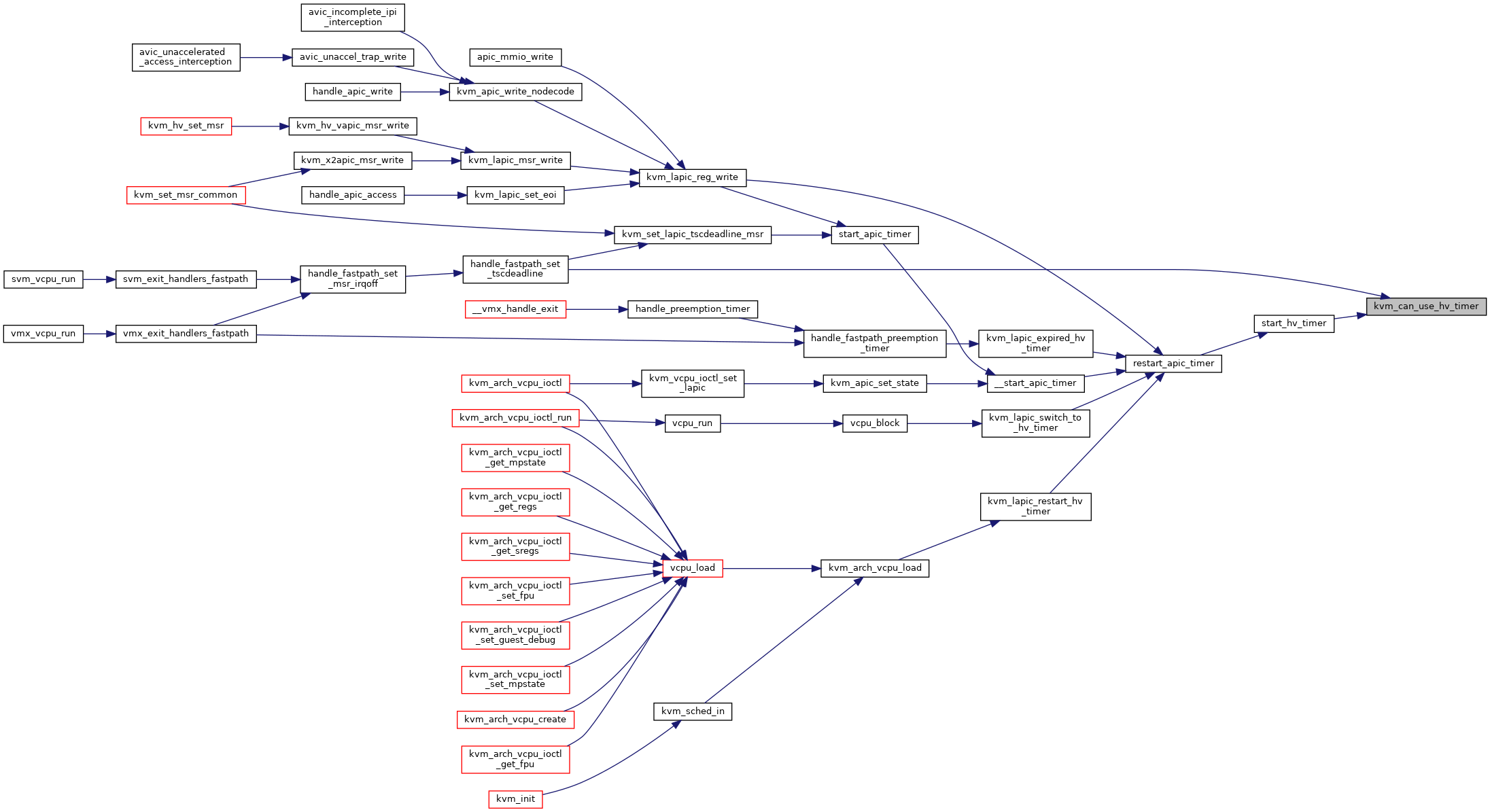

| bool | kvm_can_use_hv_timer (struct kvm_vcpu *vcpu) |

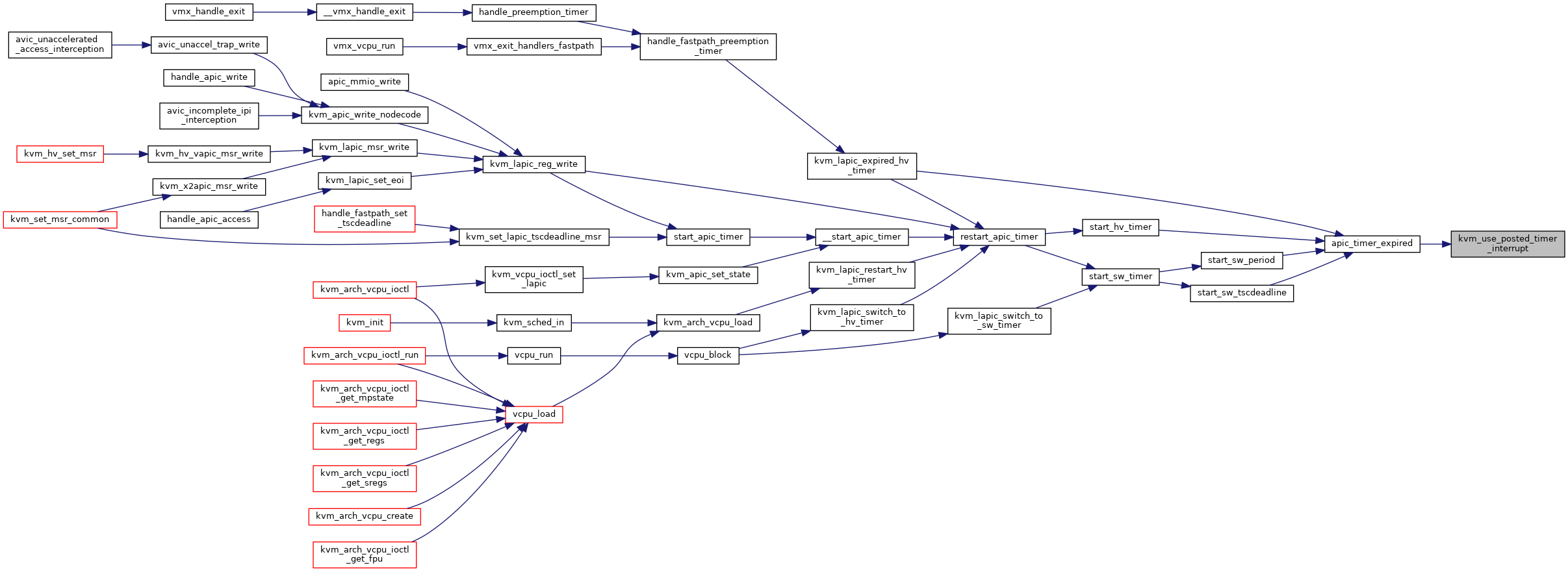

| static bool | kvm_use_posted_timer_interrupt (struct kvm_vcpu *vcpu) |

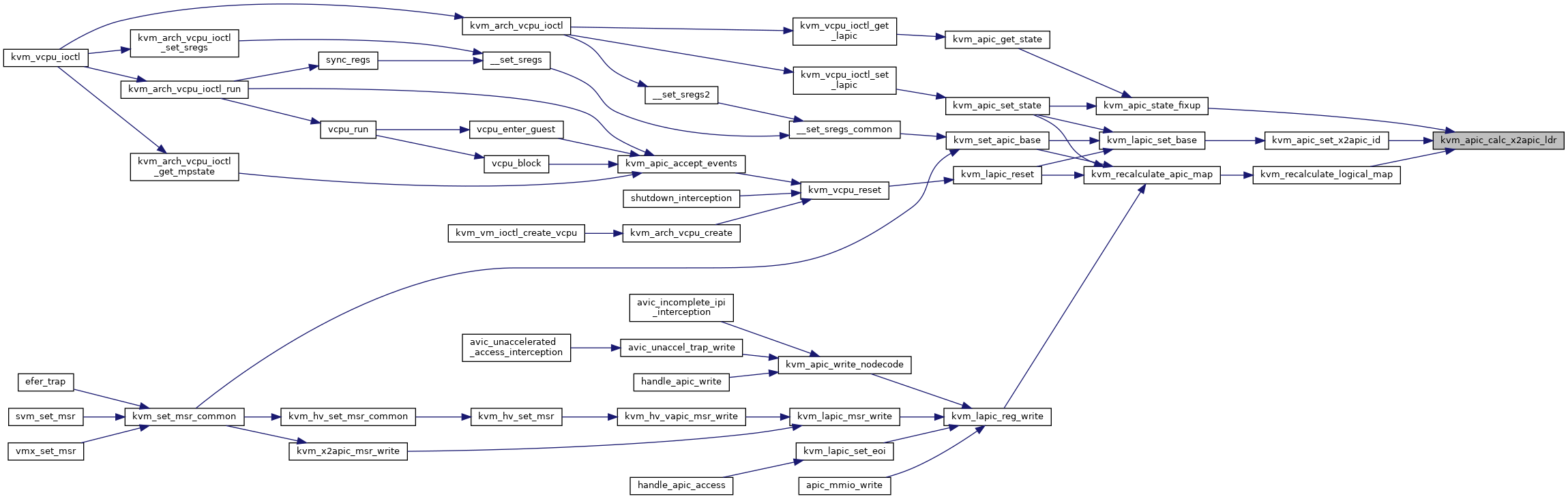

| static u32 | kvm_apic_calc_x2apic_ldr (u32 id) |

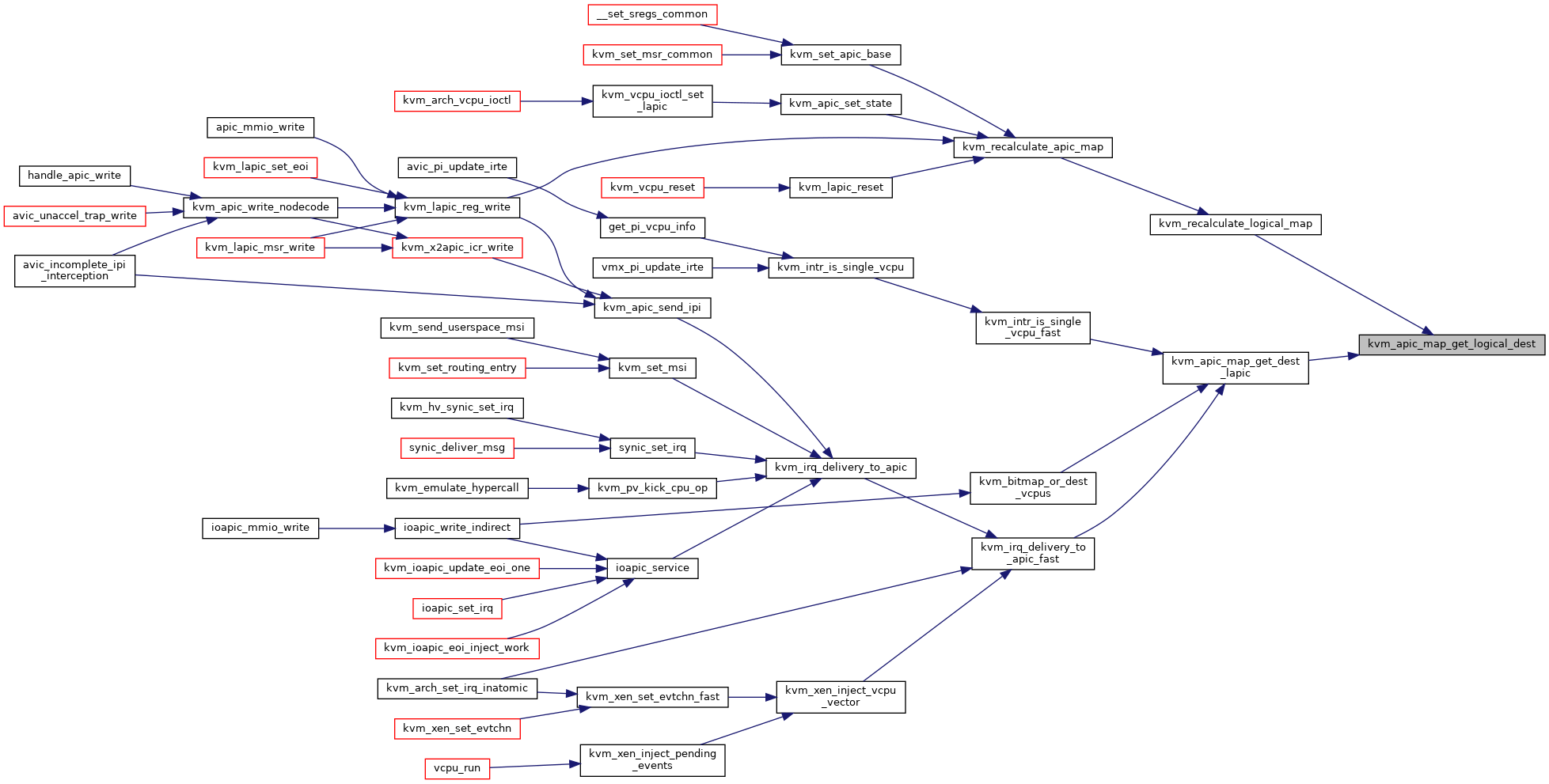

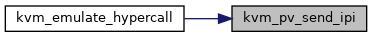

| static bool | kvm_apic_map_get_logical_dest (struct kvm_apic_map *map, u32 dest_id, struct kvm_lapic ***cluster, u16 *mask) |

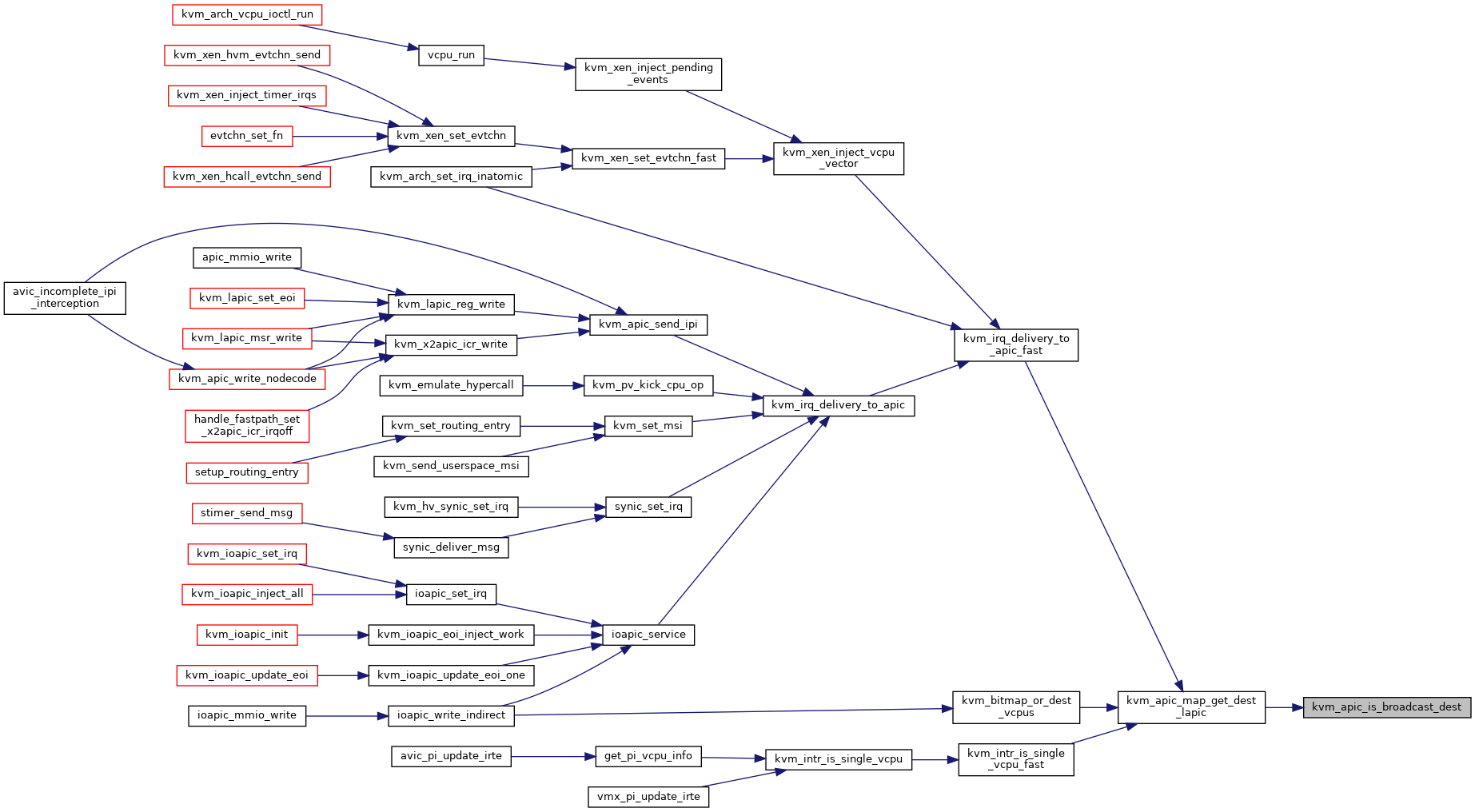

| static void | kvm_apic_map_free (struct rcu_head *rcu) |

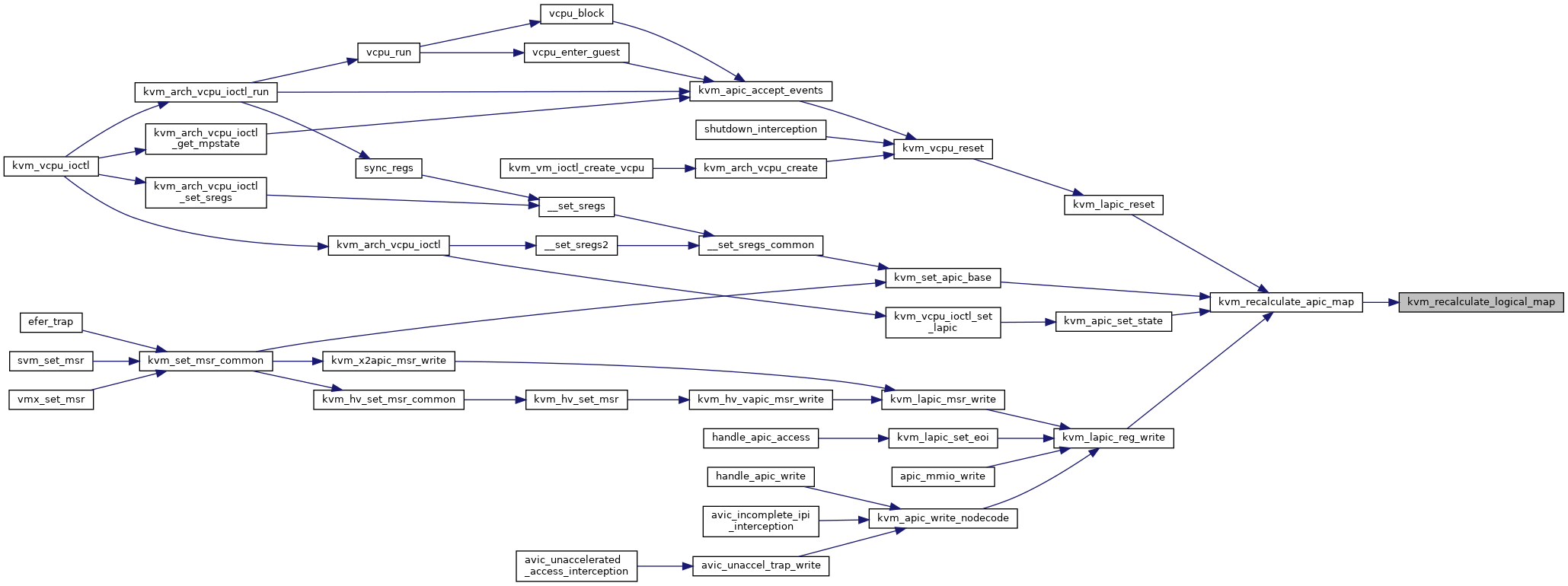

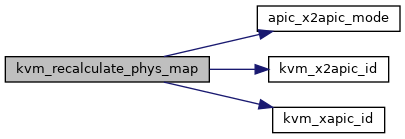

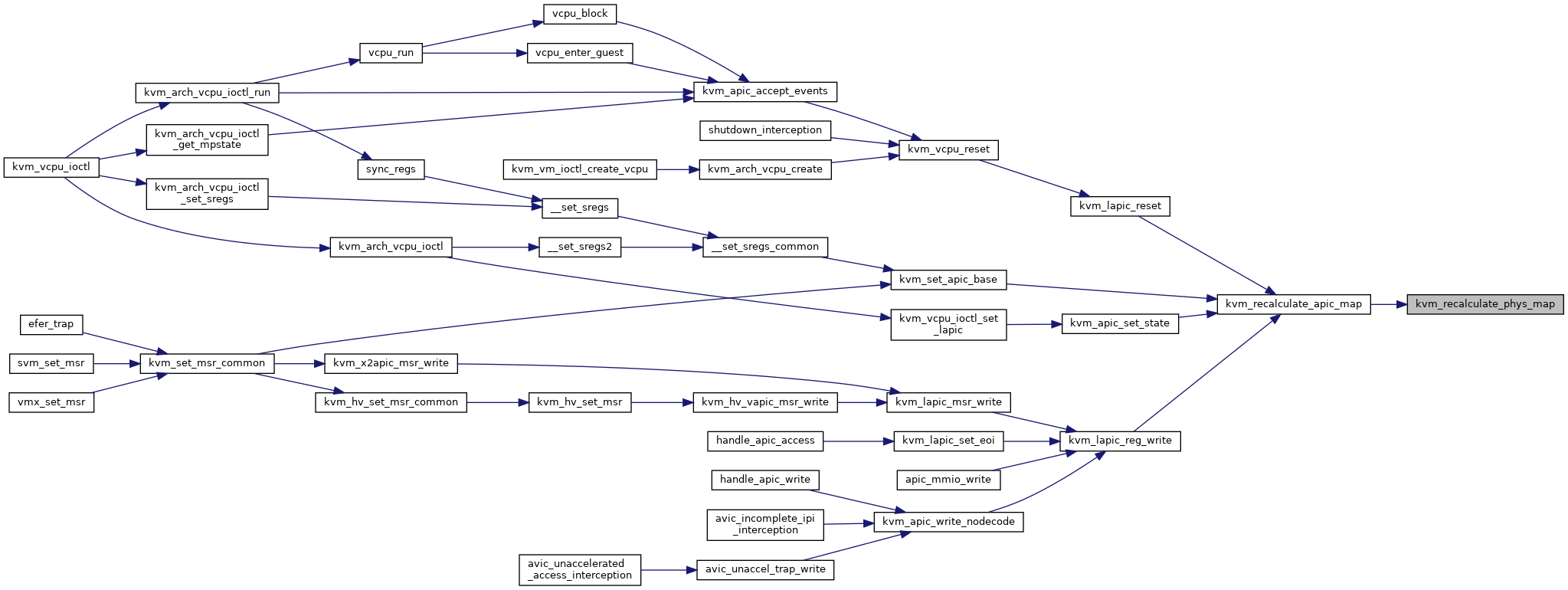

| static int | kvm_recalculate_phys_map (struct kvm_apic_map *new, struct kvm_vcpu *vcpu, bool *xapic_id_mismatch) |

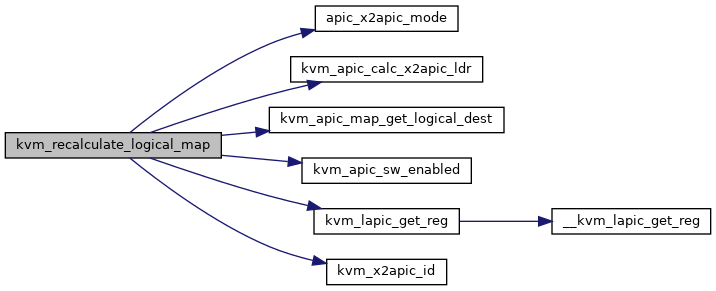

| static void | kvm_recalculate_logical_map (struct kvm_apic_map *new, struct kvm_vcpu *vcpu) |

| void | kvm_recalculate_apic_map (struct kvm *kvm) |

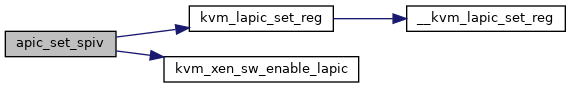

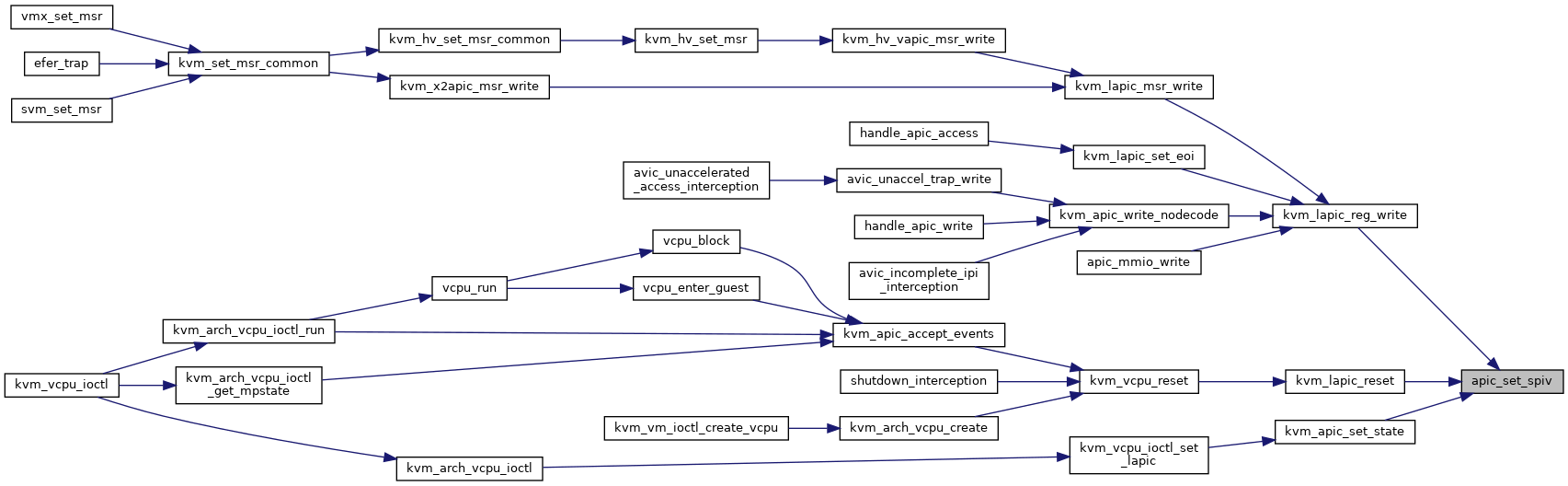

| static void | apic_set_spiv (struct kvm_lapic *apic, u32 val) |

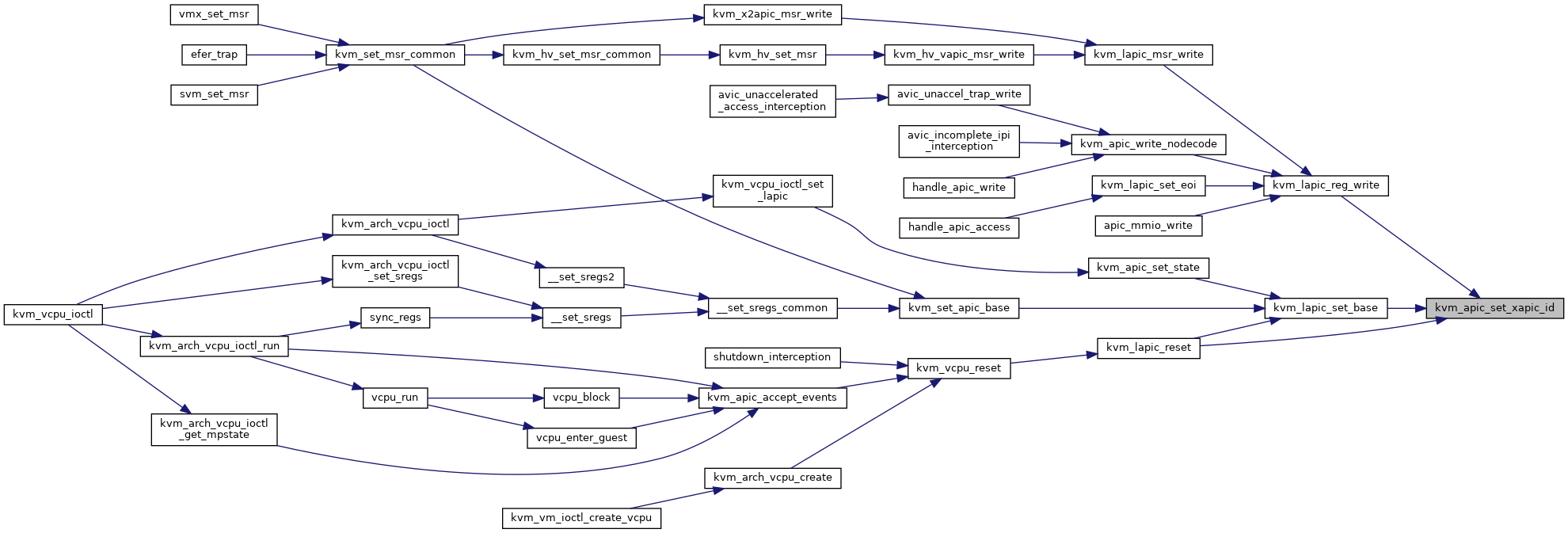

| static void | kvm_apic_set_xapic_id (struct kvm_lapic *apic, u8 id) |

| static void | kvm_apic_set_ldr (struct kvm_lapic *apic, u32 id) |

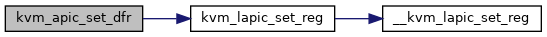

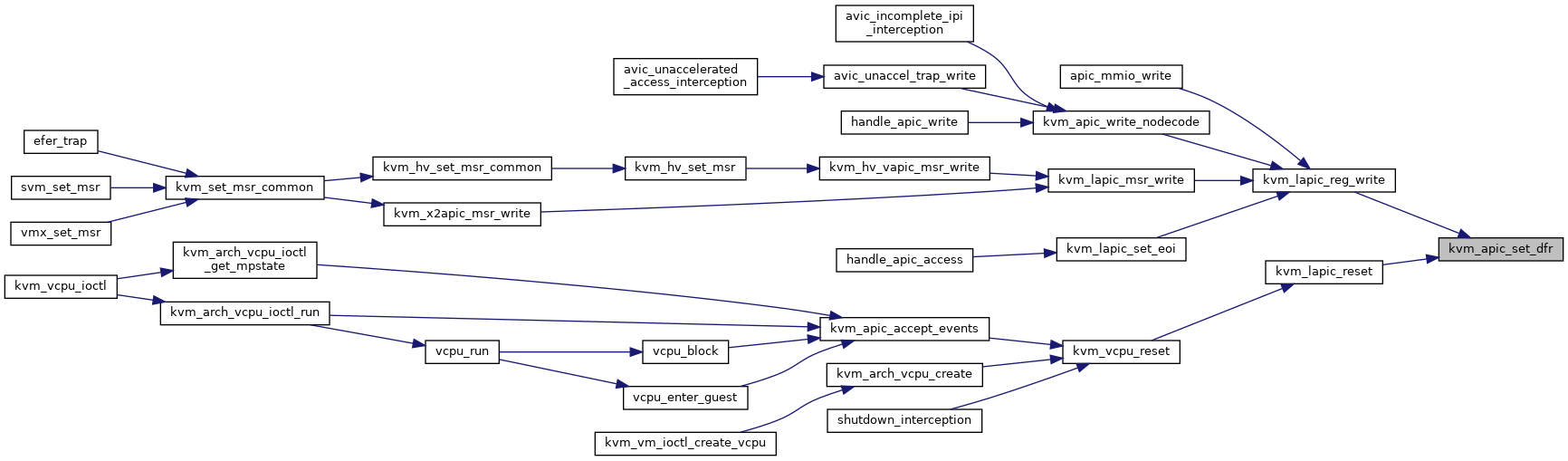

| static void | kvm_apic_set_dfr (struct kvm_lapic *apic, u32 val) |

| static void | kvm_apic_set_x2apic_id (struct kvm_lapic *apic, u32 id) |

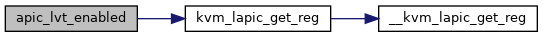

| static int | apic_lvt_enabled (struct kvm_lapic *apic, int lvt_type) |

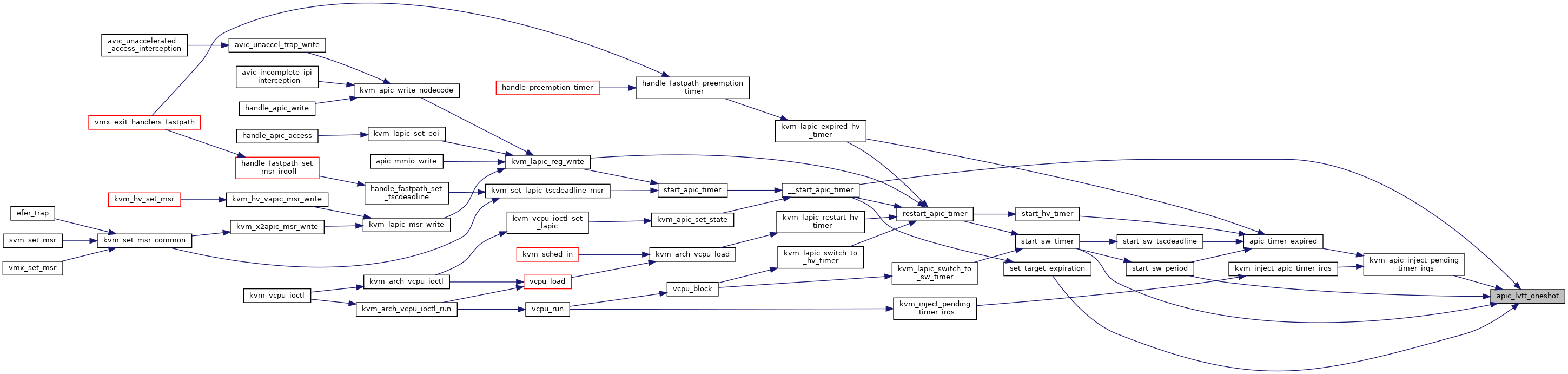

| static int | apic_lvtt_oneshot (struct kvm_lapic *apic) |

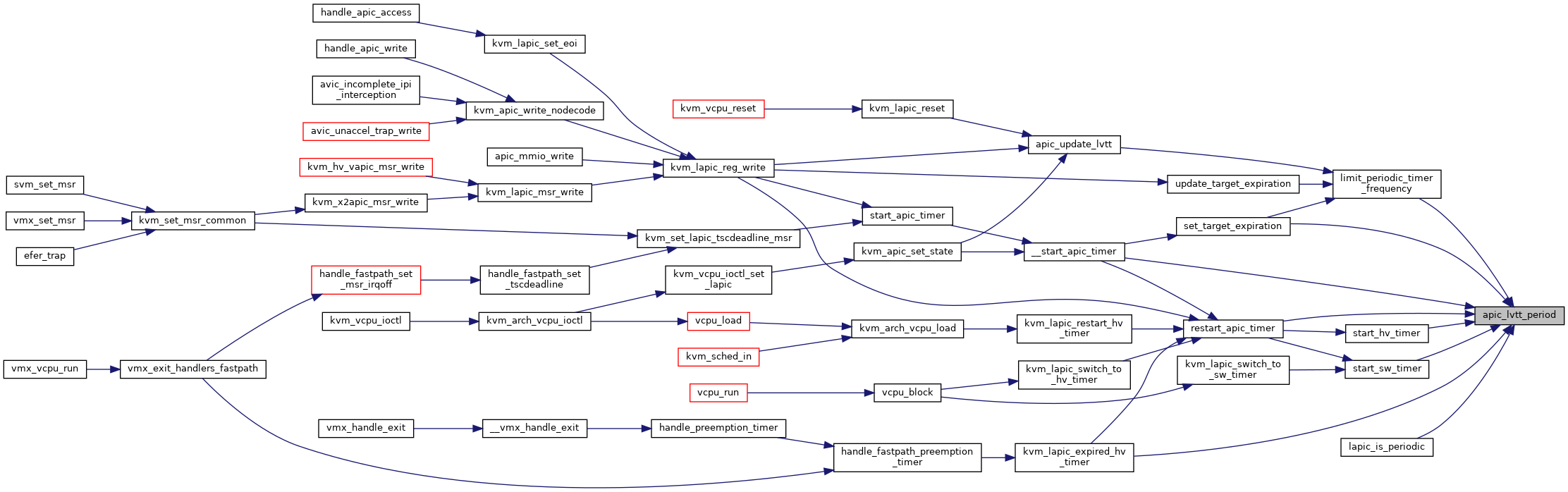

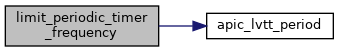

| static int | apic_lvtt_period (struct kvm_lapic *apic) |

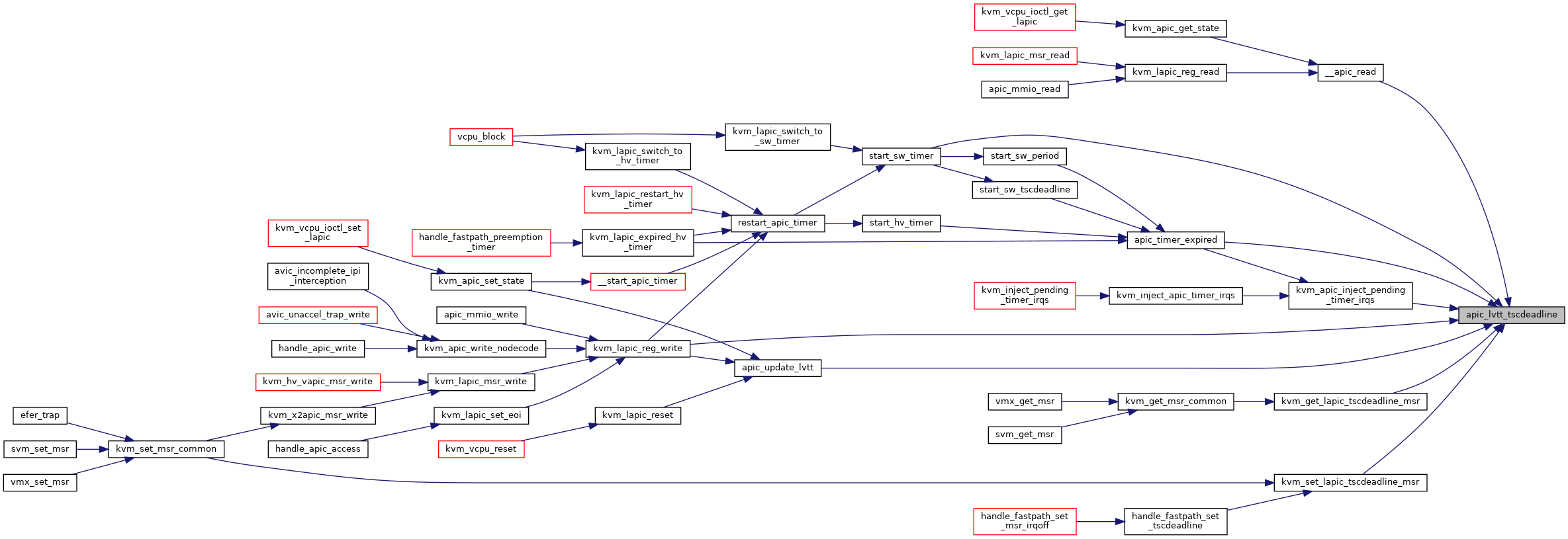

| static int | apic_lvtt_tscdeadline (struct kvm_lapic *apic) |

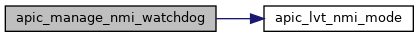

| static int | apic_lvt_nmi_mode (u32 lvt_val) |

| static bool | kvm_lapic_lvt_supported (struct kvm_lapic *apic, int lvt_index) |

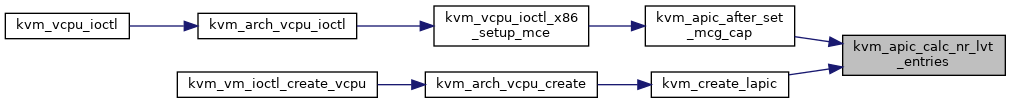

| static int | kvm_apic_calc_nr_lvt_entries (struct kvm_vcpu *vcpu) |

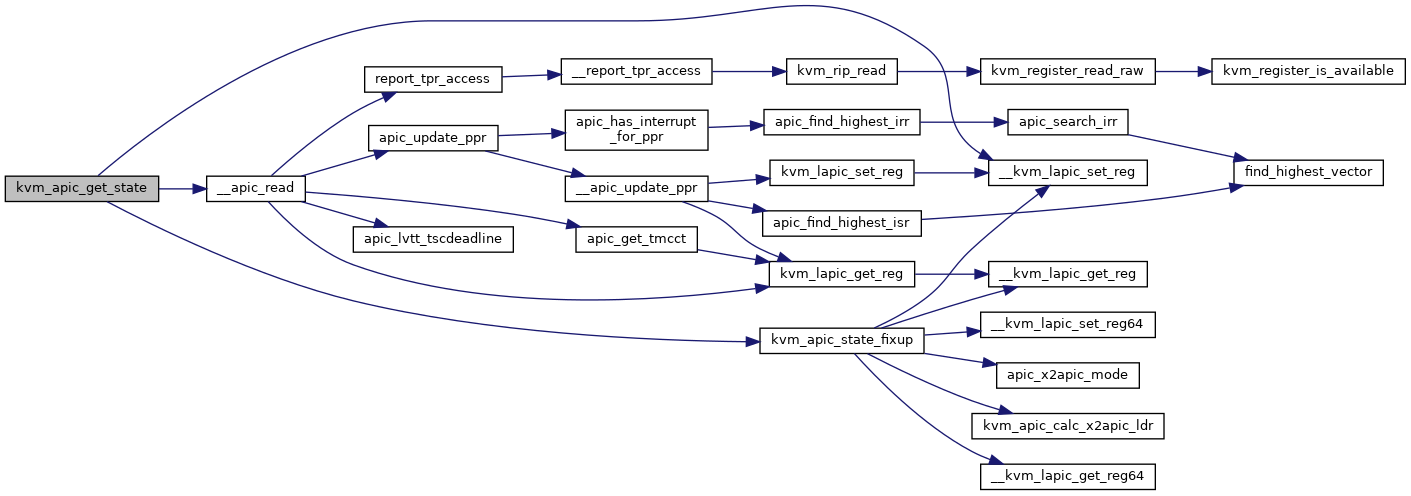

| void | kvm_apic_set_version (struct kvm_vcpu *vcpu) |

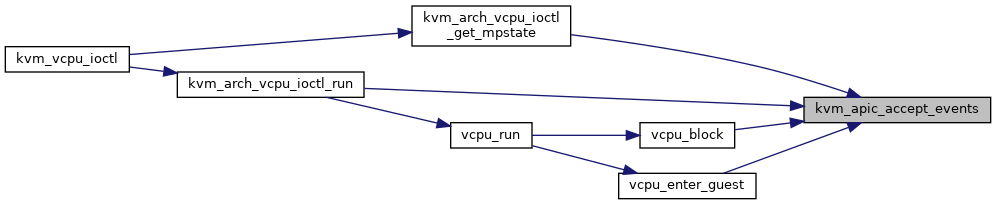

| void | kvm_apic_after_set_mcg_cap (struct kvm_vcpu *vcpu) |

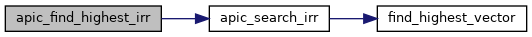

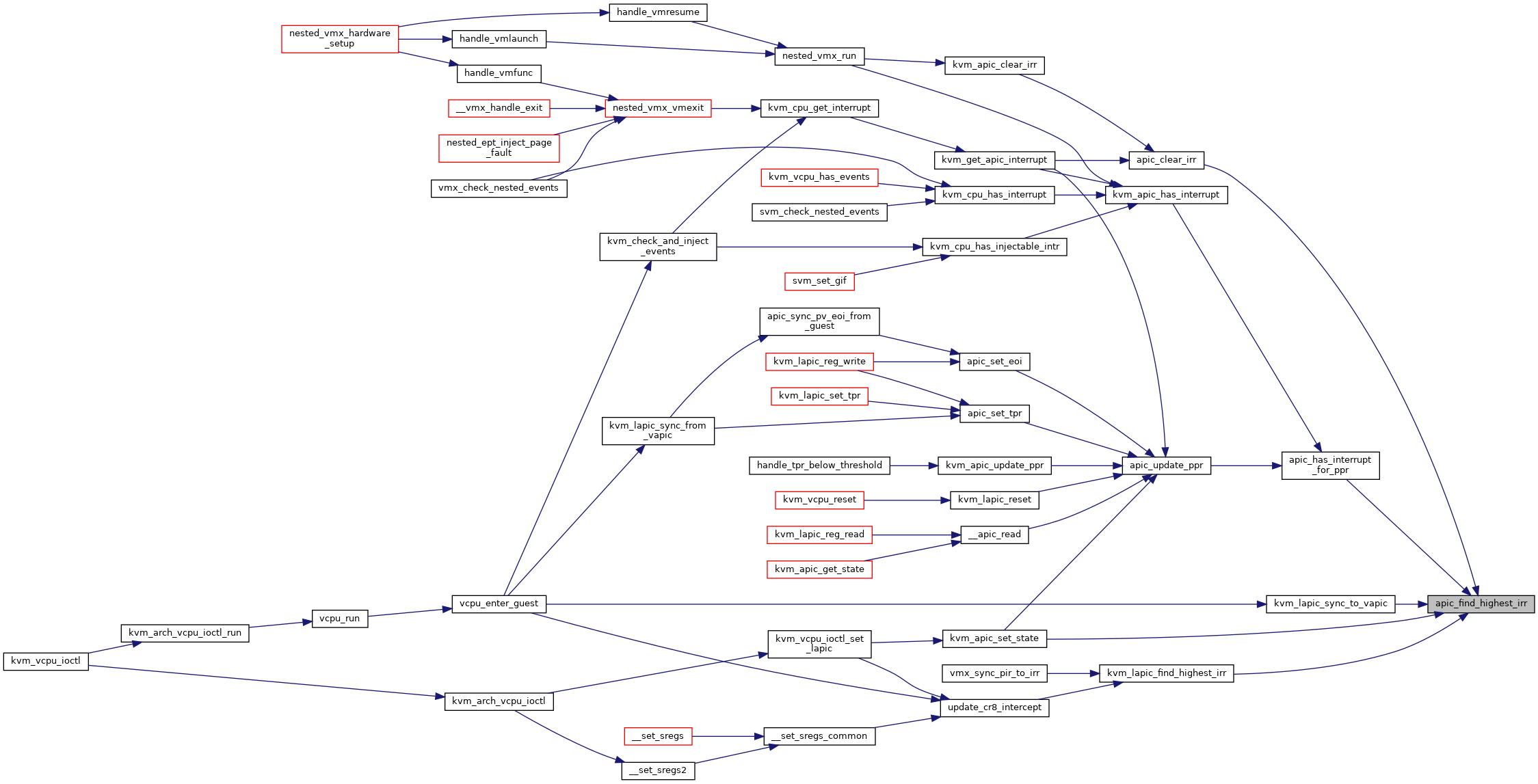

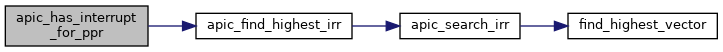

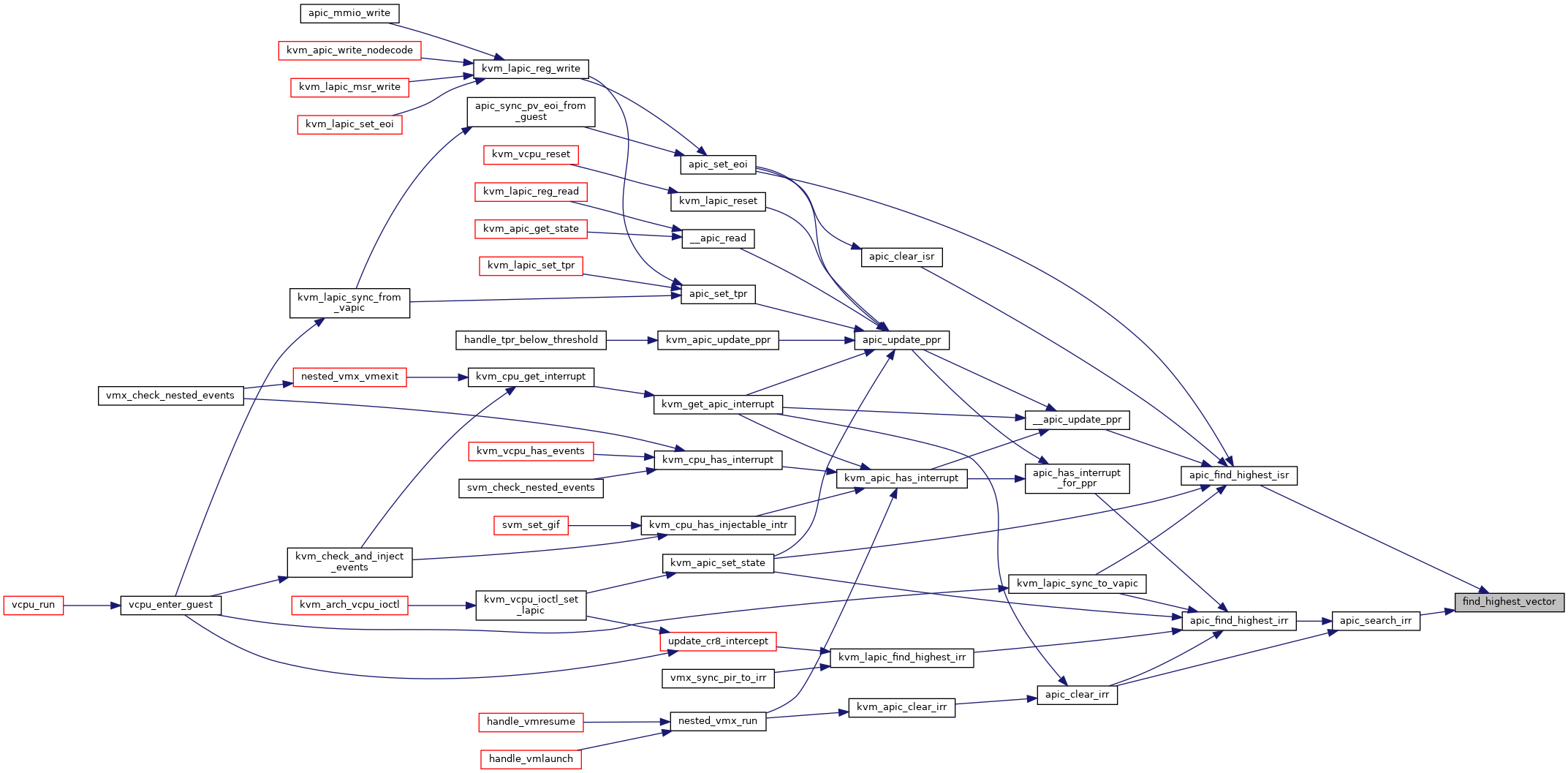

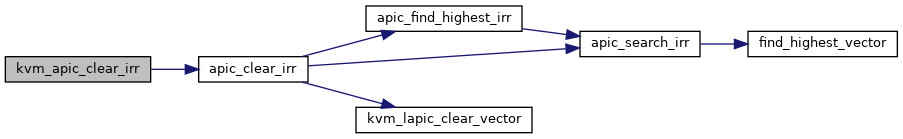

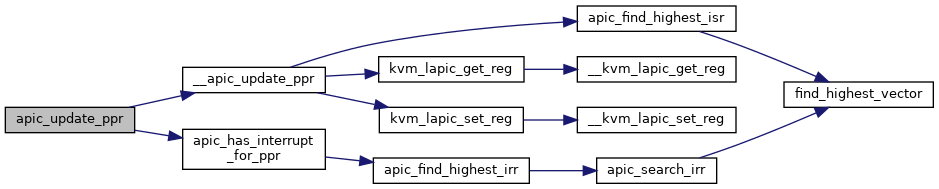

| static int | find_highest_vector (void *bitmap) |

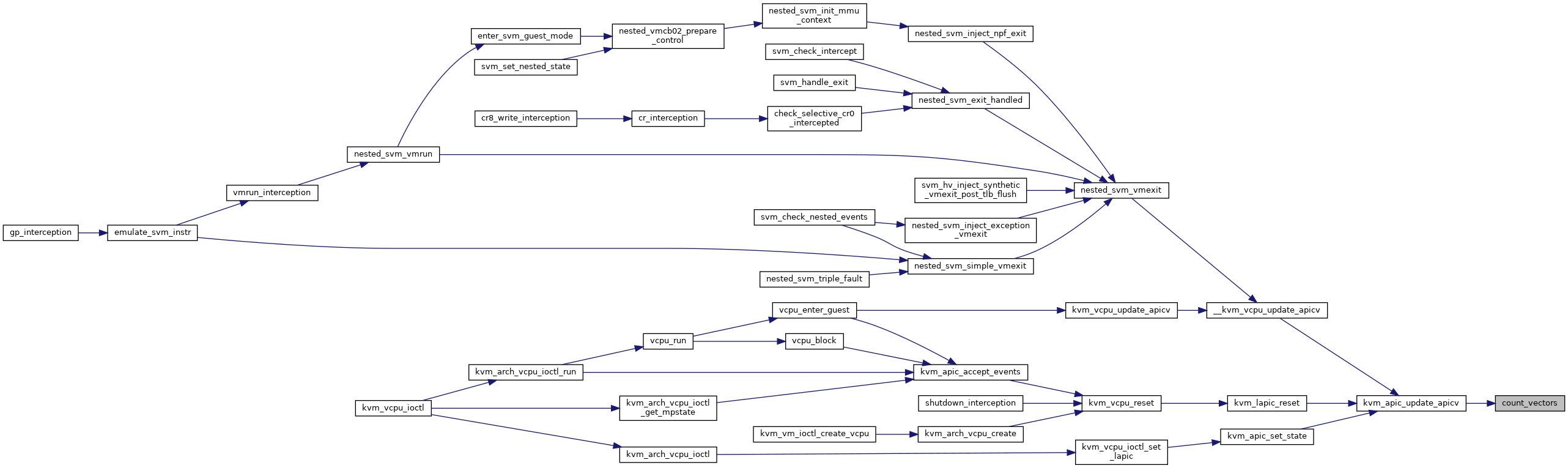

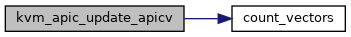

| static u8 | count_vectors (void *bitmap) |

| bool | __kvm_apic_update_irr (u32 *pir, void *regs, int *max_irr) |

| EXPORT_SYMBOL_GPL (__kvm_apic_update_irr) | |

| bool | kvm_apic_update_irr (struct kvm_vcpu *vcpu, u32 *pir, int *max_irr) |

| EXPORT_SYMBOL_GPL (kvm_apic_update_irr) | |

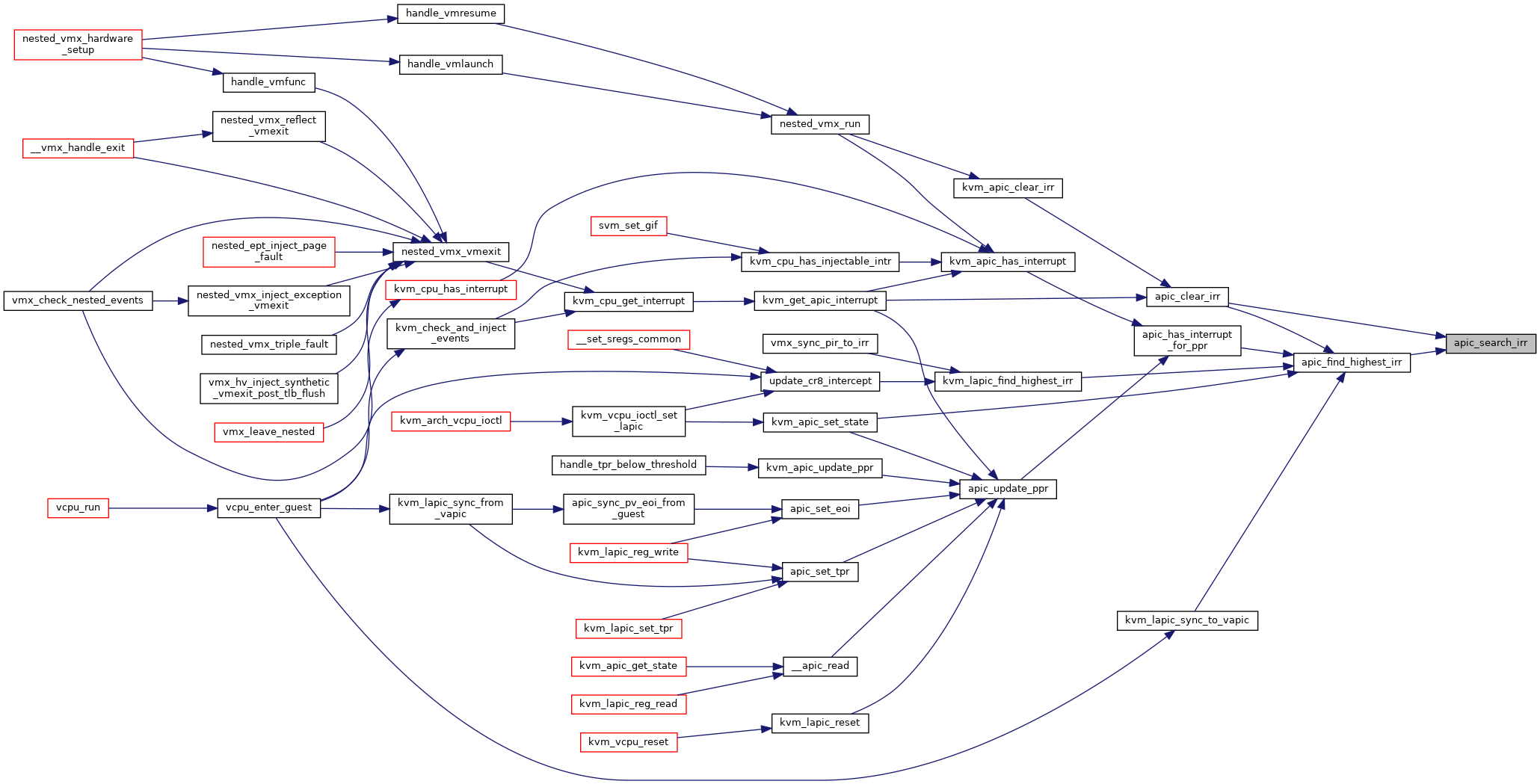

| static int | apic_search_irr (struct kvm_lapic *apic) |

| static int | apic_find_highest_irr (struct kvm_lapic *apic) |

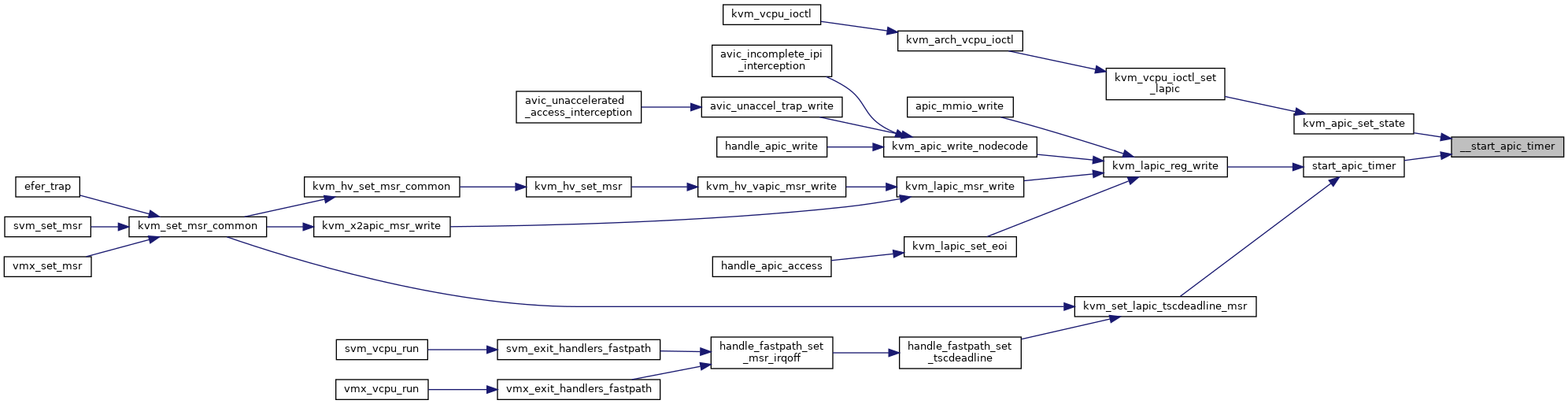

| static void | apic_clear_irr (int vec, struct kvm_lapic *apic) |

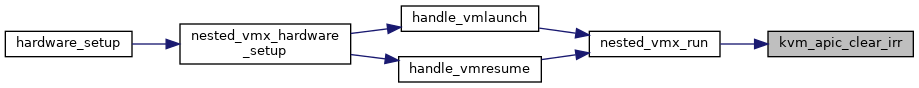

| void | kvm_apic_clear_irr (struct kvm_vcpu *vcpu, int vec) |

| EXPORT_SYMBOL_GPL (kvm_apic_clear_irr) | |

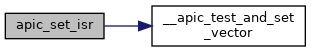

| static void | apic_set_isr (int vec, struct kvm_lapic *apic) |

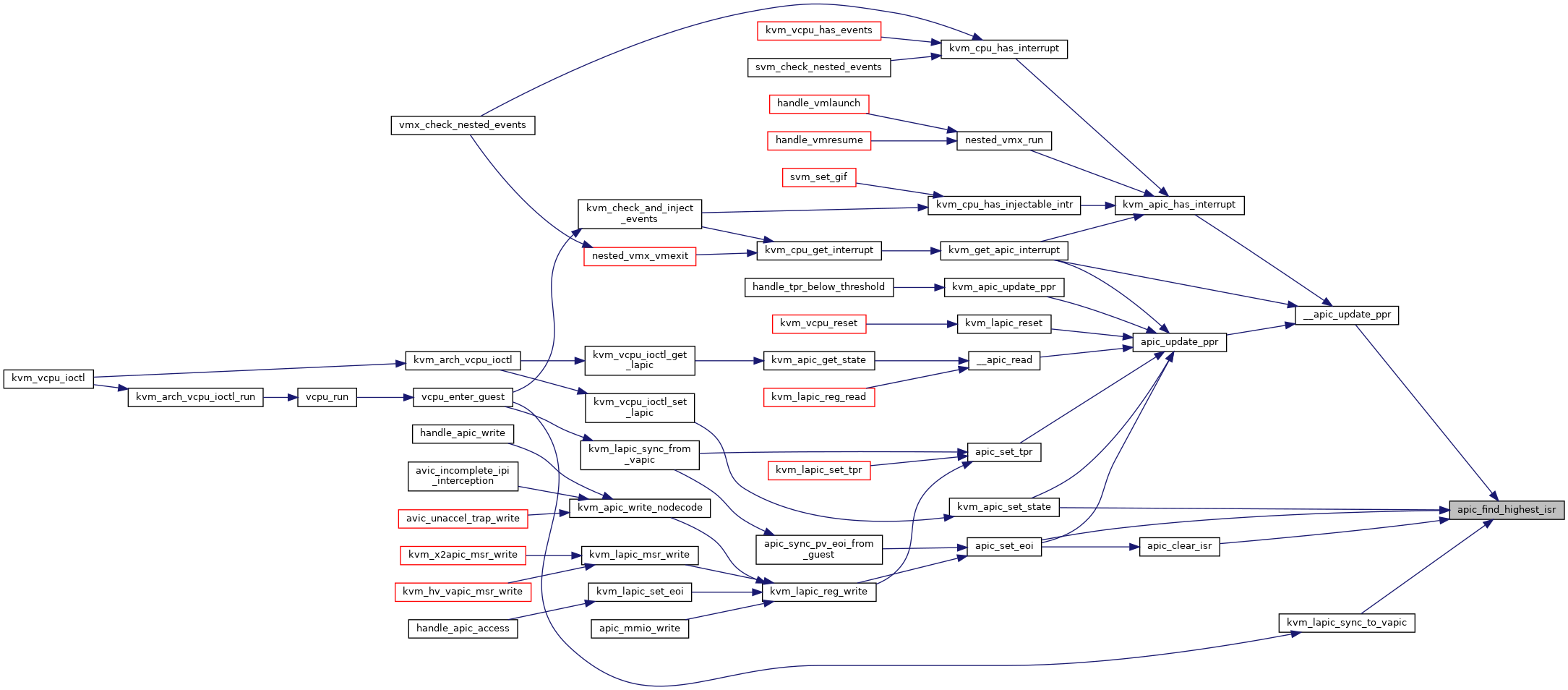

| static int | apic_find_highest_isr (struct kvm_lapic *apic) |

| static void | apic_clear_isr (int vec, struct kvm_lapic *apic) |

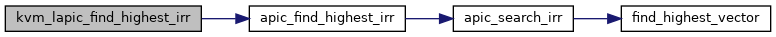

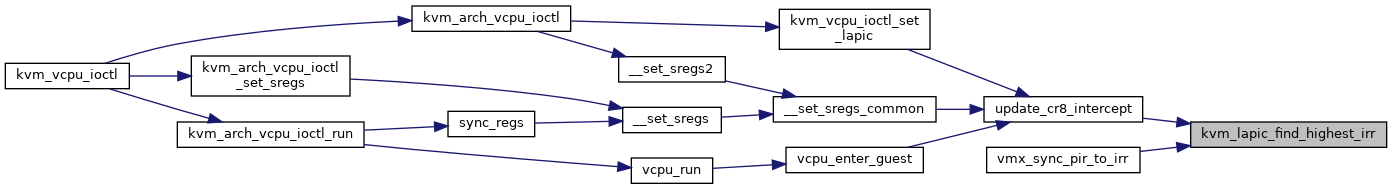

| int | kvm_lapic_find_highest_irr (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_lapic_find_highest_irr) | |

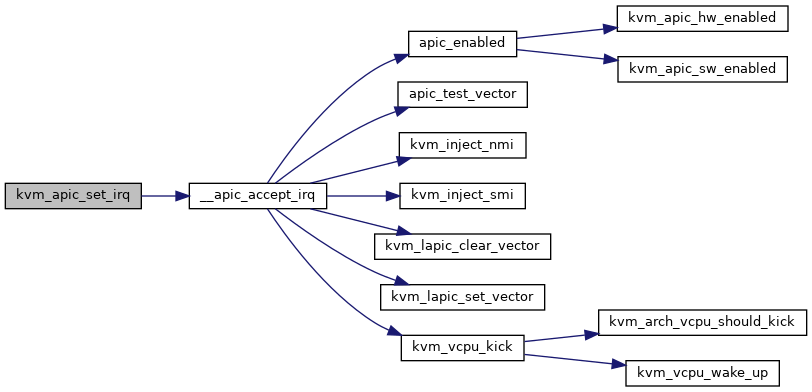

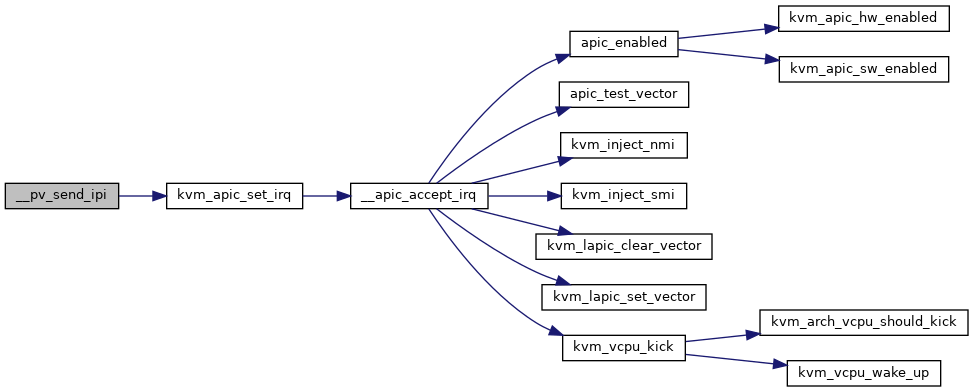

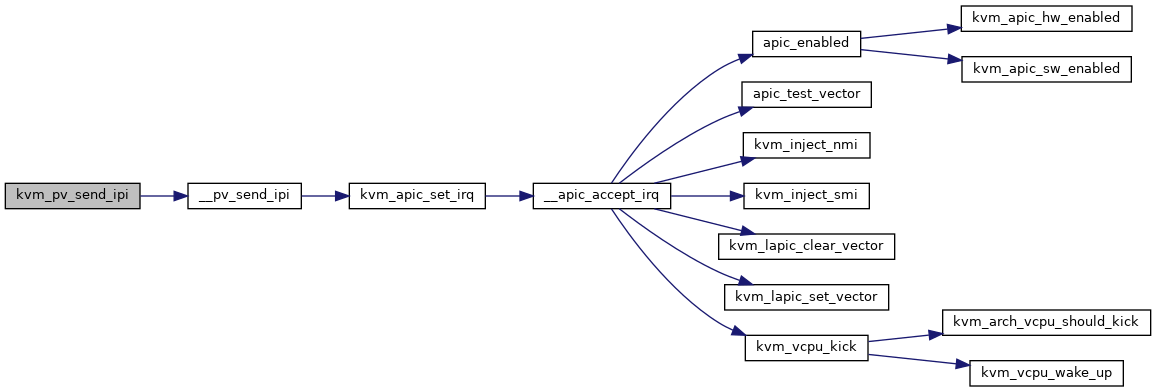

| static int | __apic_accept_irq (struct kvm_lapic *apic, int delivery_mode, int vector, int level, int trig_mode, struct dest_map *dest_map) |

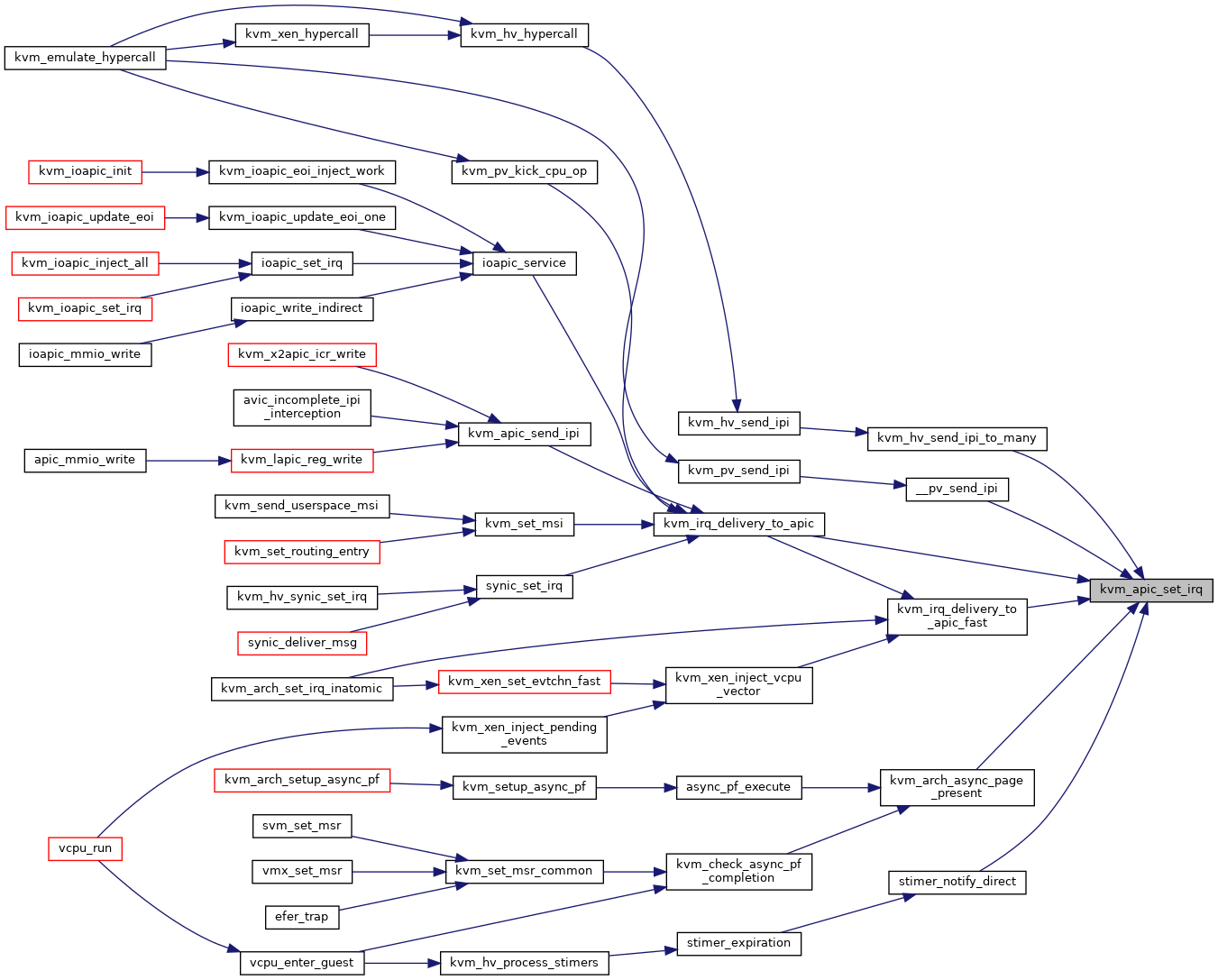

| int | kvm_apic_set_irq (struct kvm_vcpu *vcpu, struct kvm_lapic_irq *irq, struct dest_map *dest_map) |

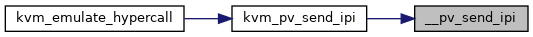

| static int | __pv_send_ipi (unsigned long *ipi_bitmap, struct kvm_apic_map *map, struct kvm_lapic_irq *irq, u32 min) |

| int | kvm_pv_send_ipi (struct kvm *kvm, unsigned long ipi_bitmap_low, unsigned long ipi_bitmap_high, u32 min, unsigned long icr, int op_64_bit) |

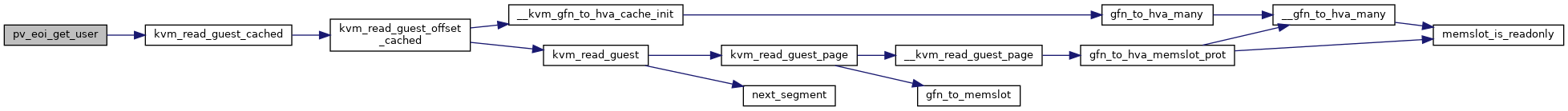

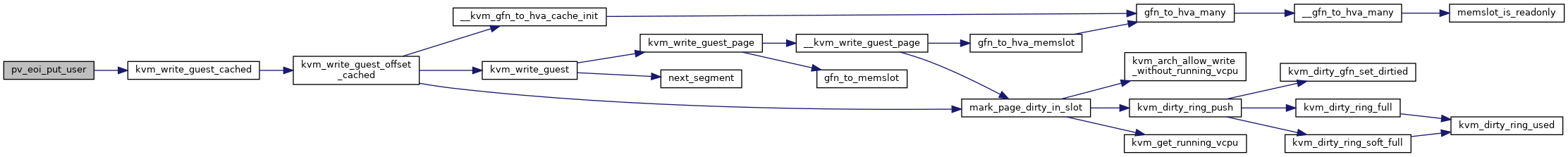

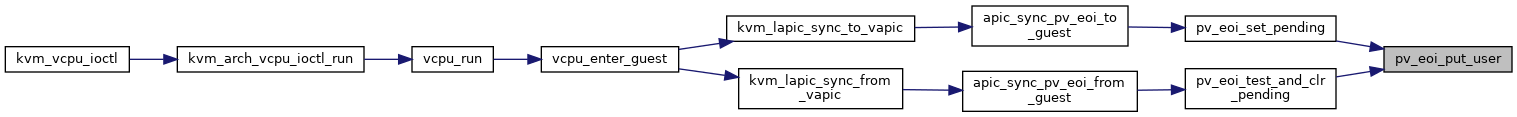

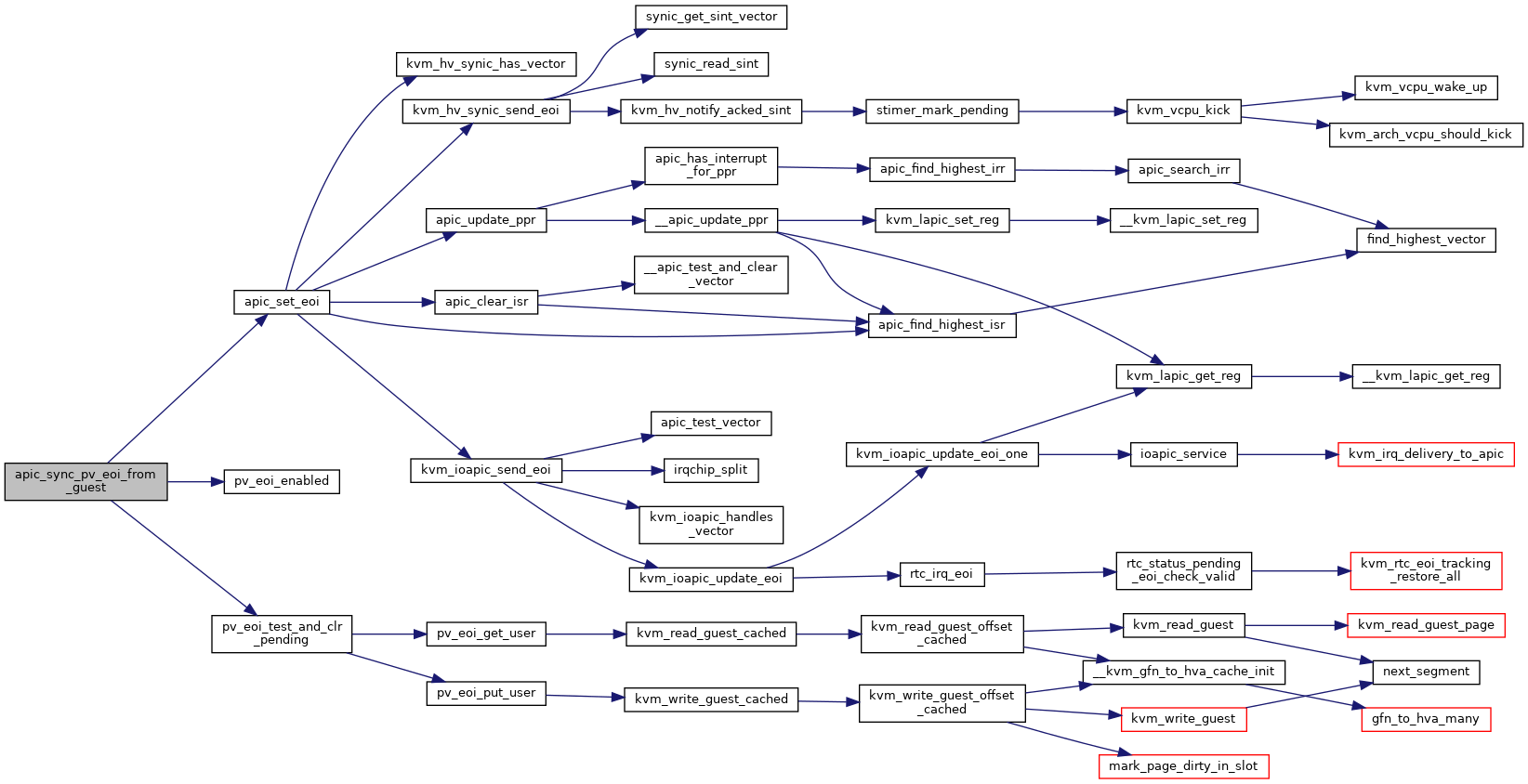

| static int | pv_eoi_put_user (struct kvm_vcpu *vcpu, u8 val) |

| static int | pv_eoi_get_user (struct kvm_vcpu *vcpu, u8 *val) |

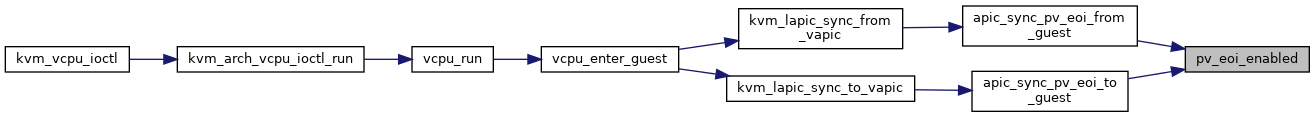

| static bool | pv_eoi_enabled (struct kvm_vcpu *vcpu) |

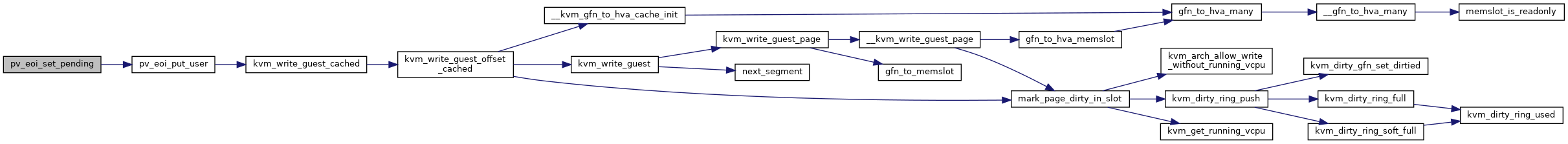

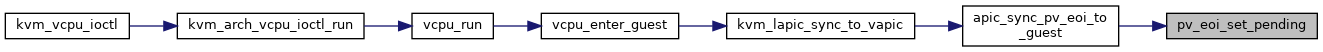

| static void | pv_eoi_set_pending (struct kvm_vcpu *vcpu) |

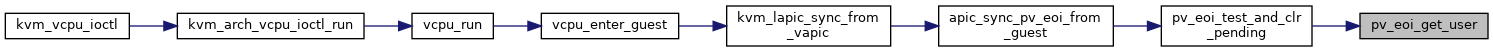

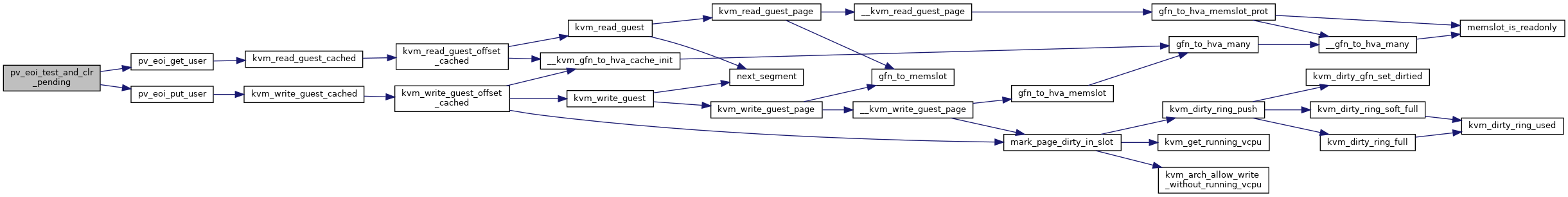

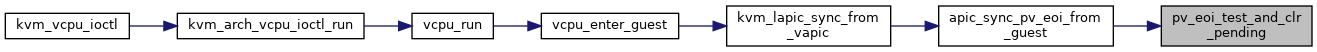

| static bool | pv_eoi_test_and_clr_pending (struct kvm_vcpu *vcpu) |

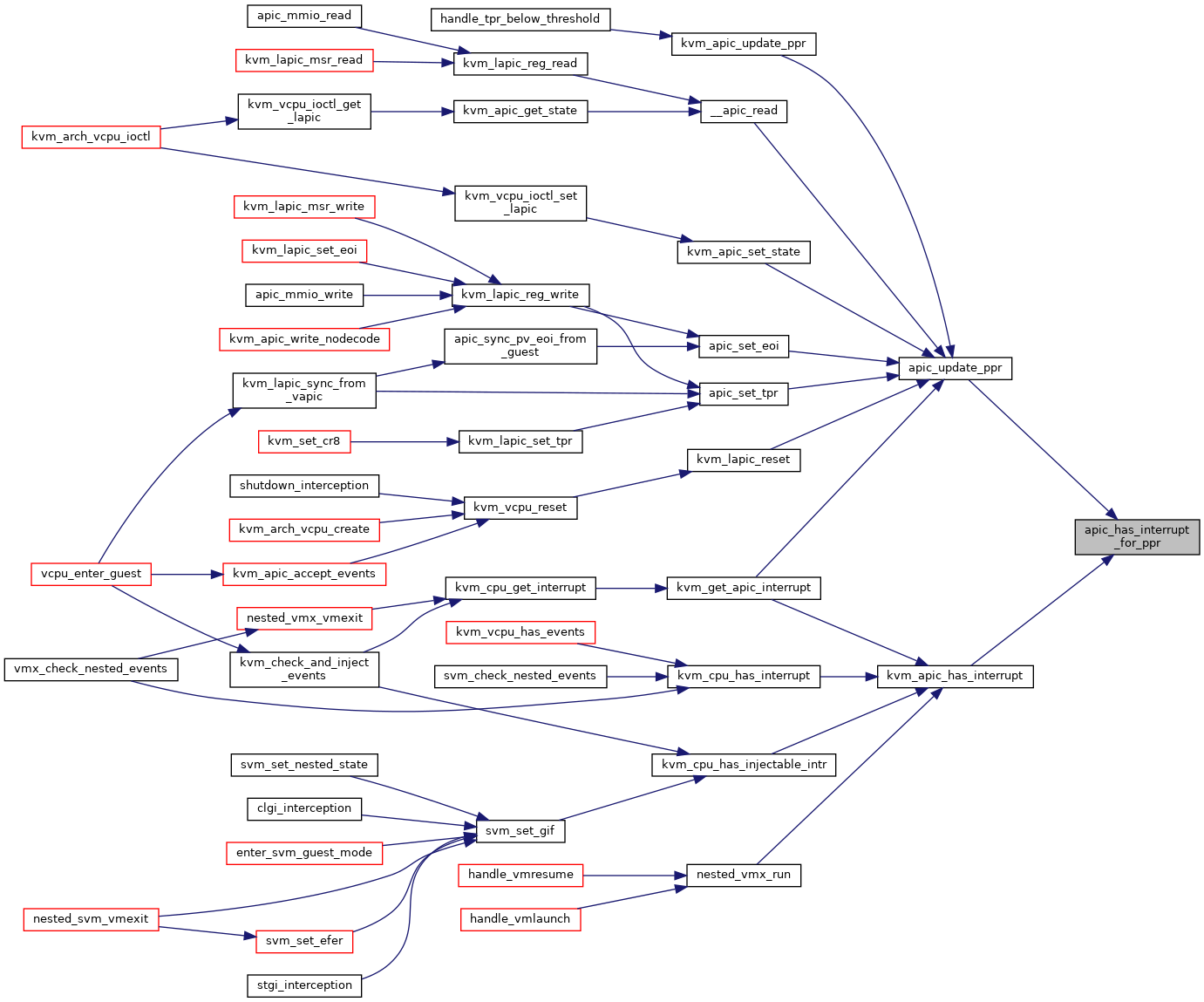

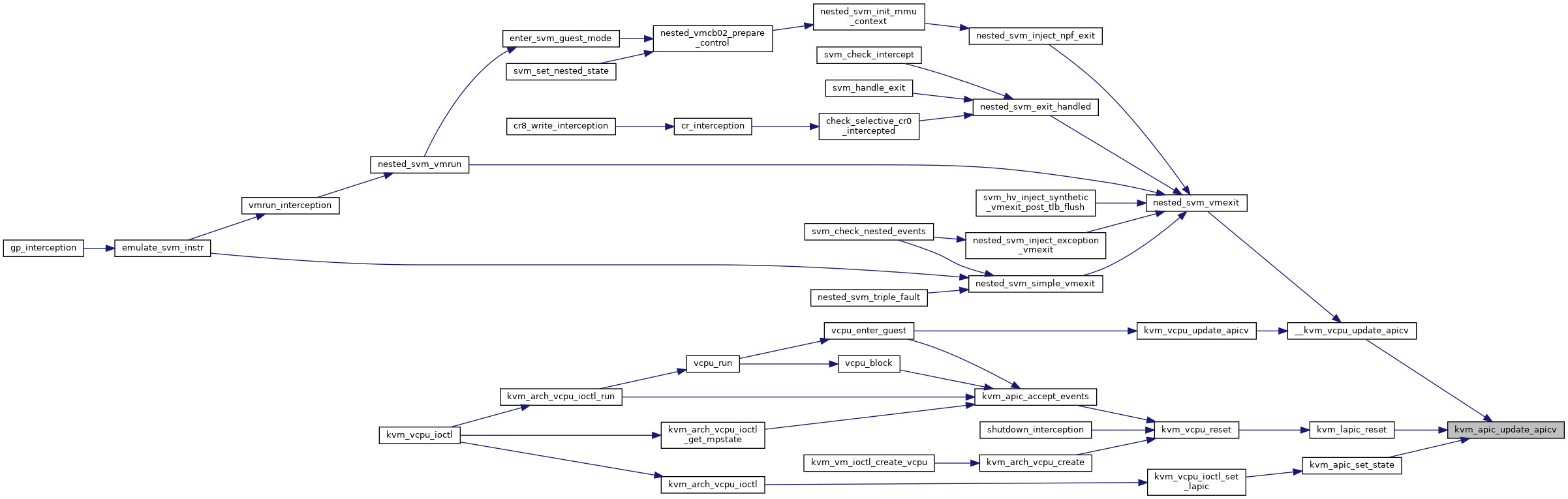

| static int | apic_has_interrupt_for_ppr (struct kvm_lapic *apic, u32 ppr) |

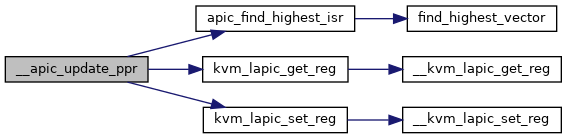

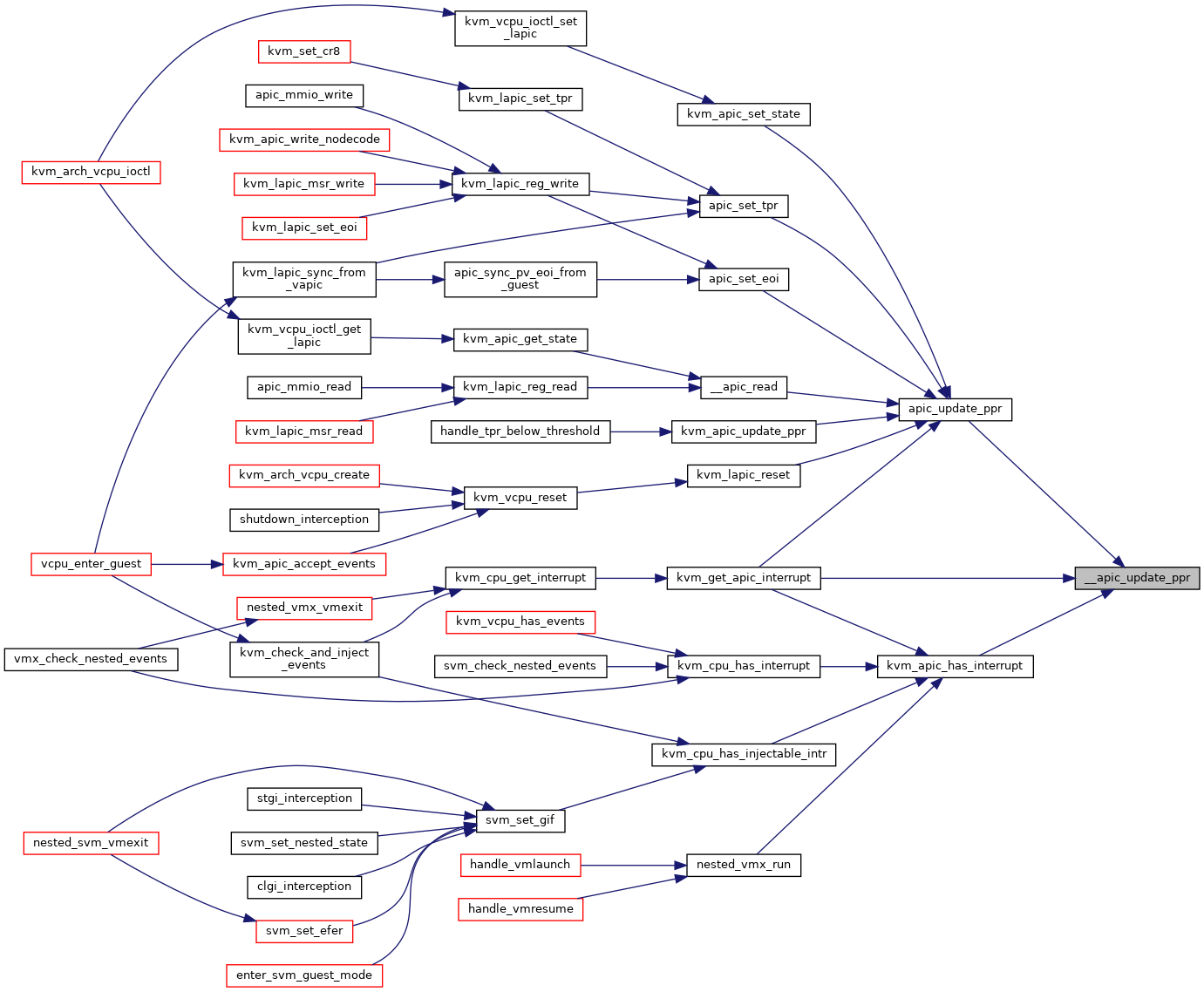

| static bool | __apic_update_ppr (struct kvm_lapic *apic, u32 *new_ppr) |

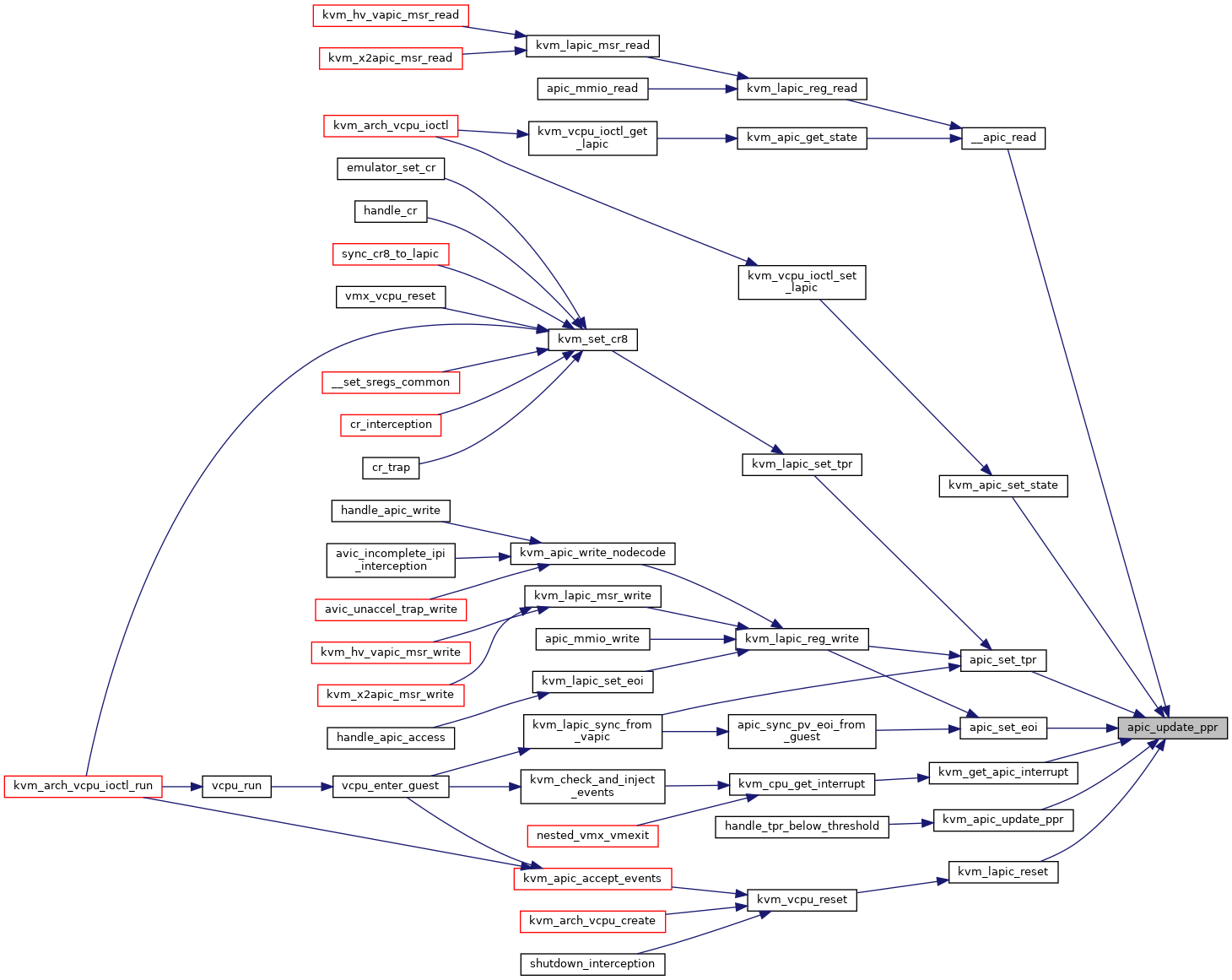

| static void | apic_update_ppr (struct kvm_lapic *apic) |

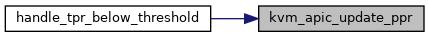

| void | kvm_apic_update_ppr (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_apic_update_ppr) | |

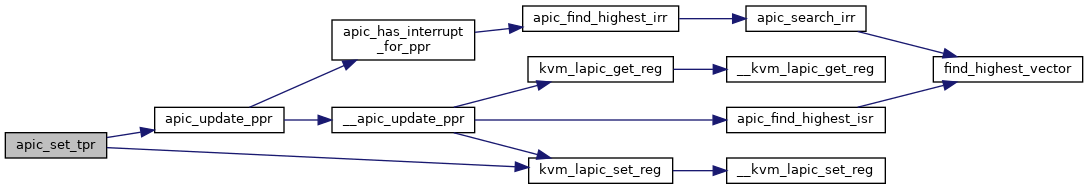

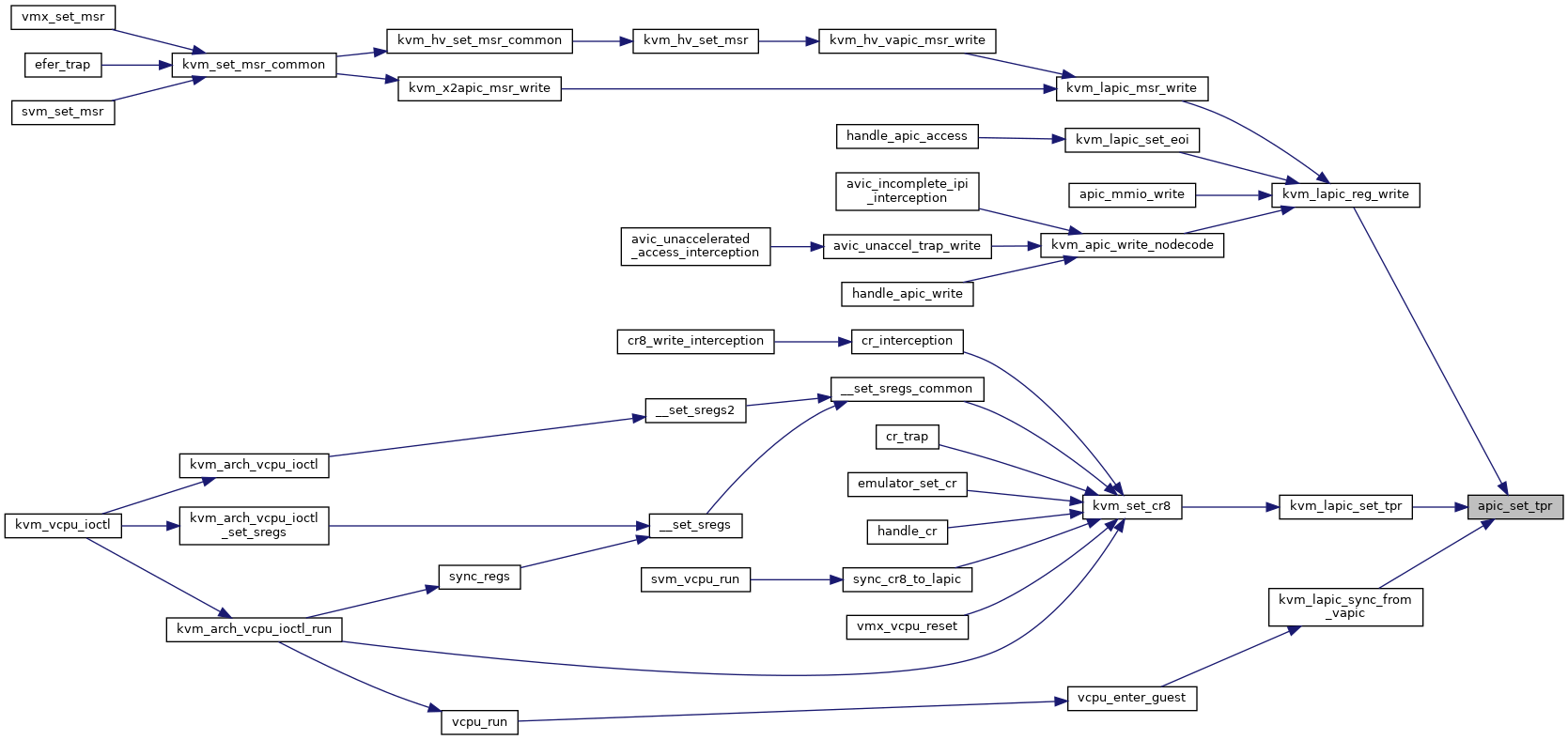

| static void | apic_set_tpr (struct kvm_lapic *apic, u32 tpr) |

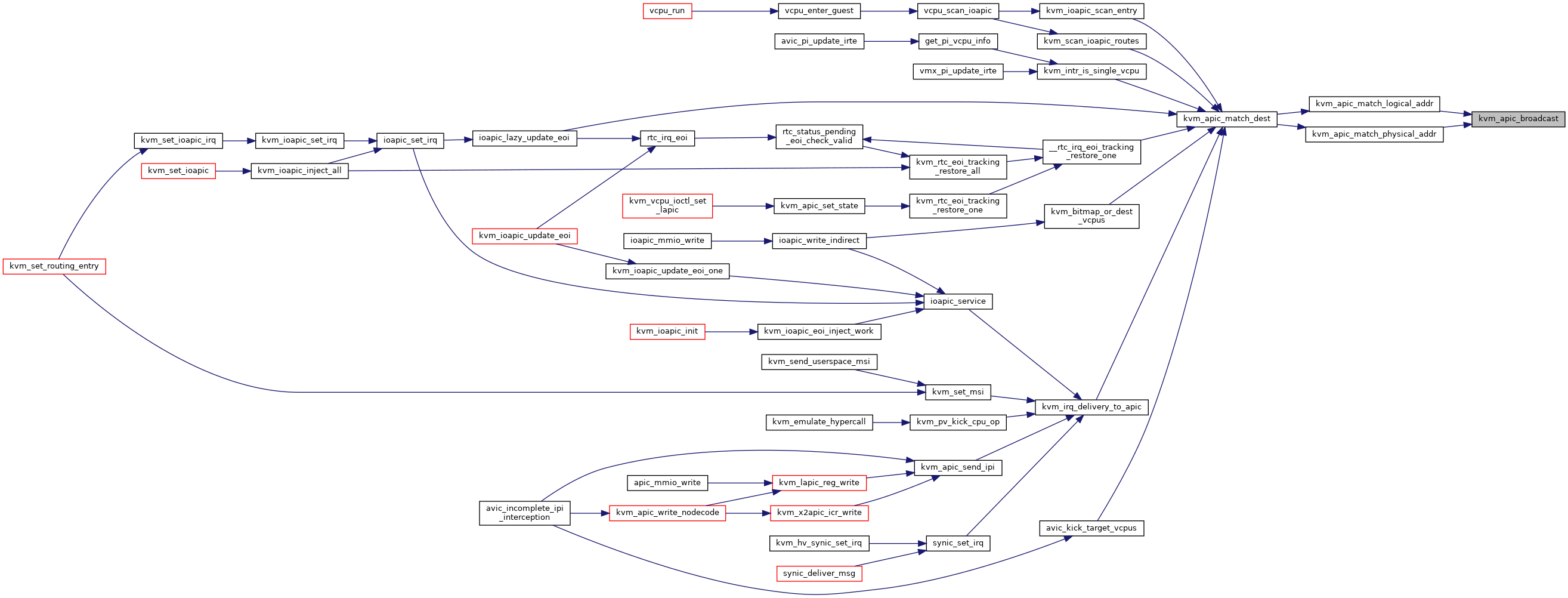

| static bool | kvm_apic_broadcast (struct kvm_lapic *apic, u32 mda) |

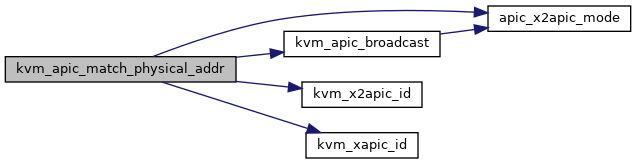

| static bool | kvm_apic_match_physical_addr (struct kvm_lapic *apic, u32 mda) |

| static bool | kvm_apic_match_logical_addr (struct kvm_lapic *apic, u32 mda) |

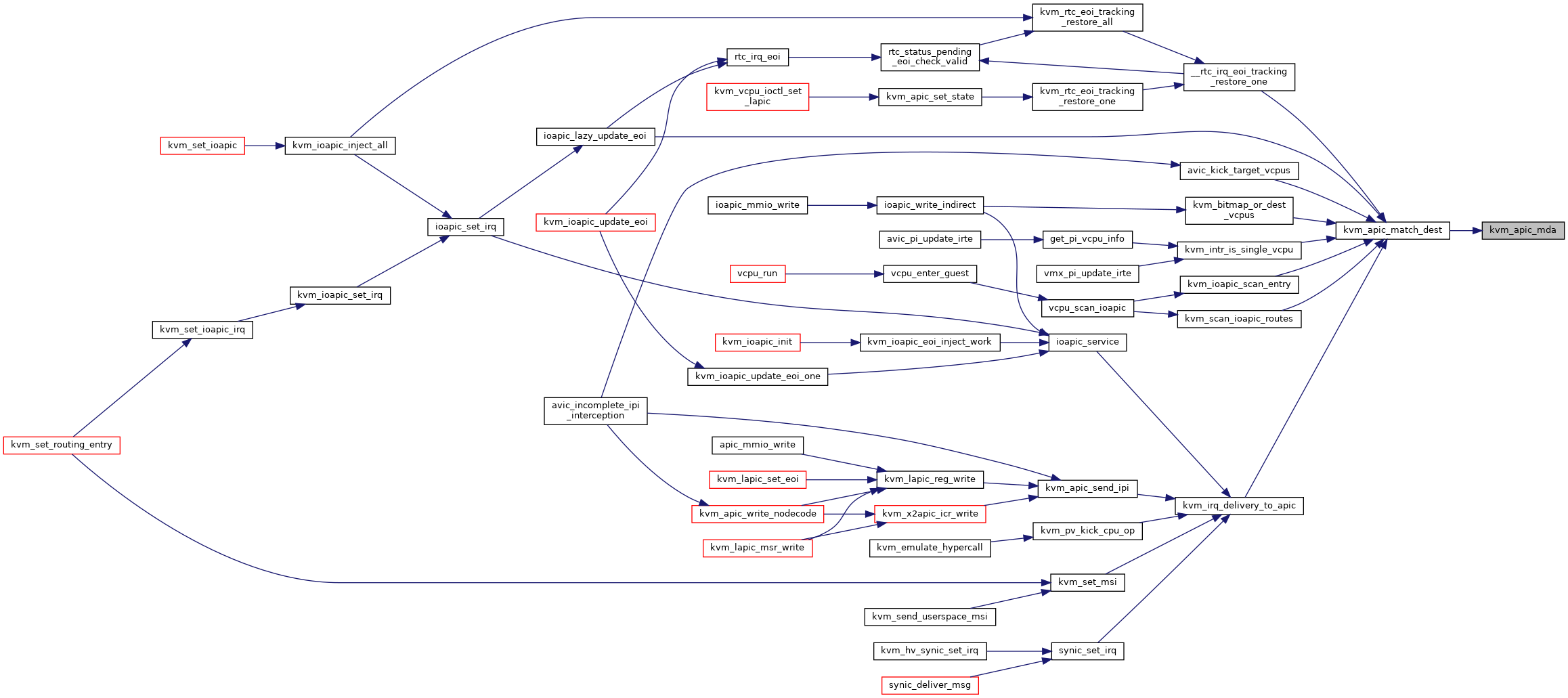

| static u32 | kvm_apic_mda (struct kvm_vcpu *vcpu, unsigned int dest_id, struct kvm_lapic *source, struct kvm_lapic *target) |

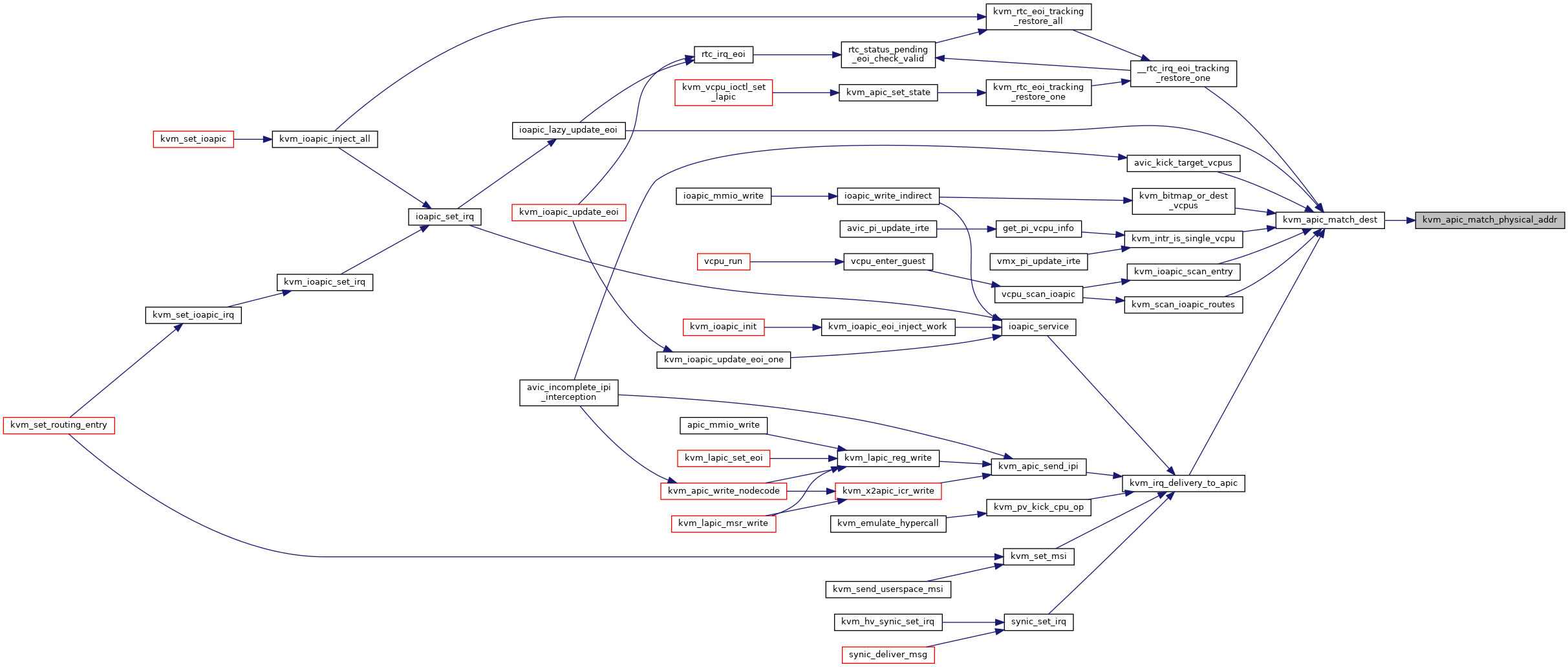

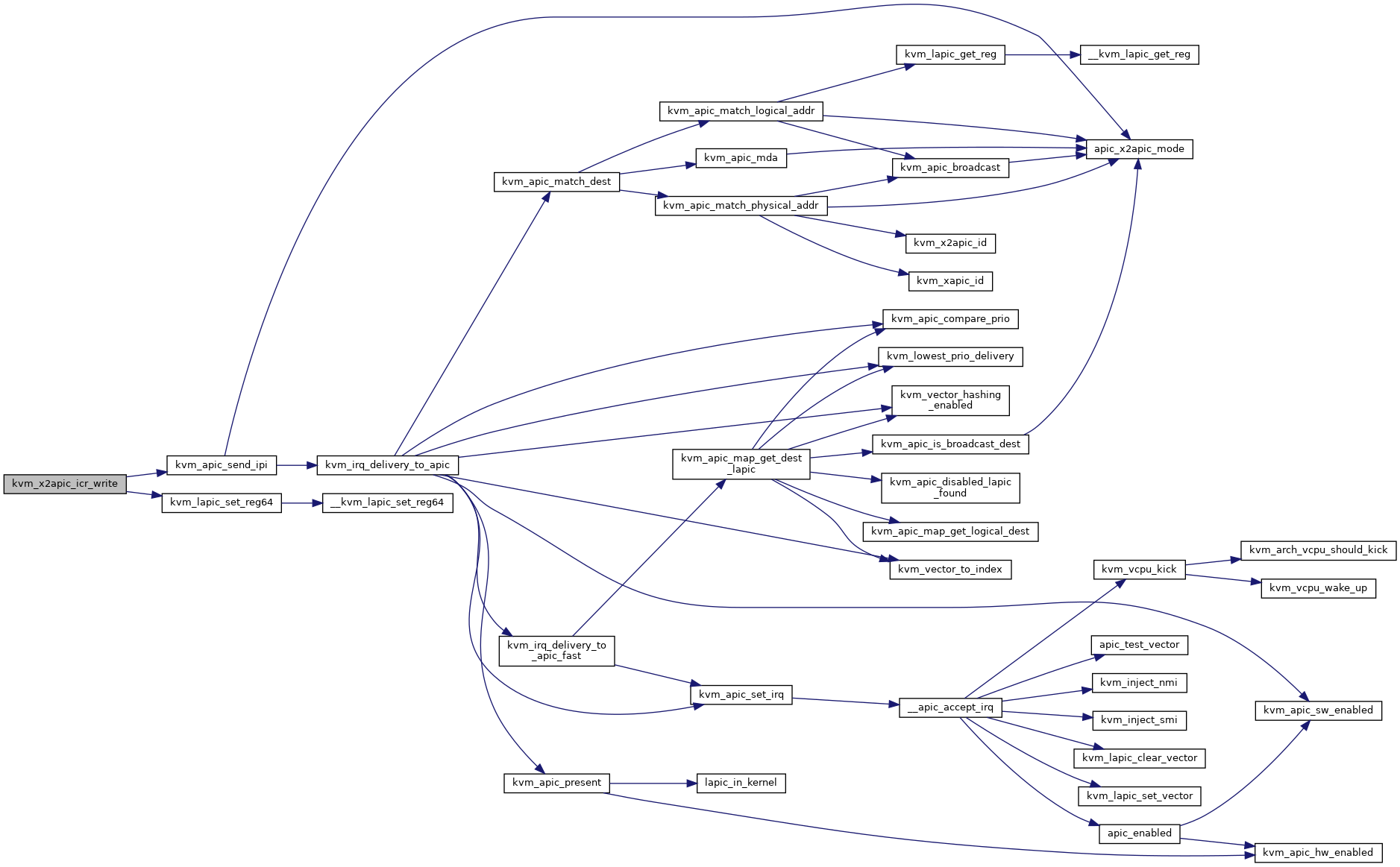

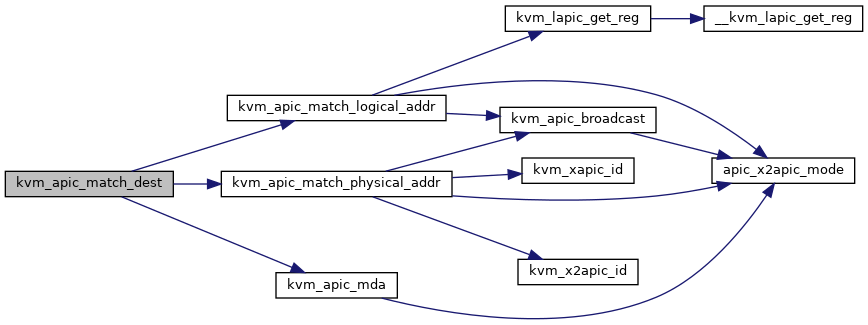

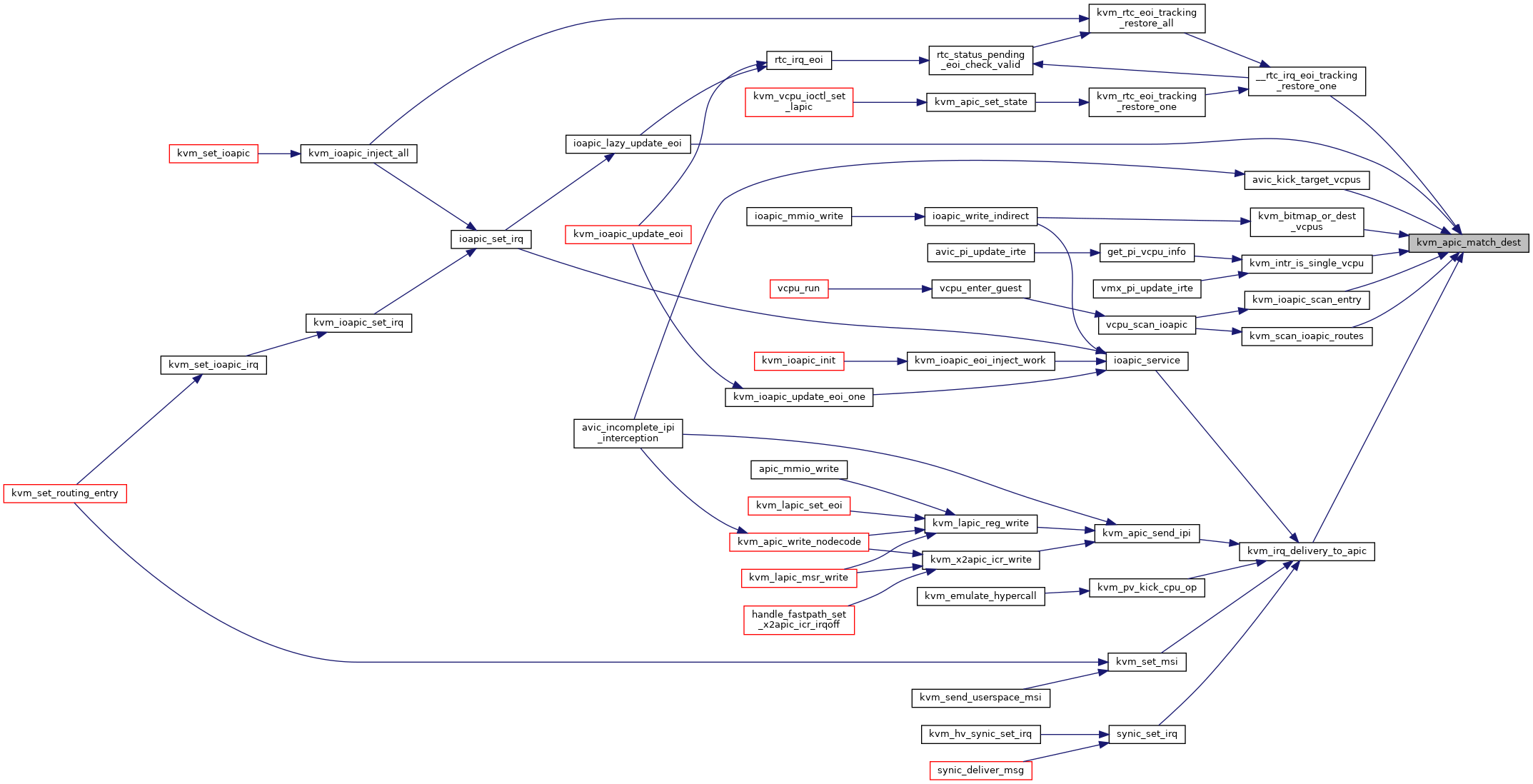

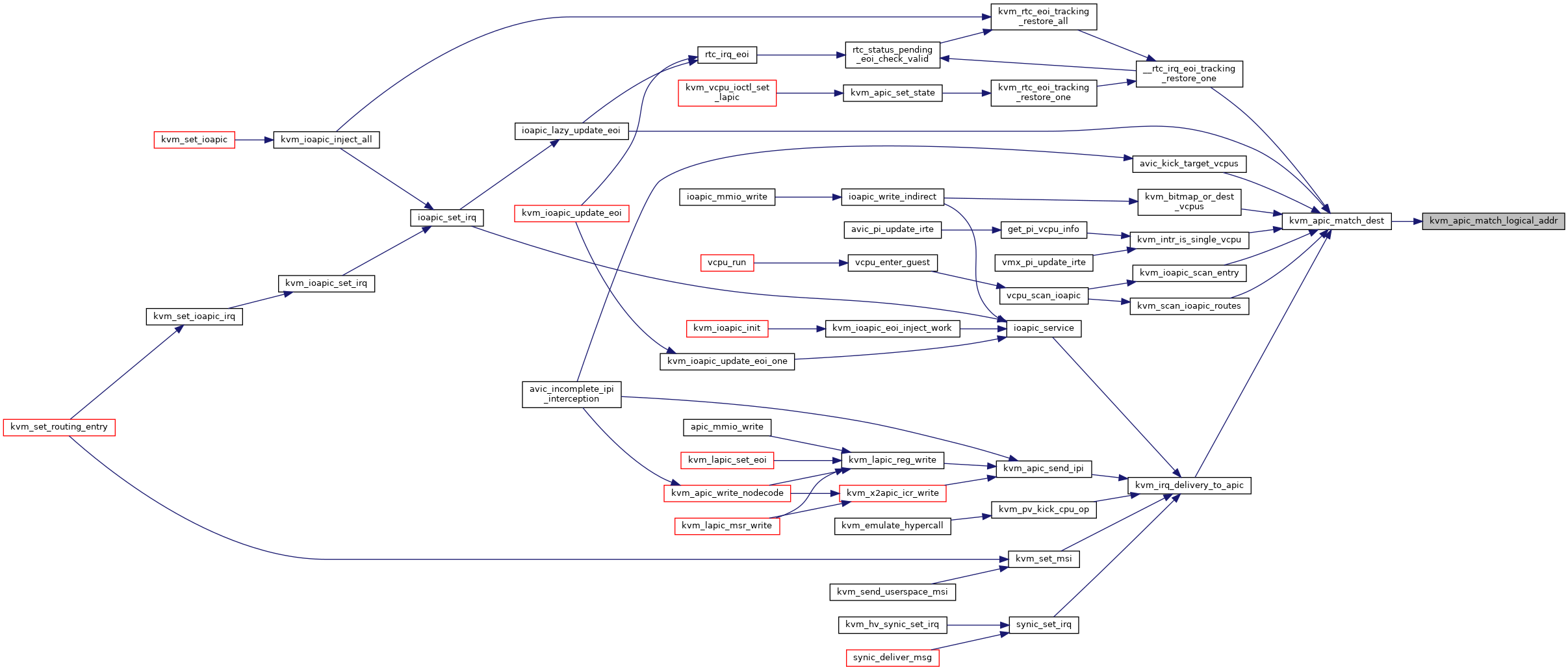

| bool | kvm_apic_match_dest (struct kvm_vcpu *vcpu, struct kvm_lapic *source, int shorthand, unsigned int dest, int dest_mode) |

| EXPORT_SYMBOL_GPL (kvm_apic_match_dest) | |

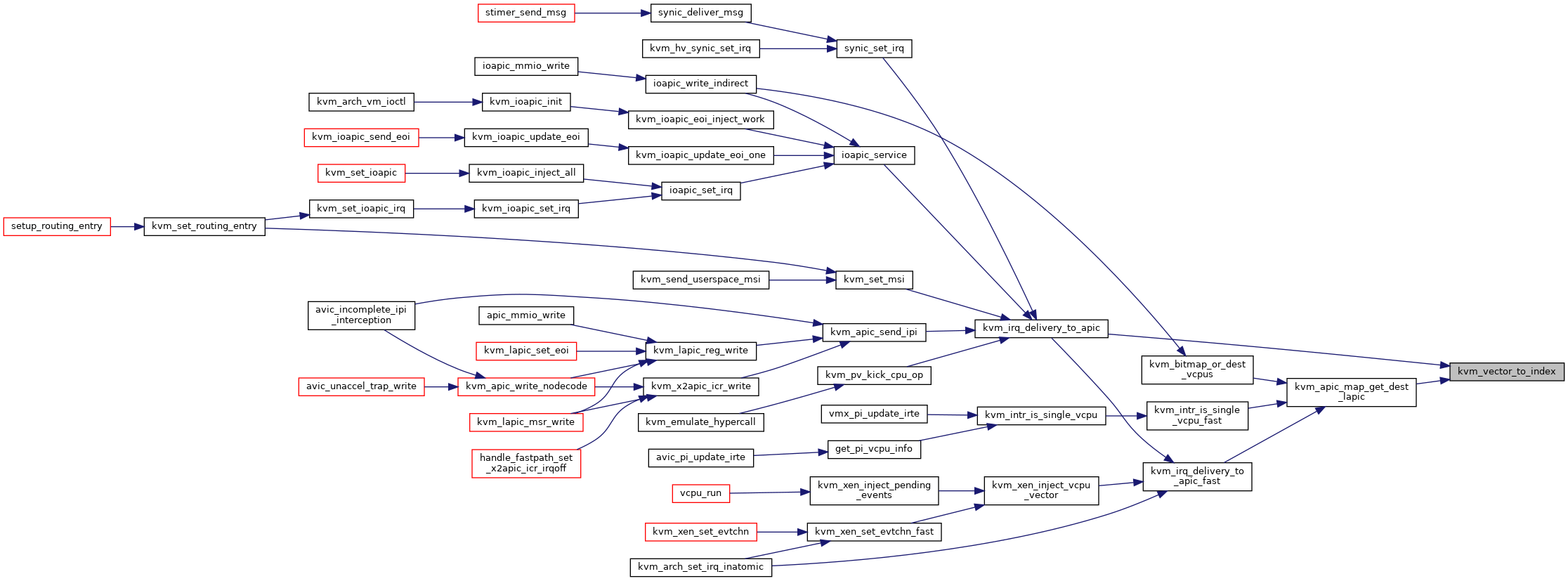

| int | kvm_vector_to_index (u32 vector, u32 dest_vcpus, const unsigned long *bitmap, u32 bitmap_size) |

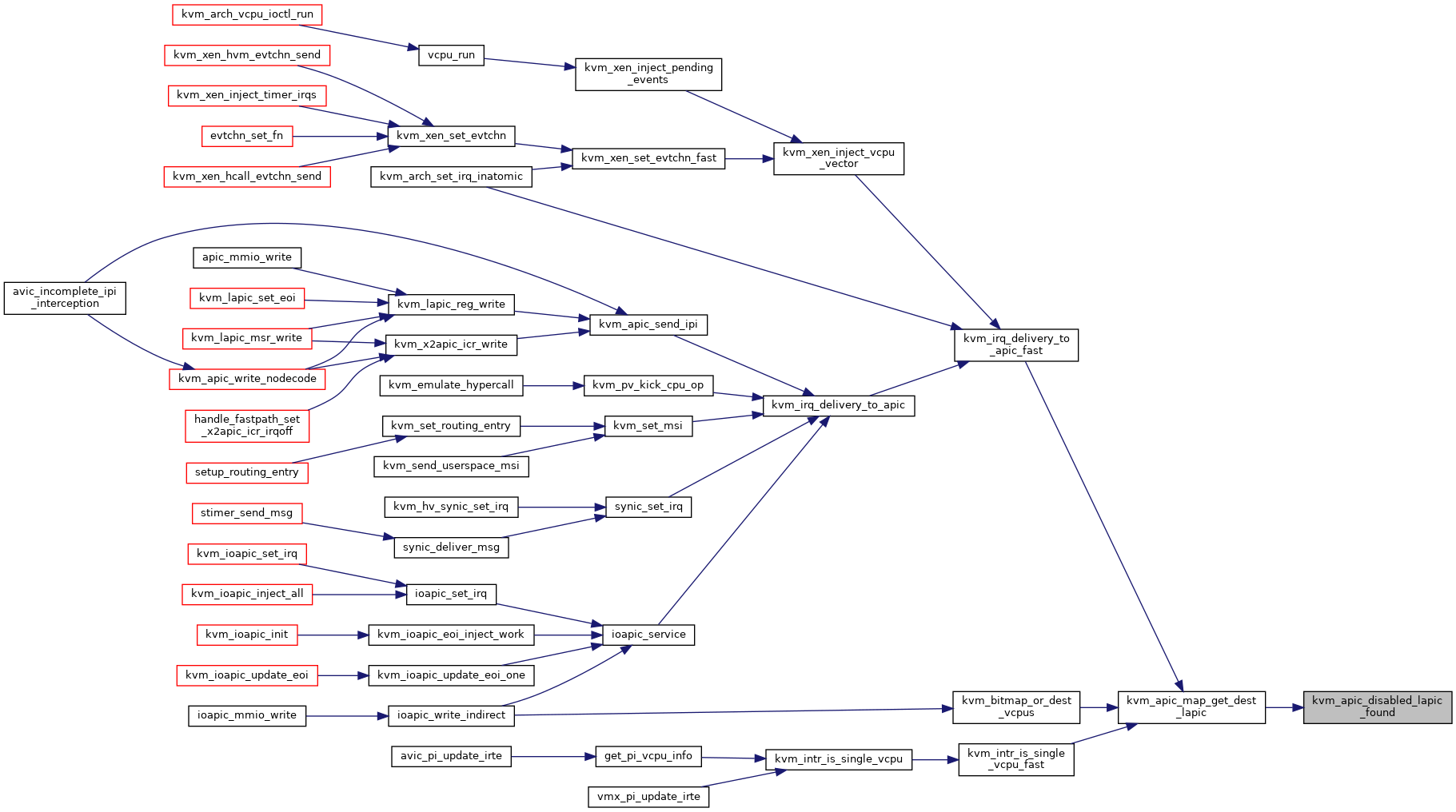

| static void | kvm_apic_disabled_lapic_found (struct kvm *kvm) |

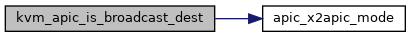

| static bool | kvm_apic_is_broadcast_dest (struct kvm *kvm, struct kvm_lapic **src, struct kvm_lapic_irq *irq, struct kvm_apic_map *map) |

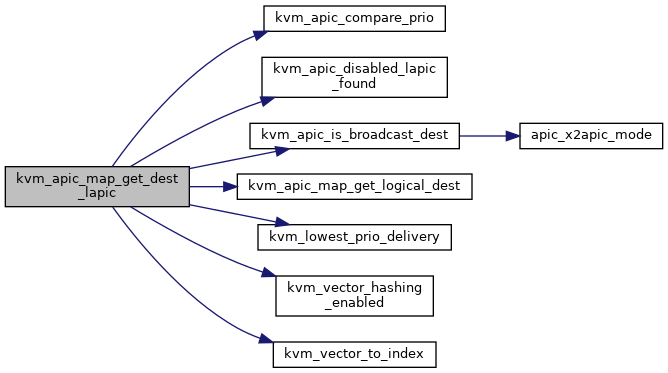

| static bool | kvm_apic_map_get_dest_lapic (struct kvm *kvm, struct kvm_lapic **src, struct kvm_lapic_irq *irq, struct kvm_apic_map *map, struct kvm_lapic ***dst, unsigned long *bitmap) |

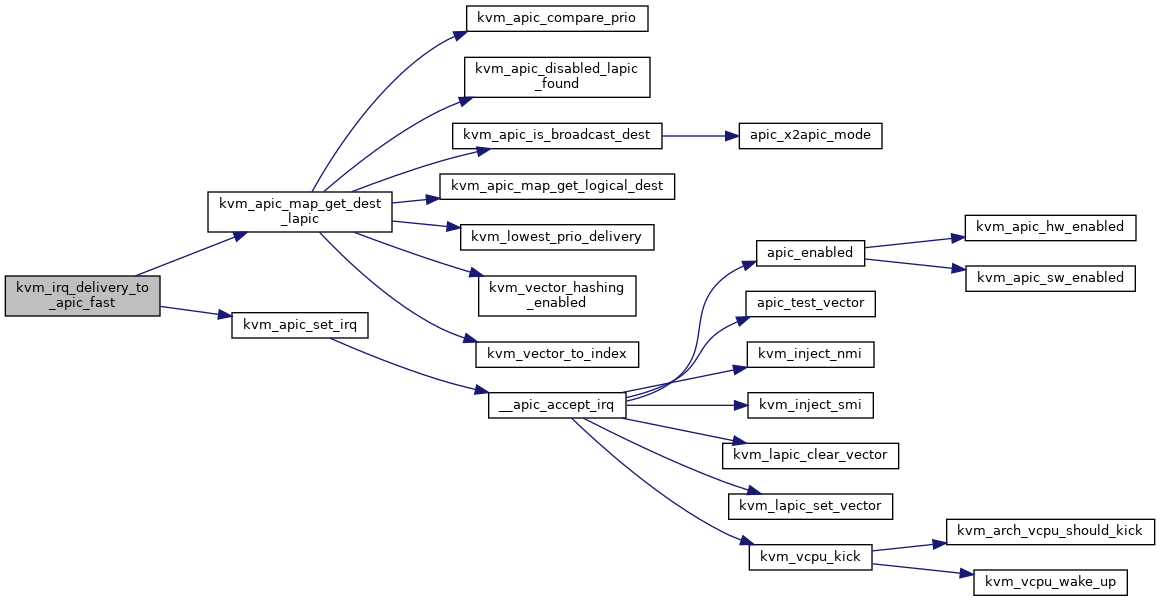

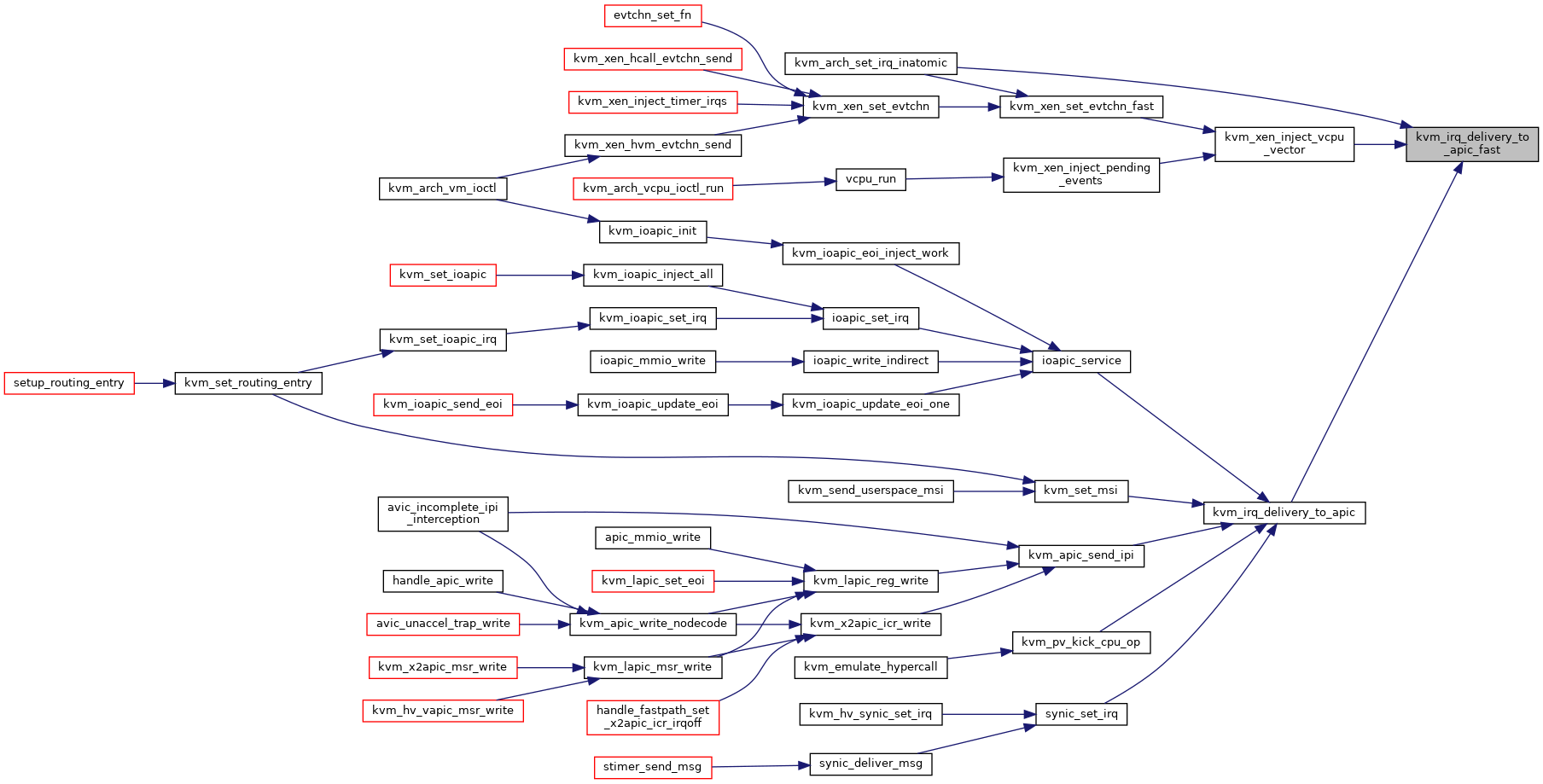

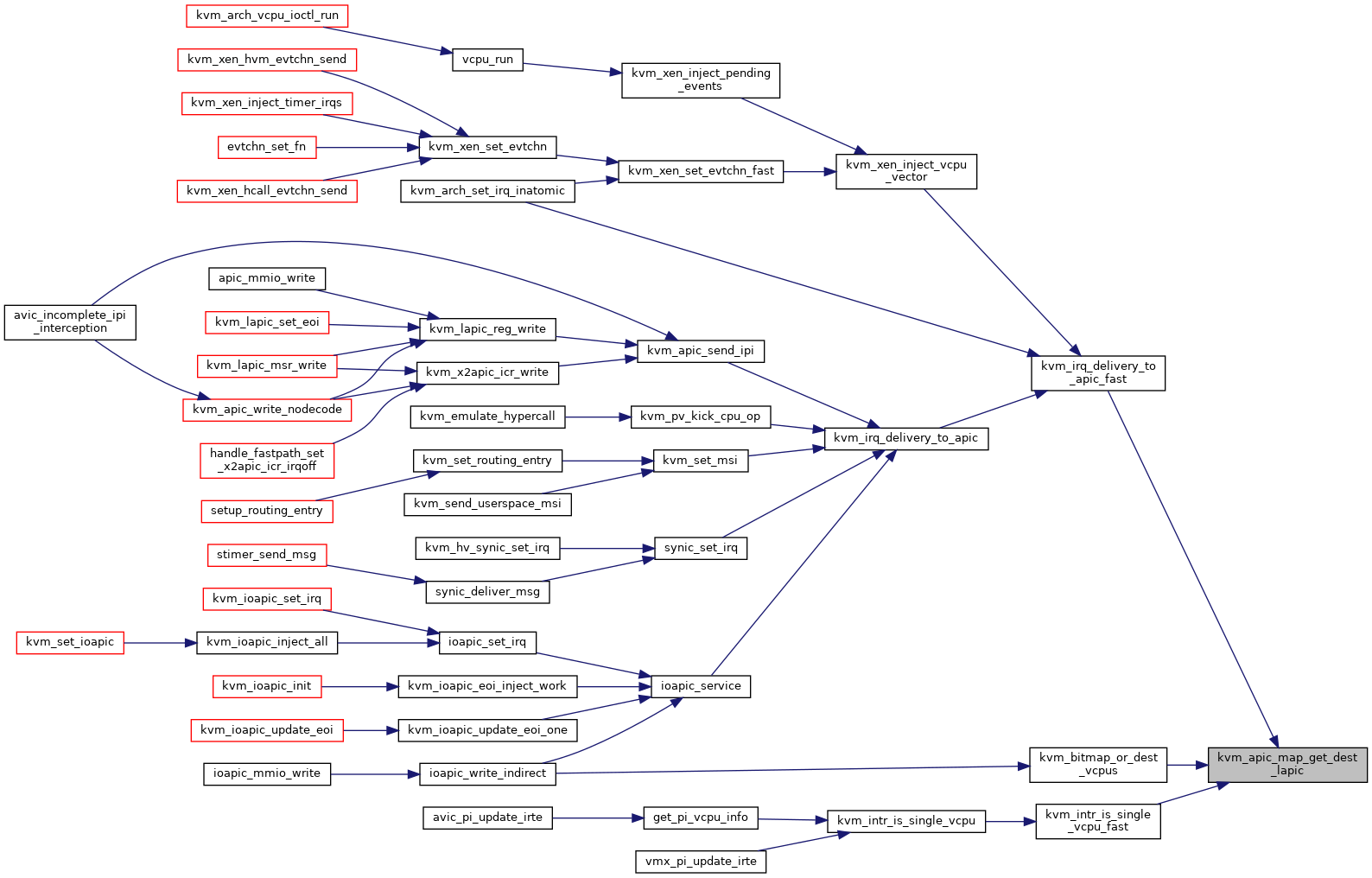

| bool | kvm_irq_delivery_to_apic_fast (struct kvm *kvm, struct kvm_lapic *src, struct kvm_lapic_irq *irq, int *r, struct dest_map *dest_map) |

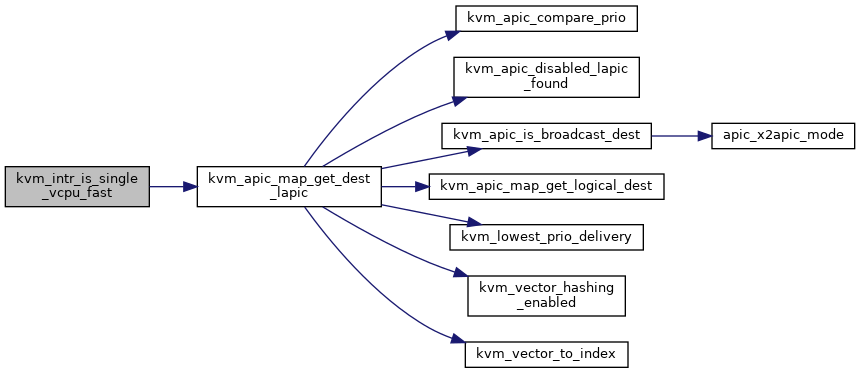

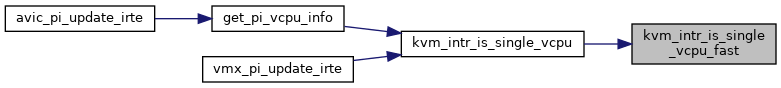

| bool | kvm_intr_is_single_vcpu_fast (struct kvm *kvm, struct kvm_lapic_irq *irq, struct kvm_vcpu **dest_vcpu) |

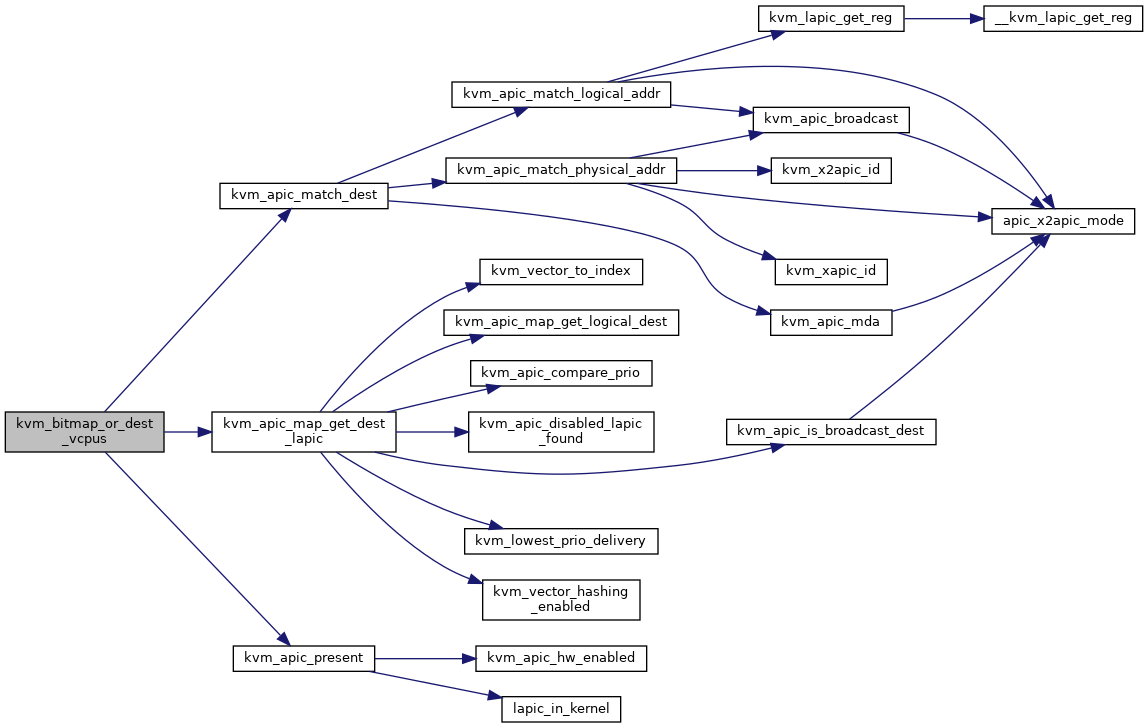

| void | kvm_bitmap_or_dest_vcpus (struct kvm *kvm, struct kvm_lapic_irq *irq, unsigned long *vcpu_bitmap) |

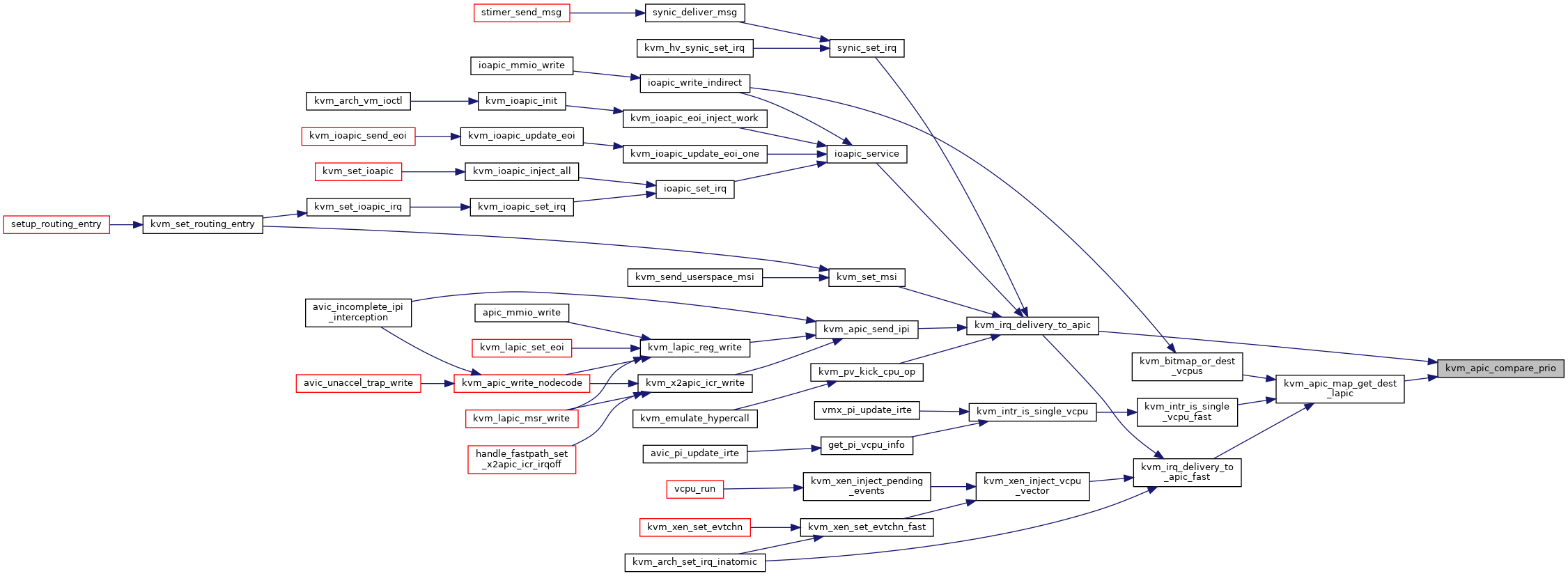

| int | kvm_apic_compare_prio (struct kvm_vcpu *vcpu1, struct kvm_vcpu *vcpu2) |

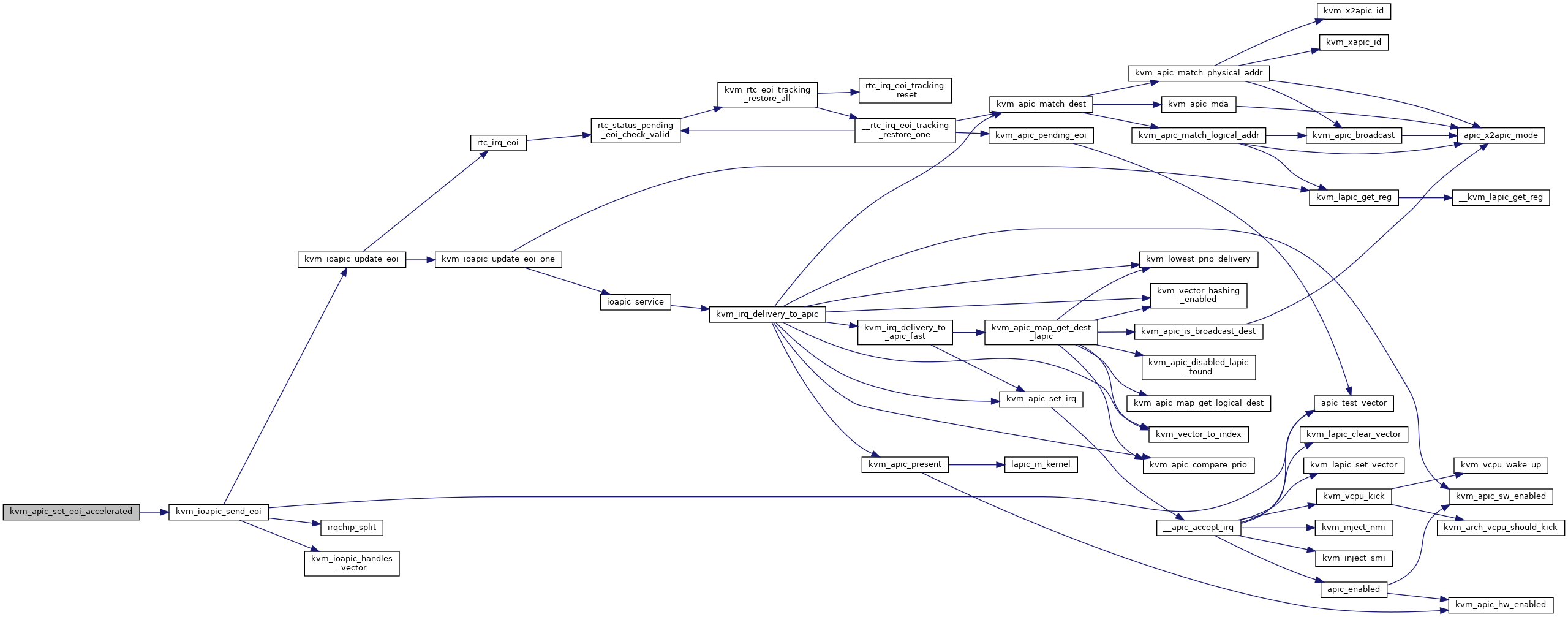

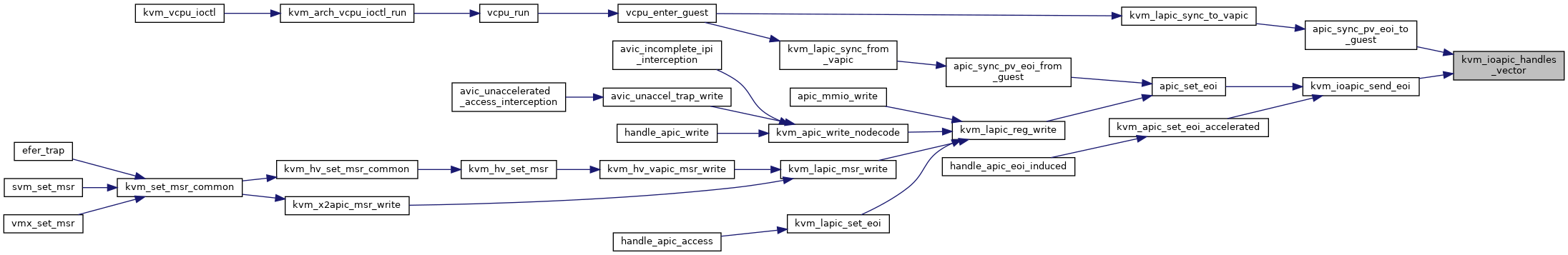

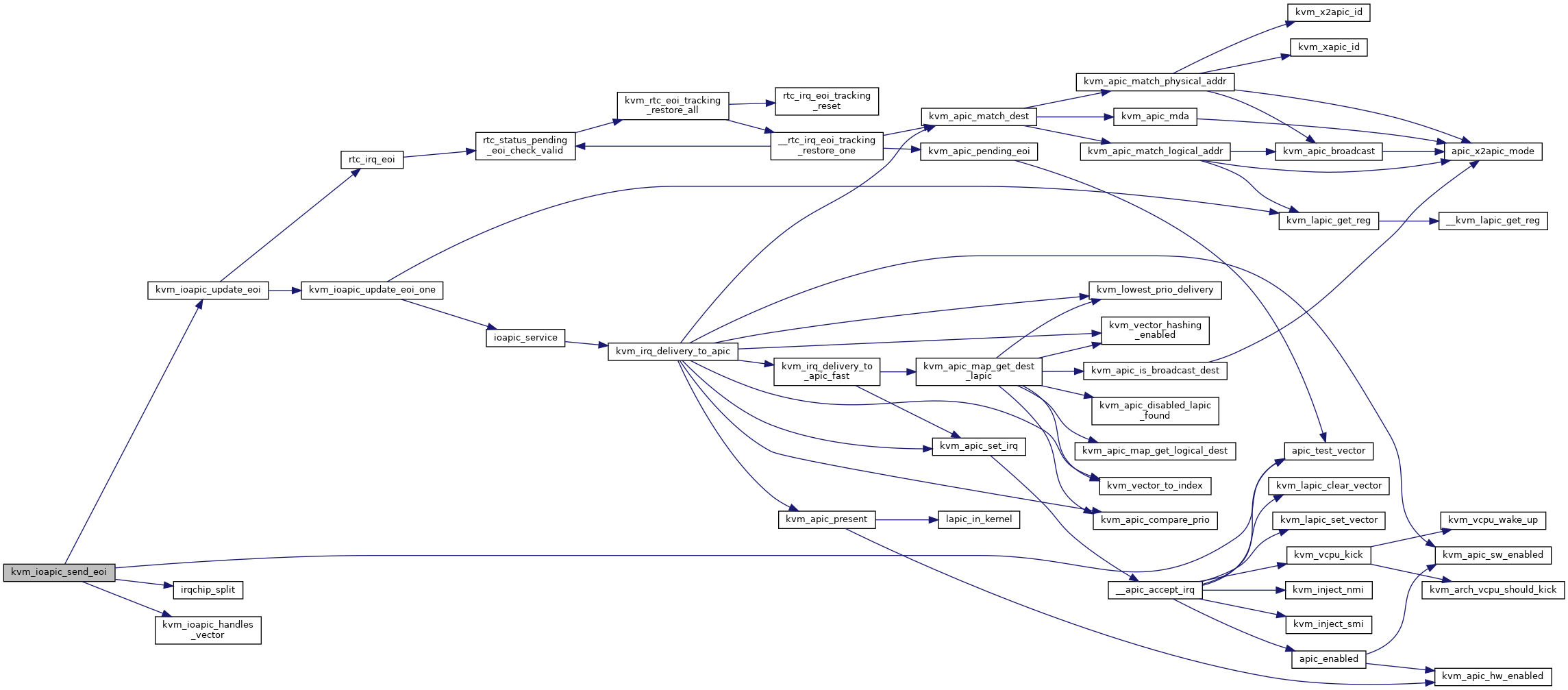

| static bool | kvm_ioapic_handles_vector (struct kvm_lapic *apic, int vector) |

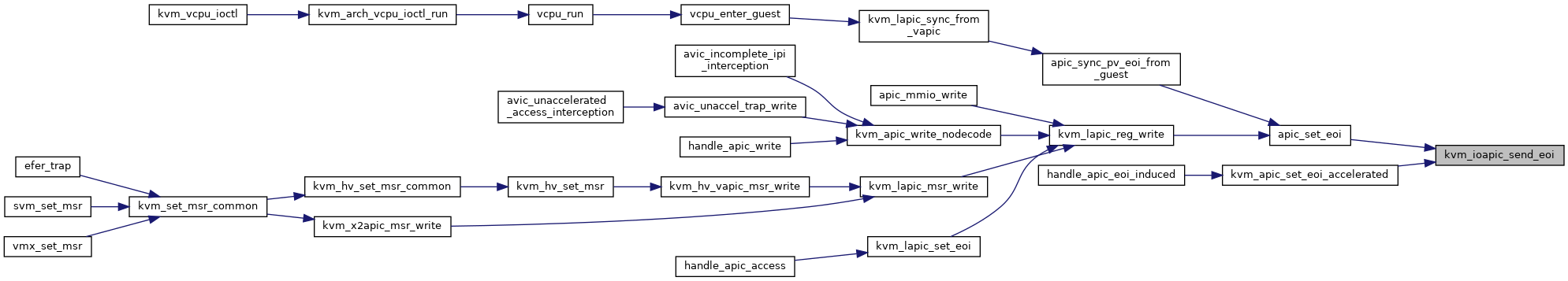

| static void | kvm_ioapic_send_eoi (struct kvm_lapic *apic, int vector) |

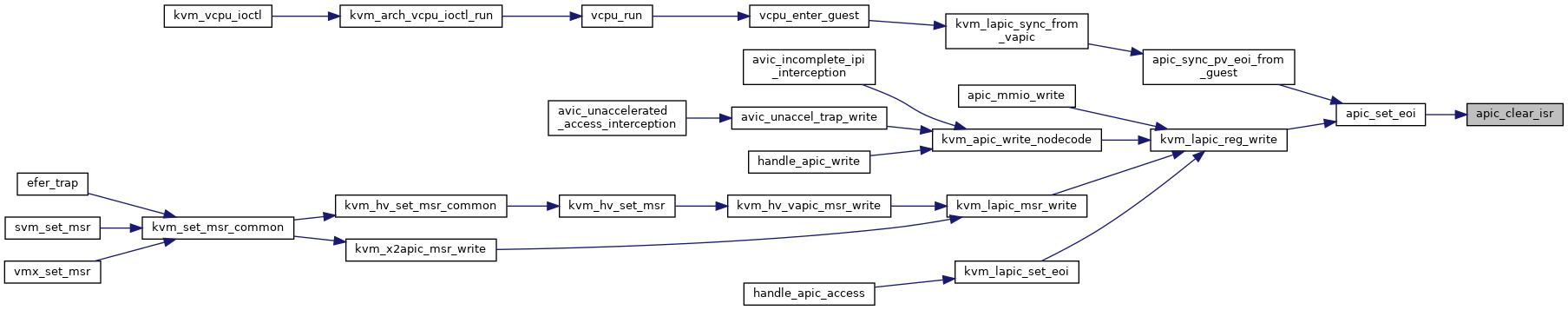

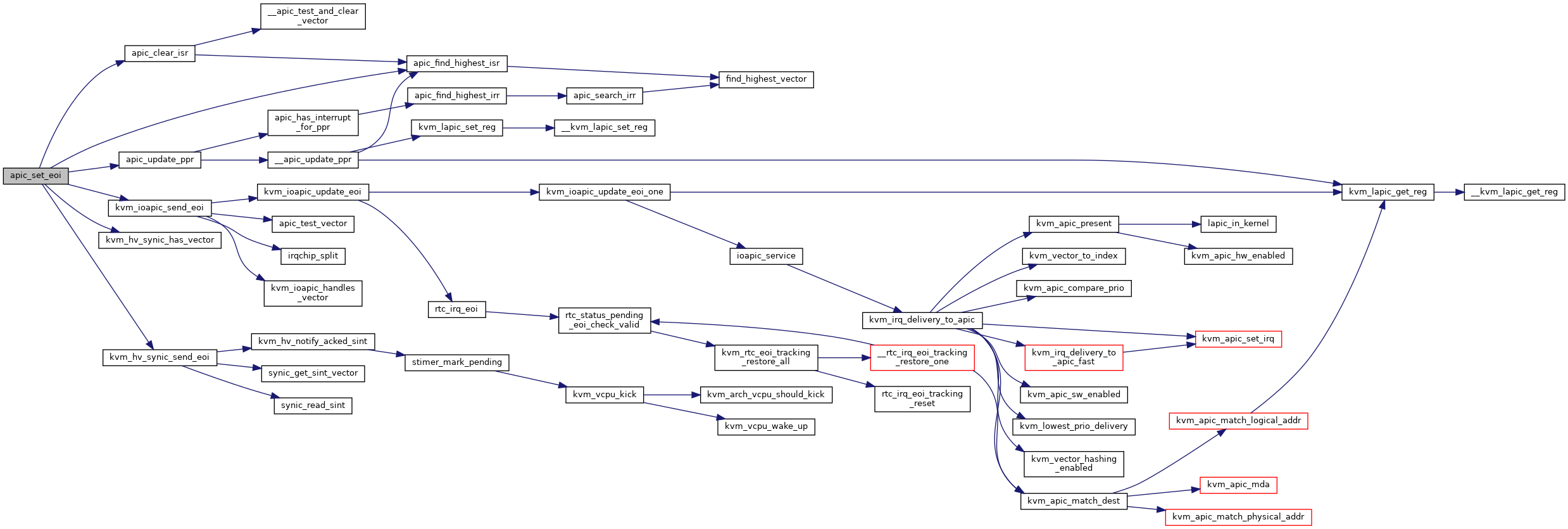

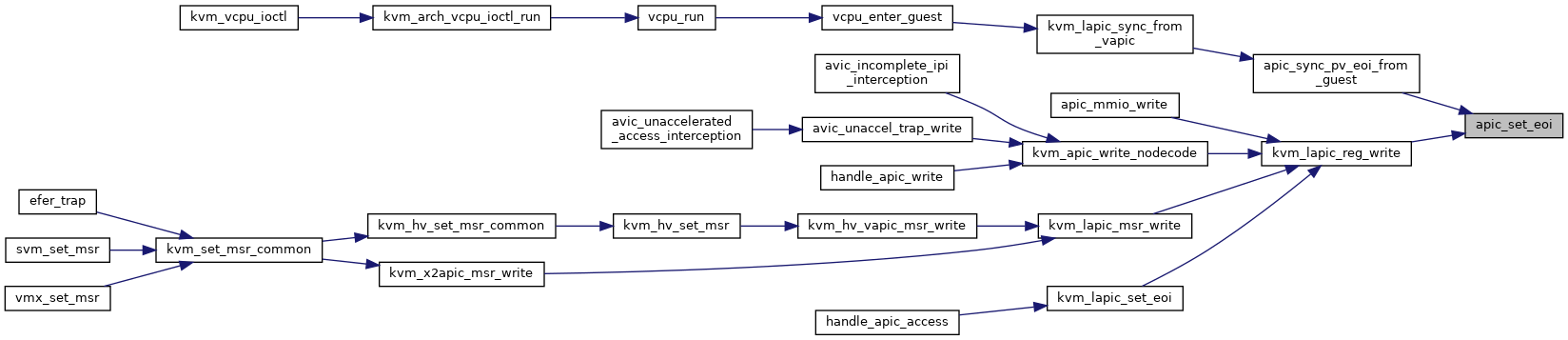

| static int | apic_set_eoi (struct kvm_lapic *apic) |

| void | kvm_apic_set_eoi_accelerated (struct kvm_vcpu *vcpu, int vector) |

| EXPORT_SYMBOL_GPL (kvm_apic_set_eoi_accelerated) | |

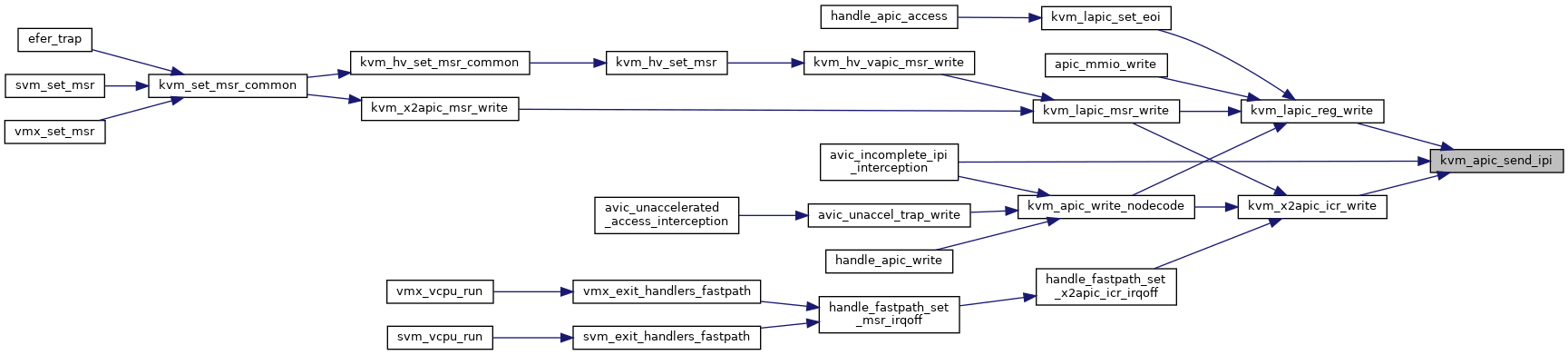

| void | kvm_apic_send_ipi (struct kvm_lapic *apic, u32 icr_low, u32 icr_high) |

| EXPORT_SYMBOL_GPL (kvm_apic_send_ipi) | |

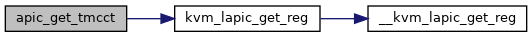

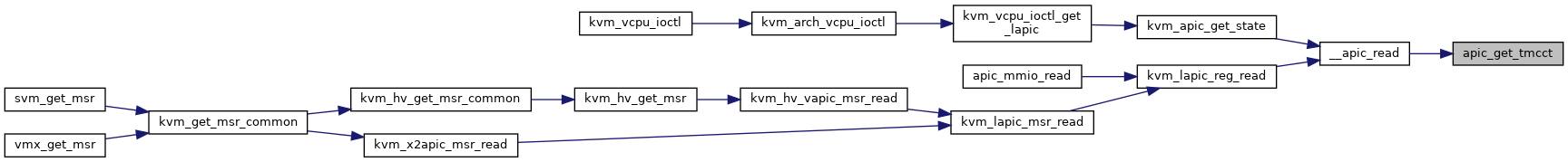

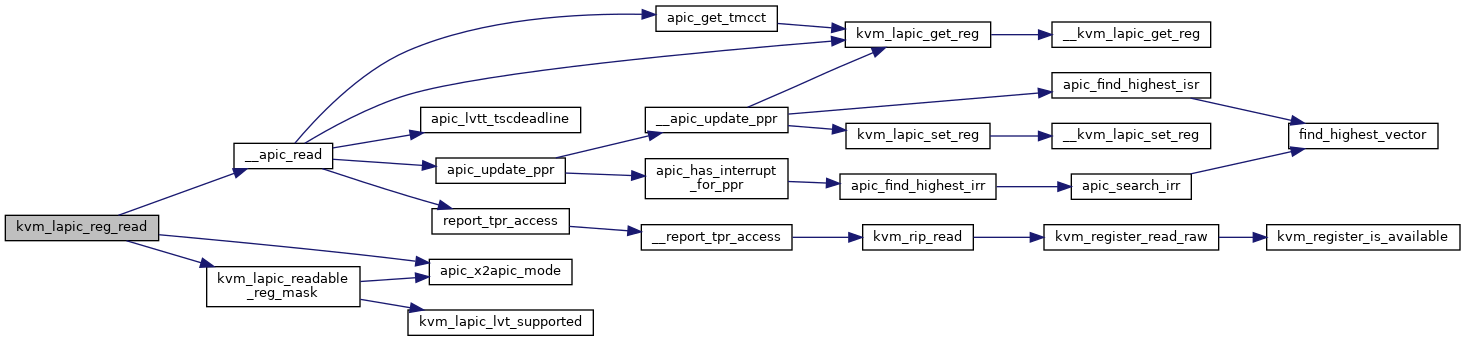

| static u32 | apic_get_tmcct (struct kvm_lapic *apic) |

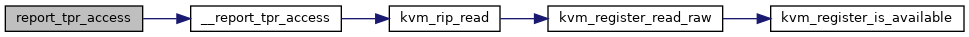

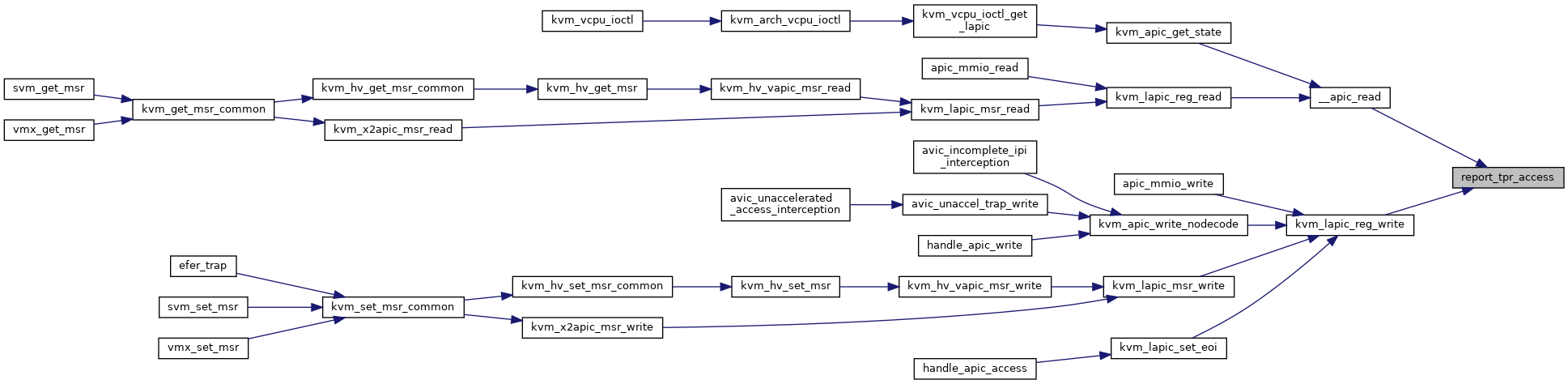

| static void | __report_tpr_access (struct kvm_lapic *apic, bool write) |

| static void | report_tpr_access (struct kvm_lapic *apic, bool write) |

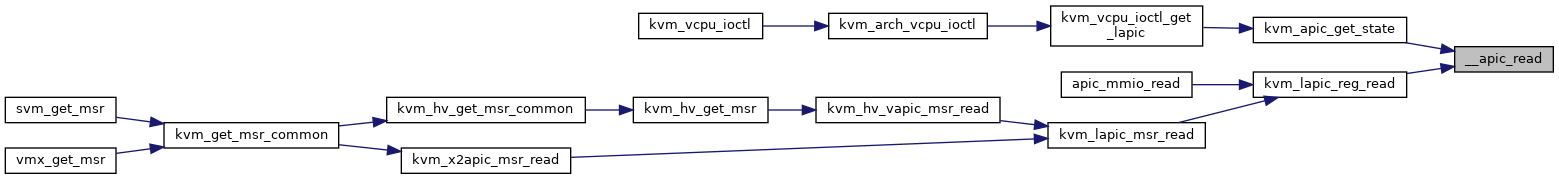

| static u32 | __apic_read (struct kvm_lapic *apic, unsigned int offset) |

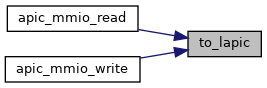

| static struct kvm_lapic * | to_lapic (struct kvm_io_device *dev) |

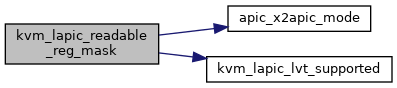

| u64 | kvm_lapic_readable_reg_mask (struct kvm_lapic *apic) |

| EXPORT_SYMBOL_GPL (kvm_lapic_readable_reg_mask) | |

| static int | kvm_lapic_reg_read (struct kvm_lapic *apic, u32 offset, int len, void *data) |

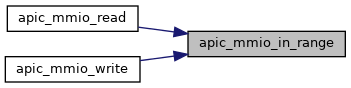

| static int | apic_mmio_in_range (struct kvm_lapic *apic, gpa_t addr) |

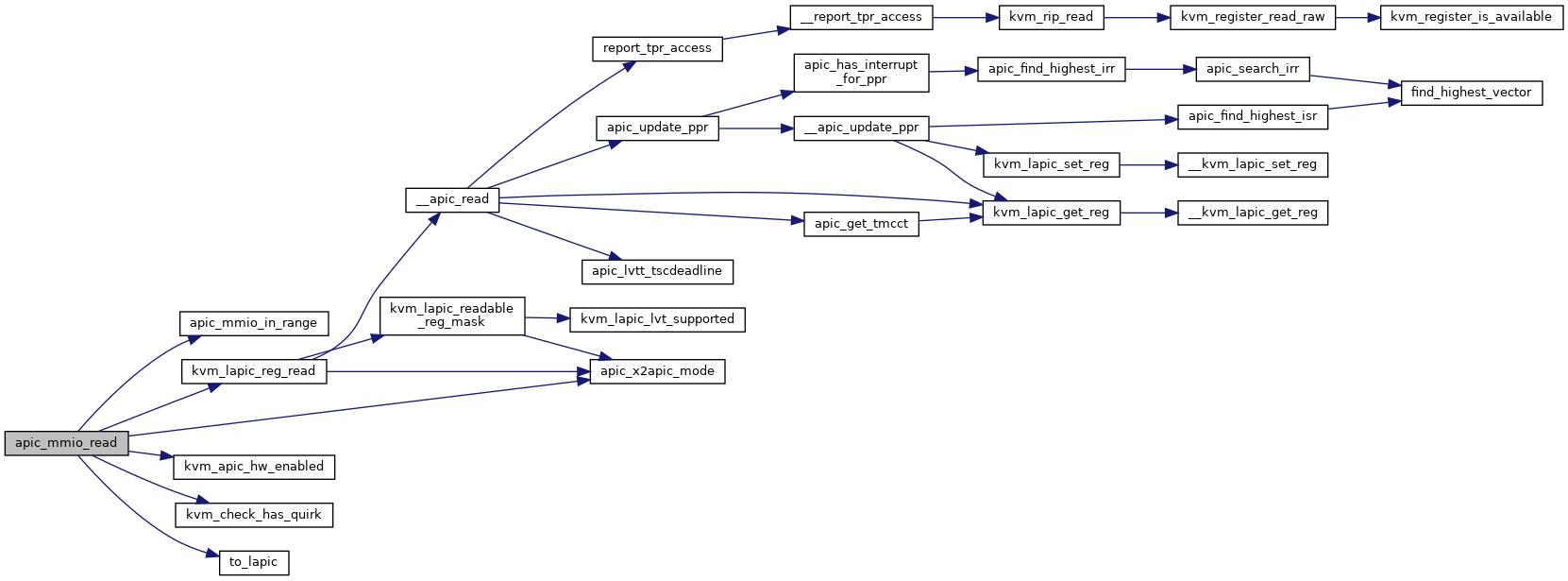

| static int | apic_mmio_read (struct kvm_vcpu *vcpu, struct kvm_io_device *this, gpa_t address, int len, void *data) |

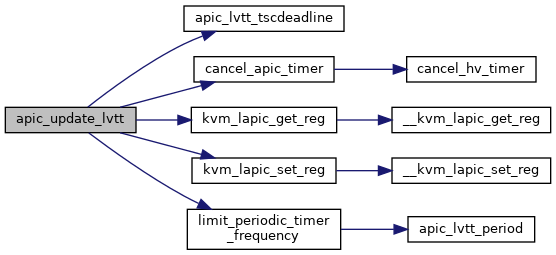

| static void | update_divide_count (struct kvm_lapic *apic) |

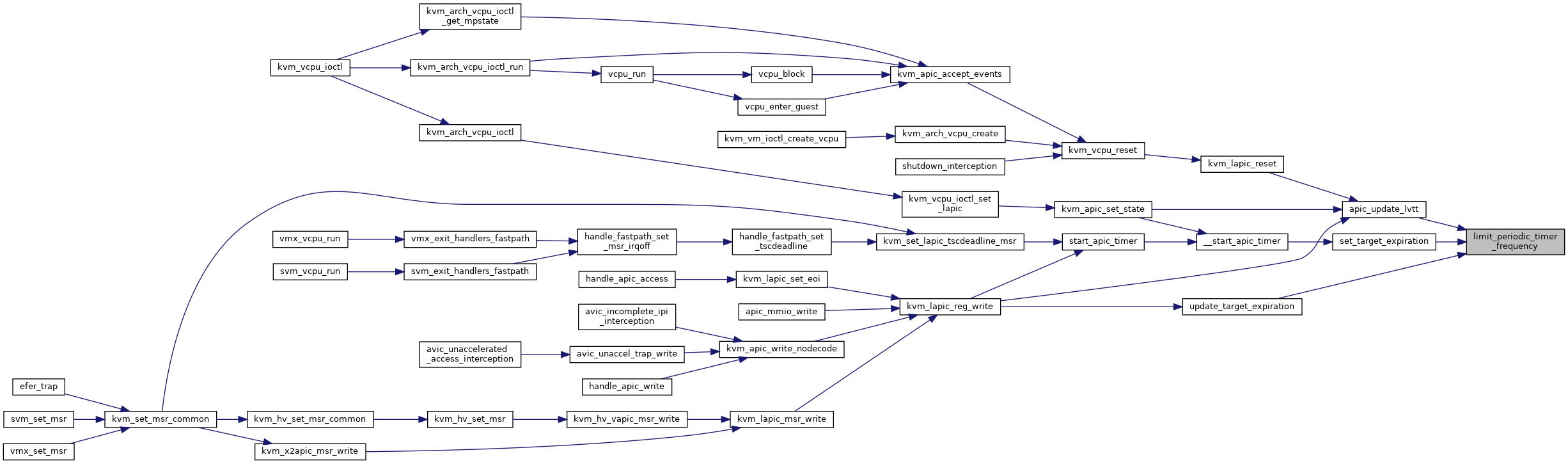

| static void | limit_periodic_timer_frequency (struct kvm_lapic *apic) |

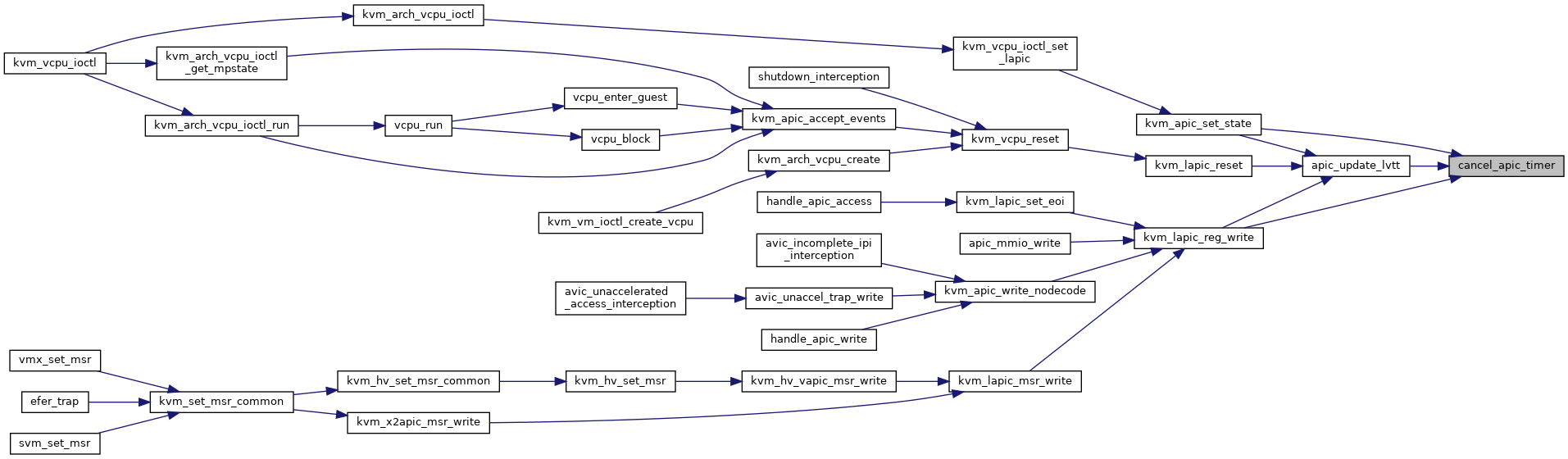

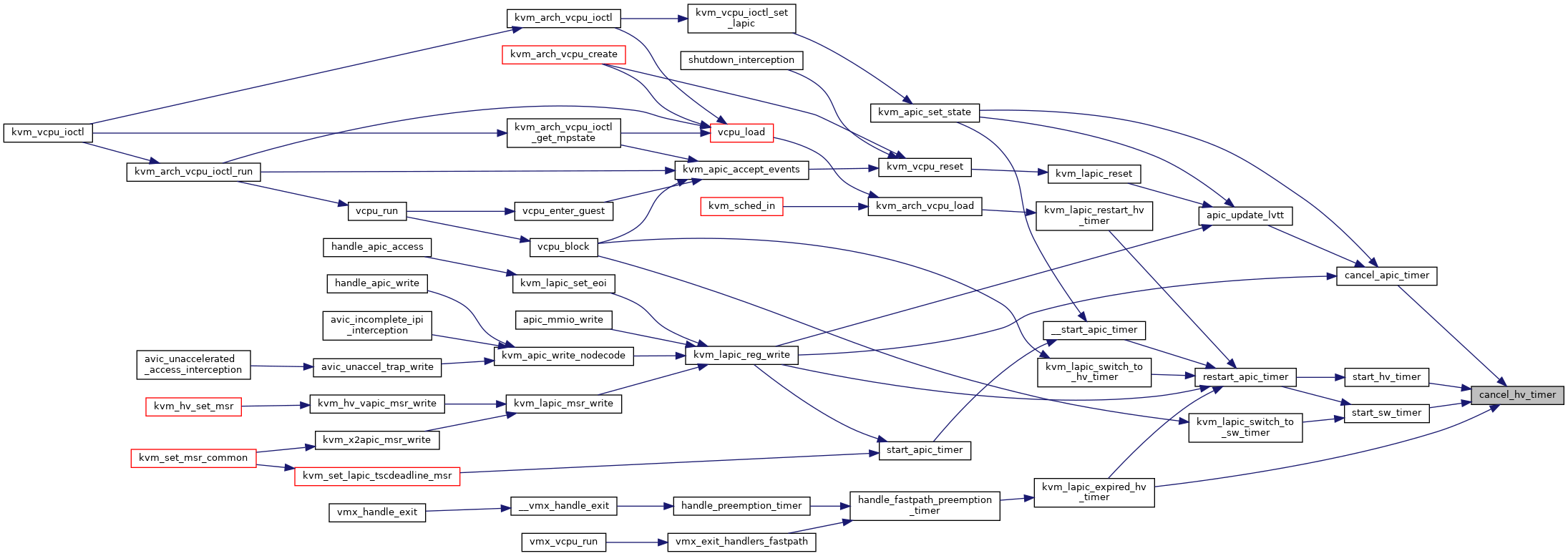

| static void | cancel_hv_timer (struct kvm_lapic *apic) |

| static void | cancel_apic_timer (struct kvm_lapic *apic) |

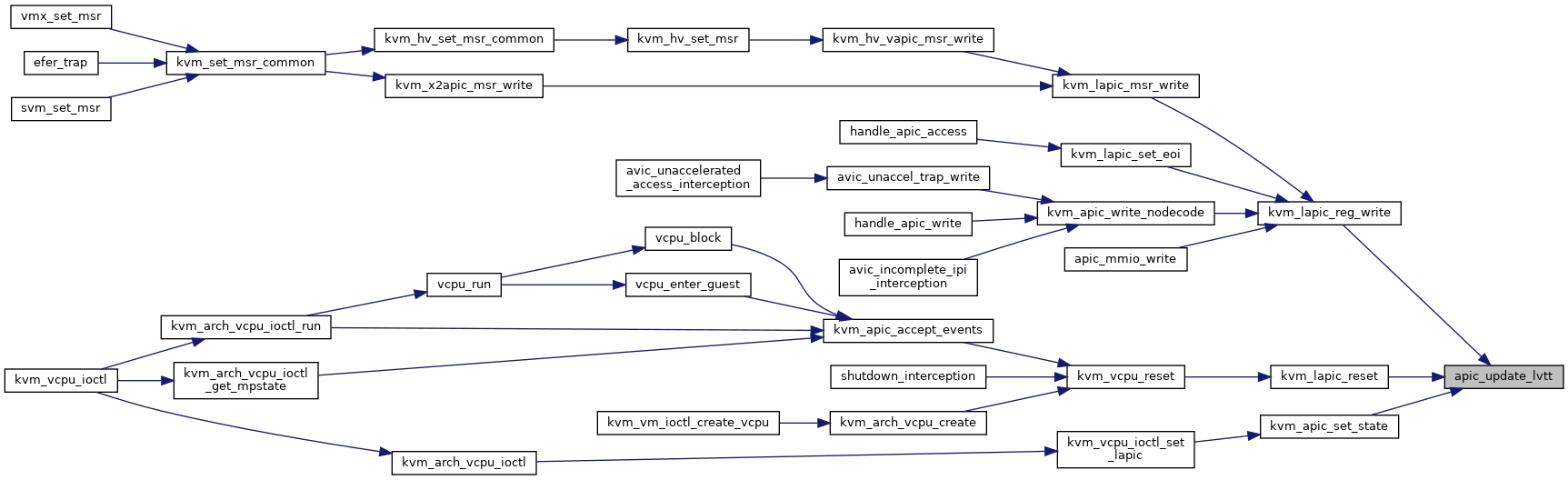

| static void | apic_update_lvtt (struct kvm_lapic *apic) |

| static bool | lapic_timer_int_injected (struct kvm_vcpu *vcpu) |

| static void | __wait_lapic_expire (struct kvm_vcpu *vcpu, u64 guest_cycles) |

| static void | adjust_lapic_timer_advance (struct kvm_vcpu *vcpu, s64 advance_expire_delta) |

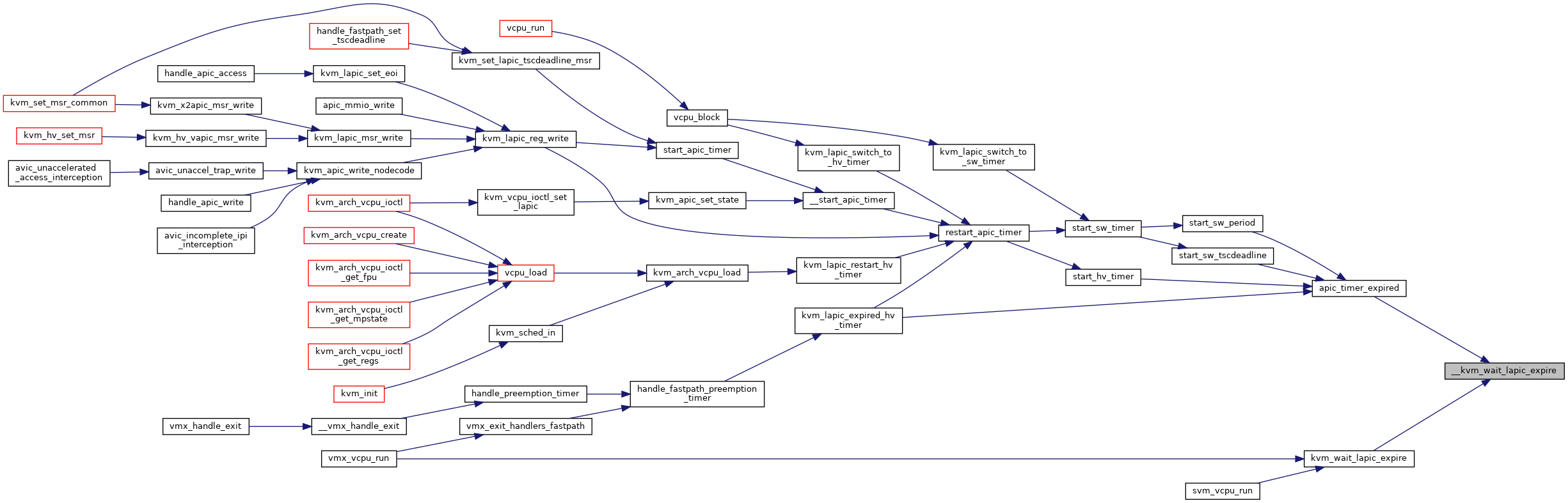

| static void | __kvm_wait_lapic_expire (struct kvm_vcpu *vcpu) |

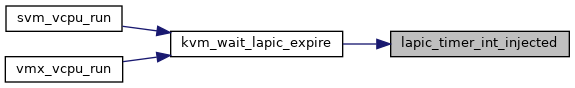

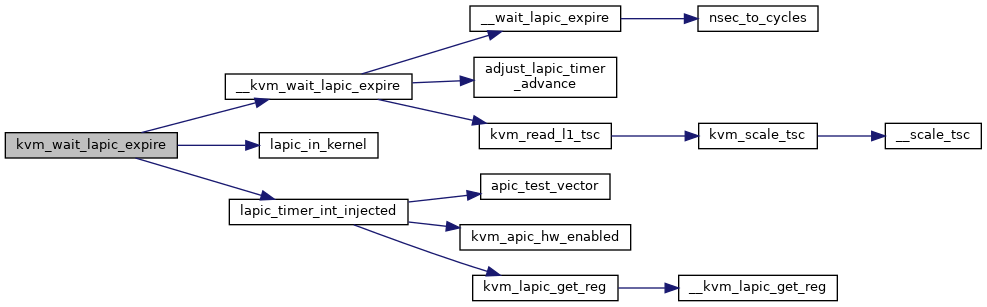

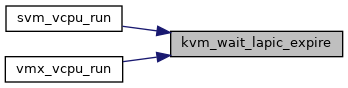

| void | kvm_wait_lapic_expire (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_wait_lapic_expire) | |

| static void | kvm_apic_inject_pending_timer_irqs (struct kvm_lapic *apic) |

| static void | apic_timer_expired (struct kvm_lapic *apic, bool from_timer_fn) |

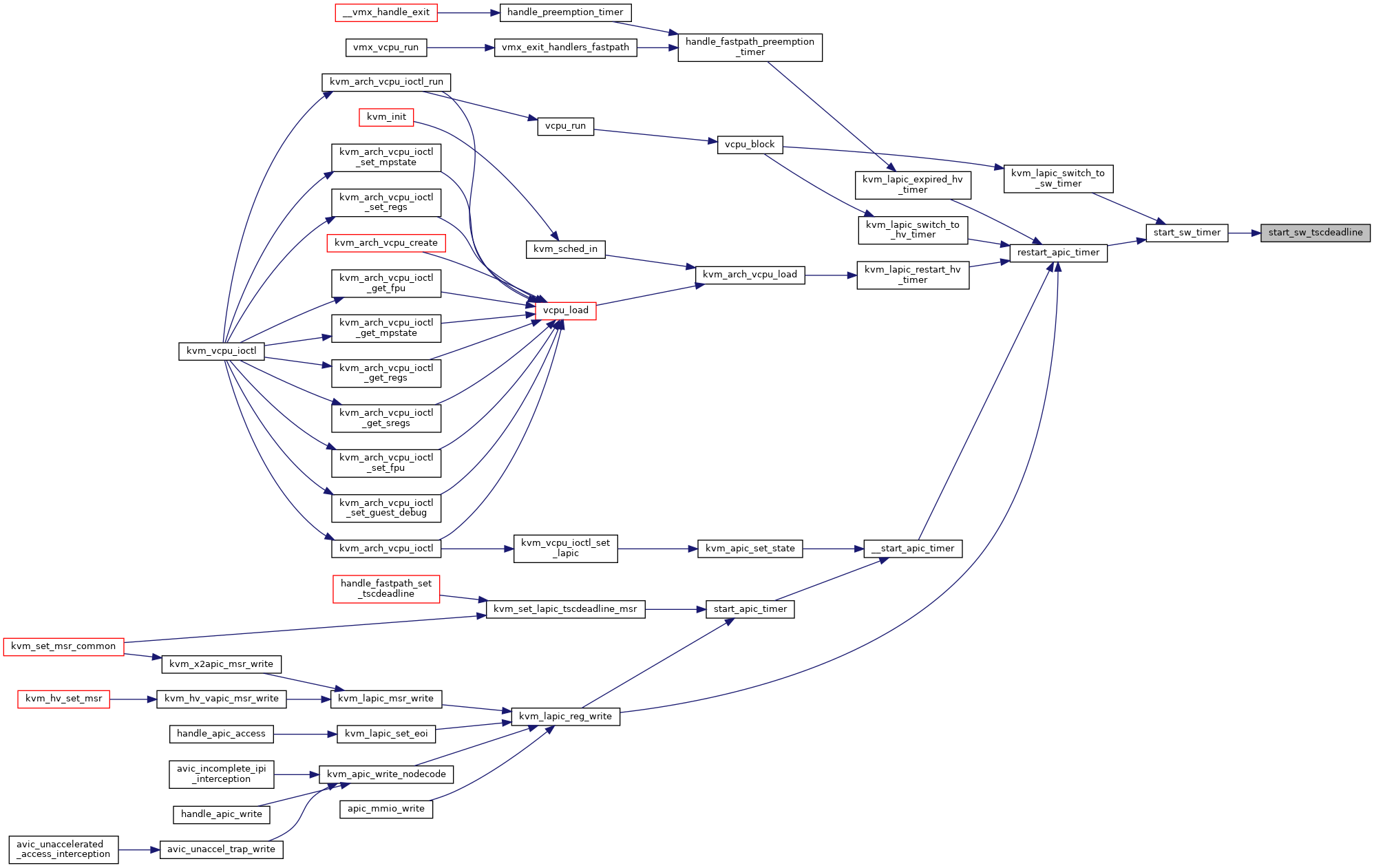

| static void | start_sw_tscdeadline (struct kvm_lapic *apic) |

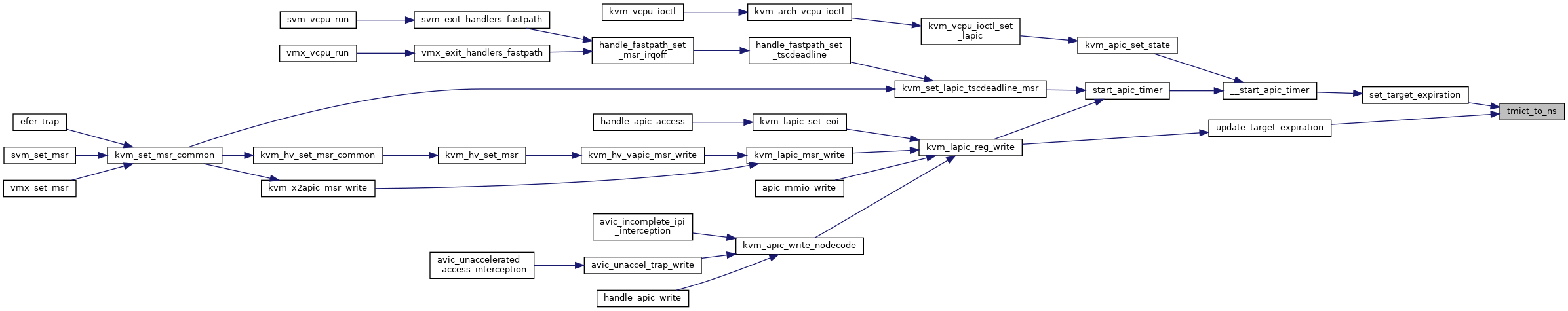

| static u64 | tmict_to_ns (struct kvm_lapic *apic, u32 tmict) |

| static void | update_target_expiration (struct kvm_lapic *apic, uint32_t old_divisor) |

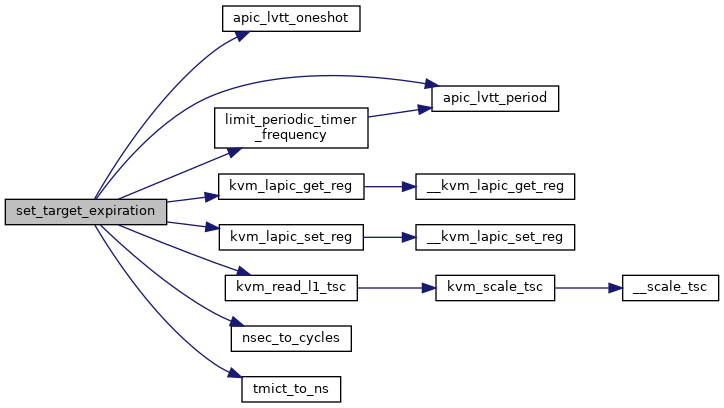

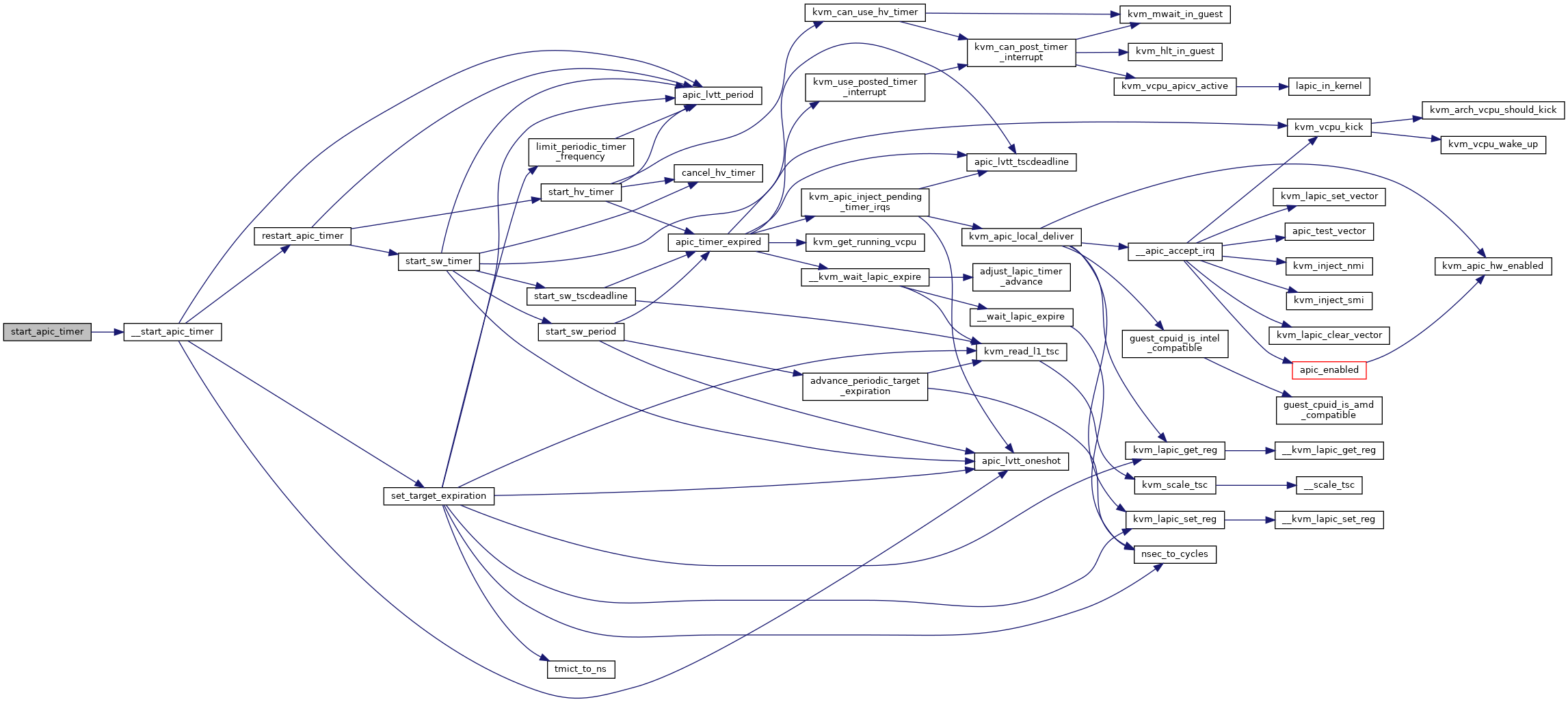

| static bool | set_target_expiration (struct kvm_lapic *apic, u32 count_reg) |

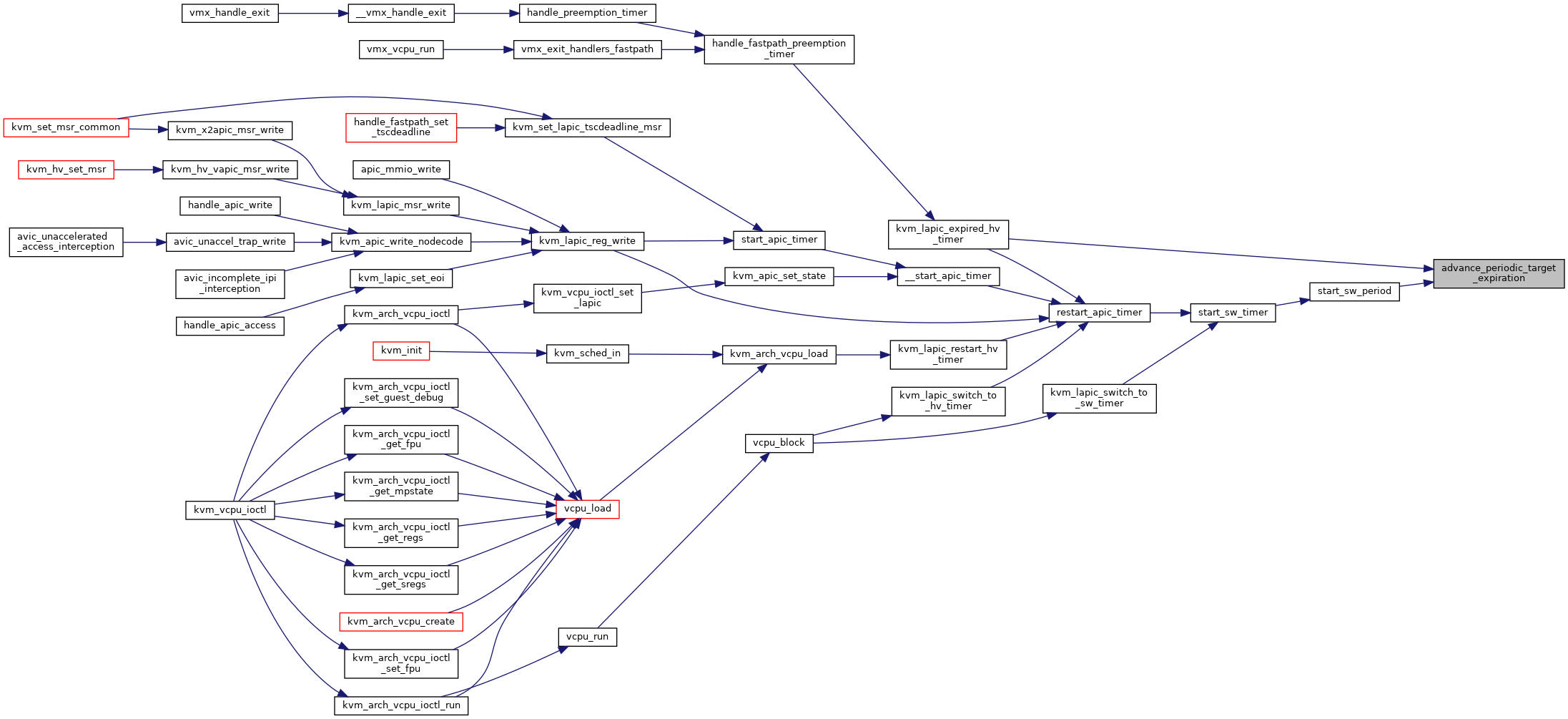

| static void | advance_periodic_target_expiration (struct kvm_lapic *apic) |

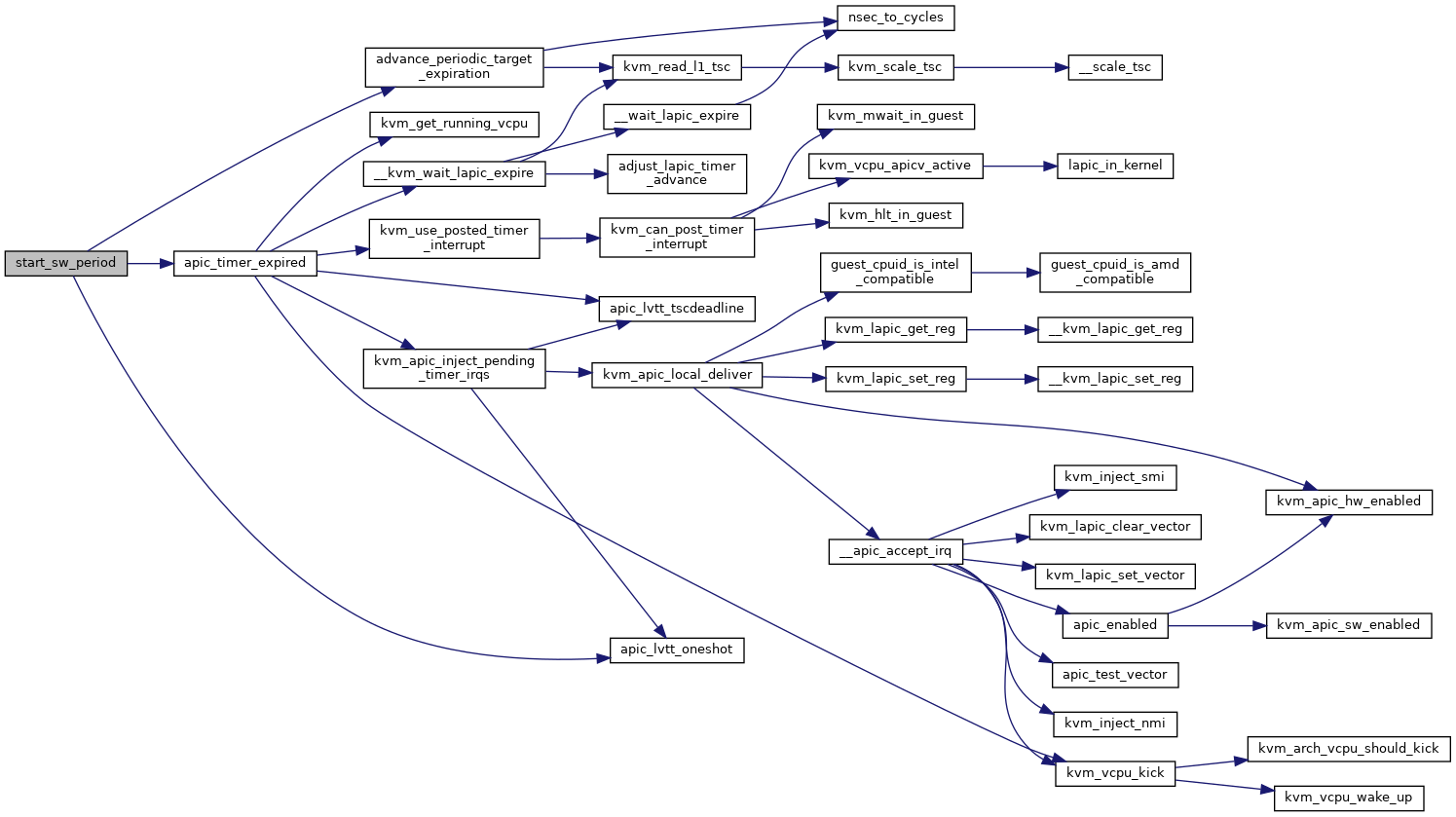

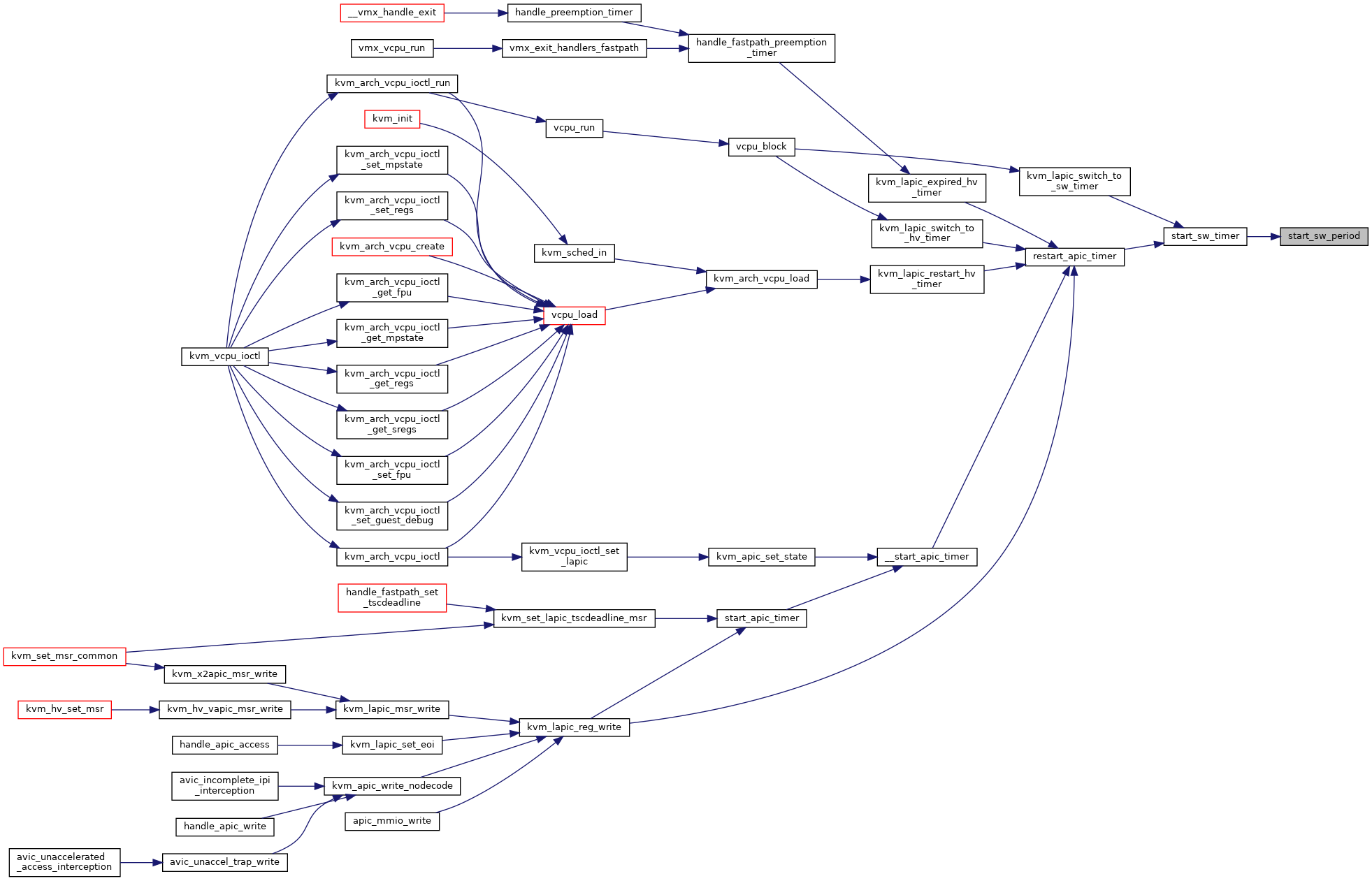

| static void | start_sw_period (struct kvm_lapic *apic) |

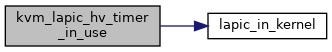

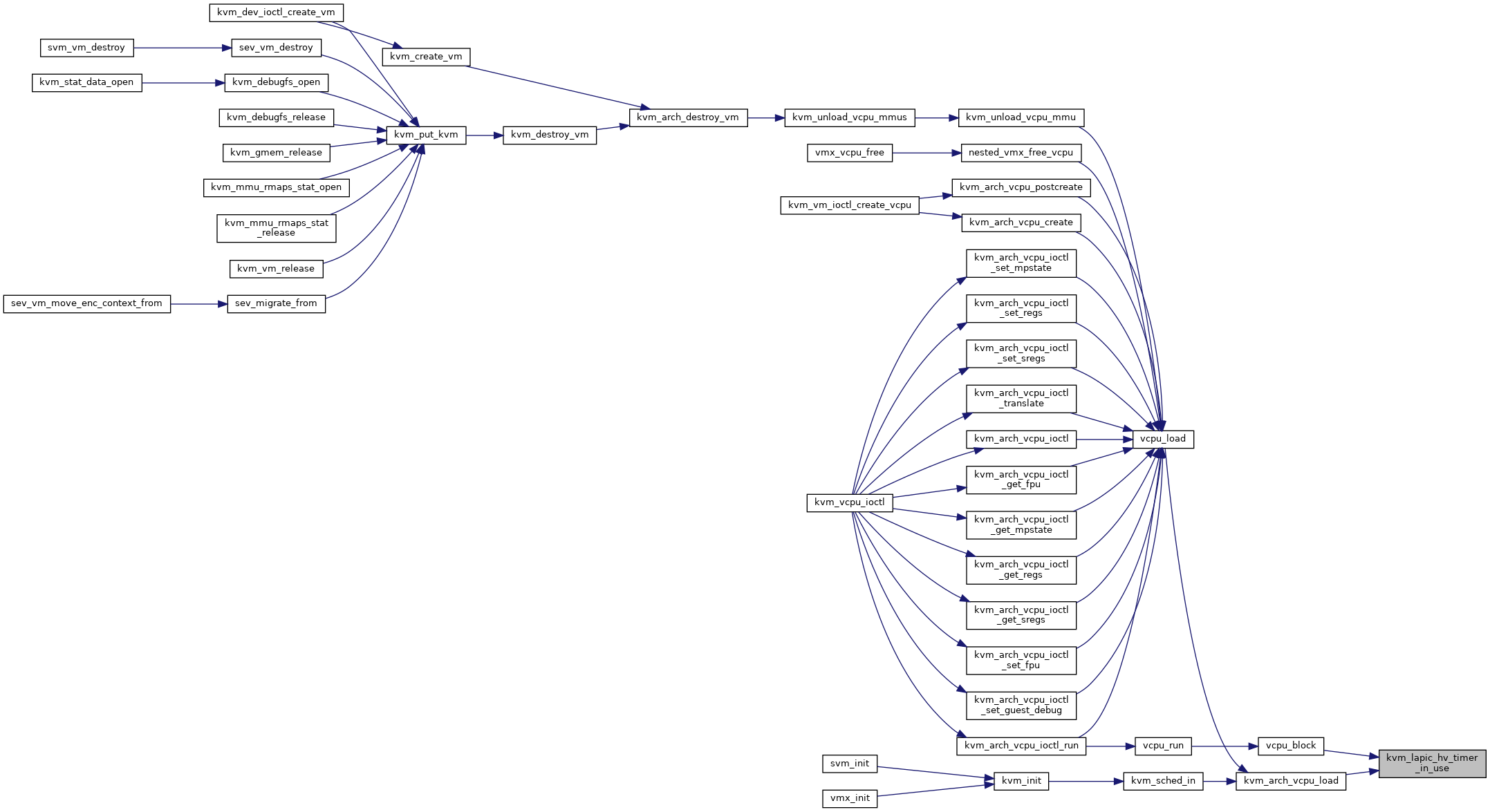

| bool | kvm_lapic_hv_timer_in_use (struct kvm_vcpu *vcpu) |

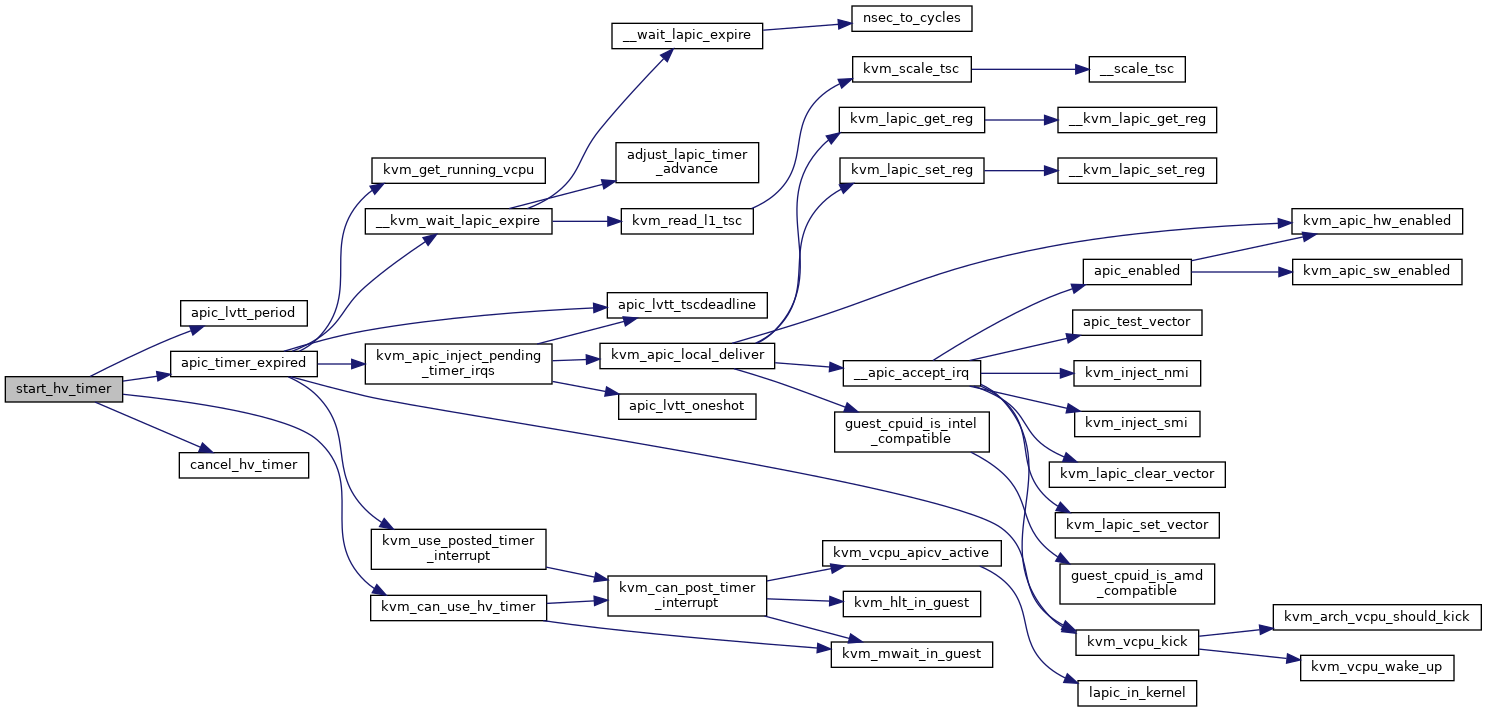

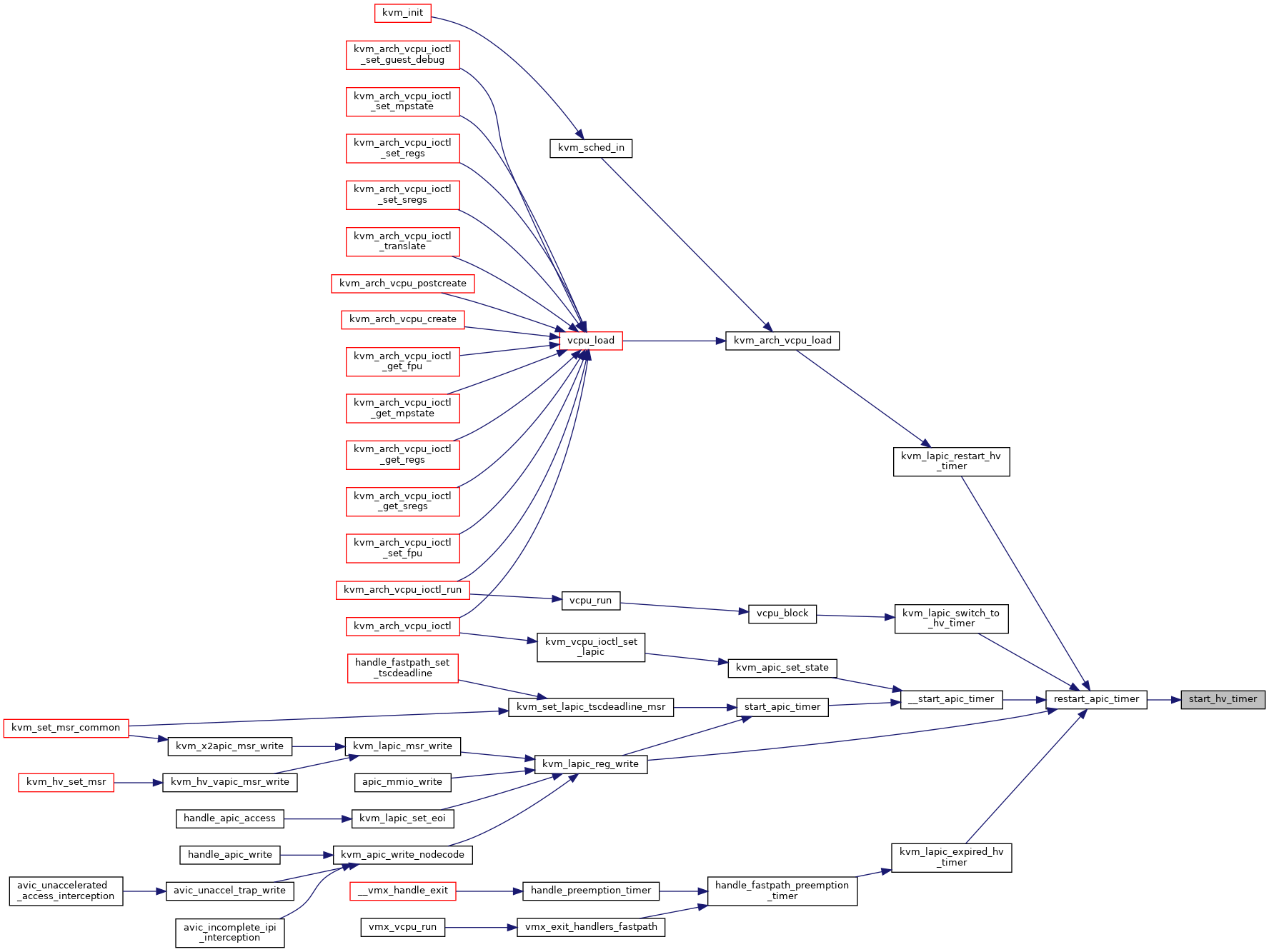

| static bool | start_hv_timer (struct kvm_lapic *apic) |

| static void | start_sw_timer (struct kvm_lapic *apic) |

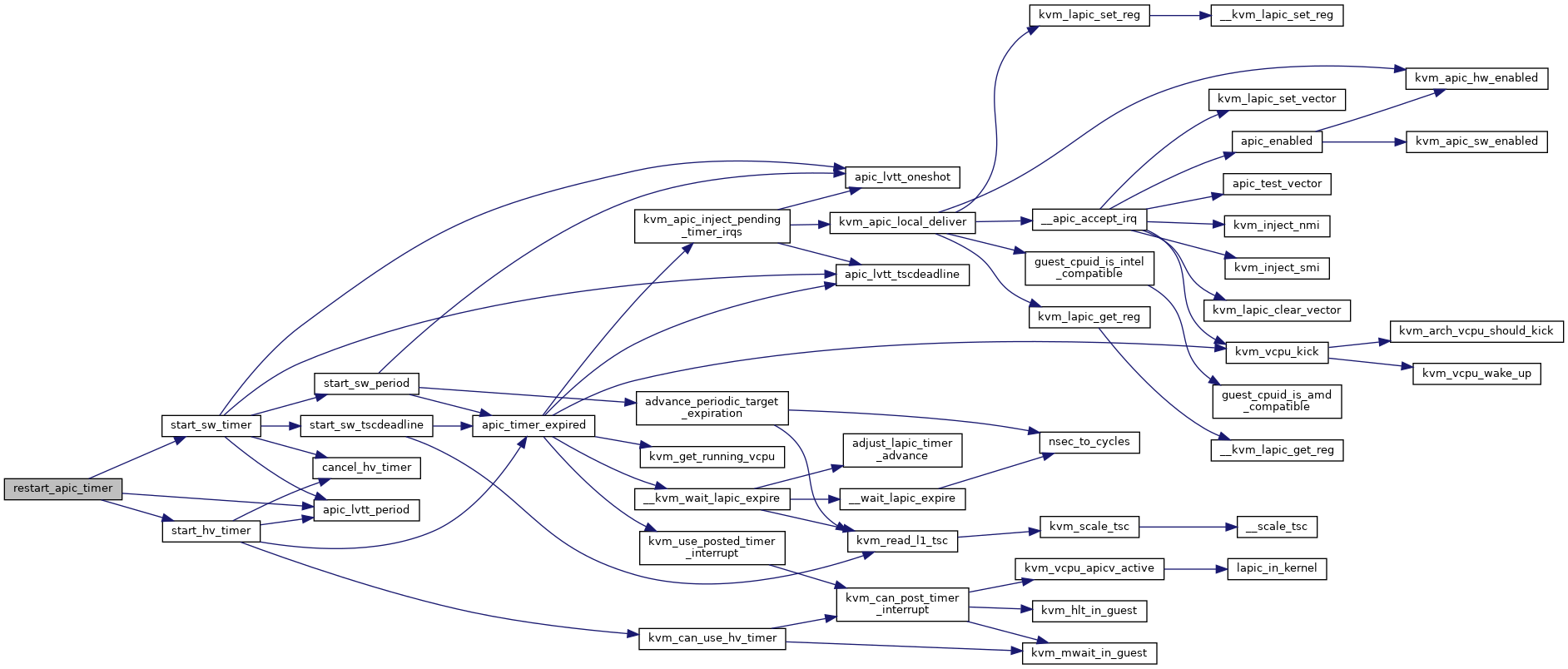

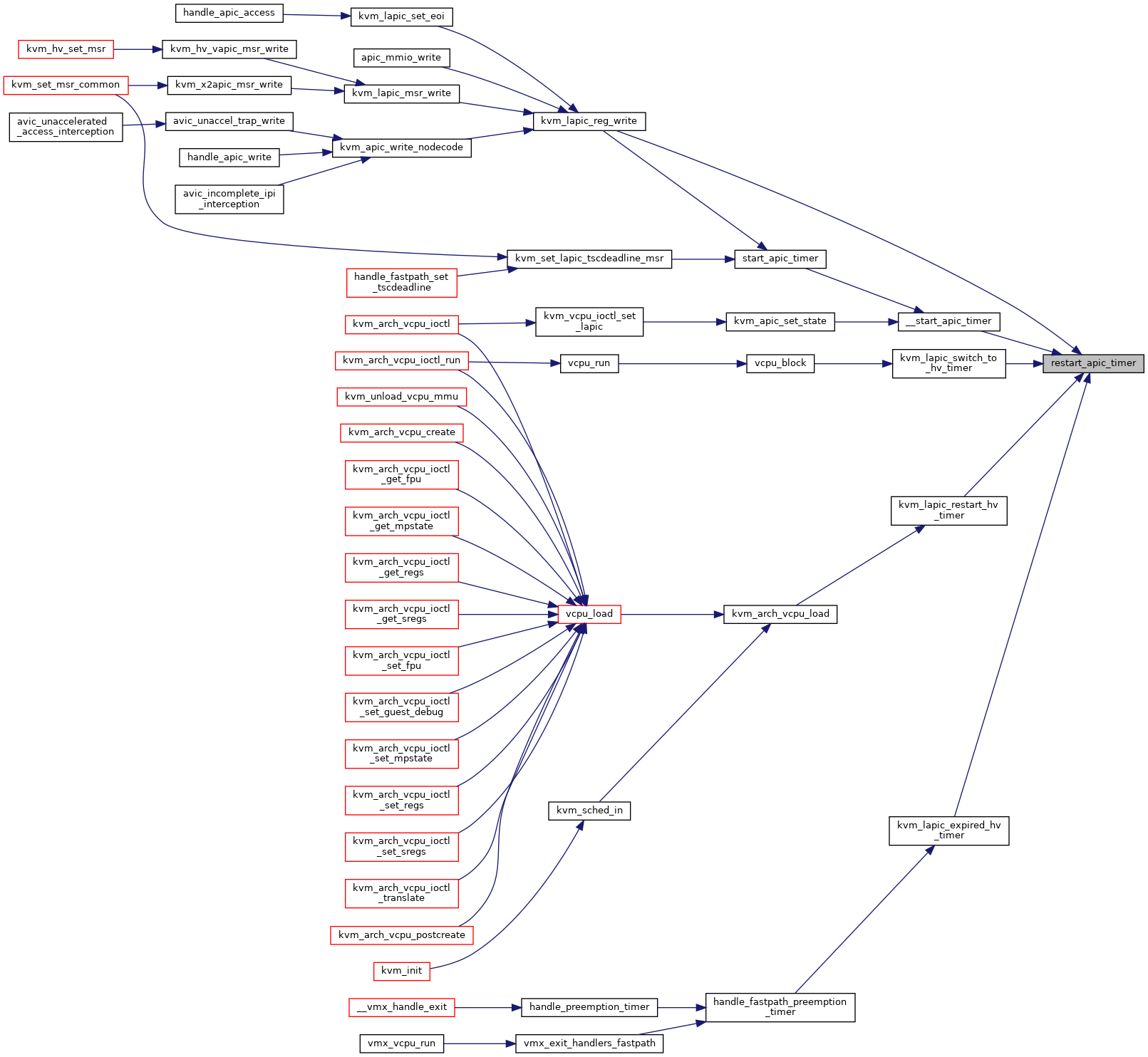

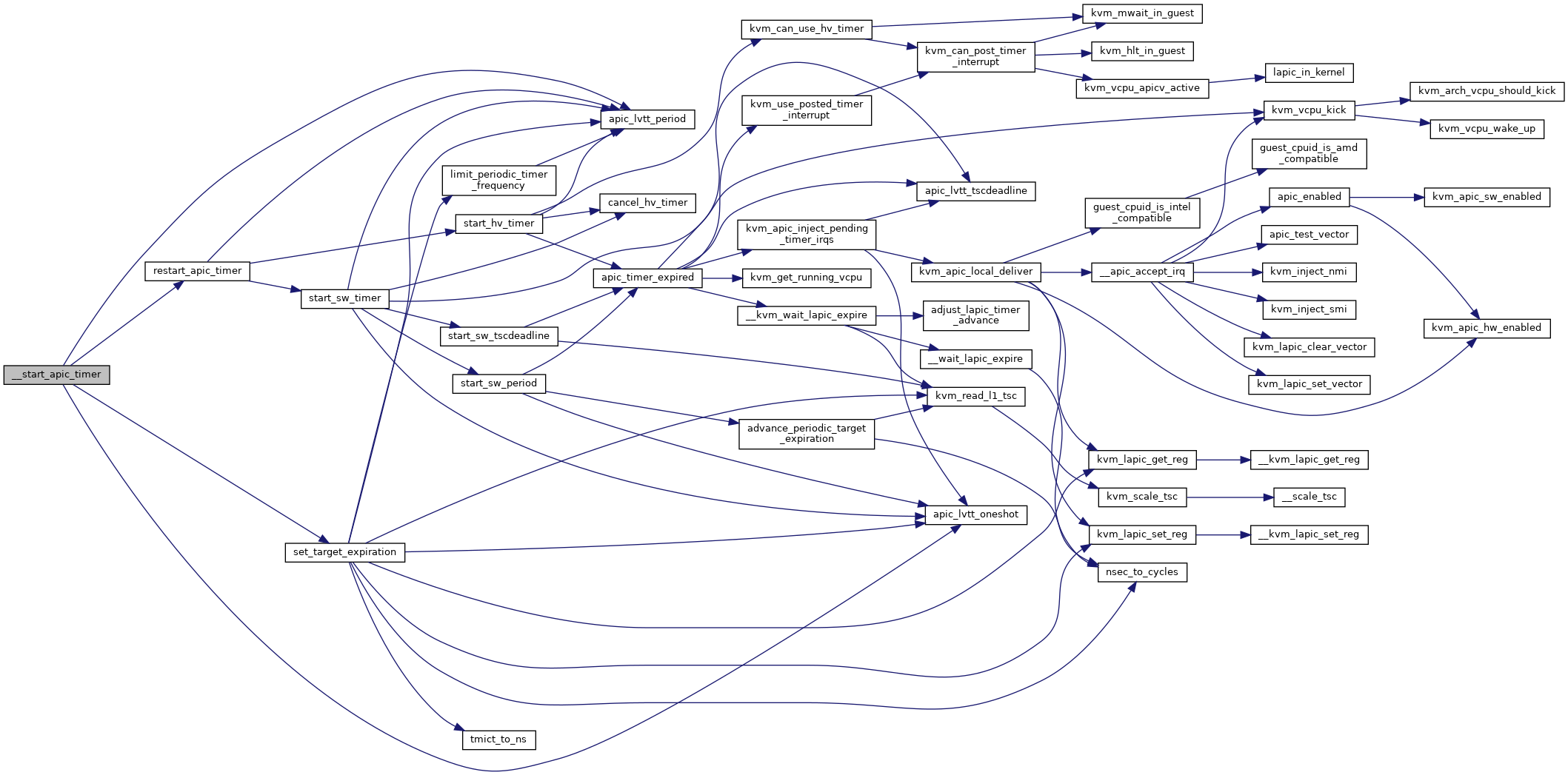

| static void | restart_apic_timer (struct kvm_lapic *apic) |

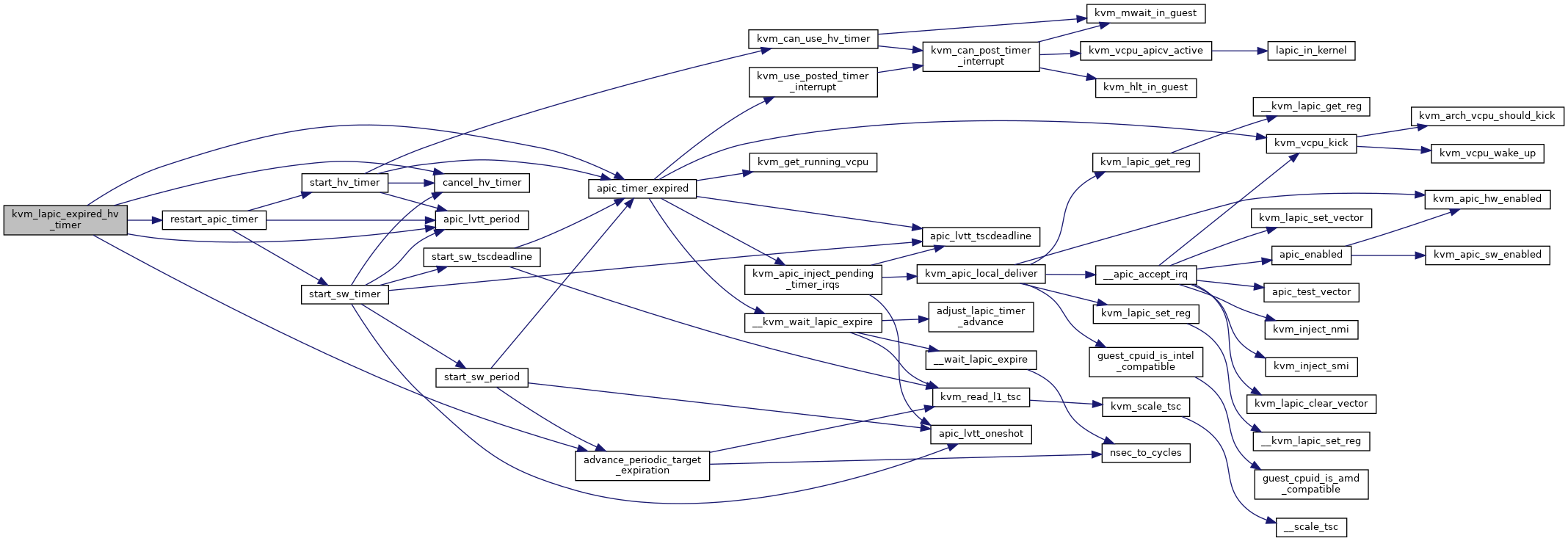

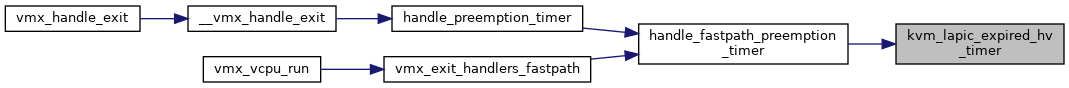

| void | kvm_lapic_expired_hv_timer (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_lapic_expired_hv_timer) | |

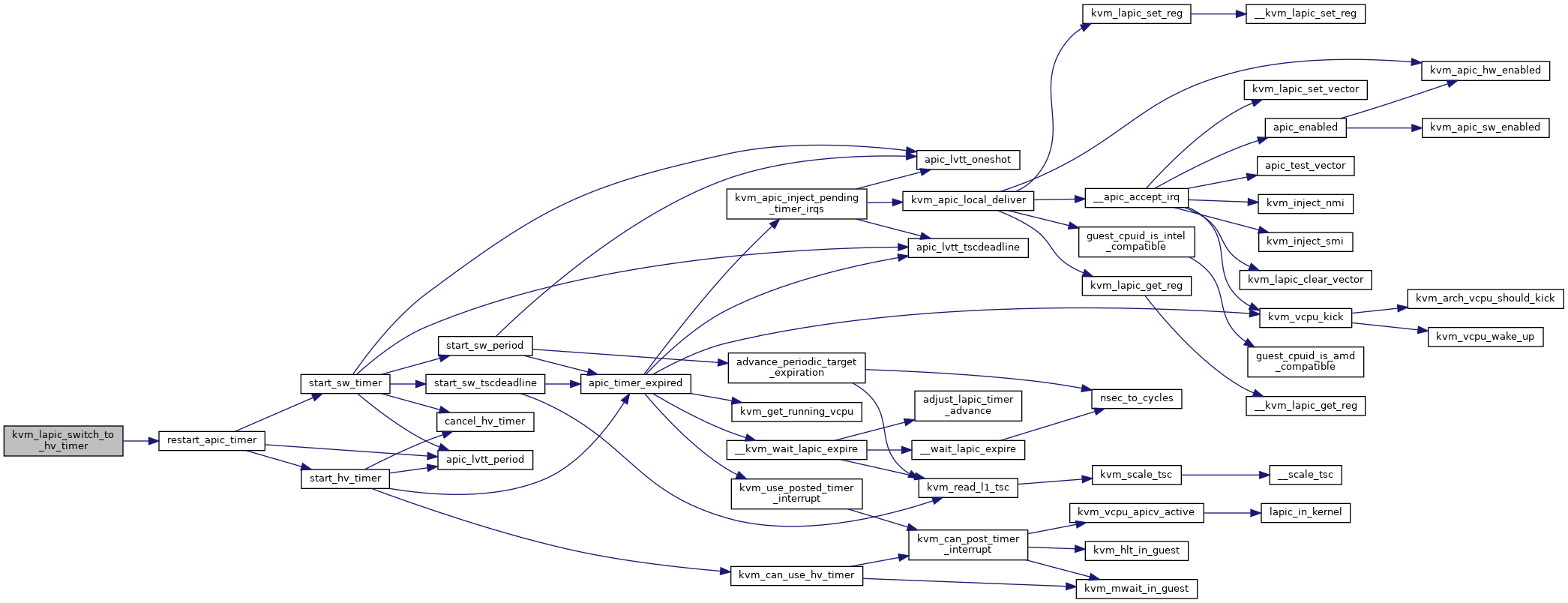

| void | kvm_lapic_switch_to_hv_timer (struct kvm_vcpu *vcpu) |

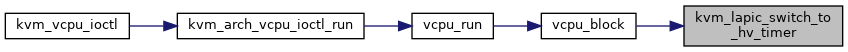

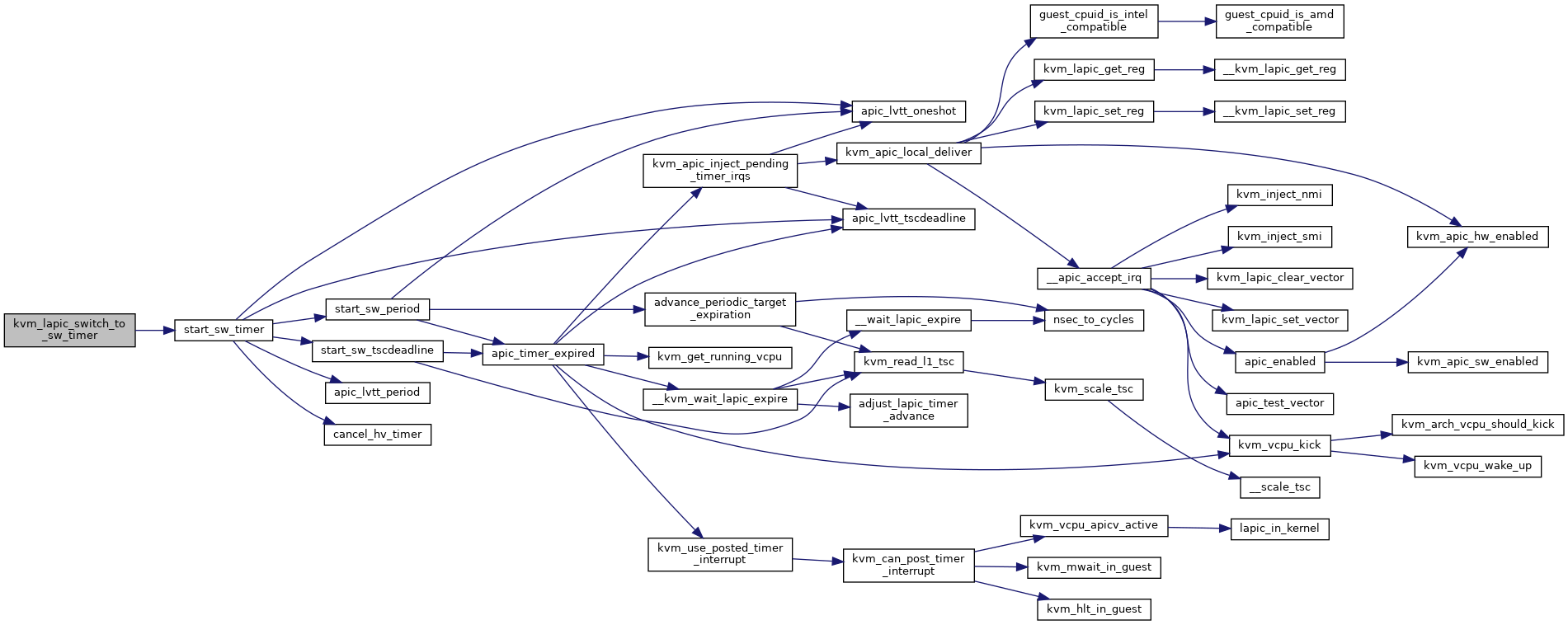

| void | kvm_lapic_switch_to_sw_timer (struct kvm_vcpu *vcpu) |

| void | kvm_lapic_restart_hv_timer (struct kvm_vcpu *vcpu) |

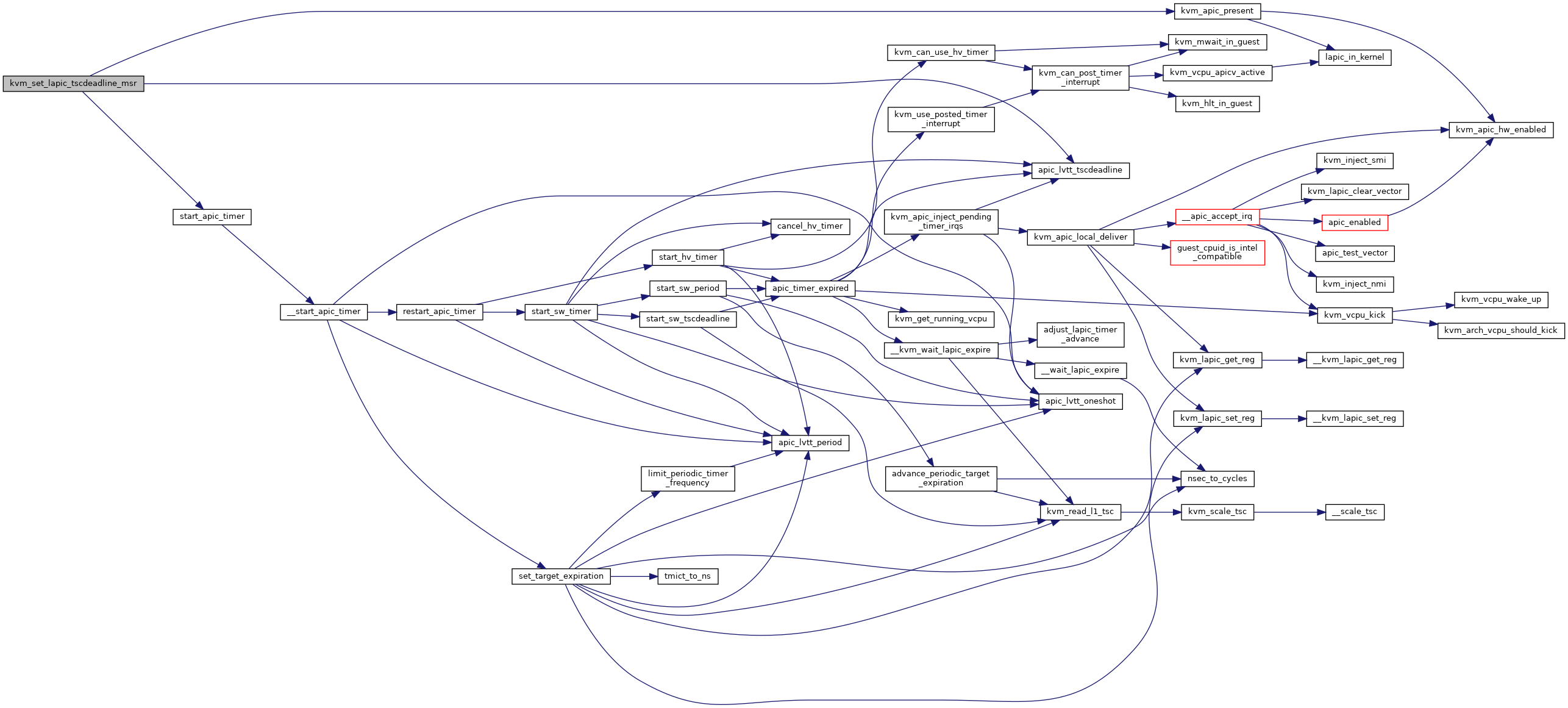

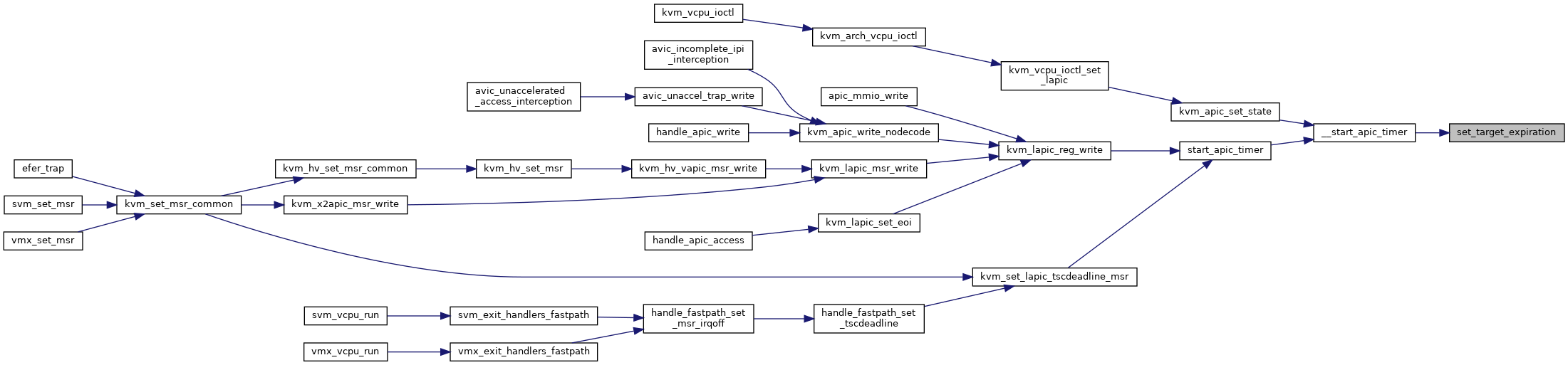

| static void | __start_apic_timer (struct kvm_lapic *apic, u32 count_reg) |

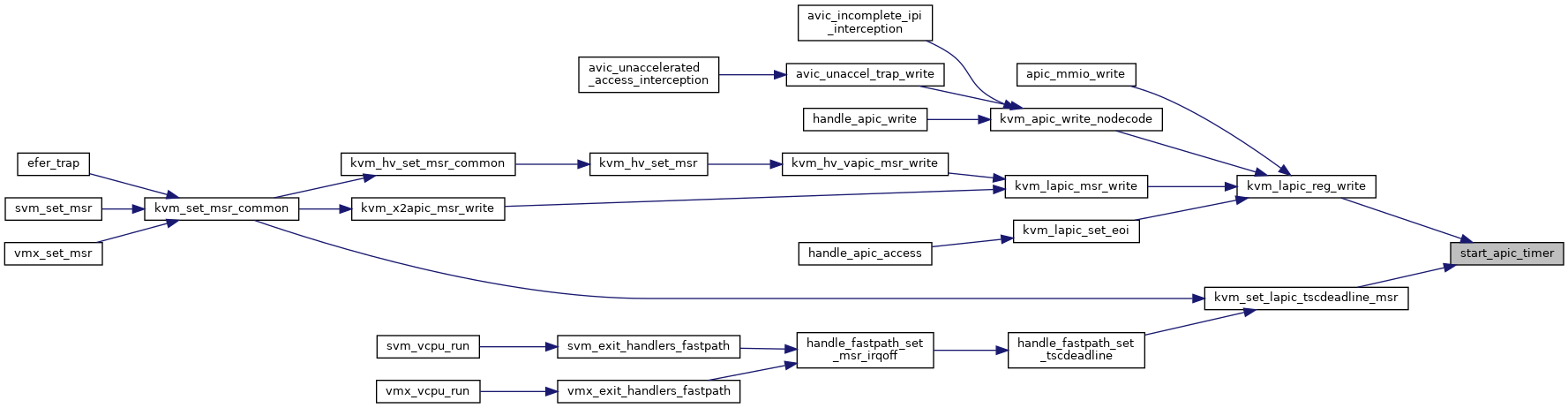

| static void | start_apic_timer (struct kvm_lapic *apic) |

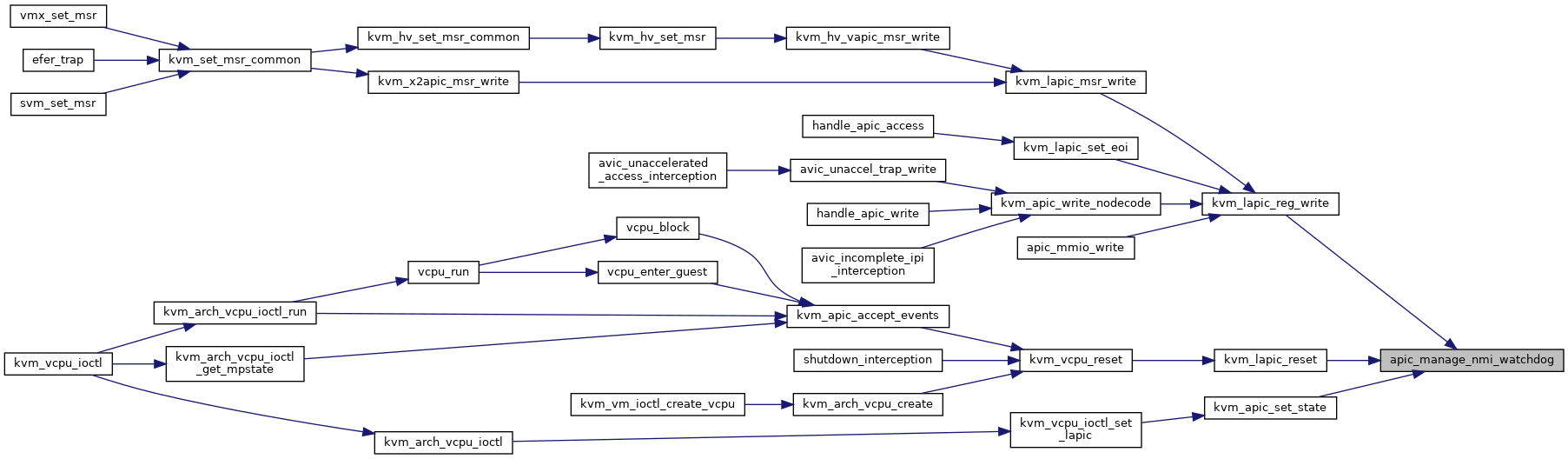

| static void | apic_manage_nmi_watchdog (struct kvm_lapic *apic, u32 lvt0_val) |

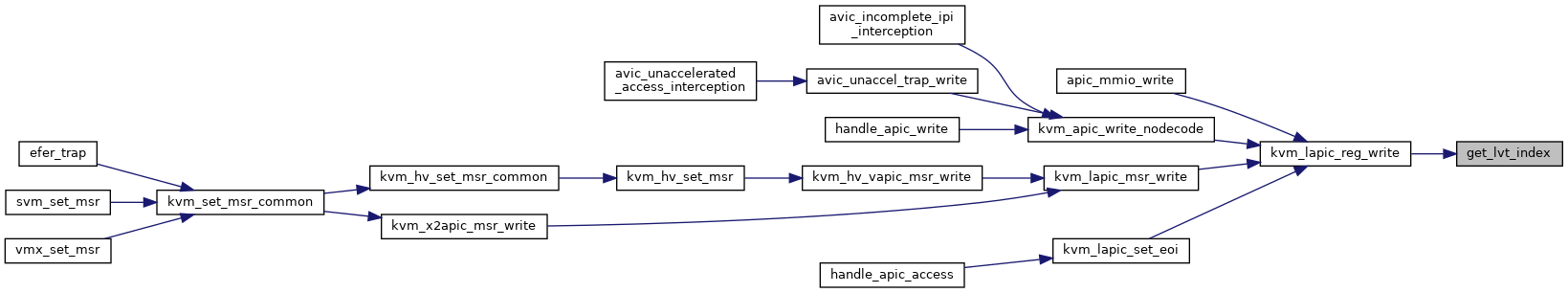

| static int | get_lvt_index (u32 reg) |

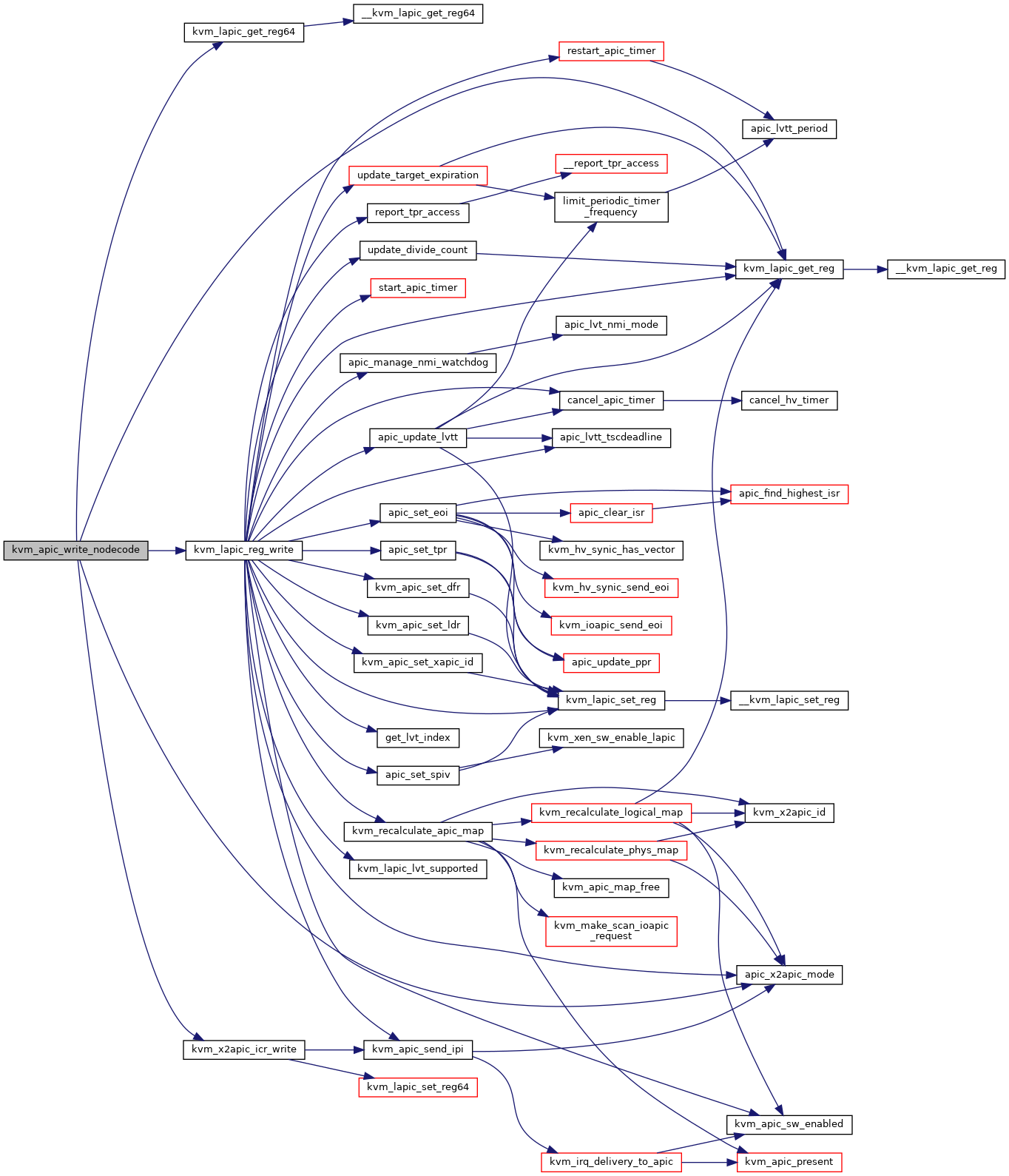

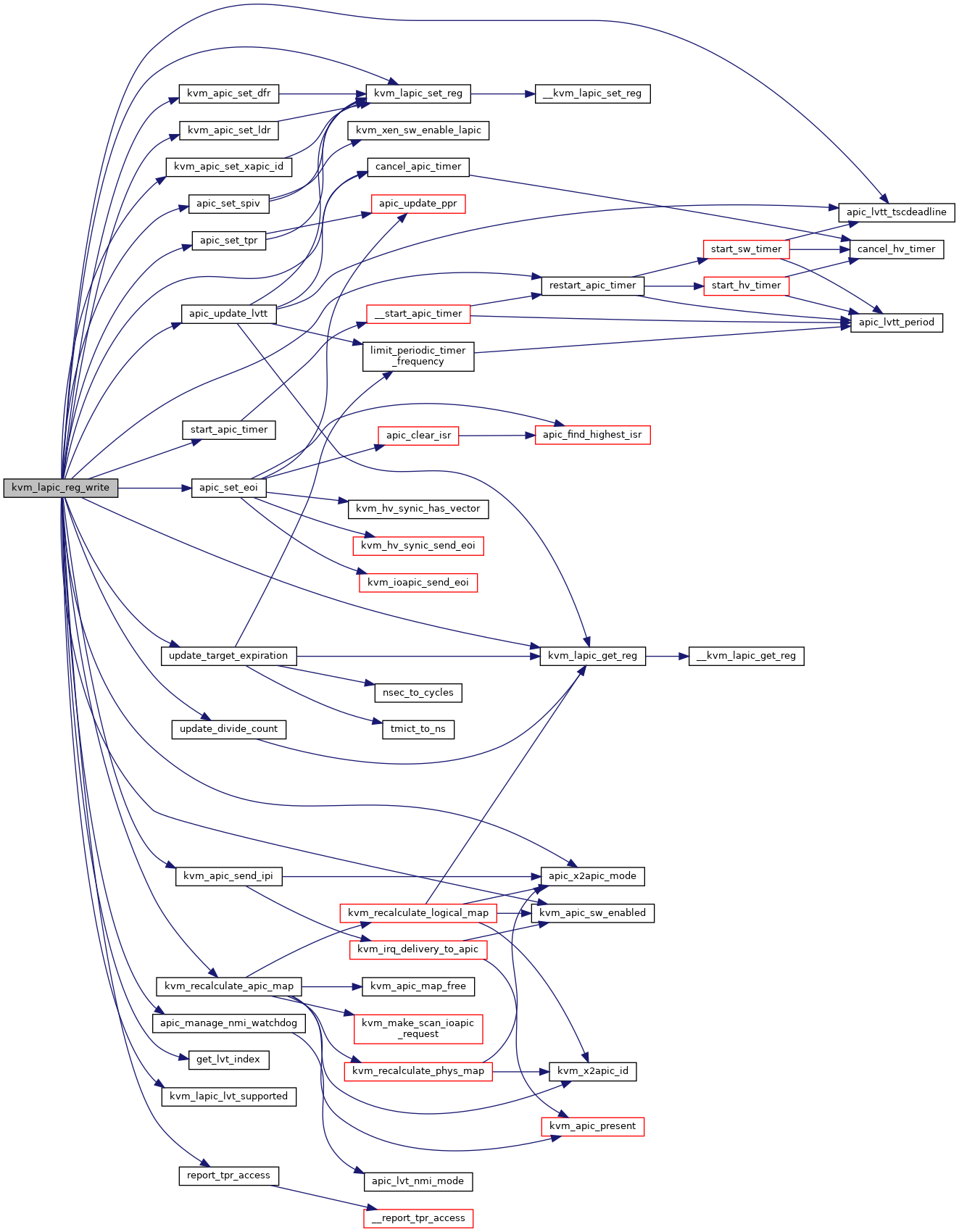

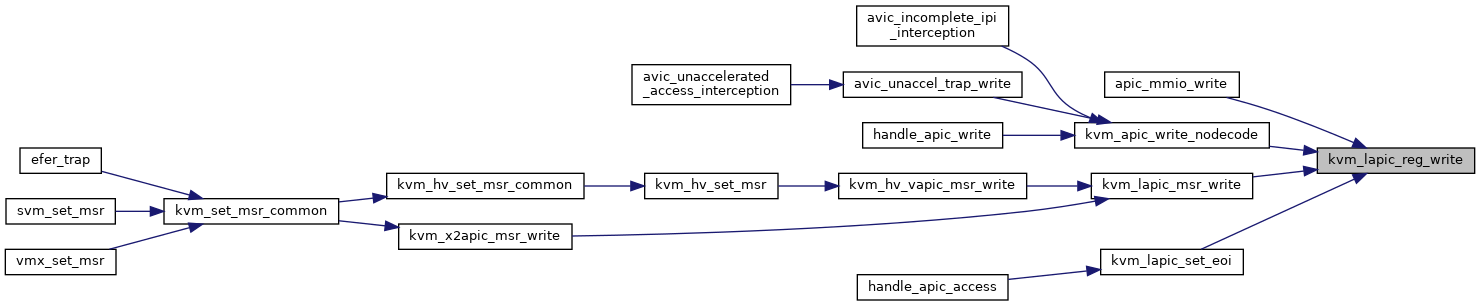

| static int | kvm_lapic_reg_write (struct kvm_lapic *apic, u32 reg, u32 val) |

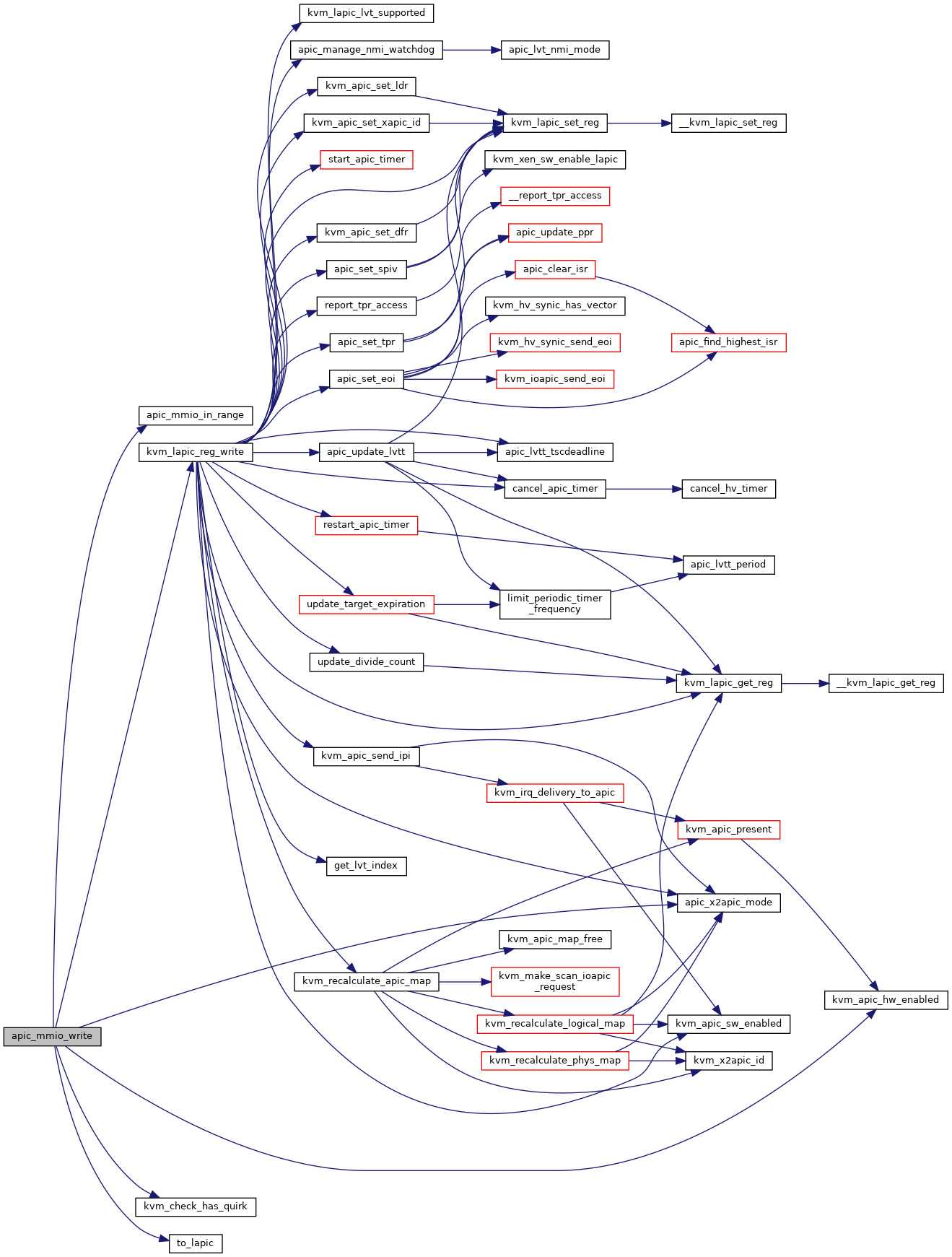

| static int | apic_mmio_write (struct kvm_vcpu *vcpu, struct kvm_io_device *this, gpa_t address, int len, const void *data) |

| void | kvm_lapic_set_eoi (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_lapic_set_eoi) | |

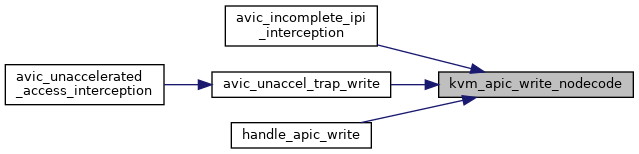

| void | kvm_apic_write_nodecode (struct kvm_vcpu *vcpu, u32 offset) |

| EXPORT_SYMBOL_GPL (kvm_apic_write_nodecode) | |

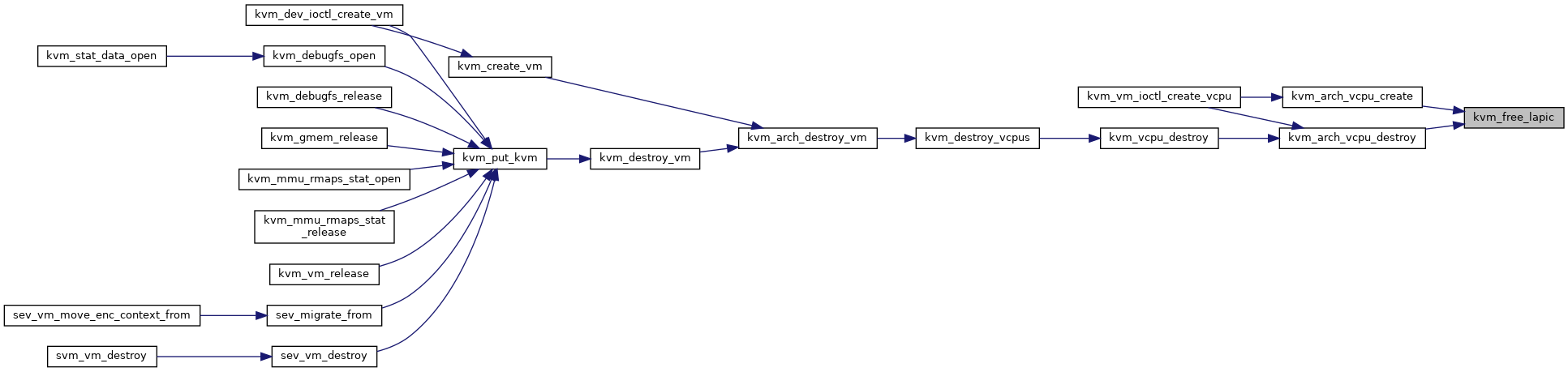

| void | kvm_free_lapic (struct kvm_vcpu *vcpu) |

| u64 | kvm_get_lapic_tscdeadline_msr (struct kvm_vcpu *vcpu) |

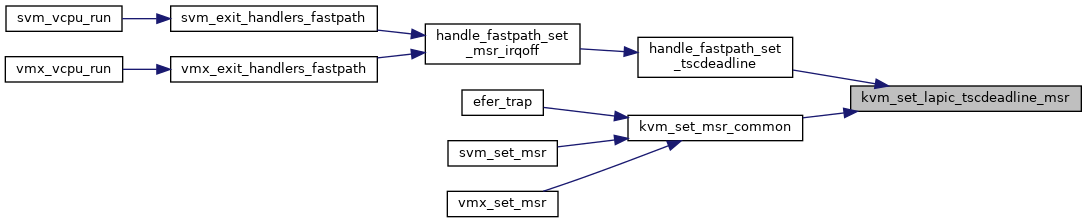

| void | kvm_set_lapic_tscdeadline_msr (struct kvm_vcpu *vcpu, u64 data) |

| void | kvm_lapic_set_tpr (struct kvm_vcpu *vcpu, unsigned long cr8) |

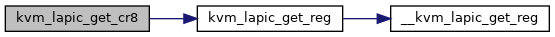

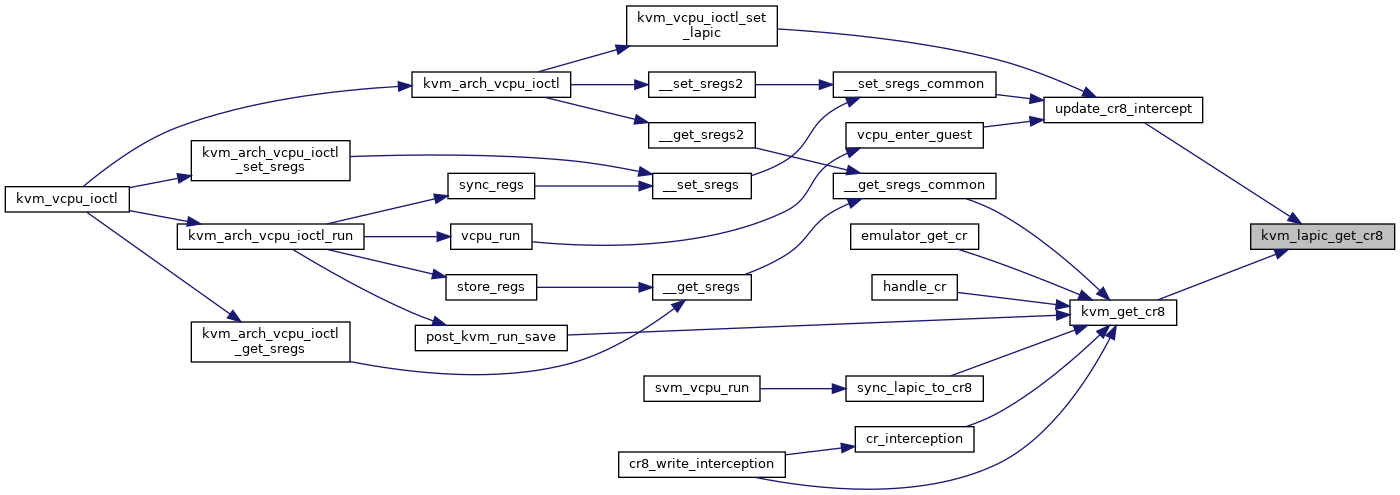

| u64 | kvm_lapic_get_cr8 (struct kvm_vcpu *vcpu) |

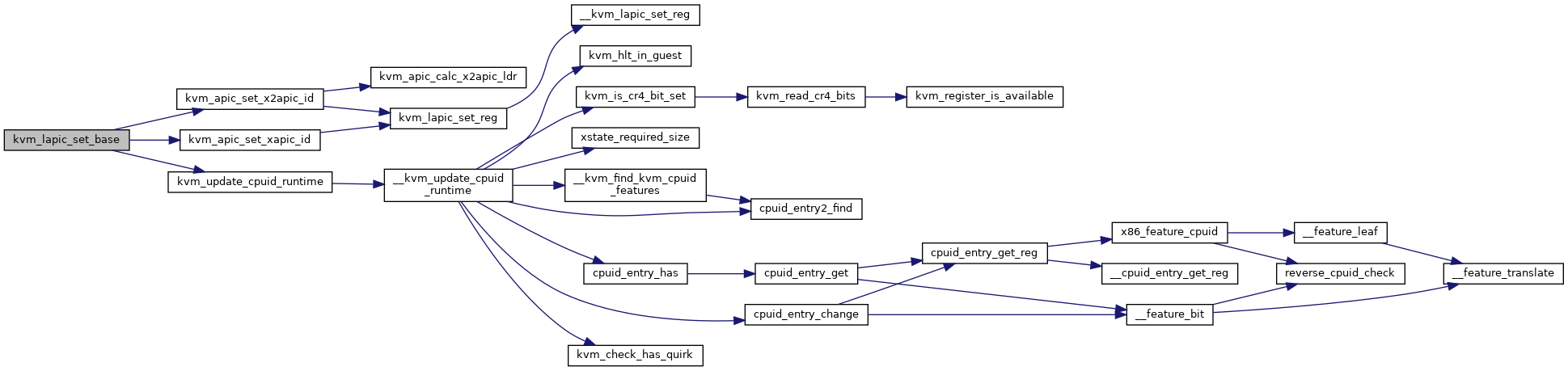

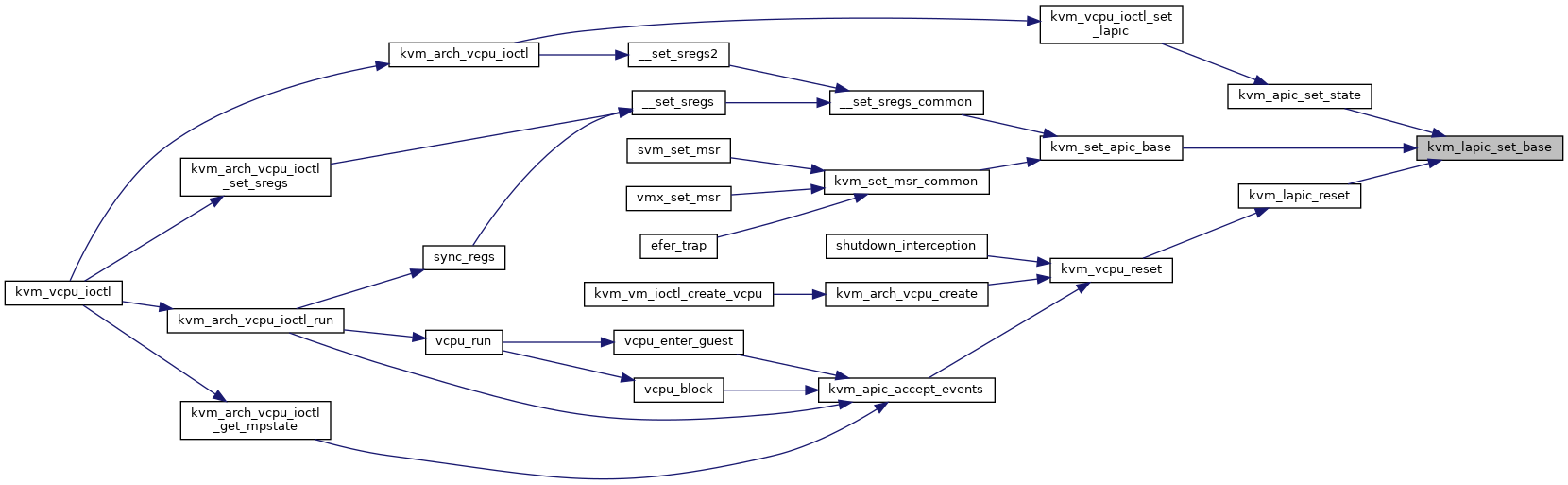

| void | kvm_lapic_set_base (struct kvm_vcpu *vcpu, u64 value) |

| void | kvm_apic_update_apicv (struct kvm_vcpu *vcpu) |

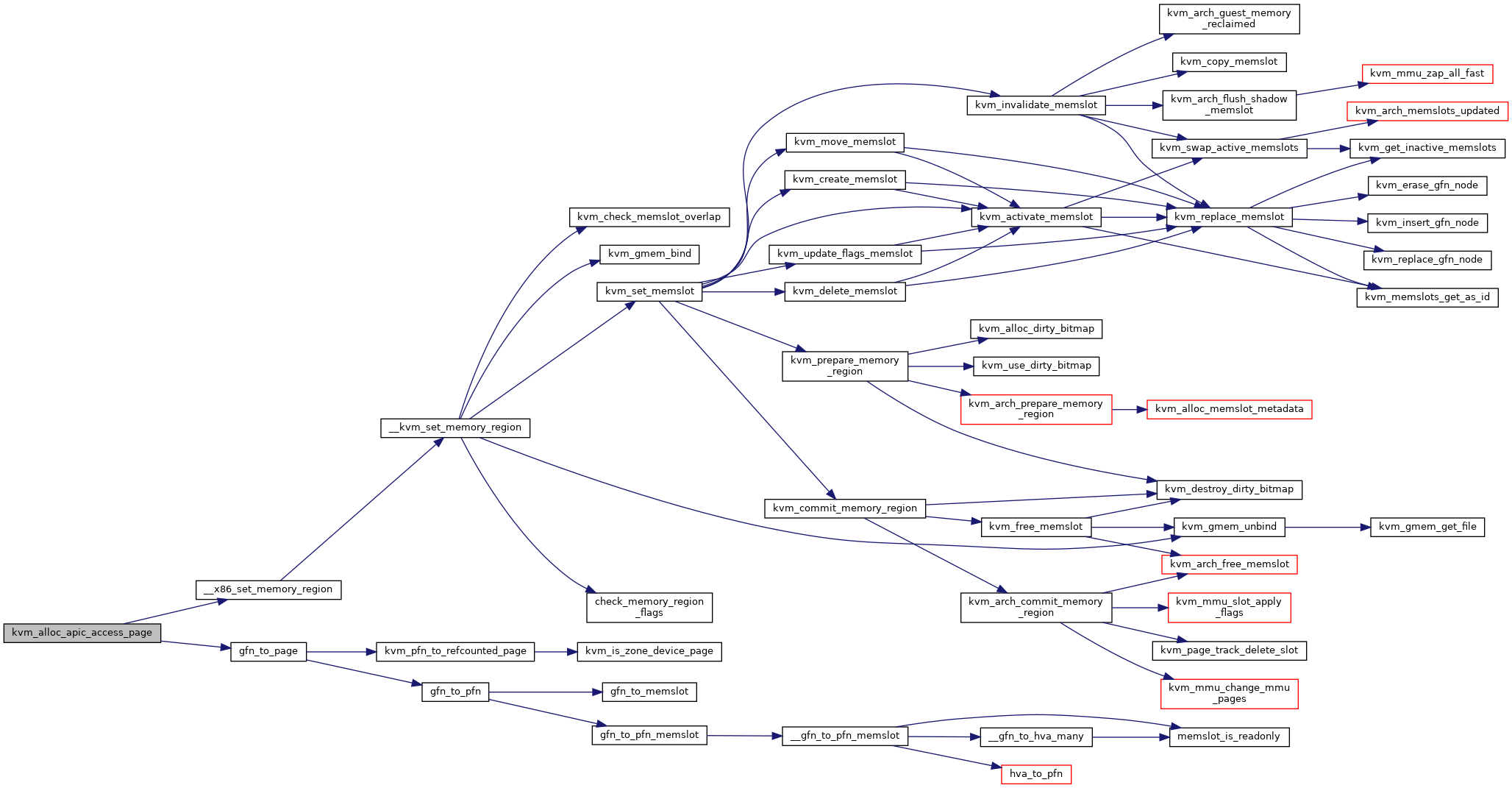

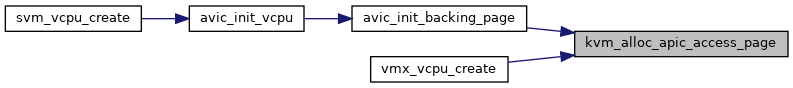

| int | kvm_alloc_apic_access_page (struct kvm *kvm) |

| EXPORT_SYMBOL_GPL (kvm_alloc_apic_access_page) | |

| void | kvm_inhibit_apic_access_page (struct kvm_vcpu *vcpu) |

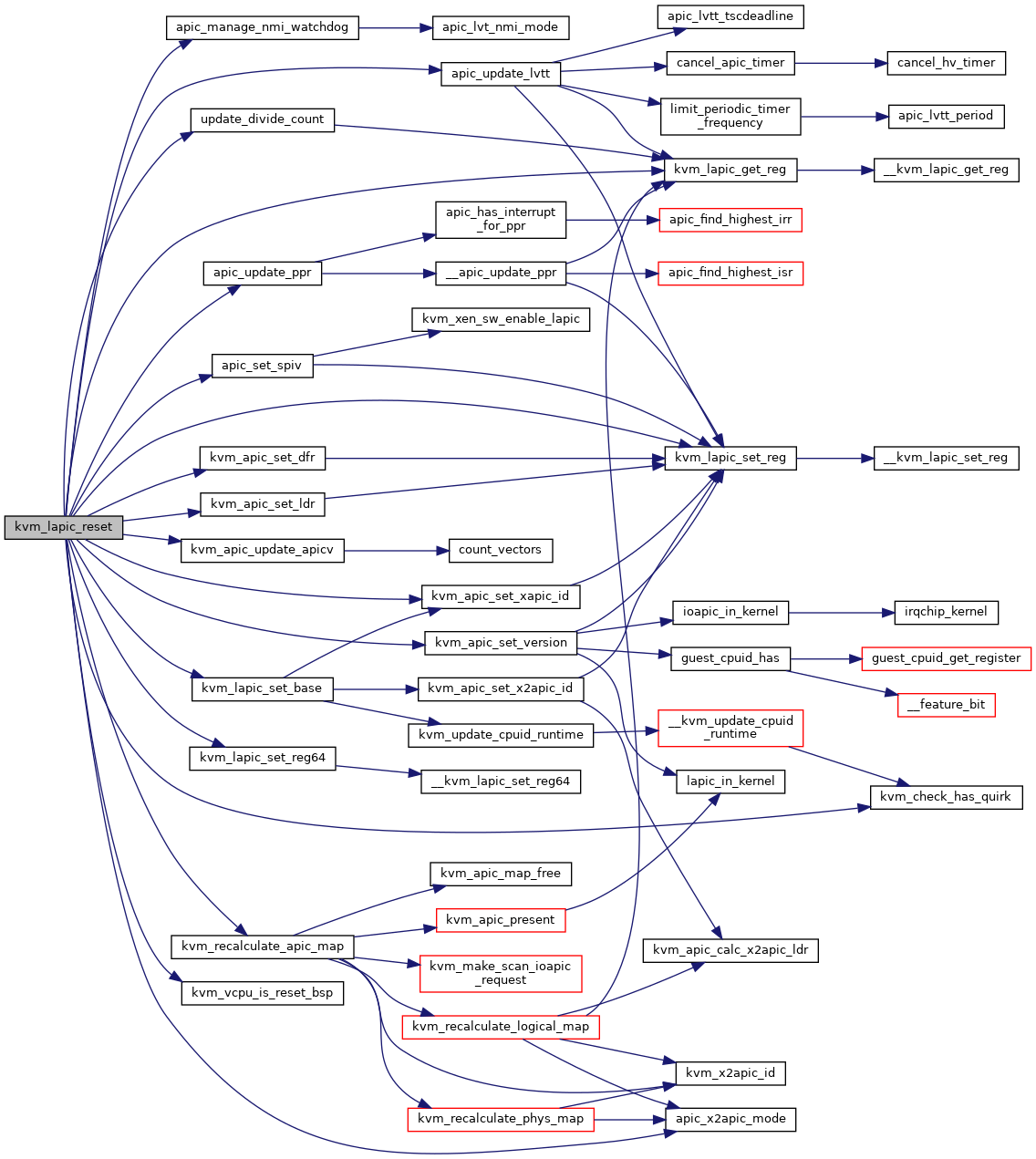

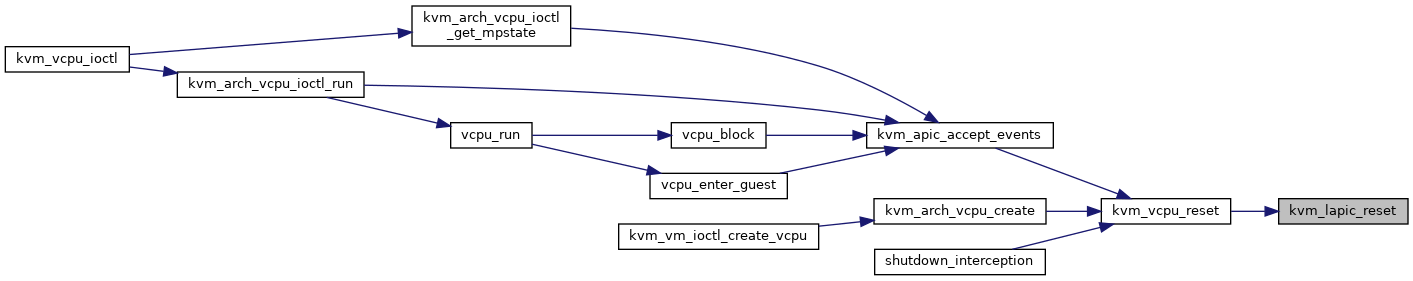

| void | kvm_lapic_reset (struct kvm_vcpu *vcpu, bool init_event) |

| static bool | lapic_is_periodic (struct kvm_lapic *apic) |

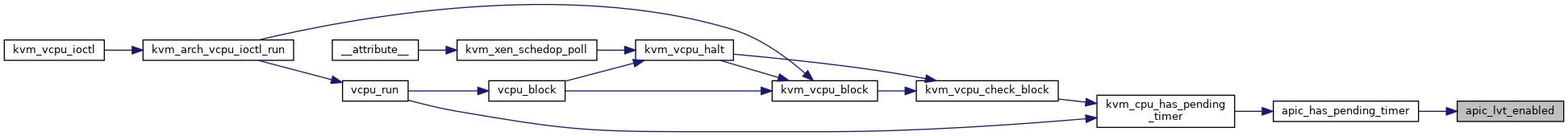

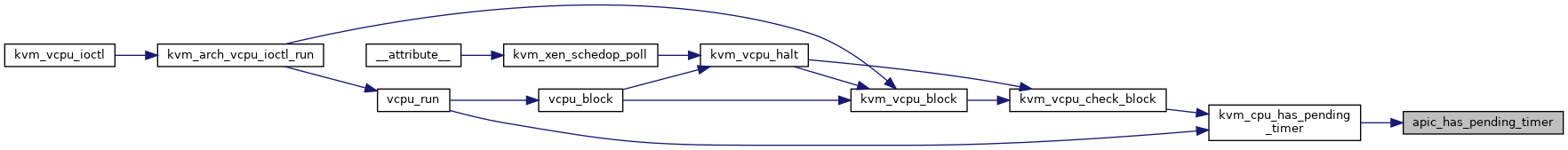

| int | apic_has_pending_timer (struct kvm_vcpu *vcpu) |

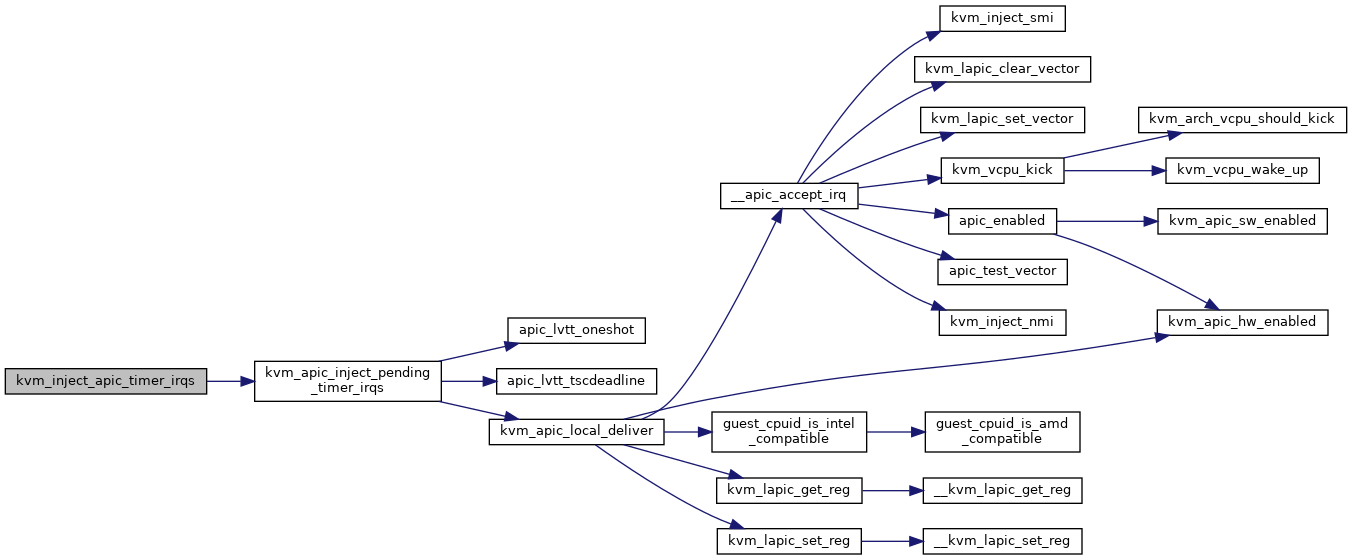

| int | kvm_apic_local_deliver (struct kvm_lapic *apic, int lvt_type) |

| void | kvm_apic_nmi_wd_deliver (struct kvm_vcpu *vcpu) |

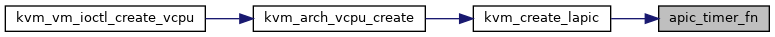

| static enum hrtimer_restart | apic_timer_fn (struct hrtimer *data) |

| int | kvm_create_lapic (struct kvm_vcpu *vcpu, int timer_advance_ns) |

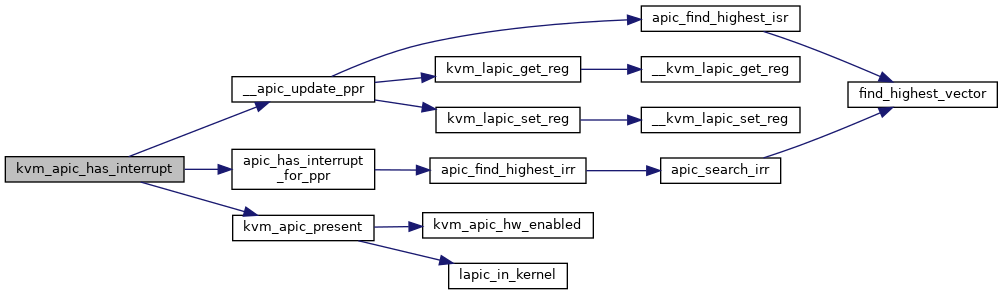

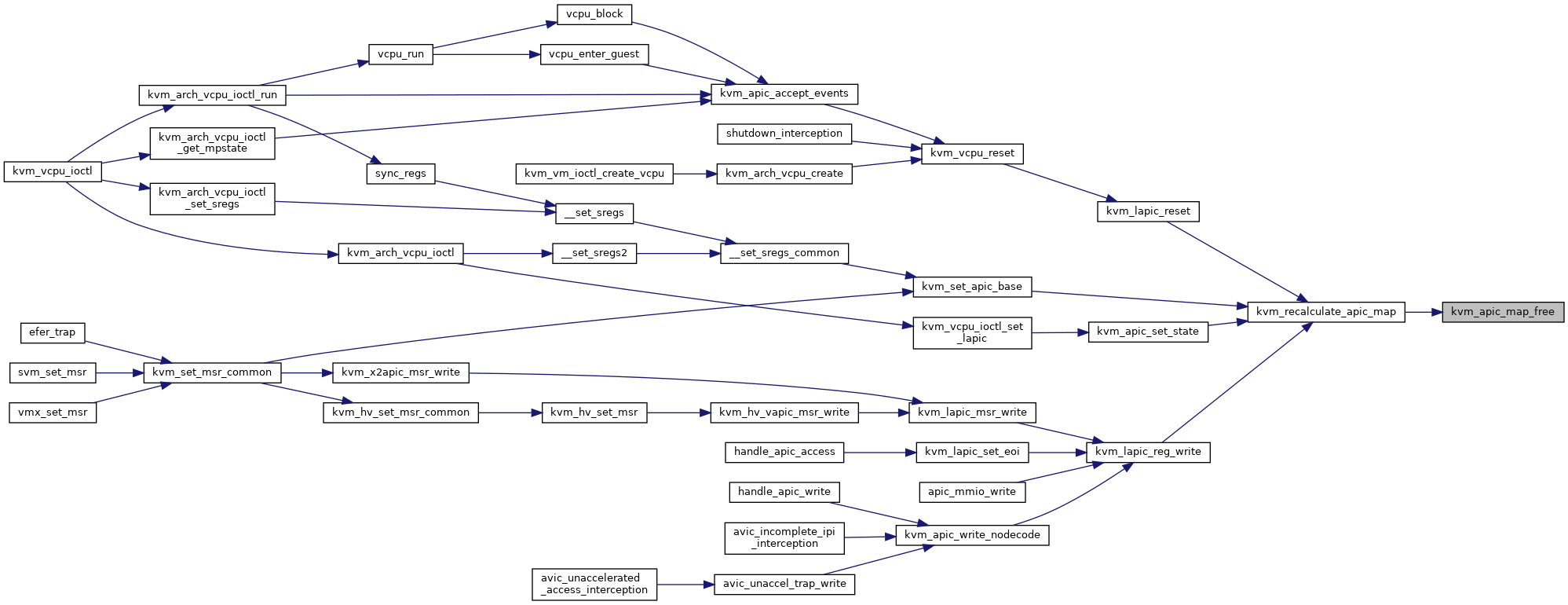

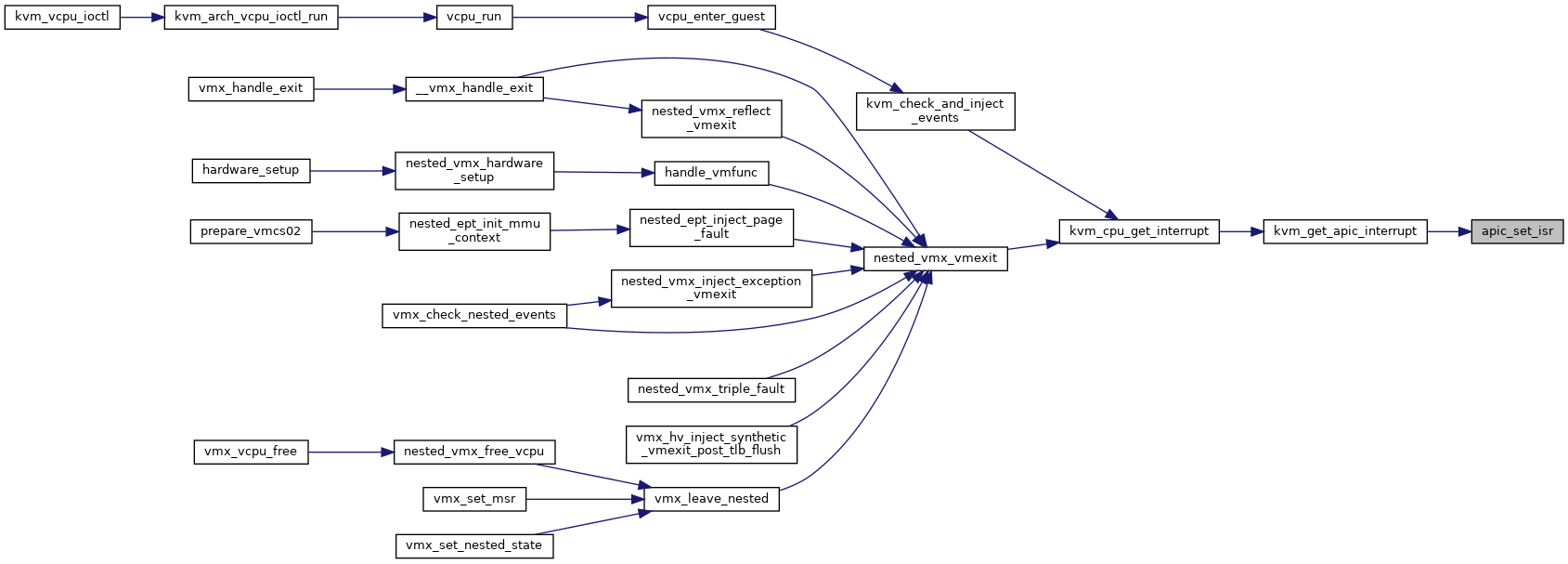

| int | kvm_apic_has_interrupt (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_apic_has_interrupt) | |

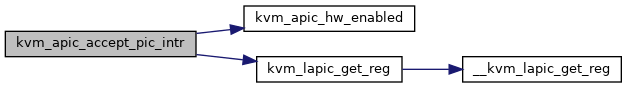

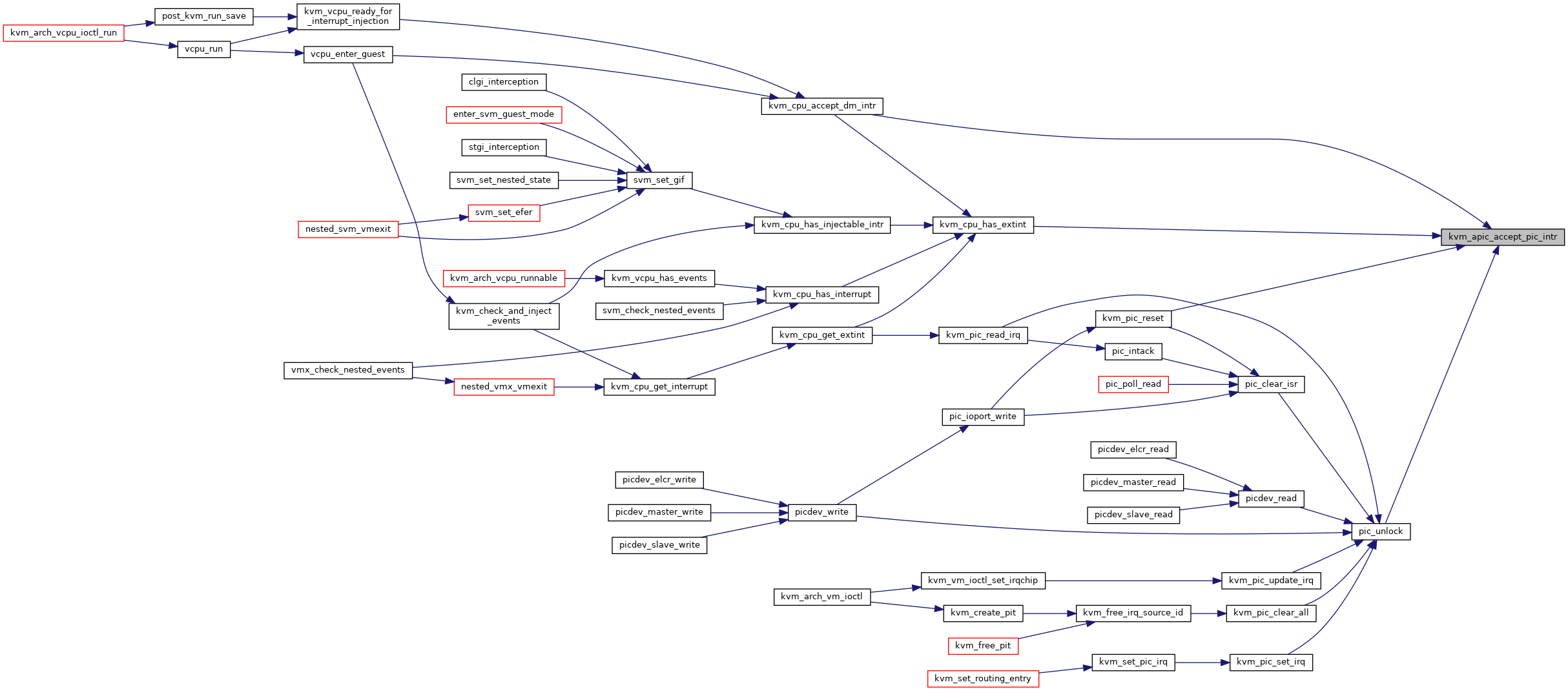

| int | kvm_apic_accept_pic_intr (struct kvm_vcpu *vcpu) |

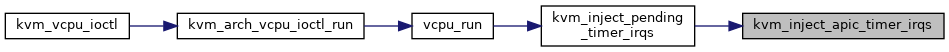

| void | kvm_inject_apic_timer_irqs (struct kvm_vcpu *vcpu) |

| int | kvm_get_apic_interrupt (struct kvm_vcpu *vcpu) |

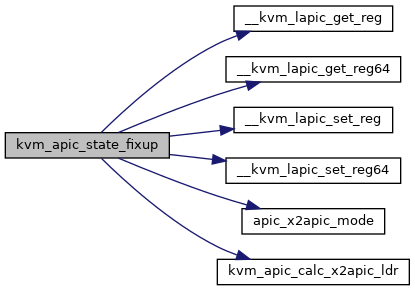

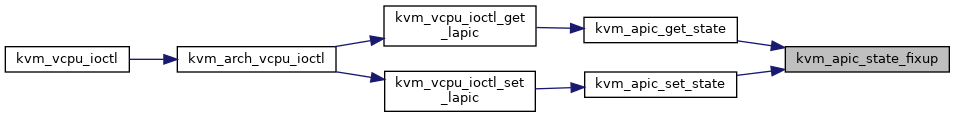

| static int | kvm_apic_state_fixup (struct kvm_vcpu *vcpu, struct kvm_lapic_state *s, bool set) |

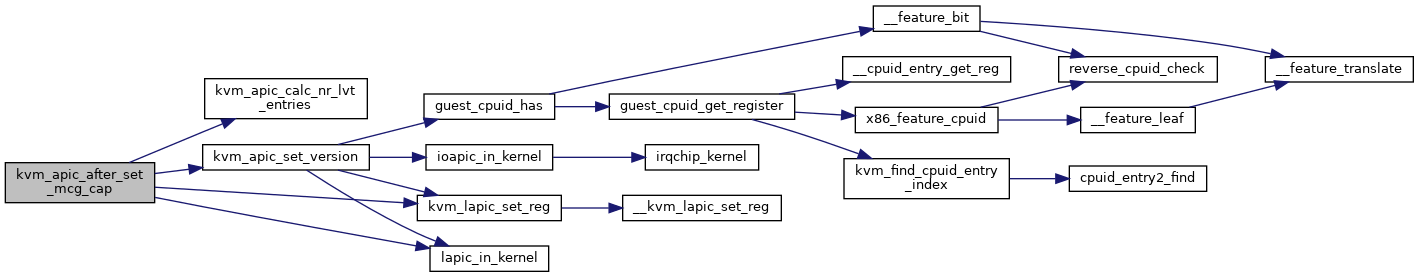

| int | kvm_apic_get_state (struct kvm_vcpu *vcpu, struct kvm_lapic_state *s) |

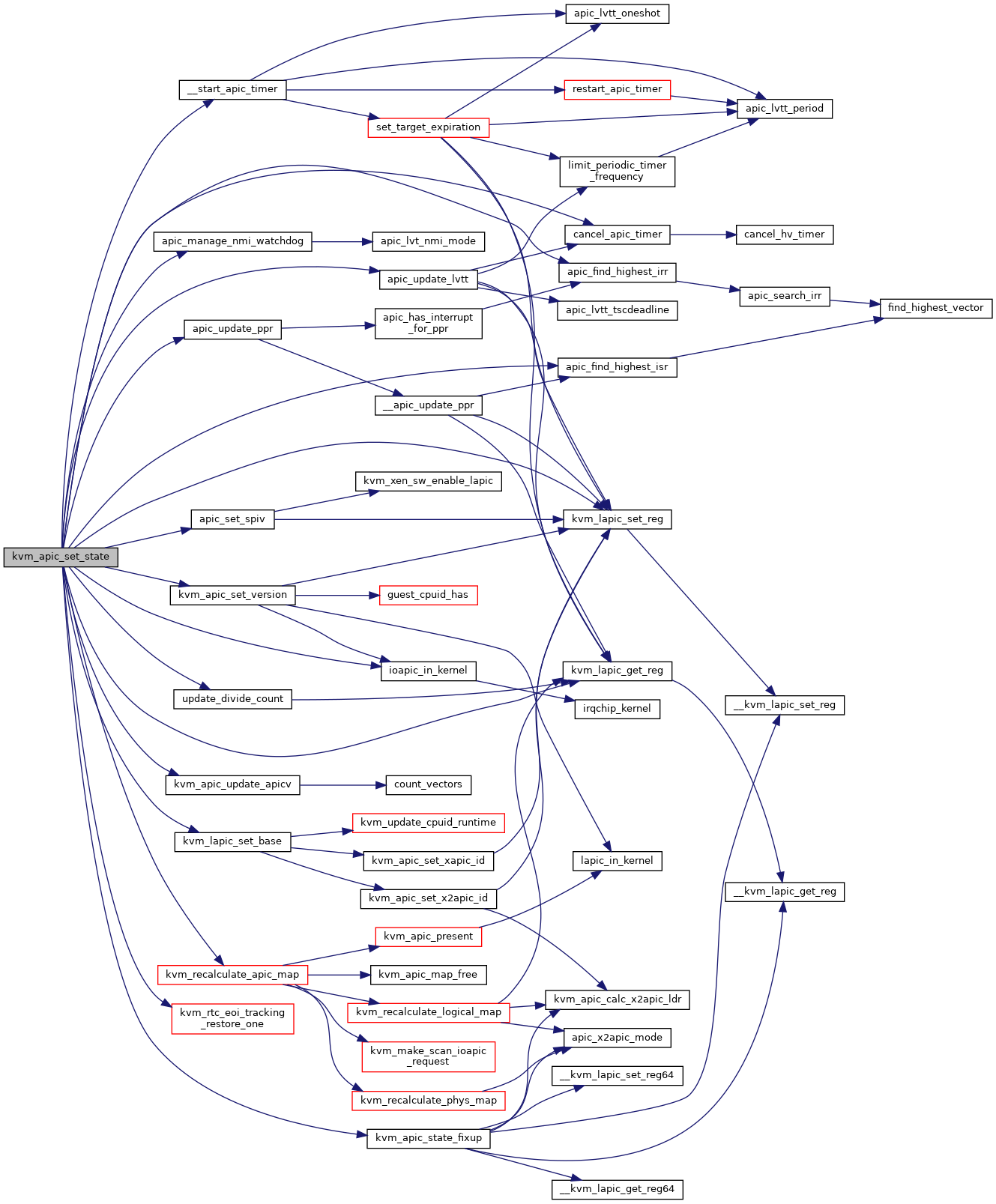

| int | kvm_apic_set_state (struct kvm_vcpu *vcpu, struct kvm_lapic_state *s) |

| void | __kvm_migrate_apic_timer (struct kvm_vcpu *vcpu) |

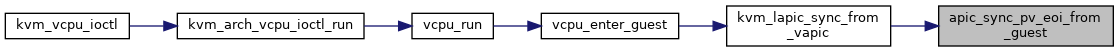

| static void | apic_sync_pv_eoi_from_guest (struct kvm_vcpu *vcpu, struct kvm_lapic *apic) |

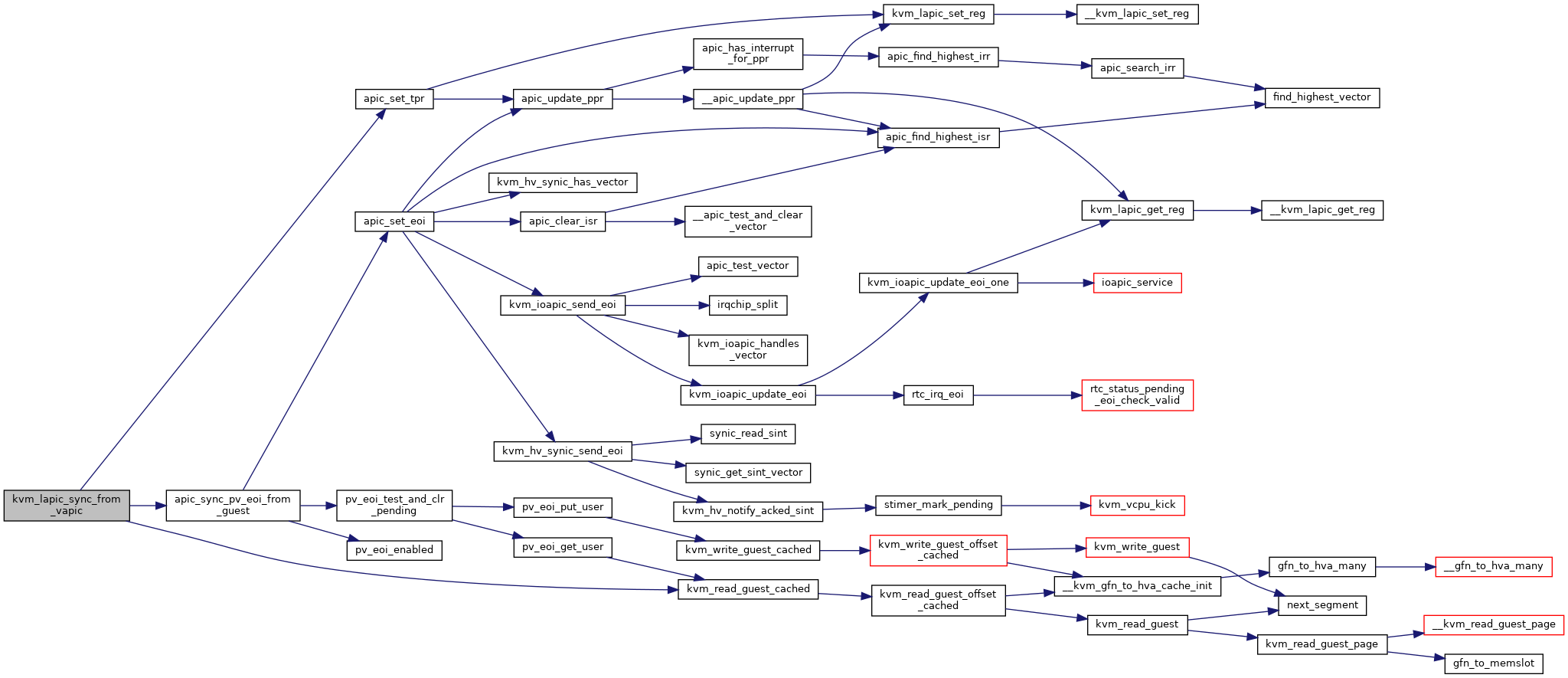

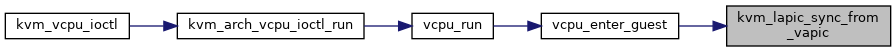

| void | kvm_lapic_sync_from_vapic (struct kvm_vcpu *vcpu) |

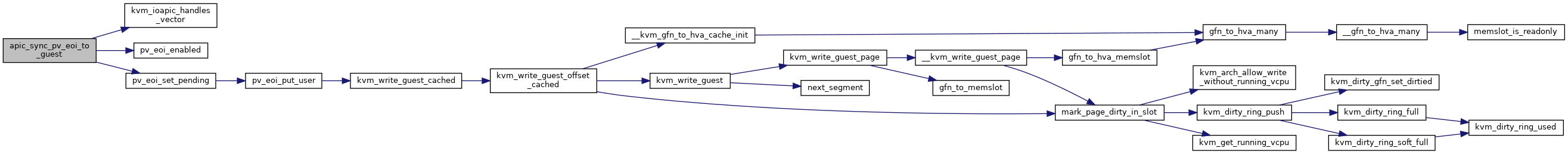

| static void | apic_sync_pv_eoi_to_guest (struct kvm_vcpu *vcpu, struct kvm_lapic *apic) |

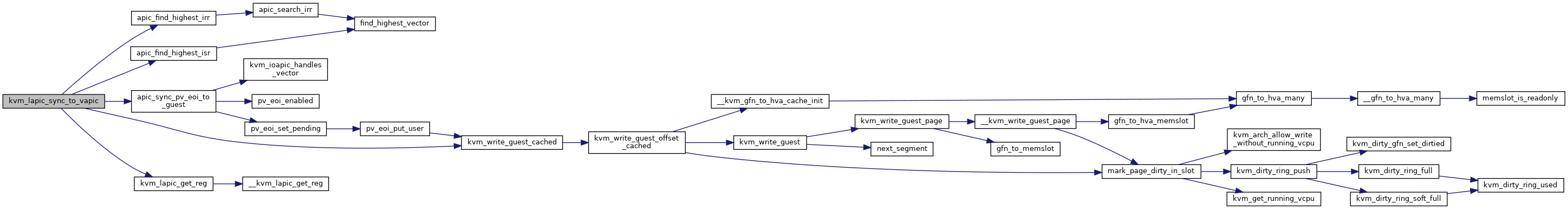

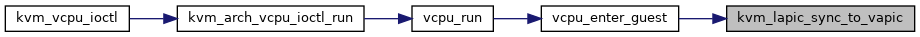

| void | kvm_lapic_sync_to_vapic (struct kvm_vcpu *vcpu) |

| int | kvm_lapic_set_vapic_addr (struct kvm_vcpu *vcpu, gpa_t vapic_addr) |

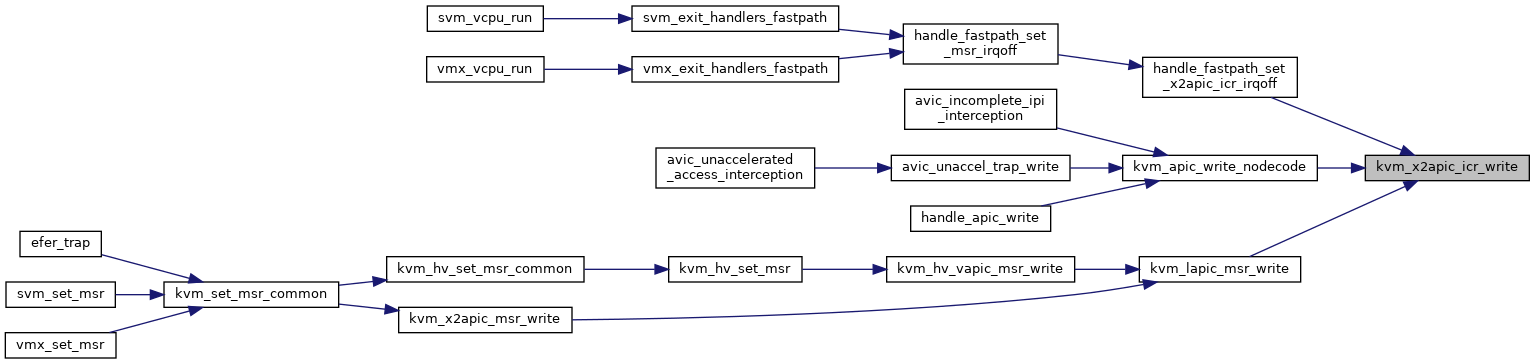

| int | kvm_x2apic_icr_write (struct kvm_lapic *apic, u64 data) |

| int | kvm_x2apic_msr_write (struct kvm_vcpu *vcpu, u32 msr, u64 data) |

| int | kvm_x2apic_msr_read (struct kvm_vcpu *vcpu, u32 msr, u64 *data) |

| int | kvm_hv_vapic_msr_write (struct kvm_vcpu *vcpu, u32 reg, u64 data) |

| int | kvm_hv_vapic_msr_read (struct kvm_vcpu *vcpu, u32 reg, u64 *data) |

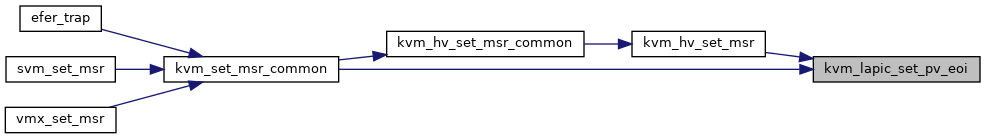

| int | kvm_lapic_set_pv_eoi (struct kvm_vcpu *vcpu, u64 data, unsigned long len) |

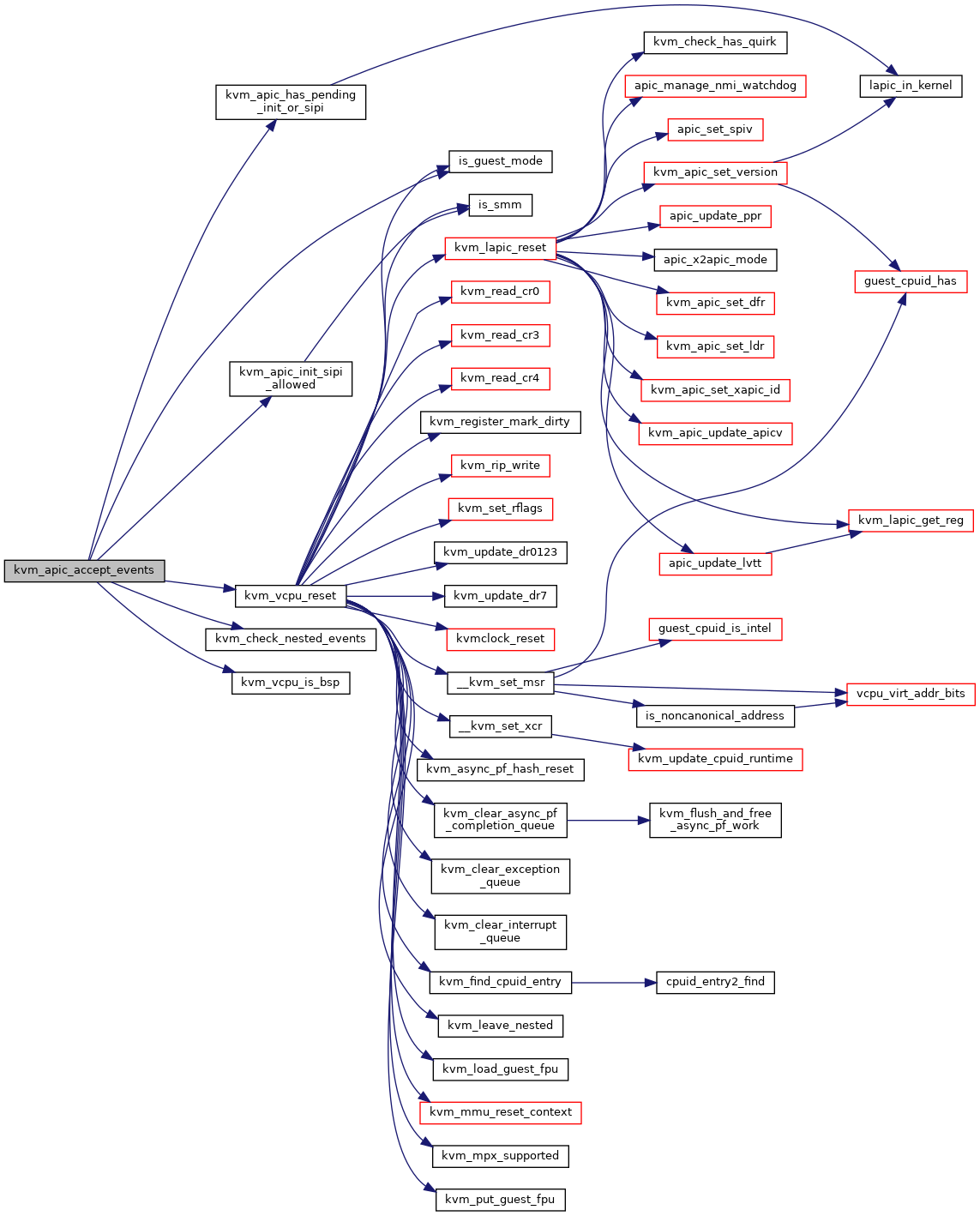

| int | kvm_apic_accept_events (struct kvm_vcpu *vcpu) |

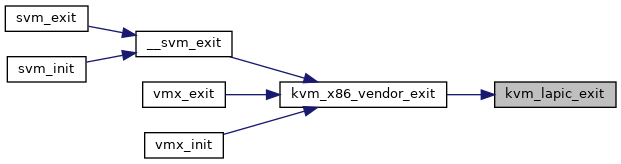

| void | kvm_lapic_exit (void) |

Variables | |

| static bool lapic_timer_advance_dynamic | __read_mostly |

| static const unsigned int | apic_lvt_mask [KVM_APIC_MAX_NR_LVT_ENTRIES] |

| static const struct kvm_io_device_ops | apic_mmio_ops |

Macro Definition Documentation

◆ APIC_REG_MASK

◆ APIC_REGS_MASK

| #define APIC_REGS_MASK | ( | first, | |

| count | |||

| ) | (APIC_REG_MASK(first) * ((1ull << (count)) - 1)) |

◆ APIC_VECTORS_PER_REG

◆ APIC_VERSION

◆ LAPIC_MMIO_LENGTH

◆ LAPIC_TIMER_ADVANCE_ADJUST_MAX

| #define LAPIC_TIMER_ADVANCE_ADJUST_MAX 10000 /* clock cycles */ |

◆ LAPIC_TIMER_ADVANCE_ADJUST_MIN

| #define LAPIC_TIMER_ADVANCE_ADJUST_MIN 100 /* clock cycles */ |

◆ LAPIC_TIMER_ADVANCE_ADJUST_STEP

◆ LAPIC_TIMER_ADVANCE_NS_INIT

◆ LAPIC_TIMER_ADVANCE_NS_MAX

◆ LINT_MASK

| #define LINT_MASK |

◆ LVT_MASK

| #define LVT_MASK (APIC_LVT_MASKED | APIC_SEND_PENDING | APIC_VECTOR_MASK) |

◆ MAX_APIC_VECTOR

◆ mod_64

◆ pr_fmt

Enumeration Type Documentation

◆ anonymous enum

Function Documentation

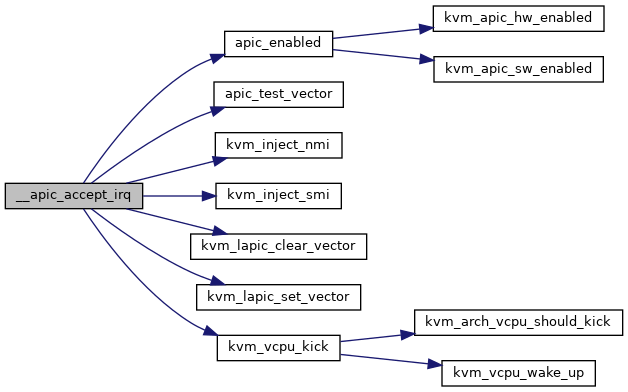

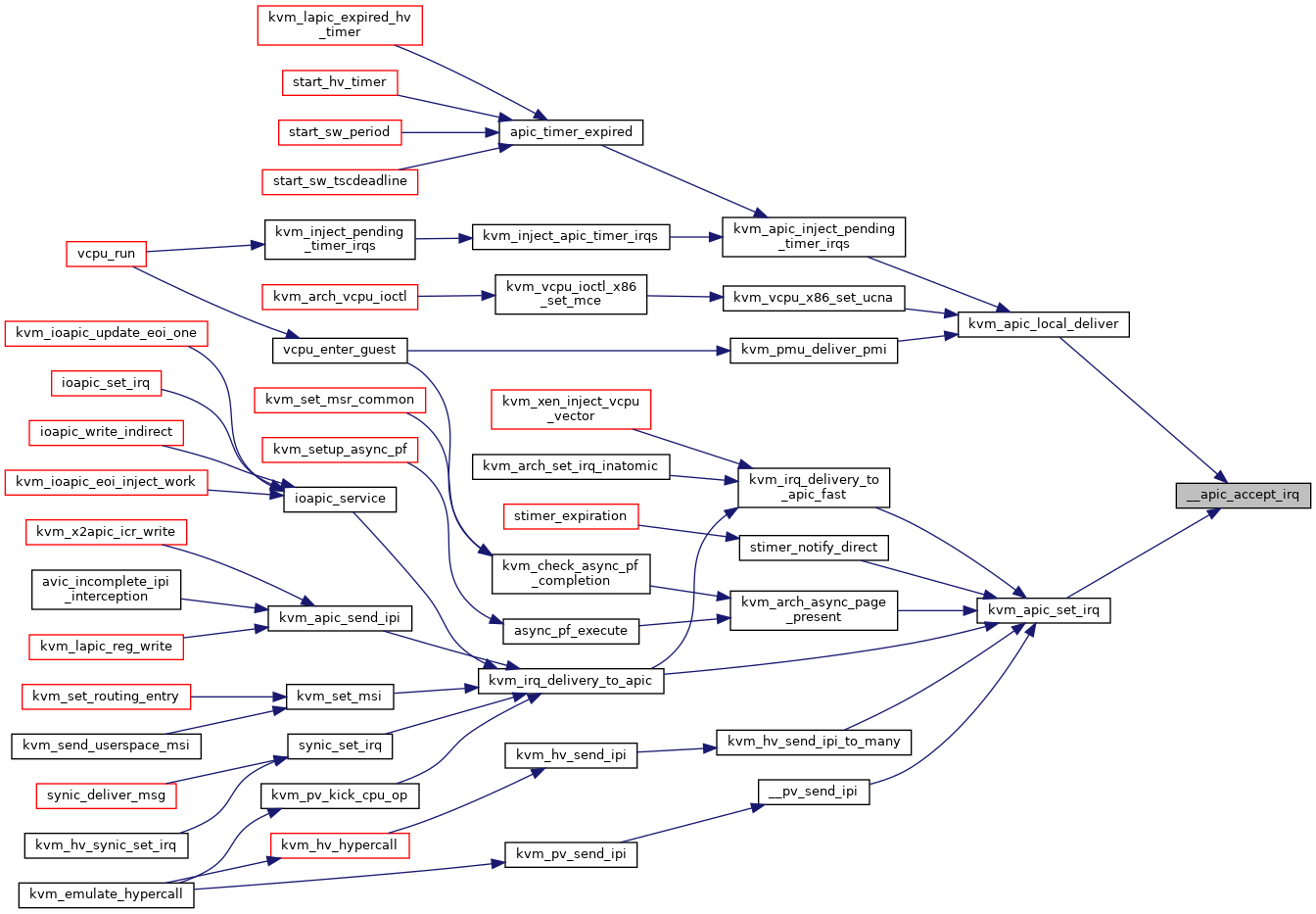

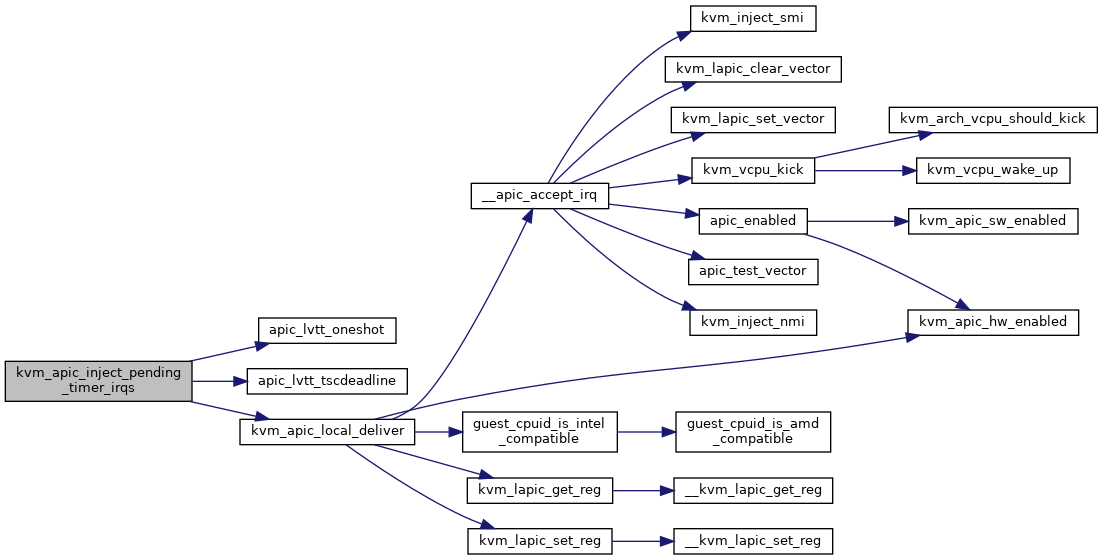

◆ __apic_accept_irq()

|

static |

Definition at line 1291 of file lapic.c.

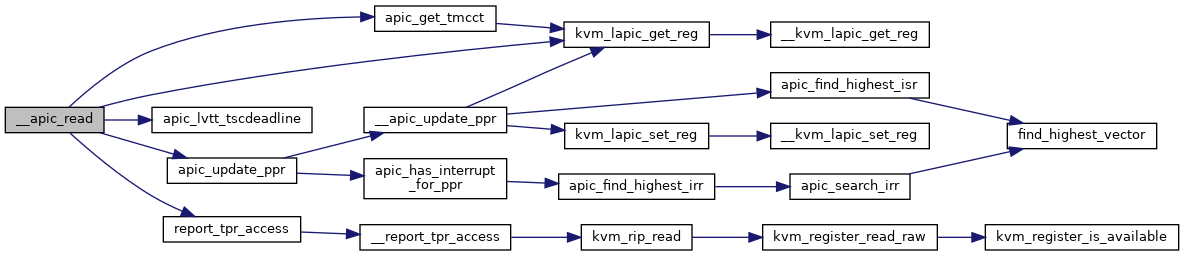

◆ __apic_read()

|

static |

Definition at line 1566 of file lapic.c.

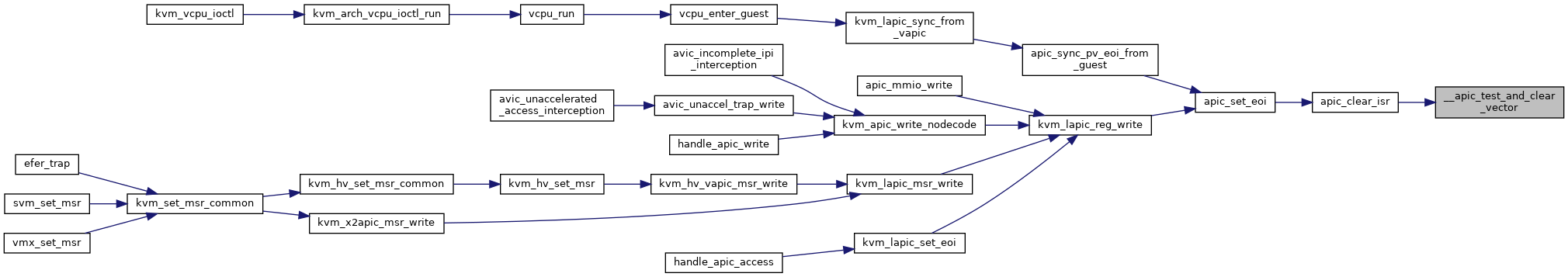

◆ __apic_test_and_clear_vector()

|

inlinestatic |

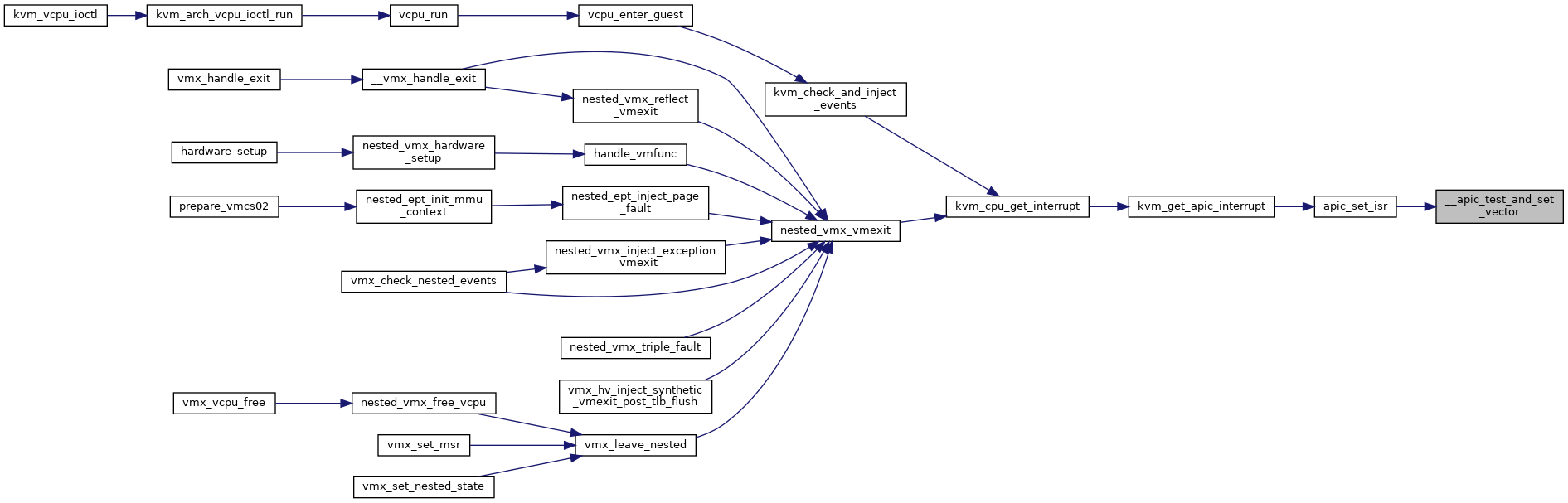

◆ __apic_test_and_set_vector()

|

inlinestatic |

◆ __apic_update_ppr()

|

static |

Definition at line 944 of file lapic.c.

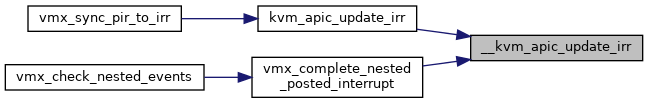

◆ __kvm_apic_update_irr()

| bool __kvm_apic_update_irr | ( | u32 * | pir, |

| void * | regs, | ||

| int * | max_irr | ||

| ) |

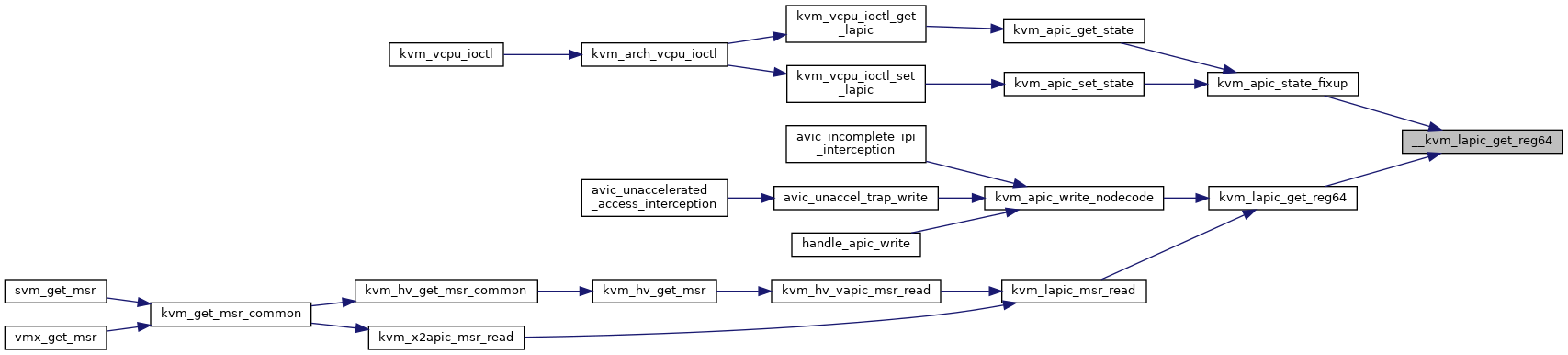

◆ __kvm_lapic_get_reg64()

|

static |

◆ __kvm_lapic_set_reg()

|

inlinestatic |

◆ __kvm_lapic_set_reg64()

|

static |

◆ __kvm_migrate_apic_timer()

| void __kvm_migrate_apic_timer | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3029 of file lapic.c.

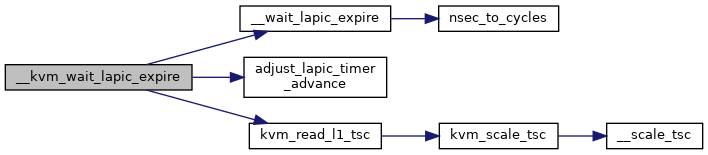

◆ __kvm_wait_lapic_expire()

|

static |

Definition at line 1844 of file lapic.c.

◆ __pv_send_ipi()

|

static |

Definition at line 832 of file lapic.c.

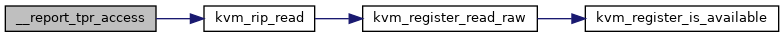

◆ __report_tpr_access()

|

static |

Definition at line 1550 of file lapic.c.

◆ __start_apic_timer()

|

static |

Definition at line 2216 of file lapic.c.

◆ __wait_lapic_expire()

|

inlinestatic |

◆ adjust_lapic_timer_advance()

|

inlinestatic |

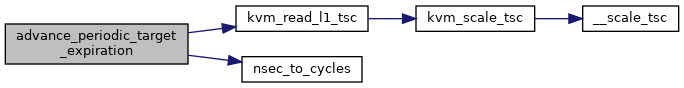

◆ advance_periodic_target_expiration()

|

static |

◆ apic_clear_irr()

|

inlinestatic |

Definition at line 723 of file lapic.c.

◆ apic_clear_isr()

|

inlinestatic |

Definition at line 787 of file lapic.c.

◆ apic_enabled()

|

inlinestatic |

◆ apic_find_highest_irr()

|

inlinestatic |

◆ apic_find_highest_isr()

|

inlinestatic |

◆ apic_get_tmcct()

|

static |

◆ apic_has_interrupt_for_ppr()

|

static |

◆ apic_has_pending_timer()

| int apic_has_pending_timer | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2752 of file lapic.c.

◆ apic_lvt_enabled()

|

inlinestatic |

◆ apic_lvt_nmi_mode()

|

inlinestatic |

◆ apic_lvtt_oneshot()

|

inlinestatic |

◆ apic_lvtt_period()

|

inlinestatic |

◆ apic_lvtt_tscdeadline()

|

inlinestatic |

◆ apic_manage_nmi_watchdog()

|

static |

◆ apic_mmio_in_range()

|

static |

◆ apic_mmio_read()

|

static |

Definition at line 1688 of file lapic.c.

◆ apic_mmio_write()

|

static |

Definition at line 2406 of file lapic.c.

◆ apic_search_irr()

|

inlinestatic |

◆ apic_set_eoi()

|

static |

Definition at line 1465 of file lapic.c.

◆ apic_set_isr()

|

inlinestatic |

Definition at line 744 of file lapic.c.

◆ apic_set_spiv()

|

inlinestatic |

◆ apic_set_tpr()

|

static |

◆ apic_sync_pv_eoi_from_guest()

|

static |

Definition at line 3049 of file lapic.c.

◆ apic_sync_pv_eoi_to_guest()

|

static |

Definition at line 3095 of file lapic.c.

◆ apic_test_vector()

|

inlinestatic |

◆ apic_timer_expired()

|

static |

Definition at line 1892 of file lapic.c.

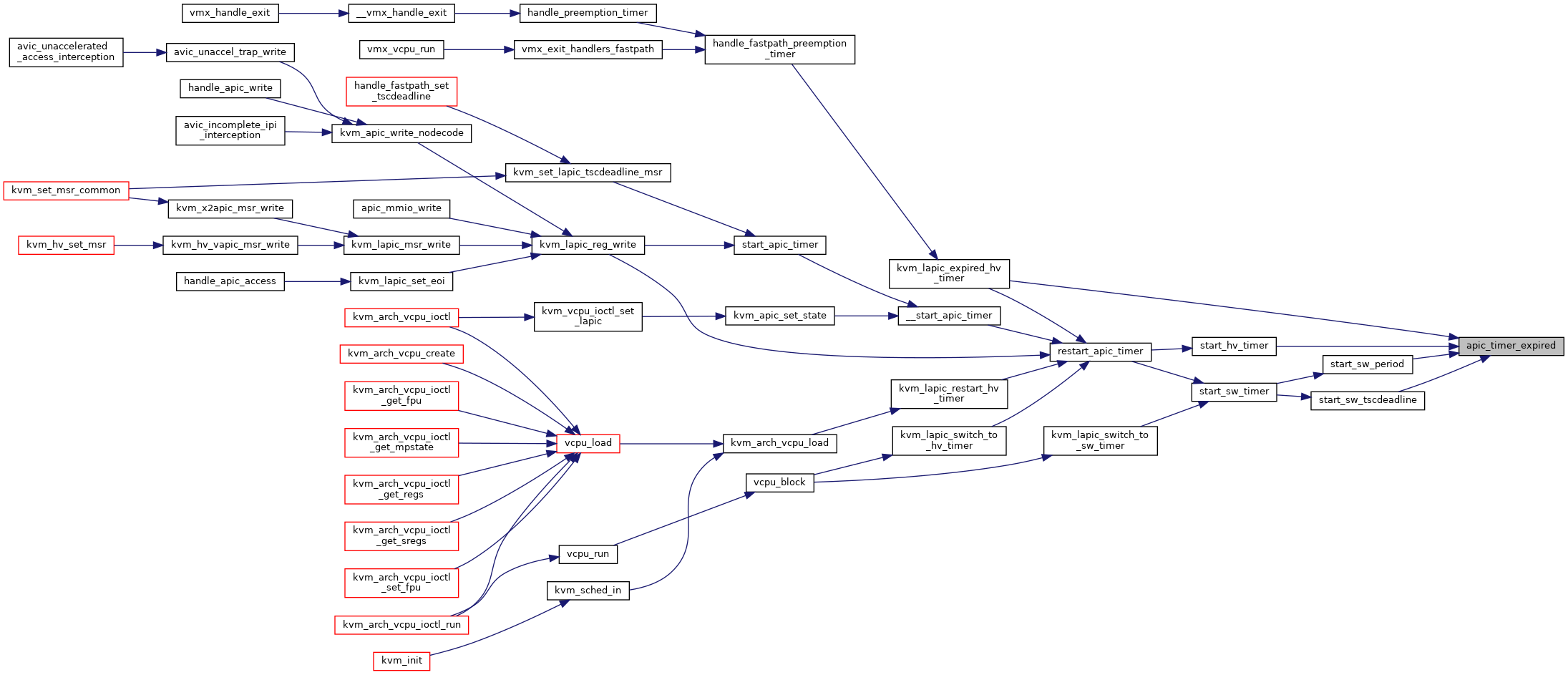

◆ apic_timer_fn()

|

static |

Definition at line 2782 of file lapic.c.

◆ apic_update_lvtt()

|

static |

Definition at line 1754 of file lapic.c.

◆ apic_update_ppr()

|

static |

Definition at line 966 of file lapic.c.

◆ cancel_apic_timer()

|

static |

◆ cancel_hv_timer()

|

static |

◆ count_vectors()

|

static |

◆ DEFINE_STATIC_KEY_DEFERRED_FALSE() [1/2]

| __read_mostly DEFINE_STATIC_KEY_DEFERRED_FALSE | ( | apic_hw_disabled | , |

| HZ | |||

| ) |

◆ DEFINE_STATIC_KEY_DEFERRED_FALSE() [2/2]

| __read_mostly DEFINE_STATIC_KEY_DEFERRED_FALSE | ( | apic_sw_disabled | , |

| HZ | |||

| ) |

◆ EXPORT_SYMBOL_GPL() [1/15]

| EXPORT_SYMBOL_GPL | ( | __kvm_apic_update_irr | ) |

◆ EXPORT_SYMBOL_GPL() [2/15]

| EXPORT_SYMBOL_GPL | ( | kvm_alloc_apic_access_page | ) |

◆ EXPORT_SYMBOL_GPL() [3/15]

| EXPORT_SYMBOL_GPL | ( | kvm_apic_clear_irr | ) |

◆ EXPORT_SYMBOL_GPL() [4/15]

| EXPORT_SYMBOL_GPL | ( | kvm_apic_has_interrupt | ) |

◆ EXPORT_SYMBOL_GPL() [5/15]

| EXPORT_SYMBOL_GPL | ( | kvm_apic_match_dest | ) |

◆ EXPORT_SYMBOL_GPL() [6/15]

| EXPORT_SYMBOL_GPL | ( | kvm_apic_send_ipi | ) |

◆ EXPORT_SYMBOL_GPL() [7/15]

| EXPORT_SYMBOL_GPL | ( | kvm_apic_set_eoi_accelerated | ) |

◆ EXPORT_SYMBOL_GPL() [8/15]

| EXPORT_SYMBOL_GPL | ( | kvm_apic_update_irr | ) |

◆ EXPORT_SYMBOL_GPL() [9/15]

| EXPORT_SYMBOL_GPL | ( | kvm_apic_update_ppr | ) |

◆ EXPORT_SYMBOL_GPL() [10/15]

| EXPORT_SYMBOL_GPL | ( | kvm_apic_write_nodecode | ) |

◆ EXPORT_SYMBOL_GPL() [11/15]

| EXPORT_SYMBOL_GPL | ( | kvm_lapic_expired_hv_timer | ) |

◆ EXPORT_SYMBOL_GPL() [12/15]

| EXPORT_SYMBOL_GPL | ( | kvm_lapic_find_highest_irr | ) |

◆ EXPORT_SYMBOL_GPL() [13/15]

| EXPORT_SYMBOL_GPL | ( | kvm_lapic_readable_reg_mask | ) |

◆ EXPORT_SYMBOL_GPL() [14/15]

| EXPORT_SYMBOL_GPL | ( | kvm_lapic_set_eoi | ) |

◆ EXPORT_SYMBOL_GPL() [15/15]

| EXPORT_SYMBOL_GPL | ( | kvm_wait_lapic_expire | ) |

◆ find_highest_vector()

|

static |

◆ get_lvt_index()

|

static |

◆ kvm_alloc_apic_access_page()

| int kvm_alloc_apic_access_page | ( | struct kvm * | kvm | ) |

Definition at line 2598 of file lapic.c.

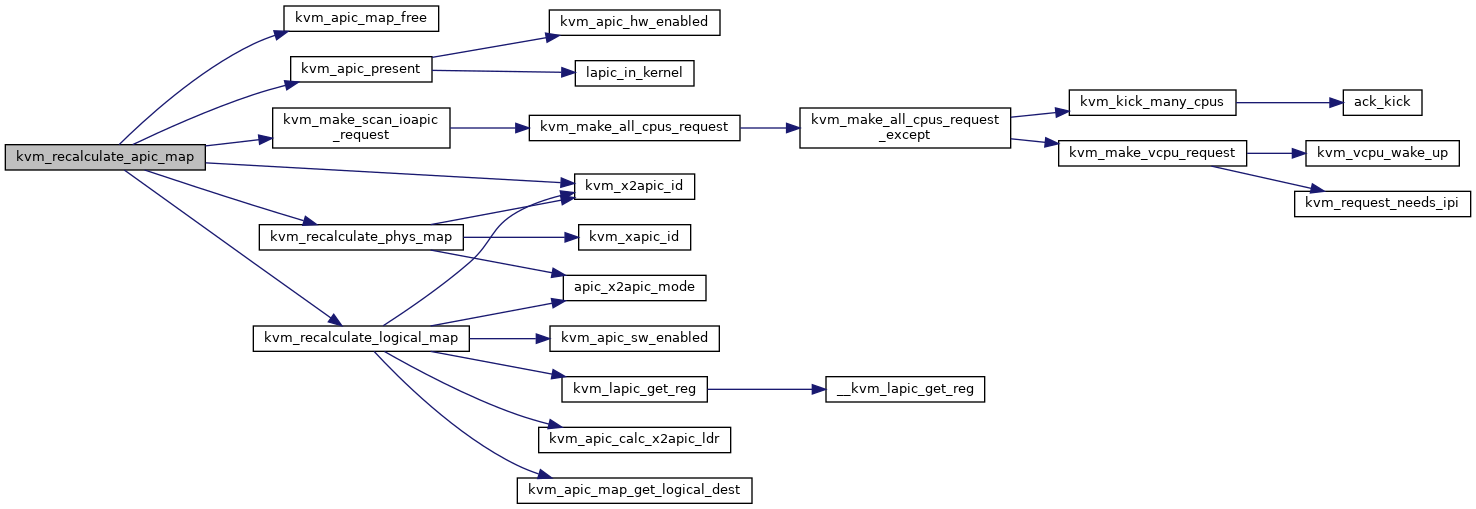

◆ kvm_apic_accept_events()

| int kvm_apic_accept_events | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3263 of file lapic.c.

◆ kvm_apic_accept_pic_intr()

| int kvm_apic_accept_pic_intr | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_apic_after_set_mcg_cap()

| void kvm_apic_after_set_mcg_cap | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 596 of file lapic.c.

◆ kvm_apic_broadcast()

|

static |

◆ kvm_apic_calc_nr_lvt_entries()

|

inlinestatic |

◆ kvm_apic_calc_x2apic_ldr()

|

inlinestatic |

◆ kvm_apic_clear_irr()

| void kvm_apic_clear_irr | ( | struct kvm_vcpu * | vcpu, |

| int | vec | ||

| ) |

◆ kvm_apic_compare_prio()

| int kvm_apic_compare_prio | ( | struct kvm_vcpu * | vcpu1, |

| struct kvm_vcpu * | vcpu2 | ||

| ) |

◆ kvm_apic_disabled_lapic_found()

|

static |

◆ kvm_apic_get_state()

| int kvm_apic_get_state | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_lapic_state * | s | ||

| ) |

Definition at line 2970 of file lapic.c.

◆ kvm_apic_has_interrupt()

| int kvm_apic_has_interrupt | ( | struct kvm_vcpu * | vcpu | ) |

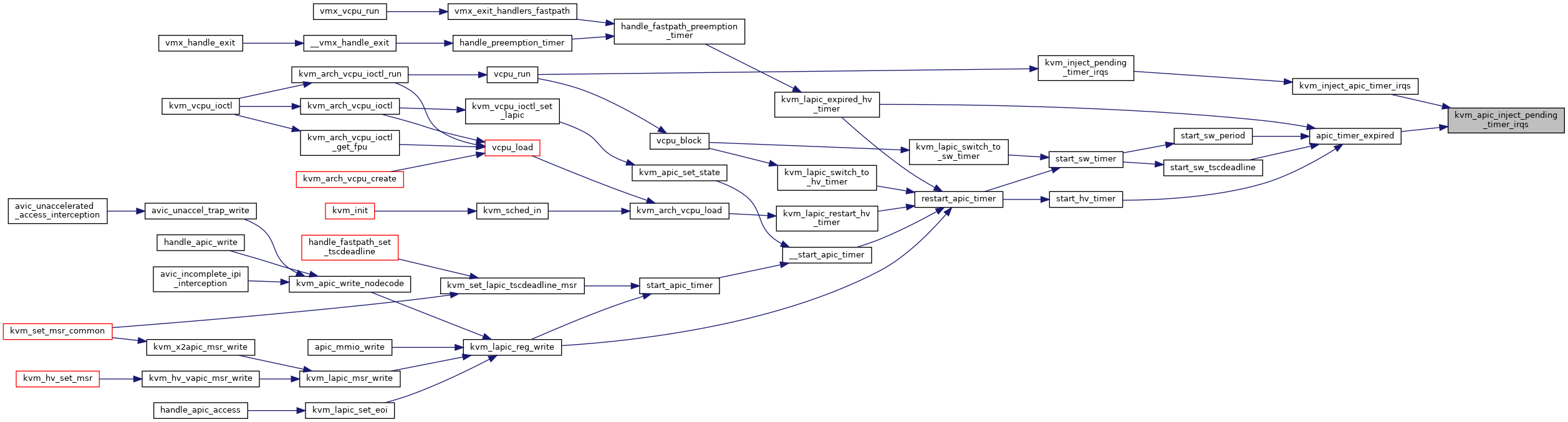

◆ kvm_apic_inject_pending_timer_irqs()

|

static |

Definition at line 1879 of file lapic.c.

◆ kvm_apic_is_broadcast_dest()

|

static |

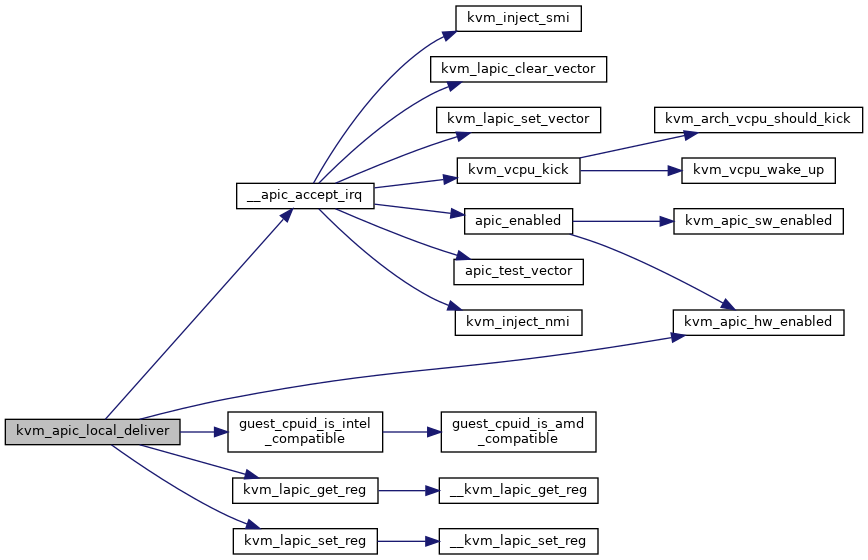

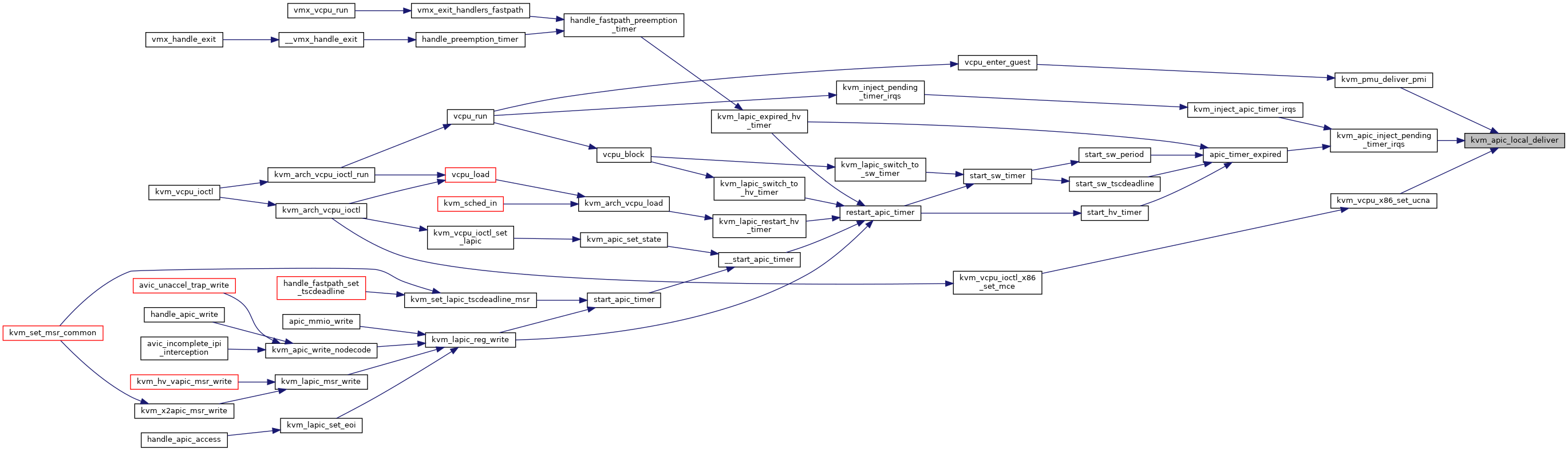

◆ kvm_apic_local_deliver()

| int kvm_apic_local_deliver | ( | struct kvm_lapic * | apic, |

| int | lvt_type | ||

| ) |

Definition at line 2762 of file lapic.c.

◆ kvm_apic_map_free()

|

static |

◆ kvm_apic_map_get_dest_lapic()

|

inlinestatic |

Definition at line 1142 of file lapic.c.

◆ kvm_apic_map_get_logical_dest()

|

inlinestatic |

◆ kvm_apic_match_dest()

| bool kvm_apic_match_dest | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_lapic * | source, | ||

| int | shorthand, | ||

| unsigned int | dest, | ||

| int | dest_mode | ||

| ) |

Definition at line 1067 of file lapic.c.

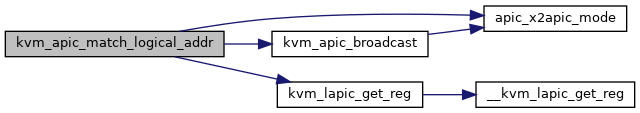

◆ kvm_apic_match_logical_addr()

|

static |

Definition at line 1013 of file lapic.c.

◆ kvm_apic_match_physical_addr()

|

static |

◆ kvm_apic_mda()

|

static |

◆ kvm_apic_nmi_wd_deliver()

| void kvm_apic_nmi_wd_deliver | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_apic_pending_eoi()

| bool kvm_apic_pending_eoi | ( | struct kvm_vcpu * | vcpu, |

| int | vector | ||

| ) |

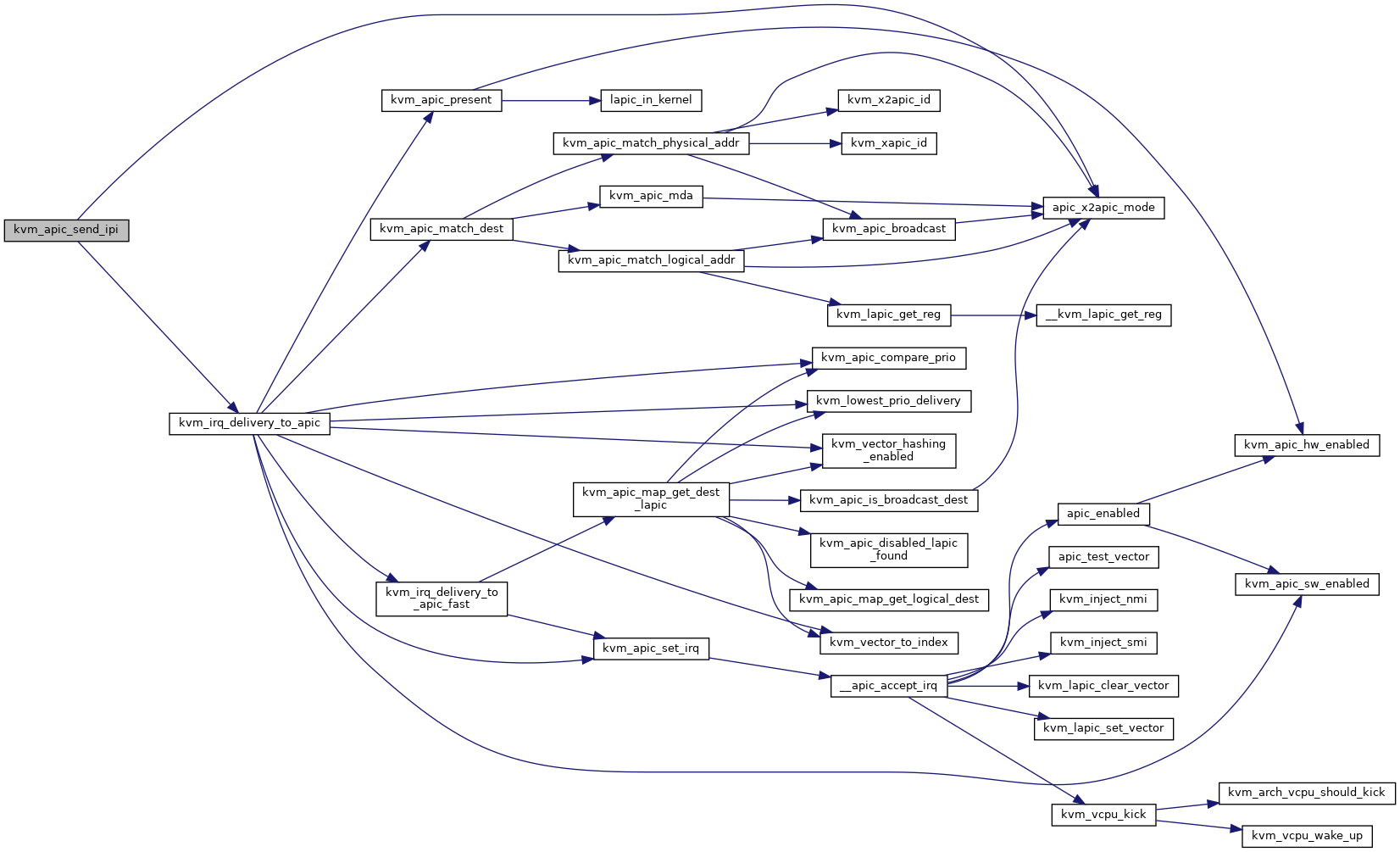

◆ kvm_apic_send_ipi()

| void kvm_apic_send_ipi | ( | struct kvm_lapic * | apic, |

| u32 | icr_low, | ||

| u32 | icr_high | ||

| ) |

Definition at line 1504 of file lapic.c.

◆ kvm_apic_set_dfr()

|

inlinestatic |

◆ kvm_apic_set_eoi_accelerated()

| void kvm_apic_set_eoi_accelerated | ( | struct kvm_vcpu * | vcpu, |

| int | vector | ||

| ) |

◆ kvm_apic_set_irq()

| int kvm_apic_set_irq | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_lapic_irq * | irq, | ||

| struct dest_map * | dest_map | ||

| ) |

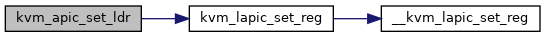

◆ kvm_apic_set_ldr()

|

inlinestatic |

◆ kvm_apic_set_state()

| int kvm_apic_set_state | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_lapic_state * | s | ||

| ) |

Definition at line 2984 of file lapic.c.

◆ kvm_apic_set_version()

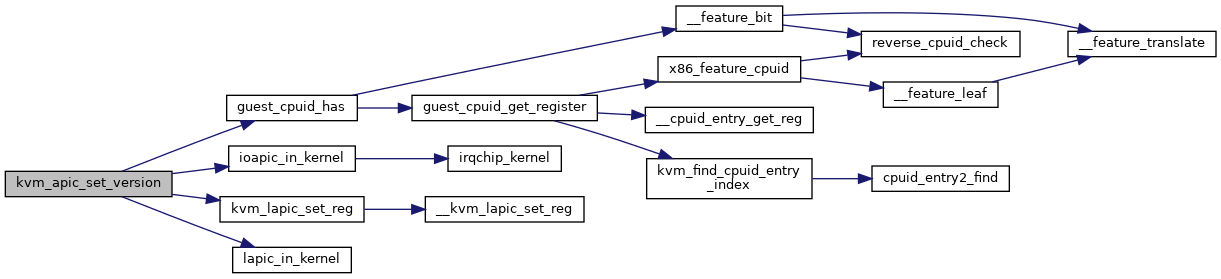

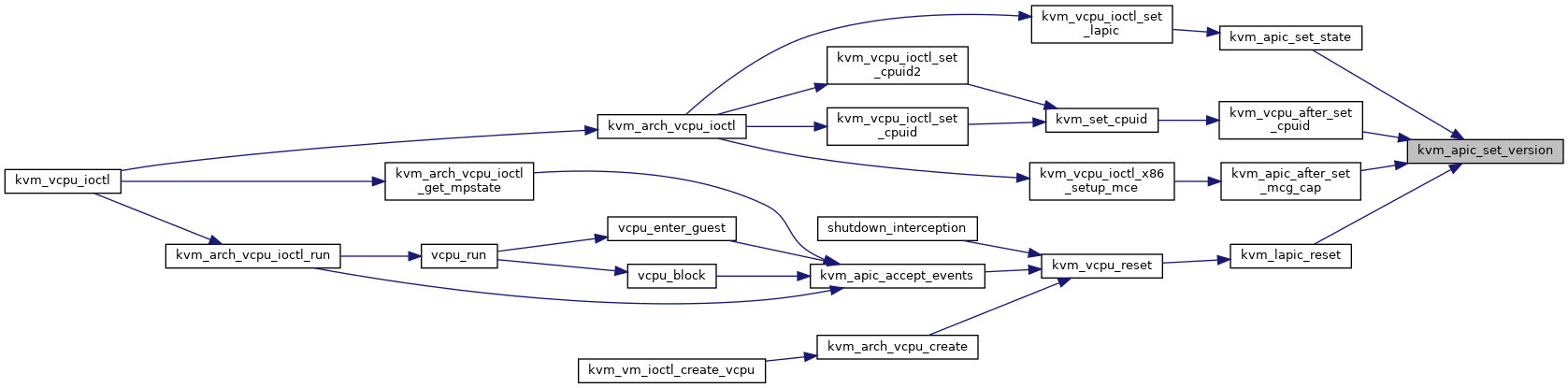

| void kvm_apic_set_version | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 573 of file lapic.c.

◆ kvm_apic_set_x2apic_id()

|

inlinestatic |

◆ kvm_apic_set_xapic_id()

|

inlinestatic |

◆ kvm_apic_state_fixup()

|

static |

Definition at line 2932 of file lapic.c.

◆ kvm_apic_update_apicv()

| void kvm_apic_update_apicv | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_apic_update_irr()

| bool kvm_apic_update_irr | ( | struct kvm_vcpu * | vcpu, |

| u32 * | pir, | ||

| int * | max_irr | ||

| ) |

Definition at line 690 of file lapic.c.

◆ kvm_apic_update_ppr()

| void kvm_apic_update_ppr | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_apic_write_nodecode()

| void kvm_apic_write_nodecode | ( | struct kvm_vcpu * | vcpu, |

| u32 | offset | ||

| ) |

Definition at line 2446 of file lapic.c.

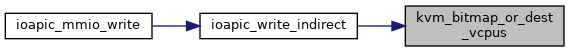

◆ kvm_bitmap_or_dest_vcpus()

| void kvm_bitmap_or_dest_vcpus | ( | struct kvm * | kvm, |

| struct kvm_lapic_irq * | irq, | ||

| unsigned long * | vcpu_bitmap | ||

| ) |

Definition at line 1394 of file lapic.c.

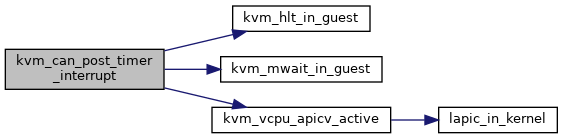

◆ kvm_can_post_timer_interrupt()

|

static |

Definition at line 148 of file lapic.c.

◆ kvm_can_use_hv_timer()

| bool kvm_can_use_hv_timer | ( | struct kvm_vcpu * | vcpu | ) |

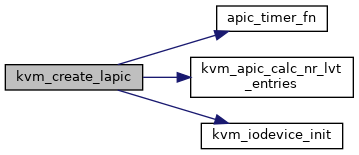

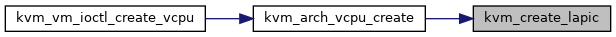

◆ kvm_create_lapic()

| int kvm_create_lapic | ( | struct kvm_vcpu * | vcpu, |

| int | timer_advance_ns | ||

| ) |

Definition at line 2810 of file lapic.c.

◆ kvm_free_lapic()

| void kvm_free_lapic | ( | struct kvm_vcpu * | vcpu | ) |

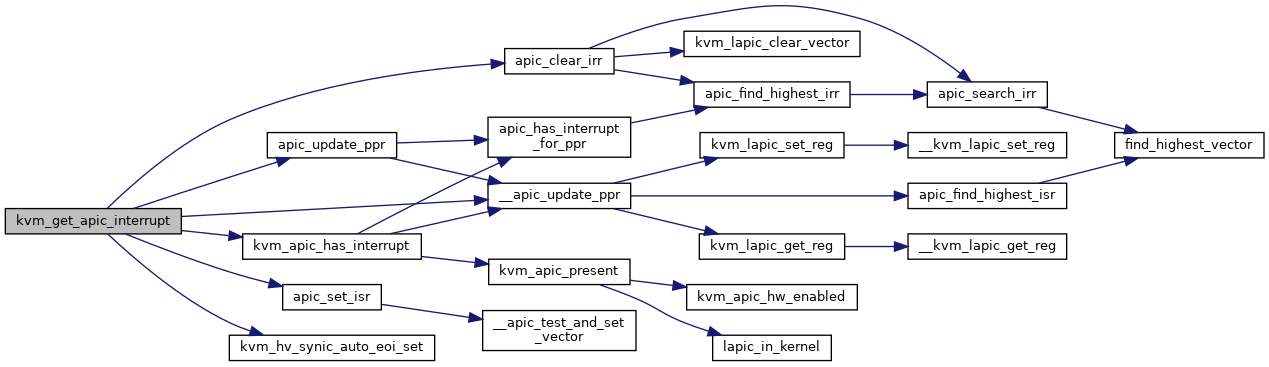

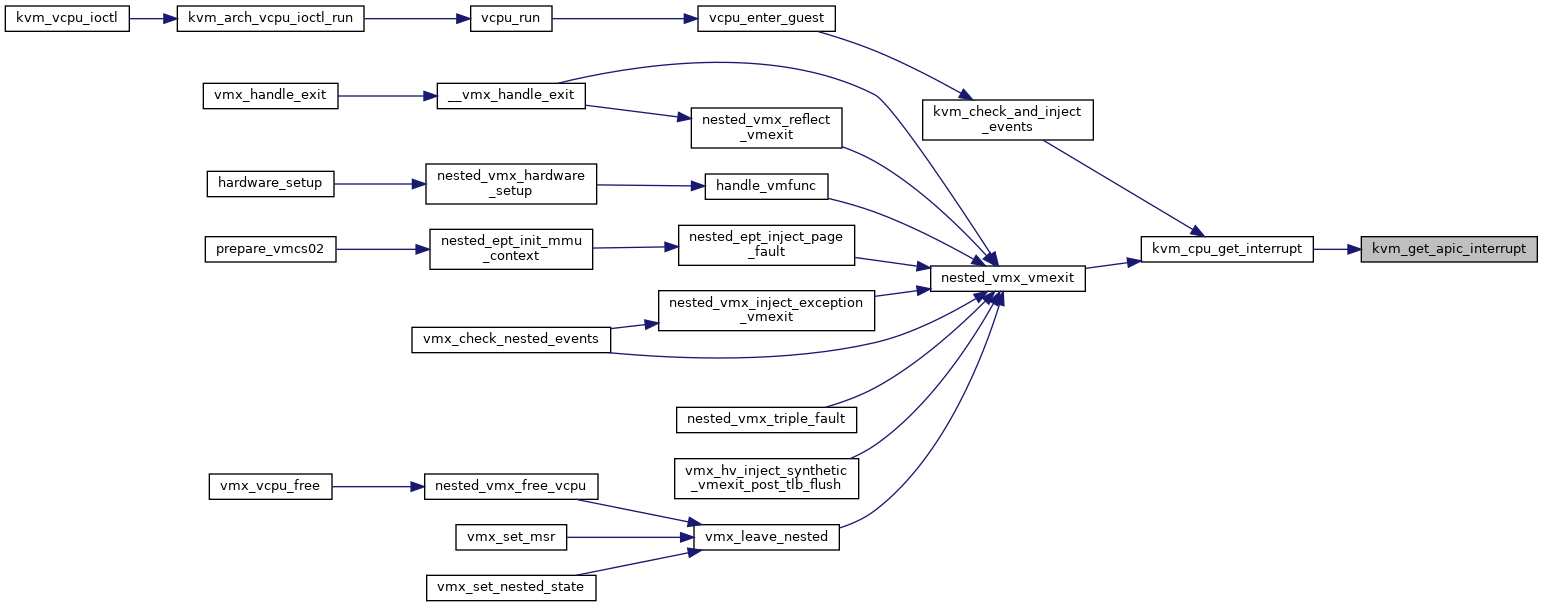

◆ kvm_get_apic_interrupt()

| int kvm_get_apic_interrupt | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2894 of file lapic.c.

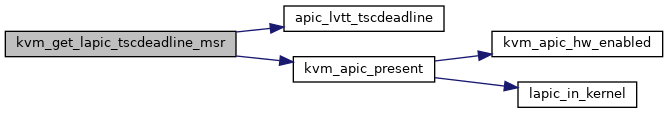

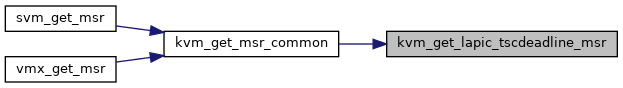

◆ kvm_get_lapic_tscdeadline_msr()

| u64 kvm_get_lapic_tscdeadline_msr | ( | struct kvm_vcpu * | vcpu | ) |

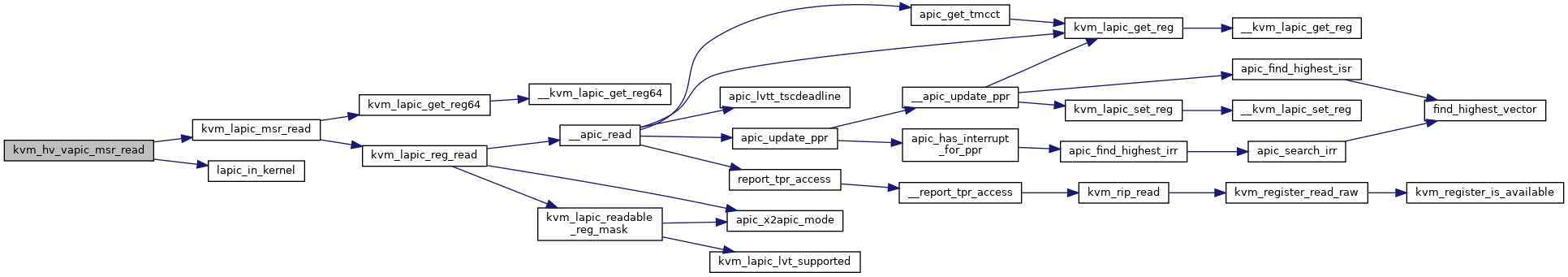

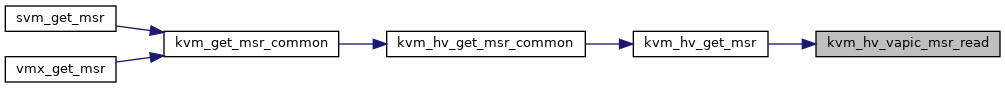

◆ kvm_hv_vapic_msr_read()

| int kvm_hv_vapic_msr_read | ( | struct kvm_vcpu * | vcpu, |

| u32 | reg, | ||

| u64 * | data | ||

| ) |

Definition at line 3229 of file lapic.c.

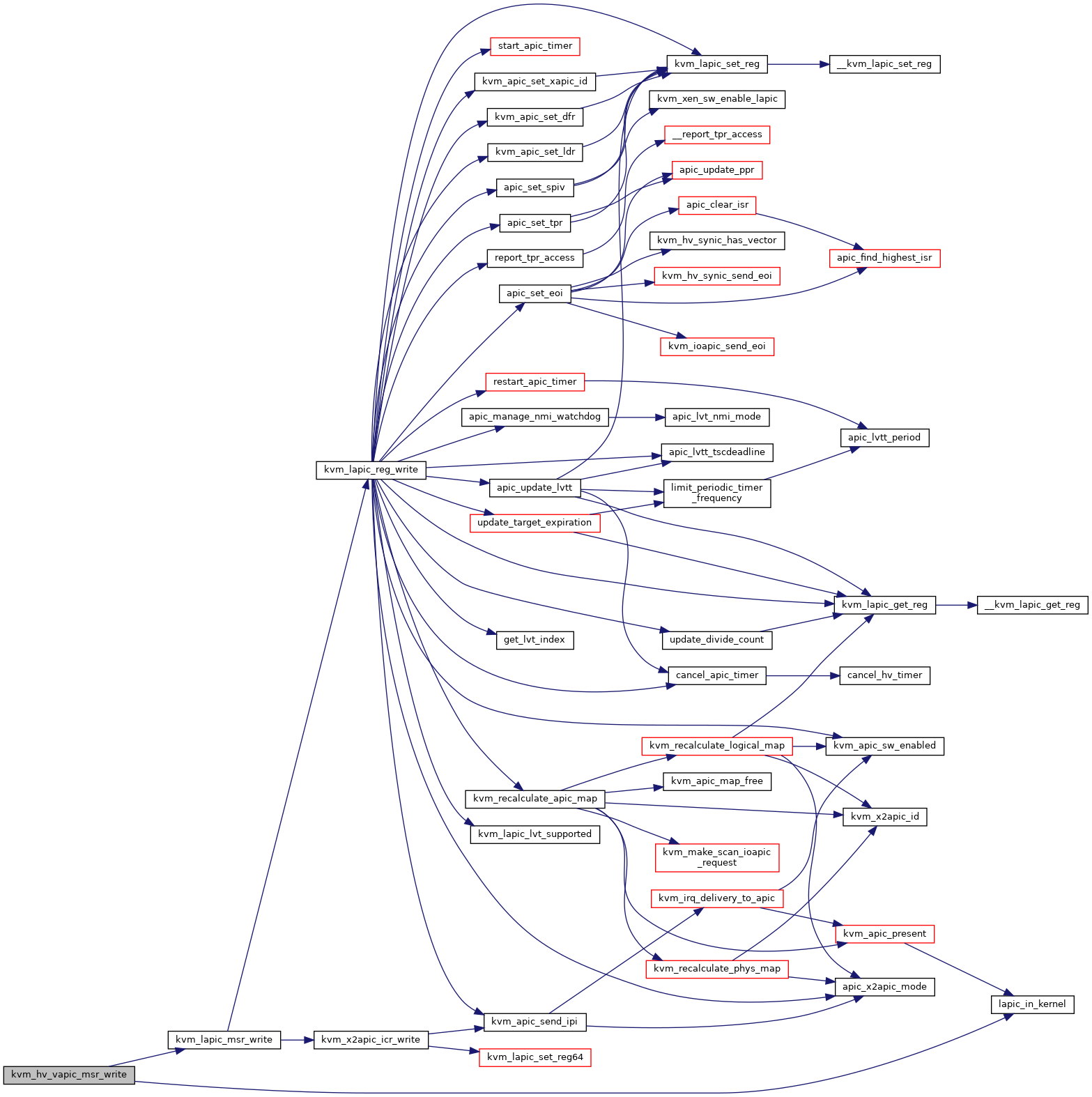

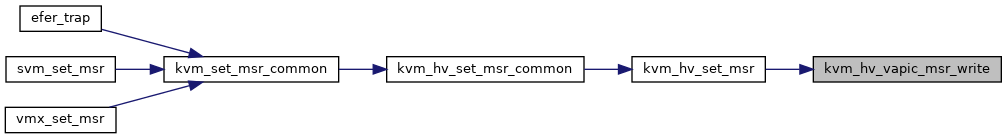

◆ kvm_hv_vapic_msr_write()

| int kvm_hv_vapic_msr_write | ( | struct kvm_vcpu * | vcpu, |

| u32 | reg, | ||

| u64 | data | ||

| ) |

Definition at line 3221 of file lapic.c.

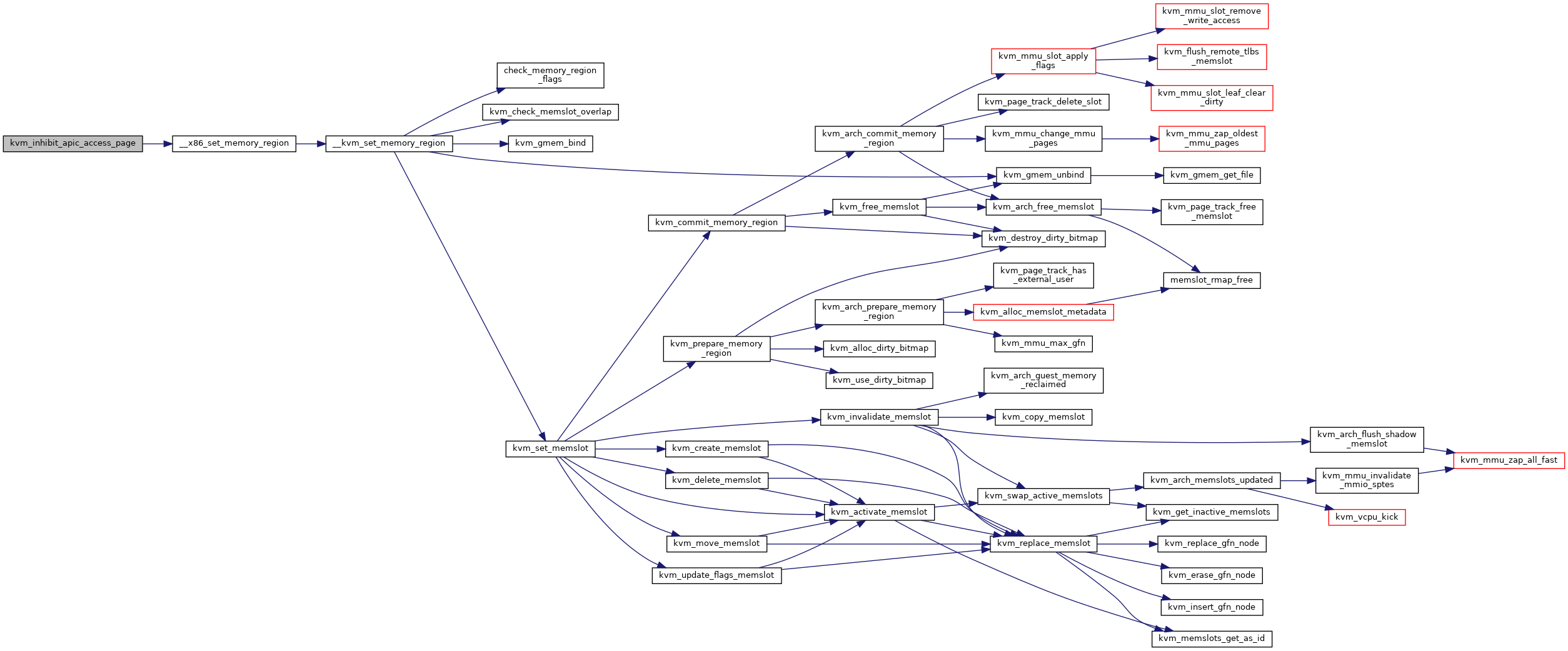

◆ kvm_inhibit_apic_access_page()

| void kvm_inhibit_apic_access_page | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_inject_apic_timer_irqs()

| void kvm_inject_apic_timer_irqs | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_intr_is_single_vcpu_fast()

| bool kvm_intr_is_single_vcpu_fast | ( | struct kvm * | kvm, |

| struct kvm_lapic_irq * | irq, | ||

| struct kvm_vcpu ** | dest_vcpu | ||

| ) |

◆ kvm_ioapic_handles_vector()

|

static |

◆ kvm_ioapic_send_eoi()

|

static |

Definition at line 1442 of file lapic.c.

◆ kvm_irq_delivery_to_apic_fast()

| bool kvm_irq_delivery_to_apic_fast | ( | struct kvm * | kvm, |

| struct kvm_lapic * | src, | ||

| struct kvm_lapic_irq * | irq, | ||

| int * | r, | ||

| struct dest_map * | dest_map | ||

| ) |

◆ kvm_lapic_exit()

| void kvm_lapic_exit | ( | void | ) |

◆ kvm_lapic_expired_hv_timer()

| void kvm_lapic_expired_hv_timer | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_find_highest_irr()

| int kvm_lapic_find_highest_irr | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_get_cr8()

| u64 kvm_lapic_get_cr8 | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_get_reg64()

|

static |

◆ kvm_lapic_hv_timer_in_use()

| bool kvm_lapic_hv_timer_in_use | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_lvt_supported()

|

inlinestatic |

◆ kvm_lapic_msr_read()

|

static |

◆ kvm_lapic_msr_write()

|

static |

◆ kvm_lapic_readable_reg_mask()

| u64 kvm_lapic_readable_reg_mask | ( | struct kvm_lapic * | apic | ) |

Definition at line 1607 of file lapic.c.

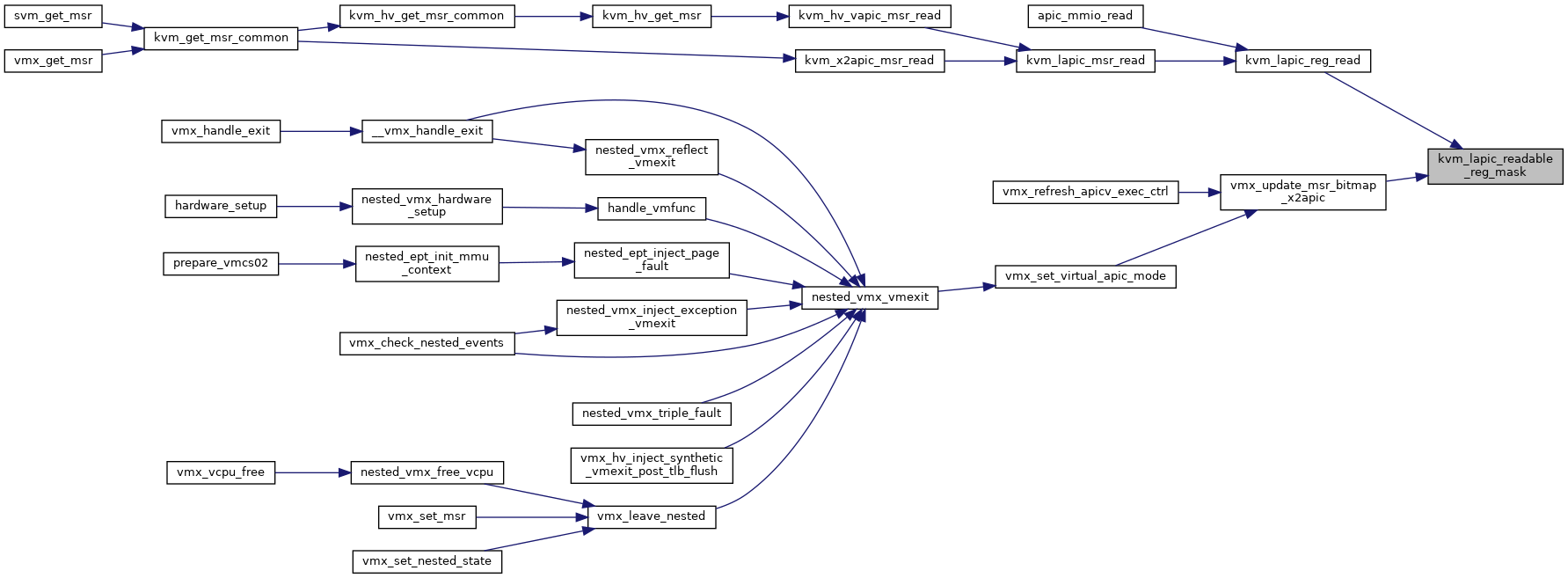

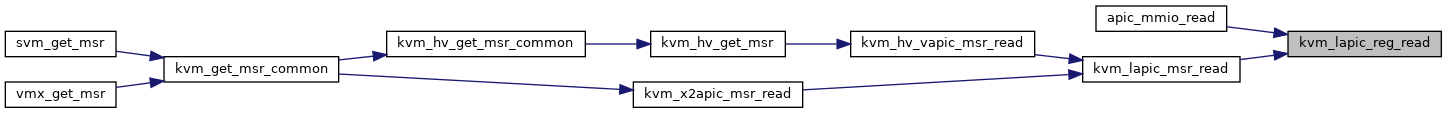

◆ kvm_lapic_reg_read()

|

static |

Definition at line 1645 of file lapic.c.

◆ kvm_lapic_reg_write()

|

static |

Definition at line 2255 of file lapic.c.

◆ kvm_lapic_reset()

| void kvm_lapic_reset | ( | struct kvm_vcpu * | vcpu, |

| bool | init_event | ||

| ) |

Definition at line 2669 of file lapic.c.

◆ kvm_lapic_restart_hv_timer()

| void kvm_lapic_restart_hv_timer | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_set_base()

| void kvm_lapic_set_base | ( | struct kvm_vcpu * | vcpu, |

| u64 | value | ||

| ) |

Definition at line 2530 of file lapic.c.

◆ kvm_lapic_set_eoi()

| void kvm_lapic_set_eoi | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_set_pv_eoi()

| int kvm_lapic_set_pv_eoi | ( | struct kvm_vcpu * | vcpu, |

| u64 | data, | ||

| unsigned long | len | ||

| ) |

Definition at line 3237 of file lapic.c.

◆ kvm_lapic_set_reg()

|

inlinestatic |

◆ kvm_lapic_set_reg64()

|

static |

◆ kvm_lapic_set_tpr()

| void kvm_lapic_set_tpr | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | cr8 | ||

| ) |

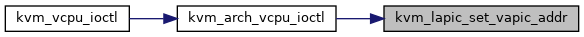

◆ kvm_lapic_set_vapic_addr()

| int kvm_lapic_set_vapic_addr | ( | struct kvm_vcpu * | vcpu, |

| gpa_t | vapic_addr | ||

| ) |

◆ kvm_lapic_switch_to_hv_timer()

| void kvm_lapic_switch_to_hv_timer | ( | struct kvm_vcpu * | vcpu | ) |

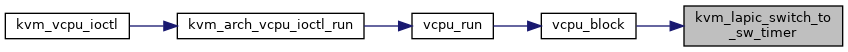

◆ kvm_lapic_switch_to_sw_timer()

| void kvm_lapic_switch_to_sw_timer | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_lapic_sync_from_vapic()

| void kvm_lapic_sync_from_vapic | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3072 of file lapic.c.

◆ kvm_lapic_sync_to_vapic()

| void kvm_lapic_sync_to_vapic | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3115 of file lapic.c.

◆ kvm_pv_send_ipi()

| int kvm_pv_send_ipi | ( | struct kvm * | kvm, |

| unsigned long | ipi_bitmap_low, | ||

| unsigned long | ipi_bitmap_high, | ||

| u32 | min, | ||

| unsigned long | icr, | ||

| int | op_64_bit | ||

| ) |

Definition at line 852 of file lapic.c.

◆ kvm_recalculate_apic_map()

| void kvm_recalculate_apic_map | ( | struct kvm * | kvm | ) |

Definition at line 374 of file lapic.c.

◆ kvm_recalculate_logical_map()

|

static |

◆ kvm_recalculate_phys_map()

|

static |

◆ kvm_set_lapic_tscdeadline_msr()

| void kvm_set_lapic_tscdeadline_msr | ( | struct kvm_vcpu * | vcpu, |

| u64 | data | ||

| ) |

◆ kvm_use_posted_timer_interrupt()

|

static |

◆ kvm_vector_to_index()

| int kvm_vector_to_index | ( | u32 | vector, |

| u32 | dest_vcpus, | ||

| const unsigned long * | bitmap, | ||

| u32 | bitmap_size | ||

| ) |

◆ kvm_wait_lapic_expire()

| void kvm_wait_lapic_expire | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1869 of file lapic.c.

◆ kvm_x2apic_icr_write()

| int kvm_x2apic_icr_write | ( | struct kvm_lapic * | apic, |

| u64 | data | ||

| ) |

◆ kvm_x2apic_id()

|

inlinestatic |

◆ kvm_x2apic_msr_read()

| int kvm_x2apic_msr_read | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| u64 * | data | ||

| ) |

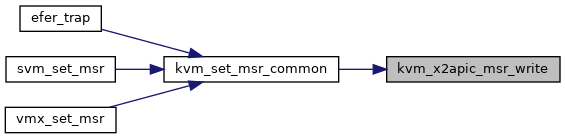

◆ kvm_x2apic_msr_write()

| int kvm_x2apic_msr_write | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| u64 | data | ||

| ) |

◆ lapic_is_periodic()

|

static |

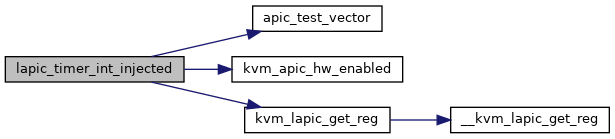

◆ lapic_timer_int_injected()

|

static |

◆ limit_periodic_timer_frequency()

|

static |

◆ pv_eoi_enabled()

|

inlinestatic |

◆ pv_eoi_get_user()

|

static |

◆ pv_eoi_put_user()

|

static |

◆ pv_eoi_set_pending()

|

static |

◆ pv_eoi_test_and_clr_pending()

|

static |

◆ report_tpr_access()

|

inlinestatic |

Definition at line 1560 of file lapic.c.

◆ restart_apic_timer()

|

static |

◆ set_target_expiration()

|

static |

◆ start_apic_timer()

|

static |

◆ start_hv_timer()

|

static |

◆ start_sw_period()

|

static |

◆ start_sw_timer()

|

static |

Definition at line 2141 of file lapic.c.

◆ start_sw_tscdeadline()

|

static |

◆ tmict_to_ns()

|

inlinestatic |

◆ to_lapic()

|

inlinestatic |

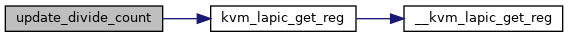

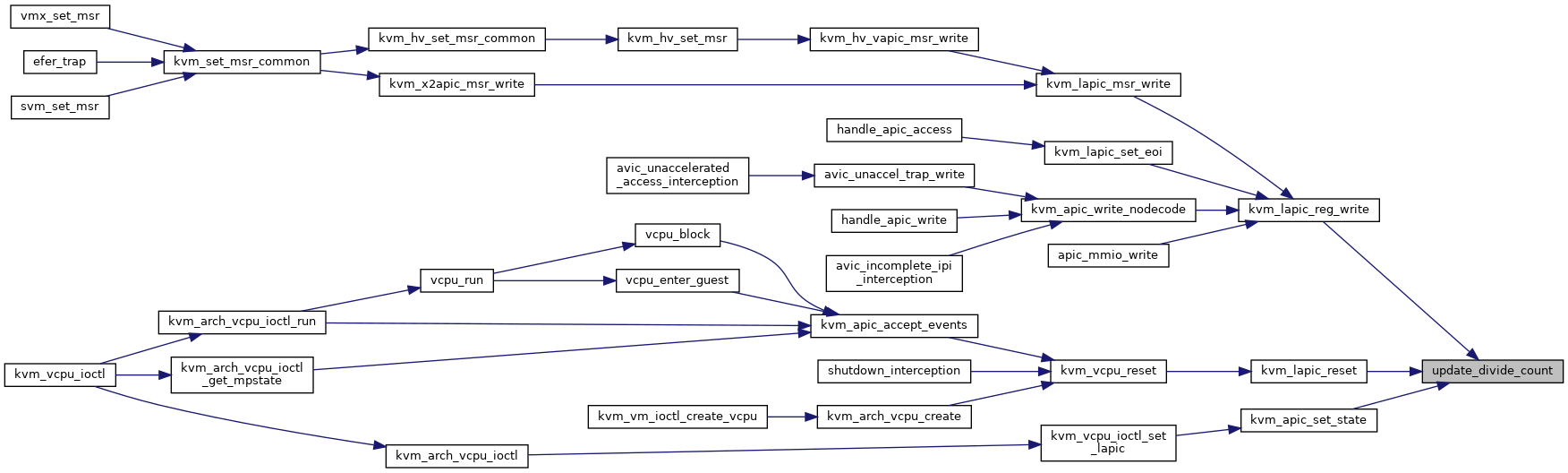

◆ update_divide_count()

|

static |

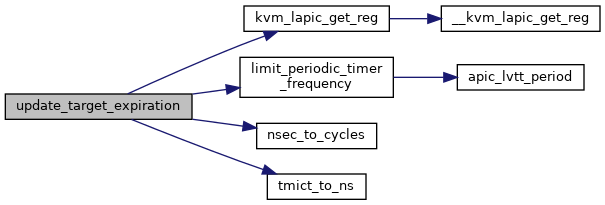

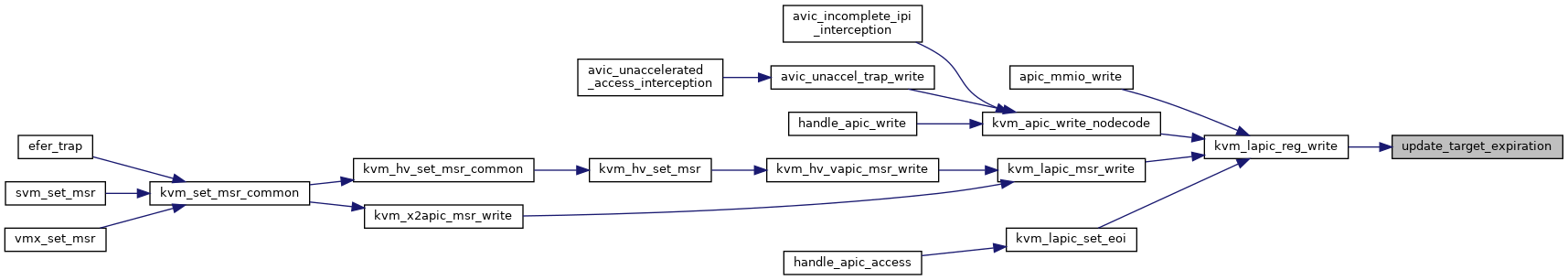

◆ update_target_expiration()

|

static |

Variable Documentation

◆ __read_mostly

◆ apic_lvt_mask

|

static |

◆ apic_mmio_ops

|

static |