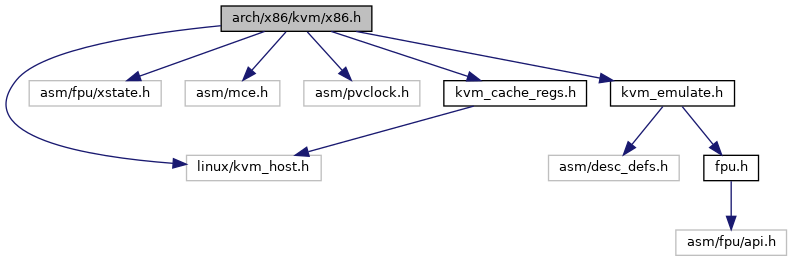

#include <linux/kvm_host.h>#include <asm/fpu/xstate.h>#include <asm/mce.h>#include <asm/pvclock.h>#include "kvm_cache_regs.h"#include "kvm_emulate.h"

Go to the source code of this file.

Classes | |

| struct | kvm_caps |

Macros | |

| #define | KVM_NESTED_VMENTER_CONSISTENCY_CHECK(consistency_check) |

| #define | KVM_FIRST_EMULATED_VMX_MSR MSR_IA32_VMX_BASIC |

| #define | KVM_LAST_EMULATED_VMX_MSR MSR_IA32_VMX_VMFUNC |

| #define | KVM_DEFAULT_PLE_GAP 128 |

| #define | KVM_VMX_DEFAULT_PLE_WINDOW 4096 |

| #define | KVM_DEFAULT_PLE_WINDOW_GROW 2 |

| #define | KVM_DEFAULT_PLE_WINDOW_SHRINK 0 |

| #define | KVM_VMX_DEFAULT_PLE_WINDOW_MAX UINT_MAX |

| #define | KVM_SVM_DEFAULT_PLE_WINDOW_MAX USHRT_MAX |

| #define | KVM_SVM_DEFAULT_PLE_WINDOW 3000 |

| #define | MSR_IA32_CR_PAT_DEFAULT 0x0007040600070406ULL |

| #define | MMIO_GVA_ANY (~(gva_t)0) |

| #define | do_shl32_div32(n, base) |

| #define | KVM_MSR_RET_INVALID 2 /* in-kernel MSR emulation #GP condition */ |

| #define | KVM_MSR_RET_FILTERED 3 /* #GP due to userspace MSR filter */ |

| #define | __cr4_reserved_bits(__cpu_has, __c) |

Enumerations | |

| enum | kvm_intr_type { KVM_HANDLING_IRQ = 1 , KVM_HANDLING_NMI } |

Functions | |

| void | kvm_spurious_fault (void) |

| static unsigned int | __grow_ple_window (unsigned int val, unsigned int base, unsigned int modifier, unsigned int max) |

| static unsigned int | __shrink_ple_window (unsigned int val, unsigned int base, unsigned int modifier, unsigned int min) |

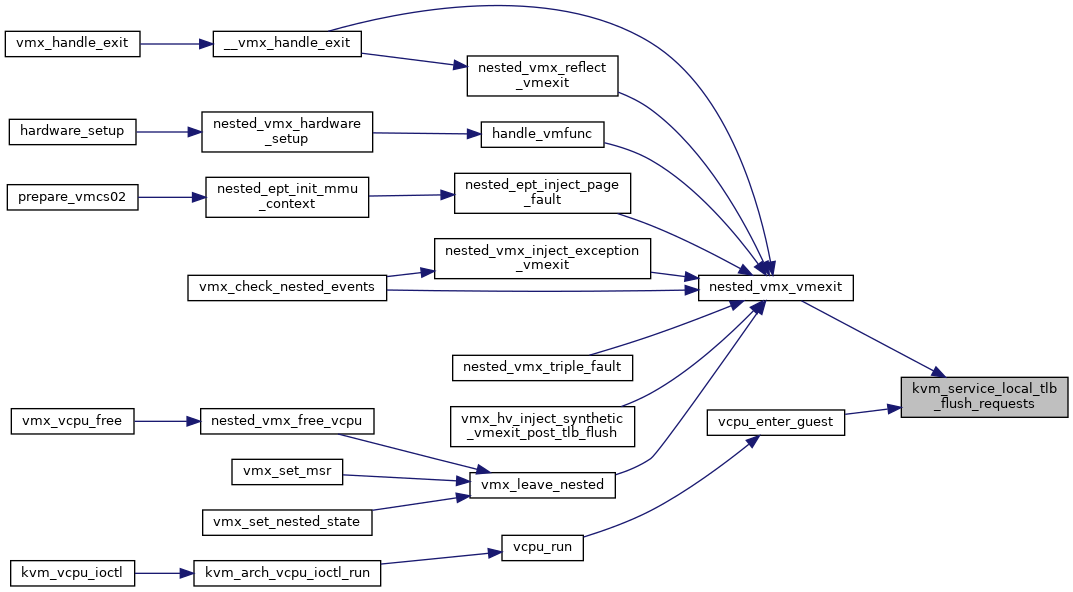

| void | kvm_service_local_tlb_flush_requests (struct kvm_vcpu *vcpu) |

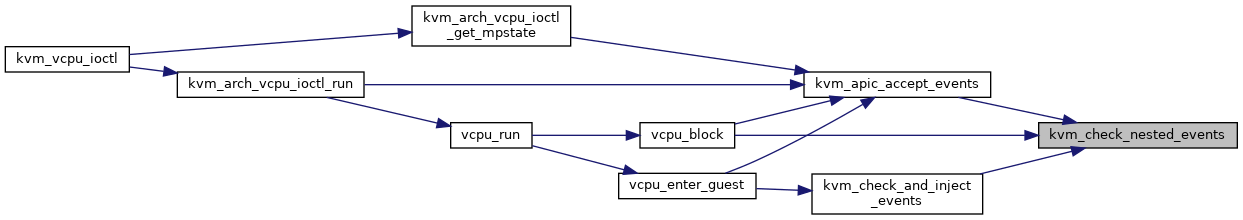

| int | kvm_check_nested_events (struct kvm_vcpu *vcpu) |

| static bool | kvm_vcpu_has_run (struct kvm_vcpu *vcpu) |

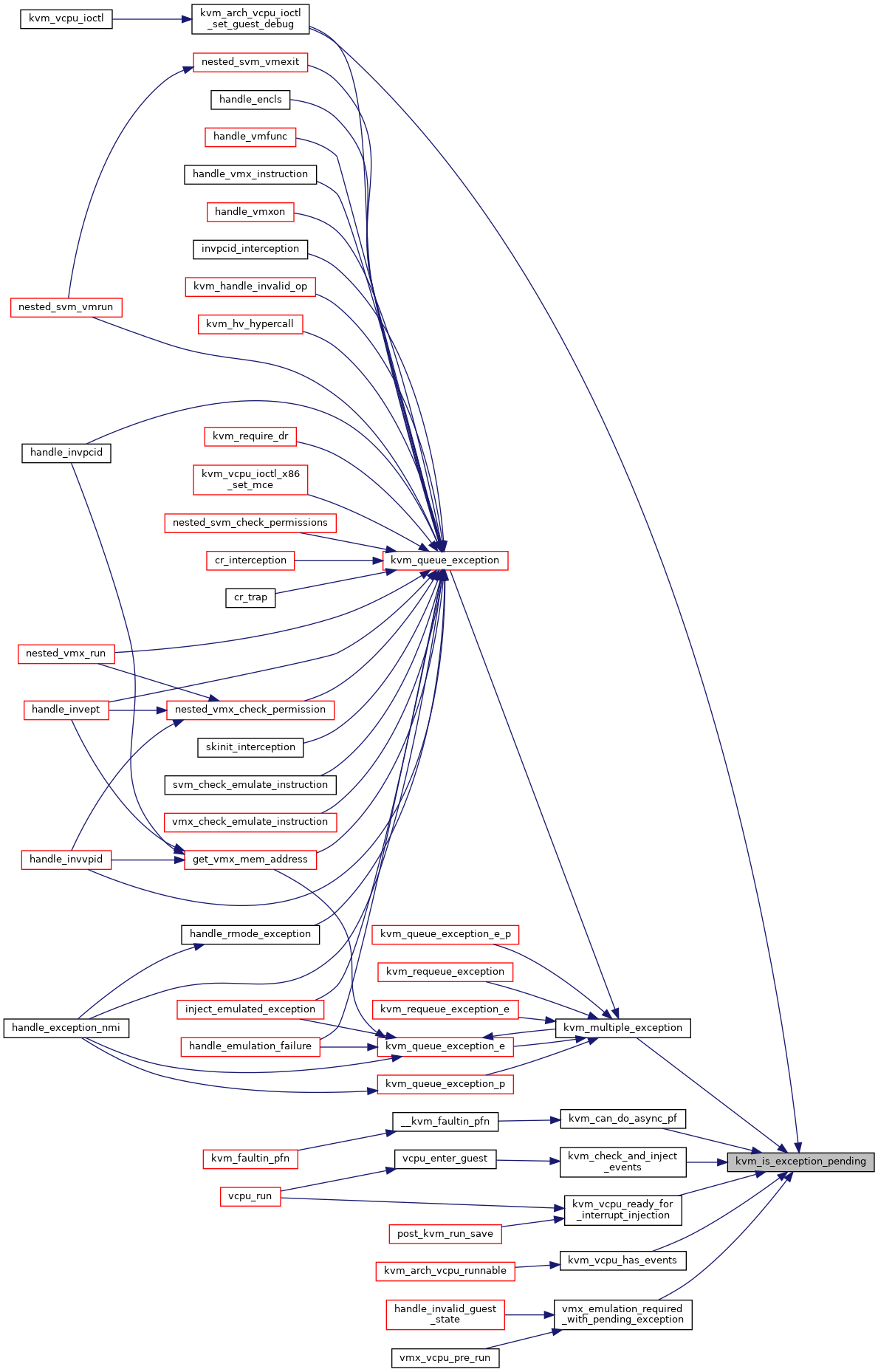

| static bool | kvm_is_exception_pending (struct kvm_vcpu *vcpu) |

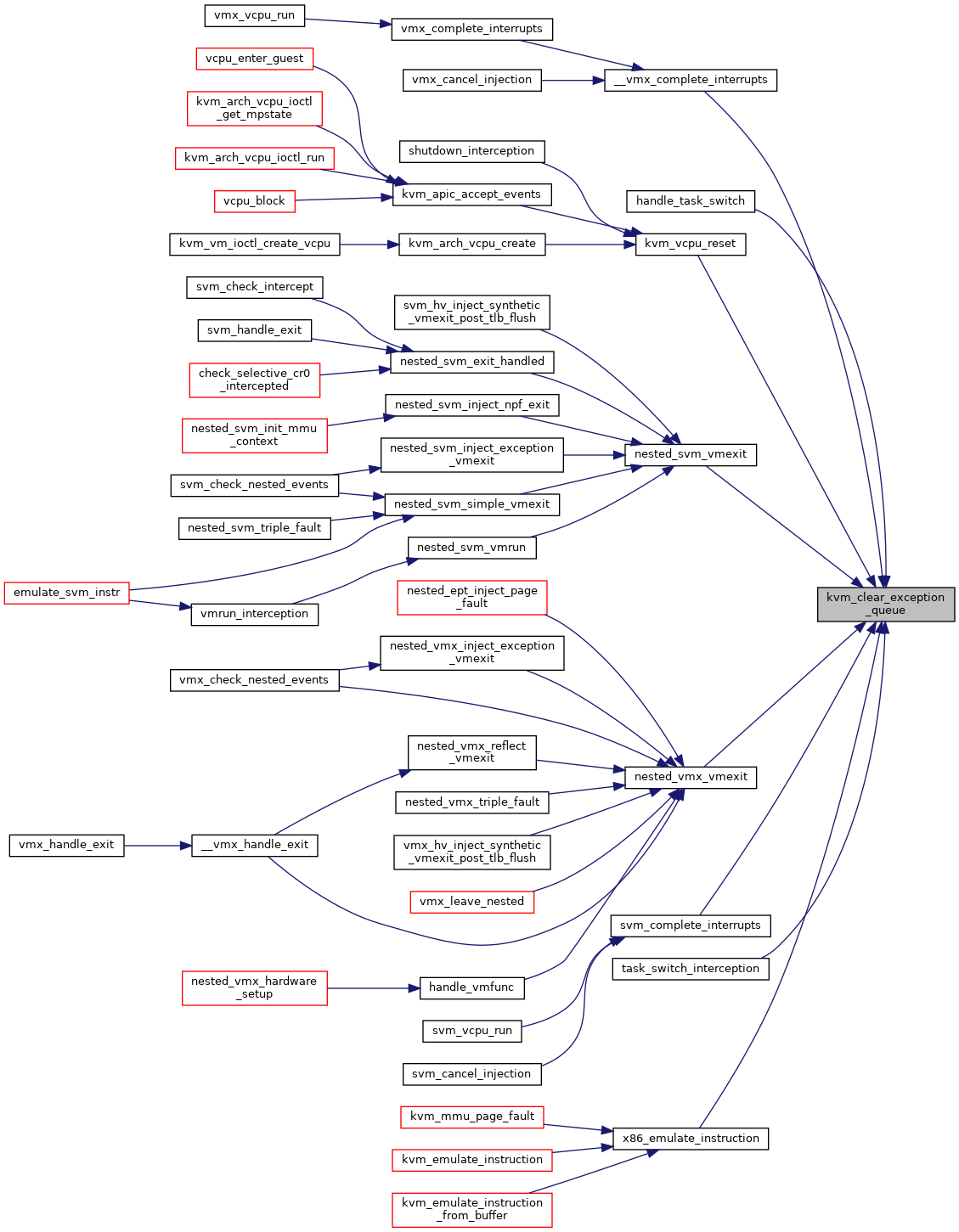

| static void | kvm_clear_exception_queue (struct kvm_vcpu *vcpu) |

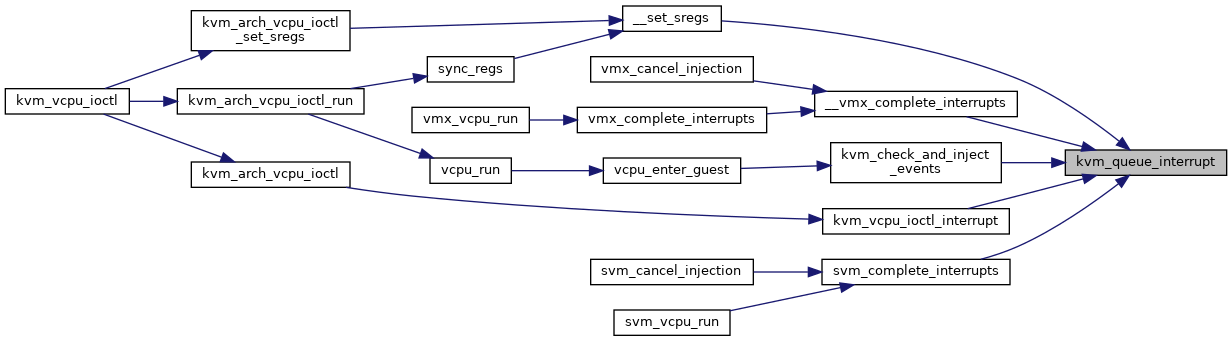

| static void | kvm_queue_interrupt (struct kvm_vcpu *vcpu, u8 vector, bool soft) |

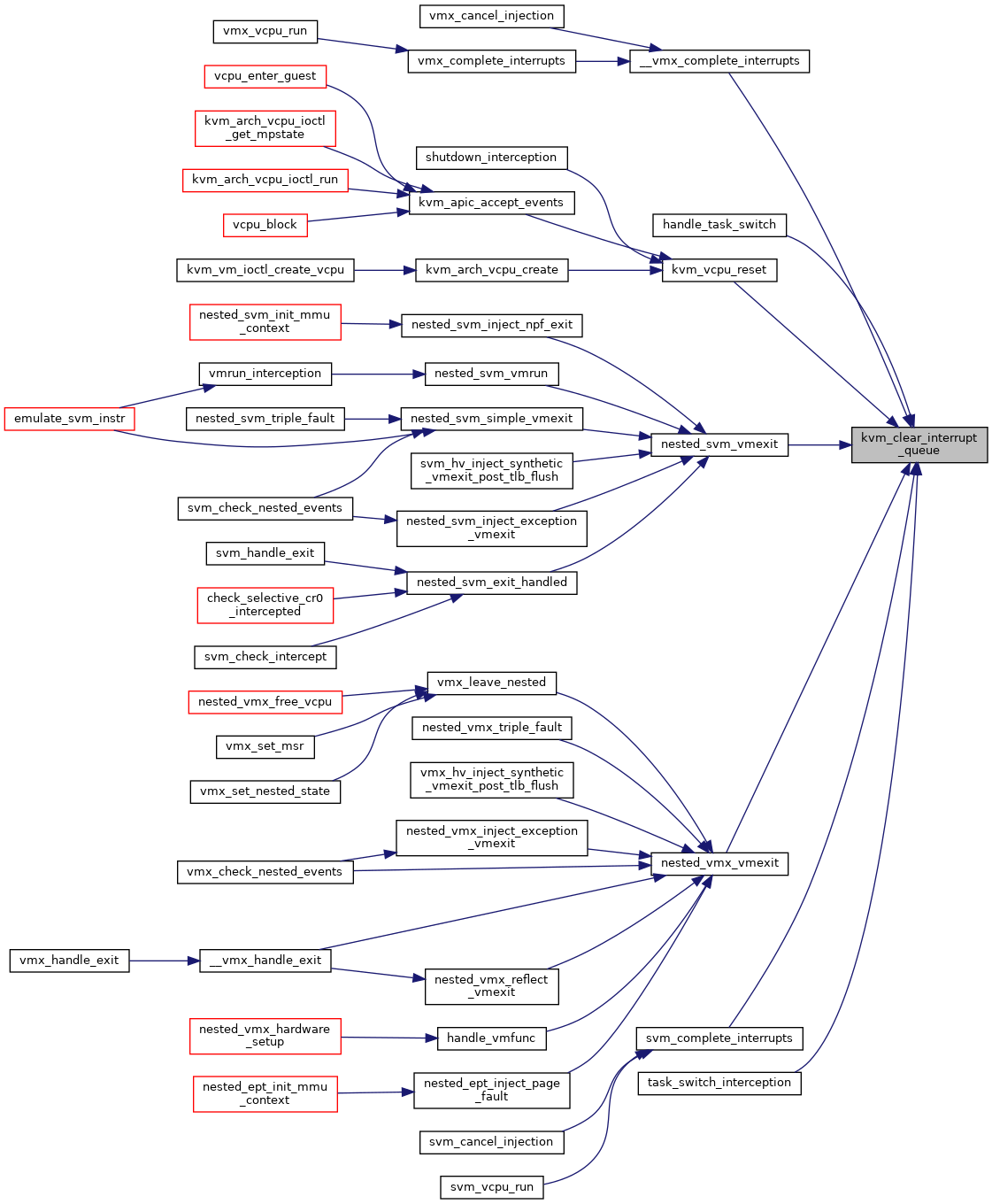

| static void | kvm_clear_interrupt_queue (struct kvm_vcpu *vcpu) |

| static bool | kvm_event_needs_reinjection (struct kvm_vcpu *vcpu) |

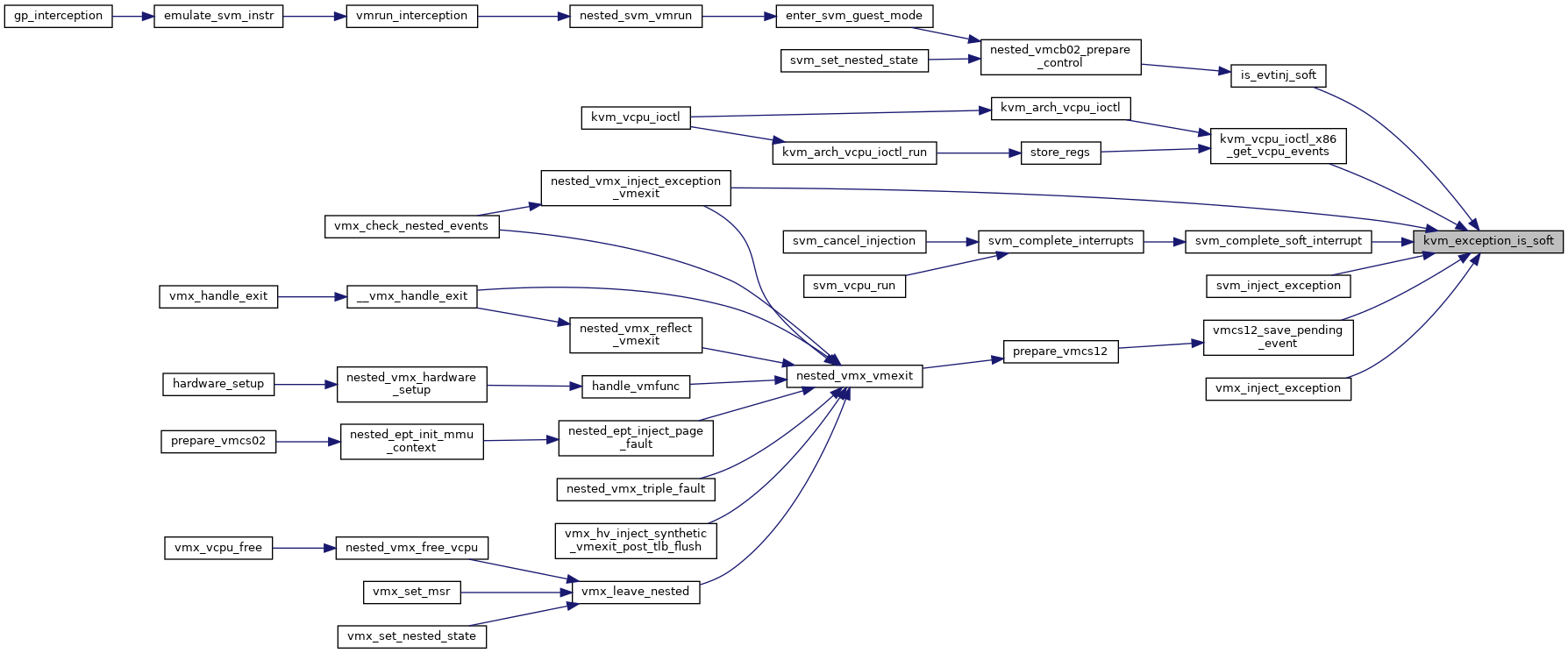

| static bool | kvm_exception_is_soft (unsigned int nr) |

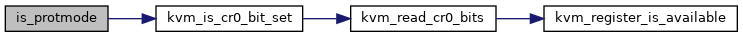

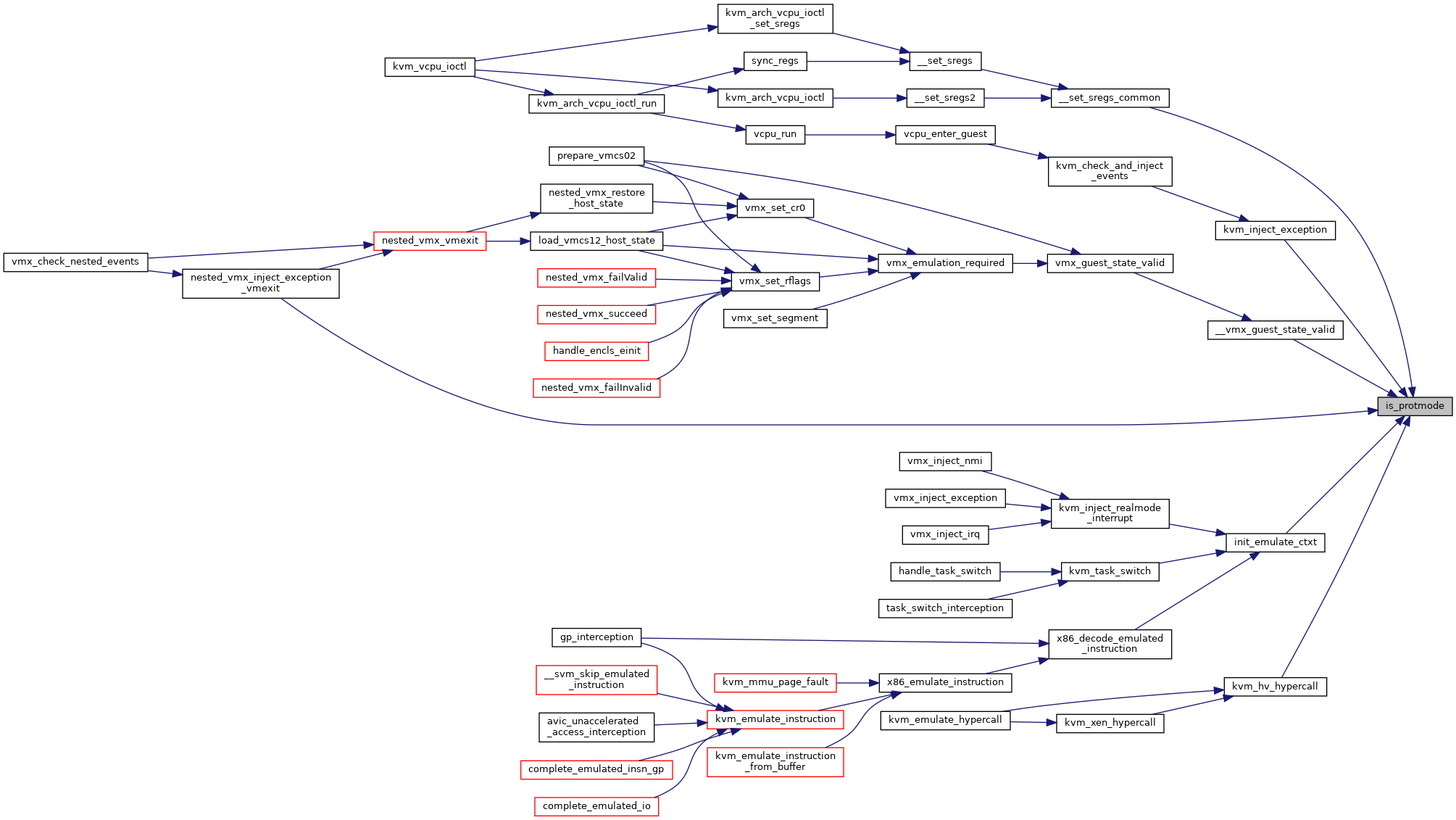

| static bool | is_protmode (struct kvm_vcpu *vcpu) |

| static bool | is_long_mode (struct kvm_vcpu *vcpu) |

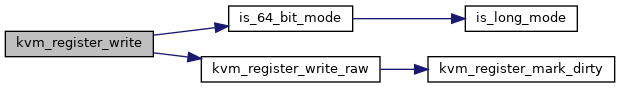

| static bool | is_64_bit_mode (struct kvm_vcpu *vcpu) |

| static bool | is_64_bit_hypercall (struct kvm_vcpu *vcpu) |

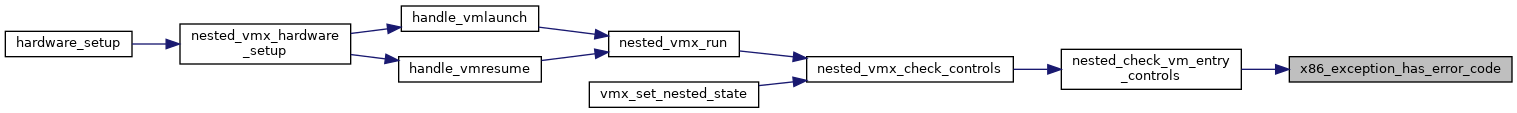

| static bool | x86_exception_has_error_code (unsigned int vector) |

| static bool | mmu_is_nested (struct kvm_vcpu *vcpu) |

| static bool | is_pae (struct kvm_vcpu *vcpu) |

| static bool | is_pse (struct kvm_vcpu *vcpu) |

| static bool | is_paging (struct kvm_vcpu *vcpu) |

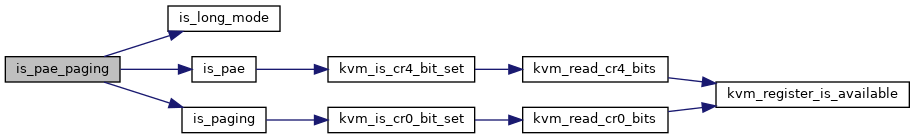

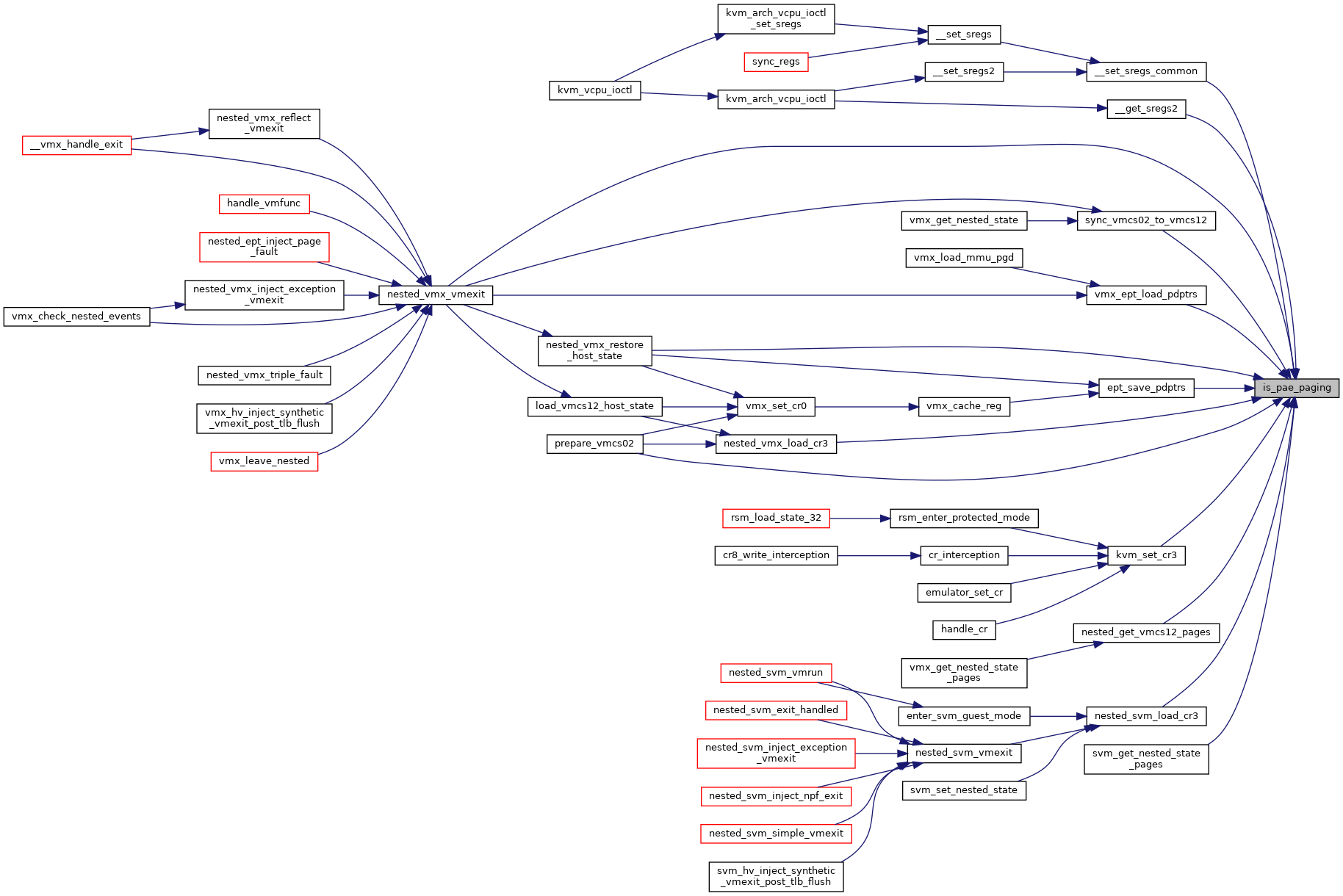

| static bool | is_pae_paging (struct kvm_vcpu *vcpu) |

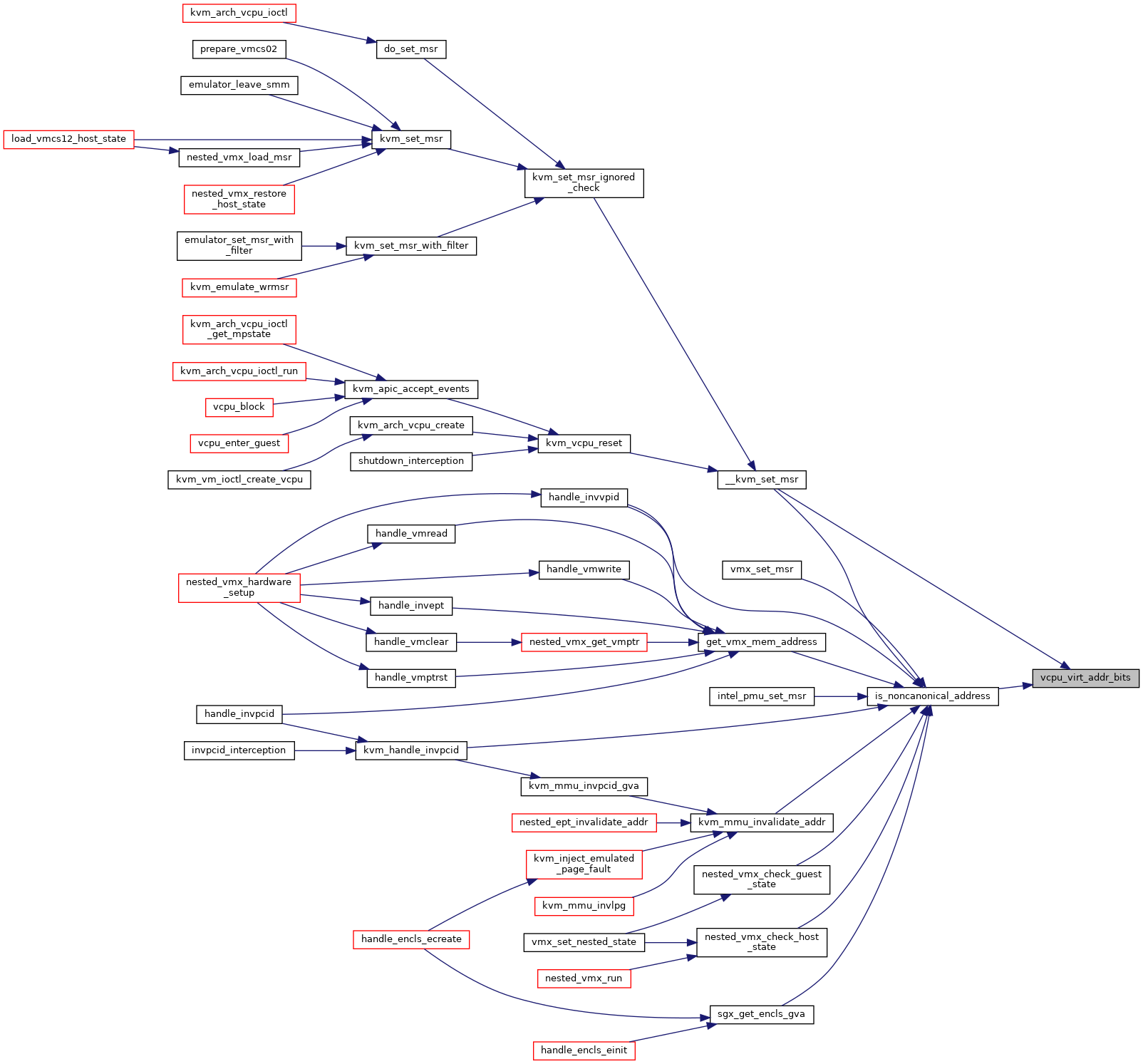

| static u8 | vcpu_virt_addr_bits (struct kvm_vcpu *vcpu) |

| static bool | is_noncanonical_address (u64 la, struct kvm_vcpu *vcpu) |

| static void | vcpu_cache_mmio_info (struct kvm_vcpu *vcpu, gva_t gva, gfn_t gfn, unsigned access) |

| static bool | vcpu_match_mmio_gen (struct kvm_vcpu *vcpu) |

| static void | vcpu_clear_mmio_info (struct kvm_vcpu *vcpu, gva_t gva) |

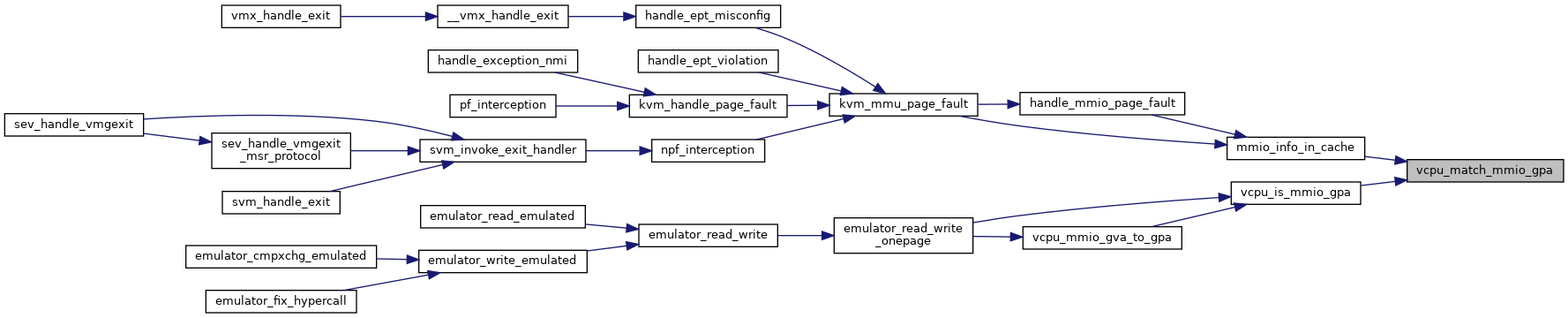

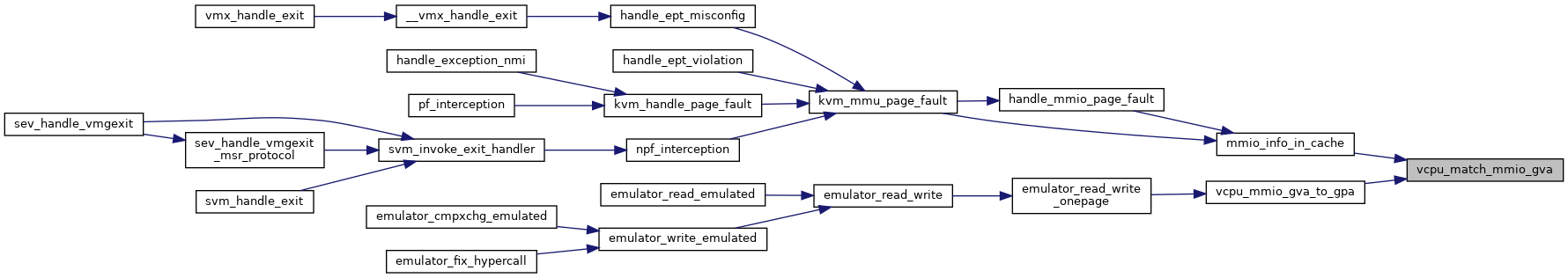

| static bool | vcpu_match_mmio_gva (struct kvm_vcpu *vcpu, unsigned long gva) |

| static bool | vcpu_match_mmio_gpa (struct kvm_vcpu *vcpu, gpa_t gpa) |

| static unsigned long | kvm_register_read (struct kvm_vcpu *vcpu, int reg) |

| static void | kvm_register_write (struct kvm_vcpu *vcpu, int reg, unsigned long val) |

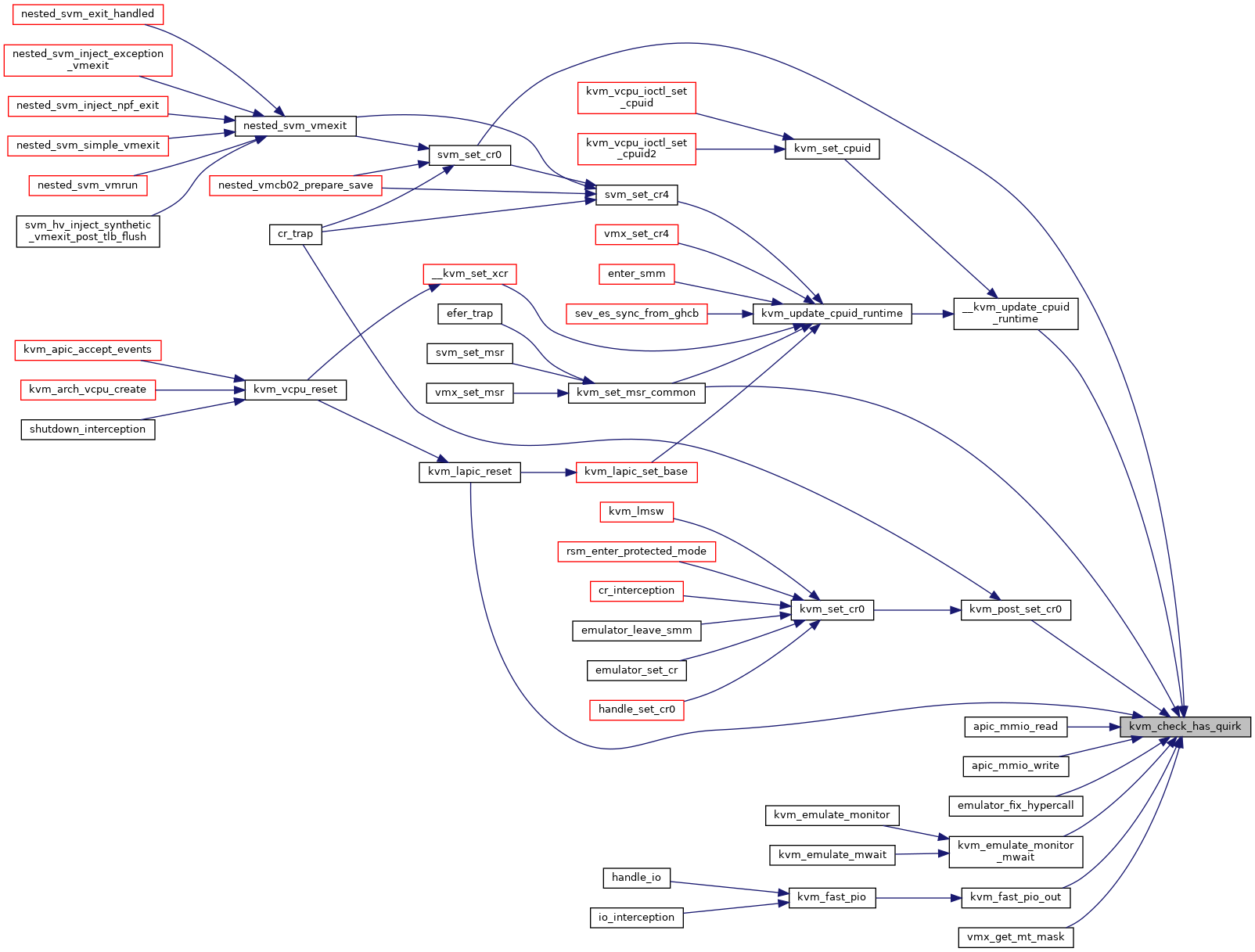

| static bool | kvm_check_has_quirk (struct kvm *kvm, u64 quirk) |

| void | kvm_inject_realmode_interrupt (struct kvm_vcpu *vcpu, int irq, int inc_eip) |

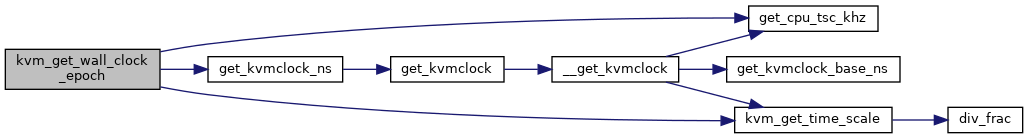

| u64 | get_kvmclock_ns (struct kvm *kvm) |

| uint64_t | kvm_get_wall_clock_epoch (struct kvm *kvm) |

| int | kvm_read_guest_virt (struct kvm_vcpu *vcpu, gva_t addr, void *val, unsigned int bytes, struct x86_exception *exception) |

| int | kvm_write_guest_virt_system (struct kvm_vcpu *vcpu, gva_t addr, void *val, unsigned int bytes, struct x86_exception *exception) |

| int | handle_ud (struct kvm_vcpu *vcpu) |

| void | kvm_deliver_exception_payload (struct kvm_vcpu *vcpu, struct kvm_queued_exception *ex) |

| void | kvm_vcpu_mtrr_init (struct kvm_vcpu *vcpu) |

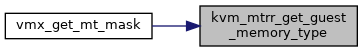

| u8 | kvm_mtrr_get_guest_memory_type (struct kvm_vcpu *vcpu, gfn_t gfn) |

| int | kvm_mtrr_set_msr (struct kvm_vcpu *vcpu, u32 msr, u64 data) |

| int | kvm_mtrr_get_msr (struct kvm_vcpu *vcpu, u32 msr, u64 *pdata) |

| bool | kvm_mtrr_check_gfn_range_consistency (struct kvm_vcpu *vcpu, gfn_t gfn, int page_num) |

| bool | kvm_vector_hashing_enabled (void) |

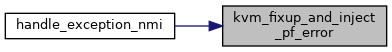

| void | kvm_fixup_and_inject_pf_error (struct kvm_vcpu *vcpu, gva_t gva, u16 error_code) |

| int | x86_decode_emulated_instruction (struct kvm_vcpu *vcpu, int emulation_type, void *insn, int insn_len) |

| int | x86_emulate_instruction (struct kvm_vcpu *vcpu, gpa_t cr2_or_gpa, int emulation_type, void *insn, int insn_len) |

| fastpath_t | handle_fastpath_set_msr_irqoff (struct kvm_vcpu *vcpu) |

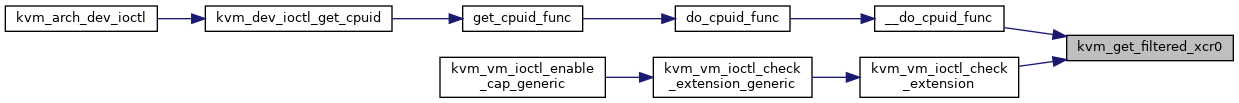

| static u64 | kvm_get_filtered_xcr0 (void) |

| static bool | kvm_mpx_supported (void) |

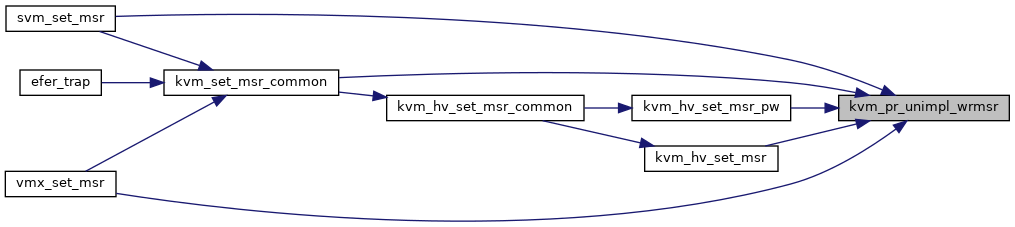

| static void | kvm_pr_unimpl_wrmsr (struct kvm_vcpu *vcpu, u32 msr, u64 data) |

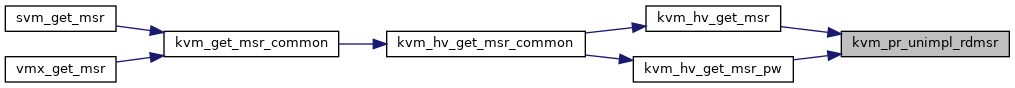

| static void | kvm_pr_unimpl_rdmsr (struct kvm_vcpu *vcpu, u32 msr) |

| static u64 | nsec_to_cycles (struct kvm_vcpu *vcpu, u64 nsec) |

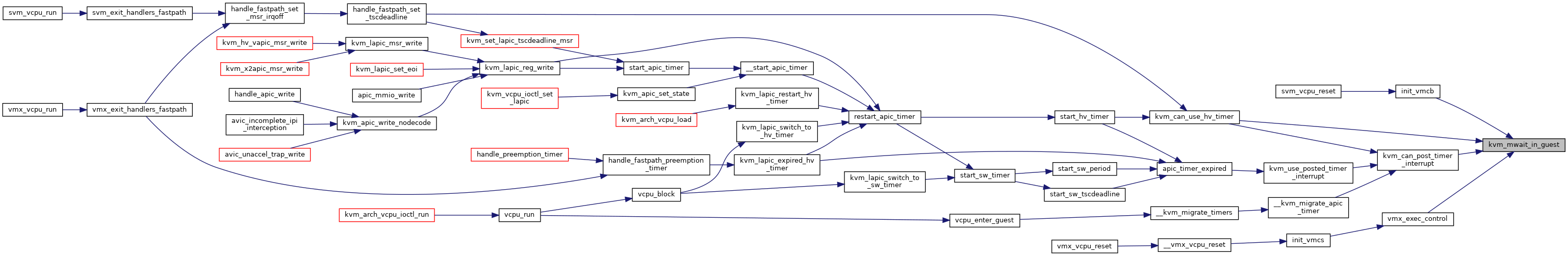

| static bool | kvm_mwait_in_guest (struct kvm *kvm) |

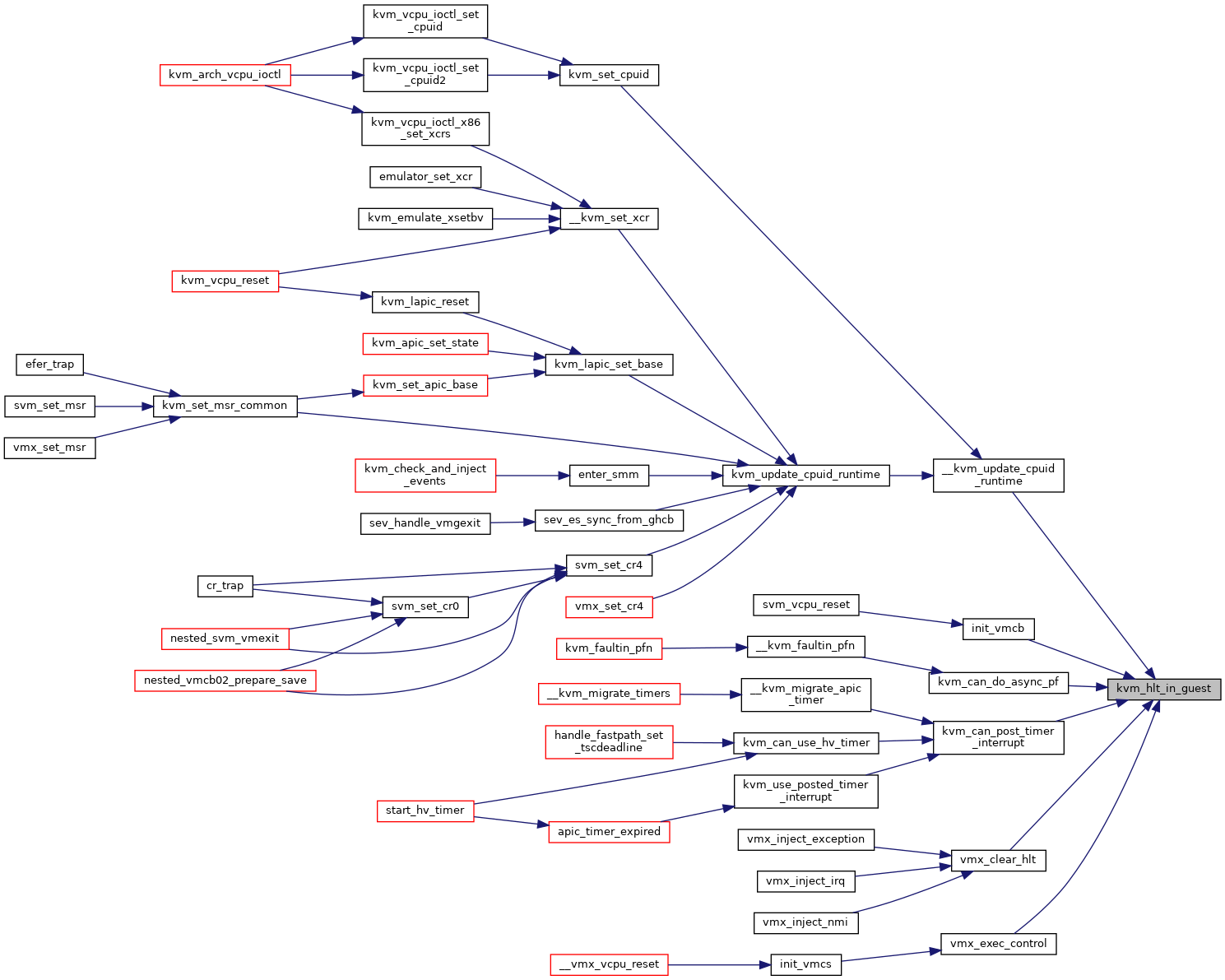

| static bool | kvm_hlt_in_guest (struct kvm *kvm) |

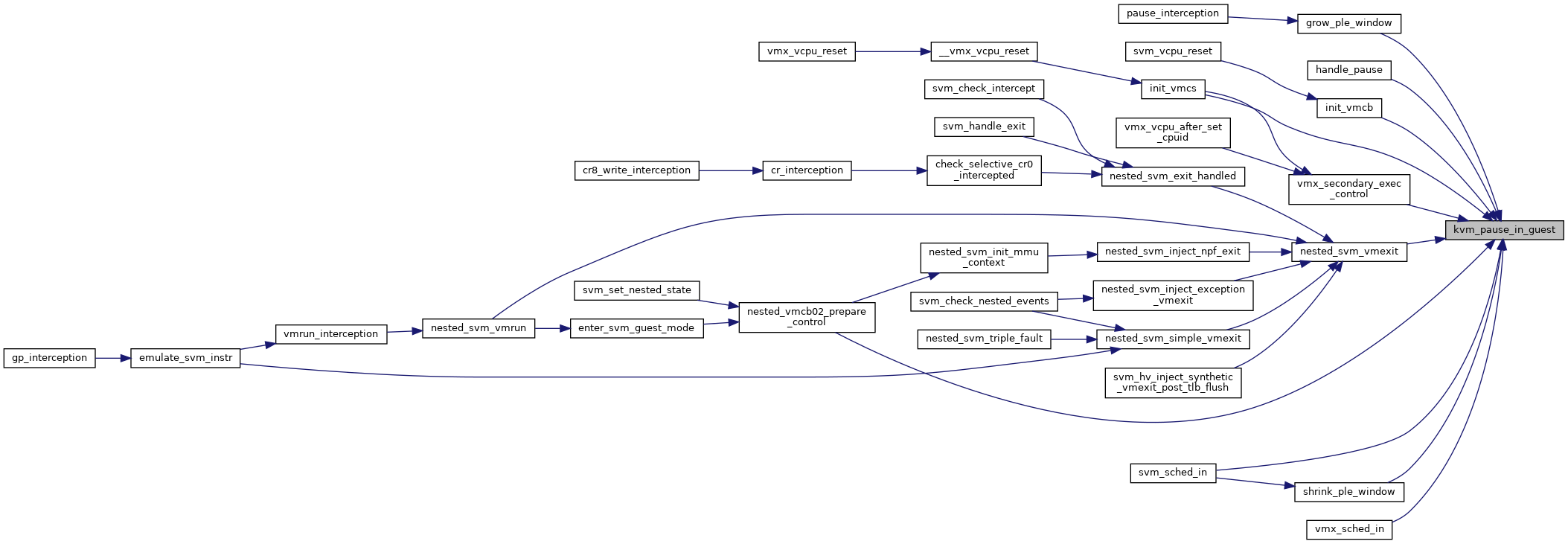

| static bool | kvm_pause_in_guest (struct kvm *kvm) |

| static bool | kvm_cstate_in_guest (struct kvm *kvm) |

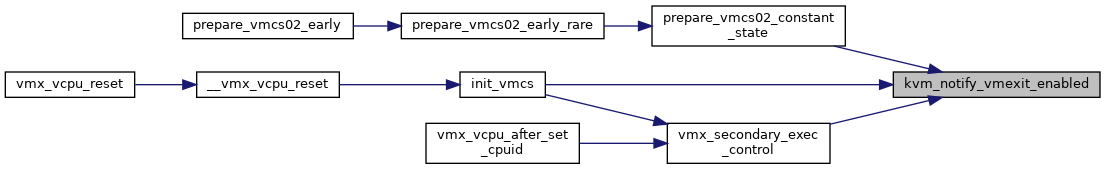

| static bool | kvm_notify_vmexit_enabled (struct kvm *kvm) |

| static __always_inline void | kvm_before_interrupt (struct kvm_vcpu *vcpu, enum kvm_intr_type intr) |

| static __always_inline void | kvm_after_interrupt (struct kvm_vcpu *vcpu) |

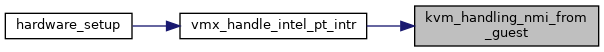

| static bool | kvm_handling_nmi_from_guest (struct kvm_vcpu *vcpu) |

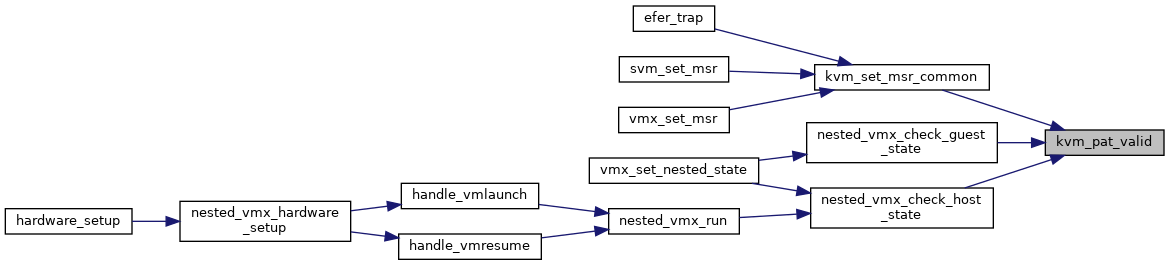

| static bool | kvm_pat_valid (u64 data) |

| static bool | kvm_dr7_valid (u64 data) |

| static bool | kvm_dr6_valid (u64 data) |

| static void | kvm_machine_check (void) |

| void | kvm_load_guest_xsave_state (struct kvm_vcpu *vcpu) |

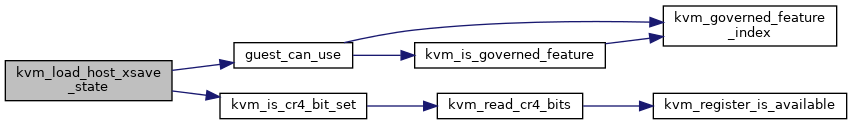

| void | kvm_load_host_xsave_state (struct kvm_vcpu *vcpu) |

| int | kvm_spec_ctrl_test_value (u64 value) |

| bool | __kvm_is_valid_cr4 (struct kvm_vcpu *vcpu, unsigned long cr4) |

| int | kvm_handle_memory_failure (struct kvm_vcpu *vcpu, int r, struct x86_exception *e) |

| int | kvm_handle_invpcid (struct kvm_vcpu *vcpu, unsigned long type, gva_t gva) |

| bool | kvm_msr_allowed (struct kvm_vcpu *vcpu, u32 index, u32 type) |

| int | kvm_sev_es_mmio_write (struct kvm_vcpu *vcpu, gpa_t src, unsigned int bytes, void *dst) |

| int | kvm_sev_es_mmio_read (struct kvm_vcpu *vcpu, gpa_t src, unsigned int bytes, void *dst) |

| int | kvm_sev_es_string_io (struct kvm_vcpu *vcpu, unsigned int size, unsigned int port, void *data, unsigned int count, int in) |

Variables | |

| u64 | host_xcr0 |

| u64 | host_xss |

| u64 | host_arch_capabilities |

| struct kvm_caps | kvm_caps |

| bool | enable_pmu |

| unsigned int | min_timer_period_us |

| bool | enable_vmware_backdoor |

| int | pi_inject_timer |

| bool | report_ignored_msrs |

| bool | eager_page_split |

Macro Definition Documentation

◆ __cr4_reserved_bits

| #define __cr4_reserved_bits | ( | __cpu_has, | |

| __c | |||

| ) |

◆ do_shl32_div32

| #define do_shl32_div32 | ( | n, | |

| base | |||

| ) |

◆ KVM_DEFAULT_PLE_GAP

◆ KVM_DEFAULT_PLE_WINDOW_GROW

◆ KVM_DEFAULT_PLE_WINDOW_SHRINK

◆ KVM_FIRST_EMULATED_VMX_MSR

◆ KVM_LAST_EMULATED_VMX_MSR

◆ KVM_MSR_RET_FILTERED

| #define KVM_MSR_RET_FILTERED 3 /* #GP due to userspace MSR filter */ |

◆ KVM_MSR_RET_INVALID

| #define KVM_MSR_RET_INVALID 2 /* in-kernel MSR emulation #GP condition */ |

◆ KVM_NESTED_VMENTER_CONSISTENCY_CHECK

| #define KVM_NESTED_VMENTER_CONSISTENCY_CHECK | ( | consistency_check | ) |

◆ KVM_SVM_DEFAULT_PLE_WINDOW

◆ KVM_SVM_DEFAULT_PLE_WINDOW_MAX

◆ KVM_VMX_DEFAULT_PLE_WINDOW

◆ KVM_VMX_DEFAULT_PLE_WINDOW_MAX

◆ MMIO_GVA_ANY

◆ MSR_IA32_CR_PAT_DEFAULT

Enumeration Type Documentation

◆ kvm_intr_type

| enum kvm_intr_type |

Function Documentation

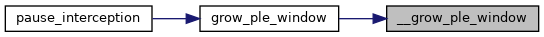

◆ __grow_ple_window()

|

inlinestatic |

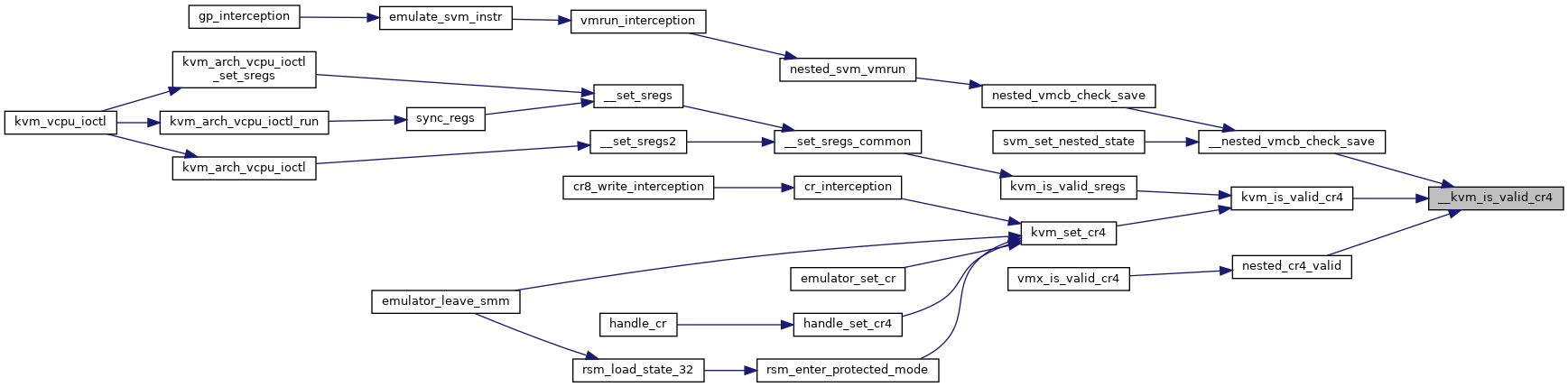

◆ __kvm_is_valid_cr4()

| bool __kvm_is_valid_cr4 | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | cr4 | ||

| ) |

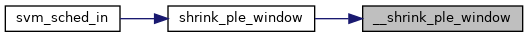

◆ __shrink_ple_window()

|

inlinestatic |

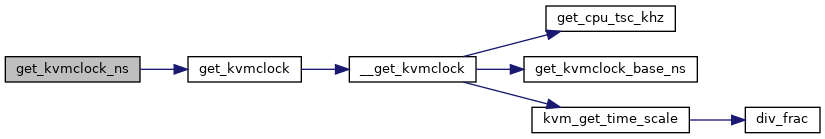

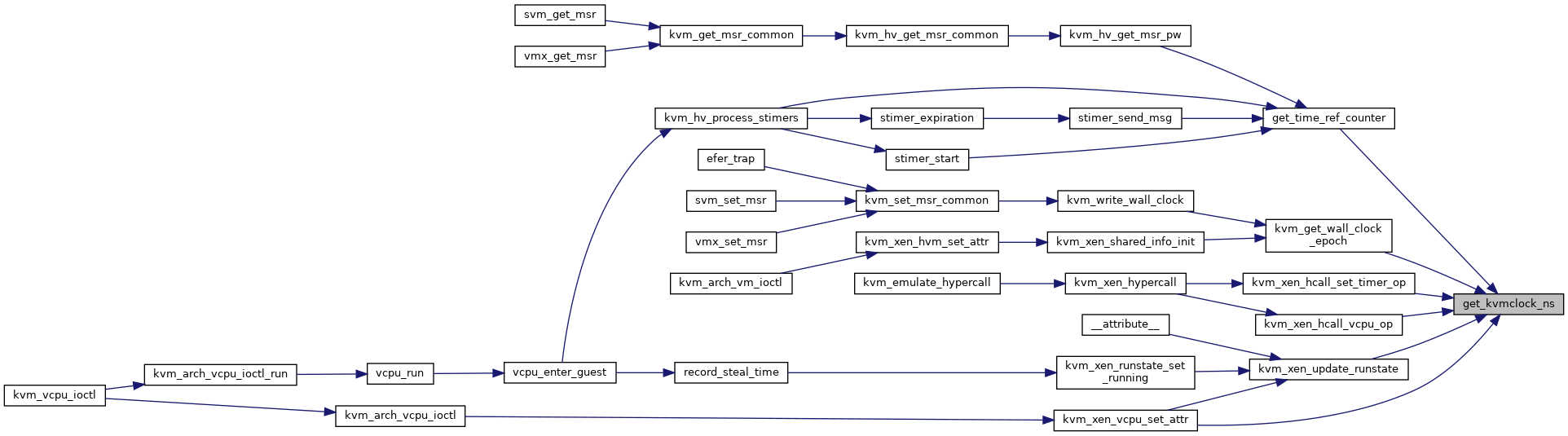

◆ get_kvmclock_ns()

| u64 get_kvmclock_ns | ( | struct kvm * | kvm | ) |

Definition at line 3105 of file x86.c.

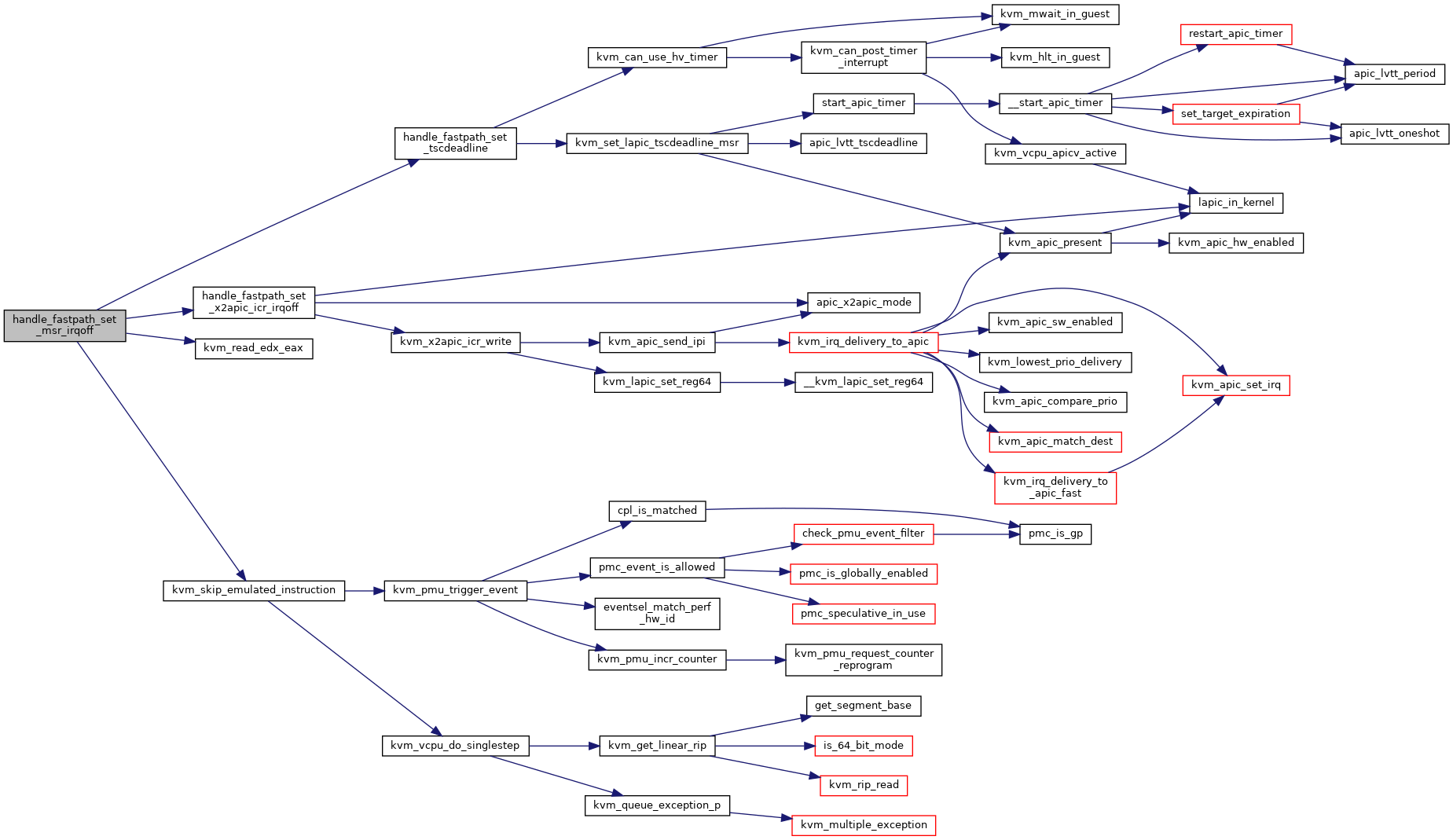

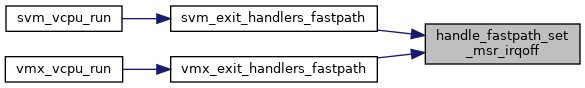

◆ handle_fastpath_set_msr_irqoff()

| fastpath_t handle_fastpath_set_msr_irqoff | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2185 of file x86.c.

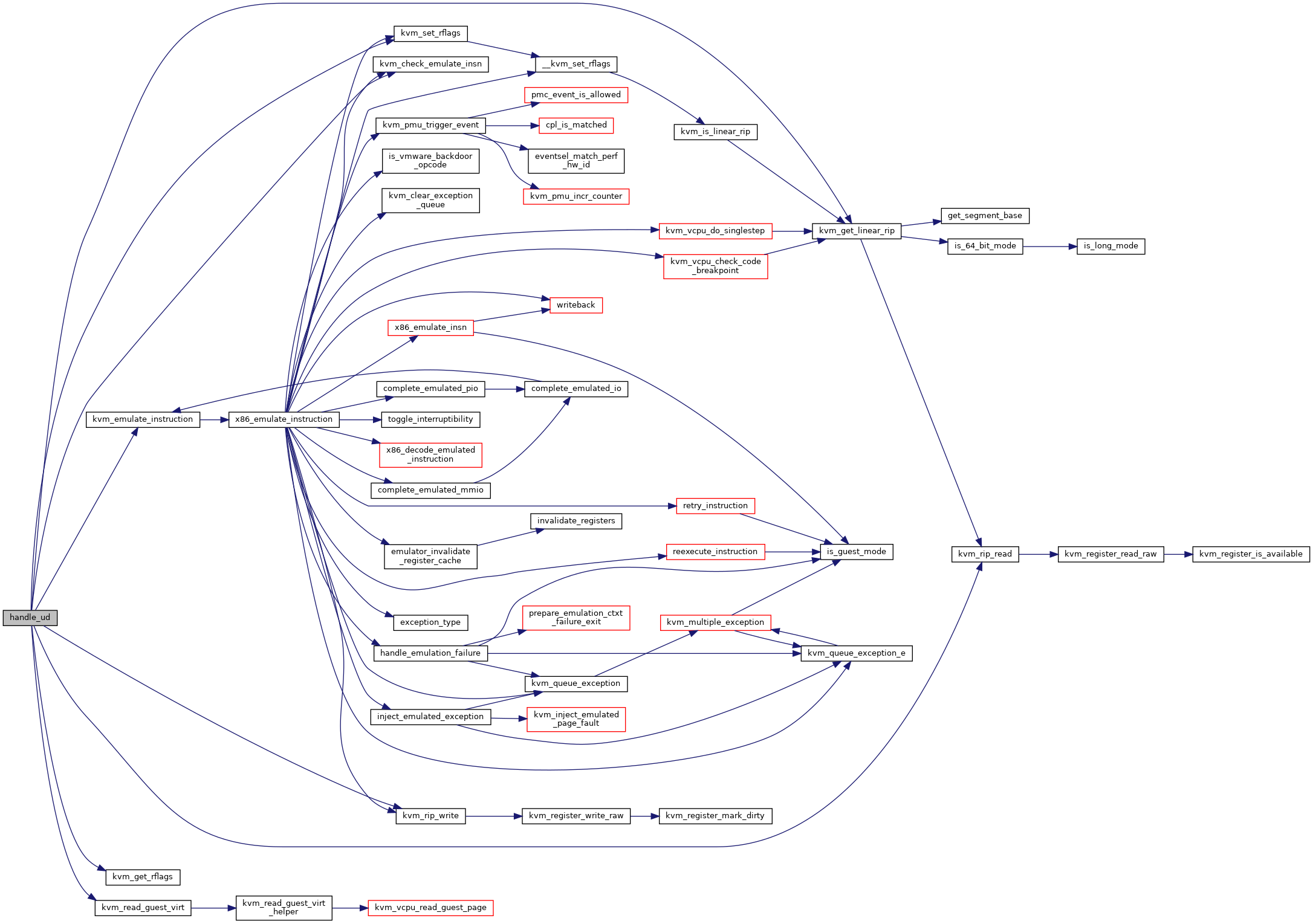

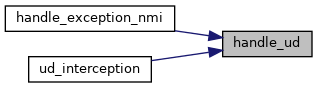

◆ handle_ud()

| int handle_ud | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 7669 of file x86.c.

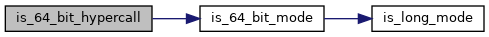

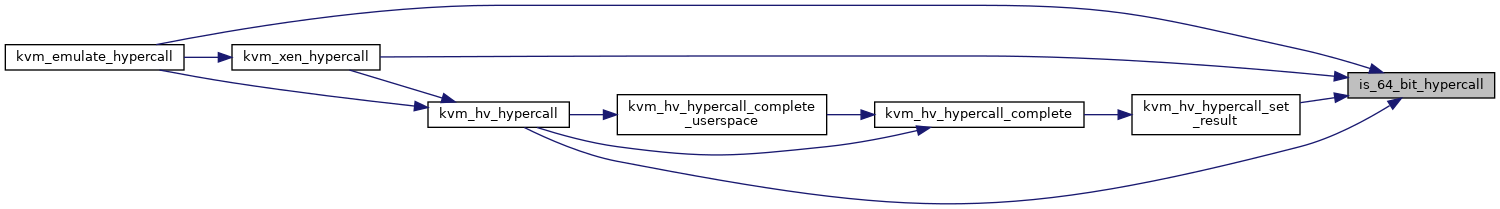

◆ is_64_bit_hypercall()

|

inlinestatic |

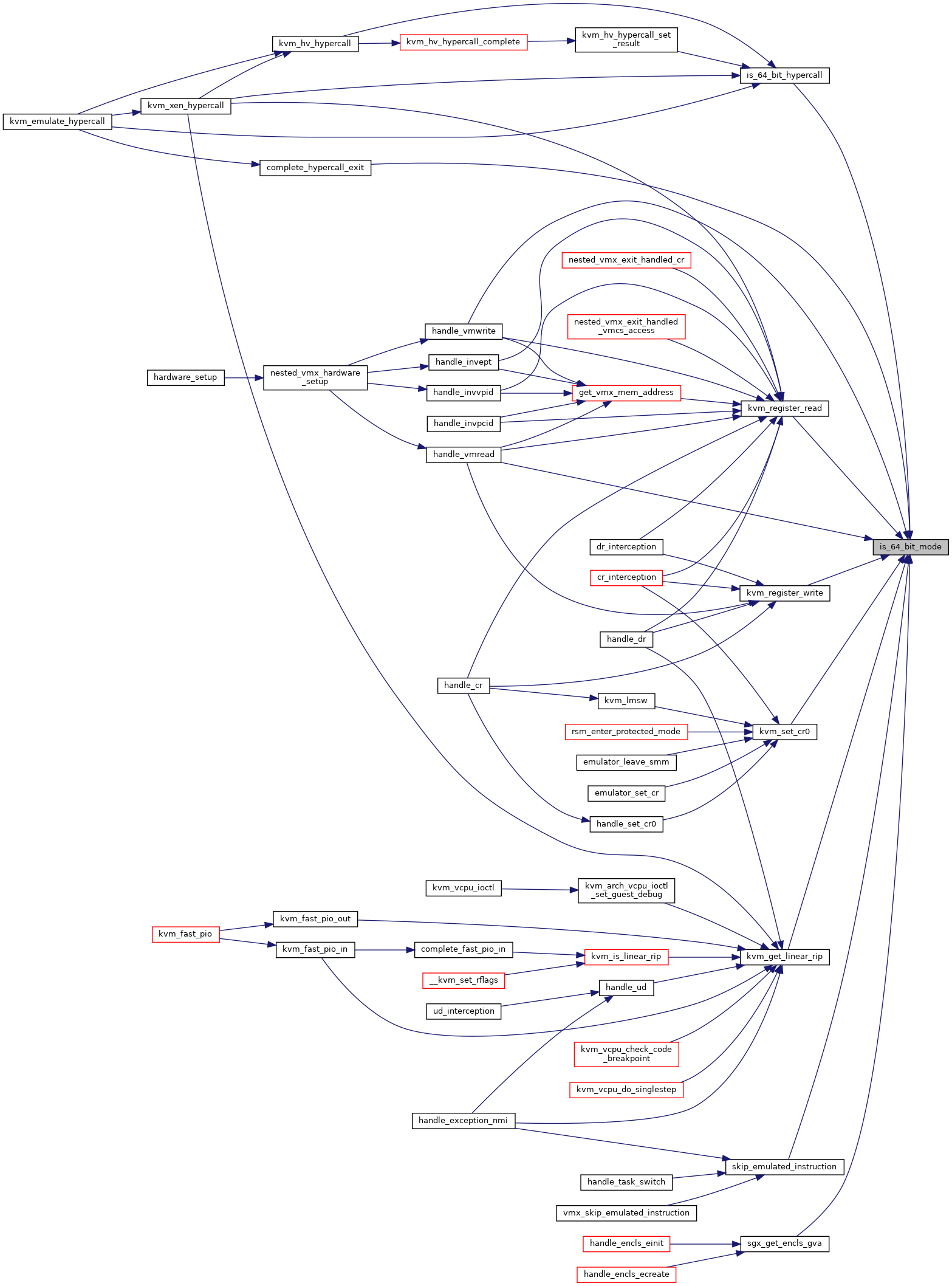

◆ is_64_bit_mode()

|

inlinestatic |

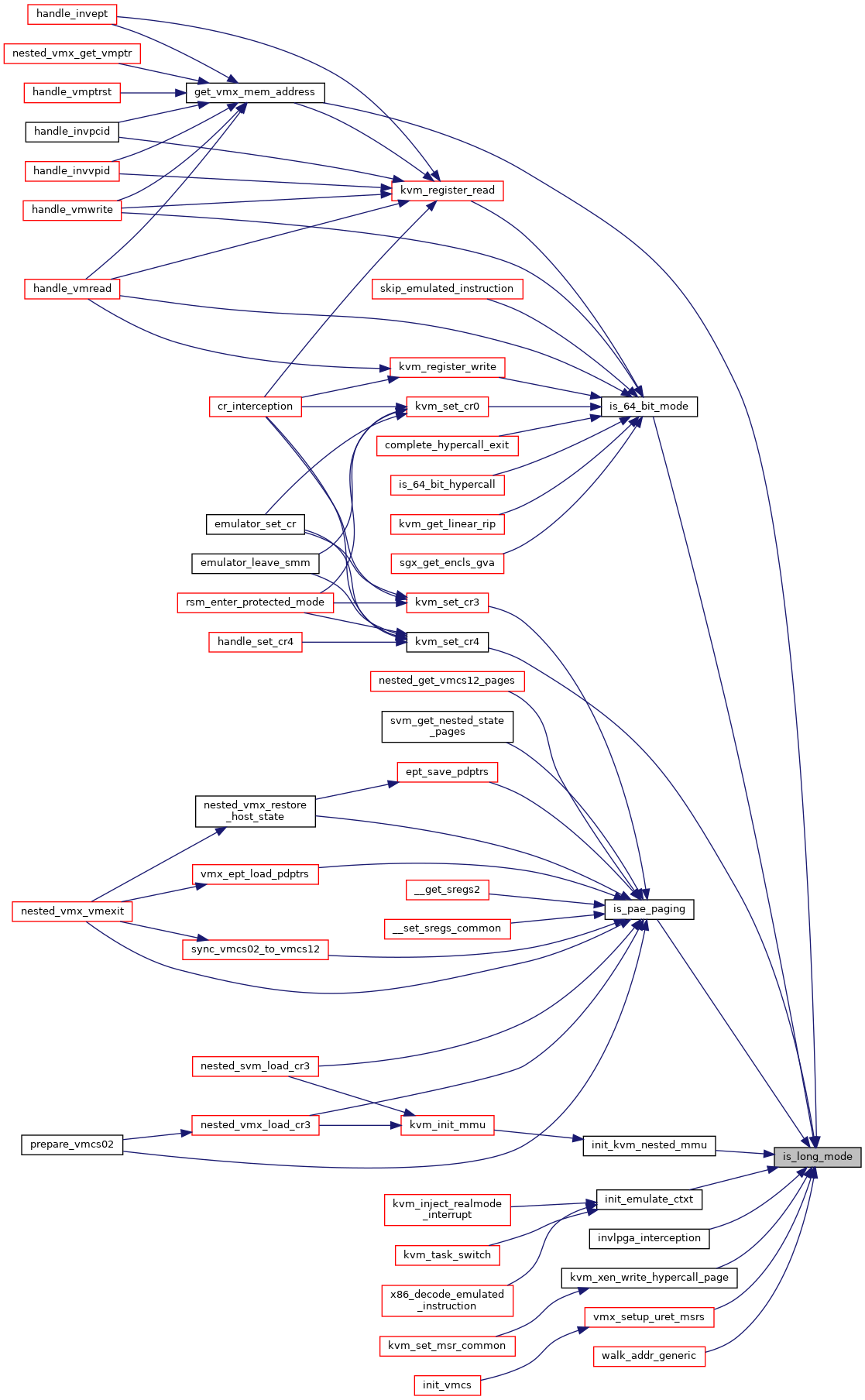

◆ is_long_mode()

|

inlinestatic |

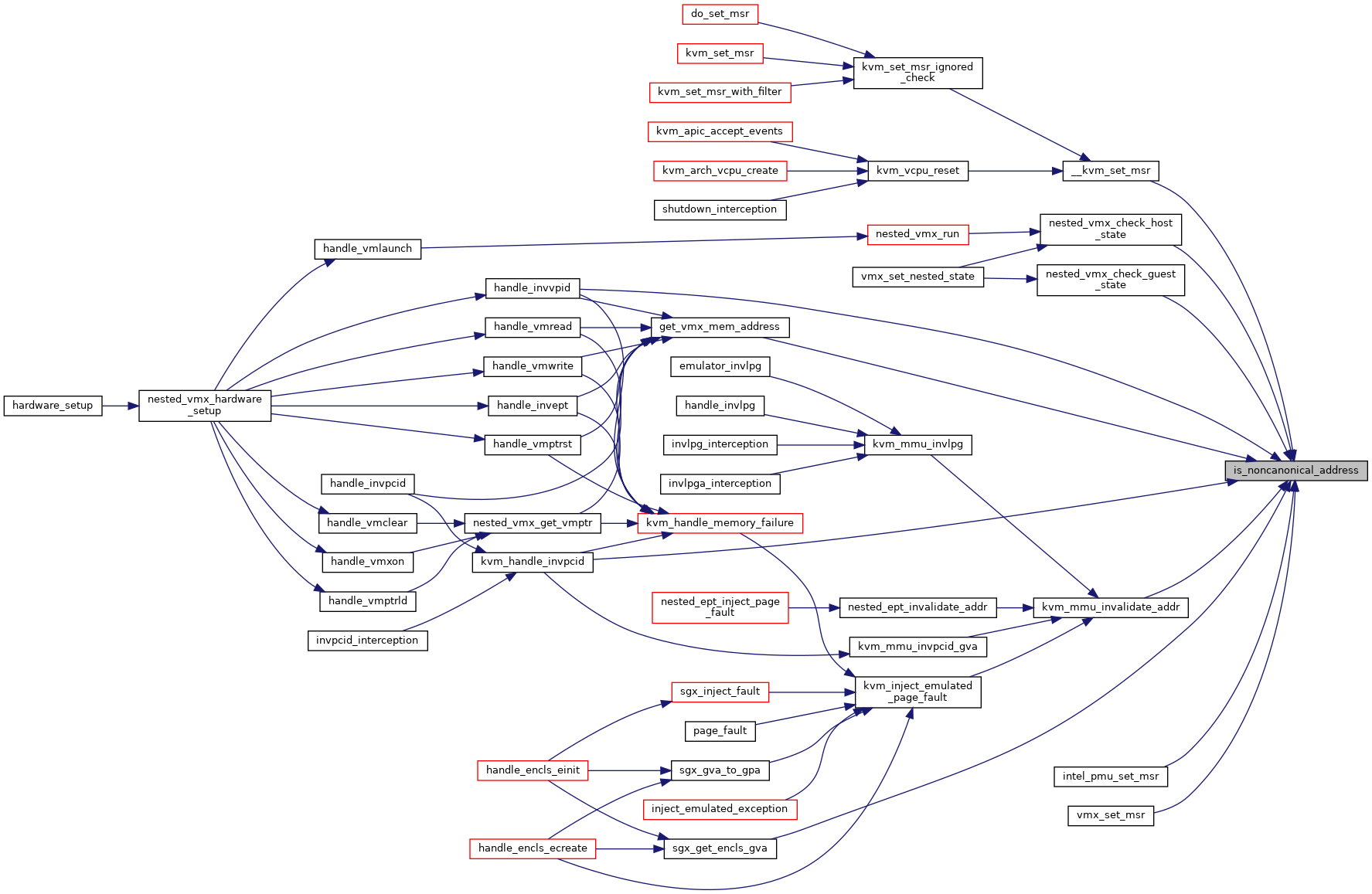

◆ is_noncanonical_address()

|

inlinestatic |

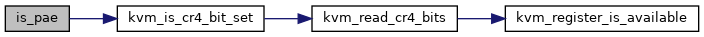

◆ is_pae()

|

inlinestatic |

Definition at line 188 of file x86.h.

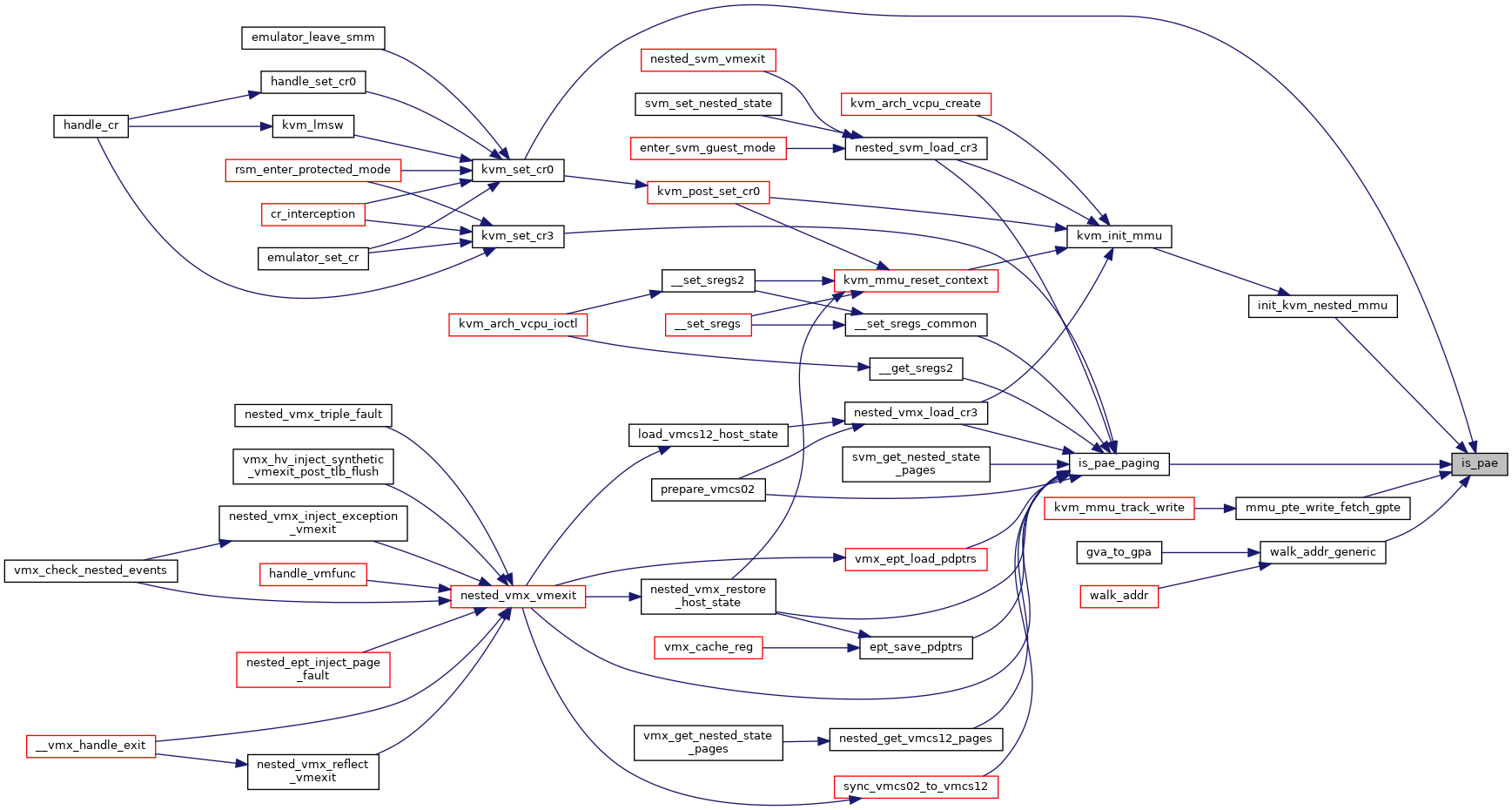

◆ is_pae_paging()

|

inlinestatic |

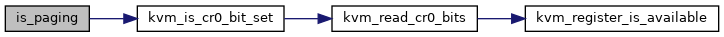

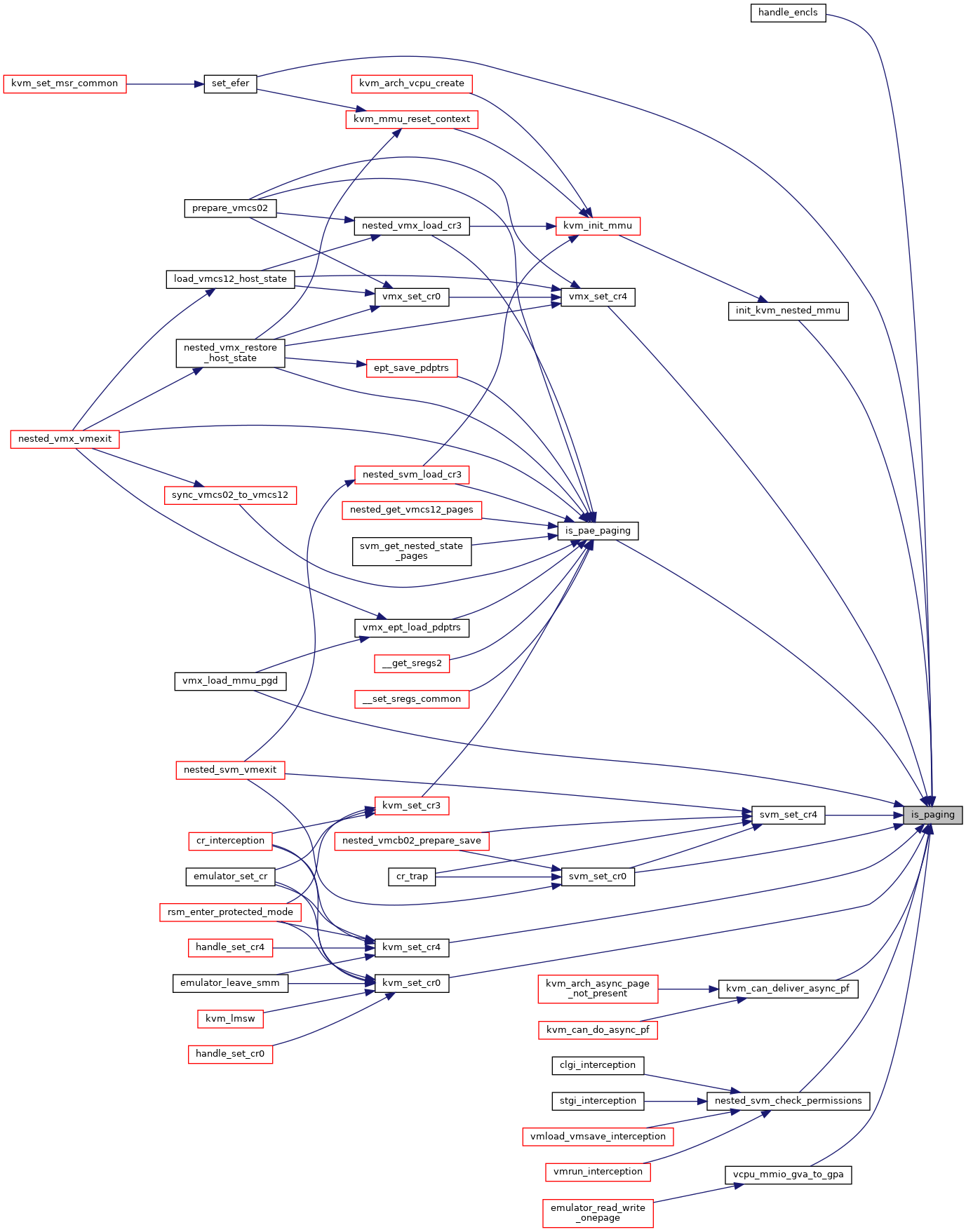

◆ is_paging()

|

inlinestatic |

Definition at line 198 of file x86.h.

◆ is_protmode()

|

inlinestatic |

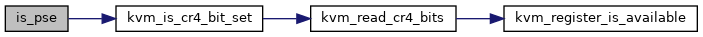

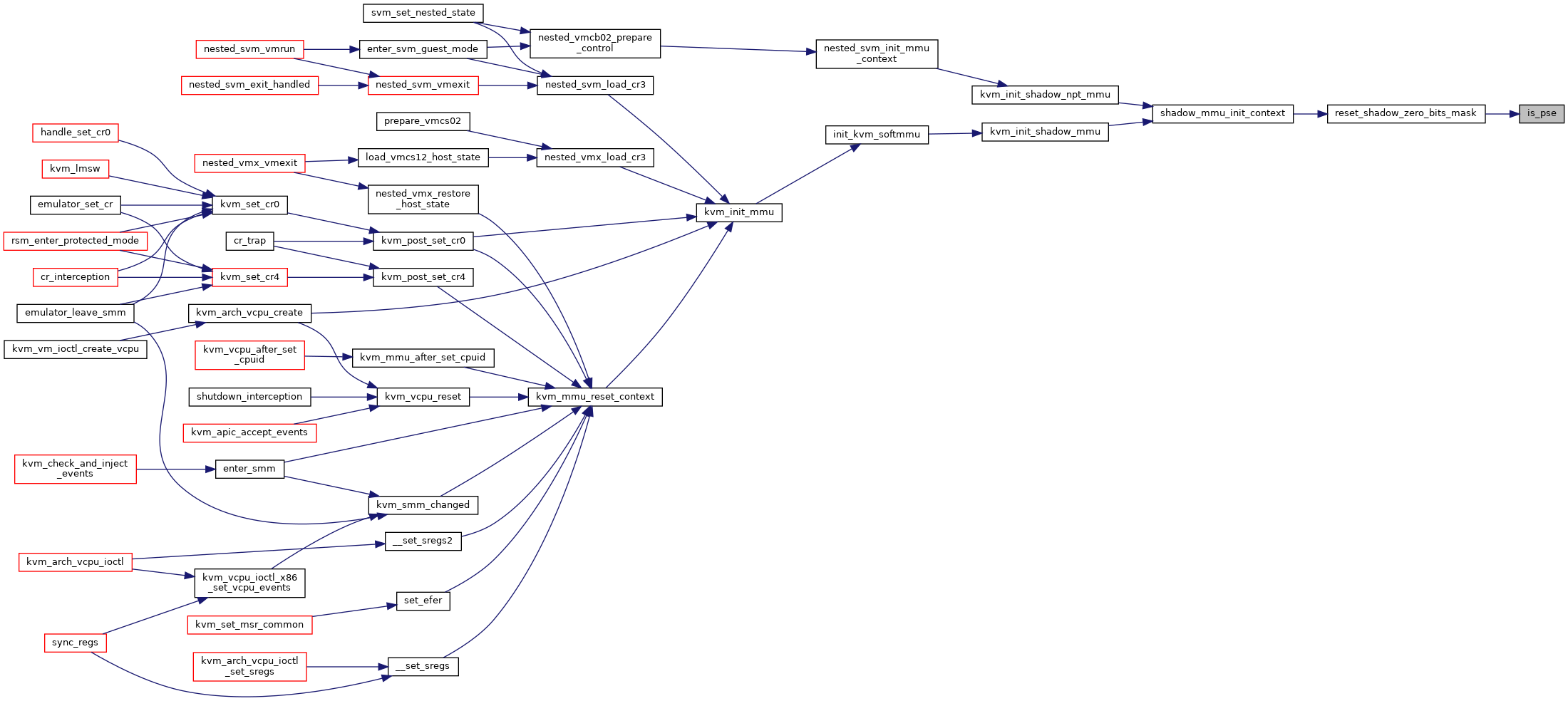

◆ is_pse()

|

inlinestatic |

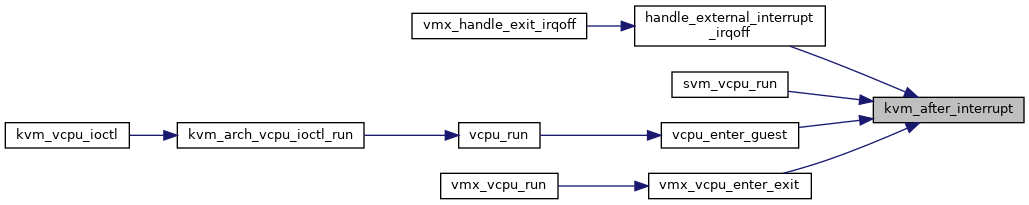

◆ kvm_after_interrupt()

|

static |

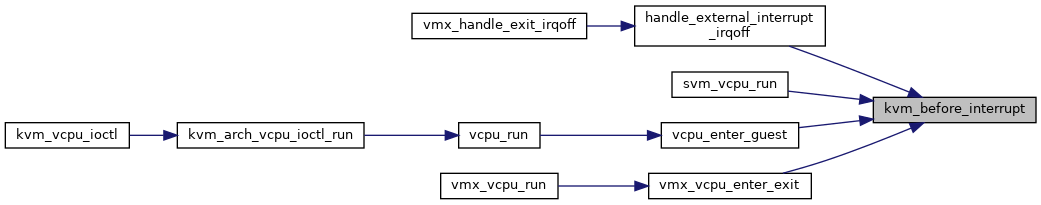

◆ kvm_before_interrupt()

|

static |

◆ kvm_check_has_quirk()

|

inlinestatic |

◆ kvm_check_nested_events()

| int kvm_check_nested_events | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_clear_exception_queue()

|

inlinestatic |

◆ kvm_clear_interrupt_queue()

|

inlinestatic |

◆ kvm_cstate_in_guest()

|

inlinestatic |

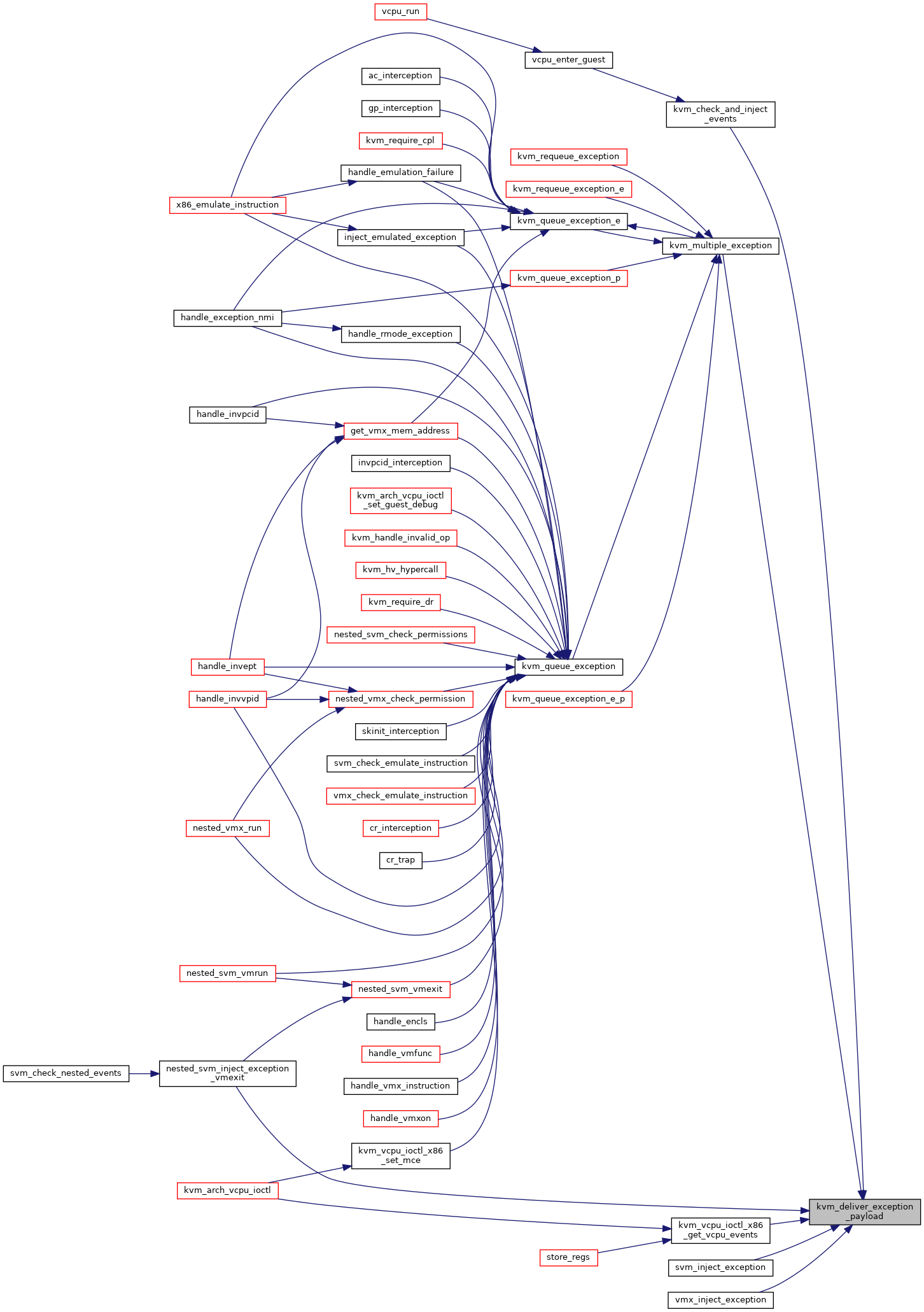

◆ kvm_deliver_exception_payload()

| void kvm_deliver_exception_payload | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_queued_exception * | ex | ||

| ) |

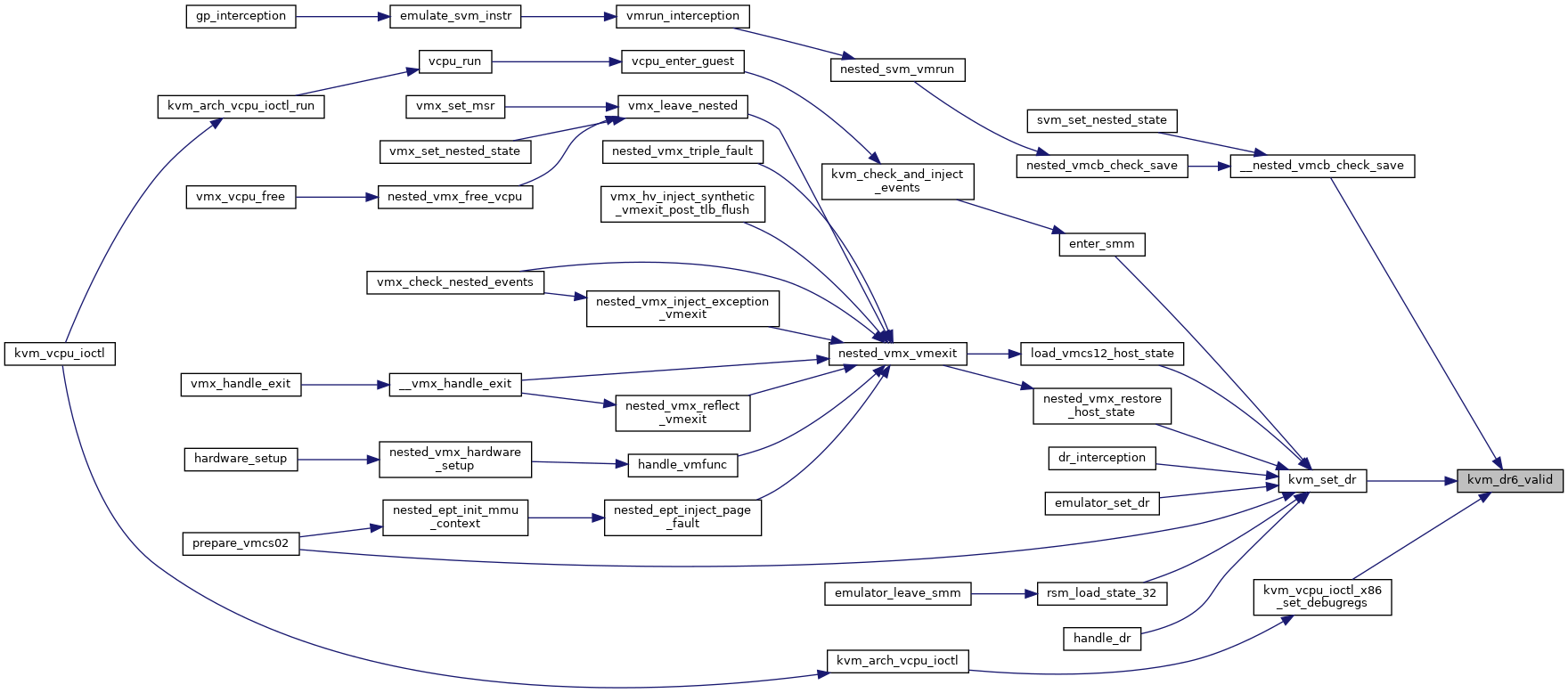

◆ kvm_dr6_valid()

|

inlinestatic |

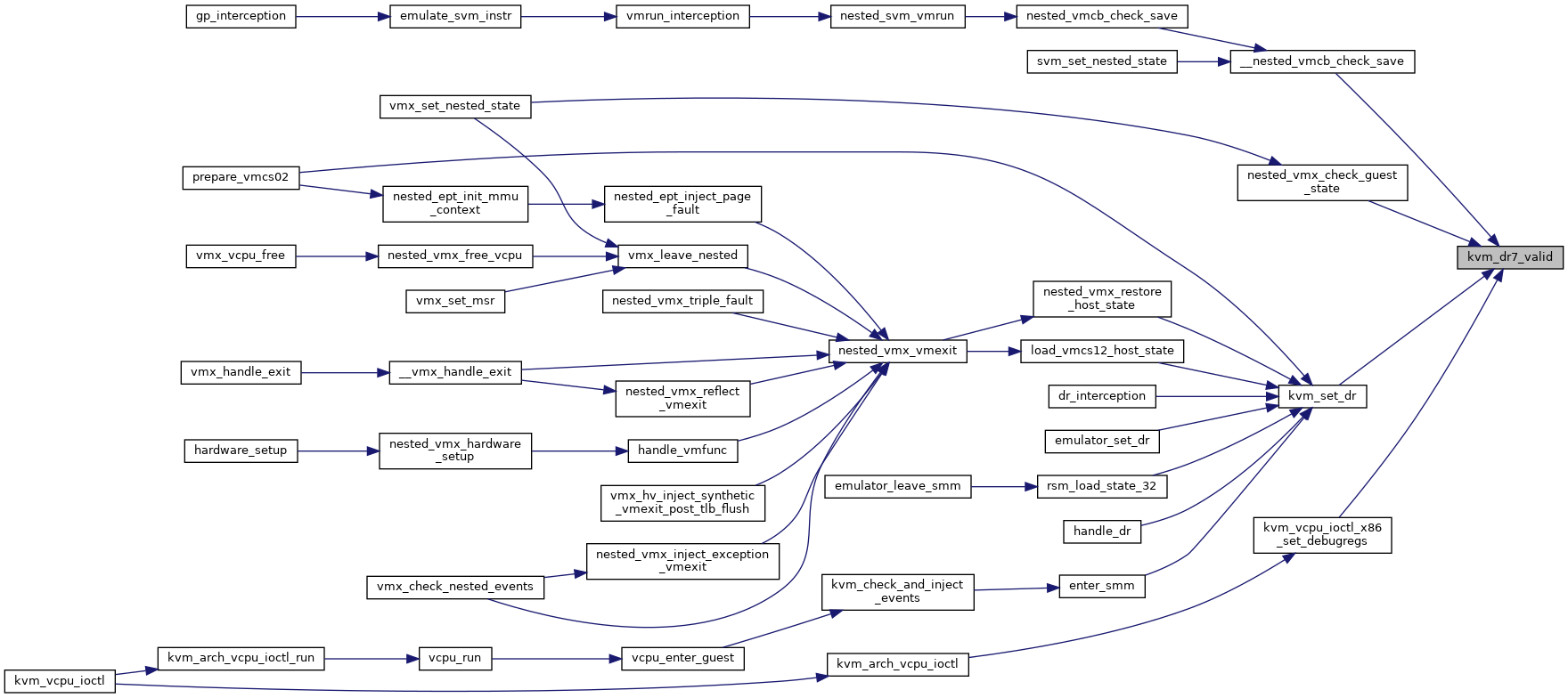

◆ kvm_dr7_valid()

|

inlinestatic |

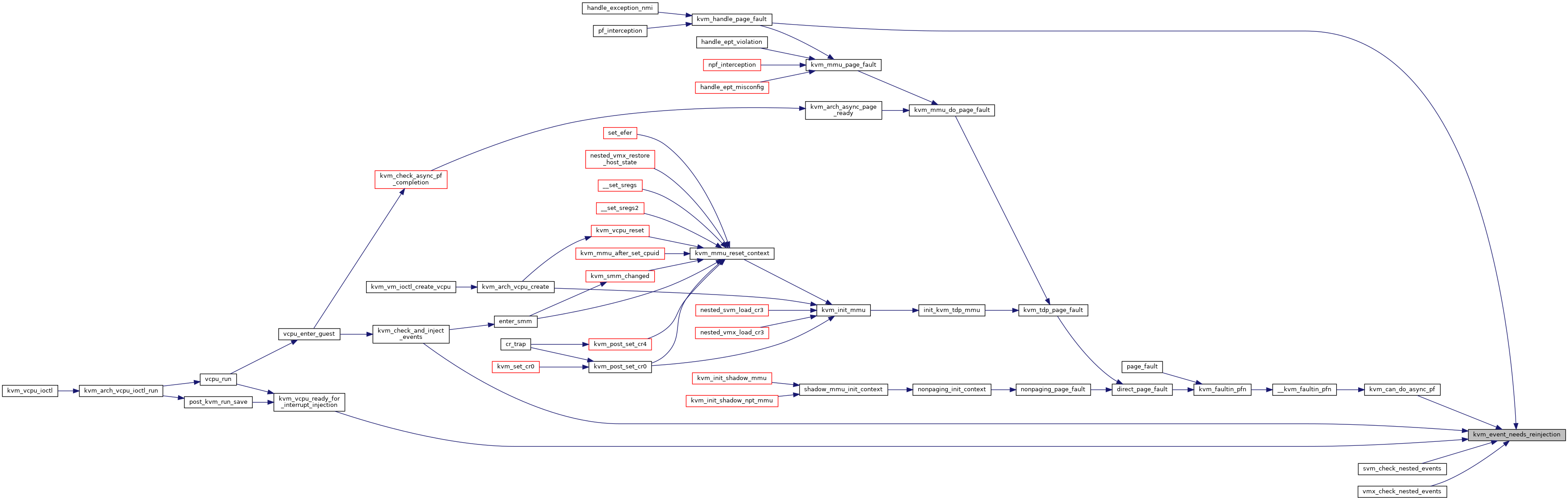

◆ kvm_event_needs_reinjection()

|

inlinestatic |

◆ kvm_exception_is_soft()

|

inlinestatic |

◆ kvm_fixup_and_inject_pf_error()

| void kvm_fixup_and_inject_pf_error | ( | struct kvm_vcpu * | vcpu, |

| gva_t | gva, | ||

| u16 | error_code | ||

| ) |

◆ kvm_get_filtered_xcr0()

|

inlinestatic |

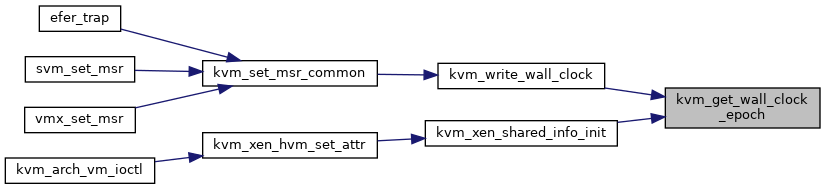

◆ kvm_get_wall_clock_epoch()

| uint64_t kvm_get_wall_clock_epoch | ( | struct kvm * | kvm | ) |

Definition at line 3298 of file x86.c.

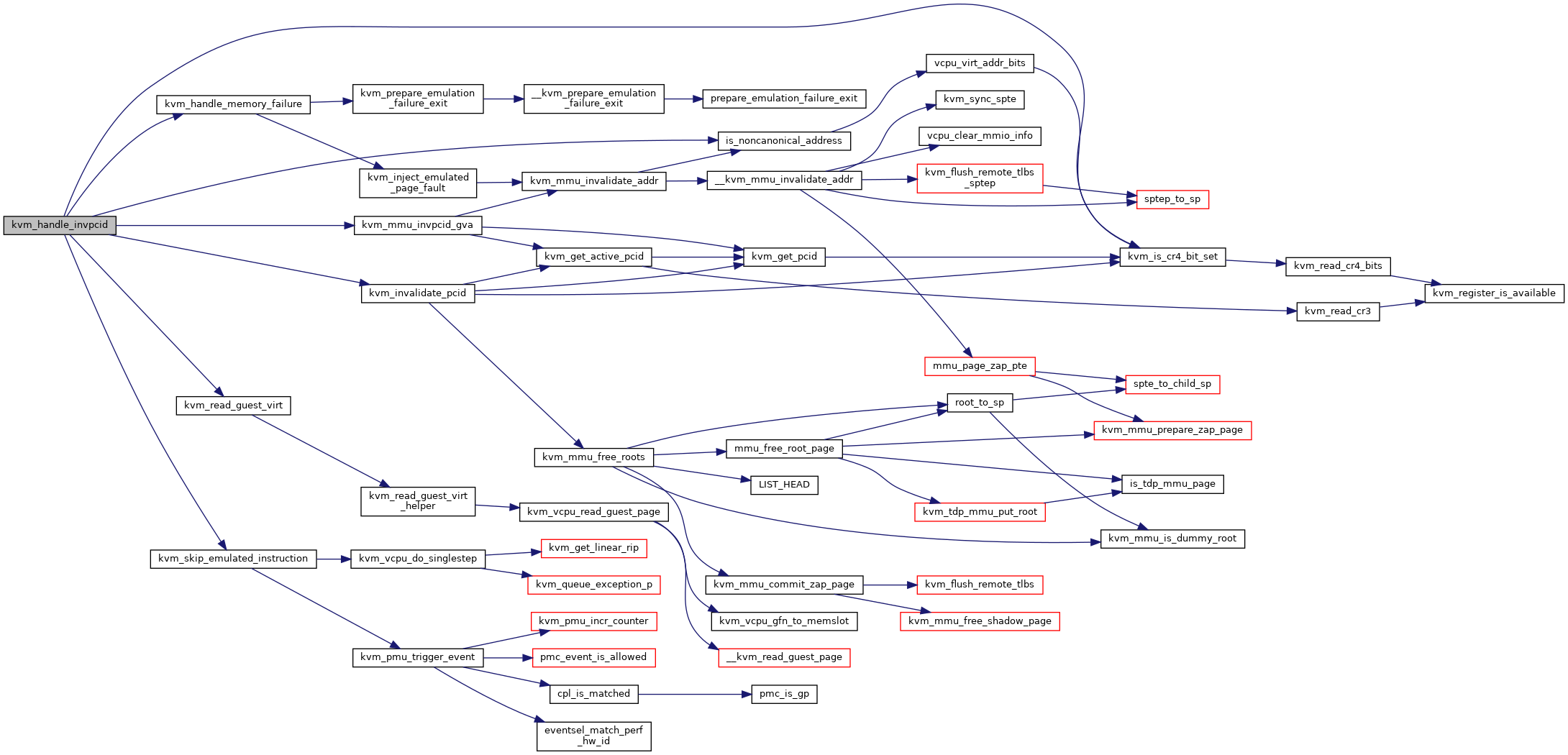

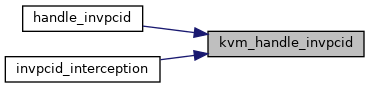

◆ kvm_handle_invpcid()

| int kvm_handle_invpcid | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | type, | ||

| gva_t | gva | ||

| ) |

Definition at line 13612 of file x86.c.

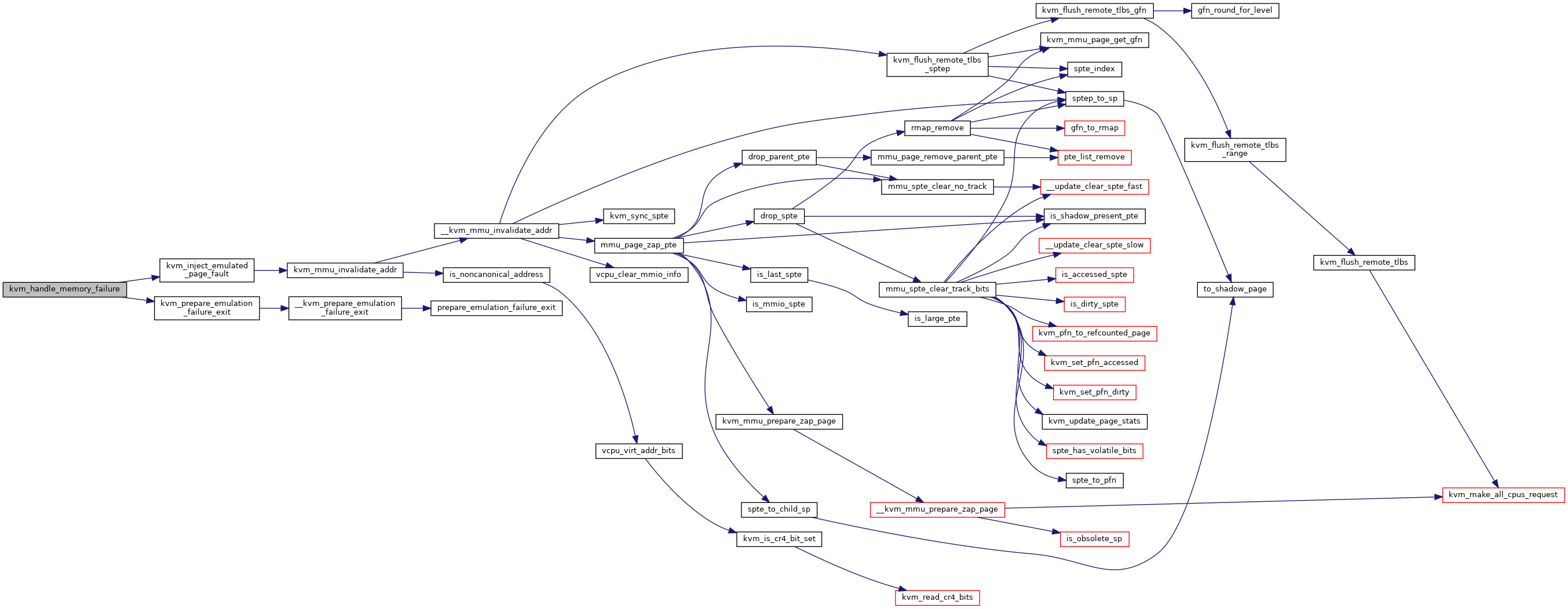

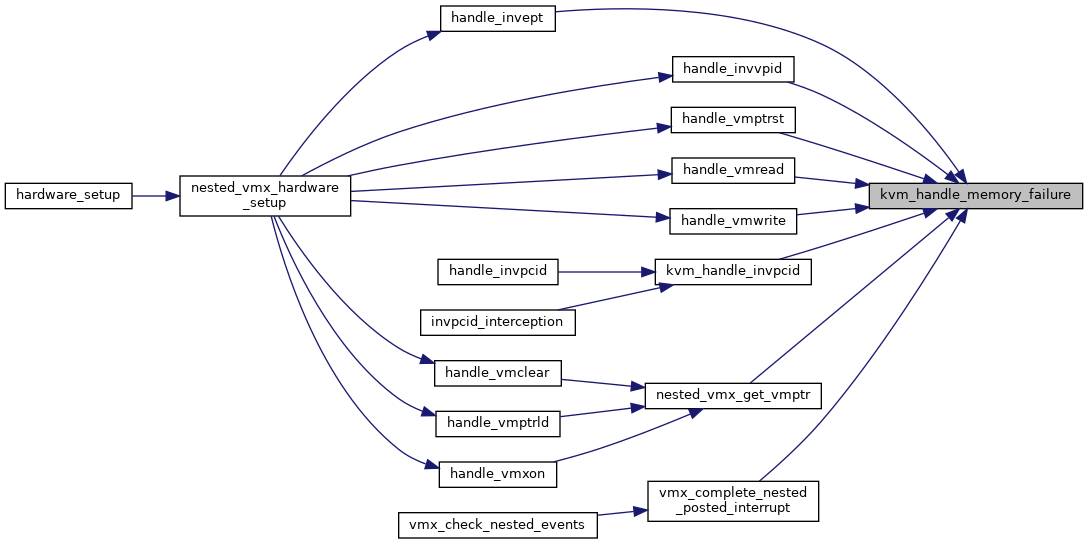

◆ kvm_handle_memory_failure()

| int kvm_handle_memory_failure | ( | struct kvm_vcpu * | vcpu, |

| int | r, | ||

| struct x86_exception * | e | ||

| ) |

Definition at line 13588 of file x86.c.

◆ kvm_handling_nmi_from_guest()

|

inlinestatic |

◆ kvm_hlt_in_guest()

|

inlinestatic |

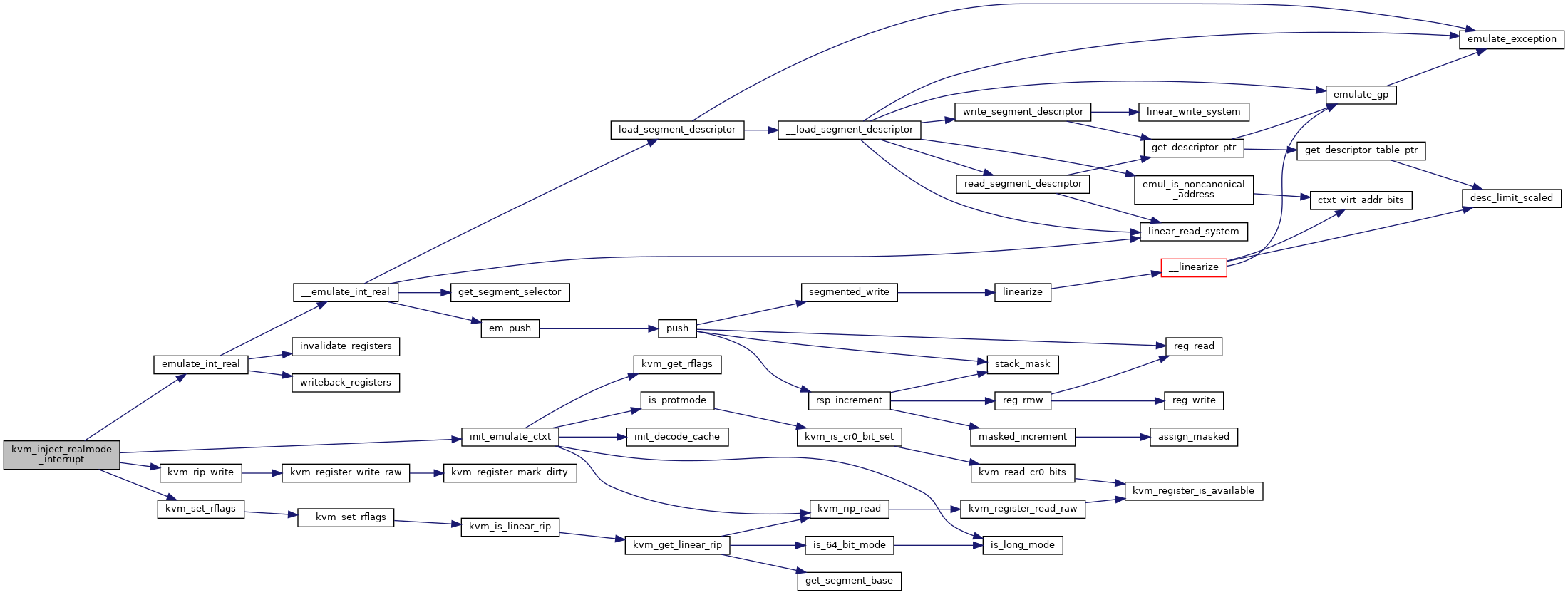

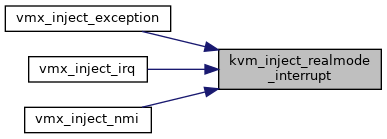

◆ kvm_inject_realmode_interrupt()

| void kvm_inject_realmode_interrupt | ( | struct kvm_vcpu * | vcpu, |

| int | irq, | ||

| int | inc_eip | ||

| ) |

Definition at line 8639 of file x86.c.

◆ kvm_is_exception_pending()

|

inlinestatic |

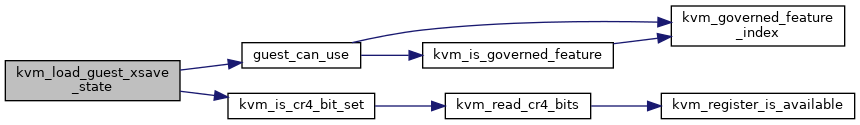

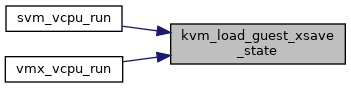

◆ kvm_load_guest_xsave_state()

| void kvm_load_guest_xsave_state | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1018 of file x86.c.

◆ kvm_load_host_xsave_state()

| void kvm_load_host_xsave_state | ( | struct kvm_vcpu * | vcpu | ) |

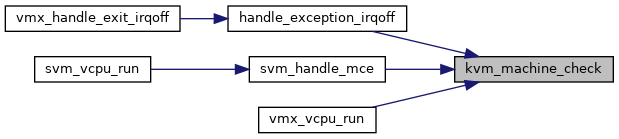

◆ kvm_machine_check()

|

inlinestatic |

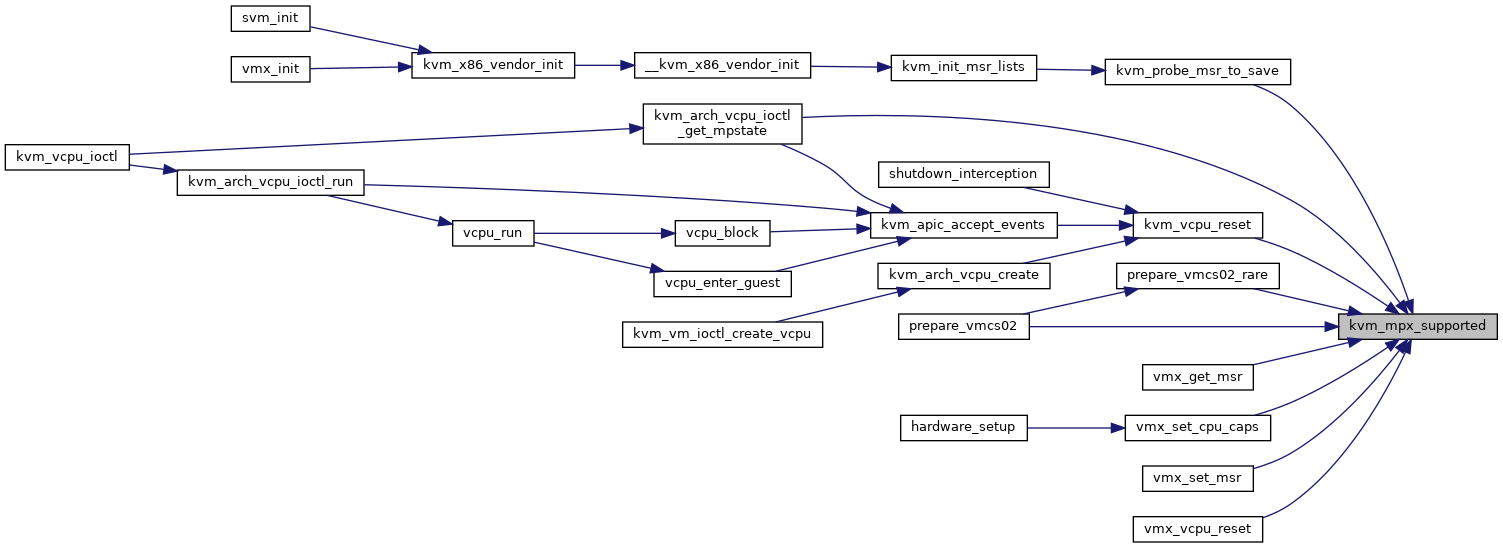

◆ kvm_mpx_supported()

|

inlinestatic |

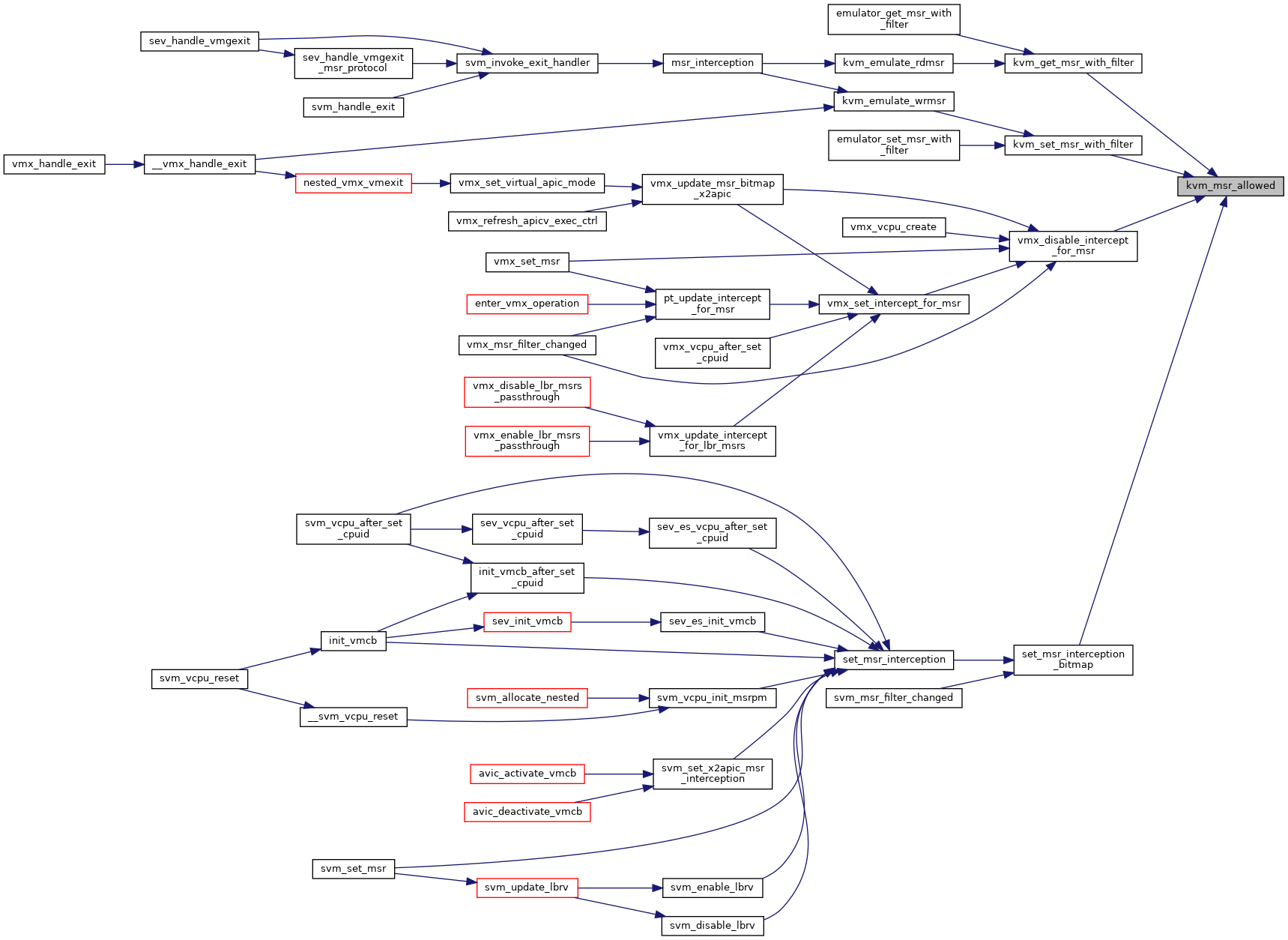

◆ kvm_msr_allowed()

| bool kvm_msr_allowed | ( | struct kvm_vcpu * | vcpu, |

| u32 | index, | ||

| u32 | type | ||

| ) |

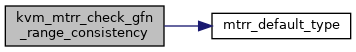

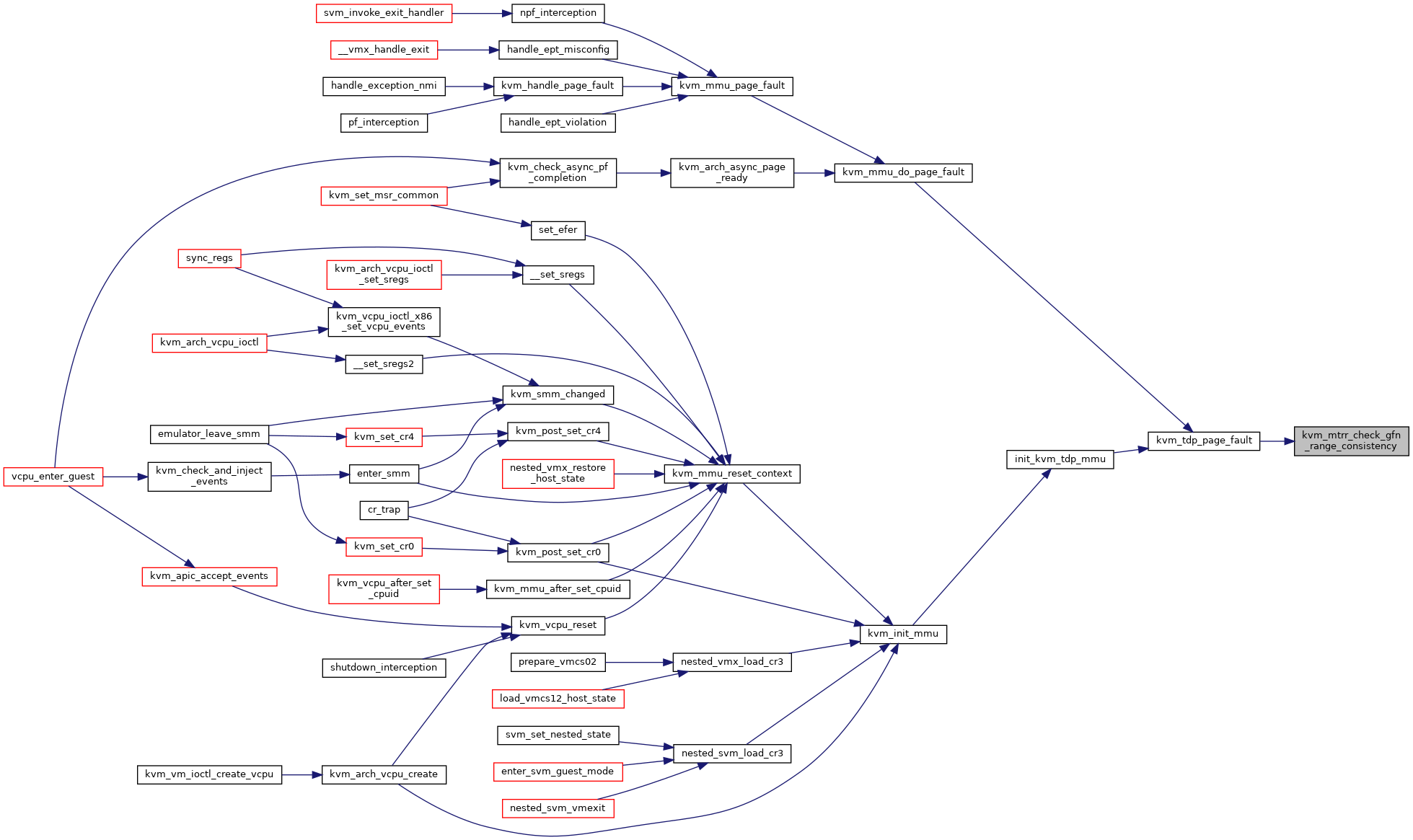

◆ kvm_mtrr_check_gfn_range_consistency()

| bool kvm_mtrr_check_gfn_range_consistency | ( | struct kvm_vcpu * | vcpu, |

| gfn_t | gfn, | ||

| int | page_num | ||

| ) |

Definition at line 690 of file mtrr.c.

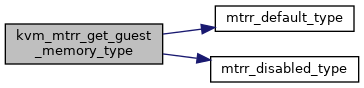

◆ kvm_mtrr_get_guest_memory_type()

| u8 kvm_mtrr_get_guest_memory_type | ( | struct kvm_vcpu * | vcpu, |

| gfn_t | gfn | ||

| ) |

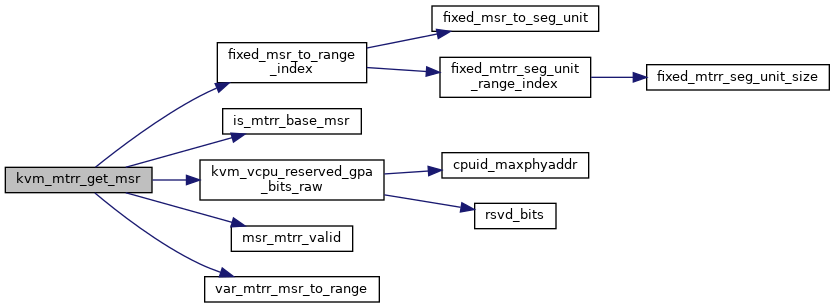

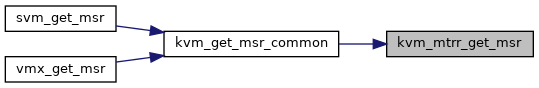

◆ kvm_mtrr_get_msr()

| int kvm_mtrr_get_msr | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| u64 * | pdata | ||

| ) |

Definition at line 397 of file mtrr.c.

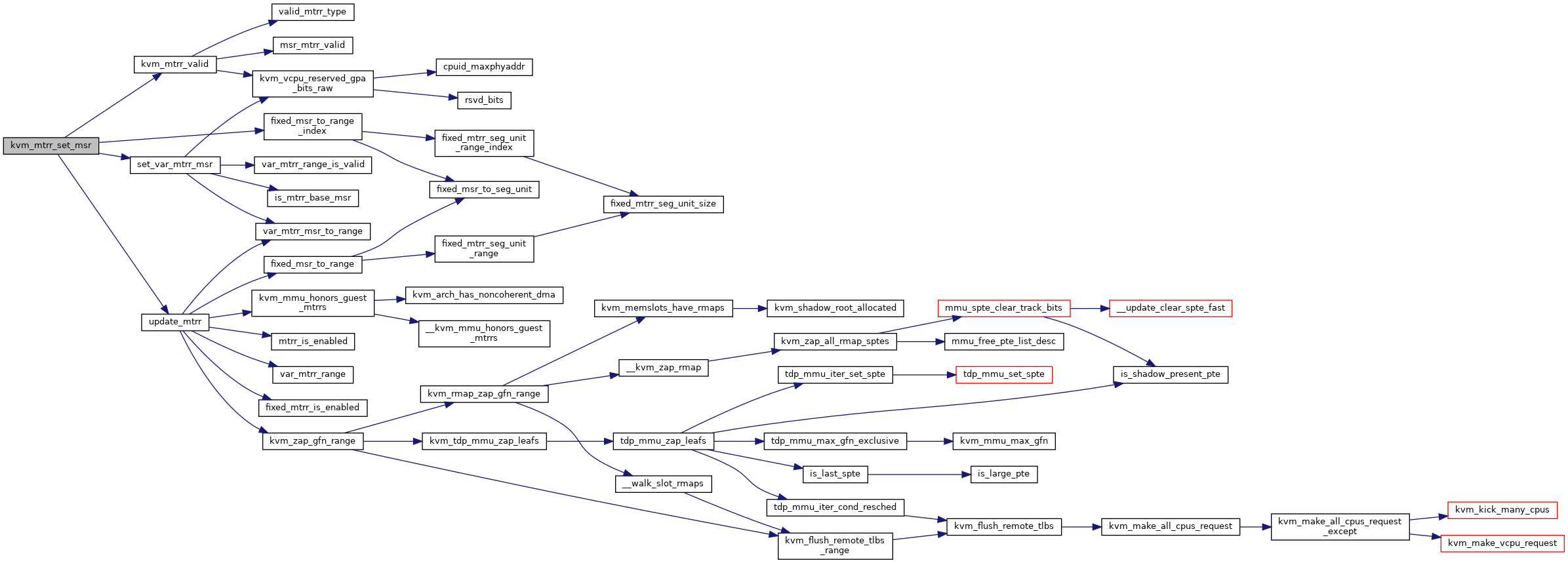

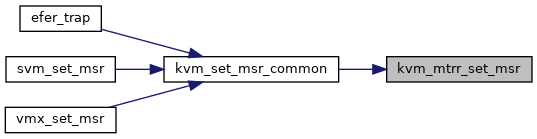

◆ kvm_mtrr_set_msr()

| int kvm_mtrr_set_msr | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| u64 | data | ||

| ) |

Definition at line 378 of file mtrr.c.

◆ kvm_mwait_in_guest()

|

inlinestatic |

◆ kvm_notify_vmexit_enabled()

|

inlinestatic |

◆ kvm_pat_valid()

|

inlinestatic |

◆ kvm_pause_in_guest()

|

inlinestatic |

◆ kvm_pr_unimpl_rdmsr()

|

inlinestatic |

◆ kvm_pr_unimpl_wrmsr()

|

inlinestatic |

◆ kvm_queue_interrupt()

|

inlinestatic |

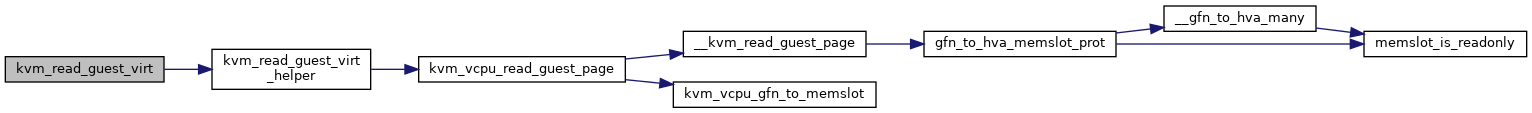

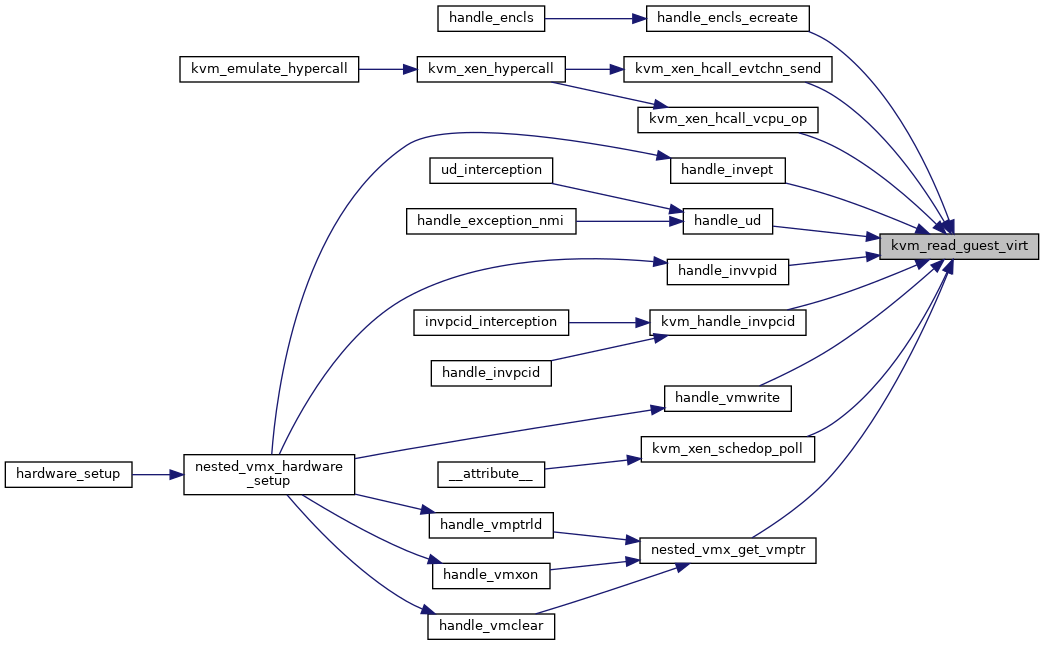

◆ kvm_read_guest_virt()

| int kvm_read_guest_virt | ( | struct kvm_vcpu * | vcpu, |

| gva_t | addr, | ||

| void * | val, | ||

| unsigned int | bytes, | ||

| struct x86_exception * | exception | ||

| ) |

Definition at line 7572 of file x86.c.

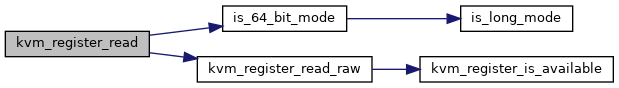

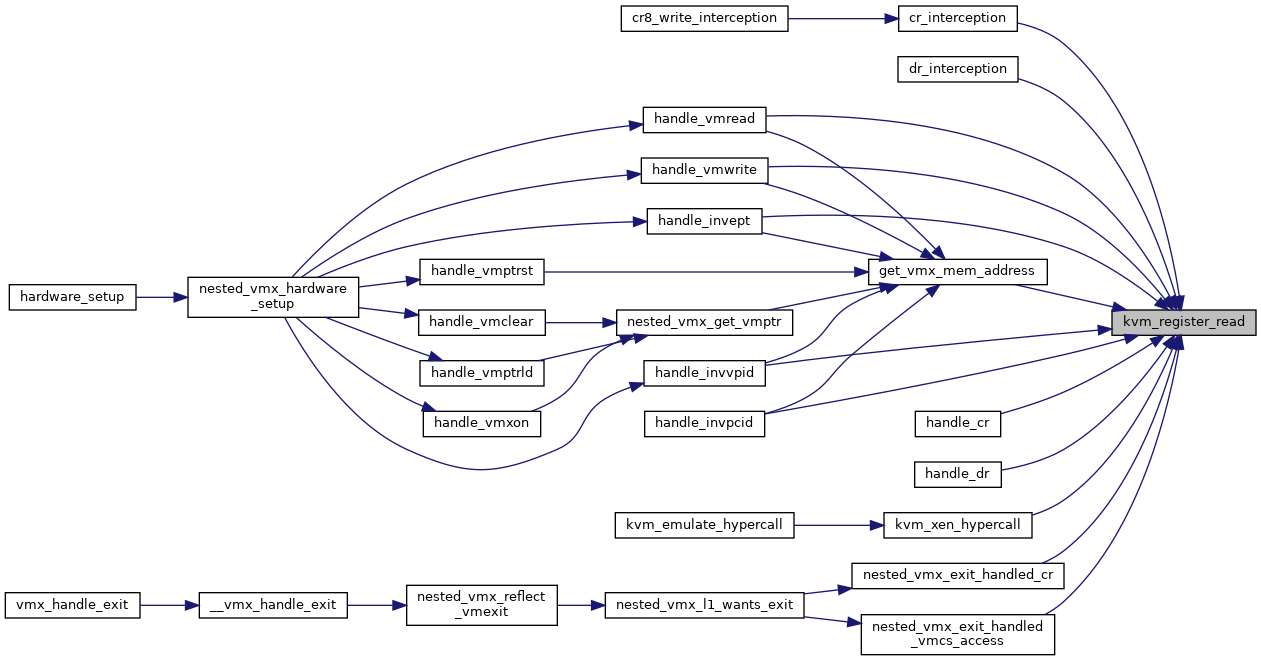

◆ kvm_register_read()

|

inlinestatic |

Definition at line 273 of file x86.h.

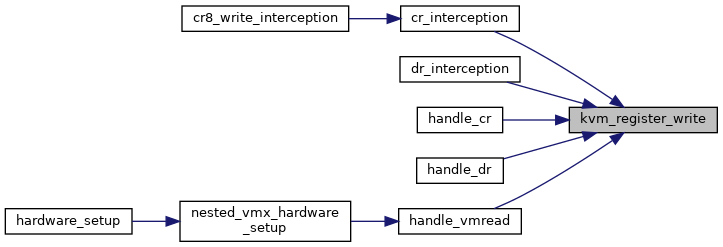

◆ kvm_register_write()

|

inlinestatic |

Definition at line 280 of file x86.h.

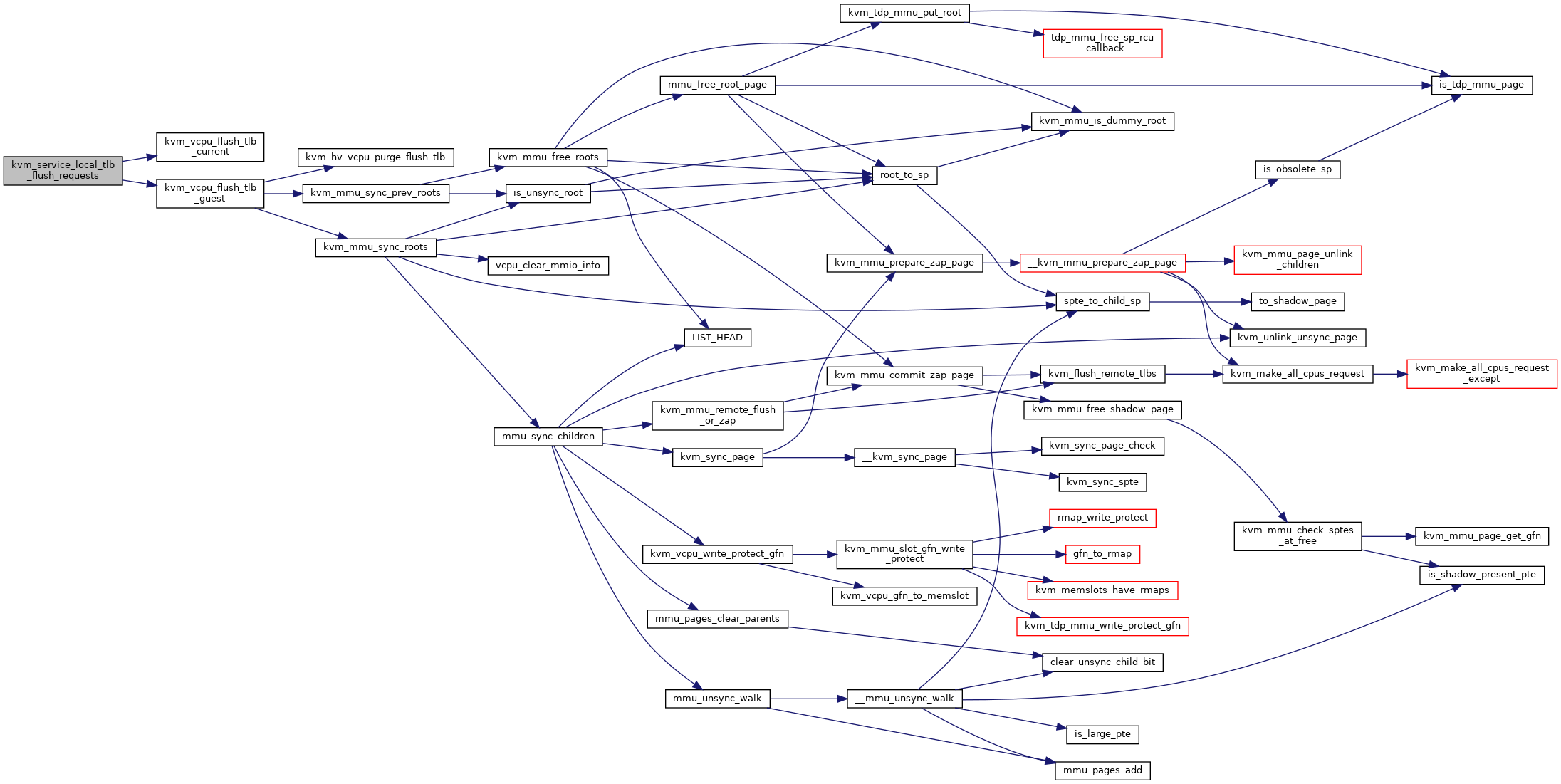

◆ kvm_service_local_tlb_flush_requests()

| void kvm_service_local_tlb_flush_requests | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3617 of file x86.c.

◆ kvm_sev_es_mmio_read()

| int kvm_sev_es_mmio_read | ( | struct kvm_vcpu * | vcpu, |

| gpa_t | src, | ||

| unsigned int | bytes, | ||

| void * | dst | ||

| ) |

Definition at line 13761 of file x86.c.

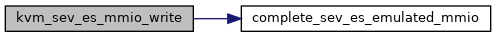

◆ kvm_sev_es_mmio_write()

| int kvm_sev_es_mmio_write | ( | struct kvm_vcpu * | vcpu, |

| gpa_t | src, | ||

| unsigned int | bytes, | ||

| void * | dst | ||

| ) |

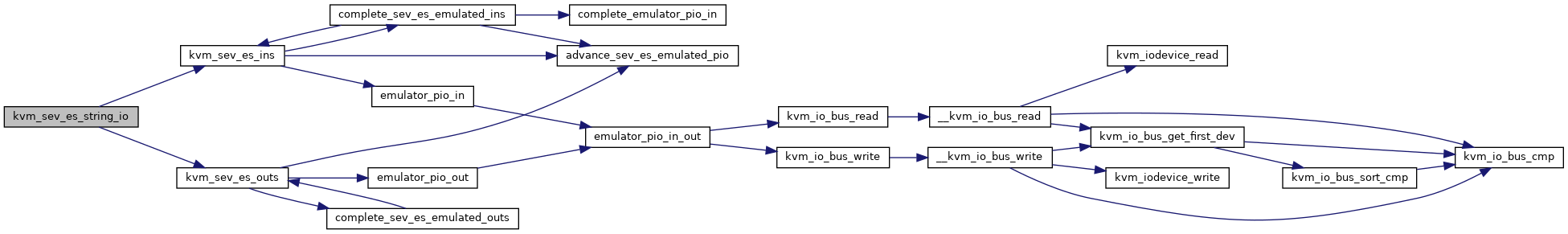

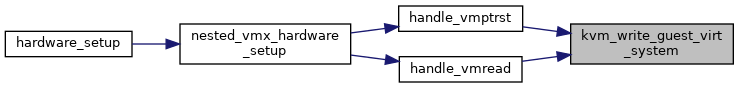

◆ kvm_sev_es_string_io()

| int kvm_sev_es_string_io | ( | struct kvm_vcpu * | vcpu, |

| unsigned int | size, | ||

| unsigned int | port, | ||

| void * | data, | ||

| unsigned int | count, | ||

| int | in | ||

| ) |

Definition at line 13876 of file x86.c.

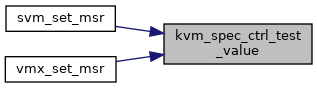

◆ kvm_spec_ctrl_test_value()

| int kvm_spec_ctrl_test_value | ( | u64 | value | ) |

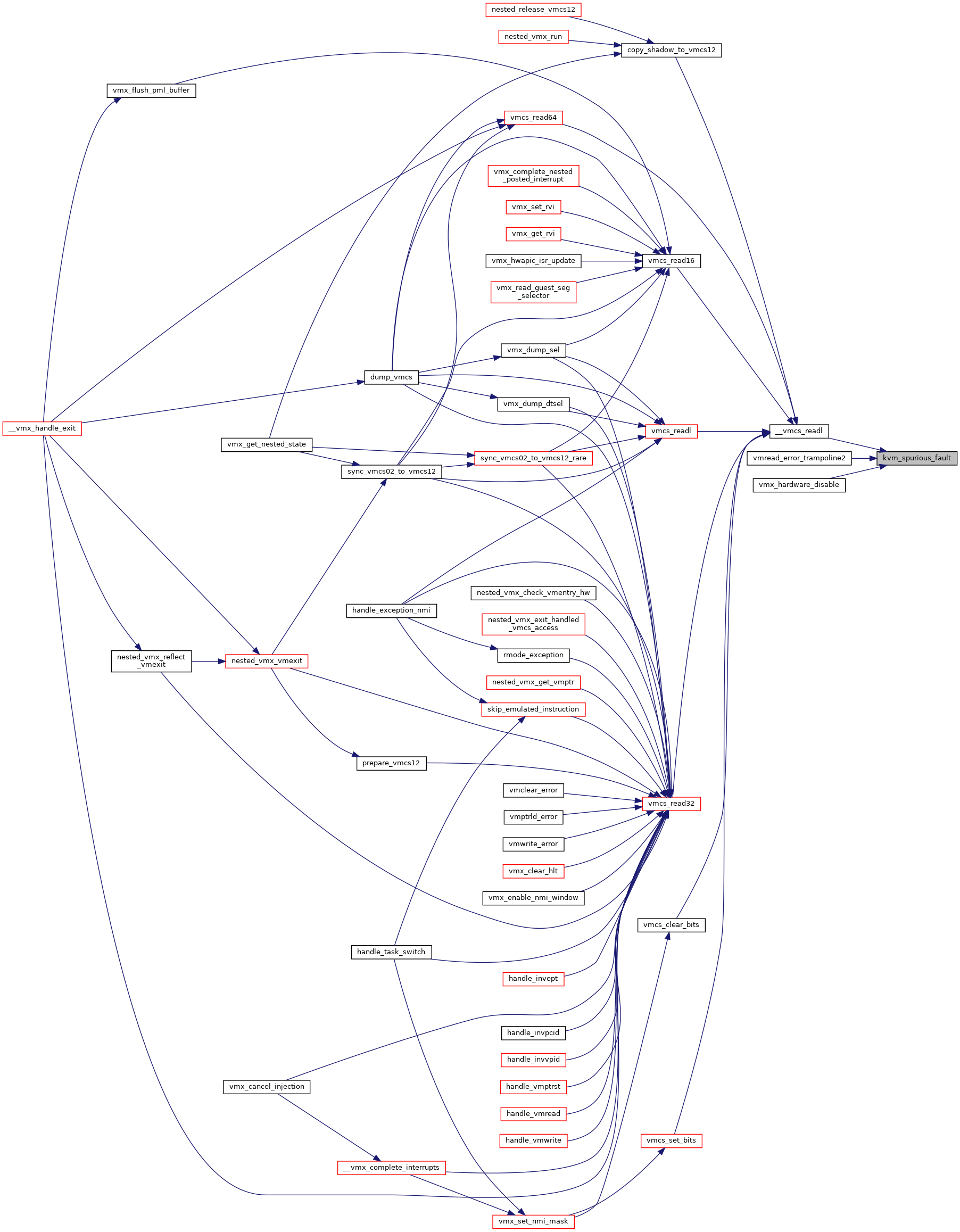

◆ kvm_spurious_fault()

| void kvm_spurious_fault | ( | void | ) |

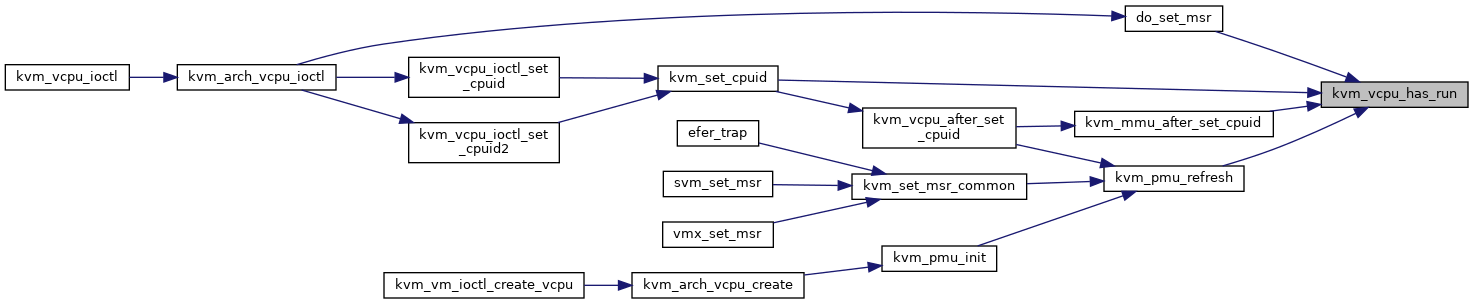

◆ kvm_vcpu_has_run()

|

inlinestatic |

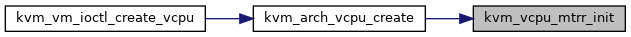

◆ kvm_vcpu_mtrr_init()

| void kvm_vcpu_mtrr_init | ( | struct kvm_vcpu * | vcpu | ) |

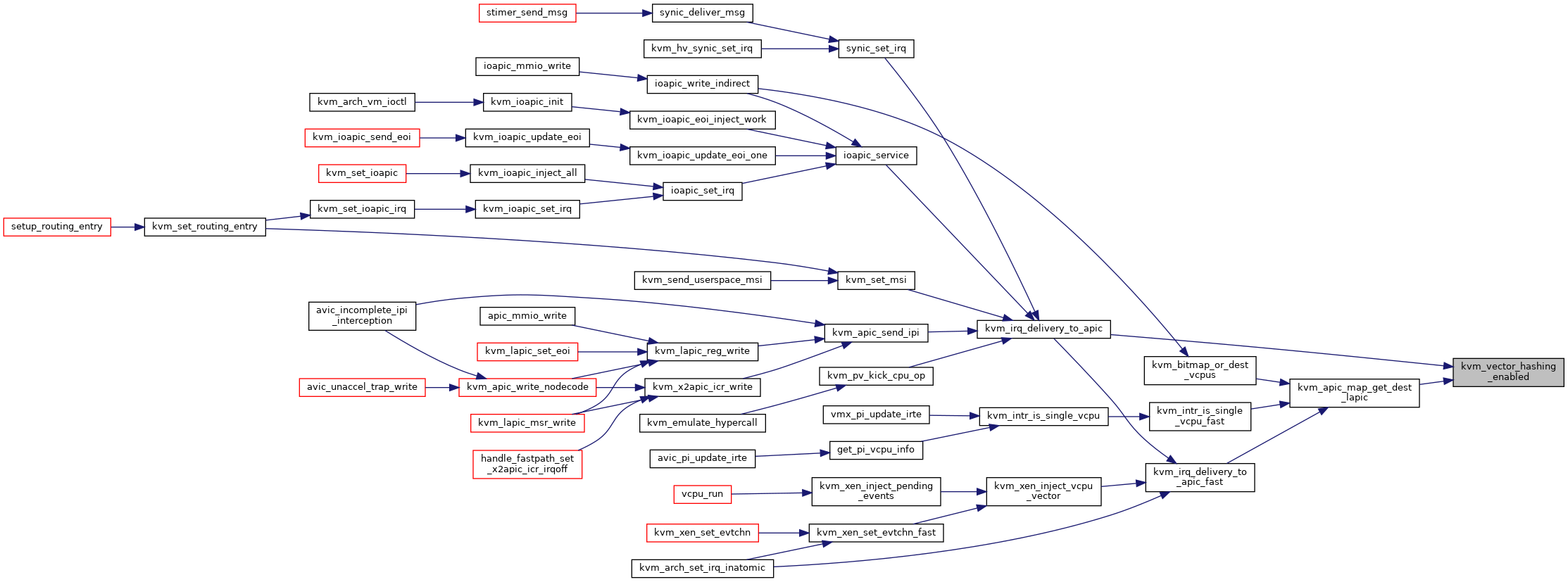

◆ kvm_vector_hashing_enabled()

| bool kvm_vector_hashing_enabled | ( | void | ) |

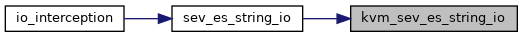

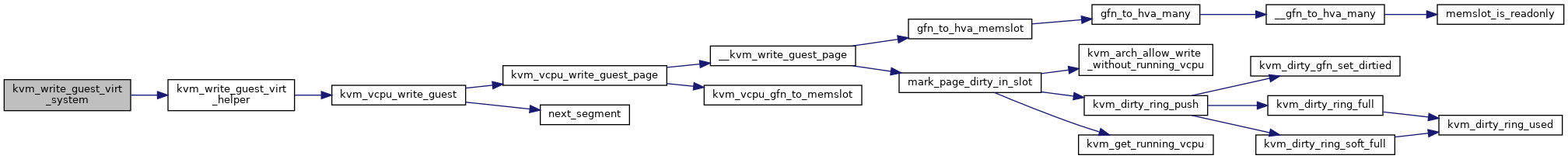

◆ kvm_write_guest_virt_system()

| int kvm_write_guest_virt_system | ( | struct kvm_vcpu * | vcpu, |

| gva_t | addr, | ||

| void * | val, | ||

| unsigned int | bytes, | ||

| struct x86_exception * | exception | ||

| ) |

Definition at line 7651 of file x86.c.

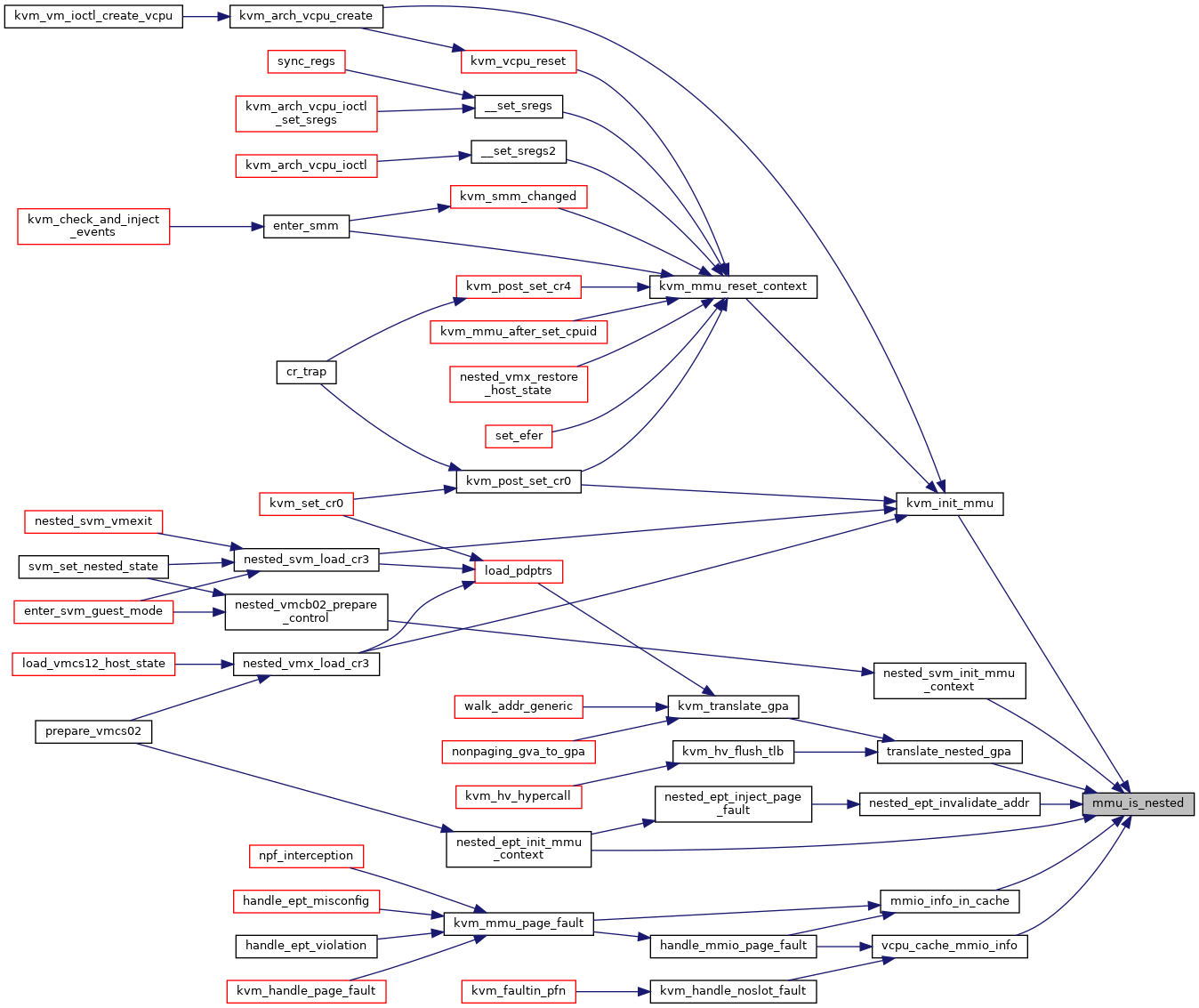

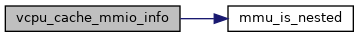

◆ mmu_is_nested()

|

inlinestatic |

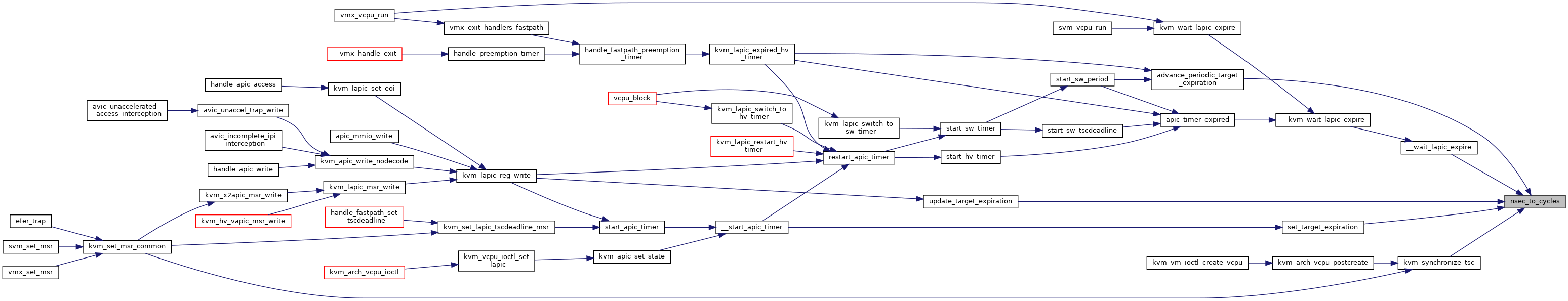

◆ nsec_to_cycles()

|

inlinestatic |

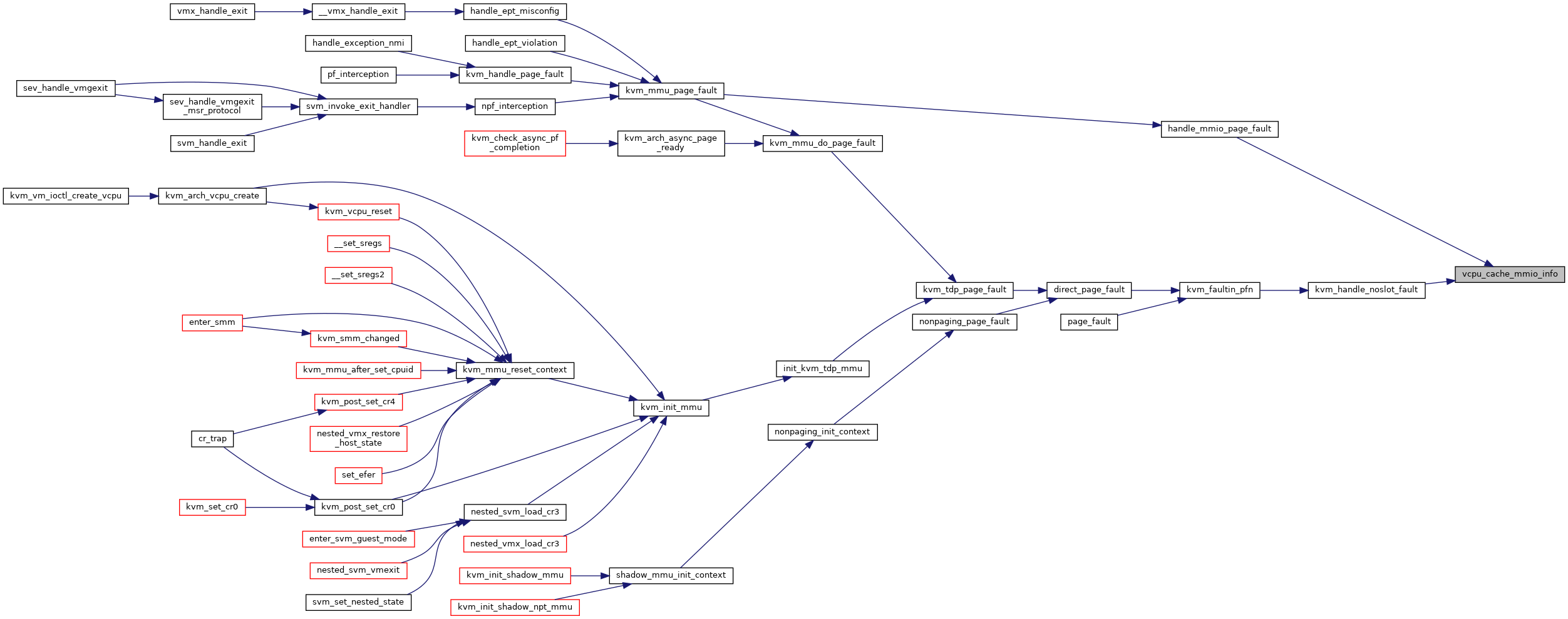

◆ vcpu_cache_mmio_info()

|

inlinestatic |

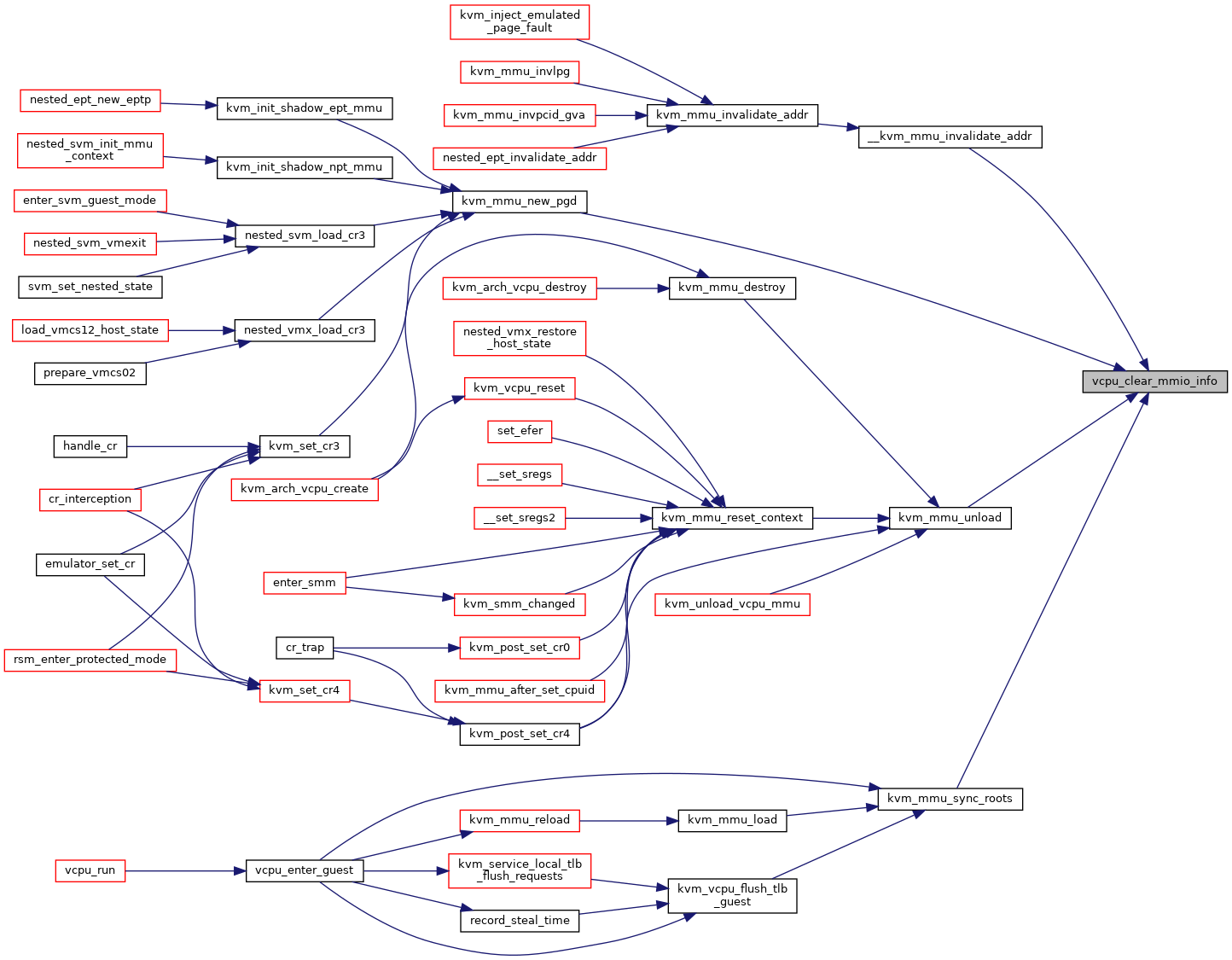

◆ vcpu_clear_mmio_info()

|

inlinestatic |

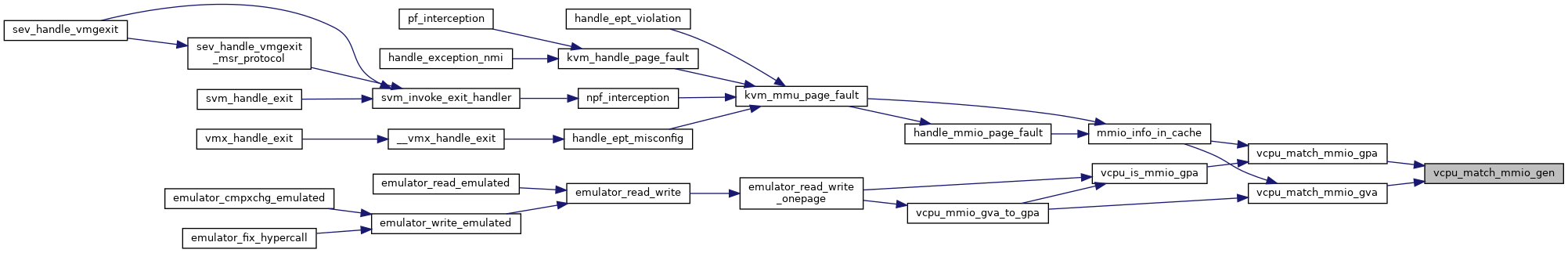

◆ vcpu_match_mmio_gen()

|

inlinestatic |

◆ vcpu_match_mmio_gpa()

|

inlinestatic |

◆ vcpu_match_mmio_gva()

|

inlinestatic |

◆ vcpu_virt_addr_bits()

|

inlinestatic |

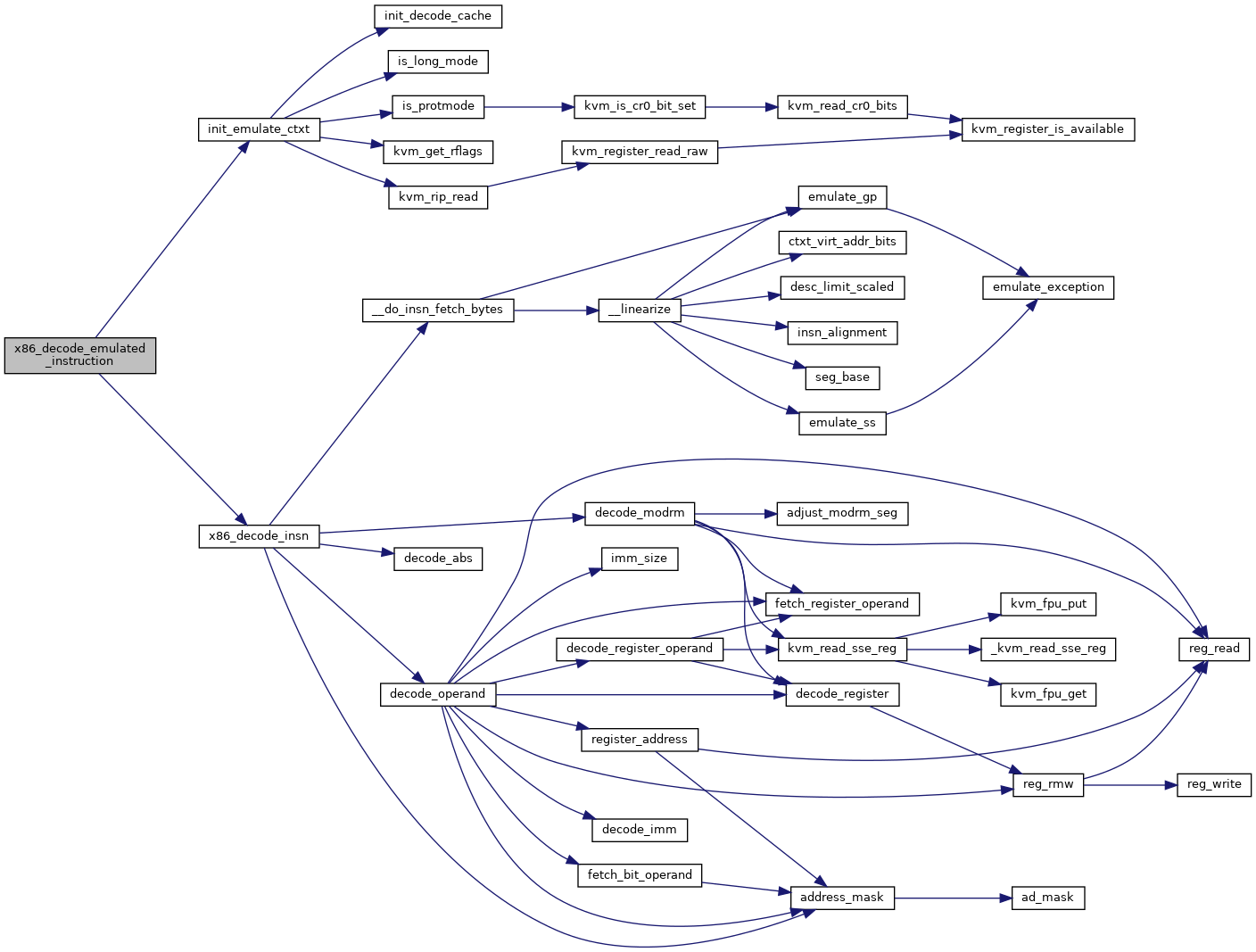

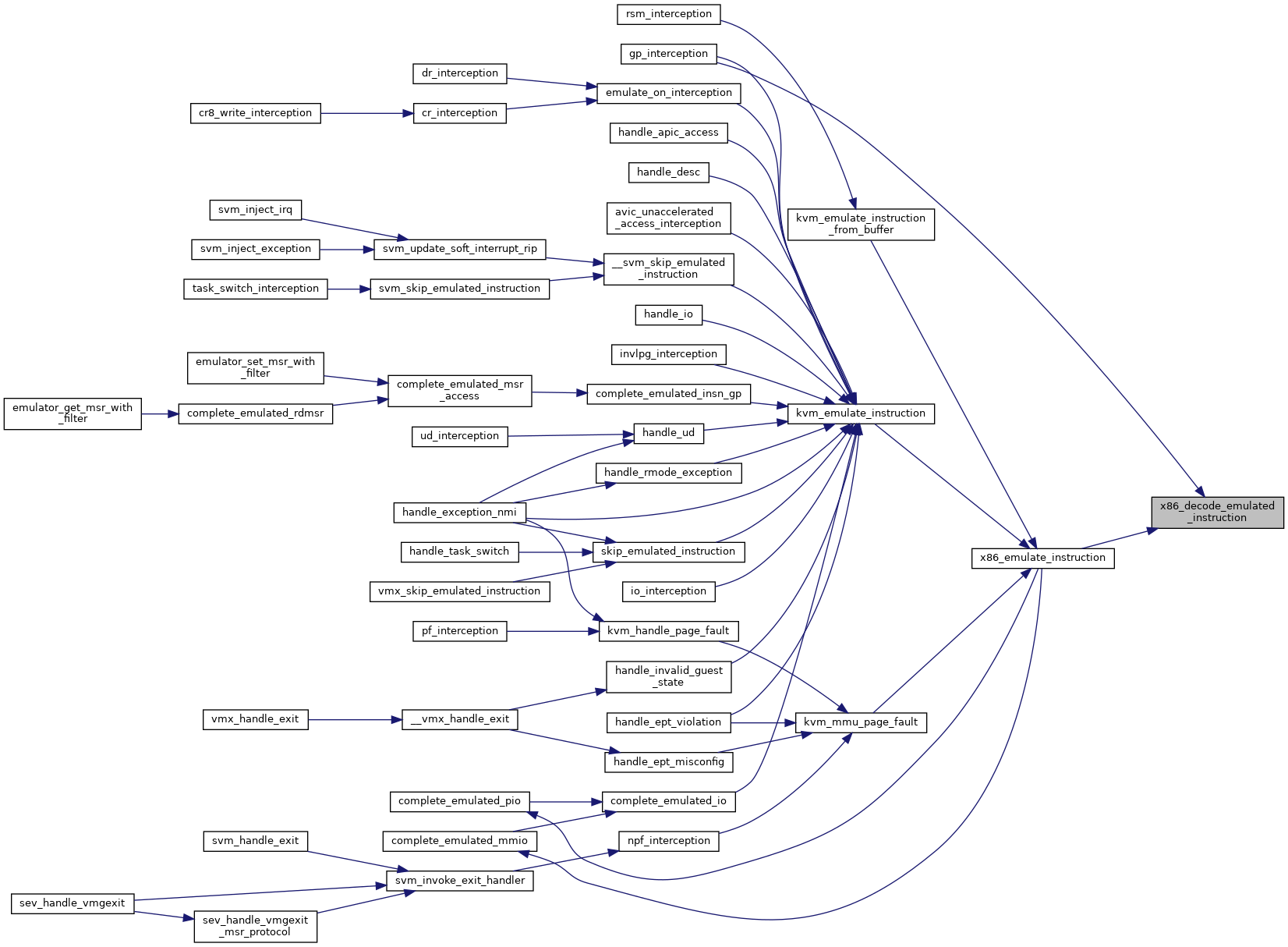

◆ x86_decode_emulated_instruction()

| int x86_decode_emulated_instruction | ( | struct kvm_vcpu * | vcpu, |

| int | emulation_type, | ||

| void * | insn, | ||

| int | insn_len | ||

| ) |

Definition at line 9057 of file x86.c.

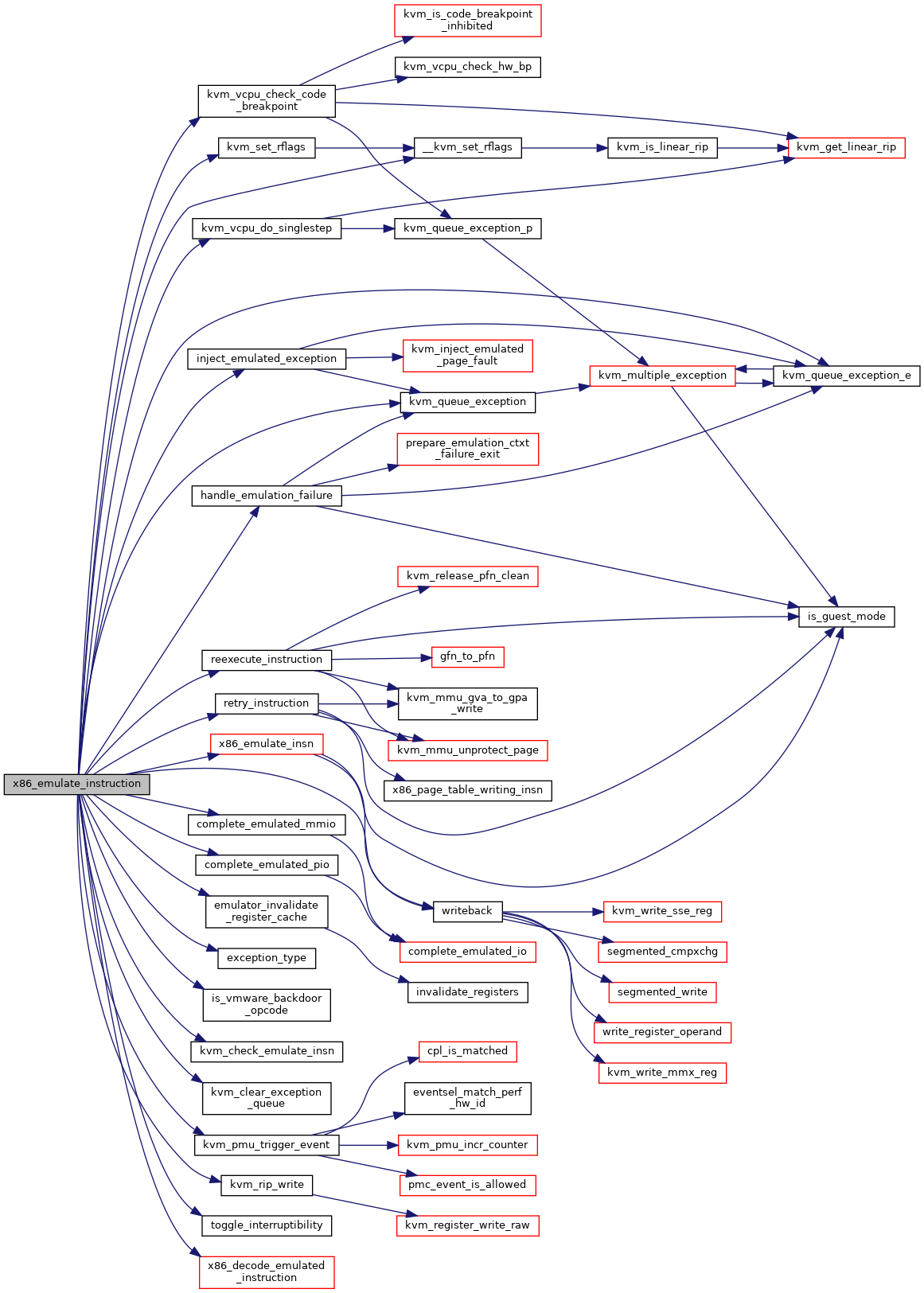

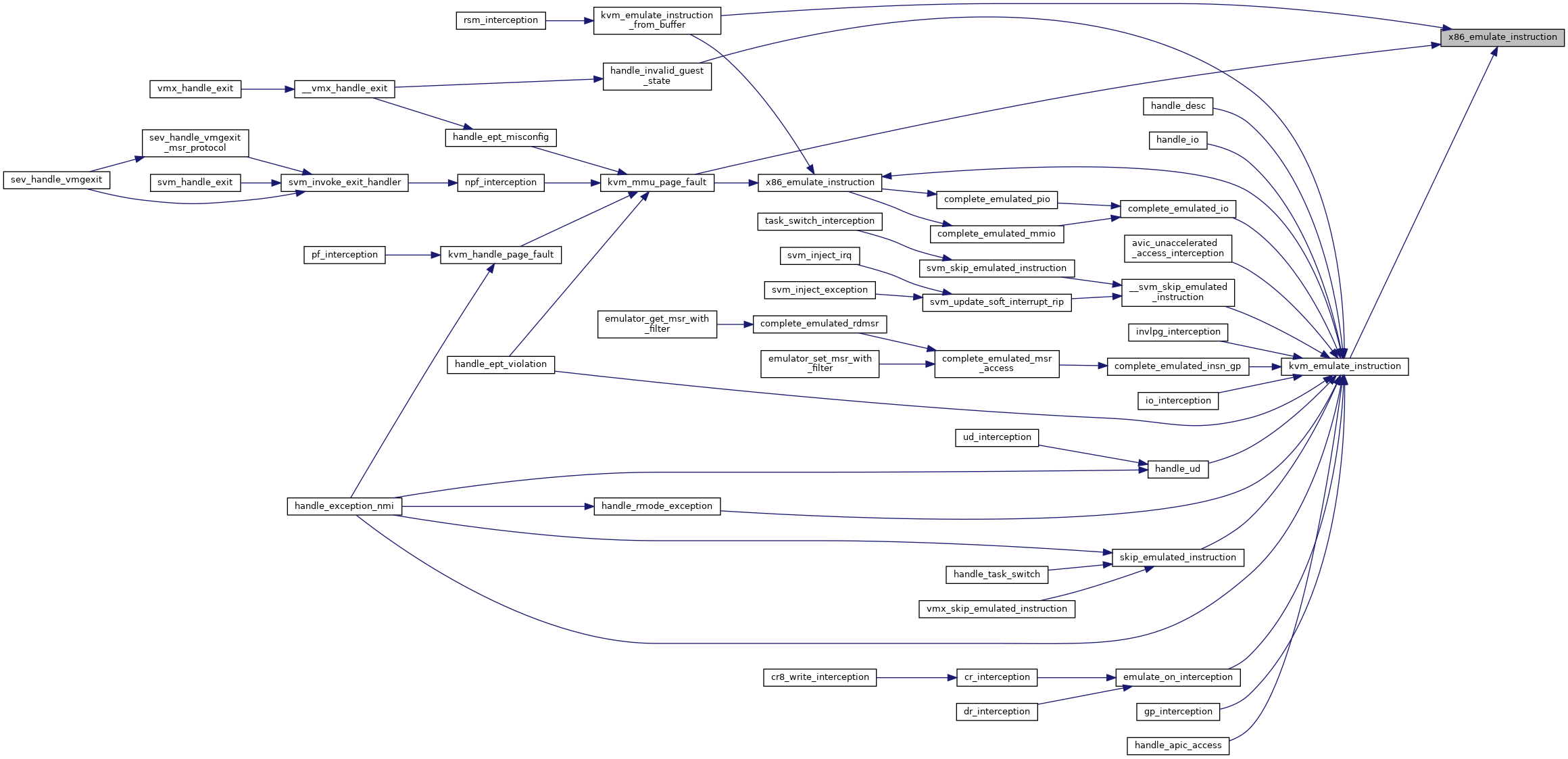

◆ x86_emulate_instruction()

| int x86_emulate_instruction | ( | struct kvm_vcpu * | vcpu, |

| gpa_t | cr2_or_gpa, | ||

| int | emulation_type, | ||

| void * | insn, | ||

| int | insn_len | ||

| ) |

Definition at line 9074 of file x86.c.

◆ x86_exception_has_error_code()

|

inlinestatic |