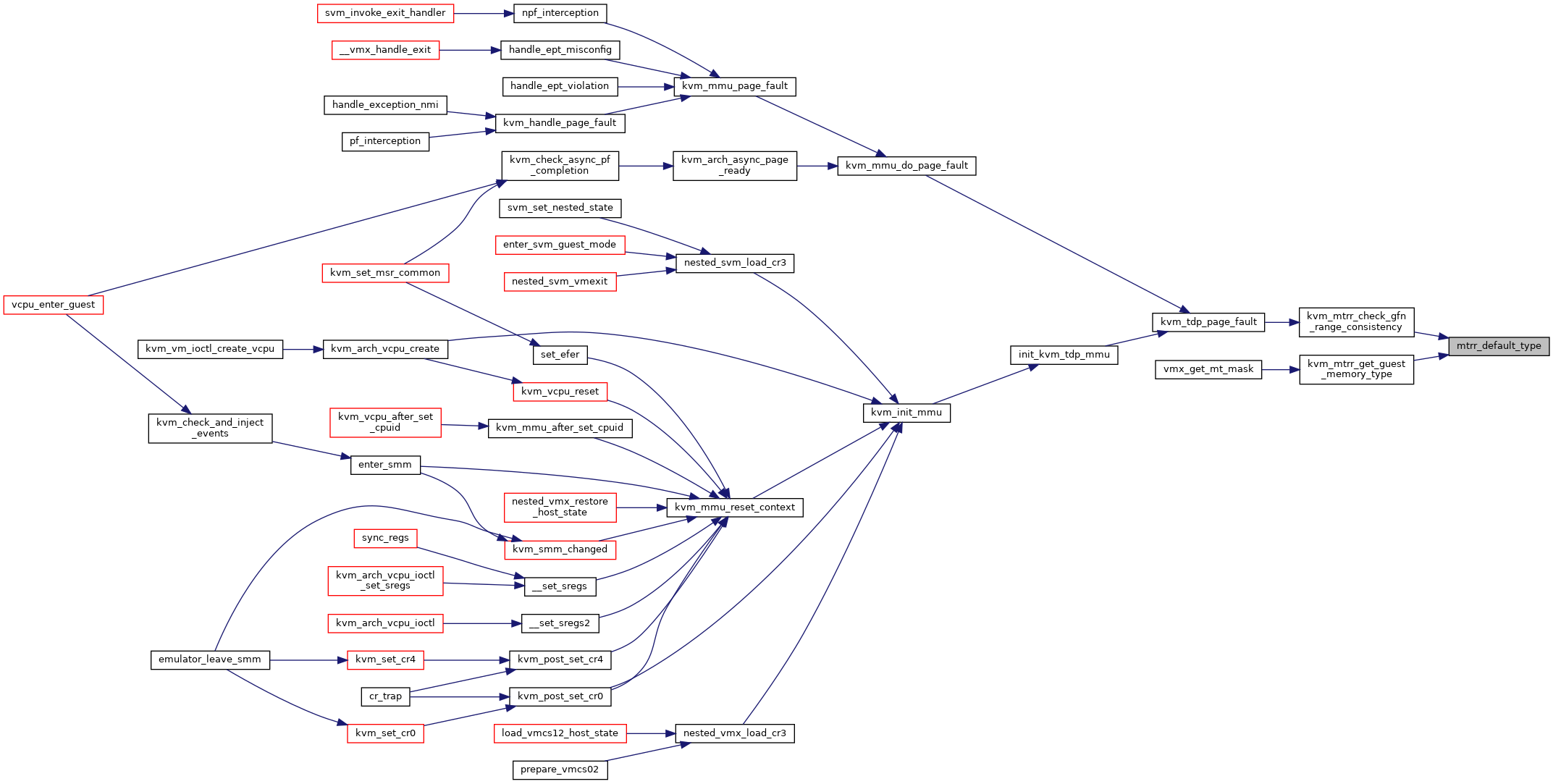

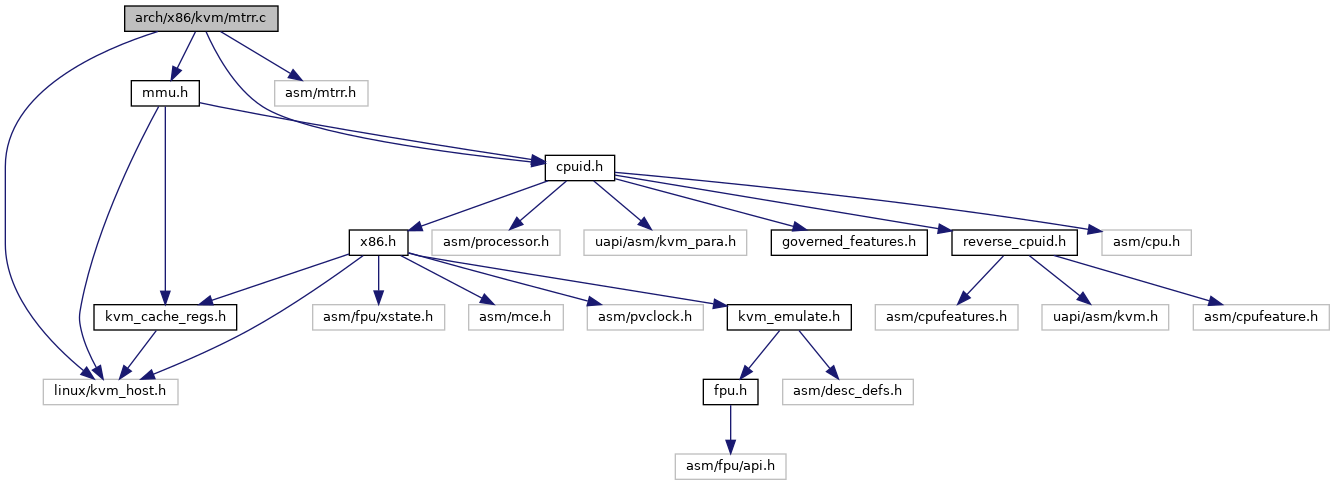

Include dependency graph for mtrr.c:

Go to the source code of this file.

Classes | |

| struct | fixed_mtrr_segment |

| struct | mtrr_iter |

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

| #define | IA32_MTRR_DEF_TYPE_E (1ULL << 11) |

| #define | IA32_MTRR_DEF_TYPE_FE (1ULL << 10) |

| #define | IA32_MTRR_DEF_TYPE_TYPE_MASK (0xff) |

| #define | mtrr_for_each_mem_type(_iter_, _mtrr_, _gpa_start_, _gpa_end_) |

Functions | |

| static bool | is_mtrr_base_msr (unsigned int msr) |

| static struct kvm_mtrr_range * | var_mtrr_msr_to_range (struct kvm_vcpu *vcpu, unsigned int msr) |

| static bool | msr_mtrr_valid (unsigned msr) |

| static bool | valid_mtrr_type (unsigned t) |

| static bool | kvm_mtrr_valid (struct kvm_vcpu *vcpu, u32 msr, u64 data) |

| static bool | mtrr_is_enabled (struct kvm_mtrr *mtrr_state) |

| static bool | fixed_mtrr_is_enabled (struct kvm_mtrr *mtrr_state) |

| static u8 | mtrr_default_type (struct kvm_mtrr *mtrr_state) |

| static u8 | mtrr_disabled_type (struct kvm_vcpu *vcpu) |

| static u64 | fixed_mtrr_seg_unit_size (int seg) |

| static bool | fixed_msr_to_seg_unit (u32 msr, int *seg, int *unit) |

| static void | fixed_mtrr_seg_unit_range (int seg, int unit, u64 *start, u64 *end) |

| static int | fixed_mtrr_seg_unit_range_index (int seg, int unit) |

| static int | fixed_mtrr_seg_end_range_index (int seg) |

| static bool | fixed_msr_to_range (u32 msr, u64 *start, u64 *end) |

| static int | fixed_msr_to_range_index (u32 msr) |

| static int | fixed_mtrr_addr_to_seg (u64 addr) |

| static int | fixed_mtrr_addr_seg_to_range_index (u64 addr, int seg) |

| static u64 | fixed_mtrr_range_end_addr (int seg, int index) |

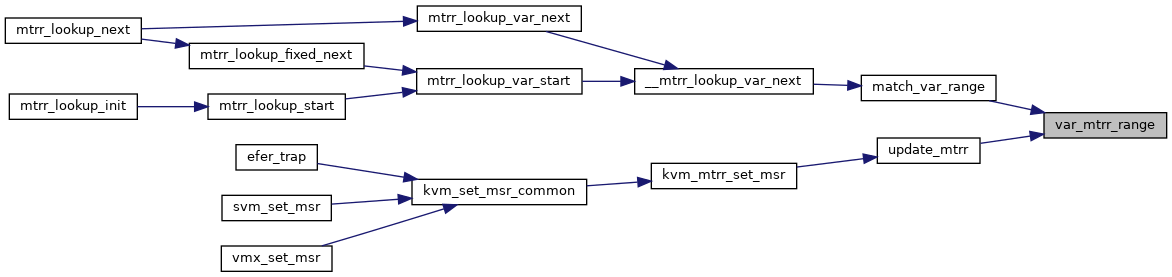

| static void | var_mtrr_range (struct kvm_mtrr_range *range, u64 *start, u64 *end) |

| static void | update_mtrr (struct kvm_vcpu *vcpu, u32 msr) |

| static bool | var_mtrr_range_is_valid (struct kvm_mtrr_range *range) |

| static void | set_var_mtrr_msr (struct kvm_vcpu *vcpu, u32 msr, u64 data) |

| int | kvm_mtrr_set_msr (struct kvm_vcpu *vcpu, u32 msr, u64 data) |

| int | kvm_mtrr_get_msr (struct kvm_vcpu *vcpu, u32 msr, u64 *pdata) |

| void | kvm_vcpu_mtrr_init (struct kvm_vcpu *vcpu) |

| static bool | mtrr_lookup_fixed_start (struct mtrr_iter *iter) |

| static bool | match_var_range (struct mtrr_iter *iter, struct kvm_mtrr_range *range) |

| static void | __mtrr_lookup_var_next (struct mtrr_iter *iter) |

| static void | mtrr_lookup_var_start (struct mtrr_iter *iter) |

| static void | mtrr_lookup_fixed_next (struct mtrr_iter *iter) |

| static void | mtrr_lookup_var_next (struct mtrr_iter *iter) |

| static void | mtrr_lookup_start (struct mtrr_iter *iter) |

| static void | mtrr_lookup_init (struct mtrr_iter *iter, struct kvm_mtrr *mtrr_state, u64 start, u64 end) |

| static bool | mtrr_lookup_okay (struct mtrr_iter *iter) |

| static void | mtrr_lookup_next (struct mtrr_iter *iter) |

| u8 | kvm_mtrr_get_guest_memory_type (struct kvm_vcpu *vcpu, gfn_t gfn) |

| EXPORT_SYMBOL_GPL (kvm_mtrr_get_guest_memory_type) | |

| bool | kvm_mtrr_check_gfn_range_consistency (struct kvm_vcpu *vcpu, gfn_t gfn, int page_num) |

Variables | |

| static struct fixed_mtrr_segment | fixed_seg_table [] |

Macro Definition Documentation

◆ IA32_MTRR_DEF_TYPE_E

◆ IA32_MTRR_DEF_TYPE_FE

◆ IA32_MTRR_DEF_TYPE_TYPE_MASK

◆ mtrr_for_each_mem_type

| #define mtrr_for_each_mem_type | ( | _iter_, | |

| _mtrr_, | |||

| _gpa_start_, | |||

| _gpa_end_ | |||

| ) |

Value:

for (mtrr_lookup_init(_iter_, _mtrr_, _gpa_start_, _gpa_end_); \

mtrr_lookup_okay(_iter_); mtrr_lookup_next(_iter_))

static void mtrr_lookup_init(struct mtrr_iter *iter, struct kvm_mtrr *mtrr_state, u64 start, u64 end)

Definition: mtrr.c:573

◆ pr_fmt

Function Documentation

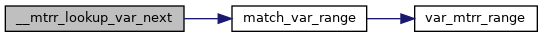

◆ __mtrr_lookup_var_next()

|

static |

Definition at line 513 of file mtrr.c.

static bool match_var_range(struct mtrr_iter *iter, struct kvm_mtrr_range *range)

Definition: mtrr.c:489

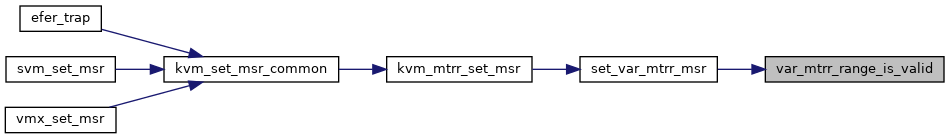

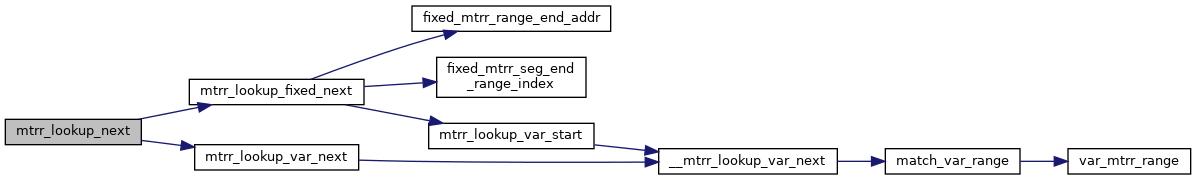

Here is the call graph for this function:

Here is the caller graph for this function:

◆ EXPORT_SYMBOL_GPL()

| EXPORT_SYMBOL_GPL | ( | kvm_mtrr_get_guest_memory_type | ) |

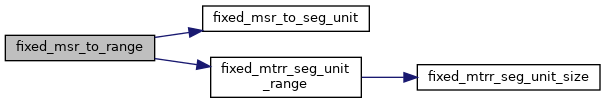

◆ fixed_msr_to_range()

|

static |

Definition at line 250 of file mtrr.c.

static void fixed_mtrr_seg_unit_range(int seg, int unit, u64 *start, u64 *end)

Definition: mtrr.c:220

static bool fixed_msr_to_seg_unit(u32 msr, int *seg, int *unit)

Definition: mtrr.c:194

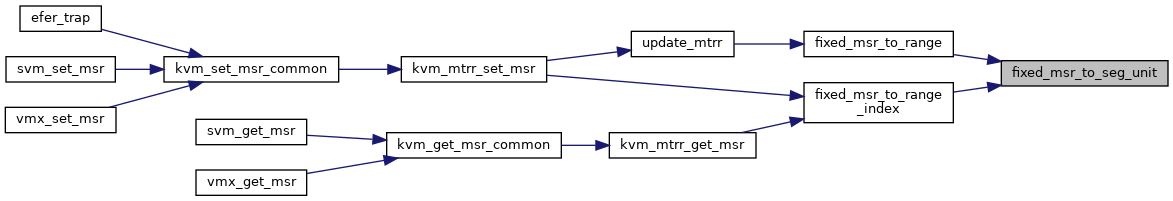

Here is the call graph for this function:

Here is the caller graph for this function:

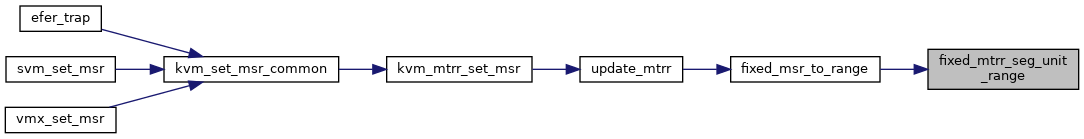

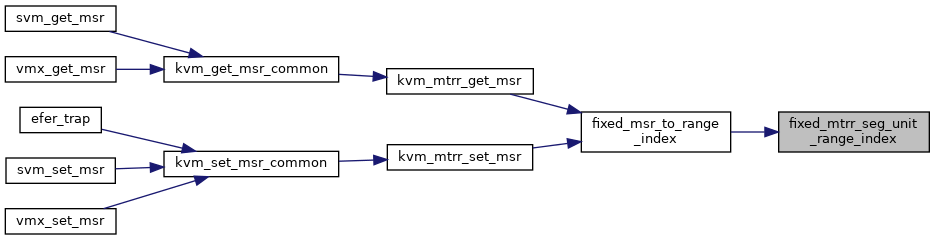

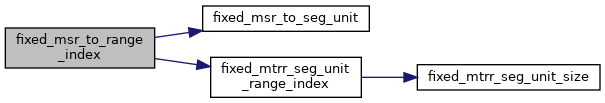

◆ fixed_msr_to_range_index()

|

static |

Definition at line 261 of file mtrr.c.

static int fixed_mtrr_seg_unit_range_index(int seg, int unit)

Definition: mtrr.c:230

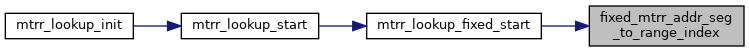

Here is the call graph for this function:

Here is the caller graph for this function:

◆ fixed_msr_to_seg_unit()

|

static |

◆ fixed_mtrr_addr_seg_to_range_index()

|

static |

Definition at line 285 of file mtrr.c.

Definition: mtrr.c:143

Here is the caller graph for this function:

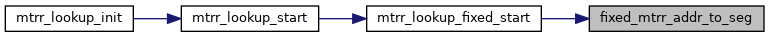

◆ fixed_mtrr_addr_to_seg()

|

static |

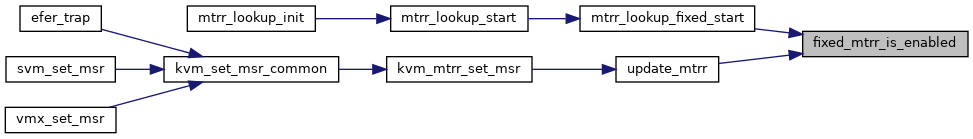

◆ fixed_mtrr_is_enabled()

|

static |

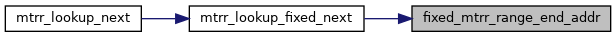

◆ fixed_mtrr_range_end_addr()

|

static |

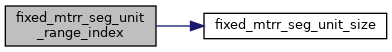

◆ fixed_mtrr_seg_end_range_index()

|

static |

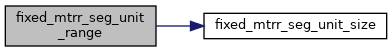

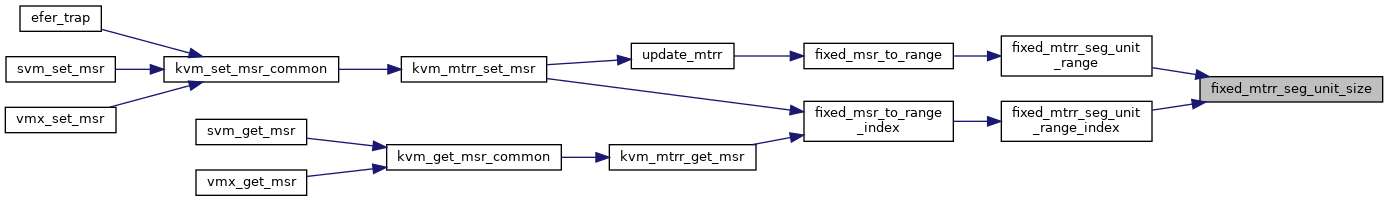

◆ fixed_mtrr_seg_unit_range()

|

static |

◆ fixed_mtrr_seg_unit_range_index()

|

static |

◆ fixed_mtrr_seg_unit_size()

|

static |

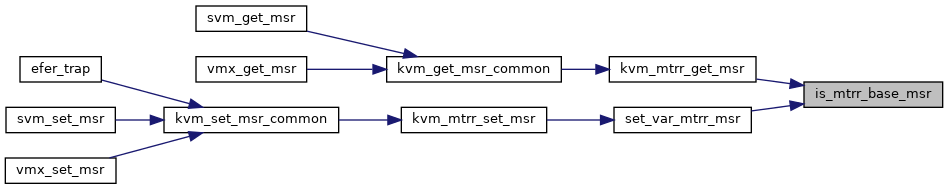

◆ is_mtrr_base_msr()

|

static |

◆ kvm_mtrr_check_gfn_range_consistency()

| bool kvm_mtrr_check_gfn_range_consistency | ( | struct kvm_vcpu * | vcpu, |

| gfn_t | gfn, | ||

| int | page_num | ||

| ) |

Definition at line 690 of file mtrr.c.

#define mtrr_for_each_mem_type(_iter_, _mtrr_, _gpa_start_, _gpa_end_)

Definition: mtrr.c:610

Definition: mtrr.c:439

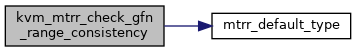

Here is the call graph for this function:

Here is the caller graph for this function:

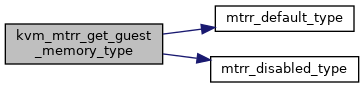

◆ kvm_mtrr_get_guest_memory_type()

| u8 kvm_mtrr_get_guest_memory_type | ( | struct kvm_vcpu * | vcpu, |

| gfn_t | gfn | ||

| ) |

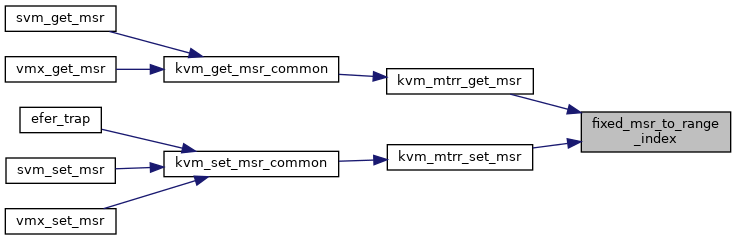

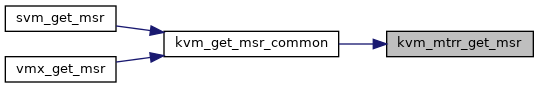

◆ kvm_mtrr_get_msr()

| int kvm_mtrr_get_msr | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| u64 * | pdata | ||

| ) |

Definition at line 397 of file mtrr.c.

u64 kvm_vcpu_reserved_gpa_bits_raw(struct kvm_vcpu *vcpu)

Definition: cpuid.c:409

static struct kvm_mtrr_range * var_mtrr_msr_to_range(struct kvm_vcpu *vcpu, unsigned int msr)

Definition: mtrr.c:34

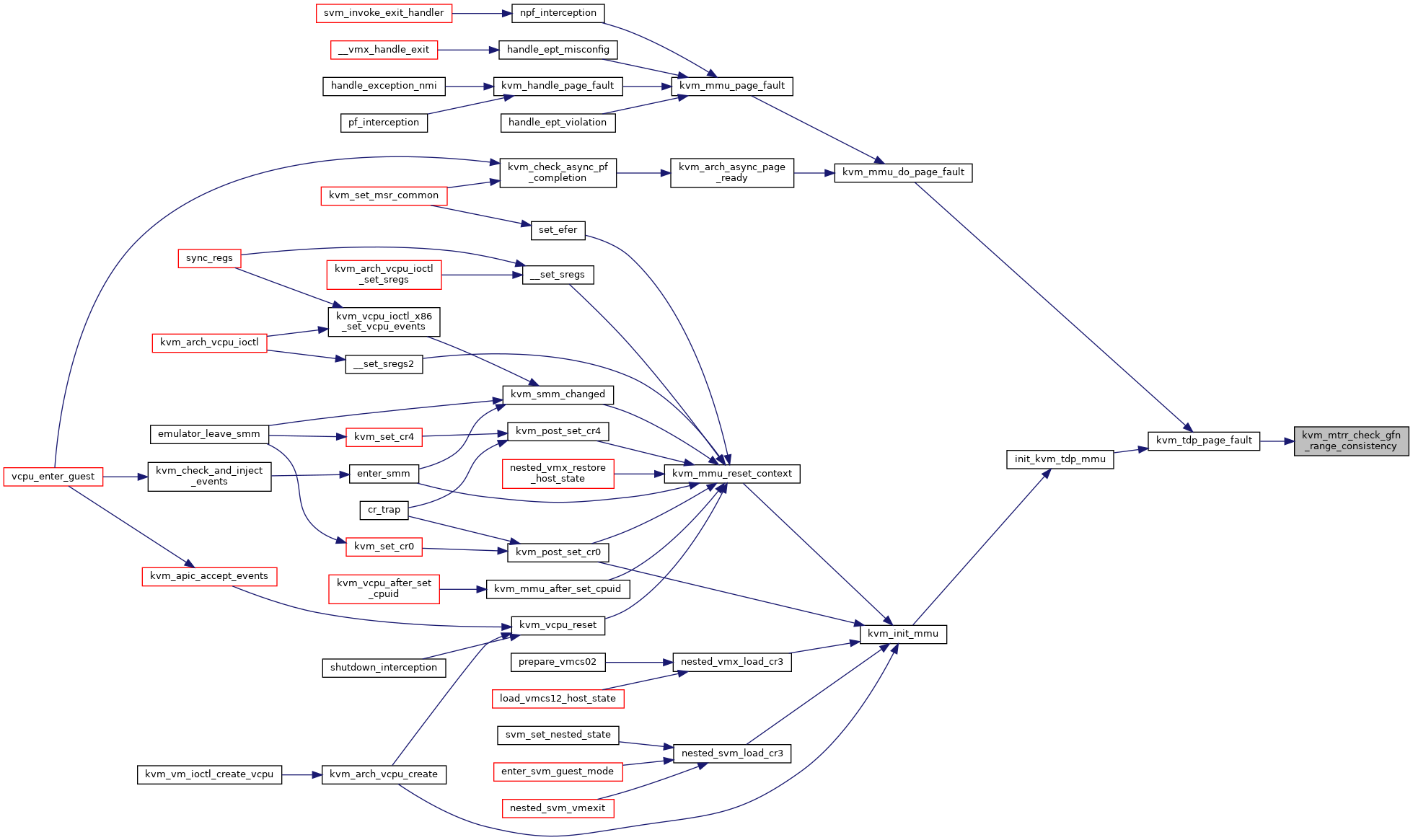

Here is the call graph for this function:

Here is the caller graph for this function:

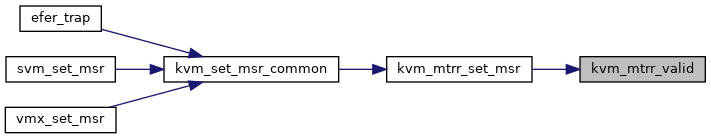

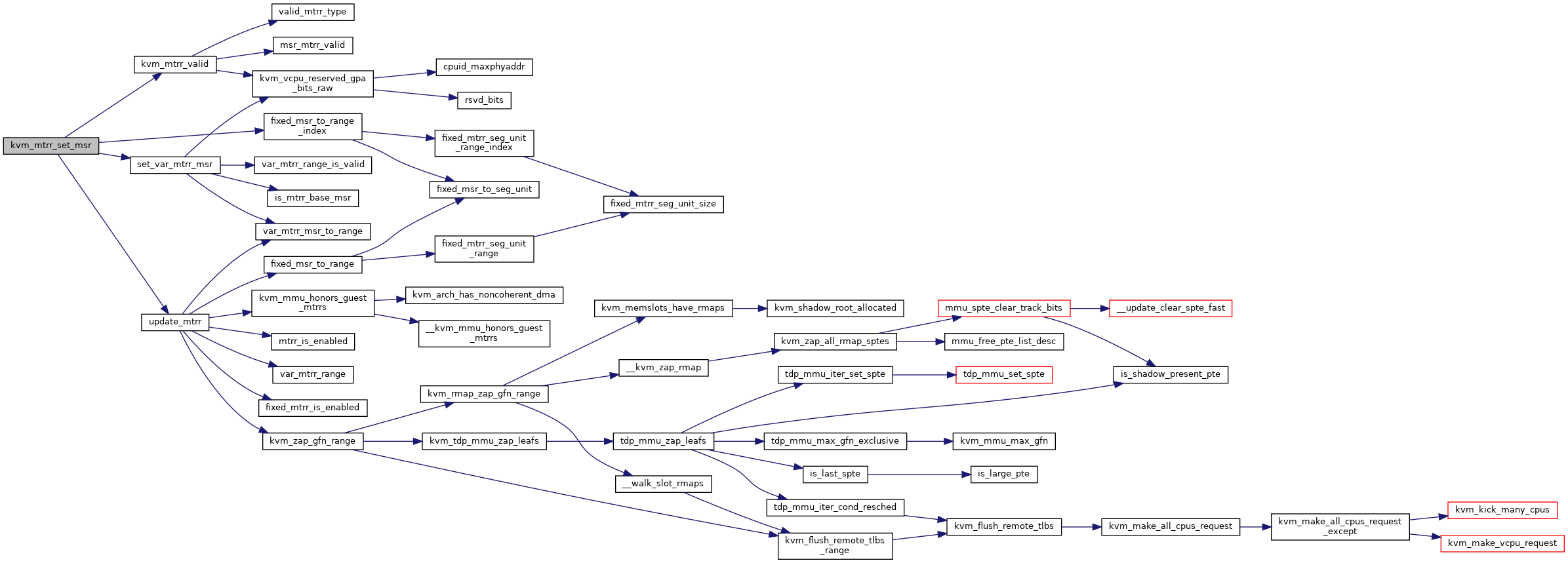

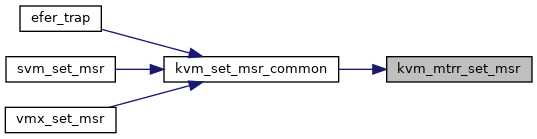

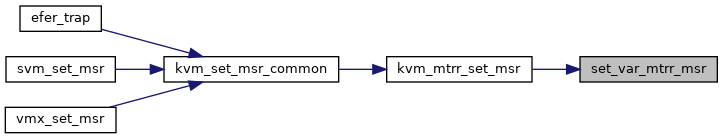

◆ kvm_mtrr_set_msr()

| int kvm_mtrr_set_msr | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| u64 | data | ||

| ) |

Definition at line 378 of file mtrr.c.

static bool kvm_mtrr_valid(struct kvm_vcpu *vcpu, u32 msr, u64 data)

Definition: mtrr.c:68

static void set_var_mtrr_msr(struct kvm_vcpu *vcpu, u32 msr, u64 data)

Definition: mtrr.c:349

Here is the call graph for this function:

Here is the caller graph for this function:

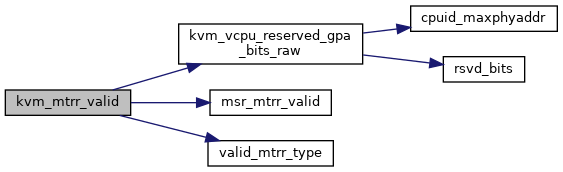

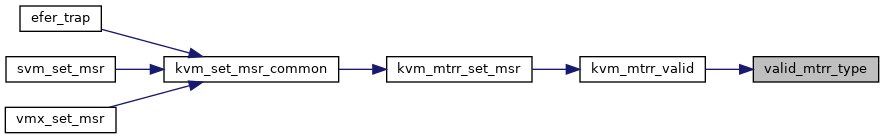

◆ kvm_mtrr_valid()

|

static |

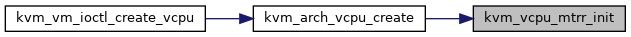

◆ kvm_vcpu_mtrr_init()

| void kvm_vcpu_mtrr_init | ( | struct kvm_vcpu * | vcpu | ) |

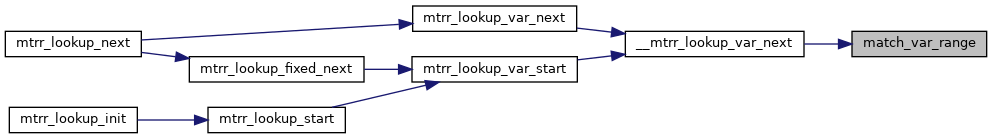

◆ match_var_range()

|

static |

Definition at line 489 of file mtrr.c.

static void var_mtrr_range(struct kvm_mtrr_range *range, u64 *start, u64 *end)

Definition: mtrr.c:304

Here is the call graph for this function:

Here is the caller graph for this function:

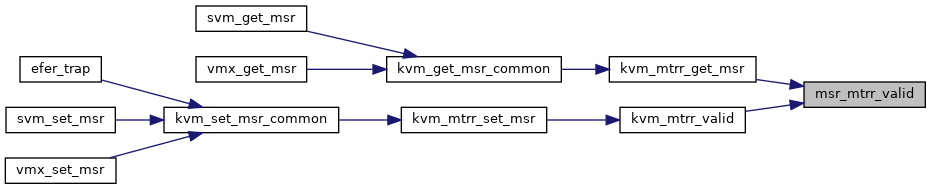

◆ msr_mtrr_valid()

|

static |

◆ mtrr_default_type()

|

static |

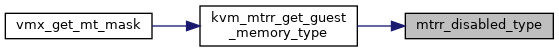

◆ mtrr_disabled_type()

|

static |

Definition at line 119 of file mtrr.c.

static __always_inline bool guest_cpuid_has(struct kvm_vcpu *vcpu, unsigned int x86_feature)

Definition: cpuid.h:83

Here is the caller graph for this function:

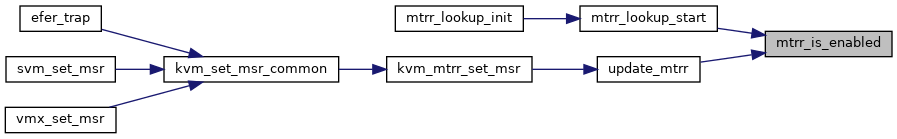

◆ mtrr_is_enabled()

|

static |

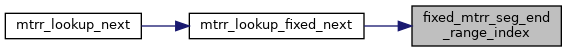

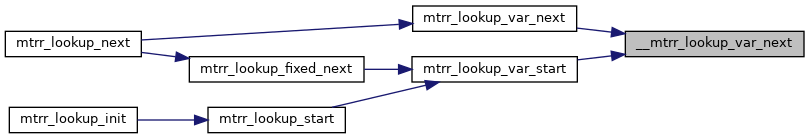

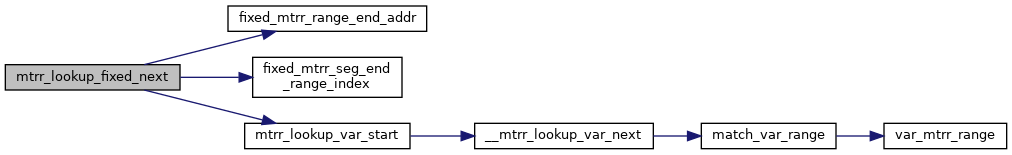

◆ mtrr_lookup_fixed_next()

|

static |

Definition at line 537 of file mtrr.c.

static void mtrr_lookup_var_start(struct mtrr_iter *iter)

Definition: mtrr.c:525

static u64 fixed_mtrr_range_end_addr(int seg, int index)

Definition: mtrr.c:296

static int fixed_mtrr_seg_end_range_index(int seg)

Definition: mtrr.c:241

Here is the call graph for this function:

Here is the caller graph for this function:

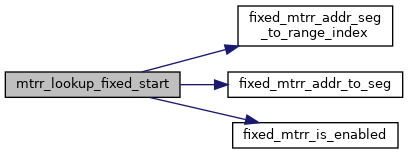

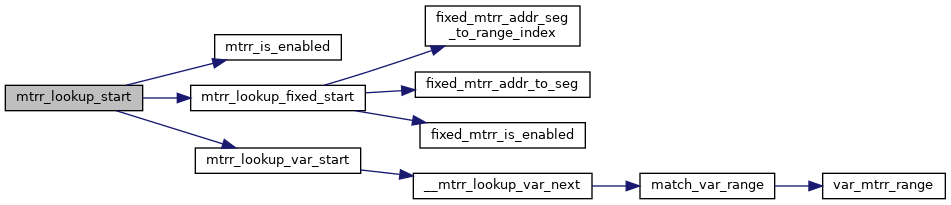

◆ mtrr_lookup_fixed_start()

|

static |

Definition at line 471 of file mtrr.c.

static bool fixed_mtrr_is_enabled(struct kvm_mtrr *mtrr_state)

Definition: mtrr.c:109

static int fixed_mtrr_addr_seg_to_range_index(u64 addr, int seg)

Definition: mtrr.c:285

Here is the call graph for this function:

Here is the caller graph for this function:

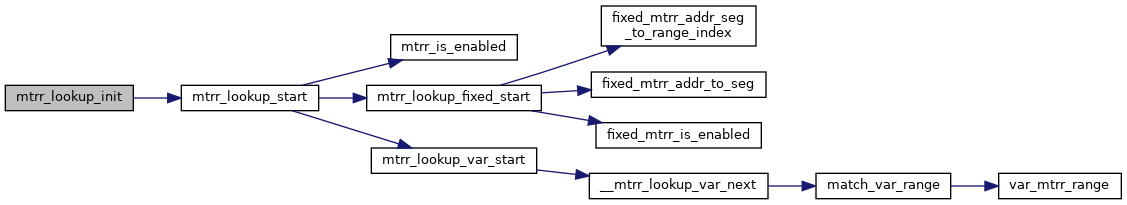

◆ mtrr_lookup_init()

|

static |

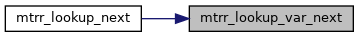

◆ mtrr_lookup_next()

|

static |

Definition at line 602 of file mtrr.c.

static void mtrr_lookup_fixed_next(struct mtrr_iter *iter)

Definition: mtrr.c:537

Here is the call graph for this function:

◆ mtrr_lookup_okay()

|

static |

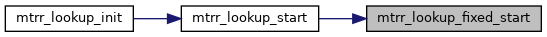

◆ mtrr_lookup_start()

|

static |

Definition at line 562 of file mtrr.c.

static bool mtrr_lookup_fixed_start(struct mtrr_iter *iter)

Definition: mtrr.c:471

Here is the call graph for this function:

Here is the caller graph for this function:

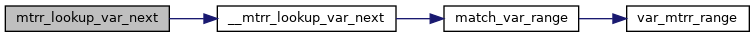

◆ mtrr_lookup_var_next()

|

static |

Definition at line 557 of file mtrr.c.

static void __mtrr_lookup_var_next(struct mtrr_iter *iter)

Definition: mtrr.c:513

Here is the call graph for this function:

Here is the caller graph for this function:

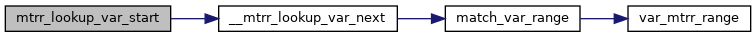

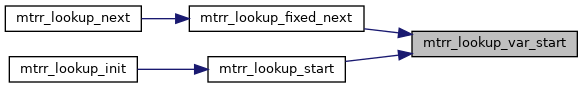

◆ mtrr_lookup_var_start()

|

static |

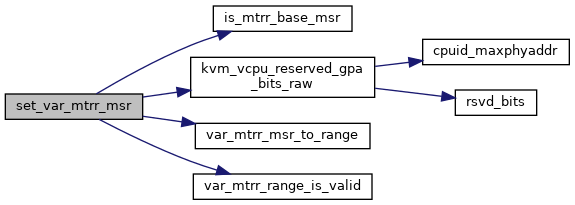

◆ set_var_mtrr_msr()

|

static |

Definition at line 349 of file mtrr.c.

static bool var_mtrr_range_is_valid(struct kvm_mtrr_range *range)

Definition: mtrr.c:344

Here is the call graph for this function:

Here is the caller graph for this function:

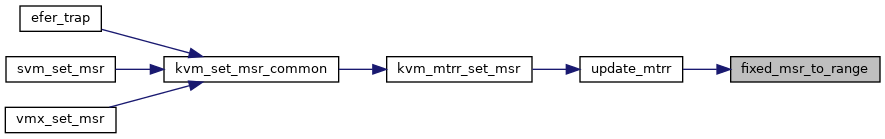

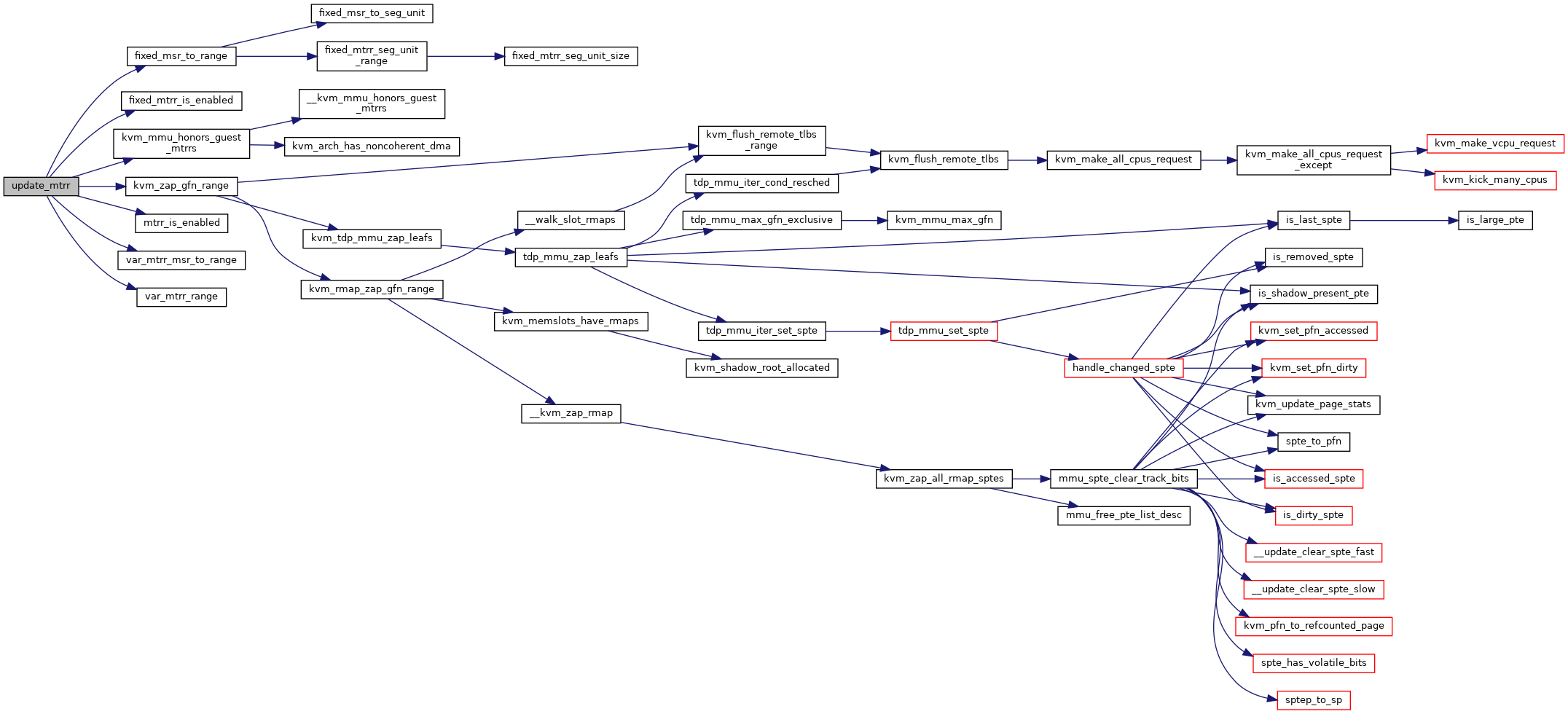

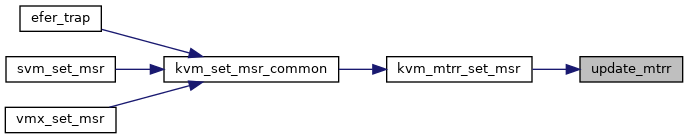

◆ update_mtrr()

|

static |

Definition at line 318 of file mtrr.c.

void kvm_zap_gfn_range(struct kvm *kvm, gfn_t gfn_start, gfn_t gfn_end)

Definition: mmu.c:6373

static bool kvm_mmu_honors_guest_mtrrs(struct kvm *kvm)

Definition: mmu.h:250

static bool fixed_msr_to_range(u32 msr, u64 *start, u64 *end)

Definition: mtrr.c:250

Here is the call graph for this function:

Here is the caller graph for this function:

◆ valid_mtrr_type()

|

static |

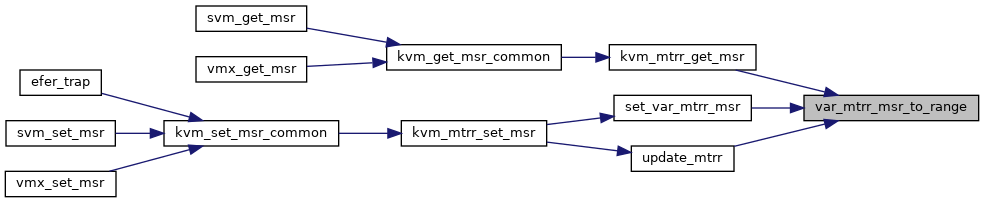

◆ var_mtrr_msr_to_range()

|

static |

◆ var_mtrr_range()

|

static |

◆ var_mtrr_range_is_valid()

|

static |

Variable Documentation

◆ fixed_seg_table

|

static |