#include <linux/kvm_host.h>#include "linux/lockdep.h"#include <linux/export.h>#include <linux/vmalloc.h>#include <linux/uaccess.h>#include <linux/sched/stat.h>#include <asm/processor.h>#include <asm/user.h>#include <asm/fpu/xstate.h>#include <asm/sgx.h>#include <asm/cpuid.h>#include "cpuid.h"#include "lapic.h"#include "mmu.h"#include "trace.h"#include "pmu.h"#include "xen.h"

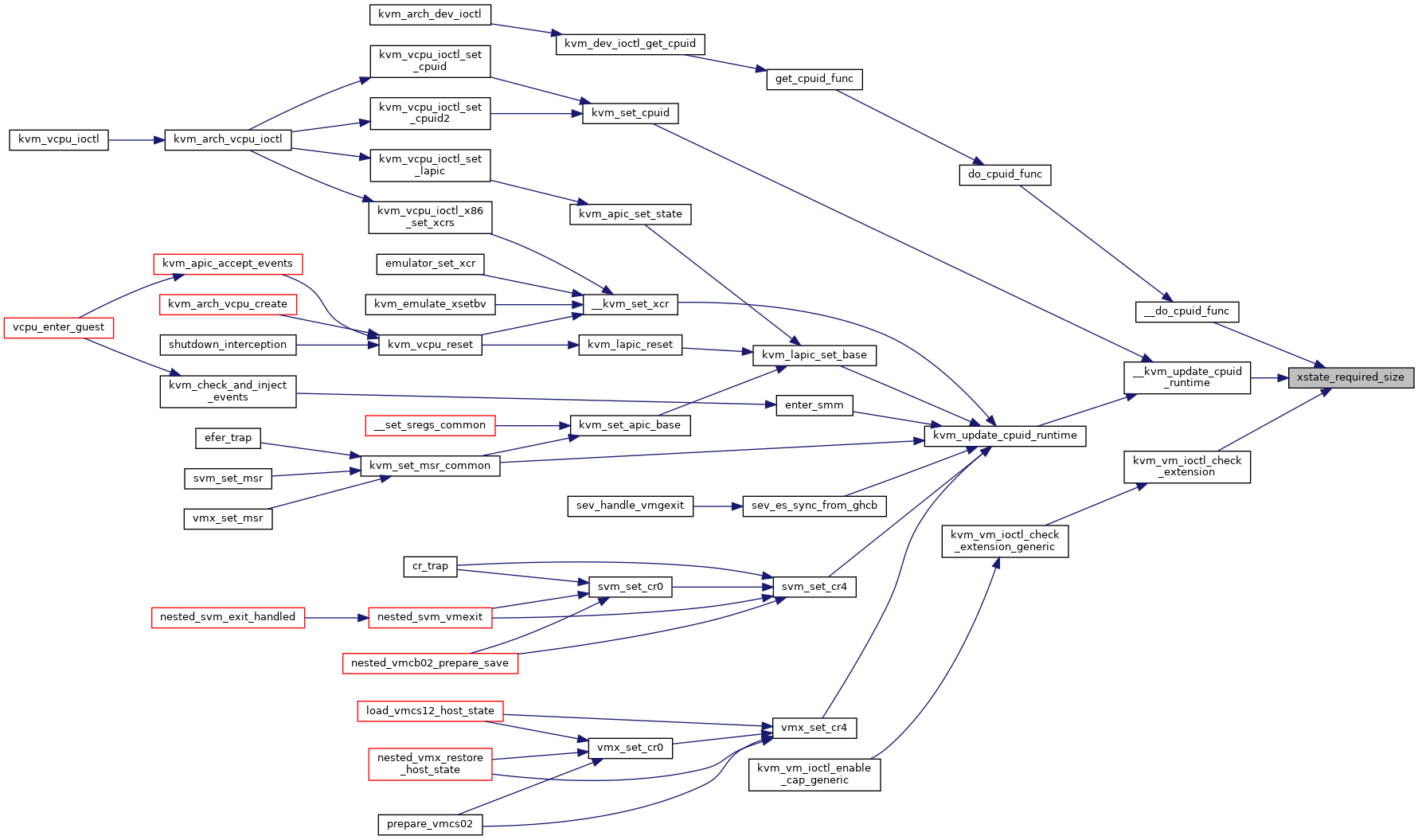

Include dependency graph for cpuid.c:

Go to the source code of this file.

Classes | |

| struct | kvm_cpuid_array |

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

| #define | F feature_bit |

| #define | SF(name) |

| #define | KVM_CPUID_INDEX_NOT_SIGNIFICANT -1ull |

| #define | CENTAUR_CPUID_SIGNATURE 0xC0000000 |

Functions | |

| EXPORT_SYMBOL_GPL (kvm_cpu_caps) | |

| u32 | xstate_required_size (u64 xstate_bv, bool compacted) |

| static struct kvm_cpuid_entry2 * | cpuid_entry2_find (struct kvm_cpuid_entry2 *entries, int nent, u32 function, u64 index) |

| static int | kvm_check_cpuid (struct kvm_vcpu *vcpu, struct kvm_cpuid_entry2 *entries, int nent) |

| static int | kvm_cpuid_check_equal (struct kvm_vcpu *vcpu, struct kvm_cpuid_entry2 *e2, int nent) |

| static struct kvm_hypervisor_cpuid | kvm_get_hypervisor_cpuid (struct kvm_vcpu *vcpu, const char *sig) |

| static struct kvm_cpuid_entry2 * | __kvm_find_kvm_cpuid_features (struct kvm_vcpu *vcpu, struct kvm_cpuid_entry2 *entries, int nent) |

| static struct kvm_cpuid_entry2 * | kvm_find_kvm_cpuid_features (struct kvm_vcpu *vcpu) |

| void | kvm_update_pv_runtime (struct kvm_vcpu *vcpu) |

| static u64 | cpuid_get_supported_xcr0 (struct kvm_cpuid_entry2 *entries, int nent) |

| static void | __kvm_update_cpuid_runtime (struct kvm_vcpu *vcpu, struct kvm_cpuid_entry2 *entries, int nent) |

| void | kvm_update_cpuid_runtime (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_update_cpuid_runtime) | |

| static bool | kvm_cpuid_has_hyperv (struct kvm_cpuid_entry2 *entries, int nent) |

| static void | kvm_vcpu_after_set_cpuid (struct kvm_vcpu *vcpu) |

| int | cpuid_query_maxphyaddr (struct kvm_vcpu *vcpu) |

| u64 | kvm_vcpu_reserved_gpa_bits_raw (struct kvm_vcpu *vcpu) |

| static int | kvm_set_cpuid (struct kvm_vcpu *vcpu, struct kvm_cpuid_entry2 *e2, int nent) |

| int | kvm_vcpu_ioctl_set_cpuid (struct kvm_vcpu *vcpu, struct kvm_cpuid *cpuid, struct kvm_cpuid_entry __user *entries) |

| int | kvm_vcpu_ioctl_set_cpuid2 (struct kvm_vcpu *vcpu, struct kvm_cpuid2 *cpuid, struct kvm_cpuid_entry2 __user *entries) |

| int | kvm_vcpu_ioctl_get_cpuid2 (struct kvm_vcpu *vcpu, struct kvm_cpuid2 *cpuid, struct kvm_cpuid_entry2 __user *entries) |

| static __always_inline void | __kvm_cpu_cap_mask (unsigned int leaf) |

| static __always_inline void | kvm_cpu_cap_init_kvm_defined (enum kvm_only_cpuid_leafs leaf, u32 mask) |

| static __always_inline void | kvm_cpu_cap_mask (enum cpuid_leafs leaf, u32 mask) |

| void | kvm_set_cpu_caps (void) |

| EXPORT_SYMBOL_GPL (kvm_set_cpu_caps) | |

| static struct kvm_cpuid_entry2 * | get_next_cpuid (struct kvm_cpuid_array *array) |

| static struct kvm_cpuid_entry2 * | do_host_cpuid (struct kvm_cpuid_array *array, u32 function, u32 index) |

| static int | __do_cpuid_func_emulated (struct kvm_cpuid_array *array, u32 func) |

| static int | __do_cpuid_func (struct kvm_cpuid_array *array, u32 function) |

| static int | do_cpuid_func (struct kvm_cpuid_array *array, u32 func, unsigned int type) |

| static int | get_cpuid_func (struct kvm_cpuid_array *array, u32 func, unsigned int type) |

| static bool | sanity_check_entries (struct kvm_cpuid_entry2 __user *entries, __u32 num_entries, unsigned int ioctl_type) |

| int | kvm_dev_ioctl_get_cpuid (struct kvm_cpuid2 *cpuid, struct kvm_cpuid_entry2 __user *entries, unsigned int type) |

| struct kvm_cpuid_entry2 * | kvm_find_cpuid_entry_index (struct kvm_vcpu *vcpu, u32 function, u32 index) |

| EXPORT_SYMBOL_GPL (kvm_find_cpuid_entry_index) | |

| struct kvm_cpuid_entry2 * | kvm_find_cpuid_entry (struct kvm_vcpu *vcpu, u32 function) |

| EXPORT_SYMBOL_GPL (kvm_find_cpuid_entry) | |

| static struct kvm_cpuid_entry2 * | get_out_of_range_cpuid_entry (struct kvm_vcpu *vcpu, u32 *fn_ptr, u32 index) |

| bool | kvm_cpuid (struct kvm_vcpu *vcpu, u32 *eax, u32 *ebx, u32 *ecx, u32 *edx, bool exact_only) |

| EXPORT_SYMBOL_GPL (kvm_cpuid) | |

| int | kvm_emulate_cpuid (struct kvm_vcpu *vcpu) |

| EXPORT_SYMBOL_GPL (kvm_emulate_cpuid) | |

Variables | |

| u32 kvm_cpu_caps[NR_KVM_CPU_CAPS] | __read_mostly = -1 |

Macro Definition Documentation

◆ CENTAUR_CPUID_SIGNATURE

◆ F

| #define F feature_bit |

◆ KVM_CPUID_INDEX_NOT_SIGNIFICANT

◆ pr_fmt

◆ SF

| #define SF | ( | name | ) |

Value:

({ \

BUILD_BUG_ON(X86_FEATURE_##name >= MAX_CPU_FEATURES); \

(boot_cpu_has(X86_FEATURE_##name) ? F(name) : 0); \

})

Function Documentation

◆ __do_cpuid_func()

|

inlinestatic |

Definition at line 913 of file cpuid.c.

static struct kvm_cpuid_entry2 * do_host_cpuid(struct kvm_cpuid_array *array, u32 function, u32 index)

Definition: cpuid.c:835

static __always_inline bool kvm_cpu_cap_has(unsigned int x86_feature)

Definition: cpuid.h:221

static __always_inline void cpuid_entry_override(struct kvm_cpuid_entry2 *entry, unsigned int leaf)

Definition: cpuid.h:61

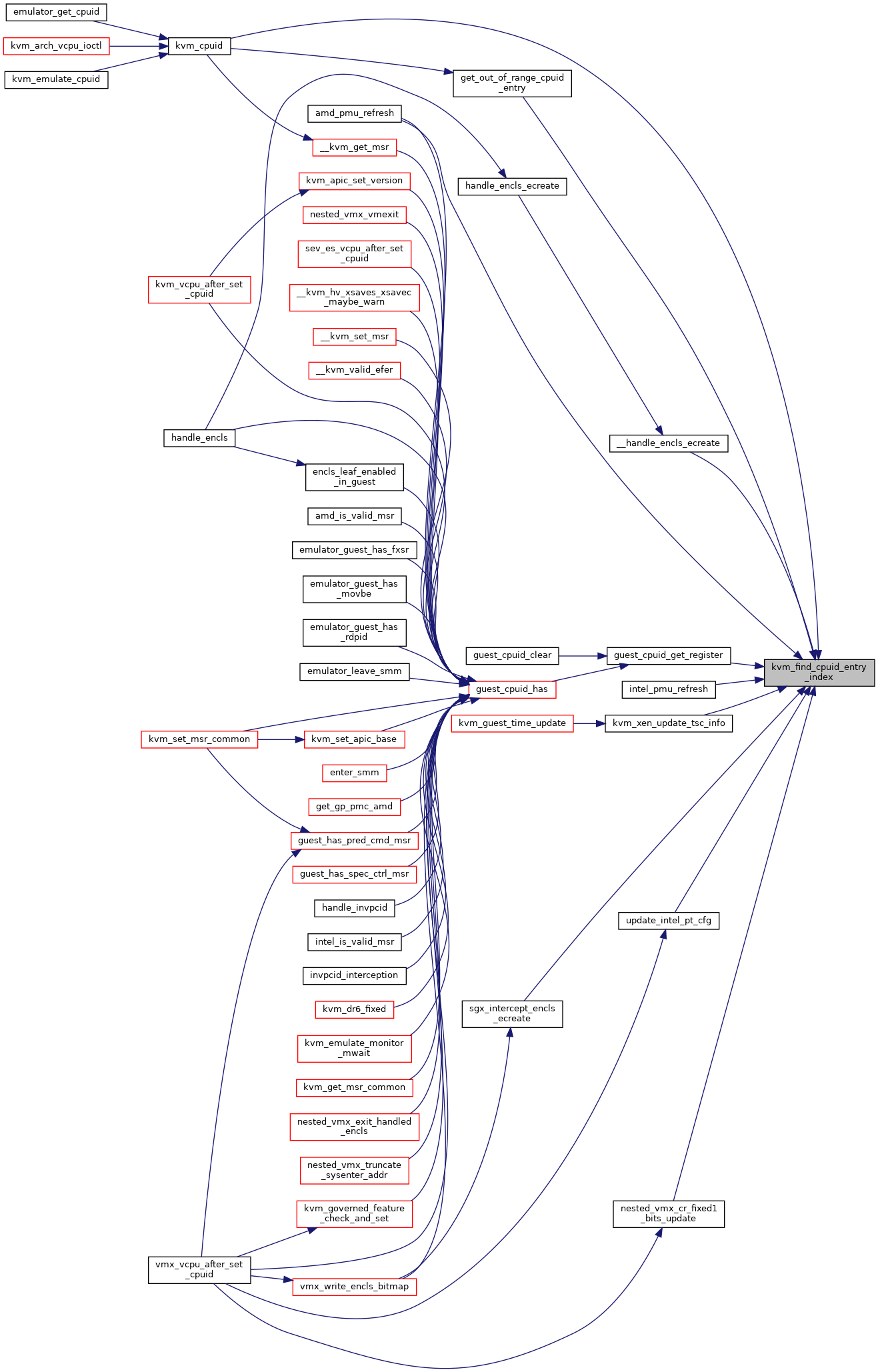

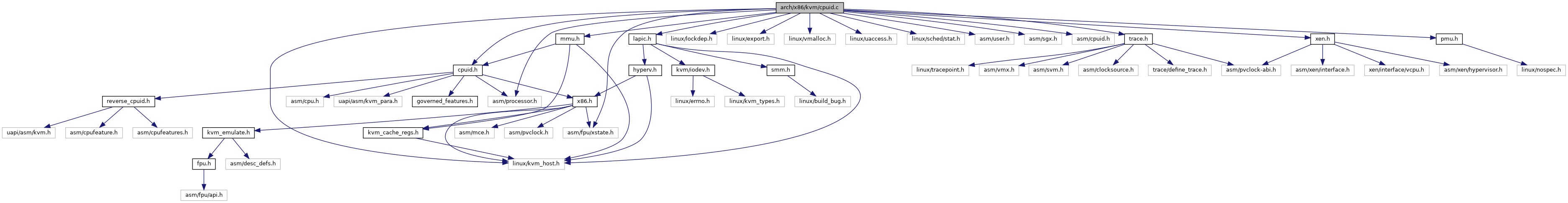

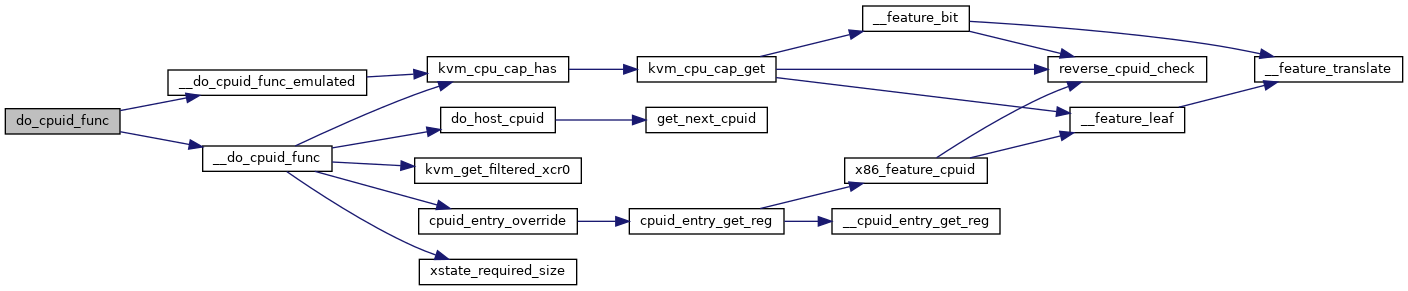

Here is the call graph for this function:

Here is the caller graph for this function:

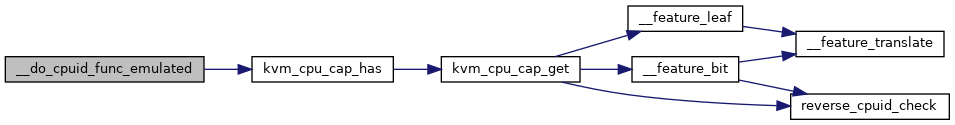

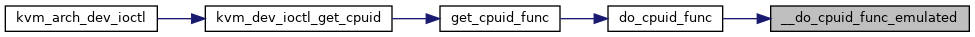

◆ __do_cpuid_func_emulated()

|

static |

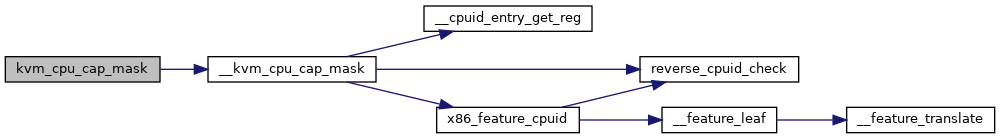

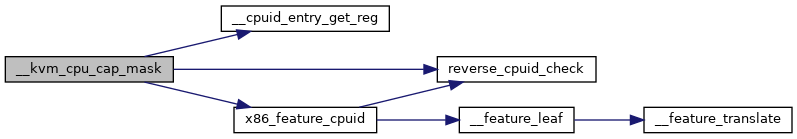

◆ __kvm_cpu_cap_mask()

|

static |

Definition at line 551 of file cpuid.c.

static __always_inline struct cpuid_reg x86_feature_cpuid(unsigned int x86_feature)

Definition: reverse_cpuid.h:158

static __always_inline u32 * __cpuid_entry_get_reg(struct kvm_cpuid_entry2 *entry, u32 reg)

Definition: reverse_cpuid.h:166

static __always_inline void reverse_cpuid_check(unsigned int x86_leaf)

Definition: reverse_cpuid.h:103

Definition: reverse_cpuid.h:64

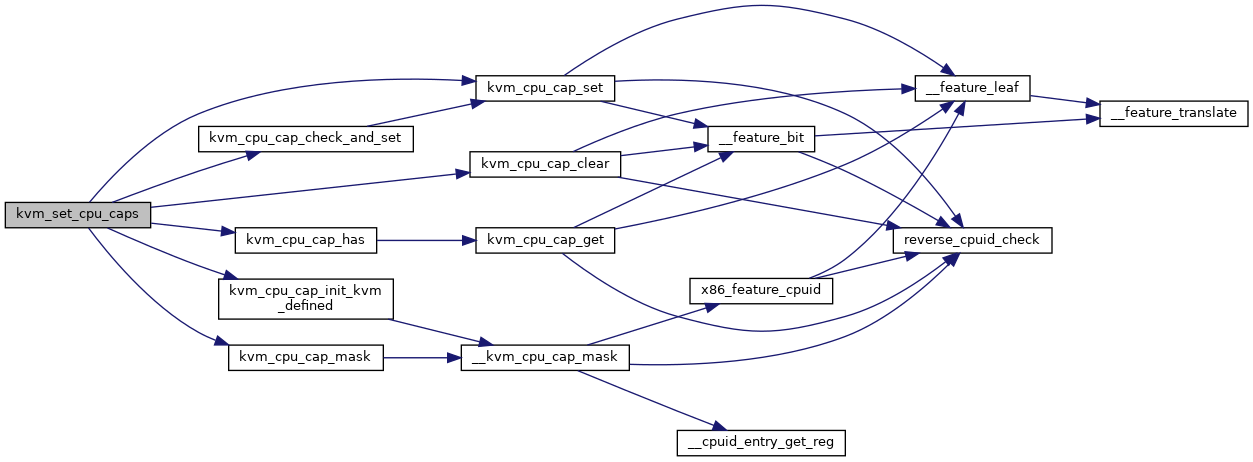

Here is the call graph for this function:

Here is the caller graph for this function:

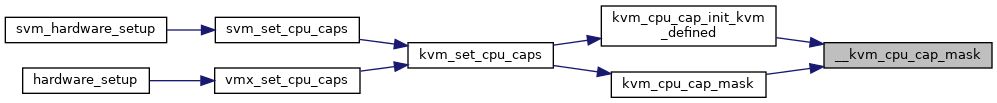

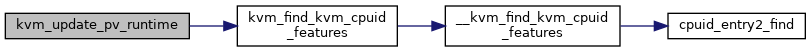

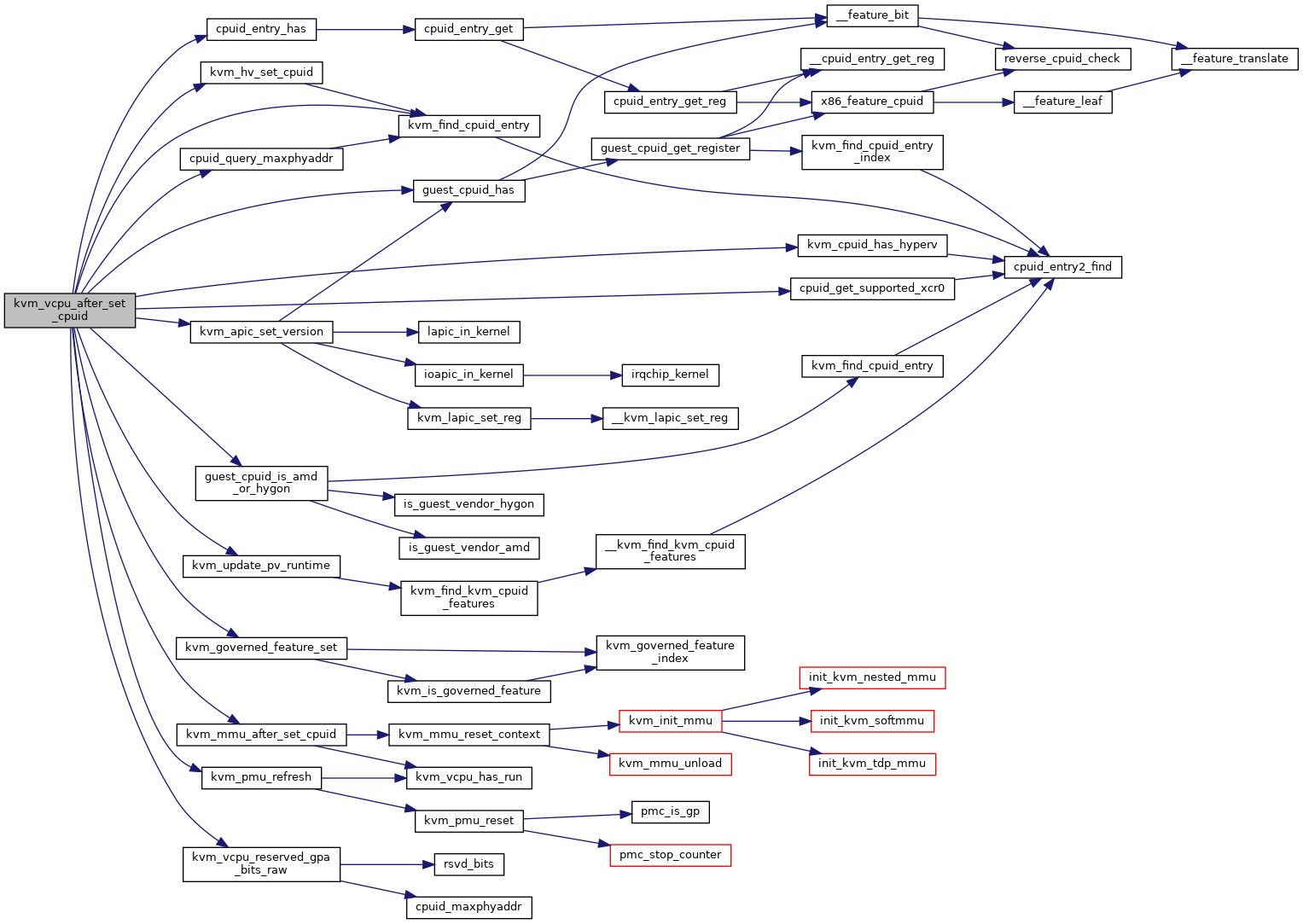

◆ __kvm_find_kvm_cpuid_features()

|

static |

Definition at line 220 of file cpuid.c.

static struct kvm_cpuid_entry2 * cpuid_entry2_find(struct kvm_cpuid_entry2 *entries, int nent, u32 function, u64 index)

Definition: cpuid.c:82

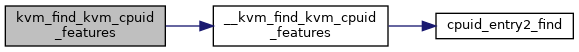

Here is the call graph for this function:

Here is the caller graph for this function:

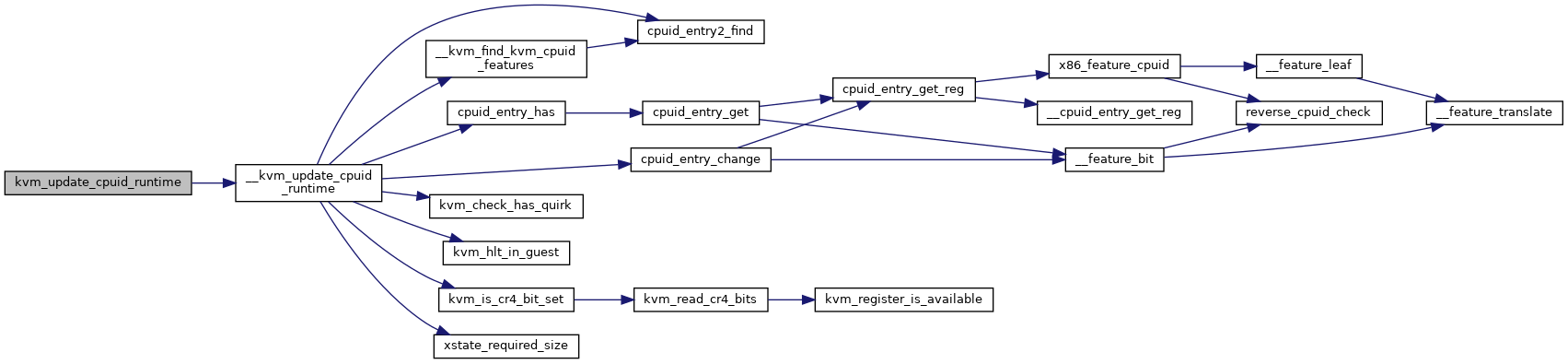

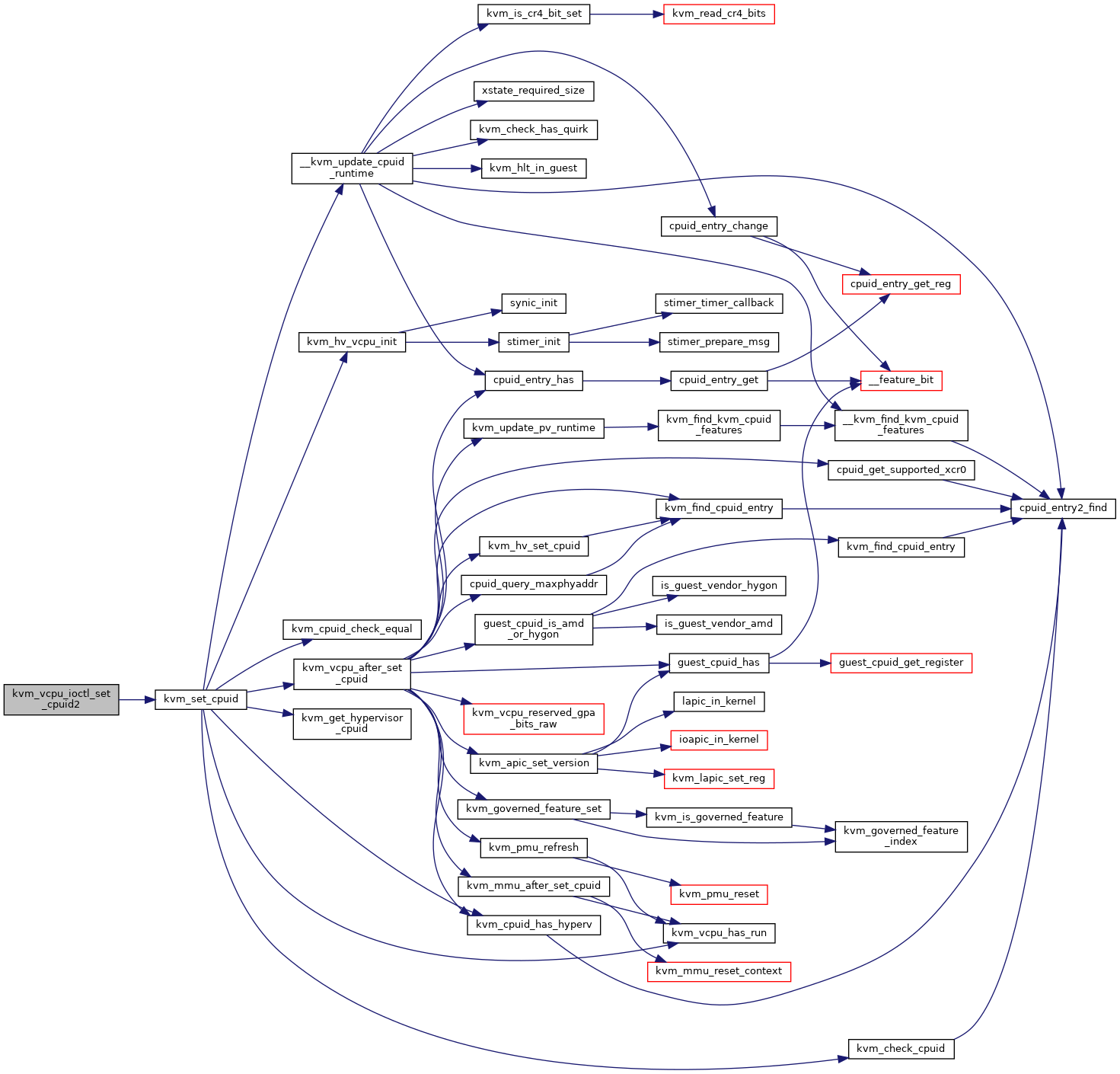

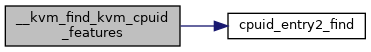

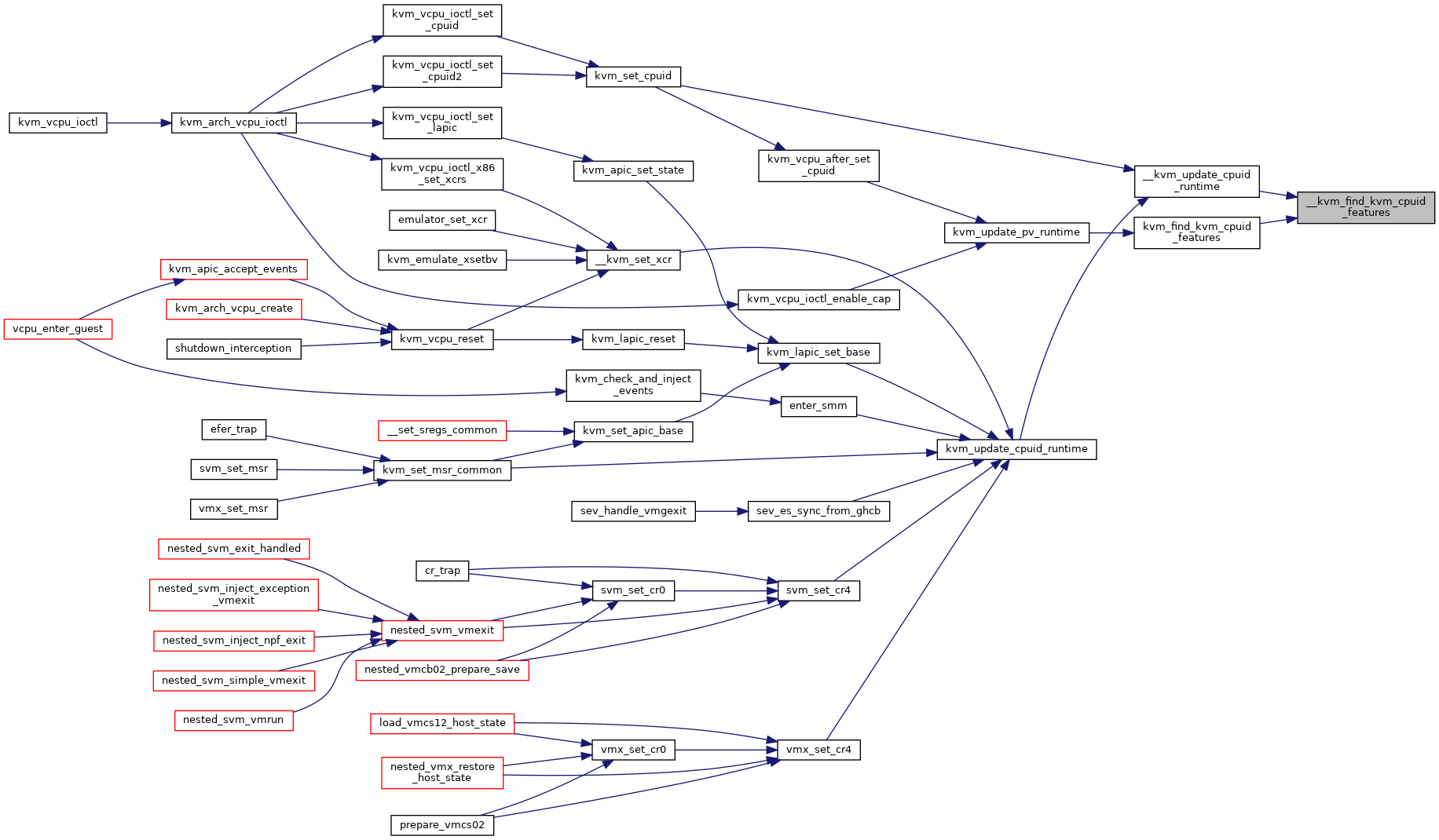

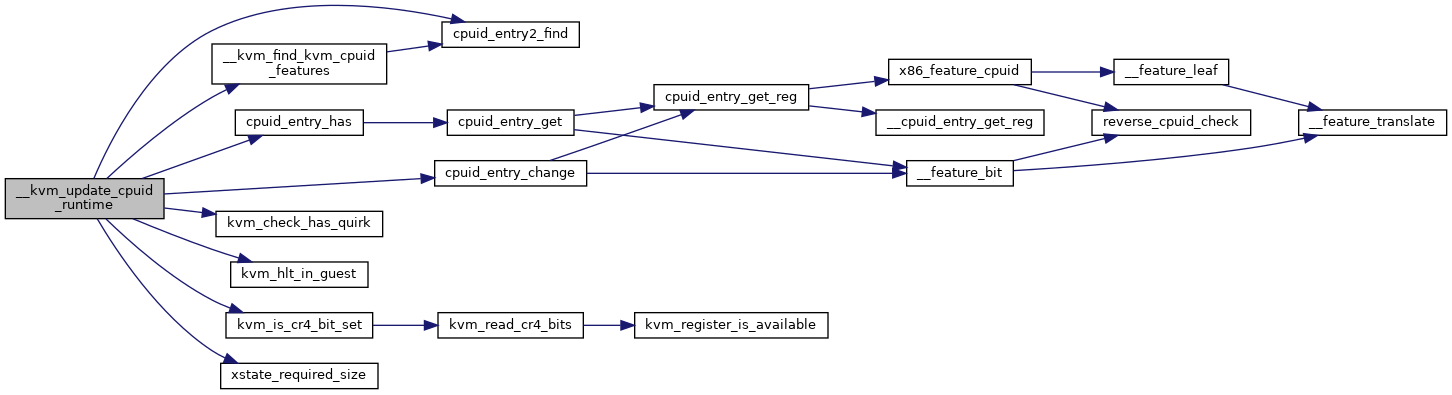

◆ __kvm_update_cpuid_runtime()

|

static |

Definition at line 265 of file cpuid.c.

static struct kvm_cpuid_entry2 * __kvm_find_kvm_cpuid_features(struct kvm_vcpu *vcpu, struct kvm_cpuid_entry2 *entries, int nent)

Definition: cpuid.c:220

static __always_inline bool kvm_is_cr4_bit_set(struct kvm_vcpu *vcpu, unsigned long cr4_bit)

Definition: kvm_cache_regs.h:182

static __always_inline bool cpuid_entry_has(struct kvm_cpuid_entry2 *entry, unsigned int x86_feature)

Definition: reverse_cpuid.h:200

static __always_inline void cpuid_entry_change(struct kvm_cpuid_entry2 *entry, unsigned int x86_feature, bool set)

Definition: reverse_cpuid.h:222

Here is the call graph for this function:

Here is the caller graph for this function:

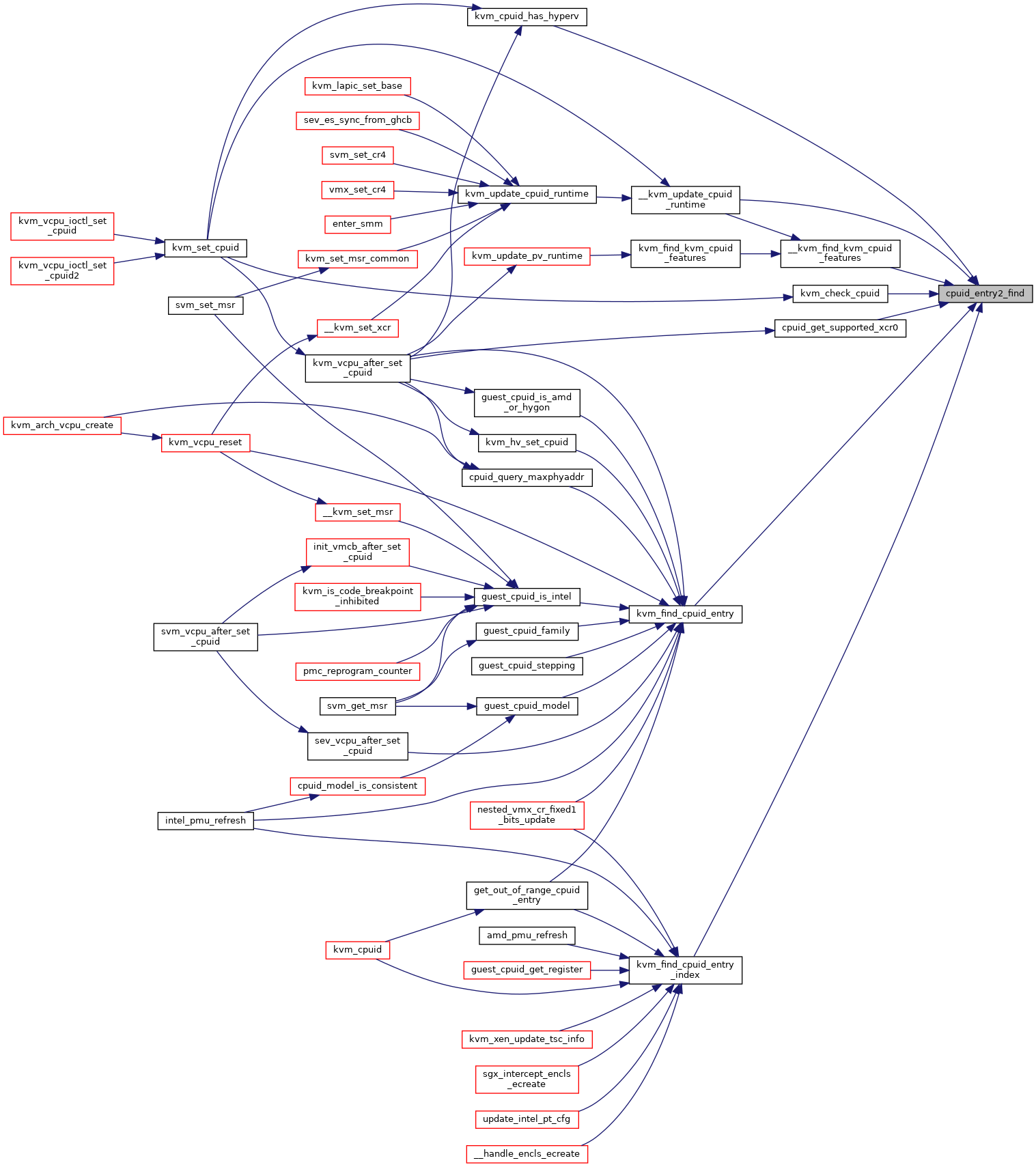

◆ cpuid_entry2_find()

|

inlinestatic |

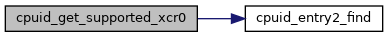

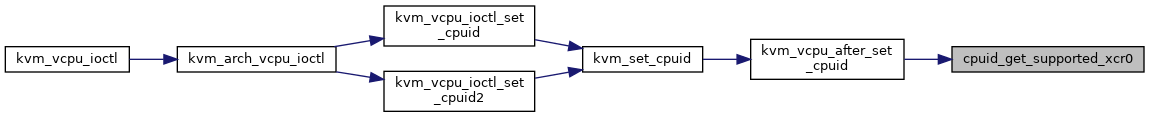

◆ cpuid_get_supported_xcr0()

|

static |

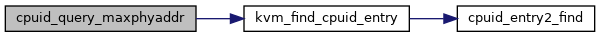

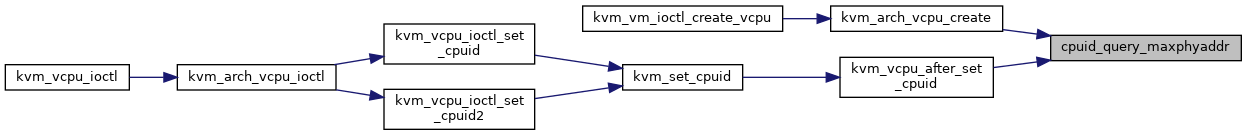

◆ cpuid_query_maxphyaddr()

| int cpuid_query_maxphyaddr | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 390 of file cpuid.c.

struct kvm_cpuid_entry2 * kvm_find_cpuid_entry(struct kvm_vcpu *vcpu, u32 function)

Definition: cpuid.c:1455

Here is the call graph for this function:

Here is the caller graph for this function:

◆ do_cpuid_func()

|

static |

Definition at line 1342 of file cpuid.c.

static int __do_cpuid_func_emulated(struct kvm_cpuid_array *array, u32 func)

Definition: cpuid.c:878

static int __do_cpuid_func(struct kvm_cpuid_array *array, u32 function)

Definition: cpuid.c:913

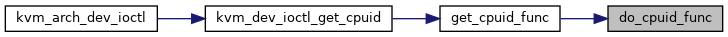

Here is the call graph for this function:

Here is the caller graph for this function:

◆ do_host_cpuid()

|

static |

Definition at line 835 of file cpuid.c.

static struct kvm_cpuid_entry2 * get_next_cpuid(struct kvm_cpuid_array *array)

Definition: cpuid.c:827

Here is the call graph for this function:

Here is the caller graph for this function:

◆ EXPORT_SYMBOL_GPL() [1/7]

| EXPORT_SYMBOL_GPL | ( | kvm_cpu_caps | ) |

◆ EXPORT_SYMBOL_GPL() [2/7]

| EXPORT_SYMBOL_GPL | ( | kvm_cpuid | ) |

◆ EXPORT_SYMBOL_GPL() [3/7]

| EXPORT_SYMBOL_GPL | ( | kvm_emulate_cpuid | ) |

◆ EXPORT_SYMBOL_GPL() [4/7]

| EXPORT_SYMBOL_GPL | ( | kvm_find_cpuid_entry | ) |

◆ EXPORT_SYMBOL_GPL() [5/7]

| EXPORT_SYMBOL_GPL | ( | kvm_find_cpuid_entry_index | ) |

◆ EXPORT_SYMBOL_GPL() [6/7]

| EXPORT_SYMBOL_GPL | ( | kvm_set_cpu_caps | ) |

◆ EXPORT_SYMBOL_GPL() [7/7]

| EXPORT_SYMBOL_GPL | ( | kvm_update_cpuid_runtime | ) |

◆ get_cpuid_func()

|

static |

Definition at line 1353 of file cpuid.c.

static int do_cpuid_func(struct kvm_cpuid_array *array, u32 func, unsigned int type)

Definition: cpuid.c:1342

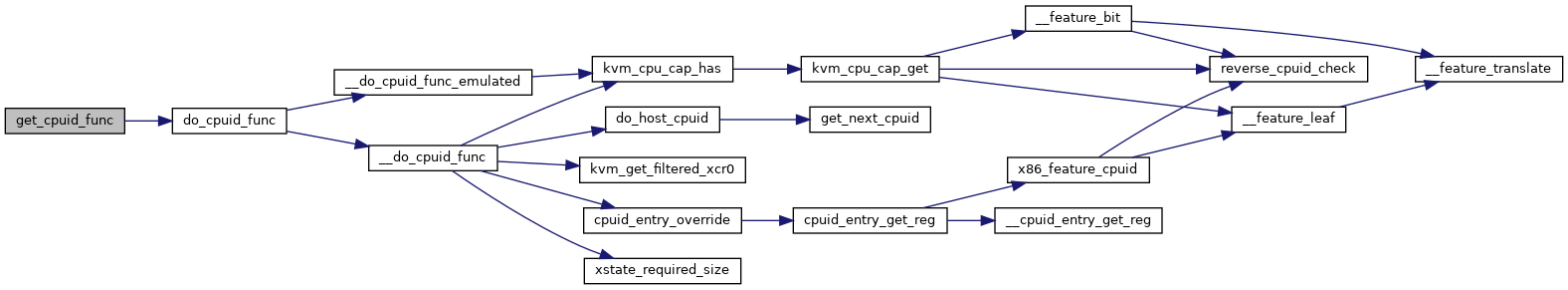

Here is the call graph for this function:

Here is the caller graph for this function:

◆ get_next_cpuid()

|

static |

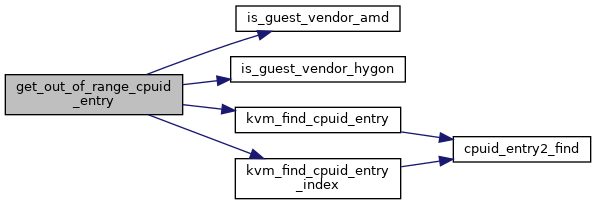

◆ get_out_of_range_cpuid_entry()

|

static |

Definition at line 1492 of file cpuid.c.

struct kvm_cpuid_entry2 * kvm_find_cpuid_entry_index(struct kvm_vcpu *vcpu, u32 function, u32 index)

Definition: cpuid.c:1447

static bool is_guest_vendor_hygon(u32 ebx, u32 ecx, u32 edx)

Definition: kvm_emulate.h:428

static bool is_guest_vendor_amd(u32 ebx, u32 ecx, u32 edx)

Definition: kvm_emulate.h:418

Here is the call graph for this function:

Here is the caller graph for this function:

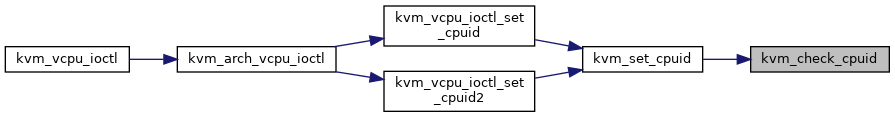

◆ kvm_check_cpuid()

|

static |

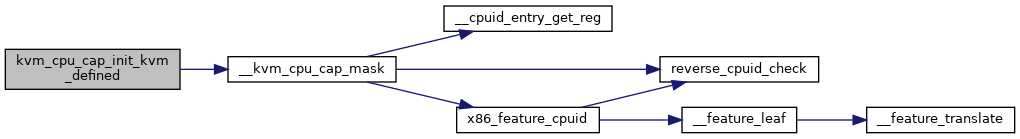

◆ kvm_cpu_cap_init_kvm_defined()

|

static |

Definition at line 565 of file cpuid.c.

static __always_inline void __kvm_cpu_cap_mask(unsigned int leaf)

Definition: cpuid.c:551

Here is the call graph for this function:

Here is the caller graph for this function:

◆ kvm_cpu_cap_mask()

|

static |

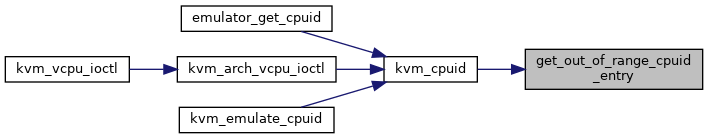

◆ kvm_cpuid()

| bool kvm_cpuid | ( | struct kvm_vcpu * | vcpu, |

| u32 * | eax, | ||

| u32 * | ebx, | ||

| u32 * | ecx, | ||

| u32 * | edx, | ||

| bool | exact_only | ||

| ) |

Definition at line 1531 of file cpuid.c.

static struct kvm_cpuid_entry2 * get_out_of_range_cpuid_entry(struct kvm_vcpu *vcpu, u32 *fn_ptr, u32 index)

Definition: cpuid.c:1492

static bool kvm_hv_invtsc_suppressed(struct kvm_vcpu *vcpu)

Definition: hyperv.h:299

int __kvm_get_msr(struct kvm_vcpu *vcpu, u32 index, u64 *data, bool host_initiated)

Definition: x86.c:1925

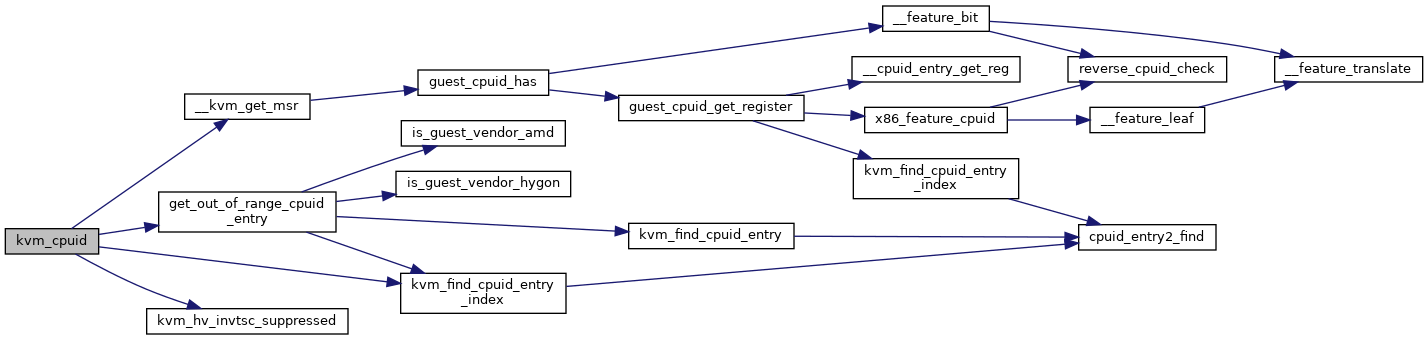

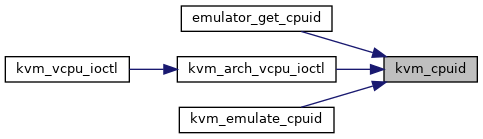

Here is the call graph for this function:

Here is the caller graph for this function:

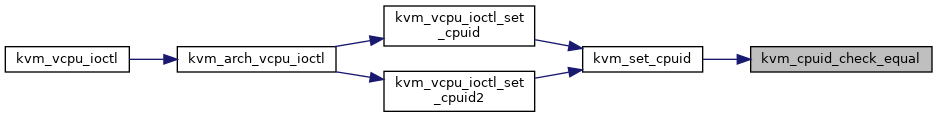

◆ kvm_cpuid_check_equal()

|

static |

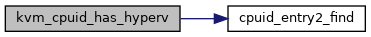

◆ kvm_cpuid_has_hyperv()

|

static |

◆ kvm_dev_ioctl_get_cpuid()

| int kvm_dev_ioctl_get_cpuid | ( | struct kvm_cpuid2 * | cpuid, |

| struct kvm_cpuid_entry2 __user * | entries, | ||

| unsigned int | type | ||

| ) |

Definition at line 1404 of file cpuid.c.

static int get_cpuid_func(struct kvm_cpuid_array *array, u32 func, unsigned int type)

Definition: cpuid.c:1353

static bool sanity_check_entries(struct kvm_cpuid_entry2 __user *entries, __u32 num_entries, unsigned int ioctl_type)

Definition: cpuid.c:1377

Definition: cpuid.c:821

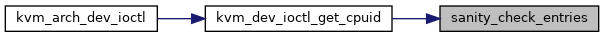

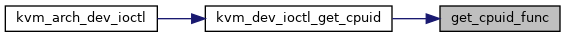

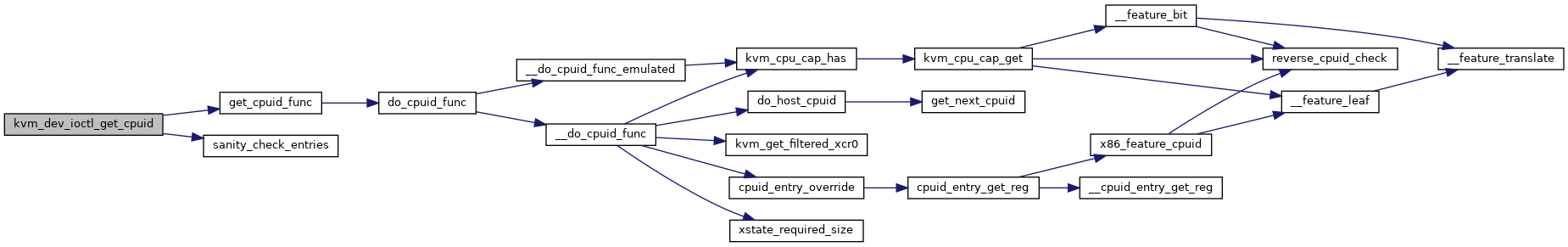

Here is the call graph for this function:

Here is the caller graph for this function:

◆ kvm_emulate_cpuid()

| int kvm_emulate_cpuid | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1583 of file cpuid.c.

bool kvm_cpuid(struct kvm_vcpu *vcpu, u32 *eax, u32 *ebx, u32 *ecx, u32 *edx, bool exact_only)

Definition: cpuid.c:1531

int kvm_skip_emulated_instruction(struct kvm_vcpu *vcpu)

Definition: x86.c:8916

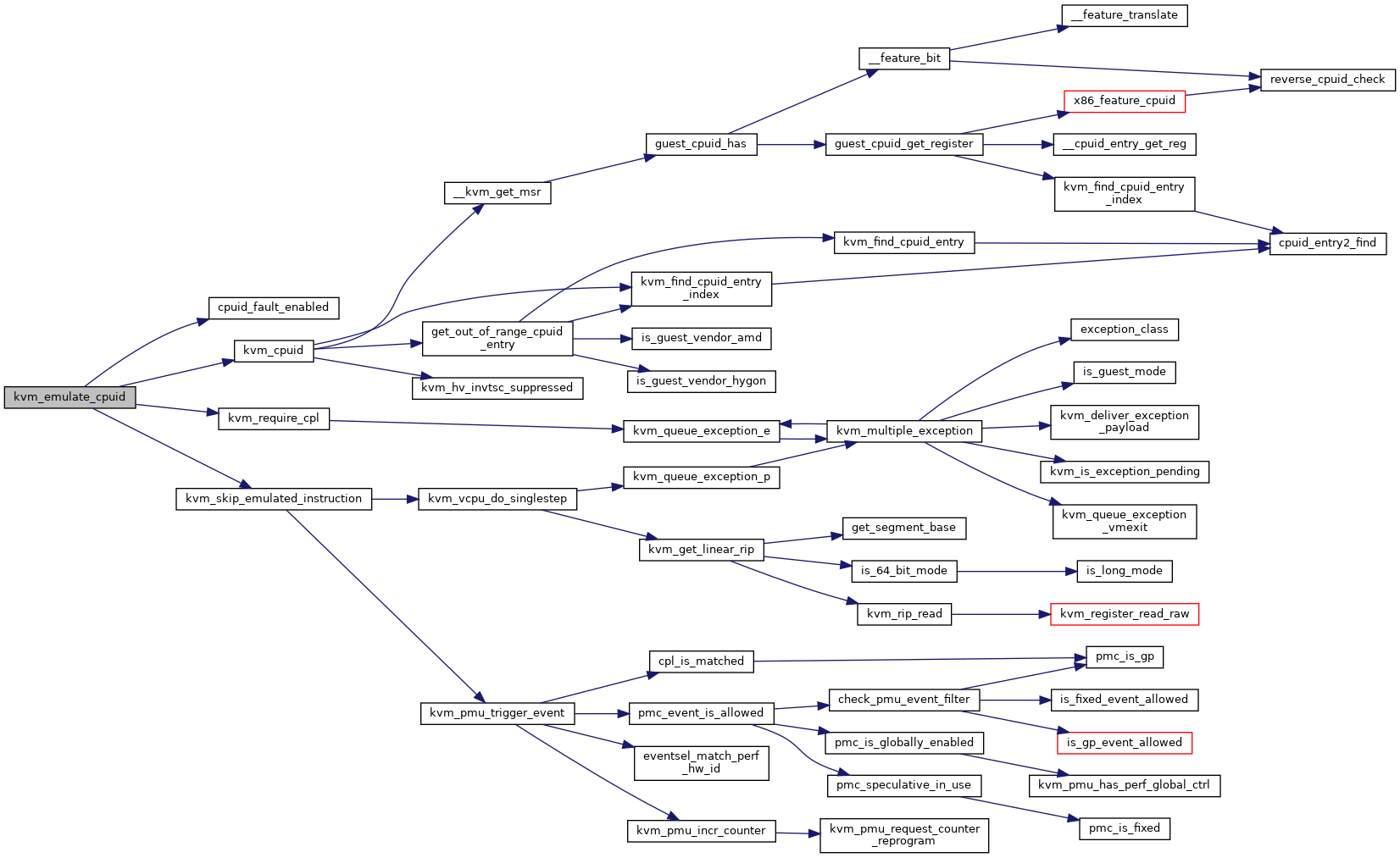

Here is the call graph for this function:

◆ kvm_find_cpuid_entry()

| struct kvm_cpuid_entry2* kvm_find_cpuid_entry | ( | struct kvm_vcpu * | vcpu, |

| u32 | function | ||

| ) |

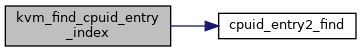

◆ kvm_find_cpuid_entry_index()

| struct kvm_cpuid_entry2* kvm_find_cpuid_entry_index | ( | struct kvm_vcpu * | vcpu, |

| u32 | function, | ||

| u32 | index | ||

| ) |

◆ kvm_find_kvm_cpuid_features()

|

static |

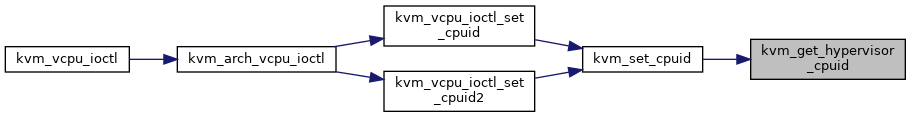

◆ kvm_get_hypervisor_cpuid()

|

static |

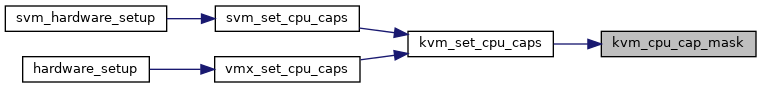

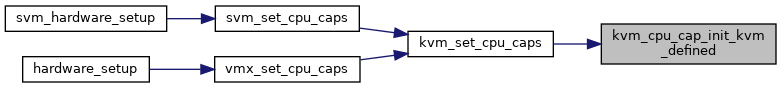

◆ kvm_set_cpu_caps()

| void kvm_set_cpu_caps | ( | void | ) |

Definition at line 585 of file cpuid.c.

static __always_inline void kvm_cpu_cap_init_kvm_defined(enum kvm_only_cpuid_leafs leaf, u32 mask)

Definition: cpuid.c:565

static __always_inline void kvm_cpu_cap_mask(enum cpuid_leafs leaf, u32 mask)

Definition: cpuid.c:575

static __always_inline void kvm_cpu_cap_check_and_set(unsigned int x86_feature)

Definition: cpuid.h:226

static __always_inline void kvm_cpu_cap_clear(unsigned int x86_feature)

Definition: cpuid.h:197

static __always_inline void kvm_cpu_cap_set(unsigned int x86_feature)

Definition: cpuid.h:205

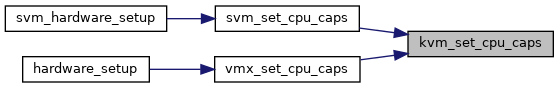

Here is the call graph for this function:

Here is the caller graph for this function:

◆ kvm_set_cpuid()

|

static |

Definition at line 414 of file cpuid.c.

static bool kvm_cpuid_has_hyperv(struct kvm_cpuid_entry2 *entries, int nent)

Definition: cpuid.c:315

static int kvm_cpuid_check_equal(struct kvm_vcpu *vcpu, struct kvm_cpuid_entry2 *e2, int nent)

Definition: cpuid.c:170

static struct kvm_hypervisor_cpuid kvm_get_hypervisor_cpuid(struct kvm_vcpu *vcpu, const char *sig)

Definition: cpuid.c:192

static void __kvm_update_cpuid_runtime(struct kvm_vcpu *vcpu, struct kvm_cpuid_entry2 *entries, int nent)

Definition: cpuid.c:265

static void kvm_vcpu_after_set_cpuid(struct kvm_vcpu *vcpu)

Definition: cpuid.c:328

static int kvm_check_cpuid(struct kvm_vcpu *vcpu, struct kvm_cpuid_entry2 *entries, int nent)

Definition: cpuid.c:133

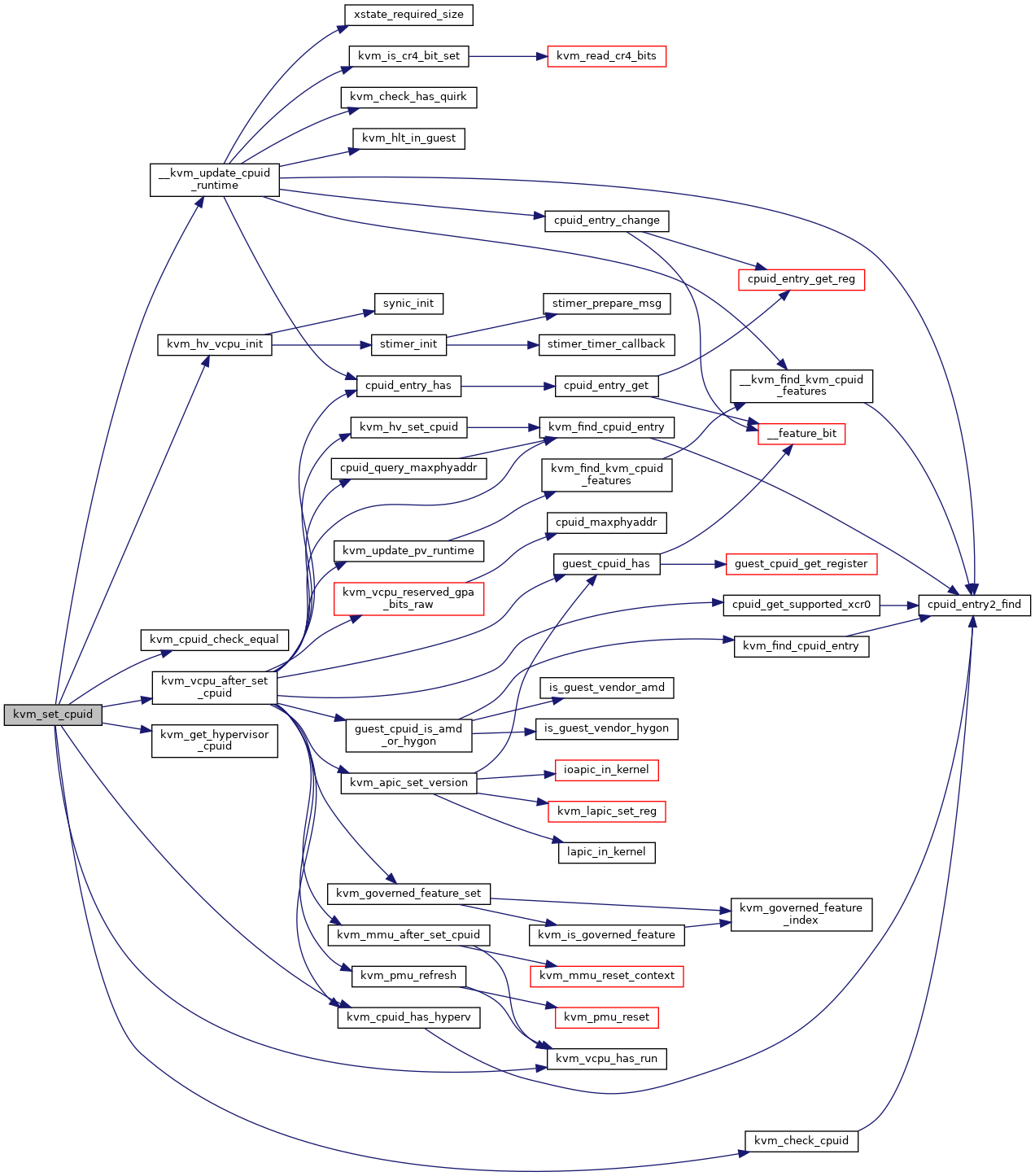

Here is the call graph for this function:

Here is the caller graph for this function:

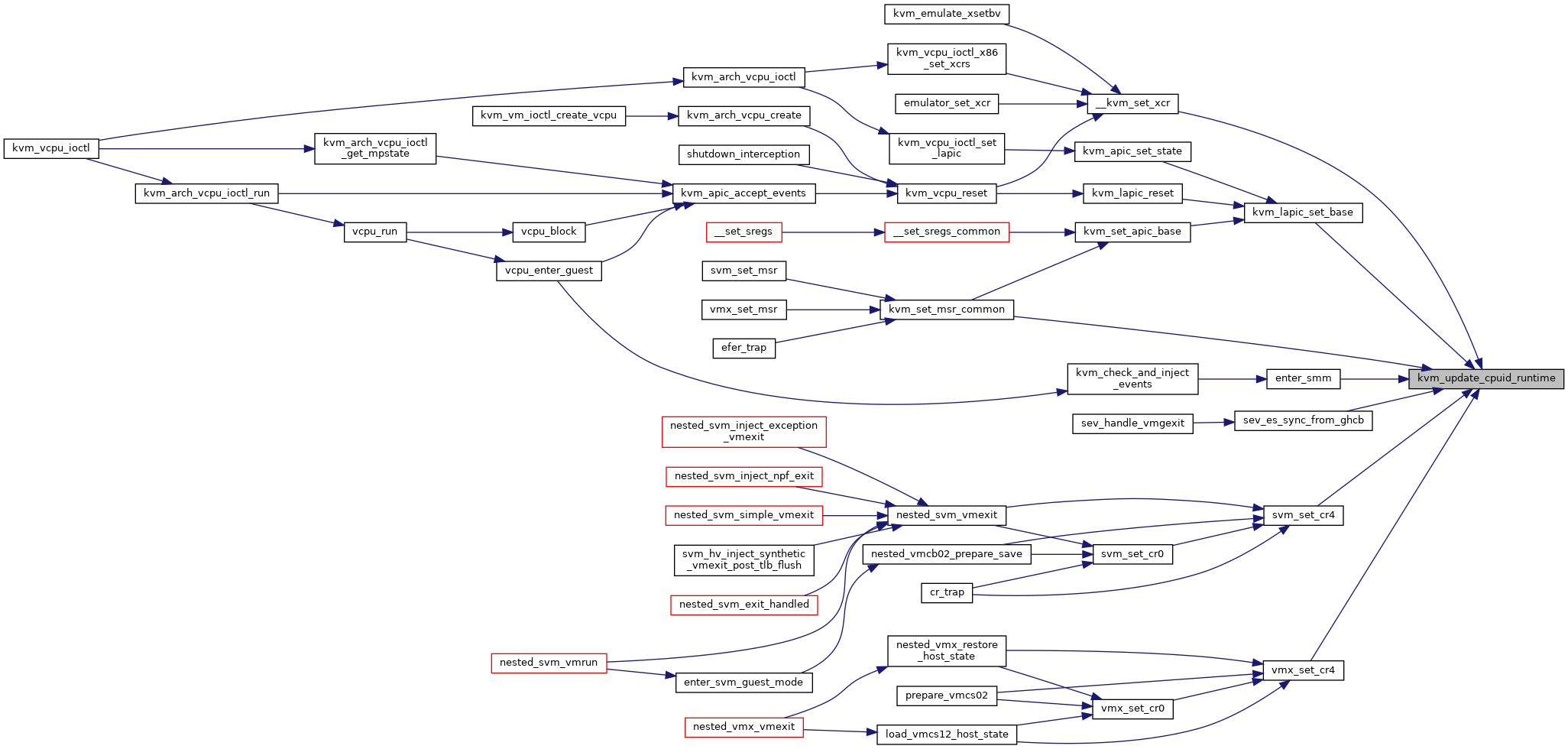

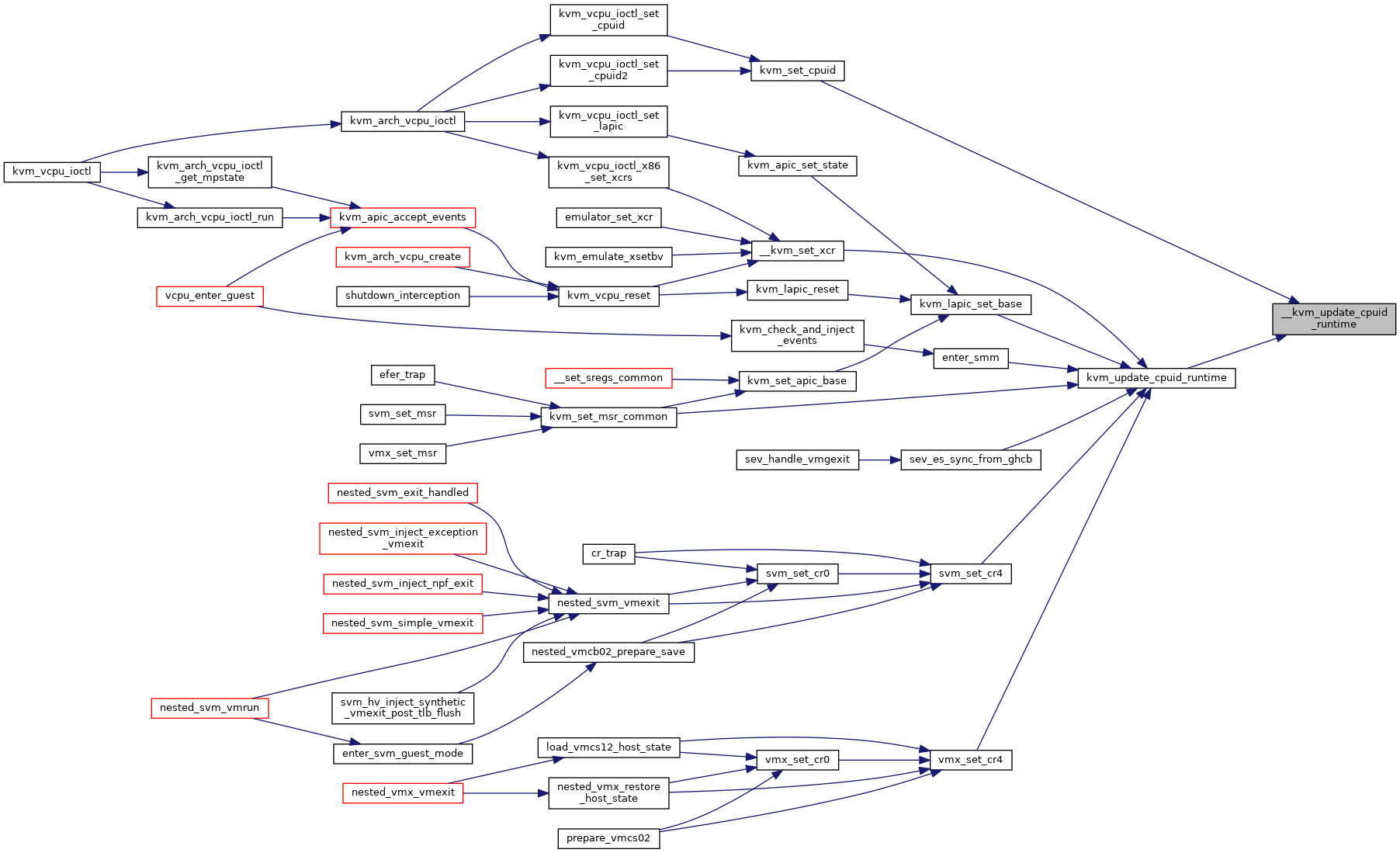

◆ kvm_update_cpuid_runtime()

| void kvm_update_cpuid_runtime | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_update_pv_runtime()

| void kvm_update_pv_runtime | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 238 of file cpuid.c.

static struct kvm_cpuid_entry2 * kvm_find_kvm_cpuid_features(struct kvm_vcpu *vcpu)

Definition: cpuid.c:232

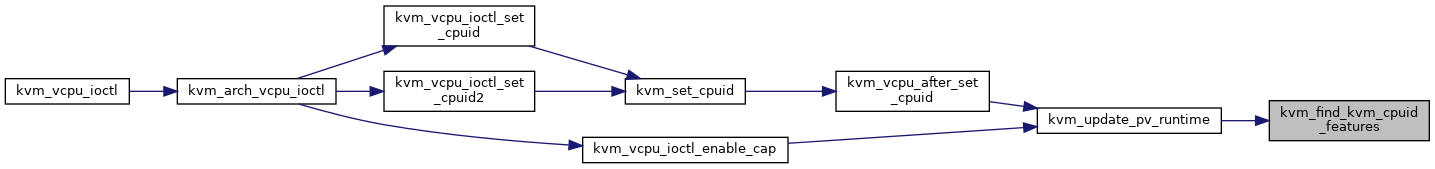

Here is the call graph for this function:

Here is the caller graph for this function:

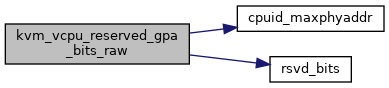

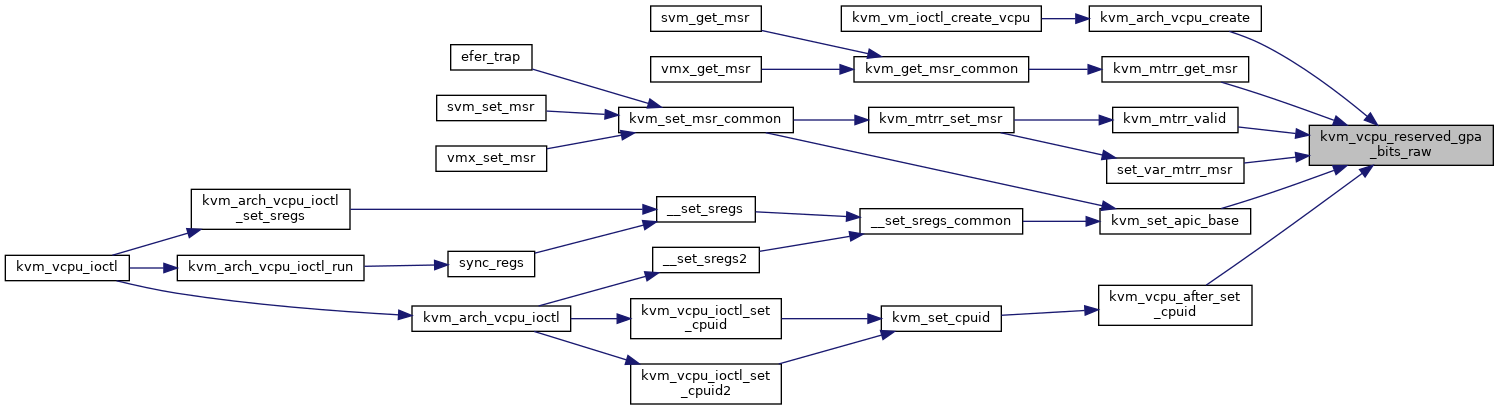

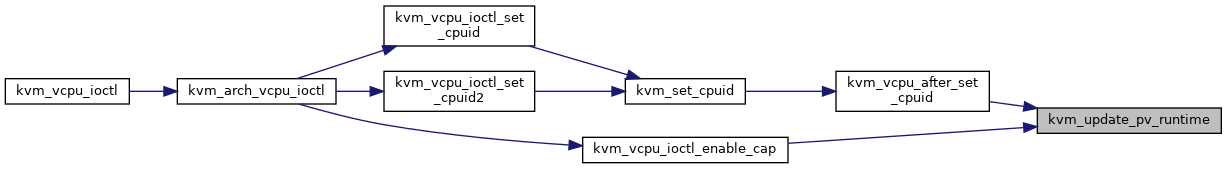

◆ kvm_vcpu_after_set_cpuid()

|

static |

Definition at line 328 of file cpuid.c.

u64 kvm_vcpu_reserved_gpa_bits_raw(struct kvm_vcpu *vcpu)

Definition: cpuid.c:409

static u64 cpuid_get_supported_xcr0(struct kvm_cpuid_entry2 *entries, int nent)

Definition: cpuid.c:254

static bool guest_cpuid_is_amd_or_hygon(struct kvm_vcpu *vcpu)

Definition: cpuid.h:105

static __always_inline void kvm_governed_feature_set(struct kvm_vcpu *vcpu, unsigned int x86_feature)

Definition: cpuid.h:262

static __always_inline bool guest_cpuid_has(struct kvm_vcpu *vcpu, unsigned int x86_feature)

Definition: cpuid.h:83

void kvm_hv_set_cpuid(struct kvm_vcpu *vcpu, bool hyperv_enabled)

Definition: hyperv.c:2297

Definition: lapic.h:59

Here is the call graph for this function:

Here is the caller graph for this function:

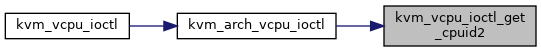

◆ kvm_vcpu_ioctl_get_cpuid2()

| int kvm_vcpu_ioctl_get_cpuid2 | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_cpuid2 * | cpuid, | ||

| struct kvm_cpuid_entry2 __user * | entries | ||

| ) |

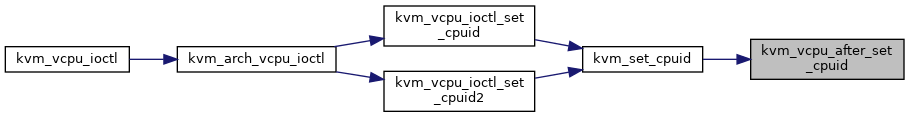

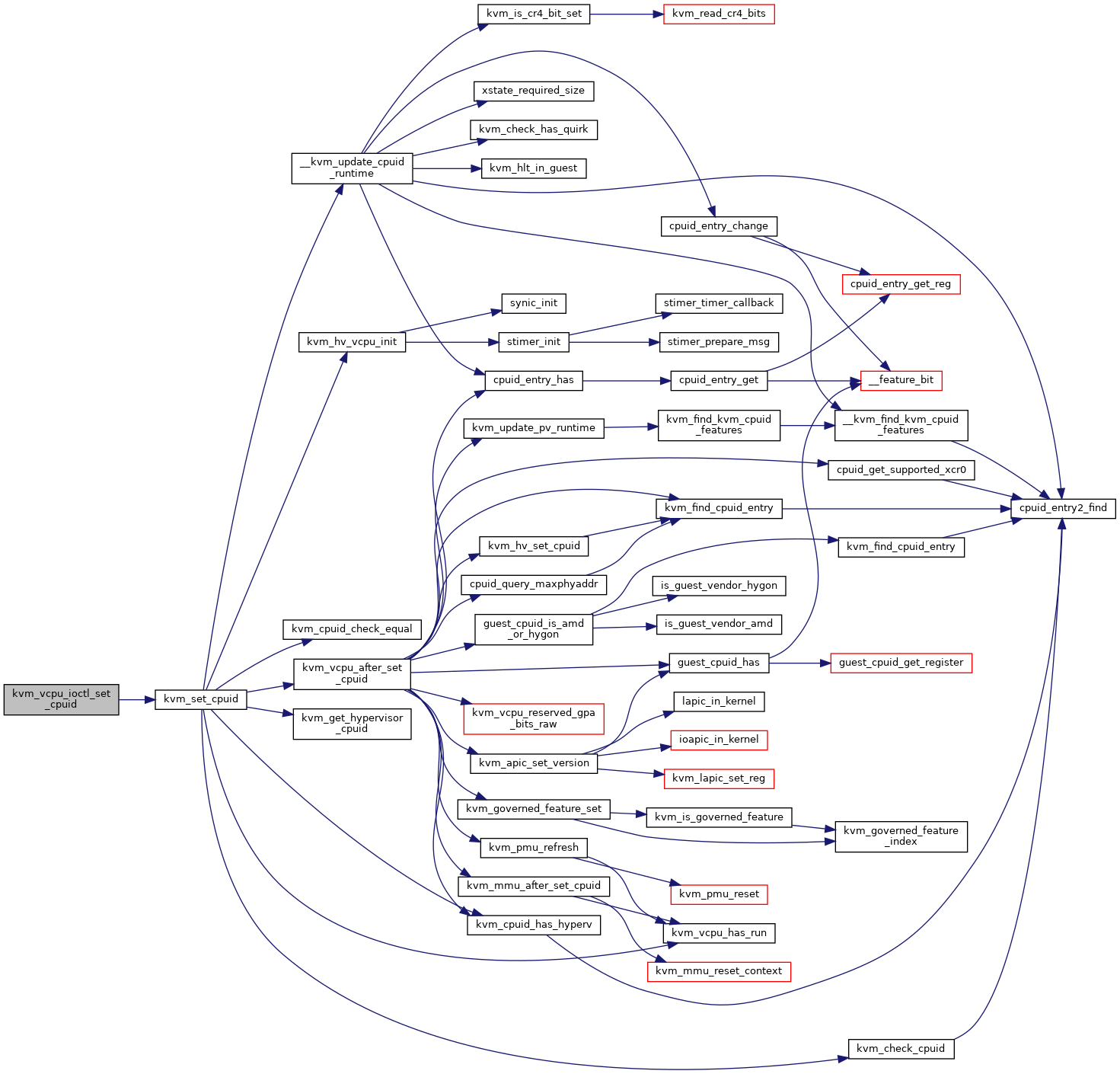

◆ kvm_vcpu_ioctl_set_cpuid()

| int kvm_vcpu_ioctl_set_cpuid | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_cpuid * | cpuid, | ||

| struct kvm_cpuid_entry __user * | entries | ||

| ) |

Definition at line 467 of file cpuid.c.

static int kvm_set_cpuid(struct kvm_vcpu *vcpu, struct kvm_cpuid_entry2 *e2, int nent)

Definition: cpuid.c:414

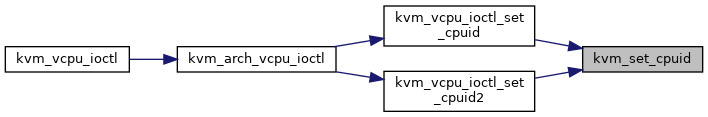

Here is the call graph for this function:

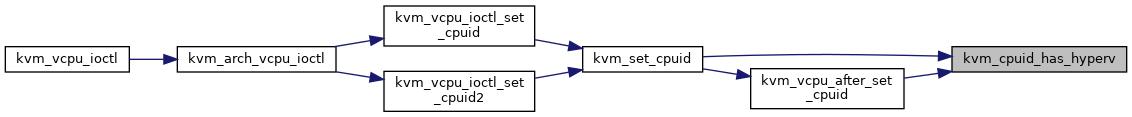

Here is the caller graph for this function:

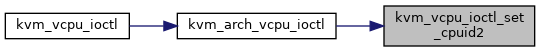

◆ kvm_vcpu_ioctl_set_cpuid2()

| int kvm_vcpu_ioctl_set_cpuid2 | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_cpuid2 * | cpuid, | ||

| struct kvm_cpuid_entry2 __user * | entries | ||

| ) |

◆ kvm_vcpu_reserved_gpa_bits_raw()

| u64 kvm_vcpu_reserved_gpa_bits_raw | ( | struct kvm_vcpu * | vcpu | ) |

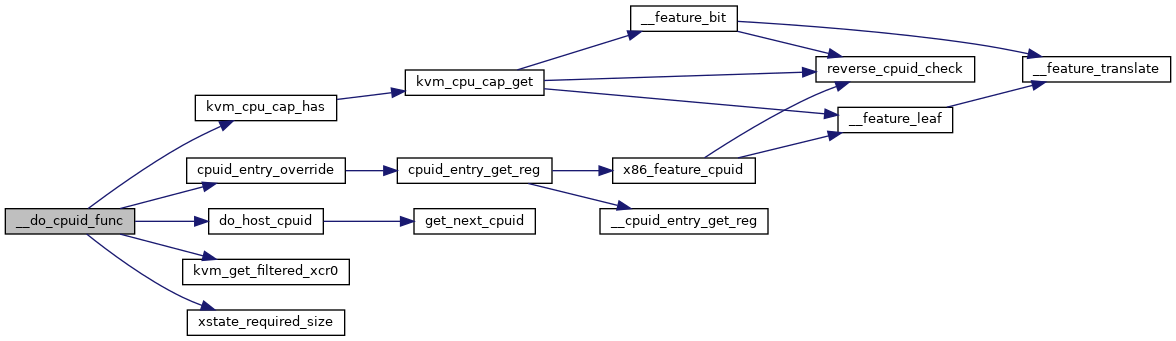

◆ sanity_check_entries()

|

static |

◆ xstate_required_size()

| u32 xstate_required_size | ( | u64 | xstate_bv, |

| bool | compacted | ||

| ) |