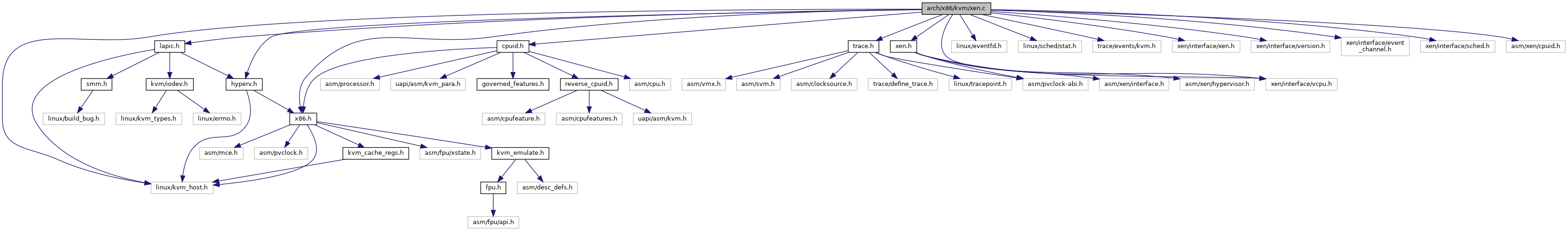

#include "x86.h"#include "xen.h"#include "hyperv.h"#include "lapic.h"#include <linux/eventfd.h>#include <linux/kvm_host.h>#include <linux/sched/stat.h>#include <trace/events/kvm.h>#include <xen/interface/xen.h>#include <xen/interface/vcpu.h>#include <xen/interface/version.h>#include <xen/interface/event_channel.h>#include <xen/interface/sched.h>#include <asm/xen/cpuid.h>#include "cpuid.h"#include "trace.h"

Go to the source code of this file.

Classes | |

| struct | compat_vcpu_set_singleshot_timer |

| struct | evtchnfd |

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

Functions | |

| static int | kvm_xen_set_evtchn (struct kvm_xen_evtchn *xe, struct kvm *kvm) |

| static int | kvm_xen_setattr_evtchn (struct kvm *kvm, struct kvm_xen_hvm_attr *data) |

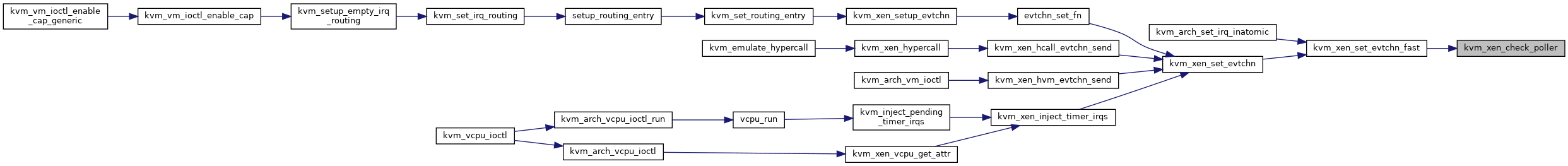

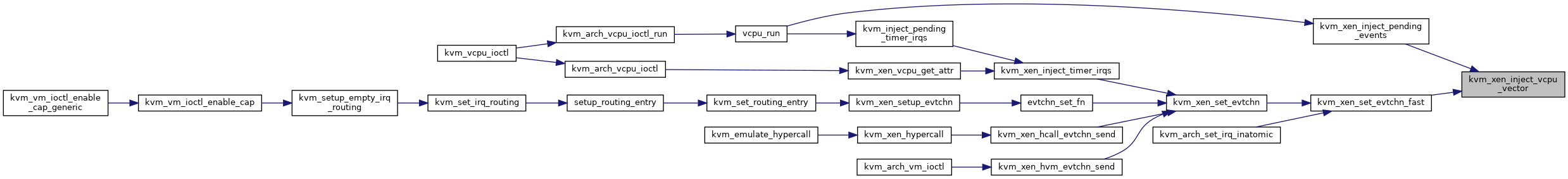

| static bool | kvm_xen_hcall_evtchn_send (struct kvm_vcpu *vcpu, u64 param, u64 *r) |

| DEFINE_STATIC_KEY_DEFERRED_FALSE (kvm_xen_enabled, HZ) | |

| static int | kvm_xen_shared_info_init (struct kvm *kvm, gfn_t gfn) |

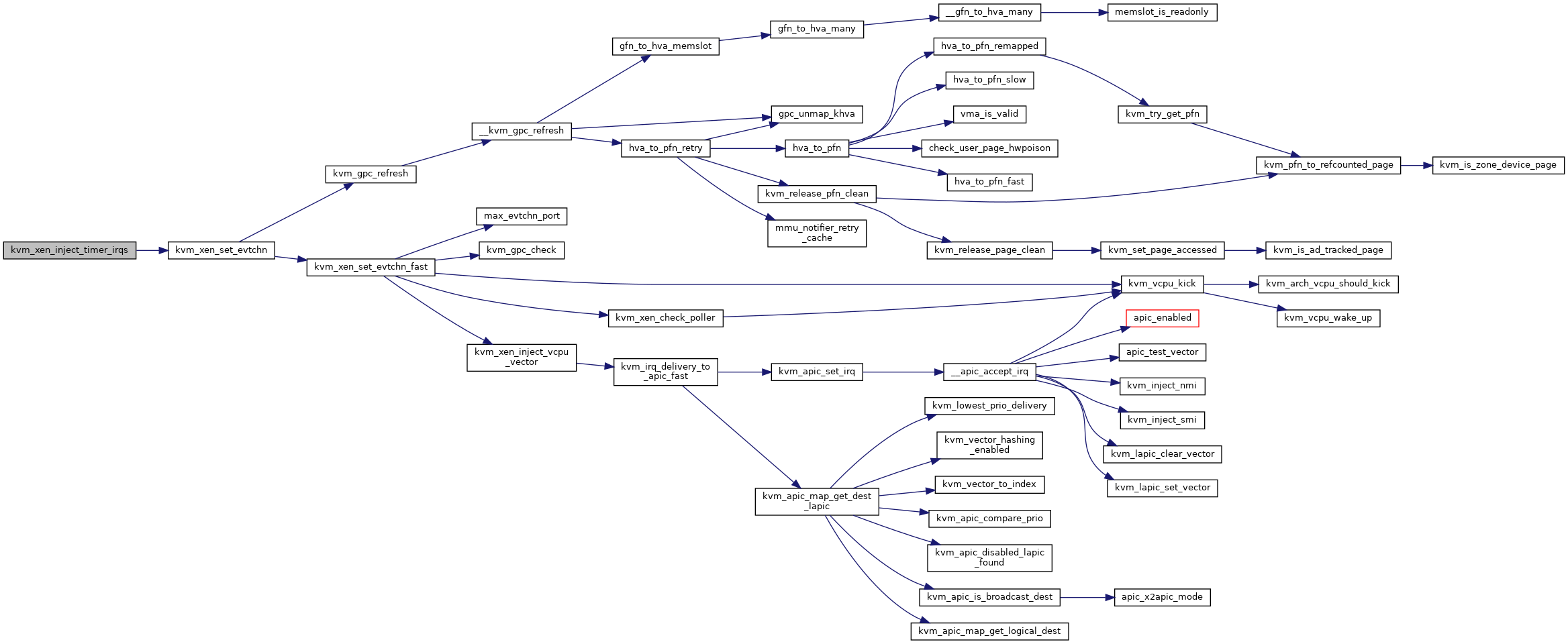

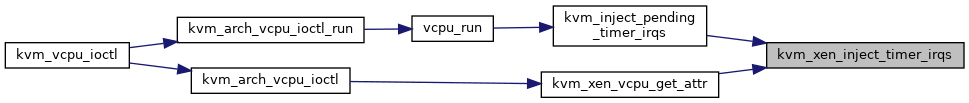

| void | kvm_xen_inject_timer_irqs (struct kvm_vcpu *vcpu) |

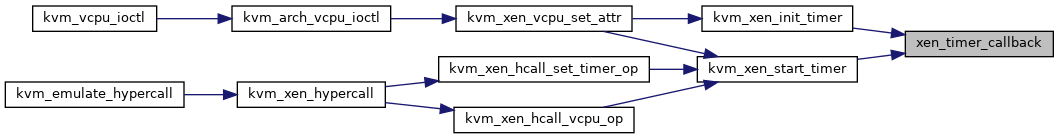

| static enum hrtimer_restart | xen_timer_callback (struct hrtimer *timer) |

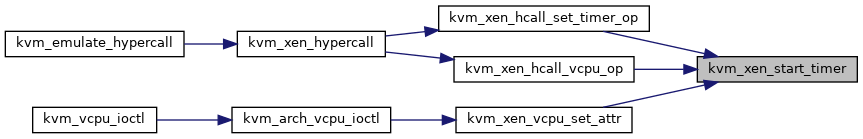

| static void | kvm_xen_start_timer (struct kvm_vcpu *vcpu, u64 guest_abs, s64 delta_ns) |

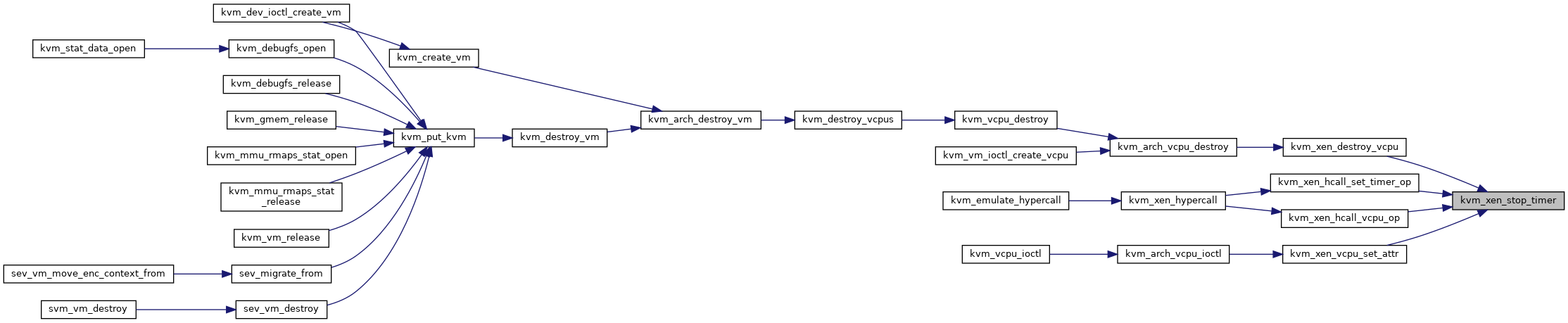

| static void | kvm_xen_stop_timer (struct kvm_vcpu *vcpu) |

| static void | kvm_xen_init_timer (struct kvm_vcpu *vcpu) |

| static void | kvm_xen_update_runstate_guest (struct kvm_vcpu *v, bool atomic) |

| void | kvm_xen_update_runstate (struct kvm_vcpu *v, int state) |

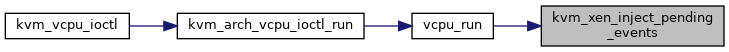

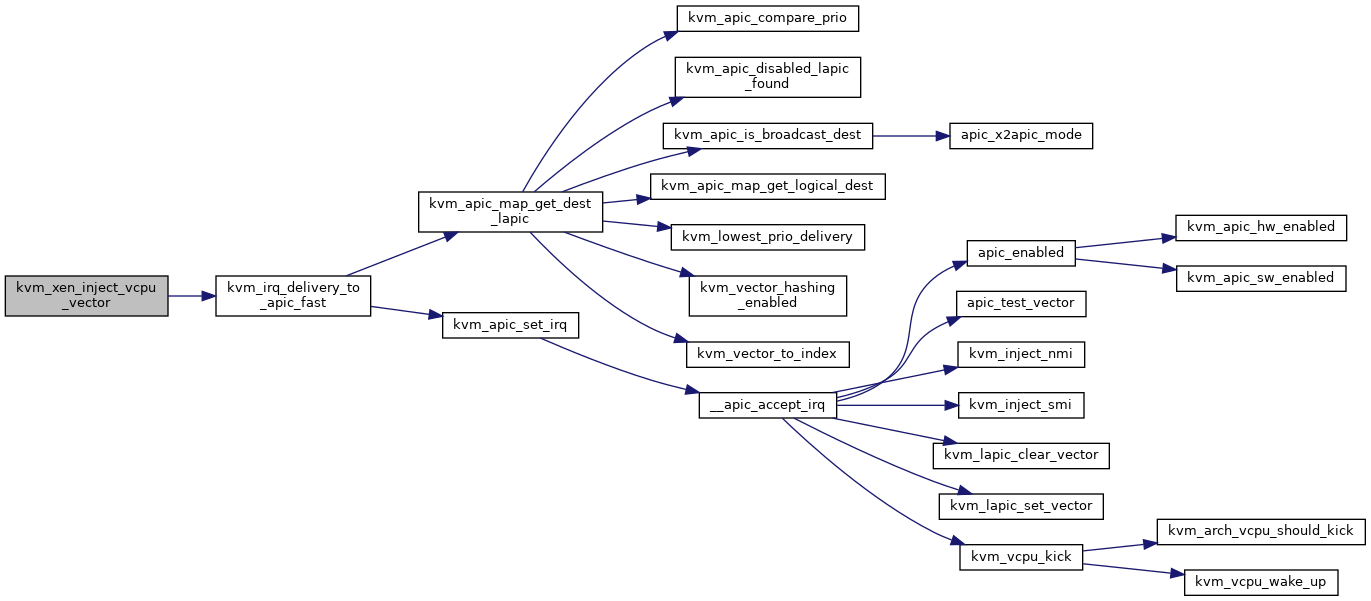

| void | kvm_xen_inject_vcpu_vector (struct kvm_vcpu *v) |

| void | kvm_xen_inject_pending_events (struct kvm_vcpu *v) |

| int | __kvm_xen_has_interrupt (struct kvm_vcpu *v) |

| int | kvm_xen_hvm_set_attr (struct kvm *kvm, struct kvm_xen_hvm_attr *data) |

| int | kvm_xen_hvm_get_attr (struct kvm *kvm, struct kvm_xen_hvm_attr *data) |

| int | kvm_xen_vcpu_set_attr (struct kvm_vcpu *vcpu, struct kvm_xen_vcpu_attr *data) |

| int | kvm_xen_vcpu_get_attr (struct kvm_vcpu *vcpu, struct kvm_xen_vcpu_attr *data) |

| int | kvm_xen_write_hypercall_page (struct kvm_vcpu *vcpu, u64 data) |

| int | kvm_xen_hvm_config (struct kvm *kvm, struct kvm_xen_hvm_config *xhc) |

| static int | kvm_xen_hypercall_set_result (struct kvm_vcpu *vcpu, u64 result) |

| static int | kvm_xen_hypercall_complete_userspace (struct kvm_vcpu *vcpu) |

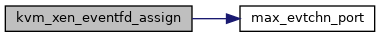

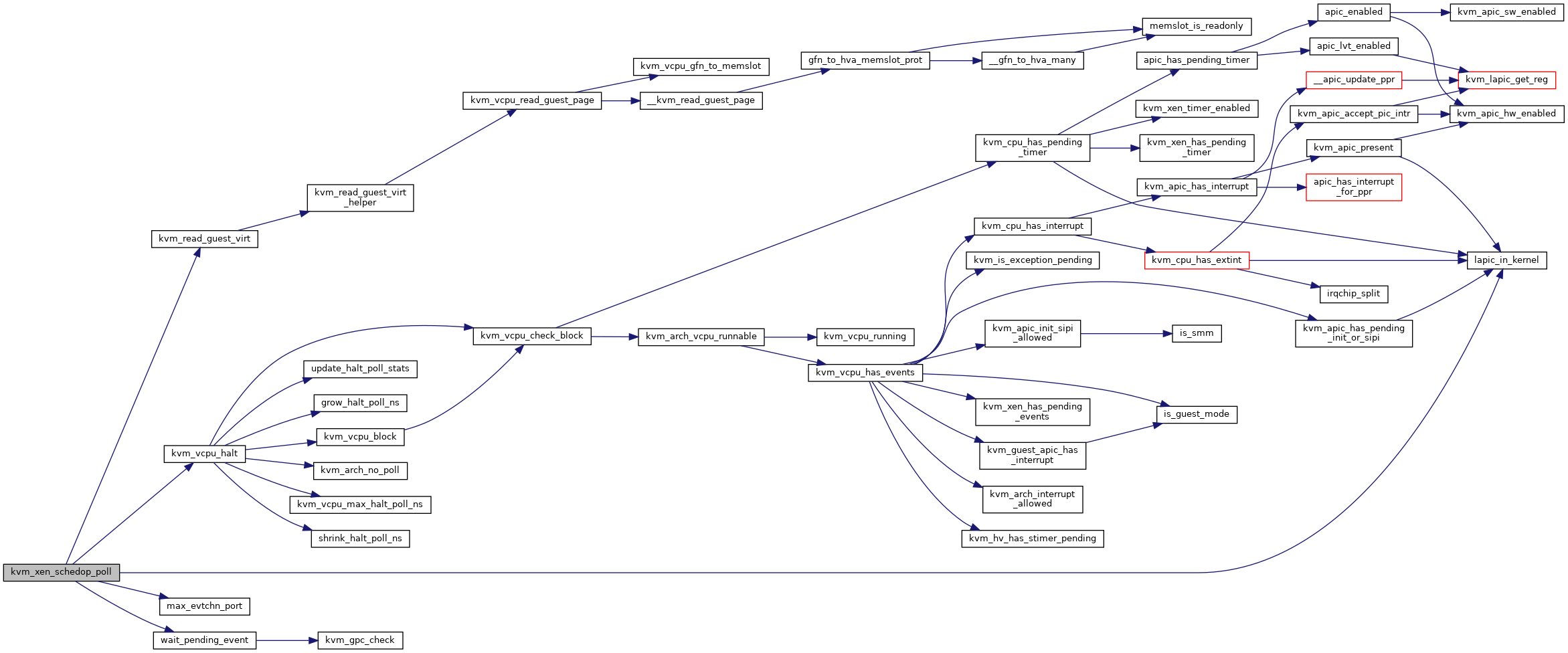

| static int | max_evtchn_port (struct kvm *kvm) |

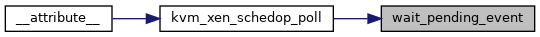

| static bool | wait_pending_event (struct kvm_vcpu *vcpu, int nr_ports, evtchn_port_t *ports) |

| static bool | kvm_xen_schedop_poll (struct kvm_vcpu *vcpu, bool longmode, u64 param, u64 *r) |

| static void | cancel_evtchn_poll (struct timer_list *t) |

| static bool | kvm_xen_hcall_sched_op (struct kvm_vcpu *vcpu, bool longmode, int cmd, u64 param, u64 *r) |

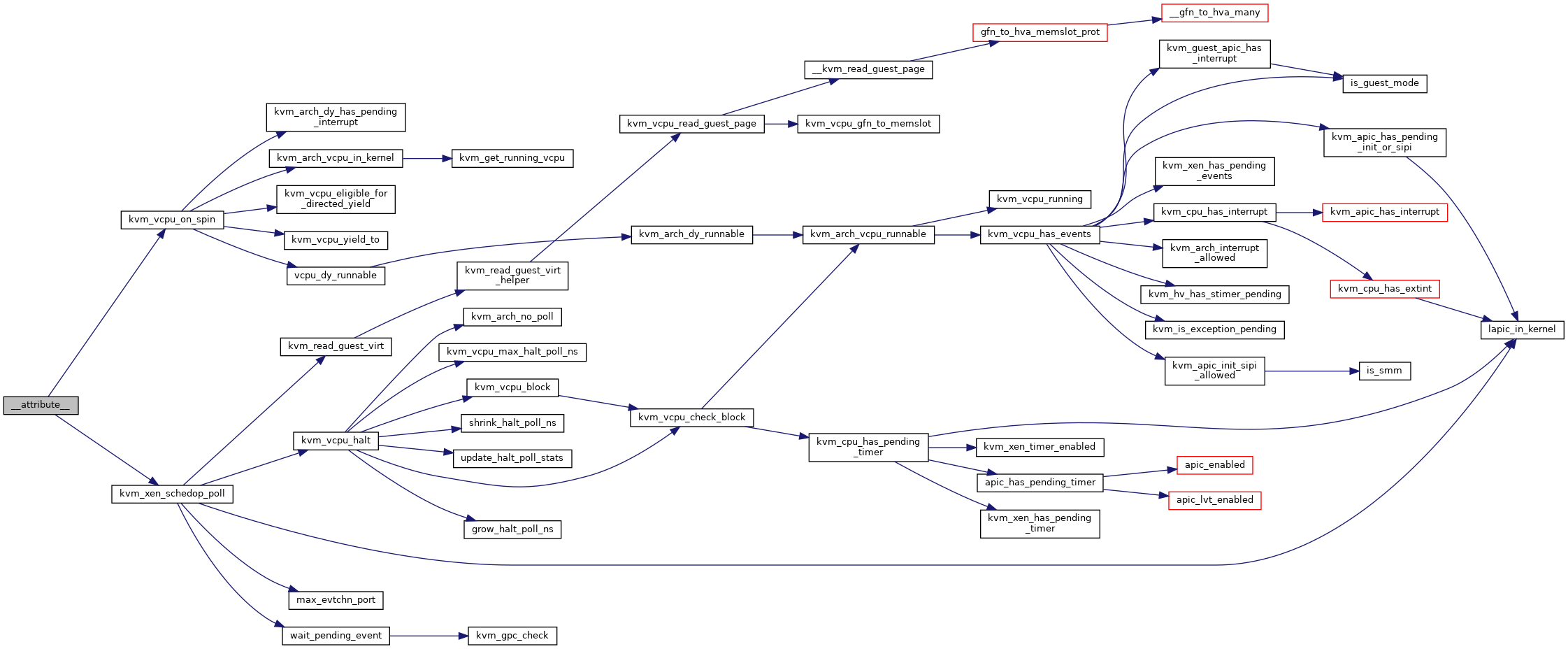

| struct compat_vcpu_set_singleshot_timer | __attribute__ ((packed)) |

| static bool | kvm_xen_hcall_vcpu_op (struct kvm_vcpu *vcpu, bool longmode, int cmd, int vcpu_id, u64 param, u64 *r) |

| static bool | kvm_xen_hcall_set_timer_op (struct kvm_vcpu *vcpu, uint64_t timeout, u64 *r) |

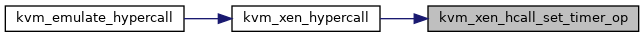

| int | kvm_xen_hypercall (struct kvm_vcpu *vcpu) |

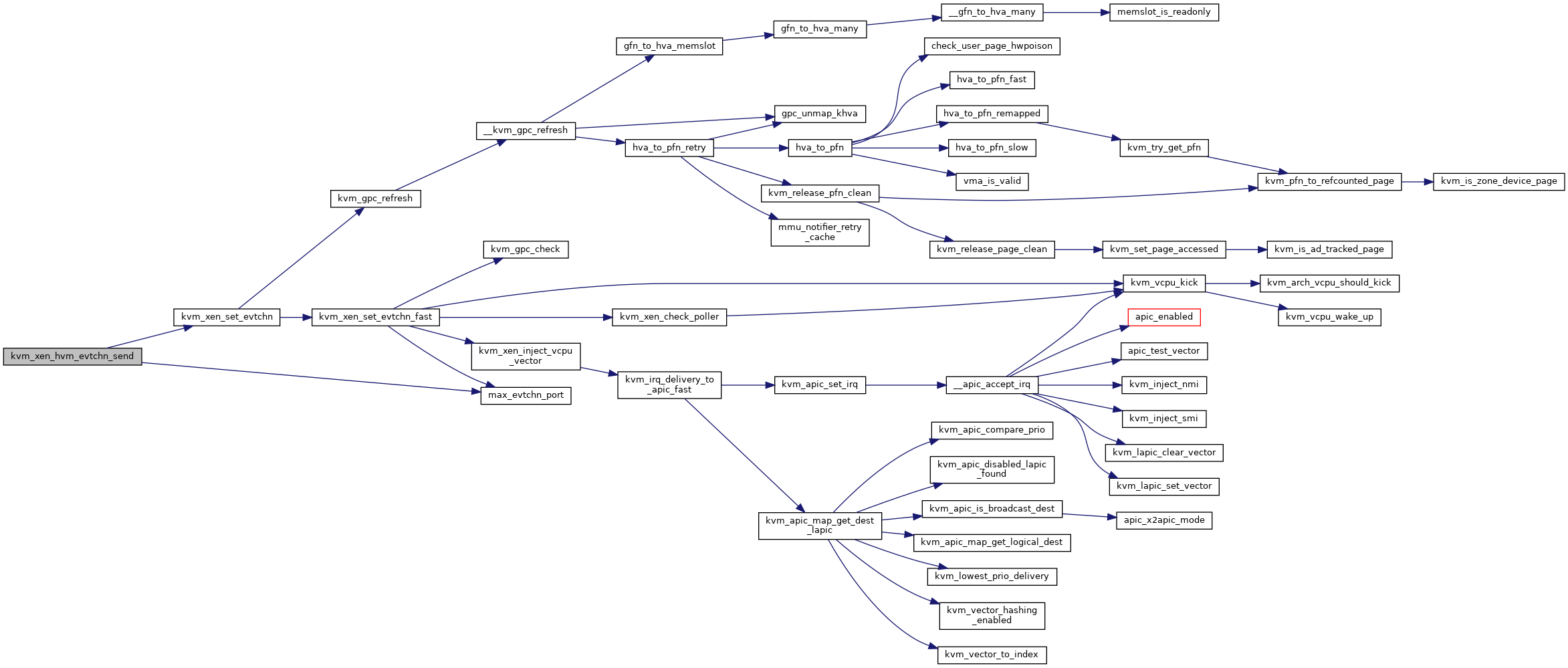

| static void | kvm_xen_check_poller (struct kvm_vcpu *vcpu, int port) |

| int | kvm_xen_set_evtchn_fast (struct kvm_xen_evtchn *xe, struct kvm *kvm) |

| static int | evtchn_set_fn (struct kvm_kernel_irq_routing_entry *e, struct kvm *kvm, int irq_source_id, int level, bool line_status) |

| int | kvm_xen_setup_evtchn (struct kvm *kvm, struct kvm_kernel_irq_routing_entry *e, const struct kvm_irq_routing_entry *ue) |

| int | kvm_xen_hvm_evtchn_send (struct kvm *kvm, struct kvm_irq_routing_xen_evtchn *uxe) |

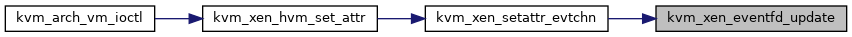

| static int | kvm_xen_eventfd_update (struct kvm *kvm, struct kvm_xen_hvm_attr *data) |

| static int | kvm_xen_eventfd_assign (struct kvm *kvm, struct kvm_xen_hvm_attr *data) |

| static int | kvm_xen_eventfd_deassign (struct kvm *kvm, u32 port) |

| static int | kvm_xen_eventfd_reset (struct kvm *kvm) |

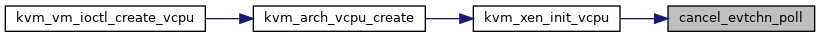

| void | kvm_xen_init_vcpu (struct kvm_vcpu *vcpu) |

| void | kvm_xen_destroy_vcpu (struct kvm_vcpu *vcpu) |

| void | kvm_xen_update_tsc_info (struct kvm_vcpu *vcpu) |

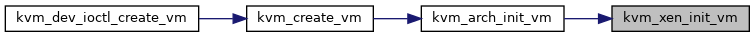

| void | kvm_xen_init_vm (struct kvm *kvm) |

| void | kvm_xen_destroy_vm (struct kvm *kvm) |

Variables | |

| uint64_t | timeout_abs_ns |

| uint32_t | flags |

| struct evtchnfd | __attribute__ |

Macro Definition Documentation

◆ pr_fmt

Function Documentation

◆ __attribute__()

| struct compat_vcpu_set_singleshot_timer __attribute__ | ( | (packed) | ) |

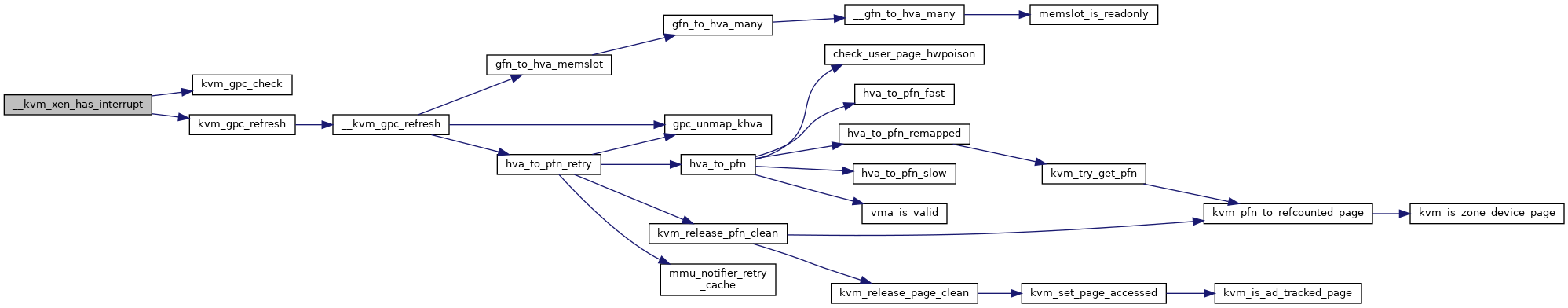

◆ __kvm_xen_has_interrupt()

| int __kvm_xen_has_interrupt | ( | struct kvm_vcpu * | v | ) |

Definition at line 577 of file xen.c.

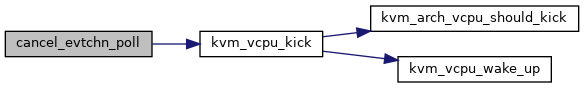

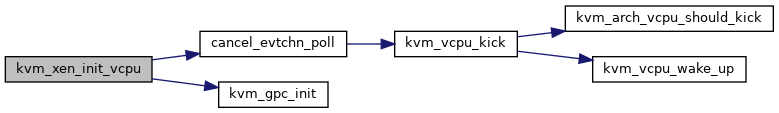

◆ cancel_evtchn_poll()

|

static |

◆ DEFINE_STATIC_KEY_DEFERRED_FALSE()

| DEFINE_STATIC_KEY_DEFERRED_FALSE | ( | kvm_xen_enabled | , |

| HZ | |||

| ) |

◆ evtchn_set_fn()

|

static |

Definition at line 1772 of file xen.c.

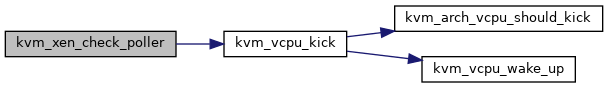

◆ kvm_xen_check_poller()

|

static |

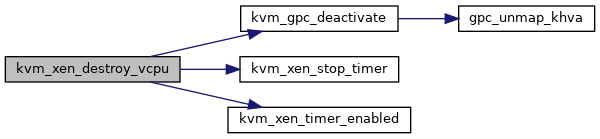

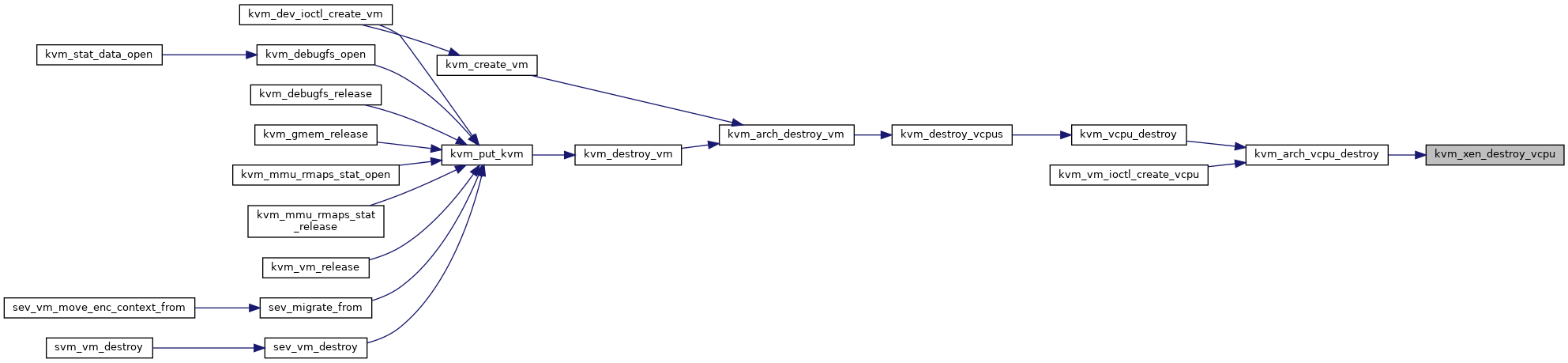

◆ kvm_xen_destroy_vcpu()

| void kvm_xen_destroy_vcpu | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_xen_destroy_vm()

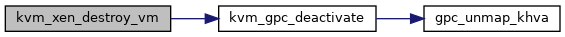

| void kvm_xen_destroy_vm | ( | struct kvm * | kvm | ) |

Definition at line 2165 of file xen.c.

◆ kvm_xen_eventfd_assign()

|

static |

◆ kvm_xen_eventfd_deassign()

|

static |

◆ kvm_xen_eventfd_reset()

|

static |

◆ kvm_xen_eventfd_update()

|

static |

◆ kvm_xen_hcall_evtchn_send()

|

static |

Definition at line 2070 of file xen.c.

◆ kvm_xen_hcall_sched_op()

|

static |

Definition at line 1370 of file xen.c.

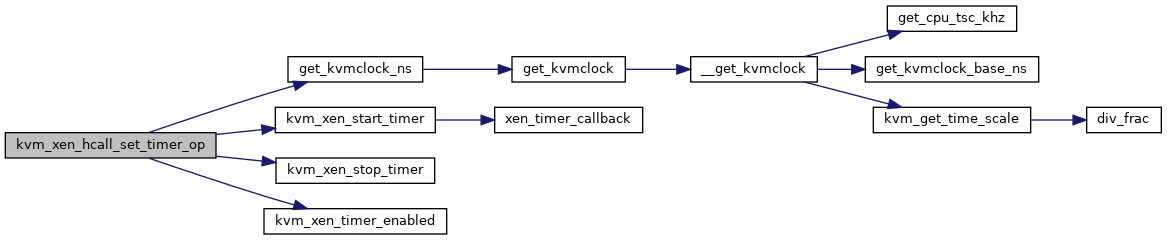

◆ kvm_xen_hcall_set_timer_op()

|

static |

◆ kvm_xen_hcall_vcpu_op()

|

static |

Definition at line 1394 of file xen.c.

◆ kvm_xen_hvm_config()

| int kvm_xen_hvm_config | ( | struct kvm * | kvm, |

| struct kvm_xen_hvm_config * | xhc | ||

| ) |

Definition at line 1161 of file xen.c.

◆ kvm_xen_hvm_evtchn_send()

| int kvm_xen_hvm_evtchn_send | ( | struct kvm * | kvm, |

| struct kvm_irq_routing_xen_evtchn * | uxe | ||

| ) |

◆ kvm_xen_hvm_get_attr()

| int kvm_xen_hvm_get_attr | ( | struct kvm * | kvm, |

| struct kvm_xen_hvm_attr * | data | ||

| ) |

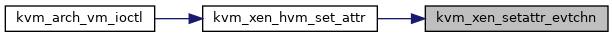

◆ kvm_xen_hvm_set_attr()

| int kvm_xen_hvm_set_attr | ( | struct kvm * | kvm, |

| struct kvm_xen_hvm_attr * | data | ||

| ) |

Definition at line 626 of file xen.c.

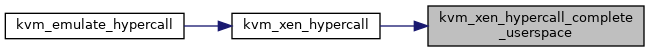

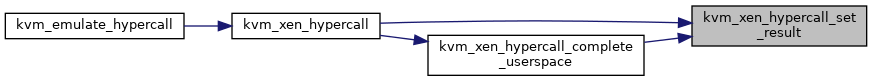

◆ kvm_xen_hypercall()

| int kvm_xen_hypercall | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1486 of file xen.c.

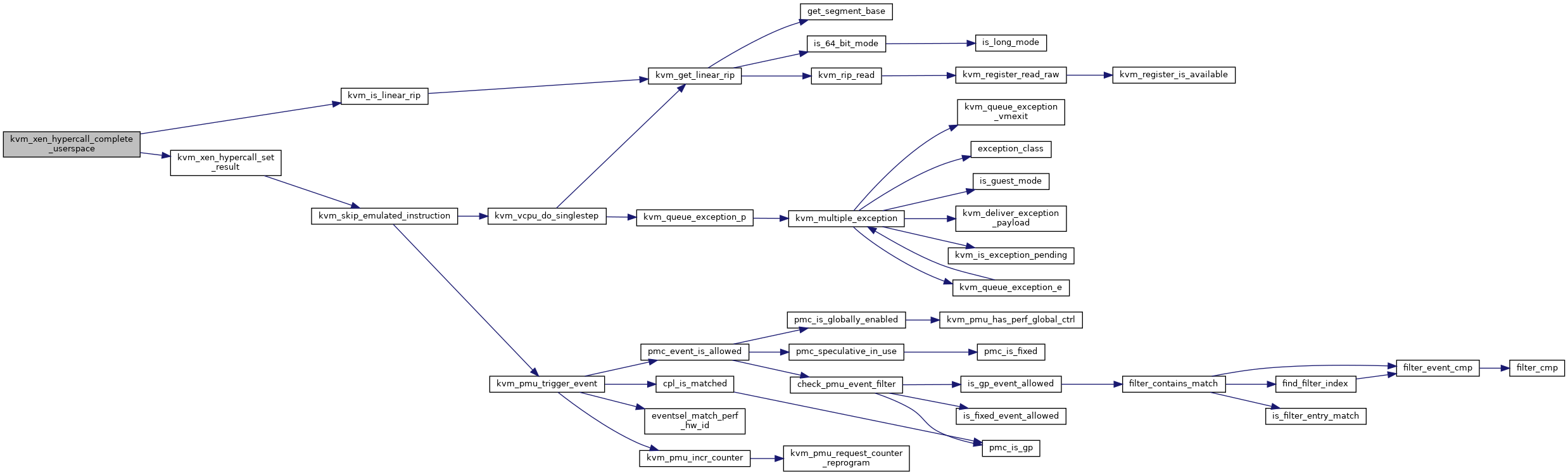

◆ kvm_xen_hypercall_complete_userspace()

|

static |

Definition at line 1205 of file xen.c.

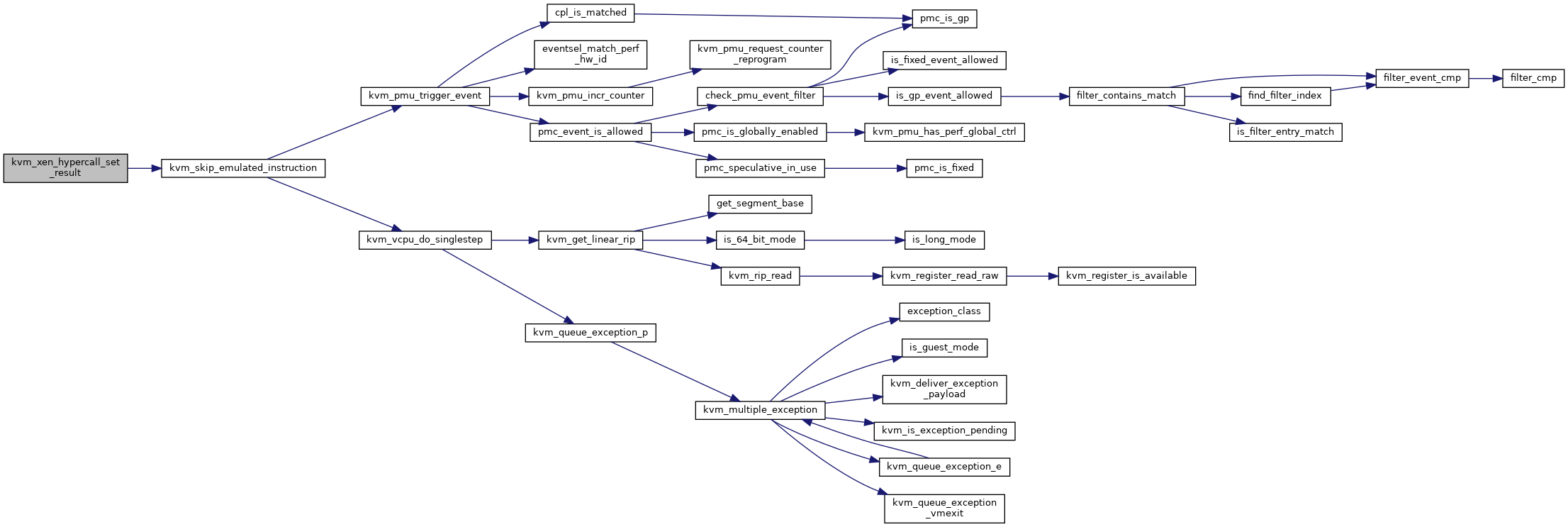

◆ kvm_xen_hypercall_set_result()

|

static |

Definition at line 1199 of file xen.c.

◆ kvm_xen_init_timer()

|

static |

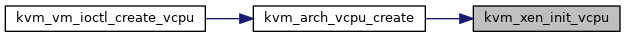

◆ kvm_xen_init_vcpu()

| void kvm_xen_init_vcpu | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2105 of file xen.c.

◆ kvm_xen_init_vm()

| void kvm_xen_init_vm | ( | struct kvm * | kvm | ) |

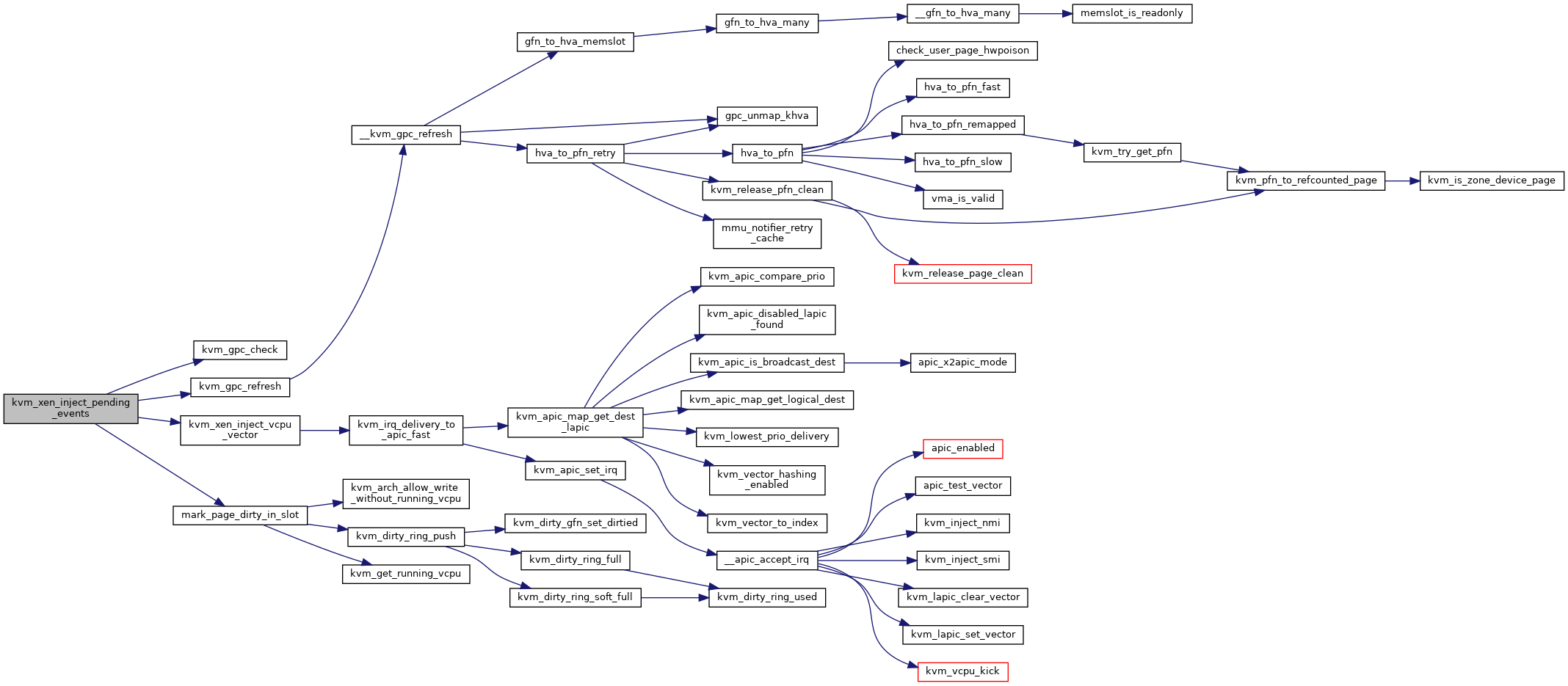

◆ kvm_xen_inject_pending_events()

| void kvm_xen_inject_pending_events | ( | struct kvm_vcpu * | v | ) |

Definition at line 519 of file xen.c.

◆ kvm_xen_inject_timer_irqs()

| void kvm_xen_inject_timer_irqs | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_xen_inject_vcpu_vector()

| void kvm_xen_inject_vcpu_vector | ( | struct kvm_vcpu * | v | ) |

Definition at line 496 of file xen.c.

◆ kvm_xen_schedop_poll()

|

static |

Definition at line 1261 of file xen.c.

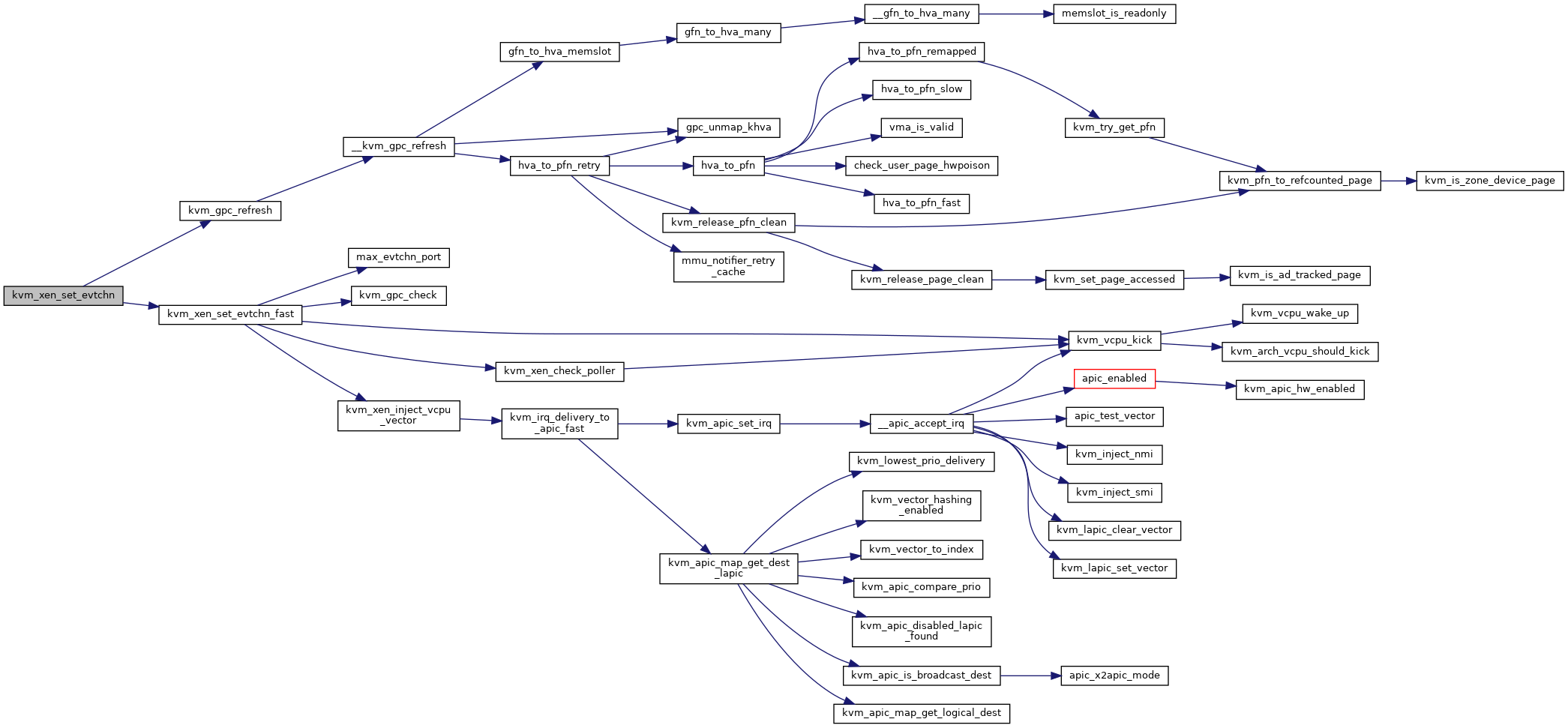

◆ kvm_xen_set_evtchn()

|

static |

Definition at line 1713 of file xen.c.

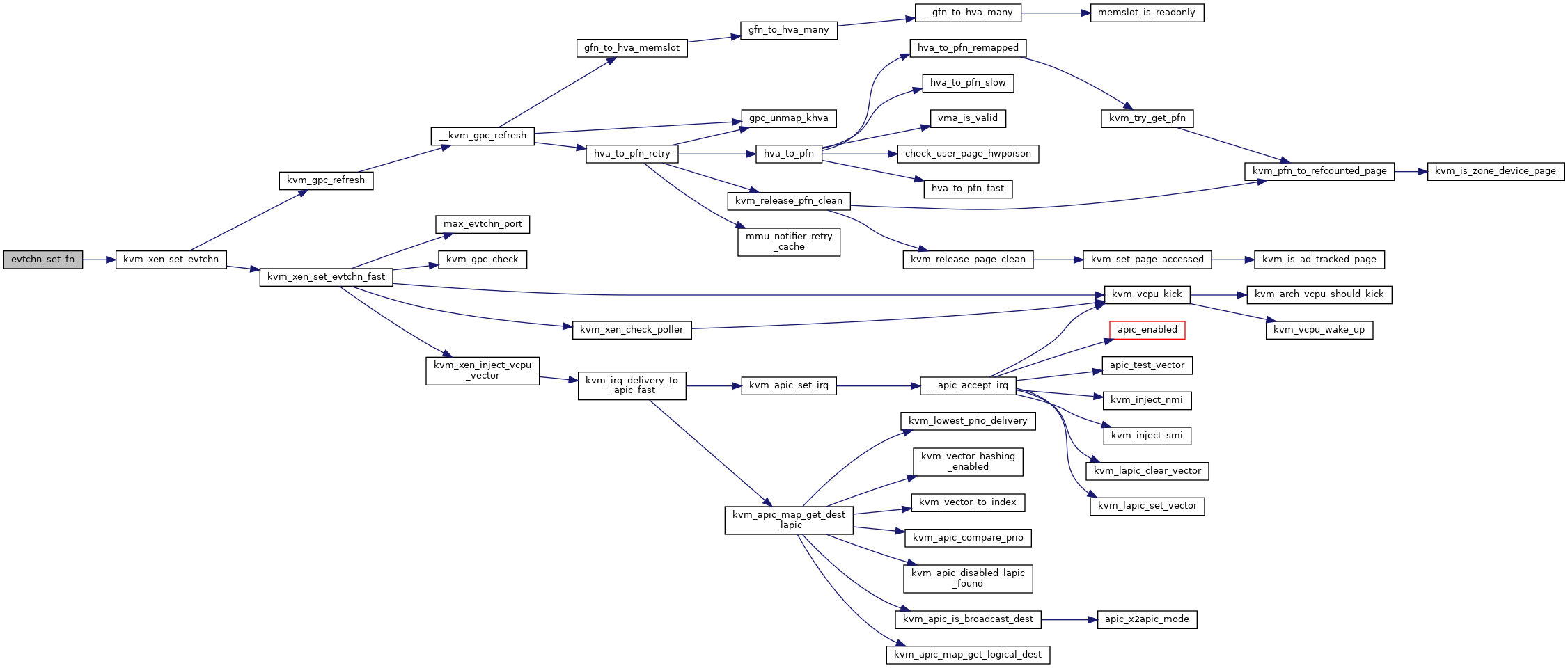

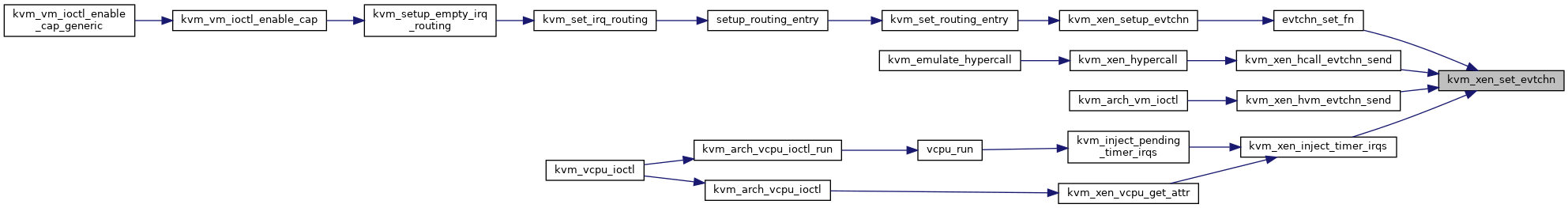

◆ kvm_xen_set_evtchn_fast()

| int kvm_xen_set_evtchn_fast | ( | struct kvm_xen_evtchn * | xe, |

| struct kvm * | kvm | ||

| ) |

Definition at line 1604 of file xen.c.

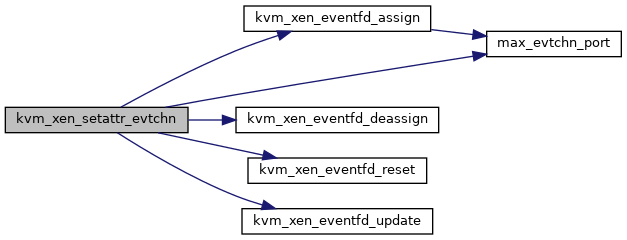

◆ kvm_xen_setattr_evtchn()

|

static |

Definition at line 2050 of file xen.c.

◆ kvm_xen_setup_evtchn()

| int kvm_xen_setup_evtchn | ( | struct kvm * | kvm, |

| struct kvm_kernel_irq_routing_entry * | e, | ||

| const struct kvm_irq_routing_entry * | ue | ||

| ) |

Definition at line 1785 of file xen.c.

◆ kvm_xen_shared_info_init()

|

static |

Definition at line 37 of file xen.c.

◆ kvm_xen_start_timer()

|

static |

◆ kvm_xen_stop_timer()

|

static |

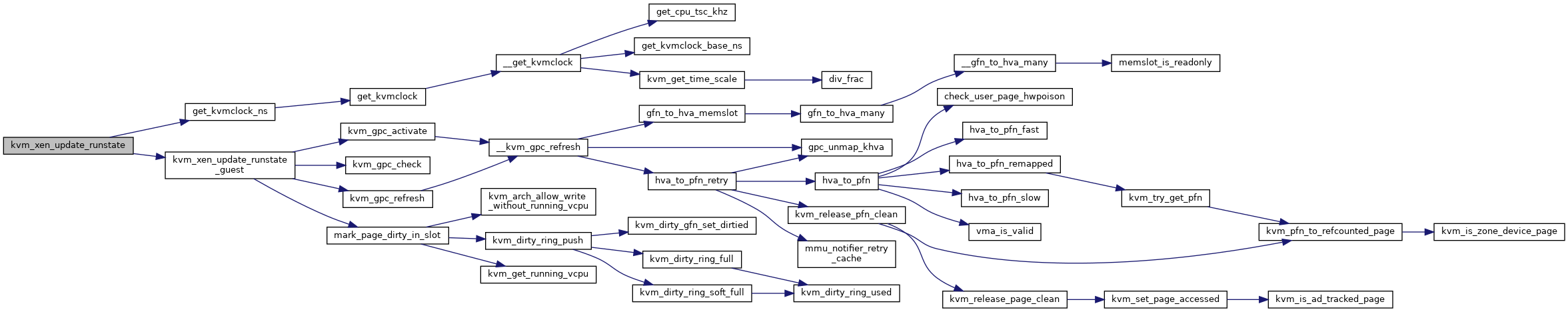

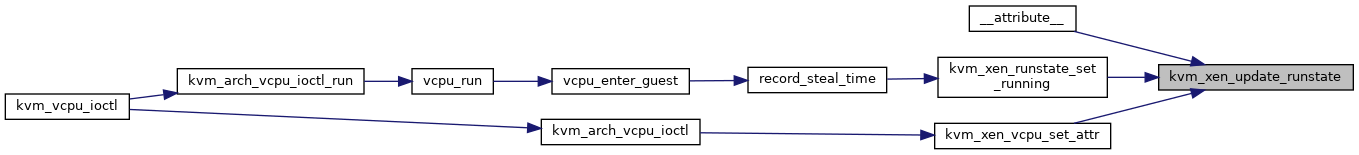

◆ kvm_xen_update_runstate()

| void kvm_xen_update_runstate | ( | struct kvm_vcpu * | v, |

| int | state | ||

| ) |

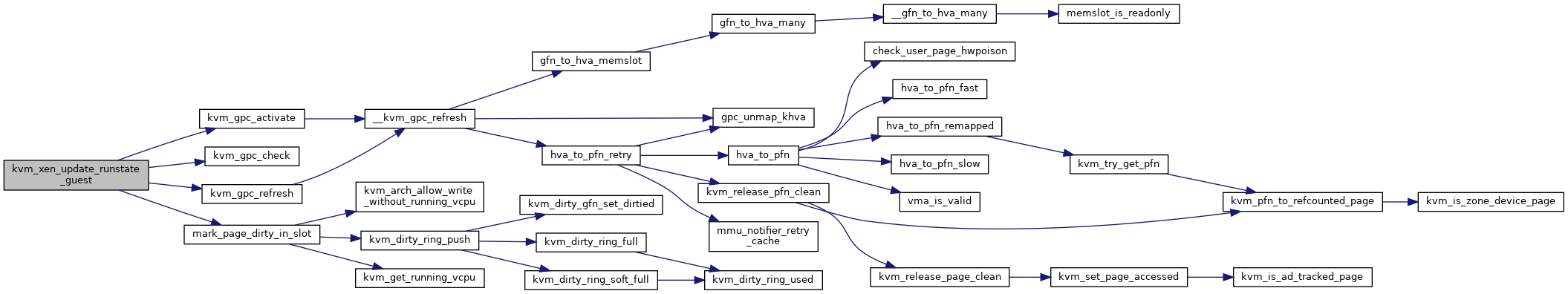

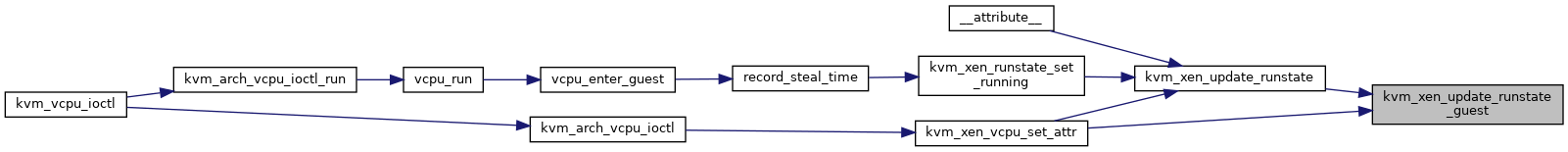

◆ kvm_xen_update_runstate_guest()

|

static |

◆ kvm_xen_update_tsc_info()

| void kvm_xen_update_tsc_info | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2135 of file xen.c.

◆ kvm_xen_vcpu_get_attr()

| int kvm_xen_vcpu_get_attr | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_xen_vcpu_attr * | data | ||

| ) |

◆ kvm_xen_vcpu_set_attr()

| int kvm_xen_vcpu_set_attr | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_xen_vcpu_attr * | data | ||

| ) |

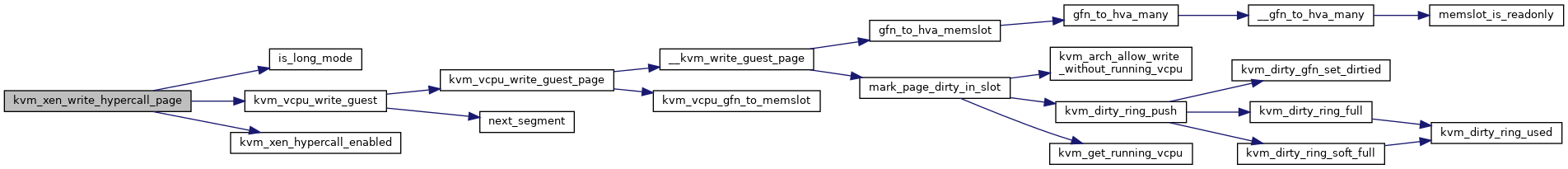

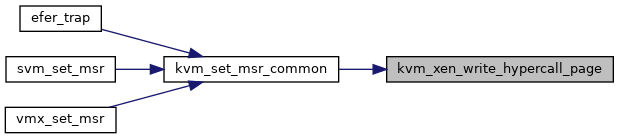

◆ kvm_xen_write_hypercall_page()

| int kvm_xen_write_hypercall_page | ( | struct kvm_vcpu * | vcpu, |

| u64 | data | ||

| ) |

Definition at line 1090 of file xen.c.

◆ max_evtchn_port()

|

inlinestatic |

◆ wait_pending_event()

|

static |

◆ xen_timer_callback()

|

static |

Variable Documentation

◆ __attribute__

| struct evtchnfd __attribute__ |