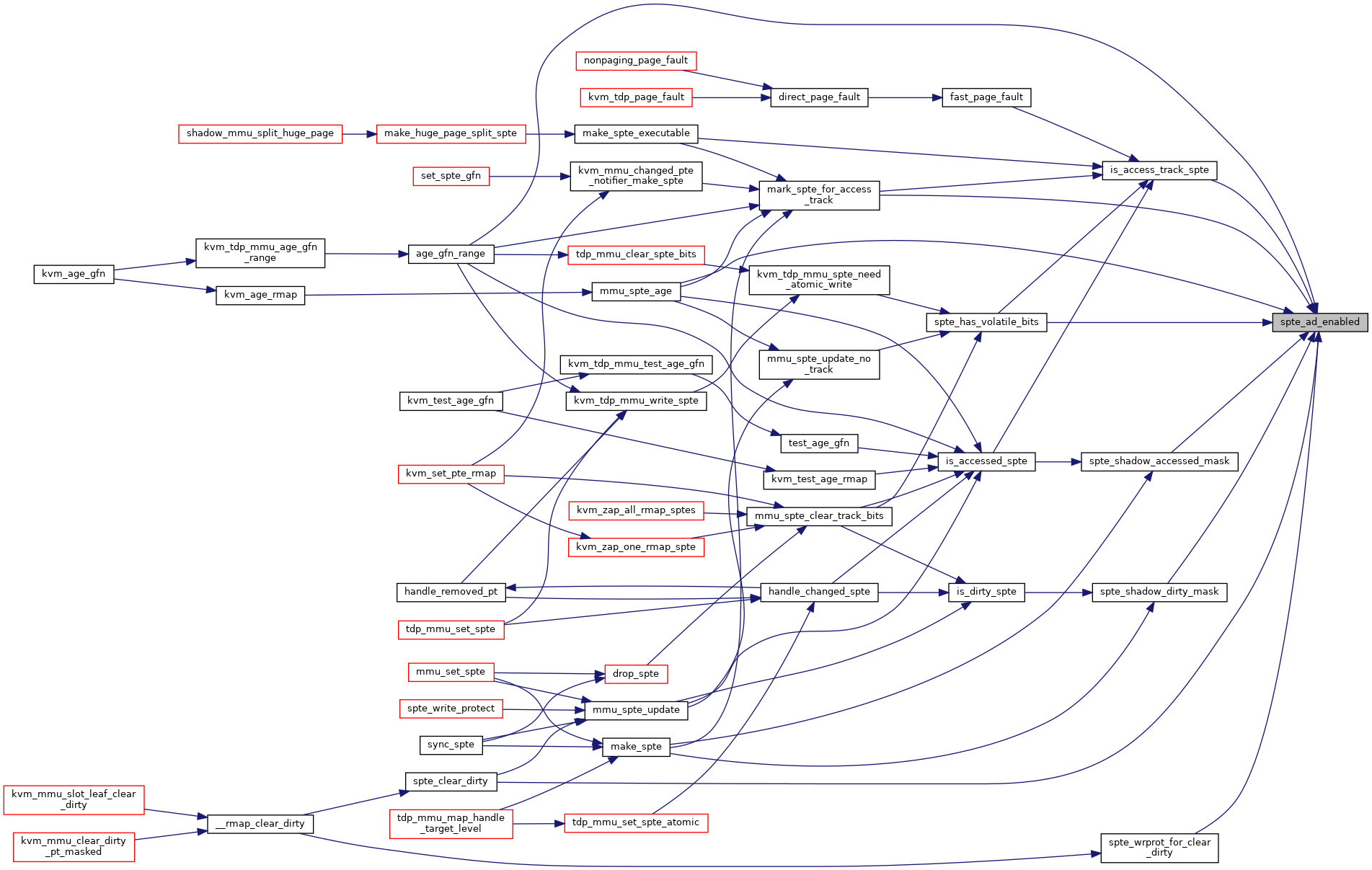

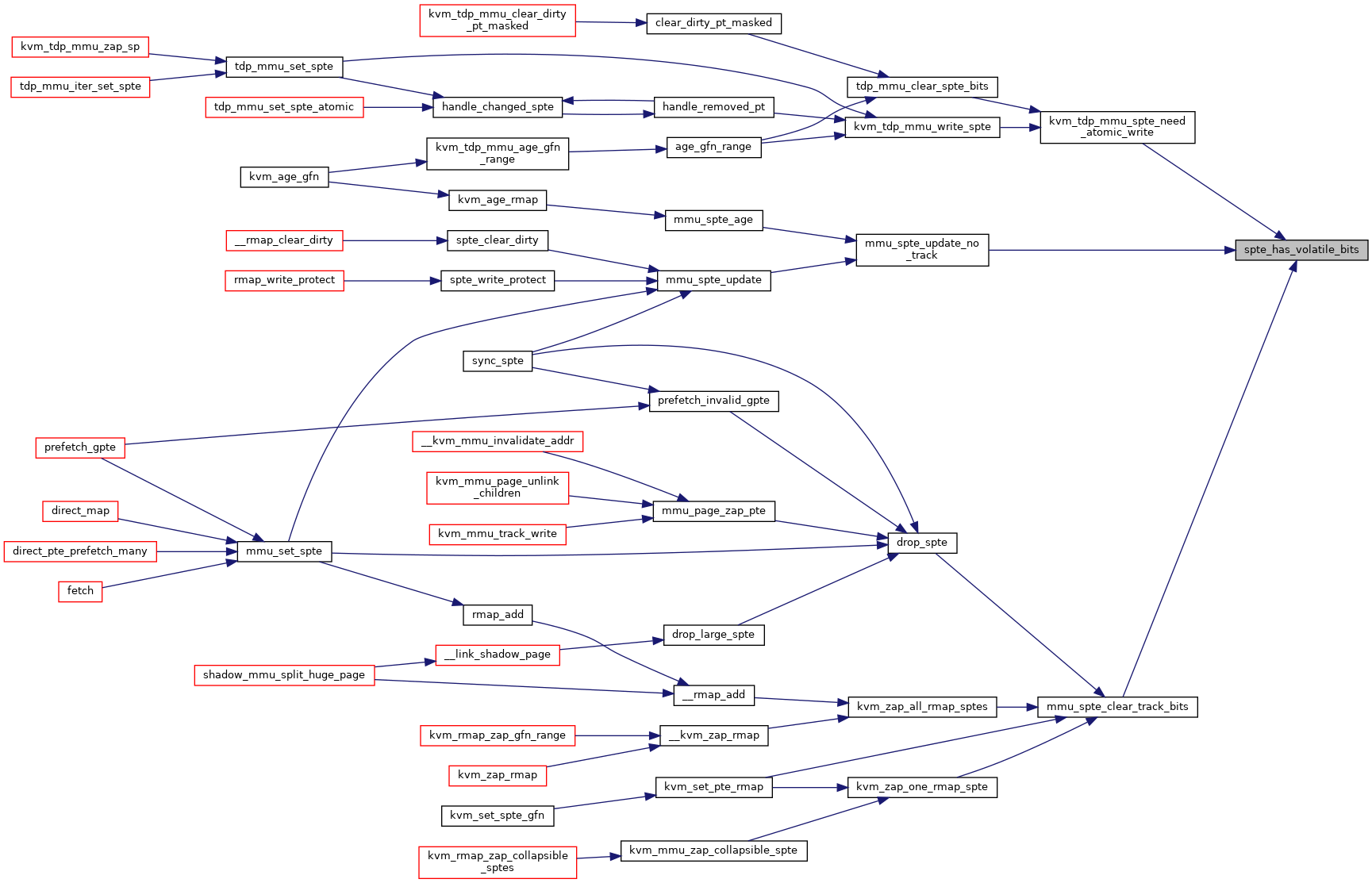

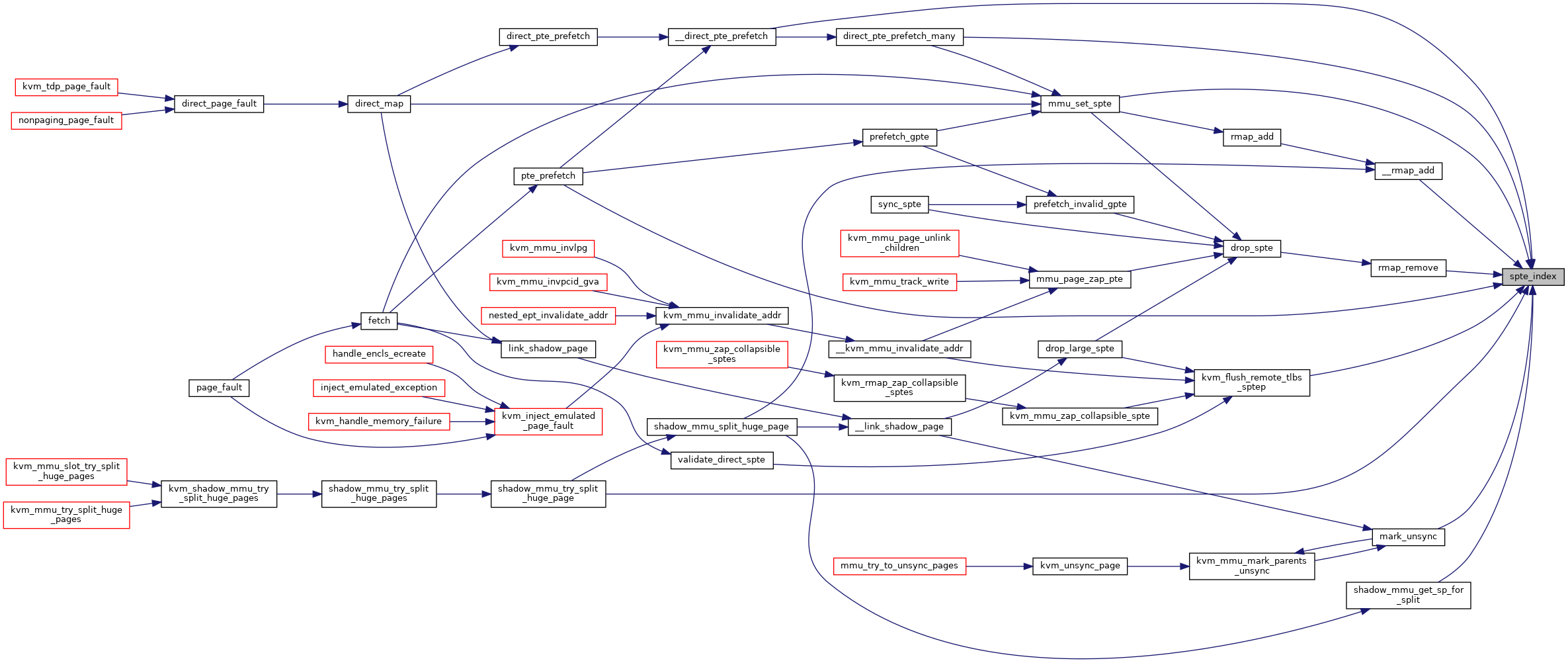

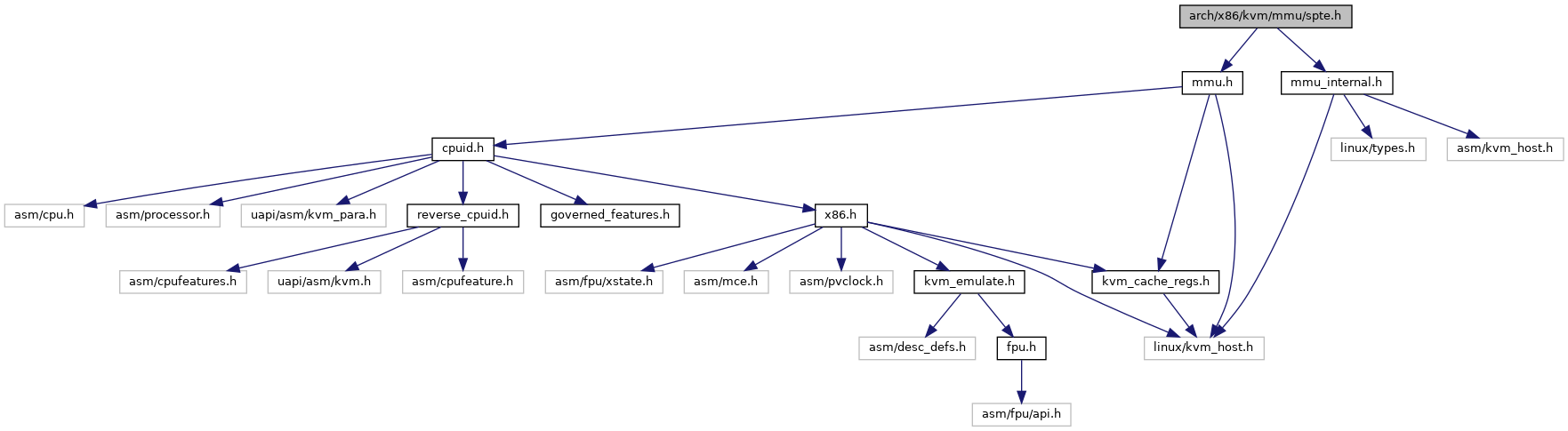

Include dependency graph for spte.h:

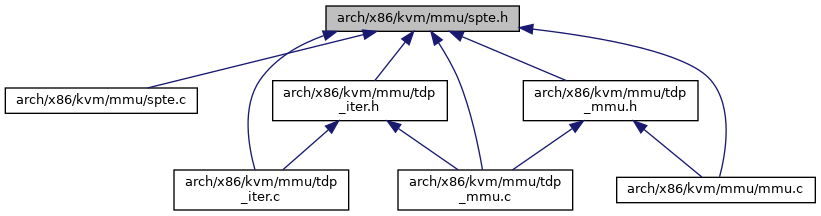

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Functions | |

| static bool | is_removed_spte (u64 spte) |

| static int | spte_index (u64 *sptep) |

| static struct kvm_mmu_page * | to_shadow_page (hpa_t shadow_page) |

| static struct kvm_mmu_page * | spte_to_child_sp (u64 spte) |

| static struct kvm_mmu_page * | sptep_to_sp (u64 *sptep) |

| static struct kvm_mmu_page * | root_to_sp (hpa_t root) |

| static bool | is_mmio_spte (u64 spte) |

| static bool | is_shadow_present_pte (u64 pte) |

| static bool | kvm_ad_enabled (void) |

| static bool | sp_ad_disabled (struct kvm_mmu_page *sp) |

| static bool | spte_ad_enabled (u64 spte) |

| static bool | spte_ad_need_write_protect (u64 spte) |

| static u64 | spte_shadow_accessed_mask (u64 spte) |

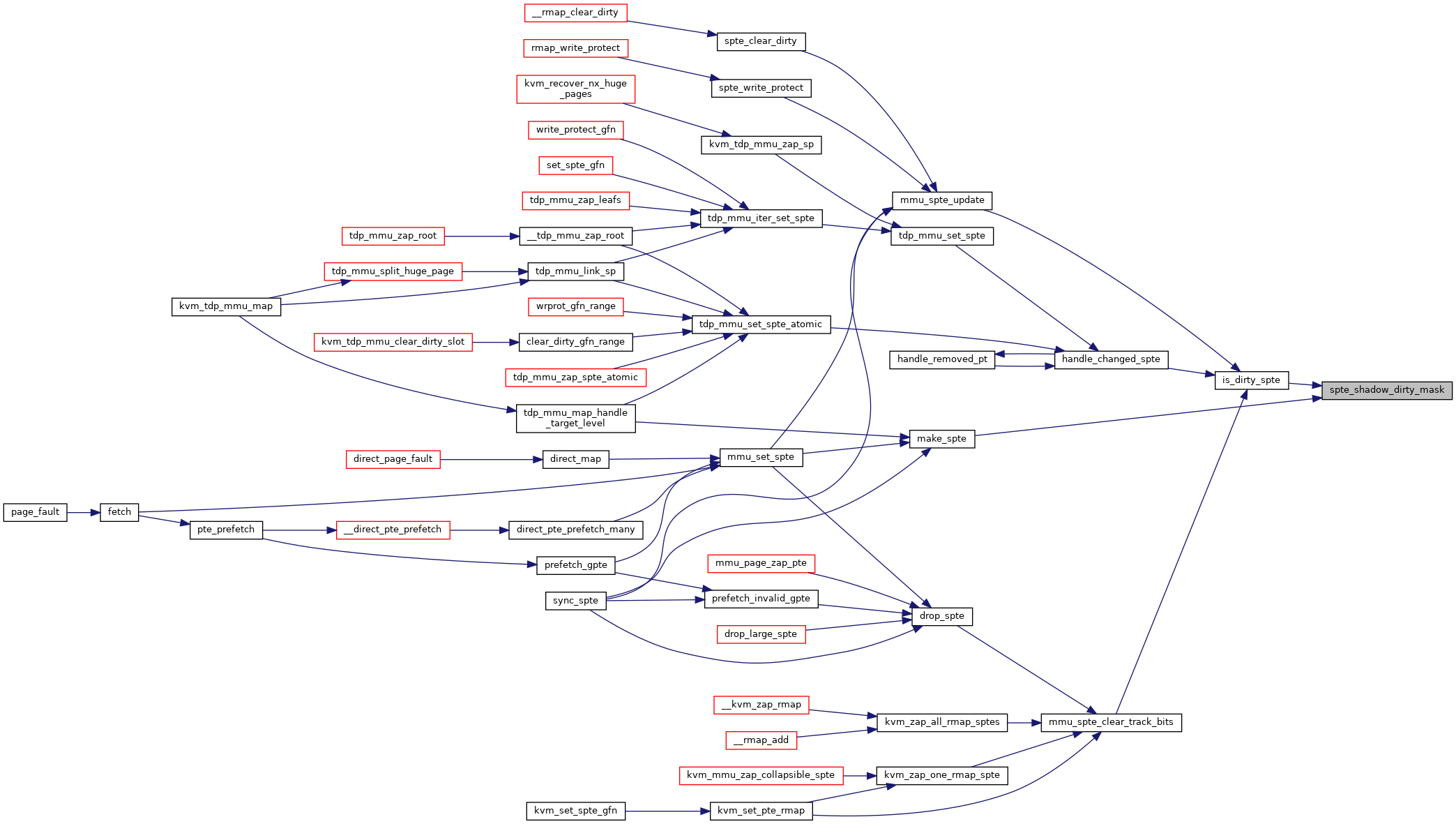

| static u64 | spte_shadow_dirty_mask (u64 spte) |

| static bool | is_access_track_spte (u64 spte) |

| static bool | is_large_pte (u64 pte) |

| static bool | is_last_spte (u64 pte, int level) |

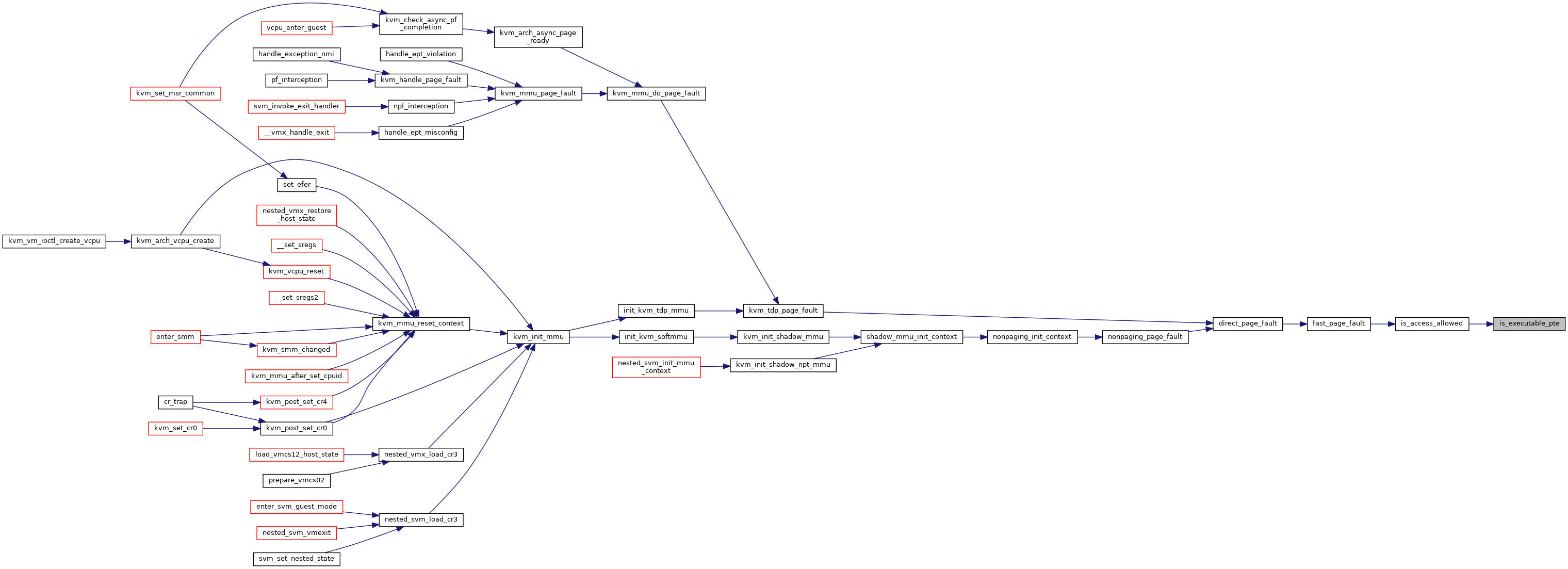

| static bool | is_executable_pte (u64 spte) |

| static kvm_pfn_t | spte_to_pfn (u64 pte) |

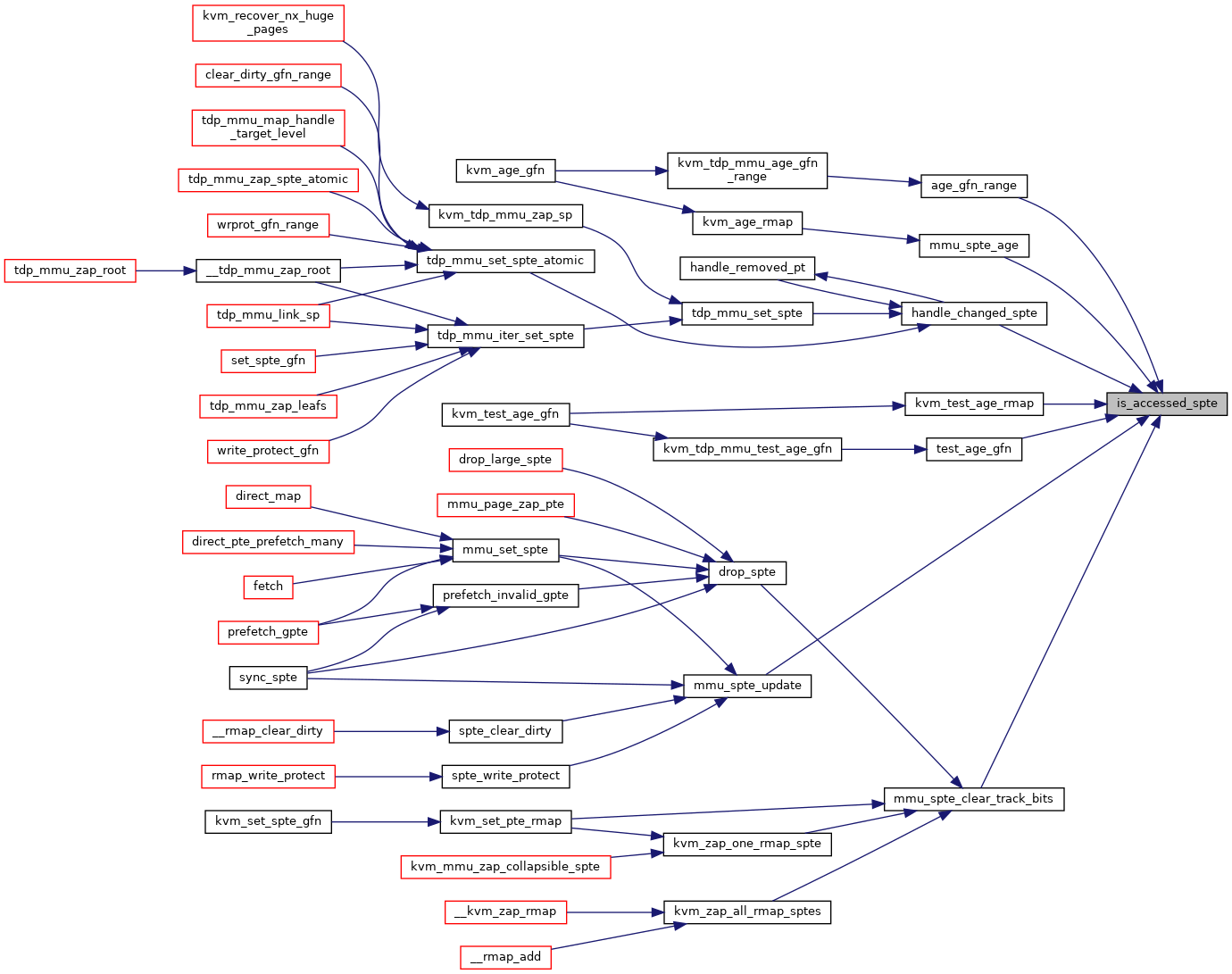

| static bool | is_accessed_spte (u64 spte) |

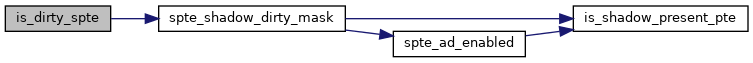

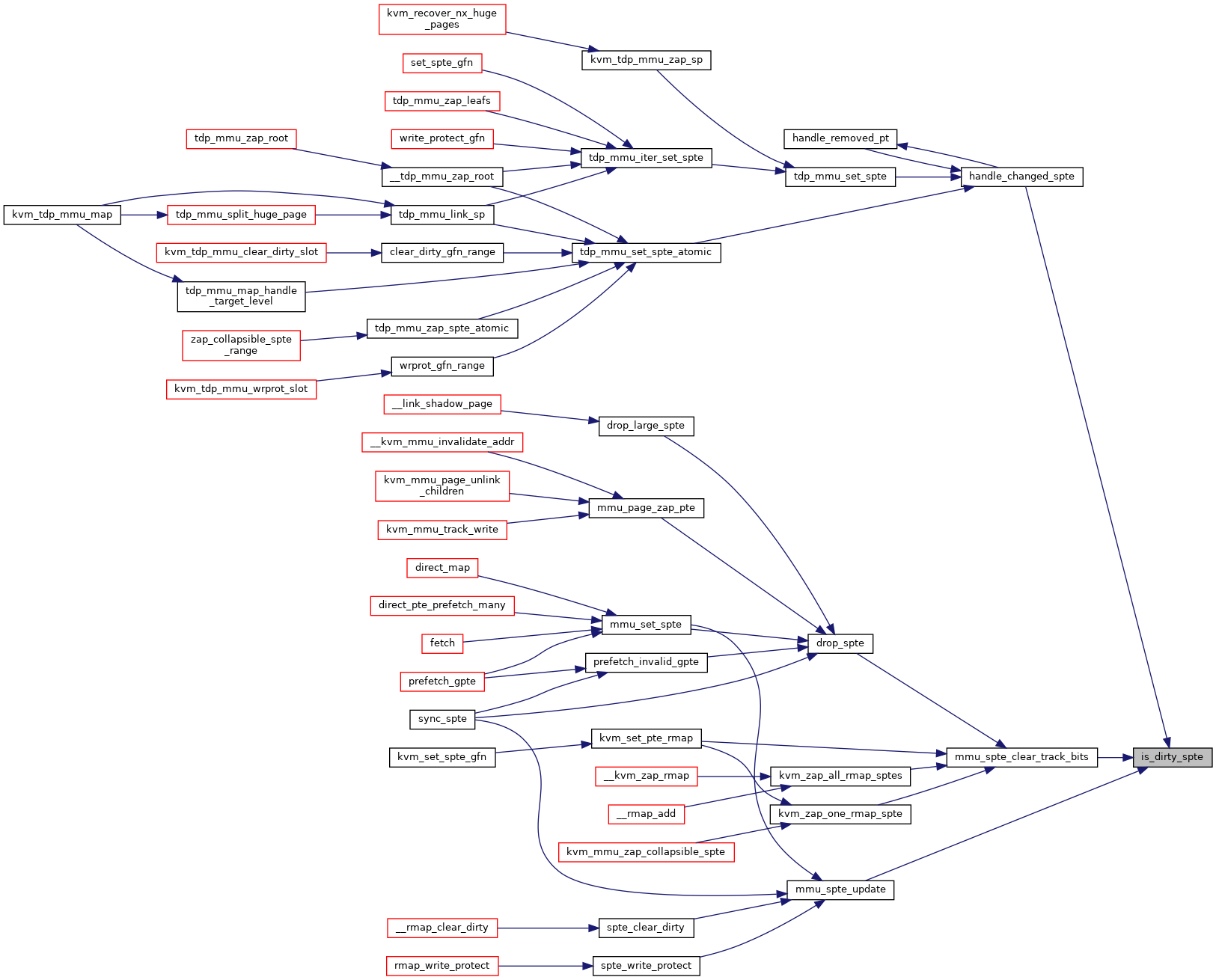

| static bool | is_dirty_spte (u64 spte) |

| static u64 | get_rsvd_bits (struct rsvd_bits_validate *rsvd_check, u64 pte, int level) |

| static bool | __is_rsvd_bits_set (struct rsvd_bits_validate *rsvd_check, u64 pte, int level) |

| static bool | __is_bad_mt_xwr (struct rsvd_bits_validate *rsvd_check, u64 pte) |

| static __always_inline bool | is_rsvd_spte (struct rsvd_bits_validate *rsvd_check, u64 spte, int level) |

| static bool | is_writable_pte (unsigned long pte) |

| static void | check_spte_writable_invariants (u64 spte) |

| static bool | is_mmu_writable_spte (u64 spte) |

| static u64 | get_mmio_spte_generation (u64 spte) |

| bool | spte_has_volatile_bits (u64 spte) |

| bool | make_spte (struct kvm_vcpu *vcpu, struct kvm_mmu_page *sp, const struct kvm_memory_slot *slot, unsigned int pte_access, gfn_t gfn, kvm_pfn_t pfn, u64 old_spte, bool prefetch, bool can_unsync, bool host_writable, u64 *new_spte) |

| u64 | make_huge_page_split_spte (struct kvm *kvm, u64 huge_spte, union kvm_mmu_page_role role, int index) |

| u64 | make_nonleaf_spte (u64 *child_pt, bool ad_disabled) |

| u64 | make_mmio_spte (struct kvm_vcpu *vcpu, u64 gfn, unsigned int access) |

| u64 | mark_spte_for_access_track (u64 spte) |

| static u64 | restore_acc_track_spte (u64 spte) |

| u64 | kvm_mmu_changed_pte_notifier_make_spte (u64 old_spte, kvm_pfn_t new_pfn) |

| void __init | kvm_mmu_spte_module_init (void) |

| void | kvm_mmu_reset_all_pte_masks (void) |

Macro Definition Documentation

◆ ACC_ALL

| #define ACC_ALL (ACC_EXEC_MASK | ACC_WRITE_MASK | ACC_USER_MASK) |

◆ ACC_EXEC_MASK

◆ ACC_USER_MASK

| #define ACC_USER_MASK PT_USER_MASK |

◆ ACC_WRITE_MASK

| #define ACC_WRITE_MASK PT_WRITABLE_MASK |

◆ DEFAULT_SPTE_HOST_WRITABLE

◆ DEFAULT_SPTE_MMU_WRITABLE

◆ EPT_SPTE_HOST_WRITABLE

◆ EPT_SPTE_MMU_WRITABLE

◆ MMIO_SPTE_GEN_HIGH_BITS

| #define MMIO_SPTE_GEN_HIGH_BITS (MMIO_SPTE_GEN_HIGH_END - MMIO_SPTE_GEN_HIGH_START + 1) |

◆ MMIO_SPTE_GEN_HIGH_END

◆ MMIO_SPTE_GEN_HIGH_MASK

| #define MMIO_SPTE_GEN_HIGH_MASK |

Value:

GENMASK_ULL(MMIO_SPTE_GEN_HIGH_END, \

◆ MMIO_SPTE_GEN_HIGH_SHIFT

| #define MMIO_SPTE_GEN_HIGH_SHIFT (MMIO_SPTE_GEN_HIGH_START - MMIO_SPTE_GEN_LOW_BITS) |

◆ MMIO_SPTE_GEN_HIGH_START

◆ MMIO_SPTE_GEN_LOW_BITS

| #define MMIO_SPTE_GEN_LOW_BITS (MMIO_SPTE_GEN_LOW_END - MMIO_SPTE_GEN_LOW_START + 1) |

◆ MMIO_SPTE_GEN_LOW_END

◆ MMIO_SPTE_GEN_LOW_MASK

| #define MMIO_SPTE_GEN_LOW_MASK |

Value:

GENMASK_ULL(MMIO_SPTE_GEN_LOW_END, \

◆ MMIO_SPTE_GEN_LOW_SHIFT

| #define MMIO_SPTE_GEN_LOW_SHIFT (MMIO_SPTE_GEN_LOW_START - 0) |

◆ MMIO_SPTE_GEN_LOW_START

◆ MMIO_SPTE_GEN_MASK

| #define MMIO_SPTE_GEN_MASK GENMASK_ULL(MMIO_SPTE_GEN_LOW_BITS + MMIO_SPTE_GEN_HIGH_BITS - 1, 0) |

◆ REMOVED_SPTE

◆ SHADOW_ACC_TRACK_SAVED_BITS_MASK

| #define SHADOW_ACC_TRACK_SAVED_BITS_MASK |

Value:

SPTE_EPT_EXECUTABLE_MASK)

◆ SHADOW_ACC_TRACK_SAVED_BITS_SHIFT

◆ SHADOW_ACC_TRACK_SAVED_MASK

| #define SHADOW_ACC_TRACK_SAVED_MASK |

Value:

SHADOW_ACC_TRACK_SAVED_BITS_SHIFT)

◆ SHADOW_NONPRESENT_OR_RSVD_MASK_LEN

◆ SPTE_BASE_ADDR_MASK

| #define SPTE_BASE_ADDR_MASK (((1ULL << 52) - 1) & ~(u64)(PAGE_SIZE-1)) |

◆ SPTE_ENT_PER_PAGE

| #define SPTE_ENT_PER_PAGE __PT_ENT_PER_PAGE(SPTE_LEVEL_BITS) |

◆ SPTE_EPT_EXECUTABLE_MASK

◆ SPTE_EPT_READABLE_MASK

◆ SPTE_INDEX

| #define SPTE_INDEX | ( | address, | |

| level | |||

| ) | __PT_INDEX(address, level, SPTE_LEVEL_BITS) |

◆ SPTE_LEVEL_BITS

◆ SPTE_LEVEL_SHIFT

| #define SPTE_LEVEL_SHIFT | ( | level | ) | __PT_LEVEL_SHIFT(level, SPTE_LEVEL_BITS) |

◆ SPTE_MMIO_ALLOWED_MASK

| #define SPTE_MMIO_ALLOWED_MASK (BIT_ULL(63) | GENMASK_ULL(51, 12) | GENMASK_ULL(2, 0)) |

◆ SPTE_MMU_PRESENT_MASK

◆ SPTE_PERM_MASK

| #define SPTE_PERM_MASK |

Value:

◆ SPTE_TDP_AD_DISABLED

| #define SPTE_TDP_AD_DISABLED (1ULL << SPTE_TDP_AD_SHIFT) |

◆ SPTE_TDP_AD_ENABLED

| #define SPTE_TDP_AD_ENABLED (0ULL << SPTE_TDP_AD_SHIFT) |

◆ SPTE_TDP_AD_MASK

| #define SPTE_TDP_AD_MASK (3ULL << SPTE_TDP_AD_SHIFT) |

◆ SPTE_TDP_AD_SHIFT

◆ SPTE_TDP_AD_WRPROT_ONLY

| #define SPTE_TDP_AD_WRPROT_ONLY (2ULL << SPTE_TDP_AD_SHIFT) |

Function Documentation

◆ __is_bad_mt_xwr()

|

inlinestatic |

◆ __is_rsvd_bits_set()

|

inlinestatic |

Definition at line 356 of file spte.h.

static u64 get_rsvd_bits(struct rsvd_bits_validate *rsvd_check, u64 pte, int level)

Definition: spte.h:348

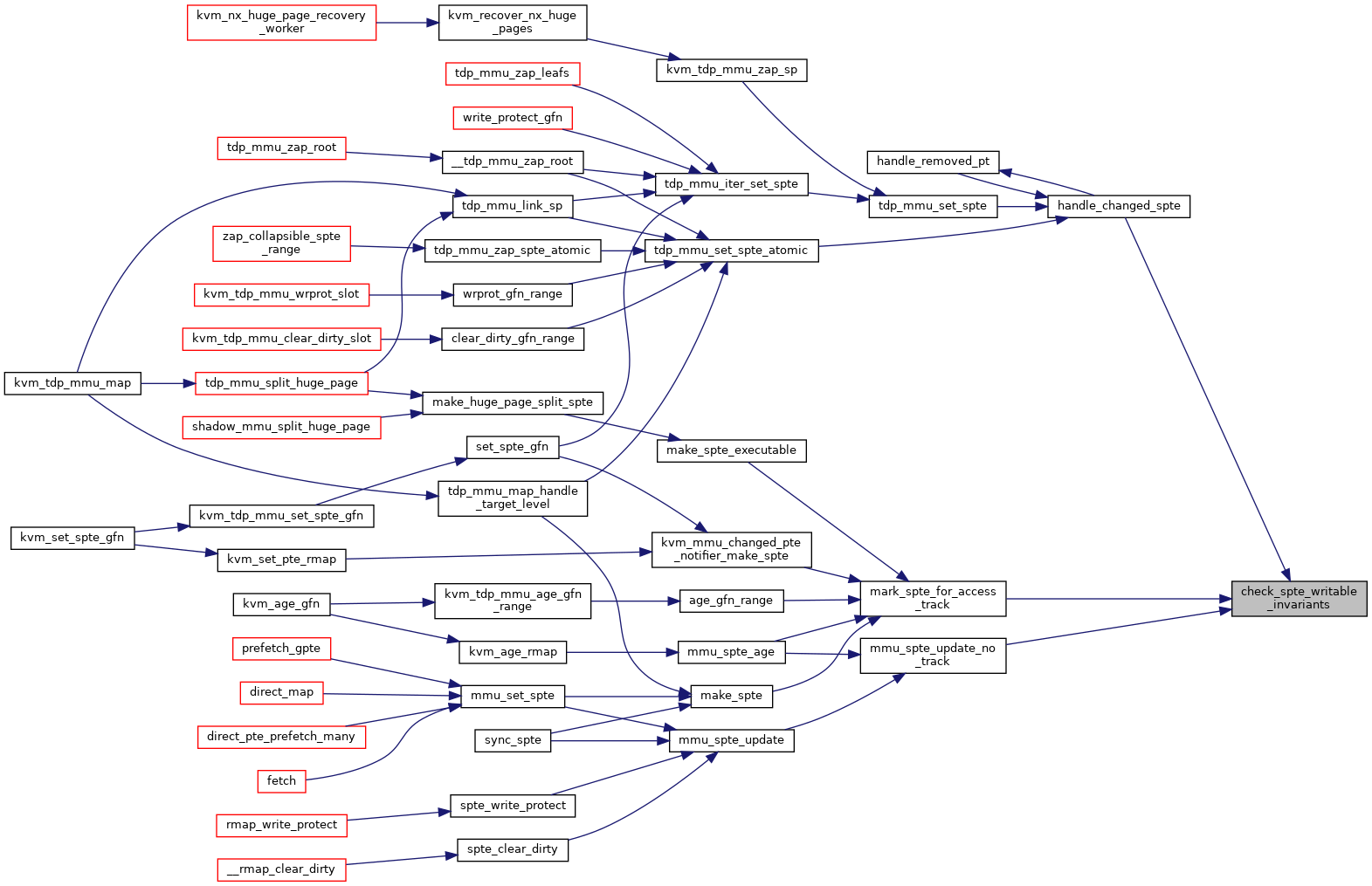

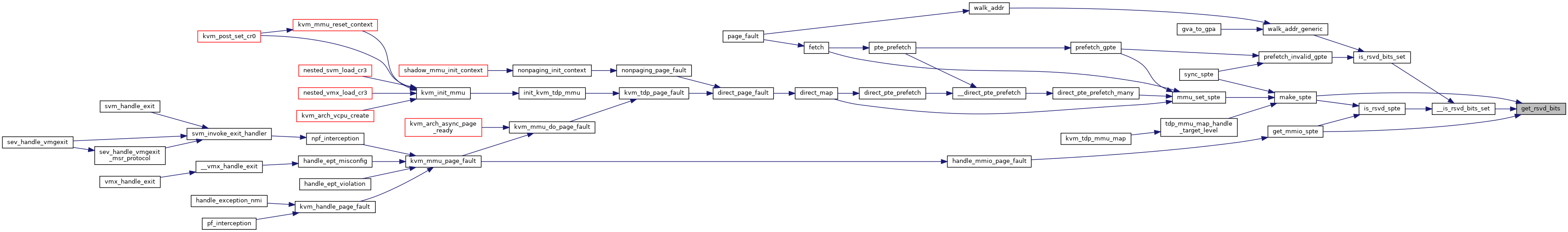

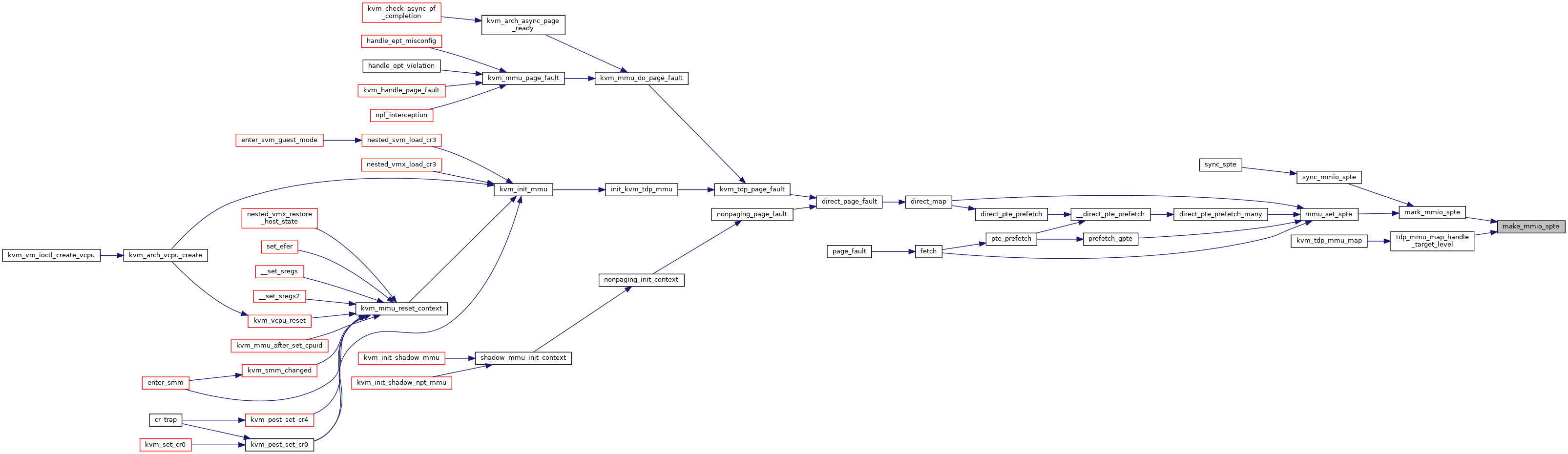

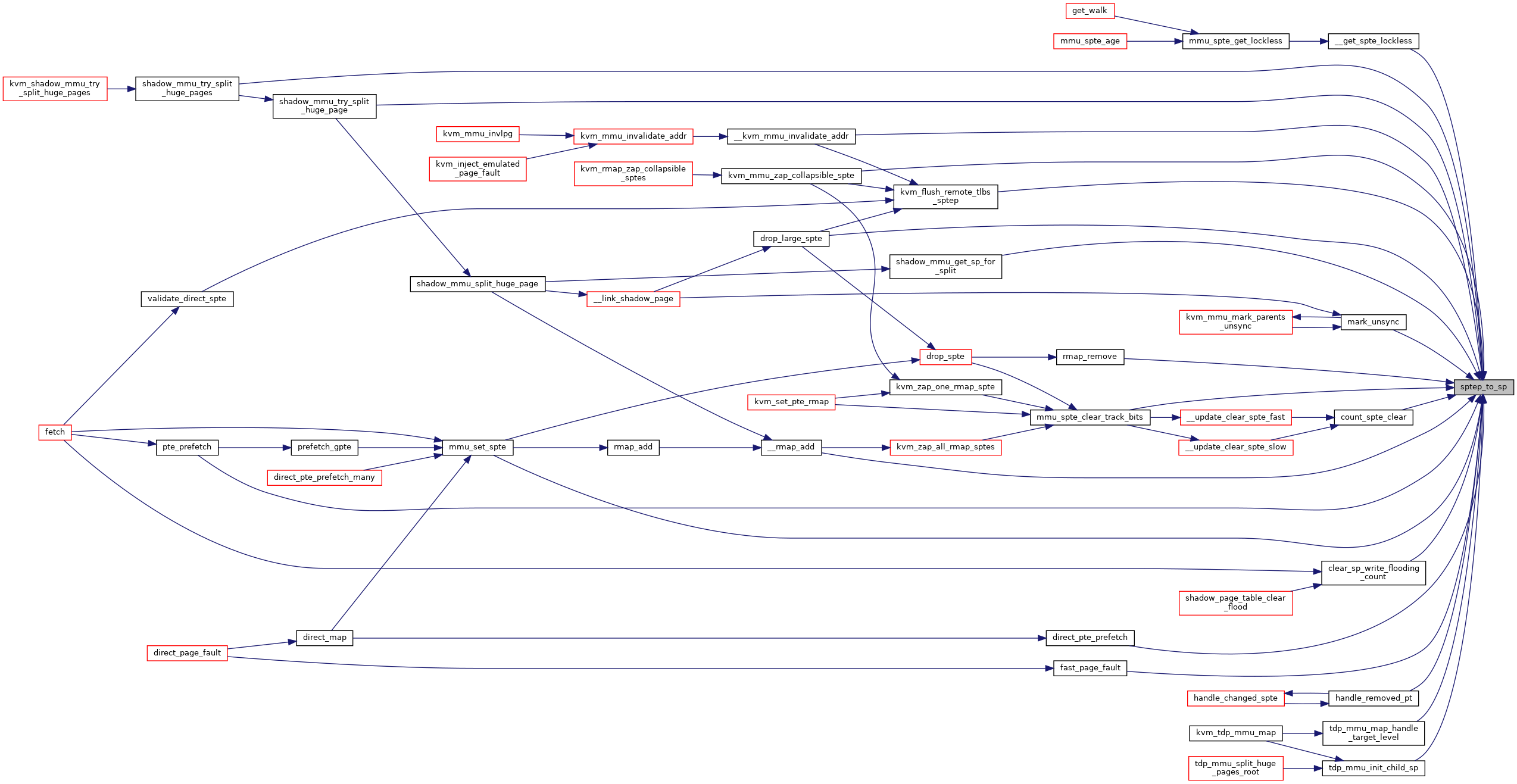

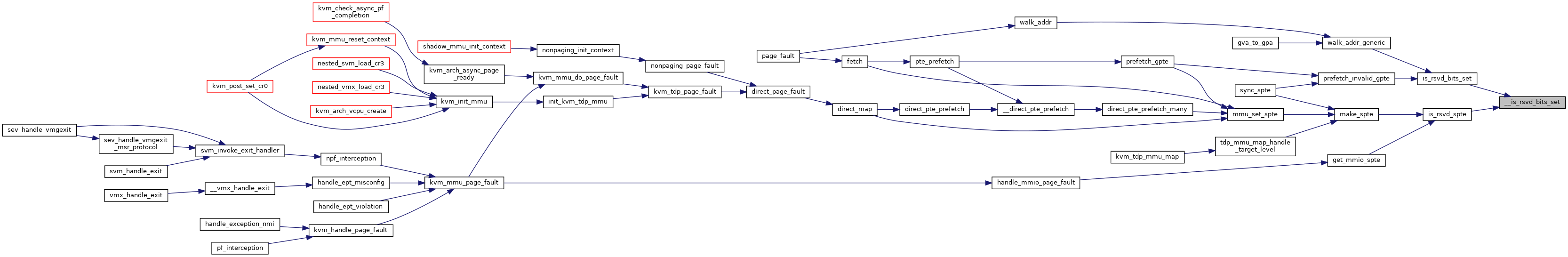

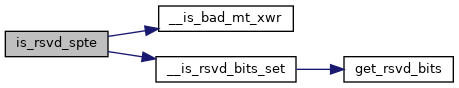

Here is the call graph for this function:

Here is the caller graph for this function:

◆ check_spte_writable_invariants()

|

inlinestatic |

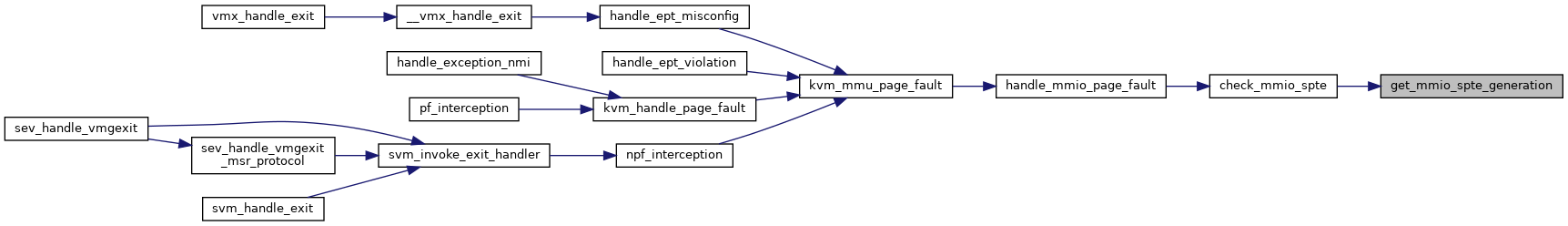

◆ get_mmio_spte_generation()

|

inlinestatic |

◆ get_rsvd_bits()

|

inlinestatic |

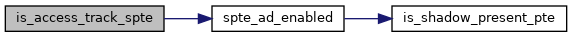

◆ is_access_track_spte()

|

inlinestatic |

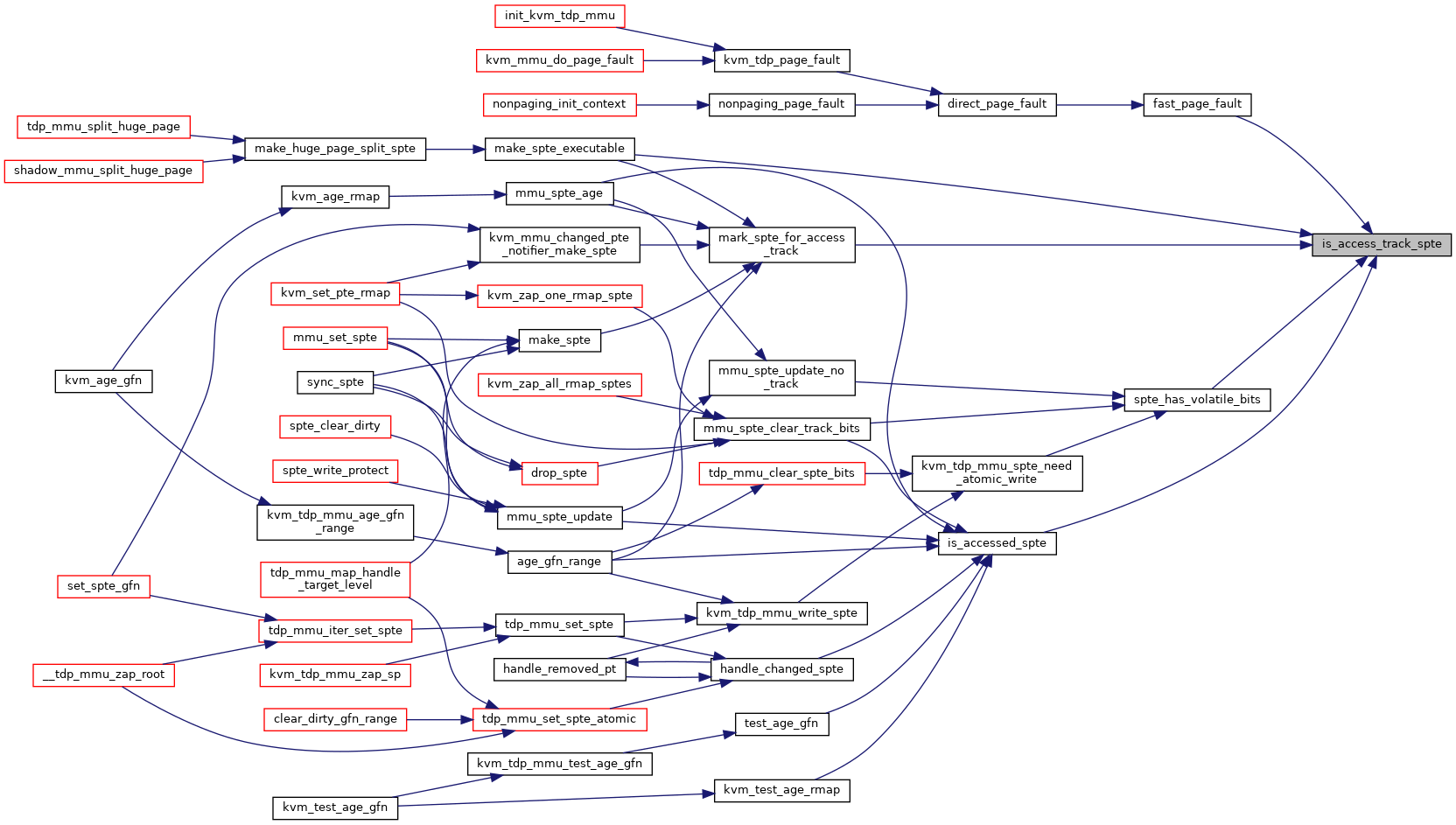

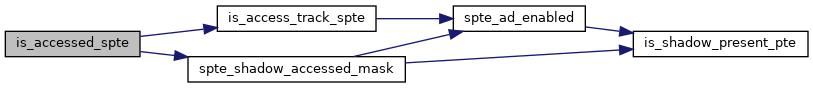

◆ is_accessed_spte()

|

inlinestatic |

◆ is_dirty_spte()

|

inlinestatic |

◆ is_executable_pte()

|

inlinestatic |

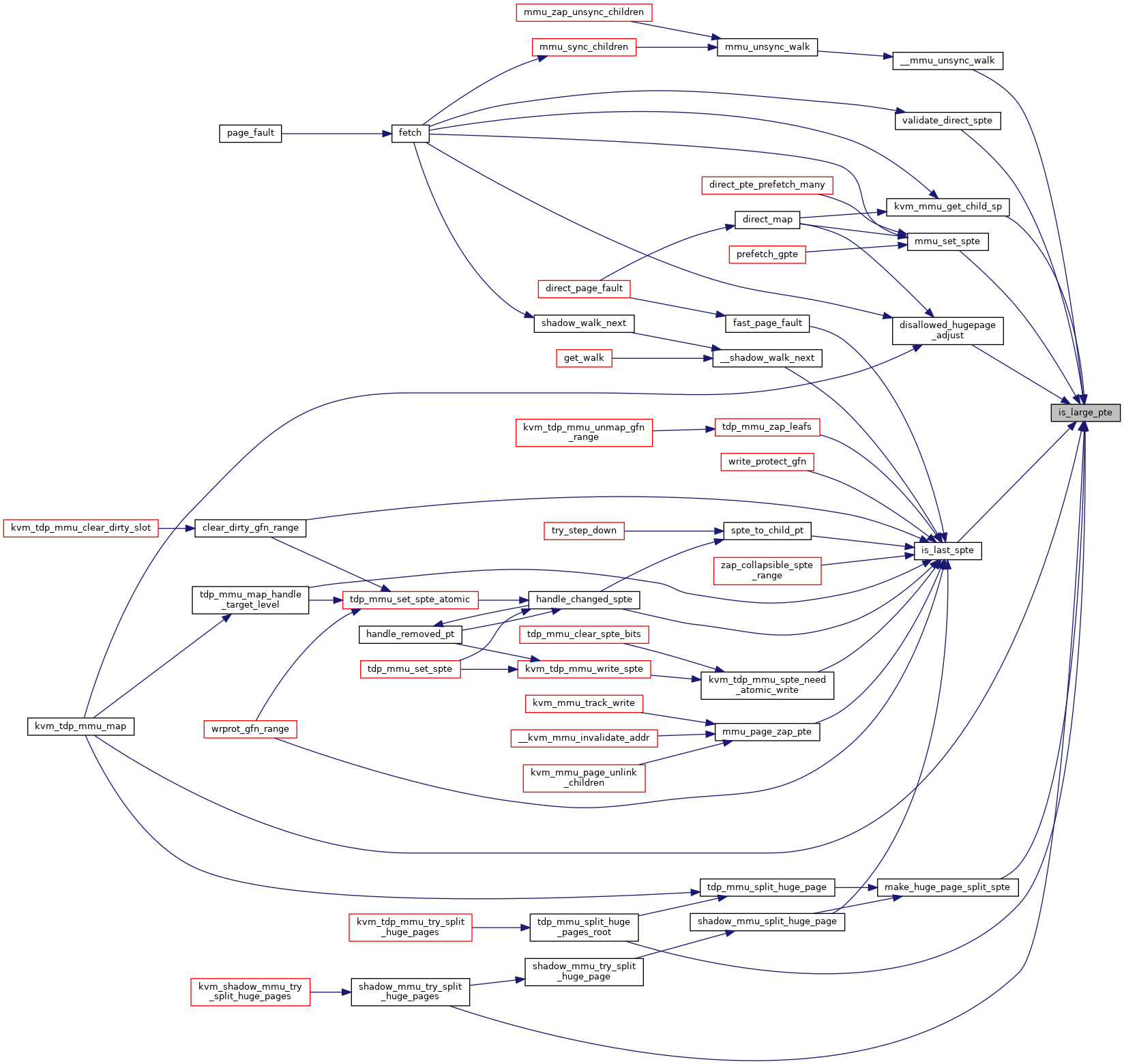

◆ is_large_pte()

|

inlinestatic |

◆ is_last_spte()

|

inlinestatic |

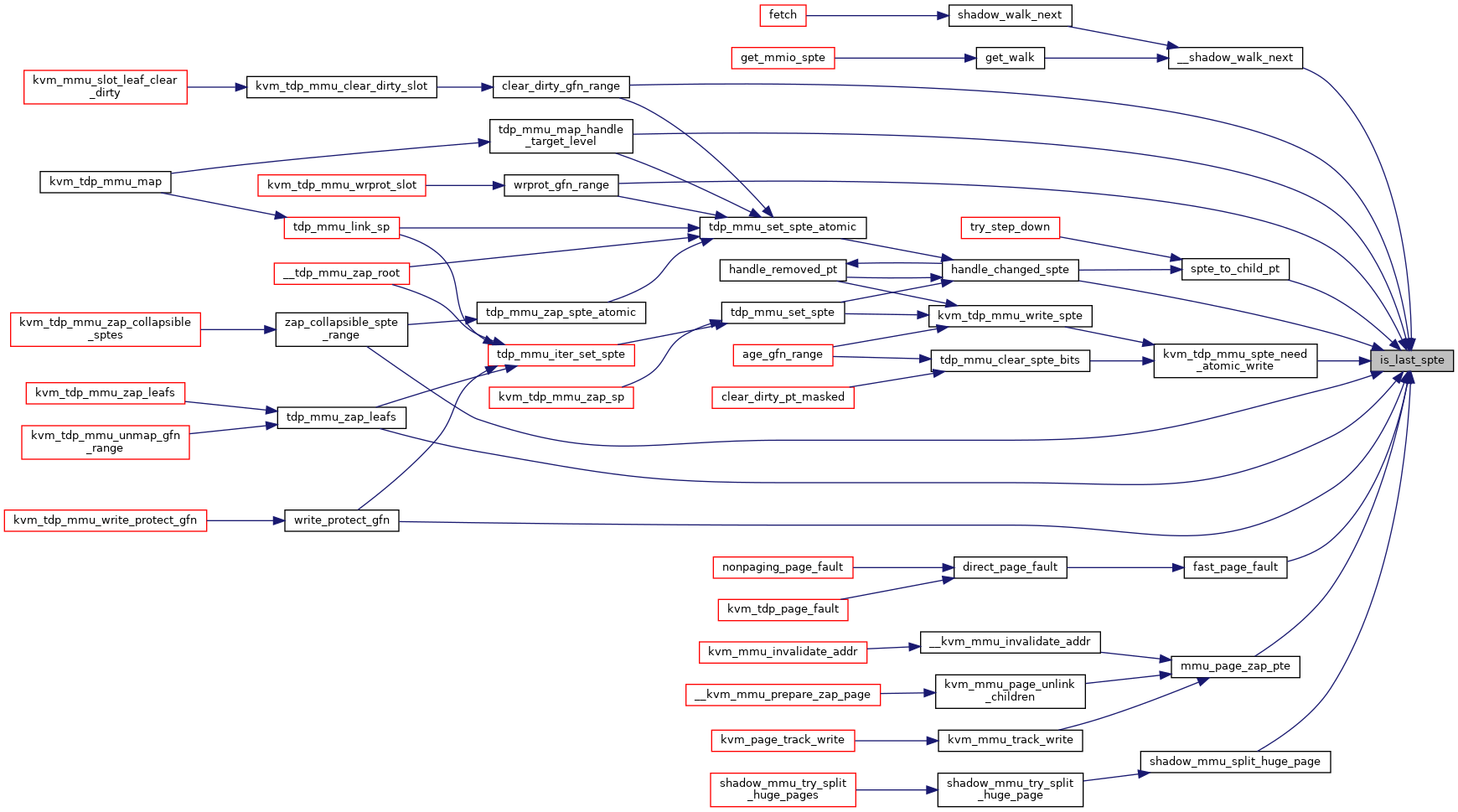

◆ is_mmio_spte()

|

inlinestatic |

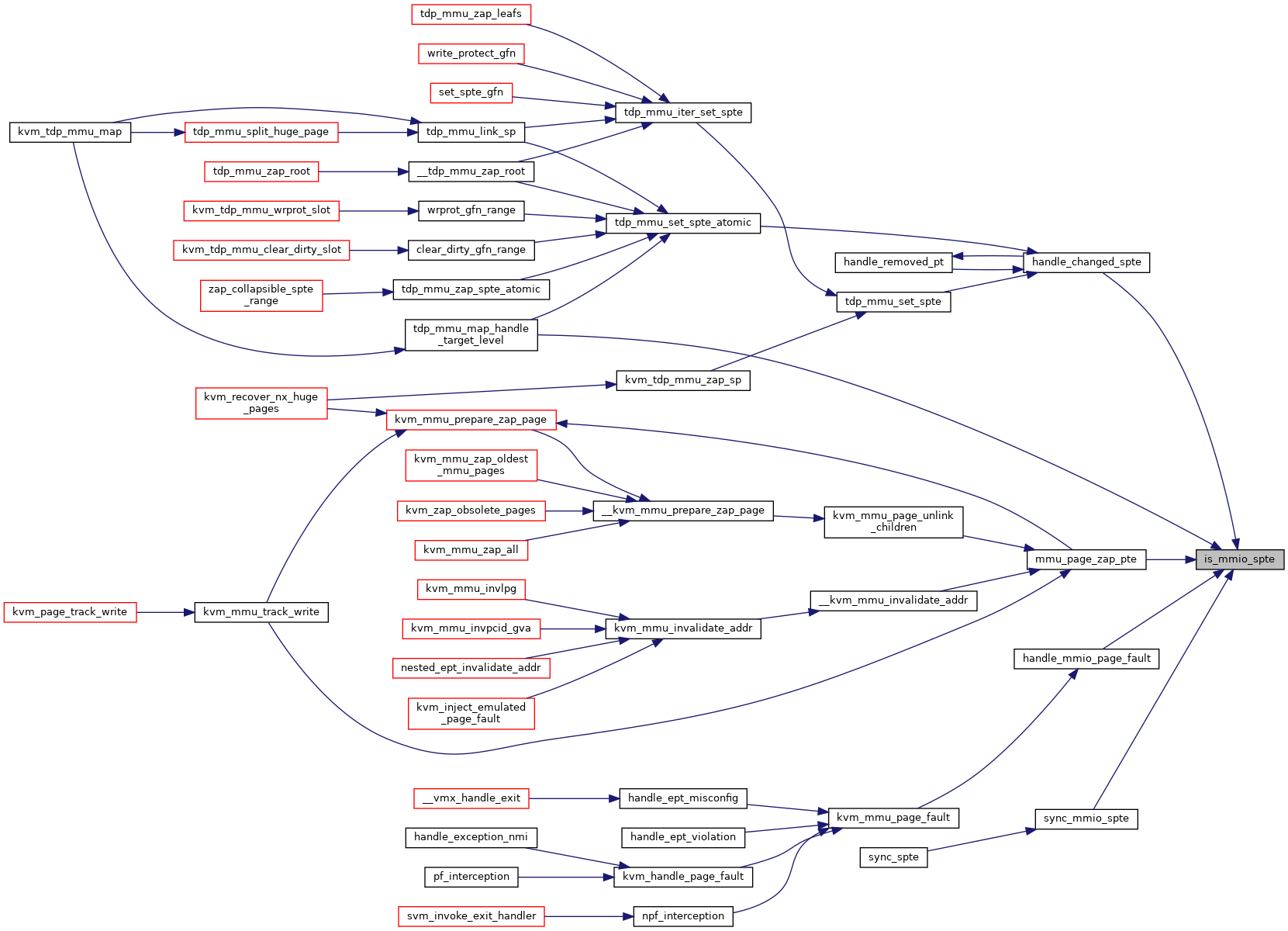

◆ is_mmu_writable_spte()

|

inlinestatic |

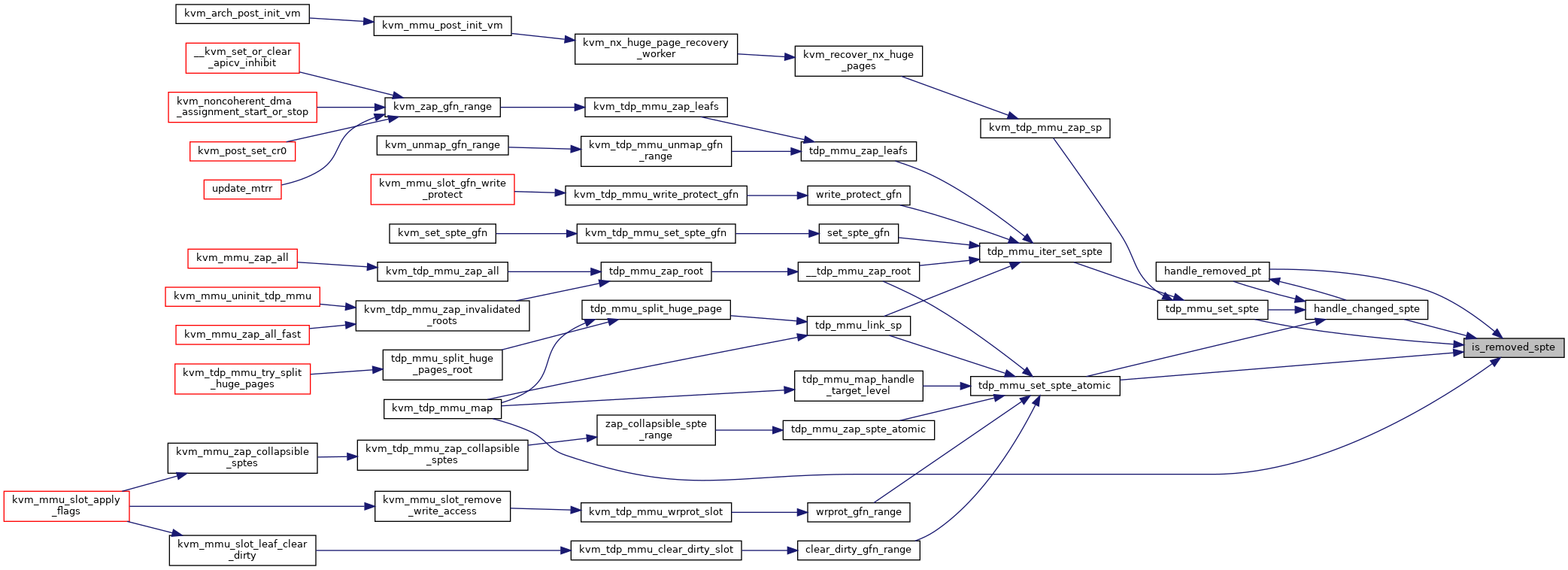

◆ is_removed_spte()

|

inlinestatic |

◆ is_rsvd_spte()

|

static |

Definition at line 368 of file spte.h.

static bool __is_rsvd_bits_set(struct rsvd_bits_validate *rsvd_check, u64 pte, int level)

Definition: spte.h:356

static bool __is_bad_mt_xwr(struct rsvd_bits_validate *rsvd_check, u64 pte)

Definition: spte.h:362

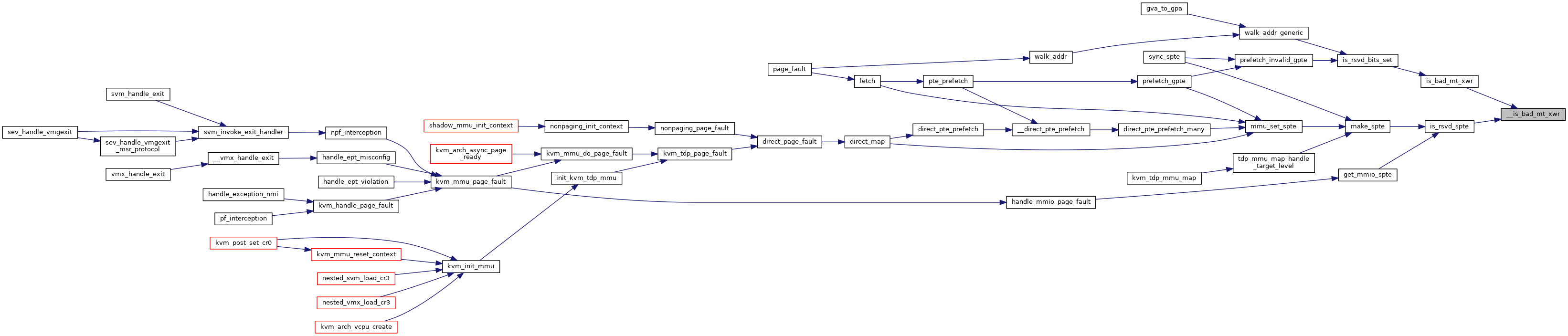

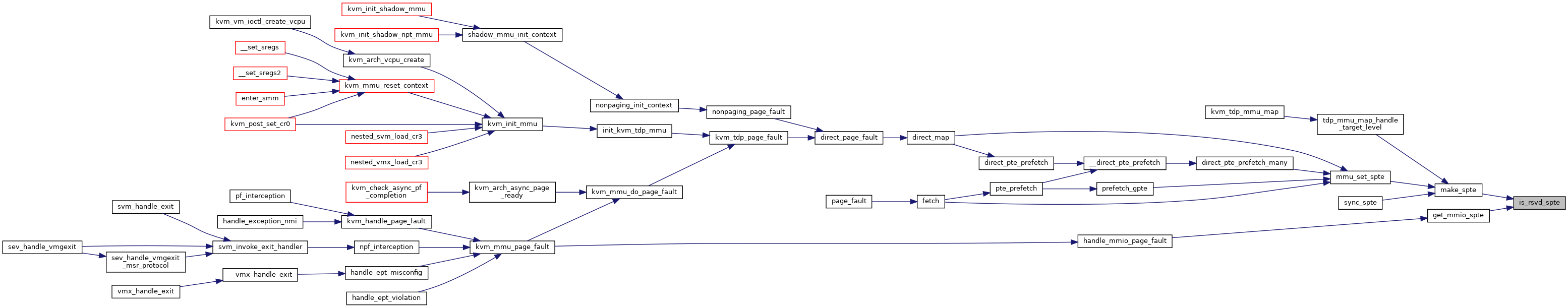

Here is the call graph for this function:

Here is the caller graph for this function:

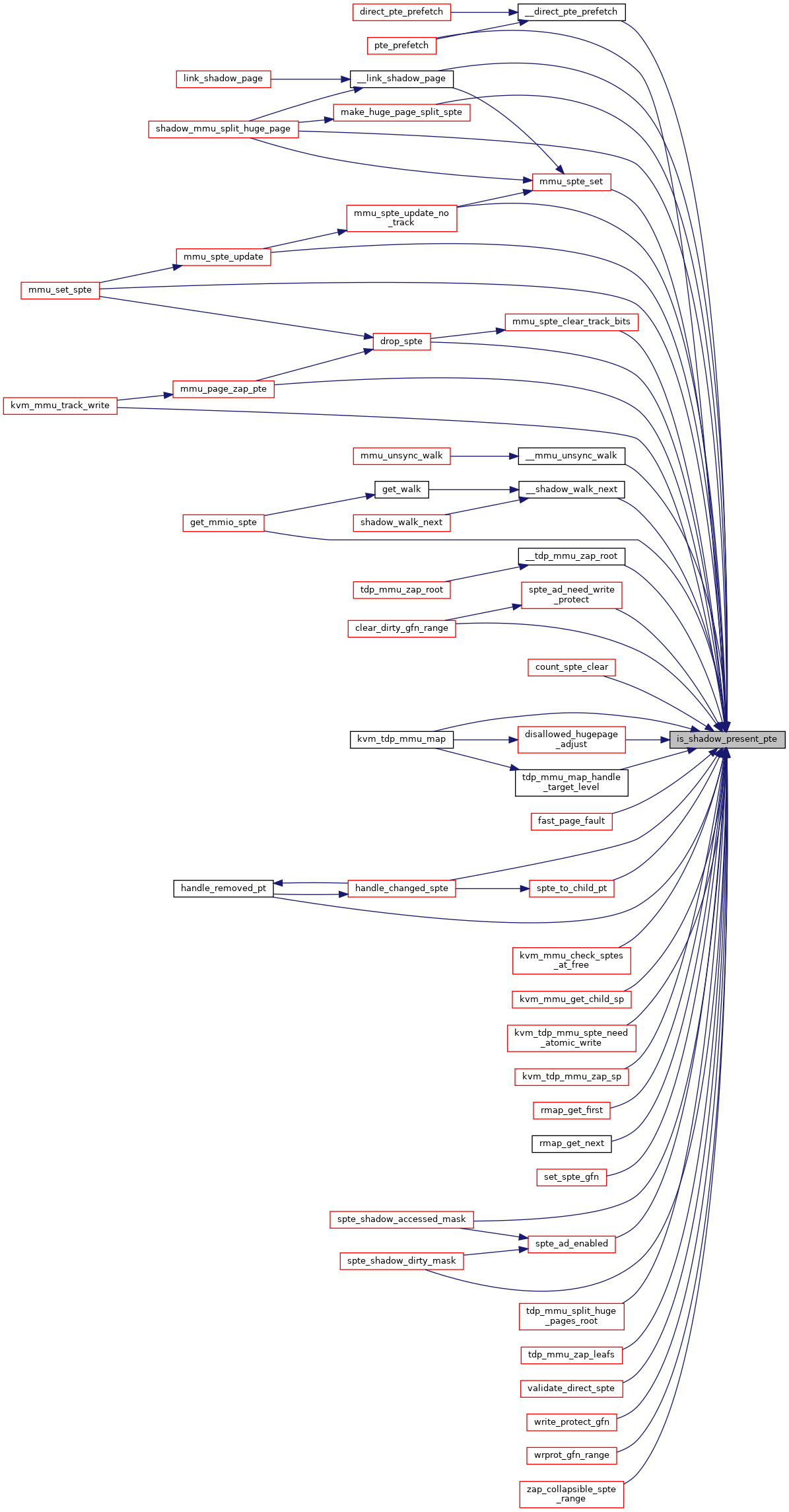

◆ is_shadow_present_pte()

|

inlinestatic |

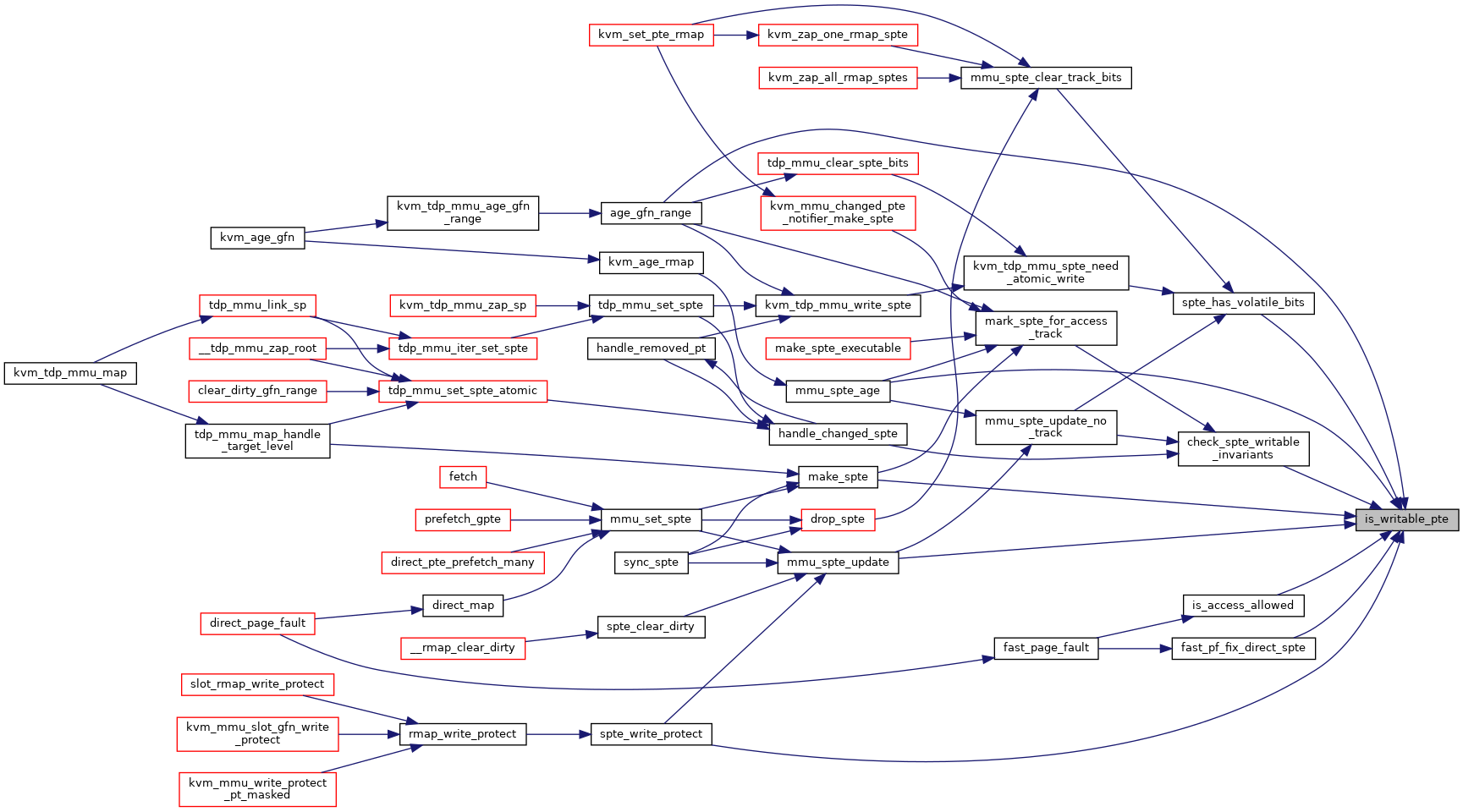

◆ is_writable_pte()

|

inlinestatic |

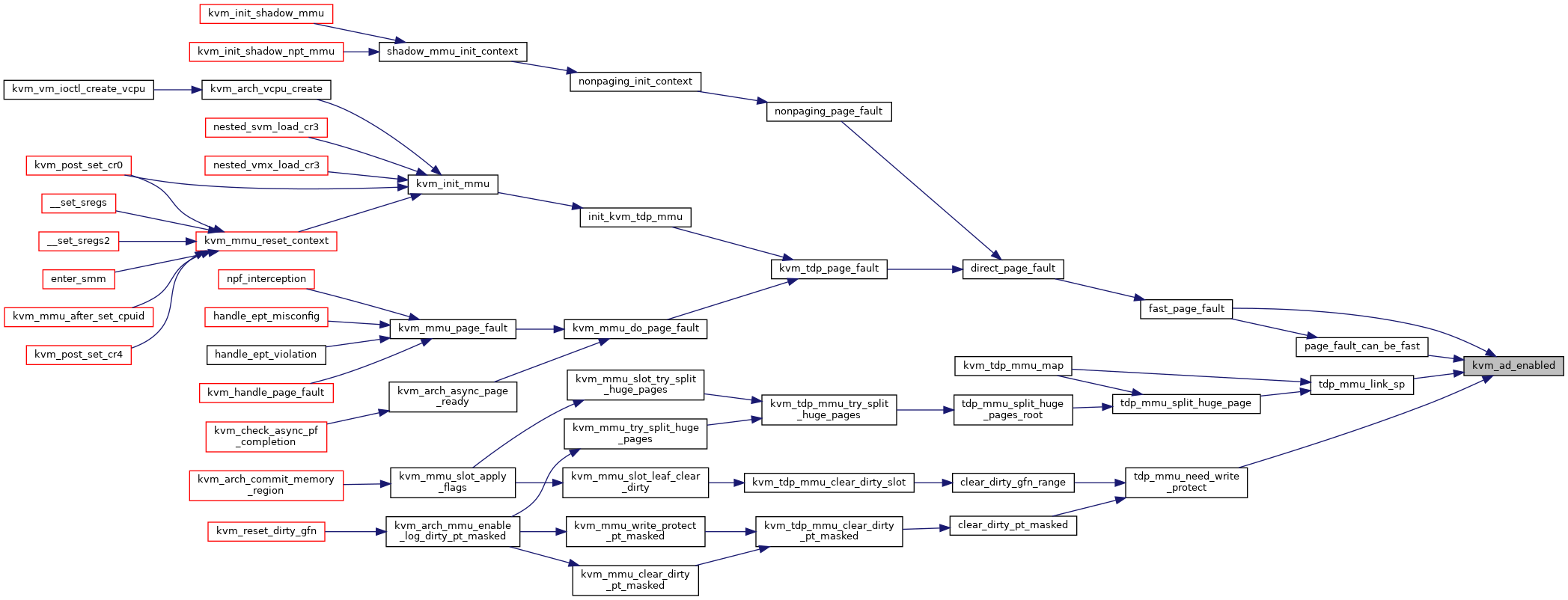

◆ kvm_ad_enabled()

|

inlinestatic |

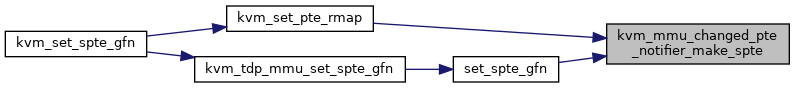

◆ kvm_mmu_changed_pte_notifier_make_spte()

| u64 kvm_mmu_changed_pte_notifier_make_spte | ( | u64 | old_spte, |

| kvm_pfn_t | new_pfn | ||

| ) |

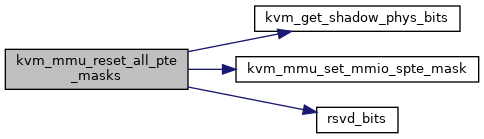

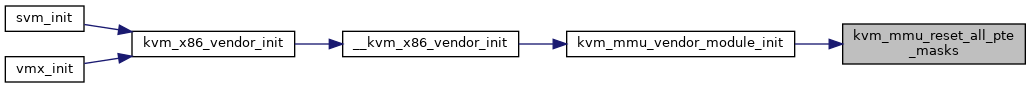

◆ kvm_mmu_reset_all_pte_masks()

| void kvm_mmu_reset_all_pte_masks | ( | void | ) |

Definition at line 453 of file spte.c.

void kvm_mmu_set_mmio_spte_mask(u64 mmio_value, u64 mmio_mask, u64 access_mask)

Definition: spte.c:362

u64 __read_mostly shadow_nonpresent_or_rsvd_lower_gfn_mask

Definition: spte.c:44

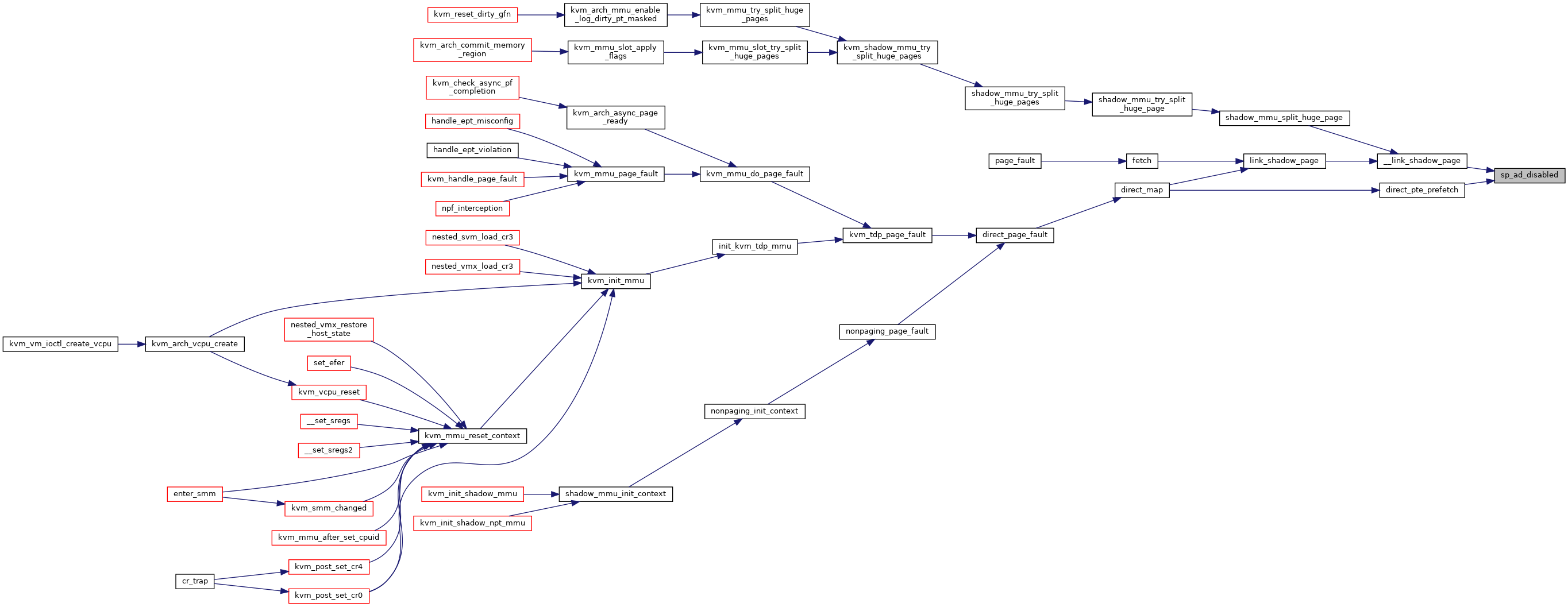

Here is the call graph for this function:

Here is the caller graph for this function:

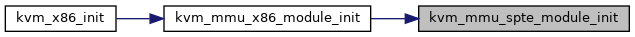

◆ kvm_mmu_spte_module_init()

| void __init kvm_mmu_spte_module_init | ( | void | ) |

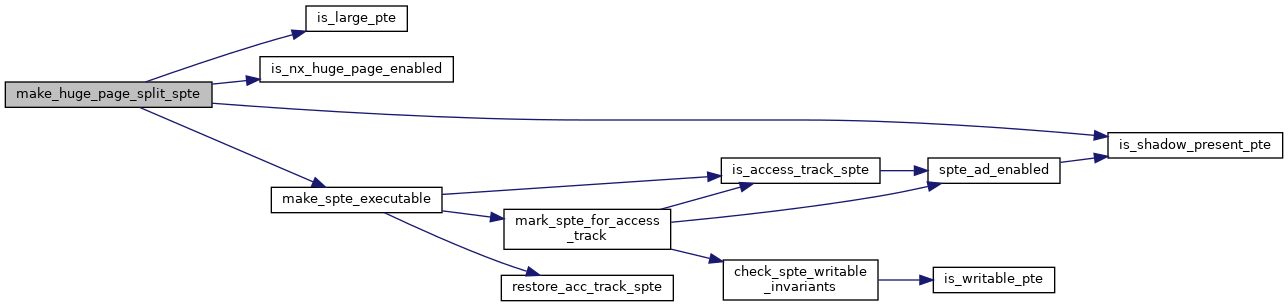

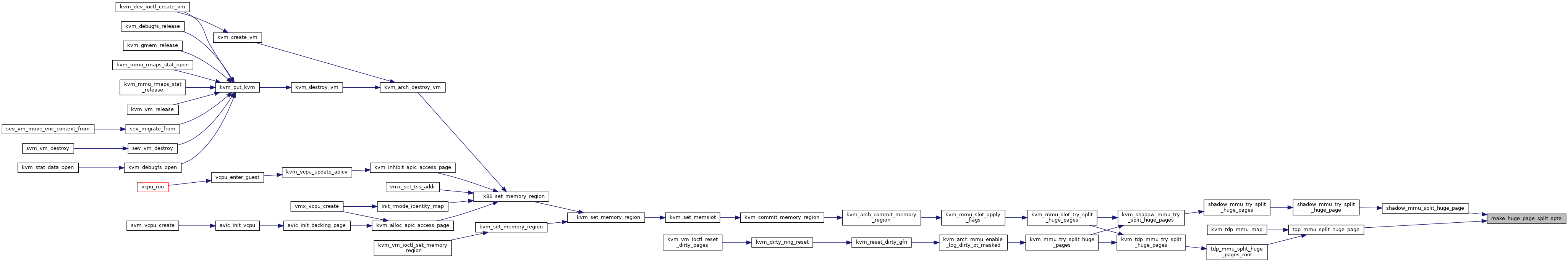

◆ make_huge_page_split_spte()

| u64 make_huge_page_split_spte | ( | struct kvm * | kvm, |

| u64 | huge_spte, | ||

| union kvm_mmu_page_role | role, | ||

| int | index | ||

| ) |

Definition at line 274 of file spte.c.

static bool is_nx_huge_page_enabled(struct kvm *kvm)

Definition: mmu_internal.h:185

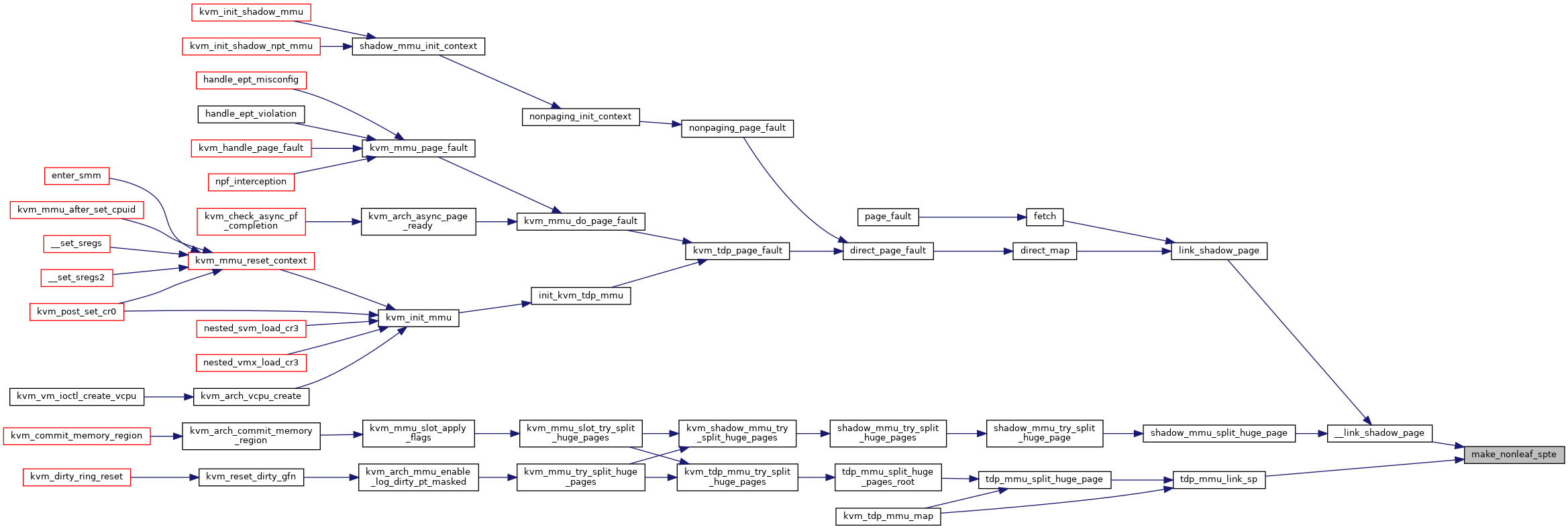

Here is the call graph for this function:

Here is the caller graph for this function:

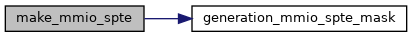

◆ make_mmio_spte()

| u64 make_mmio_spte | ( | struct kvm_vcpu * | vcpu, |

| u64 | gfn, | ||

| unsigned int | access | ||

| ) |

◆ make_nonleaf_spte()

| u64 make_nonleaf_spte | ( | u64 * | child_pt, |

| bool | ad_disabled | ||

| ) |

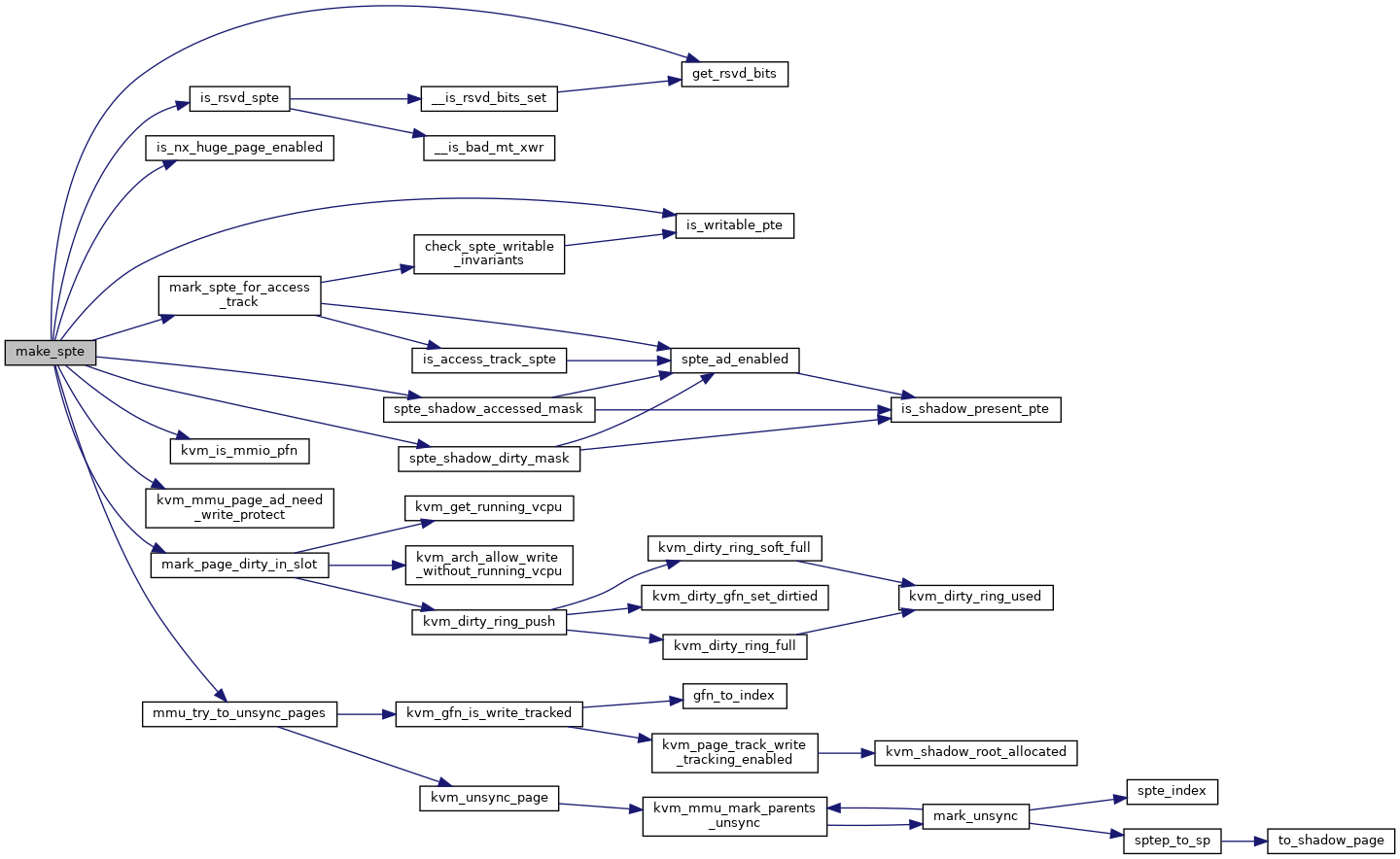

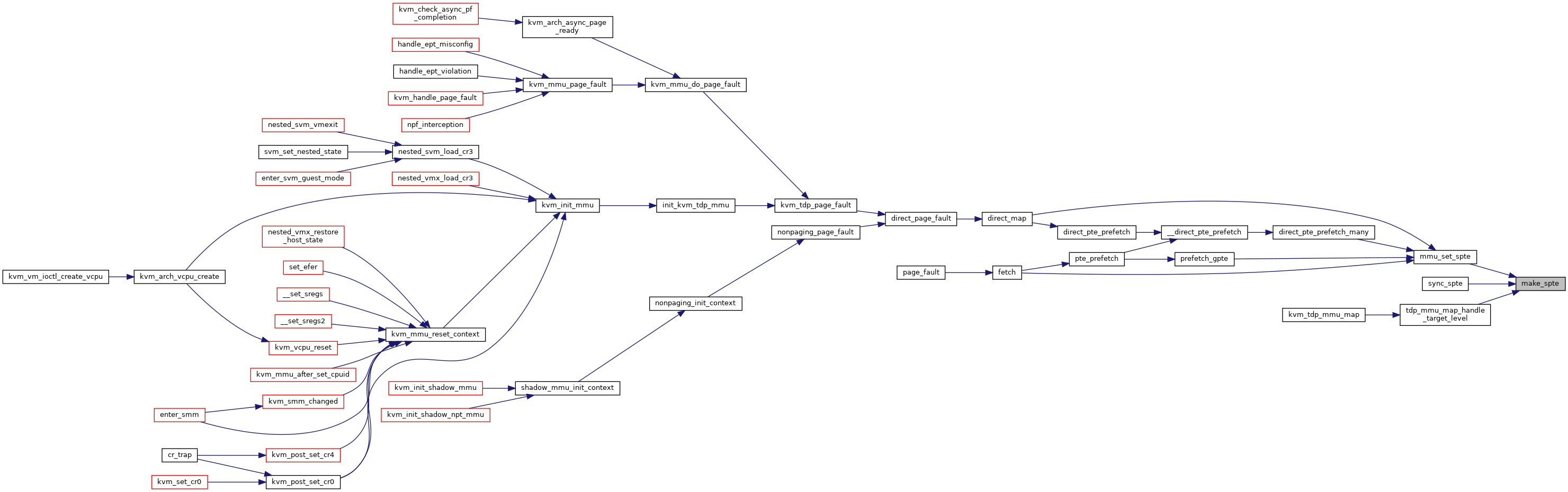

◆ make_spte()

| bool make_spte | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_mmu_page * | sp, | ||

| const struct kvm_memory_slot * | slot, | ||

| unsigned int | pte_access, | ||

| gfn_t | gfn, | ||

| kvm_pfn_t | pfn, | ||

| u64 | old_spte, | ||

| bool | prefetch, | ||

| bool | can_unsync, | ||

| bool | host_writable, | ||

| u64 * | new_spte | ||

| ) |

Definition at line 137 of file spte.c.

void mark_page_dirty_in_slot(struct kvm *kvm, const struct kvm_memory_slot *memslot, gfn_t gfn)

Definition: kvm_main.c:3635

int mmu_try_to_unsync_pages(struct kvm *kvm, const struct kvm_memory_slot *slot, gfn_t gfn, bool can_unsync, bool prefetch)

Definition: mmu.c:2805

static bool kvm_mmu_page_ad_need_write_protect(struct kvm_mmu_page *sp)

Definition: mmu_internal.h:148

static __always_inline bool is_rsvd_spte(struct rsvd_bits_validate *rsvd_check, u64 spte, int level)

Definition: spte.h:368

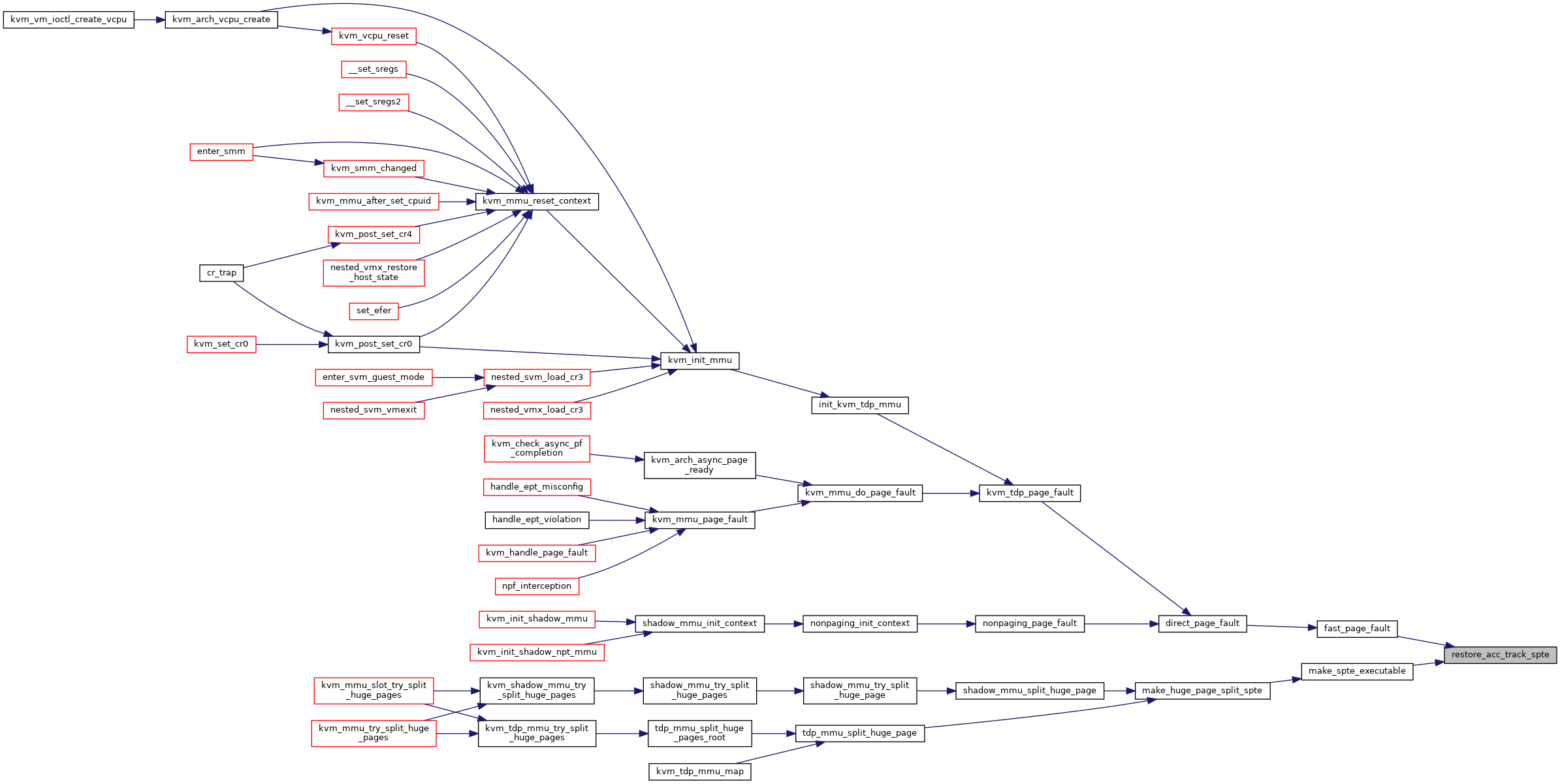

Here is the call graph for this function:

Here is the caller graph for this function:

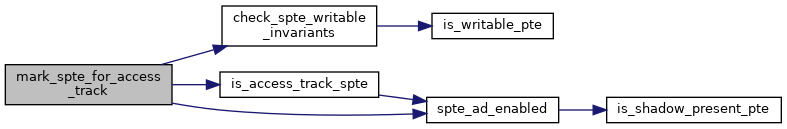

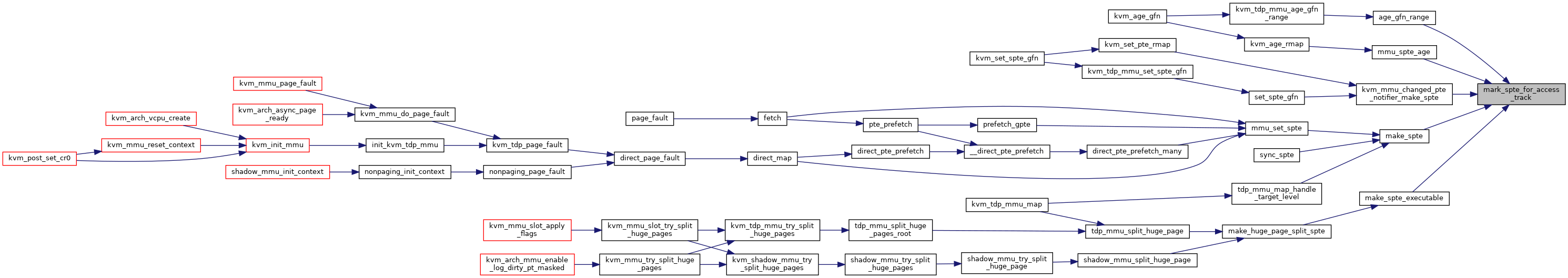

◆ mark_spte_for_access_track()

| u64 mark_spte_for_access_track | ( | u64 | spte | ) |

Definition at line 341 of file spte.c.

static void check_spte_writable_invariants(u64 spte)

Definition: spte.h:447

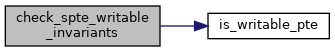

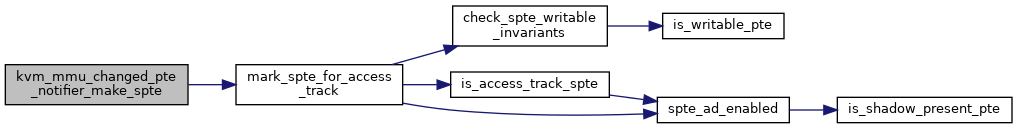

Here is the call graph for this function:

Here is the caller graph for this function:

◆ restore_acc_track_spte()

|

inlinestatic |

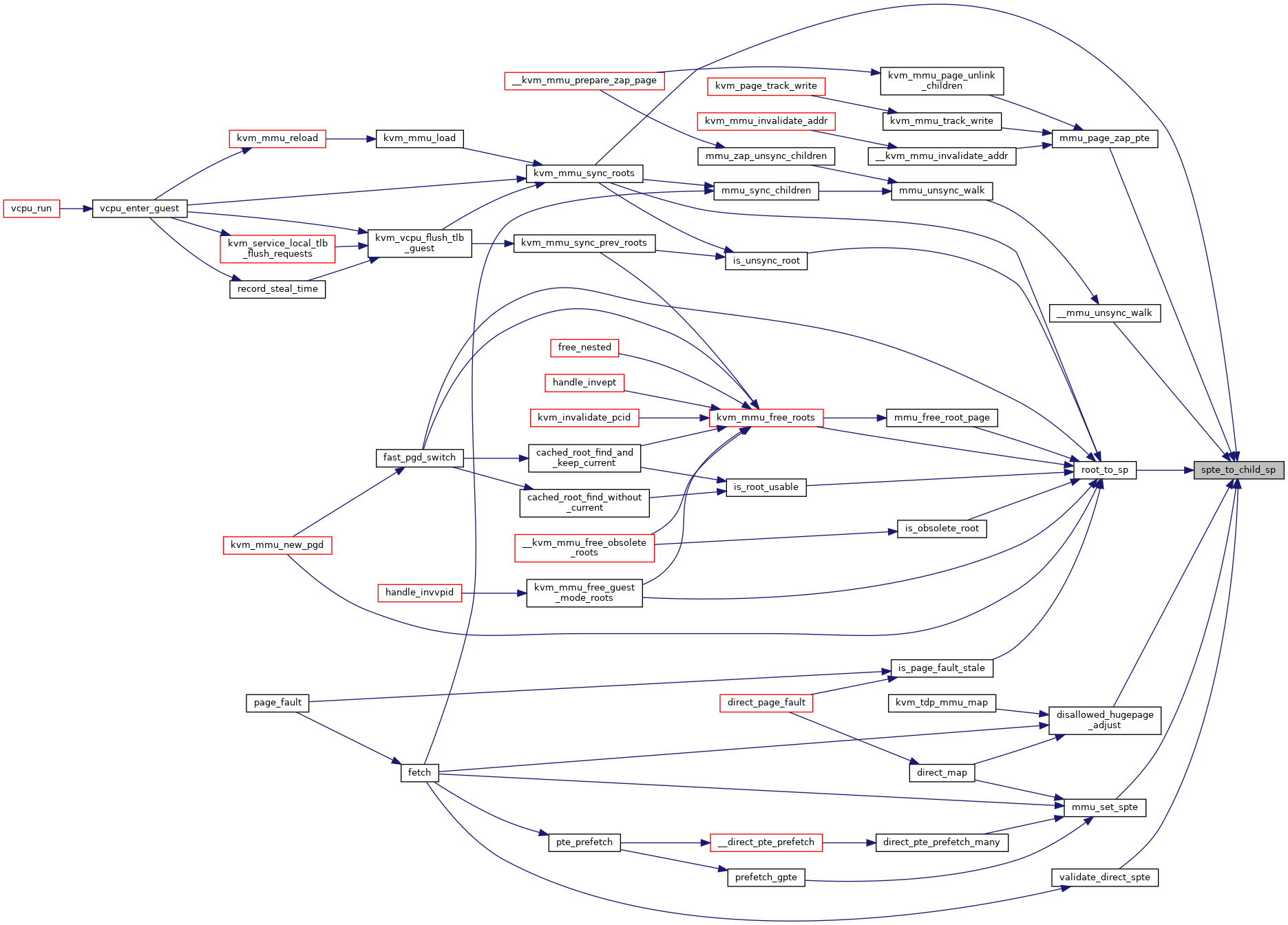

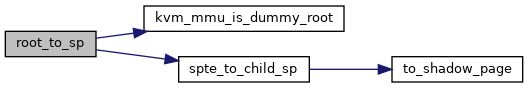

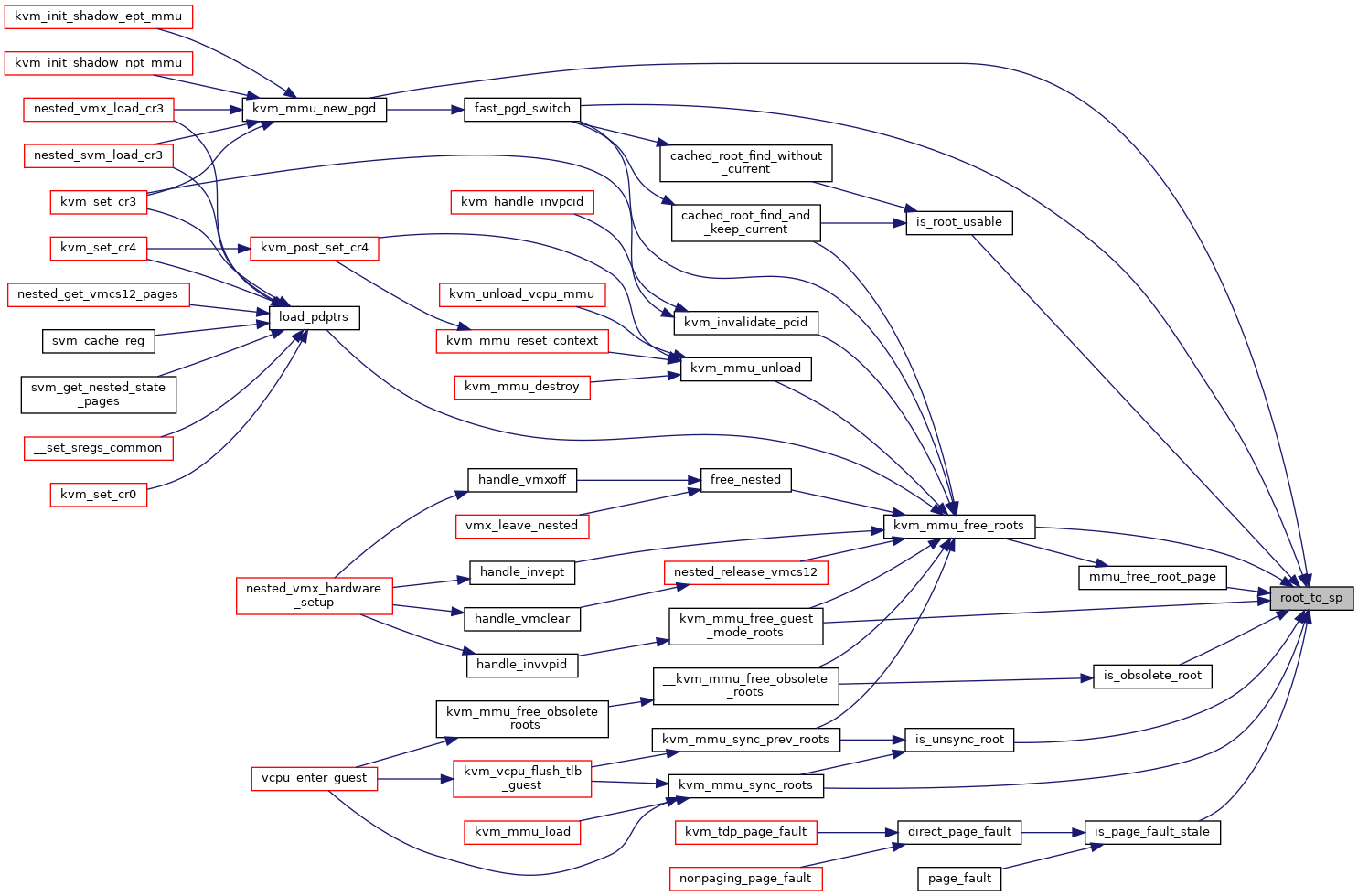

◆ root_to_sp()

|

inlinestatic |

Definition at line 240 of file spte.h.

static bool kvm_mmu_is_dummy_root(hpa_t shadow_page)

Definition: mmu_internal.h:45

Here is the call graph for this function:

Here is the caller graph for this function:

◆ sp_ad_disabled()

|

inlinestatic |

◆ spte_ad_enabled()

|

inlinestatic |

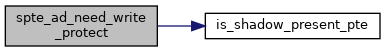

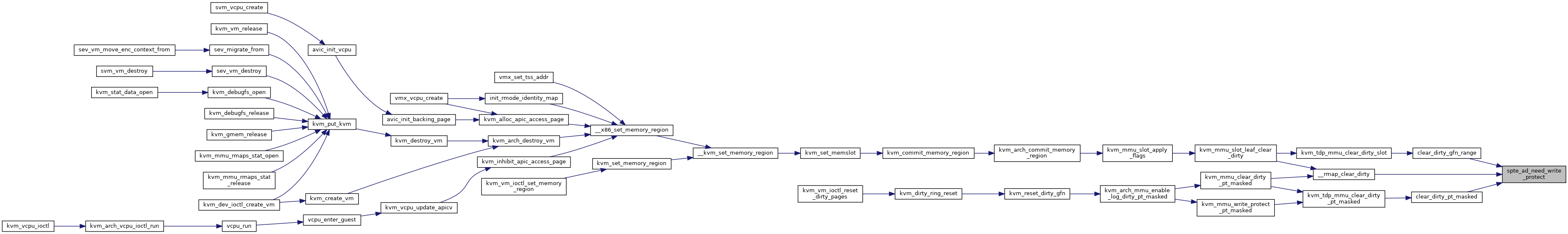

◆ spte_ad_need_write_protect()

|

inlinestatic |

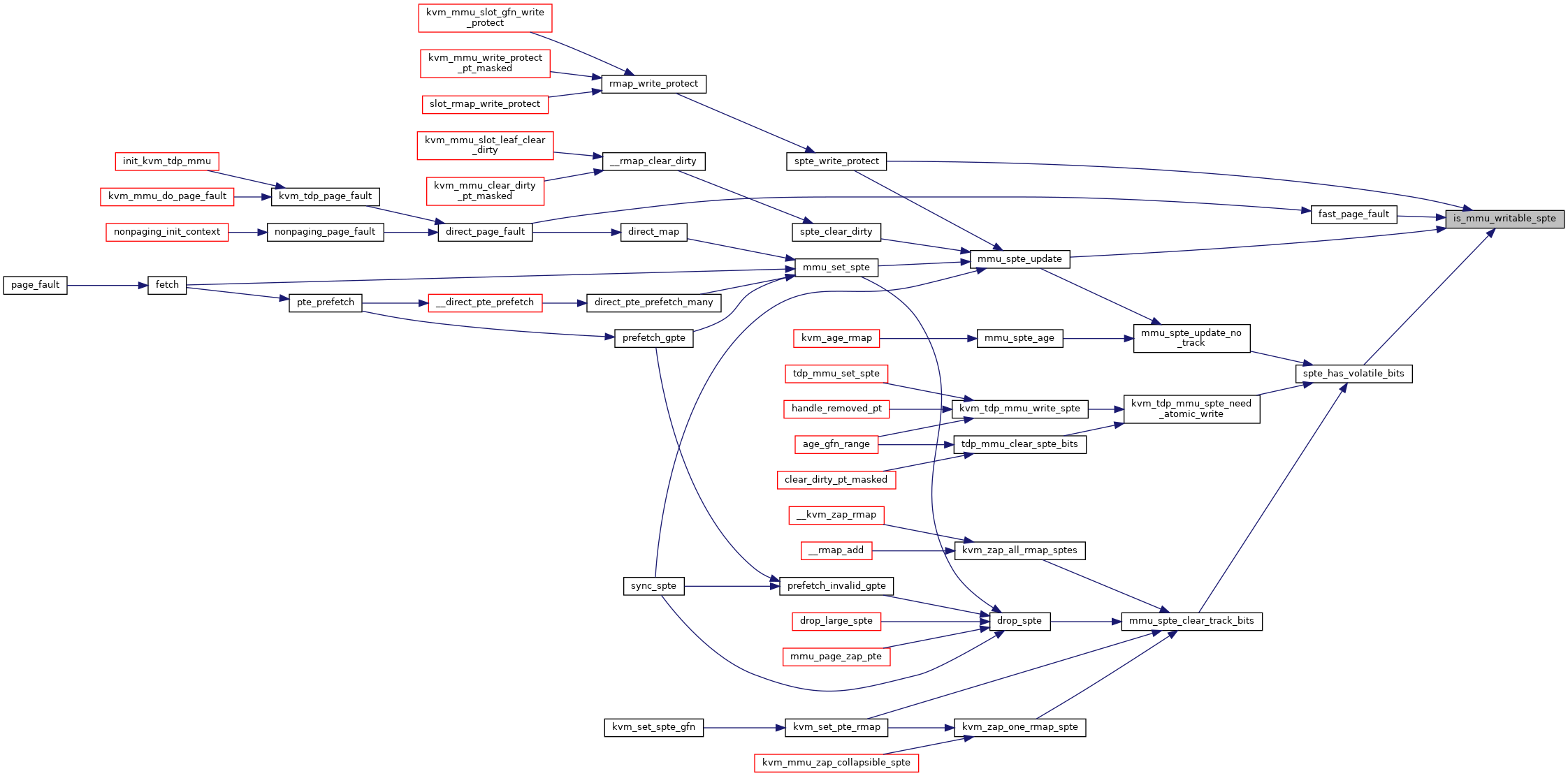

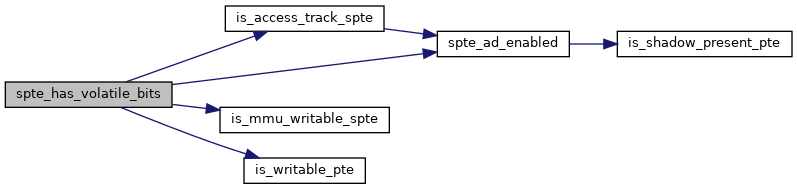

◆ spte_has_volatile_bits()

| bool spte_has_volatile_bits | ( | u64 | spte | ) |

◆ spte_index()

|

inlinestatic |

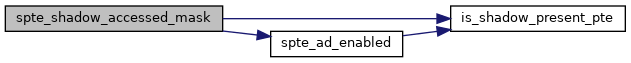

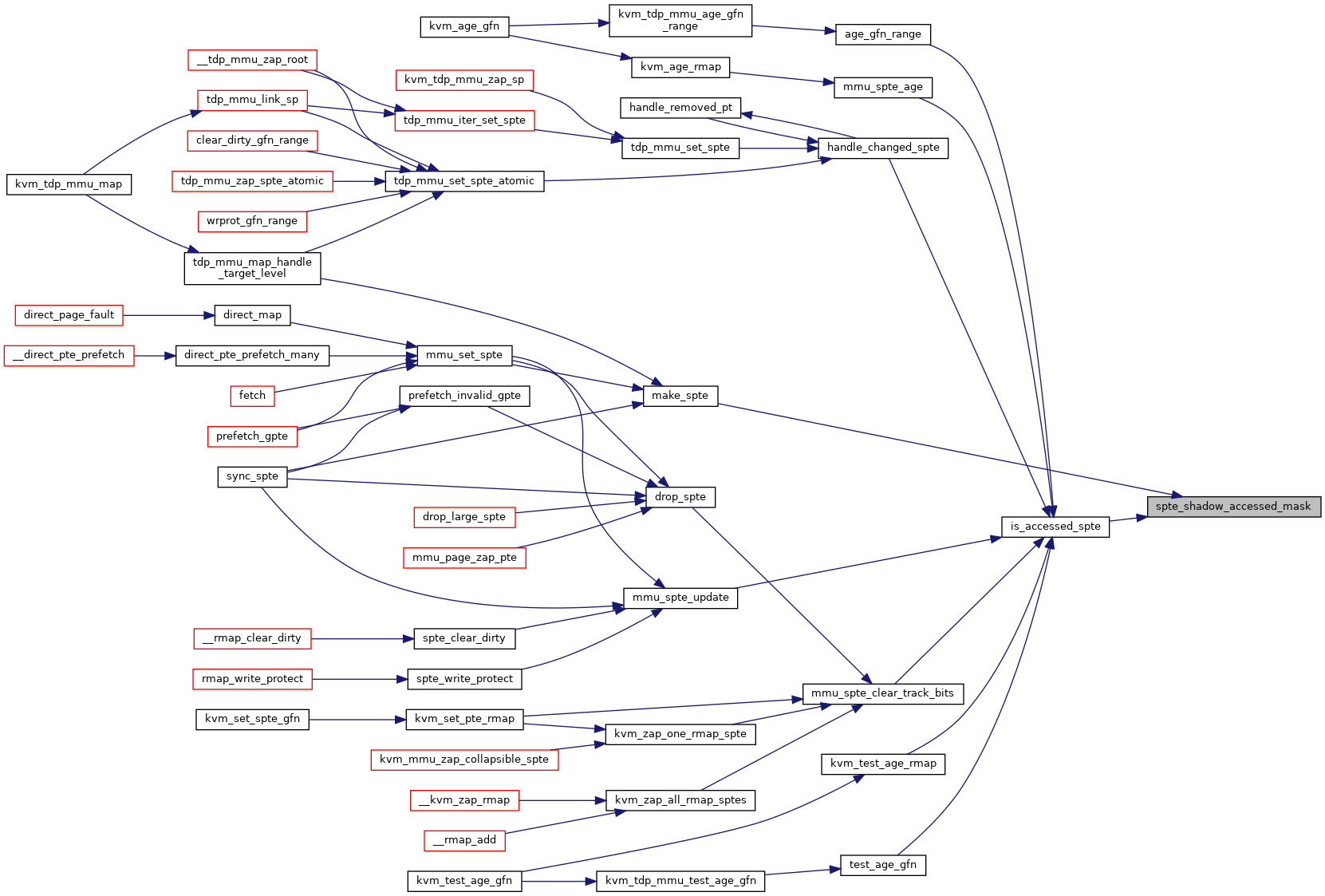

◆ spte_shadow_accessed_mask()

|

inlinestatic |

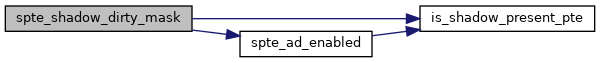

◆ spte_shadow_dirty_mask()

|

inlinestatic |

◆ spte_to_child_sp()

|

inlinestatic |

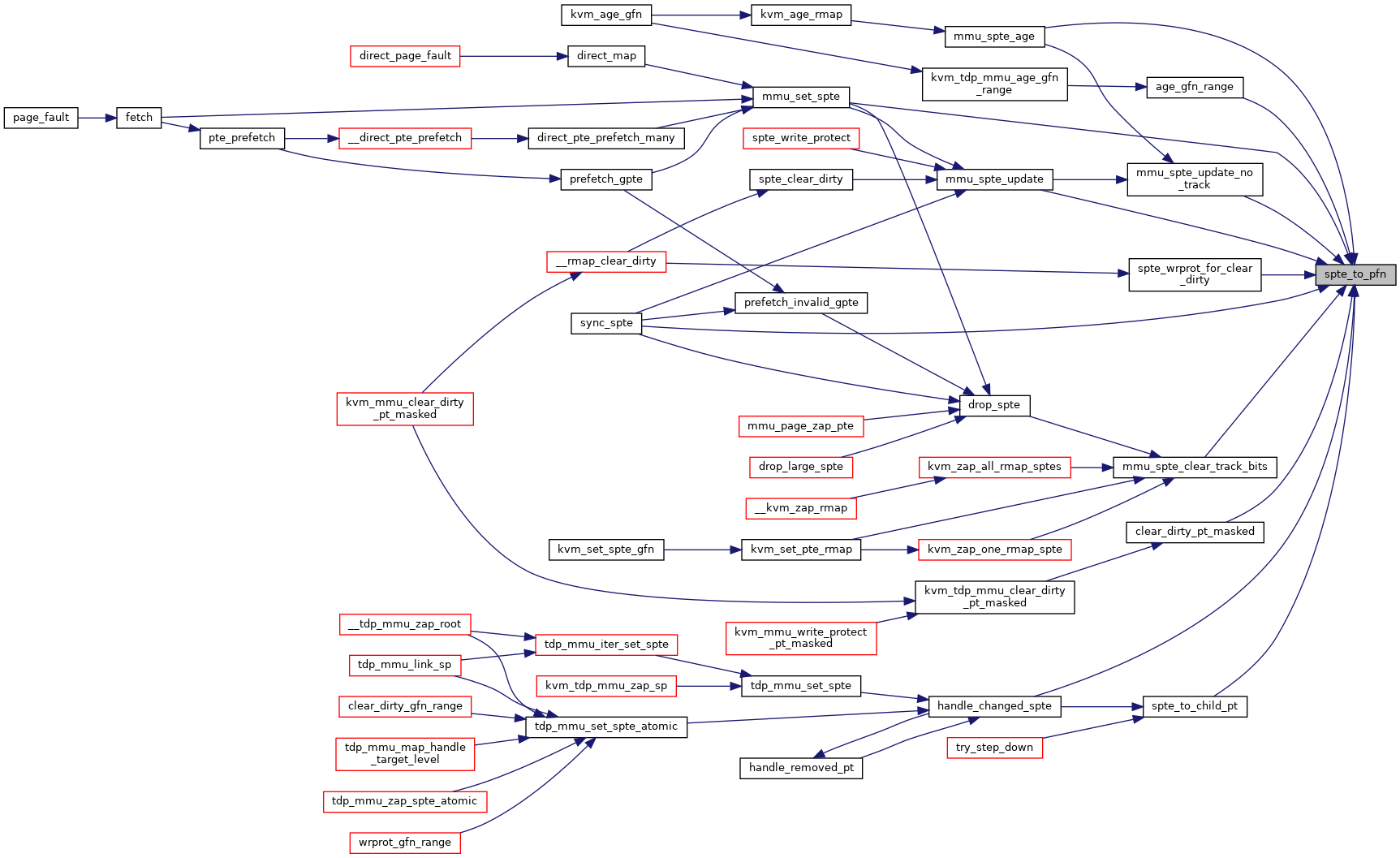

◆ spte_to_pfn()

|

inlinestatic |

◆ sptep_to_sp()

|

inlinestatic |

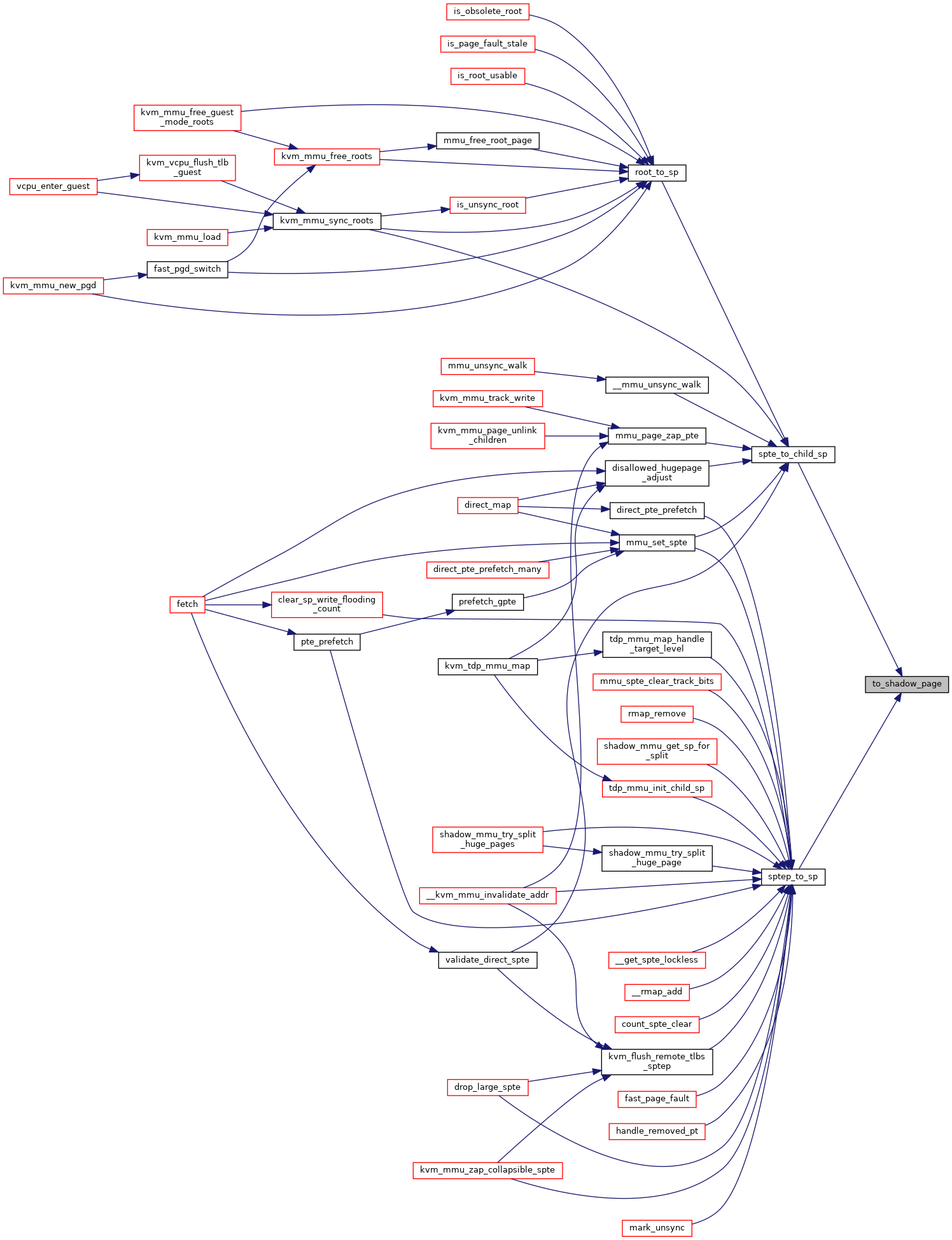

◆ to_shadow_page()

|

inlinestatic |

Definition at line 223 of file spte.h.

Definition: mmu_internal.h:52

Here is the caller graph for this function:

Variable Documentation

◆ shadow_acc_track_mask

|

extern |

◆ shadow_accessed_mask

|

extern |

◆ shadow_dirty_mask

|

extern |

◆ shadow_host_writable_mask

|

extern |

◆ shadow_me_mask

|

extern |

◆ shadow_me_value

|

extern |

◆ shadow_memtype_mask

|

extern |

◆ shadow_mmio_access_mask

|

extern |

◆ shadow_mmio_mask

|

extern |

◆ shadow_mmio_value

|

extern |

◆ shadow_mmu_writable_mask

|

extern |

◆ shadow_nonpresent_or_rsvd_lower_gfn_mask

|

extern |

◆ shadow_nonpresent_or_rsvd_mask

|

extern |

◆ shadow_nx_mask

|

extern |

◆ shadow_present_mask

|

extern |

◆ shadow_user_mask

|

extern |

◆ shadow_x_mask

|

extern |