#include <linux/kvm_host.h>#include "mmu.h"#include "mmu_internal.h"#include "x86.h"#include "spte.h"#include <asm/e820/api.h>#include <asm/memtype.h>#include <asm/vmx.h>

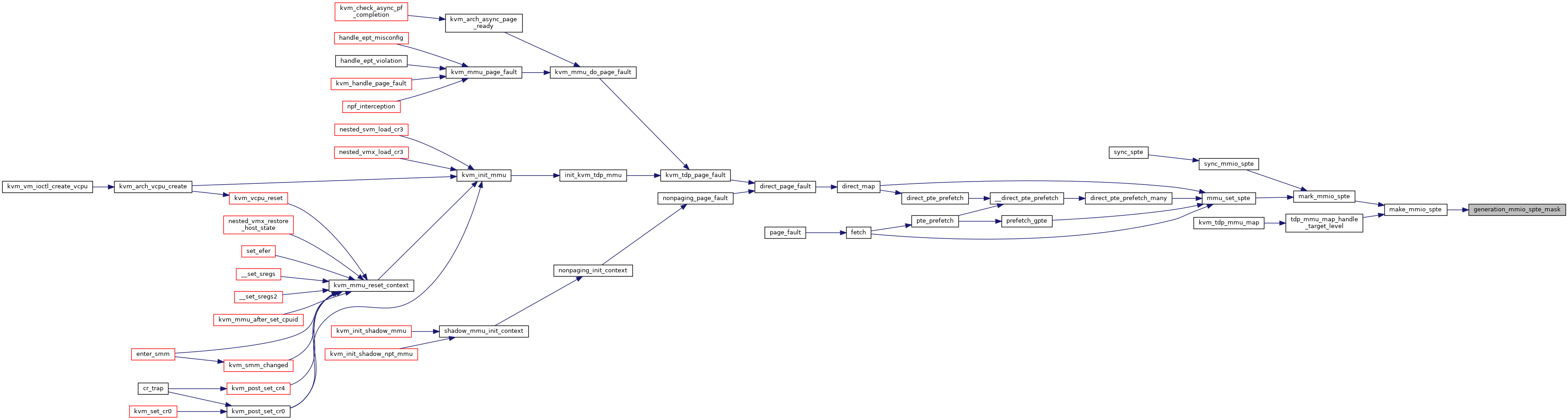

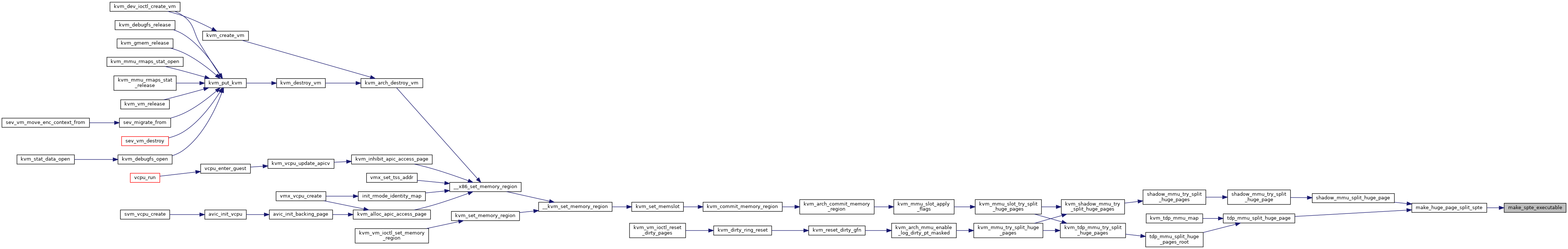

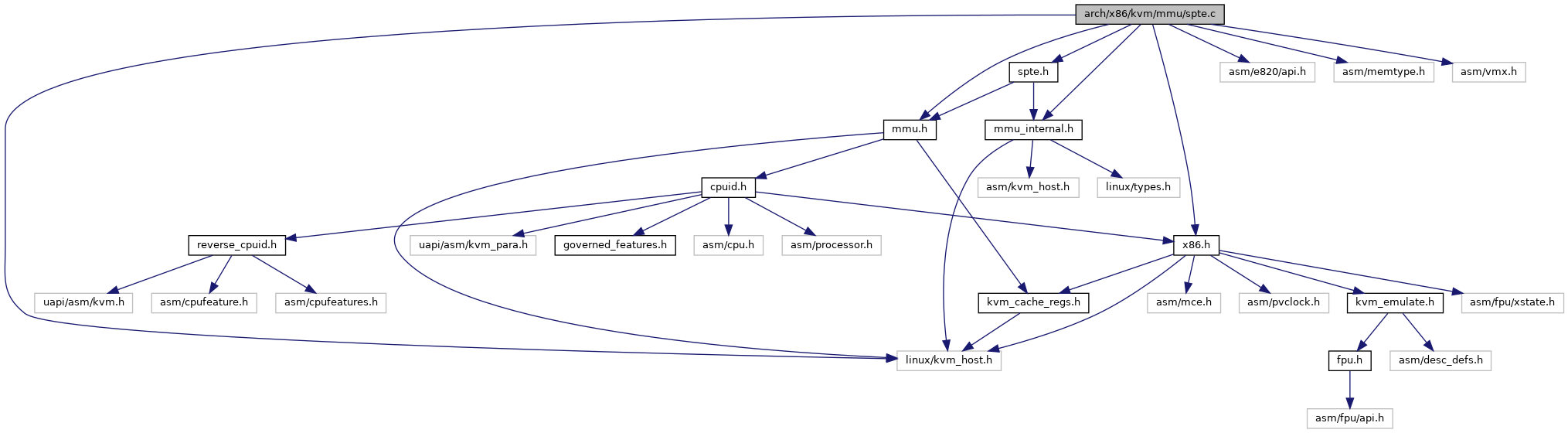

Include dependency graph for spte.c:

Go to the source code of this file.

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

Functions | |

| module_param_named (mmio_caching, enable_mmio_caching, bool, 0444) | |

| EXPORT_SYMBOL_GPL (enable_mmio_caching) | |

| void __init | kvm_mmu_spte_module_init (void) |

| static u64 | generation_mmio_spte_mask (u64 gen) |

| u64 | make_mmio_spte (struct kvm_vcpu *vcpu, u64 gfn, unsigned int access) |

| static bool | kvm_is_mmio_pfn (kvm_pfn_t pfn) |

| bool | spte_has_volatile_bits (u64 spte) |

| bool | make_spte (struct kvm_vcpu *vcpu, struct kvm_mmu_page *sp, const struct kvm_memory_slot *slot, unsigned int pte_access, gfn_t gfn, kvm_pfn_t pfn, u64 old_spte, bool prefetch, bool can_unsync, bool host_writable, u64 *new_spte) |

| static u64 | make_spte_executable (u64 spte) |

| u64 | make_huge_page_split_spte (struct kvm *kvm, u64 huge_spte, union kvm_mmu_page_role role, int index) |

| u64 | make_nonleaf_spte (u64 *child_pt, bool ad_disabled) |

| u64 | kvm_mmu_changed_pte_notifier_make_spte (u64 old_spte, kvm_pfn_t new_pfn) |

| u64 | mark_spte_for_access_track (u64 spte) |

| void | kvm_mmu_set_mmio_spte_mask (u64 mmio_value, u64 mmio_mask, u64 access_mask) |

| EXPORT_SYMBOL_GPL (kvm_mmu_set_mmio_spte_mask) | |

| void | kvm_mmu_set_me_spte_mask (u64 me_value, u64 me_mask) |

| EXPORT_SYMBOL_GPL (kvm_mmu_set_me_spte_mask) | |

| void | kvm_mmu_set_ept_masks (bool has_ad_bits, bool has_exec_only) |

| EXPORT_SYMBOL_GPL (kvm_mmu_set_ept_masks) | |

| void | kvm_mmu_reset_all_pte_masks (void) |

Macro Definition Documentation

◆ pr_fmt

Function Documentation

◆ EXPORT_SYMBOL_GPL() [1/4]

| EXPORT_SYMBOL_GPL | ( | enable_mmio_caching | ) |

◆ EXPORT_SYMBOL_GPL() [2/4]

| EXPORT_SYMBOL_GPL | ( | kvm_mmu_set_ept_masks | ) |

◆ EXPORT_SYMBOL_GPL() [3/4]

| EXPORT_SYMBOL_GPL | ( | kvm_mmu_set_me_spte_mask | ) |

◆ EXPORT_SYMBOL_GPL() [4/4]

| EXPORT_SYMBOL_GPL | ( | kvm_mmu_set_mmio_spte_mask | ) |

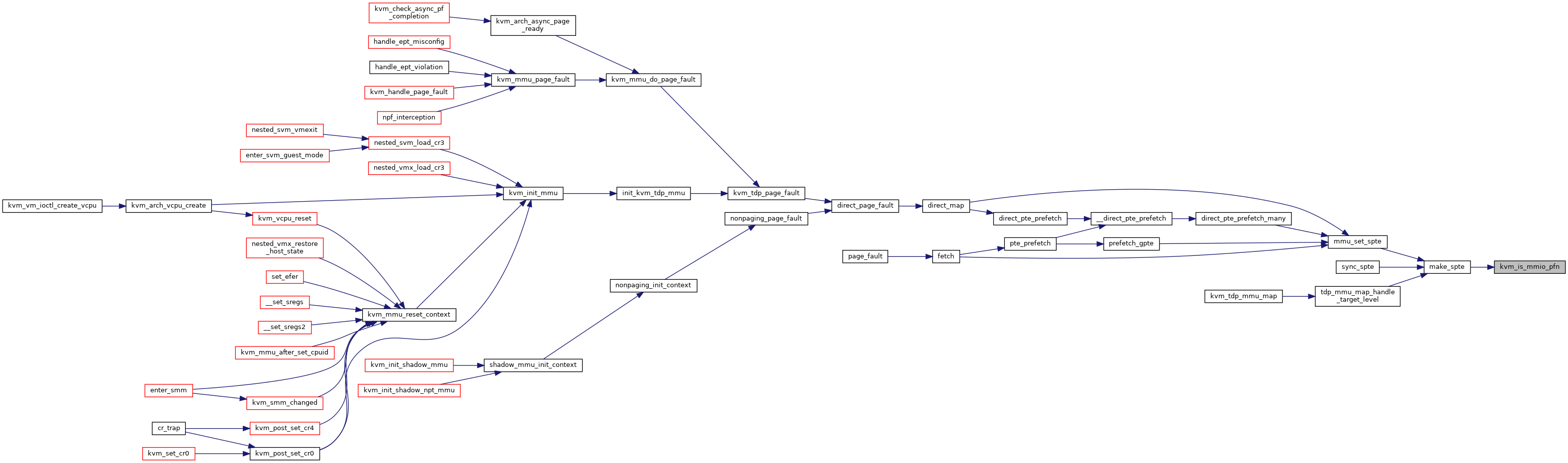

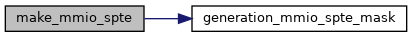

◆ generation_mmio_spte_mask()

|

static |

◆ kvm_is_mmio_pfn()

|

static |

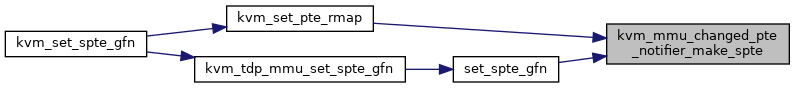

◆ kvm_mmu_changed_pte_notifier_make_spte()

| u64 kvm_mmu_changed_pte_notifier_make_spte | ( | u64 | old_spte, |

| kvm_pfn_t | new_pfn | ||

| ) |

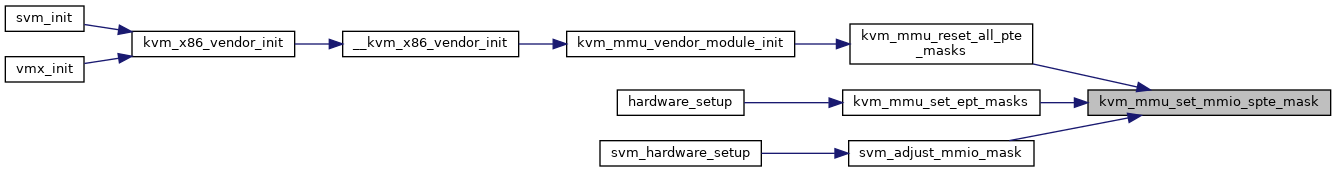

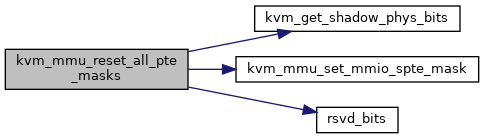

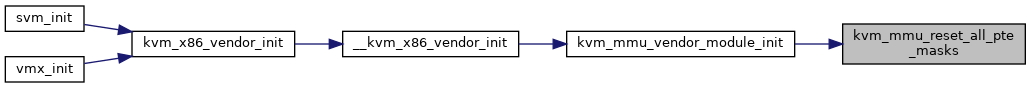

◆ kvm_mmu_reset_all_pte_masks()

| void kvm_mmu_reset_all_pte_masks | ( | void | ) |

Definition at line 453 of file spte.c.

void kvm_mmu_set_mmio_spte_mask(u64 mmio_value, u64 mmio_mask, u64 access_mask)

Definition: spte.c:362

u64 __read_mostly shadow_nonpresent_or_rsvd_lower_gfn_mask

Definition: spte.c:44

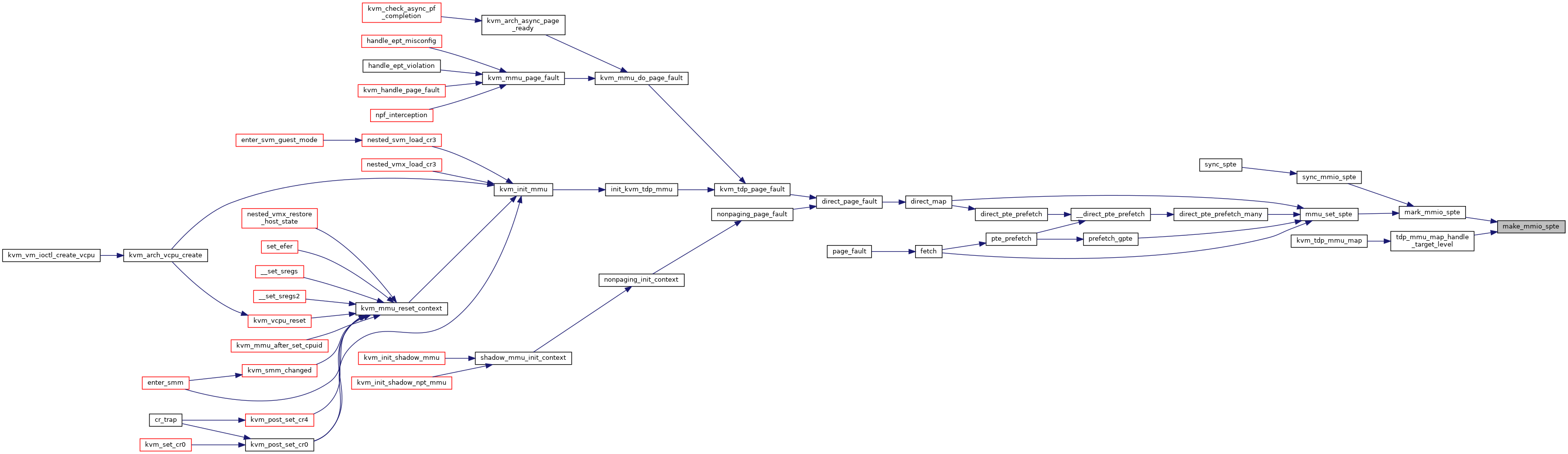

Here is the call graph for this function:

Here is the caller graph for this function:

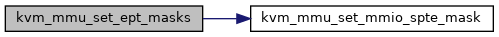

◆ kvm_mmu_set_ept_masks()

| void kvm_mmu_set_ept_masks | ( | bool | has_ad_bits, |

| bool | has_exec_only | ||

| ) |

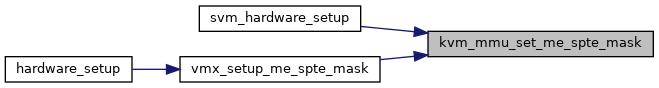

◆ kvm_mmu_set_me_spte_mask()

| void kvm_mmu_set_me_spte_mask | ( | u64 | me_value, |

| u64 | me_mask | ||

| ) |

◆ kvm_mmu_set_mmio_spte_mask()

| void kvm_mmu_set_mmio_spte_mask | ( | u64 | mmio_value, |

| u64 | mmio_mask, | ||

| u64 | access_mask | ||

| ) |

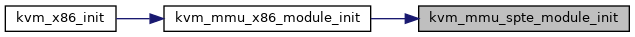

◆ kvm_mmu_spte_module_init()

| void __init kvm_mmu_spte_module_init | ( | void | ) |

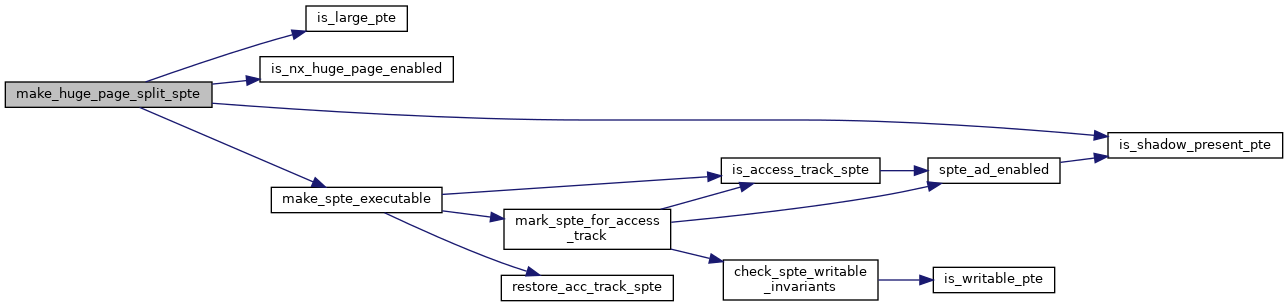

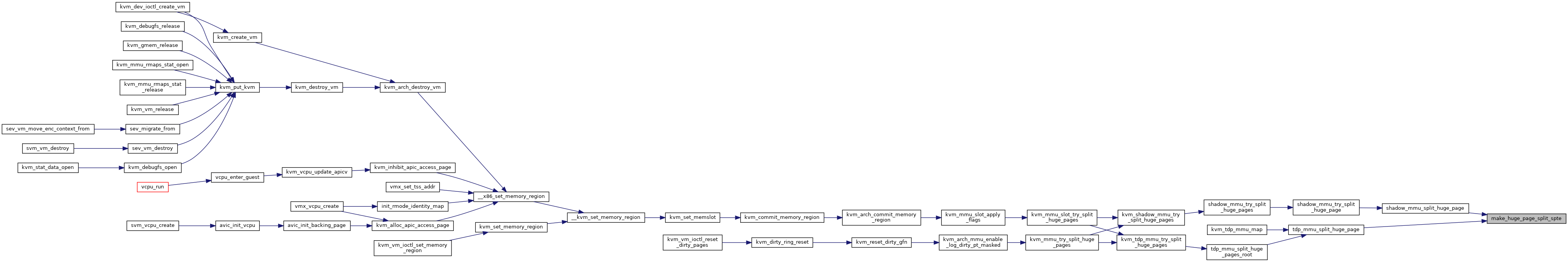

◆ make_huge_page_split_spte()

| u64 make_huge_page_split_spte | ( | struct kvm * | kvm, |

| u64 | huge_spte, | ||

| union kvm_mmu_page_role | role, | ||

| int | index | ||

| ) |

Definition at line 274 of file spte.c.

static bool is_nx_huge_page_enabled(struct kvm *kvm)

Definition: mmu_internal.h:185

Here is the call graph for this function:

Here is the caller graph for this function:

◆ make_mmio_spte()

| u64 make_mmio_spte | ( | struct kvm_vcpu * | vcpu, |

| u64 | gfn, | ||

| unsigned int | access | ||

| ) |

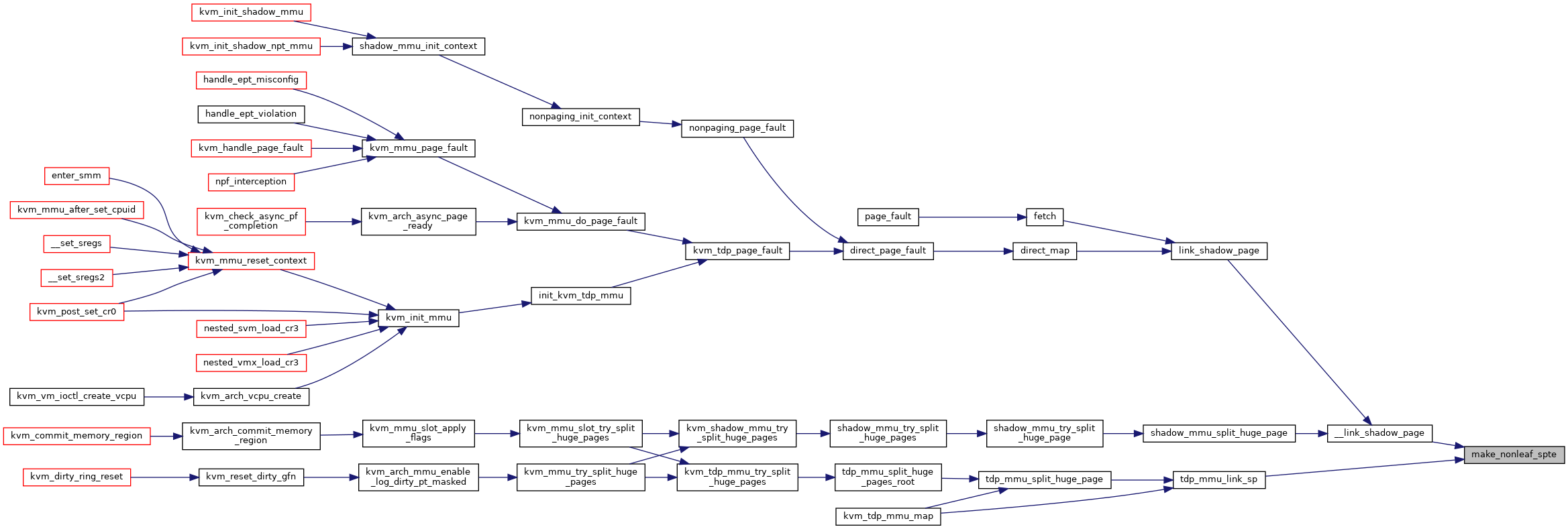

◆ make_nonleaf_spte()

| u64 make_nonleaf_spte | ( | u64 * | child_pt, |

| bool | ad_disabled | ||

| ) |

◆ make_spte()

| bool make_spte | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_mmu_page * | sp, | ||

| const struct kvm_memory_slot * | slot, | ||

| unsigned int | pte_access, | ||

| gfn_t | gfn, | ||

| kvm_pfn_t | pfn, | ||

| u64 | old_spte, | ||

| bool | prefetch, | ||

| bool | can_unsync, | ||

| bool | host_writable, | ||

| u64 * | new_spte | ||

| ) |

Definition at line 137 of file spte.c.

void mark_page_dirty_in_slot(struct kvm *kvm, const struct kvm_memory_slot *memslot, gfn_t gfn)

Definition: kvm_main.c:3635

int mmu_try_to_unsync_pages(struct kvm *kvm, const struct kvm_memory_slot *slot, gfn_t gfn, bool can_unsync, bool prefetch)

Definition: mmu.c:2805

static bool kvm_mmu_page_ad_need_write_protect(struct kvm_mmu_page *sp)

Definition: mmu_internal.h:148

static __always_inline bool is_rsvd_spte(struct rsvd_bits_validate *rsvd_check, u64 spte, int level)

Definition: spte.h:368

static u64 get_rsvd_bits(struct rsvd_bits_validate *rsvd_check, u64 pte, int level)

Definition: spte.h:348

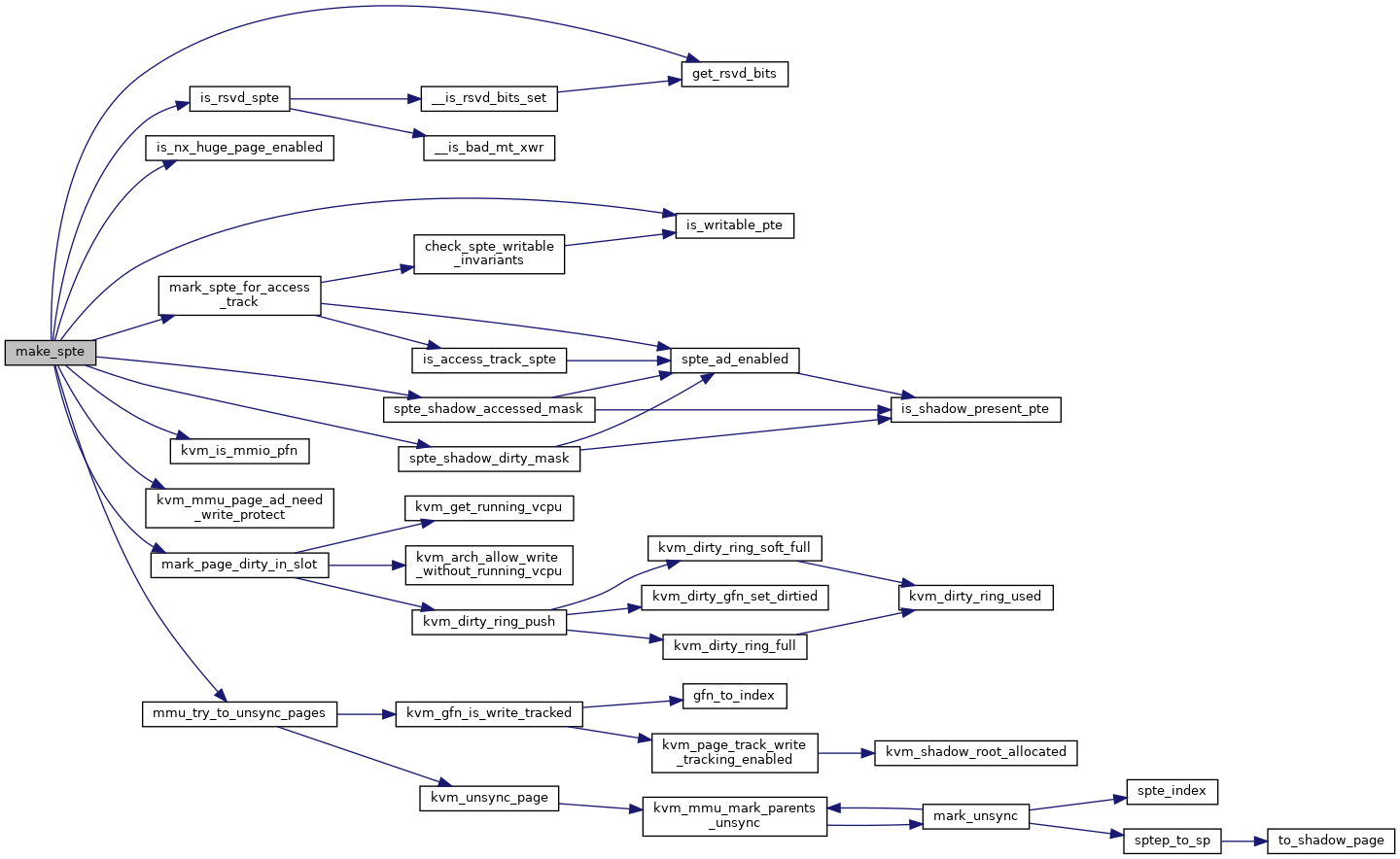

Here is the call graph for this function:

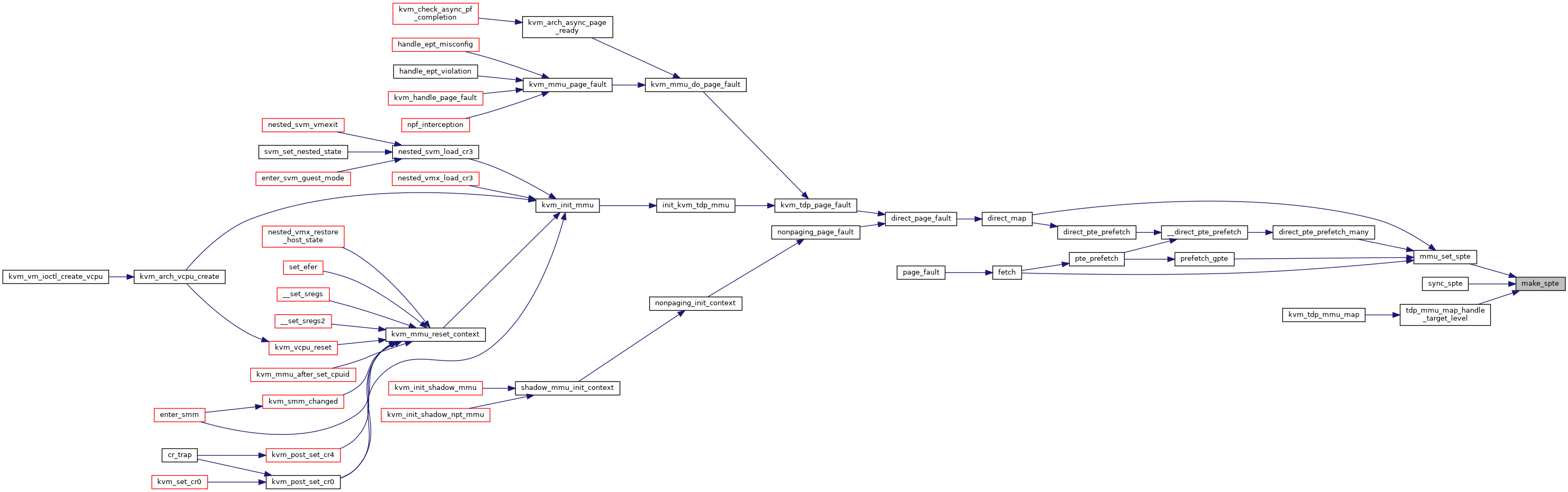

Here is the caller graph for this function:

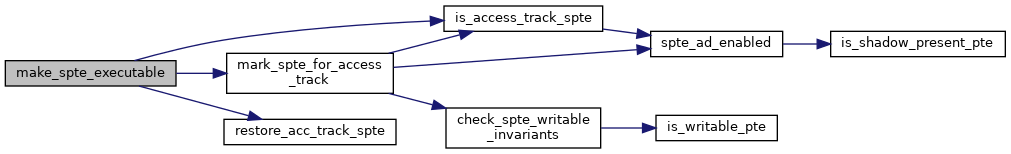

◆ make_spte_executable()

|

static |

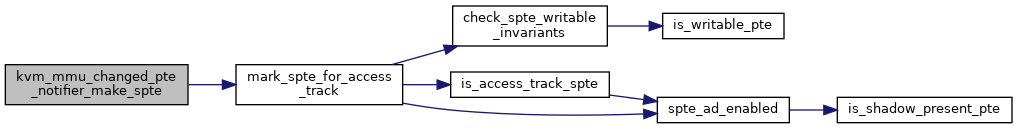

◆ mark_spte_for_access_track()

| u64 mark_spte_for_access_track | ( | u64 | spte | ) |

Definition at line 341 of file spte.c.

static void check_spte_writable_invariants(u64 spte)

Definition: spte.h:447

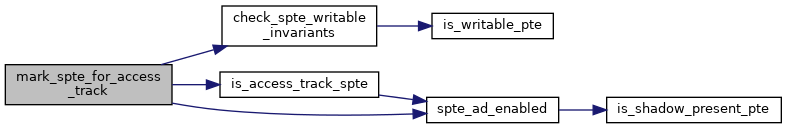

Here is the call graph for this function:

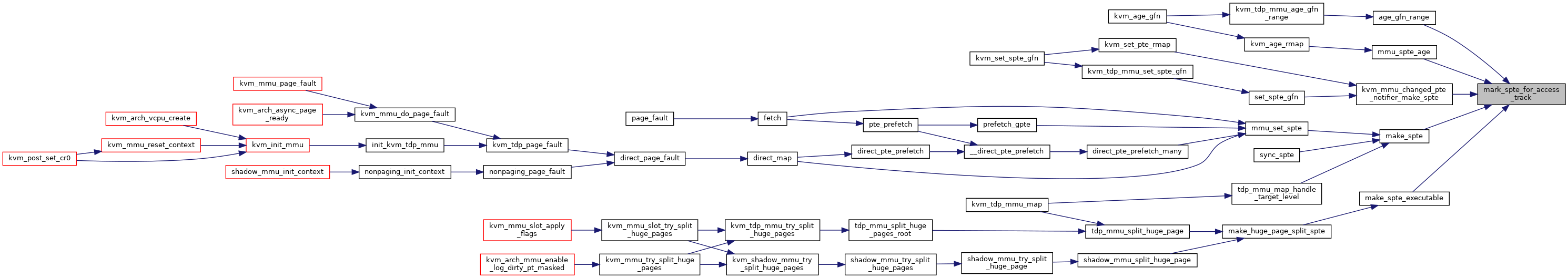

Here is the caller graph for this function:

◆ module_param_named()

| module_param_named | ( | mmio_caching | , |

| enable_mmio_caching | , | ||

| bool | , | ||

| 0444 | |||

| ) |

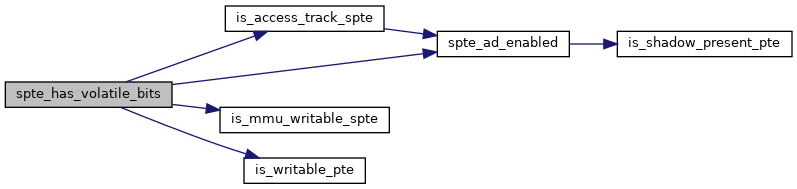

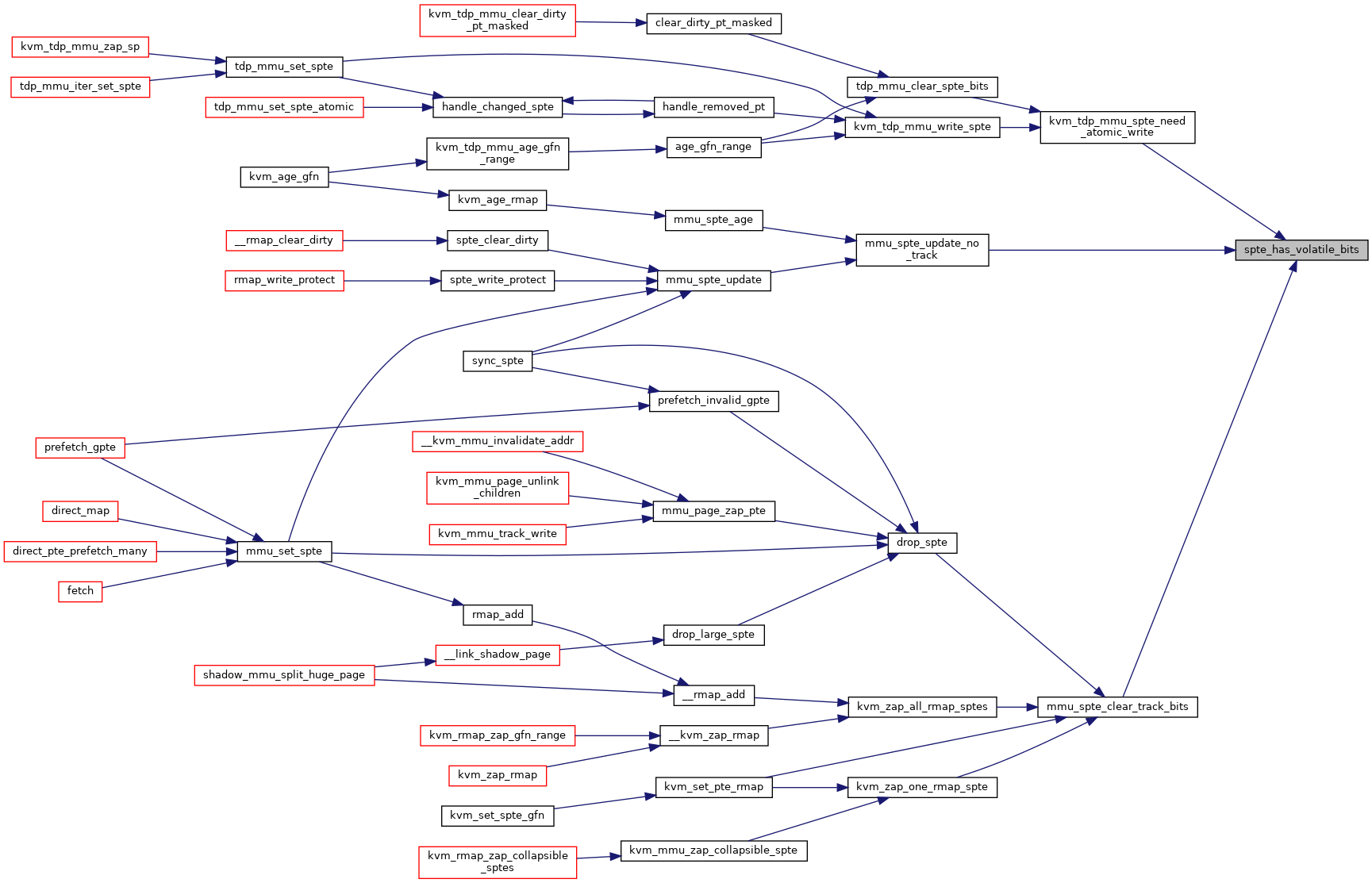

◆ spte_has_volatile_bits()

| bool spte_has_volatile_bits | ( | u64 | spte | ) |

Variable Documentation

◆ allow_mmio_caching

|

static |

◆ enable_mmio_caching

| bool __read_mostly enable_mmio_caching = true |

◆ shadow_acc_track_mask

| u64 __read_mostly shadow_acc_track_mask |

◆ shadow_accessed_mask

| u64 __read_mostly shadow_accessed_mask |

◆ shadow_dirty_mask

| u64 __read_mostly shadow_dirty_mask |

◆ shadow_host_writable_mask

| u64 __read_mostly shadow_host_writable_mask |

◆ shadow_me_mask

| u64 __read_mostly shadow_me_mask |

◆ shadow_me_value

| u64 __read_mostly shadow_me_value |

◆ shadow_memtype_mask

| u64 __read_mostly shadow_memtype_mask |

◆ shadow_mmio_access_mask

| u64 __read_mostly shadow_mmio_access_mask |

◆ shadow_mmio_mask

| u64 __read_mostly shadow_mmio_mask |

◆ shadow_mmio_value

| u64 __read_mostly shadow_mmio_value |

◆ shadow_mmu_writable_mask

| u64 __read_mostly shadow_mmu_writable_mask |

◆ shadow_nonpresent_or_rsvd_lower_gfn_mask

| u64 __read_mostly shadow_nonpresent_or_rsvd_lower_gfn_mask |

◆ shadow_nonpresent_or_rsvd_mask

| u64 __read_mostly shadow_nonpresent_or_rsvd_mask |

◆ shadow_nx_mask

| u64 __read_mostly shadow_nx_mask |

◆ shadow_phys_bits

| u8 __read_mostly shadow_phys_bits |

◆ shadow_present_mask

| u64 __read_mostly shadow_present_mask |

◆ shadow_user_mask

| u64 __read_mostly shadow_user_mask |

◆ shadow_x_mask

| u64 __read_mostly shadow_x_mask |