#include <linux/kvm_host.h>#include "x86.h"#include "kvm_cache_regs.h"#include "kvm_emulate.h"#include "smm.h"#include "cpuid.h"#include "trace.h"

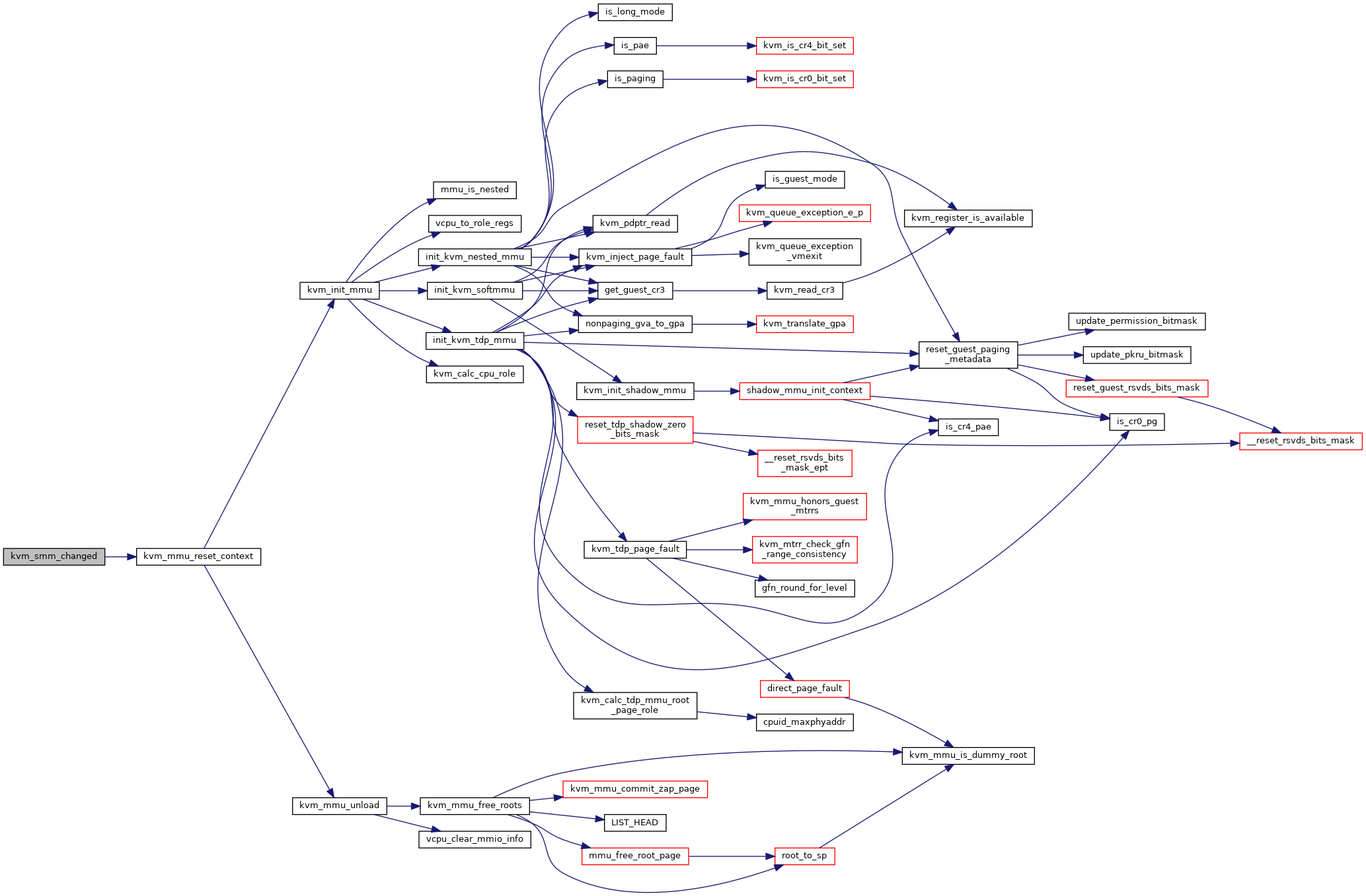

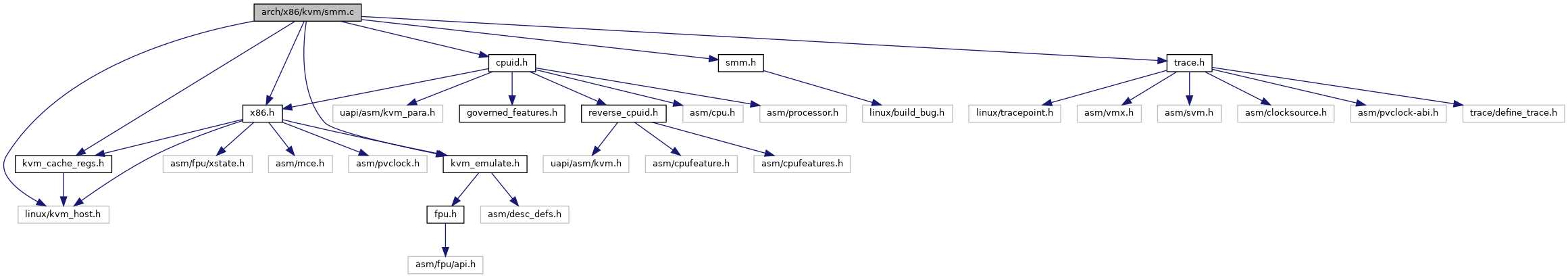

Include dependency graph for smm.c:

Go to the source code of this file.

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

| #define | CHECK_SMRAM32_OFFSET(field, offset) ASSERT_STRUCT_OFFSET(struct kvm_smram_state_32, field, offset - 0xFE00) |

| #define | CHECK_SMRAM64_OFFSET(field, offset) ASSERT_STRUCT_OFFSET(struct kvm_smram_state_64, field, offset - 0xFE00) |

Functions | |

| static void | check_smram_offsets (void) |

| void | kvm_smm_changed (struct kvm_vcpu *vcpu, bool entering_smm) |

| void | process_smi (struct kvm_vcpu *vcpu) |

| static u32 | enter_smm_get_segment_flags (struct kvm_segment *seg) |

| static void | enter_smm_save_seg_32 (struct kvm_vcpu *vcpu, struct kvm_smm_seg_state_32 *state, u32 *selector, int n) |

| static void | enter_smm_save_state_32 (struct kvm_vcpu *vcpu, struct kvm_smram_state_32 *smram) |

| void | enter_smm (struct kvm_vcpu *vcpu) |

| static void | rsm_set_desc_flags (struct kvm_segment *desc, u32 flags) |

| static int | rsm_load_seg_32 (struct kvm_vcpu *vcpu, const struct kvm_smm_seg_state_32 *state, u16 selector, int n) |

| static int | rsm_enter_protected_mode (struct kvm_vcpu *vcpu, u64 cr0, u64 cr3, u64 cr4) |

| static int | rsm_load_state_32 (struct x86_emulate_ctxt *ctxt, const struct kvm_smram_state_32 *smstate) |

| int | emulator_leave_smm (struct x86_emulate_ctxt *ctxt) |

Macro Definition Documentation

◆ CHECK_SMRAM32_OFFSET

| #define CHECK_SMRAM32_OFFSET | ( | field, | |

| offset | |||

| ) | ASSERT_STRUCT_OFFSET(struct kvm_smram_state_32, field, offset - 0xFE00) |

◆ CHECK_SMRAM64_OFFSET

| #define CHECK_SMRAM64_OFFSET | ( | field, | |

| offset | |||

| ) | ASSERT_STRUCT_OFFSET(struct kvm_smram_state_64, field, offset - 0xFE00) |

◆ pr_fmt

Function Documentation

◆ check_smram_offsets()

|

static |

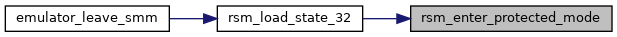

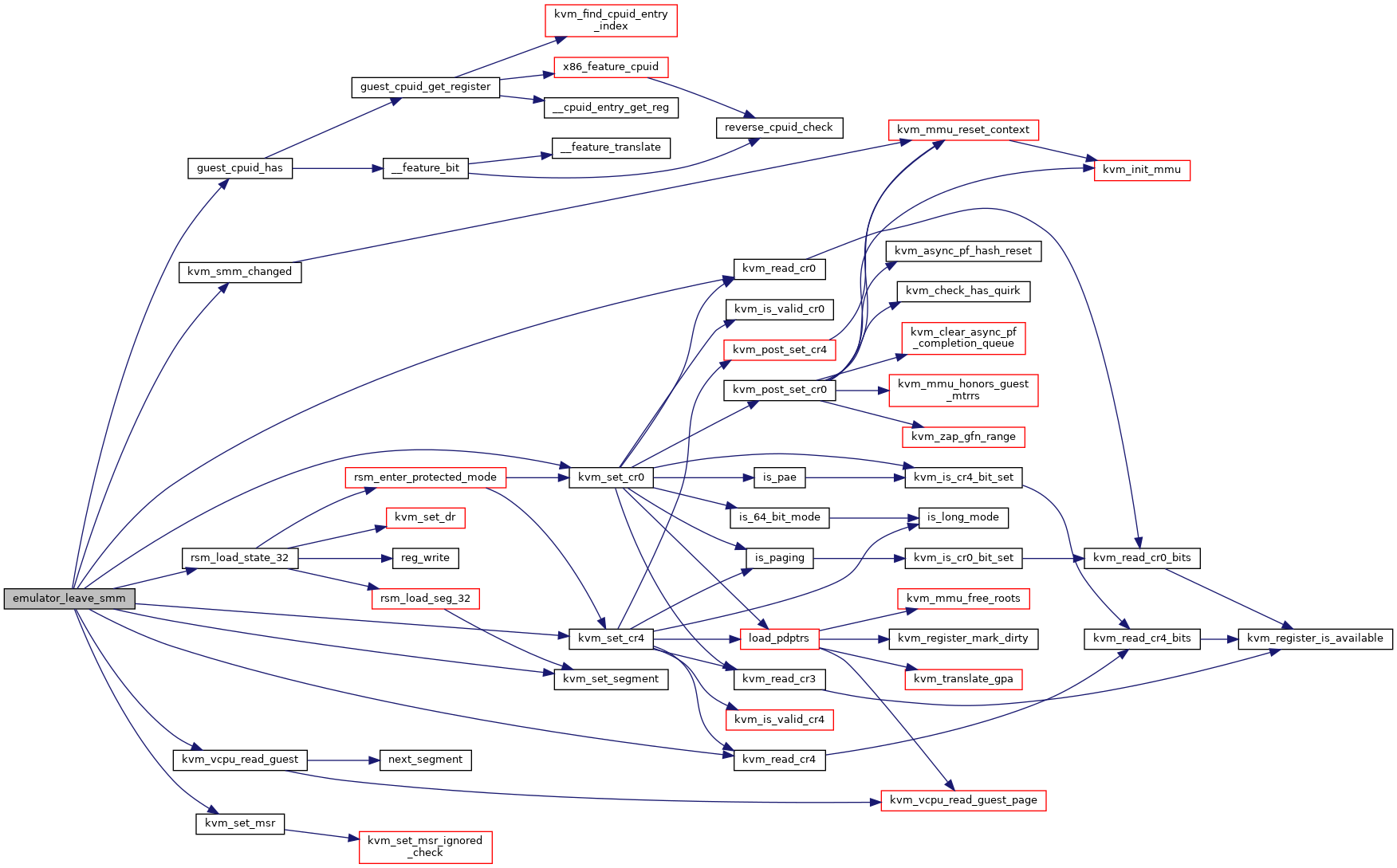

◆ emulator_leave_smm()

| int emulator_leave_smm | ( | struct x86_emulate_ctxt * | ctxt | ) |

Definition at line 571 of file smm.c.

static __always_inline bool guest_cpuid_has(struct kvm_vcpu *vcpu, unsigned int x86_feature)

Definition: cpuid.h:83

int kvm_vcpu_read_guest(struct kvm_vcpu *vcpu, gpa_t gpa, void *data, unsigned long len)

Definition: kvm_main.c:3366

static int rsm_load_state_32(struct x86_emulate_ctxt *ctxt, const struct kvm_smram_state_32 *smstate)

Definition: smm.c:466

void kvm_set_segment(struct kvm_vcpu *vcpu, struct kvm_segment *var, int seg)

Definition: x86.c:7456

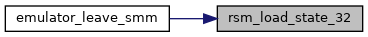

Here is the call graph for this function:

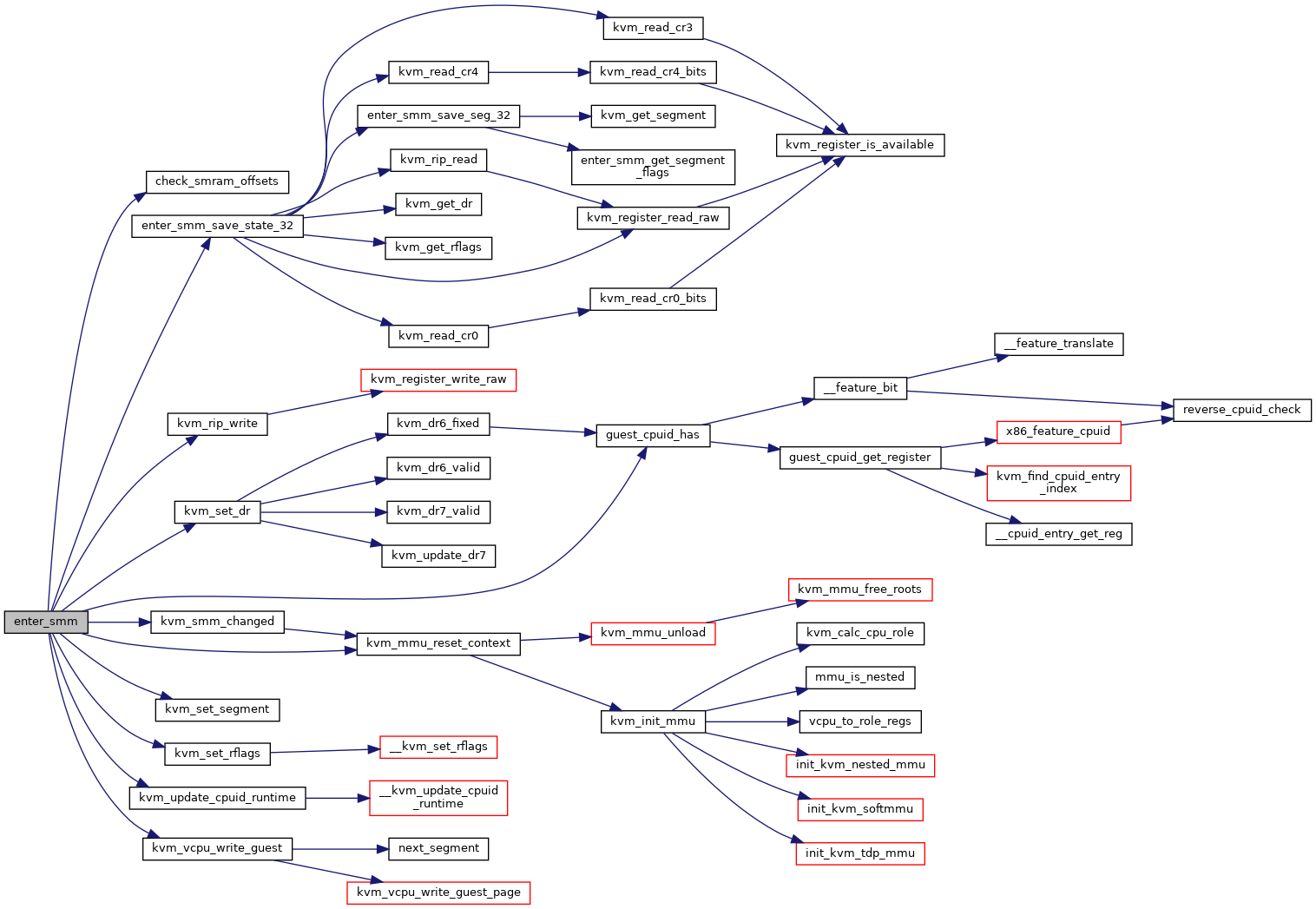

◆ enter_smm()

| void enter_smm | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 281 of file smm.c.

static void kvm_rip_write(struct kvm_vcpu *vcpu, unsigned long val)

Definition: kvm_cache_regs.h:121

int kvm_vcpu_write_guest(struct kvm_vcpu *vcpu, gpa_t gpa, const void *data, unsigned long len)

Definition: kvm_main.c:3470

static void enter_smm_save_state_32(struct kvm_vcpu *vcpu, struct kvm_smram_state_32 *smram)

Definition: smm.c:183

void kvm_set_rflags(struct kvm_vcpu *vcpu, unsigned long rflags)

Definition: x86.c:13189

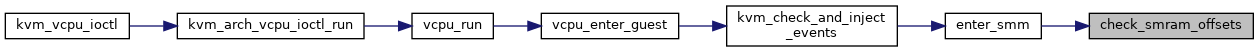

Here is the call graph for this function:

Here is the caller graph for this function:

◆ enter_smm_get_segment_flags()

|

static |

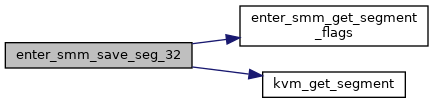

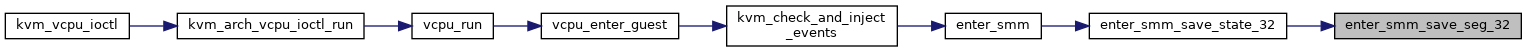

◆ enter_smm_save_seg_32()

|

static |

Definition at line 155 of file smm.c.

static u32 enter_smm_get_segment_flags(struct kvm_segment *seg)

Definition: smm.c:141

void kvm_get_segment(struct kvm_vcpu *vcpu, struct kvm_segment *var, int seg)

Definition: x86.c:7462

Here is the call graph for this function:

Here is the caller graph for this function:

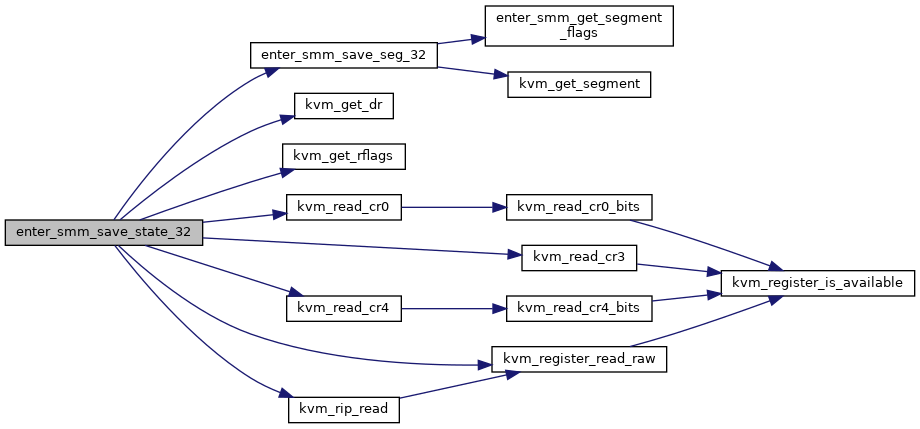

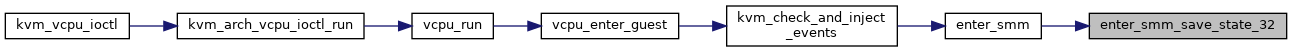

◆ enter_smm_save_state_32()

|

static |

Definition at line 183 of file smm.c.

static unsigned long kvm_register_read_raw(struct kvm_vcpu *vcpu, int reg)

Definition: kvm_cache_regs.h:95

static unsigned long kvm_rip_read(struct kvm_vcpu *vcpu)

Definition: kvm_cache_regs.h:116

static void enter_smm_save_seg_32(struct kvm_vcpu *vcpu, struct kvm_smm_seg_state_32 *state, u32 *selector, int n)

Definition: smm.c:155

Here is the call graph for this function:

Here is the caller graph for this function:

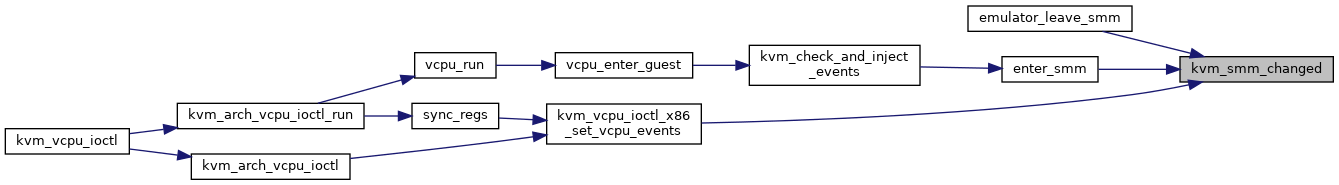

◆ kvm_smm_changed()

| void kvm_smm_changed | ( | struct kvm_vcpu * | vcpu, |

| bool | entering_smm | ||

| ) |

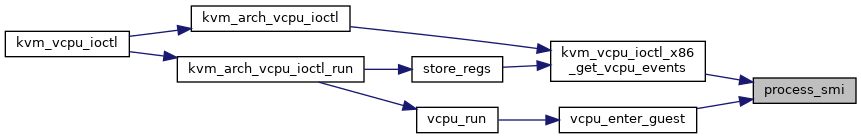

◆ process_smi()

| void process_smi | ( | struct kvm_vcpu * | vcpu | ) |

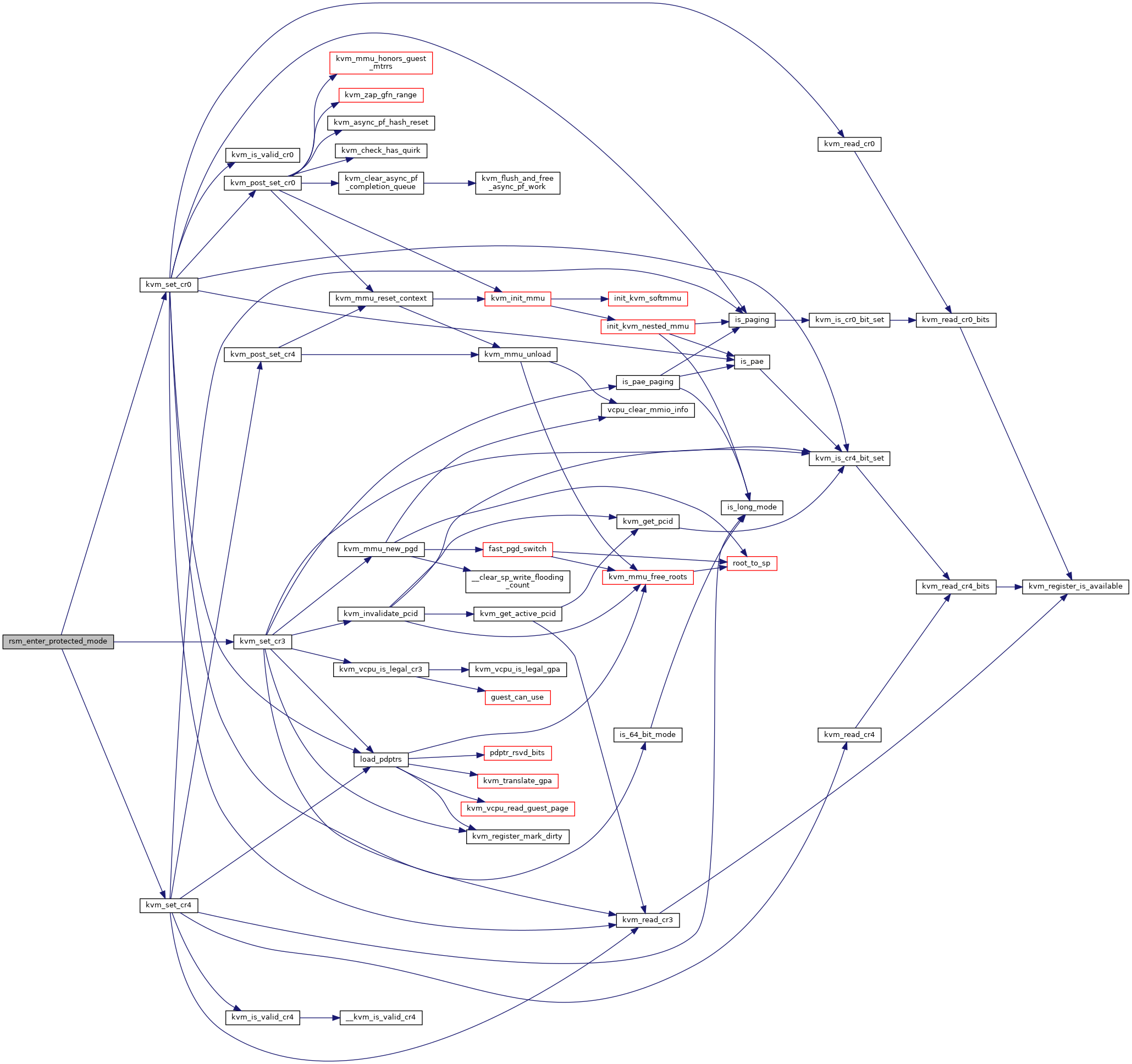

◆ rsm_enter_protected_mode()

|

static |

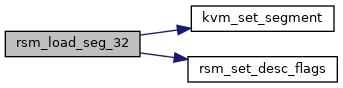

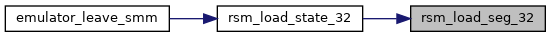

◆ rsm_load_seg_32()

|

static |

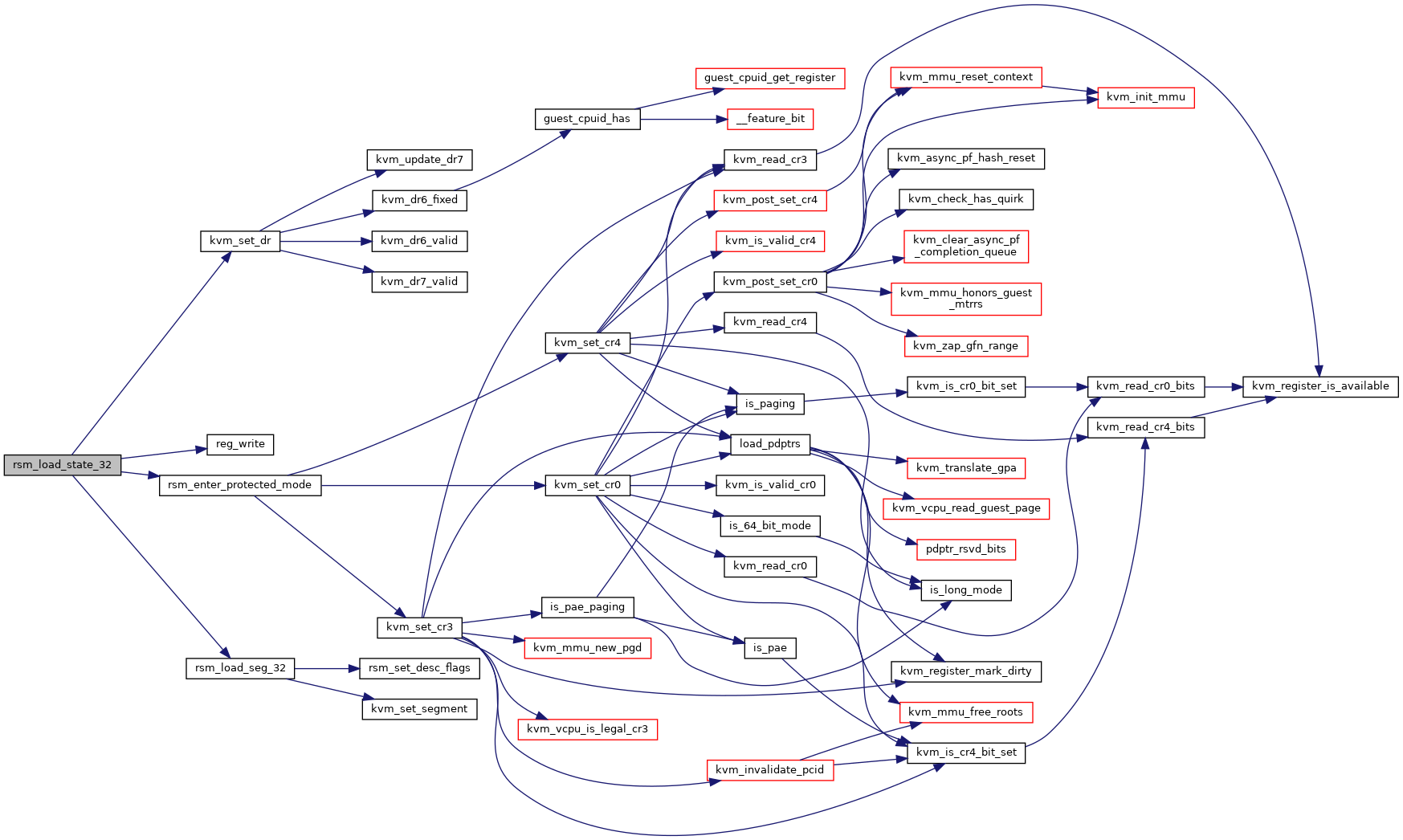

◆ rsm_load_state_32()

|

static |

Definition at line 466 of file smm.c.

static ulong * reg_write(struct x86_emulate_ctxt *ctxt, unsigned nr)

Definition: kvm_emulate.h:531

static int rsm_enter_protected_mode(struct kvm_vcpu *vcpu, u64 cr0, u64 cr3, u64 cr4)

Definition: smm.c:421

static int rsm_load_seg_32(struct kvm_vcpu *vcpu, const struct kvm_smm_seg_state_32 *state, u16 selector, int n)

Definition: smm.c:390

Here is the call graph for this function:

Here is the caller graph for this function: