#include <linux/nospec.h>

Include dependency graph for pmu.h:

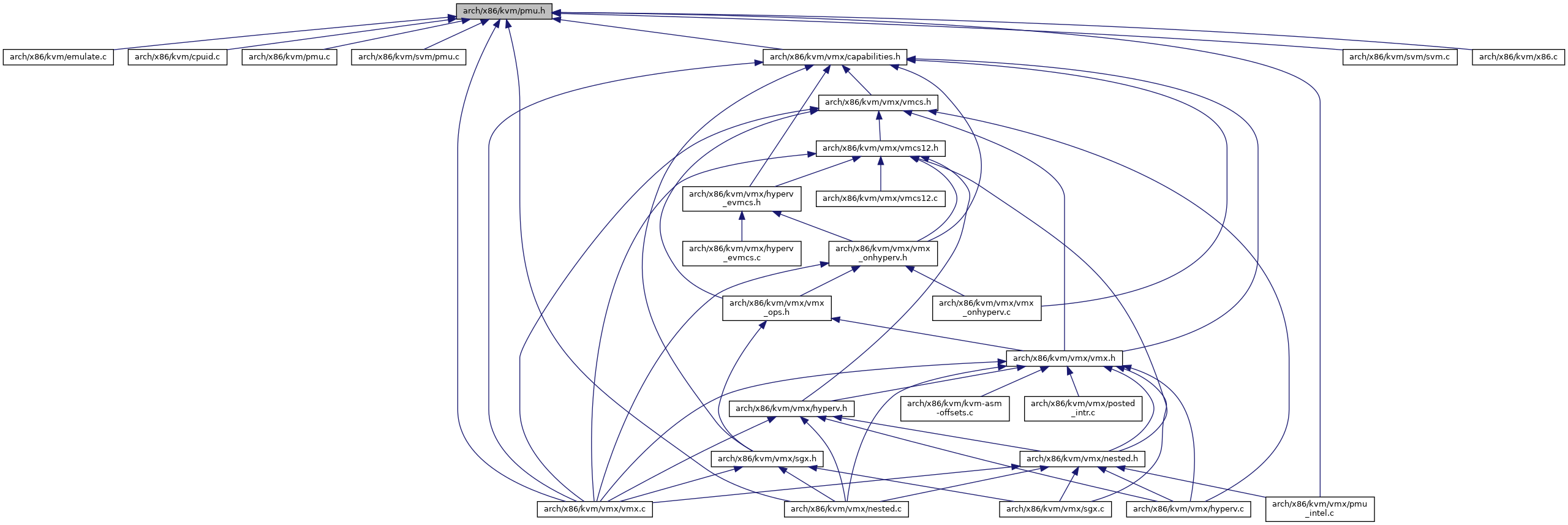

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Classes | |

| struct | kvm_pmu_ops |

Macros | |

| #define | vcpu_to_pmu(vcpu) (&(vcpu)->arch.pmu) |

| #define | pmu_to_vcpu(pmu) (container_of((pmu), struct kvm_vcpu, arch.pmu)) |

| #define | pmc_to_pmu(pmc) (&(pmc)->vcpu->arch.pmu) |

| #define | MSR_IA32_MISC_ENABLE_PMU_RO_MASK |

| #define | fixed_ctrl_field(ctrl_reg, idx) (((ctrl_reg) >> ((idx)*4)) & 0xf) |

| #define | VMWARE_BACKDOOR_PMC_HOST_TSC 0x10000 |

| #define | VMWARE_BACKDOOR_PMC_REAL_TIME 0x10001 |

| #define | VMWARE_BACKDOOR_PMC_APPARENT_TIME 0x10002 |

Functions | |

| void | kvm_pmu_ops_update (const struct kvm_pmu_ops *pmu_ops) |

| static bool | kvm_pmu_has_perf_global_ctrl (struct kvm_pmu *pmu) |

| static u64 | pmc_bitmask (struct kvm_pmc *pmc) |

| static u64 | pmc_read_counter (struct kvm_pmc *pmc) |

| void | pmc_write_counter (struct kvm_pmc *pmc, u64 val) |

| static bool | pmc_is_gp (struct kvm_pmc *pmc) |

| static bool | pmc_is_fixed (struct kvm_pmc *pmc) |

| static bool | kvm_valid_perf_global_ctrl (struct kvm_pmu *pmu, u64 data) |

| static struct kvm_pmc * | get_gp_pmc (struct kvm_pmu *pmu, u32 msr, u32 base) |

| static struct kvm_pmc * | get_fixed_pmc (struct kvm_pmu *pmu, u32 msr) |

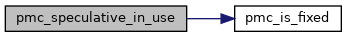

| static bool | pmc_speculative_in_use (struct kvm_pmc *pmc) |

| static void | kvm_init_pmu_capability (const struct kvm_pmu_ops *pmu_ops) |

| static void | kvm_pmu_request_counter_reprogram (struct kvm_pmc *pmc) |

| static void | reprogram_counters (struct kvm_pmu *pmu, u64 diff) |

| static bool | pmc_is_globally_enabled (struct kvm_pmc *pmc) |

| void | kvm_pmu_deliver_pmi (struct kvm_vcpu *vcpu) |

| void | kvm_pmu_handle_event (struct kvm_vcpu *vcpu) |

| int | kvm_pmu_rdpmc (struct kvm_vcpu *vcpu, unsigned pmc, u64 *data) |

| bool | kvm_pmu_is_valid_rdpmc_ecx (struct kvm_vcpu *vcpu, unsigned int idx) |

| bool | kvm_pmu_is_valid_msr (struct kvm_vcpu *vcpu, u32 msr) |

| int | kvm_pmu_get_msr (struct kvm_vcpu *vcpu, struct msr_data *msr_info) |

| int | kvm_pmu_set_msr (struct kvm_vcpu *vcpu, struct msr_data *msr_info) |

| void | kvm_pmu_refresh (struct kvm_vcpu *vcpu) |

| void | kvm_pmu_init (struct kvm_vcpu *vcpu) |

| void | kvm_pmu_cleanup (struct kvm_vcpu *vcpu) |

| void | kvm_pmu_destroy (struct kvm_vcpu *vcpu) |

| int | kvm_vm_ioctl_set_pmu_event_filter (struct kvm *kvm, void __user *argp) |

| void | kvm_pmu_trigger_event (struct kvm_vcpu *vcpu, u64 perf_hw_id) |

| bool | is_vmware_backdoor_pmc (u32 pmc_idx) |

Variables | |

| struct x86_pmu_capability | kvm_pmu_cap |

| struct kvm_pmu_ops | intel_pmu_ops |

| struct kvm_pmu_ops | amd_pmu_ops |

Macro Definition Documentation

◆ fixed_ctrl_field

| #define fixed_ctrl_field | ( | ctrl_reg, | |

| idx | |||

| ) | (((ctrl_reg) >> ((idx)*4)) & 0xf) |

◆ MSR_IA32_MISC_ENABLE_PMU_RO_MASK

| #define MSR_IA32_MISC_ENABLE_PMU_RO_MASK |

◆ pmc_to_pmu

◆ pmu_to_vcpu

| #define pmu_to_vcpu | ( | pmu | ) | (container_of((pmu), struct kvm_vcpu, arch.pmu)) |

◆ vcpu_to_pmu

◆ VMWARE_BACKDOOR_PMC_APPARENT_TIME

◆ VMWARE_BACKDOOR_PMC_HOST_TSC

◆ VMWARE_BACKDOOR_PMC_REAL_TIME

Function Documentation

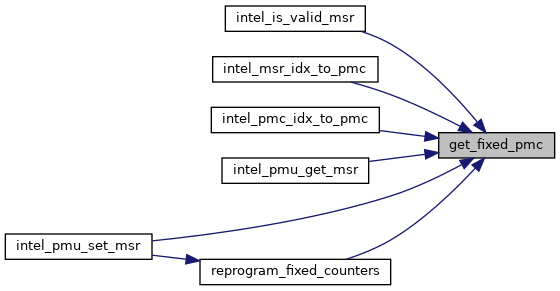

◆ get_fixed_pmc()

|

inlinestatic |

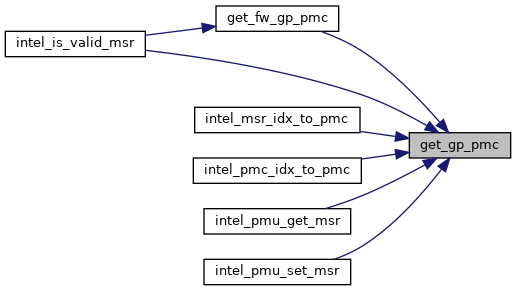

◆ get_gp_pmc()

|

inlinestatic |

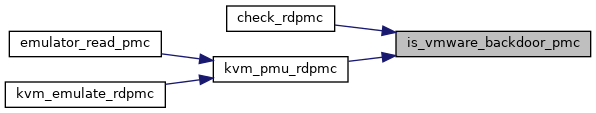

◆ is_vmware_backdoor_pmc()

| bool is_vmware_backdoor_pmc | ( | u32 | pmc_idx | ) |

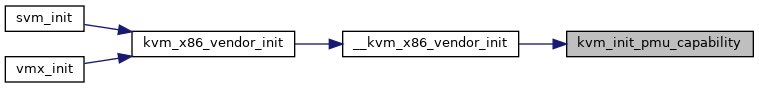

◆ kvm_init_pmu_capability()

|

inlinestatic |

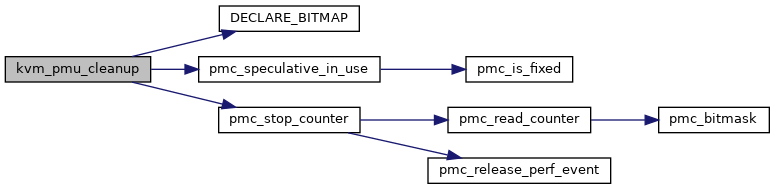

◆ kvm_pmu_cleanup()

| void kvm_pmu_cleanup | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 767 of file pmu.c.

Definition: arm_pmu.h:110

static DECLARE_BITMAP(vmx_vpid_bitmap, VMX_NR_VPIDS)

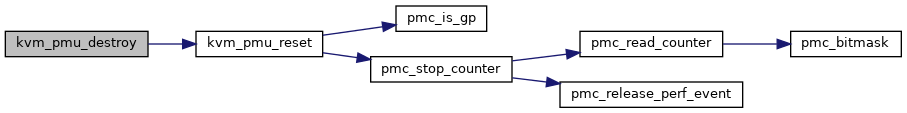

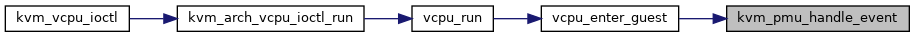

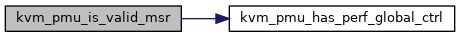

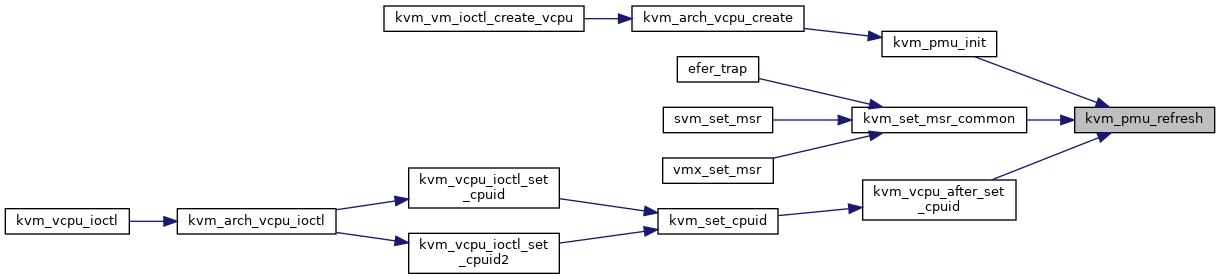

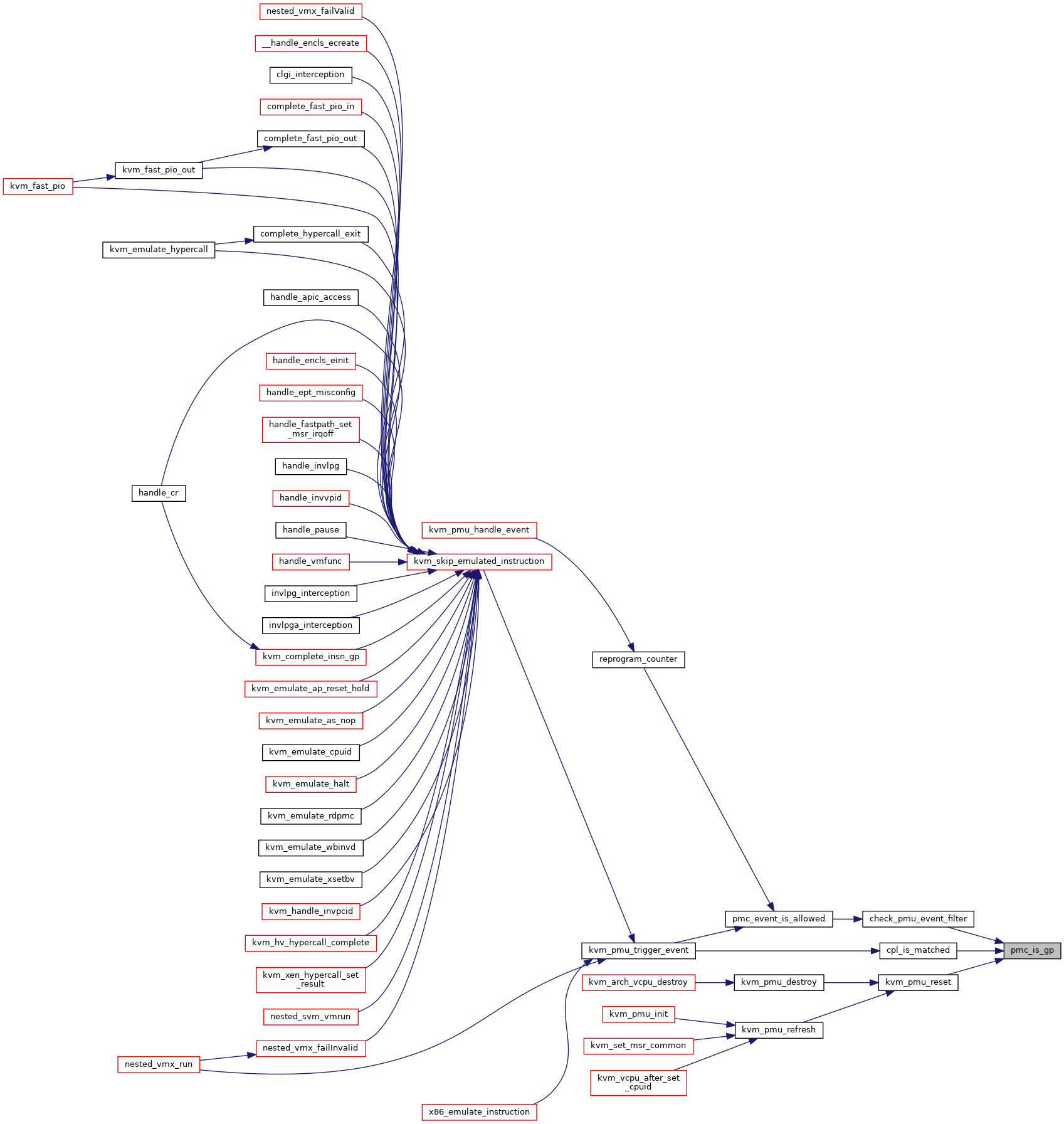

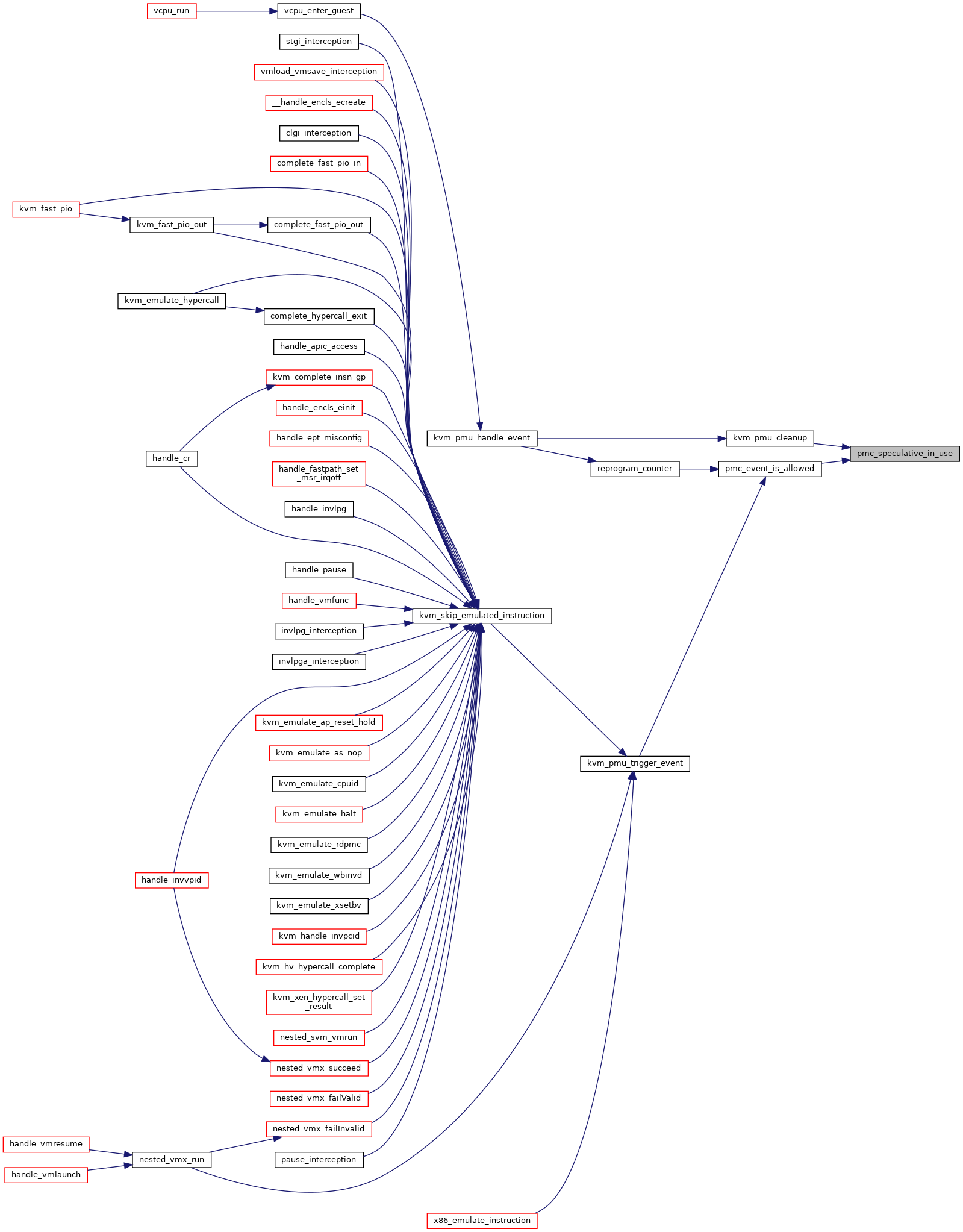

Here is the call graph for this function:

Here is the caller graph for this function:

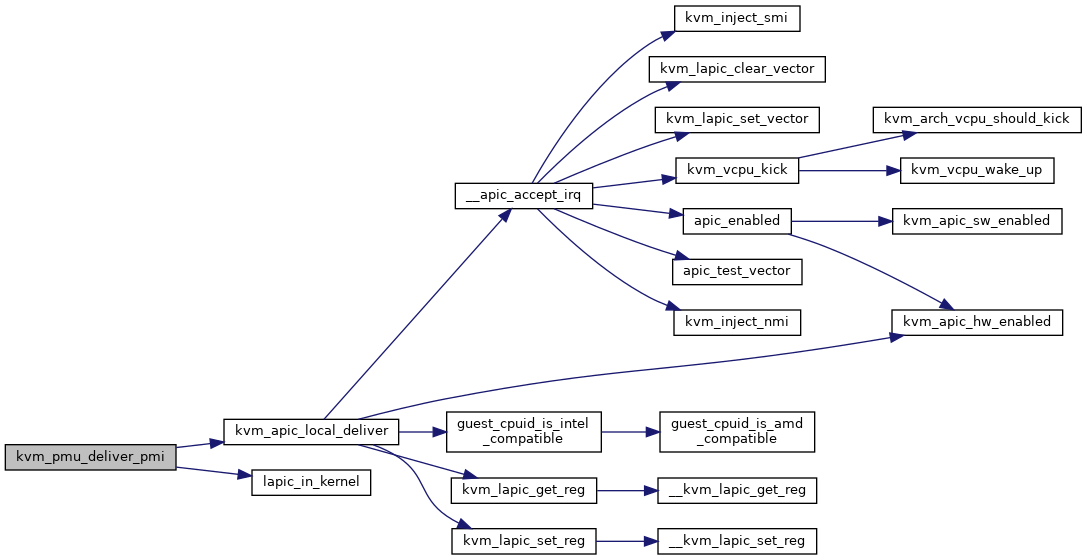

◆ kvm_pmu_deliver_pmi()

| void kvm_pmu_deliver_pmi | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 594 of file pmu.c.

int kvm_apic_local_deliver(struct kvm_lapic *apic, int lvt_type)

Definition: lapic.c:2762

Here is the call graph for this function:

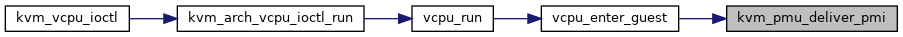

Here is the caller graph for this function:

◆ kvm_pmu_destroy()

| void kvm_pmu_destroy | ( | struct kvm_vcpu * | vcpu | ) |

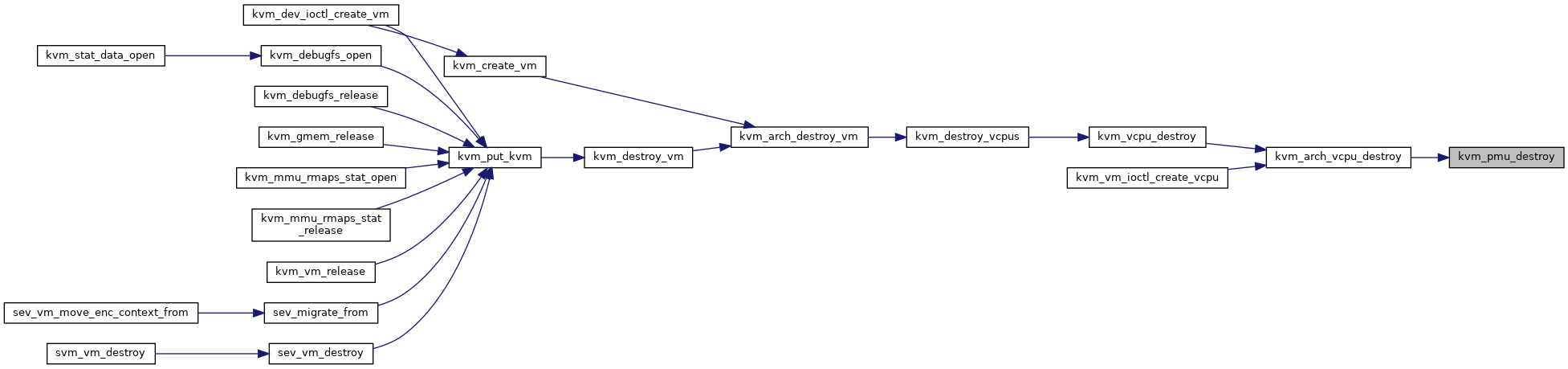

◆ kvm_pmu_get_msr()

| int kvm_pmu_get_msr | ( | struct kvm_vcpu * | vcpu, |

| struct msr_data * | msr_info | ||

| ) |

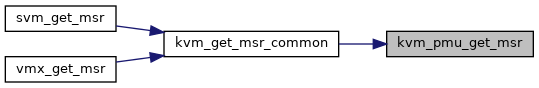

◆ kvm_pmu_handle_event()

| void kvm_pmu_handle_event | ( | struct kvm_vcpu * | vcpu | ) |

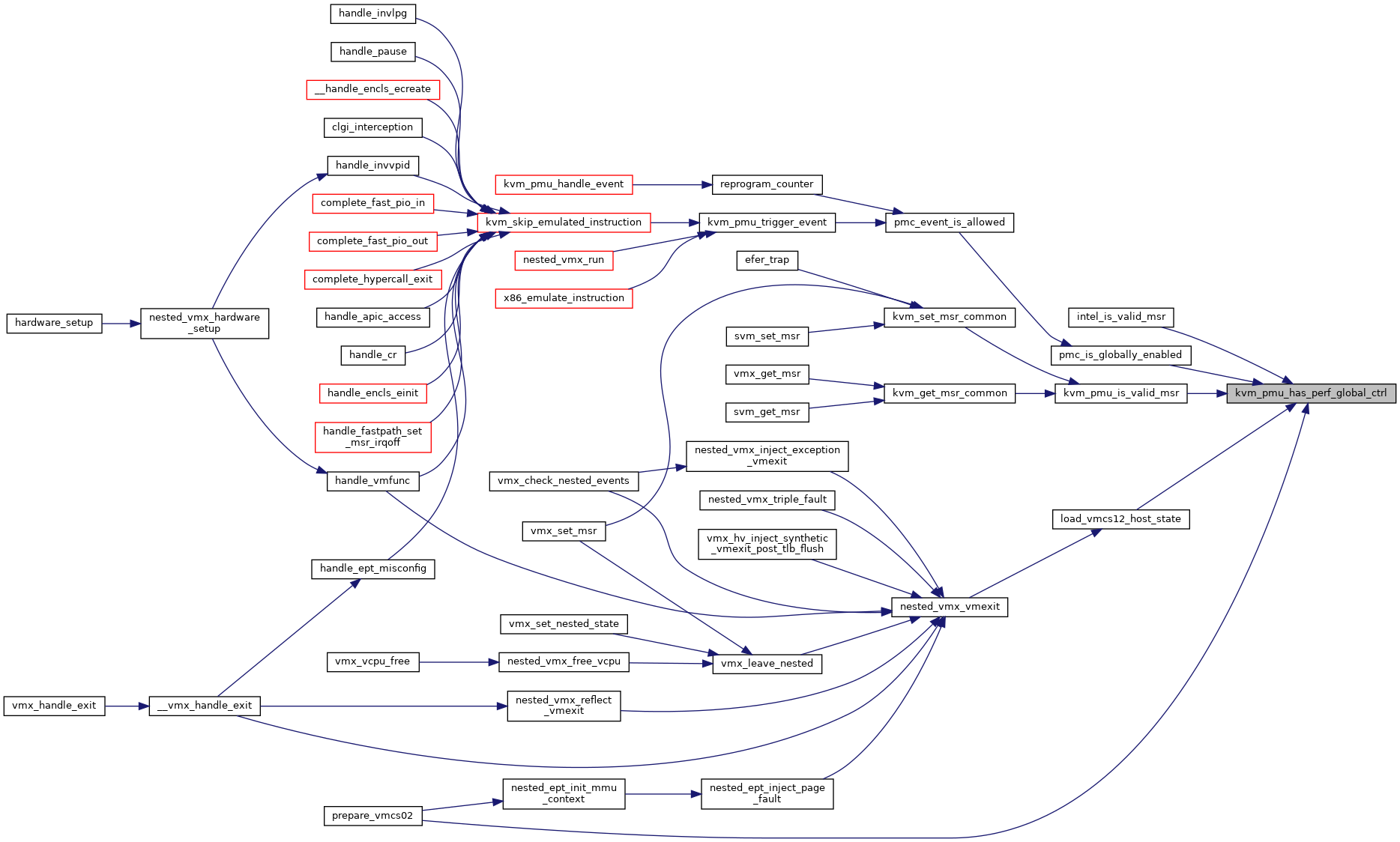

◆ kvm_pmu_has_perf_global_ctrl()

|

inlinestatic |

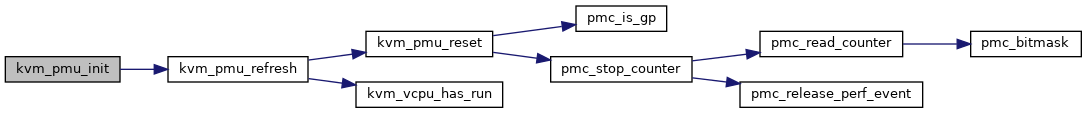

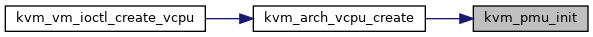

◆ kvm_pmu_init()

| void kvm_pmu_init | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_pmu_is_valid_msr()

| bool kvm_pmu_is_valid_msr | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr | ||

| ) |

◆ kvm_pmu_is_valid_rdpmc_ecx()

| bool kvm_pmu_is_valid_rdpmc_ecx | ( | struct kvm_vcpu * | vcpu, |

| unsigned int | idx | ||

| ) |

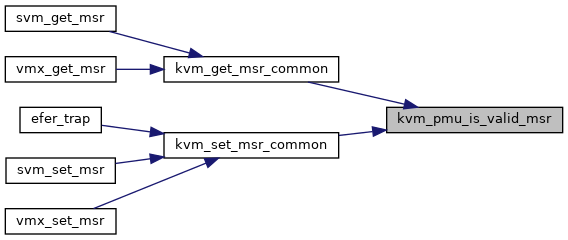

◆ kvm_pmu_ops_update()

| void kvm_pmu_ops_update | ( | const struct kvm_pmu_ops * | pmu_ops | ) |

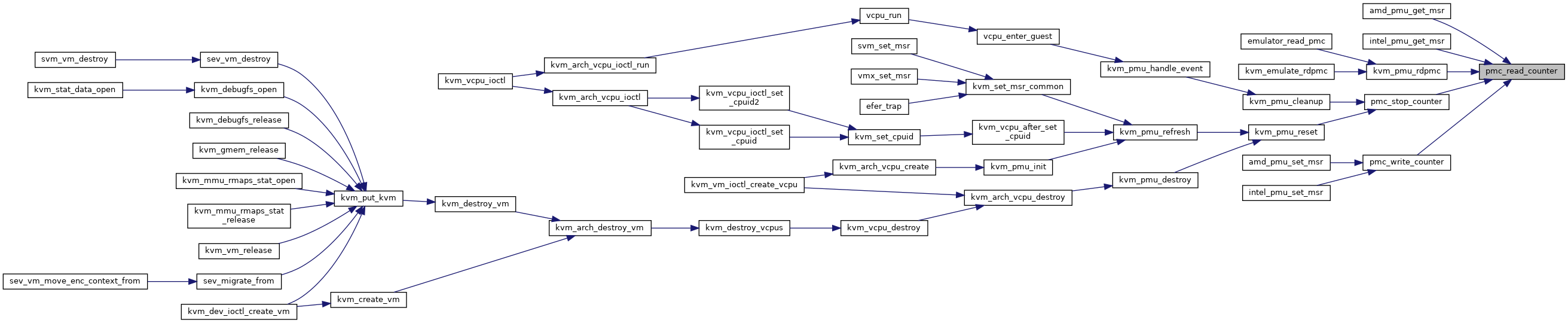

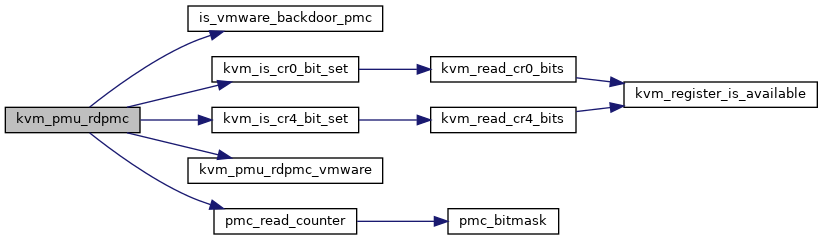

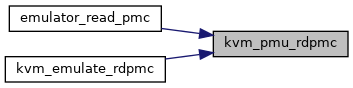

◆ kvm_pmu_rdpmc()

| int kvm_pmu_rdpmc | ( | struct kvm_vcpu * | vcpu, |

| unsigned | pmc, | ||

| u64 * | data | ||

| ) |

Definition at line 568 of file pmu.c.

static __always_inline bool kvm_is_cr0_bit_set(struct kvm_vcpu *vcpu, unsigned long cr0_bit)

Definition: kvm_cache_regs.h:160

static __always_inline bool kvm_is_cr4_bit_set(struct kvm_vcpu *vcpu, unsigned long cr4_bit)

Definition: kvm_cache_regs.h:182

static int kvm_pmu_rdpmc_vmware(struct kvm_vcpu *vcpu, unsigned idx, u64 *data)

Definition: pmu.c:545

Here is the call graph for this function:

Here is the caller graph for this function:

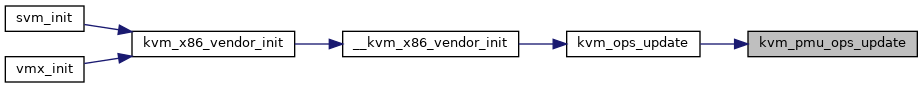

◆ kvm_pmu_refresh()

| void kvm_pmu_refresh | ( | struct kvm_vcpu * | vcpu | ) |

◆ kvm_pmu_request_counter_reprogram()

|

inlinestatic |

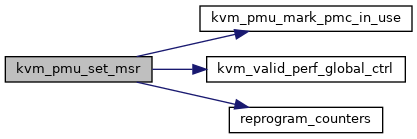

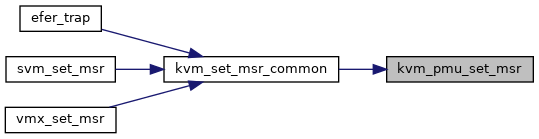

◆ kvm_pmu_set_msr()

| int kvm_pmu_set_msr | ( | struct kvm_vcpu * | vcpu, |

| struct msr_data * | msr_info | ||

| ) |

Definition at line 650 of file pmu.c.

static void kvm_pmu_mark_pmc_in_use(struct kvm_vcpu *vcpu, u32 msr)

Definition: pmu.c:616

static bool kvm_valid_perf_global_ctrl(struct kvm_pmu *pmu, u64 data)

Definition: pmu.h:90

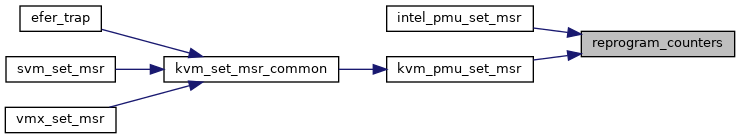

static void reprogram_counters(struct kvm_pmu *pmu, u64 diff)

Definition: pmu.h:189

Here is the call graph for this function:

Here is the caller graph for this function:

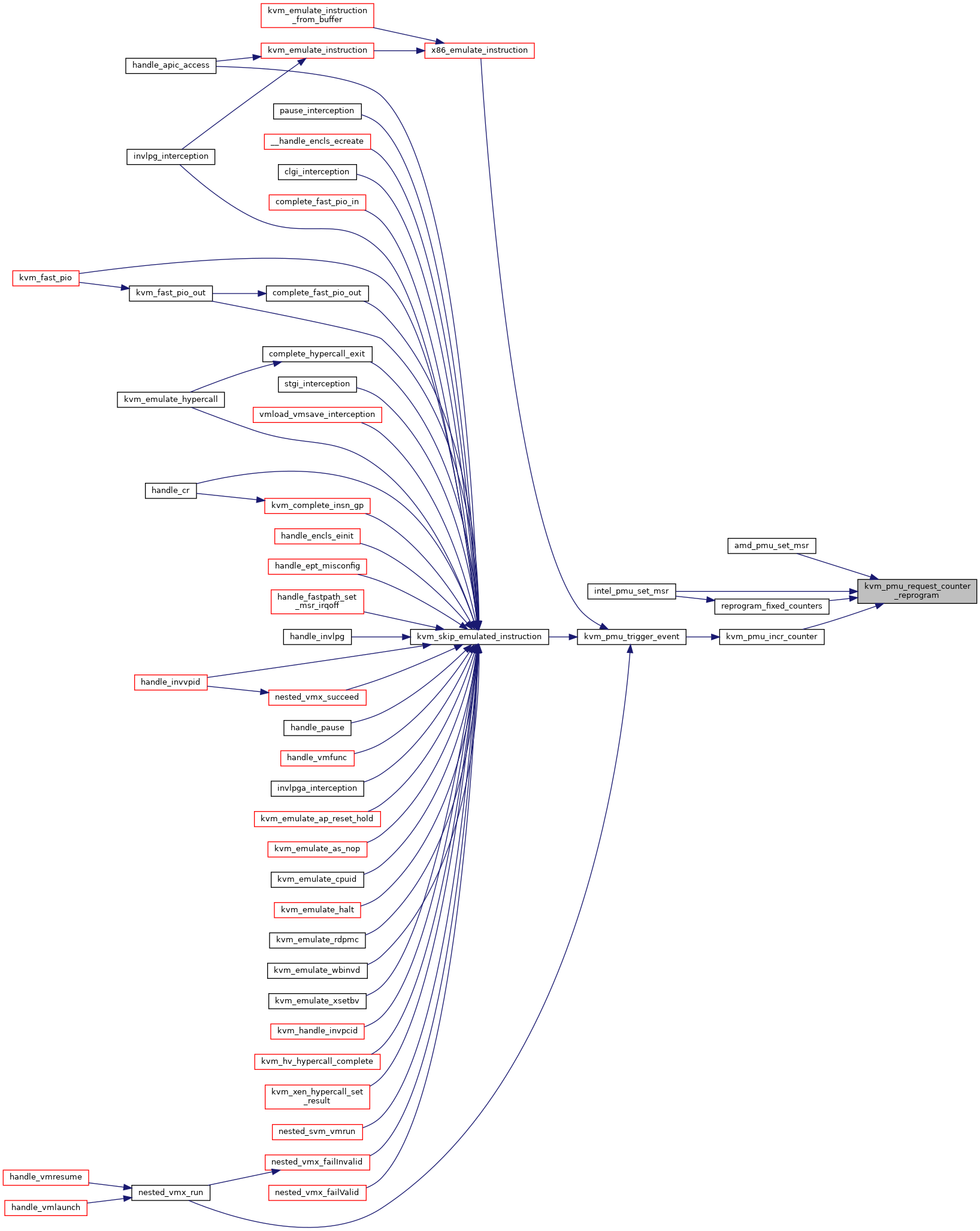

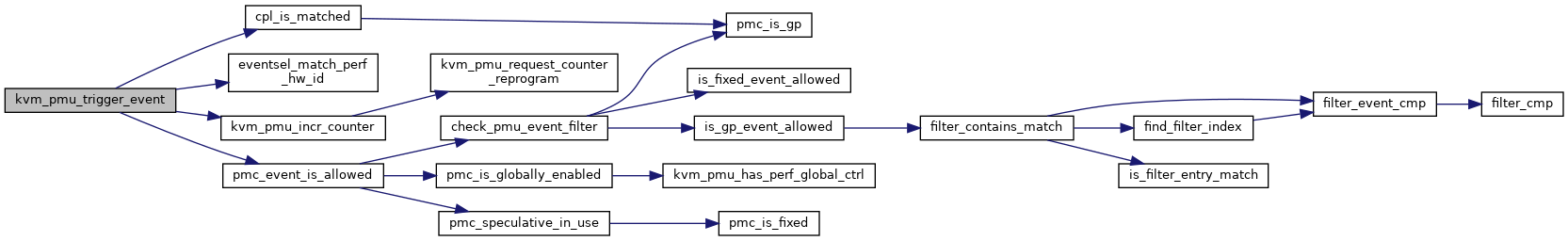

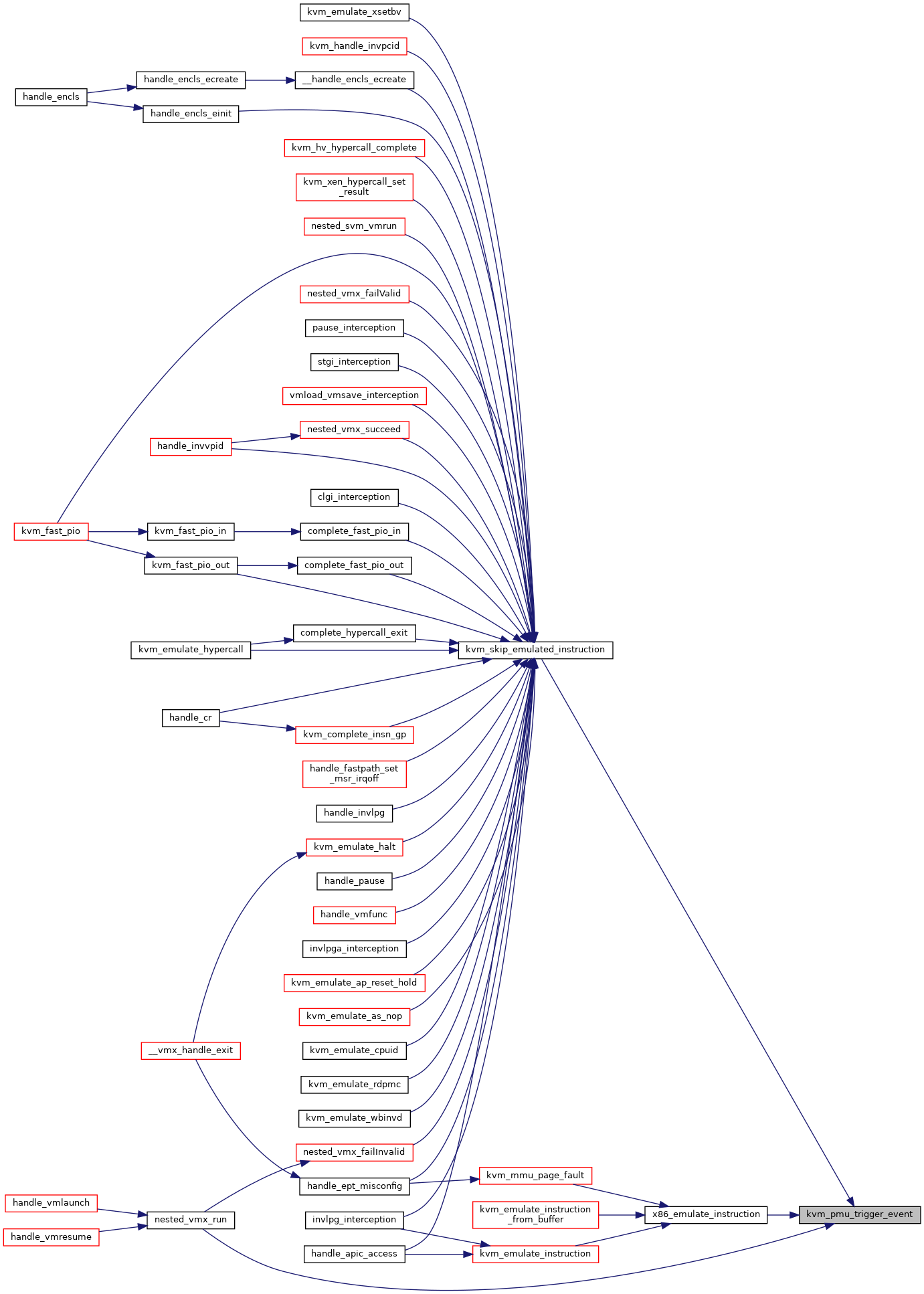

◆ kvm_pmu_trigger_event()

| void kvm_pmu_trigger_event | ( | struct kvm_vcpu * | vcpu, |

| u64 | perf_hw_id | ||

| ) |

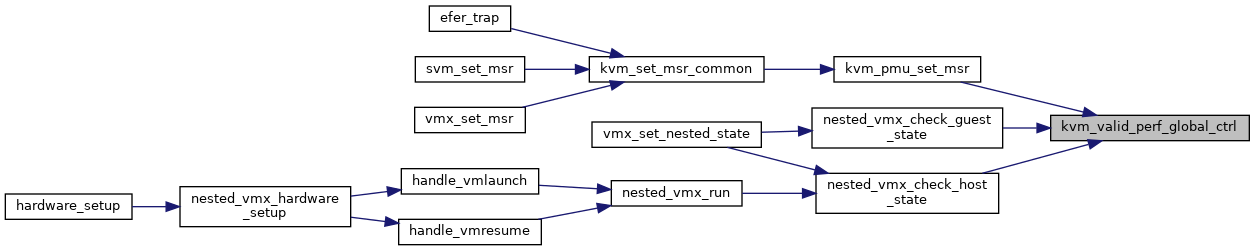

◆ kvm_valid_perf_global_ctrl()

|

inlinestatic |

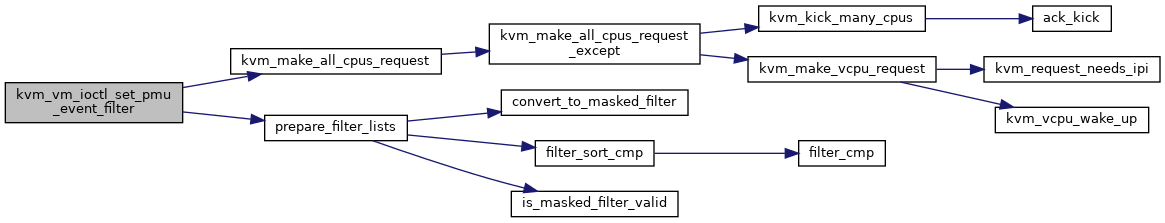

◆ kvm_vm_ioctl_set_pmu_event_filter()

| int kvm_vm_ioctl_set_pmu_event_filter | ( | struct kvm * | kvm, |

| void __user * | argp | ||

| ) |

Definition at line 928 of file pmu.c.

bool kvm_make_all_cpus_request(struct kvm *kvm, unsigned int req)

Definition: kvm_main.c:340

static int prepare_filter_lists(struct kvm_x86_pmu_event_filter *filter)

Definition: pmu.c:892

Here is the call graph for this function:

Here is the caller graph for this function:

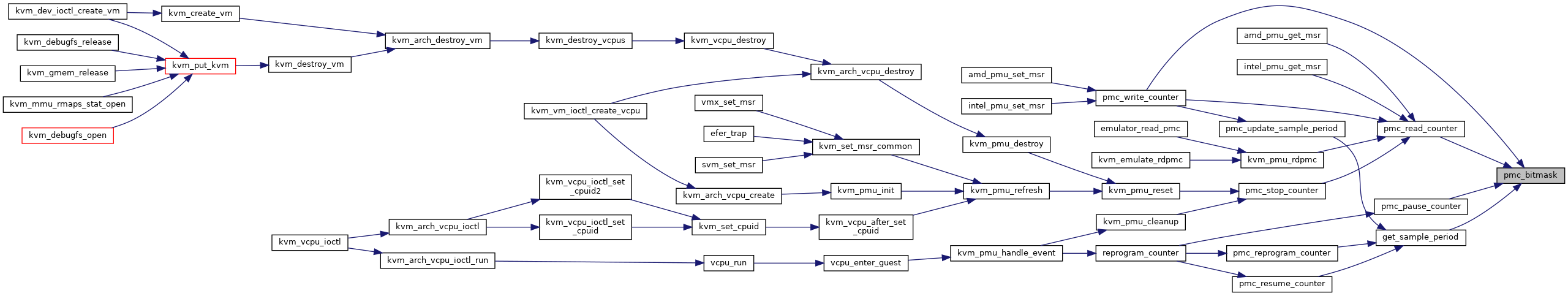

◆ pmc_bitmask()

|

inlinestatic |

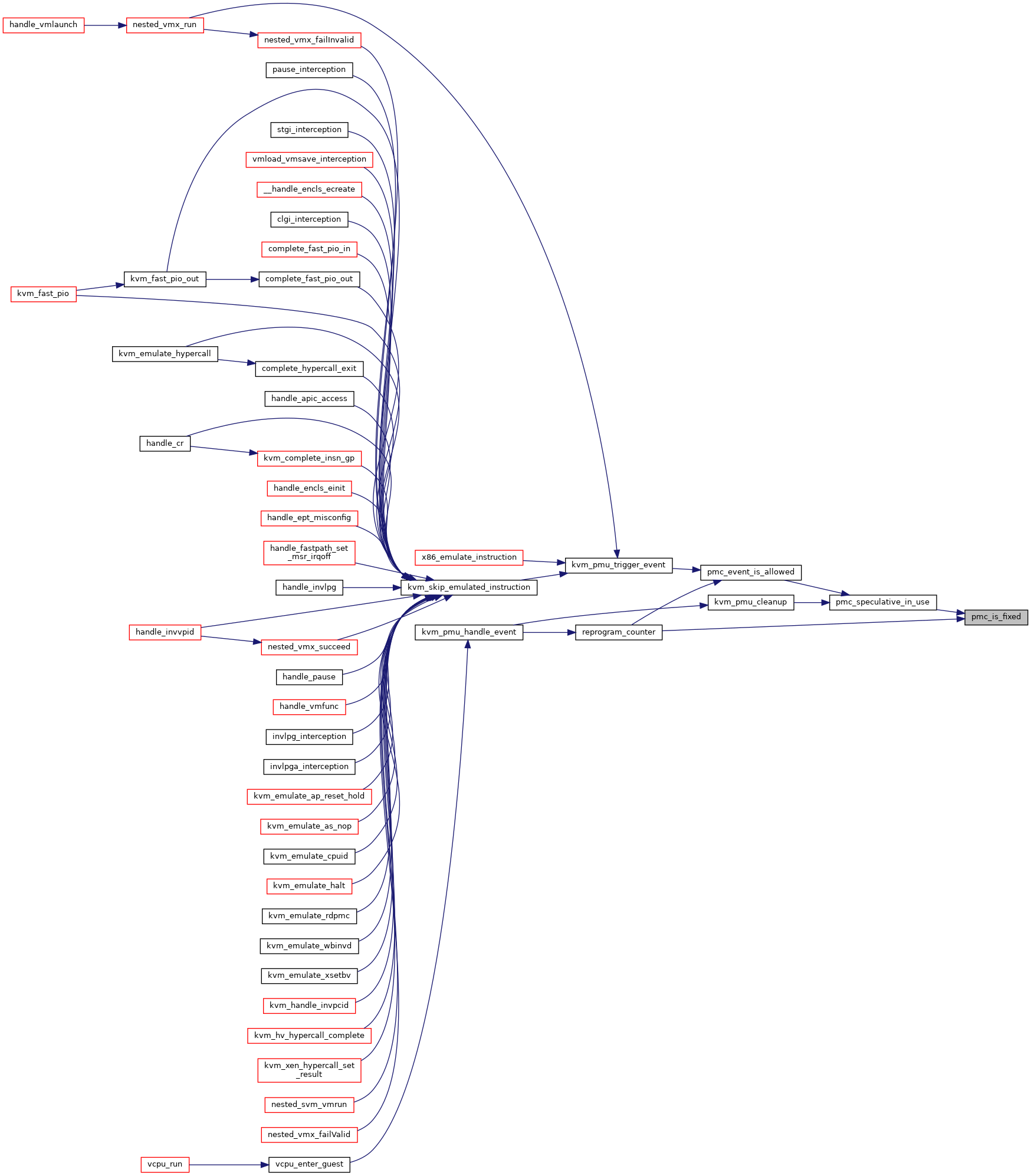

◆ pmc_is_fixed()

|

inlinestatic |

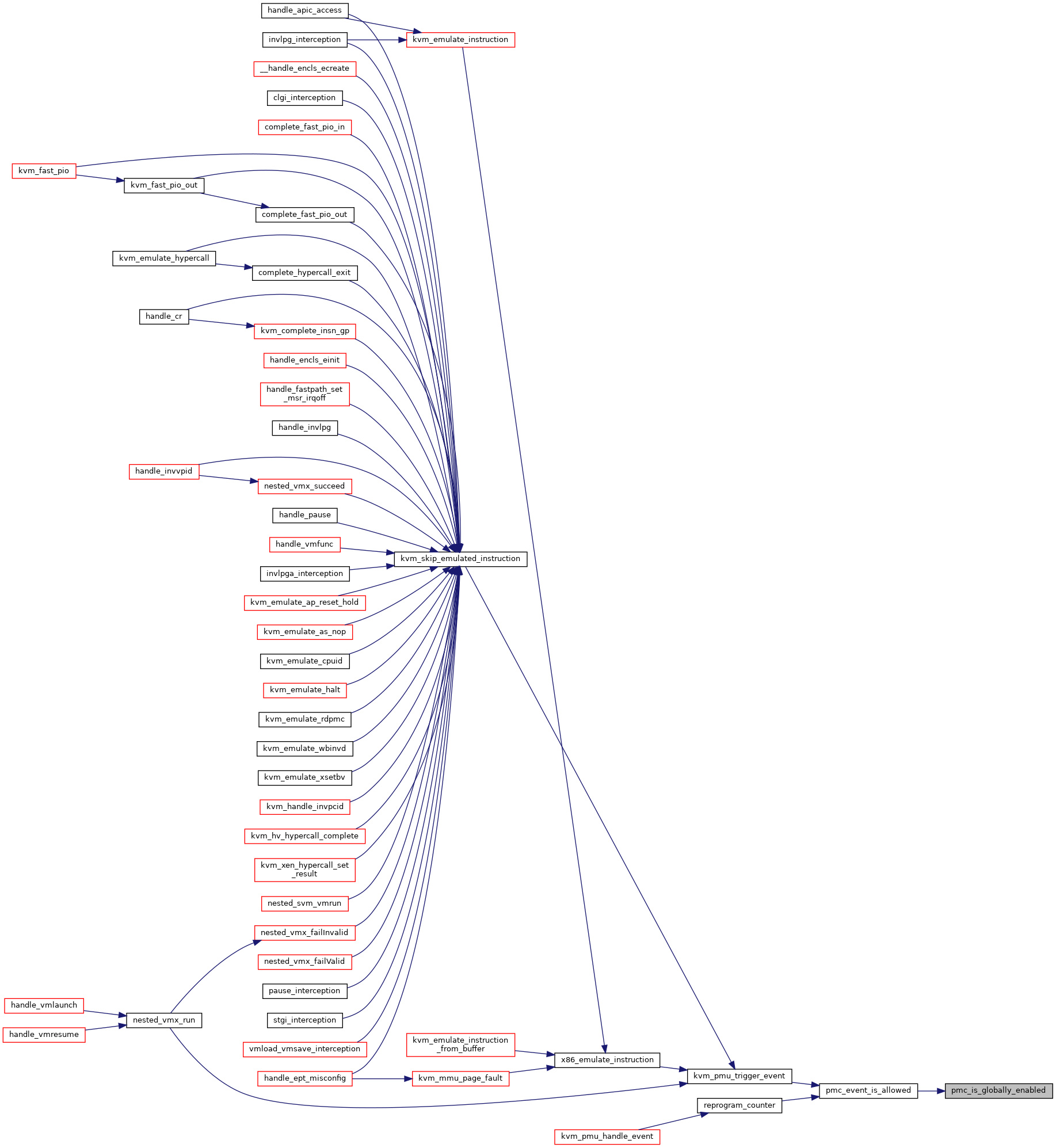

◆ pmc_is_globally_enabled()

|

inlinestatic |

◆ pmc_is_gp()

|

inlinestatic |

◆ pmc_read_counter()

|

inlinestatic |

◆ pmc_speculative_in_use()

|

inlinestatic |

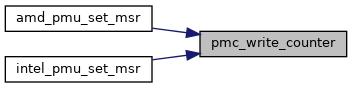

◆ pmc_write_counter()

| void pmc_write_counter | ( | struct kvm_pmc * | pmc, |

| u64 | val | ||

| ) |

◆ reprogram_counters()

|

inlinestatic |

Variable Documentation

◆ amd_pmu_ops

|

extern |

◆ intel_pmu_ops

|

extern |