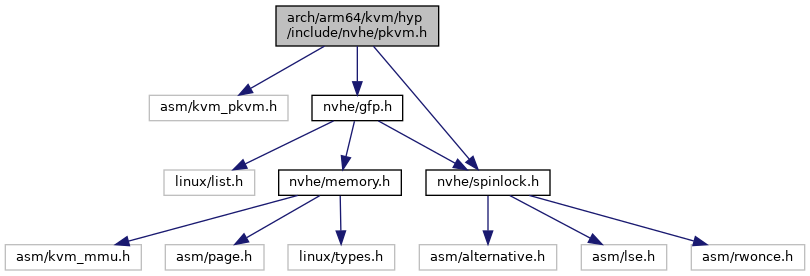

Include dependency graph for pkvm.h:

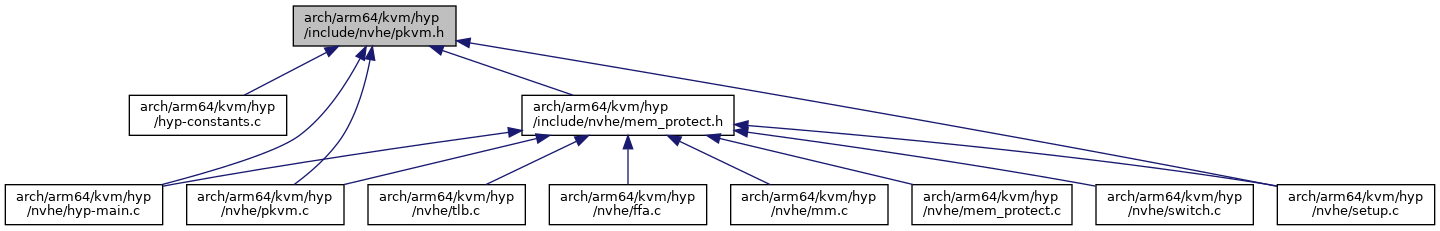

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Classes | |

| struct | pkvm_hyp_vcpu |

| struct | pkvm_hyp_vm |

Functions | |

| static struct pkvm_hyp_vm * | pkvm_hyp_vcpu_to_hyp_vm (struct pkvm_hyp_vcpu *hyp_vcpu) |

| void | pkvm_hyp_vm_table_init (void *tbl) |

| int | __pkvm_init_vm (struct kvm *host_kvm, unsigned long vm_hva, unsigned long pgd_hva) |

| int | __pkvm_init_vcpu (pkvm_handle_t handle, struct kvm_vcpu *host_vcpu, unsigned long vcpu_hva) |

| int | __pkvm_teardown_vm (pkvm_handle_t handle) |

| struct pkvm_hyp_vcpu * | pkvm_load_hyp_vcpu (pkvm_handle_t handle, unsigned int vcpu_idx) |

| void | pkvm_put_hyp_vcpu (struct pkvm_hyp_vcpu *hyp_vcpu) |

Function Documentation

◆ __pkvm_init_vcpu()

| int __pkvm_init_vcpu | ( | pkvm_handle_t | handle, |

| struct kvm_vcpu * | host_vcpu, | ||

| unsigned long | vcpu_hva | ||

| ) |

Definition at line 539 of file pkvm.c.

static void * map_donated_memory(unsigned long host_va, size_t size)

Definition: pkvm.c:421

static int init_pkvm_hyp_vcpu(struct pkvm_hyp_vcpu *hyp_vcpu, struct pkvm_hyp_vm *hyp_vm, struct kvm_vcpu *host_vcpu, unsigned int vcpu_idx)

Definition: pkvm.c:313

static struct pkvm_hyp_vm * get_vm_by_handle(pkvm_handle_t handle)

Definition: pkvm.c:253

Definition: pkvm.h:18

Definition: pkvm.h:28

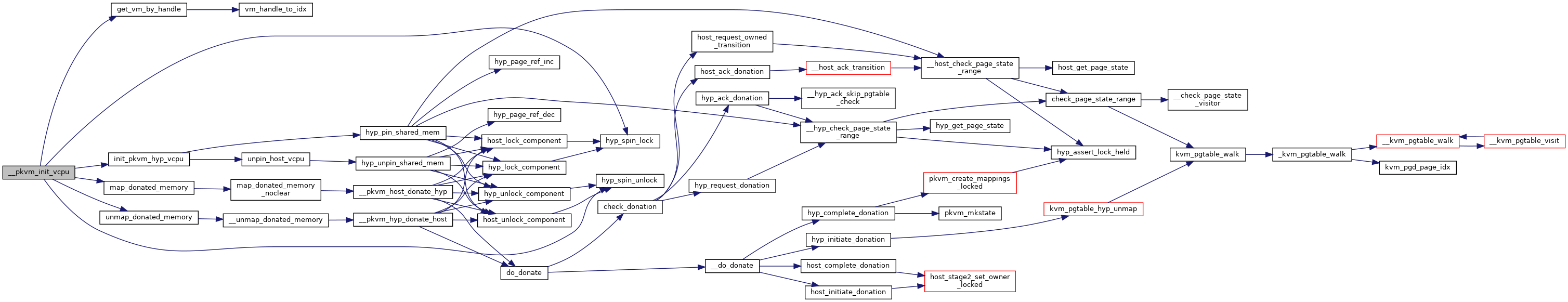

Here is the call graph for this function:

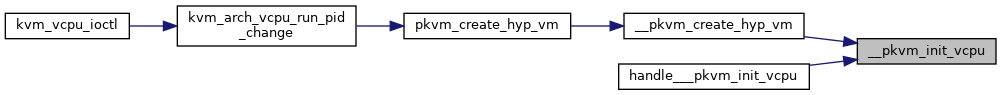

Here is the caller graph for this function:

◆ __pkvm_init_vm()

| int __pkvm_init_vm | ( | struct kvm * | host_kvm, |

| unsigned long | vm_hva, | ||

| unsigned long | pgd_hva | ||

| ) |

Definition at line 470 of file pkvm.c.

static pkvm_handle_t insert_vm_table_entry(struct kvm *host_kvm, struct pkvm_hyp_vm *hyp_vm)

Definition: pkvm.c:360

static void * map_donated_memory_noclear(unsigned long host_va, size_t size)

Definition: pkvm.c:407

static void init_pkvm_hyp_vm(struct kvm *host_kvm, struct pkvm_hyp_vm *hyp_vm, unsigned int nr_vcpus)

Definition: pkvm.c:305

int kvm_guest_prepare_stage2(struct pkvm_hyp_vm *vm, void *pgd)

Definition: mem_protect.c:232

Definition: mem_protect.h:48

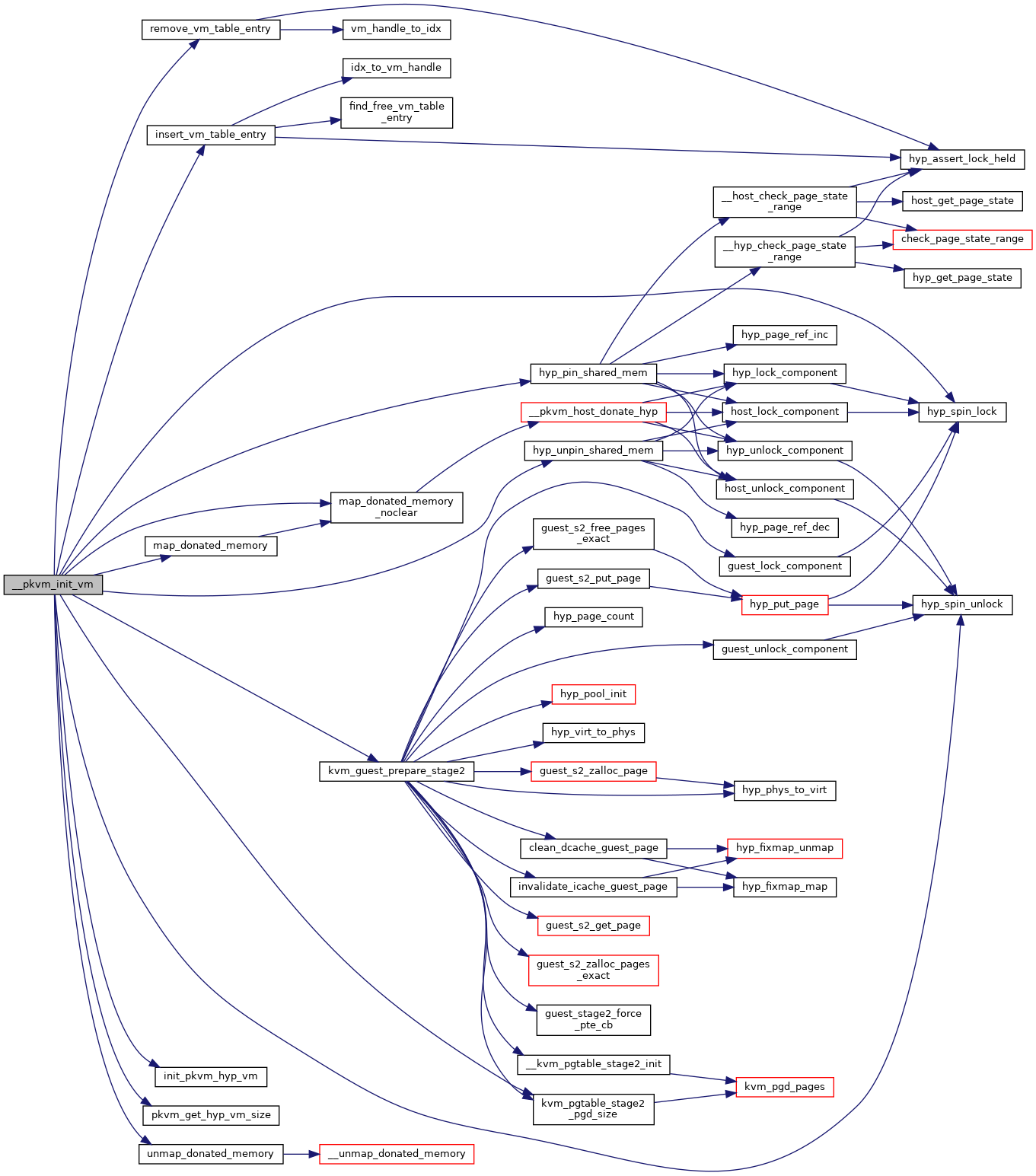

Here is the call graph for this function:

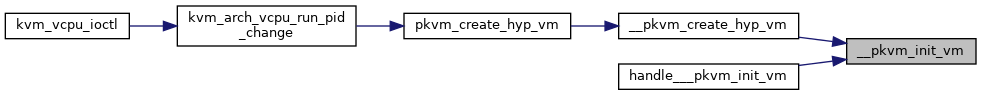

Here is the caller graph for this function:

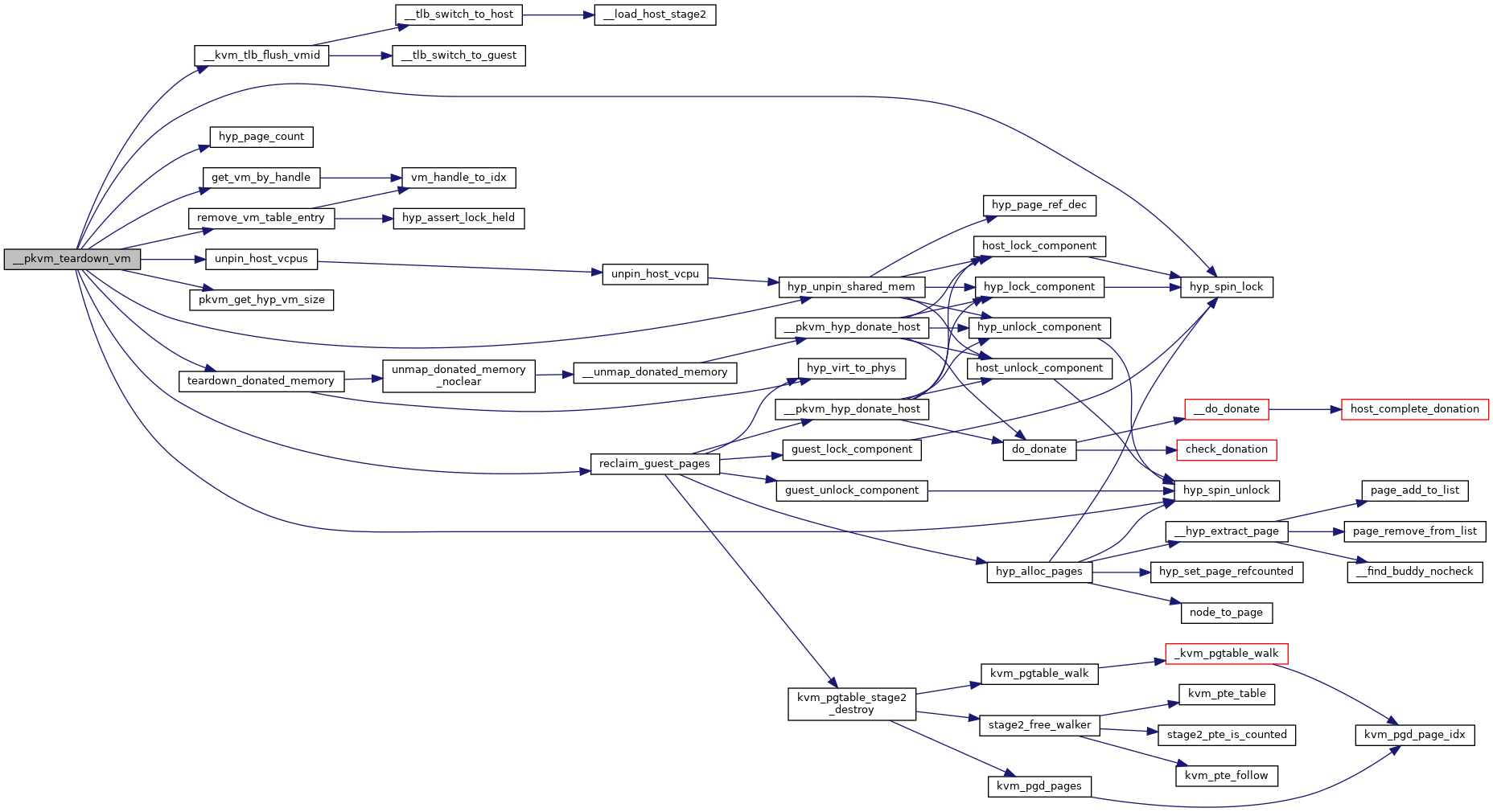

◆ __pkvm_teardown_vm()

| int __pkvm_teardown_vm | ( | pkvm_handle_t | handle | ) |

Definition at line 592 of file pkvm.c.

static void teardown_donated_memory(struct kvm_hyp_memcache *mc, void *addr, size_t size)

Definition: pkvm.c:581

static void unpin_host_vcpus(struct pkvm_hyp_vcpu *hyp_vcpus[], unsigned int nr_vcpus)

Definition: pkvm.c:296

void reclaim_guest_pages(struct pkvm_hyp_vm *vm, struct kvm_hyp_memcache *mc)

Definition: mem_protect.c:269

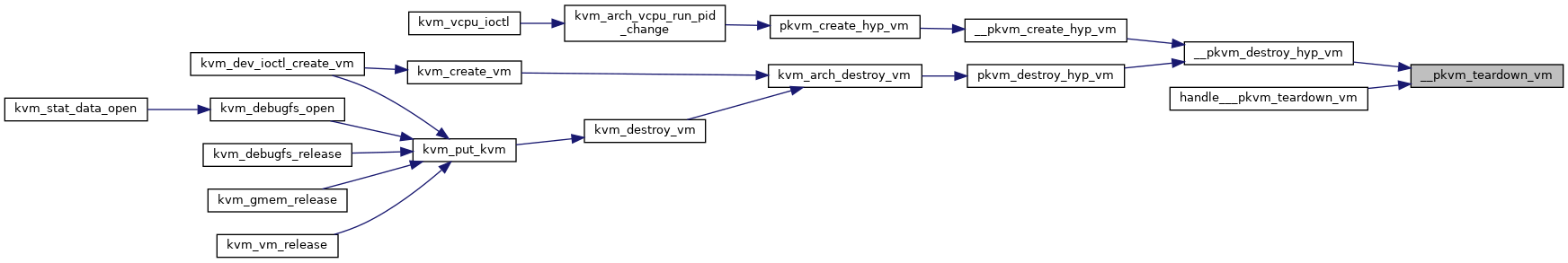

Here is the call graph for this function:

Here is the caller graph for this function:

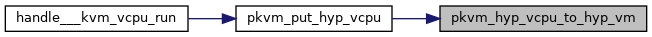

◆ pkvm_hyp_vcpu_to_hyp_vm()

|

inlinestatic |

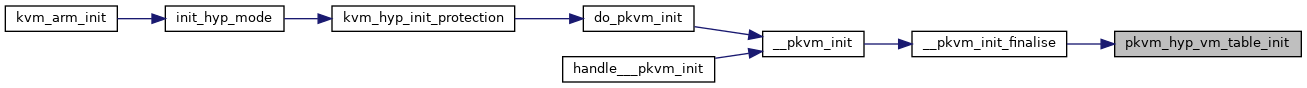

◆ pkvm_hyp_vm_table_init()

| void pkvm_hyp_vm_table_init | ( | void * | tbl | ) |

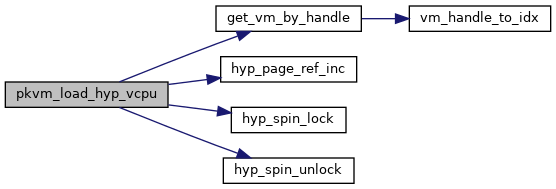

◆ pkvm_load_hyp_vcpu()

| struct pkvm_hyp_vcpu* pkvm_load_hyp_vcpu | ( | pkvm_handle_t | handle, |

| unsigned int | vcpu_idx | ||

| ) |

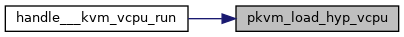

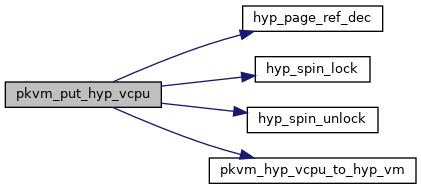

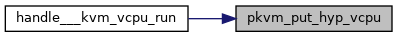

◆ pkvm_put_hyp_vcpu()

| void pkvm_put_hyp_vcpu | ( | struct pkvm_hyp_vcpu * | hyp_vcpu | ) |