Go to the source code of this file.

Functions | |

| void | kvm_mmu_init_tdp_mmu (struct kvm *kvm) |

| void | kvm_mmu_uninit_tdp_mmu (struct kvm *kvm) |

| hpa_t | kvm_tdp_mmu_get_vcpu_root_hpa (struct kvm_vcpu *vcpu) |

| static __must_check bool | kvm_tdp_mmu_get_root (struct kvm_mmu_page *root) |

| void | kvm_tdp_mmu_put_root (struct kvm *kvm, struct kvm_mmu_page *root) |

| bool | kvm_tdp_mmu_zap_leafs (struct kvm *kvm, gfn_t start, gfn_t end, bool flush) |

| bool | kvm_tdp_mmu_zap_sp (struct kvm *kvm, struct kvm_mmu_page *sp) |

| void | kvm_tdp_mmu_zap_all (struct kvm *kvm) |

| void | kvm_tdp_mmu_invalidate_all_roots (struct kvm *kvm) |

| void | kvm_tdp_mmu_zap_invalidated_roots (struct kvm *kvm) |

| int | kvm_tdp_mmu_map (struct kvm_vcpu *vcpu, struct kvm_page_fault *fault) |

| bool | kvm_tdp_mmu_unmap_gfn_range (struct kvm *kvm, struct kvm_gfn_range *range, bool flush) |

| bool | kvm_tdp_mmu_age_gfn_range (struct kvm *kvm, struct kvm_gfn_range *range) |

| bool | kvm_tdp_mmu_test_age_gfn (struct kvm *kvm, struct kvm_gfn_range *range) |

| bool | kvm_tdp_mmu_set_spte_gfn (struct kvm *kvm, struct kvm_gfn_range *range) |

| bool | kvm_tdp_mmu_wrprot_slot (struct kvm *kvm, const struct kvm_memory_slot *slot, int min_level) |

| bool | kvm_tdp_mmu_clear_dirty_slot (struct kvm *kvm, const struct kvm_memory_slot *slot) |

| void | kvm_tdp_mmu_clear_dirty_pt_masked (struct kvm *kvm, struct kvm_memory_slot *slot, gfn_t gfn, unsigned long mask, bool wrprot) |

| void | kvm_tdp_mmu_zap_collapsible_sptes (struct kvm *kvm, const struct kvm_memory_slot *slot) |

| bool | kvm_tdp_mmu_write_protect_gfn (struct kvm *kvm, struct kvm_memory_slot *slot, gfn_t gfn, int min_level) |

| void | kvm_tdp_mmu_try_split_huge_pages (struct kvm *kvm, const struct kvm_memory_slot *slot, gfn_t start, gfn_t end, int target_level, bool shared) |

| static void | kvm_tdp_mmu_walk_lockless_begin (void) |

| static void | kvm_tdp_mmu_walk_lockless_end (void) |

| int | kvm_tdp_mmu_get_walk (struct kvm_vcpu *vcpu, u64 addr, u64 *sptes, int *root_level) |

| u64 * | kvm_tdp_mmu_fast_pf_get_last_sptep (struct kvm_vcpu *vcpu, u64 addr, u64 *spte) |

| static bool | is_tdp_mmu_page (struct kvm_mmu_page *sp) |

Function Documentation

◆ is_tdp_mmu_page()

|

inlinestatic |

◆ kvm_mmu_init_tdp_mmu()

| void kvm_mmu_init_tdp_mmu | ( | struct kvm * | kvm | ) |

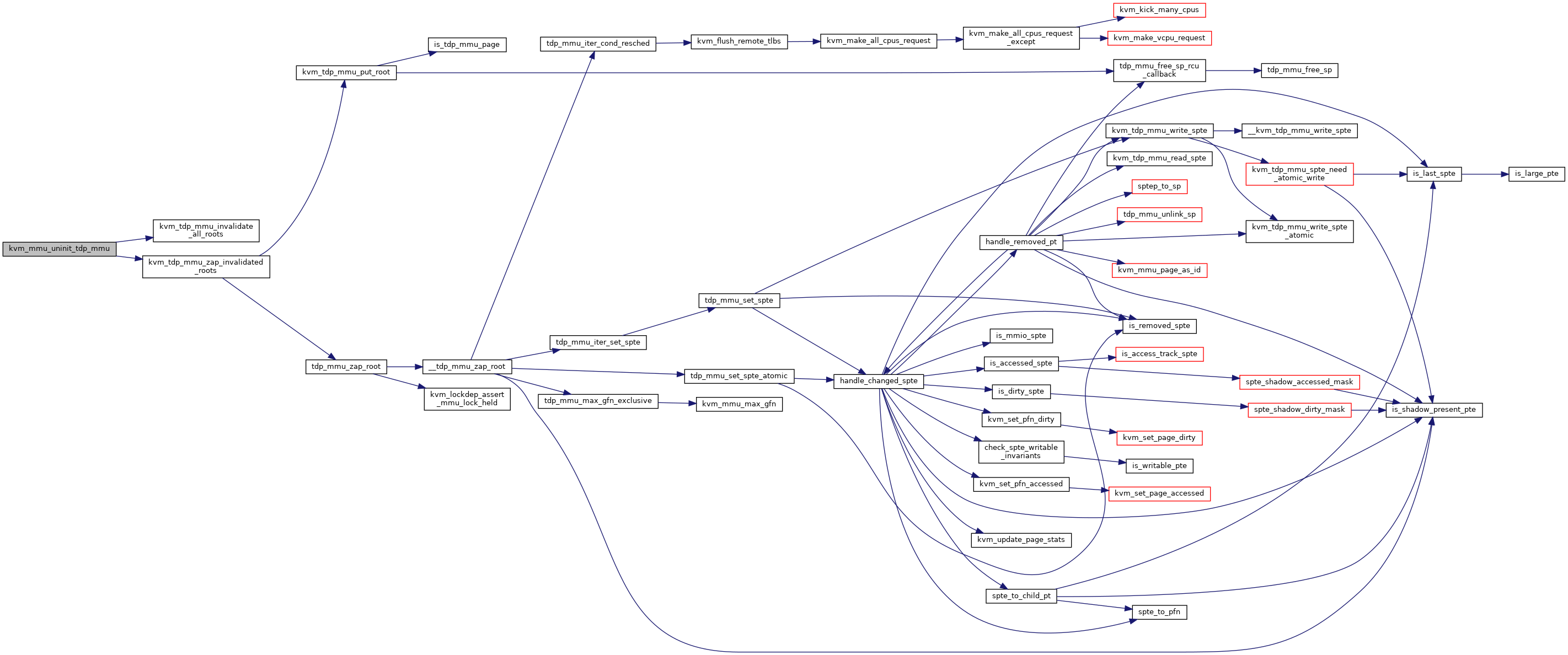

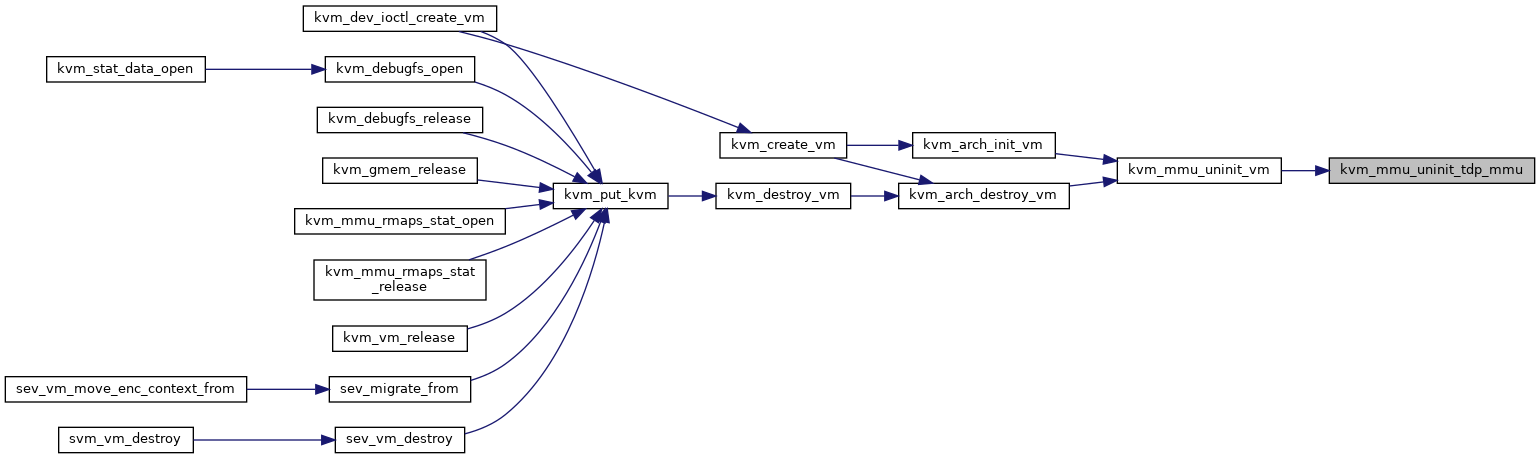

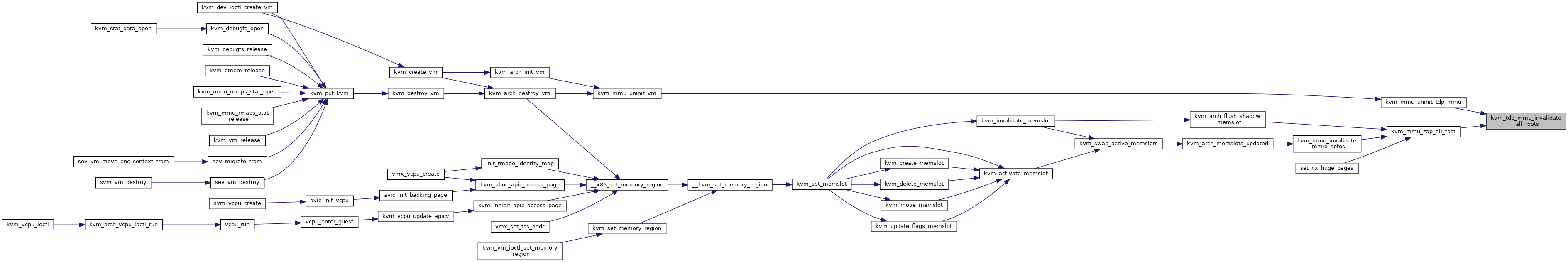

◆ kvm_mmu_uninit_tdp_mmu()

| void kvm_mmu_uninit_tdp_mmu | ( | struct kvm * | kvm | ) |

Definition at line 33 of file tdp_mmu.c.

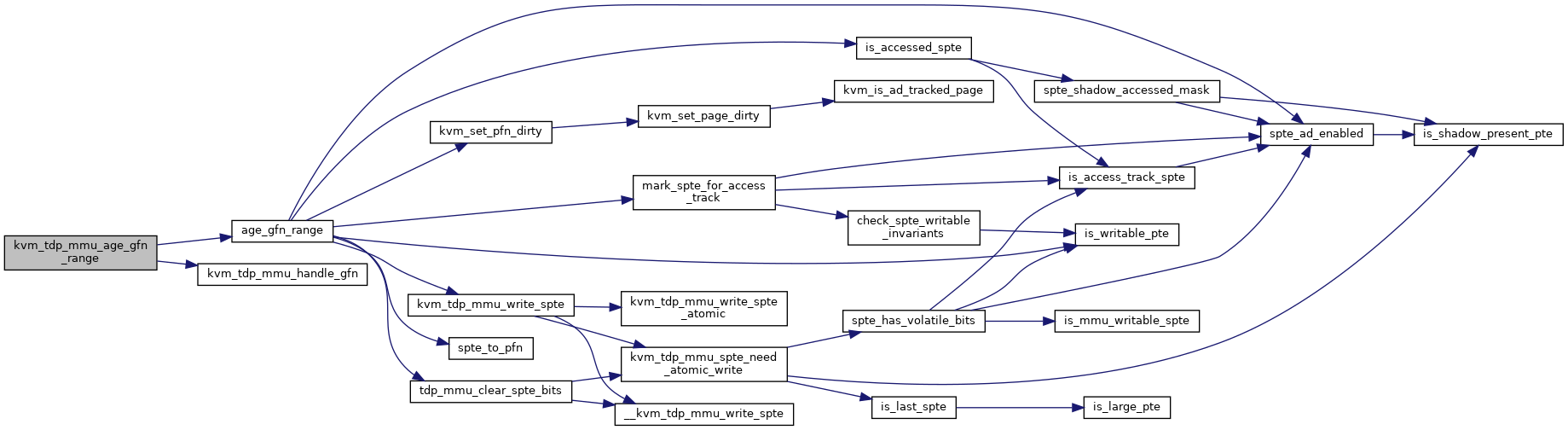

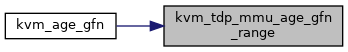

◆ kvm_tdp_mmu_age_gfn_range()

| bool kvm_tdp_mmu_age_gfn_range | ( | struct kvm * | kvm, |

| struct kvm_gfn_range * | range | ||

| ) |

Definition at line 1195 of file tdp_mmu.c.

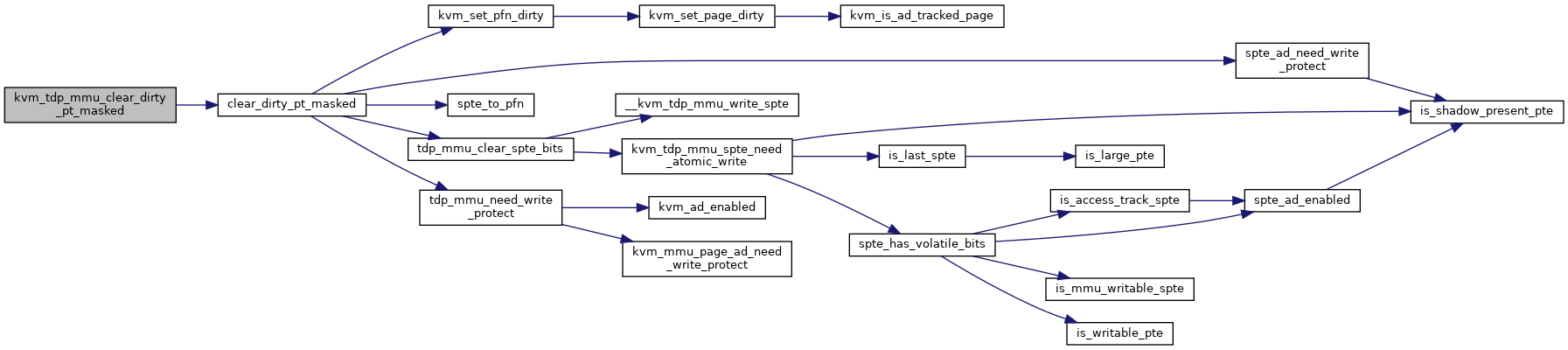

◆ kvm_tdp_mmu_clear_dirty_pt_masked()

| void kvm_tdp_mmu_clear_dirty_pt_masked | ( | struct kvm * | kvm, |

| struct kvm_memory_slot * | slot, | ||

| gfn_t | gfn, | ||

| unsigned long | mask, | ||

| bool | wrprot | ||

| ) |

Definition at line 1629 of file tdp_mmu.c.

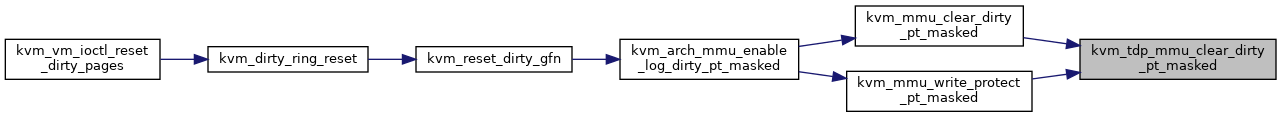

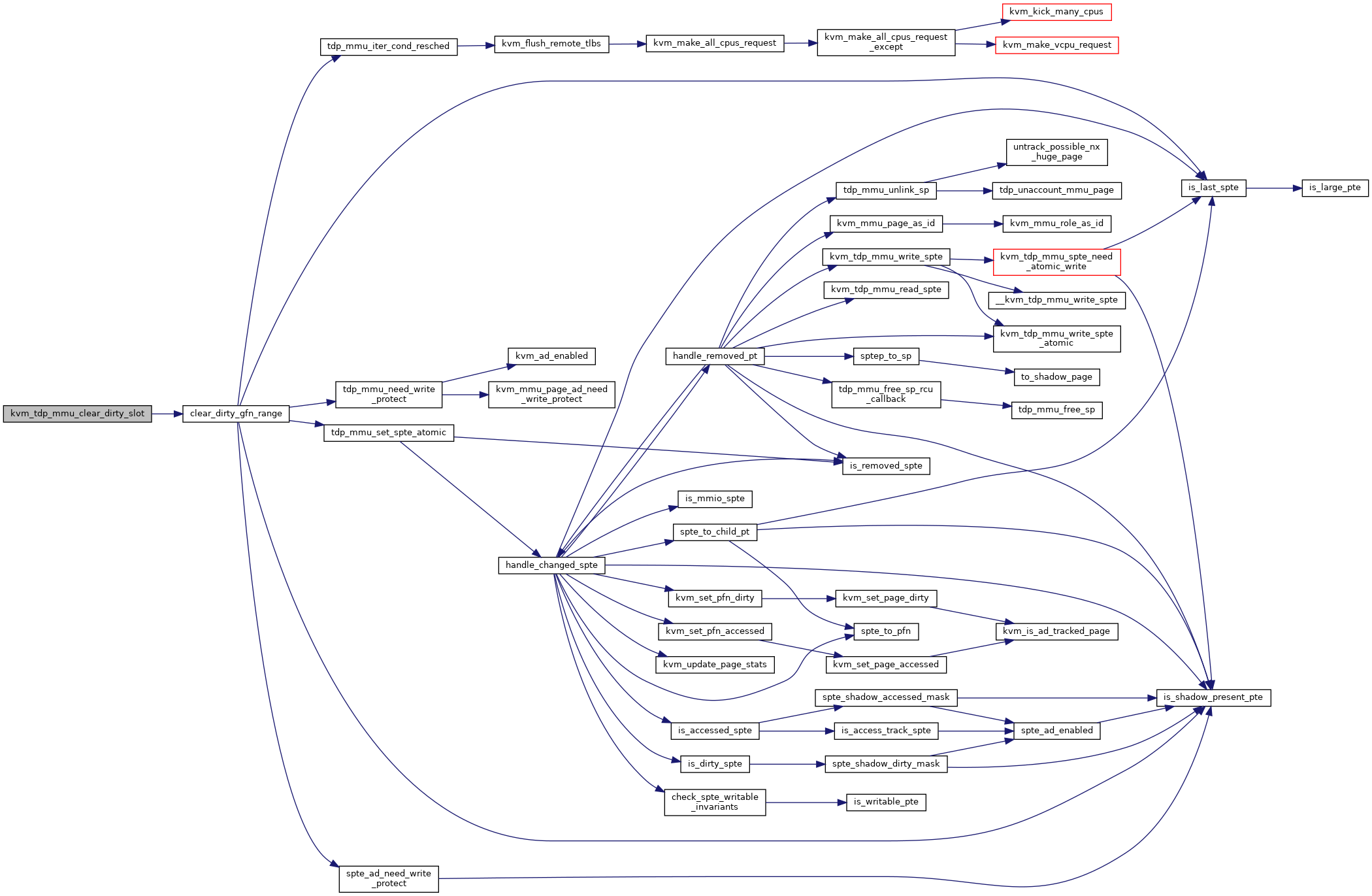

◆ kvm_tdp_mmu_clear_dirty_slot()

| bool kvm_tdp_mmu_clear_dirty_slot | ( | struct kvm * | kvm, |

| const struct kvm_memory_slot * | slot | ||

| ) |

Definition at line 1560 of file tdp_mmu.c.

◆ kvm_tdp_mmu_fast_pf_get_last_sptep()

| u64* kvm_tdp_mmu_fast_pf_get_last_sptep | ( | struct kvm_vcpu * | vcpu, |

| u64 | addr, | ||

| u64 * | spte | ||

| ) |

Definition at line 1795 of file tdp_mmu.c.

◆ kvm_tdp_mmu_get_root()

|

inlinestatic |

◆ kvm_tdp_mmu_get_vcpu_root_hpa()

| hpa_t kvm_tdp_mmu_get_vcpu_root_hpa | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 219 of file tdp_mmu.c.

◆ kvm_tdp_mmu_get_walk()

| int kvm_tdp_mmu_get_walk | ( | struct kvm_vcpu * | vcpu, |

| u64 | addr, | ||

| u64 * | sptes, | ||

| int * | root_level | ||

| ) |

◆ kvm_tdp_mmu_invalidate_all_roots()

| void kvm_tdp_mmu_invalidate_all_roots | ( | struct kvm * | kvm | ) |

Definition at line 901 of file tdp_mmu.c.

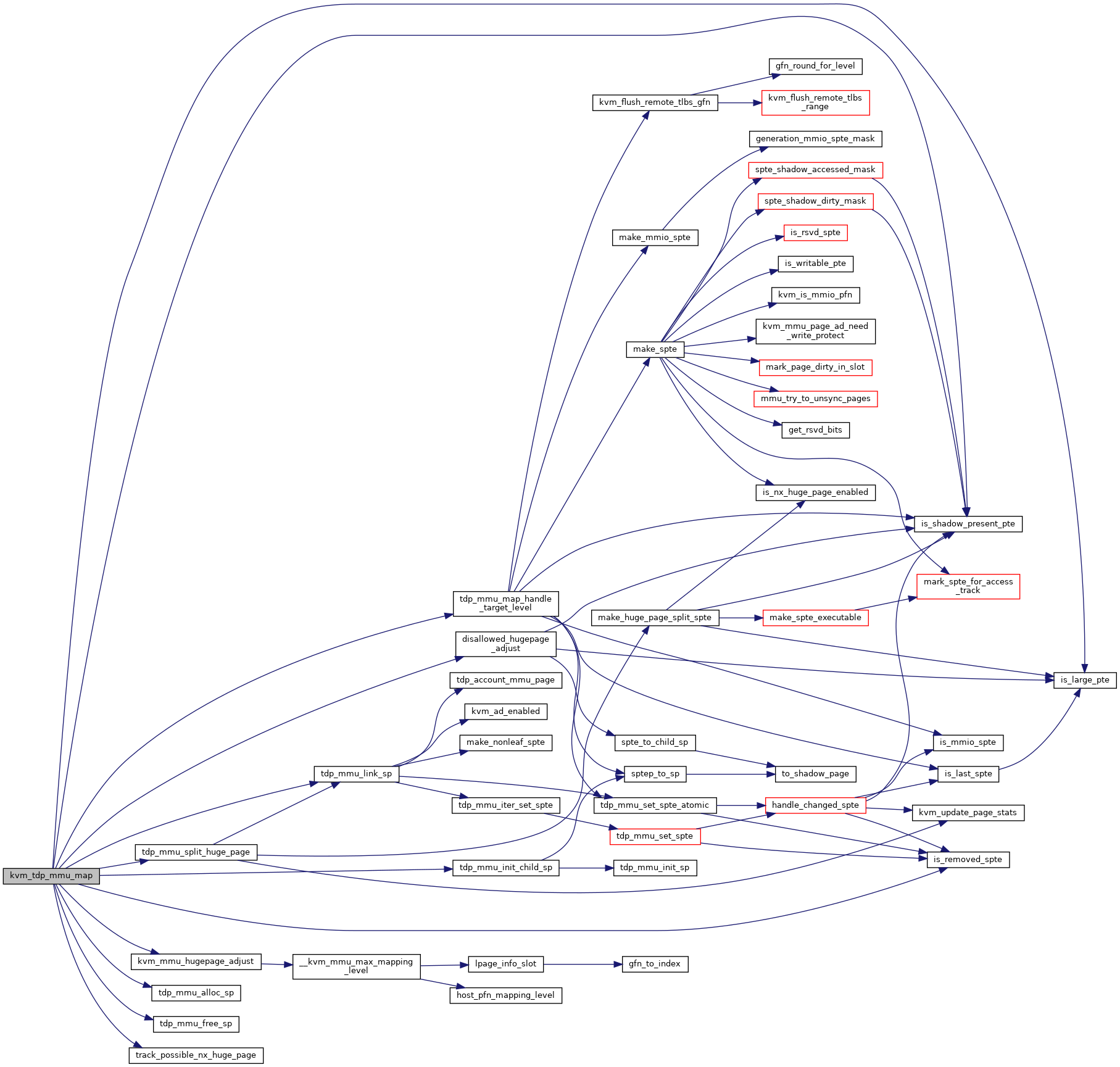

◆ kvm_tdp_mmu_map()

| int kvm_tdp_mmu_map | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_page_fault * | fault | ||

| ) |

Definition at line 1032 of file tdp_mmu.c.

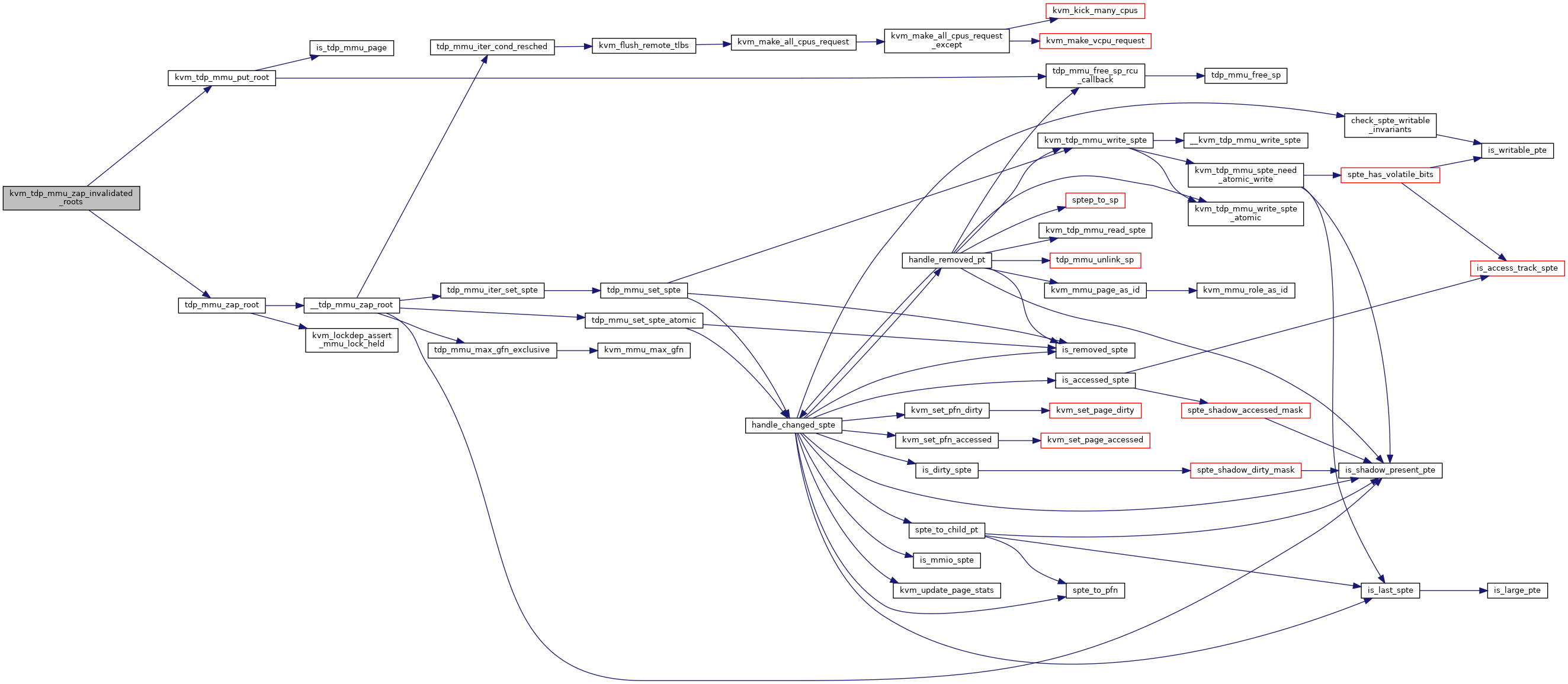

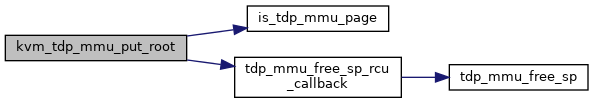

◆ kvm_tdp_mmu_put_root()

| void kvm_tdp_mmu_put_root | ( | struct kvm * | kvm, |

| struct kvm_mmu_page * | root | ||

| ) |

Definition at line 76 of file tdp_mmu.c.

◆ kvm_tdp_mmu_set_spte_gfn()

| bool kvm_tdp_mmu_set_spte_gfn | ( | struct kvm * | kvm, |

| struct kvm_gfn_range * | range | ||

| ) |

Definition at line 1247 of file tdp_mmu.c.

◆ kvm_tdp_mmu_test_age_gfn()

| bool kvm_tdp_mmu_test_age_gfn | ( | struct kvm * | kvm, |

| struct kvm_gfn_range * | range | ||

| ) |

Definition at line 1206 of file tdp_mmu.c.

◆ kvm_tdp_mmu_try_split_huge_pages()

| void kvm_tdp_mmu_try_split_huge_pages | ( | struct kvm * | kvm, |

| const struct kvm_memory_slot * | slot, | ||

| gfn_t | start, | ||

| gfn_t | end, | ||

| int | target_level, | ||

| bool | shared | ||

| ) |

Definition at line 1483 of file tdp_mmu.c.

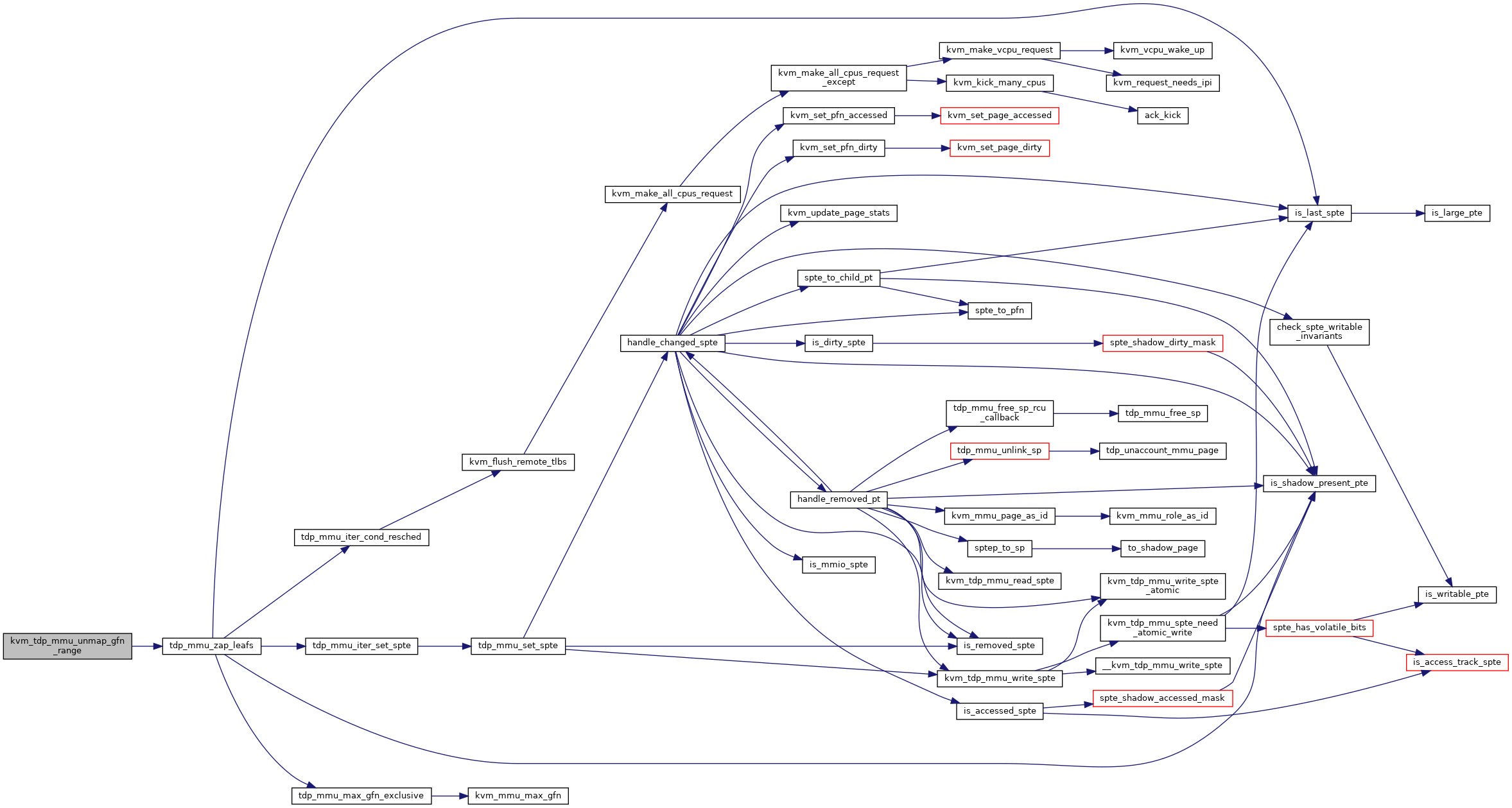

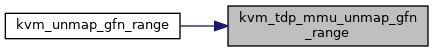

◆ kvm_tdp_mmu_unmap_gfn_range()

| bool kvm_tdp_mmu_unmap_gfn_range | ( | struct kvm * | kvm, |

| struct kvm_gfn_range * | range, | ||

| bool | flush | ||

| ) |

Definition at line 1114 of file tdp_mmu.c.

◆ kvm_tdp_mmu_walk_lockless_begin()

|

inlinestatic |

◆ kvm_tdp_mmu_walk_lockless_end()

|

inlinestatic |

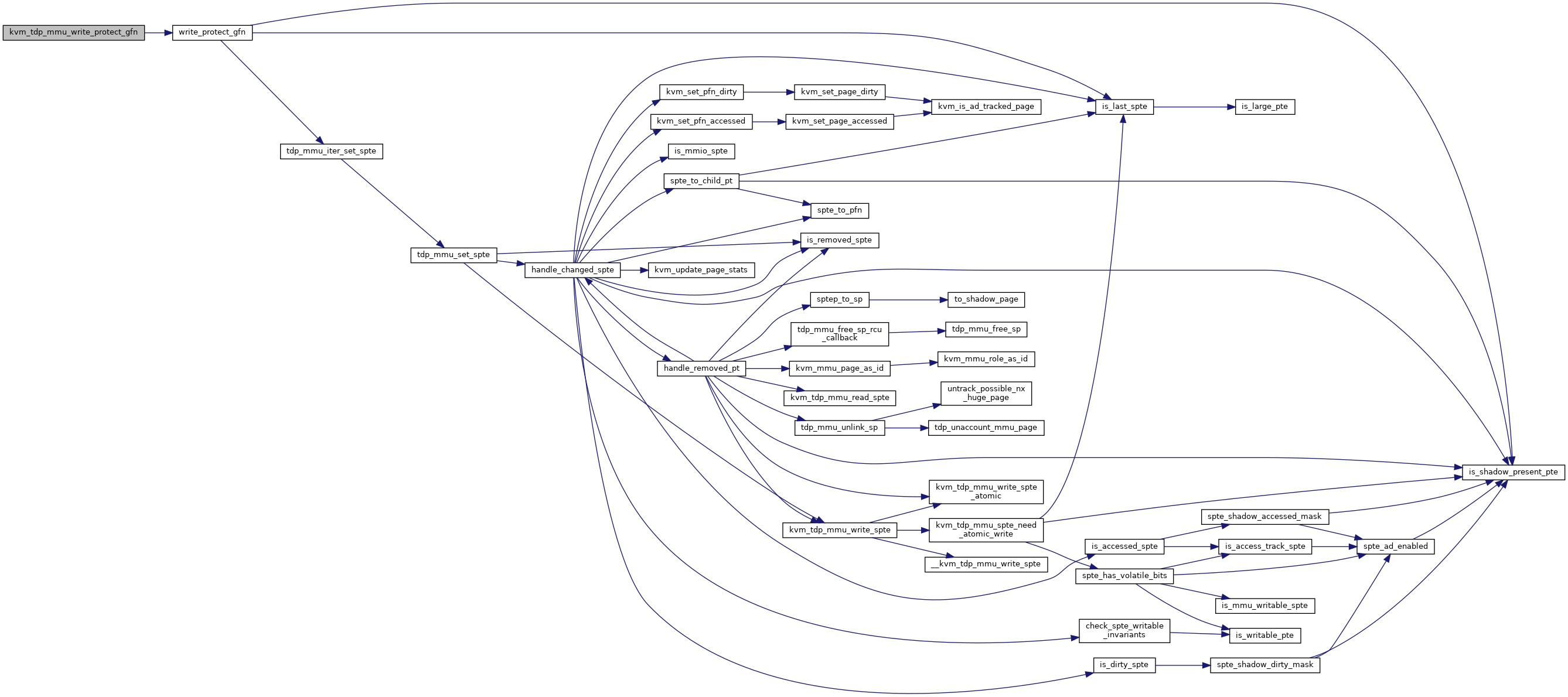

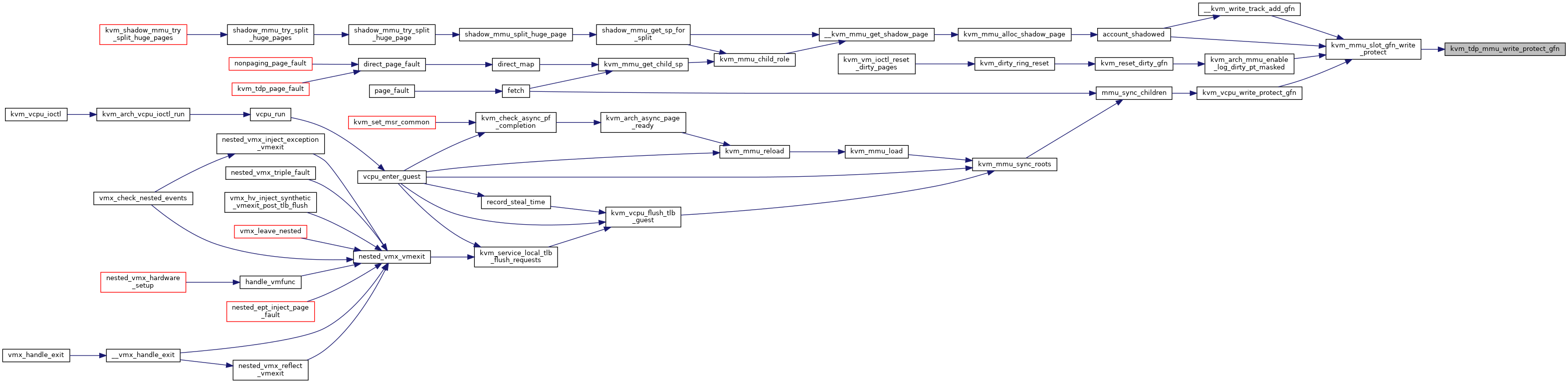

◆ kvm_tdp_mmu_write_protect_gfn()

| bool kvm_tdp_mmu_write_protect_gfn | ( | struct kvm * | kvm, |

| struct kvm_memory_slot * | slot, | ||

| gfn_t | gfn, | ||

| int | min_level | ||

| ) |

Definition at line 1746 of file tdp_mmu.c.

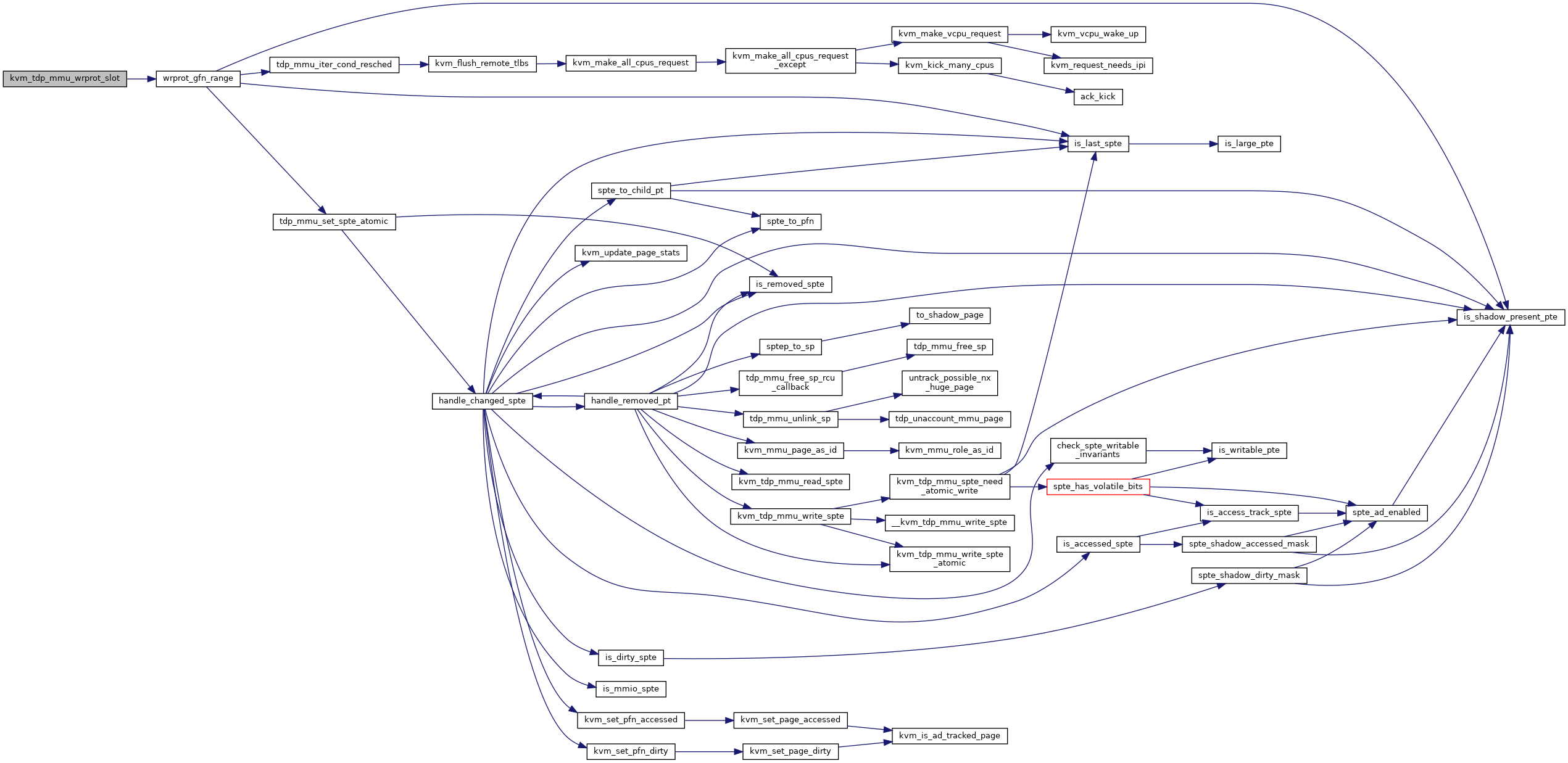

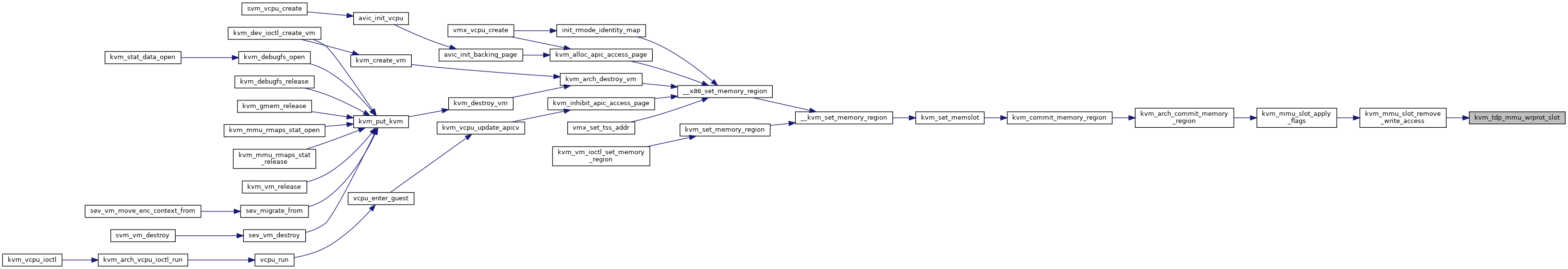

◆ kvm_tdp_mmu_wrprot_slot()

| bool kvm_tdp_mmu_wrprot_slot | ( | struct kvm * | kvm, |

| const struct kvm_memory_slot * | slot, | ||

| int | min_level | ||

| ) |

Definition at line 1300 of file tdp_mmu.c.

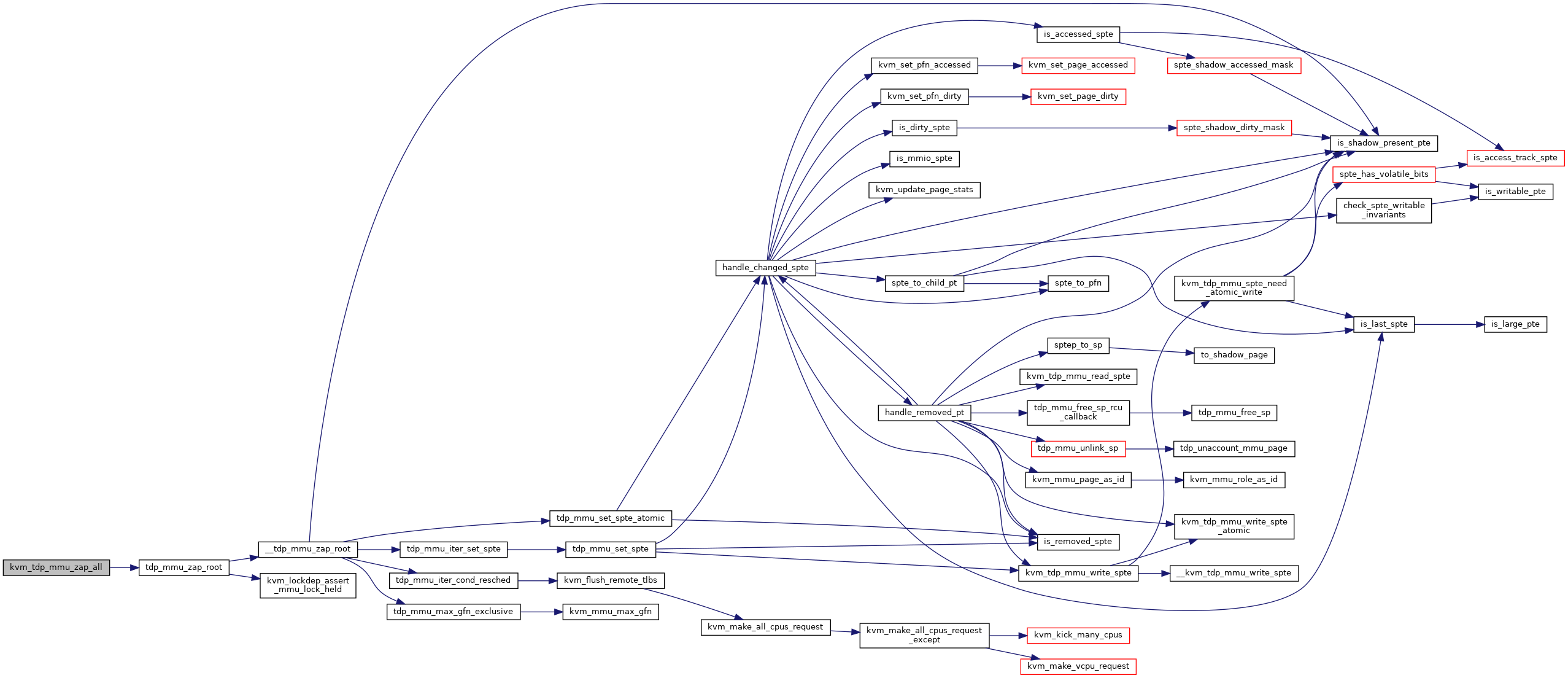

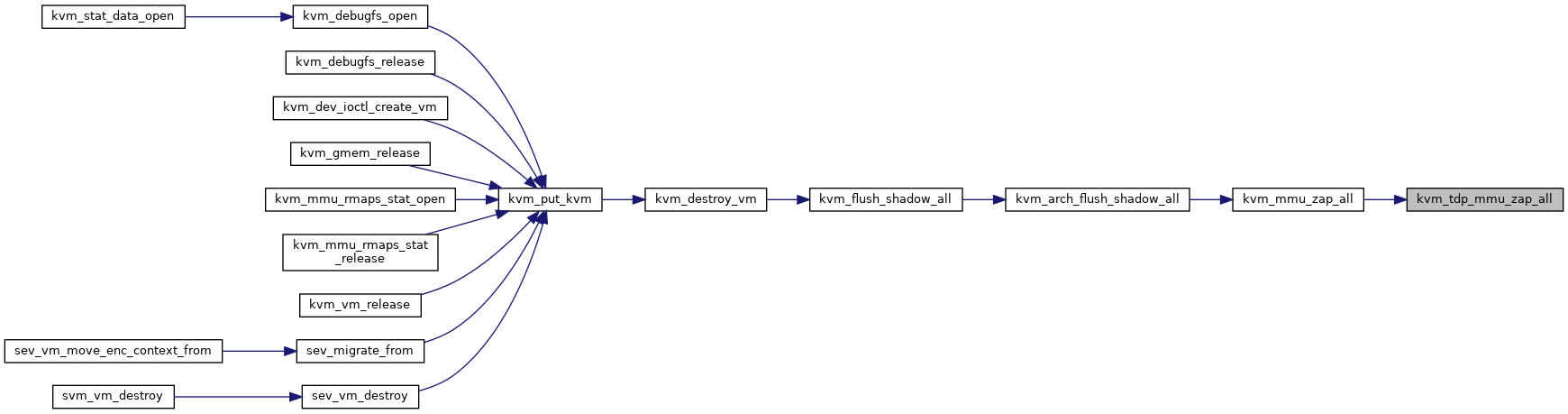

◆ kvm_tdp_mmu_zap_all()

| void kvm_tdp_mmu_zap_all | ( | struct kvm * | kvm | ) |

Definition at line 831 of file tdp_mmu.c.

◆ kvm_tdp_mmu_zap_collapsible_sptes()

| void kvm_tdp_mmu_zap_collapsible_sptes | ( | struct kvm * | kvm, |

| const struct kvm_memory_slot * | slot | ||

| ) |

Definition at line 1695 of file tdp_mmu.c.

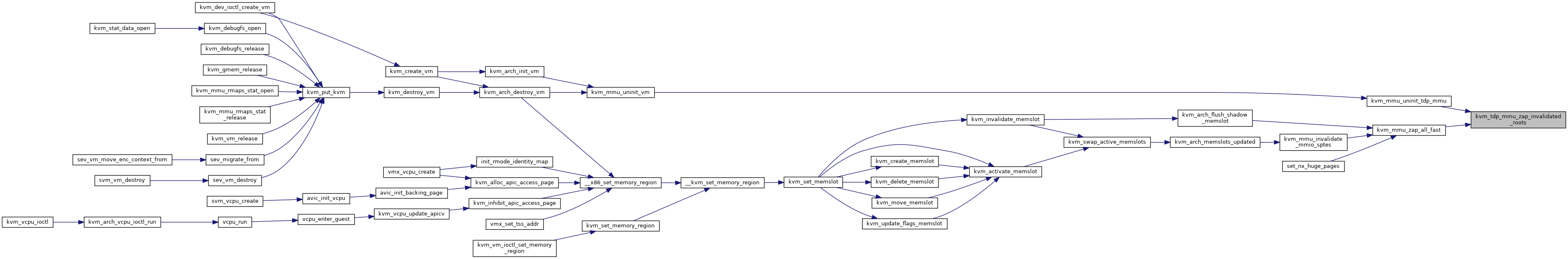

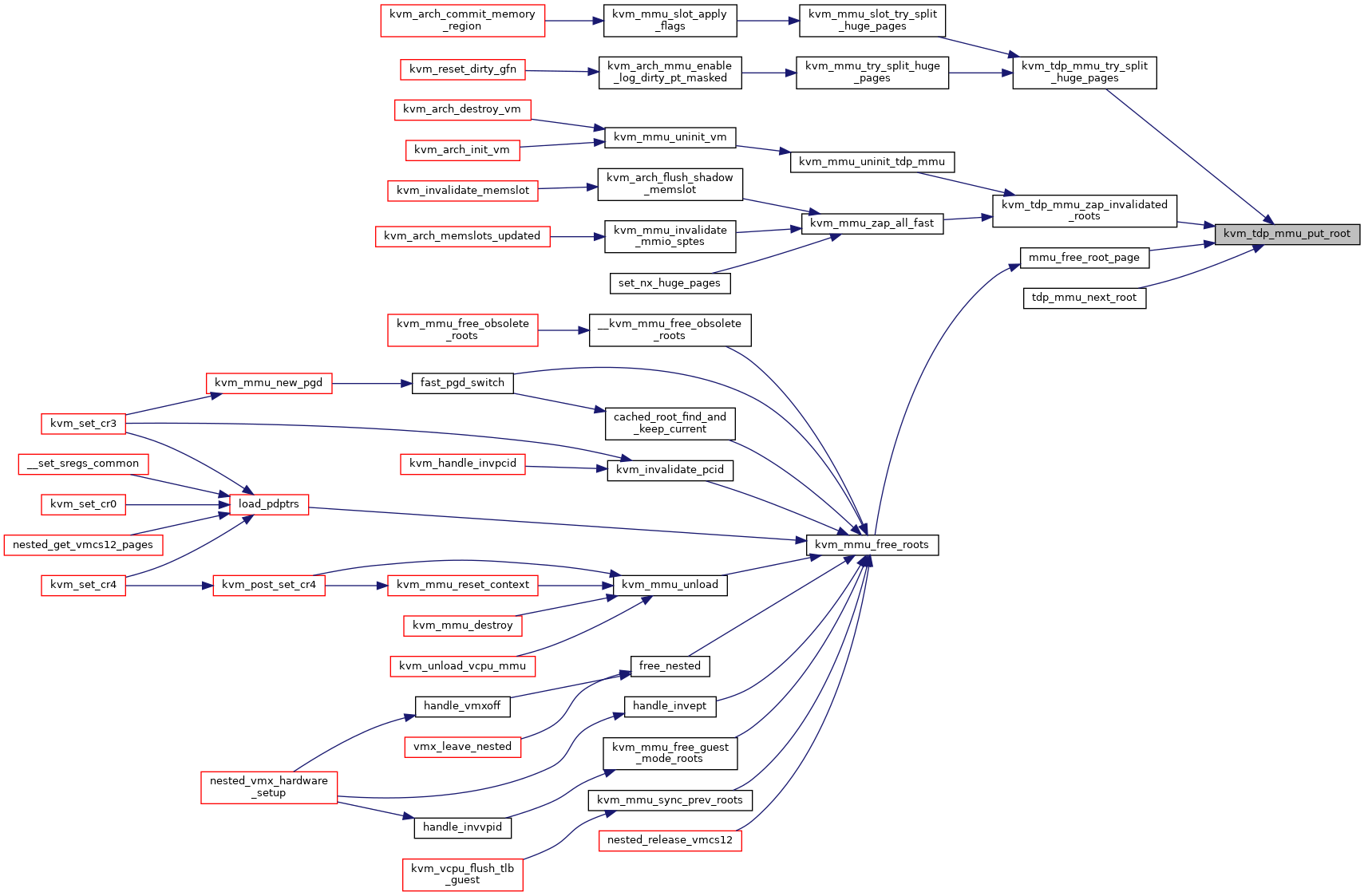

◆ kvm_tdp_mmu_zap_invalidated_roots()

| void kvm_tdp_mmu_zap_invalidated_roots | ( | struct kvm * | kvm | ) |

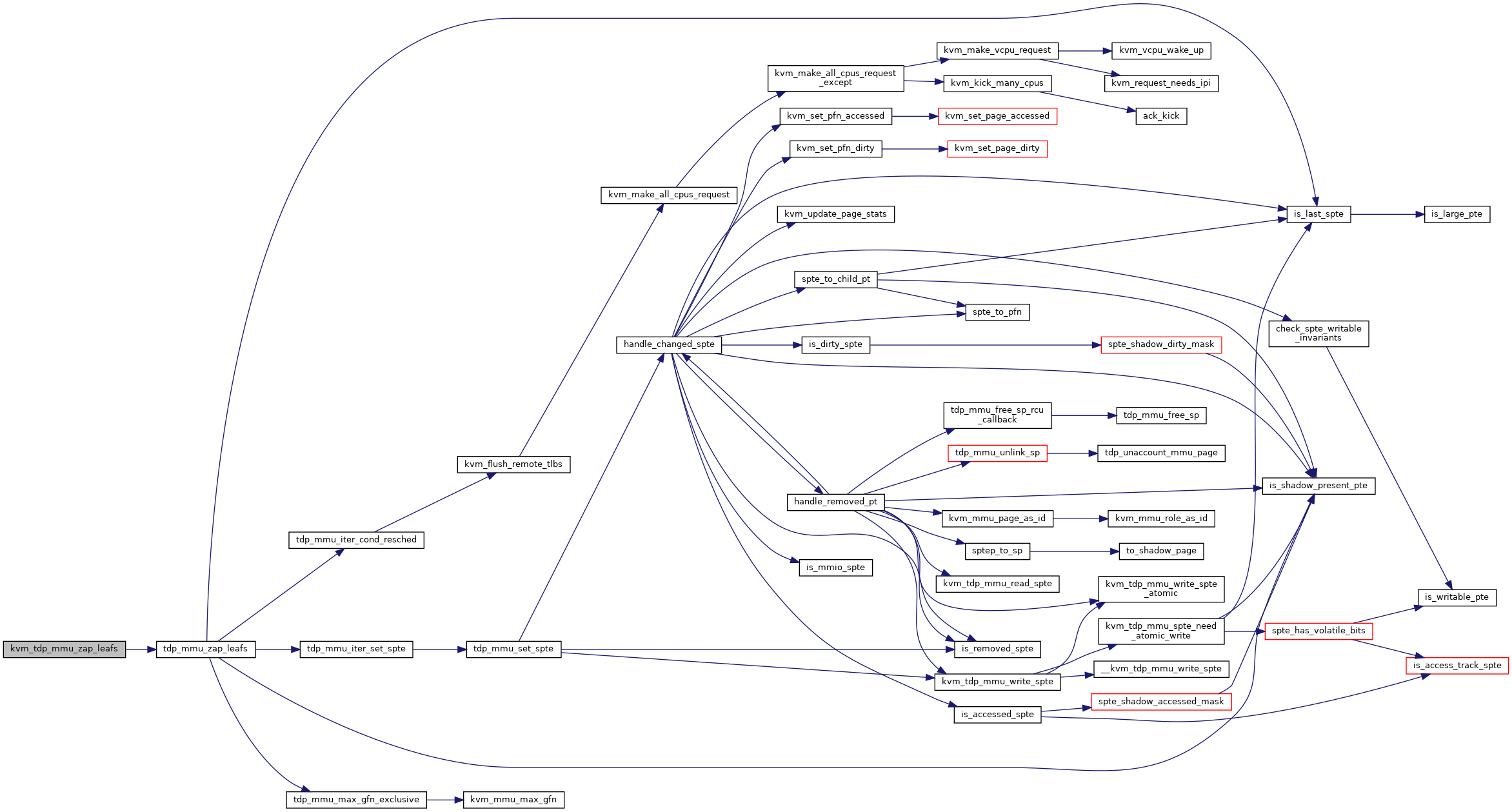

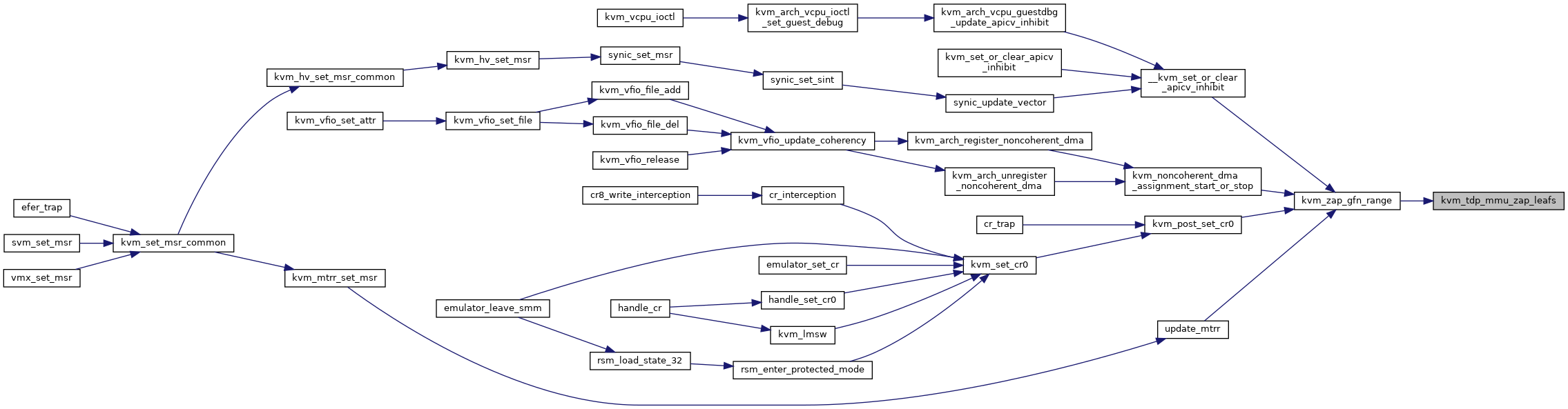

◆ kvm_tdp_mmu_zap_leafs()

| bool kvm_tdp_mmu_zap_leafs | ( | struct kvm * | kvm, |

| gfn_t | start, | ||

| gfn_t | end, | ||

| bool | flush | ||

| ) |

◆ kvm_tdp_mmu_zap_sp()

| bool kvm_tdp_mmu_zap_sp | ( | struct kvm * | kvm, |

| struct kvm_mmu_page * | sp | ||

| ) |

Definition at line 752 of file tdp_mmu.c.