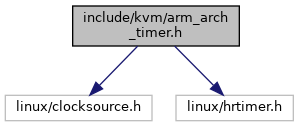

#include <linux/clocksource.h>

#include <linux/hrtimer.h>

Go to the source code of this file.

|

| void | get_timer_map (struct kvm_vcpu *vcpu, struct timer_map *map) |

| |

| int __init | kvm_timer_hyp_init (bool has_gic) |

| |

| int | kvm_timer_enable (struct kvm_vcpu *vcpu) |

| |

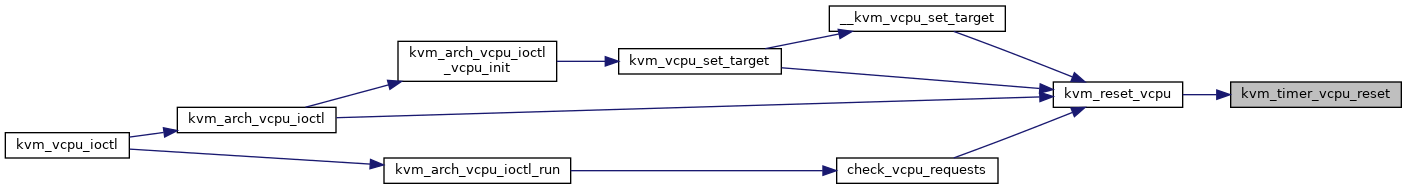

| void | kvm_timer_vcpu_reset (struct kvm_vcpu *vcpu) |

| |

| void | kvm_timer_vcpu_init (struct kvm_vcpu *vcpu) |

| |

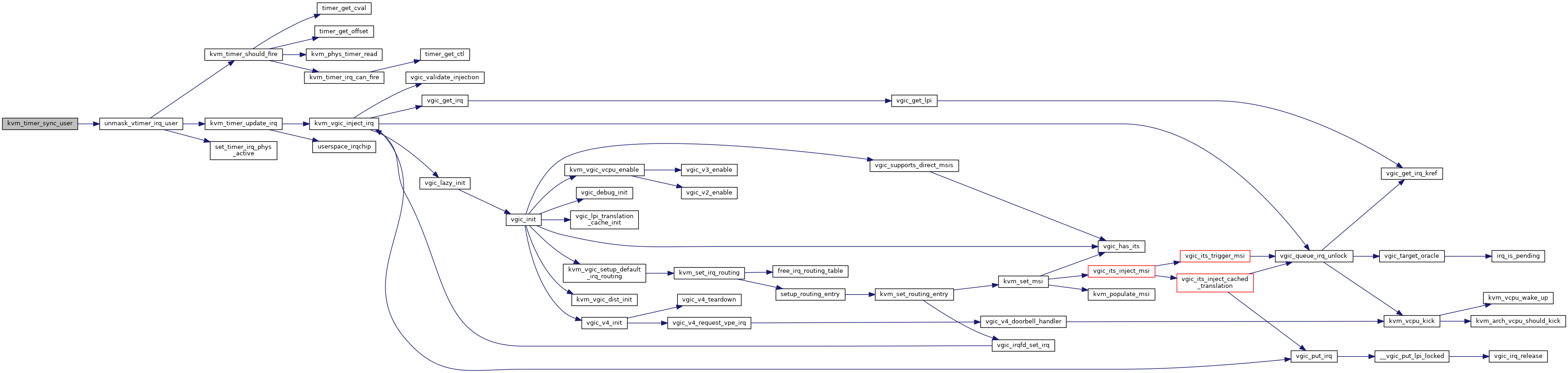

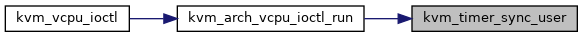

| void | kvm_timer_sync_user (struct kvm_vcpu *vcpu) |

| |

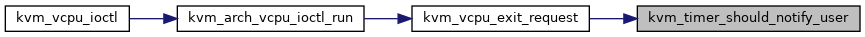

| bool | kvm_timer_should_notify_user (struct kvm_vcpu *vcpu) |

| |

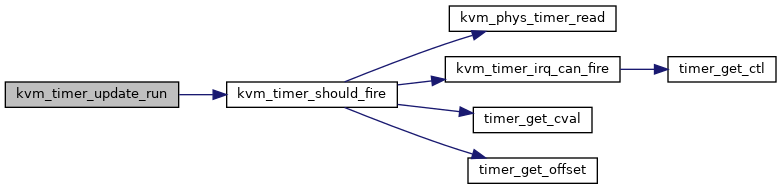

| void | kvm_timer_update_run (struct kvm_vcpu *vcpu) |

| |

| void | kvm_timer_vcpu_terminate (struct kvm_vcpu *vcpu) |

| |

| void | kvm_timer_init_vm (struct kvm *kvm) |

| |

| u64 | kvm_arm_timer_get_reg (struct kvm_vcpu *, u64 regid) |

| |

| int | kvm_arm_timer_set_reg (struct kvm_vcpu *, u64 regid, u64 value) |

| |

| int | kvm_arm_timer_set_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

| |

| int | kvm_arm_timer_get_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

| |

| int | kvm_arm_timer_has_attr (struct kvm_vcpu *vcpu, struct kvm_device_attr *attr) |

| |

| u64 | kvm_phys_timer_read (void) |

| |

| void | kvm_timer_vcpu_load (struct kvm_vcpu *vcpu) |

| |

| void | kvm_timer_vcpu_put (struct kvm_vcpu *vcpu) |

| |

| void | kvm_timer_init_vhe (void) |

| |

| u64 | kvm_arm_timer_read_sysreg (struct kvm_vcpu *vcpu, enum kvm_arch_timers tmr, enum kvm_arch_timer_regs treg) |

| |

| void | kvm_arm_timer_write_sysreg (struct kvm_vcpu *vcpu, enum kvm_arch_timers tmr, enum kvm_arch_timer_regs treg, u64 val) |

| |

| u32 | timer_get_ctl (struct arch_timer_context *ctxt) |

| |

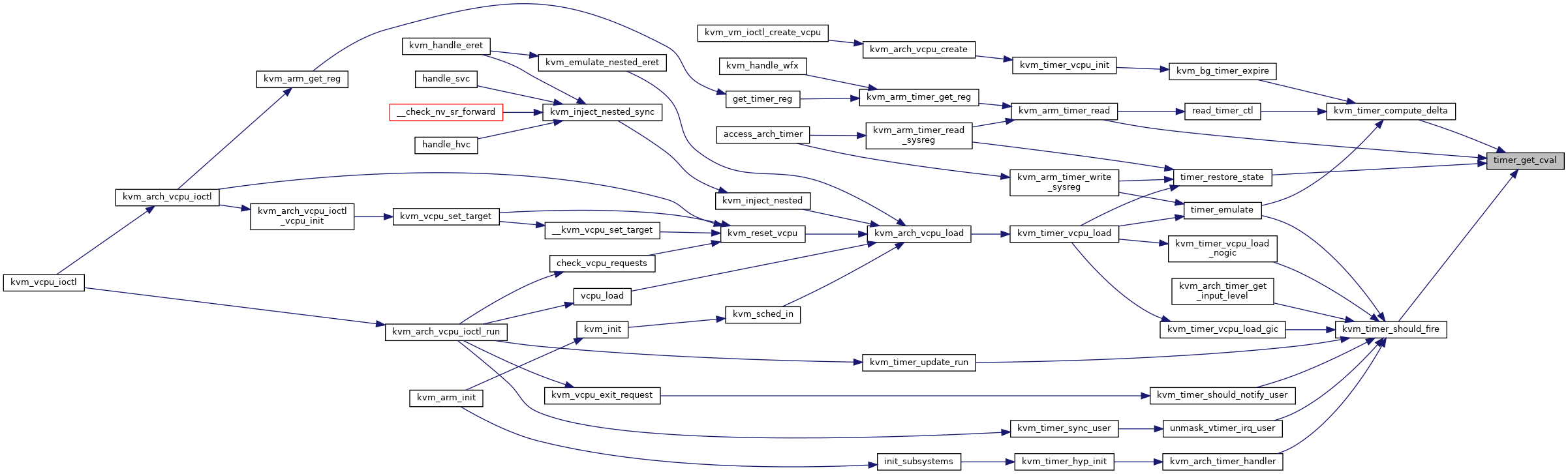

| u64 | timer_get_cval (struct arch_timer_context *ctxt) |

| |

| void | kvm_timer_cpu_up (void) |

| |

| void | kvm_timer_cpu_down (void) |

| |

| static bool | has_cntpoff (void) |

| |

◆ arch_timer_ctx_index

| #define arch_timer_ctx_index |

( |

|

ctx | ) |

((ctx) - vcpu_timer((ctx)->vcpu)->timers) |

◆ timer_irq

◆ timer_vm_data

| #define timer_vm_data |

( |

|

ctx | ) |

(&(ctx)->vcpu->kvm->arch.timer_data) |

◆ vcpu_get_timer

| #define vcpu_get_timer |

( |

|

v, |

|

|

|

t |

|

) |

| (&vcpu_timer(v)->timers[(t)]) |

◆ vcpu_hptimer

| #define vcpu_hptimer |

( |

|

v | ) |

(&(v)->arch.timer_cpu.timers[TIMER_HPTIMER]) |

◆ vcpu_hvtimer

| #define vcpu_hvtimer |

( |

|

v | ) |

(&(v)->arch.timer_cpu.timers[TIMER_HVTIMER]) |

◆ vcpu_ptimer

| #define vcpu_ptimer |

( |

|

v | ) |

(&(v)->arch.timer_cpu.timers[TIMER_PTIMER]) |

◆ vcpu_timer

| #define vcpu_timer |

( |

|

v | ) |

(&(v)->arch.timer_cpu) |

◆ vcpu_vtimer

| #define vcpu_vtimer |

( |

|

v | ) |

(&(v)->arch.timer_cpu.timers[TIMER_VTIMER]) |

◆ kvm_arch_timer_regs

| Enumerator |

|---|

| TIMER_REG_CNT | |

| TIMER_REG_CVAL | |

| TIMER_REG_TVAL | |

| TIMER_REG_CTL | |

| TIMER_REG_VOFF | |

Definition at line 22 of file arm_arch_timer.h.

◆ kvm_arch_timers

| Enumerator |

|---|

| TIMER_PTIMER | |

| TIMER_VTIMER | |

| NR_KVM_EL0_TIMERS | |

| TIMER_HVTIMER | |

| TIMER_HPTIMER | |

| NR_KVM_TIMERS | |

Definition at line 13 of file arm_arch_timer.h.

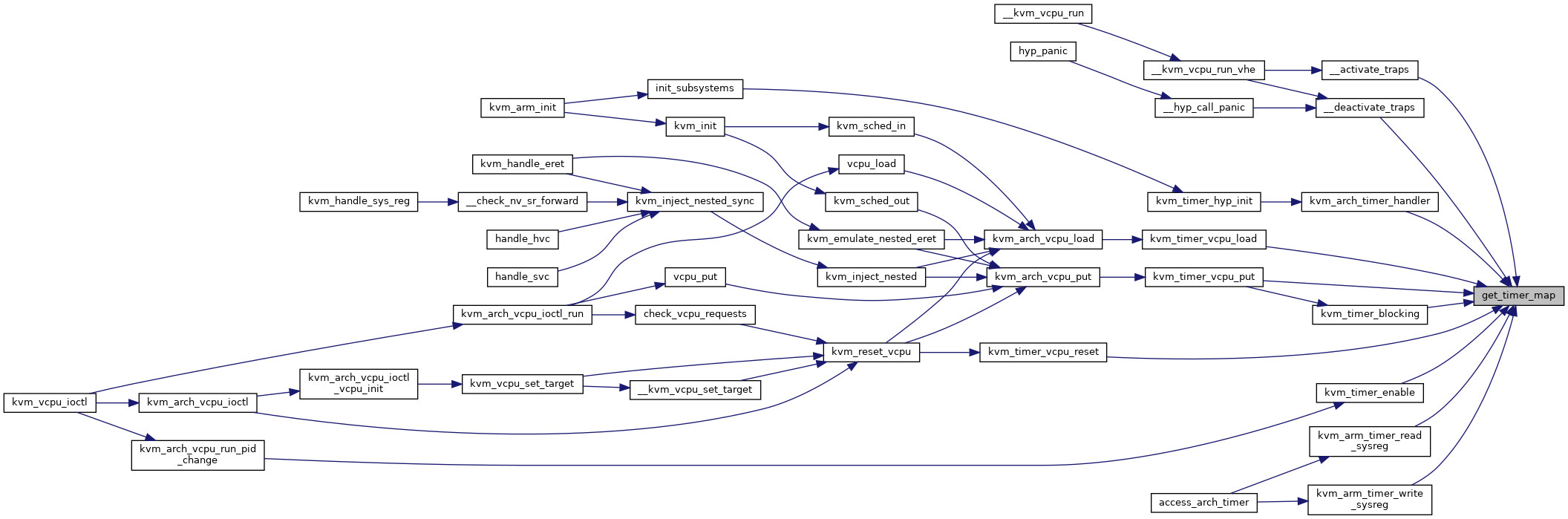

◆ get_timer_map()

| void get_timer_map |

( |

struct kvm_vcpu * |

vcpu, |

|

|

struct timer_map * |

map |

|

) |

| |

Definition at line 178 of file arch_timer.c.

180 if (vcpu_has_nv(vcpu)) {

181 if (is_hyp_ctxt(vcpu)) {

192 }

else if (has_vhe()) {

204 trace_kvm_get_timer_map(vcpu->vcpu_id, map);

struct arch_timer_context * direct_vtimer

struct arch_timer_context * direct_ptimer

struct arch_timer_context * emul_ptimer

struct arch_timer_context * emul_vtimer

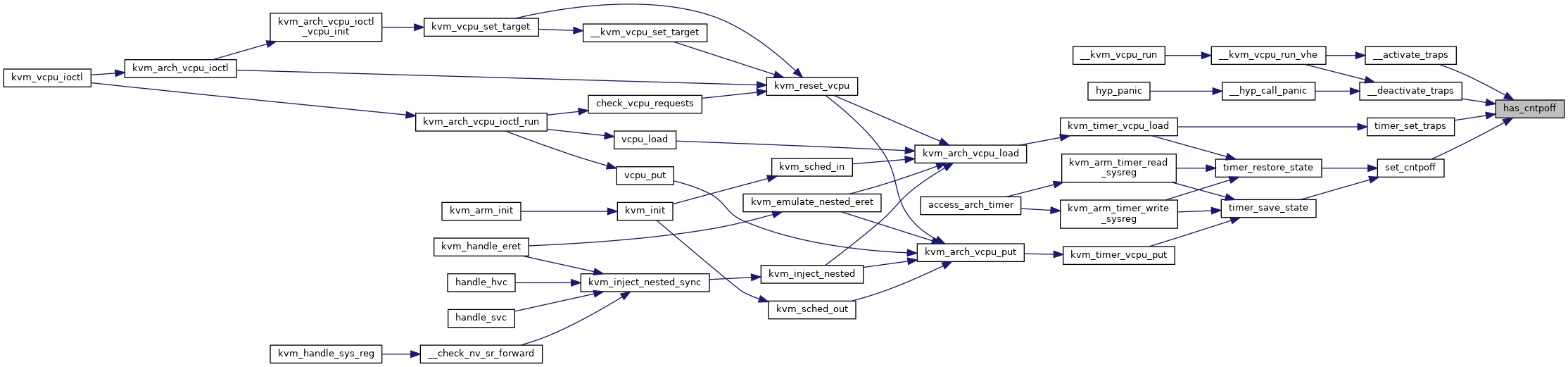

◆ has_cntpoff()

| static bool has_cntpoff |

( |

void |

| ) |

|

|

inlinestatic |

Definition at line 150 of file arm_arch_timer.h.

152 return (has_vhe() && cpus_have_final_cap(ARM64_HAS_ECV_CNTPOFF));

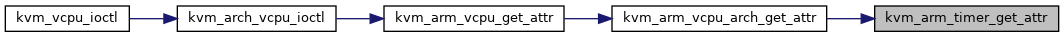

◆ kvm_arm_timer_get_attr()

| int kvm_arm_timer_get_attr |

( |

struct kvm_vcpu * |

vcpu, |

|

|

struct kvm_device_attr * |

attr |

|

) |

| |

Definition at line 1611 of file arch_timer.c.

1613 int __user *uaddr = (

int __user *)(

long)attr->addr;

1617 switch (attr->attr) {

1618 case KVM_ARM_VCPU_TIMER_IRQ_VTIMER:

1621 case KVM_ARM_VCPU_TIMER_IRQ_PTIMER:

1624 case KVM_ARM_VCPU_TIMER_IRQ_HVTIMER:

1627 case KVM_ARM_VCPU_TIMER_IRQ_HPTIMER:

1635 return put_user(

irq, uaddr);

struct arch_timer_context::@18 irq

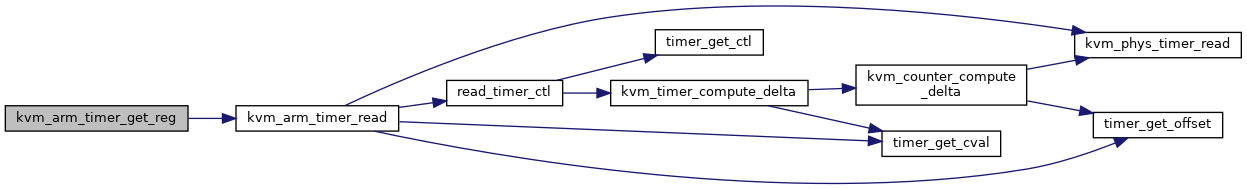

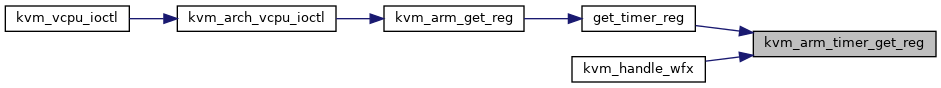

◆ kvm_arm_timer_get_reg()

| u64 kvm_arm_timer_get_reg |

( |

struct kvm_vcpu * |

vcpu, |

|

|

u64 |

regid |

|

) |

| |

Definition at line 1107 of file arch_timer.c.

1110 case KVM_REG_ARM_TIMER_CTL:

1113 case KVM_REG_ARM_TIMER_CNT:

1116 case KVM_REG_ARM_TIMER_CVAL:

1119 case KVM_REG_ARM_PTIMER_CTL:

1122 case KVM_REG_ARM_PTIMER_CNT:

1125 case KVM_REG_ARM_PTIMER_CVAL:

static u64 kvm_arm_timer_read(struct kvm_vcpu *vcpu, struct arch_timer_context *timer, enum kvm_arch_timer_regs treg)

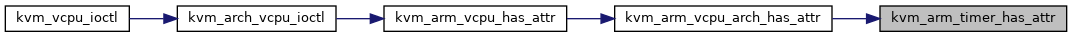

◆ kvm_arm_timer_has_attr()

| int kvm_arm_timer_has_attr |

( |

struct kvm_vcpu * |

vcpu, |

|

|

struct kvm_device_attr * |

attr |

|

) |

| |

Definition at line 1638 of file arch_timer.c.

1640 switch (attr->attr) {

1641 case KVM_ARM_VCPU_TIMER_IRQ_VTIMER:

1642 case KVM_ARM_VCPU_TIMER_IRQ_PTIMER:

1643 case KVM_ARM_VCPU_TIMER_IRQ_HVTIMER:

1644 case KVM_ARM_VCPU_TIMER_IRQ_HPTIMER:

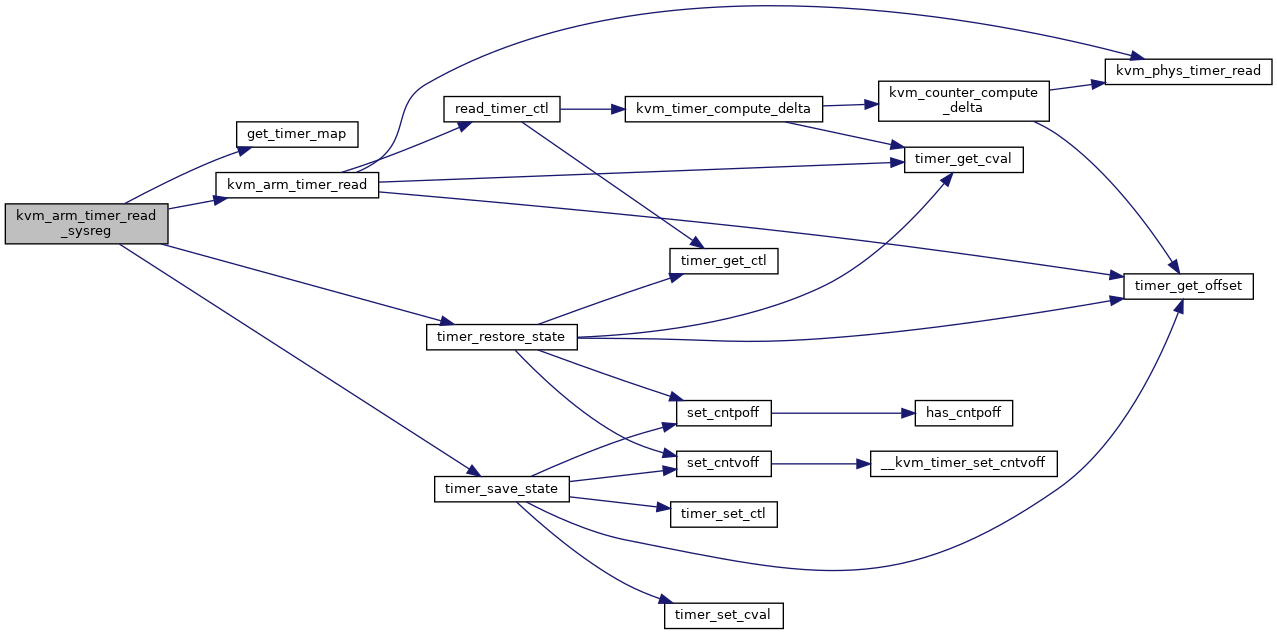

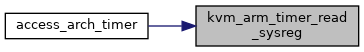

◆ kvm_arm_timer_read_sysreg()

Definition at line 1167 of file arch_timer.c.

1178 if (timer == map.emul_vtimer || timer == map.emul_ptimer)

static void timer_save_state(struct arch_timer_context *ctx)

static void timer_restore_state(struct arch_timer_context *ctx)

void get_timer_map(struct kvm_vcpu *vcpu, struct timer_map *map)

#define vcpu_get_timer(v, t)

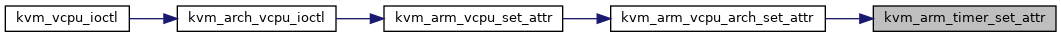

◆ kvm_arm_timer_set_attr()

| int kvm_arm_timer_set_attr |

( |

struct kvm_vcpu * |

vcpu, |

|

|

struct kvm_device_attr * |

attr |

|

) |

| |

Definition at line 1559 of file arch_timer.c.

1561 int __user *uaddr = (

int __user *)(

long)attr->addr;

1562 int irq, idx, ret = 0;

1567 if (get_user(irq, uaddr))

1573 mutex_lock(&vcpu->kvm->arch.config_lock);

1575 if (test_bit(KVM_ARCH_FLAG_TIMER_PPIS_IMMUTABLE,

1576 &vcpu->kvm->arch.flags)) {

1581 switch (attr->attr) {

1582 case KVM_ARM_VCPU_TIMER_IRQ_VTIMER:

1585 case KVM_ARM_VCPU_TIMER_IRQ_PTIMER:

1588 case KVM_ARM_VCPU_TIMER_IRQ_HVTIMER:

1591 case KVM_ARM_VCPU_TIMER_IRQ_HPTIMER:

1604 vcpu->kvm->arch.timer_data.ppi[idx] = irq;

1607 mutex_unlock(&vcpu->kvm->arch.config_lock);

#define irqchip_in_kernel(k)

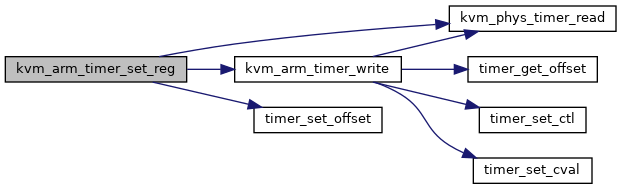

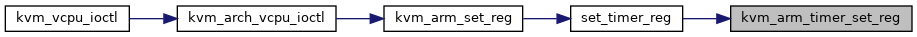

◆ kvm_arm_timer_set_reg()

| int kvm_arm_timer_set_reg |

( |

struct kvm_vcpu * |

vcpu, |

|

|

u64 |

regid, |

|

|

u64 |

value |

|

) |

| |

Definition at line 1048 of file arch_timer.c.

1053 case KVM_REG_ARM_TIMER_CTL:

1057 case KVM_REG_ARM_TIMER_CNT:

1058 if (!test_bit(KVM_ARCH_FLAG_VM_COUNTER_OFFSET,

1059 &

vcpu->kvm->arch.flags)) {

1064 case KVM_REG_ARM_TIMER_CVAL:

1068 case KVM_REG_ARM_PTIMER_CTL:

1072 case KVM_REG_ARM_PTIMER_CNT:

1073 if (!test_bit(KVM_ARCH_FLAG_VM_COUNTER_OFFSET,

1074 &

vcpu->kvm->arch.flags)) {

1079 case KVM_REG_ARM_PTIMER_CVAL:

static void timer_set_offset(struct arch_timer_context *ctxt, u64 offset)

u64 kvm_phys_timer_read(void)

static void kvm_arm_timer_write(struct kvm_vcpu *vcpu, struct arch_timer_context *timer, enum kvm_arch_timer_regs treg, u64 val)

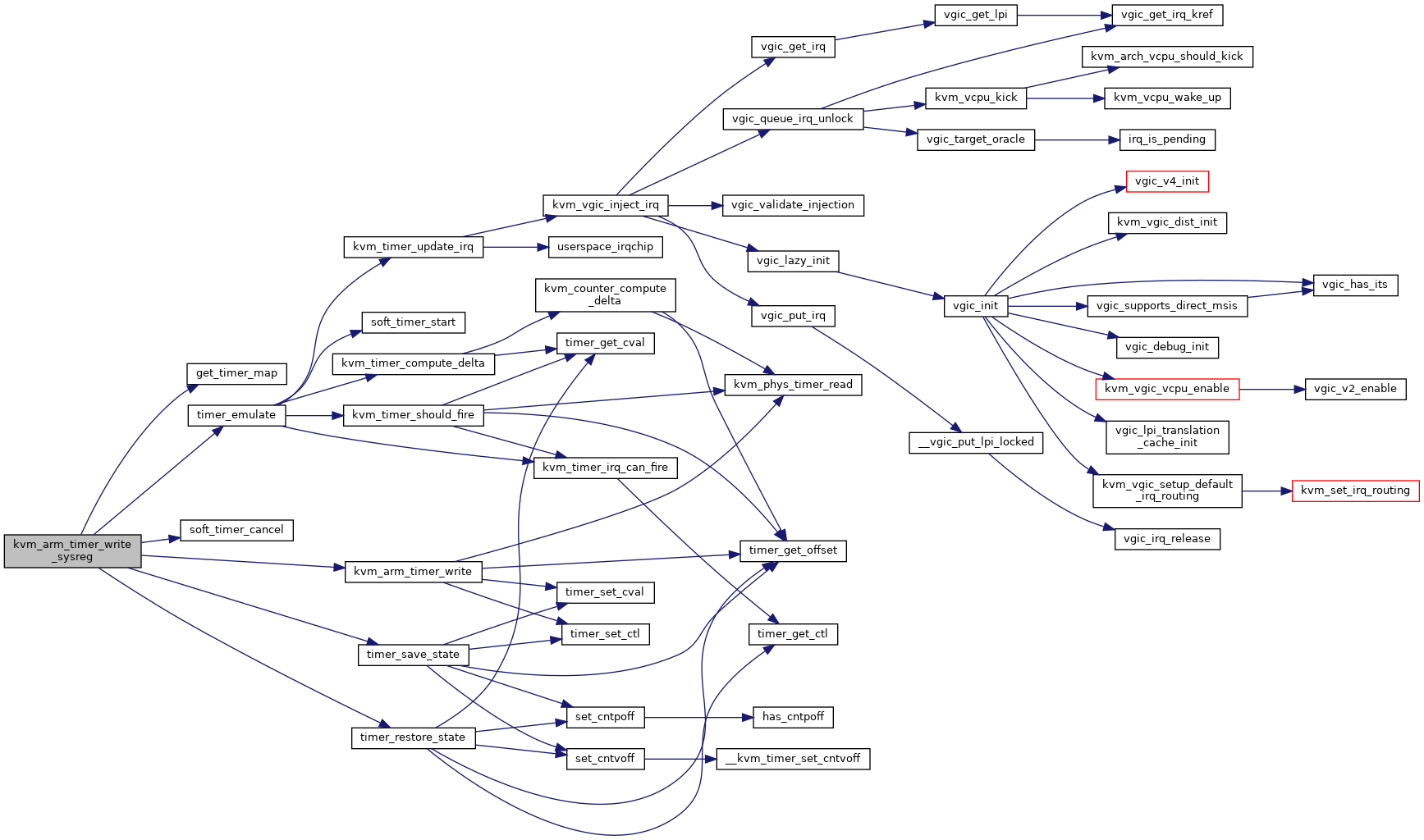

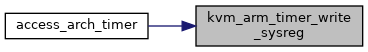

◆ kvm_arm_timer_write_sysreg()

Definition at line 1219 of file arch_timer.c.

1229 if (timer == map.emul_vtimer || timer == map.emul_ptimer) {

static void timer_emulate(struct arch_timer_context *ctx)

static void soft_timer_cancel(struct hrtimer *hrt)

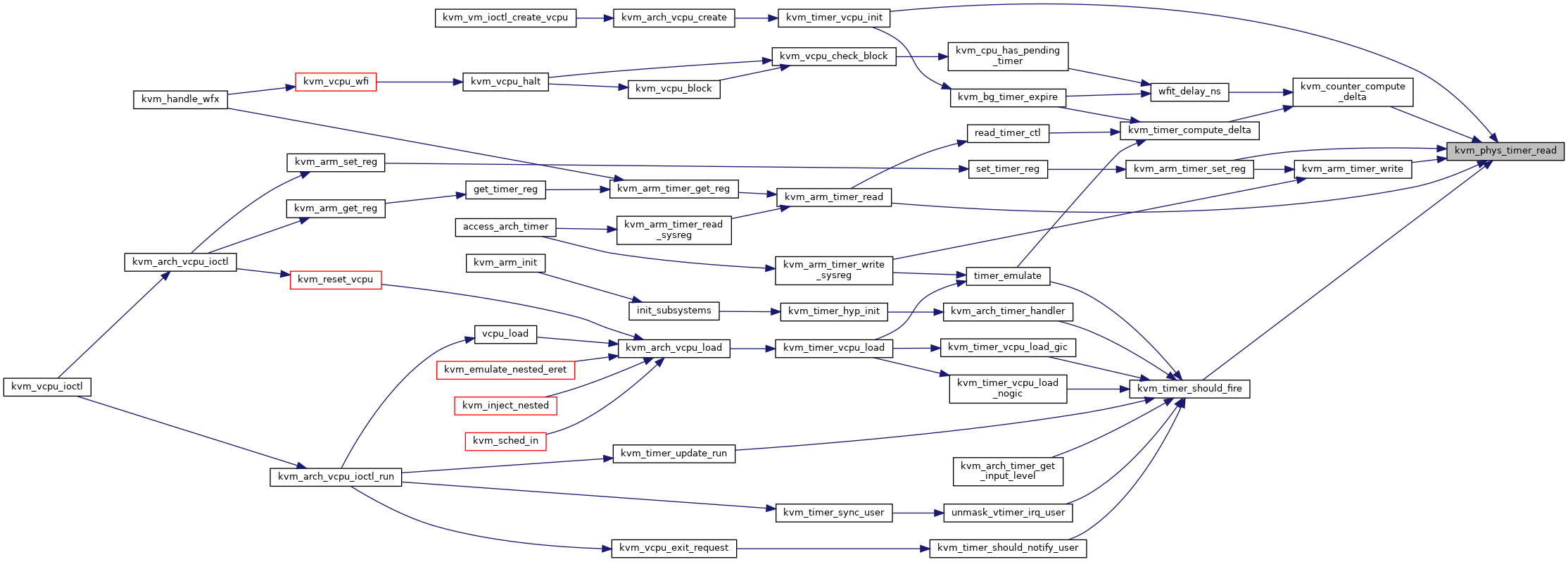

◆ kvm_phys_timer_read()

| u64 kvm_phys_timer_read |

( |

void |

| ) |

|

Definition at line 173 of file arch_timer.c.

static struct timecounter * timecounter

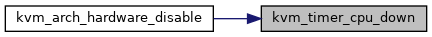

◆ kvm_timer_cpu_down()

| void kvm_timer_cpu_down |

( |

void |

| ) |

|

Definition at line 1041 of file arch_timer.c.

static unsigned int host_ptimer_irq

static unsigned int host_vtimer_irq

◆ kvm_timer_cpu_up()

| void kvm_timer_cpu_up |

( |

void |

| ) |

|

Definition at line 1034 of file arch_timer.c.

static u32 host_vtimer_irq_flags

static u32 host_ptimer_irq_flags

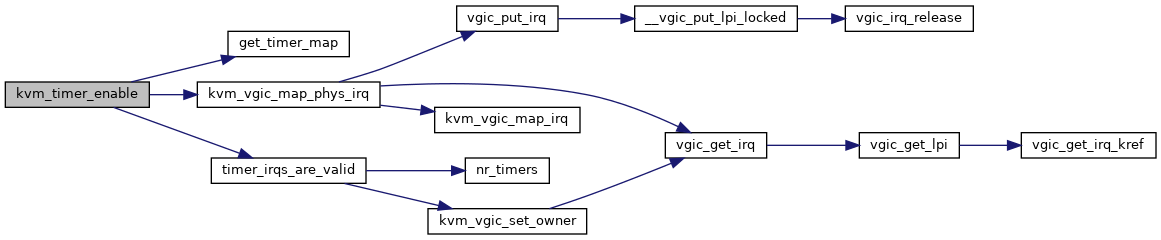

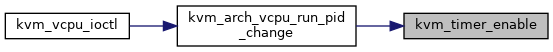

◆ kvm_timer_enable()

| int kvm_timer_enable |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 1506 of file arch_timer.c.

1524 kvm_debug(

"incorrectly configured timer irqs\n");

1531 map.direct_vtimer->host_timer_irq,

1537 if (map.direct_ptimer) {

1539 map.direct_ptimer->host_timer_irq,

static struct irq_ops arch_timer_irq_ops

static bool timer_irqs_are_valid(struct kvm_vcpu *vcpu)

int kvm_vgic_map_phys_irq(struct kvm_vcpu *vcpu, unsigned int host_irq, u32 vintid, struct irq_ops *ops)

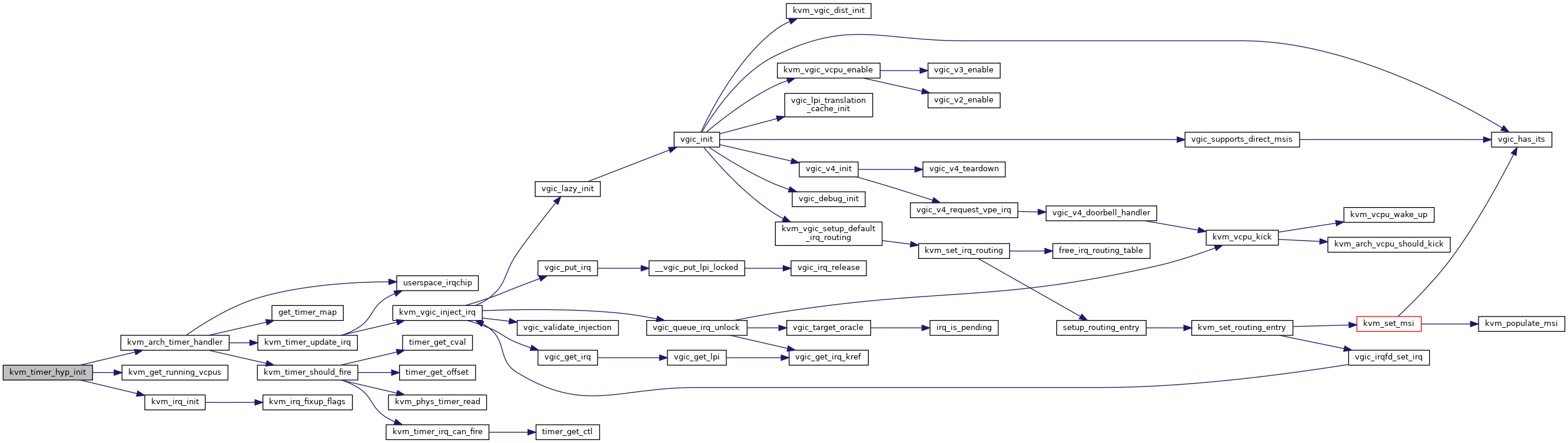

◆ kvm_timer_hyp_init()

| int __init kvm_timer_hyp_init |

( |

bool |

has_gic | ) |

|

Definition at line 1367 of file arch_timer.c.

1369 struct arch_timer_kvm_info *info;

1372 info = arch_timer_get_kvm_info();

1376 kvm_err(

"kvm_arch_timer: uninitialized timecounter\n");

1389 kvm_err(

"kvm_arch_timer: can't request vtimer interrupt %d (%d)\n",

1398 kvm_err(

"kvm_arch_timer: error setting vcpu affinity\n");

1399 goto out_free_vtimer_irq;

1402 static_branch_enable(&has_gic_active_state);

1409 if (info->physical_irq > 0) {

1413 kvm_err(

"kvm_arch_timer: can't request ptimer interrupt %d (%d)\n",

1415 goto out_free_vtimer_irq;

1422 kvm_err(

"kvm_arch_timer: error setting vcpu affinity\n");

1423 goto out_free_ptimer_irq;

1428 }

else if (has_vhe()) {

1429 kvm_err(

"kvm_arch_timer: invalid physical timer IRQ: %d\n",

1430 info->physical_irq);

1432 goto out_free_vtimer_irq;

1437 out_free_ptimer_irq:

1438 if (info->physical_irq > 0)

1440 out_free_vtimer_irq:

static int kvm_irq_init(struct arch_timer_kvm_info *info)

static irqreturn_t kvm_arch_timer_handler(int irq, void *dev_id)

struct kvm_vcpu *__percpu * kvm_get_running_vcpus(void)

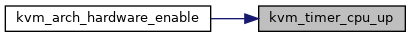

◆ kvm_timer_init_vhe()

| void kvm_timer_init_vhe |

( |

void |

| ) |

|

Definition at line 1553 of file arch_timer.c.

1555 if (cpus_have_final_cap(ARM64_HAS_ECV_CNTPOFF))

1556 sysreg_clear_set(cnthctl_el2, 0, CNTHCTL_ECV);

◆ kvm_timer_init_vm()

| void kvm_timer_init_vm |

( |

struct kvm * |

kvm | ) |

|

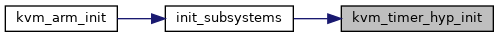

◆ kvm_timer_should_notify_user()

| bool kvm_timer_should_notify_user |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 860 of file arch_timer.c.

864 struct kvm_sync_regs *sregs = &vcpu->run->s.regs;

870 vlevel = sregs->device_irq_level & KVM_ARM_DEV_EL1_VTIMER;

871 plevel = sregs->device_irq_level & KVM_ARM_DEV_EL1_PTIMER;

static bool kvm_timer_should_fire(struct arch_timer_context *timer_ctx)

◆ kvm_timer_sync_user()

| void kvm_timer_sync_user |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 927 of file arch_timer.c.

static void unmask_vtimer_irq_user(struct kvm_vcpu *vcpu)

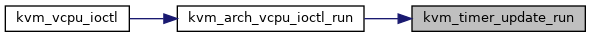

◆ kvm_timer_update_run()

| void kvm_timer_update_run |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 430 of file arch_timer.c.

434 struct kvm_sync_regs *regs = &vcpu->run->s.regs;

437 regs->device_irq_level &= ~(KVM_ARM_DEV_EL1_VTIMER |

438 KVM_ARM_DEV_EL1_PTIMER);

440 regs->device_irq_level |= KVM_ARM_DEV_EL1_VTIMER;

442 regs->device_irq_level |= KVM_ARM_DEV_EL1_PTIMER;

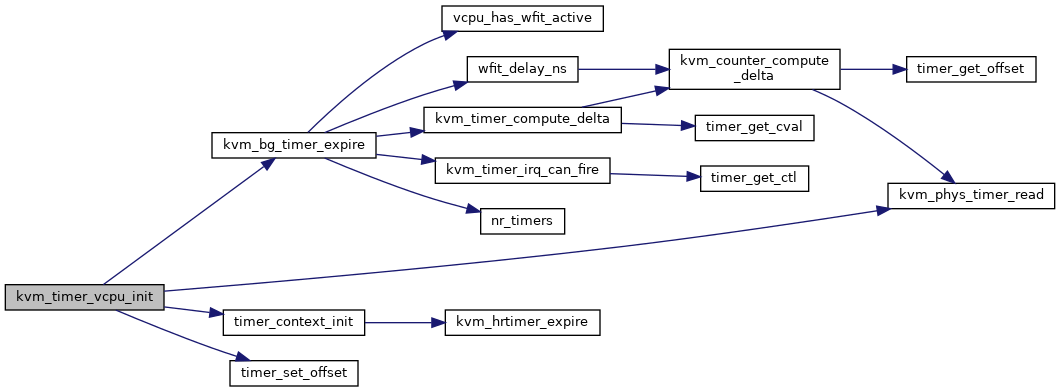

◆ kvm_timer_vcpu_init()

| void kvm_timer_vcpu_init |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 1011 of file arch_timer.c.

1019 if (!test_bit(KVM_ARCH_FLAG_VM_COUNTER_OFFSET, &vcpu->kvm->arch.flags)) {

1024 hrtimer_init(&timer->

bg_timer, CLOCK_MONOTONIC, HRTIMER_MODE_ABS_HARD);

static void timer_context_init(struct kvm_vcpu *vcpu, int timerid)

static enum hrtimer_restart kvm_bg_timer_expire(struct hrtimer *hrt)

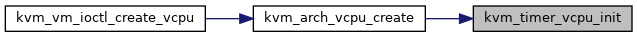

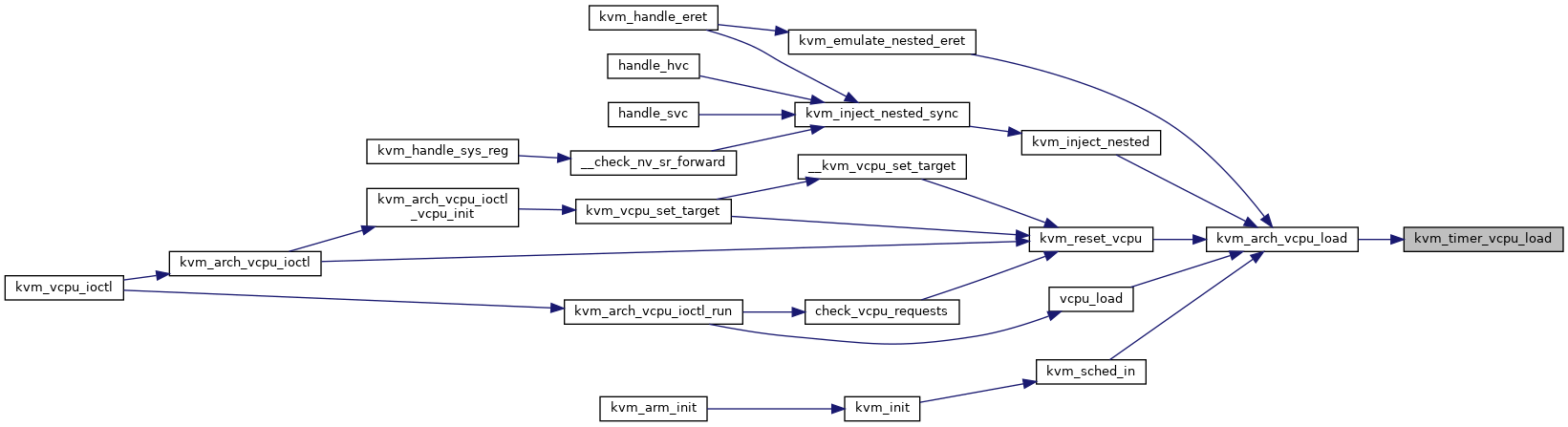

◆ kvm_timer_vcpu_load()

| void kvm_timer_vcpu_load |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 826 of file arch_timer.c.

836 if (static_branch_likely(&has_gic_active_state)) {

837 if (vcpu_has_nv(vcpu))

841 if (map.direct_ptimer)

850 if (map.direct_ptimer)

static void kvm_timer_vcpu_load_gic(struct arch_timer_context *ctx)

static void kvm_timer_vcpu_load_nogic(struct kvm_vcpu *vcpu)

static void kvm_timer_vcpu_load_nested_switch(struct kvm_vcpu *vcpu, struct timer_map *map)

static void kvm_timer_unblocking(struct kvm_vcpu *vcpu)

static void timer_set_traps(struct kvm_vcpu *vcpu, struct timer_map *map)

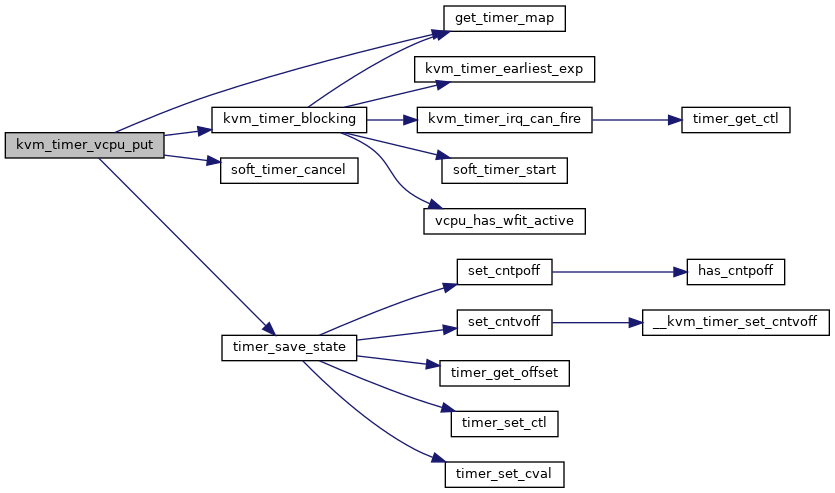

◆ kvm_timer_vcpu_put()

| void kvm_timer_vcpu_put |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 877 of file arch_timer.c.

888 if (map.direct_ptimer)

905 if (kvm_vcpu_is_blocking(vcpu))

static void kvm_timer_blocking(struct kvm_vcpu *vcpu)

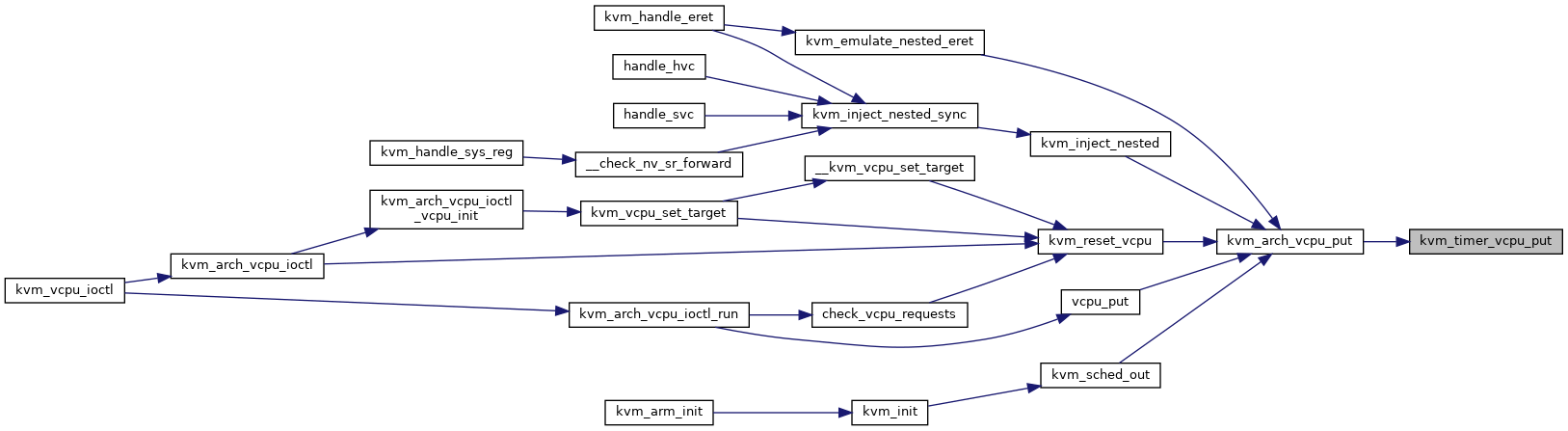

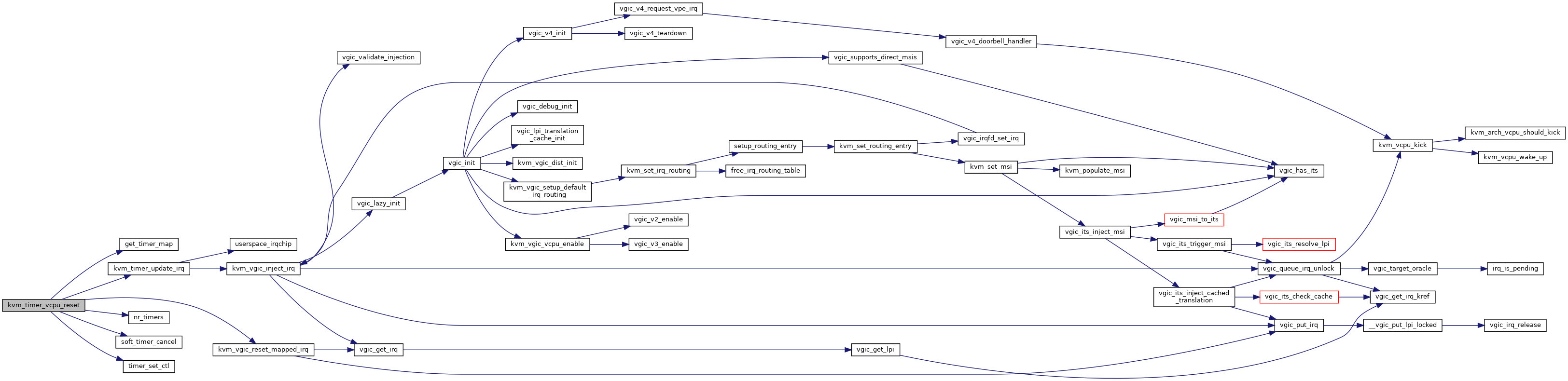

◆ kvm_timer_vcpu_reset()

| void kvm_timer_vcpu_reset |

( |

struct kvm_vcpu * |

vcpu | ) |

|

Definition at line 938 of file arch_timer.c.

951 for (

int i = 0; i <

nr_timers(vcpu); i++)

959 if (vcpu_has_nv(vcpu)) {

962 offs->

vcpu_offset = &__vcpu_sys_reg(vcpu, CNTVOFF_EL2);

963 offs->

vm_offset = &vcpu->kvm->arch.timer_data.poffset;

967 for (

int i = 0; i <

nr_timers(vcpu); i++)

973 if (map.direct_ptimer)

static void timer_set_ctl(struct arch_timer_context *ctxt, u32 ctl)

static void kvm_timer_update_irq(struct kvm_vcpu *vcpu, bool new_level, struct arch_timer_context *timer_ctx)

static int nr_timers(struct kvm_vcpu *vcpu)

void kvm_vgic_reset_mapped_irq(struct kvm_vcpu *vcpu, u32 vintid)

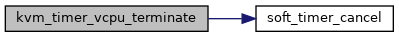

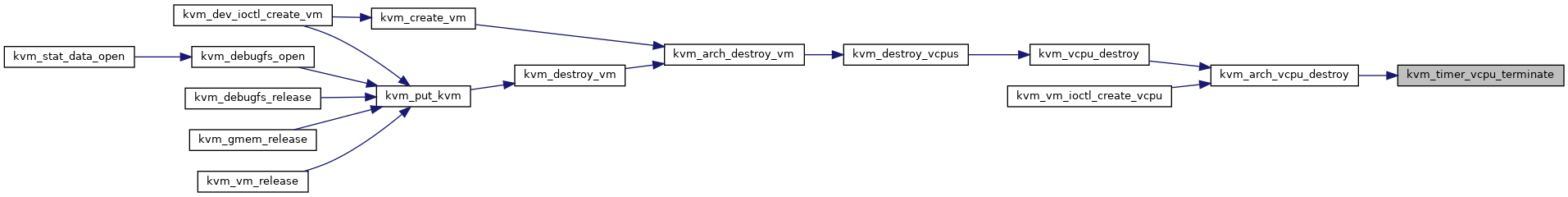

◆ kvm_timer_vcpu_terminate()

| void kvm_timer_vcpu_terminate |

( |

struct kvm_vcpu * |

vcpu | ) |

|

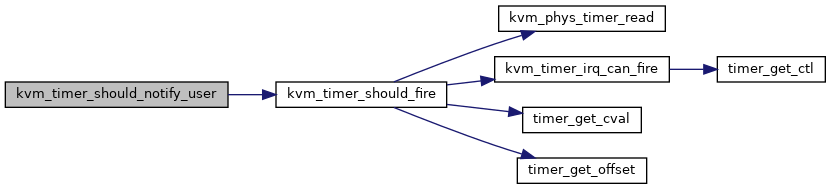

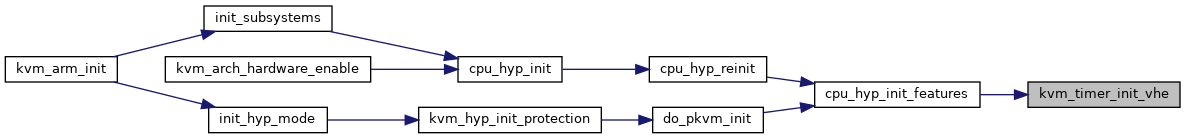

◆ timer_get_ctl()

Definition at line 66 of file arch_timer.c.

68 struct kvm_vcpu *vcpu = ctxt->

vcpu;

72 return __vcpu_sys_reg(vcpu, CNTV_CTL_EL0);

74 return __vcpu_sys_reg(vcpu, CNTP_CTL_EL0);

76 return __vcpu_sys_reg(vcpu, CNTHV_CTL_EL2);

78 return __vcpu_sys_reg(vcpu, CNTHP_CTL_EL2);

#define arch_timer_ctx_index(ctx)

◆ timer_get_cval()

Definition at line 85 of file arch_timer.c.

87 struct kvm_vcpu *vcpu = ctxt->

vcpu;

91 return __vcpu_sys_reg(vcpu, CNTV_CVAL_EL0);

93 return __vcpu_sys_reg(vcpu, CNTP_CVAL_EL0);

95 return __vcpu_sys_reg(vcpu, CNTHV_CVAL_EL2);

97 return __vcpu_sys_reg(vcpu, CNTHP_CVAL_EL2);