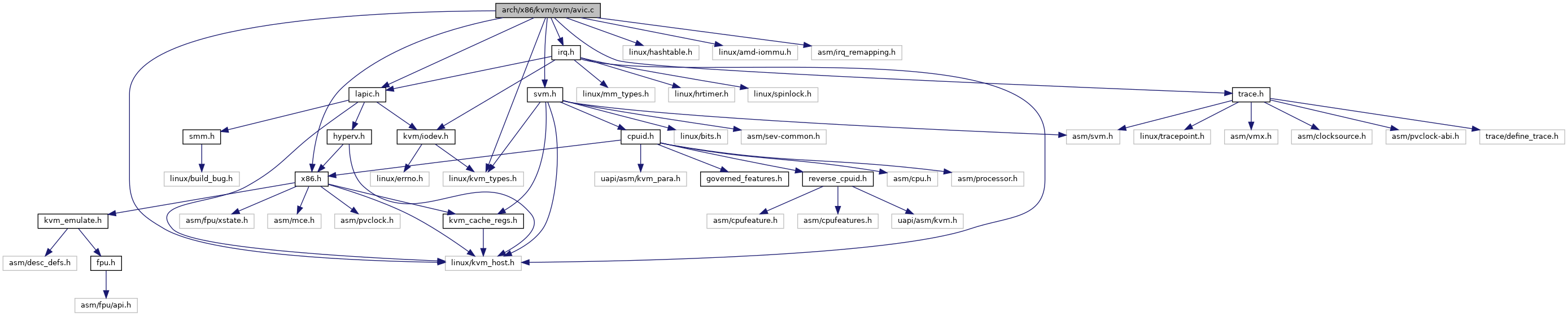

#include <linux/kvm_types.h>#include <linux/hashtable.h>#include <linux/amd-iommu.h>#include <linux/kvm_host.h>#include <asm/irq_remapping.h>#include "trace.h"#include "lapic.h"#include "x86.h"#include "irq.h"#include "svm.h"

Go to the source code of this file.

Classes | |

| struct | amd_svm_iommu_ir |

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

| #define | AVIC_VCPU_ID_MASK AVIC_PHYSICAL_MAX_INDEX_MASK |

| #define | AVIC_VM_ID_SHIFT HWEIGHT32(AVIC_PHYSICAL_MAX_INDEX_MASK) |

| #define | AVIC_VM_ID_MASK (GENMASK(31, AVIC_VM_ID_SHIFT) >> AVIC_VM_ID_SHIFT) |

| #define | AVIC_GATAG_TO_VMID(x) ((x >> AVIC_VM_ID_SHIFT) & AVIC_VM_ID_MASK) |

| #define | AVIC_GATAG_TO_VCPUID(x) (x & AVIC_VCPU_ID_MASK) |

| #define | __AVIC_GATAG(vm_id, vcpu_id) |

| #define | AVIC_GATAG(vm_id, vcpu_id) |

| #define | SVM_VM_DATA_HASH_BITS 8 |

Functions | |

| module_param_unsafe (force_avic, bool, 0444) | |

| static | DEFINE_HASHTABLE (svm_vm_data_hash, SVM_VM_DATA_HASH_BITS) |

| static | DEFINE_SPINLOCK (svm_vm_data_hash_lock) |

| static void | avic_activate_vmcb (struct vcpu_svm *svm) |

| static void | avic_deactivate_vmcb (struct vcpu_svm *svm) |

| int | avic_ga_log_notifier (u32 ga_tag) |

| void | avic_vm_destroy (struct kvm *kvm) |

| int | avic_vm_init (struct kvm *kvm) |

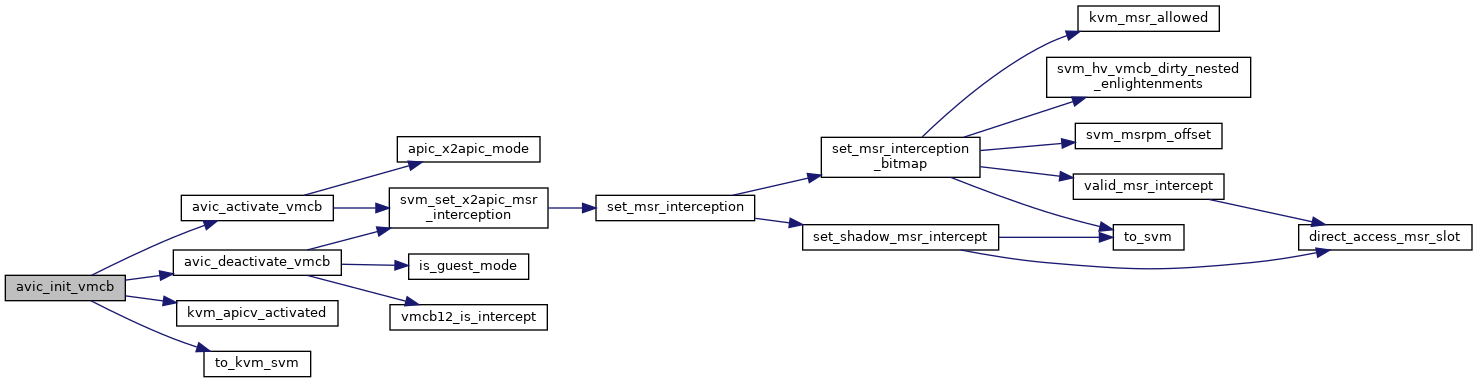

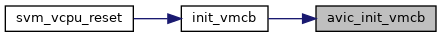

| void | avic_init_vmcb (struct vcpu_svm *svm, struct vmcb *vmcb) |

| static u64 * | avic_get_physical_id_entry (struct kvm_vcpu *vcpu, unsigned int index) |

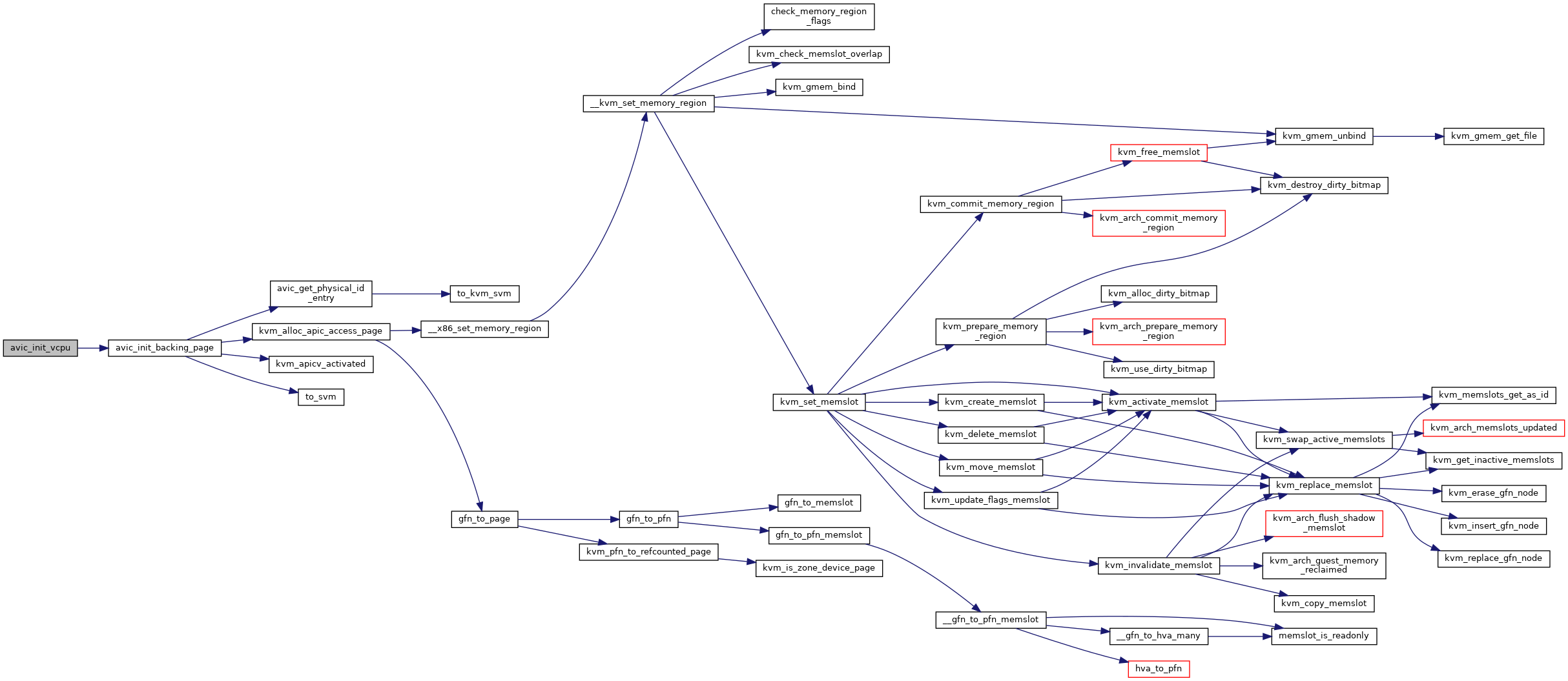

| static int | avic_init_backing_page (struct kvm_vcpu *vcpu) |

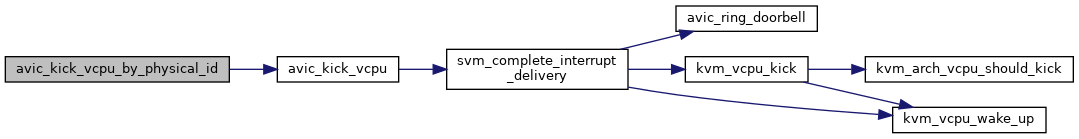

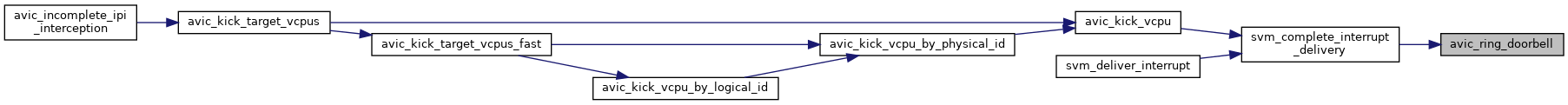

| void | avic_ring_doorbell (struct kvm_vcpu *vcpu) |

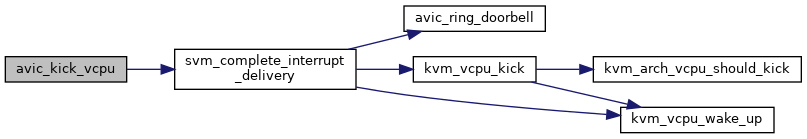

| static void | avic_kick_vcpu (struct kvm_vcpu *vcpu, u32 icrl) |

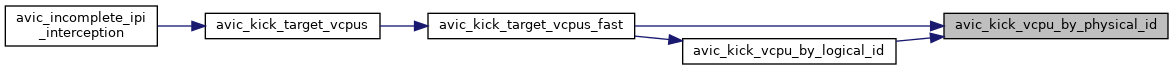

| static void | avic_kick_vcpu_by_physical_id (struct kvm *kvm, u32 physical_id, u32 icrl) |

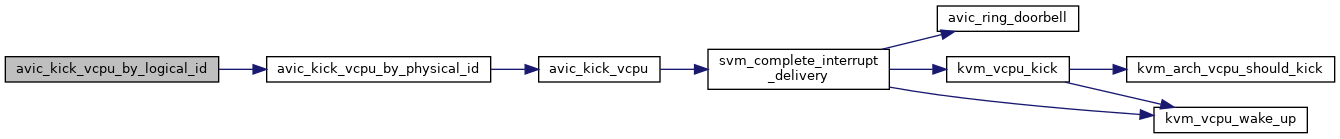

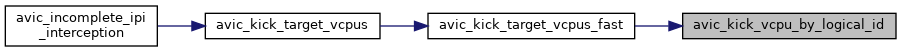

| static void | avic_kick_vcpu_by_logical_id (struct kvm *kvm, u32 *avic_logical_id_table, u32 logid_index, u32 icrl) |

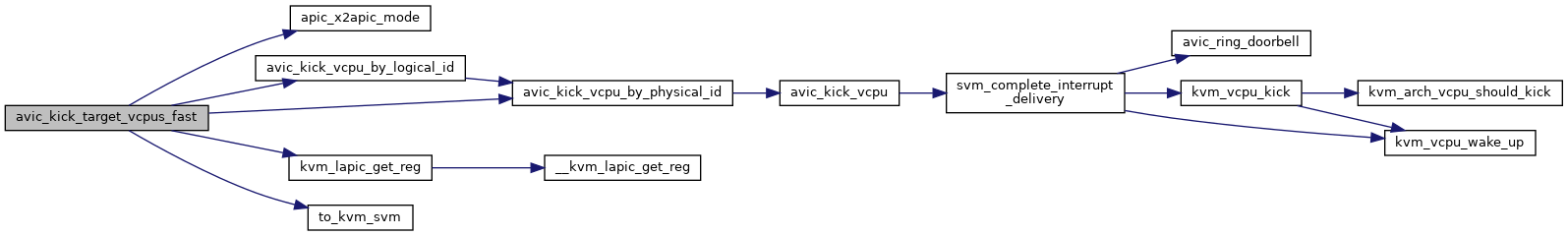

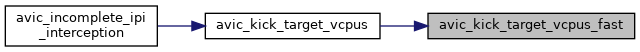

| static int | avic_kick_target_vcpus_fast (struct kvm *kvm, struct kvm_lapic *source, u32 icrl, u32 icrh, u32 index) |

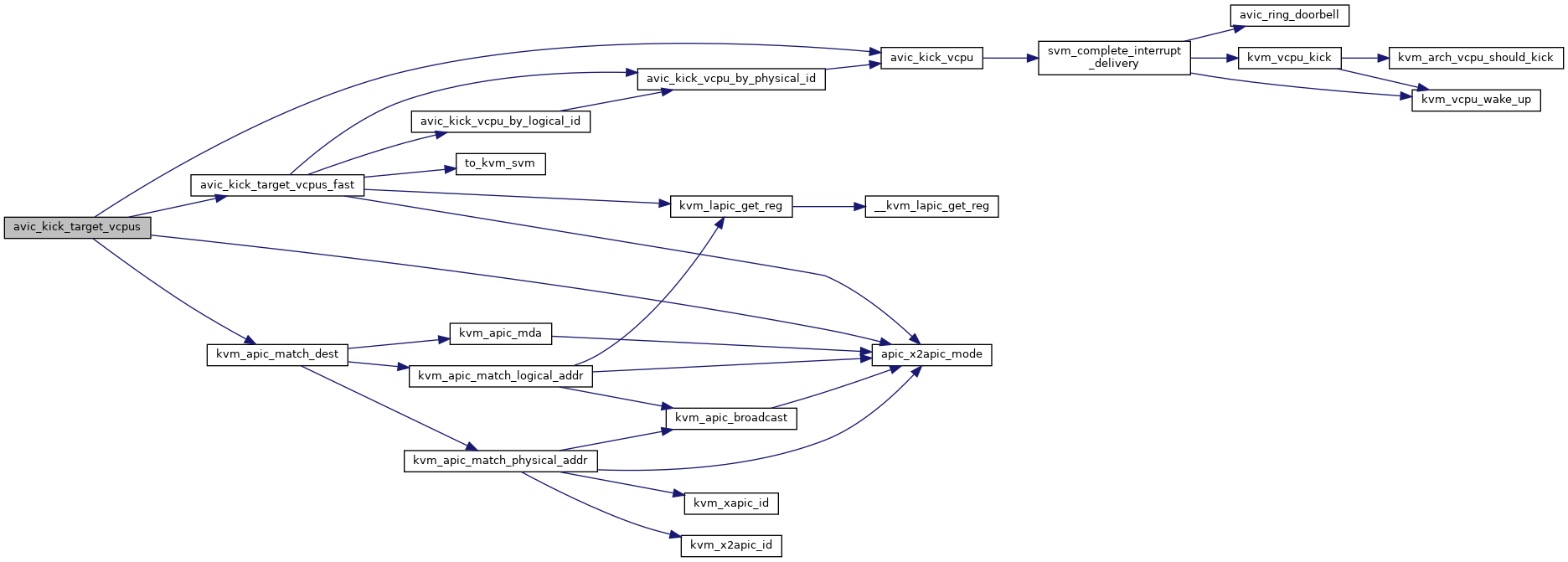

| static void | avic_kick_target_vcpus (struct kvm *kvm, struct kvm_lapic *source, u32 icrl, u32 icrh, u32 index) |

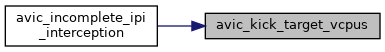

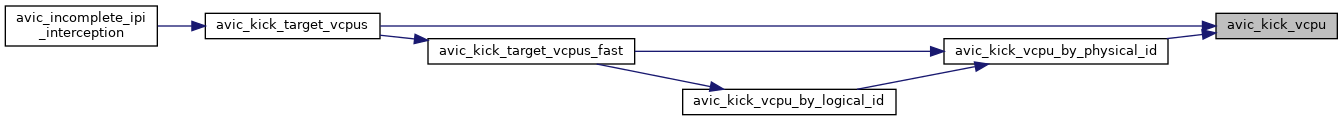

| int | avic_incomplete_ipi_interception (struct kvm_vcpu *vcpu) |

| unsigned long | avic_vcpu_get_apicv_inhibit_reasons (struct kvm_vcpu *vcpu) |

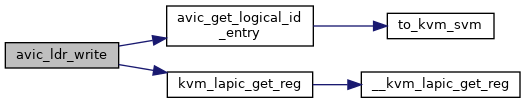

| static u32 * | avic_get_logical_id_entry (struct kvm_vcpu *vcpu, u32 ldr, bool flat) |

| static void | avic_ldr_write (struct kvm_vcpu *vcpu, u8 g_physical_id, u32 ldr) |

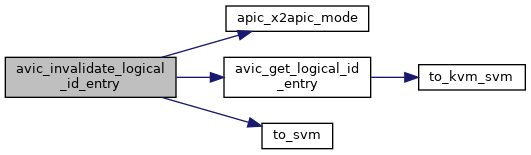

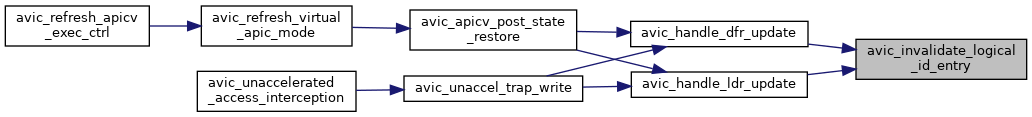

| static void | avic_invalidate_logical_id_entry (struct kvm_vcpu *vcpu) |

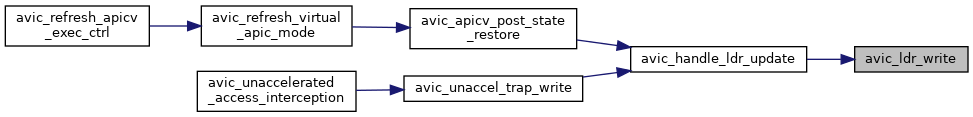

| static void | avic_handle_ldr_update (struct kvm_vcpu *vcpu) |

| static void | avic_handle_dfr_update (struct kvm_vcpu *vcpu) |

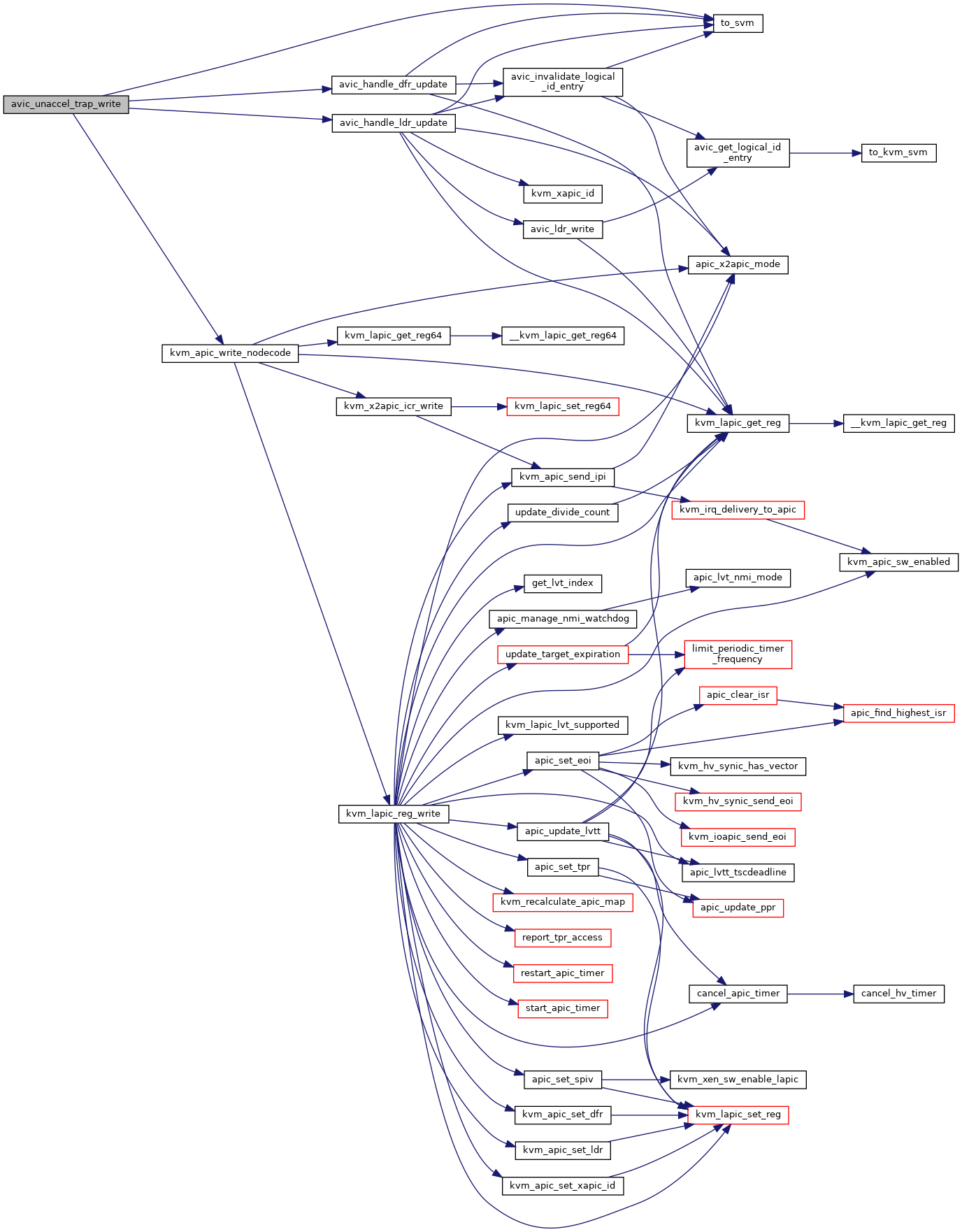

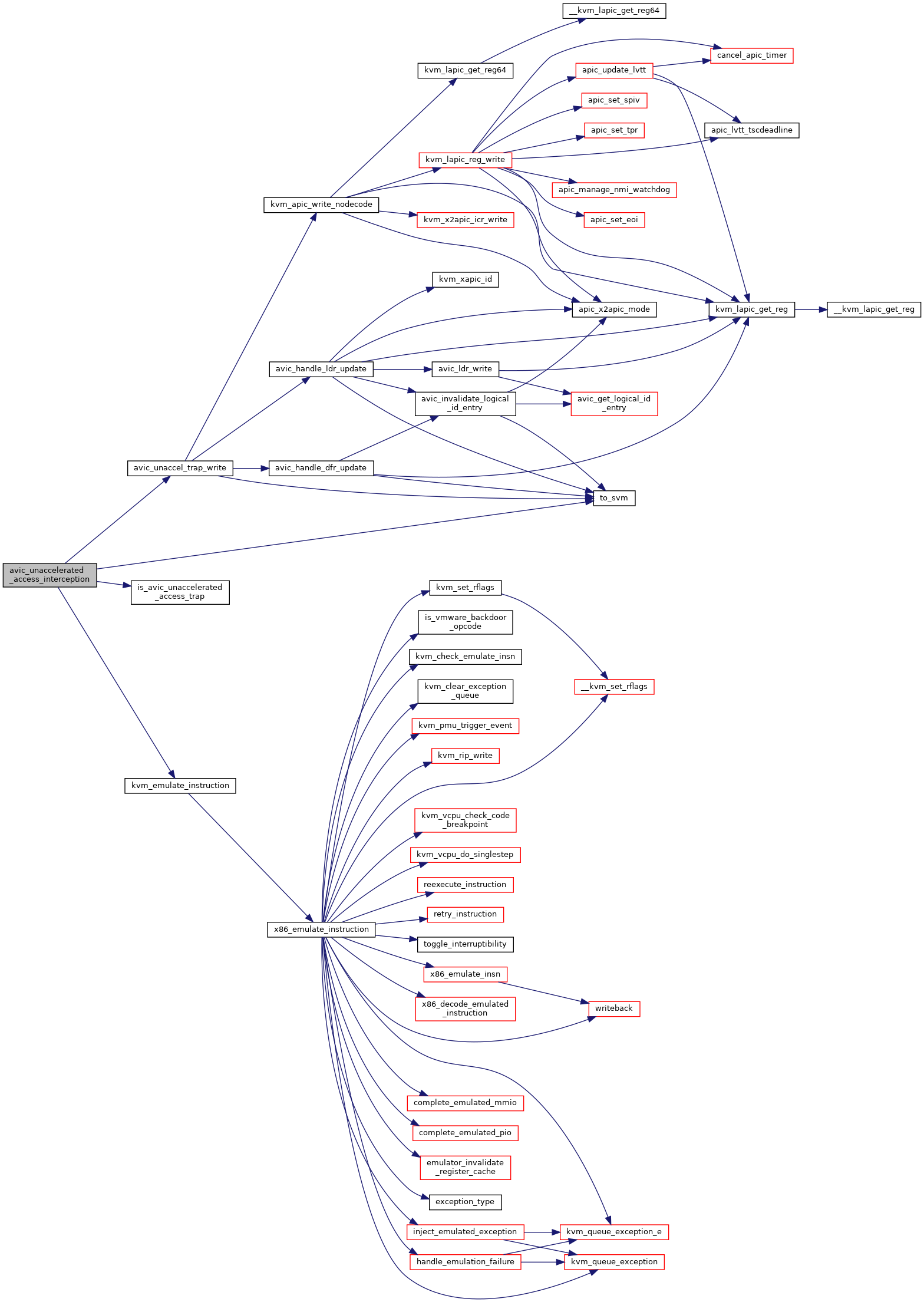

| static int | avic_unaccel_trap_write (struct kvm_vcpu *vcpu) |

| static bool | is_avic_unaccelerated_access_trap (u32 offset) |

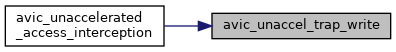

| int | avic_unaccelerated_access_interception (struct kvm_vcpu *vcpu) |

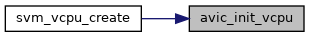

| int | avic_init_vcpu (struct vcpu_svm *svm) |

| void | avic_apicv_post_state_restore (struct kvm_vcpu *vcpu) |

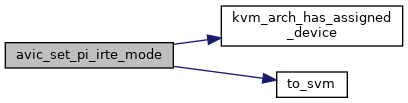

| static int | avic_set_pi_irte_mode (struct kvm_vcpu *vcpu, bool activate) |

| static void | svm_ir_list_del (struct vcpu_svm *svm, struct amd_iommu_pi_data *pi) |

| static int | svm_ir_list_add (struct vcpu_svm *svm, struct amd_iommu_pi_data *pi) |

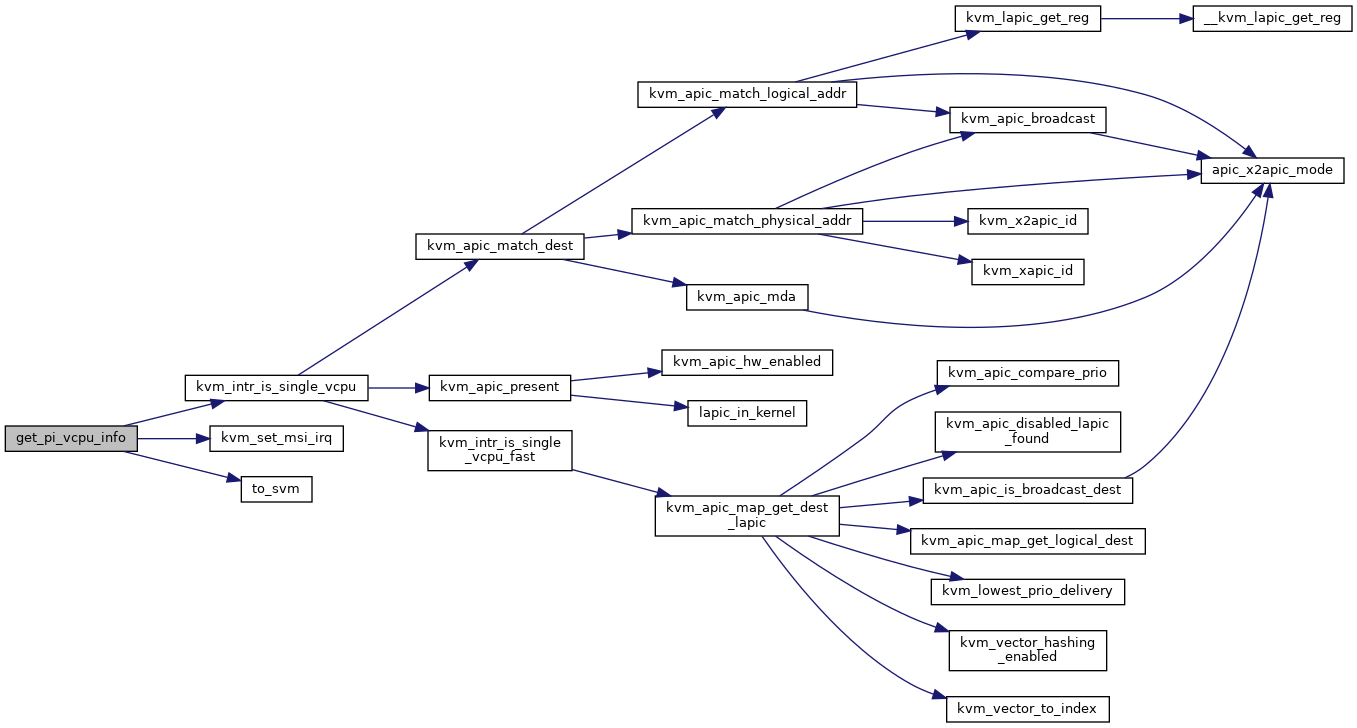

| static int | get_pi_vcpu_info (struct kvm *kvm, struct kvm_kernel_irq_routing_entry *e, struct vcpu_data *vcpu_info, struct vcpu_svm **svm) |

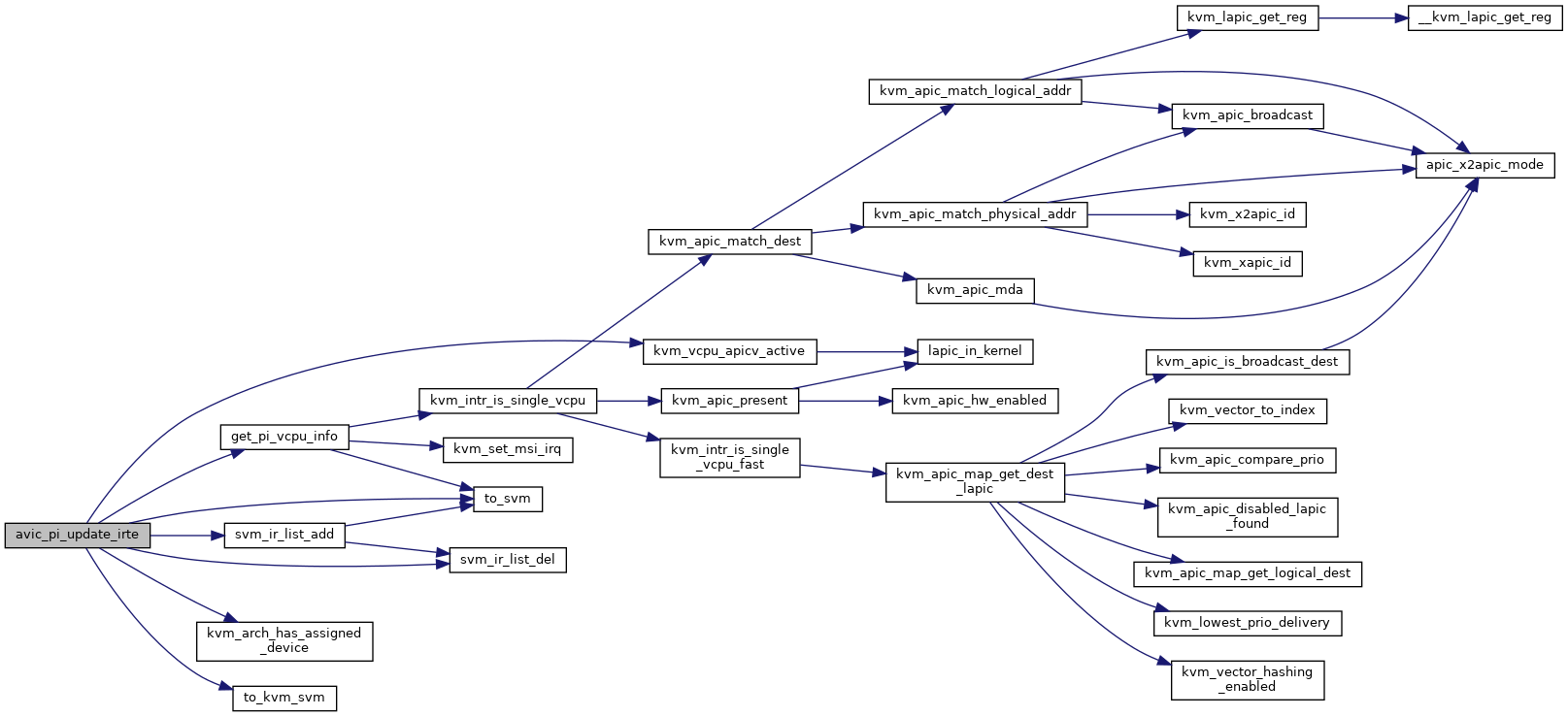

| int | avic_pi_update_irte (struct kvm *kvm, unsigned int host_irq, uint32_t guest_irq, bool set) |

| static int | avic_update_iommu_vcpu_affinity (struct kvm_vcpu *vcpu, int cpu, bool r) |

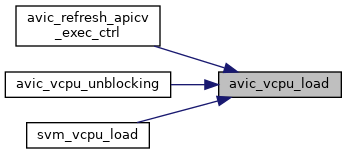

| void | avic_vcpu_load (struct kvm_vcpu *vcpu, int cpu) |

| void | avic_vcpu_put (struct kvm_vcpu *vcpu) |

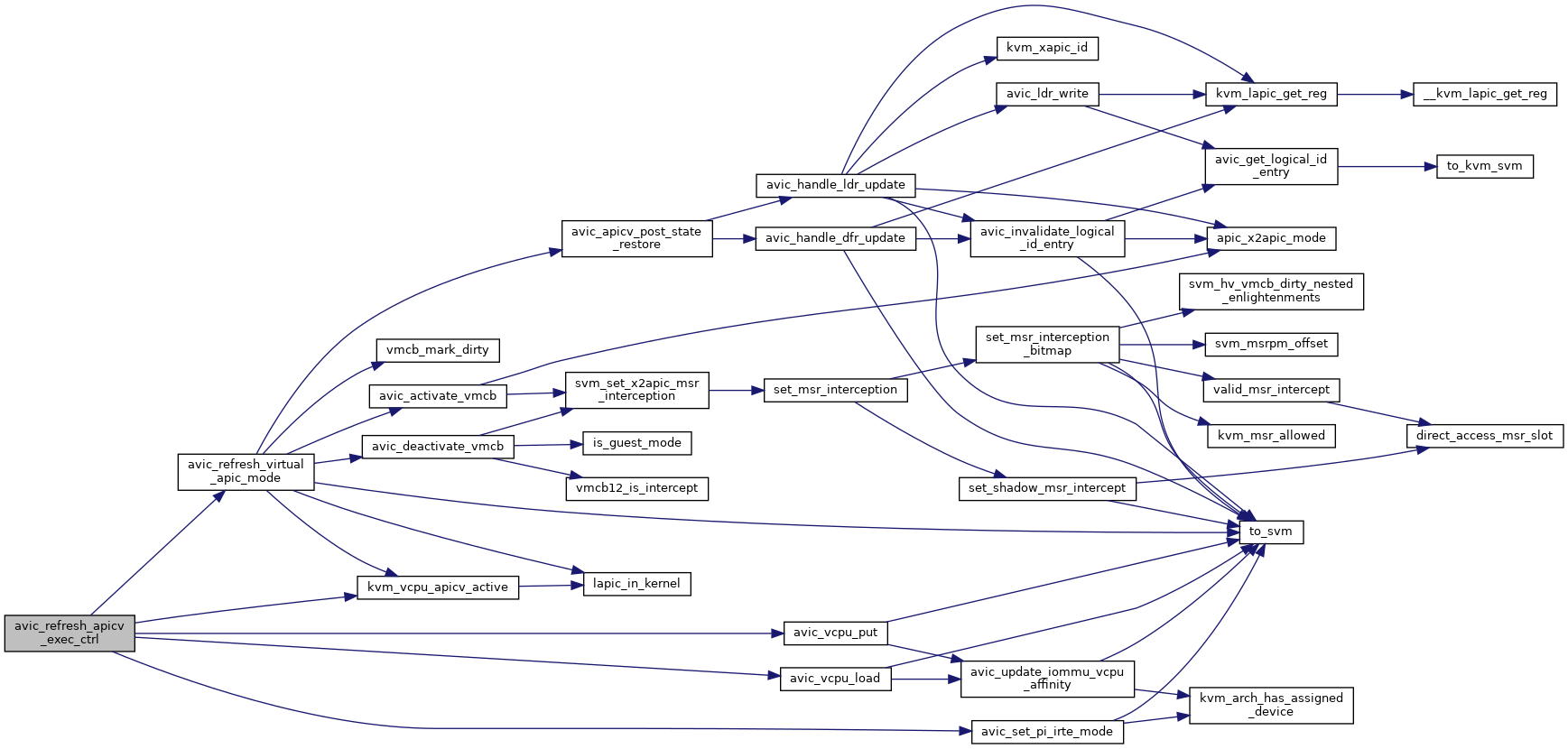

| void | avic_refresh_virtual_apic_mode (struct kvm_vcpu *vcpu) |

| void | avic_refresh_apicv_exec_ctrl (struct kvm_vcpu *vcpu) |

| void | avic_vcpu_blocking (struct kvm_vcpu *vcpu) |

| void | avic_vcpu_unblocking (struct kvm_vcpu *vcpu) |

| bool | avic_hardware_setup (void) |

Variables | |

| static bool | force_avic |

| static u32 | next_vm_id = 0 |

| static bool | next_vm_id_wrapped = 0 |

| bool | x2avic_enabled |

Macro Definition Documentation

◆ __AVIC_GATAG

| #define __AVIC_GATAG | ( | vm_id, | |

| vcpu_id | |||

| ) |

◆ AVIC_GATAG

| #define AVIC_GATAG | ( | vm_id, | |

| vcpu_id | |||

| ) |

◆ AVIC_GATAG_TO_VCPUID

| #define AVIC_GATAG_TO_VCPUID | ( | x | ) | (x & AVIC_VCPU_ID_MASK) |

◆ AVIC_GATAG_TO_VMID

| #define AVIC_GATAG_TO_VMID | ( | x | ) | ((x >> AVIC_VM_ID_SHIFT) & AVIC_VM_ID_MASK) |

◆ AVIC_VCPU_ID_MASK

◆ AVIC_VM_ID_MASK

| #define AVIC_VM_ID_MASK (GENMASK(31, AVIC_VM_ID_SHIFT) >> AVIC_VM_ID_SHIFT) |

◆ AVIC_VM_ID_SHIFT

| #define AVIC_VM_ID_SHIFT HWEIGHT32(AVIC_PHYSICAL_MAX_INDEX_MASK) |

◆ pr_fmt

◆ SVM_VM_DATA_HASH_BITS

Function Documentation

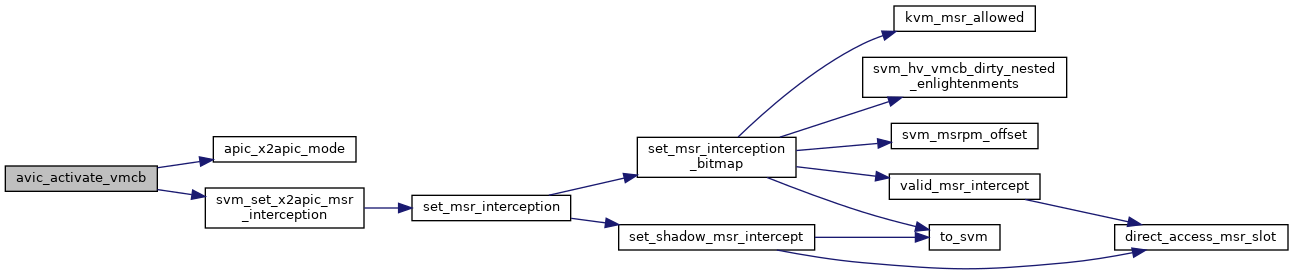

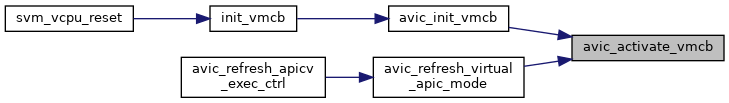

◆ avic_activate_vmcb()

|

static |

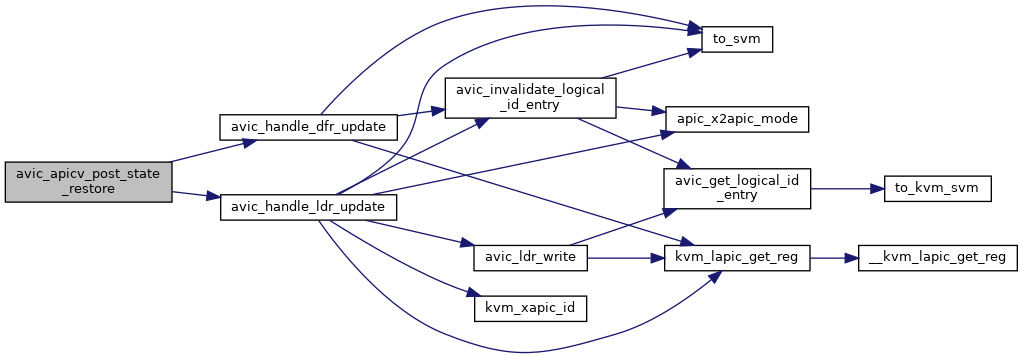

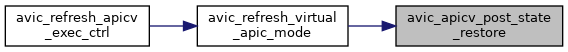

◆ avic_apicv_post_state_restore()

| void avic_apicv_post_state_restore | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 738 of file avic.c.

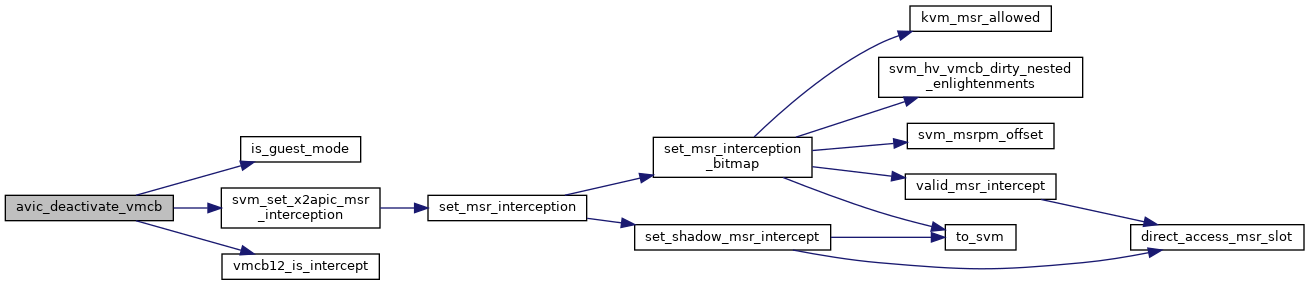

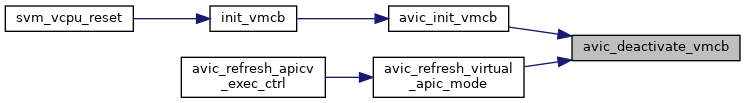

◆ avic_deactivate_vmcb()

|

static |

◆ avic_ga_log_notifier()

| int avic_ga_log_notifier | ( | u32 | ga_tag | ) |

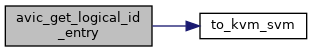

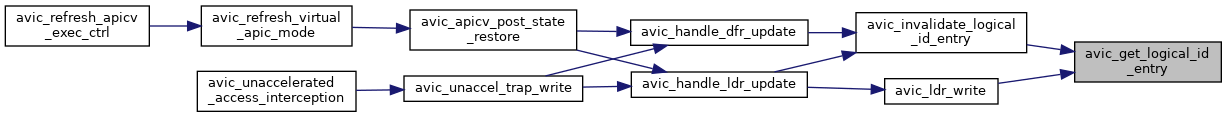

◆ avic_get_logical_id_entry()

|

static |

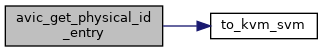

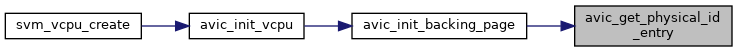

◆ avic_get_physical_id_entry()

|

static |

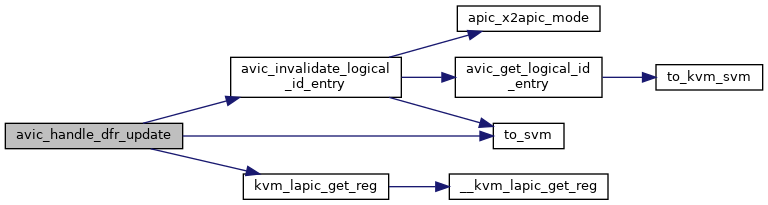

◆ avic_handle_dfr_update()

|

static |

Definition at line 629 of file avic.c.

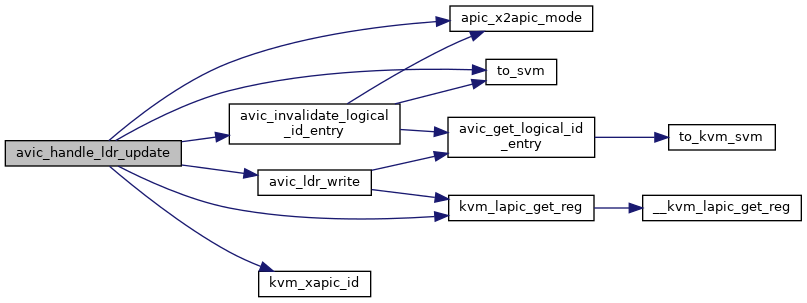

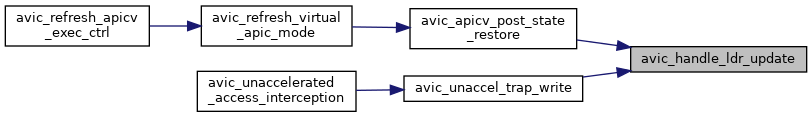

◆ avic_handle_ldr_update()

|

static |

Definition at line 610 of file avic.c.

◆ avic_hardware_setup()

| bool avic_hardware_setup | ( | void | ) |

◆ avic_incomplete_ipi_interception()

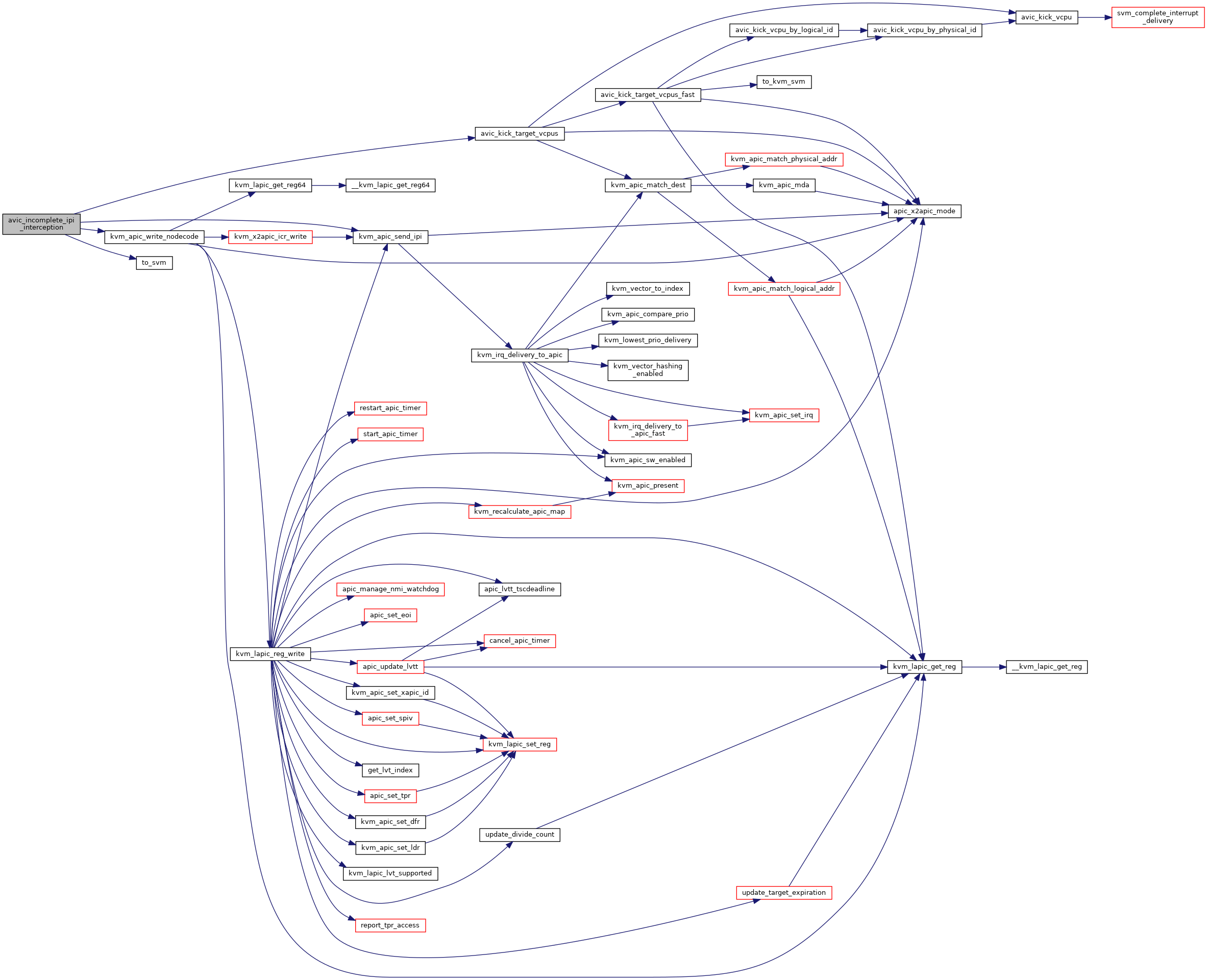

| int avic_incomplete_ipi_interception | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 490 of file avic.c.

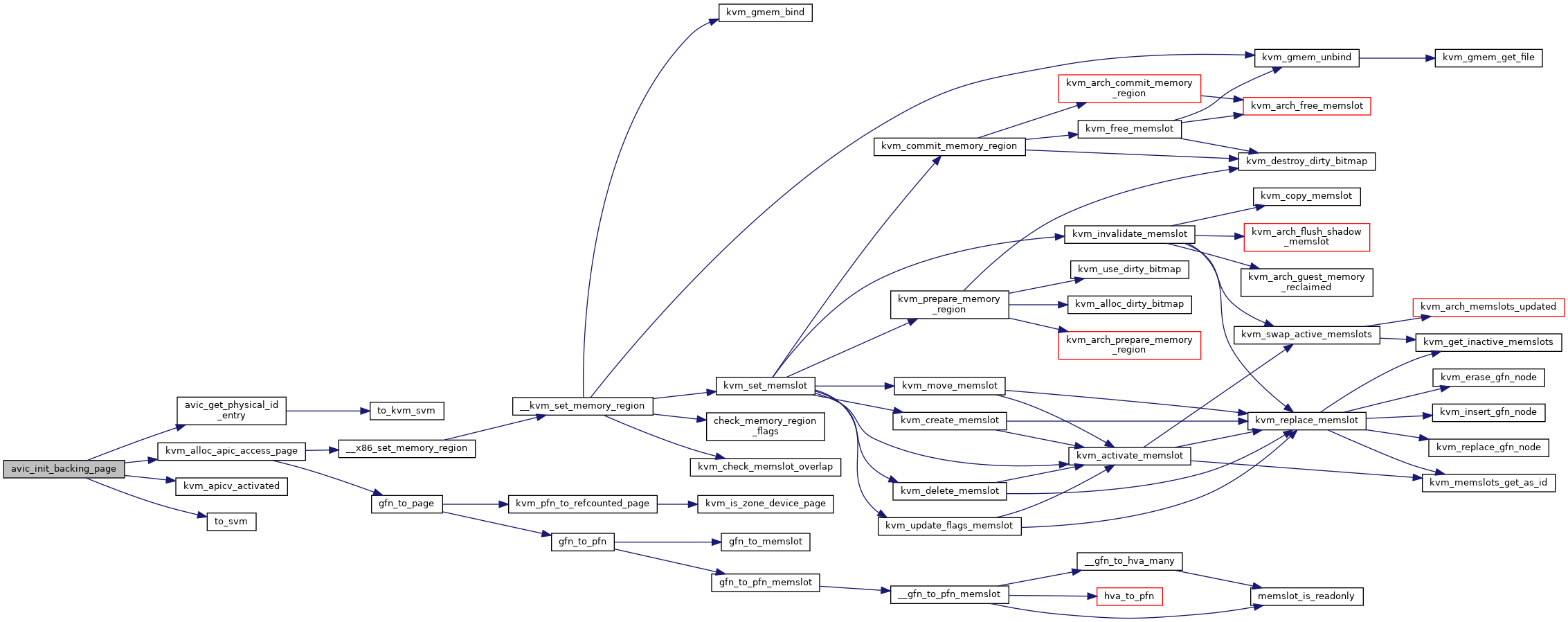

◆ avic_init_backing_page()

|

static |

Definition at line 277 of file avic.c.

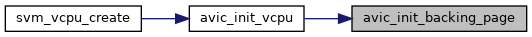

◆ avic_init_vcpu()

| int avic_init_vcpu | ( | struct vcpu_svm * | svm | ) |

Definition at line 719 of file avic.c.

◆ avic_init_vmcb()

| void avic_init_vmcb | ( | struct vcpu_svm * | svm, |

| struct vmcb * | vmcb | ||

| ) |

◆ avic_invalidate_logical_id_entry()

|

static |

Definition at line 595 of file avic.c.

◆ avic_kick_target_vcpus()

|

static |

Definition at line 465 of file avic.c.

◆ avic_kick_target_vcpus_fast()

|

static |

Definition at line 397 of file avic.c.

◆ avic_kick_vcpu()

|

static |

Definition at line 340 of file avic.c.

◆ avic_kick_vcpu_by_logical_id()

|

static |

◆ avic_kick_vcpu_by_physical_id()

|

static |

◆ avic_ldr_write()

|

static |

◆ avic_pi_update_irte()

| int avic_pi_update_irte | ( | struct kvm * | kvm, |

| unsigned int | host_irq, | ||

| uint32_t | guest_irq, | ||

| bool | set | ||

| ) |

Here, we setup with legacy mode in the following cases:

- When cannot target interrupt to a specific vcpu.

- Unsetting posted interrupt.

- APIC virtualization is disabled for the vcpu.

- IRQ has incompatible delivery mode (SMI, INIT, etc)

Here, we successfully setting up vcpu affinity in IOMMU guest mode. Now, we need to store the posted interrupt information in a per-vcpu ir_list so that we can reference to them directly when we update vcpu scheduling information in IOMMU irte.

Here, pi is used to:

- Tell IOMMU to use legacy mode for this interrupt.

- Retrieve ga_tag of prior interrupt remapping data.

Check if the posted interrupt was previously setup with the guest_mode by checking if the ga_tag was cached. If so, we need to clean up the per-vcpu ir_list.

Definition at line 894 of file avic.c.

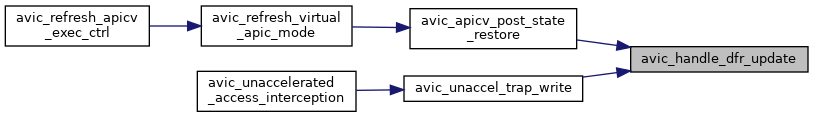

◆ avic_refresh_apicv_exec_ctrl()

| void avic_refresh_apicv_exec_ctrl | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1136 of file avic.c.

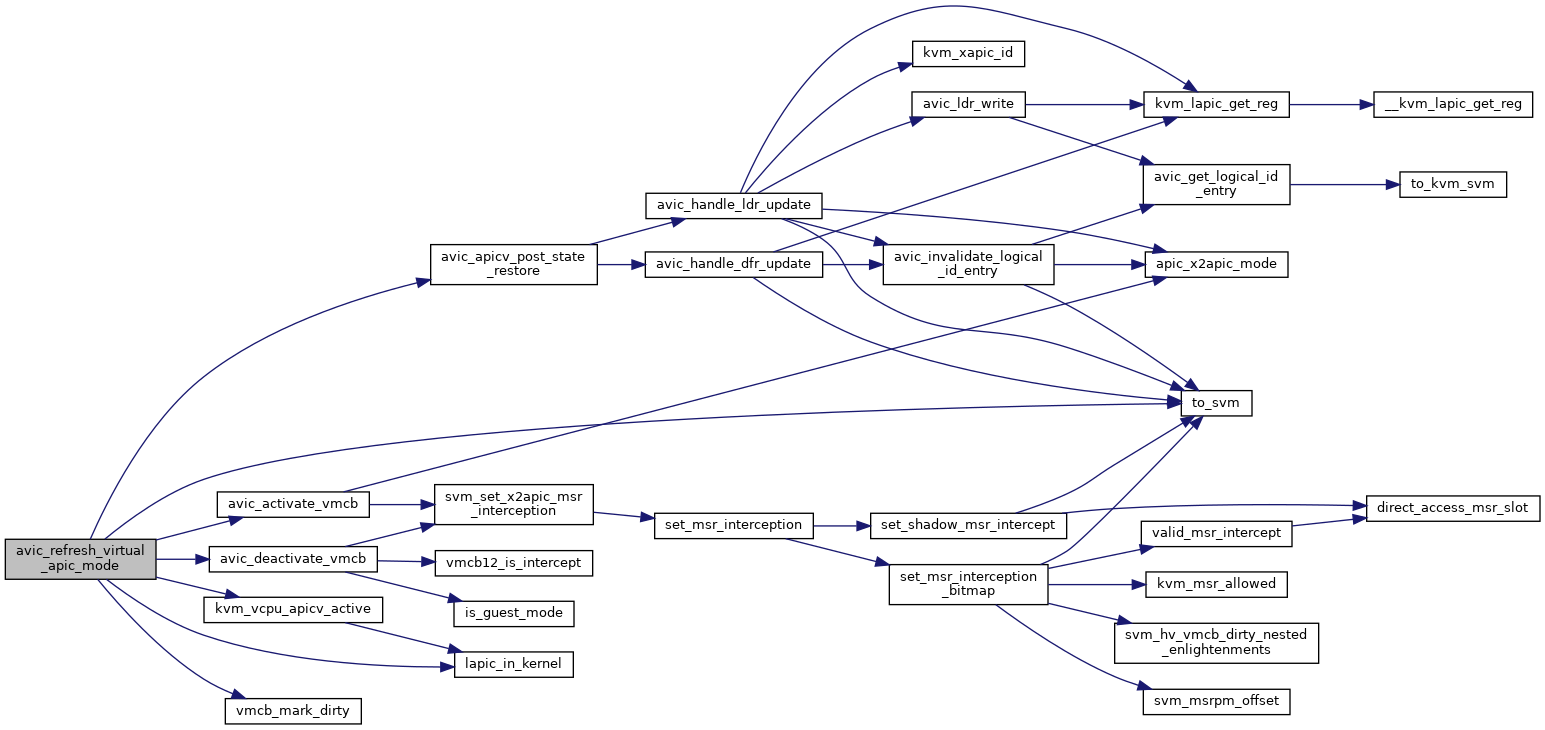

◆ avic_refresh_virtual_apic_mode()

| void avic_refresh_virtual_apic_mode | ( | struct kvm_vcpu * | vcpu | ) |

During AVIC temporary deactivation, guest could update APIC ID, DFR and LDR registers, which would not be trapped by avic_unaccelerated_access_interception(). In this case, we need to check and update the AVIC logical APIC ID table accordingly before re-activating.

Definition at line 1112 of file avic.c.

◆ avic_ring_doorbell()

| void avic_ring_doorbell | ( | struct kvm_vcpu * | vcpu | ) |

◆ avic_set_pi_irte_mode()

|

static |

◆ avic_unaccel_trap_write()

|

static |

◆ avic_unaccelerated_access_interception()

| int avic_unaccelerated_access_interception | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 693 of file avic.c.

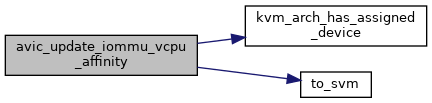

◆ avic_update_iommu_vcpu_affinity()

|

inlinestatic |

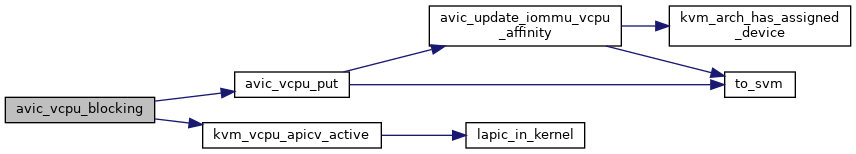

◆ avic_vcpu_blocking()

| void avic_vcpu_blocking | ( | struct kvm_vcpu * | vcpu | ) |

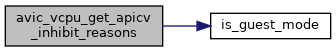

◆ avic_vcpu_get_apicv_inhibit_reasons()

| unsigned long avic_vcpu_get_apicv_inhibit_reasons | ( | struct kvm_vcpu * | vcpu | ) |

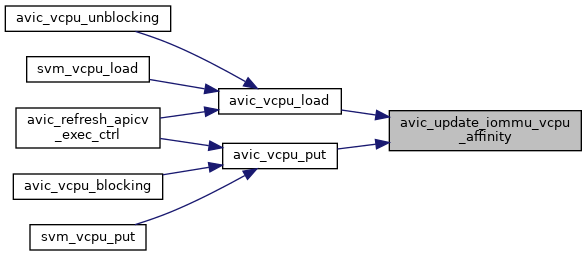

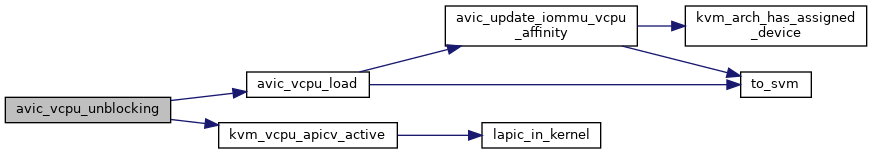

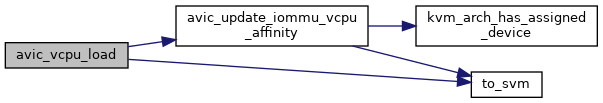

◆ avic_vcpu_load()

| void avic_vcpu_load | ( | struct kvm_vcpu * | vcpu, |

| int | cpu | ||

| ) |

Definition at line 1028 of file avic.c.

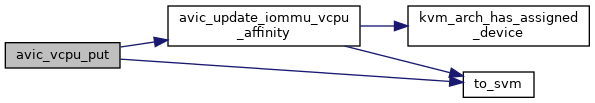

◆ avic_vcpu_put()

| void avic_vcpu_put | ( | struct kvm_vcpu * | vcpu | ) |

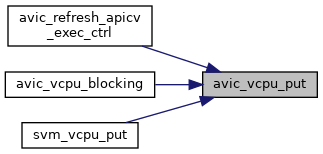

◆ avic_vcpu_unblocking()

| void avic_vcpu_unblocking | ( | struct kvm_vcpu * | vcpu | ) |

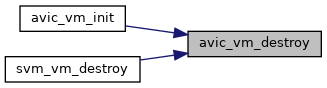

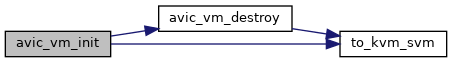

◆ avic_vm_destroy()

| void avic_vm_destroy | ( | struct kvm * | kvm | ) |

◆ avic_vm_init()

| int avic_vm_init | ( | struct kvm * | kvm | ) |

◆ DEFINE_HASHTABLE()

|

static |

◆ DEFINE_SPINLOCK()

|

static |

◆ get_pi_vcpu_info()

|

static |

Definition at line 861 of file avic.c.

◆ is_avic_unaccelerated_access_trap()

|

static |

◆ module_param_unsafe()

| module_param_unsafe | ( | force_avic | , |

| bool | , | ||

| 0444 | |||

| ) |

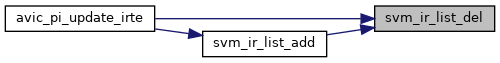

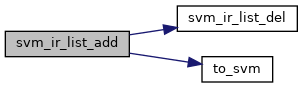

◆ svm_ir_list_add()

|

static |

In some cases, the existing irte is updated and re-set, so we need to check here if it's already been * added to the ir_list.

Allocating new amd_iommu_pi_data, which will get add to the per-vcpu ir_list.

Definition at line 792 of file avic.c.

◆ svm_ir_list_del()

|

static |