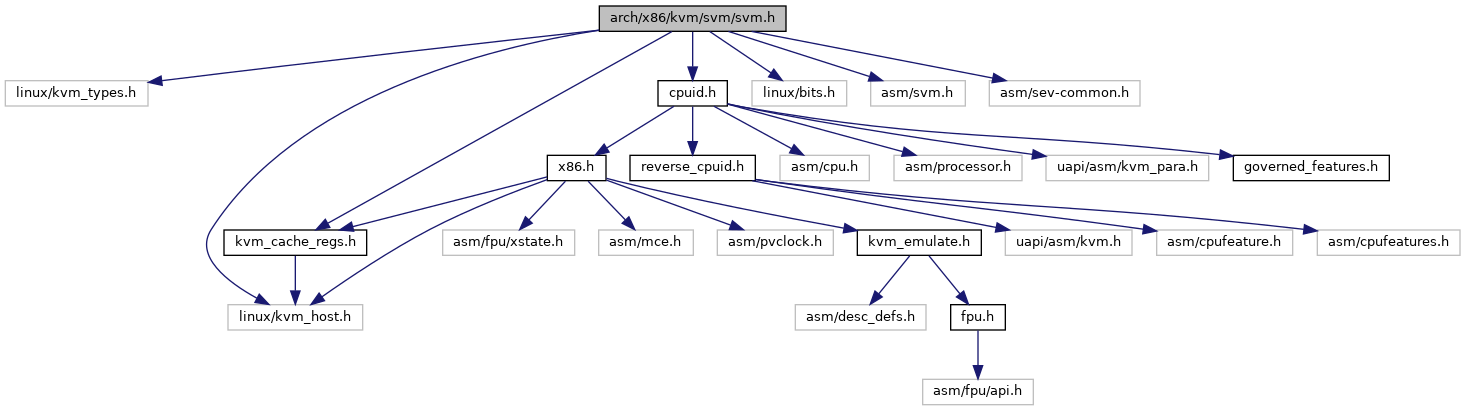

#include <linux/kvm_types.h>#include <linux/kvm_host.h>#include <linux/bits.h>#include <asm/svm.h>#include <asm/sev-common.h>#include "cpuid.h"#include "kvm_cache_regs.h"

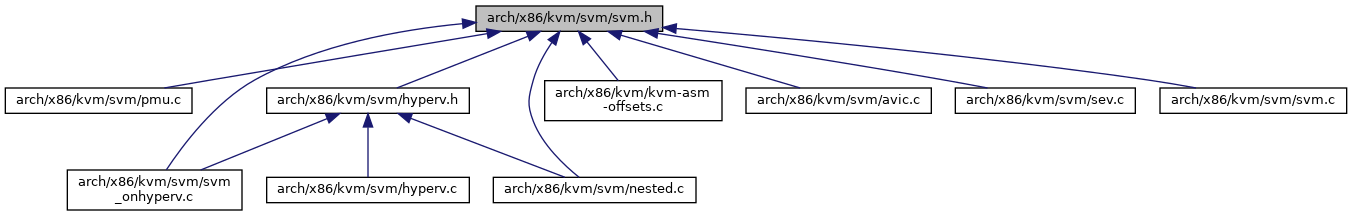

Go to the source code of this file.

Classes | |

| struct | kvm_sev_info |

| struct | kvm_svm |

| struct | kvm_vmcb_info |

| struct | vmcb_save_area_cached |

| struct | vmcb_ctrl_area_cached |

| struct | svm_nested_state |

| struct | vcpu_sev_es_state |

| struct | vcpu_svm |

| struct | svm_cpu_data |

Macros | |

| #define | __sme_page_pa(x) __sme_set(page_to_pfn(x) << PAGE_SHIFT) |

| #define | IOPM_SIZE PAGE_SIZE * 3 |

| #define | MSRPM_SIZE PAGE_SIZE * 2 |

| #define | MAX_DIRECT_ACCESS_MSRS 47 |

| #define | MSRPM_OFFSETS 32 |

| #define | VMCB_ALL_CLEAN_MASK |

| #define | VMCB_ALWAYS_DIRTY_MASK ((1U << VMCB_INTR) | (1U << VMCB_CR2)) |

| #define | SVM_REGS_LAZY_LOAD_SET (1 << VCPU_EXREG_PDPTR) |

| #define | MSR_INVALID 0xffffffffU |

| #define | DEBUGCTL_RESERVED_BITS (~(0x3fULL)) |

| #define | NESTED_EXIT_HOST 0 /* Exit handled on host level */ |

| #define | NESTED_EXIT_DONE 1 /* Exit caused nested vmexit */ |

| #define | NESTED_EXIT_CONTINUE 2 /* Further checks needed */ |

| #define | AVIC_REQUIRED_APICV_INHIBITS |

| #define | GHCB_VERSION_MAX 1ULL |

| #define | GHCB_VERSION_MIN 1ULL |

| #define | DEFINE_KVM_GHCB_ACCESSORS(field) |

Enumerations | |

| enum | { VMCB_INTERCEPTS , VMCB_PERM_MAP , VMCB_ASID , VMCB_INTR , VMCB_NPT , VMCB_CR , VMCB_DR , VMCB_DT , VMCB_SEG , VMCB_CR2 , VMCB_LBR , VMCB_AVIC , VMCB_SW = 31 } |

Functions | |

| DECLARE_PER_CPU (struct svm_cpu_data, svm_data) | |

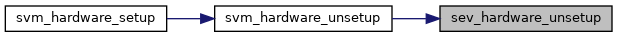

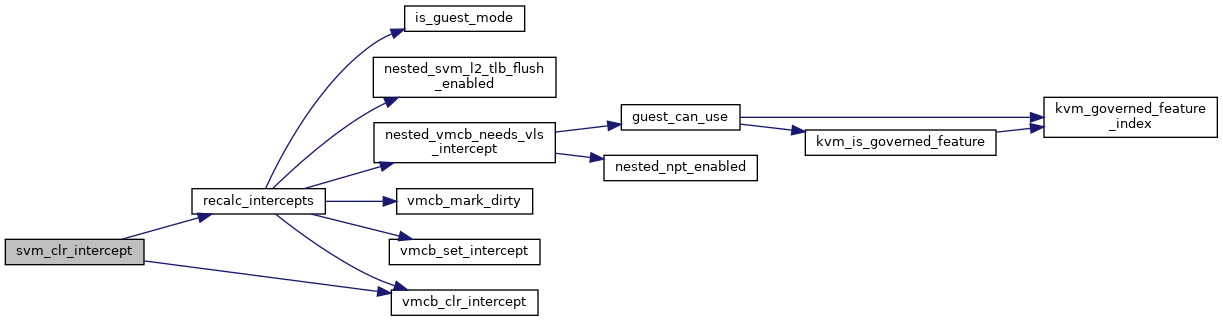

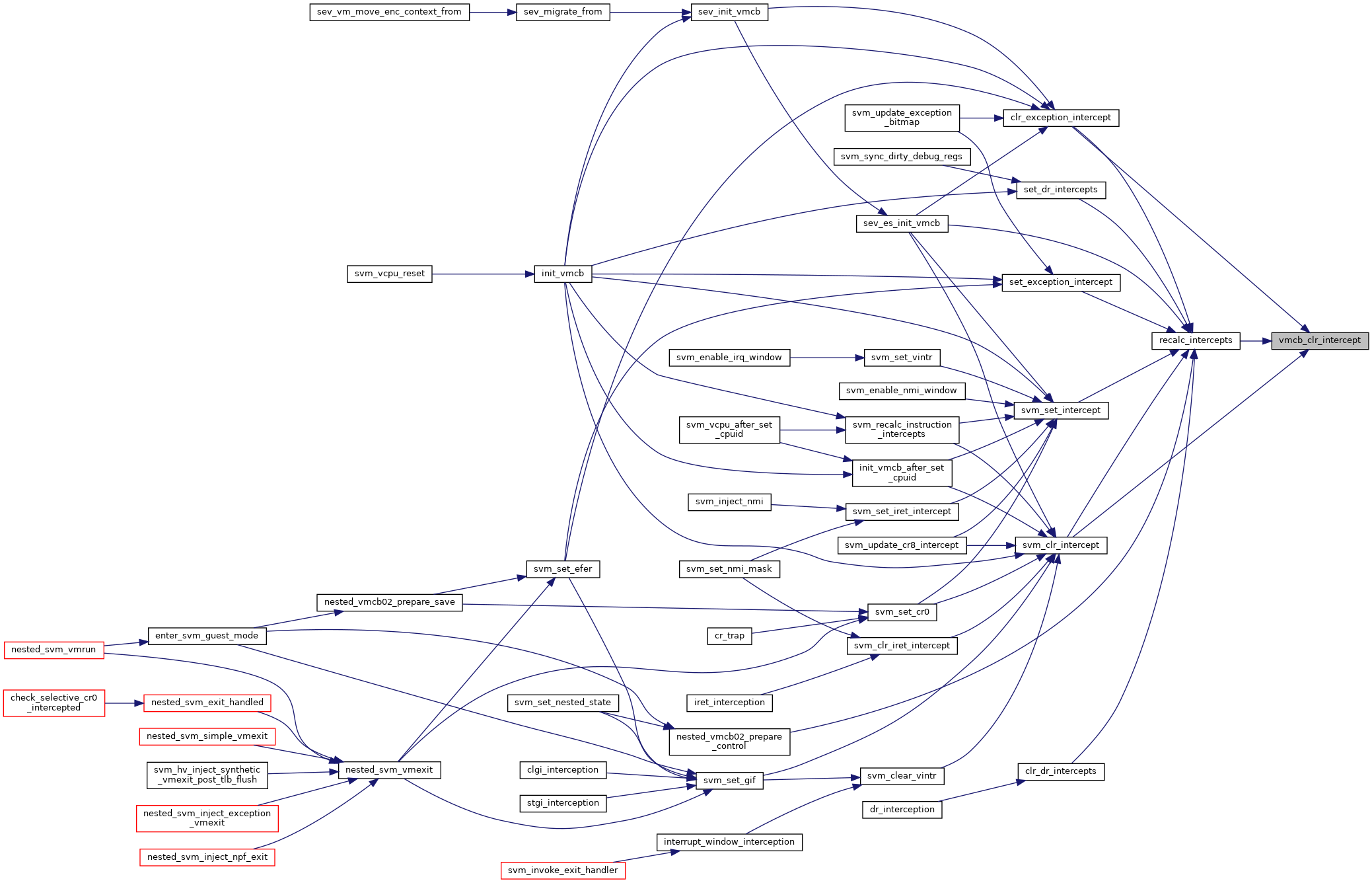

| void | recalc_intercepts (struct vcpu_svm *svm) |

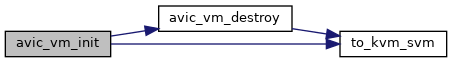

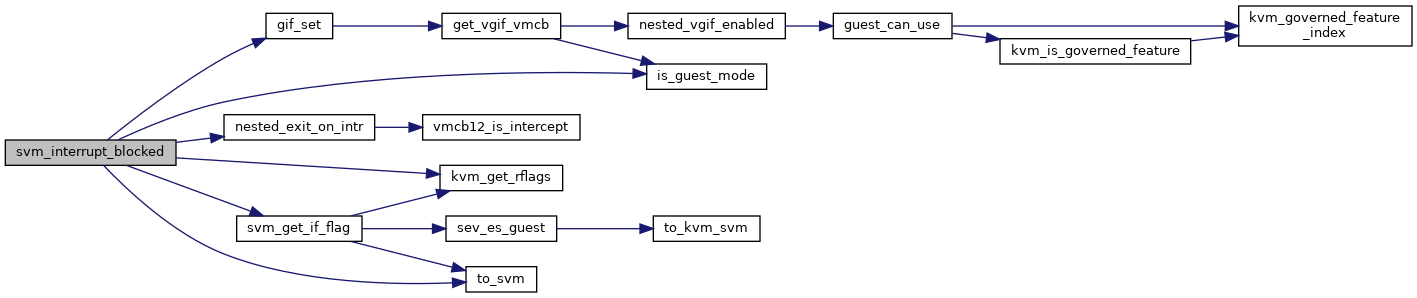

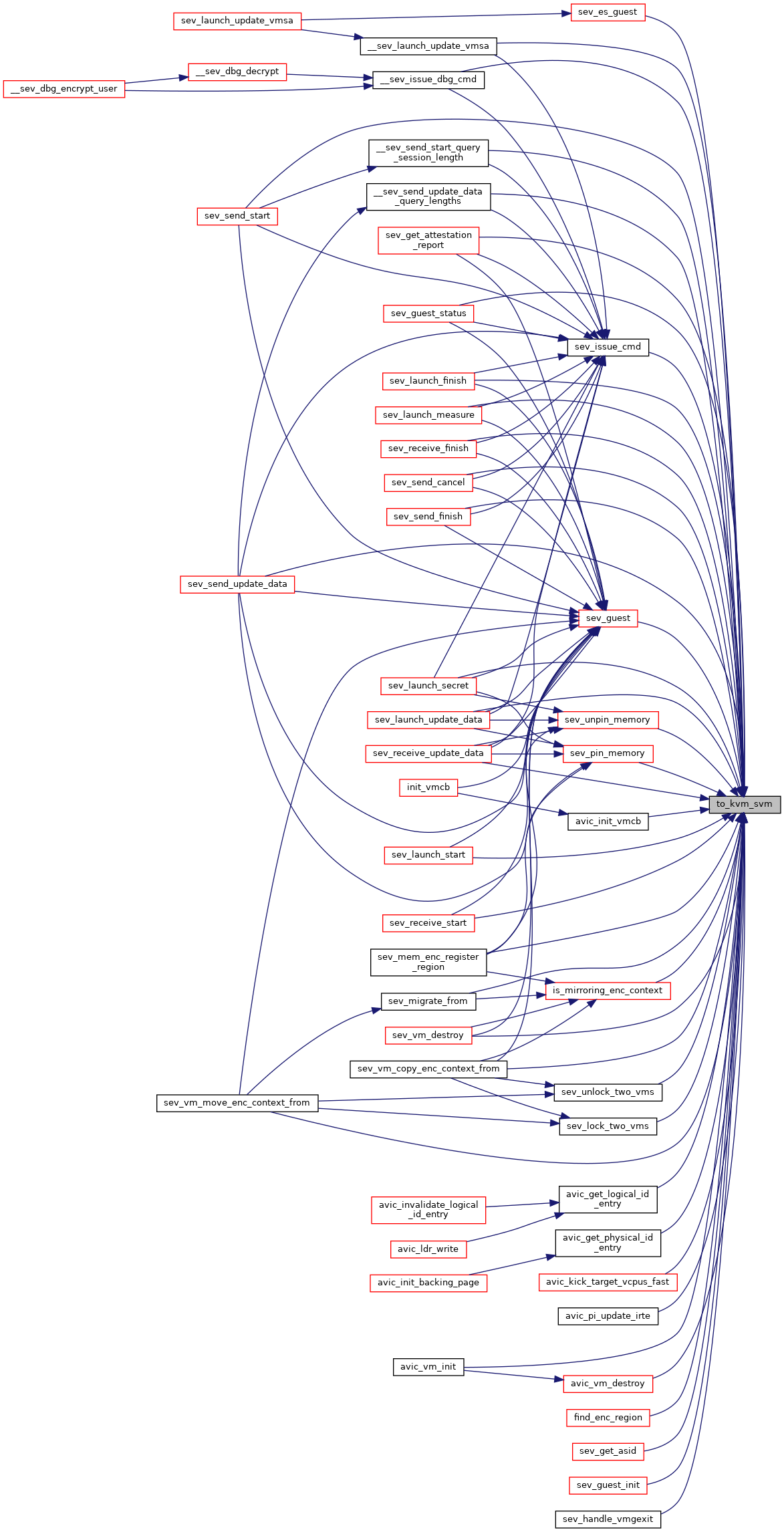

| static __always_inline struct kvm_svm * | to_kvm_svm (struct kvm *kvm) |

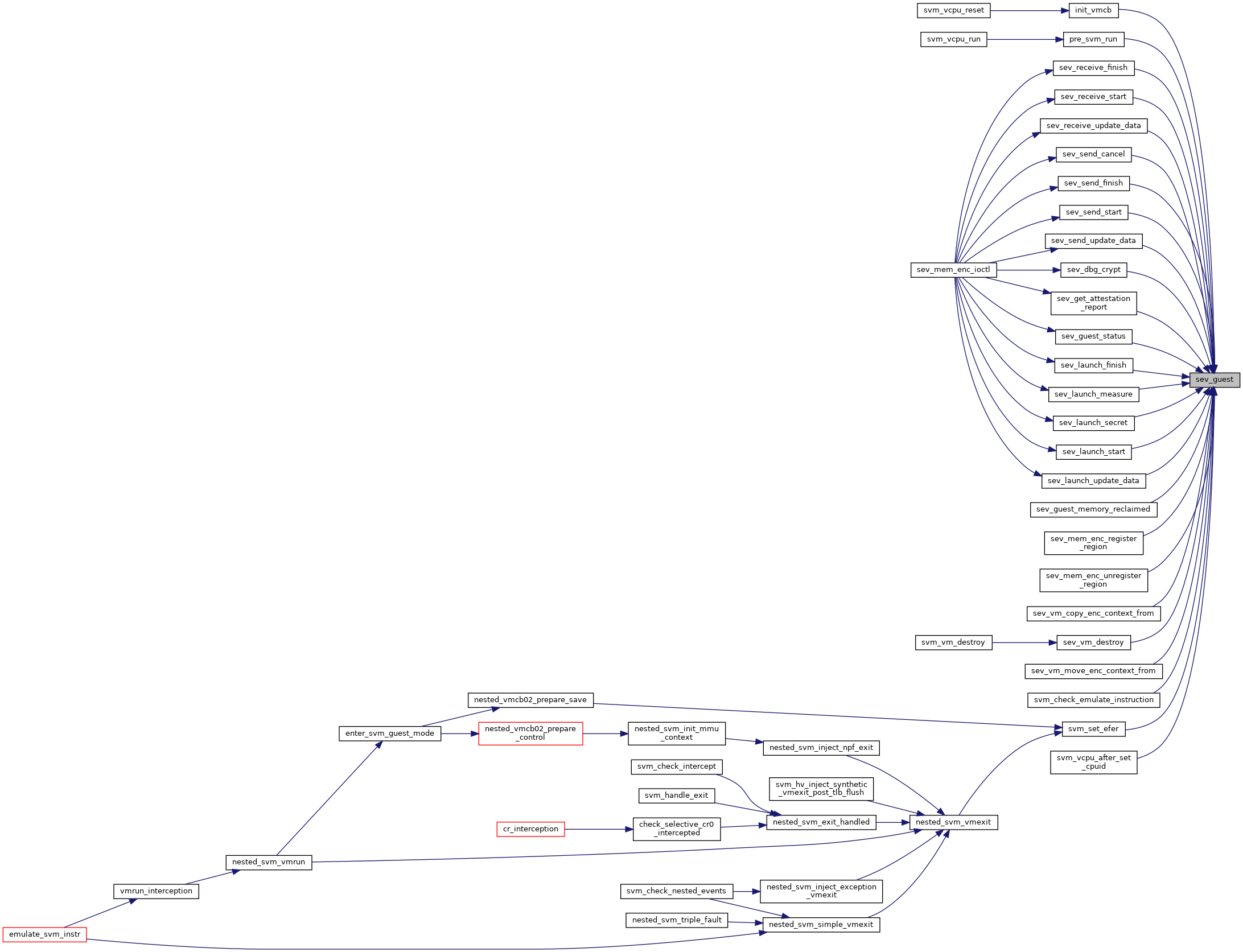

| static __always_inline bool | sev_guest (struct kvm *kvm) |

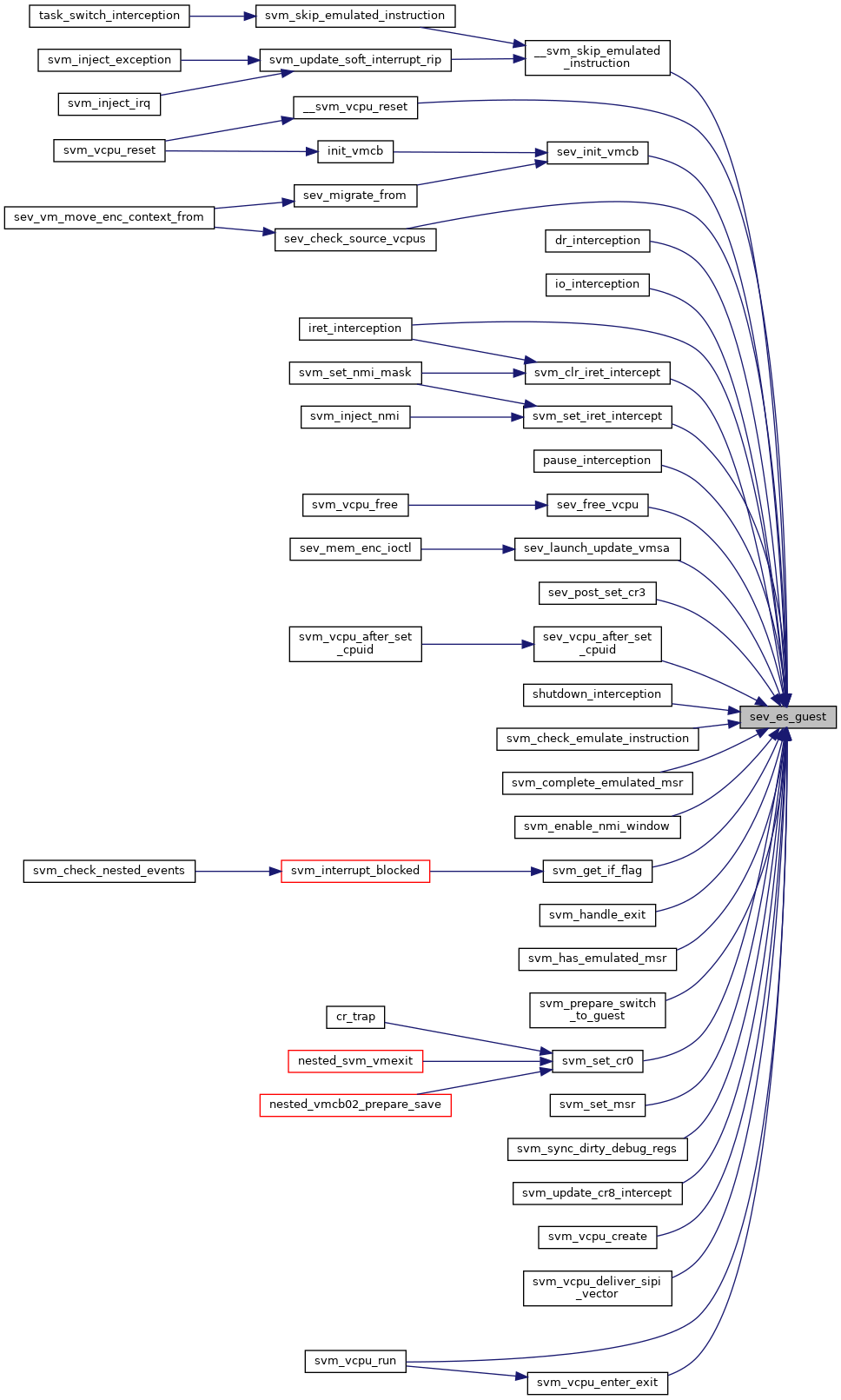

| static __always_inline bool | sev_es_guest (struct kvm *kvm) |

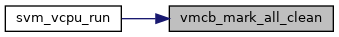

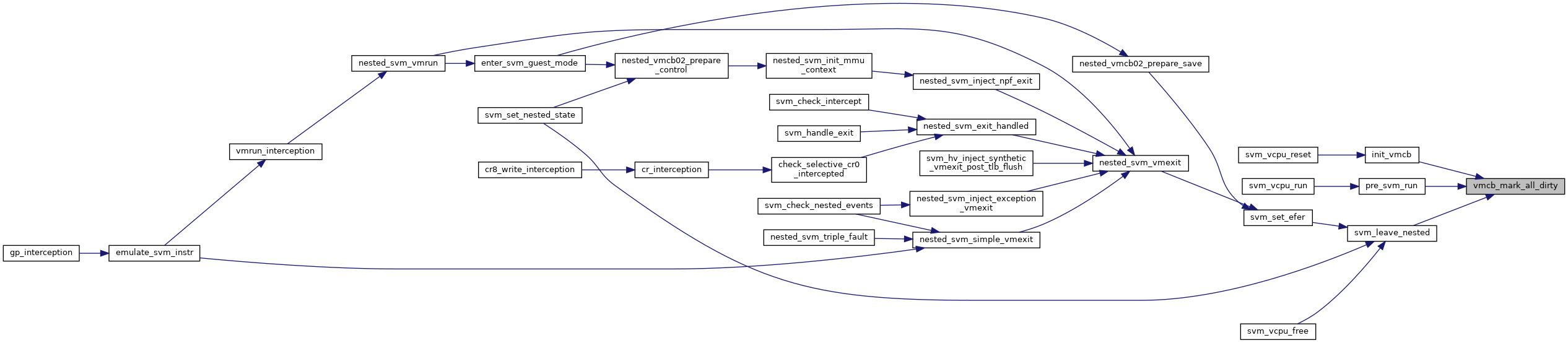

| static void | vmcb_mark_all_dirty (struct vmcb *vmcb) |

| static void | vmcb_mark_all_clean (struct vmcb *vmcb) |

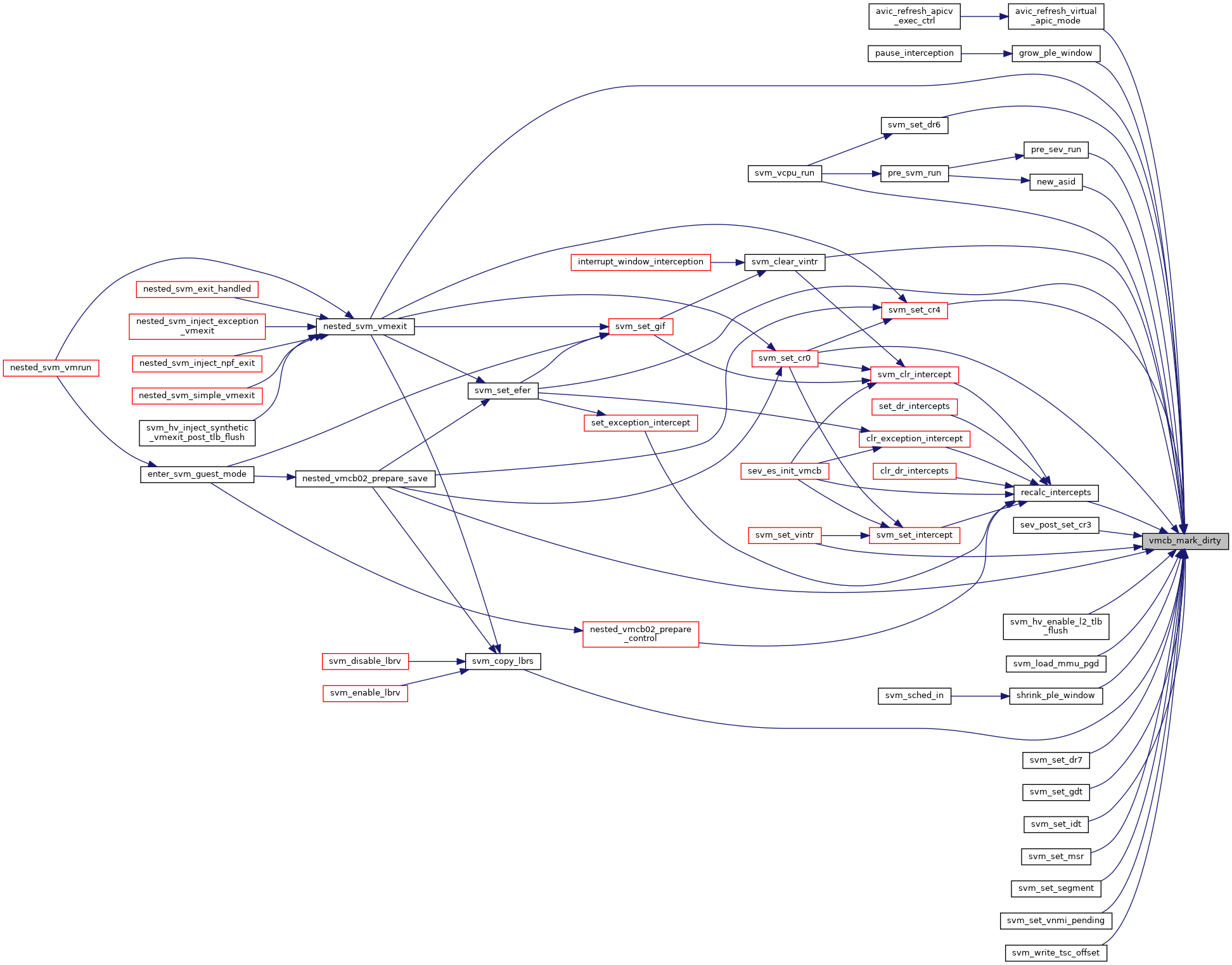

| static void | vmcb_mark_dirty (struct vmcb *vmcb, int bit) |

| static bool | vmcb_is_dirty (struct vmcb *vmcb, int bit) |

| static __always_inline struct vcpu_svm * | to_svm (struct kvm_vcpu *vcpu) |

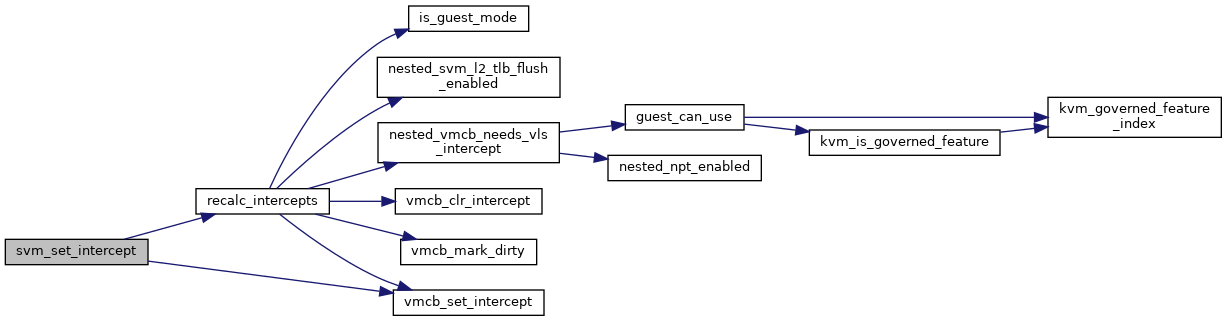

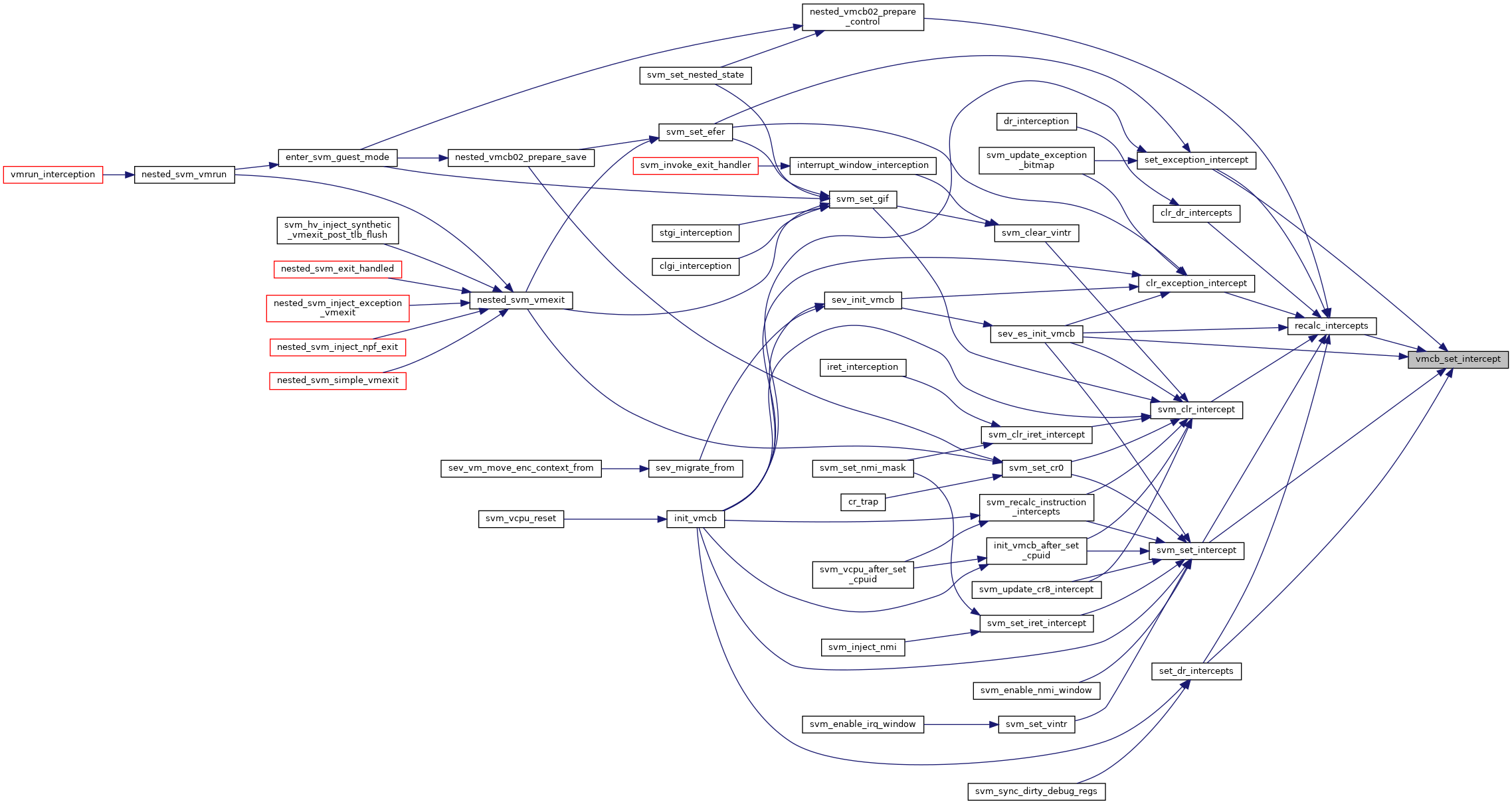

| static void | vmcb_set_intercept (struct vmcb_control_area *control, u32 bit) |

| static void | vmcb_clr_intercept (struct vmcb_control_area *control, u32 bit) |

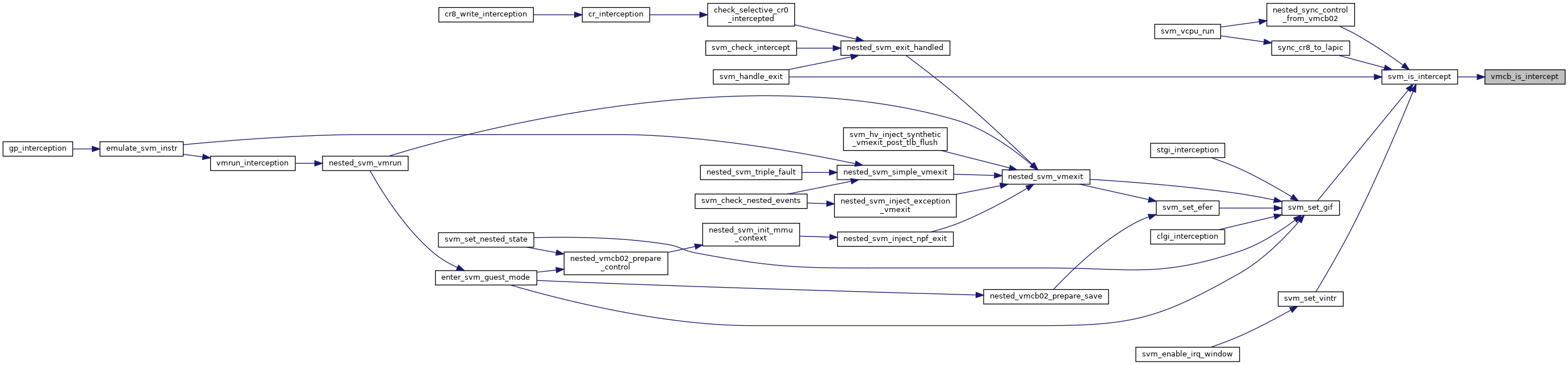

| static bool | vmcb_is_intercept (struct vmcb_control_area *control, u32 bit) |

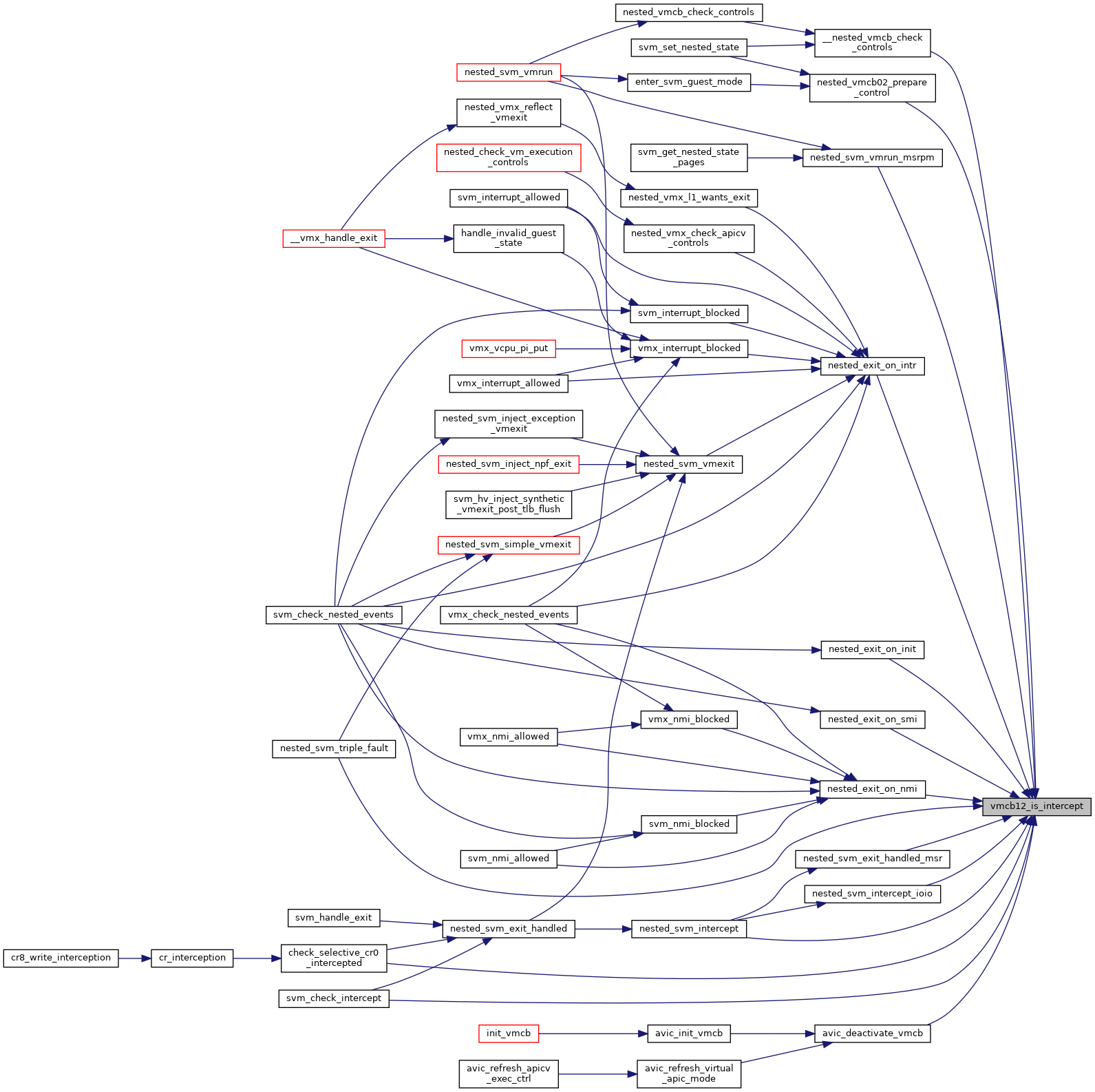

| static bool | vmcb12_is_intercept (struct vmcb_ctrl_area_cached *control, u32 bit) |

| static void | set_exception_intercept (struct vcpu_svm *svm, u32 bit) |

| static void | clr_exception_intercept (struct vcpu_svm *svm, u32 bit) |

| static void | svm_set_intercept (struct vcpu_svm *svm, int bit) |

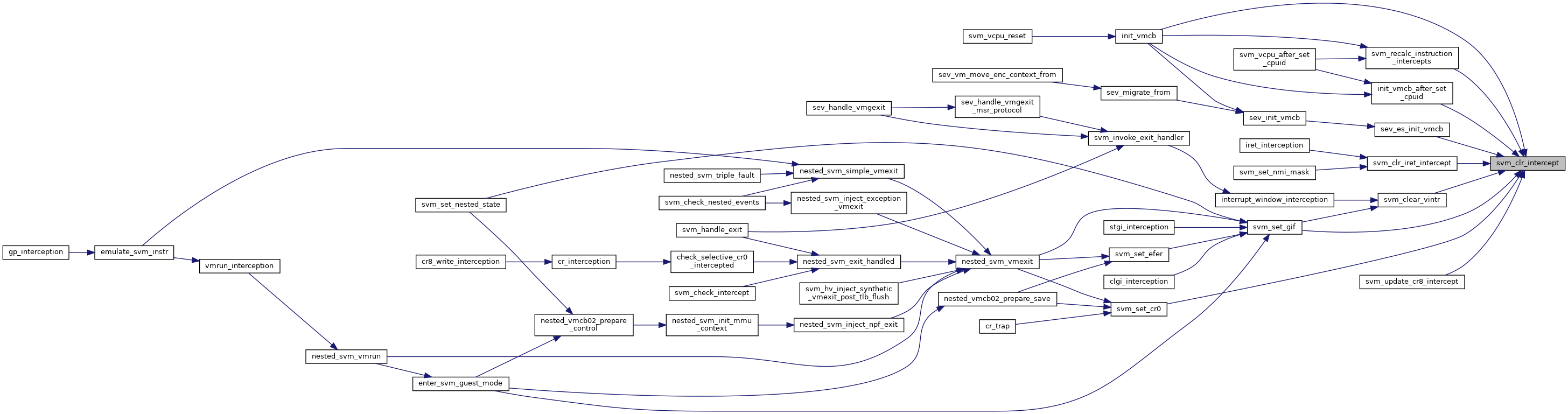

| static void | svm_clr_intercept (struct vcpu_svm *svm, int bit) |

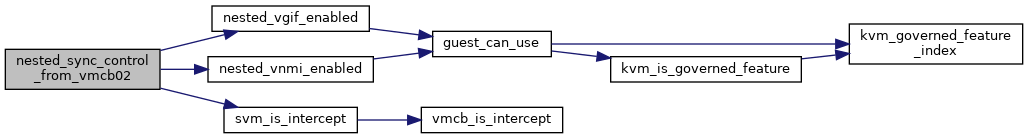

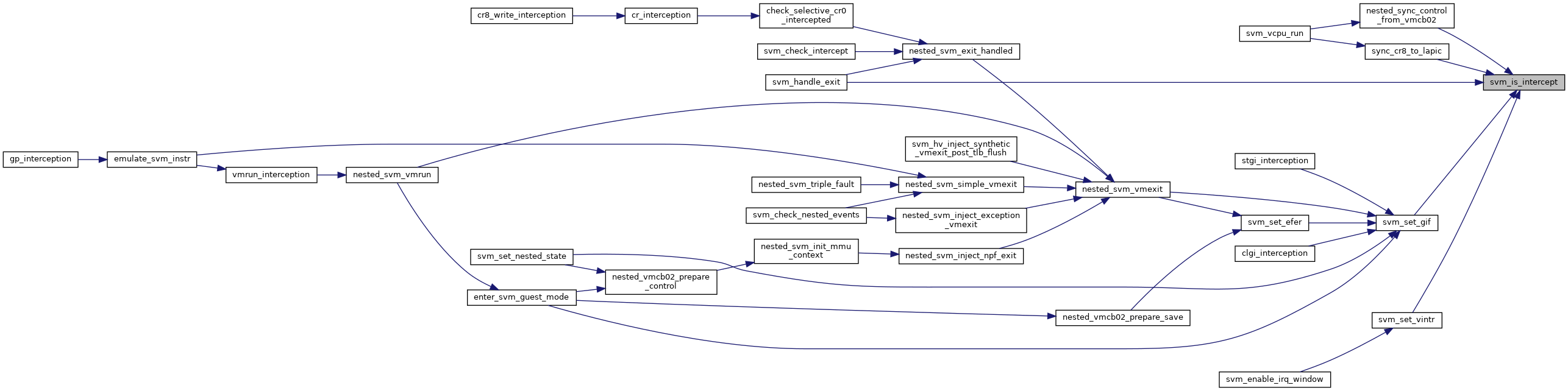

| static bool | svm_is_intercept (struct vcpu_svm *svm, int bit) |

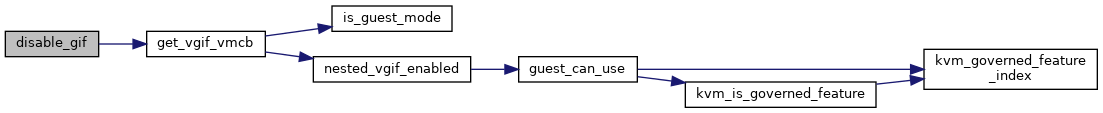

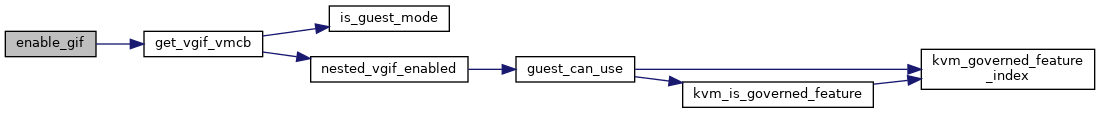

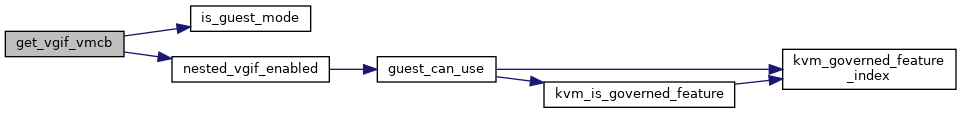

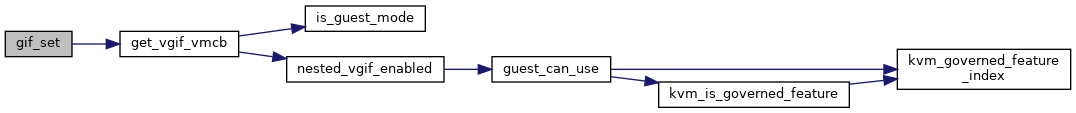

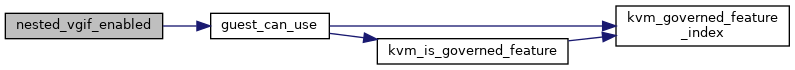

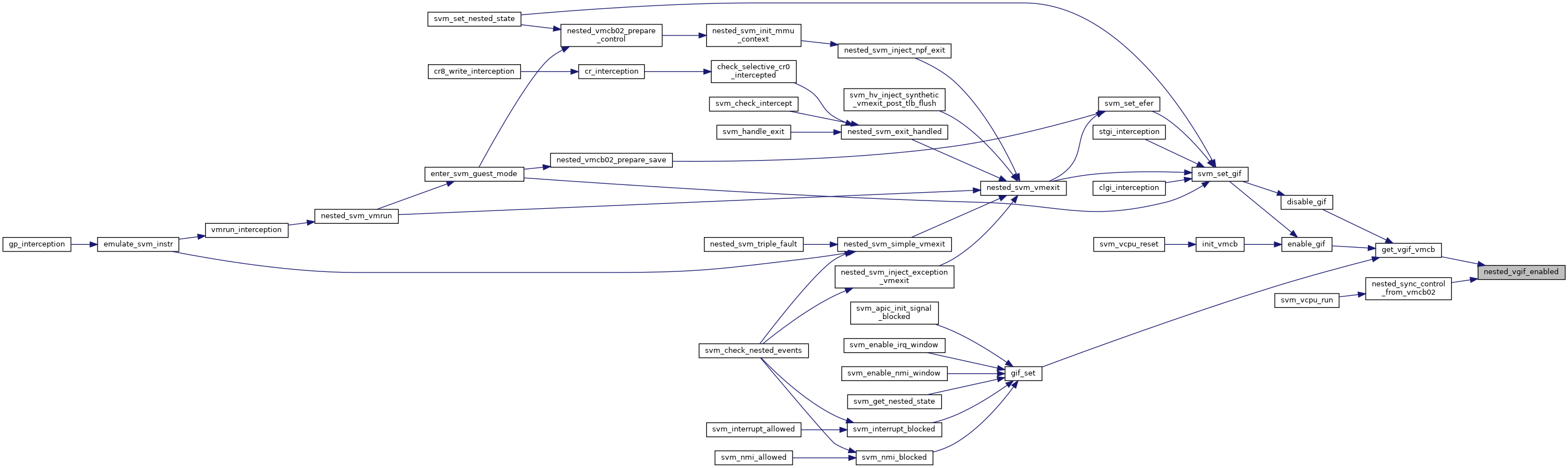

| static bool | nested_vgif_enabled (struct vcpu_svm *svm) |

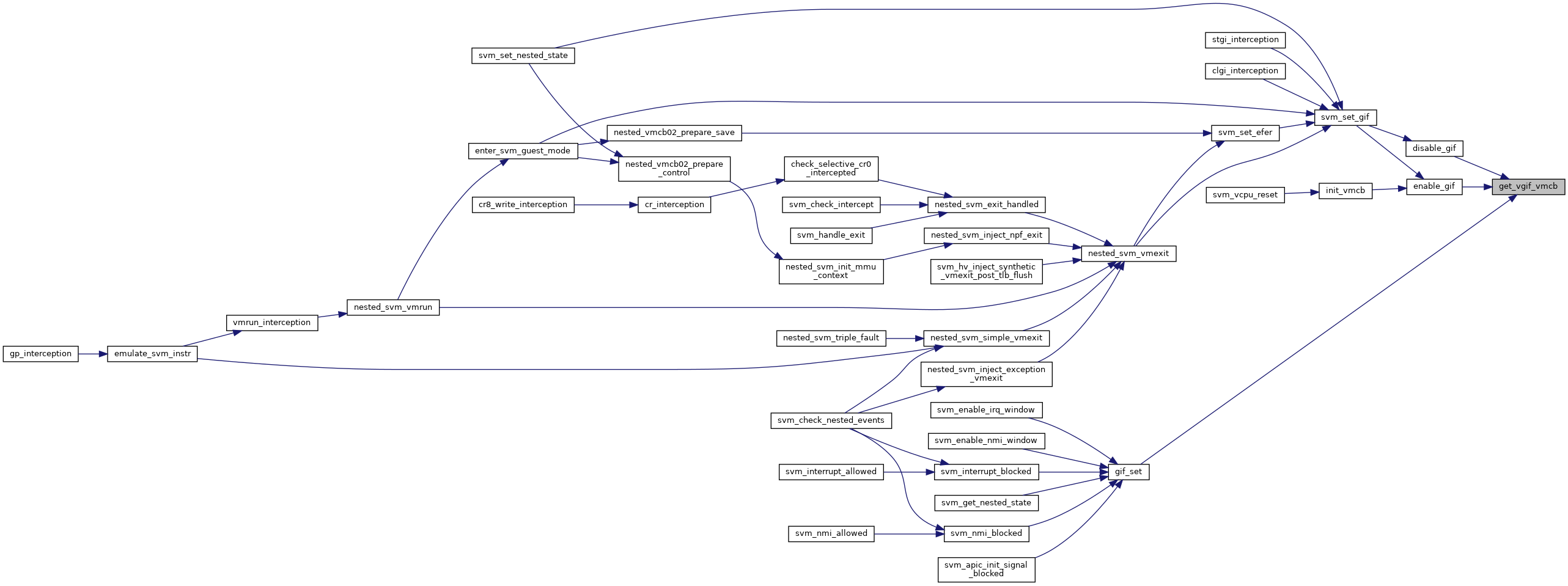

| static struct vmcb * | get_vgif_vmcb (struct vcpu_svm *svm) |

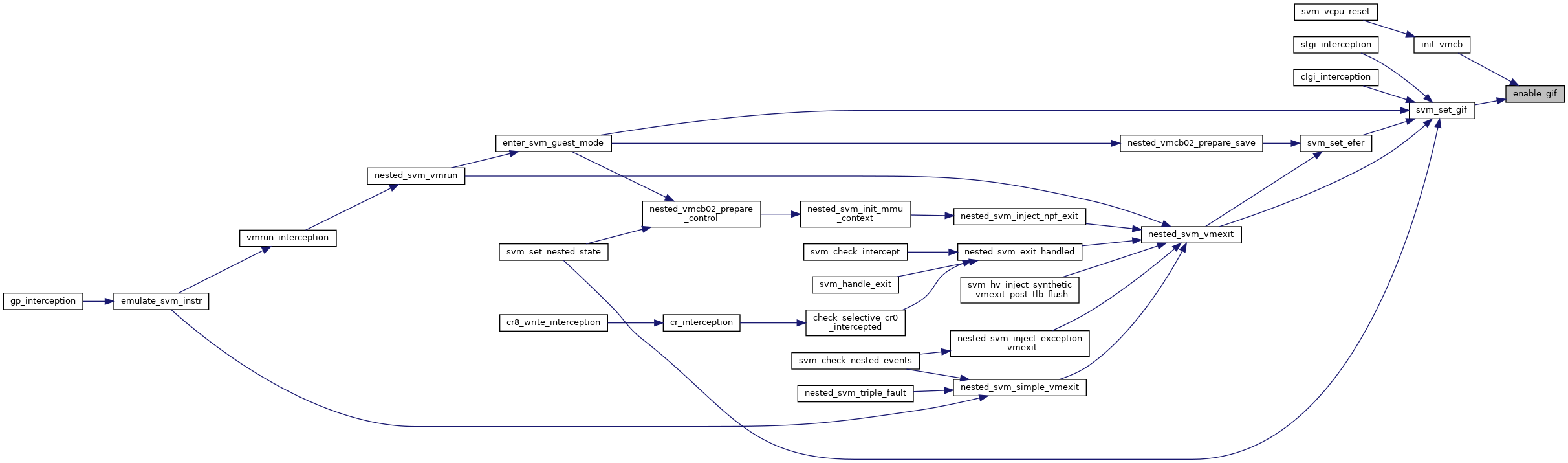

| static void | enable_gif (struct vcpu_svm *svm) |

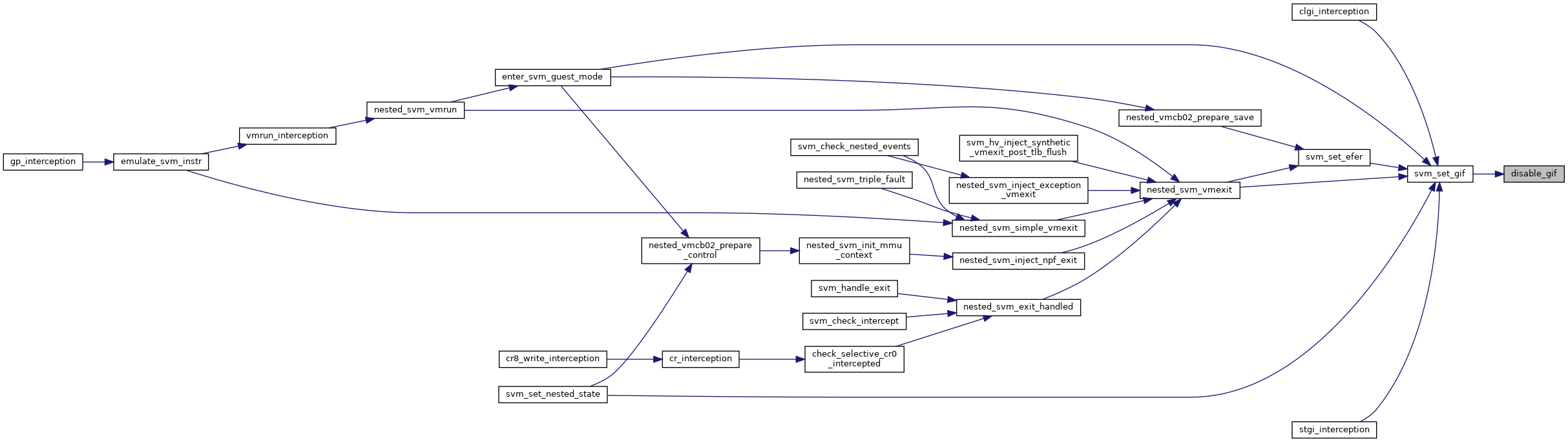

| static void | disable_gif (struct vcpu_svm *svm) |

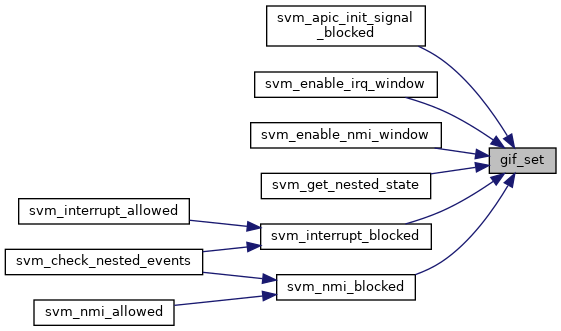

| static bool | gif_set (struct vcpu_svm *svm) |

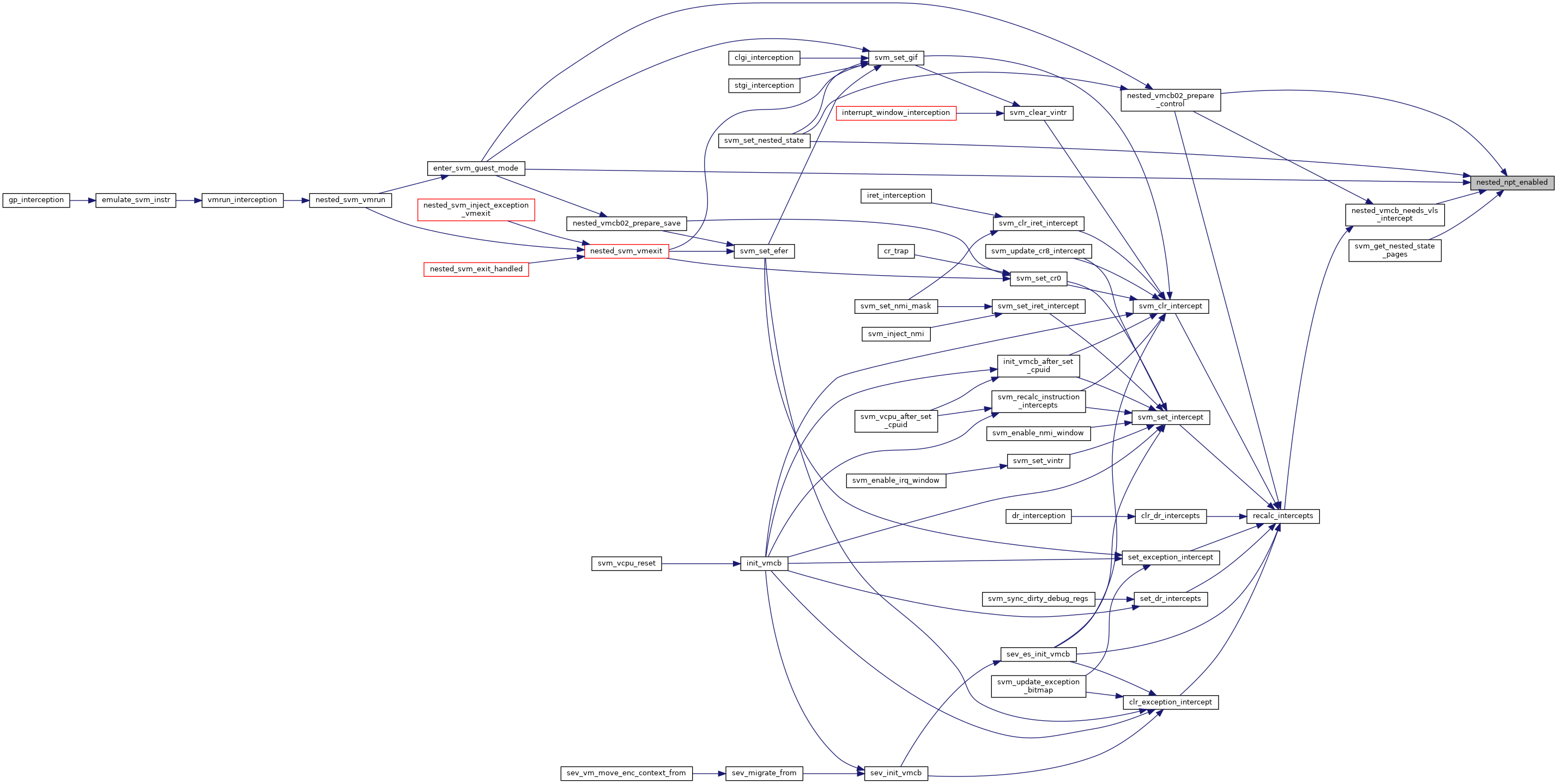

| static bool | nested_npt_enabled (struct vcpu_svm *svm) |

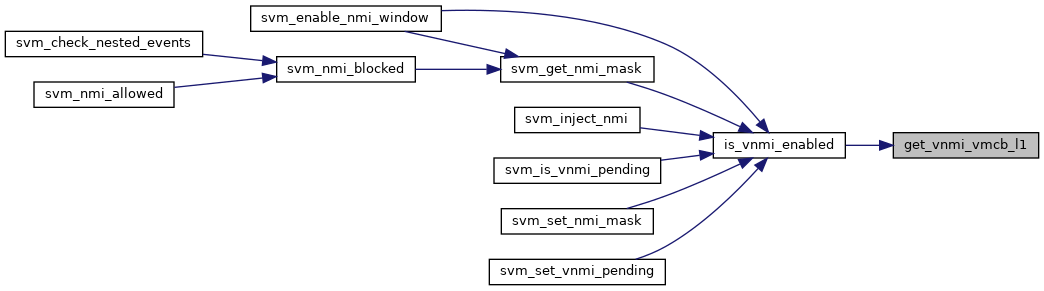

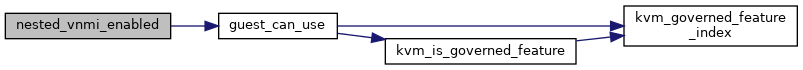

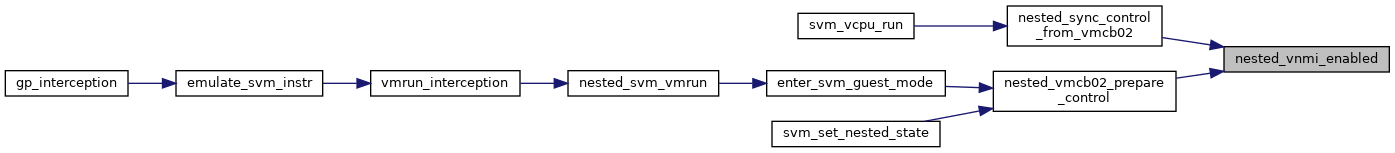

| static bool | nested_vnmi_enabled (struct vcpu_svm *svm) |

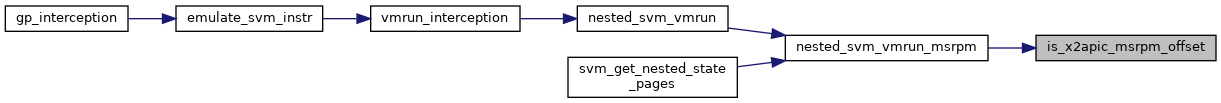

| static bool | is_x2apic_msrpm_offset (u32 offset) |

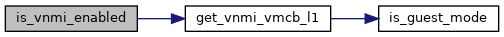

| static struct vmcb * | get_vnmi_vmcb_l1 (struct vcpu_svm *svm) |

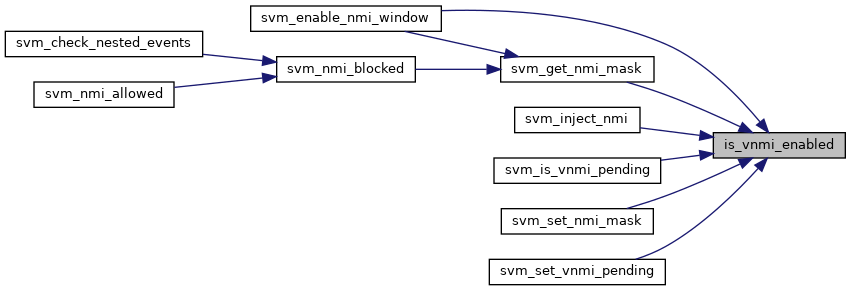

| static bool | is_vnmi_enabled (struct vcpu_svm *svm) |

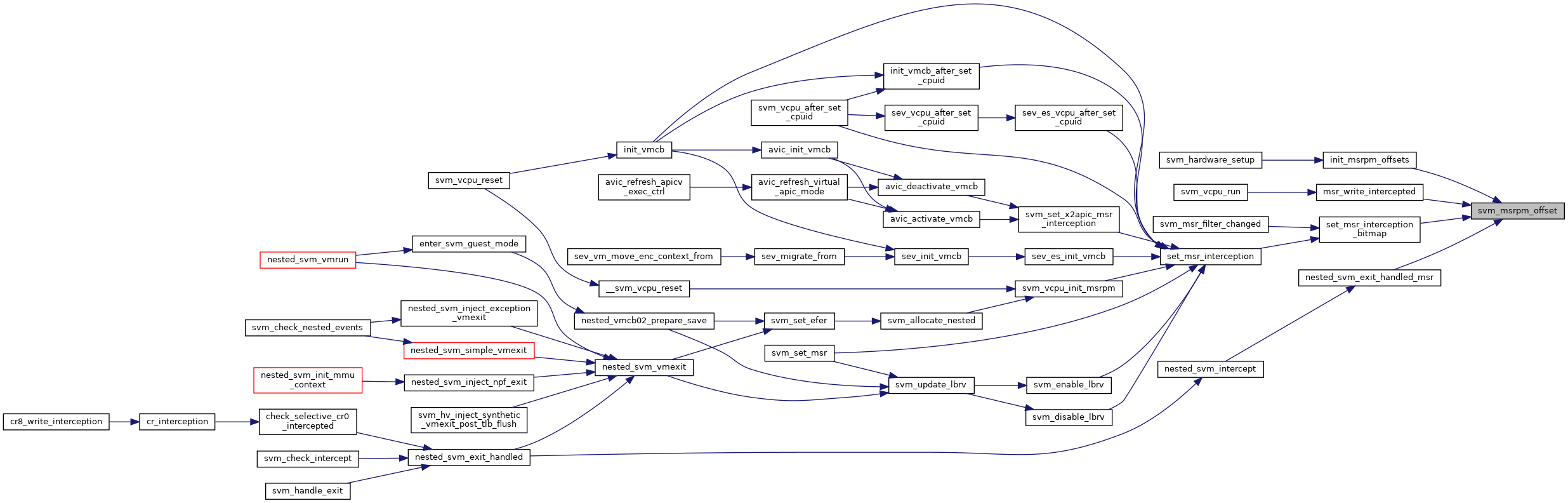

| u32 | svm_msrpm_offset (u32 msr) |

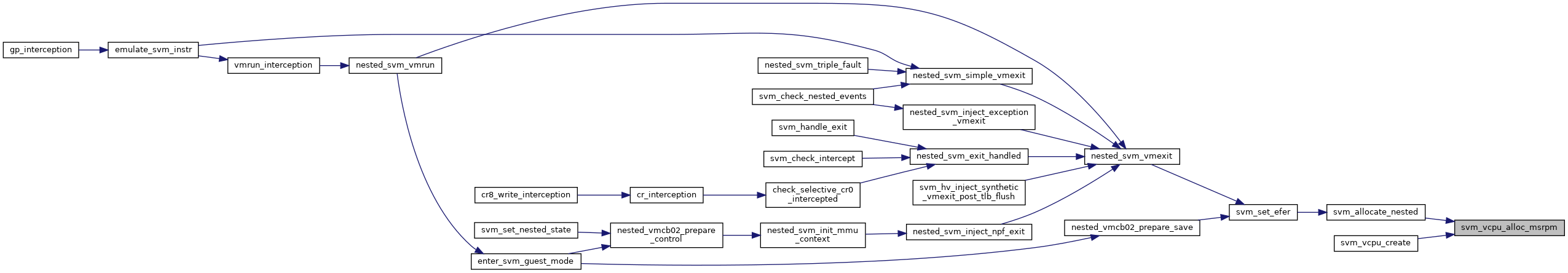

| u32 * | svm_vcpu_alloc_msrpm (void) |

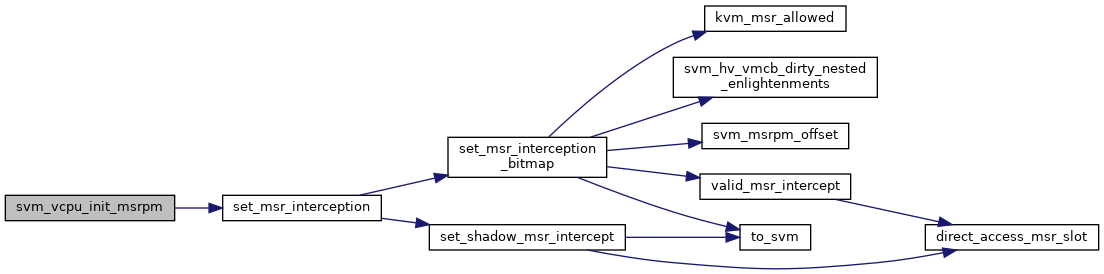

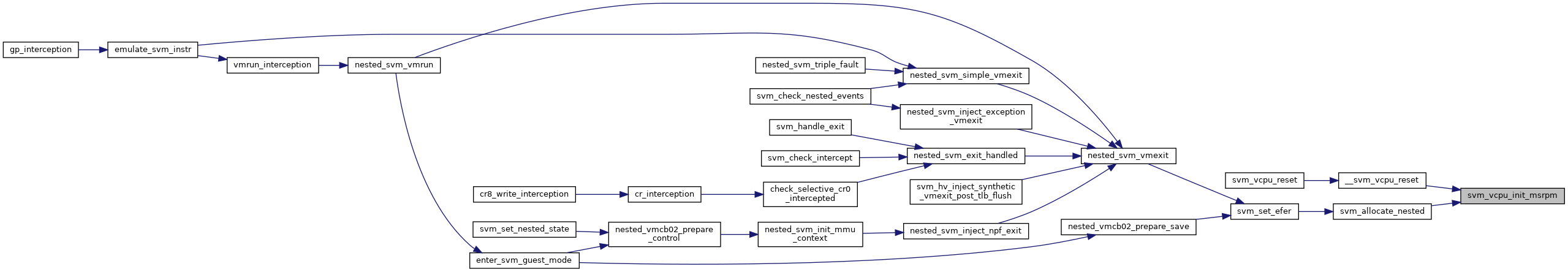

| void | svm_vcpu_init_msrpm (struct kvm_vcpu *vcpu, u32 *msrpm) |

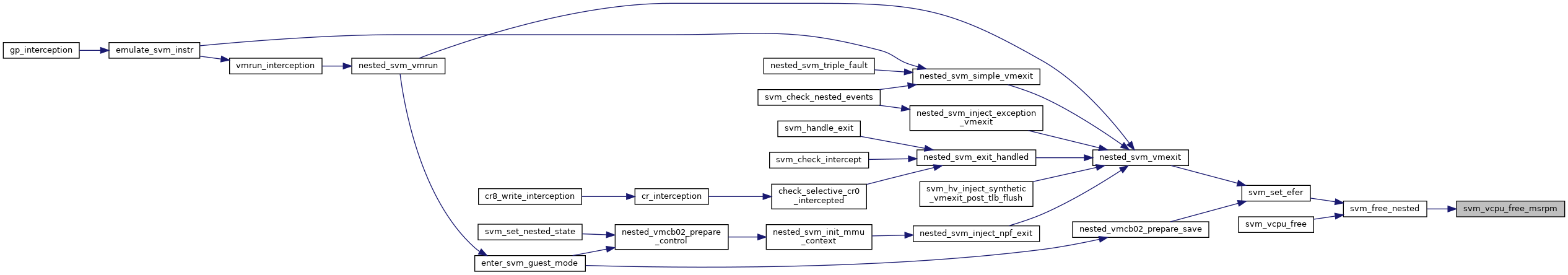

| void | svm_vcpu_free_msrpm (u32 *msrpm) |

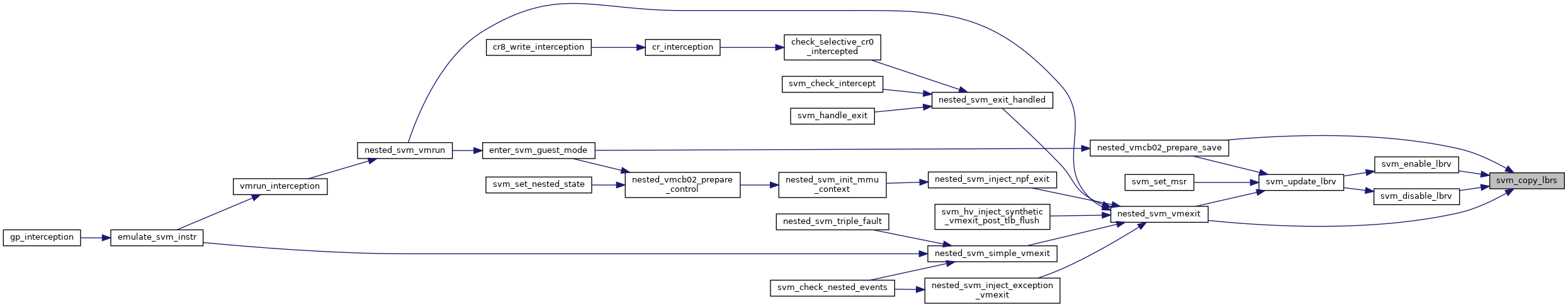

| void | svm_copy_lbrs (struct vmcb *to_vmcb, struct vmcb *from_vmcb) |

| void | svm_update_lbrv (struct kvm_vcpu *vcpu) |

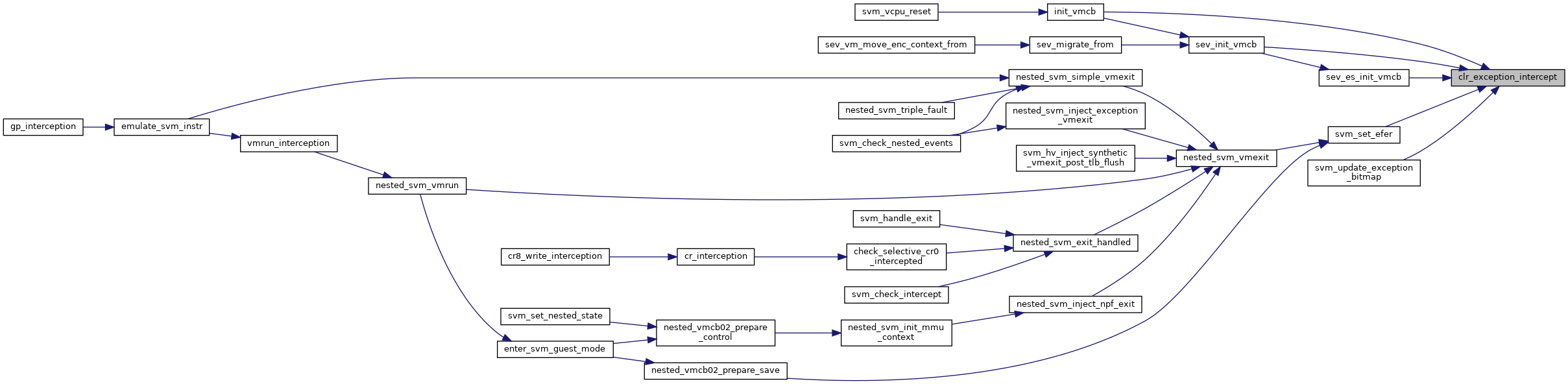

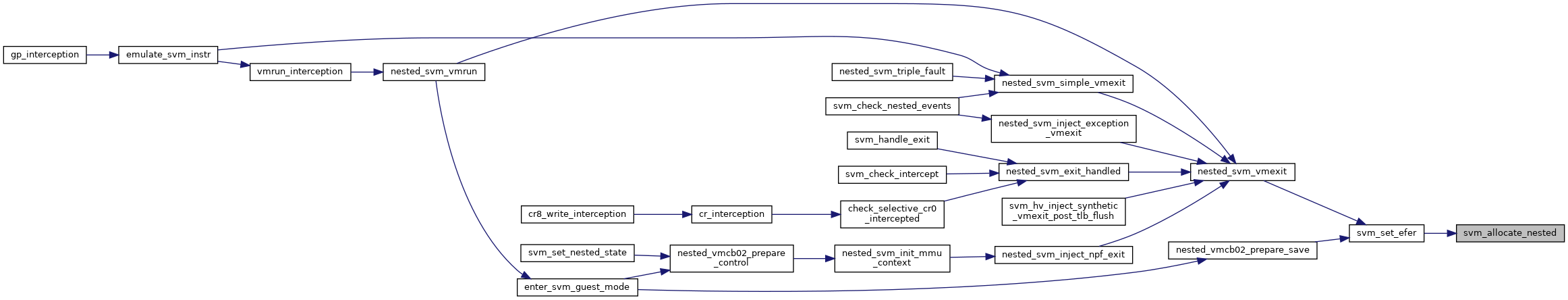

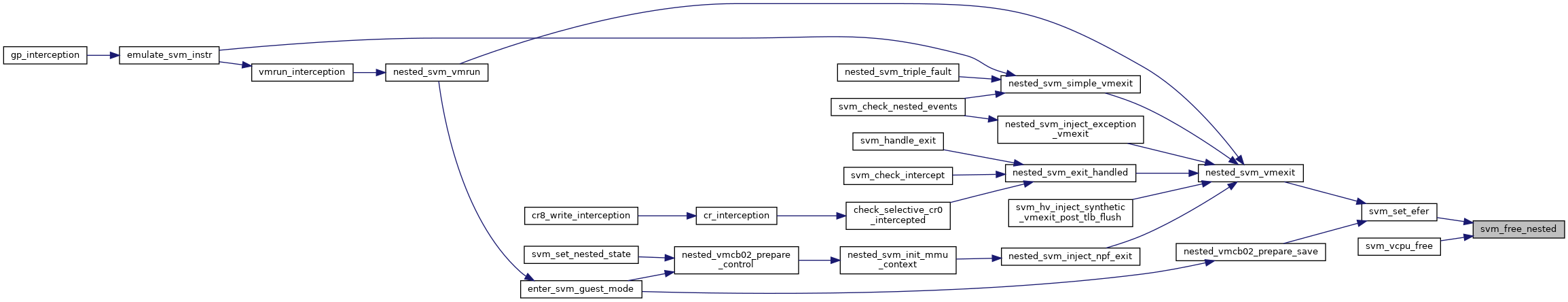

| int | svm_set_efer (struct kvm_vcpu *vcpu, u64 efer) |

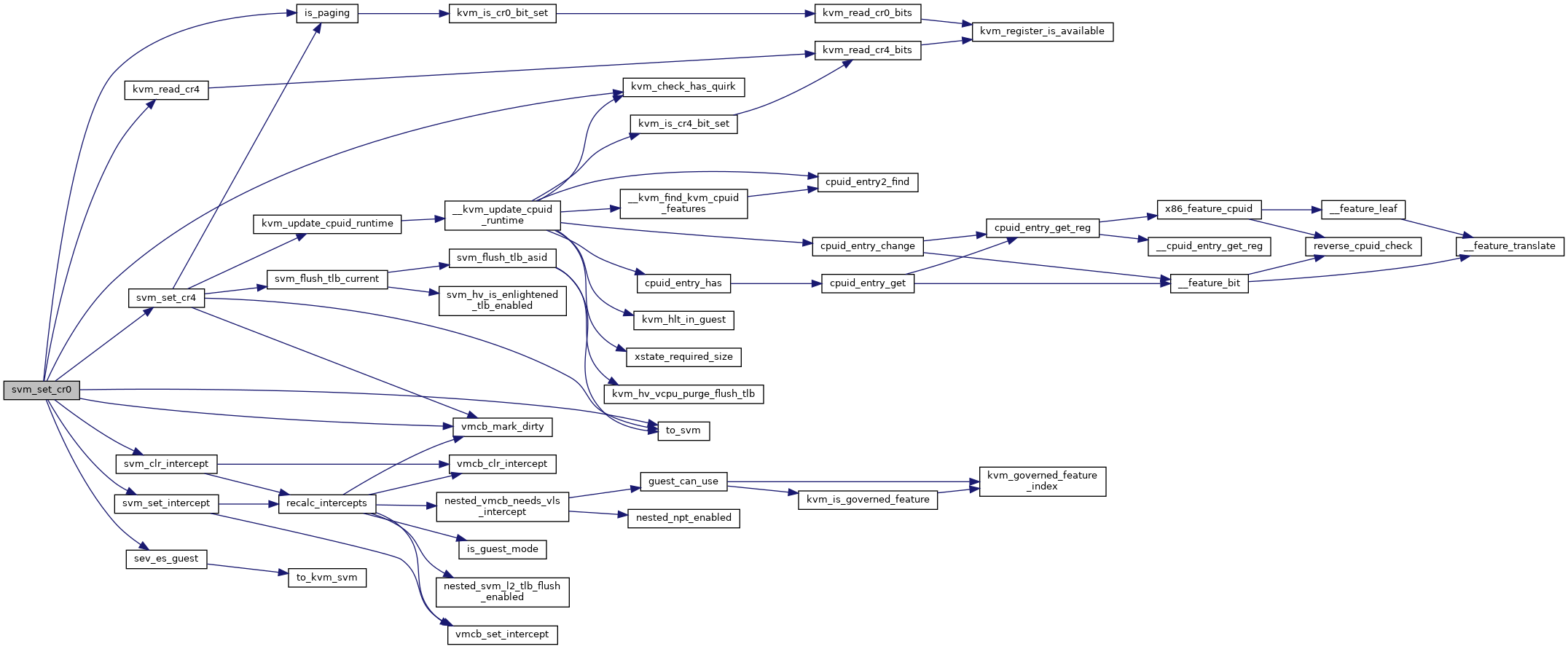

| void | svm_set_cr0 (struct kvm_vcpu *vcpu, unsigned long cr0) |

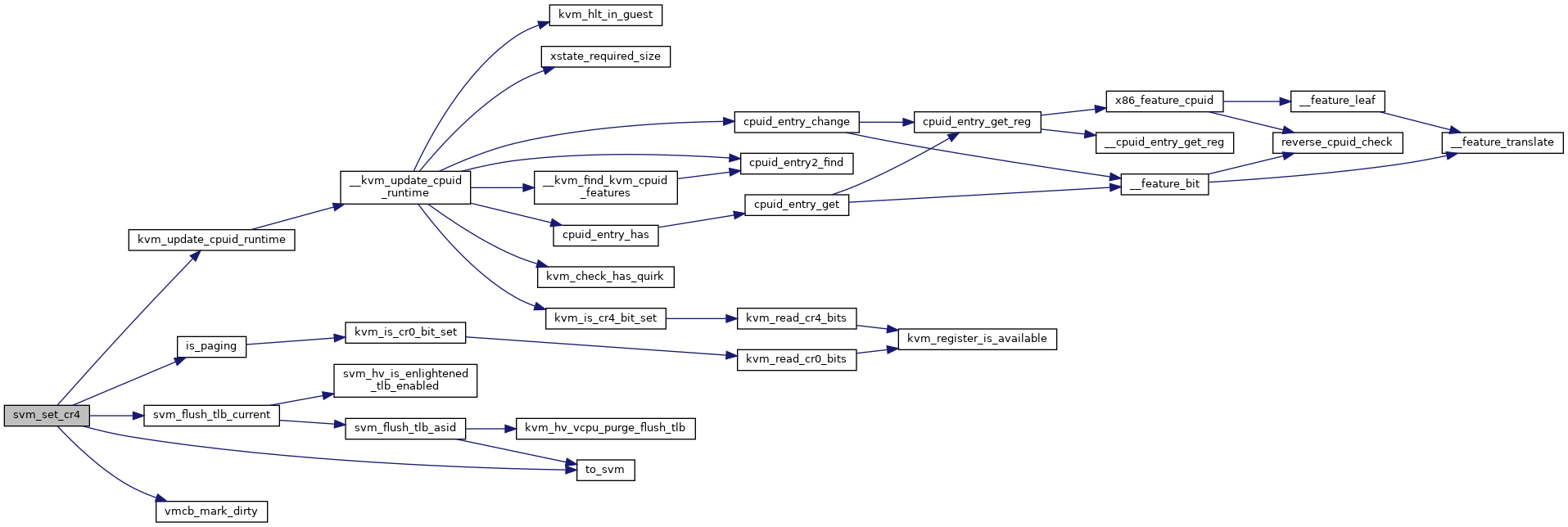

| void | svm_set_cr4 (struct kvm_vcpu *vcpu, unsigned long cr4) |

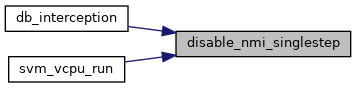

| void | disable_nmi_singlestep (struct vcpu_svm *svm) |

| bool | svm_smi_blocked (struct kvm_vcpu *vcpu) |

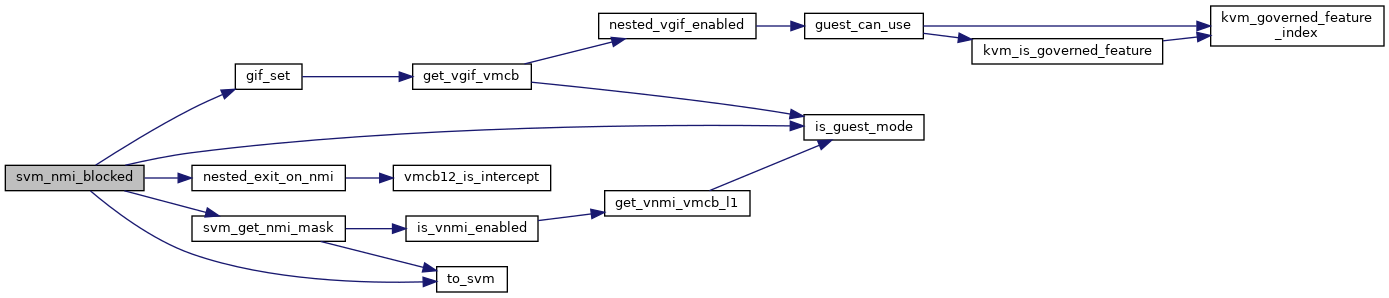

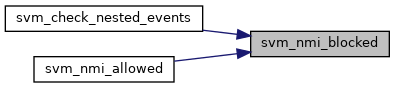

| bool | svm_nmi_blocked (struct kvm_vcpu *vcpu) |

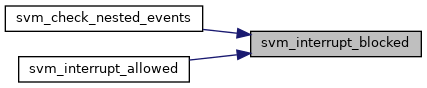

| bool | svm_interrupt_blocked (struct kvm_vcpu *vcpu) |

| void | svm_set_gif (struct vcpu_svm *svm, bool value) |

| int | svm_invoke_exit_handler (struct kvm_vcpu *vcpu, u64 exit_code) |

| void | set_msr_interception (struct kvm_vcpu *vcpu, u32 *msrpm, u32 msr, int read, int write) |

| void | svm_set_x2apic_msr_interception (struct vcpu_svm *svm, bool disable) |

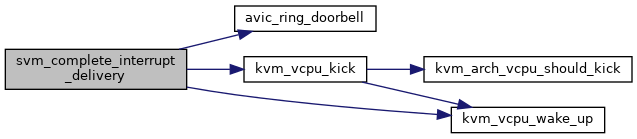

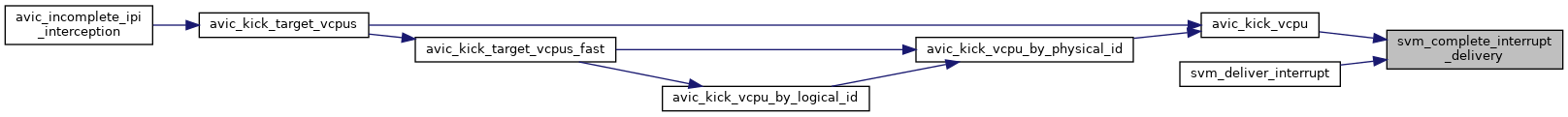

| void | svm_complete_interrupt_delivery (struct kvm_vcpu *vcpu, int delivery_mode, int trig_mode, int vec) |

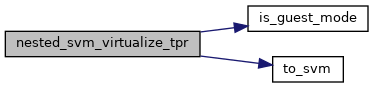

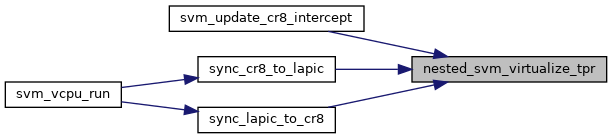

| static bool | nested_svm_virtualize_tpr (struct kvm_vcpu *vcpu) |

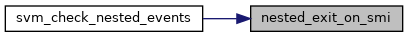

| static bool | nested_exit_on_smi (struct vcpu_svm *svm) |

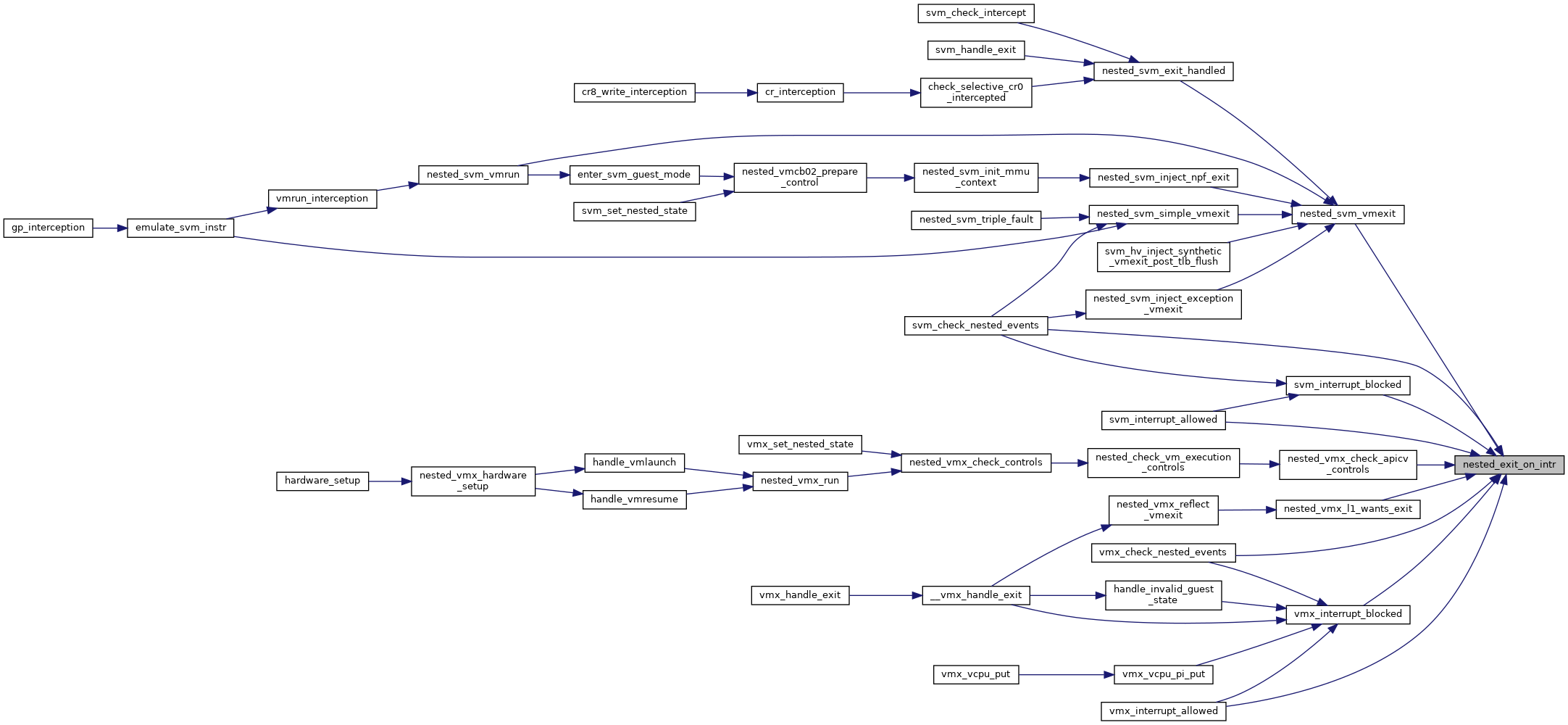

| static bool | nested_exit_on_intr (struct vcpu_svm *svm) |

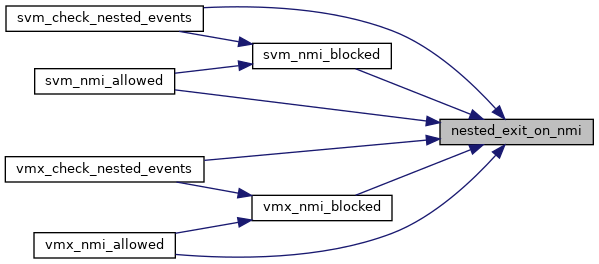

| static bool | nested_exit_on_nmi (struct vcpu_svm *svm) |

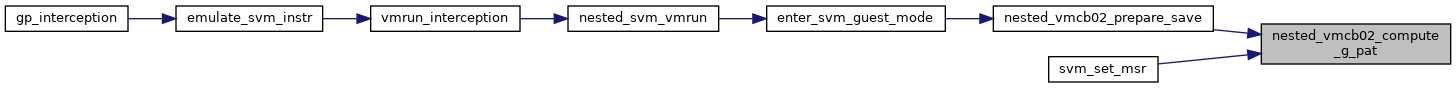

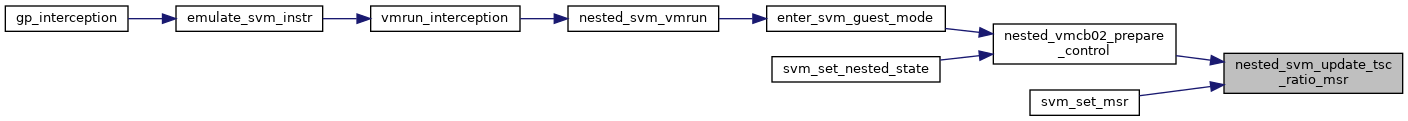

| int | enter_svm_guest_mode (struct kvm_vcpu *vcpu, u64 vmcb_gpa, struct vmcb *vmcb12, bool from_vmrun) |

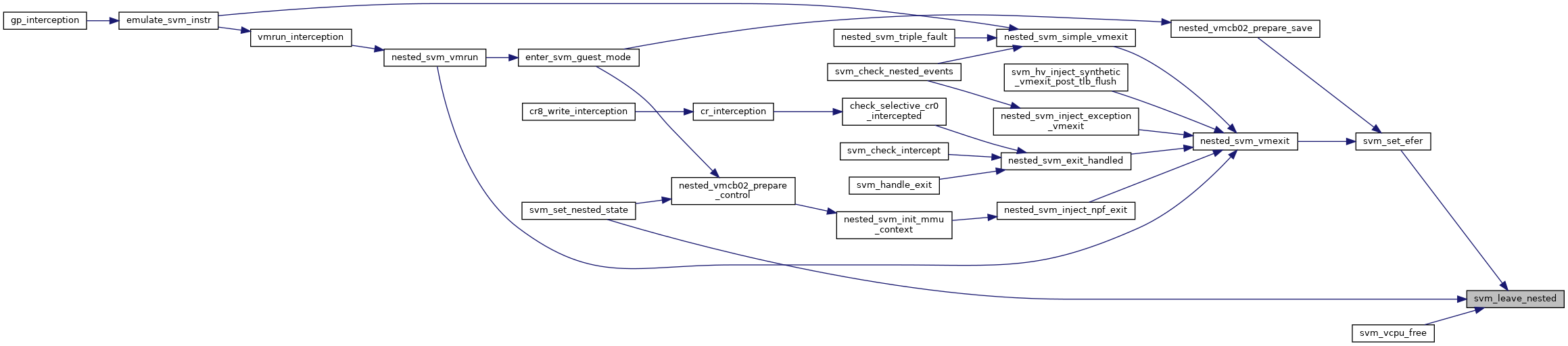

| void | svm_leave_nested (struct kvm_vcpu *vcpu) |

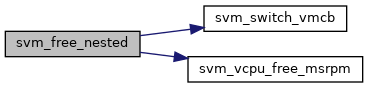

| void | svm_free_nested (struct vcpu_svm *svm) |

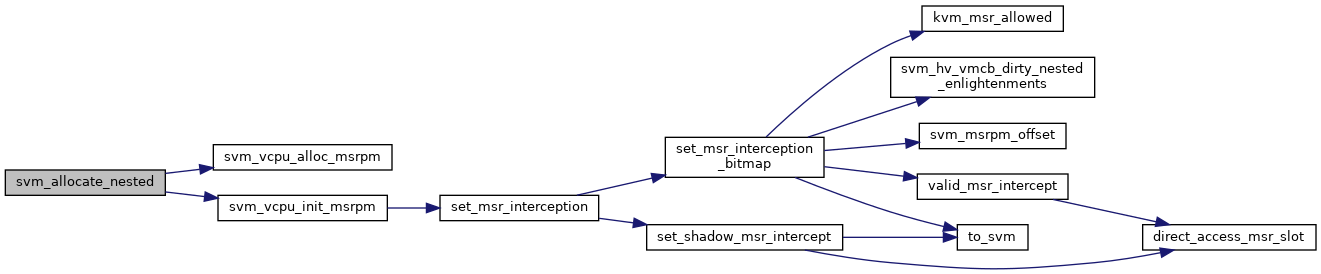

| int | svm_allocate_nested (struct vcpu_svm *svm) |

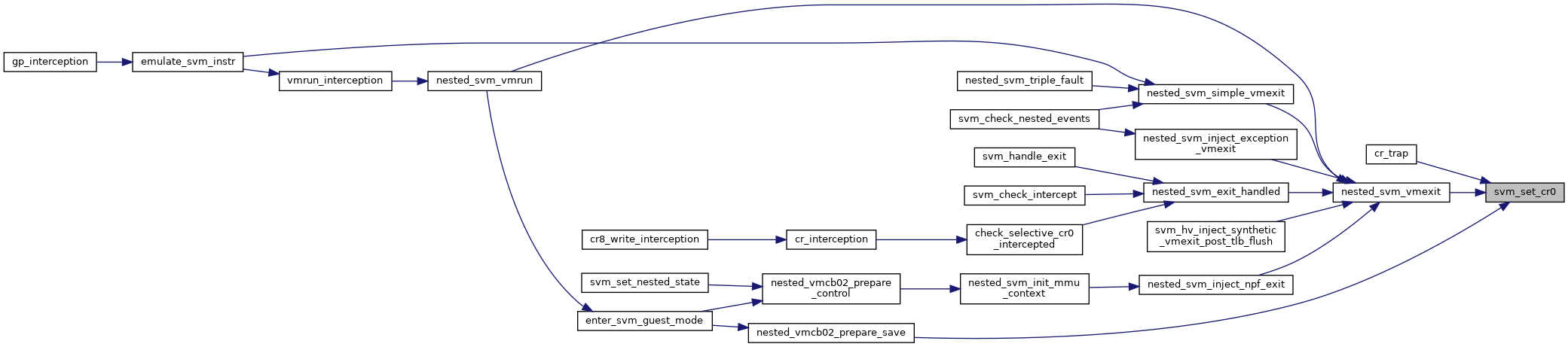

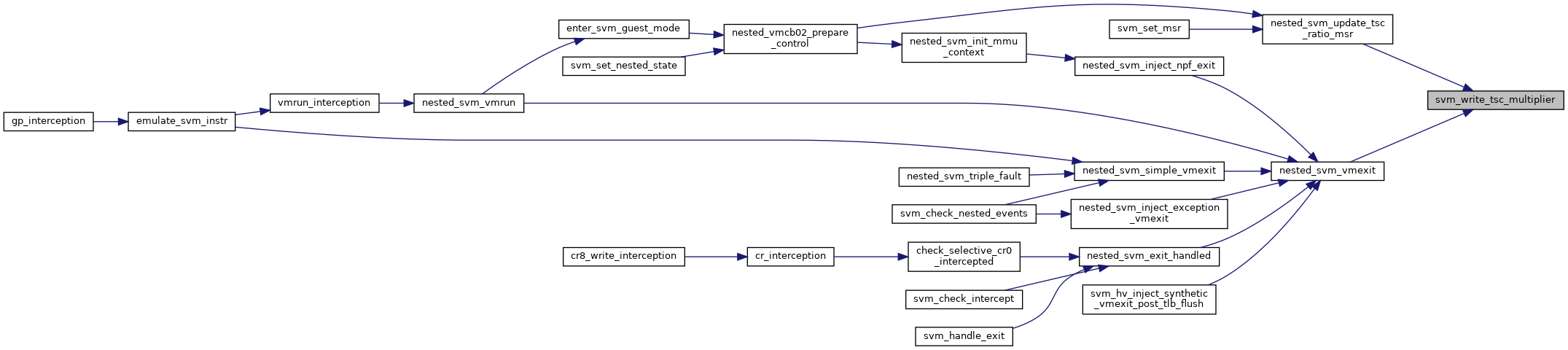

| int | nested_svm_vmrun (struct kvm_vcpu *vcpu) |

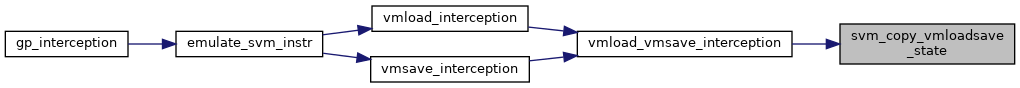

| void | svm_copy_vmrun_state (struct vmcb_save_area *to_save, struct vmcb_save_area *from_save) |

| void | svm_copy_vmloadsave_state (struct vmcb *to_vmcb, struct vmcb *from_vmcb) |

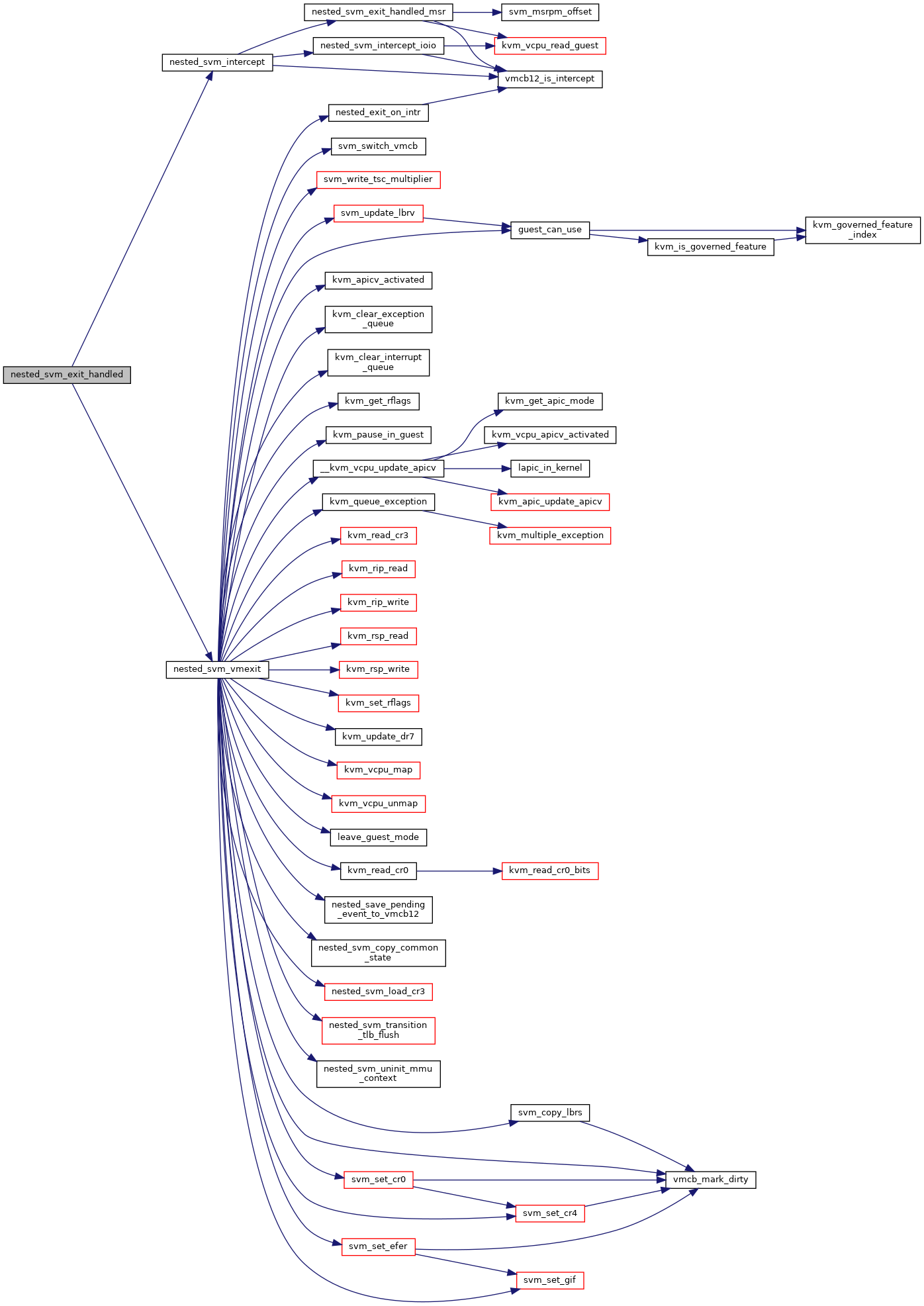

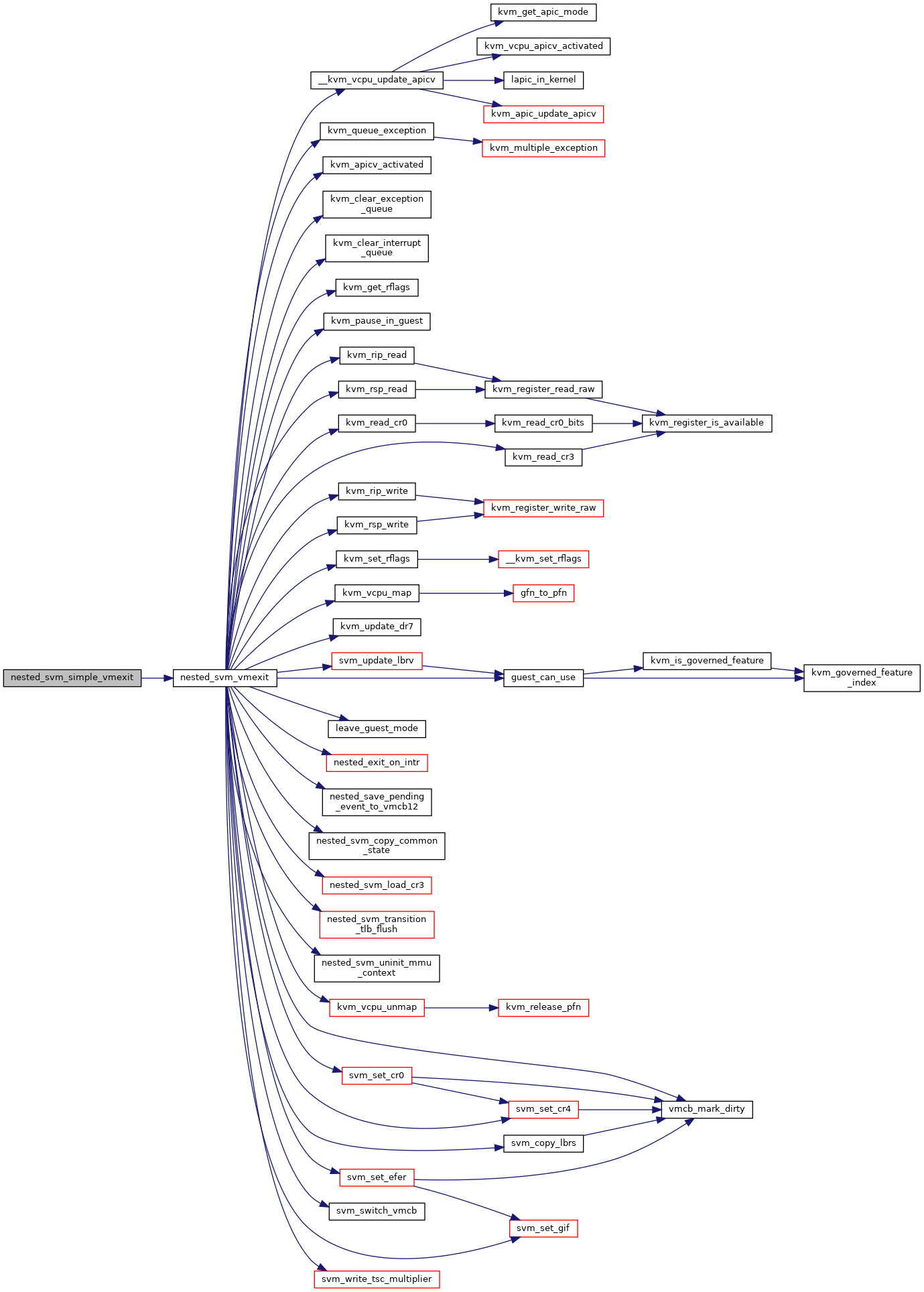

| int | nested_svm_vmexit (struct vcpu_svm *svm) |

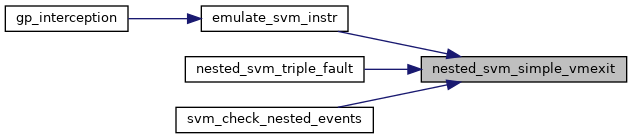

| static int | nested_svm_simple_vmexit (struct vcpu_svm *svm, u32 exit_code) |

| int | nested_svm_exit_handled (struct vcpu_svm *svm) |

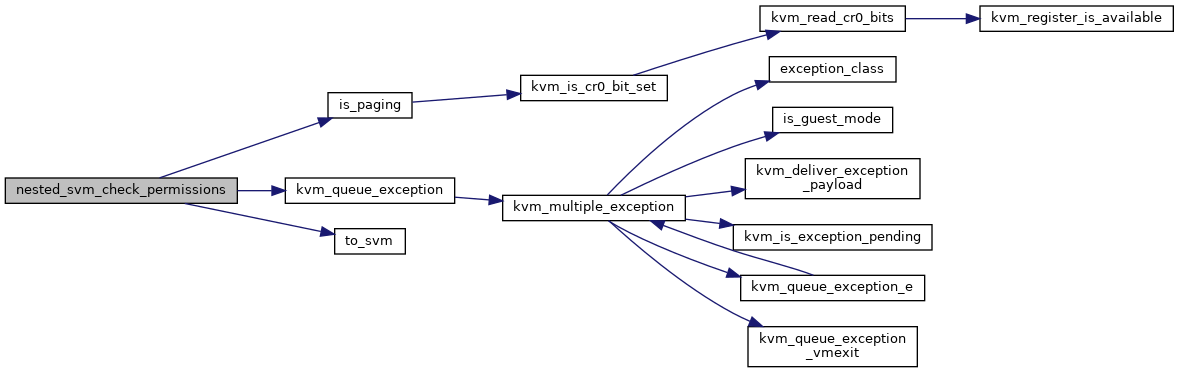

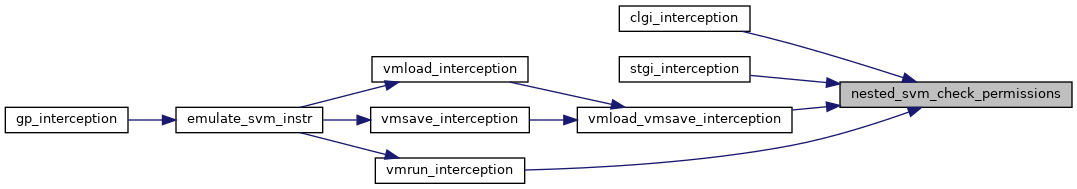

| int | nested_svm_check_permissions (struct kvm_vcpu *vcpu) |

| int | nested_svm_check_exception (struct vcpu_svm *svm, unsigned nr, bool has_error_code, u32 error_code) |

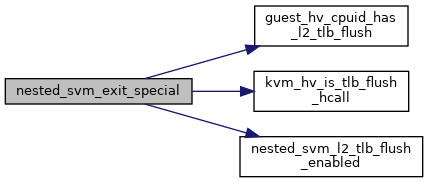

| int | nested_svm_exit_special (struct vcpu_svm *svm) |

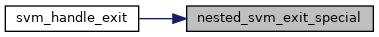

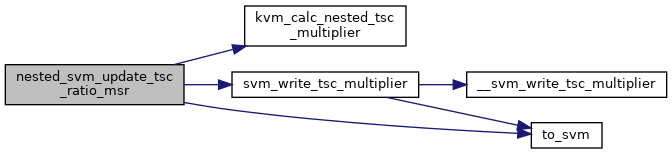

| void | nested_svm_update_tsc_ratio_msr (struct kvm_vcpu *vcpu) |

| void | svm_write_tsc_multiplier (struct kvm_vcpu *vcpu) |

| void | nested_copy_vmcb_control_to_cache (struct vcpu_svm *svm, struct vmcb_control_area *control) |

| void | nested_copy_vmcb_save_to_cache (struct vcpu_svm *svm, struct vmcb_save_area *save) |

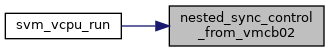

| void | nested_sync_control_from_vmcb02 (struct vcpu_svm *svm) |

| void | nested_vmcb02_compute_g_pat (struct vcpu_svm *svm) |

| void | svm_switch_vmcb (struct vcpu_svm *svm, struct kvm_vmcb_info *target_vmcb) |

| bool | avic_hardware_setup (void) |

| int | avic_ga_log_notifier (u32 ga_tag) |

| void | avic_vm_destroy (struct kvm *kvm) |

| int | avic_vm_init (struct kvm *kvm) |

| void | avic_init_vmcb (struct vcpu_svm *svm, struct vmcb *vmcb) |

| int | avic_incomplete_ipi_interception (struct kvm_vcpu *vcpu) |

| int | avic_unaccelerated_access_interception (struct kvm_vcpu *vcpu) |

| int | avic_init_vcpu (struct vcpu_svm *svm) |

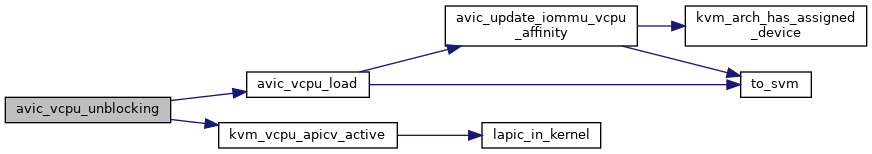

| void | avic_vcpu_load (struct kvm_vcpu *vcpu, int cpu) |

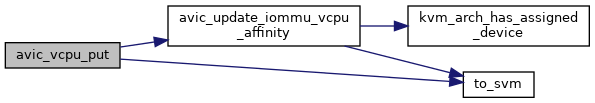

| void | avic_vcpu_put (struct kvm_vcpu *vcpu) |

| void | avic_apicv_post_state_restore (struct kvm_vcpu *vcpu) |

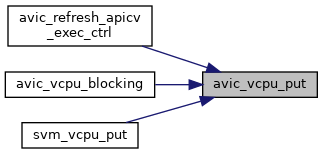

| void | avic_refresh_apicv_exec_ctrl (struct kvm_vcpu *vcpu) |

| int | avic_pi_update_irte (struct kvm *kvm, unsigned int host_irq, uint32_t guest_irq, bool set) |

| void | avic_vcpu_blocking (struct kvm_vcpu *vcpu) |

| void | avic_vcpu_unblocking (struct kvm_vcpu *vcpu) |

| void | avic_ring_doorbell (struct kvm_vcpu *vcpu) |

| unsigned long | avic_vcpu_get_apicv_inhibit_reasons (struct kvm_vcpu *vcpu) |

| void | avic_refresh_virtual_apic_mode (struct kvm_vcpu *vcpu) |

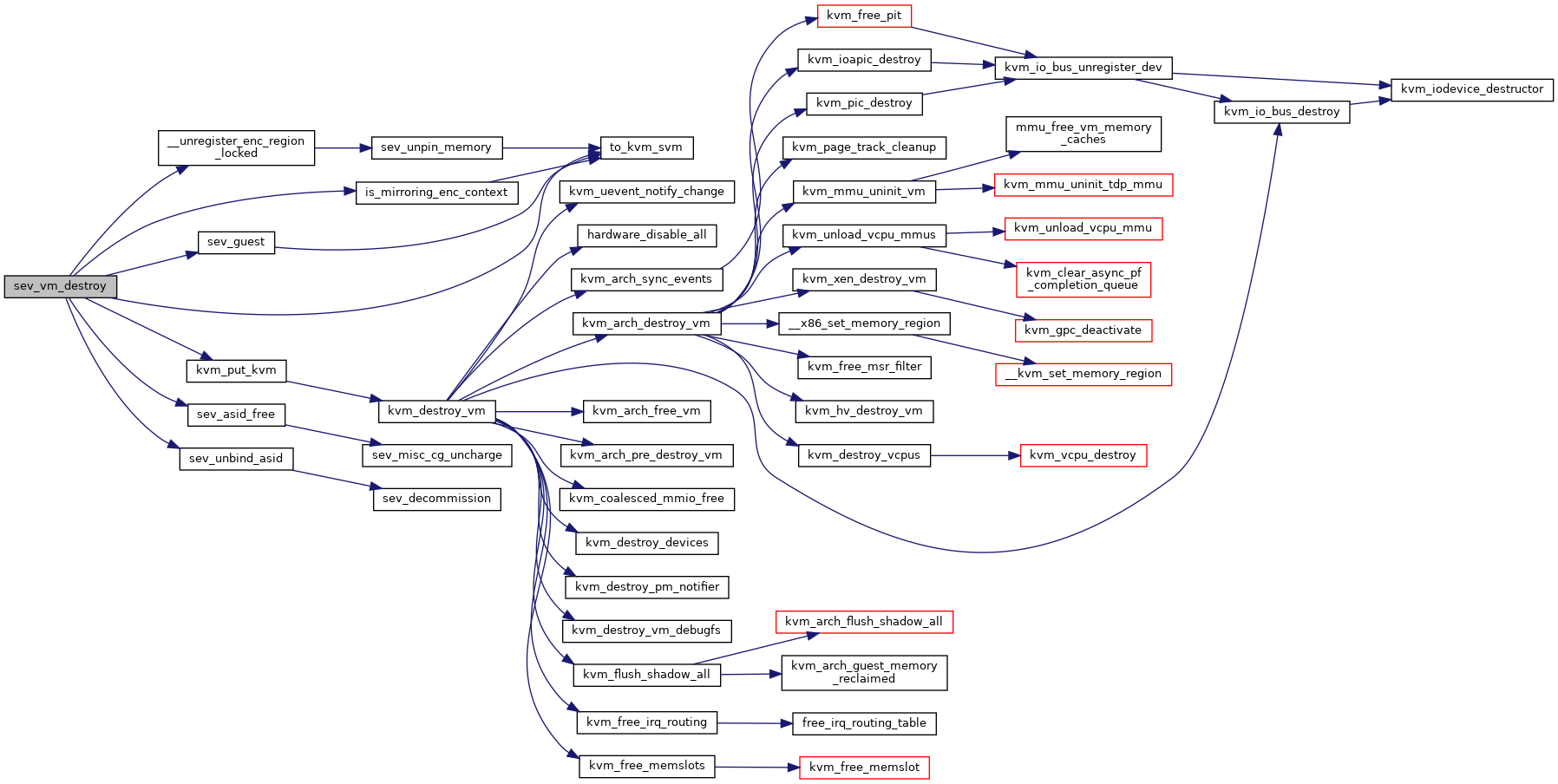

| void | sev_vm_destroy (struct kvm *kvm) |

| int | sev_mem_enc_ioctl (struct kvm *kvm, void __user *argp) |

| int | sev_mem_enc_register_region (struct kvm *kvm, struct kvm_enc_region *range) |

| int | sev_mem_enc_unregister_region (struct kvm *kvm, struct kvm_enc_region *range) |

| int | sev_vm_copy_enc_context_from (struct kvm *kvm, unsigned int source_fd) |

| int | sev_vm_move_enc_context_from (struct kvm *kvm, unsigned int source_fd) |

| void | sev_guest_memory_reclaimed (struct kvm *kvm) |

| void | pre_sev_run (struct vcpu_svm *svm, int cpu) |

| void __init | sev_set_cpu_caps (void) |

| void __init | sev_hardware_setup (void) |

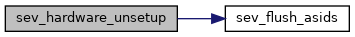

| void | sev_hardware_unsetup (void) |

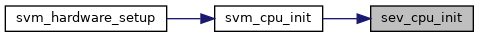

| int | sev_cpu_init (struct svm_cpu_data *sd) |

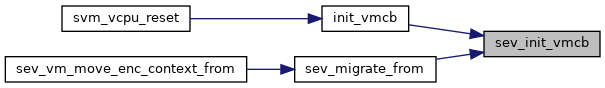

| void | sev_init_vmcb (struct vcpu_svm *svm) |

| void | sev_vcpu_after_set_cpuid (struct vcpu_svm *svm) |

| void | sev_free_vcpu (struct kvm_vcpu *vcpu) |

| int | sev_handle_vmgexit (struct kvm_vcpu *vcpu) |

| int | sev_es_string_io (struct vcpu_svm *svm, int size, unsigned int port, int in) |

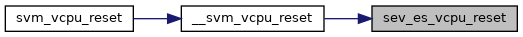

| void | sev_es_vcpu_reset (struct vcpu_svm *svm) |

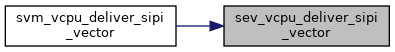

| void | sev_vcpu_deliver_sipi_vector (struct kvm_vcpu *vcpu, u8 vector) |

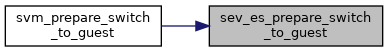

| void | sev_es_prepare_switch_to_guest (struct sev_es_save_area *hostsa) |

| void | sev_es_unmap_ghcb (struct vcpu_svm *svm) |

| void | __svm_sev_es_vcpu_run (struct vcpu_svm *svm, bool spec_ctrl_intercepted) |

| void | __svm_vcpu_run (struct vcpu_svm *svm, bool spec_ctrl_intercepted) |

Variables | |

| u32 msrpm_offsets[MSRPM_OFFSETS] | __read_mostly |

| bool | npt_enabled |

| int | nrips |

| int | vgif |

| bool | intercept_smi |

| bool | x2avic_enabled |

| bool | vnmi |

| bool | dump_invalid_vmcb |

| struct kvm_x86_nested_ops | svm_nested_ops |

| unsigned int | max_sev_asid |

Macro Definition Documentation

◆ __sme_page_pa

| #define __sme_page_pa | ( | x | ) | __sme_set(page_to_pfn(x) << PAGE_SHIFT) |

◆ AVIC_REQUIRED_APICV_INHIBITS

| #define AVIC_REQUIRED_APICV_INHIBITS |

◆ DEBUGCTL_RESERVED_BITS

◆ DEFINE_KVM_GHCB_ACCESSORS

| #define DEFINE_KVM_GHCB_ACCESSORS | ( | field | ) |

◆ GHCB_VERSION_MAX

◆ GHCB_VERSION_MIN

◆ IOPM_SIZE

◆ MAX_DIRECT_ACCESS_MSRS

◆ MSR_INVALID

◆ MSRPM_OFFSETS

◆ MSRPM_SIZE

◆ NESTED_EXIT_CONTINUE

◆ NESTED_EXIT_DONE

◆ NESTED_EXIT_HOST

◆ SVM_REGS_LAZY_LOAD_SET

◆ VMCB_ALL_CLEAN_MASK

| #define VMCB_ALL_CLEAN_MASK |

◆ VMCB_ALWAYS_DIRTY_MASK

Enumeration Type Documentation

◆ anonymous enum

| anonymous enum |

Function Documentation

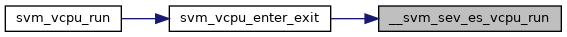

◆ __svm_sev_es_vcpu_run()

| void __svm_sev_es_vcpu_run | ( | struct vcpu_svm * | svm, |

| bool | spec_ctrl_intercepted | ||

| ) |

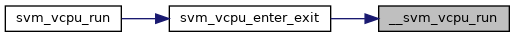

◆ __svm_vcpu_run()

| void __svm_vcpu_run | ( | struct vcpu_svm * | svm, |

| bool | spec_ctrl_intercepted | ||

| ) |

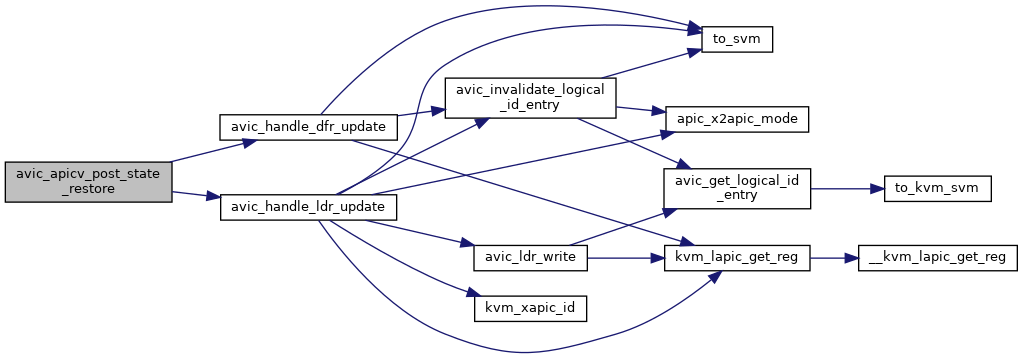

◆ avic_apicv_post_state_restore()

| void avic_apicv_post_state_restore | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 738 of file avic.c.

◆ avic_ga_log_notifier()

| int avic_ga_log_notifier | ( | u32 | ga_tag | ) |

◆ avic_hardware_setup()

| bool avic_hardware_setup | ( | void | ) |

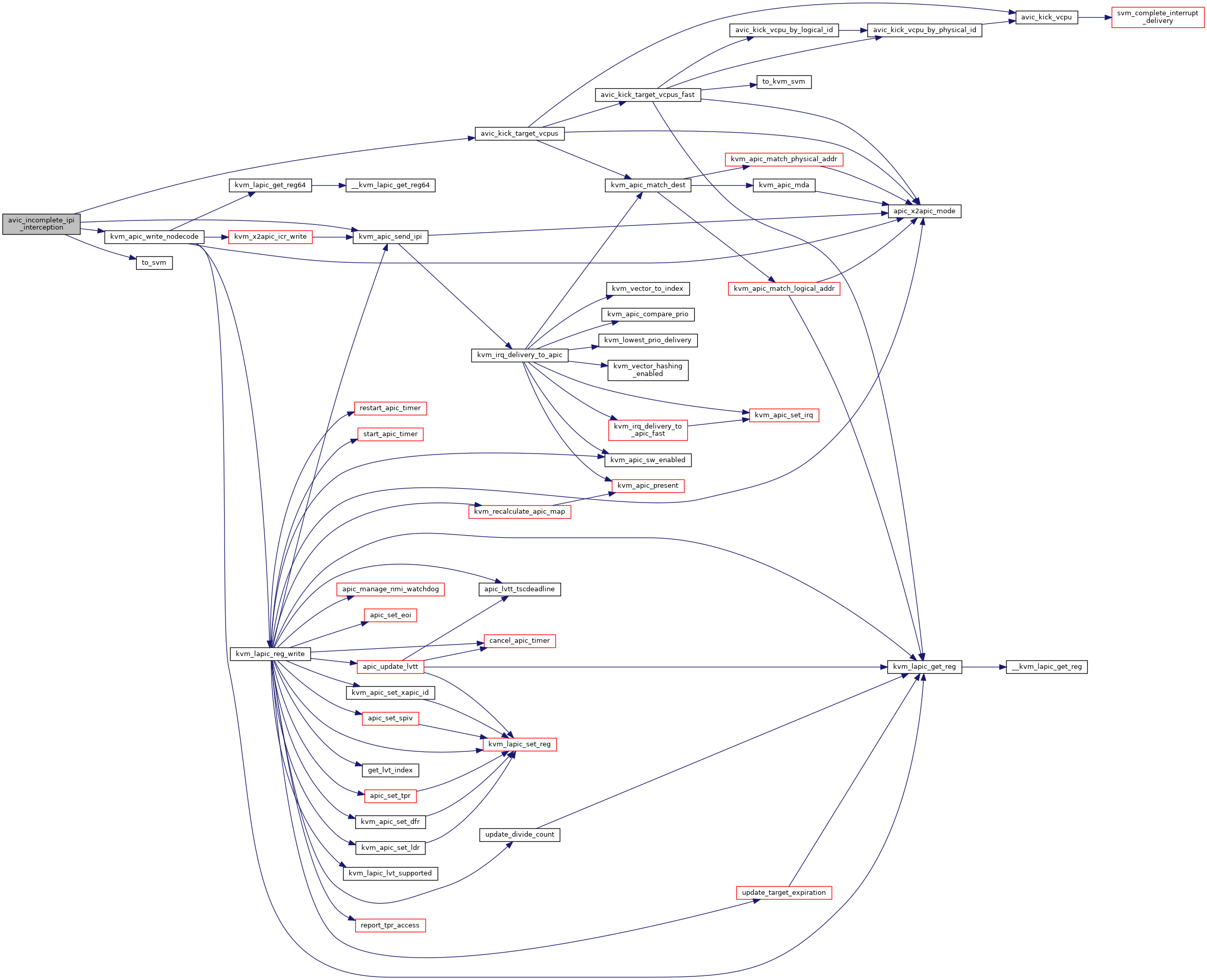

◆ avic_incomplete_ipi_interception()

| int avic_incomplete_ipi_interception | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 490 of file avic.c.

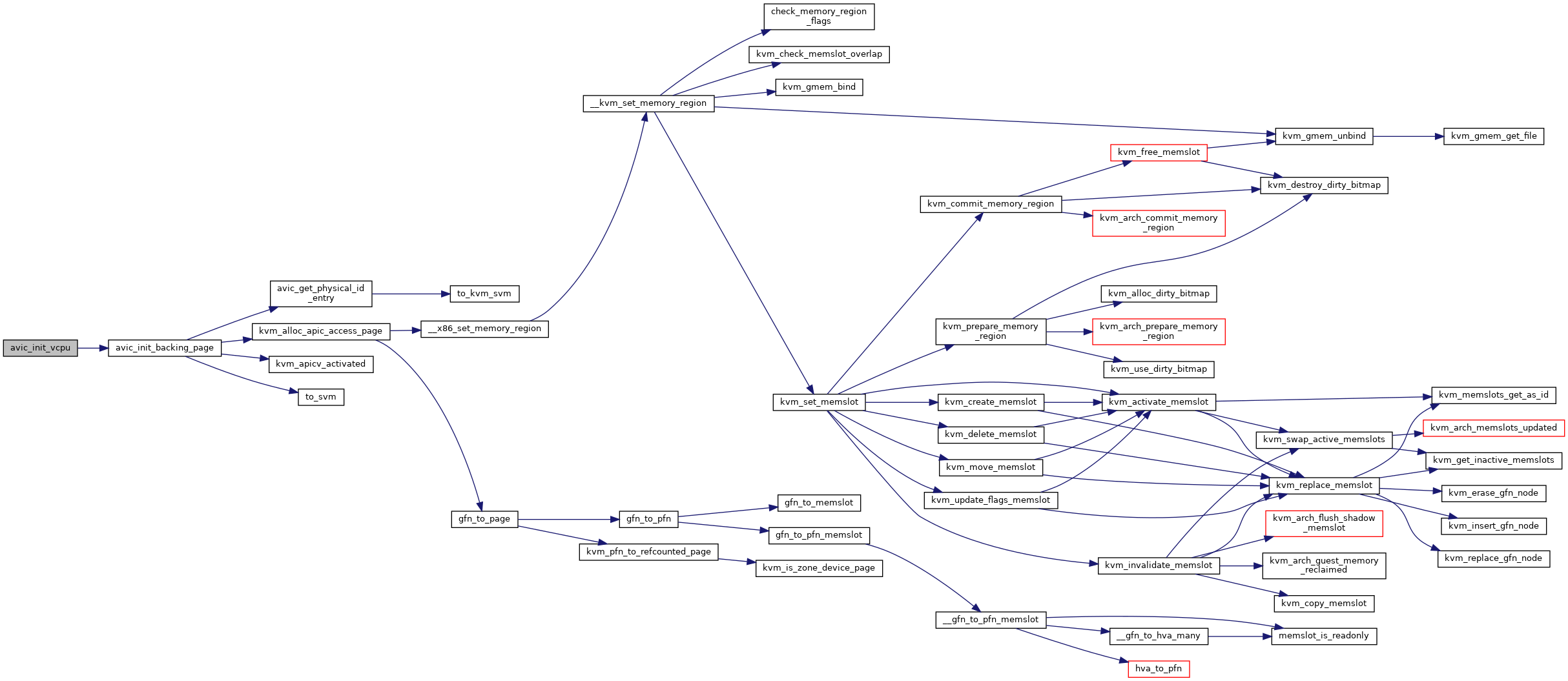

◆ avic_init_vcpu()

| int avic_init_vcpu | ( | struct vcpu_svm * | svm | ) |

Definition at line 719 of file avic.c.

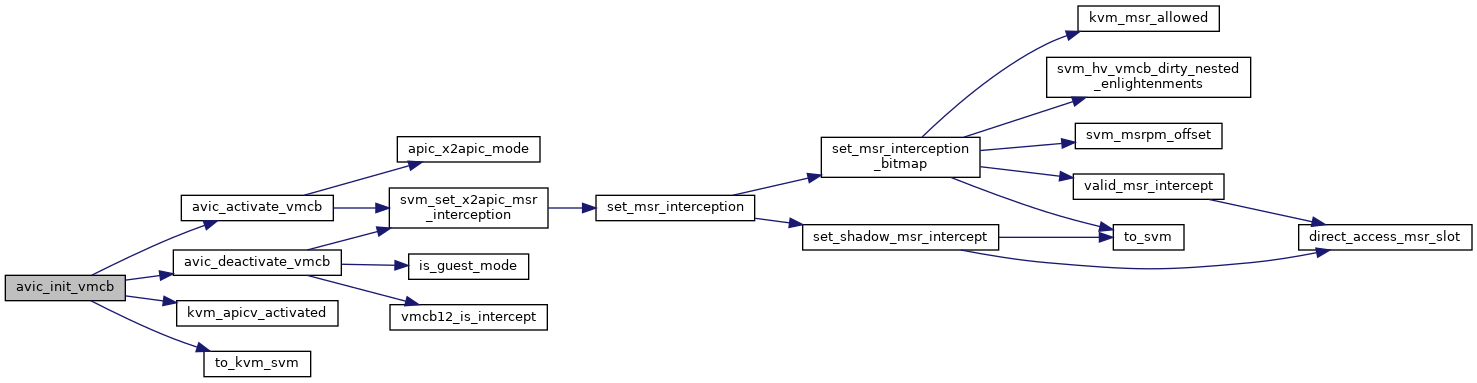

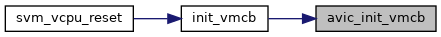

◆ avic_init_vmcb()

| void avic_init_vmcb | ( | struct vcpu_svm * | svm, |

| struct vmcb * | vmcb | ||

| ) |

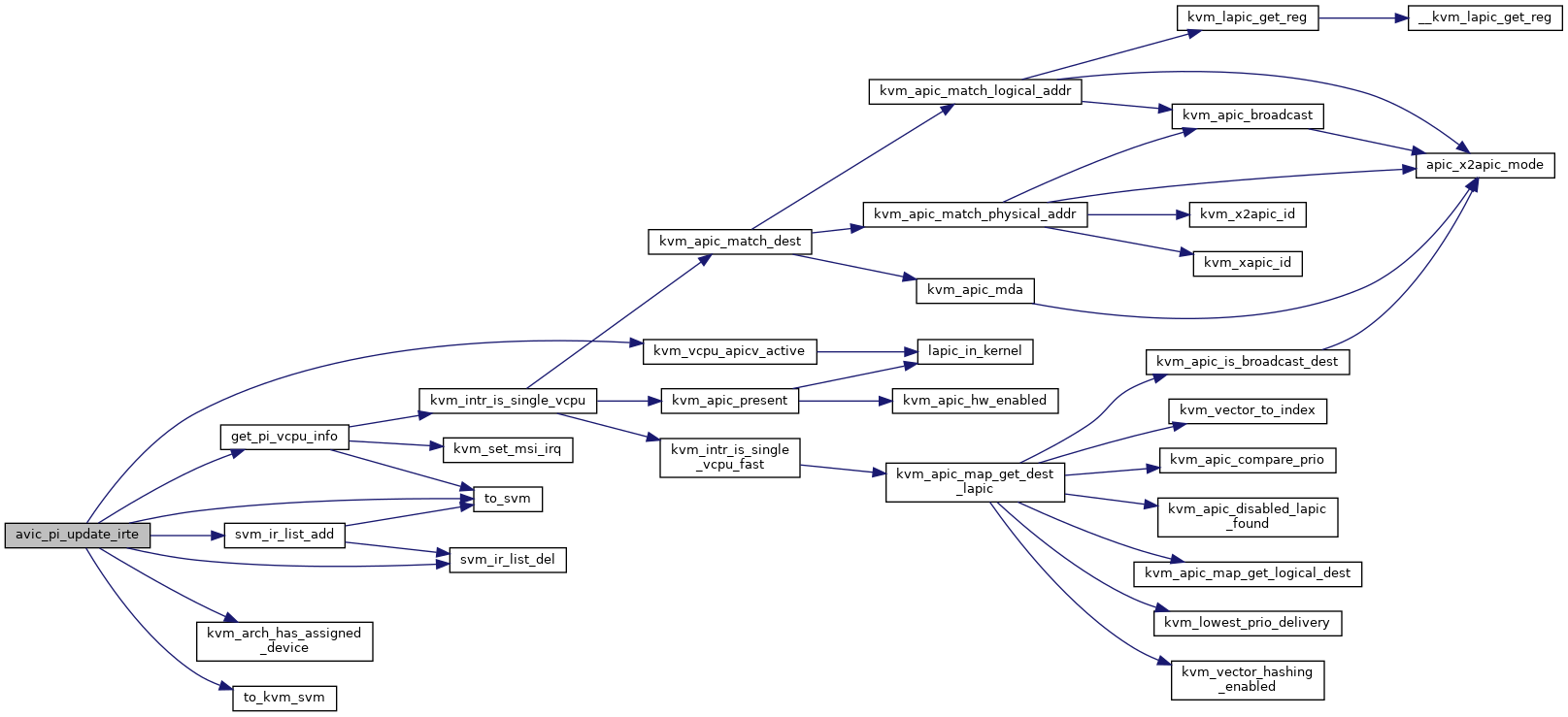

◆ avic_pi_update_irte()

| int avic_pi_update_irte | ( | struct kvm * | kvm, |

| unsigned int | host_irq, | ||

| uint32_t | guest_irq, | ||

| bool | set | ||

| ) |

Here, we setup with legacy mode in the following cases:

- When cannot target interrupt to a specific vcpu.

- Unsetting posted interrupt.

- APIC virtualization is disabled for the vcpu.

- IRQ has incompatible delivery mode (SMI, INIT, etc)

Here, we successfully setting up vcpu affinity in IOMMU guest mode. Now, we need to store the posted interrupt information in a per-vcpu ir_list so that we can reference to them directly when we update vcpu scheduling information in IOMMU irte.

Here, pi is used to:

- Tell IOMMU to use legacy mode for this interrupt.

- Retrieve ga_tag of prior interrupt remapping data.

Check if the posted interrupt was previously setup with the guest_mode by checking if the ga_tag was cached. If so, we need to clean up the per-vcpu ir_list.

Definition at line 894 of file avic.c.

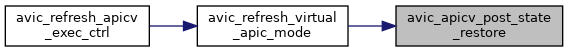

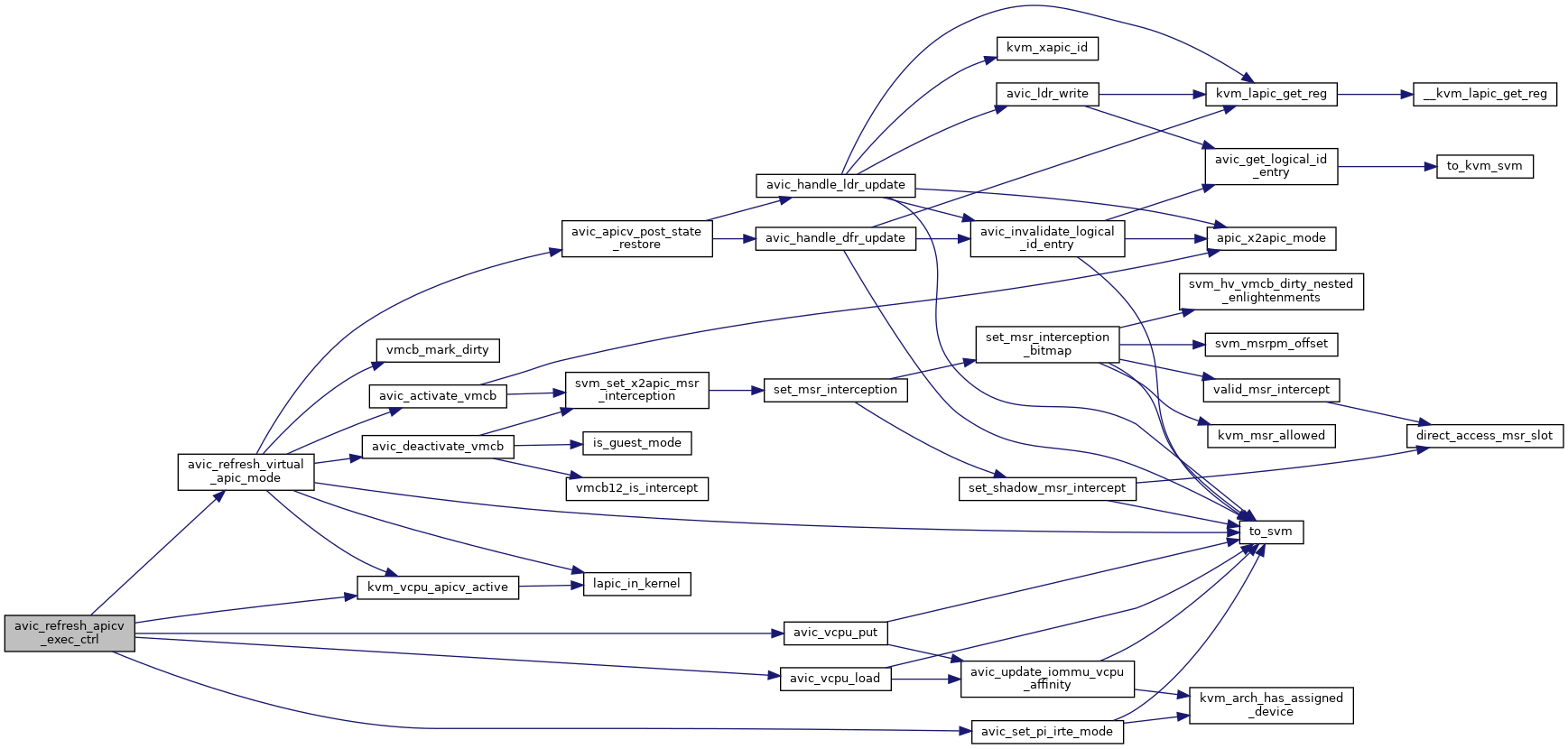

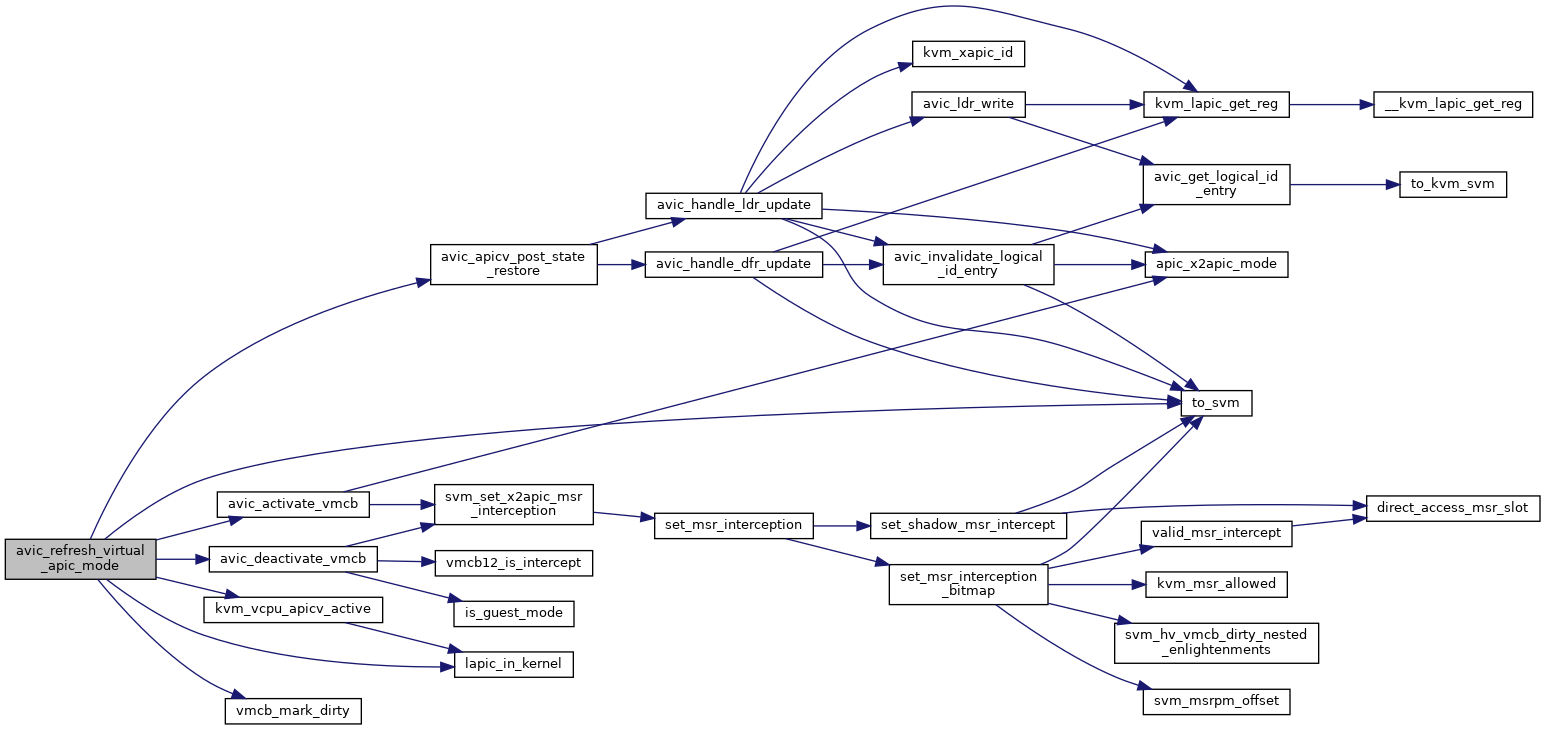

◆ avic_refresh_apicv_exec_ctrl()

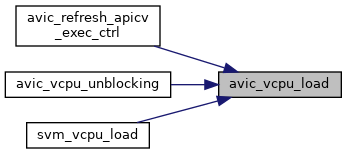

| void avic_refresh_apicv_exec_ctrl | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1136 of file avic.c.

◆ avic_refresh_virtual_apic_mode()

| void avic_refresh_virtual_apic_mode | ( | struct kvm_vcpu * | vcpu | ) |

During AVIC temporary deactivation, guest could update APIC ID, DFR and LDR registers, which would not be trapped by avic_unaccelerated_access_interception(). In this case, we need to check and update the AVIC logical APIC ID table accordingly before re-activating.

Definition at line 1112 of file avic.c.

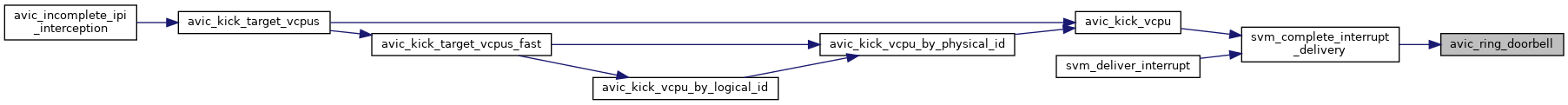

◆ avic_ring_doorbell()

| void avic_ring_doorbell | ( | struct kvm_vcpu * | vcpu | ) |

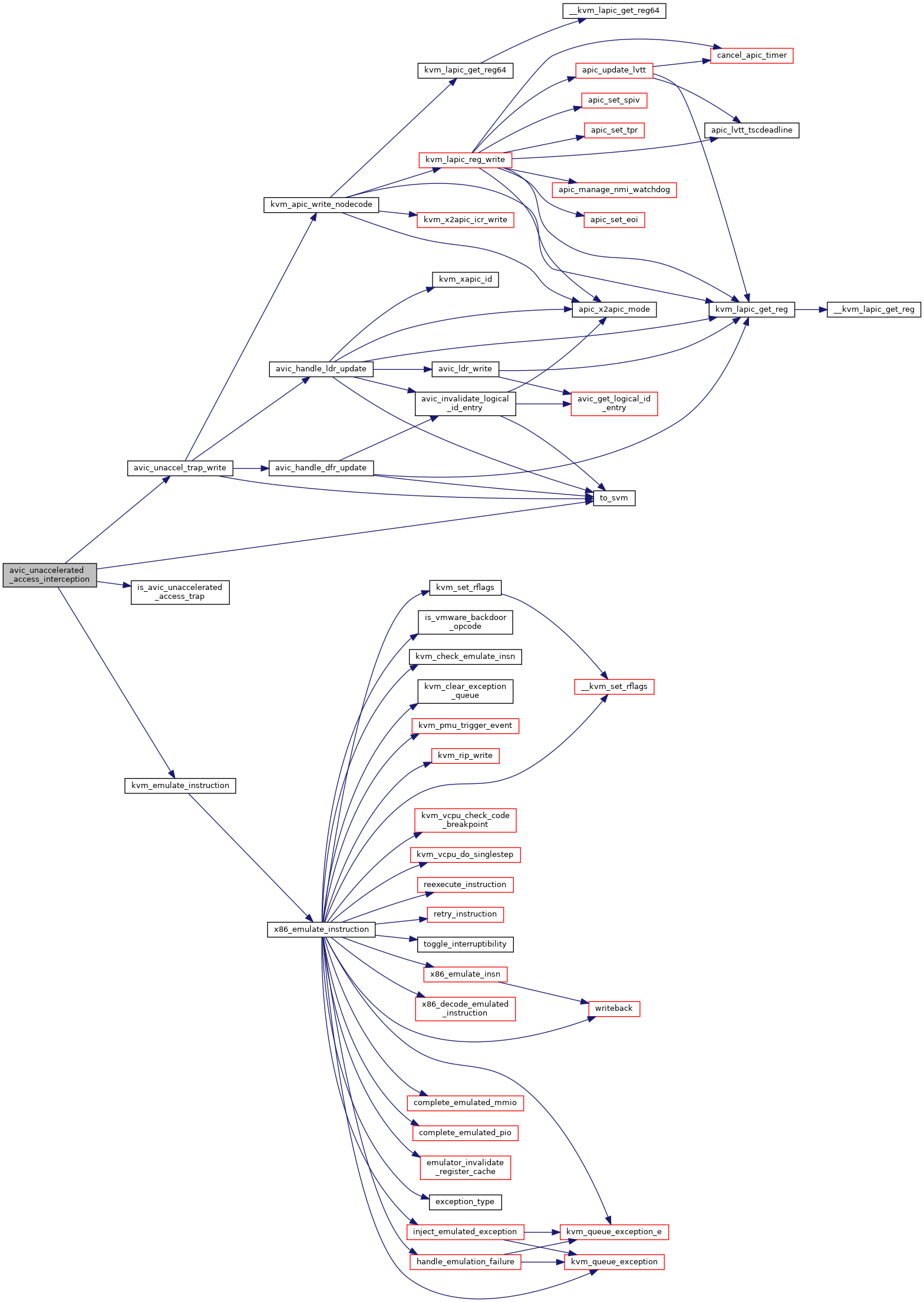

◆ avic_unaccelerated_access_interception()

| int avic_unaccelerated_access_interception | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 693 of file avic.c.

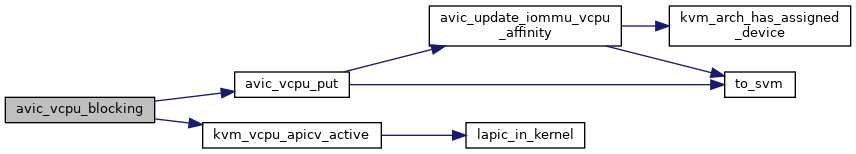

◆ avic_vcpu_blocking()

| void avic_vcpu_blocking | ( | struct kvm_vcpu * | vcpu | ) |

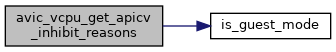

◆ avic_vcpu_get_apicv_inhibit_reasons()

| unsigned long avic_vcpu_get_apicv_inhibit_reasons | ( | struct kvm_vcpu * | vcpu | ) |

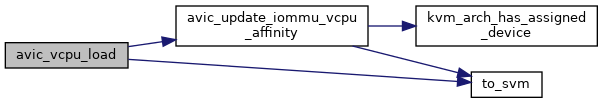

◆ avic_vcpu_load()

| void avic_vcpu_load | ( | struct kvm_vcpu * | vcpu, |

| int | cpu | ||

| ) |

Definition at line 1028 of file avic.c.

◆ avic_vcpu_put()

| void avic_vcpu_put | ( | struct kvm_vcpu * | vcpu | ) |

◆ avic_vcpu_unblocking()

| void avic_vcpu_unblocking | ( | struct kvm_vcpu * | vcpu | ) |

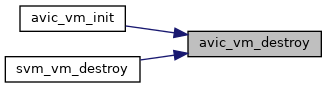

◆ avic_vm_destroy()

| void avic_vm_destroy | ( | struct kvm * | kvm | ) |

◆ avic_vm_init()

| int avic_vm_init | ( | struct kvm * | kvm | ) |

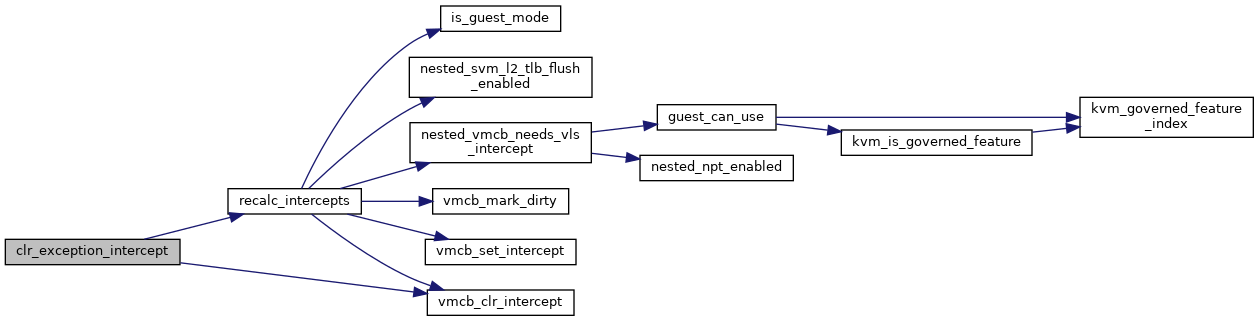

◆ clr_exception_intercept()

|

inlinestatic |

◆ DECLARE_PER_CPU()

| DECLARE_PER_CPU | ( | struct svm_cpu_data | , |

| svm_data | |||

| ) |

◆ disable_gif()

|

inlinestatic |

◆ disable_nmi_singlestep()

| void disable_nmi_singlestep | ( | struct vcpu_svm * | svm | ) |

◆ enable_gif()

|

inlinestatic |

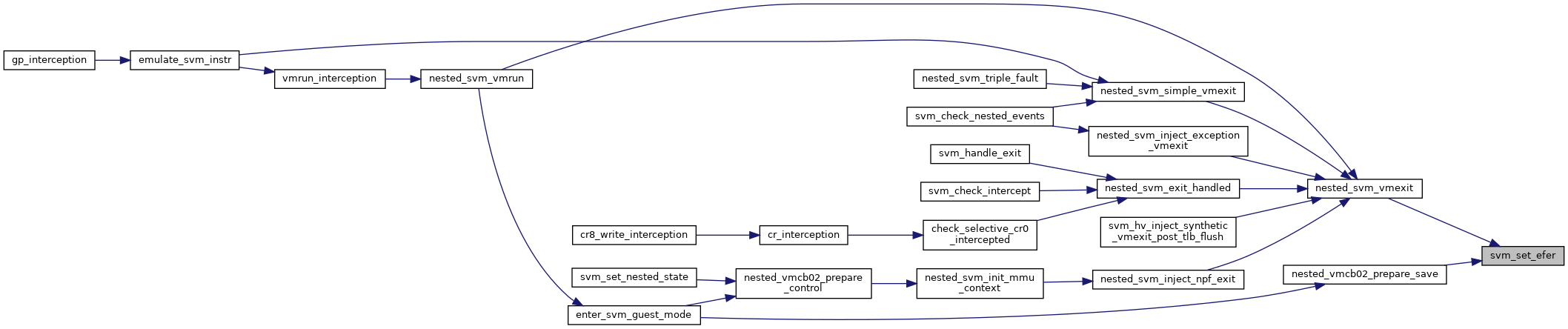

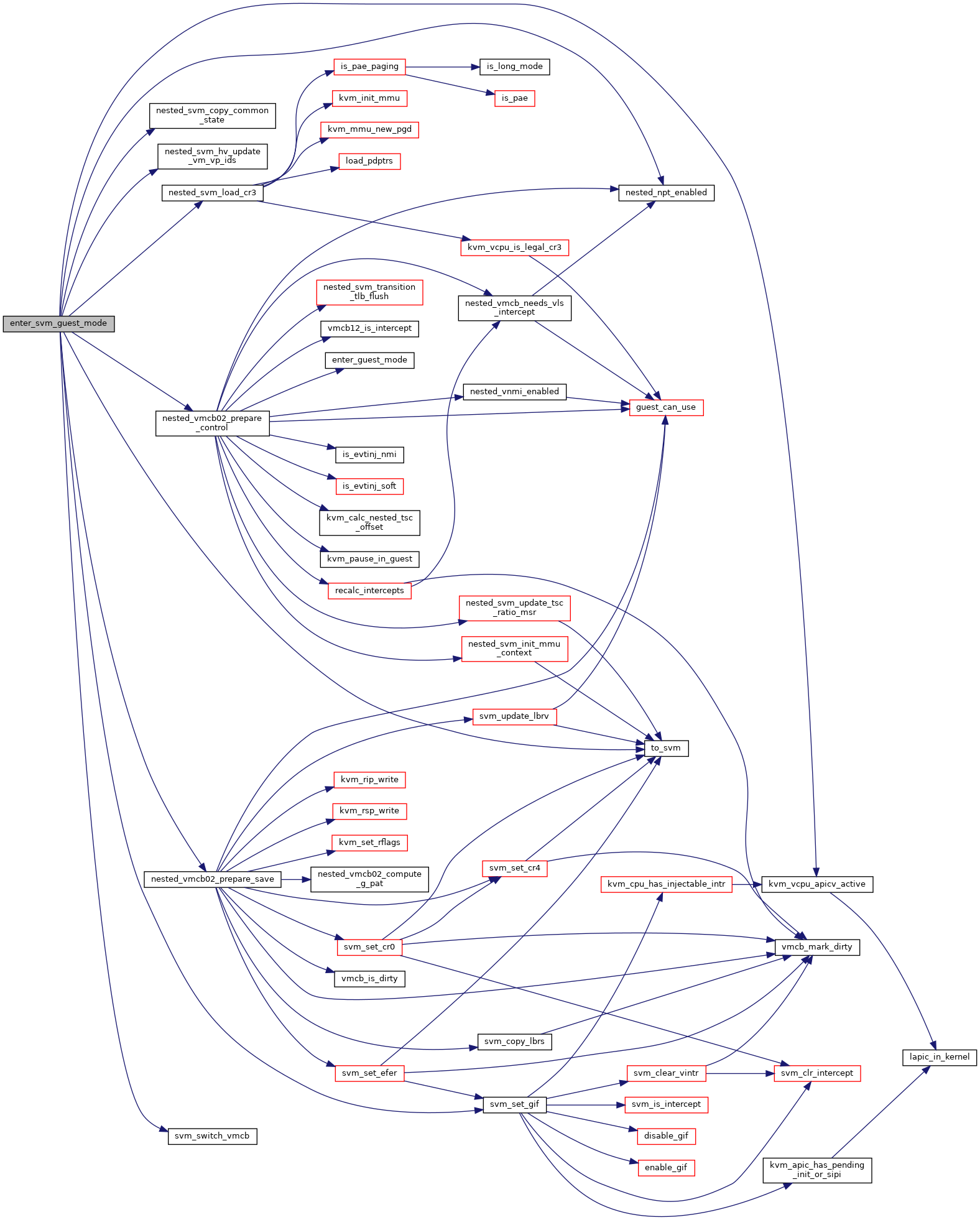

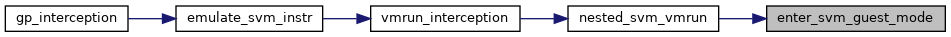

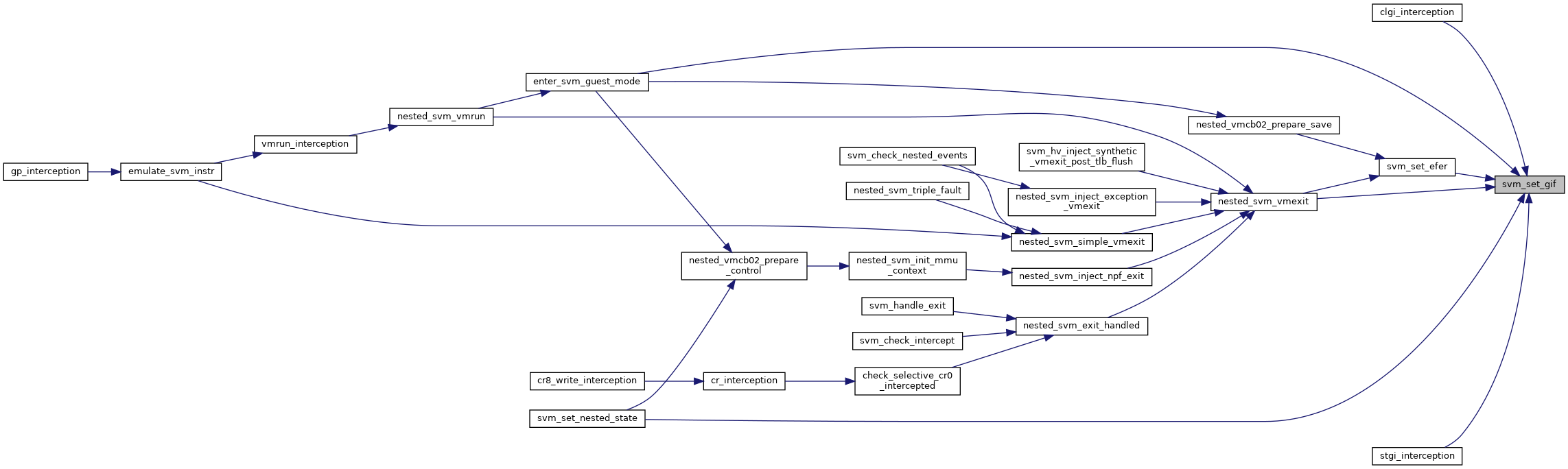

◆ enter_svm_guest_mode()

| int enter_svm_guest_mode | ( | struct kvm_vcpu * | vcpu, |

| u64 | vmcb_gpa, | ||

| struct vmcb * | vmcb12, | ||

| bool | from_vmrun | ||

| ) |

Definition at line 785 of file nested.c.

◆ get_vgif_vmcb()

|

inlinestatic |

◆ get_vnmi_vmcb_l1()

|

inlinestatic |

◆ gif_set()

|

inlinestatic |

◆ is_vnmi_enabled()

|

inlinestatic |

◆ is_x2apic_msrpm_offset()

|

inlinestatic |

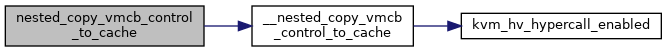

◆ nested_copy_vmcb_control_to_cache()

| void nested_copy_vmcb_control_to_cache | ( | struct vcpu_svm * | svm, |

| struct vmcb_control_area * | control | ||

| ) |

Definition at line 382 of file nested.c.

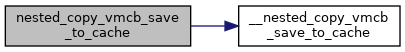

◆ nested_copy_vmcb_save_to_cache()

| void nested_copy_vmcb_save_to_cache | ( | struct vcpu_svm * | svm, |

| struct vmcb_save_area * | save | ||

| ) |

Definition at line 404 of file nested.c.

◆ nested_exit_on_intr()

|

inlinestatic |

◆ nested_exit_on_nmi()

|

inlinestatic |

◆ nested_exit_on_smi()

|

inlinestatic |

◆ nested_npt_enabled()

|

inlinestatic |

◆ nested_svm_check_exception()

| int nested_svm_check_exception | ( | struct vcpu_svm * | svm, |

| unsigned | nr, | ||

| bool | has_error_code, | ||

| u32 | error_code | ||

| ) |

◆ nested_svm_check_permissions()

| int nested_svm_check_permissions | ( | struct kvm_vcpu * | vcpu | ) |

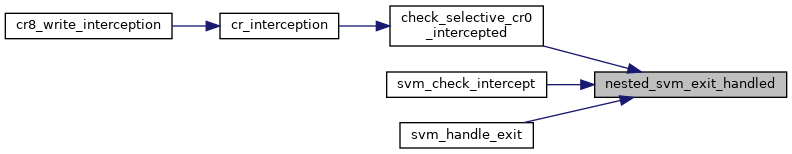

◆ nested_svm_exit_handled()

| int nested_svm_exit_handled | ( | struct vcpu_svm * | svm | ) |

◆ nested_svm_exit_special()

| int nested_svm_exit_special | ( | struct vcpu_svm * | svm | ) |

Definition at line 1496 of file nested.c.

◆ nested_svm_simple_vmexit()

|

inlinestatic |

◆ nested_svm_update_tsc_ratio_msr()

| void nested_svm_update_tsc_ratio_msr | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1532 of file nested.c.

◆ nested_svm_virtualize_tpr()

|

inlinestatic |

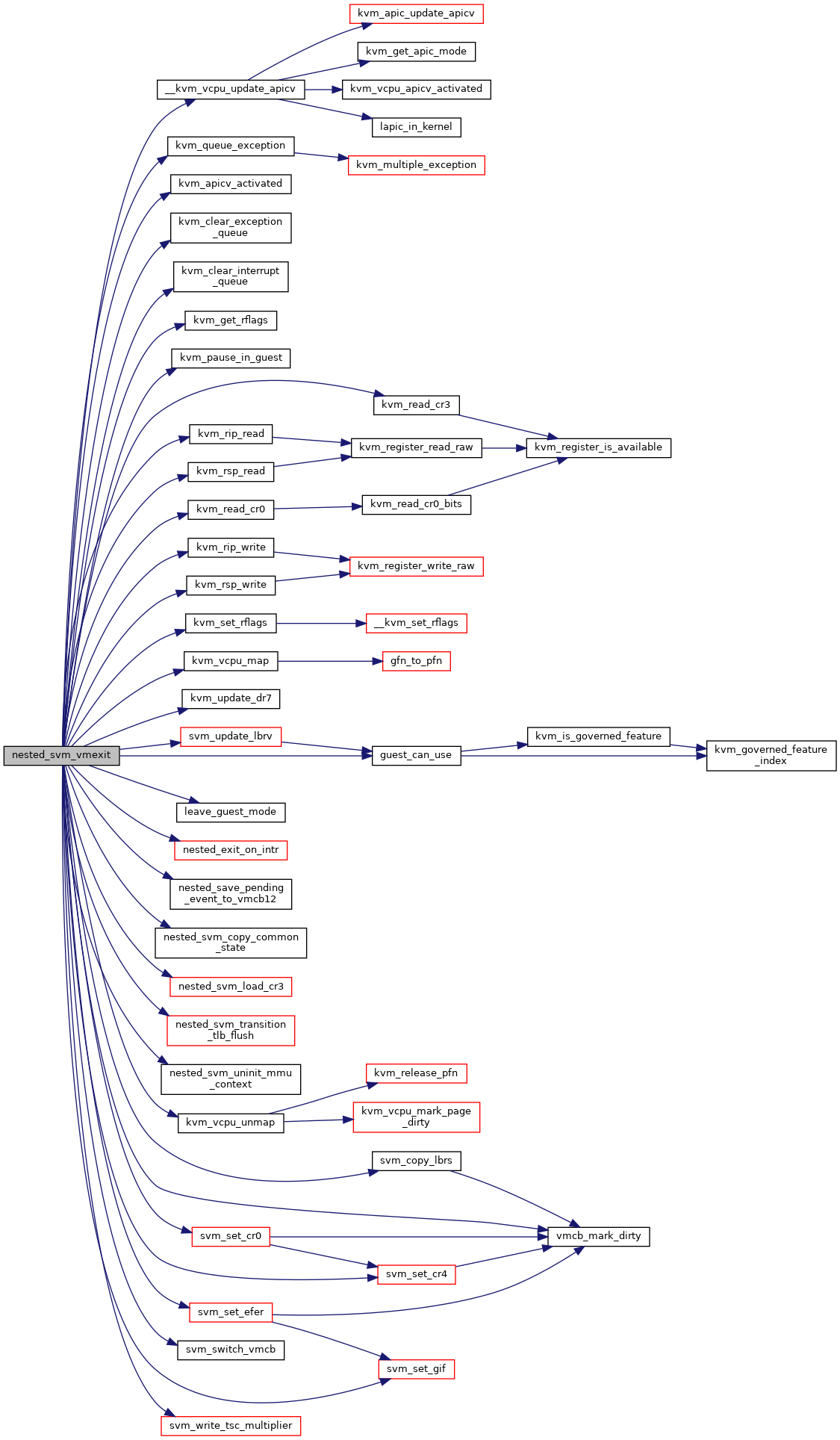

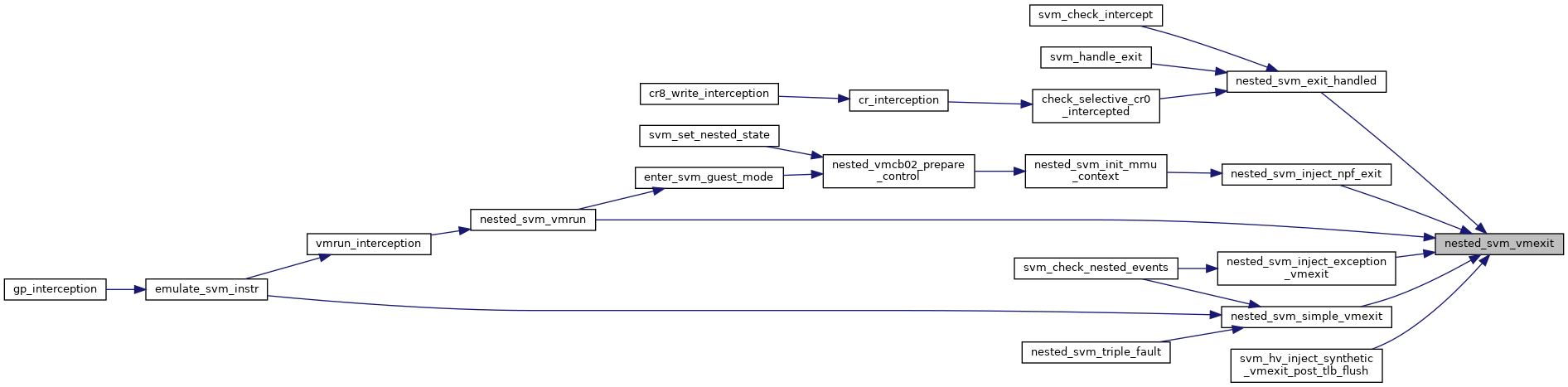

◆ nested_svm_vmexit()

| int nested_svm_vmexit | ( | struct vcpu_svm * | svm | ) |

Definition at line 967 of file nested.c.

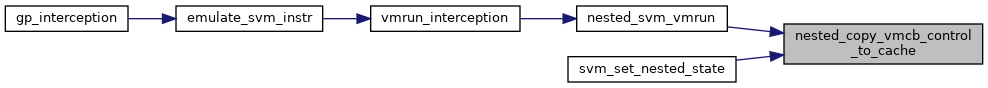

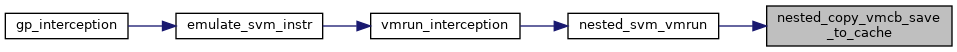

◆ nested_svm_vmrun()

| int nested_svm_vmrun | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 837 of file nested.c.

◆ nested_sync_control_from_vmcb02()

| void nested_sync_control_from_vmcb02 | ( | struct vcpu_svm * | svm | ) |

◆ nested_vgif_enabled()

|

inlinestatic |

◆ nested_vmcb02_compute_g_pat()

| void nested_vmcb02_compute_g_pat | ( | struct vcpu_svm * | svm | ) |

◆ nested_vnmi_enabled()

|

inlinestatic |

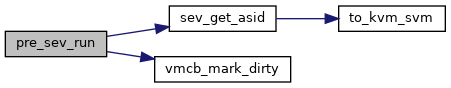

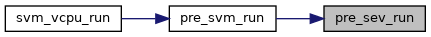

◆ pre_sev_run()

| void pre_sev_run | ( | struct vcpu_svm * | svm, |

| int | cpu | ||

| ) |

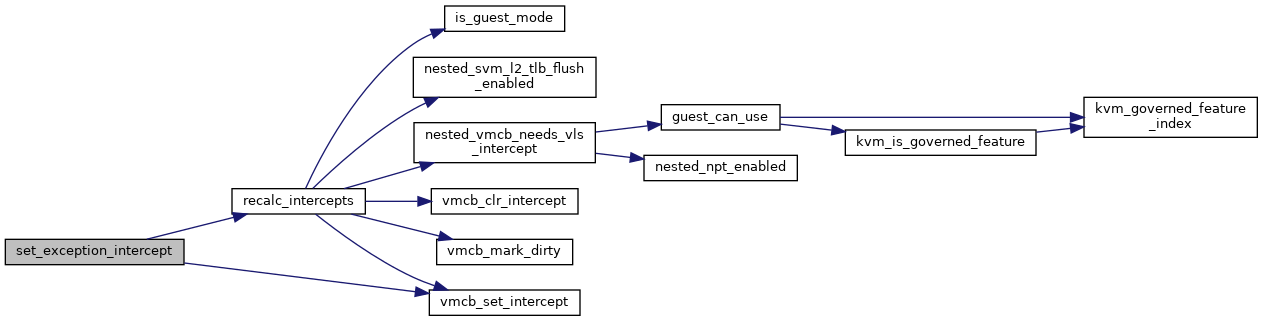

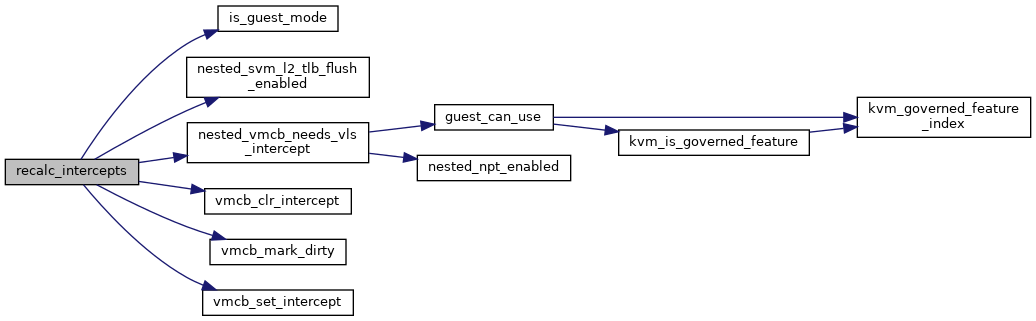

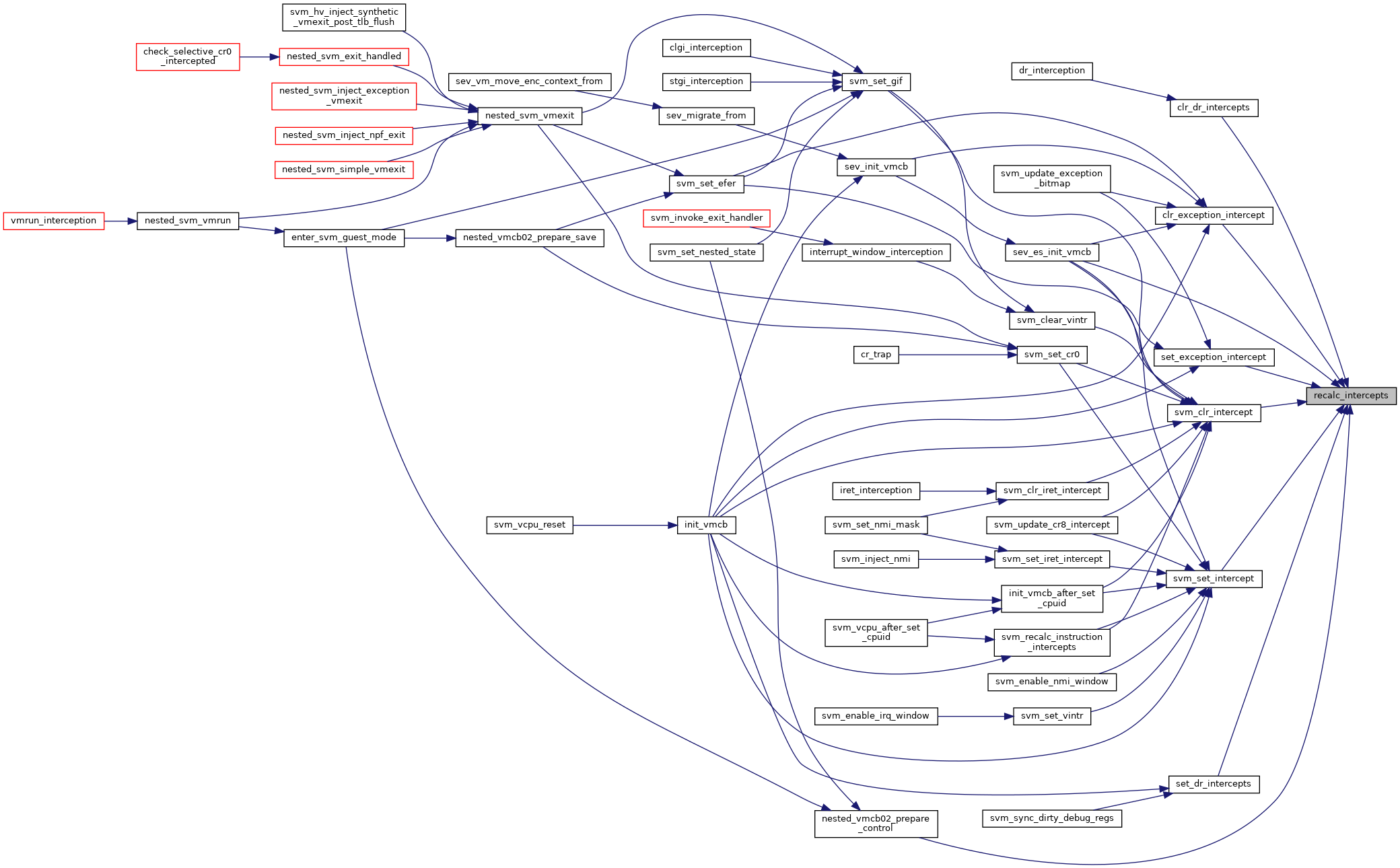

◆ recalc_intercepts()

| void recalc_intercepts | ( | struct vcpu_svm * | svm | ) |

Definition at line 122 of file nested.c.

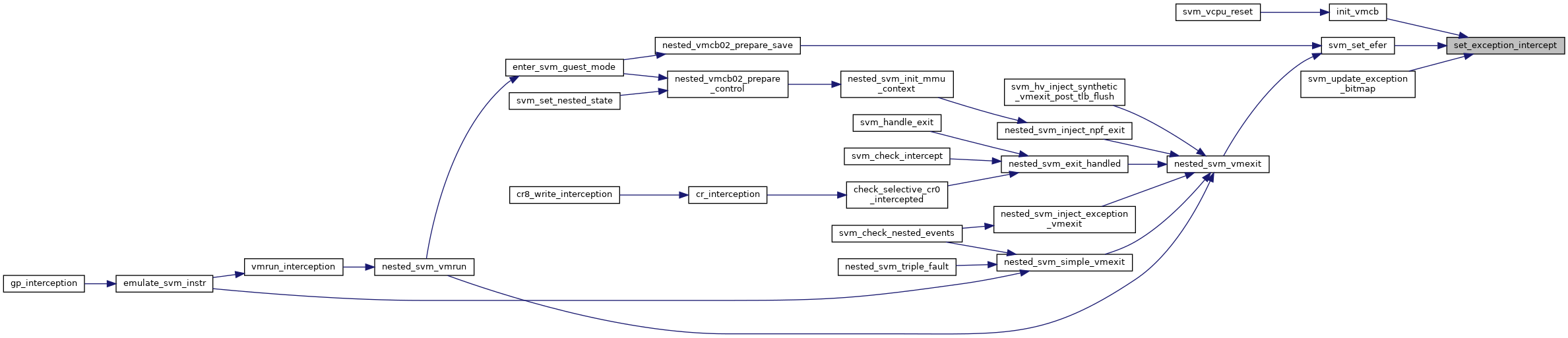

◆ set_exception_intercept()

|

inlinestatic |

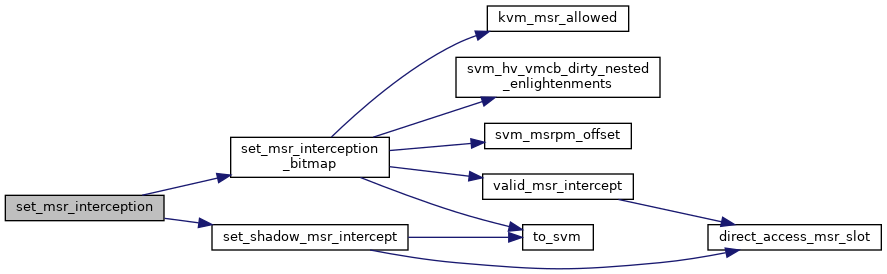

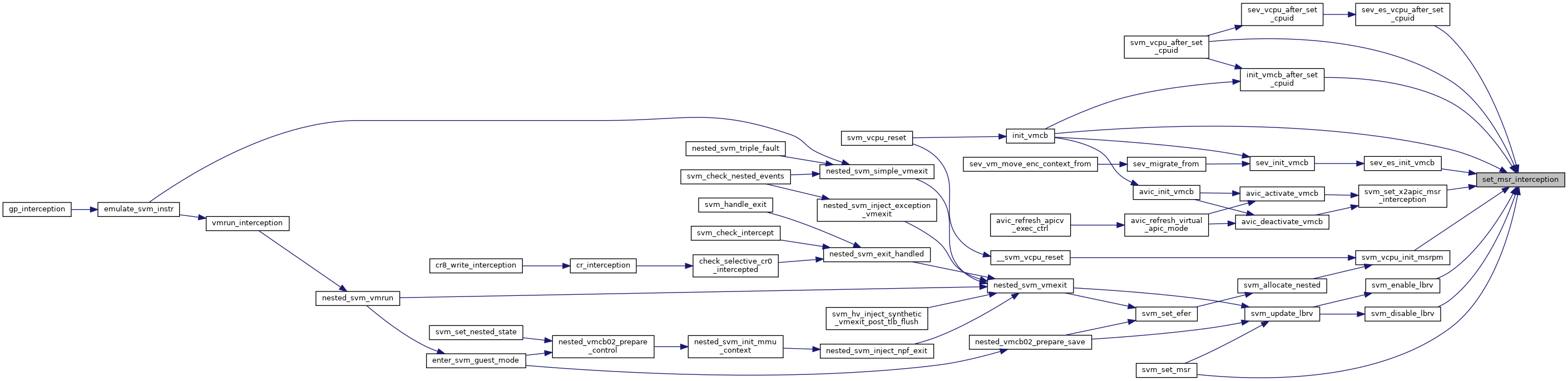

◆ set_msr_interception()

| void set_msr_interception | ( | struct kvm_vcpu * | vcpu, |

| u32 * | msrpm, | ||

| u32 | msr, | ||

| int | read, | ||

| int | write | ||

| ) |

Definition at line 859 of file svm.c.

◆ sev_cpu_init()

| int sev_cpu_init | ( | struct svm_cpu_data * | sd | ) |

◆ sev_es_guest()

|

static |

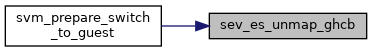

◆ sev_es_prepare_switch_to_guest()

| void sev_es_prepare_switch_to_guest | ( | struct sev_es_save_area * | hostsa | ) |

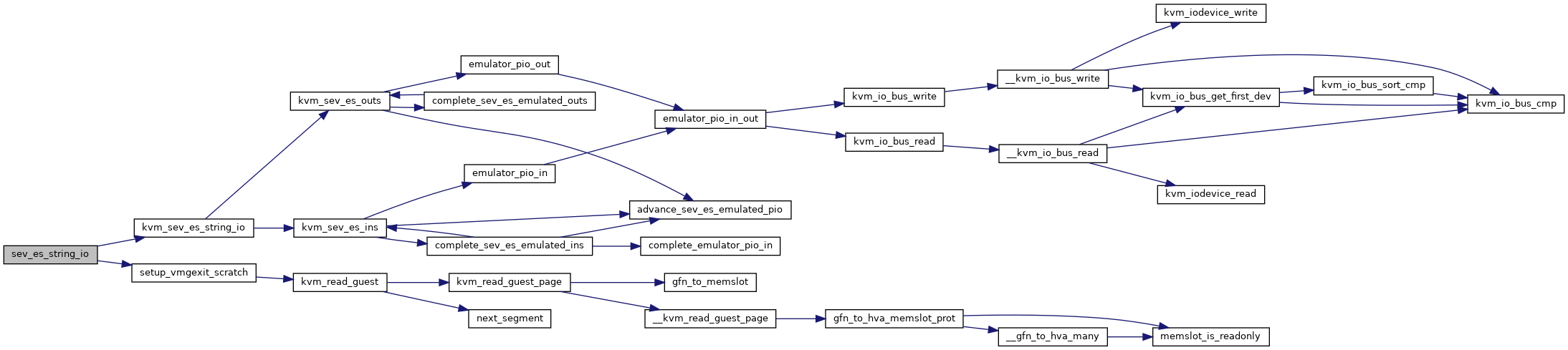

◆ sev_es_string_io()

| int sev_es_string_io | ( | struct vcpu_svm * | svm, |

| int | size, | ||

| unsigned int | port, | ||

| int | in | ||

| ) |

Definition at line 2963 of file sev.c.

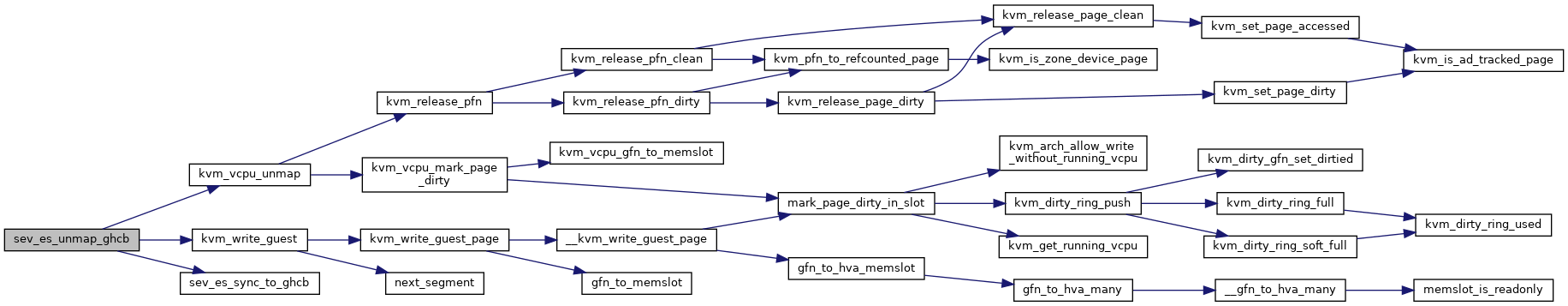

◆ sev_es_unmap_ghcb()

| void sev_es_unmap_ghcb | ( | struct vcpu_svm * | svm | ) |

Definition at line 2614 of file sev.c.

◆ sev_es_vcpu_reset()

| void sev_es_vcpu_reset | ( | struct vcpu_svm * | svm | ) |

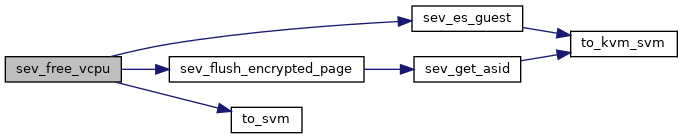

◆ sev_free_vcpu()

| void sev_free_vcpu | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2373 of file sev.c.

◆ sev_guest()

|

static |

◆ sev_guest_memory_reclaimed()

| void sev_guest_memory_reclaimed | ( | struct kvm * | kvm | ) |

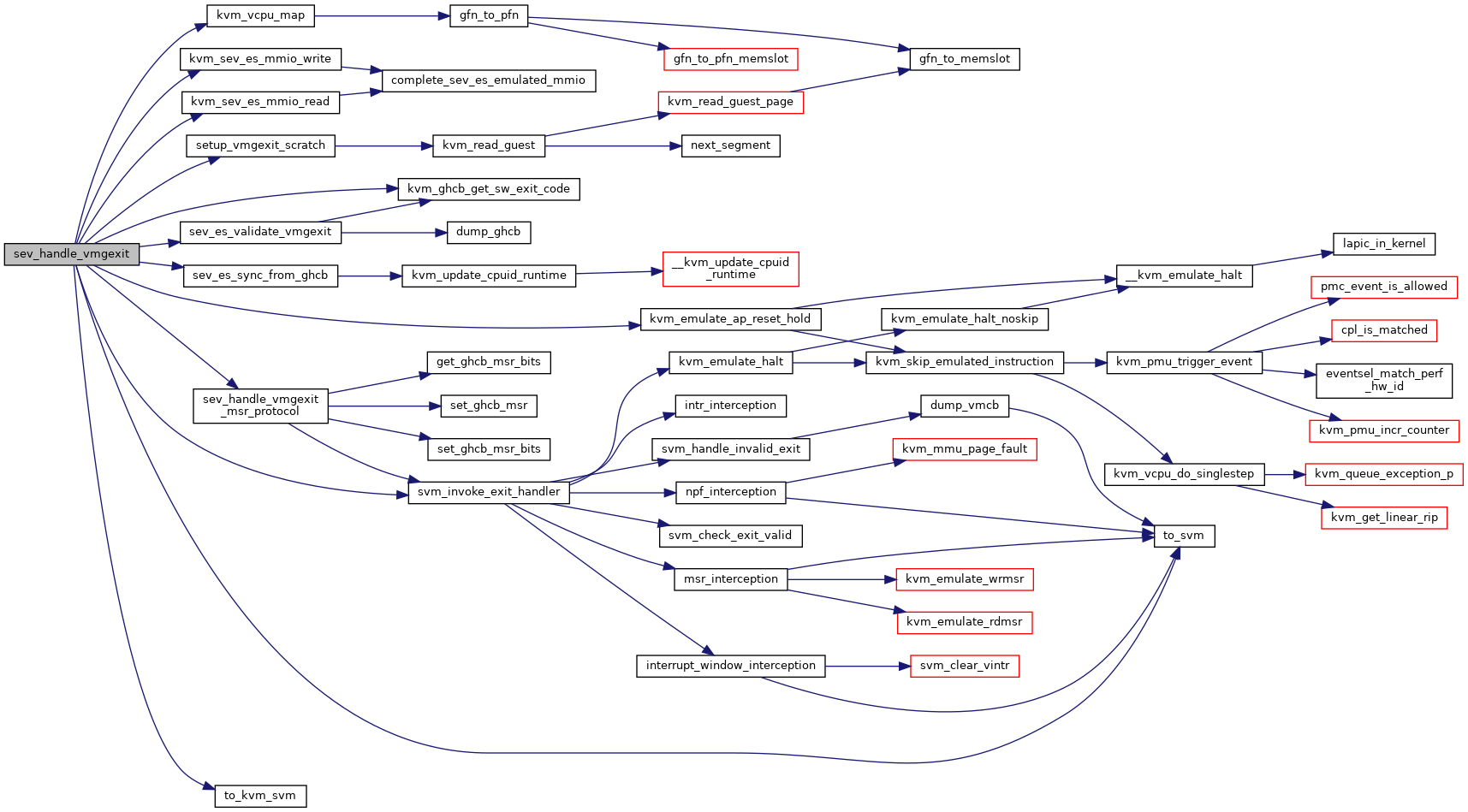

◆ sev_handle_vmgexit()

| int sev_handle_vmgexit | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2857 of file sev.c.

◆ sev_hardware_setup()

| void __init sev_hardware_setup | ( | void | ) |

◆ sev_hardware_unsetup()

| void sev_hardware_unsetup | ( | void | ) |

◆ sev_init_vmcb()

| void sev_init_vmcb | ( | struct vcpu_svm * | svm | ) |

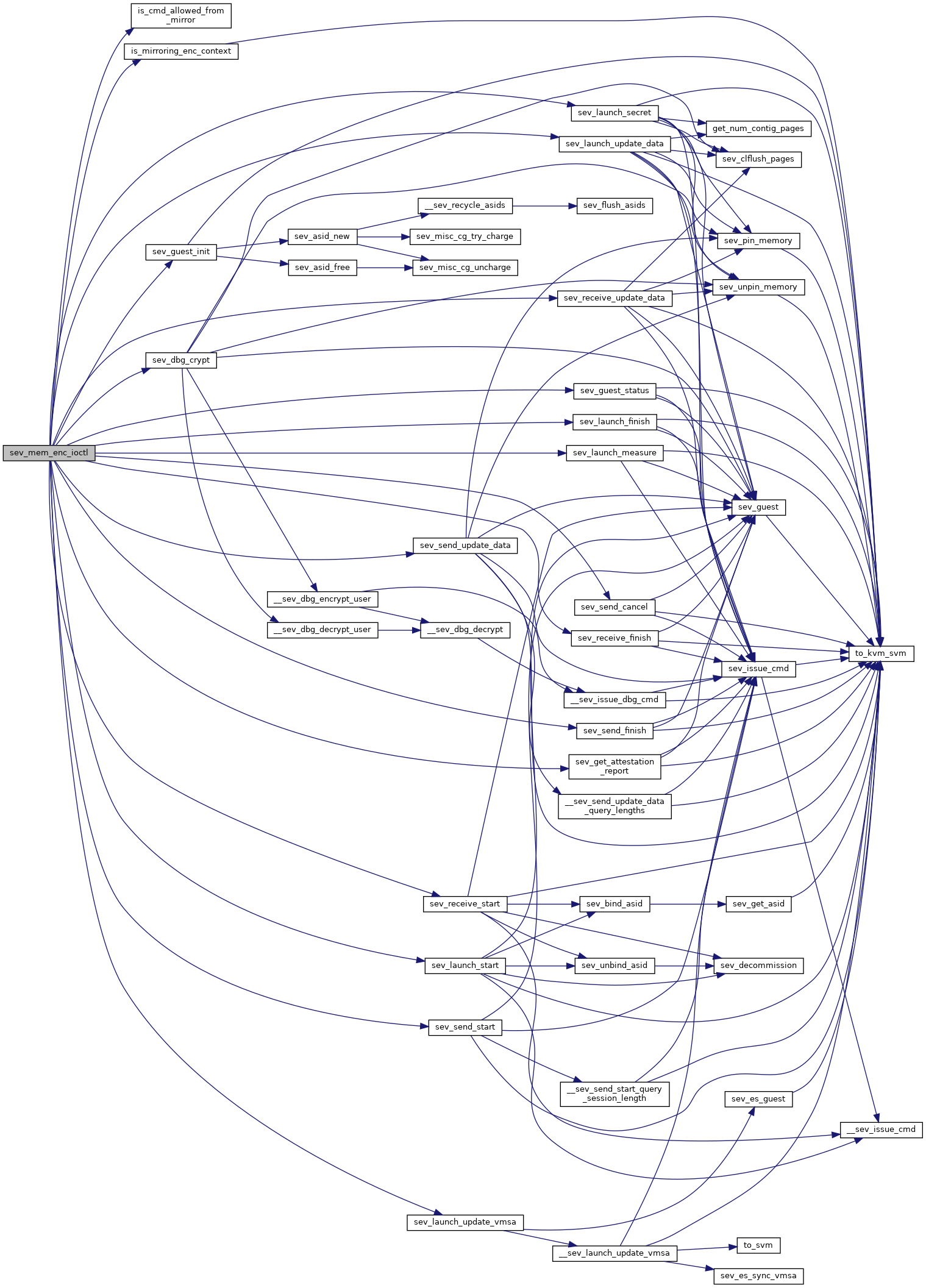

◆ sev_mem_enc_ioctl()

| int sev_mem_enc_ioctl | ( | struct kvm * | kvm, |

| void __user * | argp | ||

| ) |

Definition at line 1862 of file sev.c.

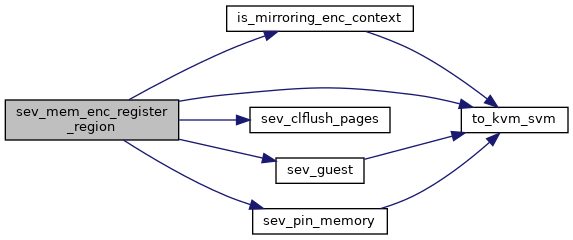

◆ sev_mem_enc_register_region()

| int sev_mem_enc_register_region | ( | struct kvm * | kvm, |

| struct kvm_enc_region * | range | ||

| ) |

Definition at line 1959 of file sev.c.

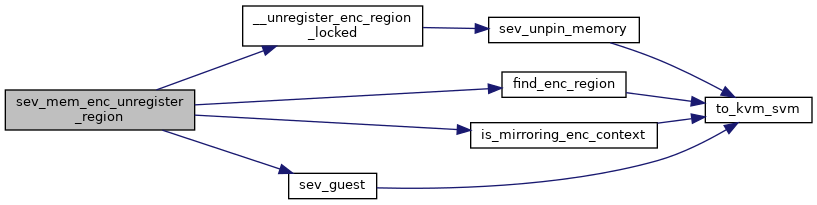

◆ sev_mem_enc_unregister_region()

| int sev_mem_enc_unregister_region | ( | struct kvm * | kvm, |

| struct kvm_enc_region * | range | ||

| ) |

Definition at line 2035 of file sev.c.

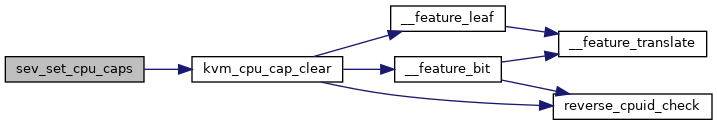

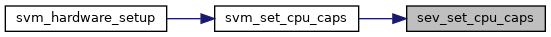

◆ sev_set_cpu_caps()

| void __init sev_set_cpu_caps | ( | void | ) |

Definition at line 2185 of file sev.c.

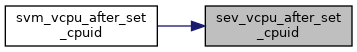

◆ sev_vcpu_after_set_cpuid()

| void sev_vcpu_after_set_cpuid | ( | struct vcpu_svm * | svm | ) |

Definition at line 3015 of file sev.c.

◆ sev_vcpu_deliver_sipi_vector()

| void sev_vcpu_deliver_sipi_vector | ( | struct kvm_vcpu * | vcpu, |

| u8 | vector | ||

| ) |

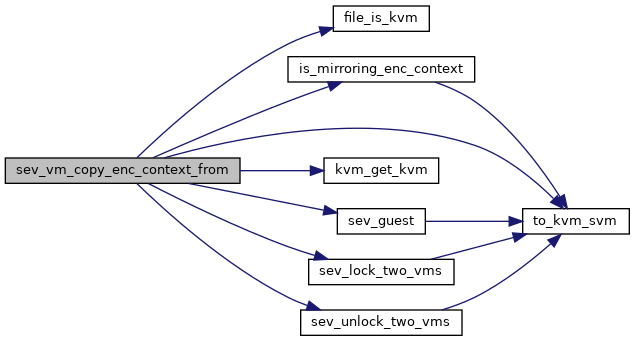

◆ sev_vm_copy_enc_context_from()

| int sev_vm_copy_enc_context_from | ( | struct kvm * | kvm, |

| unsigned int | source_fd | ||

| ) |

Definition at line 2075 of file sev.c.

◆ sev_vm_destroy()

| void sev_vm_destroy | ( | struct kvm * | kvm | ) |

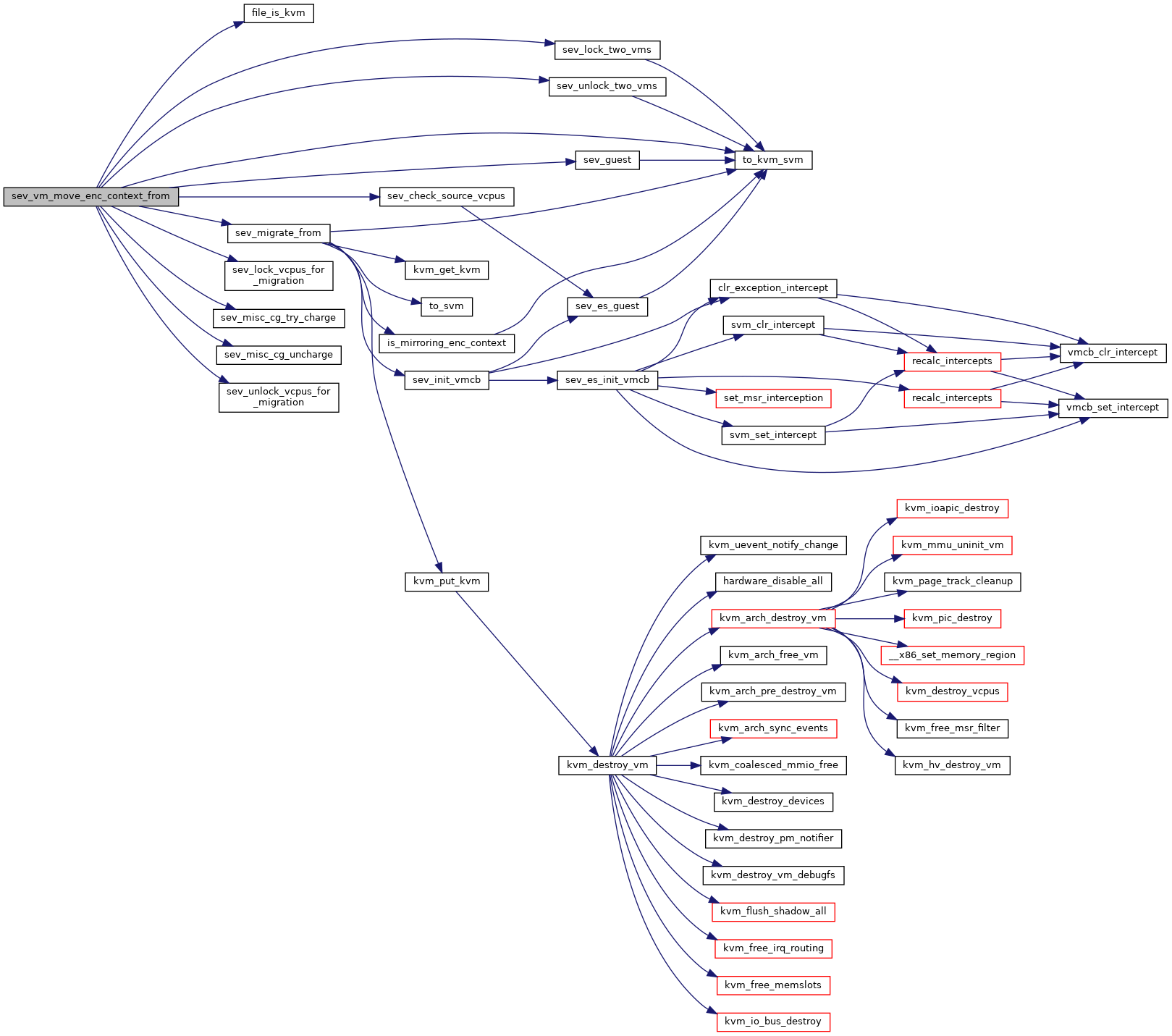

◆ sev_vm_move_enc_context_from()

| int sev_vm_move_enc_context_from | ( | struct kvm * | kvm, |

| unsigned int | source_fd | ||

| ) |

Definition at line 1791 of file sev.c.

◆ svm_allocate_nested()

| int svm_allocate_nested | ( | struct vcpu_svm * | svm | ) |

◆ svm_clr_intercept()

|

inlinestatic |

◆ svm_complete_interrupt_delivery()

| void svm_complete_interrupt_delivery | ( | struct kvm_vcpu * | vcpu, |

| int | delivery_mode, | ||

| int | trig_mode, | ||

| int | vec | ||

| ) |

◆ svm_copy_lbrs()

| void svm_copy_lbrs | ( | struct vmcb * | to_vmcb, |

| struct vmcb * | from_vmcb | ||

| ) |

◆ svm_copy_vmloadsave_state()

| void svm_copy_vmloadsave_state | ( | struct vmcb * | to_vmcb, |

| struct vmcb * | from_vmcb | ||

| ) |

◆ svm_copy_vmrun_state()

| void svm_copy_vmrun_state | ( | struct vmcb_save_area * | to_save, |

| struct vmcb_save_area * | from_save | ||

| ) |

◆ svm_free_nested()

| void svm_free_nested | ( | struct vcpu_svm * | svm | ) |

◆ svm_interrupt_blocked()

| bool svm_interrupt_blocked | ( | struct kvm_vcpu * | vcpu | ) |

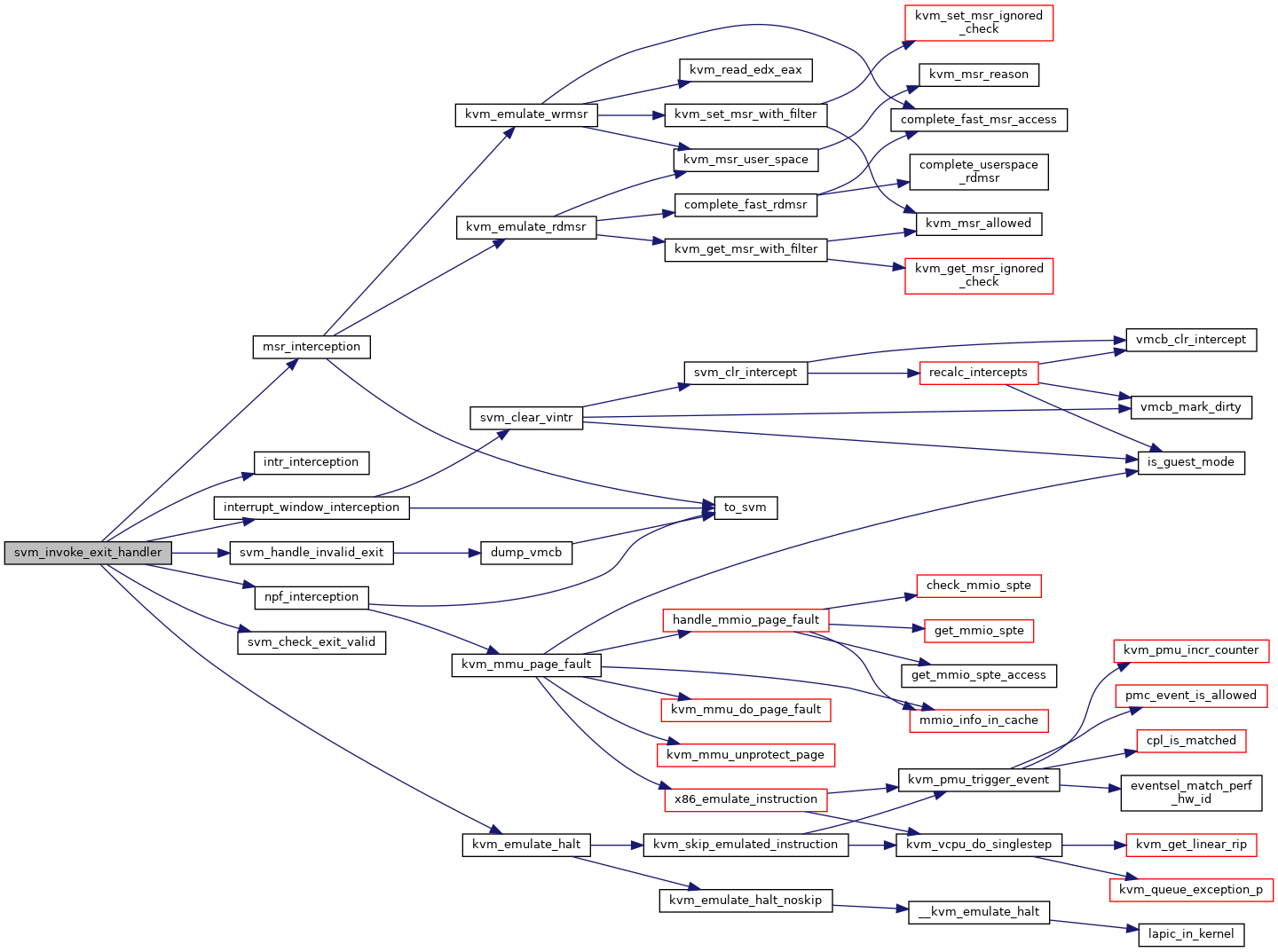

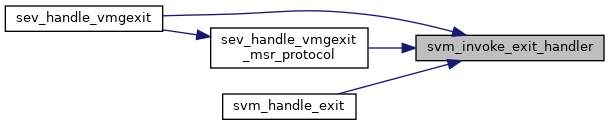

◆ svm_invoke_exit_handler()

| int svm_invoke_exit_handler | ( | struct kvm_vcpu * | vcpu, |

| u64 | exit_code | ||

| ) |

Definition at line 3453 of file svm.c.

◆ svm_is_intercept()

|

inlinestatic |

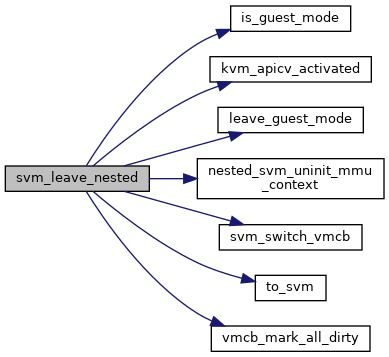

◆ svm_leave_nested()

| void svm_leave_nested | ( | struct kvm_vcpu * | vcpu | ) |

◆ svm_msrpm_offset()

| u32 svm_msrpm_offset | ( | u32 | msr | ) |

◆ svm_nmi_blocked()

| bool svm_nmi_blocked | ( | struct kvm_vcpu * | vcpu | ) |

◆ svm_set_cr0()

| void svm_set_cr0 | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | cr0 | ||

| ) |

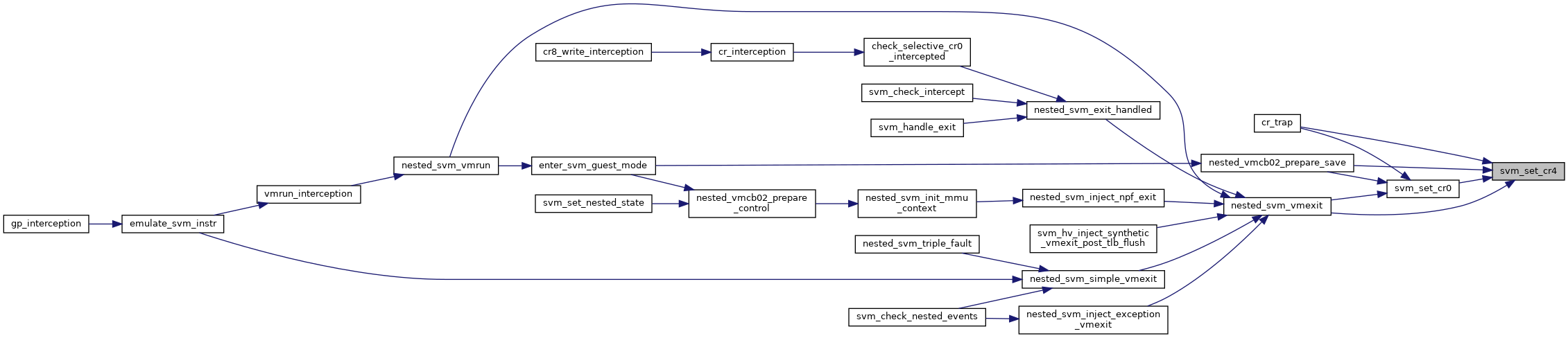

◆ svm_set_cr4()

| void svm_set_cr4 | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | cr4 | ||

| ) |

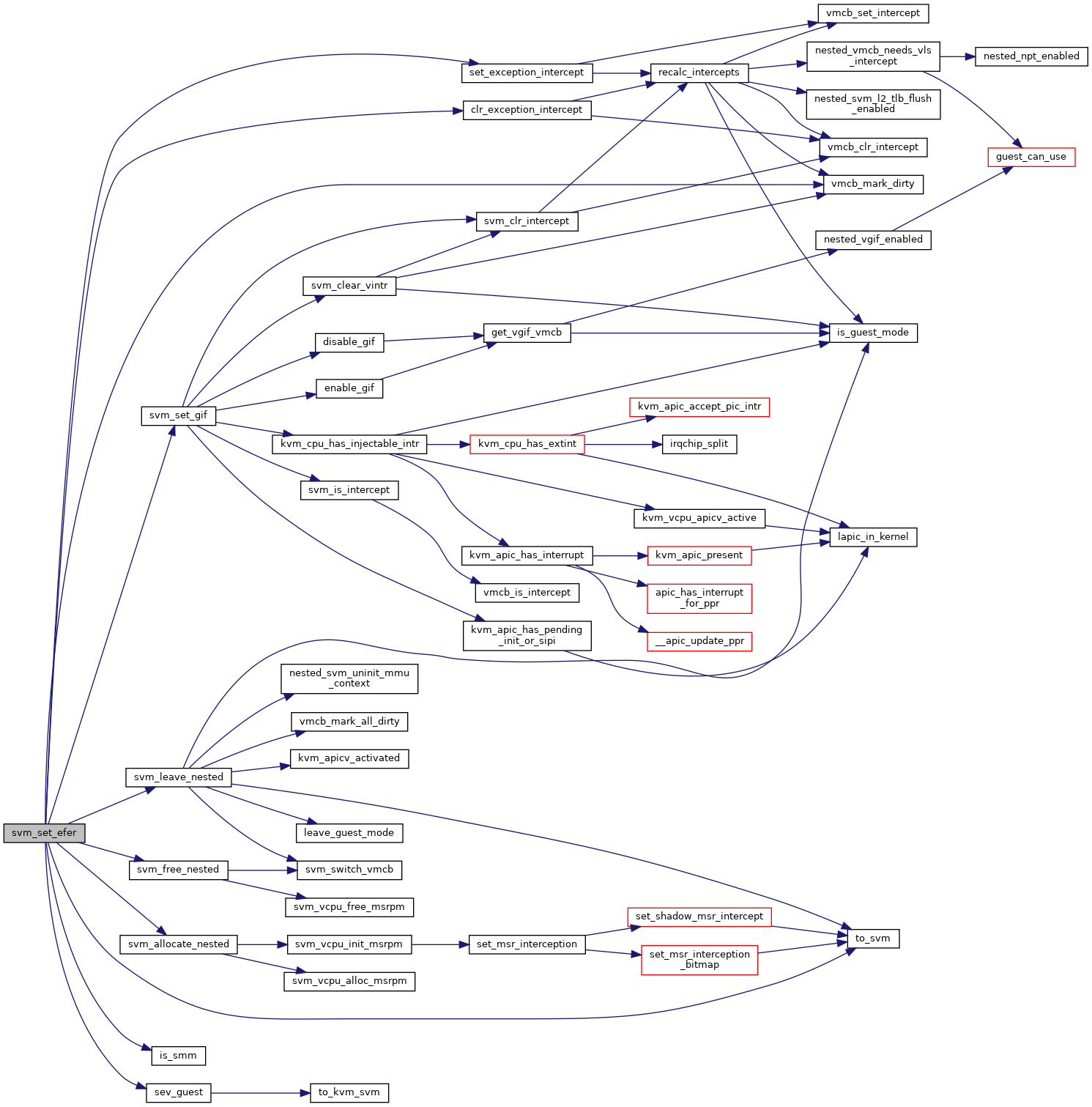

◆ svm_set_efer()

| int svm_set_efer | ( | struct kvm_vcpu * | vcpu, |

| u64 | efer | ||

| ) |

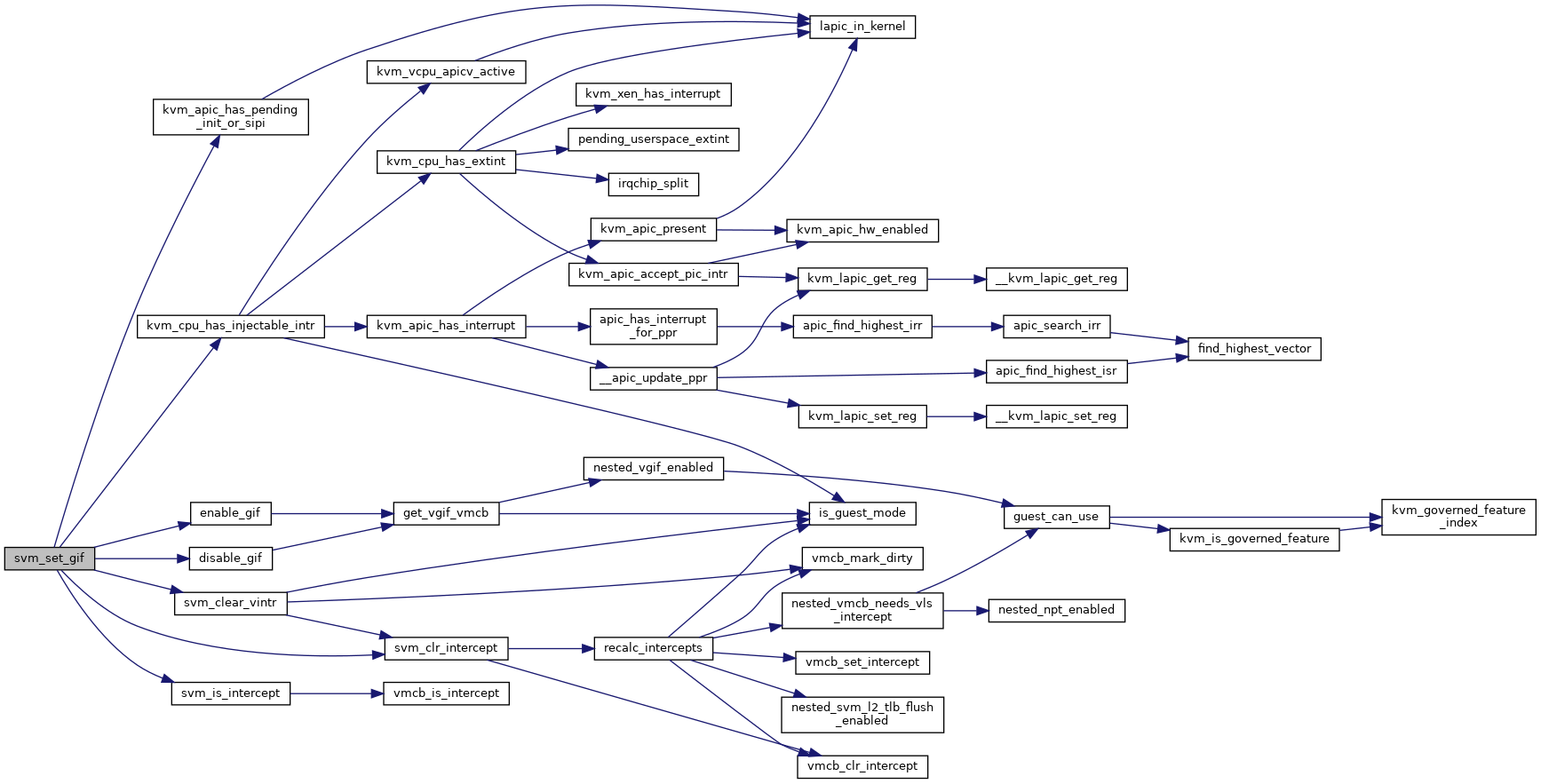

◆ svm_set_gif()

| void svm_set_gif | ( | struct vcpu_svm * | svm, |

| bool | value | ||

| ) |

Definition at line 2406 of file svm.c.

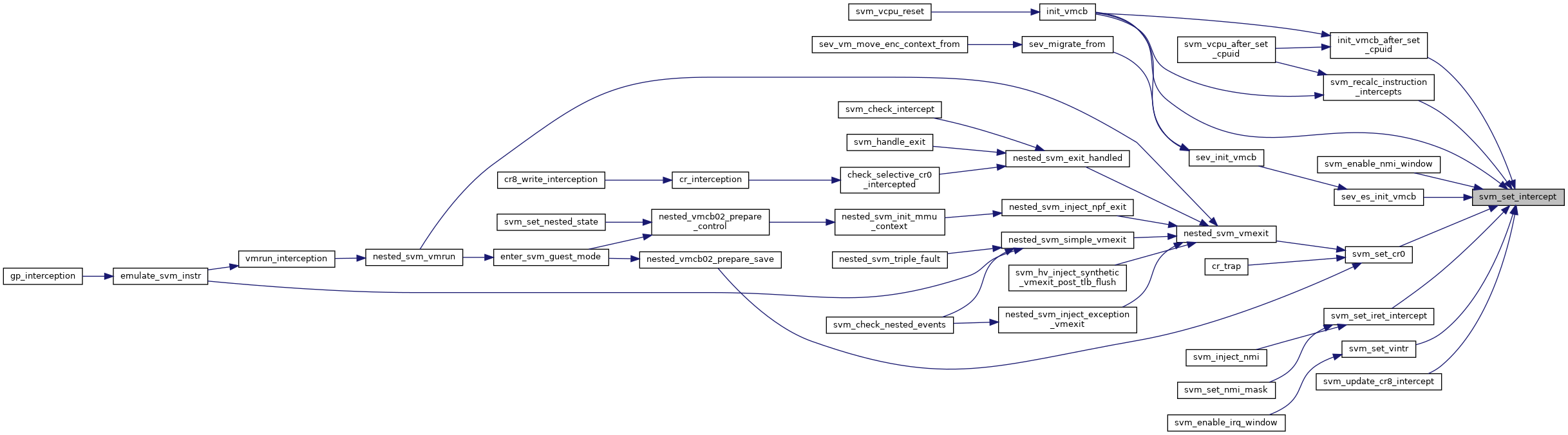

◆ svm_set_intercept()

|

inlinestatic |

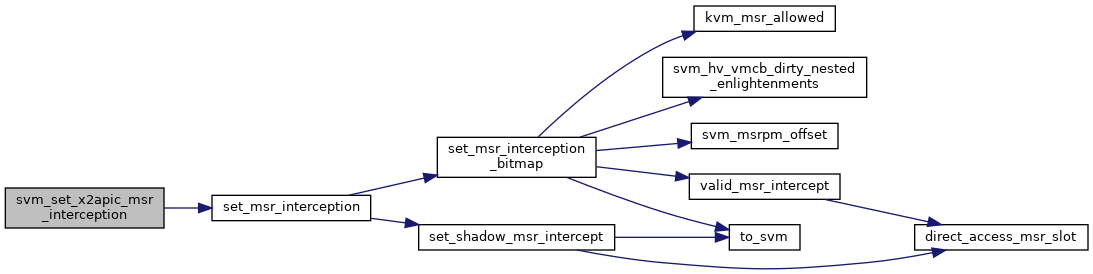

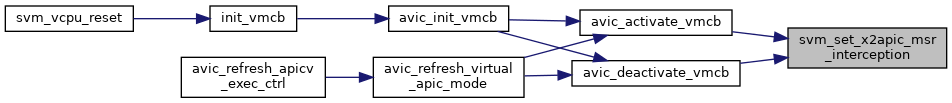

◆ svm_set_x2apic_msr_interception()

| void svm_set_x2apic_msr_interception | ( | struct vcpu_svm * | svm, |

| bool | disable | ||

| ) |

Definition at line 892 of file svm.c.

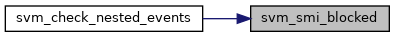

◆ svm_smi_blocked()

| bool svm_smi_blocked | ( | struct kvm_vcpu * | vcpu | ) |

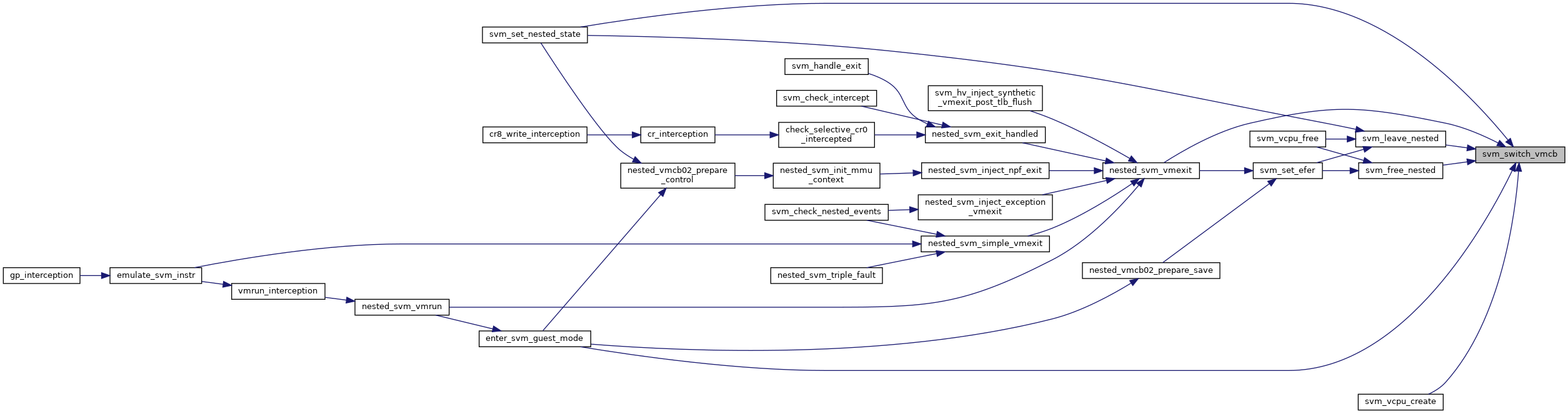

◆ svm_switch_vmcb()

| void svm_switch_vmcb | ( | struct vcpu_svm * | svm, |

| struct kvm_vmcb_info * | target_vmcb | ||

| ) |

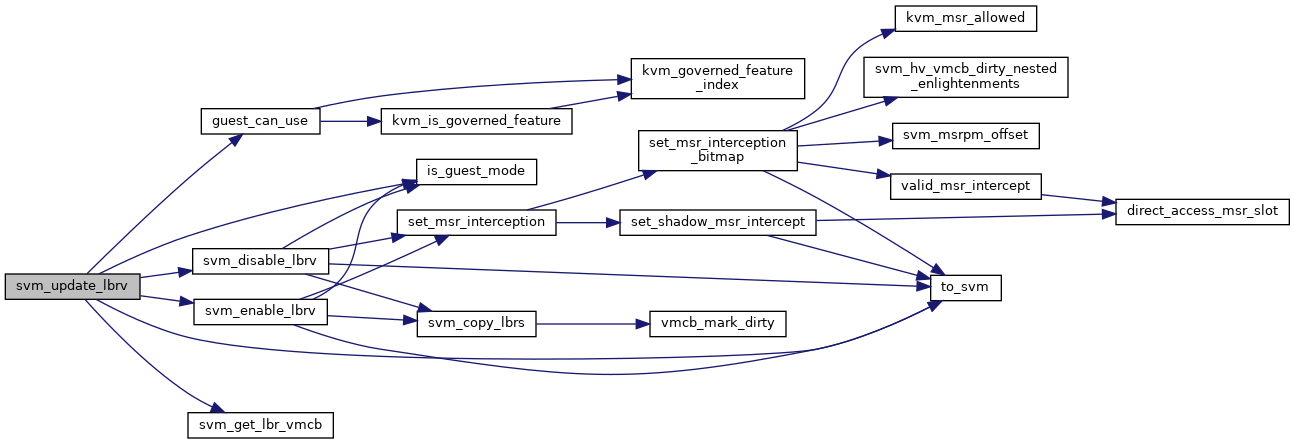

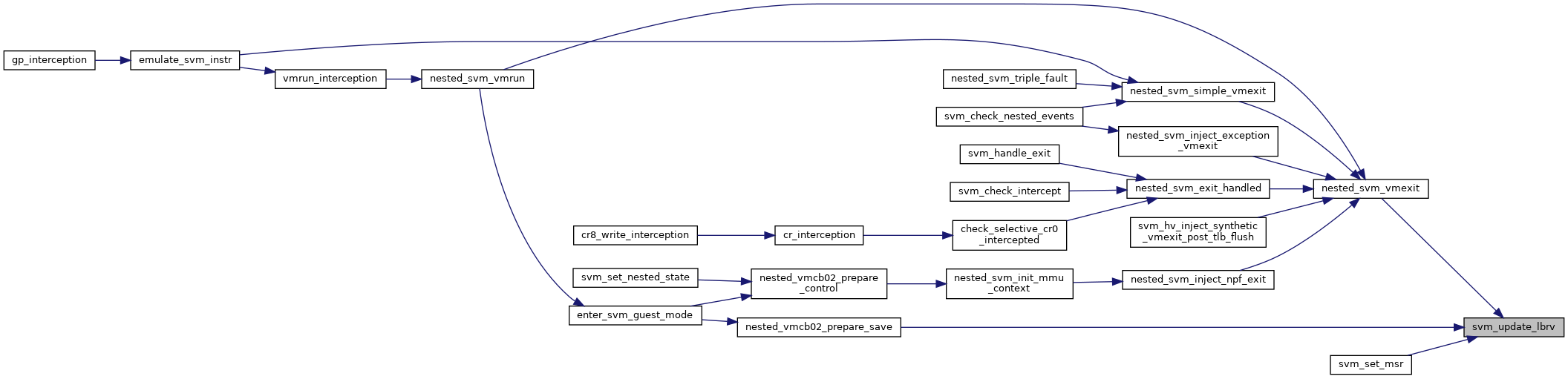

◆ svm_update_lbrv()

| void svm_update_lbrv | ( | struct kvm_vcpu * | vcpu | ) |

◆ svm_vcpu_alloc_msrpm()

| u32* svm_vcpu_alloc_msrpm | ( | void | ) |

◆ svm_vcpu_free_msrpm()

| void svm_vcpu_free_msrpm | ( | u32 * | msrpm | ) |

◆ svm_vcpu_init_msrpm()

| void svm_vcpu_init_msrpm | ( | struct kvm_vcpu * | vcpu, |

| u32 * | msrpm | ||

| ) |

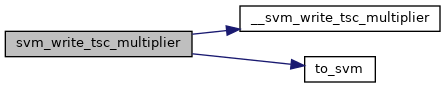

◆ svm_write_tsc_multiplier()

| void svm_write_tsc_multiplier | ( | struct kvm_vcpu * | vcpu | ) |

◆ to_kvm_svm()

|

static |

◆ to_svm()

|

static |

◆ vmcb12_is_intercept()

|

inlinestatic |

◆ vmcb_clr_intercept()

|

inlinestatic |

◆ vmcb_is_dirty()

|

inlinestatic |

◆ vmcb_is_intercept()

|

inlinestatic |

◆ vmcb_mark_all_clean()

|

inlinestatic |

◆ vmcb_mark_all_dirty()

|

inlinestatic |

◆ vmcb_mark_dirty()

|

inlinestatic |

◆ vmcb_set_intercept()

|

inlinestatic |

Variable Documentation

◆ __read_mostly

|

extern |