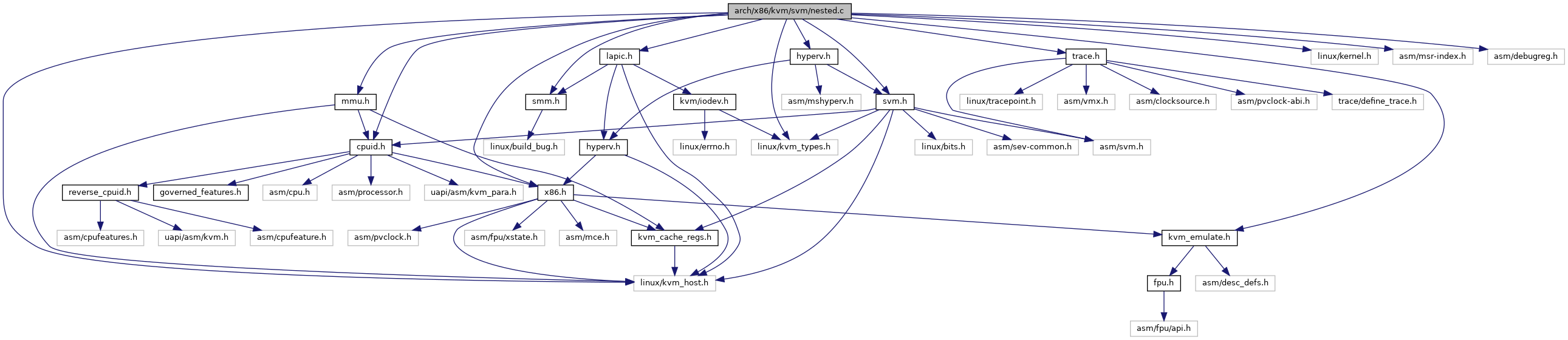

#include <linux/kvm_types.h>#include <linux/kvm_host.h>#include <linux/kernel.h>#include <asm/msr-index.h>#include <asm/debugreg.h>#include "kvm_emulate.h"#include "trace.h"#include "mmu.h"#include "x86.h"#include "smm.h"#include "cpuid.h"#include "lapic.h"#include "svm.h"#include "hyperv.h"

Go to the source code of this file.

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

| #define | CC KVM_NESTED_VMENTER_CONSISTENCY_CHECK |

Functions | |

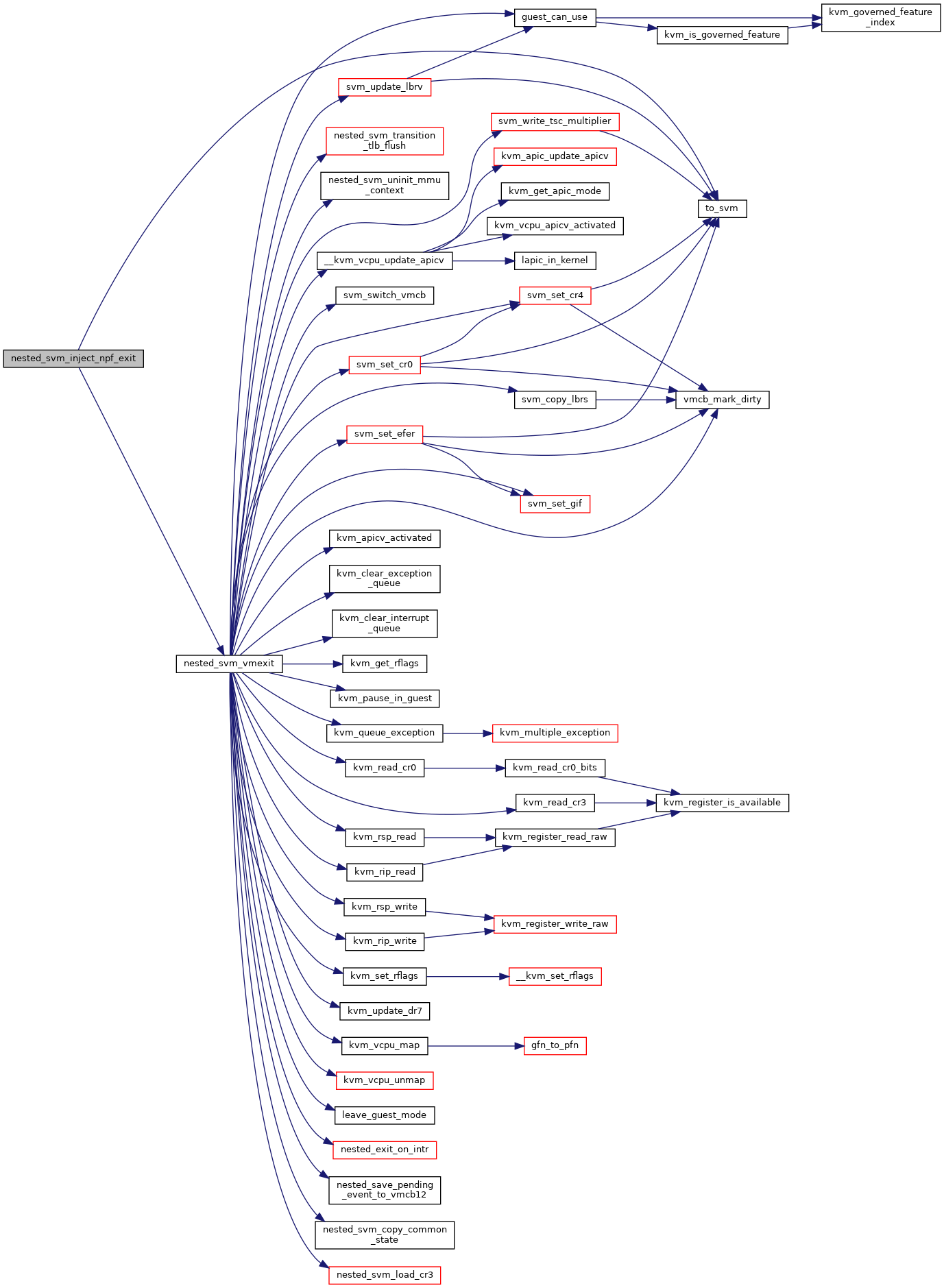

| static void | nested_svm_inject_npf_exit (struct kvm_vcpu *vcpu, struct x86_exception *fault) |

| static u64 | nested_svm_get_tdp_pdptr (struct kvm_vcpu *vcpu, int index) |

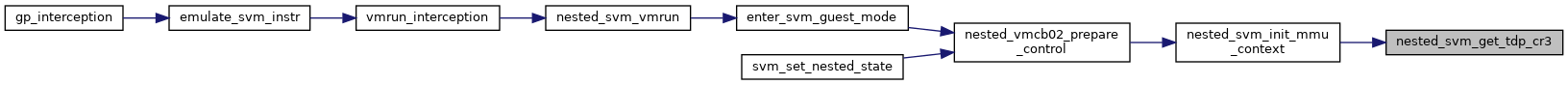

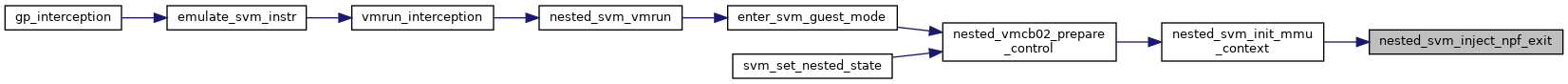

| static unsigned long | nested_svm_get_tdp_cr3 (struct kvm_vcpu *vcpu) |

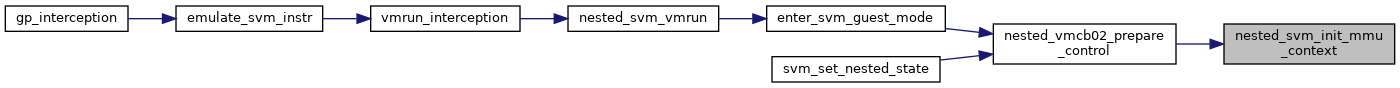

| static void | nested_svm_init_mmu_context (struct kvm_vcpu *vcpu) |

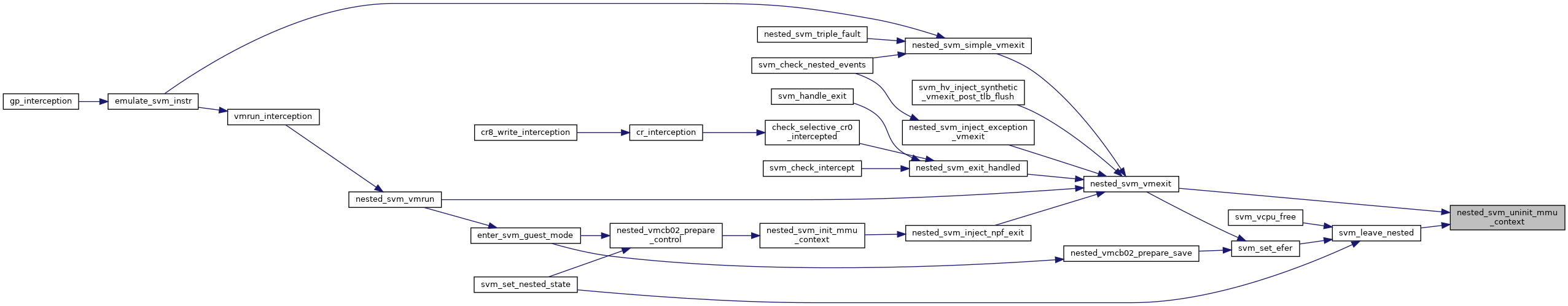

| static void | nested_svm_uninit_mmu_context (struct kvm_vcpu *vcpu) |

| static bool | nested_vmcb_needs_vls_intercept (struct vcpu_svm *svm) |

| void | recalc_intercepts (struct vcpu_svm *svm) |

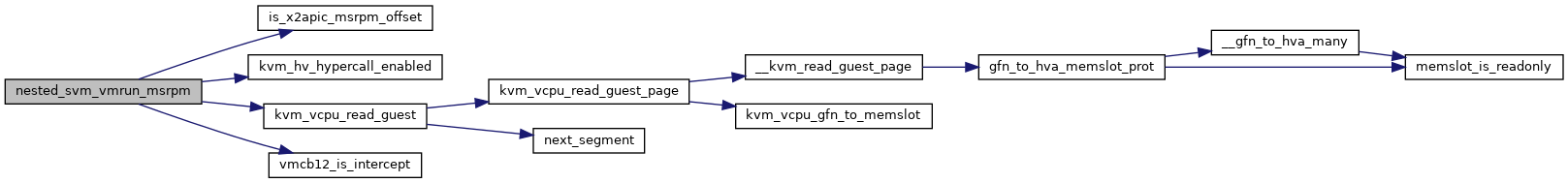

| static bool | nested_svm_vmrun_msrpm (struct vcpu_svm *svm) |

| static bool | nested_svm_check_bitmap_pa (struct kvm_vcpu *vcpu, u64 pa, u32 size) |

| static bool | __nested_vmcb_check_controls (struct kvm_vcpu *vcpu, struct vmcb_ctrl_area_cached *control) |

| static bool | __nested_vmcb_check_save (struct kvm_vcpu *vcpu, struct vmcb_save_area_cached *save) |

| static bool | nested_vmcb_check_save (struct kvm_vcpu *vcpu) |

| static bool | nested_vmcb_check_controls (struct kvm_vcpu *vcpu) |

| static void | __nested_copy_vmcb_control_to_cache (struct kvm_vcpu *vcpu, struct vmcb_ctrl_area_cached *to, struct vmcb_control_area *from) |

| void | nested_copy_vmcb_control_to_cache (struct vcpu_svm *svm, struct vmcb_control_area *control) |

| static void | __nested_copy_vmcb_save_to_cache (struct vmcb_save_area_cached *to, struct vmcb_save_area *from) |

| void | nested_copy_vmcb_save_to_cache (struct vcpu_svm *svm, struct vmcb_save_area *save) |

| void | nested_sync_control_from_vmcb02 (struct vcpu_svm *svm) |

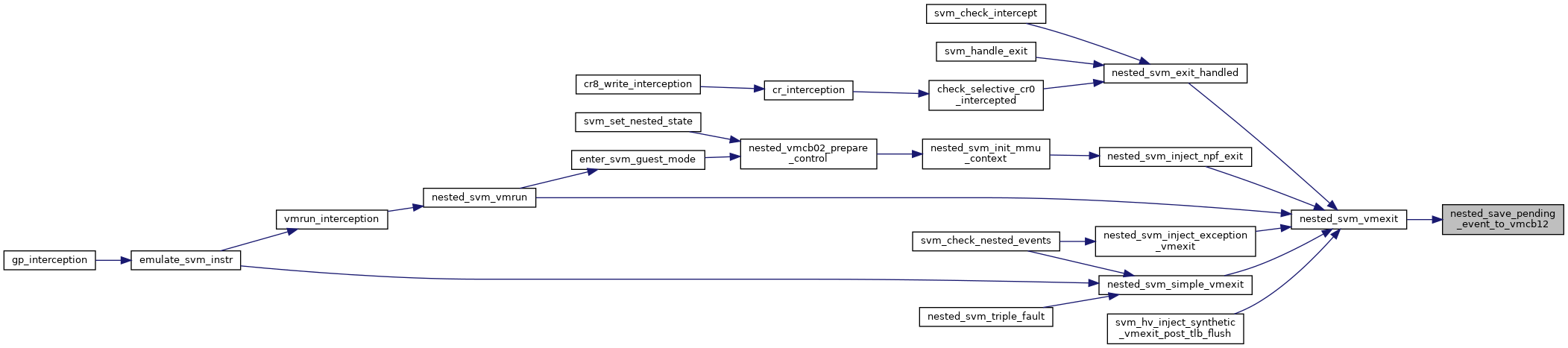

| static void | nested_save_pending_event_to_vmcb12 (struct vcpu_svm *svm, struct vmcb *vmcb12) |

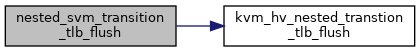

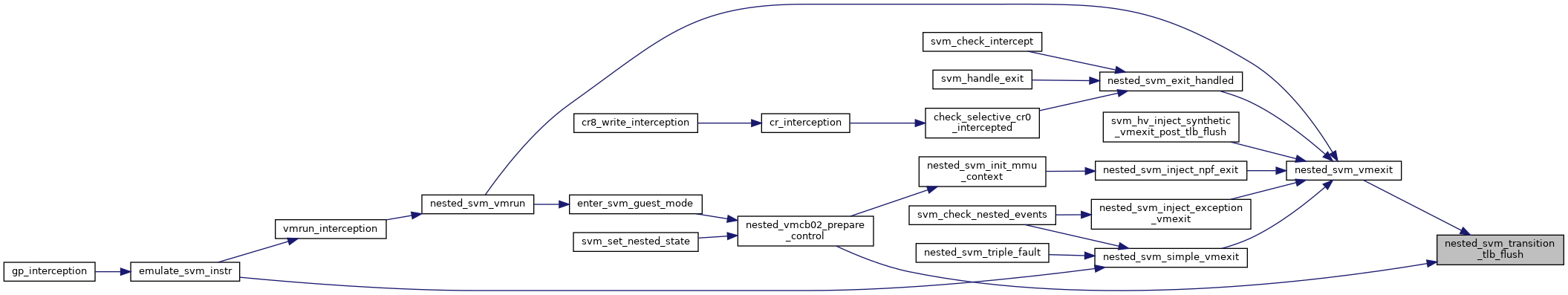

| static void | nested_svm_transition_tlb_flush (struct kvm_vcpu *vcpu) |

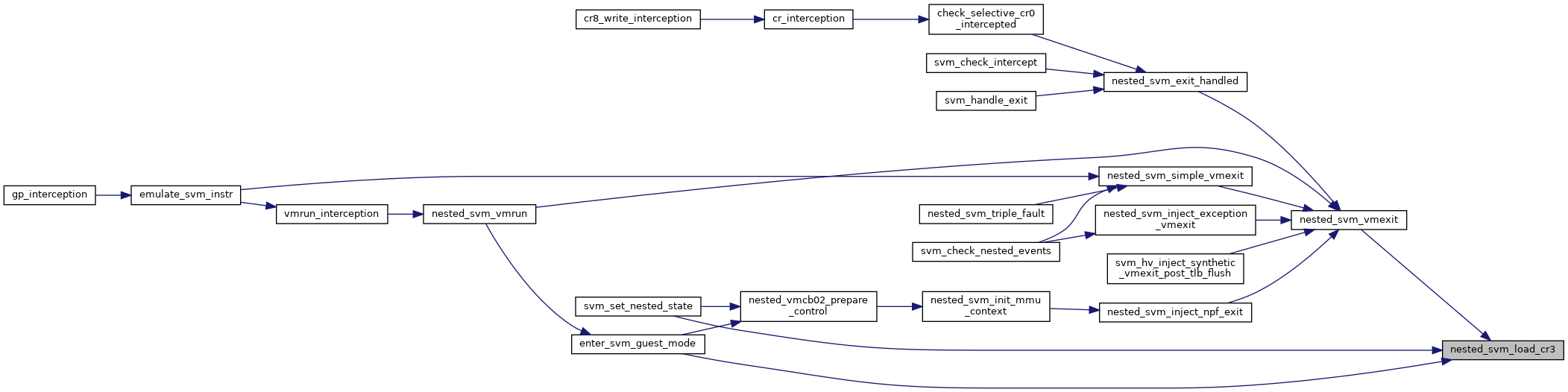

| static int | nested_svm_load_cr3 (struct kvm_vcpu *vcpu, unsigned long cr3, bool nested_npt, bool reload_pdptrs) |

| void | nested_vmcb02_compute_g_pat (struct vcpu_svm *svm) |

| static void | nested_vmcb02_prepare_save (struct vcpu_svm *svm, struct vmcb *vmcb12) |

| static bool | is_evtinj_soft (u32 evtinj) |

| static bool | is_evtinj_nmi (u32 evtinj) |

| static void | nested_vmcb02_prepare_control (struct vcpu_svm *svm, unsigned long vmcb12_rip, unsigned long vmcb12_csbase) |

| static void | nested_svm_copy_common_state (struct vmcb *from_vmcb, struct vmcb *to_vmcb) |

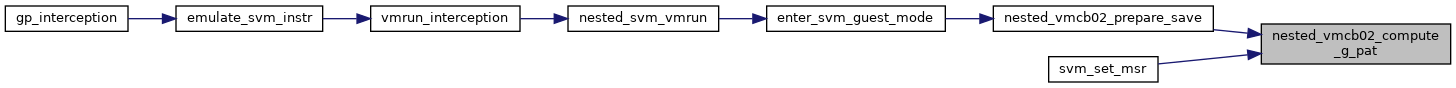

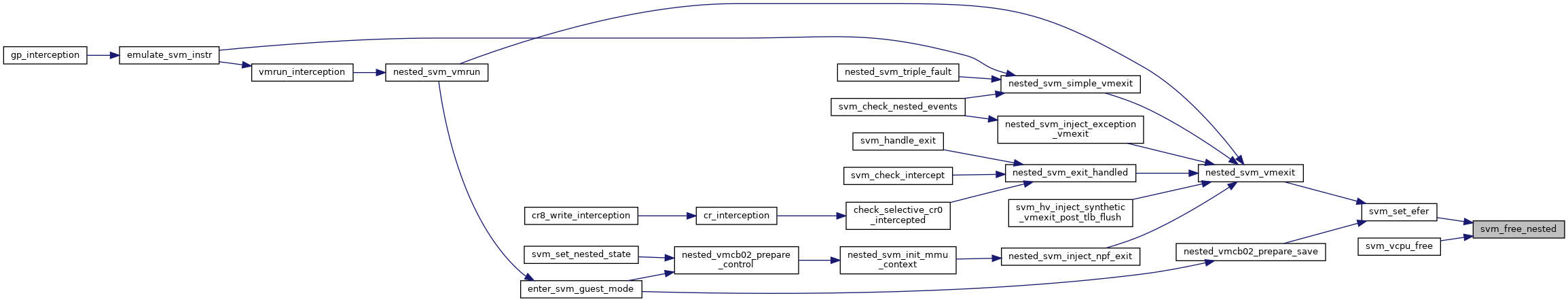

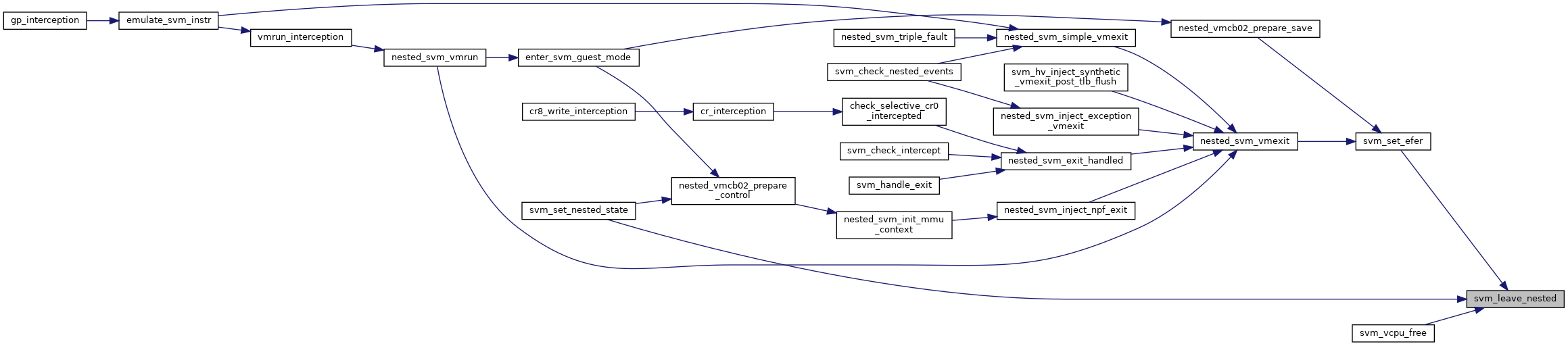

| int | enter_svm_guest_mode (struct kvm_vcpu *vcpu, u64 vmcb12_gpa, struct vmcb *vmcb12, bool from_vmrun) |

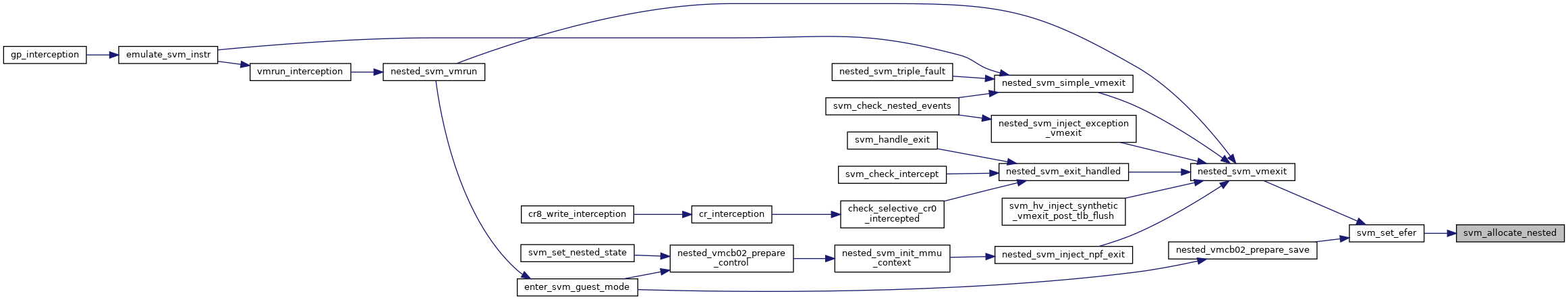

| int | nested_svm_vmrun (struct kvm_vcpu *vcpu) |

| void | svm_copy_vmrun_state (struct vmcb_save_area *to_save, struct vmcb_save_area *from_save) |

| void | svm_copy_vmloadsave_state (struct vmcb *to_vmcb, struct vmcb *from_vmcb) |

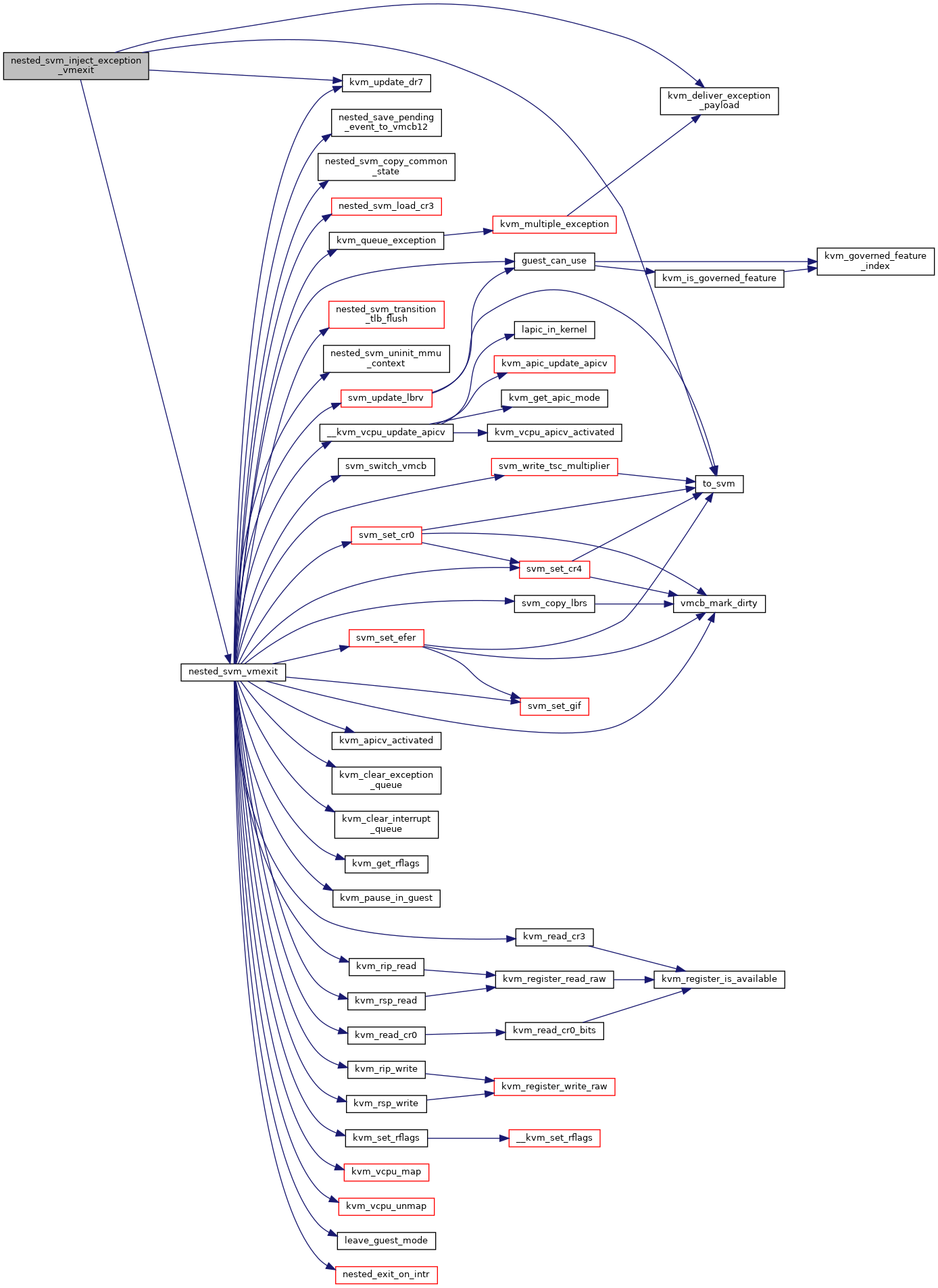

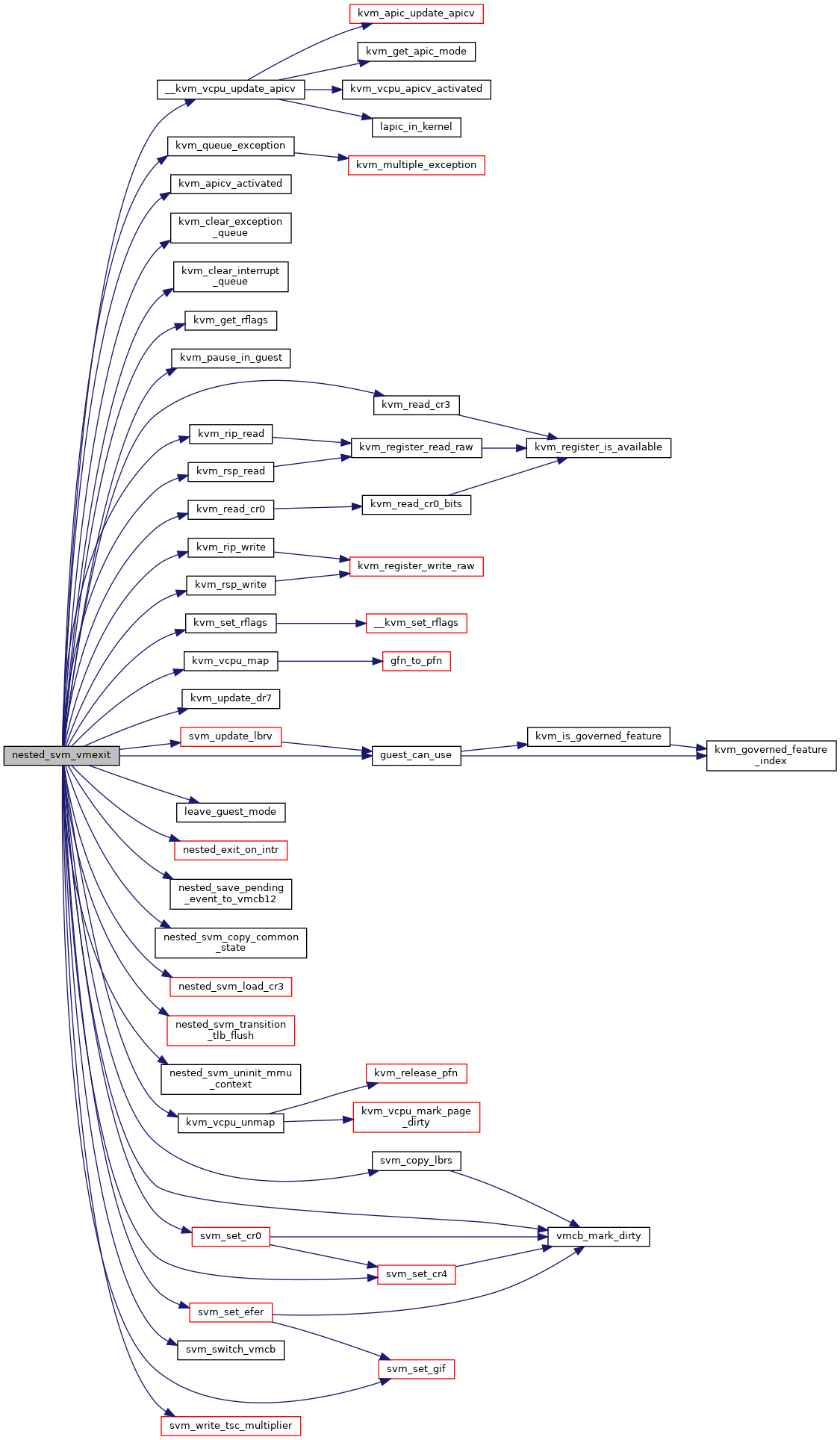

| int | nested_svm_vmexit (struct vcpu_svm *svm) |

| static void | nested_svm_triple_fault (struct kvm_vcpu *vcpu) |

| int | svm_allocate_nested (struct vcpu_svm *svm) |

| void | svm_free_nested (struct vcpu_svm *svm) |

| void | svm_leave_nested (struct kvm_vcpu *vcpu) |

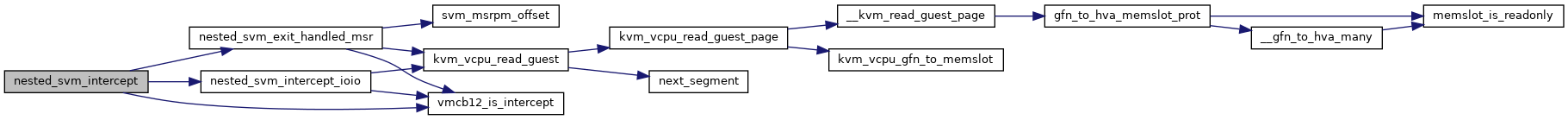

| static int | nested_svm_exit_handled_msr (struct vcpu_svm *svm) |

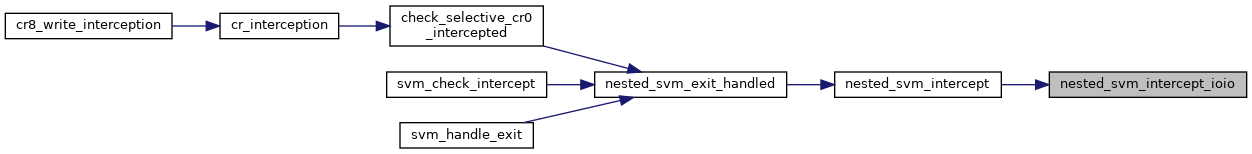

| static int | nested_svm_intercept_ioio (struct vcpu_svm *svm) |

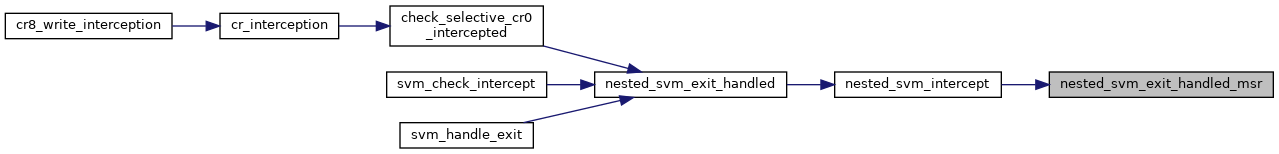

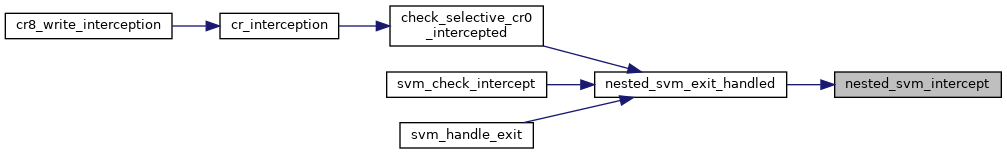

| static int | nested_svm_intercept (struct vcpu_svm *svm) |

| int | nested_svm_exit_handled (struct vcpu_svm *svm) |

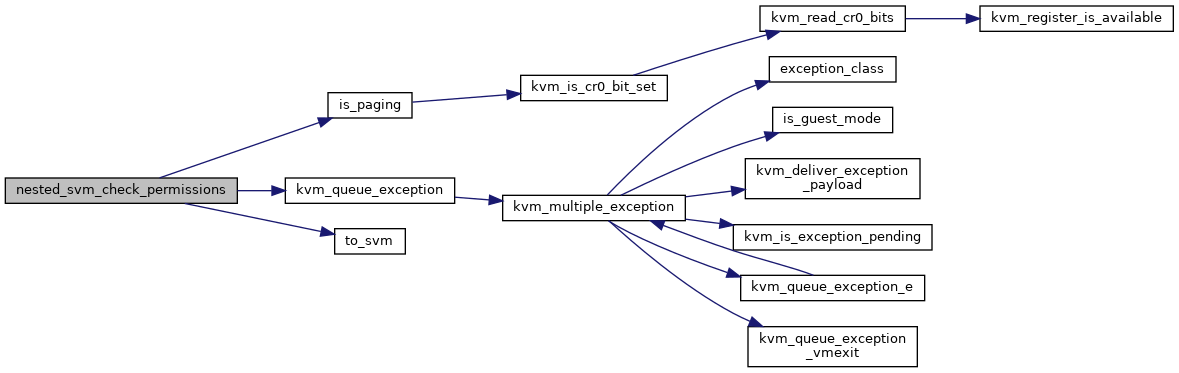

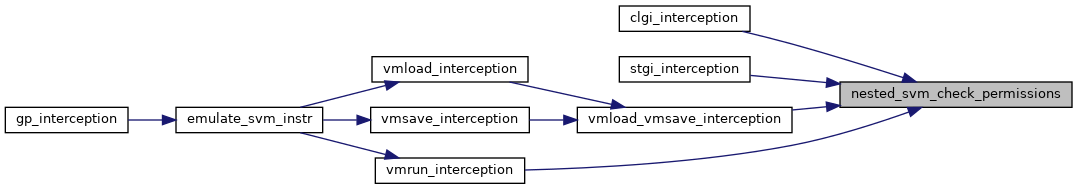

| int | nested_svm_check_permissions (struct kvm_vcpu *vcpu) |

| static bool | nested_svm_is_exception_vmexit (struct kvm_vcpu *vcpu, u8 vector, u32 error_code) |

| static void | nested_svm_inject_exception_vmexit (struct kvm_vcpu *vcpu) |

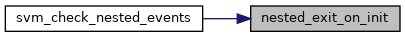

| static bool | nested_exit_on_init (struct vcpu_svm *svm) |

| static int | svm_check_nested_events (struct kvm_vcpu *vcpu) |

| int | nested_svm_exit_special (struct vcpu_svm *svm) |

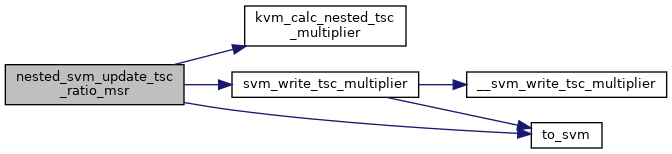

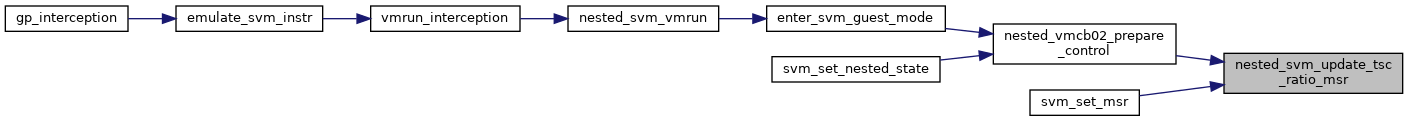

| void | nested_svm_update_tsc_ratio_msr (struct kvm_vcpu *vcpu) |

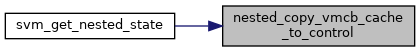

| static void | nested_copy_vmcb_cache_to_control (struct vmcb_control_area *dst, struct vmcb_ctrl_area_cached *from) |

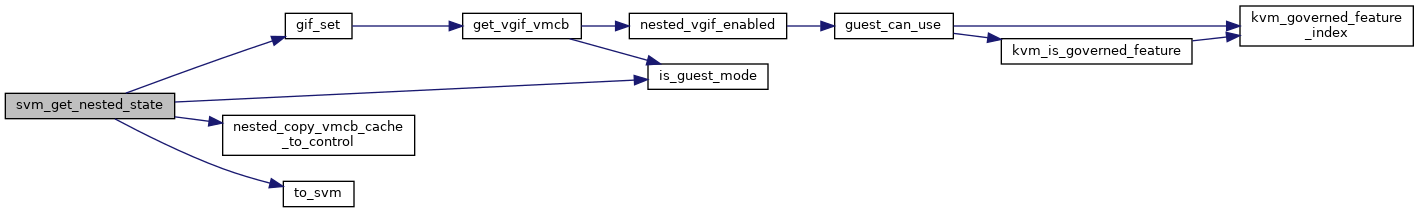

| static int | svm_get_nested_state (struct kvm_vcpu *vcpu, struct kvm_nested_state __user *user_kvm_nested_state, u32 user_data_size) |

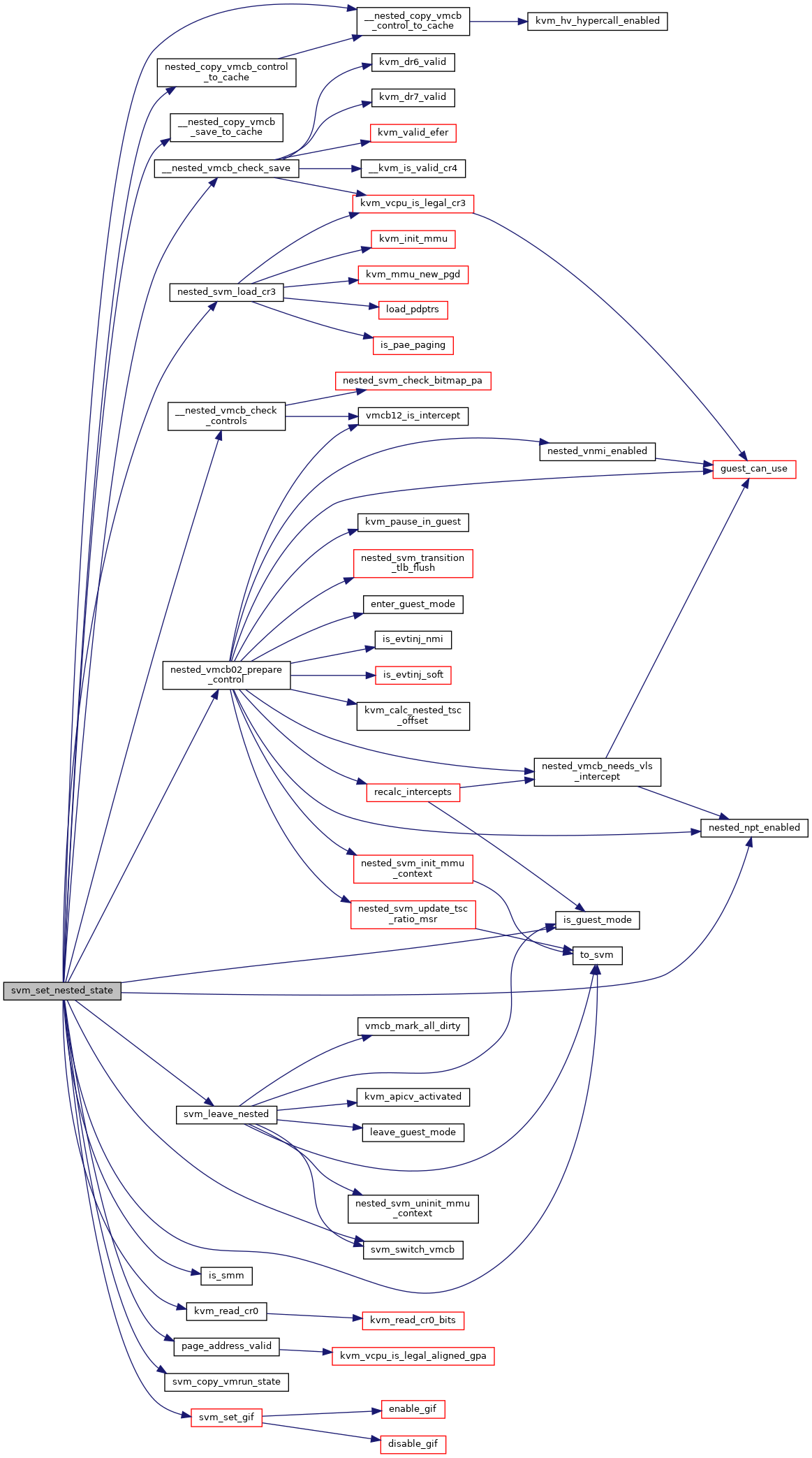

| static int | svm_set_nested_state (struct kvm_vcpu *vcpu, struct kvm_nested_state __user *user_kvm_nested_state, struct kvm_nested_state *kvm_state) |

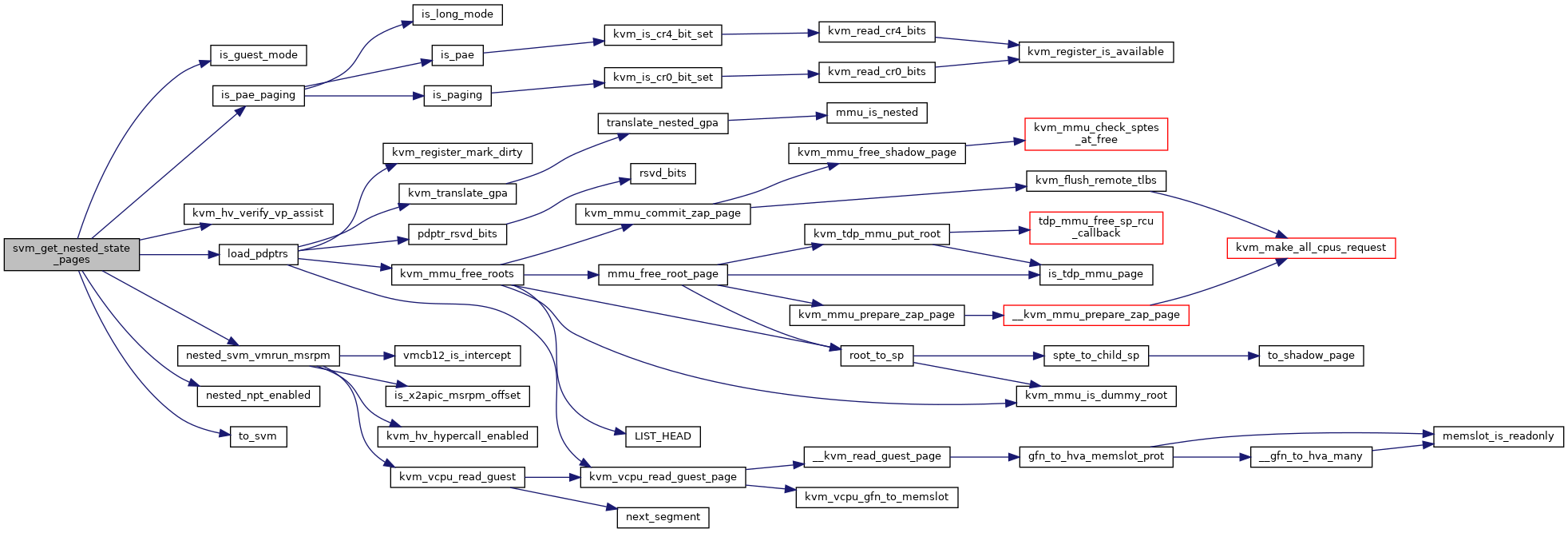

| static bool | svm_get_nested_state_pages (struct kvm_vcpu *vcpu) |

Variables | |

| struct kvm_x86_nested_ops | svm_nested_ops |

Macro Definition Documentation

◆ CC

| #define CC KVM_NESTED_VMENTER_CONSISTENCY_CHECK |

◆ pr_fmt

Function Documentation

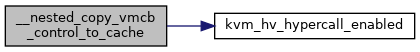

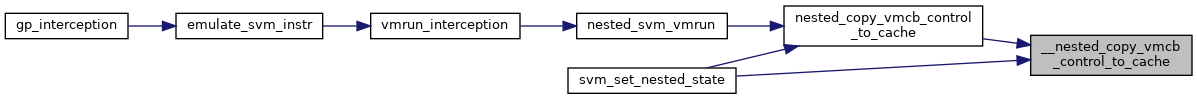

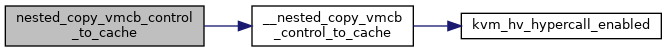

◆ __nested_copy_vmcb_control_to_cache()

|

static |

Definition at line 336 of file nested.c.

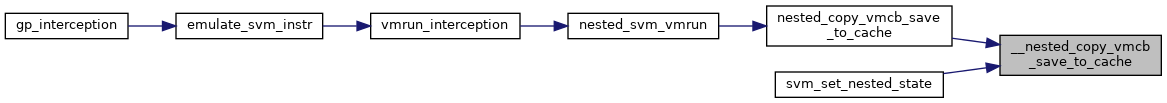

◆ __nested_copy_vmcb_save_to_cache()

|

static |

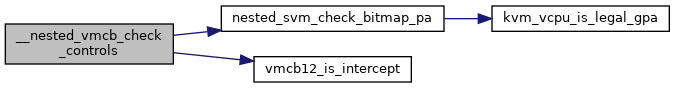

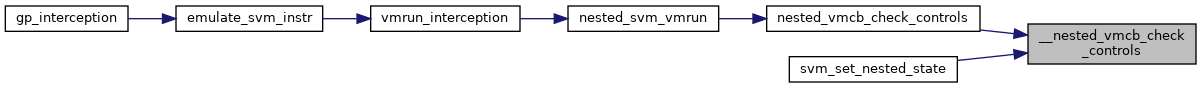

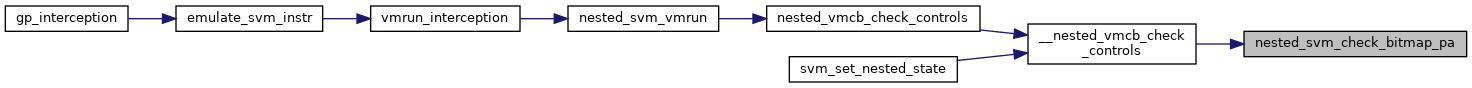

◆ __nested_vmcb_check_controls()

|

static |

Definition at line 256 of file nested.c.

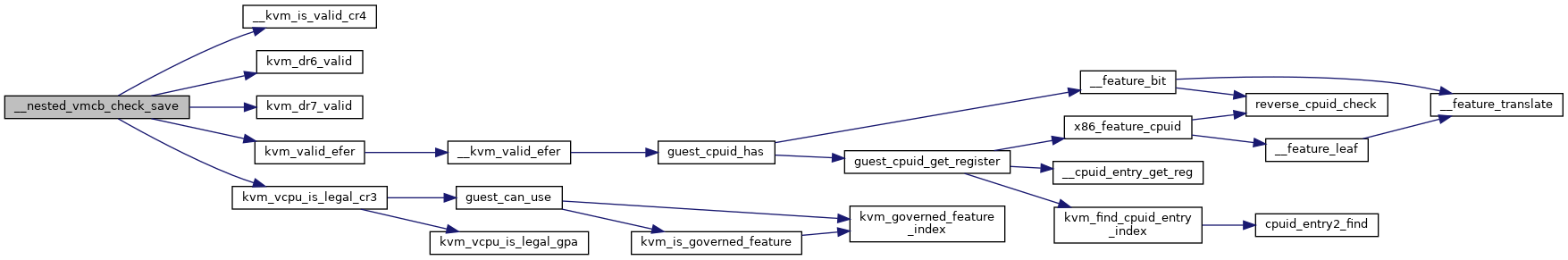

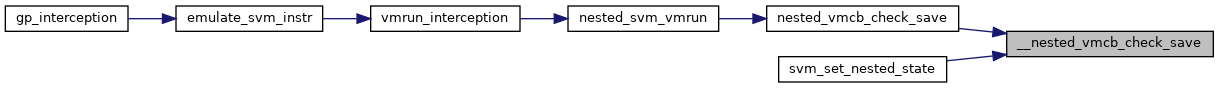

◆ __nested_vmcb_check_save()

|

static |

Definition at line 284 of file nested.c.

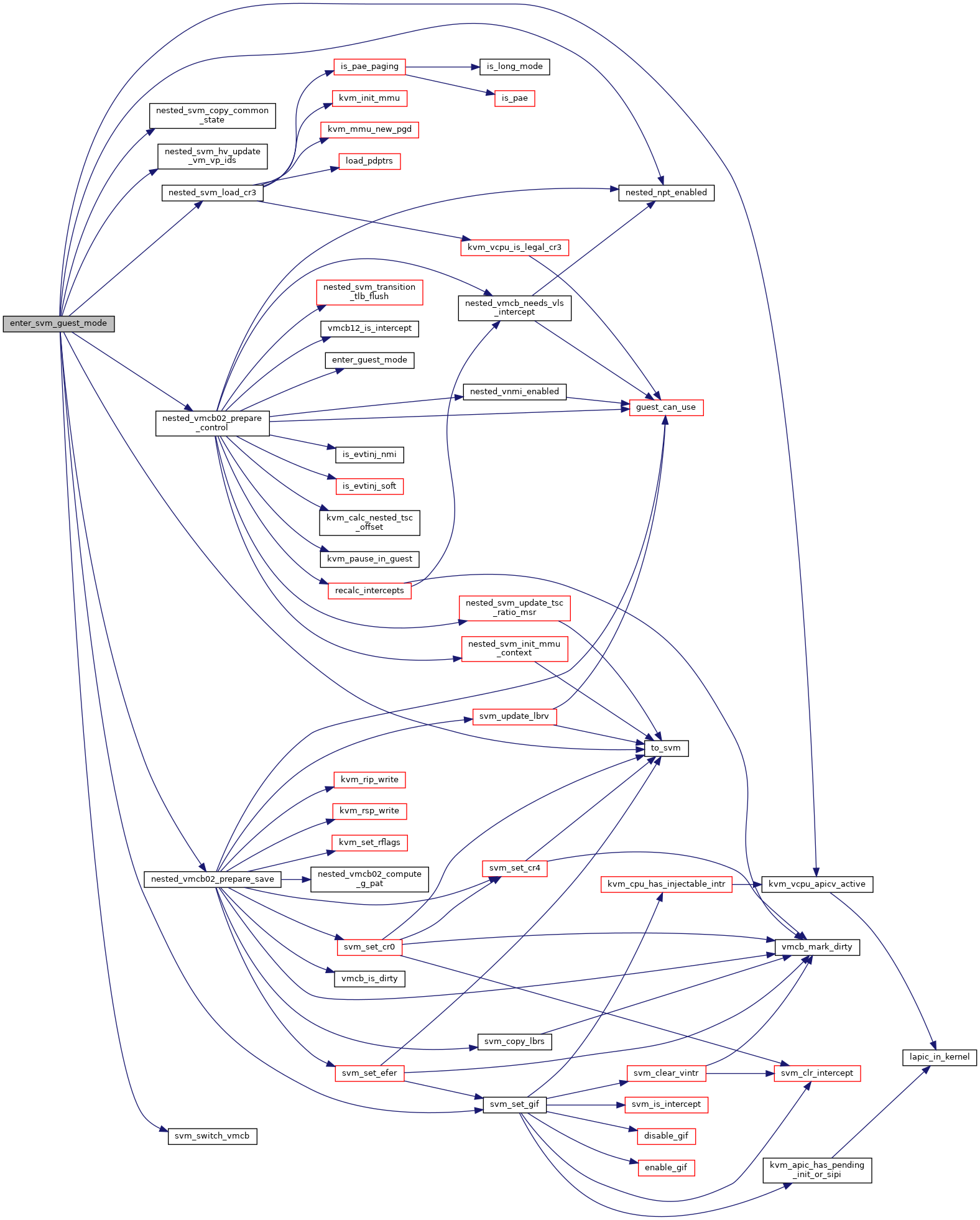

◆ enter_svm_guest_mode()

| int enter_svm_guest_mode | ( | struct kvm_vcpu * | vcpu, |

| u64 | vmcb12_gpa, | ||

| struct vmcb * | vmcb12, | ||

| bool | from_vmrun | ||

| ) |

Definition at line 785 of file nested.c.

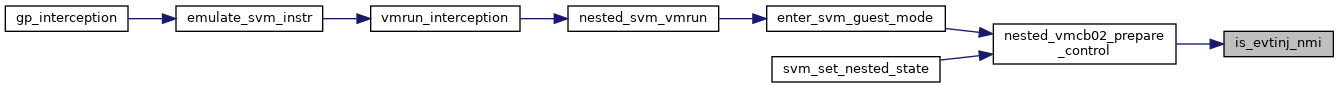

◆ is_evtinj_nmi()

|

static |

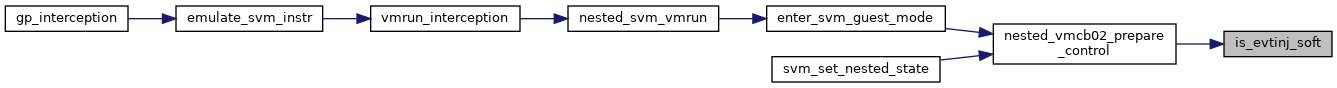

◆ is_evtinj_soft()

|

inlinestatic |

◆ nested_copy_vmcb_cache_to_control()

|

static |

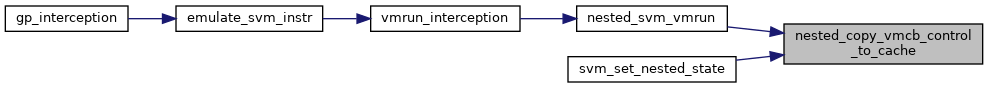

◆ nested_copy_vmcb_control_to_cache()

| void nested_copy_vmcb_control_to_cache | ( | struct vcpu_svm * | svm, |

| struct vmcb_control_area * | control | ||

| ) |

Definition at line 382 of file nested.c.

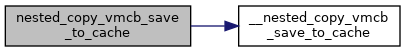

◆ nested_copy_vmcb_save_to_cache()

| void nested_copy_vmcb_save_to_cache | ( | struct vcpu_svm * | svm, |

| struct vmcb_save_area * | save | ||

| ) |

Definition at line 404 of file nested.c.

◆ nested_exit_on_init()

|

inlinestatic |

◆ nested_save_pending_event_to_vmcb12()

|

static |

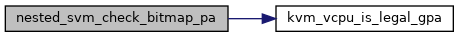

◆ nested_svm_check_bitmap_pa()

|

static |

Definition at line 248 of file nested.c.

◆ nested_svm_check_permissions()

| int nested_svm_check_permissions | ( | struct kvm_vcpu * | vcpu | ) |

◆ nested_svm_copy_common_state()

|

static |

◆ nested_svm_exit_handled()

| int nested_svm_exit_handled | ( | struct vcpu_svm * | svm | ) |

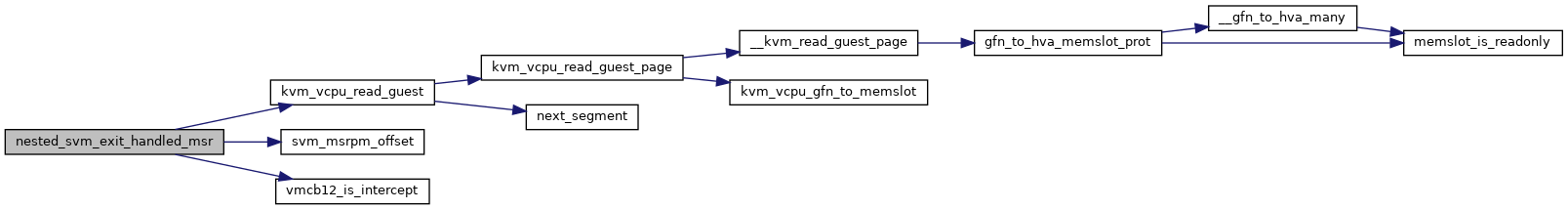

◆ nested_svm_exit_handled_msr()

|

static |

Definition at line 1251 of file nested.c.

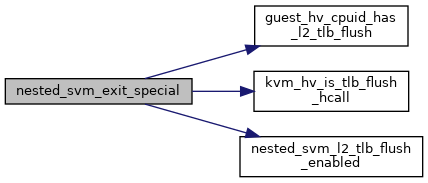

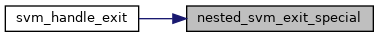

◆ nested_svm_exit_special()

| int nested_svm_exit_special | ( | struct vcpu_svm * | svm | ) |

Definition at line 1496 of file nested.c.

◆ nested_svm_get_tdp_cr3()

|

static |

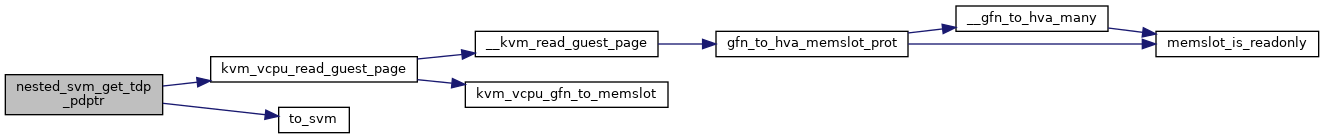

◆ nested_svm_get_tdp_pdptr()

|

static |

Definition at line 59 of file nested.c.

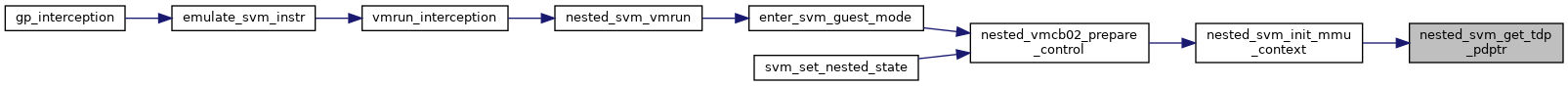

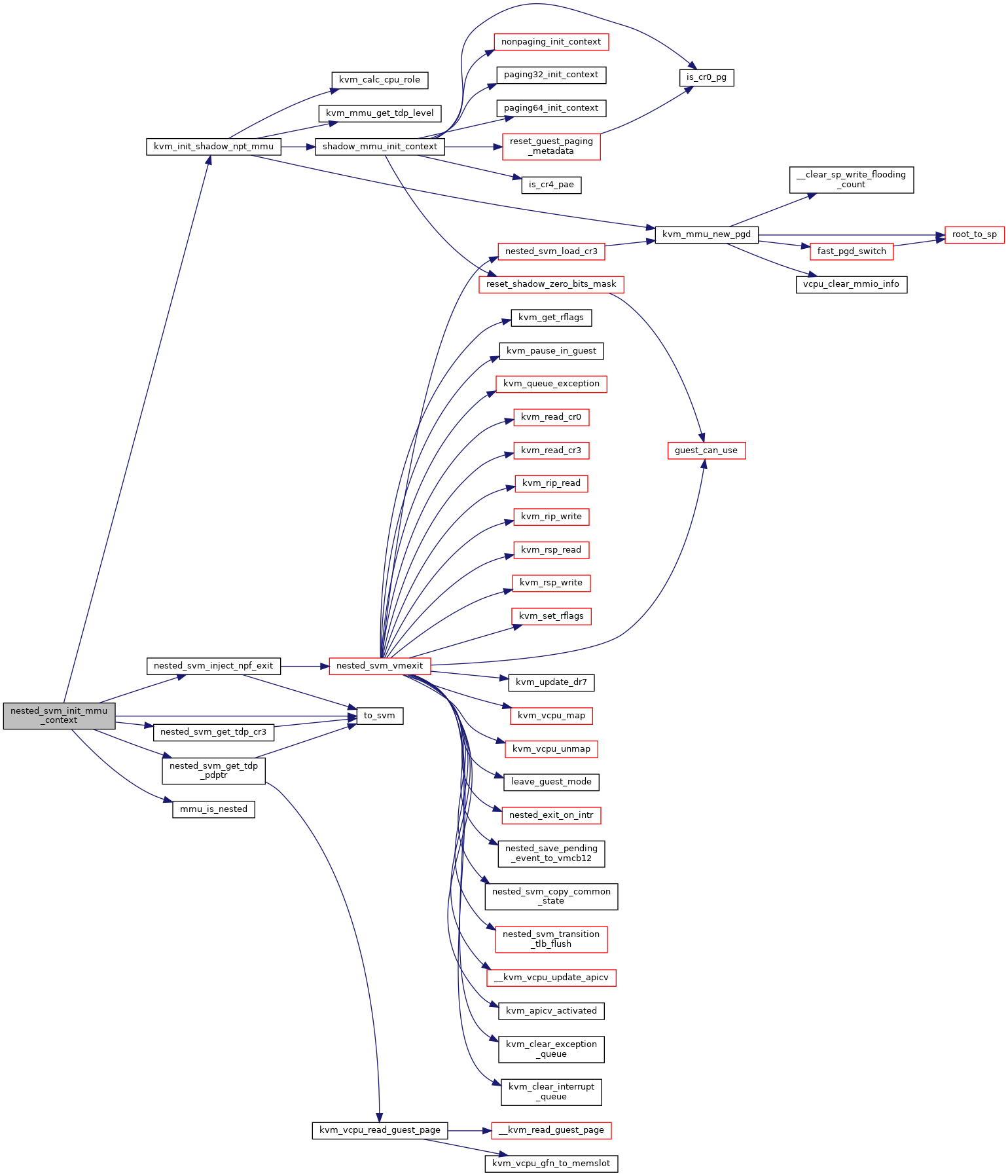

◆ nested_svm_init_mmu_context()

|

static |

Definition at line 80 of file nested.c.

◆ nested_svm_inject_exception_vmexit()

|

static |

◆ nested_svm_inject_npf_exit()

|

static |

◆ nested_svm_intercept()

|

static |

Definition at line 1301 of file nested.c.

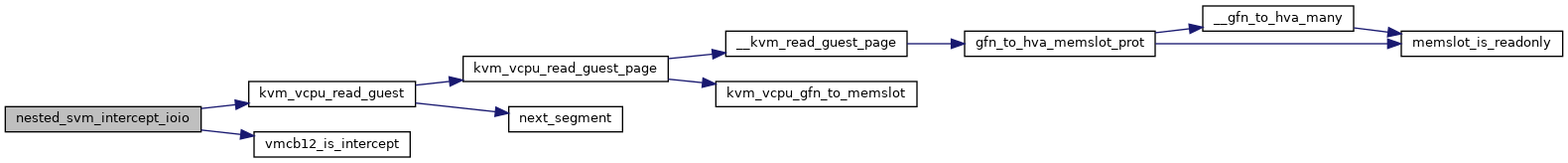

◆ nested_svm_intercept_ioio()

|

static |

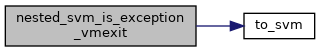

◆ nested_svm_is_exception_vmexit()

|

static |

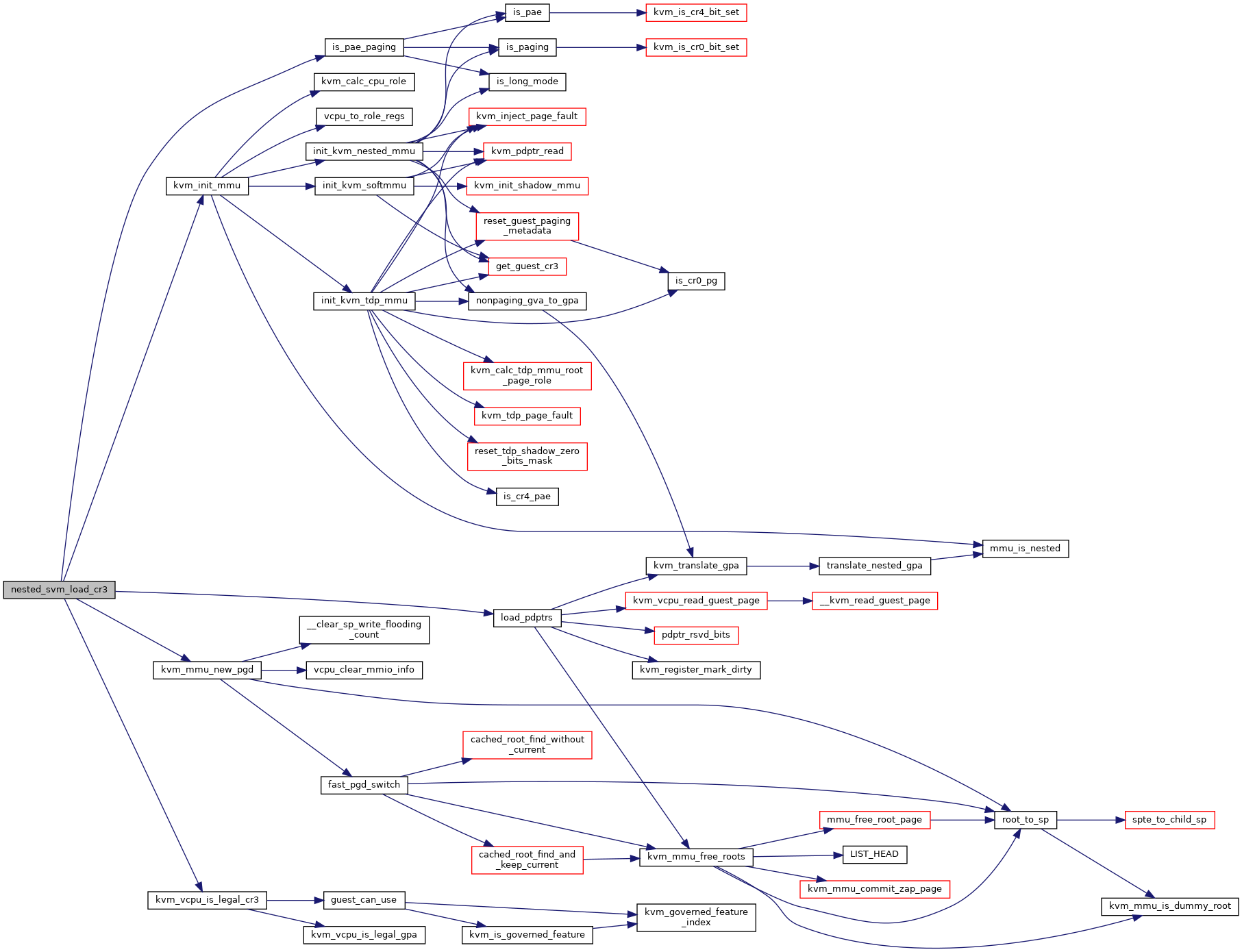

◆ nested_svm_load_cr3()

|

static |

◆ nested_svm_transition_tlb_flush()

|

static |

Definition at line 481 of file nested.c.

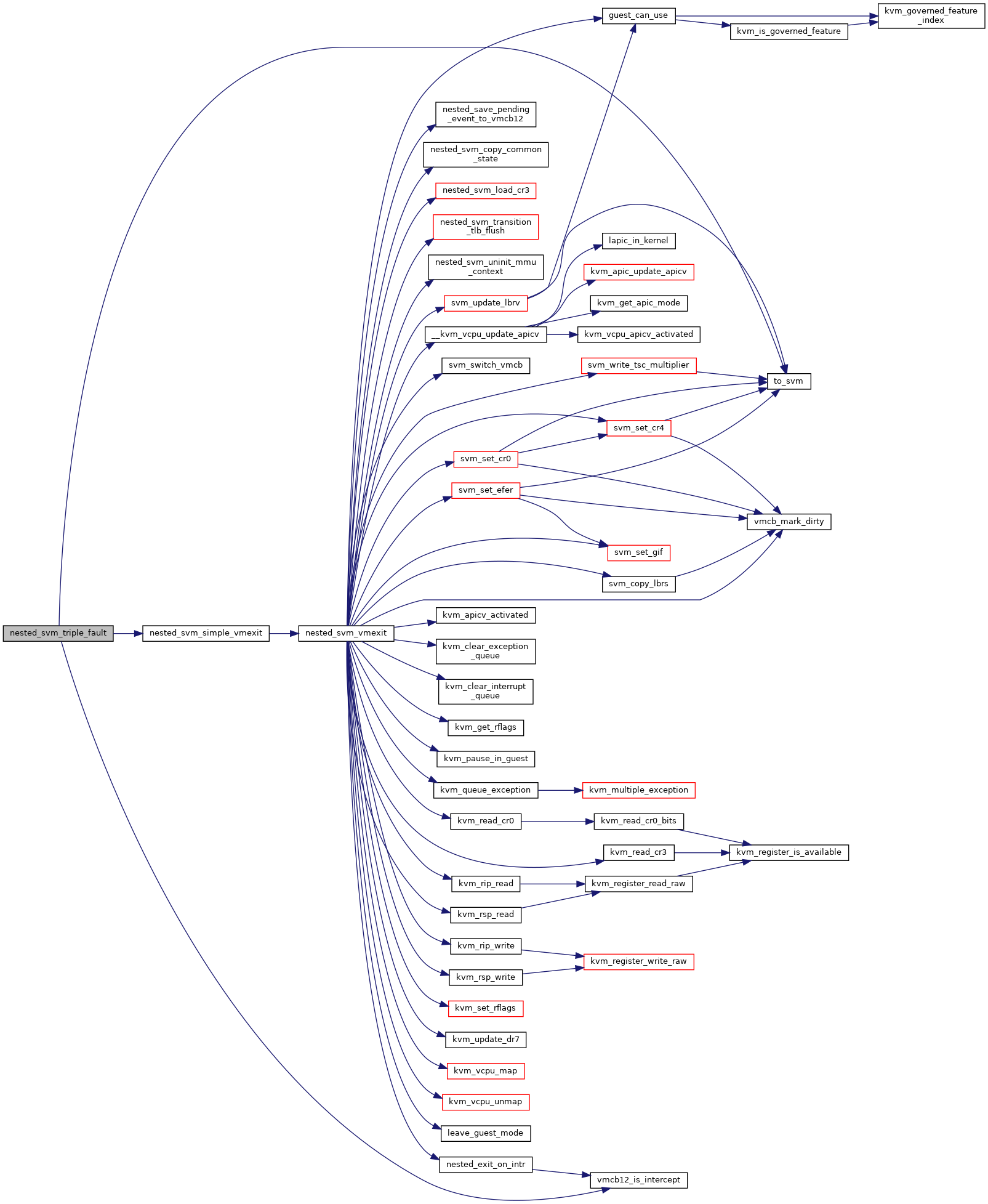

◆ nested_svm_triple_fault()

|

static |

◆ nested_svm_uninit_mmu_context()

|

static |

◆ nested_svm_update_tsc_ratio_msr()

| void nested_svm_update_tsc_ratio_msr | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1532 of file nested.c.

◆ nested_svm_vmexit()

| int nested_svm_vmexit | ( | struct vcpu_svm * | svm | ) |

Definition at line 967 of file nested.c.

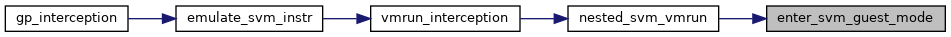

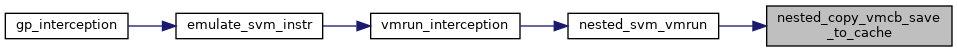

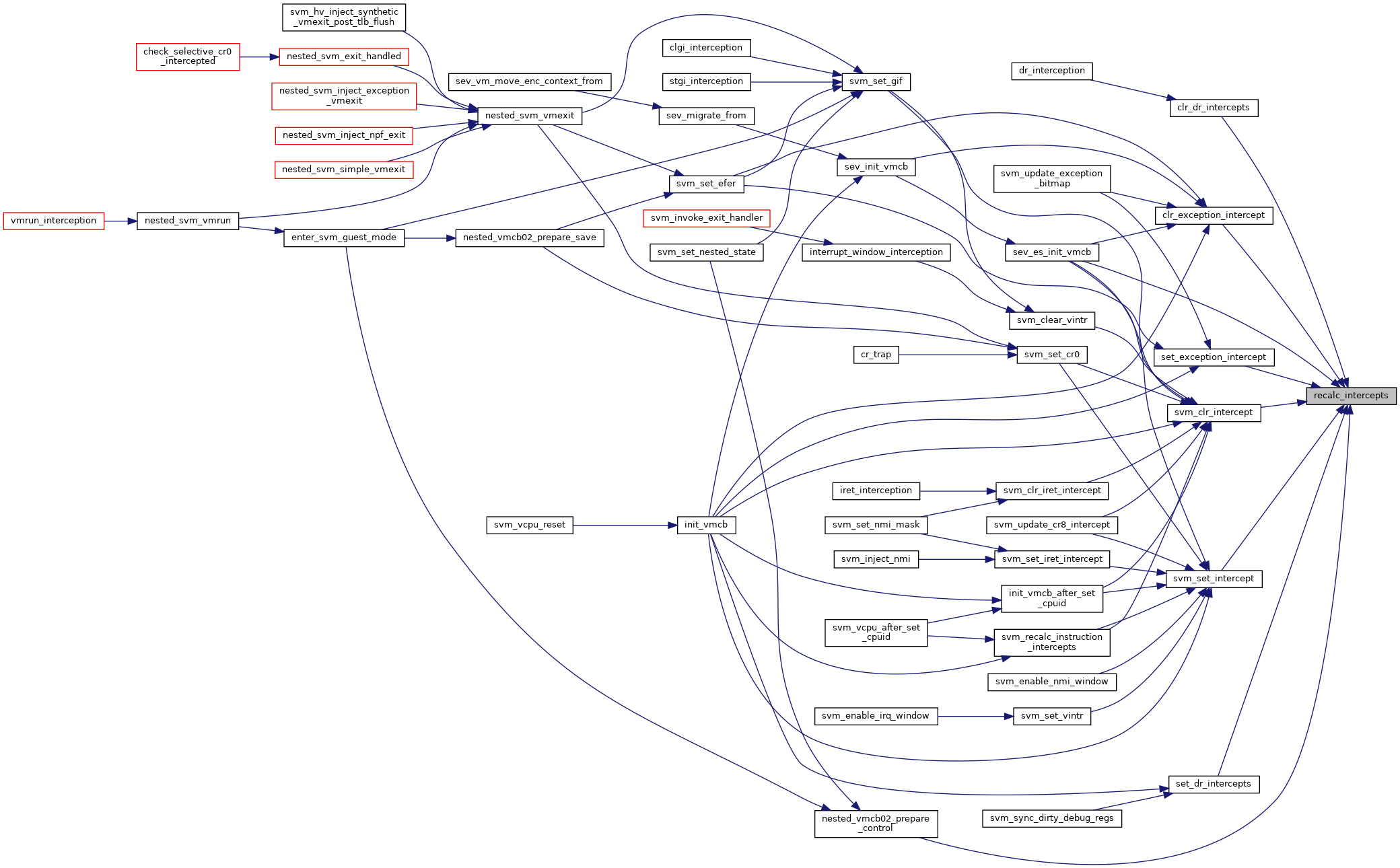

◆ nested_svm_vmrun()

| int nested_svm_vmrun | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 837 of file nested.c.

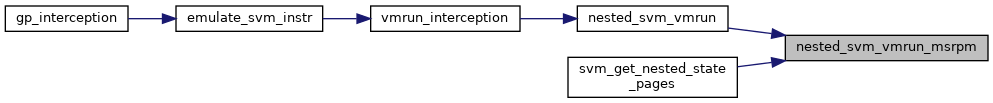

◆ nested_svm_vmrun_msrpm()

|

static |

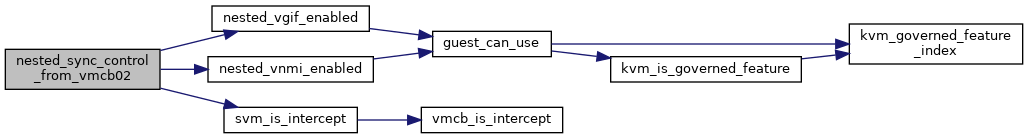

◆ nested_sync_control_from_vmcb02()

| void nested_sync_control_from_vmcb02 | ( | struct vcpu_svm * | svm | ) |

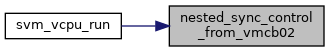

◆ nested_vmcb02_compute_g_pat()

| void nested_vmcb02_compute_g_pat | ( | struct vcpu_svm * | svm | ) |

◆ nested_vmcb02_prepare_control()

|

static |

Definition at line 632 of file nested.c.

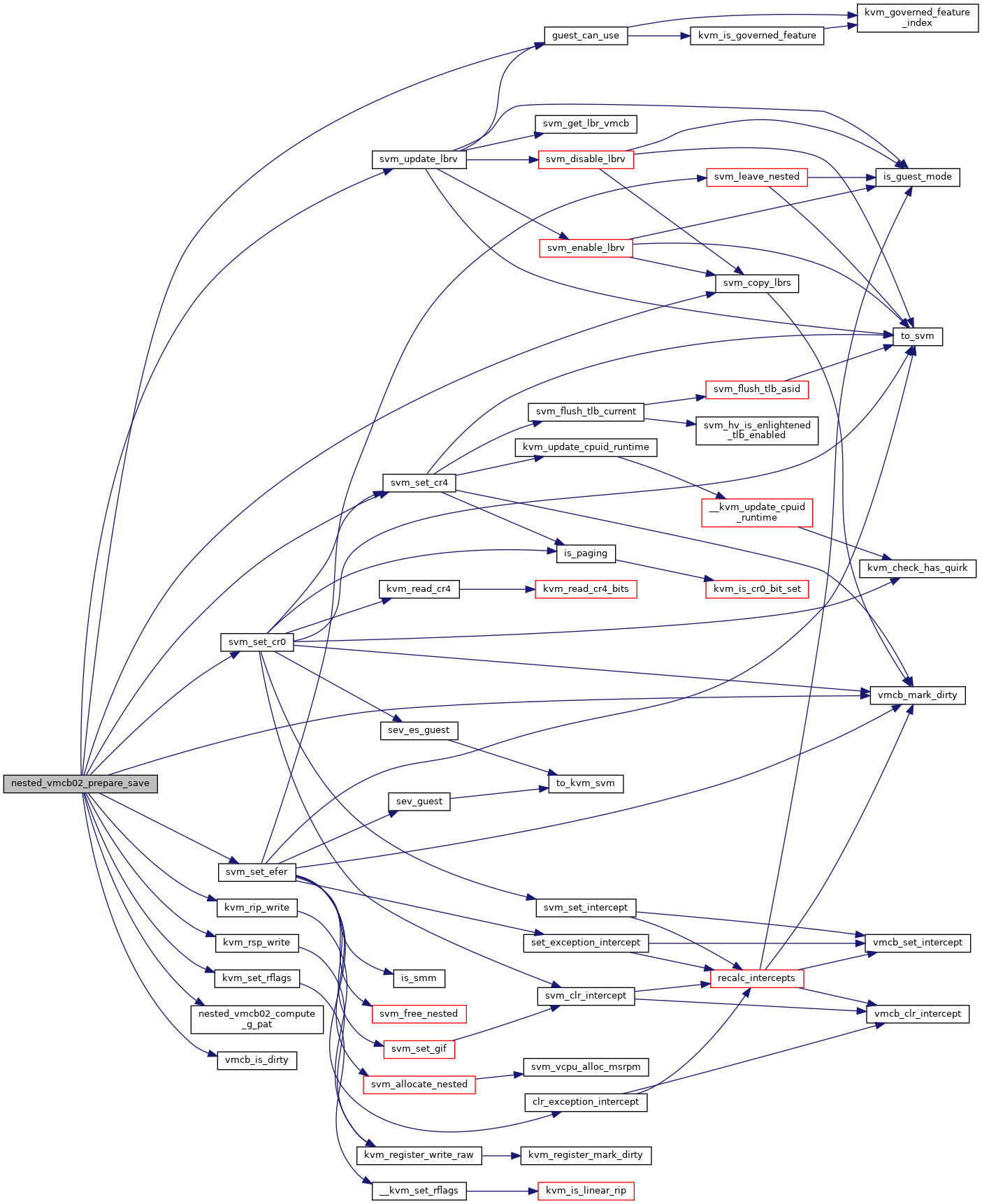

◆ nested_vmcb02_prepare_save()

|

static |

Definition at line 537 of file nested.c.

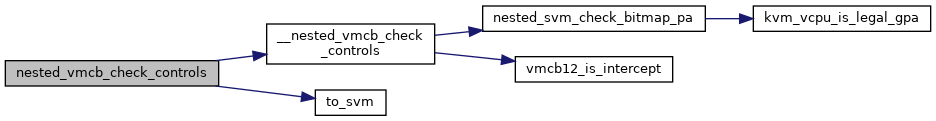

◆ nested_vmcb_check_controls()

|

static |

Definition at line 327 of file nested.c.

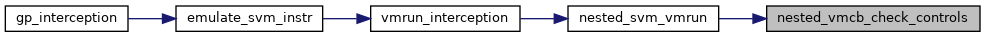

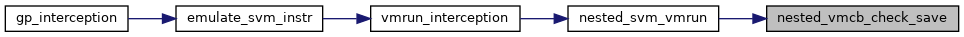

◆ nested_vmcb_check_save()

|

static |

Definition at line 319 of file nested.c.

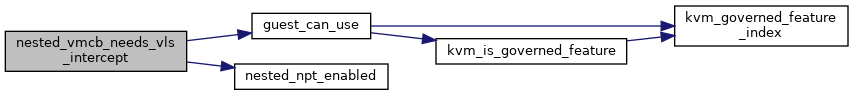

◆ nested_vmcb_needs_vls_intercept()

|

static |

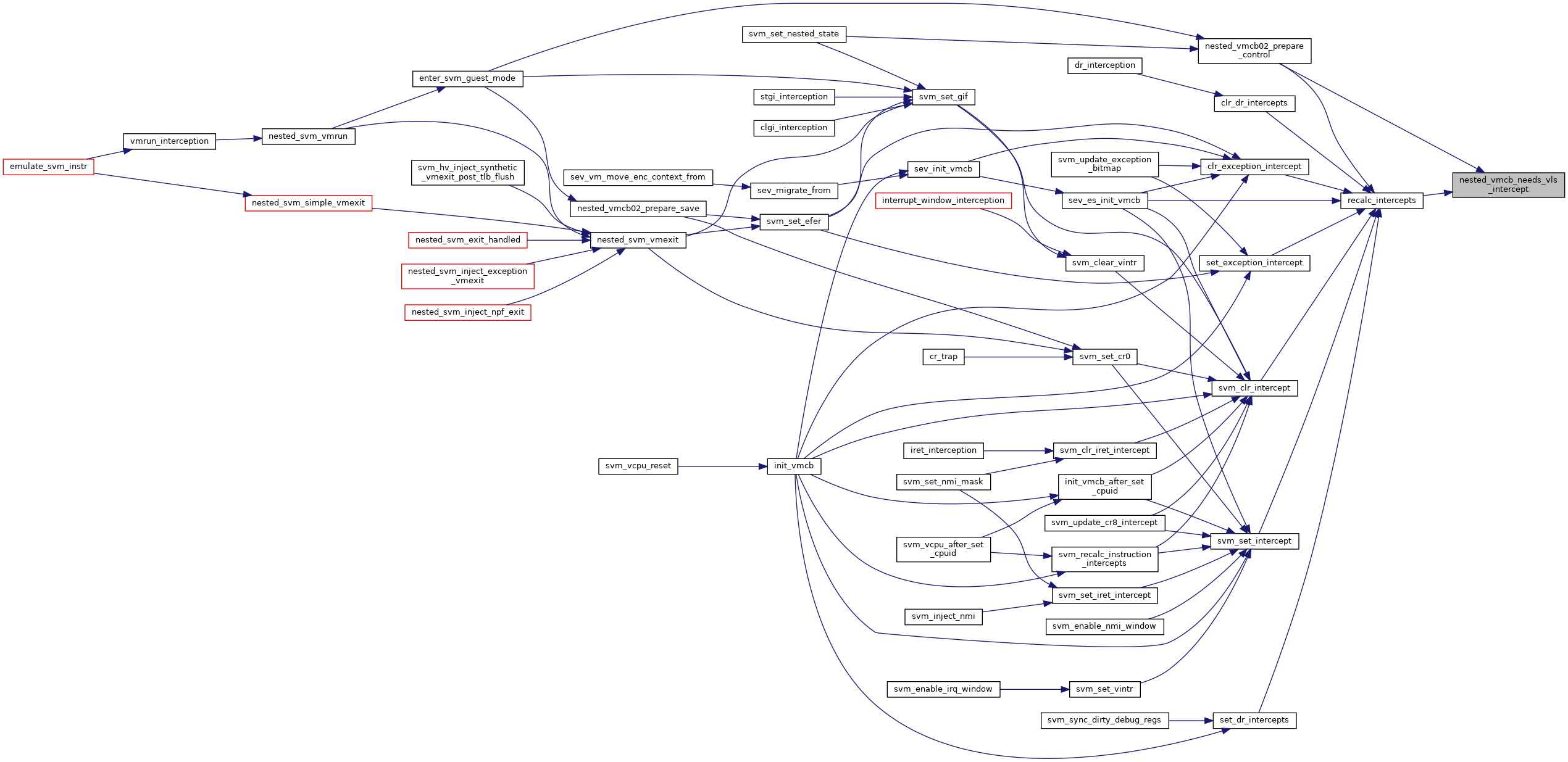

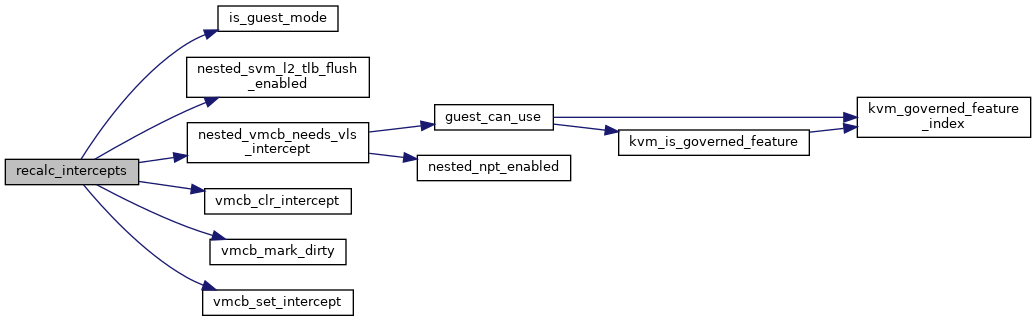

◆ recalc_intercepts()

| void recalc_intercepts | ( | struct vcpu_svm * | svm | ) |

Definition at line 122 of file nested.c.

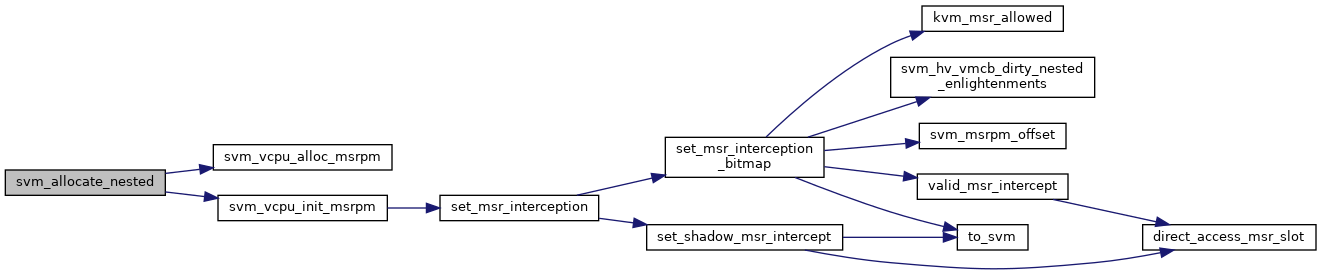

◆ svm_allocate_nested()

| int svm_allocate_nested | ( | struct vcpu_svm * | svm | ) |

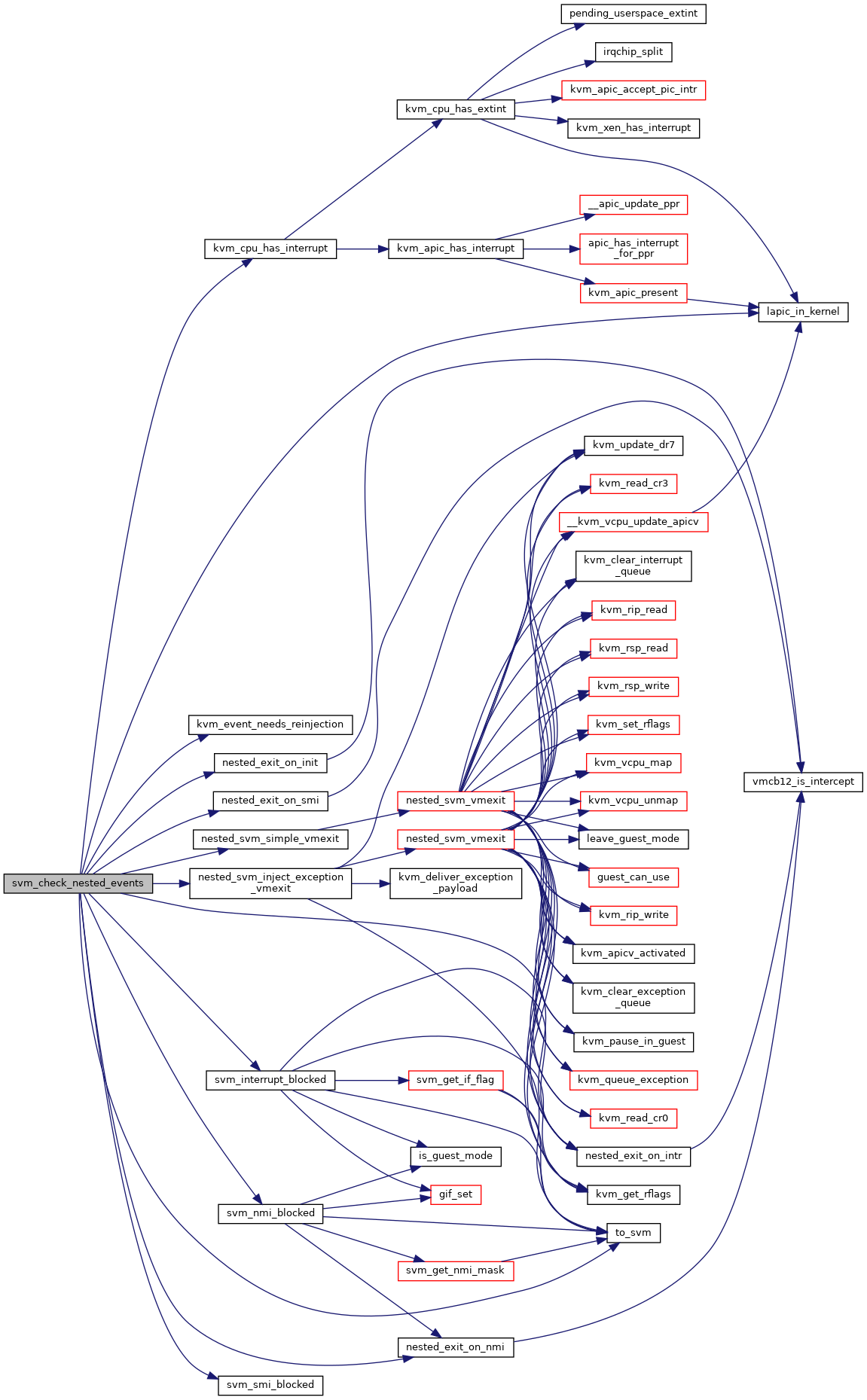

◆ svm_check_nested_events()

|

static |

Definition at line 1421 of file nested.c.

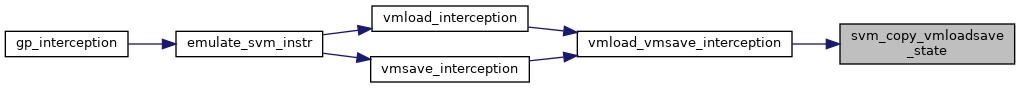

◆ svm_copy_vmloadsave_state()

| void svm_copy_vmloadsave_state | ( | struct vmcb * | to_vmcb, |

| struct vmcb * | from_vmcb | ||

| ) |

◆ svm_copy_vmrun_state()

| void svm_copy_vmrun_state | ( | struct vmcb_save_area * | to_save, |

| struct vmcb_save_area * | from_save | ||

| ) |

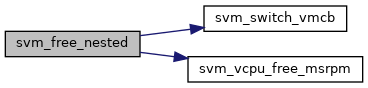

◆ svm_free_nested()

| void svm_free_nested | ( | struct vcpu_svm * | svm | ) |

◆ svm_get_nested_state()

|

static |

Definition at line 1578 of file nested.c.

◆ svm_get_nested_state_pages()

|

static |

◆ svm_leave_nested()

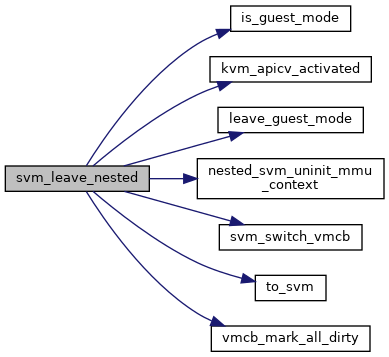

| void svm_leave_nested | ( | struct kvm_vcpu * | vcpu | ) |

◆ svm_set_nested_state()

|

static |

Definition at line 1645 of file nested.c.

Variable Documentation

◆ svm_nested_ops

| struct kvm_x86_nested_ops svm_nested_ops |