310 u64 pt_access, pte_access;

311 unsigned index, accessed_dirty, pte_pkey;

316 u64 walk_nx_mask = 0;

317 const int write_fault = access & PFERR_WRITE_MASK;

318 const int user_fault = access & PFERR_USER_MASK;

319 const int fetch_fault = access & PFERR_FETCH_MASK;

324 trace_kvm_mmu_pagetable_walk(addr, access);

326 walker->

level = mmu->cpu_role.base.level;

333 pte = mmu->get_pdptr(vcpu, (addr >> 30) & 3);

334 trace_kvm_mmu_paging_element(pte, walker->

level);

347 nested_access = (have_ad ? PFERR_WRITE_MASK : 0) | PFERR_USER_MASK;

364 struct kvm_memory_slot *slot;

365 unsigned long host_addr;

367 pt_access = pte_access;

373 pte_gpa = gfn_to_gpa(table_gfn) + offset;

375 BUG_ON(walker->

level < 1);

380 nested_access, &walker->

fault);

392 if (unlikely(real_gpa == INVALID_GPA))

396 if (!kvm_is_visible_memslot(slot))

401 if (unlikely(kvm_is_error_hva(host_addr)))

404 ptep_user = (

pt_element_t __user *)((

void *)host_addr + offset);

405 if (unlikely(__get_user(pte, ptep_user)))

409 trace_kvm_mmu_paging_element(pte, walker->

level);

415 pte_access = pt_access & (pte ^ walk_nx_mask);

421 errcode = PFERR_RSVD_MASK | PFERR_PRESENT_MASK;

437 if (unlikely(errcode))

445 gfn += pse36_gfn_delta(pte);

449 if (real_gpa == INVALID_GPA)

452 walker->

gfn = real_gpa >> PAGE_SHIFT;

462 accessed_dirty &= pte >>

465 if (unlikely(!accessed_dirty)) {

468 if (unlikely(ret < 0))

477 errcode |= write_fault | user_fault;

478 if (fetch_fault && (is_efer_nx(mmu) || is_cr4_smep(mmu)))

479 errcode |= PFERR_FETCH_MASK;

481 walker->fault.vector = PF_VECTOR;

482 walker->fault.error_code_valid =

true;

483 walker->fault.error_code = errcode;

485 #if PTTYPE == PTTYPE_EPT

499 if (!(errcode & PFERR_RSVD_MASK)) {

500 vcpu->arch.exit_qualification &= (EPT_VIOLATION_GVA_IS_VALID |

501 EPT_VIOLATION_GVA_TRANSLATED);

503 vcpu->arch.exit_qualification |= EPT_VIOLATION_ACC_WRITE;

505 vcpu->arch.exit_qualification |= EPT_VIOLATION_ACC_READ;

507 vcpu->arch.exit_qualification |= EPT_VIOLATION_ACC_INSTR;

513 vcpu->arch.exit_qualification |= (pte_access & VMX_EPT_RWX_MASK) <<

514 EPT_VIOLATION_RWX_SHIFT;

517 walker->fault.address = addr;

518 walker->fault.nested_page_fault = mmu != vcpu->arch.walk_mmu;

519 walker->fault.async_page_fault =

false;

521 trace_kvm_mmu_walker_error(walker->fault.error_code);

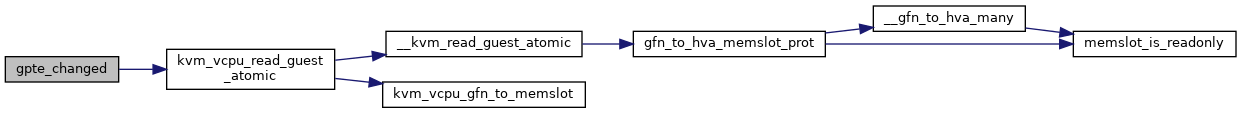

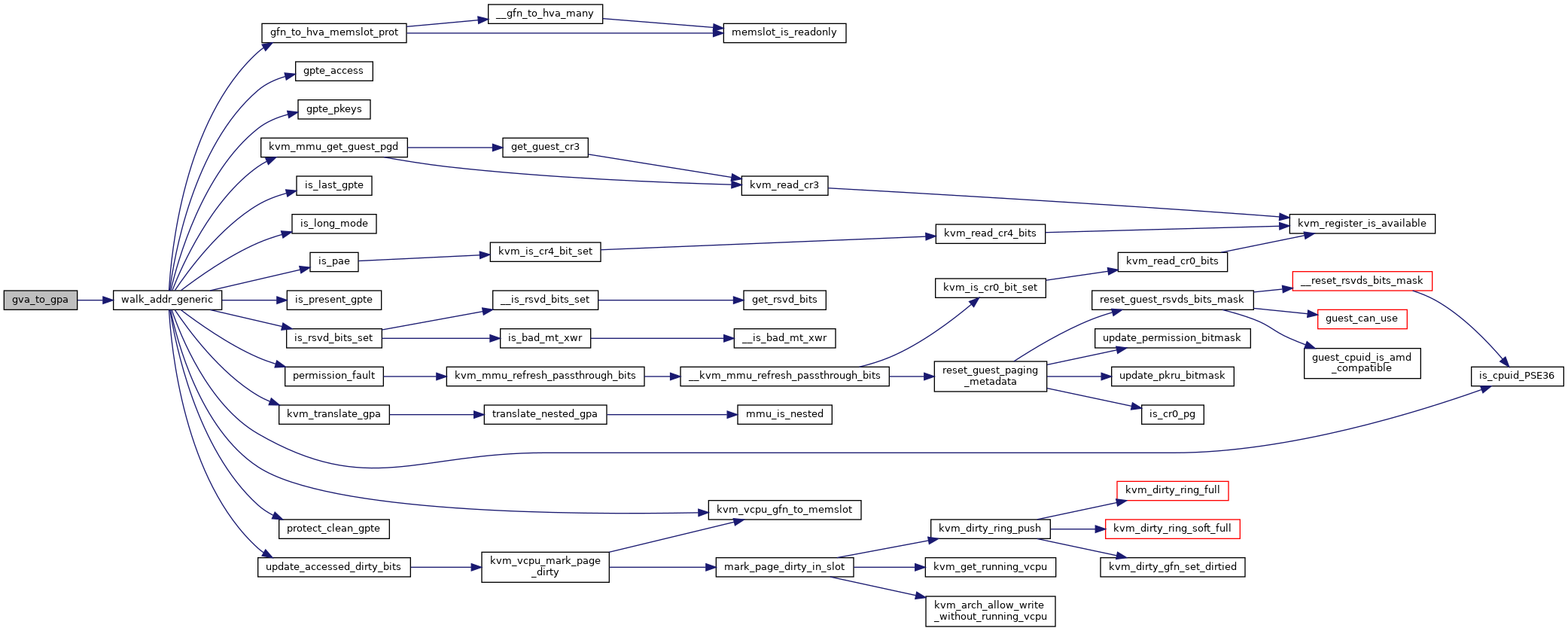

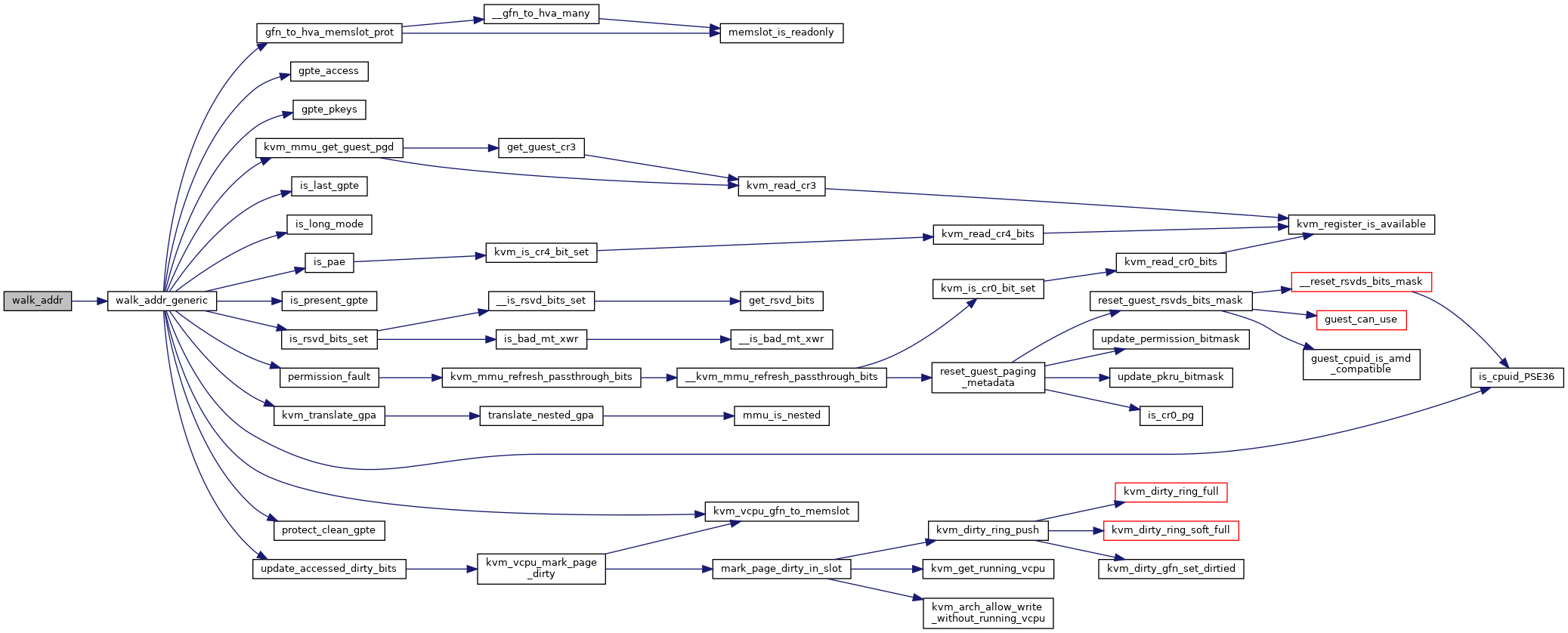

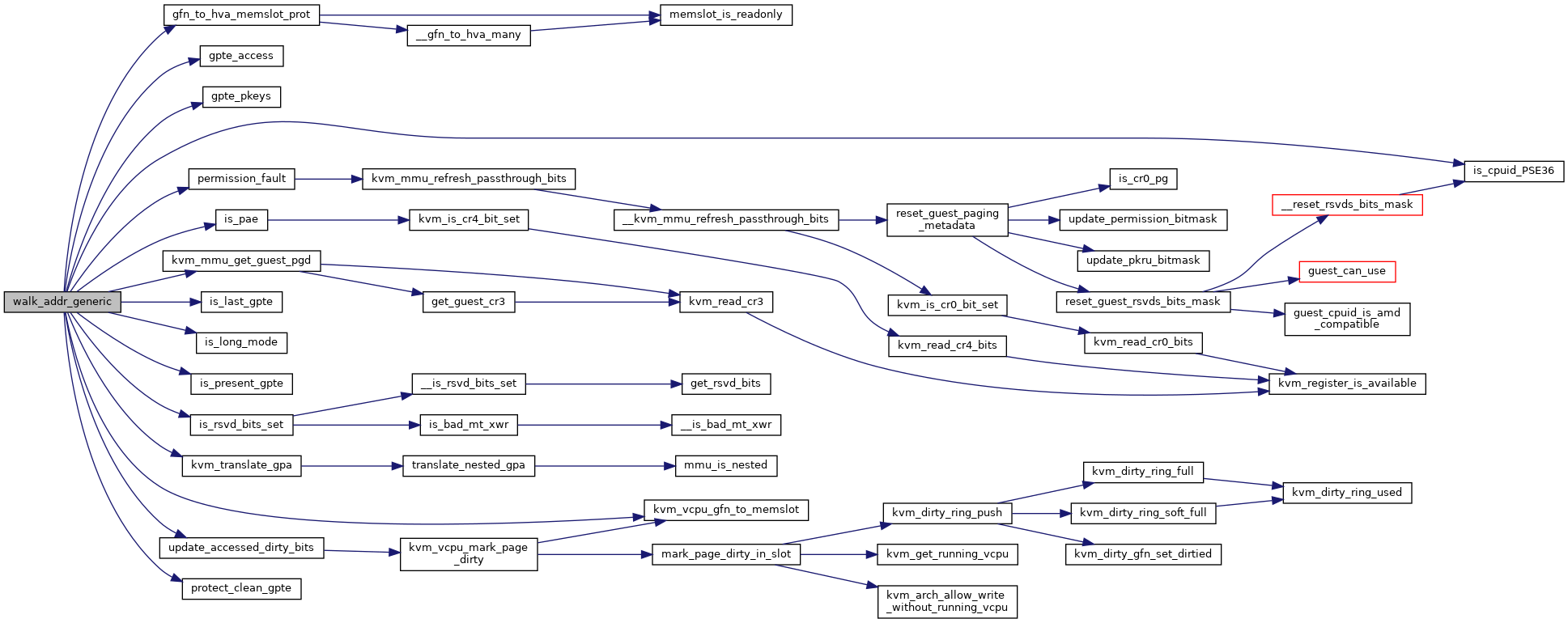

unsigned long gfn_to_hva_memslot_prot(struct kvm_memory_slot *slot, gfn_t gfn, bool *writable)

static int is_cpuid_PSE36(void)

static unsigned long kvm_mmu_get_guest_pgd(struct kvm_vcpu *vcpu, struct kvm_mmu *mmu)

static gpa_t kvm_translate_gpa(struct kvm_vcpu *vcpu, struct kvm_mmu *mmu, gpa_t gpa, u64 access, struct x86_exception *exception)

static u8 permission_fault(struct kvm_vcpu *vcpu, struct kvm_mmu *mmu, unsigned pte_access, unsigned pte_pkey, u64 access)

#define PT_LVL_OFFSET_MASK(lvl)

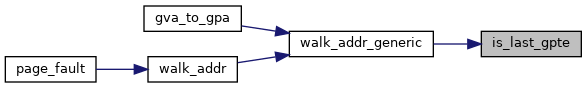

static bool FNAME() is_last_gpte(struct kvm_mmu *mmu, unsigned int level, unsigned int gpte)

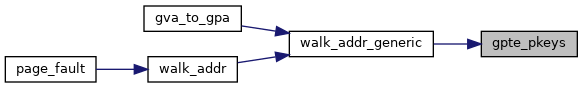

static unsigned FNAME() gpte_pkeys(struct kvm_vcpu *vcpu, u64 gpte)

#define PT_INDEX(addr, lvl)

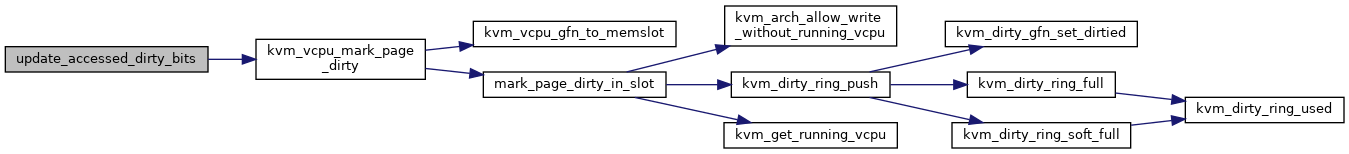

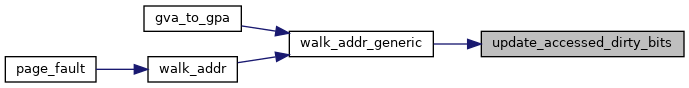

static int FNAME() update_accessed_dirty_bits(struct kvm_vcpu *vcpu, struct kvm_mmu *mmu, struct guest_walker *walker, gpa_t addr, int write_fault)

#define PT_GUEST_ACCESSED_SHIFT

static bool is_long_mode(struct kvm_vcpu *vcpu)

static bool is_pae(struct kvm_vcpu *vcpu)