487 struct kvm_cpuid_entry2 *entry;

488 union cpuid10_eax eax;

489 union cpuid10_edx edx;

490 u64 perf_capabilities;

494 pmu->nr_arch_gp_counters = 0;

495 pmu->nr_arch_fixed_counters = 0;

496 pmu->counter_bitmask[KVM_PMC_GP] = 0;

497 pmu->counter_bitmask[KVM_PMC_FIXED] = 0;

499 pmu->reserved_bits = 0xffffffff00200000ull;

500 pmu->raw_event_mask = X86_RAW_EVENT_MASK;

501 pmu->global_ctrl_mask = ~0ull;

502 pmu->global_status_mask = ~0ull;

503 pmu->fixed_ctr_ctrl_mask = ~0ull;

504 pmu->pebs_enable_mask = ~0ull;

505 pmu->pebs_data_cfg_mask = ~0ull;

518 if (!entry || !vcpu->kvm->arch.enable_pmu)

520 eax.full = entry->eax;

521 edx.full = entry->edx;

523 pmu->version = eax.split.version_id;

527 pmu->nr_arch_gp_counters = min_t(

int, eax.split.num_counters,

529 eax.split.bit_width = min_t(

int, eax.split.bit_width,

531 pmu->counter_bitmask[KVM_PMC_GP] = ((u64)1 << eax.split.bit_width) - 1;

532 eax.split.mask_length = min_t(

int, eax.split.mask_length,

534 pmu->available_event_types = ~entry->ebx &

535 ((1ull << eax.split.mask_length) - 1);

537 if (pmu->version == 1) {

538 pmu->nr_arch_fixed_counters = 0;

540 pmu->nr_arch_fixed_counters = min_t(

int, edx.split.num_counters_fixed,

542 edx.split.bit_width_fixed = min_t(

int, edx.split.bit_width_fixed,

544 pmu->counter_bitmask[KVM_PMC_FIXED] =

545 ((u64)1 << edx.split.bit_width_fixed) - 1;

549 for (i = 0; i < pmu->nr_arch_fixed_counters; i++)

550 pmu->fixed_ctr_ctrl_mask &= ~(0xbull << (i * 4));

551 counter_mask = ~(((1ull << pmu->nr_arch_gp_counters) - 1) |

552 (((1ull << pmu->nr_arch_fixed_counters) - 1) << INTEL_PMC_IDX_FIXED));

553 pmu->global_ctrl_mask = counter_mask;

560 pmu->global_status_mask = pmu->global_ctrl_mask

561 & ~(MSR_CORE_PERF_GLOBAL_OVF_CTRL_OVF_BUF |

562 MSR_CORE_PERF_GLOBAL_OVF_CTRL_COND_CHGD);

564 pmu->global_status_mask &=

565 ~MSR_CORE_PERF_GLOBAL_OVF_CTRL_TRACE_TOPA_PMI;

569 (boot_cpu_has(X86_FEATURE_HLE) || boot_cpu_has(X86_FEATURE_RTM)) &&

570 (entry->ebx & (X86_FEATURE_HLE|X86_FEATURE_RTM))) {

571 pmu->reserved_bits ^= HSW_IN_TX;

572 pmu->raw_event_mask |= (HSW_IN_TX|HSW_IN_TX_CHECKPOINTED);

575 bitmap_set(pmu->all_valid_pmc_idx,

576 0, pmu->nr_arch_gp_counters);

577 bitmap_set(pmu->all_valid_pmc_idx,

578 INTEL_PMC_MAX_GENERIC, pmu->nr_arch_fixed_counters);

588 bitmap_set(pmu->all_valid_pmc_idx, INTEL_PMC_IDX_FIXED_VLBR, 1);

590 if (perf_capabilities & PERF_CAP_PEBS_FORMAT) {

591 if (perf_capabilities & PERF_CAP_PEBS_BASELINE) {

592 pmu->pebs_enable_mask = counter_mask;

593 pmu->reserved_bits &= ~ICL_EVENTSEL_ADAPTIVE;

594 for (i = 0; i < pmu->nr_arch_fixed_counters; i++) {

595 pmu->fixed_ctr_ctrl_mask &=

596 ~(1ULL << (INTEL_PMC_IDX_FIXED + i * 4));

598 pmu->pebs_data_cfg_mask = ~0xff00000full;

600 pmu->pebs_enable_mask =

601 ~((1ull << pmu->nr_arch_gp_counters) - 1);

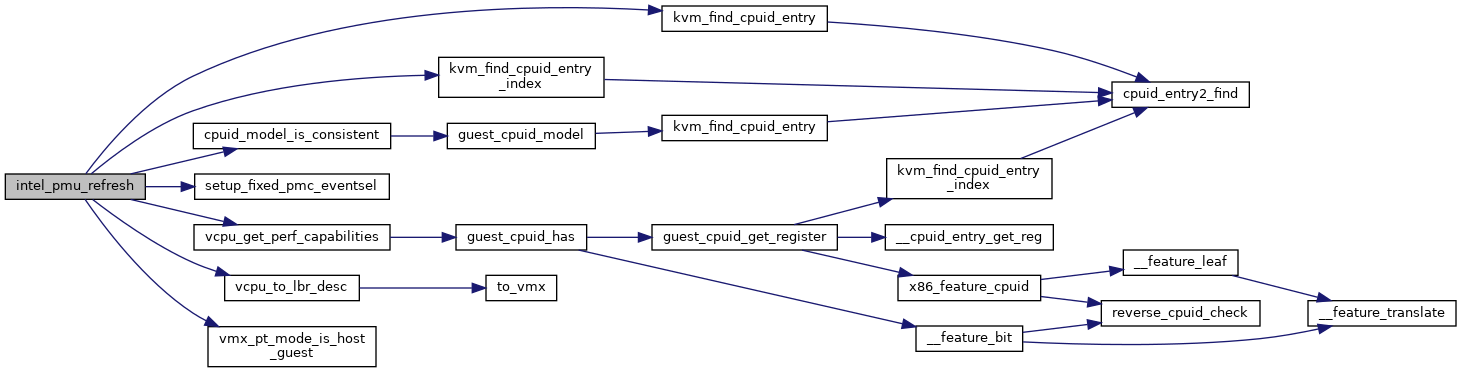

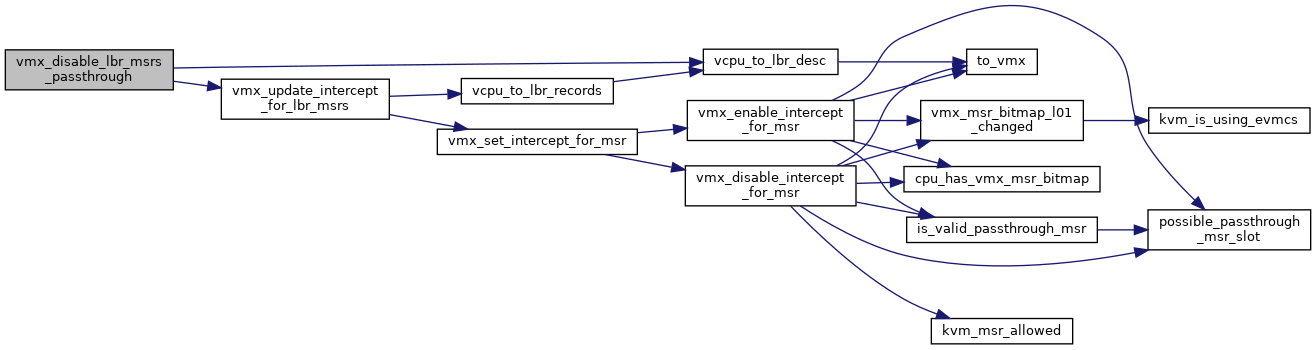

static bool vmx_pt_mode_is_host_guest(void)

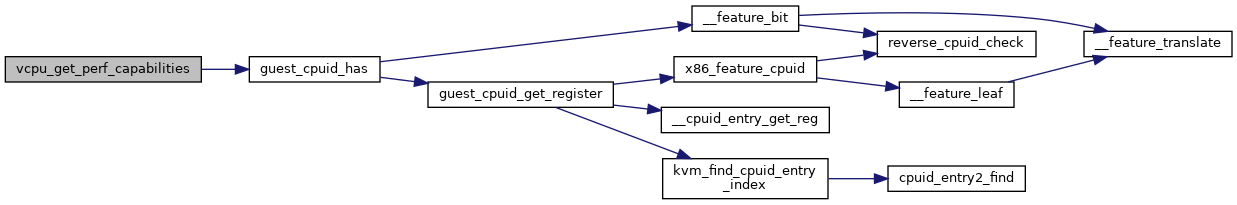

struct kvm_cpuid_entry2 * kvm_find_cpuid_entry_index(struct kvm_vcpu *vcpu, u32 function, u32 index)

struct kvm_cpuid_entry2 * kvm_find_cpuid_entry(struct kvm_vcpu *vcpu, u32 function)

static bool cpuid_model_is_consistent(struct kvm_vcpu *vcpu)

struct x86_pmu_capability __read_mostly kvm_pmu_cap

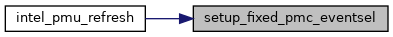

static void setup_fixed_pmc_eventsel(struct kvm_pmu *pmu)