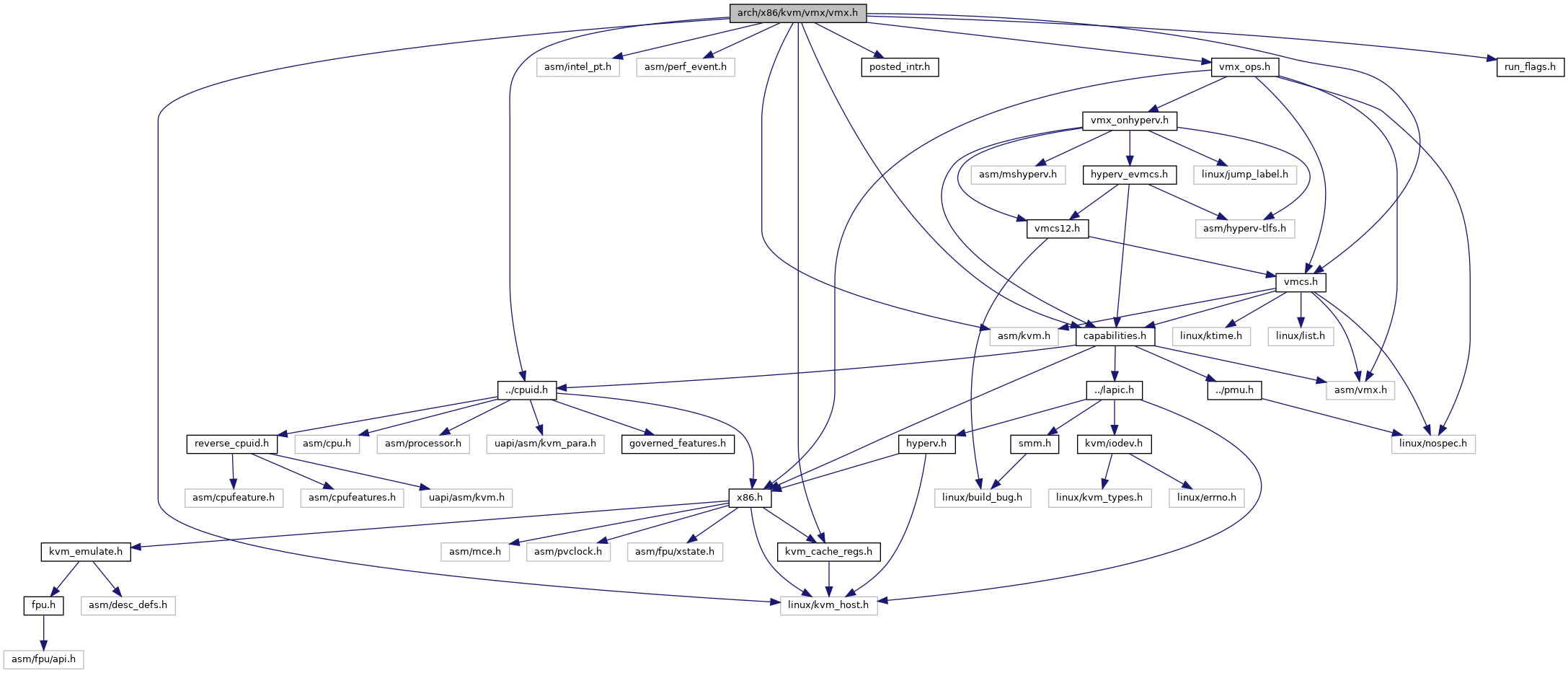

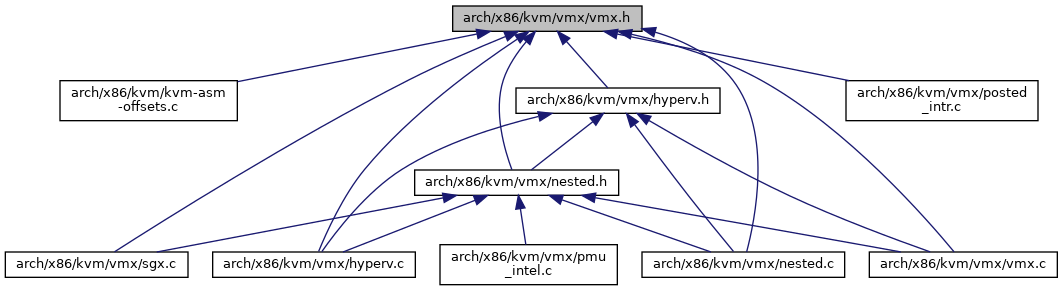

#include <linux/kvm_host.h>#include <asm/kvm.h>#include <asm/intel_pt.h>#include <asm/perf_event.h>#include "capabilities.h"#include "../kvm_cache_regs.h"#include "posted_intr.h"#include "vmcs.h"#include "vmx_ops.h"#include "../cpuid.h"#include "run_flags.h"

Go to the source code of this file.

Classes | |

| struct | vmx_msrs |

| struct | vmx_uret_msr |

| struct | pt_ctx |

| struct | pt_desc |

| union | vmx_exit_reason |

| struct | lbr_desc |

| struct | nested_vmx |

| struct | vcpu_vmx |

| struct | vcpu_vmx::msr_autoload |

| struct | vcpu_vmx::msr_autostore |

| struct | kvm_vmx |

Enumerations | |

| enum | segment_cache_field { SEG_FIELD_SEL = 0 , SEG_FIELD_BASE = 1 , SEG_FIELD_LIMIT = 2 , SEG_FIELD_AR = 3 , SEG_FIELD_NR = 4 } |

Functions | |

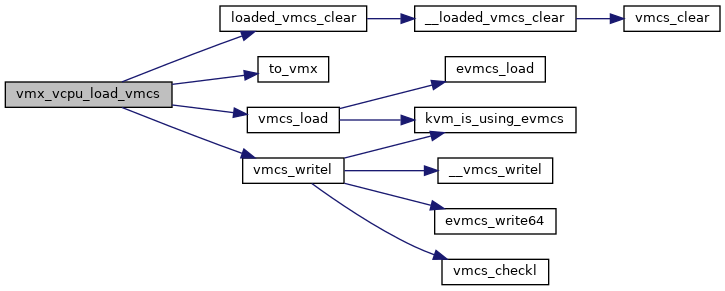

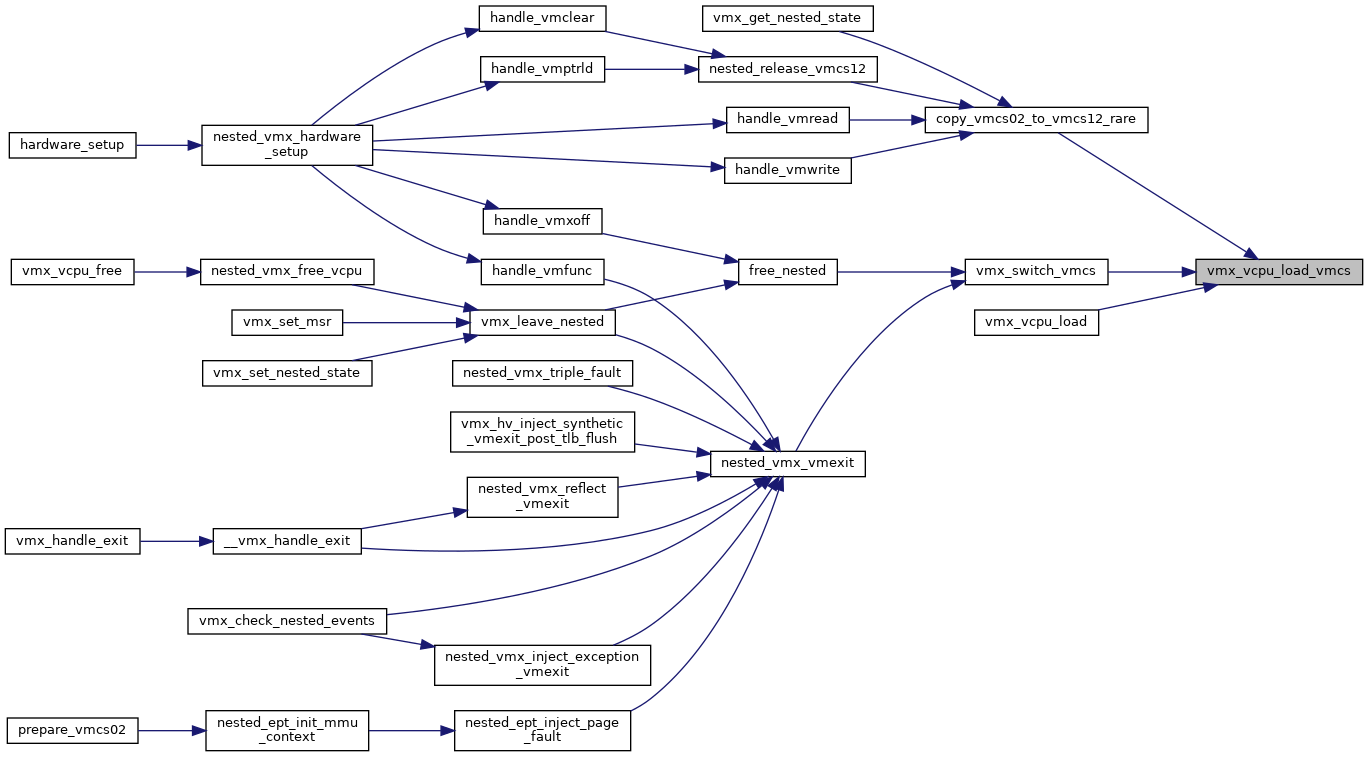

| void | vmx_vcpu_load_vmcs (struct kvm_vcpu *vcpu, int cpu, struct loaded_vmcs *buddy) |

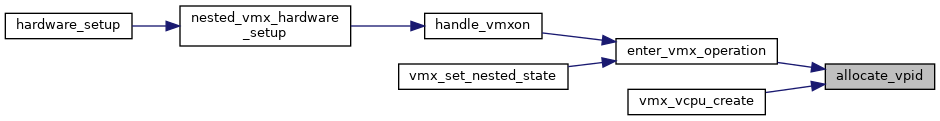

| int | allocate_vpid (void) |

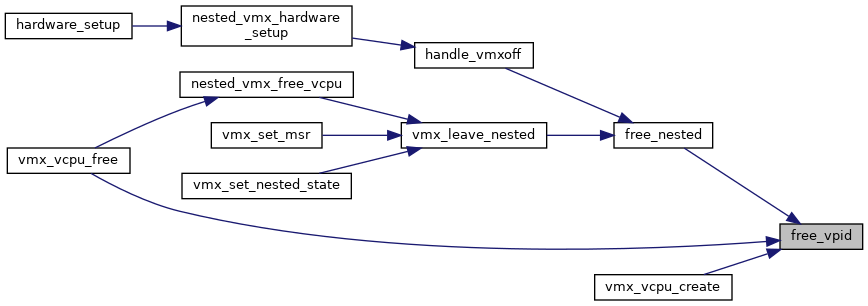

| void | free_vpid (int vpid) |

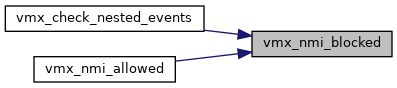

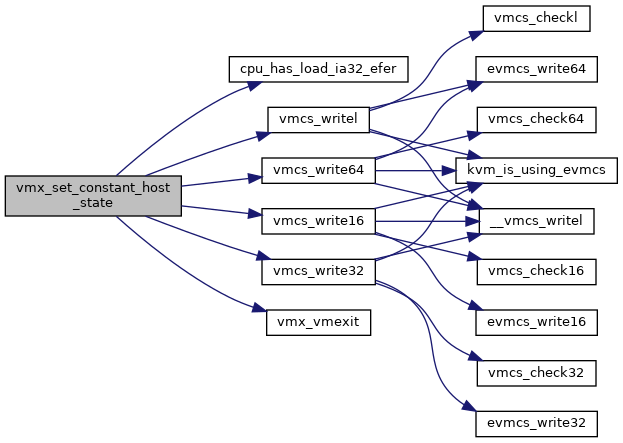

| void | vmx_set_constant_host_state (struct vcpu_vmx *vmx) |

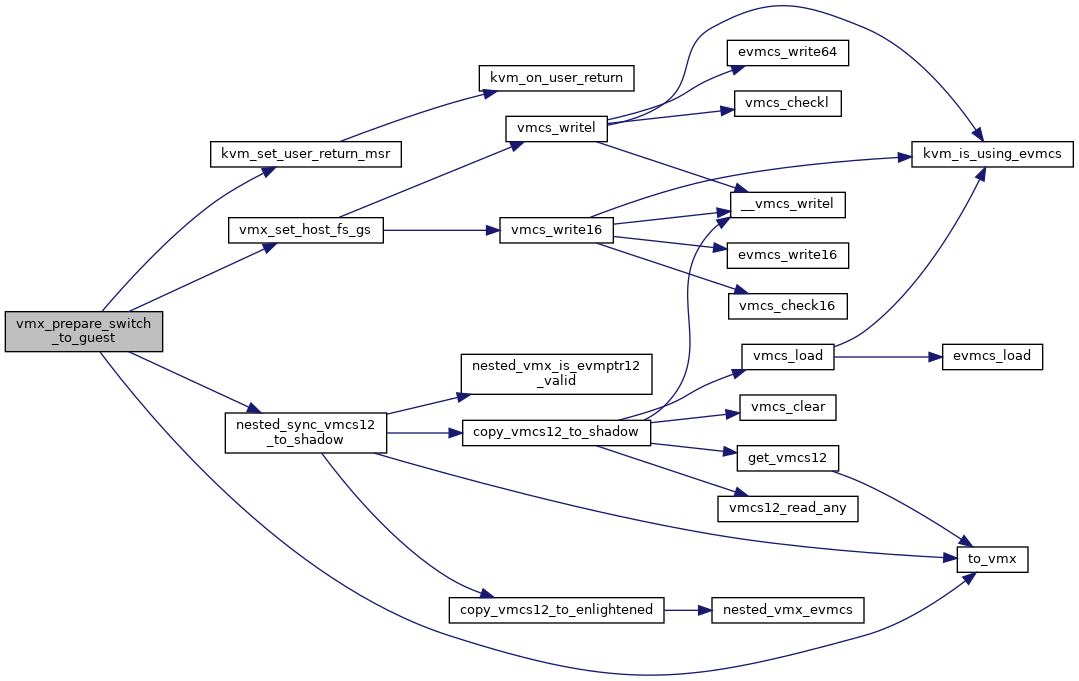

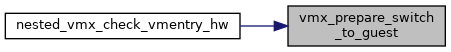

| void | vmx_prepare_switch_to_guest (struct kvm_vcpu *vcpu) |

| void | vmx_set_host_fs_gs (struct vmcs_host_state *host, u16 fs_sel, u16 gs_sel, unsigned long fs_base, unsigned long gs_base) |

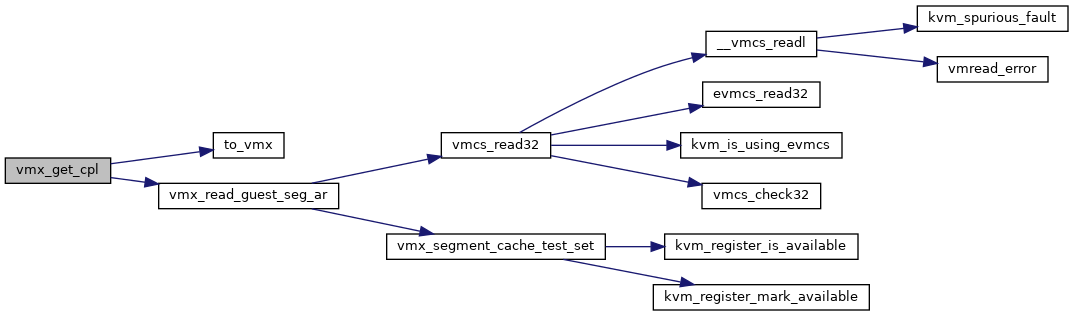

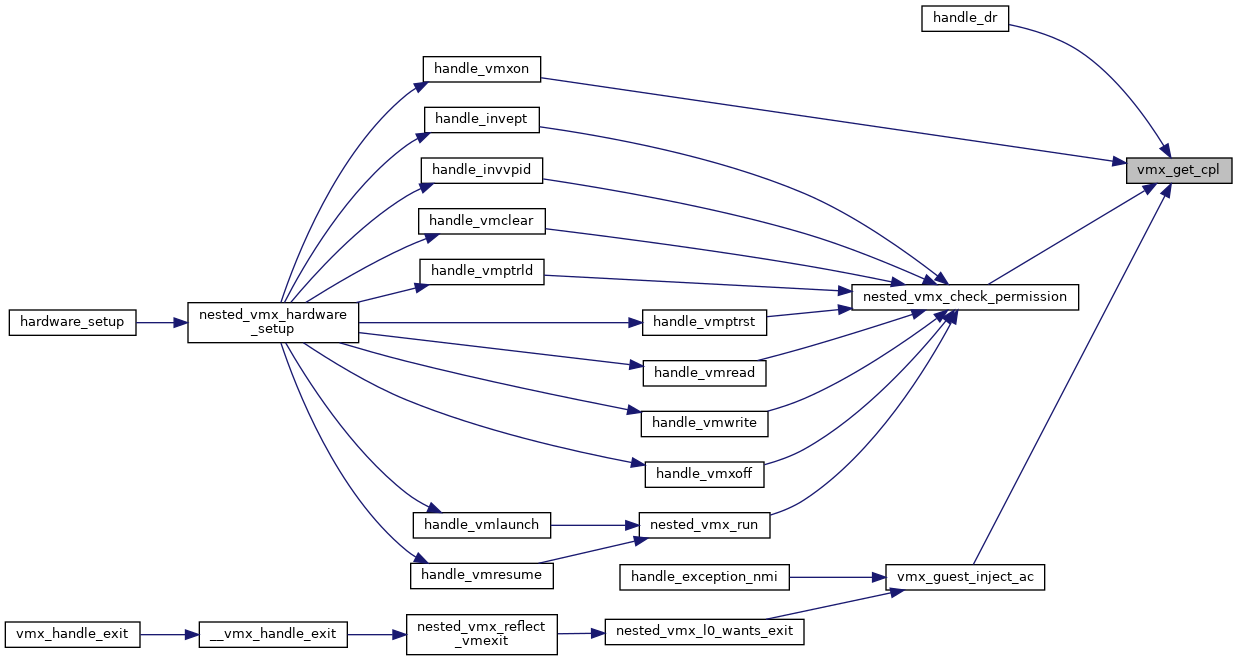

| int | vmx_get_cpl (struct kvm_vcpu *vcpu) |

| bool | vmx_emulation_required (struct kvm_vcpu *vcpu) |

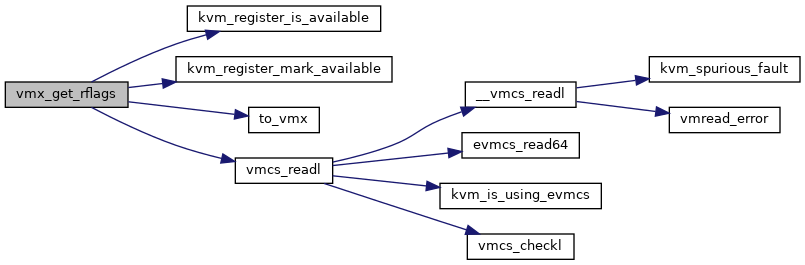

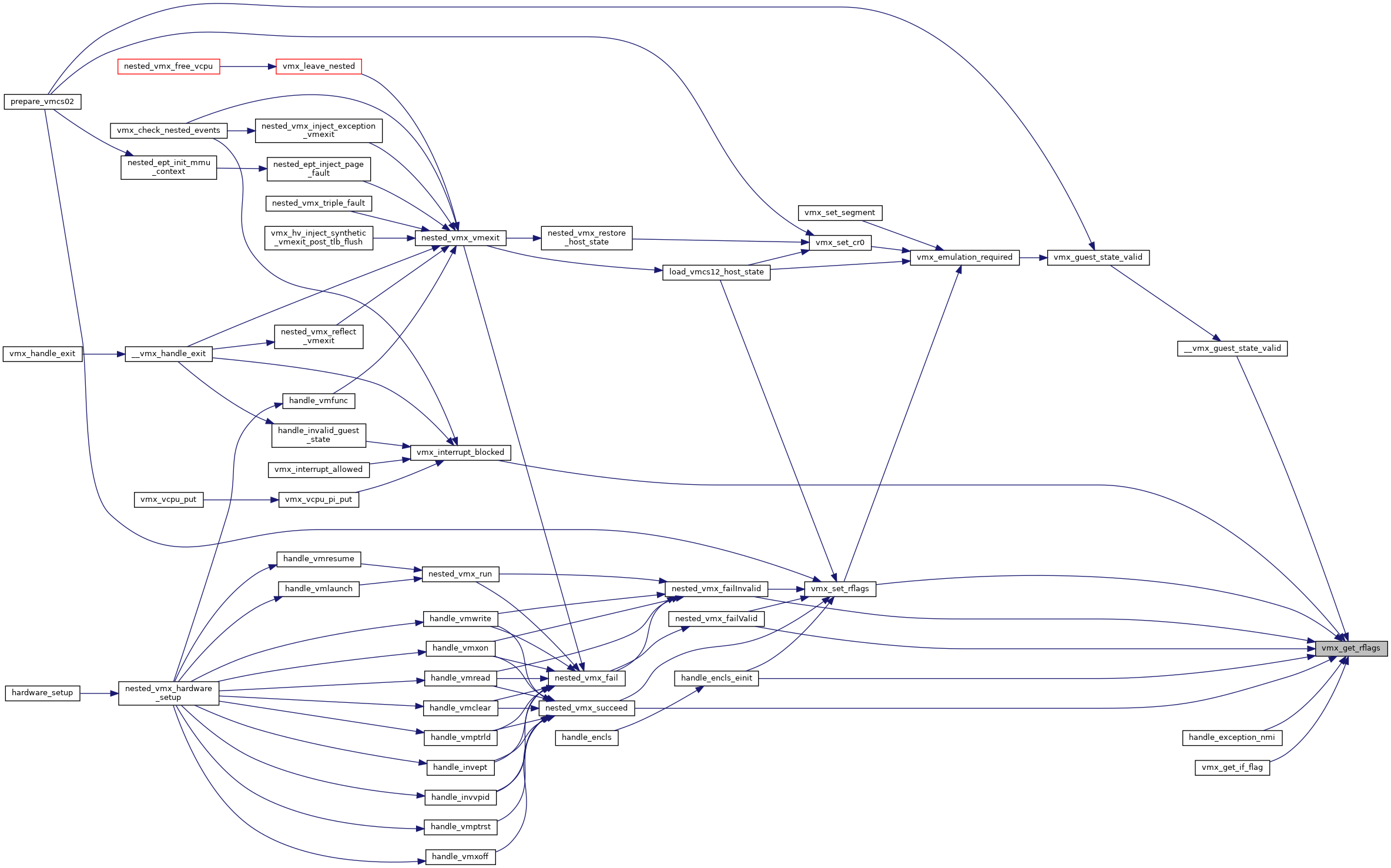

| unsigned long | vmx_get_rflags (struct kvm_vcpu *vcpu) |

| void | vmx_set_rflags (struct kvm_vcpu *vcpu, unsigned long rflags) |

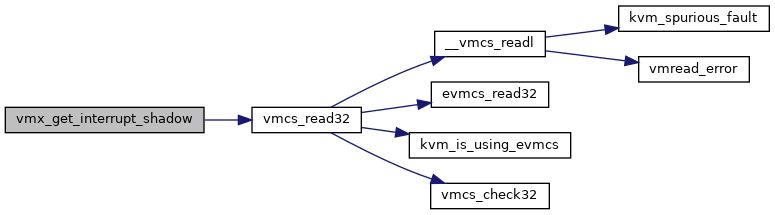

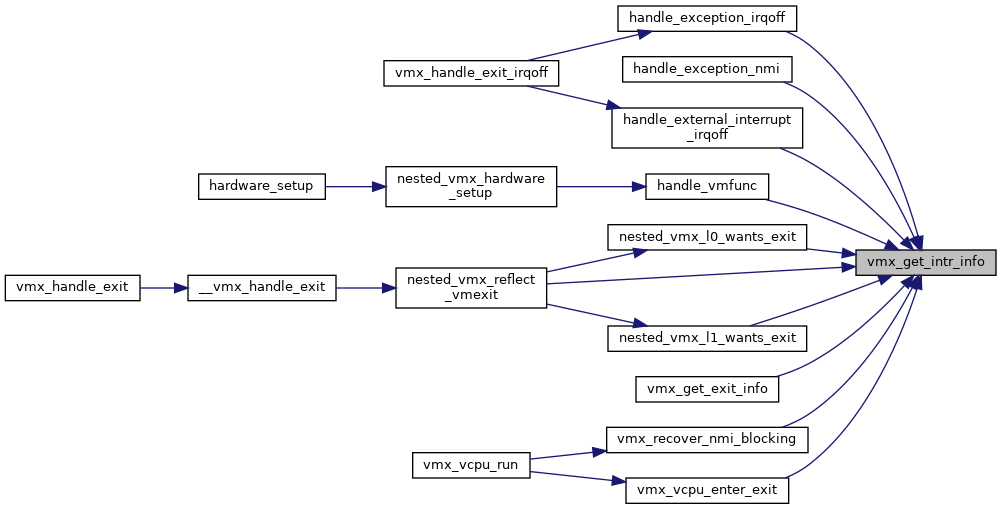

| u32 | vmx_get_interrupt_shadow (struct kvm_vcpu *vcpu) |

| void | vmx_set_interrupt_shadow (struct kvm_vcpu *vcpu, int mask) |

| int | vmx_set_efer (struct kvm_vcpu *vcpu, u64 efer) |

| void | vmx_set_cr0 (struct kvm_vcpu *vcpu, unsigned long cr0) |

| void | vmx_set_cr4 (struct kvm_vcpu *vcpu, unsigned long cr4) |

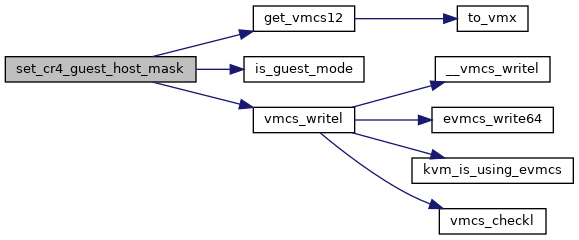

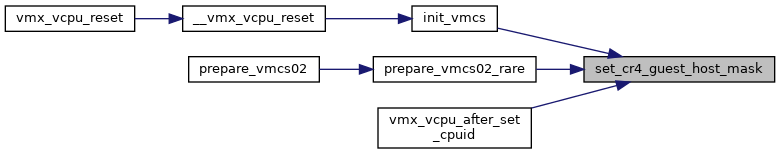

| void | set_cr4_guest_host_mask (struct vcpu_vmx *vmx) |

| void | ept_save_pdptrs (struct kvm_vcpu *vcpu) |

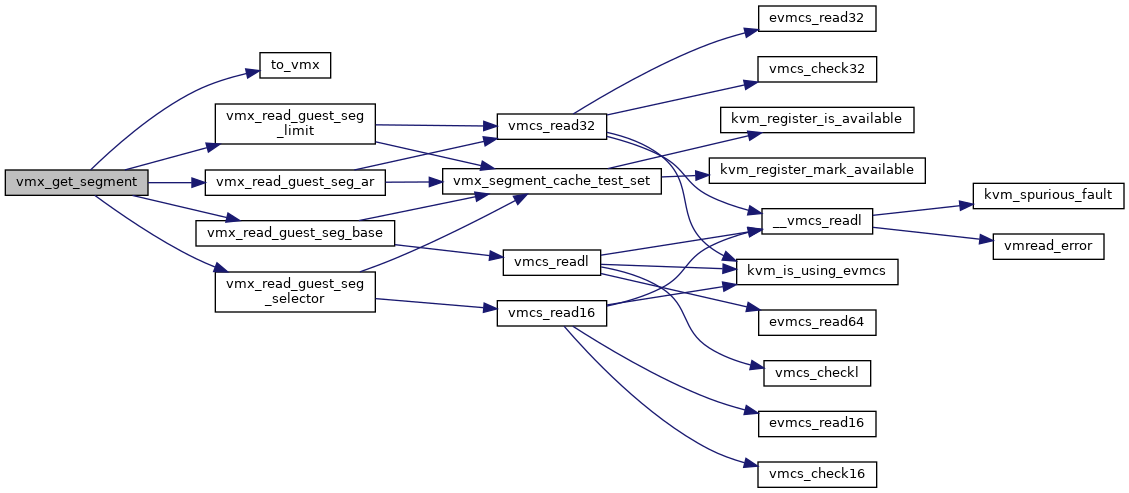

| void | vmx_get_segment (struct kvm_vcpu *vcpu, struct kvm_segment *var, int seg) |

| void | __vmx_set_segment (struct kvm_vcpu *vcpu, struct kvm_segment *var, int seg) |

| u64 | construct_eptp (struct kvm_vcpu *vcpu, hpa_t root_hpa, int root_level) |

| bool | vmx_guest_inject_ac (struct kvm_vcpu *vcpu) |

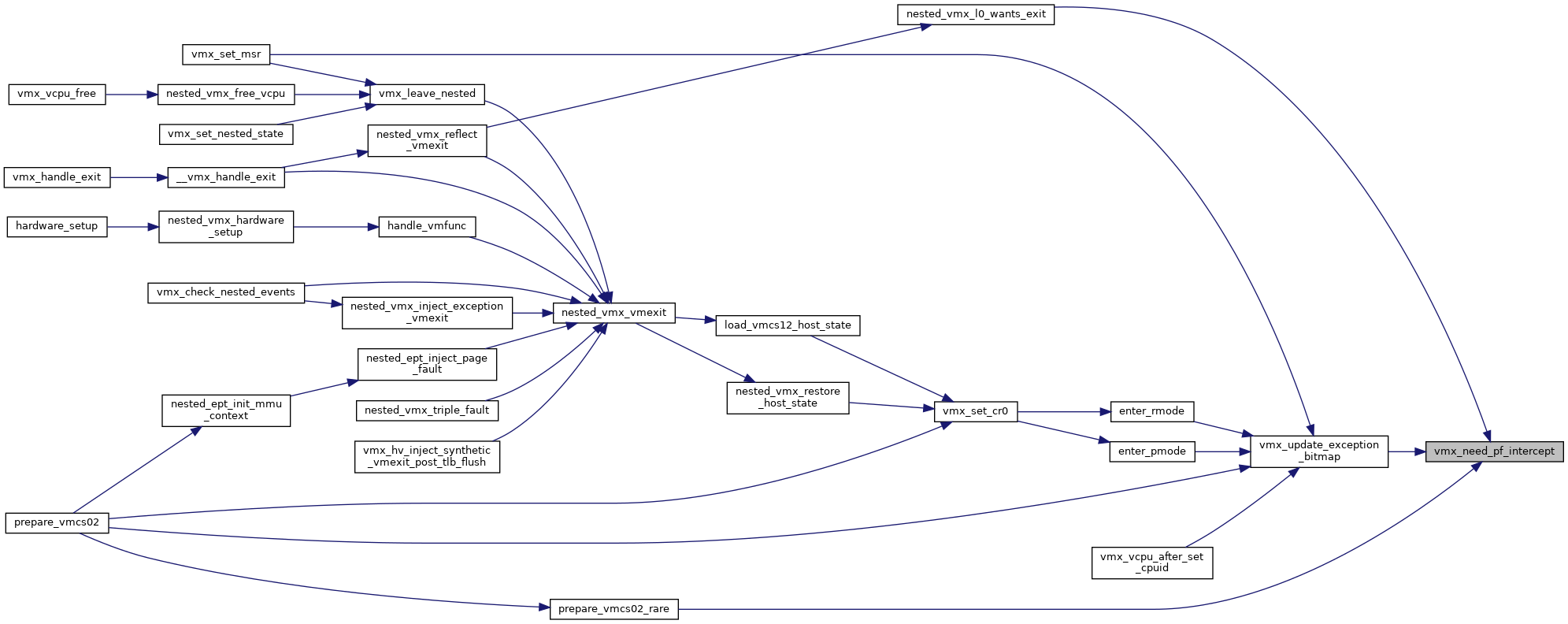

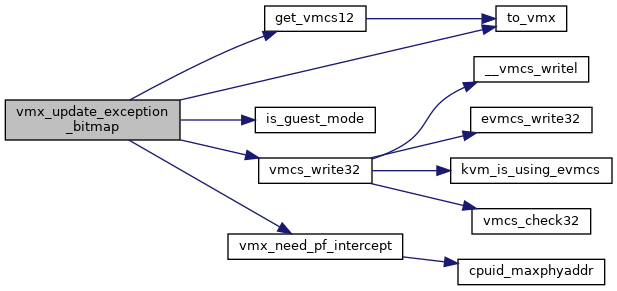

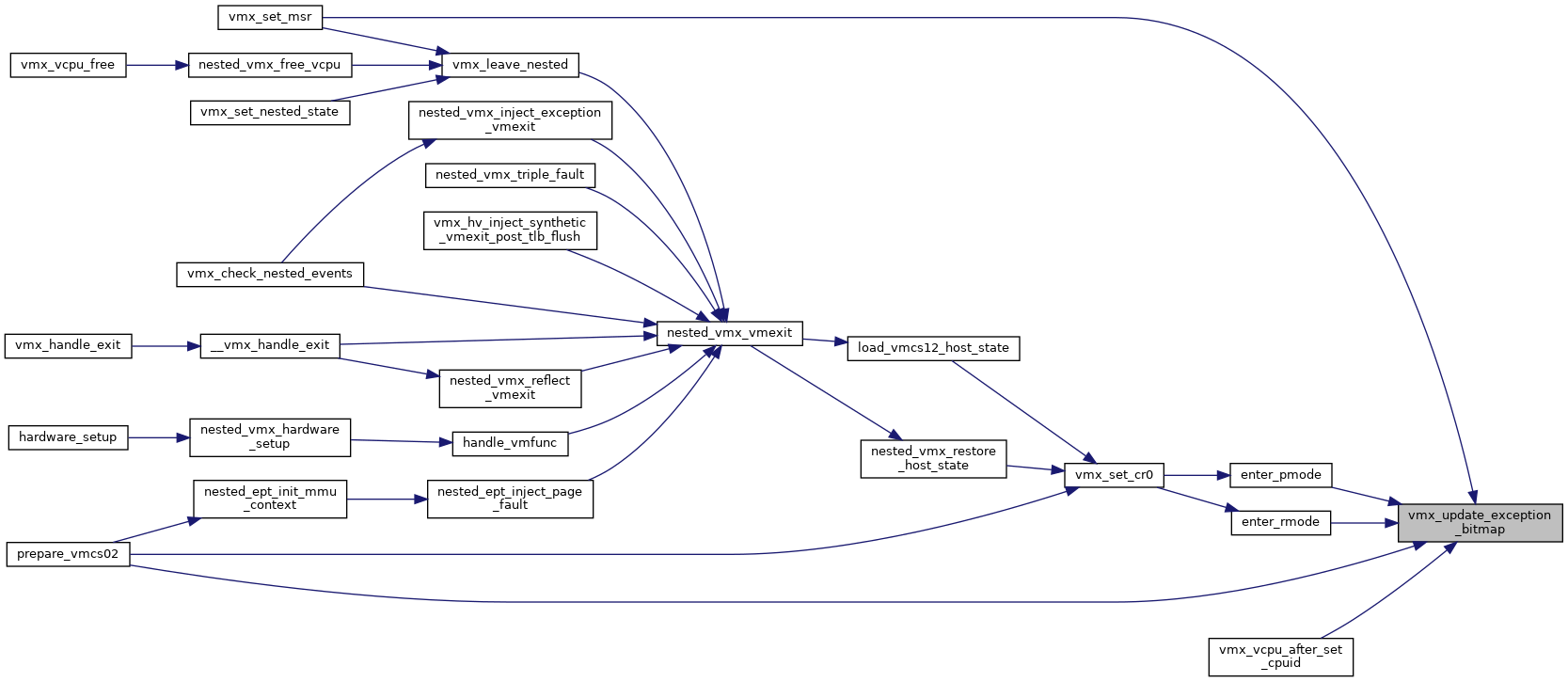

| void | vmx_update_exception_bitmap (struct kvm_vcpu *vcpu) |

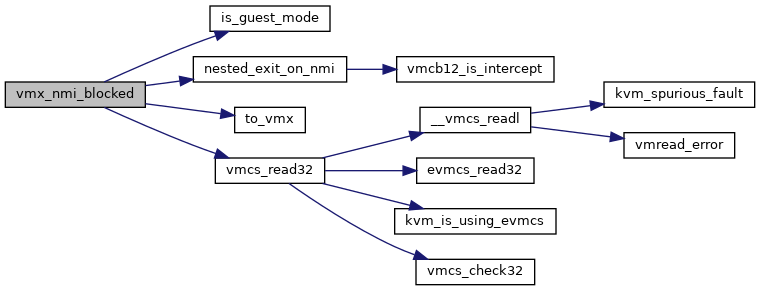

| bool | vmx_nmi_blocked (struct kvm_vcpu *vcpu) |

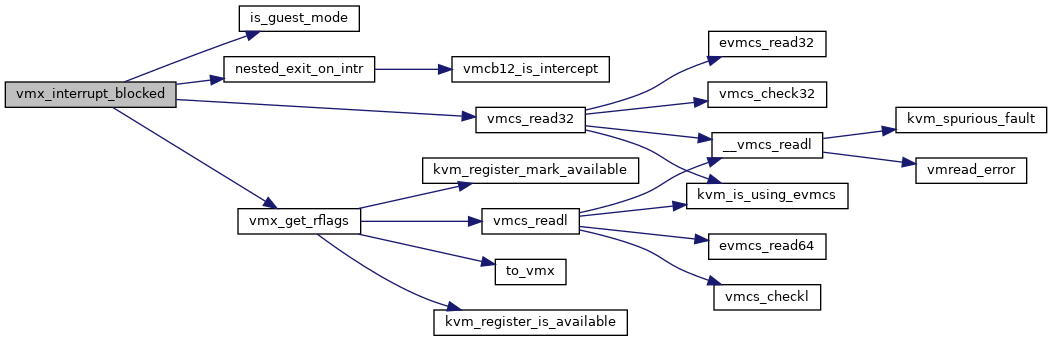

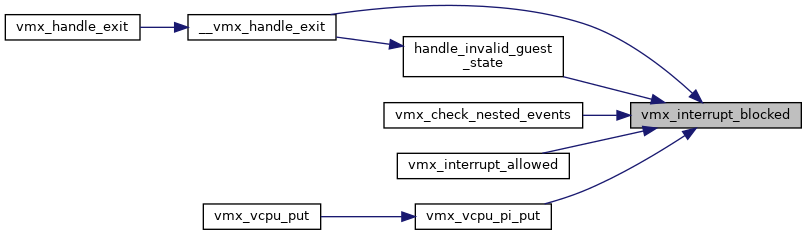

| bool | vmx_interrupt_blocked (struct kvm_vcpu *vcpu) |

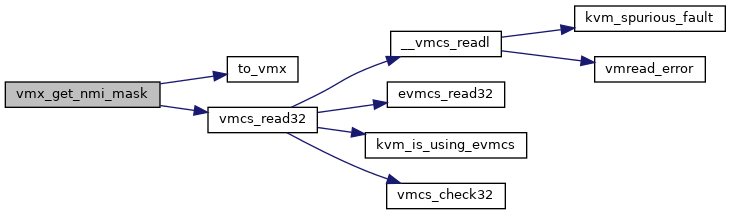

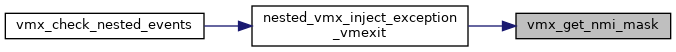

| bool | vmx_get_nmi_mask (struct kvm_vcpu *vcpu) |

| void | vmx_set_nmi_mask (struct kvm_vcpu *vcpu, bool masked) |

| void | vmx_set_virtual_apic_mode (struct kvm_vcpu *vcpu) |

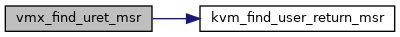

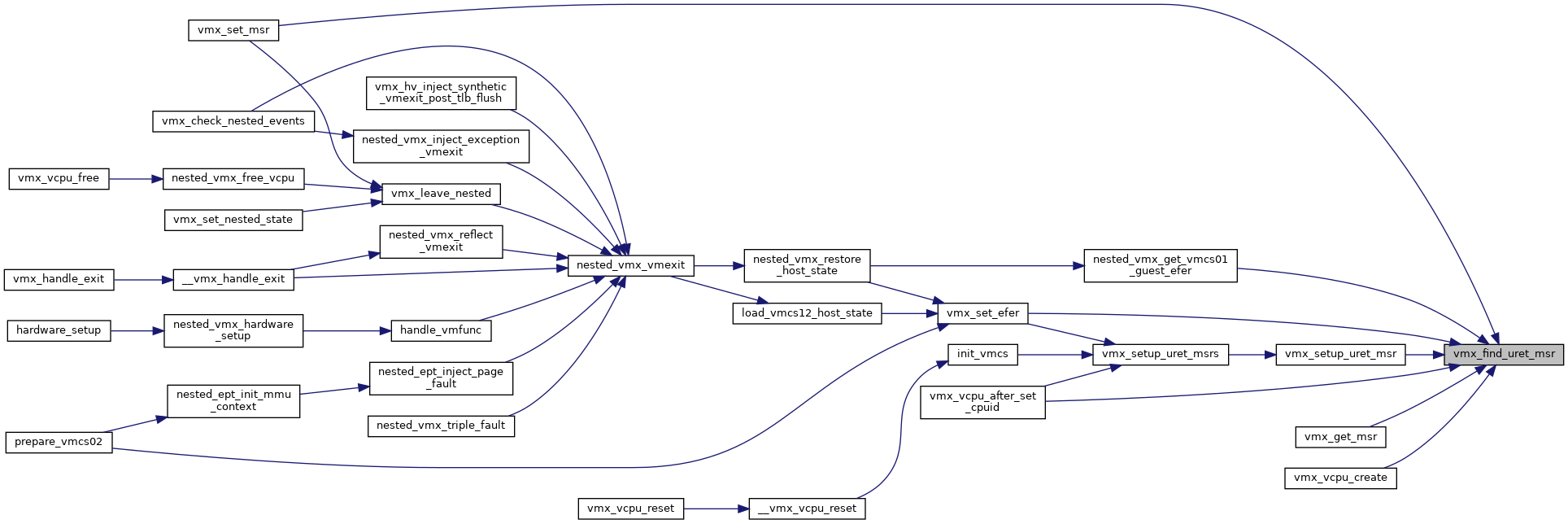

| struct vmx_uret_msr * | vmx_find_uret_msr (struct vcpu_vmx *vmx, u32 msr) |

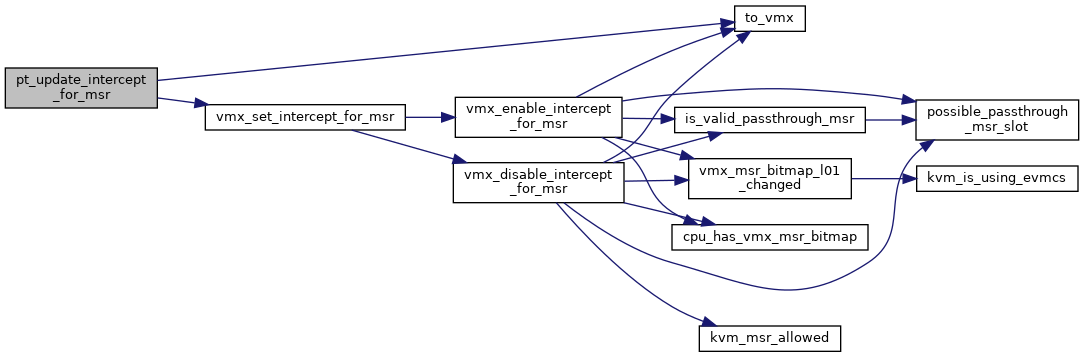

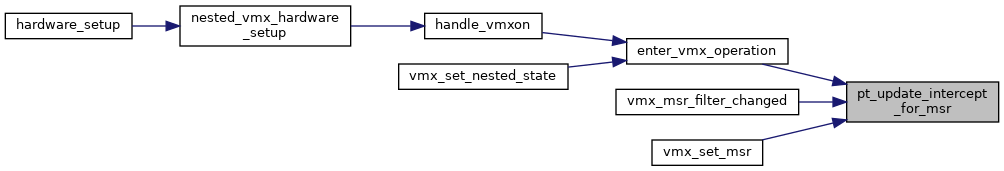

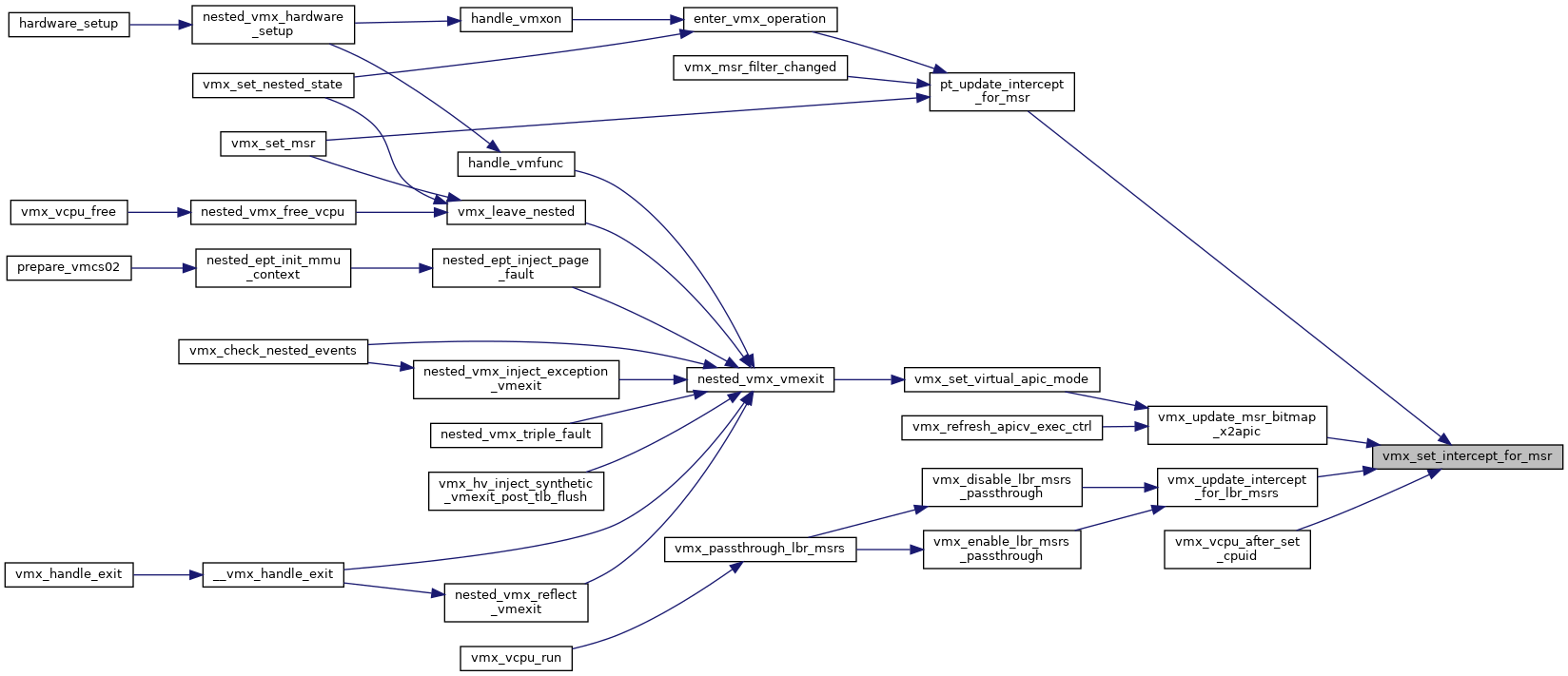

| void | pt_update_intercept_for_msr (struct kvm_vcpu *vcpu) |

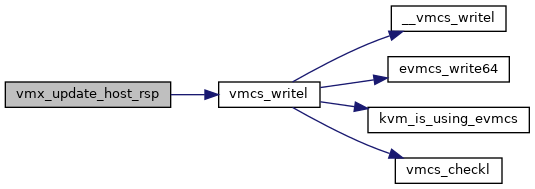

| void | vmx_update_host_rsp (struct vcpu_vmx *vmx, unsigned long host_rsp) |

| void | vmx_spec_ctrl_restore_host (struct vcpu_vmx *vmx, unsigned int flags) |

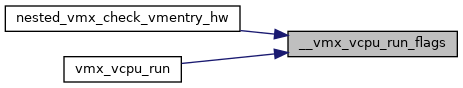

| unsigned int | __vmx_vcpu_run_flags (struct vcpu_vmx *vmx) |

| bool | __vmx_vcpu_run (struct vcpu_vmx *vmx, unsigned long *regs, unsigned int flags) |

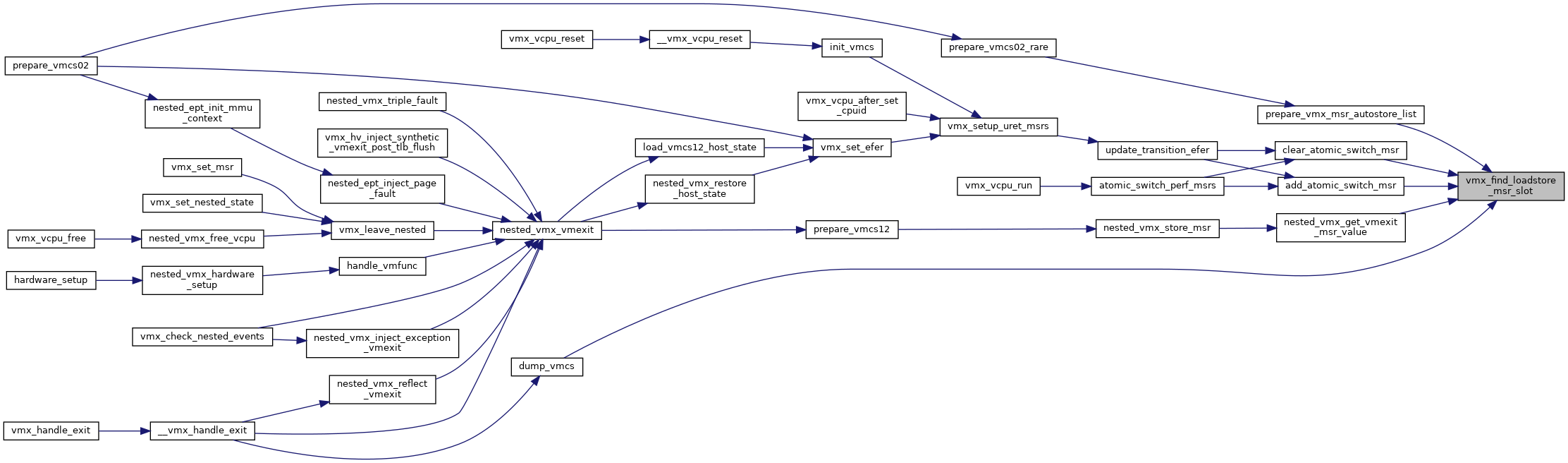

| int | vmx_find_loadstore_msr_slot (struct vmx_msrs *m, u32 msr) |

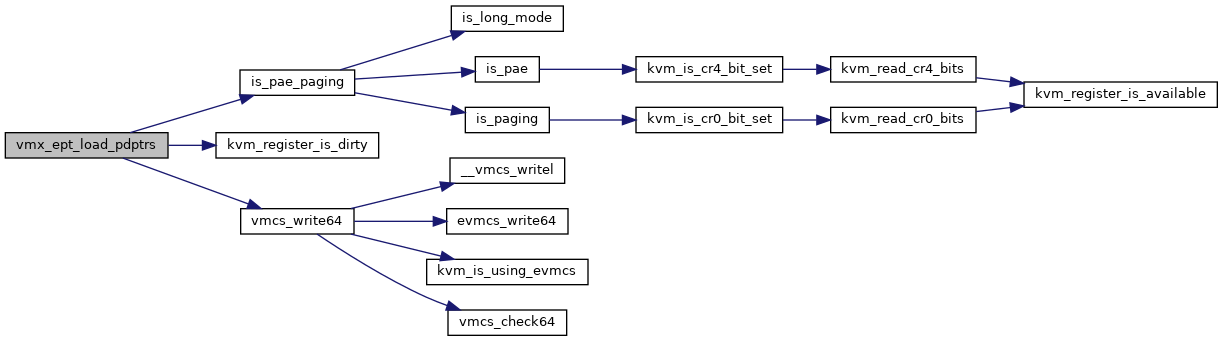

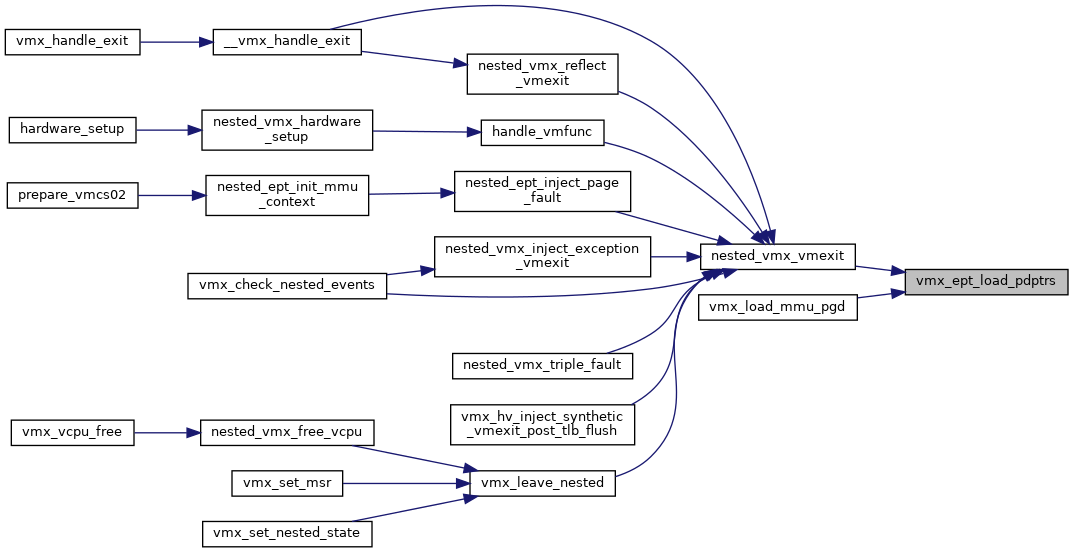

| void | vmx_ept_load_pdptrs (struct kvm_vcpu *vcpu) |

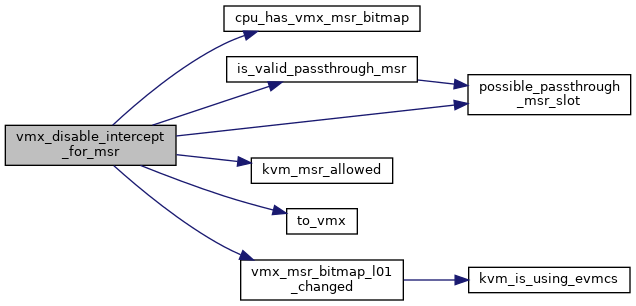

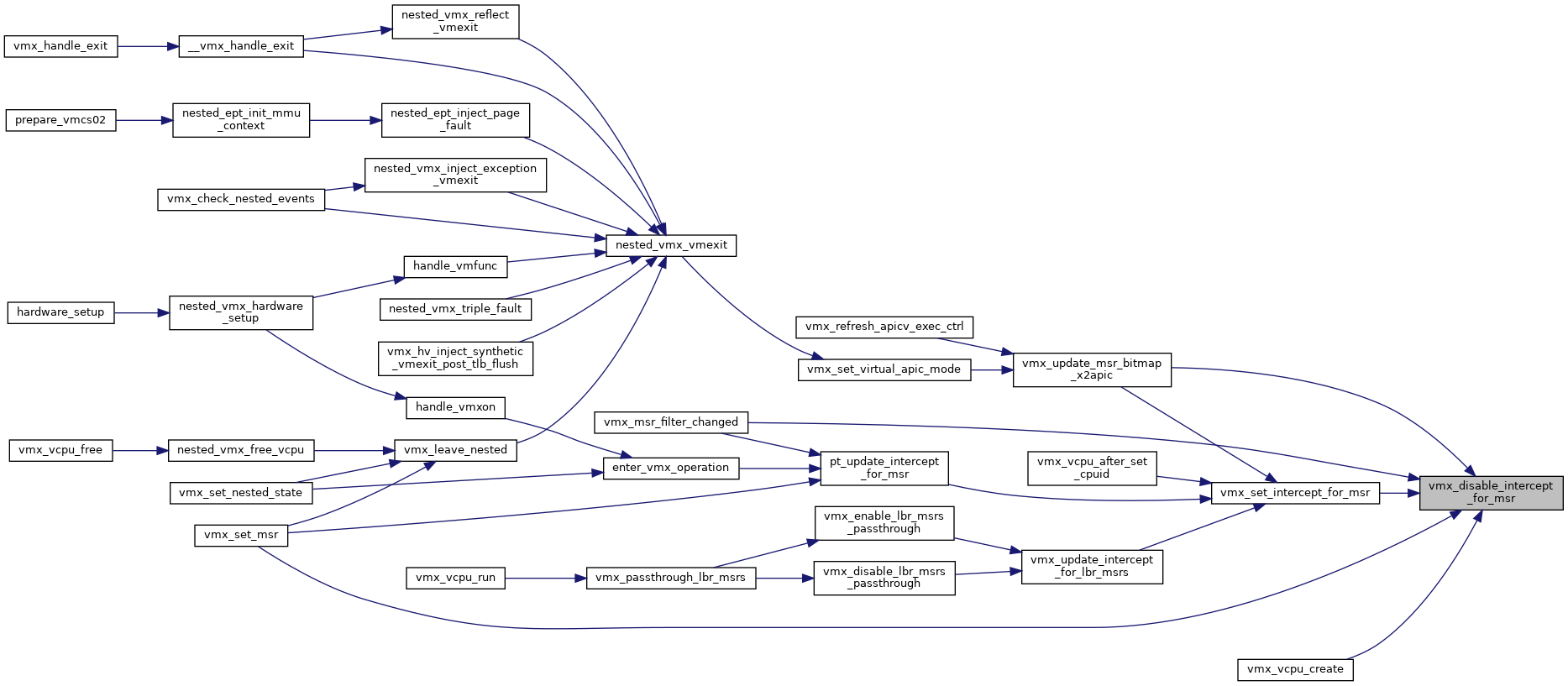

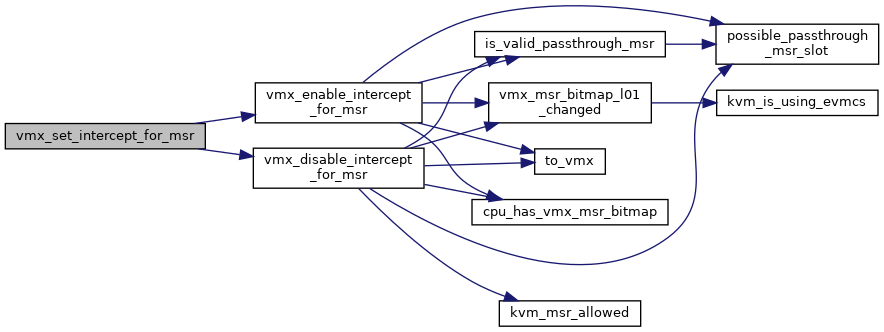

| void | vmx_disable_intercept_for_msr (struct kvm_vcpu *vcpu, u32 msr, int type) |

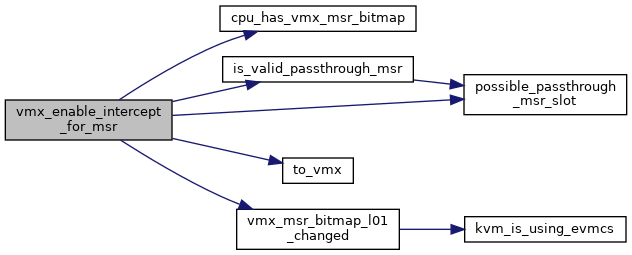

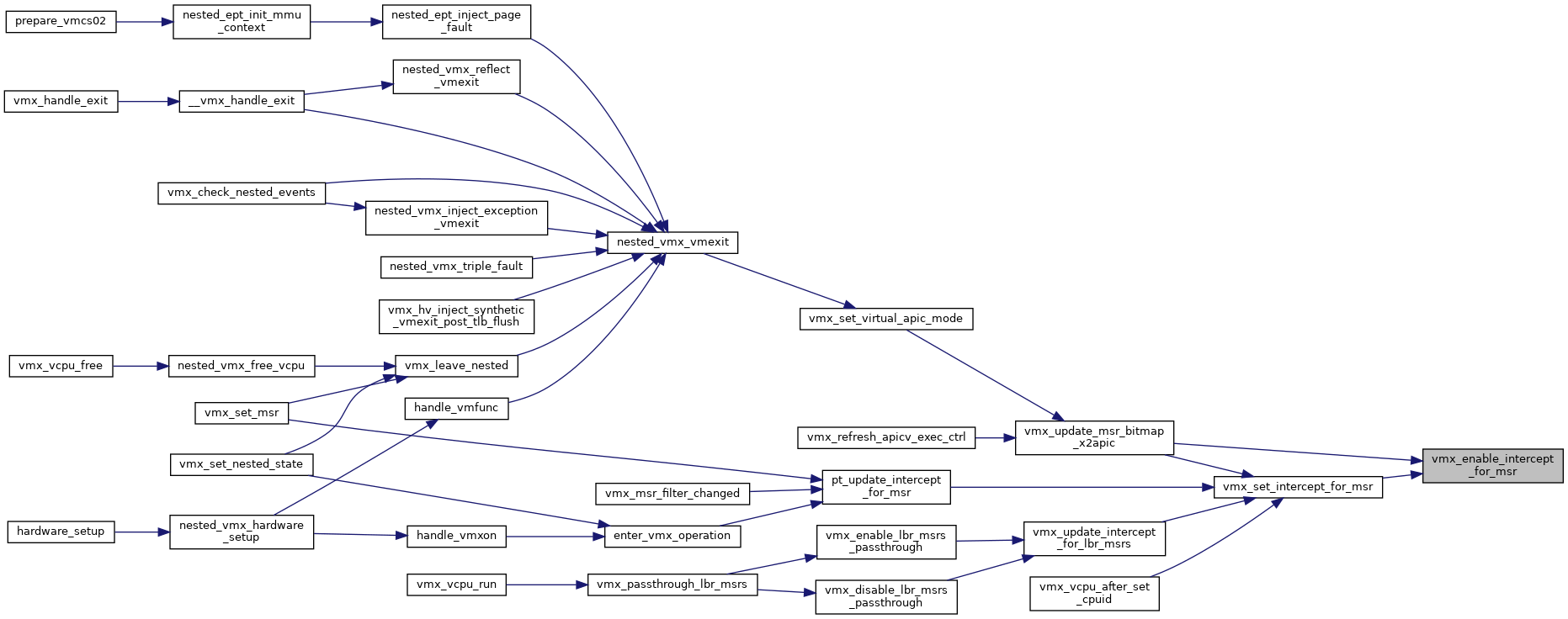

| void | vmx_enable_intercept_for_msr (struct kvm_vcpu *vcpu, u32 msr, int type) |

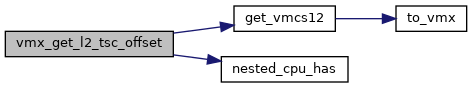

| u64 | vmx_get_l2_tsc_offset (struct kvm_vcpu *vcpu) |

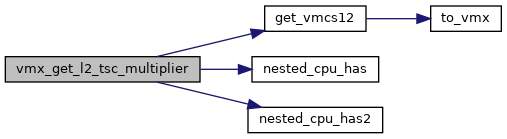

| u64 | vmx_get_l2_tsc_multiplier (struct kvm_vcpu *vcpu) |

| gva_t | vmx_get_untagged_addr (struct kvm_vcpu *vcpu, gva_t gva, unsigned int flags) |

| static void | vmx_set_intercept_for_msr (struct kvm_vcpu *vcpu, u32 msr, int type, bool value) |

| void | vmx_update_cpu_dirty_logging (struct kvm_vcpu *vcpu) |

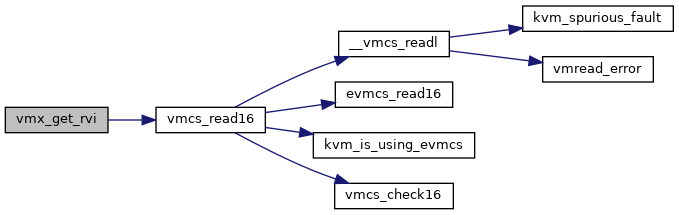

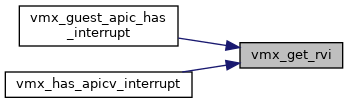

| static u8 | vmx_get_rvi (void) |

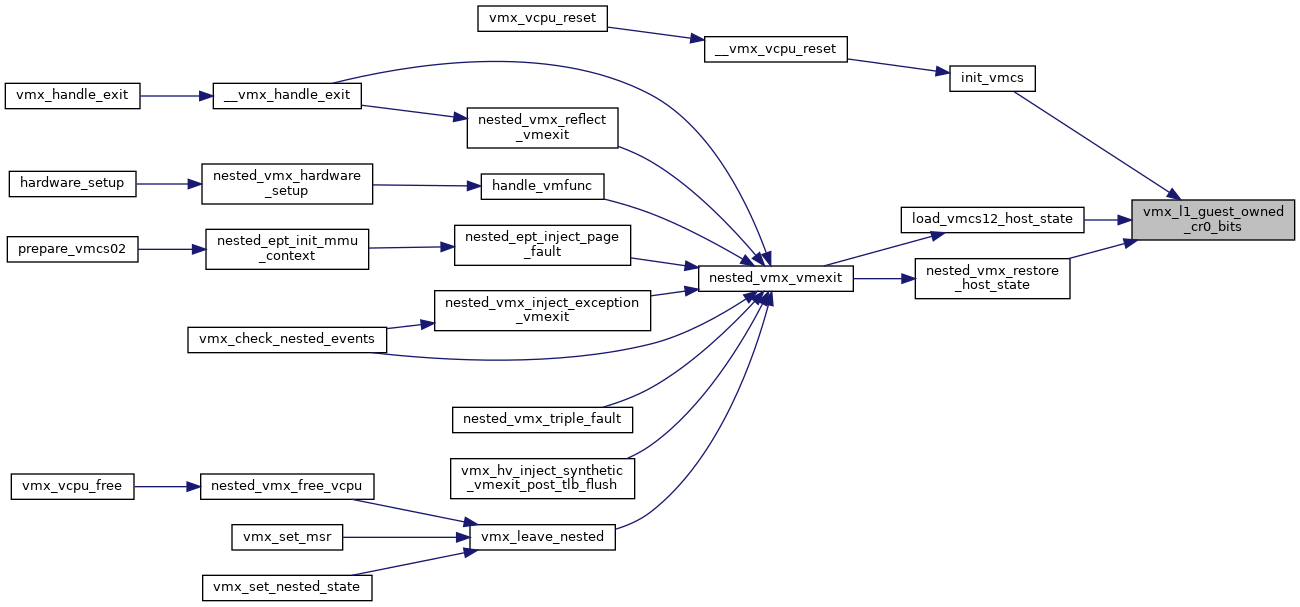

| static unsigned long | vmx_l1_guest_owned_cr0_bits (void) |

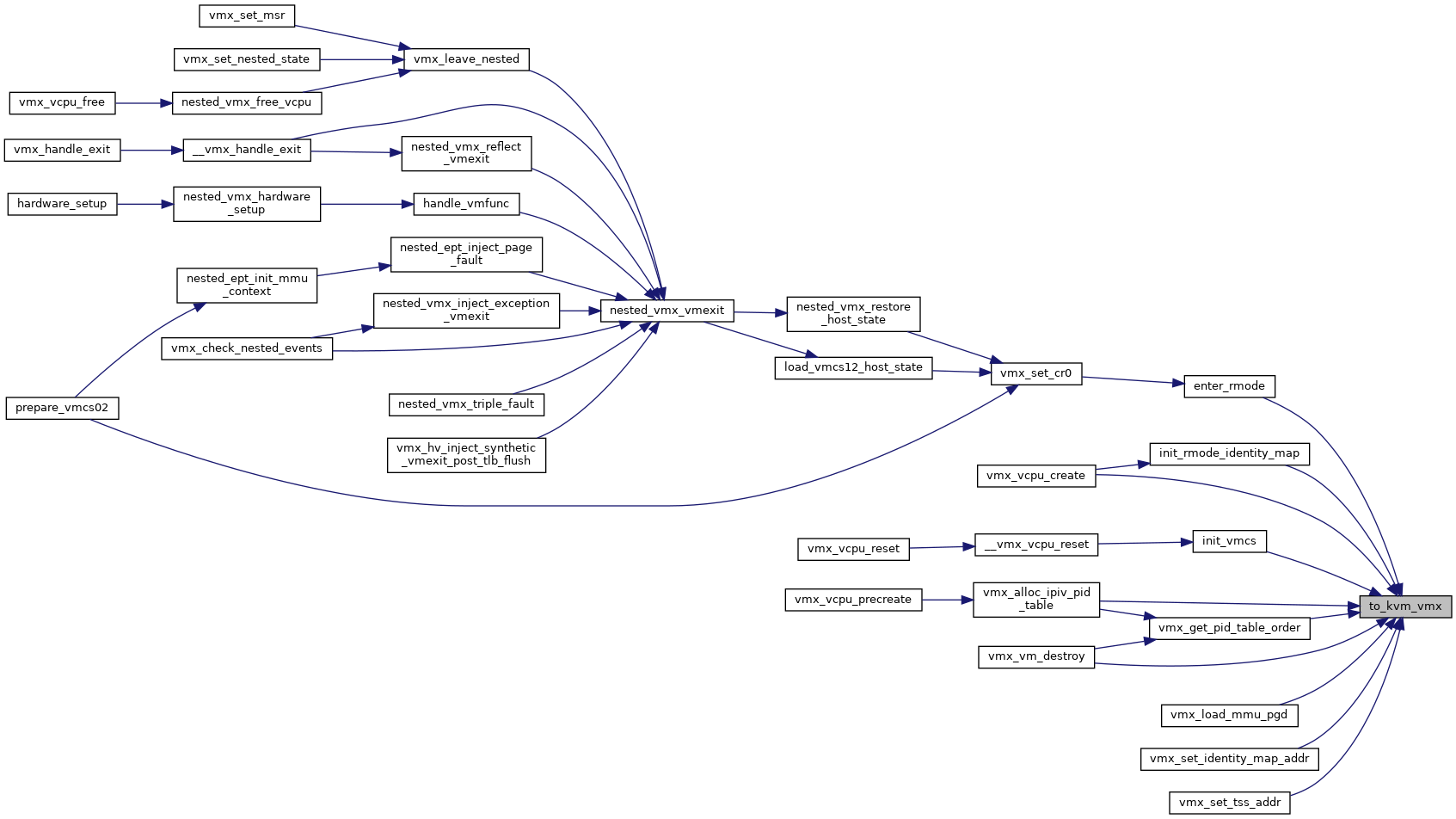

| static __always_inline struct kvm_vmx * | to_kvm_vmx (struct kvm *kvm) |

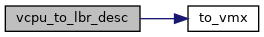

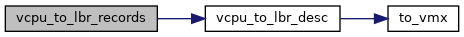

| static __always_inline struct vcpu_vmx * | to_vmx (struct kvm_vcpu *vcpu) |

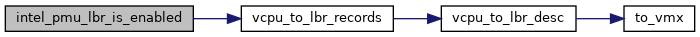

| static struct lbr_desc * | vcpu_to_lbr_desc (struct kvm_vcpu *vcpu) |

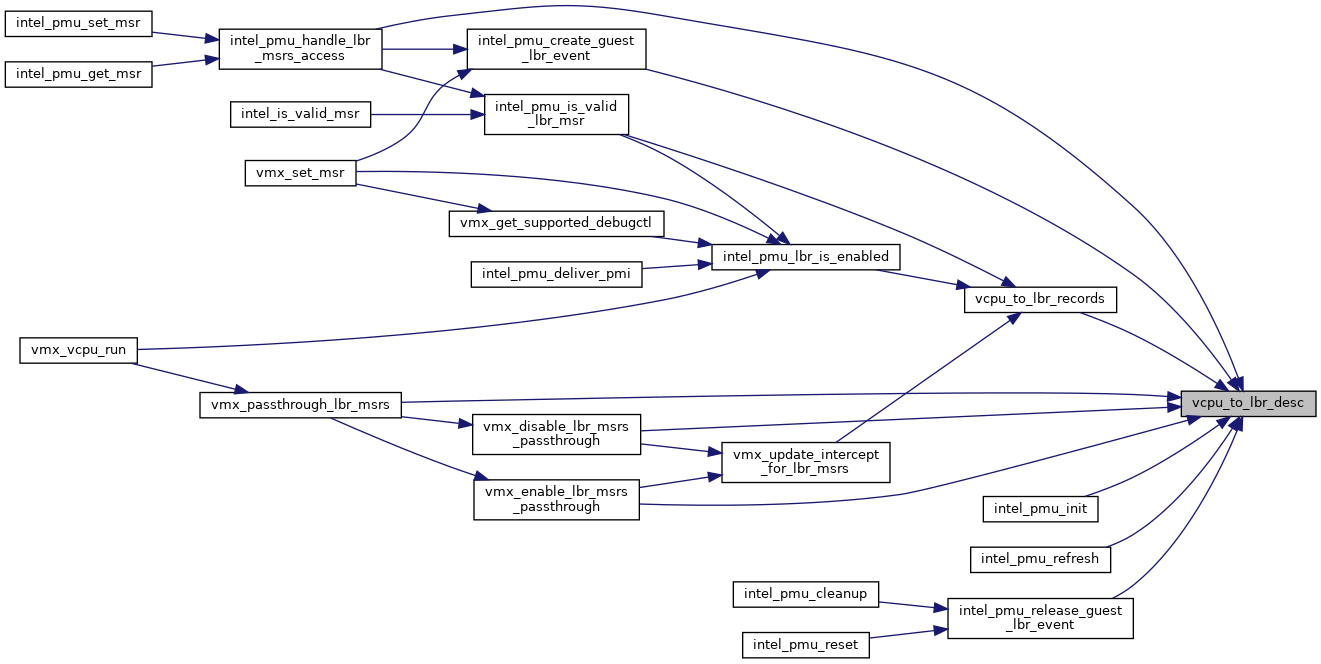

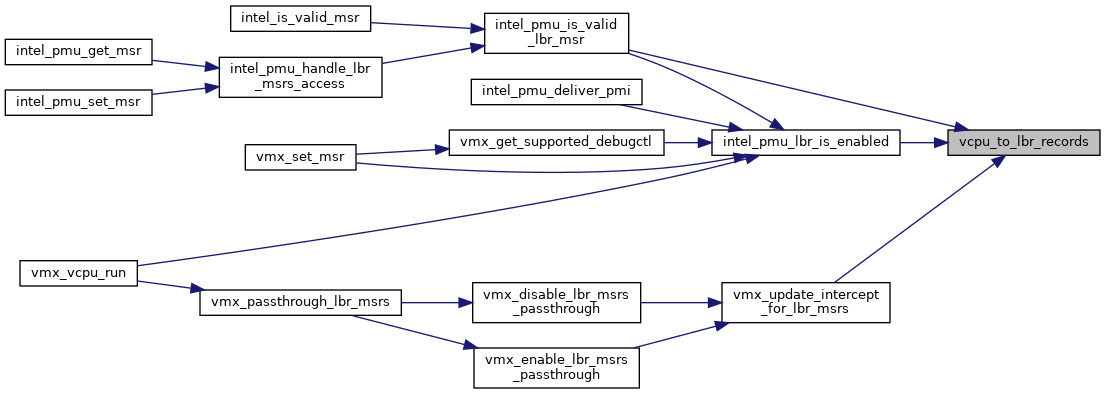

| static struct x86_pmu_lbr * | vcpu_to_lbr_records (struct kvm_vcpu *vcpu) |

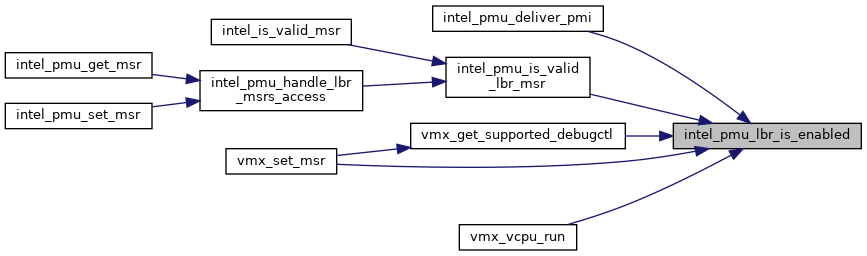

| static bool | intel_pmu_lbr_is_enabled (struct kvm_vcpu *vcpu) |

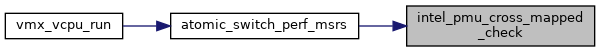

| void | intel_pmu_cross_mapped_check (struct kvm_pmu *pmu) |

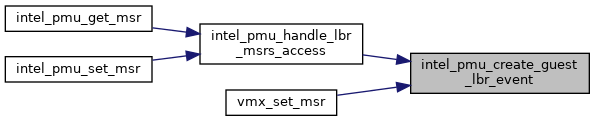

| int | intel_pmu_create_guest_lbr_event (struct kvm_vcpu *vcpu) |

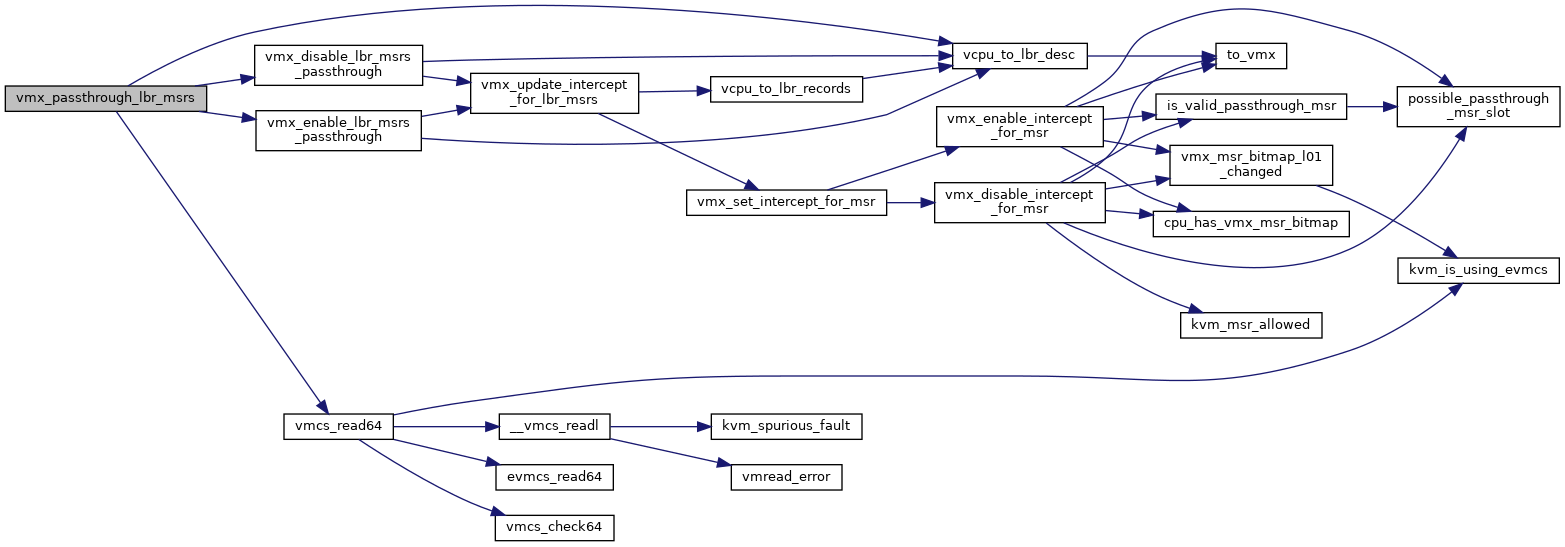

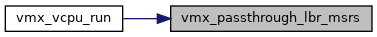

| void | vmx_passthrough_lbr_msrs (struct kvm_vcpu *vcpu) |

| static __always_inline unsigned long | vmx_get_exit_qual (struct kvm_vcpu *vcpu) |

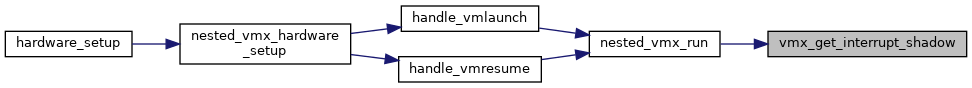

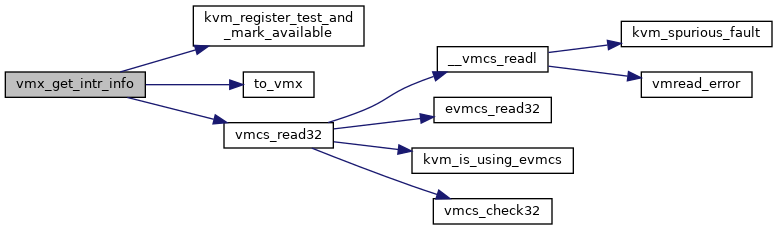

| static __always_inline u32 | vmx_get_intr_info (struct kvm_vcpu *vcpu) |

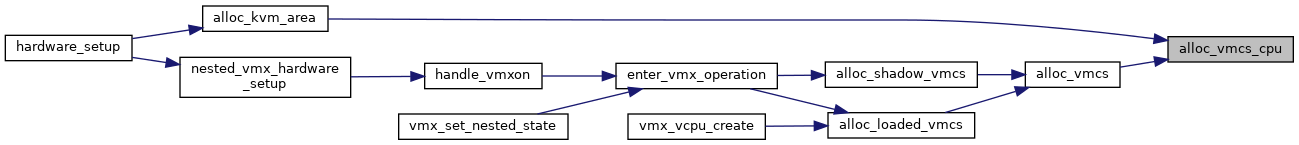

| struct vmcs * | alloc_vmcs_cpu (bool shadow, int cpu, gfp_t flags) |

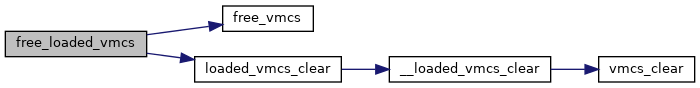

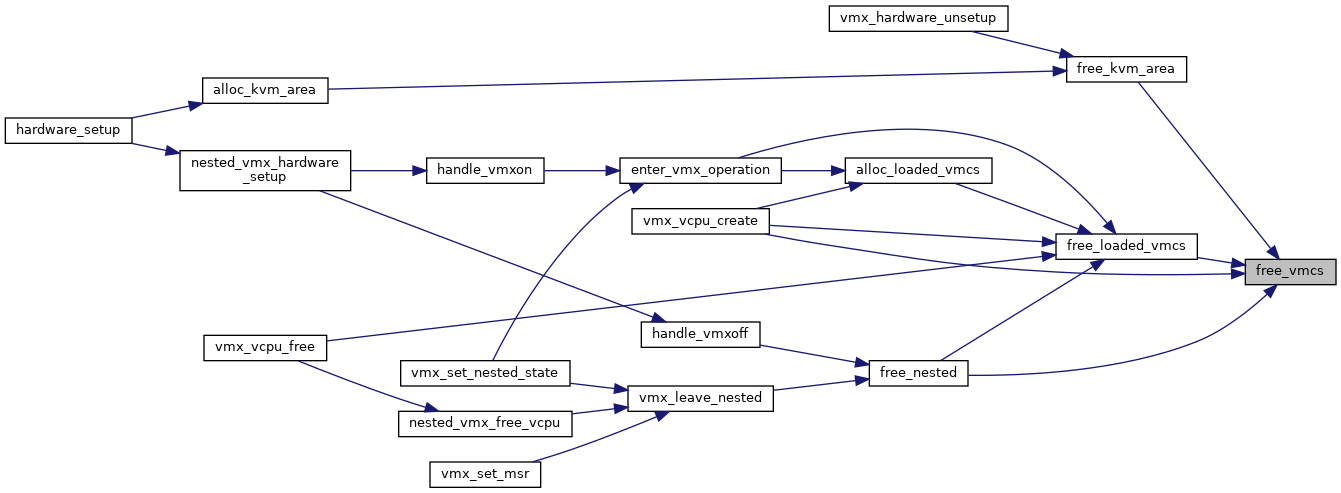

| void | free_vmcs (struct vmcs *vmcs) |

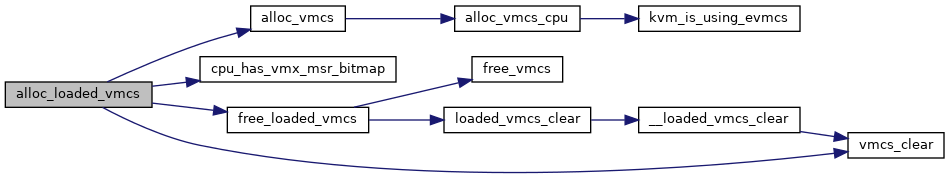

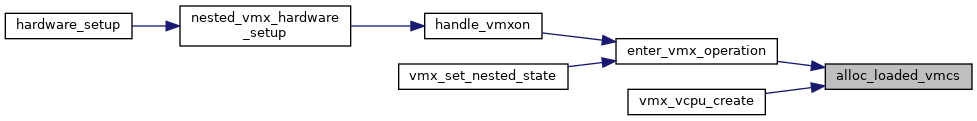

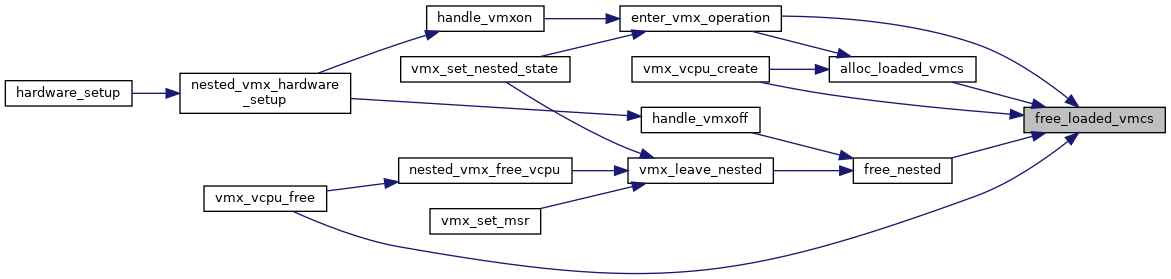

| int | alloc_loaded_vmcs (struct loaded_vmcs *loaded_vmcs) |

| void | free_loaded_vmcs (struct loaded_vmcs *loaded_vmcs) |

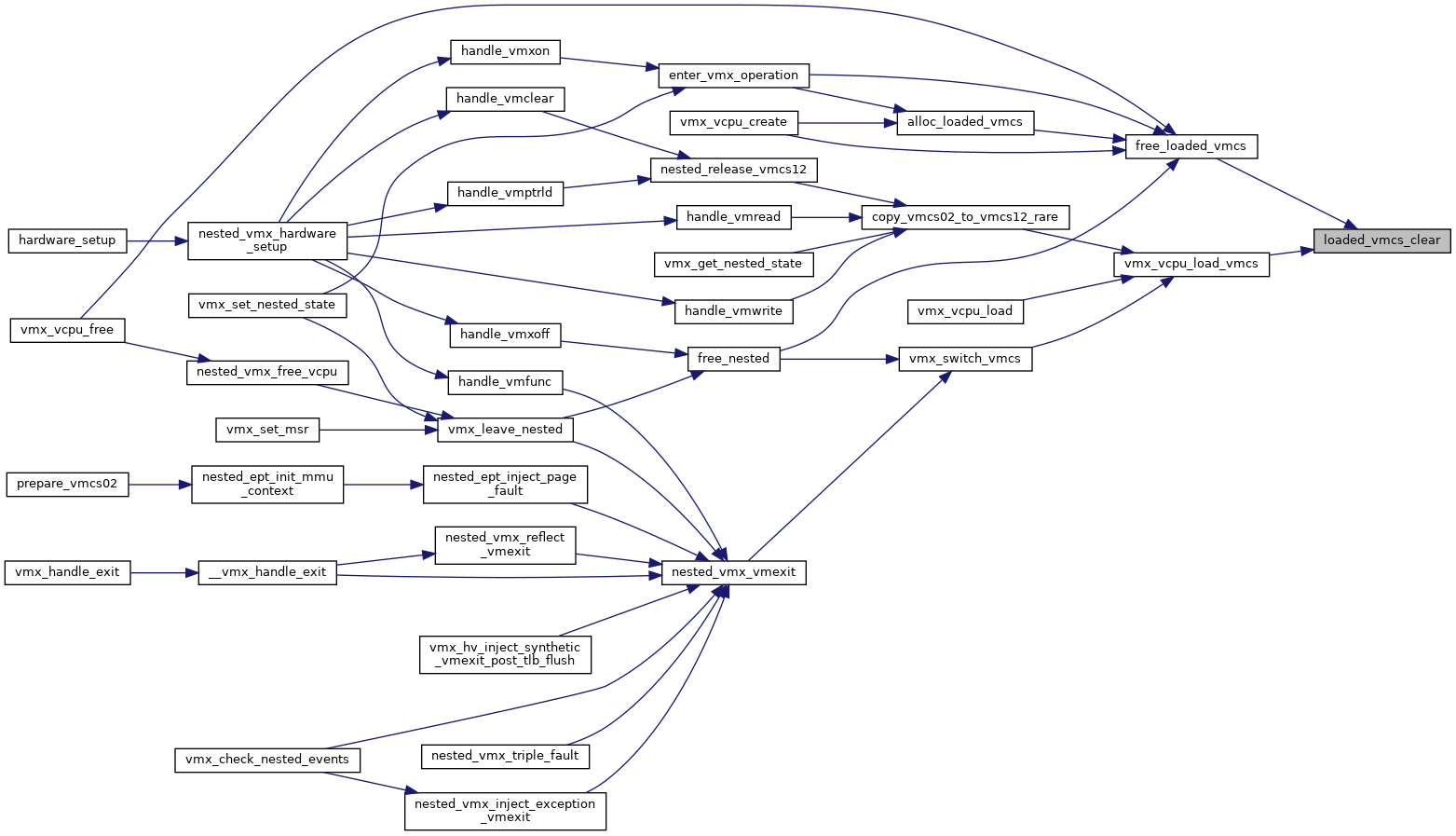

| void | loaded_vmcs_clear (struct loaded_vmcs *loaded_vmcs) |

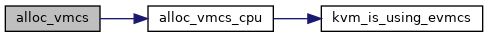

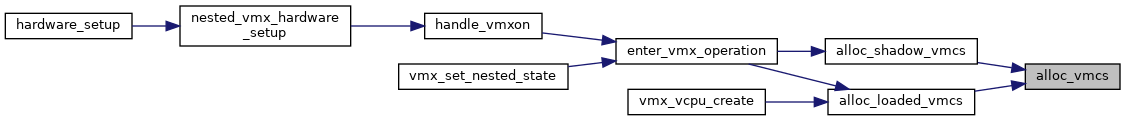

| static struct vmcs * | alloc_vmcs (bool shadow) |

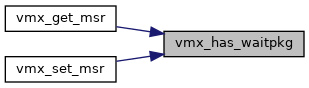

| static bool | vmx_has_waitpkg (struct vcpu_vmx *vmx) |

| static bool | vmx_need_pf_intercept (struct kvm_vcpu *vcpu) |

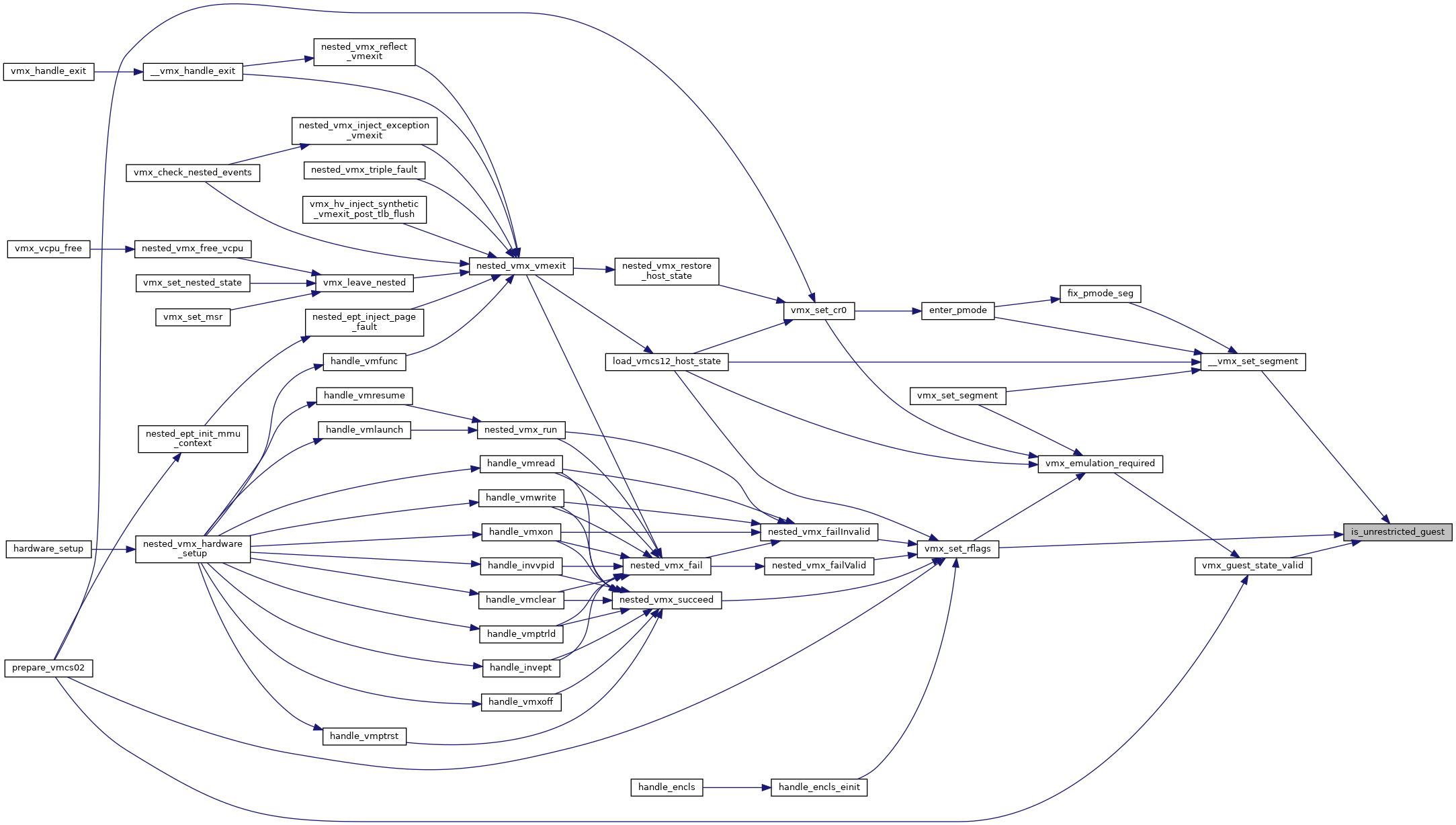

| static bool | is_unrestricted_guest (struct kvm_vcpu *vcpu) |

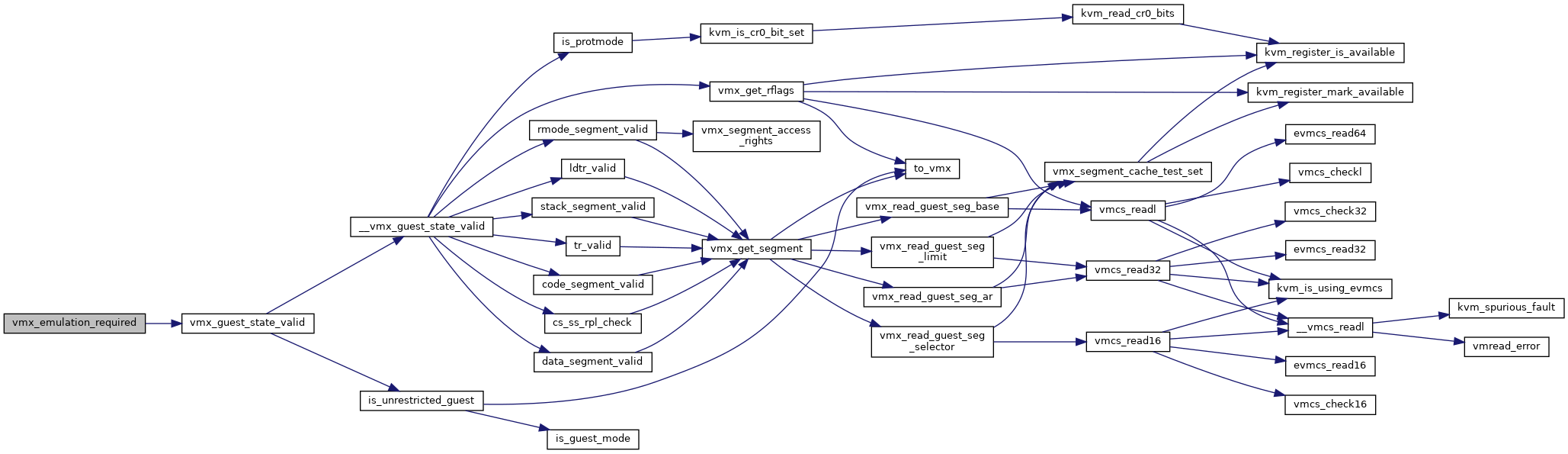

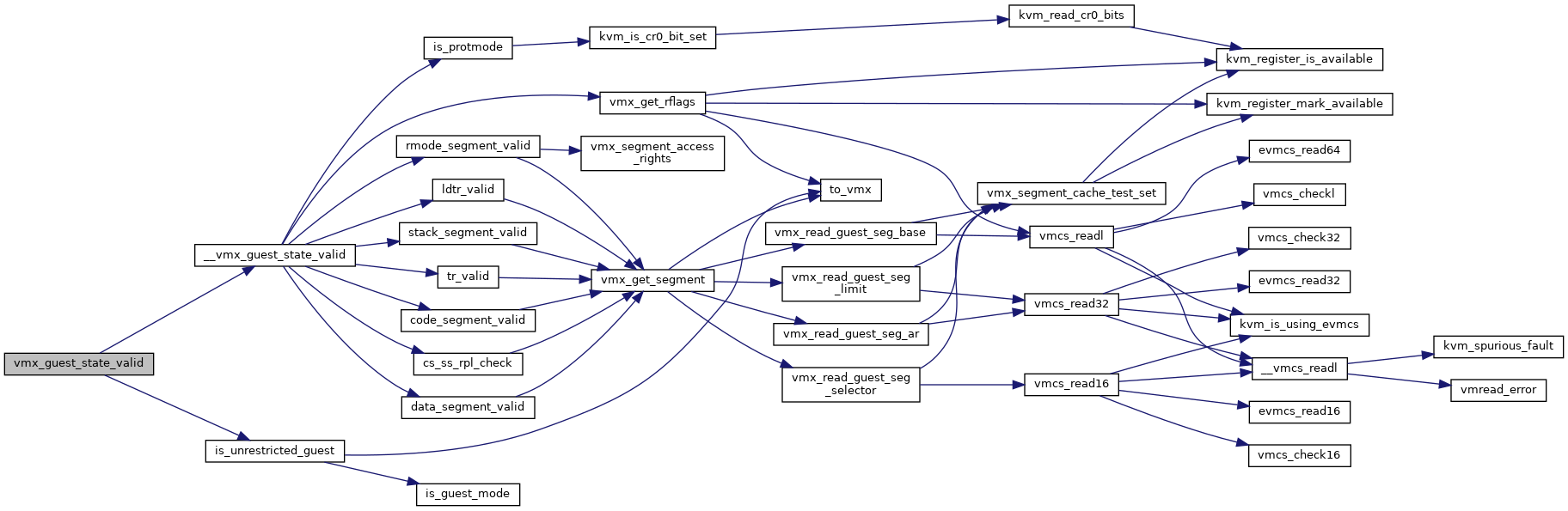

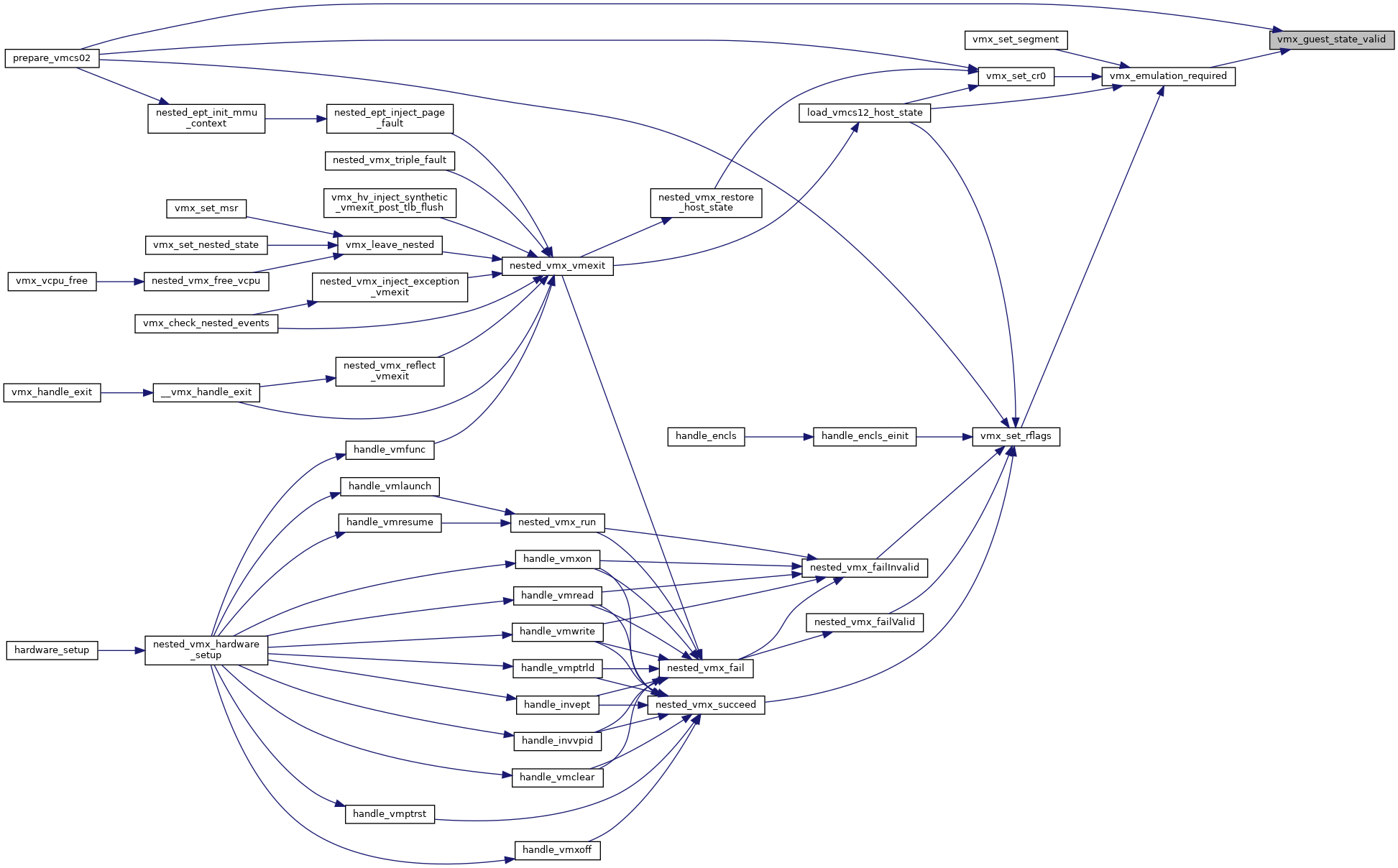

| bool | __vmx_guest_state_valid (struct kvm_vcpu *vcpu) |

| static bool | vmx_guest_state_valid (struct kvm_vcpu *vcpu) |

| void | dump_vmcs (struct kvm_vcpu *vcpu) |

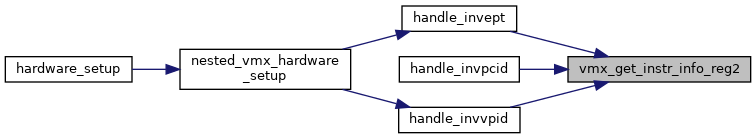

| static int | vmx_get_instr_info_reg2 (u32 vmx_instr_info) |

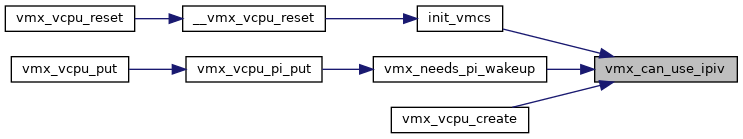

| static bool | vmx_can_use_ipiv (struct kvm_vcpu *vcpu) |

Macro Definition Documentation

◆ __BUILD_VMX_MSR_BITMAP_HELPER

| #define __BUILD_VMX_MSR_BITMAP_HELPER | ( | rtype, | |

| action, | |||

| bitop, | |||

| access, | |||

| base | |||

| ) |

◆ __KVM_REQUIRED_VMX_CPU_BASED_VM_EXEC_CONTROL

| #define __KVM_REQUIRED_VMX_CPU_BASED_VM_EXEC_CONTROL |

◆ __KVM_REQUIRED_VMX_VM_ENTRY_CONTROLS

| #define __KVM_REQUIRED_VMX_VM_ENTRY_CONTROLS (VM_ENTRY_LOAD_DEBUG_CONTROLS) |

◆ __KVM_REQUIRED_VMX_VM_EXIT_CONTROLS

| #define __KVM_REQUIRED_VMX_VM_EXIT_CONTROLS |

◆ BUILD_CONTROLS_SHADOW

| #define BUILD_CONTROLS_SHADOW | ( | lname, | |

| uname, | |||

| bits | |||

| ) |

◆ BUILD_VMX_MSR_BITMAP_HELPERS

| #define BUILD_VMX_MSR_BITMAP_HELPERS | ( | ret_type, | |

| action, | |||

| bitop | |||

| ) |

◆ KVM_OPTIONAL_VMX_CPU_BASED_VM_EXEC_CONTROL

| #define KVM_OPTIONAL_VMX_CPU_BASED_VM_EXEC_CONTROL |

◆ KVM_OPTIONAL_VMX_PIN_BASED_VM_EXEC_CONTROL

| #define KVM_OPTIONAL_VMX_PIN_BASED_VM_EXEC_CONTROL |

◆ KVM_OPTIONAL_VMX_SECONDARY_VM_EXEC_CONTROL

| #define KVM_OPTIONAL_VMX_SECONDARY_VM_EXEC_CONTROL |

◆ KVM_OPTIONAL_VMX_TERTIARY_VM_EXEC_CONTROL

| #define KVM_OPTIONAL_VMX_TERTIARY_VM_EXEC_CONTROL (TERTIARY_EXEC_IPI_VIRT) |

◆ KVM_OPTIONAL_VMX_VM_ENTRY_CONTROLS

| #define KVM_OPTIONAL_VMX_VM_ENTRY_CONTROLS |

◆ KVM_OPTIONAL_VMX_VM_EXIT_CONTROLS

| #define KVM_OPTIONAL_VMX_VM_EXIT_CONTROLS |

◆ KVM_REQUIRED_VMX_CPU_BASED_VM_EXEC_CONTROL

| #define KVM_REQUIRED_VMX_CPU_BASED_VM_EXEC_CONTROL __KVM_REQUIRED_VMX_CPU_BASED_VM_EXEC_CONTROL |

◆ KVM_REQUIRED_VMX_PIN_BASED_VM_EXEC_CONTROL

| #define KVM_REQUIRED_VMX_PIN_BASED_VM_EXEC_CONTROL |

◆ KVM_REQUIRED_VMX_SECONDARY_VM_EXEC_CONTROL

◆ KVM_REQUIRED_VMX_TERTIARY_VM_EXEC_CONTROL

◆ KVM_REQUIRED_VMX_VM_ENTRY_CONTROLS

| #define KVM_REQUIRED_VMX_VM_ENTRY_CONTROLS __KVM_REQUIRED_VMX_VM_ENTRY_CONTROLS |

◆ KVM_REQUIRED_VMX_VM_EXIT_CONTROLS

| #define KVM_REQUIRED_VMX_VM_EXIT_CONTROLS __KVM_REQUIRED_VMX_VM_EXIT_CONTROLS |

◆ MAX_NR_LOADSTORE_MSRS

◆ MAX_NR_USER_RETURN_MSRS

◆ MAX_POSSIBLE_PASSTHROUGH_MSRS

◆ MSR_TYPE_R

◆ MSR_TYPE_RW

◆ MSR_TYPE_W

◆ PML_ENTITY_NUM

◆ RTIT_ADDR_RANGE

◆ VMX_REGS_LAZY_LOAD_SET

| #define VMX_REGS_LAZY_LOAD_SET |

◆ X2APIC_MSR

Enumeration Type Documentation

◆ segment_cache_field

| enum segment_cache_field |

Function Documentation

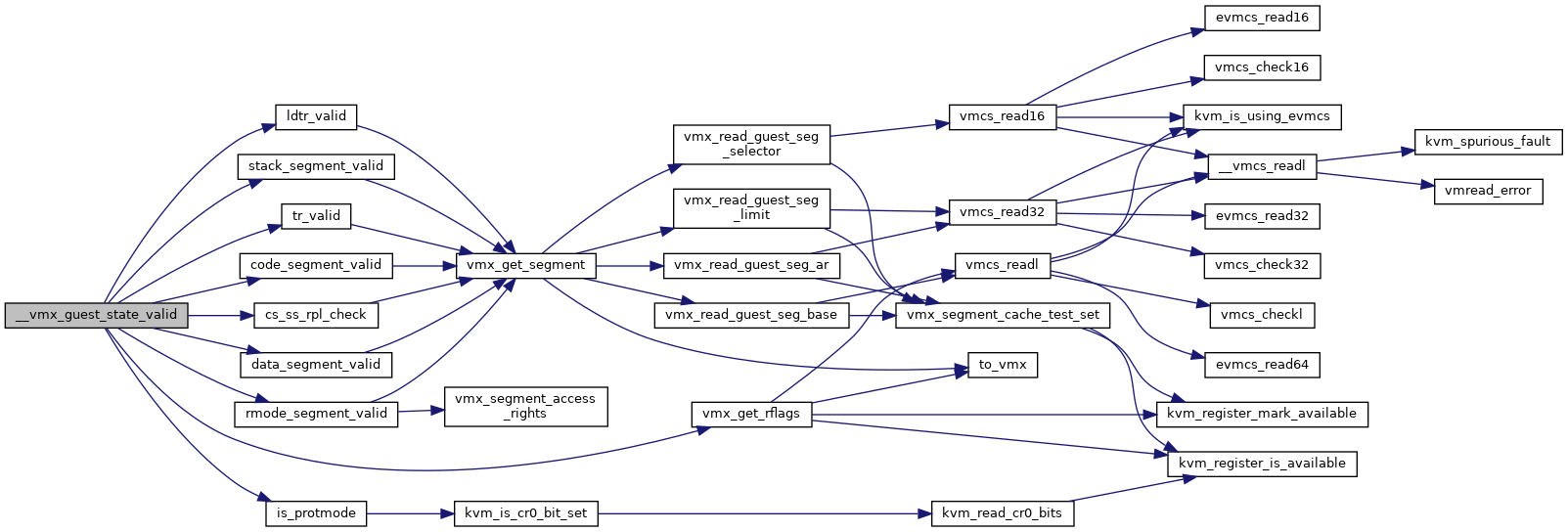

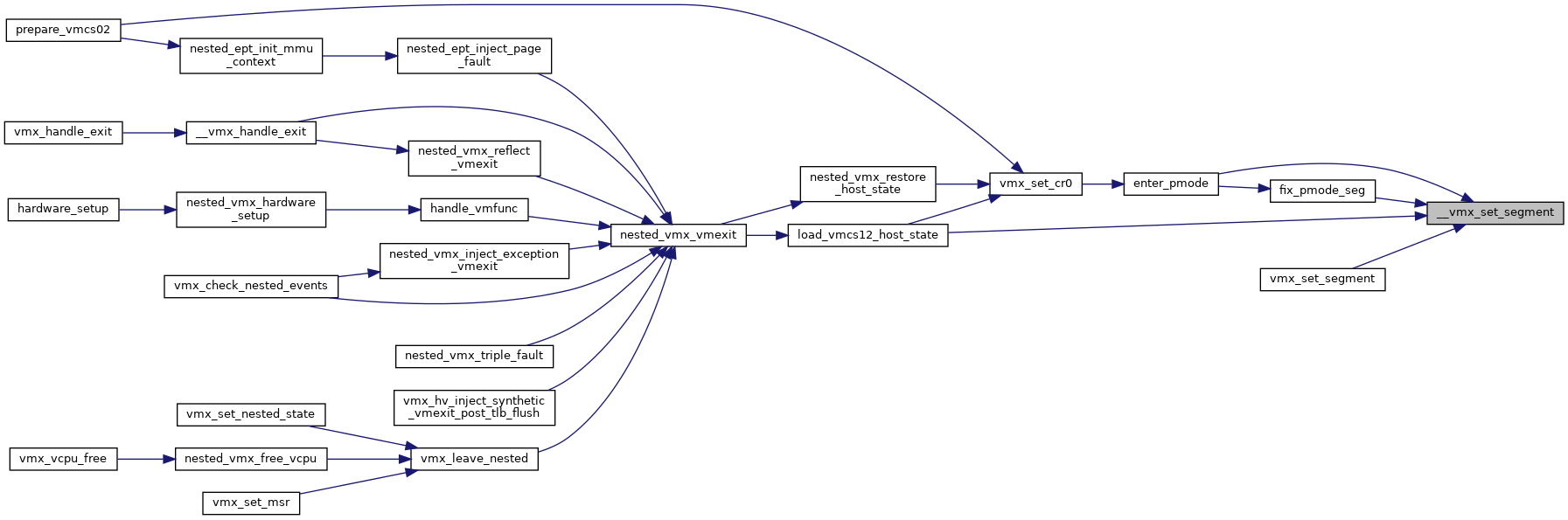

◆ __vmx_guest_state_valid()

| bool __vmx_guest_state_valid | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3796 of file vmx.c.

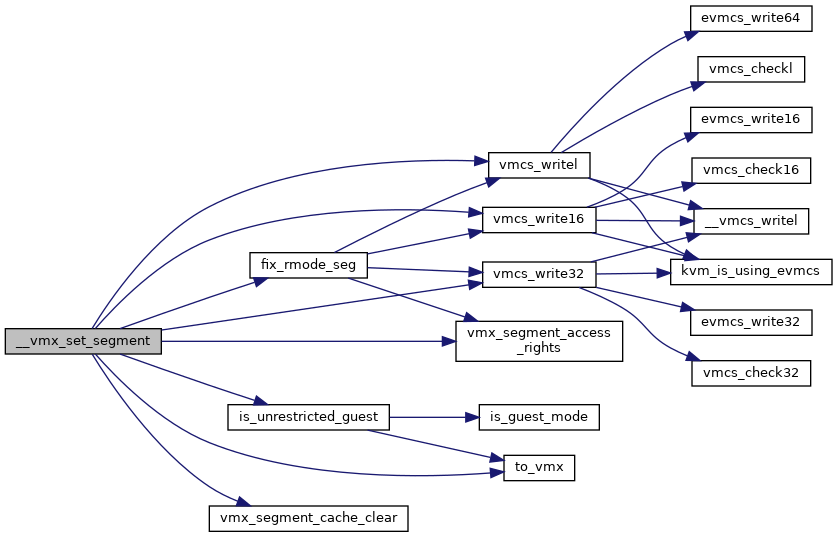

◆ __vmx_set_segment()

| void __vmx_set_segment | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_segment * | var, | ||

| int | seg | ||

| ) |

Definition at line 3572 of file vmx.c.

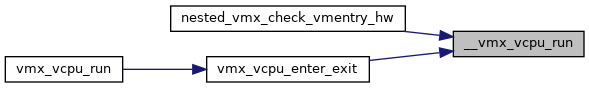

◆ __vmx_vcpu_run()

| bool __vmx_vcpu_run | ( | struct vcpu_vmx * | vmx, |

| unsigned long * | regs, | ||

| unsigned int | flags | ||

| ) |

◆ __vmx_vcpu_run_flags()

| unsigned int __vmx_vcpu_run_flags | ( | struct vcpu_vmx * | vmx | ) |

◆ alloc_loaded_vmcs()

| int alloc_loaded_vmcs | ( | struct loaded_vmcs * | loaded_vmcs | ) |

◆ alloc_vmcs()

|

inlinestatic |

◆ alloc_vmcs_cpu()

| struct vmcs* alloc_vmcs_cpu | ( | bool | shadow, |

| int | cpu, | ||

| gfp_t | flags | ||

| ) |

Definition at line 2862 of file vmx.c.

◆ allocate_vpid()

| int allocate_vpid | ( | void | ) |

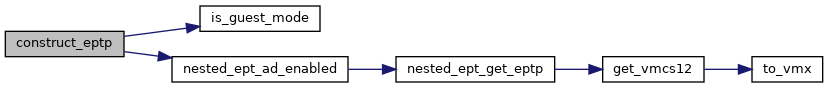

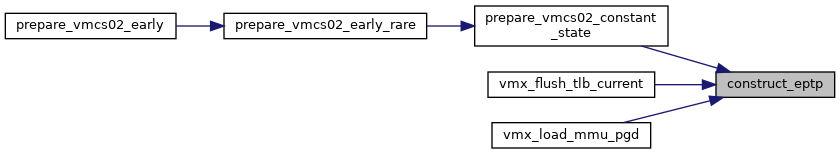

◆ construct_eptp()

| u64 construct_eptp | ( | struct kvm_vcpu * | vcpu, |

| hpa_t | root_hpa, | ||

| int | root_level | ||

| ) |

Definition at line 3371 of file vmx.c.

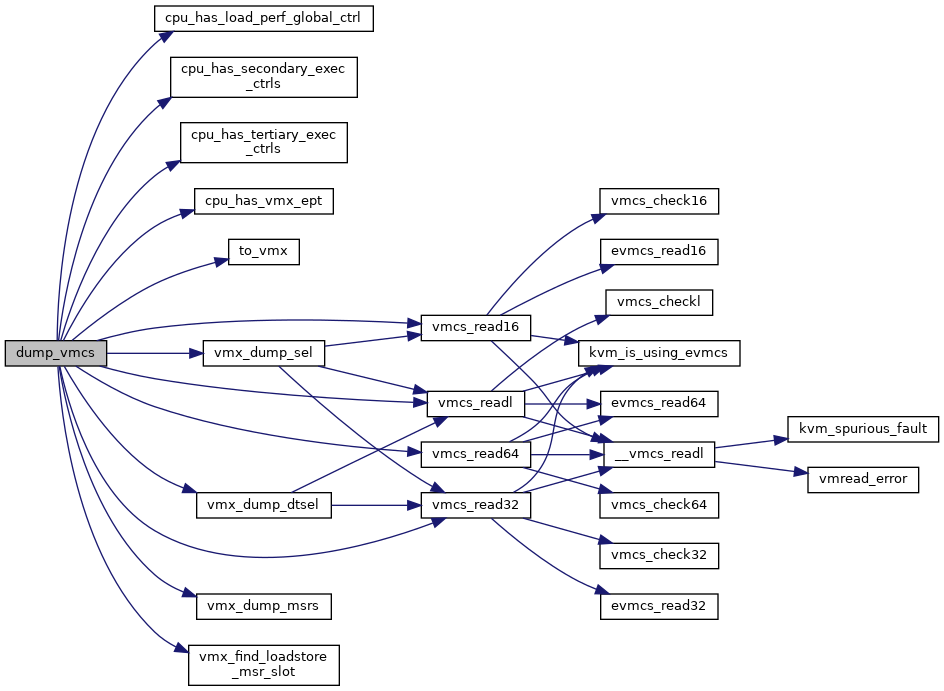

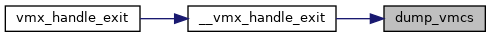

◆ dump_vmcs()

| void dump_vmcs | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 6232 of file vmx.c.

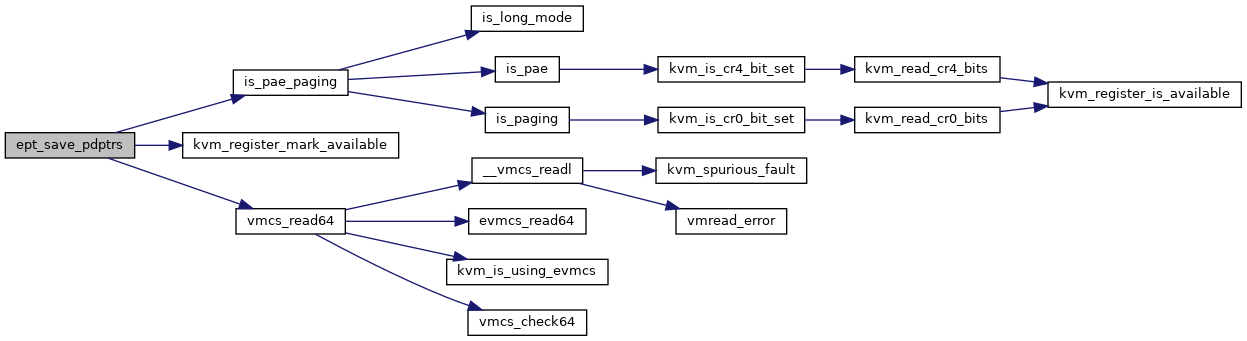

◆ ept_save_pdptrs()

| void ept_save_pdptrs | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3246 of file vmx.c.

◆ free_loaded_vmcs()

| void free_loaded_vmcs | ( | struct loaded_vmcs * | loaded_vmcs | ) |

◆ free_vmcs()

| void free_vmcs | ( | struct vmcs * | vmcs | ) |

◆ free_vpid()

| void free_vpid | ( | int | vpid | ) |

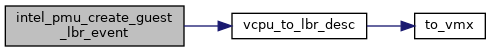

◆ intel_pmu_create_guest_lbr_event()

| int intel_pmu_create_guest_lbr_event | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 254 of file pmu_intel.c.

◆ intel_pmu_cross_mapped_check()

| void intel_pmu_cross_mapped_check | ( | struct kvm_pmu * | pmu | ) |

Definition at line 746 of file pmu_intel.c.

◆ intel_pmu_lbr_is_enabled()

|

inlinestatic |

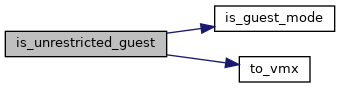

◆ is_unrestricted_guest()

|

inlinestatic |

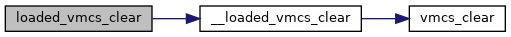

◆ loaded_vmcs_clear()

| void loaded_vmcs_clear | ( | struct loaded_vmcs * | loaded_vmcs | ) |

◆ pt_update_intercept_for_msr()

| void pt_update_intercept_for_msr | ( | struct kvm_vcpu * | vcpu | ) |

◆ set_cr4_guest_host_mask()

| void set_cr4_guest_host_mask | ( | struct vcpu_vmx * | vmx | ) |

◆ to_kvm_vmx()

|

static |

◆ to_vmx()

|

static |

◆ vcpu_to_lbr_desc()

|

inlinestatic |

◆ vcpu_to_lbr_records()

|

inlinestatic |

◆ vmx_can_use_ipiv()

|

inlinestatic |

◆ vmx_disable_intercept_for_msr()

| void vmx_disable_intercept_for_msr | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| int | type | ||

| ) |

Definition at line 3962 of file vmx.c.

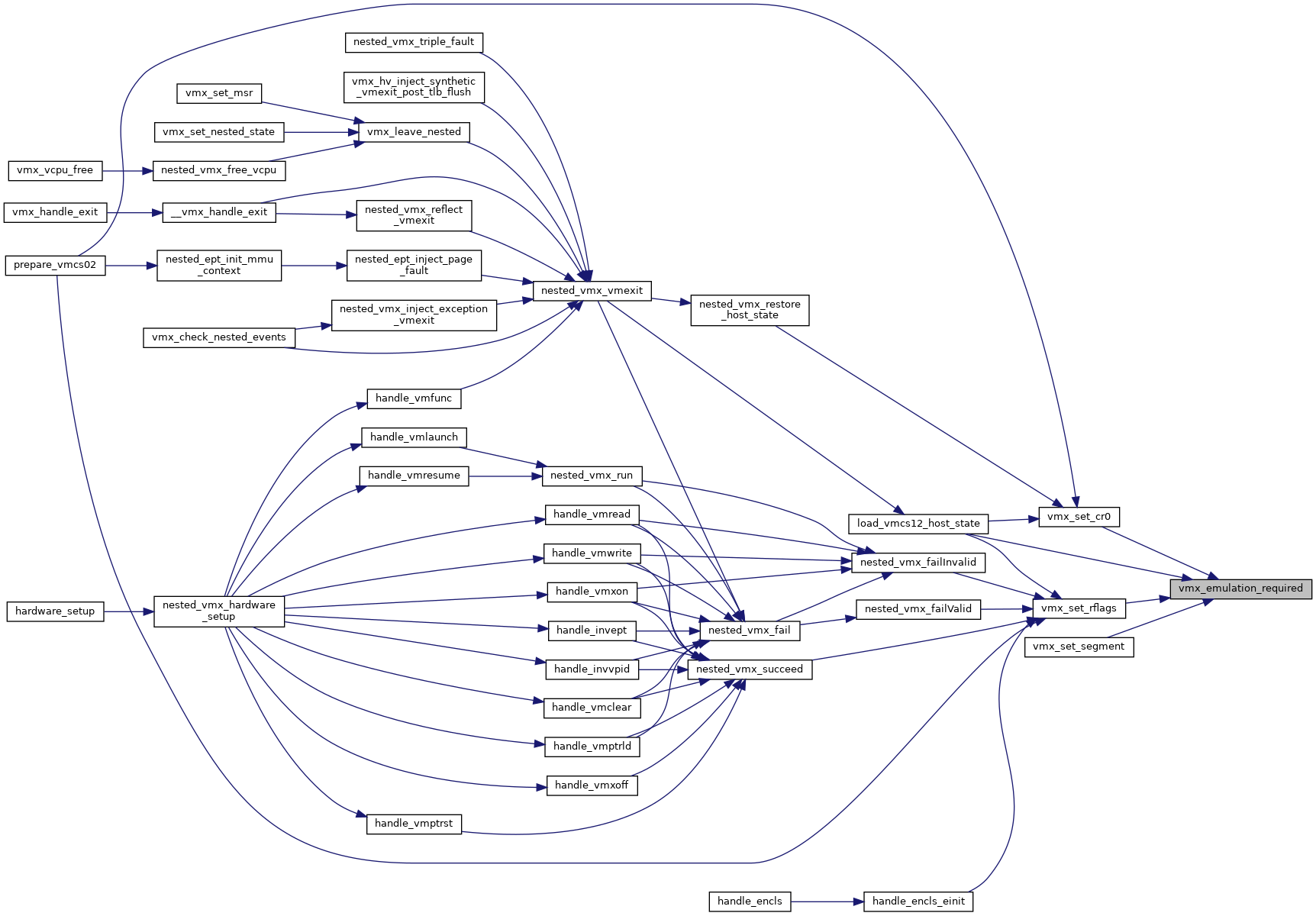

◆ vmx_emulation_required()

| bool vmx_emulation_required | ( | struct kvm_vcpu * | vcpu | ) |

◆ vmx_enable_intercept_for_msr()

| void vmx_enable_intercept_for_msr | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr, | ||

| int | type | ||

| ) |

◆ vmx_ept_load_pdptrs()

| void vmx_ept_load_pdptrs | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3231 of file vmx.c.

◆ vmx_find_loadstore_msr_slot()

| int vmx_find_loadstore_msr_slot | ( | struct vmx_msrs * | m, |

| u32 | msr | ||

| ) |

◆ vmx_find_uret_msr()

| struct vmx_uret_msr* vmx_find_uret_msr | ( | struct vcpu_vmx * | vmx, |

| u32 | msr | ||

| ) |

◆ vmx_get_cpl()

| int vmx_get_cpl | ( | struct kvm_vcpu * | vcpu | ) |

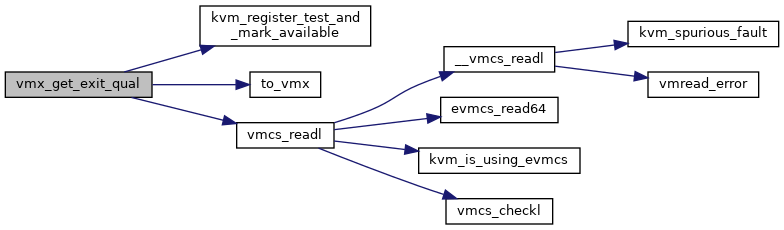

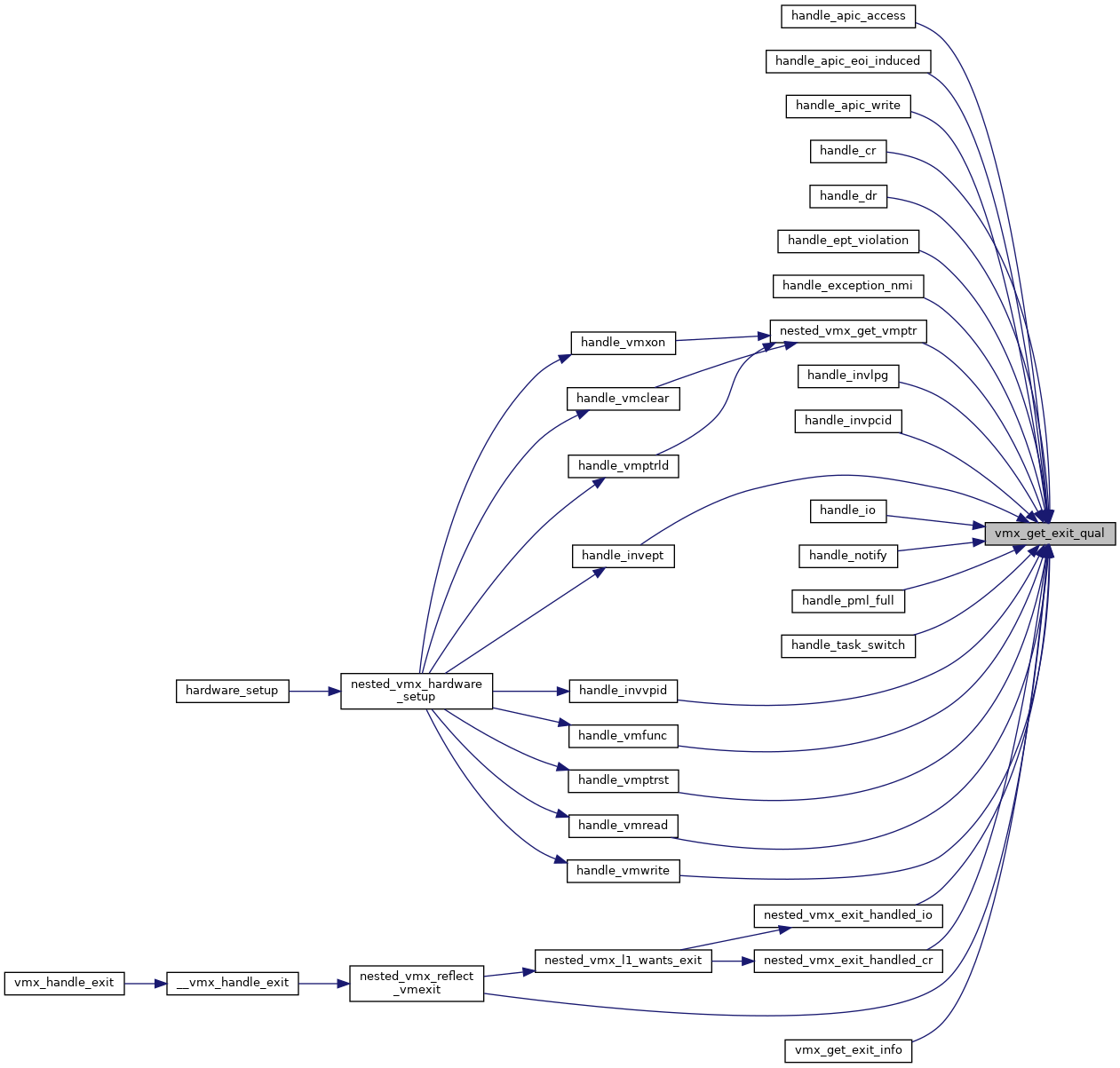

◆ vmx_get_exit_qual()

|

static |

Definition at line 681 of file vmx.h.

◆ vmx_get_instr_info_reg2()

|

inlinestatic |

◆ vmx_get_interrupt_shadow()

| u32 vmx_get_interrupt_shadow | ( | struct kvm_vcpu * | vcpu | ) |

◆ vmx_get_intr_info()

|

static |

◆ vmx_get_l2_tsc_multiplier()

| u64 vmx_get_l2_tsc_multiplier | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1907 of file vmx.c.

◆ vmx_get_l2_tsc_offset()

| u64 vmx_get_l2_tsc_offset | ( | struct kvm_vcpu * | vcpu | ) |

◆ vmx_get_nmi_mask()

| bool vmx_get_nmi_mask | ( | struct kvm_vcpu * | vcpu | ) |

◆ vmx_get_rflags()

| unsigned long vmx_get_rflags | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1509 of file vmx.c.

◆ vmx_get_rvi()

|

inlinestatic |

◆ vmx_get_segment()

| void vmx_get_segment | ( | struct kvm_vcpu * | vcpu, |

| struct kvm_segment * | var, | ||

| int | seg | ||

| ) |

Definition at line 3496 of file vmx.c.

◆ vmx_get_untagged_addr()

| gva_t vmx_get_untagged_addr | ( | struct kvm_vcpu * | vcpu, |

| gva_t | gva, | ||

| unsigned int | flags | ||

| ) |

Definition at line 8250 of file vmx.c.

◆ vmx_guest_inject_ac()

| bool vmx_guest_inject_ac | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 5174 of file vmx.c.

◆ vmx_guest_state_valid()

|

inlinestatic |

◆ vmx_has_waitpkg()

|

inlinestatic |

◆ vmx_interrupt_blocked()

| bool vmx_interrupt_blocked | ( | struct kvm_vcpu * | vcpu | ) |

◆ vmx_l1_guest_owned_cr0_bits()

|

inlinestatic |

◆ vmx_need_pf_intercept()

|

inlinestatic |

◆ vmx_nmi_blocked()

| bool vmx_nmi_blocked | ( | struct kvm_vcpu * | vcpu | ) |

◆ vmx_passthrough_lbr_msrs()

| void vmx_passthrough_lbr_msrs | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 713 of file pmu_intel.c.

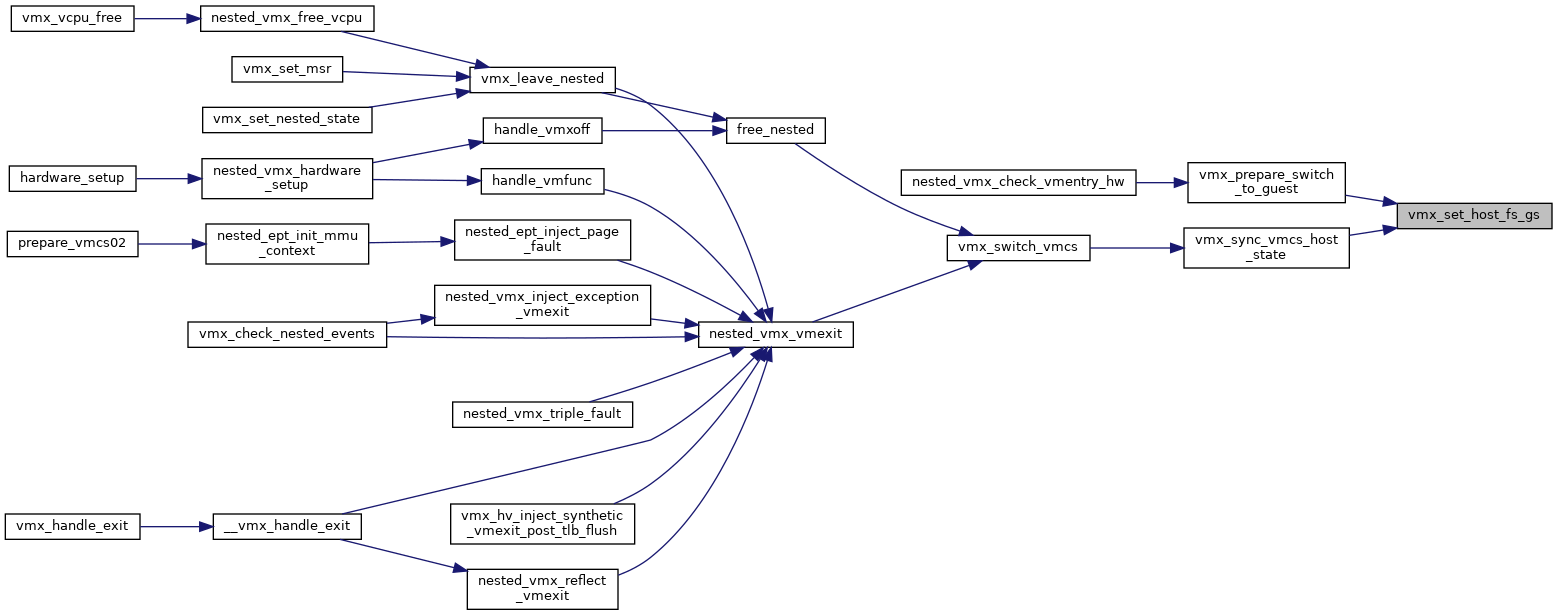

◆ vmx_prepare_switch_to_guest()

| void vmx_prepare_switch_to_guest | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 1282 of file vmx.c.

◆ vmx_set_constant_host_state()

| void vmx_set_constant_host_state | ( | struct vcpu_vmx * | vmx | ) |

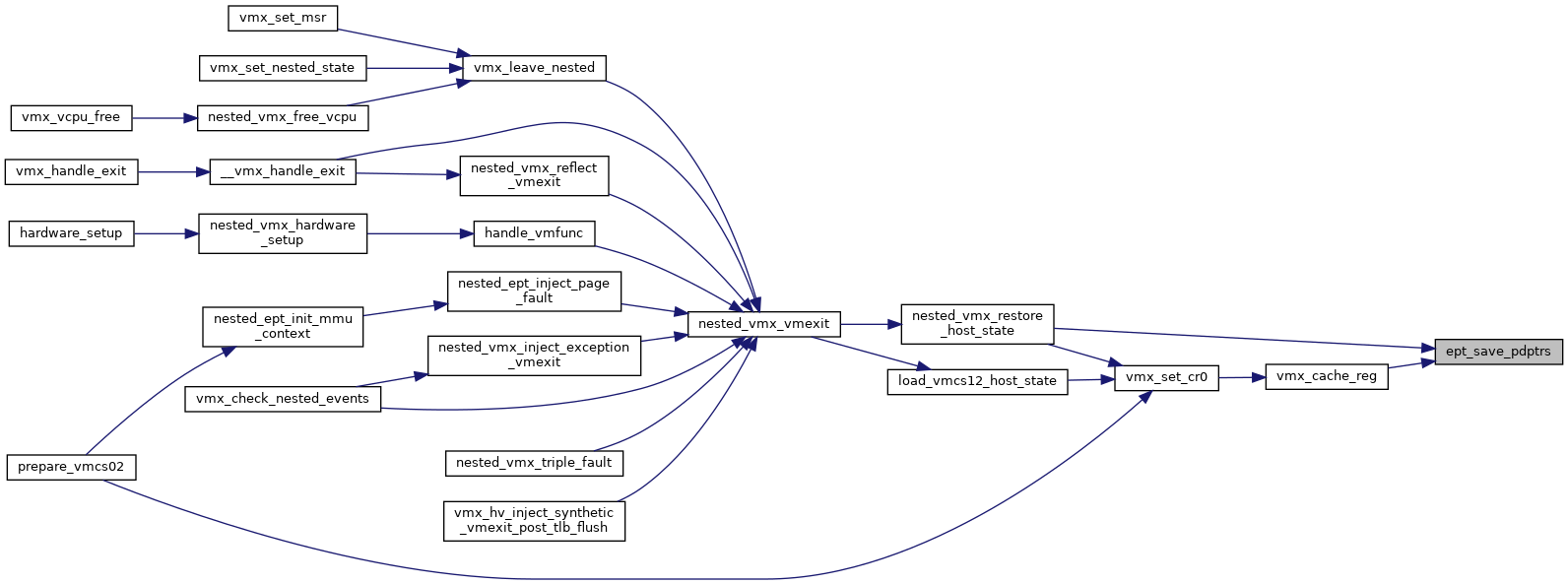

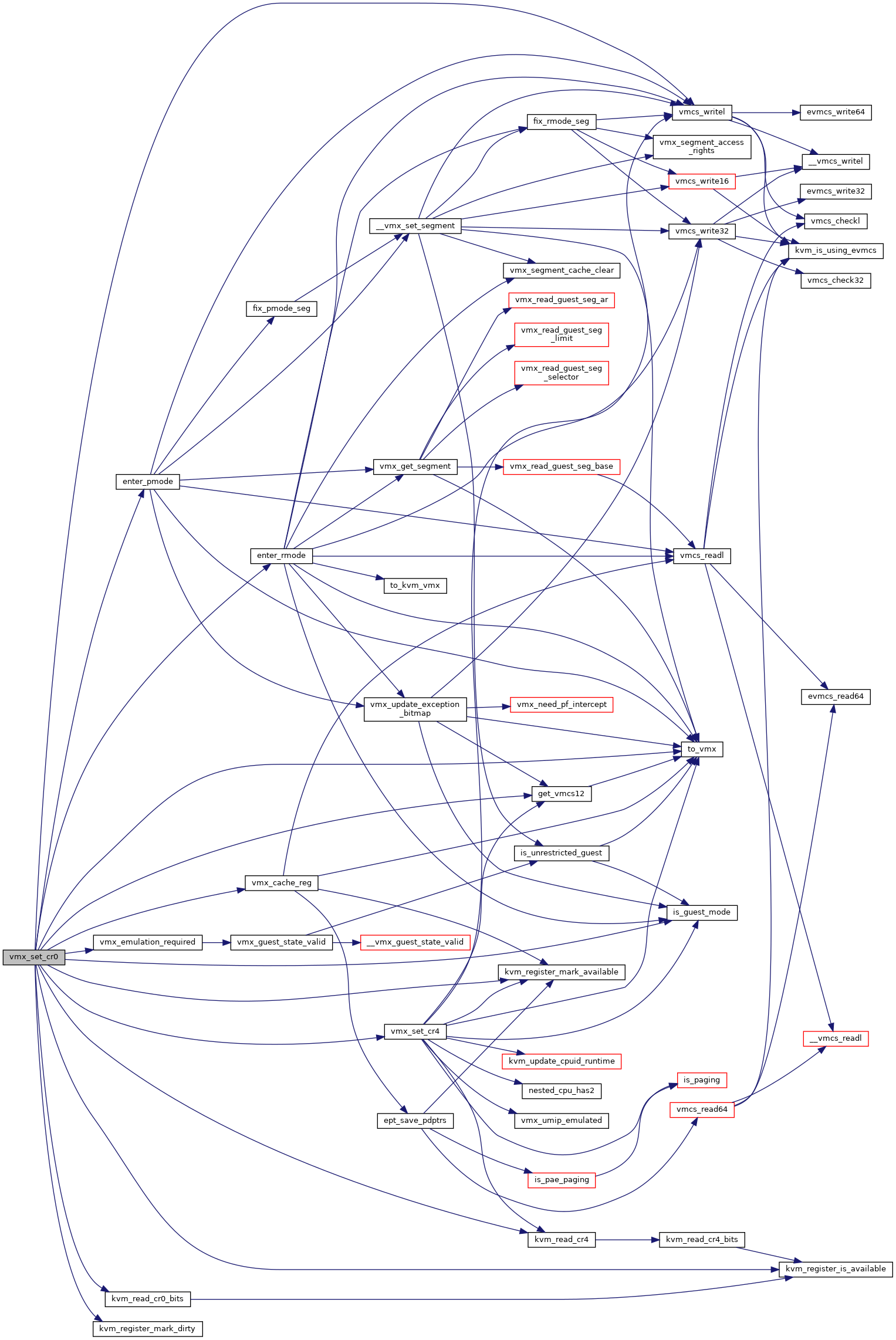

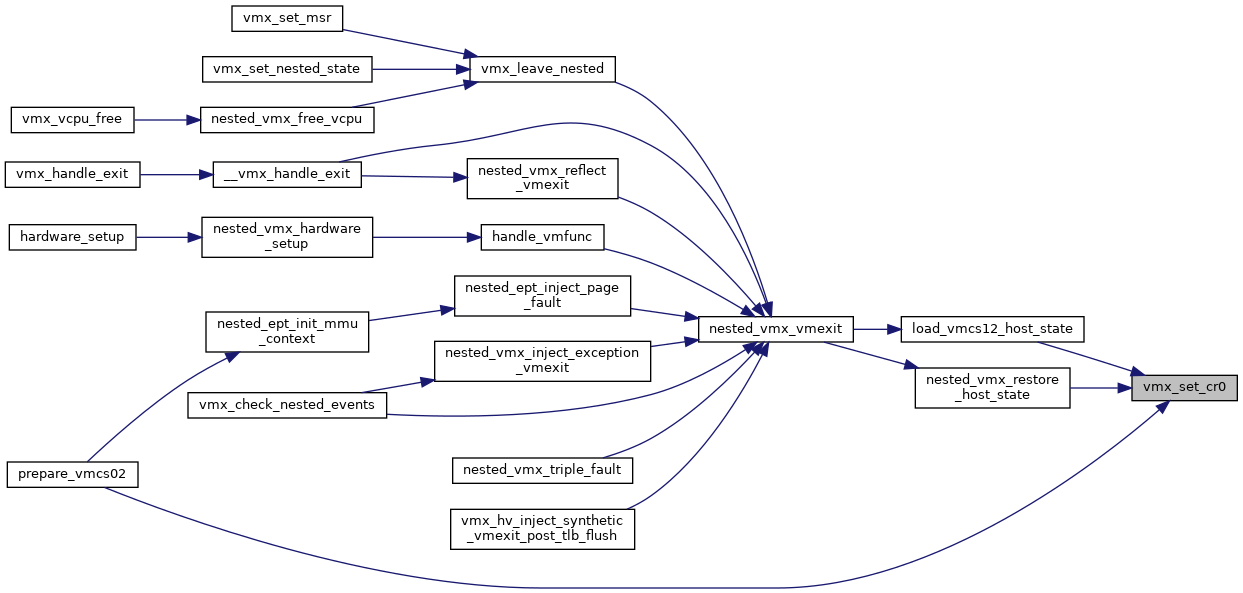

◆ vmx_set_cr0()

| void vmx_set_cr0 | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | cr0 | ||

| ) |

Definition at line 3275 of file vmx.c.

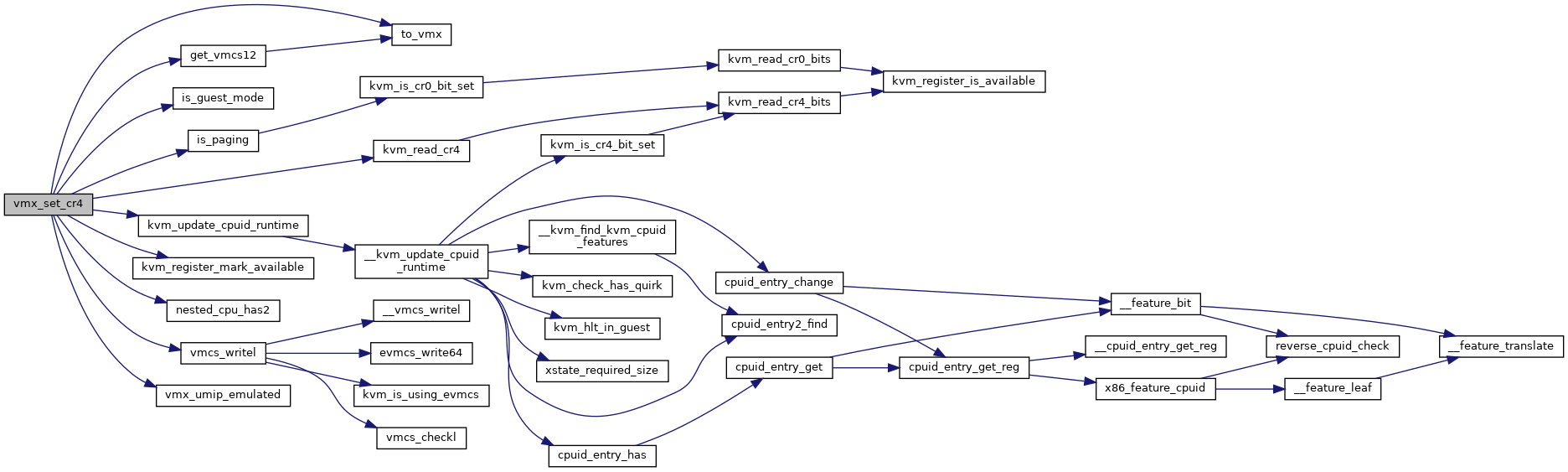

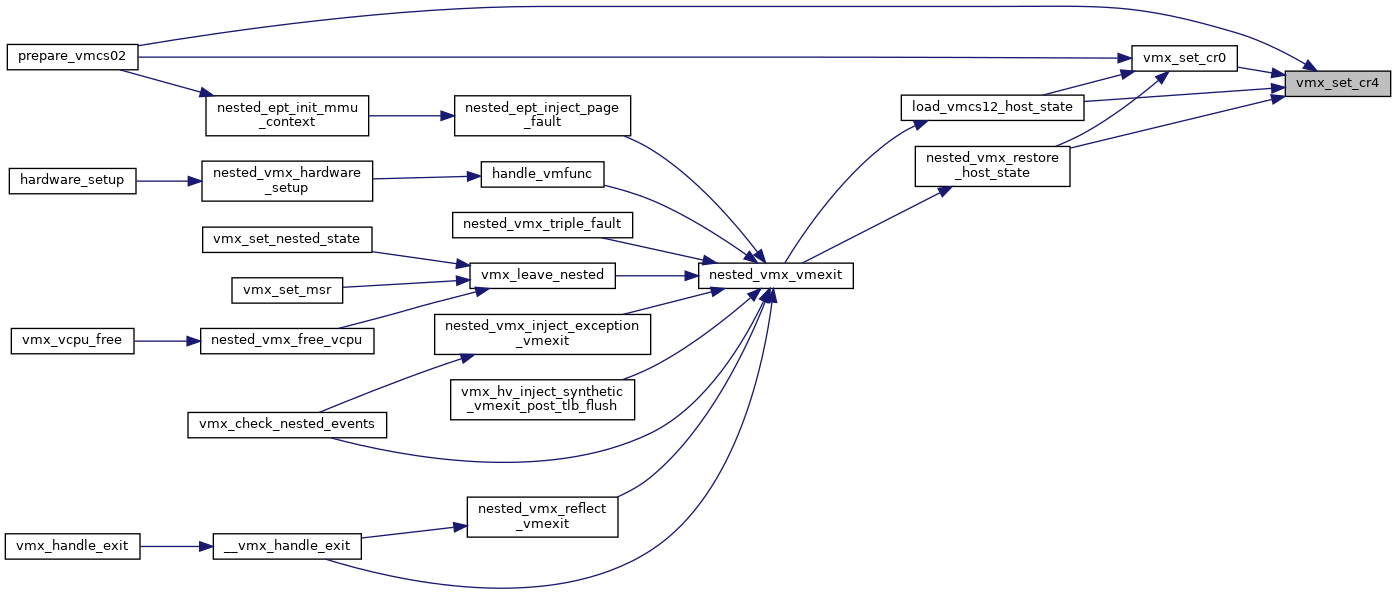

◆ vmx_set_cr4()

| void vmx_set_cr4 | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | cr4 | ||

| ) |

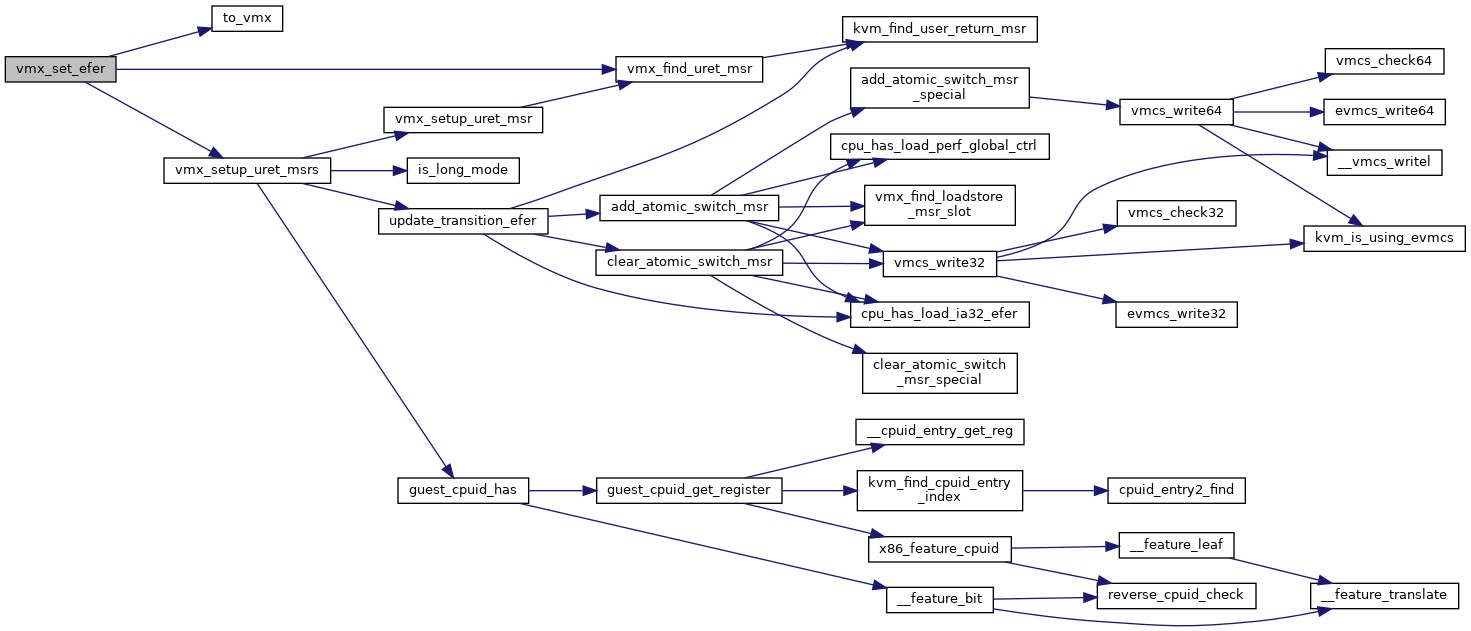

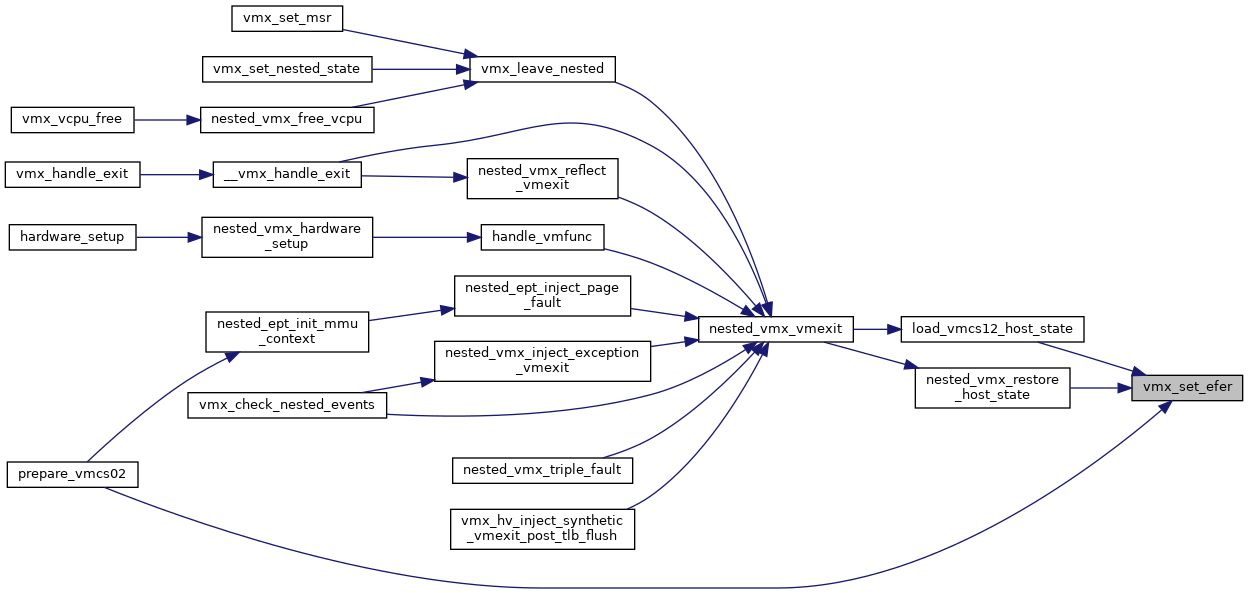

◆ vmx_set_efer()

| int vmx_set_efer | ( | struct kvm_vcpu * | vcpu, |

| u64 | efer | ||

| ) |

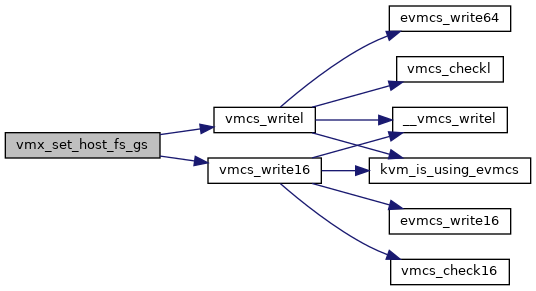

◆ vmx_set_host_fs_gs()

| void vmx_set_host_fs_gs | ( | struct vmcs_host_state * | host, |

| u16 | fs_sel, | ||

| u16 | gs_sel, | ||

| unsigned long | fs_base, | ||

| unsigned long | gs_base | ||

| ) |

◆ vmx_set_intercept_for_msr()

|

inlinestatic |

Definition at line 427 of file vmx.h.

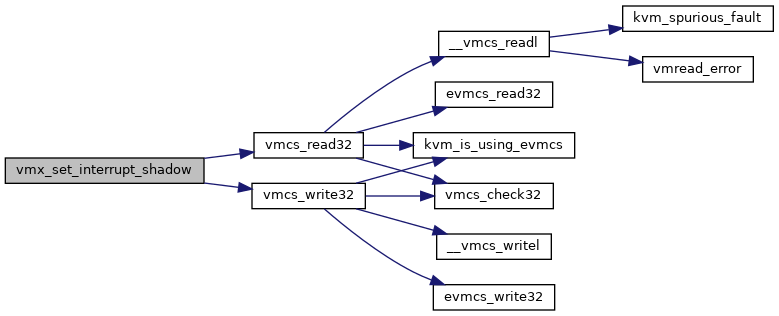

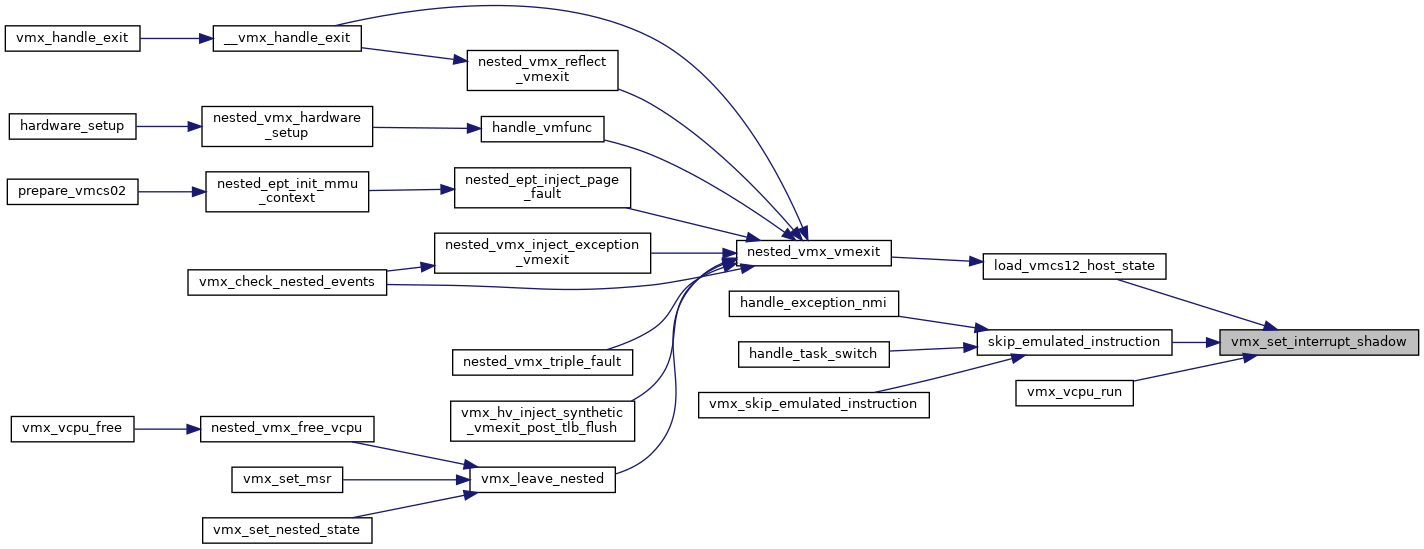

◆ vmx_set_interrupt_shadow()

| void vmx_set_interrupt_shadow | ( | struct kvm_vcpu * | vcpu, |

| int | mask | ||

| ) |

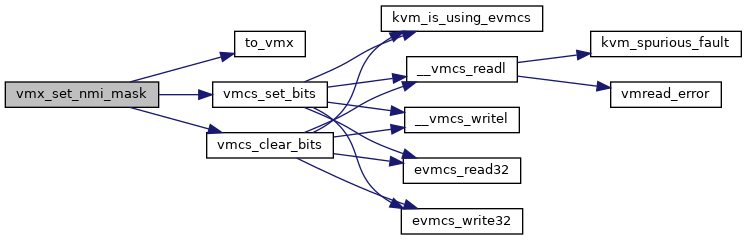

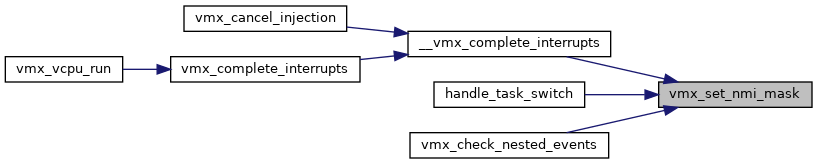

◆ vmx_set_nmi_mask()

| void vmx_set_nmi_mask | ( | struct kvm_vcpu * | vcpu, |

| bool | masked | ||

| ) |

Definition at line 5005 of file vmx.c.

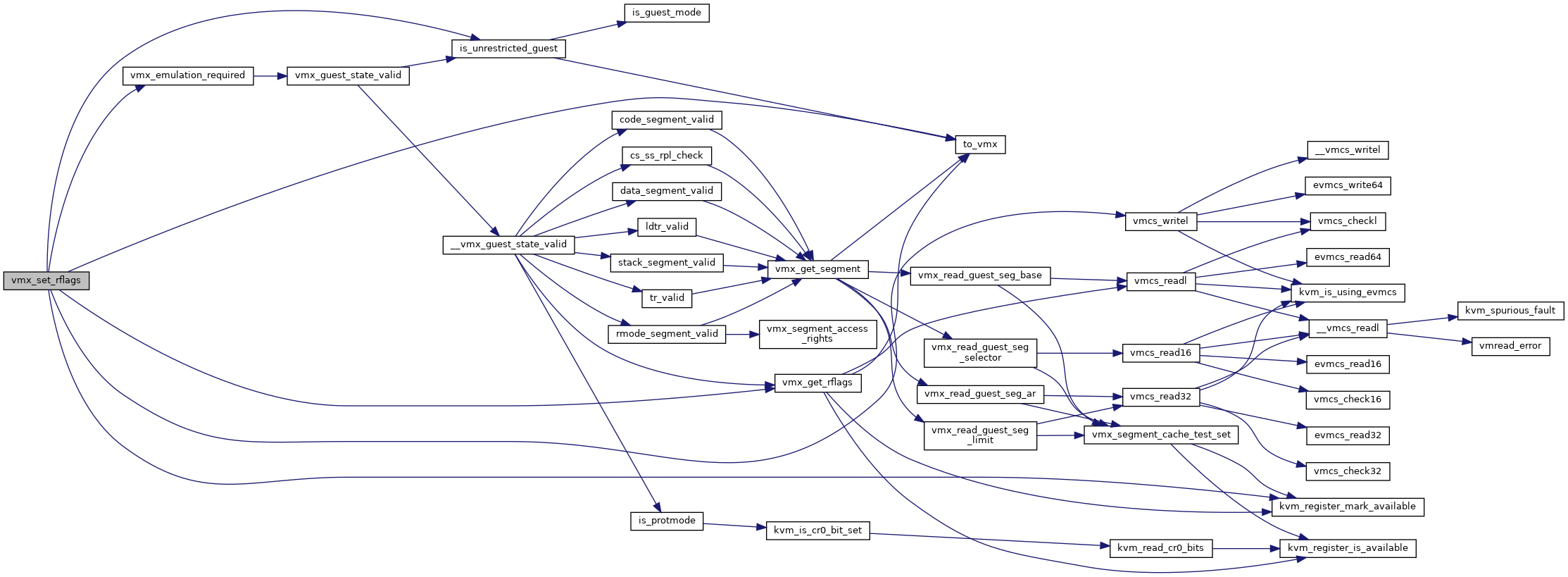

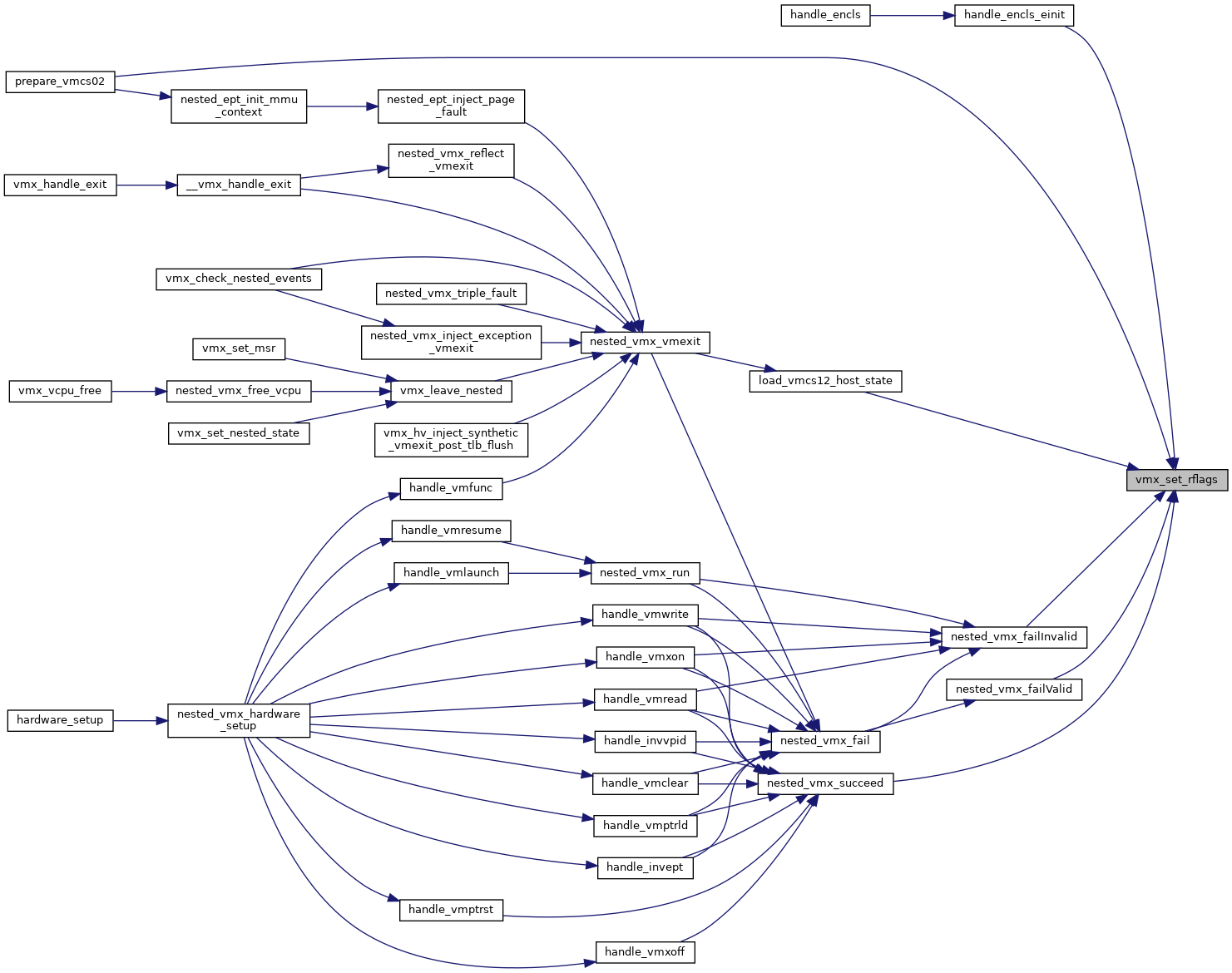

◆ vmx_set_rflags()

| void vmx_set_rflags | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | rflags | ||

| ) |

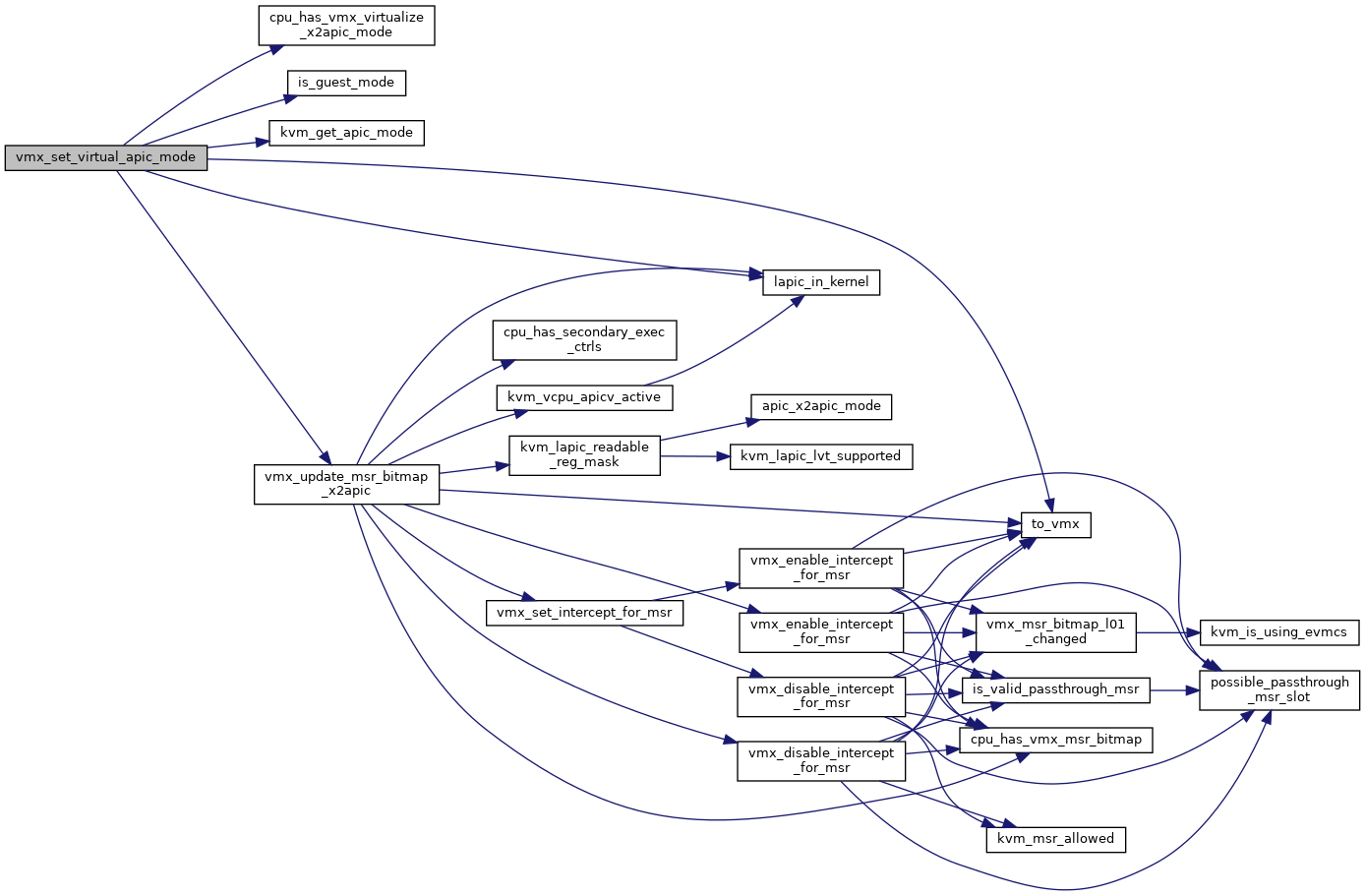

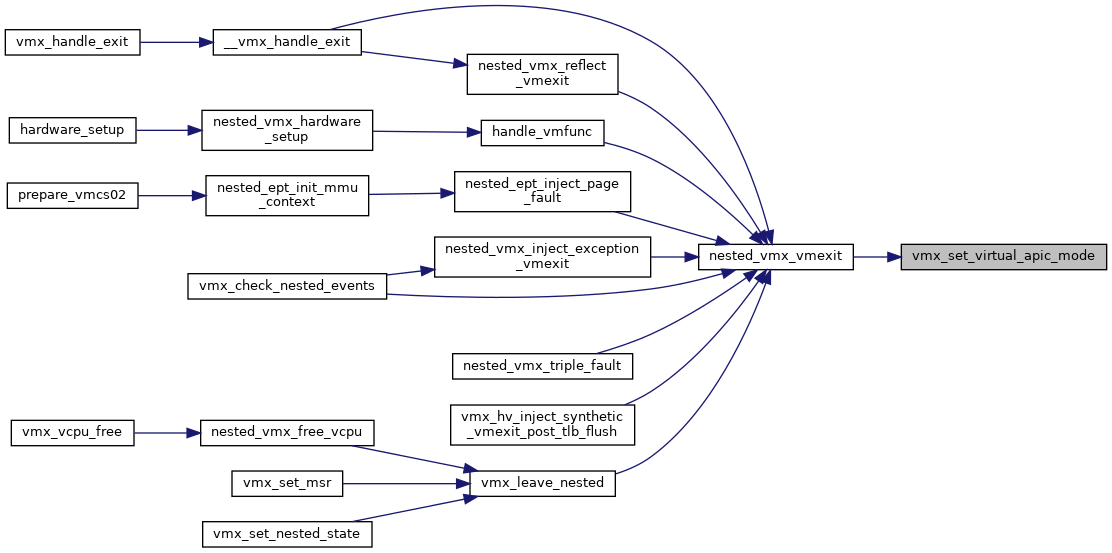

◆ vmx_set_virtual_apic_mode()

| void vmx_set_virtual_apic_mode | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 6694 of file vmx.c.

◆ vmx_spec_ctrl_restore_host()

| void vmx_spec_ctrl_restore_host | ( | struct vcpu_vmx * | vmx, |

| unsigned int | flags | ||

| ) |

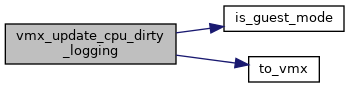

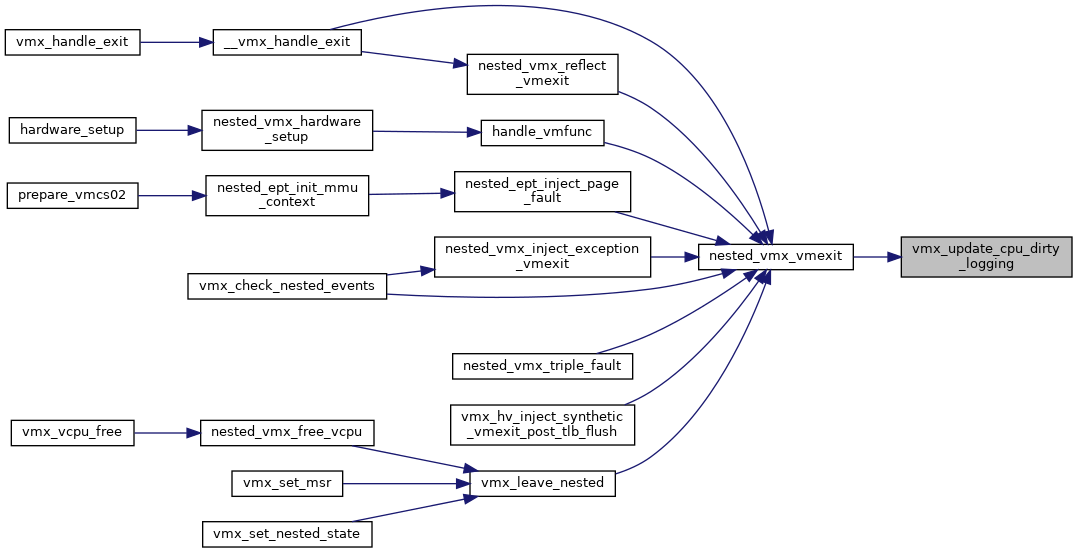

◆ vmx_update_cpu_dirty_logging()

| void vmx_update_cpu_dirty_logging | ( | struct kvm_vcpu * | vcpu | ) |

◆ vmx_update_exception_bitmap()

| void vmx_update_exception_bitmap | ( | struct kvm_vcpu * | vcpu | ) |

◆ vmx_update_host_rsp()

| void vmx_update_host_rsp | ( | struct vcpu_vmx * | vmx, |

| unsigned long | host_rsp | ||

| ) |

◆ vmx_vcpu_load_vmcs()

| void vmx_vcpu_load_vmcs | ( | struct kvm_vcpu * | vcpu, |

| int | cpu, | ||

| struct loaded_vmcs * | buddy | ||

| ) |