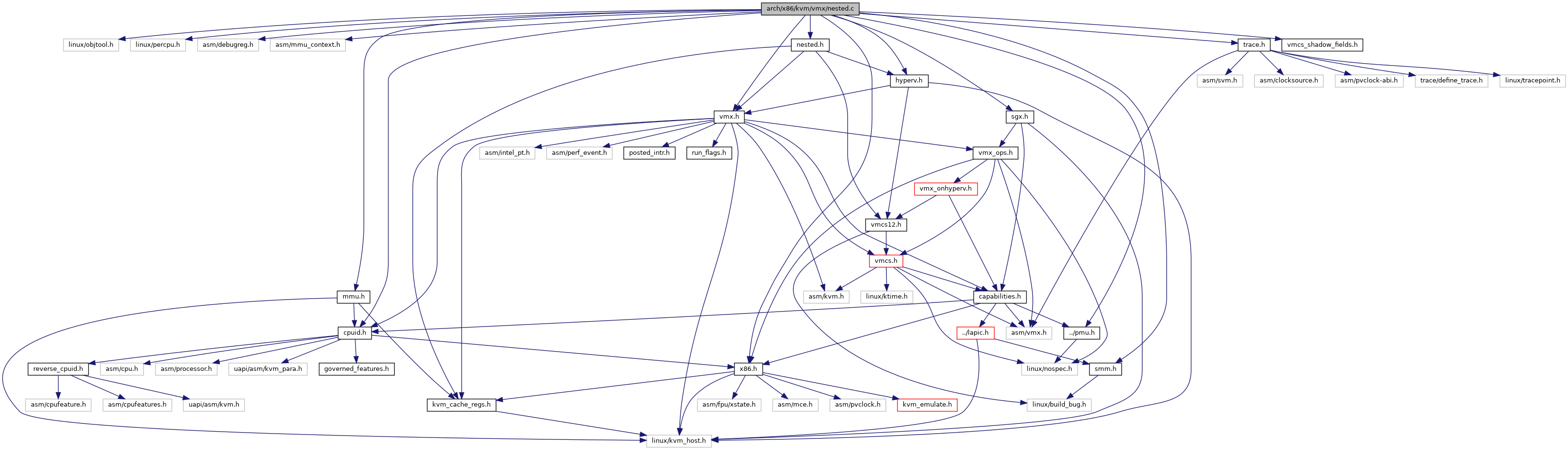

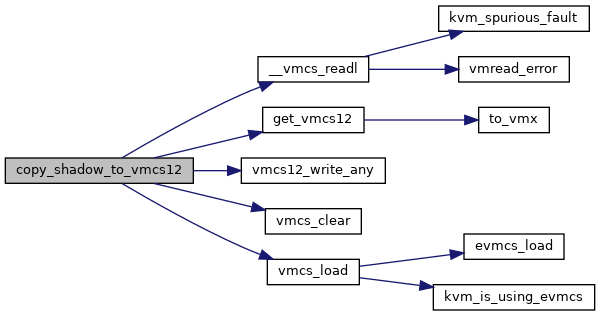

#include <linux/objtool.h>#include <linux/percpu.h>#include <asm/debugreg.h>#include <asm/mmu_context.h>#include "cpuid.h"#include "hyperv.h"#include "mmu.h"#include "nested.h"#include "pmu.h"#include "sgx.h"#include "trace.h"#include "vmx.h"#include "x86.h"#include "smm.h"#include "vmcs_shadow_fields.h"

Go to the source code of this file.

Classes | |

| struct | shadow_vmcs_field |

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

| #define | CC KVM_NESTED_VMENTER_CONSISTENCY_CHECK |

| #define | VMX_VPID_EXTENT_SUPPORTED_MASK |

| #define | VMX_MISC_EMULATED_PREEMPTION_TIMER_RATE 5 |

| #define | vmx_vmread_bitmap (vmx_bitmap[VMX_VMREAD_BITMAP]) |

| #define | vmx_vmwrite_bitmap (vmx_bitmap[VMX_VMWRITE_BITMAP]) |

| #define | SHADOW_FIELD_RO(x, y) { x, offsetof(struct vmcs12, y) }, |

| #define | SHADOW_FIELD_RW(x, y) { x, offsetof(struct vmcs12, y) }, |

| #define | EPTP_PA_MASK GENMASK_ULL(51, 12) |

| #define | BUILD_NVMX_MSR_INTERCEPT_HELPER(rw) |

| #define | SHADOW_FIELD_RW(x, y) case x: |

| #define | SHADOW_FIELD_RO(x, y) case x: |

| #define | VMCS12_IDX_TO_ENC(idx) ((u16)(((u16)(idx) >> 6) | ((u16)(idx) << 10))) |

| #define | VMXON_CR0_ALWAYSON (X86_CR0_PE | X86_CR0_PG | X86_CR0_NE) |

| #define | VMXON_CR4_ALWAYSON X86_CR4_VMXE |

Enumerations | |

| enum | { VMX_VMREAD_BITMAP , VMX_VMWRITE_BITMAP , VMX_BITMAP_NR } |

Functions | |

| module_param_named (enable_shadow_vmcs, enable_shadow_vmcs, bool, S_IRUGO) | |

| module_param (nested_early_check, bool, S_IRUGO) | |

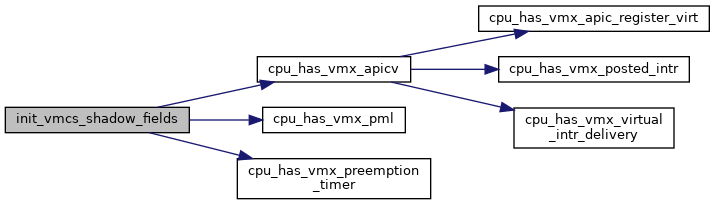

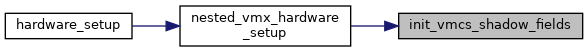

| static void | init_vmcs_shadow_fields (void) |

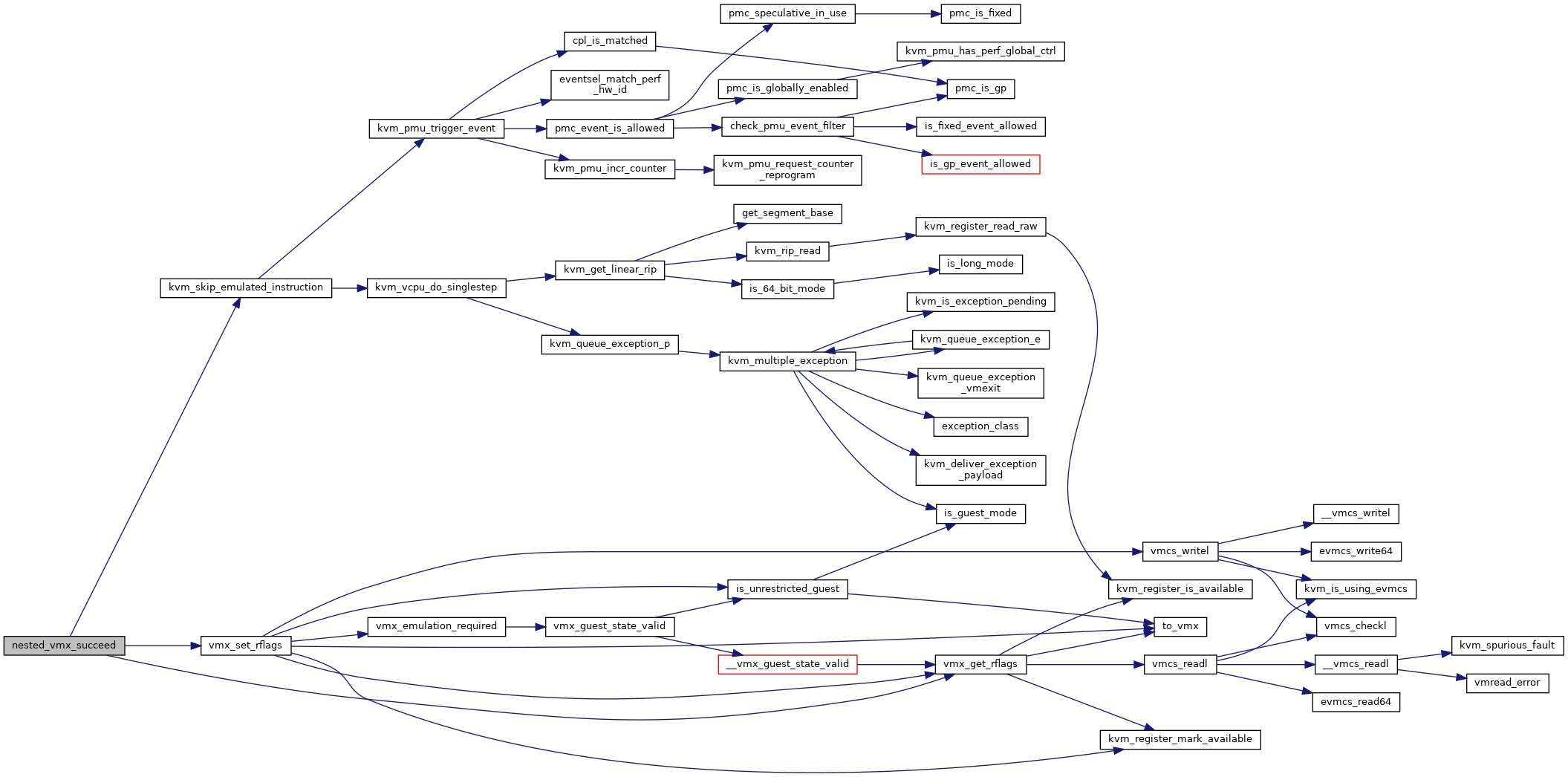

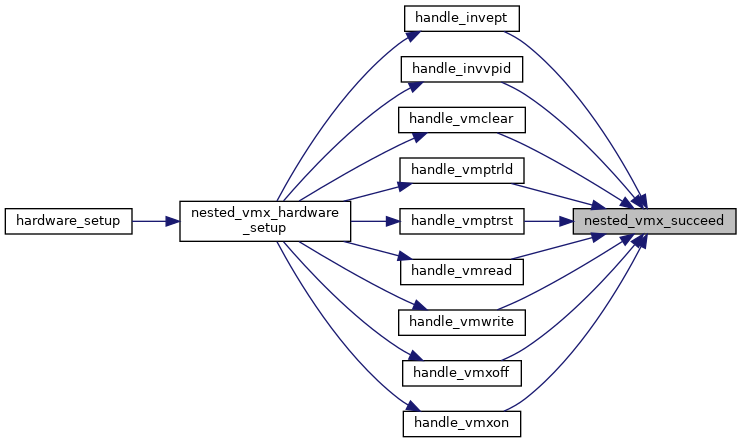

| static int | nested_vmx_succeed (struct kvm_vcpu *vcpu) |

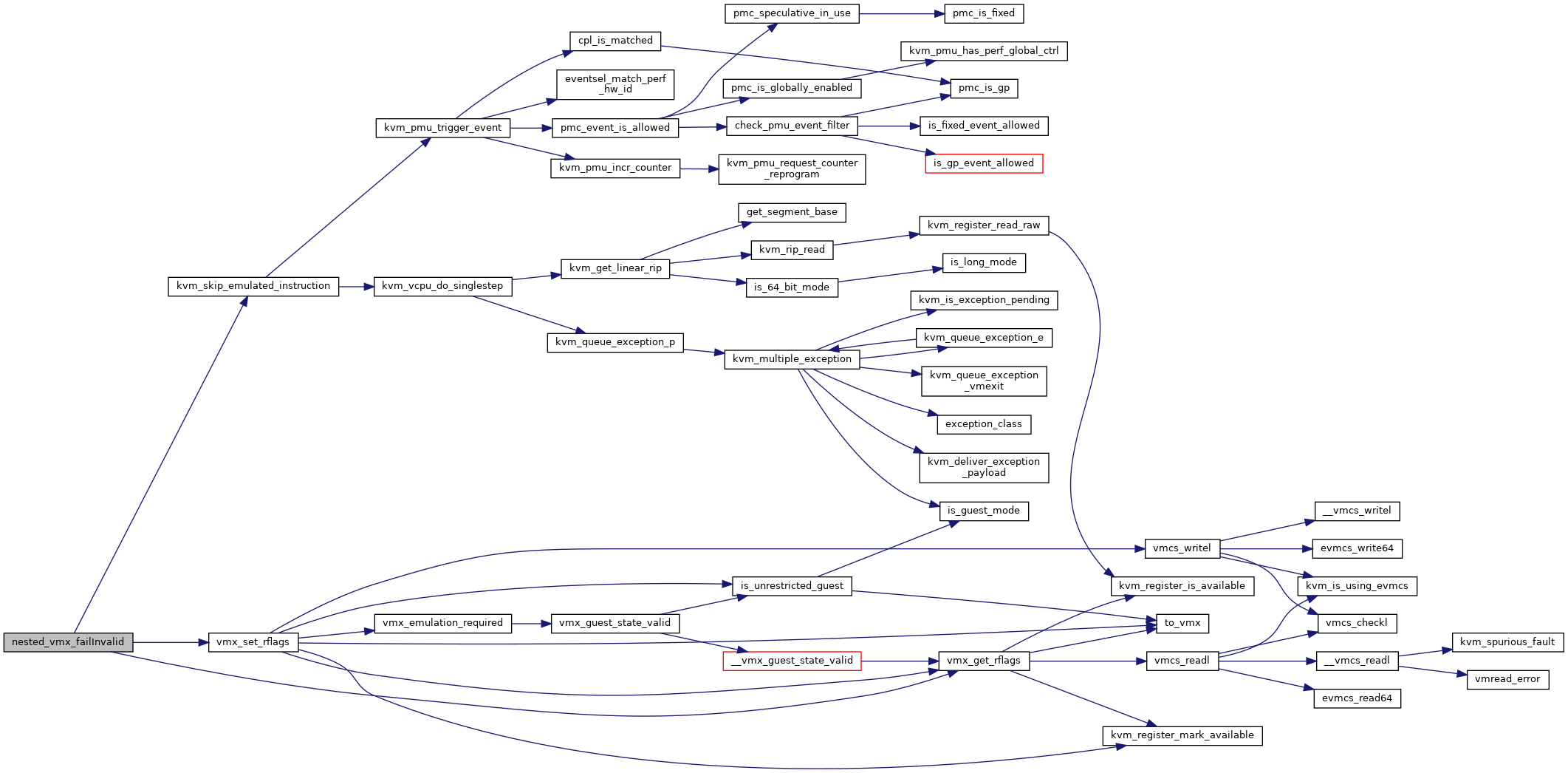

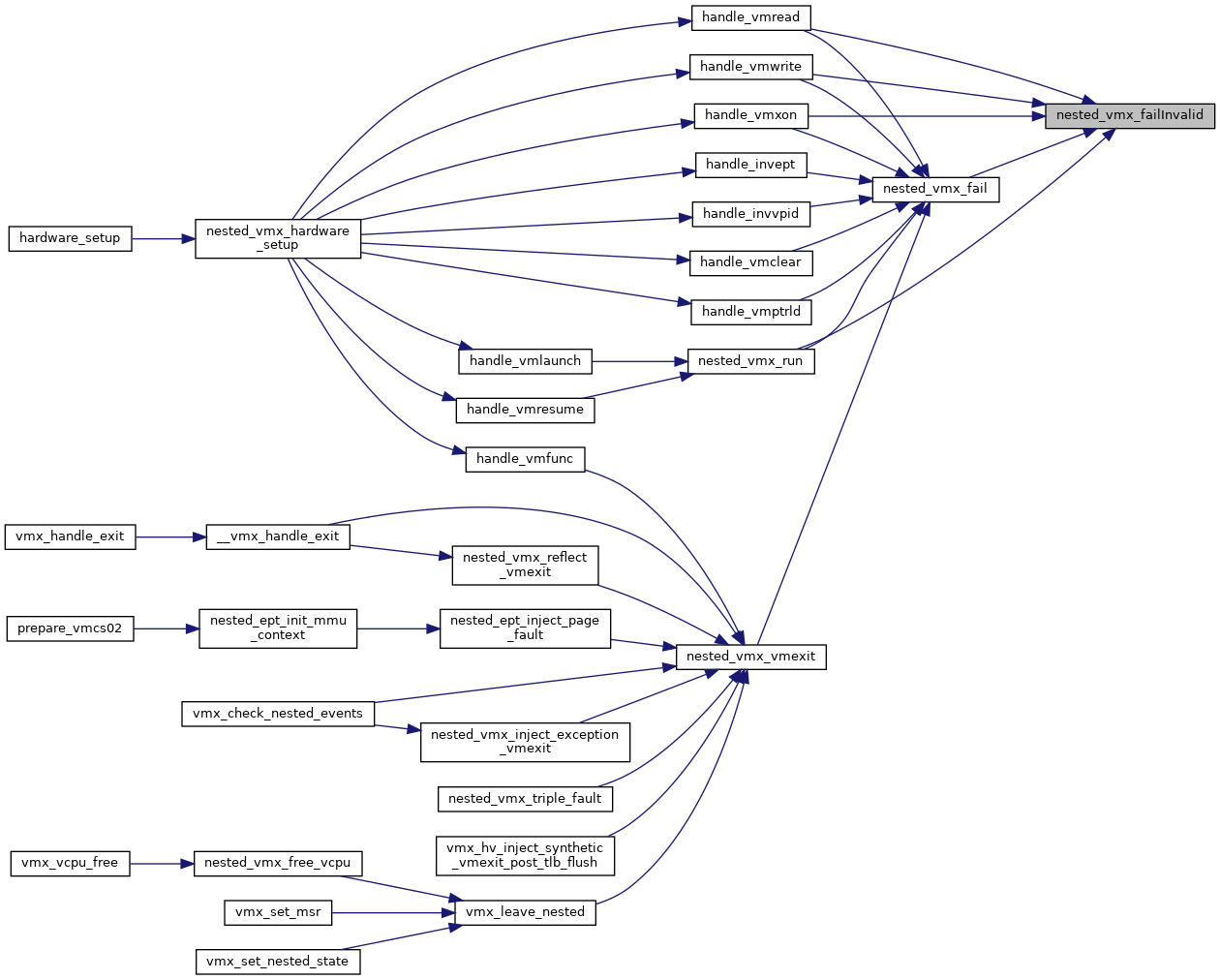

| static int | nested_vmx_failInvalid (struct kvm_vcpu *vcpu) |

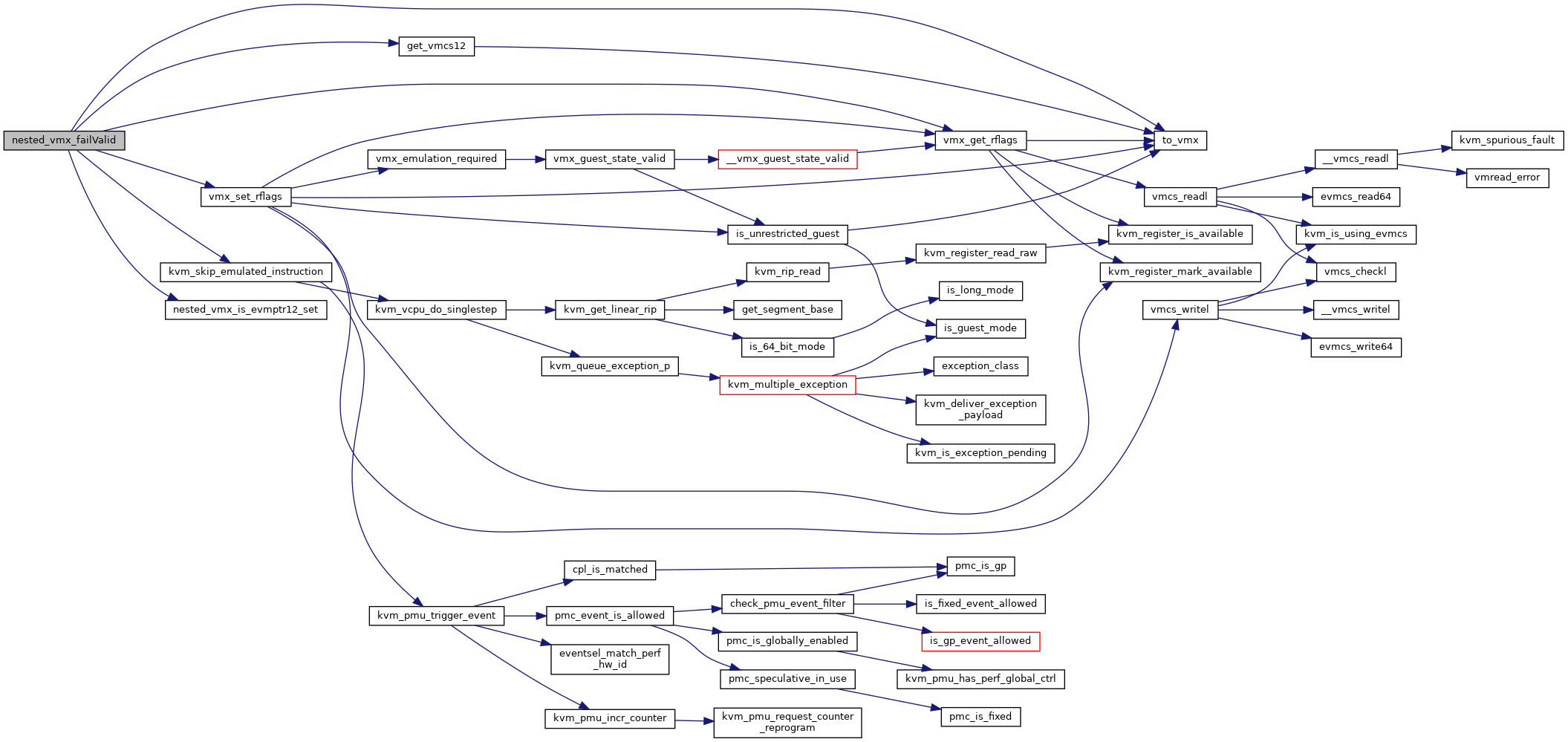

| static int | nested_vmx_failValid (struct kvm_vcpu *vcpu, u32 vm_instruction_error) |

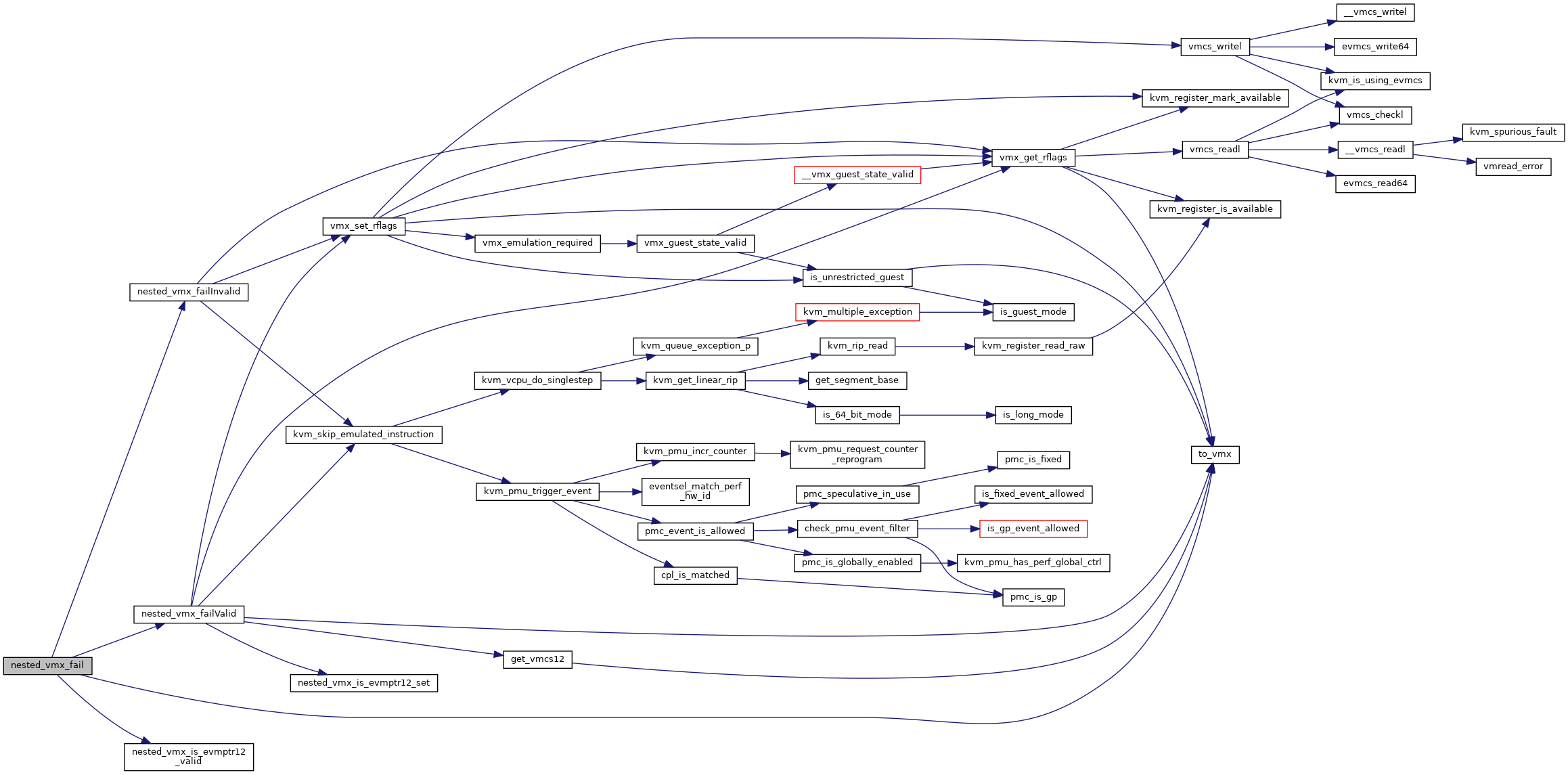

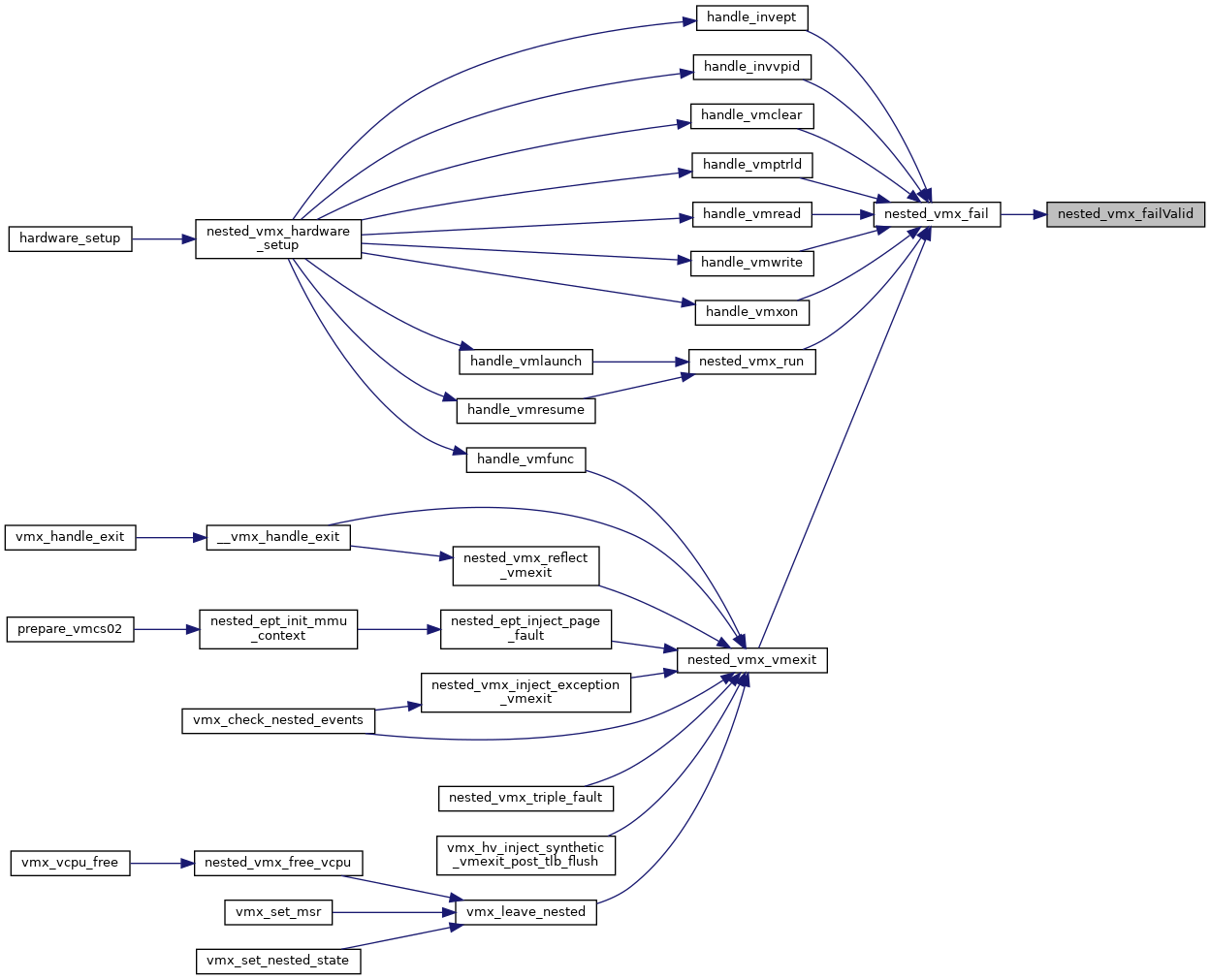

| static int | nested_vmx_fail (struct kvm_vcpu *vcpu, u32 vm_instruction_error) |

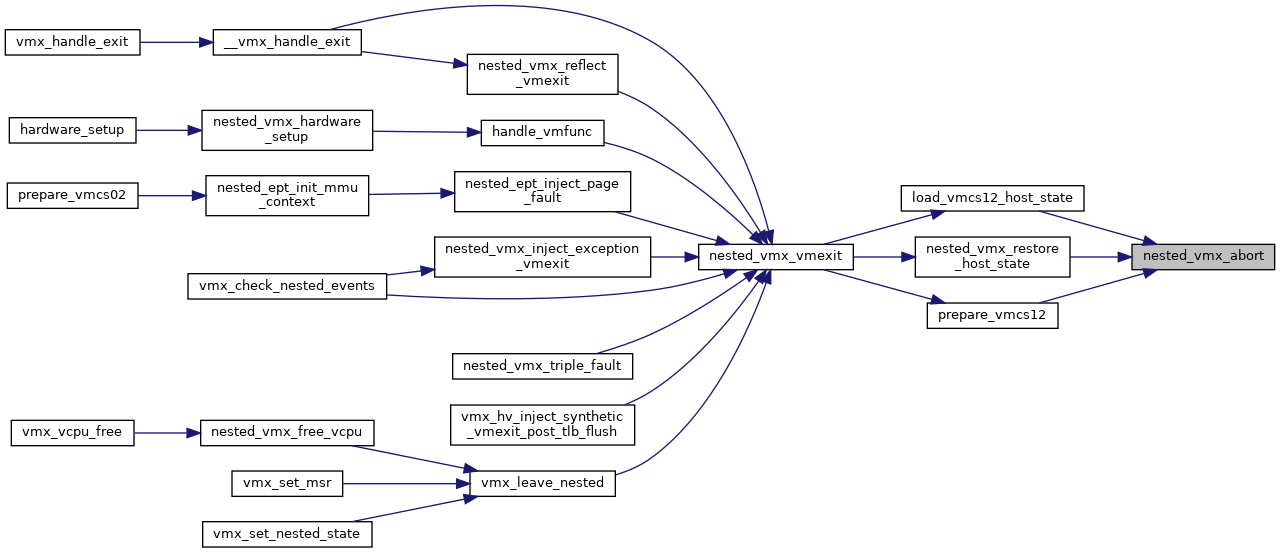

| static void | nested_vmx_abort (struct kvm_vcpu *vcpu, u32 indicator) |

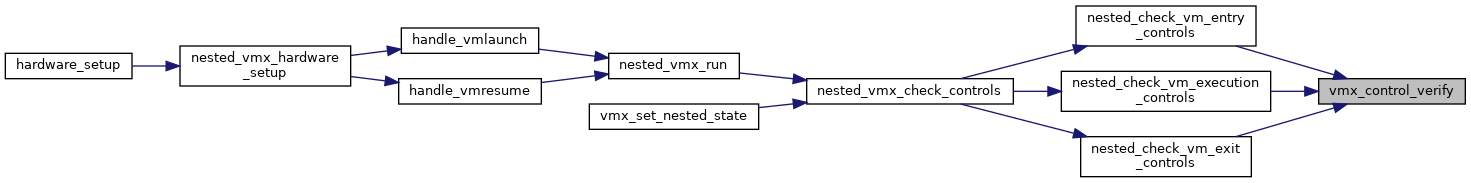

| static bool | vmx_control_verify (u32 control, u32 low, u32 high) |

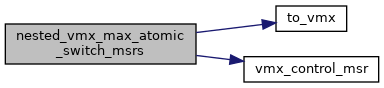

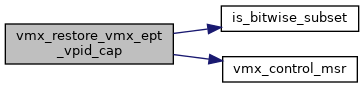

| static u64 | vmx_control_msr (u32 low, u32 high) |

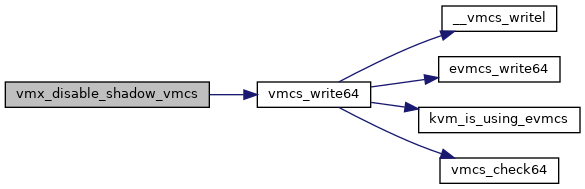

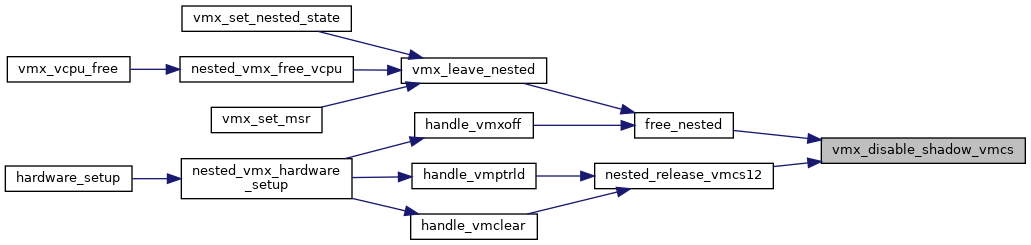

| static void | vmx_disable_shadow_vmcs (struct vcpu_vmx *vmx) |

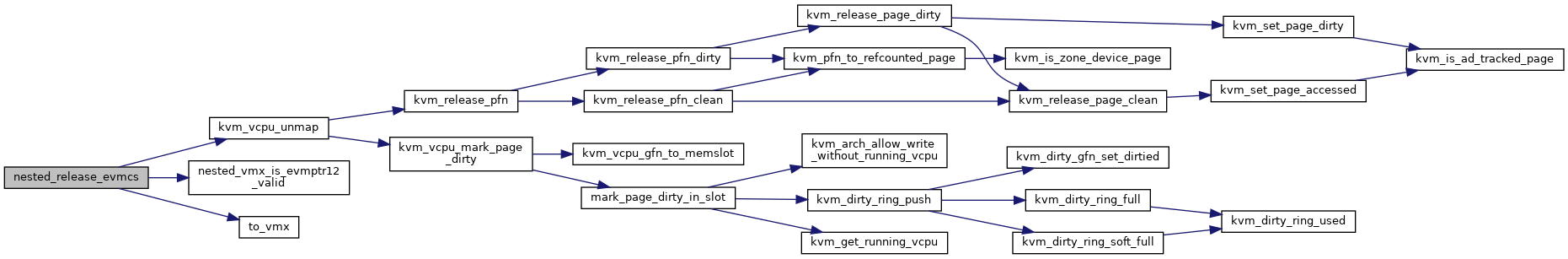

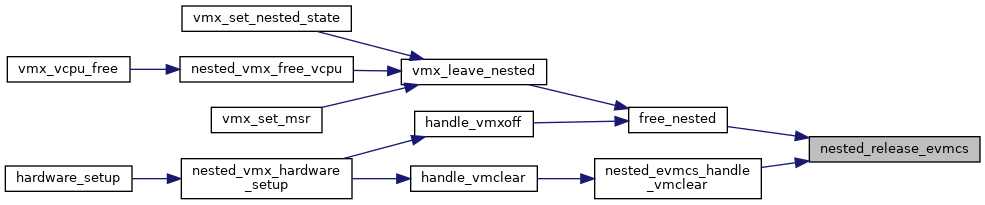

| static void | nested_release_evmcs (struct kvm_vcpu *vcpu) |

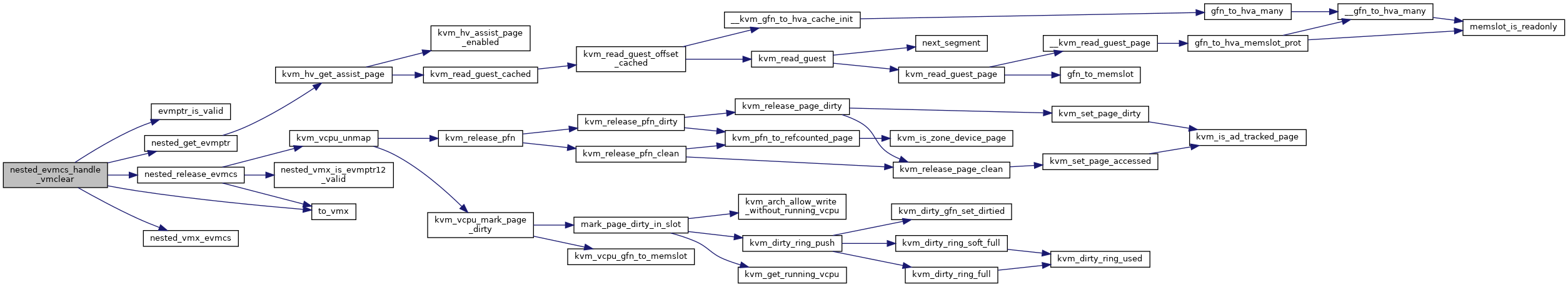

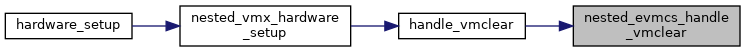

| static bool | nested_evmcs_handle_vmclear (struct kvm_vcpu *vcpu, gpa_t vmptr) |

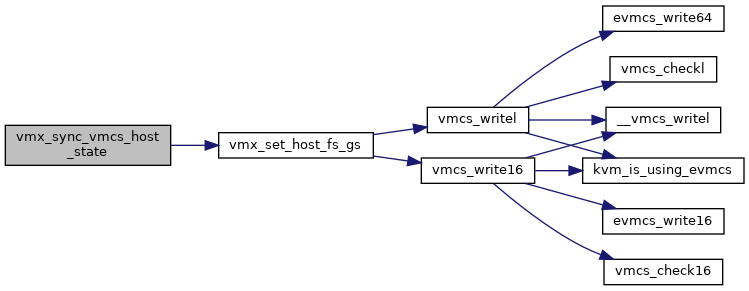

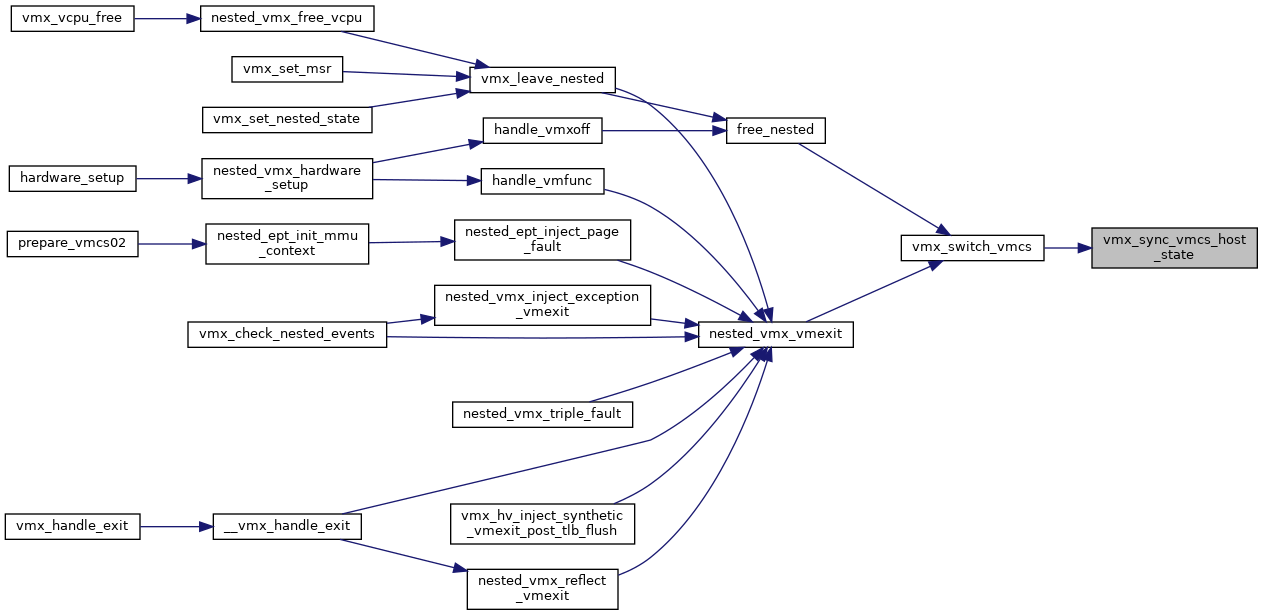

| static void | vmx_sync_vmcs_host_state (struct vcpu_vmx *vmx, struct loaded_vmcs *prev) |

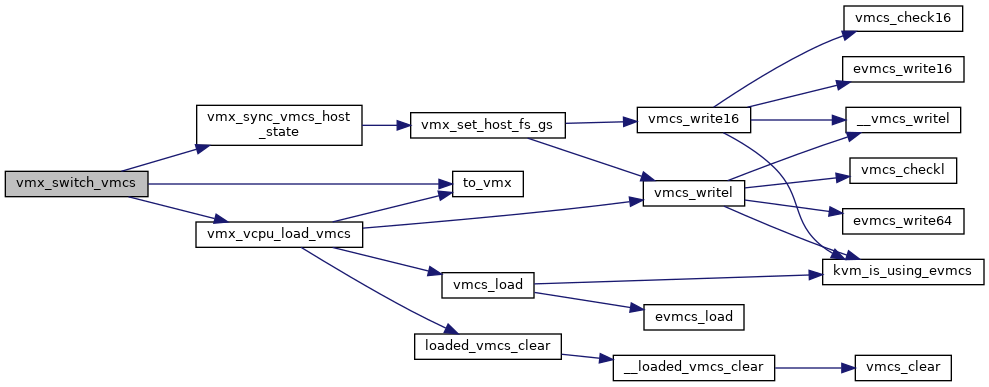

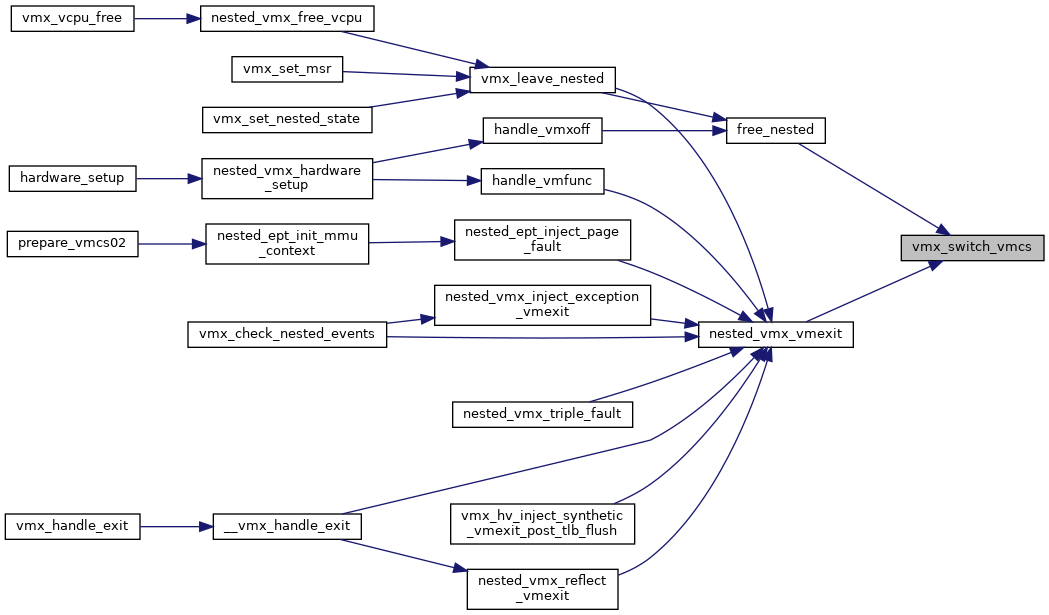

| static void | vmx_switch_vmcs (struct kvm_vcpu *vcpu, struct loaded_vmcs *vmcs) |

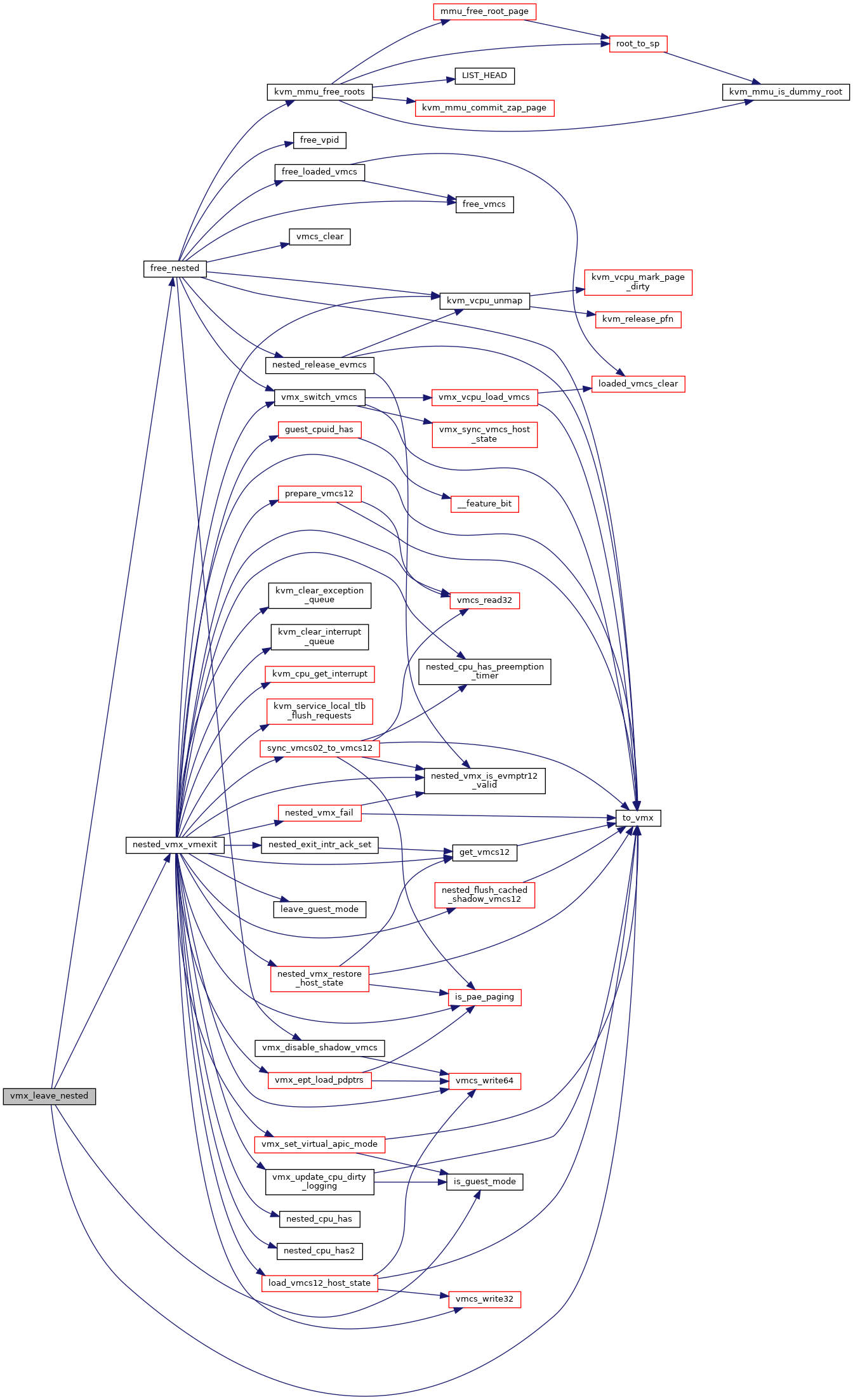

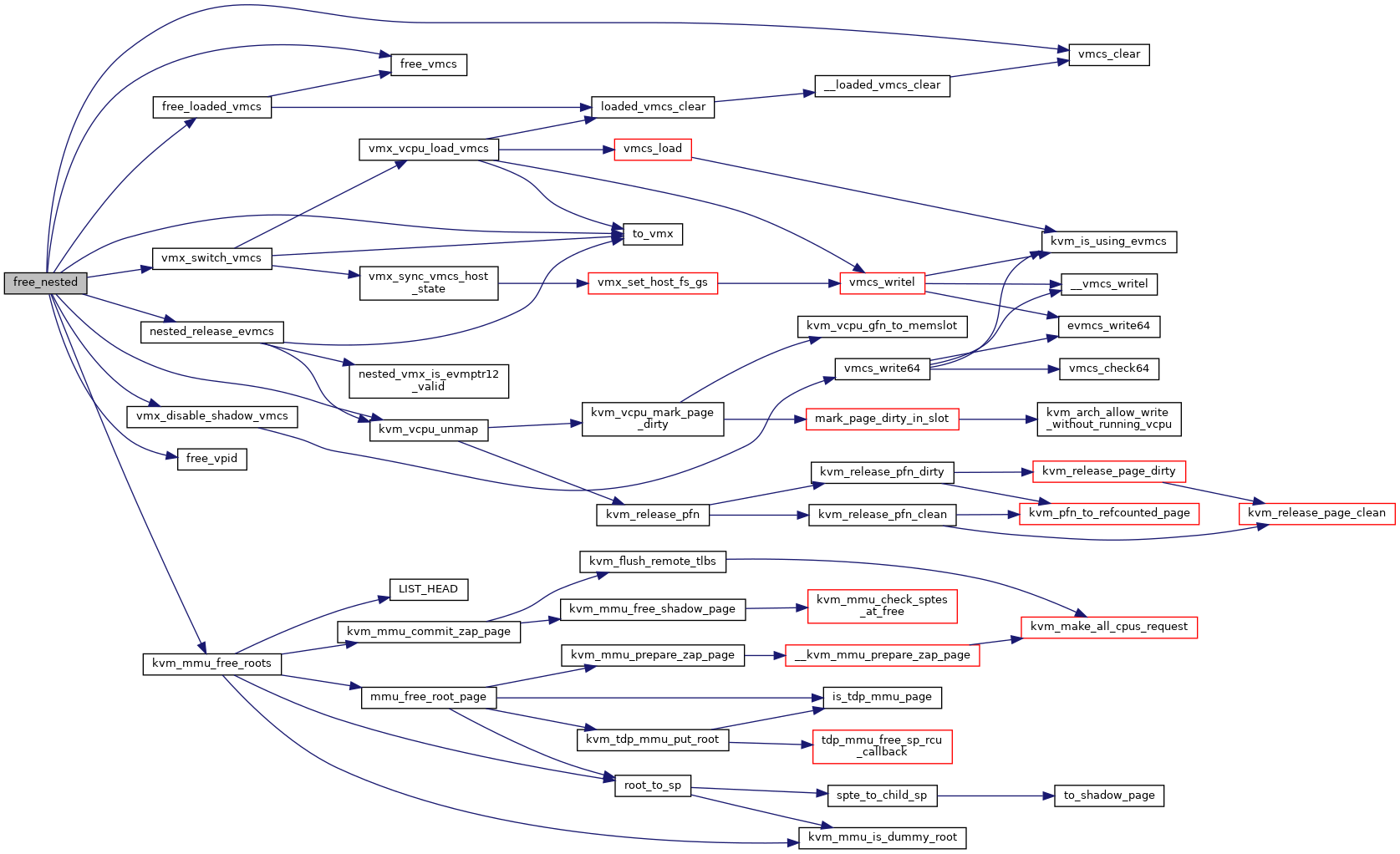

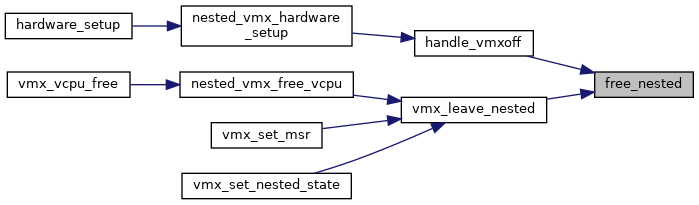

| static void | free_nested (struct kvm_vcpu *vcpu) |

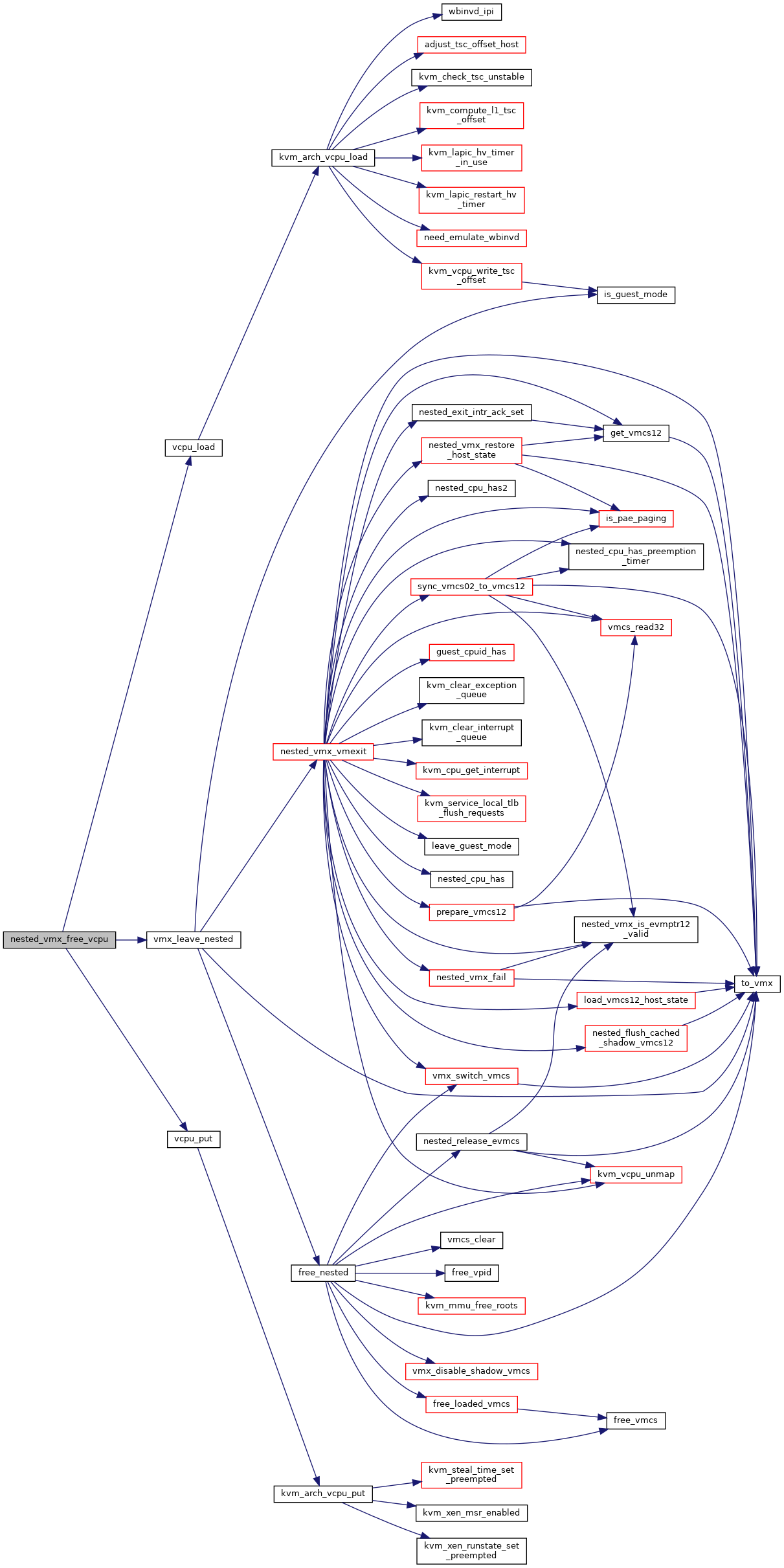

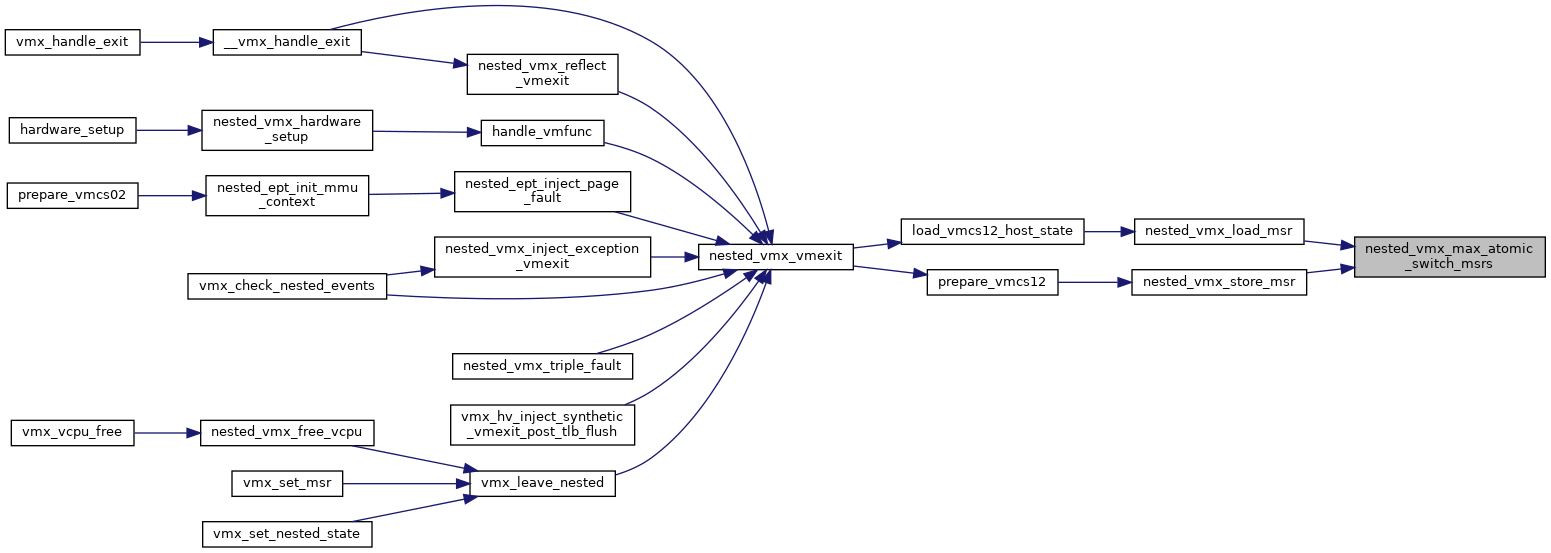

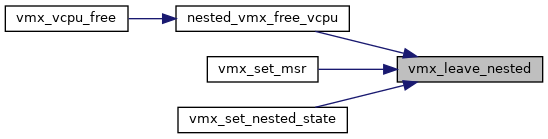

| void | nested_vmx_free_vcpu (struct kvm_vcpu *vcpu) |

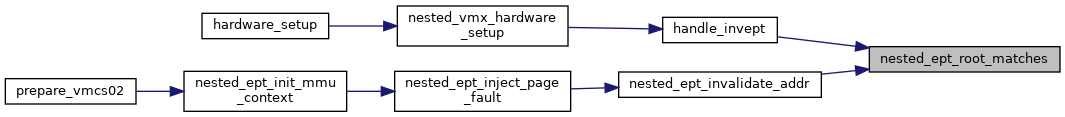

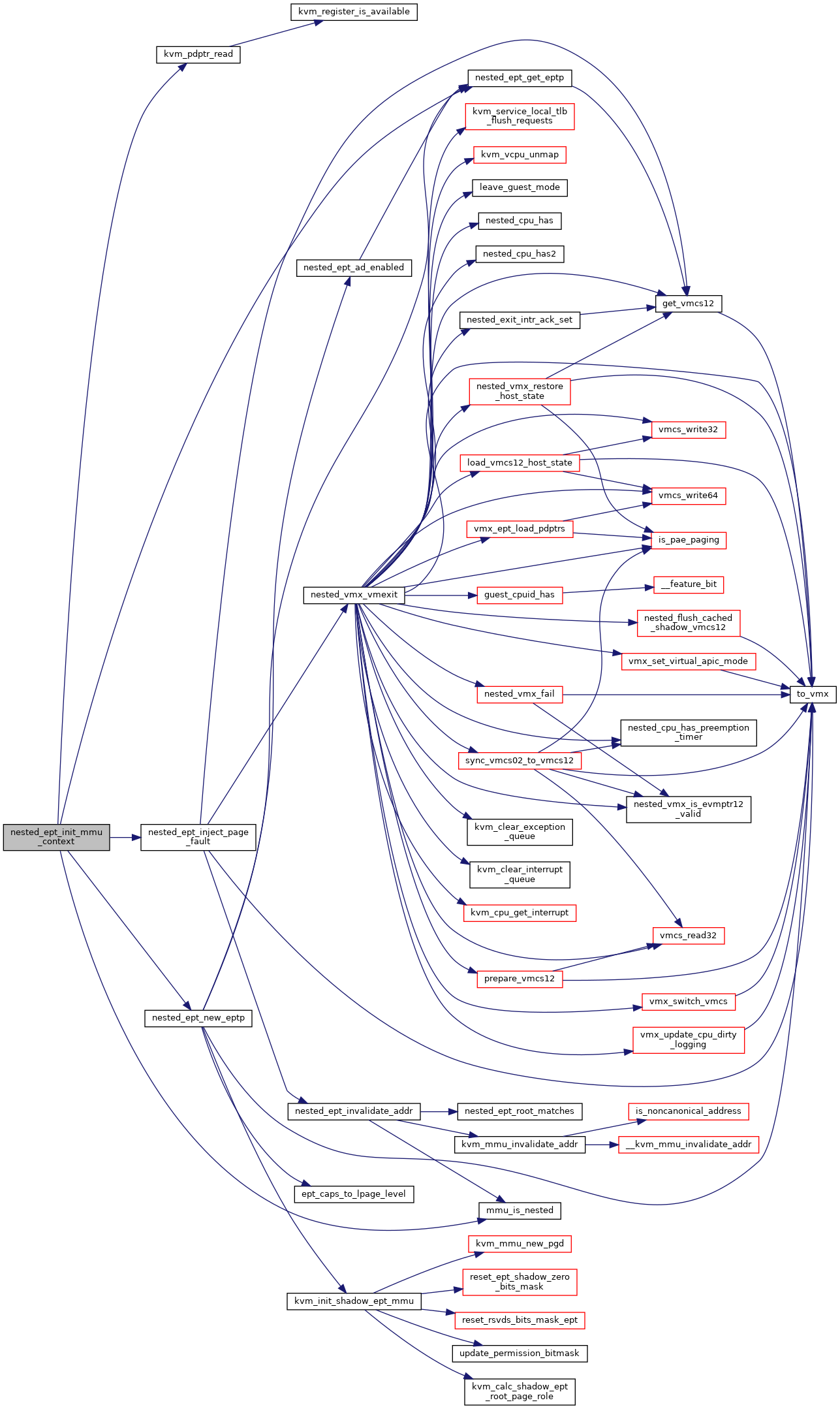

| static bool | nested_ept_root_matches (hpa_t root_hpa, u64 root_eptp, u64 eptp) |

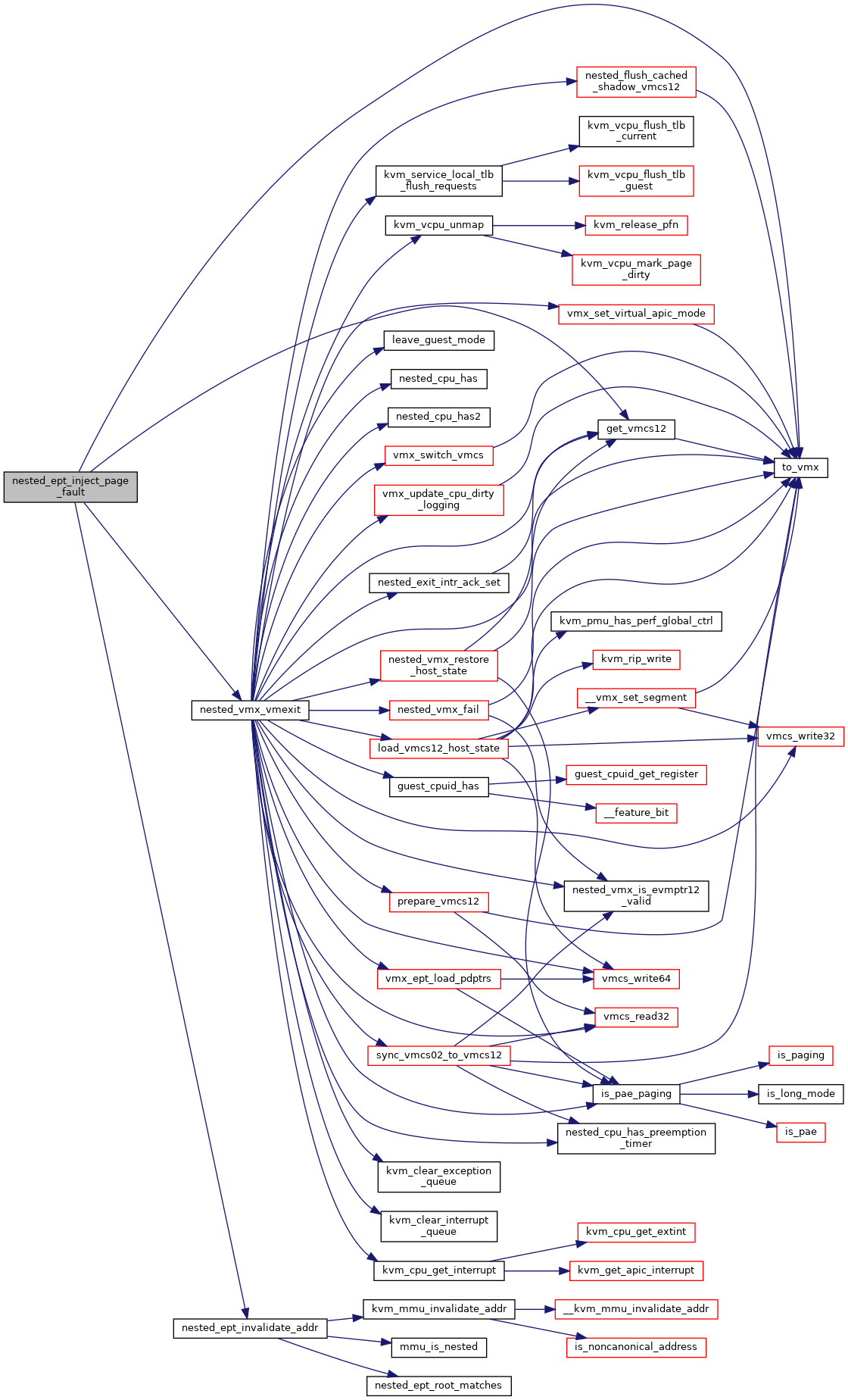

| static void | nested_ept_invalidate_addr (struct kvm_vcpu *vcpu, gpa_t eptp, gpa_t addr) |

| static void | nested_ept_inject_page_fault (struct kvm_vcpu *vcpu, struct x86_exception *fault) |

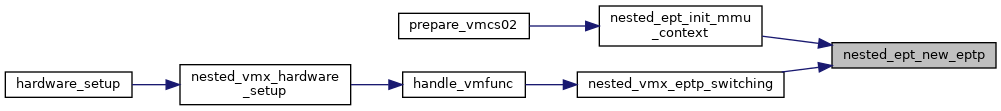

| static void | nested_ept_new_eptp (struct kvm_vcpu *vcpu) |

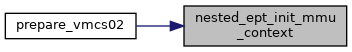

| static void | nested_ept_init_mmu_context (struct kvm_vcpu *vcpu) |

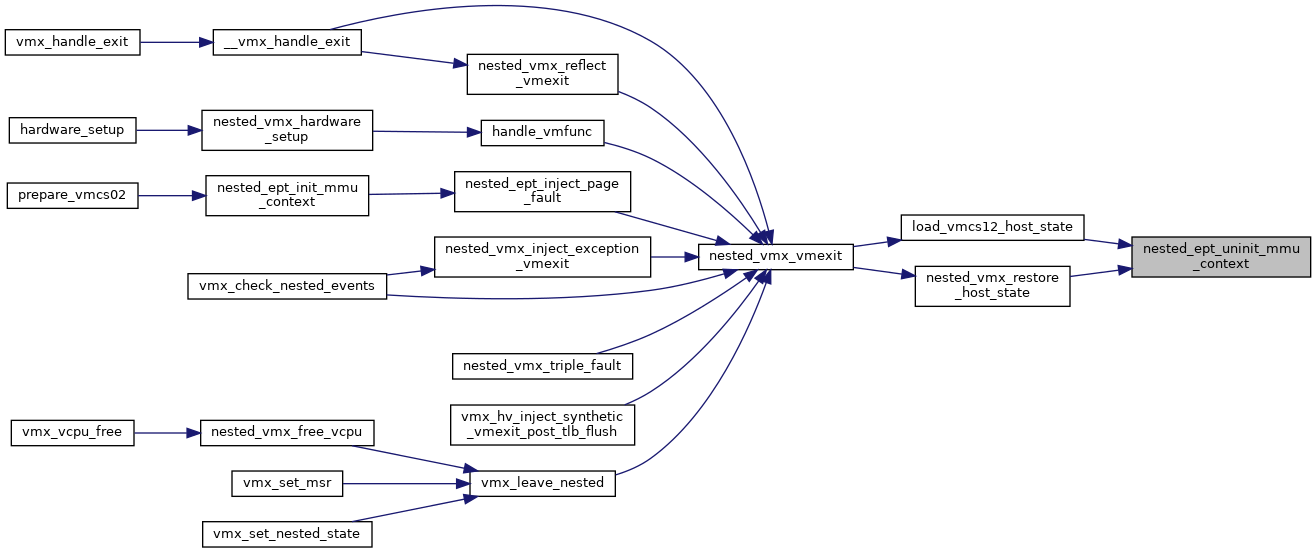

| static void | nested_ept_uninit_mmu_context (struct kvm_vcpu *vcpu) |

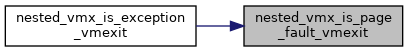

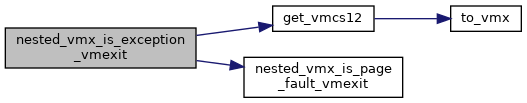

| static bool | nested_vmx_is_page_fault_vmexit (struct vmcs12 *vmcs12, u16 error_code) |

| static bool | nested_vmx_is_exception_vmexit (struct kvm_vcpu *vcpu, u8 vector, u32 error_code) |

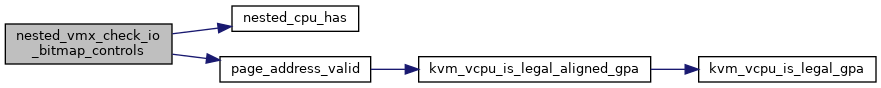

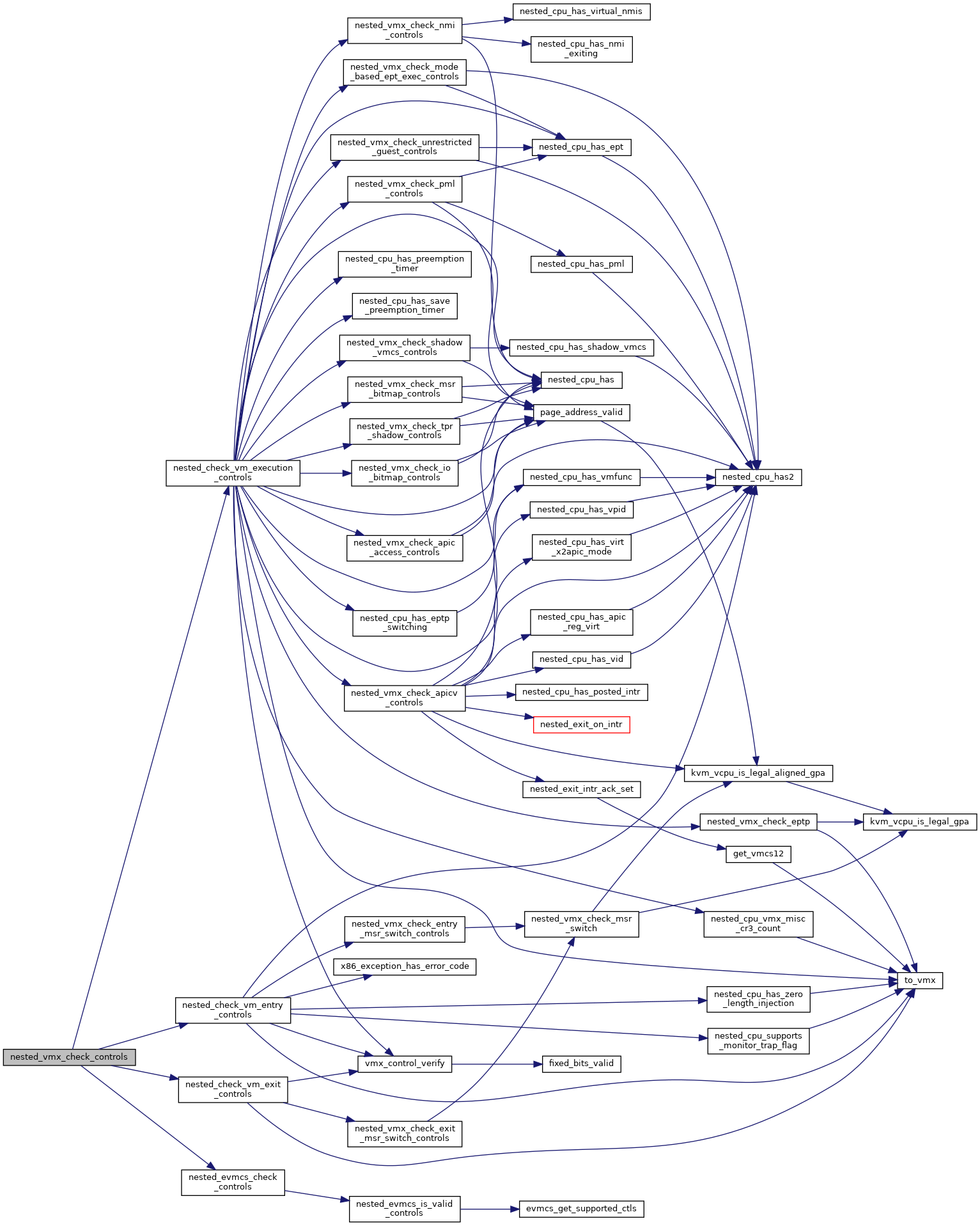

| static int | nested_vmx_check_io_bitmap_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

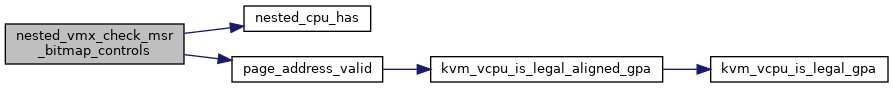

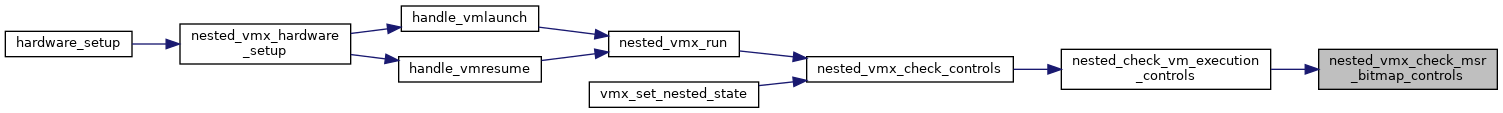

| static int | nested_vmx_check_msr_bitmap_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

| static int | nested_vmx_check_tpr_shadow_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

| static void | nested_vmx_disable_intercept_for_x2apic_msr (unsigned long *msr_bitmap_l1, unsigned long *msr_bitmap_l0, u32 msr, int type) |

| static void | enable_x2apic_msr_intercepts (unsigned long *msr_bitmap) |

| static void | nested_vmx_set_intercept_for_msr (struct vcpu_vmx *vmx, unsigned long *msr_bitmap_l1, unsigned long *msr_bitmap_l0, u32 msr, int types) |

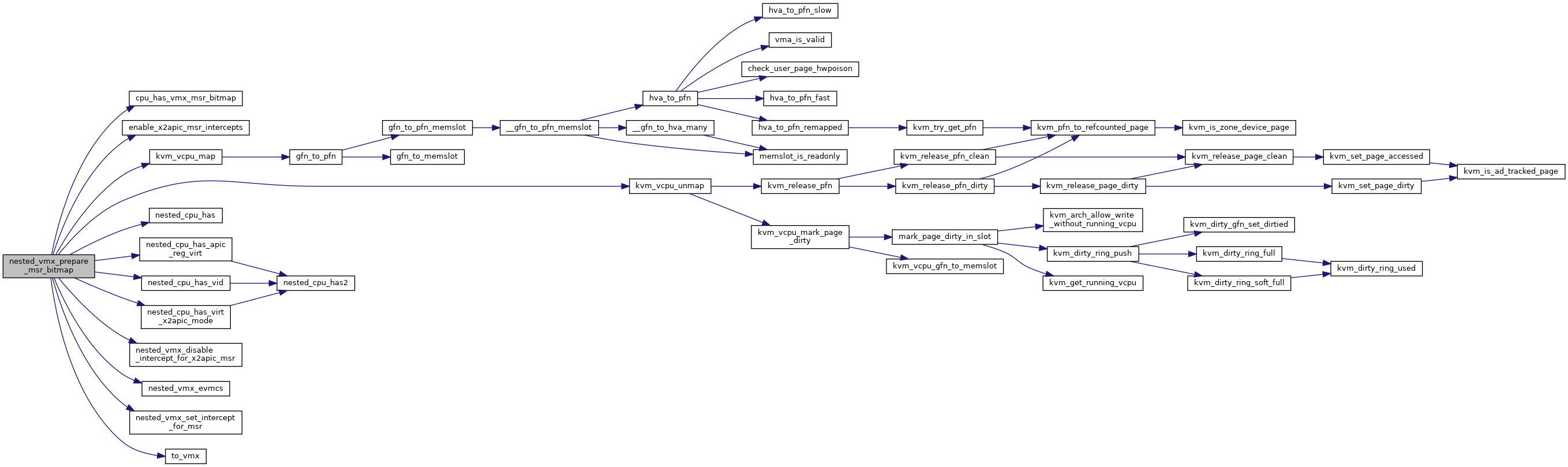

| static bool | nested_vmx_prepare_msr_bitmap (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

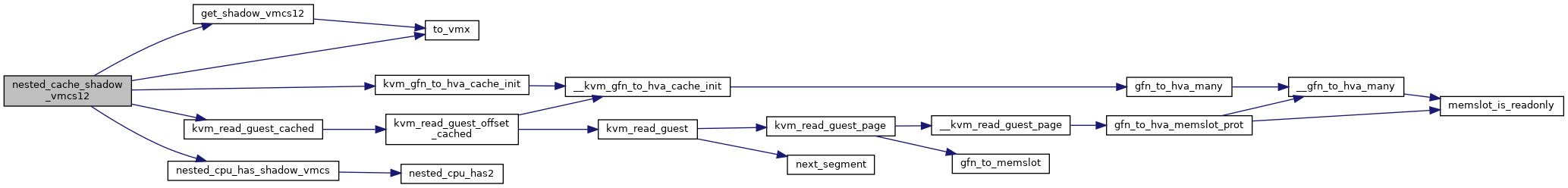

| static void | nested_cache_shadow_vmcs12 (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

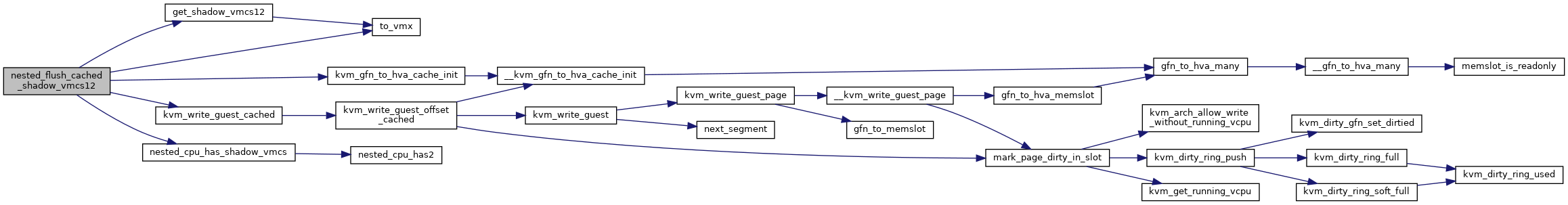

| static void | nested_flush_cached_shadow_vmcs12 (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

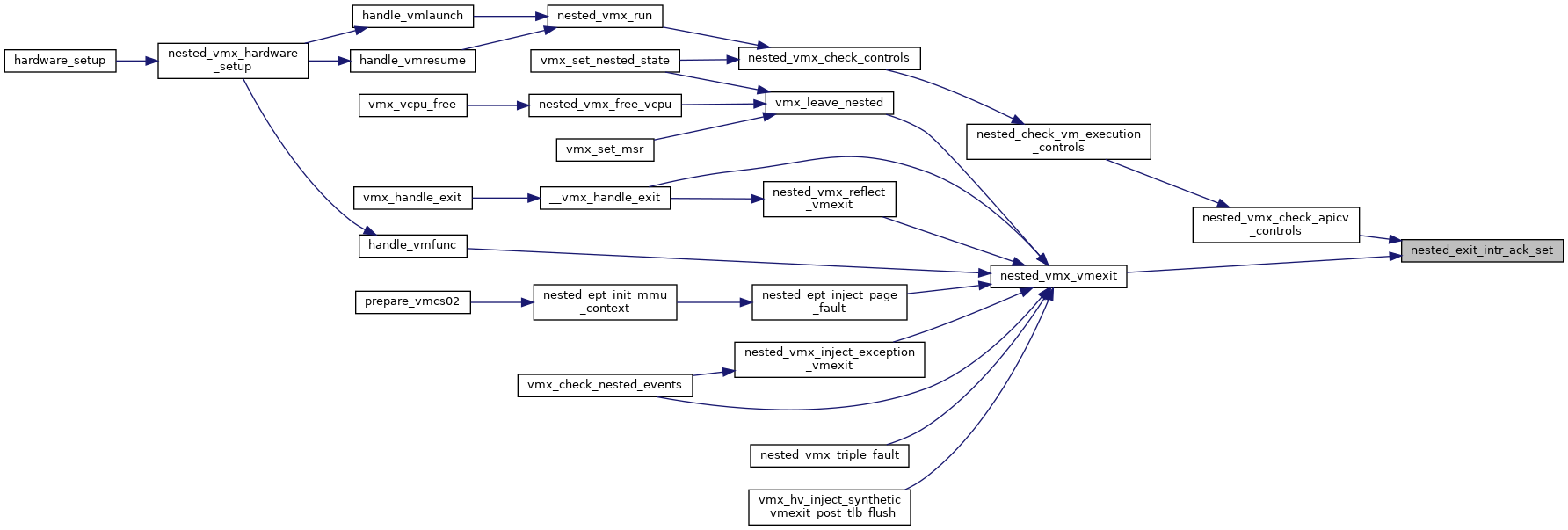

| static bool | nested_exit_intr_ack_set (struct kvm_vcpu *vcpu) |

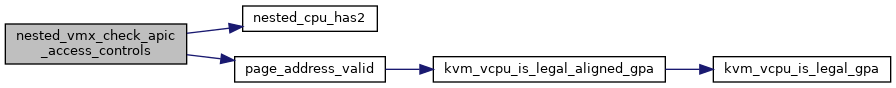

| static int | nested_vmx_check_apic_access_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

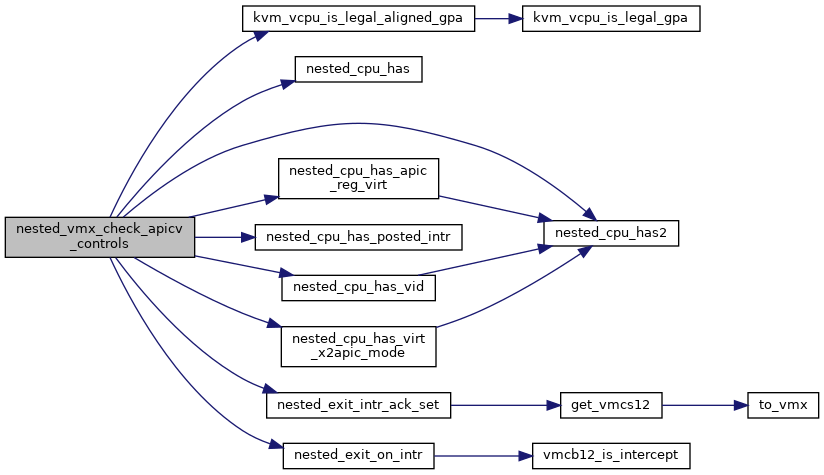

| static int | nested_vmx_check_apicv_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

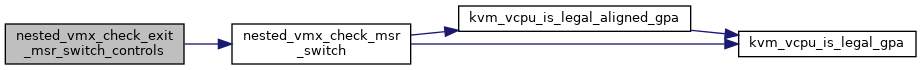

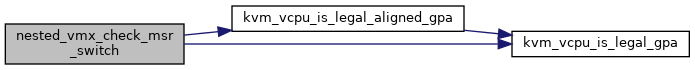

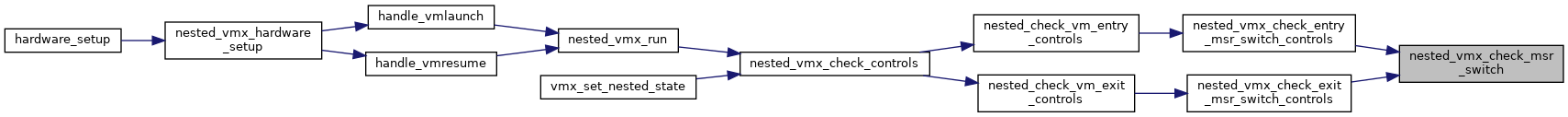

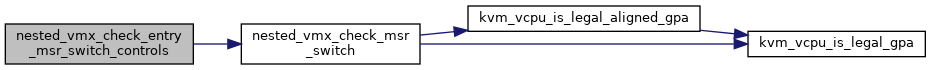

| static int | nested_vmx_check_msr_switch (struct kvm_vcpu *vcpu, u32 count, u64 addr) |

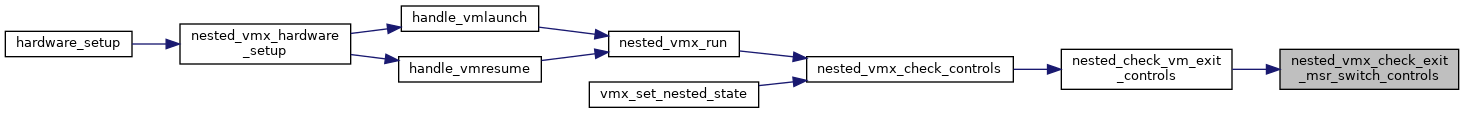

| static int | nested_vmx_check_exit_msr_switch_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

| static int | nested_vmx_check_entry_msr_switch_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

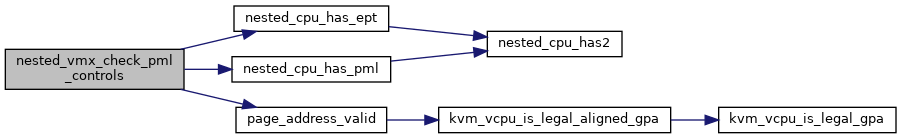

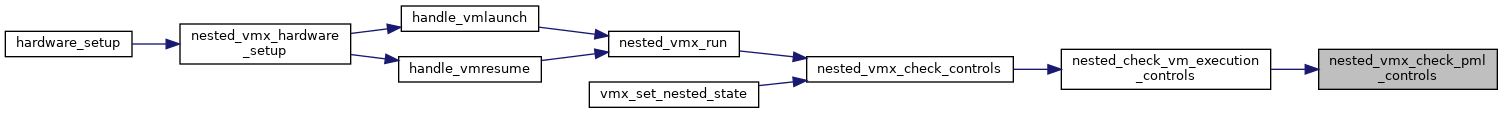

| static int | nested_vmx_check_pml_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

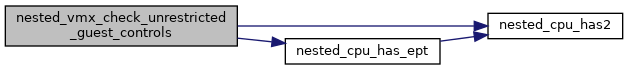

| static int | nested_vmx_check_unrestricted_guest_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

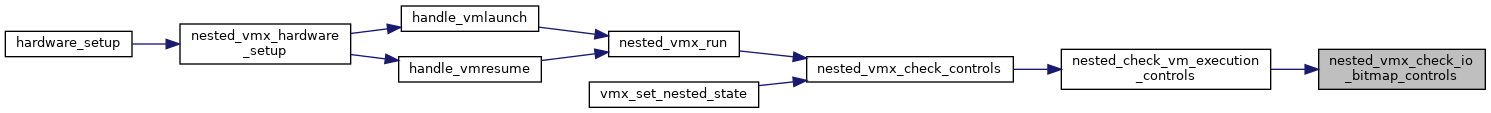

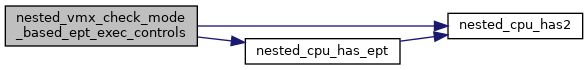

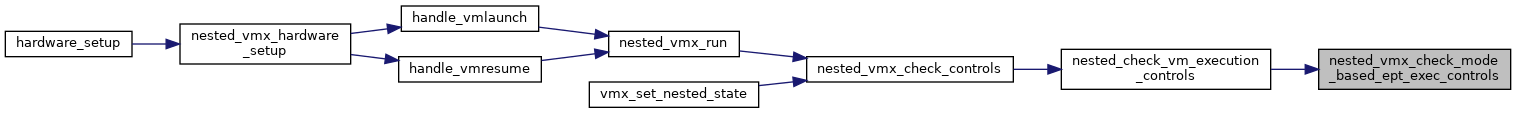

| static int | nested_vmx_check_mode_based_ept_exec_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

| static int | nested_vmx_check_shadow_vmcs_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

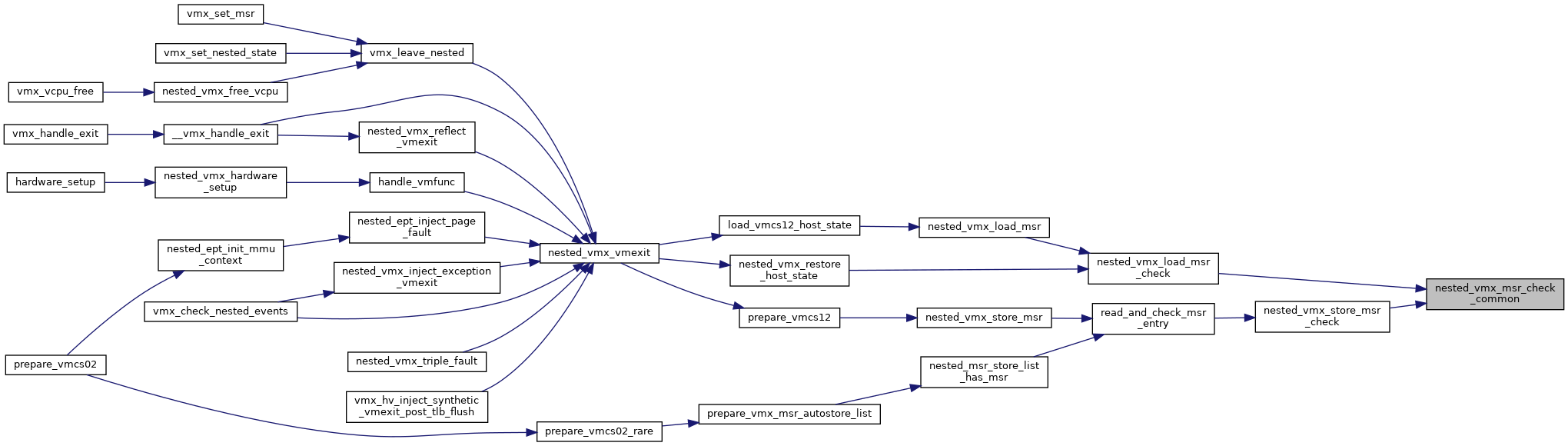

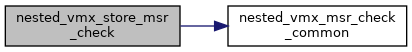

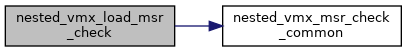

| static int | nested_vmx_msr_check_common (struct kvm_vcpu *vcpu, struct vmx_msr_entry *e) |

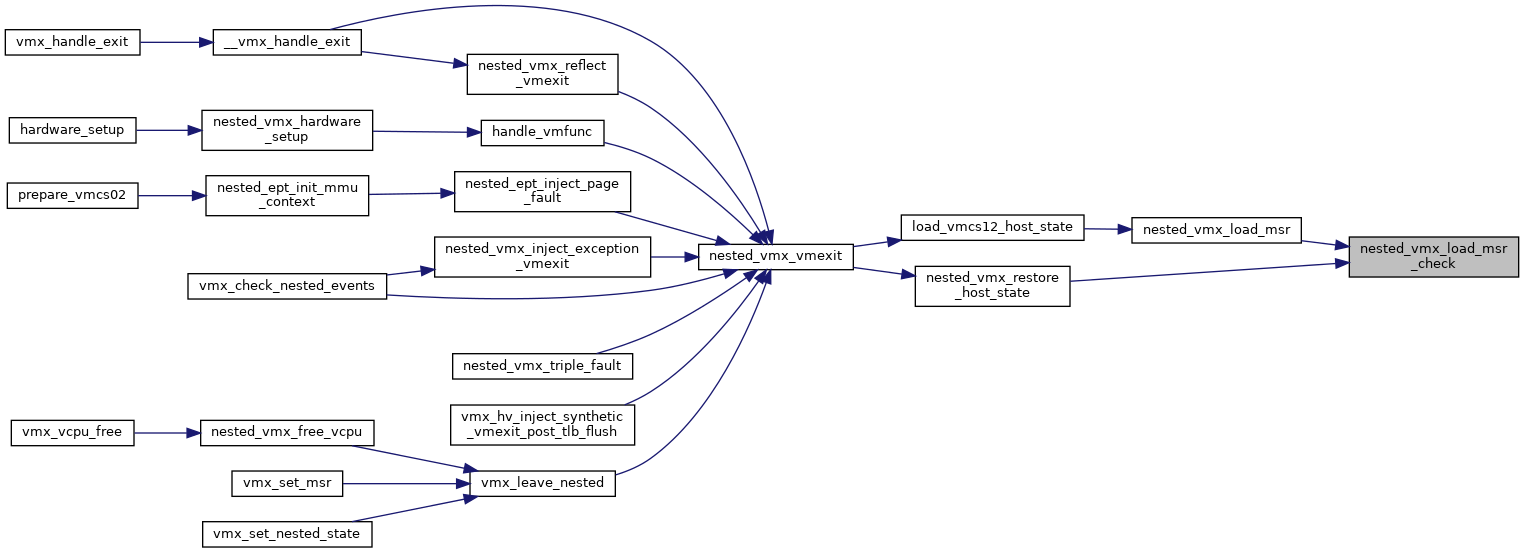

| static int | nested_vmx_load_msr_check (struct kvm_vcpu *vcpu, struct vmx_msr_entry *e) |

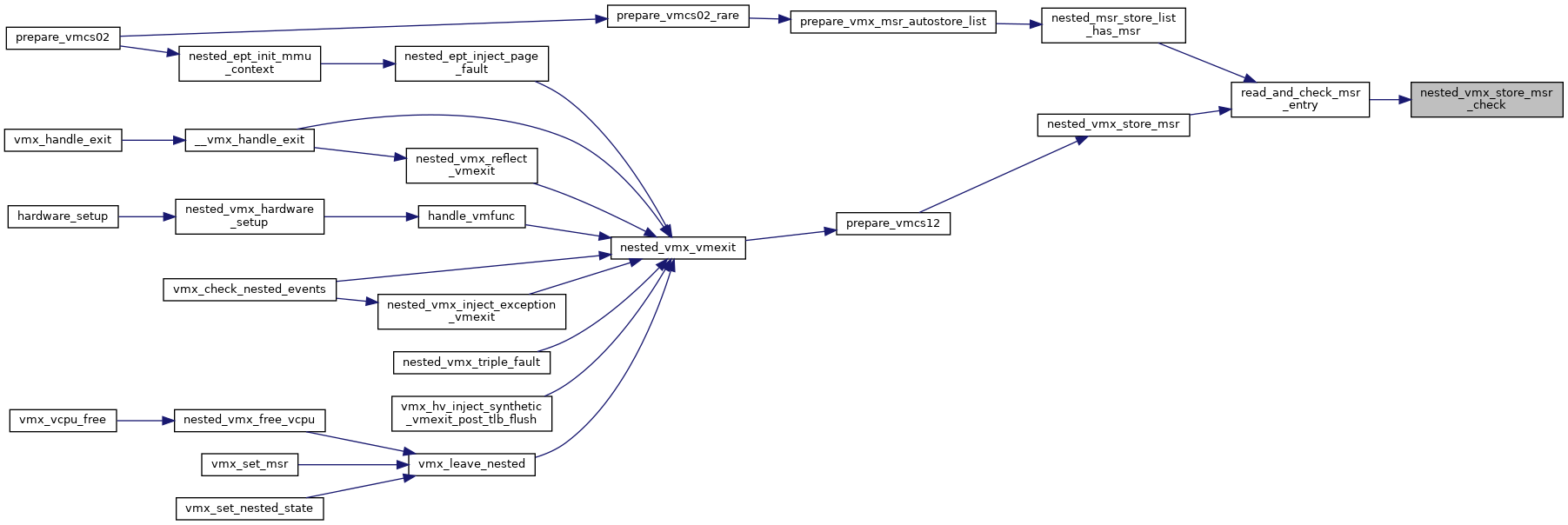

| static int | nested_vmx_store_msr_check (struct kvm_vcpu *vcpu, struct vmx_msr_entry *e) |

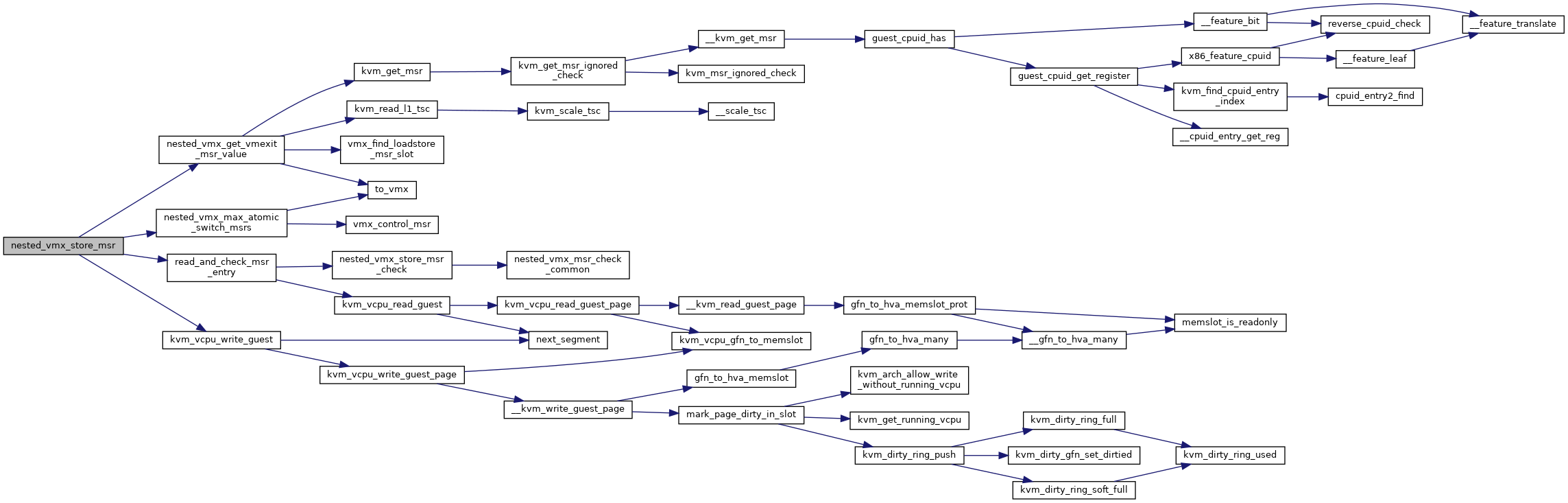

| static u32 | nested_vmx_max_atomic_switch_msrs (struct kvm_vcpu *vcpu) |

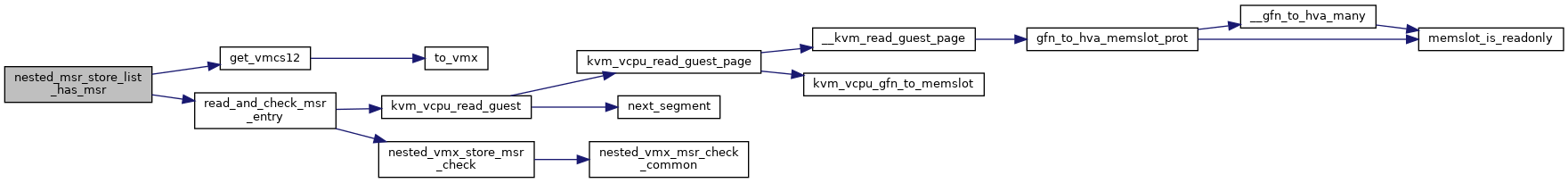

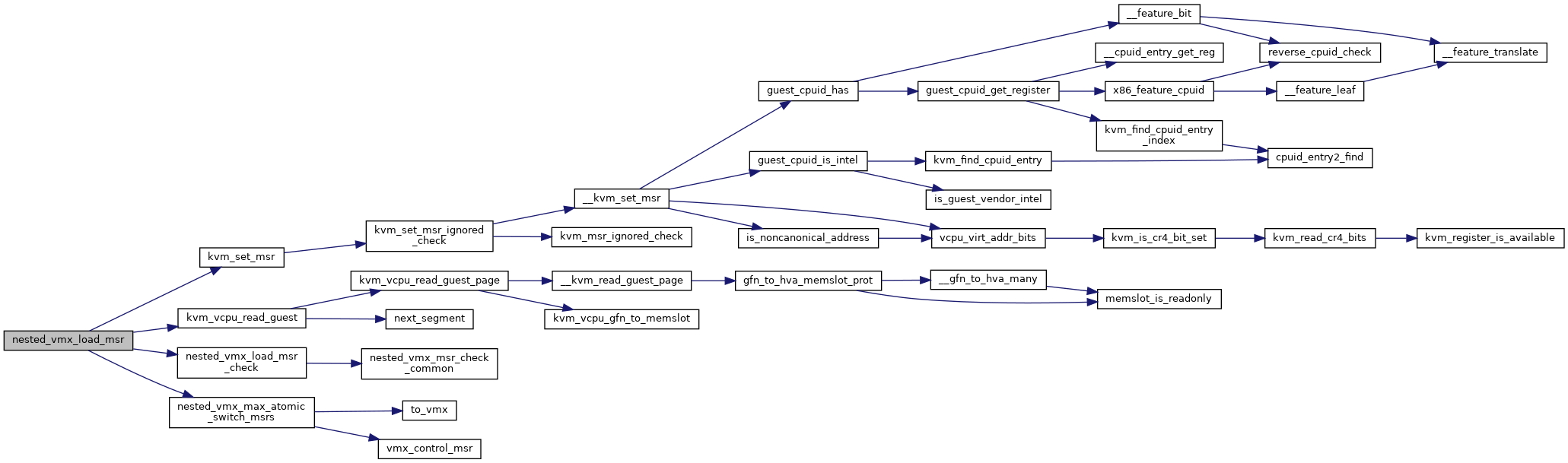

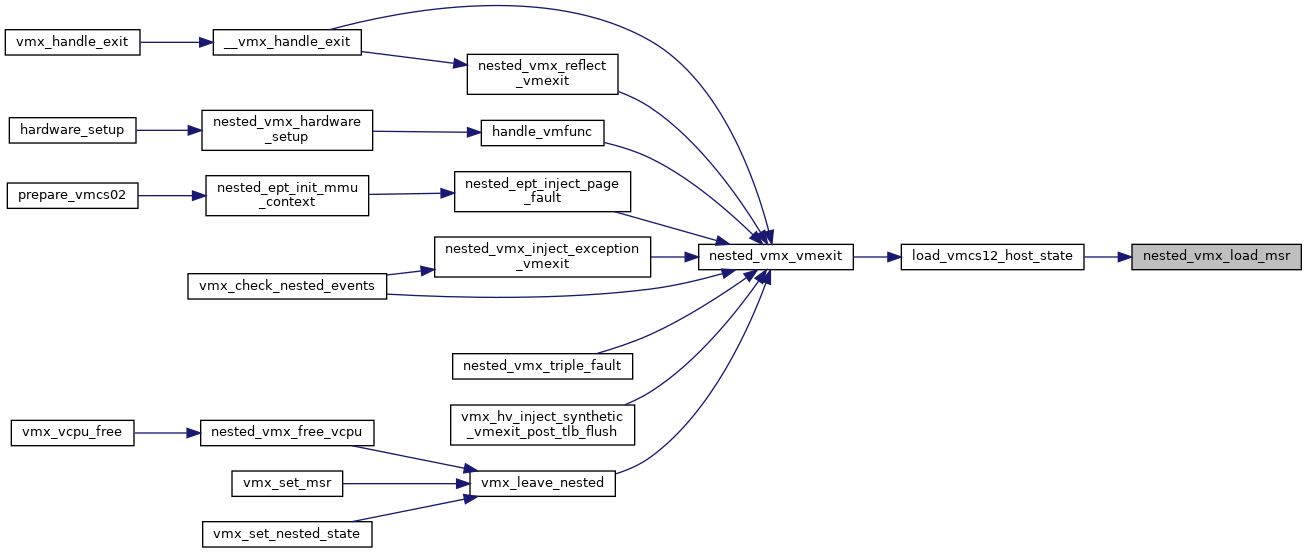

| static u32 | nested_vmx_load_msr (struct kvm_vcpu *vcpu, u64 gpa, u32 count) |

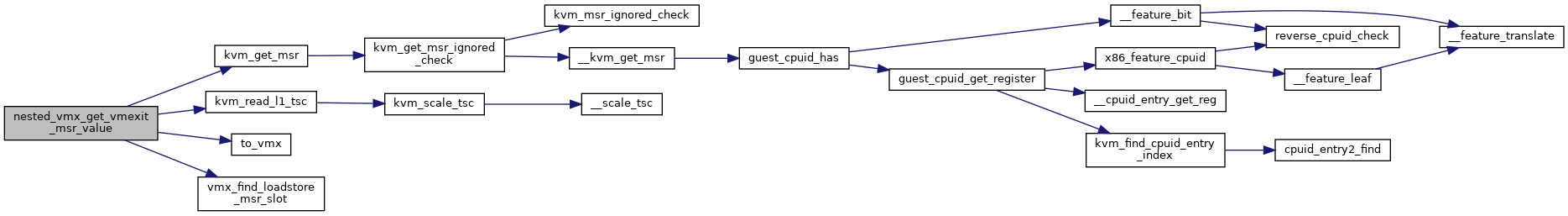

| static bool | nested_vmx_get_vmexit_msr_value (struct kvm_vcpu *vcpu, u32 msr_index, u64 *data) |

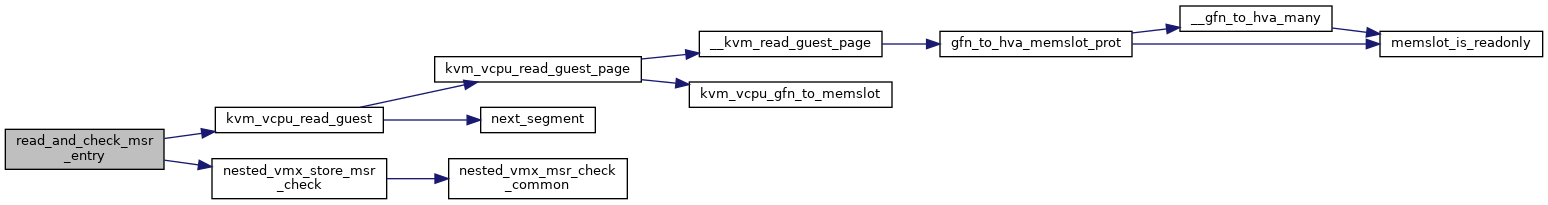

| static bool | read_and_check_msr_entry (struct kvm_vcpu *vcpu, u64 gpa, int i, struct vmx_msr_entry *e) |

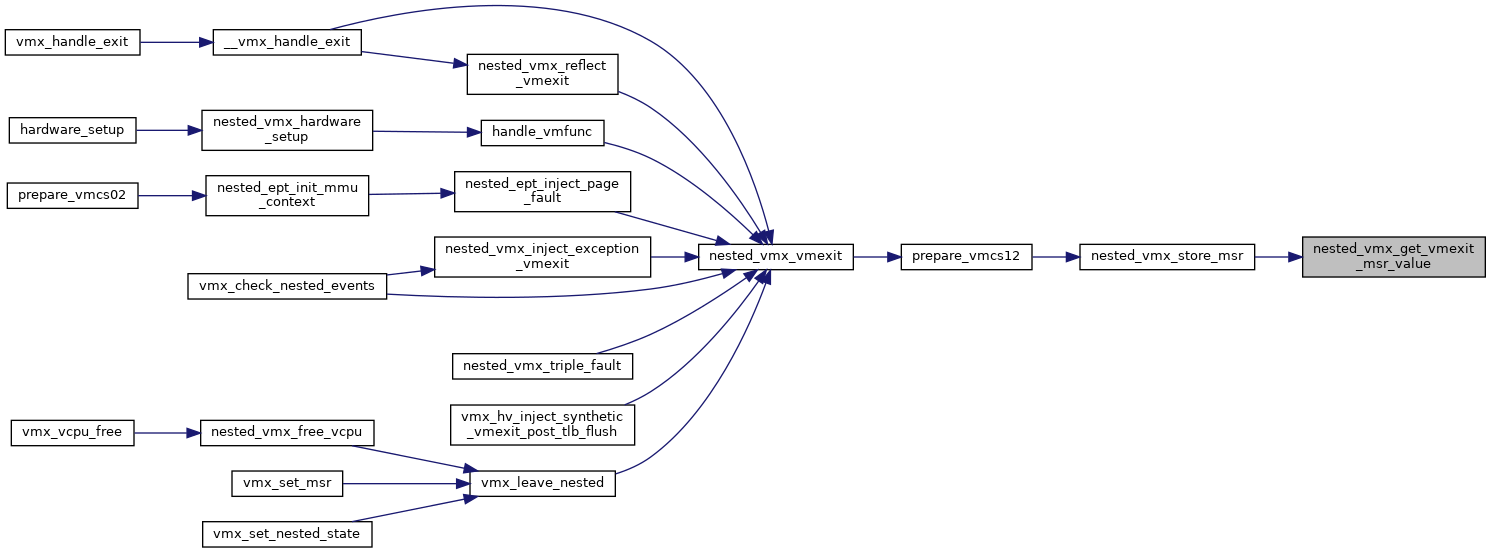

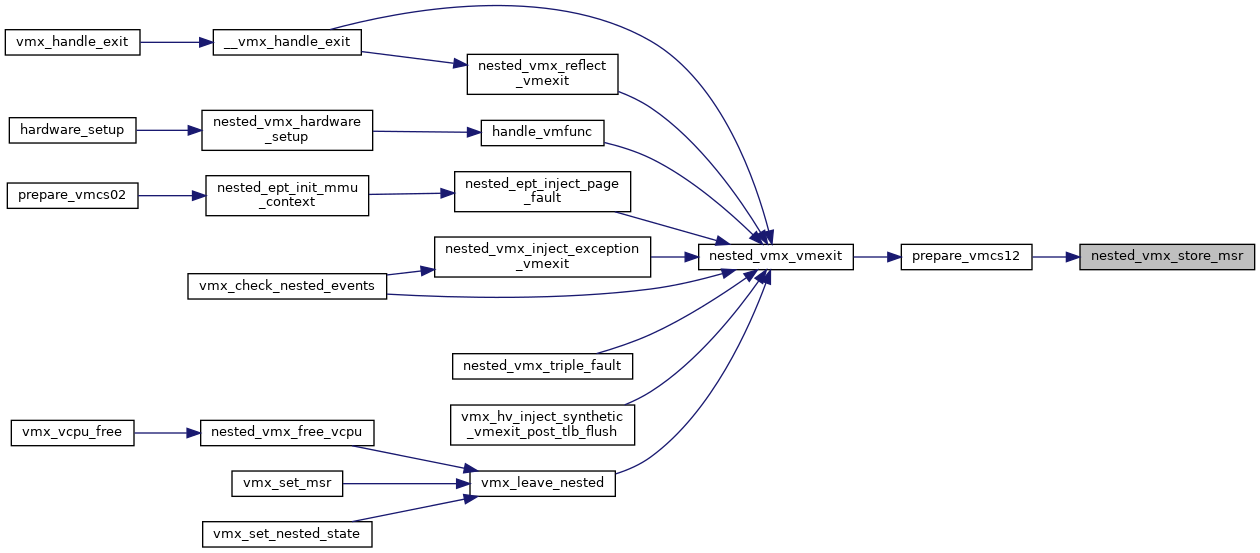

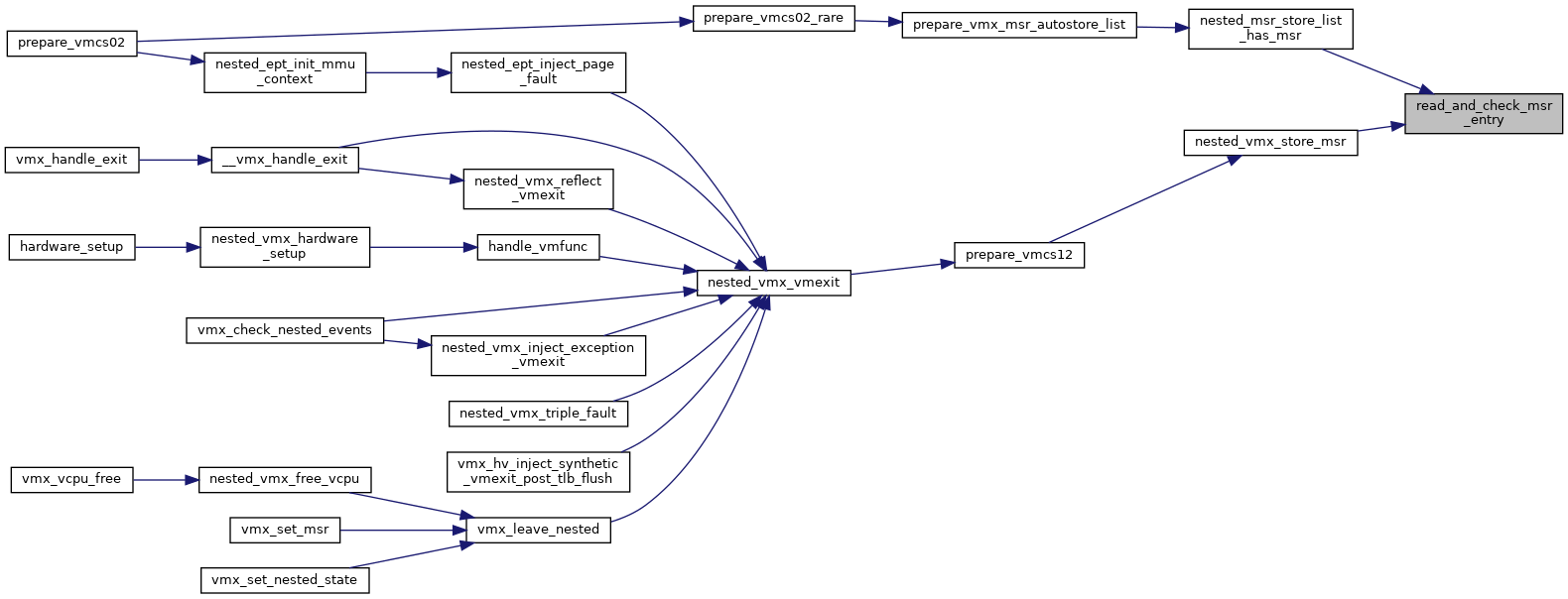

| static int | nested_vmx_store_msr (struct kvm_vcpu *vcpu, u64 gpa, u32 count) |

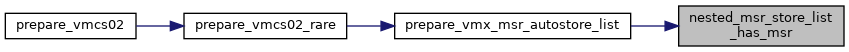

| static bool | nested_msr_store_list_has_msr (struct kvm_vcpu *vcpu, u32 msr_index) |

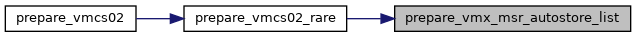

| static void | prepare_vmx_msr_autostore_list (struct kvm_vcpu *vcpu, u32 msr_index) |

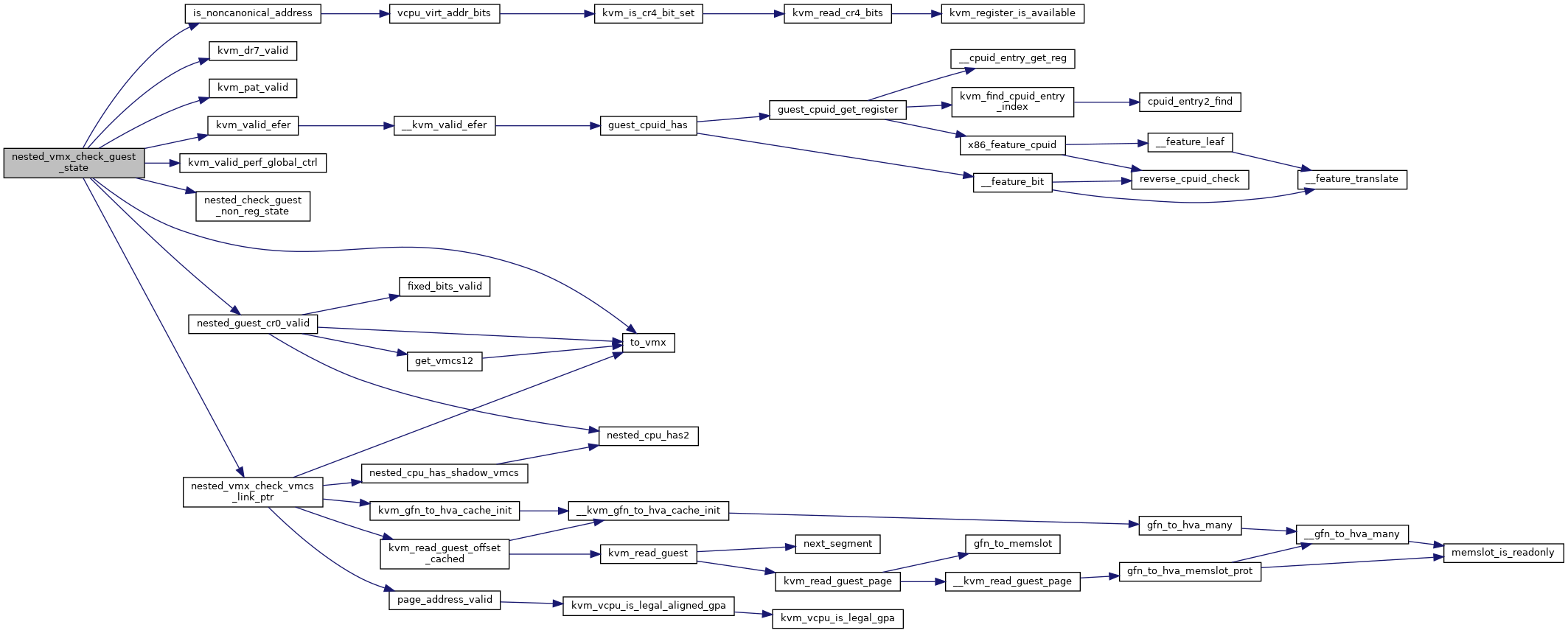

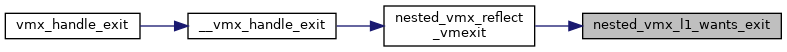

| static int | nested_vmx_load_cr3 (struct kvm_vcpu *vcpu, unsigned long cr3, bool nested_ept, bool reload_pdptrs, enum vm_entry_failure_code *entry_failure_code) |

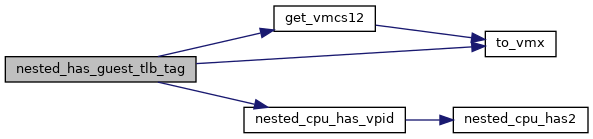

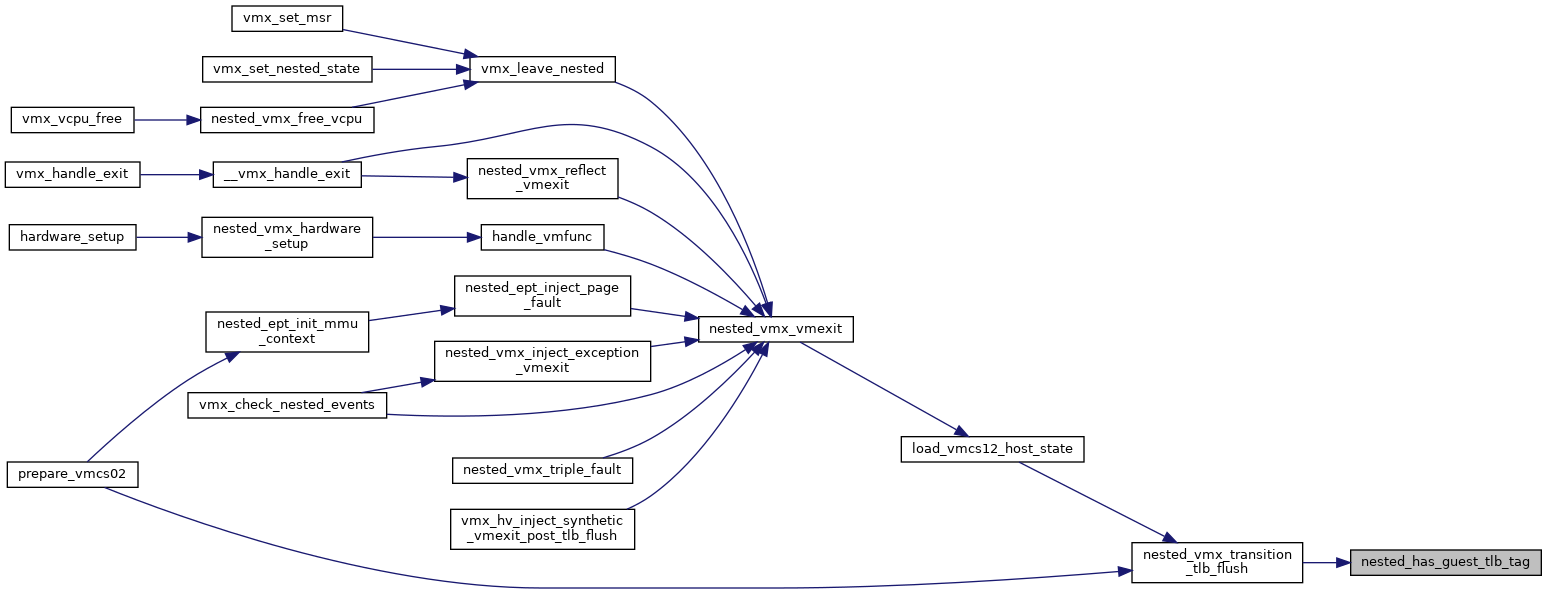

| static bool | nested_has_guest_tlb_tag (struct kvm_vcpu *vcpu) |

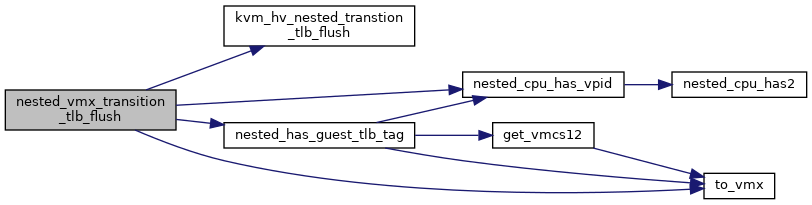

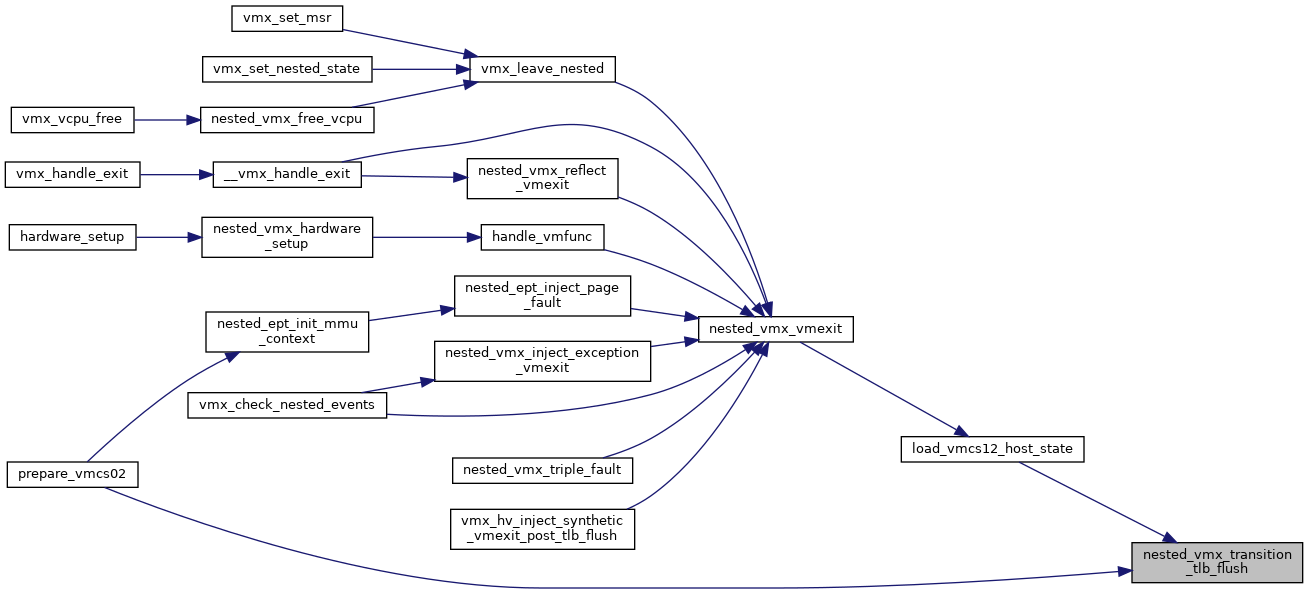

| static void | nested_vmx_transition_tlb_flush (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12, bool is_vmenter) |

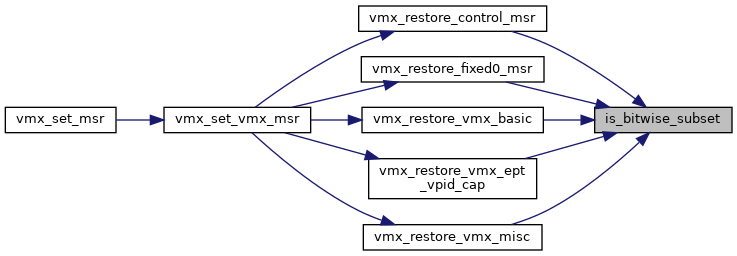

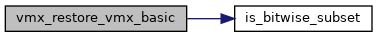

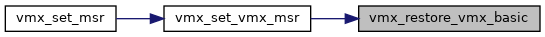

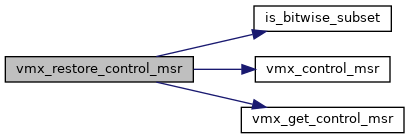

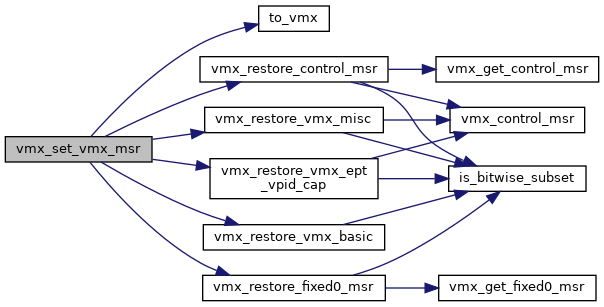

| static bool | is_bitwise_subset (u64 superset, u64 subset, u64 mask) |

| static int | vmx_restore_vmx_basic (struct vcpu_vmx *vmx, u64 data) |

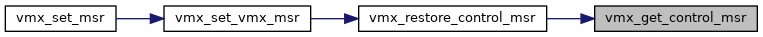

| static void | vmx_get_control_msr (struct nested_vmx_msrs *msrs, u32 msr_index, u32 **low, u32 **high) |

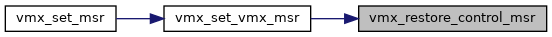

| static int | vmx_restore_control_msr (struct vcpu_vmx *vmx, u32 msr_index, u64 data) |

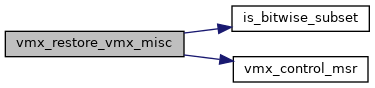

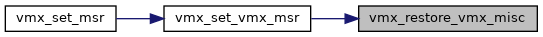

| static int | vmx_restore_vmx_misc (struct vcpu_vmx *vmx, u64 data) |

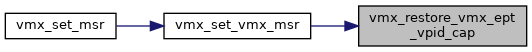

| static int | vmx_restore_vmx_ept_vpid_cap (struct vcpu_vmx *vmx, u64 data) |

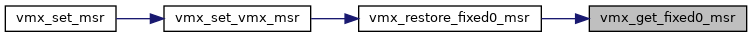

| static u64 * | vmx_get_fixed0_msr (struct nested_vmx_msrs *msrs, u32 msr_index) |

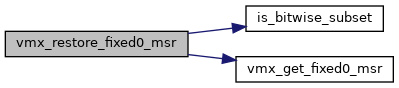

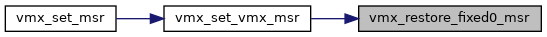

| static int | vmx_restore_fixed0_msr (struct vcpu_vmx *vmx, u32 msr_index, u64 data) |

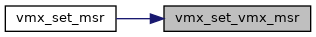

| int | vmx_set_vmx_msr (struct kvm_vcpu *vcpu, u32 msr_index, u64 data) |

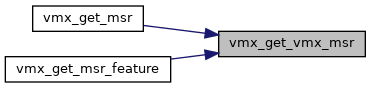

| int | vmx_get_vmx_msr (struct nested_vmx_msrs *msrs, u32 msr_index, u64 *pdata) |

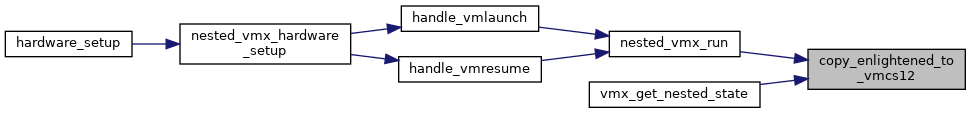

| static void | copy_shadow_to_vmcs12 (struct vcpu_vmx *vmx) |

| static void | copy_vmcs12_to_shadow (struct vcpu_vmx *vmx) |

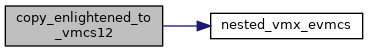

| static void | copy_enlightened_to_vmcs12 (struct vcpu_vmx *vmx, u32 hv_clean_fields) |

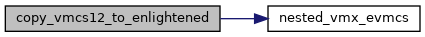

| static void | copy_vmcs12_to_enlightened (struct vcpu_vmx *vmx) |

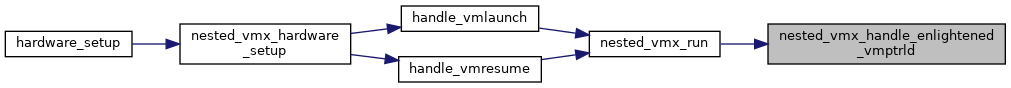

| static enum nested_evmptrld_status | nested_vmx_handle_enlightened_vmptrld (struct kvm_vcpu *vcpu, bool from_launch) |

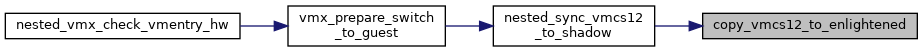

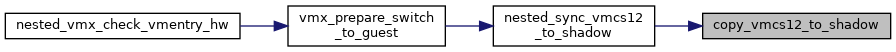

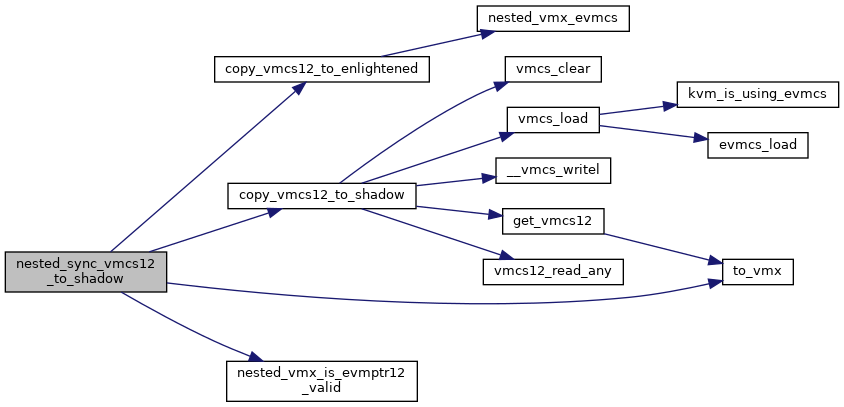

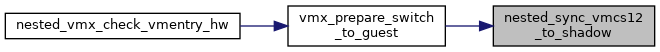

| void | nested_sync_vmcs12_to_shadow (struct kvm_vcpu *vcpu) |

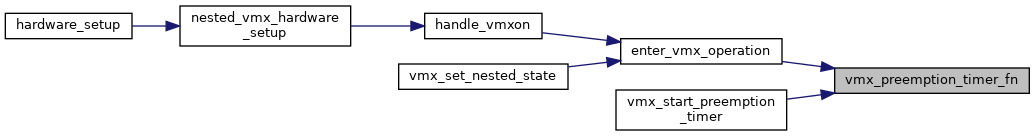

| static enum hrtimer_restart | vmx_preemption_timer_fn (struct hrtimer *timer) |

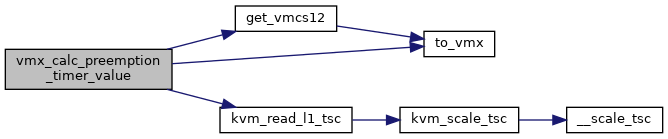

| static u64 | vmx_calc_preemption_timer_value (struct kvm_vcpu *vcpu) |

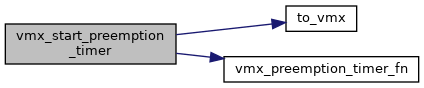

| static void | vmx_start_preemption_timer (struct kvm_vcpu *vcpu, u64 preemption_timeout) |

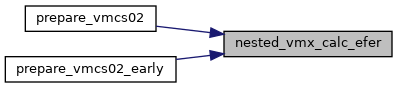

| static u64 | nested_vmx_calc_efer (struct vcpu_vmx *vmx, struct vmcs12 *vmcs12) |

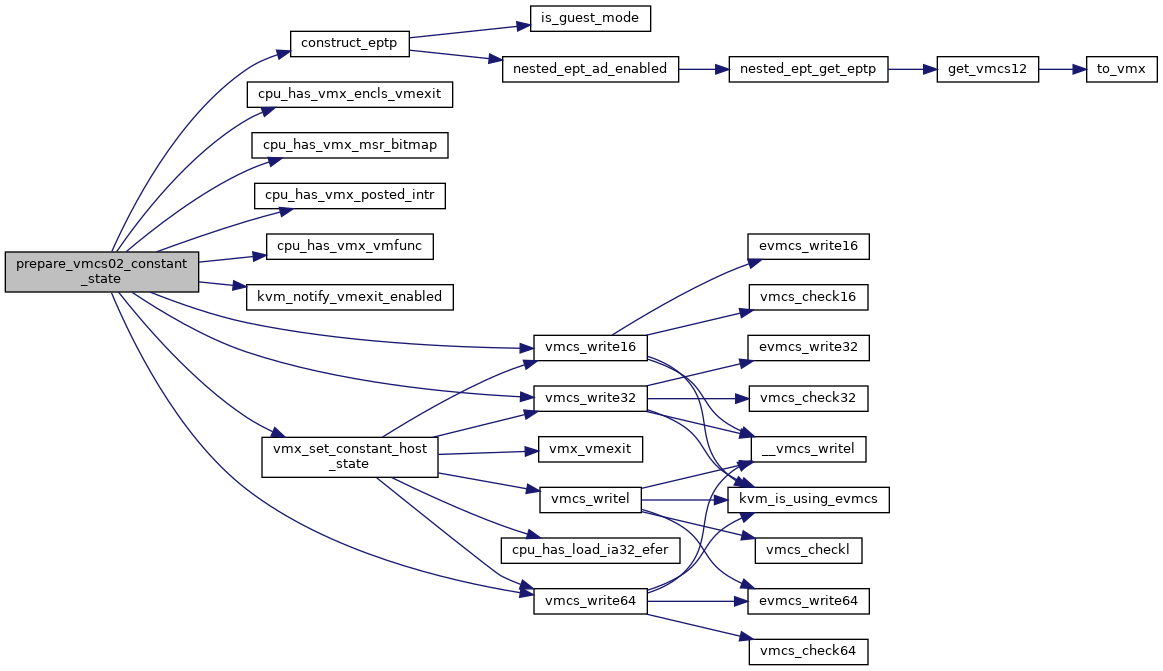

| static void | prepare_vmcs02_constant_state (struct vcpu_vmx *vmx) |

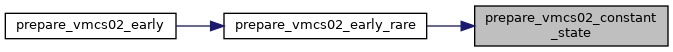

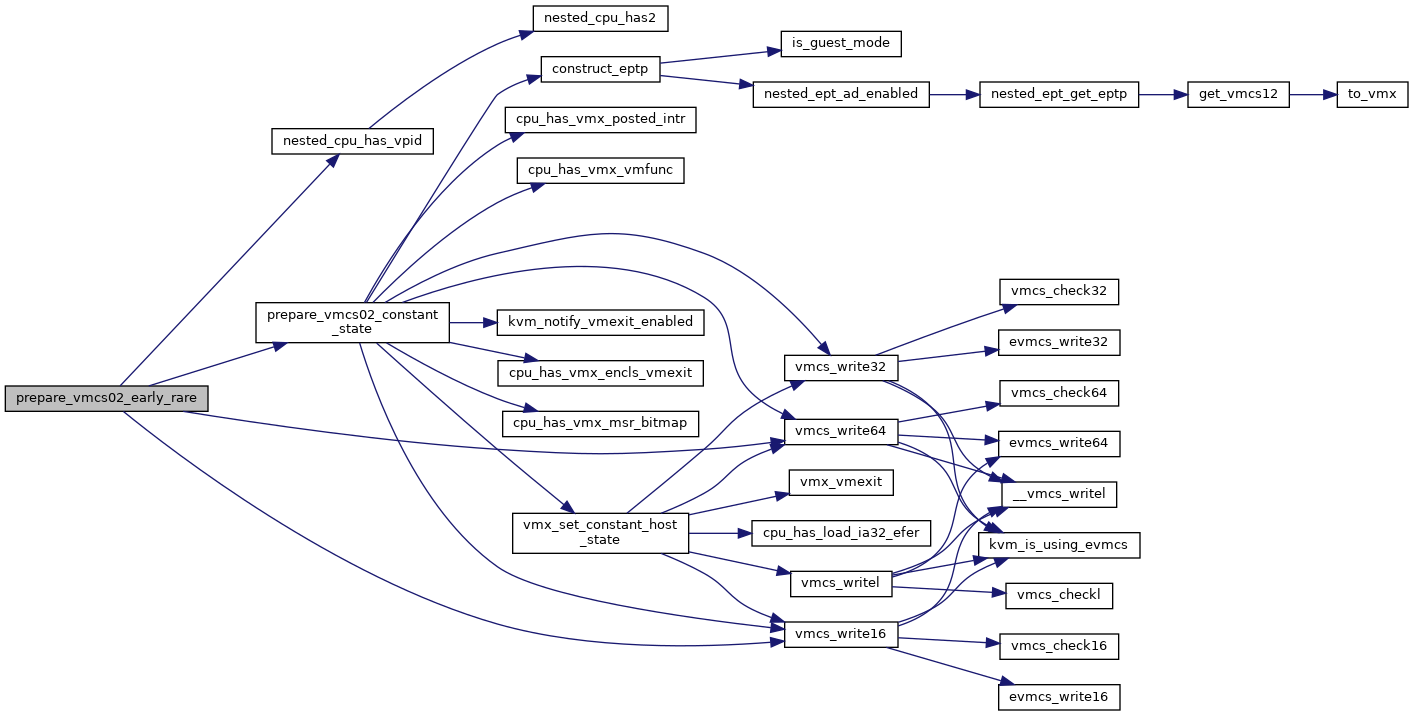

| static void | prepare_vmcs02_early_rare (struct vcpu_vmx *vmx, struct vmcs12 *vmcs12) |

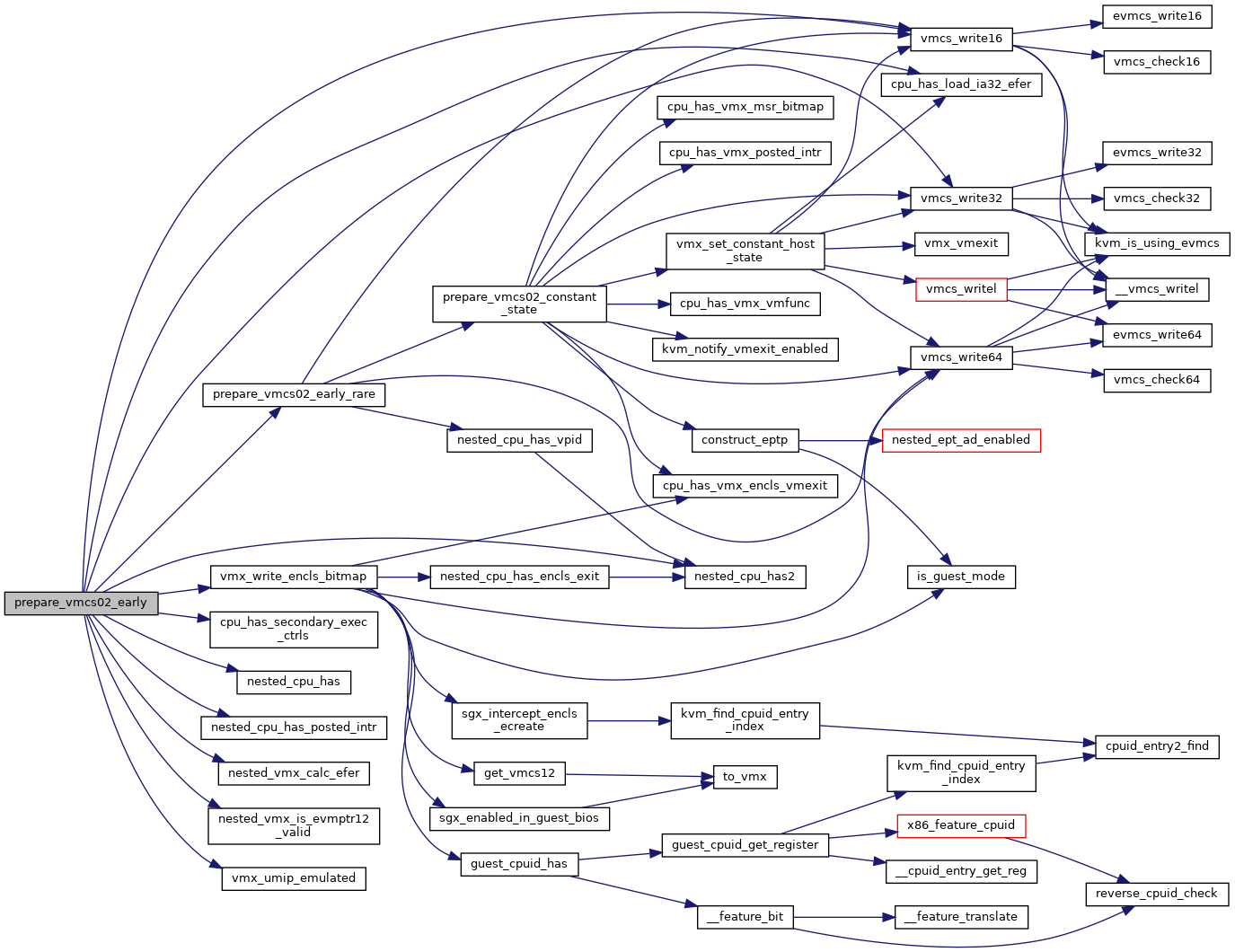

| static void | prepare_vmcs02_early (struct vcpu_vmx *vmx, struct loaded_vmcs *vmcs01, struct vmcs12 *vmcs12) |

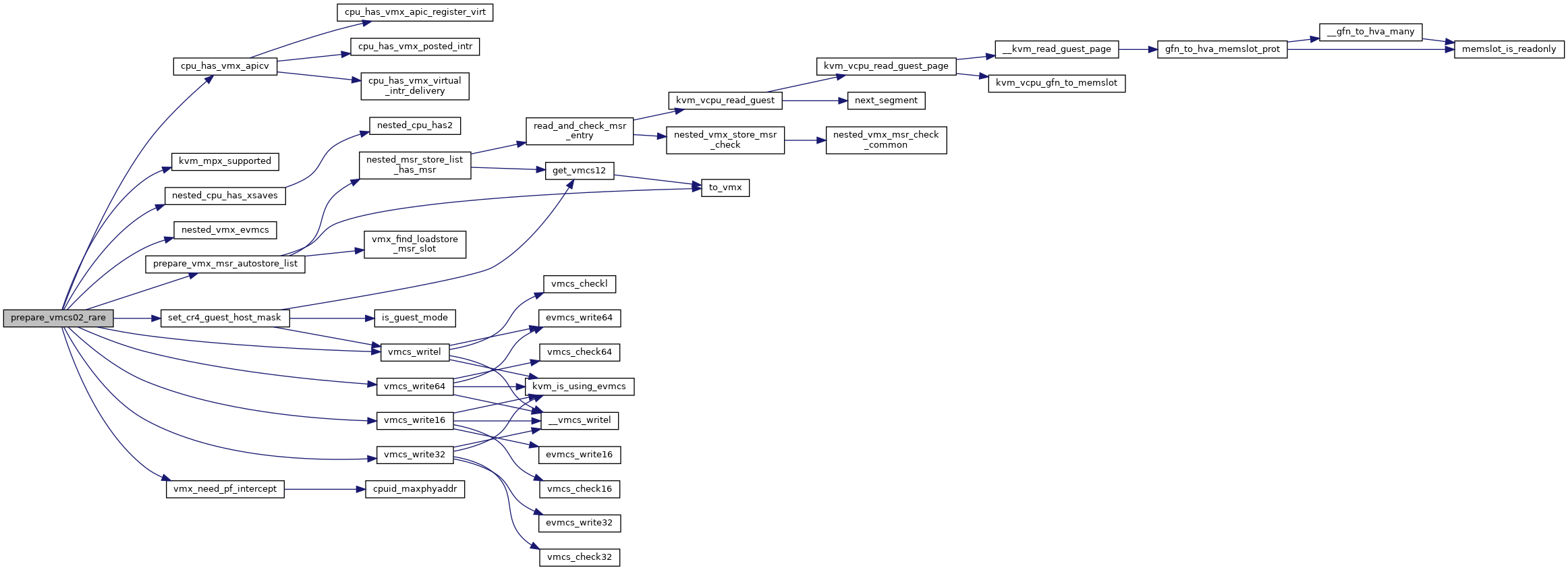

| static void | prepare_vmcs02_rare (struct vcpu_vmx *vmx, struct vmcs12 *vmcs12) |

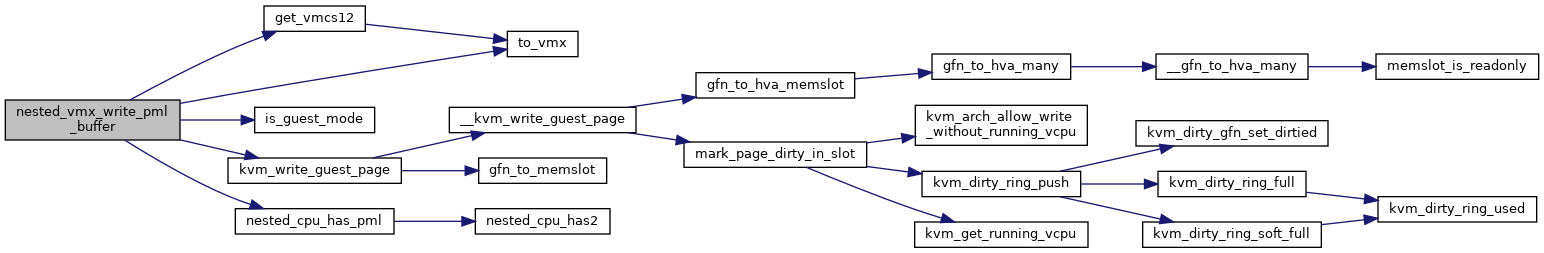

| static int | prepare_vmcs02 (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12, bool from_vmentry, enum vm_entry_failure_code *entry_failure_code) |

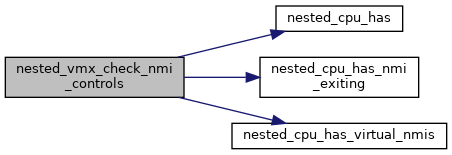

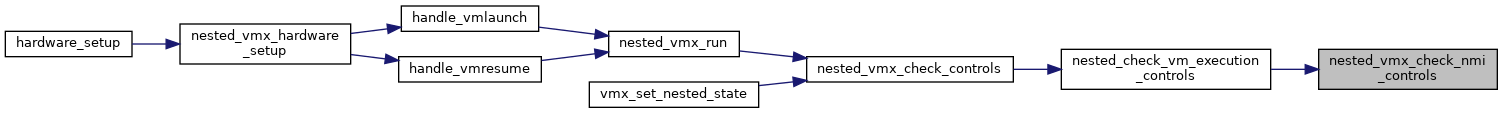

| static int | nested_vmx_check_nmi_controls (struct vmcs12 *vmcs12) |

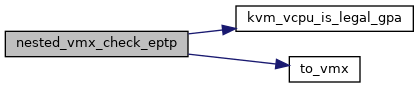

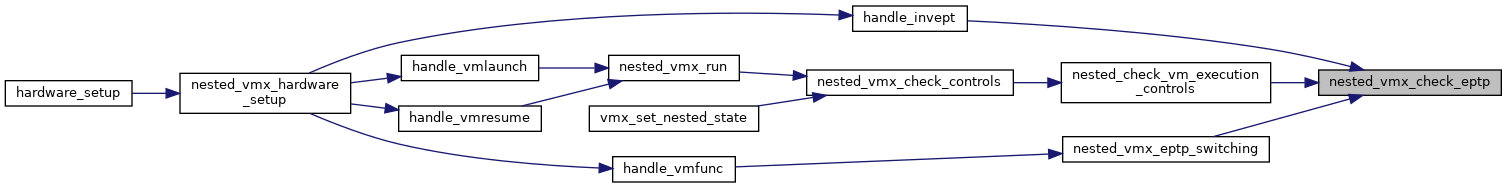

| static bool | nested_vmx_check_eptp (struct kvm_vcpu *vcpu, u64 new_eptp) |

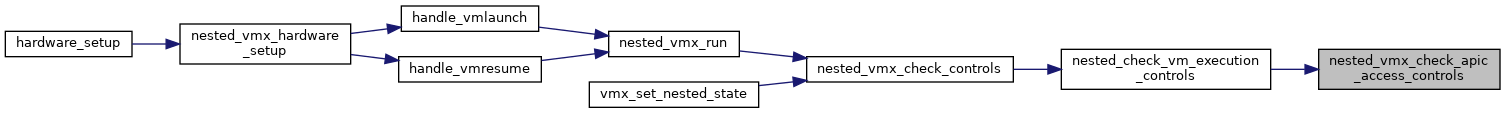

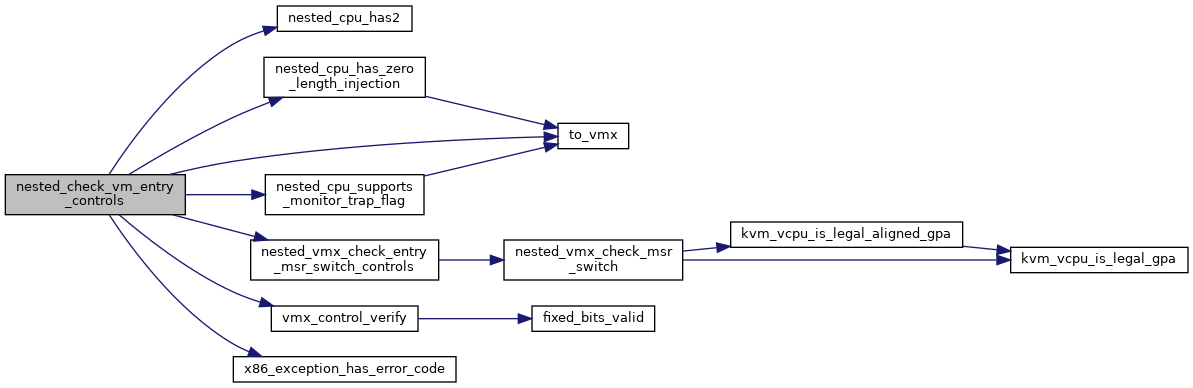

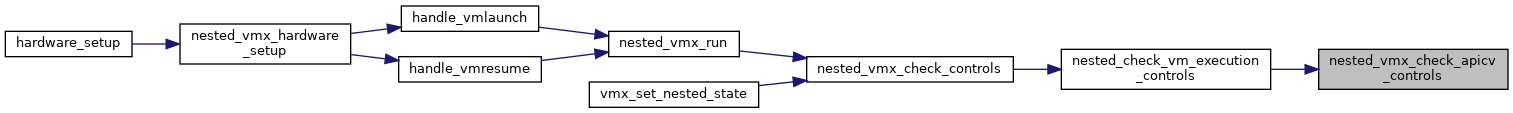

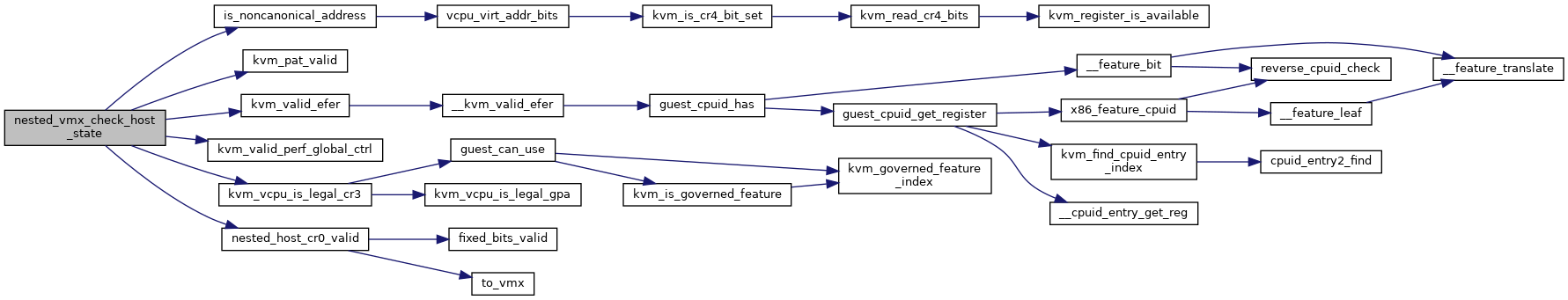

| static int | nested_check_vm_execution_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

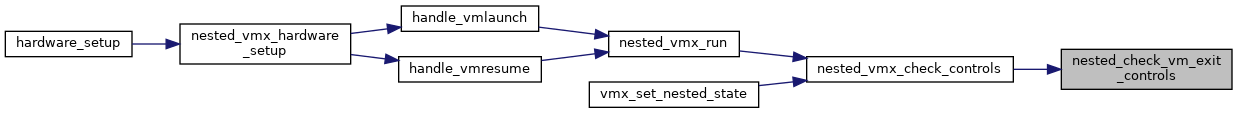

| static int | nested_check_vm_exit_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

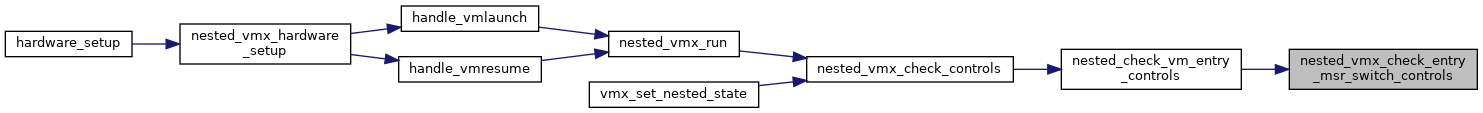

| static int | nested_check_vm_entry_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

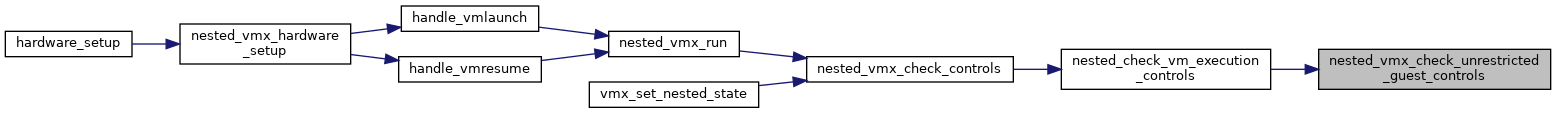

| static int | nested_vmx_check_controls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

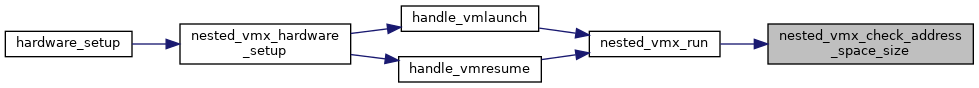

| static int | nested_vmx_check_address_space_size (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

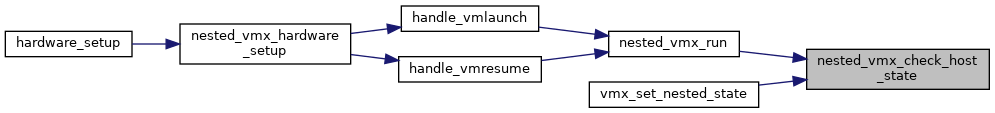

| static int | nested_vmx_check_host_state (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

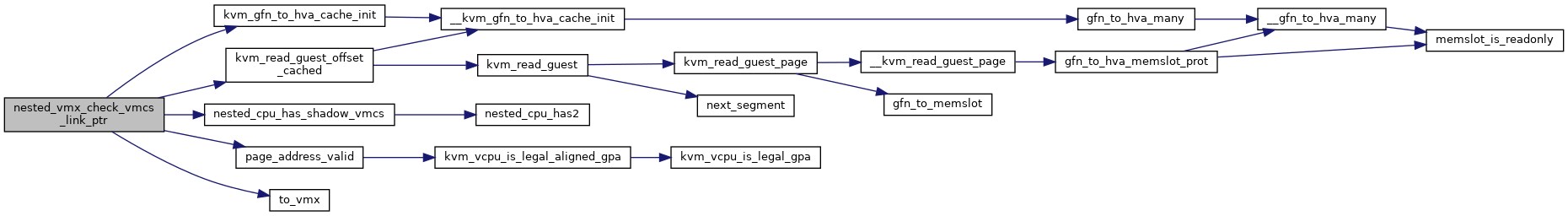

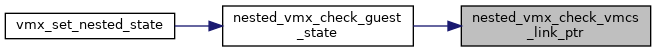

| static int | nested_vmx_check_vmcs_link_ptr (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

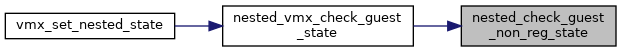

| static int | nested_check_guest_non_reg_state (struct vmcs12 *vmcs12) |

| static int | nested_vmx_check_guest_state (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12, enum vm_entry_failure_code *entry_failure_code) |

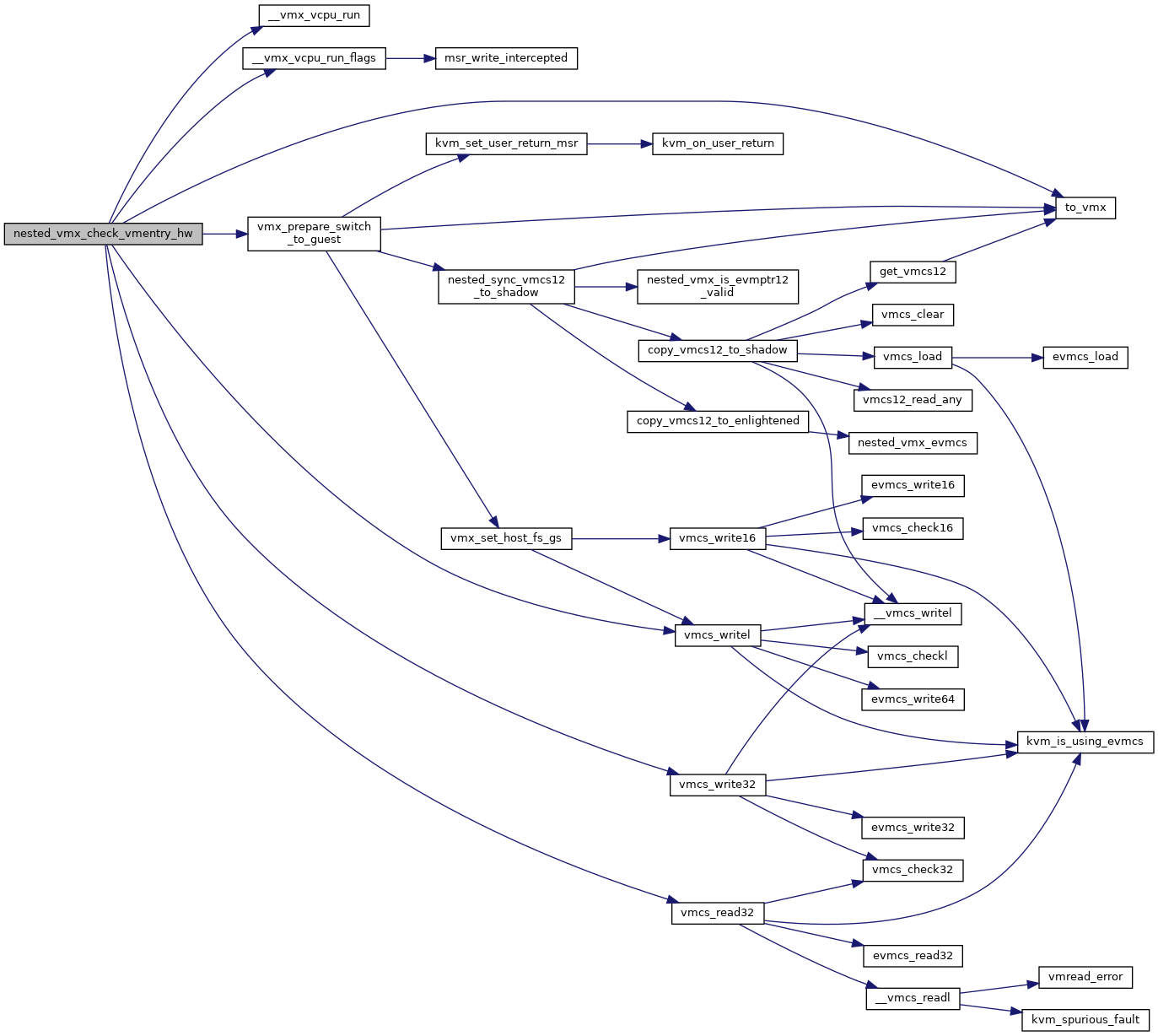

| static int | nested_vmx_check_vmentry_hw (struct kvm_vcpu *vcpu) |

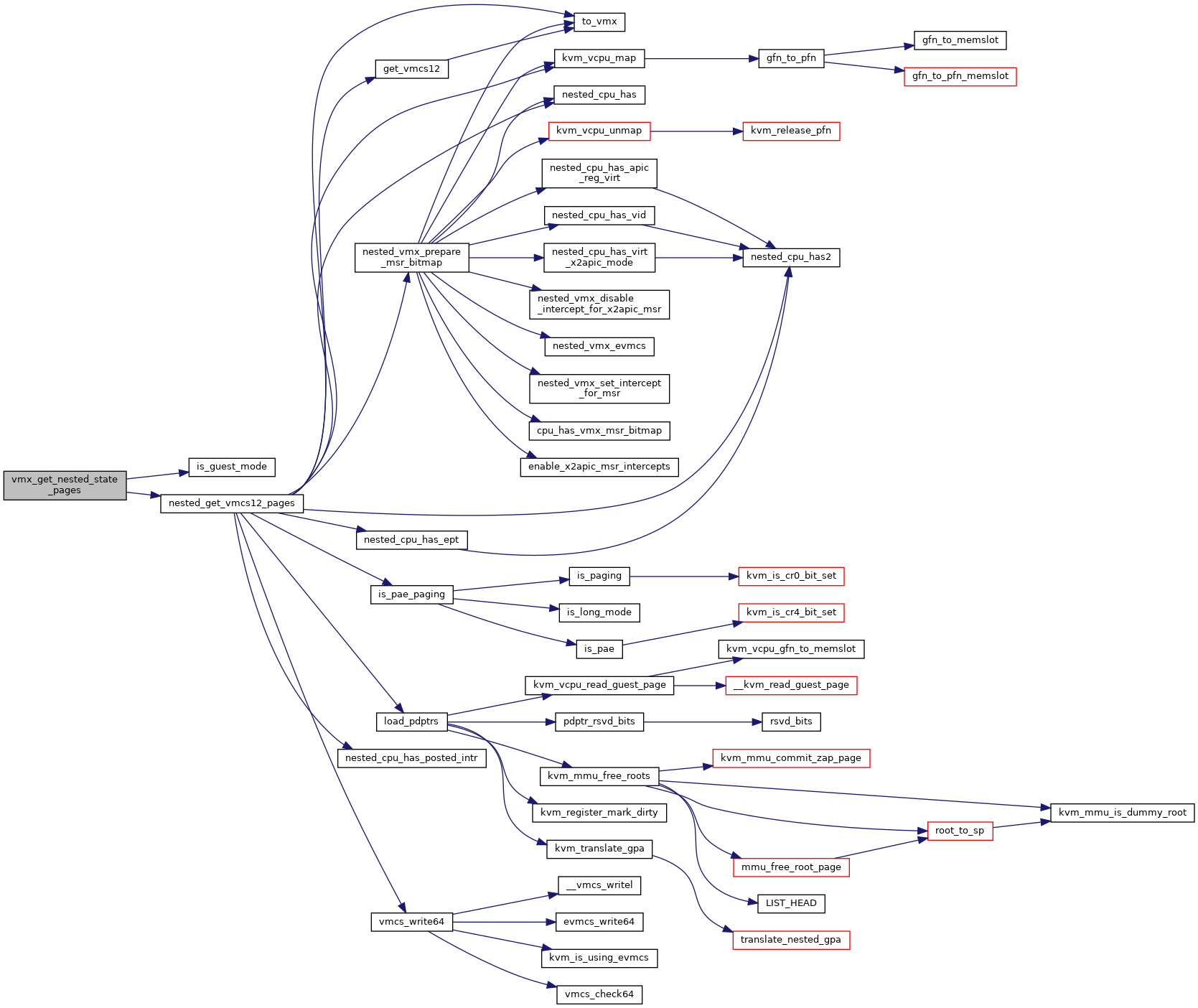

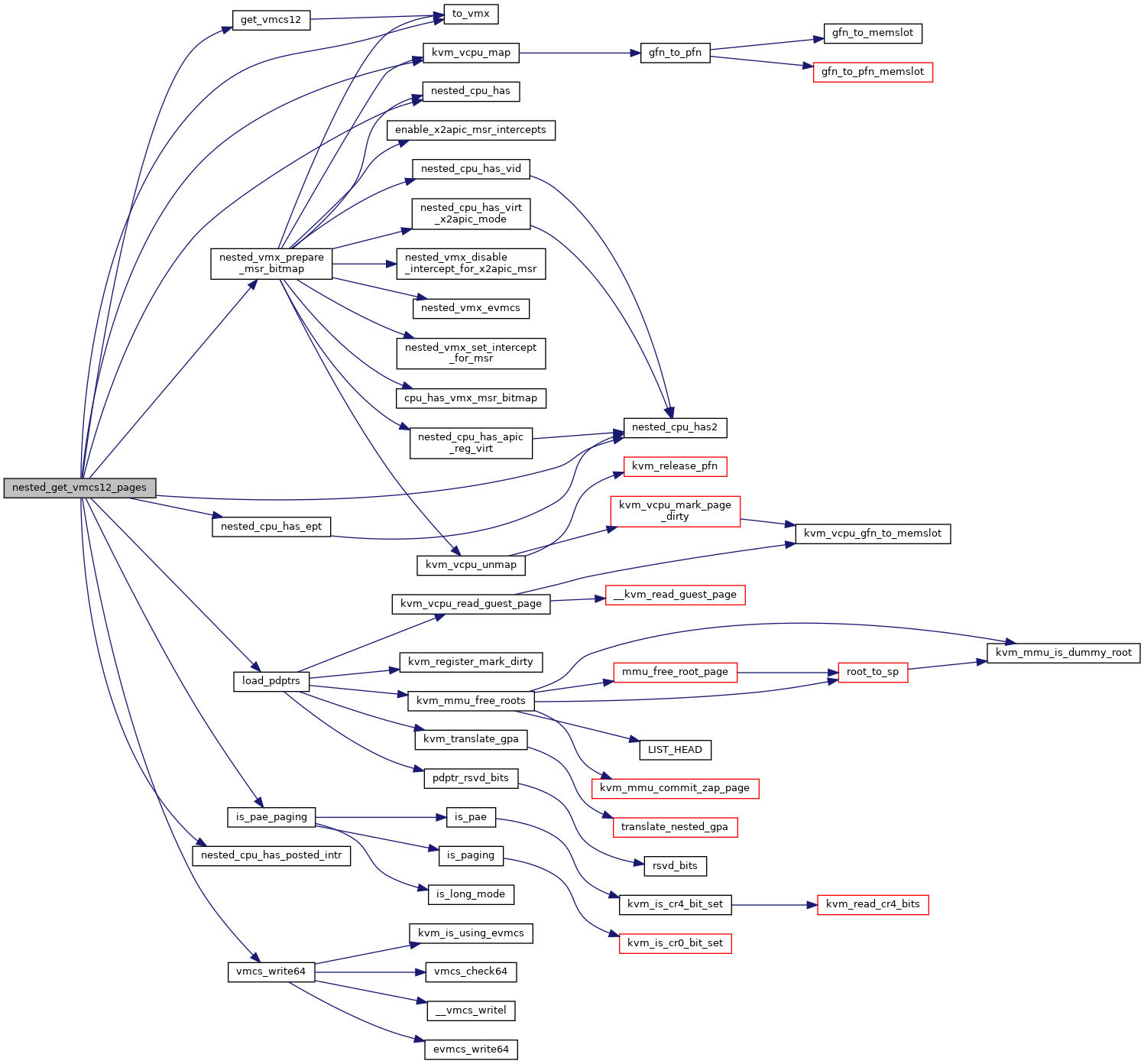

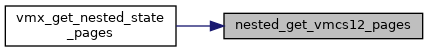

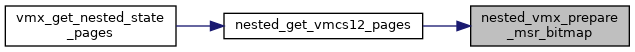

| static bool | nested_get_vmcs12_pages (struct kvm_vcpu *vcpu) |

| static bool | vmx_get_nested_state_pages (struct kvm_vcpu *vcpu) |

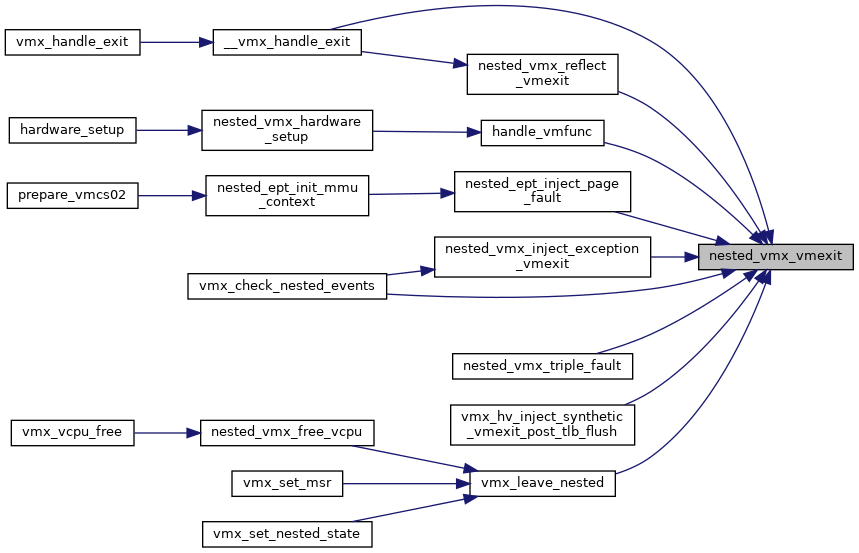

| static int | nested_vmx_write_pml_buffer (struct kvm_vcpu *vcpu, gpa_t gpa) |

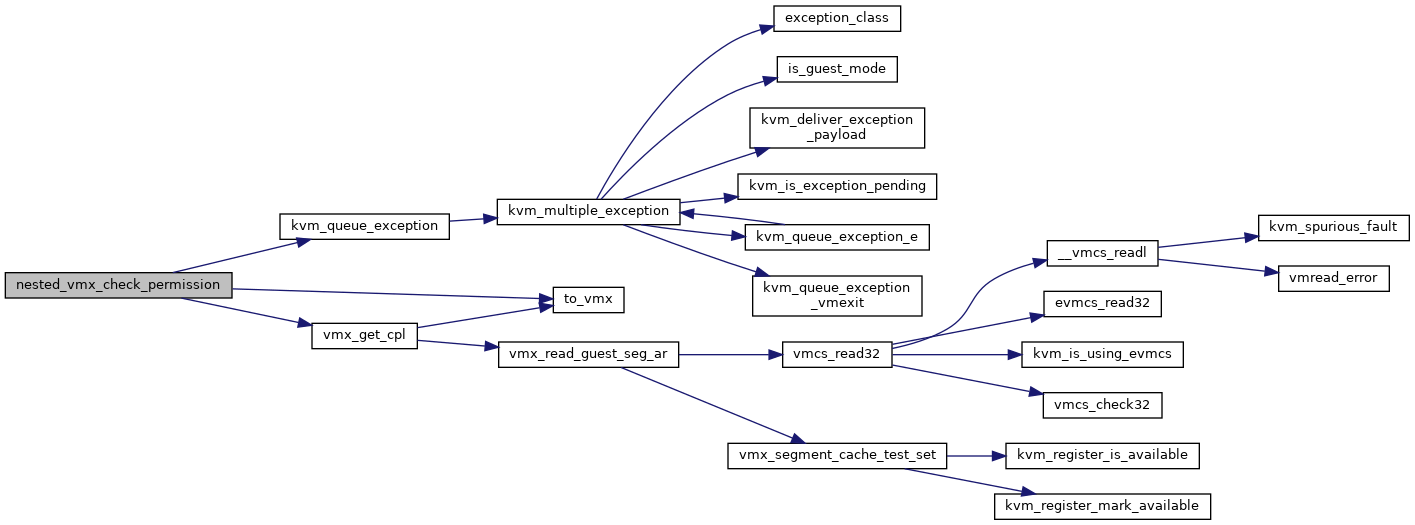

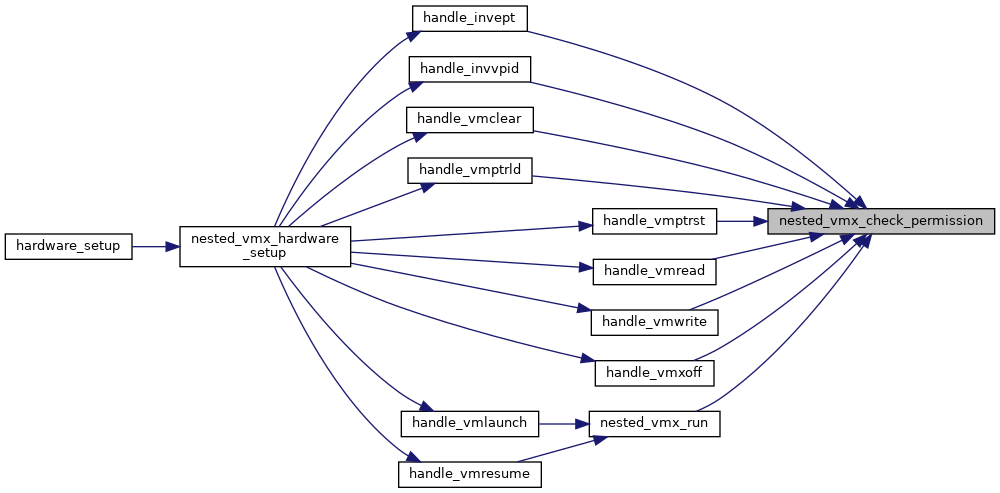

| static int | nested_vmx_check_permission (struct kvm_vcpu *vcpu) |

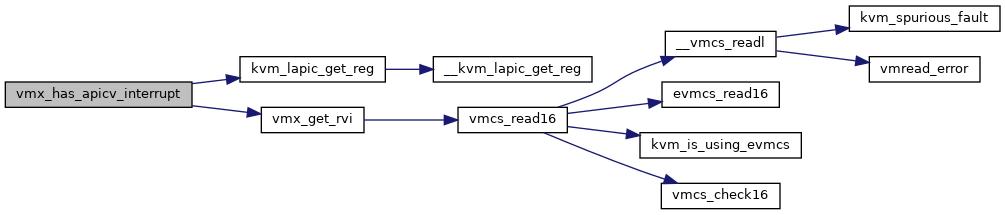

| static u8 | vmx_has_apicv_interrupt (struct kvm_vcpu *vcpu) |

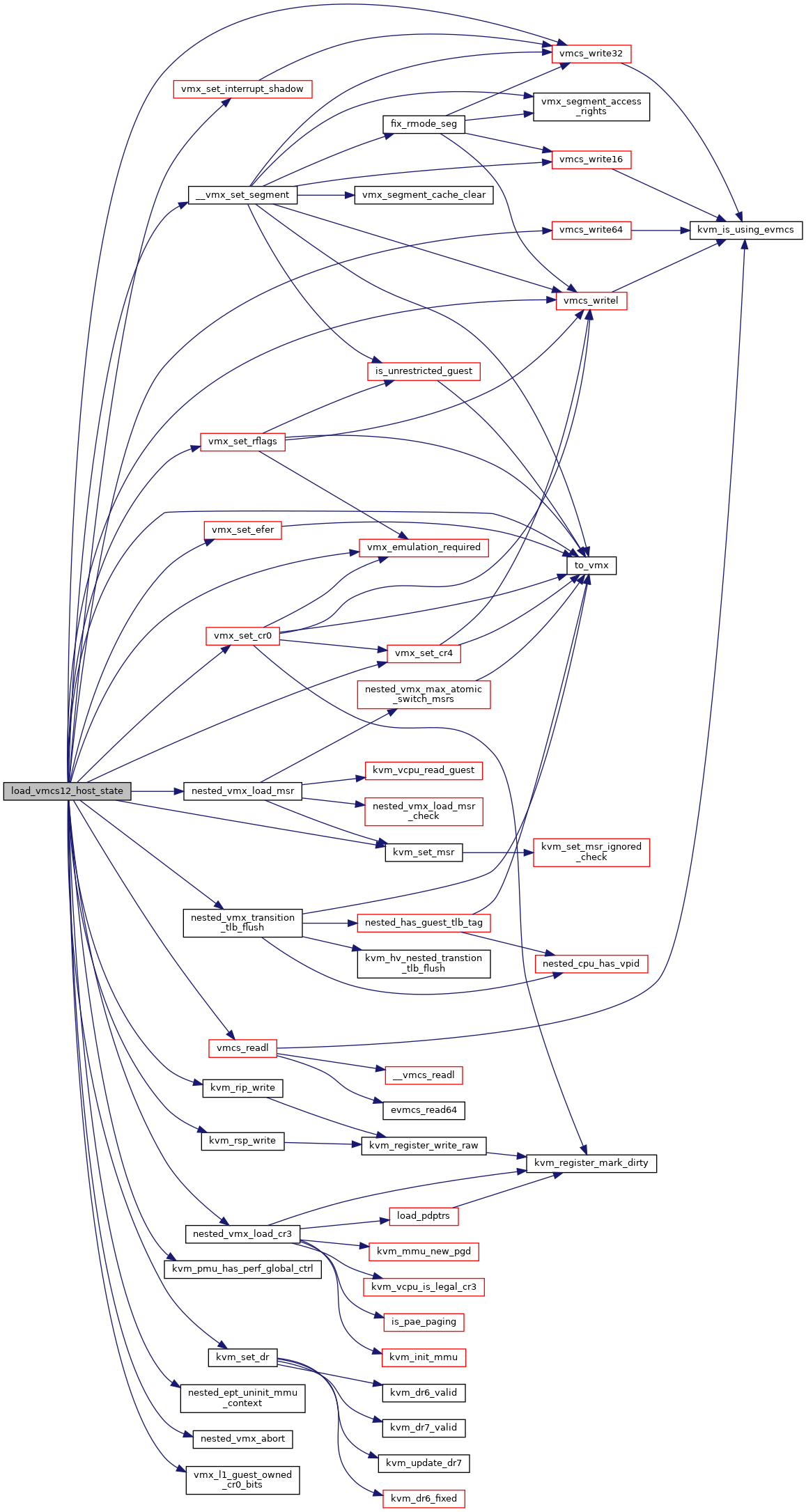

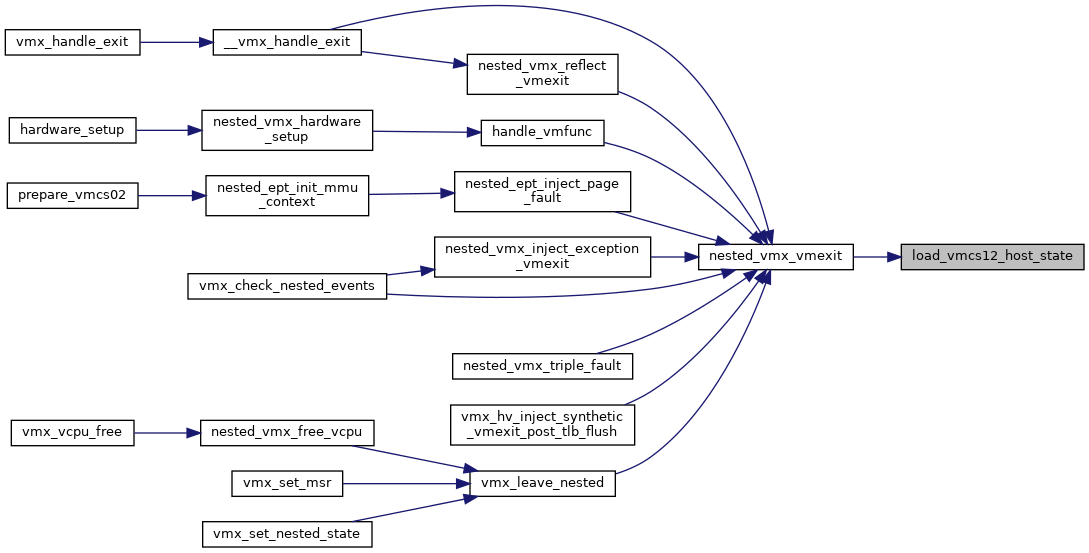

| static void | load_vmcs12_host_state (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

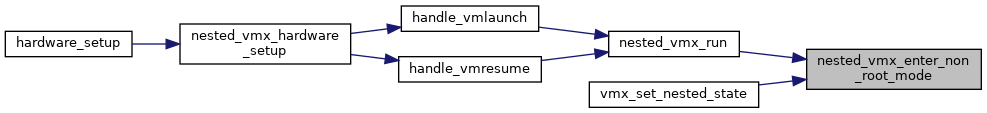

| enum nvmx_vmentry_status | nested_vmx_enter_non_root_mode (struct kvm_vcpu *vcpu, bool from_vmentry) |

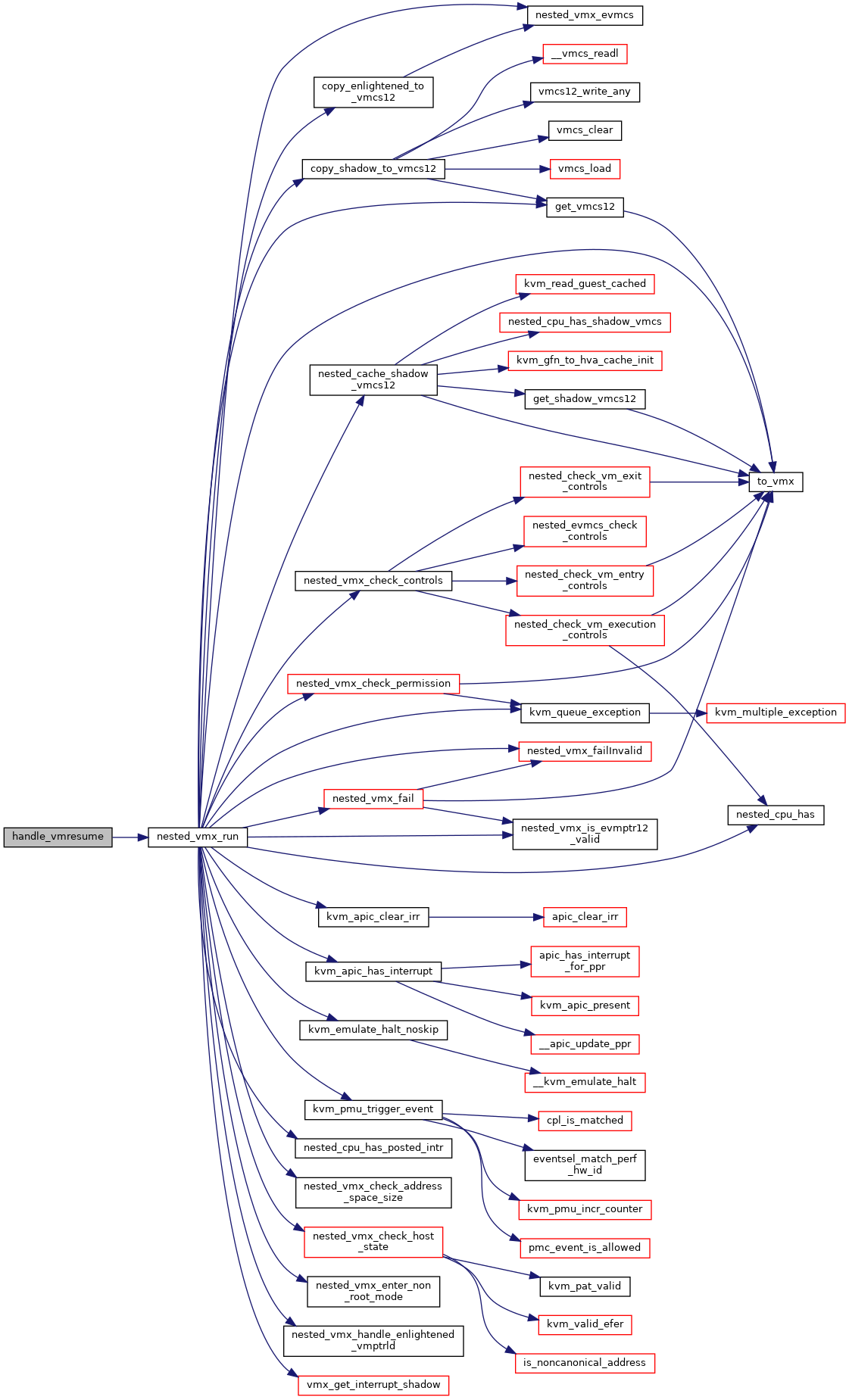

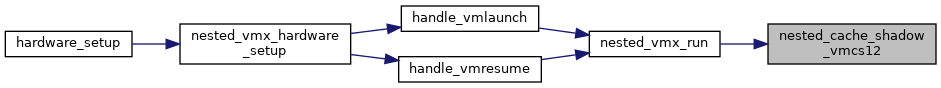

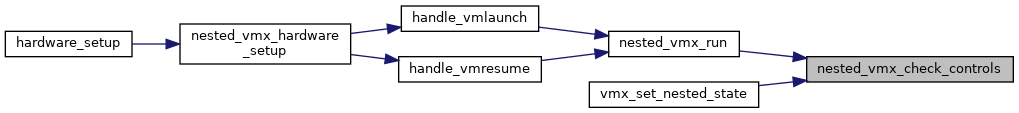

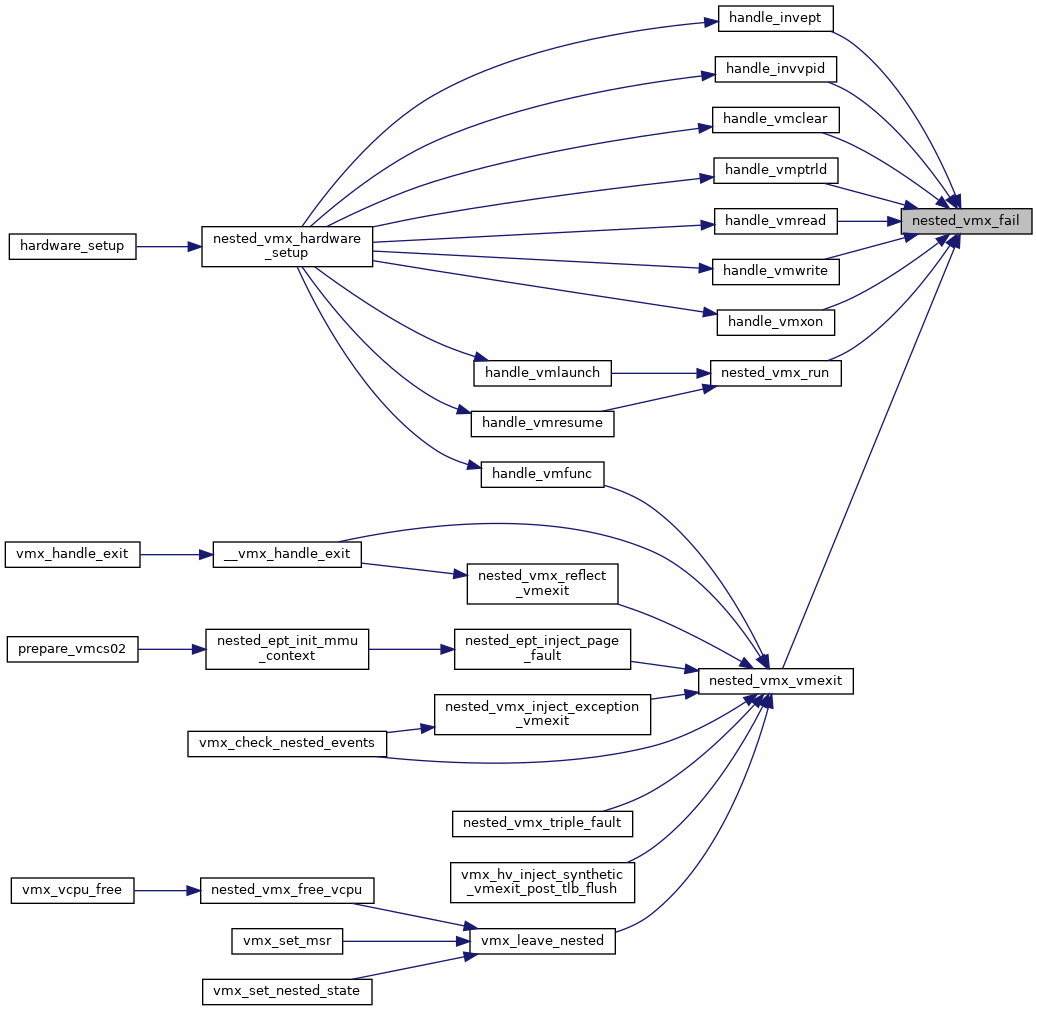

| static int | nested_vmx_run (struct kvm_vcpu *vcpu, bool launch) |

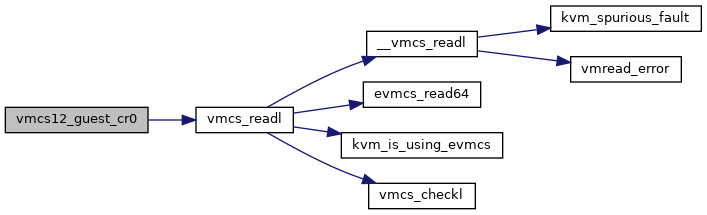

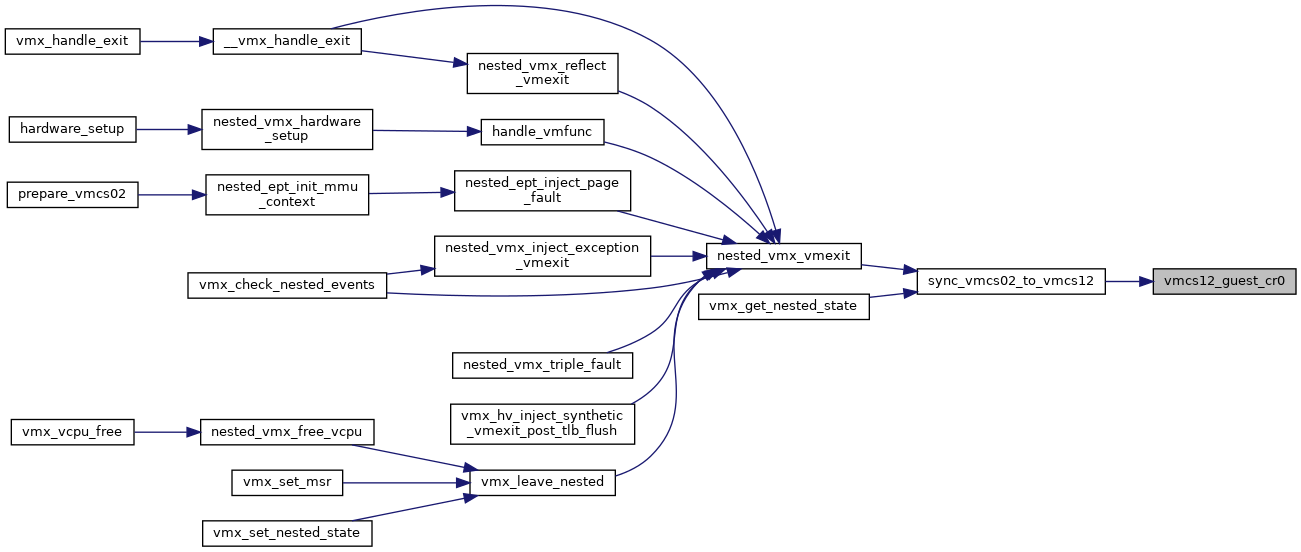

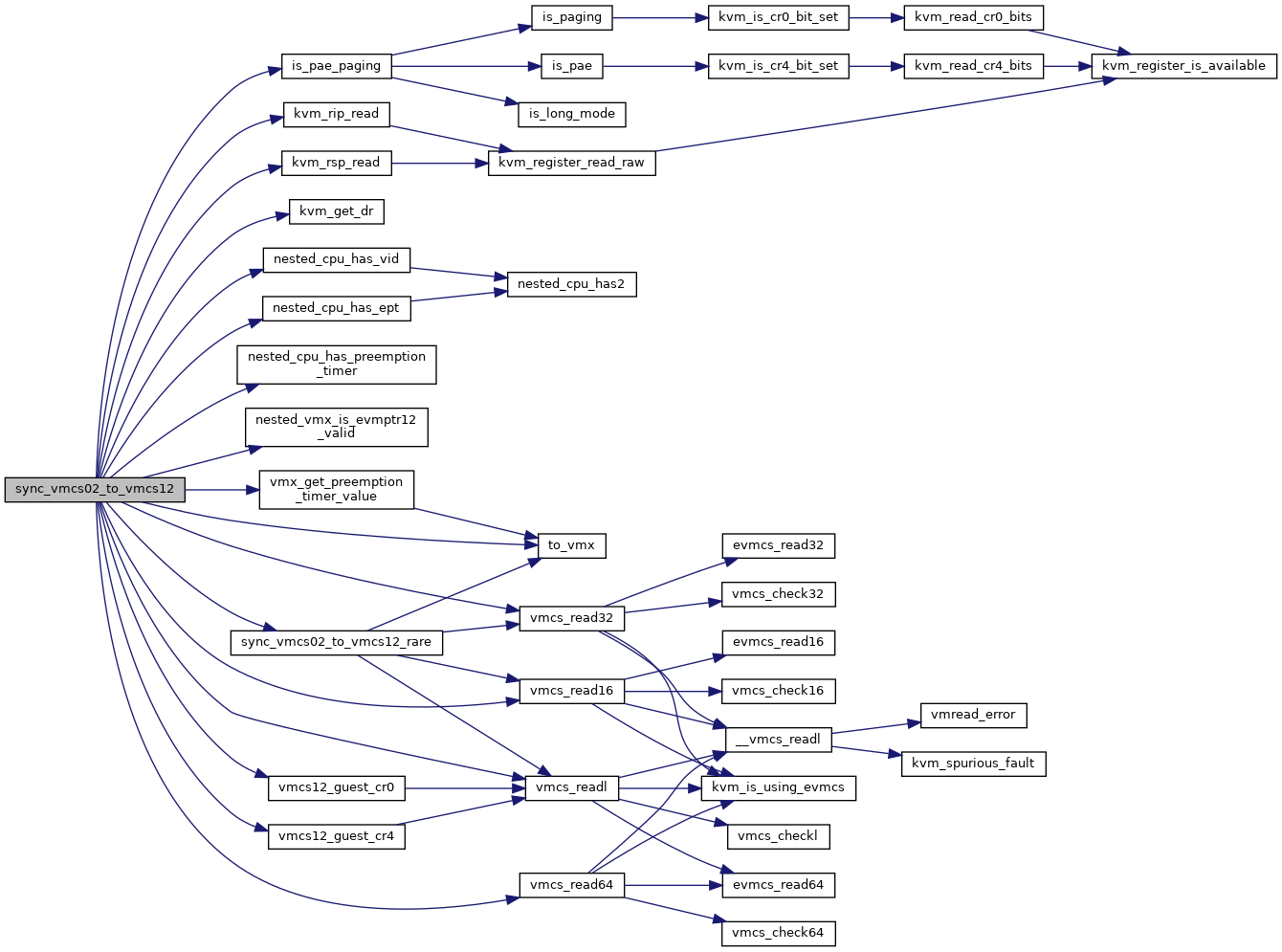

| static unsigned long | vmcs12_guest_cr0 (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

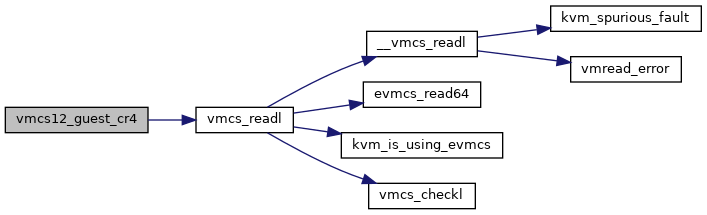

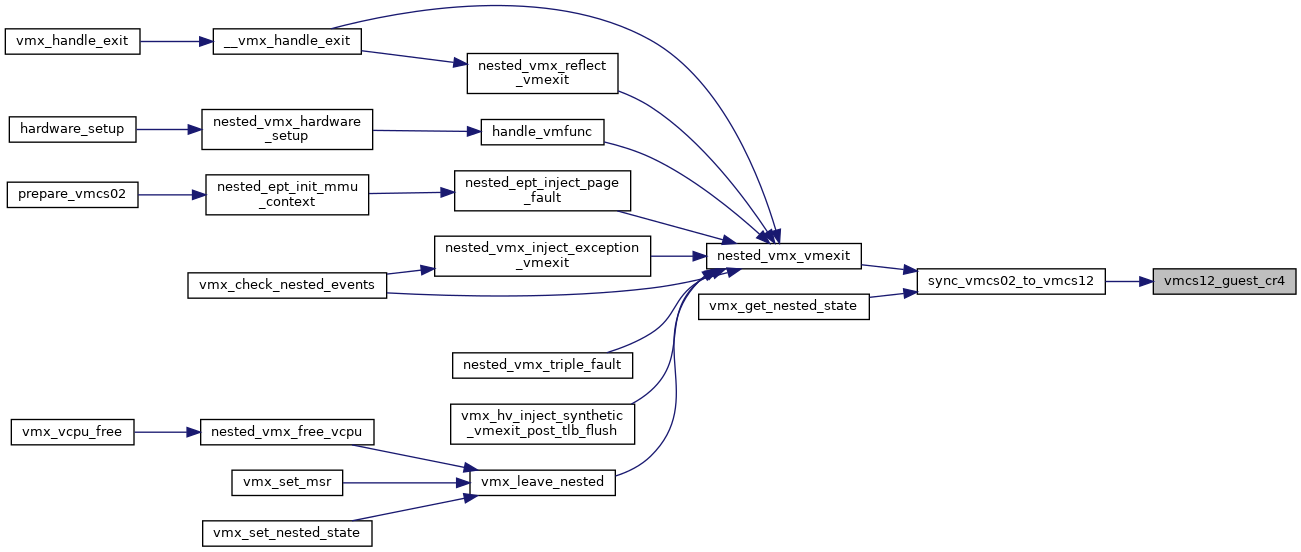

| static unsigned long | vmcs12_guest_cr4 (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

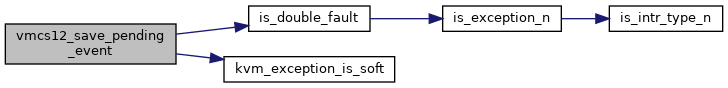

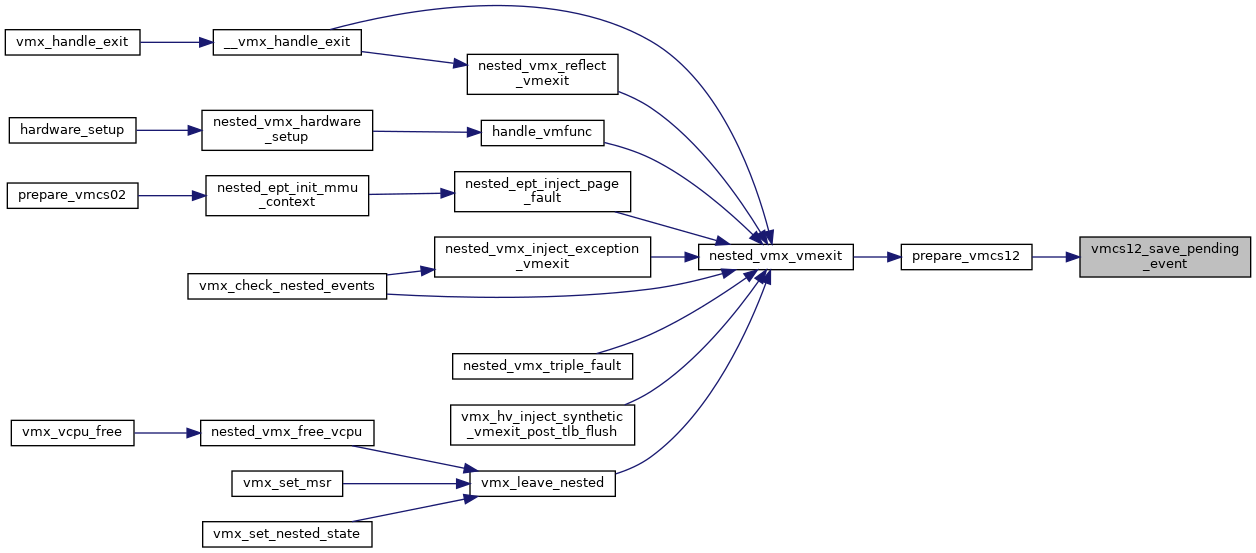

| static void | vmcs12_save_pending_event (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12, u32 vm_exit_reason, u32 exit_intr_info) |

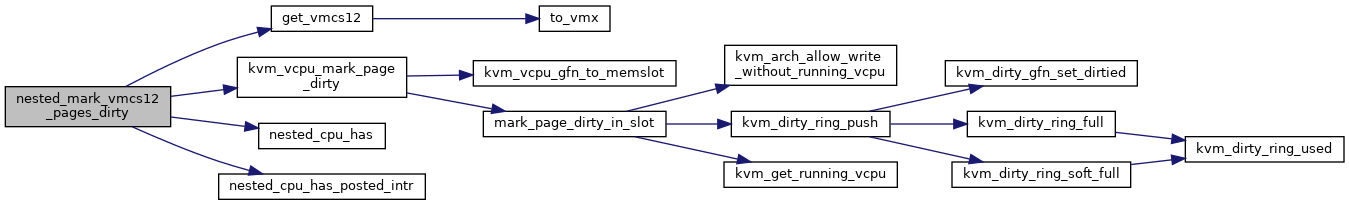

| void | nested_mark_vmcs12_pages_dirty (struct kvm_vcpu *vcpu) |

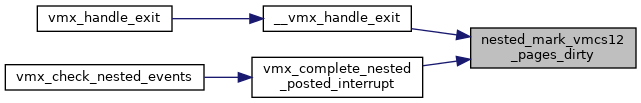

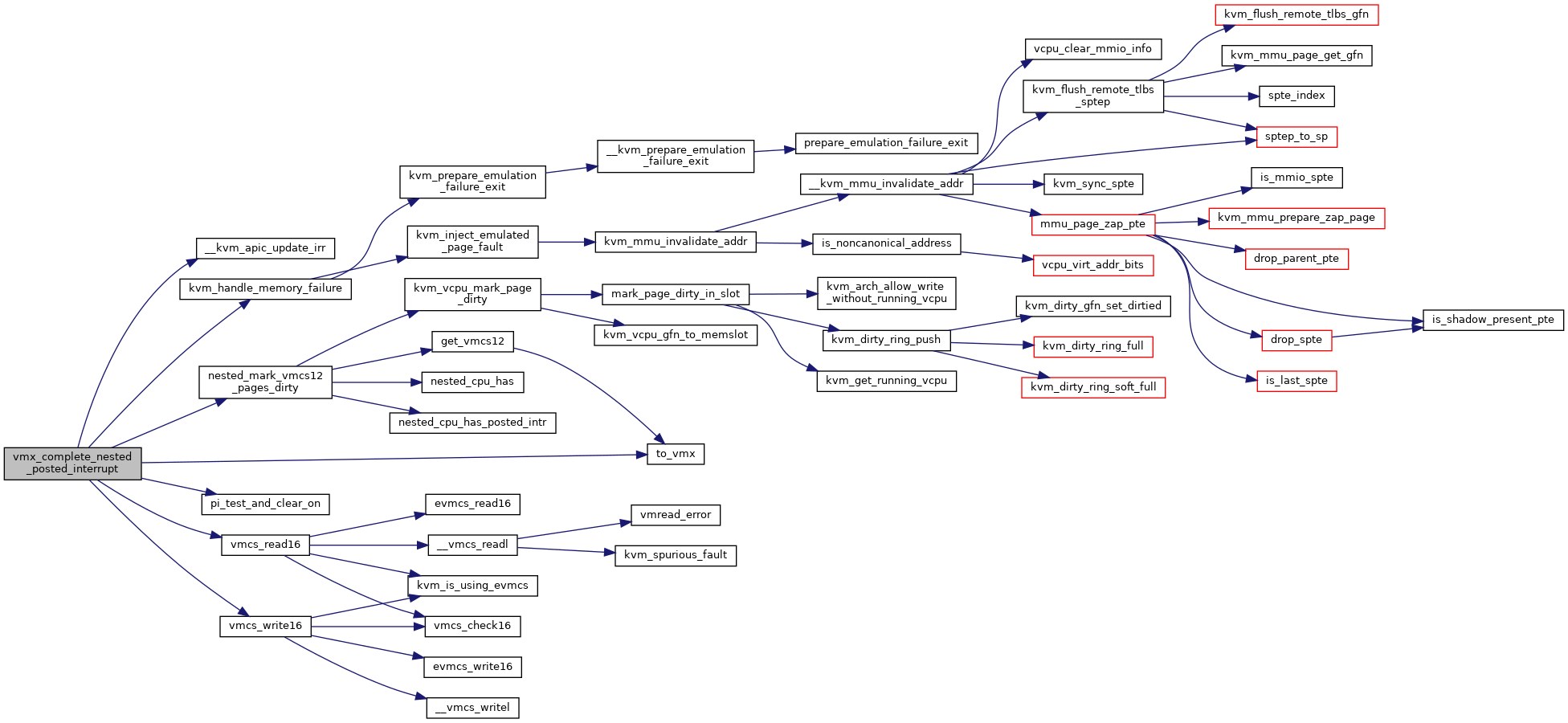

| static int | vmx_complete_nested_posted_interrupt (struct kvm_vcpu *vcpu) |

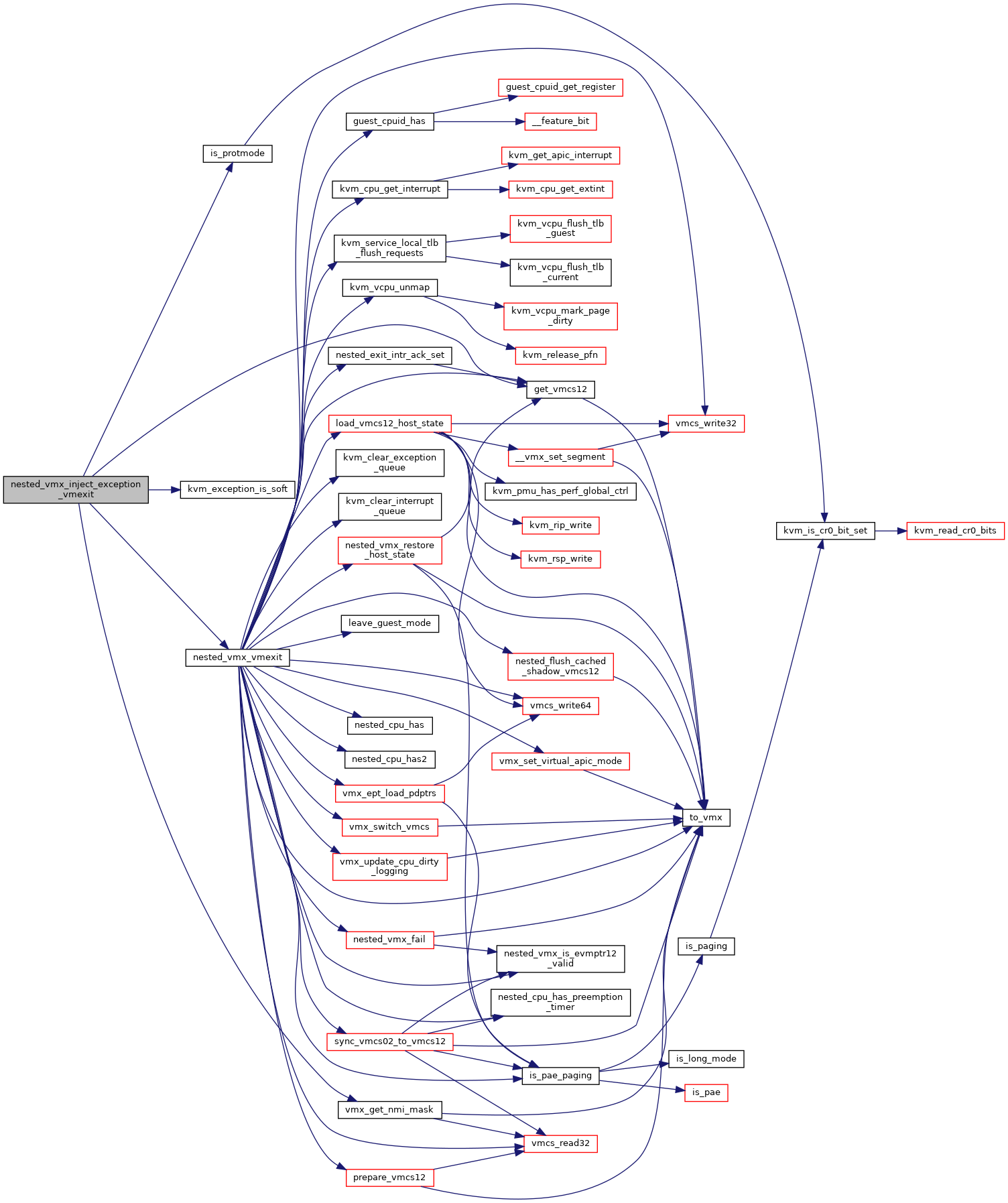

| static void | nested_vmx_inject_exception_vmexit (struct kvm_vcpu *vcpu) |

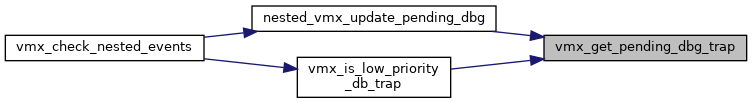

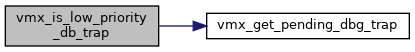

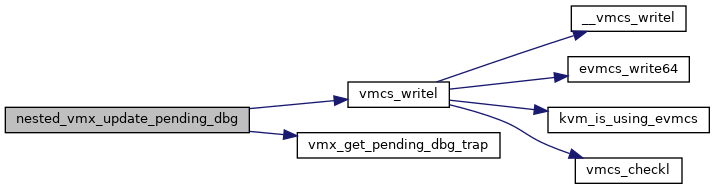

| static unsigned long | vmx_get_pending_dbg_trap (struct kvm_queued_exception *ex) |

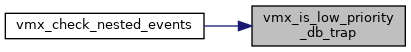

| static bool | vmx_is_low_priority_db_trap (struct kvm_queued_exception *ex) |

| static void | nested_vmx_update_pending_dbg (struct kvm_vcpu *vcpu) |

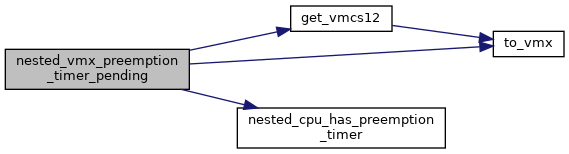

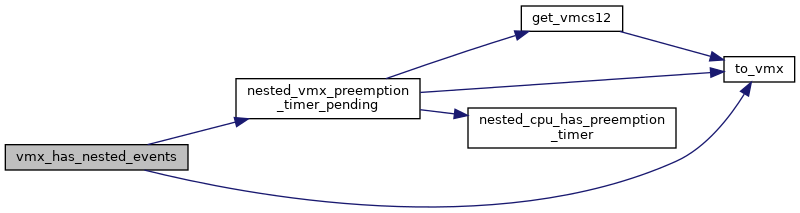

| static bool | nested_vmx_preemption_timer_pending (struct kvm_vcpu *vcpu) |

| static bool | vmx_has_nested_events (struct kvm_vcpu *vcpu) |

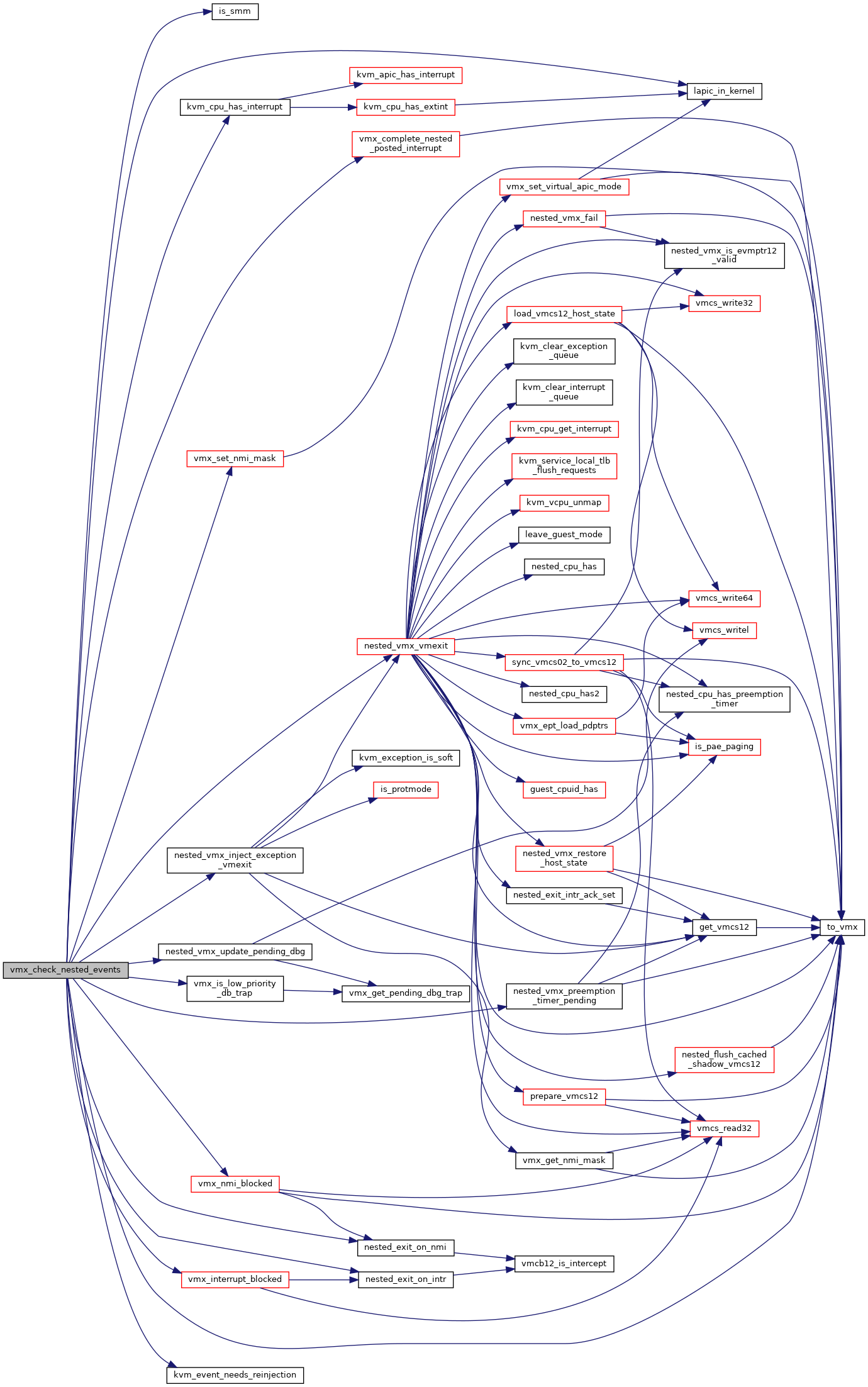

| static int | vmx_check_nested_events (struct kvm_vcpu *vcpu) |

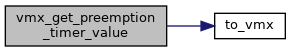

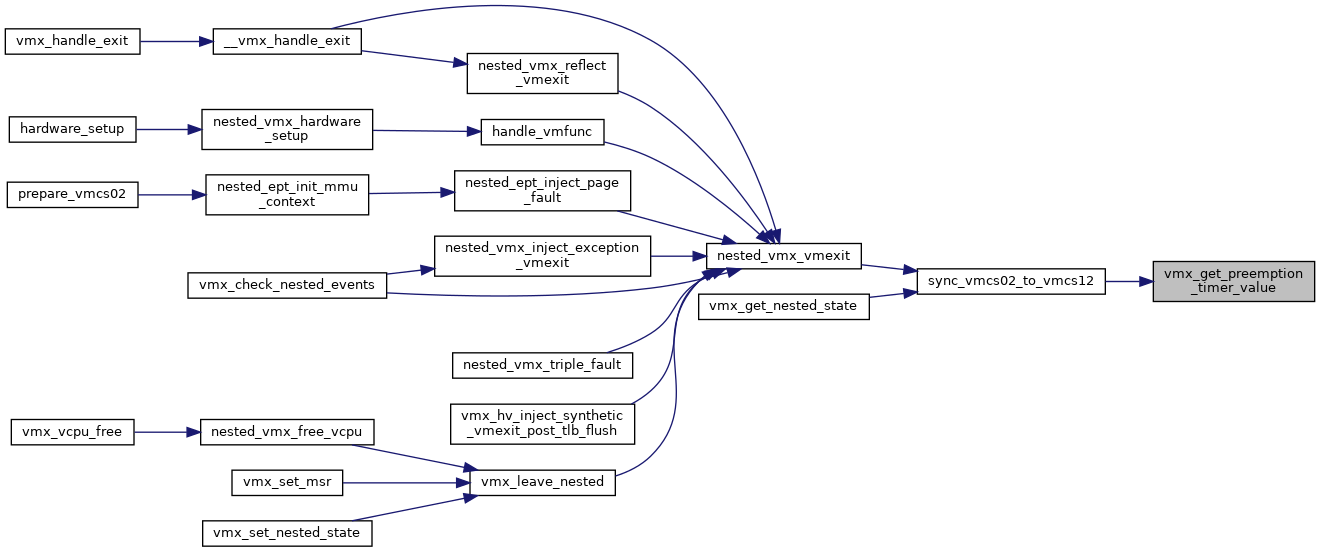

| static u32 | vmx_get_preemption_timer_value (struct kvm_vcpu *vcpu) |

| static bool | is_vmcs12_ext_field (unsigned long field) |

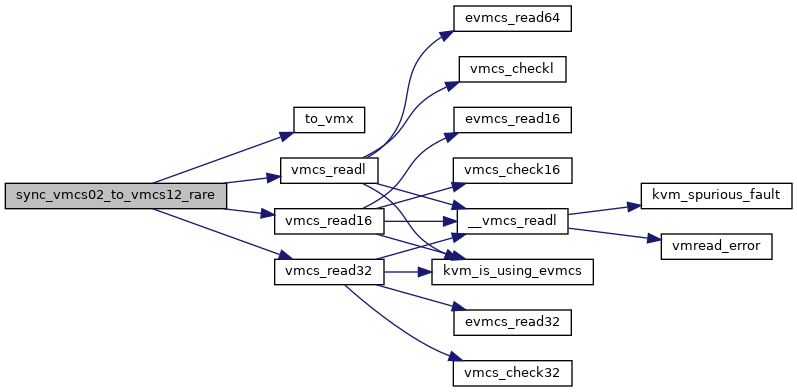

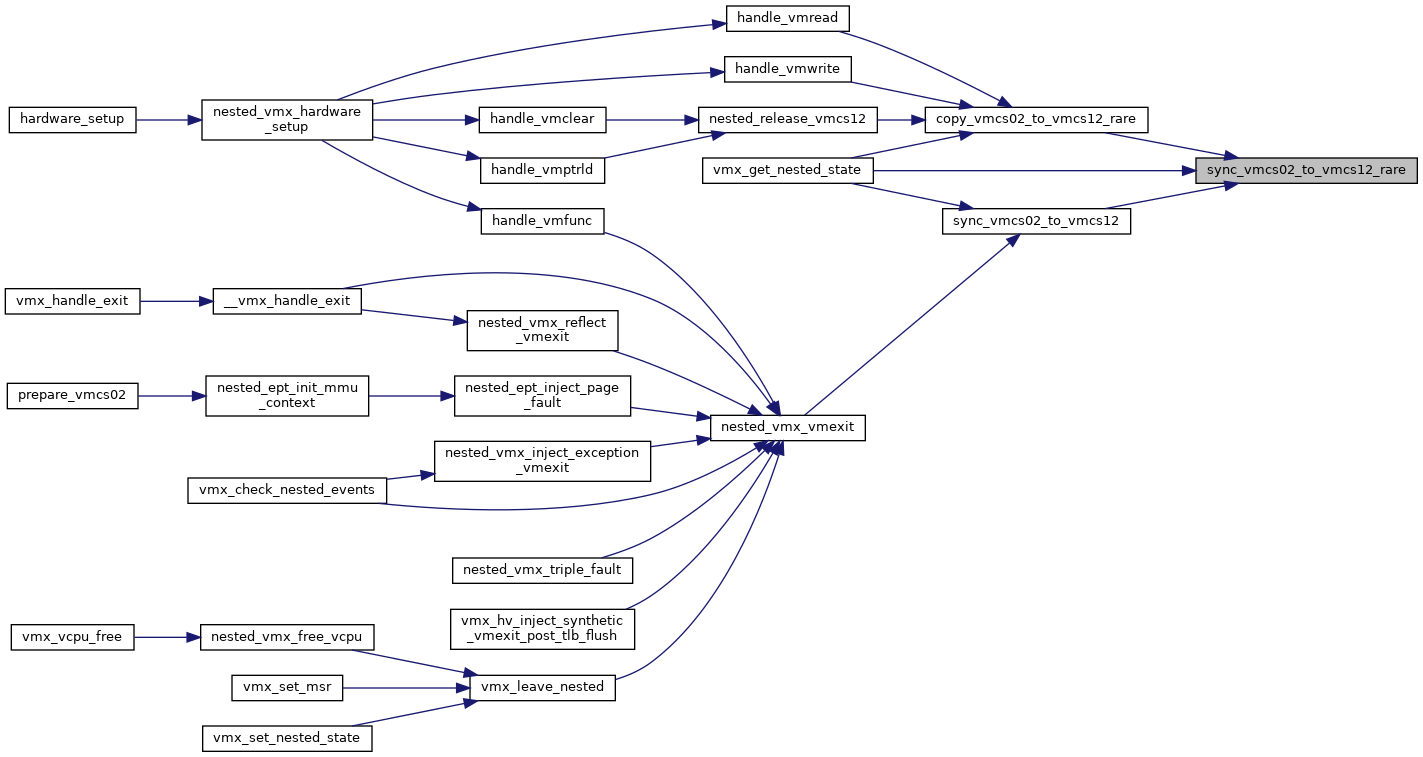

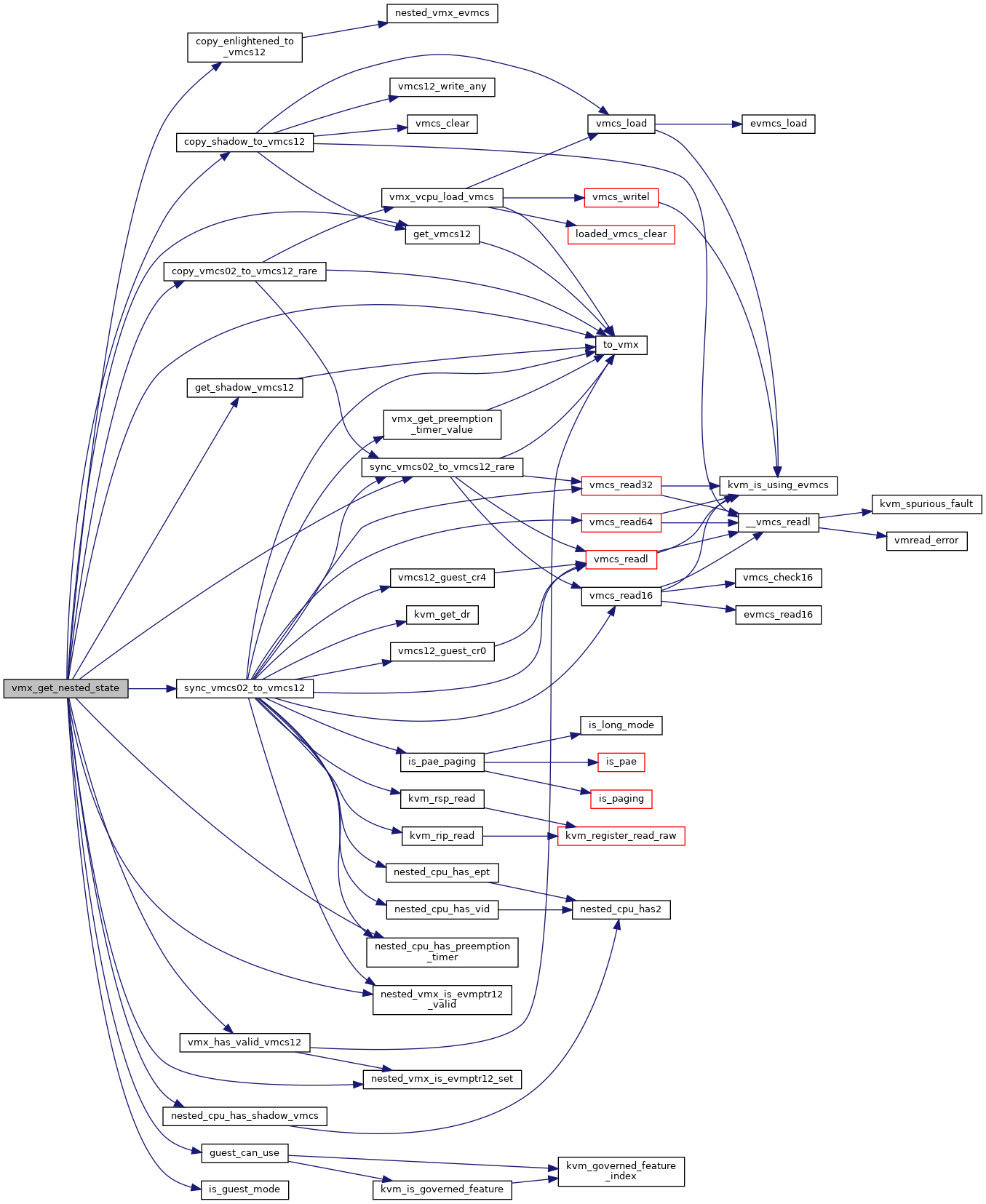

| static void | sync_vmcs02_to_vmcs12_rare (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

| static void | copy_vmcs02_to_vmcs12_rare (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

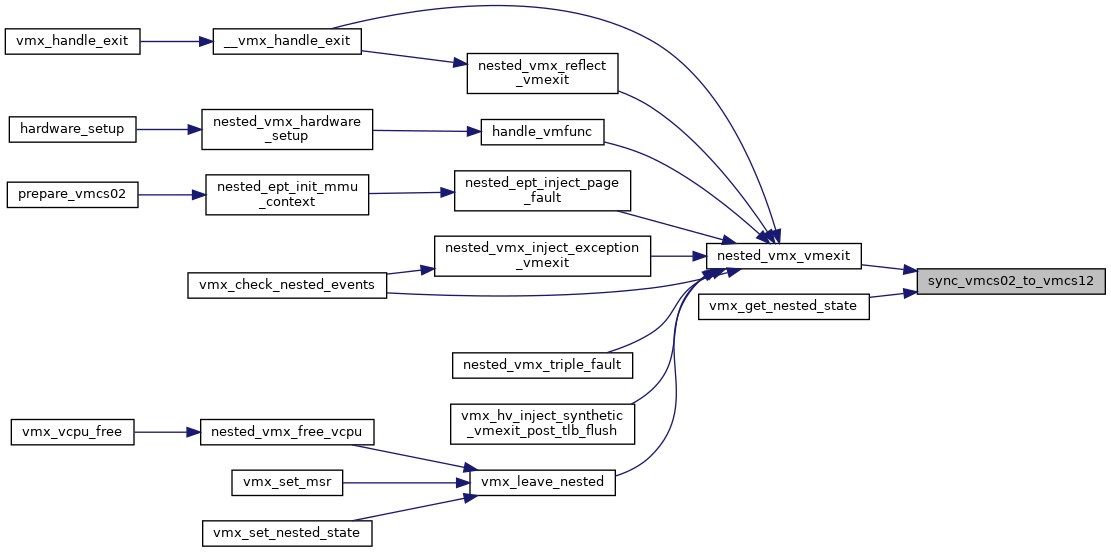

| static void | sync_vmcs02_to_vmcs12 (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

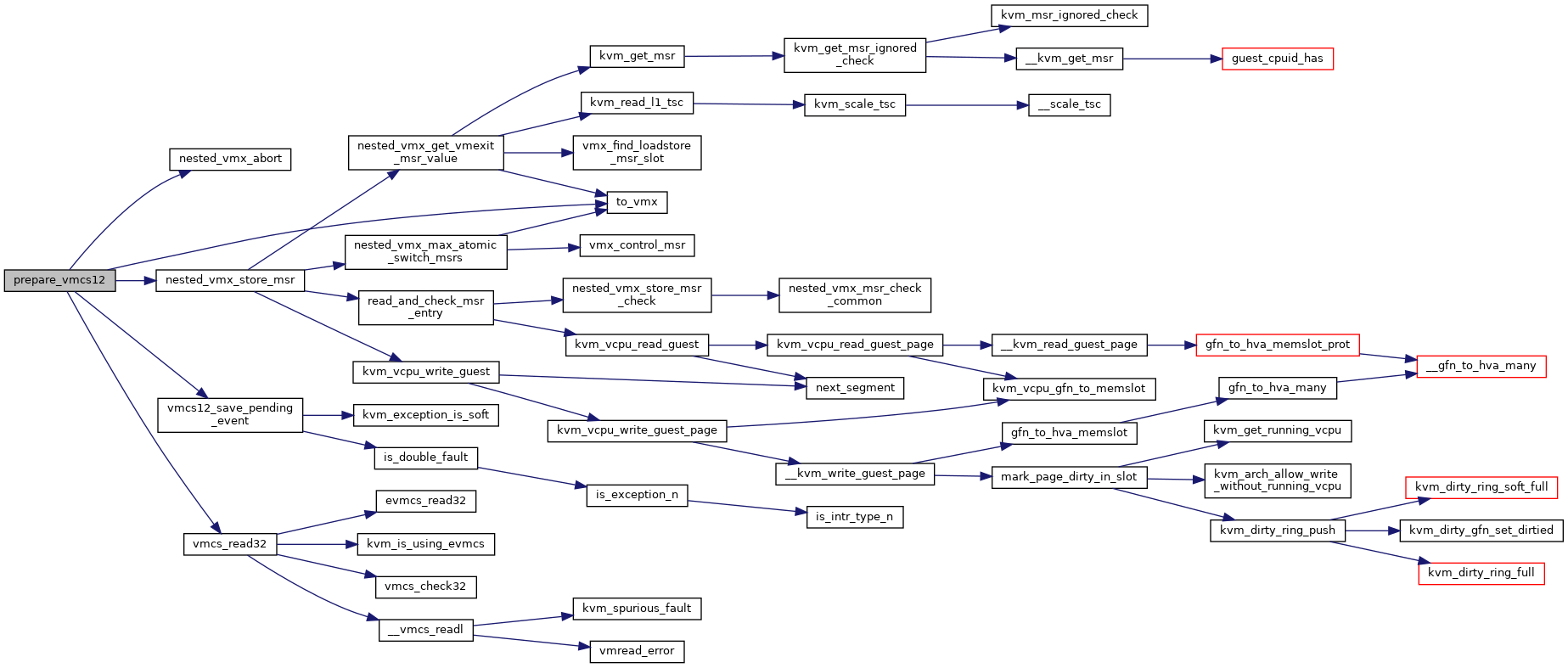

| static void | prepare_vmcs12 (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12, u32 vm_exit_reason, u32 exit_intr_info, unsigned long exit_qualification) |

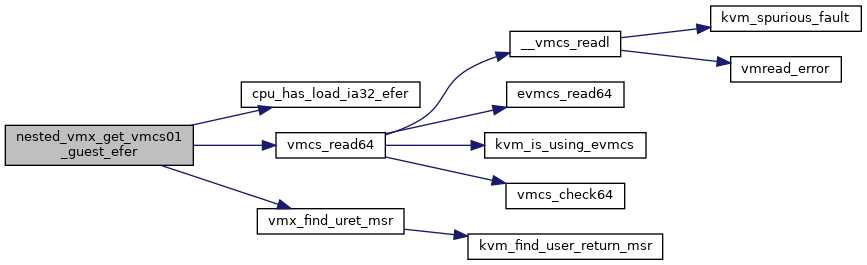

| static u64 | nested_vmx_get_vmcs01_guest_efer (struct vcpu_vmx *vmx) |

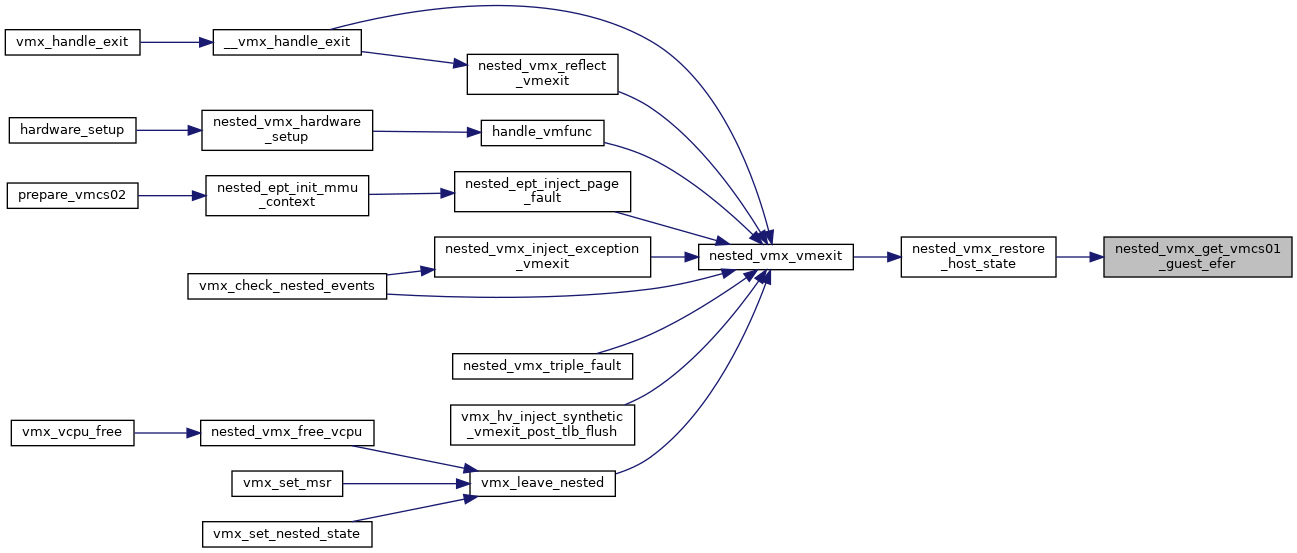

| static void | nested_vmx_restore_host_state (struct kvm_vcpu *vcpu) |

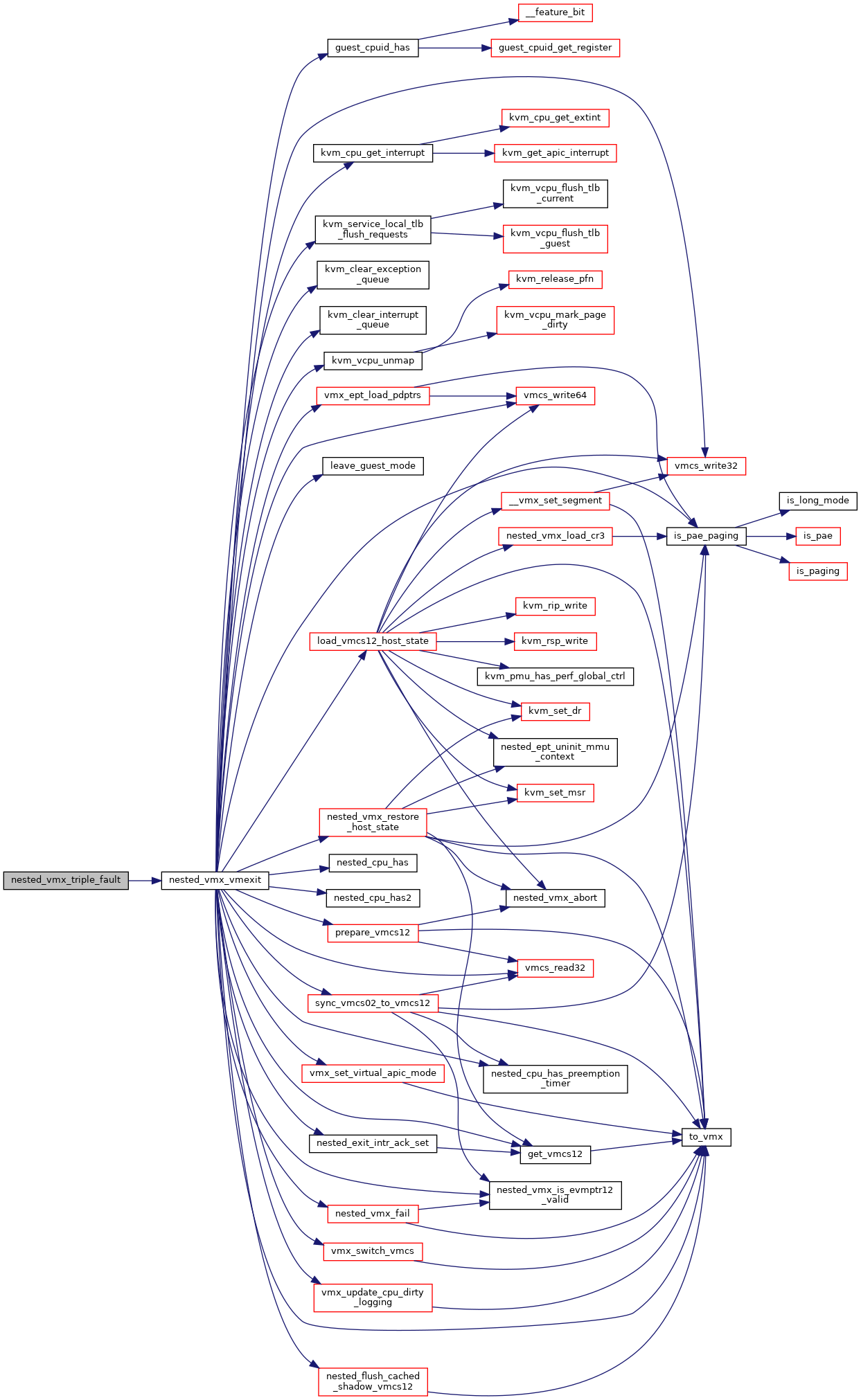

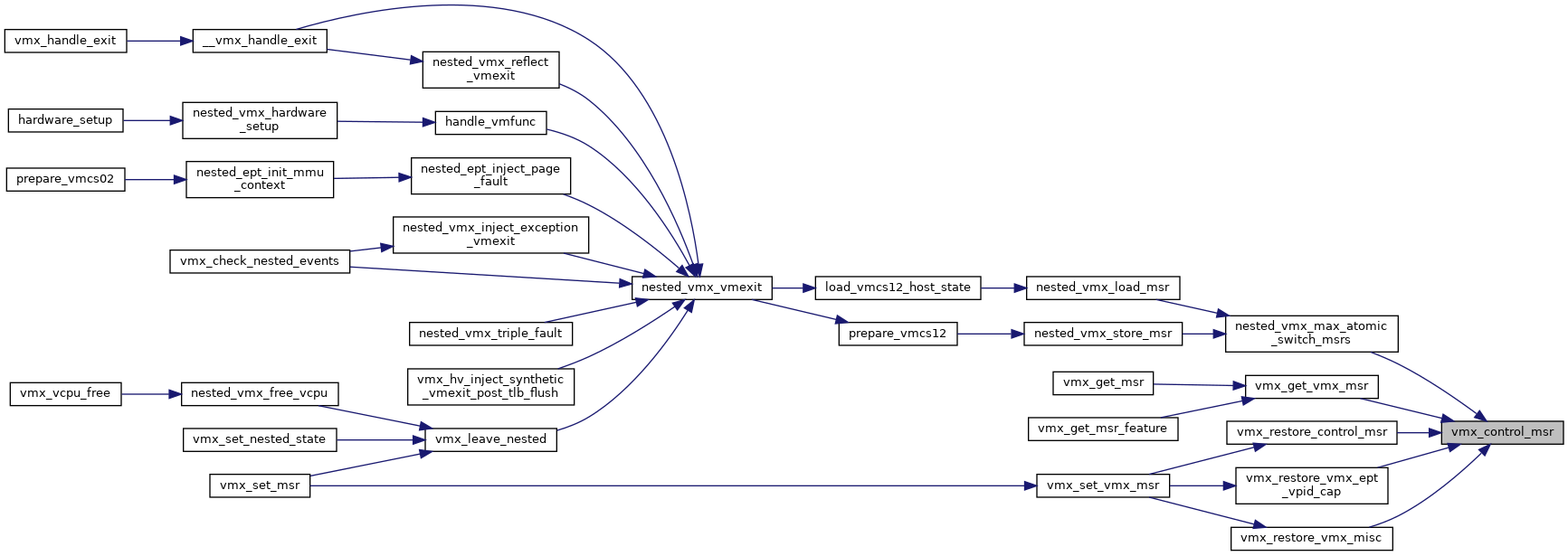

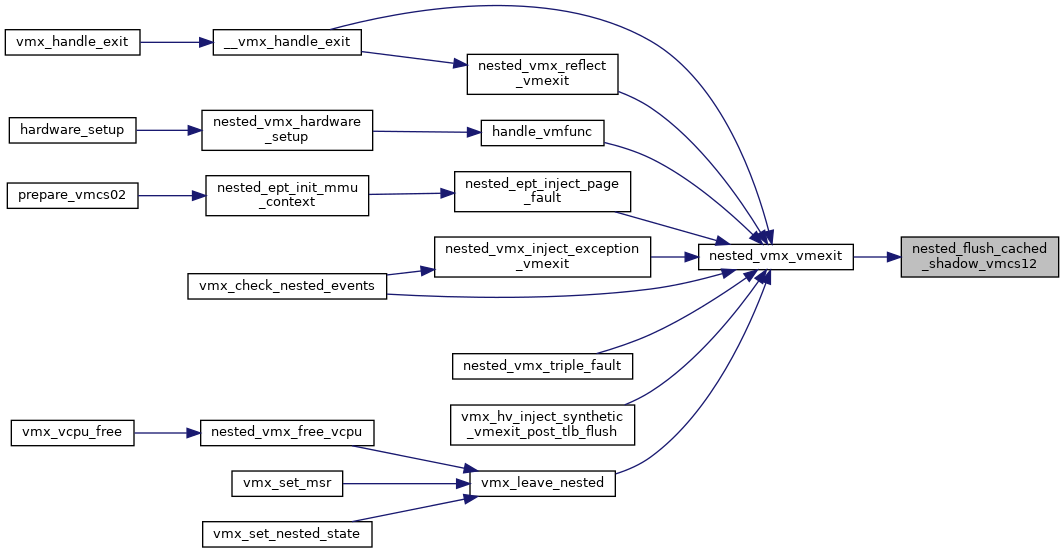

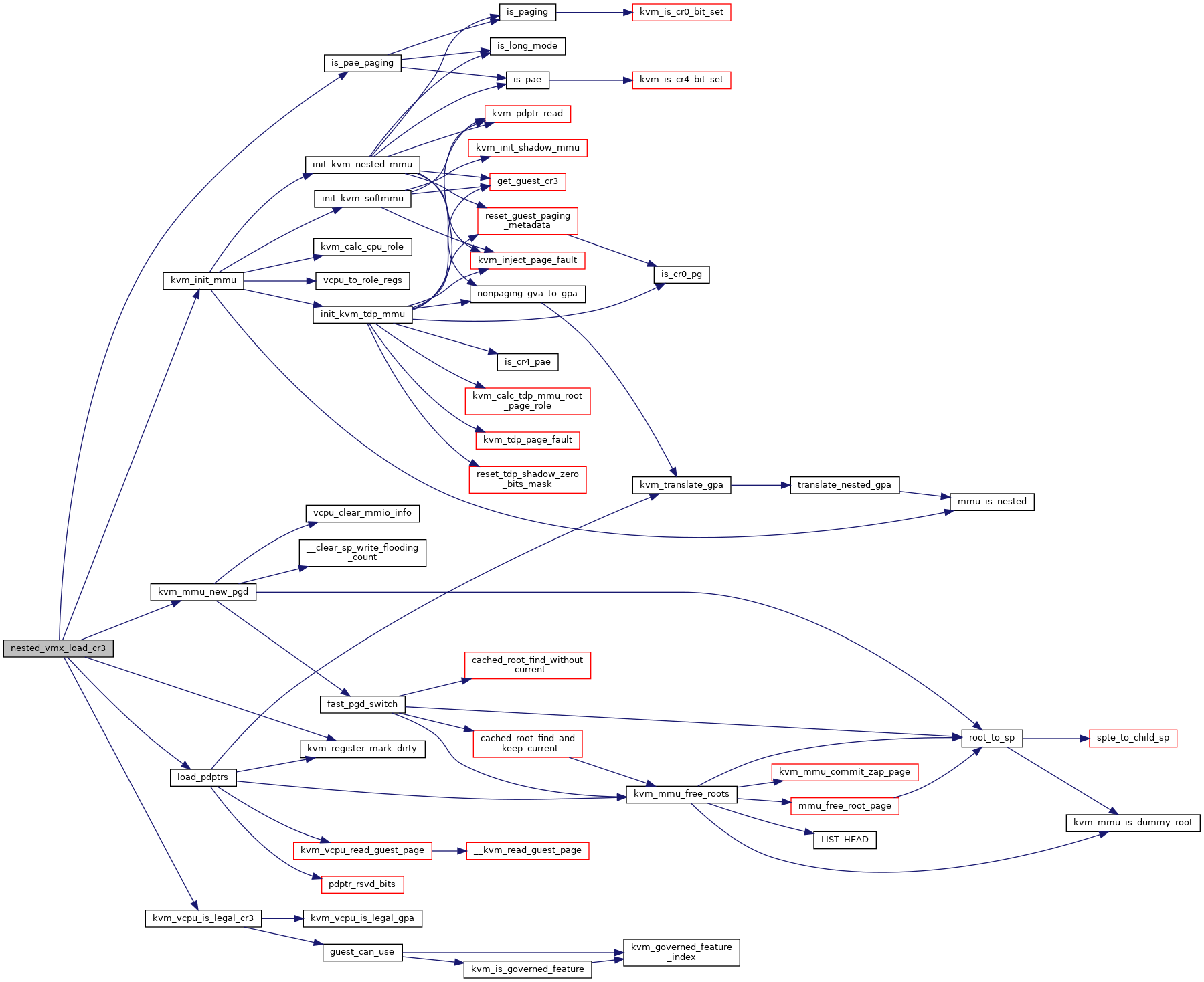

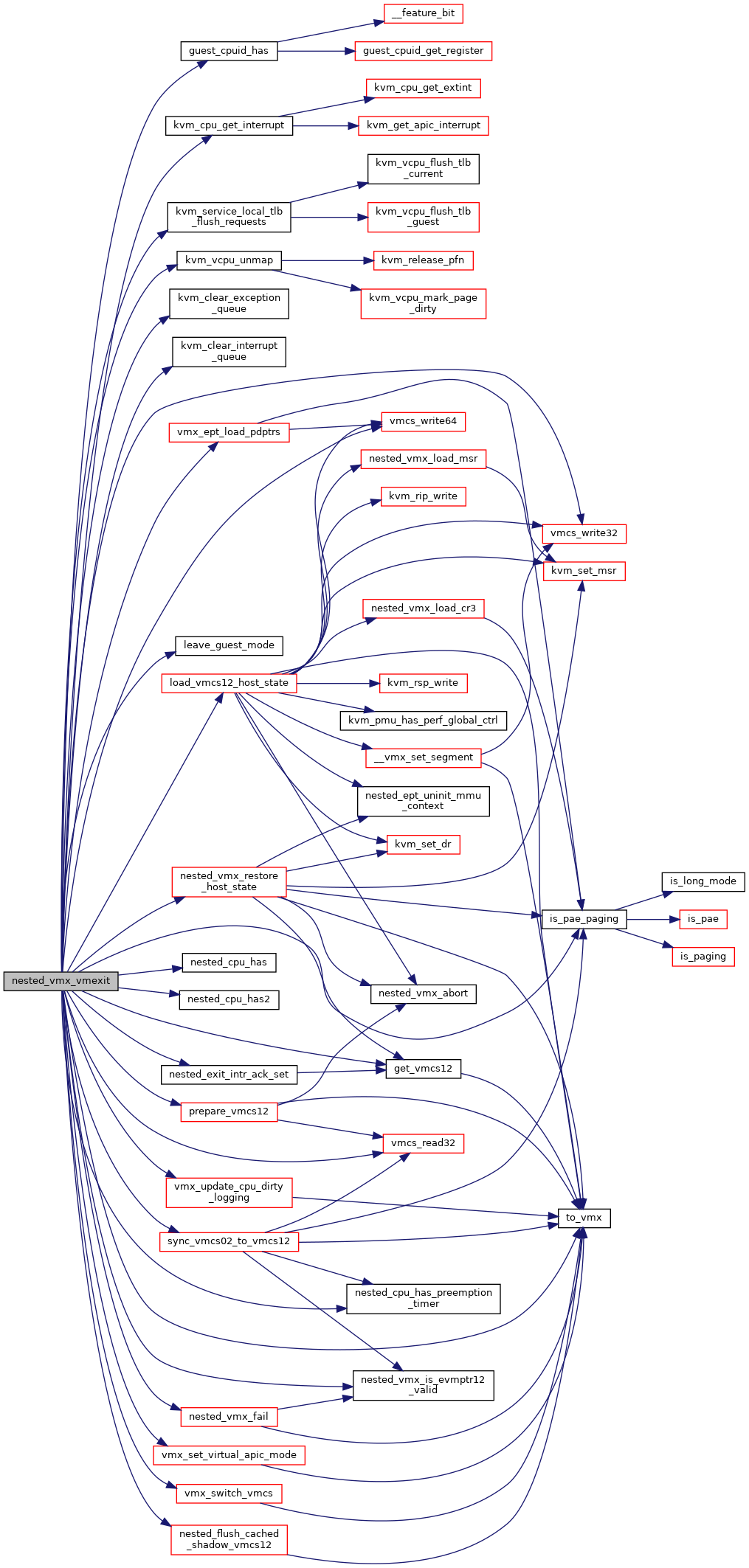

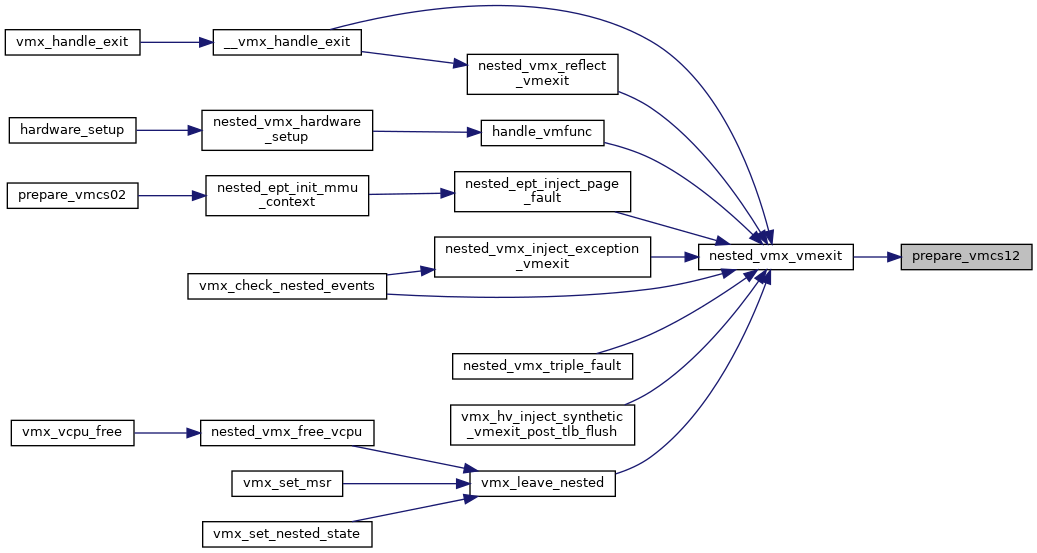

| void | nested_vmx_vmexit (struct kvm_vcpu *vcpu, u32 vm_exit_reason, u32 exit_intr_info, unsigned long exit_qualification) |

| static void | nested_vmx_triple_fault (struct kvm_vcpu *vcpu) |

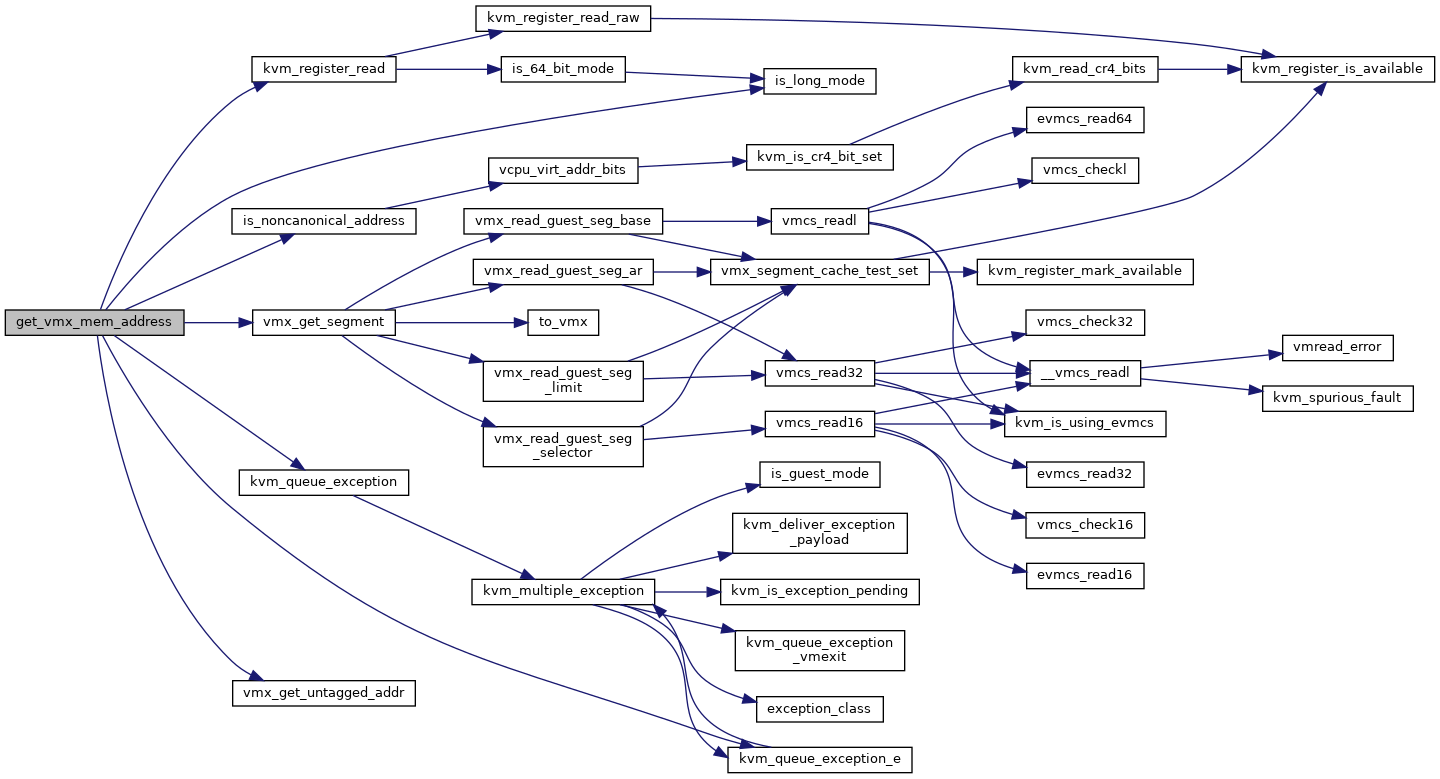

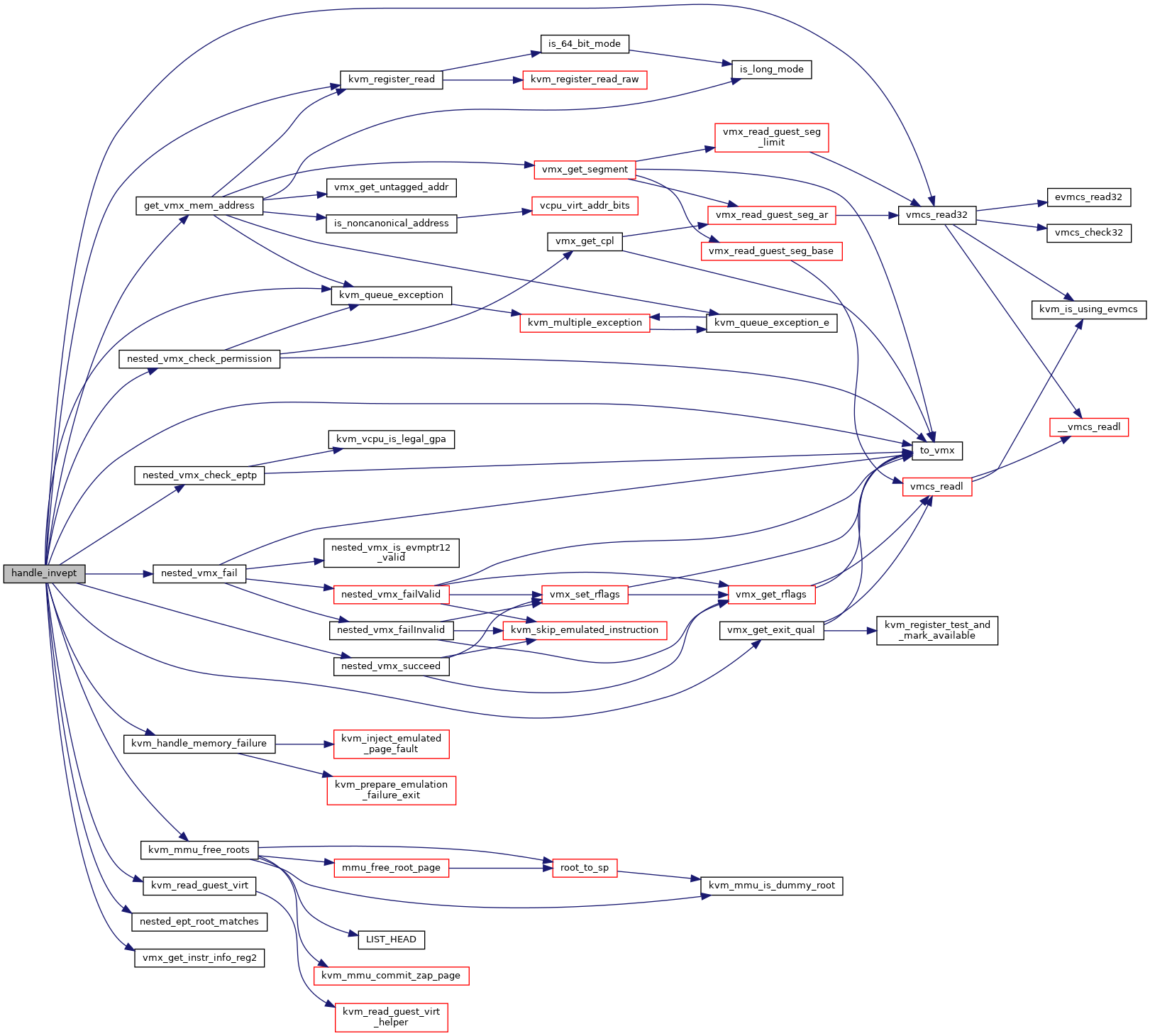

| int | get_vmx_mem_address (struct kvm_vcpu *vcpu, unsigned long exit_qualification, u32 vmx_instruction_info, bool wr, int len, gva_t *ret) |

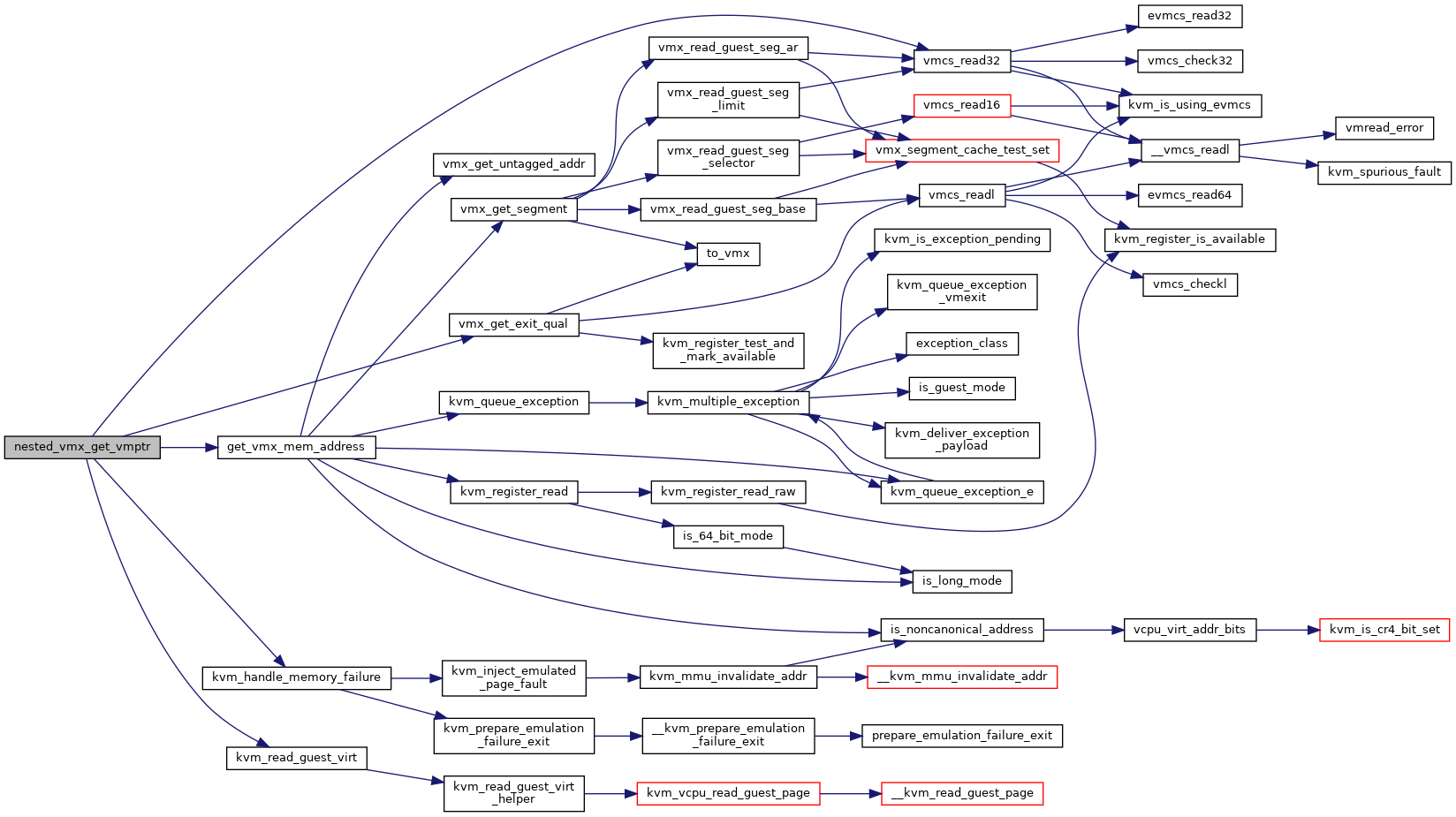

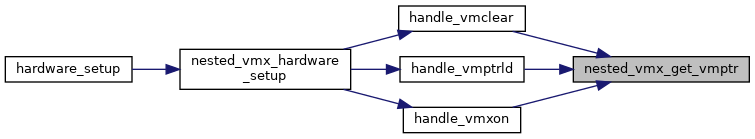

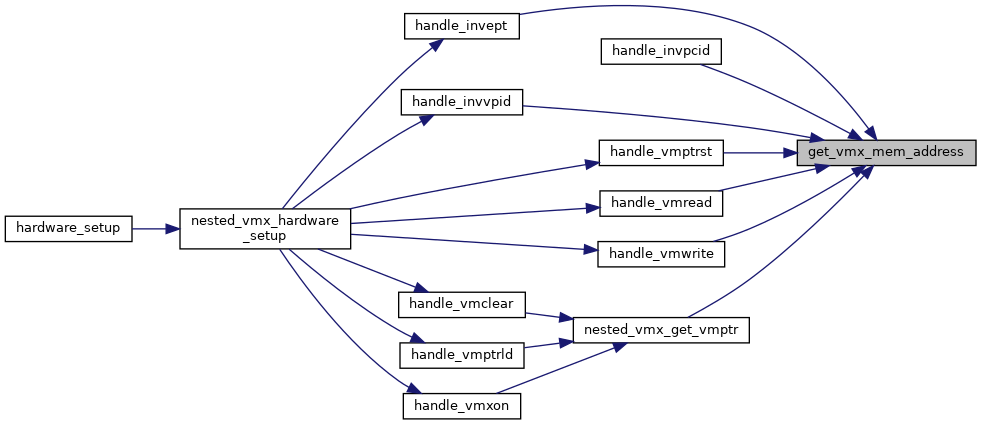

| static int | nested_vmx_get_vmptr (struct kvm_vcpu *vcpu, gpa_t *vmpointer, int *ret) |

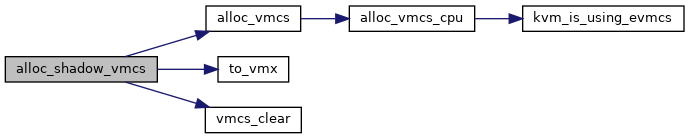

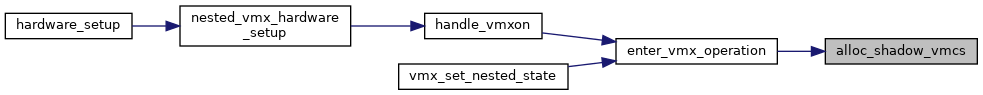

| static struct vmcs * | alloc_shadow_vmcs (struct kvm_vcpu *vcpu) |

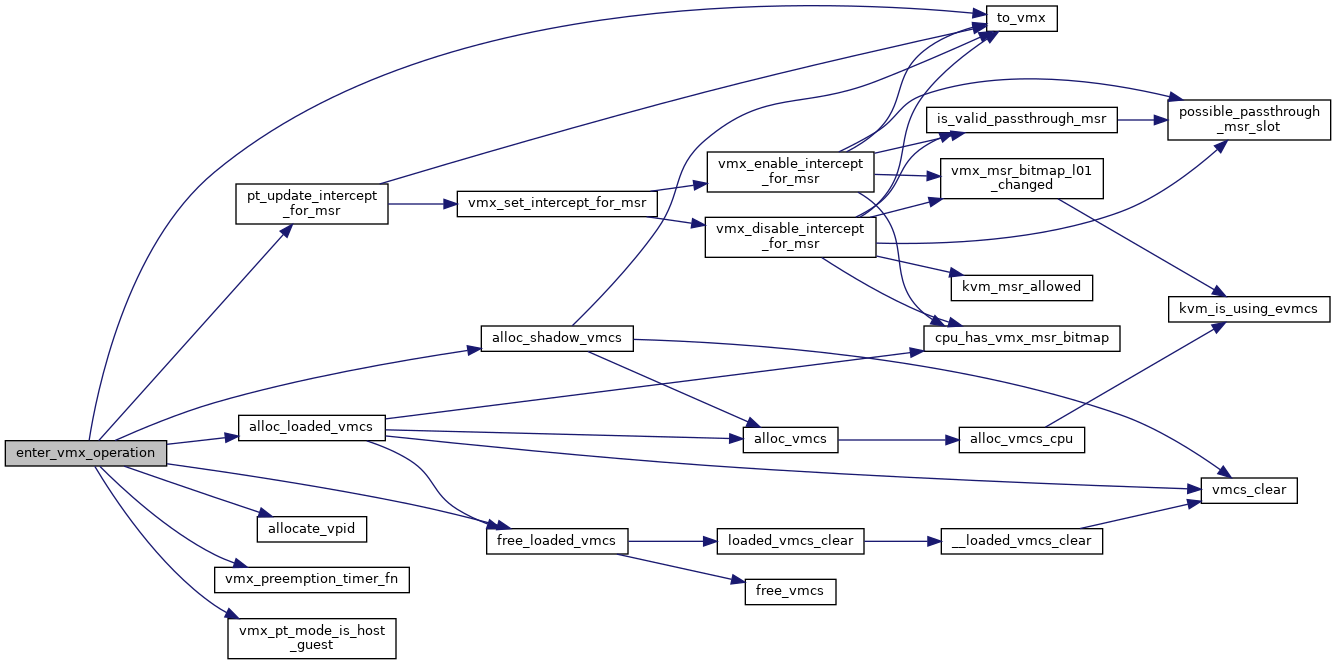

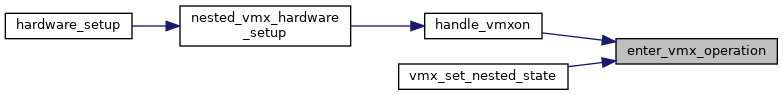

| static int | enter_vmx_operation (struct kvm_vcpu *vcpu) |

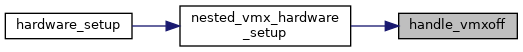

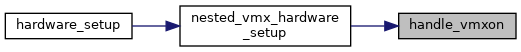

| static int | handle_vmxon (struct kvm_vcpu *vcpu) |

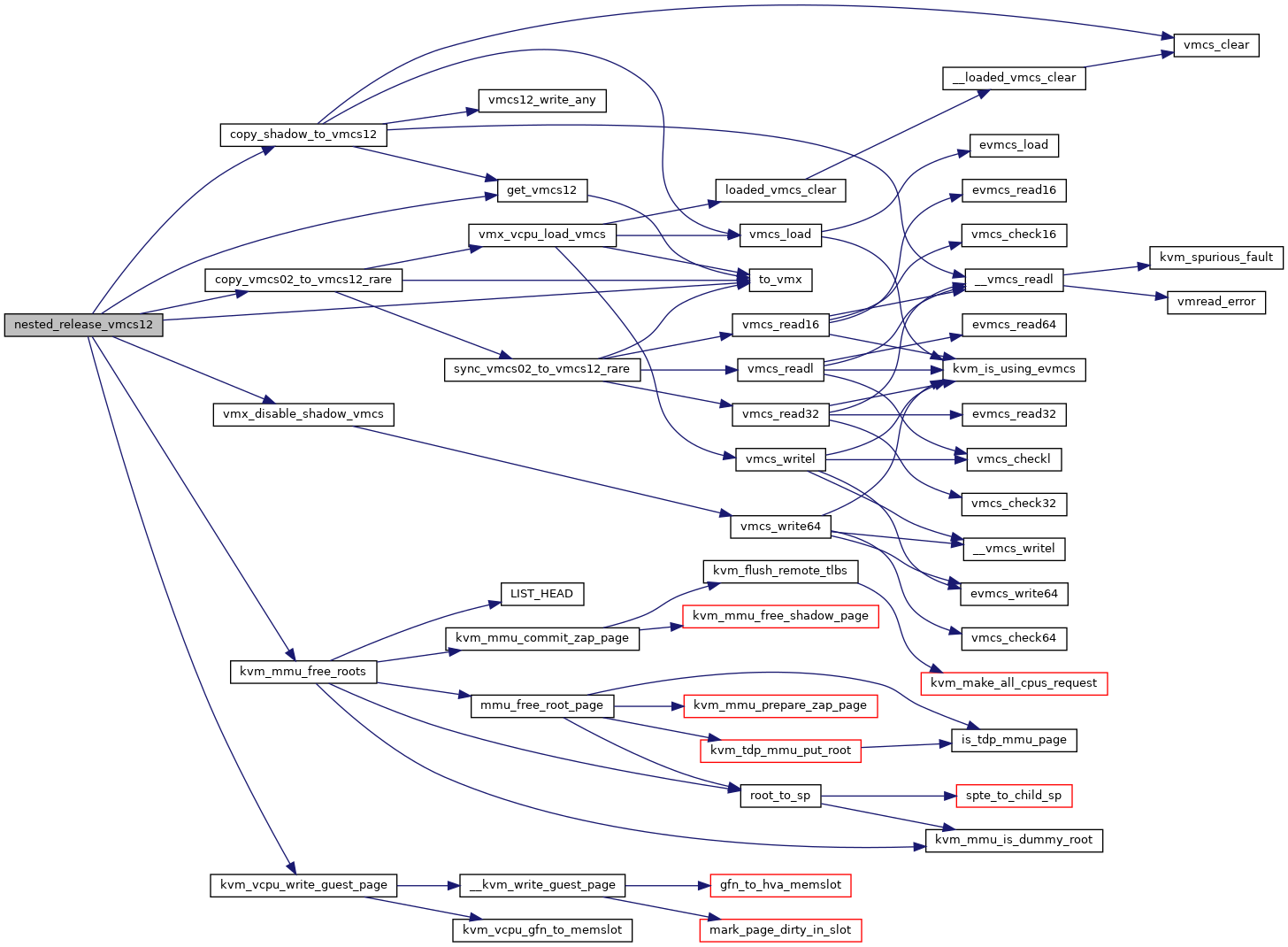

| static void | nested_release_vmcs12 (struct kvm_vcpu *vcpu) |

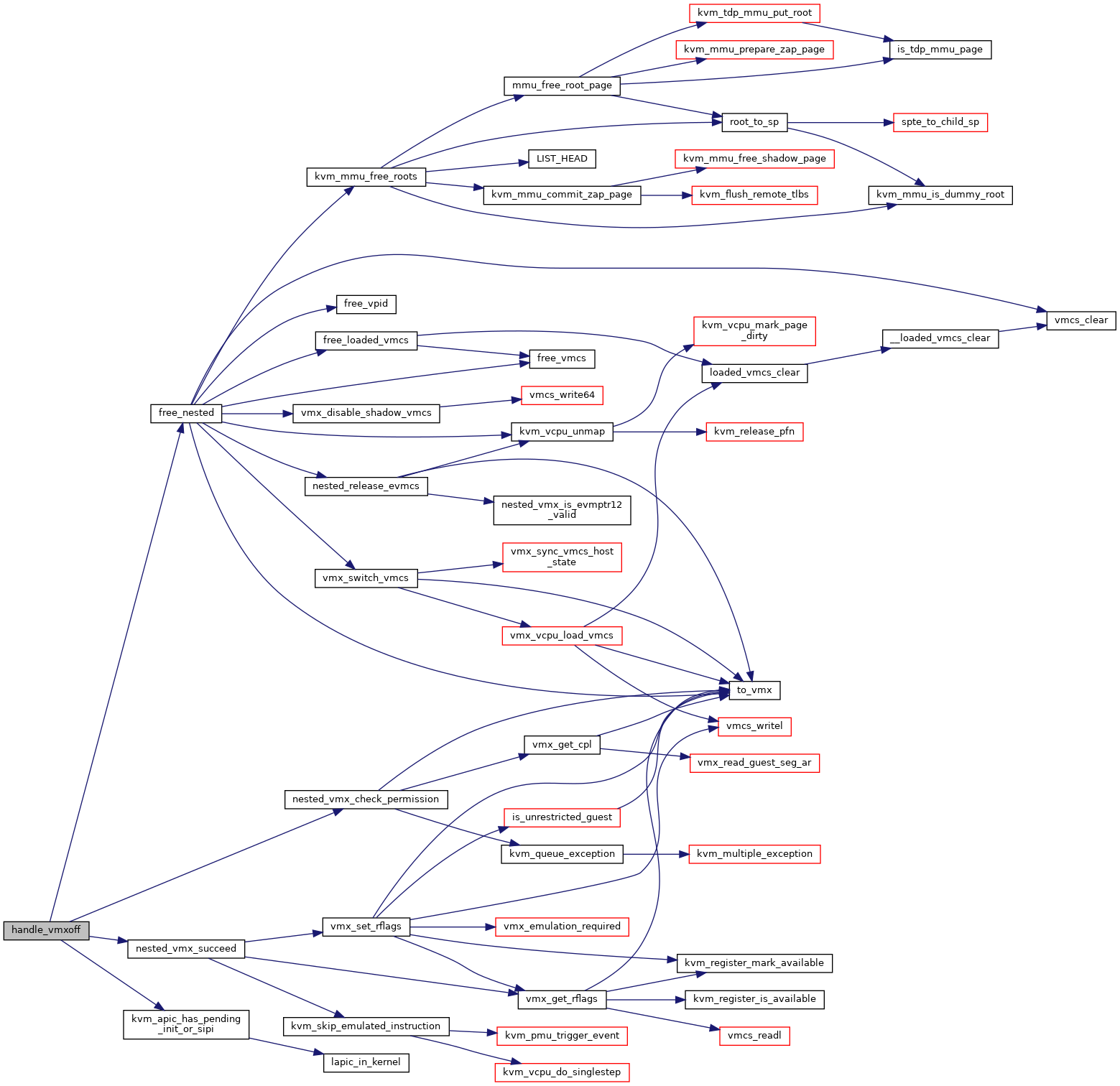

| static int | handle_vmxoff (struct kvm_vcpu *vcpu) |

| static int | handle_vmclear (struct kvm_vcpu *vcpu) |

| static int | handle_vmlaunch (struct kvm_vcpu *vcpu) |

| static int | handle_vmresume (struct kvm_vcpu *vcpu) |

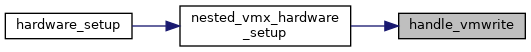

| static int | handle_vmread (struct kvm_vcpu *vcpu) |

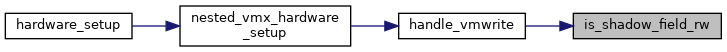

| static bool | is_shadow_field_rw (unsigned long field) |

| static bool | is_shadow_field_ro (unsigned long field) |

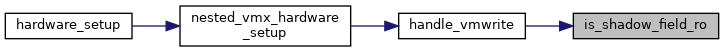

| static int | handle_vmwrite (struct kvm_vcpu *vcpu) |

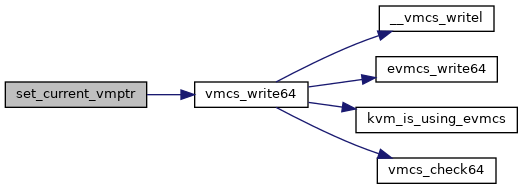

| static void | set_current_vmptr (struct vcpu_vmx *vmx, gpa_t vmptr) |

| static int | handle_vmptrld (struct kvm_vcpu *vcpu) |

| static int | handle_vmptrst (struct kvm_vcpu *vcpu) |

| static int | handle_invept (struct kvm_vcpu *vcpu) |

| static int | handle_invvpid (struct kvm_vcpu *vcpu) |

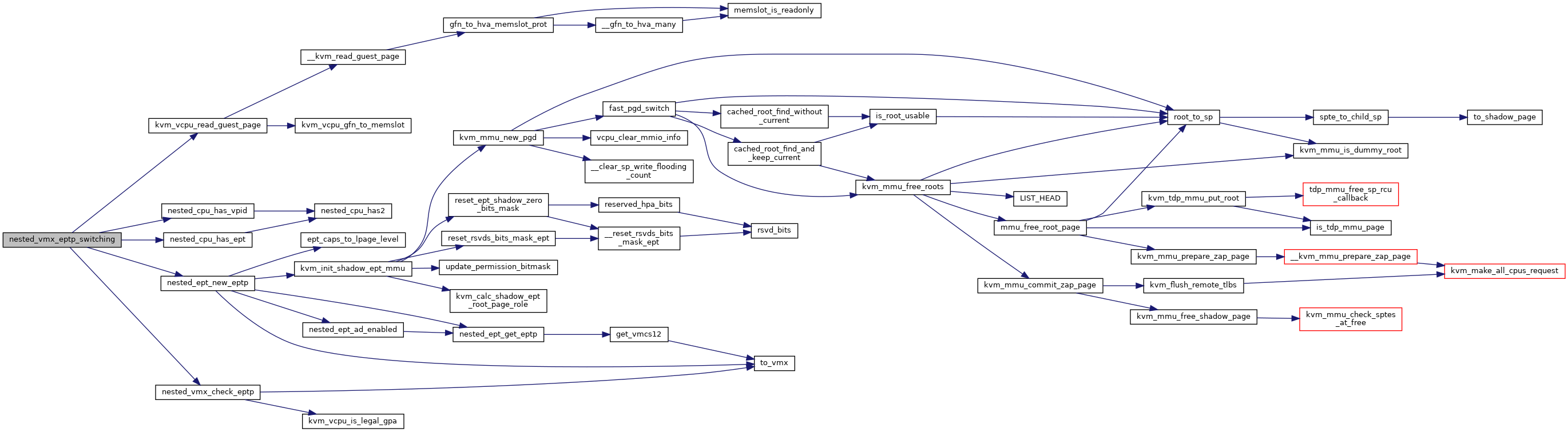

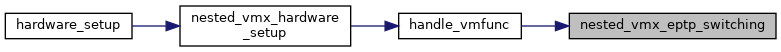

| static int | nested_vmx_eptp_switching (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

| static int | handle_vmfunc (struct kvm_vcpu *vcpu) |

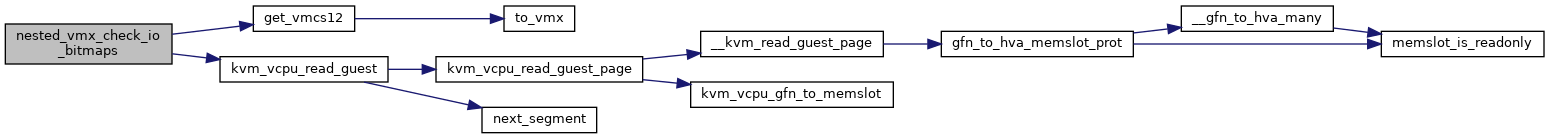

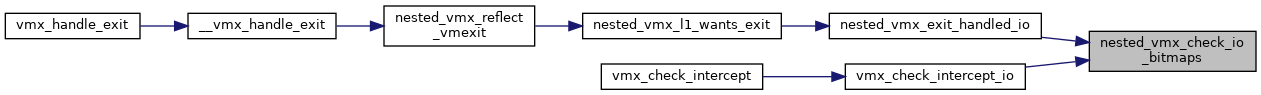

| bool | nested_vmx_check_io_bitmaps (struct kvm_vcpu *vcpu, unsigned int port, int size) |

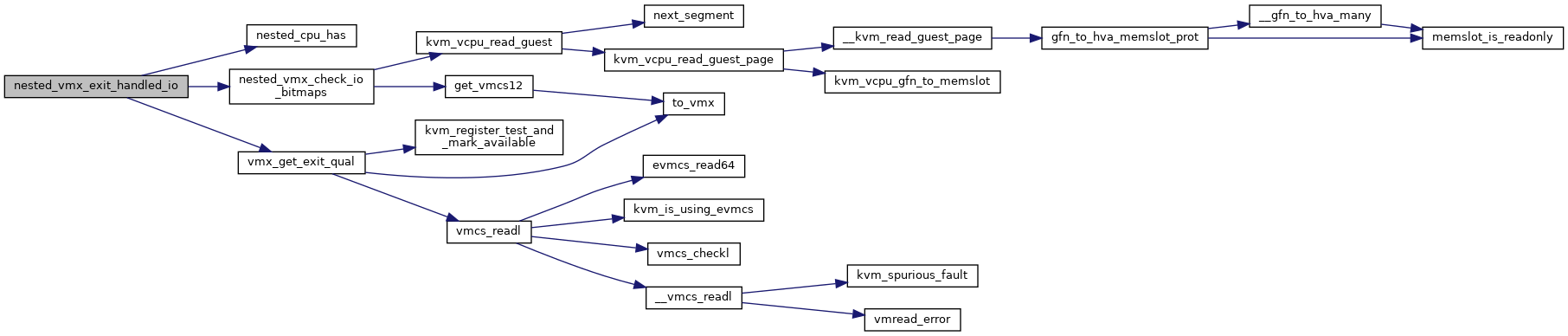

| static bool | nested_vmx_exit_handled_io (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

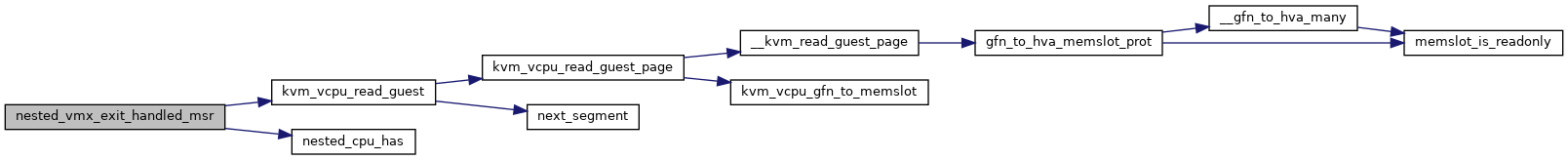

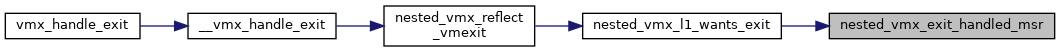

| static bool | nested_vmx_exit_handled_msr (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12, union vmx_exit_reason exit_reason) |

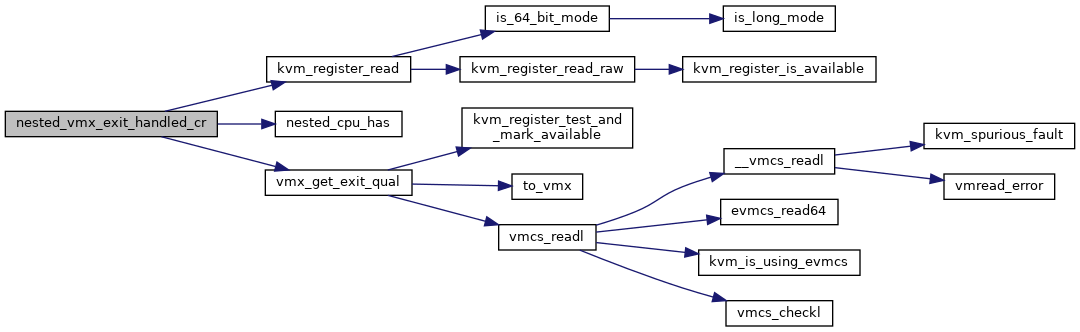

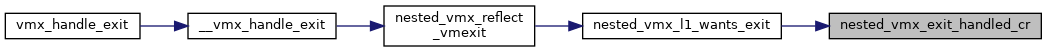

| static bool | nested_vmx_exit_handled_cr (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

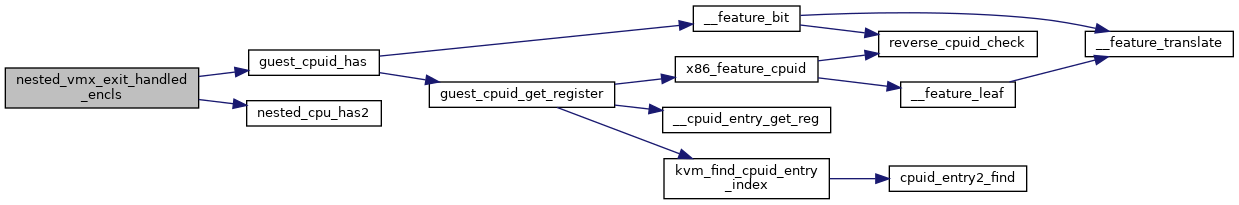

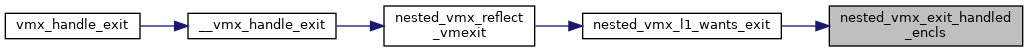

| static bool | nested_vmx_exit_handled_encls (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

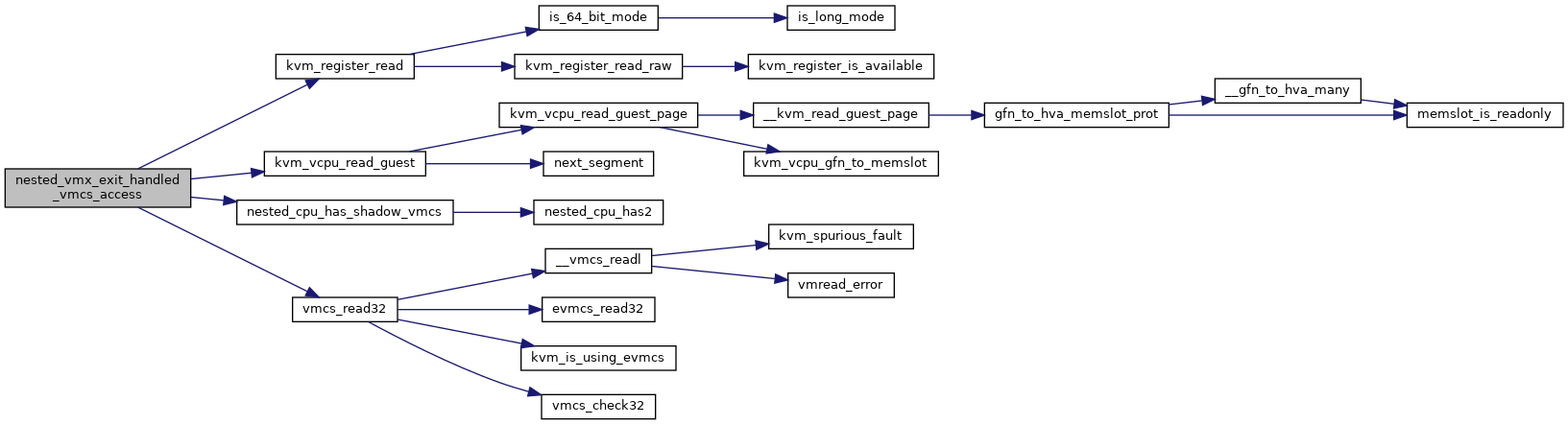

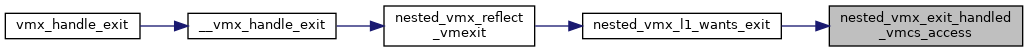

| static bool | nested_vmx_exit_handled_vmcs_access (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12, gpa_t bitmap) |

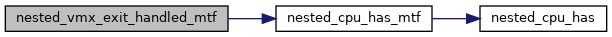

| static bool | nested_vmx_exit_handled_mtf (struct vmcs12 *vmcs12) |

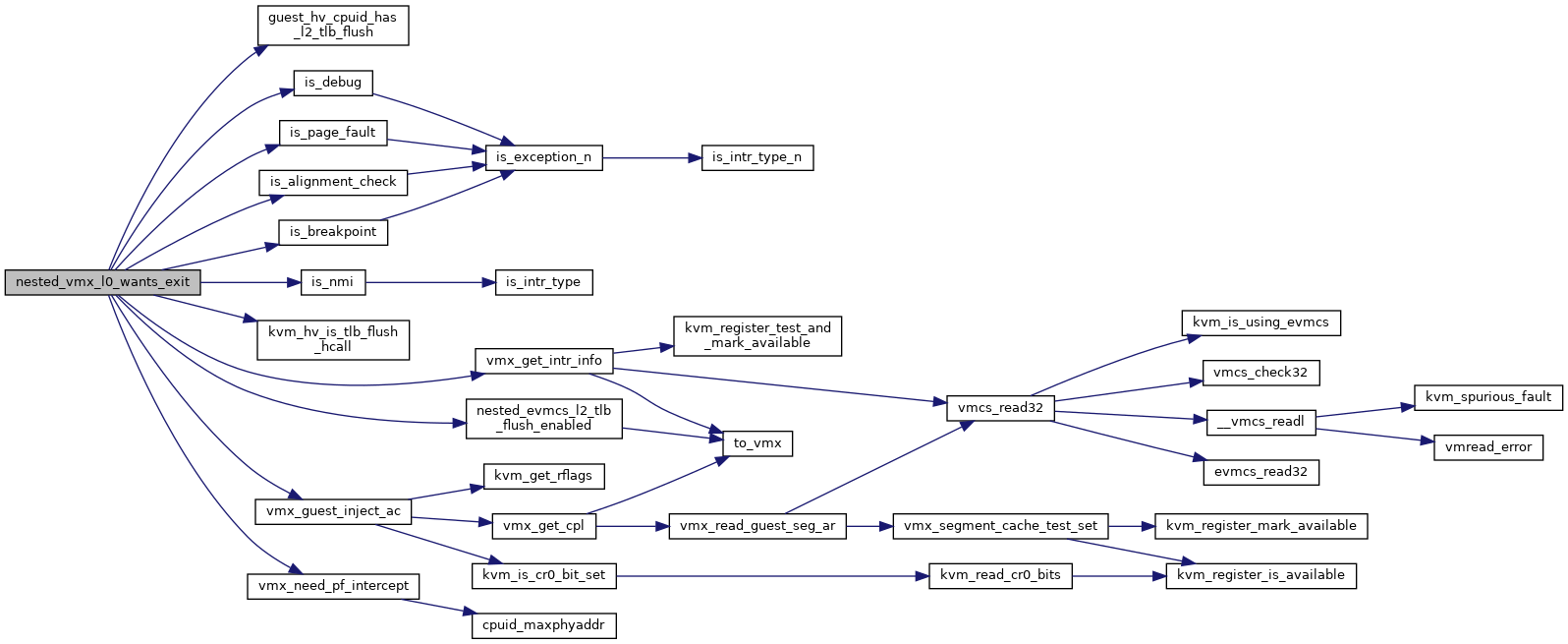

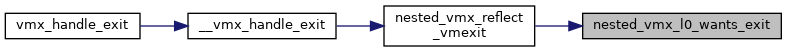

| static bool | nested_vmx_l0_wants_exit (struct kvm_vcpu *vcpu, union vmx_exit_reason exit_reason) |

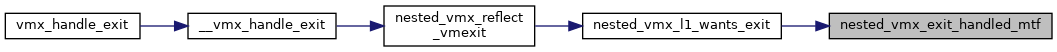

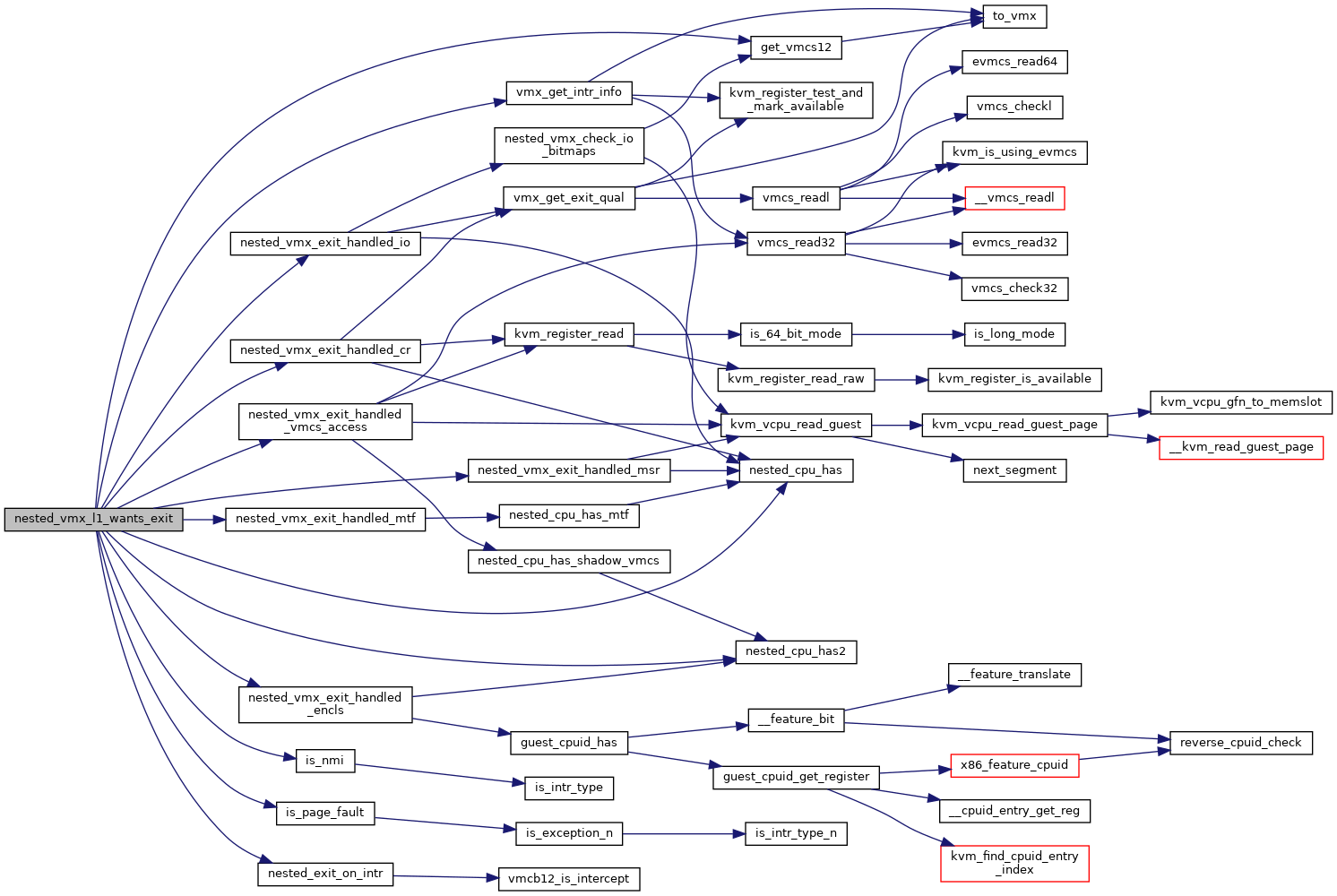

| static bool | nested_vmx_l1_wants_exit (struct kvm_vcpu *vcpu, union vmx_exit_reason exit_reason) |

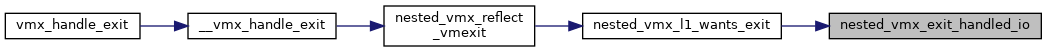

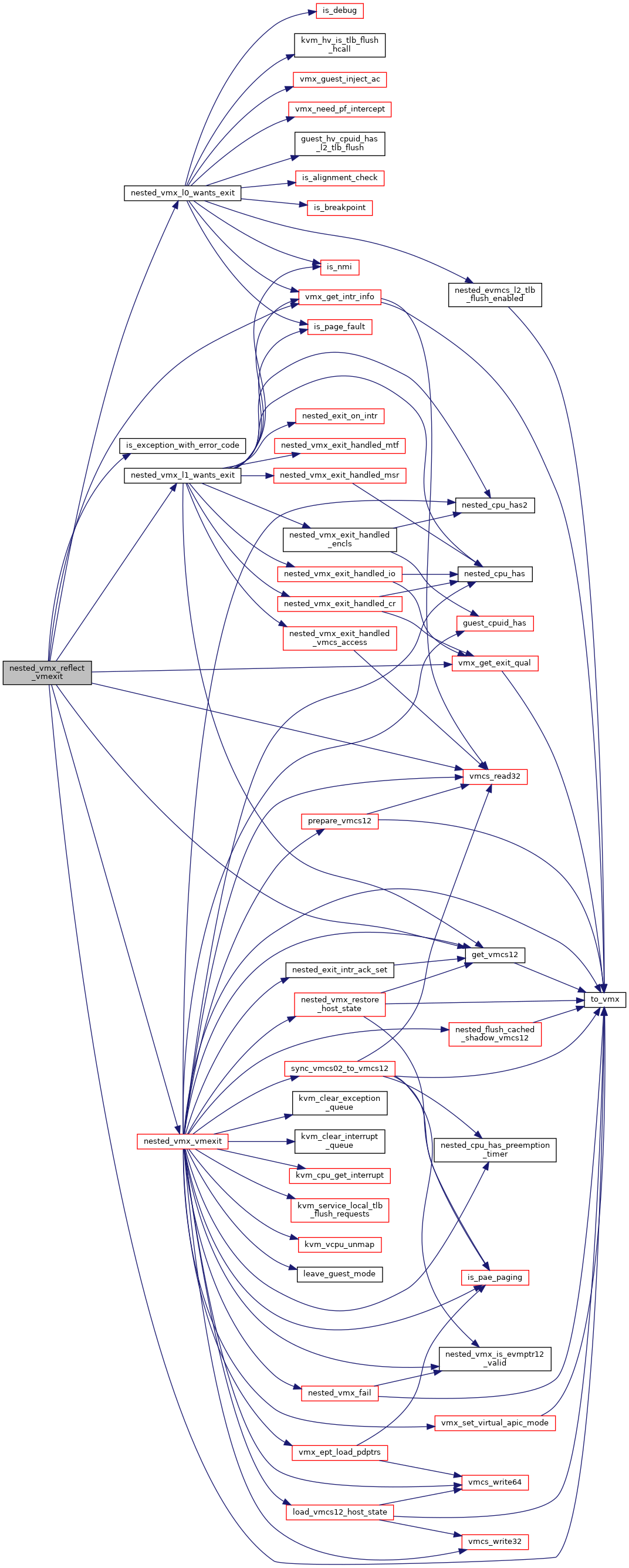

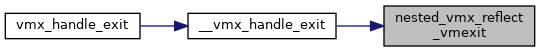

| bool | nested_vmx_reflect_vmexit (struct kvm_vcpu *vcpu) |

| static int | vmx_get_nested_state (struct kvm_vcpu *vcpu, struct kvm_nested_state __user *user_kvm_nested_state, u32 user_data_size) |

| void | vmx_leave_nested (struct kvm_vcpu *vcpu) |

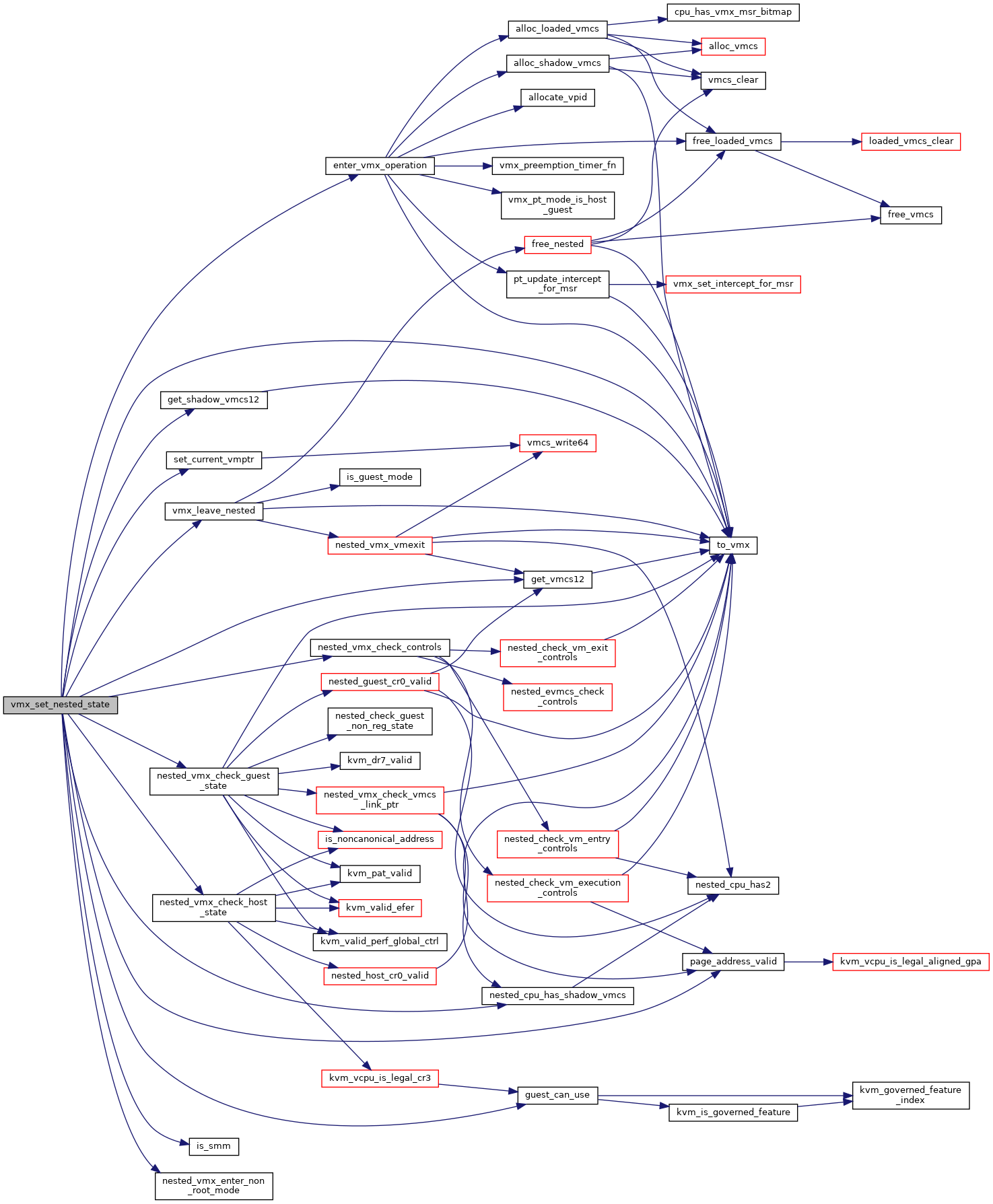

| static int | vmx_set_nested_state (struct kvm_vcpu *vcpu, struct kvm_nested_state __user *user_kvm_nested_state, struct kvm_nested_state *kvm_state) |

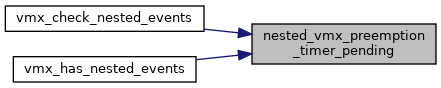

| void | nested_vmx_set_vmcs_shadowing_bitmap (void) |

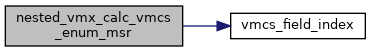

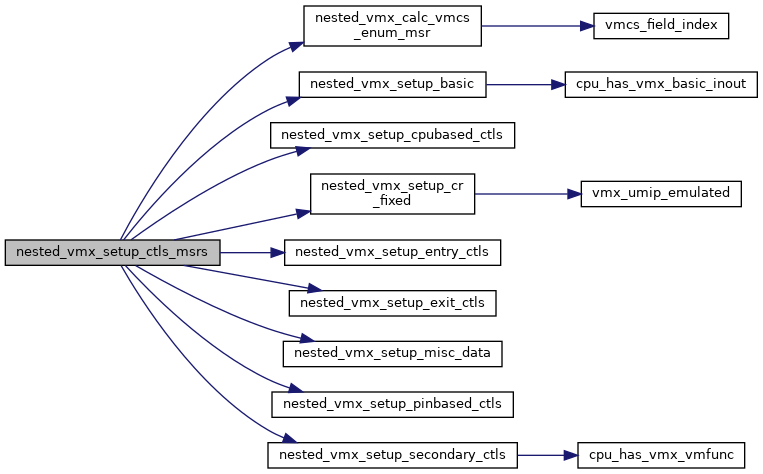

| static u64 | nested_vmx_calc_vmcs_enum_msr (void) |

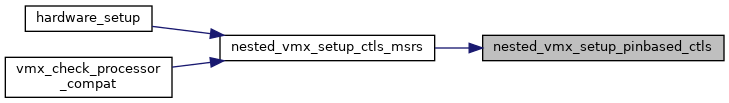

| static void | nested_vmx_setup_pinbased_ctls (struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs) |

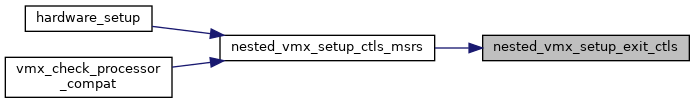

| static void | nested_vmx_setup_exit_ctls (struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs) |

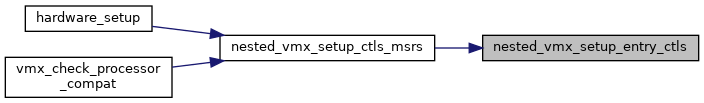

| static void | nested_vmx_setup_entry_ctls (struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs) |

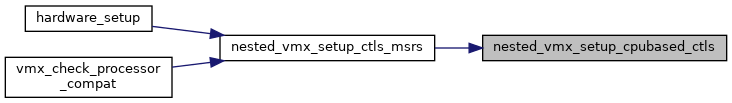

| static void | nested_vmx_setup_cpubased_ctls (struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs) |

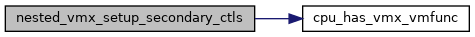

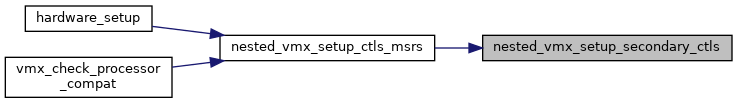

| static void | nested_vmx_setup_secondary_ctls (u32 ept_caps, struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs) |

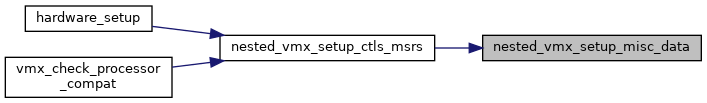

| static void | nested_vmx_setup_misc_data (struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs) |

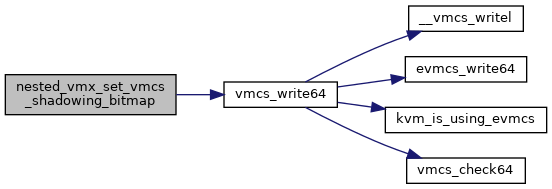

| static void | nested_vmx_setup_basic (struct nested_vmx_msrs *msrs) |

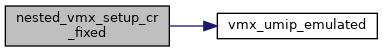

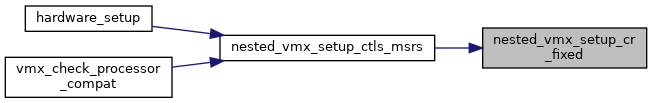

| static void | nested_vmx_setup_cr_fixed (struct nested_vmx_msrs *msrs) |

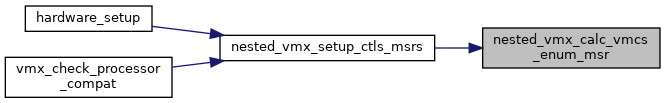

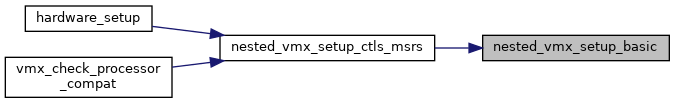

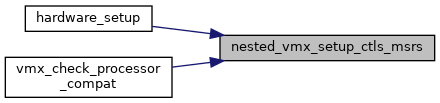

| void | nested_vmx_setup_ctls_msrs (struct vmcs_config *vmcs_conf, u32 ept_caps) |

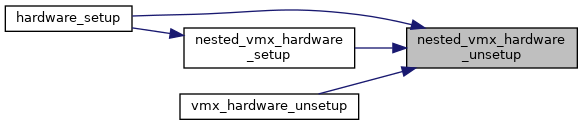

| void | nested_vmx_hardware_unsetup (void) |

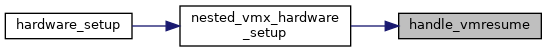

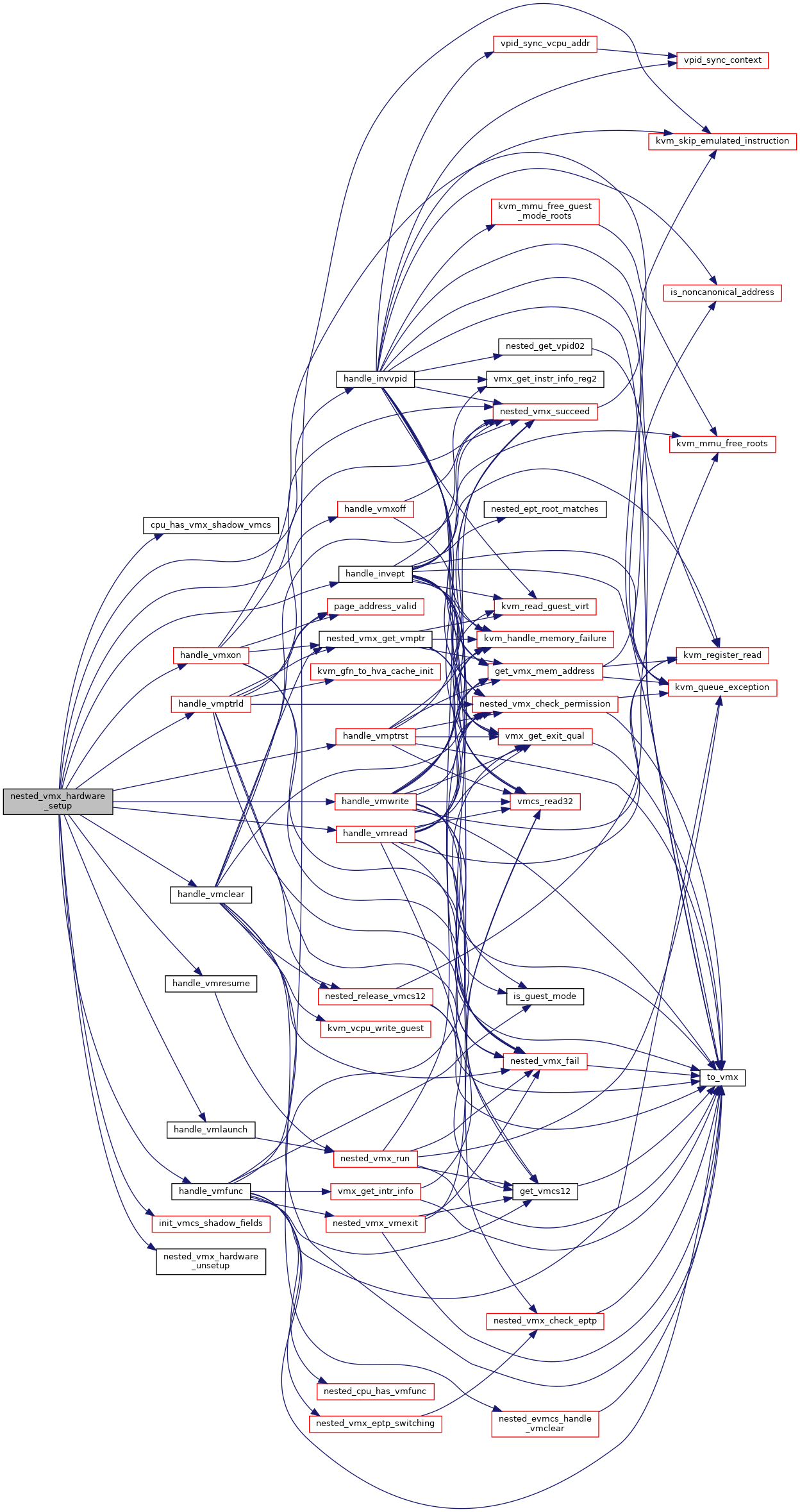

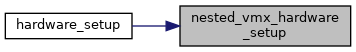

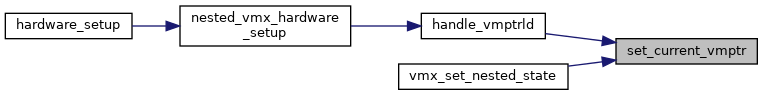

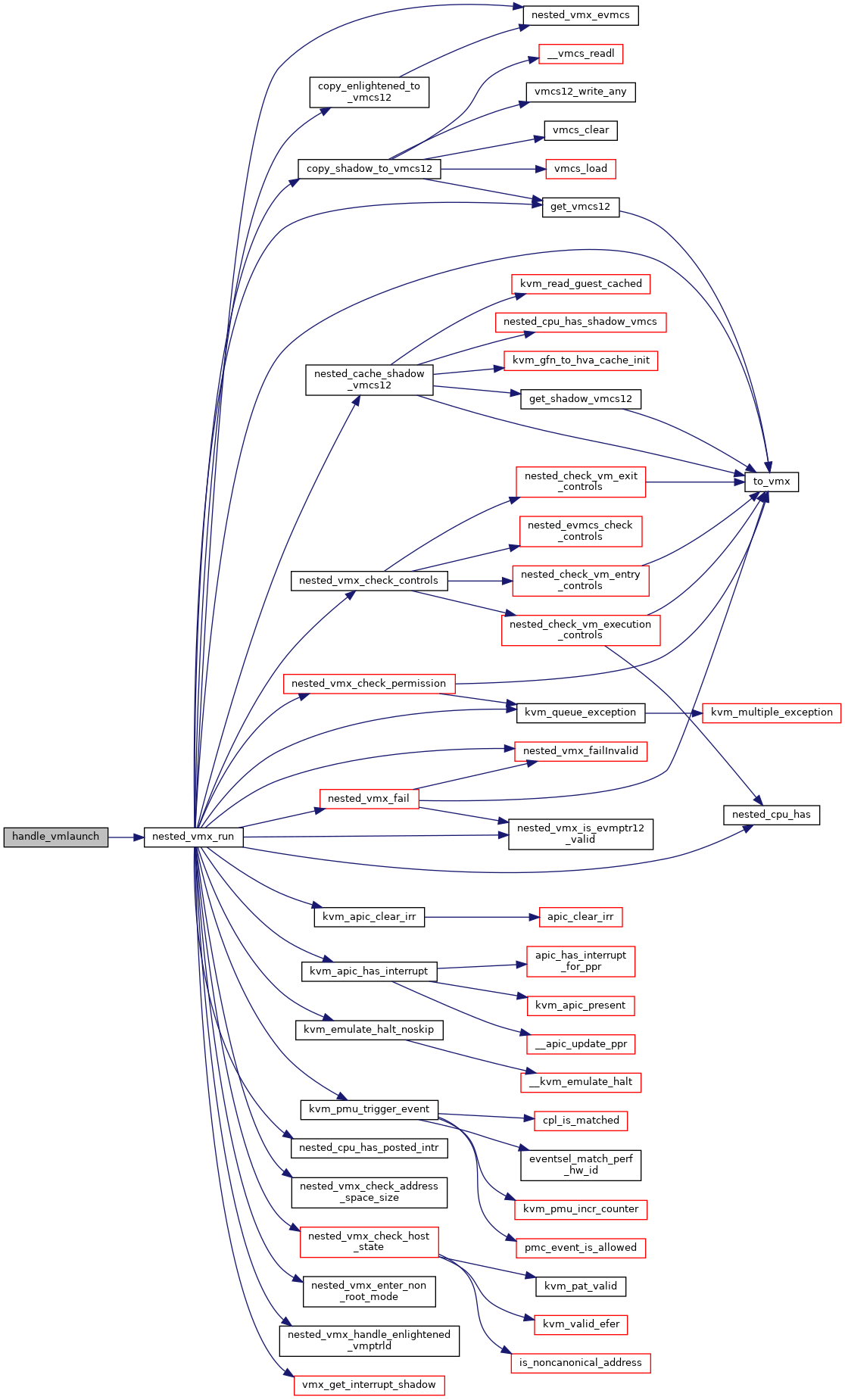

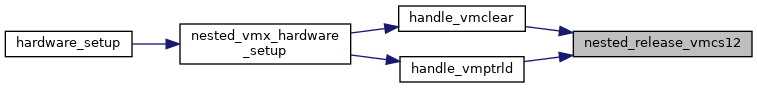

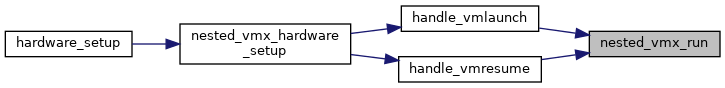

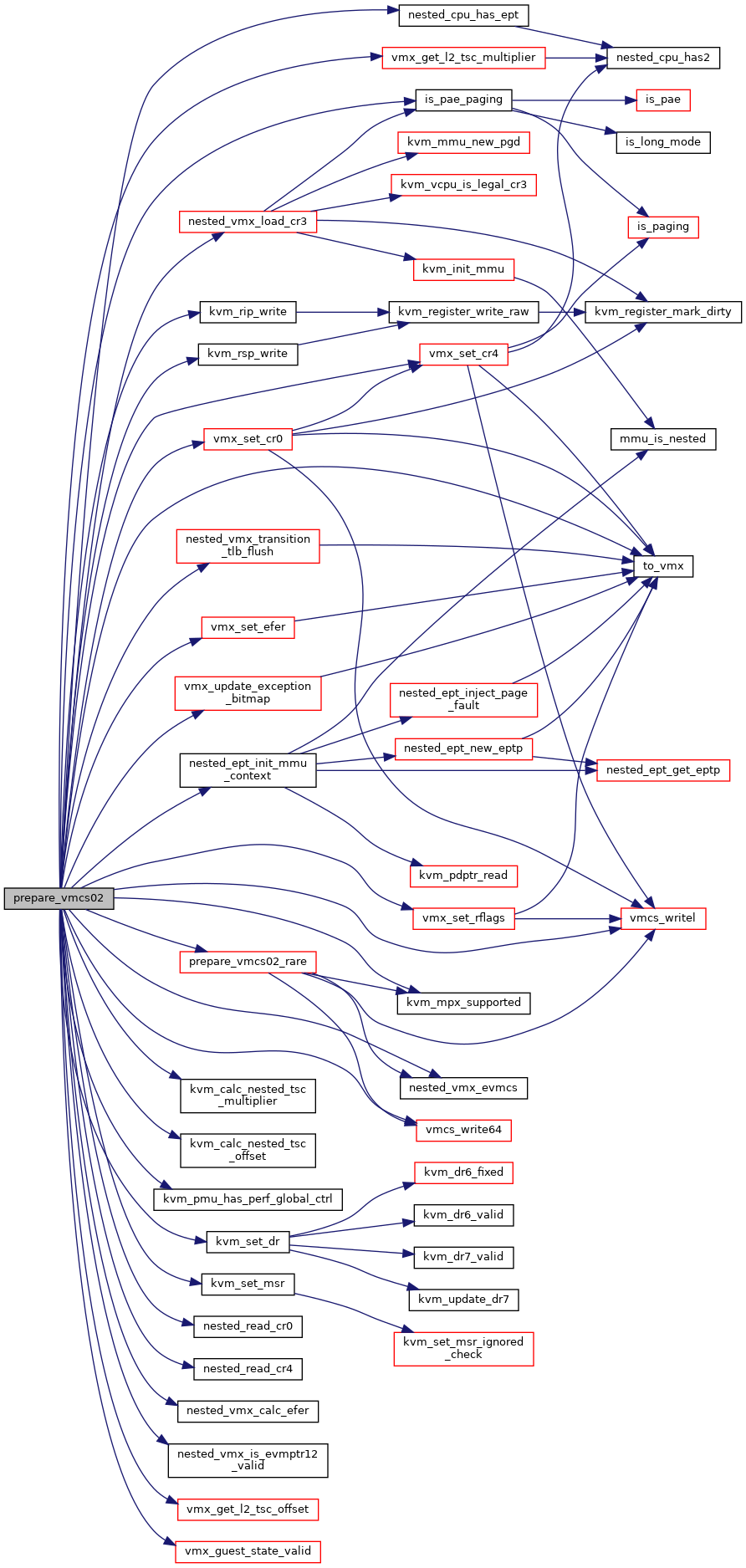

| __init int | nested_vmx_hardware_setup (int(*exit_handlers[])(struct kvm_vcpu *)) |

Variables | |

| static bool __read_mostly | enable_shadow_vmcs = 1 |

| static bool __read_mostly | nested_early_check = 0 |

| static unsigned long * | vmx_bitmap [VMX_BITMAP_NR] |

| static struct shadow_vmcs_field | shadow_read_only_fields [] |

| static int | max_shadow_read_only_fields |

| static struct shadow_vmcs_field | shadow_read_write_fields [] |

| static int | max_shadow_read_write_fields |

| struct kvm_x86_nested_ops | vmx_nested_ops |

Macro Definition Documentation

◆ BUILD_NVMX_MSR_INTERCEPT_HELPER

| #define BUILD_NVMX_MSR_INTERCEPT_HELPER | ( | rw | ) |

◆ CC

| #define CC KVM_NESTED_VMENTER_CONSISTENCY_CHECK |

◆ EPTP_PA_MASK

◆ pr_fmt

◆ SHADOW_FIELD_RO [1/2]

| #define SHADOW_FIELD_RO | ( | x, | |

| y | |||

| ) | { x, offsetof(struct vmcs12, y) }, |

◆ SHADOW_FIELD_RO [2/2]

| #define SHADOW_FIELD_RO | ( | x, | |

| y | |||

| ) | case x: |

◆ SHADOW_FIELD_RW [1/2]

| #define SHADOW_FIELD_RW | ( | x, | |

| y | |||

| ) | { x, offsetof(struct vmcs12, y) }, |

◆ SHADOW_FIELD_RW [2/2]

| #define SHADOW_FIELD_RW | ( | x, | |

| y | |||

| ) | case x: |

◆ VMCS12_IDX_TO_ENC

| #define VMCS12_IDX_TO_ENC | ( | idx | ) | ((u16)(((u16)(idx) >> 6) | ((u16)(idx) << 10))) |

◆ VMX_MISC_EMULATED_PREEMPTION_TIMER_RATE

◆ vmx_vmread_bitmap

| #define vmx_vmread_bitmap (vmx_bitmap[VMX_VMREAD_BITMAP]) |

◆ vmx_vmwrite_bitmap

| #define vmx_vmwrite_bitmap (vmx_bitmap[VMX_VMWRITE_BITMAP]) |

◆ VMX_VPID_EXTENT_SUPPORTED_MASK

| #define VMX_VPID_EXTENT_SUPPORTED_MASK |

◆ VMXON_CR0_ALWAYSON

| #define VMXON_CR0_ALWAYSON (X86_CR0_PE | X86_CR0_PG | X86_CR0_NE) |

◆ VMXON_CR4_ALWAYSON

| #define VMXON_CR4_ALWAYSON X86_CR4_VMXE |

Enumeration Type Documentation

◆ anonymous enum

| anonymous enum |

Function Documentation

◆ alloc_shadow_vmcs()

|

static |

◆ copy_enlightened_to_vmcs12()

|

static |

Definition at line 1604 of file nested.c.

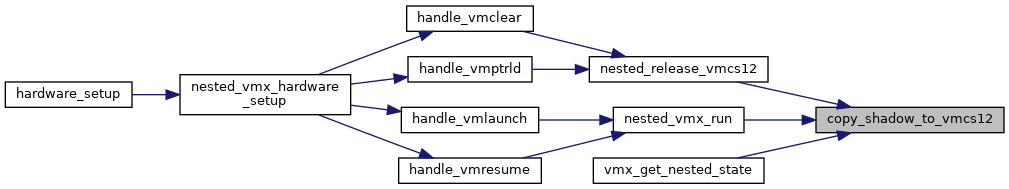

◆ copy_shadow_to_vmcs12()

|

static |

Definition at line 1543 of file nested.c.

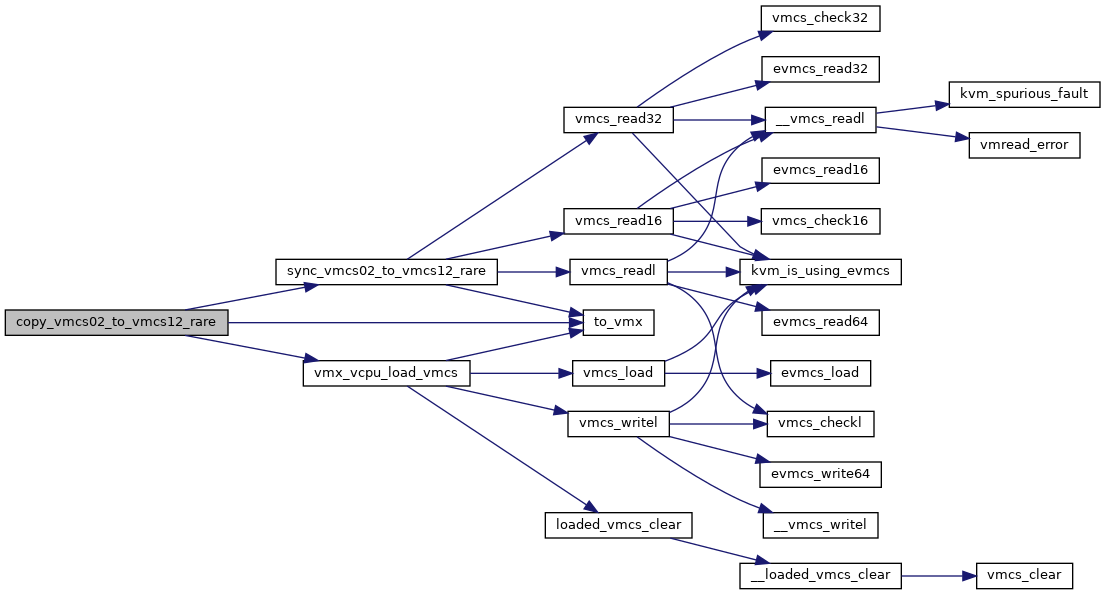

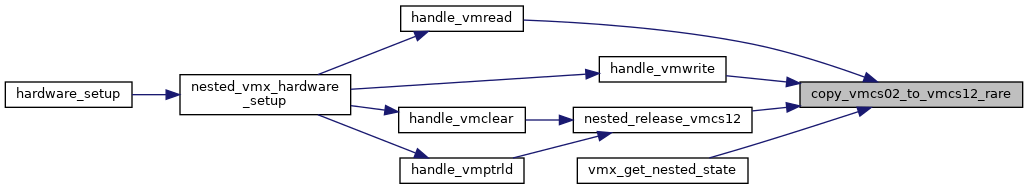

◆ copy_vmcs02_to_vmcs12_rare()

|

static |

Definition at line 4343 of file nested.c.

◆ copy_vmcs12_to_enlightened()

|

static |

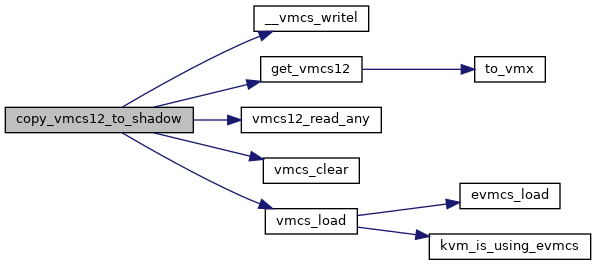

◆ copy_vmcs12_to_shadow()

|

static |

Definition at line 1570 of file nested.c.

◆ enable_x2apic_msr_intercepts()

|

inlinestatic |

◆ enter_vmx_operation()

|

static |

Definition at line 5139 of file nested.c.

◆ free_nested()

|

static |

Definition at line 323 of file nested.c.

◆ get_vmx_mem_address()

| int get_vmx_mem_address | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | exit_qualification, | ||

| u32 | vmx_instruction_info, | ||

| bool | wr, | ||

| int | len, | ||

| gva_t * | ret | ||

| ) |

Definition at line 4963 of file nested.c.

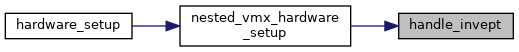

◆ handle_invept()

|

static |

Definition at line 5702 of file nested.c.

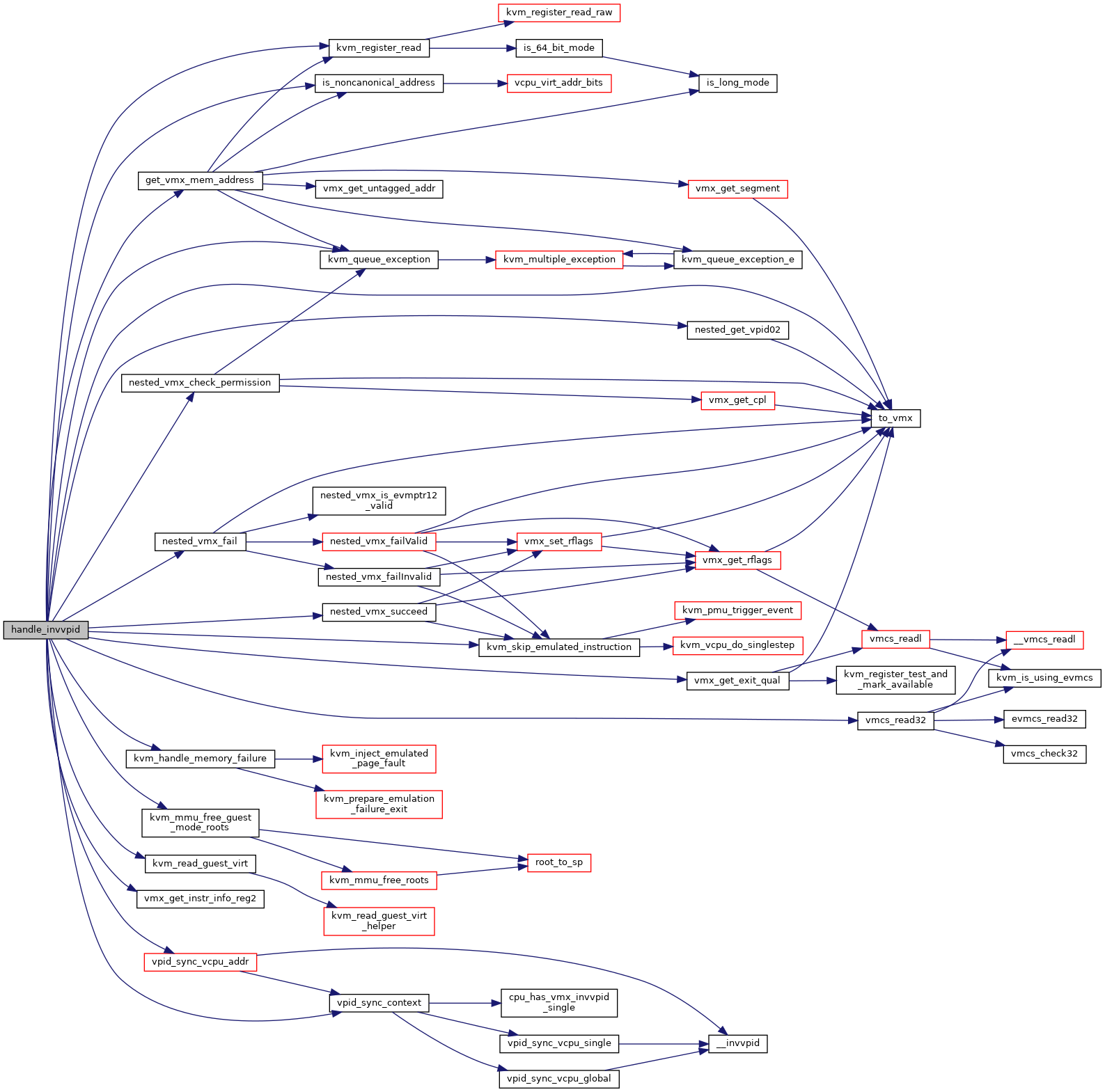

◆ handle_invvpid()

|

static |

Definition at line 5782 of file nested.c.

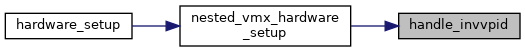

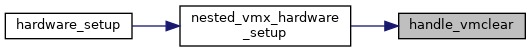

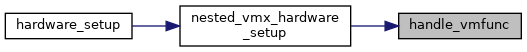

◆ handle_vmclear()

|

static |

Definition at line 5323 of file nested.c.

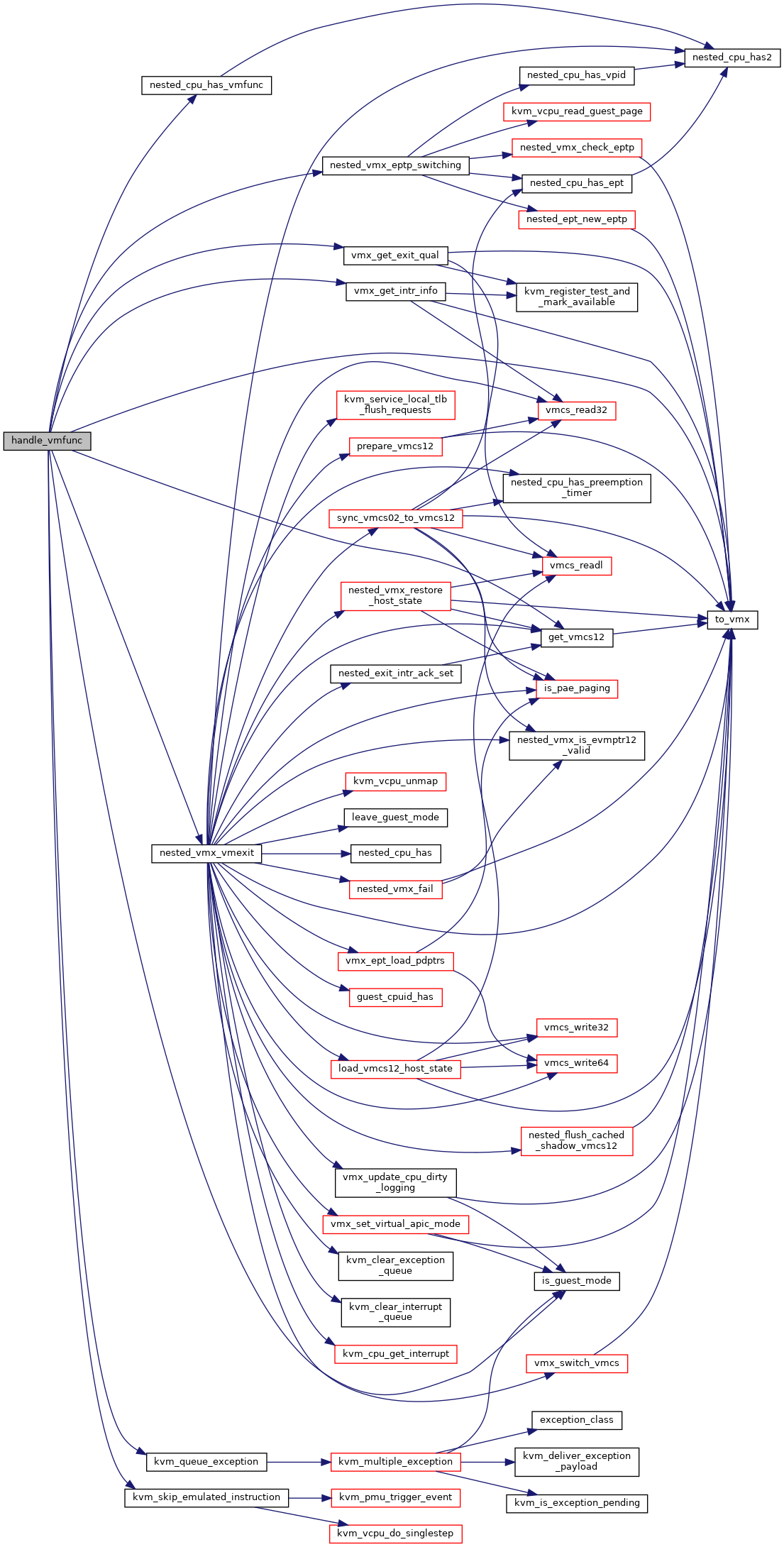

◆ handle_vmfunc()

|

static |

Definition at line 5908 of file nested.c.

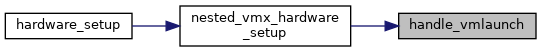

◆ handle_vmlaunch()

|

static |

Definition at line 5365 of file nested.c.

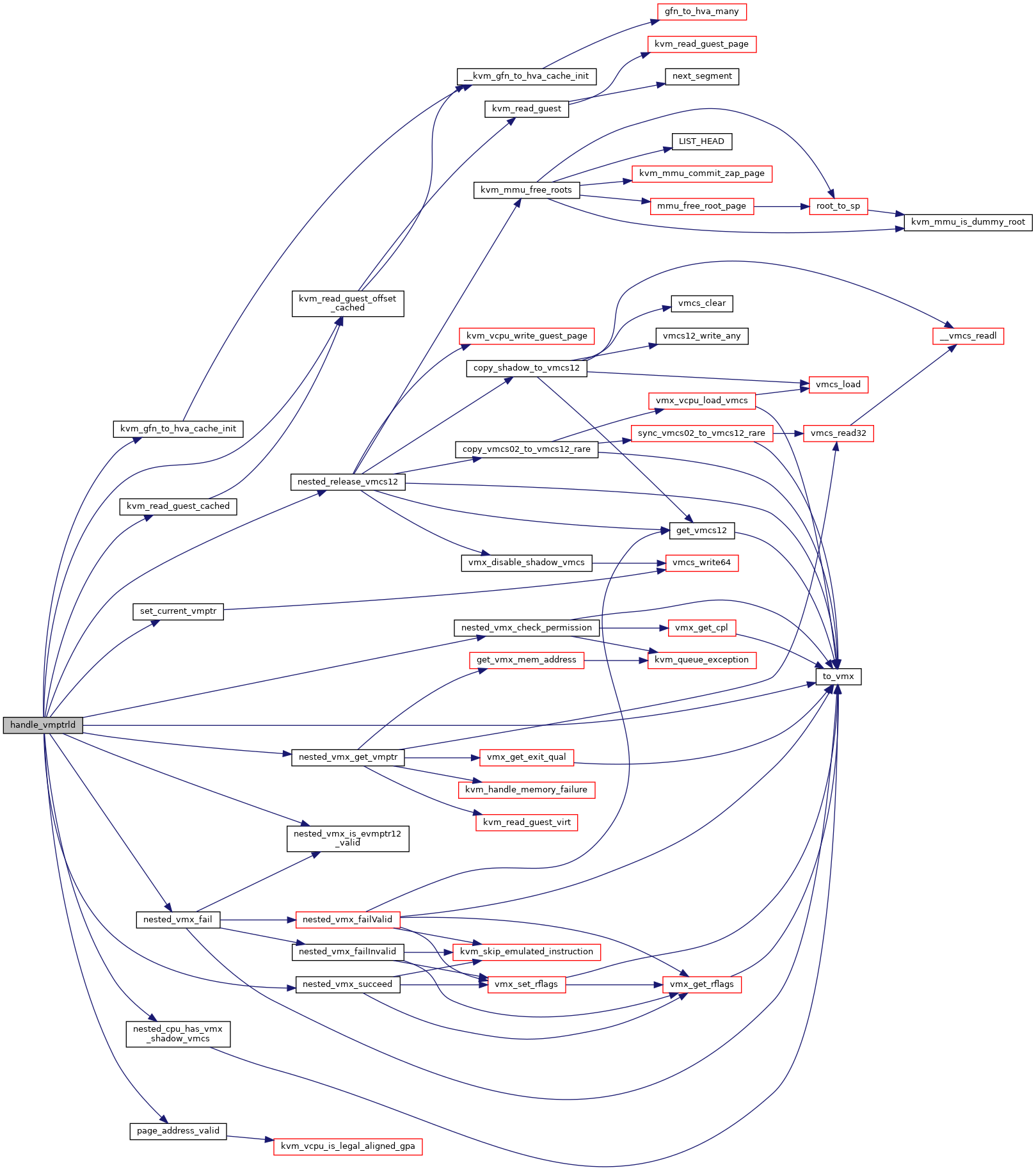

◆ handle_vmptrld()

|

static |

Definition at line 5604 of file nested.c.

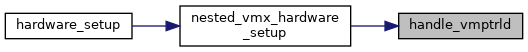

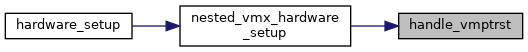

◆ handle_vmptrst()

|

static |

Definition at line 5674 of file nested.c.

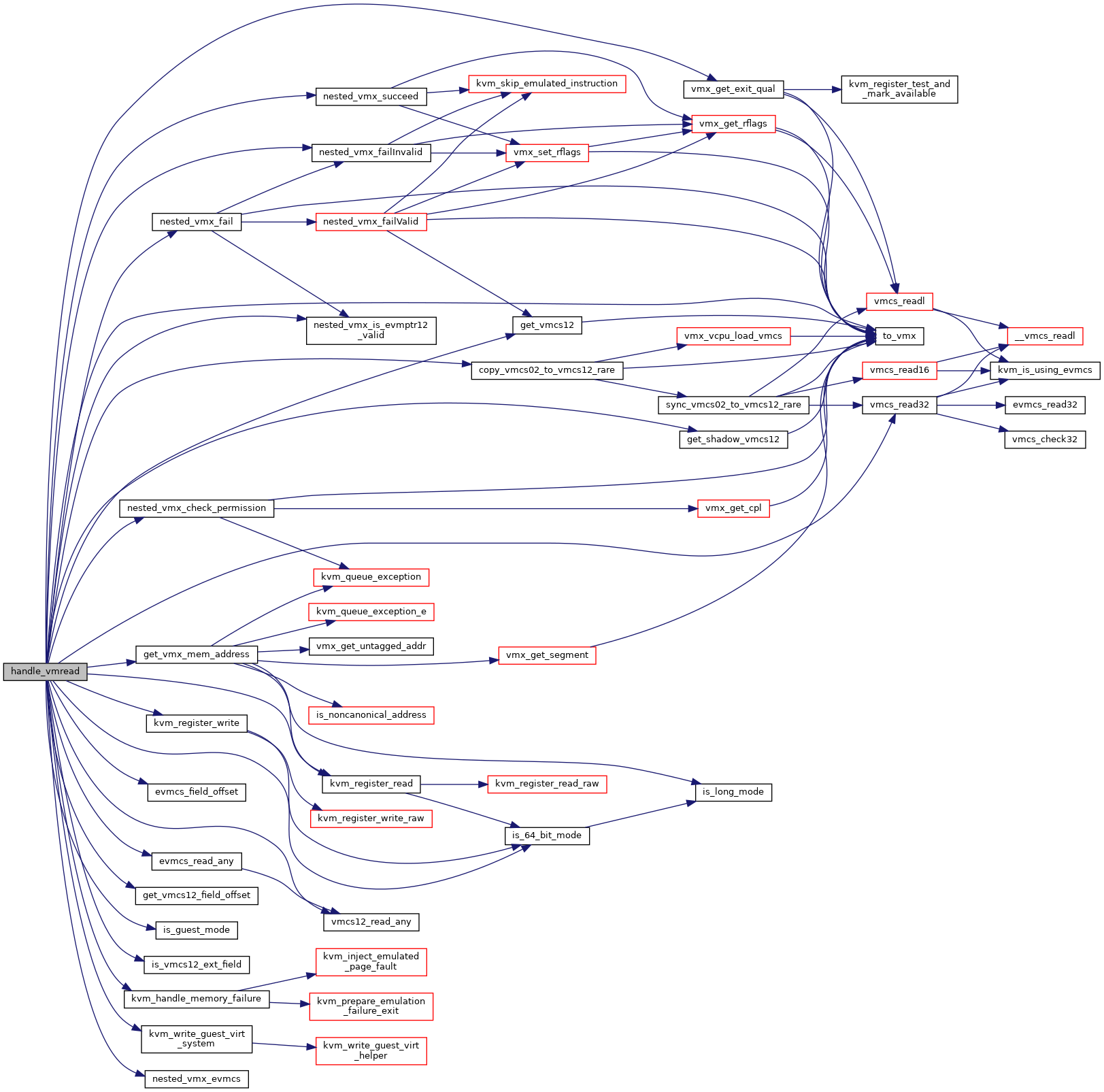

◆ handle_vmread()

|

static |

Definition at line 5377 of file nested.c.

◆ handle_vmresume()

|

static |

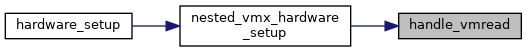

◆ handle_vmwrite()

|

static |

Definition at line 5483 of file nested.c.

◆ handle_vmxoff()

|

static |

Definition at line 5309 of file nested.c.

◆ handle_vmxon()

|

static |

Definition at line 5190 of file nested.c.

◆ init_vmcs_shadow_fields()

|

static |

Definition at line 69 of file nested.c.

◆ is_bitwise_subset()

|

static |

◆ is_shadow_field_ro()

|

static |

◆ is_shadow_field_rw()

|

static |

◆ is_vmcs12_ext_field()

|

static |

◆ load_vmcs12_host_state()

|

static |

Definition at line 4509 of file nested.c.

◆ module_param()

| module_param | ( | nested_early_check | , |

| bool | , | ||

| S_IRUGO | |||

| ) |

◆ module_param_named()

| module_param_named | ( | enable_shadow_vmcs | , |

| enable_shadow_vmcs | , | ||

| bool | , | ||

| S_IRUGO | |||

| ) |

◆ nested_cache_shadow_vmcs12()

|

static |

Definition at line 701 of file nested.c.

◆ nested_check_guest_non_reg_state()

|

static |

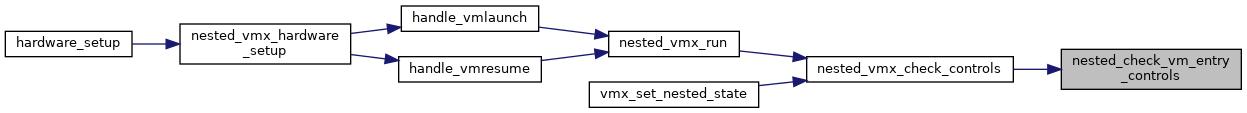

◆ nested_check_vm_entry_controls()

|

static |

Definition at line 2847 of file nested.c.

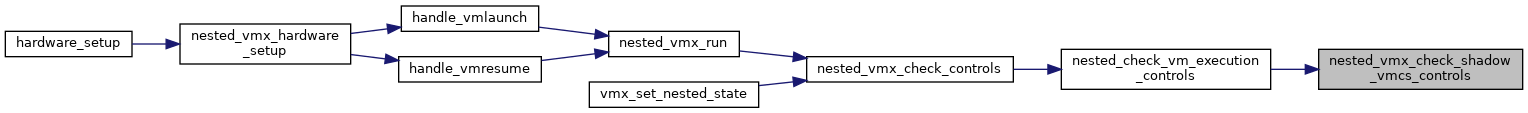

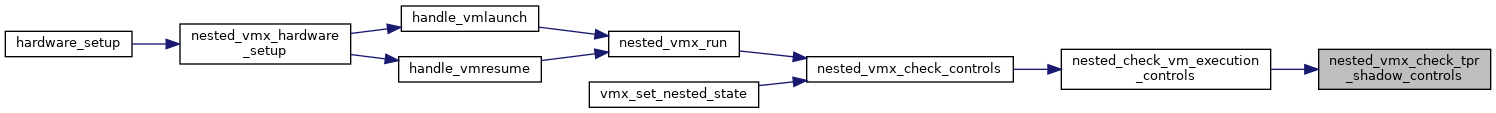

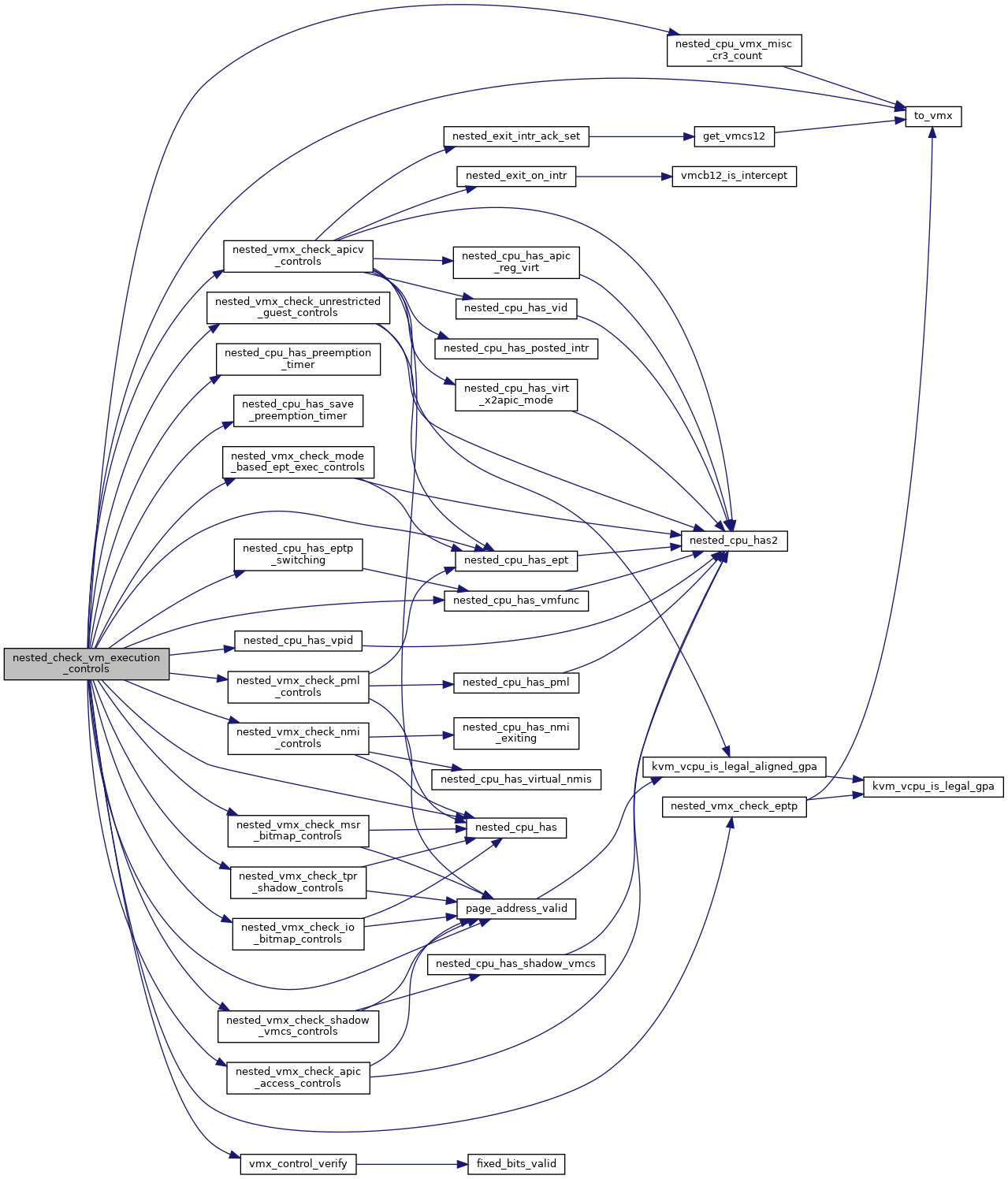

◆ nested_check_vm_execution_controls()

|

static |

Definition at line 2771 of file nested.c.

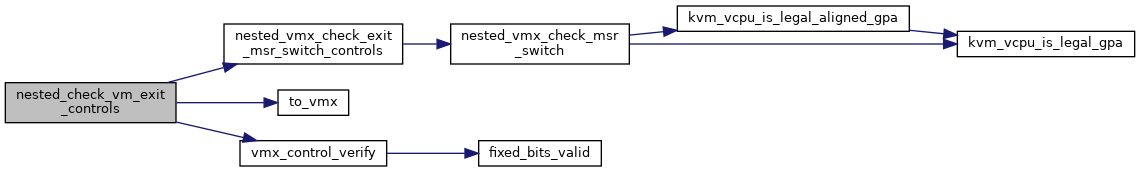

◆ nested_check_vm_exit_controls()

|

static |

Definition at line 2830 of file nested.c.

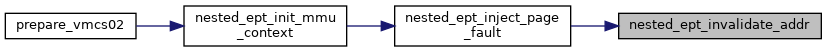

◆ nested_ept_init_mmu_context()

|

static |

Definition at line 451 of file nested.c.

◆ nested_ept_inject_page_fault()

|

static |

Definition at line 407 of file nested.c.

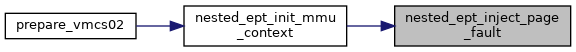

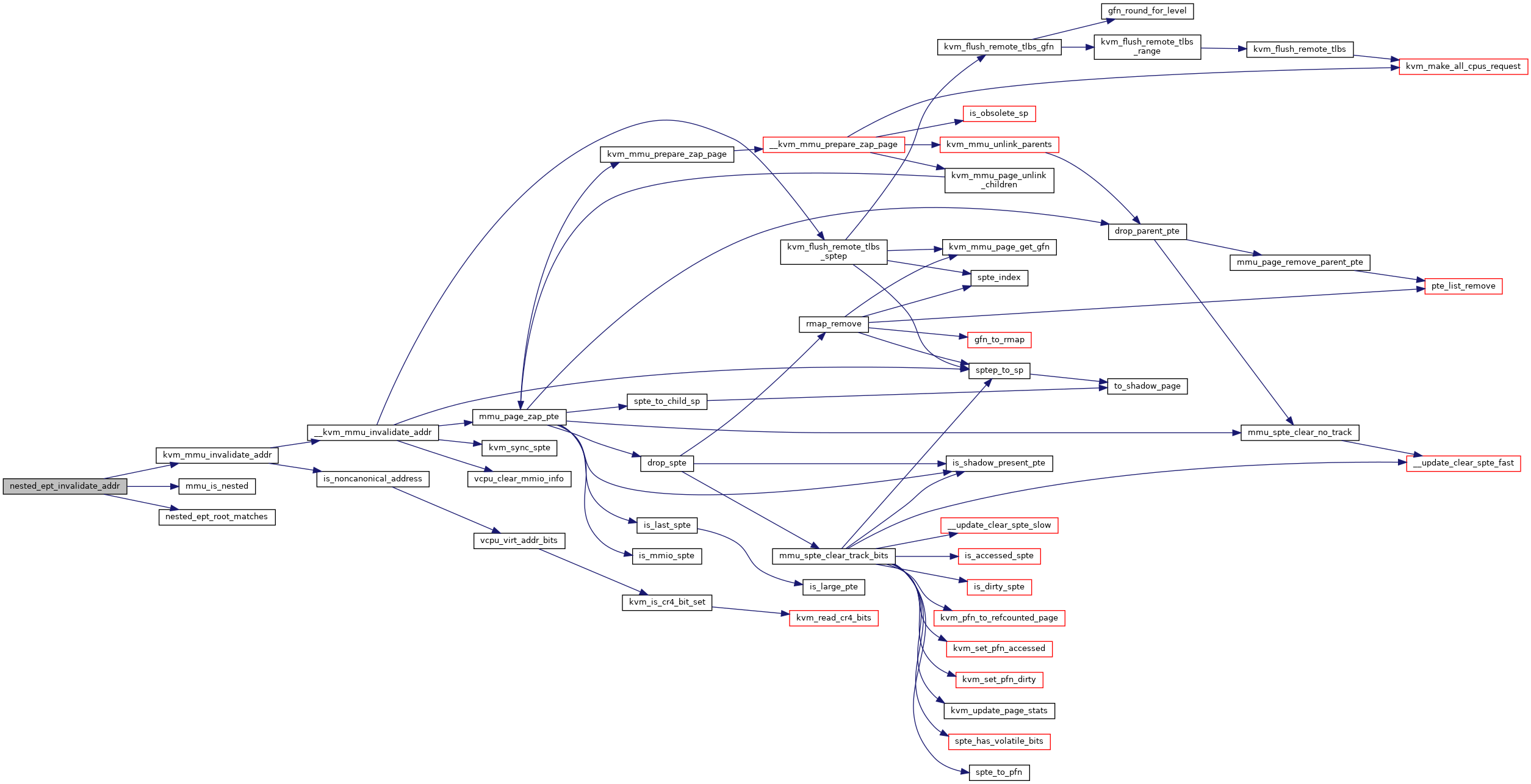

◆ nested_ept_invalidate_addr()

|

static |

Definition at line 387 of file nested.c.

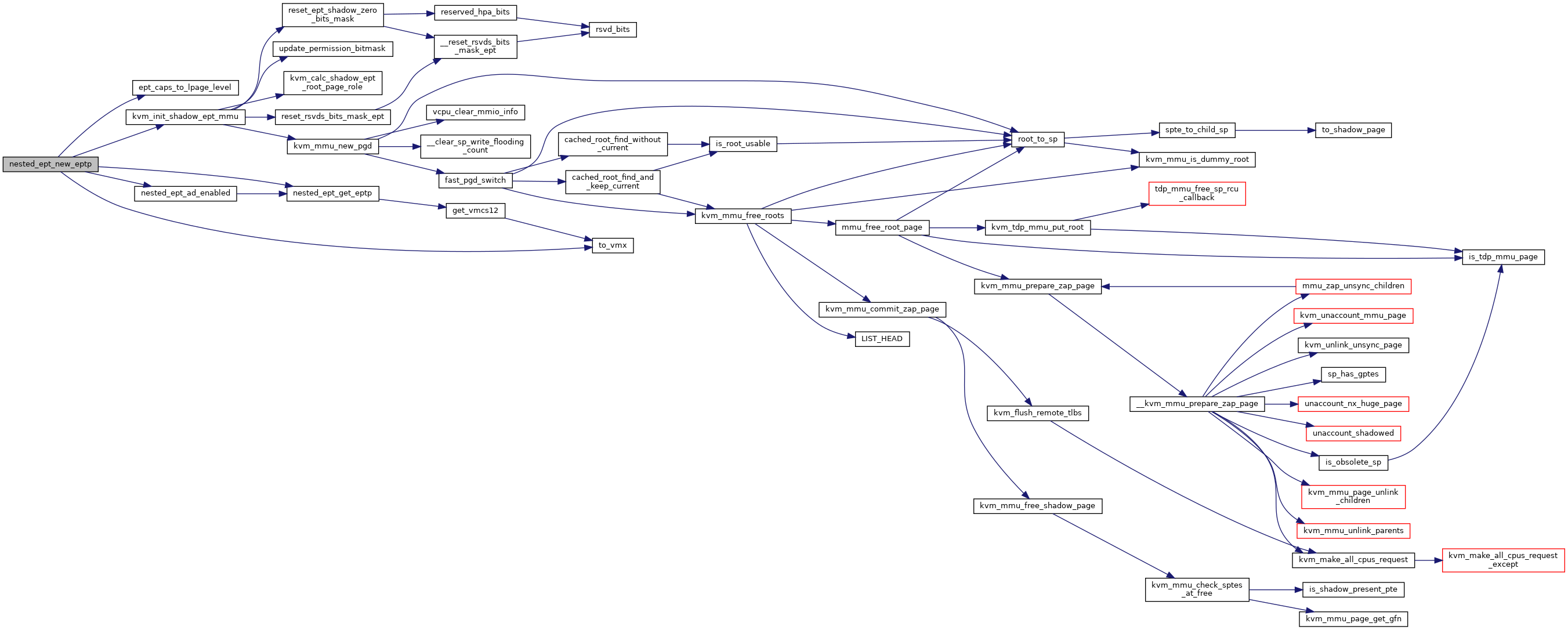

◆ nested_ept_new_eptp()

|

static |

Definition at line 440 of file nested.c.

◆ nested_ept_root_matches()

|

static |

◆ nested_ept_uninit_mmu_context()

|

static |

◆ nested_evmcs_handle_vmclear()

|

static |

◆ nested_exit_intr_ack_set()

|

static |

◆ nested_flush_cached_shadow_vmcs12()

|

static |

Definition at line 720 of file nested.c.

◆ nested_get_vmcs12_pages()

|

static |

Definition at line 3232 of file nested.c.

◆ nested_has_guest_tlb_tag()

|

static |

◆ nested_mark_vmcs12_pages_dirty()

| void nested_mark_vmcs12_pages_dirty | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3838 of file nested.c.

◆ nested_msr_store_list_has_msr()

|

static |

Definition at line 1055 of file nested.c.

◆ nested_release_evmcs()

|

inlinestatic |

◆ nested_release_vmcs12()

|

inlinestatic |

Definition at line 5281 of file nested.c.

◆ nested_sync_vmcs12_to_shadow()

| void nested_sync_vmcs12_to_shadow | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2124 of file nested.c.

◆ nested_vmx_abort()

|

static |

◆ nested_vmx_calc_efer()

◆ nested_vmx_calc_vmcs_enum_msr()

|

static |

◆ nested_vmx_check_address_space_size()

|

static |

◆ nested_vmx_check_apic_access_controls()

|

static |

◆ nested_vmx_check_apicv_controls()

|

static |

Definition at line 759 of file nested.c.

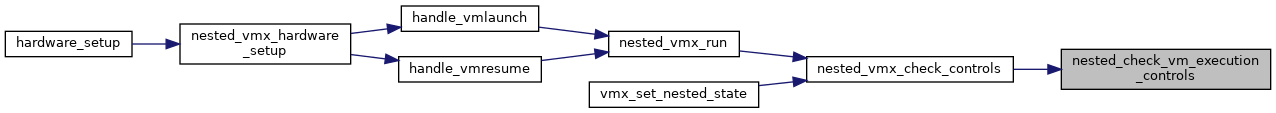

◆ nested_vmx_check_controls()

|

static |

Definition at line 2919 of file nested.c.

◆ nested_vmx_check_entry_msr_switch_controls()

|

static |

Definition at line 831 of file nested.c.

◆ nested_vmx_check_eptp()

|

static |

Definition at line 2723 of file nested.c.

◆ nested_vmx_check_exit_msr_switch_controls()

|

static |

◆ nested_vmx_check_guest_state()

|

static |

Definition at line 3058 of file nested.c.

◆ nested_vmx_check_host_state()

|

static |

Definition at line 2946 of file nested.c.

◆ nested_vmx_check_io_bitmap_controls()

|

static |

◆ nested_vmx_check_io_bitmaps()

| bool nested_vmx_check_io_bitmaps | ( | struct kvm_vcpu * | vcpu, |

| unsigned int | port, | ||

| int | size | ||

| ) |

Definition at line 5963 of file nested.c.

◆ nested_vmx_check_mode_based_ept_exec_controls()

|

static |

◆ nested_vmx_check_msr_bitmap_controls()

|

static |

◆ nested_vmx_check_msr_switch()

|

static |

◆ nested_vmx_check_nmi_controls()

|

static |

Definition at line 2710 of file nested.c.

◆ nested_vmx_check_permission()

|

static |

◆ nested_vmx_check_pml_controls()

|

static |

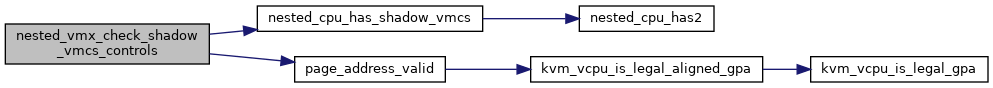

◆ nested_vmx_check_shadow_vmcs_controls()

|

static |

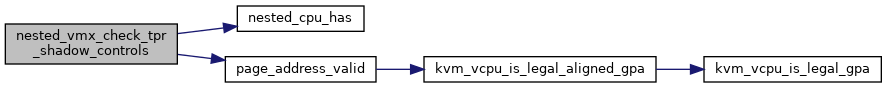

◆ nested_vmx_check_tpr_shadow_controls()

|

static |

◆ nested_vmx_check_unrestricted_guest_controls()

|

static |

◆ nested_vmx_check_vmcs_link_ptr()

|

static |

◆ nested_vmx_check_vmentry_hw()

|

static |

Definition at line 3124 of file nested.c.

◆ nested_vmx_disable_intercept_for_x2apic_msr()

|

static |

◆ nested_vmx_enter_non_root_mode()

| enum nvmx_vmentry_status nested_vmx_enter_non_root_mode | ( | struct kvm_vcpu * | vcpu, |

| bool | from_vmentry | ||

| ) |

Definition at line 3414 of file nested.c.

◆ nested_vmx_eptp_switching()

|

static |

Definition at line 5875 of file nested.c.

◆ nested_vmx_exit_handled_cr()

|

static |

◆ nested_vmx_exit_handled_encls()

|

static |

Definition at line 6128 of file nested.c.

◆ nested_vmx_exit_handled_io()

|

static |

Definition at line 5996 of file nested.c.

◆ nested_vmx_exit_handled_msr()

|

static |

◆ nested_vmx_exit_handled_mtf()

|

static |

◆ nested_vmx_exit_handled_vmcs_access()

|

static |

◆ nested_vmx_fail()

|

static |

Definition at line 188 of file nested.c.

◆ nested_vmx_failInvalid()

|

static |

◆ nested_vmx_failValid()

|

static |

Definition at line 169 of file nested.c.

◆ nested_vmx_free_vcpu()

| void nested_vmx_free_vcpu | ( | struct kvm_vcpu * | vcpu | ) |

◆ nested_vmx_get_vmcs01_guest_efer()

|

inlinestatic |

◆ nested_vmx_get_vmexit_msr_value()

|

static |

◆ nested_vmx_get_vmptr()

|

static |

◆ nested_vmx_handle_enlightened_vmptrld()

|

static |

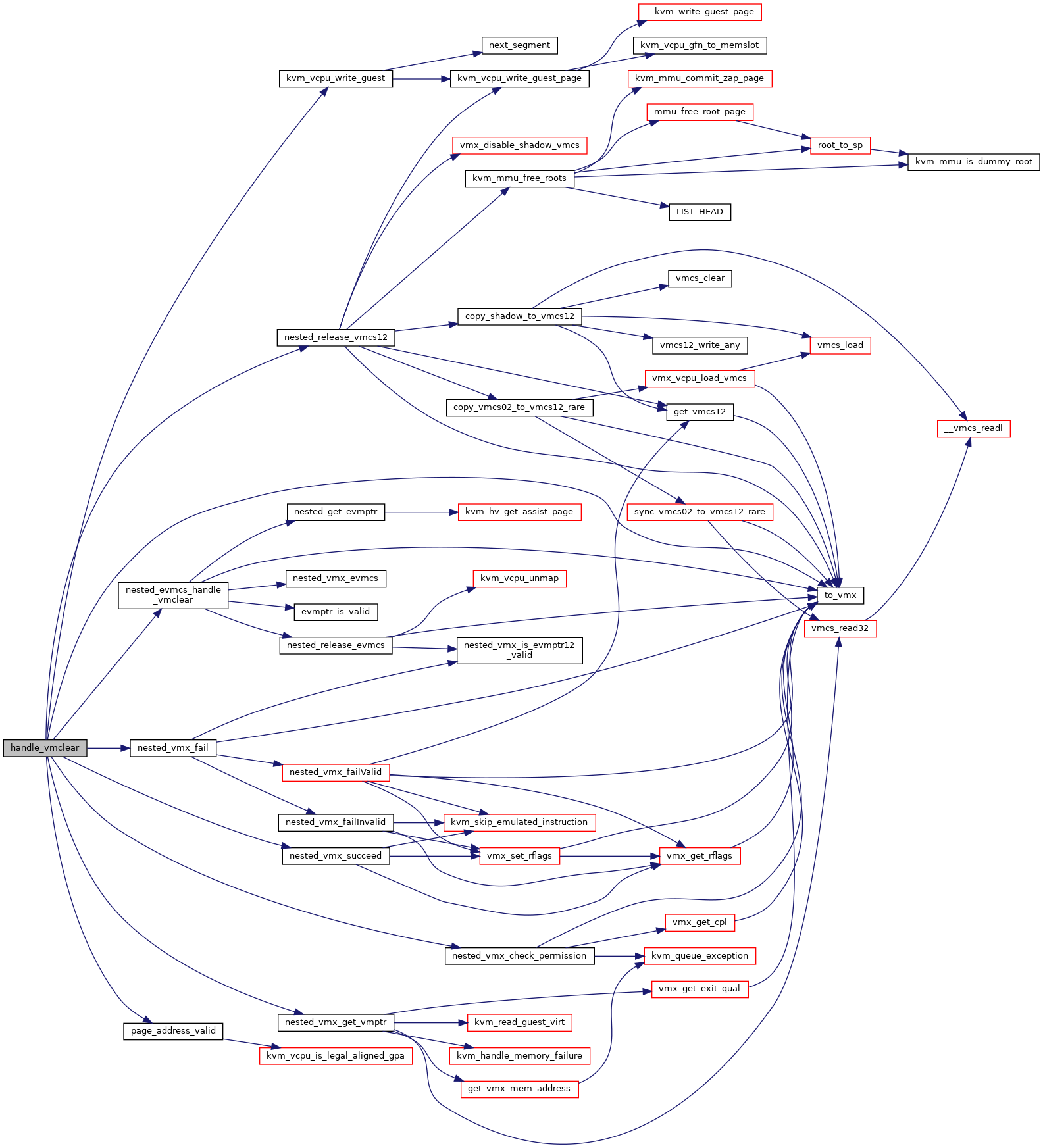

◆ nested_vmx_hardware_setup()

| __init int nested_vmx_hardware_setup | ( | int(*[])(struct kvm_vcpu *) | exit_handlers | ) |

◆ nested_vmx_hardware_unsetup()

| void nested_vmx_hardware_unsetup | ( | void | ) |

◆ nested_vmx_inject_exception_vmexit()

|

static |

◆ nested_vmx_is_exception_vmexit()

|

static |

Definition at line 482 of file nested.c.

◆ nested_vmx_is_page_fault_vmexit()

|

static |

◆ nested_vmx_l0_wants_exit()

|

static |

Definition at line 6188 of file nested.c.

◆ nested_vmx_l1_wants_exit()

|

static |

Definition at line 6266 of file nested.c.

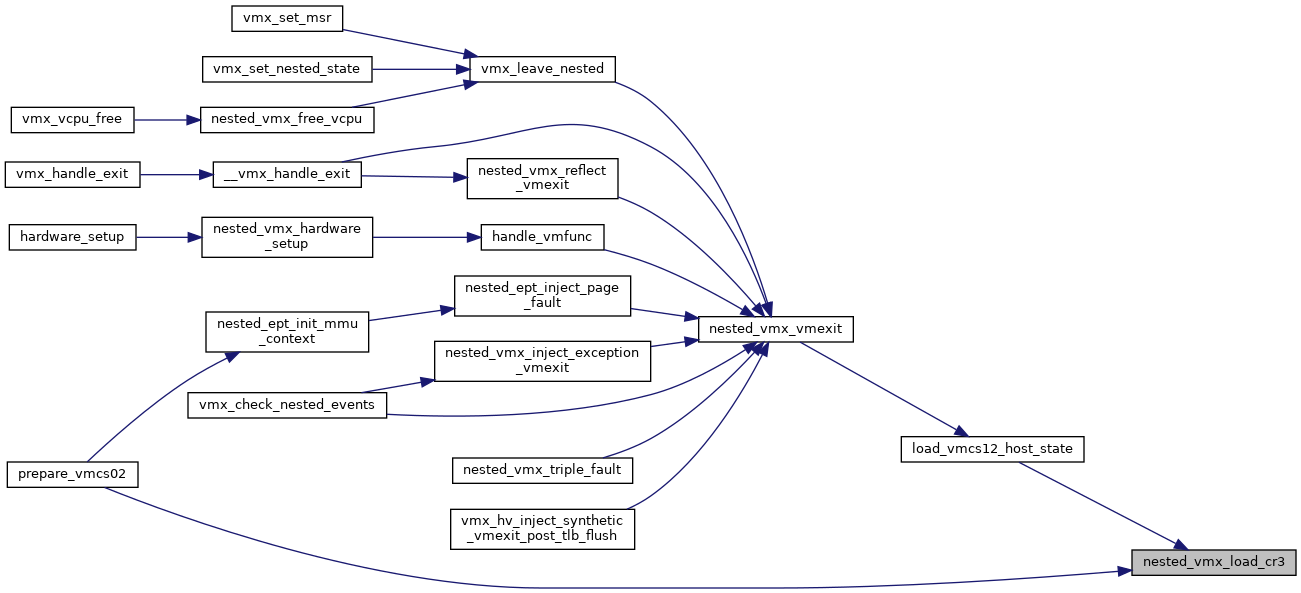

◆ nested_vmx_load_cr3()

|

static |

Definition at line 1115 of file nested.c.

◆ nested_vmx_load_msr()

|

static |

Definition at line 938 of file nested.c.

◆ nested_vmx_load_msr_check()

|

static |

Definition at line 900 of file nested.c.

◆ nested_vmx_max_atomic_switch_msrs()

|

static |

◆ nested_vmx_msr_check_common()

|

static |

◆ nested_vmx_preemption_timer_pending()

|

static |

◆ nested_vmx_prepare_msr_bitmap()

|

inlinestatic |

Definition at line 597 of file nested.c.

◆ nested_vmx_reflect_vmexit()

| bool nested_vmx_reflect_vmexit | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 6393 of file nested.c.

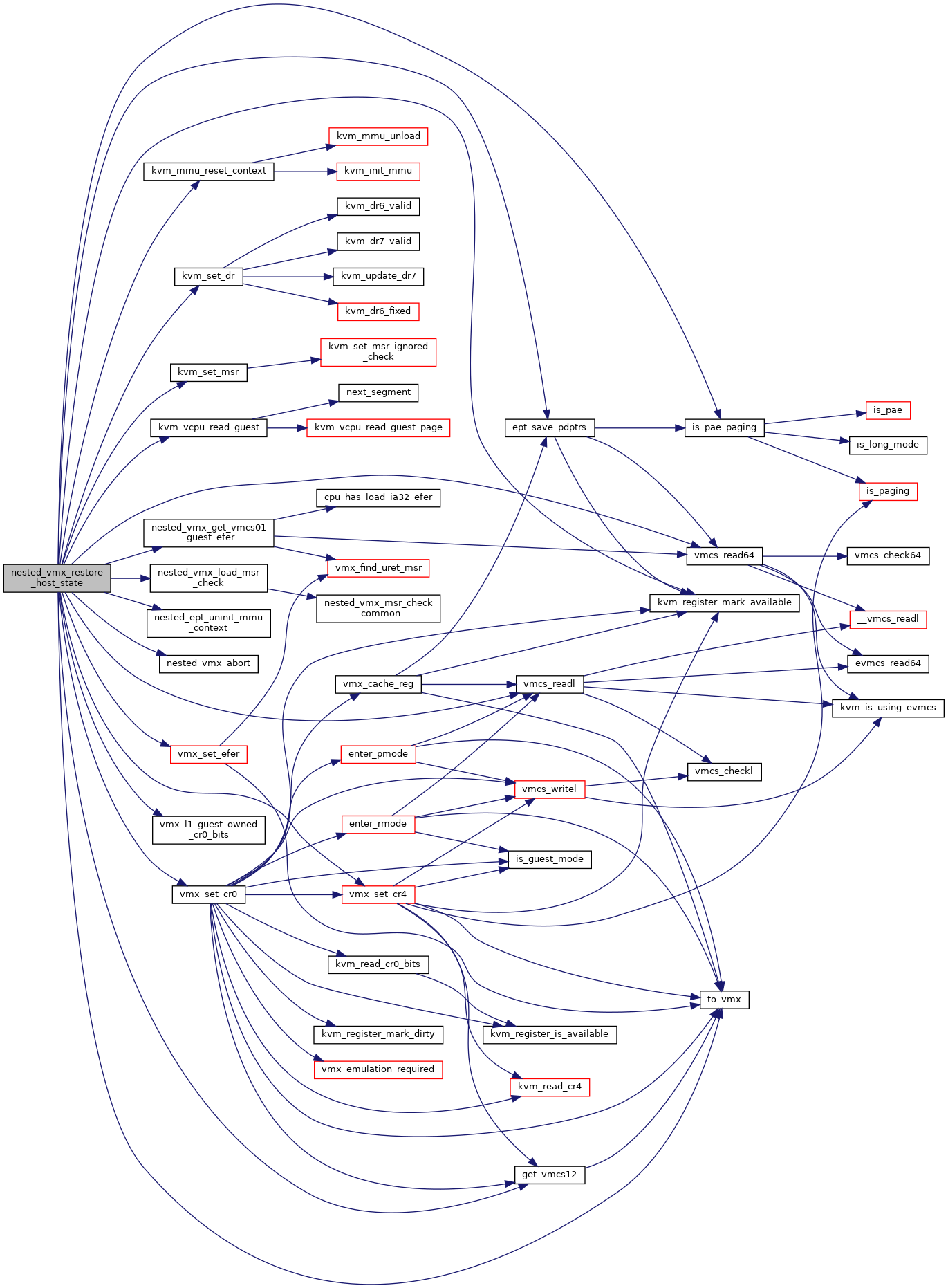

◆ nested_vmx_restore_host_state()

|

static |

Definition at line 4657 of file nested.c.

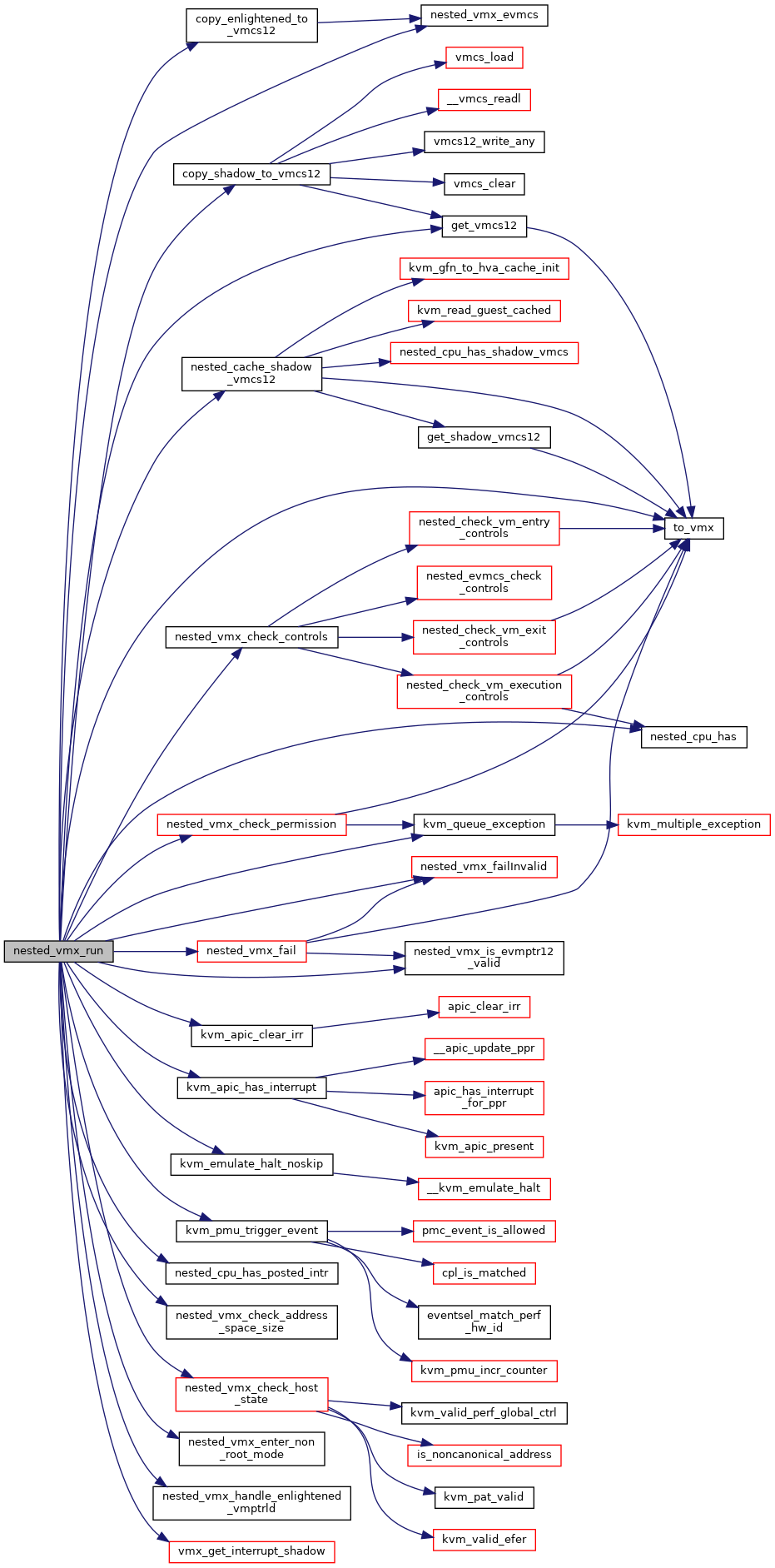

◆ nested_vmx_run()

|

static |

Definition at line 3592 of file nested.c.

◆ nested_vmx_set_intercept_for_msr()

|

inlinestatic |

◆ nested_vmx_set_vmcs_shadowing_bitmap()

| void nested_vmx_set_vmcs_shadowing_bitmap | ( | void | ) |

◆ nested_vmx_setup_basic()

|

static |

◆ nested_vmx_setup_cpubased_ctls()

|

static |

◆ nested_vmx_setup_cr_fixed()

|

static |

◆ nested_vmx_setup_ctls_msrs()

| void nested_vmx_setup_ctls_msrs | ( | struct vmcs_config * | vmcs_conf, |

| u32 | ept_caps | ||

| ) |

Definition at line 7045 of file nested.c.

◆ nested_vmx_setup_entry_ctls()

|

static |

◆ nested_vmx_setup_exit_ctls()

|

static |

◆ nested_vmx_setup_misc_data()

|

static |

Definition at line 6985 of file nested.c.

◆ nested_vmx_setup_pinbased_ctls()

|

static |

◆ nested_vmx_setup_secondary_ctls()

|

static |

◆ nested_vmx_store_msr()

|

static |

Definition at line 1025 of file nested.c.

◆ nested_vmx_store_msr_check()

|

static |

◆ nested_vmx_succeed()

|

static |

◆ nested_vmx_transition_tlb_flush()

|

static |

Definition at line 1167 of file nested.c.

◆ nested_vmx_triple_fault()

|

static |

◆ nested_vmx_update_pending_dbg()

|

static |

Definition at line 3994 of file nested.c.

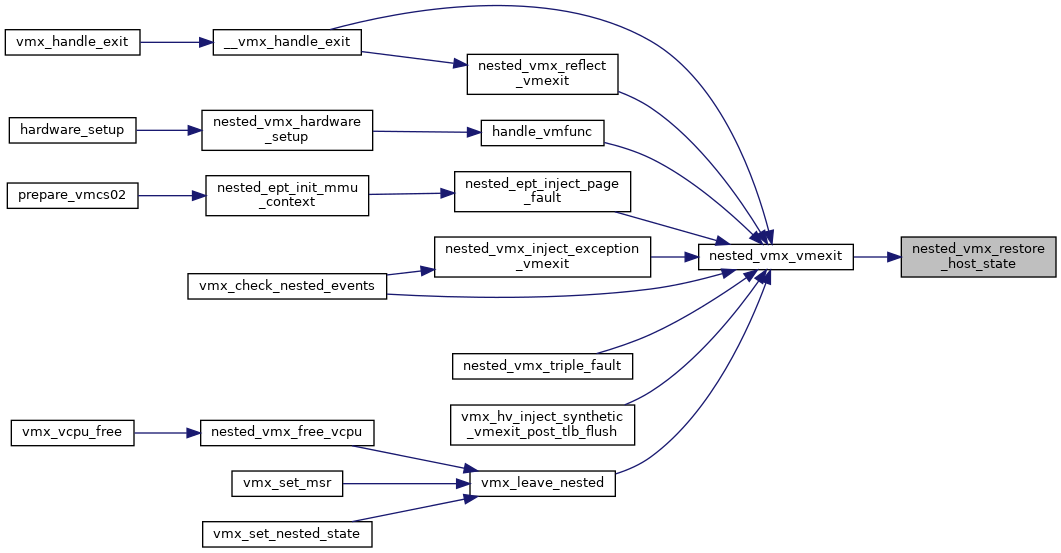

◆ nested_vmx_vmexit()

| void nested_vmx_vmexit | ( | struct kvm_vcpu * | vcpu, |

| u32 | vm_exit_reason, | ||

| u32 | exit_intr_info, | ||

| unsigned long | exit_qualification | ||

| ) |

Definition at line 4767 of file nested.c.

◆ nested_vmx_write_pml_buffer()

|

static |

Definition at line 3347 of file nested.c.

◆ prepare_vmcs02()

|

static |

Definition at line 2570 of file nested.c.

◆ prepare_vmcs02_constant_state()

|

static |

Definition at line 2200 of file nested.c.

◆ prepare_vmcs02_early()

|

static |

Definition at line 2276 of file nested.c.

◆ prepare_vmcs02_early_rare()

Definition at line 2261 of file nested.c.

◆ prepare_vmcs02_rare()

Definition at line 2441 of file nested.c.

◆ prepare_vmcs12()

|

static |

Definition at line 4453 of file nested.c.

◆ prepare_vmx_msr_autostore_list()

|

static |

Definition at line 1073 of file nested.c.

◆ read_and_check_msr_entry()

|

static |

Definition at line 1005 of file nested.c.

◆ set_current_vmptr()

|

static |

◆ sync_vmcs02_to_vmcs12()

|

static |

Definition at line 4372 of file nested.c.

◆ sync_vmcs02_to_vmcs12_rare()

|

static |

◆ vmcs12_guest_cr0()

|

inlinestatic |

◆ vmcs12_guest_cr4()

|

inlinestatic |

◆ vmcs12_save_pending_event()

|

static |

◆ vmx_calc_preemption_timer_value()

|

static |

◆ vmx_check_nested_events()

|

static |

Definition at line 4098 of file nested.c.

◆ vmx_complete_nested_posted_interrupt()

|

static |

Definition at line 3859 of file nested.c.

◆ vmx_control_msr()

|

inlinestatic |

◆ vmx_control_verify()

|

inlinestatic |

Definition at line 210 of file nested.c.

◆ vmx_disable_shadow_vmcs()

|

static |

◆ vmx_get_control_msr()

|

static |

◆ vmx_get_fixed0_msr()

|

static |

◆ vmx_get_nested_state()

|

static |

Definition at line 6445 of file nested.c.

◆ vmx_get_nested_state_pages()

|

static |

◆ vmx_get_pending_dbg_trap()

|

static |

◆ vmx_get_preemption_timer_value()

|

static |

◆ vmx_get_vmx_msr()

| int vmx_get_vmx_msr | ( | struct nested_vmx_msrs * | msrs, |

| u32 | msr_index, | ||

| u64 * | pdata | ||

| ) |

◆ vmx_has_apicv_interrupt()

|

static |

Definition at line 3406 of file nested.c.

◆ vmx_has_nested_events()

|

static |

◆ vmx_is_low_priority_db_trap()

|

static |

◆ vmx_leave_nested()

| void vmx_leave_nested | ( | struct kvm_vcpu * | vcpu | ) |

◆ vmx_preemption_timer_fn()

|

static |

◆ vmx_restore_control_msr()

|

static |

Definition at line 1289 of file nested.c.

◆ vmx_restore_fixed0_msr()

|

static |

Definition at line 1373 of file nested.c.

◆ vmx_restore_vmx_basic()

|

static |

◆ vmx_restore_vmx_ept_vpid_cap()

|

static |

◆ vmx_restore_vmx_misc()

|

static |

◆ vmx_set_nested_state()

|

static |

◆ vmx_set_vmx_msr()

| int vmx_set_vmx_msr | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr_index, | ||

| u64 | data | ||

| ) |

Definition at line 1393 of file nested.c.

◆ vmx_start_preemption_timer()

|

static |

◆ vmx_switch_vmcs()

|

static |

Definition at line 294 of file nested.c.

◆ vmx_sync_vmcs_host_state()

|

static |

Definition at line 275 of file nested.c.

Variable Documentation

◆ enable_shadow_vmcs

|

static |

◆ max_shadow_read_only_fields

|

static |

◆ max_shadow_read_write_fields

|

static |

◆ nested_early_check

|

static |

◆ shadow_read_only_fields

|

static |

◆ shadow_read_write_fields

|

static |

◆ vmx_bitmap

|

static |

◆ vmx_nested_ops

| struct kvm_x86_nested_ops vmx_nested_ops |