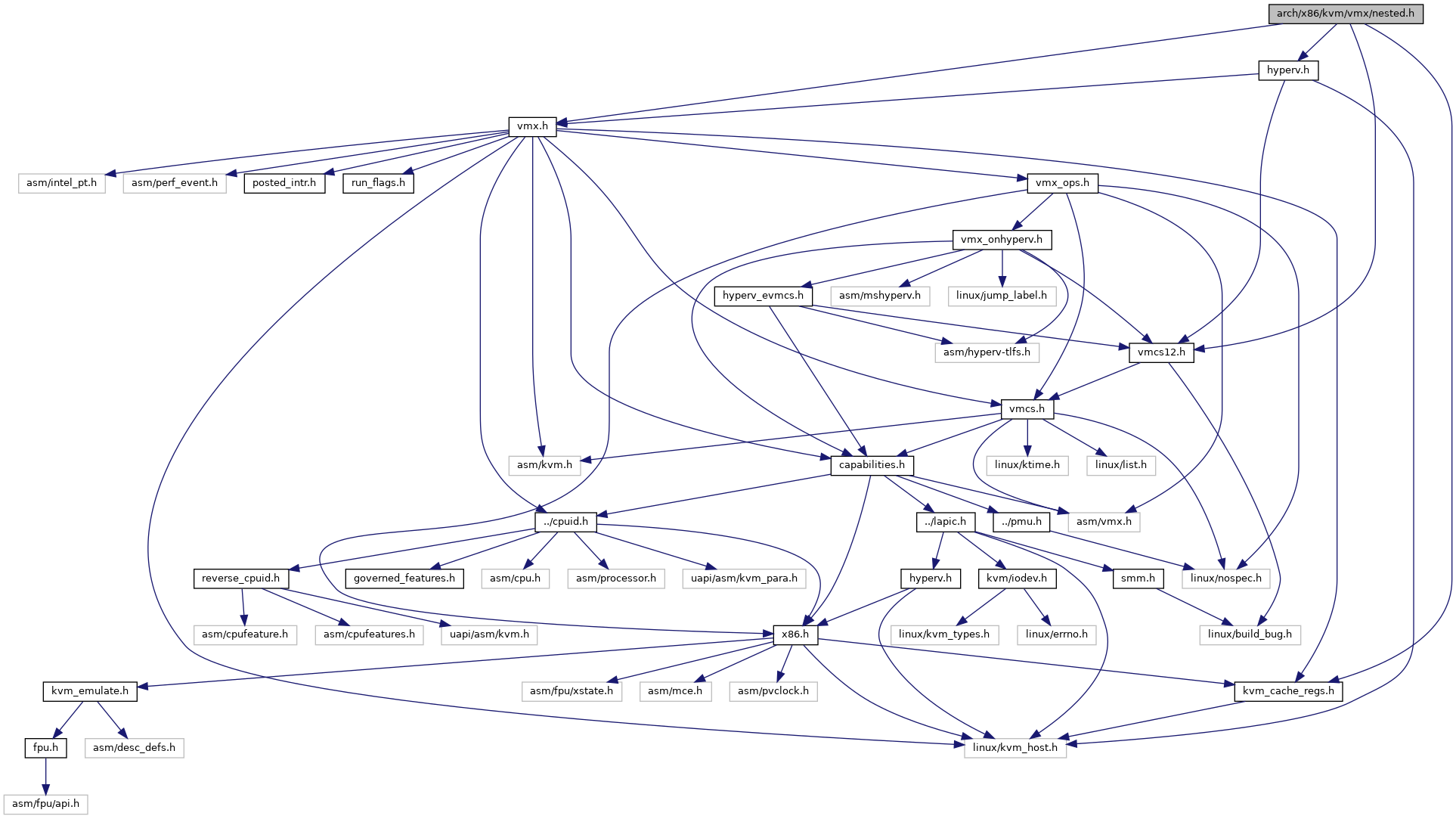

Include dependency graph for nested.h:

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Macros | |

| #define | nested_guest_cr4_valid nested_cr4_valid |

| #define | nested_host_cr4_valid nested_cr4_valid |

Enumerations | |

| enum | nvmx_vmentry_status { NVMX_VMENTRY_SUCCESS , NVMX_VMENTRY_VMFAIL , NVMX_VMENTRY_VMEXIT , NVMX_VMENTRY_KVM_INTERNAL_ERROR } |

Functions | |

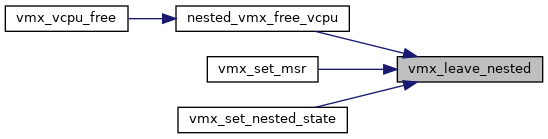

| void | vmx_leave_nested (struct kvm_vcpu *vcpu) |

| void | nested_vmx_setup_ctls_msrs (struct vmcs_config *vmcs_conf, u32 ept_caps) |

| void | nested_vmx_hardware_unsetup (void) |

| __init int | nested_vmx_hardware_setup (int(*exit_handlers[])(struct kvm_vcpu *)) |

| void | nested_vmx_set_vmcs_shadowing_bitmap (void) |

| void | nested_vmx_free_vcpu (struct kvm_vcpu *vcpu) |

| enum nvmx_vmentry_status | nested_vmx_enter_non_root_mode (struct kvm_vcpu *vcpu, bool from_vmentry) |

| bool | nested_vmx_reflect_vmexit (struct kvm_vcpu *vcpu) |

| void | nested_vmx_vmexit (struct kvm_vcpu *vcpu, u32 vm_exit_reason, u32 exit_intr_info, unsigned long exit_qualification) |

| void | nested_sync_vmcs12_to_shadow (struct kvm_vcpu *vcpu) |

| int | vmx_set_vmx_msr (struct kvm_vcpu *vcpu, u32 msr_index, u64 data) |

| int | vmx_get_vmx_msr (struct nested_vmx_msrs *msrs, u32 msr_index, u64 *pdata) |

| int | get_vmx_mem_address (struct kvm_vcpu *vcpu, unsigned long exit_qualification, u32 vmx_instruction_info, bool wr, int len, gva_t *ret) |

| void | nested_mark_vmcs12_pages_dirty (struct kvm_vcpu *vcpu) |

| bool | nested_vmx_check_io_bitmaps (struct kvm_vcpu *vcpu, unsigned int port, int size) |

| static struct vmcs12 * | get_vmcs12 (struct kvm_vcpu *vcpu) |

| static struct vmcs12 * | get_shadow_vmcs12 (struct kvm_vcpu *vcpu) |

| static int | vmx_has_valid_vmcs12 (struct kvm_vcpu *vcpu) |

| static u16 | nested_get_vpid02 (struct kvm_vcpu *vcpu) |

| static unsigned long | nested_ept_get_eptp (struct kvm_vcpu *vcpu) |

| static bool | nested_ept_ad_enabled (struct kvm_vcpu *vcpu) |

| static unsigned long | nested_read_cr0 (struct vmcs12 *fields) |

| static unsigned long | nested_read_cr4 (struct vmcs12 *fields) |

| static unsigned | nested_cpu_vmx_misc_cr3_count (struct kvm_vcpu *vcpu) |

| static bool | nested_cpu_has_vmwrite_any_field (struct kvm_vcpu *vcpu) |

| static bool | nested_cpu_has_zero_length_injection (struct kvm_vcpu *vcpu) |

| static bool | nested_cpu_supports_monitor_trap_flag (struct kvm_vcpu *vcpu) |

| static bool | nested_cpu_has_vmx_shadow_vmcs (struct kvm_vcpu *vcpu) |

| static bool | nested_cpu_has (struct vmcs12 *vmcs12, u32 bit) |

| static bool | nested_cpu_has2 (struct vmcs12 *vmcs12, u32 bit) |

| static bool | nested_cpu_has_preemption_timer (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_nmi_exiting (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_virtual_nmis (struct vmcs12 *vmcs12) |

| static int | nested_cpu_has_mtf (struct vmcs12 *vmcs12) |

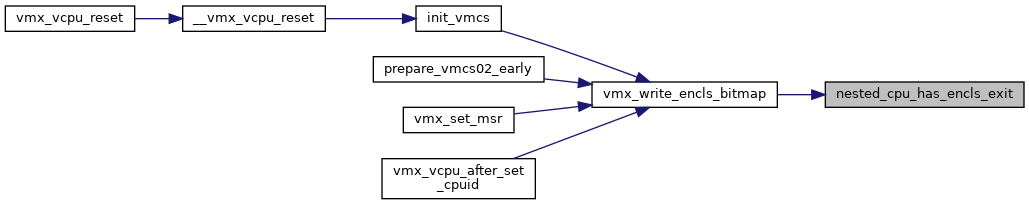

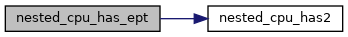

| static int | nested_cpu_has_ept (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_xsaves (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_pml (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_virt_x2apic_mode (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_vpid (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_apic_reg_virt (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_vid (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_posted_intr (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_vmfunc (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_eptp_switching (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_shadow_vmcs (struct vmcs12 *vmcs12) |

| static bool | nested_cpu_has_save_preemption_timer (struct vmcs12 *vmcs12) |

| static bool | nested_exit_on_nmi (struct kvm_vcpu *vcpu) |

| static bool | nested_exit_on_intr (struct kvm_vcpu *vcpu) |

| static bool | nested_cpu_has_encls_exit (struct vmcs12 *vmcs12) |

| static bool | fixed_bits_valid (u64 val, u64 fixed0, u64 fixed1) |

| static bool | nested_guest_cr0_valid (struct kvm_vcpu *vcpu, unsigned long val) |

| static bool | nested_host_cr0_valid (struct kvm_vcpu *vcpu, unsigned long val) |

| static bool | nested_cr4_valid (struct kvm_vcpu *vcpu, unsigned long val) |

Variables | |

| struct kvm_x86_nested_ops | vmx_nested_ops |

Macro Definition Documentation

◆ nested_guest_cr4_valid

| #define nested_guest_cr4_valid nested_cr4_valid |

◆ nested_host_cr4_valid

| #define nested_host_cr4_valid nested_cr4_valid |

Enumeration Type Documentation

◆ nvmx_vmentry_status

| enum nvmx_vmentry_status |

Function Documentation

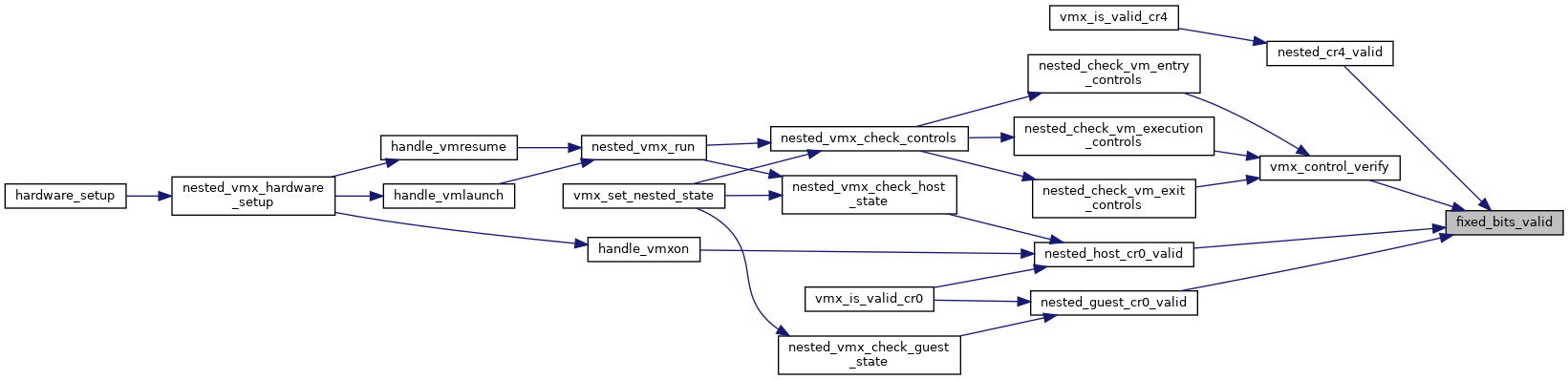

◆ fixed_bits_valid()

|

inlinestatic |

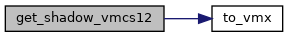

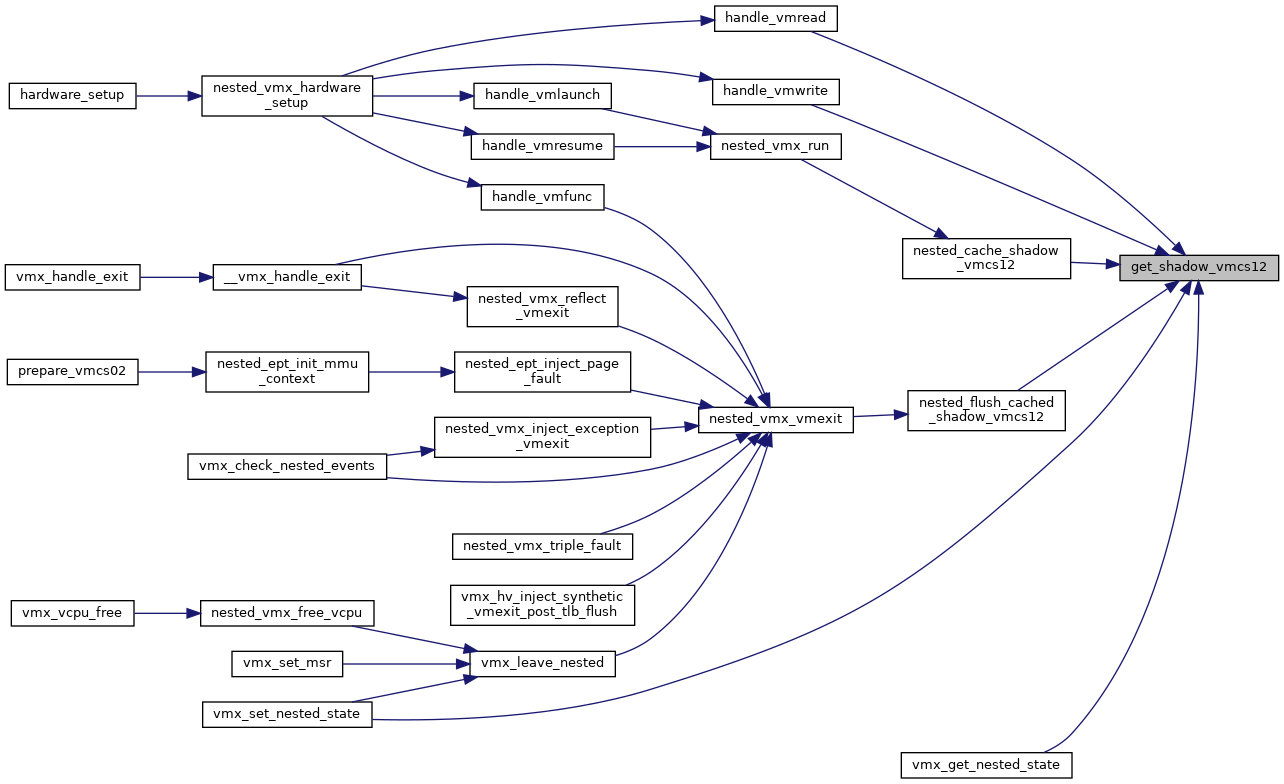

◆ get_shadow_vmcs12()

|

inlinestatic |

◆ get_vmcs12()

|

inlinestatic |

◆ get_vmx_mem_address()

| int get_vmx_mem_address | ( | struct kvm_vcpu * | vcpu, |

| unsigned long | exit_qualification, | ||

| u32 | vmx_instruction_info, | ||

| bool | wr, | ||

| int | len, | ||

| gva_t * | ret | ||

| ) |

Definition at line 4963 of file nested.c.

gva_t vmx_get_untagged_addr(struct kvm_vcpu *vcpu, gva_t gva, unsigned int flags)

Definition: vmx.c:8250

void vmx_get_segment(struct kvm_vcpu *vcpu, struct kvm_segment *var, int seg)

Definition: vmx.c:3496

void kvm_queue_exception_e(struct kvm_vcpu *vcpu, unsigned nr, u32 error_code)

Definition: x86.c:824

void kvm_queue_exception(struct kvm_vcpu *vcpu, unsigned nr)

Definition: x86.c:731

static unsigned long kvm_register_read(struct kvm_vcpu *vcpu, int reg)

Definition: x86.h:273

static bool is_noncanonical_address(u64 la, struct kvm_vcpu *vcpu)

Definition: x86.h:213

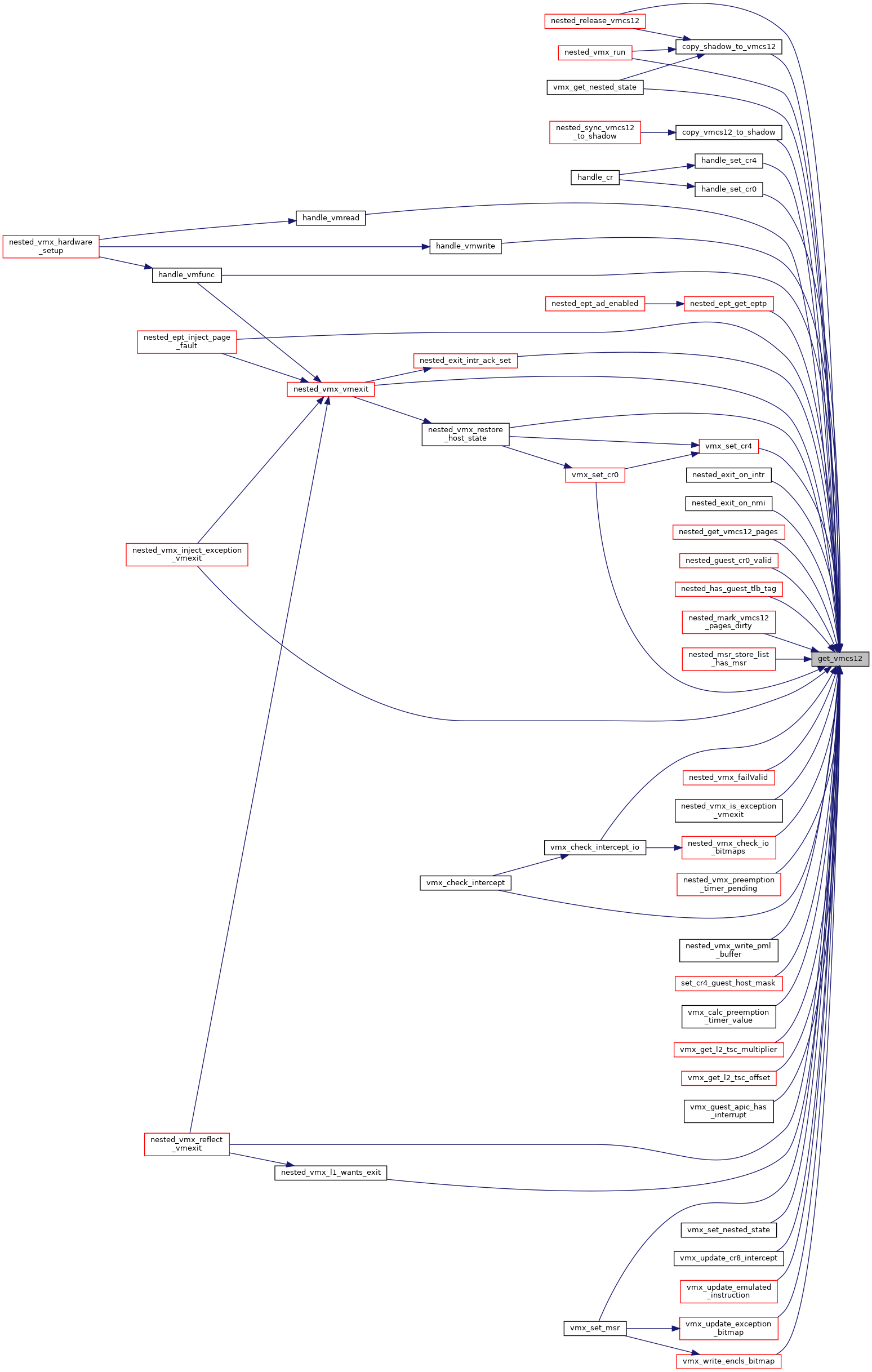

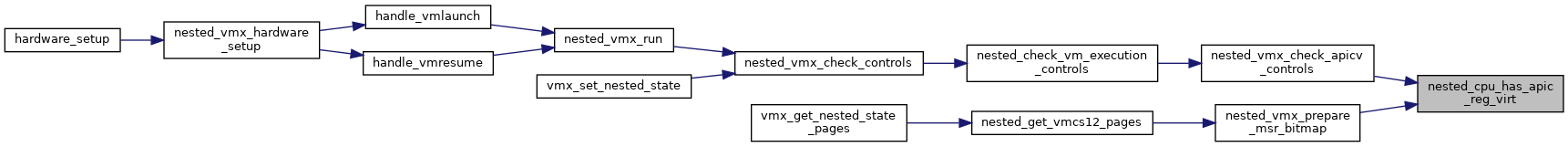

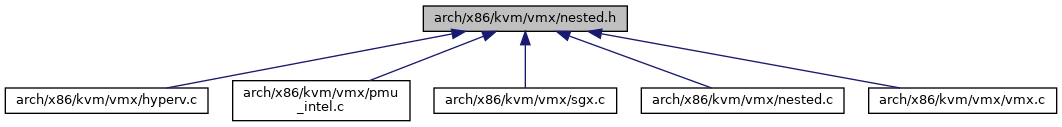

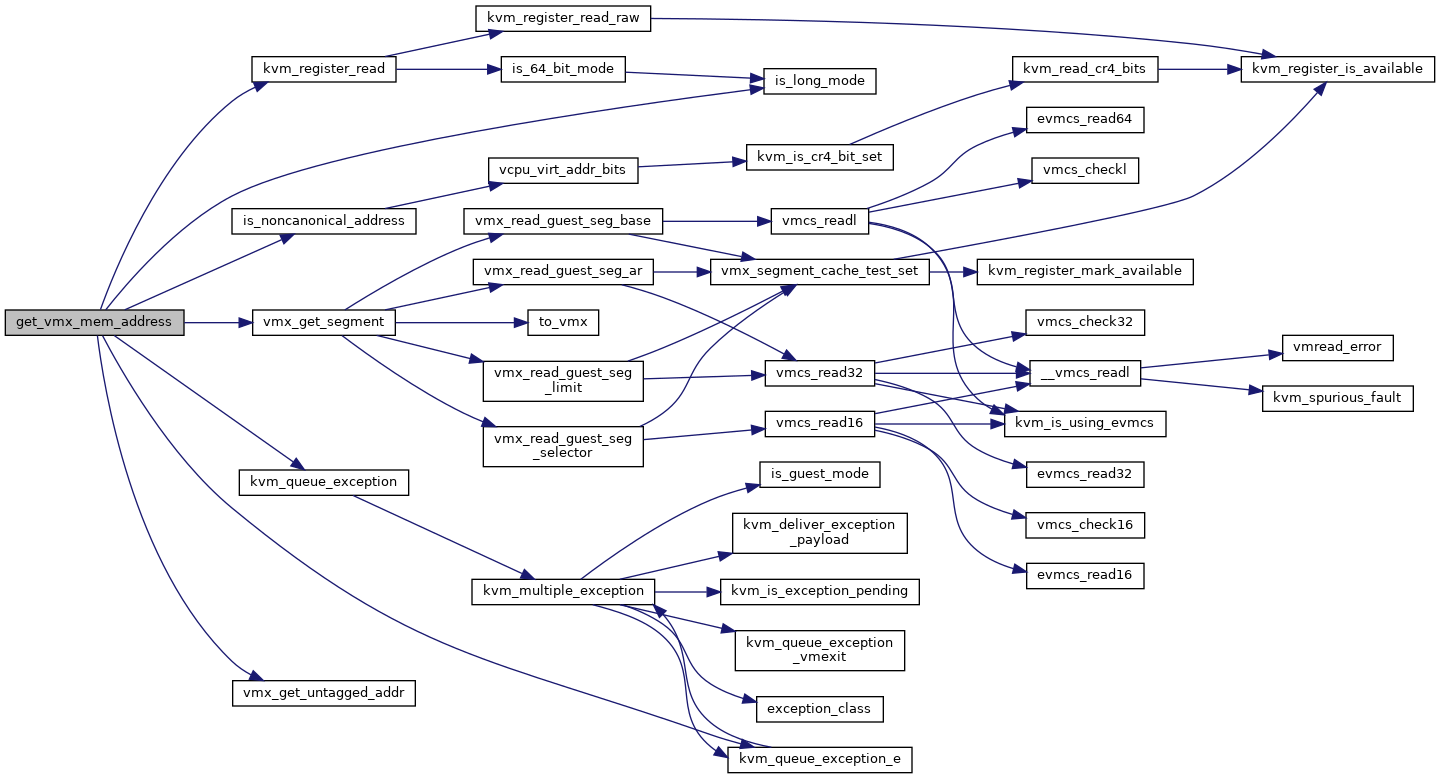

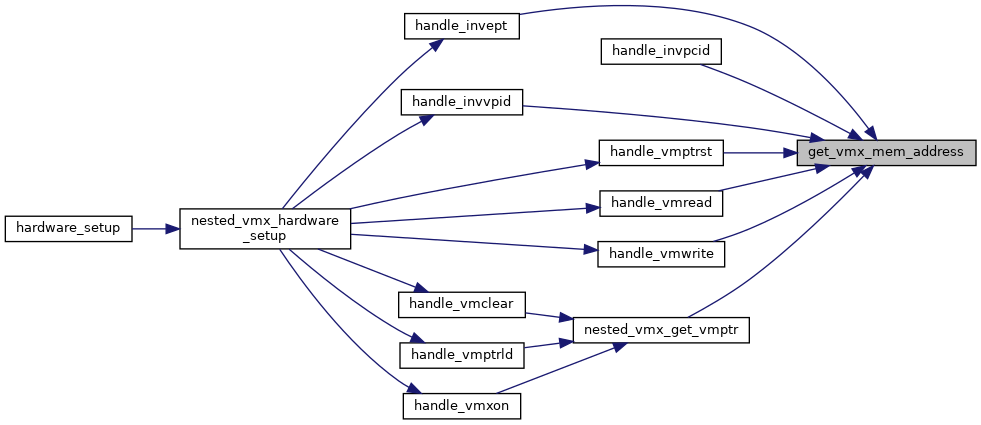

Here is the call graph for this function:

Here is the caller graph for this function:

◆ nested_cpu_has()

|

inlinestatic |

Definition at line 132 of file nested.h.

Definition: vmcs12.h:27

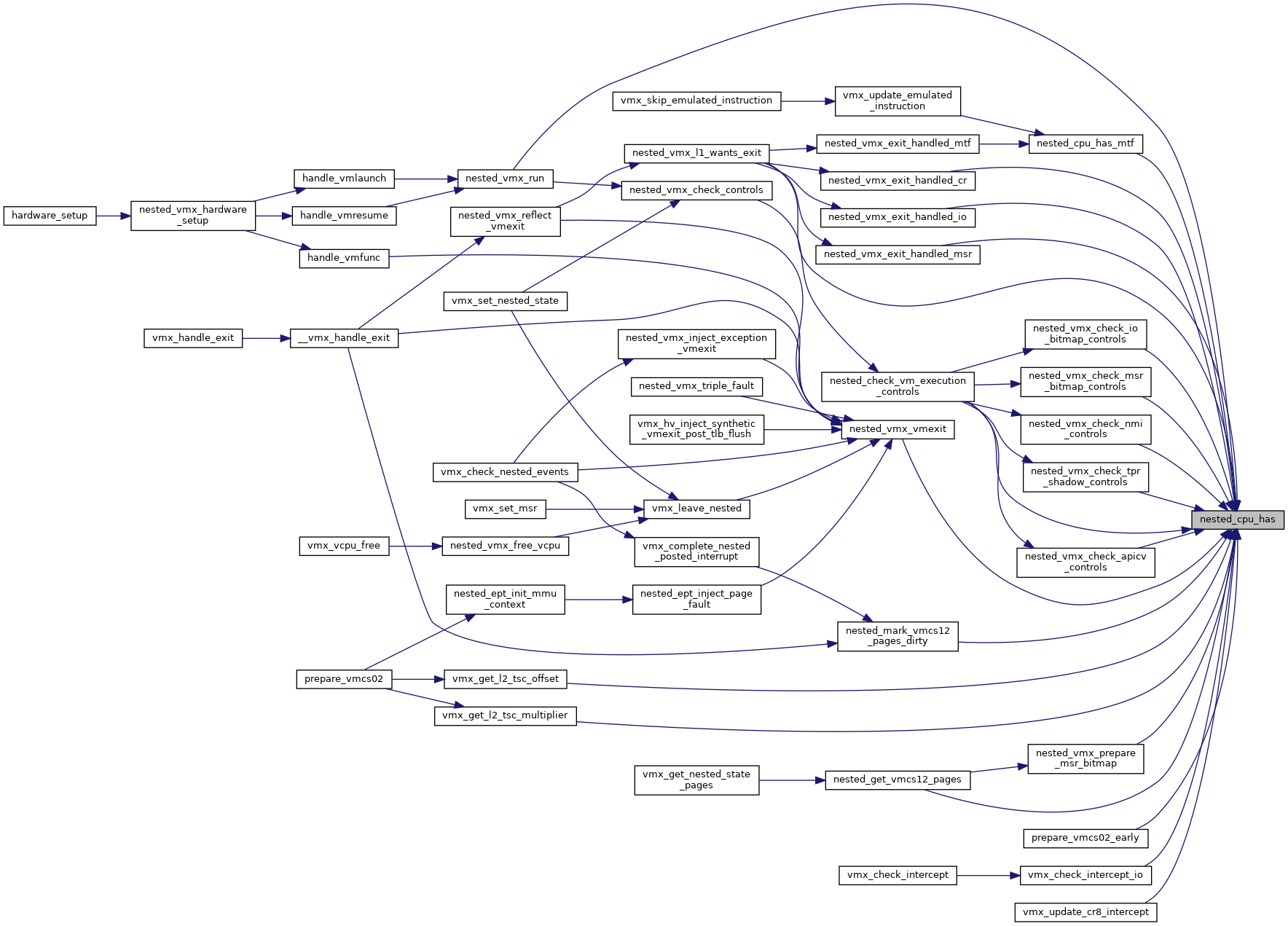

Here is the caller graph for this function:

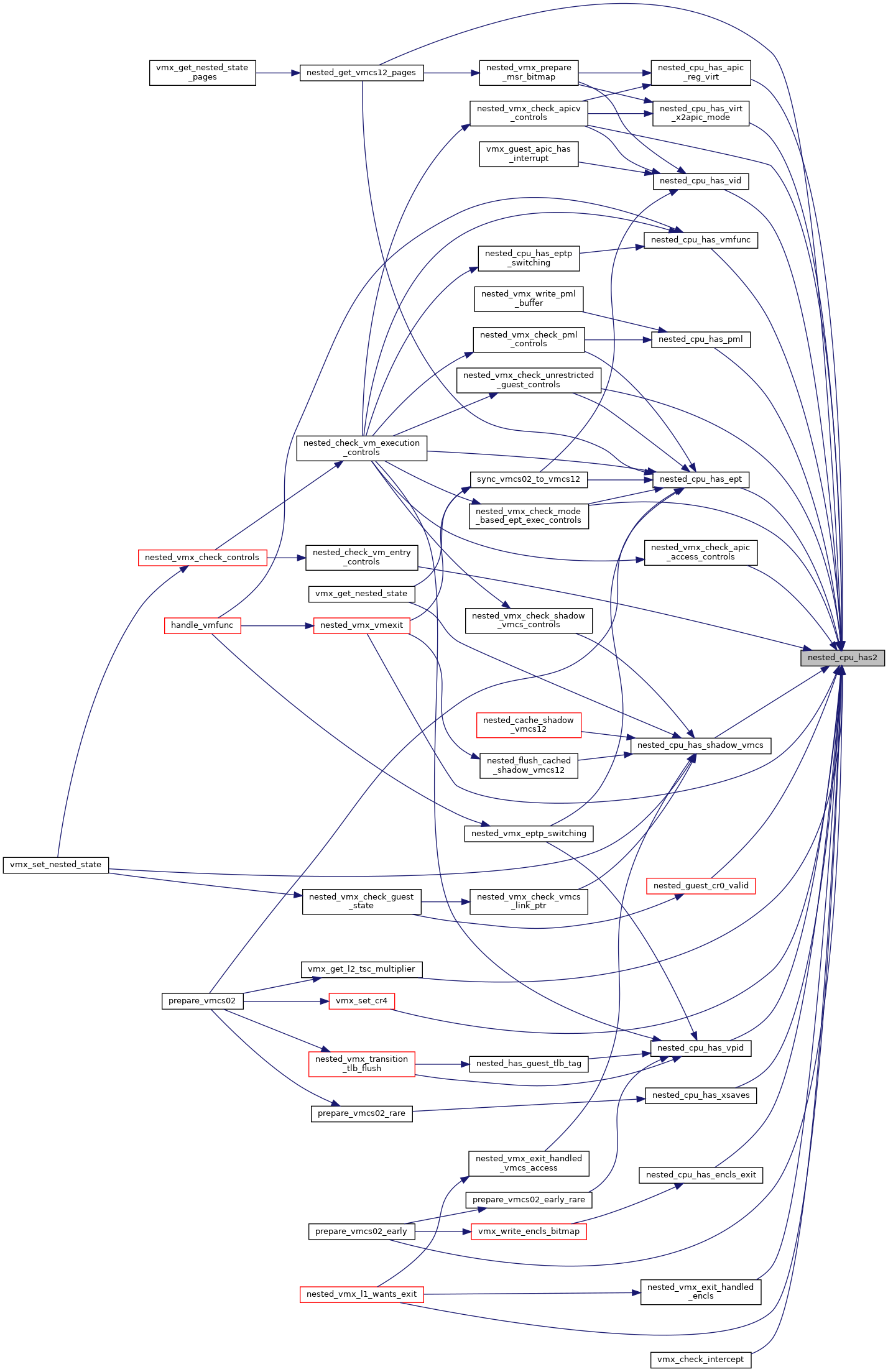

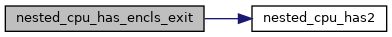

◆ nested_cpu_has2()

|

inlinestatic |

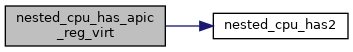

◆ nested_cpu_has_apic_reg_virt()

|

inlinestatic |

◆ nested_cpu_has_encls_exit()

|

inlinestatic |

◆ nested_cpu_has_ept()

|

inlinestatic |

◆ nested_cpu_has_eptp_switching()

|

inlinestatic |

Definition at line 210 of file nested.h.

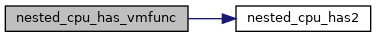

static bool nested_cpu_has_vmfunc(struct vmcs12 *vmcs12)

Definition: nested.h:205

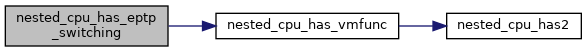

Here is the call graph for this function:

Here is the caller graph for this function:

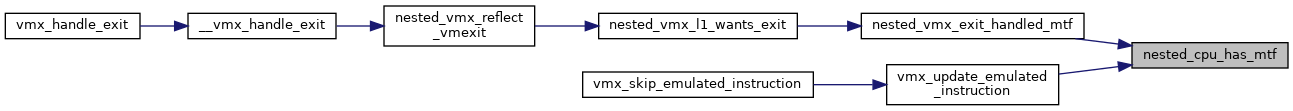

◆ nested_cpu_has_mtf()

|

inlinestatic |

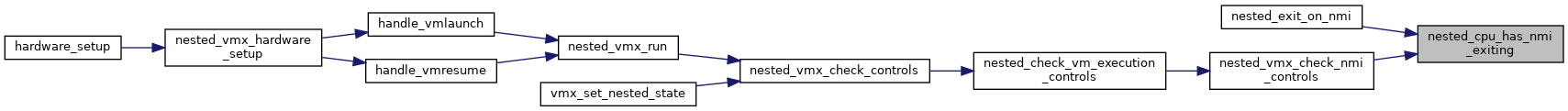

◆ nested_cpu_has_nmi_exiting()

|

inlinestatic |

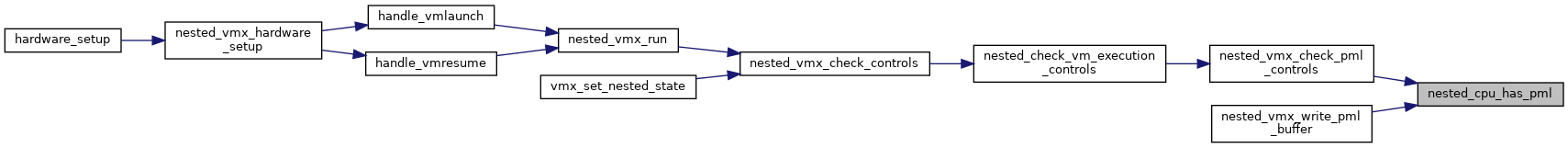

◆ nested_cpu_has_pml()

|

inlinestatic |

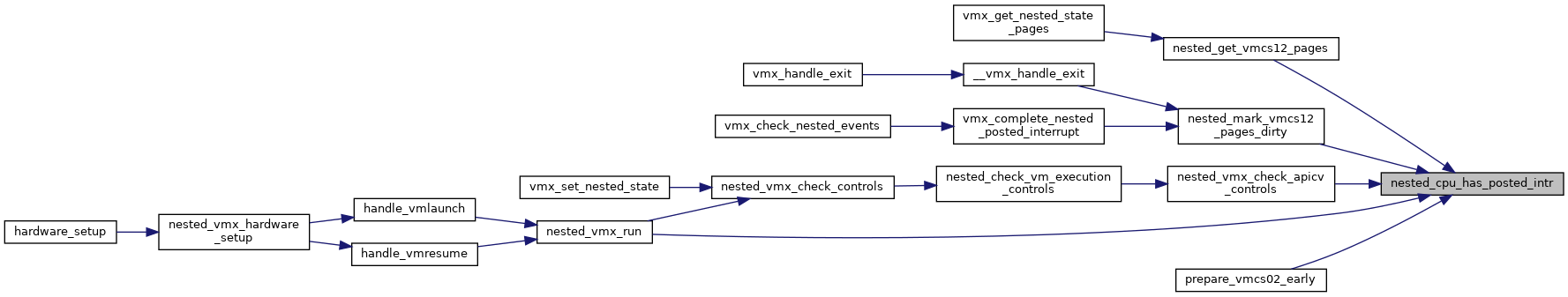

◆ nested_cpu_has_posted_intr()

|

inlinestatic |

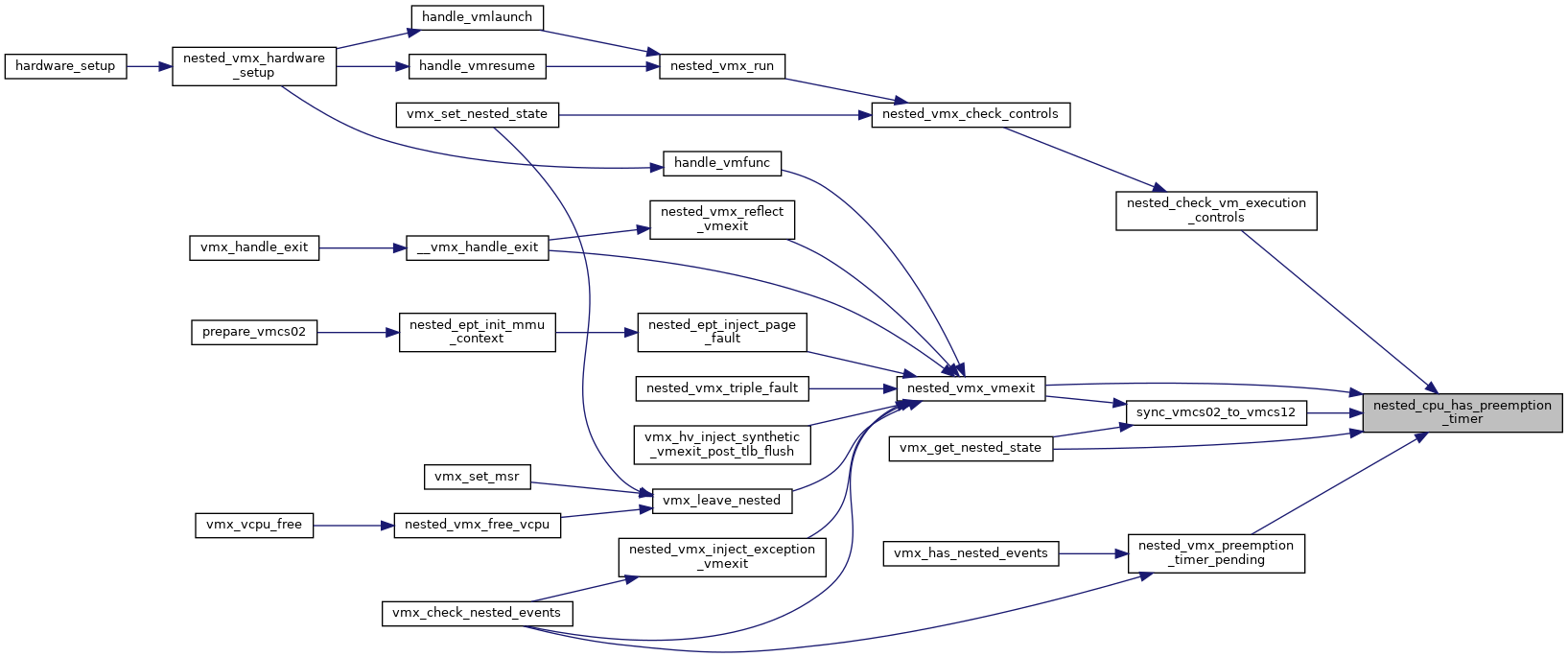

◆ nested_cpu_has_preemption_timer()

|

inlinestatic |

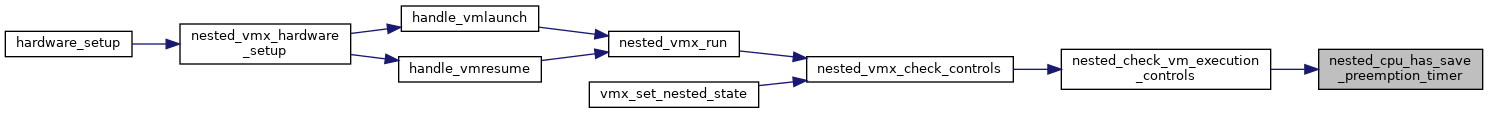

◆ nested_cpu_has_save_preemption_timer()

|

inlinestatic |

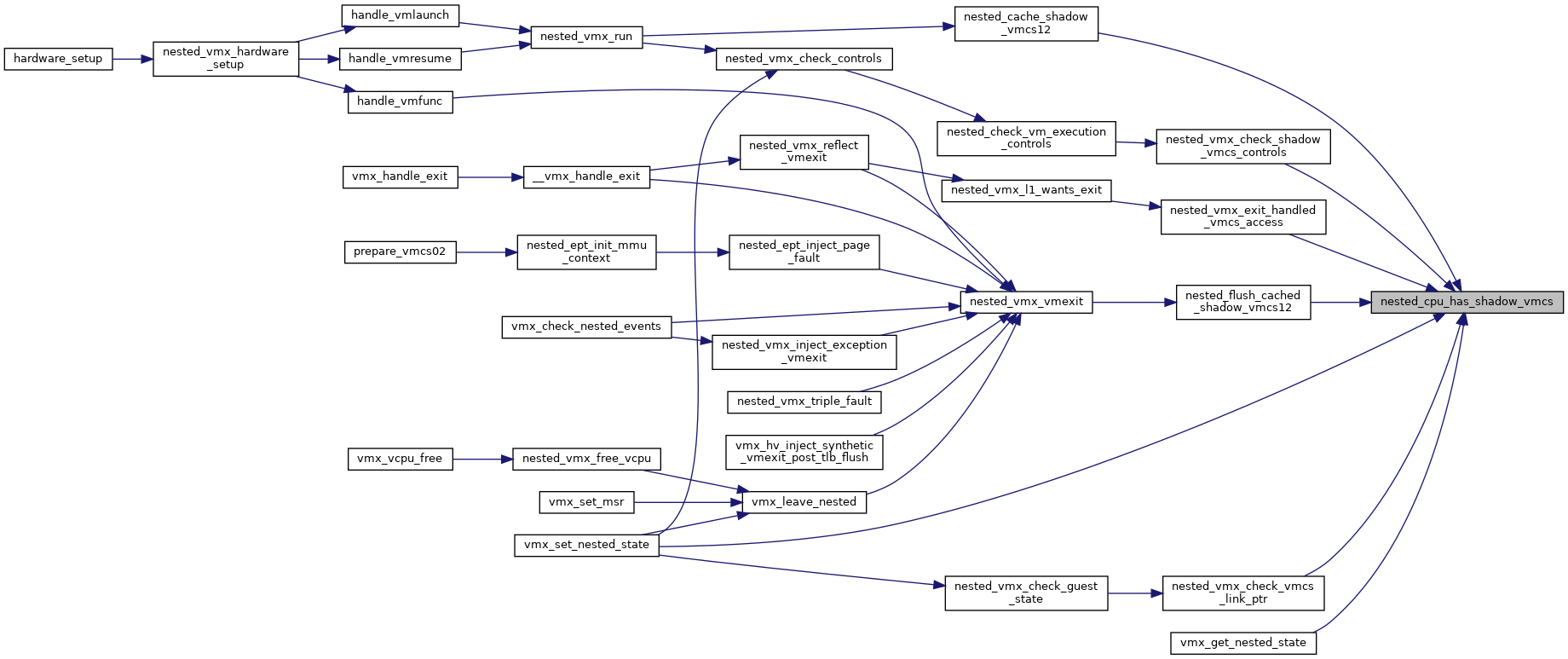

◆ nested_cpu_has_shadow_vmcs()

|

inlinestatic |

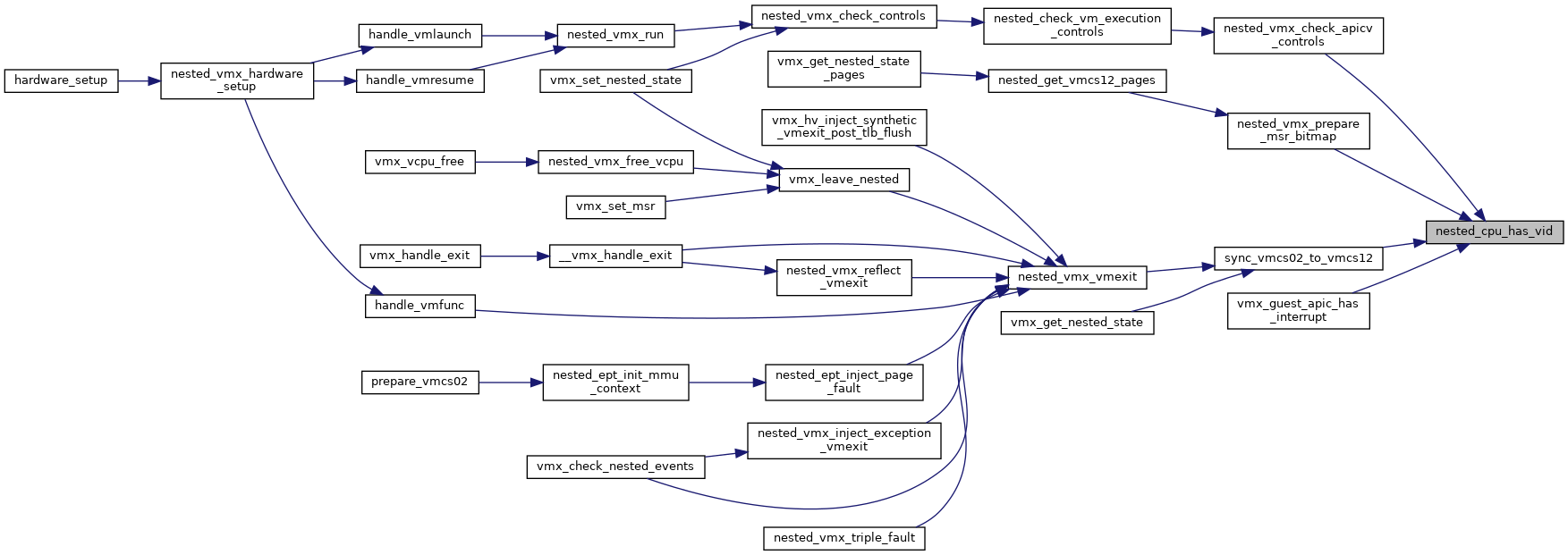

◆ nested_cpu_has_vid()

|

inlinestatic |

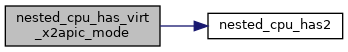

◆ nested_cpu_has_virt_x2apic_mode()

|

inlinestatic |

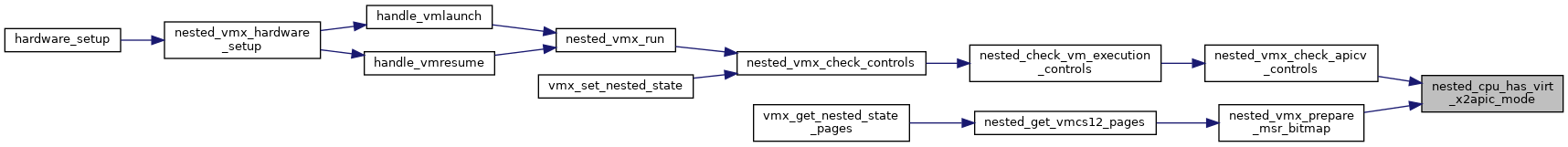

◆ nested_cpu_has_virtual_nmis()

|

inlinestatic |

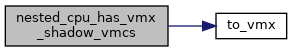

◆ nested_cpu_has_vmfunc()

|

inlinestatic |

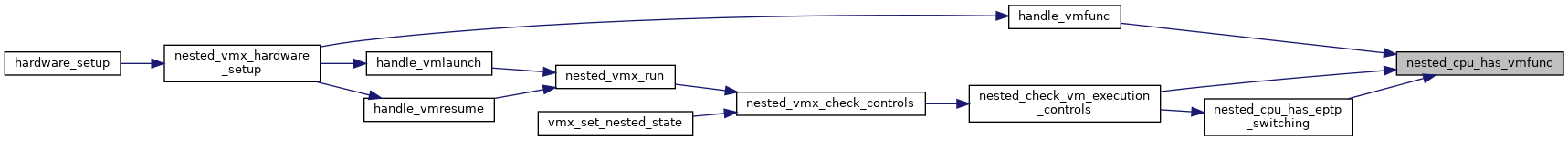

◆ nested_cpu_has_vmwrite_any_field()

|

inlinestatic |

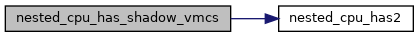

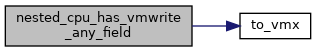

◆ nested_cpu_has_vmx_shadow_vmcs()

|

inlinestatic |

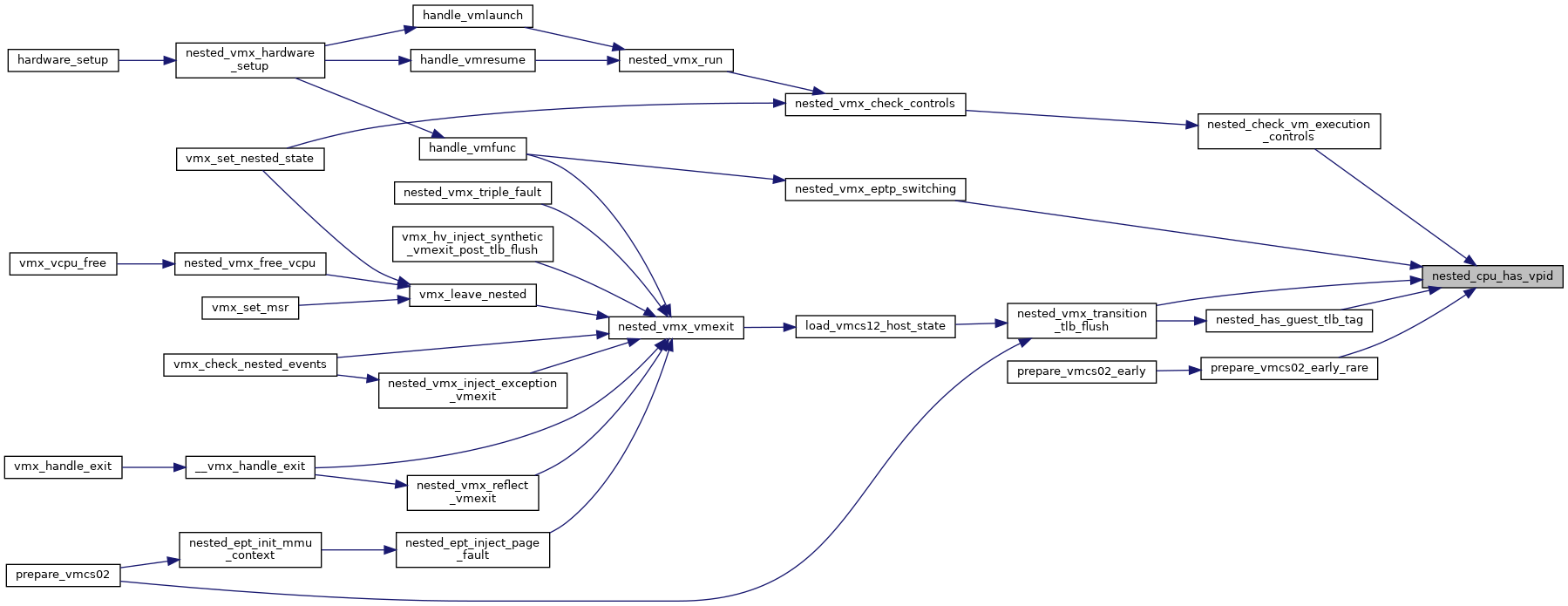

◆ nested_cpu_has_vpid()

|

inlinestatic |

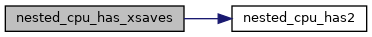

◆ nested_cpu_has_xsaves()

|

inlinestatic |

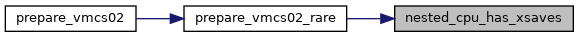

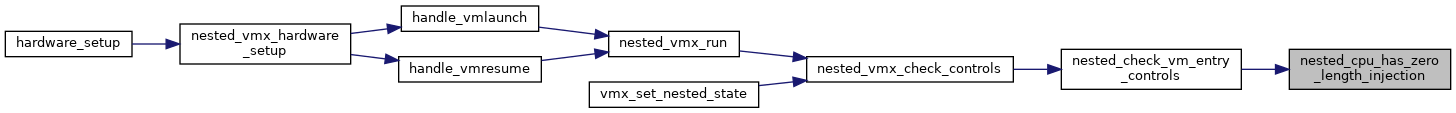

◆ nested_cpu_has_zero_length_injection()

|

inlinestatic |

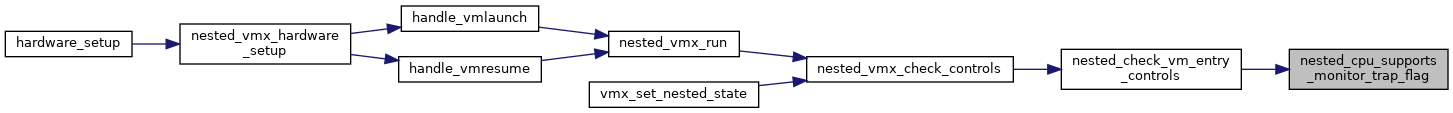

◆ nested_cpu_supports_monitor_trap_flag()

|

inlinestatic |

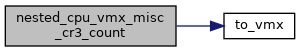

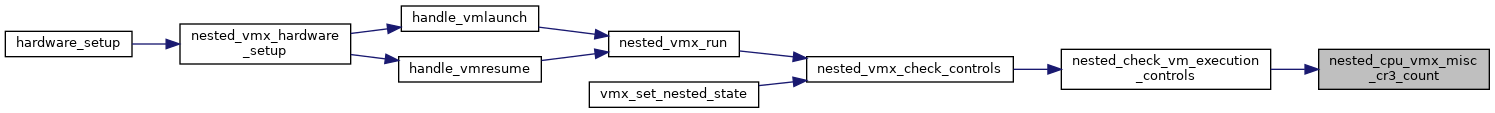

◆ nested_cpu_vmx_misc_cr3_count()

|

inlinestatic |

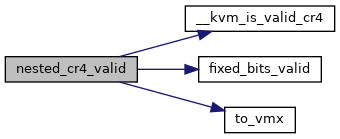

◆ nested_cr4_valid()

|

inlinestatic |

Definition at line 279 of file nested.h.

static bool fixed_bits_valid(u64 val, u64 fixed0, u64 fixed1)

Definition: nested.h:252

bool __kvm_is_valid_cr4(struct kvm_vcpu *vcpu, unsigned long cr4)

Definition: x86.c:1132

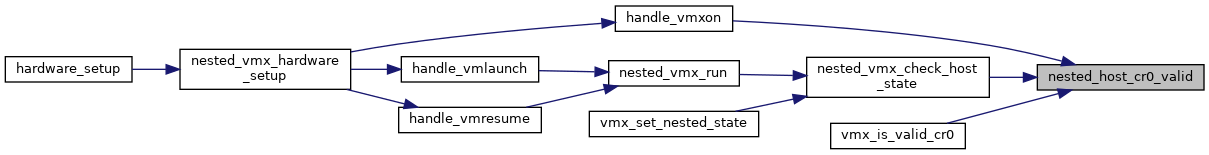

Here is the call graph for this function:

Here is the caller graph for this function:

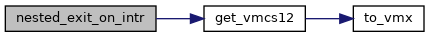

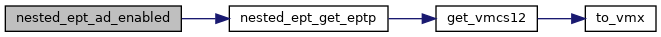

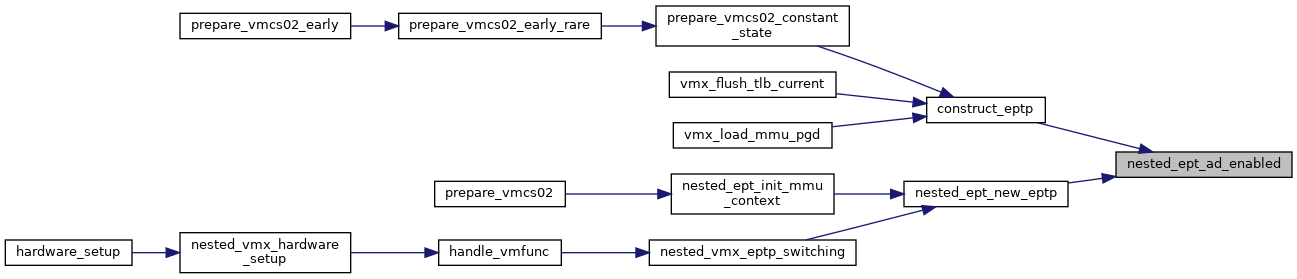

◆ nested_ept_ad_enabled()

|

inlinestatic |

Definition at line 77 of file nested.h.

static unsigned long nested_ept_get_eptp(struct kvm_vcpu *vcpu)

Definition: nested.h:71

Here is the call graph for this function:

Here is the caller graph for this function:

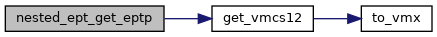

◆ nested_ept_get_eptp()

|

inlinestatic |

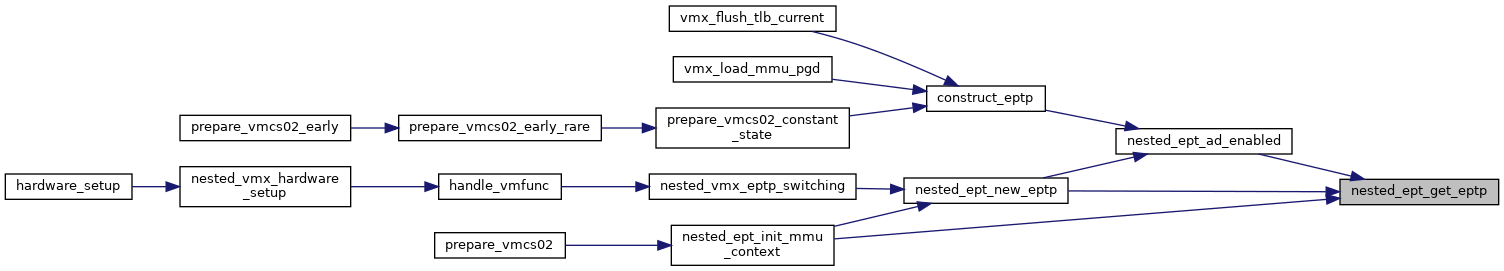

◆ nested_exit_on_intr()

|

inlinestatic |

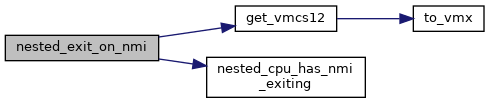

◆ nested_exit_on_nmi()

|

inlinestatic |

Definition at line 228 of file nested.h.

static bool nested_cpu_has_nmi_exiting(struct vmcs12 *vmcs12)

Definition: nested.h:150

Here is the call graph for this function:

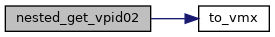

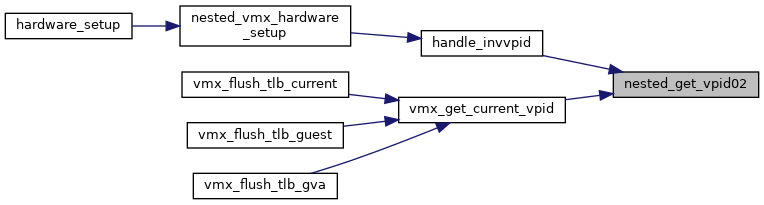

◆ nested_get_vpid02()

|

inlinestatic |

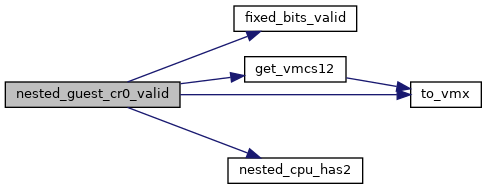

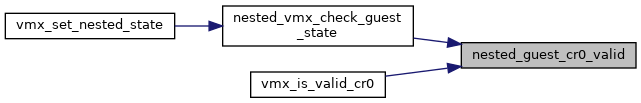

◆ nested_guest_cr0_valid()

|

inlinestatic |

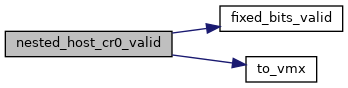

◆ nested_host_cr0_valid()

|

inlinestatic |

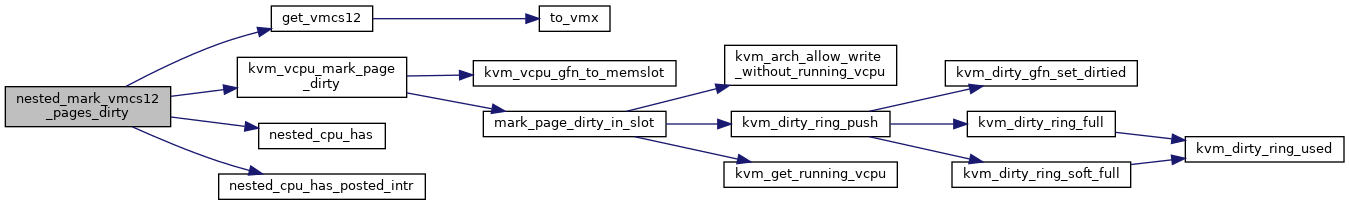

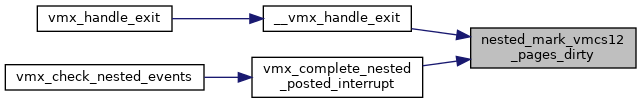

◆ nested_mark_vmcs12_pages_dirty()

| void nested_mark_vmcs12_pages_dirty | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 3838 of file nested.c.

void kvm_vcpu_mark_page_dirty(struct kvm_vcpu *vcpu, gfn_t gfn)

Definition: kvm_main.c:3669

static bool nested_cpu_has_posted_intr(struct vmcs12 *vmcs12)

Definition: nested.h:200

Here is the call graph for this function:

Here is the caller graph for this function:

◆ nested_read_cr0()

|

inlinestatic |

◆ nested_read_cr4()

|

inlinestatic |

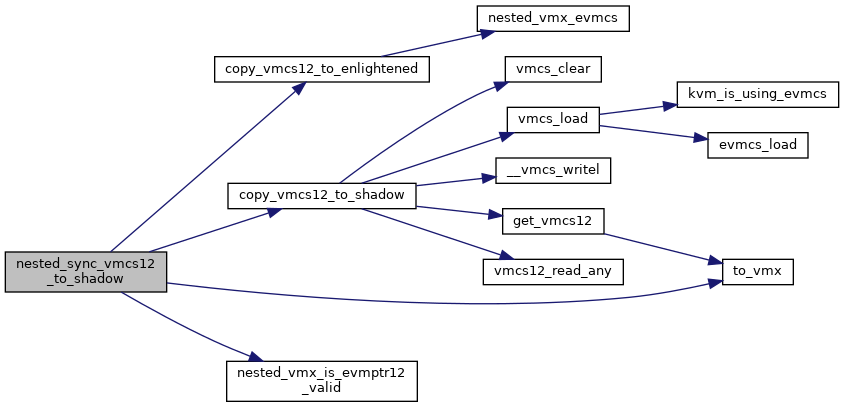

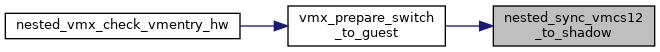

◆ nested_sync_vmcs12_to_shadow()

| void nested_sync_vmcs12_to_shadow | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 2124 of file nested.c.

static bool nested_vmx_is_evmptr12_valid(struct vcpu_vmx *vmx)

Definition: hyperv.h:69

static void copy_vmcs12_to_shadow(struct vcpu_vmx *vmx)

Definition: nested.c:1570

static void copy_vmcs12_to_enlightened(struct vcpu_vmx *vmx)

Definition: nested.c:1852

Here is the call graph for this function:

Here is the caller graph for this function:

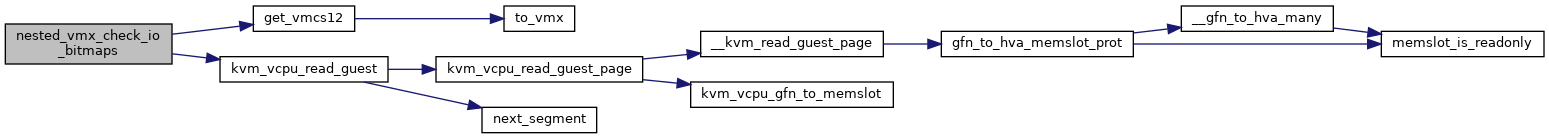

◆ nested_vmx_check_io_bitmaps()

| bool nested_vmx_check_io_bitmaps | ( | struct kvm_vcpu * | vcpu, |

| unsigned int | port, | ||

| int | size | ||

| ) |

Definition at line 5963 of file nested.c.

int kvm_vcpu_read_guest(struct kvm_vcpu *vcpu, gpa_t gpa, void *data, unsigned long len)

Definition: kvm_main.c:3366

Here is the call graph for this function:

Here is the caller graph for this function:

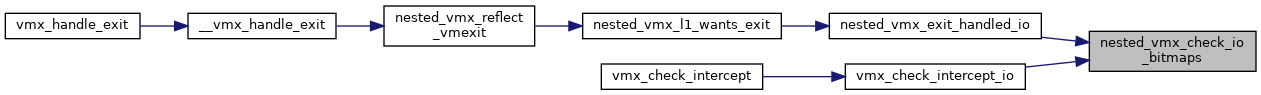

◆ nested_vmx_enter_non_root_mode()

| enum nvmx_vmentry_status nested_vmx_enter_non_root_mode | ( | struct kvm_vcpu * | vcpu, |

| bool | from_vmentry | ||

| ) |

Definition at line 3414 of file nested.c.

static unsigned long kvm_rip_read(struct kvm_vcpu *vcpu)

Definition: kvm_cache_regs.h:116

static bool kvm_apic_has_pending_init_or_sipi(struct kvm_vcpu *vcpu)

Definition: lapic.h:231

static bool kvm_vcpu_apicv_active(struct kvm_vcpu *vcpu)

Definition: lapic.h:226

static bool nested_cpu_has_preemption_timer(struct vmcs12 *vmcs12)

Definition: nested.h:144

Definition: vmx.h:73

static void load_vmcs12_host_state(struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12)

Definition: nested.c:4509

static int prepare_vmcs02(struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12, bool from_vmentry, enum vm_entry_failure_code *entry_failure_code)

Definition: nested.c:2570

static void prepare_vmcs02_early(struct vcpu_vmx *vmx, struct loaded_vmcs *vmcs01, struct vmcs12 *vmcs12)

Definition: nested.c:2276

static bool nested_get_vmcs12_pages(struct kvm_vcpu *vcpu)

Definition: nested.c:3232

static u32 nested_vmx_load_msr(struct kvm_vcpu *vcpu, u64 gpa, u32 count)

Definition: nested.c:938

static void vmx_switch_vmcs(struct kvm_vcpu *vcpu, struct loaded_vmcs *vmcs)

Definition: nested.c:294

static int nested_vmx_check_guest_state(struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12, enum vm_entry_failure_code *entry_failure_code)

Definition: nested.c:3058

static int nested_vmx_check_vmentry_hw(struct kvm_vcpu *vcpu)

Definition: nested.c:3124

static u8 vmx_has_apicv_interrupt(struct kvm_vcpu *vcpu)

Definition: nested.c:3406

static u64 vmx_calc_preemption_timer_value(struct kvm_vcpu *vcpu)

Definition: nested.c:2148

static void vmx_start_preemption_timer(struct kvm_vcpu *vcpu, u64 preemption_timeout)

Definition: nested.c:2164

static __always_inline void vmcs_writel(unsigned long field, unsigned long value)

Definition: vmx_ops.h:258

void kvm_service_local_tlb_flush_requests(struct kvm_vcpu *vcpu)

Definition: x86.c:3617

Here is the caller graph for this function:

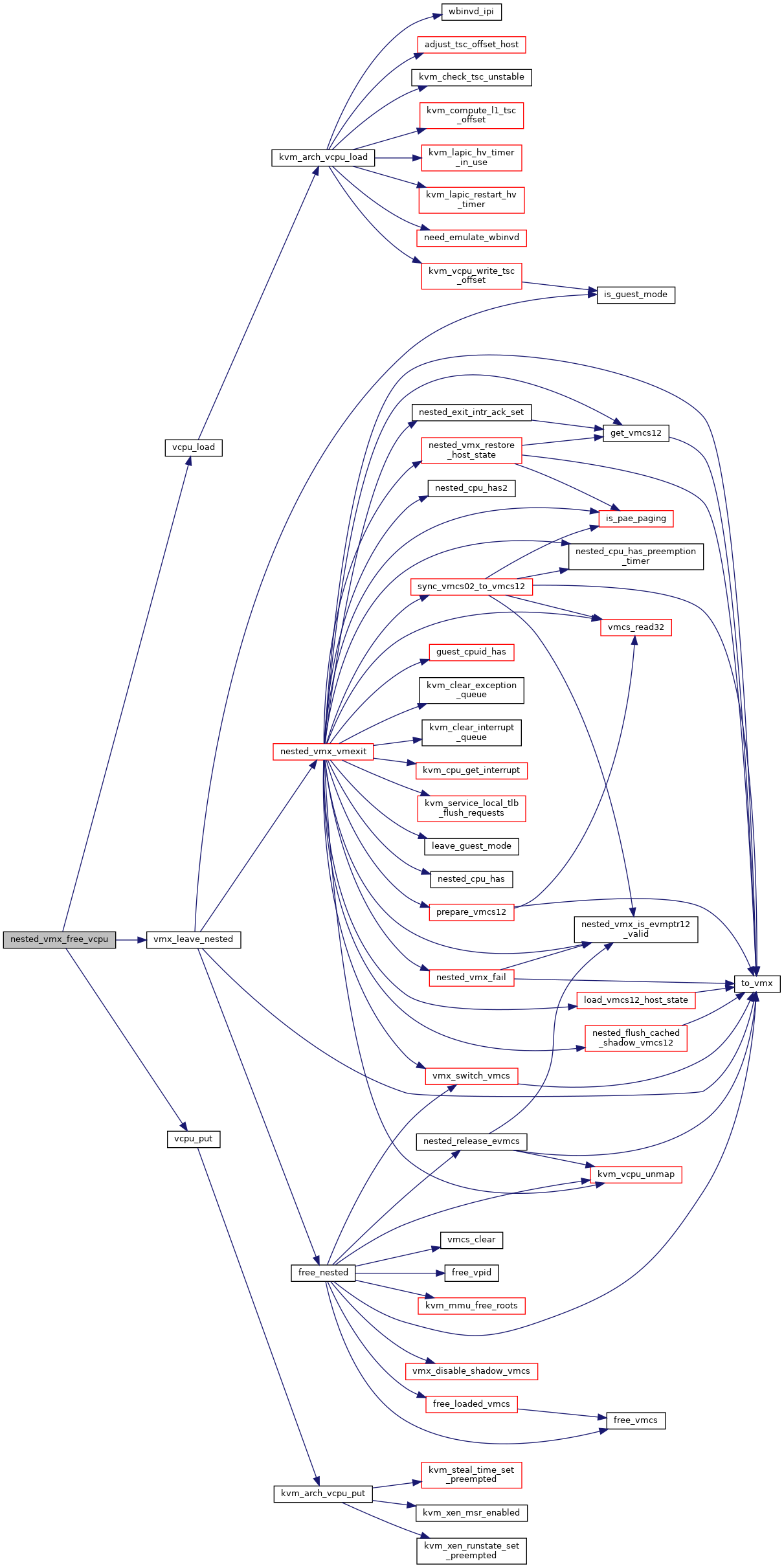

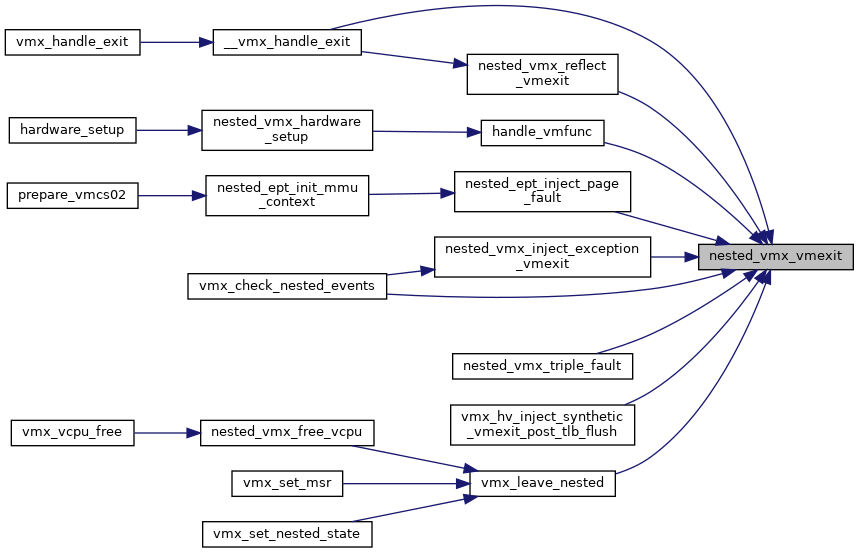

◆ nested_vmx_free_vcpu()

| void nested_vmx_free_vcpu | ( | struct kvm_vcpu * | vcpu | ) |

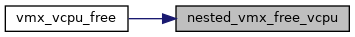

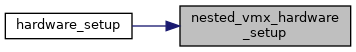

◆ nested_vmx_hardware_setup()

| __init int nested_vmx_hardware_setup | ( | int(*[])(struct kvm_vcpu *) | exit_handlers | ) |

◆ nested_vmx_hardware_unsetup()

| void nested_vmx_hardware_unsetup | ( | void | ) |

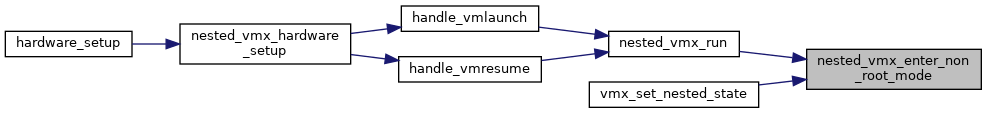

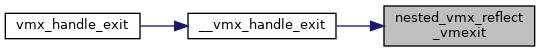

◆ nested_vmx_reflect_vmexit()

| bool nested_vmx_reflect_vmexit | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 6393 of file nested.c.

static bool is_exception_with_error_code(u32 intr_info)

Definition: vmcs.h:159

static bool nested_vmx_l1_wants_exit(struct kvm_vcpu *vcpu, union vmx_exit_reason exit_reason)

Definition: nested.c:6266

void nested_vmx_vmexit(struct kvm_vcpu *vcpu, u32 vm_exit_reason, u32 exit_intr_info, unsigned long exit_qualification)

Definition: nested.c:4767

static bool nested_vmx_l0_wants_exit(struct kvm_vcpu *vcpu, union vmx_exit_reason exit_reason)

Definition: nested.c:6188

static __always_inline unsigned long vmx_get_exit_qual(struct kvm_vcpu *vcpu)

Definition: vmx.h:681

static __always_inline u32 vmx_get_intr_info(struct kvm_vcpu *vcpu)

Definition: vmx.h:691

Here is the call graph for this function:

Here is the caller graph for this function:

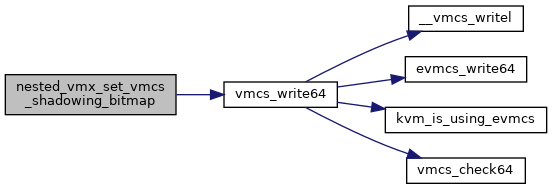

◆ nested_vmx_set_vmcs_shadowing_bitmap()

| void nested_vmx_set_vmcs_shadowing_bitmap | ( | void | ) |

Definition at line 6764 of file nested.c.

static __always_inline void vmcs_write64(unsigned long field, u64 value)

Definition: vmx_ops.h:246

Here is the call graph for this function:

Here is the caller graph for this function:

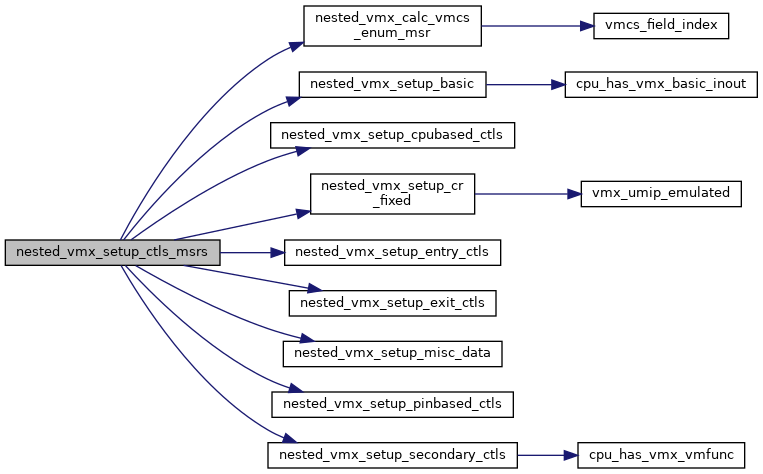

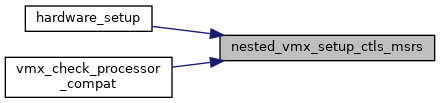

◆ nested_vmx_setup_ctls_msrs()

| void nested_vmx_setup_ctls_msrs | ( | struct vmcs_config * | vmcs_conf, |

| u32 | ept_caps | ||

| ) |

Definition at line 7045 of file nested.c.

Definition: capabilities.h:27

static void nested_vmx_setup_pinbased_ctls(struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs)

Definition: nested.c:6806

static void nested_vmx_setup_basic(struct nested_vmx_msrs *msrs)

Definition: nested.c:6997

static void nested_vmx_setup_entry_ctls(struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs)

Definition: nested.c:6846

static void nested_vmx_setup_cpubased_ctls(struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs)

Definition: nested.c:6866

static void nested_vmx_setup_exit_ctls(struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs)

Definition: nested.c:6823

static u64 nested_vmx_calc_vmcs_enum_msr(void)

Definition: nested.c:6778

static void nested_vmx_setup_cr_fixed(struct nested_vmx_msrs *msrs)

Definition: nested.c:7015

static void nested_vmx_setup_misc_data(struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs)

Definition: nested.c:6985

static void nested_vmx_setup_secondary_ctls(u32 ept_caps, struct vmcs_config *vmcs_conf, struct nested_vmx_msrs *msrs)

Definition: nested.c:6902

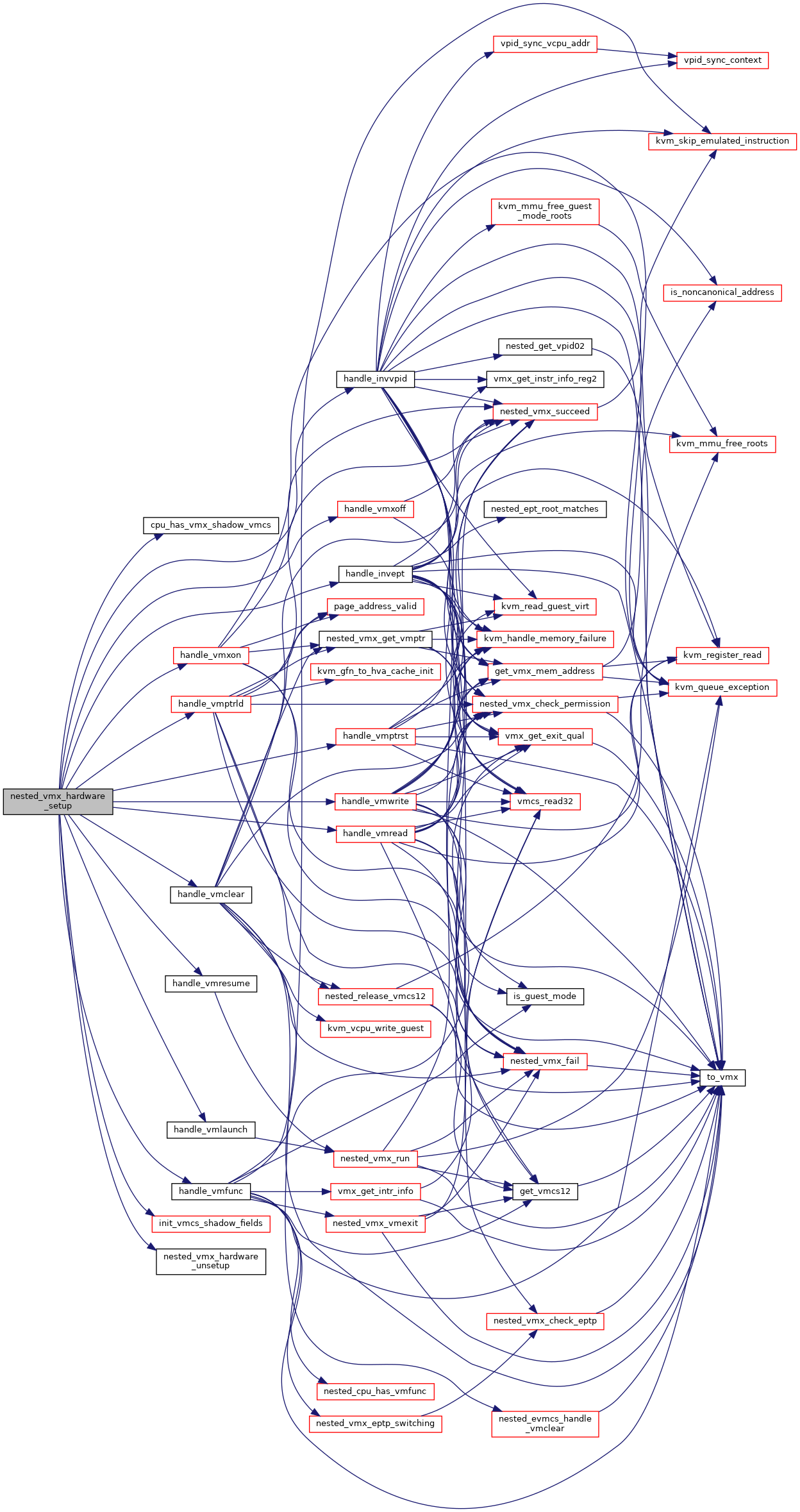

Here is the call graph for this function:

Here is the caller graph for this function:

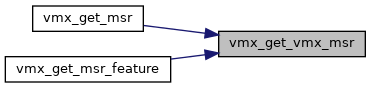

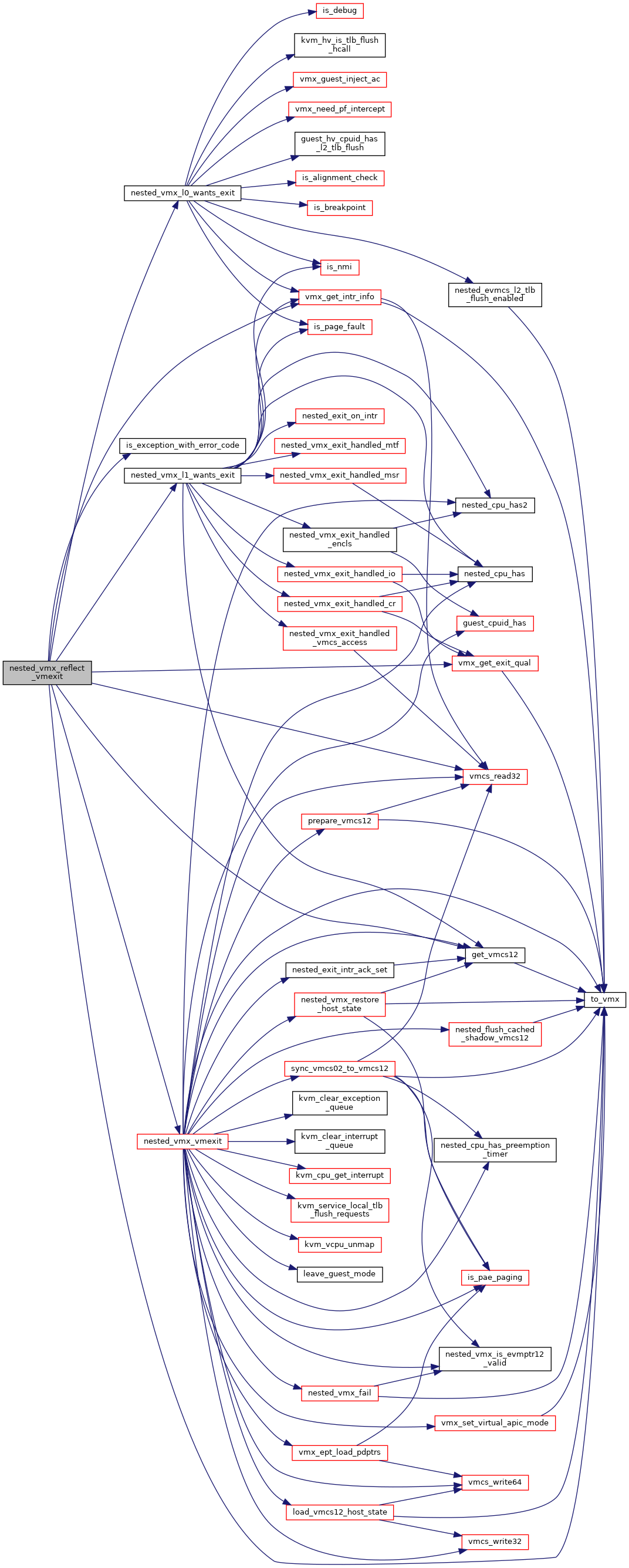

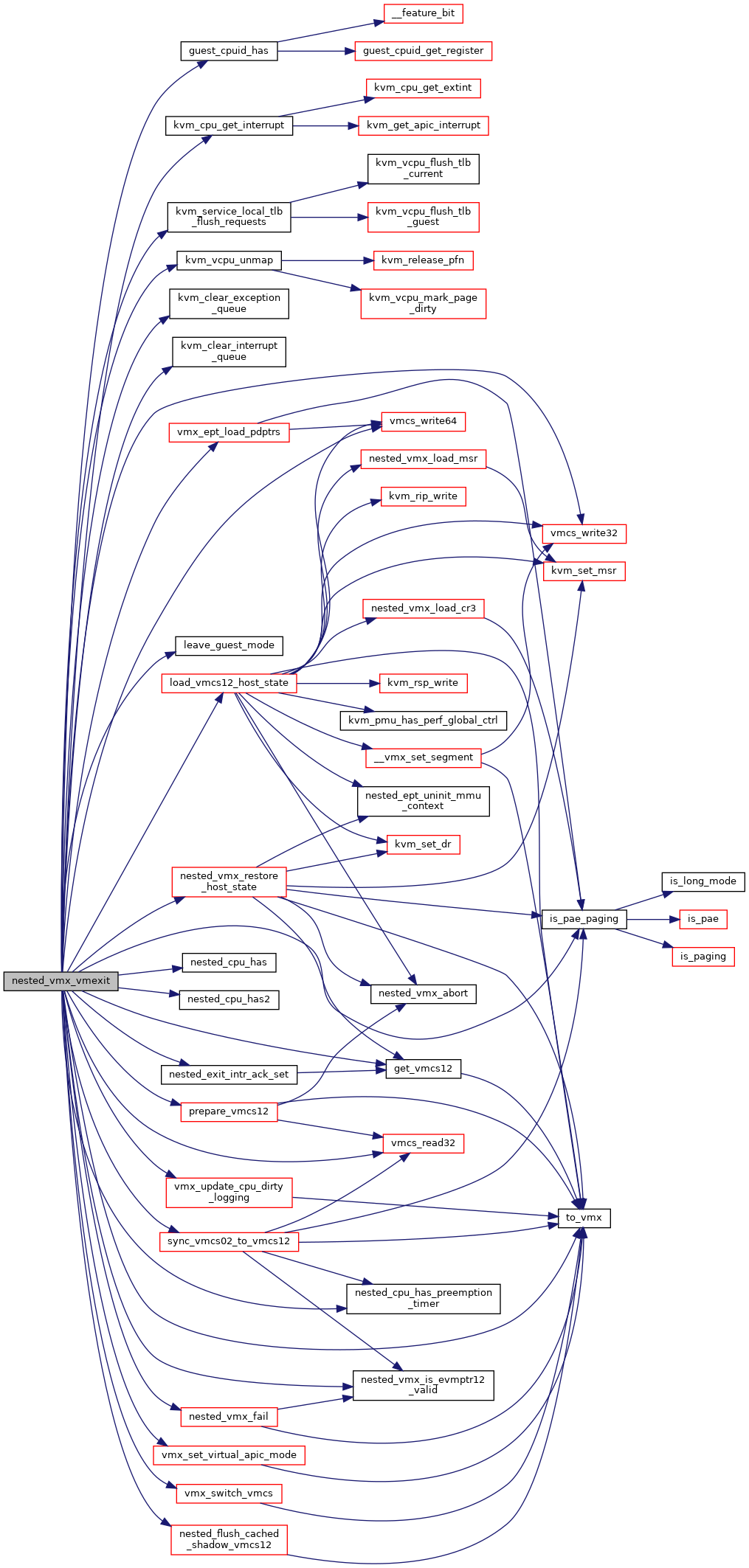

◆ nested_vmx_vmexit()

| void nested_vmx_vmexit | ( | struct kvm_vcpu * | vcpu, |

| u32 | vm_exit_reason, | ||

| u32 | exit_intr_info, | ||

| unsigned long | exit_qualification | ||

| ) |

Definition at line 4767 of file nested.c.

static __always_inline bool guest_cpuid_has(struct kvm_vcpu *vcpu, unsigned int x86_feature)

Definition: cpuid.h:83

void kvm_vcpu_unmap(struct kvm_vcpu *vcpu, struct kvm_host_map *map, bool dirty)

Definition: kvm_main.c:3186

bool update_vmcs01_cpu_dirty_logging

Definition: vmx.h:178

bool change_vmcs01_virtual_apic_mode

Definition: vmx.h:176

struct vcpu_vmx::msr_autoload msr_autoload

static void nested_vmx_restore_host_state(struct kvm_vcpu *vcpu)

Definition: nested.c:4657

static void nested_flush_cached_shadow_vmcs12(struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12)

Definition: nested.c:720

static void sync_vmcs02_to_vmcs12(struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12)

Definition: nested.c:4372

static int nested_vmx_fail(struct kvm_vcpu *vcpu, u32 vm_instruction_error)

Definition: nested.c:188

static void prepare_vmcs12(struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12, u32 vm_exit_reason, u32 exit_intr_info, unsigned long exit_qualification)

Definition: nested.c:4453

static bool nested_exit_intr_ack_set(struct kvm_vcpu *vcpu)

Definition: nested.c:743

void vmx_set_virtual_apic_mode(struct kvm_vcpu *vcpu)

Definition: vmx.c:6694

void vmx_update_cpu_dirty_logging(struct kvm_vcpu *vcpu)

Definition: vmx.c:8110

static __always_inline void vmcs_write32(unsigned long field, u32 value)

Definition: vmx_ops.h:237

static void kvm_clear_interrupt_queue(struct kvm_vcpu *vcpu)

Definition: x86.h:122

static void kvm_clear_exception_queue(struct kvm_vcpu *vcpu)

Definition: x86.h:107

Here is the call graph for this function:

Here is the caller graph for this function:

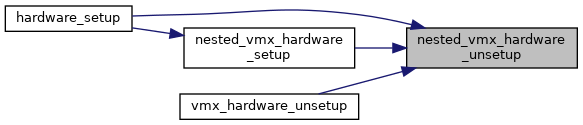

◆ vmx_get_vmx_msr()

| int vmx_get_vmx_msr | ( | struct nested_vmx_msrs * | msrs, |

| u32 | msr_index, | ||

| u64 * | pdata | ||

| ) |

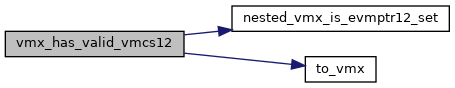

◆ vmx_has_valid_vmcs12()

|

inlinestatic |

Definition at line 55 of file nested.h.

static bool nested_vmx_is_evmptr12_set(struct vcpu_vmx *vmx)

Definition: hyperv.h:79

Here is the call graph for this function:

Here is the caller graph for this function:

◆ vmx_leave_nested()

| void vmx_leave_nested | ( | struct kvm_vcpu * | vcpu | ) |

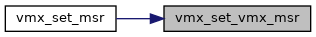

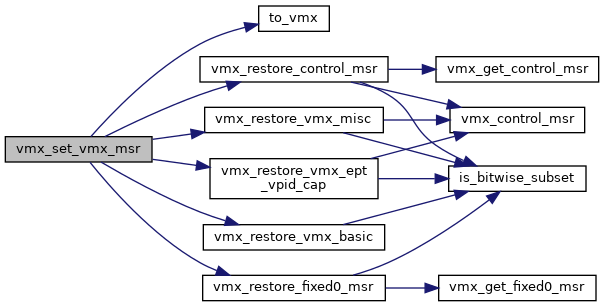

◆ vmx_set_vmx_msr()

| int vmx_set_vmx_msr | ( | struct kvm_vcpu * | vcpu, |

| u32 | msr_index, | ||

| u64 | data | ||

| ) |

Definition at line 1393 of file nested.c.

Definition: capabilities.h:56

static int vmx_restore_control_msr(struct vcpu_vmx *vmx, u32 msr_index, u64 data)

Definition: nested.c:1289

static int vmx_restore_vmx_ept_vpid_cap(struct vcpu_vmx *vmx, u64 data)

Definition: nested.c:1347

static int vmx_restore_vmx_basic(struct vcpu_vmx *vmx, u64 data)

Definition: nested.c:1229

static int vmx_restore_fixed0_msr(struct vcpu_vmx *vmx, u32 msr_index, u64 data)

Definition: nested.c:1373

static int vmx_restore_vmx_misc(struct vcpu_vmx *vmx, u64 data)

Definition: nested.c:1312

Here is the call graph for this function:

Here is the caller graph for this function: