#include <asm/sgx.h>#include "cpuid.h"#include "kvm_cache_regs.h"#include "nested.h"#include "sgx.h"#include "vmx.h"#include "x86.h"

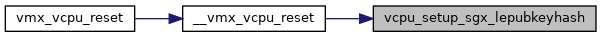

Include dependency graph for sgx.c:

Go to the source code of this file.

Macros | |

| #define | pr_fmt(fmt) KBUILD_MODNAME ": " fmt |

Functions | |

| module_param_named (sgx, enable_sgx, bool, 0444) | |

| static int | sgx_get_encls_gva (struct kvm_vcpu *vcpu, unsigned long offset, int size, int alignment, gva_t *gva) |

| static void | sgx_handle_emulation_failure (struct kvm_vcpu *vcpu, u64 addr, unsigned int size) |

| static int | sgx_read_hva (struct kvm_vcpu *vcpu, unsigned long hva, void *data, unsigned int size) |

| static int | sgx_gva_to_gpa (struct kvm_vcpu *vcpu, gva_t gva, bool write, gpa_t *gpa) |

| static int | sgx_gpa_to_hva (struct kvm_vcpu *vcpu, gpa_t gpa, unsigned long *hva) |

| static int | sgx_inject_fault (struct kvm_vcpu *vcpu, gva_t gva, int trapnr) |

| static int | __handle_encls_ecreate (struct kvm_vcpu *vcpu, struct sgx_pageinfo *pageinfo, unsigned long secs_hva, gva_t secs_gva) |

| static int | handle_encls_ecreate (struct kvm_vcpu *vcpu) |

| static int | handle_encls_einit (struct kvm_vcpu *vcpu) |

| static bool | encls_leaf_enabled_in_guest (struct kvm_vcpu *vcpu, u32 leaf) |

| static bool | sgx_enabled_in_guest_bios (struct kvm_vcpu *vcpu) |

| int | handle_encls (struct kvm_vcpu *vcpu) |

| void | setup_default_sgx_lepubkeyhash (void) |

| void | vcpu_setup_sgx_lepubkeyhash (struct kvm_vcpu *vcpu) |

| static bool | sgx_intercept_encls_ecreate (struct kvm_vcpu *vcpu) |

| void | vmx_write_encls_bitmap (struct kvm_vcpu *vcpu, struct vmcs12 *vmcs12) |

Variables | |

| bool __read_mostly | enable_sgx = 1 |

| static u64 sgx_pubkey_hash[4] | __ro_after_init |

Macro Definition Documentation

◆ pr_fmt

Function Documentation

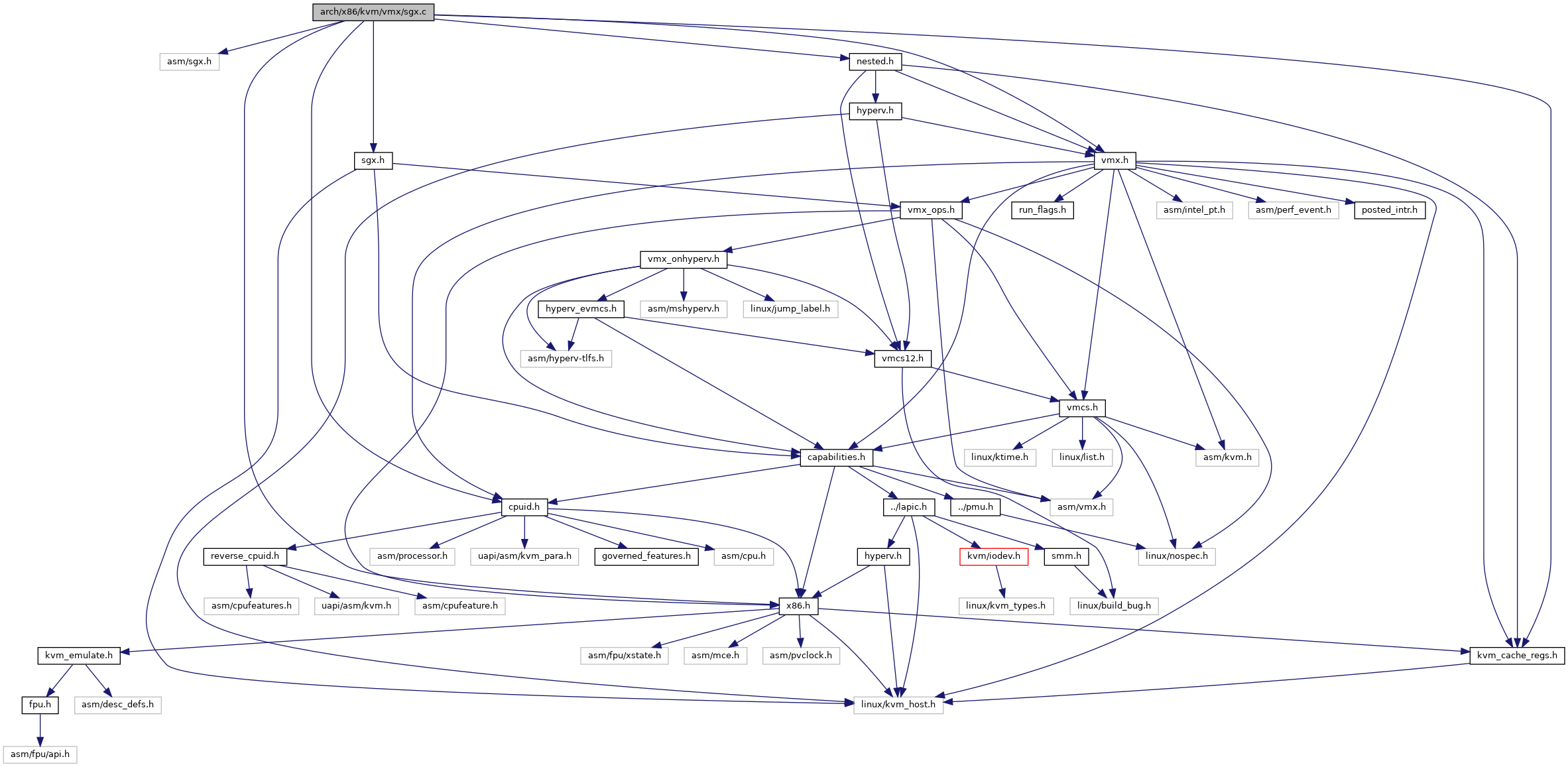

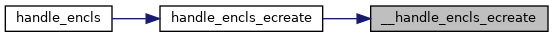

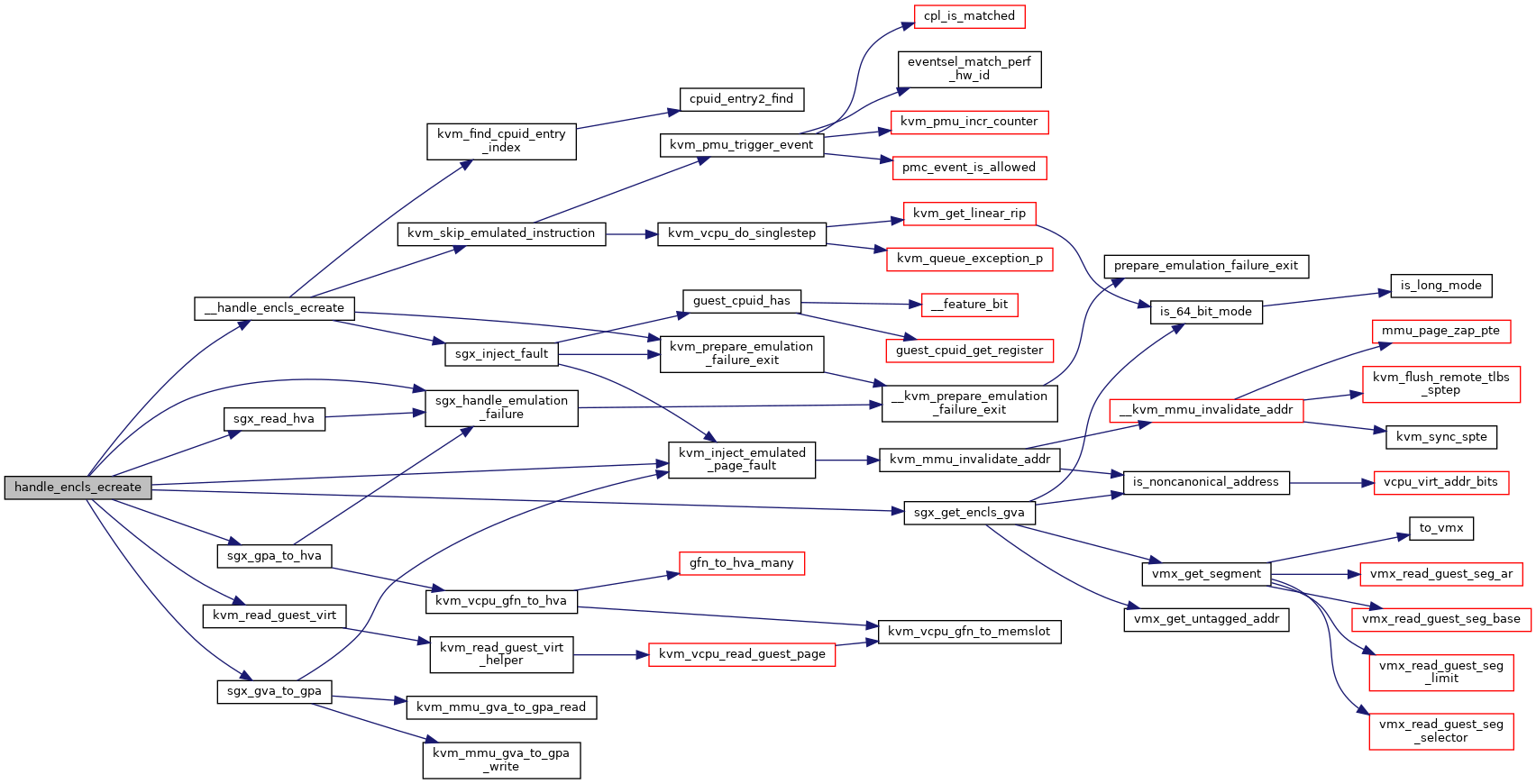

◆ __handle_encls_ecreate()

|

static |

Definition at line 141 of file sgx.c.

struct kvm_cpuid_entry2 * kvm_find_cpuid_entry_index(struct kvm_vcpu *vcpu, u32 function, u32 index)

Definition: cpuid.c:1447

static int sgx_inject_fault(struct kvm_vcpu *vcpu, gva_t gva, int trapnr)

Definition: sgx.c:105

int kvm_skip_emulated_instruction(struct kvm_vcpu *vcpu)

Definition: x86.c:8916

void kvm_prepare_emulation_failure_exit(struct kvm_vcpu *vcpu)

Definition: x86.c:8727

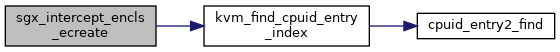

Here is the call graph for this function:

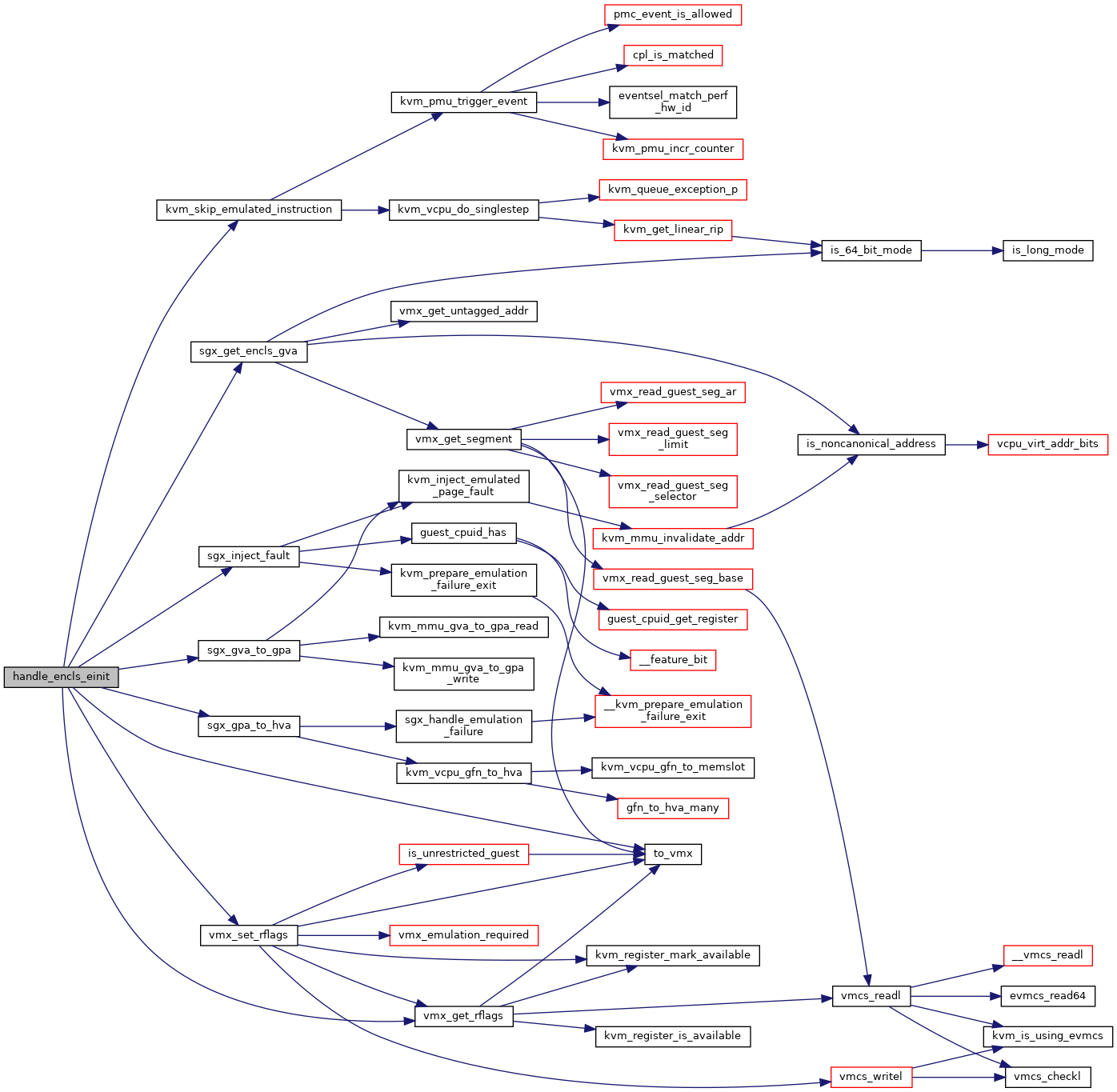

Here is the caller graph for this function:

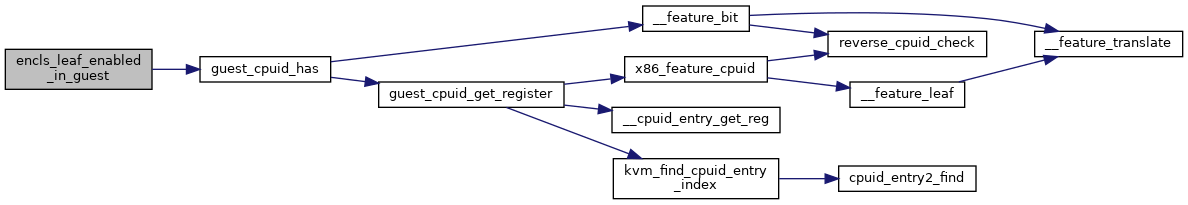

◆ encls_leaf_enabled_in_guest()

|

inlinestatic |

Definition at line 359 of file sgx.c.

static __always_inline bool guest_cpuid_has(struct kvm_vcpu *vcpu, unsigned int x86_feature)

Definition: cpuid.h:83

Here is the call graph for this function:

Here is the caller graph for this function:

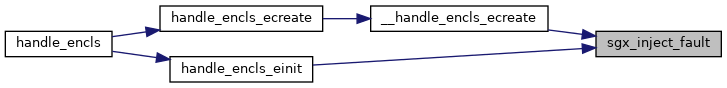

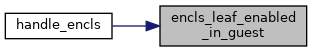

◆ handle_encls()

| int handle_encls | ( | struct kvm_vcpu * | vcpu | ) |

Definition at line 381 of file sgx.c.

static bool sgx_enabled_in_guest_bios(struct kvm_vcpu *vcpu)

Definition: sgx.c:374

static bool encls_leaf_enabled_in_guest(struct kvm_vcpu *vcpu, u32 leaf)

Definition: sgx.c:359

void kvm_queue_exception(struct kvm_vcpu *vcpu, unsigned nr)

Definition: x86.c:731

Here is the call graph for this function:

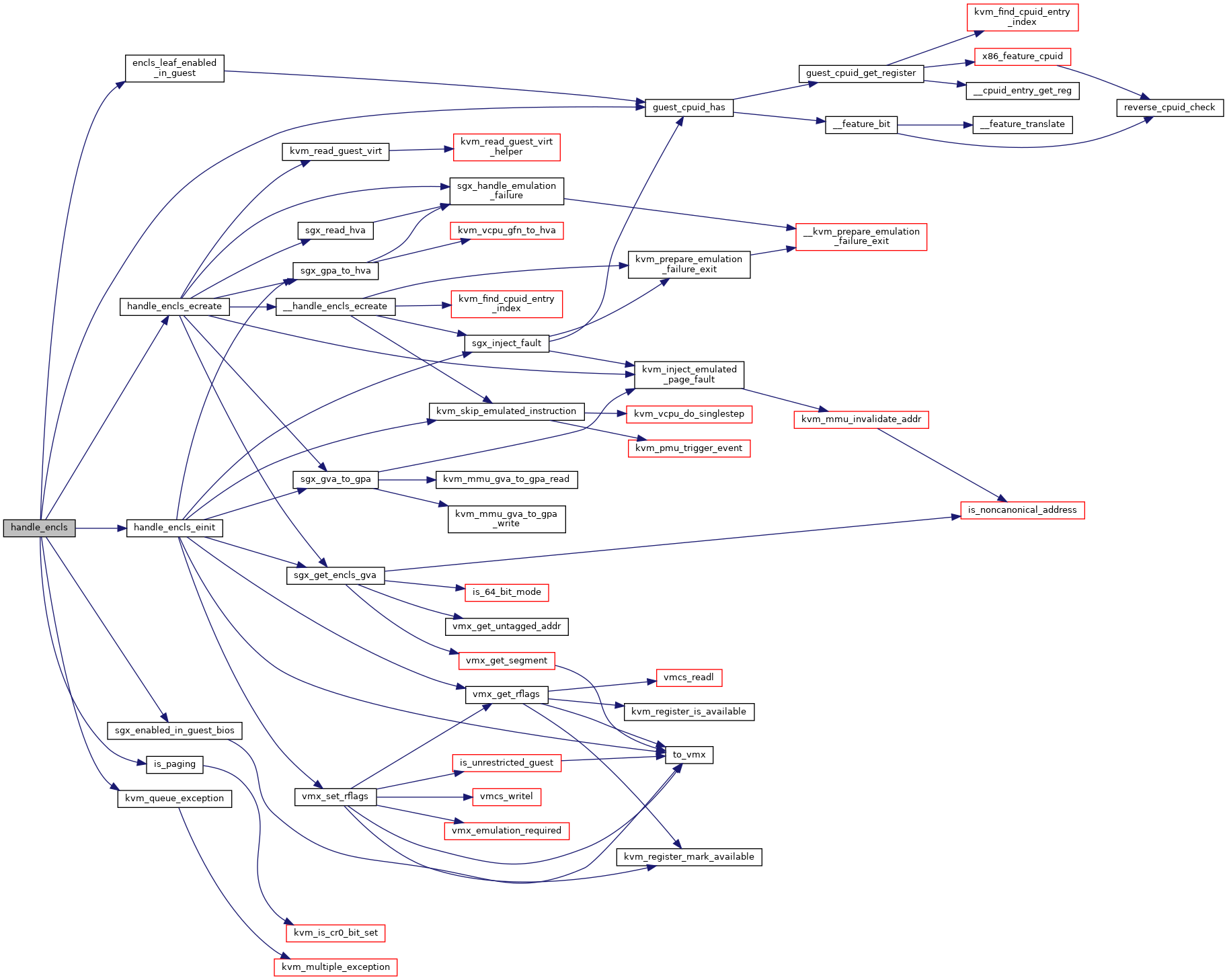

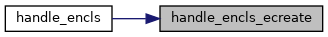

◆ handle_encls_ecreate()

|

static |

Definition at line 217 of file sgx.c.

static int sgx_gpa_to_hva(struct kvm_vcpu *vcpu, gpa_t gpa, unsigned long *hva)

Definition: sgx.c:92

static int __handle_encls_ecreate(struct kvm_vcpu *vcpu, struct sgx_pageinfo *pageinfo, unsigned long secs_hva, gva_t secs_gva)

Definition: sgx.c:141

static int sgx_get_encls_gva(struct kvm_vcpu *vcpu, unsigned long offset, int size, int alignment, gva_t *gva)

Definition: sgx.c:24

static int sgx_gva_to_gpa(struct kvm_vcpu *vcpu, gva_t gva, bool write, gpa_t *gpa)

Definition: sgx.c:74

static int sgx_read_hva(struct kvm_vcpu *vcpu, unsigned long hva, void *data, unsigned int size)

Definition: sgx.c:63

static void sgx_handle_emulation_failure(struct kvm_vcpu *vcpu, u64 addr, unsigned int size)

Definition: sgx.c:55

Definition: kvm_emulate.h:22

int kvm_read_guest_virt(struct kvm_vcpu *vcpu, gva_t addr, void *val, unsigned int bytes, struct x86_exception *exception)

Definition: x86.c:7572

void kvm_inject_emulated_page_fault(struct kvm_vcpu *vcpu, struct x86_exception *fault)

Definition: x86.c:796

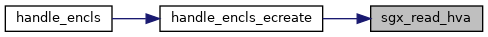

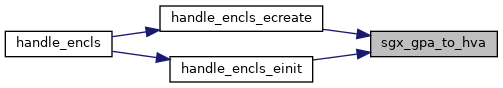

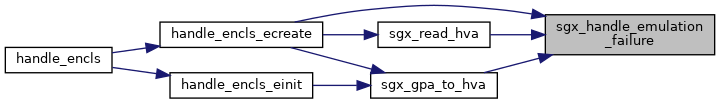

Here is the call graph for this function:

Here is the caller graph for this function:

◆ handle_encls_einit()

|

static |

Definition at line 297 of file sgx.c.

void vmx_set_rflags(struct kvm_vcpu *vcpu, unsigned long rflags)

Definition: vmx.c:1527

Here is the call graph for this function:

Here is the caller graph for this function:

◆ module_param_named()

| module_param_named | ( | sgx | , |

| enable_sgx | , | ||

| bool | , | ||

| 0444 | |||

| ) |

◆ setup_default_sgx_lepubkeyhash()

| void setup_default_sgx_lepubkeyhash | ( | void | ) |

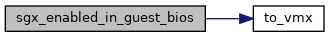

◆ sgx_enabled_in_guest_bios()

|

inlinestatic |

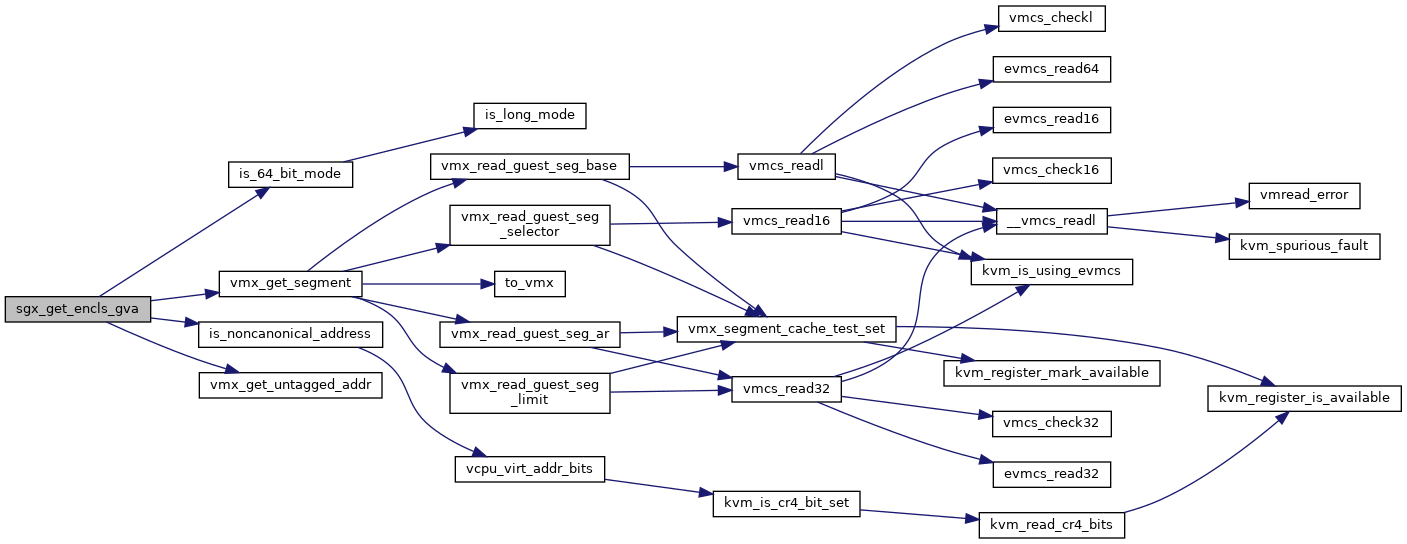

◆ sgx_get_encls_gva()

|

static |

Definition at line 24 of file sgx.c.

gva_t vmx_get_untagged_addr(struct kvm_vcpu *vcpu, gva_t gva, unsigned int flags)

Definition: vmx.c:8250

void vmx_get_segment(struct kvm_vcpu *vcpu, struct kvm_segment *var, int seg)

Definition: vmx.c:3496

static bool is_noncanonical_address(u64 la, struct kvm_vcpu *vcpu)

Definition: x86.h:213

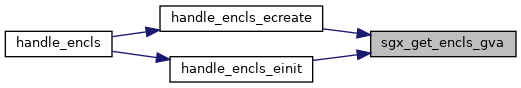

Here is the call graph for this function:

Here is the caller graph for this function:

◆ sgx_gpa_to_hva()

|

static |

Definition at line 92 of file sgx.c.

unsigned long kvm_vcpu_gfn_to_hva(struct kvm_vcpu *vcpu, gfn_t gfn)

Definition: kvm_main.c:2748

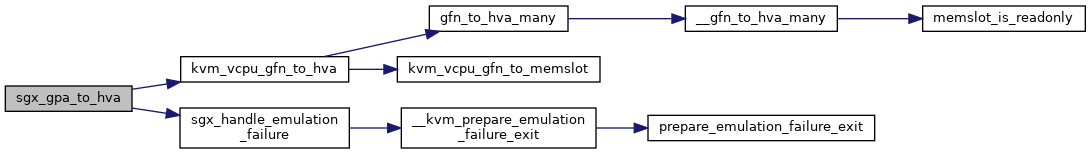

Here is the call graph for this function:

Here is the caller graph for this function:

◆ sgx_gva_to_gpa()

|

static |

Definition at line 74 of file sgx.c.

gpa_t kvm_mmu_gva_to_gpa_read(struct kvm_vcpu *vcpu, gva_t gva, struct x86_exception *exception)

Definition: x86.c:7483

gpa_t kvm_mmu_gva_to_gpa_write(struct kvm_vcpu *vcpu, gva_t gva, struct x86_exception *exception)

Definition: x86.c:7493

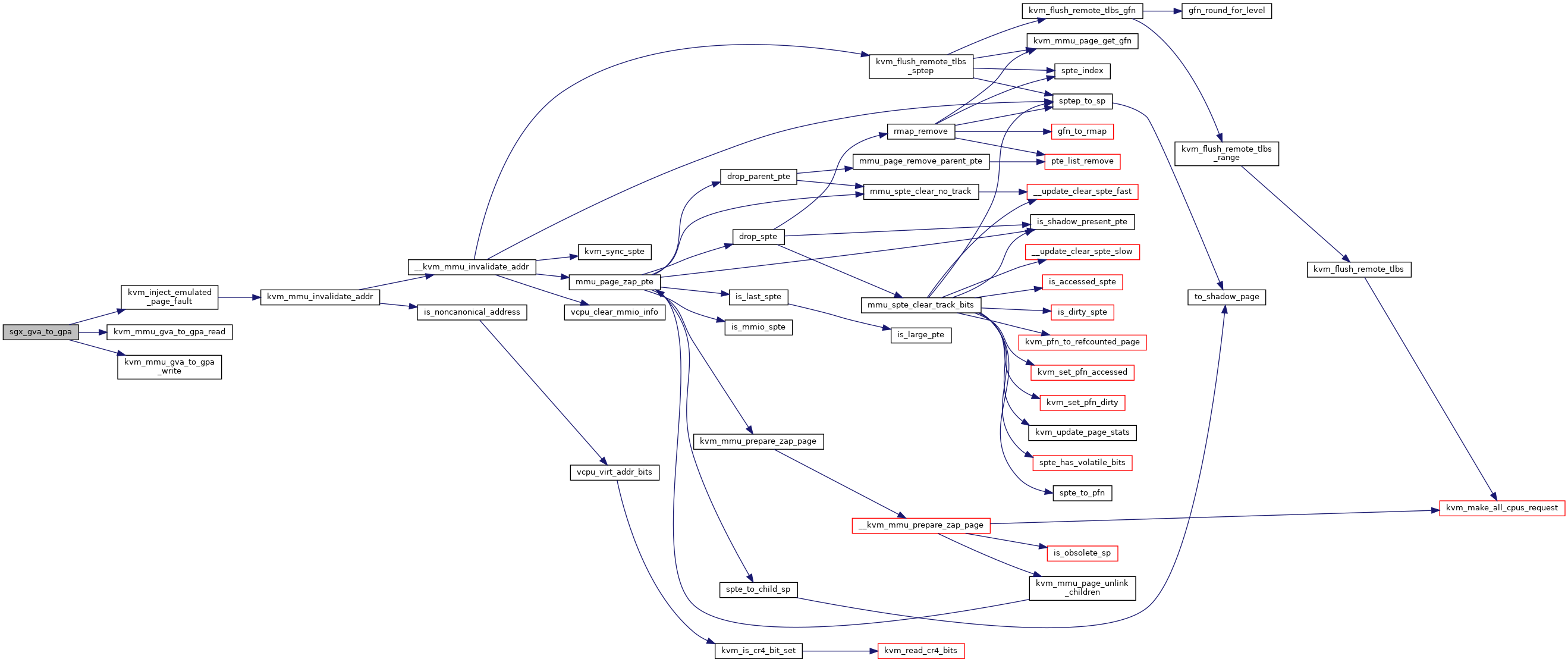

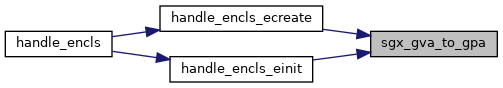

Here is the call graph for this function:

Here is the caller graph for this function:

◆ sgx_handle_emulation_failure()

|

static |

Definition at line 55 of file sgx.c.

void __kvm_prepare_emulation_failure_exit(struct kvm_vcpu *vcpu, u64 *data, u8 ndata)

Definition: x86.c:8720

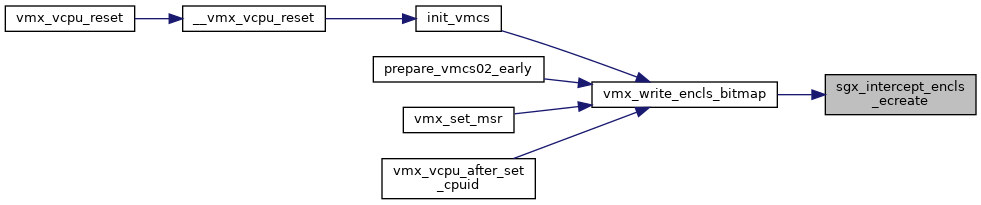

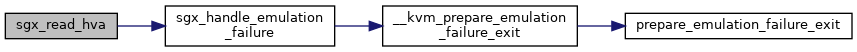

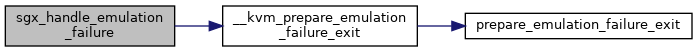

Here is the call graph for this function:

Here is the caller graph for this function:

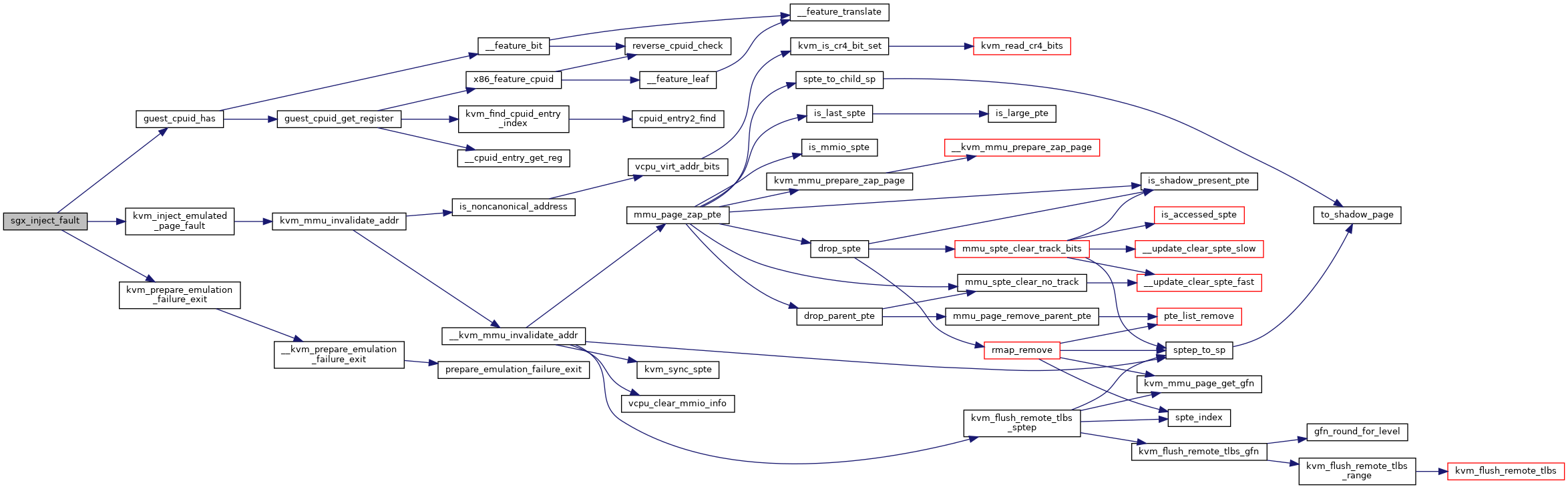

◆ sgx_inject_fault()

|

static |

◆ sgx_intercept_encls_ecreate()

|

static |

◆ sgx_read_hva()

|

static |

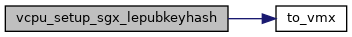

◆ vcpu_setup_sgx_lepubkeyhash()

| void vcpu_setup_sgx_lepubkeyhash | ( | struct kvm_vcpu * | vcpu | ) |

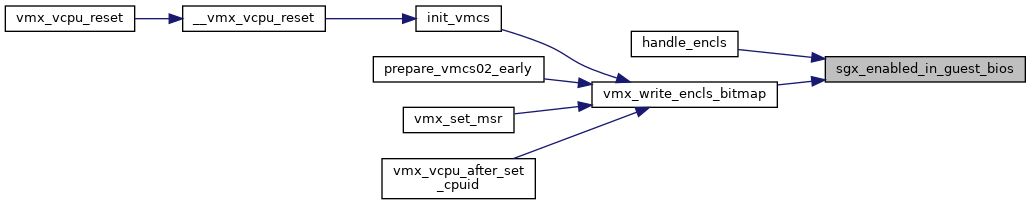

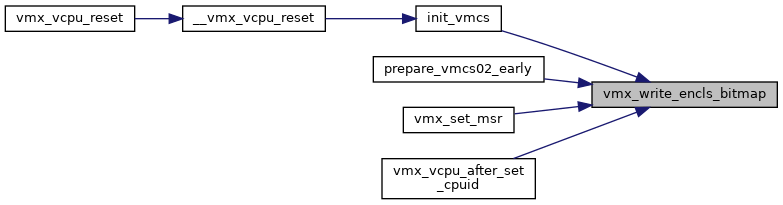

◆ vmx_write_encls_bitmap()

| void vmx_write_encls_bitmap | ( | struct kvm_vcpu * | vcpu, |

| struct vmcs12 * | vmcs12 | ||

| ) |

Definition at line 468 of file sgx.c.

static bool nested_cpu_has_encls_exit(struct vmcs12 *vmcs12)

Definition: nested.h:243

static bool sgx_intercept_encls_ecreate(struct kvm_vcpu *vcpu)

Definition: sgx.c:440

Definition: vmcs12.h:27

static __always_inline void vmcs_write64(unsigned long field, u64 value)

Definition: vmx_ops.h:246

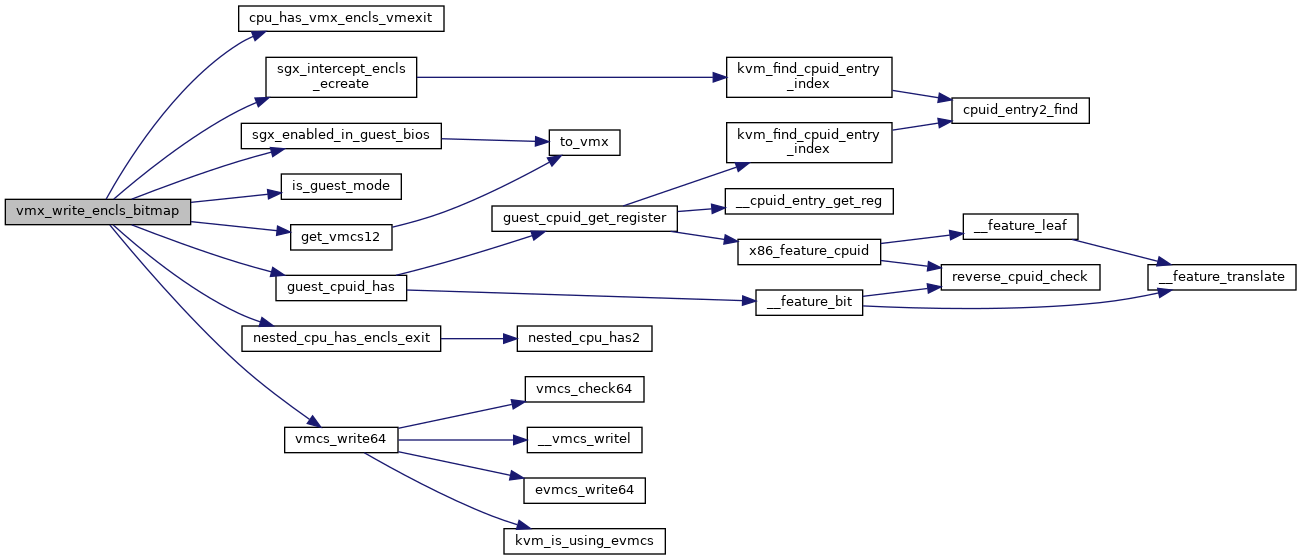

Here is the call graph for this function:

Here is the caller graph for this function:

Variable Documentation

◆ __ro_after_init

◆ enable_sgx

| bool __read_mostly enable_sgx = 1 |